├── .gitignore

├── Makefile

├── README.md

├── build.sbt

├── data

├── README.md

└── botswana-training-set.json

├── docker

├── Dockerfile

├── log4j.properties

└── task.sh

├── project

├── Environment.scala

├── Version.scala

├── build.properties

└── plugins.sbt

├── sbt

├── src

└── main

│ ├── resources

│ ├── log4j.properties

│ ├── ne_50m_admin_0_countries.cpg

│ ├── ne_50m_admin_0_countries.dbf

│ ├── ne_50m_admin_0_countries.prj

│ ├── ne_50m_admin_0_countries.shp

│ └── ne_50m_admin_0_countries.shx

│ └── scala

│ ├── CountryGeometry.scala

│ ├── FootprintGenerator.scala

│ ├── LabeledPredictApp.scala

│ ├── LabeledTrainApp.scala

│ ├── MBTiles.scala

│ ├── OSM.scala

│ ├── Output.scala

│ ├── Predict.scala

│ ├── Train.scala

│ ├── Utils.scala

│ └── WorldPop.scala

└── view

├── africa.geojson

└── index.html

/.gitignore:

--------------------------------------------------------------------------------

1 | # Project generated files #

2 |

3 | metastore_db

4 | third_party_sources

5 | derby.log

6 |

7 | # Test Data #

8 | src/test-data

9 |

10 | # AWS #

11 |

12 | *.pem

13 |

14 | # Operating System Files #

15 |

16 | *.DS_Store

17 | Thumbs.db

18 |

19 | # Build Files #

20 |

21 | bin

22 | target

23 | build/

24 | .gradle

25 |

26 | # Eclipse Project Files #

27 |

28 | .classpath

29 | .project

30 | .settings

31 |

32 | # Vagrant

33 |

34 | .vagrant

35 |

36 | # Terraform

37 | deployment/terraform/.terraform

38 | deployment/terraform/terraform.tfvars

39 | .terraform.tfstate.lock.info

40 | *.tfvars

41 | *.tfstate

42 | *.tfplan

43 | *.tfstate.backup

44 | .terraform

45 |

46 | # Node and Webpack

47 | node_modules/

48 | npm-debug.log

49 | vendor.bundle.js

50 | dist/

51 |

52 |

53 | # IntelliJ IDEA Files #

54 |

55 | *.iml

56 | *.ipr

57 | *.iws

58 | *.idea

59 |

60 | # macOS

61 | .DS_Store

62 |

63 | # Emacs #

64 |

65 | .ensime

66 | \#*#

67 | *~

68 | .#*

69 |

70 | *.jar

71 |

72 | derby.log

73 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | WORKDIR := /hot-osm

2 |

3 | rwildcard=$(foreach d,$(wildcard $1*),$(call rwildcard,$d/,$2) $(filter $(subst *,%,$2),$d))

4 |

5 | SCALA_SRC := $(call rwildcard, src/, *.scala)

6 | SCALA_BLD := $(wildcard project/) build.sbt

7 | ASSEMBLY_JAR := target/scala-2.11/hot-osm-population-assembly.jar

8 | ECR_REPO := 670261699094.dkr.ecr.us-east-1.amazonaws.com/hotosm-population:latest

9 |

10 | .PHONY: train predict docker push-ecr

11 |

12 | ${ASSEMBLY_JAR}: ${SCALA_SRC} ${SCALA_BLD}

13 | ./sbt assembly

14 |

15 | docker/hot-osm-population-assembly.jar: ${ASSEMBLY_JAR}

16 | cp $< $@

17 |

18 | docker: docker/hot-osm-population-assembly.jar

19 | docker build docker -t hotosm-population

20 |

21 | push-ecr:

22 | docker tag hotosm-population:latest ${ECR_REPO}

23 | docker push ${ECR_REPO}

24 |

25 | train: ${ASSEMBLY_JAR}

26 | spark-submit --master "local[*]" --driver-memory 4G \

27 | --class com.azavea.hotosmpopulation.LabeledTrainApp \

28 | target/scala-2.11/hot-osm-population-assembly.jar \

29 | --country botswana \

30 | --worldpop file:${WORKDIR}/WorldPop/BWA15v4.tif \

31 | --qatiles ${WORKDIR}/mbtiles/botswana.mbtiles \

32 | --training ${CURDIR}/data/botswana-training-set.json \

33 | --model ${WORKDIR}/models/botswana-regression

34 |

35 | predict: ${ASSEMBLY_JAR}

36 | spark-submit --master "local[*]" --driver-memory 4G \

37 | --class com.azavea.hotosmpopulation.LabeledPredictApp \

38 | target/scala-2.11/hot-osm-population-assembly.jar \

39 | --country botswana \

40 | --worldpop file:${WORKDIR}/WorldPop/BWA15v4.tif \

41 | --qatiles ${WORKDIR}/mbtiles/botswana.mbtiles \

42 | --model ${WORKDIR}/models/botswana-regression \

43 | --output ${WORKDIR}/botswana.json

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # HOTOSM WorldPop vs OSM Coverage

2 |

3 | This projects trains a model of population from WorldPop raster vs OSM building footprint from MapBox QA tiles in order to generate estimates of completeness for OSM building coverage.

4 |

5 | ## Building

6 |

7 | This project uses [Apache Spark](https://spark.apache.org/) and is expected to be run through [`spark-submit`](https://spark.apache.org/docs/latest/submitting-applications.html) command line tool.

8 |

9 | In order to generate the assembly `.jar` file required for this tool the following command is used:

10 |

11 | ```sh

12 | ./sbt assembly

13 | ```

14 |

15 | This will bootstrap the Scala Build Tool and built the assembly and requires only `java` version `1.8` to be available.

16 |

17 | ## Running

18 |

19 | Project defines multiple main files, for training a model of OSM based on WorldPop raster and for predicting OSM coverage based on trained model. They can be called through `spark-submit` as follows:

20 |

21 | ```sh

22 | spark-submit --master "local[*]" --driver-memory 4G \

23 | --class com.azavea.hotosmpopulation.TrainApp \

24 | target/scala-2.11/hot-osm-population-assembly.jar \

25 | --country botswana \

26 | --worldpop file:/hot-osm/WorldPop/BWA15v4.tif \

27 | --qatiles /hot-osm/mbtiles/botswana.mbtiles \

28 | --model /hot-osm/models/botswana-regression

29 |

30 | spark-submit --master "local[*]" --driver-memory 4G \

31 | --class com.azavea.hotosmpopulation.PredictApp \

32 | target/scala-2.11/hot-osm-population-assembly.jar \

33 | --country botswana \

34 | --worldpop file:/hot-osm/WorldPop/BWA15v4.tif \

35 | --qatiles /hot-osm/mbtiles/botswana.mbtiles \

36 | --model /hot-osm/models/botswana-regression \

37 | --output /hot-osm/botswana.json

38 | ```

39 |

40 | The arguments appearing before the `hot-osm-population-assembly.jar` are to `spark-submit` command.

41 | The arguments appearing after the JAR are specific to the application:

42 |

43 | `country`: `ADM0_A3` country code or name, used to lookup country boundary

44 | `worldpop`: URI to WorldPop raster, maybe `file:/` or `s3://` scheme.

45 | `qatiles`: Path to MapBox QA `.mbtiles` file for the country, must be local.

46 | `model`: Path to save/load model directory, is must be local.

47 | `output`: Path to generate prediction JSON output, must be local.

48 |

49 |

50 | For development the train and predict commands can are scripted through the `Makefile`:

51 |

52 | ```sh

53 | make train WORKDIR=/hot-osm

54 | make predict WORKDIR=/hot-osm

55 | ```

56 |

57 | ## `prediction.json`

58 | Because WorldPop and OSM have radically different resolutions for comparison to be valid they need to be aggregated to a common resolution. Specifically WorldPop will identify population centers and evenly spread estimated population for that center over each pixel. Whereas OSM building footprints are quite resolute and are covered can vary from pixel to pixel on 100m per pixel raster.

59 |

60 | Experimentally we see that aggregating the results per tile at zoom level 12 on WebMercator TMS layout produces visually useful relationship.

61 |

62 | The output of model prediction is saved as JSON where a record exist for every zoom level 12 TMS tile covering the country.

63 | The key is `"{zoom}/{column}/{row}"` following TMS endpoint convention.

64 |

65 | ```json

66 | {

67 | "12/2337/2337": {

68 | "index": 1.5921462086435942,

69 | "actual": {

70 | "pop_sum": 14.647022571414709,

71 | "osm_sum": 145.48814392089844,

72 | "osm_avg": 72.74407196044922

73 | },

74 | "prediction": {

75 | "osm_sum": 45872.07371520996,

76 | "osm_avg": 45.68931644941231

77 | }

78 | }

79 | }

80 | ```

81 |

82 | Units are:

83 | `pop`: estimated population

84 | `osm`: square meters of building footprint

85 |

86 | Why `_sum` and `_avg`? While visually the results are aggregated to one result per zoom 12 tile, training and prediction is happening at 16x16 pixels per zoom 12 tile.

87 | At this cell size there is enough smoothing between WorldPop and OSM to start building regressions.

88 |

89 | `index` is computed as `(predicted - actual) / predicted`, it will:

90 | - show negative for areas with low OSM coverage for WorldPop population coverage

91 | - show positive for areas with OSM coverage greater than WorldPop population coverage

92 | - stay close to `0` where the ratio of OSM/WorldPop coverage is average

93 |

94 | ## Docker

95 | Docker images suitable for AWS Batch can be built and pushed to ECR using:

96 |

97 | ```sh

98 | make docker

99 | aws ecr get-login --no-include-email --region us-east-1 --profile hotosm

100 | docker login -u AWS ... # copied from output of above command

101 | make push-ecr ECR_REPO=670261699094.dkr.ecr.us-east-1.amazonaws.com/hotosm-population:latest

102 | ```

103 |

104 | The `ENTRYPOINT` for docker images is `docker/task.sh` which handles the setup for the job.

105 | Note that `task.sh` uses positional arguments where all file references may refer use `s3://` scheme.

106 |

107 | The three arguments are are required in order:

108 |

109 | - `COMMAND`: `train` or `predict`

110 | - `COUNTRY`: Country name to download mbtiles

111 | - `WORLDPOP`: Name of WorldPop tiff or S3 URI to WorldPop tiff

112 |

113 | The container may be run locally with:

114 |

115 | ```sh

116 | docker run -it --rm -v ~/.aws:/root/.aws hotosm-population predict botswana s3://bucket/WorldPop/BWA15v4.tif

117 | # OR

118 | docker run -it --rm -v ~/.aws:/root/.aws hotosm-population predict botswana BWA15v4.tif

119 | ```

120 |

121 | ## Methodology

122 |

123 | Our core problem is to estimate the completeness of Open Street Map coverage of building footprints in areas where the map is known to be incomplete.

124 | In order to produce that expectation we need to correlate OSM building footprints with another data set.

125 | We assume population to be the driving factor for building construction so we use [WorldPop](http://www.worldpop.org.uk/) as the independent variable.

126 | Thus we're attempting to derive a relationship between population and building area used by that population.

127 |

128 | ### OpenStreetMap

129 |

130 | OSM geometries are sourced from [MapBox OSM QA tiles](https://osmlab.github.io/osm-qa-tiles/).

131 | They are rasterized to a layer with `CellSize(38.2185,38.2185)` in Web Mercator projection, `EPSG:3857`.

132 | This resolution corresponds to TMS zoom level 15 with 256x256 pixel tiles.

133 | The cell value is the area of the building footprint that intersects pixel footprint in square meters.

134 | If multiple buildings overlap a single pixel their footprints are combined.

135 |

136 | At this raster resolution resulting pixels cover buildings with sufficient precision:

137 |

138 | | Satellite | Rasterized Buildings |

139 | | ------------- |:--------------------:|

140 | | |

| |

141 |

142 |

143 | ### WorldPop

144 |

145 | WorldPop raster is provided in `EPSG:4326` with `CellSize(0.0008333, 0.0008333)`, this is close to 100m at equator.

146 | Because we're going to be predicting and reporting in `EPSG:3857` we reproject the raster using `SUM` resample method, aggregating population density values.

147 |

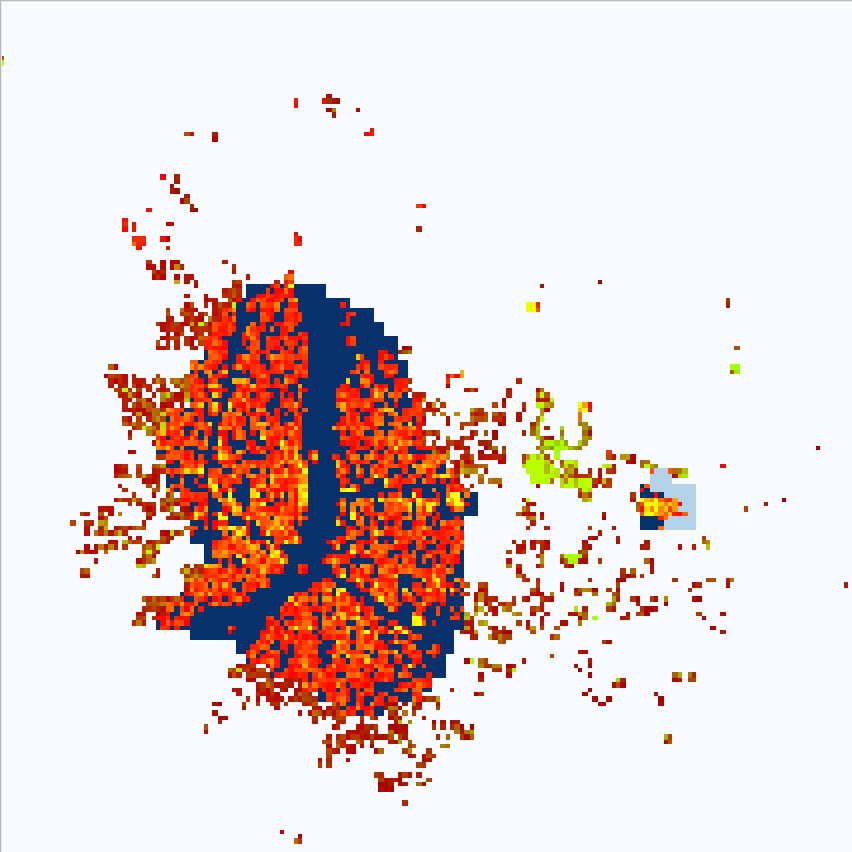

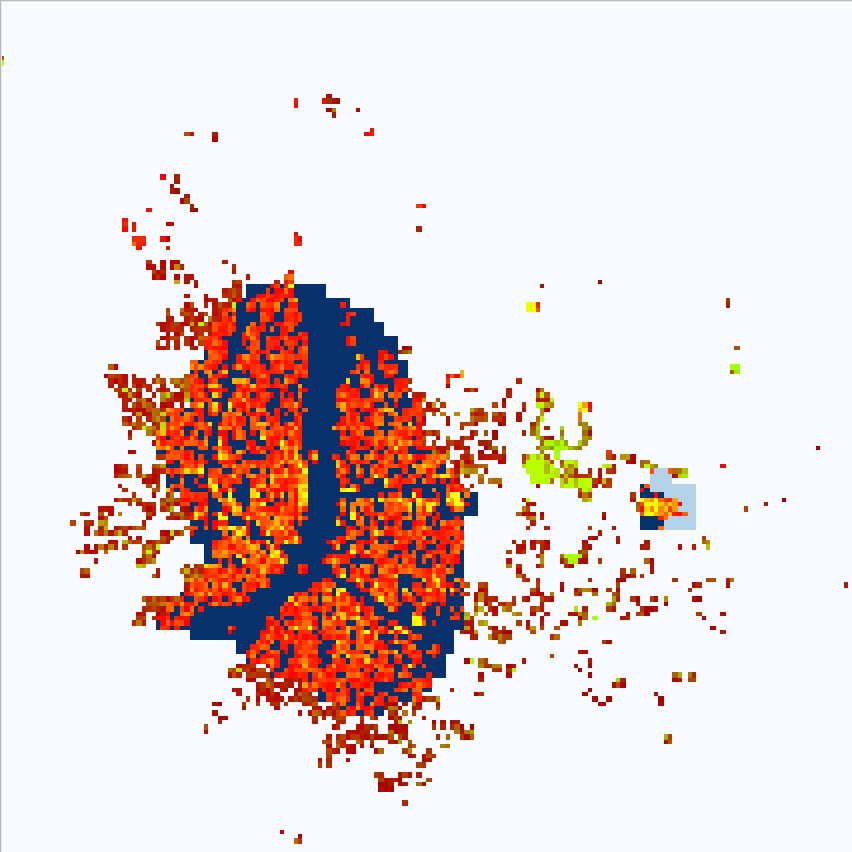

148 | Comparing WorldPop to OSM for Nata, Batswana reveals a problem:

149 |

150 | | WorldPop | WorldPop + OSM | OSM + Satellite |

151 | | ------------- |:--------------:|:---------------:|

152 | |

|

141 |

142 |

143 | ### WorldPop

144 |

145 | WorldPop raster is provided in `EPSG:4326` with `CellSize(0.0008333, 0.0008333)`, this is close to 100m at equator.

146 | Because we're going to be predicting and reporting in `EPSG:3857` we reproject the raster using `SUM` resample method, aggregating population density values.

147 |

148 | Comparing WorldPop to OSM for Nata, Batswana reveals a problem:

149 |

150 | | WorldPop | WorldPop + OSM | OSM + Satellite |

151 | | ------------- |:--------------:|:---------------:|

152 | | |

| |

| |

153 |

154 | Even though WorldPop raster resolution is ~100m the population density is spread evenly at `8.2 ppl/pixel` for the blue area.

155 | This makes it difficult to find relation between individual pixel of population and building area, which ranges from 26 to 982 in this area alone.

156 | The conclusion is that we must aggregate both population and building area to the point where they share resolution and aggregate trend may emerge.

157 |

158 | Plotting a sample of population vs building area we see this as stacks of multiple area values for single population area:

159 |

|

153 |

154 | Even though WorldPop raster resolution is ~100m the population density is spread evenly at `8.2 ppl/pixel` for the blue area.

155 | This makes it difficult to find relation between individual pixel of population and building area, which ranges from 26 to 982 in this area alone.

156 | The conclusion is that we must aggregate both population and building area to the point where they share resolution and aggregate trend may emerge.

157 |

158 | Plotting a sample of population vs building area we see this as stacks of multiple area values for single population area:

159 |  160 |

161 | Its until we reduce aggregate all values at TMS zoom 17 that a clear trend emerges:

162 |

160 |

161 | Its until we reduce aggregate all values at TMS zoom 17 that a clear trend emerges:

162 |  163 |

164 | Finding line of best fit for gives us 6.5 square meters of building footprint per person in Botswana with R-squared value of `0.88069`.

165 | Aggregating to larger areas does not significantly change the fit or the slope of the line.

166 |

167 | ### Data Quality

168 |

169 | Following data quality issues have been encountered and considered:

170 |

171 | Estimates of WorldPop population depend on administrative census data. These estimates data varies in quality and recency from country to country.

172 | To avoid training on data from various sources we fit a model per country where sources are most likely to be consistent, rather than larger areas.

173 |

174 | WorldPop and OSM are updated at different intervals and do not share coverage. This leads to following problems:

175 |

176 | - Regions in WorldPop showing population that are not covered by OSM

177 | - Regions in OSM showing building footprints not covered by WorldPop

178 | - Incomplete OSM coverage per Region

179 |

180 | To address above concerns we mark regions where OSM/WorldPop relation appears optimal, this allows us to compare other areas and reveal the above problems using the derived model. For further discussion and examples see [training set](azavea/hot-osm-population/blob/master/data/README.md) documentation.

181 |

182 | ### Workflow

183 |

184 | Overall workflow as follows:

185 |

186 | **Preparation**

187 |

188 | - Read WorldPop raster for country

189 | - Reproject WorldPop to `EPSG:3857` TMS Level 15 at `256x256` pixel tiles.

190 | - Rasterize OSM building footprints to `EPSG:3857` TMS Level 15 at `256x256` pixel tiles.

191 | - Aggregate both population and building area to `4x4` tile using `SUM` resample method.

192 |

193 | **Training**

194 |

195 | - Mask WorldPop and OSM raster by training set polygons.

196 | - Set all OSM `NODATA` cell values to `0.0`.

197 | - Fit and save `LinearRegressionModel` to population vs. building area.

198 | - Fix y-intercept at '0.0'.

199 |

200 | **Prediction**

201 | - Apply `LinearRegressionModel` to get expected building area.

202 | - Sum all of `4x4` pixel in a tile.

203 | - Report

204 | - Total population

205 | - Actual building area

206 | - Expected building area

207 | - Completeness index as `(actual area - expect area) / expected area`

208 |

209 | Because the model is derived from well labeled areas which we expect it to be stable in describing the usage building space per country.

210 | This enables us to re-run the prediction process with updated OSM input and track changes in OSM coverage.

211 |

212 |

213 |

--------------------------------------------------------------------------------

/build.sbt:

--------------------------------------------------------------------------------

1 | name := "hotosmpopulation"

2 |

3 | version := "0.0.1"

4 |

5 | description := "Estimate OSM coverage from World Population raster"

6 |

7 | organization := "com.azavea"

8 |

9 | organizationName := "Azavea"

10 |

11 | scalaVersion in ThisBuild := Version.scala

12 |

13 | val common = Seq(

14 | resolvers ++= Seq(

15 | "locationtech-releases" at "https://repo.locationtech.org/content/groups/releases",

16 | //"locationtech-snapshots" at "https://repo.locationtech.org/content/groups/snapshots",

17 | Resolver.bintrayRepo("azavea", "maven"),

18 | Resolver.bintrayRepo("s22s", "maven"),

19 | "Geotools" at "http://download.osgeo.org/webdav/geotools/"

20 | ),

21 |

22 | scalacOptions := Seq(

23 | "-deprecation",

24 | "-Ypartial-unification",

25 | "-Ywarn-value-discard",

26 | "-Ywarn-dead-code",

27 | "-Ywarn-numeric-widen"

28 | ),

29 |

30 | scalacOptions in (Compile, doc) += "-groups",

31 |

32 | libraryDependencies ++= Seq(

33 | //"io.astraea" %% "raster-frames" % "0.6.2-SNAPSHOT",

34 | "io.astraea" %% "raster-frames" % "0.6.1",

35 | "org.geotools" % "gt-shapefile" % Version.geotools,

36 | // This is one finicky dependency. Being explicit in hopes it will stop hurting Travis.

37 | "javax.media" % "jai_core" % "1.1.3" from "http://download.osgeo.org/webdav/geotools/javax/media/jai_core/1.1.3/jai_core-1.1.3.jar",

38 | "org.apache.spark" %% "spark-hive" % Version.spark % Provided,

39 | "org.apache.spark" %% "spark-core" % Version.spark % Provided,

40 | "org.apache.spark" %% "spark-sql" % Version.spark % Provided,

41 | "org.apache.spark" %% "spark-mllib" % Version.spark % Provided,

42 | "org.locationtech.geotrellis" %% "geotrellis-proj4" % Version.geotrellis,

43 | "org.locationtech.geotrellis" %% "geotrellis-vector" % Version.geotrellis,

44 | "org.locationtech.geotrellis" %% "geotrellis-raster" % Version.geotrellis,

45 | "org.locationtech.geotrellis" %% "geotrellis-shapefile" % Version.geotrellis,

46 | "org.locationtech.geotrellis" %% "geotrellis-spark" % Version.geotrellis,

47 | "org.locationtech.geotrellis" %% "geotrellis-util" % Version.geotrellis,

48 | "org.locationtech.geotrellis" %% "geotrellis-s3" % Version.geotrellis,

49 | "org.locationtech.geotrellis" %% "geotrellis-vectortile" % Version.geotrellis,

50 | "com.amazonaws" % "aws-java-sdk-s3" % "1.11.143",

51 | "org.scalatest" %% "scalatest" % "3.0.1" % Test,

52 | "org.spire-math" %% "spire" % Version.spire,

53 | "org.typelevel" %% "cats-core" % "1.0.0-RC1",

54 | "com.monovore" %% "decline" % "0.4.0-RC1",

55 | "org.tpolecat" %% "doobie-core" % "0.5.2",

56 | "org.xerial" % "sqlite-jdbc" % "3.21.0"

57 | ),

58 |

59 | parallelExecution in Test := false

60 | )

61 |

62 | fork in console := true

63 | javaOptions += "-Xmx8G -XX:+UseParallelGC"

64 |

65 | val release = Seq(

66 | licenses += ("Apache-2.0", url("http://apache.org/licenses/LICENSE-2.0"))

67 | )

68 |

69 | assemblyJarName in assembly := "hot-osm-population-assembly.jar"

70 |

71 | val MetaInfDiscardRx = """^META-INF(.+)\.(SF|RSA|MF)$""".r

72 |

73 | assemblyMergeStrategy in assembly := {

74 | case s if s.startsWith("META-INF/services") => MergeStrategy.concat

75 | case "reference.conf" | "application.conf" => MergeStrategy.concat

76 | case MetaInfDiscardRx(_*) => MergeStrategy.discard

77 | case _ => MergeStrategy.first

78 | }

79 |

80 | lazy val root = Project("hot-osm-population", file(".")).

81 | settings(common, release).

82 | settings(

83 | initialCommands in console :=

84 | """

85 | |import geotrellis.proj4._

86 | |import geotrellis.raster._

87 | |import geotrellis.raster.resample._

88 | |import geotrellis.spark._

89 | |import geotrellis.spark.tiling._

90 | |import geotrellis.vector._

91 | |import com.azavea.hotosmpopulation._

92 | |import com.azavea.hotosmpopulation.Utils._

93 | |import astraea.spark.rasterframes._

94 | |import astraea.spark.rasterframes.ml.TileExploder

95 | |import org.apache.spark.sql._

96 | |import org.apache.spark.sql.functions._

97 | |import org.apache.spark.ml.regression._

98 | |import org.apache.spark.storage.StorageLevel

99 | |import geotrellis.spark._

100 | |

101 | |implicit val spark: SparkSession = SparkSession.builder().

102 | | master("local[8]").appName("RasterFrames").

103 | | config("spark.ui.enabled", "true").

104 | | config("spark.driver.maxResultSize", "2G").

105 | | getOrCreate().

106 | | withRasterFrames

107 | |

108 | |import spark.implicits._

109 | """.stripMargin

110 | )

111 |

--------------------------------------------------------------------------------

/data/README.md:

--------------------------------------------------------------------------------

1 | # Training Data

2 |

3 | We aim to derive relationship between population density in WorldPop raster `(population / pixel)` and Open Street Map building footprint coverage `(square meters / pixel)`.

4 | This relationship can break down because OSM and WorldPop have different biases and different update intervals.

5 |

6 | In order to avoid training on regions that have this problem we need to identify training regions.

7 | The training sets are consumed as GeoJSON `FeatureCollection` of `MultiPolygon`s in `epsg:3857` projection.

8 |

9 | ## Generation

10 |

11 | A good technique for generating a training set is to use QGIS with following layers:

12 |

13 | - WorldPop Raster

14 | - Rasterized OSM Building Footprints or OSM WMS with building footprints

15 | - MapBox Satellite Streets WMS layer

16 |

17 | Following are examples of areas labeled for training:

18 |

19 | **Legend**:

20 | - `WorldPop`: light-blue to deep-blue

21 | - `OSM Building`: green/orange/yellow

22 | - `Training Area`: green polygon

23 |

24 | ### Good OSM/WorldPop Coverage

25 | WorldPop has detected high population density area and OSM building coverage looks complete.

26 |

163 |

164 | Finding line of best fit for gives us 6.5 square meters of building footprint per person in Botswana with R-squared value of `0.88069`.

165 | Aggregating to larger areas does not significantly change the fit or the slope of the line.

166 |

167 | ### Data Quality

168 |

169 | Following data quality issues have been encountered and considered:

170 |

171 | Estimates of WorldPop population depend on administrative census data. These estimates data varies in quality and recency from country to country.

172 | To avoid training on data from various sources we fit a model per country where sources are most likely to be consistent, rather than larger areas.

173 |

174 | WorldPop and OSM are updated at different intervals and do not share coverage. This leads to following problems:

175 |

176 | - Regions in WorldPop showing population that are not covered by OSM

177 | - Regions in OSM showing building footprints not covered by WorldPop

178 | - Incomplete OSM coverage per Region

179 |

180 | To address above concerns we mark regions where OSM/WorldPop relation appears optimal, this allows us to compare other areas and reveal the above problems using the derived model. For further discussion and examples see [training set](azavea/hot-osm-population/blob/master/data/README.md) documentation.

181 |

182 | ### Workflow

183 |

184 | Overall workflow as follows:

185 |

186 | **Preparation**

187 |

188 | - Read WorldPop raster for country

189 | - Reproject WorldPop to `EPSG:3857` TMS Level 15 at `256x256` pixel tiles.

190 | - Rasterize OSM building footprints to `EPSG:3857` TMS Level 15 at `256x256` pixel tiles.

191 | - Aggregate both population and building area to `4x4` tile using `SUM` resample method.

192 |

193 | **Training**

194 |

195 | - Mask WorldPop and OSM raster by training set polygons.

196 | - Set all OSM `NODATA` cell values to `0.0`.

197 | - Fit and save `LinearRegressionModel` to population vs. building area.

198 | - Fix y-intercept at '0.0'.

199 |

200 | **Prediction**

201 | - Apply `LinearRegressionModel` to get expected building area.

202 | - Sum all of `4x4` pixel in a tile.

203 | - Report

204 | - Total population

205 | - Actual building area

206 | - Expected building area

207 | - Completeness index as `(actual area - expect area) / expected area`

208 |

209 | Because the model is derived from well labeled areas which we expect it to be stable in describing the usage building space per country.

210 | This enables us to re-run the prediction process with updated OSM input and track changes in OSM coverage.

211 |

212 |

213 |

--------------------------------------------------------------------------------

/build.sbt:

--------------------------------------------------------------------------------

1 | name := "hotosmpopulation"

2 |

3 | version := "0.0.1"

4 |

5 | description := "Estimate OSM coverage from World Population raster"

6 |

7 | organization := "com.azavea"

8 |

9 | organizationName := "Azavea"

10 |

11 | scalaVersion in ThisBuild := Version.scala

12 |

13 | val common = Seq(

14 | resolvers ++= Seq(

15 | "locationtech-releases" at "https://repo.locationtech.org/content/groups/releases",

16 | //"locationtech-snapshots" at "https://repo.locationtech.org/content/groups/snapshots",

17 | Resolver.bintrayRepo("azavea", "maven"),

18 | Resolver.bintrayRepo("s22s", "maven"),

19 | "Geotools" at "http://download.osgeo.org/webdav/geotools/"

20 | ),

21 |

22 | scalacOptions := Seq(

23 | "-deprecation",

24 | "-Ypartial-unification",

25 | "-Ywarn-value-discard",

26 | "-Ywarn-dead-code",

27 | "-Ywarn-numeric-widen"

28 | ),

29 |

30 | scalacOptions in (Compile, doc) += "-groups",

31 |

32 | libraryDependencies ++= Seq(

33 | //"io.astraea" %% "raster-frames" % "0.6.2-SNAPSHOT",

34 | "io.astraea" %% "raster-frames" % "0.6.1",

35 | "org.geotools" % "gt-shapefile" % Version.geotools,

36 | // This is one finicky dependency. Being explicit in hopes it will stop hurting Travis.

37 | "javax.media" % "jai_core" % "1.1.3" from "http://download.osgeo.org/webdav/geotools/javax/media/jai_core/1.1.3/jai_core-1.1.3.jar",

38 | "org.apache.spark" %% "spark-hive" % Version.spark % Provided,

39 | "org.apache.spark" %% "spark-core" % Version.spark % Provided,

40 | "org.apache.spark" %% "spark-sql" % Version.spark % Provided,

41 | "org.apache.spark" %% "spark-mllib" % Version.spark % Provided,

42 | "org.locationtech.geotrellis" %% "geotrellis-proj4" % Version.geotrellis,

43 | "org.locationtech.geotrellis" %% "geotrellis-vector" % Version.geotrellis,

44 | "org.locationtech.geotrellis" %% "geotrellis-raster" % Version.geotrellis,

45 | "org.locationtech.geotrellis" %% "geotrellis-shapefile" % Version.geotrellis,

46 | "org.locationtech.geotrellis" %% "geotrellis-spark" % Version.geotrellis,

47 | "org.locationtech.geotrellis" %% "geotrellis-util" % Version.geotrellis,

48 | "org.locationtech.geotrellis" %% "geotrellis-s3" % Version.geotrellis,

49 | "org.locationtech.geotrellis" %% "geotrellis-vectortile" % Version.geotrellis,

50 | "com.amazonaws" % "aws-java-sdk-s3" % "1.11.143",

51 | "org.scalatest" %% "scalatest" % "3.0.1" % Test,

52 | "org.spire-math" %% "spire" % Version.spire,

53 | "org.typelevel" %% "cats-core" % "1.0.0-RC1",

54 | "com.monovore" %% "decline" % "0.4.0-RC1",

55 | "org.tpolecat" %% "doobie-core" % "0.5.2",

56 | "org.xerial" % "sqlite-jdbc" % "3.21.0"

57 | ),

58 |

59 | parallelExecution in Test := false

60 | )

61 |

62 | fork in console := true

63 | javaOptions += "-Xmx8G -XX:+UseParallelGC"

64 |

65 | val release = Seq(

66 | licenses += ("Apache-2.0", url("http://apache.org/licenses/LICENSE-2.0"))

67 | )

68 |

69 | assemblyJarName in assembly := "hot-osm-population-assembly.jar"

70 |

71 | val MetaInfDiscardRx = """^META-INF(.+)\.(SF|RSA|MF)$""".r

72 |

73 | assemblyMergeStrategy in assembly := {

74 | case s if s.startsWith("META-INF/services") => MergeStrategy.concat

75 | case "reference.conf" | "application.conf" => MergeStrategy.concat

76 | case MetaInfDiscardRx(_*) => MergeStrategy.discard

77 | case _ => MergeStrategy.first

78 | }

79 |

80 | lazy val root = Project("hot-osm-population", file(".")).

81 | settings(common, release).

82 | settings(

83 | initialCommands in console :=

84 | """

85 | |import geotrellis.proj4._

86 | |import geotrellis.raster._

87 | |import geotrellis.raster.resample._

88 | |import geotrellis.spark._

89 | |import geotrellis.spark.tiling._

90 | |import geotrellis.vector._

91 | |import com.azavea.hotosmpopulation._

92 | |import com.azavea.hotosmpopulation.Utils._

93 | |import astraea.spark.rasterframes._

94 | |import astraea.spark.rasterframes.ml.TileExploder

95 | |import org.apache.spark.sql._

96 | |import org.apache.spark.sql.functions._

97 | |import org.apache.spark.ml.regression._

98 | |import org.apache.spark.storage.StorageLevel

99 | |import geotrellis.spark._

100 | |

101 | |implicit val spark: SparkSession = SparkSession.builder().

102 | | master("local[8]").appName("RasterFrames").

103 | | config("spark.ui.enabled", "true").

104 | | config("spark.driver.maxResultSize", "2G").

105 | | getOrCreate().

106 | | withRasterFrames

107 | |

108 | |import spark.implicits._

109 | """.stripMargin

110 | )

111 |

--------------------------------------------------------------------------------

/data/README.md:

--------------------------------------------------------------------------------

1 | # Training Data

2 |

3 | We aim to derive relationship between population density in WorldPop raster `(population / pixel)` and Open Street Map building footprint coverage `(square meters / pixel)`.

4 | This relationship can break down because OSM and WorldPop have different biases and different update intervals.

5 |

6 | In order to avoid training on regions that have this problem we need to identify training regions.

7 | The training sets are consumed as GeoJSON `FeatureCollection` of `MultiPolygon`s in `epsg:3857` projection.

8 |

9 | ## Generation

10 |

11 | A good technique for generating a training set is to use QGIS with following layers:

12 |

13 | - WorldPop Raster

14 | - Rasterized OSM Building Footprints or OSM WMS with building footprints

15 | - MapBox Satellite Streets WMS layer

16 |

17 | Following are examples of areas labeled for training:

18 |

19 | **Legend**:

20 | - `WorldPop`: light-blue to deep-blue

21 | - `OSM Building`: green/orange/yellow

22 | - `Training Area`: green polygon

23 |

24 | ### Good OSM/WorldPop Coverage

25 | WorldPop has detected high population density area and OSM building coverage looks complete.

26 |  27 |

28 | Visually verify with MapBox satellite streets layer that OSM coverage is good

29 |

27 |

28 | Visually verify with MapBox satellite streets layer that OSM coverage is good

29 |  30 |

31 | Label city and surrounding area as training area

32 |

30 |

31 | Label city and surrounding area as training area

32 |  33 |

34 | ### OSM newer than WorldPop raster

35 | OSM building footprints but WorldPop is shows no change in population density.

36 |

33 |

34 | ### OSM newer than WorldPop raster

35 | OSM building footprints but WorldPop is shows no change in population density.

36 |  37 |

38 | Satellite verifies that something really is there.

39 |

37 |

38 | Satellite verifies that something really is there.

39 |  40 |

41 | Do not label this, it would indicate that no population implies building coverage for our training set.

42 |

43 | ### Partial OSM Coverage over WorldPop

44 | Here we have a high population density area that is partially mapped.

45 |

40 |

41 | Do not label this, it would indicate that no population implies building coverage for our training set.

42 |

43 | ### Partial OSM Coverage over WorldPop

44 | Here we have a high population density area that is partially mapped.

45 |  46 |

47 | Satellite show lots of unlabelled buildings.

48 |

46 |

47 | Satellite show lots of unlabelled buildings.

48 |  49 |

50 | Draw training area around well lebeled blocks of the city to capture example of dense urban region.

51 |

52 | ### OSM Coverage in Low density Area, WorldPop mislabels farms

53 | Here we have example of low density area

54 |

49 |

50 | Draw training area around well lebeled blocks of the city to capture example of dense urban region.

51 |

52 | ### OSM Coverage in Low density Area, WorldPop mislabels farms

53 | Here we have example of low density area

54 |  55 |

56 | WorldPop labelled the surrounding farmland at same density as city center

57 |

55 |

56 | WorldPop labelled the surrounding farmland at same density as city center

57 |  58 |

59 | Label the city and surround areas

60 |

58 |

59 | Label the city and surround areas

60 |  61 |

62 |

63 |

--------------------------------------------------------------------------------

/data/botswana-training-set.json:

--------------------------------------------------------------------------------

1 | {

2 | "type": "FeatureCollection",

3 | "name": "worldpop-osm-botswana-training",

4 | "crs": { "type": "name", "properties": { "name": "urn:ogc:def:crs:EPSG::3857" } },

5 | "features": [

6 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2965856.483420270495117, -2551922.143000584561378 ], [ 2979284.343173592351377, -2551568.061506075318903 ], [ 2986011.891569272615016, -2564778.024955083616078 ], [ 2978739.602412808220834, -2568809.106584883760661 ], [ 2965257.268583408091217, -2567120.410226454026997 ], [ 2964058.83890968374908, -2557396.787646462209523 ], [ 2965856.483420270495117, -2551922.143000584561378 ] ], [ [ 2973688.834171415306628, -2557723.63210293231532 ], [ 2973753.522136758547276, -2557066.538560236804187 ], [ 2975323.056453767698258, -2557144.845044599846005 ], [ 2975510.311090286355466, -2557223.151528961956501 ], [ 2976211.664819795638323, -2557413.810795236378908 ], [ 2976065.26574033498764, -2557948.337666755542159 ], [ 2975544.357387835625559, -2557941.528407245874405 ], [ 2975367.316640581004322, -2558486.269168030004948 ], [ 2974298.262897542212158, -2558503.292316804174334 ], [ 2973205.376746219582856, -2558390.939534892793745 ], [ 2973317.729528131429106, -2558138.996933029964566 ], [ 2973770.545285532716662, -2557832.580255088862032 ], [ 2973688.834171415306628, -2557723.63210293231532 ] ], [ [ 2976119.739816414192319, -2556664.79224915895611 ], [ 2976259.329636364709586, -2556899.711702247150242 ], [ 2976582.769463080447167, -2556903.116332001984119 ], [ 2976667.885206952691078, -2556399.231128277257085 ], [ 2976211.664819796103984, -2556273.259827345609665 ], [ 2976119.739816414192319, -2556664.79224915895611 ] ], [ [ 2972146.536892445757985, -2556692.02928719855845 ], [ 2973511.793424160219729, -2556664.792249159421772 ], [ 2973504.984164650551975, -2556937.162629551254213 ], [ 2973144.093410631176084, -2557127.82189582567662 ], [ 2972980.671182395890355, -2557216.342269453220069 ], [ 2972071.635037838015705, -2557284.434864551294595 ], [ 2972146.536892445757985, -2556692.02928719855845 ] ], [ [ 2970961.725737741217017, -2557577.233023472130299 ], [ 2971605.20076141692698, -2557801.938587295357138 ], [ 2971550.726685338653624, -2557944.933037001173943 ], [ 2971155.789633770473301, -2558121.973784256260842 ], [ 2970709.783135878387839, -2557822.366365824826062 ], [ 2970961.725737741217017, -2557577.233023472130299 ] ], [ [ 2969639.878235401585698, -2557274.220975285861641 ], [ 2969730.100923906546086, -2557623.195525163318962 ], [ 2969961.61574723944068, -2557618.088580530602485 ], [ 2970097.800937435589731, -2557362.741348913405091 ], [ 2969757.337961945682764, -2557165.272823129314929 ], [ 2969639.878235401585698, -2557274.220975285861641 ] ], [ [ 2975701.821514002047479, -2562912.287849399726838 ], [ 2976280.608572335448116, -2562980.380444497801363 ], [ 2976471.267838609870523, -2562789.721178223378956 ], [ 2976675.545623903628439, -2562789.721178223378956 ], [ 2976593.834509786218405, -2563211.895267831161618 ], [ 2976518.932655178476125, -2563375.317496066447347 ], [ 2976675.545623903628439, -2563484.265648222994059 ], [ 2976730.019699982367456, -2565629.18239381024614 ], [ 2975531.590026257093996, -2564131.145301654469222 ], [ 2975681.393735473044217, -2563817.919364203233272 ], [ 2975470.306690669152886, -2563470.647129203658551 ], [ 2975626.919659394305199, -2563286.797122438903898 ], [ 2975953.764115864876658, -2563205.086008321493864 ], [ 2975701.821514002047479, -2562912.287849399726838 ] ], [ [ 2973761.182553709018975, -2563443.410091164521873 ], [ 2974823.427037238143384, -2563123.374894203618169 ], [ 2975320.502981453202665, -2563538.739724301733077 ], [ 2974966.42148694396019, -2563743.017509595490992 ], [ 2975300.075202924199402, -2564519.27309371298179 ], [ 2974755.334442140068859, -2564383.087903516832739 ], [ 2974632.767770963720977, -2564492.036055673845112 ], [ 2973761.182553709018975, -2563443.410091164521873 ] ], [ [ 2976689.164142923429608, -2563279.987862929236144 ], [ 2977002.390080374199897, -2563320.843419988173991 ], [ 2977070.482675472274423, -2563443.410091164521873 ], [ 2977431.373429491650313, -2563538.739724301733077 ], [ 2977451.801208021119237, -2564614.602726850192994 ], [ 2978051.016044883523136, -2563981.341592438519001 ], [ 2977935.258633216843009, -2562782.911918713711202 ], [ 2977090.910454001743346, -2562823.76747577264905 ], [ 2976873.014149688184261, -2562428.830424204003066 ], [ 2976689.164142923429608, -2563279.987862929236144 ] ], [ [ 2975184.317791257519275, -2562347.119310086593032 ], [ 2975586.064102335833013, -2562755.674880674574524 ], [ 2976205.706717727705836, -2562612.680430968757719 ], [ 2976471.267838609870523, -2562490.113759792409837 ], [ 2976348.701167433522642, -2562299.45449351798743 ], [ 2975667.775216453243047, -2562210.934119890443981 ], [ 2975184.317791257519275, -2562347.119310086593032 ] ], [ [ 2979573.736702762544155, -2563891.118903934024274 ], [ 2979696.303373938892037, -2564080.075855331029743 ], [ 2979682.684854919556528, -2564248.605028198566288 ], [ 2979641.829297860618681, -2564357.553180355113 ], [ 2979689.494114429224283, -2564721.848564129788429 ], [ 2979650.340872247703373, -2564842.712920428719372 ], [ 2979240.082986782304943, -2564541.403187119867653 ], [ 2979427.337623301893473, -2564189.024007487576455 ], [ 2979292.85474798316136, -2564129.442986777052283 ], [ 2979573.736702762544155, -2563891.118903934024274 ] ] ] ] } },

7 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2982285.52430254034698, -2540058.710619629360735 ], [ 2985717.391095479950309, -2540821.347684727050364 ], [ 2985499.494791166391224, -2546268.755292567890137 ], [ 2981386.7020472465083, -2546500.270115900784731 ], [ 2977764.175988032482564, -2544757.099681391846389 ], [ 2980269.983487639110535, -2540508.121747276280075 ], [ 2980304.242574549280107, -2540489.396283620968461 ], [ 2982285.52430254034698, -2540058.710619629360735 ] ], [ [ 2981648.007380935363472, -2543798.696405387017876 ], [ 2982136.57175076380372, -2543905.942242666613311 ], [ 2982037.83748787175864, -2544307.688553744927049 ], [ 2981799.513405028730631, -2544278.749200827907771 ], [ 2981571.403211450204253, -2544464.301522470079362 ], [ 2981290.521256670821458, -2544181.717252813279629 ], [ 2981096.457360641565174, -2543883.812149259727448 ], [ 2981648.007380935363472, -2543798.696405387017876 ] ] ] ] } },

8 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2916059.73025692300871, -2523071.736036244314164 ], [ 2932510.901232626289129, -2527810.980655072722584 ], [ 2933545.908678117208183, -2552487.737118627410382 ], [ 2911756.278246722649783, -2549600.611086467746645 ], [ 2906254.39656279515475, -2534402.343860569875687 ], [ 2907561.774388678837568, -2522635.943427616264671 ], [ 2916059.73025692300871, -2523071.736036244314164 ] ] ] ] } },

9 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2601174.166961751878262, -2267803.772518157958984 ], [ 2600670.558589624240994, -2279084.600053811911494 ], [ 2608879.375055301003158, -2280192.53847249224782 ], [ 2624049.485501368995756, -2261508.95763139706105 ], [ 2619316.990628585685045, -2259537.918540618382394 ], [ 2607872.158311046194285, -2260098.564424608834088 ], [ 2601174.166961751878262, -2267803.772518157958984 ] ] ] ] } },

10 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2845092.974974212702364, -2434257.324267356656492 ], [ 2853481.934443594887853, -2435533.795591179281473 ], [ 2853824.340952549595386, -2446873.493505374528468 ], [ 2847006.42311248742044, -2447510.470319826621562 ], [ 2841044.521544810850173, -2440521.349225286860019 ], [ 2845092.974974212702364, -2434257.324267356656492 ] ] ] ] } },

11 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2411073.118736115749925, -2471000.188248161226511 ], [ 2416074.644180927425623, -2475153.079411723185331 ], [ 2414431.543764039874077, -2481328.248011454474181 ], [ 2405999.369097155053169, -2481237.967768768314272 ], [ 2402893.728748752269894, -2473040.521732867695391 ], [ 2406757.723135718610138, -2470368.226549358572811 ], [ 2411073.118736115749925, -2471000.188248161226511 ] ] ] ] } },

12 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2534183.777684851083905, -2706992.825551418587565 ], [ 2546124.341757350601256, -2706539.723789738956839 ], [ 2546230.953936569392681, -2721225.551477121189237 ], [ 2529252.964395984075963, -2721198.89843231625855 ], [ 2528959.780903132166713, -2709471.558718254324049 ], [ 2534183.777684851083905, -2706992.825551418587565 ] ] ] ] } },

13 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2439812.009292653296143, -2670911.266147072892636 ], [ 2493970.996335779316723, -2673363.346269104164094 ], [ 2491732.140572185162455, -2701722.185941291972995 ], [ 2439598.784934215713292, -2699376.717998479492962 ], [ 2439812.009292653296143, -2670911.266147072892636 ] ] ] ] } },

14 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3161515.205022294074297, -2501604.432672 ], [ 3170052.649105143733323, -2505013.268259037286043 ], [ 3170175.490027199033648, -2514533.439718329813331 ], [ 3160993.1311035589315, -2514410.598796274513006 ], [ 3156048.783990829717368, -2509189.859608920291066 ], [ 3157338.613672411069274, -2503846.279499511234462 ], [ 3161515.205022294074297, -2501604.432672 ] ], [ [ 3163480.899698857218027, -2510004.160564889200032 ], [ 3163976.102165893185884, -2510098.210645837709308 ], [ 3163876.293916723225266, -2510514.718147182371467 ], [ 3163417.559848422184587, -2510510.879368368070573 ], [ 3163480.899698857218027, -2510004.160564889200032 ] ] ] ] } },

15 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3183837.943750750739127, -2516677.87753343814984 ], [ 3187584.591873444616795, -2518535.846479528117925 ], [ 3187077.873069965280592, -2522973.474788784515113 ], [ 3180398.397933195345104, -2523894.781704201363027 ], [ 3178325.457373508252203, -2519134.695974549278617 ], [ 3180275.557011140044779, -2515802.635963792447001 ], [ 3183837.943750750739127, -2516677.87753343814984 ] ] ] ] } },

16 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3196841.806983976159245, -2487779.550619865767658 ], [ 3200818.781835524830967, -2488823.698457337915897 ], [ 3201463.696676316671073, -2493783.400685331318527 ], [ 3198807.261736865155399, -2496332.349817984271795 ], [ 3193924.33508515637368, -2494858.258753317408264 ], [ 3194062.531122468877584, -2489161.510992990806699 ], [ 3196841.806983976159245, -2487779.550619865767658 ] ] ] ] } },

17 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3100771.56868416024372, -2498372.66075775353238 ], [ 3109124.751383941620588, -2501259.422426060307771 ], [ 3108080.603546469006687, -2514956.18523526052013 ], [ 3097700.54563277028501, -2518150.049208706244826 ], [ 3088426.056017571594566, -2510165.389275092165917 ], [ 3094875.204425490926951, -2501935.047497366089374 ], [ 3100771.56868416024372, -2498372.66075775353238 ] ], [ [ 3097940.46930865989998, -2509931.223767423070967 ], [ 3098178.473595142364502, -2510111.646371692419052 ], [ 3098040.277557829860598, -2510388.03844631742686 ], [ 3097809.950828975532204, -2510242.164851376321167 ], [ 3097940.46930865989998, -2509931.223767423070967 ] ] ] ] } },

18 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3084003.78282356960699, -2500952.320120921358466 ], [ 3088794.578783738426864, -2503409.138562033418566 ], [ 3096902.079639408737421, -2496652.887848975136876 ], [ 3092725.488289517816156, -2489466.693908722139895 ], [ 3086829.124030848965049, -2490633.682668250519782 ], [ 3082529.691758902277797, -2495731.5809335578233 ], [ 3084003.78282356960699, -2500952.320120921358466 ] ] ] ] } },

19 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3038289.643880755640566, -2377125.833423872012645 ], [ 3041443.288716884795576, -2377198.331006311811507 ], [ 3043890.08212422626093, -2385263.687052733730525 ], [ 3039395.232012961991131, -2386061.160459571052343 ], [ 3036150.965198783203959, -2381892.549469285644591 ], [ 3036585.950693421531469, -2377687.689687780104578 ], [ 3036984.687396840192378, -2377343.32617119140923 ], [ 3038289.643880755640566, -2377125.833423872012645 ] ] ] ] } },

20 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3022550.871842978987843, -2364493.129683748353273 ], [ 3028241.932064499240369, -2373337.834741397760808 ], [ 3029111.903053775895387, -2378630.158259498886764 ], [ 3034802.963275296147913, -2378013.928808761294931 ], [ 3035854.178220672532916, -2373772.820236036088318 ], [ 3025885.760635207407176, -2363514.412320811767131 ], [ 3022550.871842978987843, -2364493.129683748353273 ] ], [ [ 3033292.408178681973368, -2374198.743532870896161 ], [ 3033625.443948014639318, -2374660.91562092397362 ], [ 3034085.350486617069691, -2374758.334247327409685 ], [ 3033953.948618444614112, -2374026.561774576548487 ], [ 3033292.408178681973368, -2374198.743532870896161 ] ] ] ] } },

21 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3013611.580095744226128, -2376237.738038988783956 ], [ 3016728.976140653248876, -2380678.214963423088193 ], [ 3017109.588448462076485, -2385263.687052737455815 ], [ 3016583.980975773651153, -2387583.609690809156746 ], [ 3013158.470205495599657, -2387058.002218120731413 ], [ 3013085.972623055800796, -2383414.998700523748994 ], [ 3010349.188885955605656, -2377615.192105344031006 ], [ 3013611.580095744226128, -2376237.738038988783956 ] ] ] ] } },

22 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3054369.363461487460881, -2405167.200034572277218 ], [ 3066202.694070590194315, -2401995.379458936396986 ], [ 3073074.971984467934817, -2414113.360632519703358 ], [ 3069699.829577060416341, -2422449.555735152214766 ], [ 3058151.149532437790185, -2422042.912071609403938 ], [ 3052336.14514377200976, -2415780.599653046112508 ], [ 3054369.363461487460881, -2405167.200034572277218 ] ] ] ] } },

23 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2841763.011948804371059, -2830685.134199555963278 ], [ 2848354.095193604473025, -2832144.904314175713807 ], [ 2848420.448380632791668, -2837674.336566525045782 ], [ 2845080.671300213783979, -2841434.350498122628778 ], [ 2838378.999410366639495, -2841257.408666047267616 ], [ 2838246.293036310467869, -2832012.197940119542181 ], [ 2841763.011948804371059, -2830685.134199555963278 ] ] ] ] } },

24 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2493423.628266260493547, -2997997.381109898909926 ], [ 2498697.380643345415592, -2999344.932979122269899 ], [ 2497699.194073550403118, -3004053.046299989801 ], [ 2493789.630008518695831, -3008145.611236150376499 ], [ 2489846.793057827744633, -3008511.612978408578783 ], [ 2488482.604745774064213, -3004352.502270928584039 ], [ 2490944.798284602351487, -2998662.83882309589535 ], [ 2493423.628266260493547, -2997997.381109898909926 ] ] ] ] } },

25 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3063645.909082676749676, -2632047.799201021436602 ], [ 3066936.923470875713974, -2633550.906734093092382 ], [ 3066857.812548082787544, -2638028.584964191075414 ], [ 3063345.287576062604785, -2638756.405453888699412 ], [ 3060671.338385650888085, -2635354.635773779358715 ], [ 3061541.558536376338452, -2633044.596828216686845 ], [ 3063645.909082676749676, -2632047.799201021436602 ] ] ] ] } },

26 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3160784.360377764329314, -2557538.978758722078055 ], [ 3164136.944197920616716, -2560629.64196792896837 ], [ 3164556.017175440210849, -2564488.605635921470821 ], [ 3159911.291674598585814, -2564453.682887794915587 ], [ 3157222.240068847779185, -2562113.858763310592622 ], [ 3158147.692894203588367, -2558726.352195027749985 ], [ 3160784.360377764329314, -2557538.978758722078055 ] ] ] ] } },

27 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 3198911.053374506067485, -2541862.432366678956896 ], [ 3202442.87716456875205, -2542154.538093676790595 ], [ 3201812.194344914983958, -2546290.489637303166091 ], [ 3198844.665709279011935, -2545832.414747238624841 ], [ 3197443.885972994845361, -2544378.524878772906959 ], [ 3197463.802272562868893, -2542134.621794108767062 ], [ 3198911.053374506067485, -2541862.432366678956896 ] ] ] ] } },

28 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2538226.887632878497243, -2442453.110894021112472 ], [ 2553538.175636132247746, -2444072.129262945149094 ], [ 2551641.611261107027531, -2454942.681168578565121 ], [ 2546599.525483600329608, -2463037.773013199213892 ], [ 2527818.912404080387205, -2453508.693470388650894 ], [ 2531010.691474245395511, -2443054.460573907010257 ], [ 2538226.887632878497243, -2442453.110894021112472 ] ] ] ] } },

29 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2776630.827958059497178, -2560800.975796493701637 ], [ 2802396.926108822692186, -2561131.310388170182705 ], [ 2804131.182715123984963, -2585576.07017222745344 ], [ 2776300.493366383481771, -2584089.564509683288634 ], [ 2776630.827958059497178, -2560800.975796493701637 ] ] ] ] } },

30 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2716984.788248481694609, -2157792.773951224517077 ], [ 2740521.127905428875238, -2159650.90602940460667 ], [ 2736639.696453230455518, -2171130.033090161159635 ], [ 2732056.303993719629943, -2174557.254478804301471 ], [ 2715746.033529695123434, -2172905.581520422361791 ], [ 2709676.135407640133053, -2168074.438117153942585 ], [ 2716984.788248481694609, -2157792.773951224517077 ] ] ] ] } },

31 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2375882.144672478083521, -2931101.346225754823536 ], [ 2431003.178133730776608, -2929611.588564639911056 ], [ 2429699.640180255286396, -2976725.174597399774939 ], [ 2376813.24321067519486, -2973745.659275169949979 ], [ 2375882.144672478083521, -2931101.346225754823536 ] ] ] ] } },

32 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2889275.635200482793152, -2833614.01044009020552 ], [ 2891516.602107145823538, -2832107.371672333683819 ], [ 2893757.569013809319586, -2833651.992930033709854 ], [ 2892516.807675656862557, -2836943.808725132141262 ], [ 2888566.62872153846547, -2837779.423503887839615 ], [ 2887693.031452839262784, -2836399.393035943154246 ], [ 2888971.775280935224146, -2835019.362567998003215 ], [ 2889275.635200482793152, -2833614.01044009020552 ] ] ] ] } },

33 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2886181.64487383980304, -2828616.147805031854659 ], [ 2887238.824177266098559, -2827546.30767162470147 ], [ 2887802.231111427303404, -2827552.638086615130305 ], [ 2888099.760615983977914, -2827748.880951323080808 ], [ 2887751.587791502475739, -2828343.93996043689549 ], [ 2888694.819625098258257, -2828983.311874485109001 ], [ 2888479.585515418555588, -2829420.110508834943175 ], [ 2888973.357884683646262, -2829413.780093844048679 ], [ 2888979.688299674075097, -2830053.152007892262191 ], [ 2888239.029745776671916, -2831072.348821375053376 ], [ 2886244.949023745488375, -2831198.957121186424047 ], [ 2886181.64487383980304, -2828616.147805031854659 ] ] ] ] } },

34 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2877654.844879339449108, -2856421.823156622238457 ], [ 2881566.288149664178491, -2856381.078955889679492 ], [ 2883073.82357676839456, -2862309.360162475612015 ], [ 2882136.70695992000401, -2865385.547317782882601 ], [ 2877002.937667618971318, -2865752.245124375913292 ], [ 2876329.385099257342517, -2859179.696243707556278 ], [ 2877654.844879339449108, -2856421.823156622238457 ] ] ] ] } },

35 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ ] } },

36 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2828607.808978485874832, -2791212.521765633951873 ], [ 2850728.527659215033054, -2791212.521765633951873 ], [ 2857167.777960111852735, -2800075.960415103938431 ], [ 2855652.660242253914475, -2812992.338959844782948 ], [ 2840387.849234832916409, -2809318.17849403899163 ], [ 2828380.541320807300508, -2810075.737352967727929 ], [ 2826448.766230538021773, -2797500.260294745210558 ], [ 2826448.766230538021773, -2797500.260294745210558 ], [ 2828607.808978485874832, -2791212.521765633951873 ] ], [ [ 2842040.34156021848321, -2798743.579628798179328 ], [ 2841852.400435372255743, -2798943.645987505093217 ], [ 2841973.652773982845247, -2799149.774963143281639 ], [ 2842240.407918925862759, -2799058.83570918533951 ], [ 2842216.157451203558594, -2798816.331031964160502 ], [ 2842040.34156021848321, -2798743.579628798179328 ] ], [ [ 2841773.586415275465697, -2799374.091789572499692 ], [ 2843998.566828777547926, -2799392.279640363994986 ], [ 2844131.944401248823851, -2799604.471232932060957 ], [ 2844077.380848874337971, -2800083.417970443610102 ], [ 2843422.618220377713442, -2800083.417970443610102 ], [ 2843465.056538891512901, -2800719.992748148273677 ], [ 2842458.662128424737602, -2800428.98713548341766 ], [ 2842428.349043772090226, -2800004.603950346820056 ], [ 2842010.028475565835834, -2799992.478716485667974 ], [ 2841737.21071369247511, -2799592.345999071374536 ], [ 2841773.586415275465697, -2799374.091789572499692 ] ], [ [ 2841719.022862900979817, -2800586.615175676997751 ], [ 2842185.844366550911218, -2800683.61704656528309 ], [ 2842294.971471300348639, -2801023.123594674747437 ], [ 2842216.157451203558594, -2801320.191824270412326 ], [ 2841573.520056568086147, -2801271.690888825803995 ], [ 2841367.391080930363387, -2801126.188082493375987 ], [ 2841634.146225873380899, -2801059.499296257738024 ], [ 2841506.831270332448184, -2800695.742280426435173 ], [ 2841719.022862900979817, -2800586.615175676997751 ] ], [ [ 2841891.428531862795353, -2797540.503820742946118 ], [ 2842859.123237589839846, -2797639.122389479540288 ], [ 2842852.959577043540776, -2798212.342820260673761 ], [ 2842729.686366123147309, -2798267.815765174571425 ], [ 2842261.248164624907076, -2798070.578627701848745 ], [ 2842279.739146262872964, -2798428.07093937182799 ], [ 2841940.737816231325269, -2798563.671471384353936 ], [ 2841466.135954186785966, -2798070.578627701848745 ], [ 2841891.428531862795353, -2797540.503820742946118 ] ], [ [ 2850134.554054611828178, -2804323.61225165007636 ], [ 2850821.802205494605005, -2804126.375114176888019 ], [ 2850932.748095323331654, -2804859.850719154812396 ], [ 2850263.990926078520715, -2804850.605228335596621 ], [ 2850134.554054611828178, -2804323.61225165007636 ] ], [ [ 2849091.35450719576329, -2797861.014169132802635 ], [ 2849273.182493303902447, -2798406.498127456754446 ], [ 2850071.376534015405923, -2798366.434333907440305 ], [ 2849904.957699272315949, -2797608.30408674525097 ], [ 2849091.35450719576329, -2797861.014169132802635 ] ], [ [ 2842936.35577330365777, -2794098.239966588094831 ], [ 2842957.039377513807267, -2794573.962863414548337 ], [ 2843319.002451186068356, -2794789.417073933873326 ], [ 2843377.605996447149664, -2794468.821208681445569 ], [ 2843013.919289090670645, -2794079.279996062163264 ], [ 2842936.35577330365777, -2794098.239966588094831 ] ], [ [ 2842069.368030174169689, -2794330.930513948667794 ], [ 2842636.443512260913849, -2794365.403187632095069 ], [ 2842622.654442787636071, -2794865.256956036668271 ], [ 2842212.429625958669931, -2794896.282362351659685 ], [ 2841841.848383865784854, -2794842.849718142766505 ], [ 2842069.368030174169689, -2794330.930513948667794 ] ] ] ] } },

37 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2777492.319082696922123, -2740785.902502064127475 ], [ 2774665.254599796142429, -2741806.647908278740942 ], [ 2775455.831924217753112, -2744988.971821773331612 ], [ 2778448.016987535171211, -2744948.942590157035738 ], [ 2780879.792808224447072, -2742567.203308987896889 ], [ 2779088.484693395439535, -2739815.193635368719697 ], [ 2777492.319082696922123, -2740785.902502064127475 ] ] ] ] } },

38 | { "type": "Feature", "properties": { }, "geometry": { "type": "MultiPolygon", "coordinates": [ [ [ [ 2814844.973892420995981, -2712351.681407482363284 ], [ 2817009.158287934027612, -2711425.376802399288863 ], [ 2819872.281612737104297, -2715021.122860313393176 ], [ 2818339.668538871686906, -2716738.996855195611715 ], [ 2815754.436595593579113, -2714709.547674967441708 ], [ 2814844.973892420995981, -2712351.681407482363284 ] ] ] ] } }

39 | ]

40 | }

41 |

--------------------------------------------------------------------------------

/docker/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM openjdk:8-jre

2 |

3 | RUN apt-get update && apt-get install -y libnss3 curl python python-pip && pip install --upgrade awscli && apt-get clean

4 | RUN mkdir /task

5 | WORKDIR /task

6 |

7 | ENV SPARK_HOME /opt/spark

8 | RUN mkdir -p /opt/spark

9 | RUN curl http://www.trieuvan.com/apache/spark/spark-2.2.1/spark-2.2.1-bin-hadoop2.7.tgz \

10 | | tar --strip-components=1 -xzC /opt/spark

11 | COPY log4j.properties /opt/spark/conf

12 |

13 | COPY hot-osm-population-assembly.jar /

14 | COPY task.sh /

15 |

16 | ENTRYPOINT ["/task.sh"]

--------------------------------------------------------------------------------

/docker/log4j.properties:

--------------------------------------------------------------------------------

1 | # Set everything to be logged to the console

2 | log4j.rootCategory=ERROR, console

3 | log4j.appender.console=org.apache.log4j.ConsoleAppender

4 | log4j.appender.console.target=System.err

5 | log4j.appender.console.layout=org.apache.log4j.PatternLayout

6 | log4j.appender.console.layout.ConversionPattern=%d{yy/MM/dd HH:mm:ss} %p %c{1}: %m%n

7 |

8 | # Set the default spark-shell log level to WARN. When running the spark-shell, the

9 | # log level for this class is used to overwrite the root logger's log level, so that

10 | # the user can have different defaults for the shell and regular Spark apps.

11 | log4j.logger.org.apache.spark.repl.Main=WARN

12 |

13 | # Settings to quiet third party logs that are too verbose

14 | log4j.logger.org.spark_project.jetty=WARN

15 | log4j.logger.org.spark_project.jetty.util.component.AbstractLifeCycle=ERROR

16 | log4j.logger.org.apache.spark.repl.SparkIMain$exprTyper=INFO

17 | log4j.logger.org.apache.spark.repl.SparkILoop$SparkILoopInterpreter=INFO

18 | log4j.logger.org.apache.parquet=ERROR

19 | log4j.logger.parquet=ERROR

20 |

21 | # SPARK-9183: Settings to avoid annoying messages when looking up nonexistent UDFs in SparkSQL with Hive support

22 | log4j.logger.org.apache.hadoop.hive.metastore.RetryingHMSHandler=FATAL

23 | log4j.logger.org.apache.hadoop.hive.ql.exec.FunctionRegistry=ERROR

24 |

--------------------------------------------------------------------------------

/docker/task.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -x # Show debug output

4 | set -e # Fail script on any command error

5 |

6 | BUCKET=${BUCKET:-hotosm-population}

7 | COMMAND=$1

8 | COUNTRY=$2

9 | WORLDPOP=$3

10 |

11 | if [[ ${WORLDPOP} == s3* ]]; then

12 | WORLDPOP_URI=${WORLDPOP}

13 | else

14 | WORLDPOP_URI=s3://${BUCKET}/WorldPop/$3

15 | fi

16 | OSM_QA_URI=https://s3.amazonaws.com/mapbox/osm-qa-tiles-production/latest.country/${COUNTRY}.mbtiles.gz

17 | MODEL_URI=s3://${BUCKET}/models/${COUNTRY}-regression/

18 | OUTPUT_URI=s3://${BUCKET}/predict/${COUNTRY}.json

19 | TRAINING_URI=s3://${BUCKET}/training/${COUNTRY}.json

20 |

21 | curl -o - ${OSM_QA_URI} | gunzip > /task/${COUNTRY}.mbtiles

22 |

23 | JAR=/hot-osm-population-assembly.jar

24 |

25 | shopt -s nocasematch

26 | case ${COMMAND} in

27 | TRAIN)

28 | aws s3 cp ${TRAINING_URI} /task/training-set.json

29 |

30 | /opt/spark/bin/spark-submit --master "local[*]" --driver-memory 7G \

31 | --class com.azavea.hotosmpopulation.LabeledTrainApp ${JAR} \

32 | --country ${COUNTRY} \

33 | --worldpop ${WORLDPOP_URI} \

34 | --training /task/training-set.json \

35 | --model /task/model \

36 | --qatiles /task/${COUNTRY}.mbtiles

37 |

38 | aws s3 sync /task/model ${MODEL_URI}

39 | ;;

40 | PREDICT)

41 | aws s3 sync ${MODEL_URI} /task/model/

42 |

43 | /opt/spark/bin/spark-submit --master "local[*]" --driver-memory 7G \

44 | --class com.azavea.hotosmpopulation.LabeledPredictApp ${JAR} \

45 | --country ${COUNTRY} \

46 | --worldpop ${WORLDPOP_URI} \

47 | --qatiles /task/${COUNTRY}.mbtiles \

48 | --model /task/model/ \

49 | --output /task/prediction.json

50 |

51 | aws s3 cp /task/prediction.json ${OUTPUT_URI}

52 | ;;

53 | esac

--------------------------------------------------------------------------------

/project/Environment.scala:

--------------------------------------------------------------------------------

1 | import scala.util.Properties

2 |

3 | object Environment {

4 | def either(environmentVariable: String, default: String): String =

5 | Properties.envOrElse(environmentVariable, default)

6 |

7 | lazy val hadoopVersion = either("SPARK_HADOOP_VERSION", "2.8.0")

8 | lazy val sparkVersion = either("SPARK_VERSION", "2.2.0")

9 | }

10 |

--------------------------------------------------------------------------------

/project/Version.scala:

--------------------------------------------------------------------------------

1 | object Version {

2 | val geotrellis = "2.0.0-SNAPSHOT"

3 | val scala = "2.11.12"

4 | val geotools = "17.1"

5 | val spire = "0.13.0"

6 | lazy val hadoop = "2.7.4"

7 | lazy val spark = "2.2.1"

8 | }

9 |

--------------------------------------------------------------------------------

/project/build.properties:

--------------------------------------------------------------------------------

1 | sbt.version=0.13.17

2 |

--------------------------------------------------------------------------------

/project/plugins.sbt:

--------------------------------------------------------------------------------

1 | addSbtPlugin("pl.project13.scala" % "sbt-jmh" % "0.2.27")

2 | addSbtPlugin("com.eed3si9n" % "sbt-assembly" % "0.14.5")

3 |

--------------------------------------------------------------------------------

/sbt:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 | #

3 | # A more capable sbt runner, coincidentally also called sbt.

4 | # Author: Paul Phillips

5 |

6 | set -o pipefail

7 |

8 | declare -r sbt_release_version="0.13.16"

9 | declare -r sbt_unreleased_version="0.13.16"

10 |

11 | declare -r latest_213="2.13.0-M2"

12 | declare -r latest_212="2.12.4"

13 | declare -r latest_211="2.11.12"

14 | declare -r latest_210="2.10.7"

15 | declare -r latest_29="2.9.3"

16 | declare -r latest_28="2.8.2"

17 |

18 | declare -r buildProps="project/build.properties"

19 |

20 | declare -r sbt_launch_ivy_release_repo="http://repo.typesafe.com/typesafe/ivy-releases"

21 | declare -r sbt_launch_ivy_snapshot_repo="https://repo.scala-sbt.org/scalasbt/ivy-snapshots"

22 | declare -r sbt_launch_mvn_release_repo="http://repo.scala-sbt.org/scalasbt/maven-releases"

23 | declare -r sbt_launch_mvn_snapshot_repo="http://repo.scala-sbt.org/scalasbt/maven-snapshots"

24 |

25 | declare -r default_jvm_opts_common="-Xms512m -Xmx1536m -Xss2m"

26 | declare -r noshare_opts="-Dsbt.global.base=project/.sbtboot -Dsbt.boot.directory=project/.boot -Dsbt.ivy.home=project/.ivy"

27 |

28 | declare sbt_jar sbt_dir sbt_create sbt_version sbt_script sbt_new

29 | declare sbt_explicit_version

30 | declare verbose noshare batch trace_level

31 | declare debugUs

32 |

33 | declare java_cmd="java"

34 | declare sbt_launch_dir="$HOME/.sbt/launchers"

35 | declare sbt_launch_repo

36 |

37 | # pull -J and -D options to give to java.

38 | declare -a java_args scalac_args sbt_commands residual_args

39 |

40 | # args to jvm/sbt via files or environment variables

41 | declare -a extra_jvm_opts extra_sbt_opts

42 |

43 | echoerr () { echo >&2 "$@"; }

44 | vlog () { [[ -n "$verbose" ]] && echoerr "$@"; }

45 | die () { echo "Aborting: $@" ; exit 1; }

46 |

47 | setTrapExit () {

48 | # save stty and trap exit, to ensure echo is re-enabled if we are interrupted.

49 | export SBT_STTY="$(stty -g 2>/dev/null)"

50 |

51 | # restore stty settings (echo in particular)

52 | onSbtRunnerExit() {

53 | [ -t 0 ] || return

54 | vlog ""

55 | vlog "restoring stty: $SBT_STTY"

56 | stty "$SBT_STTY"

57 | }

58 |

59 | vlog "saving stty: $SBT_STTY"

60 | trap onSbtRunnerExit EXIT

61 | }

62 |

63 | # this seems to cover the bases on OSX, and someone will

64 | # have to tell me about the others.

65 | get_script_path () {

66 | local path="$1"

67 | [[ -L "$path" ]] || { echo "$path" ; return; }

68 |

69 | local target="$(readlink "$path")"

70 | if [[ "${target:0:1}" == "/" ]]; then

71 | echo "$target"

72 | else

73 | echo "${path%/*}/$target"

74 | fi

75 | }

76 |

77 | declare -r script_path="$(get_script_path "$BASH_SOURCE")"

78 | declare -r script_name="${script_path##*/}"

79 |

80 | init_default_option_file () {

81 | local overriding_var="${!1}"

82 | local default_file="$2"

83 | if [[ ! -r "$default_file" && "$overriding_var" =~ ^@(.*)$ ]]; then

84 | local envvar_file="${BASH_REMATCH[1]}"

85 | if [[ -r "$envvar_file" ]]; then

86 | default_file="$envvar_file"

87 | fi

88 | fi

89 | echo "$default_file"

90 | }

91 |

92 | declare sbt_opts_file="$(init_default_option_file SBT_OPTS .sbtopts)"

93 | declare jvm_opts_file="$(init_default_option_file JVM_OPTS .jvmopts)"

94 |

95 | build_props_sbt () {

96 | [[ -r "$buildProps" ]] && \

97 | grep '^sbt\.version' "$buildProps" | tr '=\r' ' ' | awk '{ print $2; }'

98 | }

99 |

100 | update_build_props_sbt () {

101 | local ver="$1"

102 | local old="$(build_props_sbt)"

103 |

104 | [[ -r "$buildProps" ]] && [[ "$ver" != "$old" ]] && {

105 | perl -pi -e "s/^sbt\.version\b.*\$/sbt.version=${ver}/" "$buildProps"

106 | grep -q '^sbt.version[ =]' "$buildProps" || printf "\nsbt.version=%s\n" "$ver" >> "$buildProps"

107 |

108 | vlog "!!!"

109 | vlog "!!! Updated file $buildProps setting sbt.version to: $ver"

110 | vlog "!!! Previous value was: $old"

111 | vlog "!!!"

112 | }

113 | }

114 |

115 | set_sbt_version () {

116 | sbt_version="${sbt_explicit_version:-$(build_props_sbt)}"

117 | [[ -n "$sbt_version" ]] || sbt_version=$sbt_release_version

118 | export sbt_version

119 | }

120 |

121 | url_base () {

122 | local version="$1"

123 |

124 | case "$version" in

125 | 0.7.*) echo "http://simple-build-tool.googlecode.com" ;;

126 | 0.10.* ) echo "$sbt_launch_ivy_release_repo" ;;

127 | 0.11.[12]) echo "$sbt_launch_ivy_release_repo" ;;

128 | 0.*-[0-9][0-9][0-9][0-9][0-9][0-9][0-9][0-9]-[0-9][0-9][0-9][0-9][0-9][0-9]) # ie "*-yyyymmdd-hhMMss"

129 | echo "$sbt_launch_ivy_snapshot_repo" ;;

130 | 0.*) echo "$sbt_launch_ivy_release_repo" ;;

131 | *-[0-9][0-9][0-9][0-9][0-9][0-9][0-9][0-9]-[0-9][0-9][0-9][0-9][0-9][0-9]) # ie "*-yyyymmdd-hhMMss"

132 | echo "$sbt_launch_mvn_snapshot_repo" ;;

133 | *) echo "$sbt_launch_mvn_release_repo" ;;

134 | esac

135 | }

136 |

137 | make_url () {

138 | local version="$1"

139 |

140 | local base="${sbt_launch_repo:-$(url_base "$version")}"

141 |

142 | case "$version" in

143 | 0.7.*) echo "$base/files/sbt-launch-0.7.7.jar" ;;

144 | 0.10.* ) echo "$base/org.scala-tools.sbt/sbt-launch/$version/sbt-launch.jar" ;;

145 | 0.11.[12]) echo "$base/org.scala-tools.sbt/sbt-launch/$version/sbt-launch.jar" ;;

146 | 0.*) echo "$base/org.scala-sbt/sbt-launch/$version/sbt-launch.jar" ;;

147 | *) echo "$base/org/scala-sbt/sbt-launch/$version/sbt-launch.jar" ;;

148 | esac

149 | }

150 |

151 | addJava () { vlog "[addJava] arg = '$1'" ; java_args+=("$1"); }

152 | addSbt () { vlog "[addSbt] arg = '$1'" ; sbt_commands+=("$1"); }

153 | addScalac () { vlog "[addScalac] arg = '$1'" ; scalac_args+=("$1"); }

154 | addResidual () { vlog "[residual] arg = '$1'" ; residual_args+=("$1"); }

155 |

156 | addResolver () { addSbt "set resolvers += $1"; }

157 | addDebugger () { addJava "-Xdebug" ; addJava "-Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=$1"; }

158 | setThisBuild () {

159 | vlog "[addBuild] args = '$@'"

160 | local key="$1" && shift

161 | addSbt "set $key in ThisBuild := $@"

162 | }

163 | setScalaVersion () {

164 | [[ "$1" == *"-SNAPSHOT" ]] && addResolver 'Resolver.sonatypeRepo("snapshots")'

165 | addSbt "++ $1"

166 | }

167 | setJavaHome () {

168 | java_cmd="$1/bin/java"

169 | setThisBuild javaHome "_root_.scala.Some(file(\"$1\"))"

170 | export JAVA_HOME="$1"

171 | export JDK_HOME="$1"

172 | export PATH="$JAVA_HOME/bin:$PATH"

173 | }

174 |

175 | getJavaVersion() { "$1" -version 2>&1 | grep -E -e '(java|openjdk) version' | awk '{ print $3 }' | tr -d \"; }

176 |

177 | checkJava() {

178 | # Warn if there is a Java version mismatch between PATH and JAVA_HOME/JDK_HOME

179 |

180 | [[ -n "$JAVA_HOME" && -e "$JAVA_HOME/bin/java" ]] && java="$JAVA_HOME/bin/java"

181 | [[ -n "$JDK_HOME" && -e "$JDK_HOME/lib/tools.jar" ]] && java="$JDK_HOME/bin/java"

182 |

183 | if [[ -n "$java" ]]; then

184 | pathJavaVersion=$(getJavaVersion java)

185 | homeJavaVersion=$(getJavaVersion "$java")

186 | if [[ "$pathJavaVersion" != "$homeJavaVersion" ]]; then

187 | echoerr "Warning: Java version mismatch between PATH and JAVA_HOME/JDK_HOME, sbt will use the one in PATH"

188 | echoerr " Either: fix your PATH, remove JAVA_HOME/JDK_HOME or use -java-home"

189 | echoerr " java version from PATH: $pathJavaVersion"

190 | echoerr " java version from JAVA_HOME/JDK_HOME: $homeJavaVersion"

191 | fi

192 | fi

193 | }

194 |

195 | java_version () {

196 | local version=$(getJavaVersion "$java_cmd")

197 | vlog "Detected Java version: $version"

198 | echo "${version:2:1}"

199 | }

200 |

201 | # MaxPermSize critical on pre-8 JVMs but incurs noisy warning on 8+

202 | default_jvm_opts () {

203 | local v="$(java_version)"

204 | if [[ $v -ge 8 ]]; then

205 | echo "$default_jvm_opts_common"

206 | else

207 | echo "-XX:MaxPermSize=384m $default_jvm_opts_common"

208 | fi

209 | }

210 |

211 | build_props_scala () {

212 | if [[ -r "$buildProps" ]]; then

213 | versionLine="$(grep '^build.scala.versions' "$buildProps")"

214 | versionString="${versionLine##build.scala.versions=}"

215 | echo "${versionString%% .*}"

216 | fi

217 | }

218 |

219 | execRunner () {

220 | # print the arguments one to a line, quoting any containing spaces

221 | vlog "# Executing command line:" && {

222 | for arg; do

223 | if [[ -n "$arg" ]]; then

224 | if printf "%s\n" "$arg" | grep -q ' '; then

225 | printf >&2 "\"%s\"\n" "$arg"

226 | else

227 | printf >&2 "%s\n" "$arg"

228 | fi

229 | fi

230 | done

231 | vlog ""

232 | }

233 |

234 | setTrapExit

235 |

236 | if [[ -n "$batch" ]]; then

237 | "$@" < /dev/null

238 | else

239 | "$@"

240 | fi

241 | }

242 |

243 | jar_url () { make_url "$1"; }

244 |

245 | is_cygwin () [[ "$(uname -a)" == "CYGWIN"* ]]

246 |

247 | jar_file () {

248 | is_cygwin \

249 | && echo "$(cygpath -w $sbt_launch_dir/"$1"/sbt-launch.jar)" \

250 | || echo "$sbt_launch_dir/$1/sbt-launch.jar"

251 | }

252 |

253 | download_url () {

254 | local url="$1"

255 | local jar="$2"

256 |