17 |

|

28 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

32 |

|

581 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

590 | 594 | 605 | 606 | 609 | |

611 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

26 |

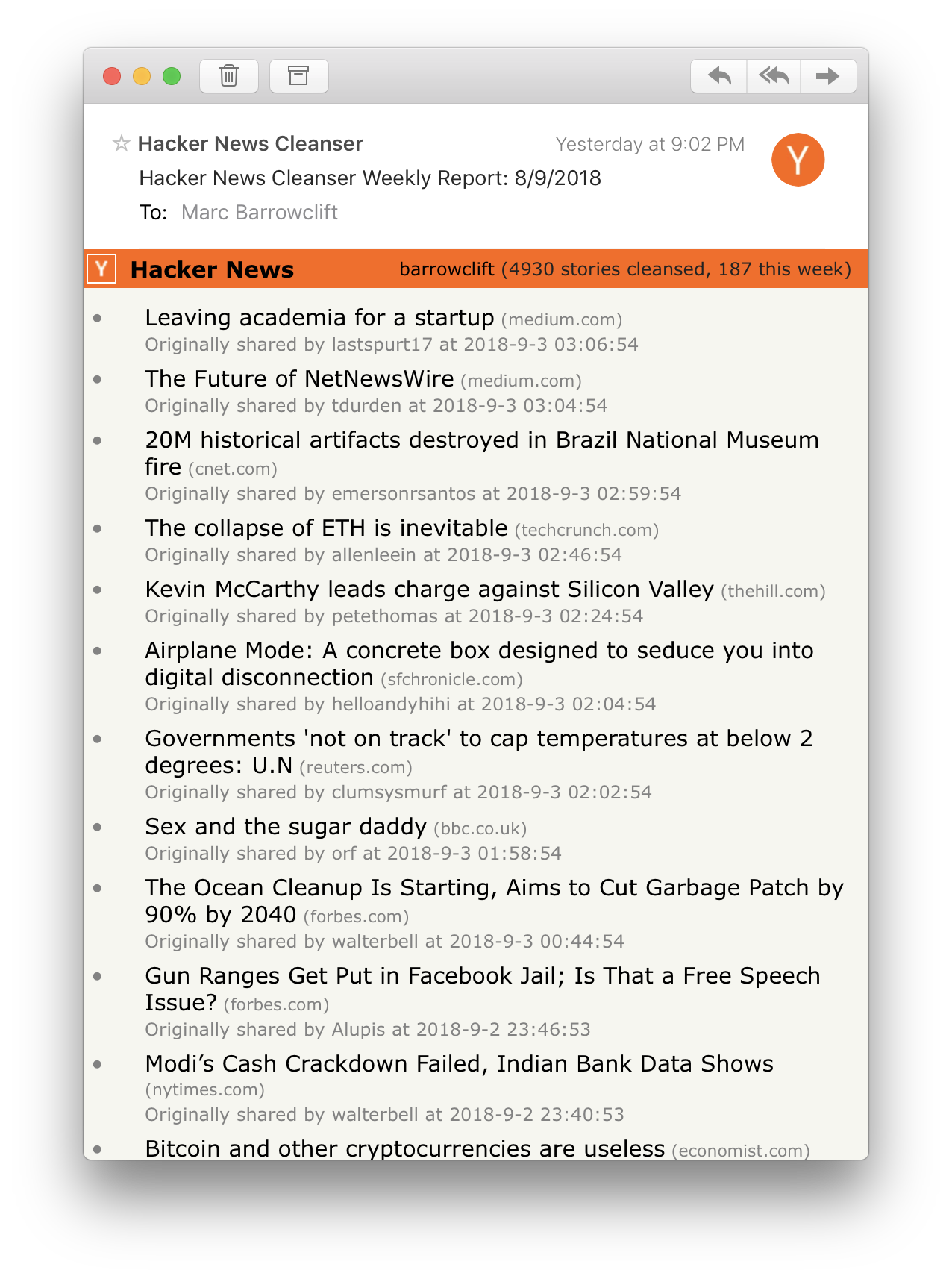

27 | While I do wish for my personal Hacker News page to be more focused, I also still want to have the ability to see the hidden articles and read them if I so choose. No amount of filter tweaking will prevent the occasional salient article or discussion from getting caught when I would have wished to still see it. Additionally, I do still feel it's important to have a finger on the heartbeat of Hacker News as a whole, only in a separate view and on my own time.

28 |

29 | To achieve this, the Hacker News Cleanser supports sending out email reports styled to look just like the Hacker News homepage containing all cleansed articles since the last email report. This requires setting up a Gmail "service" account to send emails through or using an existing Gmail account (not recommended).

30 |

31 | Personally, I have the Cleanser set to send an email report of cleansed articles every week, but it can easily be set to daily, bi-weekly, or any other kind of day-length frequency.

32 |

33 | ## Why Not A Browser Extension?

34 |

35 | My portable operating system of choice is iOS, and since Safari on iOS [does not support traditional browser extensions](https://apple.stackexchange.com/a/321213), this would mean my phone (where easily the majority of my browsing and reading occurs) would not benefit.

36 |

37 | Additionally, there are many other different browsers out there, and I have a better chance of serving the needs of other similarly-minded Hacker News readers regardless of their preferred browser or platform by focusing on just one product that's independent of the browser landscape.

38 |

39 | # Setup

40 |

41 | ## Installation

42 |

43 | See [INSTALL.md](https://github.com/barrowclift/hacker-news-cleanser/blob/master/INSTALL.md).

44 |

45 | ## Starting and Stopping

46 |

47 | Starting and stopping the Hacker News Cleanser is as simple as running `admin/start.sh` or `admin/stop.sh`, respectively.

48 |

49 | If you wish to start or stop specific components of the service, use their individual, respective start/stop scripts (not recommended).

50 |

51 | ## Adding Items To Filter

52 |

53 | You can filter articles by site, title, and username. To a new filter item, execute `admin/addBlacklistItem.py`, and the usage string will explain the rest.

54 |

55 | # License

56 |

57 | The Hacker News Cleanser is open source, licensed under the MIT License.

58 |

59 | See [LICENSE](https://github.com/barrowclift/hacker-news-cleanser/blob/master/LICENSE) for more.

60 |

--------------------------------------------------------------------------------

/admin/addBlacklistItem.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | #

3 | # Adds the specified items to the appropriate blacklist collection

4 |

5 | import sys

6 | import argparse

7 | import pymongo

8 |

9 | parser = argparse.ArgumentParser(description="Adds the specified items to the appropriate Mongo blacklist collection")

10 | parser.add_argument("-t", "--text", required=False, nargs='+', action="store", dest="text", metavar='string', help="Blacklist stories if their title contains this text anywhere. This will block words that contain this text as a substring (e.g. the text \"gpt\" will match stories containing \"GPT\", \"GPT3.5\", etc.)")

11 | parser.add_argument("-k", "--keyword", required=False, nargs='+', action="store", dest="keyword", metavar='string', help="Blacklist stories if their title contains this exact string. This will not block words that contain the keyword as a substring (e.g. the keyword \"trump\" will not match stories containing the word \"trumpet\")")

12 | parser.add_argument("-r", "--regex", required=False, nargs='+', action="store", dest="regex", metavar='string', help="Blacklist stories if their title matches this regex. Regex must be Javascript flavored")

13 | parser.add_argument("-s", "--site", required=False, nargs='+', action="store", dest="site", metavar='string', help="Blacklist stories if they're from this source (e.g. \"newyorker.com\")")

14 | parser.add_argument("-u", "--user", required=False, nargs='+', action="store", dest="user", metavar='string', help="Blacklist all stories added by a particular user")

15 | args = parser.parse_args()

16 |

17 | if not args.text and not args.keyword and not args.regex and not args.user and not args.site:

18 | print("You must at least one item to blacklist\n")

19 | parser.print_help()

20 | sys.exit(1)

21 |

22 | client = pymongo.MongoClient()

23 | db = client["hackerNewsCleanserDb"]

24 | if args.text or args.keyword or args.regex:

25 | blacklistedTitlesCollection = db["blacklistedTitles"]

26 |

27 | if args.text:

28 | textToAdd = []

29 | for text in args.text:

30 | exists = blacklistedTitlesCollection.count_documents({"text":text}) != 0

31 | if exists:

32 | print("Title text \"{}\" is already blacklisted".format(keyword))

33 | else:

34 | textDocument = {

35 | "text": text,

36 | "type": "text"

37 | }

38 | textToAdd.append(textDocument)

39 | if textToAdd:

40 | blacklistedTitlesCollection.insert_many(textToAdd)

41 | if args.keyword:

42 | keywordsToAdd = []

43 | for keyword in args.keyword:

44 | exists = blacklistedTitlesCollection.count_documents({"keyword":keyword}) != 0

45 | if exists:

46 | print("Title keyword \"{}\" is already blacklisted".format(keyword))

47 | else:

48 | keywordDocument = {

49 | "keyword": keyword,

50 | "type": "keyword"

51 | }

52 | keywordsToAdd.append(keywordDocument)

53 | if keywordsToAdd:

54 | blacklistedTitlesCollection.insert_many(keywordsToAdd)

55 | if args.regex:

56 | regexsToAdd = []

57 | for r in args.regex:

58 | exists = blacklistedTitlesCollection.count_documents({"regex":r}) != 0

59 | if exists:

60 | print("Title regex \"{}\" is already blacklisted".format(r))

61 | else:

62 | regexDocument = {

63 | "regex": r,

64 | "type": "regex"

65 | }

66 | regexsToAdd.append(regexDocument)

67 | if regexsToAdd:

68 | blacklistedTitlesCollection.insert_many(regexsToAdd)

69 | if args.site:

70 | blacklistedSitesCollection = db["blacklistedSites"]

71 | sitesToAdd = []

72 | for site in args.site:

73 | exists = blacklistedSitesCollection.count_documents({"site":site}) != 0

74 | if exists:

75 | print("Site \"{}\" is already blacklisted".format(site))

76 | else:

77 | siteDocument = {

78 | "site": site

79 | }

80 | sitesToAdd.append(siteDocument)

81 | if sitesToAdd:

82 | blacklistedSitesCollection.insert_many(sitesToAdd)

83 | if args.user:

84 | blacklistedUsersCollection = db["blacklistedUsers"]

85 | usersToAdd = []

86 | for user in args.user:

87 | exists = blacklistedUsersCollection.count_documents({"user":user}) != 0

88 | if exists:

89 | print("User \"{}\" is already blacklisted".format(user))

90 | else:

91 | userDocument = {

92 | "user": user

93 | }

94 | usersToAdd.append(userDocument)

95 | if usersToAdd:

96 | blacklistedUsersCollection.insert_many(usersToAdd)

97 |

--------------------------------------------------------------------------------

/admin/clean.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | # Confirm if they really want to clean MongoDB's data store

7 | echo "You are about to clean all Hacker News Cleanser data, and logs. This CANNOT be undone."

8 | read -p "Are you absolutely sure you want to proceed? (y/n): " -r

9 | if [[ $REPLY =~ ^[Yy]$ ]]

10 | then

11 | "${ADMIN_DIR}"/cleanMongoDb.sh 1

12 | "${ADMIN_DIR}"/cleanLogs.sh

13 | echo -e "\n${GREEN}All Hacker News Cleanser data & logs deleted${RESET}"

14 | fi

15 |

--------------------------------------------------------------------------------

/admin/cleanLogs.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | rm "${LOGS_DIR}"/mongodb.log 2> /dev/null

7 | rm "${LOGS_DIR}"/server.log 2> /dev/null

8 |

9 | echo "Log directory cleaned"

--------------------------------------------------------------------------------

/admin/cleanMongoDb.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 |

27 | # MongoDB may not be currently running. If that's the case, we want to briefly start

28 | # it for the cleaning process, then immediately turn it off again

29 | START_THEN_STOP=0

30 | if [ -z "$MONGODB_NOHUP_RUNNING" ] && [ -z "$MONGODB_SERVICE_RUNNING" ]; then

31 | START_THEN_STOP=1

32 | fi

33 |

34 | function cleanMongoDb {

35 | if [ $START_THEN_STOP -eq 1 ]; then

36 | "${ADMIN_DIR}"/startMongoDb.sh

37 | starterProcess=$!

38 | wait $starterProcess

39 | fi

40 |

41 | node "${ADMIN_DIR}"/mongoCleaner.js > "${LOGS_DIR}"/clean-mongodb.log 2>&1 &

42 | cleanerProcess=$!

43 | wait $cleanerProcess

44 |

45 | echo "Hacker News Cleanser database deleted"

46 |

47 | if [ $START_THEN_STOP -eq 1 ]; then

48 | "${ADMIN_DIR}"/stopMongoDb.sh

49 | fi

50 | }

51 |

52 | if [[ $1 == 1 ]] # If 1 is provided, skip safety prompt and just do it.

53 | then

54 | cleanMongoDb

55 | else

56 | # Confirm if they really want to clean MongoDB's data store

57 | echo "You are about to drop all collections in Hacker News Cleanser's database, this CANNOT be undone."

58 | read -p "Are you absolutely sure you want to proceed? (y/n): " -r

59 | if [[ $REPLY =~ ^[Yy]$ ]]

60 | then

61 | cleanMongoDb

62 | fi

63 | fi

64 |

65 | exit 0

--------------------------------------------------------------------------------

/admin/init.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export RED='\033[0;31m'

4 | export RESET='\033[0m'

5 | export YELLOW='\033[0;33m'

6 | export GREEN='\033[0;32m'

7 |

8 | export REPO=$(dirname "${ADMIN_DIR}")

9 | export SERVER_DIR="${REPO}"/server

10 | export LOGS_DIR="${REPO}"/logs

11 | export MONGO_DB=set/me/somewhere

12 |

13 | export USE_PM2=true

--------------------------------------------------------------------------------

/admin/mongoCleaner.js:

--------------------------------------------------------------------------------

1 | "use strict";

2 |

3 | // DEPENDENCIES

4 | // ------------

5 | // External

6 | import path from "path";

7 | import url from "url";

8 | // Local

9 | const FILENAME = url.fileURLToPath(import.meta.url);

10 | const CLEANSER_ROOT_DIRECTORY_PATH = path.join(path.dirname(FILENAME), "../");

11 | const PropertyManager = await import (path.join(CLEANSER_ROOT_DIRECTORY_PATH, "server/PropertyManager.js"));

12 | const MongoClient = await import (path.join(CLEANSER_ROOT_DIRECTORY_PATH, "server/MongoClient.js"));

13 |

14 |

15 | // CONSTANTS

16 | // ---------

17 | const PROPERTIES_FILE_NAME = path.join(CLEANSER_ROOT_DIRECTORY_PATH, "server/cleanser.properties");

18 |

19 |

20 | // GLOBALS

21 | // -------

22 | var propertyManager = null;

23 |

24 |

25 | async function cleanDbAndClose() {

26 | var mongoClient = new MongoClient.default(propertyManager);

27 | await mongoClient.connect();

28 | await mongoClient.dropCollectionBlacklistedTitles();

29 | await mongoClient.dropCollectionBlacklistedSites();

30 | await mongoClient.dropCollectionBlacklistedUsers();

31 | await mongoClient.dropCollectionCleansedItems();

32 | await mongoClient.dropCollectionWeeklyReportsLog();

33 | await mongoClient.close();

34 | }

35 |

36 | async function main() {

37 | propertyManager = new PropertyManager.default();

38 | await propertyManager.load(PROPERTIES_FILE_NAME);

39 |

40 | await cleanDbAndClose();

41 | }

42 |

43 | main();

--------------------------------------------------------------------------------

/admin/printUserStatistics.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | import sys

4 | import operator

5 | from pymongo import MongoClient

6 |

7 | client = MongoClient("localhost:27017")

8 | db = client.hackerNewsCleanserDb

9 |

10 | distribution = {}

11 | cursor = db.cleansedItems.find()

12 | for cleansedItem in cursor:

13 | user = cleansedItem["user"]

14 | if user in distribution:

15 | distribution[user] += 1

16 | else:

17 | distribution[user] = 1

18 |

19 | cursor = db.blacklistedUsers.find()

20 | for blacklistedUser in cursor:

21 | user = blacklistedUser["user"]

22 | if user in distribution:

23 | distribution.pop(user, None)

24 |

25 | sortedDistribution = sorted(distribution.items(), key=operator.itemgetter(1), reverse=True)

26 | maxPrint = 10

27 | count = 0

28 | for entry in sortedDistribution:

29 | print("%s:%d" % (entry[0], entry[1]))

30 | count += 1

31 | if count >= maxPrint:

32 | break

33 |

--------------------------------------------------------------------------------

/admin/start.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

27 |

28 | # If all necessary ingredients are running successfully, nothing to do

29 | if [ -n "$SERVER_RUNNING" ] && { [ -n "$MONGODB_NOHUP_RUNNING" ] || [ -n "$MONGODB_SERVICE_RUNNING" ]; }; then

30 | echo -e "${GREEN}Hacker News Cleanser is already running${RESET}"

31 | exit 0

32 | fi

33 |

34 | "${ADMIN_DIR}"/cleanLogs.sh

35 |

36 | echo "Starting Hacker News Cleanser..."

37 |

38 | "${ADMIN_DIR}"/startMongoDb.sh

39 | result=$!

40 | wait $result

41 |

42 | "${ADMIN_DIR}"/startServer.sh

43 | SUCCESS=$?

44 |

45 | if [ $SUCCESS -eq 0 ]; then

46 | echo -e "${GREEN}All started successfully${RESET}"

47 | exit 0

48 | else

49 | echo -e "${RED}Hacker News Cleanser failed to start${RESET}"

50 | exit 1

51 | fi

52 |

--------------------------------------------------------------------------------

/admin/startMongoDb.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 |

27 | # If neither way of running MongoDB is running, start MongoDB

28 | if [ -z "$MONGODB_NOHUP_RUNNING" ] && [ -z "$MONGODB_SERVICE_RUNNING" ]; then

29 | if [ ! -d "$MONGO_DB" ]; then

30 | mkdir -p "$MONGO_DB"

31 | fi

32 | if [ ! -d "$LOGS_DIR" ]; then

33 | mkdir "$LOGS_DIR"

34 | fi

35 |

36 | rm "$LOGS_DIR"/mongodb.log 2> /dev/null

37 |

38 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

39 | sudo service mongod start

40 | SUCCESS=$?

41 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

42 | sudo systemctl start mongod

43 | SUCCESS=$?

44 | elif [ -n "$HAS_MONGODB_TAPPED" ]; then

45 | brew services start mongodb-community

46 | SUCCESS=$?

47 | else

48 | nohup mongod --dbpath "$MONGO_DB" --bind_ip 127.0.0.1 > "$LOGS_DIR"/mongodb.log 2>&1 &

49 | SUCCESS=$?

50 | fi

51 |

52 | if [ $SUCCESS -eq 0 ]; then

53 | echo -e "${GREEN}MongoDB started${RESET}"

54 | exit 0

55 | else

56 | echo -e "${RED}MongoDB failed to start${RESET}"

57 | exit 1

58 | fi

59 | else

60 | echo "MongoDB already running"

61 | exit 0

62 | fi

63 |

--------------------------------------------------------------------------------

/admin/startServer.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | if [ ! -d "$LOGS_DIR" ]; then

7 | mkdir "$LOGS_DIR";

8 | fi

9 |

10 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

11 |

12 | if [ -n "$SERVER_RUNNING" ]; then

13 | echo -e "Server already running"

14 | else

15 | if [ "$USE_PM2" = true ] ; then

16 | pm2 --log "${LOGS_DIR}"/server.log --name hackerNewsCleanser --silent start "${SERVER_DIR}"/main.js

17 | else

18 | nohup node "${SERVER_DIR}"/main.js > "$LOGS_DIR"/server.log 2>&1 &

19 | fi

20 |

21 | SUCCESS=$?

22 | if [ $SUCCESS -eq 0 ]; then

23 | echo -e "${GREEN}Server started${RESET}"

24 | exit 0

25 | else

26 | echo -e "${RED}Server failed to start${RESET}"

27 | exit 1

28 | fi

29 | fi

30 |

--------------------------------------------------------------------------------

/admin/stop.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

27 |

28 | # If all necessary ingredients aren't running, then there's nothing to stop

29 | if [ -z "$SERVER_RUNNING" ] && { [ -z "$MONGODB_NOHUP_RUNNING" ] && [ -z "$MONGODB_SERVICE_RUNNING" ]; }; then

30 | echo "Hacker News Cleanser is not running"

31 | exit 0

32 | fi

33 |

34 | echo "Stopping Hacker News Cleanser..."

35 |

36 | # Ask to Stop MongoDB

37 | if [ -n "$MONGODB_NOHUP_RUNNING" ] || [ -n "$MONGODB_SERVICE_RUNNING" ]; then

38 | read -p "Do you want to stop MongoDB? (y/n): " -r

39 | if [[ $REPLY =~ ^[Yy]$ ]]; then

40 | read -p "Do you also want to clean MongoDB? (y/n): " -r

41 | if [[ $REPLY =~ ^[Yy]$ ]]

42 | then

43 | echo "Cleaning MongoDB..."

44 | "${ADMIN_DIR}"/cleanMongoDb.sh 1

45 | result=$!

46 | wait $result

47 | fi

48 |

49 | echo "Stopping MongoDB..."

50 | "${ADMIN_DIR}"/stopMongoDb.sh

51 | elif [ -z "$SERVER_RUNNING" ]; then

52 | echo "Server is not running"

53 | exit 0

54 | fi

55 | else

56 | echo "MongoDB is not running"

57 | fi

58 | if [ -n "$SERVER_RUNNING" ]; then

59 | "${ADMIN_DIR}"/stopServer.sh

60 | else

61 | echo "Server is not running"

62 | fi

63 |

64 | echo -e "${GREEN}Hacker News Cleanser has been stopped${RESET}"

--------------------------------------------------------------------------------

/admin/stopMongoDb.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 |

27 | # If either way of running MongoDB is active, stop MongoDB

28 | if [ -n "$MONGODB_NOHUP_RUNNING" ] || [ -n "$MONGODB_SERVICE_RUNNING" ]; then

29 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

30 | sudo service mongod stop

31 | SUCCESS=$?

32 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

33 | sudo systemctl stop mongod

34 | SUCCESS=$?

35 | elif [ -n "$HAS_MONGODB_TAPPED" ]; then

36 | brew services stop mongodb-community

37 | SUCCESS=$?

38 | else

39 | ps -ef | grep "mongod --dbpath $MONGO_DB" | grep -v grep | awk '{print $2}' | xargs kill -9

40 | SUCCESS=$?

41 | fi

42 |

43 | if [ $SUCCESS -eq 0 ]; then

44 | echo -e "${GREEN}MongoDB stopped${RESET}"

45 | exit 0

46 | else

47 | echo -e "${RED}MongoDB failed to stop${RESET}"

48 | exit 1

49 | fi

50 | else

51 | echo "MongoDB is not running"

52 | exit 0

53 | fi

54 |

--------------------------------------------------------------------------------

/admin/stopServer.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

7 |

8 | if [ -n "$SERVER_RUNNING" ]; then

9 | if [ "$USE_PM2" = true ] ; then

10 | pm2 --silent stop hackerNewsCleanser

11 | else

12 | ps -ef | grep "node ""${SERVER_DIR}""/main.js" | grep -v grep | awk '{print $2}' | xargs kill -9

13 | fi

14 |

15 | SUCCESS=$?

16 | if [ $SUCCESS -eq 0 ]; then

17 | echo -e "${GREEN}Server stopped${RESET}"

18 | exit 0

19 | else

20 | echo -e "${RED}Server failed to stop${RESET}"

21 | exit 1

22 | fi

23 | else

24 | echo "Server is not running"

25 | exit 0

26 | fi

27 |

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "Hacker News Cleanser",

3 | "version": "2.8",

4 | "type": "module",

5 | "description": "Taking out the trash, one New Yorker post at a time",

6 | "main": "server/main.js",

7 | "author": "Marc Barrowclift",

8 | "license": "MIT",

9 | "repository": {

10 | "type": "git",

11 | "url": "https://github.com/barrowclift/hacker-news-cleanser.git"

12 | },

13 | "dependencies": {

14 | "got": "^14.4.1",

15 | "jsdom": "^24.1.0",

16 | "mongodb": "^6.7.0",

17 | "nodemailer": "^6.9.14",

18 | "properties": "^1.2.1",

19 | "tough-cookie": "^4.1.4"

20 | }

21 | }

22 |

--------------------------------------------------------------------------------

/references/home-feed.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

26 |

27 | While I do wish for my personal Hacker News page to be more focused, I also still want to have the ability to see the hidden articles and read them if I so choose. No amount of filter tweaking will prevent the occasional salient article or discussion from getting caught when I would have wished to still see it. Additionally, I do still feel it's important to have a finger on the heartbeat of Hacker News as a whole, only in a separate view and on my own time.

28 |

29 | To achieve this, the Hacker News Cleanser supports sending out email reports styled to look just like the Hacker News homepage containing all cleansed articles since the last email report. This requires setting up a Gmail "service" account to send emails through or using an existing Gmail account (not recommended).

30 |

31 | Personally, I have the Cleanser set to send an email report of cleansed articles every week, but it can easily be set to daily, bi-weekly, or any other kind of day-length frequency.

32 |

33 | ## Why Not A Browser Extension?

34 |

35 | My portable operating system of choice is iOS, and since Safari on iOS [does not support traditional browser extensions](https://apple.stackexchange.com/a/321213), this would mean my phone (where easily the majority of my browsing and reading occurs) would not benefit.

36 |

37 | Additionally, there are many other different browsers out there, and I have a better chance of serving the needs of other similarly-minded Hacker News readers regardless of their preferred browser or platform by focusing on just one product that's independent of the browser landscape.

38 |

39 | # Setup

40 |

41 | ## Installation

42 |

43 | See [INSTALL.md](https://github.com/barrowclift/hacker-news-cleanser/blob/master/INSTALL.md).

44 |

45 | ## Starting and Stopping

46 |

47 | Starting and stopping the Hacker News Cleanser is as simple as running `admin/start.sh` or `admin/stop.sh`, respectively.

48 |

49 | If you wish to start or stop specific components of the service, use their individual, respective start/stop scripts (not recommended).

50 |

51 | ## Adding Items To Filter

52 |

53 | You can filter articles by site, title, and username. To a new filter item, execute `admin/addBlacklistItem.py`, and the usage string will explain the rest.

54 |

55 | # License

56 |

57 | The Hacker News Cleanser is open source, licensed under the MIT License.

58 |

59 | See [LICENSE](https://github.com/barrowclift/hacker-news-cleanser/blob/master/LICENSE) for more.

60 |

--------------------------------------------------------------------------------

/admin/addBlacklistItem.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | #

3 | # Adds the specified items to the appropriate blacklist collection

4 |

5 | import sys

6 | import argparse

7 | import pymongo

8 |

9 | parser = argparse.ArgumentParser(description="Adds the specified items to the appropriate Mongo blacklist collection")

10 | parser.add_argument("-t", "--text", required=False, nargs='+', action="store", dest="text", metavar='string', help="Blacklist stories if their title contains this text anywhere. This will block words that contain this text as a substring (e.g. the text \"gpt\" will match stories containing \"GPT\", \"GPT3.5\", etc.)")

11 | parser.add_argument("-k", "--keyword", required=False, nargs='+', action="store", dest="keyword", metavar='string', help="Blacklist stories if their title contains this exact string. This will not block words that contain the keyword as a substring (e.g. the keyword \"trump\" will not match stories containing the word \"trumpet\")")

12 | parser.add_argument("-r", "--regex", required=False, nargs='+', action="store", dest="regex", metavar='string', help="Blacklist stories if their title matches this regex. Regex must be Javascript flavored")

13 | parser.add_argument("-s", "--site", required=False, nargs='+', action="store", dest="site", metavar='string', help="Blacklist stories if they're from this source (e.g. \"newyorker.com\")")

14 | parser.add_argument("-u", "--user", required=False, nargs='+', action="store", dest="user", metavar='string', help="Blacklist all stories added by a particular user")

15 | args = parser.parse_args()

16 |

17 | if not args.text and not args.keyword and not args.regex and not args.user and not args.site:

18 | print("You must at least one item to blacklist\n")

19 | parser.print_help()

20 | sys.exit(1)

21 |

22 | client = pymongo.MongoClient()

23 | db = client["hackerNewsCleanserDb"]

24 | if args.text or args.keyword or args.regex:

25 | blacklistedTitlesCollection = db["blacklistedTitles"]

26 |

27 | if args.text:

28 | textToAdd = []

29 | for text in args.text:

30 | exists = blacklistedTitlesCollection.count_documents({"text":text}) != 0

31 | if exists:

32 | print("Title text \"{}\" is already blacklisted".format(keyword))

33 | else:

34 | textDocument = {

35 | "text": text,

36 | "type": "text"

37 | }

38 | textToAdd.append(textDocument)

39 | if textToAdd:

40 | blacklistedTitlesCollection.insert_many(textToAdd)

41 | if args.keyword:

42 | keywordsToAdd = []

43 | for keyword in args.keyword:

44 | exists = blacklistedTitlesCollection.count_documents({"keyword":keyword}) != 0

45 | if exists:

46 | print("Title keyword \"{}\" is already blacklisted".format(keyword))

47 | else:

48 | keywordDocument = {

49 | "keyword": keyword,

50 | "type": "keyword"

51 | }

52 | keywordsToAdd.append(keywordDocument)

53 | if keywordsToAdd:

54 | blacklistedTitlesCollection.insert_many(keywordsToAdd)

55 | if args.regex:

56 | regexsToAdd = []

57 | for r in args.regex:

58 | exists = blacklistedTitlesCollection.count_documents({"regex":r}) != 0

59 | if exists:

60 | print("Title regex \"{}\" is already blacklisted".format(r))

61 | else:

62 | regexDocument = {

63 | "regex": r,

64 | "type": "regex"

65 | }

66 | regexsToAdd.append(regexDocument)

67 | if regexsToAdd:

68 | blacklistedTitlesCollection.insert_many(regexsToAdd)

69 | if args.site:

70 | blacklistedSitesCollection = db["blacklistedSites"]

71 | sitesToAdd = []

72 | for site in args.site:

73 | exists = blacklistedSitesCollection.count_documents({"site":site}) != 0

74 | if exists:

75 | print("Site \"{}\" is already blacklisted".format(site))

76 | else:

77 | siteDocument = {

78 | "site": site

79 | }

80 | sitesToAdd.append(siteDocument)

81 | if sitesToAdd:

82 | blacklistedSitesCollection.insert_many(sitesToAdd)

83 | if args.user:

84 | blacklistedUsersCollection = db["blacklistedUsers"]

85 | usersToAdd = []

86 | for user in args.user:

87 | exists = blacklistedUsersCollection.count_documents({"user":user}) != 0

88 | if exists:

89 | print("User \"{}\" is already blacklisted".format(user))

90 | else:

91 | userDocument = {

92 | "user": user

93 | }

94 | usersToAdd.append(userDocument)

95 | if usersToAdd:

96 | blacklistedUsersCollection.insert_many(usersToAdd)

97 |

--------------------------------------------------------------------------------

/admin/clean.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | # Confirm if they really want to clean MongoDB's data store

7 | echo "You are about to clean all Hacker News Cleanser data, and logs. This CANNOT be undone."

8 | read -p "Are you absolutely sure you want to proceed? (y/n): " -r

9 | if [[ $REPLY =~ ^[Yy]$ ]]

10 | then

11 | "${ADMIN_DIR}"/cleanMongoDb.sh 1

12 | "${ADMIN_DIR}"/cleanLogs.sh

13 | echo -e "\n${GREEN}All Hacker News Cleanser data & logs deleted${RESET}"

14 | fi

15 |

--------------------------------------------------------------------------------

/admin/cleanLogs.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | rm "${LOGS_DIR}"/mongodb.log 2> /dev/null

7 | rm "${LOGS_DIR}"/server.log 2> /dev/null

8 |

9 | echo "Log directory cleaned"

--------------------------------------------------------------------------------

/admin/cleanMongoDb.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 |

27 | # MongoDB may not be currently running. If that's the case, we want to briefly start

28 | # it for the cleaning process, then immediately turn it off again

29 | START_THEN_STOP=0

30 | if [ -z "$MONGODB_NOHUP_RUNNING" ] && [ -z "$MONGODB_SERVICE_RUNNING" ]; then

31 | START_THEN_STOP=1

32 | fi

33 |

34 | function cleanMongoDb {

35 | if [ $START_THEN_STOP -eq 1 ]; then

36 | "${ADMIN_DIR}"/startMongoDb.sh

37 | starterProcess=$!

38 | wait $starterProcess

39 | fi

40 |

41 | node "${ADMIN_DIR}"/mongoCleaner.js > "${LOGS_DIR}"/clean-mongodb.log 2>&1 &

42 | cleanerProcess=$!

43 | wait $cleanerProcess

44 |

45 | echo "Hacker News Cleanser database deleted"

46 |

47 | if [ $START_THEN_STOP -eq 1 ]; then

48 | "${ADMIN_DIR}"/stopMongoDb.sh

49 | fi

50 | }

51 |

52 | if [[ $1 == 1 ]] # If 1 is provided, skip safety prompt and just do it.

53 | then

54 | cleanMongoDb

55 | else

56 | # Confirm if they really want to clean MongoDB's data store

57 | echo "You are about to drop all collections in Hacker News Cleanser's database, this CANNOT be undone."

58 | read -p "Are you absolutely sure you want to proceed? (y/n): " -r

59 | if [[ $REPLY =~ ^[Yy]$ ]]

60 | then

61 | cleanMongoDb

62 | fi

63 | fi

64 |

65 | exit 0

--------------------------------------------------------------------------------

/admin/init.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export RED='\033[0;31m'

4 | export RESET='\033[0m'

5 | export YELLOW='\033[0;33m'

6 | export GREEN='\033[0;32m'

7 |

8 | export REPO=$(dirname "${ADMIN_DIR}")

9 | export SERVER_DIR="${REPO}"/server

10 | export LOGS_DIR="${REPO}"/logs

11 | export MONGO_DB=set/me/somewhere

12 |

13 | export USE_PM2=true

--------------------------------------------------------------------------------

/admin/mongoCleaner.js:

--------------------------------------------------------------------------------

1 | "use strict";

2 |

3 | // DEPENDENCIES

4 | // ------------

5 | // External

6 | import path from "path";

7 | import url from "url";

8 | // Local

9 | const FILENAME = url.fileURLToPath(import.meta.url);

10 | const CLEANSER_ROOT_DIRECTORY_PATH = path.join(path.dirname(FILENAME), "../");

11 | const PropertyManager = await import (path.join(CLEANSER_ROOT_DIRECTORY_PATH, "server/PropertyManager.js"));

12 | const MongoClient = await import (path.join(CLEANSER_ROOT_DIRECTORY_PATH, "server/MongoClient.js"));

13 |

14 |

15 | // CONSTANTS

16 | // ---------

17 | const PROPERTIES_FILE_NAME = path.join(CLEANSER_ROOT_DIRECTORY_PATH, "server/cleanser.properties");

18 |

19 |

20 | // GLOBALS

21 | // -------

22 | var propertyManager = null;

23 |

24 |

25 | async function cleanDbAndClose() {

26 | var mongoClient = new MongoClient.default(propertyManager);

27 | await mongoClient.connect();

28 | await mongoClient.dropCollectionBlacklistedTitles();

29 | await mongoClient.dropCollectionBlacklistedSites();

30 | await mongoClient.dropCollectionBlacklistedUsers();

31 | await mongoClient.dropCollectionCleansedItems();

32 | await mongoClient.dropCollectionWeeklyReportsLog();

33 | await mongoClient.close();

34 | }

35 |

36 | async function main() {

37 | propertyManager = new PropertyManager.default();

38 | await propertyManager.load(PROPERTIES_FILE_NAME);

39 |

40 | await cleanDbAndClose();

41 | }

42 |

43 | main();

--------------------------------------------------------------------------------

/admin/printUserStatistics.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | import sys

4 | import operator

5 | from pymongo import MongoClient

6 |

7 | client = MongoClient("localhost:27017")

8 | db = client.hackerNewsCleanserDb

9 |

10 | distribution = {}

11 | cursor = db.cleansedItems.find()

12 | for cleansedItem in cursor:

13 | user = cleansedItem["user"]

14 | if user in distribution:

15 | distribution[user] += 1

16 | else:

17 | distribution[user] = 1

18 |

19 | cursor = db.blacklistedUsers.find()

20 | for blacklistedUser in cursor:

21 | user = blacklistedUser["user"]

22 | if user in distribution:

23 | distribution.pop(user, None)

24 |

25 | sortedDistribution = sorted(distribution.items(), key=operator.itemgetter(1), reverse=True)

26 | maxPrint = 10

27 | count = 0

28 | for entry in sortedDistribution:

29 | print("%s:%d" % (entry[0], entry[1]))

30 | count += 1

31 | if count >= maxPrint:

32 | break

33 |

--------------------------------------------------------------------------------

/admin/start.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

27 |

28 | # If all necessary ingredients are running successfully, nothing to do

29 | if [ -n "$SERVER_RUNNING" ] && { [ -n "$MONGODB_NOHUP_RUNNING" ] || [ -n "$MONGODB_SERVICE_RUNNING" ]; }; then

30 | echo -e "${GREEN}Hacker News Cleanser is already running${RESET}"

31 | exit 0

32 | fi

33 |

34 | "${ADMIN_DIR}"/cleanLogs.sh

35 |

36 | echo "Starting Hacker News Cleanser..."

37 |

38 | "${ADMIN_DIR}"/startMongoDb.sh

39 | result=$!

40 | wait $result

41 |

42 | "${ADMIN_DIR}"/startServer.sh

43 | SUCCESS=$?

44 |

45 | if [ $SUCCESS -eq 0 ]; then

46 | echo -e "${GREEN}All started successfully${RESET}"

47 | exit 0

48 | else

49 | echo -e "${RED}Hacker News Cleanser failed to start${RESET}"

50 | exit 1

51 | fi

52 |

--------------------------------------------------------------------------------

/admin/startMongoDb.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 |

27 | # If neither way of running MongoDB is running, start MongoDB

28 | if [ -z "$MONGODB_NOHUP_RUNNING" ] && [ -z "$MONGODB_SERVICE_RUNNING" ]; then

29 | if [ ! -d "$MONGO_DB" ]; then

30 | mkdir -p "$MONGO_DB"

31 | fi

32 | if [ ! -d "$LOGS_DIR" ]; then

33 | mkdir "$LOGS_DIR"

34 | fi

35 |

36 | rm "$LOGS_DIR"/mongodb.log 2> /dev/null

37 |

38 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

39 | sudo service mongod start

40 | SUCCESS=$?

41 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

42 | sudo systemctl start mongod

43 | SUCCESS=$?

44 | elif [ -n "$HAS_MONGODB_TAPPED" ]; then

45 | brew services start mongodb-community

46 | SUCCESS=$?

47 | else

48 | nohup mongod --dbpath "$MONGO_DB" --bind_ip 127.0.0.1 > "$LOGS_DIR"/mongodb.log 2>&1 &

49 | SUCCESS=$?

50 | fi

51 |

52 | if [ $SUCCESS -eq 0 ]; then

53 | echo -e "${GREEN}MongoDB started${RESET}"

54 | exit 0

55 | else

56 | echo -e "${RED}MongoDB failed to start${RESET}"

57 | exit 1

58 | fi

59 | else

60 | echo "MongoDB already running"

61 | exit 0

62 | fi

63 |

--------------------------------------------------------------------------------

/admin/startServer.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | if [ ! -d "$LOGS_DIR" ]; then

7 | mkdir "$LOGS_DIR";

8 | fi

9 |

10 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

11 |

12 | if [ -n "$SERVER_RUNNING" ]; then

13 | echo -e "Server already running"

14 | else

15 | if [ "$USE_PM2" = true ] ; then

16 | pm2 --log "${LOGS_DIR}"/server.log --name hackerNewsCleanser --silent start "${SERVER_DIR}"/main.js

17 | else

18 | nohup node "${SERVER_DIR}"/main.js > "$LOGS_DIR"/server.log 2>&1 &

19 | fi

20 |

21 | SUCCESS=$?

22 | if [ $SUCCESS -eq 0 ]; then

23 | echo -e "${GREEN}Server started${RESET}"

24 | exit 0

25 | else

26 | echo -e "${RED}Server failed to start${RESET}"

27 | exit 1

28 | fi

29 | fi

30 |

--------------------------------------------------------------------------------

/admin/stop.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

27 |

28 | # If all necessary ingredients aren't running, then there's nothing to stop

29 | if [ -z "$SERVER_RUNNING" ] && { [ -z "$MONGODB_NOHUP_RUNNING" ] && [ -z "$MONGODB_SERVICE_RUNNING" ]; }; then

30 | echo "Hacker News Cleanser is not running"

31 | exit 0

32 | fi

33 |

34 | echo "Stopping Hacker News Cleanser..."

35 |

36 | # Ask to Stop MongoDB

37 | if [ -n "$MONGODB_NOHUP_RUNNING" ] || [ -n "$MONGODB_SERVICE_RUNNING" ]; then

38 | read -p "Do you want to stop MongoDB? (y/n): " -r

39 | if [[ $REPLY =~ ^[Yy]$ ]]; then

40 | read -p "Do you also want to clean MongoDB? (y/n): " -r

41 | if [[ $REPLY =~ ^[Yy]$ ]]

42 | then

43 | echo "Cleaning MongoDB..."

44 | "${ADMIN_DIR}"/cleanMongoDb.sh 1

45 | result=$!

46 | wait $result

47 | fi

48 |

49 | echo "Stopping MongoDB..."

50 | "${ADMIN_DIR}"/stopMongoDb.sh

51 | elif [ -z "$SERVER_RUNNING" ]; then

52 | echo "Server is not running"

53 | exit 0

54 | fi

55 | else

56 | echo "MongoDB is not running"

57 | fi

58 | if [ -n "$SERVER_RUNNING" ]; then

59 | "${ADMIN_DIR}"/stopServer.sh

60 | else

61 | echo "Server is not running"

62 | fi

63 |

64 | echo -e "${GREEN}Hacker News Cleanser has been stopped${RESET}"

--------------------------------------------------------------------------------

/admin/stopMongoDb.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | MONGODB_NOHUP_RUNNING=$(ps -ef | grep "mongod --dbpath ""$MONGO_DB" | grep -v grep)

7 | HAS_SERVICE_COMMAND=$(command -v service)

8 | HAS_BREW_COMMAND=$(command -v brew)

9 | if [ -n "$HAS_SERVICE_COMMAND" ]; then

10 | HAS_MONGODB_INITD_SERVICE=$(ls /etc/init.d/mongod 2>/dev/null)

11 | HAS_MONGODB_SYSTEMCTL_SERVICE=$(ls /usr/lib/systemd/system/mongod.service 2>/dev/null)

12 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

13 | MONGODB_SERVICE_RUNNING=$(service mongod status | grep 'is running\|active (running)')

14 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

15 | MONGODB_SERVICE_RUNNING=$(systemctl status mongod | grep 'is running\|active (running)')

16 | fi

17 | elif [ -n "$HAS_BREW_COMMAND" ]; then

18 | HAS_MONGODB_TAPPED=$(brew services list | grep "mongodb-community" 2>/dev/null)

19 | if [ -n "$HAS_MONGODB_TAPPED" ]; then

20 | MONGODB_SERVICE_RUNNING=$(brew services info mongodb-community | grep "PID" 2>/dev/null)

21 | else

22 | echo -e "${RED}You don't seem to have MongoDB available through brew or systemctl${RESET}"

23 | exit 1

24 | fi

25 | fi

26 |

27 | # If either way of running MongoDB is active, stop MongoDB

28 | if [ -n "$MONGODB_NOHUP_RUNNING" ] || [ -n "$MONGODB_SERVICE_RUNNING" ]; then

29 | if [ -n "$HAS_MONGODB_INITD_SERVICE" ]; then

30 | sudo service mongod stop

31 | SUCCESS=$?

32 | elif [ -n "$HAS_MONGODB_SYSTEMCTL_SERVICE" ]; then

33 | sudo systemctl stop mongod

34 | SUCCESS=$?

35 | elif [ -n "$HAS_MONGODB_TAPPED" ]; then

36 | brew services stop mongodb-community

37 | SUCCESS=$?

38 | else

39 | ps -ef | grep "mongod --dbpath $MONGO_DB" | grep -v grep | awk '{print $2}' | xargs kill -9

40 | SUCCESS=$?

41 | fi

42 |

43 | if [ $SUCCESS -eq 0 ]; then

44 | echo -e "${GREEN}MongoDB stopped${RESET}"

45 | exit 0

46 | else

47 | echo -e "${RED}MongoDB failed to stop${RESET}"

48 | exit 1

49 | fi

50 | else

51 | echo "MongoDB is not running"

52 | exit 0

53 | fi

54 |

--------------------------------------------------------------------------------

/admin/stopServer.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | export ADMIN_DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null && pwd )"

4 | source "${ADMIN_DIR}"/init.sh

5 |

6 | SERVER_RUNNING=$(ps -ef | grep "node ""${SERVER_DIR}" | grep -v grep)

7 |

8 | if [ -n "$SERVER_RUNNING" ]; then

9 | if [ "$USE_PM2" = true ] ; then

10 | pm2 --silent stop hackerNewsCleanser

11 | else

12 | ps -ef | grep "node ""${SERVER_DIR}""/main.js" | grep -v grep | awk '{print $2}' | xargs kill -9

13 | fi

14 |

15 | SUCCESS=$?

16 | if [ $SUCCESS -eq 0 ]; then

17 | echo -e "${GREEN}Server stopped${RESET}"

18 | exit 0

19 | else

20 | echo -e "${RED}Server failed to stop${RESET}"

21 | exit 1

22 | fi

23 | else

24 | echo "Server is not running"

25 | exit 0

26 | fi

27 |

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "Hacker News Cleanser",

3 | "version": "2.8",

4 | "type": "module",

5 | "description": "Taking out the trash, one New Yorker post at a time",

6 | "main": "server/main.js",

7 | "author": "Marc Barrowclift",

8 | "license": "MIT",

9 | "repository": {

10 | "type": "git",

11 | "url": "https://github.com/barrowclift/hacker-news-cleanser.git"

12 | },

13 | "dependencies": {

14 | "got": "^14.4.1",

15 | "jsdom": "^24.1.0",

16 | "mongodb": "^6.7.0",

17 | "nodemailer": "^6.9.14",

18 | "properties": "^1.2.1",

19 | "tough-cookie": "^4.1.4"

20 | }

21 | }

22 |

--------------------------------------------------------------------------------

/references/home-feed.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

17 |

|

28 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

32 |

|

581 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

590 | 594 | 605 | 606 | 609 | |

611 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

190 |

|

199 | ||||||

203 |

|

221 | ||||||

230 | 232 | |

233 | ||||||

491 |

|

500 | |||

|

504 | |

509 | |||

518 | 520 | |

521 |