├── README.md

├── data

└── scripts

│ ├── eval_subroot.py

│ ├── make_dp_dataset.py

│ ├── make_subroot_dataset.py

│ ├── make_wsd_pred.py

│ ├── mergy_subroot_feature.py

│ ├── recover_dataset.py

│ ├── subroot_decomposition.py

│ └── subroot_replace_unk.py

├── node2vec

└── train.py

├── onmt

├── Highway.py

├── Loss.py

├── ModelConstructor.py

├── Models.py

├── Optim.py

├── SubwordElmo.py

├── Trainer.py

├── Utils.py

├── __init__.py

├── __init__.pyc

├── io

│ ├── AudioDataset.py

│ ├── DatasetBase.py

│ ├── IO.py

│ ├── IO.pyc

│ ├── ImageDataset.py

│ ├── TextDataset.py

│ ├── __init__.py

│ ├── __init__.pyc

│ └── __pycache__

│ │ ├── AudioDataset.cpython-36.pyc

│ │ ├── DatasetBase.cpython-36.pyc

│ │ ├── IO.cpython-36.pyc

│ │ ├── ImageDataset.cpython-36.pyc

│ │ ├── TextDataset.cpython-36.pyc

│ │ └── __init__.cpython-36.pyc

├── modules

│ ├── AudioEncoder.py

│ ├── Conv2Conv.py

│ ├── ConvMultiStepAttention.py

│ ├── CopyGenerator.py

│ ├── Embeddings.py

│ ├── Gate.py

│ ├── GlobalAttention.py

│ ├── ImageEncoder.py

│ ├── MultiHeadedAttn.py

│ ├── SRU.py

│ ├── StackedRNN.py

│ ├── StructuredAttention.py

│ ├── Transformer.py

│ ├── UtilClass.py

│ ├── WeightNorm.py

│ ├── __init__.py

│ └── __pycache__

│ │ ├── AudioEncoder.cpython-36.pyc

│ │ ├── Conv2Conv.cpython-36.pyc

│ │ ├── ConvMultiStepAttention.cpython-36.pyc

│ │ ├── CopyGenerator.cpython-36.pyc

│ │ ├── Embeddings.cpython-36.pyc

│ │ ├── Gate.cpython-36.pyc

│ │ ├── GlobalAttention.cpython-36.pyc

│ │ ├── ImageEncoder.cpython-36.pyc

│ │ ├── MultiHeadedAttn.cpython-36.pyc

│ │ ├── SRU.cpython-36.pyc

│ │ ├── StackedRNN.cpython-36.pyc

│ │ ├── StructuredAttention.cpython-36.pyc

│ │ ├── Transformer.cpython-36.pyc

│ │ ├── UtilClass.cpython-36.pyc

│ │ ├── WeightNorm.cpython-36.pyc

│ │ └── __init__.cpython-36.pyc

├── opts.py

└── translate

│ ├── Beam.py

│ ├── Penalties.py

│ ├── Translation.py

│ ├── TranslationServer.py

│ ├── Translator.py

│ ├── __init__.py

│ └── __pycache__

│ ├── Beam.cpython-36.pyc

│ ├── Penalties.cpython-36.pyc

│ ├── Translation.cpython-36.pyc

│ ├── TranslationServer.cpython-36.pyc

│ ├── Translator.cpython-36.pyc

│ └── __init__.cpython-36.pyc

├── preprocess.py

├── requirements.opt.txt

├── requirements.txt

├── resources

└── seq2seq4dp.pdf

├── screenshots

└── seq2seq_model.png

├── server.py

├── setup.py

├── subroot

├── README.md

├── RUN.md

├── dnn_pytorch

│ ├── dnn_utils.py

│ ├── generate_features.py

│ ├── loader.py

│ ├── nn.py

│ ├── tag.py

│ ├── train.py

│ └── utils.py

└── subroot

│ ├── preprocess.py

│ ├── stat.py

│ ├── test.py

│ └── train.py

├── tools

├── README.md

├── apply_bpe.py

├── average_models.py

├── bpe_pipeline.sh

├── detokenize.perl

├── embeddings_to_torch.py

├── extract_embeddings.py

├── learn_bpe.py

├── multi-bleu-detok.perl

├── multi-bleu.perl

├── nonbreaking_prefixes

│ ├── README.txt

│ ├── nonbreaking_prefix.ca

│ ├── nonbreaking_prefix.cs

│ ├── nonbreaking_prefix.de

│ ├── nonbreaking_prefix.el

│ ├── nonbreaking_prefix.en

│ ├── nonbreaking_prefix.es

│ ├── nonbreaking_prefix.fi

│ ├── nonbreaking_prefix.fr

│ ├── nonbreaking_prefix.ga

│ ├── nonbreaking_prefix.hu

│ ├── nonbreaking_prefix.is

│ ├── nonbreaking_prefix.it

│ ├── nonbreaking_prefix.lt

│ ├── nonbreaking_prefix.lv

│ ├── nonbreaking_prefix.nl

│ ├── nonbreaking_prefix.pl

│ ├── nonbreaking_prefix.ro

│ ├── nonbreaking_prefix.ru

│ ├── nonbreaking_prefix.sk

│ ├── nonbreaking_prefix.sl

│ ├── nonbreaking_prefix.sv

│ ├── nonbreaking_prefix.ta

│ ├── nonbreaking_prefix.yue

│ └── nonbreaking_prefix.zh

├── release_model.py

├── test_rouge.py

└── tokenizer.perl

├── train.py

└── translate.py

/README.md:

--------------------------------------------------------------------------------

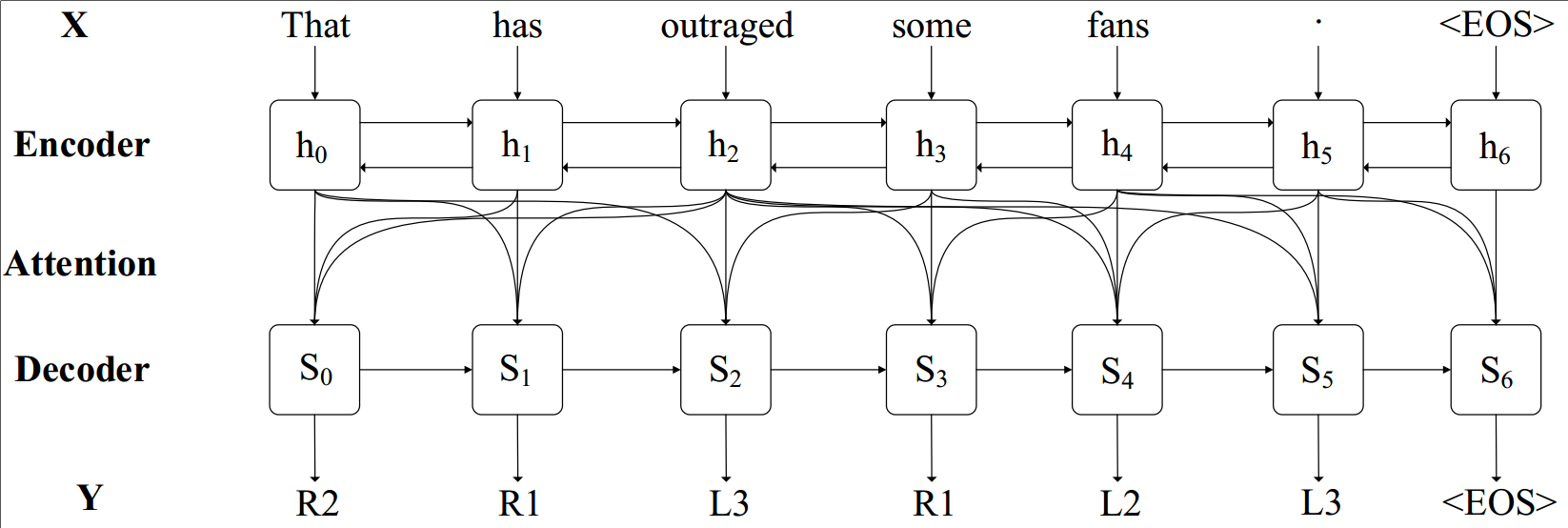

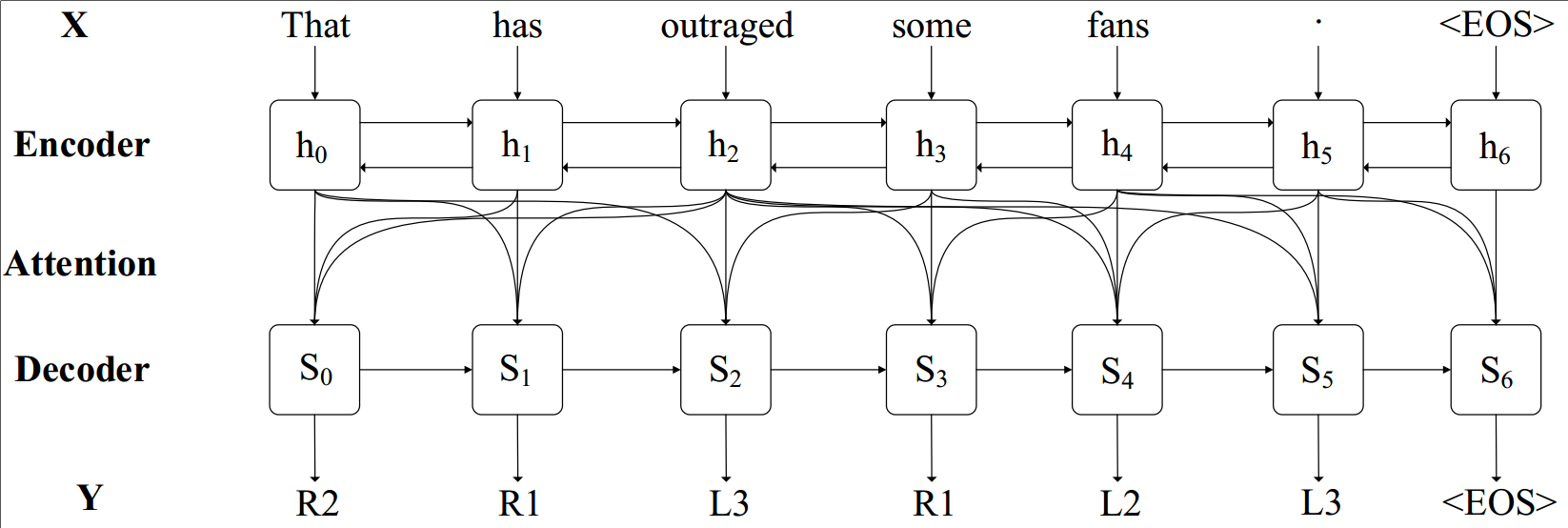

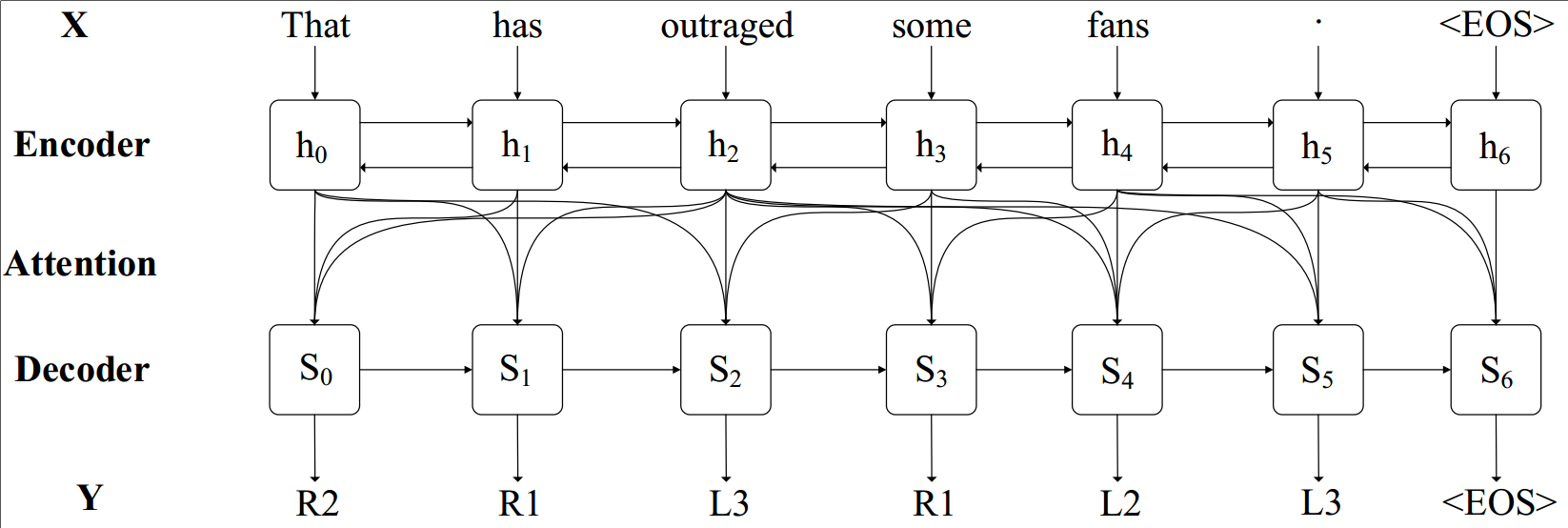

1 | # Sequence to sequence model for dependency parsing based on OpenNMT-py

2 |

3 | This is a Seq2seq model implemented based on [OpenNMT-py](http://opennmt.net/OpenNMT-py/). It is designed to be presents a seq2seq dependency parser by directly predicting the relative position of head for each given presents a seq2seq dependency parser by directly predicting the relative position of head for each given word, which therefore results in a truly end-to-end seq2seq dependency parser for the first time.word, which therefore results in a truly end-to-end seq2seq dependency parser.

4 |

5 | Enjoying the advantage of seq2seq modeling, we enrich a series of embedding enhancement, including firstly introduced subword and node2vec augmentation. Meanwhile, we propose a beam search decoder with tree constraint and subroot decomposition over the sequence to furthermore enhance our seq2seq parser.

6 |

7 | The framework of the proposed seq2seq model:

8 |  9 |

10 | ## Requirements

11 |

12 | ```bash

13 | pip install -r requirements.txt

14 | ```

15 | this project is tested on pytorch 0.3.1, the other version may need some modification.

16 |

17 | ## Quickstart

18 |

19 | ### Step 1: Convert the dependency parsing dataset

20 |

21 | ```bash

22 | python data/scripts/make_dp_dataset.py

23 | ```

24 |

25 |

26 | ### Step 2: Preprocessing the data

27 |

28 | ```bash

29 | python preprocess.py -train_src data/input/dp/src_ptb_sd_train.input -train_tgt data/input/dp/tgt_ptb_sd_train.input -valid_src data/input/dp/src_ptb_sd_dev.input -valid_tgt data/input/dp/tgt_ptb_sd_dev.input -save_data data/temp/dp/dp

30 | ```

31 | We will be working with some example data in `data/` folder.

32 |

33 | The data consists of parallel source (`src`) and target (`tgt`) data containing one sentence per line with tokens separated by a space:

34 |

35 | * `src-train.txt`

36 | * `tgt-train.txt`

37 | * `src-val.txt`

38 | * `tgt-val.txt`

39 |

40 | Validation files are required and used to evaluate the convergence of the training. It usually contains no more than 5000 sentences.

41 |

42 |

43 | After running the preprocessing, the following files are generated:

44 |

45 | * `dp.train.pt`: serialized PyTorch file containing training data

46 | * `dp.valid.pt`: serialized PyTorch file containing validation data

47 | * `dp.vocab.pt`: serialized PyTorch file containing vocabulary data

48 |

49 |

50 | Internally the system never touches the words themselves, but uses these indices.

51 |

52 | ### Step 2: Make the pretrain embedding

53 |

54 | ```bash

55 | python tools/embeddings_to_torch.py -emb_file_enc data/pretrain/glove.6B.100d.txt -dict_file data/temp/dp/dp.vocab.pt -output_file data/temp/dp/en_embeddings -type GloVe

56 | ```

57 |

58 |

59 | ### Step 3: Train the model

60 |

61 | ```bash

62 | python train.py -save_model data/model/dp/dp -batch_size 64 -enc_layers 4 -dec_layers 2 -rnn_size 800 -word_vec_size 100 -feat_vec_size 100 -pre_word_vecs_enc data/temp/dp/en_embeddings.enc.pt -data data/temp/dp/dp -encoder_type brnn -gpuid 0 -position_encoding -bridge -global_attention mlp -optim adam -learning_rate 0.001 -tensorboard -tensorboard_log_dir logs -elmo -elmo_size 500 -elmo_options data/pretrain/elmo_2x4096_512_2048cnn_2xhighway_options.json -elmo_weight data/pretrain/elmo_2x4096_512_2048cnn_2xhighway_weights.hdf5 -subword_elmo -subword_elmo_size 500 -subword_elmo_options data/pretrain/subword_elmo_options.json -subword_weight data/pretrain/en.wiki.bpe.op10000.d50.w2v.txt -subword_spm_model data/pretrain/en.wiki.bpe.op10000.model

63 | ```

64 |

65 | - elmo_2x4096_512_2048cnn_2xhighway_options.json

66 | - elmo_2x4096_512_2048cnn_2xhighway_weights.hdf5

67 | - subword_elmo_options.json

68 | - en.wiki.bpe.op10000.d50.w2v.txt

69 | - en.wiki.bpe.op10000.model

70 |

71 | You can download these files from [here](https://drive.google.com/drive/folders/1ug6ab14fpM22ed_vomOTjjUB8Awh66VM?usp=sharing).

72 |

73 |

74 | ### Step 3: Translate

75 |

76 | ```bash

77 | python translate.py -model data/model/dp/xxx.pt -src data/input/dp/src_ptb_sd_test.input -tgt data/input/dp/tgt_ptb_sd_test.input -output data/results/dp/tgt_ptb_sd_test.pred -replace_unk -verbose -gpu 0 -beam_size 64 -constraint_length 8 -alpha_c 0.8 -alpha_p 0.8

78 | ```

79 |

80 | Now you have a model which you can use to predict on new data. We do this by running beam search where `constraint_length`, `alpha_c`, `alpha_p` are parameters used in tree constraints.

81 |

82 | # Notes

83 | You can refer to our paper for more details. Thank you!

84 |

85 | ## Citation

86 |

87 | [Seq2seq Dependency Parsing](./resources/seq2seq4dp.pdf)

88 |

89 | ```

90 | @inproceedings{li2018seq2seq,

91 | title={Seq2seq dependency parsing},

92 | author={Li, Zuchao and He, Shexia and Zhao, Hai},

93 | booktitle={Proceedings of the 27th International Conference on Computational Linguistics (COLING 2018)},

94 | year={2018}

95 | }

96 | ```

97 |

--------------------------------------------------------------------------------

/data/scripts/eval_subroot.py:

--------------------------------------------------------------------------------

1 | import os

2 | from collections import Counter

3 |

4 | def load_data(path):

5 | with open(path, 'r') as f:

6 | data = f.readlines()

7 |

8 | data = [line.strip().split() for line in data if len(line.strip())>0]

9 |

10 | return data

11 |

12 |

13 | def f1(target, predict):

14 | TP = 0

15 | TN = 0

16 | FP = 0

17 | FN = 0

18 | total = 0

19 | correct = 0

20 | assert len(target) == len(predict)

21 | for i in range(len(target)):

22 | assert len(target[i]) == len(predict[i])

23 | for j in range(len(target[i])):

24 | total += 1

25 | if target[i][j] == predict[i][j]:

26 | correct += 1

27 | assert predict[i][j] == '0' or predict[i][j] == '1'

28 | if target[i][j] == '1' and target[i][j] == predict[i][j]:

29 | TP += 1

30 | if target[i][j] == '0' and target[i][j] == predict[i][j]:

31 | TN += 1

32 | if target[i][j] == '0' and target[i][j] != predict[i][j]:

33 | FP += 1

34 | if target[i][j] == '1' and target[i][j] != predict[i][j]:

35 | FN += 1

36 | P = TP / (TP + FP)

37 | R = TP / (TP + FN)

38 | F1 = 2 * P * R / (P + R)

39 |

40 | print('eval Acc:{:.2f} P:{:.2f} R:{:.2f} F1:{:.2f}'.format(correct/total*100, P * 100, R * 100, F1 * 100))

41 |

42 | if __name__ == '__main__':

43 | f1(load_data(os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_train.input')),

44 | load_data(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_train.pred')))

45 |

46 | f1(load_data(os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_dev.input')),

47 | load_data(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_dev.pred')))

48 |

49 | f1(load_data(os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_test.input')),

50 | load_data(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_test.pred')))

51 |

--------------------------------------------------------------------------------

/data/scripts/make_dp_dataset.py:

--------------------------------------------------------------------------------

1 | import os

2 | import tqdm

3 |

4 | # def is_scientific_notation(s):

5 | # s = str(s)

6 | # if s.count(',')>=1:

7 | # sl = s.split(',')

8 | # for item in sl:

9 | # if not item.isdigit():

10 | # return False

11 | # return True

12 | # return False

13 |

14 | # def is_float(s):

15 | # s = str(s)

16 | # if s.count('.')==1:

17 | # sl = s.split('.')

18 | # left = sl[0]

19 | # right = sl[1]

20 | # if left.startswith('-') and left.count('-')==1 and right.isdigit():

21 | # lleft = left.split('-')[1]

22 | # if lleft.isdigit() or is_scientific_notation(lleft):

23 | # return True

24 | # elif (left.isdigit() or is_scientific_notation(left)) and right.isdigit():

25 | # return True

26 | # return False

27 |

28 | # def is_fraction(s):

29 | # s = str(s)

30 | # if s.count('\/')==1:

31 | # sl = s.split('\/')

32 | # if len(sl)== 2 and sl[0].isdigit() and sl[1].isdigit():

33 | # return True

34 | # if s.count('/')==1:

35 | # sl = s.split('/')

36 | # if len(sl)== 2 and sl[0].isdigit() and sl[1].isdigit():

37 | # return True

38 | # if s[-1]=='%' and len(s)>1:

39 | # return True

40 | # return False

41 |

42 | # def is_number(s):

43 | # s = str(s)

44 | # if s.isdigit() or is_float(s) or is_fraction(s) or is_scientific_notation(s):

45 | # return True

46 | # else:

47 | # return False

48 |

49 | def make_input(file_name, src_path, tgt_path):

50 | with open(file_name, 'r') as f:

51 | data = f.readlines()

52 |

53 | origin_data = []

54 | sentence = []

55 |

56 | for i in range(len(data)):

57 | if len(data[i].strip()) > 0:

58 | sentence.append(data[i].strip().split('\t'))

59 | else:

60 | origin_data.append(sentence)

61 | sentence = []

62 |

63 | if len(sentence) > 0:

64 | origin_data.append(sentence)

65 |

66 | src_data = []

67 | tgt_data = []

68 | for sentence in origin_data:

69 | src_line = []

70 | tgt_line = []

71 | for line in sentence:

72 | dep_ind = int(line[0])

73 | head_ind = int(line[6])

74 | if dep_ind > head_ind:

75 | tag = 'L' + str(abs(dep_ind - head_ind))

76 | else:

77 | tag = 'R' + str(abs(dep_ind - head_ind))

78 | # word = ''.join([c if not c.isdigit() else '0' for c in line[1].lower()])

79 | is_number = False

80 | word = line[1].lower()

81 | for c in word:

82 | if c.isdigit():

83 | is_number = True

84 | break

85 | if is_number:

86 | word = 'number'

87 | src_line.append([word, line[4]])

88 | tgt_line.append(tag)

89 | if len(src_line) >= 1:

90 | src_data.append(src_line)

91 | tgt_data.append(tgt_line)

92 |

93 | with open(src_path, 'w') as f:

94 | for line in src_data:

95 | f.write(' '.join(['|'.join(item) for item in line]))

96 | f.write('\n')

97 |

98 |

99 | with open(tgt_path, 'w') as f:

100 | for line in tgt_data:

101 | f.write(' '.join(line))

102 | f.write('\n')

103 |

104 | if __name__ == '__main__':

105 | train_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/train_pro_wsd.conll')

106 | dev_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/dev_pro.conll')

107 | test_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/test_pro.conll')

108 |

109 | make_input(train_file, os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_train.input'),

110 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_train.input'))

111 | make_input(dev_file, os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_dev.input'),

112 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_dev.input'))

113 | make_input(test_file, os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_test.input'),

114 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_test.input'))

115 |

--------------------------------------------------------------------------------

/data/scripts/make_subroot_dataset.py:

--------------------------------------------------------------------------------

1 | import os

2 | import tqdm

3 |

4 | def make_input(file_name, src_path, tgt_path):

5 | with open(file_name, 'r') as f:

6 | data = f.readlines()

7 |

8 | origin_data = []

9 | sentence = []

10 |

11 | for i in range(len(data)):

12 | if len(data[i].strip()) > 0:

13 | sentence.append(data[i].strip().split('\t'))

14 | else:

15 | origin_data.append(sentence)

16 | sentence = []

17 |

18 | if len(sentence) > 0:

19 | origin_data.append(sentence)

20 |

21 | src_data = []

22 | tgt_data = []

23 | for sentence in origin_data:

24 | src_line = []

25 | tgt_line = []

26 | for line in sentence:

27 | dep_ind = int(line[0])

28 | head_ind = int(line[6])

29 | if head_ind == 0:

30 | tag = '1'

31 | else:

32 | tag = '0'

33 | # word = ''.join([c if not c.isdigit() else '0' for c in line[1].lower()])

34 | is_number = False

35 | word = line[1].lower()

36 | for c in word:

37 | if c.isdigit():

38 | is_number = True

39 | break

40 | if is_number:

41 | word = 'number'

42 | src_line.append([word, line[4]])

43 | tgt_line.append(tag)

44 | if len(src_line) > 1:

45 | src_data.append(src_line)

46 | tgt_data.append(tgt_line)

47 |

48 | with open(src_path, 'w') as f:

49 | for line in src_data:

50 | f.write(' '.join(['|'.join(item) for item in line]))

51 | f.write('\n')

52 |

53 |

54 | with open(tgt_path, 'w') as f:

55 | for line in tgt_data:

56 | f.write(' '.join(line))

57 | f.write('\n')

58 |

59 | if __name__ == '__main__':

60 | train_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/train_pro_wsd.conll')

61 | dev_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/dev_pro.conll')

62 | test_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/test_pro.conll')

63 |

64 | make_input(train_file,

65 | os.path.join(os.path.dirname(__file__), '../input/subroot/src_ptb_sd_subroot_train.input'),

66 | os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_train.input'))

67 |

68 | make_input(dev_file,

69 | os.path.join(os.path.dirname(__file__), '../input/subroot/src_ptb_sd_subroot_dev.input'),

70 | os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_dev.input'))

71 |

72 | make_input(test_file,

73 | os.path.join(os.path.dirname(__file__), '../input/subroot/src_ptb_sd_subroot_test.input'),

74 | os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_test.input'))

75 |

--------------------------------------------------------------------------------

/data/scripts/make_wsd_pred.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | def make_wsd_pred(pred_file, map_file, output_file):

4 | with open(pred_file, 'r') as f:

5 | pred_data = f.readlines()

6 |

7 | pred_data = [line.split() for line in pred_data if len(line.strip())>0]

8 |

9 |

10 | with open(map_file, 'r') as f:

11 | map_data = f.readlines()

12 |

13 | map_data = [line.strip() for line in map_data if len(line.strip())>0]

14 |

15 | output_data = []

16 |

17 | assert len(map_data) == len(pred_data)

18 |

19 | sent_len = len(map_data)

20 | sent_line = []

21 | for i in range(sent_len):

22 | if len(sent_line) == 0:

23 | sent_line = pred_data[i]

24 | else:

25 | if map_data[i] == map_data[i-1]:

26 | sent_line[-1] = ''

27 | sent_line += pred_data[i][1:]

28 | else:

29 | output_data.append(sent_line)

30 | sent_line = pred_data[i]

31 |

32 | if len(sent_line)>0:

33 | output_data.append(sent_line)

34 |

35 | with open(output_file, 'w') as f:

36 | for i in range(len(output_data)):

37 | for j in range(len(output_data[i])):

38 | if output_data[i][j] == '':

39 | output_data[i][j] = 'L'+str(j+1)

40 | f.write(' '.join(output_data[i]))

41 | f.write('\n')

42 |

43 |

44 | if __name__ == '__main__':

45 | # make_wsd_pred(os.path.join(os.path.dirname(__file__), '../results/dp/tgt_ptb_sd_dev_wsd_30.pred'),

46 | # os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_dev_wsd_30_map.input'))

47 |

48 | make_wsd_pred(os.path.join(os.path.dirname(__file__), '../results/dp/tgt_ptb_sd_test_wsd_40.pred'),

49 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_test_wsd_40_map.input'),

50 | os.path.join(os.path.dirname(__file__), '../results/dp/tgt_ptb_sd_test_wsd_40_org.pred'))

51 |

52 |

--------------------------------------------------------------------------------

/data/scripts/mergy_subroot_feature.py:

--------------------------------------------------------------------------------

1 | # we merge the golden subroot feature into train dataset for training

2 | # and we use the predict subroot (by BiLSTM+CRF) feature into dev/train dataset

3 |

4 | import os

5 |

6 | def merge_train(input_file, origin_file, output_file):

7 | with open(input_file, 'r') as f:

8 | input_data = f.readlines()

9 |

10 | input_data = [line.split() for line in input_data if len(line.strip())>0]

11 |

12 | with open(origin_file, 'r') as f:

13 | data = f.readlines()

14 |

15 | origin_data = []

16 | sentence = []

17 |

18 | for i in range(len(data)):

19 | if len(data[i].strip()) > 0:

20 | sentence.append(data[i].strip().split('\t'))

21 | else:

22 | origin_data.append(sentence)

23 | sentence = []

24 |

25 | if len(sentence) > 0:

26 | origin_data.append(sentence)

27 |

28 | assert len(input_data) == len(origin_data)

29 |

30 | with open(output_file, 'w') as f:

31 | for i in range(len(input_data)):

32 | assert len(input_data[i]) == len(origin_data[i])

33 | line = []

34 | for j in range(len(input_data[i])):

35 | if int(origin_data[i][j][6]) == 0:

36 | line.append(input_data[i][j]+'|1')

37 | else:

38 | line.append(input_data[i][j]+'|0')

39 | f.write(' '.join(line))

40 | f.write('\n')

41 |

42 |

43 | def merge_pred(input_file, subroot_pred_file, output_file):

44 | with open(input_file, 'r') as f:

45 | input_data = f.readlines()

46 |

47 | input_data = [line.split() for line in input_data if len(line.strip())>0]

48 |

49 | with open(subroot_pred_file, 'r') as f:

50 | data = f.readlines()

51 |

52 | pred_data = []

53 | sentence = []

54 |

55 | for i in range(len(data)):

56 | if len(data[i].strip()) > 0:

57 | sentence.append(data[i].strip().split('\t'))

58 | else:

59 | pred_data.append(sentence)

60 | sentence = []

61 |

62 | if len(sentence) > 0:

63 | pred_data.append(sentence)

64 |

65 | assert len(input_data) == len(pred_data)

66 |

67 | with open(output_file, 'w') as f:

68 | for i in range(len(input_data)):

69 | assert len(input_data[i]) == len(pred_data[i])

70 | line = []

71 | for j in range(len(input_data[i])):

72 | line.append(input_data[i][j]+'|'+pred_data[i][j][1])

73 | f.write(' '.join(line))

74 | f.write('\n')

75 |

76 |

77 | if __name__ == '__main__':

78 | merge_train(os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_train.input'),

79 | os.path.join(os.path.dirname(__file__), '../ptb-sd/train_pro.conll'),

80 | os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_train_ws.input'))

81 |

82 | merge_pred(os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_dev.input'),

83 | os.path.join(os.path.dirname(__file__), '../../subroot/result/dev_predicate_95.94.pred'),

84 | os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_dev_ws.input'))

85 |

86 | merge_pred(os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_test.input'),

87 | os.path.join(os.path.dirname(__file__), '../../subroot/result/test_predicate_95.16.pred'),

88 | os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_test_ws.input'))

--------------------------------------------------------------------------------

/data/scripts/recover_dataset.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 |

4 | def recover_data(file_name, pred_data, output_path):

5 | with open(file_name, 'r') as f:

6 | data = f.readlines()

7 |

8 |

9 | golden_data = []

10 | sentence = []

11 |

12 | for i in range(len(data)):

13 | if len(data[i].strip()) > 0:

14 | sentence.append(data[i].strip().split('\t'))

15 | else:

16 | golden_data.append(sentence)

17 | sentence = []

18 |

19 | if len(sentence) > 0:

20 | golden_data.append(sentence)

21 |

22 | with open(pred_data, 'r') as f:

23 | data = f.readlines()

24 |

25 | pred_data = [item.strip().split() for item in data if len(item.strip()) > 0]

26 |

27 | pred_index = 0

28 | for i in range(len(golden_data)):

29 | predicate_idx = 0

30 | for j in range(len(golden_data[i])):

31 | if golden_data[i][j][12] == 'Y':

32 | predicate_idx += 1

33 | for k in range(len(golden_data[i])):

34 | golden_data[i][k][13 + predicate_idx] = pred_data[pred_index][k]

35 | pred_index += 1

36 |

37 | with open(output_path, 'w') as f:

38 | for sentence in golden_data:

39 | for line in sentence:

40 | f.write('\t'.join(line))

41 | f.write('\n')

42 | f.write('\n')

43 |

44 | if __name__ == '__main__':

45 | recover_data(os.path.join(os.path.dirname(__file__), 'conll09-english/conll09_test.dataset'),

46 | os.path.join(os.path.dirname(__file__), 'tgt_conll09_en_test.pred'),

47 | os.path.join(os.path.dirname(__file__), 'conll09_en_test.dataset.pred'))

48 |

--------------------------------------------------------------------------------

/data/scripts/subroot_replace_unk.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | def replace_unk(input_file):

4 | with open(input_file, 'r') as f:

5 | input_data = f.readlines()

6 |

7 | input_data = [line.split() for line in input_data if len(line.strip())>0]

8 |

9 | with open(input_file, 'w') as f:

10 | for i in range(len(input_data)):

11 | line = []

12 | for j in range(len(input_data[i])):

13 | if input_data[i][j] == '0' or input_data[i][j]=='1':

14 | line.append(input_data[i][j])

15 | else:

16 | line.append('0')

17 | f.write(' '.join(line))

18 | f.write('\n')

19 |

20 |

21 | if __name__ == '__main__':

22 | replace_unk(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_train.pred'))

23 |

24 | replace_unk(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_dev.pred'))

25 |

26 | replace_unk(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_test.pred'))

--------------------------------------------------------------------------------

/node2vec/train.py:

--------------------------------------------------------------------------------

1 | import networkx as nx

2 | from node2vec import Node2Vec

3 |

4 | # FILES

5 | EMBEDDING_FILENAME = './node2vec_en.emb'

6 | EMBEDDING_MODEL_FILENAME = './node2vec_en.model'

7 |

8 | # Create a graph

9 | # graph = nx.fast_gnp_random_graph(n=100, p=0.5)

10 | graph = nx.Graph()

11 |

12 | raw_train_file = '../data/ptb-sd/train_pro.conll'

13 |

14 | with open(raw_train_file, 'r') as f:

15 | data = f.readlines()

16 |

17 | # read data

18 | train_data = []

19 | sentence = []

20 | for line in data:

21 | if len(line.strip()) > 0:

22 | line = line.strip().split('\t')

23 | sentence.append(line)

24 | else:

25 | train_data.append(sentence)

26 | sentence = []

27 | if len(sentence)>0:

28 | train_data.append(sentence)

29 | sentence = []

30 |

31 | for sentence in train_data:

32 | for line in sentence:

33 | head_idx = int(line[6])-1

34 | if head_idx == -1:

35 | is_number = False

36 | word = line[1].lower()

37 | for c in word:

38 | if c.isdigit():

39 | is_number = True

40 | break

41 | if is_number:

42 | word = 'number'

43 | graph.add_edge('', word, weight=1)

44 | else:

45 | hw = sentence[head_idx][1].lower()

46 | is_number = False

47 | for c in hw:

48 | if c.isdigit():

49 | is_number = True

50 | break

51 | if is_number:

52 | hw = 'number'

53 | w = line[1].lower()

54 | is_number = False

55 | for c in w:

56 | if c.isdigit():

57 | is_number = True

58 | break

59 | if is_number:

60 | w = 'number'

61 | graph.add_edge(hw, w, weight=0.5)

62 |

63 | # Precompute probabilities and generate walks

64 | node2vec = Node2Vec(graph, dimensions=100, walk_length=100, num_walks=18, workers=1)

65 |

66 | # Embed

67 | model = node2vec.fit(window=16, min_count=1, batch_words=64) # Any keywords acceptable by gensim.Word2Vec can be passed, `diemnsions` and `workers` are automatically passed (from the Node2Vec constructor)

68 |

69 | # Look for most similar nodes

70 | model.wv.most_similar('') # Output node names are always strings

71 |

72 | # Save embeddings for later use

73 | model.wv.save_word2vec_format(EMBEDDING_FILENAME)

74 |

75 | # Save model for later use

76 | model.save(EMBEDDING_MODEL_FILENAME)

--------------------------------------------------------------------------------

/onmt/Highway.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 |

4 |

5 | class HighwayMLP(nn.Module):

6 |

7 | def __init__(self,

8 | input_size,

9 | gate_bias=-2,

10 | activation_function=nn.functional.relu,

11 | gate_activation=nn.functional.softmax):

12 |

13 | super(HighwayMLP, self).__init__()

14 |

15 | self.activation_function = activation_function

16 | self.gate_activation = gate_activation

17 |

18 | self.normal_layer = nn.Linear(input_size, input_size)

19 |

20 | self.gate_layer = nn.Linear(input_size, input_size)

21 | self.gate_layer.bias.data.fill_(gate_bias)

22 |

23 | def forward(self, x):

24 |

25 | normal_layer_result = self.activation_function(self.normal_layer(x))

26 | gate_layer_result = self.gate_activation(self.gate_layer(x),dim=0)

27 |

28 | multiplyed_gate_and_normal = torch.mul(normal_layer_result, gate_layer_result)

29 | multiplyed_gate_and_input = torch.mul((1 - gate_layer_result), x)

30 |

31 | return torch.add(multiplyed_gate_and_normal,

32 | multiplyed_gate_and_input)

33 |

34 |

35 | class HighwayCNN(nn.Module):

36 | def __init__(self,

37 | input_size,

38 | gate_bias=-1,

39 | activation_function=nn.functional.relu,

40 | gate_activation=nn.functional.softmax):

41 |

42 | super(HighwayCNN, self).__init__()

43 |

44 | self.activation_function = activation_function

45 | self.gate_activation = gate_activation

46 |

47 | self.normal_layer = nn.Linear(input_size, input_size)

48 |

49 | self.gate_layer = nn.Linear(input_size, input_size)

50 | self.gate_layer.bias.data.fill_(gate_bias)

51 |

52 | def forward(self, x):

53 |

54 | normal_layer_result = self.activation_function(self.normal_layer(x))

55 | gate_layer_result = self.gate_activation(self.gate_layer(x))

56 |

57 | multiplyed_gate_and_normal = torch.mul(normal_layer_result, gate_layer_result)

58 | multiplyed_gate_and_input = torch.mul((1 - gate_layer_result), x)

59 |

60 | return torch.add(multiplyed_gate_and_normal,

61 | multiplyed_gate_and_input)

--------------------------------------------------------------------------------

/onmt/Optim.py:

--------------------------------------------------------------------------------

1 | import torch.optim as optim

2 | from torch.nn.utils import clip_grad_norm

3 |

4 |

5 | class MultipleOptimizer(object):

6 | def __init__(self, op):

7 | self.optimizers = op

8 |

9 | def zero_grad(self):

10 | for op in self.optimizers:

11 | op.zero_grad()

12 |

13 | def step(self):

14 | for op in self.optimizers:

15 | op.step()

16 |

17 |

18 | class Optim(object):

19 | """

20 | Controller class for optimization. Mostly a thin

21 | wrapper for `optim`, but also useful for implementing

22 | rate scheduling beyond what is currently available.

23 | Also implements necessary methods for training RNNs such

24 | as grad manipulations.

25 |

26 | Args:

27 | method (:obj:`str`): one of [sgd, adagrad, adadelta, adam]

28 | lr (float): learning rate

29 | lr_decay (float, optional): learning rate decay multiplier

30 | start_decay_at (int, optional): epoch to start learning rate decay

31 | beta1, beta2 (float, optional): parameters for adam

32 | adagrad_accum (float, optional): initialization parameter for adagrad

33 | decay_method (str, option): custom decay options

34 | warmup_steps (int, option): parameter for `noam` decay

35 | model_size (int, option): parameter for `noam` decay

36 | """

37 | # We use the default parameters for Adam that are suggested by

38 | # the original paper https://arxiv.org/pdf/1412.6980.pdf

39 | # These values are also used by other established implementations,

40 | # e.g. https://www.tensorflow.org/api_docs/python/tf/train/AdamOptimizer

41 | # https://keras.io/optimizers/

42 | # Recently there are slightly different values used in the paper

43 | # "Attention is all you need"

44 | # https://arxiv.org/pdf/1706.03762.pdf, particularly the value beta2=0.98

45 | # was used there however, beta2=0.999 is still arguably the more

46 | # established value, so we use that here as well

47 | def __init__(self, method, lr, max_grad_norm,

48 | lr_decay=1, start_decay_at=None,

49 | beta1=0.9, beta2=0.999,

50 | adagrad_accum=0.0,

51 | decay_method=None,

52 | warmup_steps=4000,

53 | model_size=None):

54 | self.last_ppl = None

55 | self.lr = lr

56 | self.original_lr = lr

57 | self.max_grad_norm = max_grad_norm

58 | self.method = method

59 | self.lr_decay = lr_decay

60 | self.start_decay_at = start_decay_at

61 | self.start_decay = False

62 | self._step = 0

63 | self.betas = [beta1, beta2]

64 | self.adagrad_accum = adagrad_accum

65 | self.decay_method = decay_method

66 | self.warmup_steps = warmup_steps

67 | self.model_size = model_size

68 |

69 | def set_parameters(self, params):

70 | self.params = []

71 | self.sparse_params = []

72 | for k, p in params:

73 | if p.requires_grad:

74 | if self.method != 'sparseadam' or "embed" not in k:

75 | self.params.append(p)

76 | else:

77 | self.sparse_params.append(p)

78 | if self.method == 'sgd':

79 | self.optimizer = optim.SGD(self.params, lr=self.lr)

80 | elif self.method == 'adagrad':

81 | self.optimizer = optim.Adagrad(self.params, lr=self.lr)

82 | for group in self.optimizer.param_groups:

83 | for p in group['params']:

84 | self.optimizer.state[p]['sum'] = self.optimizer\

85 | .state[p]['sum'].fill_(self.adagrad_accum)

86 | elif self.method == 'adadelta':

87 | self.optimizer = optim.Adadelta(self.params, lr=self.lr)

88 | elif self.method == 'adam':

89 | self.optimizer = optim.Adam(self.params, lr=self.lr,

90 | betas=self.betas, eps=1e-9)

91 | elif self.method == 'sparseadam':

92 | self.optimizer = MultipleOptimizer(

93 | [optim.Adam(self.params, lr=self.lr,

94 | betas=self.betas, eps=1e-8),

95 | optim.SparseAdam(self.sparse_params, lr=self.lr,

96 | betas=self.betas, eps=1e-8)])

97 | else:

98 | raise RuntimeError("Invalid optim method: " + self.method)

99 |

100 | def _set_rate(self, lr):

101 | self.lr = lr

102 | if self.method != 'sparseadam':

103 | self.optimizer.param_groups[0]['lr'] = self.lr

104 | else:

105 | for op in self.optimizer.optimizers:

106 | op.param_groups[0]['lr'] = self.lr

107 |

108 | def step(self):

109 | """Update the model parameters based on current gradients.

110 |

111 | Optionally, will employ gradient modification or update learning

112 | rate.

113 | """

114 | self._step += 1

115 |

116 | # Decay method used in tensor2tensor.

117 | if self.decay_method == "noam":

118 | self._set_rate(

119 | self.original_lr *

120 | (self.model_size ** (-0.5) *

121 | min(self._step ** (-0.5),

122 | self._step * self.warmup_steps**(-1.5))))

123 |

124 | if self.max_grad_norm:

125 | clip_grad_norm(self.params, self.max_grad_norm)

126 | self.optimizer.step()

127 |

128 | def update_learning_rate(self, ppl, epoch):

129 | """

130 | Decay learning rate if val perf does not improve

131 | or we hit the start_decay_at limit.

132 | """

133 |

134 | if self.start_decay_at is not None and epoch >= self.start_decay_at:

135 | self.start_decay = True

136 | if self.last_ppl is not None and ppl > self.last_ppl:

137 | self.start_decay = True

138 |

139 | if self.start_decay:

140 | self.lr = self.lr * self.lr_decay

141 | print("Decaying learning rate to %g" % self.lr)

142 |

143 | self.last_ppl = ppl

144 | if self.method != 'sparseadam':

145 | self.optimizer.param_groups[0]['lr'] = self.lr

146 |

--------------------------------------------------------------------------------

/onmt/Utils.py:

--------------------------------------------------------------------------------

1 | import torch

2 |

3 |

4 | def aeq(*args):

5 | """

6 | Assert all arguments have the same value

7 | """

8 | arguments = (arg for arg in args)

9 | first = next(arguments)

10 | assert all(arg == first for arg in arguments), \

11 | "Not all arguments have the same value: " + str(args)

12 |

13 |

14 | def sequence_mask(lengths, max_len=None):

15 | """

16 | Creates a boolean mask from sequence lengths.

17 | """

18 | batch_size = lengths.numel()

19 | max_len = max_len or lengths.max()

20 | return (torch.arange(0, max_len)

21 | .type_as(lengths)

22 | .repeat(batch_size, 1)

23 | .lt(lengths.unsqueeze(1)))

24 |

25 |

26 | def use_gpu(opt):

27 | return (hasattr(opt, 'gpuid') and len(opt.gpuid) > 0) or \

28 | (hasattr(opt, 'gpu') and opt.gpu > -1)

29 |

--------------------------------------------------------------------------------

/onmt/__init__.py:

--------------------------------------------------------------------------------

1 | import onmt.io

2 | import onmt.Models

3 | import onmt.Loss

4 | import onmt.translate

5 | import onmt.opts

6 | from onmt.Trainer import Trainer, Statistics

7 | from onmt.Optim import Optim

8 |

9 | # For flake8 compatibility

10 | __all__ = [onmt.Loss, onmt.Models, onmt.opts,

11 | Trainer, Optim, Statistics, onmt.io, onmt.translate]

12 |

--------------------------------------------------------------------------------

/onmt/__init__.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/bcmi220/seq2seq_parser/4143c2f9b3164c0fe8b8374f6bcca747184193d9/onmt/__init__.pyc

--------------------------------------------------------------------------------

/onmt/io/DatasetBase.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | from itertools import chain

4 | import torchtext

5 |

6 |

7 | PAD_WORD = ''

8 | UNK_WORD = ''

9 | UNK = 0

10 | BOS_WORD = '

9 |

10 | ## Requirements

11 |

12 | ```bash

13 | pip install -r requirements.txt

14 | ```

15 | this project is tested on pytorch 0.3.1, the other version may need some modification.

16 |

17 | ## Quickstart

18 |

19 | ### Step 1: Convert the dependency parsing dataset

20 |

21 | ```bash

22 | python data/scripts/make_dp_dataset.py

23 | ```

24 |

25 |

26 | ### Step 2: Preprocessing the data

27 |

28 | ```bash

29 | python preprocess.py -train_src data/input/dp/src_ptb_sd_train.input -train_tgt data/input/dp/tgt_ptb_sd_train.input -valid_src data/input/dp/src_ptb_sd_dev.input -valid_tgt data/input/dp/tgt_ptb_sd_dev.input -save_data data/temp/dp/dp

30 | ```

31 | We will be working with some example data in `data/` folder.

32 |

33 | The data consists of parallel source (`src`) and target (`tgt`) data containing one sentence per line with tokens separated by a space:

34 |

35 | * `src-train.txt`

36 | * `tgt-train.txt`

37 | * `src-val.txt`

38 | * `tgt-val.txt`

39 |

40 | Validation files are required and used to evaluate the convergence of the training. It usually contains no more than 5000 sentences.

41 |

42 |

43 | After running the preprocessing, the following files are generated:

44 |

45 | * `dp.train.pt`: serialized PyTorch file containing training data

46 | * `dp.valid.pt`: serialized PyTorch file containing validation data

47 | * `dp.vocab.pt`: serialized PyTorch file containing vocabulary data

48 |

49 |

50 | Internally the system never touches the words themselves, but uses these indices.

51 |

52 | ### Step 2: Make the pretrain embedding

53 |

54 | ```bash

55 | python tools/embeddings_to_torch.py -emb_file_enc data/pretrain/glove.6B.100d.txt -dict_file data/temp/dp/dp.vocab.pt -output_file data/temp/dp/en_embeddings -type GloVe

56 | ```

57 |

58 |

59 | ### Step 3: Train the model

60 |

61 | ```bash

62 | python train.py -save_model data/model/dp/dp -batch_size 64 -enc_layers 4 -dec_layers 2 -rnn_size 800 -word_vec_size 100 -feat_vec_size 100 -pre_word_vecs_enc data/temp/dp/en_embeddings.enc.pt -data data/temp/dp/dp -encoder_type brnn -gpuid 0 -position_encoding -bridge -global_attention mlp -optim adam -learning_rate 0.001 -tensorboard -tensorboard_log_dir logs -elmo -elmo_size 500 -elmo_options data/pretrain/elmo_2x4096_512_2048cnn_2xhighway_options.json -elmo_weight data/pretrain/elmo_2x4096_512_2048cnn_2xhighway_weights.hdf5 -subword_elmo -subword_elmo_size 500 -subword_elmo_options data/pretrain/subword_elmo_options.json -subword_weight data/pretrain/en.wiki.bpe.op10000.d50.w2v.txt -subword_spm_model data/pretrain/en.wiki.bpe.op10000.model

63 | ```

64 |

65 | - elmo_2x4096_512_2048cnn_2xhighway_options.json

66 | - elmo_2x4096_512_2048cnn_2xhighway_weights.hdf5

67 | - subword_elmo_options.json

68 | - en.wiki.bpe.op10000.d50.w2v.txt

69 | - en.wiki.bpe.op10000.model

70 |

71 | You can download these files from [here](https://drive.google.com/drive/folders/1ug6ab14fpM22ed_vomOTjjUB8Awh66VM?usp=sharing).

72 |

73 |

74 | ### Step 3: Translate

75 |

76 | ```bash

77 | python translate.py -model data/model/dp/xxx.pt -src data/input/dp/src_ptb_sd_test.input -tgt data/input/dp/tgt_ptb_sd_test.input -output data/results/dp/tgt_ptb_sd_test.pred -replace_unk -verbose -gpu 0 -beam_size 64 -constraint_length 8 -alpha_c 0.8 -alpha_p 0.8

78 | ```

79 |

80 | Now you have a model which you can use to predict on new data. We do this by running beam search where `constraint_length`, `alpha_c`, `alpha_p` are parameters used in tree constraints.

81 |

82 | # Notes

83 | You can refer to our paper for more details. Thank you!

84 |

85 | ## Citation

86 |

87 | [Seq2seq Dependency Parsing](./resources/seq2seq4dp.pdf)

88 |

89 | ```

90 | @inproceedings{li2018seq2seq,

91 | title={Seq2seq dependency parsing},

92 | author={Li, Zuchao and He, Shexia and Zhao, Hai},

93 | booktitle={Proceedings of the 27th International Conference on Computational Linguistics (COLING 2018)},

94 | year={2018}

95 | }

96 | ```

97 |

--------------------------------------------------------------------------------

/data/scripts/eval_subroot.py:

--------------------------------------------------------------------------------

1 | import os

2 | from collections import Counter

3 |

4 | def load_data(path):

5 | with open(path, 'r') as f:

6 | data = f.readlines()

7 |

8 | data = [line.strip().split() for line in data if len(line.strip())>0]

9 |

10 | return data

11 |

12 |

13 | def f1(target, predict):

14 | TP = 0

15 | TN = 0

16 | FP = 0

17 | FN = 0

18 | total = 0

19 | correct = 0

20 | assert len(target) == len(predict)

21 | for i in range(len(target)):

22 | assert len(target[i]) == len(predict[i])

23 | for j in range(len(target[i])):

24 | total += 1

25 | if target[i][j] == predict[i][j]:

26 | correct += 1

27 | assert predict[i][j] == '0' or predict[i][j] == '1'

28 | if target[i][j] == '1' and target[i][j] == predict[i][j]:

29 | TP += 1

30 | if target[i][j] == '0' and target[i][j] == predict[i][j]:

31 | TN += 1

32 | if target[i][j] == '0' and target[i][j] != predict[i][j]:

33 | FP += 1

34 | if target[i][j] == '1' and target[i][j] != predict[i][j]:

35 | FN += 1

36 | P = TP / (TP + FP)

37 | R = TP / (TP + FN)

38 | F1 = 2 * P * R / (P + R)

39 |

40 | print('eval Acc:{:.2f} P:{:.2f} R:{:.2f} F1:{:.2f}'.format(correct/total*100, P * 100, R * 100, F1 * 100))

41 |

42 | if __name__ == '__main__':

43 | f1(load_data(os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_train.input')),

44 | load_data(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_train.pred')))

45 |

46 | f1(load_data(os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_dev.input')),

47 | load_data(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_dev.pred')))

48 |

49 | f1(load_data(os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_test.input')),

50 | load_data(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_test.pred')))

51 |

--------------------------------------------------------------------------------

/data/scripts/make_dp_dataset.py:

--------------------------------------------------------------------------------

1 | import os

2 | import tqdm

3 |

4 | # def is_scientific_notation(s):

5 | # s = str(s)

6 | # if s.count(',')>=1:

7 | # sl = s.split(',')

8 | # for item in sl:

9 | # if not item.isdigit():

10 | # return False

11 | # return True

12 | # return False

13 |

14 | # def is_float(s):

15 | # s = str(s)

16 | # if s.count('.')==1:

17 | # sl = s.split('.')

18 | # left = sl[0]

19 | # right = sl[1]

20 | # if left.startswith('-') and left.count('-')==1 and right.isdigit():

21 | # lleft = left.split('-')[1]

22 | # if lleft.isdigit() or is_scientific_notation(lleft):

23 | # return True

24 | # elif (left.isdigit() or is_scientific_notation(left)) and right.isdigit():

25 | # return True

26 | # return False

27 |

28 | # def is_fraction(s):

29 | # s = str(s)

30 | # if s.count('\/')==1:

31 | # sl = s.split('\/')

32 | # if len(sl)== 2 and sl[0].isdigit() and sl[1].isdigit():

33 | # return True

34 | # if s.count('/')==1:

35 | # sl = s.split('/')

36 | # if len(sl)== 2 and sl[0].isdigit() and sl[1].isdigit():

37 | # return True

38 | # if s[-1]=='%' and len(s)>1:

39 | # return True

40 | # return False

41 |

42 | # def is_number(s):

43 | # s = str(s)

44 | # if s.isdigit() or is_float(s) or is_fraction(s) or is_scientific_notation(s):

45 | # return True

46 | # else:

47 | # return False

48 |

49 | def make_input(file_name, src_path, tgt_path):

50 | with open(file_name, 'r') as f:

51 | data = f.readlines()

52 |

53 | origin_data = []

54 | sentence = []

55 |

56 | for i in range(len(data)):

57 | if len(data[i].strip()) > 0:

58 | sentence.append(data[i].strip().split('\t'))

59 | else:

60 | origin_data.append(sentence)

61 | sentence = []

62 |

63 | if len(sentence) > 0:

64 | origin_data.append(sentence)

65 |

66 | src_data = []

67 | tgt_data = []

68 | for sentence in origin_data:

69 | src_line = []

70 | tgt_line = []

71 | for line in sentence:

72 | dep_ind = int(line[0])

73 | head_ind = int(line[6])

74 | if dep_ind > head_ind:

75 | tag = 'L' + str(abs(dep_ind - head_ind))

76 | else:

77 | tag = 'R' + str(abs(dep_ind - head_ind))

78 | # word = ''.join([c if not c.isdigit() else '0' for c in line[1].lower()])

79 | is_number = False

80 | word = line[1].lower()

81 | for c in word:

82 | if c.isdigit():

83 | is_number = True

84 | break

85 | if is_number:

86 | word = 'number'

87 | src_line.append([word, line[4]])

88 | tgt_line.append(tag)

89 | if len(src_line) >= 1:

90 | src_data.append(src_line)

91 | tgt_data.append(tgt_line)

92 |

93 | with open(src_path, 'w') as f:

94 | for line in src_data:

95 | f.write(' '.join(['|'.join(item) for item in line]))

96 | f.write('\n')

97 |

98 |

99 | with open(tgt_path, 'w') as f:

100 | for line in tgt_data:

101 | f.write(' '.join(line))

102 | f.write('\n')

103 |

104 | if __name__ == '__main__':

105 | train_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/train_pro_wsd.conll')

106 | dev_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/dev_pro.conll')

107 | test_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/test_pro.conll')

108 |

109 | make_input(train_file, os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_train.input'),

110 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_train.input'))

111 | make_input(dev_file, os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_dev.input'),

112 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_dev.input'))

113 | make_input(test_file, os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_test.input'),

114 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_test.input'))

115 |

--------------------------------------------------------------------------------

/data/scripts/make_subroot_dataset.py:

--------------------------------------------------------------------------------

1 | import os

2 | import tqdm

3 |

4 | def make_input(file_name, src_path, tgt_path):

5 | with open(file_name, 'r') as f:

6 | data = f.readlines()

7 |

8 | origin_data = []

9 | sentence = []

10 |

11 | for i in range(len(data)):

12 | if len(data[i].strip()) > 0:

13 | sentence.append(data[i].strip().split('\t'))

14 | else:

15 | origin_data.append(sentence)

16 | sentence = []

17 |

18 | if len(sentence) > 0:

19 | origin_data.append(sentence)

20 |

21 | src_data = []

22 | tgt_data = []

23 | for sentence in origin_data:

24 | src_line = []

25 | tgt_line = []

26 | for line in sentence:

27 | dep_ind = int(line[0])

28 | head_ind = int(line[6])

29 | if head_ind == 0:

30 | tag = '1'

31 | else:

32 | tag = '0'

33 | # word = ''.join([c if not c.isdigit() else '0' for c in line[1].lower()])

34 | is_number = False

35 | word = line[1].lower()

36 | for c in word:

37 | if c.isdigit():

38 | is_number = True

39 | break

40 | if is_number:

41 | word = 'number'

42 | src_line.append([word, line[4]])

43 | tgt_line.append(tag)

44 | if len(src_line) > 1:

45 | src_data.append(src_line)

46 | tgt_data.append(tgt_line)

47 |

48 | with open(src_path, 'w') as f:

49 | for line in src_data:

50 | f.write(' '.join(['|'.join(item) for item in line]))

51 | f.write('\n')

52 |

53 |

54 | with open(tgt_path, 'w') as f:

55 | for line in tgt_data:

56 | f.write(' '.join(line))

57 | f.write('\n')

58 |

59 | if __name__ == '__main__':

60 | train_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/train_pro_wsd.conll')

61 | dev_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/dev_pro.conll')

62 | test_file = os.path.join(os.path.dirname(__file__), '../ptb-sd/test_pro.conll')

63 |

64 | make_input(train_file,

65 | os.path.join(os.path.dirname(__file__), '../input/subroot/src_ptb_sd_subroot_train.input'),

66 | os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_train.input'))

67 |

68 | make_input(dev_file,

69 | os.path.join(os.path.dirname(__file__), '../input/subroot/src_ptb_sd_subroot_dev.input'),

70 | os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_dev.input'))

71 |

72 | make_input(test_file,

73 | os.path.join(os.path.dirname(__file__), '../input/subroot/src_ptb_sd_subroot_test.input'),

74 | os.path.join(os.path.dirname(__file__), '../input/subroot/tgt_ptb_sd_subroot_test.input'))

75 |

--------------------------------------------------------------------------------

/data/scripts/make_wsd_pred.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | def make_wsd_pred(pred_file, map_file, output_file):

4 | with open(pred_file, 'r') as f:

5 | pred_data = f.readlines()

6 |

7 | pred_data = [line.split() for line in pred_data if len(line.strip())>0]

8 |

9 |

10 | with open(map_file, 'r') as f:

11 | map_data = f.readlines()

12 |

13 | map_data = [line.strip() for line in map_data if len(line.strip())>0]

14 |

15 | output_data = []

16 |

17 | assert len(map_data) == len(pred_data)

18 |

19 | sent_len = len(map_data)

20 | sent_line = []

21 | for i in range(sent_len):

22 | if len(sent_line) == 0:

23 | sent_line = pred_data[i]

24 | else:

25 | if map_data[i] == map_data[i-1]:

26 | sent_line[-1] = ''

27 | sent_line += pred_data[i][1:]

28 | else:

29 | output_data.append(sent_line)

30 | sent_line = pred_data[i]

31 |

32 | if len(sent_line)>0:

33 | output_data.append(sent_line)

34 |

35 | with open(output_file, 'w') as f:

36 | for i in range(len(output_data)):

37 | for j in range(len(output_data[i])):

38 | if output_data[i][j] == '':

39 | output_data[i][j] = 'L'+str(j+1)

40 | f.write(' '.join(output_data[i]))

41 | f.write('\n')

42 |

43 |

44 | if __name__ == '__main__':

45 | # make_wsd_pred(os.path.join(os.path.dirname(__file__), '../results/dp/tgt_ptb_sd_dev_wsd_30.pred'),

46 | # os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_dev_wsd_30_map.input'))

47 |

48 | make_wsd_pred(os.path.join(os.path.dirname(__file__), '../results/dp/tgt_ptb_sd_test_wsd_40.pred'),

49 | os.path.join(os.path.dirname(__file__), '../input/dp/tgt_ptb_sd_test_wsd_40_map.input'),

50 | os.path.join(os.path.dirname(__file__), '../results/dp/tgt_ptb_sd_test_wsd_40_org.pred'))

51 |

52 |

--------------------------------------------------------------------------------

/data/scripts/mergy_subroot_feature.py:

--------------------------------------------------------------------------------

1 | # we merge the golden subroot feature into train dataset for training

2 | # and we use the predict subroot (by BiLSTM+CRF) feature into dev/train dataset

3 |

4 | import os

5 |

6 | def merge_train(input_file, origin_file, output_file):

7 | with open(input_file, 'r') as f:

8 | input_data = f.readlines()

9 |

10 | input_data = [line.split() for line in input_data if len(line.strip())>0]

11 |

12 | with open(origin_file, 'r') as f:

13 | data = f.readlines()

14 |

15 | origin_data = []

16 | sentence = []

17 |

18 | for i in range(len(data)):

19 | if len(data[i].strip()) > 0:

20 | sentence.append(data[i].strip().split('\t'))

21 | else:

22 | origin_data.append(sentence)

23 | sentence = []

24 |

25 | if len(sentence) > 0:

26 | origin_data.append(sentence)

27 |

28 | assert len(input_data) == len(origin_data)

29 |

30 | with open(output_file, 'w') as f:

31 | for i in range(len(input_data)):

32 | assert len(input_data[i]) == len(origin_data[i])

33 | line = []

34 | for j in range(len(input_data[i])):

35 | if int(origin_data[i][j][6]) == 0:

36 | line.append(input_data[i][j]+'|1')

37 | else:

38 | line.append(input_data[i][j]+'|0')

39 | f.write(' '.join(line))

40 | f.write('\n')

41 |

42 |

43 | def merge_pred(input_file, subroot_pred_file, output_file):

44 | with open(input_file, 'r') as f:

45 | input_data = f.readlines()

46 |

47 | input_data = [line.split() for line in input_data if len(line.strip())>0]

48 |

49 | with open(subroot_pred_file, 'r') as f:

50 | data = f.readlines()

51 |

52 | pred_data = []

53 | sentence = []

54 |

55 | for i in range(len(data)):

56 | if len(data[i].strip()) > 0:

57 | sentence.append(data[i].strip().split('\t'))

58 | else:

59 | pred_data.append(sentence)

60 | sentence = []

61 |

62 | if len(sentence) > 0:

63 | pred_data.append(sentence)

64 |

65 | assert len(input_data) == len(pred_data)

66 |

67 | with open(output_file, 'w') as f:

68 | for i in range(len(input_data)):

69 | assert len(input_data[i]) == len(pred_data[i])

70 | line = []

71 | for j in range(len(input_data[i])):

72 | line.append(input_data[i][j]+'|'+pred_data[i][j][1])

73 | f.write(' '.join(line))

74 | f.write('\n')

75 |

76 |

77 | if __name__ == '__main__':

78 | merge_train(os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_train.input'),

79 | os.path.join(os.path.dirname(__file__), '../ptb-sd/train_pro.conll'),

80 | os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_train_ws.input'))

81 |

82 | merge_pred(os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_dev.input'),

83 | os.path.join(os.path.dirname(__file__), '../../subroot/result/dev_predicate_95.94.pred'),

84 | os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_dev_ws.input'))

85 |

86 | merge_pred(os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_test.input'),

87 | os.path.join(os.path.dirname(__file__), '../../subroot/result/test_predicate_95.16.pred'),

88 | os.path.join(os.path.dirname(__file__), '../input/dp/src_ptb_sd_test_ws.input'))

--------------------------------------------------------------------------------

/data/scripts/recover_dataset.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 |

4 | def recover_data(file_name, pred_data, output_path):

5 | with open(file_name, 'r') as f:

6 | data = f.readlines()

7 |

8 |

9 | golden_data = []

10 | sentence = []

11 |

12 | for i in range(len(data)):

13 | if len(data[i].strip()) > 0:

14 | sentence.append(data[i].strip().split('\t'))

15 | else:

16 | golden_data.append(sentence)

17 | sentence = []

18 |

19 | if len(sentence) > 0:

20 | golden_data.append(sentence)

21 |

22 | with open(pred_data, 'r') as f:

23 | data = f.readlines()

24 |

25 | pred_data = [item.strip().split() for item in data if len(item.strip()) > 0]

26 |

27 | pred_index = 0

28 | for i in range(len(golden_data)):

29 | predicate_idx = 0

30 | for j in range(len(golden_data[i])):

31 | if golden_data[i][j][12] == 'Y':

32 | predicate_idx += 1

33 | for k in range(len(golden_data[i])):

34 | golden_data[i][k][13 + predicate_idx] = pred_data[pred_index][k]

35 | pred_index += 1

36 |

37 | with open(output_path, 'w') as f:

38 | for sentence in golden_data:

39 | for line in sentence:

40 | f.write('\t'.join(line))

41 | f.write('\n')

42 | f.write('\n')

43 |

44 | if __name__ == '__main__':

45 | recover_data(os.path.join(os.path.dirname(__file__), 'conll09-english/conll09_test.dataset'),

46 | os.path.join(os.path.dirname(__file__), 'tgt_conll09_en_test.pred'),

47 | os.path.join(os.path.dirname(__file__), 'conll09_en_test.dataset.pred'))

48 |

--------------------------------------------------------------------------------

/data/scripts/subroot_replace_unk.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | def replace_unk(input_file):

4 | with open(input_file, 'r') as f:

5 | input_data = f.readlines()

6 |

7 | input_data = [line.split() for line in input_data if len(line.strip())>0]

8 |

9 | with open(input_file, 'w') as f:

10 | for i in range(len(input_data)):

11 | line = []

12 | for j in range(len(input_data[i])):

13 | if input_data[i][j] == '0' or input_data[i][j]=='1':

14 | line.append(input_data[i][j])

15 | else:

16 | line.append('0')

17 | f.write(' '.join(line))

18 | f.write('\n')

19 |

20 |

21 | if __name__ == '__main__':

22 | replace_unk(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_train.pred'))

23 |

24 | replace_unk(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_dev.pred'))

25 |

26 | replace_unk(os.path.join(os.path.dirname(__file__), '../results/subroot/tgt_ptb_sd_subroot_test.pred'))

--------------------------------------------------------------------------------

/node2vec/train.py:

--------------------------------------------------------------------------------

1 | import networkx as nx

2 | from node2vec import Node2Vec

3 |

4 | # FILES

5 | EMBEDDING_FILENAME = './node2vec_en.emb'

6 | EMBEDDING_MODEL_FILENAME = './node2vec_en.model'

7 |

8 | # Create a graph

9 | # graph = nx.fast_gnp_random_graph(n=100, p=0.5)

10 | graph = nx.Graph()

11 |

12 | raw_train_file = '../data/ptb-sd/train_pro.conll'

13 |

14 | with open(raw_train_file, 'r') as f:

15 | data = f.readlines()

16 |

17 | # read data

18 | train_data = []

19 | sentence = []

20 | for line in data:

21 | if len(line.strip()) > 0:

22 | line = line.strip().split('\t')

23 | sentence.append(line)

24 | else:

25 | train_data.append(sentence)

26 | sentence = []

27 | if len(sentence)>0:

28 | train_data.append(sentence)

29 | sentence = []

30 |

31 | for sentence in train_data:

32 | for line in sentence:

33 | head_idx = int(line[6])-1

34 | if head_idx == -1:

35 | is_number = False

36 | word = line[1].lower()

37 | for c in word:

38 | if c.isdigit():

39 | is_number = True

40 | break

41 | if is_number:

42 | word = 'number'

43 | graph.add_edge('', word, weight=1)

44 | else:

45 | hw = sentence[head_idx][1].lower()

46 | is_number = False

47 | for c in hw:

48 | if c.isdigit():

49 | is_number = True

50 | break

51 | if is_number:

52 | hw = 'number'

53 | w = line[1].lower()

54 | is_number = False

55 | for c in w:

56 | if c.isdigit():

57 | is_number = True

58 | break

59 | if is_number:

60 | w = 'number'

61 | graph.add_edge(hw, w, weight=0.5)

62 |

63 | # Precompute probabilities and generate walks

64 | node2vec = Node2Vec(graph, dimensions=100, walk_length=100, num_walks=18, workers=1)

65 |

66 | # Embed

67 | model = node2vec.fit(window=16, min_count=1, batch_words=64) # Any keywords acceptable by gensim.Word2Vec can be passed, `diemnsions` and `workers` are automatically passed (from the Node2Vec constructor)

68 |

69 | # Look for most similar nodes

70 | model.wv.most_similar('') # Output node names are always strings

71 |

72 | # Save embeddings for later use

73 | model.wv.save_word2vec_format(EMBEDDING_FILENAME)

74 |

75 | # Save model for later use

76 | model.save(EMBEDDING_MODEL_FILENAME)

--------------------------------------------------------------------------------

/onmt/Highway.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 |

4 |

5 | class HighwayMLP(nn.Module):

6 |

7 | def __init__(self,

8 | input_size,

9 | gate_bias=-2,

10 | activation_function=nn.functional.relu,

11 | gate_activation=nn.functional.softmax):

12 |

13 | super(HighwayMLP, self).__init__()

14 |

15 | self.activation_function = activation_function

16 | self.gate_activation = gate_activation

17 |

18 | self.normal_layer = nn.Linear(input_size, input_size)

19 |

20 | self.gate_layer = nn.Linear(input_size, input_size)

21 | self.gate_layer.bias.data.fill_(gate_bias)

22 |

23 | def forward(self, x):

24 |

25 | normal_layer_result = self.activation_function(self.normal_layer(x))

26 | gate_layer_result = self.gate_activation(self.gate_layer(x),dim=0)

27 |

28 | multiplyed_gate_and_normal = torch.mul(normal_layer_result, gate_layer_result)

29 | multiplyed_gate_and_input = torch.mul((1 - gate_layer_result), x)

30 |

31 | return torch.add(multiplyed_gate_and_normal,

32 | multiplyed_gate_and_input)

33 |

34 |

35 | class HighwayCNN(nn.Module):

36 | def __init__(self,

37 | input_size,

38 | gate_bias=-1,

39 | activation_function=nn.functional.relu,

40 | gate_activation=nn.functional.softmax):

41 |

42 | super(HighwayCNN, self).__init__()

43 |

44 | self.activation_function = activation_function

45 | self.gate_activation = gate_activation

46 |

47 | self.normal_layer = nn.Linear(input_size, input_size)

48 |

49 | self.gate_layer = nn.Linear(input_size, input_size)

50 | self.gate_layer.bias.data.fill_(gate_bias)

51 |

52 | def forward(self, x):

53 |

54 | normal_layer_result = self.activation_function(self.normal_layer(x))

55 | gate_layer_result = self.gate_activation(self.gate_layer(x))

56 |

57 | multiplyed_gate_and_normal = torch.mul(normal_layer_result, gate_layer_result)

58 | multiplyed_gate_and_input = torch.mul((1 - gate_layer_result), x)

59 |

60 | return torch.add(multiplyed_gate_and_normal,

61 | multiplyed_gate_and_input)

--------------------------------------------------------------------------------

/onmt/Optim.py:

--------------------------------------------------------------------------------

1 | import torch.optim as optim

2 | from torch.nn.utils import clip_grad_norm

3 |

4 |

5 | class MultipleOptimizer(object):

6 | def __init__(self, op):

7 | self.optimizers = op

8 |

9 | def zero_grad(self):

10 | for op in self.optimizers:

11 | op.zero_grad()

12 |

13 | def step(self):

14 | for op in self.optimizers:

15 | op.step()

16 |

17 |

18 | class Optim(object):

19 | """

20 | Controller class for optimization. Mostly a thin

21 | wrapper for `optim`, but also useful for implementing

22 | rate scheduling beyond what is currently available.

23 | Also implements necessary methods for training RNNs such

24 | as grad manipulations.

25 |

26 | Args:

27 | method (:obj:`str`): one of [sgd, adagrad, adadelta, adam]

28 | lr (float): learning rate

29 | lr_decay (float, optional): learning rate decay multiplier

30 | start_decay_at (int, optional): epoch to start learning rate decay

31 | beta1, beta2 (float, optional): parameters for adam

32 | adagrad_accum (float, optional): initialization parameter for adagrad

33 | decay_method (str, option): custom decay options

34 | warmup_steps (int, option): parameter for `noam` decay

35 | model_size (int, option): parameter for `noam` decay

36 | """

37 | # We use the default parameters for Adam that are suggested by

38 | # the original paper https://arxiv.org/pdf/1412.6980.pdf

39 | # These values are also used by other established implementations,

40 | # e.g. https://www.tensorflow.org/api_docs/python/tf/train/AdamOptimizer

41 | # https://keras.io/optimizers/

42 | # Recently there are slightly different values used in the paper

43 | # "Attention is all you need"

44 | # https://arxiv.org/pdf/1706.03762.pdf, particularly the value beta2=0.98

45 | # was used there however, beta2=0.999 is still arguably the more

46 | # established value, so we use that here as well

47 | def __init__(self, method, lr, max_grad_norm,

48 | lr_decay=1, start_decay_at=None,

49 | beta1=0.9, beta2=0.999,

50 | adagrad_accum=0.0,

51 | decay_method=None,

52 | warmup_steps=4000,

53 | model_size=None):

54 | self.last_ppl = None

55 | self.lr = lr

56 | self.original_lr = lr

57 | self.max_grad_norm = max_grad_norm

58 | self.method = method

59 | self.lr_decay = lr_decay

60 | self.start_decay_at = start_decay_at

61 | self.start_decay = False

62 | self._step = 0

63 | self.betas = [beta1, beta2]

64 | self.adagrad_accum = adagrad_accum

65 | self.decay_method = decay_method

66 | self.warmup_steps = warmup_steps

67 | self.model_size = model_size

68 |

69 | def set_parameters(self, params):

70 | self.params = []

71 | self.sparse_params = []

72 | for k, p in params:

73 | if p.requires_grad:

74 | if self.method != 'sparseadam' or "embed" not in k:

75 | self.params.append(p)

76 | else:

77 | self.sparse_params.append(p)

78 | if self.method == 'sgd':

79 | self.optimizer = optim.SGD(self.params, lr=self.lr)

80 | elif self.method == 'adagrad':

81 | self.optimizer = optim.Adagrad(self.params, lr=self.lr)

82 | for group in self.optimizer.param_groups:

83 | for p in group['params']:

84 | self.optimizer.state[p]['sum'] = self.optimizer\

85 | .state[p]['sum'].fill_(self.adagrad_accum)

86 | elif self.method == 'adadelta':

87 | self.optimizer = optim.Adadelta(self.params, lr=self.lr)

88 | elif self.method == 'adam':

89 | self.optimizer = optim.Adam(self.params, lr=self.lr,

90 | betas=self.betas, eps=1e-9)

91 | elif self.method == 'sparseadam':

92 | self.optimizer = MultipleOptimizer(

93 | [optim.Adam(self.params, lr=self.lr,

94 | betas=self.betas, eps=1e-8),

95 | optim.SparseAdam(self.sparse_params, lr=self.lr,

96 | betas=self.betas, eps=1e-8)])

97 | else:

98 | raise RuntimeError("Invalid optim method: " + self.method)

99 |

100 | def _set_rate(self, lr):

101 | self.lr = lr

102 | if self.method != 'sparseadam':

103 | self.optimizer.param_groups[0]['lr'] = self.lr

104 | else:

105 | for op in self.optimizer.optimizers:

106 | op.param_groups[0]['lr'] = self.lr

107 |

108 | def step(self):

109 | """Update the model parameters based on current gradients.

110 |

111 | Optionally, will employ gradient modification or update learning

112 | rate.

113 | """

114 | self._step += 1

115 |

116 | # Decay method used in tensor2tensor.

117 | if self.decay_method == "noam":

118 | self._set_rate(

119 | self.original_lr *

120 | (self.model_size ** (-0.5) *

121 | min(self._step ** (-0.5),

122 | self._step * self.warmup_steps**(-1.5))))

123 |

124 | if self.max_grad_norm:

125 | clip_grad_norm(self.params, self.max_grad_norm)

126 | self.optimizer.step()

127 |

128 | def update_learning_rate(self, ppl, epoch):

129 | """

130 | Decay learning rate if val perf does not improve

131 | or we hit the start_decay_at limit.

132 | """

133 |

134 | if self.start_decay_at is not None and epoch >= self.start_decay_at:

135 | self.start_decay = True

136 | if self.last_ppl is not None and ppl > self.last_ppl:

137 | self.start_decay = True

138 |

139 | if self.start_decay:

140 | self.lr = self.lr * self.lr_decay

141 | print("Decaying learning rate to %g" % self.lr)

142 |

143 | self.last_ppl = ppl

144 | if self.method != 'sparseadam':

145 | self.optimizer.param_groups[0]['lr'] = self.lr

146 |

--------------------------------------------------------------------------------

/onmt/Utils.py:

--------------------------------------------------------------------------------

1 | import torch

2 |

3 |

4 | def aeq(*args):

5 | """

6 | Assert all arguments have the same value

7 | """

8 | arguments = (arg for arg in args)

9 | first = next(arguments)

10 | assert all(arg == first for arg in arguments), \

11 | "Not all arguments have the same value: " + str(args)

12 |

13 |

14 | def sequence_mask(lengths, max_len=None):

15 | """

16 | Creates a boolean mask from sequence lengths.

17 | """

18 | batch_size = lengths.numel()

19 | max_len = max_len or lengths.max()

20 | return (torch.arange(0, max_len)

21 | .type_as(lengths)

22 | .repeat(batch_size, 1)

23 | .lt(lengths.unsqueeze(1)))

24 |

25 |

26 | def use_gpu(opt):

27 | return (hasattr(opt, 'gpuid') and len(opt.gpuid) > 0) or \

28 | (hasattr(opt, 'gpu') and opt.gpu > -1)

29 |

--------------------------------------------------------------------------------

/onmt/__init__.py:

--------------------------------------------------------------------------------

1 | import onmt.io

2 | import onmt.Models

3 | import onmt.Loss

4 | import onmt.translate

5 | import onmt.opts