├── .gitignore

├── README.md

├── README

└── Logo.png

├── license

├── CHOSUNTRUCK_LICENSE

├── PYUSERINPUT_LICENSE.txt

├── TENSORBOX_LICENSE.txt

└── TENSORFLOW_LICENSE.txt

├── linux

├── Makefile

├── src

│ ├── IPM.cc

│ ├── IPM.h

│ ├── ets2_self_driving.h

│ ├── getScreen_linux.cc

│ ├── getScreen_linux.h

│ ├── linefinder.cc

│ ├── main2.cc

│ ├── uinput.c

│ └── uinput.h

└── tensorbox

│ ├── Car_detection.py

│ ├── data

│ └── inception_v1.ckpt

│ ├── license

│ ├── TENSORBOX_LICENSE.txt

│ └── TENSORFLOW_LICENSE.txt

│ ├── output

│ └── overfeat_rezoom_2017_02_09_13.28

│ │ ├── checkpoint

│ │ ├── hypes.json

│ │ ├── rpc-save.ckpt-100000.val_overlap0.5.txt

│ │ ├── save.ckpt-100000.data-00000-of-00001

│ │ ├── save.ckpt-100000.gt_val.json

│ │ ├── save.ckpt-100000.index

│ │ └── save.ckpt-100000.val.json

│ ├── pymouse

│ ├── __init__.py

│ ├── base.py

│ ├── java_.py

│ ├── mac.py

│ ├── mir.py

│ ├── wayland.py

│ ├── windows.py

│ └── x11.py

│ ├── train.py

│ └── utils

│ ├── Makefile

│ ├── __init__.py

│ ├── annolist

│ ├── AnnoList_pb2.py

│ ├── AnnotationLib.py

│ ├── LICENSE_FOR_THIS_FOLDER

│ ├── MatPlotter.py

│ ├── PalLib.py

│ ├── __init__.py

│ ├── doRPC.py

│ ├── ma_utils.py

│ └── plotSimple.py

│ ├── data_utils.py

│ ├── googlenet_load.py

│ ├── hungarian

│ ├── hungarian.cc

│ ├── hungarian.cpp

│ └── hungarian.hpp

│ ├── rect.py

│ ├── slim_nets

│ ├── __init__.py

│ ├── inception_v1.py

│ ├── resnet_utils.py

│ └── resnet_v1.py

│ ├── stitch_rects.cpp

│ ├── stitch_rects.hpp

│ ├── stitch_wrapper.py

│ ├── stitch_wrapper.pyx

│ └── train_utils.py

└── windows

└── src

├── IPM.cpp

├── IPM.h

├── ets2_self_driving.h

├── guassian_filter.cpp

├── hwnd2mat.cpp

├── linefinder.cpp

└── main.cpp

/.gitignore:

--------------------------------------------------------------------------------

1 | # Created by https://www.gitignore.io/api/c,c++,cuda,opencv,visualstudio,windows

2 |

3 | ### C ###

4 | # Object files

5 | *.o

6 | *.ko

7 | *.obj

8 | *.elf

9 |

10 | # Precompiled Headers

11 | *.gch

12 | *.pch

13 |

14 | # Libraries

15 | *.lib

16 | *.a

17 | *.la

18 | *.lo

19 |

20 | # Shared objects (inc. Windows DLLs)

21 | *.dll

22 | *.so

23 | *.so.*

24 | *.dylib

25 |

26 | # Executables

27 | *.exe

28 | *.out

29 | *.app

30 | *.i*86

31 | *.x86_64

32 | *.hex

33 |

34 | # Debug files

35 | *.dSYM/

36 | *.su

37 |

38 |

39 | ### C++ ###

40 | # Compiled Object files

41 | *.slo

42 | *.lo

43 | *.o

44 | *.obj

45 |

46 | # Precompiled Headers

47 | *.gch

48 | *.pch

49 |

50 | # Compiled Dynamic libraries

51 | *.so

52 | *.dylib

53 | *.dll

54 |

55 | # Fortran module files

56 | *.mod

57 | *.smod

58 |

59 | # Compiled Static libraries

60 | *.lai

61 | *.la

62 | *.a

63 | *.lib

64 |

65 | # Executables

66 | *.exe

67 | *.out

68 | *.app

69 |

70 |

71 | ### CUDA ###

72 | *.i

73 | *.ii

74 | *.gpu

75 | *.ptx

76 | *.cubin

77 | *.fatbin

78 |

79 |

80 | ### OpenCV ###

81 | #OpenCV for Mac and Linux

82 | #build and release folders

83 | */CMakeFiles

84 | */CMakeCache.txt

85 | */cmake_install.cmake

86 | .DS_Store

87 |

88 |

89 | ### VisualStudio ###

90 | ## Ignore Visual Studio temporary files, build results, and

91 | ## files generated by popular Visual Studio add-ons.

92 |

93 | # User-specific files

94 | *.suo

95 | *.user

96 | *.userosscache

97 | *.sln.docstates

98 |

99 | # User-specific files (MonoDevelop/Xamarin Studio)

100 | *.userprefs

101 |

102 | # Build results

103 | [Dd]ebug/

104 | [Dd]ebugPublic/

105 | [Rr]elease/

106 | [Rr]eleases/

107 | x64/

108 | x86/

109 | bld/

110 | [Bb]in/

111 | [Oo]bj/

112 | [Ll]og/

113 |

114 | # Visual Studio 2015 cache/options directory

115 | .vs/

116 | # Uncomment if you have tasks that create the project's static files in wwwroot

117 | #wwwroot/

118 |

119 | # MSTest test Results

120 | [Tt]est[Rr]esult*/

121 | [Bb]uild[Ll]og.*

122 |

123 | # NUNIT

124 | *.VisualState.xml

125 | TestResult.xml

126 |

127 | # Build Results of an ATL Project

128 | [Dd]ebugPS/

129 | [Rr]eleasePS/

130 | dlldata.c

131 |

132 | # DNX

133 | project.lock.json

134 | project.fragment.lock.json

135 | artifacts/

136 |

137 | *_i.c

138 | *_p.c

139 | *_i.h

140 | *.ilk

141 | *.meta

142 | *.obj

143 | *.pch

144 | *.pdb

145 | *.pgc

146 | *.pgd

147 | *.rsp

148 | *.sbr

149 | *.tlb

150 | *.tli

151 | *.tlh

152 | *.tmp

153 | *.tmp_proj

154 | *.log

155 | *.vspscc

156 | *.vssscc

157 | .builds

158 | *.pidb

159 | *.svclog

160 | *.scc

161 |

162 | # Chutzpah Test files

163 | _Chutzpah*

164 |

165 | # Visual C++ cache files

166 | ipch/

167 | *.aps

168 | *.ncb

169 | *.opendb

170 | *.opensdf

171 | *.sdf

172 | *.cachefile

173 | *.VC.db

174 | *.VC.VC.opendb

175 |

176 | # Visual Studio profiler

177 | *.psess

178 | *.vsp

179 | *.vspx

180 | *.sap

181 |

182 | # TFS 2012 Local Workspace

183 | $tf/

184 |

185 | # Guidance Automation Toolkit

186 | *.gpState

187 |

188 | # ReSharper is a .NET coding add-in

189 | _ReSharper*/

190 | *.[Rr]e[Ss]harper

191 | *.DotSettings.user

192 |

193 | # JustCode is a .NET coding add-in

194 | .JustCode

195 |

196 | # TeamCity is a build add-in

197 | _TeamCity*

198 |

199 | # DotCover is a Code Coverage Tool

200 | *.dotCover

201 |

202 | # NCrunch

203 | _NCrunch_*

204 | .*crunch*.local.xml

205 | nCrunchTemp_*

206 |

207 | # MightyMoose

208 | *.mm.*

209 | AutoTest.Net/

210 |

211 | # Web workbench (sass)

212 | .sass-cache/

213 |

214 | # Installshield output folder

215 | [Ee]xpress/

216 |

217 | # DocProject is a documentation generator add-in

218 | DocProject/buildhelp/

219 | DocProject/Help/*.HxT

220 | DocProject/Help/*.HxC

221 | DocProject/Help/*.hhc

222 | DocProject/Help/*.hhk

223 | DocProject/Help/*.hhp

224 | DocProject/Help/Html2

225 | DocProject/Help/html

226 |

227 | # Click-Once directory

228 | publish/

229 |

230 | # Publish Web Output

231 | *.[Pp]ublish.xml

232 | *.azurePubxml

233 | # TODO: Comment the next line if you want to checkin your web deploy settings

234 | # but database connection strings (with potential passwords) will be unencrypted

235 | *.pubxml

236 | *.publishproj

237 |

238 | # Microsoft Azure Web App publish settings. Comment the next line if you want to

239 | # checkin your Azure Web App publish settings, but sensitive information contained

240 | # in these scripts will be unencrypted

241 | PublishScripts/

242 |

243 | # NuGet Packages

244 | *.nupkg

245 | # The packages folder can be ignored because of Package Restore

246 | **/packages/*

247 | # except build/, which is used as an MSBuild target.

248 | !**/packages/build/

249 | # Uncomment if necessary however generally it will be regenerated when needed

250 | #!**/packages/repositories.config

251 | # NuGet v3's project.json files produces more ignoreable files

252 | *.nuget.props

253 | *.nuget.targets

254 |

255 | # Microsoft Azure Build Output

256 | csx/

257 | *.build.csdef

258 |

259 | # Microsoft Azure Emulator

260 | ecf/

261 | rcf/

262 |

263 | # Windows Store app package directories and files

264 | AppPackages/

265 | BundleArtifacts/

266 | Package.StoreAssociation.xml

267 | _pkginfo.txt

268 |

269 | # Visual Studio cache files

270 | # files ending in .cache can be ignored

271 | *.[Cc]ache

272 | # but keep track of directories ending in .cache

273 | !*.[Cc]ache/

274 |

275 | # Others

276 | ClientBin/

277 | ~$*

278 | *~

279 | *.dbmdl

280 | *.dbproj.schemaview

281 | *.pfx

282 | *.publishsettings

283 | node_modules/

284 | orleans.codegen.cs

285 |

286 | # Since there are multiple workflows, uncomment next line to ignore bower_components

287 | # (https://github.com/github/gitignore/pull/1529#issuecomment-104372622)

288 | #bower_components/

289 |

290 | # RIA/Silverlight projects

291 | Generated_Code/

292 |

293 | # Backup & report files from converting an old project file

294 | # to a newer Visual Studio version. Backup files are not needed,

295 | # because we have git ;-)

296 | _UpgradeReport_Files/

297 | Backup*/

298 | UpgradeLog*.XML

299 | UpgradeLog*.htm

300 |

301 | # SQL Server files

302 | *.mdf

303 | *.ldf

304 |

305 | # Business Intelligence projects

306 | *.rdl.data

307 | *.bim.layout

308 | *.bim_*.settings

309 |

310 | # Microsoft Fakes

311 | FakesAssemblies/

312 |

313 | # GhostDoc plugin setting file

314 | *.GhostDoc.xml

315 |

316 | # Node.js Tools for Visual Studio

317 | .ntvs_analysis.dat

318 |

319 | # Visual Studio 6 build log

320 | *.plg

321 |

322 | # Visual Studio 6 workspace options file

323 | *.opt

324 |

325 | # Visual Studio LightSwitch build output

326 | **/*.HTMLClient/GeneratedArtifacts

327 | **/*.DesktopClient/GeneratedArtifacts

328 | **/*.DesktopClient/ModelManifest.xml

329 | **/*.Server/GeneratedArtifacts

330 | **/*.Server/ModelManifest.xml

331 | _Pvt_Extensions

332 |

333 | # Paket dependency manager

334 | .paket/paket.exe

335 | paket-files/

336 |

337 | # FAKE - F# Make

338 | .fake/

339 |

340 | # JetBrains Rider

341 | .idea/

342 | *.sln.iml

343 |

344 |

345 | ### Windows ###

346 | # Windows image file caches

347 | Thumbs.db

348 | ehthumbs.db

349 |

350 | # Folder config file

351 | Desktop.ini

352 |

353 | # Recycle Bin used on file shares

354 | $RECYCLE.BIN/

355 |

356 | # Windows Installer files

357 | *.cab

358 | *.msi

359 | *.msm

360 | *.msp

361 |

362 | # Windows shortcuts

363 | *.lnk

364 |

365 | # Generated files

366 | ChosunTruck

367 |

368 | # Build files

369 | build/

370 |

371 | # pyc files

372 | *.pyc

373 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | #  ChosunTruck

2 |

3 | ## Introduction

4 | ChosunTruck is an autonomous driving solution for [Euro Truck Simulator 2](https://eurotrucksimulator2.com/).

5 | Recently, autonomous driving technology has become a big issue and as a result we have been studying technology that incorporates this.

6 | It is being developed in a simulated environment called Euro Truck Simulator 2 to allow us to study it using vehicles.

7 | We chose Euro Truck Simulator 2 because this simulator provides a good test environment that is similar to the real road.

8 |

9 | ## Features

10 | * You can drive a vehicle without handling it yourself.

11 | * You can understand the principles of autonomous driving.

12 | * (Experimental/Linux only) You can detect where other vehicles are.

13 |

14 | ## How To Run It

15 | ### Windows

16 |

17 | #### Dependencies

18 | - OS: Windows 7, 10 (64bit)

19 |

20 | - IDE: Visual Studio 2013, 2015

21 |

22 | - OpenCV version: >= 3.1

23 |

24 | - [Cuda Toolkit 7.5](https://developer.nvidia.com/cuda-75-downloads-archive) (Note: Do an ADVANCED INSTALLATION. ONLY install the Toolkit + Integration to Visual Studio. Do NOT install the drivers + other stuff it would normally give you. Once installed, your project properties should look like this: https://i.imgur.com/e7IRtjy.png)

25 |

26 | - If you have a problem during installation, look at our [Windows Installation wiki page](https://github.com/bethesirius/ChosunTruck/wiki/Windows-Installation)

27 |

28 | #### Required to allow input to work in Windows:

29 | - **Go to C:\Users\YOURUSERNAME\Documents\Euro Truck Simulator 2\profiles and edit controls.sii from**

30 | ```

31 | config_lines[0]: "device keyboard `di8.keyboard`"

32 | config_lines[1]: "device mouse `fusion.mouse`"

33 | ```

34 | to

35 | ```

36 | config_lines[0]: "device keyboard `sys.keyboard`"

37 | config_lines[1]: "device mouse `sys.mouse`"

38 | ```

39 | (thanks Komat!)

40 | - **While you are in controls.sii, make sure your sensitivity is set to:**

41 | ```

42 | config_lines[33]: "constant c_rsteersens 0.775000"

43 | config_lines[34]: "constant c_asteersens 4.650000"

44 | ```

45 | #### Then:

46 | - Set controls.sii to read-only

47 | - Open the visual studio project and build it.

48 | - Run ETS2 in windowed mode and set resolution to 1024 * 768.(It will work properly with 1920 * 1080 screen resolution and 1024 * 768 window mode ETS2.)

49 |

50 | ### Linux

51 | #### Dependencies

52 | - OS: Ubuntu 16.04 LTS

53 |

54 | - [OpenCV version: >= 3.1](http://embedonix.com/articles/image-processing/installing-opencv-3-1-0-on-ubuntu/)

55 |

56 | - (Optional) Tensorflow version: >= 0.12.1

57 |

58 | ### Build the source code with the following command (inside the linux directory).

59 | ```

60 | make

61 | ```

62 | ### If you want the car detection function then:

63 | ````

64 | make Drive

65 | ````

66 | #### Then:

67 | - Run ETS2 in windowed mode and set its resolution to 1024 * 768. (It will work properly with 1920 * 1080 screen resolution and 1024 * 768 windowed mode ETS2)

68 | - It cannot find the ETS2 window automatically. Move the ETS2 window to the right-down corner to fix this.

69 | - In ETS2 Options, set controls to 'Keyboard + Mouse Steering', 'left click' to acclerate, and 'right click' to brake.

70 | - Go to a highway and set the truck's speed to 40~60km/h. (I recommend you turn on cruise mode to set the speed easily)

71 | - Run this program!

72 |

73 | #### To enable car detection mode, add -D or --Car_Detection.

74 | ```

75 | ./ChosunTruck [-D|--Car_Detection]

76 | ```

77 | ## Troubleshooting

78 | See [Our wiki page](https://github.com/bethesirius/ChosunTruck/wiki/Troubleshooting).

79 |

80 | If you have some problems running this project, reference the demo video below. Or, [open a issue to contact our team](https://github.com/bethesirius/ChosunTruck/issues).

81 |

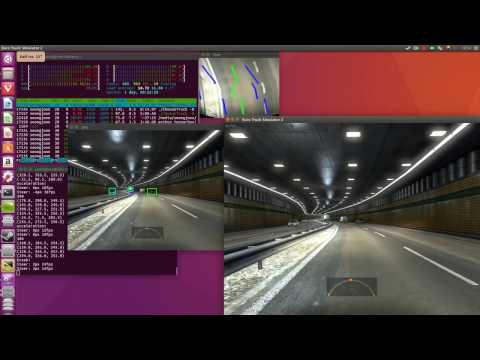

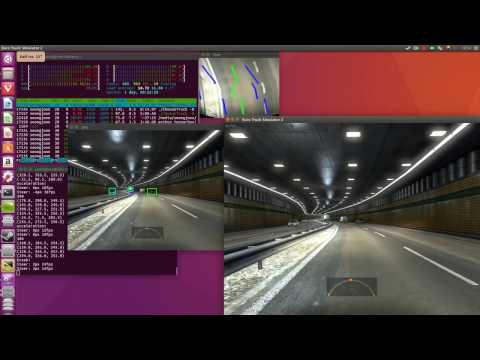

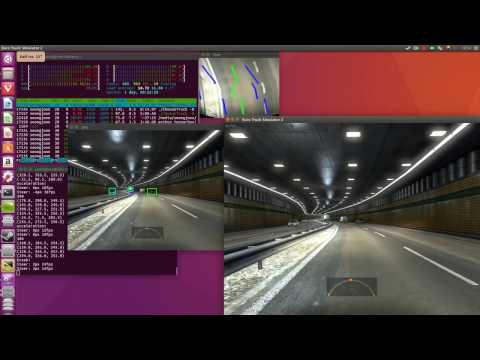

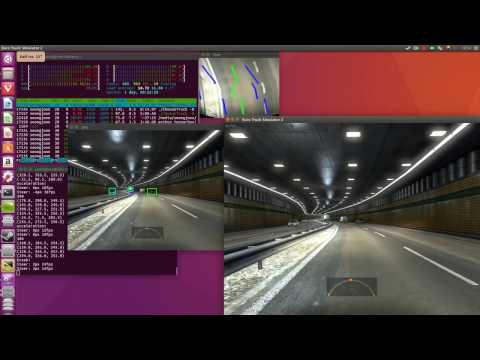

82 | ## Demo Video

83 | Lane Detection (Youtube link)

84 |

85 | [](http://www.youtube.com/watch?v=vF7J_uC045Q)

86 | [](http://www.youtube.com/watch?v=qb99czlIklA)

87 |

88 | Lane Detection + Vehicle Detection (Youtube link)

89 |

90 | [](http://www.youtube.com/watch?v=w6H2eGEvzvw)

91 |

92 | ## Todo

93 | * For better detection performance, Change the Tensorbox to YOLO2.

94 | * The information from in-game screen have Restrictions. Read ETS2 process memory to collect more driving environment data.

95 |

96 | ## Founders

97 | - Chiwan Song, chi3236@gmail.com

98 |

99 | - JaeCheol Sim, simjaecheol@naver.com

100 |

101 | - Seongjoon Chu, hs4393@gmail.com

102 |

103 | ## Contributors

104 | - [zappybiby](https://github.com/zappybiby)

105 |

106 | ## How To Contribute

107 | Anyone who is interested in this project is welcome! Just fork it and pull requests!

108 |

109 | ## License

110 | ChosunTruck, Euro Truck Simulator 2 auto driving solution

111 | Copyright (C) 2017 chi3236, bethesirius, uoyssim

112 |

113 | This program is free software: you can redistribute it and/or modify

114 | it under the terms of the GNU General Public License as published by

115 | the Free Software Foundation, either version 3 of the License, or

116 | (at your option) any later version.

117 |

118 | This program is distributed in the hope that it will be useful,

119 | but WITHOUT ANY WARRANTY; without even the implied warranty of

120 | MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

121 | GNU General Public License for more details.

122 |

123 | You should have received a copy of the GNU General Public License

124 | along with this program. If not, see .

125 |

--------------------------------------------------------------------------------

/README/Logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/bethesirius/ChosunTruck/889644385ce57f971ec2921f006fbb0a167e6f1e/README/Logo.png

--------------------------------------------------------------------------------

/license/TENSORBOX_LICENSE.txt:

--------------------------------------------------------------------------------

1 | Copyright (c) 2016 "The Contributors"

2 |

3 |

4 | Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

5 |

6 | The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

7 |

8 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

9 |

--------------------------------------------------------------------------------

/license/TENSORFLOW_LICENSE.txt:

--------------------------------------------------------------------------------

1 | Copyright 2015 The TensorFlow Authors. All rights reserved.

2 |

3 | Apache License

4 | Version 2.0, January 2004

5 | http://www.apache.org/licenses/

6 |

7 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

8 |

9 | 1. Definitions.

10 |

11 | "License" shall mean the terms and conditions for use, reproduction,

12 | and distribution as defined by Sections 1 through 9 of this document.

13 |

14 | "Licensor" shall mean the copyright owner or entity authorized by

15 | the copyright owner that is granting the License.

16 |

17 | "Legal Entity" shall mean the union of the acting entity and all

18 | other entities that control, are controlled by, or are under common

19 | control with that entity. For the purposes of this definition,

20 | "control" means (i) the power, direct or indirect, to cause the

21 | direction or management of such entity, whether by contract or

22 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

23 | outstanding shares, or (iii) beneficial ownership of such entity.

24 |

25 | "You" (or "Your") shall mean an individual or Legal Entity

26 | exercising permissions granted by this License.

27 |

28 | "Source" form shall mean the preferred form for making modifications,

29 | including but not limited to software source code, documentation

30 | source, and configuration files.

31 |

32 | "Object" form shall mean any form resulting from mechanical

33 | transformation or translation of a Source form, including but

34 | not limited to compiled object code, generated documentation,

35 | and conversions to other media types.

36 |

37 | "Work" shall mean the work of authorship, whether in Source or

38 | Object form, made available under the License, as indicated by a

39 | copyright notice that is included in or attached to the work

40 | (an example is provided in the Appendix below).

41 |

42 | "Derivative Works" shall mean any work, whether in Source or Object

43 | form, that is based on (or derived from) the Work and for which the

44 | editorial revisions, annotations, elaborations, or other modifications

45 | represent, as a whole, an original work of authorship. For the purposes

46 | of this License, Derivative Works shall not include works that remain

47 | separable from, or merely link (or bind by name) to the interfaces of,

48 | the Work and Derivative Works thereof.

49 |

50 | "Contribution" shall mean any work of authorship, including

51 | the original version of the Work and any modifications or additions

52 | to that Work or Derivative Works thereof, that is intentionally

53 | submitted to Licensor for inclusion in the Work by the copyright owner

54 | or by an individual or Legal Entity authorized to submit on behalf of

55 | the copyright owner. For the purposes of this definition, "submitted"

56 | means any form of electronic, verbal, or written communication sent

57 | to the Licensor or its representatives, including but not limited to

58 | communication on electronic mailing lists, source code control systems,

59 | and issue tracking systems that are managed by, or on behalf of, the

60 | Licensor for the purpose of discussing and improving the Work, but

61 | excluding communication that is conspicuously marked or otherwise

62 | designated in writing by the copyright owner as "Not a Contribution."

63 |

64 | "Contributor" shall mean Licensor and any individual or Legal Entity

65 | on behalf of whom a Contribution has been received by Licensor and

66 | subsequently incorporated within the Work.

67 |

68 | 2. Grant of Copyright License. Subject to the terms and conditions of

69 | this License, each Contributor hereby grants to You a perpetual,

70 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

71 | copyright license to reproduce, prepare Derivative Works of,

72 | publicly display, publicly perform, sublicense, and distribute the

73 | Work and such Derivative Works in Source or Object form.

74 |

75 | 3. Grant of Patent License. Subject to the terms and conditions of

76 | this License, each Contributor hereby grants to You a perpetual,

77 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

78 | (except as stated in this section) patent license to make, have made,

79 | use, offer to sell, sell, import, and otherwise transfer the Work,

80 | where such license applies only to those patent claims licensable

81 | by such Contributor that are necessarily infringed by their

82 | Contribution(s) alone or by combination of their Contribution(s)

83 | with the Work to which such Contribution(s) was submitted. If You

84 | institute patent litigation against any entity (including a

85 | cross-claim or counterclaim in a lawsuit) alleging that the Work

86 | or a Contribution incorporated within the Work constitutes direct

87 | or contributory patent infringement, then any patent licenses

88 | granted to You under this License for that Work shall terminate

89 | as of the date such litigation is filed.

90 |

91 | 4. Redistribution. You may reproduce and distribute copies of the

92 | Work or Derivative Works thereof in any medium, with or without

93 | modifications, and in Source or Object form, provided that You

94 | meet the following conditions:

95 |

96 | (a) You must give any other recipients of the Work or

97 | Derivative Works a copy of this License; and

98 |

99 | (b) You must cause any modified files to carry prominent notices

100 | stating that You changed the files; and

101 |

102 | (c) You must retain, in the Source form of any Derivative Works

103 | that You distribute, all copyright, patent, trademark, and

104 | attribution notices from the Source form of the Work,

105 | excluding those notices that do not pertain to any part of

106 | the Derivative Works; and

107 |

108 | (d) If the Work includes a "NOTICE" text file as part of its

109 | distribution, then any Derivative Works that You distribute must

110 | include a readable copy of the attribution notices contained

111 | within such NOTICE file, excluding those notices that do not

112 | pertain to any part of the Derivative Works, in at least one

113 | of the following places: within a NOTICE text file distributed

114 | as part of the Derivative Works; within the Source form or

115 | documentation, if provided along with the Derivative Works; or,

116 | within a display generated by the Derivative Works, if and

117 | wherever such third-party notices normally appear. The contents

118 | of the NOTICE file are for informational purposes only and

119 | do not modify the License. You may add Your own attribution

120 | notices within Derivative Works that You distribute, alongside

121 | or as an addendum to the NOTICE text from the Work, provided

122 | that such additional attribution notices cannot be construed

123 | as modifying the License.

124 |

125 | You may add Your own copyright statement to Your modifications and

126 | may provide additional or different license terms and conditions

127 | for use, reproduction, or distribution of Your modifications, or

128 | for any such Derivative Works as a whole, provided Your use,

129 | reproduction, and distribution of the Work otherwise complies with

130 | the conditions stated in this License.

131 |

132 | 5. Submission of Contributions. Unless You explicitly state otherwise,

133 | any Contribution intentionally submitted for inclusion in the Work

134 | by You to the Licensor shall be under the terms and conditions of

135 | this License, without any additional terms or conditions.

136 | Notwithstanding the above, nothing herein shall supersede or modify

137 | the terms of any separate license agreement you may have executed

138 | with Licensor regarding such Contributions.

139 |

140 | 6. Trademarks. This License does not grant permission to use the trade

141 | names, trademarks, service marks, or product names of the Licensor,

142 | except as required for reasonable and customary use in describing the

143 | origin of the Work and reproducing the content of the NOTICE file.

144 |

145 | 7. Disclaimer of Warranty. Unless required by applicable law or

146 | agreed to in writing, Licensor provides the Work (and each

147 | Contributor provides its Contributions) on an "AS IS" BASIS,

148 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

149 | implied, including, without limitation, any warranties or conditions

150 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

151 | PARTICULAR PURPOSE. You are solely responsible for determining the

152 | appropriateness of using or redistributing the Work and assume any

153 | risks associated with Your exercise of permissions under this License.

154 |

155 | 8. Limitation of Liability. In no event and under no legal theory,

156 | whether in tort (including negligence), contract, or otherwise,

157 | unless required by applicable law (such as deliberate and grossly

158 | negligent acts) or agreed to in writing, shall any Contributor be

159 | liable to You for damages, including any direct, indirect, special,

160 | incidental, or consequential damages of any character arising as a

161 | result of this License or out of the use or inability to use the

162 | Work (including but not limited to damages for loss of goodwill,

163 | work stoppage, computer failure or malfunction, or any and all

164 | other commercial damages or losses), even if such Contributor

165 | has been advised of the possibility of such damages.

166 |

167 | 9. Accepting Warranty or Additional Liability. While redistributing

168 | the Work or Derivative Works thereof, You may choose to offer,

169 | and charge a fee for, acceptance of support, warranty, indemnity,

170 | or other liability obligations and/or rights consistent with this

171 | License. However, in accepting such obligations, You may act only

172 | on Your own behalf and on Your sole responsibility, not on behalf

173 | of any other Contributor, and only if You agree to indemnify,

174 | defend, and hold each Contributor harmless for any liability

175 | incurred by, or claims asserted against, such Contributor by reason

176 | of your accepting any such warranty or additional liability.

177 |

178 | END OF TERMS AND CONDITIONS

179 |

180 | APPENDIX: How to apply the Apache License to your work.

181 |

182 | To apply the Apache License to your work, attach the following

183 | boilerplate notice, with the fields enclosed by brackets "[]"

184 | replaced with your own identifying information. (Don't include

185 | the brackets!) The text should be enclosed in the appropriate

186 | comment syntax for the file format. We also recommend that a

187 | file or class name and description of purpose be included on the

188 | same "printed page" as the copyright notice for easier

189 | identification within third-party archives.

190 |

191 | Copyright 2015, The TensorFlow Authors.

192 |

193 | Licensed under the Apache License, Version 2.0 (the "License");

194 | you may not use this file except in compliance with the License.

195 | You may obtain a copy of the License at

196 |

197 | http://www.apache.org/licenses/LICENSE-2.0

198 |

199 | Unless required by applicable law or agreed to in writing, software

200 | distributed under the License is distributed on an "AS IS" BASIS,

201 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

202 | See the License for the specific language governing permissions and

203 | limitations under the License.

204 |

--------------------------------------------------------------------------------

/linux/Makefile:

--------------------------------------------------------------------------------

1 | OUT_O_DIR = build

2 | TENSORBOX_UTILS_DIR = tensorbox/utils

3 |

4 | OBJS = $(OUT_O_DIR)/getScreen_linux.o $(OUT_O_DIR)/main2.o $(OUT_O_DIR)/IPM.o $(OUT_O_DIR)/linefinder.o $(OUT_O_DIR)/uinput.o

5 |

6 | CC = gcc

7 | CFLAGS = -std=c11 -Wall -O3 -march=native

8 | CPP = g++

9 | CPPFLAGS = `pkg-config opencv --cflags --libs` -std=c++11 -lX11 -Wall -fopenmp -O3 -march=native

10 |

11 | TARGET = ChosunTruck

12 |

13 | $(TARGET) : $(OBJS)

14 | $(CPP) $(OBJS) $(CPPFLAGS) -o $@

15 |

16 | $(OUT_O_DIR)/main2.o : src/main2.cc

17 | mkdir -p $(@D)

18 | $(CPP) -c $< $(CPPFLAGS) -o $@

19 | $(OUT_O_DIR)/getScreen_linux.o : src/getScreen_linux.cc

20 | mkdir -p $(@D)

21 | $(CPP) -c $< $(CPPFLAGS) -o $@

22 | $(OUT_O_DIR)/IPM.o : src/IPM.cc

23 | mkdir -p $(@D)

24 | $(CPP) -c $< $(CPPFLAGS) -o $@

25 | $(OUT_O_DIR)/linefinder.o : src/linefinder.cc

26 | mkdir -p $(@D)

27 | $(CPP) -c $< $(CPPFLAGS) -o $@

28 | $(OUT_O_DIR)/uinput.o : src/uinput.c

29 | mkdir -p $(@D)

30 | $(CC) -c $< $(CFLAGS) -o $@

31 |

32 | clean :

33 | rm -f $(OBJS) ./$(TARGET)

34 |

35 | .PHONY: Drive

36 |

37 | Drive:

38 | pip install runcython

39 | makecython++ $(TENSORBOX_UTILS_DIR)/stitch_wrapper.pyx "" "$(TENSORBOX_UTILS_DIR)/stitch_rects.cpp $(TENSORBOX_UTILS_DIR)/hungarian/hungarian.cpp"

40 |

41 | hungarian: $(TENSORBOX_UTILS_DIR)/hungarian/hungarian.so

42 |

43 | $(TENSORBOX_UTILS_DIR)/hungarian/hungarian.so:

44 | cd $(TENSORBOX_UTILS_DIR)/hungarian && \

45 | TF_INC=$$(python -c 'import tensorflow as tf; print(tf.sysconfig.get_include())') && \

46 | if [ `uname` == Darwin ];\

47 | then g++ -std=c++11 -shared hungarian.cc -o hungarian.so -fPIC -I -D_GLIBCXX_USE_CXX11_ABI=0$$TF_INC;\

48 | else g++ -std=c++11 -shared hungarian.cc -o hungarian.so -fPIC -I $$TF_INC; fi

49 |

50 |

51 |

--------------------------------------------------------------------------------

/linux/src/IPM.cc:

--------------------------------------------------------------------------------

1 | #include "IPM.h"

2 |

3 | using namespace cv;

4 | using namespace std;

5 |

6 | // Public

7 | IPM::IPM( const cv::Size& _origSize, const cv::Size& _dstSize, const std::vector& _origPoints, const std::vector& _dstPoints )

8 | : m_origSize(_origSize), m_dstSize(_dstSize), m_origPoints(_origPoints), m_dstPoints(_dstPoints)

9 | {

10 | assert( m_origPoints.size() == 4 && m_dstPoints.size() == 4 && "Orig. points and Dst. points must vectors of 4 points" );

11 | m_H = getPerspectiveTransform( m_origPoints, m_dstPoints );

12 | m_H_inv = m_H.inv();

13 |

14 | createMaps();

15 | }

16 |

17 | void IPM::setIPM( const cv::Size& _origSize, const cv::Size& _dstSize, const std::vector& _origPoints, const std::vector& _dstPoints )

18 | {

19 | m_origSize = _origSize;

20 | m_dstSize = _dstSize;

21 | m_origPoints = _origPoints;

22 | m_dstPoints = _dstPoints;

23 | assert( m_origPoints.size() == 4 && m_dstPoints.size() == 4 && "Orig. points and Dst. points must vectors of 4 points" );

24 | m_H = getPerspectiveTransform( m_origPoints, m_dstPoints );

25 | m_H_inv = m_H.inv();

26 |

27 | createMaps();

28 | }

29 | void IPM::drawPoints( const std::vector& _points, cv::Mat& _img ) const

30 | {

31 | assert(_points.size() == 4);

32 |

33 | line(_img, Point(static_cast(_points[0].x), static_cast(_points[0].y)), Point(static_cast(_points[3].x), static_cast(_points[3].y)), CV_RGB( 205,205,0), 2);

34 | line(_img, Point(static_cast(_points[2].x), static_cast(_points[2].y)), Point(static_cast(_points[3].x), static_cast(_points[3].y)), CV_RGB( 205,205,0), 2);

35 | line(_img, Point(static_cast(_points[0].x), static_cast(_points[0].y)), Point(static_cast(_points[1].x), static_cast(_points[1].y)), CV_RGB( 205,205,0), 2);

36 | line(_img, Point(static_cast(_points[2].x), static_cast(_points[2].y)), Point(static_cast(_points[1].x), static_cast(_points[1].y)), CV_RGB( 205,205,0), 2);

37 | for(size_t i=0; i<_points.size(); i++)

38 | {

39 | circle(_img, Point(static_cast(_points[i].x), static_cast(_points[i].y)), 2, CV_RGB(238,238,0), -1);

40 | circle(_img, Point(static_cast(_points[i].x), static_cast(_points[i].y)), 5, CV_RGB(255,255,255), 2);

41 | }

42 | }

43 | void IPM::getPoints(vector& _origPts, vector& _ipmPts)

44 | {

45 | _origPts = m_origPoints;

46 | _ipmPts = m_dstPoints;

47 | }

48 | void IPM::applyHomography(const Mat& _inputImg, Mat& _dstImg, int _borderMode)

49 | {

50 | // Generate IPM image from src

51 | remap(_inputImg, _dstImg, m_mapX, m_mapY, INTER_LINEAR, _borderMode);//, BORDER_CONSTANT, Scalar(0,0,0,0));

52 | }

53 | void IPM::applyHomographyInv(const Mat& _inputImg, Mat& _dstImg, int _borderMode)

54 | {

55 | // Generate IPM image from src

56 | remap(_inputImg, _dstImg, m_mapX, m_mapY, INTER_LINEAR, _borderMode);//, BORDER_CONSTANT, Scalar(0,0,0,0));

57 | }

58 | Point2d IPM::applyHomography( const Point2d& _point )

59 | {

60 | return applyHomography( _point, m_H );

61 | }

62 | Point2d IPM::applyHomographyInv( const Point2d& _point )

63 | {

64 | return applyHomography( _point, m_H_inv );

65 | }

66 | Point2d IPM::applyHomography( const Point2d& _point, const Mat& _H )

67 | {

68 | Point2d ret = Point2d( -1, -1 );

69 |

70 | const double u = _H.at(0,0) * _point.x + _H.at(0,1) * _point.y + _H.at(0,2);

71 | const double v = _H.at(1,0) * _point.x + _H.at(1,1) * _point.y + _H.at(1,2);

72 | const double s = _H.at(2,0) * _point.x + _H.at(2,1) * _point.y + _H.at(2,2);

73 | if ( s != 0 )

74 | {

75 | ret.x = ( u / s );

76 | ret.y = ( v / s );

77 | }

78 | return ret;

79 | }

80 | Point3d IPM::applyHomography( const Point3d& _point )

81 | {

82 | return applyHomography( _point, m_H );

83 | }

84 | Point3d IPM::applyHomographyInv( const Point3d& _point )

85 | {

86 | return applyHomography( _point, m_H_inv );

87 | }

88 | Point3d IPM::applyHomography( const Point3d& _point, const cv::Mat& _H )

89 | {

90 | Point3d ret = Point3d( -1, -1, 1 );

91 |

92 | const double u = _H.at(0,0) * _point.x + _H.at(0,1) * _point.y + _H.at(0,2) * _point.z;

93 | const double v = _H.at(1,0) * _point.x + _H.at(1,1) * _point.y + _H.at(1,2) * _point.z;

94 | const double s = _H.at(2,0) * _point.x + _H.at(2,1) * _point.y + _H.at(2,2) * _point.z;

95 | if ( s != 0 )

96 | {

97 | ret.x = ( u / s );

98 | ret.y = ( v / s );

99 | }

100 | else

101 | ret.z = 0;

102 | return ret;

103 | }

104 |

105 | // Private

106 | void IPM::createMaps()

107 | {

108 | // Create remap images

109 | m_mapX.create(m_dstSize, CV_32F);

110 | m_mapY.create(m_dstSize, CV_32F);

111 | //#pragma omp parallel for schedule(dynamic)

112 | for( int j = 0; j < m_dstSize.height; ++j )

113 | {

114 | float* ptRowX = m_mapX.ptr(j);

115 | float* ptRowY = m_mapY.ptr(j);

116 | //#pragma omp parallel for schedule(dynamic)

117 | for( int i = 0; i < m_dstSize.width; ++i )

118 | {

119 | Point2f pt = applyHomography( Point2f( static_cast(i), static_cast(j) ), m_H_inv );

120 | ptRowX[i] = pt.x;

121 | ptRowY[i] = pt.y;

122 | }

123 | }

124 |

125 | m_invMapX.create(m_origSize, CV_32F);

126 | m_invMapY.create(m_origSize, CV_32F);

127 |

128 | //#pragma omp parallel for schedule(dynamic)

129 | for( int j = 0; j < m_origSize.height; ++j )

130 | {

131 | float* ptRowX = m_invMapX.ptr(j);

132 | float* ptRowY = m_invMapY.ptr(j);

133 | //#pragma omp parallel for schedule(dynamic)

134 | for( int i = 0; i < m_origSize.width; ++i )

135 | {

136 | Point2f pt = applyHomography( Point2f( static_cast(i), static_cast(j) ), m_H );

137 | ptRowX[i] = pt.x;

138 | ptRowY[i] = pt.y;

139 | }

140 | }

141 | }

142 |

--------------------------------------------------------------------------------

/linux/src/IPM.h:

--------------------------------------------------------------------------------

1 | #ifndef __IPM_H__

2 | #define __IPM_H__

3 |

4 | #include

5 | #include

6 | #include

7 | #include

8 |

9 | #include

10 |

11 | class IPM

12 | {

13 | public:

14 | IPM( const cv::Size& _origSize, const cv::Size& _dstSize,

15 | const std::vector& _origPoints, const std::vector& _dstPoints );

16 |

17 | IPM() {}

18 | // Apply IPM on points

19 | cv::Point2d applyHomography(const cv::Point2d& _point, const cv::Mat& _H);

20 | cv::Point3d applyHomography( const cv::Point3d& _point, const cv::Mat& _H);

21 | cv::Point2d applyHomography(const cv::Point2d& _point);

22 | cv::Point3d applyHomography( const cv::Point3d& _point);

23 | cv::Point2d applyHomographyInv(const cv::Point2d& _point);

24 | cv::Point3d applyHomographyInv( const cv::Point3d& _point);

25 | void applyHomography( const cv::Mat& _origBGR, cv::Mat& _ipmBGR, int borderMode = cv::BORDER_CONSTANT);

26 | void applyHomographyInv( const cv::Mat& _ipmBGR, cv::Mat& _origBGR, int borderMode = cv::BORDER_CONSTANT);

27 |

28 | // Getters

29 | cv::Mat getH() const { return m_H; }

30 | cv::Mat getHinv() const { return m_H_inv; }

31 | void getPoints(std::vector& _origPts, std::vector& _ipmPts);

32 |

33 | // Draw

34 | void drawPoints( const std::vector& _points, cv::Mat& _img ) const;

35 |

36 | void setIPM( const cv::Size& _origSize, const cv::Size& _dstSize,

37 | const std::vector& _origPoints, const std::vector& _dstPoints );

38 | private:

39 | void createMaps();

40 |

41 | // Sizes

42 | cv::Size m_origSize;

43 | cv::Size m_dstSize;

44 |

45 | // Points

46 | std::vector m_origPoints;

47 | std::vector m_dstPoints;

48 |

49 | // Homography

50 | cv::Mat m_H;

51 | cv::Mat m_H_inv;

52 |

53 | // Maps

54 | cv::Mat m_mapX, m_mapY;

55 | cv::Mat m_invMapX, m_invMapY;

56 | };

57 |

58 | #endif /*__IPM_H__*/

59 |

--------------------------------------------------------------------------------

/linux/src/ets2_self_driving.h:

--------------------------------------------------------------------------------

1 | #ifndef __ets_self_driving_h__

2 | #define __ets_self_driving_h__

3 |

4 | #include

5 | #include

6 | //#include

7 | #include

8 | #include

9 | #include

10 | #include

11 | //#include

12 | //#include

13 | //#include

14 | //#include

15 | #include

16 | #include

17 | #include

18 |

19 | #define PI 3.1415926

20 |

21 |

22 | using namespace cv;

23 | using namespace std;

24 |

25 | class LineFinder{

26 |

27 | private:

28 | cv::Mat image; // 원 영상

29 | std::vector lines; // 선을 감지하기 위한 마지막 점을 포함한 벡터

30 | double deltaRho;

31 | double deltaTheta; // 누산기 해상도 파라미터

32 | int minVote; // 선을 고려하기 전에 받아야 하는 최소 투표 개수

33 | double minLength; // 선에 대한 최소 길이

34 | double maxGap; // 선에 따른 최대 허용 간격

35 |

36 | public:

37 | LineFinder() : deltaRho(1), deltaTheta(PI / 180), minVote(50), minLength(50), maxGap(10) {}

38 | // 기본 누적 해상도는 1각도 1화소

39 | // 간격이 없고 최소 길이도 없음

40 | void setAccResolution(double dRho, double dTheta);

41 | void setMinVote(int minv);

42 | void setLineLengthAndGap(double length, double gap);

43 | std::vector findLines(cv::Mat& binary);

44 | void drawDetectedLines(cv::Mat &image, cv::Scalar color = cv::Scalar(112, 112, 0));

45 | };

46 | //Mat hwnd2mat(HWND hwnd);

47 | void cudaf();

48 |

49 | #endif

50 |

--------------------------------------------------------------------------------

/linux/src/getScreen_linux.cc:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include

4 | #include

5 | #include

6 |

7 | void ImageFromDisplay(std::vector& Pixels, int& Width, int& Height, int& BitsPerPixel)

8 | {

9 | Display* display = XOpenDisplay(nullptr);

10 | Window root = DefaultRootWindow(display);

11 |

12 | XWindowAttributes attributes = {0};

13 | XGetWindowAttributes(display, root, &attributes);

14 |

15 | Width = attributes.width;

16 | Height = attributes.height;

17 |

18 | XImage* img = XGetImage(display, root, 0, 0 , Width, Height, AllPlanes, ZPixmap);

19 | BitsPerPixel = img->bits_per_pixel;

20 | Pixels.resize(Width * Height * 4);

21 |

22 | memcpy(&Pixels[0], img->data, Pixels.size());

23 |

24 | XDestroyImage(img);

25 | XCloseDisplay(display);

26 | }

27 |

--------------------------------------------------------------------------------

/linux/src/getScreen_linux.h:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include

4 | #include

5 | #include

6 |

7 | void ImageFromDisplay(std::vector& Pixels, int& Width, int& Height, int& BitsPerPixel);

8 |

--------------------------------------------------------------------------------

/linux/src/linefinder.cc:

--------------------------------------------------------------------------------

1 | #include "ets2_self_driving.h"

2 | #include

3 | #include

4 | //#include

5 | #include

6 | #include

7 | #include

8 | #include

9 | //#include

10 | //#include

11 | //#include

12 | #include

13 | #include

14 |

15 | #define PI 3.1415926

16 |

17 | cv::Point prev_point;

18 |

19 | using namespace cv;

20 | using namespace std;

21 |

22 | // 해당 세터 메소드들

23 | // 누적기에 해상도 설정

24 | void LineFinder::setAccResolution(double dRho, double dTheta) {

25 | deltaRho = dRho;

26 | deltaTheta = dTheta;

27 | }

28 |

29 | // 투표 최소 개수 설정

30 | void LineFinder::setMinVote(int minv) {

31 | minVote = minv;

32 | }

33 |

34 | // 선 길이와 간격 설정

35 | void LineFinder::setLineLengthAndGap(double length, double gap) {

36 | minLength = length;

37 | maxGap = gap;

38 | }

39 |

40 | // 허프 선 세그먼트 감지를 수행하는 메소드

41 | // 확률적 허프 변환 적용

42 | std::vector LineFinder::findLines(cv::Mat& binary) {

43 | UMat gpuBinary = binary.getUMat(ACCESS_RW);

44 | lines.clear();

45 | cv::HoughLinesP(gpuBinary, lines, deltaRho, deltaTheta, minVote, minLength, maxGap);

46 | return lines;

47 | } // cv::Vec4i 벡터를 반환하고, 감지된 각 세그먼트의 시작과 마지막 점 좌표를 포함.

48 |

49 | // 위 메소드에서 감지한 선을 다음 메소드를 사용해서 그림

50 | // 영상에서 감지된 선을 그리기

51 | void LineFinder::drawDetectedLines(cv::Mat &image, cv::Scalar color) {

52 |

53 | UMat gpuImage = image.getUMat(ACCESS_RW);

54 | // 선 그리기

55 | std::vector::const_iterator it2 = lines.begin();

56 | cv::Point endPoint;

57 |

58 | while (it2 != lines.end()) {

59 | cv::Point startPoint((*it2)[0], (*it2)[1]);

60 | endPoint = cv::Point((*it2)[2], (*it2)[3]);

61 | cv::line(gpuImage, startPoint, endPoint, color, 3);

62 | ++it2;

63 | }

64 | }

65 |

--------------------------------------------------------------------------------

/linux/src/main2.cc:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include

4 | #include

5 | #include

6 | #include

7 |

8 | #include

9 | #include

10 | #include

11 | #include

12 | #include

13 | #include

14 | #include

15 | #include

16 | #include

17 | #include

18 | #include

19 | #include

20 | #include

21 | #include

22 | #include

23 | #include "ets2_self_driving.h"

24 | #include "IPM.h"

25 | #include "getScreen_linux.h"

26 | #include "uinput.c"

27 |

28 | #define PI 3.1415926

29 |

30 | using namespace cv;

31 | using namespace std;

32 |

33 | void input(int, int, int);

34 | string exec(const char* cmd);

35 | int counter = 0;

36 |

37 | int main(int argc, char** argv) {

38 | if(-1 == setUinput()) {

39 | return -1;

40 | }

41 | bool detection = false;

42 | bool python_on = false;

43 | int width = 1024, height = 768;

44 | double IPM_BOTTOM_RIGHT = width+400;

45 | double IPM_BOTTOM_LEFT = -400;

46 | double IPM_RIGHT = width/2+100;

47 | double IPM_LEFT = width/2-100;

48 | int IPM_diff = 0;

49 | if(argc == 2){

50 | if(strcmp(argv[1], "--Car_Detection")){

51 | detection = true;

52 | cout<<"Car Detection Enabled"<(shmat(shmid, NULL, 0))) == (char *) -1)

90 | {

91 | printf("Error attaching shared memory id");

92 | exit(1);

93 | }

94 |

95 | int curve;

96 | key = 123464;

97 | if ((curve = shmget(key, 4, IPC_CREAT | 0666)) < 0)

98 | {

99 | printf("Error getting shared memory curve");

100 | exit(1);

101 | }

102 | // Attached shared memory

103 | if ((shared_curve = static_cast(shmat(curve, NULL, 0))) == (char *) -1)

104 | {

105 | printf("Error attaching shared memory curve");

106 | exit(1);

107 | }

108 | }

109 | }

110 | int move_mouse_pixel = 0;

111 | while (true) {

112 | auto begin = chrono::high_resolution_clock::now();

113 | // ETS2

114 | Mat image, sendImg, outputImg;

115 | int Width = 1024;

116 | int Height = 768;

117 | int Bpp = 0;

118 | std::vector Pixels;

119 |

120 | ImageFromDisplay(Pixels, Width, Height, Bpp);

121 |

122 | Mat img = Mat(Height, Width, Bpp > 24 ? CV_8UC4 : CV_8UC3, &Pixels[0]); //Mat(Size(Height, Width), Bpp > 24 ? CV_8UC4 : CV_8UC3, &Pixels[0]);

123 | cv::Rect myROI(896, 312, 1024, 768);

124 | image = img(myROI);

125 |

126 | //------------------------

127 | //cv::cvtColor(image, sendImg, CV_YUV2RGBA_NV21);

128 | cv::cvtColor(image, sendImg, CV_RGBA2RGB);

129 | //printf("%d\n", sendImg.dataend - sendImg.datastart);

130 |

131 | if(detection == true) {

132 | memcpy(shared_memory, sendImg.datastart, sendImg.dataend - sendImg.datastart);

133 | }

134 | //

135 |

136 | // Mat to GpuMat

137 | //cuda::GpuMat imageGPU;

138 | //imageGPU.upload(image);

139 |

140 | //medianBlur(image, image, 3);

141 | //cv::cuda::bilateralFilter(imageGPU, imageGPU, );

142 |

143 | IPM ipm;

144 | vector origPoints;

145 | vector dstPoints;

146 | // The 4-points at the input image

147 |

148 | origPoints.push_back(Point2f(IPM_BOTTOM_LEFT, (height-50)));

149 | origPoints.push_back(Point2f(IPM_BOTTOM_RIGHT, height-50));

150 | origPoints.push_back(Point2f(IPM_RIGHT, height/2+30));

151 | origPoints.push_back(Point2f(IPM_LEFT, height/2+30));

152 |

153 | // The 4-points correspondences in the destination image

154 |

155 | dstPoints.push_back(Point2f(0, height));

156 | dstPoints.push_back(Point2f(width, height));

157 | dstPoints.push_back(Point2f(width, 0));

158 | dstPoints.push_back(Point2f(0, 0));

159 |

160 | // IPM object

161 | ipm.setIPM(Size(width, height), Size(width, height), origPoints, dstPoints);

162 |

163 | // Process

164 | //clock_t begin = clock();

165 | ipm.applyHomography(image, outputImg);

166 | //clock_t end = clock();

167 | //double elapsed_secs = double(end - begin) / CLOCKS_PER_SEC;

168 | //printf("%.2f (ms)\r", 1000 * elapsed_secs);

169 | //ipm.drawPoints(origPoints, image);

170 |

171 | //Mat row = outputImg.row[0];

172 | cv::UMat gray;

173 | cv::UMat blur;

174 | cv::UMat sobel;

175 | cv::Mat contours;

176 | LineFinder ld; // 인스턴스 생성

177 |

178 | cv::resize(outputImg, outputImg, cv::Size(320, 240));

179 | cv::cvtColor(outputImg, gray, COLOR_RGB2GRAY);

180 | cv::blur(gray, blur, cv::Size(10, 10));

181 | cv::Sobel(blur, sobel, blur.depth(), 1, 0, 3, 0.5, 127);

182 | cv::threshold(sobel, contours, 145, 255, CV_THRESH_BINARY);

183 | //Thinning(contours, contours.rows, contours.cols);

184 | //cv::Canny(gray, contours, 125, 350);

185 |

186 |

187 | // 확률적 허프변환 파라미터 설정하기

188 |

189 | ld.setLineLengthAndGap(20, 120);

190 | ld.setMinVote(55);

191 |

192 | std::vector li = ld.findLines(contours);

193 | ld.drawDetectedLines(contours);

194 |

195 | ////////////////////////////////////////////

196 | // 자율 주행

197 |

198 | int bottom_center = 160;

199 | int sum_centerline = 0;

200 | int count_centerline = 0;

201 | int first_centerline = 0;

202 | int last_centerline = 0;

203 | double avr_center_to_left = 0;

204 | double avr_center_to_right = 0;

205 |

206 | //#pragma omp parallel for

207 | for(int i=240; i>30; i--){

208 | double center_to_right = -1;

209 | double center_to_left = -1;

210 |

211 | for (int j=0;j<150;j++) {

212 | if (contours.at(i, bottom_center+j) == 112 && center_to_right == -1) {

213 | center_to_right = j;

214 | }

215 | if (contours.at(i, bottom_center-j) == 112 && center_to_left == -1) {

216 | center_to_left = j;

217 | }

218 | }

219 | if(center_to_left!=-1 && center_to_right!=-1){

220 | int centerline = (center_to_right - center_to_left +2*bottom_center)/2;

221 | if (first_centerline == 0 ) {

222 | first_centerline = centerline;

223 | }

224 | //cv::circle(outputImg, Point(centerline, i), 1, Scalar(30, 255, 30) , 3);

225 | //cv::circle(outputImg, Point(centerline + center_to_right+20, i), 1, Scalar(255, 30, 30) , 3);

226 | //cv::circle(outputImg, Point(centerline - center_to_left+10, i), 1, Scalar(255, 30, 30) , 3);

227 | sum_centerline += centerline;

228 | avr_center_to_left = (avr_center_to_left * count_centerline + center_to_left)/count_centerline+1;

229 | avr_center_to_right = (avr_center_to_right * count_centerline + center_to_right)/count_centerline+1;

230 | last_centerline = centerline;

231 | count_centerline++;

232 | } else {

233 | }

234 | }

235 |

236 | // 컨트롤러 입력

237 | int diff = 0;

238 | if (count_centerline!=0) {

239 | diff = sum_centerline/count_centerline - bottom_center;

240 | int degree = atan2 (last_centerline - first_centerline, count_centerline) * 180 / PI;

241 | //diff = (90 - degree);

242 |

243 | move_mouse_pixel = 0 - counter + diff;

244 | cout << "Steer: "<< move_mouse_pixel << "px ";

245 | //goDirection(move_mouse_pixel);

246 | if(move_mouse_pixel == 0){

247 | if(IPM_diff > 0){

248 | IPM_RIGHT -= 1;

249 | IPM_LEFT -= 1;

250 | IPM_diff -= 1;

251 | }

252 | else if(IPM_diff < 0){

253 | IPM_RIGHT += 1;

254 | IPM_LEFT += 1;

255 | IPM_diff += 1;

256 | }

257 | else{

258 | IPM_RIGHT = width/2+100;

259 | IPM_LEFT = width/2-100;

260 | IPM_diff = 0;

261 | }

262 | }

263 | else{

264 | if (IPM_diff >= -30 && IPM_diff <= 30){

265 | IPM_RIGHT += move_mouse_pixel;

266 | IPM_LEFT += move_mouse_pixel;

267 | if(move_mouse_pixel > 0){

268 | IPM_diff++;

269 | }

270 | else{

271 | IPM_diff--;

272 | }

273 | }

274 | }

275 | moveMouse(move_mouse_pixel);

276 | int road_curve = (int)((sum_centerline/count_centerline-bottom_center));

277 |

278 | if(detection == true) {

279 | memcpy(shared_curve, &road_curve, sizeof(int));

280 | }

281 |

282 | counter = diff;/*

283 |

284 |

285 | if (abs(move_mouse_pixel)< 5) {

286 | goDirection(0 - counter/3);

287 | counter -= counter/3;

288 | } else if (abs(move_mouse_pixel) < 4) {

289 | goDirection(0 - counter/2);

290 | counter -= counter/2;

291 | } else if (abs (move_mouse_pixel) < 2) {

292 | goDirection(0 - counter);

293 | counter = 0;

294 | } else {

295 | goDirection(move_mouse_pixel);

296 | counter += move_mouse_pixel;

297 | }

298 | */

299 |

300 | } else {}

301 |

302 |

303 | ////////////////////////////////////////////

304 |

305 | //cv::cvtColor(contours, contours, COLOR_GRAY2RGB);

306 | imshow("Road", outputImg);

307 | imshow("Lines", contours);

308 | moveWindow("Lines", 200, 400);

309 | moveWindow("Road", 530, 400);

310 | waitKey(1);

311 |

312 | auto end = chrono::high_resolution_clock::now();

313 | auto dur = end - begin;

314 | auto ms = std::chrono::duration_cast(dur).count();

315 | ms++;

316 | //cout << 1000 / ms << "fps avr:" << 1000 / (sum / (++i)) << endl;

317 | cout << 1000 / ms << "fps" << endl;

318 |

319 | }

320 | return 0;

321 | }

322 |

323 |

324 |

325 |

326 | std::string exec(const char* cmd) {

327 | std::array buffer;

328 | std::string result;

329 | std::shared_ptr pipe(popen(cmd, "r"), pclose);

330 | if (!pipe)

331 | throw std::runtime_error("popen() failed!");

332 | while (!feof(pipe.get())) {

333 | if (fgets(buffer.data(), 128, pipe.get()) != NULL)

334 | result += buffer.data();

335 | }

336 | return result;

337 | }

338 |

--------------------------------------------------------------------------------

/linux/src/uinput.c:

--------------------------------------------------------------------------------

1 | #include

2 | #include "uinput.h"

3 |

4 | void setEventAndWrite(__u16 type, __u16 code, __s32 value)

5 | {

6 | ev.type=type;

7 | ev.code=code;

8 | ev.value=value;

9 | if(write(fd, &ev, sizeof(struct input_event)) < 0)

10 | die("error: write");

11 | }

12 |

13 | int setUinput() {

14 | fd = open("/dev/uinput", O_WRONLY | O_NONBLOCK);

15 | if(fd < 0) {

16 | fd=open("/dev/input/uinput",O_WRONLY|O_NONBLOCK);

17 | if(fd<0){

18 | die("error: Can't open an uinput file. see #17.(https://github.com/bethesirius/ChosunTruck/issues/17)");

19 | return -1;

20 | }

21 | }

22 | if(ioctl(fd, UI_SET_EVBIT, EV_KEY) < 0) {

23 | die("error: ioctl");

24 | return -1;

25 | }

26 | if(ioctl(fd, UI_SET_KEYBIT, BTN_LEFT) < 0) {

27 | die("error: ioctl");

28 | return -1;

29 | }

30 | if(ioctl(fd, UI_SET_KEYBIT, KEY_TAB) < 0) {

31 | die("error: ioctl");

32 | return -1;

33 | }

34 | if(ioctl(fd, UI_SET_KEYBIT, KEY_ENTER) < 0) {

35 | die("error: ioctl");

36 | return -1;

37 | }

38 | if(ioctl(fd, UI_SET_KEYBIT, KEY_LEFTSHIFT) < 0) {

39 | die("error: ioctl");

40 | return -1;

41 | }

42 | if(ioctl(fd, UI_SET_EVBIT, EV_REL) < 0) {

43 | die("error: ioctl");

44 | return -1;

45 | }

46 | if(ioctl(fd, UI_SET_RELBIT, REL_X) < 0) {

47 | die("error: ioctl");

48 | return -1;

49 | }

50 | if(ioctl(fd, UI_SET_RELBIT, REL_Y) < 0) {

51 | die("error: ioctl");

52 | return -1;

53 | }

54 |

55 | memset(&uidev, 0, sizeof(uidev));

56 | snprintf(uidev.name, UINPUT_MAX_NAME_SIZE, "uinput-virtualMouse");

57 | uidev.id.bustype = BUS_USB;

58 | uidev.id.vendor = 0x1;

59 | uidev.id.product = 0x1;

60 | uidev.id.version = 1;

61 |

62 | if(write(fd, &uidev, sizeof(uidev)) < 0) {

63 | die("error: write");

64 | return -1;

65 | }

66 | if(ioctl(fd, UI_DEV_CREATE) < 0) {

67 | die("error: ioctl");

68 | return -1;

69 | }

70 |

71 | memset(&ev, 0, sizeof(struct input_event));

72 | setEventAndWrite(EV_REL,REL_X,1);

73 | setEventAndWrite(EV_REL,REL_Y,1);

74 | setEventAndWrite(EV_SYN,0,0);

75 | return 0;

76 | }

77 |

78 | void moveMouse(int x) {

79 | int dx=x;

80 | setEventAndWrite(EV_REL,REL_X,dx);

81 | setEventAndWrite(EV_SYN,0,0);

82 | }

83 |

84 |

85 |

--------------------------------------------------------------------------------

/linux/src/uinput.h:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include

4 | #include

5 | #include

6 | #include

7 | #include

8 | #include

9 | #include

10 | #include

11 | #include

12 |

13 | #define die(str, args...) do { \

14 | perror(str); \

15 | exit(EXIT_FAILURE); \

16 | } while(0)

17 |

18 | static int fd;//uinput fd

19 | static struct uinput_user_dev uidev;

20 | static struct input_event ev;

21 |

22 | int setUinput();

23 | void moveMouse(int);

24 |

25 |

--------------------------------------------------------------------------------

/linux/tensorbox/Car_detection.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | import os

3 | import json

4 | import subprocess

5 | import sysv_ipc

6 | import struct

7 |

8 | from scipy.misc import imread, imresize

9 | from scipy import misc

10 |

11 | from train import build_forward

12 | from utils.annolist import AnnotationLib as al

13 | from utils.train_utils import add_rectangles, rescale_boxes

14 | from pymouse import PyMouse

15 |

16 | import cv2

17 | import argparse

18 | import time

19 | import numpy as np

20 |

21 | def get_image_dir(args):

22 | weights_iteration = int(args.weights.split('-')[-1])

23 | expname = '_' + args.expname if args.expname else ''

24 | image_dir = '%s/images_%s_%d%s' % (os.path.dirname(args.weights), os.path.basename(args.test_boxes)[:-5], weights_iteration, expname)

25 | return image_dir

26 |

27 | def get_results(args, H):

28 | tf.reset_default_graph()

29 | x_in = tf.placeholder(tf.float32, name='x_in', shape=[H['image_height'], H['image_width'], 3])

30 | if H['use_rezoom']:

31 | pred_boxes, pred_logits, pred_confidences, pred_confs_deltas, pred_boxes_deltas = build_forward(H, tf.expand_dims(x_in, 0), 'test', reuse=None)

32 | grid_area = H['grid_height'] * H['grid_width']

33 | pred_confidences = tf.reshape(tf.nn.softmax(tf.reshape(pred_confs_deltas, [grid_area * H['rnn_len'], 2])), [grid_area, H['rnn_len'], 2])

34 | if H['reregress']:

35 | pred_boxes = pred_boxes + pred_boxes_deltas

36 | else:

37 | pred_boxes, pred_logits, pred_confidences = build_forward(H, tf.expand_dims(x_in, 0), 'test', reuse=None)

38 | saver = tf.train.Saver()

39 | with tf.Session() as sess:

40 | sess.run(tf.initialize_all_variables())

41 | saver.restore(sess, args.weights)

42 |

43 | pred_annolist = al.AnnoList()

44 |

45 | data_dir = os.path.dirname(args.test_boxes)

46 | image_dir = get_image_dir(args)

47 | subprocess.call('mkdir -p %s' % image_dir, shell=True)

48 |

49 | memory = sysv_ipc.SharedMemory(123463)

50 | memory2 = sysv_ipc.SharedMemory(123464)

51 | size = 768, 1024, 3

52 |

53 | pedal = PyMouse()

54 | pedal.press(1)

55 | road_center = 320

56 | while True:

57 | cv2.waitKey(1)

58 | frameCount = bytearray(memory.read())

59 | curve = bytearray(memory2.read())

60 | curve = str(struct.unpack('i',curve)[0])

61 | m = np.array(frameCount, dtype=np.uint8)

62 | orig_img = m.reshape(size)

63 |

64 | img = imresize(orig_img, (H["image_height"], H["image_width"]), interp='cubic')

65 | feed = {x_in: img}

66 | (np_pred_boxes, np_pred_confidences) = sess.run([pred_boxes, pred_confidences], feed_dict=feed)

67 | pred_anno = al.Annotation()

68 |

69 | new_img, rects = add_rectangles(H, [img], np_pred_confidences, np_pred_boxes,

70 | use_stitching=True, rnn_len=H['rnn_len'], min_conf=args.min_conf, tau=args.tau, show_suppressed=args.show_suppressed)

71 | flag = 0

72 | road_center = 320 + int(curve)

73 | print(road_center)

74 | for rect in rects:

75 | print(rect.x1, rect.x2, rect.y2)

76 | if (rect.x1 < road_center and rect.x2 > road_center and rect.y2 > 200) and (rect.x2 - rect.x1 > 30):

77 | flag = 1

78 |

79 | if flag is 1:

80 | pedal.press(2)

81 | print("break!")

82 | else:

83 | pedal.release(2)

84 | pedal.press(1)

85 | print("acceleration!")

86 |

87 | pred_anno.rects = rects

88 | pred_anno.imagePath = os.path.abspath(data_dir)

89 | pred_anno = rescale_boxes((H["image_height"], H["image_width"]), pred_anno, orig_img.shape[0], orig_img.shape[1])

90 | pred_annolist.append(pred_anno)

91 |

92 | cv2.imshow('.jpg', new_img)

93 |

94 | return none;

95 |

96 | def main():

97 | parser = argparse.ArgumentParser()

98 | parser.add_argument('--weights', default='tensorbox/output/overfeat_rezoom_2017_02_09_13.28/save.ckpt-100000')

99 | parser.add_argument('--expname', default='')

100 | parser.add_argument('--test_boxes', default='default')

101 | parser.add_argument('--gpu', default=0)

102 | parser.add_argument('--logdir', default='output')

103 | parser.add_argument('--iou_threshold', default=0.5, type=float)

104 | parser.add_argument('--tau', default=0.25, type=float)

105 | parser.add_argument('--min_conf', default=0.2, type=float)

106 | parser.add_argument('--show_suppressed', default=True, type=bool)

107 | args = parser.parse_args()

108 | os.environ['CUDA_VISIBLE_DEVICES'] = str(args.gpu)

109 | hypes_file = '%s/hypes.json' % os.path.dirname(args.weights)

110 | with open(hypes_file, 'r') as f:

111 | H = json.load(f)

112 | expname = args.expname + '_' if args.expname else ''

113 | pred_boxes = '%s.%s%s' % (args.weights, expname, os.path.basename(args.test_boxes))

114 |

115 | get_results(args, H)

116 |

117 | if __name__ == '__main__':

118 | main()

119 |

--------------------------------------------------------------------------------

/linux/tensorbox/data/inception_v1.ckpt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/bethesirius/ChosunTruck/889644385ce57f971ec2921f006fbb0a167e6f1e/linux/tensorbox/data/inception_v1.ckpt

--------------------------------------------------------------------------------

/linux/tensorbox/license/TENSORBOX_LICENSE.txt:

--------------------------------------------------------------------------------

1 | Copyright (c) 2016 "The Contributors"

2 |

3 |

4 | Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

5 |

6 | The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

7 |

8 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

9 |

--------------------------------------------------------------------------------

/linux/tensorbox/license/TENSORFLOW_LICENSE.txt:

--------------------------------------------------------------------------------

1 | Copyright 2015 The TensorFlow Authors. All rights reserved.

2 |

3 | Apache License

4 | Version 2.0, January 2004

5 | http://www.apache.org/licenses/

6 |

7 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

8 |

9 | 1. Definitions.

10 |

11 | "License" shall mean the terms and conditions for use, reproduction,

12 | and distribution as defined by Sections 1 through 9 of this document.

13 |

14 | "Licensor" shall mean the copyright owner or entity authorized by

15 | the copyright owner that is granting the License.

16 |

17 | "Legal Entity" shall mean the union of the acting entity and all

18 | other entities that control, are controlled by, or are under common

19 | control with that entity. For the purposes of this definition,

20 | "control" means (i) the power, direct or indirect, to cause the

21 | direction or management of such entity, whether by contract or

22 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

23 | outstanding shares, or (iii) beneficial ownership of such entity.

24 |

25 | "You" (or "Your") shall mean an individual or Legal Entity

26 | exercising permissions granted by this License.

27 |

28 | "Source" form shall mean the preferred form for making modifications,

29 | including but not limited to software source code, documentation

30 | source, and configuration files.

31 |

32 | "Object" form shall mean any form resulting from mechanical

33 | transformation or translation of a Source form, including but

34 | not limited to compiled object code, generated documentation,

35 | and conversions to other media types.

36 |

37 | "Work" shall mean the work of authorship, whether in Source or

38 | Object form, made available under the License, as indicated by a

39 | copyright notice that is included in or attached to the work

40 | (an example is provided in the Appendix below).

41 |

42 | "Derivative Works" shall mean any work, whether in Source or Object

43 | form, that is based on (or derived from) the Work and for which the

44 | editorial revisions, annotations, elaborations, or other modifications

45 | represent, as a whole, an original work of authorship. For the purposes

46 | of this License, Derivative Works shall not include works that remain

47 | separable from, or merely link (or bind by name) to the interfaces of,

48 | the Work and Derivative Works thereof.

49 |

50 | "Contribution" shall mean any work of authorship, including

51 | the original version of the Work and any modifications or additions

52 | to that Work or Derivative Works thereof, that is intentionally

53 | submitted to Licensor for inclusion in the Work by the copyright owner

54 | or by an individual or Legal Entity authorized to submit on behalf of

55 | the copyright owner. For the purposes of this definition, "submitted"

56 | means any form of electronic, verbal, or written communication sent

57 | to the Licensor or its representatives, including but not limited to

58 | communication on electronic mailing lists, source code control systems,

59 | and issue tracking systems that are managed by, or on behalf of, the

60 | Licensor for the purpose of discussing and improving the Work, but

61 | excluding communication that is conspicuously marked or otherwise

62 | designated in writing by the copyright owner as "Not a Contribution."

63 |

64 | "Contributor" shall mean Licensor and any individual or Legal Entity

65 | on behalf of whom a Contribution has been received by Licensor and

66 | subsequently incorporated within the Work.

67 |

68 | 2. Grant of Copyright License. Subject to the terms and conditions of

69 | this License, each Contributor hereby grants to You a perpetual,

70 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

71 | copyright license to reproduce, prepare Derivative Works of,

72 | publicly display, publicly perform, sublicense, and distribute the

73 | Work and such Derivative Works in Source or Object form.

74 |

75 | 3. Grant of Patent License. Subject to the terms and conditions of

76 | this License, each Contributor hereby grants to You a perpetual,

77 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

78 | (except as stated in this section) patent license to make, have made,

79 | use, offer to sell, sell, import, and otherwise transfer the Work,

80 | where such license applies only to those patent claims licensable

81 | by such Contributor that are necessarily infringed by their

82 | Contribution(s) alone or by combination of their Contribution(s)

83 | with the Work to which such Contribution(s) was submitted. If You

84 | institute patent litigation against any entity (including a

85 | cross-claim or counterclaim in a lawsuit) alleging that the Work

86 | or a Contribution incorporated within the Work constitutes direct

87 | or contributory patent infringement, then any patent licenses

88 | granted to You under this License for that Work shall terminate

89 | as of the date such litigation is filed.

90 |

91 | 4. Redistribution. You may reproduce and distribute copies of the

92 | Work or Derivative Works thereof in any medium, with or without

93 | modifications, and in Source or Object form, provided that You

94 | meet the following conditions:

95 |

96 | (a) You must give any other recipients of the Work or

97 | Derivative Works a copy of this License; and

98 |

99 | (b) You must cause any modified files to carry prominent notices

100 | stating that You changed the files; and

101 |

102 | (c) You must retain, in the Source form of any Derivative Works

103 | that You distribute, all copyright, patent, trademark, and

104 | attribution notices from the Source form of the Work,

105 | excluding those notices that do not pertain to any part of

106 | the Derivative Works; and

107 |

108 | (d) If the Work includes a "NOTICE" text file as part of its

109 | distribution, then any Derivative Works that You distribute must

110 | include a readable copy of the attribution notices contained

111 | within such NOTICE file, excluding those notices that do not

112 | pertain to any part of the Derivative Works, in at least one

113 | of the following places: within a NOTICE text file distributed

114 | as part of the Derivative Works; within the Source form or

115 | documentation, if provided along with the Derivative Works; or,

116 | within a display generated by the Derivative Works, if and

117 | wherever such third-party notices normally appear. The contents

118 | of the NOTICE file are for informational purposes only and

119 | do not modify the License. You may add Your own attribution

120 | notices within Derivative Works that You distribute, alongside

121 | or as an addendum to the NOTICE text from the Work, provided

122 | that such additional attribution notices cannot be construed

123 | as modifying the License.

124 |

125 | You may add Your own copyright statement to Your modifications and

126 | may provide additional or different license terms and conditions

127 | for use, reproduction, or distribution of Your modifications, or

128 | for any such Derivative Works as a whole, provided Your use,

129 | reproduction, and distribution of the Work otherwise complies with

130 | the conditions stated in this License.

131 |