├── .gitignore

├── LICENSE

├── README.md

├── Todoist.py

├── config.py

├── docker-example

├── Dockerfile-librelinkup

├── README.md

├── config.py

├── librelinkup.py

└── requirements.txt

├── edsm.py

├── exist.py

├── fitbit.py

├── foursquare.py

├── fshub.py

├── github.py

├── google-play.py

├── grafana

├── edsm.json

├── exist.json

├── fitbit.json

├── foursquare.json

├── fshub.json

├── gaming.json

├── github.json

├── instagram.json

├── rescuetime.json

├── todoist.json

└── trakt.json

├── instagram.py

├── k8s-example

├── README.md

├── cronjob-example.yaml

├── external-secret-example.yaml

└── secret-example.yaml

├── librelinkup.py

├── nintendo-switch.py

├── onetouchreveal.py

├── psn.py

├── requirements.txt

├── rescuetime-games.py

├── rescuetime.py

├── retroachievements.py

├── retroarch_emulationstation.py

├── retropie

├── influx-onend.sh

├── influx-onstart.sh

└── influx-retropie.py

├── screenshots

├── grafana-edsm.png

├── grafana-exist.png

├── grafana-fitbit.png

├── grafana-foursquare.png

├── grafana-fshub.png

├── grafana-gaming.png

├── grafana-github.png

├── grafana-instagram.png

├── grafana-rescuetime.png

├── grafana-todoist.png

└── grafana-trakt.png

├── stadia.py

├── steam.py

├── trakt-tv.py

└── xbox.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # pyenv

85 | .python-version

86 |

87 | # pipenv

88 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

89 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

90 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

91 | # install all needed dependencies.

92 | #Pipfile.lock

93 |

94 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

95 | __pypackages__/

96 |

97 | # Celery stuff

98 | celerybeat-schedule

99 | celerybeat.pid

100 |

101 | # SageMath parsed files

102 | *.sage.py

103 |

104 | # Environments

105 | .env

106 | .venv

107 | env/

108 | venv/

109 | ENV/

110 | env.bak/

111 | venv.bak/

112 |

113 | # Spyder project settings

114 | .spyderproject

115 | .spyproject

116 |

117 | # Rope project settings

118 | .ropeproject

119 |

120 | # mkdocs documentation

121 | /site

122 |

123 | # mypy

124 | .mypy_cache/

125 | .dmypy.json

126 | dmypy.json

127 |

128 | # Pyre type checker

129 | .pyre/

130 |

131 | .DS_Store

132 | .trakt.json

133 | .fitbit-refreshtoken

134 | *.sqlite

135 | *.code-workspace

136 | .vscode

137 | .librelinkup-authtoken

138 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Personal-InfluxDB

2 |

3 | Import personal data from various APIs into InfluxDB

4 |

5 | ## Configuration

6 |

7 | Open `config.py` and set your API credentials and InfluxDB server configuration at the top of the file

8 |

9 | * __RescueTime__: Register for an API key at https://www.rescuetime.com/anapi/manage

10 | * __Foursquare__: Register an app at https://foursquare.com/developers/ and generate an OAuth2 access token

11 | * __Fitbit__: Register a "Personal" app at https://dev.fitbit.com/ and generate an OAuth2 access token

12 | * __Steam__: Register for an API key at https://steamcommunity.com/dev/apikey and look up your SteamID at https://steamidfinder.com/ (use the `steamID64 (Dec)` value)

13 | * __Nintendo Switch__: You'll need to set up [mitmproxy](https://mitmproxy.org/) and intercept the Nintendo Switch Parent Controls app on an iOS or Android device to grab your authentication tokens and device IDs

14 | * __Xbox Live__: Register a profile at https://www.trueachievements.com/ and link it to your Xbox account. You can get your ID number by clicking your "TrueAchievement Points" score on your profile and looking at the leaderboard URL, it will be the `findgamerid` parameter.

15 | * __Google Play Games__: Download your Google Play Games archive from https://takeout.google.com/ and extract it in the same folder as the script

16 | * __Todoist__: *Access to the API requires a Todoist Premium subscription* Create an app at https://developer.todoist.com/appconsole.html and generate a test token

17 | * __GitHub__: Create a personal access token at https://github.com/settings/tokens

18 | * __Trakt.tv__: Register for an API key at https://trakt.tv/oauth/applications and generate an OAuth2 access token, you'll also need to create an API key at https://www.themoviedb.org/settings/api to download movie / show posters

19 | * __EDSM__: Generate an API key at https://www.edsm.net/en/settings/api

20 | * __Exist__: Register an app at https://exist.io/account/apps/

21 | * __RetroPie__: Place the shell files and python script into user `pi`'s home directory. Created or edit `/opt/retropie/configs/all/runcommand-onstart.sh` and append the line `bash "/home/pi/influx-onstart.sh" "$@"`. Create or edit `/opt/retropie/configs/all/runcommand-onend.sh` and append the line `bash "/home/pi/influx-onend.sh" "$@"`

22 | * __FsHub.io__: Generate a personal access token at https://fshub.io/settings/integrations and set your pilot ID to the number in your "Personal Dashboard" URL

23 | * __Stadia__: Link your Stadia account to [Exophase](https://www.exophase.com/) and then set your Exophase username and Stadia nickname

24 | * __PSN__: Link your PSN account to [Exophase](https://www.exophase.com/) and then set your Exophase username and PSN nickname

25 | * __LibreLinkUp__: Open the Freestyle Libre app and choose `Connected Apps` from the menu, then send yourself an invite to `LibreLinkUp`. Install the `LibreLinkUp` app on your phone and accept the invitation, then set your username and password in the configuration file.

26 |

27 | ## Usage

28 |

29 | Check your Python version and make sure version 3.7 or newer is installed on your system:

30 |

31 | ```shell

32 | $ python3 --version

33 | ```

34 |

35 | Install required python3 modules:

36 |

37 | ```shell

38 | $ pip3 install pytz influxdb requests requests-cache instaloader trakt.py publicsuffix2 colorlog bs4

39 | ```

40 |

41 | Run each Python script from the terminal and it will insert the most recent data into InfluxDB.

42 |

43 | ## Notes

44 |

45 | * Each script is designed to write to its own InfluxDB database. Using the same database name between scripts can lead to data being unexpectedly overwritten or deleted.

46 | * RescueTime provides data each hour, so scheduling the script as an hourly cron job is recommended.

47 | * Steam provides the recent playtime over 2 weeks, so the first set of data inserted will contain 2 weeks of time. New data going forward will be more accurate as the script will calculate the time since the last run.

48 | * Google Play doesn't provide total play time, only achievements and last played timestamps

49 | * Instagram can take a very long time to download, so by default it will only fetch the 10 most recent posts. Set `MAX_POSTS` to `0` to download everything.

50 | * Access to the Todoist API requires a premium subscription

51 |

52 | ## Grafana Dashboards

53 |

54 | The [grafana](grafana/) folder contains json files for various example dashboards.

55 | Most dashboards require the `grafana-piechart-panel` plugin, and the Foursquaure panel also requires the panodata `grafana-map-panel` plugin:

56 |

57 | ```shell

58 | $ grafana-cli plugins install grafana-piechart-panel

59 | $ grafana-cli --pluginUrl grafana-cli --pluginUrl https://github.com/panodata/grafana-map-panel/releases/download/0.15.0/grafana-map-panel-0.15.0.zip plugins install grafana-map-panel plugins install grafana-map-panel

60 | ```

61 |

62 | ### RescueTime dashboard

63 |

64 |

65 |

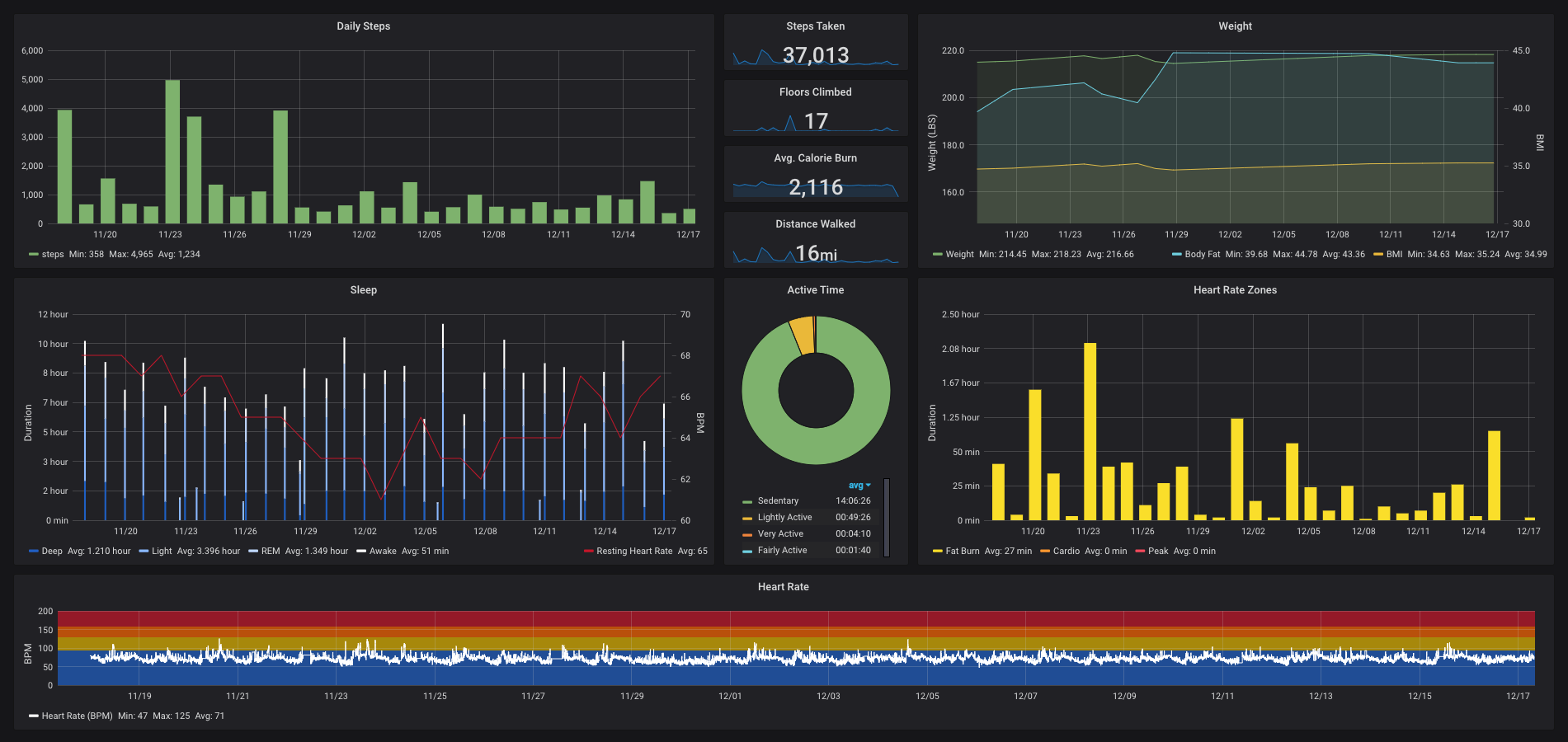

66 | ### Fitbit dashboard

67 |

68 |

69 |

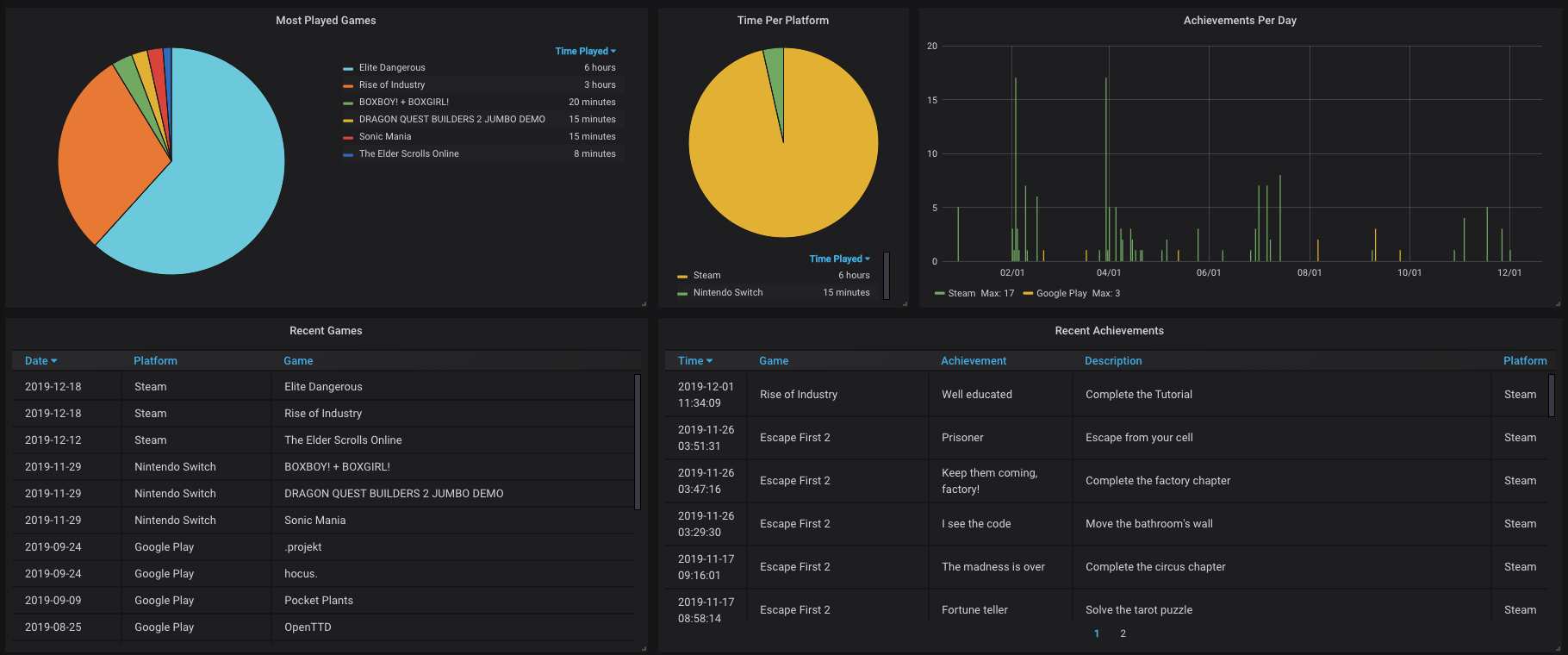

70 | ### Gaming dashboard

71 |

72 |

73 |

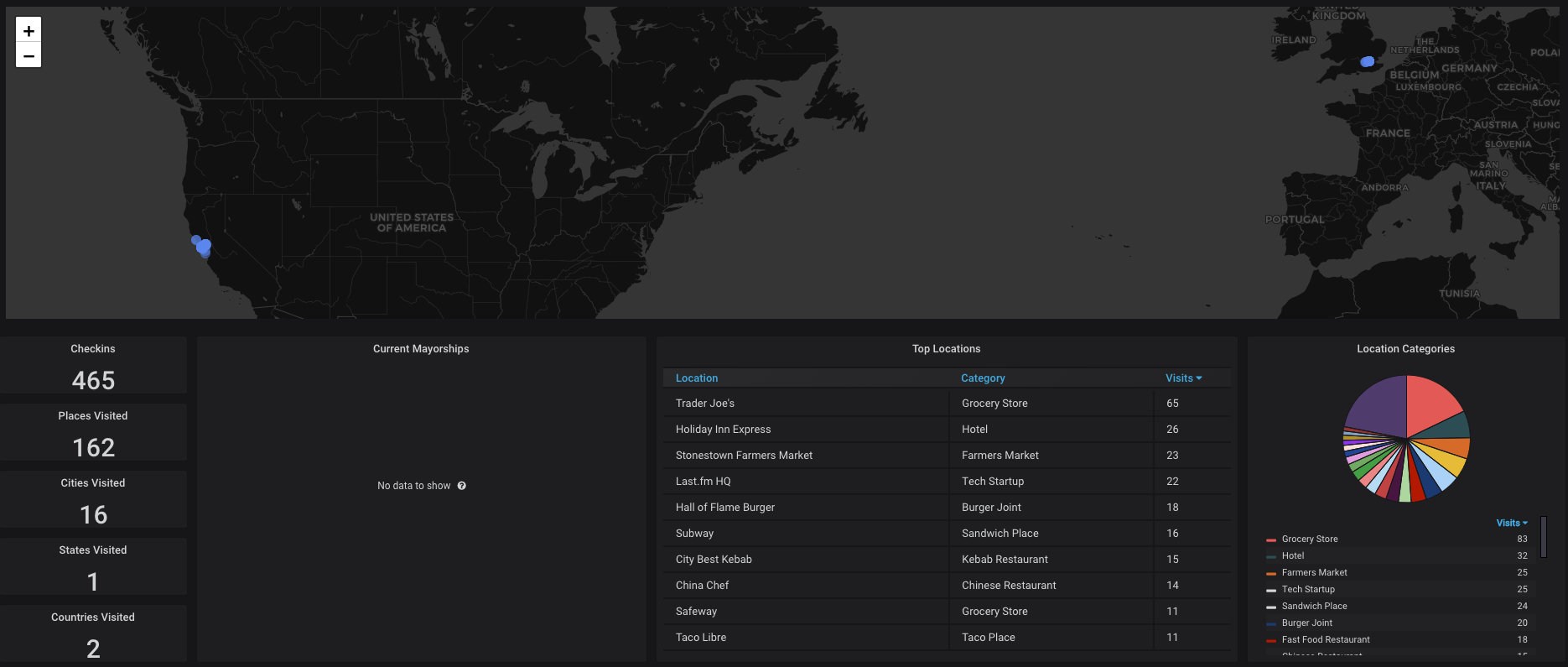

74 | ### Foursquare dashboard

75 |

76 |

77 |

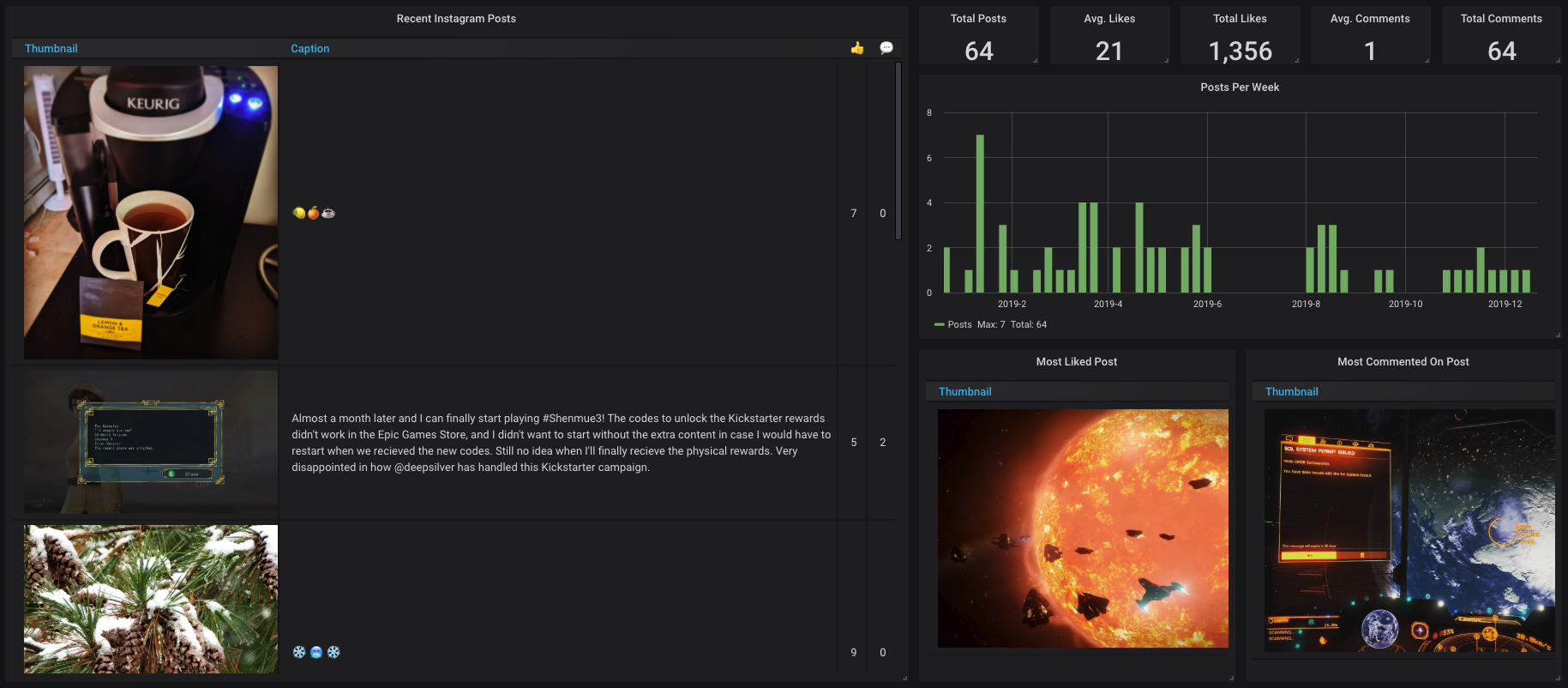

78 | ### Instagram dashboard

79 |

80 |

81 |

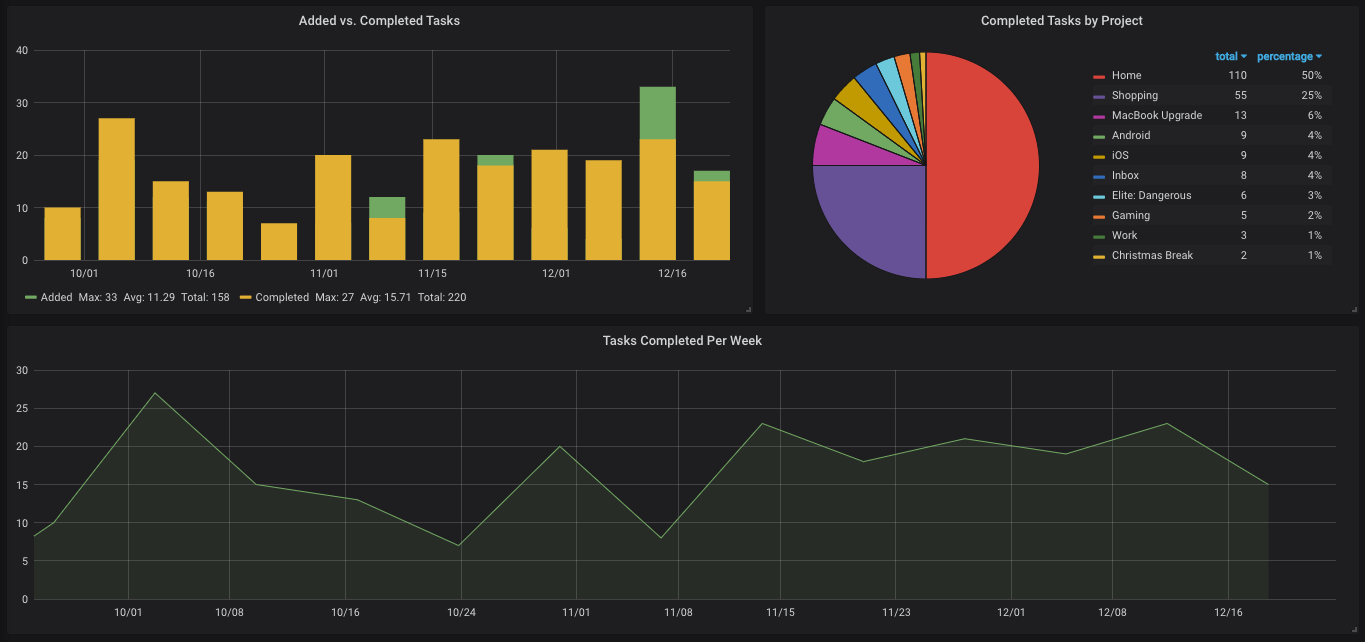

82 | ### Todoist dashboard

83 |

84 |

85 |

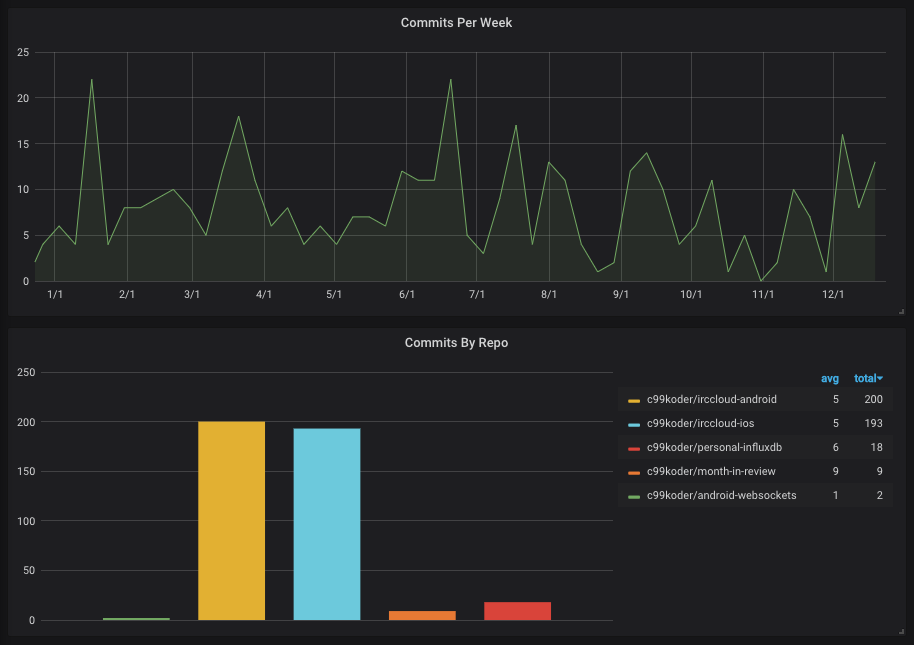

86 | ### GitHub dashboard

87 |

88 |

89 |

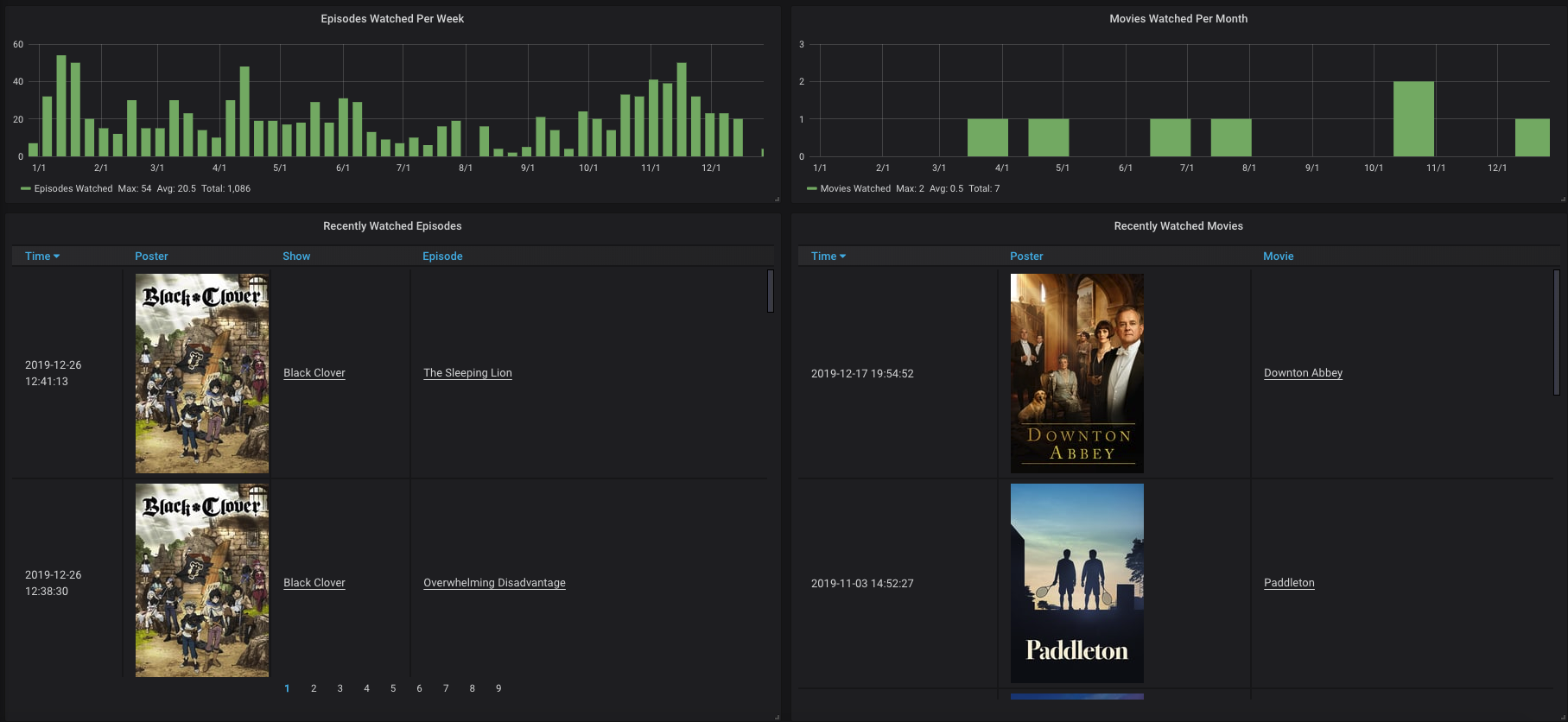

90 | ### Trakt.tv dashboard

91 |

92 |

93 |

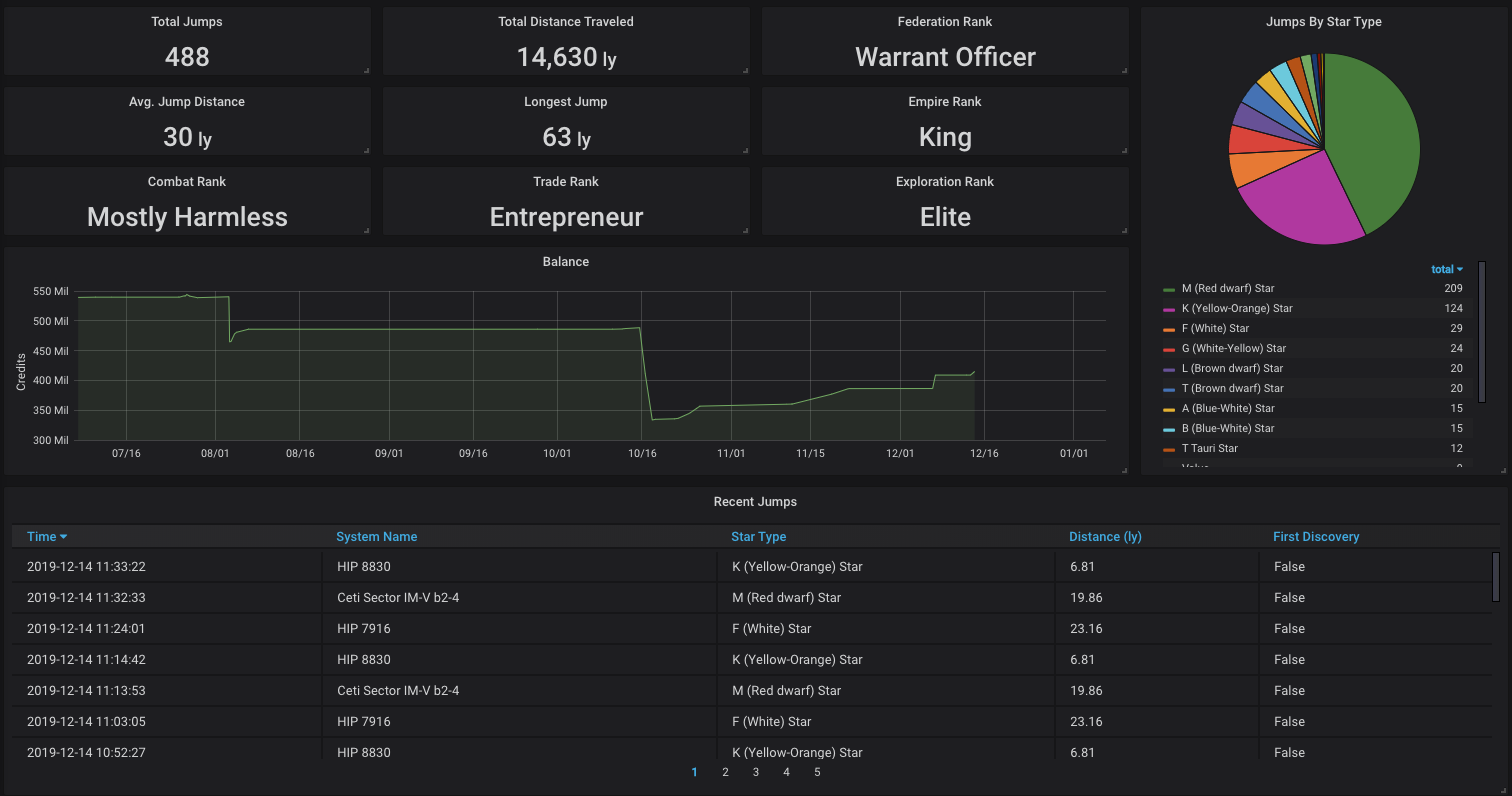

94 | ### EDSM dashboard

95 |

96 |

97 |

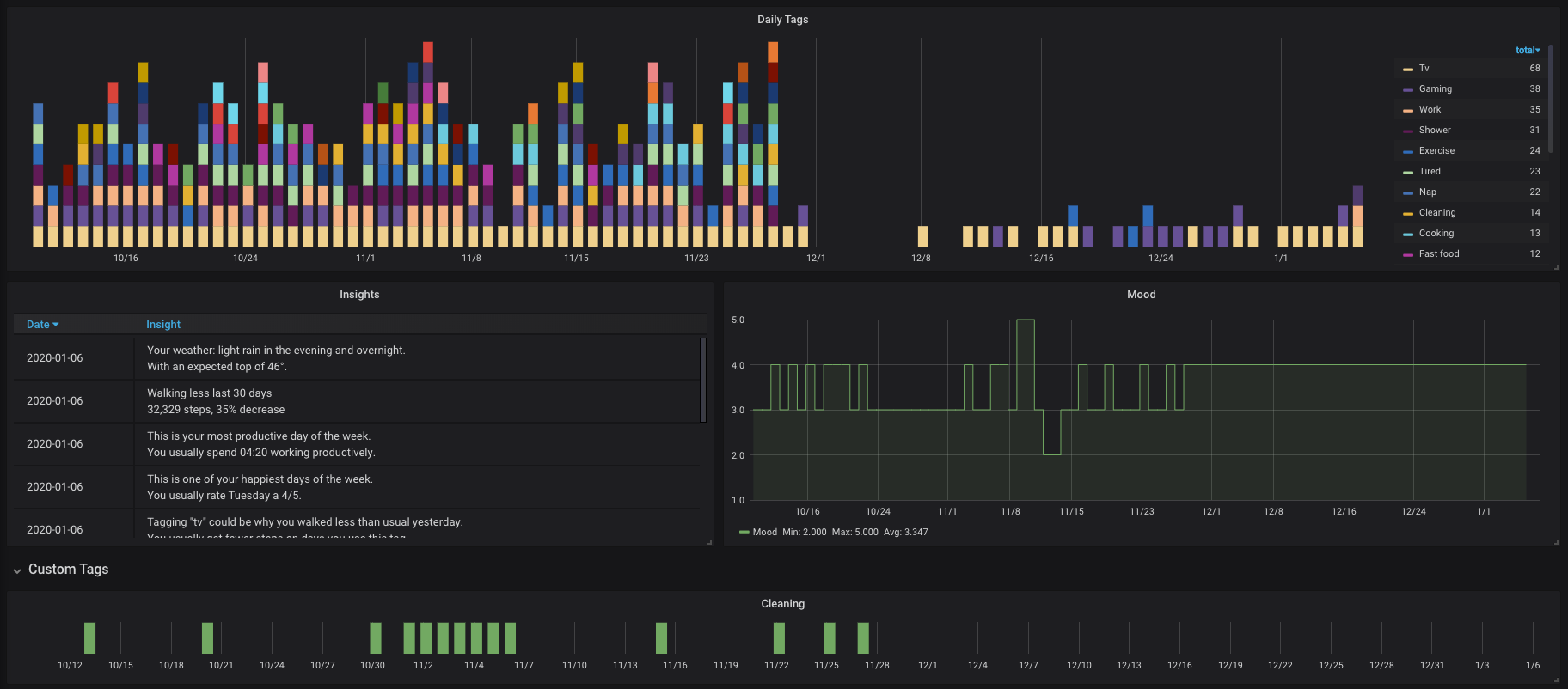

98 | ### Exist dashboard

99 |

100 |

101 |

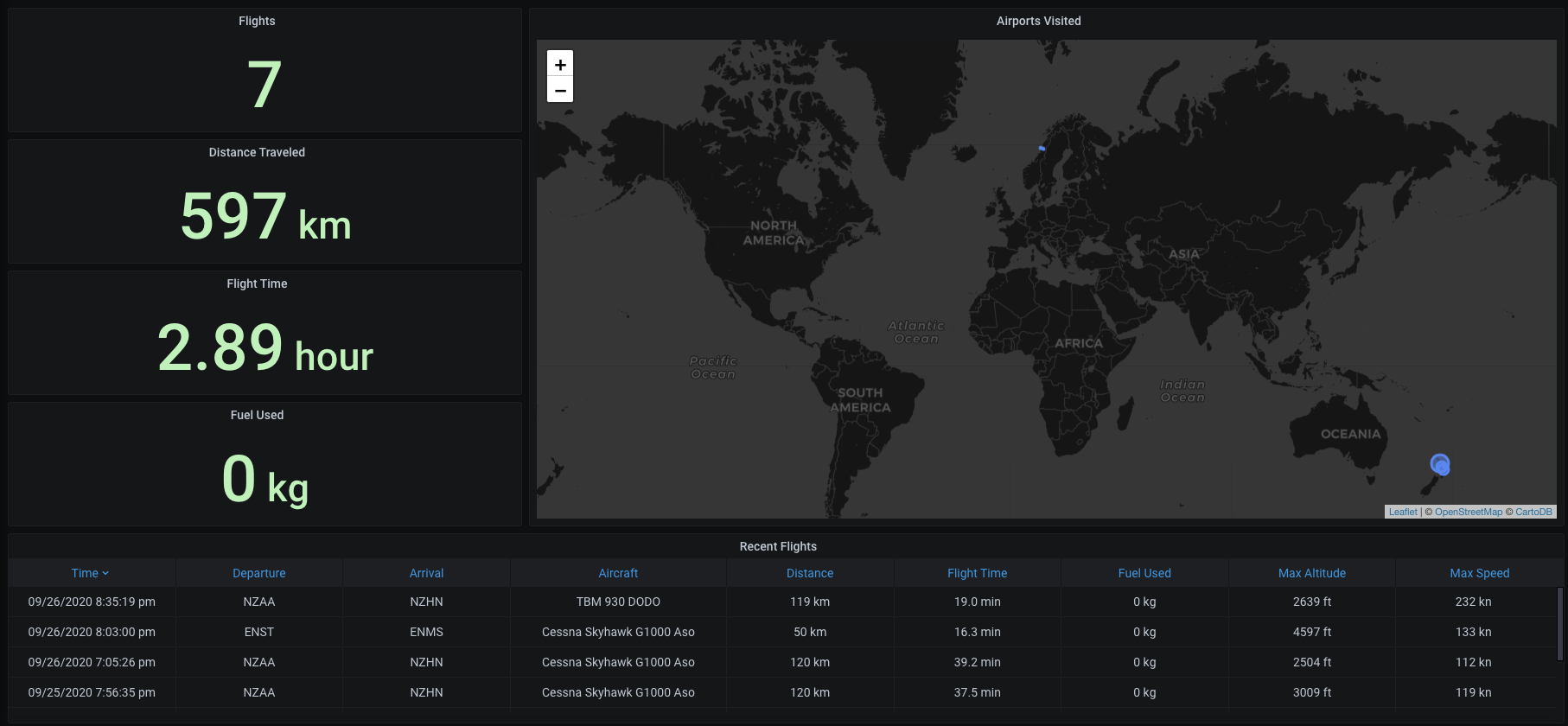

102 | ### FsHub.io dashboard

103 |

104 |

105 |

106 | # License

107 |

108 | Copyright (C) 2022 Sam Steele. Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

109 |

110 | http://www.apache.org/licenses/LICENSE-2.0

111 |

112 | Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

113 |

--------------------------------------------------------------------------------

/Todoist.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # Copyright 2022 Sam Steele

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | import requests, sys

17 | from config import *

18 |

19 | if not TODOIST_ACCESS_TOKEN:

20 | logging.error("TODOIST_ACCESS_TOKEN not set in config.py")

21 | sys.exit(1)

22 |

23 | points = []

24 |

25 | def get_project(project_id):

26 | try:

27 | response = requests.get(f'https://api.todoist.com/sync/v9/projects/get',

28 | params={'project_id': project_id},

29 | headers={'Authorization': f'Bearer {TODOIST_ACCESS_TOKEN}'})

30 | response.raise_for_status()

31 | except requests.exceptions.HTTPError as err:

32 | if err.response.status_code == 404:

33 | logging.warning("Project ID not found: %s", project_id)

34 | return None

35 | else:

36 | logging.error("HTTP request failed: %s", err)

37 | sys.exit(1)

38 |

39 | return response.json()

40 |

41 | def get_activity(page):

42 | events = []

43 | count = -1

44 | offset = 0

45 | while count == -1 or len(events) < count:

46 | logging.debug("Fetching page %s offset %s", page, len(events))

47 | try:

48 | response = requests.get(f'https://api.todoist.com/sync/v9/activity/get',

49 | params={'page': page, 'offset': offset, 'limit': 100},

50 | headers={'Authorization': f'Bearer {TODOIST_ACCESS_TOKEN}'})

51 | response.raise_for_status()

52 | except requests.exceptions.HTTPError as err:

53 | logging.error("HTTP request failed: %s", err)

54 | sys.exit(1)

55 |

56 | activity = response.json()

57 | events.extend(activity['events'])

58 | count = activity['count']

59 |

60 | logging.info("Got %s items from Todoist", len(events))

61 |

62 | return events

63 |

64 | connect(TODOIST_DATABASE)

65 |

66 | page = 0

67 | activity = get_activity(page)

68 | projects = {}

69 | for event in activity:

70 | if event['object_type'] == 'item':

71 | if event['event_type'] == 'added' or event['event_type'] == 'completed':

72 | project = None

73 | try:

74 | if event['parent_project_id'] in projects:

75 | project = projects[event['parent_project_id']]

76 | else:

77 | project = get_project(event['parent_project_id'])

78 | projects[event['parent_project_id']] = project

79 | except AttributeError as err:

80 | logging.warning("Unable to fetch name for project ID %s", event['parent_project_id'])

81 |

82 | if project != None:

83 | points.append({

84 | "measurement": event['event_type'],

85 | "time": event['event_date'],

86 | "tags": {

87 | "item_id": event['id'],

88 | "project_id": event['parent_project_id'],

89 | "project_name": project['project']['name'],

90 | },

91 | "fields": {

92 | "content": event['extra_data']['content']

93 | }

94 | })

95 |

96 | write_points(points)

97 |

--------------------------------------------------------------------------------

/config.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # Copyright 2022 Sam Steele

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | import sys, logging, colorlog, pytz

17 | from influxdb import InfluxDBClient

18 | from influxdb.exceptions import InfluxDBClientError

19 |

20 | LOCAL_TIMEZONE = pytz.timezone('America/New_York')

21 |

22 | # InfluxDB Configuration

23 | INFLUXDB_HOST = 'localhost'

24 | INFLUXDB_PORT = 8086

25 | INFLUXDB_USERNAME = 'root'

26 | INFLUXDB_PASSWORD = 'root'

27 | INFLUXDB_CHUNK_SIZE = 50 # How many points to send per request

28 |

29 | # Shared gaming database

30 | GAMING_DATABASE = 'gaming'

31 |

32 | # EDSM configuration

33 | EDSM_API_KEY = ''

34 | EDSM_COMMANDER_NAME = ''

35 | EDSM_DATABASE = 'edsm'

36 |

37 | # Exist.io configuration

38 | EXIST_ACCESS_TOKEN = ''

39 | EXIST_USERNAME = ''

40 | EXIST_DATABASE = 'exist'

41 | EXIST_USE_FITBIT = True

42 | EXIST_USE_TRAKT = True

43 | EXIST_USE_GAMING = True

44 | EXIST_USE_RESCUETIME = False

45 |

46 | # Fitbit configuration

47 | FITBIT_LANGUAGE = 'en_US'

48 | FITBIT_CLIENT_ID = ''

49 | FITBIT_CLIENT_SECRET = ''

50 | FITBIT_ACCESS_TOKEN = ''

51 | FITBIT_INITIAL_CODE = ''

52 | FITBIT_REDIRECT_URI = 'https://www.example.net'

53 | FITBIT_DATABASE = 'fitbit'

54 |

55 | # Foursquare configuration

56 | FOURSQUARE_ACCESS_TOKEN = ''

57 | FOURSQUARE_DATABASE = 'foursquare'

58 |

59 | # FSHub configuration

60 | FSHUB_API_KEY = ''

61 | FSHUB_PILOT_ID = ''

62 | FSHUB_DATABASE = 'fshub'

63 |

64 | # GitHub configuration

65 | GITHUB_API_KEY = ''

66 | GITHUB_USERNAME = ''

67 | GITHUB_DATABASE = 'github'

68 |

69 | # Instagram configuration

70 | INSTAGRAM_PROFILE = ''

71 | INSTAGRAM_DATABASE = 'instagram'

72 | INSTAGRAM_MAX_POSTS = 10 #set to 0 to download all posts

73 |

74 | # Freestyle LibreLinkUp configuration

75 | LIBRELINKUP_USERNAME = ''

76 | LIBRELINKUP_PASSWORD = ''

77 | LIBRELINKUP_DATABASE = 'glucose'

78 | LIBRELINKUP_URL = 'https://api-us.libreview.io'

79 | LIBRELINKUP_VERSION = '4.7.0'

80 | LIBRELINKUP_PRODUCT = 'llu.ios'

81 |

82 | # Nintendo Switch configuration

83 | NS_DEVICE_ID = ''

84 | NS_SMART_DEVICE_ID = ''

85 | NS_SESSION_TOKEN = ''

86 | NS_CLIENT_ID = ''

87 | # These occasionally need to be updated when Nintendo changes the minimum allowed version

88 | NS_INTERNAL_VERSION = '321'

89 | NS_DISPLAY_VERSION = '1.17.0'

90 | NS_OS_VERSION = '15.2'

91 | NS_DATABASE = GAMING_DATABASE

92 |

93 | # OneTouch Reveal configuration

94 | ONETOUCH_USERNAME = ''

95 | ONETOUCH_PASSWORD = ''

96 | ONETOUCH_URL = 'https://app.onetouchreveal.com'

97 | ONETOUCH_DATABASE = 'glucose'

98 |

99 | # RescueTime configuration

100 | RESCUETIME_API_KEY = ''

101 | RESCUETIME_DATABASE = 'rescuetime'

102 |

103 | # RetroAchievements configuration

104 | RA_API_KEY = ''

105 | RA_USERNAME = ''

106 | RA_DATABASE = GAMING_DATABASE

107 |

108 | # RetroArch configuration

109 | RETROARCH_LOGS = '/home/ark/.config/retroarch/playlists/logs/'

110 | EMULATIONSTATION_ROMS = '/roms'

111 | RETROARCH_IMAGE_WEB_PREFIX = 'https://example.net/retroarch_images/'

112 |

113 | # Exophase configuration for Stadia and PSN

114 | EXOPHASE_NAME = ''

115 |

116 | # Stadia configuration

117 | STADIA_NAME = ''

118 | STADIA_DATABASE = GAMING_DATABASE

119 |

120 | # PSN configuration

121 | PSN_NAME = ''

122 | PSN_DATABASE = GAMING_DATABASE

123 |

124 | # Steam configuration

125 | STEAM_API_KEY = ''

126 | STEAM_ID = ''

127 | STEAM_USERNAME = ''

128 | STEAM_LANGUAGE = 'en'

129 | STEAM_DATABASE = GAMING_DATABASE

130 |

131 | # Todoist configuration

132 | TODOIST_ACCESS_TOKEN = ''

133 | TODOIST_DATABASE = 'todoist'

134 |

135 | # Trakt.tv configuration

136 | TRAKT_CLIENT_ID = ''

137 | TRAKT_CLIENT_SECRET = ''

138 | TRAKT_OAUTH_CODE = ''

139 | TMDB_API_KEY = ''

140 | TMDB_IMAGE_BASE = 'https://image.tmdb.org/t/p/'

141 | TRAKT_DATABASE = 'trakt'

142 |

143 | # Xbox configuration

144 | XBOX_GAMERTAG = ''

145 | TRUE_ACHIEVEMENTS_ID = ''

146 | XBOX_DATABASE = GAMING_DATABASE

147 |

148 | # Logging configuration

149 | LOG_LEVEL = logging.INFO

150 | LOG_FORMAT = '%(asctime)s %(log_color)s%(message)s'

151 | LOG_COLORS = {

152 | 'WARNING': 'yellow',

153 | 'ERROR': 'red',

154 | 'CRITICAL': 'red',

155 | }

156 |

157 | def connect(db):

158 | global client

159 | try:

160 | logging.info("Connecting to %s:%s", INFLUXDB_HOST, INFLUXDB_PORT)

161 | client = InfluxDBClient(host=INFLUXDB_HOST, port=INFLUXDB_PORT, username=INFLUXDB_USERNAME, password=INFLUXDB_PASSWORD)

162 | client.create_database(db)

163 | client.switch_database(db)

164 | except InfluxDBClientError as err:

165 | logging.error("InfluxDB connection failed: %s", err)

166 | sys.exit(1)

167 | return client

168 |

169 | def write_points(points):

170 | total = len(points)

171 | global client

172 | try:

173 | start = 0

174 | end = INFLUXDB_CHUNK_SIZE

175 | while start < len(points):

176 | if end > len(points):

177 | end = len(points)

178 |

179 | client.write_points(points[start:end])

180 | logging.debug(f"Wrote {end} / {total} points")

181 |

182 | start = end

183 | end = end + INFLUXDB_CHUNK_SIZE

184 | except InfluxDBClientError as err:

185 | logging.error("Unable to write points to InfluxDB: %s", err)

186 | sys.exit(1)

187 |

188 | logging.info("Successfully wrote %s data points to InfluxDB", total)

189 |

190 | client = None

191 |

192 | if sys.stdout.isatty():

193 | colorlog.basicConfig(level=LOG_LEVEL, format=LOG_FORMAT, log_colors=LOG_COLORS, stream=sys.stdout)

194 | else:

195 | logging.basicConfig(level=LOG_LEVEL, format=LOG_FORMAT.replace(f'%(log_color)s', ''), stream=sys.stdout)

196 |

197 | def handle_exception(exc_type, exc_value, exc_traceback):

198 | if issubclass(exc_type, KeyboardInterrupt):

199 | sys.__excepthook__(exc_type, exc_value, exc_traceback)

200 | return

201 |

202 | logging.critical("Uncaught exception:", exc_info=(exc_type, exc_value, exc_traceback))

203 |

204 | sys.excepthook = handle_exception

--------------------------------------------------------------------------------

/docker-example/Dockerfile-librelinkup:

--------------------------------------------------------------------------------

1 | FROM python:3.11.4-alpine3.18

2 |

3 | LABEL maintainer="Evan Richardson (evanrich81[at]gmail.com)"

4 |

5 | # Set the working directory to /app

6 | WORKDIR /app

7 |

8 | # Copy the requirements.txt file into the container at /app

9 | COPY requirements.txt /app/

10 |

11 | # Install the Python dependencies specified in requirements.txt

12 | RUN pip install --no-cache-dir -r requirements.txt

13 |

14 | # Copy the link.py and config.py files to the root directory

15 | COPY librelinkup.py /

16 | COPY config.py /

17 |

18 | ENV INFLUXDB_HOST="default_host"

19 | ENV INFLUXDB_PORT="default_port"

20 | ENV INFLUXDB_USER="default_username"

21 | ENV INFLUXDB_PASSWORD="default_password"

22 | ENV LIBRELINKUP_DATABASE="database"

23 | ENV LIBRELINKUP_USERNAME="librelinkup_username"

24 | ENV LIBRELINKUP_PASSWORD="librelinkup_password"

25 |

26 | # Run the Python script link.py

27 | CMD ["python", "/librelinkup.py"]

--------------------------------------------------------------------------------

/docker-example/README.md:

--------------------------------------------------------------------------------

1 | ## Dockerfile

2 | You can obviously customize this however you want, and likely will. I wrote this quick and dirty to extract all the parameters I needed for the librelinkup and config python files to work. You can add/delete as necessary.

3 |

4 | Breaking the file down:

5 |

6 | `FROM python:3.11.4-alpine3.18`

7 | ###### this defines the base image to use. I wanted to keep things nice and tidy, so I used the python 3.11 image based on alpine 3.18. you can use whatever you want.

8 |

9 | `LABEL maintainer="Evan Richardson (evanrich81[at]gmail.com)"`

10 | ###### **The maintainer of the image. I posted this to dockerhub, so I stuck my name and email on there, please don't spam me. You can change to whatever you want.**

11 |

12 | `WORKDIR /app`

13 | ###### This sets the working directory for the container to `/app`

14 |

15 | `COPY requirements.txt /app/`

16 | ###### Self explanitory.

17 |

18 | `RUN pip install --no-cache-dir -r requirements.txt`

19 | ###### Installs the requirements, leaving no cache behind (smaller final image)

20 |

21 | COPY librelinkup.py /

22 | COPY config.py /

23 | ###### These lines copy the python files to the root directory (could go in app if you wanted). These could also be consolidated on one line for one less layer, but not the end of the world.

24 |

25 |

26 | ENV INFLUXDB_HOST="default_host"

27 | ENV INFLUXDB_PORT="default_port"

28 | ENV INFLUXDB_USER="default_username"

29 | ENV INFLUXDB_PASSWORD="default_password"

30 | ENV LIBRELINKUP_DATABASE="database"

31 | ENV LIBRELINKUP_USERNAME="librelinkup_username"

32 | ENV LIBRELINKUP_PASSWORD="librelinkup_password"

33 | ##### This block of env variables defines env variables that will be available to the container. Set these to whatever you want.

34 |

35 | `CMD ["python", "/librelinkup.py"]`

36 | ##### And finally, the run line. This runs the selected python file upon start of the container.

37 |

38 |

39 | ## Running the container

40 | If you use the cronjob in the kubernetes folder, it will add the environmental variables automatically. if not, then you should run this image in a way similar to:

41 | `docker run -e VAR1=VALUE1 -e VAR2=VALUE evanrich/libre2influx:latest`

--------------------------------------------------------------------------------

/docker-example/config.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # Copyright 2022 Sam Steele

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | import sys, logging, colorlog, pytz, os

17 | from influxdb import InfluxDBClient

18 | from influxdb.exceptions import InfluxDBClientError

19 |

20 | TIMEZONE = os.getenv('TZ', 'America/Los_Angeles')

21 | LOCAL_TIMEZONE = pytz.timezone(TIMEZONE)

22 |

23 | # InfluxDB Configuration

24 | INFLUXDB_HOST = os.getenv('INFLUXDB_HOST')

25 | INFLUXDB_PORT = os.getenv('INFLUXDB_PORT', 8086)

26 | INFLUXDB_USERNAME = os.getenv('INFLUXDB_USER', 'admin')

27 | INFLUXDB_PASSWORD = os.getenv('INFLUXDB_PASSWORD', 'admin')

28 | INFLUXDB_CHUNK_SIZE = 50 # How many points to send per request

29 |

30 | # Freestyle LibreLinkUp configuration

31 | LIBRELINKUP_USERNAME = os.getenv('LIBRELINKUP_USERNAME')

32 | LIBRELINKUP_PASSWORD = os.getenv('LIBRELINKUP_PASSWORD')

33 | LIBRELINKUP_DATABASE = os.getenv('LIBRELINKUP_DATABASE')

34 | LIBRELINKUP_URL = 'https://api-us.libreview.io'

35 | LIBRELINKUP_VERSION = '4.7.0'

36 | LIBRELINKUP_PRODUCT = 'llu.ios'

37 |

38 | # Logging configuration

39 | LOG_LEVEL = logging.INFO

40 | LOG_FORMAT = '%(asctime)s %(log_color)s%(message)s'

41 | LOG_COLORS = {

42 | 'WARNING': 'yellow',

43 | 'ERROR': 'red',

44 | 'CRITICAL': 'red',

45 | }

46 |

47 | def connect(db):

48 | global client

49 | try:

50 | logging.info("Connecting to %s:%s", INFLUXDB_HOST, INFLUXDB_PORT)

51 | client = InfluxDBClient(host=INFLUXDB_HOST, port=INFLUXDB_PORT, username=INFLUXDB_USERNAME, password=INFLUXDB_PASSWORD)

52 | client.create_database(db)

53 | client.switch_database(db)

54 | except InfluxDBClientError as err:

55 | logging.error("InfluxDB connection failed: %s", err)

56 | sys.exit(1)

57 | return client

58 |

59 | def write_points(points):

60 | total = len(points)

61 | global client

62 | try:

63 | start = 0

64 | end = INFLUXDB_CHUNK_SIZE

65 | while start < len(points):

66 | if end > len(points):

67 | end = len(points)

68 |

69 | client.write_points(points[start:end])

70 | logging.debug(f"Wrote {end} / {total} points")

71 |

72 | start = end

73 | end = end + INFLUXDB_CHUNK_SIZE

74 | except InfluxDBClientError as err:

75 | logging.error("Unable to write points to InfluxDB: %s", err)

76 | sys.exit(1)

77 |

78 | logging.info("Successfully wrote %s data points to InfluxDB", total)

79 |

80 | client = None

81 |

82 | if sys.stdout.isatty():

83 | colorlog.basicConfig(level=LOG_LEVEL, format=LOG_FORMAT, log_colors=LOG_COLORS, stream=sys.stdout)

84 | else:

85 | logging.basicConfig(level=LOG_LEVEL, format=LOG_FORMAT.replace(f'%(log_color)s', ''), stream=sys.stdout)

86 |

87 | def handle_exception(exc_type, exc_value, exc_traceback):

88 | if issubclass(exc_type, KeyboardInterrupt):

89 | sys.__excepthook__(exc_type, exc_value, exc_traceback)

90 | return

91 |

92 | logging.critical("Uncaught exception:", exc_info=(exc_type, exc_value, exc_traceback))

93 |

94 | sys.excepthook = handle_exception

--------------------------------------------------------------------------------

/docker-example/librelinkup.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # Copyright 2022 Sam Steele

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | import requests, sys, os, json, time

17 | from datetime import datetime

18 | from config import *

19 |

20 | def append_reading(points, data, reading):

21 | time = datetime.strptime(reading['FactoryTimestamp'] + '+00:00', '%m/%d/%Y %I:%M:%S %p%z')

22 | points.append({

23 | "measurement": "glucose",

24 | "time": time,

25 | "tags": {

26 | "deviceType": "Libre",

27 | "deviceSerialNumber": data['data']['connection']['sensor']['sn'],

28 | },

29 | "fields": {

30 | "value": int(reading['ValueInMgPerDl']),

31 | "units": 'mg/dL',

32 | }

33 | })

34 |

35 | if not LIBRELINKUP_USERNAME:

36 | logging.error("LIBRELINKUP_USERNAME not set in config.py")

37 | sys.exit(1)

38 |

39 | points = []

40 |

41 | connect(LIBRELINKUP_DATABASE)

42 |

43 | LIBRELINKUP_HEADERS = {

44 | "version": LIBRELINKUP_VERSION,

45 | "product": LIBRELINKUP_PRODUCT,

46 | }

47 |

48 | LIBRELINKUP_TOKEN = None

49 | script_dir = os.path.dirname(__file__)

50 | auth_token_path = os.path.join(script_dir, '.librelinkup-authtoken')

51 | if os.path.isfile(auth_token_path):

52 | with open(auth_token_path) as json_file:

53 | auth = json.load(json_file)

54 | if auth['expires'] > time.time():

55 | LIBRELINKUP_TOKEN = auth['token']

56 | logging.info("Using cached authTicket, expiration: %s", datetime.fromtimestamp(auth['expires']).isoformat())

57 |

58 | if LIBRELINKUP_TOKEN is None:

59 | logging.info("Auth ticket not found or expired, requesting a new one")

60 | try:

61 | response = requests.post(f'{LIBRELINKUP_URL}/llu/auth/login',

62 | headers=LIBRELINKUP_HEADERS, json = {'email': LIBRELINKUP_USERNAME, 'password': LIBRELINKUP_PASSWORD})

63 | response.raise_for_status()

64 | except requests.exceptions.HTTPError as err:

65 | logging.error("HTTP request failed: %s", err)

66 | sys.exit(1)

67 |

68 | data = response.json()

69 | if not 'authTicket' in data['data']:

70 | logging.error("Authentication failed")

71 | sys.exit(1)

72 |

73 | with open(auth_token_path, 'w') as outfile:

74 | json.dump(data['data']['authTicket'], outfile)

75 |

76 | LIBRELINKUP_TOKEN = data['data']['authTicket']['token']

77 |

78 | LIBRELINKUP_HEADERS['Authorization'] = 'Bearer ' + LIBRELINKUP_TOKEN

79 |

80 | try:

81 | response = requests.get(f'{LIBRELINKUP_URL}/llu/connections', headers=LIBRELINKUP_HEADERS)

82 | response.raise_for_status()

83 | except requests.exceptions.HTTPError as err:

84 | logging.error("HTTP request failed: %s", err)

85 | sys.exit(1)

86 |

87 | connections = response.json()

88 | if not 'data' in connections or len(connections['data']) < 1:

89 | logging.error("No connections configured. Accept an invitation in the mobile app first.")

90 | sys.exit(1)

91 |

92 | logging.info("Using connection %s: %s %s", connections['data'][0]['patientId'], connections['data'][0]['firstName'], connections['data'][0]['lastName'])

93 |

94 | try:

95 | response = requests.get(f'{LIBRELINKUP_URL}/llu/connections/{connections["data"][0]["patientId"]}/graph', headers=LIBRELINKUP_HEADERS)

96 | response.raise_for_status()

97 | except requests.exceptions.HTTPError as err:

98 | logging.error("HTTP request failed: %s", err)

99 | sys.exit(1)

100 |

101 | data = response.json()

102 | append_reading(points, data, data['data']['connection']['glucoseMeasurement'])

103 |

104 | if len(data['data']['graphData']) > 0:

105 | for reading in data['data']['graphData']:

106 | append_reading(points, data, reading)

107 |

108 | write_points(points)

--------------------------------------------------------------------------------

/docker-example/requirements.txt:

--------------------------------------------------------------------------------

1 | influxdb==5.3.1

2 | requests==2.25.1

3 | colorlog==6.7.0

4 | pytz==2023.3

--------------------------------------------------------------------------------

/edsm.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # Copyright 2022 Sam Steele

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | import requests, requests_cache, sys, math, logging

17 | from datetime import datetime, date

18 | from config import *

19 |

20 | if not EDSM_API_KEY:

21 | logging.error("EDSM_API_KEY not set in config.py")

22 | sys.exit(1)

23 |

24 | points = []

25 | last = None

26 |

27 | def add_rank(data, activity):

28 | global points

29 | points.append({

30 | "measurement": "rank",

31 | "time": date.today().isoformat() + "T00:00:00",

32 | "tags": {

33 | "commander": EDSM_COMMANDER_NAME,

34 | "activity": activity

35 | },

36 | "fields": {

37 | "value": data['ranks'][activity],

38 | "progress": data['progress'][activity],

39 | "name": data['ranksVerbose'][activity]

40 | }

41 | })

42 |

43 | def fetch_system(name):

44 | try:

45 | response = requests.get('https://www.edsm.net/api-v1/system',

46 | params={'systemName':name, 'showCoordinates':1, 'showPrimaryStar':1, 'apiKey':EDSM_API_KEY})

47 | response.raise_for_status()

48 | except requests.exceptions.HTTPError as err:

49 | logging.error("HTTP request failed: %s", err)

50 | sys.exit(1)

51 |

52 | return response.json()

53 |

54 | def distance(system1, system2):

55 | s1 = fetch_system(system1)

56 | s2 = fetch_system(system2)

57 |

58 | dx = float(s1['coords']['x']) - float(s2['coords']['x'])

59 | dy = float(s1['coords']['y']) - float(s2['coords']['y'])

60 | dz = float(s1['coords']['z']) - float(s2['coords']['z'])

61 |

62 | return math.sqrt(dx*dx + dy*dy + dz*dz)

63 |

64 | def add_jump(src, dst):

65 | global points

66 | system = fetch_system(dst['system'])

67 | if 'type' in system['primaryStar']:

68 | points.append({

69 | "measurement": "jump",

70 | "time": datetime.fromisoformat(dst['date']).isoformat(),

71 | "tags": {

72 | "commander": EDSM_COMMANDER_NAME,

73 | "system": dst['system'],

74 | "firstDiscover": dst['firstDiscover'],

75 | "primaryStarType": system['primaryStar']['type']

76 | },

77 | "fields": {

78 | "distance": distance(src['system'], dst['system']),

79 | "x": float(system['coords']['x']),

80 | "y": float(system['coords']['y']),

81 | "z": float(system['coords']['z'])

82 | }

83 | })

84 | else:

85 | points.append({

86 | "measurement": "jump",

87 | "time": datetime.fromisoformat(dst['date']).isoformat(),

88 | "tags": {

89 | "commander": EDSM_COMMANDER_NAME,

90 | "system": dst['system'],

91 | "firstDiscover": dst['firstDiscover']

92 | },

93 | "fields": {

94 | "distance": distance(src['system'], dst['system']),

95 | "x": float(system['coords']['x']),

96 | "y": float(system['coords']['y']),

97 | "z": float(system['coords']['z'])

98 | }

99 | })

100 |

101 | def fetch_jumps(time):

102 | global last

103 | try:

104 | response = requests.get('https://www.edsm.net/api-logs-v1/get-logs',

105 | params={'commanderName':EDSM_COMMANDER_NAME, 'apiKey':EDSM_API_KEY, 'endDateTime':time})

106 | response.raise_for_status()

107 | except requests.exceptions.HTTPError as err:

108 | print("HTTP request failed: %s", err)

109 | sys.exit(1)

110 |

111 | data = response.json()

112 | logging.info("Got %s jumps from EDSM", len(data['logs']))

113 |

114 | for jump in data['logs']:

115 | if last != None:

116 | add_jump(jump, last)

117 | last = jump

118 |

119 | return data

120 |

121 | connect(EDSM_DATABASE)

122 |

123 | try:

124 | response = requests.get('https://www.edsm.net/api-commander-v1/get-credits',

125 | params={'commanderName':EDSM_COMMANDER_NAME, 'apiKey':EDSM_API_KEY})

126 | response.raise_for_status()

127 | except requests.exceptions.HTTPError as err:

128 | logging.error("HTTP request failed: %s", err)

129 | sys.exit(1)

130 |

131 | data = response.json()

132 | if 'credits' not in data:

133 | logging.error("Unable to fetch data from EDSM: %s", data['msg'])

134 | sys.exit(1)

135 |

136 | logging.info("Got credits from EDSM")

137 |

138 | for credits in data['credits']:

139 | points.append({

140 | "measurement": "credits",

141 | "time": datetime.fromisoformat(credits['date']).isoformat(),

142 | "tags": {

143 | "commander": EDSM_COMMANDER_NAME

144 | },

145 | "fields": {

146 | "value": credits['balance']

147 | }

148 | })

149 |

150 | try:

151 | response = requests.get('https://www.edsm.net/api-commander-v1/get-ranks',

152 | params={'commanderName':EDSM_COMMANDER_NAME, 'apiKey':EDSM_API_KEY})

153 | response.raise_for_status()

154 | except requests.exceptions.HTTPError as err:

155 | logging.error("HTTP request failed: %s" % (err))

156 | sys.exit()

157 |

158 | data = response.json()

159 | logging.info("Got ranks from EDSM")

160 | add_rank(data, "Combat")

161 | add_rank(data, "Trade")

162 | add_rank(data, "Explore")

163 | add_rank(data, "CQC")

164 | add_rank(data, "Federation")

165 | add_rank(data, "Empire")

166 | add_rank(data, "Soldier")

167 | add_rank(data, "Exobiologist")

168 |

169 | requests_cache.install_cache('edsm')

170 | data = fetch_jumps(date.today().isoformat() + " 00:00:00")

171 | if len(data['logs']) > 0:

172 | data = fetch_jumps(data['startDateTime'])

173 | while len(data['logs']) == 0:

174 | data = fetch_jumps(data['startDateTime'])

175 |

176 | write_points(points)

177 |

--------------------------------------------------------------------------------

/exist.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # Copyright 2022 Sam Steele

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | import requests, sys, logging

17 | from datetime import date, datetime, time, timedelta

18 | from publicsuffix2 import PublicSuffixList

19 | from config import *

20 |

21 | if not EXIST_ACCESS_TOKEN:

22 | logging.error("EXIST_ACCESS_TOKEN not set in config.py")

23 | sys.exit(1)

24 |

25 | points = []

26 | start_time = str(int(LOCAL_TIMEZONE.localize(datetime.combine(date.today(), time(0,0)) - timedelta(days=7)).astimezone(pytz.utc).timestamp()) * 1000) + 'ms'

27 |

28 | def append_tags(tags):

29 | try:

30 | response = requests.post('https://exist.io/api/1/attributes/custom/append/',

31 | headers={'Authorization':f'Bearer {EXIST_ACCESS_TOKEN}'},

32 | json=tags)

33 | response.raise_for_status()

34 | except requests.exceptions.HTTPError as err:

35 | logging.error("HTTP request failed: %s", err)

36 | sys.exit(1)

37 |

38 | result = response.json()

39 | if len(result['failed']) > 0:

40 | logging.error("Request failed: %s", result['failed'])

41 | sys.exit(1)

42 |

43 | if len(result['success']) > 0:

44 | logging.info("Successfully sent %s tags", len(result['success']))

45 |

46 | def acquire_attributes(attributes):

47 | try:

48 | response = requests.post('https://exist.io/api/1/attributes/acquire/',

49 | headers={'Authorization':f'Bearer {EXIST_ACCESS_TOKEN}'},

50 | json=attributes)

51 | response.raise_for_status()

52 | except requests.exceptions.HTTPError as err:

53 | logging.error("HTTP request failed: %s", err)

54 | sys.exit(1)

55 |

56 | result = response.json()

57 | if len(result['failed']) > 0:

58 | logging.error("Request failed: %s", result['failed'])

59 | sys.exit(1)

60 |

61 | def post_attributes(values):

62 | try:

63 | response = requests.post('https://exist.io/api/1/attributes/update/',

64 | headers={'Authorization':f'Bearer {EXIST_ACCESS_TOKEN}'},

65 | json=values)

66 | response.raise_for_status()

67 | except requests.exceptions.HTTPError as err:

68 | logging.error("HTTP request failed: %s", err)

69 | sys.exit(1)

70 |

71 | result = response.json()

72 | if len(result['failed']) > 0:

73 | logging.error("Request failed: %s", result['failed'])

74 | sys.exit(1)

75 |

76 | if len(result['success']) > 0:

77 | logging.info("Successfully sent %s attributes" % len(result['success']))

78 |

79 | client = connect(EXIST_DATABASE)

80 |

81 | acquire_attributes([{"name":"gaming_min", "active":True}, {"name":"tv_min", "active":True}])

82 |

83 | try:

84 | response = requests.get('https://exist.io/api/1/users/' + EXIST_USERNAME + '/insights/',

85 | headers={'Authorization':f'Bearer {EXIST_ACCESS_TOKEN}'})

86 | response.raise_for_status()

87 | except requests.exceptions.HTTPError as err:

88 | logging.error("HTTP request failed: %s", err)

89 | sys.exit(1)

90 |

91 | data = response.json()

92 | logging.info("Got %s insights from exist.io", len(data['results']))

93 |

94 | for insight in data['results']:

95 | if insight['target_date'] == None:

96 | date = datetime.fromisoformat(insight['created'].strip('Z')).strftime('%Y-%m-%d')

97 | else:

98 | date = insight['target_date']

99 | points.append({

100 | "measurement": "insight",

101 | "time": date + "T00:00:00",

102 | "tags": {

103 | "type": insight['type']['name'],

104 | "attribute": insight['type']['attribute']['label'],

105 | "group": insight['type']['attribute']['group']['label'],

106 | },

107 | "fields": {

108 | "html": insight['html'].replace("\n", "").replace("\r", ""),

109 | "text": insight['text']

110 | }

111 | })

112 |

113 | try:

114 | response = requests.get('https://exist.io/api/1/users/' + EXIST_USERNAME + '/attributes/?limit=7&groups=custom,mood',

115 | headers={'Authorization':f'Bearer {EXIST_ACCESS_TOKEN}'})

116 | response.raise_for_status()

117 | except requests.exceptions.HTTPError as err:

118 | logging.error("HTTP request failed: %s", err)

119 | sys.exit(1)

120 |

121 | data = response.json()

122 | logging.info("Got attributes from exist.io")

123 |

124 | for result in data:

125 | for value in result['values']:

126 | if value['value'] and result['attribute'] != 'custom':

127 | if result['group']['name'] == 'custom':

128 | points.append({

129 | "measurement": result['group']['name'],

130 | "time": value['date'] + "T00:00:00",

131 | "tags": {

132 | "tag": result['label']

133 | },

134 | "fields": {

135 | "value": value['value']

136 | }

137 | })

138 | else:

139 | points.append({

140 | "measurement": result['attribute'],

141 | "time": value['date'] + "T00:00:00",

142 | "fields": {

143 | "value": value['value']

144 | }

145 | })

146 |

147 | write_points(points)

148 |

149 | values = []

150 | tags = []

151 | if FITBIT_DATABASE and EXIST_USE_FITBIT:

152 | client.switch_database(FITBIT_DATABASE)

153 | durations = client.query(f'SELECT "duration" FROM "activity" WHERE (activityName = \'Meditating\' OR activityName = \'Meditation\')AND time >= {start_time}')

154 | for duration in list(durations.get_points()):

155 | if duration['duration'] > 0:

156 | date = datetime.fromisoformat(duration['time'].strip('Z') + "+00:00").astimezone(LOCAL_TIMEZONE).strftime('%Y-%m-%d')

157 | tags.append({'date': date, 'value': 'meditation'})

158 |

159 | durations = client.query(f'SELECT "duration","activityName" FROM "activity" WHERE activityName != \'Meditating\' AND activityName != \'Meditation\' AND time >= {start_time}')

160 | for duration in list(durations.get_points()):

161 | if duration['duration'] > 0:

162 | date = datetime.fromisoformat(duration['time'].strip('Z') + "+00:00").astimezone(LOCAL_TIMEZONE).strftime('%Y-%m-%d')

163 | tags.append({'date': date, 'value': 'exercise'})

164 | tags.append({'date': date, 'value': duration['activityName'].lower().replace(" ", "_")})

165 |

166 | if TRAKT_DATABASE and EXIST_USE_TRAKT:

167 | totals = {}

168 | client.switch_database(TRAKT_DATABASE)

169 | durations = client.query(f'SELECT "duration" FROM "watch" WHERE time >= {start_time}')

170 | for duration in list(durations.get_points()):

171 | date = datetime.fromisoformat(duration['time'].strip('Z') + "+00:00").astimezone(LOCAL_TIMEZONE).strftime('%Y-%m-%d')

172 | if date in totals:

173 | totals[date] = totals[date] + duration['duration']

174 | else:

175 | totals[date] = duration['duration']

176 |

177 | for date in totals:

178 | values.append({'date': date, 'name': 'tv_min', 'value': int(totals[date])})

179 | tags.append({'date': date, 'value': 'tv'})

180 |

181 | if GAMING_DATABASE and EXIST_USE_GAMING:

182 | totals = {}

183 | client.switch_database(GAMING_DATABASE)

184 | durations = client.query(f'SELECT "value" FROM "time" WHERE "value" > 0 AND time >= {start_time}')

185 | for duration in list(durations.get_points()):

186 | date = datetime.fromisoformat(duration['time'].strip('Z') + "+00:00").astimezone(LOCAL_TIMEZONE).strftime('%Y-%m-%d')

187 | if date in totals:

188 | totals[date] = totals[date] + duration['value']

189 | else:

190 | totals[date] = duration['value']

191 |

192 | for date in totals:

193 | values.append({'date': date, 'name': 'gaming_min', 'value': int(totals[date] / 60)})

194 | tags.append({'date': date, 'value': 'gaming'})

195 | elif RESCUETIME_DATABASE and EXIST_USE_RESCUETIME:

196 | psl = PublicSuffixList()

197 | totals = {}

198 | client.switch_database(RESCUETIME_DATABASE)

199 | durations = client.query(f'SELECT "duration","activity" FROM "activity" WHERE category = \'Games\' AND activity != \'Steam\' AND activity != \'steamwebhelper\' AND activity != \'origin\' AND activity != \'mixedrealityportal\' AND activity != \'holoshellapp\' AND activity != \'vrmonitor\' AND activity != \'vrserver\' AND activity != \'oculusclient\' AND activity != \'vive\' AND activity != \'obs64\' AND time >= {start_time}')

200 | for duration in list(durations.get_points()):

201 | date = datetime.fromisoformat(duration['time'].strip('Z') + "+00:00").astimezone(LOCAL_TIMEZONE).strftime('%Y-%m-%d')

202 | if psl.get_public_suffix(duration['activity'], strict=True) is None:

203 | if date in totals:

204 | totals[date] = totals[date] + duration['duration']

205 | else:

206 | totals[date] = duration['duration']

207 |

208 | for date in totals:

209 | values.append({'date': date, 'name': 'gaming_min', 'duration': int(totals[date] / 60)})

210 | tags.append({'date': date, 'value': 'gaming'})

211 |

212 | if len(tags) > 0:

213 | append_tags(tags)

214 |

215 | if len(values) > 0:

216 | post_attributes(values)

217 |

--------------------------------------------------------------------------------

/fitbit.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # Copyright 2022 Sam Steele

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # See the License for the specific language governing permissions and

14 | # limitations under the License.

15 |

16 | import requests, sys, os, pytz

17 | from datetime import datetime, date, timedelta

18 | from config import *

19 |

20 | if not FITBIT_CLIENT_ID or not FITBIT_CLIENT_SECRET:

21 | logging.error("FITBIT_CLIENT_ID or FITBIT_CLIENT_SECRET not set in config.py")

22 | sys.exit(1)

23 | points = []

24 |

25 | def fetch_data(category, type):

26 | try:

27 | response = requests.get(f'https://api.fitbit.com/1/user/-/{category}/{type}/date/today/1d.json',

28 | headers={'Authorization': f'Bearer {FITBIT_ACCESS_TOKEN}', 'Accept-Language': FITBIT_LANGUAGE})

29 | response.raise_for_status()

30 | except requests.exceptions.HTTPError as err:

31 | logging.error("HTTP request failed: %s", err)

32 | sys.exit(1)

33 |

34 | data = response.json()

35 | logging.info(f"Got {type} from Fitbit")

36 |

37 | for day in data[category.replace('/', '-') + '-' + type]:

38 | points.append({

39 | "measurement": type,

40 | "time": LOCAL_TIMEZONE.localize(datetime.fromisoformat(day['dateTime'])).astimezone(pytz.utc).isoformat(),

41 | "fields": {

42 | "value": float(day['value'])

43 | }

44 | })

45 |

46 | def fetch_heartrate(date):

47 | try:

48 | response = requests.get(f'https://api.fitbit.com/1/user/-/activities/heart/date/{date}/1d/1min.json',

49 | headers={'Authorization': f'Bearer {FITBIT_ACCESS_TOKEN}', 'Accept-Language': FITBIT_LANGUAGE})

50 | response.raise_for_status()

51 | except requests.exceptions.HTTPError as err:

52 | logging.error("HTTP request failed: %s", err)

53 | sys.exit(1)

54 |

55 | data = response.json()

56 | logging.info("Got heartrates from Fitbit")

57 |

58 | for day in data['activities-heart']:

59 | if 'restingHeartRate' in day['value']:

60 | points.append({

61 | "measurement": "restingHeartRate",

62 | "time": datetime.fromisoformat(day['dateTime']),

63 | "fields": {

64 | "value": float(day['value']['restingHeartRate'])

65 | }

66 | })

67 |

68 | if 'heartRateZones' in day['value']:

69 | for zone in day['value']['heartRateZones']:

70 | if 'caloriesOut' in zone and 'min' in zone and 'max' in zone and 'minutes' in zone:

71 | points.append({

72 | "measurement": "heartRateZones",

73 | "time": datetime.fromisoformat(day['dateTime']),

74 | "tags": {

75 | "zone": zone['name']

76 | },

77 | "fields": {

78 | "caloriesOut": float(zone['caloriesOut']),

79 | "min": float(zone['min']),

80 | "max": float(zone['max']),

81 | "minutes": float(zone['minutes'])

82 | }

83 | })

84 | elif 'min' in zone and 'max' in zone and 'minutes' in zone:

85 | points.append({

86 | "measurement": "heartRateZones",

87 | "time": datetime.fromisoformat(day['dateTime']),

88 | "tags": {

89 | "zone": zone['name']

90 | },

91 | "fields": {

92 | "min": float(zone['min']),

93 | "max": float(zone['max']),

94 | "minutes": float(zone['minutes'])

95 | }

96 | })

97 |

98 | if 'activities-heart-intraday' in data:

99 | for value in data['activities-heart-intraday']['dataset']:

100 | time = datetime.fromisoformat(date + "T" + value['time'])

101 | utc_time = LOCAL_TIMEZONE.localize(time).astimezone(pytz.utc).isoformat()

102 | points.append({

103 | "measurement": "heartrate",

104 | "time": utc_time,

105 | "fields": {

106 | "value": float(value['value'])

107 | }

108 | })

109 |

110 | def process_levels(levels):

111 | for level in levels:

112 | type = level['level']

113 | if type == "asleep":

114 | type = "light"

115 | if type == "restless":

116 | type = "rem"

117 | if type == "awake":

118 | type = "wake"

119 |

120 | time = datetime.fromisoformat(level['dateTime'])

121 | utc_time = LOCAL_TIMEZONE.localize(time).astimezone(pytz.utc).isoformat()

122 | points.append({

123 | "measurement": "sleep_levels",

124 | "time": utc_time,

125 | "fields": {

126 | "seconds": int(level['seconds'])

127 | }

128 | })

129 |

130 | def fetch_activities(date):

131 | try:

132 | response = requests.get('https://api.fitbit.com/1/user/-/activities/list.json',

133 | headers={'Authorization': f'Bearer {FITBIT_ACCESS_TOKEN}', 'Accept-Language': FITBIT_LANGUAGE},

134 | params={'beforeDate': date, 'sort':'desc', 'limit':10, 'offset':0})

135 | response.raise_for_status()

136 | except requests.exceptions.HTTPError as err:

137 | logging.error("HTTP request failed: %s", err)

138 | sys.exit(1)

139 |

140 | data = response.json()

141 | logging.info("Got activities from Fitbit")

142 |

143 | for activity in data['activities']:

144 | fields = {}

145 |

146 | if 'activeDuration' in activity:

147 | fields['activeDuration'] = int(activity['activeDuration'])

148 | if 'averageHeartRate' in activity:

149 | fields['averageHeartRate'] = int(activity['averageHeartRate'])

150 | if 'calories' in activity:

151 | fields['calories'] = int(activity['calories'])

152 | if 'duration' in activity:

153 | fields['duration'] = int(activity['duration'])

154 | if 'distance' in activity:

155 | fields['distance'] = float(activity['distance'])

156 | fields['distanceUnit'] = activity['distanceUnit']

157 | if 'pace' in activity:

158 | fields['pace'] = float(activity['pace'])

159 | if 'speed' in activity:

160 | fields['speed'] = float(activity['speed'])

161 | if 'elevationGain' in activity:

162 | fields['elevationGain'] = int(activity['elevationGain'])

163 | if 'steps' in activity:

164 | fields['steps'] = int(activity['steps'])

165 |

166 | for level in activity['activityLevel']:

167 | if level['name'] == 'sedentary':

168 | fields[level['name'] + "Minutes"] = int(level['minutes'])

169 | else:

170 | fields[level['name'] + "ActiveMinutes"] = int(level['minutes'])

171 |

172 |

173 | time = datetime.fromisoformat(activity['startTime'].strip("Z"))

174 | utc_time = time.astimezone(pytz.utc).isoformat()

175 | points.append({

176 | "measurement": "activity",

177 | "time": utc_time,

178 | "tags": {

179 | "activityName": activity['activityName']

180 | },

181 | "fields": fields

182 | })

183 |

184 | connect(FITBIT_DATABASE)

185 |

186 | if not FITBIT_ACCESS_TOKEN:

187 | script_dir = os.path.dirname(__file__)

188 | refresh_token_path = os.path.join(script_dir, '.fitbit-refreshtoken')

189 | if os.path.isfile(refresh_token_path):

190 | f = open(refresh_token_path, "r")

191 | token = f.read().strip()

192 | f.close()

193 | response = requests.post('https://api.fitbit.com/oauth2/token',

194 | data={

195 | "client_id": FITBIT_CLIENT_ID,

196 | "grant_type": "refresh_token",

197 | "redirect_uri": FITBIT_REDIRECT_URI,

198 | "refresh_token": token

199 | }, auth=(FITBIT_CLIENT_ID, FITBIT_CLIENT_SECRET))

200 | else:

201 | response = requests.post('https://api.fitbit.com/oauth2/token',

202 | data={

203 | "client_id": FITBIT_CLIENT_ID,

204 | "grant_type": "authorization_code",

205 | "redirect_uri": FITBIT_REDIRECT_URI,

206 | "code": FITBIT_INITIAL_CODE

207 | }, auth=(FITBIT_CLIENT_ID, FITBIT_CLIENT_SECRET))

208 |

209 | response.raise_for_status()

210 |

211 | json = response.json()

212 | FITBIT_ACCESS_TOKEN = json['access_token']

213 | refresh_token = json['refresh_token']

214 | f = open(refresh_token_path, "w+")

215 | f.write(refresh_token)

216 | f.close()

217 |

218 | try:

219 | response = requests.get('https://api.fitbit.com/1/user/-/devices.json',

220 | headers={'Authorization': f'Bearer {FITBIT_ACCESS_TOKEN}', 'Accept-Language': FITBIT_LANGUAGE})

221 | response.raise_for_status()

222 | except requests.exceptions.HTTPError as err:

223 | logging.error("HTTP request failed: %s", err)

224 | sys.exit(1)

225 |

226 | data = response.json()

227 | logging.info("Got devices from Fitbit")

228 |

229 | for device in data:

230 | points.append({

231 | "measurement": "deviceBatteryLevel",

232 | "time": LOCAL_TIMEZONE.localize(datetime.fromisoformat(device['lastSyncTime'])).astimezone(pytz.utc).isoformat(),

233 | "tags": {

234 | "id": device['id'],

235 | "deviceVersion": device['deviceVersion'],

236 | "type": device['type']

237 | },

238 | "fields": {

239 | "value": float(device['batteryLevel'])

240 | }

241 | })

242 |

243 | end = date.today()

244 | start = end - timedelta(days=1)

245 |

246 | try:

247 | response = requests.get(f'https://api.fitbit.com/1.2/user/-/sleep/date/{start.isoformat()}/{end.isoformat()}.json',

248 | headers={'Authorization': f'Bearer {FITBIT_ACCESS_TOKEN}', 'Accept-Language': FITBIT_LANGUAGE})

249 | response.raise_for_status()

250 | except requests.exceptions.HTTPError as err:

251 | logging.error("HTTP request failed: %s", err)

252 | sys.exit(1)

253 |

254 | data = response.json()

255 | logging.info("Got sleep sessions from Fitbit")

256 |

257 | for day in data['sleep']:

258 | time = datetime.fromisoformat(day['startTime'])

259 | utc_time = LOCAL_TIMEZONE.localize(time).astimezone(pytz.utc).isoformat()

260 | if day['type'] == 'stages':

261 | points.append({

262 | "measurement": "sleep",

263 | "time": utc_time,

264 | "fields": {

265 | "duration": int(day['duration']),

266 | "efficiency": int(day['efficiency']),

267 | "is_main_sleep": bool(day['isMainSleep']),

268 | "minutes_asleep": int(day['minutesAsleep']),

269 | "minutes_awake": int(day['minutesAwake']),

270 | "time_in_bed": int(day['timeInBed']),

271 | "minutes_deep": int(day['levels']['summary']['deep']['minutes']),

272 | "minutes_light": int(day['levels']['summary']['light']['minutes']),

273 | "minutes_rem": int(day['levels']['summary']['rem']['minutes']),

274 | "minutes_wake": int(day['levels']['summary']['wake']['minutes']),

275 | }

276 | })

277 | else:

278 | points.append({

279 | "measurement": "sleep",

280 | "time": utc_time,

281 | "fields": {

282 | "duration": int(day['duration']),

283 | "efficiency": int(day['efficiency']),

284 | "is_main_sleep": bool(day['isMainSleep']),

285 | "minutes_asleep": int(day['minutesAsleep']),

286 | "minutes_awake": int(day['minutesAwake']),