├── README.md

├── conv_modules.py

├── data

├── KITTI.py

├── __pycache__

│ ├── Ego4D.cpython-310.pyc

│ ├── KITTI.cpython-310.pyc

│ ├── KITTI.cpython-39.pyc

│ ├── MultiFrameShapenet.cpython-310.pyc

│ ├── co3d.cpython-310.pyc

│ ├── co3d.cpython-39.pyc

│ ├── co3dv1.cpython-310.pyc

│ ├── realestate10k_dataio.cpython-310.pyc

│ └── realestate10k_dataio.cpython-39.pyc

├── co3d.py

└── realestate10k_dataio.py

├── demo.py

├── eval.py

├── geometry.py

├── mlp_modules.py

├── models.py

├── renderer.py

├── run.py

├── train.py

├── vis_scripts.py

└── wandb_logging.py

/README.md:

--------------------------------------------------------------------------------

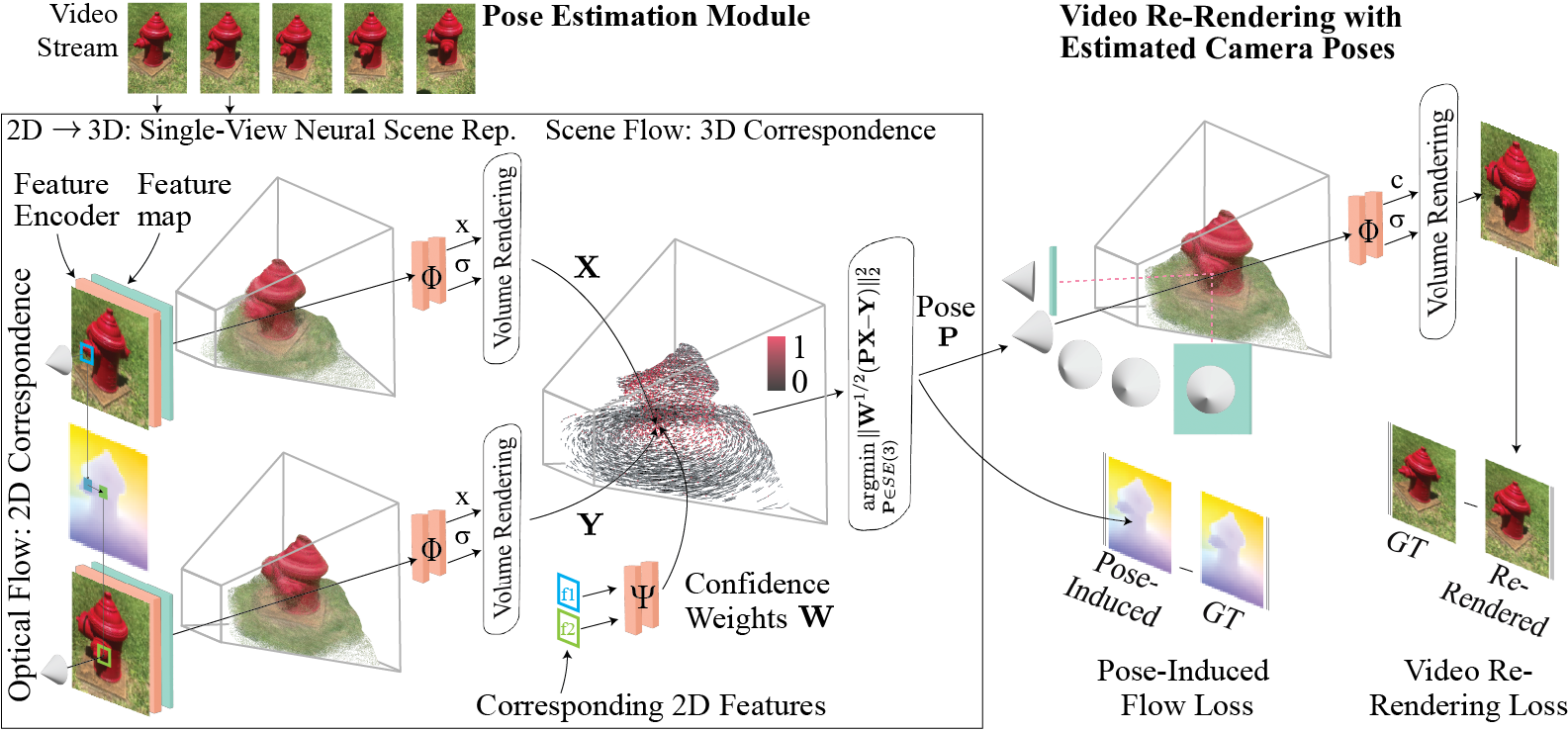

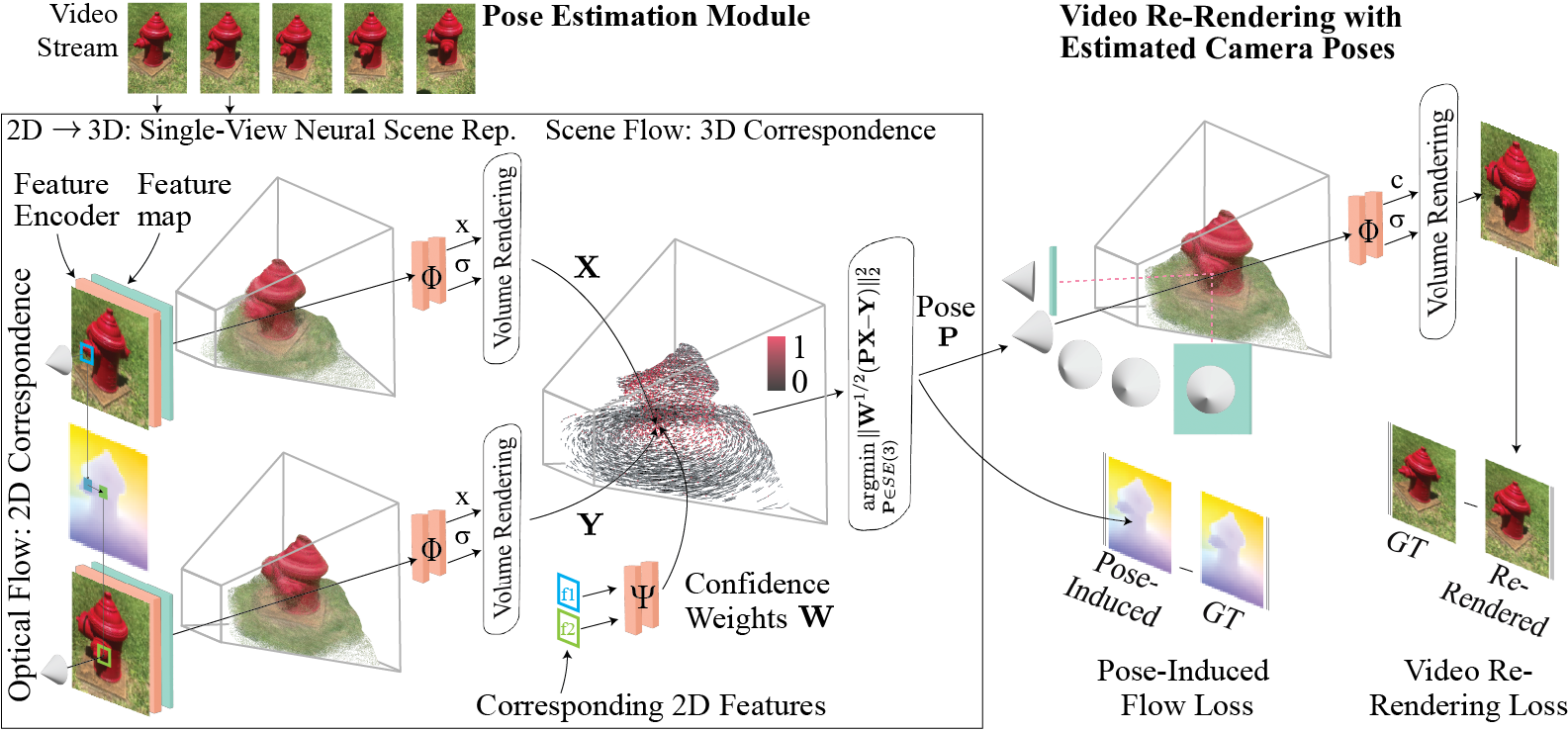

1 | # FlowCam: Training Generalizable 3D Radiance Fields without Camera Poses via Pixel-Aligned Scene Flow

2 | ### [Project Page](https://cameronosmith.github.io/flowcam) | [Paper](https://arxiv.org/abs/2306.00180) | [Pretrained Models](https://drive.google.com/drive/folders/1t7vmvBg9OAo4S8I2zjwfqhL656H1r2JP?usp=sharing)

3 |

4 | [Cameron Smith](https://cameronosmith.github.io/),

5 | [Yilun Du](https://yilundu.github.io/),

6 | [Ayush Tewari](https://ayushtewari.com),

7 | [Vincent Sitzmann](https://vsitzmann.github.io/)

8 |

9 | MIT

10 |

11 | This is the official implementation of the paper "FlowCam: Training Generalizable 3D Radiance Fields without Camera Poses via Pixel-Aligned Scene Flow".

12 |

13 |  14 |

15 | ## High-Level structure

16 | The code is organized as follows:

17 | * models.py contains the model definition

18 | * run.py contains a generic argument parser which creates the model and dataloaders for both training and evaluation

19 | * train.py and eval.py contains train and evaluation loops

20 | * mlp_modules.py and conv_modules.py contain common MLP and CNN blocks

21 | * vis_scripts.py contains plotting and wandb logging code

22 | * renderer.py implements volume rendering helper functions

23 | * geometry.py implements various geometric operations (projections, 3D lifting, rigid transforms, etc.)

24 | * data contains a list of dataset scripts

25 | * demo.py contains a script to run our model on any image directory for pose estimates. See the file header for an example on running it.

26 |

27 | ## Reproducing experiments

28 |

29 | See `python run.py --help` for a list of command line arguments.

30 | An example training command for CO3D-Hydrants is `python train.py --dataset hydrant --vid_len 8 --batch_size 2 --online --name hydrants_flowcam --n_skip 1 2.`

31 | Similarly, replace `--dataset hydrants` with any of `[realestate,kitti,10cat]` for training on RealEstate10K, KITTI, or CO3D-10Category.

32 |

33 | Example training commands for each dataset are listed below:

34 | `python train.py --dataset hydrant --vid_len 8 --batch_size 2 --online --name hydrant_flowcam --n_skip 1 2`

35 | `python train.py --dataset 10cat --vid_len 8 --batch_size 2 --online --name 10cat_flowcam --n_skip 1`

36 | `python train.py --dataset realestate --vid_len 8 --batch_size 2 --online --name realestate_flowcam --n_skip 9`

37 | `python train.py --dataset kitti --vid_len 8 --batch_size 2 --online --name kitti_flowcam --n_skip 0`

38 |

39 | Use the `--online` flag for summaries to be logged to your wandb account or omit it otherwise.

40 |

41 | ## Environment variables

42 |

43 | We use environment variables to set the dataset and logging paths, though you can easily hardcode the paths in each respective dataset script. Specifically, we use the environment variables `CO3D_ROOT, RE10K_IMG_ROOT, RE10K_POSE_ROOT, KITTI_ROOT, and LOGDIR`. For instance, you can add the line `export CO3D_ROOT="/nobackup/projects/public/facebook-co3dv2"` to your `.bashrc`.

44 |

45 | ## Data

46 |

47 | The KITTI dataset we use can be downloaded here: https://www.cvlibs.net/datasets/kitti/raw_data.php

48 |

49 | Instructions for downloading the RealEstate10K dataset can be found here: https://github.com/yilundu/cross_attention_renderer/blob/master/data_download/README.md

50 |

51 | We use the V2 version of the CO3D dataset, which can be downloaded here: https://github.com/facebookresearch/co3d

52 |

53 | ## Using FlowCam to estimate poses for your own scenes

54 |

55 | You can query FlowCam for any set of images using the script in `demo.py` and specifying the rgb_path, intrinsics (fx,fy,cx,cy), the pretrained checkpoint, whether to render out the reconstructed images or not (slower but illustrates how accurate the geometry is estimated by the model), and the image resolution to resize to in preprocessing (should be around 128 width to avoid memory issues).

56 | For example: `python demo.py --demo_rgb /nobackup/projects/public/facebook-co3dv2/hydrant/615_99120_197713/images --intrinsics 1.7671e+03,3.1427e+03,5.3550e+02,9.5150e+02 -c pretrained_models/co3d_hydrant.pt --render_imgs --low_res 144 128`. The script will write the poses, a rendered pose plot, and re-rendered rgb and depth (if requested) to the folder `demo_output`.

57 | The RealEstate10K pretrained (`pretrained_models/re10k.pt`) model probably has the most general prior to use for your own scenes. We are planning on training and releasing a model on all datasets for a more general prior, so stay tuned for that.

58 |

59 | ### Coordinate and camera parameter conventions

60 | This code uses an "OpenCV" style camera coordinate system, where the Y-axis points downwards (the up-vector points in the negative Y-direction), the X-axis points right, and the Z-axis points into the image plane.

61 |

62 | ### Citation

63 | If you find our work useful in your research, please cite:

64 | ```

65 | @misc{smith2023flowcam,

66 | title={FlowCam: Training Generalizable 3D Radiance Fields without Camera Poses via Pixel-Aligned Scene Flow},

67 | author={Cameron Smith and Yilun Du and Ayush Tewari and Vincent Sitzmann},

68 | year={2023},

69 | eprint={2306.00180},

70 | archivePrefix={arXiv},

71 | primaryClass={cs.CV}

72 | }

73 | ```

74 |

75 | ### Contact

76 | If you have any questions, please email Cameron Smith at omid.smith.cameron@gmail.com or open an issue.

77 |

--------------------------------------------------------------------------------

/conv_modules.py:

--------------------------------------------------------------------------------

1 | import torch, torchvision

2 | from torch import nn

3 | import functools

4 | from einops import rearrange, repeat

5 | from torch.nn import functional as F

6 | import numpy as np

7 |

8 | def get_norm_layer(norm_type="instance", group_norm_groups=32):

9 | """Return a normalization layer

10 | Parameters:

11 | norm_type (str) -- the name of the normalization layer: batch | instance | none

12 | For BatchNorm, we use learnable affine parameters and track running statistics (mean/stddev).

13 | For InstanceNorm, we do not use learnable affine parameters. We do not track running statistics.

14 | """

15 | if norm_type == "batch":

16 | norm_layer = functools.partial(

17 | nn.BatchNorm2d, affine=True, track_running_stats=True

18 | )

19 | elif norm_type == "instance":

20 | norm_layer = functools.partial(

21 | nn.InstanceNorm2d, affine=False, track_running_stats=False

22 | )

23 | elif norm_type == "group":

24 | norm_layer = functools.partial(nn.GroupNorm, group_norm_groups)

25 | elif norm_type == "none":

26 | norm_layer = None

27 | else:

28 | raise NotImplementedError("normalization layer [%s] is not found" % norm_type)

29 | return norm_layer

30 |

31 |

32 | class PixelNeRFEncoder(nn.Module):

33 | def __init__(

34 | self,

35 | backbone="resnet34",

36 | pretrained=True,

37 | num_layers=4,

38 | index_interp="bilinear",

39 | index_padding="border",

40 | upsample_interp="bilinear",

41 | feature_scale=1.0,

42 | use_first_pool=True,

43 | norm_type="batch",

44 | in_ch=3,

45 | ):

46 | super().__init__()

47 |

48 | self.use_custom_resnet = backbone == "custom"

49 | self.feature_scale = feature_scale

50 | self.use_first_pool = use_first_pool

51 | norm_layer = get_norm_layer(norm_type)

52 |

53 | print("Using torchvision", backbone, "encoder")

54 | self.model = getattr(torchvision.models, backbone)(

55 | pretrained=pretrained, norm_layer=norm_layer

56 | )

57 |

58 | if in_ch != 3:

59 | self.model.conv1 = nn.Conv2d(

60 | in_ch,

61 | self.model.conv1.weight.shape[0],

62 | self.model.conv1.kernel_size,

63 | self.model.conv1.stride,

64 | self.model.conv1.padding,

65 | padding_mode=self.model.conv1.padding_mode,

66 | )

67 |

68 | # Following 2 lines need to be uncommented for older configs

69 | self.model.fc = nn.Sequential()

70 | self.model.avgpool = nn.Sequential()

71 | self.latent_size = [0, 64, 128, 256, 512, 1024][num_layers]

72 |

73 | self.num_layers = num_layers

74 | self.index_interp = index_interp

75 | self.index_padding = index_padding

76 | self.upsample_interp = upsample_interp

77 | self.register_buffer("latent", torch.empty(1, 1, 1, 1), persistent=False)

78 | self.register_buffer(

79 | "latent_scaling", torch.empty(2, dtype=torch.float32), persistent=False

80 | )

81 |

82 | self.out = nn.Sequential(

83 | nn.Conv2d(self.latent_size, 512, 1),

84 | )

85 |

86 | def forward(self, x, custom_size=None):

87 |

88 |

89 | if len(x.shape)>4: return self(x.flatten(0,1),custom_size).unflatten(0,x.shape[:2])

90 |

91 | if self.feature_scale != 1.0:

92 | x = F.interpolate(

93 | x,

94 | scale_factor=self.feature_scale,

95 | mode="bilinear" if self.feature_scale > 1.0 else "area",

96 | align_corners=True if self.feature_scale > 1.0 else None,

97 | recompute_scale_factor=True,

98 | )

99 | x = x.to(device=self.latent.device)

100 |

101 | if self.use_custom_resnet:

102 | self.latent = self.model(x)

103 | else:

104 | x = self.model.conv1(x)

105 | x = self.model.bn1(x)

106 | x = self.model.relu(x)

107 |

108 | latents = [x]

109 | if self.num_layers > 1:

110 | if self.use_first_pool:

111 | x = self.model.maxpool(x)

112 | x = self.model.layer1(x)

113 | latents.append(x)

114 | if self.num_layers > 2:

115 | x = self.model.layer2(x)

116 | latents.append(x)

117 | if self.num_layers > 3:

118 | x = self.model.layer3(x)

119 | latents.append(x)

120 | if self.num_layers > 4:

121 | x = self.model.layer4(x)

122 | latents.append(x)

123 |

124 | self.latents = latents

125 | align_corners = None if self.index_interp == "nearest " else True

126 | latent_sz = latents[0].shape[-2:]

127 | for i in range(len(latents)):

128 | latents[i] = F.interpolate(

129 | latents[i],

130 | latent_sz if custom_size is None else custom_size,

131 | mode=self.upsample_interp,

132 | align_corners=align_corners,

133 | )

134 | self.latent = torch.cat(latents, dim=1)

135 | self.latent_scaling[0] = self.latent.shape[-1]

136 | self.latent_scaling[1] = self.latent.shape[-2]

137 | self.latent_scaling = self.latent_scaling / (self.latent_scaling - 1) * 2.0

138 | return self.out(self.latent)

139 |

--------------------------------------------------------------------------------

/data/KITTI.py:

--------------------------------------------------------------------------------

1 | import os

2 | import multiprocessing as mp

3 | import torch.nn.functional as F

4 | import torch

5 | import random

6 | import imageio

7 | import numpy as np

8 | from glob import glob

9 | from collections import defaultdict

10 | from pdb import set_trace as pdb

11 | from itertools import combinations

12 | from random import choice

13 | import matplotlib.pyplot as plt

14 |

15 | from torchvision import transforms

16 | from einops import rearrange, repeat

17 |

18 | import sys

19 |

20 | # Geometry functions below used for calculating depth, ignore

21 | def glob_imgs(path):

22 | imgs = []

23 | for ext in ["*.png", "*.jpg", "*.JPEG", "*.JPG"]:

24 | imgs.extend(glob(os.path.join(path, ext)))

25 | return imgs

26 |

27 |

28 | def pick(list, item_idcs):

29 | if not list:

30 | return list

31 | return [list[i] for i in item_idcs]

32 |

33 |

34 | def parse_intrinsics(intrinsics):

35 | fx = intrinsics[..., 0, :1]

36 | fy = intrinsics[..., 1, 1:2]

37 | cx = intrinsics[..., 0, 2:3]

38 | cy = intrinsics[..., 1, 2:3]

39 | return fx, fy, cx, cy

40 |

41 |

42 | hom = lambda x, i=-1: torch.cat((x, torch.ones_like(x.unbind(i)[0].unsqueeze(i))), i)

43 | ch_sec = lambda x: rearrange(x,"... c x y -> ... (x y) c")

44 |

45 | def expand_as(x, y):

46 | if len(x.shape) == len(y.shape):

47 | return x

48 |

49 | for i in range(len(y.shape) - len(x.shape)):

50 | x = x.unsqueeze(-1)

51 |

52 | return x

53 |

54 |

55 | def lift(x, y, z, intrinsics, homogeneous=False):

56 | """

57 |

58 | :param self:

59 | :param x: Shape (batch_size, num_points)

60 | :param y:

61 | :param z:

62 | :param intrinsics:

63 | :return:

64 | """

65 | fx, fy, cx, cy = parse_intrinsics(intrinsics)

66 |

67 | x_lift = (x - expand_as(cx, x)) / expand_as(fx, x) * z

68 | y_lift = (y - expand_as(cy, y)) / expand_as(fy, y) * z

69 |

70 | if homogeneous:

71 | return torch.stack((x_lift, y_lift, z, torch.ones_like(z).to(x.device)), dim=-1)

72 | else:

73 | return torch.stack((x_lift, y_lift, z), dim=-1)

74 |

75 |

76 | def world_from_xy_depth(xy, depth, cam2world, intrinsics):

77 | batch_size, *_ = cam2world.shape

78 |

79 | x_cam = xy[..., 0]

80 | y_cam = xy[..., 1]

81 | z_cam = depth

82 |

83 | pixel_points_cam = lift(

84 | x_cam, y_cam, z_cam, intrinsics=intrinsics, homogeneous=True

85 | )

86 | world_coords = torch.einsum("b...ij,b...kj->b...ki", cam2world, pixel_points_cam)[

87 | ..., :3

88 | ]

89 |

90 | return world_coords

91 |

92 |

93 | def get_ray_directions(xy, cam2world, intrinsics, normalize=True):

94 | z_cam = torch.ones(xy.shape[:-1]).to(xy.device)

95 | pixel_points = world_from_xy_depth(

96 | xy, z_cam, intrinsics=intrinsics, cam2world=cam2world

97 | ) # (batch, num_samples, 3)

98 |

99 | cam_pos = cam2world[..., :3, 3]

100 | ray_dirs = pixel_points - cam_pos[..., None, :] # (batch, num_samples, 3)

101 | if normalize:

102 | ray_dirs = F.normalize(ray_dirs, dim=-1)

103 | return ray_dirs

104 |

105 |

106 | class SceneInstanceDataset(torch.utils.data.Dataset):

107 | """This creates a dataset class for a single object instance (such as a single car)."""

108 |

109 | def __init__(

110 | self,

111 | instance_idx,

112 | instance_dir,

113 | specific_observation_idcs=None,

114 | input_img_sidelength=None,

115 | img_sidelength=None,

116 | num_images=None,

117 | cache=None,

118 | raft=None,

119 | low_res=(64,208),

120 | ):

121 | self.instance_idx = instance_idx

122 | self.img_sidelength = img_sidelength

123 | self.input_img_sidelength = input_img_sidelength

124 | self.instance_dir = instance_dir

125 | self.cache = {}

126 |

127 | self.low_res=low_res

128 |

129 | pose_dir = os.path.join(instance_dir, "pose")

130 | color_dir = os.path.join(instance_dir, "image")

131 |

132 | import pykitti

133 |

134 | drive = self.instance_dir.strip("/").split("/")[-1].split("_")[-2]

135 | date = self.instance_dir.strip("/").split("/")[-2]

136 | self.kitti_raw = pykitti.raw(

137 | "/".join(self.instance_dir.rstrip("/").split("/")[:-2]), date, drive

138 | )

139 | self.num_img = len(

140 | os.listdir(

141 | os.path.join(self.instance_dir, self.instance_dir, "image_02/data")

142 | )

143 | )

144 |

145 | self.color_paths = sorted(glob_imgs(color_dir))

146 | self.pose_paths = sorted(glob(os.path.join(pose_dir, "*.txt")))

147 | self.instance_name = os.path.basename(os.path.dirname(self.instance_dir))

148 |

149 | if specific_observation_idcs is not None:

150 | self.color_paths = pick(self.color_paths, specific_observation_idcs)

151 | self.pose_paths = pick(self.pose_paths, specific_observation_idcs)

152 | elif num_images is not None:

153 | idcs = np.linspace(

154 | 0, stop=len(self.color_paths), num=num_images, endpoint=False, dtype=int

155 | )

156 | self.color_paths = pick(self.color_paths, idcs)

157 | self.pose_paths = pick(self.pose_paths, idcs)

158 |

159 | def set_img_sidelength(self, new_img_sidelength):

160 | """For multi-resolution training: Updates the image sidelength with whichimages are loaded."""

161 | self.img_sidelength = new_img_sidelength

162 |

163 | def __len__(self):

164 | return self.num_img

165 |

166 | def __getitem__(self, idx, context=False, input_context=True):

167 | # print("trgt load")

168 |

169 | rgb = transforms.ToTensor()(self.kitti_raw.get_cam2(idx)) * 2 - 1

170 |

171 | K = torch.from_numpy(self.kitti_raw.calib.K_cam2.copy())

172 | cam2imu = torch.from_numpy(self.kitti_raw.calib.T_cam2_imu).inverse()

173 | imu2world = torch.from_numpy(self.kitti_raw.oxts[idx].T_w_imu)

174 | cam2world = (imu2world @ cam2imu).float()

175 |

176 | uv = np.mgrid[0 : rgb.size(1), 0 : rgb.size(2)].astype(float).transpose(1, 2, 0)

177 | uv = torch.from_numpy(np.flip(uv, axis=-1).copy()).long()

178 |

179 | # Downsample

180 | h, w = rgb.shape[-2:]

181 |

182 | K = torch.stack((K[0] / w, K[1] / h, K[2])) #normalize intrinsics to be resolution independent

183 |

184 | scale = 2;

185 | lowh, loww = int(64 * scale), int(208 * scale)

186 | med_rgb = F.interpolate( rgb[None], (lowh, loww), mode="bilinear", align_corners=True)[0]

187 | scale = 3;

188 | lowh, loww = int(64 * scale), int(208 * scale)

189 | large_rgb = F.interpolate( rgb[None], (lowh, loww), mode="bilinear", align_corners=True)[0]

190 | uv_large = np.mgrid[0:lowh, 0:loww].astype(float).transpose(1, 2, 0)

191 | uv_large = torch.from_numpy(np.flip(uv_large, axis=-1).copy()).long()

192 | uv_large = uv_large / torch.tensor([loww, lowh]) # uv in [0,1]

193 |

194 | #scale = 1;

195 | lowh, loww = self.low_res#int(64 * scale), int(208 * scale)

196 | rgb = F.interpolate(

197 | rgb[None], (lowh, loww), mode="bilinear", align_corners=True

198 | )[0]

199 | uv = np.mgrid[0:lowh, 0:loww].astype(float).transpose(1, 2, 0)

200 | uv = torch.from_numpy(np.flip(uv, axis=-1).copy()).long()

201 | uv = uv / torch.tensor([loww, lowh]) # uv in [0,1]

202 |

203 | tmp = torch.eye(4)

204 | tmp[:3, :3] = K

205 | K = tmp

206 |

207 | sample = {

208 | "instance_name": self.instance_name,

209 | "instance_idx": torch.Tensor([self.instance_idx]).squeeze().long(),

210 | "cam2world": cam2world,

211 | "img_idx": torch.Tensor([idx]).squeeze().long(),

212 | "img_id": "%s_%02d_%02d" % (self.instance_name, self.instance_idx, idx),

213 | "rgb": rgb,

214 | "large_rgb": large_rgb,

215 | "med_rgb": med_rgb,

216 | "intrinsics": K.float(),

217 | "uv": uv,

218 | "uv_large": uv_large,

219 | }

220 |

221 | return sample

222 |

223 |

224 | def get_instance_datasets(

225 | root,

226 | max_num_instances=None,

227 | specific_observation_idcs=None,

228 | cache=None,

229 | sidelen=None,

230 | max_observations_per_instance=None,

231 | ):

232 | instance_dirs = sorted(glob(os.path.join(root, "*/")))

233 | assert len(instance_dirs) != 0, f"No objects in the directory {root}"

234 |

235 | if max_num_instances != None:

236 | instance_dirs = instance_dirs[:max_num_instances]

237 |

238 | all_instances = [

239 | SceneInstanceDataset(

240 | instance_idx=idx,

241 | instance_dir=dir,

242 | specific_observation_idcs=specific_observation_idcs,

243 | img_sidelength=sidelen,

244 | cache=cache,

245 | num_images=max_observations_per_instance,

246 | )

247 | for idx, dir in enumerate(instance_dirs)

248 | ]

249 | return all_instances

250 |

251 |

252 | class KittiDataset(torch.utils.data.Dataset):

253 | """Dataset for a class of objects, where each datapoint is a SceneInstanceDataset."""

254 |

255 | def __init__(

256 | self,

257 | num_context=2,

258 | num_trgt=1,

259 | vary_context_number=False,

260 | query_sparsity=None,

261 | img_sidelength=None,

262 | input_img_sidelength=None,

263 | max_num_instances=None,

264 | max_observations_per_instance=None,

265 | specific_observation_idcs=None,

266 | val=False,

267 | test_context_idcs=None,

268 | context_is_last=False,

269 | context_is_first=False,

270 | cache=None,

271 | video=True,

272 | low_res=(64,208),

273 | n_skip=0,

274 | ):

275 |

276 | max_num_instances = None

277 |

278 | root_dir = os.environ['KITTI_ROOT']

279 |

280 | basedirs = list(

281 | filter(lambda x: "20" in x and "zip" not in x, os.listdir(root_dir))

282 | )

283 | drive_paths = []

284 | for basedir in basedirs:

285 | dirs = list(

286 | filter(

287 | lambda x: "txt" not in x,

288 | os.listdir(os.path.join(root_dir, basedir)),

289 | )

290 | )

291 | drive_paths += [

292 | os.path.abspath(os.path.join(root_dir, basedir, dir_)) for dir_ in dirs

293 | ]

294 | self.instance_dirs = sorted(drive_paths)

295 |

296 | if type(n_skip)==type([]):n_skip=n_skip[0]

297 | self.n_skip = n_skip+1

298 | self.num_context = num_context

299 | self.num_trgt = num_trgt

300 | self.query_sparsity = query_sparsity

301 | self.img_sidelength = img_sidelength

302 | self.vary_context_number = vary_context_number

303 | self.cache = {}

304 | self.test = val

305 | self.test_context_idcs = test_context_idcs

306 | self.context_is_last = context_is_last

307 | self.context_is_first = context_is_first

308 |

309 | print(f"Root dir {root_dir}, {len(self.instance_dirs)} instances")

310 |

311 | assert len(self.instance_dirs) != 0, "No objects in the data directory"

312 |

313 | self.max_num_instances = max_num_instances

314 | if max_num_instances == 1:

315 | self.instance_dirs = [

316 | x for x in self.instance_dirs if "2011_09_26_drive_0027_sync" in x

317 | ]

318 | print("note testing single dir") # testing dir

319 |

320 | self.all_instances = [

321 | SceneInstanceDataset(

322 | instance_idx=idx,

323 | instance_dir=dir,

324 | specific_observation_idcs=specific_observation_idcs,

325 | img_sidelength=img_sidelength,

326 | input_img_sidelength=input_img_sidelength,

327 | num_images=max_observations_per_instance,

328 | cache=cache,

329 | low_res=low_res,

330 | )

331 | for idx, dir in enumerate(self.instance_dirs)

332 | ]

333 | self.all_instances = [x for x in self.all_instances if len(x) > 40]

334 | if max_num_instances is not None:

335 | self.all_instances = self.all_instances[:max_num_instances]

336 |

337 | test_idcs = list(range(len(self.all_instances)))[::8]

338 | self.all_instances = [x for i,x in enumerate(self.all_instances) if (i in test_idcs and val) or (i not in test_idcs and not val)]

339 | print("validation: ",val,len(self.all_instances))

340 |

341 | self.num_per_instance_observations = [len(obj) for obj in self.all_instances]

342 | self.num_instances = len(self.all_instances)

343 |

344 | self.instance_img_pairs = []

345 | for i,instance_dir in enumerate(self.all_instances):

346 | for j in range(len(instance_dir)-n_skip*(num_trgt+1)):

347 | self.instance_img_pairs.append((i,j))

348 |

349 | def sparsify(self, dict, sparsity):

350 | new_dict = {}

351 | if sparsity is None:

352 | return dict

353 | else:

354 | # Sample upper_limit pixel idcs at random.

355 | rand_idcs = np.random.choice(

356 | self.img_sidelength ** 2, size=sparsity, replace=False

357 | )

358 | for key in ["rgb", "uv"]:

359 | new_dict[key] = dict[key][rand_idcs]

360 |

361 | for key, v in dict.items():

362 | if key not in ["rgb", "uv"]:

363 | new_dict[key] = dict[key]

364 |

365 | return new_dict

366 |

367 | def set_img_sidelength(self, new_img_sidelength):

368 | """For multi-resolution training: Updates the image sidelength with which images are loaded."""

369 | self.img_sidelength = new_img_sidelength

370 | for instance in self.all_instances:

371 | instance.set_img_sidelength(new_img_sidelength)

372 |

373 | def __len__(self):

374 | return len(self.instance_img_pairs)

375 |

376 | def get_instance_idx(self, idx):

377 | if self.test:

378 | obj_idx = 0

379 | while idx >= 0:

380 | idx -= self.num_per_instance_observations[obj_idx]

381 | obj_idx += 1

382 | return (

383 | obj_idx - 1,

384 | int(idx + self.num_per_instance_observations[obj_idx - 1]),

385 | )

386 | else:

387 | return np.random.randint(self.num_instances), 0

388 |

389 | def collate_fn(self, batch_list):

390 | keys = batch_list[0].keys()

391 | result = defaultdict(list)

392 |

393 | for entry in batch_list:

394 | # make them all into a new dict

395 | for key in keys:

396 | result[key].append(entry[key])

397 |

398 | for key in keys:

399 | try:

400 | result[key] = torch.stack(result[key], dim=0)

401 | except:

402 | continue

403 |

404 | return result

405 |

406 | def getframe(self, obj_idx, x):

407 | return (

408 | self.all_instances[obj_idx].__getitem__(

409 | x, context=True, input_context=True

410 | ),

411 | x,

412 | )

413 |

414 | def __getitem__(self, idx, sceneidx=None):

415 |

416 | context = []

417 | trgt = []

418 | post_input = []

419 | #obj_idx,det_idx= np.random.randint(self.num_instances), 0

420 | of=0

421 |

422 | obj_idx, i = self.instance_img_pairs[idx]

423 |

424 | if of: obj_idx = 0

425 |

426 | if sceneidx is not None:

427 | obj_idx, det_idx = sceneidx[0], sceneidx[0]

428 |

429 | if len(self.all_instances[obj_idx])<=i+self.num_trgt*self.n_skip:

430 | i=0

431 | if sceneidx is not None:

432 | i=sceneidx[1]

433 | for _ in range(self.num_trgt):

434 | if sceneidx is not None:

435 | print(i)

436 | i += self.n_skip

437 | sample = self.all_instances[obj_idx].__getitem__(

438 | i, context=True, input_context=True

439 | )

440 | post_input.append(sample)

441 | post_input[-1]["mask"] = torch.Tensor([1.0])

442 | sub_sample = self.sparsify(sample, self.query_sparsity)

443 | trgt.append(sub_sample)

444 |

445 | post_input = self.collate_fn(post_input)

446 | trgt = self.collate_fn(trgt)

447 |

448 | out_dict = {"query": trgt, "post_input": post_input, "context": None}, trgt

449 |

450 | imgs = trgt["rgb"]

451 | imgs_large = (trgt["large_rgb"]*.5+.5)*255

452 | imgs_med = (trgt["large_rgb"]*.5+.5)*255

453 | Ks = trgt["intrinsics"][:,:3,:3]

454 | uv = trgt["uv"].flatten(1,2)

455 |

456 | #imgs large in [0,255],

457 | #imgs in [-1,1],

458 | #gt_rgb in [0,1],

459 | model_input = {

460 | "trgt_rgb": imgs[1:],

461 | "ctxt_rgb": imgs[:-1],

462 | "trgt_rgb_large": imgs_large[1:],

463 | "ctxt_rgb_large": imgs_large[:-1],

464 | "trgt_rgb_med": imgs_med[1:],

465 | "ctxt_rgb_med": imgs_med[:-1],

466 | "intrinsics": Ks[1:],

467 | "x_pix": uv[1:],

468 | "trgt_c2w": trgt["cam2world"][1:],

469 | "ctxt_c2w": trgt["cam2world"][:-1],

470 | }

471 | gt = {

472 | "trgt_rgb": ch_sec(imgs[1:])*.5+.5,

473 | "ctxt_rgb": ch_sec(imgs[:-1])*.5+.5,

474 | "intrinsics": Ks[1:],

475 | "x_pix": uv[1:],

476 | }

477 | return model_input,gt

478 |

--------------------------------------------------------------------------------

/data/__pycache__/Ego4D.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/Ego4D.cpython-310.pyc

--------------------------------------------------------------------------------

/data/__pycache__/KITTI.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/KITTI.cpython-310.pyc

--------------------------------------------------------------------------------

/data/__pycache__/KITTI.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/KITTI.cpython-39.pyc

--------------------------------------------------------------------------------

/data/__pycache__/MultiFrameShapenet.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/MultiFrameShapenet.cpython-310.pyc

--------------------------------------------------------------------------------

/data/__pycache__/co3d.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/co3d.cpython-310.pyc

--------------------------------------------------------------------------------

/data/__pycache__/co3d.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/co3d.cpython-39.pyc

--------------------------------------------------------------------------------

/data/__pycache__/co3dv1.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/co3dv1.cpython-310.pyc

--------------------------------------------------------------------------------

/data/__pycache__/realestate10k_dataio.cpython-310.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/realestate10k_dataio.cpython-310.pyc

--------------------------------------------------------------------------------

/data/__pycache__/realestate10k_dataio.cpython-39.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cameronosmith/FlowCam/aff310543a858df2ddbf4c6ac6f01372e2f4562e/data/__pycache__/realestate10k_dataio.cpython-39.pyc

--------------------------------------------------------------------------------

/data/co3d.py:

--------------------------------------------------------------------------------

1 | # note for davis dataloader later: temporally consistent depth estimator: https://github.com/yu-li/TCMonoDepth

2 | # note for cool idea of not even downloading data and just streaming from youtube:https://gist.github.com/Mxhmovd/41e7690114e7ddad8bcd761a76272cc3

3 | import matplotlib.pyplot as plt;

4 | import cv2

5 | import os

6 | import multiprocessing as mp

7 | import torch.nn.functional as F

8 | import torch

9 | import random

10 | import imageio

11 | import numpy as np

12 | from glob import glob

13 | from collections import defaultdict

14 | from pdb import set_trace as pdb

15 | from itertools import combinations

16 | from random import choice

17 | import matplotlib.pyplot as plt

18 | import imageio.v3 as iio

19 |

20 | from torchvision import transforms

21 |

22 | import sys

23 |

24 | from glob import glob

25 | import os

26 | import gzip

27 | import json

28 | import numpy as np

29 |

30 | from PIL import Image

31 | def _load_16big_png_depth(depth_png) -> np.ndarray:

32 | with Image.open(depth_png) as depth_pil:

33 | # the image is stored with 16-bit depth but PIL reads it as I (32 bit).

34 | # we cast it to uint16, then reinterpret as float16, then cast to float32

35 | depth = (

36 | np.frombuffer(np.array(depth_pil, dtype=np.uint16), dtype=np.float16)

37 | .astype(np.float32)

38 | .reshape((depth_pil.size[1], depth_pil.size[0]))

39 | )

40 | return depth

41 | def _load_depth(path, scale_adjustment) -> np.ndarray:

42 | d = _load_16big_png_depth(path) * scale_adjustment

43 | d[~np.isfinite(d)] = 0.0

44 | return d[None] # fake feature channel

45 |

46 | # Geometry functions below used for calculating depth, ignore

47 | def glob_imgs(path):

48 | imgs = []

49 | for ext in ["*.png", "*.jpg", "*.JPEG", "*.JPG"]:

50 | imgs.extend(glob(os.path.join(path, ext)))

51 | return imgs

52 |

53 |

54 | def pick(list, item_idcs):

55 | if not list:

56 | return list

57 | return [list[i] for i in item_idcs]

58 |

59 |

60 | def parse_intrinsics(intrinsics):

61 | fx = intrinsics[..., 0, :1]

62 | fy = intrinsics[..., 1, 1:2]

63 | cx = intrinsics[..., 0, 2:3]

64 | cy = intrinsics[..., 1, 2:3]

65 | return fx, fy, cx, cy

66 |

67 |

68 | from einops import rearrange, repeat

69 | ch_sec = lambda x: rearrange(x,"... c x y -> ... (x y) c")

70 | hom = lambda x, i=-1: torch.cat((x, torch.ones_like(x.unbind(i)[0].unsqueeze(i))), i)

71 |

72 |

73 | def expand_as(x, y):

74 | if len(x.shape) == len(y.shape):

75 | return x

76 |

77 | for i in range(len(y.shape) - len(x.shape)):

78 | x = x.unsqueeze(-1)

79 |

80 | return x

81 |

82 |

83 | def lift(x, y, z, intrinsics, homogeneous=False):

84 | """

85 |

86 | :param self:

87 | :param x: Shape (batch_size, num_points)

88 | :param y:

89 | :param z:

90 | :param intrinsics:

91 | :return:

92 | """

93 | fx, fy, cx, cy = parse_intrinsics(intrinsics)

94 |

95 | x_lift = (x - expand_as(cx, x)) / expand_as(fx, x) * z

96 | y_lift = (y - expand_as(cy, y)) / expand_as(fy, y) * z

97 |

98 | if homogeneous:

99 | return torch.stack((x_lift, y_lift, z, torch.ones_like(z).to(x.device)), dim=-1)

100 | else:

101 | return torch.stack((x_lift, y_lift, z), dim=-1)

102 |

103 |

104 | def world_from_xy_depth(xy, depth, cam2world, intrinsics):

105 | batch_size, *_ = cam2world.shape

106 |

107 | x_cam = xy[..., 0]

108 | y_cam = xy[..., 1]

109 | z_cam = depth

110 |

111 | pixel_points_cam = lift(

112 | x_cam, y_cam, z_cam, intrinsics=intrinsics, homogeneous=True

113 | )

114 | world_coords = torch.einsum("b...ij,b...kj->b...ki", cam2world, pixel_points_cam)[

115 | ..., :3

116 | ]

117 |

118 | return world_coords

119 |

120 |

121 | def get_ray_directions(xy, cam2world, intrinsics, normalize=True):

122 | z_cam = torch.ones(xy.shape[:-1]).to(xy.device)

123 | pixel_points = world_from_xy_depth(

124 | xy, z_cam, intrinsics=intrinsics, cam2world=cam2world

125 | ) # (batch, num_samples, 3)

126 |

127 | cam_pos = cam2world[..., :3, 3]

128 | ray_dirs = pixel_points - cam_pos[..., None, :] # (batch, num_samples, 3)

129 | if normalize:

130 | ray_dirs = F.normalize(ray_dirs, dim=-1)

131 | return ray_dirs

132 |

133 | from PIL import Image

134 | def _load_16big_png_depth(depth_png) -> np.ndarray:

135 | with Image.open(depth_png) as depth_pil:

136 | # the image is stored with 16-bit depth but PIL reads it as I (32 bit).

137 | # we cast it to uint16, then reinterpret as float16, then cast to float32

138 | depth = (

139 | np.frombuffer(np.array(depth_pil, dtype=np.uint16), dtype=np.float16)

140 | .astype(np.float32)

141 | .reshape((depth_pil.size[1], depth_pil.size[0]))

142 | )

143 | return depth

144 | def _load_depth(path, scale_adjustment) -> np.ndarray:

145 | d = _load_16big_png_depth(path) * scale_adjustment

146 | d[~np.isfinite(d)] = 0.0

147 | return d[None] # fake feature channel

148 |

149 | # NOTE currently using CO3D V1 because they switch to NDC cameras in 2. TODO is to make conversion code (different intrinsics), verify pointclouds, and switch.

150 |

151 | class Co3DNoCams(torch.utils.data.Dataset):

152 | """Dataset for a class of objects, where each datapoint is a SceneInstanceDataset."""

153 |

154 | def __init__(

155 | self,

156 | num_context=2,

157 | n_skip=1,

158 | num_trgt=1,

159 | low_res=(128,144),

160 | depth_scale=1,#1.8/5,

161 | val=False,

162 | num_cat=1000,

163 | overfit=False,

164 | category=None,

165 | use_mask=False,

166 | use_v1=True,

167 | # delete below, not used

168 | vary_context_number=False,

169 | query_sparsity=None,

170 | img_sidelength=None,

171 | input_img_sidelength=None,

172 | max_num_instances=None,

173 | max_observations_per_instance=None,

174 | specific_observation_idcs=None,

175 | test=False,

176 | test_context_idcs=None,

177 | context_is_last=False,

178 | context_is_first=False,

179 | cache=None,

180 | video=True,

181 | ):

182 |

183 | if num_cat is None: num_cat=1000

184 |

185 | self.n_trgt=num_trgt

186 | self.use_mask=use_mask

187 | self.depth_scale=depth_scale

188 | self.of=overfit

189 | self.val=val

190 |

191 | self.num_skip=n_skip

192 | self.low_res=low_res

193 | max_num_instances = None

194 |

195 | self.base_path=os.environ['CO3D_ROOT']

196 | print(self.base_path)

197 |

198 | # Get sequences!

199 | from collections import defaultdict

200 | sequences = defaultdict(list)

201 | self.total_num_data=0

202 | self.all_frame_names=[]

203 | all_cats = [ "hydrant","teddybear","apple", "ball", "bench", "cake", "donut", "plant", "suitcase", "vase","backpack", "banana", "baseballbat", "baseballglove", "bicycle", "book", "bottle", "bowl", "broccoli", "car", "carrot", "cellphone", "chair", "couch", "cup", "frisbee", "hairdryer", "handbag", "hotdog", "keyboard", "kite", "laptop", "microwave", "motorcycle", "mouse", "orange", "parkingmeter", "pizza", "remote", "sandwich", "skateboard", "stopsign", "toaster", "toilet", "toybus", "toyplane", "toytrain", "toytruck", "tv", "umbrella", "wineglass", ]

204 |

205 | for cat in (all_cats[:num_cat]) if category is None else [category]:

206 | print(cat)

207 | dataset = json.loads(gzip.GzipFile(os.path.join(self.base_path,cat,"frame_annotations.jgz"),"rb").read().decode("utf8"))

208 | val_amt = int(len(dataset)*.03)

209 | dataset = dataset[:-val_amt] if not val else dataset[-val_amt:]

210 | self.total_num_data+=len(dataset)

211 | for i,data in enumerate(dataset):

212 | self.all_frame_names.append((data["sequence_name"],data["frame_number"]))

213 | sequences[data["sequence_name"]].append(data)

214 |

215 | sorted_seq={}

216 | for k,v in sequences.items():

217 | sorted_seq[k]=sorted(sequences[k],key=lambda x:x["frame_number"])

218 | #for k,v in sequences.items(): sequences[k]=v[:-(max(self.num_skip) if type(self.num_skip)==list else self.num_skip)*self.n_trgt]

219 | self.seqs = sorted_seq

220 |

221 | print("done with dataloader init")

222 |

223 | def sparsify(self, dict, sparsity):

224 | new_dict = {}

225 | if sparsity is None:

226 | return dict

227 | else:

228 | # Sample upper_limit pixel idcs at random.

229 | rand_idcs = np.random.choice(

230 | self.img_sidelength ** 2, size=sparsity, replace=False

231 | )

232 | for key in ["rgb", "uv"]:

233 | new_dict[key] = dict[key][rand_idcs]

234 |

235 | for key, v in dict.items():

236 | if key not in ["rgb", "uv"]:

237 | new_dict[key] = dict[key]

238 |

239 | return new_dict

240 |

241 | def set_img_sidelength(self, new_img_sidelength):

242 | """For multi-resolution training: Updates the image sidelength with which images are loaded."""

243 | self.img_sidelength = new_img_sidelength

244 | for instance in self.all_instances:

245 | instance.set_img_sidelength(new_img_sidelength)

246 |

247 | def __len__(self):

248 | return self.total_num_data

249 |

250 | def collate_fn(self, batch_list):

251 | keys = batch_list[0].keys()

252 | result = defaultdict(list)

253 |

254 | for entry in batch_list:

255 | # make them all into a new dict

256 | for key in keys:

257 | result[key].append(entry[key])

258 |

259 | for key in keys:

260 | try:

261 | result[key] = torch.stack(result[key], dim=0)

262 | except:

263 | continue

264 |

265 | return result

266 |

267 | def __getitem__(self, idx,seq_query=None):

268 |

269 | context = []

270 | trgt = []

271 | post_input = []

272 |

273 | n_skip = (random.choice(self.num_skip) if type(self.num_skip)==list else self.num_skip) + 1

274 |

275 | if seq_query is None:

276 | try:

277 | seq_name,frame_idx=self.all_frame_names[idx]

278 | except:

279 | print(f"Out of bounds erorr at {idx}. Investigate.")

280 | return self[-2*n_skip*self.n_trgt if self.val else np.random.randint(len(self))]

281 |

282 | if seq_query is not None:

283 | frame_idx=idx

284 | seq_name = list(self.seqs.keys())[seq_query]

285 | all_frames= self.seqs[seq_name]

286 | else:

287 | all_frames=self.seqs[seq_name] if not self.of else self.seqs[random.choice(list(self.seqs.keys())[:int(self.of)])]

288 |

289 | if len(all_frames)<=self.n_trgt*n_skip or frame_idx >= (len(all_frames)-self.n_trgt*n_skip):

290 | frame_idx=0

291 | if len(all_frames)<=self.n_trgt*n_skip or frame_idx >= (len(all_frames)-self.n_trgt*n_skip):

292 | if len(all_frames)<=self.n_trgt*n_skip:

293 | print(len(all_frames) ," frames < ",self.n_trgt*n_skip," queries")

294 | print("returning low/high")

295 | return self[-2*n_skip*self.n_trgt if self.val else np.random.randint(len(self))]

296 | start_idx = frame_idx

297 |

298 | if self.of and 1: start_idx=0

299 |

300 | frames = all_frames[start_idx:start_idx+self.n_trgt*n_skip:n_skip]

301 | if np.random.rand()<.5 and not self.of and not self.val: frames=frames[::-1]

302 |

303 | paths = [os.path.join(self.base_path,x["image"]["path"]) for x in frames]

304 | for path in paths:

305 | if not os.path.exists(path):

306 | print("path missing")

307 | return self[np.random.randint(len(self))]

308 |

309 | #masks=[torch.from_numpy(plt.imread(os.path.join(self.base_path,x["mask"]["path"]))) for x in frames]

310 | imgs=[torch.from_numpy(plt.imread(path)) for path in paths]

311 |

312 | Ks=[]

313 | c2ws=[]

314 | depths=[]

315 | for data in frames:

316 |

317 | #depths.append(torch.from_numpy(_load_depth(os.path.join(self.base_path,data["depth"]["path"]), data["depth"]["scale_adjustment"])[0])) # commenting out since slow to load; uncomment when needed

318 |

319 | # Below pose processing taken from co3d github issue

320 | p = data["viewpoint"]["principal_point"]

321 | f = data["viewpoint"]["focal_length"]

322 | h, w = data["image"]["size"]

323 | K = np.eye(3)

324 | s = (min(h, w)) / 2

325 | K[0, 0] = f[0] * (w) / 2

326 | K[1, 1] = f[1] * (h) / 2

327 | K[0, 2] = -p[0] * s + (w) / 2

328 | K[1, 2] = -p[1] * s + (h) / 2

329 |

330 | # Normalize intrinsics to [-1,1]

331 | #print(K)

332 | raw_K=[torch.from_numpy(K).clone(),[h,w]]

333 | K[:2] /= torch.tensor([w, h])[:, None]

334 | Ks.append(torch.from_numpy(K).float())

335 |

336 | R = np.asarray(data["viewpoint"]["R"]).T # note the transpose here

337 | T = np.asarray(data["viewpoint"]["T"]) * self.depth_scale

338 | pose = np.concatenate([R,T[:,None]],1)

339 | pose = torch.from_numpy( np.diag([-1,-1,1]).astype(np.float32) @ pose )# flip the direction of x,y axis

340 | tmp=torch.eye(4)

341 | tmp[:3,:4]=pose

342 | c2ws.append(tmp.inverse())

343 |

344 | Ks=torch.stack(Ks)

345 | c2w=torch.stack(c2ws).float()

346 |

347 | no_mask=0

348 | if no_mask:

349 | masks=[x*0+1 for x in masks]

350 |

351 | low_res=self.low_res#(128,144)#(108,144)

352 | minx,miny=min([x.size(0) for x in imgs]),min([x.size(1) for x in imgs])

353 |

354 | imgs=[x[:minx,:miny].float() for x in imgs]

355 |

356 | if self.use_mask: # mask images and depths

357 | imgs = [x*y.unsqueeze(-1)+(255*(1-y).unsqueeze(-1)) for x,y in zip(imgs,masks)]

358 | depths = [x*y for x,y in zip(depths,masks)]

359 |

360 | large_scale=2

361 | imgs_large = F.interpolate(torch.stack([x.permute(2,0,1) for x in imgs]),(int(256*large_scale),int(288*large_scale)),antialias=True,mode="bilinear")

362 | imgs_med = F.interpolate(torch.stack([x.permute(2,0,1) for x in imgs]),(int(256),int(288)),antialias=True,mode="bilinear")

363 | imgs = F.interpolate(torch.stack([x.permute(2,0,1) for x in imgs]),low_res,antialias=True,mode="bilinear")

364 |

365 | if self.use_mask:

366 | imgs = imgs*masks[:,None]+255*(1-masks[:,None])

367 |

368 | imgs = imgs/255 * 2 - 1

369 |

370 | uv = np.mgrid[0:low_res[0], 0:low_res[1]].astype(float).transpose(1, 2, 0)

371 | uv = torch.from_numpy(np.flip(uv, axis=-1).copy()).long()

372 | uv = uv/ torch.tensor([low_res[1]-1, low_res[0]-1]) # uv in [0,1]

373 | uv = uv[None].expand(len(imgs),-1,-1,-1).flatten(1,2)

374 |

375 | model_input = {

376 | "trgt_rgb": imgs[1:],

377 | "ctxt_rgb": imgs[:-1],

378 | "trgt_rgb_large": imgs_large[1:],

379 | "ctxt_rgb_large": imgs_large[:-1],

380 | "trgt_rgb_med": imgs_med[1:],

381 | "ctxt_rgb_med": imgs_med[:-1],

382 | #"ctxt_depth": depths.squeeze(1)[:-1],

383 | #"trgt_depth": depths.squeeze(1)[1:],

384 | "intrinsics": Ks[1:],

385 | "trgt_c2w": c2w[1:],

386 | "ctxt_c2w": c2w[:-1],

387 | "x_pix": uv[1:],

388 | #"trgt_mask": masks[1:],

389 | #"ctxt_mask": masks[:-1],

390 | }

391 |

392 | gt = {

393 | #"paths": paths,

394 | #"raw_K": raw_K,

395 | #"seq_name": seq_name,

396 | "trgt_rgb": ch_sec(imgs[1:])*.5+.5,

397 | "ctxt_rgb": ch_sec(imgs[:-1])*.5+.5,

398 | #"ctxt_depth": depths.squeeze(1)[:-1].flatten(1,2).unsqueeze(-1),

399 | #"trgt_depth": depths.squeeze(1)[1:].flatten(1,2).unsqueeze(-1),

400 | "intrinsics": Ks[1:],

401 | "x_pix": uv[1:],

402 | #"seq_name": [seq_name],

403 | #"trgt_mask": masks[1:].flatten(1,2).unsqueeze(-1),

404 | #"ctxt_mask": masks[:-1].flatten(1,2).unsqueeze(-1),

405 | }

406 |

407 | return model_input,gt

408 |

--------------------------------------------------------------------------------

/data/realestate10k_dataio.py:

--------------------------------------------------------------------------------

1 | import random

2 | from torch.nn import functional as F

3 | import os

4 | import torch

5 | import numpy as np

6 | from glob import glob

7 | import json

8 | from collections import defaultdict

9 | import os.path as osp

10 | from imageio import imread

11 | from torch.utils.data import Dataset

12 | from pathlib import Path

13 | import cv2

14 | from tqdm import tqdm

15 | from scipy.io import loadmat

16 |

17 | import functools

18 | import cv2

19 | import numpy as np

20 | import imageio

21 | from glob import glob

22 | import os

23 | import shutil

24 | import io

25 |

26 | not_of=1

27 |

28 | def load_rgb(path, sidelength=None):

29 | img = imageio.imread(path)[:, :, :3]

30 | img = skimage.img_as_float32(img)

31 |

32 | img = square_crop_img(img)

33 |

34 | if sidelength is not None:

35 | img = cv2.resize(img, (sidelength, sidelength), interpolation=cv2.INTER_NEAREST)

36 |

37 | img -= 0.5

38 | img *= 2.

39 | return img

40 |

41 | def load_depth(path, sidelength=None):

42 | img = cv2.imread(path, cv2.IMREAD_UNCHANGED).astype(np.float32)

43 |

44 | if sidelength is not None:

45 | img = cv2.resize(img, (sidelength, sidelength), interpolation=cv2.INTER_NEAREST)

46 |

47 | img *= 1e-4

48 |

49 | if len(img.shape) == 3:

50 | img = img[:, :, :1]

51 | img = img.transpose(2, 0, 1)

52 | else:

53 | img = img[None, :, :]

54 | return img

55 |

56 |

57 | def load_pose(filename):

58 | lines = open(filename).read().splitlines()

59 | if len(lines) == 1:

60 | pose = np.zeros((4, 4), dtype=np.float32)

61 | for i in range(16):

62 | pose[i // 4, i % 4] = lines[0].split(" ")[i]

63 | return pose.squeeze()

64 | else:

65 | lines = [[x[0], x[1], x[2], x[3]] for x in (x.split(" ") for x in lines[:4])]

66 | return np.asarray(lines).astype(np.float32).squeeze()

67 |

68 |

69 | def load_numpy_hdf5(instance_ds, key):

70 | rgb_ds = instance_ds['rgb']

71 | raw = rgb_ds[key][...]

72 | s = raw.tostring()

73 | f = io.BytesIO(s)

74 |

75 | img = imageio.imread(f)[:, :, :3]

76 | img = skimage.img_as_float32(img)

77 |

78 | img = square_crop_img(img)

79 |

80 | img -= 0.5

81 | img *= 2.

82 |

83 | return img

84 |

85 |

86 | def load_rgb_hdf5(instance_ds, key, sidelength=None):

87 | rgb_ds = instance_ds['rgb']

88 | raw = rgb_ds[key][...]

89 | s = raw.tostring()

90 | f = io.BytesIO(s)

91 |

92 | img = imageio.imread(f)[:, :, :3]

93 | img = skimage.img_as_float32(img)

94 |

95 | img = square_crop_img(img)

96 |

97 | if sidelength is not None:

98 | img = cv2.resize(img, (sidelength, sidelength), interpolation=cv2.INTER_AREA)

99 |

100 | img -= 0.5

101 | img *= 2.

102 |

103 | return img

104 |

105 |

106 | def load_pose_hdf5(instance_ds, key):

107 | pose_ds = instance_ds['pose']

108 | raw = pose_ds[key][...]

109 | ba = bytearray(raw)

110 | s = ba.decode('ascii')

111 |

112 | lines = s.splitlines()

113 |

114 | if len(lines) == 1:

115 | pose = np.zeros((4, 4), dtype=np.float32)

116 | for i in range(16):

117 | pose[i // 4, i % 4] = lines[0].split(" ")[i]

118 | # processed_pose = pose.squeeze()

119 | return pose.squeeze()

120 | else:

121 | lines = [[x[0], x[1], x[2], x[3]] for x in (x.split(" ") for x in lines[:4])]

122 | return np.asarray(lines).astype(np.float32).squeeze()

123 |

124 |

125 | def cond_mkdir(path):

126 | if not os.path.exists(path):

127 | os.makedirs(path)

128 |

129 |

130 | def square_crop_img(img):

131 | min_dim = np.amin(img.shape[:2])

132 | center_coord = np.array(img.shape[:2]) // 2

133 | img = img[center_coord[0] - min_dim // 2:center_coord[0] + min_dim // 2,

134 | center_coord[1] - min_dim // 2:center_coord[1] + min_dim // 2]

135 | return img

136 |

137 |

138 | def glob_imgs(path):

139 | imgs = []

140 | for ext in ['*.png', '*.jpg', '*.JPEG', '*.JPG']:

141 | imgs.extend(glob(os.path.join(path, ext)))

142 | return imgs

143 |

144 | def augment(rgb, intrinsics, c2w_mat):

145 |

146 | # Horizontal Flip with 50% Probability

147 | if np.random.uniform(0, 1) < 0.5:

148 | rgb = rgb[:, ::-1, :]

149 | tf_flip = np.array([[-1, 0, 0, 0], [0, 1, 0, 0], [0, 0, 1, 0], [0, 0, 0, 1]])

150 | c2w_mat = c2w_mat @ tf_flip

151 |

152 | # Crop by aspect ratio

153 | if np.random.uniform(0, 1) < 0.5:

154 | py = np.random.randint(1, 32)

155 | rgb = rgb[py:-py, :, :]

156 | else:

157 | py = 0

158 |

159 | if np.random.uniform(0, 1) < 0.5:

160 | px = np.random.randint(1, 32)

161 | rgb = rgb[:, px:-px, :]

162 | else:

163 | px = 0

164 |

165 | H, W, _ = rgb.shape

166 | rgb = cv2.resize(rgb, (256, 256))

167 | xscale = 256 / W

168 | yscale = 256 / H

169 |

170 | intrinsics[0, 0] = intrinsics[0, 0] * xscale

171 | intrinsics[1, 1] = intrinsics[1, 1] * yscale

172 |

173 | return rgb, intrinsics, c2w_mat

174 |

175 | class Camera(object):

176 | def __init__(self, entry):

177 | fx, fy, cx, cy = entry[1:5]

178 | self.intrinsics = np.array([[fx, 0, cx, 0],

179 | [0, fy, cy, 0],

180 | [0, 0, 1, 0],

181 | [0, 0, 0, 1]])

182 | w2c_mat = np.array(entry[7:]).reshape(3, 4)

183 | w2c_mat_4x4 = np.eye(4)

184 | w2c_mat_4x4[:3, :] = w2c_mat

185 | self.w2c_mat = w2c_mat_4x4

186 | self.c2w_mat = np.linalg.inv(w2c_mat_4x4)

187 |

188 |

189 | def unnormalize_intrinsics(intrinsics, h, w):

190 | intrinsics = intrinsics.copy()

191 | intrinsics[0] *= w

192 | intrinsics[1] *= h

193 | return intrinsics

194 |

195 |

196 | def parse_pose_file(file):

197 | f = open(file, 'r')

198 | cam_params = {}

199 | for i, line in enumerate(f):

200 | if i == 0:

201 | continue

202 | entry = [float(x) for x in line.split()]

203 | id = int(entry[0])

204 | cam_params[id] = Camera(entry)

205 | return cam_params

206 |

207 |

208 | def parse_pose(pose, timestep):

209 | timesteps = pose[:, :1]

210 | timesteps = np.around(timesteps)

211 | mask = (timesteps == timestep)[:, 0]

212 | pose_entry = pose[mask][0]

213 | camera = Camera(pose_entry)

214 |

215 | return camera

216 |

217 |

218 | def get_camera_pose(scene_path, all_pose_dir, uv, views=1):

219 | npz_files = sorted(scene_path.glob("*.npz"))

220 | npz_file = npz_files[0]

221 | data = np.load(npz_file)

222 | all_pose_dir = Path(all_pose_dir)

223 |

224 | rgb_files = list(data.keys())

225 |

226 | timestamps = [int(rgb_file.split('.')[0]) for rgb_file in rgb_files]

227 | sorted_ids = np.argsort(timestamps)

228 |

229 | rgb_files = np.array(rgb_files)[sorted_ids]

230 | timestamps = np.array(timestamps)[sorted_ids]

231 |

232 | camera_file = all_pose_dir / (str(scene_path.name) + '.txt')

233 | cam_params = parse_pose_file(camera_file)

234 | # H, W, _ = data[rgb_files[0]].shape

235 |

236 | # Weird cropping of images

237 | H, W = 256, 456

238 |

239 | xscale = W / min(H, W)

240 | yscale = H / min(H, W)

241 |

242 |

243 | query = {}

244 | context = {}

245 |

246 | render_frame = min(128, rgb_files.shape[0])

247 |

248 | query_intrinsics = []

249 | query_c2w = []

250 | query_rgbs = []

251 | for i in range(1, render_frame):

252 | rgb = data[rgb_files[i]]

253 | timestep = timestamps[i]

254 |

255 | # rgb = cv2.resize(rgb, (W, H))

256 | intrinsics = unnormalize_intrinsics(cam_params[timestep].intrinsics, H, W)

257 |

258 | intrinsics[0, 2] = intrinsics[0, 2] / xscale

259 | intrinsics[1, 2] = intrinsics[1, 2] / yscale

260 | rgb = rgb.astype(np.float32) / 127.5 - 1

261 |

262 | query_intrinsics.append(intrinsics)

263 | query_c2w.append(cam_params[timestep].c2w_mat)

264 | query_rgbs.append(rgb)

265 |

266 | context_intrinsics = []

267 | context_c2w = []

268 | context_rgbs = []

269 |

270 | if views == 1:

271 | render_ids = [0]

272 | elif views == 2:

273 | render_ids = [0, min(len(rgb_files) - 1, 128)]

274 | else:

275 | assert False

276 |

277 | for i in render_ids:

278 | rgb = data[rgb_files[i]]

279 | timestep = timestamps[i]

280 | # print("render: ", i)

281 | # rgb = cv2.resize(rgb, (W, H))

282 | intrinsics = unnormalize_intrinsics(cam_params[timestep].intrinsics, H, W)

283 | intrinsics[0, 2] = intrinsics[0, 2] / xscale

284 | intrinsics[1, 2] = intrinsics[1, 2] / yscale

285 |

286 | rgb = rgb.astype(np.float32) / 127.5 - 1

287 |

288 | context_intrinsics.append(intrinsics)

289 | context_c2w.append(cam_params[timestep].c2w_mat)

290 | context_rgbs.append(rgb)

291 |

292 | query = {'rgb': torch.Tensor(query_rgbs)[None].float(),

293 | 'cam2world': torch.Tensor(query_c2w)[None].float(),

294 | 'intrinsics': torch.Tensor(query_intrinsics)[None].float(),

295 | 'uv': uv.view(-1, 2)[None, None].expand(1, render_frame - 1, -1, -1)}

296 | ctxt = {'rgb': torch.Tensor(context_rgbs)[None].float(),

297 | 'cam2world': torch.Tensor(context_c2w)[None].float(),

298 | 'intrinsics': torch.Tensor(context_intrinsics)[None].float()}

299 |

300 | return {'query': query, 'context': ctxt}

301 |

302 | class RealEstate10k():

303 | def __init__(self, img_root=None, pose_root=None,

304 | num_ctxt_views=2, num_query_views=2, query_sparsity=None,imsl=256,

305 | max_num_scenes=None, square_crop=True, augment=False, lpips=False, dual_view=False, val=False,n_skip=12):

306 |

307 | self.n_skip =n_skip[0] if type(n_skip)==type([]) else n_skip

308 | print(self.n_skip,"n_skip")

309 | self.val = val

310 | if img_root is None: img_root = os.path.join(os.environ['RE10K_IMG_ROOT'],"test" if val else "train")

311 | if pose_root is None: pose_root = os.path.join(os.environ['RE10K_POSE_ROOT'],"test" if val else "train")

312 | print("Loading RealEstate10k...")

313 | self.num_ctxt_views = num_ctxt_views

314 | self.num_query_views = num_query_views

315 | self.query_sparsity = query_sparsity

316 | self.dual_view = dual_view

317 |

318 | self.imsl=imsl

319 |

320 | all_im_dir = Path(img_root)

321 | #self.all_pose_dir = Path(pose_root)

322 | self.all_pose = loadmat(pose_root)

323 | self.lpips = lpips

324 |

325 | self.all_scenes = sorted(all_im_dir.glob('*/'))

326 |

327 | dummy_img_path = str(next(self.all_scenes[0].glob("*.npz")))

328 |

329 | if max_num_scenes:

330 | self.all_scenes = list(self.all_scenes)[:max_num_scenes]

331 |

332 | data = np.load(dummy_img_path)

333 | key = list(data.keys())[0]

334 | im = data[key]

335 |

336 | H, W = im.shape[:2]

337 | H, W = 256, 455

338 | self.H, self.W = H, W

339 | self.augment = augment

340 |

341 | self.square_crop = square_crop

342 | # Downsample to be 256 x 256 image

343 | # self.H, self.W = 256, 455

344 |

345 | xscale = W / min(H, W)

346 | yscale = H / min(H, W)

347 |

348 | dim = min(H, W)

349 |

350 | self.xscale = xscale

351 | self.yscale = yscale

352 |

353 | # For now the images are already square cropped

354 | self.H = 256

355 | self.W = 455

356 |

357 | print(f"Resolution is {H}, {W}.")

358 |

359 | if self.square_crop:

360 | i, j = torch.meshgrid(torch.arange(0, self.imsl), torch.arange(0, self.imsl))

361 | else:

362 | i, j = torch.meshgrid(torch.arange(0, W), torch.arange(0, H))

363 |

364 | self.uv = torch.stack([i.float(), j.float()], dim=-1).permute(1, 0, 2)

365 |

366 | # if self.square_crop:

367 | # self.uv = data_util.square_crop_img(self.uv)

368 |

369 | self.uv = self.uv[None].permute(0, -1, 1, 2).permute(0, 2, 3, 1)

370 | self.uv = self.uv.reshape(-1, 2)

371 |

372 | self.scene_path_list = list(Path(img_root).glob("*/"))

373 |

374 | def __len__(self):

375 | return len(self.all_scenes)

376 |

377 | def __getitem__(self, idx,scene_query=None):

378 | idx = idx if not_of else 0

379 | scene_path = self.all_scenes[idx if scene_query is None else scene_query]

380 | npz_files = sorted(scene_path.glob("*.npz"))

381 |

382 | name = scene_path.name

383 |

384 | def get_another():

385 | if self.val:

386 | return self[idx-1 if idx >200 else idx+1]

387 | return self.__getitem__(random.randint(0, len(self.all_scenes) - 1))

388 |

389 | if name not in self.all_pose: return get_another()

390 |

391 | pose = self.all_pose[name]

392 |

393 | if len(npz_files) == 0:

394 | print("npz get another")

395 | return get_another()

396 |

397 | npz_file = npz_files[0]

398 | try:

399 | data = np.load(npz_file)

400 | except:

401 | print("npz load error get another")

402 | return get_another()

403 |

404 | rgb_files = list(data.keys())

405 | window_size = 128

406 |

407 | if len(rgb_files) <= 20:

408 | print("<20 rgbs error get another")

409 | return get_another()

410 |

411 | timestamps = [int(rgb_file.split('.')[0]) for rgb_file in rgb_files]

412 | sorted_ids = np.argsort(timestamps)

413 |

414 | rgb_files = np.array(rgb_files)[sorted_ids]

415 | timestamps = np.array(timestamps)[sorted_ids]

416 |

417 | assert (timestamps == sorted(timestamps)).all()

418 | num_frames = len(rgb_files)

419 | left_bound = 0

420 | right_bound = num_frames - 1

421 | candidate_ids = np.arange(left_bound, right_bound)

422 |

423 | # remove windows between frame -32 to 32

424 | nframe = 1

425 | nframe_view = 140 if self.val else 92

426 |

427 | id_feats = []

428 |

429 | n_skip=self.n_skip

430 |

431 | id_feat = np.array(id_feats)

432 | low = 0

433 | high = num_frames-1-n_skip*self.num_query_views

434 |

435 | if high <= low:

436 | n_skip = int(num_frames//(self.num_query_views+1))

437 | high = num_frames-1-n_skip*self.num_query_views

438 | print("high ... (x y) c")

18 |

19 | # A quick dummy dataset for the demo rgb folder

20 | class SingleVid(Dataset):

21 |

22 | # If specified here, intrinsics should be a 4-element array of [fx,fy,cx,cy] at input image resolution

23 | def __init__(self, img_dir,intrinsics=None,n_trgt=6,num_skip=0,low_res=None,hi_res=None):

24 | self.low_res,self.intrinsics,self.n_trgt,self.num_skip,self.hi_res=low_res,intrinsics,n_trgt,num_skip,hi_res

25 | if self.hi_res is None:self.hi_res=[x*2 for x in self.low_res]

26 | self.hi_res = [(x+x%64) for x in self.hi_res]

27 |

28 | self.img_paths = glob(img_dir + '/*.png') + glob(img_dir + '/*.jpg')

29 | self.img_paths.sort()

30 |

31 | def __len__(self):

32 | return len(self.img_paths)-(1+self.n_trgt)*(1+self.num_skip)

33 |

34 | def __getitem__(self, idx):

35 |

36 | n_skip=self.num_skip+1

37 | paths = self.img_paths[idx:idx+self.n_trgt*n_skip:n_skip]

38 | imgs=torch.stack([torch.from_numpy(plt.imread(path)).permute(2,0,1) for path in paths]).float()

39 |

40 | imgs_large = F.interpolate(imgs,self.hi_res,antialias=True,mode="bilinear")

41 | frames = F.interpolate(imgs,self.low_res)

42 |

43 | frames = frames/255 * 2 - 1

44 |

45 | uv = np.mgrid[0:self.low_res[0], 0:self.low_res[1]].astype(float).transpose(1, 2, 0)

46 | uv = torch.from_numpy(np.flip(uv, axis=-1).copy()).long()

47 | uv = uv/ torch.tensor([self.low_res[1], self.low_res[0]]) # uv in [0,1]

48 | uv = uv[None].expand(len(frames),-1,-1,-1).flatten(1,2)

49 |

50 | #imgs large values in [0,255], imgs in [-1,1], gt_rgb in [0,1],

51 |

52 | model_input = {

53 | "trgt_rgb": frames[1:],

54 | "ctxt_rgb": frames[:-1],

55 | "trgt_rgb_large": imgs_large[1:],

56 | "ctxt_rgb_large": imgs_large[:-1],

57 | "x_pix": uv[1:],

58 | }

59 | gt = {

60 | "trgt_rgb": ch_sec(frames[1:])*.5+.5,

61 | "ctxt_rgb": ch_sec(frames[:-1])*.5+.5,

62 | "x_pix": uv[1:],

63 | }

64 |

65 | if self.intrinsics is not None:

66 | K = torch.eye(3)

67 | K[0,0],K[1,1],K[0,2],K[1,2]=[float(x) for x in self.intrinsics.strip().split(",")]

68 | h,w=imgs[0].shape[-2:]

69 | K[:2] /= torch.tensor([w, h])[:, None]

70 | model_input["intrinsics"] = K[None].expand(self.n_trgt-1,-1,-1)

71 |

72 | return model_input,gt

73 |

74 | dataset=SingleVid(args.demo_rgb,args.intrinsics,args.vid_len,args.n_skip,args.low_res)

75 |

76 | all_poses = torch.tensor([]).cuda()

77 | all_render_rgb=torch.tensor([]).cuda()

78 | all_render_depth=torch.tensor([])

79 | for seq_i in range(len(dataset)//(dataset.n_trgt)):

80 | print(seq_i*(dataset.n_trgt),"/",len(dataset))

81 | model_input = {k:to_gpu(v)[None] for k,v in dataset.__getitem__(seq_i*(dataset.n_trgt-1))[0].items()}

82 | with torch.no_grad(): out = (model.forward if not args.render_imgs else model.render_full_img)(model_input)

83 | curr_transfs = out["poses"][0]

84 | if len(all_poses): curr_transfs = all_poses[[-1]] @ curr_transfs # integrate poses

85 | all_poses = torch.cat((all_poses,curr_transfs))

86 | all_render_rgb = torch.cat((all_render_rgb,out["rgb"][0]))

87 | all_render_depth = torch.cat((all_render_depth,out["depth"][0]))

88 |

89 | out_dir="demo_output/"+args.demo_rgb.replace("/","_")

90 | os.makedirs(out_dir,exist_ok=True)

91 | fig = plt.figure()

92 | ax = fig.add_subplot(111, projection='3d')

93 | ax.plot(*all_poses[:,:3,-1].T.cpu().numpy())

94 | ax.xaxis.set_tick_params(labelbottom=False);ax.yaxis.set_tick_params(labelleft=False);ax.zaxis.set_tick_params(labelleft=False)

95 | ax.view_init(elev=10., azim=45)

96 | plt.tight_layout()

97 | fp = os.path.join(out_dir,f"pose_plot.png");plt.savefig(fp,bbox_inches='tight');plt.close()

98 |

99 | fp = os.path.join(out_dir,f"poses.npy");np.save(fp,all_poses.cpu())

100 | if args.render_imgs:

101 | out_dir=os.path.join(out_dir,"renders")

102 | os.makedirs(out_dir,exist_ok=True)

103 | for i,(rgb,depth) in enumerate(zip(all_render_rgb.unflatten(1,model_input["trgt_rgb"].shape[-2:]),all_render_depth.unflatten(1,model_input["trgt_rgb"].shape[-2:]))):

104 | plt.imsave(os.path.join(out_dir,"render_rgb_%04d.png"%i),rgb.clip(0,1).cpu().numpy())

105 | plt.imsave(os.path.join(out_dir,"render_depth_%04d.png"%i),depth.clip(0,1).cpu().numpy())

106 |

107 |

--------------------------------------------------------------------------------

/eval.py:

--------------------------------------------------------------------------------

1 | from run import *

2 |

3 | # Evaluation script

4 | import piqa,lpips

5 | from torchvision.utils import make_grid

6 | import matplotlib.pyplot as plt

7 | loss_fn_vgg = lpips.LPIPS(net='vgg').cuda()

8 | lpips,psnr,ate=0,0,0

9 |

10 | eval_dir = save_dir+"/"+args.name+datetime.datetime.now().strftime("%b%d%Y_")+str(random.randint(0,1e3))

11 | try: os.mkdir(eval_dir)

12 | except: pass

13 | torch.set_grad_enabled(False)

14 |

15 | model.n_samples=128

16 |

17 | val_dataset = get_dataset(val=True,)

18 |

19 | for eval_idx,eval_dataset_idx in enumerate(tqdm(torch.linspace(0,len(val_dataset)-1,min(args.n_eval,len(val_dataset))).int())):

20 | model_input,ground_truth = val_dataset[eval_dataset_idx]

21 |

22 | for x in (model_input,ground_truth):

23 | for k,v in x.items(): x[k] = v[None].cuda() # collate

24 |

25 | model_out = model.render_full_img(model_input)

26 |

27 | # remove last frame since used as ctxt when n_ctxt=2

28 | rgb_est,rgb_gt = [rearrange(img[:,:-1].clip(0,1),"b trgt (x y) c -> (b trgt) c x y",x=model_input["trgt_rgb"].size(-2))

29 | for img in (model_out["fine_rgb" if "fine_rgb" in model_out else "rgb"],ground_truth["trgt_rgb"])]

30 | depth_est = rearrange(model_out["depth"][:,:-1],"b trgt (x y) c -> (b trgt) c x y",x=model_input["trgt_rgb"].size(-2))

31 |

32 | psnr += piqa.PSNR()(rgb_est.clip(0,1).contiguous(),rgb_gt.clip(0,1).contiguous())

33 | lpips += loss_fn_vgg(rgb_est*2-1,rgb_gt*2-1).mean()

34 |

35 | print(args.save_imgs)

36 | if args.save_imgs:

37 | fp = os.path.join(eval_dir,f"{eval_idx}_est.png");plt.imsave(fp,make_grid(rgb_est).permute(1,2,0).clip(0,1).cpu().numpy())

38 | if depth_est.size(1)==3: fp = os.path.join(eval_dir,f"{eval_idx}_depth.png");plt.imsave(fp,make_grid(depth_est).clip(0,1).permute(1,2,0).cpu().numpy())

39 | fp = os.path.join(eval_dir,f"{eval_idx}_gt.png");plt.imsave(fp,make_grid(rgb_gt).permute(1,2,0).cpu().numpy())

40 | print(fp)

41 |

42 |

43 | if args.save_imgs and args.save_ind: # save individual images separately

44 | eval_idx_dir = os.path.join(eval_dir,f"dir_{eval_idx}")

45 |

46 | try: os.mkdir(eval_idx_dir)

47 | except: pass

48 | ctxt_rgbs = torch.cat((model_input["ctxt_rgb"][:,0],model_input["trgt_rgb"][:,model_input["trgt_rgb"].size(1)//2],model_input["trgt_rgb"][:,-1]))*.5+.5

49 | fp = os.path.join(eval_idx_dir,f"ctxt0.png");plt.imsave(fp,ctxt_rgbs[0].clip(0,1).permute(1,2,0).cpu().numpy())

50 | fp = os.path.join(eval_idx_dir,f"ctxt1.png");plt.imsave(fp,ctxt_rgbs[1].clip(0,1).permute(1,2,0).cpu().numpy())

51 | fp = os.path.join(eval_idx_dir,f"ctxt2.png");plt.imsave(fp,ctxt_rgbs[2].clip(0,1).permute(1,2,0).cpu().numpy())

52 | for i,(rgb_est,rgb_gt,depth) in enumerate(zip(rgb_est,rgb_gt,depth_est)):

53 | fp = os.path.join(eval_idx_dir,f"{i}_est.png");plt.imsave(fp,rgb_est.clip(0,1).permute(1,2,0).cpu().numpy())

54 | print(fp)

55 | fp = os.path.join(eval_idx_dir,f"{i}_gt.png");plt.imsave(fp,rgb_gt.clip(0,1).permute(1,2,0).cpu().numpy())

56 | if depth_est.size(1)==3: fp = os.path.join(eval_idx_dir,f"{i}_depth.png");plt.imsave(fp,depth.permute(1,2,0).cpu().clip(1e-4,1-1e-4).numpy())

57 |

58 | # Pose plotting/evaluation

59 | if "poses" in model_out:

60 | import scipy.spatial

61 | pose_est,pose_gt = model_out["poses"][0][:,:3,-1].cpu(),model_input["trgt_c2w"][0][:,:3,-1].cpu()

62 | pose_gt,pose_est,_ = scipy.spatial.procrustes(pose_gt.numpy(),pose_est.numpy())

63 | ate += ((pose_est-pose_gt)**2).mean()

64 | if args.save_imgs:

65 | fig = plt.figure()

66 | ax = fig.add_subplot(111, projection='3d')

67 | ax.plot(*pose_gt.T)

68 | ax.plot(*pose_est.T)

69 | ax.xaxis.set_tick_params(labelbottom=False)

70 | ax.yaxis.set_tick_params(labelleft=False)

71 | ax.zaxis.set_tick_params(labelleft=False)

72 | ax.view_init(elev=10., azim=45)

73 | plt.tight_layout()

74 | fp = os.path.join(eval_dir,f"{eval_idx}_pose_plot.png");plt.savefig(fp,bbox_inches='tight');plt.close()

75 | if args.save_ind:

76 | for i in range(len(pose_est)):

77 | fig = plt.figure()

78 | ax = fig.add_subplot(111, projection='3d')

79 | ax.plot(*pose_gt.T,color="black")

80 | ax.plot(*pose_est.T,alpha=0)

81 | ax.plot(*pose_est[:i].T,alpha=1,color="red")

82 | ax.xaxis.set_tick_params(labelbottom=False)

83 | ax.yaxis.set_tick_params(labelleft=False)

84 | ax.zaxis.set_tick_params(labelleft=False)

85 | ax.view_init(elev=10., azim=45)

86 | plt.tight_layout()

87 | fp = os.path.join(eval_idx_dir,f"pose_{i}.png"); plt.savefig(fp,bbox_inches='tight');plt.close()

88 |

89 | print(f"psnr {psnr/(1+eval_idx)}, lpips {lpips/(1+eval_idx)}, ate {(ate/(1+eval_idx))**.5}, eval_idx {eval_idx}", flush=True)

90 |

91 |

--------------------------------------------------------------------------------

/geometry.py:

--------------------------------------------------------------------------------

1 | """Multi-view geometry & proejction code.."""

2 | import torch

3 | from einops import rearrange, repeat

4 | from torch.nn import functional as F

5 | import numpy as np

6 |

7 | def d6_to_rotmat(d6):

8 | a1, a2 = d6[..., :3], d6[..., 3:]

9 | b1 = F.normalize(a1, dim=-1)

10 | b2 = a2 - (b1 * a2).sum(-1, keepdim=True) * b1

11 | b2 = F.normalize(b2, dim=-1)

12 | b3 = torch.cross(b1, b2, dim=-1)

13 | return torch.stack((b1, b2, b3), dim=-2)

14 |

15 | def time_interp_poses(pose_inp,time_i,n_trgt,eye_pts):

16 | i,j = max(0,int(time_i*(n_trgt-1))-1),int(time_i*(n_trgt-1))

17 | pose_interp = camera_interp(*pose_inp[:,[i,j]].unbind(1),time_i)

18 | if i==j: pose_interp=pose_inp[:,0]

19 | pose_interp = repeat(pose_interp,"b x y -> b trgt x y",trgt=n_trgt)

20 | return pose_interp

21 | eye_pts = torch.cat((eye_pts,torch.ones_like(eye_pts[...,[0]])),-1)

22 | query_pts = torch.einsum("bcij,bcdkj->bcdki",pose_interp,eye_pts)[...,:3]

23 | return query_pts

24 |

25 | def pixel_aligned_features(

26 | coords_3d_world, cam2world, intrinsics, img_features, interp="bilinear",padding_mode="border",

27 | ):

28 | # Args:

29 | # coords_3d_world: shape (b, n, 3)

30 | # cam2world: camera pose of shape (..., 4, 4)

31 |

32 | # project 3d points to 2D

33 | c3d_world_hom = homogenize_points(coords_3d_world)

34 | c3d_cam_hom = transform_world2cam(c3d_world_hom, cam2world)

35 | c2d_cam, depth = project(c3d_cam_hom, intrinsics.unsqueeze(1))

36 |

37 | # now between 0 and 1. Map to -1 and 1

38 | c2d_norm = (c2d_cam - 0.5) * 2

39 | c2d_norm = rearrange(c2d_norm, "b n ch -> b n () ch")

40 | c2d_norm = c2d_norm[..., :2]

41 |

42 | # grid_sample

43 | feats = F.grid_sample(

44 | img_features, c2d_norm, align_corners=True, padding_mode=padding_mode, mode=interp

45 | )

46 | feats = feats.squeeze(-1) # b ch n

47 |

48 | feats = rearrange(feats, "b ch n -> b n ch")

49 | return feats, c3d_cam_hom[..., :3], c2d_cam

50 |

51 | # https://gist.github.com/mkocabas/54ea2ff3b03260e3fedf8ad22536f427

52 | def procrustes(S1, S2,weights=None):

53 |

54 | if len(S1.shape)==4:

55 | out = procrustes(S1.flatten(0,1),S2.flatten(0,1),weights.flatten(0,1) if weights is not None else None)

56 | return out[0],out[1].unflatten(0,S1.shape[:2])

57 | '''

58 | Computes a similarity transform (sR, t) that takes

59 | a set of 3D points S1 (BxNx3) closest to a set of 3D points, S2,