();

286 | Core.split(matBGRA, channels);

287 | matB = channels.get(0);

288 | matG = channels.get(1);

289 | matR = channels.get(2);

290 | matA = channels.get(3);

291 | }

292 |

293 | /**

294 | *

295 | * Set OpenCV to do image processing on the grayscale version

296 | * of the loaded image.

297 | *

298 | */

299 | public void useGray(){

300 | useColor = false;

301 | }

302 |

303 | /**

304 | *

305 | * Checks whether OpenCV is currently using the color version of the image

306 | * or the grayscale version.

307 | *

308 | * @return

309 | * True if OpenCV is currently using the color version of the image.

310 | */

311 | public boolean getUseColor(){

312 | return useColor;

313 | }

314 |

315 | private Mat getCurrentMat(){

316 | if(useROI){

317 | return matROI;

318 |

319 | } else{

320 |

321 | if(useColor){

322 | return matBGRA;

323 | } else{

324 | return matGray;

325 | }

326 | }

327 | }

328 |

329 | /**

330 | * Initialize OpenCV with a width and height.

331 | * You will need to load an image in before processing.

332 | * See copy(PImage img).

333 | *

334 | * @param theParent

335 | * A PApplet representing the user sketch, i.e "this"

336 | * @param width

337 | * int

338 | * @param height

339 | * int

340 | */

341 | public OpenCV(PApplet theParent, int width, int height) {

342 | initNative();

343 | parent = theParent;

344 | init(width, height);

345 | }

346 |

347 | private void init(int w, int h){

348 | width = w;

349 | height = h;

350 | welcome();

351 | setupWorkingImages();

352 | setupFlow();

353 |

354 | matR = new Mat(height, width, CvType.CV_8UC1);

355 | matG = new Mat(height, width, CvType.CV_8UC1);

356 | matB = new Mat(height, width, CvType.CV_8UC1);

357 | matA = new Mat(height, width, CvType.CV_8UC1);

358 | matGray = new Mat(height, width, CvType.CV_8UC1);

359 |

360 | matBGRA = new Mat(height, width, CvType.CV_8UC4);

361 | }

362 |

363 | private void setupFlow(){

364 | flow = new Flow(parent);

365 | }

366 |

367 | private void setupWorkingImages(){

368 | outputImage = parent.createImage(width,height, PConstants.ARGB);

369 | }

370 |

371 | private String getLibPath() {

372 | URL url = this.getClass().getResource("OpenCV.class");

373 | if (url != null) {

374 | // Convert URL to string, taking care of spaces represented by the "%20"

375 | // string.

376 | String path = url.toString().replace("%20", " ");

377 | int n0 = path.indexOf('/');

378 |

379 | int n1 = -1;

380 |

381 |

382 | n1 = path.indexOf("opencv_processing.jar");

383 | if (PApplet.platform == PConstants.WINDOWS) { //platform Windows

384 | // In Windows, path string starts with "jar file/C:/..."

385 | // so the substring up to the first / is removed.

386 | n0++;

387 | }

388 |

389 |

390 | if ((-1 < n0) && (-1 < n1)) {

391 | return path.substring(n0, n1);

392 | } else {

393 | return "";

394 | }

395 | }

396 | return "";

397 | }

398 |

399 | private void initNative(){

400 | if(nativeLoaded) return;

401 | Loader.load(opencv_java.class);

402 | nativeLoaded = true;

403 | }

404 |

405 | /**

406 | * Load a cascade file for face or object detection.

407 | * Expects one of:

408 | *

409 | *

410 | * OpenCV.CASCADE_FRONTALFACE

411 | * OpenCV.CASCADE_PEDESTRIANS

412 | * OpenCV.CASCADE_EYE

413 | * OpenCV.CASCADE_CLOCK

414 | * OpenCV.CASCADE_NOSE

415 | * OpenCV.CASCADE_MOUTH

416 | * OpenCV.CASCADE_UPPERBODY

417 | * OpenCV.CASCADE_LOWERBODY

418 | * OpenCV.CASCADE_FULLBODY

419 | * OpenCV.CASCADE_PEDESTRIANS

420 | * OpenCV.CASCADE_RIGHT_EAR

421 | * OpenCV.CASCADE_PROFILEFACE

422 | *

423 | *

424 | * To pass your own cascade file, provide an absolute path and a second

425 | * argument of true, thusly:

426 | *

427 | *

428 | * opencv.loadCascade("/path/to/my/custom/cascade.xml", true)

429 | *

430 | *

431 | * (NB: ant build scripts copy the data folder outside of the

432 | * jar so that this will work.)

433 | *

434 | * @param cascadeFileName

435 | * The name of the cascade file to be loaded form within OpenCV for Processing.

436 | * Must be one of the constants provided by this library

437 | */

438 | public void loadCascade(String cascadeFileName){

439 |

440 | // localize path to cascade file to point at the library's data folder

441 | String relativePath = "cascade-files/" + cascadeFileName;

442 | String cascadePath = getLibPath();

443 | cascadePath += relativePath;

444 |

445 | PApplet.println("Load cascade from: " + cascadePath);

446 |

447 | classifier = new CascadeClassifier(cascadePath);

448 |

449 | if(classifier.empty()){

450 | PApplet.println("Cascade failed to load"); // raise exception here?

451 | } else {

452 | PApplet.println("Cascade loaded: " + cascadeFileName);

453 | }

454 | }

455 |

456 | /**

457 | * Load a cascade file for face or object detection.

458 | * If absolute is true, cascadeFilePath must be an

459 | * absolute path to a cascade xml file. If it is false

460 | * then cascadeFilePath must be one of the options provided

461 | * by OpenCV for Processing as in the single-argument

462 | * version of this function.

463 | *

464 | * @param cascadeFilePath

465 | * A string. Either an absolute path to a cascade XML file or

466 | * one of the constants provided by this library.

467 | * @param absolute

468 | * Whether or not the cascadeFilePath is an absolute path to an XML file.

469 | */

470 | public void loadCascade(String cascadeFilePath, boolean absolute){

471 | if(absolute){

472 | classifier = new CascadeClassifier(cascadeFilePath);

473 |

474 | if(classifier.empty()){

475 | PApplet.println("Cascade failed to load"); // raise exception here?

476 | } else {

477 | PApplet.println("Cascade loaded from absolute path: " + cascadeFilePath);

478 | }

479 | } else {

480 | loadCascade(cascadeFilePath);

481 | }

482 | }

483 |

484 | /**

485 | * Convert an array of OpenCV Rect objects into

486 | * an array of java.awt.Rectangle rectangles.

487 | * Especially useful when working with

488 | * classifier.detectMultiScale().

489 | *

490 | * @param rects Rectangles

491 | *

492 | * @return

493 | * A Rectangle[] of java.awt.Rectangle

494 | */

495 | public static Rectangle[] toProcessing(Rect[] rects){

496 | Rectangle[] results = new Rectangle[rects.length];

497 | for(int i = 0; i < rects.length; i++){

498 | results[i] = new Rectangle(rects[i].x, rects[i].y, rects[i].width, rects[i].height);

499 | }

500 | return results;

501 | }

502 |

503 | /**

504 | * Detect objects using the cascade classifier. loadCascade() must already

505 | * have been called to setup the classifier. See the OpenCV documentation

506 | * for details on the arguments: http://docs.opencv.org/java/org/opencv/objdetect/CascadeClassifier.html#detectMultiScale(org.opencv.core.Mat, org.opencv.core.MatOfRect, double, int, int, org.opencv.core.Size, org.opencv.core.Size)

507 | *

508 | * A simpler version of detect() that doesn't need these arguments is also available.

509 | *

510 | * @param scaleFactor

511 | * @param minNeighbors

512 | * @param flags

513 | * @param minSize

514 | * @param maxSize

515 | * @return

516 | * An array of java.awt.Rectangle objects with the location, width, and height of each detected object.

517 | */

518 | public Rectangle[] detect(double scaleFactor , int minNeighbors , int flags, int minSize , int maxSize){

519 | Size minS = new Size(minSize, minSize);

520 | Size maxS = new Size(maxSize, maxSize);

521 |

522 | MatOfRect detections = new MatOfRect();

523 | classifier.detectMultiScale(getCurrentMat(), detections, scaleFactor, minNeighbors, flags, minS, maxS );

524 |

525 | return OpenCV.toProcessing(detections.toArray());

526 | }

527 |

528 | /**

529 | * Detect objects using the cascade classifier. loadCascade() must already

530 | * have been called to setup the classifier.

531 | *

532 | * @return

533 | * An array of java.awt.Rectnangle objects with the location, width, and height of each detected object.

534 | */

535 | public Rectangle[] detect(){

536 | MatOfRect detections = new MatOfRect();

537 | classifier.detectMultiScale(getCurrentMat(), detections);

538 |

539 | return OpenCV.toProcessing(detections.toArray());

540 | }

541 |

542 | /**

543 | * Setup background subtraction. After calling this function,

544 | * updateBackground() must be called with each new frame

545 | * you want to add to the running background subtraction calculation.

546 | *

547 | * For details on the arguments, see:

548 | * http://docs.opencv.org/java/org/opencv/video/BackgroundSubtractorMOG.html#BackgroundSubtractorMOG(int, int, double)

549 | *

550 | * @param history

551 | * @param nMixtures

552 | * @param backgroundRatio

553 | */

554 | public void startBackgroundSubtraction(int history, int nMixtures, double backgroundRatio){

555 | backgroundSubtractor = createBackgroundSubtractorMOG(history, nMixtures, backgroundRatio);

556 | }

557 |

558 | /**

559 | * Update the running background for background subtraction based on

560 | * the current image loaded into OpenCV. startBackgroundSubtraction()

561 | * must have been called before this to setup the background subtractor.

562 | *

563 | */

564 | public void updateBackground(){

565 | Mat foreground = imitate(getCurrentMat());

566 | backgroundSubtractor.apply(getCurrentMat(), foreground, 0.05);

567 | setGray(foreground);

568 | }

569 |

570 | /**

571 | * Calculate the optical flow of the current image relative

572 | * to a running series of images (typically frames from video).

573 | * Optical flow is useful for detecting what parts of the image

574 | * are moving and in what direction.

575 | *

576 | */

577 | public void calculateOpticalFlow(){

578 | flow.calculateOpticalFlow(getCurrentMat());

579 | }

580 |

581 | /*

582 | * Get the total optical flow within a region of the image.

583 | * Be sure to call calculateOpticalFlow() first.

584 | *

585 | */

586 | public PVector getTotalFlowInRegion(int x, int y, int w, int h) {

587 | return flow.getTotalFlowInRegion(x, y, w, h);

588 | }

589 |

590 | /*

591 | * Get the average optical flow within a region of the image.

592 | * Be sure to call calculateOpticalFlow() first.

593 | *

594 | */

595 | public PVector getAverageFlowInRegion(int x, int y, int w, int h) {

596 | return flow.getAverageFlowInRegion(x,y,w,h);

597 | }

598 |

599 | /*

600 | * Get the total optical flow for the entire image.

601 | * Be sure to call calculateOpticalFlow() first.

602 | */

603 | public PVector getTotalFlow() {

604 | return flow.getTotalFlow();

605 | }

606 |

607 | /*

608 | * Get the average optical flow for the entire image.

609 | * Be sure to call calculateOpticalFlow() first.

610 | */

611 | public PVector getAverageFlow() {

612 | return flow.getAverageFlow();

613 | }

614 |

615 | /*

616 | * Get the optical flow at a single point in the image.

617 | * Be sure to call calcuateOpticalFlow() first.

618 | */

619 | public PVector getFlowAt(int x, int y){

620 | return flow.getFlowAt(x,y);

621 | }

622 |

623 | /*

624 | * Draw the optical flow.

625 | * Be sure to call calcuateOpticalFlow() first.

626 | */

627 | public void drawOpticalFlow(){

628 | flow.draw();

629 | }

630 |

631 | /**

632 | * Flip the current image.

633 | *

634 | * @param direction

635 | * One of: OpenCV.HORIZONTAL, OpenCV.VERTICAL, or OpenCV.BOTH

636 | */

637 | public void flip(int direction){

638 | Core.flip(getCurrentMat(), getCurrentMat(), direction);

639 | }

640 |

641 | /**

642 | *

643 | * Adjust the contrast of the image. Works on color or black and white images.

644 | *

645 | * @param amt

646 | * Amount of contrast to apply. 0-1.0 reduces contrast. Above 1.0 increases contrast.

647 | *

648 | **/

649 | public void contrast(float amt){

650 | Scalar modifier;

651 | if(useColor){

652 | modifier = new Scalar(amt,amt,amt,1);

653 |

654 | } else{

655 | modifier = new Scalar(amt);

656 | }

657 |

658 | Core.multiply(getCurrentMat(), modifier, getCurrentMat());

659 | }

660 |

661 | /**

662 | * Get the x-y location of the maximum value in the current image.

663 | *

664 | * @return

665 | * A PVector with the location of the maximum value.

666 | */

667 | public PVector max(){

668 | MinMaxLocResult r = Core.minMaxLoc(getCurrentMat());

669 | return OpenCV.pointToPVector(r.maxLoc);

670 | }

671 |

672 | /**

673 | * Get the x-y location of the minimum value in the current image.

674 | *

675 | * @return

676 | * A PVector with the location of the minimum value.

677 | */

678 | public PVector min(){

679 | MinMaxLocResult r = Core.minMaxLoc(getCurrentMat());

680 | return OpenCV.pointToPVector(r.minLoc);

681 | }

682 |

683 | /**

684 | * Helper function to convert an OpenCV Point into a Processing PVector

685 | *

686 | * @param p

687 | * A Point

688 | * @return

689 | * A PVector

690 | */

691 | public static PVector pointToPVector(Point p){

692 | return new PVector((float)p.x, (float)p.y);

693 | }

694 |

695 |

696 | /**

697 | * Adjust the brightness of the image. Works on color or black and white images.

698 | *

699 | * @param amt

700 | * The amount to brighten the image. Ranges -255 to 255.

701 | *

702 | **/

703 | public void brightness(int amt){

704 | Scalar modifier;

705 | if(useColor){

706 | modifier = new Scalar(amt,amt,amt, 1);

707 |

708 | } else{

709 | modifier = new Scalar(amt);

710 | }

711 |

712 | Core.add(getCurrentMat(), modifier, getCurrentMat());

713 | }

714 |

715 | /**

716 | * Helper to create a new OpenCV Mat whose channels and

717 | * bit-depth mask an existing Mat.

718 | *

719 | * @param m

720 | * The Mat to match

721 | * @return

722 | * A new Mat

723 | */

724 | public static Mat imitate(Mat m){

725 | return new Mat(m.height(), m.width(), m.type());

726 | }

727 |

728 | /**

729 | * Calculate the difference between the current image

730 | * loaded into OpenCV and a second image. The result is stored

731 | * in the loaded image in OpenCV. Works on both color and grayscale

732 | * images.

733 | *

734 | * @param img

735 | * A PImage to diff against.

736 | */

737 | public void diff(PImage img){

738 | Mat imgMat = imitate(getColor());

739 | toCv(img, imgMat);

740 |

741 | Mat dst = imitate(getCurrentMat());

742 |

743 | if(useColor){

744 | ARGBtoBGRA(imgMat, imgMat);

745 | Core.absdiff(getCurrentMat(), imgMat, dst);

746 | } else {

747 | Core.absdiff(getCurrentMat(), OpenCV.gray(imgMat), dst);

748 | }

749 |

750 | dst.assignTo(getCurrentMat());

751 | }

752 |

753 | /**

754 | * A helper function that diffs two Mats using absdiff.

755 | * Places the result back into mat1

756 | *

757 | * @param mat1

758 | * The destination Mat

759 | * @param mat2

760 | * The Mat to diff against

761 | */

762 | public static void diff(Mat mat1, Mat mat2){

763 | Mat dst = imitate(mat1);

764 | Core.absdiff(mat1, mat2, dst);

765 | dst.assignTo(mat1);

766 | }

767 |

768 | /**

769 | * Apply a global threshold to an image. Produces a binary image

770 | * with white pixels where the original image was above the threshold

771 | * and black where it was below.

772 | *

773 | * @param threshold

774 | * An int from 0-255.

775 | */

776 | public void threshold(int threshold){

777 | Imgproc.threshold(getCurrentMat(), getCurrentMat(), threshold, 255, Imgproc.THRESH_BINARY);

778 | }

779 |

780 | /**

781 | * Apply a global threshold to the image. The threshold is determined by Otsu's method, which

782 | * attempts to divide the image at a threshold which minimizes the variance of pixels in the black

783 | * and white regions.

784 | *

785 | * See: https://en.wikipedia.org/wiki/Otsu's_method

786 | */

787 | public void threshold() {

788 | Imgproc.threshold(getCurrentMat(), getCurrentMat(), 0, 255, Imgproc.THRESH_BINARY | Imgproc.THRESH_OTSU);

789 | }

790 |

791 | /**

792 | * Apply an adaptive threshold to an image. Produces a binary image

793 | * with white pixels where the original image was above the threshold

794 | * and black where it was below.

795 | *

796 | * See:

797 | * http://docs.opencv.org/java/org/opencv/imgproc/Imgproc.html#adaptiveThreshold(org.opencv.core.Mat, org.opencv.core.Mat, double, int, int, int, double)

798 | *

799 | * @param blockSize

800 | * The size of the pixel neighborhood to use.

801 | * @param c

802 | * A constant subtracted from the mean of each neighborhood.

803 | */

804 | public void adaptiveThreshold(int blockSize, int c){

805 | try{

806 | Imgproc.adaptiveThreshold(getCurrentMat(), getCurrentMat(), 255, Imgproc.ADAPTIVE_THRESH_GAUSSIAN_C, Imgproc.THRESH_BINARY, blockSize, c);

807 | } catch(CvException e){

808 | PApplet.println("ERROR: adaptiveThreshold function only works on gray images.");

809 | }

810 | }

811 |

812 | /**

813 | * Normalize the histogram of the image. This will spread the image's color

814 | * spectrum over the full 0-255 range. Only works on grayscale images.

815 | *

816 | *

817 | * See: http://docs.opencv.org/java/org/opencv/imgproc/Imgproc.html#equalizeHist(org.opencv.core.Mat, org.opencv.core.Mat)

818 | *

819 | */

820 | public void equalizeHistogram(){

821 | try{

822 | Imgproc.equalizeHist(getCurrentMat(), getCurrentMat());

823 | } catch(CvException e){

824 | PApplet.println("ERROR: equalizeHistogram only works on a gray image.");

825 | }

826 | }

827 |

828 | /**

829 | * Invert the image.

830 | * See: http://docs.opencv.org/java/org/opencv/core/Core.html#bitwise_not(org.opencv.core.Mat, org.opencv.core.Mat)

831 | *

832 | */

833 | public void invert(){

834 | Core.bitwise_not(getCurrentMat(),getCurrentMat());

835 | }

836 |

837 | /**

838 | * Dilate the image. Dilation is a morphological operation (i.e. it affects the shape) often used to

839 | * close holes in contours. It expands white areas of the image.

840 | *

841 | * See:

842 | * http://docs.opencv.org/java/org/opencv/imgproc/Imgproc.html#dilate(org.opencv.core.Mat, org.opencv.core.Mat, org.opencv.core.Mat)

843 | *

844 | */

845 | public void dilate(){

846 | Imgproc.dilate(getCurrentMat(), getCurrentMat(), new Mat());

847 | }

848 |

849 | /**

850 | * Erode the image. Erosion is a morphological operation (i.e. it affects the shape) often used to

851 | * close holes in contours. It contracts white areas of the image.

852 | *

853 | * See:

854 | * http://docs.opencv.org/java/org/opencv/imgproc/Imgproc.html#erode(org.opencv.core.Mat, org.opencv.core.Mat, org.opencv.core.Mat)

855 | *

856 | */

857 | public void erode(){

858 | Imgproc.erode(getCurrentMat(), getCurrentMat(), new Mat());

859 | }

860 |

861 | /**

862 | * Apply a morphological operation (e.g., opening, closing) to the image with a given kernel element.

863 | *

864 | * See:

865 | * http://docs.opencv.org/doc/tutorials/imgproc/opening_closing_hats/opening_closing_hats.html

866 | *

867 | * @param operation

868 | * The morphological operation to apply: Imgproc.MORPH_CLOSE, MORPH_OPEN,

869 | * MORPH_TOPHAT, MORPH_BLACKHAT, MORPH_GRADIENT.

870 | * @param kernelElement

871 | * The shape to apply the operation with: Imgproc.MORPH_RECT, MORPH_CROSS, or MORPH_ELLIPSE.

872 | * @param width

873 | * Width of the shape.

874 | * @param height

875 | * Height of the shape.

876 | */

877 | public void morphX(int operation, int kernelElement, int width, int height) {

878 | Mat kernel = Imgproc.getStructuringElement(kernelElement, new Size(width, height));

879 | Imgproc.morphologyEx(getCurrentMat(), getCurrentMat(), operation, kernel);

880 | }

881 |

882 | /**

883 | * Close the image with a circle of a given size.

884 | *

885 | * See:

886 | * http://docs.opencv.org/doc/tutorials/imgproc/opening_closing_hats/opening_closing_hats.html#closing

887 | *

888 | * @param size

889 | * Radius of the circle to close with.

890 | */

891 | public void close(int size) {

892 | Mat kernel = Imgproc.getStructuringElement(Imgproc.MORPH_ELLIPSE, new Size(size, size));

893 | Imgproc.morphologyEx(getCurrentMat(), getCurrentMat(), Imgproc.MORPH_CLOSE, kernel);

894 | }

895 |

896 | /**

897 | * Open the image with a circle of a given size.

898 | *

899 | * See:

900 | * http://docs.opencv.org/doc/tutorials/imgproc/opening_closing_hats/opening_closing_hats.html#opening

901 | *

902 | * @param size

903 | * Radius of the circle to open with.

904 | */

905 | public void open(int size) {

906 | Mat kernel = Imgproc.getStructuringElement(Imgproc.MORPH_ELLIPSE, new Size(size, size));

907 | Imgproc.morphologyEx(getCurrentMat(), getCurrentMat(), Imgproc.MORPH_OPEN, kernel);

908 | }

909 |

910 | /**

911 | * Blur an image symetrically by a given number of pixels.

912 | *

913 | * @param blurSize

914 | * int - the amount to blur by in x- and y-directions.

915 | */

916 | public void blur(int blurSize){

917 | Imgproc.blur(getCurrentMat(), getCurrentMat(), new Size(blurSize, blurSize));

918 | }

919 |

920 | /**

921 | * Blur an image assymetrically by a different number of pixels in x- and y-directions.

922 | *

923 | * @param blurW

924 | * amount to blur in the x-direction

925 | * @param blurH

926 | * amount to blur in the y-direction

927 | */

928 | public void blur(int blurW, int blurH){

929 | Imgproc.blur(getCurrentMat(), getCurrentMat(), new Size(blurW, blurH));

930 | }

931 |

932 | /**

933 | * Find edges in the image using Canny edge detection.

934 | *

935 | * @param lowThreshold

936 | * @param highThreshold

937 | */

938 | public void findCannyEdges(int lowThreshold, int highThreshold){

939 | Imgproc.Canny(getCurrentMat(), getCurrentMat(), lowThreshold, highThreshold);

940 | }

941 |

942 | public void findSobelEdges(int dx, int dy){

943 | Mat sobeled = new Mat(getCurrentMat().height(), getCurrentMat().width(), CvType.CV_32F);

944 | Imgproc.Sobel(getCurrentMat(), sobeled, CvType.CV_32F, dx, dy);

945 | sobeled.convertTo(getCurrentMat(), getCurrentMat().type());

946 | }

947 |

948 | public void findScharrEdges(int direction){

949 | if(direction == HORIZONTAL){

950 | Imgproc.Scharr(getCurrentMat(), getCurrentMat(), -1, 1, 0 );

951 | }

952 |

953 | if(direction == VERTICAL){

954 | Imgproc.Scharr(getCurrentMat(), getCurrentMat(), -1, 0, 1 );

955 | }

956 |

957 | if(direction == BOTH){

958 | Mat hMat = imitate(getCurrentMat());

959 | Mat vMat = imitate(getCurrentMat());

960 | Imgproc.Scharr(getCurrentMat(), hMat, -1, 1, 0 );

961 | Imgproc.Scharr(getCurrentMat(), vMat, -1, 0, 1 );

962 | Core.add(vMat,hMat, getCurrentMat());

963 | }

964 | }

965 |

966 | public ArrayList findContours(){

967 | return findContours(true, false);

968 | }

969 |

970 | public ArrayList findContours(boolean findHoles, boolean sort){

971 | ArrayList result = new ArrayList();

972 |

973 | ArrayList contourMat = new ArrayList();

974 | try{

975 | int contourFindingMode = (findHoles ? Imgproc.RETR_LIST : Imgproc.RETR_EXTERNAL);

976 |

977 | Imgproc.findContours(getCurrentMat(), contourMat, new Mat(), contourFindingMode, Imgproc.CHAIN_APPROX_NONE);

978 | } catch(CvException e){

979 | PApplet.println("ERROR: findContours only works with a gray image.");

980 | }

981 | for (MatOfPoint c : contourMat) {

982 | result.add(new Contour(parent, c));

983 | }

984 |

985 | if(sort){

986 | Collections.sort(result, new ContourComparator());

987 | }

988 |

989 | return result;

990 | }

991 |

992 | public ArrayList findLines(int threshold, double minLineLength, double maxLineGap){

993 | ArrayList result = new ArrayList();

994 |

995 | Mat lineMat = new Mat();

996 | Imgproc.HoughLinesP(getCurrentMat(), lineMat, 1, PConstants.PI/180.0, threshold, minLineLength, maxLineGap);

997 | for (int i = 0; i < lineMat.width(); i++) {

998 | double[] coords = lineMat.get(0, i);

999 | result.add(new Line(coords[0], coords[1], coords[2], coords[3]));

1000 | }

1001 |

1002 | return result;

1003 | }

1004 |

1005 | public ArrayList findChessboardCorners(int patternWidth, int patternHeight){

1006 | MatOfPoint2f corners = new MatOfPoint2f();

1007 | Calib3d.findChessboardCorners(getCurrentMat(), new Size(patternWidth,patternHeight), corners);

1008 | return matToPVectors(corners);

1009 | }

1010 |

1011 | /**

1012 | *

1013 | * @param mat

1014 | * The mat from which to calculate the histogram. Get this from getGray(), getR(), getG(), getB(), etc..

1015 | * By default this will normalize the histogram (scale the values to 0.0-1.0). Pass false as the third argument to keep values unormalized.

1016 | * @param numBins

1017 | * The number of bins into which divide the histogram should be divided.

1018 | * @return

1019 | * A Histogram object that you can call draw() on.

1020 | */

1021 | public Histogram findHistogram(Mat mat, int numBins){

1022 | return findHistogram(mat, numBins, true);

1023 | }

1024 |

1025 |

1026 | public Histogram findHistogram(Mat mat, int numBins, boolean normalize){

1027 |

1028 | MatOfInt channels = new MatOfInt(0);

1029 | MatOfInt histSize = new MatOfInt(numBins);

1030 | float[] r = {0f, 256f};

1031 | MatOfFloat ranges = new MatOfFloat(r);

1032 | Mat hist = new Mat();

1033 |

1034 | ArrayList images = new ArrayList();

1035 | images.add(mat);

1036 |

1037 | Imgproc.calcHist( images, channels, new Mat(), hist, histSize, ranges);

1038 |

1039 | if(normalize){

1040 | Core.normalize(hist, hist);

1041 | }

1042 |

1043 | return new Histogram(parent, hist);

1044 | }

1045 |

1046 | /**

1047 | *

1048 | * Filter the image for values between a lower and upper bound.

1049 | * Converts the current image into a binary image with white where pixel

1050 | * values were within bounds and black elsewhere.

1051 | *

1052 | * @param lowerBound

1053 | * @param upperBound

1054 | */

1055 | public void inRange(int lowerBound, int upperBound){

1056 | Core.inRange(getCurrentMat(), new Scalar(lowerBound), new Scalar(upperBound), getCurrentMat());

1057 | }

1058 |

1059 | /**

1060 | *

1061 | * @param src

1062 | * A Mat of type 8UC4 with channels arranged as BGRA.

1063 | * @return

1064 | * A Mat of type 8UC1 in grayscale.

1065 | */

1066 | public static Mat gray(Mat src){

1067 | Mat result = new Mat(src.height(), src.width(), CvType.CV_8UC1);

1068 | Imgproc.cvtColor(src, result, Imgproc.COLOR_BGRA2GRAY);

1069 |

1070 | return result;

1071 | }

1072 |

1073 | public void gray(){

1074 | matGray = gray(matBGRA);

1075 | useGray(); //???

1076 | }

1077 |

1078 | /**

1079 | * Set a Region of Interest within the image. Subsequent image processing

1080 | * functions will apply to this ROI rather than the full image.

1081 | * Full image will display be included in output.

1082 | *

1083 | * @return

1084 | * False if requested ROI exceed the bounds of the working image.

1085 | * True if ROI was successfully set.

1086 | */

1087 | public boolean setROI(int x, int y, int w, int h){

1088 | if(x < 0 ||

1089 | x + w > width ||

1090 | y < 0 ||

1091 | y + h > height){

1092 | return false;

1093 | } else{

1094 | roiWidth = w;

1095 | roiHeight = h;

1096 |

1097 | if(useColor){

1098 | nonROImat = matBGRA;

1099 | matROI = new Mat(matBGRA, new Rect(x, y, w, h));

1100 | } else {

1101 | nonROImat = matGray;

1102 | matROI = new Mat(matGray, new Rect(x, y, w, h));

1103 | }

1104 | useROI = true;

1105 |

1106 | return true;

1107 | }

1108 | }

1109 |

1110 | public void releaseROI(){

1111 | useROI = false;

1112 | }

1113 |

1114 | /**

1115 | * Load an image from a path.

1116 | *

1117 | * @param imgPath

1118 | * String with the path to the image

1119 | */

1120 | public void loadImage(String imgPath){

1121 | loadImage(parent.loadImage(imgPath));

1122 | }

1123 |

1124 | // NOTE: We're not handling the signed/unsigned

1125 | // conversion. Is that any issue?

1126 | public void loadImage(PImage img){

1127 | // FIXME: is there a better way to hold onto

1128 | // this?

1129 | inputImage = img;

1130 |

1131 | toCv(img, matBGRA);

1132 | ARGBtoBGRA(matBGRA,matBGRA);

1133 | populateBGRA();

1134 |

1135 | if(useColor){

1136 | useColor(this.colorSpace);

1137 | } else {

1138 | gray();

1139 | }

1140 |

1141 | }

1142 |

1143 | public static void ARGBtoBGRA(Mat rgba, Mat bgra){

1144 | ArrayList channels = new ArrayList();

1145 | Core.split(rgba, channels);

1146 |

1147 | ArrayList reordered = new ArrayList();

1148 | // Starts as ARGB.

1149 | // Make into BGRA.

1150 |

1151 | reordered.add(channels.get(3));

1152 | reordered.add(channels.get(2));

1153 | reordered.add(channels.get(1));

1154 | reordered.add(channels.get(0));

1155 |

1156 | Core.merge(reordered, bgra);

1157 | }

1158 |

1159 |

1160 | public int getSize(){

1161 | return width * height;

1162 | }

1163 |

1164 | /**

1165 | *

1166 | * Convert a 4 channel OpenCV Mat object into

1167 | * pixels to be shoved into a 4 channel ARGB PImage's

1168 | * pixel array.

1169 | *

1170 | * @param m

1171 | * An RGBA Mat we want converted

1172 | * @return

1173 | * An int[] formatted to be the pixels of a PImage

1174 | */

1175 | public int[] matToARGBPixels(Mat m){

1176 | int pImageChannels = 4;

1177 | int numPixels = m.width()*m.height();

1178 | int[] intPixels = new int[numPixels];

1179 | byte[] matPixels = new byte[numPixels*pImageChannels];

1180 |

1181 | m.get(0,0, matPixels);

1182 | ByteBuffer.wrap(matPixels).order(ByteOrder.LITTLE_ENDIAN).asIntBuffer().get(intPixels);

1183 | return intPixels;

1184 | }

1185 |

1186 |

1187 | /**

1188 | * Convert an OpenCV Mat object into a PImage

1189 | * to be used in other Processing code.

1190 | * Copies the Mat's pixel data into the PImage's pixel array.

1191 | * Iterates over each pixel in the Mat, i.e. expensive.

1192 | *

1193 | * (Mainly used internally by OpenCV. Inspired by toCv()

1194 | * from KyleMcDonald's ofxCv.)

1195 | *

1196 | * @param m

1197 | * A Mat you want converted

1198 | * @param img

1199 | * The PImage you want the Mat converted into.

1200 | */

1201 | public void toPImage(Mat m, PImage img){

1202 | img.loadPixels();

1203 |

1204 | if(m.channels() == 3){

1205 | Mat m2 = new Mat();

1206 | Imgproc.cvtColor(m, m2, Imgproc.COLOR_RGB2RGBA);

1207 | img.pixels = matToARGBPixels(m2);

1208 | } else if(m.channels() == 1){

1209 | Mat m2 = new Mat();

1210 | Imgproc.cvtColor(m, m2, Imgproc.COLOR_GRAY2RGBA);

1211 | img.pixels = matToARGBPixels(m2);

1212 | } else if(m.channels() == 4){

1213 | img.pixels = matToARGBPixels(m);

1214 | }

1215 |

1216 | img.updatePixels();

1217 | }

1218 |

1219 | /**

1220 | * Convert a Processing PImage to an OpenCV Mat.

1221 | * (Inspired by Kyle McDonald's ofxCv's toOf())

1222 | *

1223 | * @param img

1224 | * The PImage to convert.

1225 | * @param m

1226 | * The Mat to receive the image data.

1227 | */

1228 | public static void toCv(PImage img, Mat m){

1229 | BufferedImage image = (BufferedImage)img.getNative();

1230 | int[] matPixels = ((DataBufferInt)image.getRaster().getDataBuffer()).getData();

1231 |

1232 | ByteBuffer bb = ByteBuffer.allocate(matPixels.length * 4);

1233 | IntBuffer ib = bb.asIntBuffer();

1234 | ib.put(matPixels);

1235 |

1236 | byte[] bvals = bb.array();

1237 |

1238 | m.put(0,0, bvals);

1239 | }

1240 |

1241 | public static ArrayList matToPVectors(MatOfPoint mat){

1242 | ArrayList result = new ArrayList();

1243 | Point[] points = mat.toArray();

1244 | for(int i = 0; i < points.length; i++){

1245 | result.add(new PVector((float)points[i].x, (float)points[i].y));

1246 | }

1247 |

1248 | return result;

1249 | }

1250 |

1251 | public static ArrayList matToPVectors(MatOfPoint2f mat){

1252 | ArrayList result = new ArrayList();

1253 | Point[] points = mat.toArray();

1254 | for(int i = 0; i < points.length; i++){

1255 | result.add(new PVector((float)points[i].x, (float)points[i].y));

1256 | }

1257 |

1258 | return result;

1259 | }

1260 |

1261 | public String matToS(Mat mat){

1262 | return CvType.typeToString(mat.type());

1263 | }

1264 |

1265 | public PImage getInput(){

1266 | return inputImage;

1267 | }

1268 |

1269 | public PImage getOutput(){

1270 | if(useColor){

1271 | toPImage(matBGRA, outputImage);

1272 | } else {

1273 | toPImage(matGray, outputImage);

1274 | }

1275 |

1276 | return outputImage;

1277 | }

1278 |

1279 | public PImage getSnapshot(){

1280 | PImage result;

1281 |

1282 | if(useROI){

1283 | result = getSnapshot(matROI);

1284 | } else {

1285 | if(useColor){

1286 | if(colorSpace == PApplet.HSB){

1287 | result = getSnapshot(matHSV);

1288 | } else {

1289 | result = getSnapshot(matBGRA);

1290 | }

1291 | } else {

1292 | result = getSnapshot(matGray);

1293 | }

1294 | }

1295 | return result;

1296 | }

1297 |

1298 | public PImage getSnapshot(Mat m){

1299 | PImage result = parent.createImage(m.width(), m.height(), PApplet.ARGB);

1300 | toPImage(m, result);

1301 | return result;

1302 | }

1303 |

1304 | public Mat getR(){

1305 | return matR;

1306 | }

1307 |

1308 | public Mat getG(){

1309 | return matG;

1310 | }

1311 |

1312 | public Mat getB(){

1313 | return matB;

1314 | }

1315 |

1316 | public Mat getA(){

1317 | return matA;

1318 | }

1319 |

1320 | public Mat getH(){

1321 | return matH;

1322 | }

1323 |

1324 | public Mat getS(){

1325 | return matS;

1326 | }

1327 |

1328 | public Mat getV(){

1329 | return matV;

1330 | }

1331 |

1332 | public Mat getGray(){

1333 | return matGray;

1334 | }

1335 |

1336 | public void setGray(Mat m){

1337 | matGray = m;

1338 | useColor = false;

1339 | }

1340 |

1341 | public void setColor(Mat m){

1342 | matBGRA = m;

1343 | useColor = true;

1344 | }

1345 |

1346 | public Mat getColor(){

1347 | return matBGRA;

1348 | }

1349 |

1350 | public Mat getROI(){

1351 | return matROI;

1352 | }

1353 |

1354 | private void welcome() {

1355 | System.out.println("Java OpenCV " + Core.VERSION);

1356 | }

1357 |

1358 | /**

1359 | * return the version of the library.

1360 | *

1361 | * @return String

1362 | */

1363 | public static String version() {

1364 | return VERSION;

1365 | }

1366 | }

1367 |

1368 |

--------------------------------------------------------------------------------

/src/test/java/AlphaChannelTest.java:

--------------------------------------------------------------------------------

1 | import processing.core.PApplet;

2 | import processing.core.PImage;

3 |

4 | import gab.opencv.*;

5 | import org.opencv.core.*;

6 |

7 | public class AlphaChannelTest extends PApplet {

8 |

9 | public static void main(String... args) {

10 | AlphaChannelTest sketch = new AlphaChannelTest();

11 | sketch.runSketch();

12 | }

13 |

14 | public void settings() {

15 | size(800, 300);

16 | }

17 |

18 | OpenCV opencv;

19 |

20 | PImage input, output;

21 |

22 | public void setup() {

23 | opencv = new OpenCV(this, 0, 0);

24 | input = createImage(400, 300, ARGB);

25 |

26 | // fill red

27 | for (int y = 0; y < input.height; y++) {

28 | for (int x = 0; x < input.width; x++) {

29 | input.set(x, y, color(255, 0, 0));

30 | }

31 | }

32 |

33 | input = loadImage("https://github.com/pjreddie/darknet/raw/master/data/dog.jpg");

34 | input.resize(400, 300);

35 |

36 | Mat mat = new Mat(input.height, input.width, CvType.CV_8UC4);

37 | OpenCV.toCv(input, mat);

38 |

39 | output = createImage(input.width, input.height, RGB);

40 | opencv.toPImage(mat, output);

41 |

42 | println("first pixel of input: " + binary(input.pixels[0]));

43 | println("first pixel of mat:");

44 | println(mat.get(0, 0));

45 | println("first pixel of output :" + binary(output.pixels[0]));

46 | }

47 |

48 | public void draw() {

49 | image(input, 0, 0);

50 | image(output, input.width, 0);

51 | }

52 | }

--------------------------------------------------------------------------------

/src/test/java/GrabImageTest.java:

--------------------------------------------------------------------------------

1 | import gab.opencv.OpenCV;

2 | import processing.core.PApplet;

3 | import processing.core.PImage;

4 |

5 | public class GrabImageTest extends PApplet {

6 |

7 | public static void main(String... args) {

8 | GrabImageTest sketch = new GrabImageTest();

9 | sketch.runSketch();

10 | }

11 |

12 | public void settings() {

13 | size(640, 480);

14 | }

15 |

16 | PImage testImage;

17 | OpenCV opencv;

18 |

19 | public void setup() {

20 | testImage = loadImage(sketchPath("examples/BrightestPoint/robot_light.jpg"));

21 | opencv = new OpenCV(this, testImage.width, testImage.height);

22 | noLoop();

23 | }

24 |

25 | public void draw() {

26 | opencv.useColor();

27 | opencv.loadImage(testImage);

28 | PImage result = opencv.getSnapshot();

29 | image(result, 0, 0);

30 | }

31 | }

--------------------------------------------------------------------------------

/src/test/java/OpticalFlowTest.java:

--------------------------------------------------------------------------------

1 | import gab.opencv.OpenCV;

2 | import processing.core.PApplet;

3 | import processing.core.PImage;

4 |

5 | public class OpticalFlowTest extends PApplet {

6 |

7 | public static void main(String... args) {

8 | OpticalFlowTest sketch = new OpticalFlowTest();

9 | sketch.runSketch();

10 | }

11 |

12 | public void settings() {

13 | size(640, 480);

14 | }

15 |

16 | PImage testImage;

17 | OpenCV opencv;

18 |

19 | public void setup() {

20 | testImage = loadImage(sketchPath("examples/BrightestPoint/robot_light.jpg"));

21 | opencv = new OpenCV(this, testImage.width, testImage.height);

22 | }

23 |

24 | public void draw() {

25 | opencv.loadImage(testImage);

26 | opencv.calculateOpticalFlow();

27 | println("Optical Flow was running");

28 | }

29 | }

--------------------------------------------------------------------------------

69 |

70 | Code: [FaceDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FaceDetection/FaceDetection.pde)

71 |

72 | #### BrightnessContrast

73 |

74 | Adjust the brightness and contrast of color and gray images.

75 |

76 |

69 |

70 | Code: [FaceDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FaceDetection/FaceDetection.pde)

71 |

72 | #### BrightnessContrast

73 |

74 | Adjust the brightness and contrast of color and gray images.

75 |

76 |  77 |

78 | Code: [BrightnessContrast.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/BrightnessContrast/BrightnessContrast.pde)

79 |

80 | #### FilterImages

81 |

82 | Basic filtering operations on images: threshold, blur, and adaptive thresholds.

83 |

84 |

77 |

78 | Code: [BrightnessContrast.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/BrightnessContrast/BrightnessContrast.pde)

79 |

80 | #### FilterImages

81 |

82 | Basic filtering operations on images: threshold, blur, and adaptive thresholds.

83 |

84 |  85 |

86 | Code: [FilterImages.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FilterImages/FilterImages.pde)

87 |

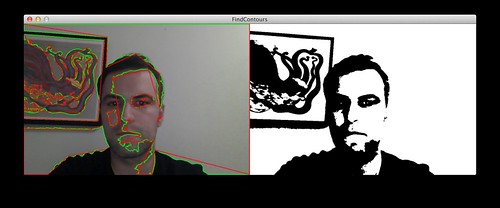

88 | #### FindContours

89 |

90 | Find contours in images and calculate polygon approximations of the contours (i.e., the closest straight line that fits the contour).

91 |

92 |

85 |

86 | Code: [FilterImages.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FilterImages/FilterImages.pde)

87 |

88 | #### FindContours

89 |

90 | Find contours in images and calculate polygon approximations of the contours (i.e., the closest straight line that fits the contour).

91 |

92 |  93 |

94 | Code: [FindContours.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FindContours/FindContours.pde)

95 |

96 | #### FindEdges

97 |

98 | Three different edge-detection techniques: Canny, Scharr, and Sobel.

99 |

100 |

93 |

94 | Code: [FindContours.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FindContours/FindContours.pde)

95 |

96 | #### FindEdges

97 |

98 | Three different edge-detection techniques: Canny, Scharr, and Sobel.

99 |

100 |  101 |

102 | Code: [FindEdges.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FindEdges/FindEdges.pde)

103 |

104 | #### FindLines

105 |

106 | Find straight lines in the image using Hough line detection.

107 |

108 |

101 |

102 | Code: [FindEdges.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FindEdges/FindEdges.pde)

103 |

104 | #### FindLines

105 |

106 | Find straight lines in the image using Hough line detection.

107 |

108 |  109 |

110 | Code: [HoughLineDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/HoughLineDetection/HoughLineDetection.pde)

111 |

112 | #### BrightestPoint

113 |

114 | Find the brightest point in an image.

115 |

116 |

109 |

110 | Code: [HoughLineDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/HoughLineDetection/HoughLineDetection.pde)

111 |

112 | #### BrightestPoint

113 |

114 | Find the brightest point in an image.

115 |

116 |  117 |

118 | Code: [BrightestPoint.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/BrightestPoint/BrightestPoint.pde)

119 |

120 | #### RegionOfInterest

121 |

122 | Assign a sub-section (or Region of Interest) of the image to be processed. Video of this example in action here: [Region of Interest demo on Vimeo](https://vimeo.com/69009345).

123 |

124 |

117 |

118 | Code: [BrightestPoint.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/BrightestPoint/BrightestPoint.pde)

119 |

120 | #### RegionOfInterest

121 |

122 | Assign a sub-section (or Region of Interest) of the image to be processed. Video of this example in action here: [Region of Interest demo on Vimeo](https://vimeo.com/69009345).

123 |

124 |  125 |

126 | Code: [RegionOfInterest.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/RegionOfInterest/RegionOfInterest.pde)

127 |

128 | #### ImageDiff

129 |

130 | Find the difference between two images in order to subtract the background or detect a new object in a scene.

131 |

132 |

125 |

126 | Code: [RegionOfInterest.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/RegionOfInterest/RegionOfInterest.pde)

127 |

128 | #### ImageDiff

129 |

130 | Find the difference between two images in order to subtract the background or detect a new object in a scene.

131 |

132 |  133 |

134 | Code: [ImageDiff.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/ImageDiff/ImageDiff.pde)

135 |

136 | #### DilationAndErosion

137 |

138 | Thin (erode) and expand (dilate) an image in order to close holes. These are known as "morphological" operations.

139 |

140 |

133 |

134 | Code: [ImageDiff.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/ImageDiff/ImageDiff.pde)

135 |

136 | #### DilationAndErosion

137 |

138 | Thin (erode) and expand (dilate) an image in order to close holes. These are known as "morphological" operations.

139 |

140 |  141 |

142 | Code: [DilationAndErosion.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/DilationAndErosion/DilationAndErosion.pde)

143 |

144 | #### BackgroundSubtraction

145 |

146 | Detect moving objects in a scene. Use background subtraction to distinguish background from foreground and contour tracking to track the foreground objects.

147 |

148 |

141 |

142 | Code: [DilationAndErosion.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/DilationAndErosion/DilationAndErosion.pde)

143 |

144 | #### BackgroundSubtraction

145 |

146 | Detect moving objects in a scene. Use background subtraction to distinguish background from foreground and contour tracking to track the foreground objects.

147 |

148 |  149 |

150 | Code: [BackgroundSubtraction.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/BackgroundSubtraction/BackgroundSubtraction.pde)

151 |

152 |

153 | #### WorkingWithColorImages

154 |

155 | Demonstration of what you can do color images in OpenCV (threshold, blur, etc) and what you can't (lots of other operations).

156 |

157 |

149 |

150 | Code: [BackgroundSubtraction.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/BackgroundSubtraction/BackgroundSubtraction.pde)

151 |

152 |

153 | #### WorkingWithColorImages

154 |

155 | Demonstration of what you can do color images in OpenCV (threshold, blur, etc) and what you can't (lots of other operations).

156 |

157 |  158 |

159 | Code: [WorkingWithColorImages.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/WorkingWithColorImages/WorkingWithColorImages.pde)

160 |

161 | #### ColorChannels ####

162 |

163 | Separate a color image into red, green, blue or hue, saturation, and value channels in order to work with the channels individually.

164 |

165 |

158 |

159 | Code: [WorkingWithColorImages.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/WorkingWithColorImages/WorkingWithColorImages.pde)

160 |

161 | #### ColorChannels ####

162 |

163 | Separate a color image into red, green, blue or hue, saturation, and value channels in order to work with the channels individually.

164 |

165 |  166 |

167 | Code: [ColorChannels](https://github.com/atduskgreg/opencv-processing/blob/master/examples/ColorChannels/ColorChannels.pde)

168 |

169 | #### FindHistogram

170 |

171 | Demonstrates use of the findHistogram() function and the Histogram class to get and draw histograms for grayscale and individual color channels.

172 |

173 |

166 |

167 | Code: [ColorChannels](https://github.com/atduskgreg/opencv-processing/blob/master/examples/ColorChannels/ColorChannels.pde)

168 |

169 | #### FindHistogram

170 |

171 | Demonstrates use of the findHistogram() function and the Histogram class to get and draw histograms for grayscale and individual color channels.

172 |

173 |  174 |

175 | Code: [FindHistogram.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FindHistogram/FindHistogram.pde)

176 |

177 | #### HueRangeSelection

178 |

179 | Detect objects based on their color. Demonstrates the use of HSV color space as well as range-based image filtering.

180 |

181 |

174 |

175 | Code: [FindHistogram.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/FindHistogram/FindHistogram.pde)

176 |

177 | #### HueRangeSelection

178 |

179 | Detect objects based on their color. Demonstrates the use of HSV color space as well as range-based image filtering.

180 |

181 |  182 |

183 | Code: [HueRangeSelection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/HueRangeSelection/HueRangeSelection.pde)

184 |

185 | #### CalibrationDemo (in progress)

186 |

187 | An example of the process involved in calibrating a camera. Currently only detects the corners in a chessboard pattern.

188 |

189 |

182 |

183 | Code: [HueRangeSelection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/HueRangeSelection/HueRangeSelection.pde)

184 |

185 | #### CalibrationDemo (in progress)

186 |

187 | An example of the process involved in calibrating a camera. Currently only detects the corners in a chessboard pattern.

188 |

189 |  190 |

191 | Code: [CalibrationDemo.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/CalibrationDemo/CalibrationDemo.pde)

192 |

193 | #### HistogramSkinDetection

194 |

195 | A more advanced example. Detecting skin in an image based on colors in a region of color space. Warning: uses un-wrapped OpenCV objects and functions.

196 |

197 |

190 |

191 | Code: [CalibrationDemo.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/CalibrationDemo/CalibrationDemo.pde)

192 |

193 | #### HistogramSkinDetection

194 |

195 | A more advanced example. Detecting skin in an image based on colors in a region of color space. Warning: uses un-wrapped OpenCV objects and functions.

196 |

197 |  198 |

199 | Code: [HistogramSkinDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/HistogramSkinDetection/HistogramSkinDetection.pde)

200 |

201 | #### DepthFromStereo

202 |

203 | An advanced example. Calculates depth information from a pair of stereo images. Warning: uses un-wrapped OpenCV objects and functions.

204 |

205 |

198 |

199 | Code: [HistogramSkinDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/HistogramSkinDetection/HistogramSkinDetection.pde)

200 |

201 | #### DepthFromStereo

202 |

203 | An advanced example. Calculates depth information from a pair of stereo images. Warning: uses un-wrapped OpenCV objects and functions.

204 |

205 |  206 |

207 | Code: [DepthFromStereo.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/DepthFromStereo/DepthFromStereo.pde)

208 |

209 | #### WarpPerspective (in progress)

210 |

211 | Un-distort an object that's in perspective. Coming to the real API soon.

212 |

213 |

206 |

207 | Code: [DepthFromStereo.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/DepthFromStereo/DepthFromStereo.pde)

208 |

209 | #### WarpPerspective (in progress)

210 |

211 | Un-distort an object that's in perspective. Coming to the real API soon.

212 |

213 |  214 |

215 | Code: [WarpPerspective.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/WarpPerspective/WarpPerspective.pde)

216 |

217 | #### MarkerDetection

218 |

219 | An in-depth advanced example. Detect a CV marker in an image, warp perspective, and detect the number stored in the marker. Many steps in the code. Uses many un-wrapped OpenCV objects and functions.

220 |

221 |

214 |

215 | Code: [WarpPerspective.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/WarpPerspective/WarpPerspective.pde)

216 |

217 | #### MarkerDetection

218 |

219 | An in-depth advanced example. Detect a CV marker in an image, warp perspective, and detect the number stored in the marker. Many steps in the code. Uses many un-wrapped OpenCV objects and functions.

220 |

221 |  222 |

223 | Code: [MarkerDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/MarkerDetection/MarkerDetection.pde)

224 |

225 | #### MorphologyOperations

226 |

227 | Open and close an image, or do more complicated morphological transformations.

228 |

229 |

222 |

223 | Code: [MarkerDetection.pde](https://github.com/atduskgreg/opencv-processing/blob/master/examples/MarkerDetection/MarkerDetection.pde)

224 |

225 | #### MorphologyOperations

226 |

227 | Open and close an image, or do more complicated morphological transformations.

228 |

229 |  230 |

231 | Code: [MorphologyOperations.pde](examples/MorphologyOperations/MorphologyOperations.pde)

232 |

--------------------------------------------------------------------------------

/build.gradle:

--------------------------------------------------------------------------------

1 | plugins {

2 | id 'java-library'

3 | id 'org.bytedeco.gradle-javacpp-platform' version "1.5.10"

4 | }

5 |

6 | group 'gab.opencv'

7 | version '0.8.0'

8 |

9 | def javaCvVersion = '1.5.10'

10 |

11 | // We can set this on the command line too this way: -PjavacppPlatform=linux-x86_64,macosx-x86_64,windows-x86_64,etc

12 | ext {

13 | javacppPlatform = 'linux-x86_64,macosx-x86_64,macosx-arm64,windows-x86_64,linux-armhf,linux-arm64' // defaults to Loader.getPlatform()

14 | }

15 |

16 | sourceCompatibility = 1.8

17 |

18 | repositories {

19 | mavenCentral()

20 | maven { url 'https://jitpack.io' }

21 | }

22 |

23 | configurations {

24 | jar.archiveName = outputName + '.jar'

25 | }

26 |

27 | javadoc {

28 | source = sourceSets.main.allJava

29 | }

30 |

31 | dependencies {

32 | // compile

33 | testImplementation group: 'junit', name: 'junit', version: '4.13.1'

34 |

35 | // opencv

36 | implementation group: 'org.bytedeco', name: 'opencv-platform', version: "4.9.0-$javaCvVersion"

37 | implementation group: 'org.bytedeco', name: 'openblas-platform', version: "0.3.26-$javaCvVersion"

38 |

39 | // processing

40 | implementation fileTree(include: ["core.jar", "jogl-all-main.jar", "gluegen-rt-main.jar"], dir: 'core-libs')

41 | }

42 |

43 | task fatJar(type: Jar) {

44 | archiveFileName = "$outputName-complete.jar"

45 | duplicatesStrategy = DuplicatesStrategy.EXCLUDE

46 | dependsOn configurations.runtimeClasspath

47 | from {

48 | (configurations.runtimeClasspath).filter( {! (it.name =~ /core.jar/ ||

49 | it.name =~ /jogl-all-main.jar/ ||

50 | it.name =~ /gluegen-rt-main.jar/)}).collect {

51 | it.isDirectory() ? it : zipTree(it)

52 | }

53 | }

54 | with jar

55 | }

56 |

57 | // add processing library support

58 | apply from: "processing-library.gradle"

--------------------------------------------------------------------------------

/core-libs/core.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/core-libs/core.jar

--------------------------------------------------------------------------------

/core-libs/gluegen-rt.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/core-libs/gluegen-rt.jar

--------------------------------------------------------------------------------

/core-libs/jogl-all.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/core-libs/jogl-all.jar

--------------------------------------------------------------------------------

/data/cascade-files/haarcascade_mcs_leftear.xml:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/data/cascade-files/haarcascade_mcs_leftear.xml

--------------------------------------------------------------------------------

/data/cascade-files/haarcascade_mcs_rightear.xml:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/data/cascade-files/haarcascade_mcs_rightear.xml

--------------------------------------------------------------------------------

/examples/BackgroundSubtraction/BackgroundSubtraction.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 | import processing.video.*;

3 |

4 | Movie video;

5 | OpenCV opencv;

6 |

7 | void setup() {

8 | size(720, 480);

9 | video = new Movie(this, "street.mov");

10 | opencv = new OpenCV(this, 720, 480);

11 |

12 | opencv.startBackgroundSubtraction(5, 3, 0.5);

13 |

14 | video.loop();

15 | video.play();

16 | }

17 |

18 | void draw() {

19 | image(video, 0, 0);

20 |

21 | if (video.width == 0 || video.height == 0)

22 | return;

23 |

24 | opencv.loadImage(video);

25 | opencv.updateBackground();

26 |

27 | opencv.dilate();

28 | opencv.erode();

29 |

30 | noFill();

31 | stroke(255, 0, 0);

32 | strokeWeight(3);

33 | for (Contour contour : opencv.findContours()) {

34 | contour.draw();

35 | }

36 | }

37 |

38 | void movieEvent(Movie m) {

39 | m.read();

40 | }

41 |

--------------------------------------------------------------------------------

/examples/BackgroundSubtraction/data/street.mov:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/examples/BackgroundSubtraction/data/street.mov

--------------------------------------------------------------------------------

/examples/BrightestPoint/BrightestPoint.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 |

3 | OpenCV opencv;

4 |

5 | void setup() {

6 | PImage src = loadImage("robot_light.jpg");

7 | src.resize(800, 0);

8 | size(800, 533);

9 |

10 | opencv = new OpenCV(this, src);

11 | }

12 |

13 | void draw() {

14 | image(opencv.getOutput(), 0, 0);

15 | PVector loc = opencv.max();

16 |

17 | stroke(255, 0, 0);

18 | strokeWeight(4);

19 | noFill();

20 | ellipse(loc.x, loc.y, 10, 10);

21 | }

--------------------------------------------------------------------------------

/examples/BrightestPoint/robot_light.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/examples/BrightestPoint/robot_light.jpg

--------------------------------------------------------------------------------

/examples/BrightnessContrast/BrightnessContrast.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 |

3 | PImage img;

4 | OpenCV opencv;

5 |

6 | void setup(){

7 | img = loadImage("test.jpg");

8 | size(1080, 720);

9 | opencv = new OpenCV(this, img);

10 | }

11 |

12 | void draw(){

13 | opencv.loadImage(img);

14 | opencv.brightness((int)map(mouseX, 0, width, -255, 255));

15 | image(opencv.getOutput(),0,0);

16 | }

--------------------------------------------------------------------------------

/examples/BrightnessContrast/test.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/examples/BrightnessContrast/test.jpg

--------------------------------------------------------------------------------

/examples/CalibrationDemo/CalibrationDemo.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 |

3 | PImage src;

4 | ArrayList

230 |

231 | Code: [MorphologyOperations.pde](examples/MorphologyOperations/MorphologyOperations.pde)

232 |

--------------------------------------------------------------------------------

/build.gradle:

--------------------------------------------------------------------------------

1 | plugins {

2 | id 'java-library'

3 | id 'org.bytedeco.gradle-javacpp-platform' version "1.5.10"

4 | }

5 |

6 | group 'gab.opencv'

7 | version '0.8.0'

8 |

9 | def javaCvVersion = '1.5.10'

10 |

11 | // We can set this on the command line too this way: -PjavacppPlatform=linux-x86_64,macosx-x86_64,windows-x86_64,etc

12 | ext {

13 | javacppPlatform = 'linux-x86_64,macosx-x86_64,macosx-arm64,windows-x86_64,linux-armhf,linux-arm64' // defaults to Loader.getPlatform()

14 | }

15 |

16 | sourceCompatibility = 1.8

17 |

18 | repositories {

19 | mavenCentral()

20 | maven { url 'https://jitpack.io' }

21 | }

22 |

23 | configurations {

24 | jar.archiveName = outputName + '.jar'

25 | }

26 |

27 | javadoc {

28 | source = sourceSets.main.allJava

29 | }

30 |

31 | dependencies {

32 | // compile

33 | testImplementation group: 'junit', name: 'junit', version: '4.13.1'

34 |

35 | // opencv

36 | implementation group: 'org.bytedeco', name: 'opencv-platform', version: "4.9.0-$javaCvVersion"

37 | implementation group: 'org.bytedeco', name: 'openblas-platform', version: "0.3.26-$javaCvVersion"

38 |

39 | // processing

40 | implementation fileTree(include: ["core.jar", "jogl-all-main.jar", "gluegen-rt-main.jar"], dir: 'core-libs')

41 | }

42 |

43 | task fatJar(type: Jar) {

44 | archiveFileName = "$outputName-complete.jar"

45 | duplicatesStrategy = DuplicatesStrategy.EXCLUDE

46 | dependsOn configurations.runtimeClasspath

47 | from {

48 | (configurations.runtimeClasspath).filter( {! (it.name =~ /core.jar/ ||

49 | it.name =~ /jogl-all-main.jar/ ||

50 | it.name =~ /gluegen-rt-main.jar/)}).collect {

51 | it.isDirectory() ? it : zipTree(it)

52 | }

53 | }

54 | with jar

55 | }

56 |

57 | // add processing library support

58 | apply from: "processing-library.gradle"

--------------------------------------------------------------------------------

/core-libs/core.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/core-libs/core.jar

--------------------------------------------------------------------------------

/core-libs/gluegen-rt.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/core-libs/gluegen-rt.jar

--------------------------------------------------------------------------------

/core-libs/jogl-all.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/core-libs/jogl-all.jar

--------------------------------------------------------------------------------

/data/cascade-files/haarcascade_mcs_leftear.xml:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/data/cascade-files/haarcascade_mcs_leftear.xml

--------------------------------------------------------------------------------

/data/cascade-files/haarcascade_mcs_rightear.xml:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/data/cascade-files/haarcascade_mcs_rightear.xml

--------------------------------------------------------------------------------

/examples/BackgroundSubtraction/BackgroundSubtraction.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 | import processing.video.*;

3 |

4 | Movie video;

5 | OpenCV opencv;

6 |

7 | void setup() {

8 | size(720, 480);

9 | video = new Movie(this, "street.mov");

10 | opencv = new OpenCV(this, 720, 480);

11 |

12 | opencv.startBackgroundSubtraction(5, 3, 0.5);

13 |

14 | video.loop();

15 | video.play();

16 | }

17 |

18 | void draw() {

19 | image(video, 0, 0);

20 |

21 | if (video.width == 0 || video.height == 0)

22 | return;

23 |

24 | opencv.loadImage(video);

25 | opencv.updateBackground();

26 |

27 | opencv.dilate();

28 | opencv.erode();

29 |

30 | noFill();

31 | stroke(255, 0, 0);

32 | strokeWeight(3);

33 | for (Contour contour : opencv.findContours()) {

34 | contour.draw();

35 | }

36 | }

37 |

38 | void movieEvent(Movie m) {

39 | m.read();

40 | }

41 |

--------------------------------------------------------------------------------

/examples/BackgroundSubtraction/data/street.mov:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/examples/BackgroundSubtraction/data/street.mov

--------------------------------------------------------------------------------

/examples/BrightestPoint/BrightestPoint.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 |

3 | OpenCV opencv;

4 |

5 | void setup() {

6 | PImage src = loadImage("robot_light.jpg");

7 | src.resize(800, 0);

8 | size(800, 533);

9 |

10 | opencv = new OpenCV(this, src);

11 | }

12 |

13 | void draw() {

14 | image(opencv.getOutput(), 0, 0);

15 | PVector loc = opencv.max();

16 |

17 | stroke(255, 0, 0);

18 | strokeWeight(4);

19 | noFill();

20 | ellipse(loc.x, loc.y, 10, 10);

21 | }

--------------------------------------------------------------------------------

/examples/BrightestPoint/robot_light.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/examples/BrightestPoint/robot_light.jpg

--------------------------------------------------------------------------------

/examples/BrightnessContrast/BrightnessContrast.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 |

3 | PImage img;

4 | OpenCV opencv;

5 |

6 | void setup(){

7 | img = loadImage("test.jpg");

8 | size(1080, 720);

9 | opencv = new OpenCV(this, img);

10 | }

11 |

12 | void draw(){

13 | opencv.loadImage(img);

14 | opencv.brightness((int)map(mouseX, 0, width, -255, 255));

15 | image(opencv.getOutput(),0,0);

16 | }

--------------------------------------------------------------------------------

/examples/BrightnessContrast/test.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cansik/opencv-processing/97c9ab8119c06ce9a4f1a141baa18eafd0d12ccb/examples/BrightnessContrast/test.jpg

--------------------------------------------------------------------------------

/examples/CalibrationDemo/CalibrationDemo.pde:

--------------------------------------------------------------------------------

1 | import gab.opencv.*;

2 |

3 | PImage src;

4 | ArrayList