9 | 10 | © 2019-2020 Nanyang Technological University, Singapore 11 | 12 | 13 | ); 14 | } 15 | -------------------------------------------------------------------------------- /modelci/types/vo/__init__.py: -------------------------------------------------------------------------------- 1 | #!/usr/bin/env python 2 | # -*- coding: utf-8 -*- 3 | """ 4 | Author: USER 5 | Email: yli056@e.ntu.edu.sg 6 | Date: 6/13/2020 7 | """ 8 | from .model_vo import ( 9 | ModelInputFormat, Framework, Engine, Status, IOShapeVO, InfoTupleVO, ProfileMemoryVO, ProfileLatencyVO, 10 | ProfileThroughputVO, DynamicResultVO, ProfileResultVO, ModelListOut, ModelDetailOut 11 | ) 12 | 13 | __all__ = [_s for _s in dir() if not _s.startswith('_')] 14 | -------------------------------------------------------------------------------- /example/resnet50_explicit_path.yml: -------------------------------------------------------------------------------- 1 | weight: "~/.modelci/ResNet50/PyTorch-PYTORCH/Image_Classification/1.pth" 2 | architecture: ResNet50 3 | framework: PyTorch 4 | engine: PYTORCH 5 | version: 1 6 | dataset: ImageNet 7 | task: Image_Classification 8 | metric: 9 | acc: 0.76 10 | inputs: 11 | - name: "input" 12 | shape: [ -1, 3, 224, 224 ] 13 | dtype: TYPE_FP32 14 | outputs: 15 | - name: "output" 16 | shape: [ -1, 1000 ] 17 | dtype: TYPE_FP32 18 | convert: true 19 | -------------------------------------------------------------------------------- /modelci/types/bo/__init__.py: -------------------------------------------------------------------------------- 1 | from .dynamic_profile_result_bo import DynamicProfileResultBO, ProfileLatency, ProfileMemory, ProfileThroughput 2 | from .model_bo import ModelBO 3 | from .model_objects import DataType, Task, Metric, ModelStatus, Framework, Engine, Status, ModelVersion, IOShape, InfoTuple, Weight 4 | from .profile_result_bo import ProfileResultBO 5 | from .static_profile_result_bo import StaticProfileResultBO 6 | 7 | __all__ = [_s for _s in dir() if not _s.startswith('_')] 8 | -------------------------------------------------------------------------------- /frontend/src/config.ts: -------------------------------------------------------------------------------- 1 | // FIXME: unable to get environment variables from process.env 2 | const host = process.env.REACT_APP_BACKEND_URL || 'http://localhost:8000' 3 | 4 | export default { 5 | default: { 6 | modelURL: `${host}/api/v1/model`, 7 | visualizerURL: `${host}/api/v1/visualizer`, 8 | structureURL: `${host}/api/exp/structure`, 9 | structureRefractorURL: `${host}/api/exp/cv-tuner/finetune`, // temp url 10 | trainerURL: `${host}/api/exp/train` 11 | } 12 | } 13 | -------------------------------------------------------------------------------- /frontend/src/pages/Dashboard/components/Pannel/index.tsx: -------------------------------------------------------------------------------- 1 | import * as React from 'react'; 2 | import styles from 'index.module.scss'; 3 | 4 | const Pannel = () => { 5 | return ( 6 |

7 |

13 | );

14 | };

15 |

16 | export default Pannel;

17 |

--------------------------------------------------------------------------------

/modelci/controller/__init__.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 | """

4 | Author: Li Yuanming

5 | Email: yli056@e.ntu.edu.sg

6 | Date: 6/29/2020

7 | """

8 | import atexit

9 |

10 | from modelci.controller.executor import JobExecutor

11 |

12 | job_executor = JobExecutor()

13 | job_executor.start()

14 |

15 |

16 | @atexit.register

17 | def terminate_controllers():

18 | job_executor.join()

19 | print('Exiting job executor.')

20 |

21 |

22 | __all__ = ['job_executor']

23 |

--------------------------------------------------------------------------------

/frontend/build.json:

--------------------------------------------------------------------------------

1 | {

2 | "plugins": [

3 | [

4 | "build-plugin-fusion",

5 | {

6 | "themePackage": "@alifd/theme-design-pro"

7 | }

8 | ],

9 | [

10 | "build-plugin-moment-locales",

11 | {

12 | "locales": [

13 | "zh-cn"

14 | ]

15 | }

16 | ],

17 | [

18 | "build-plugin-antd",

19 | {

20 | "themeConfig": {

21 | "primary-color": "#1890ff"

22 | }

23 | }

24 | ]

25 | ]

26 | }

27 |

--------------------------------------------------------------------------------

/modelci/persistence/exceptions.py:

--------------------------------------------------------------------------------

1 | class ServiceException(Exception):

2 | def __init__(self, message):

3 | self.message = message

4 |

5 | def __str__(self):

6 | return repr(self.message)

7 |

8 |

9 | class DoesNotExistException(ServiceException):

10 | def __init__(self, message):

11 | super().__init__(message=message)

12 |

13 |

14 | class BadRequestValueException(ServiceException):

15 | def __init__(self, message):

16 | super().__init__(message=message)

17 |

--------------------------------------------------------------------------------

/frontend/public/index.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

9 |

10 |

11 |

12 | Welcome to ModelCI!

8 | 9 |This is a awesome project, enjoy it!

10 | 11 | 12 |

14 |

15 | {image &&  }

16 | {text}

17 |

18 |

}

16 | {text}

17 |

18 |

19 | );

20 | }

21 |

--------------------------------------------------------------------------------

/example/sample_k8s_deployment.conf:

--------------------------------------------------------------------------------

1 | [remote_storage]

2 | # configuration for pulling models from cloud storage.

3 | storage_type = S3

4 | aws_access_key_id = sample-id

5 | aws_secret_access_key = sample-key

6 | bucket_name = sample-bucket

7 | remote_model_path = models/bidaf-9

8 |

9 | [model]

10 | # local model path for storing model after pulling it from cloud

11 | local_model_dir = /models

12 | local_model_name = bidaf-9

13 |

14 | [deployment]

15 | # deployment detailed configuration

16 | name = sample-deployment

17 | namespace = default

18 | replicas = 1

19 | engine = ONNX

20 | device = cpu

21 | batch_size = 16

22 |

--------------------------------------------------------------------------------

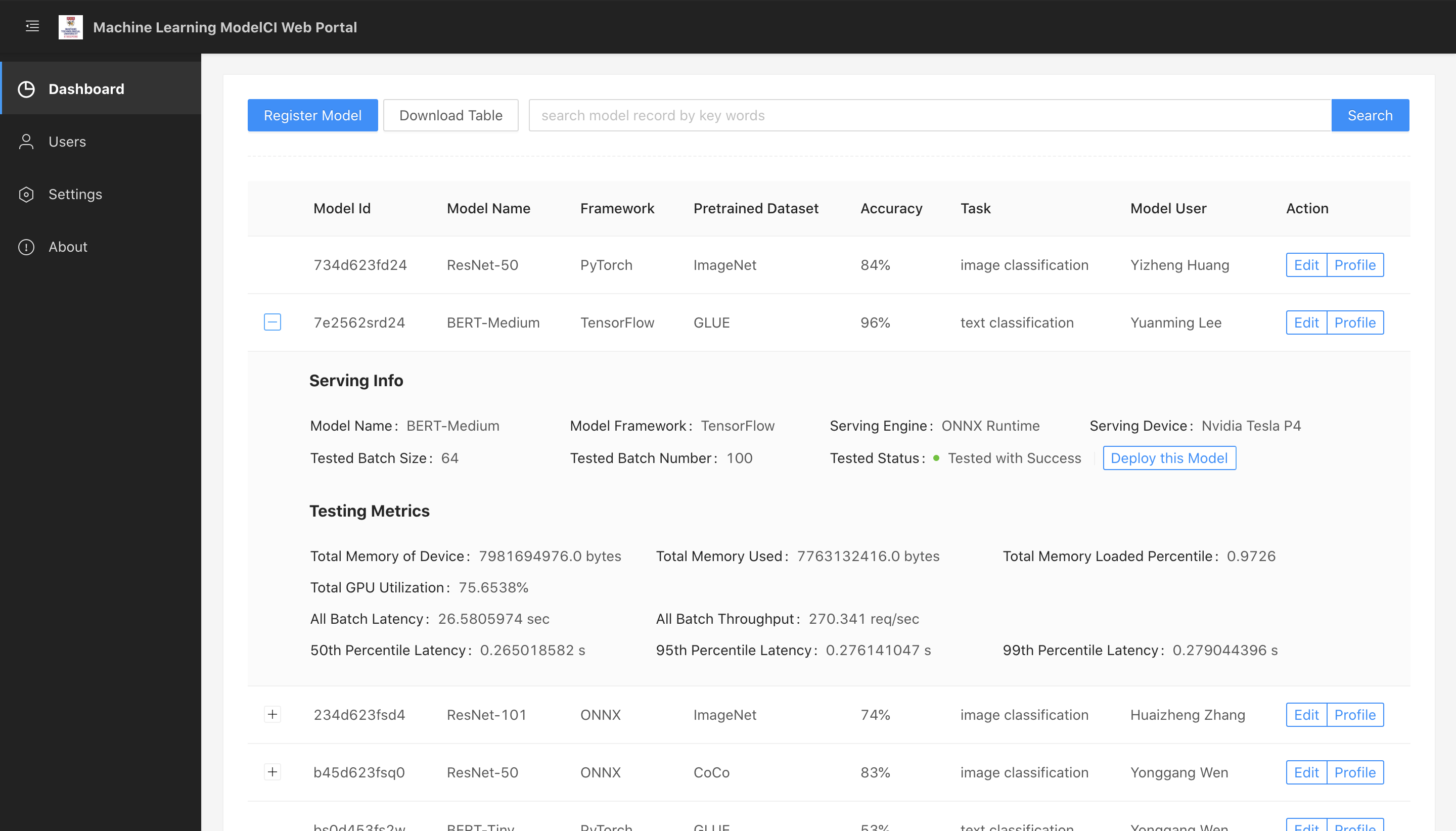

/frontend/README.md:

--------------------------------------------------------------------------------

1 | # Web Portal of ML Model CI

2 |

3 | ## Quick Start

4 |

5 | Before getting start with the web application, you need to connect with your backend APIs (MLModelCI services). The default address is http://localhost:8000/api/v1/model. If you want to connect to your own MLModelCI service, you should modify the address before starting in `src/config.ts`.

6 |

7 | ```shell script

8 | npm install

9 | npm start

10 | ```

11 |

12 | ## Screentshot

13 |

14 | ### Dashboard

15 |

16 |

17 |

18 | ### Model visualization

19 |

20 |

21 |

--------------------------------------------------------------------------------

/modelci/experimental/model/common.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 | """

4 | Author: Li Yuanming

5 | Email: yli056@e.ntu.edu.sg

6 | Date: 2/1/2021

7 |

8 | Common Pydantic Class

9 | """

10 | from bson import ObjectId

11 |

12 |

13 | class ObjectIdStr(str):

14 | @classmethod

15 | def __get_validators__(cls):

16 | yield cls.validate

17 |

18 | @classmethod

19 | def validate(cls, v):

20 | if isinstance(v, str):

21 | v = ObjectId(v)

22 | if not isinstance(v, ObjectId):

23 | raise ValueError("Not a valid ObjectId")

24 | return str(v)

25 |

--------------------------------------------------------------------------------

/modelci/types/do/profile_result_do.py:

--------------------------------------------------------------------------------

1 | from mongoengine import EmbeddedDocument

2 | from mongoengine.fields import EmbeddedDocumentField, ListField

3 |

4 | from .dynamic_profile_result_do import DynamicProfileResultDO

5 | from .static_profile_result_do import StaticProfileResultDO

6 |

7 |

8 | class ProfileResultDO(EmbeddedDocument):

9 | """

10 | Profiling result plain object.

11 | """

12 | # Static profile result

13 | static_profile_result = EmbeddedDocumentField(StaticProfileResultDO)

14 | # Dynamic profile result

15 | dynamic_profile_results = ListField(EmbeddedDocumentField(DynamicProfileResultDO))

16 |

--------------------------------------------------------------------------------

/modelci/app/v1/api.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 | """

4 | Author: Li Yuanming

5 | Email: yli056@e.ntu.edu.sg

6 | Date: 6/20/2020

7 | """

8 | from fastapi import APIRouter

9 |

10 | from modelci.app.v1.endpoints import model

11 | from modelci.app.v1.endpoints import visualizer

12 | from modelci.app.v1.endpoints import profiler

13 |

14 | api_router = APIRouter()

15 | api_router.include_router(model.router, prefix='/model', tags=['model'])

16 | api_router.include_router(visualizer.router, prefix='/visualizer', tags=['visualizer'])

17 | api_router.include_router(profiler.router, prefix='/profiler', tags=['profiler'])

--------------------------------------------------------------------------------

/modelci/app/experimental/api.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | # -*- coding: utf-8 -*-

3 | """

4 | Author: Li Yuanming

5 | Email: yli056@e.ntu.edu.sg

6 | Date: 6/20/2020

7 | """

8 | from fastapi import APIRouter

9 |

10 | from modelci.app.experimental.endpoints import cv_tuner

11 | from modelci.app.experimental.endpoints import model_structure, trainer

12 |

13 | api_router = APIRouter()

14 | api_router.include_router(cv_tuner.router, prefix='/cv-tuner', tags=['[*exp] cv-tuner'])

15 | api_router.include_router(model_structure.router, prefix='/structure', tags=['[*exp] structure'])

16 | api_router.include_router(trainer.router, prefix='/train', tags=['[*exp] train'])

17 |

--------------------------------------------------------------------------------

/modelci/cli/modelps.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) NTU_CAP 2021. All Rights Reserved.

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at:

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express

12 | # or implied. See the License for the specific language governing

13 | # permissions and limitations under the License.

--------------------------------------------------------------------------------

/ops/start_node_exporter.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | VERSION=0.18.1

4 | DIR_NAME="node_exporter-${VERSION}.linux-amd64"

5 | FILENAME="${DIR_NAME}.tar.gz"

6 |

7 | # create temporary directory

8 | mkdir -p ~/tmp && cd ~/tmp || exit 1

9 | mkdir -p node_exporter && cd node_exporter || exit 1

10 |

11 |

12 | # download and unzip

13 | if [ -d "${DIR_NAME}" ]; then

14 | echo "${DIR_NAME} has been downloaded"

15 | else

16 | echo "Start to download ${DIR_NAME}"

17 | wget "https://github.com/prometheus/node_exporter/releases/download/v${VERSION}/${FILENAME}"

18 | tar xvfz "${FILENAME}"

19 | fi

20 |

21 | # install

22 | cd ${DIR_NAME} || exit

23 | ./node_exporter &

24 |

--------------------------------------------------------------------------------

/modelci/types/proto/service.proto:

--------------------------------------------------------------------------------

1 | syntax = "proto3";

2 |

3 | service Predict {

4 | // Inference

5 | rpc Infer (InferRequest) returns (InferResponse) {

6 | }

7 |

8 | // Stream Interface

9 | rpc StreamInfer (stream InferRequest) returns (stream InferResponse) {

10 | }

11 | }

12 |

13 | message InferRequest {

14 | // Model name

15 | string model_name = 1;

16 |

17 | // Meta data

18 | string meta = 2;

19 |

20 | // List of bytes (etc. encoded frame)

21 | repeated bytes raw_input = 3;

22 | }

23 |

24 | message InferResponse {

25 | // Json as string

26 | string json = 1;

27 | // Meta data

28 | string meta = 2;

29 | }

30 |

--------------------------------------------------------------------------------

/modelci/hub/deployer/pytorch/proto/service.proto:

--------------------------------------------------------------------------------

1 | syntax = "proto3";

2 |

3 | service Predict {

4 | // Inference

5 | rpc Infer (InferRequest) returns (InferResponse) {

6 | }

7 |

8 | // Stream Interface

9 | rpc StreamInfer (stream InferRequest) returns (stream InferResponse) {

10 | }

11 | }

12 |

13 | message InferRequest {

14 | // Model name

15 | string model_name = 1;

16 |

17 | // Meta data

18 | string meta = 2;

19 |

20 | // List of bytes (etc. encoded frame)

21 | repeated bytes raw_input = 3;

22 | }

23 |

24 | message InferResponse {

25 | // Json as string

26 | string json = 1;

27 | // Meta data

28 | string meta = 2;

29 | }

--------------------------------------------------------------------------------

/modelci/hub/deployer/onnxs/proto/service.proto:

--------------------------------------------------------------------------------

1 | syntax = "proto3";

2 |

3 | service Predict {

4 | // Inference

5 | rpc Infer (InferRequest) returns (InferResponse) {

6 | }

7 |

8 | // Stream Interface

9 | rpc StreamInfer (stream InferRequest) returns (stream InferResponse) {

10 | }

11 | }

12 |

13 | message InferRequest {

14 | // Model name

15 | string model_name = 1;

16 |

17 | // Meta data

18 | string meta = 2;

19 |

20 | // List of bytes (etc. encoded frame)

21 | repeated bytes raw_input = 3;

22 | }

23 |

24 | message InferResponse {

25 | // Json as string

26 | string json = 1;

27 | // Meta data

28 | string meta = 2;

29 | }

30 |

--------------------------------------------------------------------------------

/modelci/app/v1/endpoints/profiler.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/python3

2 | # -*- coding: utf-8 -*-

3 | """

4 | Author: Xing Di

5 | Date: 2021/1/15

6 |

7 | """

8 | from fastapi import APIRouter, HTTPException

9 | from modelci.persistence.service_ import exists_by_id, profile_model

10 |

11 | router = APIRouter()

12 |

13 |

14 | @router.get('/{model_id}', status_code=201)

15 | def profile(model_id: str, device: str='cuda', batch_size: int=1):

16 | if not exists_by_id(model_id):

17 | raise HTTPException(

18 | status_code=404,

19 | detail=f'Model ID {model_id} does not exist. You may change the ID',

20 | )

21 | profile_result = profile_model(model_id, device, batch_size)

22 | return profile_result

23 |

--------------------------------------------------------------------------------

/CHANGELOG.md:

--------------------------------------------------------------------------------

1 |

2 | # Change Log

3 | All notable changes to this project will be documented in this file.

4 |

5 | ## \[Unreleased\] - 2021-01-20

6 |

7 | ### Added

8 | - PyTorch model visualization

9 |

10 |

11 | ## \[Unreleased\] - 2020-11-01

12 |

13 | Here we write upgrading notes for brands. It's a team effort to make them as

14 | straightforward as possible.

15 |

16 | ### Added

17 | - PyTorch converter from XGBoost, LightGBM, Sci-kit Learn, and ONNX models.

18 | - ONNX converter from XGBoost, LightGBM, Sci-kit Learn, and Keras models.

19 | - Part of CI tests for PyTorch converters and ONNX converters.

20 |

21 | ### Changed

22 | - Required packages to include only packages used by `import` statement.

23 |

24 | ### Fixed

25 |

26 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | betterproto>=1.2.3

2 | click==7.1.2

3 | docker>=4.2.0

4 | fastapi<=0.61.2,>=0.58.0

5 | GPUtil==1.4.0

6 | grpcio>=1.27.2

7 | humanize>=3.0.1

8 | hummingbird-ml>=0.0.6

9 | lightgbm==2.3.0

10 | mongoengine>=0.19.1

11 | numpy<1.19.0,>=1.16.0

12 | onnx==1.6.0

13 | opencv-python>=3.1.0

14 | onnxconverter-common>=1.6.0

15 | onnxmltools>=1.6.0

16 | onnxruntime==1.2.0

17 | py-cpuinfo>=6.0.0

18 | pydantic<2.0.0,>=0.32.2

19 | pymongo==3.11.2

20 | pytest>=5.4.1

21 | pytest-env==0.6.2

22 | PyYAML==5.3.1

23 | rich==9.1.0

24 | scikit-learn==0.23.2

25 | starlette<=0.13.6,>=0.13.4

26 | typer>=0.3.2

27 | typer-cli>=0.0.11

28 | toolz==0.10.0

29 | tqdm==4.55.1

30 | uvicorn>=0.11.5

31 | xgboost==1.2.0

32 | Jinja2==2.11.2

33 | python-multipart==0.0.5

34 | pydantic[dotenv]>=0.10.4

--------------------------------------------------------------------------------

/modelci/types/do/static_profile_result_do.py:

--------------------------------------------------------------------------------

1 | from mongoengine import EmbeddedDocument

2 | from mongoengine.fields import IntField, LongField

3 |

4 |

5 | class StaticProfileResultDO(EmbeddedDocument):

6 | """

7 | Static profiling result plain object

8 | """

9 |

10 | # Number of parameters of this model

11 | parameters = IntField(required=True)

12 | # Floating point operations

13 | flops = LongField(required=True)

14 | # Memory consumption in Byte in order to load this model into GPU or CPU

15 | memory = LongField(required=True)

16 | # Memory read in Byte

17 | mread = LongField(required=True)

18 | # Memory write in Byte

19 | mwrite = LongField(required=True)

20 | # Memory readwrite in Byte

21 | mrw = LongField(required=True)

22 |

--------------------------------------------------------------------------------

/scripts/install.trtis_client.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | VERSION=1.8.0

4 | UBUNTU_VERSION=$(lsb_release -sr | tr -d '.')

5 | FILENAME=v"${VERSION}"_ubuntu"${UBUNTU_VERSION}".clients.tar.gz

6 |

7 | function download_file_and_un_tar() {

8 | wget https://github.com/NVIDIA/triton-inference-server/releases/download/v"${VERSION}"/"${FILENAME}"

9 | tar xzf "${FILENAME}"

10 | }

11 |

12 | mkdir -p ~/tmp

13 | cd ~/tmp || return 1

14 | mkdir -p tensorrtserver && cd tensorrtserver || return 1

15 |

16 | # get package

17 | if [ -f "${FILENAME}" ] ; then

18 | echo "Already downloaded at ${FILENAME}"

19 | tar xzf "${FILENAME}" || download_file_and_un_tar

20 | else

21 | download_file_and_un_tar

22 | fi

23 |

24 | # install

25 | pip install python/tensorrtserver-${VERSION}-py2.py3-none-linux_x86_64.whl

26 |

--------------------------------------------------------------------------------

/modelci/data_engine/postprocessor/image_classification.py:

--------------------------------------------------------------------------------

1 | def postprocess(results, filenames, batch_size):

2 | """

3 | Post-process results to show classifications.

4 | """

5 | if len(results) != 1:

6 | raise Exception("expected 1 result, got {}".format(len(results)))

7 |

8 | batched_result = list(results.values())[0]

9 | if len(batched_result) != batch_size:

10 | raise Exception("expected {} results, got {}".format(batch_size, len(batched_result)))

11 | if len(filenames) != batch_size:

12 | raise Exception("expected {} filenames, got {}".format(batch_size, len(filenames)))

13 |

14 | for (index, result) in enumerate(batched_result):

15 | print("Image '{}':".format(filenames[index]))

16 | for cls in result:

17 | print(" {} ({}) = {}".format(cls[0], cls[2], cls[1]))

18 |

--------------------------------------------------------------------------------

/.github/PULL_REQUEST_TEMPLATE.md:

--------------------------------------------------------------------------------

1 |

4 |

5 | Closes #

6 |

7 |

10 |

11 | #### What has been done to verify that this works as intended?

12 |

13 | #### Why is this the best possible solution? Were any other approaches considered?

14 |

15 | #### How does this change affect users? Describe intentional changes to behavior and behavior that could have accidentally been affected by code changes. In other words, what are the regression risks?

16 |

17 | #### Does this change require updates to documentation?

18 |

19 | #### Before submitting this PR, please make sure you have:

20 |

21 | - [ ] run `python -m pytest tests/` and confirmed all checks still pass.

22 | - [ ] verified that any code or assets from external sources are properly credited.

23 |

--------------------------------------------------------------------------------

/frontend/src/pages/ModelRegister/components/CustomUpload/index.tsx:

--------------------------------------------------------------------------------

1 | import React from 'react';

2 | import { Upload } from 'antd';

3 | import { InboxOutlined } from '@ant-design/icons';

4 |

5 | const { Dragger } = Upload;

6 |

7 | export default function CustomUpload(props) {

8 | return (

9 |

25 |

Click or drag file to this area to upload

28 |29 | Support for a single upload 30 |

31 |-

6 |

7 |

- Serve by name 8 | 9 | Serve a ResNet50 model built with TensorFlow framework and to be served by TensorRT, on CUDA device 1: 10 | ```shell script 11 | python serving.py name --m ResNet50 -f tensorflow -e trt --device cuda:1 12 | ``` 13 | 14 | 15 | 16 |

- Serve by task 17 | 18 | Serve a model performing image classification task on CPU 19 | ```shell script 20 | python serving.py task --task 'image classification' --device cpu 21 | ``` 22 | 23 | 24 | 25 |

-

29 |

- Get pretrained torch model 30 | 31 | We assume you have setup the MongoDB and all environment variables by [install](/README.md#installation). 32 | See `modelci/hub/init_data.py`. 33 | For example, running 34 | ```shell script 35 | python modelci/init_data.py --model resnet50 --framework pytorch 36 | ``` 37 | Models will be saved at `~/.modelci/ResNet50/pytorch-torchscript/` directory. 38 | **Note**: You do not need to rerun the above code if you have done so for [TorchScript](/modelci/hub/deployer/pytorch). 39 | 40 | 41 |

- deploy model 42 | 43 | CPU version: 44 | ```shell script 45 | sh deploy_model_cpu.sh {MODEL_NAME} {REST_API_PORT} 46 | ``` 47 | GPU version: 48 | ```shell script 49 | sh depoly_model_gpu.sh {MODEL_NAME} {REST_API_PORT} 50 | ``` 51 | 52 | 53 |

-

29 |

- Get pre-trained PyTorch model 30 | 31 | We assume you have setup the MongoDB and all environment variables by [install](/README.md#installation). 32 | See `modelci/hub/init_data.py`. 33 | For example, running 34 | ```shell script 35 | python modelci/init_data.py --model resnet50 --framework pytorch 36 | ``` 37 | Models will be saved at `~/.modelci/ResNet50/pytorch-torchscript/` directory. 38 | **Note**: You do not need to rerun the above code if you have done so for [ONNX](/modelci/hub/deployer/onnxs). 39 | 40 | 41 |

- deploy model 42 | 43 | CPU version: 44 | ```shell script 45 | sh deploy_model_cpu.sh {MODEL_NAME} {REST_API_PORT} 46 | ``` 47 | GPU version: 48 | ```shell script 49 | sh deploy_model_cpu.sh {MODEL_NAME} {REST_API_PORT} 50 | ``` 51 | 52 | 53 |

-

6 |

7 |

- Generate a config file for necessary environment variables in deployment file. 8 | 9 | ``` 10 | [remote_storage] 11 | # configuration for pulling models from cloud storage. 12 | storage_type = S3 13 | aws_access_key_id = sample-id 14 | aws_secret_access_key = sample-key 15 | bucket_name = sample-bucket 16 | remote_model_path = models/bidaf-9 17 | 18 | [model] 19 | # local model path for storing model after pulling it from cloud 20 | local_model_dir = /models 21 | local_model_name = bidaf-9 22 | 23 | [deployment] 24 | # deployment detailed configuration 25 | name = sample-deployment 26 | namespace = default 27 | replicas = 1 28 | engine = ONNX 29 | device = cpu 30 | batch_size = 16 31 | ``` 32 | 33 | `[remote_storage]` defines variables for pulling model from cloud storage. Currently only s3 bucket is supported. 34 | 35 | `[model]` defines variables of model path in containers 36 | 37 | `[deployment]` defines variables for serving the model as a cloud service 38 | 39 | 40 | 41 |

- Generate deployment file to desired output path. 42 | 43 | ``` 44 | from modelci.hub.deployer.k8s.dispatcher import render 45 | 46 | render( 47 | configuration='example/sample_k8s_deployment.conf', 48 | output_file_path='example/output.yaml' 49 | ) 50 | ``` 51 | 52 | 53 | 54 |

- Deploy the service into your k8s cluster 55 | 56 | 57 |

-

9 |

- Get pre-trained Keras model 10 | 11 | We assume you have setup the MongoDB and all environment variables [install](/README.md#installation). 12 | See `modelci/hub/init_data.py`. 13 | For example, running 14 | ```shell script 15 | python modelci/hub/init_data.py export --model resnet50 --framework tensorflow 16 | ``` 17 | Models will be saved at `~/.modelci/ResNet50/tensorflow-tfs/` directory. 18 | **Note**: You do not need to rerun the above code if you have done so for [TensorRT](/modelci/hub/deployer/trt). 19 | 20 | 21 |

- Deploy model 22 | 23 | ```shell script 24 | sh deploy_model_cpu.sh {MODEL_NAME} {GRPC_PORT} {REST_API_PORT} 25 | ``` 26 | Or on a gpu 27 | ```shell script 28 | sh deploy_model_gpu.sh {MODEL_NAME} {GRPC_PORT} {REST_API_PORT} 29 | ``` 30 | You may check the deployed model using `save_model_cli` from https://www.tensorflow.org/guide/saved_model 31 | ```shell script 32 | saved_model_cli show --dir {PATH_TO_SAVED_MODEL}/{MODEL_NAME}/{MODEL_VARIANT}/{MODEL_VERSION} --all 33 | ``` 34 | 35 | 36 |

- Testing 37 | 38 | ```shell script 39 | # 4.1. MAKE REST REQUEST 40 | python rest_request.py --model {MODEL_NAME} --port {PORT} 41 | # 4.2. MAKE GRPC REQUEST 42 | python grpc_request.py --model {MODEL_NAME} --input_name {INPUT_NAME} 43 | ``` 44 | 45 | 46 |