├── LICENSE

├── README.md

├── bootstrap

└── bootstrap.secret.yaml

├── calico

├── calico-ipv6.yaml

├── calico-typha.yaml

└── calico.yaml

├── cilium-test

├── connectivity-check.yaml

└── monitoring-example.yaml

├── dashboard

└── dashboard-user.yaml

├── doc

├── Enable-implement-IPv4-IPv6.md

├── Kubernetes_docker.md

├── Minikube_init.md

├── Upgrade_Kubernetes.md

├── kube-proxy_permissions.md

├── kubeadm-install-IPV6-IPV4.md

├── kubeadm-install-V1.32.md

├── kubeadm-install.md

├── kubernetes_install_cilium.md

├── v1.21.13-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.22.10-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.23.3-CentOS-binary-install.md

├── v1.23.4-CentOS-binary-install.md

├── v1.23.5-CentOS-binary-install.md

├── v1.23.6-CentOS-binary-install.md

├── v1.23.7-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.24.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.24.0-CentOS-binary-install-IPv6-IPv4.md

├── v1.24.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.24.1-CentOS-binary-install-IPv6-IPv4.md

├── v1.24.1-Ubuntu-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.24.2-CentOS-binary-install-IPv6-IPv4.md

├── v1.24.3-CentOS-binary-install-IPv6-IPv4.md

├── v1.25.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.25.0-CentOS-binary-install-IPv6-IPv4.md

├── v1.25.4-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.26.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md

├── v1.26.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.27.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.27.3-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.28.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.28.3-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.29.2-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.30.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.30.2-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── v1.31.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

└── v1.32.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md

├── images

├── 1.jpg

├── 2.jpg

└── 3.jpg

├── ingress-yaml

├── backend.yaml

├── deploy.yaml

└── ingress-demo-app.yaml

├── metrics-server

├── high-availability.yaml

└── metrics-server-components.yaml

├── pki

├── admin-csr.json

├── apiserver-csr.json

├── ca-config.json

├── ca-csr.json

├── etcd-ca-csr.json

├── etcd-csr.json

├── front-proxy-ca-csr.json

├── front-proxy-client-csr.json

├── kube-proxy-csr.json

├── kubelet-csr.json

├── manager-csr.json

└── scheduler-csr.json

├── shell

├── download.sh

└── update_k8s.sh

└── yaml

├── PHP-Nginx-Deployment-ConfMap-Service.yaml

├── admin.yaml

├── calico.yaml

├── cby.yaml

├── connectivity-check.yaml

├── dashboard-user.yaml

├── deploy.yaml

├── metrics-server-components.yaml

├── monitoring-example.yaml

├── mysql-ha-read-write-separation.yaml

├── nfs-storage.yaml

└── vscode.yaml

/README.md:

--------------------------------------------------------------------------------

1 | # kubernetes (k8s) 二进制高可用安装

2 |

3 | [Kubernetes](https://github.com/cby-chen/Kubernetes) 开源不易,帮忙点个star,谢谢了🌹

4 |

5 | GitHub访问不通畅可以访问国内GitEE https://gitee.com/cby-inc/Kubernetes

6 |

7 | ## ⚠️ 中国大陆地区 Docker 以及其他仓库镜像已被 GFW 完全封锁!!!

8 | ## ⚠️ 现镜像已无法直接拉取,需要通过一些特殊方式进行!!!

9 |

10 | # 一、写在前面

11 |

12 | 打开文档,使用全文替换,全局替换主机IP即可。

13 |

14 | 各位仁兄,手动方式部署,没必要折磨自己。

15 |

16 | 能用自动部署就用自动部署吧。推荐:https://github.com/easzlab/kubeasz

17 |

18 | 也可以用 [kubeadm-install.md](./doc/kubeadm-install-V1.32.md)

19 |

20 | # 二、介绍

21 |

22 | 我使用IPV6的目的是在公网进行访问,所以我配置了IPV6静态地址。

23 | 若您没有IPV6环境,或者不想使用IPv6,不对主机进行配置IPv6地址即可。

24 | 不配置IPV6,不影响后续,不过集群依旧是支持IPv6的。为后期留有扩展可能性。

25 | 若不要IPv6 ,不给网卡配置IPv6即可,不要对IPv6相关配置删除或操作,否则会出问题。

26 | 如果本地没有IPv6,那么Calico需要使用IPv4的yaml配置文件。

27 | 后续尽可能第一时间更新新版本文档,更新后内容在GitHub。

28 |

29 | 最新版本的文档对于IPv6方面的写的比较详细。

30 |

31 | 不要删除 IPv6 相关配置!!!

32 |

33 | 不要删除 IPv6 相关配置!!!

34 |

35 | 不要删除 IPv6 相关配置!!!

36 |

37 | # 三、当前文档版本

38 |

39 | - 1.32.x

40 | - 1.31.x

41 | - 1.30.x

42 | - 1.29.x

43 | - 1.28.x

44 | - 1.27.x

45 | - 1.26.x

46 | - 1.25.x

47 | - 1.24.x

48 | - 1.23.x

49 | - 1.22.x

50 | - 1.21.x

51 |

52 | 大版本之间是通用的,比如使用 1.26.0 的文档可以安装 1.26.x 各种版本,只是安装过程中的下载新的包即可。

53 |

54 | # 四、访问地址

55 |

56 | 手动项目地址:

57 | https://github.com/cby-chen/Kubernetes

58 |

59 | 脚本项目地址(已停更):

60 | https://github.com/cby-chen/Binary_installation_of_Kubernetes

61 | https://github.com/cby-chen/kube_ansible

62 |

63 | # 五、文档

64 |

65 | ### 最新版本文档

66 | - [kubeadm-install.md](./doc/kubeadm-install-V1.32.md)

67 | - [v1.32.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.32.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

68 |

69 | ## 安装文档

70 | ### 1.32.x版本

71 | - [v1.32.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.32.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

72 |

73 | ### 1.31.x版本

74 | - [v1.31.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.31.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

75 |

76 | ### 1.30.x版本

77 | - [v1.30.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.30.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

78 | - [v1.30.2-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.30.2-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

79 |

80 | ### 1.29.x版本

81 | - [v1.29.2-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.29.2-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

82 |

83 | ### 1.28.x版本

84 | - [v1.28.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.28.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

85 | - [v1.28.3-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.28.3-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

86 |

87 | ### 1.27.x版本

88 | - [v1.27.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.27.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

89 | - [v1.27.3-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.27.3-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

90 |

91 | ### 1.26.x版本

92 | - [v1.26.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md](./doc/v1.26.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md)

93 | - [v1.26.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md](./doc/v1.26.1-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md)

94 |

95 | ### 1.25.x版本

96 | - [v1.25.0-CentOS-binary-install-IPv6-IPv4.md](./doc/v1.25.0-CentOS-binary-install-IPv6-IPv4.md)

97 | - [v1.25.4-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md](./doc/v1.25.4-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md)

98 |

99 | ### 1.24.x版本

100 | - [v1.24.0-CentOS-binary-install-IPv6-IPv4.md](./doc/v1.24.0-CentOS-binary-install-IPv6-IPv4.md)

101 | - [v1.24.1-CentOS-binary-install-IPv6-IPv4.md](./doc/v1.24.1-CentOS-binary-install-IPv6-IPv4.md)

102 | - [v1.24.2-CentOS-binary-install-IPv6-IPv4.md](./doc/v1.24.2-CentOS-binary-install-IPv6-IPv4.md)

103 | - [v1.24.3-CentOS-binary-install-IPv6-IPv4.md](./doc/v1.24.3-CentOS-binary-install-IPv6-IPv4.md)

104 |

105 | ### 1.23.x版本

106 | - [v1.23.3-CentOS-binary-install](./doc/v1.23.3-CentOS-binary-install.md)

107 | - [v1.23.4-CentOS-binary-install](./doc/v1.23.4-CentOS-binary-install.md)

108 | - [v1.23.5-CentOS-binary-install](./doc/v1.23.5-CentOS-binary-install.md)

109 | - [v1.23.6-CentOS-binary-install](./doc/v1.23.6-CentOS-binary-install.md)

110 |

111 | ### 1.22.x版本

112 | - [v1.22.10-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md](./doc/v1.22.10-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md)

113 |

114 | ### 1.21.x版本

115 | - [v1.21.13-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md](./doc/v1.21.13-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md)

116 |

117 | ## 其他文档

118 | - 修复kube-proxy证书权限过大问题 [kube-proxy_permissions.md](./doc/kube-proxy_permissions.md)

119 | - 使用kubeadm初始化IPV4/IPV6集群 [kubeadm-install-IPV6-IPV4.md](./doc/kubeadm-install-IPV6-IPV4.md)

120 | - IPv4集群启用IPv6功能,关闭IPv6则反之 [Enable-implement-IPv4-IPv6.md](./doc/Enable-implement-IPv4-IPv6.md)

121 | - 升级kubernetes集群 [Upgrade_Kubernetes.md](./doc/Upgrade_Kubernetes.md)

122 | - Minikube初始化集群 [Minikube_init.md](./doc/Minikube_init.md)

123 | - Kubernetes 1.24 1.25 集群使用docker作为容器 [Kubernetes_docker](./doc/Kubernetes_docker.md)

124 | - kubernetes 安装cilium [kubernetes_install_cilium](./doc/kubernetes_install_cilium.md)

125 | - 二进制安装每个版本文档

126 |

127 | # 六、安装包

128 |

129 | - 123云盘 https://www.123pan.com/s/Z8ArVv-PG60d

130 | - 夸克云盘 https://pan.quark.cn/s/8d8525a12895

131 |

132 | - https://github.com/cby-chen/Kubernetes/releases

133 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.22.10/kubernetes-v1.22.10.tar

134 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.21.13/kubernetes-v1.21.13.tar

135 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/cby/Kubernetes.tar

136 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.23.4/kubernetes-v1.23.4.tar

137 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.23.5/kubernetes-v1.24.5.tar

138 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.23.6/kubernetes-v1.23.6.tar

139 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.23.7/kubernetes-v1.23.7.tar

140 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.24.0/kubernetes-v1.24.0.tar

141 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.24.1/kubernetes-v1.24.1.tar

142 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.24.2/kubernetes-v1.24.2.tar

143 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.24.3/kubernetes-v1.24.3.tar

144 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.25.0/kubernetes-v1.25.0.tar

145 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.25.4/kubernetes-v1.25.4.tar

146 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.26.0/kubernetes-v1.26.0.tar

147 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.26.1/kubernetes-v1.26.1.tar

148 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.27.1/kubernetes-v1.27.1.tar

149 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.27.3/kubernetes-v1.27.3.tar

150 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.28.0/kubernetes-v1.28.0.tar

151 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.28.3/kubernetes-v1.28.3.tar

152 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.29.2/kubernetes-v1.29.2.tar

153 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.30.1/kubernetes-v1.30.1.tar

154 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.30.2/kubernetes-v1.30.2.tar

155 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.31.1/kubernetes-v1.31.1.tar

156 | - wget https://mirrors.chenby.cn/https://github.com/cby-chen/Kubernetes/releases/download/v1.32.0/kubernetes-v1.32.0.tar

157 |

158 | *注意:1.23.3 版本当时没想到会后续更新,所以当时命名不太规范。

159 |

160 |

161 | # 八、常见异常

162 |

163 | - 注意hosts配置文件中主机名和IP地址对应

164 |

165 | - 在文档7.2,却记别忘记执行`kubectl create -f bootstrap.secret.yaml`命令

166 |

167 | - 重启服务器之后出现异常,可以查看`systemctl status kubelet.service`服务是否正常

168 |

169 | - 在 centos7 环境下需要升级 runc 和 libseccomp

170 | 详见 https://github.com/cby-chen/Kubernetes/blob/main/doc/v1.25.0-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves.md#9%E5%AE%89%E8%A3%85%E7%BD%91%E7%BB%9C%E6%8F%92%E4%BB%B6

171 |

172 | - 安装会出现kubelet异常,无法识别 `--node-labels` 字段问题,原因如下。

173 | 将 `--node-labels=node.kubernetes.io/node=''` 替换为 `--node-labels=node.kubernetes.io/node=` 将 `''` 删除即可。

174 |

175 | - IPv6无法正常访问,kubelet服务需要添加`--node-ip=`参数,若动态获取IP地址变动之后需要重新配置,详细查看文档 https://github.com/cby-chen/Kubernetes/blob/main/doc/v1.28.3-CentOS-binary-install-IPv6-IPv4-Three-Masters-Two-Slaves-Offline.md#82kubelet%E9%85%8D%E7%BD%AE

176 |

177 | # 九、其他

178 |

179 | ## 生产环境推荐配置

180 |

181 | ### Master节点:

182 | - 三个节点实现高可用(必须)

183 | - 节点数:0-100 8核16+

184 | - 节点数:100-250 8核32G+

185 | - 节点数:250-500 16核32G+

186 |

187 | ### etcd节点:

188 | - 三个节点实现高可用(必须),有条件存储分区必须高性能SSD硬盘,没有SSD也要有高效独立磁盘

189 | - 节点数:0-50 2核8G+ 50G SSD存储

190 | - 节点数:50-250 4核16G+ 150G SSD存储

191 | - 节点数:250-1000 8核32G+ 250G SSD存储

192 |

193 | ### Node节点:

194 | - 无特殊要求,主要是Docker数据分区、系统分区需要单独使用,不可以使用同一个磁盘,系统分区100G+、Docker数据分区200G+,有条件使用SSD硬盘,必须独立于系统盘

195 |

196 | ### 其他:

197 | - 集群规模不大可以将etcd和master放置于同一个宿主机,

198 | - 也就是每个master节点部署k8s组件和etcd服务,但是etcd的数据目录一定要独立,并且使用SSD,

199 | - 两者部署在一起需要相对增加宿主机的资源,个人建议生产环境把master节点的资源一次性给够,

200 | - 此处的费用不应该节省,可以直接使用16核32G或者64G的机器,之后集群扩容就无需扩容master节点的资源,减少风险。

201 | - 其中master节点和etcd节点的系统分区100G即可。

202 |

203 | ### 添加好友

204 |  205 |

206 | ### 打赏

207 |

205 |

206 | ### 打赏

207 |  208 |

209 | - 建议在 [Kubernetes](https://github.com/cby-chen/Kubernetes) 查看文档,后续会陆续更新文档

210 | - 小陈网站:

211 |

212 | > https://www.oiox.cn/

213 | >

214 | > https://www.oiox.cn/index.php/start-page.html

215 | >

216 | > **CSDN、GitHub、51CTO、知乎、开源中国、思否、掘金、简书、华为云、阿里云、腾讯云、哔哩哔哩、今日头条、新浪微博、个人博客**

217 | >

218 | > **全网可搜《小陈运维》**

219 | >

220 | > **文章主要发布于微信公众号**

221 |

222 |

223 | ## Stargazers over time

224 |

225 | [](https://starchart.cc/cby-chen/Kubernetes)

226 |

--------------------------------------------------------------------------------

/bootstrap/bootstrap.secret.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Secret

3 | metadata:

4 | name: bootstrap-token-c8ad9c

5 | namespace: kube-system

6 | type: bootstrap.kubernetes.io/token

7 | stringData:

8 | description: "The default bootstrap token generated by 'kubelet '."

9 | token-id: c8ad9c

10 | token-secret: 2e4d610cf3e7426e

11 | usage-bootstrap-authentication: "true"

12 | usage-bootstrap-signing: "true"

13 | auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

14 |

15 | ---

16 | apiVersion: rbac.authorization.k8s.io/v1

17 | kind: ClusterRoleBinding

18 | metadata:

19 | name: kubelet-bootstrap

20 | roleRef:

21 | apiGroup: rbac.authorization.k8s.io

22 | kind: ClusterRole

23 | name: system:node-bootstrapper

24 | subjects:

25 | - apiGroup: rbac.authorization.k8s.io

26 | kind: Group

27 | name: system:bootstrappers:default-node-token

28 | ---

29 | apiVersion: rbac.authorization.k8s.io/v1

30 | kind: ClusterRoleBinding

31 | metadata:

32 | name: node-autoapprove-bootstrap

33 | roleRef:

34 | apiGroup: rbac.authorization.k8s.io

35 | kind: ClusterRole

36 | name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

37 | subjects:

38 | - apiGroup: rbac.authorization.k8s.io

39 | kind: Group

40 | name: system:bootstrappers:default-node-token

41 | ---

42 | apiVersion: rbac.authorization.k8s.io/v1

43 | kind: ClusterRoleBinding

44 | metadata:

45 | name: node-autoapprove-certificate-rotation

46 | roleRef:

47 | apiGroup: rbac.authorization.k8s.io

48 | kind: ClusterRole

49 | name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

50 | subjects:

51 | - apiGroup: rbac.authorization.k8s.io

52 | kind: Group

53 | name: system:nodes

54 | ---

55 | apiVersion: rbac.authorization.k8s.io/v1

56 | kind: ClusterRole

57 | metadata:

58 | annotations:

59 | rbac.authorization.kubernetes.io/autoupdate: "true"

60 | labels:

61 | kubernetes.io/bootstrapping: rbac-defaults

62 | name: system:kube-apiserver-to-kubelet

63 | rules:

64 | - apiGroups:

65 | - ""

66 | resources:

67 | - nodes/proxy

68 | - nodes/stats

69 | - nodes/log

70 | - nodes/spec

71 | - nodes/metrics

72 | verbs:

73 | - "*"

74 | ---

75 | apiVersion: rbac.authorization.k8s.io/v1

76 | kind: ClusterRoleBinding

77 | metadata:

78 | name: system:kube-apiserver

79 | namespace: ""

80 | roleRef:

81 | apiGroup: rbac.authorization.k8s.io

82 | kind: ClusterRole

83 | name: system:kube-apiserver-to-kubelet

84 | subjects:

85 | - apiGroup: rbac.authorization.k8s.io

86 | kind: User

87 | name: kube-apiserver

88 |

--------------------------------------------------------------------------------

/dashboard/dashboard-user.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ServiceAccount

3 | metadata:

4 | name: admin-user

5 | namespace: kube-system

6 | ---

7 | apiVersion: rbac.authorization.k8s.io/v1

8 | kind: ClusterRoleBinding

9 | metadata:

10 | name: admin-user

11 | roleRef:

12 | apiGroup: rbac.authorization.k8s.io

13 | kind: ClusterRole

14 | name: cluster-admin

15 | subjects:

16 | - kind: ServiceAccount

17 | name: admin-user

18 | namespace: kube-system

19 |

--------------------------------------------------------------------------------

/doc/Enable-implement-IPv4-IPv6.md:

--------------------------------------------------------------------------------

1 | 背景

2 | ==

3 |

4 | 如今IPv4IP地址已经使用完毕,未来全球会以IPv6地址为中心,会大力发展IPv6网络环境,由于IPv6可以实现给任何一个设备分配到公网IP,所以资源是非常丰富的。

5 |

6 |

7 | 配置hosts

8 | =======

9 |

10 | ```shell

11 | [root@k8s-master01 ~]# vim /etc/hosts

12 | [root@k8s-master01 ~]# cat /etc/hosts

13 | 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

14 | ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

15 | 2408:8207:78ce:7561::10 k8s-master01

16 | 2408:8207:78ce:7561::20 k8s-master02

17 | 2408:8207:78ce:7561::30 k8s-master03

18 | 2408:8207:78ce:7561::40 k8s-node01

19 | 2408:8207:78ce:7561::50 k8s-node02

20 | 2408:8207:78ce:7561::60 k8s-node03

21 | 2408:8207:78ce:7561::70 k8s-node04

22 | 2408:8207:78ce:7561::80 k8s-node05

23 |

24 | 10.0.0.81 k8s-master01

25 | 10.0.0.82 k8s-master02

26 | 10.0.0.83 k8s-master03

27 | 10.0.0.84 k8s-node01

28 | 10.0.0.85 k8s-node02

29 | 10.0.0.86 k8s-node03

30 | 10.0.0.87 k8s-node04

31 | 10.0.0.88 k8s-node05

32 | 10.0.0.80 lb01

33 | 10.0.0.90 lb02

34 | 10.0.0.99 lb-vip

35 |

36 | [root@k8s-master01 ~]#

37 |

38 | ```

39 |

40 | 配置ipv6地址

41 | ========

42 |

43 | ```shell

44 | [root@k8s-master01 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens160

45 | [root@k8s-master01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens160

46 | TYPE=Ethernet

47 | PROXY_METHOD=none

48 | BROWSER_ONLY=no

49 | BOOTPROTO=none

50 | DEFROUTE=yes

51 | IPV4_FAILURE_FATAL=no

52 | IPV6INIT=yes

53 | IPV6_AUTOCONF=no

54 | IPV6ADDR=2408:8207:78ce:7561::10/64

55 | IPV6_DEFAULTGW=2408:8207:78ce:7561::1

56 | IPV6_DEFROUTE=yes

57 | IPV6_FAILURE_FATAL=no

58 | NAME=ens160

59 | UUID=56ca7c8c-21c6-484f-acbd-349111b3ddb5

60 | DEVICE=ens160

61 | ONBOOT=yes

62 | IPADDR=10.0.0.81

63 | PREFIX=24

64 | GATEWAY=10.0.0.1

65 | DNS1=8.8.8.8

66 | DNS2=2408:8000:1010:1::8

67 | [root@k8s-master01 ~]#

68 |

69 | ```

70 |

71 | 注意:每一台主机都需要配置为静态IPv6地址!若不进行配置,在内核中开启IPv6数据包转发功能后会出现IPv6异常。

72 |

73 | sysctl参数启用ipv6

74 | ==============

75 |

76 | ```shell

77 | [root@k8s-master01 ~]# vim /etc/sysctl.d/k8s.conf

78 | [root@k8s-master01 ~]# cat /etc/sysctl.d/k8s.conf

79 | net.ipv4.ip_forward = 1

80 | net.bridge.bridge-nf-call-iptables = 1

81 | fs.may_detach_mounts = 1

82 | vm.overcommit_memory=1

83 | vm.panic_on_oom=0

84 | fs.inotify.max_user_watches=89100

85 | fs.file-max=52706963

86 | fs.nr_open=52706963

87 | net.netfilter.nf_conntrack_max=2310720

88 |

89 |

90 | net.ipv4.tcp_keepalive_time = 600

91 | net.ipv4.tcp_keepalive_probes = 3

92 | net.ipv4.tcp_keepalive_intvl =15

93 | net.ipv4.tcp_max_tw_buckets = 36000

94 | net.ipv4.tcp_tw_reuse = 1

95 | net.ipv4.tcp_max_orphans = 327680

96 | net.ipv4.tcp_orphan_retries = 3

97 | net.ipv4.tcp_syncookies = 1

98 | net.ipv4.tcp_max_syn_backlog = 16384

99 | net.ipv4.ip_conntrack_max = 65536

100 | net.ipv4.tcp_max_syn_backlog = 16384

101 | net.ipv4.tcp_timestamps = 0

102 | net.core.somaxconn = 16384

103 |

104 |

105 | net.ipv6.conf.all.disable_ipv6 = 0

106 | net.ipv6.conf.default.disable_ipv6 = 0

107 | net.ipv6.conf.lo.disable_ipv6 = 0

108 | net.ipv6.conf.all.forwarding = 0

109 |

110 | [root@k8s-master01 ~]#

111 | [root@k8s-master01 ~]# reboot

112 |

113 | ```

114 |

115 | 测试访问公网IPv6

116 | ==========

117 |

118 | ```shell

119 | [root@k8s-master01 ~]# ping www.chenby.cn -6

120 | PING www.chenby.cn(2408:871a:5100:119:1d:: (2408:871a:5100:119:1d::)) 56 data bytes

121 | 64 bytes from 2408:871a:5100:119:1d:: (2408:871a:5100:119:1d::): icmp_seq=1 ttl=53 time=10.6 ms

122 | 64 bytes from 2408:871a:5100:119:1d:: (2408:871a:5100:119:1d::): icmp_seq=2 ttl=53 time=9.94 ms

123 | ^C

124 | --- www.chenby.cn ping statistics ---

125 | 2 packets transmitted, 2 received, 0% packet loss, time 1002ms

126 | rtt min/avg/max/mdev = 9.937/10.269/10.602/0.347 ms

127 | [root@k8s-master01 ~]#

128 |

129 | ```

130 |

131 | 修改kube-apiserver如下配置

132 | ====================

133 |

134 | ```shell

135 | --service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112

136 | --feature-gates=IPv6DualStack=true

137 |

138 | [root@k8s-master01 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

139 | [root@k8s-master01 ~]# cat /usr/lib/systemd/system/kube-apiserver.service

140 |

141 | [Unit]

142 | Description=Kubernetes API Server

143 | Documentation=https://github.com/kubernetes/kubernetes

144 | After=network.target

145 |

146 | [Service]

147 | ExecStart=/usr/local/bin/kube-apiserver \

148 | --v=2 \

149 | --logtostderr=true \

150 | --allow-privileged=true \

151 | --bind-address=0.0.0.0 \

152 | --secure-port=6443 \

153 | --insecure-port=0 \

154 | --advertise-address=192.168.1.81 \

155 | --service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \

156 | --feature-gates=IPv6DualStack=true \

157 | --service-node-port-range=30000-32767 \

158 | --etcd-servers=https://192.168.1.81:2379,https://192.168.1.82:2379,https://192.168.1.83:2379 \

159 | --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

160 | --etcd-certfile=/etc/etcd/ssl/etcd.pem \

161 | --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

162 | --client-ca-file=/etc/kubernetes/pki/ca.pem \

163 | --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

164 | --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

165 | --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

166 | --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

167 | --service-account-key-file=/etc/kubernetes/pki/sa.pub \

168 | --service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

169 | --service-account-issuer=https://kubernetes.default.svc.cluster.local \

170 | --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

171 | --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

172 | --authorization-mode=Node,RBAC \

173 | --enable-bootstrap-token-auth=true \

174 | --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

175 | --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

176 | --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

177 | --requestheader-allowed-names=aggregator \

178 | --requestheader-group-headers=X-Remote-Group \

179 | --requestheader-extra-headers-prefix=X-Remote-Extra- \

180 | --requestheader-username-headers=X-Remote-User \

181 | --enable-aggregator-routing=true

182 | # --token-auth-file=/etc/kubernetes/token.csv

183 |

184 | Restart=on-failure

185 | RestartSec=10s

186 | LimitNOFILE=65535

187 |

188 | [Install]

189 | WantedBy=multi-user.target

190 |

191 | ```

192 |

193 | 修改kube-controller-manager如下配置

194 | ====================

195 |

196 | ```shell

197 | --feature-gates=IPv6DualStack=true

198 | --service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112

199 | --cluster-cidr=172.16.0.0/12,fc00:2222::/112

200 | --node-cidr-mask-size-ipv4=24

201 | --node-cidr-mask-size-ipv6=120

202 |

203 | [root@k8s-master01 ~]# vim /usr/lib/systemd/system/kube-controller-manager.service

204 | [root@k8s-master01 ~]# cat /usr/lib/systemd/system/kube-controller-manager.service

205 | [Unit]

206 | Description=Kubernetes Controller Manager

207 | Documentation=https://github.com/kubernetes/kubernetes

208 | After=network.target

209 |

210 | [Service]

211 | ExecStart=/usr/local/bin/kube-controller-manager \

212 | --v=2 \

213 | --logtostderr=true \

214 | --address=127.0.0.1 \

215 | --root-ca-file=/etc/kubernetes/pki/ca.pem \

216 | --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

217 | --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

218 | --service-account-private-key-file=/etc/kubernetes/pki/sa.key \

219 | --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

220 | --leader-elect=true \

221 | --use-service-account-credentials=true \

222 | --node-monitor-grace-period=40s \

223 | --node-monitor-period=5s \

224 | --pod-eviction-timeout=2m0s \

225 | --controllers=*,bootstrapsigner,tokencleaner \

226 | --allocate-node-cidrs=true \

227 | --feature-gates=IPv6DualStack=true \

228 | --service-cluster-ip-range=10.96.0.0/12,fd00:1111::/112 \

229 | --cluster-cidr=172.16.0.0/12,fc00:2222::/112 \

230 | --node-cidr-mask-size-ipv4=24 \

231 | --node-cidr-mask-size-ipv6=120 \

232 | --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem

233 |

234 | Restart=always

235 | RestartSec=10s

236 |

237 | [Install]

238 | WantedBy=multi-user.target

239 |

240 | ```

241 |

242 | 修改kubelet如下配置

243 | =============

244 |

245 | ```shell

246 | --feature-gates=IPv6DualStack=true

247 |

248 | [root@k8s-master01 ~]# vim /usr/lib/systemd/system/kubelet.service

249 | [root@k8s-master01 ~]# cat /usr/lib/systemd/system/kubelet.service

250 | [Unit]

251 | Description=Kubernetes Kubelet

252 | Documentation=https://github.com/kubernetes/kubernetes

253 | After=docker.service

254 | Requires=docker.service

255 |

256 | [Service]

257 | ExecStart=/usr/local/bin/kubelet \

258 | --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig \

259 | --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

260 | --config=/etc/kubernetes/kubelet-conf.yml \

261 | --network-plugin=cni \

262 | --cni-conf-dir=/etc/cni/net.d \

263 | --cni-bin-dir=/opt/cni/bin \

264 | --container-runtime=remote \

265 | --runtime-request-timeout=15m \

266 | --container-runtime-endpoint=unix:///run/containerd/containerd.sock \

267 | --cgroup-driver=systemd \

268 | --node-labels=node.kubernetes.io/node='' \

269 | --feature-gates=IPv6DualStack=true

270 |

271 | Restart=always

272 | StartLimitInterval=0

273 | RestartSec=10

274 |

275 | [Install]

276 | WantedBy=multi-user.target

277 |

278 | ```

279 |

280 | 修改kube-proxy如下配置

281 | ====================

282 |

283 | ```shell

284 | #修改如下配置

285 | clusterCIDR: 172.16.0.0/12,fc00:2222::/112

286 |

287 | [root@k8s-master01 ~]# vim /etc/kubernetes/kube-proxy.yaml

288 | [root@k8s-master01 ~]# cat /etc/kubernetes/kube-proxy.yaml

289 | apiVersion: kubeproxy.config.k8s.io/v1alpha1

290 | bindAddress: 0.0.0.0

291 | clientConnection:

292 | acceptContentTypes: ""

293 | burst: 10

294 | contentType: application/vnd.kubernetes.protobuf

295 | kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

296 | qps: 5

297 | clusterCIDR: 172.16.0.0/12,fc00:2222::/112

298 | configSyncPeriod: 15m0s

299 | conntrack:

300 | max: null

301 | maxPerCore: 32768

302 | min: 131072

303 | tcpCloseWaitTimeout: 1h0m0s

304 | tcpEstablishedTimeout: 24h0m0s

305 | enableProfiling: false

306 | healthzBindAddress: 0.0.0.0:10256

307 | hostnameOverride: ""

308 | iptables:

309 | masqueradeAll: false

310 | masqueradeBit: 14

311 | minSyncPeriod: 0s

312 | syncPeriod: 30s

313 | ipvs:

314 | masqueradeAll: true

315 | minSyncPeriod: 5s

316 | scheduler: "rr"

317 | syncPeriod: 30s

318 | kind: KubeProxyConfiguration

319 | metricsBindAddress: 127.0.0.1:10249

320 | mode: "ipvs"

321 | nodePortAddresses: null

322 | oomScoreAdj: -999

323 | portRange: ""

324 | udpIdleTimeout: 250ms

325 | [root@k8s-master01 ~]#

326 |

327 | ```

328 |

329 | 修改calico如下配置

330 | ============

331 |

332 | ```shell

333 | # vim calico.yaml

334 | # calico-config ConfigMap处

335 | "ipam": {

336 | "type": "calico-ipam",

337 | "assign_ipv4": "true",

338 | "assign_ipv6": "true"

339 | },

340 | - name: IP

341 | value: "autodetect"

342 |

343 | - name: IP6

344 | value: "autodetect"

345 |

346 | - name: CALICO_IPV4POOL_CIDR

347 | value: "172.16.0.0/12"

348 |

349 | - name: CALICO_IPV6POOL_CIDR

350 | value: "fc00::/48"

351 |

352 | - name: FELIX_IPV6SUPPORT

353 | value: "true"

354 | # kubectl apply -f calico.yaml

355 |

356 | ```

357 |

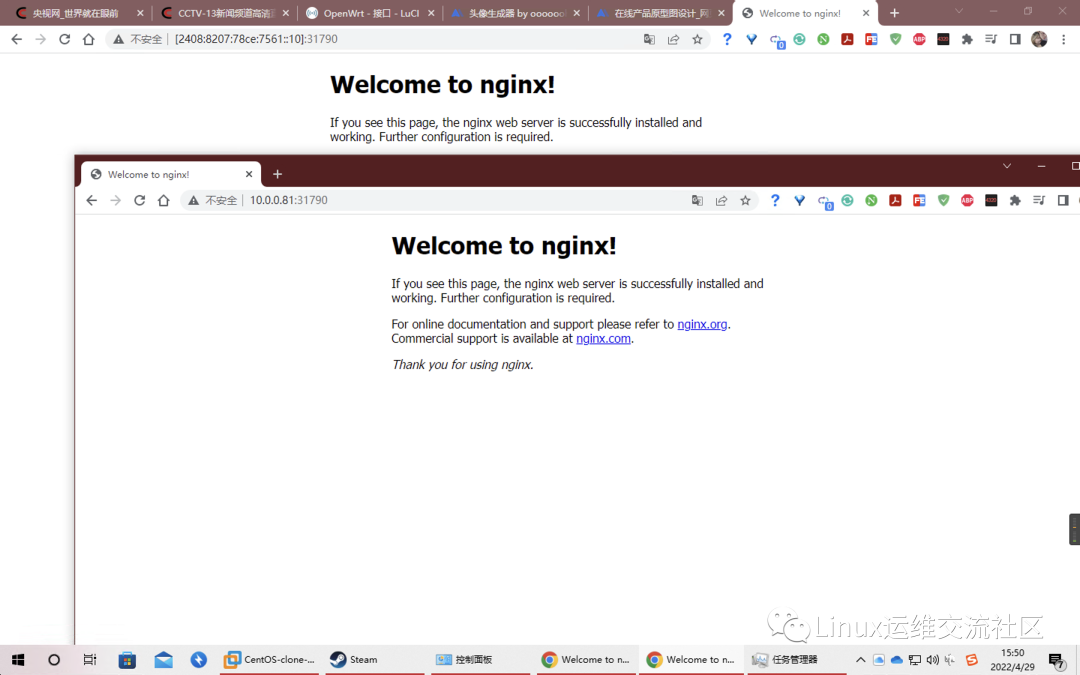

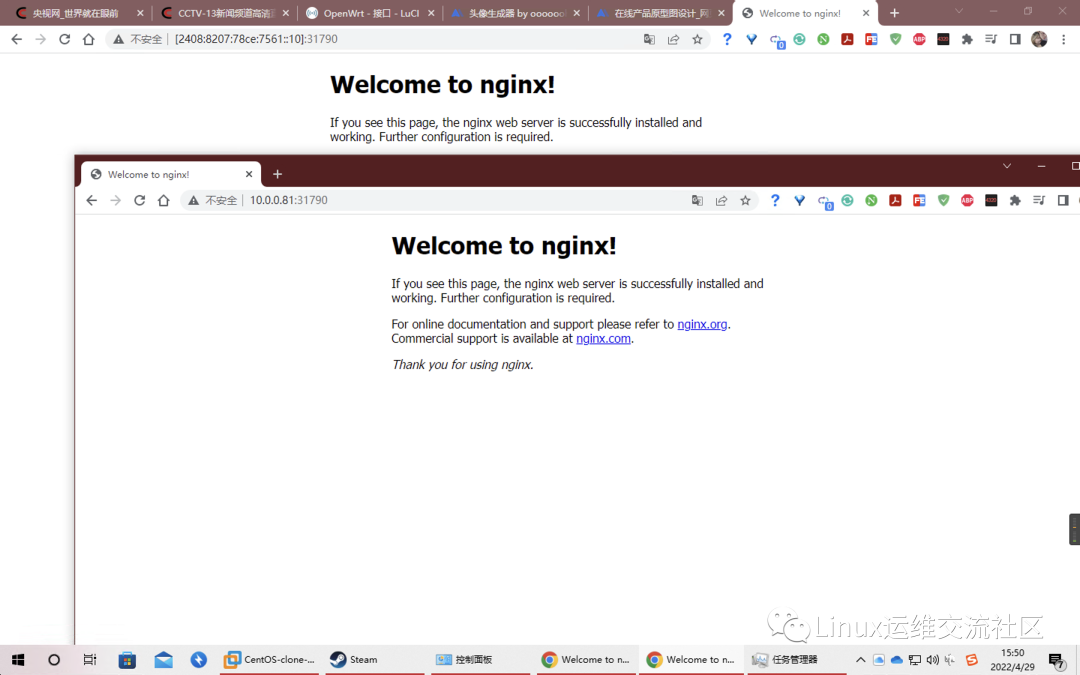

358 | 测试

359 | ==

360 |

361 | ```shell

362 | #部署应用

363 | [root@k8s-master01 ~]# cat cby.yaml

364 | apiVersion: apps/v1

365 | kind: Deployment

366 | metadata:

367 | name: chenby

368 | spec:

369 | replicas: 3

370 | selector:

371 | matchLabels:

372 | app: chenby

373 | template:

374 | metadata:

375 | labels:

376 | app: chenby

377 | spec:

378 | containers:

379 | - name: chenby

380 | image: nginx

381 | resources:

382 | limits:

383 | memory: "128Mi"

384 | cpu: "500m"

385 | ports:

386 | - containerPort: 80

387 |

388 | ---

389 | apiVersion: v1

390 | kind: Service

391 | metadata:

392 | name: chenby

393 | spec:

394 | ipFamilyPolicy: PreferDualStack

395 | ipFamilies:

396 | - IPv6

397 | - IPv4

398 | type: NodePort

399 | selector:

400 | app: chenby

401 | ports:

402 | - port: 80

403 | targetPort: 80

404 | [root@k8s-master01 ~]# kubectl apply -f cby.yaml

405 |

406 | #查看端口

407 | [root@k8s-master01 ~]# kubectl get svc

408 | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

409 | chenby NodePort fd00::d80a 80:31535/TCP 54s

410 | kubernetes ClusterIP 10.96.0.1 443/TCP 22h

411 | [root@k8s-master01 ~]#

412 |

413 | #使用内网访问

414 | [root@k8s-master01 ~]# curl -I http://[fd00::d80a]

415 | HTTP/1.1 200 OK

416 | Server: nginx/1.21.6

417 | Date: Fri, 29 Apr 2022 07:29:28 GMT

418 | Content-Type: text/html

419 | Content-Length: 615

420 | Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT

421 | Connection: keep-alive

422 | ETag: "61f01158-267"

423 | Accept-Ranges: bytes

424 |

425 | [root@k8s-master01 ~]#

426 |

427 | #使用公网访问

428 | [root@k8s-master01 ~]# curl -I http://[2408:8207:78ce:7561::10]:31535

429 | HTTP/1.1 200 OK

430 | Server: nginx/1.21.6

431 | Date: Fri, 29 Apr 2022 07:25:16 GMT

432 | Content-Type: text/html

433 | Content-Length: 615

434 | Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT

435 | Connection: keep-alive

436 | ETag: "61f01158-267"

437 | Accept-Ranges: bytes

438 |

439 | [root@k8s-master01 ~]#

440 |

441 | [root@k8s-master01 ~]# curl -I http://10.0.0.81:31535

442 | HTTP/1.1 200 OK

443 | Server: nginx/1.21.6

444 | Date: Fri, 29 Apr 2022 07:26:16 GMT

445 | Content-Type: text/html

446 | Content-Length: 615

447 | Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT

448 | Connection: keep-alive

449 | ETag: "61f01158-267"

450 | Accept-Ranges: bytes

451 |

452 | [root@k8s-master01 ~]#

453 |

454 | ```

455 |

456 |

457 |

458 |

459 |

460 |

461 |

462 |

463 |

464 |

465 |

466 | > **关于**

467 | >

468 | > https://www.oiox.cn/

469 | >

470 | > https://www.oiox.cn/index.php/start-page.html

471 | >

472 | > **CSDN、GitHub、知乎、开源中国、思否、掘金、简书、华为云、阿里云、腾讯云、哔哩哔哩、今日头条、新浪微博、个人博客**

473 | >

474 | > **全网可搜《小陈运维》**

475 | >

476 | > **文章主要发布于微信公众号:《Linux运维交流社区》**

477 |

--------------------------------------------------------------------------------

/doc/Kubernetes_docker.md:

--------------------------------------------------------------------------------

1 | ## Kubernetes 1.24 1.25 集群使用docker作为容器

2 |

3 | ### 背景

4 |

5 | 在新版本Kubernetes环境(1.24以及以上版本)下官方不在支持docker作为容器运行时了,若要继续使用docker 需要对docker进行配置一番。需要安装cri-docker作为Kubernetes容器

6 |

7 |

8 |

9 | ### 查看当前容器运行时

10 |

11 | ```shell

12 | # 查看指定节点容器运行时

13 | kubectl describe node k8s-node05 | grep Container

14 | Container Runtime Version: containerd://1.6.8

15 |

16 | # 查看所有节点容器运行时

17 | kubectl describe node | grep Container

18 | Container Runtime Version: containerd://1.6.8

19 | Container Runtime Version: containerd://1.6.8

20 | Container Runtime Version: containerd://1.6.8

21 | Container Runtime Version: containerd://1.6.8

22 | Container Runtime Version: containerd://1.6.8

23 | Container Runtime Version: containerd://1.6.8

24 | Container Runtime Version: containerd://1.6.8

25 | Container Runtime Version: containerd://1.6.8

26 | ```

27 |

28 |

29 |

30 | ### 安装docker

31 |

32 | ```shell

33 | # 更新源信息

34 | yum update

35 | # 安装必要软件

36 | yum install -y yum-utils device-mapper-persistent-data lvm2

37 |

38 | # 写入docker源信息

39 | sudo yum-config-manager \

40 | --add-repo \

41 | https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/docker-ce.repo

42 |

43 | # 更新源信息并进行安装

44 | yum update

45 | yum install docker-ce docker-ce-cli containerd.io

46 |

47 |

48 | # 配置加速器

49 | sudo mkdir -p /etc/docker

50 | sudo tee /etc/docker/daemon.json <<-'EOF'

51 | {

52 | "registry-mirrors": ["https://hub-mirror.c.163.com"],

53 | "exec-opts": ["native.cgroupdriver=systemd"]

54 | }

55 | EOF

56 | sudo systemctl daemon-reload

57 | sudo systemctl restart docker

58 | ```

59 |

60 |

61 |

62 | ### 安装cri-docker

63 |

64 | ```shell

65 | # 由于1.24以及更高版本不支持docker所以安装cri-docker

66 | # 下载cri-docker

67 | wget https://mirrors.chenby.cn/https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd-0.2.5.amd64.tgz

68 |

69 | # 解压cri-docker

70 | tar xvf cri-dockerd-0.2.5.amd64.tgz

71 | cp cri-dockerd/cri-dockerd /usr/bin/

72 |

73 | # 写入启动配置文件

74 | cat > /usr/lib/systemd/system/cri-docker.service < /usr/lib/systemd/system/cri-docker.socket < /usr/lib/systemd/system/kubelet.service << EOF

133 |

134 | [Unit]

135 | Description=Kubernetes Kubelet

136 | Documentation=https://github.com/kubernetes/kubernetes

137 | After=containerd.service

138 | Requires=containerd.service

139 |

140 | [Service]

141 | ExecStart=/usr/local/bin/kubelet \\

142 | --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig \\

143 | --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

144 | --config=/etc/kubernetes/kubelet-conf.yml \\

145 | --container-runtime-endpoint=unix:///run/cri-dockerd.sock \\

146 | --node-labels=node.kubernetes.io/node=

147 |

148 | [Install]

149 | WantedBy=multi-user.target

150 | EOF

151 |

152 |

153 | # 1.24 版本下 所有k8s节点配置kubelet service

154 | cat > /usr/lib/systemd/system/kubelet.service << EOF

155 |

156 | [Unit]

157 | Description=Kubernetes Kubelet

158 | Documentation=https://github.com/kubernetes/kubernetes

159 | After=containerd.service

160 | Requires=containerd.service

161 |

162 | [Service]

163 | ExecStart=/usr/local/bin/kubelet \\

164 | --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig \\

165 | --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

166 | --config=/etc/kubernetes/kubelet-conf.yml \\

167 | --container-runtime=remote \\

168 | --runtime-request-timeout=15m \\

169 | --container-runtime-endpoint=unix:///run/cri-dockerd.sock \\

170 | --cgroup-driver=systemd \\

171 | --node-labels=node.kubernetes.io/node= \\

172 | --feature-gates=IPv6DualStack=true

173 |

174 | [Install]

175 | WantedBy=multi-user.target

176 | EOF

177 |

178 |

179 |

180 | # 重启

181 | systemctl daemon-reload

182 | systemctl restart kubelet

183 | systemctl enable --now kubelet

184 | ```

185 |

186 |

187 |

188 | ### 验证

189 |

190 | ```shell

191 | # 查看指定节点容器运行时

192 | kubectl describe node k8s-node05 | grep Container

193 | Container Runtime Version: docker://20.10.17

194 |

195 | # 查看所有节点容器运行时

196 | kubectl describe node | grep Container

197 | Container Runtime Version: containerd://1.6.8

198 | Container Runtime Version: containerd://1.6.8

199 | Container Runtime Version: containerd://1.6.8

200 | Container Runtime Version: containerd://1.6.8

201 | Container Runtime Version: containerd://1.6.8

202 | Container Runtime Version: containerd://1.6.8

203 | Container Runtime Version: containerd://1.6.8

204 | Container Runtime Version: docker://20.10.17

205 | ```

206 |

207 |

208 |

209 |

210 | > **关于**

211 | >

212 | > https://www.oiox.cn/

213 | >

214 | > https://www.oiox.cn/index.php/start-page.html

215 | >

216 | > **CSDN、GitHub、知乎、开源中国、思否、掘金、简书、华为云、阿里云、腾讯云、哔哩哔哩、今日头条、新浪微博、个人博客**

217 | >

218 | > **全网可搜《小陈运维》**

219 | >

220 | > **文章主要发布于微信公众号**

--------------------------------------------------------------------------------

/doc/Minikube_init.md:

--------------------------------------------------------------------------------

1 | ## 安装Minikube并启动一个Kubernetes环境

2 | Minikube 是一种轻量级的Kubernetes 实现,可在本地计算机上创建VM 并部署仅包含一个节点的简单集群。Minikube 可用于Linux , macOS 和Windows 系统。Minikube CLI 提供了用于引导集群工作的多种操作,包括启动、停止、查看状态和删除。

3 |

4 | ### 安装docker

5 |

6 | ```shell

7 | # 更新源信息

8 | sudo apt-get update

9 |

10 | # 安装必要软件

11 | sudo apt-get install ca-certificates curl gnupg lsb-release

12 |

13 | # 创建key

14 | sudo mkdir -p /etc/apt/keyrings

15 |

16 | # 导入key证书

17 | curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

18 |

19 | # 写入docker源信息

20 | echo \

21 | "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

22 | $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

23 |

24 | # 设置为国内源

25 | sed -i s#download.docker.com#mirrors.ustc.edu.cn/docker-ce#g /etc/apt/sources.list.d/docker.list

26 |

27 | # 更新源信息并进行安装

28 | sudo apt-get update

29 | sudo apt-get install docker-ce docker-ce-cli containerd.io docker-compose-plugin

30 |

31 | # 配置加速器

32 | sudo mkdir -p /etc/docker

33 | sudo tee /etc/docker/daemon.json <<-'EOF'

34 | {

35 | "registry-mirrors": ["https://hub-mirror.c.163.com"],

36 | "exec-opts": ["native.cgroupdriver=systemd"]

37 | }

38 | EOF

39 | sudo systemctl daemon-reload

40 | sudo systemctl restart docker

41 | ```

42 |

43 | ### 安装cri-docker

44 | ```shell

45 | # 由于1.24以及更高版本不支持docker所以安装cri-docker

46 | # 下载cri-docker

47 | wget https://mirrors.chenby.cn/https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd-0.2.5.amd64.tgz

48 |

49 | # 解压cri-docker

50 | tar xvf cri-dockerd-0.2.5.amd64.tgz

51 | cp cri-dockerd/cri-dockerd /usr/bin/

52 |

53 | # 写入启动配置文件

54 | cat > /usr/lib/systemd/system/cri-docker.service < /usr/lib/systemd/system/cri-docker.socket < registry.cn-hangzhou.aliyun...: 386.60 MiB / 386.61 MiB 100.00% 1.37 Mi

146 | > registry.cn-hangzhou.aliyun...: 0 B [____________________] ?% ? p/s 4m9s

147 | * Creating docker container (CPUs=2, Memory=2200MB) ...

148 | * Preparing Kubernetes v1.24.3 on containerd 1.6.6 ...

149 | > kubelet.sha256: 64 B / 64 B [-------------------------] 100.00% ? p/s 0s

150 | > kubectl.sha256: 64 B / 64 B [-------------------------] 100.00% ? p/s 0s

151 | > kubeadm.sha256: 64 B / 64 B [-------------------------] 100.00% ? p/s 0s

152 | > kubeadm: 42.32 MiB / 42.32 MiB [--------------] 100.00% 1.36 MiB p/s 31s

153 | > kubectl: 43.59 MiB / 43.59 MiB [--------------] 100.00% 1.02 MiB p/s 43s

154 | > kubelet: 110.64 MiB / 110.64 MiB [----------] 100.00% 1.36 MiB p/s 1m22s

155 | - Generating certificates and keys ...

156 | - Booting up control plane ...

157 | - Configuring RBAC rules ...

158 | * Configuring CNI (Container Networking Interface) ...

159 | * Verifying Kubernetes components...

160 | - Using image registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner:v5

161 | * Enabled addons: storage-provisioner, default-storageclass

162 | * Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

163 | root@cby:~#

164 | ```

165 |

166 | ### 验证

167 | ```shell

168 | root@cby:~# kubectl get node

169 | NAME STATUS ROLES AGE VERSION

170 | minikube Ready control-plane 43s v1.24.3

171 | root@cby:~#

172 | root@cby:~# kubectl get pod -A

173 | NAMESPACE NAME READY STATUS RESTARTS AGE

174 | kube-system coredns-7f74c56694-znvr4 1/1 Running 0 31s

175 | kube-system etcd-minikube 1/1 Running 0 43s

176 | kube-system kindnet-nt8nf 1/1 Running 0 31s

177 | kube-system kube-apiserver-minikube 1/1 Running 0 43s

178 | kube-system kube-controller-manager-minikube 1/1 Running 0 43s

179 | kube-system kube-proxy-ztq87 1/1 Running 0 31s

180 | kube-system kube-scheduler-minikube 1/1 Running 0 43s

181 | kube-system storage-provisioner 1/1 Running 0 41s

182 | root@cby:~#

183 | ```

184 |

185 | ### 附录

186 |

187 | ```

188 | # 若出现错误可以做如下操作

189 | minikube delete

190 | rm -rf .minikube/

191 | ```

192 |

193 |

194 |

195 |

196 | > **关于**

197 | >

198 | > https://www.oiox.cn/

199 | >

200 | > https://www.oiox.cn/index.php/start-page.html

201 | >

202 | > **CSDN、GitHub、知乎、开源中国、思否、掘金、简书、华为云、阿里云、腾讯云、哔哩哔哩、今日头条、新浪微博、个人博客**

203 | >

204 | > **全网可搜《小陈运维》**

205 | >

206 | > **文章主要发布于微信公众号**

207 |

--------------------------------------------------------------------------------

/doc/Upgrade_Kubernetes.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## 升级二进制kubernetes集群

4 |

5 |

6 |

7 | ### 背景介绍

8 |

9 | 最近由于时间不足,暂时无法对小版本更新第一时间出新的文档。若需要升级集群版本,可以参考此文档进行操作,每个节点一个一个的更新。大版本更新请各位持续关注我的Github项目仓库。后续更新会在仓库持续更新。感谢各位小伙伴一直以来的支持。

10 |

11 | 此文档基于我的二进制安装仓库 https://github.com/cby-chen/Kubernetes

12 |

13 |

14 |

15 | ### 基础操作

16 |

17 | #### 查看当前版本信息

18 |

19 | ```shell

20 | [root@k8s-master01 ~]# kubectl get node

21 | NAME STATUS ROLES AGE VERSION

22 | k8s-master01 Ready 57d v1.23.6

23 | k8s-master02 Ready 57d v1.23.6

24 | k8s-master03 Ready 57d v1.23.6

25 | k8s-node01 Ready 57d v1.23.6

26 | k8s-node02 Ready 57d v1.23.6

27 | [root@k8s-master01 ~]#

28 | ```

29 |

30 |

31 |

32 | #### 主机域名以及IP地址

33 |

34 | ```shell

35 | [root@k8s-master01 ~]# cat /etc/hosts | grep k8s

36 | 192.168.1.230 k8s-master01

37 | 192.168.1.231 k8s-master02

38 | 192.168.1.232 k8s-master03

39 | 192.168.1.233 k8s-node01

40 | 192.168.1.234 k8s-node02

41 | [root@k8s-master01 ~]#

42 | ```

43 |

44 |

45 |

46 | #### 下载二进制安装包

47 |

48 | ```shell

49 | [root@k8s-master01 ~]# wget https://dl.k8s.io/v1.23.9/kubernetes-server-linux-amd64.tar.gz

50 | [root@k8s-master01 ~]#

51 | ```

52 |

53 |

54 |

55 | #### 解压二进制安装包

56 |

57 | ```shell

58 | [root@k8s-master01 ~]# tar xf kubernetes-server-linux-amd64.tar.gz

59 | [root@k8s-master01 ~]#

60 | ```

61 |

62 |

63 |

64 | ### 升级Maser

65 |

66 | #### 升级三台主节点上的客户端

67 |

68 | ```shell

69 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kubectl root@192.168.1.230:/usr/local/bin/

70 | [root@k8s-master01 ~]#

71 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kubectl root@192.168.1.231:/usr/local/bin/

72 | [root@k8s-master01 ~]#

73 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kubectl root@192.168.1.232:/usr/local/bin/

74 | [root@k8s-master01 ~]#

75 | ```

76 |

77 |

78 |

79 | #### 升级三台主节点api组件

80 |

81 | ```shell

82 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl stop kube-apiserver"

83 | [root@k8s-master01 ~]#

84 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kube-apiserver root@192.168.1.230:/usr/local/bin/

85 | [root@k8s-master01 ~]#

86 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl start kube-apiserver"

87 | [root@k8s-master01 ~]#

88 | [root@k8s-master01 ~]# kube-apiserver --version

89 | Kubernetes v1.23.9

90 | [root@k8s-master01 ~]#

91 | ```

92 |

93 |

94 |

95 | #### 升级三台主节点控制器组件

96 |

97 | ```shell

98 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl stop kube-controller-manager"

99 | [root@k8s-master01 ~]#

100 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kube-controller-manager root@192.168.1.230:/usr/local/bin/

101 | [root@k8s-master01 ~]#

102 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl start kube-controller-manager"

103 | [root@k8s-master01 ~]#

104 | ```

105 |

106 |

107 |

108 | #### 升级三台主节点选择器组件

109 |

110 | ```shell

111 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl stop kube-scheduler"

112 | [root@k8s-master01 ~]#

113 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kube-scheduler root@192.168.1.230:/usr/local/bin/

114 | [root@k8s-master01 ~]#

115 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl start kube-scheduler"

116 | [root@k8s-master01 ~]#

117 | ```

118 |

119 |

120 |

121 | ### 升级Worker

122 |

123 | #### 每一台机器都要升级kubelet

124 |

125 | ```shell

126 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl stop kubelet"

127 | [root@k8s-master01 ~]#

128 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kubelet root@192.168.1.230:/usr/local/bin/

129 | [root@k8s-master01 ~]#

130 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl start kubelet"

131 | [root@k8s-master01 ~]#

132 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "kubelet --version"

133 | Kubernetes v1.23.9

134 | [root@k8s-master01 ~]#

135 | ```

136 |

137 |

138 |

139 | #### 每一台机器都要升级kube-proxy

140 |

141 | ```shell

142 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl stop kube-proxy"

143 | [root@k8s-master01 ~]#

144 | [root@k8s-master01 ~]# scp kubernetes/server/bin/kube-proxy root@192.168.1.230:/usr/local/bin/

145 | [root@k8s-master01 ~]#

146 | [root@k8s-master01 ~]# ssh root@192.168.1.230 "systemctl start kube-proxy"

147 | [root@k8s-master01 ~]#

148 | ```

149 |

150 |

151 |

152 | ### 验证

153 |

154 | ```shell

155 | [root@k8s-master01 ~]# kubectl get node

156 | NAME STATUS ROLES AGE VERSION

157 | k8s-master01 Ready 57d v1.23.9

158 | k8s-master02 Ready 57d v1.23.9

159 | k8s-master03 Ready 57d v1.23.9

160 | k8s-node01 Ready 57d v1.23.9

161 | k8s-node02 Ready 57d v1.23.9

162 | [root@k8s-master01 ~]#

163 | [root@k8s-master01 ~]# kubectl version

164 | Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.9", GitCommit:"c1de2d70269039fe55efb98e737d9a29f9155246", GitTreeState:"clean", BuildDate:"2022-07-13T14:26:51Z", GoVersion:"go1.17.11", Compiler:"gc", Platform:"linux/amd64"}

165 | Server Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.9", GitCommit:"c1de2d70269039fe55efb98e737d9a29f9155246", GitTreeState:"clean", BuildDate:"2022-07-13T14:19:57Z", GoVersion:"go1.17.11", Compiler:"gc", Platform:"linux/amd64"}

166 | [root@k8s-master01 ~]#

167 | ```

168 |

169 |

170 |

171 | > **关于**

172 | >

173 | > https://www.oiox.cn/

174 | >

175 | > https://www.oiox.cn/index.php/start-page.html

176 | >

177 | > **CSDN、GitHub、知乎、开源中国、思否、掘金、简书、华为云、阿里云、腾讯云、哔哩哔哩、今日头条、新浪微博、个人博客**

178 | >

179 | > **全网可搜《小陈运维》**

180 | >

181 | > **文章主要发布于微信公众号**

182 |

--------------------------------------------------------------------------------

/doc/kube-proxy_permissions.md:

--------------------------------------------------------------------------------

1 | # 修复kube-proxy证书权限过大问题

2 |

3 |

4 |

5 | 之前kube-proxy服务都是用admin集群证书,造成权限过大不安全,后续该问题,将在文档中修复

6 |

7 | 请关注 https://github.com/cby-chen/Kubernetes

8 |

9 |

10 |

11 | ## 创建生成证书配置文件

12 |

13 | ```shell

14 | 详细见:https://github.com/cby-chen/Kubernetes#23%E5%88%9B%E5%BB%BA%E8%AF%81%E4%B9%A6%E7%9B%B8%E5%85%B3%E6%96%87%E4%BB%B6

15 |

16 | cat > ca-config.json << EOF

17 | {

18 | "signing": {

19 | "default": {

20 | "expiry": "876000h"

21 | },

22 | "profiles": {

23 | "kubernetes": {

24 | "usages": [

25 | "signing",

26 | "key encipherment",

27 | "server auth",

28 | "client auth"

29 | ],

30 | "expiry": "876000h"

31 | }

32 | }

33 | }

34 | }

35 | EOF

36 |

37 | cat > kube-proxy-csr.json << EOF

38 | {

39 | "CN": "system:kube-proxy",

40 | "key": {

41 | "algo": "rsa",

42 | "size": 2048

43 | },

44 | "names": [

45 | {

46 | "C": "CN",

47 | "ST": "Beijing",

48 | "L": "Beijing",

49 | "O": "system:kube-proxy",

50 | "OU": "Kubernetes-manual"

51 | }

52 | ]

53 | }

54 | EOF

55 | ```

56 |

57 |

58 |

59 | ## 生成 CA 证书和私钥

60 |

61 | ```shell

62 | cfssl gencert \

63 | -ca=/etc/kubernetes/pki/ca.pem \

64 | -ca-key=/etc/kubernetes/pki/ca-key.pem \

65 | -config=ca-config.json \

66 | -profile=kubernetes \

67 | kube-proxy-csr.json | cfssljson -bare /etc/kubernetes/pki/kube-proxy

68 |

69 |

70 |

71 |

72 | ll /etc/kubernetes/pki/kube-proxy*

73 | -rw-r--r-- 1 root root 1045 May 26 10:21 /etc/kubernetes/pki/kube-proxy.csr

74 | -rw------- 1 root root 1675 May 26 10:21 /etc/kubernetes/pki/kube-proxy-key.pem

75 | -rw-r--r-- 1 root root 1464 May 26 10:21 /etc/kubernetes/pki/kube-proxy.pem

76 | ```

77 |

78 | 设置集群参数和客户端认证参数时 --embed-certs 都为 true,这会将 certificate-authority、client-certificate 和 client-key 指向的证书文件内容写入到生成的 kube-proxy.kubeconfig 文件中;

79 |

80 | kube-proxy.pem 证书中 CN 为 system:kube-proxy,kube-apiserver 预定义的 RoleBinding cluster-admin 将User system:kube-proxy 与 Role system:node-proxier 绑定,该 Role 授予了调用 kube-apiserver Proxy 相关 API 的权限;

81 |

82 |

83 |

84 | ## 创建 kubeconfig 文件

85 |

86 | ```shell

87 | kubectl config set-cluster kubernetes \

88 | --certificate-authority=/etc/kubernetes/pki/ca.pem \

89 | --embed-certs=true \

90 | --server=https://10.0.0.89:8443 \

91 | --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

92 |

93 | kubectl config set-credentials kube-proxy \

94 | --client-certificate=/etc/kubernetes/pki/kube-proxy.pem \

95 | --client-key=/etc/kubernetes/pki/kube-proxy-key.pem \

96 | --embed-certs=true \

97 | --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

98 |

99 | kubectl config set-context kube-proxy@kubernetes \

100 | --cluster=kubernetes \

101 | --user=kube-proxy \

102 | --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

103 |

104 | kubectl config use-context kube-proxy@kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

105 | ```

106 |

107 |

108 |

109 | ## 无法访问 pod资源

110 |

111 | ```shell

112 | [cby@k8s-master01 ~]$ kubectl get pod

113 | Error from server (Forbidden): pods is forbidden: User "system:kube-proxy" cannot list resource "pods" in API group "" in the namespace "default"

114 | [cby@k8s-master01 ~]$

115 | ```

116 |

117 |

118 |

119 | ## 可以访问 node资源

120 |

121 | ```shell

122 | [cby@k8s-master01 ~]$ kubectl get node

123 | NAME STATUS ROLES AGE VERSION

124 | k8s-master01 Ready 2d21h v1.24.0

125 | k8s-master02 Ready 2d21h v1.24.0

126 | k8s-master03 Ready 2d21h v1.24.0

127 | k8s-node01 Ready 2d21h v1.24.0

128 | k8s-node02 Ready 2d21h v1.24.0

129 | [cby@k8s-master01 ~]$

130 |

131 | ```

132 |

133 |

134 |

135 | ## 将配置进行替换

136 |

137 | ```shell

138 | for NODE in k8s-master02 k8s-master03; do scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig; done

139 |

140 | for NODE in k8s-node01 k8s-node02; do scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig; done

141 |

142 | [root@k8s-master01 ~]# cat /etc/kubernetes/kube-proxy.yaml

143 | apiVersion: kubeproxy.config.k8s.io/v1alpha1

144 | bindAddress: 0.0.0.0

145 | clientConnection:

146 | acceptContentTypes: ""

147 | burst: 10

148 | contentType: application/vnd.kubernetes.protobuf

149 | kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

150 | qps: 5

151 | clusterCIDR: 172.16.0.0/12,fc00:2222::/112

152 | configSyncPeriod: 15m0s

153 | conntrack:

154 | max: null

155 | maxPerCore: 32768

156 | min: 131072

157 | tcpCloseWaitTimeout: 1h0m0s

158 | tcpEstablishedTimeout: 24h0m0s

159 | enableProfiling: false

160 | healthzBindAddress: 0.0.0.0:10256

161 | hostnameOverride: ""

162 | iptables:

163 | masqueradeAll: false

164 | masqueradeBit: 14

165 | minSyncPeriod: 0s

166 | syncPeriod: 30s

167 | ipvs:

168 | masqueradeAll: true

169 | minSyncPeriod: 5s

170 | scheduler: "rr"

171 | syncPeriod: 30s

172 | kind: KubeProxyConfiguration

173 | metricsBindAddress: 127.0.0.1:10249

174 | mode: "ipvs"

175 | nodePortAddresses: null

176 | oomScoreAdj: -999

177 | portRange: ""

178 | udpIdleTimeout: 250ms

179 |

180 | [root@k8s-master01 ~]# systemctl restart kube-proxy

181 | ```

182 |

183 |

184 |

185 |

186 |

187 | > **关于**

188 | >

189 | > https://www.oiox.cn/

190 | >

191 | > https://www.oiox.cn/index.php/start-page.html

192 | >

193 | > **CSDN、GitHub、知乎、开源中国、思否、掘金、简书、华为云、阿里云、腾讯云、哔哩哔哩、今日头条、新浪微博、个人博客**

194 | >

195 | > **全网可搜《小陈运维》**

196 | >

197 | > **文章主要发布于微信公众号:《Linux运维交流社区》**

198 |

--------------------------------------------------------------------------------

/doc/kubeadm-install-IPV6-IPV4.md:

--------------------------------------------------------------------------------

1 | 使用kubeadm初始化IPV4/IPV6集群

2 | =======================

3 |

4 | CentOS 配置YUM源

5 | =============

6 |

7 | ```shell

8 | cat < /etc/yum.repos.d/kubernetes.repo

9 | [kubernetes]

10 | name=kubernetes

11 | baseurl=https://mirrors.ustc.edu.cn/kubernetes/yum/repos/kubernetes-el7-$basearch

12 | enabled=1

13 | EOF

14 | setenforce 0

15 | yum install -y kubelet kubeadm kubectl

16 |

17 | # 如安装老版本

18 | # yum install kubelet-1.16.9-0 kubeadm-1.16.9-0 kubectl-1.16.9-0

19 |

20 | systemctl enable kubelet && systemctl start kubelet

21 |

22 |

23 | # 将 SELinux 设置为 permissive 模式(相当于将其禁用)

24 | sudo setenforce 0

25 | sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

26 | sudo systemctl enable --now kubelet

27 |

28 | ```

29 |

30 | Ubuntu 配置APT源

31 | =============

32 |

33 | ```shell

34 | curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

35 | cat </etc/apt/sources.list.d/kubernetes.list

36 | deb https://mirrors.ustc.edu.cn/kubernetes/apt kubernetes-xenial main

37 | EOF

38 | apt-get update

39 | apt-get install -y kubelet kubeadm kubectl

40 |

41 | # 如安装老版本

42 | # apt install kubelet=1.23.6-00 kubeadm=1.23.6-00 kubectl=1.23.6-00

43 |

44 | ```

45 |

46 | 配置containerd

47 | ============

48 |

49 | ```shell

50 | wget https://github.com/containerd/containerd/releases/download/v1.6.4/cri-containerd-cni-1.6.4-linux-amd64.tar.gz

51 |

52 | #解压

53 | tar -C / -xzf cri-containerd-cni-1.6.4-linux-amd64.tar.gz

54 |

55 | #创建服务启动文件

56 | cat > /etc/systemd/system/containerd.service < /etc/hosts < --discovery-token-ca-cert-hash sha256:2ade8c834a41cc1960993a600c89fa4bb86e3594f82e09bcd42633d4defbda0d

284 | [preflight] Running pre-flight checks

285 | [preflight] Reading configuration from the cluster...

286 | [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

287 | [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

288 | [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

289 | [kubelet-start] Starting the kubelet

290 | [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

291 |

292 | This node has joined the cluster:

293 | * Certificate signing request was sent to apiserver and a response was received.

294 | * The Kubelet was informed of the new secure connection details.

295 |

296 | Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

297 |

298 | root@k8s-node01:~#

299 |

300 |

301 | root@k8s-node02:~# kubeadm join 10.0.0.21:6443 --token qf3z22.qwtqieutbkik6dy4 \

302 | > --discovery-token-ca-cert-hash sha256:2ade8c834a41cc1960993a600c89fa4bb86e3594f82e09bcd42633d4defbda0d

303 | [preflight] Running pre-flight checks

304 | [preflight] Reading configuration from the cluster...

305 | [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

306 | [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

307 | [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

308 | [kubelet-start] Starting the kubelet

309 | [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

310 |

311 | This node has joined the cluster:

312 | * Certificate signing request was sent to apiserver and a response was received.

313 | * The Kubelet was informed of the new secure connection details.

314 |

315 | Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

316 |

317 | root@k8s-node02:~#

318 |

319 | ```

320 |

321 | 查看集群

322 | ====

323 |

324 | ```shell

325 | root@k8s-master01:~# kubectl get node

326 | NAME STATUS ROLES AGE VERSION

327 | k8s-master01 Ready control-plane 111s v1.24.0

328 | k8s-node01 Ready 82s v1.24.0

329 | k8s-node02 Ready 92s v1.24.0

330 | root@k8s-master01:~#

331 | root@k8s-master01:~#

332 | root@k8s-master01:~# kubectl get pod -A

333 | NAMESPACE NAME READY STATUS RESTARTS AGE

334 | kube-system coredns-bc77466fc-jxkpv 1/1 Running 0 83s

335 | kube-system coredns-bc77466fc-nrc9l 1/1 Running 0 83s

336 | kube-system etcd-k8s-master01 1/1 Running 0 87s

337 | kube-system kube-apiserver-k8s-master01 1/1 Running 0 89s

338 | kube-system kube-controller-manager-k8s-master01 1/1 Running 0 87s

339 | kube-system kube-proxy-2lgrn 1/1 Running 0 83s

340 | kube-system kube-proxy-69p9r 1/1 Running 0 47s

341 | kube-system kube-proxy-g58m2 1/1 Running 0 42s

342 | kube-system kube-scheduler-k8s-master01 1/1 Running 0 87s

343 | root@k8s-master01:~#

344 |

345 | ```

346 |

347 | 配置calico

348 | ========

349 |

350 | ```shell

351 | wget https://raw.githubusercontent.com/cby-chen/Kubernetes/main/yaml/calico-ipv6.yaml

352 |

353 | # vim calico-ipv6.yaml

354 | # calico-config ConfigMap处

355 | "ipam": {

356 | "type": "calico-ipam",

357 | "assign_ipv4": "true",

358 | "assign_ipv6": "true"

359 | },

360 | - name: IP

361 | value: "autodetect"

362 |

363 | - name: IP6

364 | value: "autodetect"

365 |

366 | - name: CALICO_IPV4POOL_CIDR

367 | value: "172.16.0.0/12"

368 |

369 | - name: CALICO_IPV6POOL_CIDR

370 | value: "fc00::/48"

371 |

372 | - name: FELIX_IPV6SUPPORT

373 | value: "true"

374 |

375 | kubectl apply -f calico-ipv6.yaml

376 |

377 | ```

378 |

379 | 测试IPV6

380 | ======

381 |

382 | ```shell

383 | root@k8s-master01:~# cat cby.yaml

384 | apiVersion: apps/v1

385 | kind: Deployment

386 | metadata:

387 | name: chenby

388 | spec:

389 | replicas: 3

390 | selector:

391 | matchLabels:

392 | app: chenby

393 | template:

394 | metadata:

395 | labels:

396 | app: chenby

397 | spec:

398 | containers:

399 | - name: chenby

400 | image: nginx

401 | resources:

402 | limits:

403 | memory: "128Mi"

404 | cpu: "500m"

405 | ports:

406 | - containerPort: 80

407 |

408 | ---

409 | apiVersion: v1

410 | kind: Service

411 | metadata:

412 | name: chenby

413 | spec:

414 | ipFamilyPolicy: PreferDualStack

415 | ipFamilies:

416 | - IPv6

417 | - IPv4

418 | type: NodePort

419 | selector:

420 | app: chenby

421 | ports:

422 | - port: 80

423 | targetPort: 80

424 |

425 | kubectl apply -f cby.yaml

426 |

427 | root@k8s-master01:~# kubectl get pod

428 | NAME READY STATUS RESTARTS AGE

429 | chenby-57479d5997-6pfzg 1/1 Running 0 6m

430 | chenby-57479d5997-jjwpk 1/1 Running 0 6m

431 | chenby-57479d5997-pzrkc 1/1 Running 0 6m

432 |

433 | root@k8s-master01:~# kubectl get svc

434 | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

435 | chenby NodePort fd00::f816 80:30265/TCP 6m7s

436 | kubernetes ClusterIP 10.96.0.1 443/TCP 168m

437 |

438 | root@k8s-master01:~# curl -I http://[2408:8207:78ce:7561::21]:30265/

439 | HTTP/1.1 200 OK

440 | Server: nginx/1.21.6

441 | Date: Wed, 11 May 2022 07:01:43 GMT

442 | Content-Type: text/html

443 | Content-Length: 615

444 | Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT

445 | Connection: keep-alive

446 | ETag: "61f01158-267"

447 | Accept-Ranges: bytes

448 |

449 | root@k8s-master01:~# curl -I http://10.0.0.21:30265/

450 | HTTP/1.1 200 OK

451 | Server: nginx/1.21.6

452 | Date: Wed, 11 May 2022 07:01:54 GMT

453 | Content-Type: text/html

454 | Content-Length: 615

455 | Last-Modified: Tue, 25 Jan 2022 15:03:52 GMT

456 | Connection: keep-alive

457 | ETag: "61f01158-267"

458 | Accept-Ranges: bytes

459 |

460 | ```

461 |

462 | > **关于**

463 | >

464 | > https://www.oiox.cn/

465 | >

466 | > https://www.oiox.cn/index.php/start-page.html

467 | >

468 | > **CSDN、GitHub、知乎、开源中国、思否、掘金、简书、华为云、阿里云、腾讯云、哔哩哔哩、今日头条、新浪微博、个人博客**

469 | >

470 | > **全网可搜《小陈运维》**

471 | >

472 | > **文章主要发布于微信公众号:《Linux运维交流社区》**

473 |

474 |

--------------------------------------------------------------------------------

/doc/kubernetes_install_cilium.md:

--------------------------------------------------------------------------------

1 | ## kubernetes 安装cilium

2 |

3 | ### Cilium介绍

4 | Cilium是一个开源软件,用于透明地提供和保护使用Kubernetes,Docker和Mesos等Linux容器管理平台部署的应用程序服务之间的网络和API连接。

5 |

6 | Cilium基于一种名为BPF的新Linux内核技术,它可以在Linux内部动态插入强大的安全性,可见性和网络控制逻辑。 除了提供传统的网络级安全性之外,BPF的灵活性还可以在API和进程级别上实现安全性,以保护容器或容器内的通信。由于BPF在Linux内核中运行,因此可以应用和更新Cilium安全策略,而无需对应用程序代码或容器配置进行任何更改。

7 |

8 |

9 | ### 1 安装helm

10 |

11 | ```shell

12 | [root@k8s-master01 ~]# curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

13 | [root@k8s-master01 ~]# chmod 700 get_helm.sh

14 | [root@k8s-master01 ~]# ./get_helm.sh

15 | ```

16 |

17 | ### 2 安装cilium

18 |

19 | ```shell

20 | [root@k8s-master01 ~]# helm repo add cilium https://helm.cilium.io

21 | [root@k8s-master01 ~]# helm install cilium cilium/cilium --namespace kube-system --set hubble.relay.enabled=true --set hubble.ui.enabled=true --set prometheus.enabled=true --set operator.prometheus.enabled=true --set hubble.enabled=true --set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,http}"

22 |

23 | NAME: cilium

24 | LAST DEPLOYED: Sun Sep 11 00:04:30 2022

25 | NAMESPACE: kube-system

26 | STATUS: deployed

27 | REVISION: 1

28 | TEST SUITE: None

29 | NOTES:

30 | You have successfully installed Cilium with Hubble.

31 |

32 | Your release version is 1.12.1.

33 |

34 | For any further help, visit https://docs.cilium.io/en/v1.12/gettinghelp

35 | [root@k8s-master01 ~]#

36 | ```

37 |

38 | ### 3 查看

39 |

40 | ```shell

41 | [root@k8s-master01 ~]# kubectl get pod -A | grep cil

42 | kube-system cilium-gmr6c 1/1 Running 0 5m3s

43 | kube-system cilium-kzgdj 1/1 Running 0 5m3s

44 | kube-system cilium-operator-69b677f97c-6pw4k 1/1 Running 0 5m3s

45 | kube-system cilium-operator-69b677f97c-xzzdk 1/1 Running 0 5m3s

46 | kube-system cilium-q2rnr 1/1 Running 0 5m3s

47 | kube-system cilium-smx5v 1/1 Running 0 5m3s

48 | kube-system cilium-tdjq4 1/1 Running 0 5m3s

49 | [root@k8s-master01 ~]#

50 | ```

51 |

52 | ### 4 下载专属监控面板

53 |

54 | ```shell

55 | [root@k8s-master01 yaml]# wget https://raw.githubusercontent.com/cilium/cilium/1.12.1/examples/kubernetes/addons/prometheus/monitoring-example.yaml

56 | [root@k8s-master01 yaml]#

57 | [root@k8s-master01 yaml]# kubectl apply -f monitoring-example.yaml

58 | namespace/cilium-monitoring created

59 | serviceaccount/prometheus-k8s created

60 | configmap/grafana-config created

61 | configmap/grafana-cilium-dashboard created

62 | configmap/grafana-cilium-operator-dashboard created

63 | configmap/grafana-hubble-dashboard created

64 | configmap/prometheus created

65 | clusterrole.rbac.authorization.k8s.io/prometheus created

66 | clusterrolebinding.rbac.authorization.k8s.io/prometheus created

67 | service/grafana created

68 | service/prometheus created

69 | deployment.apps/grafana created

70 | deployment.apps/prometheus created

71 | [root@k8s-master01 yaml]#

72 | ```

73 |

74 | ### 5 下载部署测试用例

75 |

76 | ```shell

77 | [root@k8s-master01 yaml]# wget https://raw.githubusercontent.com/cilium/cilium/master/examples/kubernetes/connectivity-check/connectivity-check.yaml

78 |

79 | [root@k8s-master01 yaml]# sed -i "s#google.com#baidu.cn#g" connectivity-check.yaml

80 |

81 | [root@k8s-master01 yaml]# kubectl apply -f connectivity-check.yaml

82 | deployment.apps/echo-a created

83 | deployment.apps/echo-b created

84 | deployment.apps/echo-b-host created

85 | deployment.apps/pod-to-a created

86 | deployment.apps/pod-to-external-1111 created

87 | deployment.apps/pod-to-a-denied-cnp created

88 | deployment.apps/pod-to-a-allowed-cnp created

89 | deployment.apps/pod-to-external-fqdn-allow-google-cnp created

90 | deployment.apps/pod-to-b-multi-node-clusterip created

91 | deployment.apps/pod-to-b-multi-node-headless created

92 | deployment.apps/host-to-b-multi-node-clusterip created

93 | deployment.apps/host-to-b-multi-node-headless created

94 | deployment.apps/pod-to-b-multi-node-nodeport created

95 | deployment.apps/pod-to-b-intra-node-nodeport created

96 | service/echo-a created

97 | service/echo-b created

98 | service/echo-b-headless created

99 | service/echo-b-host-headless created

100 | ciliumnetworkpolicy.cilium.io/pod-to-a-denied-cnp created

101 | ciliumnetworkpolicy.cilium.io/pod-to-a-allowed-cnp created

102 | ciliumnetworkpolicy.cilium.io/pod-to-external-fqdn-allow-google-cnp created

103 | [root@k8s-master01 yaml]#

104 | ```

105 |

106 | ### 6 查看pod

107 |

108 | ```shell

109 | [root@k8s-master01 yaml]# kubectl get pod -A

110 | NAMESPACE NAME READY STATUS RESTARTS AGE

111 | cilium-monitoring grafana-59957b9549-6zzqh 1/1 Running 0 10m

112 | cilium-monitoring prometheus-7c8c9684bb-4v9cl 1/1 Running 0 10m

113 | default chenby-75b5d7fbfb-7zjsr 1/1 Running 0 27h

114 | default chenby-75b5d7fbfb-hbvr8 1/1 Running 0 27h

115 | default chenby-75b5d7fbfb-ppbzg 1/1 Running 0 27h

116 | default echo-a-6799dff547-pnx6w 1/1 Running 0 10m

117 | default echo-b-fc47b659c-4bdg9 1/1 Running 0 10m

118 | default echo-b-host-67fcfd59b7-28r9s 1/1 Running 0 10m

119 | default host-to-b-multi-node-clusterip-69c57975d6-z4j2z 1/1 Running 0 10m

120 | default host-to-b-multi-node-headless-865899f7bb-frrmc 1/1 Running 0 10m

121 | default pod-to-a-allowed-cnp-5f9d7d4b9d-hcd8x 1/1 Running 0 10m

122 | default pod-to-a-denied-cnp-65cc5ff97b-2rzb8 1/1 Running 0 10m

123 | default pod-to-a-dfc64f564-p7xcn 1/1 Running 0 10m

124 | default pod-to-b-intra-node-nodeport-677868746b-trk2l 1/1 Running 0 10m

125 | default pod-to-b-multi-node-clusterip-76bbbc677b-knfq2 1/1 Running 0 10m

126 | default pod-to-b-multi-node-headless-698c6579fd-mmvd7 1/1 Running 0 10m

127 | default pod-to-b-multi-node-nodeport-5dc4b8cfd6-8dxmz 1/1 Running 0 10m

128 | default pod-to-external-1111-8459965778-pjt9b 1/1 Running 0 10m

129 | default pod-to-external-fqdn-allow-google-cnp-64df9fb89b-l9l4q 1/1 Running 0 10m

130 | kube-system cilium-7rfj6 1/1 Running 0 56s

131 | kube-system cilium-d4cch 1/1 Running 0 56s

132 | kube-system cilium-h5x8r 1/1 Running 0 56s

133 | kube-system cilium-operator-5dbddb6dbf-flpl5 1/1 Running 0 56s

134 | kube-system cilium-operator-5dbddb6dbf-gcznc 1/1 Running 0 56s

135 | kube-system cilium-t2xlz 1/1 Running 0 56s

136 | kube-system cilium-z65z7 1/1 Running 0 56s

137 | kube-system coredns-665475b9f8-jkqn8 1/1 Running 1 (36h ago) 36h

138 | kube-system hubble-relay-59d8575-9pl9z 1/1 Running 0 56s

139 | kube-system hubble-ui-64d4995d57-nsv9j 2/2 Running 0 56s

140 | kube-system metrics-server-776f58c94b-c6zgs 1/1 Running 1 (36h ago) 37h

141 | [root@k8s-master01 yaml]#

142 | ```

143 |

144 | ### 7 修改为NodePort

145 |

146 | ```shell

147 | [root@k8s-master01 yaml]# kubectl edit svc -n kube-system hubble-ui

148 | service/hubble-ui edited

149 | [root@k8s-master01 yaml]#

150 | [root@k8s-master01 yaml]# kubectl edit svc -n cilium-monitoring grafana

151 | service/grafana edited

152 | [root@k8s-master01 yaml]#

153 | [root@k8s-master01 yaml]# kubectl edit svc -n cilium-monitoring prometheus

154 | service/prometheus edited

155 | [root@k8s-master01 yaml]#

156 |

157 | type: NodePort

158 | ```

159 |

160 | ### 8 查看端口

161 |

162 | ```shell

163 | [root@k8s-master01 yaml]# kubectl get svc -A | grep monit