├── .gitignore

├── README.md

├── app

├── app.js

└── content.js

├── assets

└── shaders

│ └── defaultEffect.js

├── gruntfile.js

├── index.html

├── package.json

├── renderer.js

├── src

├── SIMD

│ └── buffer_simd.js

├── buffer.js

├── effect.js

├── main.js

├── math.js

├── model.js

└── texture.js

└── style.css

/.gitignore:

--------------------------------------------------------------------------------

1 | *.o

2 | *.DS_Store

3 | node_modules/*

4 | _asmjs/*

5 | assets/models/*

6 | assets/scenes/*

7 | website/*

8 | media/*

9 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

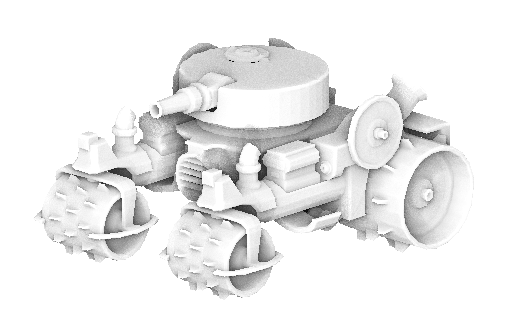

1 | # JS TinyRenderer

2 | A small software renderer written in JavaScript.

3 |

4 |

5 |

6 | This renderer is based off Dmitry's [TinyRenderer](https://github.com/ssloy/tinyrenderer), using the same concepts and goal of keeping

7 | the application small while still retaining the core features of a software renderer. Currently fits in less than 8k of code! I'll try to keep it small. For technical info on how it's implemented, see [Scratchapixel's article on rasterization](https://www.scratchapixel.com/lessons/3d-basic-rendering/rasterization-practical-implementation/rasterization-stage).

8 |

9 | ## Current Features

10 |

11 | * Triangle drawing with scanline algorithm

12 | * 3D transformations and Z-buffer

13 | * Custom shader support with external scripts

14 | * Loading different content types (models, textures, shaders)

15 | * Screen-space effects (SSAO included as an example)

16 |

17 | ## Implementation

18 |

19 | ### Renderer

20 | * Programmable pipeline using Effect objects with vertex and fragment operations

21 | * Effect parameters are mapped from object keys

22 | * Math library with common vector, matrix, and quaternion operations

23 | * Triangle rasterization algorithm

24 | * Edge function for testing points that lie inside a triangle

25 | * Barycentric coordinates for texel, depth and color interpolation

26 | * Z-buffer for depth checking

27 | * Float32 typed array for storing model coordinates provides faster performance

28 | * Uint8 and Uint32 arrays for framebuffer storage

29 | * OBJ model parser that supports vertex, texture, and normal coordinates

30 | * Texture loader and nearest-neighbor sampler using an "off-screen" Canvas image

31 | ### Boilerplate code

32 | * Mouse functions for rotating model

33 | * Content manager

34 | * Asynchronous loading for content

35 | * Currying as a generic loader for various types eg. `content.load('type')('path_to_content','handle')`

36 |

37 | ## Overview

38 |

39 | JS TinyRenderer emulates the basic steps of a hardware renderer, by reading geometric data from an array source, transforming triangle vertices to screen space, and rasterization of those triangles. It does this using the HTML5 Canvas element to represent the backbuffer, using only the ImageData interface and pixel setting/getting functions to render an image. No built-in Canvas functions for drawing lines or shapes are used. Of course, WebGL is not used either.

40 |

41 | As this is a software renderer, the entire application is CPU bound, but there is a lot of potential to add more features. It is currently a single threaded application, and might be difficult to add multithreading support due to the language, but it's something I would still consider.

42 |

43 | ### Todo

44 | * Render to Texture

45 | * Perspective view camera

46 | * Anti-aliasing using edge detection

47 | * Support multiple materials

48 |

49 | ## Installing

50 |

51 | [Grunt](http://gruntjs.com/getting-started) is required to build the application.

52 | The Package.json and Gruntfile already provide the build setup. Just run these commands in the repo folder:

53 |

54 | ```

55 | npm install grunt --save-dev

56 | npm install grunt-contrib-watch --save-dev

57 | npm install grunt-contrib-uglify --save-dev

58 | ```

59 |

60 | Run `grunt watch` to start watching the changes in the source code, which will bundle the files and `uglify` the code when a file is saved.

61 |

--------------------------------------------------------------------------------

/app/app.js:

--------------------------------------------------------------------------------

1 | (function()

2 | {

3 | // Globals

4 | startProfile = null;

5 | frames = 0;

6 |

7 | // Application entry point

8 |

9 | App = (function()

10 | {

11 | // Internal variables

12 | var thetaX = m.PI;

13 | var thetaY = m.PI;

14 | var ctx = null;

15 | var ssaoToggle = doc.getElementById('ssao-toggle');

16 | var ssaoEnabled = false;

17 |

18 | // Mouse control

19 | var mouseDown = false;

20 | var mouseMoved = false;

21 | var lastMouseX = 0;

22 | var lastMouseY = 0;

23 |

24 | function App()

25 | {

26 | //this.renderer = null;

27 | this.init();

28 | }

29 |

30 | // Mouse event handling

31 |

32 | App.handleMouseEvents = function(canvas)

33 | {

34 | canvas.onmousedown = App.onMouseDown;

35 | document.onmouseup = App.onMouseUp;

36 | document.onmousemove = App.onMouseMove;

37 | }

38 |

39 | App.onMouseDown = function(event)

40 | {

41 | mouseDown = true;

42 | lastMouseX = event.clientX;

43 | lastMouseY = event.clientY;

44 | }

45 |

46 | App.onMouseUp = function(event)

47 | {

48 | mouseDown = false;

49 | }

50 |

51 | // Only detect mouse movement when button is pressed

52 |

53 | App.onMouseMove = function(event)

54 | {

55 | if (!mouseDown) return;

56 |

57 | var newX = event.clientX;

58 | var newY = event.clientY;

59 |

60 | thetaX += (newX - lastMouseX) / (m.PI * 90);

61 | thetaY += (newY - lastMouseY) / (m.PI * 60);

62 |

63 | lastMouseX = newX;

64 | lastMouseY = newY;

65 | }

66 |

67 | App.prototype =

68 | {

69 | init: function()

70 | {

71 | // Set canvas

72 | var canvas = doc.getElementById('render');

73 |

74 | content = new ContentManager();

75 |

76 | if (canvas.getContext)

77 | {

78 | content.load('Model')('assets/models/tank1/Tank1.obj', 'model');

79 | content.load('Texture')('assets/models/tank1/engine_diff_tex_small.png', 'model_diff');

80 | //content.load('Texture')('assets/models/testmodel/model_pose_nm.png', 'model_nrm');

81 |

82 | content.load('Effect')('assets/shaders/defaultEffect.js');

83 |

84 | // Call update after content is loaded

85 | content.finishedLoading(this.ready(canvas));

86 | }

87 | else

88 | console.error("Canvas context not supported!");

89 | },

90 |

91 | // Display render button

92 |

93 | ready: function(canvas)

94 | {

95 | var self = this;

96 | console.log('ready to render!');

97 |

98 | return function(content)

99 | {

100 | model = content.model;

101 |

102 | // Create texture and effects

103 | effect = new DefaultEffect();

104 | var texture = content.model_diff;

105 | var texture_nrm = content.model_nrm;

106 |

107 | // Set context

108 | ctx = canvas.getContext('2d');

109 | var el = doc.getElementById('render-start');

110 |

111 | buffer = new Buffer(ctx, canvas.width, canvas.height);

112 | self.renderer = new Renderer(content);

113 | App.handleMouseEvents(canvas);

114 |

115 | // Font setup

116 | ctx.fillStyle = '#888';

117 | ctx.font = '16px Helvetica';

118 |

119 | // Set shader parameters

120 | effect.setParameters({

121 | scr_w: buffer.w,

122 | scr_h: buffer.h,

123 | texture: texture,

124 | texture_nrm: texture_nrm

125 | });

126 |

127 | // Begin render button

128 | el.style.display = 'inline';

129 | el.disabled = false;

130 | el.value = "Render";

131 | el.onclick = function()

132 | {

133 | console.log('Begin render!');

134 | el.disabled = true;

135 | el.value = "Rendering";

136 | startProfile = new Date();

137 | self.update(self.renderer);

138 | }

139 |

140 | // Toggle SSAO button

141 | ssaoToggle.onclick = function()

142 | {

143 | //simdEnabled = !simdEnabled;

144 | ssaoEnabled = !ssaoEnabled;

145 | ssaoToggle.value = 'SSAO is ' +

146 | ((ssaoEnabled) ? 'on' : 'off');

147 | }

148 | }

149 | },

150 |

151 | // Update loop

152 |

153 | update: function(renderer)

154 | {

155 | var self = this;

156 |

157 | // Set up effect params

158 | start = new Date();

159 |

160 | var rotateX = Matrix.rotation(Quaternion.fromAxisAngle(1, 0, 0, thetaY));

161 | var rotateY = Matrix.rotation(Quaternion.fromAxisAngle(0, 1, 0, thetaX));

162 | var scale = Matrix.scale(1, 1, 1);

163 |

164 | var world = Matrix.mul(rotateX, rotateY);

165 |

166 | effect.setParameters({

167 | m_world: world

168 | });

169 |

170 | // Render

171 | buffer.clear(ssaoEnabled ? [255, 255, 255] : [5, 5, 5]);

172 | renderer.drawGeometry(buffer);

173 |

174 | if (ssaoEnabled) buffer.postProc();

175 | buffer.draw();

176 |

177 | // Update rotation angle

178 | //theta += m.max((0.001 * (new Date().getTime() - start.getTime())), 1/60);

179 |

180 | // Display stats

181 | var execTime = "Frame time: "+ (new Date().getTime() - start.getTime()) +" ms";

182 | var calls = "Pixels drawn/searched: "+ buffer.calls +'/'+ buffer.pixels;

183 |

184 | ctx.fillText(execTime, 4, buffer.h - 26);

185 | ctx.fillText(calls, 4, buffer.h - 8);

186 |

187 | // Reset stats

188 | buffer.calls = 0;

189 | buffer.pixels = 0;

190 |

191 | requestAnimationFrame(function() {

192 | self.update(renderer);

193 | });

194 | }

195 | }

196 |

197 | return App;

198 |

199 | })();

200 |

201 | // Start the application

202 | var app = new App();

203 |

204 | }).call(this);

--------------------------------------------------------------------------------

/app/content.js:

--------------------------------------------------------------------------------

1 |

2 | // Content pipeline functions

3 |

4 | ContentManager = (function()

5 | {

6 | function Content() { }

7 |

8 | // Content request data and content loaded callback

9 |

10 | var requestsCompleted = 0;

11 | var requestsToComplete = 0;

12 | var contentLoadedCallback;

13 |

14 | // Storage for content

15 |

16 | var collection = { }

17 |

18 | // General AJAX request function

19 |

20 | function request(file)

21 | {

22 | return new Promise(function(resolve, reject)

23 | {

24 | var xhr = new XMLHttpRequest();

25 |

26 | xhr.open("GET", file, true);

27 | xhr.onload = function() {

28 | if (xhr.status == 200) {

29 | console.log(xhr.response);

30 | resolve(xhr.response);

31 | }

32 | else {

33 | reject(Error(xhr.statusText));

34 | }

35 | }

36 | xhr.onerror = reject;

37 | xhr.send(null);

38 | });

39 | }

40 |

41 | function loadError(response)

42 | {

43 | console.error("request failed!");

44 | }

45 |

46 | // Add total completed content load requests

47 |

48 | function requestComplete()

49 | {

50 | requestsCompleted++;

51 |

52 | if (requestsCompleted == requestsToComplete)

53 | {

54 | console.info("All content is ready")

55 | contentLoadedCallback(collection);

56 | }

57 | };

58 |

59 | // Load OBJ model via AJAX

60 |

61 | function loadModel(file, modelname)

62 | {

63 | requestsToComplete++;

64 | var success = function(response)

65 | {

66 | if (modelname == null) return;

67 |

68 | var model = new Model();

69 | var lines = response.split('\n');

70 |

71 | Model.parseOBJ(lines, model);

72 |

73 | collection[modelname] = model;

74 | requestComplete();

75 | }

76 |

77 | return request(file).then(success, loadError);

78 | }

79 |

80 | // Load Texture image into off-screen canvas

81 |

82 | function loadTexture(file, texname)

83 | {

84 | requestsToComplete++;

85 | var success = function(response)

86 | {

87 | if (texname == null) return;

88 |

89 | var img = new Image();

90 | img.src = file;

91 | img.onload = function()

92 | {

93 | var texture = Texture.load(img);

94 | collection[texname] = texture;

95 | requestComplete();

96 | }

97 | }

98 |

99 | return request(file).then(success, loadError);

100 | }

101 |

102 | // Load an effect from external JS file

103 |

104 | function loadEffect(file, func)

105 | {

106 | requestsToComplete++;

107 | var effect = document.createElement('script');

108 | effect.src = file;

109 | effect.onload = function()

110 | {

111 | if (func != null) func();

112 | requestComplete();

113 | }

114 |

115 | document.head.appendChild(effect);

116 | }

117 |

118 | // Entry point for loading content

119 |

120 | Content.prototype =

121 | {

122 | // Just one public method, for loading all content

123 |

124 | load: function(contentType)

125 | {

126 | return function(file, func)

127 | {

128 | func = (typeof func !== 'undefined') ? func : null;

129 |

130 | switch (contentType)

131 | {

132 | case 'Model':

133 | return loadModel(file, func);

134 | break;

135 | case 'Texture':

136 | return loadTexture(file, func);

137 | break;

138 | case 'Effect':

139 | return loadEffect(file, func);

140 | break;

141 | }

142 | }

143 | },

144 |

145 | // Accessor to content

146 |

147 | contentCollection: function()

148 | {

149 | return collection;

150 | },

151 |

152 | // Set up content loaded callback

153 |

154 | finishedLoading: function(callback)

155 | {

156 | contentLoadedCallback = callback;

157 | }

158 | }

159 |

160 | return Content;

161 |

162 | })();

--------------------------------------------------------------------------------

/assets/shaders/defaultEffect.js:

--------------------------------------------------------------------------------

1 |

2 | // Default shader effect

3 |

4 | DefaultEffect = (function()

5 | {

6 | function DefaultEffect()

7 | {

8 | this.setParameters = Effect.prototype.setParameters;

9 | }

10 |

11 | // Conversion to screen space, vs_in contains vertex and normal info

12 |

13 | DefaultEffect.prototype =

14 | {

15 | cam: [0, 0, 1],

16 | l: [1, -0.15, 0],

17 |

18 | vertex: function(vs_in)

19 | {

20 | var world = [vs_in[0][0], vs_in[0][1], vs_in[0][2], 1];

21 | var uv = vs_in[1];

22 | var normal = vs_in[2];

23 | var ratio = this.scr_h / this.scr_w;

24 |

25 | var nx, ny, nz;

26 |

27 | // Rotate vertex and normal

28 | var mat_w = this.m_world;

29 | var rt = Matrix.mul(mat_w, world);

30 |

31 | // Transform vertex to screen space

32 |

33 | var x = m.floor((rt[0] / 2 + 0.5 / ratio) * this.scr_w * ratio);

34 | var y = m.floor((rt[1] / 2 + 0.75) * this.scr_h);

35 | var z = m.floor((rt[2] / 2 + 0.5) * 65536);

36 |

37 | return [[x, y, z], vs_in[1], normal, this.r];

38 | },

39 |

40 | fragment: function(ps_in, color)

41 | {

42 | var ambient = 0.65;

43 | var light = [];

44 | var spcolor = [0.2, 0.25, 0.35];

45 |

46 | // Sample diffuse and normal textures

47 | var t = this.texture.sample(null, ps_in[0]);

48 | var nt = ps_in[1];//this.texture_nrm.sample(null, ps_in[0]);

49 |

50 | // Set normal

51 | var nl = Vec3.normalize(this.l);

52 | var nnt = Vec3.normalize([nt[0], nt[1], nt[2]]);

53 | var intensity = m.max(Vec3.dot(nnt, nl), 0);

54 |

55 | // Using Blinn reflection model

56 | var view = this.cam;

57 | var r = Vec3.reflect(nl, nnt); // reflected light

58 | var spec = m.pow(m.max(Vec3.dot(r, view), 0), 10) * 10;

59 |

60 | light[0] = intensity + ambient + spec * spcolor[0];

61 | light[1] = intensity + ambient + spec * spcolor[1];

62 | light[2] = intensity + ambient + spec * spcolor[2];

63 |

64 | // Output color

65 | color[0] = m.min(t[0] * light[0], 255);

66 | color[1] = m.min(t[1] * light[1], 255);

67 | color[2] = m.min(t[2] * light[2], 255);

68 |

69 | return true;

70 | }

71 | }

72 |

73 | return DefaultEffect;

74 |

75 | })();

76 |

--------------------------------------------------------------------------------

/gruntfile.js:

--------------------------------------------------------------------------------

1 |

2 | module.exports = function(grunt)

3 | {

4 | grunt.loadNpmTasks('grunt-contrib-watch');

5 | grunt.loadNpmTasks('grunt-contrib-uglify');

6 |

7 | grunt.initConfig(

8 | {

9 | cwd: process.cwd(),

10 | pkg: grunt.file.readJSON('package.json'),

11 |

12 | uglify:

13 | {

14 | options: {

15 | banner: '/*! <%= pkg.name %> - ver. <%= pkg.version %> */\r\n',

16 | compress: { drop_console: true }

17 | },

18 |

19 | js: {

20 | files: {

21 | 'renderer.js': [

22 | 'src/*.js'

23 | ],

24 | 'website/renderer.js': [

25 | 'src/*.js'

26 | ]}

27 | }

28 | },

29 |

30 | watchfiles: {

31 | first: {

32 | files: ['src/*.js'],

33 | tasks: ['uglify']

34 | }

35 | }

36 | });

37 |

38 | grunt.renameTask('watch', 'watchfiles');

39 | grunt.registerTask('watch', ['watchfiles']);

40 | }

--------------------------------------------------------------------------------

/index.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | JS TinyRenderer

7 |

8 |

9 |

10 |

11 |

JS TinyRenderer

12 |

20 |

...

22 |

23 |

24 |

25 |

26 |

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "JS-TinyRenderer",

3 | "version": "0.1.0",

4 | "devDependencies": {

5 | "grunt": "^0.4.5",

6 | "grunt-contrib-compress": "^1.0.0",

7 | "grunt-contrib-jshint": "^0.11.3",

8 | "grunt-contrib-uglify": "^0.11.1",

9 | "grunt-contrib-watch": "^1.0.0"

10 | }

11 | }

12 |

--------------------------------------------------------------------------------

/renderer.js:

--------------------------------------------------------------------------------

1 | /*! JS-TinyRenderer - ver. 0.1.0 */

2 | Buffer=function(){function a(a,b,c){this.ctx=a,this.w=b,this.h=c,this.calls=0,this.pixels=0,this.imgData=a.createImageData(this.w,this.h),this.buf=new ArrayBuffer(this.imgData.data.length),this.buf8=new Uint8ClampedArray(this.buf),this.buf32=new Uint32Array(this.buf),this.zbuf=new Uint32Array(this.imgData.data.length)}return a.prototype={clear:function(a){for(var b=0;b<=this.h;b++)for(var c=0;ci;i++)h+=b-m.atan(max_elevation_angle(this.zbuf,g,[f,e],[this.w,this.h],a[i]));h/=b*d;var j=this.buf32[g],k=(255&j)*h,l=(j>>8&255)*h,n=(j>>16&255)*h;this.set(g,[k,l,n]),this.calls++}}}},a}(),Effect=function(){function a(){}return a.prototype={vertex:function(a){},fragment:function(a,b){},setParameters:function(a){var b=this;Object.keys(a).map(function(c){b[c]=a[c]})}},a}(),function(){m=Math,doc=document,f32a=Float32Array,f64a=Float64Array,Renderer=function(){function a(){}return a.prototype={drawGeometry:function(a){for(var b=0;bd;d++)c.push(effect.vertex(model.vert(b,d)));this.drawTriangle(a,c,effect)}},drawTriangle:function(a,b,c){for(var d=[b[0][0],b[1][0],b[2][0]],e=[b[0][1],b[1][1],b[2][1]],f=[b[0][2],b[1][2],b[2][2]],g=[a.w+1,a.h+1],h=[-1,-1],i=0;ij;j++)g[j]=m.min(d[i][j],g[j]),h[j]=m.max(d[i][j],h[j]);if(!(g[0]>a.w||h[0]<0||g[1]>a.h||h[1]<0)){g[0]<0&&(g[0]=0),h[0]>a.w&&(h[0]=a.w);for(var k,l,n,o,p=[],q=[],r=d[0][1]-d[1][1],s=d[1][0]-d[0][0],t=d[1][1]-d[2][1],u=d[2][0]-d[1][0],v=d[2][1]-d[0][1],w=d[0][0]-d[2][0],x=d[1][1]-d[0][1],y=d[2][1]-d[1][1],z=1/(s*y-u*x),A=orient2d(d[1],d[2],g),B=orient2d(d[2],d[0],g),C=orient2d(d[0],d[1],g),D=[0,0,0],E=g[1];E++<=h[1];){for(var F=[A,B,C],G=g[0];G++<=h[0];){if(a.pixels++,(F[0]|F[1]|F[2])>0){q[0]=F[0]*z,q[1]=F[1]*z,q[2]=F[2]*z;for(var i=0,o=0;3>i;)o+=d[i][2]*q[i++];var H=E*a.w+G;if(a.zbuf[H]e;e++)c[e]=[Vec4.dot(a[e],[b[0][0],b[1][0],b[2][0],b[3][0]]),Vec4.dot(a[e],[b[0][1],b[1][1],b[2][1],b[3][1]]),Vec4.dot(a[e],[b[0][2],b[1][2],b[2][2],b[3][2]]),Vec4.dot(a[e],[b[0][3],b[1][3],b[2][3],b[3][3]])];return c},a.scale=function(a,b,c){return[[a,0,0,0],[0,b,0,0],[0,0,c,0],[0,0,0,1]]},a.rotation=function(a){var b=a[0],c=a[1],d=a[2],e=a[3];return[[1-2*c*c-2*d*d,2*b*c+2*d*e,2*b*d-2*c*e,0],[2*b*c-2*d*e,1-2*b*b-2*d*d,2*d*c+2*b*e,0],[2*b*d+2*c*e,2*d*c-2*b*e,1-2*b*b-2*c*c,0],[0,0,0,1]]},a}(),Quaternion=function(){function a(){}return a.fromEuler=function(a,b,c){var d,e,f,g,h,i;return g=m.sin(.5*a),d=m.cos(.5*a),h=m.sin(.5*b),e=m.cos(.5*b),i=m.sin(.5*c),f=m.cos(.5*c),[d*e*f+g*h*i,g*e*f-d*h*i,d*h*f+g*e*i,d*e*i-g*h*f]},a.fromAxisAngle=function(a,b,c,d){var e=m.sin(.5*d);return[a*e,b*e,c*e,m.cos(.5*d)]},a}(),Vec3=function(){function a(){}return a.dot=function(a,b){return a[0]*b[0]+a[1]*b[1]+a[2]*b[2]},a.cross=function(a,b){return[a[1]*b[2]-a[2]*b[1],a[2]*b[0]-a[0]*b[2],a[0]*b[1]-a[1]*b[0]]},a.reflect=function(b,c){var d=a.dot(b,c),e=[2*c[0]*d,2*c[1]*d,2*c[2]*d];return[e[0]-b[0],e[1]-b[1],e[2]-b[2]]},a.dist=function(a){for(var b=0,c=0;ch;h++){var i=.0035*h*d[0],j=[c[0]+e[0]*i,c[1]+e[1]*i];if(j[0]>d[0]||j[1]>d[1]||j[0]<0||j[1]<0)return f;var k=c[0]-j[0],l=c[1]-j[1],m=k*k+l*l;if(!(1>m)){var n=(0|j[1])*d[0]+(0|j[0]),o=.018*(a[n]-a[b]);o>f*m&&(f=o/m)}}return f},Model=function(){function a(){this.v=[],this.f=[],this.vn=[],this.vt=[]}return a.parseOBJ=function(a,b){for(var c=function(b){return a[b].split(" ").splice(1,3)},d=0;d0?model.vt[c[b][1]]:[0,0],f=model.vn.length>0?model.vn[c[b][2]]:[1,0,0];return[d,e,f]}},a}(),Texture=function(){function a(a,b){this.source=a,this.texData=b,this.sample=function(a,b){var c=b[0],d=b[1];0>c&&(c=1+c),0>d&&(d=1+d);var e=4*((this.texData.height-d*this.texData.height|0)*this.texData.width+c*this.texData.width|0);return[this.texData.data[e],this.texData.data[e+1],this.texData.data[e+2],this.texData.data[e+3]]}}return a.load=function(b){texCanvas=document.createElement("canvas"),ctx=texCanvas.getContext("2d"),texCanvas.width=b.width,texCanvas.height=b.height,ctx.drawImage(b,0,0),b.style.display="none";var c=ctx.getImageData(0,0,b.width,b.height);return new a(b.src,c)},a}();

--------------------------------------------------------------------------------

/src/SIMD/buffer_simd.js:

--------------------------------------------------------------------------------

1 |

2 | // Buffer drawing functions (SIMD extension)

3 |

4 | Buffer = (function (BufferSIMD)

5 | {

6 | function BufferSIMD() { };

7 |

8 | //Constructor for SIMD module

9 |

10 | BufferSIMD.prototype =

11 | {

12 | // Draw triangles from 2D points (SIMD enabled)

13 |

14 | drawTest: function()

15 | {

16 |

17 | },

18 | };

19 |

20 | return BufferSIMD;

21 |

22 | }(Buffer));

--------------------------------------------------------------------------------

/src/buffer.js:

--------------------------------------------------------------------------------

1 |

2 | // Buffer drawing functions

3 |

4 | Buffer = (function()

5 | {

6 | function Buffer(ctx, w, h)

7 | {

8 | this.ctx = ctx;

9 | this.w = w;

10 | this.h = h;

11 |

12 | this.calls = 0;

13 | this.pixels = 0;

14 |

15 | // create buffers for data manipulation

16 | this.imgData = ctx.createImageData(this.w, this.h);

17 |

18 | this.buf = new ArrayBuffer(this.imgData.data.length);

19 | this.buf8 = new Uint8ClampedArray(this.buf);

20 | this.buf32 = new Uint32Array(this.buf);

21 |

22 | // Z-buffer

23 | this.zbuf = new Uint32Array(this.imgData.data.length);

24 | }

25 |

26 | Buffer.prototype =

27 | {

28 | // Clear canvas

29 |

30 | clear: function(color)

31 | {

32 | for (var y = 0; y <= this.h; y++)

33 | for (var x = 0; x < this.w; x++)

34 | {

35 | var index = y * this.w + x;

36 | this.set(index, color);

37 | this.zbuf[index] = 0;

38 | }

39 | },

40 |

41 | // Set a pixel

42 |

43 | set: function(index, color)

44 | {

45 | var c = (color[0] & 255) | ((color[1] & 255) << 8) | ((color[2] & 255) << 16);

46 | this.buf32[index] = c | 0xff000000;

47 | },

48 |

49 | // Get a pixel

50 | /*

51 | get: function(x, y)

52 | {

53 | return this.buf32[y * this.w + x];

54 | },

55 | */

56 | // Put image data on the canvas

57 |

58 | draw: function()

59 | {

60 | this.imgData.data.set(this.buf8);

61 | this.ctx.putImageData(this.imgData, 0, 0);

62 | },

63 |

64 | // Post-processing (temporary, mostly SSAO)

65 |

66 | postProc: function()

67 | {

68 | // Calculate ray vectors

69 | var rays = [];

70 | var pi2 = m.PI * .5;

71 |

72 | for (var a = 0; a < m.PI * 2-1e-4; a += m.PI * 0.1111)

73 | rays.push([m.sin(a), m.cos(a)]);

74 |

75 | var rlength = rays.length;

76 |

77 | for (var y = 0; y < this.h; y++)

78 | for (var x = 0; x < this.w; x++)

79 | {

80 | // Get buffer index

81 | var index = y * this.w + x;

82 | if (this.zbuf[index] < 1e-5) continue;

83 |

84 | var total = 0;

85 | for (var i = 0; i < rlength; i++)

86 | {

87 | total += pi2 - m.atan(max_elevation_angle(

88 | this.zbuf, index, [x, y], [this.w, this.h], rays[i]));

89 | }

90 | total /= pi2 * rlength;

91 | //if (total > 1) total = 1;

92 |

93 | var c = this.buf32[index];//this.get(x, y);

94 |

95 | var r = ((c) & 0xff) * total;

96 | var g = ((c >> 8) & 0xff) * total;

97 | var b = ((c >> 16) & 0xff) * total;

98 |

99 | this.set(index, [r, g, b]);

100 | this.calls++;

101 | };

102 | }

103 | }

104 |

105 | return Buffer;

106 |

107 | })();

--------------------------------------------------------------------------------

/src/effect.js:

--------------------------------------------------------------------------------

1 | // Effect object implementation

2 |

3 | Effect = (function()

4 | {

5 | function Effect() {}

6 |

7 | Effect.prototype =

8 | {

9 | // Vertex and fragment shaders

10 |

11 | vertex: function(vs_in) { },

12 |

13 | fragment: function(ps_in, color) { },

14 |

15 | // Map parameters to effect

16 |

17 | setParameters: function(params)

18 | {

19 | var self = this;

20 | Object.keys(params).map(function(key)

21 | {

22 | self[key] = params[key];

23 | });

24 | }

25 | }

26 |

27 | return Effect;

28 |

29 | })();

30 |

--------------------------------------------------------------------------------

/src/main.js:

--------------------------------------------------------------------------------

1 | (function()

2 | {

3 | // Shorthand

4 |

5 | m = Math;

6 | doc = document;

7 | f32a = Float32Array;

8 | f64a = Float64Array;

9 |

10 | //simdSupported = false;// typeof SIMD !== 'undefined'

11 | //simdEnabled = false;

12 |

13 | // Main function

14 |

15 | Renderer = (function()

16 | {

17 | function Renderer() { }

18 |

19 | Renderer.prototype =

20 | {

21 | // Draw model called in deferred request

22 |

23 | drawGeometry: function(buffer)

24 | {

25 | // Transform geometry to screen space

26 |

27 | for (var f = 0; f < model.f.length; f++)

28 | {

29 | var vs_out = [];

30 |

31 | for (var j = 0; j < 3; j++)

32 | vs_out.push(effect.vertex(model.vert(f, j)));

33 |

34 | this.drawTriangle(buffer, vs_out, effect);

35 | }

36 | },

37 |

38 | // Draw a triangle from screen space points

39 |

40 | drawTriangle: function(buf, verts, effect)

41 | {

42 | var pts = [verts[0][0], verts[1][0], verts[2][0]];

43 | var texUV = [verts[0][1], verts[1][1], verts[2][1]];

44 | var norm = [verts[0][2], verts[1][2], verts[2][2]];

45 |

46 | // Create bounding box

47 | var boxMin = [buf.w + 1, buf.h + 1], boxMax = [-1, -1];

48 |

49 | // Find X and Y dimensions for each

50 | for (var i = 0; i < pts.length; i++)

51 | for (var j = 0; j < 2; j++)

52 | {

53 | boxMin[j] = m.min(pts[i][j], boxMin[j]);

54 | boxMax[j] = m.max(pts[i][j], boxMax[j]);

55 | }

56 |

57 | // Skip triangles that don't appear on the screen

58 | if (boxMin[0] > buf.w || boxMax[0] < 0 || boxMin[1] > buf.h || boxMax[1] < 0)

59 | return;

60 |

61 | // Limit box dimensions to edges of the screen to avoid render glitches

62 | if (boxMin[0] < 0) boxMin[0] = 0;

63 | if (boxMax[0] > buf.w) boxMax[0] = buf.w;

64 |

65 | var uv = [];

66 | var bc = [];

67 |

68 | // Triangle setup

69 | var a01 = pts[0][1] - pts[1][1], b01 = pts[1][0] - pts[0][0];

70 | var a12 = pts[1][1] - pts[2][1], b12 = pts[2][0] - pts[1][0];

71 | var a20 = pts[2][1] - pts[0][1], b20 = pts[0][0] - pts[2][0];

72 |

73 | var c01 = pts[1][1] - pts[0][1];

74 | var c12 = pts[2][1] - pts[1][1];

75 |

76 | //| b01 b12 |

77 | //| c01 c12 |

78 |

79 | // Parallelogram area from determinant (inverse)

80 | var area2inv = 1 / ((b01 * c12) - (b12 * c01));

81 |

82 | // Get orientation to see where the triangle is facing

83 | var edge_w0 = orient2d(pts[1], pts[2], boxMin);

84 | var edge_w1 = orient2d(pts[2], pts[0], boxMin);

85 | var edge_w2 = orient2d(pts[0], pts[1], boxMin);

86 |

87 | var color = [0, 0, 0];

88 | var nx, ny, nz;

89 | var z;

90 |

91 | for (var py = boxMin[1]; py++ <= boxMax[1];)

92 | {

93 | // Coordinates at start of row

94 | var w = [edge_w0, edge_w1, edge_w2];

95 |

96 | for (var px = boxMin[0]; px++ <= boxMax[0];)

97 | {

98 | buf.pixels++;

99 |

100 | // Check if pixel is outsde of barycentric coords

101 | if ((w[0] | w[1] | w[2]) > 0)

102 | {

103 | // Get normalized barycentric coordinates

104 | bc[0] = w[0] * area2inv;

105 | bc[1] = w[1] * area2inv;

106 | bc[2] = w[2] * area2inv;

107 |

108 | // Get pixel depth

109 | for (var i = 0, z = 0; i < 3;)

110 | z += pts[i][2] * bc[i++];

111 |

112 | // Get buffer index and run fragment shader

113 | var index = py * buf.w + px;

114 |

115 | if (buf.zbuf[index] < z)

116 | {

117 | var nx, ny, nz;

118 |

119 | // Calculate tex and normal coords

120 | uv[0] = bc[0] * texUV[0][0] + bc[1] * texUV[1][0] + bc[2] * texUV[2][0];

121 | uv[1] = bc[0] * texUV[0][1] + bc[1] * texUV[1][1] + bc[2] * texUV[2][1];

122 |

123 | nx = bc[0] * norm[0][0] + bc[1] * norm[1][0] + bc[2] * norm[2][0];

124 | ny = bc[0] * norm[0][1] + bc[1] * norm[1][1] + bc[2] * norm[2][1];

125 | nz = bc[0] * norm[0][2] + bc[1] * norm[1][2] + bc[2] * norm[2][2];

126 |

127 | if (effect.fragment([uv, [nx, ny, nz]], color))

128 | {

129 | buf.zbuf[index] = z;

130 | buf.set(index, color);

131 | buf.calls++;

132 | }

133 | }

134 | }

135 |

136 | // Step right

137 | w[0] += a12;

138 | w[1] += a20;

139 | w[2] += a01;

140 | }

141 |

142 | // One row step

143 | edge_w0 += b12;

144 | edge_w1 += b20;

145 | edge_w2 += b01;

146 | }

147 | }

148 | }

149 |

150 | return Renderer;

151 |

152 | })();

153 |

154 | })();

155 |

--------------------------------------------------------------------------------

/src/math.js:

--------------------------------------------------------------------------------

1 |

2 | // Matrix functions

3 |

4 | Matrix = (function()

5 | {

6 | function Matrix() {}

7 |

8 | Matrix.identity = function()

9 | {

10 | return [

11 | [1, 0, 0, 0],

12 | [0, 1, 0, 0],

13 | [0, 0, 1, 0],

14 | [0, 0, 0, 1]

15 | ];

16 | }

17 |

18 | Matrix.mul = function(lh, r)

19 | {

20 | var mat = [];

21 |

22 | // If rhs is a vector instead of matrix

23 | if (!Array.isArray(r[0]))

24 | {

25 | var vec = [

26 | lh[0][0] * r[0] + lh[0][1] * r[1] + lh[0][2] * r[2] + lh[0][3] * r[3],

27 | lh[1][0] * r[0] + lh[1][1] * r[1] + lh[1][2] * r[2] + lh[1][3] * r[3],

28 | lh[2][0] * r[0] + lh[2][1] * r[1] + lh[2][2] * r[2] + lh[2][3] * r[3]

29 | ];

30 | //console.log(r[0][3]);

31 | return vec;

32 | }

33 |

34 | for (var i = 0; i < 4; i++)

35 | mat[i] = [

36 | Vec4.dot(lh[i], [r[0][0], r[1][0], r[2][0], r[3][0]]),

37 | Vec4.dot(lh[i], [r[0][1], r[1][1], r[2][1], r[3][1]]),

38 | Vec4.dot(lh[i], [r[0][2], r[1][2], r[2][2], r[3][2]]),

39 | Vec4.dot(lh[i], [r[0][3], r[1][3], r[2][3], r[3][3]])

40 | ];

41 |

42 | return mat;

43 | }

44 |

45 | Matrix.scale = function(x, y, z)

46 | {

47 | return [

48 | [x, 0, 0, 0],

49 | [0, y, 0, 0],

50 | [0, 0, z, 0],

51 | [0, 0, 0, 1]

52 | ];

53 | }

54 |

55 | Matrix.rotation = function(q)

56 | {

57 | var x = q[0], y = q[1], z = q[2], w = q[3];

58 |

59 | return [

60 | [1 - 2*y*y - 2*z*z, 2*x*y + 2*z*w, 2*x*z - 2*y*w, 0],

61 | [2*x*y - 2*z*w, 1 - 2*x*x - 2*z*z, 2*z*y + 2*x*w, 0],

62 | [2*x*z + 2*y*w, 2*z*y - 2*x*w, 1 - 2*x*x - 2*y*y, 0],

63 | [0, 0, 0, 1]

64 | ];

65 | }

66 |

67 | // Camera lookat with three 3D vectors

68 | /*

69 | Matrix.view = function(eye, lookat, up)

70 | {

71 | var forward = Vec3.normalize([eye[0] - lookat[0],

72 | eye[1] - lookat[1], eye[2] - lookat[2]]);

73 |

74 | var right = Vec3.normalize(Vec3.cross(up, forward));

75 | var up = Vec3.normalize(Vec3.cross(forward, right));

76 |

77 | var view = Matrix.identity();

78 |

79 | for (var i = 0; i < 3; i++)

80 | {

81 | view[0][i] = right[i];

82 | view[1][i] = up[i];

83 | view[2][i] = forward[i];

84 | }

85 |

86 | view[3][0] = -Vec3.dot(right, eye);

87 | view[3][1] = -Vec3.dot(up, eye);

88 | view[3][2] = -Vec3.dot(forward, eye);

89 |

90 | return view;

91 | }

92 | */

93 | return Matrix;

94 |

95 | })();

96 |

97 | // Quaternion functions

98 |

99 | Quaternion = (function()

100 | {

101 | function Quaternion() {}

102 |

103 | Quaternion.fromEuler = function(x, y, z)

104 | {

105 | var cx, cy, cz, sx, sy, sz;

106 | sx = m.sin(x * 0.5);

107 | cx = m.cos(x * 0.5);

108 | sy = m.sin(y * 0.5);

109 | cy = m.cos(y * 0.5);

110 | sz = m.sin(z * 0.5);

111 | cz = m.cos(z * 0.5);

112 |

113 | return [

114 | cx * cy * cz + sx * sy * sz,

115 | sx * cy * cz - cx * sy * sz,

116 | cx * sy * cz + sx * cy * sz,

117 | cx * cy * sz - sx * sy * cz

118 | ];

119 | }

120 |

121 | Quaternion.fromAxisAngle = function(x, y, z, angle)

122 | {

123 | var s = m.sin(angle * .5);

124 |

125 | return [

126 | x * s,

127 | y * s,

128 | z * s,

129 | m.cos(angle * .5)

130 | ];

131 | }

132 |

133 | return Quaternion;

134 |

135 | })();

136 |

137 | // 3D Vector functions

138 |

139 | Vec3 = (function()

140 | {

141 | function Vec3() {}

142 |

143 | Vec3.dot = function(a, b)

144 | {

145 | return (a[0] * b[0]) + (a[1] * b[1]) + (a[2] * b[2]);

146 | }

147 |

148 | Vec3.cross = function(a, b)

149 | {

150 | // (a.x, a.y, a.z) x (b.x, b.y, b.z)

151 | return [

152 | a[1] * b[2] - a[2] * b[1],

153 | a[2] * b[0] - a[0] * b[2],

154 | a[0] * b[1] - a[1] * b[0]

155 | ];

156 | }

157 |

158 | Vec3.reflect = function(l, n)

159 | {

160 | var ldn = Vec3.dot(l, n);

161 | var proj = [2 * n[0] * ldn, 2 * n[1] * ldn, 2 * n[2] * ldn];

162 |

163 | return [proj[0] - l[0], proj[1] - l[1], proj[2] - l[2]];

164 | }

165 |

166 | Vec3.dist = function(v)

167 | {

168 | var sum = 0;

169 | for (var i = 0; i < v.length; i++)

170 | sum += (v[i] * v[i]);

171 |

172 | return m.sqrt(sum);

173 | }

174 |

175 | Vec3.normalize = function(v)

176 | {

177 | var dist1 = 1 / Vec3.dist(v);

178 | return [v[0] * dist1, v[1] * dist1, v[2] * dist1];

179 | }

180 |

181 | return Vec3;

182 |

183 | })();

184 |

185 | // 4D Vector functions

186 |

187 | Vec4 = (function()

188 | {

189 | function Vec4() { }

190 |

191 | Vec4.dot = function(a, b)

192 | {

193 | return (a[0] * b[0]) + (a[1] * b[1]) + (a[2] * b[2]) + (a[3] * b[3]);

194 | }

195 |

196 | return Vec4;

197 |

198 | })();

199 |

200 | // Orientation on a side

201 |

202 | orient2d = function(p1, p2, b)

203 | {

204 | return (p2[0]-p1[0]) * (b[1]-p1[1]) - (p2[1]-p1[1]) * (b[0]-p1[0]);

205 | }

206 |

207 | // Get the max elevation angle from a point in the z-buffer (as a heightmap)

208 |

209 | max_elevation_angle = function(zbuf, index, p, dims, ray)

210 | {

211 | var maxangle = 0;

212 | var steps = 7;

213 |

214 | for (var t = 0; t < steps; t++)

215 | {

216 | var v = t * .0035 * dims[0];

217 | // Current position of the ray traveled, and check for out of bounds

218 | var cur = [p[0] + ray[0] * v, p[1] + ray[1] * v];

219 |

220 | // Return if the ray exceeds bounds

221 | if (cur[0] > dims[0] || cur[1] > dims[1] || cur[0] < 0 || cur[1] < 0)

222 | return maxangle;

223 |

224 | var dx = p[0] - cur[0];

225 | var dy = p[1] - cur[1];

226 | var dist2 = (dx * dx) + (dy * dy);

227 | if (dist2 < 1) continue;

228 |

229 | // buffer index

230 | var curIndex = ((cur[1]|0) * dims[0]) + (cur[0]|0);

231 | var angle = ((zbuf[curIndex] - zbuf[index]) * 0.018); // 1/125

232 | //var angle = elevation / distance;

233 |

234 | if (maxangle * dist2 < angle) maxangle = angle / dist2;

235 | }

236 |

237 | return maxangle;

238 | }

--------------------------------------------------------------------------------

/src/model.js:

--------------------------------------------------------------------------------

1 |

2 | // Model functions

3 |

4 | Model = (function()

5 | {

6 | // OBJ properties

7 |

8 | function Model()

9 | {

10 | this.v = [];

11 | this.f = [];

12 | this.vn = [];

13 | this.vt = [];

14 | }

15 |

16 | Model.parseOBJ = function(lines, model)

17 | {

18 | var splitLn = function(i) { return lines[i].split(' ').splice(1, 3); }

19 |

20 | // Read each line

21 | for (var i = 0; i < lines.length; i++)

22 | {

23 | // Find vertex positions

24 |

25 | var mtype = lines[i].substr(0, 2);

26 | var mdata = (mtype === 'v ') ? model.v :

27 | (mtype === 'vn') ? model.vn :

28 | (mtype === 'vt') ? model.vt : null;

29 |

30 | // Special case for parsing face indices

31 | if (lines[i].substr(0, 2) === 'f ')

32 | {

33 | idx = splitLn(i);

34 | for (j = 0; j < 3; j++)

35 | idx[j] = idx[j].split('/')

36 | .map(function(i) { return parseInt(i - 1) });

37 |

38 | model.f.push(idx);

39 | }

40 |

41 | // Otherwise, add vertex data as normal

42 | else if (mdata)

43 | mdata.push(new f32a(splitLn(i)));

44 | }

45 | }

46 |

47 | Model.prototype =

48 | {

49 | // Get vertex data from face

50 |

51 | vert: function(f_index, v)

52 | {

53 | var face = model.f[f_index];

54 | var vert = model.v[face[v][0]];

55 | var vt = (model.vt.length > 0) ? model.vt[face[v][1]] : [0, 0];

56 | var vn = (model.vn.length > 0) ? model.vn[face[v][2]] : [1, 0, 0];

57 |

58 | // Return vertex data

59 | return [vert, vt, vn];

60 | }

61 | }

62 |

63 | return Model;

64 |

65 | })();

66 |

--------------------------------------------------------------------------------

/src/texture.js:

--------------------------------------------------------------------------------

1 | // Texture object

2 |

3 | Texture = (function()

4 | {

5 | function Texture(src, data)

6 | {

7 | this.source = src;

8 | this.texData = data;

9 |

10 | this.sample = function(state, uv)

11 | {

12 | var u = uv[0];

13 | var v = uv[1];

14 | if (u < 0) u = 1 + u;

15 | if (v < 0) v = 1 + v;

16 |

17 | // Get starting index of texture data sample

18 | var idx = ((this.texData.height -

19 | (v * this.texData.height)|0) * this.texData.width +

20 | (u * this.texData.width)|0) * 4;

21 |

22 | return [

23 | this.texData.data[idx],

24 | this.texData.data[idx + 1],

25 | this.texData.data[idx + 2],

26 | this.texData.data[idx + 3]

27 | ];

28 | }

29 | }

30 |

31 | // Create a new texture from an image

32 |

33 | Texture.load = function(img)

34 | {

35 | texCanvas = document.createElement('canvas');

36 | ctx = texCanvas.getContext('2d');

37 |

38 | texCanvas.width = img.width;

39 | texCanvas.height = img.height;

40 |

41 | ctx.drawImage(img, 0, 0);

42 | img.style.display = 'none';

43 |

44 | // Set the buffer data

45 | var texData = ctx.getImageData(0, 0, img.width, img.height);

46 |

47 | return new Texture(img.src, texData);

48 | }

49 |

50 | return Texture;

51 |

52 | })();

--------------------------------------------------------------------------------

/style.css:

--------------------------------------------------------------------------------

1 |

2 | html, body {

3 | margin: 0px;

4 | padding: 0px;

5 | }

6 |

7 | body {

8 | font-family: Helvetica, Arial, "Sans-Serif";

9 | }

10 |

11 | h1, h2, h3, h4 {

12 | margin: 10px 0px;

13 | color: #555;

14 | text-align: center;

15 | }

16 |

17 | canvas {

18 | margin: 0px auto;

19 | border: 1px solid #ccc;

20 | }

21 |

22 | .container-fluid {

23 | margin: 0px auto;

24 | width: 80%;

25 | }

26 |

27 | #top-info, .btn-container {

28 | text-align: center;

29 | }

30 |

31 | .btn-container {

32 | position: relative;

33 | overflow: auto;

34 | }

35 |

36 | .float-left {

37 | float: left;

38 | }

39 |

40 | .btn-container input[type='button'] {

41 | position: relative;

42 | padding: 4px;

43 | font-family: Helvetica, Arial, sans-serif;

44 | font-size: 16px;

45 | width: 150px;

46 | border: 0px;

47 | background: #ddd;

48 | }

49 |

50 | input[type='button']:active{

51 | background: #aaa;

52 | }

53 |

54 | input[type='button']:disabled {

55 | color: #999;

56 | }

57 |

58 | .midblue { color: #0077ff; }

59 |

--------------------------------------------------------------------------------