├── .gitignore

├── .travis.yml

├── README.md

├── docs

├── Makefile

├── images

│ ├── convolution_filter.png

│ ├── dropout.png

│ ├── generalization_error.png

│ ├── laplacian_filter.png

│ ├── max_pooling.png

│ └── mlp.png

├── requirements.txt

├── source

│ ├── _ext

│ │ └── show_on_colaboratory.py

│ ├── _static

│ │ └── colaboratory.png

│ ├── _templates

│ │ └── searchbox.html

│ ├── begginers_hands_on.rst

│ ├── conf.py

│ ├── index.rst

│ ├── official_examples.rst

│ ├── other_examples.rst

│ └── other_hands_on.rst

└── source_ja

│ ├── _ext

│ └── show_on_colaboratory.py

│ ├── _static

│ ├── _templates

│ ├── begginers_hands_on.rst

│ ├── conf.py

│ ├── index.rst

│ ├── official_examples.rst

│ ├── other_examples.rst

│ └── other_hands_on.rst

├── example

├── chainer

│ ├── OfficialTutorial

│ │ └── 02_1_How_to_Write_a_New_Network.ipynb

│ └── mnist

│ │ ├── Chainer_MNIST_Example_en.ipynb

│ │ └── Chainer_MNIST_Example_ja.ipynb

└── cupy

│ └── prml

│ ├── LICENSE

│ └── ch01_Introduction_ipynb.ipynb

├── hands_on_en

├── chainer

│ ├── begginers_hands_on

│ │ ├── 01_Write_the_training_loop.ipynb

│ │ ├── 02_Try_Trainer_class.ipynb

│ │ ├── 03_Write_your_own_network.ipynb

│ │ └── 04_Write_your_own_dataset_class.ipynb

│ ├── chainer.ipynb

│ └── classify_anime_characters.ipynb

├── chainercv

│ └── object_detection_tutorial.ipynb

└── chainerrl

│ ├── README.md

│ └── quickstart.ipynb

├── hands_on_ja

├── chainer

│ ├── begginers_hands_on

│ │ ├── 00_How_to_use_chainer_on_colaboratory.ipynb

│ │ ├── 01_Chainer_basic_tutorial.ipynb

│ │ ├── 02_Chainer_kaggle_tutorial.ipynb

│ │ ├── 11_Write_the_training_loop.ipynb

│ │ ├── 12_Try_Trainer_class.ipynb

│ │ ├── 13_Write_your_own_network.ipynb

│ │ └── 14_Write_your_own_dataset_class.ipynb

│ ├── chainer.ipynb

│ ├── chainer_tutorial_book.ipynb

│ └── classify_anime_characters.ipynb

└── chainerrl

│ ├── README.md

│ ├── atari_sample.ipynb

│ └── quickstart.ipynb

├── official_example_en

├── dcgan.ipynb

├── sentiment.ipynb

└── word2vec.ipynb

├── official_example_ja

├── sentiment.ipynb

├── wavenet.ipynb

└── word2vec.ipynb

└── requirements.txt

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | env/

12 | build/

13 | develop-eggs/

14 | dist/

15 | downloads/

16 | eggs/

17 | .eggs/

18 | lib/

19 | lib64/

20 | parts/

21 | sdist/

22 | var/

23 | wheels/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 |

28 | # PyInstaller

29 | # Usually these files are written by a python script from a template

30 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

31 | *.manifest

32 | *.spec

33 |

34 | # Installer logs

35 | pip-log.txt

36 | pip-delete-this-directory.txt

37 |

38 | # Unit test / coverage reports

39 | htmlcov/

40 | .tox/

41 | .coverage

42 | .coverage.*

43 | .cache

44 | nosetests.xml

45 | coverage.xml

46 | *.cover

47 | .hypothesis/

48 |

49 | # Translations

50 | *.mo

51 | *.pot

52 |

53 | # Django stuff:

54 | *.log

55 | local_settings.py

56 |

57 | # Flask stuff:

58 | instance/

59 | .webassets-cache

60 |

61 | # Scrapy stuff:

62 | .scrapy

63 |

64 | # Sphinx documentation

65 | docs/_build/

66 |

67 | # PyBuilder

68 | target/

69 |

70 | # Jupyter Notebook

71 | .ipynb_checkpoints

72 |

73 | # pyenv

74 | .python-version

75 |

76 | # celery beat schedule file

77 | celerybeat-schedule

78 |

79 | # SageMath parsed files

80 | *.sage.py

81 |

82 | # dotenv

83 | .env

84 |

85 | # virtualenv

86 | .venv

87 | venv/

88 | ENV/

89 |

90 | # Spyder project settings

91 | .spyderproject

92 | .spyproject

93 |

94 | # Rope project settings

95 | .ropeproject

96 |

97 | # mkdocs documentation

98 | /site

99 |

100 | # mypy

101 | .mypy_cache/

102 |

103 | # Mac

104 | .DS_Store

105 |

106 | # for this repo

107 | notebook/

108 | notebook_ja/

109 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: python

2 | python:

3 | - '3.6'

4 | before_install:

5 | - sudo apt-get install -y pandoc

6 | install: pip install -q -r requirements.txt

7 | script: make -C docs html

8 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # chainer-colab-notebook

2 |

3 | ## Overview

4 | You can quickly start Chainer hands-on with Colaboratory:

5 |

6 | - without setting up,

7 | - with GPU,

8 | - and without MONEY!

9 |

10 | ## Description

11 | The github repository [chainer-community/chainer-colab-notebook](https://github.com/chainer-community/chainer-colab-notebook) is synchronized with [ReadTheDocs](https://chainer-colab-notebook.readthedocs.io/en/latest/).

12 | All the notebooks here can be run on Colaboratory.

13 |

14 |

15 | ## Requirement

16 | You can use the notebooks if you can use Colaboratory.

17 | See detail of [Colaboratory FAQ](https://research.google.com/colaboratory/faq.html).

18 |

19 | ## Usage

20 | 1. Access [Chainer Colab Notebook](https://chainer-colab-notebook.readthedocs.io/en/latest/) on ReadTheDocs.

21 |

22 | 2. Find the notebook you want to start.

23 |

24 | 3. Click and open with Colaboratory.

25 |

26 | 4. Copy the notebook to your drive.

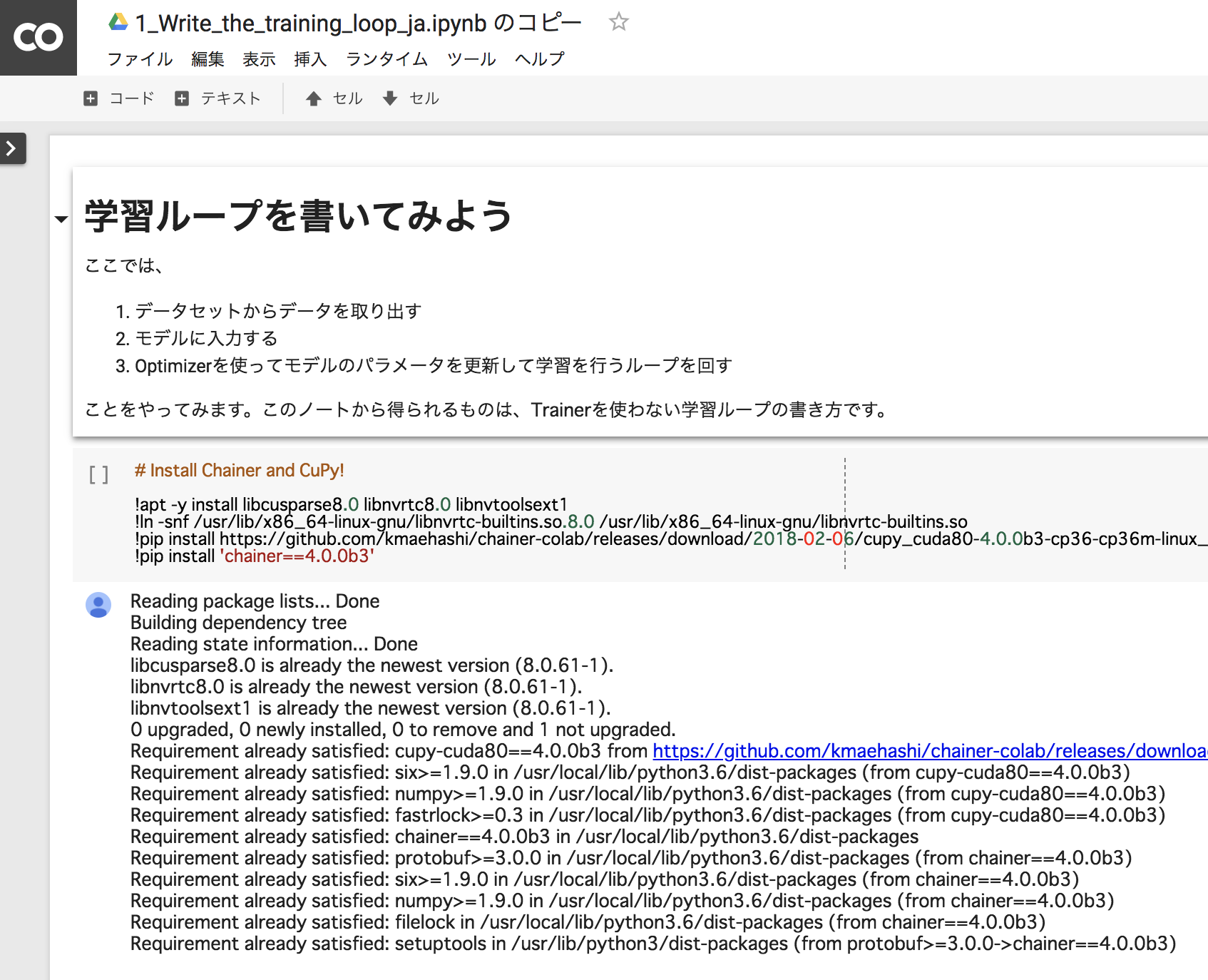

27 |  28 |

29 | 5. Just run the notebook!

30 |

28 |

29 | 5. Just run the notebook!

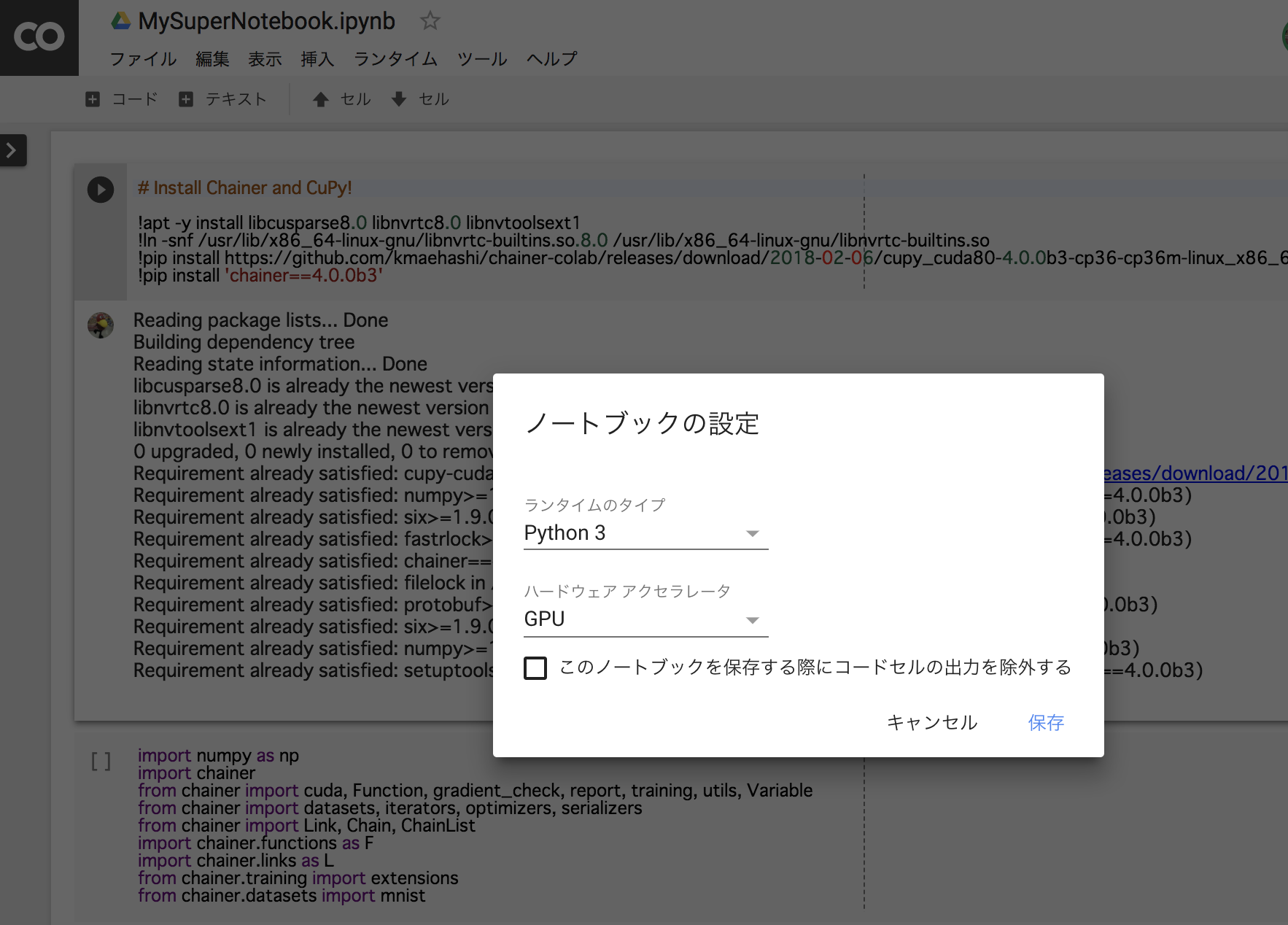

30 |  31 |

32 | ## Contribution

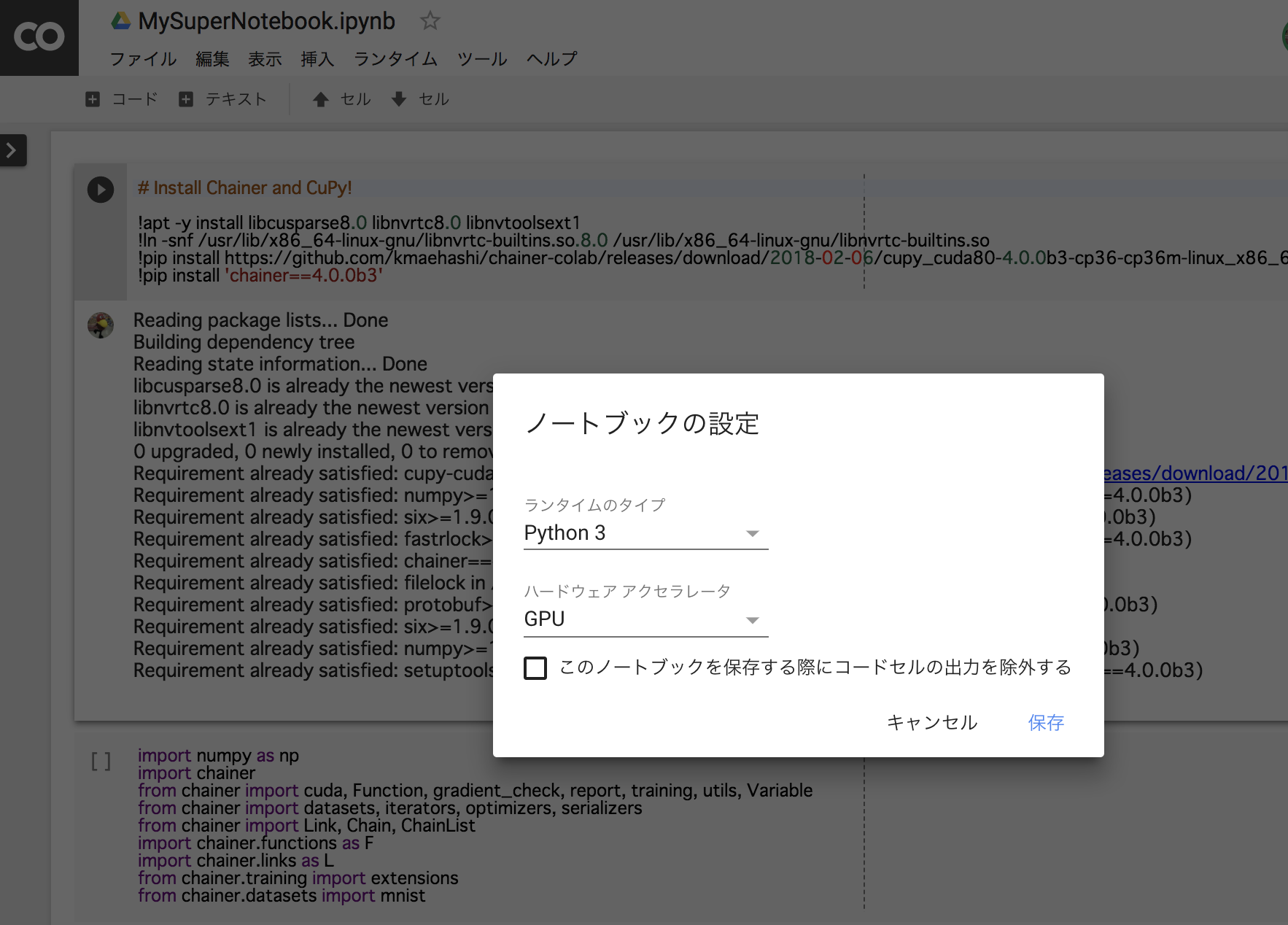

33 | 1. Create your notebook. Please use Python3 and GPU.

34 |

31 |

32 | ## Contribution

33 | 1. Create your notebook. Please use Python3 and GPU.

34 |  35 |

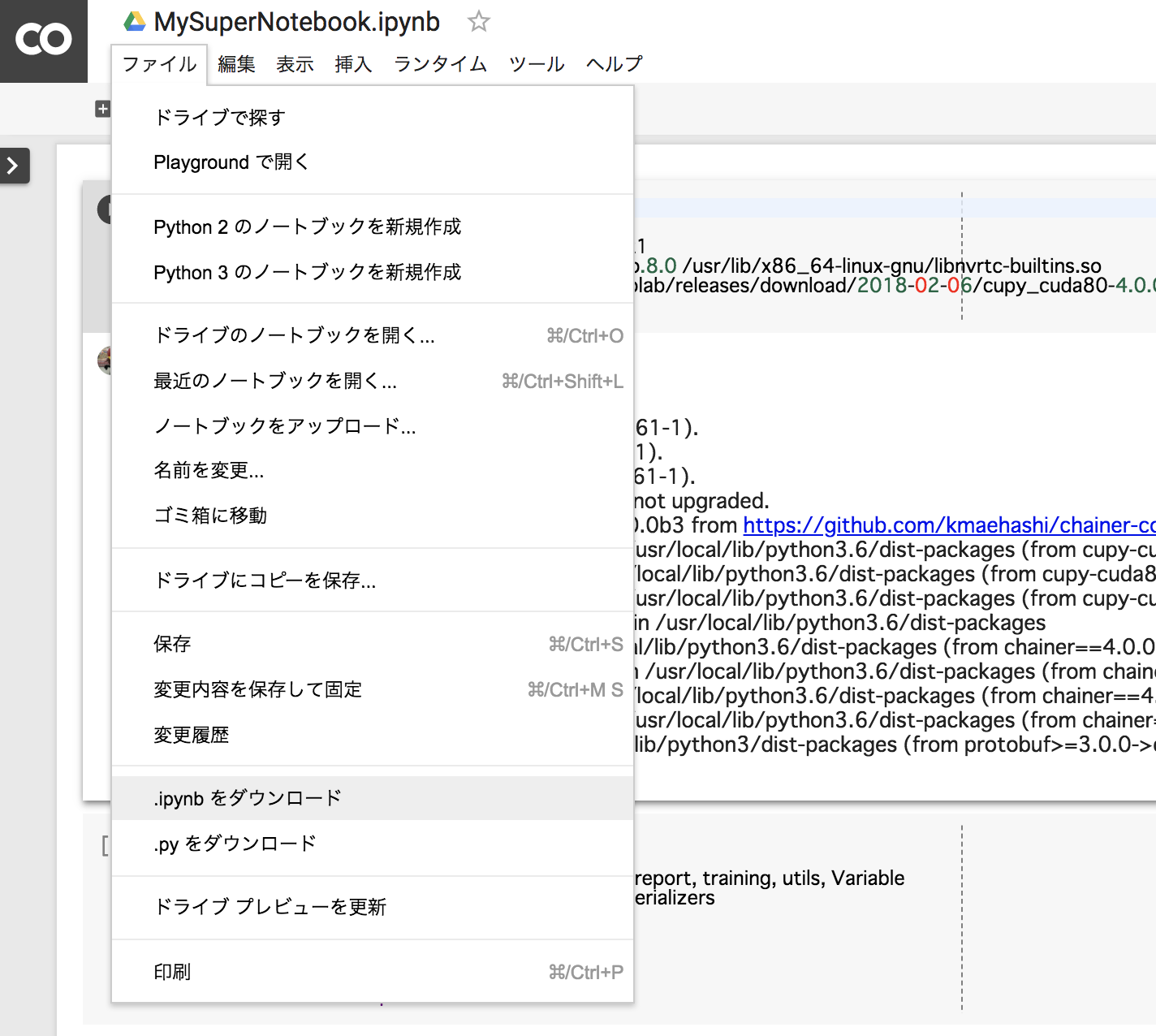

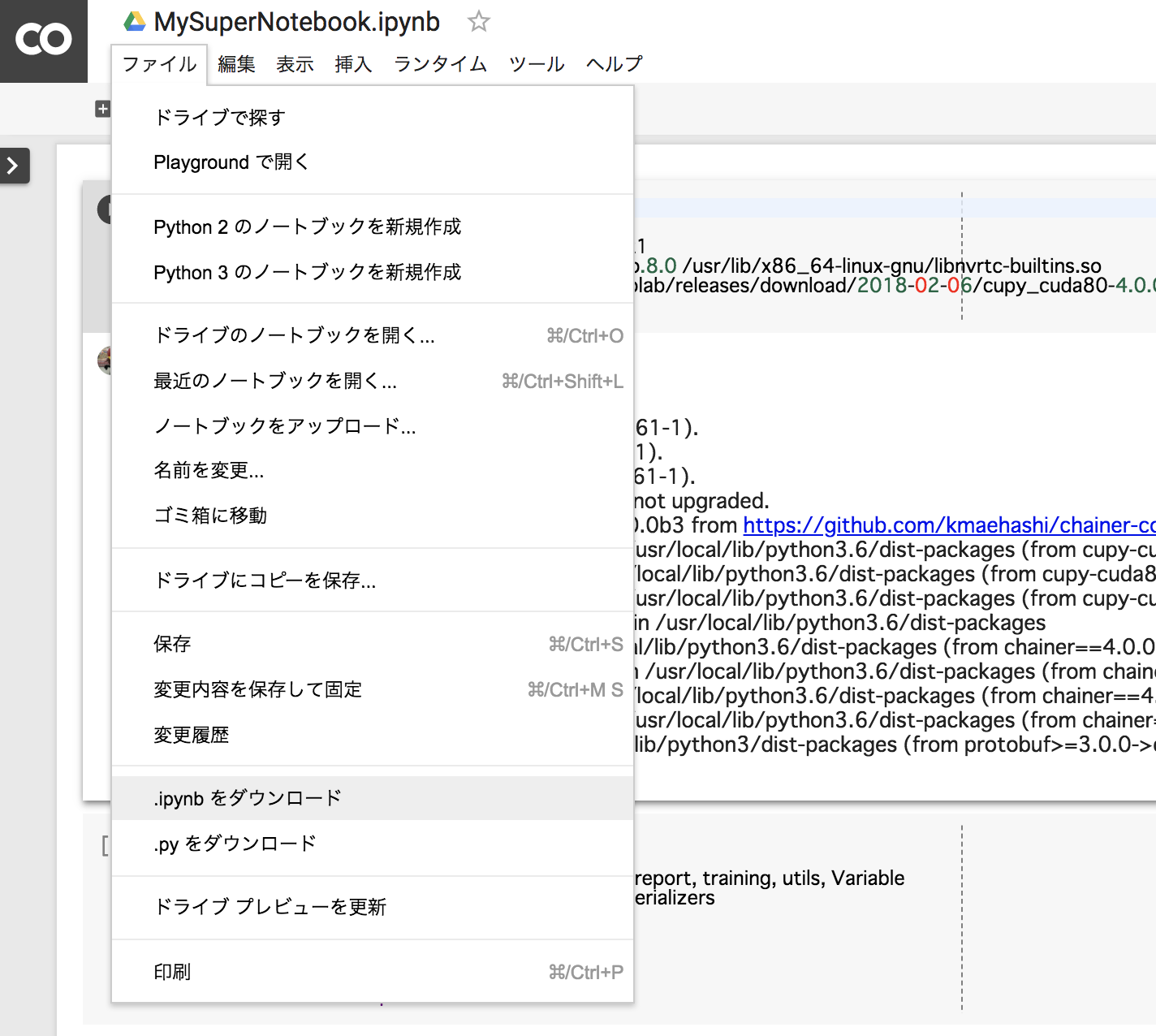

36 | 2. Download it as the jupyter notebook.

37 |

35 |

36 | 2. Download it as the jupyter notebook.

37 |  38 |

39 | 3. Push it to the github repository [chainer-community](https://github.com/chainer-community/chainer-colab-notebook) and make PR!

40 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line.

5 | SPHINXOPTS =

6 | SPHINXBUILD = sphinx-build

7 | SPHINXPROJ = ChainerColabNotebook

8 | SOURCEDIR = ./source

9 | BUILDDIR = _build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help:

13 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

14 |

15 | .PHONY: help Makefile

16 |

17 | # Catch-all target: route all unknown targets to Sphinx using the new

18 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

19 | %: Makefile

20 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

21 |

--------------------------------------------------------------------------------

/docs/images/convolution_filter.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/convolution_filter.png

--------------------------------------------------------------------------------

/docs/images/dropout.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/dropout.png

--------------------------------------------------------------------------------

/docs/images/generalization_error.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/generalization_error.png

--------------------------------------------------------------------------------

/docs/images/laplacian_filter.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/laplacian_filter.png

--------------------------------------------------------------------------------

/docs/images/max_pooling.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/max_pooling.png

--------------------------------------------------------------------------------

/docs/images/mlp.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/mlp.png

--------------------------------------------------------------------------------

/docs/requirements.txt:

--------------------------------------------------------------------------------

1 | nbsphinx

2 |

--------------------------------------------------------------------------------

/docs/source/_ext/show_on_colaboratory.py:

--------------------------------------------------------------------------------

1 | """

2 | Sphinx extension to add ReadTheDocs-style "Show on Colaboratory" links to the

3 | sidebar.

4 | Loosely based on https://github.com/astropy/astropy/pull/347

5 | """

6 |

7 | import os

8 | import warnings

9 |

10 |

11 | __licence__ = 'BSD (3 clause)'

12 |

13 |

14 | def get_colaboratory_url(path):

15 | fromto = [('hands_on', 'hands_on_en'), ('official_example', 'official_example_en')]

16 | for f, t in fromto:

17 | if path.startswith(f):

18 | path = path.replace(f, t, 1)

19 | return 'https://colab.research.google.com/github/chainer-community/chainer-colab-notebook/blob/master/{path}'.format(

20 | path=path)

21 |

22 |

23 | def html_page_context(app, pagename, templatename, context, doctree):

24 | if templatename != 'page.html':

25 | return

26 |

27 | path = os.path.relpath(doctree.get('source'), app.builder.srcdir).replace('notebook/', '')

28 | show_url = get_colaboratory_url(path)

29 |

30 | context['show_on_colaboratory_url'] = show_url

31 |

32 |

33 | def setup(app):

34 | app.connect('html-page-context', html_page_context)

35 |

--------------------------------------------------------------------------------

/docs/source/_static/colaboratory.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/source/_static/colaboratory.png

--------------------------------------------------------------------------------

/docs/source/_templates/searchbox.html:

--------------------------------------------------------------------------------

1 | {%- if builder != 'singlehtml' %}

2 |

38 |

39 | 3. Push it to the github repository [chainer-community](https://github.com/chainer-community/chainer-colab-notebook) and make PR!

40 |

--------------------------------------------------------------------------------

/docs/Makefile:

--------------------------------------------------------------------------------

1 | # Minimal makefile for Sphinx documentation

2 | #

3 |

4 | # You can set these variables from the command line.

5 | SPHINXOPTS =

6 | SPHINXBUILD = sphinx-build

7 | SPHINXPROJ = ChainerColabNotebook

8 | SOURCEDIR = ./source

9 | BUILDDIR = _build

10 |

11 | # Put it first so that "make" without argument is like "make help".

12 | help:

13 | @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

14 |

15 | .PHONY: help Makefile

16 |

17 | # Catch-all target: route all unknown targets to Sphinx using the new

18 | # "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

19 | %: Makefile

20 | @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

21 |

--------------------------------------------------------------------------------

/docs/images/convolution_filter.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/convolution_filter.png

--------------------------------------------------------------------------------

/docs/images/dropout.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/dropout.png

--------------------------------------------------------------------------------

/docs/images/generalization_error.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/generalization_error.png

--------------------------------------------------------------------------------

/docs/images/laplacian_filter.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/laplacian_filter.png

--------------------------------------------------------------------------------

/docs/images/max_pooling.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/max_pooling.png

--------------------------------------------------------------------------------

/docs/images/mlp.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/images/mlp.png

--------------------------------------------------------------------------------

/docs/requirements.txt:

--------------------------------------------------------------------------------

1 | nbsphinx

2 |

--------------------------------------------------------------------------------

/docs/source/_ext/show_on_colaboratory.py:

--------------------------------------------------------------------------------

1 | """

2 | Sphinx extension to add ReadTheDocs-style "Show on Colaboratory" links to the

3 | sidebar.

4 | Loosely based on https://github.com/astropy/astropy/pull/347

5 | """

6 |

7 | import os

8 | import warnings

9 |

10 |

11 | __licence__ = 'BSD (3 clause)'

12 |

13 |

14 | def get_colaboratory_url(path):

15 | fromto = [('hands_on', 'hands_on_en'), ('official_example', 'official_example_en')]

16 | for f, t in fromto:

17 | if path.startswith(f):

18 | path = path.replace(f, t, 1)

19 | return 'https://colab.research.google.com/github/chainer-community/chainer-colab-notebook/blob/master/{path}'.format(

20 | path=path)

21 |

22 |

23 | def html_page_context(app, pagename, templatename, context, doctree):

24 | if templatename != 'page.html':

25 | return

26 |

27 | path = os.path.relpath(doctree.get('source'), app.builder.srcdir).replace('notebook/', '')

28 | show_url = get_colaboratory_url(path)

29 |

30 | context['show_on_colaboratory_url'] = show_url

31 |

32 |

33 | def setup(app):

34 | app.connect('html-page-context', html_page_context)

35 |

--------------------------------------------------------------------------------

/docs/source/_static/colaboratory.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chainer-community/chainer-colab-notebook/7e8a134279d362859a6600d4c9ca9a2699d00338/docs/source/_static/colaboratory.png

--------------------------------------------------------------------------------

/docs/source/_templates/searchbox.html:

--------------------------------------------------------------------------------

1 | {%- if builder != 'singlehtml' %}

2 |

3 |

8 |

9 | {%- endif %}

10 | {%- if show_source and has_source and sourcename %}

11 | {%- if show_on_colaboratory_url %}

12 |

13 |  14 |

17 | {%- endif %}

18 | {%- endif %}

19 |

--------------------------------------------------------------------------------

/docs/source/begginers_hands_on.rst:

--------------------------------------------------------------------------------

1 | Chainer Begginer's Hands-on

2 | ----------------------------

3 |

4 | .. toctree::

5 | :glob:

6 | :titlesonly:

7 |

8 | notebook/hands_on/chainer/begginers_hands_on/*

9 |

--------------------------------------------------------------------------------

/docs/source/conf.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 | #

4 | # Chainer Colab Notebook documentation build configuration file, created by

5 | # sphinx-quickstart on Tue Jun 12 09:34:10 2018.

6 | #

7 | # This file is execfile()d with the current directory set to its

8 | # containing dir.

9 | #

10 | # Note that not all possible configuration values are present in this

11 | # autogenerated file.

12 | #

13 | # All configuration values have a default; values that are commented out

14 | # serve to show the default.

15 |

16 | # If extensions (or modules to document with autodoc) are in another directory,

17 | # add these directories to sys.path here. If the directory is relative to the

18 | # documentation root, use os.path.abspath to make it absolute, like shown here.

19 | #

20 | import os

21 | import sys

22 | sys.path.insert(0, os.path.abspath('_ext'))

23 |

24 |

25 | # COPY FILES

26 | import shutil

27 |

28 | try:

29 | shutil.rmtree('notebook')

30 | except:

31 | pass

32 | os.mkdir('notebook')

33 | shutil.copytree('../../hands_on_en', 'notebook/hands_on')

34 | shutil.copytree('../../official_example_en', 'notebook/official_example')

35 | shutil.copytree('../../example', 'notebook/example')

36 |

37 | # -- General configuration ------------------------------------------------

38 |

39 | # If your documentation needs a minimal Sphinx version, state it here.

40 | #

41 | # needs_sphinx = '1.0'

42 |

43 | # Add any Sphinx extension module names here, as strings. They can be

44 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

45 | # ones.

46 | extensions = ['sphinx.ext.autodoc',

47 | 'sphinx.ext.doctest',

48 | 'sphinx.ext.intersphinx',

49 | 'sphinx.ext.mathjax',

50 | 'nbsphinx',

51 | 'show_on_colaboratory',]

52 |

53 | # Add any paths that contain templates here, relative to this directory.

54 | templates_path = ['_templates']

55 |

56 | # The suffix(es) of source filenames.

57 | # You can specify multiple suffix as a list of string:

58 | #

59 | # source_suffix = ['.rst', '.md']

60 | source_suffix = '.rst'

61 |

62 | # The master toctree document.

63 | master_doc = 'index'

64 |

65 | # General information about the project.

66 | project = 'Chainer Colab Notebook'

67 | copyright = '2018, Chainer User Group'

68 | author = 'Chainer User Group'

69 |

70 | # The version info for the project you're documenting, acts as replacement for

71 | # |version| and |release|, also used in various other places throughout the

72 | # built documents.

73 | #

74 | # The short X.Y version.

75 | version = '0.0'

76 | # The full version, including alpha/beta/rc tags.

77 | release = '0.0'

78 |

79 | # The language for content autogenerated by Sphinx. Refer to documentation

80 | # for a list of supported languages.

81 | #

82 | # This is also used if you do content translation via gettext catalogs.

83 | # Usually you set "language" from the command line for these cases.

84 | language = None

85 |

86 | # List of patterns, relative to source directory, that match files and

87 | # directories to ignore when looking for source files.

88 | # This patterns also effect to html_static_path and html_extra_path

89 | exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store', '**.ipynb_checkpoints']

90 |

91 | # The name of the Pygments (syntax highlighting) style to use.

92 | pygments_style = 'sphinx'

93 |

94 | # If true, `todo` and `todoList` produce output, else they produce nothing.

95 | todo_include_todos = False

96 |

97 |

98 | # -- Options for HTML output ----------------------------------------------

99 |

100 | # The theme to use for HTML and HTML Help pages. See the documentation for

101 | # a list of builtin themes.

102 | #

103 | html_theme = 'sphinx_rtd_theme'

104 |

105 | # Theme options are theme-specific and customize the look and feel of a theme

106 | # further. For a list of options available for each theme, see the

107 | # documentation.

108 | #

109 | # html_theme_options = {}

110 |

111 | # Add any paths that contain custom static files (such as style sheets) here,

112 | # relative to this directory. They are copied after the builtin static files,

113 | # so a file named "default.css" will overwrite the builtin "default.css".

114 | html_static_path = ['_static']

115 |

116 |

117 | # -- Options for HTMLHelp output ------------------------------------------

118 |

119 | # Output file base name for HTML help builder.

120 | htmlhelp_basename = 'ChainerColabNotebookdoc'

121 |

122 |

123 | # -- Options for LaTeX output ---------------------------------------------

124 |

125 | latex_elements = {

126 | # The paper size ('letterpaper' or 'a4paper').

127 | #

128 | # 'papersize': 'letterpaper',

129 |

130 | # The font size ('10pt', '11pt' or '12pt').

131 | #

132 | # 'pointsize': '10pt',

133 |

134 | # Additional stuff for the LaTeX preamble.

135 | #

136 | # 'preamble': '',

137 |

138 | # Latex figure (float) alignment

139 | #

140 | # 'figure_align': 'htbp',

141 | }

142 |

143 | # Grouping the document tree into LaTeX files. List of tuples

144 | # (source start file, target name, title,

145 | # author, documentclass [howto, manual, or own class]).

146 | latex_documents = [

147 | (master_doc, 'ChainerColabNotebook.tex', 'Chainer Colab Notebook Documentation',

148 | 'Chainer User Group', 'manual'),

149 | ]

150 |

151 |

152 | # -- Options for manual page output ---------------------------------------

153 |

154 | # One entry per manual page. List of tuples

155 | # (source start file, name, description, authors, manual section).

156 | man_pages = [

157 | (master_doc, 'chainercolabnotebook', 'Chainer Colab Notebook Documentation',

158 | [author], 1)

159 | ]

160 |

161 |

162 | # -- Options for Texinfo output -------------------------------------------

163 |

164 | # Grouping the document tree into Texinfo files. List of tuples

165 | # (source start file, target name, title, author,

166 | # dir menu entry, description, category)

167 | texinfo_documents = [

168 | (master_doc, 'ChainerColabNotebook', 'Chainer Colab Notebook Documentation',

169 | author, 'ChainerColabNotebook', 'One line description of project.',

170 | 'Miscellaneous'),

171 | ]

172 |

173 |

174 |

175 |

176 | # Example configuration for intersphinx: refer to the Python standard library.

177 | intersphinx_mapping = {'https://docs.python.org/': None}

178 |

--------------------------------------------------------------------------------

/docs/source/index.rst:

--------------------------------------------------------------------------------

1 | Chainer Colab Notebooks : An easy way to learn and use Deep Learning

2 | =====================================================================

3 |

4 | You can run notebooks on Colaboratory as soon as you can click the link of

5 | "Show on Colaboratory" of each page.

6 |

7 |

8 | .. toctree::

9 | :maxdepth: 2

10 | :caption: Hands-on

11 |

12 | begginers_hands_on

13 | other_hands_on

14 |

15 | .. toctree::

16 | :maxdepth: 2

17 | :caption: Examples

18 |

19 | official_examples

20 | other_examples

21 |

22 | Other Languages

23 | ================

24 |

25 | - `日本語 `_

26 |

27 | Links

28 | ======

29 |

30 | - `GitHub Issues `_

31 |

--------------------------------------------------------------------------------

/docs/source/official_examples.rst:

--------------------------------------------------------------------------------

1 | Official Example

2 | -----------------

3 |

4 | .. toctree::

5 | :glob:

6 | :titlesonly:

7 |

8 | notebook/official_example/*

9 |

10 |

--------------------------------------------------------------------------------

/docs/source/other_examples.rst:

--------------------------------------------------------------------------------

1 | Other Examples

2 | ---------------

3 |

4 | CuPy

5 | ^^^^^

6 |

7 | .. toctree::

8 | :glob:

9 | :titlesonly:

10 |

11 | notebook/example/cupy/prml/*

12 |

--------------------------------------------------------------------------------

/docs/source/other_hands_on.rst:

--------------------------------------------------------------------------------

1 | Other Hands-on

2 | ---------------

3 |

4 | Chainer

5 | ^^^^^^^^^^^

6 |

7 | .. toctree::

8 | :glob:

9 | :titlesonly:

10 |

11 | notebook/hands_on/chainer/*

12 |

13 | Chainer RL

14 | ^^^^^^^^^^^

15 |

16 | .. toctree::

17 | :glob:

18 | :titlesonly:

19 |

20 | notebook/hands_on/chainerrl/*

21 |

--------------------------------------------------------------------------------

/docs/source_ja/_ext/show_on_colaboratory.py:

--------------------------------------------------------------------------------

1 | """

2 | Sphinx extension to add ReadTheDocs-style "Show on Colaboratory" links to the

3 | sidebar.

4 | Loosely based on https://github.com/astropy/astropy/pull/347

5 | """

6 |

7 | import os

8 | import warnings

9 |

10 |

11 | __licence__ = 'BSD (3 clause)'

12 |

13 |

14 | def get_colaboratory_url(path):

15 | fromto = [('hands_on', 'hands_on_ja'), ('official_example', 'official_example_ja')]

16 | for f, t in fromto:

17 | if path.startswith(f):

18 | path = path.replace(f, t, 1)

19 | return 'https://colab.research.google.com/github/chainer-community/chainer-colab-notebook/blob/master/{path}'.format(

20 | path=path)

21 |

22 |

23 | def html_page_context(app, pagename, templatename, context, doctree):

24 | if templatename != 'page.html':

25 | return

26 |

27 | path = os.path.relpath(doctree.get('source'), app.builder.srcdir).replace('notebook/', '')

28 | show_url = get_colaboratory_url(path)

29 |

30 | context['show_on_colaboratory_url'] = show_url

31 |

32 |

33 | def setup(app):

34 | app.connect('html-page-context', html_page_context)

35 |

--------------------------------------------------------------------------------

/docs/source_ja/_static:

--------------------------------------------------------------------------------

1 | ../source/_static/

--------------------------------------------------------------------------------

/docs/source_ja/_templates:

--------------------------------------------------------------------------------

1 | ../source/_templates/

--------------------------------------------------------------------------------

/docs/source_ja/begginers_hands_on.rst:

--------------------------------------------------------------------------------

1 | Chainer Begginer's Hands-on

2 | ============================

3 |

4 | 概要

5 | ------

6 |

7 | Deep Learningフレームワーク

8 | `Chainer `_

9 | を使って、Googleの提供する

10 | `Colaboratory `_

11 | で演習をする無料ハンズオンコースです。

12 | プログラムを変更・実行していくことで実際に問題解決の方法を学習し、疑問が発生しうるところに詳細な説明を加えています。その結果として、より効率的な学習を行えることを目指しています。

13 |

14 | ※ Colaboratory: 完全にクラウドで実行される Jupyterノートブック環境。設定不要で、無料で利用可能。

15 |

16 | また、単に動くDeep Learningモデルを作成するのではなく、実際の問題を解くことができるモデルを作ることを目指しています。そのため、単にモデルを訓練するだけでなく、訓練・検証・テストセットを使用した厳密な機械学習の手法を実践し、モデルを正しく評価する方法も学習します。

17 |

18 | コース全体を通した目標

19 | -----------------------

20 |

21 | - Deep Learningフレームワークに必要な構成要素とChainerによる実装を説明できる

22 | - モデルの評価に必要な機械学習の手法を実践できる

23 | - CNN、RNNなど基本的なネットワークを使ったモデルを実装できる

24 | - 画像処理、自然言語処理などの応用分野の問題をChainerで解くことができる

25 | - 実際に作成したモデルを使って、アプリケーションを作ることができる

26 |

27 | コンテンツ

28 | -----------------------

29 |

30 | .. toctree::

31 | :glob:

32 | :titlesonly:

33 |

34 | notebook/hands_on/chainer/begginers_hands_on/*

35 |

--------------------------------------------------------------------------------

/docs/source_ja/conf.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 | # -*- coding: utf-8 -*-

3 | #

4 | # Chainer Colab Notebook documentation build configuration file, created by

5 | # sphinx-quickstart on Tue Jun 12 09:34:10 2018.

6 | #

7 | # This file is execfile()d with the current directory set to its

8 | # containing dir.

9 | #

10 | # Note that not all possible configuration values are present in this

11 | # autogenerated file.

12 | #

13 | # All configuration values have a default; values that are commented out

14 | # serve to show the default.

15 |

16 | # If extensions (or modules to document with autodoc) are in another directory,

17 | # add these directories to sys.path here. If the directory is relative to the

18 | # documentation root, use os.path.abspath to make it absolute, like shown here.

19 | #

20 | import os

21 | import sys

22 | sys.path.insert(0, os.path.abspath('_ext'))

23 |

24 |

25 | # COPY FILES

26 | import shutil

27 |

28 |

29 | try:

30 | shutil.rmtree('notebook')

31 | except:

32 | pass

33 | os.mkdir('notebook')

34 | shutil.copytree('../../hands_on_ja', 'notebook/hands_on')

35 | shutil.copytree('../../official_example_ja', 'notebook/official_example')

36 | shutil.copytree('../../example', 'notebook/example')

37 |

38 | # -- General configuration ------------------------------------------------

39 |

40 | # If your documentation needs a minimal Sphinx version, state it here.

41 | #

42 | # needs_sphinx = '1.0'

43 |

44 | # Add any Sphinx extension module names here, as strings. They can be

45 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

46 | # ones.

47 | extensions = ['sphinx.ext.autodoc',

48 | 'sphinx.ext.doctest',

49 | 'sphinx.ext.intersphinx',

50 | 'sphinx.ext.mathjax',

51 | 'nbsphinx',

52 | 'show_on_colaboratory',]

53 |

54 | # Add any paths that contain templates here, relative to this directory.

55 | templates_path = ['_templates']

56 |

57 | # The suffix(es) of source filenames.

58 | # You can specify multiple suffix as a list of string:

59 | #

60 | # source_suffix = ['.rst', '.md']

61 | source_suffix = '.rst'

62 |

63 | # The master toctree document.

64 | master_doc = 'index'

65 |

66 | # General information about the project.

67 | project = 'Chainer Colab Notebook'

68 | copyright = '2018, Chainer User Group'

69 | author = 'Chainer User Group'

70 |

71 | # The version info for the project you're documenting, acts as replacement for

72 | # |version| and |release|, also used in various other places throughout the

73 | # built documents.

74 | #

75 | # The short X.Y version.

76 | version = '0.0'

77 | # The full version, including alpha/beta/rc tags.

78 | release = '0.0'

79 |

80 | # The language for content autogenerated by Sphinx. Refer to documentation

81 | # for a list of supported languages.

82 | #

83 | # This is also used if you do content translation via gettext catalogs.

84 | # Usually you set "language" from the command line for these cases.

85 | language = None

86 |

87 | # List of patterns, relative to source directory, that match files and

88 | # directories to ignore when looking for source files.

89 | # This patterns also effect to html_static_path and html_extra_path

90 | exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store', '**.ipynb_checkpoints']

91 |

92 | # The name of the Pygments (syntax highlighting) style to use.

93 | pygments_style = 'sphinx'

94 |

95 | # If true, `todo` and `todoList` produce output, else they produce nothing.

96 | todo_include_todos = False

97 |

98 |

99 | # -- Options for HTML output ----------------------------------------------

100 |

101 | # The theme to use for HTML and HTML Help pages. See the documentation for

102 | # a list of builtin themes.

103 | #

104 | html_theme = 'sphinx_rtd_theme'

105 |

106 | # Theme options are theme-specific and customize the look and feel of a theme

107 | # further. For a list of options available for each theme, see the

108 | # documentation.

109 | #

110 | # html_theme_options = {}

111 |

112 | # Add any paths that contain custom static files (such as style sheets) here,

113 | # relative to this directory. They are copied after the builtin static files,

114 | # so a file named "default.css" will overwrite the builtin "default.css".

115 | html_static_path = ['_static']

116 |

117 |

118 | # -- Options for HTMLHelp output ------------------------------------------

119 |

120 | # Output file base name for HTML help builder.

121 | htmlhelp_basename = 'ChainerColabNotebookdoc'

122 |

123 |

124 | # -- Options for LaTeX output ---------------------------------------------

125 |

126 | latex_elements = {

127 | # The paper size ('letterpaper' or 'a4paper').

128 | #

129 | # 'papersize': 'letterpaper',

130 |

131 | # The font size ('10pt', '11pt' or '12pt').

132 | #

133 | # 'pointsize': '10pt',

134 |

135 | # Additional stuff for the LaTeX preamble.

136 | #

137 | # 'preamble': '',

138 |

139 | # Latex figure (float) alignment

140 | #

141 | # 'figure_align': 'htbp',

142 | }

143 |

144 | # Grouping the document tree into LaTeX files. List of tuples

145 | # (source start file, target name, title,

146 | # author, documentclass [howto, manual, or own class]).

147 | latex_documents = [

148 | (master_doc, 'ChainerColabNotebook.tex', 'Chainer Colab Notebook Documentation',

149 | 'Chainer User Group', 'manual'),

150 | ]

151 |

152 |

153 | # -- Options for manual page output ---------------------------------------

154 |

155 | # One entry per manual page. List of tuples

156 | # (source start file, name, description, authors, manual section).

157 | man_pages = [

158 | (master_doc, 'chainercolabnotebook', 'Chainer Colab Notebook Documentation',

159 | [author], 1)

160 | ]

161 |

162 |

163 | # -- Options for Texinfo output -------------------------------------------

164 |

165 | # Grouping the document tree into Texinfo files. List of tuples

166 | # (source start file, target name, title, author,

167 | # dir menu entry, description, category)

168 | texinfo_documents = [

169 | (master_doc, 'ChainerColabNotebook', 'Chainer Colab Notebook Documentation',

170 | author, 'ChainerColabNotebook', 'One line description of project.',

171 | 'Miscellaneous'),

172 | ]

173 |

174 |

175 |

176 |

177 | # Example configuration for intersphinx: refer to the Python standard library.

178 | intersphinx_mapping = {'https://docs.python.org/': None}

179 |

--------------------------------------------------------------------------------

/docs/source_ja/index.rst:

--------------------------------------------------------------------------------

1 | Chainer Colab Notebooks : Deep Learning 実践ノートブック集

2 | ===========================================================

3 |

4 | 各ページの「Show on Colaboratory」をクリックすれば、

5 | 今すぐにnotebookをColaboratoryで実行することができます。

6 |

7 | .. toctree::

8 | :maxdepth: 2

9 | :caption: Hands-on

10 |

11 | begginers_hands_on

12 | other_hands_on

13 |

14 | .. toctree::

15 | :maxdepth: 2

16 | :caption: Examples

17 |

18 | official_examples

19 | other_examples

20 |

21 | Other Languages

22 | ================

23 |

24 | - `English `_

25 |

26 | Links

27 | ======

28 |

29 | - `GitHub Issues `_

30 |

--------------------------------------------------------------------------------

/docs/source_ja/official_examples.rst:

--------------------------------------------------------------------------------

1 | ../source/official_examples.rst

--------------------------------------------------------------------------------

/docs/source_ja/other_examples.rst:

--------------------------------------------------------------------------------

1 | ../source/other_examples.rst

--------------------------------------------------------------------------------

/docs/source_ja/other_hands_on.rst:

--------------------------------------------------------------------------------

1 | ../source/other_hands_on.rst

--------------------------------------------------------------------------------

/example/chainer/OfficialTutorial/02_1_How_to_Write_a_New_Network.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "name": "02-1-How-to-Write-a-New-Network.ipynb",

7 | "version": "0.3.2",

8 | "views": {},

9 | "default_view": {},

10 | "provenance": [],

11 | "collapsed_sections": [],

12 | "toc_visible": true

13 | },

14 | "kernelspec": {

15 | "name": "python3",

16 | "display_name": "Python 3"

17 | },

18 | "accelerator": "GPU"

19 | },

20 | "cells": [

21 | {

22 | "metadata": {

23 | "id": "0IerUqWPN2YL",

24 | "colab_type": "text"

25 | },

26 | "cell_type": "markdown",

27 | "source": [

28 | "# How to Write a New Network\n",

29 | "\n",

30 | "## Precondition\n",

31 | "\n",

32 | "Install Chainer and Cupy."

33 | ]

34 | },

35 | {

36 | "metadata": {

37 | "id": "kizqNcZNNuYL",

38 | "colab_type": "code",

39 | "colab": {

40 | "autoexec": {

41 | "startup": false,

42 | "wait_interval": 0

43 | },

44 | "output_extras": [

45 | {

46 | "item_id": 21

47 | },

48 | {

49 | "item_id": 45

50 | }

51 | ],

52 | "base_uri": "https://localhost:8080/",

53 | "height": 972

54 | },

55 | "outputId": "de43cbeb-c415-4748-8dad-3f653a67f431",

56 | "executionInfo": {

57 | "status": "ok",

58 | "timestamp": 1520062255406,

59 | "user_tz": -540,

60 | "elapsed": 40721,

61 | "user": {

62 | "displayName": "Yasuki IKEUCHI",

63 | "photoUrl": "//lh6.googleusercontent.com/-QrqZkF-x2us/AAAAAAAAAAI/AAAAAAAAAAA/PzOMhw6mH3o/s50-c-k-no/photo.jpg",

64 | "userId": "110487681503898314699"

65 | }

66 | }

67 | },

68 | "cell_type": "code",

69 | "source": [

70 | "# Install Chainer and CuPy!\n",

71 | "\n",

72 | "!curl https://colab.chainer.org/install | sh -"

73 | ],

74 | "execution_count": 1,

75 | "outputs": [

76 | {

77 | "output_type": "stream",

78 | "text": [

79 | "Reading package lists... Done\n",

80 | "Building dependency tree \n",

81 | "Reading state information... Done\n",

82 | "The following NEW packages will be installed:\n",

83 | " libcusparse8.0 libnvrtc8.0 libnvtoolsext1\n",

84 | "0 upgraded, 3 newly installed, 0 to remove and 1 not upgraded.\n",

85 | "Need to get 28.9 MB of archives.\n",

86 | "After this operation, 71.6 MB of additional disk space will be used.\n",

87 | "Get:1 http://archive.ubuntu.com/ubuntu artful/multiverse amd64 libcusparse8.0 amd64 8.0.61-1 [22.6 MB]\n",

88 | "Get:2 http://archive.ubuntu.com/ubuntu artful/multiverse amd64 libnvrtc8.0 amd64 8.0.61-1 [6,225 kB]\n",

89 | "Get:3 http://archive.ubuntu.com/ubuntu artful/multiverse amd64 libnvtoolsext1 amd64 8.0.61-1 [32.2 kB]\n",

90 | "Fetched 28.9 MB in 1s (16.2 MB/s)\n",

91 | "\n",

92 | "\u001b7\u001b[0;23r\u001b8\u001b[1ASelecting previously unselected package libcusparse8.0:amd64.\n",

93 | "(Reading database ... 16669 files and directories currently installed.)\n",

94 | "Preparing to unpack .../libcusparse8.0_8.0.61-1_amd64.deb ...\n",

95 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 0%]\u001b[49m\u001b[39m [..........................................................] \u001b8\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 6%]\u001b[49m\u001b[39m [###.......................................................] \u001b8Unpacking libcusparse8.0:amd64 (8.0.61-1) ...\n",

96 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 12%]\u001b[49m\u001b[39m [#######...................................................] \u001b8\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 18%]\u001b[49m\u001b[39m [##########................................................] \u001b8Selecting previously unselected package libnvrtc8.0:amd64.\n",

97 | "Preparing to unpack .../libnvrtc8.0_8.0.61-1_amd64.deb ...\n",

98 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 25%]\u001b[49m\u001b[39m [##############............................................] \u001b8Unpacking libnvrtc8.0:amd64 (8.0.61-1) ...\n",

99 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 31%]\u001b[49m\u001b[39m [##################........................................] \u001b8\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 37%]\u001b[49m\u001b[39m [#####################.....................................] \u001b8Selecting previously unselected package libnvtoolsext1:amd64.\n",

100 | "Preparing to unpack .../libnvtoolsext1_8.0.61-1_amd64.deb ...\n",

101 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 43%]\u001b[49m\u001b[39m [#########################.................................] \u001b8Unpacking libnvtoolsext1:amd64 (8.0.61-1) ...\n",

102 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 50%]\u001b[49m\u001b[39m [#############################.............................] \u001b8\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 56%]\u001b[49m\u001b[39m [################################..........................] \u001b8Setting up libnvtoolsext1:amd64 (8.0.61-1) ...\n",

103 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 62%]\u001b[49m\u001b[39m [####################################......................] \u001b8\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 68%]\u001b[49m\u001b[39m [#######################################...................] \u001b8Setting up libcusparse8.0:amd64 (8.0.61-1) ...\n",

104 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 75%]\u001b[49m\u001b[39m [###########################################...............] \u001b8\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 81%]\u001b[49m\u001b[39m [###############################################...........] \u001b8Setting up libnvrtc8.0:amd64 (8.0.61-1) ...\n",

105 | "\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 87%]\u001b[49m\u001b[39m [##################################################........] \u001b8\u001b7\u001b[24;0f\u001b[42m\u001b[30mProgress: [ 93%]\u001b[49m\u001b[39m [######################################################....] \u001b8Processing triggers for libc-bin (2.26-0ubuntu2.1) ...\n",

106 | "\n",

107 | "\u001b7\u001b[0;24r\u001b8\u001b[1A\u001b[JCollecting cupy-cuda80==4.0.0b3 from https://github.com/kmaehashi/chainer-colab/releases/download/2018-02-06/cupy_cuda80-4.0.0b3-cp36-cp36m-linux_x86_64.whl\n",

108 | " Downloading https://github.com/kmaehashi/chainer-colab/releases/download/2018-02-06/cupy_cuda80-4.0.0b3-cp36-cp36m-linux_x86_64.whl (205.2MB)\n",

109 | "\u001b[K 13% |████▍ | 28.3MB 36.2MB/s eta 0:00:05"

110 | ],

111 | "name": "stdout"

112 | },

113 | {

114 | "output_type": "stream",

115 | "text": [

116 | "\u001b[K 100% |████████████████████████████████| 205.2MB 7.1kB/s \n",

117 | "\u001b[?25hRequirement already satisfied: six>=1.9.0 in /usr/local/lib/python3.6/dist-packages (from cupy-cuda80==4.0.0b3)\n",

118 | "Requirement already satisfied: numpy>=1.9.0 in /usr/local/lib/python3.6/dist-packages (from cupy-cuda80==4.0.0b3)\n",

119 | "Collecting fastrlock>=0.3 (from cupy-cuda80==4.0.0b3)\n",

120 | " Downloading fastrlock-0.3-cp36-cp36m-manylinux1_x86_64.whl (77kB)\n",

121 | "\u001b[K 100% |████████████████████████████████| 81kB 2.3MB/s \n",

122 | "\u001b[?25hInstalling collected packages: fastrlock, cupy-cuda80\n",

123 | "Successfully installed cupy-cuda80-4.0.0b3 fastrlock-0.3\n",

124 | "Collecting chainer==4.0.0b3\n",

125 | " Downloading chainer-4.0.0b3.tar.gz (366kB)\n",

126 | "\u001b[K 100% |████████████████████████████████| 368kB 2.3MB/s \n",

127 | "\u001b[?25hCollecting filelock (from chainer==4.0.0b3)\n",

128 | " Downloading filelock-3.0.4.tar.gz\n",

129 | "Requirement already satisfied: numpy>=1.9.0 in /usr/local/lib/python3.6/dist-packages (from chainer==4.0.0b3)\n",

130 | "Requirement already satisfied: protobuf>=3.0.0 in /usr/local/lib/python3.6/dist-packages (from chainer==4.0.0b3)\n",

131 | "Requirement already satisfied: six>=1.9.0 in /usr/local/lib/python3.6/dist-packages (from chainer==4.0.0b3)\n",

132 | "Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from protobuf>=3.0.0->chainer==4.0.0b3)\n",

133 | "Building wheels for collected packages: chainer, filelock\n",

134 | " Running setup.py bdist_wheel for chainer ... \u001b[?25l-\b \b\\\b \b|\b \b/\b \bdone\n",

135 | "\u001b[?25h Stored in directory: /content/.cache/pip/wheels/ce/20/9f/4f7d6978c1a5f88bf2bb18d429998b85cf75cfe96315c7631b\n",

136 | " Running setup.py bdist_wheel for filelock ... \u001b[?25l-\b \bdone\n",

137 | "\u001b[?25h Stored in directory: /content/.cache/pip/wheels/5f/5e/8a/9f1eb481ffbfff95d5f550570c1dbeff3c1785c8383c12c62b\n",

138 | "Successfully built chainer filelock\n",

139 | "Installing collected packages: filelock, chainer\n",

140 | "Successfully installed chainer-4.0.0b3 filelock-3.0.4\n"

141 | ],

142 | "name": "stdout"

143 | }

144 | ]

145 | },

146 | {

147 | "metadata": {

148 | "id": "NgmVF6QhOLSK",

149 | "colab_type": "code",

150 | "colab": {

151 | "autoexec": {

152 | "startup": false,

153 | "wait_interval": 0

154 | },

155 | "output_extras": [

156 | {

157 | "item_id": 1

158 | }

159 | ],

160 | "base_uri": "https://localhost:8080/",

161 | "height": 71

162 | },

163 | "outputId": "3decdb4c-9734-43cd-8194-84432f907e40",

164 | "executionInfo": {

165 | "status": "ok",

166 | "timestamp": 1520062260652,

167 | "user_tz": -540,

168 | "elapsed": 5228,

169 | "user": {

170 | "displayName": "Yasuki IKEUCHI",

171 | "photoUrl": "//lh6.googleusercontent.com/-QrqZkF-x2us/AAAAAAAAAAI/AAAAAAAAAAA/PzOMhw6mH3o/s50-c-k-no/photo.jpg",

172 | "userId": "110487681503898314699"

173 | }

174 | }

175 | },

176 | "cell_type": "code",

177 | "source": [

178 | "import numpy as np\n",

179 | "import chainer\n",

180 | "from chainer import cuda, Function, gradient_check, report, training, utils, Variable\n",

181 | "from chainer import datasets, iterators, optimizers, serializers\n",

182 | "from chainer import Link, Chain, ChainList\n",

183 | "import chainer.functions as F\n",

184 | "import chainer.links as L\n",

185 | "from chainer.training import extensions"

186 | ],

187 | "execution_count": 2,

188 | "outputs": [

189 | {

190 | "output_type": "stream",

191 | "text": [

192 | "/usr/local/lib/python3.6/dist-packages/cupy/core/fusion.py:659: FutureWarning: cupy.core.fusion is experimental. The interface can change in the future.\n",

193 | " util.experimental('cupy.core.fusion')\n"

194 | ],

195 | "name": "stderr"

196 | }

197 | ]

198 | },

199 | {

200 | "metadata": {

201 | "id": "hYjsrZEJFpKE",

202 | "colab_type": "text"

203 | },

204 | "cell_type": "markdown",

205 | "source": [

206 | "## Convolutional Network for Visual Recognition Tasks\n",

207 | "\n",

208 | "In this section, you will learn how to write\n",

209 | "\n",

210 | "* A small convolutional network with a model class that is inherited from :class:`chainer.Chain`,\n",

211 | "* A large convolutional network that has several building block networks with :class:`chainer.ChainList`.\n",

212 | "\n",

213 | "After reading this section, you will be able to:\n",

214 | "\n",

215 | "* Write your own original convolutional network in Chainer\n",

216 | "\n",

217 | "A convolutional network (ConvNet) is mainly comprised of convolutional layers.\n",

218 | "This type of network is commonly used for various visual recognition tasks,\n",

219 | "e.g., classifying hand-written digits or natural images into given object\n",

220 | "classes, detecting objects from an image, and labeling all pixels of an image\n",

221 | "with the object classes (semantic segmentation), and so on.\n",

222 | "\n",

223 | "In such tasks, a typical ConvNet takes a set of images whose shape is\n",

224 | ":math:`(N, C, H, W)`, where\n",

225 | "\n",

226 | "- `N` denotes the number of images in a mini-batch,\n",

227 | "- `C` denotes the number of channels of those images,\n",

228 | "- `H` and `W` denote the height and width of those images,\n",

229 | "\n",

230 | "respectively. Then, it typically outputs a fixed-sized vector as membership\n",

231 | "probabilities over the target object classes. It also can output a set of\n",

232 | "feature maps that have the corresponding size to the input image for a pixel\n",

233 | "labeling task, etc.\n"

234 | ]

235 | },

236 | {

237 | "metadata": {

238 | "id": "ltUfGA-JPGZg",

239 | "colab_type": "text"

240 | },

241 | "cell_type": "markdown",

242 | "source": [

243 | "Note\n",

244 | "\n",

245 | "> The below example code assumes that some packages are already imported.\n",

246 | "> Please see the details here: `basic`."

247 | ]

248 | },

249 | {

250 | "metadata": {

251 | "id": "KSgZ2XqzPRll",

252 | "colab_type": "text"

253 | },

254 | "cell_type": "markdown",

255 | "source": [

256 | "### LeNet5\n",

257 | "\n",

258 | "Here, let's start by defining LeNet5 [LeCun98] in Chainer.\n",

259 | "This is a ConvNet model that has 5 layers comprised of 3 convolutional layers\n",

260 | "and 2 fully-connected layers. This was proposed to classify hand-written\n",

261 | "digit images in 1998. In Chainer, the model can be written as follows:"

262 | ]

263 | },

264 | {

265 | "metadata": {

266 | "id": "04ZUqAdNFq9M",

267 | "colab_type": "code",

268 | "colab": {

269 | "autoexec": {

270 | "startup": false,

271 | "wait_interval": 0

272 | }

273 | }

274 | },

275 | "cell_type": "code",

276 | "source": [

277 | "class LeNet5(Chain):\n",

278 | " def __init__(self):\n",

279 | " super(LeNet5, self).__init__()\n",

280 | " with self.init_scope():\n",

281 | " self.conv1 = L.Convolution2D(\n",

282 | " in_channels=1, out_channels=6, ksize=5, stride=1)\n",

283 | " self.conv2 = L.Convolution2D(\n",

284 | " in_channels=6, out_channels=16, ksize=5, stride=1)\n",

285 | " self.conv3 = L.Convolution2D(\n",

286 | " in_channels=16, out_channels=120, ksize=4, stride=1)\n",

287 | " self.fc4 = L.Linear(None, 84)\n",

288 | " self.fc5 = L.Linear(84, 10)\n",

289 | "\n",

290 | " def __call__(self, x):\n",

291 | " h = F.sigmoid(self.conv1(x))\n",

292 | " h = F.max_pooling_2d(h, 2, 2)\n",

293 | " h = F.sigmoid(self.conv2(h))\n",

294 | " h = F.max_pooling_2d(h, 2, 2)\n",

295 | " h = F.sigmoid(self.conv3(h))\n",

296 | " h = F.sigmoid(self.fc4(h))\n",

297 | " if chainer.config.train:\n",

298 | " return self.fc5(h)\n",

299 | " return F.softmax(self.fc5(h))"

300 | ],

301 | "execution_count": 0,

302 | "outputs": []

303 | },

304 | {

305 | "metadata": {

306 | "id": "0zcZTI-7Ob8T",

307 | "colab_type": "text"

308 | },

309 | "cell_type": "markdown",

310 | "source": [

311 | "A typical way to write your network is creating a new class inherited from\n",

312 | "`chainer.Chain` class. When defining your model in this way, typically,\n",

313 | "all the layers which have trainable parameters are registered to the model\n",

314 | "by assigning the objects of `chainer.Link` as an attribute.\n",

315 | "\n",

316 | "The model class is instantiated before the forward and backward computations.\n",

317 | "To give input images and label vectors simply by calling the model object\n",

318 | "like a function, `__call__` is usually defined in the model class.\n",

319 | "\n",

320 | "This method performs the forward computation of the model. Chainer uses\n",

321 | "the powerful autograd system for any computational graphs written with\n",

322 | "`chainer.FunctionNode`\\ s and `chainer.Link`\\ s (actually a\n",

323 | "`chainer.Link` calls a corresponding `chainer.FunctionNode`\n",

324 | "inside of it), so that you don't need to explicitly write the code for backward\n",

325 | "computations in the model. Just prepare the data, then give it to the model.\n",

326 | "\n",

327 | "The way this works is the resulting output `chainer.Variable` from the\n",

328 | "forward computation has a `chainer.Variable.backward` method to perform\n",

329 | "autograd. In the above model, `__call__` has a ``if`` statement at the\n",

330 | "end to switch its behavior by the Chainer's running mode, i.e., training mode or\n",

331 | "not. Chainer presents the running mode as a global variable ``chainer.config.train``.\n",

332 | "\n",

333 | "When it's in training mode, `__call__` returns the output value of the\n",

334 | "last layer as is to compute the loss later on, otherwise it returns a\n",

335 | "prediction result by calculating `chainer.functions.softmax`."

336 | ]

337 | },

338 | {

339 | "metadata": {

340 | "id": "zaRmPHh_PaJI",

341 | "colab_type": "text"

342 | },

343 | "cell_type": "markdown",

344 | "source": [

345 | "Note:\n",

346 | "\n",

347 | "> In Chainer v1, if a function or link behaved differently in\n",

348 | "> training and other modes, it was common that it held an attribute\n",

349 | "> that represented its running mode or was provided with the mode\n",

350 | "> from outside as an argument. In Chainer v2, it is recommended to use\n",

351 | "> the global configuration `chainer.config.train` to switch the running mode.\n"

352 | ]

353 | },

354 | {

355 | "metadata": {

356 | "id": "20wNAeRUPoQi",

357 | "colab_type": "text"

358 | },

359 | "cell_type": "markdown",

360 | "source": [

361 | "If you don't want to write ``conv1`` and the other layers more than once, you\n",

362 | "can also write the model like in this way:"

363 | ]

364 | },

365 | {

366 | "metadata": {

367 | "id": "uIBiqO44PfMW",

368 | "colab_type": "code",

369 | "colab": {

370 | "autoexec": {

371 | "startup": false,

372 | "wait_interval": 0

373 | }

374 | }

375 | },

376 | "cell_type": "code",

377 | "source": [

378 | " class LeNet5(Chain):\n",

379 | " def __init__(self):\n",

380 | " super(LeNet5, self).__init__()\n",

381 | " net = [('conv1', L.Convolution2D(1, 6, 5, 1))]\n",

382 | " net += [('_sigm1', F.Sigmoid())]\n",

383 | " net += [('_mpool1', F.MaxPooling2D(2, 2))]\n",

384 | " net += [('conv2', L.Convolution2D(6, 16, 5, 1))]\n",

385 | " net += [('_sigm2', F.Sigmoid())]\n",

386 | " net += [('_mpool2', F.MaxPooling2D(2, 2))]\n",

387 | " net += [('conv3', L.Convolution2D(16, 120, 4, 1))]\n",

388 | " net += [('_sigm3', F.Sigmoid())]\n",

389 | " net += [('_mpool3', F.MaxPooling2D(2, 2))]\n",

390 | " net += [('fc4', L.Linear(None, 84))]\n",

391 | " net += [('_sigm4', F.Sigmoid())]\n",

392 | " net += [('fc5', L.Linear(84, 10))]\n",

393 | " net += [('_sigm5', F.Sigmoid())]\n",

394 | " with self.init_scope():\n",

395 | " for n in net:\n",

396 | " if not n[0].startswith('_'):\n",

397 | " setattr(self, n[0], n[1])\n",

398 | " self.forward = net\n",

399 | "\n",

400 | " def __call__(self, x):\n",

401 | " for n, f in self.forward:\n",

402 | " if not n.startswith('_'):\n",

403 | " x = getattr(self, n)(x)\n",

404 | " else:\n",

405 | " x = f(x)\n",

406 | " if chainer.config.train:\n",

407 | " return x\n",

408 | " return F.softmax(x)"

409 | ],

410 | "execution_count": 0,

411 | "outputs": []

412 | },

413 | {

414 | "metadata": {

415 | "id": "coslyHOtQGxm",

416 | "colab_type": "text"

417 | },

418 | "cell_type": "markdown",

419 | "source": [

420 | "This code creates a list of all `chainer.Link`\\ s and\n",

421 | "`chainer.FunctionNode`\\ s after calling its superclass's constructor.\n",

422 | "\n",

423 | "Then the elements of the list are registered to this model as\n",

424 | "trainable layers when the name of an element doesn't start with ``_``\n",

425 | "character. This operation can be freely replaced with many other ways because\n",

426 | "those names are just designed to select `chainer.Link`\\ s only from the\n",

427 | "list ``net`` easily. `chainer.FunctionNode` doesn't have any trainable\n",

428 | "parameters, so that we can't register it to the model, but we want to use\n",

429 | "`chainer.FunctionNode`\\ s for constructing a forward path. The list\n",

430 | "``net`` is stored as an attribute `forward` to refer it in\n",

431 | "`__call__`. In `__call__`, it retrieves all layers in the network\n",

432 | "from `self.forward` sequentially regardless of what types of object (\n",

433 | "`chainer.Link` or `chainer.FunctionNode`) it is, and gives the\n",

434 | "input variable or the intermediate output from the previous layer to the\n",

435 | "current layer. The last part of the `__call__` to switch its behavior\n",

436 | "by the training/inference mode is the same as the former way."

437 | ]

438 | },

439 | {

440 | "metadata": {

441 | "id": "3OtpUJ_cQP1Y",

442 | "colab_type": "text"

443 | },

444 | "cell_type": "markdown",

445 | "source": [

446 | "### Ways to calculate loss\n",

447 | "\n",

448 | "When you train the model with label vector ``t``, the loss should be calculated\n",

449 | "using the output from the model. There also are several ways to calculate the\n",

450 | "loss:"

451 | ]

452 | },

453 | {

454 | "metadata": {

455 | "id": "CCziSWwtQLY7",

456 | "colab_type": "code",

457 | "colab": {

458 | "autoexec": {

459 | "startup": false,

460 | "wait_interval": 0

461 | }

462 | }

463 | },

464 | "cell_type": "code",

465 | "source": [

466 | "def do():\n",

467 | " model = LeNet5()\n",

468 | "\n",

469 | " # Input data and label\n",

470 | " x = np.random.rand(32, 1, 28, 28).astype(np.float32)\n",

471 | " t = np.random.randint(0, 10, size=(32,)).astype(np.int32)\n",

472 | "\n",

473 | " # Forward computation\n",

474 | " y = model(x)\n",

475 | "\n",

476 | " # Loss calculation\n",

477 | " loss = F.softmax_cross_entropy(y, t)"

478 | ],

479 | "execution_count": 0,

480 | "outputs": []

481 | },

482 | {

483 | "metadata": {

484 | "id": "pAuoNPUjbmFL",

485 | "colab_type": "text"

486 | },

487 | "cell_type": "markdown",

488 | "source": [

489 | "This is a primitive way to calculate a loss value from the output of the model.\n",

490 | "\n",

491 | "On the other hand, the loss computation can be included in the model itself by\n",

492 | "wrapping the model object (`chainer.Chain` or\n",

493 | "`chainer.ChainList` object) with a class inherited from\n",

494 | "`chainer.Chain`. The outer `chainer.Chain` should take the\n",

495 | "model defined above and register it with `chainer.Chain.init_scope`.\n",

496 | "\n",

497 | "`chainer.Chain` is actually\n",

498 | "inherited from `chainer.Link`, so that `chainer.Chain` itself\n",

499 | "can also be registered as a trainable `chainer.Link` to another\n",

500 | "`chainer.Chain`. Actually, `chainer.links.Classifier` class to\n",

501 | "wrap the model and add the loss computation to the model already exists.\n",

502 | "\n",

503 | "Actually, there is already a `chainer.links.Classifier` class that can\n",

504 | "be used to wrap the model and include the loss computation as well.\n",

505 | "\n",

506 | "It can be used like this\n"

507 | ]

508 | },

509 | {

510 | "metadata": {

511 | "id": "5AMnm8vJQVgy",

512 | "colab_type": "code",

513 | "colab": {

514 | "autoexec": {

515 | "startup": false,

516 | "wait_interval": 0

517 | }

518 | }

519 | },

520 | "cell_type": "code",

521 | "source": [

522 | "def do():\n",

523 | " model = L.Classifier(LeNet5())\n",

524 | "\n",

525 | " # Foward & Loss calculation\n",

526 | " loss = model(x, t)"

527 | ],

528 | "execution_count": 0,

529 | "outputs": []

530 | },

531 | {

532 | "metadata": {

533 | "id": "M7_DiDKzb9PH",

534 | "colab_type": "text"

535 | },

536 | "cell_type": "markdown",

537 | "source": [

538 | "This class takes a model object as an input argument and registers it to\n",

539 | "a ``predictor`` property as a trained parameter. As shown above, the returned\n",

540 | "object can then be called like a function in which we pass ``x`` and ``t`` as\n",

541 | "the input arguments and the resulting loss value (which we recall is a\n",

542 | "`chainer.Variable`) is returned.\n",

543 | "\n",

544 | "See the detailed implementation of `chainer.links.Classifier` from\n",

545 | "hereclass`chainer.links.Classifier` and check the implementation by looking\n",

546 | "at the source.\n",

547 | "\n",

548 | "From the above examples, we can see that Chainer provides the flexibility to\n",

549 | "write our original network in many different ways. Such flexibility intends to\n",

550 | "make it intuitive for users to design new and complex models.\n",

551 | "\n"

552 | ]

553 | },

554 | {

555 | "metadata": {

556 | "id": "WOurFBsBcAWx",

557 | "colab_type": "text"

558 | },

559 | "cell_type": "markdown",

560 | "source": [

561 | "### VGG16\n",

562 | "\n",

563 | "Next, let's write some larger models in Chainer. When you write a large network\n",

564 | "consisting of several building block networks, :class:`chainer.ChainList` is\n",

565 | "useful. First, let's see how to write a VGG16 [Simonyan14] model.\n"

566 | ]

567 | },

568 | {

569 | "metadata": {

570 | "id": "T_D2bGV9byTj",

571 | "colab_type": "code",

572 | "colab": {

573 | "autoexec": {

574 | "startup": false,

575 | "wait_interval": 0

576 | }

577 | }

578 | },

579 | "cell_type": "code",

580 | "source": [

581 | "class VGG16(chainer.ChainList):\n",

582 | " def __init__(self):\n",

583 | " super(VGG16, self).__init__(\n",

584 | " VGGBlock(64),\n",

585 | " VGGBlock(128),\n",

586 | " VGGBlock(256, 3),\n",

587 | " VGGBlock(512, 3),\n",

588 | " VGGBlock(512, 3, True))\n",

589 | "\n",

590 | " def __call__(self, x):\n",

591 | " for f in self.children():\n",

592 | " x = f(x)\n",

593 | " if chainer.config.train:\n",

594 | " return x\n",

595 | " return F.softmax(x)\n",

596 | "\n",

597 | "\n",

598 | "class VGGBlock(chainer.Chain):\n",

599 | " def __init__(self, n_channels, n_convs=2, fc=False):\n",

600 | " w = chainer.initializers.HeNormal()\n",

601 | " super(VGGBlock, self).__init__()\n",

602 | " with self.init_scope():\n",

603 | " self.conv1 = L.Convolution2D(None, n_channels, 3, 1, 1, initialW=w)\n",

604 | " self.conv2 = L.Convolution2D(\n",

605 | " n_channels, n_channels, 3, 1, 1, initialW=w)\n",

606 | " if n_convs == 3:\n",

607 | " self.conv3 = L.Convolution2D(\n",

608 | " n_channels, n_channels, 3, 1, 1, initialW=w)\n",

609 | " if fc:\n",

610 | " self.fc4 = L.Linear(None, 4096, initialW=w)\n",

611 | " self.fc5 = L.Linear(4096, 4096, initialW=w)\n",

612 | " self.fc6 = L.Linear(4096, 1000, initialW=w)\n",

613 | "\n",

614 | " self.n_convs = n_convs\n",

615 | " self.fc = fc\n",

616 | "\n",

617 | " def __call__(self, x):\n",

618 | " h = F.relu(self.conv1(x))\n",

619 | " h = F.relu(self.conv2(h))\n",

620 | " if self.n_convs == 3:\n",

621 | " h = F.relu(self.conv3(h))\n",

622 | " h = F.max_pooling_2d(h, 2, 2)\n",

623 | " if self.fc:\n",

624 | " h = F.dropout(F.relu(self.fc4(h)))\n",

625 | " h = F.dropout(F.relu(self.fc5(h)))\n",

626 | " h = self.fc6(h)\n",

627 | " return h"

628 | ],

629 | "execution_count": 0,

630 | "outputs": []

631 | },

632 | {

633 | "metadata": {

634 | "id": "fCP9FOHQdAV6",

635 | "colab_type": "text"

636 | },

637 | "cell_type": "markdown",

638 | "source": [

639 | "That's it. VGG16 is a model which won the 1st place in\n",

640 | "[classification + localization task at ILSVRC 2014](http://www.image-net.org/challenges/LSVRC/2014/results#clsloc,\n",

641 | "and since then, has become one of the standard models for many different tasks\n",

642 | "as a pre-trained model.\n",

643 | "\n",

644 | "This has 16-layers, so it's called \"VGG-16\", but we can\n",

645 | "write this model without writing all layers independently. Since this model\n",

646 | "consists of several building blocks that have the same architecture, we can\n",

647 | "build the whole network by re-using the building block definition.\n",

648 | "\n",

649 | "Each part of the network is consisted of 2 or 3 convolutional layers and activation\n",

650 | "function (`chainer.functions.relu`) following them, and\n",

651 | "`chainer.functions.max_pooling_2d` operations. This block is written as\n",

652 | "`VGGBlock` in the above example code. And the whole network just calls\n",

653 | "this block one by one in sequential manner.\n"

654 | ]

655 | },

656 | {

657 | "metadata": {

658 | "id": "oOyKzj-7c7zD",

659 | "colab_type": "code",

660 | "colab": {

661 | "autoexec": {

662 | "startup": false,

663 | "wait_interval": 0

664 | }

665 | }

666 | },

667 | "cell_type": "code",

668 | "source": [

669 | ""

670 | ],

671 | "execution_count": 0,

672 | "outputs": []

673 | },

674 | {

675 | "metadata": {

676 | "id": "TuwSx9lXdh0P",

677 | "colab_type": "text"

678 | },

679 | "cell_type": "markdown",

680 | "source": [

681 | "### ResNet152\n",

682 | "\n",

683 | "\n",

684 | "How about ResNet? ResNet [He16] came in the following year's ILSVRC. It is a\n",

685 | "much deeper model than VGG16, having up to 152 layers. This sounds super\n",

686 | "laborious to build, but it can be implemented in almost same manner as VGG16.\n",

687 | "\n",

688 | "In the other words, it's easy. One possible way to write ResNet-152 is:\n"

689 | ]

690 | },

691 | {

692 | "metadata": {

693 | "id": "QbPfVL_ydqA4",

694 | "colab_type": "code",

695 | "colab": {

696 | "autoexec": {

697 | "startup": false,

698 | "wait_interval": 0

699 | }

700 | }

701 | },

702 | "cell_type": "code",

703 | "source": [

704 | "class ResNet152(chainer.Chain):\n",

705 | " def __init__(self, n_blocks=[3, 8, 36, 3]):\n",

706 | " w = chainer.initializers.HeNormal()\n",

707 | " super(ResNet152, self).__init__()\n",

708 | " with self.init_scope():\n",

709 | " self.conv1 = L.Convolution2D(None, 64, 7, 2, 3, initialW=w, nobias=True)\n",

710 | " self.bn1 = L.BatchNormalization(64)\n",

711 | " self.res2 = ResBlock(n_blocks[0], 64, 64, 256, 1)\n",

712 | " self.res3 = ResBlock(n_blocks[1], 256, 128, 512)\n",

713 | " self.res4 = ResBlock(n_blocks[2], 512, 256, 1024)\n",

714 | " self.res5 = ResBlock(n_blocks[3], 1024, 512, 2048)\n",

715 | " self.fc6 = L.Linear(2048, 1000)\n",

716 | "\n",

717 | " def __call__(self, x):\n",

718 | " h = self.bn1(self.conv1(x))\n",

719 | " h = F.max_pooling_2d(F.relu(h), 2, 2)\n",

720 | " h = self.res2(h)\n",

721 | " h = self.res3(h)\n",

722 | " h = self.res4(h)\n",

723 | " h = self.res5(h)\n",

724 | " h = F.average_pooling_2d(h, h.shape[2:], stride=1)\n",

725 | " h = self.fc6(h)\n",

726 | " if chainer.config.train:\n",

727 | " return h\n",

728 | " return F.softmax(h)\n",

729 | "\n",

730 | "\n",

731 | "class ResBlock(chainer.ChainList):\n",

732 | " def __init__(self, n_layers, n_in, n_mid, n_out, stride=2):\n",

733 | " super(ResBlock, self).__init__()\n",

734 | " self.add_link(BottleNeck(n_in, n_mid, n_out, stride, True))\n",

735 | " for _ in range(n_layers - 1):\n",

736 | " self.add_link(BottleNeck(n_out, n_mid, n_out))\n",

737 | "\n",

738 | " def __call__(self, x):\n",

739 | " for f in self.children():\n",

740 | " x = f(x)\n",

741 | " return x\n",

742 | "\n",

743 | "\n",

744 | "class BottleNeck(chainer.Chain):\n",

745 | " def __init__(self, n_in, n_mid, n_out, stride=1, proj=False):\n",

746 | " w = chainer.initializers.HeNormal()\n",

747 | " super(BottleNeck, self).__init__()\n",

748 | " with self.init_scope():\n",

749 | " self.conv1x1a = L.Convolution2D(\n",

750 | " n_in, n_mid, 1, stride, 0, initialW=w, nobias=True)\n",

751 | " self.conv3x3b = L.Convolution2D(\n",

752 | " n_mid, n_mid, 3, 1, 1, initialW=w, nobias=True)\n",

753 | " self.conv1x1c = L.Convolution2D(\n",

754 | " n_mid, n_out, 1, 1, 0, initialW=w, nobias=True)\n",

755 | " self.bn_a = L.BatchNormalization(n_mid)\n",

756 | " self.bn_b = L.BatchNormalization(n_mid)\n",

757 | " self.bn_c = L.BatchNormalization(n_out)\n",

758 | " if proj:\n",

759 | " self.conv1x1r = L.Convolution2D(\n",

760 | " n_in, n_out, 1, stride, 0, initialW=w, nobias=True)\n",

761 | " self.bn_r = L.BatchNormalization(n_out)\n",

762 | " self.proj = proj\n",

763 | "\n",

764 | " def __call__(self, x):\n",

765 | " h = F.relu(self.bn_a(self.conv1x1a(x)))\n",

766 | " h = F.relu(self.bn_b(self.conv3x3b(h)))\n",

767 | " h = self.bn_c(self.conv1x1c(h))\n",

768 | " if self.proj:\n",

769 | " x = self.bn_r(self.conv1x1r(x))\n",

770 | " return F.relu(h + x)"

771 | ],

772 | "execution_count": 0,

773 | "outputs": []

774 | },

775 | {

776 | "metadata": {

777 | "id": "isqRzDSedxsh",

778 | "colab_type": "text"

779 | },

780 | "cell_type": "markdown",

781 | "source": [

782 | "In the `BottleNeck` class, depending on the value of the proj argument\n",

783 | "supplied to the initializer, it will conditionally compute a convolutional\n",

784 | "layer ``conv1x1r`` which will extend the number of channels of the input ``x``\n",

785 | "to be equal to the number of channels of the output of ``conv1x1c``, and\n",

786 | "followed by a batch normalization layer before the final ReLU layer.\n",

787 | "\n",

788 | "Writing the building block in this way improves the re-usability of a class.\n",

789 | "It switches not only the behavior in `__class__` by flags but also the\n",

790 | "parameter registration. In this case, when `proj` is ``False``, the\n",

791 | "`BottleNeck` doesn't have `conv1x1r` and `bn_r` layers, so the memory\n",

792 | "usage would be efficient compared to the case when it registers both anyway and\n",