├── NER

├── metrics

│ ├── __init__.py

│ ├── functional

│ │ ├── __init__.py

│ │ └── query_span_f1.py

│ └── query_span_f1.py

├── models

│ ├── __init__.py

│ ├── query_ner_config.py

│ ├── classifier.py

│ └── bert_query_ner.py

├── ner2mrc

│ ├── __init__.py

│ ├── queries

│ │ ├── zh_msra.json

│ │ └── genia.json

│ ├── download.md

│ ├── genia2mrc.py

│ └── msra2mrc.py

├── utils

│ ├── __init__.py

│ ├── convert_tf2torch.sh

│ ├── radom_seed.py

│ ├── get_parser.py

│ └── bmes_decode.py

├── datasets

│ ├── __init__.py

│ ├── truncate_dataset.py

│ ├── collate_functions.py

│ ├── mrc_ner_dataset.py

│ ├── compute_acc.py

│ ├── compute_acc_linux.py

│ └── doc-paragraph-sentence-id

│ │ ├── mrc-ner.test-id

│ │ └── mrc-ner.dev-id

├── loss

│ ├── __init__.py

│ ├── adaptive_dice_loss.py

│ └── dice_loss.py

├── requirements.txt

├── scripts

│ └── reproduce

│ │ ├── zh_msra.sh

│ │ ├── ace04.sh

│ │ └── ace05.sh

├── parameters

├── evaluate.py

├── README.md

└── trainer.py

├── RE

├── requirements.txt

├── data

│ └── relation2id.txt

├── README.md

├── process_data.py

├── train_GRU.py

├── network.py

├── initial.py

└── test_GRU.py

├── data

└── annotation-guidelines.pdf

├── .gitignore

├── README.md

└── LICENSE

/NER/metrics/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/NER/models/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/NER/ner2mrc/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/NER/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/NER/datasets/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/NER/metrics/functional/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/NER/loss/__init__.py:

--------------------------------------------------------------------------------

1 | from .dice_loss import DiceLoss

2 |

--------------------------------------------------------------------------------

/RE/requirements.txt:

--------------------------------------------------------------------------------

1 | tensorflow==1.15.4

2 | scikit_learn==0.23.2

3 | jieba==0.42.1

4 |

--------------------------------------------------------------------------------

/NER/requirements.txt:

--------------------------------------------------------------------------------

1 | torch

2 | pytorch-lightning==0.9.0

3 | tokenizers

4 | transformers

5 |

--------------------------------------------------------------------------------

/data/annotation-guidelines.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/changdejie/diaKG-code/HEAD/data/annotation-guidelines.pdf

--------------------------------------------------------------------------------

/NER/ner2mrc/queries/zh_msra.json:

--------------------------------------------------------------------------------

1 | {

2 | "NR": "人名和虚构的人物形象",

3 | "NS": "按照地理位置划分的国家,城市,乡镇,大洲",

4 | "NT": "组织包括公司,政府党派,学校,政府,新闻机构"

5 | }

--------------------------------------------------------------------------------

/NER/models/query_ner_config.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | from transformers import BertConfig

5 |

6 |

7 | class BertQueryNerConfig(BertConfig):

8 | def __init__(self, **kwargs):

9 | super(BertQueryNerConfig, self).__init__(**kwargs)

10 | self.mrc_dropout = kwargs.get("mrc_dropout", 0.1)

11 |

--------------------------------------------------------------------------------

/NER/utils/convert_tf2torch.sh:

--------------------------------------------------------------------------------

1 | # convert tf model to pytorch format

2 |

3 | export BERT_BASE_DIR=/mnt/mrc/wwm_uncased_L-24_H-1024_A-16

4 |

5 | transformers-cli convert --model_type bert \

6 | --tf_checkpoint $BERT_BASE_DIR/model.ckpt \

7 | --config $BERT_BASE_DIR/config.json \

8 | --pytorch_dump_output $BERT_BASE_DIR/pytorch_model.bin

9 |

--------------------------------------------------------------------------------

/NER/ner2mrc/queries/genia.json:

--------------------------------------------------------------------------------

1 | {

2 | "DNA": "deoxyribonucleic acid",

3 | "RNA": "ribonucleic acid",

4 | "cell_line": "cell line",

5 | "cell_type": "cell type",

6 | "protein": "protein entities are limited to nitrogenous organic compounds and are parts of all living organisms, as structural components of body tissues such as muscle, hair, collagen and as enzymes and antibodies."

7 | }

8 |

--------------------------------------------------------------------------------

/RE/data/relation2id.txt:

--------------------------------------------------------------------------------

1 | Rel_Method_Drug 0

2 | Rel_Test_items_Disease 1

3 | Rel_Anatomy_Disease 2

4 | Rel_Drug_Disease 3

5 | Rel_SideEff_Disease 4

6 | Rel_Treatment_Disease 5

7 | Rel_Pathogenesis_Disease 6

8 | Rel_Frequency_Drug 7

9 | Rel_Test_Disease 8

10 | Rel_Operation_Disese 9

11 | Rel_Symptom_Disease 10

12 | Rel_Type_Disease 11

13 | Rel_Amount_Drug 12

14 | Rel_SideEff_Drug 13

15 | Rel_Reason_Disease 14

16 | Rel_Duration_Drug 15

--------------------------------------------------------------------------------

/NER/utils/radom_seed.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import numpy as np

5 | import torch

6 |

7 |

8 | def set_random_seed(seed: int):

9 | """set seeds for reproducibility"""

10 | np.random.seed(seed)

11 | torch.manual_seed(seed)

12 | torch.backends.cudnn.deterministic = True

13 | torch.backends.cudnn.benchmark = False

14 |

15 |

16 | if __name__ == '__main__':

17 | # without this line, x would be different in every execution.

18 | set_random_seed(0)

19 |

20 | x = np.random.random()

21 | print(x)

22 |

--------------------------------------------------------------------------------

/NER/ner2mrc/download.md:

--------------------------------------------------------------------------------

1 | ## Download Processed MRC-NER Datasets

2 | ZH:

3 | - [MSRA](https://drive.google.com/file/d/1bAoSJfT1IBdpbQWSrZPjQPPbAsDGlN2D/view?usp=sharing)

4 | - [OntoNotes4](https://drive.google.com/file/d/1CRVgZJDDGuj0O1NLK5DgujQBTLKyMR-g/view?usp=sharing)

5 |

6 | EN:

7 | - [CoNLL03](https://drive.google.com/file/d/1COt5bSHgwfl3oIZ6sCBVAenJKlfy3LI_/view?usp=sharing)

8 | - [ACE2004](https://drive.google.com/file/d/1zxLjecKK7CeLjxvPa-9QU9xsRJTVI5vb/view?usp=sharing)

9 | - [ACE2005](https://drive.google.com/file/d/1yxfwlrBmYIECqL_4K5xRve-pfBeIt58z/view?usp=sharing)

10 |

--------------------------------------------------------------------------------

/NER/datasets/truncate_dataset.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 | from torch.utils.data import Dataset

4 |

5 |

6 | class TruncateDataset(Dataset):

7 | """Truncate dataset to certain num"""

8 | def __init__(self, dataset: Dataset, max_num: int = 100):

9 | self.dataset = dataset

10 | self.max_num = min(max_num, len(self.dataset))

11 |

12 | def __len__(self):

13 | return self.max_num

14 |

15 | def __getitem__(self, item):

16 | return self.dataset[item]

17 |

18 | def __getattr__(self, item):

19 | """other dataset func"""

20 | return getattr(self.dataset, item)

21 |

--------------------------------------------------------------------------------

/NER/metrics/query_span_f1.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | from pytorch_lightning.metrics.metric import TensorMetric

5 | from .functional.query_span_f1 import query_span_f1

6 |

7 |

8 | class QuerySpanF1(TensorMetric):

9 | """

10 | Query Span F1

11 | Args:

12 | flat: is flat-ner

13 | """

14 | def __init__(self, reduce_group=None, reduce_op=None, flat=False):

15 | super(QuerySpanF1, self).__init__(name="query_span_f1",

16 | reduce_group=reduce_group,

17 | reduce_op=reduce_op)

18 | self.flat = flat

19 |

20 | def forward(self, start_preds, end_preds, match_logits, start_label_mask, end_label_mask, match_labels):

21 | return query_span_f1(start_preds, end_preds, match_logits, start_label_mask, end_label_mask, match_labels,

22 | flat=self.flat)

23 |

--------------------------------------------------------------------------------

/NER/scripts/reproduce/zh_msra.sh:

--------------------------------------------------------------------------------

1 | export PYTHONPATH="$PWD"

2 | export TOKENIZERS_PARALLELISM=false

3 | DATA_DIR="/mnt/mrc/zh_msra"

4 | BERT_DIR="/mnt/mrc/chinese_roberta_wwm_large_ext_pytorch"

5 | SPAN_WEIGHT=0.1

6 | DROPOUT=0.2

7 | LR=8e-6

8 | MAXLEN=128

9 |

10 | OUTPUT_DIR="/mnt/mrc/train_logs/zh_msra/zh_msra_bertlarge_lr${LR}20200913_dropout${DROPOUT}_bsz16_maxlen${MAXLEN}"

11 |

12 | mkdir -p $OUTPUT_DIR

13 |

14 | python trainer.py \

15 | --chinese \

16 | --data_dir $DATA_DIR \

17 | --bert_config_dir $BERT_DIR \

18 | --max_length $MAXLEN \

19 | --batch_size 4 \

20 | --gpus="0,1,2,3" \

21 | --precision=16 \

22 | --progress_bar_refresh_rate 1 \

23 | --lr ${LR} \

24 | --distributed_backend=ddp \

25 | --val_check_interval 0.5 \

26 | --accumulate_grad_batches 1 \

27 | --default_root_dir $OUTPUT_DIR \

28 | --mrc_dropout $DROPOUT \

29 | --max_epochs 20 \

30 | --weight_span $SPAN_WEIGHT \

31 | --span_loss_candidates "pred_and_gold"

32 |

--------------------------------------------------------------------------------

/NER/scripts/reproduce/ace04.sh:

--------------------------------------------------------------------------------

1 | export PYTHONPATH="$PWD"

2 | DATA_DIR="/mnt/mrc/ace2004"

3 | BERT_DIR="/mnt/mrc/bert-large-uncased"

4 |

5 | BERT_DROPOUT=0.1

6 | MRC_DROPOUT=0.3

7 | LR=3e-5

8 | SPAN_WEIGHT=0.1

9 | WARMUP=0

10 | MAXLEN=128

11 | MAXNORM=1.0

12 |

13 | OUTPUT_DIR="/mnt/mrc/train_logs/ace2004/ace2004_20200915reproduce_lr${LR}_drop${MRC_DROPOUT}_norm${MAXNORM}_bsz32_hard_span_weight${SPAN_WEIGHT}_warmup${WARMUP}_maxlen${MAXLEN}_newtrunc_debug"

14 | mkdir -p $OUTPUT_DIR

15 | python trainer.py \

16 | --data_dir $DATA_DIR \

17 | --bert_config_dir $BERT_DIR \

18 | --max_length $MAXLEN \

19 | --batch_size 4 \

20 | --gpus="0,1,2,3" \

21 | --precision=16 \

22 | --progress_bar_refresh_rate 1 \

23 | --lr $LR \

24 | --distributed_backend=ddp \

25 | --val_check_interval 0.5 \

26 | --accumulate_grad_batches 2 \

27 | --default_root_dir $OUTPUT_DIR \

28 | --mrc_dropout $MRC_DROPOUT \

29 | --bert_dropout $BERT_DROPOUT \

30 | --max_epochs 20 \

31 | --span_loss_candidates "pred_and_gold" \

32 | --weight_span $SPAN_WEIGHT \

33 | --warmup_steps $WARMUP \

34 | --max_length $MAXLEN \

35 | --gradient_clip_val $MAXNORM

36 |

--------------------------------------------------------------------------------

/NER/scripts/reproduce/ace05.sh:

--------------------------------------------------------------------------------

1 | export PYTHONPATH="$PWD"

2 | DATA_DIR="/mnt/mrc/ace2005"

3 | BERT_DIR="/mnt/mrc/wwm_uncased_L-24_H-1024_A-16"

4 |

5 | BERT_DROPOUT=0.1

6 | MRC_DROPOUT=0.4

7 | LR=1e-5

8 | SPAN_WEIGHT=0.1

9 | WARMUP=0

10 | MAXLEN=128

11 | MAXNORM=1.0

12 |

13 | OUTPUT_DIR="/mnt/mrc/train_logs/ace2005/ace2005_20200917_wwmlarge_sgd_warm${WARMUP}lr${LR}_drop${MRC_DROPOUT}_norm${MAXNORM}_bsz32_gold_span_weight${SPAN_WEIGHT}_warmup${WARMUP}_maxlen${MAXLEN}"

14 | mkdir -p $OUTPUT_DIR

15 |

16 | python trainer.py \

17 | --data_dir $DATA_DIR \

18 | --bert_config_dir $BERT_DIR \

19 | --max_length $MAXLEN \

20 | --batch_size 8 \

21 | --gpus="0,1,2,3" \

22 | --precision=16 \

23 | --progress_bar_refresh_rate 1 \

24 | --lr $LR \

25 | --distributed_backend=ddp \

26 | --val_check_interval 0.25 \

27 | --accumulate_grad_batches 1 \

28 | --default_root_dir $OUTPUT_DIR \

29 | --mrc_dropout $MRC_DROPOUT \

30 | --bert_dropout $BERT_DROPOUT \

31 | --max_epochs 20 \

32 | --span_loss_candidates "pred_and_gold" \

33 | --weight_span $SPAN_WEIGHT \

34 | --warmup_steps $WARMUP \

35 | --max_length $MAXLEN \

36 | --gradient_clip_val $MAXNORM \

37 | --optimizer "adamw"

38 |

--------------------------------------------------------------------------------

/NER/models/classifier.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import torch.nn as nn

5 | from torch.nn import functional as F

6 |

7 |

8 | class SingleLinearClassifier(nn.Module):

9 | def __init__(self, hidden_size, num_label):

10 | super(SingleLinearClassifier, self).__init__()

11 | self.num_label = num_label

12 | self.classifier = nn.Linear(hidden_size, num_label)

13 |

14 | def forward(self, input_features):

15 | features_output = self.classifier(input_features)

16 | return features_output

17 |

18 |

19 | class MultiNonLinearClassifier(nn.Module):

20 | def __init__(self, hidden_size, num_label, dropout_rate):

21 | super(MultiNonLinearClassifier, self).__init__()

22 | self.num_label = num_label

23 | self.classifier1 = nn.Linear(hidden_size, hidden_size)

24 | self.classifier2 = nn.Linear(hidden_size, num_label)

25 | self.dropout = nn.Dropout(dropout_rate)

26 |

27 | def forward(self, input_features):

28 | features_output1 = self.classifier1(input_features)

29 | # features_output1 = F.relu(features_output1)

30 | features_output1 = F.gelu(features_output1)

31 | features_output1 = self.dropout(features_output1)

32 | features_output2 = self.classifier2(features_output1)

33 | return features_output2

34 |

--------------------------------------------------------------------------------

/NER/utils/get_parser.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import argparse

5 |

6 |

7 | def get_parser() -> argparse.ArgumentParser:

8 | """

9 | return basic arg parser

10 | """

11 | parser = argparse.ArgumentParser(description="Training")

12 |

13 | parser.add_argument("--data_dir", type=str, required=False, default="E:\\data\\nested",help="data dir")

14 | parser.add_argument("--bert_config_dir", type=str, required=False, default="E:\data\chinese_roberta_wwm_large_ext_pytorch",help="bert config dir")

15 | parser.add_argument("--pretrained_checkpoint", default="", type=str, help="pretrained checkpoint path")

16 | parser.add_argument("--max_length", type=int, default=128, help="max length of dataset")

17 | parser.add_argument("--batch_size", type=int, default=2, help="batch size")

18 | parser.add_argument("--lr", type=float, default=2e-5, help="learning rate")

19 | parser.add_argument("--workers", type=int, default=0, help="num workers for dataloader")

20 | parser.add_argument("--weight_decay", default=0.01, type=float,

21 | help="Weight decay if we apply some.")

22 | parser.add_argument("--warmup_steps", default=0, type=int,

23 | help="warmup steps used for scheduler.")

24 | parser.add_argument("--adam_epsilon", default=1e-8, type=float,

25 | help="Epsilon for Adam optimizer.")

26 |

27 | return parser

28 |

--------------------------------------------------------------------------------

/NER/datasets/collate_functions.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 | import torch

4 | from typing import List

5 |

6 |

7 | def collate_to_max_length(batch: List[List[torch.Tensor]]) -> List[torch.Tensor]:

8 | """

9 | pad to maximum length of this batch

10 | Args:

11 | batch: a batch of samples, each contains a list of field data(Tensor):

12 | tokens, token_type_ids, start_labels, end_labels, start_label_mask, end_label_mask, match_labels, sample_idx, label_idx

13 | Returns:

14 | output: list of field batched data, which shape is [batch, max_length]

15 | """

16 | batch_size = len(batch)

17 | max_length = max(x[0].shape[0] for x in batch)

18 | output = []

19 |

20 | for field_idx in range(6):

21 | pad_output = torch.full([batch_size, max_length], 0, dtype=batch[0][field_idx].dtype)

22 | for sample_idx in range(batch_size):

23 | data = batch[sample_idx][field_idx]

24 | pad_output[sample_idx][: data.shape[0]] = data

25 | output.append(pad_output)

26 |

27 | pad_match_labels = torch.zeros([batch_size, max_length, max_length], dtype=torch.long)

28 | for sample_idx in range(batch_size):

29 | data = batch[sample_idx][6]

30 | pad_match_labels[sample_idx, : data.shape[1], : data.shape[1]] = data

31 | output.append(pad_match_labels)

32 |

33 | output.append(torch.stack([x[-2] for x in batch]))

34 | output.append(torch.stack([x[-1] for x in batch]))

35 |

36 | return output

37 |

--------------------------------------------------------------------------------

/NER/parameters:

--------------------------------------------------------------------------------

1 | accumulate_grad_batches: 1

2 | adam_epsilon: 1.0e-08

3 | amp_backend: native

4 | amp_level: O2

5 | auto_lr_find: false

6 | auto_scale_batch_size: false

7 | auto_select_gpus: false

8 | batch_size: 32

9 | benchmark: false

10 | bert_config_dir: chinese_roberta_wwm_large_ext_pytorch

11 | bert_dropout: 0.1

12 | check_val_every_n_epoch: 1

13 | checkpoint_callback: true

14 | chinese: false

15 | data_dir: nested

16 | default_root_dir: null

17 | deterministic: false

18 | dice_smooth: 1.0e-08

19 | distributed_backend: null

20 | early_stop_callback: false

21 | fast_dev_run: false

22 | final_div_factor: 10000.0

23 | flat: false

24 | gradient_clip_val: 0

25 | limit_test_batches: 1.0

26 | limit_train_batches: 1.0

27 | limit_val_batches: 1.0

28 | log_gpu_memory: null

29 | log_save_interval: 100

30 | logger: true

31 | loss_type: bce

32 | lr: 2.0e-05

33 | gpus: 0

34 | max_epochs: 10

35 | max_length: 128

36 | max_steps: null

37 | min_epochs: 1

38 | min_steps: null

39 | mrc_dropout: 0.1

40 | num_nodes: 1

41 | num_processes: 1

42 | num_sanity_val_steps: 2

43 | optimizer: adamw

44 | overfit_batches: 0.0

45 | overfit_pct: null

46 | precision: 32

47 | prepare_data_per_node: true

48 | pretrained_checkpoint: ''

49 | process_position: 0

50 | profiler: null

51 | progress_bar_refresh_rate: 1

52 | reload_dataloaders_every_epoch: false

53 | replace_sampler_ddp: true

54 | resume_from_checkpoint: null

55 | row_log_interval: 50

56 | span_loss_candidates: all

57 | sync_batchnorm: false

58 | terminate_on_nan: false

59 | test_percent_check: null

60 | track_grad_norm: -1

61 | train_percent_check: null

62 | truncated_bptt_steps: null

63 | val_check_interval: 1.0

64 | val_percent_check: null

65 | warmup_steps: 0

66 | weight_decay: 0.01

67 | weight_end: 1.0

68 | weight_span: 1.0

69 | weight_start: 1.0

70 | weights_save_path: null

71 | weights_summary: top

72 | workers: 0

73 |

--------------------------------------------------------------------------------

/NER/ner2mrc/genia2mrc.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import os

5 | from utils.bmes_decode import bmes_decode

6 | import json

7 |

8 |

9 | def convert_file(input_file, output_file, tag2query_file):

10 | """

11 | Convert GENIA(xiaoya) data to MRC format

12 | """

13 | all_data = json.load(open(input_file))

14 | tag2query = json.load(open(tag2query_file))

15 |

16 | output = []

17 | origin_count = 0

18 | new_count = 0

19 |

20 | for data in all_data:

21 | origin_count += 1

22 | context = data["context"]

23 | label2positions = data["label"]

24 | for tag_idx, (tag, query) in enumerate(tag2query.items()):

25 | positions = label2positions.get(tag, [])

26 | mrc_sample = {

27 | "context": context,

28 | "query": query,

29 | "start_position": [int(x.split(";")[0]) for x in positions],

30 | "end_position": [int(x.split(";")[1]) for x in positions],

31 | "qas_id": f"{origin_count}.{tag_idx}"

32 | }

33 | output.append(mrc_sample)

34 | new_count += 1

35 |

36 | json.dump(output, open(output_file, "w"), ensure_ascii=False, indent=2)

37 | print(f"Convert {origin_count} samples to {new_count} samples and save to {output_file}")

38 |

39 |

40 | def main():

41 | genia_raw_dir = "/mnt/mrc/genia/genia_raw"

42 | genia_mrc_dir = "/mnt/mrc/genia/genia_raw/mrc_format"

43 | tag2query_file = "queries/genia.json"

44 | os.makedirs(genia_mrc_dir, exist_ok=True)

45 | for phase in ["train", "dev", "test"]:

46 | old_file = os.path.join(genia_raw_dir, f"{phase}.genia.json")

47 | new_file = os.path.join(genia_mrc_dir, f"mrc-ner.{phase}")

48 | convert_file(old_file, new_file, tag2query_file)

49 |

50 |

51 | if __name__ == '__main__':

52 | main()

53 |

--------------------------------------------------------------------------------

/NER/evaluate.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import os

5 | from pytorch_lightning import Trainer

6 |

7 | from trainer import BertLabeling

8 |

9 |

10 | def evaluate(ckpt, hparams_file):

11 | """main"""

12 |

13 | trainer = Trainer(distributed_backend="ddp")

14 |

15 | model = BertLabeling.load_from_checkpoint(

16 | checkpoint_path=ckpt,

17 | hparams_file=hparams_file,

18 | map_location=None,

19 | batch_size=1,

20 | max_length=128,

21 | workers=0

22 | )

23 | trainer.test(model=model)

24 |

25 | if __name__ == '__main__':

26 | # ace04

27 | # HPARAMS = "/mnt/mrc/train_logs/ace2004/ace2004_20200911reproduce_epoch15_lr3e-5_drop0.3_norm1.0_bsz32_hard_span_weight0.1_warmup0_maxlen128_newtrunc_debug/lightning_logs/version_0/hparams.yaml"

28 | # CHECKPOINTS = "/mnt/mrc/train_logs/ace2004/ace2004_20200911reproduce_epoch15_lr3e-5_drop0.3_norm1.0_bsz32_hard_span_weight0.1_warmup0_maxlen128_newtrunc_debug/epoch=10_v0.ckpt"

29 | # DIR = "/mnt/mrc/train_logs/ace2004/ace2004_20200910_lr3e-5_drop0.3_bert0.1_bsz32_hard_loss_bce_weight_span0.05"

30 | # CHECKPOINTS = [os.path.join(DIR, x) for x in os.listdir(DIR)]

31 |

32 | # ace04-large

33 | # HPARAMS = "/mnt/mrc/train_logs/ace2004/ace2004_20200910reproduce_lr3e-5_drop0.3_norm1.0_bsz32_hard_span_weight0.1_warmup0_maxlen128_newtrunc_debug/lightning_logs/version_2/hparams.yaml"

34 | # CHECKPOINTS = "/mnt/mrc/train_logs/ace2004/ace2004_20200910reproduce_lr3e-5_drop0.3_norm1.0_bsz32_hard_span_weight0.1_warmup0_maxlen128_newtrunc_debug/epoch=10.ckpt"

35 |

36 | # ace05

37 | # HPARAMS = "/mnt/mrc/train_logs/ace2005/ace2005_20200911_lr3e-5_drop0.3_norm1.0_bsz32_hard_span_weight0.1_warmup0_maxlen128_newtrunc_debug/lightning_logs/version_0/hparams.yaml"

38 | # CHECKPOINTS = "/mnt/mrc/train_logs/ace2005/ace2005_20200911_lr3e-5_drop0.3_norm1.0_bsz32_hard_span_weight0.1_warmup0_maxlen128_newtrunc_debug/epoch=15.ckpt"

39 |

40 | # zh_msra

41 | CHECKPOINTS = "E:\\data\\modelNER\\version_7\\checkpoints\\epoch=2.ckpt"

42 | HPARAMS = "E:\\data\\modelNER\\version_7\\hparams.yaml"

43 |

44 | evaluate(ckpt=CHECKPOINTS, hparams_file=HPARAMS)

45 |

--------------------------------------------------------------------------------

/NER/ner2mrc/msra2mrc.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import os

5 | from utils.bmes_decode import bmes_decode

6 | import json

7 |

8 |

9 | def convert_file(input_file, output_file, tag2query_file):

10 | """

11 | Convert MSRA raw data to MRC format

12 | """

13 | origin_count = 0

14 | new_count = 0

15 | tag2query = json.load(open(tag2query_file))

16 | mrc_samples = []

17 | with open(input_file) as fin:

18 | for line in fin:

19 | line = line.strip()

20 | if not line:

21 | continue

22 | origin_count += 1

23 | src, labels = line.split("\t")

24 | tags = bmes_decode(char_label_list=[(char, label) for char, label in zip(src.split(), labels.split())])

25 | for label, query in tag2query.items():

26 | mrc_samples.append(

27 | {

28 | "context": src,

29 | "start_position": [tag.begin for tag in tags if tag.tag == label],

30 | "end_position": [tag.end-1 for tag in tags if tag.tag == label],

31 | "query": query

32 | }

33 | )

34 | new_count += 1

35 |

36 | json.dump(mrc_samples, open(output_file, "w"), ensure_ascii=False, sort_keys=True, indent=2)

37 | print(f"Convert {origin_count} samples to {new_count} samples and save to {output_file}")

38 |

39 |

40 | def main():

41 | # msra_raw_dir = "/mnt/mrc/zh_msra_yuxian"

42 | # msra_mrc_dir = "/mnt/mrc/zh_msra_yuxian/mrc_format"

43 | msra_raw_dir = "./queries/zh_msra"

44 | msra_mrc_dir = "/mnt/mrc/zh_msra/mrc_format"

45 | tag2query_file = "queries/zh_msra.json"

46 | os.makedirs(msra_mrc_dir, exist_ok=True)

47 | for phase in ["train", "dev", "test"]:

48 | old_file = os.path.join(msra_raw_dir, f"{phase}.tsv")

49 | new_file = os.path.join(msra_mrc_dir, f"mrc-ner.{phase}")

50 | # old_file = os.path.join(msra_raw_dir, f"mrc-ner.{phase}")

51 | # new_file = os.path.join(msra_mrc_dir, f"mrc-ner.{phase}")

52 | convert_file(old_file, new_file, tag2query_file)

53 |

54 |

55 | if __name__ == '__main__':

56 | main()

57 |

--------------------------------------------------------------------------------

/NER/utils/bmes_decode.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | from typing import Tuple, List

5 |

6 |

7 | class Tag(object):

8 | def __init__(self, term, tag, begin, end):

9 | self.term = term

10 | self.tag = tag

11 | self.begin = begin

12 | self.end = end

13 |

14 | def to_tuple(self):

15 | return tuple([self.term, self.begin, self.end])

16 |

17 | def __str__(self):

18 | return str({key: value for key, value in self.__dict__.items()})

19 |

20 | def __repr__(self):

21 | return str({key: value for key, value in self.__dict__.items()})

22 |

23 |

24 | def bmes_decode(char_label_list: List[Tuple[str, str]]) -> List[Tag]:

25 | """

26 | decode inputs to tags

27 | Args:

28 | char_label_list: list of tuple (word, bmes-tag)

29 | Returns:

30 | tags

31 | Examples:

32 | >>> x = [("Hi", "O"), ("Beijing", "S-LOC")]

33 | >>> bmes_decode(x)

34 | [{'term': 'Beijing', 'tag': 'LOC', 'begin': 1, 'end': 2}]

35 | """

36 | idx = 0

37 | length = len(char_label_list)

38 | tags = []

39 | while idx < length:

40 | term, label = char_label_list[idx]

41 | current_label = label[0]

42 |

43 | # correct labels

44 | if current_label in ["M", "E"]:

45 | current_label = "B"

46 | if idx + 1 == length and current_label == "B":

47 | current_label = "S"

48 |

49 | # merge chars

50 | if current_label == "O":

51 | idx += 1

52 | continue

53 | if current_label == "S":

54 | tags.append(Tag(term, label[2:], idx, idx + 1))

55 | idx += 1

56 | continue

57 | if current_label == "B":

58 | end = idx + 1

59 | while end + 1 < length and char_label_list[end][1][0] == "M":

60 | end += 1

61 | if char_label_list[end][1][0] == "E": # end with E

62 | entity = "".join(char_label_list[i][0] for i in range(idx, end + 1))

63 | tags.append(Tag(entity, label[2:], idx, end + 1))

64 | idx = end + 1

65 | else: # end with M/B

66 | entity = "".join(char_label_list[i][0] for i in range(idx, end))

67 | tags.append(Tag(entity, label[2:], idx, end))

68 | idx = end

69 | continue

70 | else:

71 | raise Exception("Invalid Inputs")

72 | return tags

73 |

--------------------------------------------------------------------------------

/NER/README.md:

--------------------------------------------------------------------------------

1 | # A Unified MRC Framework for Named Entity Recognition

2 | The repository contains the code of the recent research advances in [Shannon.AI](http://www.shannonai.com).

3 |

4 | **A Unified MRC Framework for Named Entity Recognition**

5 | Xiaoya Li, Jingrong Feng, Yuxian Meng, Qinghong Han, Fei Wu and Jiwei Li

6 | In ACL 2020. [paper](https://arxiv.org/abs/1910.11476)

7 | If you find this repo helpful, please cite the following:

8 | ```latex

9 | @article{li2019unified,

10 | title={A Unified MRC Framework for Named Entity Recognition},

11 | author={Li, Xiaoya and Feng, Jingrong and Meng, Yuxian and Han, Qinghong and Wu, Fei and Li, Jiwei},

12 | journal={arXiv preprint arXiv:1910.11476},

13 | year={2019}

14 | }

15 | ```

16 | For any question, please feel free to post Github issues.

17 |

18 | ## Install Requirements

19 | `pip install -r requirements.txt`

20 |

21 | We build our project on [pytorch-lightning.](https://github.com/PyTorchLightning/pytorch-lightning)

22 | If you want to know more about the arguments used in our training scripts, please

23 | refer to [pytorch-lightning documentation.](https://pytorch-lightning.readthedocs.io/en/latest/)

24 |

25 | ## Prepare Datasets

26 | You can [download](./ner2mrc/download.md) our preprocessed MRC-NER datasets or

27 | write your own preprocess scripts. We provide `ner2mrc/mrsa2mrc.py` for reference.

28 |

29 | ## Prepare Models

30 | For English Datasets, we use [BERT-Large](https://github.com/google-research/bert)

31 |

32 | For Chinese Datasets, we use [RoBERTa-wwm-ext-large](https://github.com/ymcui/Chinese-BERT-wwm)

33 |

34 | ## Train

35 | The main training procedure is in `trainer.py`

36 |

37 | Examples to start training are in `scripts/reproduce`.

38 |

39 | Note that you may need to change `DATA_DIR`, `BERT_DIR`, `OUTPUT_DIR` to your own

40 | dataset path, bert model path and log path, respectively.

41 |

42 | ## Evaluate

43 | `trainer.py` will automatically evaluate on dev set every `val_check_interval` epochs,

44 | and save the topk checkpoints to `default_root_dir`.

45 |

46 | To evaluate them, use `evaluate.py`

47 |

48 | # 模型经改造后使用如下

49 | ## 训练模型

50 | ```

51 | 训练的时候依据自己的机器情况设置参数,参数过大容易导致显存溢出

52 | python trainer.py --data_dir entity_type_data --bert_config models/chinese_roberta_wwm_large_ext_pytorch --batch_size 16 --max_epochs 10 --gpus 1

53 | ```

54 | ## 评估模型

55 | ```

56 | 需要修改evaluate里面新模型的路径

57 | python evaluate.py

58 |

59 | ```

60 | ## 统计详细不同实体信息

61 | ```

62 | datasets/compute_acc_linux.py or datasets/compute_acc.py

63 |

64 | ```

65 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | .idea/

21 | sdist/

22 | var/

23 | wheels/

24 | pip-wheel-metadata/

25 | share/python-wheels/

26 | *.egg-info/

27 | .installed.cfg

28 | *.egg

29 | MANIFEST

30 |

31 | # PyInstaller

32 | # Usually these files are written by a python script from a template

33 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

34 | *.manifest

35 | *.spec

36 |

37 | # Installer logs

38 | pip-log.txt

39 | pip-delete-this-directory.txt

40 |

41 | # Unit test / coverage reports

42 | htmlcov/

43 | .tox/

44 | .nox/

45 | .coverage

46 | .coverage.*

47 | .cache

48 | nosetests.xml

49 | coverage.xml

50 | *.cover

51 | *.py,cover

52 | .hypothesis/

53 | .pytest_cache/

54 |

55 | # Translations

56 | *.mo

57 | *.pot

58 |

59 | # Django stuff:

60 | *.log

61 | local_settings.py

62 | db.sqlite3

63 | db.sqlite3-journal

64 |

65 | # Flask stuff:

66 | instance/

67 | .webassets-cache

68 |

69 | # Scrapy stuff:

70 | .scrapy

71 |

72 | # Sphinx documentation

73 | docs/_build/

74 |

75 | # PyBuilder

76 | target/

77 |

78 | # Jupyter Notebook

79 | .ipynb_checkpoints

80 |

81 | # IPython

82 | profile_default/

83 | ipython_config.py

84 |

85 | # pyenv

86 | .python-version

87 |

88 | # pipenv

89 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

90 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

91 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

92 | # install all needed dependencies.

93 | #Pipfile.lock

94 |

95 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

96 | __pypackages__/

97 |

98 | # Celery stuff

99 | celerybeat-schedule

100 | celerybeat.pid

101 |

102 | # SageMath parsed files

103 | *.sage.py

104 |

105 | # Environments

106 | .env

107 | .venv

108 | env/

109 | venv/

110 | ENV/

111 | env.bak/

112 | venv.bak/

113 |

114 | # Spyder project settings

115 | .spyderproject

116 | .spyproject

117 |

118 | # Rope project settings

119 | .ropeproject

120 |

121 | # mkdocs documentation

122 | /site

123 |

124 | # mypy

125 | .mypy_cache/

126 | .dmypy.json

127 | dmypy.json

128 |

129 | # Pyre type checker

130 | .pyre/

131 |

132 | # mac

133 | .DS_Store

134 |

--------------------------------------------------------------------------------

/NER/models/bert_query_ner.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import torch

5 | import torch.nn as nn

6 | from transformers import BertModel, BertPreTrainedModel

7 |

8 | from models.classifier import MultiNonLinearClassifier, SingleLinearClassifier

9 |

10 |

11 | class BertQueryNER(BertPreTrainedModel):

12 | def __init__(self, config):

13 | super(BertQueryNER, self).__init__(config)

14 | self.bert = BertModel(config)

15 |

16 | # self.start_outputs = nn.Linear(config.hidden_size, 2)

17 | # self.end_outputs = nn.Linear(config.hidden_size, 2)

18 | self.start_outputs = nn.Linear(config.hidden_size, 1)

19 | self.end_outputs = nn.Linear(config.hidden_size, 1)

20 | self.span_embedding = MultiNonLinearClassifier(config.hidden_size * 2, 1, config.mrc_dropout)

21 | # self.span_embedding = SingleLinearClassifier(config.hidden_size * 2, 1)

22 |

23 | self.hidden_size = config.hidden_size

24 |

25 | self.init_weights()

26 |

27 | def forward(self, input_ids, token_type_ids=None, attention_mask=None):

28 | """

29 | Args:

30 | input_ids: bert input tokens, tensor of shape [seq_len]

31 | token_type_ids: 0 for query, 1 for context, tensor of shape [seq_len]

32 | attention_mask: attention mask, tensor of shape [seq_len]

33 | Returns:

34 | start_logits: start/non-start probs of shape [seq_len]

35 | end_logits: end/non-end probs of shape [seq_len]

36 | match_logits: start-end-match probs of shape [seq_len, 1]

37 | """

38 |

39 | bert_outputs = self.bert(input_ids, token_type_ids=token_type_ids, attention_mask=attention_mask)

40 | sequence_heatmap = bert_outputs[0] # [batch, seq_len, hidden]

41 | batch_size, seq_len, hid_size = sequence_heatmap.size()

42 |

43 | start_logits = self.start_outputs(sequence_heatmap).squeeze(-1) # [batch, seq_len, 1]

44 | end_logits = self.end_outputs(sequence_heatmap).squeeze(-1) # [batch, seq_len, 1]

45 |

46 | # for every position $i$ in sequence, should concate $j$ to

47 | # predict if $i$ and $j$ are start_pos and end_pos for an entity.

48 | # [batch, seq_len, seq_len, hidden]

49 | start_extend = sequence_heatmap.unsqueeze(2).expand(-1, -1, seq_len, -1)

50 | # [batch, seq_len, seq_len, hidden]

51 | end_extend = sequence_heatmap.unsqueeze(1).expand(-1, seq_len, -1, -1)

52 | # [batch, seq_len, seq_len, hidden*2]

53 | span_matrix = torch.cat([start_extend, end_extend], 3)

54 | # [batch, seq_len, seq_len]

55 | span_logits = self.span_embedding(span_matrix).squeeze(-1)

56 |

57 | return start_logits, end_logits, span_logits

58 |

--------------------------------------------------------------------------------

/NER/loss/adaptive_dice_loss.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import torch

5 | import torch.nn as nn

6 | from torch import Tensor

7 | from typing import Optional

8 |

9 |

10 | class AdaptiveDiceLoss(nn.Module):

11 | """

12 | Dice coefficient for short, is an F1-oriented statistic used to gauge the similarity of two sets.

13 |

14 | Math Function:

15 | https://arxiv.org/abs/1911.02855.pdf

16 | adaptive_dice_loss(p, y) = 1 - numerator / denominator

17 | numerator = 2 * \sum_{1}^{t} (1 - p_i) ** alpha * p_i * y_i + smooth

18 | denominator = \sum_{1}^{t} (1 - p_i) ** alpha * p_i + \sum_{1} ^{t} y_i + smooth

19 |

20 | Args:

21 | alpha: alpha in math function

22 | smooth (float, optional): smooth in math function

23 | square_denominator (bool, optional): [True, False], specifies whether to square the denominator in the loss function.

24 | with_logits (bool, optional): [True, False], specifies whether the input tensor is normalized by Sigmoid/Softmax funcs.

25 | True: the loss combines a `sigmoid` layer and the `BCELoss` in one single class.

26 | False: the loss contains `BCELoss`.

27 | Shape:

28 | - input: (*)

29 | - target: (*)

30 | - mask: (*) 0,1 mask for the input sequence.

31 | - Output: Scalar loss

32 | Examples:

33 | >>> loss = AdaptiveDiceLoss()

34 | >>> input = torch.randn(3, 1, requires_grad=True)

35 | >>> target = torch.empty(3, dtype=torch.long).random_(5)

36 | >>> output = loss(input, target)

37 | >>> output.backward()

38 | """

39 | def __init__(self,

40 | alpha: float = 0.1,

41 | smooth: Optional[float] = 1e-8,

42 | square_denominator: Optional[bool] = False,

43 | with_logits: Optional[bool] = True,

44 | reduction: Optional[str] = "mean") -> None:

45 | super(AdaptiveDiceLoss, self).__init__()

46 |

47 | self.reduction = reduction

48 | self.with_logits = with_logits

49 | self.alpha = alpha

50 | self.smooth = smooth

51 | self.square_denominator = square_denominator

52 |

53 | def forward(self,

54 | input: Tensor,

55 | target: Tensor,

56 | mask: Optional[Tensor] = None) -> Tensor:

57 |

58 | flat_input = input.view(-1)

59 | flat_target = target.view(-1)

60 |

61 | if self.with_logits:

62 | flat_input = torch.sigmoid(flat_input)

63 |

64 | if mask is not None:

65 | mask = mask.view(-1).float()

66 | flat_input = flat_input * mask

67 | flat_target = flat_target * mask

68 |

69 | intersection = torch.sum((1-flat_input)**self.alpha * flat_input * flat_target, -1) + self.smooth

70 | denominator = torch.sum((1-flat_input)**self.alpha * flat_input) + flat_target.sum() + self.smooth

71 | return 1 - 2 * intersection / denominator

72 |

73 | def __str__(self):

74 | return f"Adaptive Dice Loss, smooth:{self.smooth}; alpha:{self.alpha}"

75 |

--------------------------------------------------------------------------------

/NER/loss/dice_loss.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 |

3 |

4 | import torch

5 | import torch.nn as nn

6 | from torch import Tensor

7 | from typing import Optional

8 |

9 |

10 | class DiceLoss(nn.Module):

11 | """

12 | Dice coefficient for short, is an F1-oriented statistic used to gauge the similarity of two sets.

13 | Given two sets A and B, the vanilla dice coefficient between them is given as follows:

14 | Dice(A, B) = 2 * True_Positive / (2 * True_Positive + False_Positive + False_Negative)

15 | = 2 * |A and B| / (|A| + |B|)

16 |

17 | Math Function:

18 | U-NET: https://arxiv.org/abs/1505.04597.pdf

19 | dice_loss(p, y) = 1 - numerator / denominator

20 | numerator = 2 * \sum_{1}^{t} p_i * y_i + smooth

21 | denominator = \sum_{1}^{t} p_i + \sum_{1} ^{t} y_i + smooth

22 | if square_denominator is True, the denominator is \sum_{1}^{t} (p_i ** 2) + \sum_{1} ^{t} (y_i ** 2) + smooth

23 | V-NET: https://arxiv.org/abs/1606.04797.pdf

24 | Args:

25 | smooth (float, optional): a manual smooth value for numerator and denominator.

26 | square_denominator (bool, optional): [True, False], specifies whether to square the denominator in the loss function.

27 | with_logits (bool, optional): [True, False], specifies whether the input tensor is normalized by Sigmoid/Softmax funcs.

28 | True: the loss combines a `sigmoid` layer and the `BCELoss` in one single class.

29 | False: the loss contains `BCELoss`.

30 | Shape:

31 | - input: (*)

32 | - target: (*)

33 | - mask: (*) 0,1 mask for the input sequence.

34 | - Output: Scalar loss

35 | Examples:

36 | >>> loss = DiceLoss()

37 | >>> input = torch.randn(3, 1, requires_grad=True)

38 | >>> target = torch.empty(3, dtype=torch.long).random_(5)

39 | >>> output = loss(input, target)

40 | >>> output.backward()

41 | """

42 | def __init__(self,

43 | smooth: Optional[float] = 1e-8,

44 | square_denominator: Optional[bool] = False,

45 | with_logits: Optional[bool] = True,

46 | reduction: Optional[str] = "mean") -> None:

47 | super(DiceLoss, self).__init__()

48 |

49 | self.reduction = reduction

50 | self.with_logits = with_logits

51 | self.smooth = smooth

52 | self.square_denominator = square_denominator

53 |

54 | def forward(self,

55 | input: Tensor,

56 | target: Tensor,

57 | mask: Optional[Tensor] = None) -> Tensor:

58 |

59 | flat_input = input.view(-1)

60 | flat_target = target.view(-1)

61 |

62 | if self.with_logits:

63 | flat_input = torch.sigmoid(flat_input)

64 |

65 | if mask is not None:

66 | mask = mask.view(-1).float()

67 | flat_input = flat_input * mask

68 | flat_target = flat_target * mask

69 |

70 | interection = torch.sum(flat_input * flat_target, -1)

71 | if not self.square_denominator:

72 | return 1 - ((2 * interection + self.smooth) /

73 | (flat_input.sum() + flat_target.sum() + self.smooth))

74 | else:

75 | return 1 - ((2 * interection + self.smooth) /

76 | (torch.sum(torch.square(flat_input,), -1) + torch.sum(torch.square(flat_target), -1) + self.smooth))

77 |

78 | def __str__(self):

79 | return f"Dice Loss smooth:{self.smooth}"

80 |

--------------------------------------------------------------------------------

/RE/README.md:

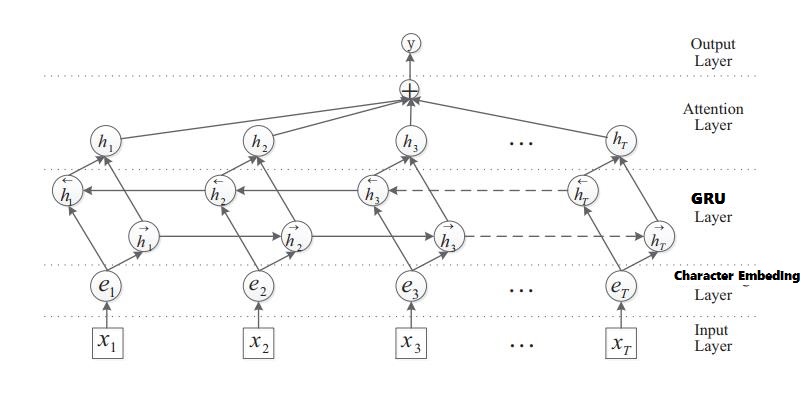

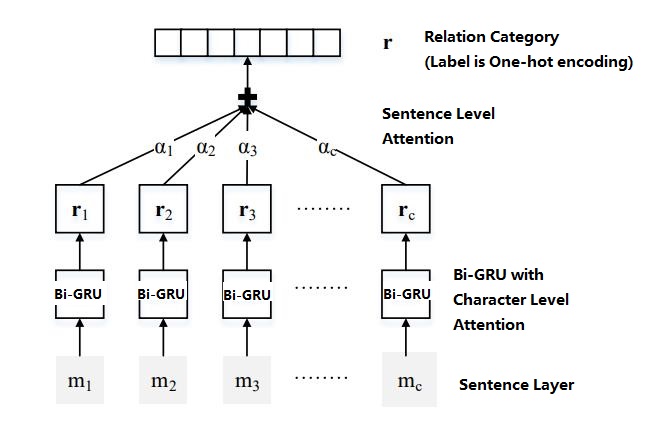

--------------------------------------------------------------------------------

1 |

2 | # Chinese Relation Extraction by biGRU with Character and Sentence Attentions

3 |

4 | ### [中文Blog](http://www.crownpku.com//2017/08/19/%E7%94%A8Bi-GRU%E5%92%8C%E5%AD%97%E5%90%91%E9%87%8F%E5%81%9A%E7%AB%AF%E5%88%B0%E7%AB%AF%E7%9A%84%E4%B8%AD%E6%96%87%E5%85%B3%E7%B3%BB%E6%8A%BD%E5%8F%96.html)

5 |

6 | Bi-directional GRU with Word and Sentence Dual Attentions for End-to End Relation Extraction

7 |

8 | Original Code in https://github.com/thunlp/TensorFlow-NRE, modified for Chinese.

9 |

10 | Original paper [Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification](http://anthology.aclweb.org/P16-2034) and [Neural Relation Extraction with Selective Attention over Instances](http://aclweb.org/anthology/P16-1200)

11 |

12 |

13 |

14 |

15 |

16 |

17 | ## Requrements

18 |

19 | * Python (>=3.5)

20 |

21 | * TensorFlow (>=r1.0)

22 |

23 | * scikit-learn (>=0.18)

24 |

25 |

26 | ## Usage

27 |

28 |

29 | ### * Training:

30 |

31 | 1. Prepare data in origin_data/ , including relation types (relation2id.txt), training data (train.txt), testing data (test.txt) and Chinese word vectors (vec.txt).

32 |

33 | ```

34 | Current sample data includes the following 12 relationships:

35 | unknown, 父母, 夫妻, 师生, 兄弟姐妹, 合作, 情侣, 祖孙, 好友, 亲戚, 同门, 上下级

36 | ```

37 |

38 | 2. Organize data into npy files, which will be save at data/

39 | ```

40 | #python3 initial.py

41 | ```

42 |

43 | 3. Training, models will be save at model/

44 | ```

45 | #python3 train_GRU.py

46 | ```

47 |

48 |

49 | ### * Inference

50 |

51 | **If you have trained a new model, please remember to change the pathname in main_for_evaluation() and main() in test_GRU.py with your own model name.**

52 |

53 | ```

54 | #python3 test_GRU.py

55 | ```

56 |

57 | Program will ask for data input in the format of "name1 name2 sentence".

58 |

59 | We have pre-trained model in /model. To test the pre-trained model, simply initialize the data and run test_GRU.py:

60 |

61 | ```

62 | #python3 initial.py

63 | #python3 test_GRU.py

64 | ```

65 |

66 |

67 | ## Sample Results

68 |

69 | We make up some sentences and test the performance. The model gives good results, sometimes wrong but reasonable.

70 |

71 | More data is needed for better performance.

72 |

73 | ```

74 | INFO:tensorflow:Restoring parameters from ./model/ATT_GRU_model-9000

75 | reading word embedding data...

76 | reading relation to id

77 |

78 | 实体1: 李晓华

79 | 实体2: 王大牛

80 | 李晓华和她的丈夫王大牛前日一起去英国旅行了。

81 | 关系是:

82 | No.1: 夫妻, Probability is 0.996217

83 | No.2: 父母, Probability is 0.00193673

84 | No.3: 兄弟姐妹, Probability is 0.00128172

85 |

86 | 实体1: 李晓华

87 | 实体2: 王大牛

88 | 李晓华和她的高中同学王大牛两个人前日一起去英国旅行。

89 | 关系是:

90 | No.1: 好友, Probability is 0.526823

91 | No.2: 兄弟姐妹, Probability is 0.177491

92 | No.3: 夫妻, Probability is 0.132977

93 |

94 | 实体1: 李晓华

95 | 实体2: 王大牛

96 | 王大牛命令李晓华在周末前完成这份代码。

97 | 关系是:

98 | No.1: 上下级, Probability is 0.965674

99 | No.2: 亲戚, Probability is 0.0185355

100 | No.3: 父母, Probability is 0.00953698

101 |

102 | 实体1: 李晓华

103 | 实体2: 王大牛

104 | 王大牛非常疼爱他的孙女李晓华小朋友。

105 | 关系是:

106 | No.1: 祖孙, Probability is 0.785542

107 | No.2: 好友, Probability is 0.0829895

108 | No.3: 同门, Probability is 0.0728216

109 |

110 | 实体1: 李晓华

111 | 实体2: 王大牛

112 | 谈起曾经一起求学的日子,王大牛非常怀念他的师妹李晓华。

113 | 关系是:

114 | No.1: 师生, Probability is 0.735982

115 | No.2: 同门, Probability is 0.159495

116 | No.3: 兄弟姐妹, Probability is 0.0440367

117 |

118 | 实体1: 李晓华

119 | 实体2: 王大牛

120 | 王大牛对于他的学生李晓华做出的成果非常骄傲!

121 | 关系是:

122 | No.1: 师生, Probability is 0.994964

123 | No.2: 父母, Probability is 0.00460191

124 | No.3: 夫妻, Probability is 0.000108601

125 |

126 | 实体1: 李晓华

127 | 实体2: 王大牛

128 | 王大牛和李晓华是从小一起长大的好哥们

129 | 关系是:

130 | No.1: 兄弟姐妹, Probability is 0.852632

131 | No.2: 亲戚, Probability is 0.0477967

132 | No.3: 好友, Probability is 0.0433101

133 |

134 | 实体1: 李晓华

135 | 实体2: 王大牛

136 | 王大牛的表舅叫李晓华的二妈为大姐

137 | 关系是:

138 | No.1: 亲戚, Probability is 0.766272

139 | No.2: 父母, Probability is 0.162108

140 | No.3: 兄弟姐妹, Probability is 0.0623203

141 |

142 | 实体1: 李晓华

143 | 实体2: 王大牛

144 | 这篇论文是王大牛负责编程,李晓华负责写作的。

145 | 关系是:

146 | No.1: 合作, Probability is 0.907599

147 | No.2: unknown, Probability is 0.082604

148 | No.3: 上下级, Probability is 0.00730342

149 |

150 | 实体1: 李晓华

151 | 实体2: 王大牛

152 | 王大牛和李晓华为谁是论文的第一作者争得头破血流。

153 | 关系是:

154 | No.1: 合作, Probability is 0.819008

155 | No.2: 上下级, Probability is 0.116768

156 | No.3: 师生, Probability is 0.0448312

157 | ```

158 |

159 |

--------------------------------------------------------------------------------

/NER/metrics/functional/query_span_f1.py:

--------------------------------------------------------------------------------

1 | # encoding: utf-8

2 | import os

3 |

4 | import torch

5 | from tokenizers import BertWordPieceTokenizer

6 |

7 | from utils.bmes_decode import bmes_decode

8 |

9 | bert_path = "E:\data\chinese_roberta_wwm_large_ext_pytorch\\"

10 | json_path = "E:\\data\\nested\\mrc-ner.dev"

11 | is_chinese = True

12 |

13 | vocab_file = os.path.join(bert_path, "vocab.txt")

14 | tokenizer = BertWordPieceTokenizer(vocab_file)

15 |

16 |

17 | def query_span_f1(start_preds, end_preds, match_logits, start_label_mask, end_label_mask, match_labels, flat=False):

18 | """

19 | Compute span f1 according to query-based model output

20 | Args:

21 | start_preds: [bsz, seq_len]

22 | end_preds: [bsz, seq_len]

23 | match_logits: [bsz, seq_len, seq_len]

24 | start_label_mask: [bsz, seq_len]

25 | end_label_mask: [bsz, seq_len]

26 | match_labels: [bsz, seq_len, seq_len]

27 | flat: if True, decode as flat-ner

28 | Returns:

29 | span-f1 counts, tensor of shape [3]: tp, fp, fn

30 | """

31 | start_label_mask = start_label_mask.bool()

32 | end_label_mask = end_label_mask.bool()

33 | match_labels = match_labels.bool()

34 | bsz, seq_len = start_label_mask.size()

35 | # [bsz, seq_len, seq_len]

36 | match_preds = match_logits > 0

37 | # [bsz, seq_len]

38 | start_preds = start_preds.bool()

39 | # [bsz, seq_len]

40 | end_preds = end_preds.bool()

41 |

42 | match_preds = (match_preds

43 | & start_preds.unsqueeze(-1).expand(-1, -1, seq_len)

44 | & end_preds.unsqueeze(1).expand(-1, seq_len, -1))

45 | match_label_mask = (start_label_mask.unsqueeze(-1).expand(-1, -1, seq_len)

46 | & end_label_mask.unsqueeze(1).expand(-1, seq_len, -1))

47 | match_label_mask = torch.triu(match_label_mask, 0) # start should be less or equal to end

48 | match_preds = match_label_mask & match_preds

49 |

50 | tp = (match_labels & match_preds).long().sum()

51 | fp = (~match_labels & match_preds).long().sum()

52 | fn = (match_labels & ~match_preds).long().sum()

53 | return torch.stack([tp, fp, fn])

54 |

55 |

56 | def extract_flat_spans(start_pred, end_pred, match_pred, label_mask):

57 | """

58 | Extract flat-ner spans from start/end/match logits

59 | Args:

60 | start_pred: [seq_len], 1/True for start, 0/False for non-start

61 | end_pred: [seq_len, 2], 1/True for end, 0/False for non-end

62 | match_pred: [seq_len, seq_len], 1/True for match, 0/False for non-match

63 | label_mask: [seq_len], 1 for valid boundary.

64 | Returns:

65 | tags: list of tuple (start, end)

66 | Examples:

67 | >>> start_pred = [0, 1]

68 | >>> end_pred = [0, 1]

69 | >>> match_pred = [[0, 0], [0, 1]]

70 | >>> label_mask = [1, 1]

71 | >>> extract_flat_spans(start_pred, end_pred, match_pred, label_mask)

72 | [(1, 2)]

73 | """

74 | pseudo_tag = "TAG"

75 | pseudo_input = "a"

76 |

77 | bmes_labels = ["O"] * len(start_pred)

78 | start_positions = [idx for idx, tmp in enumerate(start_pred) if tmp and label_mask[idx]]

79 | end_positions = [idx for idx, tmp in enumerate(end_pred) if tmp and label_mask[idx]]

80 |

81 | for start_item in start_positions:

82 | bmes_labels[start_item] = f"B-{pseudo_tag}"

83 | for end_item in end_positions:

84 | bmes_labels[end_item] = f"E-{pseudo_tag}"

85 |

86 | for tmp_start in start_positions:

87 | tmp_end = [tmp for tmp in end_positions if tmp >= tmp_start]

88 | if len(tmp_end) == 0:

89 | continue

90 | else:

91 | tmp_end = min(tmp_end)

92 | if match_pred[tmp_start][tmp_end]:

93 | if tmp_start != tmp_end:

94 | for i in range(tmp_start + 1, tmp_end):

95 | bmes_labels[i] = f"M-{pseudo_tag}"

96 | else:

97 | bmes_labels[tmp_end] = f"S-{pseudo_tag}"

98 |

99 | tags = bmes_decode([(pseudo_input, label) for label in bmes_labels])

100 |

101 | return [(tag.begin, tag.end) for tag in tags]

102 |

103 |

104 | def remove_overlap(spans):

105 | """

106 | remove overlapped spans greedily for flat-ner

107 | Args:

108 | spans: list of tuple (start, end), which means [start, end] is a ner-span

109 | Returns:

110 | spans without overlap

111 | """

112 | output = []

113 | occupied = set()

114 | for start, end in spans:

115 | if any(x for x in range(start, end + 1)) in occupied:

116 | continue

117 | output.append((start, end))

118 | for x in range(start, end + 1):

119 | occupied.add(x)

120 | return output

121 |

--------------------------------------------------------------------------------

/RE/process_data.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 | # @Time : 2021/5/7 下午2:08

3 | # @Author : liuliping

4 | # @File : process_data.py.py

5 | # @description: 从原始数据抽取实体-关系

6 |

7 | import json

8 | import os

9 | import glob

10 | import copy

11 | import random

12 |

13 | total_count = {}

14 |

15 | def custom_RE(file_path, output_path):

16 | #

17 | with open(file_path, 'r', encoding='utf-8') as f:

18 | data = [json.loads(line.strip()) for line in f.readlines()]

19 |

20 | result = []

21 | relation_count = {}

22 | for sub in data: # 行

23 | ner_relation = {}

24 | relation_set = set()

25 | ner_data = sub['sd_result']['items']

26 | text = sub['text']

27 | segments = [i for i in range(len(text)) if text[i] in {'。', '?', '!', ';', ':', ','}]

28 | for ner in ner_data:

29 | ner_text = ner['meta']['text']

30 | start, end = ner['meta']['segment_range']

31 | ner_label = ner['labels']['Entity']

32 | relations = ner['labels'].get('Relation', [])

33 | for rel in relations:

34 | relation_set.add(rel)

35 |

36 | ner_relation[f'{ner_text}#{ner_label}#{start}#{end}'] = relations

37 |

38 | for rel in relation_set:

39 | tmp = []

40 | for ner, rels in ner_relation.items():

41 |

42 | if rel in rels and (len(tmp) == 0 or tmp[0].split('#')[1] != ner.split('#')[1]):

43 | tmp.append(ner)

44 |

45 | if len(tmp) == 2:

46 | ner_1 = tmp[0].split('#')

47 | ner_2 = tmp[1].split('#')

48 |

49 | index = [int(d) for d in ner_1[2:]] + [int(d) for d in ner_2[2:]]

50 |

51 | min_idx, max_idx = min(index), max(index)

52 |

53 | sub_text = text[:segments[0]] if segments and segments[0] >= max_idx else text[:]

54 |

55 | min_seg = 0

56 | max_seg = len(text)

57 | for i in range(len(segments) - 1):

58 | if segments[i] <= min_idx <= segments[i + 1]:

59 | min_seg = segments[i]

60 | break

61 | for i in range(len(segments) - 1):

62 | if segments[i] <= max_idx <= segments[i + 1]:

63 | max_seg = segments[i + 1]

64 | break

65 |

66 | if min_seg != 0 or max_seg != len(text):

67 | sub_text = text[min_seg + 1: max_seg]

68 | rel = rel.split('-'[0])[0].strip().replace('?', '')

69 | result.append([t.split('#')[0] for t in tmp] + [rel, sub_text])

70 | relation_count[rel] = relation_count.setdefault(rel, 0) + 1

71 | total_count[rel] = total_count.setdefault(rel, 0) + 1

72 | break

73 |

74 | with open(os.path.join(output_path, 'custom_RE_{}.txt'.format(os.path.split(file_path)[1].split('.')[0])),

75 | 'w', encoding='utf-8') as fw:

76 | fw.write('\n'.join(['\t'.join(t) for t in result]))

77 |

78 | print(os.path.basename(file_path), relation_count)

79 |

80 |

81 | if __name__ == '__main__':

82 | input_path = '../data/糖尿病标注数据4.28/' # 原始数据path

83 | output_path = 'data/custom_RE'

84 | os.makedirs('data/custom_RE/', exist_ok=True)

85 |

86 | files = glob.glob(f'{input_path}/*.txt')

87 | for fil in files:

88 | custom_RE(fil, output_path)

89 | print('total', total_count)

90 |

91 | relations = {}

92 | files = glob.glob(f'{output_path}/*.txt')

93 | for fil in files:

94 | with open(fil, 'r', encoding='utf-8') as f:

95 | data = f.readlines()

96 | for sub in data:

97 | l = sub.strip().split('\t')

98 | relations.setdefault(l[2], []).append(sub)

99 | # 拆分数据集 train:dev:test=6:2:2

100 | train_data, dev_data, test_data = [], [], []

101 | for rel, val in relations.items():

102 | print(rel, len(val))

103 | length = len(val)

104 | random.shuffle(val)

105 | train_data.extend(val[:length // 10 * 6])

106 | dev_data.extend(val[length // 10 * 6: length // 10 * 8])

107 | test_data.extend(val[length // 10 * 8:])

108 |

109 | with open('data/train.txt', 'w', encoding='utf-8') as fw:

110 | random.shuffle(train_data)

111 | fw.write(''.join(train_data))

112 |

113 | with open('data/dev.txt', 'w', encoding='utf-8') as fw:

114 | random.shuffle(dev_data)

115 | fw.write(''.join(dev_data))

116 |

117 | with open('data/test.txt', 'w', encoding='utf-8') as fw:

118 | random.shuffle(test_data)

119 | fw.write(''.join(test_data))

120 |

--------------------------------------------------------------------------------

/RE/train_GRU.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | import numpy as np

3 | import time

4 | import datetime

5 | import os

6 | import network

7 | # from tensorflow.contrib.tensorboard.plugins import projector

8 |

9 | FLAGS = tf.app.flags.FLAGS

10 |

11 | tf.app.flags.DEFINE_string('summary_dir', '.', 'path to store summary')

12 |

13 | import os

14 | os.environ["CUDA_VISIBLE_DEVICES"] = "1"

15 | # os.environ["CUDA_VISIBLE_DEVICES"] = "1"

16 |

17 | def main(_):

18 | # the path to save models

19 | save_path = './model'

20 | if not os.path.isdir(save_path):

21 | os.makedirs(save_path)

22 |

23 | print('reading wordembedding')

24 | wordembedding = np.load('./data/vec.npy', allow_pickle=True)

25 |

26 | print('reading training data')

27 | train_y = np.load('./data/train_y.npy', allow_pickle=True)

28 | train_word = np.load('./data/train_word.npy', allow_pickle=True)

29 | train_pos1 = np.load('./data/train_pos1.npy', allow_pickle=True)

30 | train_pos2 = np.load('./data/train_pos2.npy', allow_pickle=True)

31 |

32 | settings = network.Settings()

33 | settings.vocab_size = len(wordembedding)

34 | settings.num_classes = len(train_y[0])

35 |

36 | # big_num = settings.big_num

37 |

38 | with tf.Graph().as_default():

39 |

40 | sess = tf.Session()

41 | with sess.as_default():

42 |

43 | initializer = tf.contrib.layers.xavier_initializer()

44 | with tf.variable_scope("model", reuse=None, initializer=initializer):

45 | m = network.GRU(is_training=True, word_embeddings=wordembedding, settings=settings)

46 | global_step = tf.Variable(0, name="global_step", trainable=False)

47 | optimizer = tf.train.AdamOptimizer(0.0005)

48 |

49 | train_op = optimizer.minimize(m.final_loss, global_step=global_step)

50 | sess.run(tf.global_variables_initializer())

51 | saver = tf.train.Saver(max_to_keep=None)

52 |

53 | merged_summary = tf.summary.merge_all()

54 | summary_writer = tf.summary.FileWriter(FLAGS.summary_dir + '/train_loss', sess.graph)

55 |

56 | def train_step(word_batch, pos1_batch, pos2_batch, y_batch, big_num):

57 |

58 | feed_dict = {}

59 | total_shape = []

60 | total_num = 0

61 | total_word = []

62 | total_pos1 = []

63 | total_pos2 = []

64 | for i in range(len(word_batch)):

65 | total_shape.append(total_num)

66 | total_num += len(word_batch[i])

67 | for word in word_batch[i]:

68 | total_word.append(word)

69 | for pos1 in pos1_batch[i]:

70 | total_pos1.append(pos1)

71 | for pos2 in pos2_batch[i]:

72 | total_pos2.append(pos2)

73 | total_shape.append(total_num)

74 | total_shape = np.array(total_shape)

75 | total_word = np.array(total_word)

76 | total_pos1 = np.array(total_pos1)

77 | total_pos2 = np.array(total_pos2)

78 |

79 | feed_dict[m.total_shape] = total_shape

80 | feed_dict[m.input_word] = total_word

81 | feed_dict[m.input_pos1] = total_pos1

82 | feed_dict[m.input_pos2] = total_pos2

83 | feed_dict[m.input_y] = y_batch

84 |

85 | temp, step, loss, accuracy, summary, l2_loss, final_loss = sess.run(

86 | [train_op, global_step, m.total_loss, m.accuracy, merged_summary, m.l2_loss, m.final_loss],

87 | feed_dict)

88 | time_str = datetime.datetime.now().isoformat()

89 | accuracy = np.reshape(np.array(accuracy), (big_num))

90 | acc = np.mean(accuracy)

91 | summary_writer.add_summary(summary, step)

92 |

93 | if step % 50 == 0:

94 | tempstr = "{}: step {}, softmax_loss {:g}, acc {:g}".format(time_str, step, loss, acc)

95 | print(tempstr)

96 |

97 | for one_epoch in range(settings.num_epochs):

98 |

99 | temp_order = list(range(len(train_word)))

100 | np.random.shuffle(temp_order)

101 | for i in range(int(len(temp_order) / float(settings.big_num))):

102 |

103 | temp_word = []

104 | temp_pos1 = []

105 | temp_pos2 = []

106 | temp_y = []

107 |

108 | temp_input = temp_order[i * settings.big_num:(i + 1) * settings.big_num]

109 | for k in temp_input:

110 | temp_word.append(train_word[k])

111 | temp_pos1.append(train_pos1[k])

112 | temp_pos2.append(train_pos2[k])

113 | temp_y.append(train_y[k])

114 | num = 0

115 | for single_word in temp_word:

116 | num += len(single_word)

117 |

118 | if num > 1500:

119 | print('out of range')

120 | continue

121 |

122 | temp_word = np.array(temp_word)

123 | temp_pos1 = np.array(temp_pos1)

124 | temp_pos2 = np.array(temp_pos2)

125 | temp_y = np.array(temp_y)

126 |

127 | train_step(temp_word, temp_pos1, temp_pos2, temp_y, settings.big_num)

128 |

129 | current_step = tf.train.global_step(sess, global_step)

130 | if current_step > 8000 and current_step % 100 == 0:

131 | print('saving model')

132 | path = saver.save(sess, save_path + 'ATT_GRU_model', global_step=current_step)

133 | tempstr = 'have saved model to ' + path

134 | print(tempstr)

135 |

136 |

137 | if __name__ == "__main__":

138 | tf.app.run()

139 |

--------------------------------------------------------------------------------

/RE/network.py:

--------------------------------------------------------------------------------

1 | import tensorflow as tf

2 | import numpy as np

3 |

4 |

5 | class Settings(object):

6 | def __init__(self):

7 | self.vocab_size = 16691

8 | self.num_steps = 70

9 | self.num_epochs = 200

10 | self.num_classes = 16

11 | self.gru_size = 230

12 | self.keep_prob = 0.5

13 | self.num_layers = 1

14 | self.pos_size = 5

15 | self.pos_num = 123

16 | # the number of entity pairs of each batch during training or testing

17 | self.big_num = 50

18 |

19 |

20 | class GRU:

21 | def __init__(self, is_training, word_embeddings, settings):

22 |

23 | self.num_steps = num_steps = settings.num_steps

24 | self.vocab_size = vocab_size = settings.vocab_size

25 | self.num_classes = num_classes = settings.num_classes

26 | self.gru_size = gru_size = settings.gru_size

27 | self.big_num = big_num = settings.big_num

28 |

29 | self.input_word = tf.placeholder(dtype=tf.int32, shape=[None, num_steps], name='input_word')

30 | self.input_pos1 = tf.placeholder(dtype=tf.int32, shape=[None, num_steps], name='input_pos1')

31 | self.input_pos2 = tf.placeholder(dtype=tf.int32, shape=[None, num_steps], name='input_pos2')

32 | self.input_y = tf.placeholder(dtype=tf.float32, shape=[None, num_classes], name='input_y')

33 | self.total_shape = tf.placeholder(dtype=tf.int32, shape=[big_num + 1], name='total_shape')

34 | total_num = self.total_shape[-1]

35 |

36 | word_embedding = tf.get_variable(initializer=word_embeddings, name='word_embedding')

37 | pos1_embedding = tf.get_variable('pos1_embedding', [settings.pos_num, settings.pos_size])

38 | pos2_embedding = tf.get_variable('pos2_embedding', [settings.pos_num, settings.pos_size])

39 |

40 | attention_w = tf.get_variable('attention_omega', [gru_size, 1])

41 | sen_a = tf.get_variable('attention_A', [gru_size])

42 | sen_r = tf.get_variable('query_r', [gru_size, 1])

43 | relation_embedding = tf.get_variable('relation_embedding', [self.num_classes, gru_size])

44 | sen_d = tf.get_variable('bias_d', [self.num_classes])

45 |

46 | gru_cell_forward = tf.contrib.rnn.GRUCell(gru_size)

47 | gru_cell_backward = tf.contrib.rnn.GRUCell(gru_size)

48 |

49 | if is_training and settings.keep_prob < 1:

50 | gru_cell_forward = tf.contrib.rnn.DropoutWrapper(gru_cell_forward, output_keep_prob=settings.keep_prob)

51 | gru_cell_backward = tf.contrib.rnn.DropoutWrapper(gru_cell_backward, output_keep_prob=settings.keep_prob)

52 |

53 | cell_forward = tf.contrib.rnn.MultiRNNCell([gru_cell_forward] * settings.num_layers)

54 | cell_backward = tf.contrib.rnn.MultiRNNCell([gru_cell_backward] * settings.num_layers)

55 |

56 | sen_repre = []

57 | sen_alpha = []

58 | sen_s = []

59 | sen_out = []

60 | self.prob = []

61 | self.predictions = []

62 | self.loss = []

63 | self.accuracy = []

64 | self.total_loss = 0.0

65 |

66 | self._initial_state_forward = cell_forward.zero_state(total_num, tf.float32)

67 | self._initial_state_backward = cell_backward.zero_state(total_num, tf.float32)

68 |

69 | # embedding layer

70 | inputs_forward = tf.concat(axis=2, values=[tf.nn.embedding_lookup(word_embedding, self.input_word),

71 | tf.nn.embedding_lookup(pos1_embedding, self.input_pos1),

72 | tf.nn.embedding_lookup(pos2_embedding, self.input_pos2)])

73 | inputs_backward = tf.concat(axis=2,

74 | values=[tf.nn.embedding_lookup(word_embedding, tf.reverse(self.input_word, [1])),

75 | tf.nn.embedding_lookup(pos1_embedding, tf.reverse(self.input_pos1, [1])),

76 | tf.nn.embedding_lookup(pos2_embedding,

77 | tf.reverse(self.input_pos2, [1]))])

78 |

79 | outputs_forward = []

80 |

81 | state_forward = self._initial_state_forward

82 |

83 | # Bi-GRU layer

84 | with tf.variable_scope('GRU_FORWARD') as scope:

85 | for step in range(num_steps):

86 | if step > 0:

87 | scope.reuse_variables()

88 | (cell_output_forward, state_forward) = cell_forward(inputs_forward[:, step, :], state_forward)

89 | outputs_forward.append(cell_output_forward)

90 |

91 | outputs_backward = []

92 |

93 | state_backward = self._initial_state_backward

94 | with tf.variable_scope('GRU_BACKWARD') as scope:

95 | for step in range(num_steps):

96 | if step > 0:

97 | scope.reuse_variables()

98 | (cell_output_backward, state_backward) = cell_backward(inputs_backward[:, step, :], state_backward)

99 | outputs_backward.append(cell_output_backward)

100 |

101 | output_forward = tf.reshape(tf.concat(axis=1, values=outputs_forward), [total_num, num_steps, gru_size])

102 | output_backward = tf.reverse(

103 | tf.reshape(tf.concat(axis=1, values=outputs_backward), [total_num, num_steps, gru_size]),

104 | [1])

105 |

106 | # word-level attention layer

107 | output_h = tf.add(output_forward, output_backward)

108 | attention_r = tf.reshape(tf.matmul(tf.reshape(tf.nn.softmax(

109 | tf.reshape(tf.matmul(tf.reshape(tf.tanh(output_h), [total_num * num_steps, gru_size]), attention_w),

110 | [total_num, num_steps])), [total_num, 1, num_steps]), output_h), [total_num, gru_size])

111 |

112 | # sentence-level attention layer

113 | for i in range(big_num):

114 |

115 | sen_repre.append(tf.tanh(attention_r[self.total_shape[i]:self.total_shape[i + 1]]))

116 | batch_size = self.total_shape[i + 1] - self.total_shape[i]

117 |

118 | sen_alpha.append(

119 | tf.reshape(tf.nn.softmax(tf.reshape(tf.matmul(tf.multiply(sen_repre[i], sen_a), sen_r), [batch_size])),

120 | [1, batch_size]))

121 |

122 | sen_s.append(tf.reshape(tf.matmul(sen_alpha[i], sen_repre[i]), [gru_size, 1]))

123 | sen_out.append(tf.add(tf.reshape(tf.matmul(relation_embedding, sen_s[i]), [self.num_classes]), sen_d))

124 |

125 | self.prob.append(tf.nn.softmax(sen_out[i]))

126 |

127 | with tf.name_scope("output"):

128 | self.predictions.append(tf.argmax(self.prob[i], 0, name="predictions"))

129 |

130 | with tf.name_scope("loss"):

131 | self.loss.append(

132 | tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=sen_out[i], labels=self.input_y[i])))

133 | if i == 0:

134 | self.total_loss = self.loss[i]

135 | else:

136 | self.total_loss += self.loss[i]

137 |

138 | # tf.summary.scalar('loss',self.total_loss)

139 | # tf.scalar_summary(['loss'],[self.total_loss])

140 | with tf.name_scope("accuracy"):

141 | self.accuracy.append(

142 | tf.reduce_mean(tf.cast(tf.equal(self.predictions[i], tf.argmax(self.input_y[i], 0)), "float"),

143 | name="accuracy"))

144 |

145 | # tf.summary.scalar('loss',self.total_loss)

146 | tf.summary.scalar('loss', self.total_loss)

147 | # regularization

148 | self.l2_loss = tf.contrib.layers.apply_regularization(regularizer=tf.contrib.layers.l2_regularizer(0.0001),

149 | weights_list=tf.trainable_variables())

150 | self.final_loss = self.total_loss + self.l2_loss

151 | tf.summary.scalar('l2_loss', self.l2_loss)

152 | tf.summary.scalar('final_loss', self.final_loss)

153 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 | # DiaKG: an Annotated Diabetes Dataset for Medical Knowledge Graph Construction

3 |

4 | This is the source code of the DiaKG [paper](https://arxiv.org/abs/2105.15033).

5 |

6 | ## DataSet

7 |

8 | ### Overview

9 | The DiaKG dataset is derived from 41 diabetes guidelines and consensus, which are from authoritative Chinese journals including basic research, clinical research, drug usage, clinical cases, diagnosis and treatment methods, etc. The dataset covers the most extensive field of research content and hotspot in recent years. The annotation process is done by 2 seasoned endocrinologists and 6 M.D. candidates, and finally conduct a high-quality diabates database which contains 22,050 entities and 6,890 relations in total.

10 |

11 | ### Get the Data

12 | The codebase only provides some sample annotation files. If you want to download the fullset, please apply at [Tianchi Platform](https://tianchi.aliyun.com/dataset/dataDetail?dataId=88836).

13 |

14 | ### Data Format

15 | The dataset is exhibited as a hierachical structure with "document-paragraph-sentence" information. All the entities and sentences are labelled on the sentence level. Below is an example:

16 |

17 | ```

18 | {

19 | "doc_id": "1", // string, document id