├── .gitignore

├── Dockerfile.ds

├── LICENSE

├── README.md

├── README_zh-CN.md

├── __init__.py

├── app.py

├── config

├── easyanimate_image_normal_v1.yaml

├── easyanimate_video_long_sequence_v1.yaml

└── easyanimate_video_motion_module_v1.yaml

├── datasets

└── put datasets here.txt

├── easyanimate

├── __init__.py

├── data

│ ├── bucket_sampler.py

│ ├── dataset_image.py

│ ├── dataset_image_video.py

│ └── dataset_video.py

├── models

│ ├── attention.py

│ ├── autoencoder_magvit.py

│ ├── motion_module.py

│ ├── patch.py

│ ├── transformer2d.py

│ └── transformer3d.py

├── pipeline

│ ├── pipeline_easyanimate.py

│ ├── pipeline_easyanimate_inpaint.py

│ └── pipeline_pixart_magvit.py

├── ui

│ └── ui.py

└── utils

│ ├── IDDIM.py

│ ├── __init__.py

│ ├── diffusion_utils.py

│ ├── gaussian_diffusion.py

│ ├── lora_utils.py

│ ├── respace.py

│ └── utils.py

├── models

└── put models here.txt

├── nodes.py

├── predict_t2i.py

├── predict_t2v.py

├── requirements.txt

├── scripts

├── extra_motion_module.py

├── train_t2i.py

├── train_t2i.sh

├── train_t2i_lora.py

├── train_t2i_lora.sh

├── train_t2iv.py

├── train_t2iv.sh

├── train_t2v.py

├── train_t2v.sh

├── train_t2v_lora.py

└── train_t2v_lora.sh

├── wf.json

└── wf.png

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | models*

3 | output*

4 | samples*

5 | __pycache__/

6 | *.py[cod]

7 | *$py.class

8 |

9 | # C extensions

10 | *.so

11 |

12 | # Distribution / packaging

13 | .Python

14 | build/

15 | develop-eggs/

16 | dist/

17 | downloads/

18 | eggs/

19 | .eggs/

20 | lib/

21 | lib64/

22 | parts/

23 | sdist/

24 | var/

25 | wheels/

26 | share/python-wheels/

27 | *.egg-info/

28 | .installed.cfg

29 | *.egg

30 | MANIFEST

31 |

32 | # PyInstaller

33 | # Usually these files are written by a python script from a template

34 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

35 | *.manifest

36 | *.spec

37 |

38 | # Installer logs

39 | pip-log.txt

40 | pip-delete-this-directory.txt

41 |

42 | # Unit test / coverage reports

43 | htmlcov/

44 | .tox/

45 | .nox/

46 | .coverage

47 | .coverage.*

48 | .cache

49 | nosetests.xml

50 | coverage.xml

51 | *.cover

52 | *.py,cover

53 | .hypothesis/

54 | .pytest_cache/

55 | cover/

56 |

57 | # Translations

58 | *.mo

59 | *.pot

60 |

61 | # Django stuff:

62 | *.log

63 | local_settings.py

64 | db.sqlite3

65 | db.sqlite3-journal

66 |

67 | # Flask stuff:

68 | instance/

69 | .webassets-cache

70 |

71 | # Scrapy stuff:

72 | .scrapy

73 |

74 | # Sphinx documentation

75 | docs/_build/

76 |

77 | # PyBuilder

78 | .pybuilder/

79 | target/

80 |

81 | # Jupyter Notebook

82 | .ipynb_checkpoints

83 |

84 | # IPython

85 | profile_default/

86 | ipython_config.py

87 |

88 | # pyenv

89 | # For a library or package, you might want to ignore these files since the code is

90 | # intended to run in multiple environments; otherwise, check them in:

91 | # .python-version

92 |

93 | # pipenv

94 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

95 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

96 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

97 | # install all needed dependencies.

98 | #Pipfile.lock

99 |

100 | # poetry

101 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

102 | # This is especially recommended for binary packages to ensure reproducibility, and is more

103 | # commonly ignored for libraries.

104 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

105 | #poetry.lock

106 |

107 | # pdm

108 | # Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

109 | #pdm.lock

110 | # pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

111 | # in version control.

112 | # https://pdm.fming.dev/#use-with-ide

113 | .pdm.toml

114 |

115 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

116 | __pypackages__/

117 |

118 | # Celery stuff

119 | celerybeat-schedule

120 | celerybeat.pid

121 |

122 | # SageMath parsed files

123 | *.sage.py

124 |

125 | # Environments

126 | .env

127 | .venv

128 | env/

129 | venv/

130 | ENV/

131 | env.bak/

132 | venv.bak/

133 |

134 | # Spyder project settings

135 | .spyderproject

136 | .spyproject

137 |

138 | # Rope project settings

139 | .ropeproject

140 |

141 | # mkdocs documentation

142 | /site

143 |

144 | # mypy

145 | .mypy_cache/

146 | .dmypy.json

147 | dmypy.json

148 |

149 | # Pyre type checker

150 | .pyre/

151 |

152 | # pytype static type analyzer

153 | .pytype/

154 |

155 | # Cython debug symbols

156 | cython_debug/

157 |

158 | # PyCharm

159 | # JetBrains specific template is maintained in a separate JetBrains.gitignore that can

160 | # be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

161 | # and can be added to the global gitignore or merged into this file. For a more nuclear

162 | # option (not recommended) you can uncomment the following to ignore the entire idea folder.

163 | #.idea/

164 |

--------------------------------------------------------------------------------

/Dockerfile.ds:

--------------------------------------------------------------------------------

1 | FROM nvidia/cuda:11.8.0-devel-ubuntu22.04

2 | ENV DEBIAN_FRONTEND noninteractive

3 |

4 | RUN rm -r /etc/apt/sources.list.d/

5 |

6 | RUN apt-get update -y && apt-get install -y \

7 | libgl1 libglib2.0-0 google-perftools \

8 | sudo wget git git-lfs vim tig pkg-config libcairo2-dev \

9 | telnet curl net-tools iputils-ping wget jq \

10 | python3-pip python-is-python3 python3.10-venv tzdata lsof && \

11 | rm -rf /var/lib/apt/lists/*

12 | RUN pip3 install --upgrade pip -i https://mirrors.aliyun.com/pypi/simple/

13 |

14 | # add all extensions

15 | RUN apt-get update -y && apt-get install -y zip && \

16 | rm -rf /var/lib/apt/lists/*

17 | RUN pip install wandb tqdm GitPython==3.1.32 Pillow==9.5.0 setuptools --upgrade -i https://mirrors.aliyun.com/pypi/simple/

18 |

19 | # reinstall torch to keep compatible with xformers

20 | RUN pip uninstall -qy torch torchvision && \

21 | pip install torch==2.2.0 torchvision==0.17.0 torchaudio==2.2.0 --index-url https://download.pytorch.org/whl/cu118

22 | RUN pip uninstall -qy xfromers && pip install xformers==0.0.24 --index-url https://download.pytorch.org/whl/cu118

23 |

24 | # install requirements

25 | COPY ./requirements.txt /root/requirements.txt

26 | RUN pip install -r /root/requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

27 | RUN rm -rf /root/requirements.txt

28 |

29 | ENV PYTHONUNBUFFERED 1

30 | ENV NVIDIA_DISABLE_REQUIRE 1

31 |

32 | WORKDIR /root/

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # ComfyUI-EasyAnimate

2 |

3 | ## workflow

4 |

5 | [basic](https://github.com/chaojie/ComfyUI-EasyAnimate/blob/main/wf.json)

6 |

7 |  8 |

9 | ### 1、Model Weights

10 | | Name | Type | Storage Space | Url | Description |

11 | |--|--|--|--|--|

12 | | easyanimate_v1_mm.safetensors | Motion Module | 4.1GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Motion_Module/easyanimate_v1_mm.safetensors) | ComfyUI/models/checkpoints |

13 | | PixArt-XL-2-512x512.tar | Pixart | 11.4GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Diffusion_Transformer/PixArt-XL-2-512x512.tar)| ComfyUI/models/diffusers (tar -xvf PixArt-XL-2-512x512.tar) |

14 |

15 | ### 2、Optional Model Weights

16 | | Name | Type | Storage Space | Url | Description |

17 | |--|--|--|--|--|

18 | | easyanimate_portrait.safetensors | Checkpoint of Pixart | 2.3GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait.safetensors) | ComfyUI/models/checkpoints |

19 | | easyanimate_portrait_lora.safetensors | Lora of Pixart | 654.0MB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait_lora.safetensors)| ComfyUI/models/checkpoints

20 |

21 | ## [EasyAnimate](https://github.com/aigc-apps/EasyAnimate)

22 |

--------------------------------------------------------------------------------

/README_zh-CN.md:

--------------------------------------------------------------------------------

1 | # EasyAnimate | 您的智能生成器。

2 | 😊 EasyAnimate是一个用于生成长视频和训练基于transformer的扩散生成器的repo。

3 |

4 | 😊 我们基于类SORA结构与DIT,使用transformer进行作为扩散器进行视频生成。为了保证良好的拓展性,我们基于motion module构建了EasyAnimate,未来我们也会尝试更多的训练方案一提高效果。

5 |

6 | 😊 Welcome!

7 |

8 | [English](./README.md) | 简体中文

9 |

10 | # 目录

11 | - [目录](#目录)

12 | - [简介](#简介)

13 | - [TODO List](#todo-list)

14 | - [Model zoo](#model-zoo)

15 | - [1、运动权重](#1运动权重)

16 | - [2、其他权重](#2其他权重)

17 | - [快速启动](#快速启动)

18 | - [1. 云使用: AliyunDSW/Docker](#1-云使用-aliyundswdocker)

19 | - [2. 本地安装: 环境检查/下载/安装](#2-本地安装-环境检查下载安装)

20 | - [如何使用](#如何使用)

21 | - [1. 生成](#1-生成)

22 | - [2. 模型训练](#2-模型训练)

23 | - [算法细节](#算法细节)

24 | - [参考文献](#参考文献)

25 | - [许可证](#许可证)

26 |

27 | # 简介

28 | EasyAnimate是一个基于transformer结构的pipeline,可用于生成AI动画、训练Diffusion Transformer的基线模型与Lora模型,我们支持从已经训练好的EasyAnimate模型直接进行预测,生成不同分辨率,6秒左右、fps12的视频(40 ~ 80帧, 未来会支持更长的视频),也支持用户训练自己的基线模型与Lora模型,进行一定的风格变换。

29 |

30 | 我们会逐渐支持从不同平台快速启动,请参阅 [快速启动](#快速启动)。

31 |

32 | 新特性:

33 | - 创建代码!现在支持 Windows 和 Linux。[ 2024.04.12 ]

34 |

35 | 这些是我们的生成结果:

36 |

37 | 我们的ui界面如下:

38 |

39 |

40 | # TODO List

41 | - 支持更大分辨率的文视频生成模型。

42 | - 支持基于magvit的文视频生成模型。

43 | - 支持视频inpaint模型。

44 |

45 | # Model zoo

46 | ### 1、运动权重

47 | | 名称 | 种类 | 存储空间 | 下载地址 | 描述 |

48 | |--|--|--|--|--|

49 | | easyanimate_v1_mm.safetensors | Motion Module | 4.1GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Motion_Module/easyanimate_v1_mm.safetensors) | Training with 80 frames and fps 12 |

50 |

51 | ### 2、其他权重

52 | | 名称 | 种类 | 存储空间 | 下载地址 | 描述 |

53 | |--|--|--|--|--|

54 | | PixArt-XL-2-512x512.tar | Pixart | 11.4GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Diffusion_Transformer/PixArt-XL-2-512x512.tar)| Pixart-Alpha official weights |

55 | | easyanimate_portrait.safetensors | Checkpoint of Pixart | 2.3GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait.safetensors) | Training with internal portrait datasets |

56 | | easyanimate_portrait_lora.safetensors | Lora of Pixart | 654.0MB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait_lora.safetensors)| Training with internal portrait datasets |

57 |

58 |

59 | # 生成效果

60 | 在生成风景类animation时,采样器推荐使用DPM++和Euler A。在生成人像类animation时,采样器推荐使用Euler A和Euler。

61 |

62 | 有些时候Github无法正常显示大GIF,可以通过Download GIF下载到本地查看。

63 |

64 | 使用原始的pixart checkpoint进行预测。

65 |

66 | | Base Models | Sampler | Seed | Resolution (h x w x f) | Prompt | GenerationResult | Download |

67 | | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

68 | | PixArt | DPM++ | 43 | 512x512x80 | A soaring drone footage captures the majestic beauty of a coastal cliff, its red and yellow stratified rock faces rich in color and against the vibrant turquoise of the sea. Seabirds can be seen taking flight around the cliff\'s precipices. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/1-cliff.gif) |

69 | | PixArt | DPM++ | 43 | 448x640x80 | The video captures the majestic beauty of a waterfall cascading down a cliff into a serene lake. The waterfall, with its powerful flow, is the central focus of the video. The surrounding landscape is lush and green, with trees and foliage adding to the natural beauty of the scene. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/2-waterfall.gif) |

70 | | PixArt | DPM++ | 43 | 704x384x80 | A vibrant scene of a snowy mountain landscape. The sky is filled with a multitude of colorful hot air balloons, each floating at different heights, creating a dynamic and lively atmosphere. The balloons are scattered across the sky, some closer to the viewer, others further away, adding depth to the scene. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/3-snowy.gif) |

71 | | PixArt | DPM++ | 43 | 448x640x64 | The vibrant beauty of a sunflower field. The sunflowers, with their bright yellow petals and dark brown centers, are in full bloom, creating a stunning contrast against the green leaves and stems. The sunflowers are arranged in neat rows, creating a sense of order and symmetry. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/4-sunflower.gif) |

72 | | PixArt | DPM++ | 43 | 384x704x48 | A tranquil Vermont autumn, with leaves in vibrant colors of orange and red fluttering down a mountain stream. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/5-autumn.gif) |

73 | | PixArt | DPM++ | 43 | 704x384x48 | A vibrant underwater scene. A group of blue fish, with yellow fins, are swimming around a coral reef. The coral reef is a mix of brown and green, providing a natural habitat for the fish. The water is a deep blue, indicating a depth of around 30 feet. The fish are swimming in a circular pattern around the coral reef, indicating a sense of motion and activity. The overall scene is a beautiful representation of marine life. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/6-underwater.gif) |

74 | | PixArt | DPM++ | 43 | 576x448x48 | Pacific coast, carmel by the blue sea ocean and peaceful waves |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/7-coast.gif) |

75 | | PixArt | DPM++ | 43 | 576x448x80 | A snowy forest landscape with a dirt road running through it. The road is flanked by trees covered in snow, and the ground is also covered in snow. The sun is shining, creating a bright and serene atmosphere. The road appears to be empty, and there are no people or animals visible in the video. The style of the video is a natural landscape shot, with a focus on the beauty of the snowy forest and the peacefulness of the road. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/8-forest.gif) |

76 | | PixArt | DPM++ | 43 | 640x448x64 | The dynamic movement of tall, wispy grasses swaying in the wind. The sky above is filled with clouds, creating a dramatic backdrop. The sunlight pierces through the clouds, casting a warm glow on the scene. The grasses are a mix of green and brown, indicating a change in seasons. The overall style of the video is naturalistic, capturing the beauty of the landscape in a realistic manner. The focus is on the grasses and their movement, with the sky serving as a secondary element. The video does not contain any human or animal elements. | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/9-grasses.gif) |

77 | | PixArt | DPM++ | 43 | 704x384x80 | A serene night scene in a forested area. The first frame shows a tranquil lake reflecting the star-filled sky above. The second frame reveals a beautiful sunset, casting a warm glow over the landscape. The third frame showcases the night sky, filled with stars and a vibrant Milky Way galaxy. The video is a time-lapse, capturing the transition from day to night, with the lake and forest serving as a constant backdrop. The style of the video is naturalistic, emphasizing the beauty of the night sky and the peacefulness of the forest. | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/10-night.gif) |

78 | | PixArt | DPM++ | 43 | 640x448x80 | Sunset over the sea. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/11-sunset.gif) |

79 |

80 | 使用人像checkpoint进行预测。

81 |

82 | | Base Models | Sampler | Seed | Resolution (h x w x f) | Prompt | GenerationResult | Download |

83 | | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

84 | | Portrait | Euler A | 43 | 448x576x80 | 1girl, 3d, black hair, brown eyes, earrings, grey background, jewelry, lips, long hair, looking at viewer, photo \\(medium\\), realistic, red lips, solo |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/1-check.gif) |

85 | | Portrait | Euler A | 43 | 448x576x80 | 1girl, bare shoulders, blurry, brown eyes, dirty, dirty face, freckles, lips, long hair, looking at viewer, realistic, sleeveless, solo, upper body | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/2-check.gif) |

86 | | Portrait | Euler A | 43 | 512x512x64 | 1girl, black hair, brown eyes, earrings, grey background, jewelry, lips, looking at viewer, mole, mole under eye, neck tattoo, nose, ponytail, realistic, shirt, simple background, solo, tattoo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/3-check.gif) |

87 | | Portrait | Euler A | 43 | 576x448x64 | 1girl, black hair, lips, looking at viewer, mole, mole under eye, mole under mouth, realistic, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/5-check.gif) |

88 |

89 | 使用人像Lora进行预测。

90 |

91 | | Base Models | Sampler | Seed | Resolution (h x w x f) | Prompt | GenerationResult | Download |

92 | | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

93 | | Pixart + Lora | Euler A | 43 | 512x512x64 | 1girl, 3d, black hair, brown eyes, earrings, grey background, jewelry, lips, long hair, looking at viewer, photo \\(medium\\), realistic, red lips, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/1-lora.gif) |

94 | | Pixart + Lora | Euler A | 43 | 512x512x64 | 1girl, bare shoulders, blurry, brown eyes, dirty, dirty face, freckles, lips, long hair, looking at viewer, mole, mole on breast, mole on neck, mole under eye, mole under mouth, realistic, sleeveless, solo, upper body | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/2-lora.gif) |

95 | | Pixart + Lora | Euler A | 43 | 512x512x64 | 1girl, black hair, lips, looking at viewer, mole, mole under eye, mole under mouth, realistic, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/5-lora.gif) |

96 | | Pixart + Lora | Euler A | 43 | 512x512x80 | 1girl, bare shoulders, blurry, blurry background, blurry foreground, bokeh, brown eyes, christmas tree, closed mouth, collarbone, depth of field, earrings, jewelry, lips, long hair, looking at viewer, photo \\(medium\\), realistic, smile, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/8-lora.gif) |

97 |

98 | # 快速启动

99 | ### 1. 云使用: AliyunDSW/Docker

100 | #### a. 通过阿里云 DSW

101 | 敬请期待。

102 |

103 | #### b. 通过docker

104 | 使用docker的情况下,请保证机器中已经正确安装显卡驱动与CUDA环境,然后以此执行以下命令:

105 | ```

106 | # 拉取镜像

107 | docker pull mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:easyanimate

108 |

109 | # 进入镜像

110 | docker run -it -p 7860:7860 --network host --gpus all --security-opt seccomp:unconfined --shm-size 200g mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:easyanimate

111 |

112 | # clone 代码

113 | git clone https://github.com/aigc-apps/EasyAnimate.git

114 |

115 | # 进入EasyAnimate文件夹

116 | cd EasyAnimate

117 |

118 | # 下载权重

119 | mkdir models/Diffusion_Transformer

120 | mkdir models/Motion_Module

121 | mkdir models/Personalized_Model

122 |

123 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Motion_Module/easyanimate_v1_mm.safetensors -O models/Motion_Module/easyanimate_v1_mm.safetensors

124 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait.safetensors -O models/Personalized_Model/easyanimate_portrait.safetensors

125 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait_lora.safetensors -O models/Personalized_Model/easyanimate_portrait_lora.safetensors

126 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Diffusion_Transformer/PixArt-XL-2-512x512.tar -O models/Diffusion_Transformer/PixArt-XL-2-512x512.tar

127 |

128 | cd models/Diffusion_Transformer/

129 | tar -xvf PixArt-XL-2-512x512.tar

130 | cd ../../

131 | ```

132 |

133 | ### 2. 本地安装: 环境检查/下载/安装

134 | #### a. 环境检查

135 | 我们已验证EasyAnimate可在以下环境中执行:

136 |

137 | Linux 的详细信息:

138 | - 操作系统 Ubuntu 20.04, CentOS

139 | - python: python3.10 & python3.11

140 | - pytorch: torch2.2.0

141 | - CUDA: 11.8

142 | - CUDNN: 8+

143 | - GPU: Nvidia-A10 24G & Nvidia-A100 40G & Nvidia-A100 80G

144 |

145 | 我们需要大约 60GB 的可用磁盘空间,请检查!

146 |

147 | #### b. 权重放置

148 | 我们最好将权重按照指定路径进行放置:

149 |

150 | ```

151 | 📦 models/

152 | ├── 📂 Diffusion_Transformer/

153 | │ └── 📂 PixArt-XL-2-512x512/

154 | ├── 📂 Motion_Module/

155 | │ └── 📄 easyanimate_v1_mm.safetensors

156 | ├── 📂 Motion_Module/

157 | │ ├── 📄 easyanimate_portrait.safetensors

158 | │ └── 📄 easyanimate_portrait_lora.safetensors

159 | ```

160 |

161 | # 如何使用

162 | ### 1. 生成

163 | #### a. 视频生成

164 | ##### i、运行python文件

165 | - 步骤1:下载对应权重放入models文件夹。

166 | - 步骤2:在predict_t2v.py文件中修改prompt、neg_prompt、guidance_scale和seed。

167 | - 步骤3:运行predict_t2v.py文件,等待生成结果,结果保存在samples/easyanimate-videos文件夹中。

168 | - 步骤4:如果想结合自己训练的其他backbone与Lora,则看情况修改predict_t2v.py中的predict_t2v.py和lora_path。

169 |

170 | ##### ii、通过ui界面

171 | - 步骤1:下载对应权重放入models文件夹。

172 | - 步骤2:运行app.py文件,进入gradio页面。

173 | - 步骤3:根据页面选择生成模型,填入prompt、neg_prompt、guidance_scale和seed等,点击生成,等待生成结果,结果保存在sample文件夹中。

174 |

175 | ### 2. 模型训练

176 | #### a、训练视频生成模型

177 | ##### i、基于webvid数据集

178 | 如果使用webvid数据集进行训练,则需要首先下载webvid的数据集。

179 |

180 | 您需要以这种格式排列webvid数据集。

181 | ```

182 | 📦 project/

183 | ├── 📂 datasets/

184 | │ ├── 📂 webvid/

185 | │ ├── 📂 videos/

186 | │ │ ├── 📄 00000001.mp4

187 | │ │ ├── 📄 00000002.mp4

188 | │ │ └── 📄 .....

189 | │ └── 📄 csv_of_webvid.csv

190 | ```

191 |

192 | 然后,进入scripts/train_t2v.sh进行设置。

193 | ```

194 | export DATASET_NAME="datasets/webvid/videos/"

195 | export DATASET_META_NAME="datasets/webvid/csv_of_webvid.csv"

196 |

197 | ...

198 |

199 | train_data_format="webvid"

200 | ```

201 |

202 | 最后运行scripts/train_t2v.sh。

203 | ```sh

204 | sh scripts/train_t2v.sh

205 | ```

206 |

207 | ##### ii、基于自建数据集

208 | 如果使用内部数据集进行训练,则需要首先格式化数据集。

209 |

210 | 您需要以这种格式排列数据集。

211 | ```

212 | 📦 project/

213 | ├── 📂 datasets/

214 | │ ├── 📂 internal_datasets/

215 | │ ├── 📂 videos/

216 | │ │ ├── 📄 00000001.mp4

217 | │ │ ├── 📄 00000002.mp4

218 | │ │ └── 📄 .....

219 | │ └── 📄 json_of_internal_datasets.json

220 | ```

221 |

222 | json_of_internal_datasets.json是一个标准的json文件,如下所示:

223 | ```json

224 | [

225 | {

226 | "file_path": "videos/00000001.mp4",

227 | "text": "A group of young men in suits and sunglasses are walking down a city street.",

228 | "type": "video"

229 | },

230 | {

231 | "file_path": "videos/00000002.mp4",

232 | "text": "A notepad with a drawing of a woman on it.",

233 | "type": "video"

234 | }

235 | .....

236 | ]

237 | ```

238 | json中的file_path需要设置为相对路径。

239 |

240 | 然后,进入scripts/train_t2v.sh进行设置。

241 | ```

242 | export DATASET_NAME="datasets/internal_datasets/"

243 | export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

244 |

245 | ...

246 |

247 | train_data_format="normal"

248 | ```

249 |

250 | 最后运行scripts/train_t2v.sh。

251 | ```sh

252 | sh scripts/train_t2v.sh

253 | ```

254 |

255 | #### b、训练基础文生图模型

256 | ##### i、基于diffusers格式

257 | 数据集的格式可以设置为diffusers格式。

258 |

259 | ```

260 | 📦 project/

261 | ├── 📂 datasets/

262 | │ ├── 📂 diffusers_datasets/

263 | │ ├── 📂 train/

264 | │ │ ├── 📄 00000001.jpg

265 | │ │ ├── 📄 00000002.jpg

266 | │ │ └── 📄 .....

267 | │ └── 📄 metadata.jsonl

268 | ```

269 |

270 | 然后,进入scripts/train_t2i.sh进行设置。

271 | ```

272 | export DATASET_NAME="datasets/diffusers_datasets/"

273 |

274 | ...

275 |

276 | train_data_format="diffusers"

277 | ```

278 |

279 | 最后运行scripts/train_t2i.sh。

280 | ```sh

281 | sh scripts/train_t2i.sh

282 | ```

283 | ##### ii、基于自建数据集

284 | 如果使用自建数据集进行训练,则需要首先格式化数据集。

285 |

286 | 您需要以这种格式排列数据集。

287 | ```

288 | 📦 project/

289 | ├── 📂 datasets/

290 | │ ├── 📂 internal_datasets/

291 | │ ├── 📂 train/

292 | │ │ ├── 📄 00000001.jpg

293 | │ │ ├── 📄 00000002.jpg

294 | │ │ └── 📄 .....

295 | │ └── 📄 json_of_internal_datasets.json

296 | ```

297 |

298 | json_of_internal_datasets.json是一个标准的json文件,如下所示:

299 | ```json

300 | [

301 | {

302 | "file_path": "train/00000001.jpg",

303 | "text": "A group of young men in suits and sunglasses are walking down a city street.",

304 | "type": "image"

305 | },

306 | {

307 | "file_path": "train/00000002.jpg",

308 | "text": "A notepad with a drawing of a woman on it.",

309 | "type": "image"

310 | }

311 | .....

312 | ]

313 | ```

314 | json中的file_path需要设置为相对路径。

315 |

316 | 然后,进入scripts/train_t2i.sh进行设置。

317 | ```

318 | export DATASET_NAME="datasets/internal_datasets/"

319 | export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

320 |

321 | ...

322 |

323 | train_data_format="normal"

324 | ```

325 |

326 | 最后运行scripts/train_t2i.sh。

327 | ```sh

328 | sh scripts/train_t2i.sh

329 | ```

330 |

331 | #### c、训练Lora模型

332 | ##### i、基于diffusers格式

333 | 数据集的格式可以设置为diffusers格式。

334 | ```

335 | 📦 project/

336 | ├── 📂 datasets/

337 | │ ├── 📂 diffusers_datasets/

338 | │ ├── 📂 train/

339 | │ │ ├── 📄 00000001.jpg

340 | │ │ ├── 📄 00000002.jpg

341 | │ │ └── 📄 .....

342 | │ └── 📄 metadata.jsonl

343 | ```

344 |

345 | 然后,进入scripts/train_lora.sh进行设置。

346 | ```

347 | export DATASET_NAME="datasets/diffusers_datasets/"

348 |

349 | ...

350 |

351 | train_data_format="diffusers"

352 | ```

353 |

354 | 最后运行scripts/train_lora.sh。

355 | ```sh

356 | sh scripts/train_lora.sh

357 | ```

358 |

359 | ##### ii、基于自建数据集

360 | 如果使用自建数据集进行训练,则需要首先格式化数据集。

361 |

362 | 您需要以这种格式排列数据集。

363 | ```

364 | 📦 project/

365 | ├── 📂 datasets/

366 | │ ├── 📂 internal_datasets/

367 | │ ├── 📂 train/

368 | │ │ ├── 📄 00000001.jpg

369 | │ │ ├── 📄 00000002.jpg

370 | │ │ └── 📄 .....

371 | │ └── 📄 json_of_internal_datasets.json

372 | ```

373 |

374 | json_of_internal_datasets.json是一个标准的json文件,如下所示:

375 | ```json

376 | [

377 | {

378 | "file_path": "train/00000001.jpg",

379 | "text": "A group of young men in suits and sunglasses are walking down a city street.",

380 | "type": "image"

381 | },

382 | {

383 | "file_path": "train/00000002.jpg",

384 | "text": "A notepad with a drawing of a woman on it.",

385 | "type": "image"

386 | }

387 | .....

388 | ]

389 | ```

390 | json中的file_path需要设置为相对路径。

391 |

392 | 然后,进入scripts/train_lora.sh进行设置。

393 | ```

394 | export DATASET_NAME="datasets/internal_datasets/"

395 | export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

396 |

397 | ...

398 |

399 | train_data_format="normal"

400 | ```

401 |

402 | 最后运行scripts/train_lora.sh。

403 | ```sh

404 | sh scripts/train_lora.sh

405 | ```

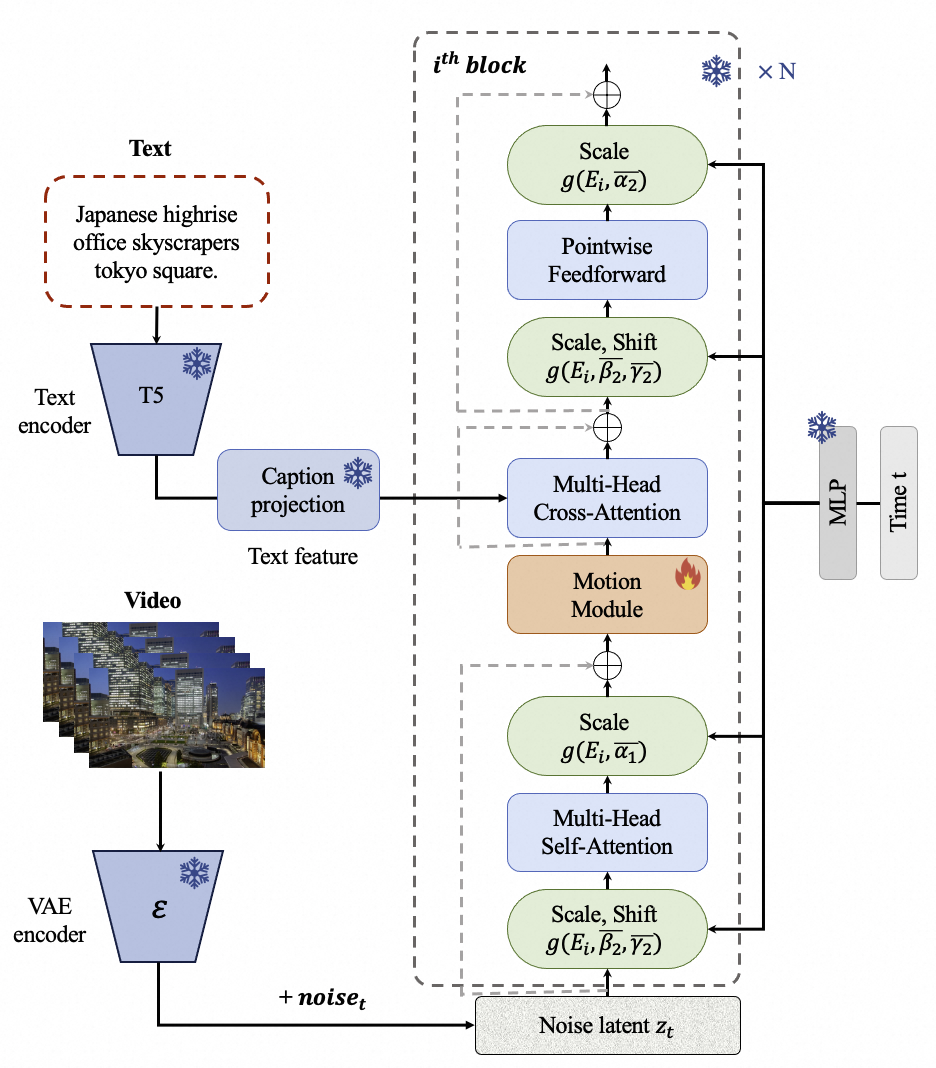

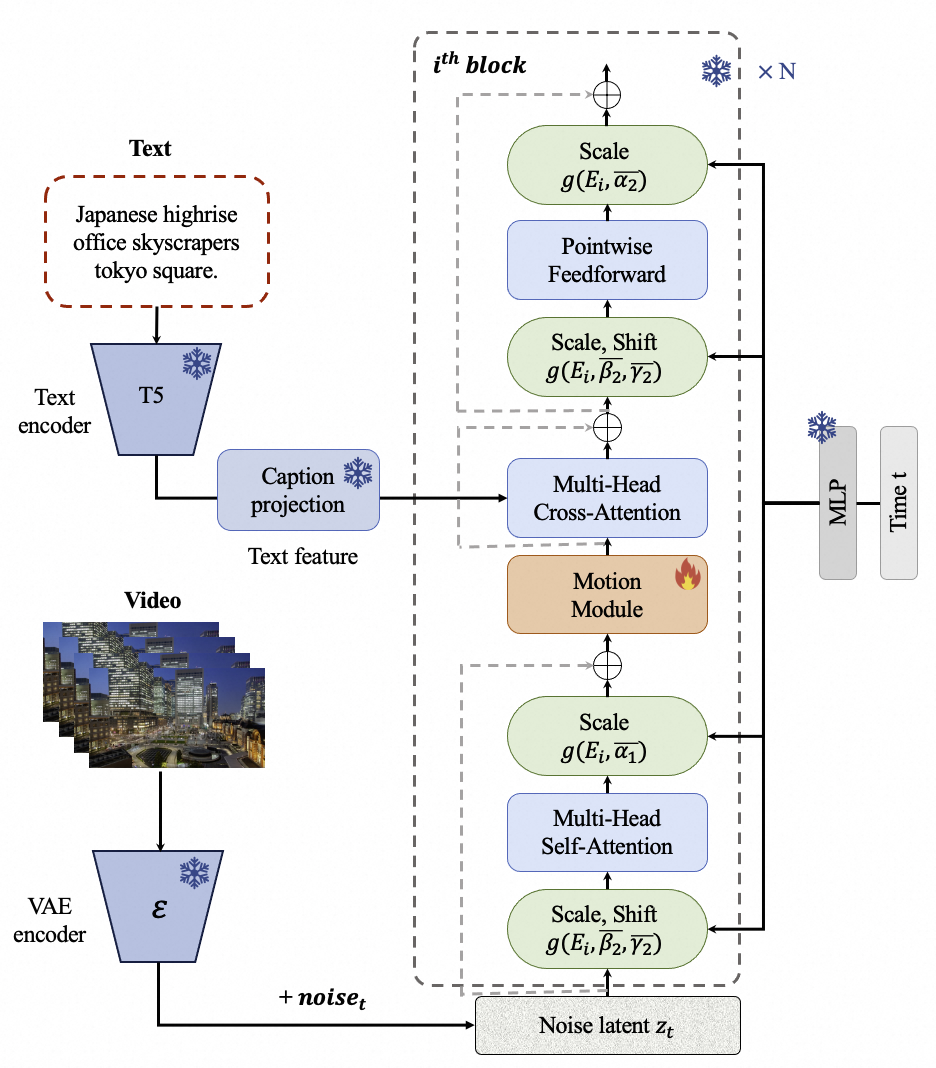

406 | # 算法细节

407 | 我们使用了[PixArt-alpha](https://github.com/PixArt-alpha/PixArt-alpha)作为基础模型,并在此基础上引入额外的运动模块(motion module)来将DiT模型从2D图像生成扩展到3D视频生成上来。其框架图如下:

408 |

409 |

410 |

411 |

8 |

9 | ### 1、Model Weights

10 | | Name | Type | Storage Space | Url | Description |

11 | |--|--|--|--|--|

12 | | easyanimate_v1_mm.safetensors | Motion Module | 4.1GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Motion_Module/easyanimate_v1_mm.safetensors) | ComfyUI/models/checkpoints |

13 | | PixArt-XL-2-512x512.tar | Pixart | 11.4GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Diffusion_Transformer/PixArt-XL-2-512x512.tar)| ComfyUI/models/diffusers (tar -xvf PixArt-XL-2-512x512.tar) |

14 |

15 | ### 2、Optional Model Weights

16 | | Name | Type | Storage Space | Url | Description |

17 | |--|--|--|--|--|

18 | | easyanimate_portrait.safetensors | Checkpoint of Pixart | 2.3GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait.safetensors) | ComfyUI/models/checkpoints |

19 | | easyanimate_portrait_lora.safetensors | Lora of Pixart | 654.0MB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait_lora.safetensors)| ComfyUI/models/checkpoints

20 |

21 | ## [EasyAnimate](https://github.com/aigc-apps/EasyAnimate)

22 |

--------------------------------------------------------------------------------

/README_zh-CN.md:

--------------------------------------------------------------------------------

1 | # EasyAnimate | 您的智能生成器。

2 | 😊 EasyAnimate是一个用于生成长视频和训练基于transformer的扩散生成器的repo。

3 |

4 | 😊 我们基于类SORA结构与DIT,使用transformer进行作为扩散器进行视频生成。为了保证良好的拓展性,我们基于motion module构建了EasyAnimate,未来我们也会尝试更多的训练方案一提高效果。

5 |

6 | 😊 Welcome!

7 |

8 | [English](./README.md) | 简体中文

9 |

10 | # 目录

11 | - [目录](#目录)

12 | - [简介](#简介)

13 | - [TODO List](#todo-list)

14 | - [Model zoo](#model-zoo)

15 | - [1、运动权重](#1运动权重)

16 | - [2、其他权重](#2其他权重)

17 | - [快速启动](#快速启动)

18 | - [1. 云使用: AliyunDSW/Docker](#1-云使用-aliyundswdocker)

19 | - [2. 本地安装: 环境检查/下载/安装](#2-本地安装-环境检查下载安装)

20 | - [如何使用](#如何使用)

21 | - [1. 生成](#1-生成)

22 | - [2. 模型训练](#2-模型训练)

23 | - [算法细节](#算法细节)

24 | - [参考文献](#参考文献)

25 | - [许可证](#许可证)

26 |

27 | # 简介

28 | EasyAnimate是一个基于transformer结构的pipeline,可用于生成AI动画、训练Diffusion Transformer的基线模型与Lora模型,我们支持从已经训练好的EasyAnimate模型直接进行预测,生成不同分辨率,6秒左右、fps12的视频(40 ~ 80帧, 未来会支持更长的视频),也支持用户训练自己的基线模型与Lora模型,进行一定的风格变换。

29 |

30 | 我们会逐渐支持从不同平台快速启动,请参阅 [快速启动](#快速启动)。

31 |

32 | 新特性:

33 | - 创建代码!现在支持 Windows 和 Linux。[ 2024.04.12 ]

34 |

35 | 这些是我们的生成结果:

36 |

37 | 我们的ui界面如下:

38 |

39 |

40 | # TODO List

41 | - 支持更大分辨率的文视频生成模型。

42 | - 支持基于magvit的文视频生成模型。

43 | - 支持视频inpaint模型。

44 |

45 | # Model zoo

46 | ### 1、运动权重

47 | | 名称 | 种类 | 存储空间 | 下载地址 | 描述 |

48 | |--|--|--|--|--|

49 | | easyanimate_v1_mm.safetensors | Motion Module | 4.1GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Motion_Module/easyanimate_v1_mm.safetensors) | Training with 80 frames and fps 12 |

50 |

51 | ### 2、其他权重

52 | | 名称 | 种类 | 存储空间 | 下载地址 | 描述 |

53 | |--|--|--|--|--|

54 | | PixArt-XL-2-512x512.tar | Pixart | 11.4GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Diffusion_Transformer/PixArt-XL-2-512x512.tar)| Pixart-Alpha official weights |

55 | | easyanimate_portrait.safetensors | Checkpoint of Pixart | 2.3GB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait.safetensors) | Training with internal portrait datasets |

56 | | easyanimate_portrait_lora.safetensors | Lora of Pixart | 654.0MB | [download](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait_lora.safetensors)| Training with internal portrait datasets |

57 |

58 |

59 | # 生成效果

60 | 在生成风景类animation时,采样器推荐使用DPM++和Euler A。在生成人像类animation时,采样器推荐使用Euler A和Euler。

61 |

62 | 有些时候Github无法正常显示大GIF,可以通过Download GIF下载到本地查看。

63 |

64 | 使用原始的pixart checkpoint进行预测。

65 |

66 | | Base Models | Sampler | Seed | Resolution (h x w x f) | Prompt | GenerationResult | Download |

67 | | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

68 | | PixArt | DPM++ | 43 | 512x512x80 | A soaring drone footage captures the majestic beauty of a coastal cliff, its red and yellow stratified rock faces rich in color and against the vibrant turquoise of the sea. Seabirds can be seen taking flight around the cliff\'s precipices. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/1-cliff.gif) |

69 | | PixArt | DPM++ | 43 | 448x640x80 | The video captures the majestic beauty of a waterfall cascading down a cliff into a serene lake. The waterfall, with its powerful flow, is the central focus of the video. The surrounding landscape is lush and green, with trees and foliage adding to the natural beauty of the scene. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/2-waterfall.gif) |

70 | | PixArt | DPM++ | 43 | 704x384x80 | A vibrant scene of a snowy mountain landscape. The sky is filled with a multitude of colorful hot air balloons, each floating at different heights, creating a dynamic and lively atmosphere. The balloons are scattered across the sky, some closer to the viewer, others further away, adding depth to the scene. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/3-snowy.gif) |

71 | | PixArt | DPM++ | 43 | 448x640x64 | The vibrant beauty of a sunflower field. The sunflowers, with their bright yellow petals and dark brown centers, are in full bloom, creating a stunning contrast against the green leaves and stems. The sunflowers are arranged in neat rows, creating a sense of order and symmetry. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/4-sunflower.gif) |

72 | | PixArt | DPM++ | 43 | 384x704x48 | A tranquil Vermont autumn, with leaves in vibrant colors of orange and red fluttering down a mountain stream. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/5-autumn.gif) |

73 | | PixArt | DPM++ | 43 | 704x384x48 | A vibrant underwater scene. A group of blue fish, with yellow fins, are swimming around a coral reef. The coral reef is a mix of brown and green, providing a natural habitat for the fish. The water is a deep blue, indicating a depth of around 30 feet. The fish are swimming in a circular pattern around the coral reef, indicating a sense of motion and activity. The overall scene is a beautiful representation of marine life. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/6-underwater.gif) |

74 | | PixArt | DPM++ | 43 | 576x448x48 | Pacific coast, carmel by the blue sea ocean and peaceful waves |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/7-coast.gif) |

75 | | PixArt | DPM++ | 43 | 576x448x80 | A snowy forest landscape with a dirt road running through it. The road is flanked by trees covered in snow, and the ground is also covered in snow. The sun is shining, creating a bright and serene atmosphere. The road appears to be empty, and there are no people or animals visible in the video. The style of the video is a natural landscape shot, with a focus on the beauty of the snowy forest and the peacefulness of the road. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/8-forest.gif) |

76 | | PixArt | DPM++ | 43 | 640x448x64 | The dynamic movement of tall, wispy grasses swaying in the wind. The sky above is filled with clouds, creating a dramatic backdrop. The sunlight pierces through the clouds, casting a warm glow on the scene. The grasses are a mix of green and brown, indicating a change in seasons. The overall style of the video is naturalistic, capturing the beauty of the landscape in a realistic manner. The focus is on the grasses and their movement, with the sky serving as a secondary element. The video does not contain any human or animal elements. | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/9-grasses.gif) |

77 | | PixArt | DPM++ | 43 | 704x384x80 | A serene night scene in a forested area. The first frame shows a tranquil lake reflecting the star-filled sky above. The second frame reveals a beautiful sunset, casting a warm glow over the landscape. The third frame showcases the night sky, filled with stars and a vibrant Milky Way galaxy. The video is a time-lapse, capturing the transition from day to night, with the lake and forest serving as a constant backdrop. The style of the video is naturalistic, emphasizing the beauty of the night sky and the peacefulness of the forest. | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/10-night.gif) |

78 | | PixArt | DPM++ | 43 | 640x448x80 | Sunset over the sea. |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/11-sunset.gif) |

79 |

80 | 使用人像checkpoint进行预测。

81 |

82 | | Base Models | Sampler | Seed | Resolution (h x w x f) | Prompt | GenerationResult | Download |

83 | | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

84 | | Portrait | Euler A | 43 | 448x576x80 | 1girl, 3d, black hair, brown eyes, earrings, grey background, jewelry, lips, long hair, looking at viewer, photo \\(medium\\), realistic, red lips, solo |  | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/1-check.gif) |

85 | | Portrait | Euler A | 43 | 448x576x80 | 1girl, bare shoulders, blurry, brown eyes, dirty, dirty face, freckles, lips, long hair, looking at viewer, realistic, sleeveless, solo, upper body | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/2-check.gif) |

86 | | Portrait | Euler A | 43 | 512x512x64 | 1girl, black hair, brown eyes, earrings, grey background, jewelry, lips, looking at viewer, mole, mole under eye, neck tattoo, nose, ponytail, realistic, shirt, simple background, solo, tattoo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/3-check.gif) |

87 | | Portrait | Euler A | 43 | 576x448x64 | 1girl, black hair, lips, looking at viewer, mole, mole under eye, mole under mouth, realistic, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/5-check.gif) |

88 |

89 | 使用人像Lora进行预测。

90 |

91 | | Base Models | Sampler | Seed | Resolution (h x w x f) | Prompt | GenerationResult | Download |

92 | | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

93 | | Pixart + Lora | Euler A | 43 | 512x512x64 | 1girl, 3d, black hair, brown eyes, earrings, grey background, jewelry, lips, long hair, looking at viewer, photo \\(medium\\), realistic, red lips, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/1-lora.gif) |

94 | | Pixart + Lora | Euler A | 43 | 512x512x64 | 1girl, bare shoulders, blurry, brown eyes, dirty, dirty face, freckles, lips, long hair, looking at viewer, mole, mole on breast, mole on neck, mole under eye, mole under mouth, realistic, sleeveless, solo, upper body | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/2-lora.gif) |

95 | | Pixart + Lora | Euler A | 43 | 512x512x64 | 1girl, black hair, lips, looking at viewer, mole, mole under eye, mole under mouth, realistic, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/5-lora.gif) |

96 | | Pixart + Lora | Euler A | 43 | 512x512x80 | 1girl, bare shoulders, blurry, blurry background, blurry foreground, bokeh, brown eyes, christmas tree, closed mouth, collarbone, depth of field, earrings, jewelry, lips, long hair, looking at viewer, photo \\(medium\\), realistic, smile, solo | | [Download GIF](https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/asset/8-lora.gif) |

97 |

98 | # 快速启动

99 | ### 1. 云使用: AliyunDSW/Docker

100 | #### a. 通过阿里云 DSW

101 | 敬请期待。

102 |

103 | #### b. 通过docker

104 | 使用docker的情况下,请保证机器中已经正确安装显卡驱动与CUDA环境,然后以此执行以下命令:

105 | ```

106 | # 拉取镜像

107 | docker pull mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:easyanimate

108 |

109 | # 进入镜像

110 | docker run -it -p 7860:7860 --network host --gpus all --security-opt seccomp:unconfined --shm-size 200g mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:easyanimate

111 |

112 | # clone 代码

113 | git clone https://github.com/aigc-apps/EasyAnimate.git

114 |

115 | # 进入EasyAnimate文件夹

116 | cd EasyAnimate

117 |

118 | # 下载权重

119 | mkdir models/Diffusion_Transformer

120 | mkdir models/Motion_Module

121 | mkdir models/Personalized_Model

122 |

123 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Motion_Module/easyanimate_v1_mm.safetensors -O models/Motion_Module/easyanimate_v1_mm.safetensors

124 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait.safetensors -O models/Personalized_Model/easyanimate_portrait.safetensors

125 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Personalized_Model/easyanimate_portrait_lora.safetensors -O models/Personalized_Model/easyanimate_portrait_lora.safetensors

126 | wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/easyanimate/Diffusion_Transformer/PixArt-XL-2-512x512.tar -O models/Diffusion_Transformer/PixArt-XL-2-512x512.tar

127 |

128 | cd models/Diffusion_Transformer/

129 | tar -xvf PixArt-XL-2-512x512.tar

130 | cd ../../

131 | ```

132 |

133 | ### 2. 本地安装: 环境检查/下载/安装

134 | #### a. 环境检查

135 | 我们已验证EasyAnimate可在以下环境中执行:

136 |

137 | Linux 的详细信息:

138 | - 操作系统 Ubuntu 20.04, CentOS

139 | - python: python3.10 & python3.11

140 | - pytorch: torch2.2.0

141 | - CUDA: 11.8

142 | - CUDNN: 8+

143 | - GPU: Nvidia-A10 24G & Nvidia-A100 40G & Nvidia-A100 80G

144 |

145 | 我们需要大约 60GB 的可用磁盘空间,请检查!

146 |

147 | #### b. 权重放置

148 | 我们最好将权重按照指定路径进行放置:

149 |

150 | ```

151 | 📦 models/

152 | ├── 📂 Diffusion_Transformer/

153 | │ └── 📂 PixArt-XL-2-512x512/

154 | ├── 📂 Motion_Module/

155 | │ └── 📄 easyanimate_v1_mm.safetensors

156 | ├── 📂 Motion_Module/

157 | │ ├── 📄 easyanimate_portrait.safetensors

158 | │ └── 📄 easyanimate_portrait_lora.safetensors

159 | ```

160 |

161 | # 如何使用

162 | ### 1. 生成

163 | #### a. 视频生成

164 | ##### i、运行python文件

165 | - 步骤1:下载对应权重放入models文件夹。

166 | - 步骤2:在predict_t2v.py文件中修改prompt、neg_prompt、guidance_scale和seed。

167 | - 步骤3:运行predict_t2v.py文件,等待生成结果,结果保存在samples/easyanimate-videos文件夹中。

168 | - 步骤4:如果想结合自己训练的其他backbone与Lora,则看情况修改predict_t2v.py中的predict_t2v.py和lora_path。

169 |

170 | ##### ii、通过ui界面

171 | - 步骤1:下载对应权重放入models文件夹。

172 | - 步骤2:运行app.py文件,进入gradio页面。

173 | - 步骤3:根据页面选择生成模型,填入prompt、neg_prompt、guidance_scale和seed等,点击生成,等待生成结果,结果保存在sample文件夹中。

174 |

175 | ### 2. 模型训练

176 | #### a、训练视频生成模型

177 | ##### i、基于webvid数据集

178 | 如果使用webvid数据集进行训练,则需要首先下载webvid的数据集。

179 |

180 | 您需要以这种格式排列webvid数据集。

181 | ```

182 | 📦 project/

183 | ├── 📂 datasets/

184 | │ ├── 📂 webvid/

185 | │ ├── 📂 videos/

186 | │ │ ├── 📄 00000001.mp4

187 | │ │ ├── 📄 00000002.mp4

188 | │ │ └── 📄 .....

189 | │ └── 📄 csv_of_webvid.csv

190 | ```

191 |

192 | 然后,进入scripts/train_t2v.sh进行设置。

193 | ```

194 | export DATASET_NAME="datasets/webvid/videos/"

195 | export DATASET_META_NAME="datasets/webvid/csv_of_webvid.csv"

196 |

197 | ...

198 |

199 | train_data_format="webvid"

200 | ```

201 |

202 | 最后运行scripts/train_t2v.sh。

203 | ```sh

204 | sh scripts/train_t2v.sh

205 | ```

206 |

207 | ##### ii、基于自建数据集

208 | 如果使用内部数据集进行训练,则需要首先格式化数据集。

209 |

210 | 您需要以这种格式排列数据集。

211 | ```

212 | 📦 project/

213 | ├── 📂 datasets/

214 | │ ├── 📂 internal_datasets/

215 | │ ├── 📂 videos/

216 | │ │ ├── 📄 00000001.mp4

217 | │ │ ├── 📄 00000002.mp4

218 | │ │ └── 📄 .....

219 | │ └── 📄 json_of_internal_datasets.json

220 | ```

221 |

222 | json_of_internal_datasets.json是一个标准的json文件,如下所示:

223 | ```json

224 | [

225 | {

226 | "file_path": "videos/00000001.mp4",

227 | "text": "A group of young men in suits and sunglasses are walking down a city street.",

228 | "type": "video"

229 | },

230 | {

231 | "file_path": "videos/00000002.mp4",

232 | "text": "A notepad with a drawing of a woman on it.",

233 | "type": "video"

234 | }

235 | .....

236 | ]

237 | ```

238 | json中的file_path需要设置为相对路径。

239 |

240 | 然后,进入scripts/train_t2v.sh进行设置。

241 | ```

242 | export DATASET_NAME="datasets/internal_datasets/"

243 | export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

244 |

245 | ...

246 |

247 | train_data_format="normal"

248 | ```

249 |

250 | 最后运行scripts/train_t2v.sh。

251 | ```sh

252 | sh scripts/train_t2v.sh

253 | ```

254 |

255 | #### b、训练基础文生图模型

256 | ##### i、基于diffusers格式

257 | 数据集的格式可以设置为diffusers格式。

258 |

259 | ```

260 | 📦 project/

261 | ├── 📂 datasets/

262 | │ ├── 📂 diffusers_datasets/

263 | │ ├── 📂 train/

264 | │ │ ├── 📄 00000001.jpg

265 | │ │ ├── 📄 00000002.jpg

266 | │ │ └── 📄 .....

267 | │ └── 📄 metadata.jsonl

268 | ```

269 |

270 | 然后,进入scripts/train_t2i.sh进行设置。

271 | ```

272 | export DATASET_NAME="datasets/diffusers_datasets/"

273 |

274 | ...

275 |

276 | train_data_format="diffusers"

277 | ```

278 |

279 | 最后运行scripts/train_t2i.sh。

280 | ```sh

281 | sh scripts/train_t2i.sh

282 | ```

283 | ##### ii、基于自建数据集

284 | 如果使用自建数据集进行训练,则需要首先格式化数据集。

285 |

286 | 您需要以这种格式排列数据集。

287 | ```

288 | 📦 project/

289 | ├── 📂 datasets/

290 | │ ├── 📂 internal_datasets/

291 | │ ├── 📂 train/

292 | │ │ ├── 📄 00000001.jpg

293 | │ │ ├── 📄 00000002.jpg

294 | │ │ └── 📄 .....

295 | │ └── 📄 json_of_internal_datasets.json

296 | ```

297 |

298 | json_of_internal_datasets.json是一个标准的json文件,如下所示:

299 | ```json

300 | [

301 | {

302 | "file_path": "train/00000001.jpg",

303 | "text": "A group of young men in suits and sunglasses are walking down a city street.",

304 | "type": "image"

305 | },

306 | {

307 | "file_path": "train/00000002.jpg",

308 | "text": "A notepad with a drawing of a woman on it.",

309 | "type": "image"

310 | }

311 | .....

312 | ]

313 | ```

314 | json中的file_path需要设置为相对路径。

315 |

316 | 然后,进入scripts/train_t2i.sh进行设置。

317 | ```

318 | export DATASET_NAME="datasets/internal_datasets/"

319 | export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

320 |

321 | ...

322 |

323 | train_data_format="normal"

324 | ```

325 |

326 | 最后运行scripts/train_t2i.sh。

327 | ```sh

328 | sh scripts/train_t2i.sh

329 | ```

330 |

331 | #### c、训练Lora模型

332 | ##### i、基于diffusers格式

333 | 数据集的格式可以设置为diffusers格式。

334 | ```

335 | 📦 project/

336 | ├── 📂 datasets/

337 | │ ├── 📂 diffusers_datasets/

338 | │ ├── 📂 train/

339 | │ │ ├── 📄 00000001.jpg

340 | │ │ ├── 📄 00000002.jpg

341 | │ │ └── 📄 .....

342 | │ └── 📄 metadata.jsonl

343 | ```

344 |

345 | 然后,进入scripts/train_lora.sh进行设置。

346 | ```

347 | export DATASET_NAME="datasets/diffusers_datasets/"

348 |

349 | ...

350 |

351 | train_data_format="diffusers"

352 | ```

353 |

354 | 最后运行scripts/train_lora.sh。

355 | ```sh

356 | sh scripts/train_lora.sh

357 | ```

358 |

359 | ##### ii、基于自建数据集

360 | 如果使用自建数据集进行训练,则需要首先格式化数据集。

361 |

362 | 您需要以这种格式排列数据集。

363 | ```

364 | 📦 project/

365 | ├── 📂 datasets/

366 | │ ├── 📂 internal_datasets/

367 | │ ├── 📂 train/

368 | │ │ ├── 📄 00000001.jpg

369 | │ │ ├── 📄 00000002.jpg

370 | │ │ └── 📄 .....

371 | │ └── 📄 json_of_internal_datasets.json

372 | ```

373 |

374 | json_of_internal_datasets.json是一个标准的json文件,如下所示:

375 | ```json

376 | [

377 | {

378 | "file_path": "train/00000001.jpg",

379 | "text": "A group of young men in suits and sunglasses are walking down a city street.",

380 | "type": "image"

381 | },

382 | {

383 | "file_path": "train/00000002.jpg",

384 | "text": "A notepad with a drawing of a woman on it.",

385 | "type": "image"

386 | }

387 | .....

388 | ]

389 | ```

390 | json中的file_path需要设置为相对路径。

391 |

392 | 然后,进入scripts/train_lora.sh进行设置。

393 | ```

394 | export DATASET_NAME="datasets/internal_datasets/"

395 | export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

396 |

397 | ...

398 |

399 | train_data_format="normal"

400 | ```

401 |

402 | 最后运行scripts/train_lora.sh。

403 | ```sh

404 | sh scripts/train_lora.sh

405 | ```

406 | # 算法细节

407 | 我们使用了[PixArt-alpha](https://github.com/PixArt-alpha/PixArt-alpha)作为基础模型,并在此基础上引入额外的运动模块(motion module)来将DiT模型从2D图像生成扩展到3D视频生成上来。其框架图如下:

408 |

409 |

410 |

411 |  412 |

413 |

414 |

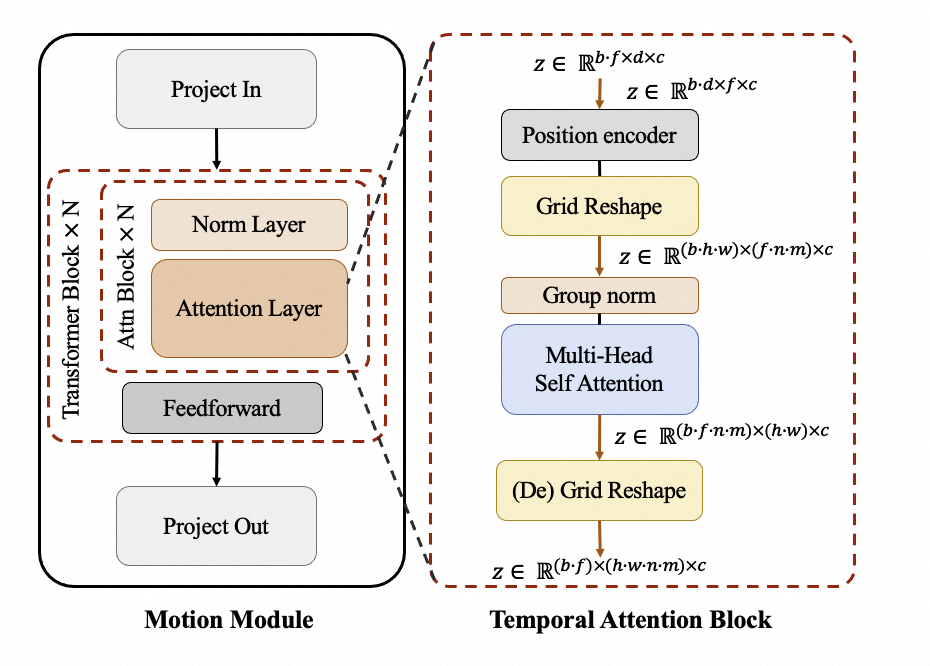

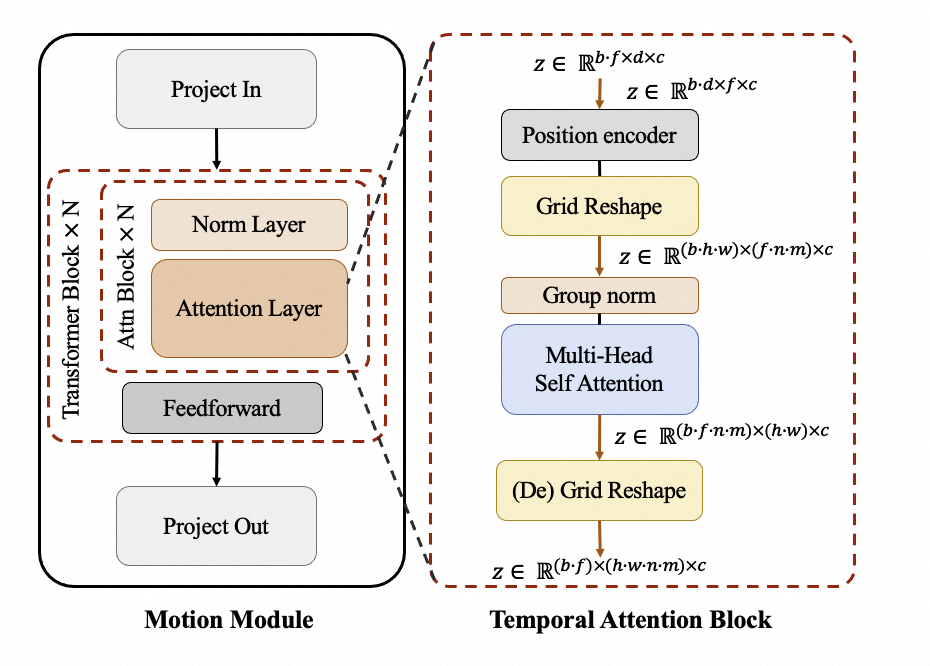

415 | 其中,Motion Module 用于捕捉时序维度的帧间关系,其结构如下:

416 |

417 |

418 |

419 |

412 |

413 |

414 |

415 | 其中,Motion Module 用于捕捉时序维度的帧间关系,其结构如下:

416 |

417 |

418 |

419 |  420 |

421 |

422 |

423 | 我们在时序维度上引入注意力机制来让模型学习时序信息,以进行连续视频帧的生成。同时,我们利用额外的网格计算(Grid Reshape),来扩大注意力机制的input token数目,从而更多地利用图像的空间信息以达到更好的生成效果。Motion Module 作为一个单独的模块,在推理时可以用在不同的DiT基线模型上。此外,EasyAnimate不仅支持了motion-module模块的训练,也支持了DiT基模型/LoRA模型的训练,以方便用户根据自身需要来完成自定义风格的模型训练,进而生成任意风格的视频。

424 |

425 |

426 | # 算法限制

427 | - 受

428 |

429 | # 参考文献

430 | - magvit: https://github.com/google-research/magvit

431 | - PixArt: https://github.com/PixArt-alpha/PixArt-alpha

432 | - Open-Sora-Plan: https://github.com/PKU-YuanGroup/Open-Sora-Plan

433 | - Open-Sora: https://github.com/hpcaitech/Open-Sora

434 | - Animatediff: https://github.com/guoyww/AnimateDiff

435 |

436 | # 许可证

437 | 本项目采用 [Apache License (Version 2.0)](https://github.com/modelscope/modelscope/blob/master/LICENSE).

438 |

--------------------------------------------------------------------------------

/__init__.py:

--------------------------------------------------------------------------------

1 | from .nodes import NODE_CLASS_MAPPINGS

2 |

3 | __all__ = ['NODE_CLASS_MAPPINGS']

--------------------------------------------------------------------------------

/app.py:

--------------------------------------------------------------------------------

1 | from easyanimate.ui.ui import ui

2 |

3 | if __name__ == "__main__":

4 | server_name = "0.0.0.0"

5 | demo = ui()

6 | demo.launch(server_name=server_name)

--------------------------------------------------------------------------------

/config/easyanimate_image_normal_v1.yaml:

--------------------------------------------------------------------------------

1 | noise_scheduler_kwargs:

2 | beta_start: 0.0001

3 | beta_end: 0.02

4 | beta_schedule: "linear"

5 | steps_offset: 1

6 |

7 | vae_kwargs:

8 | enable_magvit: false

--------------------------------------------------------------------------------

/config/easyanimate_video_long_sequence_v1.yaml:

--------------------------------------------------------------------------------

1 | transformer_additional_kwargs:

2 | patch_3d: false

3 | fake_3d: false

4 | basic_block_type: "selfattentiontemporal"

5 | time_position_encoding_before_transformer: true

6 |

7 | noise_scheduler_kwargs:

8 | beta_start: 0.0001

9 | beta_end: 0.02

10 | beta_schedule: "linear"

11 | steps_offset: 1

12 |

13 | vae_kwargs:

14 | enable_magvit: false

--------------------------------------------------------------------------------

/config/easyanimate_video_motion_module_v1.yaml:

--------------------------------------------------------------------------------

1 | transformer_additional_kwargs:

2 | patch_3d: false

3 | fake_3d: false

4 | basic_block_type: "motionmodule"

5 | time_position_encoding_before_transformer: false

6 | motion_module_type: "VanillaGrid"

7 |

8 | motion_module_kwargs:

9 | num_attention_heads: 8

10 | num_transformer_block: 1

11 | attention_block_types: [ "Temporal_Self", "Temporal_Self" ]

12 | temporal_position_encoding: true

13 | temporal_position_encoding_max_len: 4096

14 | temporal_attention_dim_div: 1

15 | block_size: 2

16 |

17 | noise_scheduler_kwargs:

18 | beta_start: 0.0001

19 | beta_end: 0.02

20 | beta_schedule: "linear"

21 | steps_offset: 1

22 |

23 | vae_kwargs:

24 | enable_magvit: false

--------------------------------------------------------------------------------

/datasets/put datasets here.txt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chaojie/ComfyUI-EasyAnimate/9bef69d1ceda9d300613488517af6cc66cf5c360/datasets/put datasets here.txt

--------------------------------------------------------------------------------

/easyanimate/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chaojie/ComfyUI-EasyAnimate/9bef69d1ceda9d300613488517af6cc66cf5c360/easyanimate/__init__.py

--------------------------------------------------------------------------------

/easyanimate/data/bucket_sampler.py:

--------------------------------------------------------------------------------

1 | # Copyright (c) OpenMMLab. All rights reserved.

2 | import os

3 |

4 | import cv2

5 | import numpy as np

6 | from PIL import Image

7 | from torch.utils.data import BatchSampler, Dataset, Sampler

8 |

9 | ASPECT_RATIO_512 = {

10 | '0.25': [256.0, 1024.0], '0.26': [256.0, 992.0], '0.27': [256.0, 960.0], '0.28': [256.0, 928.0],

11 | '0.32': [288.0, 896.0], '0.33': [288.0, 864.0], '0.35': [288.0, 832.0], '0.4': [320.0, 800.0],

12 | '0.42': [320.0, 768.0], '0.48': [352.0, 736.0], '0.5': [352.0, 704.0], '0.52': [352.0, 672.0],

13 | '0.57': [384.0, 672.0], '0.6': [384.0, 640.0], '0.68': [416.0, 608.0], '0.72': [416.0, 576.0],

14 | '0.78': [448.0, 576.0], '0.82': [448.0, 544.0], '0.88': [480.0, 544.0], '0.94': [480.0, 512.0],

15 | '1.0': [512.0, 512.0], '1.07': [512.0, 480.0], '1.13': [544.0, 480.0], '1.21': [544.0, 448.0],

16 | '1.29': [576.0, 448.0], '1.38': [576.0, 416.0], '1.46': [608.0, 416.0], '1.67': [640.0, 384.0],

17 | '1.75': [672.0, 384.0], '2.0': [704.0, 352.0], '2.09': [736.0, 352.0], '2.4': [768.0, 320.0],

18 | '2.5': [800.0, 320.0], '2.89': [832.0, 288.0], '3.0': [864.0, 288.0], '3.11': [896.0, 288.0],

19 | '3.62': [928.0, 256.0], '3.75': [960.0, 256.0], '3.88': [992.0, 256.0], '4.0': [1024.0, 256.0]

20 | }

21 | ASPECT_RATIO_RANDOM_CROP_512 = {

22 | '0.42': [320.0, 768.0], '0.5': [352.0, 704.0],

23 | '0.57': [384.0, 672.0], '0.68': [416.0, 608.0], '0.78': [448.0, 576.0], '0.88': [480.0, 544.0],

24 | '0.94': [480.0, 512.0], '1.0': [512.0, 512.0], '1.07': [512.0, 480.0],

25 | '1.13': [544.0, 480.0], '1.29': [576.0, 448.0], '1.46': [608.0, 416.0], '1.75': [672.0, 384.0],

26 | '2.0': [704.0, 352.0], '2.4': [768.0, 320.0]

27 | }

28 | ASPECT_RATIO_RANDOM_CROP_PROB = [

29 | 1, 2,

30 | 4, 4, 4, 4,

31 | 8, 8, 8,

32 | 4, 4, 4, 4,

33 | 2, 1

34 | ]

35 | ASPECT_RATIO_RANDOM_CROP_PROB = np.array(ASPECT_RATIO_RANDOM_CROP_PROB) / sum(ASPECT_RATIO_RANDOM_CROP_PROB)

36 |

37 | def get_closest_ratio(height: float, width: float, ratios: dict = ASPECT_RATIO_512):

38 | aspect_ratio = height / width

39 | closest_ratio = min(ratios.keys(), key=lambda ratio: abs(float(ratio) - aspect_ratio))

40 | return ratios[closest_ratio], float(closest_ratio)

41 |

42 | def get_image_size_without_loading(path):

43 | with Image.open(path) as img:

44 | return img.size # (width, height)

45 |

46 | class AspectRatioBatchImageSampler(BatchSampler):

47 | """A sampler wrapper for grouping images with similar aspect ratio into a same batch.

48 |

49 | Args:

50 | sampler (Sampler): Base sampler.

51 | dataset (Dataset): Dataset providing data information.

52 | batch_size (int): Size of mini-batch.

53 | drop_last (bool): If ``True``, the sampler will drop the last batch if

54 | its size would be less than ``batch_size``.

55 | aspect_ratios (dict): The predefined aspect ratios.

56 | """

57 | def __init__(

58 | self,

59 | sampler: Sampler,

60 | dataset: Dataset,

61 | batch_size: int,

62 | train_folder: str = None,

63 | aspect_ratios: dict = ASPECT_RATIO_512,

64 | drop_last: bool = False,

65 | config=None,

66 | **kwargs

67 | ) -> None:

68 | if not isinstance(sampler, Sampler):

69 | raise TypeError('sampler should be an instance of ``Sampler``, '

70 | f'but got {sampler}')

71 | if not isinstance(batch_size, int) or batch_size <= 0:

72 | raise ValueError('batch_size should be a positive integer value, '

73 | f'but got batch_size={batch_size}')

74 | self.sampler = sampler

75 | self.dataset = dataset

76 | self.train_folder = train_folder

77 | self.batch_size = batch_size

78 | self.aspect_ratios = aspect_ratios

79 | self.drop_last = drop_last

80 | self.config = config

81 | # buckets for each aspect ratio

82 | self._aspect_ratio_buckets = {ratio: [] for ratio in aspect_ratios}

83 | # [str(k) for k, v in aspect_ratios]

84 | self.current_available_bucket_keys = list(aspect_ratios.keys())

85 |

86 | def __iter__(self):

87 | for idx in self.sampler:

88 | try:

89 | image_dict = self.dataset[idx]

90 |

91 | image_id, name = image_dict['file_path'], image_dict['text']

92 | if self.train_folder is None:

93 | image_dir = image_id

94 | else:

95 | image_dir = os.path.join(self.train_folder, image_id)

96 |

97 | width, height = get_image_size_without_loading(image_dir)

98 |

99 | ratio = height / width # self.dataset[idx]

100 | except Exception as e:

101 | print(e)

102 | continue

103 | # find the closest aspect ratio

104 | closest_ratio = min(self.aspect_ratios.keys(), key=lambda r: abs(float(r) - ratio))

105 | if closest_ratio not in self.current_available_bucket_keys:

106 | continue

107 | bucket = self._aspect_ratio_buckets[closest_ratio]

108 | bucket.append(idx)

109 | # yield a batch of indices in the same aspect ratio group

110 | if len(bucket) == self.batch_size:

111 | yield bucket[:]

112 | del bucket[:]

113 |