├── .idea

├── TIANCHI_Project.iml

├── misc.xml

└── modules.xml

├── Ad_Convert_prediction

├── README.md

├── data

│ ├── round1_ijcai_18_result_demo_20180301.txt

│ └── 数据说明.txt

├── doc

│ ├── paper

│ │ ├── Factorization Machines with libFM.pdf

│ │ ├── Factorization Machines--Steffen Rendle.pdf

│ │ ├── Field-aware Factorization Machines for CTR Prediction.pdf

│ │ ├── Field-aware Factorization Machines in a Real-world Online Advertising System-ind0438-juanA.pdf

│ │ ├── Recurrent Neural Networks with Top-k Gains for Session-based Recommendations.pdf

│ │ ├── SESSION-BASED RECOMMENDATIONS WITH RECURRENT NEURAL NETWORKS.pdf

│ │ ├── Wide & Deep Learning for Recommender Systems.pdf

│ │ ├── XGBoost A Scalable Tree Boosting System.pdf

│ │ ├── 【ECIR-16-FNN】Deep Learning over Multi-field Categorical Data--A Case Study on User Response Prediction.pdf

│ │ ├── 【IJCAI-17】-DeepFM-A Factorization-Machine based Neural Network for CTR Prediction.pdf

│ │ ├── 【NIPS-2017】lightgbm-a-highly-efficient-gradient-boosting-decision-tree.pdf

│ │ └── 【SIGIR-17】Neural Factorization Machines for r Sparse Predictive Analytics.pdf

│ └── 基于深度学习的搜索广告点击率预测方法研究.pdf

└── src

│ ├── Data_Preprocess.py

│ └── dnn_42.ipynb

├── Click_prediction

├── README.md

├── code

│ ├── Logloss.py

│ ├── blagging.py

│ ├── ctr

│ │ ├── Preprocess.py

│ │ ├── ctr.ipynb

│ │ └── ffm.py

│ ├── ctr_nn

│ │ ├── Main.py

│ │ ├── Models.py

│ │ ├── Utils.py

│ │ └── __init__.py

│ └── cvr

│ │ ├── 1.problem_setting.ipynb

│ │ ├── 2.Baseline_version.ipynb

│ │ ├── 3.feature_engineering_and_machine_learning.ipynb

│ │ └── README.md

├── data

│ ├── data.pdf

│ ├── data_description.pdf

│ ├── download.sh

│ └── tencent_数据说明

│ │ ├── Tencent_cvr_prediction.png

│ │ ├── data_dscr_4.png

│ │ ├── data_dscr_5.png

│ │ ├── 上下文特征.png

│ │ ├── 广告特征.png

│ │ └── 用户特征.png

├── doc

│ ├── 8课下课件-张伟楠.pdf

│ ├── Ad click prediction a view from the trenches.pdf

│ ├── ffm.txt

│ ├── fm.txt

│ └── 资料.txt

├── libffm

│ └── libffm

│ │ ├── COPYRIGHT

│ │ ├── Makefile

│ │ ├── Makefile.win

│ │ ├── README

│ │ ├── ffm-predict

│ │ ├── ffm-predict.cpp

│ │ ├── ffm-train

│ │ ├── ffm-train.cpp

│ │ ├── ffm.cpp

│ │ ├── ffm.h

│ │ ├── ffm.o

│ │ ├── timer.cpp

│ │ ├── timer.h

│ │ └── timer.o

├── libfm

│ └── libfm

│ │ ├── Makefile

│ │ ├── README.md

│ │ ├── bin

│ │ ├── convert

│ │ ├── fm_model

│ │ ├── libFM

│ │ └── transpose

│ │ ├── license.txt

│ │ ├── scripts

│ │ └── triple_format_to_libfm.pl

│ │ └── src

│ │ ├── fm_core

│ │ ├── fm_data.h

│ │ ├── fm_model.h

│ │ └── fm_sgd.h

│ │ ├── libfm

│ │ ├── Makefile

│ │ ├── libfm.cpp

│ │ ├── libfm.o

│ │ ├── src

│ │ │ ├── Data.h

│ │ │ ├── fm_learn.h

│ │ │ ├── fm_learn_mcmc.h

│ │ │ ├── fm_learn_mcmc_simultaneous.h

│ │ │ ├── fm_learn_sgd.h

│ │ │ ├── fm_learn_sgd_element.h

│ │ │ ├── fm_learn_sgd_element_adapt_reg.h

│ │ │ └── relation.h

│ │ └── tools

│ │ │ ├── convert.cpp

│ │ │ ├── convert.o

│ │ │ ├── transpose.cpp

│ │ │ └── transpose.o

│ │ └── util

│ │ ├── cmdline.h

│ │ ├── fmatrix.h

│ │ ├── matrix.h

│ │ ├── memory.h

│ │ ├── random.h

│ │ ├── rlog.h

│ │ ├── smatrix.h

│ │ └── util.h

└── output

│ ├── criteo.jpg

│ ├── facebook.png

│ ├── ffm_formula.png

│ ├── fm_format.png

│ ├── fm_formula.png

│ ├── fm_formula2.png

│ ├── loss.png

│ ├── model.png

│ ├── tensorboard.png

│ └── train_info.png

├── Coupon_Usage_Predict

└── readme.md

├── Loan_risk_prediction

├── README.md

├── code

│ ├── XGBoost models.ipynb

│ ├── Xgboost调优示例.py

│ └── data_preparation.ipynb

├── data

│ ├── Test_bCtAN1w.csv

│ ├── Train_nyOWmfK.csv

│ └── train_modified.csv

└── doc

│ ├── README.md

│ ├── 不得直视本王-解决方案.pdf

│ ├── 创新应用.docx

│ ├── 最优分箱.docx

│ └── 风控算法大赛解决方案.pdf

├── PPD_RiskControl

├── README.md

└── doc

│ └── 风控算法大赛解决方案.pdf

├── README.md

├── Shangjialiuliang_predict

├── README.md

├── data

│ ├── results

│ │ ├── result_2017-03-11_model.csv

│ │ ├── result_2017-03-11_special_day_weather_huopot.csv

│ │ ├── result_2017-03-16_.csv

│ │ ├── result_2017-03-16_fuse.csv

│ │ ├── result_2017-03-16_special_day.csv

│ │ ├── result_2017-03-16_special_day_weather.csv

│ │ └── result_2017-03-16_special_day_weather_huopot.csv

│ ├── shop_info_name2Id

│ │ ├── cate_1_name.csv

│ │ ├── cate_2_name.csv

│ │ ├── cate_3_name.csv

│ │ ├── city_name.csv

│ │ ├── shop_info.csv

│ │ └── shop_info_num.csv

│ ├── statistics

│ │ ├── all_mon_week3_mean_med_var_std.csv

│ │ ├── city_weather.csv

│ │ ├── count_user_pay.csv

│ │ ├── count_user_pay_avg.csv

│ │ ├── count_user_pay_avg_no_header.csv

│ │ ├── count_user_view.csv

│ │ ├── result_avg7_common_with_last_week.csv

│ │ ├── shop_info.txt

│ │ ├── shop_info_num.csv

│ │ ├── shopid_day_num.txt

│ │ ├── weather-10-11.csv

│ │ ├── weather-11-14.csv

│ │ └── weather_city.csv

│ ├── test_train

│ │ ├── 2017-03-16_test_off_x.csv

│ │ ├── 2017-03-16_test_off_y.csv

│ │ ├── 2017-03-16_test_on_x.csv

│ │ ├── 2017-03-16_train_off_x.csv

│ │ ├── 2017-03-16_train_off_y.csv

│ │ ├── 2017-03-16_train_on_x.csv

│ │ └── 2017-03-16_train_on_y.csv

│ ├── weekABCD

│ │ ├── A.csv

│ │ ├── B.csv

│ │ ├── C.csv

│ │ ├── D.csv

│ │ ├── week0.csv

│ │ ├── week1.csv

│ │ ├── week2.csv

│ │ ├── week3.csv

│ │ ├── week4.csv

│ │ ├── weekA.csv

│ │ ├── weekA1.csv

│ │ ├── weekA_view.csv

│ │ ├── weekB.csv

│ │ ├── weekB1.csv

│ │ ├── weekB_view.csv

│ │ ├── weekC.csv

│ │ ├── weekC1.csv

│ │ ├── weekC_view.csv

│ │ ├── weekD.csv

│ │ ├── weekD1.csv

│ │ ├── weekD_view.csv

│ │ ├── weekP.csv

│ │ ├── weekP2.csv

│ │ ├── weekZ.csv

│ │ └── weekZ1.csv

│ └── weekABCD_0123

│ │ ├── A0.csv

│ │ ├── A1.csv

│ │ ├── A2.csv

│ │ ├── A3.csv

│ │ ├── B0.csv

│ │ ├── B1.csv

│ │ ├── B2.csv

│ │ ├── B3.csv

│ │ ├── C0.csv

│ │ ├── C1.csv

│ │ ├── C2.csv

│ │ ├── C3.csv

│ │ ├── D0.csv

│ │ ├── D1.csv

│ │ ├── D2.csv

│ │ └── D3.csv

├── doc

│ └── 资料.txt

├── main

│ └── __init__.py

├── notebook

│ ├── Untitled.ipynb

│ └── a.txt

├── pictures

│ ├── cate_shop_number

│ │ ├── cate_1.csv

│ │ ├── cate_1.png

│ │ ├── cate_2.csv

│ │ ├── cate_2.png

│ │ ├── cate_3.csv

│ │ └── cate_3.png

│ └── city_shop_number

│ │ ├── 0-50.png

│ │ ├── 101-121.png

│ │ ├── 51-100.png

│ │ ├── all.png

│ │ └── city_shop_number.csv

└── run.py

├── Tencent_Social_Ads

├── README.md

├── data

│ └── 数据说明.txt

├── doc

│ ├── 各代码功能说明.txt

│ └── 模型介绍.txt

├── notebook

│ └── _1_preprocess_data.ipynb

├── run.sh

└── src

│ ├── Ad_Utils.py

│ ├── Feature_joint.py

│ ├── Gen_ID_click_vectors.py

│ ├── Gen_app_install_features.py

│ ├── Gen_global_sum_counts.py

│ ├── Gen_smooth_cvr.py

│ ├── Gen_tricks.py

│ ├── Gen_tricks_final.py

│ ├── Gen_user_click_features.py

│ ├── Preprocess_Data.py

│ ├── Smooth.py

│ ├── __init__.py

│ └── ffm.py

└── Zhihuijiaotong

├── README.md

├── code

├── Preprocess.py

├── Related_lagging.py

├── Utils.py

├── Xgboost_Model.py

└── __init__.py

└── doc

└── “数聚华夏 创享未来”中国数据创新行——智慧交通预测挑战赛 _ 赛题与数据.html

/.idea/TIANCHI_Project.iml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/.idea/misc.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | ApexVCS

5 |

6 |

7 |

--------------------------------------------------------------------------------

/.idea/modules.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/Ad_Convert_prediction/README.md:

--------------------------------------------------------------------------------

1 | # TIANCHI_Project

2 | 天池大数据比赛总结

3 |

4 | 数据下载链接:

--------------------------------------------------------------------------------

/Ad_Convert_prediction/data/round1_ijcai_18_result_demo_20180301.txt:

--------------------------------------------------------------------------------

1 | instance_id predicted_score

2 | 2475218615076601065 0.9

3 | 398316874173557226 0.7

4 | 6586402638209028583 0.5

5 | 1040996105851528465 0.3

6 | 6316278569655873454 0.1

7 |

--------------------------------------------------------------------------------

/Ad_Convert_prediction/data/数据说明.txt:

--------------------------------------------------------------------------------

1 | 基础数据

2 | 字段 解释 特征重要性(1-5排列,数值越大越重要)

3 | instance_id 样本编号,Long

4 | is_trade 是否交易的标记位,Int类型;取值是0或者1,其中1

5 | 表示这条样本最终产生交易,0 表示没有交易

6 | item_id 广告商品编号,Long类型

7 | user_id 用户的编号,Long类型

8 | context_id 上下文信息的编号,Long类型

9 | shop_id 店铺的编号,Long类型

10 |

11 |

12 |

13 |

14 | 广告商品信息

15 | 字段 解释

16 | item_id 广告商品编号,Long类型

17 | item_category_list 广告商品的的类目列表,String类型;从根类目(最粗略的一级类目)向叶子类目

18 | (最精细的类目)依次排列,数据拼接格式为 "category_0;category_1;category_2",其中 category_1 是 category_0 的子类目,

19 | category_2 是 category_1 的子类目

20 | item_property_list 广告商品的属性列表,String类型;数据拼接格式为 "property_0;property_1;property_2",各个属性没有从属关系

21 | item_brand_id 广告商品的品牌编号,Long类型

22 | item_city_id 广告商品的城市编号,Long类型

23 | item_price_level 广告商品的价格等级,Int类型;取值从0开始,数值越大表示价格越高

24 | item_sales_level 广告商品的销量等级,Int类型;取值从0开始,数值越大表示销量越大

25 | item_collected_level 广告商品被收藏次数的等级,Int类型;取值从0开始,数值越大表示被收藏次数越大

26 | item_pv_level 广告商品被展示次数的等级,Int类型;取值从0开始,数值越大表示被展示次数越大

27 |

28 |

29 | 用户信息

30 | 字段 解释

31 | user_id 用户的编号,Long类型

32 | user_gender_id 用户的预测性别编号,Int类型;0表示女性用户,1表示男性用户,2表示家庭用户

33 | user_age_level 用户的预测年龄等级,Int类型;数值越大表示年龄越大

34 | user_occupation_id 用户的预测职业编号,Int类型

35 | user_star_level 用户的星级编号,Int类型;数值越大表示用户的星级越高

36 |

37 |

38 |

39 | 上下文信息

40 | 字段 解释

41 | context_id 上下文信息的编号,Long类型

42 | context_timestamp 广告商品的展示时间,Long类型;取值是以秒为单位的Unix时间戳,以1天为单位对时间戳进行了偏移

43 | context_page_id 广告商品的展示页面编号,Int类型;取值从1开始,依次增加;在一次搜索的展示结果中第一屏的编号为1,第二屏的编号为2

44 | predict_category_property 根据查询词预测的类目属性列表,String类型;数据拼接格式为 “category_A:property_A_1,property_A_2,property_A_3;category_B:-1;category_C:property_C_1,property_C_2” ,其中 category_A、category_B、category_C 是预测的三个类目;property_B 取值为-1,表示预测的第二个类目 category_B 没有对应的预测属性

45 |

46 |

47 |

48 | 店铺信息

49 | 字段 解释

50 | shop_id 店铺的编号,Long类型

51 | shop_review_num_level 店铺的评价数量等级,Int类型;取值从0开始,数值越大表示评价数量越多

52 | shop_review_positive_rate 店铺的好评率,Double类型;取值在0到1之间,数值越大表示好评率越高

53 | shop_star_level 店铺的星级编号,Int类型;取值从0开始,数值越大表示店铺的星级越高

54 | shop_score_service 店铺的服务态度评分,Double类型;取值在0到1之间,数值越大表示评分越高

55 | shop_score_delivery 店铺的物流服务评分,Double类型;取值在0到1之间,数值越大表示评分越高

56 | shop_score_description 店铺的描述相符评分,Double类型;取值在0到1之间,数值越大表示评分越高

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/Factorization Machines with libFM.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/Factorization Machines with libFM.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/Factorization Machines--Steffen Rendle.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/Factorization Machines--Steffen Rendle.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/Field-aware Factorization Machines for CTR Prediction.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/Field-aware Factorization Machines for CTR Prediction.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/Field-aware Factorization Machines in a Real-world Online Advertising System-ind0438-juanA.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/Field-aware Factorization Machines in a Real-world Online Advertising System-ind0438-juanA.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/Recurrent Neural Networks with Top-k Gains for Session-based Recommendations.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/Recurrent Neural Networks with Top-k Gains for Session-based Recommendations.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/SESSION-BASED RECOMMENDATIONS WITH RECURRENT NEURAL NETWORKS.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/SESSION-BASED RECOMMENDATIONS WITH RECURRENT NEURAL NETWORKS.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/Wide & Deep Learning for Recommender Systems.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/Wide & Deep Learning for Recommender Systems.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/XGBoost A Scalable Tree Boosting System.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/XGBoost A Scalable Tree Boosting System.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/【ECIR-16-FNN】Deep Learning over Multi-field Categorical Data--A Case Study on User Response Prediction.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/【ECIR-16-FNN】Deep Learning over Multi-field Categorical Data--A Case Study on User Response Prediction.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/【IJCAI-17】-DeepFM-A Factorization-Machine based Neural Network for CTR Prediction.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/【IJCAI-17】-DeepFM-A Factorization-Machine based Neural Network for CTR Prediction.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/【NIPS-2017】lightgbm-a-highly-efficient-gradient-boosting-decision-tree.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/【NIPS-2017】lightgbm-a-highly-efficient-gradient-boosting-decision-tree.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/paper/【SIGIR-17】Neural Factorization Machines for r Sparse Predictive Analytics.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/paper/【SIGIR-17】Neural Factorization Machines for r Sparse Predictive Analytics.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/doc/基于深度学习的搜索广告点击率预测方法研究.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Ad_Convert_prediction/doc/基于深度学习的搜索广告点击率预测方法研究.pdf

--------------------------------------------------------------------------------

/Ad_Convert_prediction/src/Data_Preprocess.py:

--------------------------------------------------------------------------------

1 | #-*- coding:utf-8 _*-

2 |

3 | """

4 | @version:

5 | @author: CharlesXu

6 | @license: Q_S_Y_Q

7 | @file: Data_Preprocess.py

8 | @time: 2018/3/2 13:15

9 | @desc: 阿里妈妈广告点击转化数据预处理

10 | """

11 |

12 | import pandas as pd

13 | import numpy as np

14 |

15 | # 读取数据

16 | test_set = pd.read_csv('E:\dataset\TIANCHI_ad\\test.txt',sep=' ')

17 | train_set = pd.read_csv('E:\dataset\TIANCHI_ad\\train.txt', sep=' ')

18 | # print(test_set.info())

19 | # print(train_set.info())

20 |

21 | train_set['dayofweek'] = (train_set['context_timestamp']/(60*60*24)).apply(np.floor) % 7

22 | train_set['hourofday'] = (train_set['context_timestamp']/(60*60)).apply(np.floor)%24

23 | train_set['minofday'] = (train_set['context_timestamp']/(60)).apply(np.floor)%(24*60)

24 |

25 |

26 | test_set['is_trade'] = -1

27 | test_set['dayofweek'] = (test_set['context_timestamp']/(60*60*24)).apply(np.floor)%7

28 | test_set['hourofday'] = (test_set['context_timestamp']/(60*60)).apply(np.floor)%24

29 | test_set['minofday'] = (test_set['context_timestamp']/(60)).apply(np.floor)%(24*60)

30 |

31 | print((train_set['context_timestamp']/(60*60*24)).apply(np.floor).max())

32 |

33 |

34 |

35 |

36 | # if __name__ == '__main__':

37 | # pass

--------------------------------------------------------------------------------

/Click_prediction/README.md:

--------------------------------------------------------------------------------

1 | # kaggle_criteo_ctr_challenge-

2 | This is a kaggle challenge project called Display Advertising Challenge by CriteoLabs at 2014.

3 | 这是2014年由CriteoLabs在kaggle上发起的广告点击率预估挑战项目。

4 | 使用TensorFlow1.0和Python 3.5开发。

5 |

6 | 代码详解请参见jupyter notebook和↓↓↓

7 |

8 | 博客:http://blog.csdn.net/chengcheng1394/article/details/78940565

9 |

10 | 知乎专栏:https://zhuanlan.zhihu.com/p/32500652

11 |

12 | 欢迎转发扩散 ^_^

13 |

14 | 本文使用GBDT、FM、FFM和神经网络构建了点击率预估模型。

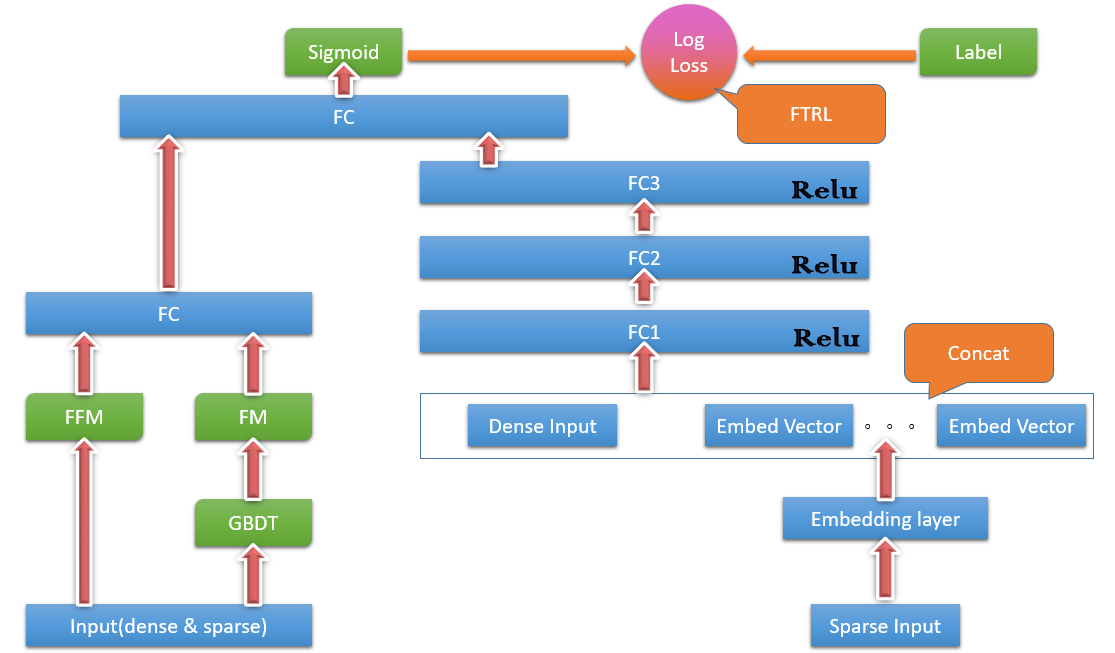

15 |

16 | ## 网络模型

17 |

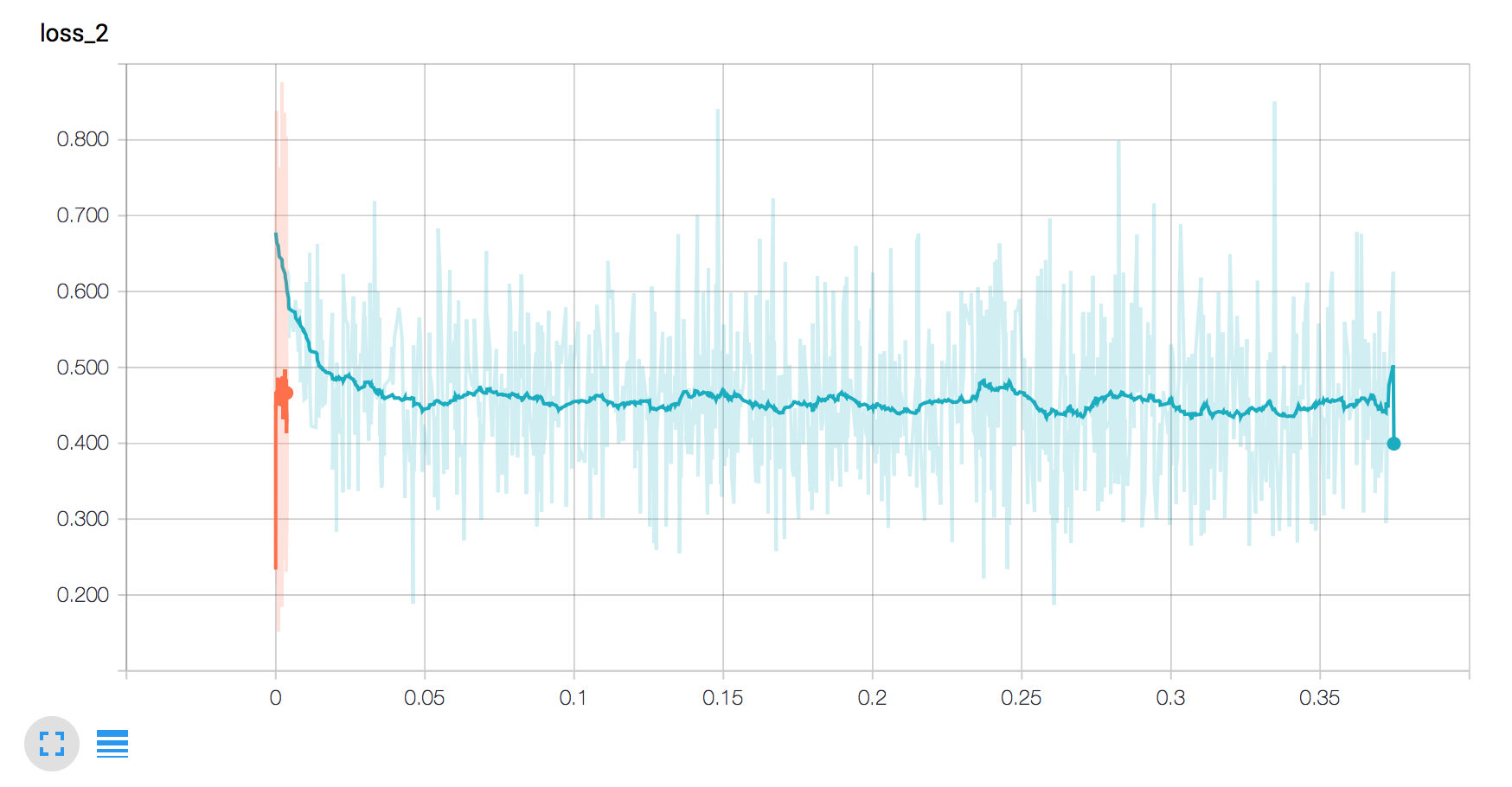

18 |

19 | ## LogLoss曲线

20 |

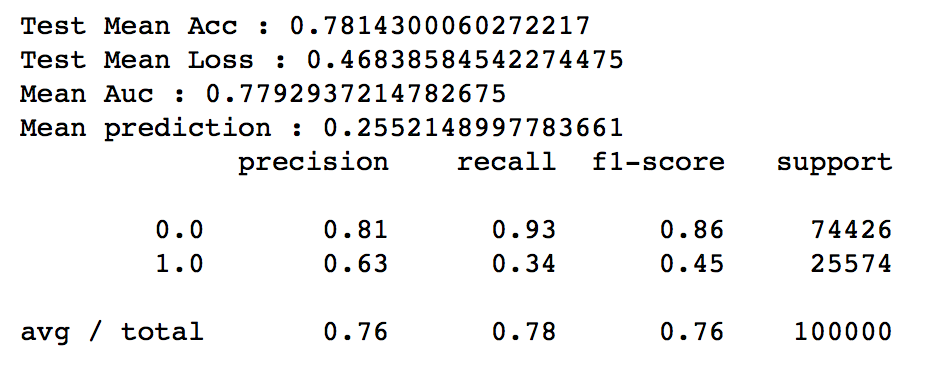

21 |

22 | ## 验证集上的训练信息

23 | - 平均准确率

24 | - 平均损失

25 | - 平均Auc

26 | - 预测的平均点击率

27 | - 精确率、召回率、F1 Score等信息

28 |

29 |

30 |

31 | 更多内容请参考代码,Enjoy!

32 |

--------------------------------------------------------------------------------

/Click_prediction/code/Logloss.py:

--------------------------------------------------------------------------------

1 | #-*- coding:utf-8 _*-

2 | """

3 | @author:charlesXu

4 | @file: Logloss.py

5 | @desc: 腾讯算法大赛logloss求法

6 | @time: 2018/03/04

7 | """

8 |

9 | import scipy as sp

10 |

11 | def logloss(act, pred):

12 | epsilon = 1e-15

13 | pred = sp.maximum(epsilon, pred)

14 | pred = sp.minimum(1-epsilon, pred)

15 | ll = sum(act * sp.log(pred) + sp.subtract(1, act) * sp.log(sp.subtract(1, pred)))

16 | ll = ll * - 1.0 / len(act)

17 | return ll

--------------------------------------------------------------------------------

/Click_prediction/code/ctr/Preprocess.py:

--------------------------------------------------------------------------------

1 | #-*- coding:utf-8 _*-

2 |

3 | """

4 | @version:

5 | @author: CharlesXu

6 | @license: Q_S_Y_Q

7 | @file: Preprocess.py

8 | @time: 2018/3/5 11:08

9 | @desc: 数据预处理

10 | """

11 |

12 | '''

13 | 生成神经网络的输入

14 | 生成ffm的输入

15 | 生成GBDT的输入

16 | '''

17 |

18 | continous_features = range(1, 14)

19 | categorial_features = range(14, 40)

20 |

21 |

22 |

23 |

24 |

25 | if __name__ == '__main__':

26 | pass

--------------------------------------------------------------------------------

/Click_prediction/code/ctr/ffm.py:

--------------------------------------------------------------------------------

1 | import subprocess,multiprocessing

2 | import os,time

3 | import pandas as pd

4 | import numpy as np

5 |

6 | class FFM:

7 | """libffm-1.21 Python Wrapper with libffm format data

8 |

9 | :Args:

10 | - reg_lambda: float, default: 2e-5

11 | regularization parameter

12 | - factor: int,default: 4

13 | number of latent factors

14 | - iteration: int, default: 15

15 | - learning_rate: float, default: 0.2

16 | - n_jobs: int, default: 1

17 | Number of parallel threads

18 | - verbose: int, default: 1

19 | - norm: bool, default: True

20 | instance-wise normalization

21 | """

22 | def __init__(self,reg_lambda=0.00002,factor=4,iteration=15,learning_rate=0.2,n_jobs=1,

23 | verbose=1,norm=True,):

24 | if n_jobs <=0 or n_jobs > multiprocessing.cpu_count():

25 | raise ValueError('n_jobs must be 1~{0}'.format(multiprocessing.cpu_count()))

26 |

27 | self.reg_lambda = reg_lambda

28 | self.factor = factor

29 | self.iteration = iteration

30 | self.learning_rate = learning_rate

31 | self.n_jobs = n_jobs

32 | self.verbose = verbose

33 | self.norm = norm

34 |

35 |

36 | self.cmd = ''

37 |

38 | self.output_name = 'ffm_result'+str(int(time.time()))# temp predict result file

39 |

40 |

41 |

42 |

43 | def fit(self,train_ffm_path,valid_ffm_path=None,model_path=None,auto_stop=False,):

44 | """ Train the FFM model with ffm-format data,

45 |

46 | :Args:

47 | - train_ffm_path: str

48 | - valid_ffm_path: str, default: None

49 | - model_path: str, default: None

50 | - auto_stop: bool, default: False

51 | stop at the iteration that achieves the best validation loss

52 | """

53 |

54 | if not os.path.exists(train_ffm_path):

55 | raise FileNotFoundError("file '{0}' not exists".format(train_ffm_path))

56 | self.train_ffm_path = train_ffm_path

57 | self.valid_ffm_path = valid_ffm_path

58 | self.model_path = None

59 | self.auto_stop = auto_stop

60 |

61 |

62 | cmd = 'ffm-train -l {l} -k {k} -t {t} -r {r} -s {s}'\

63 | .format(l=self.reg_lambda,k=self.factor,t=self.iteration,r=self.learning_rate,s=self.n_jobs)

64 | if self.valid_ffm_path is not None:

65 | cmd +=' -p {p}'.format(p=self.valid_ffm_path)

66 |

67 | if self.verbose == 0:

68 | cmd += ' --quiet'

69 | if not self.norm:

70 | cmd += ' --no-norm'

71 |

72 | if self.auto_stop:

73 | if self.valid_ffm_path is None:

74 | raise ValueError('Must specify valid_ffm_path when auto_stop = True')

75 | cmd += ' --auto-stop'

76 | cmd += ' {p}'.format(p=self.train_ffm_path)

77 | if not model_path is None:

78 | cmd +=' {p}'.format(p=model_path)

79 | self.model_path = model_path

80 | self.cmd = cmd

81 | print('Sending command...')

82 | popen = subprocess.Popen(cmd, stdout = subprocess.PIPE,shell=True)

83 | while True:

84 | output = str(popen.stdout.readline(),encoding='utf-8').strip('\n')

85 | if output.strip()=='':

86 | print('FFM training done')

87 | break

88 | print(output)

89 |

90 | def predict(self,test_ffm_path,model_path=None):

91 | """ Predict and return the probability of positive class.

92 |

93 | :Args:

94 | - test_ffm_path: str

95 | - model_path: str, default: None

96 | :returns:

97 | - pred_prob: np.array

98 | """

99 |

100 | cmd = "ffm-predict {t}".format(t=test_ffm_path)

101 | if model_path is None and self.model_path is None:

102 | raise ValueError('Must specify model_path')

103 | elif model_path is not None:

104 | self.model_path = model_path

105 | cmd +=" {0} {1}".format(self.model_path,self.output_name)

106 | self.cmd = cmd

107 | print('Sending command...')

108 | popen = subprocess.Popen(cmd, stdout = subprocess.PIPE,shell=True)

109 | while True:

110 | output = str(popen.stdout.readline(),encoding='utf-8').strip('\n')

111 | if output.strip()=='':

112 | print('FFM predicting done')

113 | break

114 | print(output)

115 |

116 | ans = pd.read_csv(self.output_name,names=['prob'])

117 | os.remove(self.output_name)

118 | return ans.prob.values

--------------------------------------------------------------------------------

/Click_prediction/code/ctr_nn/Main.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from sklearn.metrics import roc_auc_score

3 |

4 | import Utils

5 | from Models import LR, FM, PNN1, PNN2, FNN, CCPM

6 |

7 | train_file = '../data/train.yx.txt'

8 | test_file = '../data/test.yx.txt'

9 | # fm_model_file = '../data/fm.model.txt'

10 |

11 | input_dim = Utils.INPUT_DIM

12 |

13 | train_data = Utils.read_data(train_file)

14 | train_data = Utils.shuffle(train_data)

15 | test_data = Utils.read_data(test_file)

16 |

17 | if train_data[1].ndim > 1:

18 | print ('label must be 1-dim')

19 | exit(0)

20 | print('read finish')

21 |

22 | train_size = train_data[0].shape[0]

23 | test_size = test_data[0].shape[0]

24 | num_feas = len(Utils.FIELD_SIZES)

25 |

26 | min_round = 1

27 | num_round = 1000

28 | early_stop_round = 50

29 | batch_size = 1024

30 |

31 | field_sizes = Utils.FIELD_SIZES

32 | field_offsets = Utils.FIELD_OFFSETS

33 |

34 |

35 | def train(model):

36 | history_score = []

37 | for i in range(num_round):

38 | fetches = [model.optimizer, model.loss]

39 | if batch_size > 0:

40 | ls = []

41 | for j in range(train_size / batch_size + 1):

42 | X_i, y_i = Utils.slice(train_data, j * batch_size, batch_size)

43 | _, l = model.run(fetches, X_i, y_i)

44 | ls.append(l)

45 | elif batch_size == -1:

46 | X_i, y_i = Utils.slice(train_data)

47 | _, l = model.run(fetches, X_i, y_i)

48 | ls = [l]

49 | train_preds = model.run(model.y_prob, Utils.slice(train_data)[0])

50 | test_preds = model.run(model.y_prob, Utils.slice(test_data)[0])

51 | train_score = roc_auc_score(train_data[1], train_preds)

52 | test_score = roc_auc_score(test_data[1], test_preds)

53 | print('[%d]\tloss (with l2 norm):%f\ttrain-auc: %f\teval-auc: %f' % (i, np.mean(ls), train_score, test_score))

54 | history_score.append(test_score)

55 | if i > min_round and i > early_stop_round:

56 | if np.argmax(history_score) == i - early_stop_round and history_score[-1] - history_score[

57 | -1 * early_stop_round] < 1e-5:

58 | print('early stop\nbest iteration:\n[%d]\teval-auc: %f' % (

59 | np.argmax(history_score), np.max(history_score)))

60 | break

61 |

62 |

63 | algo = 'pnn2'

64 |

65 | if algo == 'lr':

66 | lr_params = {

67 | 'input_dim': input_dim,

68 | 'opt_algo': 'gd',

69 | 'learning_rate': 0.01,

70 | 'l2_weight': 0,

71 | 'random_seed': 0

72 | }

73 |

74 | model = LR(**lr_params)

75 | elif algo == 'fm':

76 | fm_params = {

77 | 'input_dim': input_dim,

78 | 'factor_order': 10,

79 | 'opt_algo': 'gd',

80 | 'learning_rate': 0.1,

81 | 'l2_w': 0,

82 | 'l2_v': 0,

83 | }

84 |

85 | model = FM(**fm_params)

86 | elif algo == 'fnn':

87 | fnn_params = {

88 | 'layer_sizes': [field_sizes, 10, 1],

89 | 'layer_acts': ['tanh', 'none'],

90 | 'drop_out': [0, 0],

91 | 'opt_algo': 'gd',

92 | 'learning_rate': 0.1,

93 | 'layer_l2': [0, 0],

94 | 'random_seed': 0

95 | }

96 |

97 | model = FNN(**fnn_params)

98 | elif algo == 'ccpm':

99 | ccpm_params = {

100 | 'layer_sizes': [field_sizes, 10, 5, 3],

101 | 'layer_acts': ['tanh', 'tanh', 'none'],

102 | 'drop_out': [0, 0, 0],

103 | 'opt_algo': 'gd',

104 | 'learning_rate': 0.1,

105 | 'random_seed': 0

106 | }

107 |

108 | model = CCPM(**ccpm_params)

109 | elif algo == 'pnn1':

110 | pnn1_params = {

111 | 'layer_sizes': [field_sizes, 10, 1],

112 | 'layer_acts': ['tanh', 'none'],

113 | 'drop_out': [0, 0],

114 | 'opt_algo': 'gd',

115 | 'learning_rate': 0.1,

116 | 'layer_l2': [0, 0],

117 | 'kernel_l2': 0,

118 | 'random_seed': 0

119 | }

120 |

121 | model = PNN1(**pnn1_params)

122 | elif algo == 'pnn2':

123 | pnn2_params = {

124 | 'layer_sizes': [field_sizes, 10, 1],

125 | 'layer_acts': ['tanh', 'none'],

126 | 'drop_out': [0, 0],

127 | 'opt_algo': 'gd',

128 | 'learning_rate': 0.01,

129 | 'layer_l2': [0, 0],

130 | 'kernel_l2': 0,

131 | 'random_seed': 0

132 | }

133 |

134 | model = PNN2(**pnn2_params)

135 |

136 | if algo in {'fnn', 'ccpm', 'pnn1', 'pnn2'}:

137 | train_data = Utils.split_data(train_data)

138 | test_data = Utils.split_data(test_data)

139 |

140 | train(model)

141 |

142 | # X_i, y_i = utils.slice(train_data, 0, 100)

143 | # fetches = [model.tmp1, model.tmp2]

144 | # tmp1, tmp2 = model.run(fetches, X_i, y_i)

145 | # print tmp1.shape

146 | # print tmp2.shape

147 |

--------------------------------------------------------------------------------

/Click_prediction/code/ctr_nn/__init__.py:

--------------------------------------------------------------------------------

1 | #-*- coding:utf-8 _*-

2 |

3 | """

4 | @version:

5 | @author: CharlesXu

6 | @license: Q_S_Y_Q

7 | @file: __init__.py.py

8 | @time: 2018/3/6 18:51

9 | @desc:

10 | """

11 |

12 | if __name__ == '__main__':

13 | pass

--------------------------------------------------------------------------------

/Click_prediction/code/cvr/1.problem_setting.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "## 腾讯移动App广告转化率预估"

8 | ]

9 | },

10 | {

11 | "cell_type": "markdown",

12 | "metadata": {},

13 | "source": [

14 | ""

15 | ]

16 | },

17 | {

18 | "cell_type": "markdown",

19 | "metadata": {},

20 | "source": [

21 | "### 题目描述\n",

22 | "计算广告是互联网最重要的商业模式之一,广告投放效果通常通过曝光、点击和转化各环节来衡量,大多数广告系统受广告效果数据回流的限制只能通过曝光或点击作为投放效果的衡量标准开展优化。\n",

23 | "\n",

24 | "腾讯社交广告(`http://ads.tencent.com`)发挥特有的用户识别和转化跟踪数据能力,帮助广告主跟踪广告投放后的转化效果,基于广告转化数据训练转化率预估模型(pCVR,Predicted Conversion Rate),在广告排序中引入pCVR因子优化广告投放效果,提升ROI。\n",

25 | "\n",

26 | "本题目以移动App广告为研究对象,预测App广告点击后被激活的概率:pCVR=P(conversion=1 | Ad,User,Context),即给定广告、用户和上下文情况下广告被点击后发生激活的概率。"

27 | ]

28 | },

29 | {

30 | "cell_type": "markdown",

31 | "metadata": {},

32 | "source": [

33 | "### 训练数据\n",

34 | "从腾讯社交广告系统中某一连续两周的日志中按照推广中的App和用户维度随机采样。\n",

35 | "\n",

36 | "每一条训练样本即为一条广告点击日志(点击时间用clickTime表示),样本label取值0或1,其中0表示点击后没有发生转化,1表示点击后有发生转化,如果label为1,还会提供转化回流时间(conversionTime,定义详见“FAQ”)。给定特征集如下:"

37 | ]

38 | },

39 | {

40 | "cell_type": "markdown",

41 | "metadata": {},

42 | "source": [

43 | "\n",

44 | "\n",

45 | ""

46 | ]

47 | },

48 | {

49 | "cell_type": "markdown",

50 | "metadata": {},

51 | "source": [

52 | "特别的,出于数据安全的考虑,对于userID,appID,特征,以及时间字段,我们不提供原始数据,按照如下方式加密处理:"

53 | ]

54 | },

55 | {

56 | "cell_type": "markdown",

57 | "metadata": {},

58 | "source": [

59 | ""

60 | ]

61 | },

62 | {

63 | "cell_type": "markdown",

64 | "metadata": {},

65 | "source": [

66 | "#### 训练数据文件(train.csv)"

67 | ]

68 | },

69 | {

70 | "cell_type": "markdown",

71 | "metadata": {},

72 | "source": [

73 | "每行代表一个训练样本,各字段之间由逗号分隔,顺序依次为:“label,clickTime,conversionTime,creativeID,userID,positionID,connectionType,telecomsOperator”。\n",

74 | "\n",

75 | "当label=0时,conversionTime字段为空字符串。特别的,训练数据时间范围为第17天0点到第31天0点(定义详见下面的“补充说明”)。为了节省存储空间,用户、App、广告和广告位相关信息以独立文件提供(训练数据和测试数据共用),具体如下:"

76 | ]

77 | },

78 | {

79 | "cell_type": "markdown",

80 | "metadata": {},

81 | "source": [

82 | ""

83 | ]

84 | },

85 | {

86 | "cell_type": "markdown",

87 | "metadata": {},

88 | "source": [

89 | "注:若字段取值为0或空字符串均代表未知。(站点集合ID(sitesetID)为0并不表示未知,而是一个特定的站点集合。)"

90 | ]

91 | },

92 | {

93 | "cell_type": "markdown",

94 | "metadata": {},

95 | "source": [

96 | "### 测试数据\n",

97 | "从训练数据时段随后1天(即第31天)的广告日志中按照与训练数据同样的采样方式抽取得到,测试数据文件(test.csv)每行代表一个测试样本,各字段之间由逗号分隔,顺序依次为:“instanceID,-1,clickTime,creativeID,userID,positionID,connectionType,telecomsOperator”。其中,instanceID唯一标识一个样本,-1代表label占位使用,表示待预测。"

98 | ]

99 | },

100 | {

101 | "cell_type": "markdown",

102 | "metadata": {},

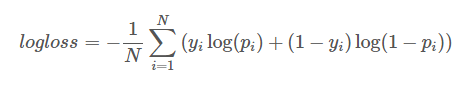

103 | "source": [

104 | "### 评估方式\n",

105 | "通过Logarithmic Loss评估(越小越好),公式如下:\n",

106 | "\n",

107 | "其中,N是测试样本总数,yi是二值变量,取值0或1,表示第i个样本的label,pi为模型预测第i个样本 label为1的概率。"

108 | ]

109 | },

110 | {

111 | "cell_type": "markdown",

112 | "metadata": {},

113 | "source": [

114 | "示例代码如下(Python语言):\n",

115 | "```python\n",

116 | "import scipy as sp\n",

117 | "def logloss(act, pred):\n",

118 | " epsilon = 1e-15\n",

119 | " pred = sp.maximum(epsilon, pred)\n",

120 | " pred = sp.minimum(1-epsilon, pred)\n",

121 | " ll = sum(act*sp.log(pred) + sp.subtract(1,act)*sp.log(sp.subtract(1,pred)))\n",

122 | " ll = ll * -1.0/len(act)\n",

123 | " return ll\n",

124 | "```"

125 | ]

126 | },

127 | {

128 | "cell_type": "markdown",

129 | "metadata": {},

130 | "source": [

131 | "### 提交格式\n",

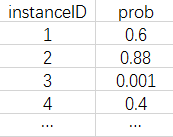

132 | "模型预估结果以zip压缩文件方式提交,内部文件名是submission.csv。每行代表一个测试样本,第一行为header,可以记录本文件相关关键信息,评测时会忽略,从第二行开始各字段之间由逗号分隔,顺序依次为:“instanceID, prob”,其中,instanceID唯一标识一个测试样本,必须升序排列,prob为模型预估的广告转化概率。示例如下:\n",

133 | ""

134 | ]

135 | }

136 | ],

137 | "metadata": {

138 | "kernelspec": {

139 | "display_name": "Python 2",

140 | "language": "python",

141 | "name": "python2"

142 | },

143 | "language_info": {

144 | "codemirror_mode": {

145 | "name": "ipython",

146 | "version": 2

147 | },

148 | "file_extension": ".py",

149 | "mimetype": "text/x-python",

150 | "name": "python",

151 | "nbconvert_exporter": "python",

152 | "pygments_lexer": "ipython2",

153 | "version": "2.7.12"

154 | }

155 | },

156 | "nbformat": 4,

157 | "nbformat_minor": 2

158 | }

159 |

--------------------------------------------------------------------------------

/Click_prediction/code/cvr/2.Baseline_version.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "## CVR预估基线版本"

8 | ]

9 | },

10 | {

11 | "cell_type": "markdown",

12 | "metadata": {},

13 | "source": [

14 | "### 2.1 基于AD统计的版本"

15 | ]

16 | },

17 | {

18 | "cell_type": "code",

19 | "execution_count": null,

20 | "metadata": {

21 | "collapsed": true

22 | },

23 | "outputs": [],

24 | "source": [

25 | "# -*- coding: utf-8 -*-\n",

26 | "\"\"\"\n",

27 | "baseline 1: history pCVR of creativeID/adID/camgaignID/advertiserID/appID/appPlatform\n",

28 | "\"\"\"\n",

29 | "\n",

30 | "import zipfile\n",

31 | "import numpy as np\n",

32 | "import pandas as pd\n",

33 | "\n",

34 | "# load data\n",

35 | "data_root = \"E:\\dataset\\pre\"\n",

36 | "dfTrain = pd.read_csv(\"%s/train.csv\"%data_root)\n",

37 | "dfTest = pd.read_csv(\"%s/test.csv\"%data_root)\n",

38 | "dfAd = pd.read_csv(\"%s/ad.csv\"%data_root)\n",

39 | "\n",

40 | "# process data\n",

41 | "dfTrain = pd.merge(dfTrain, dfAd, on=\"creativeID\")\n",

42 | "dfTest = pd.merge(dfTest, dfAd, on=\"creativeID\")\n",

43 | "y_train = dfTrain[\"label\"].values\n",

44 | "\n",

45 | "# model building\n",

46 | "key = \"appID\"\n",

47 | "dfCvr = dfTrain.groupby(key).apply(lambda df: np.mean(df[\"label\"])).reset_index()\n",

48 | "dfCvr.columns = [key, \"avg_cvr\"]\n",

49 | "dfTest = pd.merge(dfTest, dfCvr, how=\"left\", on=key)\n",

50 | "dfTest[\"avg_cvr\"].fillna(np.mean(dfTrain[\"label\"]), inplace=True)\n",

51 | "proba_test = dfTest[\"avg_cvr\"].values\n",

52 | "\n",

53 | "# submission\n",

54 | "df = pd.DataFrame({\"instanceID\": dfTest[\"instanceID\"].values, \"proba\": proba_test})\n",

55 | "df.sort_values(\"instanceID\", inplace=True)\n",

56 | "df.to_csv(\"submission.csv\", index=False)\n",

57 | "with zipfile.ZipFile(\"submission.zip\", \"w\") as fout:\n",

58 | " fout.write(\"submission.csv\", compress_type=zipfile.ZIP_DEFLATED)"

59 | ]

60 | },

61 | {

62 | "cell_type": "markdown",

63 | "metadata": {},

64 | "source": [

65 | "### 得分\n",

66 | "| Submission | 描述| 初赛A | 初赛B | 决赛A | 决赛B |\n",

67 | "| :------- | :-------: | :-------: | :-------: | :-------: | :-------: |\n",

68 | "| baseline 2.1 | ad 统计 | 0.10988 | - | - | - |"

69 | ]

70 | },

71 | {

72 | "cell_type": "markdown",

73 | "metadata": {},

74 | "source": [

75 | "### 2.2 AD+LR版本"

76 | ]

77 | },

78 | {

79 | "cell_type": "code",

80 | "execution_count": null,

81 | "metadata": {

82 | "collapsed": true

83 | },

84 | "outputs": [],

85 | "source": [

86 | "# -*- coding: utf-8 -*-\n",

87 | "\"\"\"\n",

88 | "baseline 2: ad.csv (creativeID/adID/camgaignID/advertiserID/appID/appPlatform) + lr\n",

89 | "\"\"\"\n",

90 | "\n",

91 | "import zipfile\n",

92 | "import pandas as pd\n",

93 | "from scipy import sparse\n",

94 | "from sklearn.preprocessing import OneHotEncoder\n",

95 | "from sklearn.linear_model import LogisticRegression\n",

96 | "\n",

97 | "# load data\n",

98 | "data_root = \"./data\"\n",

99 | "dfTrain = pd.read_csv(\"%s/train.csv\"%data_root)\n",

100 | "dfTest = pd.read_csv(\"%s/test.csv\"%data_root)\n",

101 | "dfAd = pd.read_csv(\"%s/ad.csv\"%data_root)\n",

102 | "\n",

103 | "# process data\n",

104 | "dfTrain = pd.merge(dfTrain, dfAd, on=\"creativeID\")\n",

105 | "dfTest = pd.merge(dfTest, dfAd, on=\"creativeID\")\n",

106 | "y_train = dfTrain[\"label\"].values\n",

107 | "\n",

108 | "# feature engineering/encoding\n",

109 | "enc = OneHotEncoder()\n",

110 | "feats = [\"creativeID\", \"adID\", \"camgaignID\", \"advertiserID\", \"appID\", \"appPlatform\"]\n",

111 | "for i,feat in enumerate(feats):\n",

112 | " x_train = enc.fit_transform(dfTrain[feat].values.reshape(-1, 1))\n",

113 | " x_test = enc.transform(dfTest[feat].values.reshape(-1, 1))\n",

114 | " if i == 0:\n",

115 | " X_train, X_test = x_train, x_test\n",

116 | " else:\n",

117 | " X_train, X_test = sparse.hstack((X_train, x_train)), sparse.hstack((X_test, x_test))\n",

118 | "\n",

119 | "# model training\n",

120 | "lr = LogisticRegression()\n",

121 | "lr.fit(X_train, y_train)\n",

122 | "proba_test = lr.predict_proba(X_test)[:,1]\n",

123 | "\n",

124 | "# submission\n",

125 | "df = pd.DataFrame({\"instanceID\": dfTest[\"instanceID\"].values, \"proba\": proba_test})\n",

126 | "df.sort_values(\"instanceID\", inplace=True)\n",

127 | "df.to_csv(\"submission.csv\", index=False)\n",

128 | "with zipfile.ZipFile(\"submission.zip\", \"w\") as fout:\n",

129 | " fout.write(\"submission.csv\", compress_type=zipfile.ZIP_DEFLATED)"

130 | ]

131 | },

132 | {

133 | "cell_type": "markdown",

134 | "metadata": {},

135 | "source": [

136 | "### 得分\n",

137 | "| Submission | 描述| 初赛A | 初赛B | 决赛A | 决赛B |\n",

138 | "| :------- | :-------: | :-------: | :-------: | :-------: | :-------: |\n",

139 | "| baseline 2.2 | ad + lr | 0.10743 | - | - | - |"

140 | ]

141 | }

142 | ],

143 | "metadata": {

144 | "kernelspec": {

145 | "display_name": "Python 2",

146 | "language": "python",

147 | "name": "python2"

148 | },

149 | "language_info": {

150 | "codemirror_mode": {

151 | "name": "ipython",

152 | "version": 2

153 | },

154 | "file_extension": ".py",

155 | "mimetype": "text/x-python",

156 | "name": "python",

157 | "nbconvert_exporter": "python",

158 | "pygments_lexer": "ipython2",

159 | "version": "2.7.12"

160 | }

161 | },

162 | "nbformat": 4,

163 | "nbformat_minor": 2

164 | }

165 |

--------------------------------------------------------------------------------

/Click_prediction/code/cvr/README.md:

--------------------------------------------------------------------------------

1 | # 第一届腾讯社交广告高校算法大赛-移动App广告转化率预估

2 | 赛题详情http://algo.tpai.qq.com/home/information/index.html

3 | 题目描述

4 | 根据从某社交广告系统连续两周的日志记录按照推广中的App和用户维度随机采样构造的数据,预测App广告点击后被激活的概率:pCVR=P(conversion=1 | Ad,User,Context),即给定广告、用户和上下文情况下广告被点击后发生激活的概率。

5 | # 运行环境

6 | - 操作系统 Ubuntu 14.04.4 LTS (GNU/Linux 4.2.0-27-generic x86_64)

7 | - 内存 128GB

8 | - CPU 32 Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz

9 | - 显卡 TITAN X (Pascal) 12GB

10 | - 语言 Python3.6

11 | - Python依赖包

12 | 1. Keras==2.0.6

13 | 2. lightgbm==0.1

14 | 3. matplotlib==2.0.0

15 | 4. numpy==1.11.3

16 | 5. pandas==0.19.2

17 | 6. scikit-learn==0.18.1

18 | 7. scipy==0.18.1

19 | 8. tensorflow-gpu==1.2.1

20 | 9. tqdm==4.11.2

21 | 10. xgboost==0.6a2

22 | - 其他库

23 | LIBFFM v121

24 | # 运行说明

25 | 1. 将复赛数据文件`final.zip`放在根目录下

26 | 2. 在根目录下运行`sh run.sh`命令生成特征文件

27 | 3. 打开`./code/_4_*_model_*.ipynb`分别进行模型训练和预测,生成单模型提交结果,包括`lgb,xgb,ffm,mlp`

28 | 4. 打开`./code/_4_5_model_avg.ipynb`进行最终的加权平均并生成最终提交结果

29 | # 方案说明

30 |

31 | 1. 用户点击日志挖掘`_2_1_gen_user_click_features.py`

32 | 挖掘广告点击日志,从不同时间粒度(天,小时)和不同属性维度(点击的素材,广告,推广计划,广告主类型,广告位等)提取用户点击行为的统计特征。

33 | 2. 用户安装日志挖掘 `_2_2_gen_app_install_features.py`

34 | 根据用户历史APP安装记录日志,分析用户的安装偏好和APP的流行趋势,结合APP安装时间的信息提取APP的时间维度的描述向量。这里最后只用了一种特征。

35 | 3. 广告主转化回流上报机制分析`_2_4_gen_tricks.py`

36 | 不同的广告主具有不同的转化计算方式,如第一次点击算转化,最后一次点击算转化,安装时点击算转化,分析并构造相应描述特征,提升模型预测精度。

37 | 4. 广告转化率特征提取`_2_5_gen_smooth_cvr.py`

38 | 构造转化率特征,使用全局和滑动窗口等方式计算单特征转化率,组合特征转化率,使用均值填充,层级填充,贝叶斯平滑,拉普拉斯平滑等方式对转化率进行修正。

39 | 5. 广告描述向量特征提取`_2_6_gen_ID_click_vectors.py`

40 | 广告投放是有特定受众对象的,而特定的受众对象也可以描述广告的相关特性,使用不同的人口属性对广告ID和APPID进行向量表示,学习隐含的语义特征。

41 | 6. 建模预测

42 | 使用多种模型进行训练,包括LightGBM,XGBoost,FFM和神经网络,最后进行多模型加权融合提高最终模型性能。

43 |

44 | # 其他

45 | - 最终线上排名20,logloss 0.101763

46 | - 最终特征维度在110左右

47 | - 部分最终没有采用的特征代码依然保留

48 | - 由于我们团队的代码是3个人共同完成的,我这里整理的模型训练的部分可能和当时略有差异,但特征部分基本一致。

49 | - `deprecated`目录下为弃用的代码,包括一些原始代码和打算尝试的方法

--------------------------------------------------------------------------------

/Click_prediction/data/data.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/data.pdf

--------------------------------------------------------------------------------

/Click_prediction/data/data_description.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/data_description.pdf

--------------------------------------------------------------------------------

/Click_prediction/data/download.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | wget --no-check-certificate https://s3-eu-west-1.amazonaws.com/criteo-labs/dac.tar.gz

4 | tar zxf dac.tar.gz

5 | rm -f dac.tar.gz

6 |

7 | mkdir raw

8 | mv ./*.txt raw/

9 |

--------------------------------------------------------------------------------

/Click_prediction/data/tencent_数据说明/Tencent_cvr_prediction.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/tencent_数据说明/Tencent_cvr_prediction.png

--------------------------------------------------------------------------------

/Click_prediction/data/tencent_数据说明/data_dscr_4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/tencent_数据说明/data_dscr_4.png

--------------------------------------------------------------------------------

/Click_prediction/data/tencent_数据说明/data_dscr_5.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/tencent_数据说明/data_dscr_5.png

--------------------------------------------------------------------------------

/Click_prediction/data/tencent_数据说明/上下文特征.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/tencent_数据说明/上下文特征.png

--------------------------------------------------------------------------------

/Click_prediction/data/tencent_数据说明/广告特征.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/tencent_数据说明/广告特征.png

--------------------------------------------------------------------------------

/Click_prediction/data/tencent_数据说明/用户特征.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/data/tencent_数据说明/用户特征.png

--------------------------------------------------------------------------------

/Click_prediction/doc/8课下课件-张伟楠.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/doc/8课下课件-张伟楠.pdf

--------------------------------------------------------------------------------

/Click_prediction/doc/Ad click prediction a view from the trenches.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/doc/Ad click prediction a view from the trenches.pdf

--------------------------------------------------------------------------------

/Click_prediction/doc/ffm.txt:

--------------------------------------------------------------------------------

1 | FFM应用

2 |

3 | 在计算广告领域,点击率CTR(click-through rate)和转化率CVR(conversion rate)是衡量广告流量的两个关键指标。

4 | 准确的估计CTR、CVR对于提高流量的价值,增加广告收入有重要的指导作用。

5 |

6 | 预估CTR/CVR,业界常用的方法有

7 | 人工特征工程 +

8 | LR(Logistic Regression)、

9 | GBDT(Gradient Boosting Decision Tree) +

10 | LR[1][2][3]、

11 | FM(Factorization Machine)[2][7]和

12 | FFM(Field-aware Factorization Machine)[9]模型。

13 | 在这些模型中,FM和FFM近年来表现突出,分别在由Criteo和Avazu举办的CTR预测竞赛中夺得冠军[4][5]。

14 |

15 |

16 |

--------------------------------------------------------------------------------

/Click_prediction/doc/fm.txt:

--------------------------------------------------------------------------------

1 | FM说明文档

2 |

3 | FM用来解决数据量大并且特征稀疏下的特征组合问题。先来看看公式(只考虑二阶多项式的情况):n代表样本的特征数量,xi是第i个特征的值,w0、wi、wij是模型参数。

4 |

5 |

6 |

--------------------------------------------------------------------------------

/Click_prediction/doc/资料.txt:

--------------------------------------------------------------------------------

1 | 参考博客:

2 | 点击率预估数据下载链接: wget --no-check-certificate https://s3-eu-west-1.amazonaws.com/criteo-labs/dac.tar.gz

3 | 点击率预估算法:FM与FFM详解: http://blog.csdn.net/jediael_lu/article/details/77772565

4 | Kaggle实战——点击率预估: http://blog.csdn.net/chengcheng1394/article/details/78940565

5 | 深入FFM原理与实践: http://blog.csdn.net/mmc2015/article/details/51760681

6 | 关于CTR预估的面试问题: http://blog.csdn.net/wanghai00/article/details/60466617

7 |

8 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/COPYRIGHT:

--------------------------------------------------------------------------------

1 |

2 | Copyright (c) 2017 The LIBFFM Project.

3 | All rights reserved.

4 |

5 | Redistribution and use in source and binary forms, with or without

6 | modification, are permitted provided that the following conditions

7 | are met:

8 |

9 | 1. Redistributions of source code must retain the above copyright

10 | notice, this list of conditions and the following disclaimer.

11 |

12 | 2. Redistributions in binary form must reproduce the above copyright

13 | notice, this list of conditions and the following disclaimer in the

14 | documentation and/or other materials provided with the distribution.

15 |

16 | 3. Neither name of copyright holders nor the names of its contributors

17 | may be used to endorse or promote products derived from this software

18 | without specific prior written permission.

19 |

20 |

21 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

22 | ``AS IS'' AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

23 | LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

24 | A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE REGENTS OR

25 | CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL,

26 | EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO,

27 | PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR

28 | PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF

29 | LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING

30 | NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS

31 | SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

32 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/Makefile:

--------------------------------------------------------------------------------

1 | CXX = g++

2 | CXXFLAGS = -Wall -O3 -std=c++0x -march=native

3 |

4 | # comment the following flags if you do not want to SSE instructions

5 | DFLAG += -DUSESSE

6 |

7 | # comment the following flags if you do not want to use OpenMP

8 | #DFLAG += -DUSEOMP

9 | #CXXFLAGS += -fopenmp

10 |

11 | all: ffm-train ffm-predict

12 |

13 | ffm-train: ffm-train.cpp ffm.o timer.o

14 | $(CXX) $(CXXFLAGS) $(DFLAG) -o $@ $^

15 |

16 | ffm-predict: ffm-predict.cpp ffm.o timer.o

17 | $(CXX) $(CXXFLAGS) $(DFLAG) -o $@ $^

18 |

19 | ffm.o: ffm.cpp ffm.h timer.o

20 | $(CXX) $(CXXFLAGS) $(DFLAG) -c -o $@ $<

21 |

22 | timer.o: timer.cpp timer.h

23 | $(CXX) $(CXXFLAGS) $(DFLAG) -c -o $@ $<

24 |

25 | clean:

26 | rm -f ffm-train ffm-predict ffm.o timer.o

27 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/Makefile.win:

--------------------------------------------------------------------------------

1 | CXX = cl.exe

2 | CFLAGS = /nologo /O2 /EHsc /D "_CRT_SECURE_NO_DEPRECATE" /D "USEOMP" /D "USESSE" /openmp

3 |

4 | TARGET = windows

5 |

6 | all: $(TARGET) $(TARGET)\ffm-train.exe $(TARGET)\ffm-predict.exe

7 |

8 | $(TARGET)\ffm-predict.exe: ffm.h ffm-predict.cpp ffm.obj timer.obj

9 | $(CXX) $(CFLAGS) ffm-predict.cpp ffm.obj timer.obj -Fe$(TARGET)\ffm-predict.exe

10 |

11 | $(TARGET)\ffm-train.exe: ffm.h ffm-train.cpp ffm.obj timer.obj

12 | $(CXX) $(CFLAGS) ffm-train.cpp ffm.obj timer.obj -Fe$(TARGET)\ffm-train.exe

13 |

14 | ffm.obj: ffm.cpp ffm.h

15 | $(CXX) $(CFLAGS) -c ffm.cpp

16 |

17 | timer.obj: timer.cpp timer.h

18 | $(CXX) $(CFLAGS) -c timer.cpp

19 |

20 | .PHONY: $(TARGET)

21 | $(TARGET):

22 | -mkdir $(TARGET)

23 |

24 | clean:

25 | -erase /Q *.obj *.exe $(TARGET)\.

26 | -rd $(TARGET)

27 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/ffm-predict:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/libffm/libffm/ffm-predict

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/ffm-predict.cpp:

--------------------------------------------------------------------------------

1 | #include

2 | #include

3 | #include

4 | #include

5 | #include

6 | #include

7 | #include

8 | #include

9 | #include

10 | #include

11 |

12 | #include "ffm.h"

13 |

14 | using namespace std;

15 | using namespace ffm;

16 |

17 | struct Option {

18 | string test_path, model_path, output_path, withoutY_flag;

19 | };

20 |

21 | string predict_help() {

22 | return string(

23 | "usage: ffm-predict test_file model_file output_file\n");

24 | }

25 |

26 | Option parse_option(int argc, char **argv) {

27 | vector args;

28 | for(int i = 0; i < argc; i++)

29 | args.push_back(string(argv[i]));

30 |

31 | if(argc == 1)

32 | throw invalid_argument(predict_help());

33 |

34 | Option option;

35 |

36 | if(argc != 4 && argc != 5)

37 | throw invalid_argument("cannot parse argument");

38 |

39 | option.test_path = string(args[1]);

40 | option.model_path = string(args[2]);

41 | option.output_path = string(args[3]);

42 | if(argc == 5){

43 | option.withoutY_flag = string(args[4]);

44 | } else {

45 | option.withoutY_flag = "";

46 | }

47 |

48 | return option;

49 | }

50 |

51 | void predict(string test_path, string model_path, string output_path) {

52 | int const kMaxLineSize = 1000000;

53 |

54 | FILE *f_in = fopen(test_path.c_str(), "r");

55 | ofstream f_out(output_path);

56 | ofstream f_out_t(output_path + ".logit");

57 | char line[kMaxLineSize];

58 |

59 | ffm_model model = ffm_load_model(model_path);

60 |

61 | ffm_double loss = 0;

62 | vector x;

63 | ffm_int i = 0;

64 |

65 | for(; fgets(line, kMaxLineSize, f_in) != nullptr; i++) {

66 | x.clear();

67 | char *y_char = strtok(line, " \t");

68 | ffm_float y = (atoi(y_char)>0)? 1.0f : -1.0f;

69 |

70 | while(true) {

71 | char *field_char = strtok(nullptr,":");

72 | char *idx_char = strtok(nullptr,":");

73 | char *value_char = strtok(nullptr," \t");

74 | if(field_char == nullptr || *field_char == '\n')

75 | break;

76 |

77 | ffm_node N;

78 | N.f = atoi(field_char);

79 | N.j = atoi(idx_char);

80 | N.v = atof(value_char);

81 |

82 | x.push_back(N);

83 | }

84 |

85 | ffm_float y_bar = ffm_predict(x.data(), x.data()+x.size(), model);

86 | ffm_float ret_t = ffm_get_wTx(x.data(), x.data()+x.size(), model);

87 | loss -= y==1? log(y_bar) : log(1-y_bar);

88 |

89 | f_out_t << ret_t << "\n";

90 | f_out << y_bar << "\n";

91 | }

92 |

93 | loss /= i;

94 |

95 | cout << "logloss = " << fixed << setprecision(5) << loss << endl;

96 |

97 | fclose(f_in);

98 | }

99 |

100 |

101 | void predict_withoutY(string test_path, string model_path, string output_path) {

102 | int const kMaxLineSize = 1000000;

103 |

104 | FILE *f_in = fopen(test_path.c_str(), "r");

105 | ofstream f_out(output_path);

106 | ofstream f_out_t(output_path + ".logit");

107 | char line[kMaxLineSize];

108 |

109 | ffm_model model = ffm_load_model(model_path);

110 |

111 | //ffm_double loss = 0;

112 | vector x;

113 | ffm_int i = 0;

114 |

115 | for(; fgets(line, kMaxLineSize, f_in) != nullptr; i++) {

116 | x.clear();

117 | //char *y_char = strtok(line, " \t");

118 | //ffm_float y = (atoi(y_char)>0)? 1.0f : -1.0f;

119 |

120 | char *field_char = strtok(line,":");

121 | char *idx_char = strtok(nullptr,":");

122 | char *value_char = strtok(nullptr," \t");

123 | if(field_char == nullptr || *field_char == '\n')

124 | continue;

125 |

126 | ffm_node N;

127 | N.f = atoi(field_char);

128 | N.j = atoi(idx_char);

129 | N.v = atof(value_char);

130 |

131 | x.push_back(N);

132 |

133 | while(true) {

134 | char *field_char = strtok(nullptr,":");

135 | char *idx_char = strtok(nullptr,":");

136 | char *value_char = strtok(nullptr," \t");

137 | if(field_char == nullptr || *field_char == '\n')

138 | break;

139 |

140 | ffm_node N;

141 | N.f = atoi(field_char);

142 | N.j = atoi(idx_char);

143 | N.v = atof(value_char);

144 |

145 | x.push_back(N);

146 | }

147 |

148 | ffm_float y_bar = ffm_predict(x.data(), x.data()+x.size(), model);

149 | ffm_float ret_t = ffm_get_wTx(x.data(), x.data()+x.size(), model);

150 | //loss -= y==1? log(y_bar) : log(1-y_bar);

151 |

152 | f_out_t << ret_t << "\n";

153 | f_out << y_bar << "\n";

154 | }

155 |

156 | //loss /= i;

157 |

158 | //cout << "logloss = " << fixed << setprecision(5) << loss << endl;

159 | cout << "done!" << endl;

160 |

161 | fclose(f_in);

162 | }

163 |

164 | int main(int argc, char **argv) {

165 | Option option;

166 | try {

167 | option = parse_option(argc, argv);

168 | } catch(invalid_argument const &e) {

169 | cout << e.what() << endl;

170 | return 1;

171 | }

172 |

173 | if(argc == 5 && option.withoutY_flag.compare("true") == 0){

174 | predict_withoutY(option.test_path, option.model_path, option.output_path);

175 | } else {

176 | predict(option.test_path, option.model_path, option.output_path);

177 | }

178 | return 0;

179 | }

180 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/ffm-train:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/libffm/libffm/ffm-train

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/ffm-train.cpp:

--------------------------------------------------------------------------------

1 | #pragma GCC diagnostic ignored "-Wunused-result"

2 | #include

3 | #include

4 | #include

5 | #include

6 | #include

7 | #include

8 | #include

9 |

10 | #include "ffm.h"

11 |

12 | #if defined USEOMP

13 | #include

14 | #endif

15 |

16 | using namespace std;

17 | using namespace ffm;

18 |

19 | string train_help() {

20 | return string(

21 | "usage: ffm-train [options] training_set_file [model_file]\n"

22 | "\n"

23 | "options:\n"

24 | "-l : set regularization parameter (default 0.00002)\n"

25 | "-k : set number of latent factors (default 4)\n"

26 | "-t : set number of iterations (default 15)\n"

27 | "-r : set learning rate (default 0.2)\n"

28 | "-s : set number of threads (default 1)\n"

29 | "-p : set path to the validation set\n"

30 | "--quiet: quiet mode (no output)\n"

31 | "--no-norm: disable instance-wise normalization\n"

32 | "--auto-stop: stop at the iteration that achieves the best validation loss (must be used with -p)\n");

33 | }

34 |

35 | struct Option {

36 | string tr_path;

37 | string va_path;

38 | string model_path;

39 | ffm_parameter param;

40 | bool quiet = false;

41 | ffm_int nr_threads = 1;

42 | };

43 |

44 | string basename(string path) {

45 | const char *ptr = strrchr(&*path.begin(), '/');

46 | if(!ptr)

47 | ptr = path.c_str();

48 | else

49 | ptr++;

50 | return string(ptr);

51 | }

52 |

53 | Option parse_option(int argc, char **argv) {

54 | vector args;

55 | for(int i = 0; i < argc; i++)

56 | args.push_back(string(argv[i]));

57 |

58 | if(argc == 1)

59 | throw invalid_argument(train_help());

60 |

61 | Option opt;

62 |

63 | ffm_int i = 1;

64 | for(; i < argc; i++) {

65 | if(args[i].compare("-t") == 0)

66 | {

67 | if(i == argc-1)

68 | throw invalid_argument("need to specify number of iterations after -t");

69 | i++;

70 | opt.param.nr_iters = atoi(args[i].c_str());

71 | if(opt.param.nr_iters <= 0)

72 | throw invalid_argument("number of iterations should be greater than zero");

73 | } else if(args[i].compare("-k") == 0) {

74 | if(i == argc-1)

75 | throw invalid_argument("need to specify number of factors after -k");

76 | i++;

77 | opt.param.k = atoi(args[i].c_str());

78 | if(opt.param.k <= 0)

79 | throw invalid_argument("number of factors should be greater than zero");

80 | } else if(args[i].compare("-r") == 0) {

81 | if(i == argc-1)

82 | throw invalid_argument("need to specify eta after -r");

83 | i++;

84 | opt.param.eta = atof(args[i].c_str());

85 | if(opt.param.eta <= 0)

86 | throw invalid_argument("learning rate should be greater than zero");

87 | } else if(args[i].compare("-l") == 0) {

88 | if(i == argc-1)

89 | throw invalid_argument("need to specify lambda after -l");

90 | i++;

91 | opt.param.lambda = atof(args[i].c_str());

92 | if(opt.param.lambda < 0)

93 | throw invalid_argument("regularization cost should not be smaller than zero");

94 | } else if(args[i].compare("-s") == 0) {

95 | if(i == argc-1)

96 | throw invalid_argument("need to specify number of threads after -s");

97 | i++;

98 | opt.nr_threads = atoi(args[i].c_str());

99 | if(opt.nr_threads <= 0)

100 | throw invalid_argument("number of threads should be greater than zero");

101 | } else if(args[i].compare("-p") == 0) {

102 | if(i == argc-1)

103 | throw invalid_argument("need to specify path after -p");

104 | i++;

105 | opt.va_path = args[i];

106 | } else if(args[i].compare("--no-norm") == 0) {

107 | opt.param.normalization = false;

108 | } else if(args[i].compare("--quiet") == 0) {

109 | opt.quiet = true;

110 | } else if(args[i].compare("--auto-stop") == 0) {

111 | opt.param.auto_stop = true;

112 | } else {

113 | break;

114 | }

115 | }

116 |

117 | if(i != argc-2 && i != argc-1)

118 | throw invalid_argument("cannot parse command\n");

119 |

120 | opt.tr_path = args[i];

121 | i++;

122 |

123 | if(i < argc) {

124 | opt.model_path = string(args[i]);

125 | } else if(i == argc) {

126 | opt.model_path = basename(opt.tr_path) + ".model";

127 | } else {

128 | throw invalid_argument("cannot parse argument");

129 | }

130 |

131 | return opt;

132 | }

133 |

134 | int train_on_disk(Option opt) {

135 | string tr_bin_path = basename(opt.tr_path) + ".bin";

136 | string va_bin_path = opt.va_path.empty()? "" : basename(opt.va_path) + ".bin";

137 |

138 | ffm_read_problem_to_disk(opt.tr_path, tr_bin_path);

139 | if(!opt.va_path.empty())

140 | ffm_read_problem_to_disk(opt.va_path, va_bin_path);

141 |

142 | ffm_model model = ffm_train_on_disk(tr_bin_path.c_str(), va_bin_path.c_str(), opt.param);

143 |

144 | ffm_save_model(model, opt.model_path);

145 |

146 | return 0;

147 | }

148 |

149 | int main(int argc, char **argv) {

150 | Option opt;

151 | try {

152 | opt = parse_option(argc, argv);

153 | } catch(invalid_argument &e) {

154 | cout << e.what() << endl;

155 | return 1;

156 | }

157 |

158 | if(opt.quiet)

159 | cout.setstate(ios_base::badbit);

160 |

161 | if(opt.param.auto_stop && opt.va_path.empty()) {

162 | cout << "To use auto-stop, you need to assign a validation set" << endl;

163 | return 1;

164 | }

165 |

166 | #if defined USEOMP

167 | omp_set_num_threads(opt.nr_threads);

168 | #endif

169 |

170 | train_on_disk(opt);

171 |

172 | return 0;

173 | }

174 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/ffm.h:

--------------------------------------------------------------------------------

1 | #ifndef _LIBFFM_H

2 | #define _LIBFFM_H

3 |

4 | #include

5 |

6 | namespace ffm {

7 |

8 | using namespace std;

9 |

10 | typedef float ffm_float;

11 | typedef double ffm_double;

12 | typedef int ffm_int;

13 | typedef long long ffm_long;

14 |

15 | struct ffm_node {

16 | ffm_int f; // field index

17 | ffm_int j; // feature index

18 | ffm_float v; // value

19 | };

20 |

21 | struct ffm_model {

22 | ffm_int n; // number of features

23 | ffm_int m; // number of fields

24 | ffm_int k; // number of latent factors

25 | ffm_float *W = nullptr;

26 | bool normalization;

27 | ~ffm_model();

28 | };

29 |

30 | struct ffm_parameter {

31 | ffm_float eta = 0.2; // learning rate

32 | ffm_float lambda = 0.00002; // regularization parameter

33 | ffm_int nr_iters = 15;

34 | ffm_int k = 4; // number of latent factors

35 | bool normalization = true;

36 | bool auto_stop = false;

37 | };

38 |

39 | void ffm_read_problem_to_disk(string txt_path, string bin_path);

40 |

41 | void ffm_save_model(ffm_model &model, string path);

42 |

43 | ffm_model ffm_load_model(string path);

44 |

45 | ffm_model ffm_train_on_disk(string Tr_path, string Va_path, ffm_parameter param);

46 |

47 | ffm_float ffm_predict(ffm_node *begin, ffm_node *end, ffm_model &model);

48 |

49 | ffm_float ffm_get_wTx(ffm_node *begin, ffm_node *end, ffm_model &model);

50 |

51 | } // namespace ffm

52 |

53 | #endif // _LIBFFM_H

54 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/ffm.o:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/libffm/libffm/ffm.o

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/timer.cpp:

--------------------------------------------------------------------------------

1 | #include

2 | #include "timer.h"

3 |

4 | Timer::Timer()

5 | {

6 | reset();

7 | }

8 |

9 | void Timer::reset()

10 | {

11 | begin = std::chrono::high_resolution_clock::now();

12 | duration =

13 | std::chrono::duration_cast(begin-begin);

14 | }

15 |

16 | void Timer::tic()

17 | {

18 | begin = std::chrono::high_resolution_clock::now();

19 | }

20 |

21 | float Timer::toc()

22 | {

23 | duration += std::chrono::duration_cast

24 | (std::chrono::high_resolution_clock::now()-begin);

25 | return get();

26 | }

27 |

28 | float Timer::get()

29 | {

30 | return (float)duration.count() / 1000;

31 | }

32 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/timer.h:

--------------------------------------------------------------------------------

1 | #include

2 |

3 | class Timer

4 | {

5 | public:

6 | Timer();

7 | void reset();

8 | void tic();

9 | float toc();

10 | float get();

11 | private:

12 | std::chrono::high_resolution_clock::time_point begin;

13 | std::chrono::milliseconds duration;

14 | };

15 |

--------------------------------------------------------------------------------

/Click_prediction/libffm/libffm/timer.o:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/libffm/libffm/timer.o

--------------------------------------------------------------------------------

/Click_prediction/libfm/libfm/Makefile:

--------------------------------------------------------------------------------

1 | all:

2 | cd src/libfm; make all

3 |

4 | libFM:

5 | cd src/libfm; make libFM

6 |

7 | clean:

8 | cd src/libfm; make clean

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/Click_prediction/libfm/libfm/README.md:

--------------------------------------------------------------------------------

1 | libFM

2 | =====

3 |

4 | Library for factorization machines

5 |

6 | web: http://www.libfm.org/

7 |

8 | forum: https://groups.google.com/forum/#!forum/libfm

9 |

10 | Factorization machines (FM) are a generic approach that allows to mimic most factorization models by feature engineering. This way, factorization machines combine the generality of feature engineering with the superiority of factorization models in estimating interactions between categorical variables of large domain. libFM is a software implementation for factorization machines that features stochastic gradient descent (SGD) and alternating least squares (ALS) optimization as well as Bayesian inference using Markov Chain Monte Carlo (MCMC).

11 |

12 | Compile

13 | =======

14 | libFM has been tested with the GNU compiler collection and GNU make. libFM and the tools can be compiled with

15 | > make all

16 |

17 | Usage

18 | =====

19 | Please see the [libFM 1.4.2 manual](http://www.libfm.org/libfm-1.42.manual.pdf) for details about how to use libFM. If you have questions, please visit the [forum](https://groups.google.com/forum/#!forum/libfm).

20 |

--------------------------------------------------------------------------------

/Click_prediction/libfm/libfm/bin/convert:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/charlesXu86/TIANCHI_Project/ad4598ebc8e5bf63b07185ddbc9418c9504e05ce/Click_prediction/libfm/libfm/bin/convert

--------------------------------------------------------------------------------

/Click_prediction/libfm/libfm/bin/fm_model:

--------------------------------------------------------------------------------

1 | #global bias W0

2 | -1.51913e-05

3 | #unary interactions Wj

4 | 0.0543242

5 | 0.0284947

6 | 0.0266538

7 | 0.016659

8 | 0.0125872

9 | 0.0107259

10 | 0.0118907

11 | 0.0157203

12 | 0.00839382

13 | 0.00971495

14 | 0.00612373

15 | 0.00524808

16 | 0.00285415

17 | 0.0008417

18 | -0.000239964

19 | -0.0086962

20 | 0.00883555

21 | -0.0162203

22 | -0.00296712

23 | 0.0212882

24 | 0.0188632

25 | -0.00632028

26 | -0.0134724

27 | 0.00510968

28 | -0.0100098

29 | 0.010746

30 | -0.0212505

31 | 0.0112133

32 | -0.00330014

33 | 0.0205507

34 | -0.0058263

35 | -0.00871744

36 | #pairwise interactions Vj,f

37 | -0.00700443 0.0115064 0.000250908 -0.0337887 0.00279776 0.0310548 -0.0307156 0.0202006

38 | -0.0181796 0.00783596 -0.00684362 -0.00773165 0.00437475 -0.00467768 0.00468273 -0.0114019