├── .gitignore

├── .vscode

├── launch.json

└── settings.json

├── LICENSE

├── README.md

├── checkpoint_configs

├── depthwise.yaml

├── fixed_channels_finetuned

│ ├── allen.yaml

│ ├── cp.yaml

│ └── hpa.yaml

├── hypernet.yaml

├── slice_param.yaml

├── target_param.yaml

└── template_mixing.yaml

├── config.py

├── configs

└── morphem70k

│ ├── allen_cfg.yaml

│ ├── attn_pooling

│ ├── none.yaml

│ ├── param1.yaml

│ ├── param2.yaml

│ ├── param3.yaml

│ ├── param4.yaml

│ └── param5.yaml

│ ├── cp_cfg.yaml

│ ├── data_chunk

│ ├── allen.yaml

│ ├── cp.yaml

│ ├── hpa.yaml

│ └── morphem70k.yaml

│ ├── dataset

│ ├── allen.yaml

│ ├── cp.yaml

│ ├── hpa.yaml

│ └── morphem70k_v2.yaml

│ ├── eval

│ └── default.yaml

│ ├── hardware

│ ├── default.yaml

│ └── four_workers.yaml

│ ├── hpa_cfg.yaml

│ ├── logging

│ ├── no.yaml

│ └── wandb.yaml

│ ├── model

│ ├── clip_resnet50.yaml

│ ├── clip_vit.yaml

│ ├── convnext_base.yaml

│ ├── convnext_base_miro.yaml

│ ├── convnext_shared_miro.yaml

│ ├── depthwiseconvnext.yaml

│ ├── depthwiseconvnext_miro.yaml

│ ├── dino_base.yaml

│ ├── hyperconvnext.yaml

│ ├── hyperconvnext_miro.yaml

│ ├── separate.yaml

│ ├── sliceparam.yaml

│ ├── sliceparam_miro.yaml

│ ├── template_mixing.yaml

│ ├── template_mixing_v2.yaml

│ └── template_mixing_v2_miro.yaml

│ ├── morphem70k_cfg.yaml

│ ├── optimizer

│ ├── adam.yaml

│ ├── adamw.yaml

│ └── sgd.yaml

│ ├── scheduler

│ ├── cosine.yaml

│ ├── multistep.yaml

│ └── none.yaml

│ └── train

│ └── random_instance.yaml

├── custom_log.py

├── datasets

├── __init__.py

├── cifar.py

├── compute_mean_std_morphem70k.py

├── dataset_utils.py

├── morphem70k.py

├── split_datasets.py

└── tps_transform.py

├── evaluate.ipynb

├── figs

├── 01-adaptive-models.png

└── 04-diagrams.png

├── helper_classes

├── __init__.py

├── best_result.py

├── channel_initialization.py

├── channel_pooling_type.py

├── datasplit.py

├── feature_pooling.py

├── first_layer_init.py

└── norm_type.py

├── lr_schedulers.py

├── main.py

├── metadata

└── morphem70k_v2.csv

├── models

├── __init__.py

├── channel_attention_pooling.py

├── convnext_base.py

├── convnext_base_miro.py

├── convnext_shared_miro.py

├── depthwise_convnext.py

├── depthwise_convnext_miro.py

├── hypernet.py

├── hypernet_convnext.py

├── hypernet_convnext_miro.py

├── loss_fn.py

├── model_utils.py

├── shared_convnext.py

├── slice_param_convnext.py

├── slice_param_convnext_miro.py

├── template_convnextv2.py

├── template_convnextv2_miro.py

└── template_mixing_convnext.py

├── optimizers.py

├── requirements.txt

├── ssltrainer.py

├── train_scripts.sh

├── trainer.py

└── utils.py

/.gitignore:

--------------------------------------------------------------------------------

1 |

2 | # Created by https://www.toptal.com/developers/gitignore/api/macos,pycharm,jupyternotebooks

3 | # Edit at https://www.toptal.com/developers/gitignore?templates=macos,pycharm,jupyternotebooks

4 | /data

5 | !/data/

6 | /data/*

7 | !/data/split/

8 | /logs

9 | /mlruns

10 | /notebooks

11 | /archives

12 | /store_result

13 | /checkpoints

14 | /multirun

15 | push_scc

16 | .env

17 | /outputs

18 | __pycache__/

19 | */__pycache__/

20 | .idea/

21 |

22 | ### JupyterNotebooks ###

23 |

24 | # gitignore template for Jupyter Notebooks

25 | # website: http://jupyter.org/

26 |

27 | .ipynb_checkpoints

28 | */.ipynb_checkpoints/*

29 |

30 | # IPython

31 | profile_default/

32 | ipython_config.py

33 |

34 | # Remove previous ipynb_checkpoints

35 | # git rm -r .ipynb_checkpoints/

36 |

37 | ### macOS ###

38 | # General

39 | .DS_Store

40 | .AppleDouble

41 | .LSOverride

42 |

43 | # Icon must end with two \r

44 | Icon

45 |

46 |

47 | # Thumbnails

48 | ._*

49 |

50 | # Files that might appear in the root of a volume

51 | .DocumentRevisions-V100

52 | .fseventsd

53 | .Spotlight-V100

54 | .TemporaryItems

55 | .Trashes

56 | .VolumeIcon.icns

57 | .com.apple.timemachine.donotpresent

58 |

59 | # Directories potentially created on remote AFP share

60 | .AppleDB

61 | .AppleDesktop

62 | Network Trash Folder

63 | Temporary Items

64 | .apdisk

65 |

66 | ### macOS Patch ###

67 | # iCloud generated files

68 | *.icloud

69 |

70 | ### PyCharm ###

71 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio, WebStorm and Rider

72 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

73 |

74 | # User-specific stuff

75 | .idea/**/workspace.xml

76 | .idea/**/tasks.xml

77 | .idea/**/usage.statistics.xml

78 | .idea/**/dictionaries

79 | .idea/**/shelf

80 |

81 | # AWS User-specific

82 | .idea/**/aws.xml

83 |

84 | # Generated files

85 | .idea/**/contentModel.xml

86 |

87 | # Sensitive or high-churn files

88 | .idea/**/dataSources/

89 | .idea/**/dataSources.ids

90 | .idea/**/dataSources.local.xml

91 | .idea/**/sqlDataSources.xml

92 | .idea/**/dynamic.xml

93 | .idea/**/uiDesigner.xml

94 | .idea/**/dbnavigator.xml

95 |

96 | # Gradle

97 | .idea/**/gradle.xml

98 | .idea/**/libraries

99 |

100 | # Gradle and Maven with auto-import

101 | # When using Gradle or Maven with auto-import, you should exclude module files,

102 | # since they will be recreated, and may cause churn. Uncomment if using

103 | # auto-import.

104 | # .idea/artifacts

105 | # .idea/compiler.xml

106 | # .idea/jarRepositories.xml

107 | # .idea/modules.xml

108 | # .idea/*.iml

109 | # .idea/modules

110 | # *.iml

111 | # *.ipr

112 |

113 | # CMake

114 | cmake-build-*/

115 |

116 | # Mongo Explorer plugin

117 | .idea/**/mongoSettings.xml

118 |

119 | # File-based project format

120 | *.iws

121 |

122 | # IntelliJ

123 | out/

124 |

125 | # mpeltonen/sbt-idea plugin

126 | .idea_modules/

127 |

128 | # JIRA plugin

129 | atlassian-ide-plugin.xml

130 |

131 | # Cursive Clojure plugin

132 | .idea/replstate.xml

133 |

134 | # SonarLint plugin

135 | .idea/sonarlint/

136 |

137 | # Crashlytics plugin (for Android Studio and IntelliJ)

138 | com_crashlytics_export_strings.xml

139 | crashlytics.properties

140 | crashlytics-build.properties

141 | fabric.properties

142 |

143 | # Editor-based Rest Client

144 | .idea/httpRequests

145 |

146 | # Android studio 3.1+ serialized cache file

147 | .idea/caches/build_file_checksums.ser

148 |

149 | ### PyCharm Patch ###

150 | # Comment Reason: https://github.com/joeblau/gitignore.io/issues/186#issuecomment-215987721

151 |

152 | # *.iml

153 | # modules.xml

154 | # .idea/misc.xml

155 | # *.ipr

156 |

157 | # Sonarlint plugin

158 | # https://plugins.jetbrains.com/plugin/7973-sonarlint

159 | .idea/**/sonarlint/

160 |

161 | # SonarQube Plugin

162 | # https://plugins.jetbrains.com/plugin/7238-sonarqube-community-plugin

163 | .idea/**/sonarIssues.xml

164 |

165 | # Markdown Navigator plugin

166 | # https://plugins.jetbrains.com/plugin/7896-markdown-navigator-enhanced

167 | .idea/**/markdown-navigator.xml

168 | .idea/**/markdown-navigator-enh.xml

169 | .idea/**/markdown-navigator/

170 |

171 | # Cache file creation bug

172 | # See https://youtrack.jetbrains.com/issue/JBR-2257

173 | .idea/$CACHE_FILE$

174 |

175 | # CodeStream plugin

176 | # https://plugins.jetbrains.com/plugin/12206-codestream

177 | .idea/codestream.xml

178 |

179 | # End of https://www.toptal.com/developers/gitignore/api/macos,pycharm,jupyternotebooks

180 | /outputs/

181 | /wandb/

182 |

183 |

184 | ### VisualStudioCode ###

185 | .vscode/*

186 | !.vscode/settings.json

187 | !.vscode/tasks.json

188 | !.vscode/launch.json

189 | !.vscode/extensions.json

190 | !.vscode/*.code-snippets

191 |

192 | # Local History for Visual Studio Code

193 | .history/

194 |

195 | # Built Visual Studio Code Extensions

196 | *.vsix

197 |

198 | ### VisualStudioCode Patch ###

199 | # Ignore all local history of files

200 | .history

201 | .ionide

202 | __tmp_scripts

203 | # End of https://www.toptal.com/developers/gitignore/api/visualstudiocode,macos

204 |

--------------------------------------------------------------------------------

/.vscode/launch.json:

--------------------------------------------------------------------------------

1 | {

2 | // Use IntelliSense to learn about possible attributes.

3 | // Hover to view descriptions of existing attributes.

4 | // For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

5 | "version": "0.2.0",

6 | "configurations": [

7 |

8 | {

9 | "name": "Python: Remote Attach",

10 | "type": "python",

11 | "request": "attach",

12 | "connect": {

13 | "host": "localhost",

14 | "port": 5678

15 | },

16 | "justMyCode": true

17 | }

18 | ]

19 | }

--------------------------------------------------------------------------------

/.vscode/settings.json:

--------------------------------------------------------------------------------

1 | {

2 | "workbench.colorCustomizations": {

3 | "titleBar.activeBackground": "#261ac8"

4 | },

5 | "files.watcherExclude": {

6 | "**/checkpoints/**": true,

7 | "**/data/**": true,

8 | "**/multirun/**": true,

9 | "**/wandb/**": true,

10 | "**/logs/**": true,

11 | "**/snapshots/**": true,

12 | "../MorphEm/**": true

13 | },

14 | "files.exclude": {

15 | "**/.git": true,

16 | "**/.svn": true,

17 | "**/.hg": true,

18 | "**/CVS": true,

19 | "**/.DS_Store": true,

20 | "**/Thumbs.db": true,

21 | "**/__pycache__": true,

22 | "**/**/__pycache__": true,

23 | "**/wandb/**": true,

24 | "**/multirun/**": true,

25 | "**/checkpoints/**": true,

26 | "**/snapshots/**": true,

27 | },

28 | }

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 Chau Pham

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | A Pytorch implementation for channel-adaptive models in our [paper](https://arxiv.org/pdf/2310.19224.pdf). This code was tested using Pytorch 2.0 and Python 3.10.

2 |

3 |

4 | If you find our work useful, please consider citing:

5 |

6 | ```

7 | @InProceedings{ChenCHAMMI2023,

8 | author={Zitong Chen and Chau Pham and Siqi Wang and Michael Doron and Nikita Moshkov and Bryan A. Plummer and Juan C Caicedo},

9 | title={CHAMMI: A benchmark for channel-adaptive models in microscopy imaging},

10 | booktitle={Advances in Neural Information Processing Systems (NeurIPS) Track on Datasets and Benchmarks},

11 | year={2023}}

12 | ```

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 | # Setup

21 |

22 | 1/ Install Morphem Evaluation Benchmark package:

23 |

24 | https://github.com/broadinstitute/MorphEm

25 |

26 |

27 | 2/ Install required packages:

28 |

29 | `pip install -r requirements.txt`

30 |

31 |

32 | # Dataset

33 |

34 |

35 |

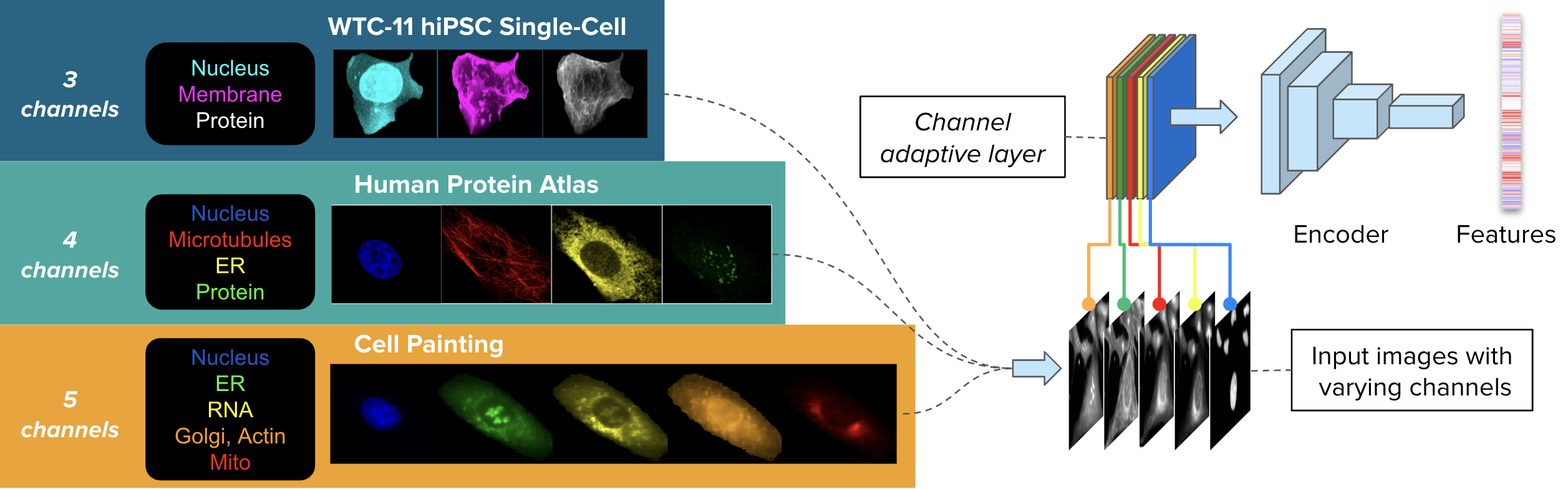

36 | CHAMMI consists of varying-channel images from three sources: WTC-11 hiPSC dataset (WTC-11, 3 channels), Human Protein Atlas (HPA, 4 channels), and Cell Painting datasets (CP, 5 channels).

37 |

38 | The dataset can be found at https://doi.org/10.5281/zenodo.7988357

39 |

40 | First, you need to download the dataset.

41 | Suppose the dataset folder is named `chammi_dataset`, and it is located inside the project folder.

42 |

43 | You need to modify the folder path in `configs/morphem70k/dataset/morphem70k_v2.yaml` and `configs/morphem70k/eval/default.yaml`.

44 | Specifically, set `root_dir` to `chammi_dataset` in both files.

45 |

46 |

47 | Then, copy `medadata/morphem70k_v2.csv` file to the `chammi_dataset` folder that you have just downloaded. You can use the following command:

48 |

49 | ```

50 | cp metadata/morphem70k_v2.csv chammi_dataset

51 | ```

52 |

53 | This particular file is simply a merged version of the metadata files (`enriched_meta.csv`) from three sub-datasets within your dataset folder. It will be utilized by `datasets/morphem70k.py` to load of the dataset.

54 |

55 |

56 | # Channel-adaptive Models

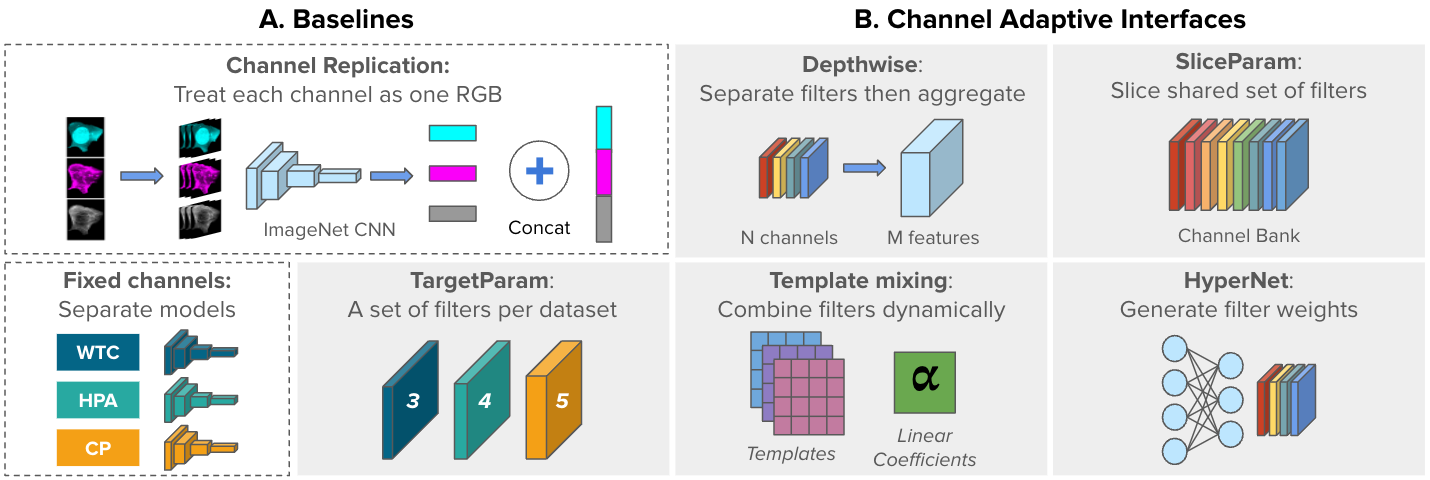

57 | Figure below demonstrates the baseline models.

58 | A) Two non-adaptive, baseline approaches: ChannelReplication and FixedChannels.

59 | B) Five channel-adaptive strategies to accommodate varying

60 | image inputs: Depthwise, SliceParam, TargetParam, TemplateMixing, and HyperNet (gray blocks). Adaptive interfaces are the first layer of a shared backbone network.

61 |

62 |

63 |

64 |

65 | # Training

66 |

67 | In this project, we use [Hydra](https://hydra.cc/) to manage configurations.

68 | To submit a job using Hydra, you need to specify the config file. Here are some key parameters:

69 |

70 | ```

71 | -m: multi-run mode (submit multiple runs with 1 job)

72 |

73 | -cp: config folder, all config files are in `configs/morphem70k`

74 |

75 | -cn: config file name (without .yaml extension)

76 | ```

77 |

78 | Parameters in the command lines will override the ones in the config file.

79 | For example, to train a SliceParam model:

80 |

81 | ```

82 | python main.py -m -cp configs/morphem70k -cn morphem70k_cfg model=sliceparam tag=slice ++optimizer.params.lr=0.0001 ++model.learnable_temp=True ++model.temperature=0.15 ++model.first_layer=pretrained_pad_dups ++model.slice_class_emb=True ++train.seed=725375

83 | ```

84 |

85 |

86 | To reproduce the results, please refer to [train_scripts.sh](https://github.com/chaudatascience/channel_adaptive_models/blob/main/train_scripts.sh).

87 |

88 | - **Add Wandb key**: If you would like to use Wandb to keep track of experiments, add your Wandb key to `.env` file:

89 |

90 | `echo WANDB_API_KEY=your_wandb_key >> .env`

91 |

92 | and, change `use_wandb` to `True` in `configs/morphem70k/logging/wandb.yaml`.

93 |

94 |

95 | # Checkpoints

96 |

97 | Our pre-trained models can be found at: https://drive.google.com/drive/folders/1_xVgzfdc6H9ar4T5bd1jTjNkrpTwkSlL?usp=drive_link

98 |

99 | Configs for the checkpoints are stored in [checkpoint_configs](https://github.com/chaudatascience/channel_adaptive_models/tree/main/checkpoint_configs) folder.

100 |

101 | A quick example of using the checkpoints for evaluation is provided in [evaluate.ipynb](https://github.com/chaudatascience/channel_adaptive_models/blob/main/evaluate.ipynb)

102 |

--------------------------------------------------------------------------------

/checkpoint_configs/depthwise.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: depthwise.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 483112

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: depthwiseconvnext

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.07

32 | learnable_temp: false

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: reinit_as_random

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | kernels_per_channel: 64

39 | pooling_channel_type: weighted_sum_random

40 | in_channel_names:

41 | - er

42 | - golgi

43 | - membrane

44 | - microtubules

45 | - mito

46 | - nucleus

47 | - protein

48 | - rna

49 | scheduler:

50 | name: cosine

51 | convert_to_batch: false

52 | params:

53 | t_initial: FILL_LATER

54 | lr_min: 1.0e-06

55 | cycle_mul: 1.0

56 | cycle_decay: 0.5

57 | cycle_limit: 1

58 | warmup_t: 3

59 | warmup_lr_init: 1.0e-05

60 | warmup_prefix: false

61 | t_in_epochs: true

62 | noise_range_t: null

63 | noise_pct: 0.67

64 | noise_std: 1.0

65 | noise_seed: 42

66 | k_decay: 1.0

67 | initialize: true

68 | optimizer:

69 | name: adamw

70 | params:

71 | lr: 0.0004

72 | betas:

73 | - 0.9

74 | - 0.999

75 | eps: 1.0e-08

76 | weight_decay: 5.0e-05

77 | amsgrad: false

78 | dataset:

79 | name: morphem70k

80 | img_size: 224

81 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

82 | file_name: morphem70k_v2.csv

83 | data_chunk:

84 | chunks:

85 | - Allen:

86 | - nucleus

87 | - membrane

88 | - protein

89 | - HPA:

90 | - microtubules

91 | - protein

92 | - nucleus

93 | - er

94 | - CP:

95 | - nucleus

96 | - er

97 | - rna

98 | - golgi

99 | - mito

100 | logging:

101 | wandb:

102 | use_wandb: false

103 | log_freq: 10000

104 | num_images_to_log: 0

105 | project_name: null

106 | use_py_log: false

107 | scc_jobid: null

108 | hardware:

109 | num_workers: 3

110 | device: cuda

111 | multi_gpus: null

112 | eval:

113 | batch_size: null

114 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

115 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

116 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

117 | meta_csv_file: FILL_LATER

118 | classifiers:

119 | - knn

120 | - sgd

121 | classifier: PLACE_HOLDER

122 | feature_file: features.npy

123 | use_gpu: true

124 | knn_metric: PLACE_HOLDER

125 | knn_metrics:

126 | - l2

127 | - cosine

128 | clean_up: false

129 | umap: true

130 | only_eval_first_and_last: false

131 | attn_pooling: {}

132 | tag: depthwise

133 |

--------------------------------------------------------------------------------

/checkpoint_configs/fixed_channels_finetuned/allen.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: fixed_channels_finetuned/allen.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 582814

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: convnext_base

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.3

32 | learnable_temp: false

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: pretrained_pad_avg

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | scheduler:

39 | name: cosine

40 | convert_to_batch: false

41 | params:

42 | t_initial: FILL_LATER

43 | lr_min: 1.0e-06

44 | cycle_mul: 1.0

45 | cycle_decay: 0.5

46 | cycle_limit: 1

47 | warmup_t: 3

48 | warmup_lr_init: 1.0e-05

49 | warmup_prefix: false

50 | t_in_epochs: true

51 | noise_range_t: null

52 | noise_pct: 0.67

53 | noise_std: 1.0

54 | noise_seed: 42

55 | k_decay: 1.0

56 | initialize: true

57 | optimizer:

58 | name: adamw

59 | params:

60 | lr: 0.001

61 | betas:

62 | - 0.9

63 | - 0.999

64 | eps: 1.0e-08

65 | weight_decay: 5.0e-05

66 | amsgrad: false

67 | dataset:

68 | name: Allen

69 | img_size: 224

70 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

71 | file_name: morphem70k_v2.csv

72 | data_chunk:

73 | chunks:

74 | - Allen:

75 | - nucleus

76 | - membrane

77 | - protein

78 | logging:

79 | wandb:

80 | use_wandb: false

81 | log_freq: 10000

82 | num_images_to_log: 0

83 | project_name: null

84 | use_py_log: false

85 | scc_jobid: null

86 | hardware:

87 | num_workers: 3

88 | device: cuda

89 | multi_gpus: null

90 | eval:

91 | batch_size: null

92 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

93 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

94 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

95 | meta_csv_file: FILL_LATER

96 | classifiers:

97 | - knn

98 | - sgd

99 | classifier: PLACE_HOLDER

100 | feature_file: features.npy

101 | use_gpu: true

102 | knn_metric: PLACE_HOLDER

103 | knn_metrics:

104 | - l2

105 | - cosine

106 | clean_up: false

107 | umap: true

108 | only_eval_first_and_last: false

109 | tag: allen

110 |

--------------------------------------------------------------------------------

/checkpoint_configs/fixed_channels_finetuned/cp.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: fixed_channels_finetuned/cp.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 530400

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: convnext_base

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.3

32 | learnable_temp: false

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: pretrained_pad_avg

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | scheduler:

39 | name: cosine

40 | convert_to_batch: false

41 | params:

42 | t_initial: FILL_LATER

43 | lr_min: 1.0e-06

44 | cycle_mul: 1.0

45 | cycle_decay: 0.5

46 | cycle_limit: 1

47 | warmup_t: 3

48 | warmup_lr_init: 1.0e-05

49 | warmup_prefix: false

50 | t_in_epochs: true

51 | noise_range_t: null

52 | noise_pct: 0.67

53 | noise_std: 1.0

54 | noise_seed: 42

55 | k_decay: 1.0

56 | initialize: true

57 | optimizer:

58 | name: adamw

59 | params:

60 | lr: 0.0001

61 | betas:

62 | - 0.9

63 | - 0.999

64 | eps: 1.0e-08

65 | weight_decay: 5.0e-05

66 | amsgrad: false

67 | dataset:

68 | name: CP

69 | img_size: 224

70 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

71 | file_name: morphem70k_v2.csv

72 | data_chunk:

73 | chunks:

74 | - CP:

75 | - nucleus

76 | - er

77 | - rna

78 | - golgi

79 | - mito

80 | logging:

81 | wandb:

82 | use_wandb: false

83 | log_freq: 10000

84 | num_images_to_log: 0

85 | project_name: null

86 | use_py_log: false

87 | scc_jobid: null

88 | hardware:

89 | num_workers: 3

90 | device: cuda

91 | multi_gpus: null

92 | eval:

93 | batch_size: null

94 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

95 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

96 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

97 | meta_csv_file: FILL_LATER

98 | classifiers:

99 | - knn

100 | - sgd

101 | classifier: PLACE_HOLDER

102 | feature_file: features.npy

103 | use_gpu: true

104 | knn_metric: PLACE_HOLDER

105 | knn_metrics:

106 | - l2

107 | - cosine

108 | clean_up: false

109 | umap: true

110 | only_eval_first_and_last: false

111 | tag: cp

112 |

--------------------------------------------------------------------------------

/checkpoint_configs/fixed_channels_finetuned/hpa.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: fixed_channels_finetuned/hpa.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 744395

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: convnext_base

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.3

32 | learnable_temp: false

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: pretrained_pad_avg

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | scheduler:

39 | name: cosine

40 | convert_to_batch: false

41 | params:

42 | t_initial: FILL_LATER

43 | lr_min: 1.0e-06

44 | cycle_mul: 1.0

45 | cycle_decay: 0.5

46 | cycle_limit: 1

47 | warmup_t: 3

48 | warmup_lr_init: 1.0e-05

49 | warmup_prefix: false

50 | t_in_epochs: true

51 | noise_range_t: null

52 | noise_pct: 0.67

53 | noise_std: 1.0

54 | noise_seed: 42

55 | k_decay: 1.0

56 | initialize: true

57 | optimizer:

58 | name: adamw

59 | params:

60 | lr: 0.0001

61 | betas:

62 | - 0.9

63 | - 0.999

64 | eps: 1.0e-08

65 | weight_decay: 5.0e-05

66 | amsgrad: false

67 | dataset:

68 | name: HPA

69 | img_size: 224

70 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

71 | file_name: morphem70k_v2.csv

72 | data_chunk:

73 | chunks:

74 | - HPA:

75 | - microtubules

76 | - protein

77 | - nucleus

78 | - er

79 | logging:

80 | wandb:

81 | use_wandb: false

82 | log_freq: 10000

83 | num_images_to_log: 0

84 | project_name: null

85 | use_py_log: false

86 | scc_jobid: null

87 | hardware:

88 | num_workers: 3

89 | device: cuda

90 | multi_gpus: null

91 | eval:

92 | batch_size: null

93 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

94 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

95 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

96 | meta_csv_file: FILL_LATER

97 | classifiers:

98 | - knn

99 | - sgd

100 | classifier: PLACE_HOLDER

101 | feature_file: features.npy

102 | use_gpu: true

103 | knn_metric: PLACE_HOLDER

104 | knn_metrics:

105 | - l2

106 | - cosine

107 | clean_up: false

108 | umap: true

109 | only_eval_first_and_last: false

110 | tag: hpa

111 |

--------------------------------------------------------------------------------

/checkpoint_configs/hypernet.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: hypernet.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 125617

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: hyperconvnext

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.07

32 | learnable_temp: false

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: reinit_as_random

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | z_dim: 128

39 | hidden_dim: 256

40 | in_channel_names:

41 | - er

42 | - golgi

43 | - membrane

44 | - microtubules

45 | - mito

46 | - nucleus

47 | - protein

48 | - rna

49 | separate_emb: true

50 | scheduler:

51 | name: cosine

52 | convert_to_batch: false

53 | params:

54 | t_initial: FILL_LATER

55 | lr_min: 1.0e-06

56 | cycle_mul: 1.0

57 | cycle_decay: 0.5

58 | cycle_limit: 1

59 | warmup_t: 3

60 | warmup_lr_init: 1.0e-05

61 | warmup_prefix: false

62 | t_in_epochs: true

63 | noise_range_t: null

64 | noise_pct: 0.67

65 | noise_std: 1.0

66 | noise_seed: 42

67 | k_decay: 1.0

68 | initialize: true

69 | optimizer:

70 | name: adamw

71 | params:

72 | lr: 0.0004

73 | betas:

74 | - 0.9

75 | - 0.999

76 | eps: 1.0e-08

77 | weight_decay: 5.0e-05

78 | amsgrad: false

79 | dataset:

80 | name: morphem70k

81 | img_size: 224

82 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

83 | file_name: morphem70k_v2.csv

84 | data_chunk:

85 | chunks:

86 | - Allen:

87 | - nucleus

88 | - membrane

89 | - protein

90 | - HPA:

91 | - microtubules

92 | - protein

93 | - nucleus

94 | - er

95 | - CP:

96 | - nucleus

97 | - er

98 | - rna

99 | - golgi

100 | - mito

101 | logging:

102 | wandb:

103 | use_wandb: false

104 | log_freq: 10000

105 | num_images_to_log: 0

106 | project_name: null

107 | use_py_log: false

108 | scc_jobid: null

109 | hardware:

110 | num_workers: 3

111 | device: cuda

112 | multi_gpus: null

113 | eval:

114 | batch_size: null

115 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

116 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

117 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

118 | meta_csv_file: FILL_LATER

119 | classifiers:

120 | - knn

121 | - sgd

122 | classifier: PLACE_HOLDER

123 | feature_file: features.npy

124 | use_gpu: true

125 | knn_metric: PLACE_HOLDER

126 | knn_metrics:

127 | - l2

128 | - cosine

129 | clean_up: false

130 | umap: true

131 | only_eval_first_and_last: false

132 | attn_pooling: {}

133 | tag: hyper

134 |

--------------------------------------------------------------------------------

/checkpoint_configs/slice_param.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: slice_param.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 725375

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: sliceparamconvnext

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.15

32 | learnable_temp: true

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: pretrained_pad_dups

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | in_channel_names:

39 | - er

40 | - golgi

41 | - membrane

42 | - microtubules

43 | - mito

44 | - nucleus

45 | - protein

46 | - rna

47 | duplicate: false

48 | slice_class_emb: true

49 | scheduler:

50 | name: cosine

51 | convert_to_batch: false

52 | params:

53 | t_initial: FILL_LATER

54 | lr_min: 1.0e-06

55 | cycle_mul: 1.0

56 | cycle_decay: 0.5

57 | cycle_limit: 1

58 | warmup_t: 3

59 | warmup_lr_init: 1.0e-05

60 | warmup_prefix: false

61 | t_in_epochs: true

62 | noise_range_t: null

63 | noise_pct: 0.67

64 | noise_std: 1.0

65 | noise_seed: 42

66 | k_decay: 1.0

67 | initialize: true

68 | optimizer:

69 | name: adamw

70 | params:

71 | lr: 0.0001

72 | betas:

73 | - 0.9

74 | - 0.999

75 | eps: 1.0e-08

76 | weight_decay: 5.0e-05

77 | amsgrad: false

78 | dataset:

79 | name: morphem70k

80 | img_size: 224

81 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

82 | file_name: morphem70k_v2.csv

83 | data_chunk:

84 | chunks:

85 | - Allen:

86 | - nucleus

87 | - membrane

88 | - protein

89 | - HPA:

90 | - microtubules

91 | - protein

92 | - nucleus

93 | - er

94 | - CP:

95 | - nucleus

96 | - er

97 | - rna

98 | - golgi

99 | - mito

100 | logging:

101 | wandb:

102 | use_wandb: false

103 | log_freq: 10000

104 | num_images_to_log: 0

105 | project_name: null

106 | use_py_log: false

107 | scc_jobid: null

108 | hardware:

109 | num_workers: 3

110 | device: cuda

111 | multi_gpus: null

112 | eval:

113 | batch_size: null

114 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

115 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

116 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

117 | meta_csv_file: FILL_LATER

118 | classifiers:

119 | - knn

120 | - sgd

121 | classifier: PLACE_HOLDER

122 | feature_file: features.npy

123 | use_gpu: true

124 | knn_metric: PLACE_HOLDER

125 | knn_metrics:

126 | - l2

127 | - cosine

128 | clean_up: false

129 | umap: true

130 | only_eval_first_and_last: false

131 | attn_pooling: {}

132 | tag: slice

133 |

--------------------------------------------------------------------------------

/checkpoint_configs/target_param.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: target_param.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 505429

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: shared_convnext

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.07

32 | learnable_temp: true

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: pretrained_pad_avg

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | scheduler:

39 | name: cosine

40 | convert_to_batch: false

41 | params:

42 | t_initial: FILL_LATER

43 | lr_min: 1.0e-06

44 | cycle_mul: 1.0

45 | cycle_decay: 0.5

46 | cycle_limit: 1

47 | warmup_t: 3

48 | warmup_lr_init: 1.0e-05

49 | warmup_prefix: false

50 | t_in_epochs: true

51 | noise_range_t: null

52 | noise_pct: 0.67

53 | noise_std: 1.0

54 | noise_seed: 42

55 | k_decay: 1.0

56 | initialize: true

57 | optimizer:

58 | name: adamw

59 | params:

60 | lr: 0.0002

61 | betas:

62 | - 0.9

63 | - 0.999

64 | eps: 1.0e-08

65 | weight_decay: 5.0e-05

66 | amsgrad: false

67 | dataset:

68 | name: morphem70k

69 | img_size: 224

70 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

71 | file_name: morphem70k_v2.csv

72 | data_chunk:

73 | chunks:

74 | - Allen:

75 | - nucleus

76 | - membrane

77 | - protein

78 | - HPA:

79 | - microtubules

80 | - protein

81 | - nucleus

82 | - er

83 | - CP:

84 | - nucleus

85 | - er

86 | - rna

87 | - golgi

88 | - mito

89 | logging:

90 | wandb:

91 | use_wandb: false

92 | log_freq: 10000

93 | num_images_to_log: 0

94 | project_name: null

95 | use_py_log: false

96 | scc_jobid: null

97 | hardware:

98 | num_workers: 3

99 | device: cuda

100 | multi_gpus: null

101 | eval:

102 | batch_size: null

103 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

104 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

105 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

106 | meta_csv_file: FILL_LATER

107 | classifiers:

108 | - knn

109 | - sgd

110 | classifier: PLACE_HOLDER

111 | feature_file: features.npy

112 | use_gpu: true

113 | knn_metric: PLACE_HOLDER

114 | knn_metrics:

115 | - l2

116 | - cosine

117 | clean_up: false

118 | umap: true

119 | only_eval_first_and_last: false

120 | attn_pooling: {}

121 | tag: shared

122 |

--------------------------------------------------------------------------------

/checkpoint_configs/template_mixing.yaml:

--------------------------------------------------------------------------------

1 | train:

2 | batch_strategy: random_instance

3 | resume_train: false

4 | resume_model: template_mixing.pt

5 | use_amp: false

6 | checkpoints: ../STORE/adaptive_interface/checkpoints

7 | save_model: no_save

8 | clip_grad_norm: null

9 | batch_size: 128

10 | num_epochs: 15

11 | verbose_batches: 50

12 | seed: 451006

13 | debug: false

14 | adaptive_interface_epochs: 0

15 | adaptive_interface_lr: null

16 | swa: false

17 | swad: false

18 | swa_lr: 0.05

19 | swa_start: 5

20 | miro: false

21 | miro_lr_mult: 10.0

22 | miro_ld: 0.01

23 | tps_prob: 0.0

24 | model:

25 | name: templatemixingconvnext

26 | pretrained: true

27 | pretrained_model_name: convnext_tiny.fb_in22k

28 | in_dim: null

29 | num_classes: null

30 | pooling: avg

31 | temperature: 0.05

32 | learnable_temp: false

33 | unfreeze_last_n_layers: -1

34 | unfreeze_first_layer: true

35 | first_layer: reinit_as_random

36 | reset_last_n_unfrozen_layers: false

37 | use_auto_rgn: false

38 | in_channel_names:

39 | - er

40 | - golgi

41 | - membrane

42 | - microtubules

43 | - mito

44 | - nucleus

45 | - protein

46 | - rna

47 | num_templates: 128

48 | separate_coef: true

49 | scheduler:

50 | name: cosine

51 | convert_to_batch: false

52 | params:

53 | t_initial: FILL_LATER

54 | lr_min: 1.0e-06

55 | cycle_mul: 1.0

56 | cycle_decay: 0.5

57 | cycle_limit: 1

58 | warmup_t: 3

59 | warmup_lr_init: 1.0e-05

60 | warmup_prefix: false

61 | t_in_epochs: true

62 | noise_range_t: null

63 | noise_pct: 0.67

64 | noise_std: 1.0

65 | noise_seed: 42

66 | k_decay: 1.0

67 | initialize: true

68 | optimizer:

69 | name: adamw

70 | params:

71 | lr: 0.0001

72 | betas:

73 | - 0.9

74 | - 0.999

75 | eps: 1.0e-08

76 | weight_decay: 5.0e-05

77 | amsgrad: false

78 | dataset:

79 | name: morphem70k

80 | img_size: 224

81 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

82 | file_name: morphem70k_v2.csv

83 | data_chunk:

84 | chunks:

85 | - Allen:

86 | - nucleus

87 | - membrane

88 | - protein

89 | - HPA:

90 | - microtubules

91 | - protein

92 | - nucleus

93 | - er

94 | - CP:

95 | - nucleus

96 | - er

97 | - rna

98 | - golgi

99 | - mito

100 | logging:

101 | wandb:

102 | use_wandb: false

103 | log_freq: 10000

104 | num_images_to_log: 0

105 | project_name: null

106 | use_py_log: false

107 | scc_jobid: null

108 | hardware:

109 | num_workers: 3

110 | device: cuda

111 | multi_gpus: null

112 | eval:

113 | batch_size: null

114 | dest_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/results

115 | feature_dir: ../STORE/adaptive_interface/snapshots/{FOLDER_NAME}/features

116 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2/

117 | meta_csv_file: FILL_LATER

118 | classifiers:

119 | - knn

120 | - sgd

121 | classifier: PLACE_HOLDER

122 | feature_file: features.npy

123 | use_gpu: true

124 | knn_metric: PLACE_HOLDER

125 | knn_metrics:

126 | - l2

127 | - cosine

128 | clean_up: false

129 | umap: true

130 | only_eval_first_and_last: false

131 | attn_pooling: {}

132 | tag: templ

133 |

--------------------------------------------------------------------------------

/config.py:

--------------------------------------------------------------------------------

1 | from __future__ import annotations

2 |

3 | from dataclasses import dataclass, asdict, field

4 | from typing import List, Dict, Optional

5 |

6 | from omegaconf import MISSING

7 |

8 | from helper_classes.channel_initialization import ChannelInitialization

9 | from helper_classes.feature_pooling import FeaturePooling

10 | from helper_classes.first_layer_init import FirstLayerInit

11 | from helper_classes.norm_type import NormType

12 | from helper_classes.channel_pooling_type import ChannelPoolingType

13 |

14 | # fmt: off

15 |

16 | @dataclass

17 | class OptimizerParams(Dict):

18 | pass

19 |

20 |

21 | @dataclass

22 | class Optimizer:

23 | name: str

24 | params: OptimizerParams

25 |

26 |

27 | @dataclass

28 | class SchedulerParams(Dict):

29 | pass

30 |

31 |

32 | @dataclass

33 | class Scheduler:

34 | name: str

35 | convert_to_batch: bool

36 | params: SchedulerParams

37 |

38 |

39 | @dataclass

40 | class Train:

41 | batch_strategy: None

42 | resume_train: bool

43 | resume_model: str

44 | use_amp: bool

45 | checkpoints: str

46 | clip_grad_norm: int

47 | batch_size: int

48 | num_epochs: int

49 | verbose_batches: int

50 | seed: int

51 | save_model: bool

52 | debug: Optional[bool] = False

53 | real_batch_size: Optional[int] = None

54 | compile_pytorch: Optional[bool] = False

55 | adaptive_interface_epochs: int = 0

56 | adaptive_interface_lr: Optional[float] = None

57 | swa: Optional[bool] = False

58 | swad: Optional[bool] = False

59 | swa_lr: Optional[float] = 0.05

60 | swa_start: Optional[int] = 5

61 |

62 | ## MIRO

63 | miro: Optional[bool] = False

64 | miro_lr_mult: Optional[float] = 10.0

65 | miro_ld: Optional[float] = 0.01 # 0.1

66 |

67 | ## TPS Transform (Augmentation)

68 | tps_prob: Optional[float] = 0.0

69 | ssl: Optional[bool] = False

70 | ssl_lambda: Optional[float] = 0.0

71 |

72 | @dataclass

73 | class Eval:

74 | batch_size: int

75 | dest_dir: str = "" ## where to save results

76 | feature_dir: str = "" ## where to save features for evaluation

77 | root_dir: str = "" ## folder that contains images and metadata

78 | classifiers: List[str] = field(default_factory=list) ## classifier to use

79 | classifier: str = "" ## placeholder for classifier

80 | feature_file: str = "" ## feature file to use

81 | use_gpu: bool = True ## use gpu for evaluation

82 | knn_metrics: List[str] = field(default_factory=list) ## "l2" or "cosine"

83 | knn_metric: str = "" ## should be "l2" or "cosine", placeholder

84 | meta_csv_file: str = "" ## metadata csv file

85 | clean_up: bool = True ## whether to delete the feature file after evaluation

86 | only_eval_first_and_last: bool = False ## whether to only evaluate first (off the shelf) and last (final fune-tuned) epochs

87 |

88 | @dataclass

89 | class AttentionPoolingParams:

90 | """

91 | param for ChannelAttentionPoolingLayer class.

92 | initialize all arguments in the class.

93 | """

94 |

95 | max_num_channels: int

96 | dim: int

97 | depth: int

98 | dim_head: int

99 | heads: int

100 | mlp_dim: int

101 | dropout: float

102 | use_cls_token: bool

103 | use_channel_tokens: bool

104 | init_channel_tokens: ChannelInitialization

105 |

106 |

107 | @dataclass

108 | class Model:

109 | name: str

110 | init_weights: bool

111 | in_dim: int = MISSING

112 | num_classes: int = MISSING ## Num of training classes

113 | freeze_other: Optional[bool] = None ## used in Shared Models

114 | in_channel_names: Optional[List[str]] = None ## used with Slice Param Models

115 | separate_norm: Optional[

116 | bool

117 | ] = None ## use a separate norm layer for each data chunk

118 | image_h_w: Optional[List[int]] = None ## used with layer norm

119 | norm_type: Optional[

120 | NormType

121 | ] = None # one of ["batch_norm", "norm_type", "instance_norm"]

122 | duplicate: Optional[

123 | bool

124 | ] = None # whether to only use the first param bank and duplicate for all the channels

125 | pooling_channel_type: Optional[ChannelPoolingType] = None

126 | kernels_per_channel: Optional[int] = None

127 | num_templates: Optional[int] = None # number of templates to use in template mixing

128 | separate_coef: Optional[bool] = None # whether to use a separate set of coefficients for each chunk

129 | coefs_init: Optional[bool] = None # whether to initialize the coefficients, used in templ mixing ver2

130 | freeze_coefs_epochs: Optional[int] = None # TODO: add this. Whether to freeze the coefficients for some first epoch, used in templ mixing ver2

131 | separate_emb: Optional[bool] = None # whether to use a separate embedding (hypernetwork) for each chunk

132 | z_dim: Optional[int] = None # dimension of the latent space, hypernetwork

133 | hidden_dim: Optional[int] = None # dimension of the hidden layer, hypernetwork

134 |

135 | ### ConvNet/CLIP-ResNet50 Params

136 | pretrained: Optional[bool] = None

137 | pretrained_model_name: Optional[str] = None

138 | pooling: Optional[FeaturePooling] = None # one of ["avg", "max", "avgmax", "none"]

139 | temperature: Optional[float] = None

140 | unfreeze_last_n_layers: Optional[int] = -1

141 | # -1: unfreeze all layers, 0: freeze all layers, 1: unfreeze last layer, etc.

142 | first_layer: Optional[FirstLayerInit] = None

143 | unfreeze_first_layer: Optional[bool] = True

144 | reset_last_n_unfrozen_layers: Optional[bool] = False

145 | use_auto_rgn: Optional[bool] = None # relative gradient norm, this supersedes the use of `unfreeze_vit_layers`

146 |

147 | ### CLIP ViT16Base

148 | unfreeze_vit_layers: Optional[List[str]] = None

149 |

150 | ## temperature in the loss

151 | learnable_temp: bool = False

152 |

153 | ## Slice Params

154 | slice_class_emb: Optional[bool] = False

155 |

156 |

157 | @dataclass

158 | class Dataset:

159 | name: str

160 | img_size: int = 224

161 | label_column: Optional[str] = None

162 | root_dir: str = ""

163 | file_name: str = ""

164 |

165 |

166 | @dataclass

167 | class Wandb:

168 | use_wandb: bool

169 | log_freq: int

170 | num_images_to_log: int

171 | log_imgs_every_n_epochs: int

172 | project_name: str

173 |

174 |

175 | @dataclass

176 | class Logging:

177 | wandb: Wandb

178 | use_py_log: bool

179 | scc_jobid: Optional[str] = None

180 |

181 |

182 | @dataclass

183 | class DataChunk:

184 | chunks: List[Dict[str, List[str]]]

185 |

186 | def __str__(self) -> str:

187 | channel_names = [list(c.keys())[0] for c in self.chunks]

188 | channel_values = [list(c.values())[0] for c in self.chunks]

189 |

190 | channels = zip(*(channel_names, channel_values))

191 | channels_str = "----".join(

192 | ["--".join([c[0], "_".join(c[1])]) for c in channels]

193 | )

194 | return channels_str

195 |

196 |

197 | @dataclass

198 | class Hardware:

199 | num_workers: int

200 | device: str

201 | multi_gpus: str

202 |

203 |

204 | @dataclass

205 | class MyConfig:

206 | train: Train

207 | eval: Eval

208 | optimizer: Optimizer

209 | scheduler: Scheduler

210 | model: Model

211 | dataset: Dataset

212 | data_chunk: DataChunk

213 | logging: Logging

214 | hardware: Hardware

215 | tag: str

216 | attn_pooling: Optional[AttentionPoolingParams] = None

217 |

--------------------------------------------------------------------------------

/configs/morphem70k/allen_cfg.yaml:

--------------------------------------------------------------------------------

1 | defaults:

2 | - train: ~

3 | - model: ~

4 | - scheduler: ~

5 | - optimizer: ~

6 | - dataset: ~

7 | - data_chunk: ~

8 | - logging: ~

9 | - hardware: ~

10 | - eval: ~

11 | - _self_

12 |

13 | tag: ~

14 |

15 | hydra:

16 | sweeper:

17 | params:

18 | train: random_instance

19 | data_chunk: allen

20 | model: convnext_base

21 | scheduler: cosine

22 | optimizer: adamw

23 | dataset: allen

24 | logging: wandb

25 | hardware: default

26 | eval: default

--------------------------------------------------------------------------------

/configs/morphem70k/attn_pooling/none.yaml:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/chaudatascience/channel_adaptive_models/b62f69ae5d7d2c49cba2c96f0c9d2eef0fe2ee42/configs/morphem70k/attn_pooling/none.yaml

--------------------------------------------------------------------------------

/configs/morphem70k/attn_pooling/param1.yaml:

--------------------------------------------------------------------------------

1 | max_num_channels: FILL_LATER_IN_CODE

2 | dim: FILL_LATER_IN_CODE

3 | depth: 1

4 | dim_head: 16

5 | heads: 4

6 | mlp_dim: 4

7 | dropout: 0.0

8 | use_cls_token: False

9 | use_channel_tokens: False

10 | init_channel_tokens: ~ # only used when use_channel_tokens=True

--------------------------------------------------------------------------------

/configs/morphem70k/attn_pooling/param2.yaml:

--------------------------------------------------------------------------------

1 | max_num_channels: FILL_LATER_IN_CODE

2 | dim: FILL_LATER_IN_CODE

3 | depth: 2

4 | dim_head: 16

5 | heads: 4

6 | mlp_dim: 4

7 | dropout: 0.05

8 | use_cls_token: False

9 | use_channel_tokens: True

10 | init_channel_tokens: random # only used when use_channel_tokens=True

--------------------------------------------------------------------------------

/configs/morphem70k/attn_pooling/param3.yaml:

--------------------------------------------------------------------------------

1 | max_num_channels: FILL_LATER_IN_CODE

2 | dim: FILL_LATER_IN_CODE

3 | depth: 4

4 | dim_head: 64

5 | heads: 8

6 | mlp_dim: 4

7 | dropout: 0.0

8 | use_cls_token: True

9 | use_channel_tokens: True

10 | init_channel_tokens: random # only used when use_channel_tokens=True

11 |

--------------------------------------------------------------------------------

/configs/morphem70k/attn_pooling/param4.yaml:

--------------------------------------------------------------------------------

1 | max_num_channels: FILL_LATER_IN_CODE

2 | dim: FILL_LATER_IN_CODE

3 | depth: 2

4 | dim_head: 32

5 | heads: 4

6 | mlp_dim: 4

7 | dropout: 0.1

8 | use_cls_token: False

9 | use_channel_tokens: True

10 | init_channel_tokens: random # only used when use_channel_tokens=True

--------------------------------------------------------------------------------

/configs/morphem70k/attn_pooling/param5.yaml:

--------------------------------------------------------------------------------

1 | max_num_channels: FILL_LATER_IN_CODE

2 | dim: FILL_LATER_IN_CODE

3 | depth: 1

4 | dim_head: 32

5 | heads: 4

6 | mlp_dim: 4

7 | dropout: 0.1

8 | use_cls_token: False

9 | use_channel_tokens: True

10 | init_channel_tokens: random # only used when use_channel_tokens=True

--------------------------------------------------------------------------------

/configs/morphem70k/cp_cfg.yaml:

--------------------------------------------------------------------------------

1 | defaults:

2 | - train: ~

3 | - model: ~

4 | - scheduler: ~

5 | - optimizer: ~

6 | - dataset: ~

7 | - data_chunk: ~

8 | - logging: ~

9 | - hardware: ~

10 | - eval: ~

11 | - _self_

12 |

13 | tag: ~

14 |

15 | hydra:

16 | sweeper:

17 | params:

18 | train: random_instance

19 | data_chunk: cp

20 | model: convnext_base

21 | scheduler: cosine

22 | optimizer: adamw

23 | dataset: cp

24 | logging: wandb

25 | hardware: default

26 | eval: default

--------------------------------------------------------------------------------

/configs/morphem70k/data_chunk/allen.yaml:

--------------------------------------------------------------------------------

1 | chunks:

2 | - Allen:

3 | - nucleus

4 | - membrane

5 | - protein

--------------------------------------------------------------------------------

/configs/morphem70k/data_chunk/cp.yaml:

--------------------------------------------------------------------------------

1 | chunks:

2 | - CP:

3 | - nucleus

4 | - er

5 | - rna

6 | - golgi

7 | - mito

--------------------------------------------------------------------------------

/configs/morphem70k/data_chunk/hpa.yaml:

--------------------------------------------------------------------------------

1 | chunks:

2 | - HPA:

3 | - microtubules

4 | - protein

5 | - nucleus

6 | - er

--------------------------------------------------------------------------------

/configs/morphem70k/data_chunk/morphem70k.yaml:

--------------------------------------------------------------------------------

1 | chunks:

2 | - Allen:

3 | - nucleus

4 | - membrane

5 | - protein

6 | - HPA:

7 | - microtubules

8 | - protein

9 | - nucleus

10 | - er

11 | - CP:

12 | - nucleus

13 | - er

14 | - rna

15 | - golgi

16 | - mito

--------------------------------------------------------------------------------

/configs/morphem70k/dataset/allen.yaml:

--------------------------------------------------------------------------------

1 | name: Allen ## 6 classes - We use this version

2 | img_size: 224 #374

3 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

4 | file_name: morphem70k_v2.csv

--------------------------------------------------------------------------------

/configs/morphem70k/dataset/cp.yaml:

--------------------------------------------------------------------------------

1 | name: CP

2 | img_size: 224 # 160

3 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

4 | file_name: morphem70k_v2.csv

--------------------------------------------------------------------------------

/configs/morphem70k/dataset/hpa.yaml:

--------------------------------------------------------------------------------

1 | name: HPA

2 | img_size: 224 # 512

3 | root_dir: /projectnb/morphem/data_70k/ver2/morphem_70k_version2

4 | file_name: morphem70k_v2.csv

--------------------------------------------------------------------------------

/configs/morphem70k/dataset/morphem70k_v2.yaml:

--------------------------------------------------------------------------------

1 | name: morphem70k

2 | img_size: 224

3 | root_dir: chammi_dataset

4 | file_name: morphem70k_v2.csv

--------------------------------------------------------------------------------

/configs/morphem70k/eval/default.yaml:

--------------------------------------------------------------------------------

1 | batch_size: ~ ## None to auto set

2 | dest_dir: snapshots/{FOLDER_NAME}/results

3 | feature_dir: snapshots/{FOLDER_NAME}/features

4 | root_dir: chammi_dataset

5 | meta_csv_file: FILL_LATER

6 | classifiers:

7 | - knn

8 | classifier: PLACE_HOLDER

9 | feature_file: features.npy

10 | use_gpu: True

11 | knn_metric: PLACE_HOLDER

12 | knn_metrics:

13 | - cosine

14 | clean_up: False

15 | umap: True

16 | only_eval_first_and_last: False

--------------------------------------------------------------------------------

/configs/morphem70k/hardware/default.yaml:

--------------------------------------------------------------------------------

1 | num_workers: 3

2 | device: cuda # "cuda:0" ## cuda whose idx is 0

3 | multi_gpus: ~ # "DataParallel" # {DistributedDataParallel, DataParallel, None}

4 |

--------------------------------------------------------------------------------

/configs/morphem70k/hardware/four_workers.yaml:

--------------------------------------------------------------------------------

1 | num_workers: 4

2 | device: cuda # "cuda:0" ## cuda whose idx is 0

3 | multi_gpus: ~ # "DataParallel" # {DistributedDataParallel, DataParallel, None}

4 |

--------------------------------------------------------------------------------

/configs/morphem70k/hpa_cfg.yaml:

--------------------------------------------------------------------------------

1 | defaults:

2 | - train: ~

3 | - model: ~

4 | - scheduler: ~

5 | - optimizer: ~

6 | - dataset: ~

7 | - data_chunk: ~

8 | - logging: ~

9 | - hardware: ~

10 | - eval: ~

11 | - _self_

12 |

13 | tag: ~

14 |

15 | hydra:

16 | sweeper:

17 | params:

18 | train: random_instance

19 | data_chunk: hpa

20 | model: convnext_base

21 | scheduler: cosine

22 | optimizer: adamw

23 | dataset: hpa

24 | logging: wandb

25 | hardware: default

26 | eval: default

--------------------------------------------------------------------------------

/configs/morphem70k/logging/no.yaml:

--------------------------------------------------------------------------------

1 | wandb:

2 | use_wandb: False

3 | log_freq: 10000

4 | num_images_to_log: 0 # set <= 0 to disable this

5 | project_name: ~ # set to ~ for auto setting

6 | use_py_log: False

7 | scc_jobid: ~

8 |

--------------------------------------------------------------------------------

/configs/morphem70k/logging/wandb.yaml:

--------------------------------------------------------------------------------

1 | wandb:

2 | use_wandb: False

3 | log_freq: 5000

4 | num_images_to_log: 0 # set <= 0 to disable this

5 | log_imgs_every_n_epochs: 0 # used when `num_images_to_log` > 0

6 | project_name: ~ # set to ~ for auto setting

7 | tag: ~

8 | use_py_log: False

9 | scc_jobid: ~

10 |

--------------------------------------------------------------------------------

/configs/morphem70k/model/clip_resnet50.yaml:

--------------------------------------------------------------------------------

1 | name: clip_based_model

2 | pretrained: True

3 | pretrained_model_name: RN50 ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | unfreeze_last_n_layers: -1

9 | unfreeze_first_layer: True

10 | first_layer: reinit_as_random

11 | reset_last_n_unfrozen_layers: False

12 | use_auto_rgn: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/clip_vit.yaml:

--------------------------------------------------------------------------------

1 | name: clip_based_model

2 | pretrained: True

3 | pretrained_model_name: ViT-B/16 ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | first_layer: reinit_as_random

9 | unfreeze_vit_layers: ~

10 | use_auto_rgn: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/convnext_base.yaml:

--------------------------------------------------------------------------------

1 | name: convnext_base

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/convnext_base_miro.yaml:

--------------------------------------------------------------------------------

1 | name: convnext_base_miro

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/convnext_shared_miro.yaml:

--------------------------------------------------------------------------------

1 | name: convnext_shared_miro

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: pretrained_pad_avg

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/depthwiseconvnext.yaml:

--------------------------------------------------------------------------------

1 | name: depthwiseconvnext

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

14 | kernels_per_channel: FILL_LATER

15 | pooling_channel_type: FILL_LATER # choice(sum, avg, weighted_sum_random, weighted_sum_one, weighted_sum_random_no_softmax, weighted_sum_one_no_softmax, attention)

16 | in_channel_names: ['er','golgi','membrane','microtubules','mito', 'nucleus', 'protein', 'rna']

17 |

18 |

--------------------------------------------------------------------------------

/configs/morphem70k/model/depthwiseconvnext_miro.yaml:

--------------------------------------------------------------------------------

1 | name: depthwiseconvnext_miro

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

14 | kernels_per_channel: FILL_LATER

15 | pooling_channel_type: FILL_LATER # choice(sum, avg, weighted_sum_random, weighted_sum_one, weighted_sum_random_no_softmax, weighted_sum_one_no_softmax, attention)

16 | in_channel_names: ['er','golgi','membrane','microtubules','mito', 'nucleus', 'protein', 'rna']

17 |

18 |

--------------------------------------------------------------------------------

/configs/morphem70k/model/dino_base.yaml:

--------------------------------------------------------------------------------

1 | name: dino_base

2 | pretrained: True

3 | pretrained_model_name: dinov2_vits14 ## https://github.com/facebookresearch/dinov2

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/hyperconvnext.yaml:

--------------------------------------------------------------------------------

1 | name: hyperconvnext

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

14 | z_dim: FILL_LATER

15 | hidden_dim: FILL_LATER

16 | in_channel_names: ['er','golgi','membrane','microtubules','mito', 'nucleus', 'protein', 'rna']

17 | separate_emb: True

--------------------------------------------------------------------------------

/configs/morphem70k/model/hyperconvnext_miro.yaml:

--------------------------------------------------------------------------------

1 | name: hyperconvnext_miro

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

14 | z_dim: FILL_LATER

15 | hidden_dim: FILL_LATER

16 | in_channel_names: ['er','golgi','membrane','microtubules','mito', 'nucleus', 'protein', 'rna']

17 | separate_emb: True

--------------------------------------------------------------------------------

/configs/morphem70k/model/separate.yaml:

--------------------------------------------------------------------------------

1 | name: shared_convnext

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: pretrained_pad_avg

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/sliceparam.yaml:

--------------------------------------------------------------------------------

1 | name: sliceparamconvnext

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None

5 | num_classes: ~ # autofill later if None

6 | pooling: "avg"

7 | temperature: 0.11111

8 | learnable_temp: False

9 | unfreeze_last_n_layers: -1

10 | unfreeze_first_layer: True

11 | first_layer: reinit_as_random

12 | reset_last_n_unfrozen_layers: False

13 | use_auto_rgn: False

14 | in_channel_names: ['er','golgi','membrane','microtubules','mito', 'nucleus', 'protein', 'rna']

15 | duplicate: False

16 | slice_class_emb: False

--------------------------------------------------------------------------------

/configs/morphem70k/model/sliceparam_miro.yaml:

--------------------------------------------------------------------------------

1 | name: sliceparamconvnext_miro

2 | pretrained: True

3 | pretrained_model_name: convnext_tiny.fb_in22k ## convnext_tiny.fb_in22k_ft_in1k, convnext_small.in12k_ft_in1k

4 | in_dim: ~ # autofill later if None