├── .gitignore

├── README.md

├── _config.yml

├── aws

└── aws-volume-types.md

├── baking

├── apple-pie.md

├── banana-bread.md

├── best-chocolate-chip-cookies.md

├── great-pumpkin-pie.jpg

├── great-pumpkin-pie.md

└── perfect-pie-crust.md

├── bash

├── a-generalized-single-instance-script.md

├── bash-shell-prompts.md

├── dont-do-grep-wc-l.md

├── groot.md

├── md5sum-of-a-path.md

├── pipefail.md

├── prompt-statement-variables.md

└── ring-the-audio-bell.md

├── consul

├── ingress-gateways.md

└── ingress-gateways.png

├── containers

└── non-privileged-containers-based-on-the-scratch-image.md

├── est

├── approximate-with-powers.md

├── ballpark-io-performance-figures.md

├── calculating-iops.md

├── littles-law.md

├── pi-seconds-is-a-nanocentury.md

└── rule-of-72.md

├── gardening

└── cotyledon-leaves.md

├── git

└── global-git-ignore.md

├── go

├── a-high-performance-go-cache.md

├── check-whether-a-file-exists.md

├── generating-hash-of-a-path.md

├── opentelemetry-tracer.md

└── using-error-groups.md

├── history

├── geological-eons-and-eras.md

└── the-late-bronze-age-collapse.md

├── kubernetes

└── installing-ssl-certs.md

├── linux

├── create-cron-without-an-editor.md

├── creating-a-linux-service-with-systemd.md

├── lastlog.md

├── socat.md

└── sparse-files.md

├── mac

├── installing-flock-on-mac.md

└── osascript.md

├── misc

├── buttons-1.jpg

├── buttons-2.jpg

├── buttons.md

├── chestertons-fence.md

├── convert-youtube-video-to-zoom-background.md

├── hugo-quickstart.md

├── new-gdocs.md

├── new-gdocs.png

├── voronoi-diagram.md

└── voronoi-diagram.png

├── software-architecture

├── hexagonal-architecture.md

└── hexagonal-architecture.png

├── ssh

├── break-out-of-a-stuck-session.md

└── exit-on-network-interruptions.md

├── team

├── amazon-leadership-principles.md

├── never-attribute-to-stupidity-that-which-is-adequately-explained-by-opportunity-cost.md

└── stay-out-of-the-critical-path.md

├── terraform

├── custom-validation-rules.md

├── plugin-basics.md

├── provider-plugin-development.md

├── rest-provider.md

└── time-provider.md

├── things-to-add.md

├── tls+ssl

├── dissecting-an-ssl-cert.md

├── use-vault-as-a-ca.md

└── use-vault-as-a-ca.png

└── vocabulary

├── sealioning.md

└── sealioning.png

/.gitignore:

--------------------------------------------------------------------------------

1 | .vscode

2 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Today I Learned

2 |

3 | A place for me to write down all of the little things I learn every day. This serves largely as a way for me to remember little things I'd otherwise have to keep looking up.

4 |

5 | Many of these will be technical. Many of these will not.

6 |

7 | Behold my periodic learnings, and judge me for my ignorance.

8 |

9 | ## Tech and Team

10 |

11 | "Worky" things. Mostly (or entirely) technical and team leadership learnings.

12 |

13 | ### AWS

14 |

15 | * [Amazon EBS volume type details](aws/aws-volume-types.md) (21 March 2021)

16 |

17 | ### Bash

18 |

19 | * [Bash shell prompts](bash/bash-shell-prompts.md) (6 May 2020)

20 | * [Bash shell prompt statement variables](bash/prompt-statement-variables.md) (6 May 2020)

21 | * [Move to the root of a Git directory](bash/groot.md) (6 May 2020)

22 | * [One-liner to calculate the md5sum of a Linux path](bash/md5sum-of-a-path.md) (7 May 2020)

23 | * [Safer bash scripts with `set -euxo pipefail`](bash/pipefail.md) (14 May 2020)

24 | * [Ring the audio bell](bash/ring-the-audio-bell.md) (18 May 2020)

25 | * [Don't do `grep | wc -l`](bash/dont-do-grep-wc-l.md) (25 May 2020)

26 | * [A generalized single-instance bash script](bash/a-generalized-single-instance-script.md) (23 June 2020)

27 |

28 | ### Calculations and Estimations

29 |

30 | * [Approximate with powers](est/approximate-with-powers.md) (25 May 2020)

31 | * [Ballpark I/O performance figures](est/ballpark-io-performance-figures.md) (25 May 2020)

32 | * [Little's Law](est/littles-law.md) (25 May 2020)

33 | * [Rule of 72](est/rule-of-72.md) (25 May 2020)

34 | * [Calculating IOPS](est/calculating-iops.md) (21 June 2020)

35 | * [π seconds is a nanocentury](est/pi-seconds-is-a-nanocentury.md) (22 June 2020)

36 |

37 | ### Consul

38 |

39 | * [Ingress Gateways](consul/ingress-gateways.md) (18 June 2020)

40 |

41 | ### Containers

42 |

43 | * [Non-privileged containers based on the scratch image](containers/non-privileged-containers-based-on-the-scratch-image.md) (15 June 2020)

44 |

45 | ### Git

46 |

47 | * [Using core.excludesFile to define a global git ignore](git/global-git-ignore.md) (26 June 2020)

48 |

49 | ### Go

50 |

51 | * [Check whether a file exists](go/check-whether-a-file-exists.md) (27 April 2020)

52 | * [Generate a hash from files in a path](go/generating-hash-of-a-path.md) (27 April 2020)

53 | * [Ristretto: a high-performance Go cache](go/a-high-performance-go-cache.md) (13 June 2020)

54 | * [OpenTelemetry: Tracer](go/opentelemetry-tracer.md) (29 June 2020)

55 | * [Using error groups in Go](go/using-error-groups.md) (29 June 2020)

56 |

57 | ### Kubernetes

58 |

59 | * [Install TLS certificates as a Kubernetes secret](kubernetes/installing-ssl-certs.md) (29 April 2020)

60 |

61 | ### Linux

62 |

63 | * [Create a cron job using bash without the interactive editor](linux/create-cron-without-an-editor.md) (7 May 2020)

64 | * [Create a Linux service with systemd](linux/creating-a-linux-service-with-systemd.md) (8 May 2020)

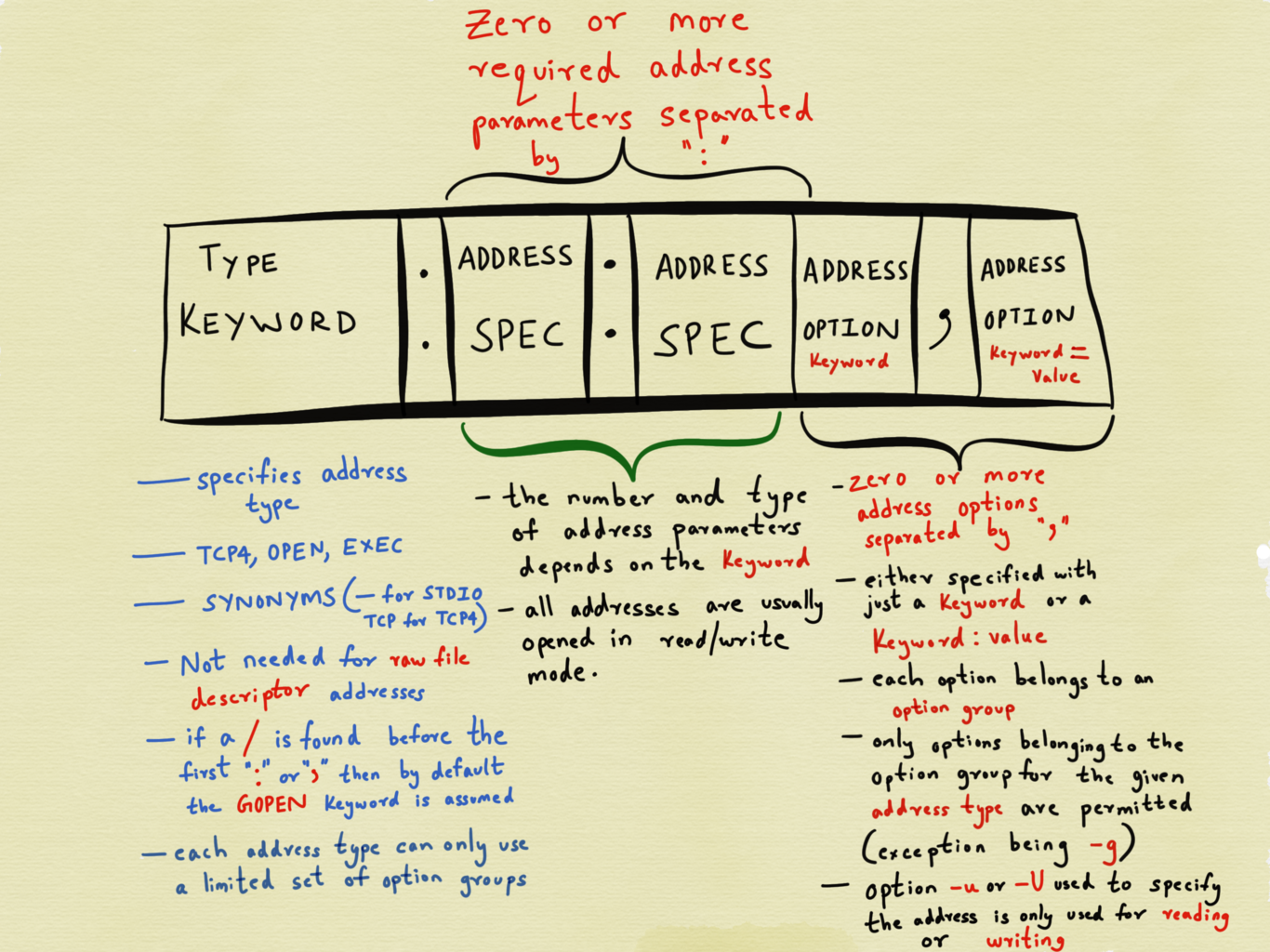

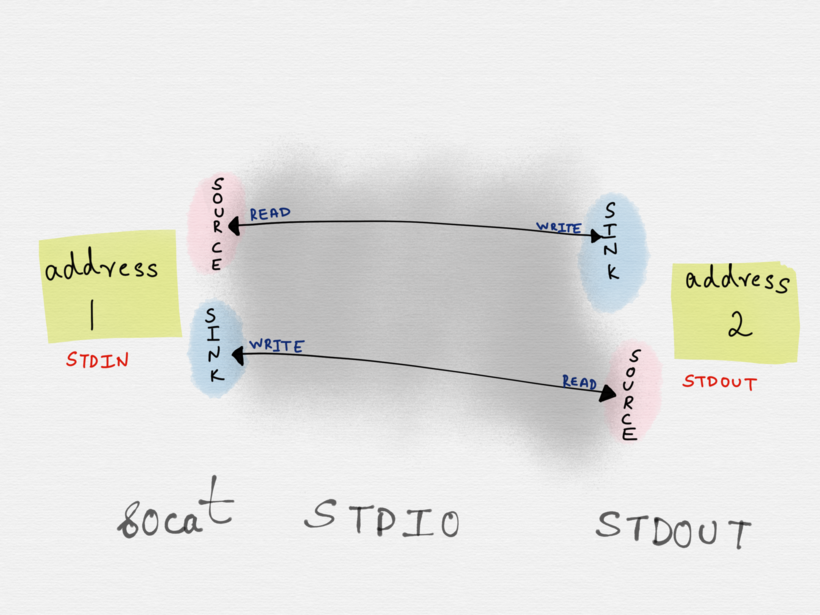

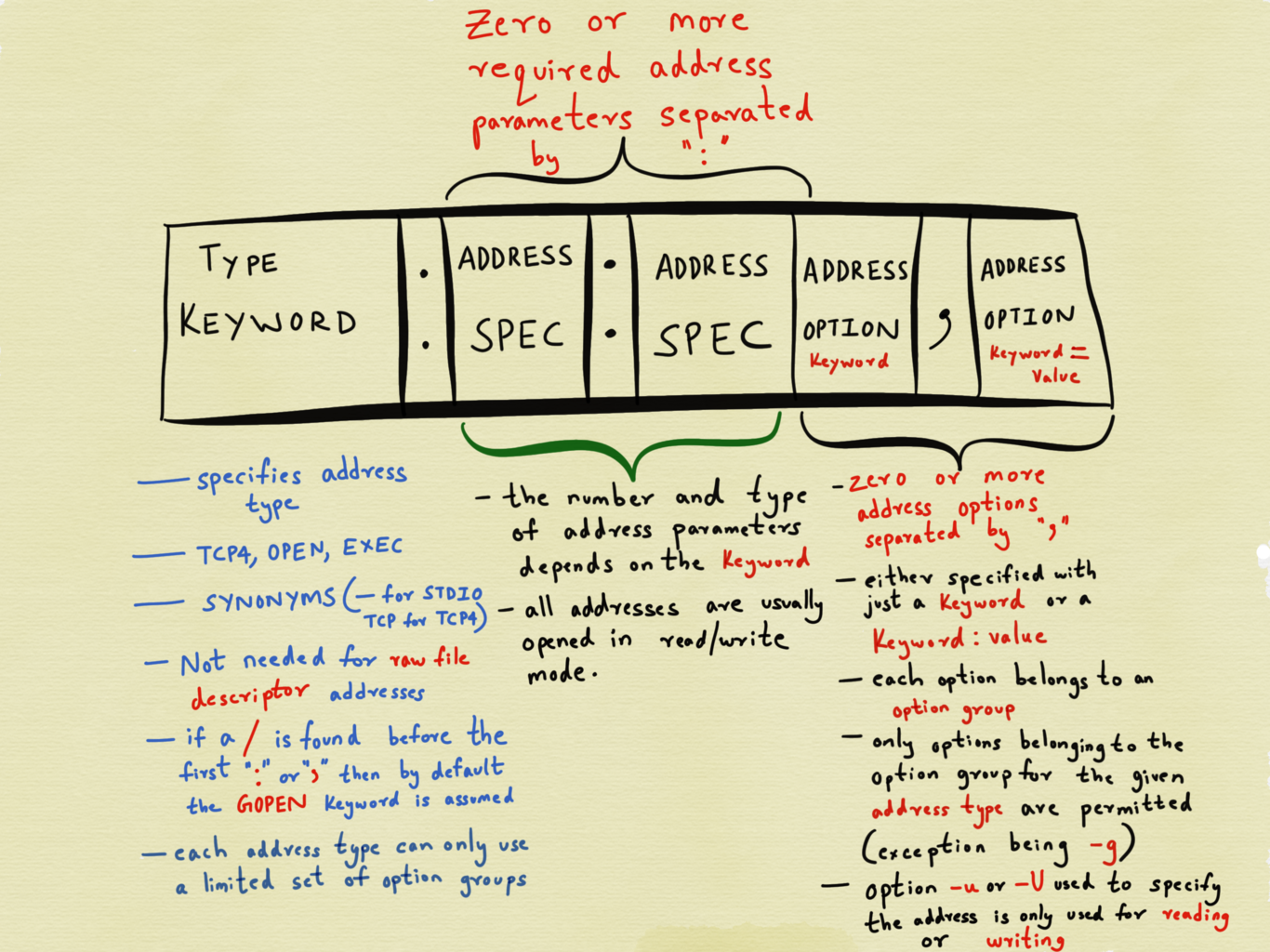

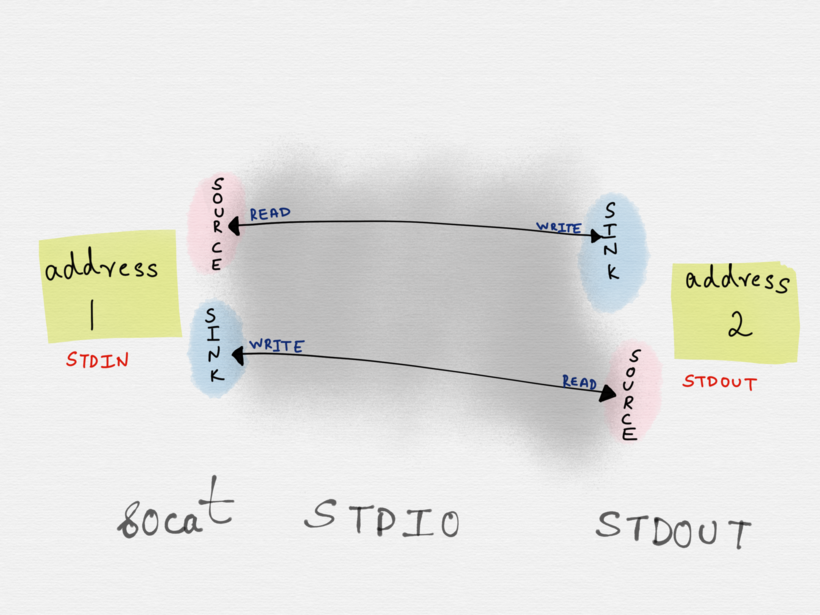

65 | * [The socat (SOcket CAT) command](linux/socat.md) (15 May 2020)

66 | * [The lastlog utility](linux/lastlog.md) (23 May 2020)

67 | * [Sparse files](linux/sparse-files.md) (23 May 2020)

68 |

69 | ### Mac

70 |

71 | * [The osascript command is a thing](mac/osascript.md) (18 May 2020)

72 | * [Installing flock(1) on Mac](mac/installing-flock-on-mac.md) (21 June 2020)

73 |

74 | ### Miscellaneous

75 |

76 | * [Install and set up the Hugo static site generator](misc/hugo-quickstart.md) (7 June 2020)

77 | * [Chesterton's Fence](misc/chestertons-fence.md) (27 May 2020)

78 | * [Easily Create New Google Docs/Sheets/Forms/Slideshows](misc/new-gdocs.md) (22 September 2020)

79 |

80 | ### SSH

81 |

82 | * [Break out of a stuck SSH session](ssh/break-out-of-a-stuck-session.md) (30 April 2020)

83 | * [Exit SSH automatically on network interruptions](ssh/exit-on-network-interruptions.md) (30 April 2020)

84 |

85 | ### Software Architecture

86 |

87 | * [Hexagonal architecture](software-architecture/hexagonal-architecture.md) (30 May 2020)

88 |

89 | ### Team

90 |

91 | * [Amazon leadership principles](team/amazon-leadership-principles.md) (3 May 2020)

92 | * [Never attribute to stupidity that which is adequately explained by opportunity cost](team/never-attribute-to-stupidity-that-which-is-adequately-explained-by-opportunity-cost.md) (4 May 2020)

93 | * [Stop writing code and engineering in the critical path](team/stay-out-of-the-critical-path.md) (3 May 2020)

94 |

95 | ### Terraform

96 |

97 | * [Custom Terraform module variable validation rules](terraform/custom-validation-rules.md) (20 May 2020)

98 | * [Terraform plugin basics](terraform/plugin-basics.md) (3 May 2020)

99 | * [Terraform provider plugin development](terraform/provider-plugin-development.md) (3 May 2020)

100 | * [Terraform provider for generic REST APIs](terraform/rest-provider.md) (26 June 2020)

101 | * [Terraform provider for time](terraform/time-provider.md) (15 June 2020)

102 |

103 | ### TLS/SSL

104 |

105 | * [Anatomy of a TLS+SSL certificate](tls+ssl/dissecting-an-ssl-cert.md) (6 May 2020)

106 | * [Using Hashicorp Vault to build a certificate authority (CA)](tls+ssl/use-vault-as-a-ca.md) (6 May 2020)

107 |

108 | ## Non-Technical Things

109 |

110 | Non-work things: baking, gardening, and random cool trivia.

111 |

112 | ### Baking

113 |

114 | * [Banana bread recipe - 2lb loaf (Bread Machine)](baking/banana-bread.md) (23 April 2020)

115 | * [Perfect Pie Crust](baking/perfect-pie-crust.md) (7 March 2021)

116 | * [The Best Chocolate Chip Cookie Recipe Ever](baking/best-chocolate-chip-cookies.md) (9 May 2020)

117 | * [Apple Pie](baking/apple-pie.md) (12 October 2021)

118 | * [The Great Pumpkin Pie Recipe](baking/great-pumpkin-pie.md) (12 October 2021)

119 |

120 | ### Gardening

121 |

122 | * [Why are the cotyledon leaves on a tomato plant falling off?](gardening/cotyledon-leaves.md) (5 May 2020)

123 |

124 | ### History

125 |

126 | * [The Late Bronze Age collapse](history/the-late-bronze-age-collapse.md) (17 May 2020)

127 | * [Geological eons and eras](history/geological-eons-and-eras.md) (1 June 2020)

128 |

129 | ### Miscellaneous

130 |

131 | * ["Button up" vs "button down"](misc/buttons.md) (6 May 2021)

132 | * [Converting a YouTube video to a Zoom background](misc/convert-youtube-video-to-zoom-background.md) (23 April 2020)

133 | * [Voronoi diagram](misc/voronoi-diagram.md) (17 June 2020)

134 |

135 | ### Services

136 |

137 | * [Ephemeral, trivially revocable cards that pass-through to your actual card (privacy.com)](https://privacy.com/) (21 May 2020)

138 |

139 | ### Vocabulary

140 |

141 | * [Sealioning](vocabulary/sealioning.md) (13 May 2020)

142 |

--------------------------------------------------------------------------------

/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-architect

--------------------------------------------------------------------------------

/aws/aws-volume-types.md:

--------------------------------------------------------------------------------

1 | # Amazon EBS Volume Types

2 |

3 | *Source: [Amazon EBS volume types](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-volume-types.html)*

4 |

5 | Amazon EBS provides the following volume types, which differ in performance characteristics and price, so that you can tailor your storage performance and cost to the needs of your applications. The volumes types fall into these categories:

6 |

7 | * Solid state drives (SSD) — Optimized for transactional workloads involving frequent read/write operations with small I/O size, where the dominant performance attribute is IOPS.

8 |

9 | * Hard disk drives (HDD) — Optimized for large streaming workloads where the dominant performance attribute is throughput.

10 |

11 | * Previous generation — Hard disk drives that can be used for workloads with small datasets where data is accessed infrequently and performance is not of primary importance. We recommend that you consider a current generation volume type instead.

12 |

13 | There are several factors that can affect the performance of EBS volumes, such as instance configuration, I/O characteristics, and workload demand. To fully use the IOPS provisioned on an EBS volume, use EBS-optimized instances. For more information about getting the most out of your EBS volumes, see Amazon EBS volume performance on Linux instances.

14 |

15 | ## Solid state drives (SSD)

16 |

17 | The SSD-backed volumes provided by Amazon EBS fall into these categories:

18 |

19 | * General Purpose SSD — Provides a balance of price and performance. We recommend these volumes for most workloads.

20 |

21 | * Provisioned IOPS SSD — Provides high performance for mission-critical, low-latency, or high-throughput workloads.

22 |

23 | The following is a summary of the use cases and characteristics of SSD-backed volumes. For information about the maximum IOPS and throughput per instance, see [Amazon EBS–optimized instances](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-optimized.html).

24 |

25 | ### General Purpose SSD

26 |

27 | #### `gp2`

28 |

29 | * **Durability:** 99.8% - 99.9%

30 | * **Use cases:** low-latency interactive apps; development and test environments

31 | * **Volume size:** 1 GiB - 16 TiB

32 | * **IOPS per volume (16 KiB I/O):** 3 IOPS per GiB of volume size (100 IOPS at 33.33 GiB and below to 16,000 IOPS at 5,334 GiB and above)

33 | * **Throughput per volume:** 250 MiB/s

34 | * **Amazon EBS Multi-attach supported?** No

35 | * **Boot volume supported?** Yes

36 |

37 | #### `gp3`

38 |

39 | * **Durability:** 99.8% - 99.9%

40 | * **Use cases:** low-latency interactive apps; development and test environments

41 | * **Volume size:** 1 GiB - 16 TiB

42 | * **IOPS per volume (16 KiB I/O):** 3,000 IOPS base; can be increased up to 16,000 IOPS (see below)

43 | * **Throughput per volume:** 125 MiB/s base; can be increased up to 1,000 MiB/s (see below)

44 | * **Amazon EBS Multi-attach supported?** No

45 | * **Boot volume supported?** Yes

46 |

47 | The maximum ratio of provisioned IOPS to provisioned volume size is 500 IOPS per GiB. The maximum ratio of provisioned throughput to provisioned IOPS is .25 MiB/s per IOPS. The following volume configurations support provisioning either maximum IOPS or maximum throughput:

48 |

49 | * 32 GiB or larger: 500 IOPS/GiB x 32 GiB = 16,000 IOPS

50 | * 8 GiB or larger and 4,000 IOPS or higher: 4,000 IOPS x 0.25 MiB/s/IOPS = 1,000 MiB/s

51 |

52 |

53 | ### Provisioned IOPS SSD

54 |

55 | #### `io1`

56 |

57 | * **Durability:** 99.8% - 99.9% durability

58 | * **Use cases:** Workloads that require sustained IOPS performance or more than 16,000 IOPS; I/O-intensive database workloads

59 | * **Volume size:** 4 GiB - 16 TiB

60 | * **IOPS per volume (16 KiB I/O):** 64,000 (see note 2)

61 | * **Throughput per volume:** 1,000 MiB/s

62 | * **Amazon EBS Multi-attach supported?** Yes

63 | * **Boot volume supported?** Yes

64 |

65 | Note 2: Maximum IOPS and throughput are guaranteed only on Instances built on the Nitro System provisioned with more than 32,000 IOPS. Other instances guarantee up to 32,000 IOPS and 500 MiB/s. io1 volumes that were created before December 6, 2017 and that have not been modified since creation might not reach full performance unless you modify the volume.

66 |

67 | #### `io2`

68 |

69 | * **Durability:** 99.999% durability

70 | * **Use cases:** Workloads that require sustained IOPS performance or more than 16,000 IOPS; I/O-intensive database workloads

71 | * **Volume size:** 4 GiB - 16 TiB

72 | * **IOPS per volume (16 KiB I/O):** 64,000 (see note 3)

73 | * **Throughput per volume:** 1,000 MiB/s

74 | * **Amazon EBS Multi-attach supported?** Yes

75 | * **Boot volume supported?** Yes

76 |

77 | Note 3: Maximum IOPS and throughput are guaranteed only on Instances built on the Nitro System provisioned with more than 32,000 IOPS. Other instances guarantee up to 32,000 IOPS and 500 MiB/s. io1 volumes that were created before December 6, 2017 and that have not been modified since creation might not reach full performance unless you modify the volume.

78 |

79 | #### `io2 Block Express` (in opt-in preview only as of March 2021)

80 |

81 | * **Durability:** 99.999% durability

82 | * **Use cases:** Workloads that require sub-millisecond latency, and sustained IOPS performance or more than 64,000 IOPS or 1,000 MiB/s of throughput

83 | * **Volume size:** 4 GiB - 64 TiB

84 | * **IOPS per volume (16 KiB I/O):** 256,000

85 | * **Throughput per volume:** 4,000 MiB/s

86 | * **Amazon EBS Multi-attach supported?** No

87 | * **Boot volume supported?** Yes

88 |

--------------------------------------------------------------------------------

/baking/apple-pie.md:

--------------------------------------------------------------------------------

1 | # Apple Pie

2 |

3 | _Source: Modified from [A Taste of Home: Apple Pie](https://www.tasteofhome.com/recipes/apple-pie/)_

4 |

5 | The original recipe produces a pie that's delicious, but runny and somewhat underdone. Replacing flour with ground tapioca sufficiently thickens the filling, increasing the baking time and temperature ensures an adequate bake.

6 |

7 | ## Ingredients

8 |

9 | * 1/2 cup **sugar**

10 | * 1/2 cup packed **brown sugar**

11 | * 3 tablespoons **ground tapioca**

12 | * 1 teaspoon **cinnamon**

13 | * 1/4 teaspoon **ginger**

14 | * 1/4 teaspoon **nutmeg**

15 | * 6 to 7 cups thinly sliced peeled **tart apples** (Granny Smith, maybe some sweet, crisp apples like Golden Delicious thrown in, work great)

16 | * 1 tablespoon **lemon juice**

17 | * **Dough for double-crust pie** ([this recipe](perfect-pie-crust.md) works great)

18 | * 1 tablespoon **butter**

19 | * 1 large **egg**

20 | * Additional **sugar**

21 |

22 | ## Directions

23 |

24 | 1. Preheat oven to 425°F (218°C).

25 |

26 | 2. In a small bowl, combine sugars, flour and spices; set aside.

27 |

28 | 3. In a large bowl, toss apples with lemon juice. Add sugar mixture; toss to coat.

29 |

30 | 4. On a lightly floured surface, roll one half of dough to a 12-inch circle; transfer to a 9-in. pie plate. Trim even with rim.

31 |

32 | 5. Add filling; dot with butter.

33 |

34 | 6. Roll remaining dough to a 1/8-in.-thick circle. Place over filling. Trim, seal and flute edge. Cut slits in top.

35 |

36 | 7. Beat egg until foamy; brush over crust. Sprinkle with sugar. Cover edge loosely with foil.

37 |

38 | 8. Bake at 425°F (218°C) for 20-25 minutes.

39 |

40 | 9. Reduce temperature to 350°F (176°C); Remove foil; bake until crust is golden brown and filling is bubbly, 30-50 minutes longer. Cool on a wire rack.

41 |

--------------------------------------------------------------------------------

/baking/banana-bread.md:

--------------------------------------------------------------------------------

1 | # Banana Bread Recipe - 2lb Loaf (Bread Machine)

2 |

3 | _Source: modified from https://breaddad.com/bread-machine-banana-bread/_

4 |

5 | ## Ingredients

6 |

7 | * 1/2 Cup – Milk (warm)

8 | * 2 – Eggs (beaten)

9 | * 8 Tablespoons – Butter (softened)

10 | * 1 Teaspoon – Vanilla Extract

11 | * 3 – Bananas (Ripe), Medium Sized (mashed)

12 | * 1 Cup – White Granulated Sugar

13 | * 2 Cups – Flour (all-purpose)

14 | * 1/2 Teaspoon – Salt

15 | * 2 Teaspoons – Baking Powder (aluminum free)

16 | * 1 Teaspoon – Baking Soda

17 | * 1/2 Cup - Chopped Walnuts

18 |

19 | ## Instructions

20 |

21 | * Prep Time – 10 minutes

22 | * Baking Time – 1:40 hours

23 | * Bread Machine Setting – Quick Bread

24 | * Beat the eggs.

25 | * Mash bananas with a fork.

26 | * Soften the butter in microwave.

27 | * Pour the milk into the bread pan and then add the other ingredients (except the walnuts). Try to follow the order of the ingredients listed above so that liquid ingredients are placed in the bread pan first and the dry ingredients second. Be aware that the bread pan should be removed from the bread machine before you start to add any ingredients. This helps to avoid spilling any material inside the bread machine.

28 | * Put the bread pan (with all of the ingredients) back into the bread machine and close the bread machine lid.

29 | * Start the machine after checking the settings (i.e. make sure it is on the quick bread setting).

30 | * Add chopped walnuts when the machine stops after the first kneading.

31 | When the bread machine has finished baking the bread, remove the bread pan and place it on a wooden cutting board. Let the bread stay within the bread pan (bread loaf container) for 10 minutes before you remove it from the bread pan. Use oven mitts when removing the bread pan because it will be very hot!

32 | After removing the bread from the bread pan, place the bread on a cooling rack. Use oven mitts when removing the bread.

33 | Don’t forget to remove the mixing paddle if it is stuck in the bread. Use oven mitts as the mixing paddle could be hot.

34 | You should allow the banana bread to completely cool before cutting. This can take up to 2 hours. Otherwise, the banana bread will break (crumble) more easily when cut.

35 |

36 |

--------------------------------------------------------------------------------

/baking/best-chocolate-chip-cookies.md:

--------------------------------------------------------------------------------

1 | # The Best Chocolate Chip Cookie Recipe Ever

2 |

3 | _Source: [Joy Food Sunshine: The Best Chocolate Chip Cookie Recipe Ever](https://joyfoodsunshine.com/the-most-amazing-chocolate-chip-cookies/)_

4 |

5 | This is the best chocolate chip cookie recipe ever. No funny ingredients, no chilling time, etc. Just a simple, straightforward, amazingly delicious, doughy yet still fully cooked, chocolate chip cookie that turns out perfectly every single time!

6 |

7 | ## How to make easy cookies from scratch

8 |

9 | Like I said, these cookies are crazy easy, however here are a few notes.

10 |

11 | 1. **Soften butter.** If you are planning on making these, take the butter out of the fridge first thing in the morning so it’s ready to go when you need it.

12 |

13 | 2. **Measure the flour correctly.** Be sure to use a measuring cup made for dry ingredients (NOT a pyrex liquid measuring cup). There has been some controversy on how to measure flour. I personally use the scoop and shake method and always have (gasp)! It’s easier and I have never had that method fail me. Many of you say that the only way to measure flour is to scoop it into the measuring cup and level with a knife. I say, measure it the way _**you**_ always do. Just make sure that the dough matches the consistency of the dough in the photos in this post.

14 |

15 | 3. **Use LOTS of chocolate chips.** Do I really need to explain this?!

16 |

17 | 4. **DO NOT over-bake these chocolate chip cookies!** I explain this more below, but these chocolate chip cookies will not look done when you pull them out of the oven, and that is GOOD.

18 |

19 | ## How do you make gooey chocolate chip cookies?

20 |

21 | The trick to making this best chocolate chip cookie recipe gooey is to not over-bake them. At the end of the baking time, these chocolate chip cookies **won’t look done** but they are.

22 |

23 | These chocolate chip cookies will look a little doughy when you remove them from the oven, and thats **good**. They will set up as they sit on the cookie sheet for a few minutes.

24 |

25 | ## Ingredients

26 |

27 | * 1 cup salted butter softened

28 | * 1 cup white (granulated) sugar

29 | * 1 cup light brown sugar packed

30 | * 2 tsp pure vanilla extract

31 | * 2 large eggs

32 | * 3 cups all-purpose flour

33 | * 1 tsp baking soda

34 | * 1/2 tsp baking powder

35 | * 1 tsp sea salt

36 | * 2 cups chocolate chips (or chunks, or chopped chocolate)

37 |

38 | ### Notes

39 |

40 | * When you remove the cookies from the oven they will still look doughy. THIS is the secret that makes these cookies so absolutely amazing! Please, I beg you, do NOT overbake!

41 | * Butter. If you use unsalted butter, increase the salt to 1 1/2 tsp total.

42 | * Some people have said they think the cookies are too salty. Please be sure to use natural Sea Salt (not iodized table salt). If you are concerned about saltiness start with 1/2 tsp salt and adjust to your tastes.

43 |

44 | ## Instructions

45 |

46 | 1. Preheat oven to 375 degrees F. Line a baking pan with parchment paper and set aside.

47 | 2. In a separate bowl mix flour, baking soda, salt, baking powder. Set aside.

48 | 3. Cream together butter and sugars until combined.

49 | 4. Beat in eggs and vanilla until fluffy.

50 | 5. Mix in the dry ingredients until combined.

51 | 6. Add 12 oz package of chocolate chips and mix well.

52 | 7. Roll 2-3 tbs (depending on how large you like your cookies) of dough at a time into balls and place them evenly spaced on your prepared cookie sheets. (alternately, use a small cookie scoop to make your cookies).

53 | 8. Bake in preheated oven for approximately 8-10 minutes. Take them out when they are just BARELY starting to turn brown.

54 | 9. Let them sit on the baking pan for 2 minutes before removing to cooling rack.

55 |

--------------------------------------------------------------------------------

/baking/great-pumpkin-pie.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/clockworksoul/today-i-learned/1257079ee89defd9da4c261fd30d7d4a85f8ceb7/baking/great-pumpkin-pie.jpg

--------------------------------------------------------------------------------

/baking/great-pumpkin-pie.md:

--------------------------------------------------------------------------------

1 | # The Great Pumpkin Pie Recipe

2 |

3 | _Source: Combination of [Sally's Baking Addition: The Great Pumpkin Pie Recipe](https://sallysbakingaddiction.com/the-great-pumpkin-pie-recipe/) and [The Perfect Pie](https://smile.amazon.com/Perfect-Pie-Ultimate-Classic-Galettes/dp/1945256915/ref=sr_1_1_)_

4 |

5 |  6 |

7 | * Prep Time: 45 minutes

8 | * Cook Time: 65 minutes

9 | * Total Time: Overnight (14 hours - includes time for pie dough and cranberries)

10 | * Yield: serves 8-10; 1 cup sugared cranberries

11 |

12 | ## Description

13 |

14 | _Bursting with flavor, this pumpkin pie recipe is my very favorite. It’s rich, smooth, and tastes incredible on my homemade pie crust and served with whipped cream. The pie crust leaves are purely for decor, you can leave those off of the pie and only make 1 pie crust. You can also leave off the sugared cranberries._

15 |

16 | ## Ingredients

17 |

18 | ### Sugared Cranberries

19 |

20 | * 1 cup (120g) **fresh cranberries**

21 | * 2 cups (400g) **granulated sugar**, divided

22 | * 1 cup (240ml) **water**

23 |

24 | ### Pumpkin Pie

25 |

26 | * 1 recipe [double-crust pie dough](perfect-pie-crust.md) (full recipe makes 2 crusts: 1 for bottom, 1 for leaf decor)

27 | * Egg wash: 1 **large egg** beaten with 1 Tablespoon **whole milk**

28 | * 1 cup (240ml) **heavy cream**

29 | * 1/4 cup (60ml) **whole milk**

30 | * 3 **large eggs** plus 2 **large yolks**

31 | * 1 teaspoon **vanilla extract**

32 | * One 15oz can (about 2 cups; 450g) **pumpkin puree**

33 | * 1 cup drained candied **sweet potatoes or yams**

34 | * 3/4 cup (5 1/4 ounces) **sugar**

35 | * 1/4 cup **maple syrup**

36 | * 1 teaspoon table **salt**

37 | * 2 teaspoons ground or freshly grated **ginger**

38 | * 1/2 teaspoon ground **cinnamon**

39 | * 1/4 teaspoon ground **nutmeg**

40 | * 1/8 teaspoon ground **cloves**

41 | * 1/8 teaspoon fresh ground **black pepper** (seriously!)

42 |

43 |

83 |

84 | ## Instructions

85 |

86 | ### Sugared Cranberries (Optional)

87 |

88 | 1. Place cranberries in a large bowl; set aside.

89 |

90 | 2. In a medium saucepan, bring 1 cup of sugar and the water to a boil and whisk until the sugar has dissolved. Remove pan from the heat and allow to cool for 5 minutes.

91 |

92 | 3. Pour sugar syrup over the cranberries and stir. Let the cranberries sit at room temperature or in the refrigerator for 6 hours or overnight (ideal). You’ll notice the sugar syrup is quite thick after this amount of time.

93 |

94 | 4. Drain the cranberries from the syrup and pour 1 cup of sugar on top. Toss the cranberries, coating them all the way around.

95 |

96 | 5. Pour the sugared cranberries on a parchment paper or silicone baking mat-lined baking sheet and let them dry for at least 2 hours at room temperature or in the refrigerator.

97 |

98 | 6. Cover tightly and store in the refrigerator for up to 3 days.

99 |

100 | You’ll have extra, but they’re great for eating or as garnish on other dishes.

101 |

102 | ### Prepare the Crust

103 |

104 | 1. Roll half of the 2-crust dough into a 12-inch circle on floured counter. Loosely roll dough around rolling pin and gently unroll it onto 9-inch pie plate, letting excess dough hang over edge. Ease dough into plate by gently lifting edge of dough with your hand while pressing into plate bottom with your other hand.

105 |

106 | 2. Trim overhang to 1/2 inch beyond lip of plate. Tuck overhang under itself; folded edge should be flush with edge of plate. Crimp dough evenly around edge of plate.

107 | Crimp the edges with a fork or flute the edges with your fingers, if desired. Wrap dough-lined plate loosely in plastic wrap and refrigerate until firm, about 30 minutes.

108 |

109 | ### Pie Crust Leaves (Optional)

110 |

111 | If you're using a store bought or have only a single-crust's amount of dough, skip this step.

112 |

113 | 1. On a floured work surface, roll out one of the balls of chilled dough to about 1/8 inch thickness (the shape doesn’t matter).

114 |

115 | 2. Using leaf cookie cutters, cut into shapes.

116 |

117 | 3. Brush each lightly with the beaten egg + milk mixture.

118 |

119 | 4. Cut leaf veins into leaves using a sharp knife, if desired.

120 |

121 | 5. Place onto a parchment paper or silicone baking mat-lined baking sheet and bake at 350°F (177°C) for 10 minutes or until lightly browned. Remove and set aside to cool before decorating pie.

122 |

123 | ### The Pie Proper

124 |

125 | 1. Adjust oven rack to middle position and heat to 375°F (190°C).

126 |

127 | 2. Brush edges of chilled pie shell lightly with egg wash mixture. Line with double layer of aluminum foil, covering edges to prevent burning, and fill with pie weights. Bake on foil-lined rimmed baking sheet for 10-15 minutes.

128 |

129 | 3. Remove foil and weights, rotate sheet, and continue to bake crust until golden brown and crisp, 10 to 15 minutes longer. Transfer sheet to wire rack. (The crust must still be warm when filling is added).

130 |

131 | 4. While crust is baking, whisk cream, milk, eggs and yolks, and vanilla together in bowl; set aside. Bring pumpkin, sweet potatoes, sugar, maple syrup, ginger, salt, cinnamon, and nutmeg to simmer in large saucepan over medium heat and cook, stirring constantly and mashing sweet potatoes against sides of saucepan, until thick and shiny, 15 to 20 minutes.

132 |

133 | 5. Remove saucepan from heat and whisk in cream mixture until fully incorporated.

134 |

135 | 6. Strain mixture through fine-mesh strainer into bowl, using back of ladle or spatula to press solids through strainer.

136 |

137 | 7. Whisk mixture, then, with pie still on sheet, pour into warm crust. Bake the pie until the center is almost set, about 55-60 minutes. A small part of the center will be wobbly – that’s ok. After 25 minutes of baking, be sure to cover the edges of the crust with aluminum foil or use a pie crust shield to prevent the edges from getting too brown. Check for doneness at minute 50, and then 55, and then 60, etc.

138 |

139 | 8. Once done, transfer the pie to a wire rack and allow to cool completely for at least 4 hours. Decorate with sugared cranberries and pie crust leaves (see note). You’ll definitely have leftover cranberries – they’re tasty for snacking. Serve pie with whipped cream if desired. Cover leftovers tightly and store in the refrigerator for up to 5 days.

140 |

141 | ## Notes

142 |

143 | 1. **Make Ahead & Freezing Instructions:** Pumpkin pie freezes well, up to 3 months. Thaw overnight in the refrigerator before serving. Pie crust dough freezes well for up to 3 months. Thaw overnight in the refrigerator before using. If decorating your pie with sugared cranberries, start them the night before. You’ll also begin the pie crust the night before as well (the dough needs at least 2 hours to chill; overnight is best). The filling can be made the night before as well. In fact, I prefer it that way. It gives the spices, pumpkin, and brown sugar flavors a chance to infuse and blend. It’s awesome. Cover and refrigerate overnight. No need to bring to room temperature before baking.

144 |

145 | 1. **Cranberries:** Use fresh cranberries, not frozen. The sugar syrup doesn’t coat evenly on the frozen berries, leaving you with rather ugly and some very plain shriveled cranberries.

146 |

147 | 1. **Pumpkin:** Canned pumpkin is best in this pumpkin pie recipe. If using fresh pumpkin puree, lightly blot it before adding to remove some moisture; the bake time may also be longer.

148 |

149 | 1. **Spices:** Instead of ground ginger and nutmeg, you can use 2 teaspoons of pumpkin pie spice. Be sure to still add 1/2 teaspoon cinnamon.

150 |

151 | 1. **Pie Crust:** No matter if you’re using homemade crust or store-bought crust, pre-bake the crust (The Pie Proper; steps 1-3). You can use graham cracker crust if you’d like, but the slices may get a little messy. Pre-bake for 10 minutes just as you do with regular pie crust in this recipe. No need to use pie weights if using a cookie crust.

152 |

153 | 1. **Mini Pumpkin Pies:** Many have asked about a mini version. Here are my mini pumpkin pies. They’re pretty easy– no blind baking the crust!

154 |

155 |

156 |

157 |

158 |

159 |

160 |

161 |

162 |

163 |

164 |

165 |

166 |

167 |

168 |

169 |

--------------------------------------------------------------------------------

/baking/perfect-pie-crust.md:

--------------------------------------------------------------------------------

1 | # Homemade Buttery Flaky Pie Crust

2 |

3 | _Source: [Sally's Baking Addition: Homemade Buttery Flaky Pie Crust](https://sallysbakingaddiction.com/baking-basics-homemade-buttery-flaky-pie-crust/)_

4 |

5 | * Prep Time: 15 minutes

6 | * Yield: 2 pie crusts

7 |

8 | This recipe provides enough for a double crust pie. If you only need one crust, you can cut the recipe in half, or freeze the other half.

9 |

10 | ## Ingredients

11 |

12 | * 2 1/2 cups (315g) **all-purpose flour** (spoon & leveled)

13 | * 1 teaspoon **salt**

14 | * 2 tablespoons **sugar**

15 | * 6 tablespoons (90g) **unsalted butter**, chilled and cubed

16 | * 3/4 cup (148g) **vegetable shortening**, chilled

17 | * 1/2 cup of **ice water**, plus extra as needed

18 |

19 | ## Instructions

20 |

21 | 1. Mix the flour and salt together in a large bowl. Add the butter and shortening.

22 |

23 | 2. Using a pastry cutter ([the one I own](https://smile.amazon.com/gp/product/B07D471TC8/ref=ppx_yo_dt_b_search_asin_title)) or two forks, cut the butter and shortening into the mixture until it resembles coarse meal (pea-sized bits with a few larger bits of fat is OK). A pastry cutter makes this step very easy and quick.

24 |

25 | 3. Measure 1/2 cup (120ml) of water in a cup. Add ice. Stir it around. From that, measure 1/2 cup (120ml) of water– since the ice has melted a bit. Drizzle the cold water in, 1 Tablespoon (15ml) at a time, and stir with a rubber spatula or wooden spoon after every Tablespoon (15ml) added. Do not add any more water than you need to. Stop adding water when the dough begins to form large clumps. I always use about 1/2 cup (120ml) of water and a little more in dry winter months (up to 3/4 cup).

26 |

27 | 4. Transfer the pie dough to a floured work surface. The dough should come together easily and should not feel overly sticky. Using floured hands, fold the dough into itself until the flour is fully incorporated into the fats. Form it into a ball. Divide dough in half. Flatten each half into 1-inch thick discs using your hands.

28 |

29 | 5. Wrap each tightly in plastic wrap. Refrigerate for at least 2 hours (and up to 5 days).

30 |

31 | 6. When rolling out the chilled pie dough discs to use in your pie, always use gentle force with your rolling pin. Start from the center of the disc and work your way out in all directions, turning the dough with your hands as you go. Visible specks of butter and fat in the dough are perfectly normal and expected!

32 |

33 | 7. Proceed with the pie per your recipe’s instructions.

34 |

35 | ## Notes

36 |

37 | 1. **Make Ahead & Freezing Instructions:** Prepare the pie dough through step 4 and freeze the discs for up to 3 months. Thaw overnight in the refrigerator before using in your pie recipe.

38 |

39 | 2. **Salt:** I use and strongly recommend regular table salt. If using kosher salt, use 1 and 1/2 teaspoons.

40 |

--------------------------------------------------------------------------------

/bash/a-generalized-single-instance-script.md:

--------------------------------------------------------------------------------

1 | # A generalized single-instance bash script

2 |

3 | _Source: [Przemyslaw Pawelczyk](https://gist.github.com/przemoc/571091)_

4 |

5 | An excellent generalized script that ensures that only one instance of the script can run at a time.

6 |

7 | It does the following:

8 |

9 | * Sets an [exit trap](http://redsymbol.net/articles/bash-exit-traps/) so the lock is always released (via `_prepare_locking`)

10 | * Obtains an [exclusive file lock](https://en.wikipedia.org/wiki/File_locking) on `$LOCKFILE` or immediately fail (via `exlock_now`)

11 | * Executes any additional custom logic

12 |

13 | ```bash

14 | #!/usr/bin/env bash

15 |

16 | set -euo pipefail

17 |

18 | # This script will do the following:

19 | #

20 | # - Set an exit trap so the lock is always released (via _prepare_locking)

21 | # - Obtain an exclusive lock on $LOCKFILE or immediately fail (via exlock_now)

22 | # - Execute any additional logic

23 | #

24 | # If this script is run while another has a lock it will exit with status 1.

25 |

26 | ### HEADER ###

27 | readonly LOCKFILE="/tmp/$(basename $0).lock" # source has "/var/lock/`basename $0`"

28 | readonly LOCKFD=99

29 |

30 | # PRIVATE

31 | _lock() { flock -$1 $LOCKFD; }

32 | _no_more_locking() { _lock u; _lock xn && rm -f $LOCKFILE; }

33 | _prepare_locking() { eval "exec $LOCKFD>\"$LOCKFILE\""; trap _no_more_locking EXIT; }

34 |

35 | # ON START

36 | _prepare_locking

37 |

38 | # PUBLIC

39 | exlock_now() { _lock xn; } # obtain an exclusive lock immediately or fail

40 | exlock() { _lock x; } # obtain an exclusive lock

41 | shlock() { _lock s; } # obtain a shared lock

42 | unlock() { _lock u; } # drop a lock

43 |

44 | ### BEGIN OF SCRIPT ###

45 |

46 | # Simplest example is avoiding running multiple instances of script.

47 | exlock_now || exit 1

48 |

49 | # All script logic goes below.

50 | #

51 | # Remember! Lock file is removed when one of the scripts exits and it is

52 | # the only script holding the lock or lock is not acquired at all.

53 | ```

--------------------------------------------------------------------------------

/bash/bash-shell-prompts.md:

--------------------------------------------------------------------------------

1 | # Bash Shell: Take Control of PS1, PS2, PS3, PS4 and PROMPT_COMMAND

2 |

3 | _Source: https://www.thegeekstuff.com/2008/09/bash-shell-take-control-of-ps1-ps2-ps3-ps4-and-prompt_command/_

4 |

5 | Your interaction with Linux Bash shell will become very pleasant if you use PS1, PS2, PS3, PS4, and PROMPT_COMMAND effectively. PS stands for prompt statement. This article will give you a jumpstart on the Linux command prompt environment variables using simple examples.

6 |

7 | ## PS1 – Default interaction prompt

8 |

9 | The default interactive prompt on your Linux can be modified as shown below to something useful and informative. In the following example, the default PS1 was `\s-\v\$`, which displays the shell name and the version number. Let us change this default behavior to display the username, hostname and current working directory name as shown below.

10 |

11 | ```bash

12 | -bash-3.2$ export PS1="\u@\h \w> "

13 |

14 | ramesh@dev-db ~> cd /etc/mail

15 | ramesh@dev-db /etc/mail>

16 | ```

17 |

18 | Prompt changed to "username@hostname current-dir>" format.

19 |

20 | The following PS1 codes are used in this example:

21 |

22 | * `\u` – Username

23 | * `\h` – Hostname

24 | * `\w` – Full pathname of current directory. Please note that when you are in the home directory, this will display only `~` as shown above

25 | * Note that there is a space at the end in the value of PS1. Personally, I prefer a space at the end of the prompt for better readability.

26 |

27 | Make this setting permanent by adding export `PS1="\u@\h \w> "` to either `.bash_profile` (Mac) or `.bashrc` (Linux/WSL).

28 |

29 | ## PS2 – Continuation interactive prompt

30 |

31 | A very long Unix command can be broken down to multiple line by giving `\` at the end of the line. The default interactive prompt for a multi-line command is "> ". Let us change this default behavior to display `continue->` by using PS2 environment variable as shown below.

32 |

33 | ```

34 | ramesh@dev-db ~> myisamchk --silent --force --fast --update-state \

35 | > --key_buffer_size=512M --sort_buffer_size=512M \

36 | > --read_buffer_size=4M --write_buffer_size=4M \

37 | > /var/lib/mysql/bugs/*.MYI

38 | ```

39 |

40 | This uses the default ">" for continuation prompt.

41 |

42 | ```

43 | ramesh@dev-db ~> export PS2="continue-> "

44 |

45 | ramesh@dev-db ~> myisamchk --silent --force --fast --update-state \

46 | continue-> --key_buffer_size=512M --sort_buffer_size=512M \

47 | continue-> --read_buffer_size=4M --write_buffer_size=4M \

48 | continue-> /var/lib/mysql/bugs/*.MYI

49 | ```

50 |

51 | This uses the modified "continue-> " for continuation prompt.

52 |

53 | I found it very helpful and easy to read, when I break my long commands into multiple lines using \. I have also seen others who don’t like to break-up long commands. What is your preference? Do you like breaking up long commands into multiple lines?

54 |

55 | ## PS3 – Prompt used by "select" inside shell script

56 |

57 | You can define a custom prompt for the select loop inside a shell script, using the PS3 environment variable, as explained below.

58 |

59 | ### Shell script and output WITHOUT PS3

60 |

61 | ```

62 | ramesh@dev-db ~> cat ps3.sh

63 |

64 | select i in mon tue wed exit

65 | do

66 | case $i in

67 | mon) echo "Monday";;

68 | tue) echo "Tuesday";;

69 | wed) echo "Wednesday";;

70 | exit) exit;;

71 | esac

72 | done

73 |

74 | ramesh@dev-db ~> ./ps3.sh

75 |

76 | 1) mon

77 | 2) tue

78 | 3) wed

79 | 4) exit

80 | #? 1

81 | Monday

82 | #? 4

83 | ```

84 |

85 | This displays the default "#?" for select command prompt.

86 |

87 | ### Shell script and output WITH PS3

88 |

89 | ```

90 | ramesh@dev-db ~> cat ps3.sh

91 |

92 | PS3="Select a day (1-4): "

93 | select i in mon tue wed exit

94 | do

95 | case $i in

96 | mon) echo "Monday";;

97 | tue) echo "Tuesday";;

98 | wed) echo "Wednesday";;

99 | exit) exit;;

100 | esac

101 | done

102 |

103 | ramesh@dev-db ~> ./ps3.sh

104 | 1) mon

105 | 2) tue

106 | 3) wed

107 | 4) exit

108 | Select a day (1-4): 1

109 | Monday

110 | Select a day (1-4): 4

111 | ```

112 |

113 | This displays the modified "Select a day (1-4): " for select command prompt.

114 |

115 | ## PS4 – Used by "set -x" to prefix tracing output

116 |

117 | The PS4 shell variable defines the prompt that gets displayed, when you execute a shell script in debug mode as shown below.

118 |

119 | ### Shell script and output WITHOUT PS4

120 |

121 | ```

122 | ramesh@dev-db ~> cat ps4.sh

123 |

124 | set -x

125 | echo "PS4 demo script"

126 | ls -l /etc/ | wc -l

127 | du -sh ~

128 |

129 | ramesh@dev-db ~> ./ps4.sh

130 |

131 | ++ echo 'PS4 demo script'

132 | PS4 demo script

133 | ++ ls -l /etc/

134 | ++ wc -l

135 | 243

136 | ++ du -sh /home/ramesh

137 | 48K /home/ramesh

138 | ```

139 |

140 | This displays the default "++" while tracing the output using `set -x`.

141 |

142 | ### Shell script and output WITH PS4

143 |

144 | The PS4 defined below in the ps4.sh has the following two codes:

145 |

146 | * `$0` – indicates the name of script

147 | * `$LINENO` – displays the current line number within the script

148 |

149 | ```

150 | ramesh@dev-db ~> cat ps4.sh

151 |

152 | export PS4='$0.$LINENO+ '

153 | set -x

154 | echo "PS4 demo script"

155 | ls -l /etc/ | wc -l

156 | du -sh ~

157 |

158 | ramesh@dev-db ~> ./ps4.sh

159 | ../ps4.sh.3+ echo 'PS4 demo script'

160 | PS4 demo script

161 | ../ps4.sh.4+ ls -l /etc/

162 | ../ps4.sh.4+ wc -l

163 | 243

164 | ../ps4.sh.5+ du -sh /home/ramesh

165 | 48K /home/ramesh

166 | ```

167 |

168 | This displays the modified "{script-name}.{line-number}+" while tracing the output using `set -x`.

169 |

170 | ## PROMPT_COMMAND

171 |

172 | Bash shell executes the content of the `PROMPT_COMMAND` just before displaying the PS1 variable.

173 |

174 | ```

175 | ramesh@dev-db ~> export PROMPT_COMMAND="date +%k:%m:%S"

176 | 22:08:42

177 | ramesh@dev-db ~>

178 | ```

179 |

180 | This displays the `PROMPT_COMMAND` and PS1 output on different lines.

181 |

182 | If you want to display the value of `PROMPT_COMMAND` in the same line as the PS1, use the `echo -n` as shown below:

183 |

184 | ```

185 | ramesh@dev-db ~> export PROMPT_COMMAND="echo -n [$(date +%k:%m:%S)]"

186 | [22:08:51]ramesh@dev-db ~>

187 | ```

188 |

189 | This displays the PROMPT_COMMAND and PS1 output on the same line.

--------------------------------------------------------------------------------

/bash/dont-do-grep-wc-l.md:

--------------------------------------------------------------------------------

1 | # Don't do `grep | wc -l`

2 |

3 | _Source: [Useless Use of Cat Award](http://porkmail.org/era/unix/award.html)_

4 |

5 | There is actually a whole class of "Useless Use of (something) | grep (something) | (something)" problems but this one usually manifests itself in scripts riddled by useless backticks and pretzel logic.

6 |

7 | Anything that looks like:

8 |

9 | ```bash

10 | something | grep '..*' | wc -l

11 | ```

12 |

13 | can usually be rewritten like something along the lines of

14 |

15 | ```bash

16 | something | grep -c . # Notice that . is better than '..*'

17 | ```

18 |

19 | or even (if all we want to do is check whether something produced any non-empty output lines)

20 |

21 | ```bash

22 | something | grep . >/dev/null && ...

23 | ```

24 |

25 | (or `grep -q` if your grep has that).

26 |

27 | ## Addendum

28 |

29 | `grep -c` can actually solve a large class of problems that `grep | wc -l` can't.

30 |

31 | If what interests you is the count for each of a group of files, then the only way to do it with `grep | wc -l` is to put a loop round it. So where I had this:

32 |

33 | ```bash

34 | grep -c "^~h" [A-Z]*/hmm[39]/newMacros

35 | ```

36 |

37 | the naive solution using `wc -l` would have been...

38 |

39 | ```bash

40 | for f in [A-Z]*/hmm[39]/newMacros; do

41 | # or worse, for f in `ls [A-Z]*/hmm[39]/newMacros` ...

42 | echo -n "$f:"

43 | # so that we know which file's results we're looking at

44 | grep "^~h" "$f" | wc -l

45 | # gag me with a spoon

46 | done

47 | ```

48 |

49 | ...and notice that we also had to fiddle to get the output in a convenient form.

50 |

--------------------------------------------------------------------------------

/bash/groot.md:

--------------------------------------------------------------------------------

1 | # Move to the Root of a Git Directory

2 |

3 | _Source: My friend Alex Novak_

4 |

5 | ```bash

6 | cd `git rev-parse --show-toplevel`

7 | ```

8 |

9 | I keep this aliased as `groot` and use it very frequently when moving through a codebase.

10 |

--------------------------------------------------------------------------------

/bash/md5sum-of-a-path.md:

--------------------------------------------------------------------------------

1 | # Bash One-Liner to Calculate the md5sum of a Path in Linux

2 |

3 | To compute the md5sum of an entire path in Linux, we `tar` the directory in question and pipe the product to `md5sum`.

4 |

5 | Note in this example that I specifically exclude the `.git` directory, which I've found can vary between runs even if there's no change to the repository contents.

6 |

7 | ```bash

8 | $ tar --exclude='.git' -cf - "${SOME_DIR}" 2> /dev/null | md5sum

9 | 851ce98b3e97b42c1b01dd26e23e2efa -

10 | ```

11 |

--------------------------------------------------------------------------------

/bash/pipefail.md:

--------------------------------------------------------------------------------

1 | # Safer Bash Scripts With `set -euxo pipefail`

2 |

3 | _Source: [Safer bash scripts with 'set -euxo pipefail'](https://vaneyckt.io/posts/safer_bash_scripts_with_set_euxo_pipefail/)_

4 |

5 | The bash shell comes with several builtin commands for modifying the behavior of the shell itself. We are particularly interested in the `set` builtin, as this command has several options that will help us write safer scripts. I hope to convince you that it’s a really good idea to add `set -euxo pipefail` to the beginning of all your future bash scripts.

6 |

7 | ## `set -e`

8 |

9 | The `-e` option will cause a bash script to exit immediately when a command fails.

10 |

11 | This is generally a vast improvement upon the default behavior where the script just ignores the failing command and continues with the next line. This option is also smart enough to not react on failing commands that are part of conditional statements.

12 |

13 | You can append a command with `|| true` for those rare cases where you don’t want a failing command to trigger an immediate exit.

14 |

15 | ### Before

16 |

17 | ```bash

18 | #!/bin/bash

19 |

20 | # 'foo' is a non-existing command

21 | foo

22 | echo "bar"

23 |

24 | # output

25 | # ------

26 | # line 4: foo: command not found

27 | # bar

28 | #

29 | # Note how the script didn't exit when the foo command could not be found.

30 | # Instead it continued on and echoed 'bar'.

31 | ```

32 |

33 | ### After

34 |

35 | ```bash

36 | #!/bin/bash

37 | set -e

38 |

39 | # 'foo' is a non-existing command

40 | foo

41 | echo "bar"

42 |

43 | # output

44 | # ------

45 | # line 5: foo: command not found

46 | #

47 | # This time around the script exited immediately when the foo command wasn't found.

48 | # Such behavior is much more in line with that of higher-level languages.

49 | ```

50 |

51 | ### Any command returning a non-zero exit code will cause an immediate exit

52 |

53 | ```bash

54 | #!/bin/bash

55 | set -e

56 |

57 | # 'ls' is an existing command, but giving it a nonsensical param will cause

58 | # it to exit with exit code 1

59 | $(ls foobar)

60 | echo "bar"

61 |

62 | # output

63 | # ------

64 | # ls: foobar: No such file or directory

65 | #

66 | # I'm putting this in here to illustrate that it's not just non-existing commands

67 | # that will cause an immediate exit.

68 | ```

69 |

70 | ### Preventing an immediate exit

71 |

72 | ```bash

73 | #!/bin/bash

74 | set -e

75 |

76 | foo || true

77 | $(ls foobar) || true

78 | echo "bar"

79 |

80 | # output

81 | # ------

82 | # line 4: foo: command not found

83 | # ls: foobar: No such file or directory

84 | # bar

85 | #

86 | # Sometimes we want to ensure that, even when 'set -e' is used, the failure of

87 | # a particular command does not cause an immediate exit. We can use '|| true' for this.

88 | ```

89 |

90 | ### Failing commands in a conditional statement will not cause an immediate exit

91 |

92 | ```bash

93 | #!/bin/bash

94 | set -e

95 |

96 | # we make 'ls' exit with exit code 1 by giving it a nonsensical param

97 | if ls foobar; then

98 | echo "foo"

99 | else

100 | echo "bar"

101 | fi

102 |

103 | # output

104 | # ------

105 | # ls: foobar: No such file or directory

106 | # bar

107 | #

108 | # Note that 'ls foobar' did not cause an immediate exit despite exiting with

109 | # exit code 1. This is because the command was evaluated as part of a

110 | # conditional statement.

111 | ```

112 |

113 | That’s all for `set -e`. However, `set -e` by itself is far from enough. We can further improve upon the behavior created by `set -e` by combining it with `set -o pipefail`. Let’s have a look at that next.

114 |

115 | ## `set -o pipefail`

116 |

117 | The bash shell normally only looks at the exit code of the last command of a pipeline. This behavior is not ideal as it causes the `-e` option to only be able to act on the exit code of a pipeline’s last command. This is where `-o pipefail` comes in. This particular option sets the exit code of a pipeline to that of the rightmost command to exit with a non-zero status, or to zero if all commands of the pipeline exit successfully.

118 |

119 | ### Before

120 |

121 | ```bash

122 | #!/bin/bash

123 | set -e

124 |

125 | # 'foo' is a non-existing command

126 | foo | echo "a"

127 | echo "bar"

128 |

129 | # output

130 | # ------

131 | # a

132 | # line 5: foo: command not found

133 | # bar

134 | #

135 | # Note how the non-existing foo command does not cause an immediate exit, as

136 | # it's non-zero exit code is ignored by piping it with '| echo "a"'.

137 | ```

138 |

139 | ### After

140 |

141 | ```bash

142 | #!/bin/bash

143 | set -eo pipefail

144 |

145 | # 'foo' is a non-existing command

146 | foo | echo "a"

147 | echo "bar"

148 |

149 | # output

150 | # ------

151 | # a

152 | # line 5: foo: command not found

153 | #

154 | # This time around the non-existing foo command causes an immediate exit, as

155 | # '-o pipefail' will prevent piping from causing non-zero exit codes to be ignored.

156 | ```

157 |

158 | This section hopefully made it clear that `-o pipefail` provides an important improvement upon just using `-e` by itself. However, as we shall see in the next section, we can still do more to make our scripts behave like higher-level languages.

159 |

160 | ## `set -u`

161 |

162 | This option causes the bash shell to treat unset variables as an error and exit immediately. Unset variables are a common cause of bugs in shell scripts, so having unset variables cause an immediate exit is often highly desirable behavior.

163 |

164 | ### Before

165 |

166 | ```bash

167 | #!/bin/bash

168 | set -eo pipefail

169 |

170 | echo $a

171 | echo "bar"

172 |

173 | # output

174 | # ------

175 | #

176 | # bar

177 | #

178 | # The default behavior will not cause unset variables to trigger an immediate exit.

179 | # In this particular example, echoing the non-existing $a variable will just cause

180 | # an empty line to be printed.

181 | ```

182 |

183 | ### After

184 |

185 | ```bash

186 | #!/bin/bash

187 | set -euo pipefail

188 |

189 | echo "$a"

190 | echo "bar"

191 |

192 | # output

193 | # ------

194 | # line 5: a: unbound variable

195 | #

196 | # Notice how 'bar' no longer gets printed. We can clearly see that '-u' did indeed

197 | # cause an immediate exit upon encountering an unset variable.

198 | ```

199 |

200 | ### Dealing with `${a:-b}` variable assignments

201 |

202 | Sometimes you’ll want to use a [`${a:-b}` variable assignment](https://unix.stackexchange.com/questions/122845/using-a-b-for-variable-assignment-in-scripts/122878) to ensure a variable is assigned a default value of b when a is either empty or undefined. The `-u` option is smart enough to not cause an immediate exit in such a scenario.

203 |

204 | ```bash

205 | #!/bin/bash

206 | set -euo pipefail

207 |

208 | DEFAULT=5

209 | RESULT=${VAR:-$DEFAULT}

210 | echo "$RESULT"

211 |

212 | # output

213 | # ------

214 | # 5

215 | #

216 | # Even though VAR was not defined, the '-u' option realizes there's no need to cause

217 | # an immediate exit in this scenario as a default value has been provided.

218 | ```

219 |

220 | ### Using conditional statements that check if variables are set

221 |

222 | Sometimes you want your script to not immediately exit when an unset variable is encountered. A common example is checking for a variable’s existence inside an `if` statement.

223 |

224 | ```bash

225 | #!/bin/bash

226 | set -euo pipefail

227 |

228 | if [ -z "${MY_VAR:-}" ]; then

229 | echo "MY_VAR was not set"

230 | fi

231 |

232 | # output

233 | # ------

234 | # MY_VAR was not set

235 | #

236 | # In this scenario we don't want our program to exit when the unset MY_VAR variable

237 | # is evaluated. We can prevent such an exit by using the same syntax as we did in the

238 | # previous example, but this time around we specify no default value.

239 | ```

240 |

241 | This section has brought us a lot closer to making our bash shell behave like higher-level languages. While `-euo pipefail` is great for the early detection of all kinds of problems, sometimes it won’t be enough. This is why in the next section we’ll look at an option that will help us figure out those really tricky bugs that you encounter every once in a while.

242 |

243 | ## `set -x`

244 |

245 | The `-x` option causes bash to print each command before executing it.

246 |

247 | This can be a great help when trying to debug a bash script failure. Note that arguments get expanded before a command gets printed, which will cause our logs to contain the actual argument values that were present at the time of execution!

248 |

249 | ```bash

250 | #!/bin/bash

251 | set -euxo pipefail

252 |

253 | a=5

254 | echo $a

255 | echo "bar"

256 |

257 | # output

258 | # ------

259 | # + a=5

260 | # + echo 5

261 | # 5

262 | # + echo bar

263 | # bar

264 | ```

265 |

266 | That’s it for the `-x` option. It’s pretty straightforward, but can be a great help for debugging.

267 |

268 | ## `set -E`

269 |

270 | Traps are pieces of code that fire when a bash script catches certain signals. Aside from the usual signals (e.g. `SIGINT`, `SIGTERM`, ...), traps can also be used to catch special bash signals like `EXIT`, `DEBUG`, `RETURN`, and `ERR`. However, reader Kevin Gibbs pointed out that using `-e` without `-E` will cause an `ERR` trap to not fire in certain scenarios.

271 |

272 | ### Before

273 |

274 | ```bash

275 | #!/bin/bash

276 | set -euo pipefail

277 |

278 | trap "echo ERR trap fired!" ERR

279 |

280 | myfunc()

281 | {

282 | # 'foo' is a non-existing command

283 | foo

284 | }

285 |

286 | myfunc

287 | echo "bar"

288 |

289 | # output

290 | # ------

291 | # line 9: foo: command not found

292 | #

293 | # Notice that while '-e' did indeed cause an immediate exit upon trying to execute

294 | # the non-existing foo command, it did not case the ERR trap to be fired.

295 | ```

296 |

297 | ### After

298 |

299 | ```bash

300 | #!/bin/bash

301 | set -Eeuo pipefail

302 |

303 | trap "echo ERR trap fired!" ERR

304 |

305 | myfunc()

306 | {

307 | # 'foo' is a non-existing command

308 | foo

309 | }

310 |

311 | myfunc

312 | echo "bar"

313 |

314 | # output

315 | # ------

316 | # line 9: foo: command not found

317 | # ERR trap fired!

318 | #

319 | # Not only do we still have an immediate exit, we can also clearly see that the

320 | # ERR trap was actually fired now.

321 | ```

322 |

323 | The documentation states that `-E` needs to be set if we want the `ERR` trap to be inherited by shell functions, command substitutions, and commands that are executed in a subshell environment. The `ERR` trap is normally not inherited in such cases.

324 |

--------------------------------------------------------------------------------

/bash/prompt-statement-variables.md:

--------------------------------------------------------------------------------

1 | # Bash Shell Prompt Statement Variables

2 |

3 | _Source: https://ss64.com/bash/syntax-prompt.html_

4 |

5 | There are several variables that can be set to control the appearance of the bash command prompt: PS1, PS2, PS3, PS4 and PROMPT_COMMAND the contents are executed just as if they had been typed on the command line.

6 |

7 | * `PS1` – Default interactive prompt (this is the variable most often customized)

8 | * `PS2` – Continuation interactive prompt (when a long command is broken up with \ at the end of the line) default=">"

9 | * `PS3` – Prompt used by `select` loop inside a shell script

10 | * `PS4` – Prompt used when a shell script is executed in debug mode (`set -x` will turn this on) default ="++"

11 | * `PROMPT_COMMAND` - If this variable is set and has a non-null value, then it will be executed just before the PS1 variable.

12 |

13 | Set your prompt by changing the value of the PS1 environment variable, as follows:

14 |

15 | ```

16 | $ export PS1="My simple prompt> "

17 | >

18 | ```

19 |

20 | This change can be made permanent by placing the `export` definition in your `~/.bashrc` (or `~/.bash_profile`) file.

21 |

22 | ## Special prompt variable characters

23 |

24 | ```

25 | \d The date, in "Weekday Month Date" format (e.g., "Tue May 26").

26 |

27 | \h The hostname, up to the first . (e.g. deckard)

28 | \H The hostname. (e.g. deckard.SS64.com)

29 |

30 | \j The number of jobs currently managed by the shell.

31 |

32 | \l The basename of the shell's terminal device name.

33 |

34 | \s The name of the shell, the basename of $0 (the portion following

35 | the final slash).

36 |

37 | \t The time, in 24-hour HH:MM:SS format.

38 | \T The time, in 12-hour HH:MM:SS format.

39 | \@ The time, in 12-hour am/pm format.

40 |

41 | \u The username of the current user.

42 |

43 | \v The version of Bash (e.g., 2.00)

44 |

45 | \V The release of Bash, version + patchlevel (e.g., 2.00.0)

46 |

47 | \w The current working directory.

48 | \W The basename of $PWD.

49 |

50 | \! The history number of this command.

51 | \# The command number of this command.

52 |

53 | \$ If you are not root, inserts a "$"; if you are root, you get a "#" (root uid = 0)

54 |

55 | \nnn The character whose ASCII code is the octal value nnn.

56 |

57 | \n A newline.

58 | \r A carriage return.

59 | \e An escape character (typically a color code).

60 | \a A bell character.

61 | \\ A backslash.

62 |

63 | \[ Begin a sequence of non-printing characters. (like color escape sequences). This

64 | allows bash to calculate word wrapping correctly.

65 |

66 | \] End a sequence of non-printing characters.

67 | ```

68 |

69 | Using single quotes instead of double quotes when exporting your PS variables is recommended, it makes the prompt a tiny bit faster to evaluate plus you can then do an echo $PS1 to see the current prompt settings.

70 |

71 | ## Color Codes (ANSI Escape Sequences)

72 |

73 | ### Foreground colors

74 |

75 | Normal (non-bold) is the default, so the `0;` prefix is optional.

76 |

77 | ```

78 | \e[0;30m = Dark Gray

79 | \e[1;30m = Bold Dark Gray

80 | \e[0;31m = Red

81 | \e[1;31m = Bold Red

82 | \e[0;32m = Green

83 | \e[1;32m = Bold Green

84 | \e[0;33m = Yellow

85 | \e[1;33m = Bold Yellow

86 | \e[0;34m = Blue

87 | \e[1;34m = Bold Blue

88 | \e[0;35m = Purple

89 | \e[1;35m = Bold Purple

90 | \e[0;36m = Turquoise

91 | \e[1;36m = Bold Turquoise

92 | \e[0;37m = Light Gray

93 | \e[1;37m = Bold Light Gray

94 | ```

95 |

96 | ### Background colors

97 |

98 | ```

99 | \e[40m = Dark Gray

100 | \e[41m = Red

101 | \e[42m = Green

102 | \e[43m = Yellow

103 | \e[44m = Blue

104 | \e[45m = Purple

105 | \e[46m = Turquoise

106 | \e[47m = Light Gray

107 | ```

108 |

109 | Examples

110 |

111 | Set a prompt like: `[username@hostname:~/CurrentWorkingDirectory]$`

112 |

113 | `export PS1='[\u@\h:\w]\$ '`

114 |

115 | Set a prompt in color. Note the escapes for the non printing characters [ ]. These ensure that readline can keep track of the cursor position correctly.

116 |

117 | `export PS1='\[\e[31m\]\u@\h:\w\[\e[0m\] '`

118 |

--------------------------------------------------------------------------------

/bash/ring-the-audio-bell.md:

--------------------------------------------------------------------------------

1 | # Ring the audio bell in bash

2 |

3 | In many shells, the `\a` character will trigger the audio bell.

4 |

5 | Example:

6 |

7 | ```bash

8 | $ echo -n $'\a'

9 | ```

10 |

11 | You can also use `tput`, since `/a` doesn't work in some shells:

12 |

13 | ```bash

14 | $ tput bel

15 | ```

16 |

--------------------------------------------------------------------------------

/consul/ingress-gateways.md:

--------------------------------------------------------------------------------

1 | # Ingress Gateways in HashiCorp Consul 1.8

2 |

3 | _Source: [Ingress Gateways in HashiCorp Consul 1.8](https://www.hashicorp.com/blog/ingress-gateways-in-hashicorp-consul-1-8/), [HashiCorp Learn](https://learn.hashicorp.com/consul/developer-mesh/ingress-gateways)_

4 |

5 | Consul ingress gateways provide an easy and secure way for external services to communicate with services inside the Consul service mesh.

6 |

7 |

8 |

9 | Using the Consul CLI or our Consul Kubernetes Helm chart you can easily set up multiple instances of ingress gateways and configure and secure traffic flows natively via our Layer 7 routing and intentions features.

10 |

11 | # Overview

12 |

13 | Applications that reside outside of a service mesh often need to communicate with applications within the mesh. This is typically done by providing a gateway that allows the traffic to the upstream service. Consul 1.8 allows us to create an ingress solution without us having to roll our own.

14 |

15 | # Using Ingress Gateways on VMs

16 |

17 | Let’s take a look at how Ingress Gateways work in practice. First, let’s register our Ingress Gateway on port 8888:

18 |

19 | ```bash

20 | $ consul connect envoy -gateway=ingress -register -service ingress-gateway \

21 | -address '{{ GetInterfaceIP "eth0" }}:8888'

22 | ```

23 |

24 | Next, let’s create and apply an ingress-gateway [configuration entry](https://www.consul.io/docs/agent/config-entries/ingress-gateway) that defines a set of listeners that expose the desired backing services:

25 |

26 | ```hcl

27 | Kind = "ingress-gateway"

28 | Name = "ingress-gateway"

29 | Listeners = [

30 | {

31 | Port = 80

32 | Protocol = "http"

33 | Services = [

34 | {

35 | Name = "api"

36 | }

37 | ]

38 | }

39 | ]

40 | ```

41 |

42 | With the ingress gateway now configured to listen for a service on port 80, we can now route traffic destined for specific paths to that specific service listening on port 80 of the gateway via a [service-router](https://www.consul.io/docs/agent/config-entries/service-router) config entry:

43 |

44 | ```hcl

45 | Kind = "service-router"

46 | Name = "api"

47 | Routes = [

48 | {

49 | Match {

50 | HTTP {

51 | PathPrefix = "/api_service1"

52 | }

53 | }

54 |

55 | Destination {

56 | Service = "api_service1"

57 | }

58 | },

59 | {

60 | Match {

61 | HTTP {

62 | PathPrefix = "/api_service2"

63 | }

64 | }

65 |

66 | Destination {

67 | Service = "api_service2"

68 | }

69 | }

70 | ]

71 | ```

72 |

73 | Finally, to secure traffic from the Ingress Gateway, we can also then define intentions to allow the Ingress Gateway to communicate to specific upstream services:

74 |

75 | ```bash

76 | $ consul intention create -allow ingress-gateway api_service1

77 | $ consul intention create -allow ingress-gateway api_service2

78 | ```

79 |

80 | Since Consul also specializes in Service Discovery, we can also discovery gateways for Ingress Gateway enabled services via DNS using [built-in subdomains](https://www.consul.io/docs/agent/dns#ingress-service-lookups):

81 |

82 | ```bash

83 | $ dig @127.0.0.1 -p 8600 api_service1.ingress... ANY

84 | $ dig @127.0.0.1 -p 8600 api_service2.ingress... ANY

85 | ```

86 |

--------------------------------------------------------------------------------

/consul/ingress-gateways.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/clockworksoul/today-i-learned/1257079ee89defd9da4c261fd30d7d4a85f8ceb7/consul/ingress-gateways.png

--------------------------------------------------------------------------------

/containers/non-privileged-containers-based-on-the-scratch-image.md:

--------------------------------------------------------------------------------

1 | # Non-privileged containers based on the scratch image

2 |

3 | _Source: [Non-privileged containers based on the scratch image](https://medium.com/@lizrice/non-privileged-containers-based-on-the-scratch-image-a80105d6d341)_

4 |

5 | ## Multi-stage builds give us the power

6 |

7 | Here’s a Dockerfile that gives us what we’re looking for.

8 |

9 | ```dockerfile

10 | FROM ubuntu:latest

11 | RUN useradd -u 10001 scratchuser

12 |

13 | FROM scratch

14 | COPY dosomething /dosomething

15 | COPY --from=0 /etc/passwd /etc/passwd

16 | USER scratchuser

17 |

18 | ENTRYPOINT ["/dosomething"]

19 | ```

20 |

21 | Building from this Dockerfile starts FROM an Ubuntu base image, and creates a new user called _scratchuser_. See that second `FROM` command? That’s the start of the next stage in the multi-stage build, this time working from the scratch base image (which contains nothing).

22 |

23 | Into that empty file system we copy a binary called `dosomething` — it’s a trivial Go app that simply sleeps for a while.

24 |

25 | ```go

26 | package main

27 |

28 | import "time"

29 |

30 | func main() {

31 | time.Sleep(500 * time.Second)

32 | }

33 | ```

34 |

35 | We also copy over the `/etc/passwd` file from the first stage of the build into the new image. This has `scratchuser` in it.

36 |

37 | This is all we need to be able to transition to `scratchuser` before starting the dosomething executable.

38 |

--------------------------------------------------------------------------------

/est/approximate-with-powers.md:

--------------------------------------------------------------------------------

1 | # Approximate with powers

2 |

3 | _Source: [robertovitillo.com](https://robertovitillo.com/back-of-the-envelope-estimation-hacks/) (Check out [his book](https://leanpub.com/systemsmanual)!)_

4 |

5 | Using powers of 2 or 10 makes multiplications very easy as in logarithmic space, multiplications become additions.

6 |

7 | For example, let’s say you have 800K users watching videos in UHD at 12 Mbps. A machine in your Content Delivery Network can egress at 1 Gbps. How many machines do you need?

8 |

9 | Approximating 800K with 10^6 and 12 Mbps with 10^7 yields:

10 |

11 | ```

12 | 1e6 * 1e7 bps

13 | --------------- = 1e(6+7-9) = 1e4 = 10,000

14 | 1e9 bps

15 | ```

16 |

17 | Summing 6 to 7 and subtracting 9 from it is much easier than trying to get to the exact answer:

18 |

19 | ```

20 | 0.8M * 12M bps

21 | --------------- = 9,600

22 | 10000 Mbps

23 | ```

24 |

25 | That's pretty close.

26 |

--------------------------------------------------------------------------------

/est/ballpark-io-performance-figures.md:

--------------------------------------------------------------------------------

1 | # Ballpark I/O performance figures

2 |

3 | _Source: [robertovitillo.com](https://robertovitillo.com/back-of-the-envelope-estimation-hacks/) (Check out [his book](https://leanpub.com/systemsmanual)!)_

4 |

5 | What’s important are not the exact numbers per se but their relative differences in terms of orders of magnitude.

6 |

7 | The goal of estimation is not to get a correct answer, but to get one in the right ballpark.

8 |

9 | ## Know your numbers

10 |

11 | How fast can you read data from the disk? How quickly from the network? You should be familiar with ballpark performance figures of your components.

12 |

13 | | Storage | IOPS | MB/s (random) | MB/s (sequential) |

14 | |------------------------|-------------|---------------|-------------------|

15 | | Mechanical hard drives | 100 | 10 | 50 |