├── .circleci

└── config.yml

├── .gitignore

├── README.md

├── common

└── provision.sh

├── current_architecture.png

├── dev-requirements.txt

├── get.py

├── image-builder

├── docker

│ ├── README.md

│ ├── packerfile.json

│ └── provision.sh

├── packman

│ ├── README.md

│ ├── packerfile.json

│ └── provision.sh

└── quickstart-vagrantbox

│ ├── README.md

│ ├── cloudify-hpcloud

│ └── Vagrantfile

│ ├── keys

│ └── insecure_private_key

│ ├── nightly-builder.py

│ ├── nightly.png

│ ├── packer_inputs.json

│ ├── packerfile.json

│ ├── provision

│ ├── Vagrantfile

│ ├── cleanup.sh

│ ├── common.sh

│ ├── influxdb_monkeypatch.sh

│ ├── install_ga.sh

│ └── prepare_nightly.sh

│ ├── settings.py

│ ├── templates

│ ├── box_Vagrantfile.template

│ ├── extlinux.conf.template

│ └── publish_Vagrantfile.template

│ └── userdata

│ └── add_vagrant_user.sh

├── offline-configuration

├── .pydistutils

├── bandersnatch.conf

├── nginx.conf

├── pip.conf

└── provision.sh

├── package-configuration

├── .gitignore

├── debian-agent

│ ├── debian-agent-disable-requiretty.sh

│ ├── debian-celeryd-cloudify.conf.template

│ └── debian-celeryd-cloudify.init.template

├── linux-cli

│ ├── get-cloudify.py

│ ├── test_cli_install.py

│ └── test_get_cloudify.py

├── manager

│ ├── conf

│ │ └── guni.conf.template

│ └── init

│ │ ├── amqpflux.conf.template

│ │ └── manager.conf.template

├── rabbitmq

│ └── init

│ │ └── rabbitmq-server.conf.template

├── riemann

│ └── init

│ │ └── riemann.conf.template

└── ubuntu-commercial-agent

│ ├── Ubuntu-agent-disable-requiretty.sh

│ ├── Ubuntu-celeryd-cloudify.conf.template

│ └── Ubuntu-celeryd-cloudify.init.template

├── package-scripts

└── .gitignore

├── package-templates

├── .gitignore

├── agent-centos-bootstrap.template

├── agent-debian-bootstrap.template

├── agent-ubuntu-bootstrap.template

├── agent-windows-bootstrap.template

├── cli-linux.template

├── manager-bootstrap.template

└── virtualenv-bootstrap.template

├── tox.ini

├── user_definitions-DEPRECATED.py

└── vagrant

├── .gitignore

├── agents

├── Vagrantfile

├── packager.yaml

├── provision.bat

├── provision.sh

├── runit.sh

└── windows

│ ├── packaging

│ ├── create_install_wizard.iss

│ └── source

│ │ ├── icons

│ │ └── Cloudify.ico

│ │ ├── license.txt

│ │ ├── pip

│ │ ├── get-pip.py

│ │ ├── pip-7.0.1-py2.py3-none-any.whl

│ │ └── setuptools-17.0-py2.py3-none-any.whl

│ │ ├── python

│ │ └── python.msi

│ │ └── virtualenv

│ │ └── virtualenv-13.0.1-py2.py3-none-any.whl

│ └── provision.sh

├── cli

├── Vagrantfile

├── provision.sh

└── windows

│ ├── packaging

│ ├── create_install_wizard.iss

│ ├── source

│ │ ├── icons

│ │ │ └── Cloudify.ico

│ │ ├── license.txt

│ │ ├── pip

│ │ │ ├── get-pip.py

│ │ │ ├── pip-6.1.1-py2.py3-none-any.whl

│ │ │ └── setuptools-15.2-py2.py3-none-any.whl

│ │ ├── python

│ │ │ └── python.msi

│ │ └── virtualenv

│ │ │ └── virtualenv-12.1.1-py2.py3-none-any.whl

│ └── update_wheel.py

│ └── provision.sh

└── docker_images

├── Vagrantfile

└── provision.sh

/.circleci/config.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 |

3 | checkout:

4 | post:

5 | - >

6 | if [ -n "$CI_PULL_REQUEST" ]; then

7 | PR_ID=${CI_PULL_REQUEST##*/}

8 | git fetch origin +refs/pull/$PR_ID/merge:

9 | git checkout -qf FETCH_HEAD

10 | fi

11 |

12 | defaults:

13 | - &tox_defaults

14 | docker:

15 | - image: circleci/python:2.7

16 |

17 | steps:

18 | - checkout

19 | - run:

20 | name: Install tox

21 | command: sudo pip install tox

22 | - run:

23 | name: Run tox of specfic environment

24 | command: python -m tox -e $DO_ENV

25 |

26 | jobs:

27 | flake8:

28 | <<: *tox_defaults

29 | environment:

30 | DO_ENV: flake8

31 |

32 | test:

33 | <<: *tox_defaults

34 | environment:

35 | DO_ENV: py27

36 |

37 | workflows:

38 | version: 2

39 |

40 | build_and_test:

41 | jobs:

42 | - flake8

43 | - test

44 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | *.py[cod]

2 | *.pyc

3 | *.iml

4 |

5 | # C extensions

6 | *.so

7 |

8 | # Packages

9 | *.egg

10 | *.egg-info

11 | *.deb

12 | *.iml

13 | .idea

14 | dist

15 | build

16 | eggs

17 | parts

18 | bin

19 | var

20 | sdist

21 | develop-eggs

22 | .installed.cfg

23 | lib

24 | lib64

25 | __pycache__

26 |

27 | # Installer logs

28 | pip-log.txt

29 |

30 | # Unit test / coverage reports

31 | .coverage

32 | .tox

33 | nosetests.xml

34 |

35 | # Translations

36 | *.mo

37 |

38 | # Mr Developer

39 | .mr.developer.cfg

40 | .project

41 | .pydevproject

42 |

43 | .vagrant/

44 | *COMMIT_MSG

45 |

46 | packager.log

47 |

48 | # QuickBuild

49 | .qbcache/

50 |

51 | # OS X

52 | .DS_Store

53 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | Cloudify-Packager

2 | =================

3 |

4 | * Master [](https://circleci.com/gh/cloudify-cosmo/cloudify-packager/tree/master)

5 |

6 | Cloudify's packager provides tools and configuration objects we use to build Cloudify's Management environments, agents and demo images.

7 |

8 | ### [Docker Images](http://www.docker.com)

9 |

10 | Please see [Bootstrapping using Docker](http://getcloudify.org/guide/3.1/installation-bootstrapping.html#bootstrapping-using-docker) for information on our transition from packages to container-based installations.

11 |

12 | To generate our [Dockerfile](https://github.com/cloudify-cosmo/cloudify-packager/raw/master/docker/Dockerfile.template) templates, we're using [Jocker](https://github.com/nir0s/jocker).

13 |

14 | ### Generate a custom Cloudify manager image

15 |

16 | * Clone the cloudify-packager repository from github:

17 | `git clone https://github.com/cloudify-cosmo/cloudify-packager.git`

18 |

19 | * Make your changes in [var.py](https://github.com/cloudify-cosmo/cloudify-packager/blob/master/docker/vars.py)

20 |

21 | - For example:

22 |

23 | - Use a specific branch of Cloudify related [modules](https://github.com/cloudify-cosmo/cloudify-packager/blob/master/docker/vars.py#L123).

24 | For example, replace the `master` branch with `my-branch` in `cloudify_rest_client` module:

25 | `"cloudify_rest_client": "git+git://github.com/cloudify-cosmo/cloudify-rest-client.git@my-branch"`

26 | - Add system packages to be installed on the image.

27 | For example, add the package "my-package" to the manager's requirements list (the [reqs](https://github.com/cloudify-cosmo/cloudify-packager/blob/master/docker/vars.py#L119) list):

28 |

29 | ```

30 | "manager": {

31 | "service_name": "manager",

32 | "reqs": [

33 | "git",

34 | "python2.7",

35 | "my-package"

36 | ],

37 | ...

38 | }

39 | ```

40 |

41 | * Run the [build.sh](https://github.com/cloudify-cosmo/cloudify-packager/blob/master/docker/build.sh)

42 | script from the [docker folder](https://github.com/cloudify-cosmo/cloudify-packager/tree/master/docker):

43 | ```

44 | cd cloudify-packager/docker/

45 | . build.sh

46 | ```

47 | - Create a tar file from the generated image:

48 | {% highlight bash %}

49 | sudo docker run -t --name=cloudifycommercial -d cloudify-commercial:latest /bin/bash

50 | sudo docker export cloudifycommercial > /tmp/cloudify-docker_commercial.tar

51 | {% endhighlight %}

52 |

53 | - Create a url from which you can download the tar file.

54 |

55 | * Set the `docker_url` property in your manager blueprint (see `cloudify_packages` property in [CloudifyManager Type](http://getcloudify.org/guide/3.2/reference-types.html#cloudifymanager-type) with your custom image url, e.g:

56 | ```

57 | cloudify_packages:

58 | ...

59 | docker:

60 | docker_url: {url to download the custom Cloudify manager image tar file}

61 | ```

62 |

63 | * Run cfy [bootstrap](http://getcloudify.org/guide/3.1/installation-bootstrapping.html) using your manager blueprint.

64 |

65 |

66 | ### [packman](http://packman.readthedocs.org) configuration

67 |

68 | Package based provisioning will be deprecated in Cloudify 3.2!

69 |

70 | Packman is used to generate Cloudify's packages.

71 | This repository contains packman's configuration for creating the packages.

72 |

73 | #### package-configuration

74 |

75 | The package-configuration folder contains the init scripts and configuration files for Cloudify's management environment components.

76 |

77 | #### package-templates

78 |

79 | The package-templates folder contains the bootstrap scripts that are used to install Cloudify's management environment.

80 |

81 | #### packages.py

82 |

83 | The packages.py file is the base packman configuration file containing the configuration of the entire stack (including agents).

84 |

85 | ### [Vagrant](http://www.vagrantup.com)

86 |

87 | Cloudify's packages are created using vagrant VM's (currently on AWS).

88 |

89 | The Vagrant folder contains vagrant configuration for different components that are generated using packman:

90 |

91 | - A Vagrant VM is initialized.

92 | - Packman is installed on the machine alongside its requirements.

93 | - If a virtualenv is required, it is created and the relevant modules are installed in it.

94 | - Packman is used to create the environment into which the components are retrieved.

95 | - Packman is used to create the package.

96 |

97 | NOTE: the Windows Agent Vagrantfile uses a premade image already containing the basic requirements for creating the Windows agent.

98 |

99 | #### image-builder

100 |

101 | Creates a Vagrant box (using Virtualbox, AWS orw HPCloud) with the Cloudify Manager installed on it.

102 |

--------------------------------------------------------------------------------

/common/provision.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | function print_params() {

4 |

5 | echo "## print common parameters"

6 |

7 | arr[0]="VERSION=$VERSION"

8 | arr[1]="PRERELEASE=$PRERELEASE"

9 | arr[2]="CORE_BRANCH=$CORE_BRANCH"

10 | arr[2]="CORE_TAG_NAME=$CORE_TAG_NAME"

11 | echo ${arr[@]}

12 | }

13 |

14 | function install_common_prereqs () {

15 |

16 | echo "## install common prerequisites"

17 | if which yum >> /dev/null; then

18 | sudo yum -y install openssl curl

19 | SUDO="sudo"

20 | # Setting this for Centos only, as it seems to break otherwise on 6.5

21 | CURL_OPTIONS="-1"

22 | elif which apt-get >> /dev/null; then

23 | sudo apt-get update &&

24 | sudo apt-get -y install openssl libssl-dev

25 | SUDO="sudo"

26 | if [ "`lsb_release -r -s`" == "16.04" ];then

27 | sudo apt-get -y install python

28 | fi

29 | elif [[ "$OSTYPE" == "darwin"* ]]; then

30 | echo "Installing on OSX"

31 | else

32 | echo 'Probably windows machine'

33 | fi

34 |

35 | curl $CURL_OPTIONS "https://bootstrap.pypa.io/2.6/get-pip.py" -o "get-pip.py" &&

36 | $SUDO python get-pip.py pip==9.0.1 &&

37 | $SUDO pip install wheel==0.29.0 &&

38 | $SUDO pip install setuptools==36.8.0 &&

39 | $SUDO pip install awscli &&

40 | echo "## end of installing common prerequisites"

41 |

42 | }

43 |

44 | function create_md5() {

45 |

46 | local file_ext=$1

47 | echo "## create md5"

48 | if [[ "$OSTYPE" == "darwin"* ]]; then

49 | md5cmd="md5 -r"

50 | else

51 | md5cmd="md5sum -t"

52 | fi

53 | md5sum=$($md5cmd *.$file_ext) &&

54 | echo $md5sum | $SUDO tee ${md5sum##* }.md5

55 | }

56 |

57 | function upload_to_s3() {

58 |

59 | local file_ext=$1

60 | file=$(basename $(find . -type f -name "*.$file_ext"))

61 |

62 | echo "## uploading https://$AWS_S3_BUCKET.s3.amazonaws.com/$AWS_S3_PATH/$file"

63 | export AWS_SECRET_ACCESS_KEY=${AWS_ACCESS_KEY} &&

64 | export AWS_ACCESS_KEY_ID=${AWS_ACCESS_KEY_ID} &&

65 | awscli="aws"

66 | if [[ "$OSTYPE" == "cygwin" ]]; then

67 | awscli="python `cygpath -w $(which aws)`"

68 | fi

69 | echo "$awscli s3 cp --acl public-read $file s3://$AWS_S3_BUCKET/$AWS_S3_PATH/"

70 | $awscli s3 cp --acl public-read $file s3://$AWS_S3_BUCKET/$AWS_S3_PATH/ &&

71 | echo "## successfully uploaded $file"

72 |

73 | }

74 |

75 | print_params

76 |

--------------------------------------------------------------------------------

/current_architecture.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cloudify-cosmo/cloudify-packager/0c2c2637fd1fe024e61c55d959ead28e9b9178af/current_architecture.png

--------------------------------------------------------------------------------

/dev-requirements.txt:

--------------------------------------------------------------------------------

1 | coverage==3.7.1

2 | nose

3 | nose-cov

4 | testfixtures

5 | testtools

6 | mock

7 | virtualenv

--------------------------------------------------------------------------------

/get.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | ########

3 | # Copyright (c) 2014 GigaSpaces Technologies Ltd. All rights reserved

4 | #

5 | # Licensed under the Apache License, Version 2.0 (the "License");

6 | # you may not use this file except in compliance with the License.

7 | # You may obtain a copy of the License at

8 | #

9 | # http://www.apache.org/licenses/LICENSE-2.0

10 | #

11 | # Unless required by applicable law or agreed to in writing, software

12 | # distributed under the License is distributed on an "AS IS" BASIS,

13 | # * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

14 | # * See the License for the specific language governing permissions and

15 | # * limitations under the License.

16 |

17 | from packman import logger

18 | from packman.packman import get_package_config as get_conf

19 | from packman import utils

20 | from packman import python

21 | from packman import retrieve

22 |

23 | lgr = logger.init()

24 |

25 |

26 | def _prepare(package):

27 |

28 | common = utils.Handler()

29 | common.rmdir(package['sources_path'])

30 | common.mkdir('{0}/archives'.format(package['sources_path']))

31 | common.mkdir(package['package_path'])

32 |

33 |

34 | def create_agent(package, download=False):

35 | dl_handler = retrieve.Handler()

36 | common = utils.Handler()

37 | py_handler = python.Handler()

38 | _prepare(package)

39 | py_handler.make_venv(package['sources_path'])

40 | if download:

41 | tar_file = '{0}/{1}.tar.gz'.format(

42 | package['sources_path'], package['name'])

43 | for url in package['source_urls']:

44 | dl_handler.download(url, file=tar_file)

45 | common.untar(package['sources_path'], tar_file)

46 | for module in package['modules']:

47 | py_handler.pip(module, package['sources_path'])

48 |

49 |

50 | def get_ubuntu_precise_agent(download=False):

51 | package = get_conf('Ubuntu-precise-agent')

52 | create_agent(package, download)

53 |

54 |

55 | def get_ubuntu_trusty_agent(download=False):

56 | package = get_conf('Ubuntu-trusty-agent')

57 | create_agent(package, download)

58 |

59 |

60 | def get_centos_final_agent(download=False):

61 | package = get_conf('centos-Final-agent')

62 | create_agent(package, download)

63 |

64 |

65 | def get_debian_jessie_agent(download=False):

66 | package = get_conf('debian-jessie-agent')

67 | create_agent(package, download)

68 |

69 |

70 | def get_celery(download=False):

71 | package = get_conf('celery')

72 |

73 | dl_handler = retrieve.Handler()

74 | common = utils.Handler()

75 | py_handler = python.Handler()

76 | _prepare(package)

77 | py_handler.make_venv(package['sources_path'])

78 | tar_file = '{0}/{1}.tar.gz'.format(

79 | package['sources_path'], package['name'])

80 | for url in package['source_urls']:

81 | dl_handler.download(url, file=tar_file)

82 | common.untar(package['sources_path'], tar_file)

83 | if download:

84 | for module in package['modules']:

85 | py_handler.pip(module, package['sources_path'])

86 |

87 |

88 | def get_manager(download=False):

89 | package = get_conf('manager')

90 |

91 | dl_handler = retrieve.Handler()

92 | common = utils.Handler()

93 | py_handler = python.Handler()

94 | _prepare(package)

95 | py_handler.make_venv(package['sources_path'])

96 | tar_file = '{0}/{1}.tar.gz'.format(

97 | package['sources_path'], package['name'])

98 | for url in package['source_urls']:

99 | dl_handler.download(url, file=tar_file)

100 | common.untar(package['sources_path'], tar_file)

101 |

102 | common.mkdir(package['file_server_dir'])

103 | common.cp(package['resources_path'], package['file_server_dir'])

104 | if download:

105 | for module in package['modules']:

106 | py_handler.pip(module, package['sources_path'])

107 |

108 |

109 | def main():

110 |

111 | lgr.debug('VALIDATED!')

112 |

113 |

114 | if __name__ == '__main__':

115 | main()

116 |

--------------------------------------------------------------------------------

/image-builder/docker/README.md:

--------------------------------------------------------------------------------

1 | # Docker Image Builder

2 |

3 | This allows generating a Docker image using Packer to be used in our build processes.

4 | It is meant to be generated manually per request.

5 |

6 | Currently, the image will be provisioned with the following:

7 |

8 | * Docker (version not hardcoded)

9 | * docker-compose (Docker's API will be exposed for docker-compose to work)

10 | * boto

--------------------------------------------------------------------------------

/image-builder/docker/packerfile.json:

--------------------------------------------------------------------------------

1 | {

2 | "variables": {

3 | "aws_access_key": "{{env `AWS_ACCESS_KEY_ID`}}",

4 | "aws_secret_key": "{{env `AWS_ACCESS_KEY`}}",

5 | "aws_ubuntu_trusty_source_ami": "ami-f0b11187",

6 | "instance_type": "m3.medium",

7 | "region": "eu-west-1"

8 | },

9 | "builders": [

10 | {

11 | "name": "ubuntu_trusty_docker",

12 | "type": "amazon-ebs",

13 | "access_key": "{{user `aws_access_key`}}",

14 | "secret_key": "{{user `aws_secret_key`}}",

15 | "region": "{{user `region`}}",

16 | "source_ami": "{{user `aws_ubuntu_trusty_source_ami`}}",

17 | "instance_type": "{{user `instance_type`}}",

18 | "ssh_username": "ubuntu",

19 | "ami_name": "ubuntu-trusty-docker {{timestamp}}",

20 | "run_tags": {

21 | "Name": "ubuntu trusty docker image generator"

22 | }

23 | }

24 | ],

25 | "provisioners": [

26 | {

27 | "type": "shell",

28 | "script": "provision.sh"

29 | }

30 | ]

31 | }

32 |

--------------------------------------------------------------------------------

/image-builder/docker/provision.sh:

--------------------------------------------------------------------------------

1 | #! /bin/bash -e

2 |

3 | function install_docker

4 | {

5 | echo Installing Docker

6 | curl -sSL https://get.docker.com/ubuntu/ | sudo sh

7 | }

8 |

9 | function install_docker_compose

10 | {

11 | echo Installing docker-compose

12 | # docker-compose requires requests in version 2.2.1. will probably change.

13 | sudo pip install requests==2.2.1 --upgrade

14 | sudo pip install docker-compose==1.1.0

15 |

16 | echo Exposing docker api

17 | sudo /bin/sh -c 'echo DOCKER_OPTS=\"-H tcp://127.0.0.1:4243 -H unix:///var/run/docker.sock\" >> /etc/default/docker'

18 | sudo restart docker

19 | export DOCKER_HOST=tcp://localhost:4243

20 | }

21 |

22 | function install_pip

23 | {

24 | echo Installing pip

25 | curl --silent --show-error --retry 5 https://bootstrap.pypa.io/get-pip.py | sudo python

26 | }

27 |

28 | function install_boto

29 | {

30 | echo Installing boto

31 | sudo pip install boto==2.36.0

32 |

33 | }

34 |

35 | install_docker

36 | install_pip

37 | install_boto

38 | install_docker_compose

39 |

--------------------------------------------------------------------------------

/image-builder/packman/README.md:

--------------------------------------------------------------------------------

1 | # Packman Image Builder

2 |

3 | This allows generating a packman image using Packer to be used in our build processes.

4 | It is meant to be generated manually per request.

5 |

6 | We use packman to generate our Agent and CLI packages.

7 |

8 | The provisioning script supports Debian, Ubuntu and CentOS (and potentially, RHEL) based images.

9 | Currently, the image will be provisioned with the following:

10 |

11 | * Build prerequisites such as gcc, g++, python-dev, etc..

12 | * git

13 | * fpm (and consequently, Ruby 1.9.3), for packman to generate packages

14 | * Virtualenv and boto

15 | * packman (hardcoded version. should be upgraded if needed)

16 |

17 | The supplied packerfile currently generates images for:

18 |

19 | * debian jessie

20 | * Ubuntu precise

21 | * Ubuntu trusty

22 | * Centos 6.4

23 |

24 | Note that all images are ebs backed - a prerequisite of baking them using Packer.

--------------------------------------------------------------------------------

/image-builder/packman/packerfile.json:

--------------------------------------------------------------------------------

1 | {

2 | "variables": {

3 | "aws_access_key": "{{env `AWS_ACCESS_KEY_ID`}}",

4 | "aws_secret_key": "{{env `AWS_ACCESS_KEY`}}",

5 | "aws_debian_jessie_source_ami": "ami-f9a9238e",

6 | "aws_ubuntu_trusty_source_ami": "ami-f0b11187",

7 | "aws_ubuntu_precise_source_ami": "ami-00d12677",

8 | "aws_ubuntu_precise_official_ami": "ami-73cb5a04",

9 | "aws_centos_64_source_ami": "ami-3b39ee4c",

10 | "instance_type": "m3.large",

11 | "region": "eu-west-1"

12 | },

13 | "builders": [

14 | {

15 | "name": "ubuntu_precise_packman",

16 | "type": "amazon-ebs",

17 | "access_key": "{{user `aws_access_key`}}",

18 | "secret_key": "{{user `aws_secret_key`}}",

19 | "region": "{{user `region`}}",

20 | "source_ami": "{{user `aws_ubuntu_precise_source_ami`}}",

21 | "instance_type": "{{user `instance_type`}}",

22 | "ssh_username": "ubuntu",

23 | "ami_name": "ubuntu-precise-packman {{timestamp}}",

24 | "run_tags": {

25 | "Name": "ubuntu precise packman image generator"

26 | }

27 | },

28 | {

29 | "name": "ubuntu_trusty_packman",

30 | "type": "amazon-ebs",

31 | "access_key": "{{user `aws_access_key`}}",

32 | "secret_key": "{{user `aws_secret_key`}}",

33 | "region": "{{user `region`}}",

34 | "source_ami": "{{user `aws_ubuntu_trusty_source_ami`}}",

35 | "instance_type": "{{user `instance_type`}}",

36 | "ssh_username": "ubuntu",

37 | "ami_name": "ubuntu-trusty-packman {{timestamp}}",

38 | "run_tags": {

39 | "Name": "ubuntu trusty packman image generator"

40 | }

41 | },

42 | {

43 | "name": "debian_jessie_packman",

44 | "type": "amazon-ebs",

45 | "access_key": "{{user `aws_access_key`}}",

46 | "secret_key": "{{user `aws_secret_key`}}",

47 | "region": "{{user `region`}}",

48 | "source_ami": "{{user `aws_debian_jessie_source_ami`}}",

49 | "instance_type": "{{user `instance_type`}}",

50 | "ssh_username": "admin",

51 | "ami_name": "debian-jessie-packman {{timestamp}}",

52 | "run_tags": {

53 | "Name": "debian jessie packman image generator"

54 | }

55 | },

56 | {

57 | "name": "centos_64_packman",

58 | "type": "amazon-ebs",

59 | "access_key": "{{user `aws_access_key`}}",

60 | "secret_key": "{{user `aws_secret_key`}}",

61 | "region": "{{user `region`}}",

62 | "source_ami": "{{user `aws_centos_64_source_ami`}}",

63 | "instance_type": "{{user `instance_type`}}",

64 | "ssh_username": "root",

65 | "ssh_private_key_file": "/home/nir0s/.ssh/aws/vagrant_centos_build.pem",

66 | "ami_name": "centos-64-packman {{timestamp}}",

67 | "run_tags": {

68 | "Name": "centos 64 packman image generator"

69 | }

70 | }

71 | ],

72 | "provisioners": [

73 | {

74 | "type": "shell",

75 | "script": "provision.sh"

76 | }

77 | ]

78 | }

79 |

--------------------------------------------------------------------------------

/image-builder/packman/provision.sh:

--------------------------------------------------------------------------------

1 | function install_prereqs

2 | {

3 | # some of these are not related to the image specifically but rather to processes that will be executed later

4 | # such as git, python-dev and make

5 | if which apt-get; then

6 | # ubuntu

7 | sudo apt-get -y update &&

8 | # the commented below might not be required. If the latest version of git is required, we'll have to enable them

9 | # precise - python-software-properties

10 | # trusty - software-properties-common

11 | # sudo apt-get install -y software-properties-common

12 | # sudo apt-get install -y python-software-properties

13 | # sudo add-apt-repository -y ppa:git-core/ppa &&

14 | sudo apt-get install -y curl python-dev git make gcc libyaml-dev zlib1g-dev g++

15 | elif which yum; then

16 | # centos/REHL

17 | sudo yum -y update &&

18 | sudo yum install -y yum-downloadonly wget mlocate yum-utils &&

19 | sudo yum install -y python-devel libyaml-devel ruby rubygems ruby-devel make gcc git g++

20 | # this is required to build pyzmq under centos/RHEL

21 | sudo yum install -y zeromq-devel -c http://download.opensuse.org/repositories/home:/fengshuo:/zeromq/CentOS_CentOS-6/home:fengshuo:zeromq.repo

22 | else

23 | echo 'unsupported package manager, exiting'

24 | exit 1

25 | fi

26 | }

27 |

28 | function install_ruby

29 | {

30 | wget https://ftp.ruby-lang.org/pub/ruby/ruby-1.9.3-rc1.tar.gz --no-check-certificate

31 | # alt since ruby-lang's ftp blows

32 | # wget http://mirrors.ibiblio.org/ruby/1.9/ruby-1.9.3-rc1.tar.gz --no-check-certificate

33 | tar -xzvf ruby-1.9.3-rc1.tar.gz

34 | cd ruby-1.9.3-rc1

35 | ./configure --disable-install-doc

36 | make

37 | sudo make install

38 | cd ~

39 | }

40 |

41 | function install_fpm

42 | {

43 | sudo gem install fpm --no-ri --no-rdoc

44 | # if we want to downlod gems as a part of the packman run, this should be enabled

45 | # echo -e 'gem: --no-ri --no-rdoc\ninstall: --no-rdoc --no-ri\nupdate: --no-rdoc --no-ri' >> ~/.gemrc

46 | }

47 |

48 | function install_pip

49 | {

50 | curl --silent --show-error --retry 5 https://bootstrap.pypa.io/get-pip.py | sudo python

51 | }

52 |

53 | install_prereqs &&

54 | if ! which ruby; then

55 | install_ruby

56 | fi

57 | install_fpm &&

58 | install_pip &&

59 | sudo pip install "packman==0.5.0" &&

60 | sudo pip install "virtualenv==12.0.7" &&

61 | sudo pip install "boto==2.36.0"

62 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/README.md:

--------------------------------------------------------------------------------

1 | # Image Builder

2 | This directory contains configuration and script files that are intended to be used for creating Vagrant box with working Cloudify Manager to number of Vagrant providers.

3 | Supported scenarios:

4 |

5 | 1. Create Vagrant box locally by using Virtualbox (for Virtualbox Vagrant provider)

6 | 1. Create Vagrant box remotly by using AWS (for Virtualbox Vagrant provider)

7 | 1. Create Vagrant box remotly by using AWS (for AWS Vagrant provider)

8 | 1. Create Vagrant box locally by using HPCloud (for HPCloud Vagrant provider)

9 |

10 | # Directory Structure

11 | * `userdata` - contains userdata scripts for AWS machines

12 | * `templates` - templates files used for building

13 | * `provision` - provisioning scripts used by Packer & Vagrant

14 | * common.sh - Main provisioning script (installing manager). Includes all the GIT repos (SHA)

15 | * cleanup.sh - Post install for provisioning with AWS

16 | * prepare_nightly.sh - All the needed changes to make Cloud image into Virtualbox image

17 | * `keys` - insecure keys for Vagrant

18 | * `cloudify-hpcloud` - Vagrant box creator for hpcloud

19 |

20 | # How to use this

21 | ## Pre Requirements

22 |

23 | 1. Python2.7 (for scenerio 2):

24 | * [Fabric](http://www.fabfile.org/)

25 | * [Boto](http://docs.pythonboto.org/en/latest/)

26 | 1. [Packer](https://www.packer.io/)

27 | 1. [Virtualbox](https://www.virtualbox.org/) (for scenerio 1 & 4 only)

28 | 1. [Vagrant](https://www.vagrantup.com/) (for scenerio 4 only):

29 | * [HPCloud Vagrant plugin](https://github.com/mohitsethi/vagrant-hp)

30 |

31 | ## Configuration files

32 | ### settings.py (scenerio 2 only)

33 | This file contains settings used by `nightly-builder.py` script. You'll need to configure it in case you want to build nightly Virtualbox image on AWS.

34 | * `region` - The region where `nightly-builder` will launch its worker instance. This should be the same region as in Packer config.

35 | * `username` - The username to use when connecting to worker instance. This depands on what instance you use. Usually `ubuntu` for Ubuntu AMIs.

36 | * `aws_s3_bucket` - S3 bucket name where nightlies should be uploaded to.

37 | * `aws_iam_group` - IAM group for worker instance (see below).

38 | * `factory_ami` - Base AMI for worker instance.

39 | * `instance_type` - Worker instance type (m3.medium, m3.large,...). Note that not all AMIs support all instance types.

40 | * `packer_var_file` - Packer var file path. This is the `packer_inputs.json` file which used by Packer.

41 |

42 | ### packer_inputs.json

43 | This is input file for Packer.

44 | * `cloudify_release` - Release version number.

45 | * `aws_source_ami` - Base AWS AMI.

46 | * `components_package_url` - Components package url

47 | * `core_package_url` - Core package url

48 | * `ui_package_url` - UI package url

49 | * `ubuntu_agent_url` - Ubuntu package url

50 | * `centos_agent_url` - Centos agent url

51 | * `windows_agent_url` - Windows agent url

52 |

53 | ### packerfile.json

54 | Packer template file. It contains number of user variables defined in the top of the file (`variables` section). `packer_inputs.json` is the inputs file for these variables. Note that some variables are not passed via that file:

55 | * `aws_access_key` - AWS key ID, taken from environment variable `AWS_ACCESS_KEY_ID`

56 | * `aws_secret_key` - AWS Secret key, taken from environment variable `AWS_ACCESS_KEY`

57 | * `instance_type` - Instance type for the provisioning machine

58 | * `virtualbox_source_image` - Source image (ovf) for when building local virtualbox image with Packer (without AWS)

59 | * `insecure_private_key` - Path of Vagrant's default insecure private key

60 |

61 | ## AWS requirements

62 | * Valid credentials - Your user must be able to launch/terminate instances, add/remove security groups and private keys

63 | * AMI base image - This was tested with Ubuntu base image

64 | * S3 bucket - S3 bucket where final images will be stored

65 | * IAM role - An IAM group must be created for the worker instances with sufficient rights to upload into the S3 bucket.

66 |

67 | ### IAM role

68 | Example for role policy:

69 | ```json

70 | {

71 | "Version": "2012-10-17",

72 | "Statement": [

73 | {

74 | "Effect": "Allow",

75 | "Action": [

76 | "s3:List*",

77 | "s3:Put*"

78 | ],

79 | "Resource": [

80 | "arn:aws:s3:::name-of-s3-bucket-here",

81 | "arn:aws:s3:::name-of-s3-bucket-here/*"

82 | ]

83 | }

84 | ]

85 | }

86 | ```

87 |

88 | ## Running

89 |

90 | ### Create Vagrant box locally by using Virtualbox

91 | ```shell

92 | packer build \

93 | -only=virtualbox

94 | -var-file=packer_inputs.json

95 | packerfile.json

96 | ```

97 |

98 | ### Create Vagrant box remotly by using AWS (for Virtualbox provider)

99 | ```shell

100 | python nightly-builder.py

101 | ````

102 |

103 | ### Create Vagrant box remotly by using AWS (for AWS provider)

104 | ```shell

105 | packer build \

106 | -only=amazon

107 | -var-file=packer_inputs.json

108 | packerfile.json

109 | ```

110 |

111 | ### Create Vagrant box locally by using HPCloud

112 | ```shell

113 | cd cloudify-hpcloud

114 | vagrant up --provider hp

115 | ```

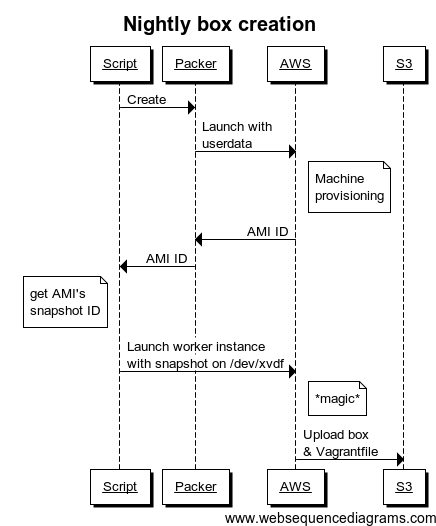

116 | ## How nightly image is built

117 | The nightly image process is more complecated from the rest. This is because we use AWS as the platform to build our images on. The following diagram explains the process:

118 |

119 |

120 |

121 |

122 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/cloudify-hpcloud/Vagrantfile:

--------------------------------------------------------------------------------

1 | ########

2 | # Copyright (c) 2014 GigaSpaces Technologies Ltd. All rights reserved

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # * See the License for the specific language governing permissions and

14 | # * limitations under the License.

15 |

16 | # -*- mode: ruby -*-

17 | # vi: set ft=ruby :

18 |

19 | INSTALL_FROM_PYPI = ENV['INSTALL_FROM_PYPI']

20 |

21 | HP_ACCESS_KEY = ENV['HP_ACCESS_KEY']

22 | HP_SECRET_KEY = ENV['HP_SECRET_KEY']

23 | HP_TENANT_ID = ENV['HP_TENANT_ID']

24 | HP_KEYPAIR_NAME = ENV['HP_KEYPAIR_NAME']

25 | HP_PRIVATE_KEY_PATH = ENV['HP_PRIVATE_KEY_PATH']

26 | HP_SERVER_IMAGE = ENV['HP_SERVER_IMAGE'] || "Ubuntu Server 12.04.5 LTS (amd64 20140927) - Partner Image"

27 | HP_SSH_USERNAME = ENV['HP_SSH_USERNAME'] || "ubuntu"

28 | HP_AVAILABILITY_ZONE = ENV['HP_AVAILABILITY_ZONE'] || "us-east"

29 |

30 | SERVER_NAME = INSTALL_FROM_PYPI==="true"? "install_cloudify_3_stable" : "install_cloudify_3_latest"

31 |

32 | Vagrant.configure('2') do |config|

33 | cloudify.vm.provider :hp do |hp, override|

34 | unless Vagrant.has_plugin?("vagrant-hp")

35 | raise 'vagrant-hp plugin not installed!'

36 | end

37 | override.vm.box = "dummy_hp"

38 | override.vm.box_url = "https://github.com/mohitsethi/vagrant-hp/raw/master/dummy_hp.box"

39 |

40 | hp.access_key = "#{HP_ACCESS_KEY}"

41 | hp.secret_key = "#{HP_SECRET_KEY}"

42 | hp.flavor = "standard.medium"

43 | hp.tenant_id = "#{HP_TENANT_ID}"

44 | hp.server_name = "#{SERVER_NAME}"

45 | hp.image = "#{HP_SERVER_IMAGE}"

46 | hp.keypair_name = "#{HP_KEYPAIR_NAME}"

47 | hp.ssh_private_key_path = "#{HP_PRIVATE_KEY_PATH}"

48 | hp.ssh_username = "#{HP_SSH_USERNAME}"

49 | hp.availability_zone = "#{HP_AVAILABILITY_ZONE}"

50 | end

51 |

52 | config.vm.provision "shell" do |sh|

53 | sh.path = "../provision/common.sh"

54 | sh.args = "#{INSTALL_FROM_PYPI}"

55 | sh.privileged = false

56 | end

57 | end

58 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/keys/insecure_private_key:

--------------------------------------------------------------------------------

1 | -----BEGIN RSA PRIVATE KEY-----

2 | MIIEogIBAAKCAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjGYe7gHzI

3 | w+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoP

4 | kcmF0aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2

5 | hMNG0zQPyUecp4pzC6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NO

6 | Td0jMZEnDkbUvxhMmBYSdETk1rRgm+R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcW

7 | yLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQIBIwKCAQEA4iqWPJXtzZA68mKd

8 | ELs4jJsdyky+ewdZeNds5tjcnHU5zUYE25K+ffJED9qUWICcLZDc81TGWjHyAqD1

9 | Bw7XpgUwFgeUJwUlzQurAv+/ySnxiwuaGJfhFM1CaQHzfXphgVml+fZUvnJUTvzf

10 | TK2Lg6EdbUE9TarUlBf/xPfuEhMSlIE5keb/Zz3/LUlRg8yDqz5w+QWVJ4utnKnK

11 | iqwZN0mwpwU7YSyJhlT4YV1F3n4YjLswM5wJs2oqm0jssQu/BT0tyEXNDYBLEF4A

12 | sClaWuSJ2kjq7KhrrYXzagqhnSei9ODYFShJu8UWVec3Ihb5ZXlzO6vdNQ1J9Xsf

13 | 4m+2ywKBgQD6qFxx/Rv9CNN96l/4rb14HKirC2o/orApiHmHDsURs5rUKDx0f9iP

14 | cXN7S1uePXuJRK/5hsubaOCx3Owd2u9gD6Oq0CsMkE4CUSiJcYrMANtx54cGH7Rk

15 | EjFZxK8xAv1ldELEyxrFqkbE4BKd8QOt414qjvTGyAK+OLD3M2QdCQKBgQDtx8pN

16 | CAxR7yhHbIWT1AH66+XWN8bXq7l3RO/ukeaci98JfkbkxURZhtxV/HHuvUhnPLdX

17 | 3TwygPBYZFNo4pzVEhzWoTtnEtrFueKxyc3+LjZpuo+mBlQ6ORtfgkr9gBVphXZG

18 | YEzkCD3lVdl8L4cw9BVpKrJCs1c5taGjDgdInQKBgHm/fVvv96bJxc9x1tffXAcj

19 | 3OVdUN0UgXNCSaf/3A/phbeBQe9xS+3mpc4r6qvx+iy69mNBeNZ0xOitIjpjBo2+

20 | dBEjSBwLk5q5tJqHmy/jKMJL4n9ROlx93XS+njxgibTvU6Fp9w+NOFD/HvxB3Tcz

21 | 6+jJF85D5BNAG3DBMKBjAoGBAOAxZvgsKN+JuENXsST7F89Tck2iTcQIT8g5rwWC

22 | P9Vt74yboe2kDT531w8+egz7nAmRBKNM751U/95P9t88EDacDI/Z2OwnuFQHCPDF

23 | llYOUI+SpLJ6/vURRbHSnnn8a/XG+nzedGH5JGqEJNQsz+xT2axM0/W/CRknmGaJ

24 | kda/AoGANWrLCz708y7VYgAtW2Uf1DPOIYMdvo6fxIB5i9ZfISgcJ/bbCUkFrhoH

25 | +vq/5CIWxCPp0f85R4qxxQ5ihxJ0YDQT9Jpx4TMss4PSavPaBH3RXow5Ohe+bYoQ

26 | NE5OgEXk2wVfZczCZpigBKbKZHNYcelXtTt/nP3rsCuGcM4h53s=

27 | -----END RSA PRIVATE KEY-----

28 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/nightly-builder.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 | import os

3 | import re

4 | import string

5 | import random

6 | from time import sleep, strftime

7 | from string import Template

8 | from tempfile import gettempdir

9 | from StringIO import StringIO

10 | from subprocess import Popen, PIPE

11 |

12 | import boto.ec2

13 | from boto.ec2 import blockdevicemapping as bdm

14 | from fabric.api import env, run, sudo, execute, put

15 |

16 | from settings import settings

17 |

18 | RESOURCES = []

19 |

20 |

21 | def main():

22 | print('Starting nightly build: {}'.format(strftime("%Y-%m-%d %H:%M:%S")))

23 | print('Opening connection..')

24 | access_key = os.environ.get('AWS_ACCESS_KEY_ID')

25 | secret_key = os.environ.get('AWS_ACCESS_KEY')

26 | conn = boto.ec2.connect_to_region(settings['region'],

27 | aws_access_key_id=access_key,

28 | aws_secret_access_key=secret_key)

29 | RESOURCES.append(conn)

30 |

31 | print('Running Packer..')

32 | baked_ami_id = run_packer()

33 | baked_ami = conn.get_image(baked_ami_id)

34 | RESOURCES.append(baked_ami)

35 |

36 | baked_snap = baked_ami.block_device_mapping['/dev/sda1'].snapshot_id

37 |

38 | print('Launching worker machine..')

39 | mapping = bdm.BlockDeviceMapping()

40 | mapping['/dev/sda1'] = bdm.BlockDeviceType(size=10,

41 | volume_type='gp2',

42 | delete_on_termination=True)

43 | mapping['/dev/sdf'] = bdm.BlockDeviceType(snapshot_id=baked_snap,

44 | volume_type='gp2',

45 | delete_on_termination=True)

46 |

47 | kp_name = random_generator()

48 | kp = conn.create_key_pair(kp_name)

49 | kp.save(gettempdir())

50 | print('Keypair created: {}'.format(kp_name))

51 |

52 | sg_name = random_generator()

53 | sg = conn.create_security_group(sg_name, 'vagrant nightly')

54 | sg.authorize(ip_protocol='tcp',

55 | from_port=22,

56 | to_port=22,

57 | cidr_ip='0.0.0.0/0')

58 | print('Security Group created: {}'.format(sg_name))

59 |

60 | reserv = conn.run_instances(

61 | image_id=settings['factory_ami'],

62 | key_name=kp_name,

63 | instance_type=settings['instance_type'],

64 | security_groups=[sg],

65 | block_device_map=mapping,

66 | instance_profile_name=settings['aws_iam_group'])

67 |

68 | factory_instance = reserv.instances[0]

69 | RESOURCES.append(factory_instance)

70 | RESOURCES.append(kp)

71 | RESOURCES.append(sg)

72 |

73 | env.key_filename = os.path.join(gettempdir(), '{}.pem'.format(kp_name))

74 | env.timeout = 10

75 | env.connection_attempts = 12

76 |

77 | while factory_instance.state != 'running':

78 | sleep(5)

79 | factory_instance.update()

80 | print('machine state: {}'.format(factory_instance.state))

81 |

82 | print('Executing script..')

83 | execute(do_work, host='{}@{}'.format(settings['username'],

84 | factory_instance.ip_address))

85 |

86 |

87 | def random_generator(size=8, chars=string.ascii_uppercase + string.digits):

88 | return ''.join(random.choice(chars) for _ in range(size))

89 |

90 |

91 | def run_packer():

92 | packer_cmd = 'packer build ' \

93 | '-machine-readable ' \

94 | '-only=nightly_virtualbox_build ' \

95 | '-var-file={} ' \

96 | 'packerfile.json'.format(settings['packer_var_file'])

97 | p = Popen(packer_cmd.split(), stdout=PIPE, stderr=PIPE)

98 |

99 | packer_output = ''

100 | while True:

101 | line = p.stdout.readline()

102 | if line == '':

103 | break

104 | else:

105 | print(line, end="")

106 | if re.match('^.+artifact.+id', line):

107 | packer_output = line

108 | return packer_output.split(':')[-1].rstrip()

109 |

110 |

111 | def do_work():

112 | sudo('apt-get update')

113 | sudo('apt-get install -y virtualbox kpartx extlinux qemu-utils python-pip')

114 | sudo('pip install awscli')

115 |

116 | sudo('mkdir -p /mnt/image')

117 | sudo('mount /dev/xvdf1 /mnt/image')

118 |

119 | run('dd if=/dev/zero of=image.raw bs=1M count=8192')

120 | sudo('losetup --find --show image.raw')

121 | sudo('parted -s -a optimal /dev/loop0 mklabel msdos'

122 | ' -- mkpart primary ext4 1 -1')

123 | sudo('parted -s /dev/loop0 set 1 boot on')

124 | sudo('kpartx -av /dev/loop0')

125 | sudo('mkfs.ext4 /dev/mapper/loop0p1')

126 | sudo('mkdir -p /mnt/raw')

127 | sudo('mount /dev/mapper/loop0p1 /mnt/raw')

128 |

129 | sudo('cp -a /mnt/image/* /mnt/raw')

130 |

131 | sudo('extlinux --install /mnt/raw/boot')

132 | sudo('dd if=/usr/lib/syslinux/mbr.bin conv=notrunc bs=440 count=1 '

133 | 'of=/dev/loop0')

134 | sudo('echo -e "DEFAULT cloudify\n'

135 | 'LABEL cloudify\n'

136 | 'LINUX /vmlinuz\n'

137 | 'APPEND root=/dev/disk/by-uuid/'

138 | '`sudo blkid -s UUID -o value /dev/mapper/loop0p1` ro\n'

139 | 'INITRD /initrd.img" | sudo -s tee /mnt/raw/boot/extlinux.conf')

140 |

141 | sudo('umount /mnt/raw')

142 | sudo('kpartx -d /dev/loop0')

143 | sudo('losetup --detach /dev/loop0')

144 |

145 | run('qemu-img convert -f raw -O vmdk image.raw image.vmdk')

146 | run('rm image.raw')

147 |

148 | run('mkdir output')

149 | run('VBoxManage createvm --name cloudify --ostype Ubuntu_64 --register')

150 | run('VBoxManage storagectl cloudify '

151 | '--name SATA '

152 | '--add sata '

153 | '--sataportcount 1 '

154 | '--hostiocache on '

155 | '--bootable on')

156 | run('VBoxManage storageattach cloudify '

157 | '--storagectl SATA '

158 | '--port 0 '

159 | '--type hdd '

160 | '--medium image.vmdk')

161 | run('VBoxManage modifyvm cloudify '

162 | '--memory 2048 '

163 | '--cpus 2 '

164 | '--vram 12 '

165 | '--ioapic on '

166 | '--rtcuseutc on '

167 | '--pae off '

168 | '--boot1 disk '

169 | '--boot2 none '

170 | '--boot3 none '

171 | '--boot4 none ')

172 | run('VBoxManage export cloudify --output output/box.ovf')

173 |

174 | run('echo "Vagrant::Config.run do |config|" > output/Vagrantfile')

175 | run('echo " config.vm.base_mac = `VBoxManage showvminfo cloudify '

176 | '--machinereadable | grep macaddress1 | cut -d"=" -f2`"'

177 | ' >> output/Vagrantfile')

178 | run('echo -e "end\n\n" >> output/Vagrantfile')

179 | run('echo \'include_vagrantfile = File.expand_path'

180 | '("../include/_Vagrantfile", __FILE__)\' >> output/Vagrantfile')

181 | run('echo "load include_vagrantfile if File.exist?'

182 | '(include_vagrantfile)" >> output/Vagrantfile')

183 | run('echo \'{ "provider": "virtualbox" }\' > output/metadata.json')

184 | run('tar -cvf cloudify.box -C output/ .')

185 |

186 | box_name = 'cloudify_{}'.format(strftime('%y%m%d-%H%M'))

187 | box_url = 'https://s3-{0}.amazonaws.com/{1}/{2}.box'.format(

188 | settings['region'], settings['aws_s3_bucket'], box_name

189 | )

190 | run('aws s3 cp '

191 | 'cloudify.box s3://{}/{}.box'.format(settings['aws_s3_bucket'],

192 | box_name))

193 | with open('templates/publish_Vagrantfile.template') as f:

194 | template = Template(f.read())

195 | vfile = StringIO()

196 | vfile.write(template.substitute(BOX_NAME=box_name,

197 | BOX_URL=box_url))

198 | put(vfile, 'publish_Vagrantfile')

199 | run('aws s3 cp publish_Vagrantfile s3://{}/{}'.format(

200 | settings['aws_s3_bucket'], 'Vagrantfile'))

201 |

202 |

203 | def cleanup():

204 | print('cleaning up..')

205 | for item in RESOURCES:

206 | if type(item) == boto.ec2.image.Image:

207 | item.deregister()

208 | print('{} deregistered'.format(item))

209 | elif type(item) == boto.ec2.instance.Instance:

210 | item.terminate()

211 | while item.state != 'terminated':

212 | sleep(5)

213 | item.update()

214 | print('{} terminated'.format(item))

215 | elif type(item) == boto.ec2.connection.EC2Connection:

216 | item.close()

217 | print('{} closed'.format(item))

218 | elif (type(item) == boto.ec2.securitygroup.SecurityGroup or

219 | type(item) == boto.ec2.keypair.KeyPair):

220 | item.delete()

221 | print('{} deleted'.format(item))

222 | else:

223 | print('{} not cleared'.format(item))

224 |

225 |

226 | try:

227 | main()

228 | finally:

229 | cleanup()

230 | print('finished build: {}'.format(strftime("%Y-%m-%d %H:%M:%S")))

231 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/nightly.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cloudify-cosmo/cloudify-packager/0c2c2637fd1fe024e61c55d959ead28e9b9178af/image-builder/quickstart-vagrantbox/nightly.png

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/packer_inputs.json:

--------------------------------------------------------------------------------

1 | {

2 | "aws_source_ami": "ami-234ecc54"

3 | }

4 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/packerfile.json:

--------------------------------------------------------------------------------

1 | {

2 | "variables": {

3 | "aws_access_key": "{{env `AWS_ACCESS_KEY_ID`}}",

4 | "aws_secret_key": "{{env `AWS_ACCESS_KEY`}}",

5 | "aws_source_ami": "",

6 | "instance_type": "m3.large",

7 | "virtualbox_source_image": "",

8 | "insecure_private_key": "./keys/insecure_private_key"

9 | },

10 | "builders": [

11 | {

12 | "name": "virtualbox",

13 | "type": "virtualbox-ovf",

14 | "source_path": "{{user `virtualbox_source_image`}}",

15 | "vm_name": "cloudify",

16 | "ssh_username": "vagrant",

17 | "ssh_key_path": "{{user `insecure_private_key`}}",

18 | "ssh_wait_timeout": "2m",

19 | "shutdown_command": "sudo -S shutdown -P now",

20 | "vboxmanage": [

21 | ["modifyvm", "{{.Name}}", "--memory", "2048"],

22 | ["modifyvm", "{{.Name}}", "--cpus", "2"],

23 | ["modifyvm", "{{.Name}}", "--natdnshostresolver1", "on"]

24 | ],

25 | "headless": true

26 | },

27 | {

28 | "name": "nightly_virtualbox_build",

29 | "type": "amazon-ebs",

30 | "access_key": "{{user `aws_access_key`}}",

31 | "secret_key": "{{user `aws_secret_key`}}",

32 | "ssh_private_key_file": "{{user `insecure_private_key`}}",

33 | "region": "eu-west-1",

34 | "source_ami": "{{user `aws_source_ami`}}",

35 | "instance_type": "{{user `instance_type`}}",

36 | "ssh_username": "vagrant",

37 | "user_data_file": "userdata/add_vagrant_user.sh",

38 | "ami_name": "cloudify nightly {{timestamp}}"

39 | },

40 | {

41 | "name": "amazon",

42 | "type": "amazon-ebs",

43 | "access_key": "{{user `aws_access_key`}}",

44 | "secret_key": "{{user `aws_secret_key`}}",

45 | "region": "eu-west-1",

46 | "source_ami": "{{user `aws_source_ami`}}",

47 | "instance_type": "{{user `instance_type`}}",

48 | "ssh_username": "ubuntu",

49 | "ami_name": "cloudify {{timestamp}}"

50 | }

51 | ],

52 | "provisioners": [

53 | {

54 | "type": "shell",

55 | "script": "provision/prepare_nightly.sh",

56 | "only": ["nightly_virtualbox_build"]

57 | },

58 | {

59 | "type": "shell",

60 | "script": "provision/common.sh"

61 | },

62 | {

63 | "type": "shell",

64 | "inline": ["sudo reboot"],

65 | "only": ["nightly_virtualbox_build"]

66 | },

67 | {

68 | "type": "shell",

69 | "script": "provision/install_ga.sh",

70 | "only": ["nightly_virtualbox_build"]

71 | },

72 | {

73 | "type": "shell",

74 | "script": "provision/influxdb_monkeypatch.sh",

75 | "only": ["nightly_virtualbox_build"]

76 | },

77 | {

78 | "type": "shell",

79 | "script": "provision/cleanup.sh",

80 | "only": ["nightly_virtualbox_build"]

81 | }

82 | ],

83 | "post-processors": [

84 | {

85 | "type": "vagrant",

86 | "only": ["virtualbox"],

87 | "output": "cloudify_{{.Provider}}.box"

88 | }

89 | ]

90 | }

91 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/provision/Vagrantfile:

--------------------------------------------------------------------------------

1 | ########

2 | # Copyright (c) 2014 GigaSpaces Technologies Ltd. All rights reserved

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # * See the License for the specific language governing permissions and

14 | # * limitations under the License.

15 |

16 | # -*- mode: ruby -*-

17 | # vi: set ft=ruby :

18 |

19 | AWS_ACCESS_KEY_ID = ENV['AWS_ACCESS_KEY_ID']

20 | AWS_ACCESS_KEY = ENV['AWS_ACCESS_KEY']

21 |

22 | UBUNTU_TRUSTY_BOX_NAME = 'ubuntu/trusty64'

23 |

24 | Vagrant.configure('2') do |config|

25 | config.vm.define :ubuntu_trusty_box do |local|

26 | local.vm.provider :virtualbox do |vb|

27 | vb.customize ['modifyvm', :id, '--memory', '4096']

28 | end

29 | local.vm.box = UBUNTU_TRUSTY_BOX_NAME

30 | local.vm.hostname = 'local'

31 | local.vm.network :private_network, ip: "10.10.1.10"

32 | local.vm.synced_folder "../../", "/cloudify-packager", create: true

33 | local.vm.provision "shell" do |s|

34 | s.path = "common.sh"

35 | s.privileged = false

36 | end

37 | end

38 | end

39 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/provision/cleanup.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | sudo apt-get purge -y build-essential

4 | sudo apt-get autoremove -y

5 | sudo apt-get clean -y

6 | sudo rm -rf /var/lib/apt/lists/*

7 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/provision/common.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # accepted arguments

4 | # $1 = true iff install from PYPI

5 |

6 | function set_username

7 | {

8 | USERNAME=$(id -u -n)

9 | if [ "$USERNAME" = "" ]; then

10 | echo "using default username"

11 | USERNAME="vagrant"

12 | fi

13 | echo "username is [$USERNAME]"

14 | }

15 |

16 | function install_prereqs

17 | {

18 | echo updating apt cache

19 | sudo apt-get -y update

20 | echo installing prerequisites

21 | sudo apt-get install -y curl vim git gcc python-dev

22 | }

23 |

24 | function install_pip

25 | {

26 | curl --silent --show-error --retry 5 https://bootstrap.pypa.io/get-pip.py | sudo python

27 | }

28 |

29 | function create_and_source_virtualenv

30 | {

31 | cd ~

32 | echo installing virtualenv

33 | sudo pip install virtualenv==1.11.4

34 | echo creating cloudify virtualenv

35 | virtualenv cloudify

36 | source cloudify/bin/activate

37 | }

38 |

39 | function install_cli

40 | {

41 | if [ "$INSTALL_FROM_PYPI" = "true" ]; then

42 | echo installing cli from pypi

43 | pip install cloudify

44 | else

45 | echo installing cli from github

46 | pip install git+https://github.com/cloudify-cosmo/cloudify-dsl-parser.git@$CORE_TAG_NAME

47 | pip install git+https://github.com/cloudify-cosmo/flask-securest.git@0.6

48 | pip install git+https://github.com/cloudify-cosmo/cloudify-rest-client.git@$CORE_TAG_NAME

49 | pip install git+https://github.com/cloudify-cosmo/cloudify-plugins-common.git@$CORE_TAG_NAME

50 | pip install git+https://github.com/cloudify-cosmo/cloudify-script-plugin.git@$PLUGINS_TAG_NAME

51 | pip install git+https://github.com/cloudify-cosmo/cloudify-cli.git@$CORE_TAG_NAME

52 | fi

53 | }

54 |

55 | function init_cfy_workdir

56 | {

57 | cd ~

58 | mkdir -p cloudify

59 | cd cloudify

60 | cfy init

61 | }

62 |

63 | function get_manager_blueprints

64 | {

65 | cd ~/cloudify

66 | echo "Retrieving Manager Blueprints"

67 | sudo curl -O http://cloudify-public-repositories.s3.amazonaws.com/cloudify-manager-blueprints/${CORE_TAG_NAME}/cloudify-manager-blueprints.tar.gz &&

68 | sudo tar -zxvf cloudify-manager-blueprints.tar.gz &&

69 | mv cloudify-manager-blueprints-*/ cloudify-manager-blueprints

70 | sudo rm cloudify-manager-blueprints.tar.gz

71 | }

72 |

73 | function generate_keys

74 | {

75 | # generate public/private key pair and add to authorized_keys

76 | ssh-keygen -t rsa -f ~/.ssh/id_rsa -q -N ''

77 | cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

78 |

79 | }

80 |

81 | function configure_manager_blueprint_inputs

82 | {

83 | # configure inputs

84 | cd ~/cloudify

85 | cp cloudify-manager-blueprints/simple-manager-blueprint-inputs.yaml inputs.yaml

86 | sed -i "s|public_ip: ''|public_ip: \'127.0.0.1\'|g" inputs.yaml

87 | sed -i "s|private_ip: ''|private_ip: \'127.0.0.1\'|g" inputs.yaml

88 | sed -i "s|ssh_user: ''|ssh_user: \'${USERNAME}\'|g" inputs.yaml

89 | sed -i "s|ssh_key_filename: ''|ssh_key_filename: \'~/.ssh/id_rsa\'|g" inputs.yaml

90 | # configure manager blueprint

91 | sudo sed -i "s|/cloudify-docker_3|/cloudify-docker-commercial_3|g" cloudify-manager-blueprints/simple-manager-blueprint.yaml

92 | }

93 |

94 | function bootstrap

95 | {

96 | cd ~/cloudify

97 | echo "bootstrapping..."

98 | # bootstrap the manager locally

99 | cfy bootstrap -v -p cloudify-manager-blueprints/simple-manager-blueprint.yaml -i inputs.yaml --install-plugins

100 | if [ "$?" -ne "0" ]; then

101 | echo "Bootstrap failed, stoping provision."

102 | exit 1

103 | fi

104 | echo "bootstrap done."

105 | }

106 |

107 | function create_blueprints_and_inputs_dir

108 | {

109 | mkdir -p ~/cloudify/blueprints/inputs

110 | }

111 |

112 | function configure_nodecellar_blueprint_inputs

113 | {

114 | echo '

115 | host_ip: 10.10.1.10

116 | agent_user: vagrant

117 | agent_private_key_path: /root/.ssh/id_rsa

118 | ' >> ~/cloudify/blueprints/inputs/nodecellar-singlehost.yaml

119 | }

120 |

121 | function configure_shell_login

122 | {

123 | # source virtualenv on login

124 | echo "source /home/${USERNAME}/cloudify/bin/activate" >> /home/${USERNAME}/.bashrc

125 |

126 | # set shell login base dir

127 | echo "cd ~/cloudify" >> /home/${USERNAME}/.bashrc

128 | }

129 |

130 | INSTALL_FROM_PYPI=$1

131 | echo "Install from PyPI: ${INSTALL_FROM_PYPI}"

132 | CORE_TAG_NAME="master"

133 | PLUGINS_TAG_NAME="master"

134 |

135 | set_username

136 | install_prereqs

137 | install_pip

138 | create_and_source_virtualenv

139 | install_cli

140 | activate_cfy_bash_completion

141 | init_cfy_workdir

142 | get_manager_blueprints

143 | generate_keys

144 | configure_manager_blueprint_inputs

145 | bootstrap

146 | create_blueprints_and_inputs_dir

147 | configure_nodecellar_blueprint_inputs

148 | configure_shell_login

149 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/provision/influxdb_monkeypatch.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash -e

2 |

3 | sudo apt-get update

4 | sudo apt-get install -y jq

5 |

6 | DEST=`sudo docker inspect cfy | jq -r '.[0].Volumes["/opt/influxdb/shared/data"]'`

7 | sudo find $DEST -type f -delete

8 | sudo docker exec cfy /usr/bin/pkill influxdb

9 | sleep 10

10 | curl --fail "http://localhost:8086/db?u=root&p=root" -d "{\"name\": \"cloudify\"}"

11 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/provision/install_ga.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # update apt cache

4 | sudo apt-get update

5 |

6 | # install guest additions

7 | sudo apt-get install -y dkms module-assistant

8 | sudo m-a -i prepare

9 | wget http://download.virtualbox.org/virtualbox/4.3.20/VBoxGuestAdditions_4.3.20.iso

10 | sudo mkdir -p /mnt/iso

11 | sudo sudo mount -o loop VBoxGuestAdditions_4.3.20.iso /mnt/iso/

12 | sudo /mnt/iso/VBoxLinuxAdditions.run

13 | sudo umount /mnt/iso

14 | sudo rmdir /mnt/iso

15 | rm VBoxGuestAdditions_4.3.20.iso

16 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/provision/prepare_nightly.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # update apt cache

4 | sudo apt-get update

5 |

6 | # change partition uuid

7 | sudo apt-get install -y uuid

8 | sudo tune2fs /dev/xvda1 -U `uuid`

9 |

10 | # disable cloud-init datasource retries

11 | echo 'datasource_list: [ None ]' | sudo -s tee /etc/cloud/cloud.cfg.d/90_dpkg.cfg

12 | sudo dpkg-reconfigure -f noninteractive cloud-init

13 |

14 | # disable ttyS0

15 | echo manual | sudo tee /etc/init/ttyS0.override

16 |

17 | # change hostname

18 | echo cloudify | sudo -S tee /etc/hostname

19 | echo 127.0.0.1 cloudify | sudo -S tee -a /etc/hosts

20 |

21 | # change dns resolver to 8.8.8.8

22 | # this is done so docker won't start with AWS dns resolver

23 | echo nameserver 8.8.8.8 | sudo -S tee /etc/resolv.conf

24 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/settings.py:

--------------------------------------------------------------------------------

1 | settings = {

2 | "region": "eu-west-1",

3 | "username": "ubuntu",

4 | "aws_s3_bucket": "cloudify-nightly-vagrant",

5 | "aws_iam_group": "nightly-vagrant-build",

6 | "factory_ami": "ami-6ca1011b",

7 | "instance_type": "m3.large",

8 | "packer_var_file": "packer_inputs.json"

9 | }

10 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/templates/box_Vagrantfile.template:

--------------------------------------------------------------------------------

1 | Vagrant::Config.run do |config|

2 | config.vm.base_mac = $MACHINE_MAC `VBoxManage showvminfo cloudify --machinereadable | grep macaddress1 | cut -d"=" -f2`"'

3 | end

4 |

5 |

6 | include_vagrantfile = File.expand_path("../include/_Vagrantfile", __FILE__)

7 | load include_vagrantfile if File.exist?(include_vagrantfile)

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/templates/extlinux.conf.template:

--------------------------------------------------------------------------------

1 | DEFAULT cloudify

2 | LABEL cloudify

3 | LINUX /vmlinuz

4 | APPEND root=/dev/disk/by-uuid/$UUID `sudo blkid -s UUID -o value /dev/mapper/loop0p1`

5 | INITRD /initrd.img

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/templates/publish_Vagrantfile.template:

--------------------------------------------------------------------------------

1 | ########

2 | # Copyright (c) 2014 GigaSpaces Technologies Ltd. All rights reserved

3 | #

4 | # Licensed under the Apache License, Version 2.0 (the "License");

5 | # you may not use this file except in compliance with the License.

6 | # You may obtain a copy of the License at

7 | #

8 | # http://www.apache.org/licenses/LICENSE-2.0

9 | #

10 | # Unless required by applicable law or agreed to in writing, software

11 | # distributed under the License is distributed on an "AS IS" BASIS,

12 | # * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 | # * See the License for the specific language governing permissions and

14 | # * limitations under the License.

15 |

16 | # -*- mode: ruby -*-

17 | # vi: set ft=ruby :

18 |

19 | Vagrant.configure("2") do |config|

20 | config.vm.box = "$BOX_NAME"

21 | config.vm.box_url = "$BOX_URL"

22 | config.vm.network :private_network, ip: "10.10.1.10"

23 | end

24 |

--------------------------------------------------------------------------------

/image-builder/quickstart-vagrantbox/userdata/add_vagrant_user.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | useradd -m -s /bin/bash -U vagrant

4 | adduser vagrant admin

5 | echo 'vagrant ALL=(ALL) NOPASSWD:ALL' > /etc/sudoers.d/99-vagrant

6 | chmod 0440 /etc/sudoers.d/99-vagrant

7 | mkdir -p /home/vagrant/.ssh/

8 | wget https://raw.githubusercontent.com/mitchellh/vagrant/master/keys/vagrant.pub -O /home/vagrant/.ssh/authorized_keys

9 | chown -R vagrant:vagrant /home/vagrant/

10 | chmod 0700 /home/vagrant/.ssh/

11 | chmod 0600 /home/vagrant/.ssh/authorized_keys

--------------------------------------------------------------------------------

/offline-configuration/.pydistutils:

--------------------------------------------------------------------------------

1 | [easy_install]

2 | index-url = http:///simple/

--------------------------------------------------------------------------------

/offline-configuration/bandersnatch.conf:

--------------------------------------------------------------------------------

1 | # a configuration file for nginx to serve general files from

2 | # /srv/cloudify and PyPi mirror on /srv/pypi/web

3 | server {

4 | listen 80;

5 | location /simple/ {

6 | root /srv/pypi/web;

7 | }

8 | location /packages/{

9 | root /srv/pypi/web;

10 | }

11 | location /cloudify/ {

12 | root /srv/;

13 | }

14 | autoindex on;

15 | charset utf-8;

16 | }

17 |

--------------------------------------------------------------------------------

/offline-configuration/nginx.conf:

--------------------------------------------------------------------------------

1 | user www-data;

2 | worker_processes 4;

3 | pid /var/run/nginx.pid;

4 |

5 | events {

6 | worker_connections 768;

7 | # multi_accept on;

8 | }

9 |

10 | http {

11 |

12 | ##

13 | # Basic Settings

14 | ##

15 |

16 | sendfile on;

17 | tcp_nopush on;

18 | tcp_nodelay on;

19 | keepalive_timeout 65;

20 | types_hash_max_size 2048;

21 | # server_tokens off;

22 |

23 | # server_names_hash_bucket_size 64;

24 | # server_name_in_redirect off;

25 |

26 | include /etc/nginx/mime.types;

27 | default_type application/octet-stream;

28 |

29 | ##

30 | # Logging Settings

31 | ##

32 |

33 | access_log /var/log/nginx/access.log;

34 | error_log /var/log/nginx/error.log;

35 |

36 | ##

37 | # Gzip Settings

38 | ##

39 |

40 | gzip on;

41 | gzip_disable "msie6";

42 |

43 | ##

44 | # Virtual Host Configs

45 | ##

46 |

47 | include /etc/nginx/conf.d/*.conf;

48 | include /etc/nginx/sites-enabled/bandersnatch.conf;

49 |

50 | }

--------------------------------------------------------------------------------

/offline-configuration/pip.conf:

--------------------------------------------------------------------------------

1 | [global]

2 | index-url = http:///simple/

--------------------------------------------------------------------------------

/offline-configuration/provision.sh:

--------------------------------------------------------------------------------

1 | # This script configures and starts a PyPi mirror and http-server for cloudify offline

2 | # Note that you should edit HOME_FOLDER and FILES_DIR variables before running this script

3 |

4 | HOME_FOLDER="/home/ubuntu"

5 |

6 | # Expected to contain all the needed files - nginx.conf, bandersnatch.conf etc

7 | FILES_DIR="/home/ubuntu"

8 |

9 | echo "Using $HOME_FOLDER as a home folder"

10 | echo "Using $FILES_DIR as a files folder"

11 | echo ' INSTALLING '

12 | echo '------------'

13 | cd "$HOME_FOLDER"

14 | sudo apt-get update -y

15 | echo "### Installing python-pip python-dev build-essential nginx"

16 | sudo apt-get install python-pip python-dev build-essential nginx -y

17 | sudo pip install --upgrade pip

18 | sudo pip install virtualenv

19 | sudo pip install --upgrade virtualenv

20 | virtualenv env

21 | source "$HOME_FOLDER/env/bin/activate"

22 | echo "### Installing bandersnatch"

23 | pip install -r https://bitbucket.org/pypa/bandersnatch/raw/stable/requirements.txt

24 | echo "### Confuring nginx"

25 | sudo mv /etc/nginx/nginx.conf /etc/nginx/nginx.conf.original # backup the original

26 | sudo cp "$FILES_DIR/nginx.conf" /etc/nginx/nginx.conf

27 | sudo cp "$FILES_DIR/bandersnatch.conf" /etc/nginx/sites-available/bandersnatch.conf

28 | sudo ln -s /etc/nginx/sites-available/bandersnatch.conf /etc/nginx/sites-enabled/bandersnatch.conf

29 | sudo mkdir /srv/cloudify

30 | echo "### Starting nginx"

31 | sudo nginx

32 |

33 | echo "Creating configuration file for bandersnatch"

34 | sudo bandersnatch mirror

35 |

36 | echo "Using nohup to download PyPi mirror"

37 | nohup sudo bandersnatch mirror &

38 | PID=$!

39 | echo "nohup pid is $PID"

40 | echo "execute ps -m $PID to check if the download has finished"

--------------------------------------------------------------------------------

/package-configuration/.gitignore:

--------------------------------------------------------------------------------

1 | *.pyc

2 | *.deb

3 | debs/

--------------------------------------------------------------------------------

/package-configuration/debian-agent/debian-agent-disable-requiretty.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | # now modify sudoers configuration to allow execution without tty

3 | grep -i ubuntu /proc/version > /dev/null

4 | if [ "$?" -eq "0" ]; then

5 | # ubuntu

6 | echo Running on Ubuntu

7 | if sudo grep -q -E '[^!]requiretty' /etc/sudoers; then

8 | echo creating sudoers user file

9 | echo "Defaults:`whoami` !requiretty" | sudo tee /etc/sudoers.d/`whoami` >/dev/null

10 | sudo chmod 0440 /etc/sudoers.d/`whoami`

11 | else

12 | echo No requiretty directive found, nothing to do

13 | fi

14 | else

15 | # other - modify sudoers file

16 | if [ ! -f "/etc/sudoers" ]; then

17 | error_exit 116 "Could not find sudoers file at expected location (/etc/sudoers)"

18 | fi

19 | echo Setting privileged mode

20 | sudo sed -i 's/^Defaults.*requiretty/#&/g' /etc/sudoers || error_exit_on_level $? 117 "Failed to edit sudoers file to disable requiretty directive" 1

21 | fi

22 |

--------------------------------------------------------------------------------

/package-configuration/debian-agent/debian-celeryd-cloudify.conf.template:

--------------------------------------------------------------------------------

1 | . {{ includes_file_path }}

2 | CELERY_BASE_DIR="{{ celery_base_dir }}"

3 |

4 | # replaces management__worker

5 | WORKER_MODIFIER="{{ worker_modifier }}"

6 |

7 | export BROKER_IP="{{ broker_ip }}"

8 | export MANAGEMENT_IP="{{ management_ip }}"

9 | export BROKER_URL="amqp://guest:guest@${BROKER_IP}:5672//"

10 | export MANAGER_REST_PORT="8101"

11 | export CELERY_WORK_DIR="${CELERY_BASE_DIR}/cloudify.${WORKER_MODIFIER}/work"

12 | export IS_MANAGEMENT_NODE="False"

13 | export AGENT_IP="{{ agent_ip }}"

14 | export VIRTUALENV="${CELERY_BASE_DIR}/cloudify.${WORKER_MODIFIER}/env"

15 | export MANAGER_FILE_SERVER_URL="http://${MANAGEMENT_IP}:53229"

16 | export MANAGER_FILE_SERVER_BLUEPRINTS_ROOT_URL="${MANAGER_FILE_SERVER_URL}/blueprints"

17 | export PATH="${VIRTUALENV}/bin:${PATH}"

18 | # enable running celery as root

19 | export C_FORCE_ROOT="true"

20 |

21 | CELERYD_MULTI="${VIRTUALENV}/bin/celeryd-multi"

22 | CELERYD_USER="{{ celery_user }}"

23 | CELERYD_GROUP="{{ celery_group }}"

24 | CELERY_TASK_SERIALIZER="json"

25 | CELERY_RESULT_SERIALIZER="json"

26 | CELERY_RESULT_BACKEND="$BROKER_URL"

27 | DEFAULT_PID_FILE="${CELERY_WORK_DIR}/celery.pid"

28 | DEFAULT_LOG_FILE="${CELERY_WORK_DIR}/celery%I.log"

29 | CELERYD_OPTS="-Ofair --events --loglevel=debug --app=cloudify --include=${INCLUDES} -Q ${WORKER_MODIFIER} --broker=${BROKER_URL} --hostname=${WORKER_MODIFIER} --autoscale={{ worker_autoscale }} --maxtasksperchild=10 --without-gossip --without-mingle"

30 |

--------------------------------------------------------------------------------

/package-configuration/debian-agent/debian-celeryd-cloudify.init.template:

--------------------------------------------------------------------------------

1 | #!/bin/sh -e

2 | # ============================================

3 | # celeryd - Starts the Celery worker daemon.

4 | # ============================================

5 | #

6 | # :Usage: /etc/init.d/celeryd {start|stop|force-reload|restart|try-restart|status}

7 | # :Configuration file: /etc/default/celeryd

8 | #

9 | # See http://docs.celeryproject.org/en/latest/tutorials/daemonizing.html#generic-init-scripts

10 |

11 |

12 | ### BEGIN INIT INFO

13 | # Provides: celeryd

14 | # Required-Start: $network $local_fs $remote_fs

15 | # Required-Stop: $network $local_fs $remote_fs