├── .gitignore

├── README.md

└── aws-cloudformation

└── couchbase_server.template

/.gitignore:

--------------------------------------------------------------------------------

1 | *~

2 | \#*\#

3 | .\#*

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [](https://gitter.im/couchbase/discuss?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge&utm_content=badge)

2 |

3 | # Couchbase Server under Docker + CoreOS.

4 |

5 | Here are instructions on how to fire up a Couchbase Server cluster running under CoreOS on AWS CloudFormation. You will end up with the following system:

6 |

7 |

8 |

9 | *Disclaimer*: this approach to running Couchbase Server and Sync Gateway is entirely **experimental** and you should do your own testing before running a production system.

10 |

11 | ## Launch CoreOS instances via AWS Cloud Formation

12 |

13 | Click the "Launch Stack" button to launch your CoreOS instances via AWS Cloud Formation:

14 |

15 | [ ](https://console.aws.amazon.com/cloudformation/home?region=us-east-1#cstack=sn%7ECouchbase-CoreOS%7Cturl%7Ehttp://tleyden-misc.s3.amazonaws.com/couchbase-coreos/sync_gateway.template)

16 |

17 | *NOTE: this is hardcoded to use the us-east-1 region, so if you need a different region, you should edit the URL accordingly*

18 |

19 | Use the following parameters in the form:

20 |

21 | * **ClusterSize**: 3 nodes (default)

22 | * **Discovery URL**: as it says, you need to grab a new token from https://discovery.etcd.io/new and paste it in the box.

23 | * **KeyPair**: use whatever you normally use to start EC2 instances. For this discussion, let's assumed you used `aws`, which corresponds to a file you have on your laptop called `aws.cer`

24 |

25 | ### Wait until instances are up

26 |

27 |

28 |

29 | ### ssh into a CoreOS instance

30 |

31 | Go to the AWS console under EC2 instances and find the public ip of one of your newly launched CoreOS instances.

32 |

33 |

34 |

35 | Choose any one of them (it doesn't matter which), and ssh into it as the **core** user with the cert provided in the previous step:

36 |

37 | ```

38 | $ ssh -i aws.cer -A core@ec2-54-83-80-161.compute-1.amazonaws.com

39 | ```

40 |

41 | ## Sanity check

42 |

43 | Let's make sure the CoreOS cluster is healthy first:

44 |

45 | ```

46 | $ fleetctl list-machines

47 | ```

48 |

49 | This should return a list of machines in the cluster, like this:

50 |

51 | ```

52 | MACHINE IP METADATA

53 | 03b08680... 10.33.185.16 -

54 | 209a8a2e... 10.164.175.9 -

55 | 25dd84b7... 10.13.180.194 -

56 | ```

57 |

58 | ## Launch cluster

59 |

60 |

61 | ```

62 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 couchbase-fleet launch-cbs \

63 | --version latest \

64 | --num-nodes 3 \

65 | --userpass "user:passw0rd" \

66 | --docker-tag 0.8.6

67 | ```

68 |

69 | Where:

70 |

71 | * --version= Couchbase Server version -- see [Docker Tags](https://registry.hub.docker.com/u/couchbase/server/tags) for a list of versions that can be used.

72 | * --num-nodes= number of couchbase nodes to start

73 | * --userpass the username and password as a single string, delimited by a colon (:)

74 | * --etcd-servers= Comma separated list of etcd servers, or omit to connect to etcd running on localhost

75 | * --docker-tag= if present, use this docker tag the couchbase-cluster-go version in spawned containers, otherwise, default to "latest"

76 |

77 | Replace `user:passw0rd` with a sensible username and password. It **must** be colon separated, with no spaces. The password itself must be at least 6 characters.

78 |

79 | After you kick it off, you can expect it to take approximately **10-20 minutes** to download the Docker images and bootstrap the cluster. Once it's finished, you should see the following log entry:

80 |

81 | ```

82 | Cluster is up!

83 | ```

84 |

85 | If you never got that far, you can check your output against this [expected output](https://gist.github.com/tleyden/6b903e40cf87b5dd4ed8). Please file an [issue](https://github.com/couchbaselabs/couchbase-server-coreos/issues) here.

86 |

87 | ## Verify

88 |

89 | To check the status of your cluster, run:

90 |

91 | ```

92 | $ fleetctl list-units

93 | ```

94 |

95 | You should see three units, all as active.

96 |

97 | ```

98 | UNIT MACHINE ACTIVE SUB

99 | couchbase_node@1.service 3c819355.../10.239.170.243 active running

100 | couchbase_node@2.service 782b35d4.../10.168.87.23 active running

101 | couchbase_node@3.service 7cd5f94c.../10.234.188.145 active running

102 | ```

103 |

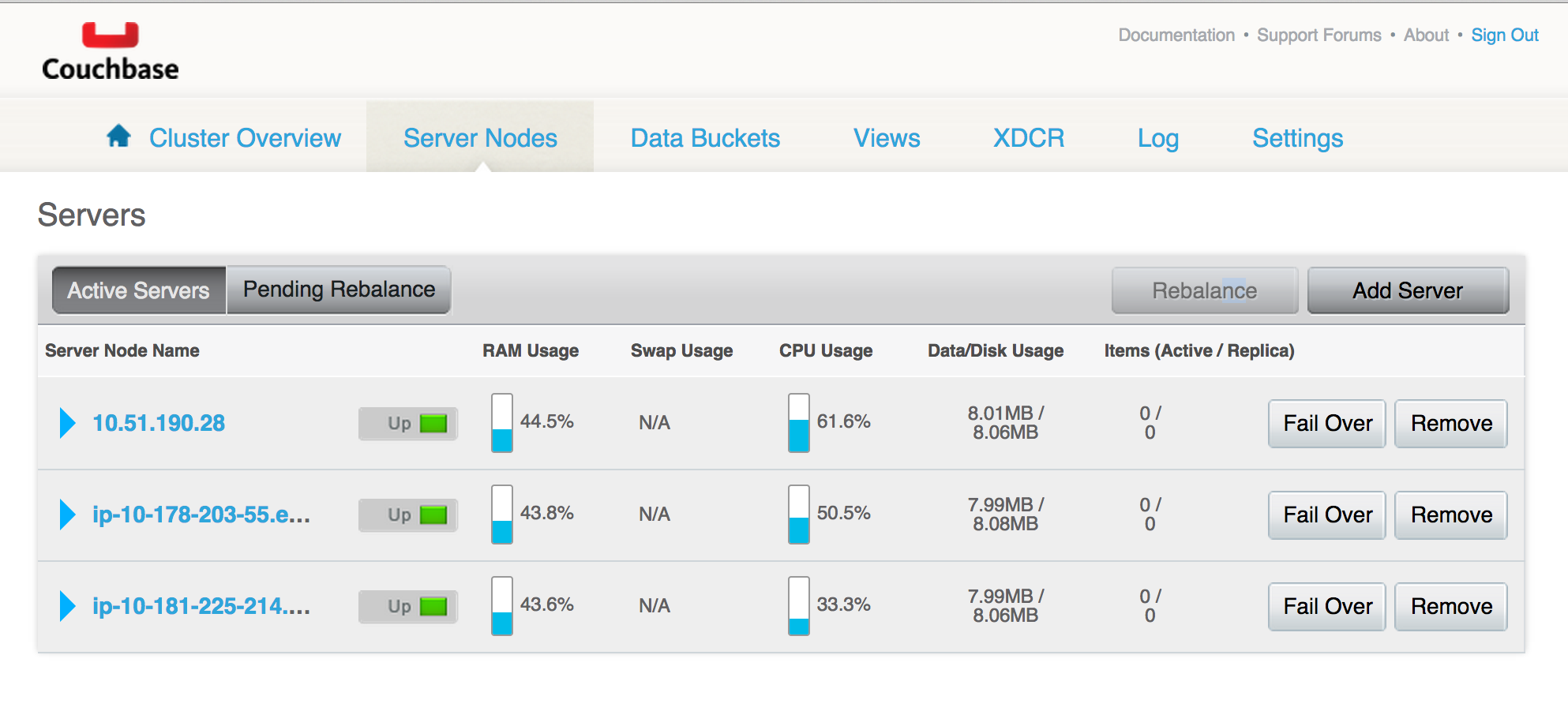

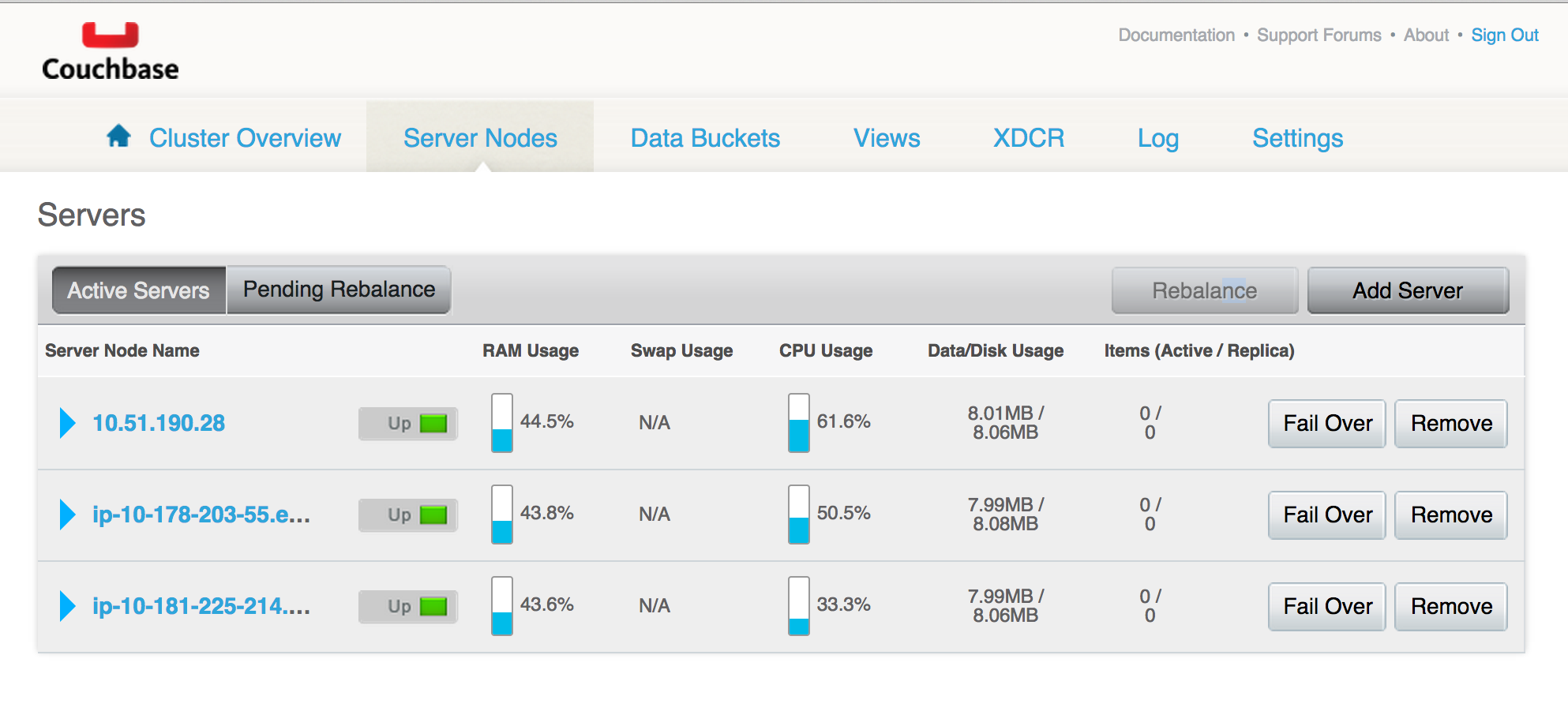

104 | # Login to Admin Web UI

105 |

106 | * Find the public ip of any of your CoreOS instances via the AWS console

107 | * In a browser, go to `http://:8091`

108 | * Login with the username/password you provided above

109 |

110 | You should see:

111 |

112 |

113 |

114 | Congratulations! You now have a 3 node Couchbase Server cluster running under CoreOS / Docker.

115 |

116 | # Sync Gateway

117 |

118 | The steps below will walk you through adding Sync Gateway into the cluster.

119 |

120 |

121 |

122 |

123 | ### Kick off Sync Gateway cluster

124 |

125 | ```

126 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 sync-gw-cluster launch-sgw \

127 | --num-nodes=1 \

128 | --config-url=http://git.io/b9PK \

129 | --create-bucket todos \

130 | --create-bucket-size 512 \

131 | --create-bucket-replicas 1 \

132 | --docker-tag 0.8.6

133 | ```

134 |

135 | Where:

136 |

137 | * --num-nodes= number of sync gw nodes to start

138 | * --config-url= the url where the sync gw config json is stored

139 | * --sync-gw-commit= the branch or commit of sync gw to use, defaults to "image", which is the master branch at the time the docker image was built.

140 | * --create-bucket= create a bucket on couchbase server with the given name

141 | * --create-bucket-size= if creating a bucket, use this size in MB

142 | * --create-bucket-replicas= if creating a bucket, use this replica count (defaults to 1)

143 | * --etcd-servers= Comma separated list of etcd servers, or omit to connect to etcd running on localhost

144 | * --docker-tag= if present, use this docker tag for spawned containers, otherwise, default to "latest"

145 |

146 |

147 | ### View cluster

148 |

149 | After the above script finishes, run `fleetctl list-units` to list the services in your cluster, and you should see:

150 |

151 | ```

152 | UNIT MACHINE ACTIVE SUB

153 | couchbase_node@1.service 2ad1cfaf.../10.95.196.213 active running

154 | couchbase_node@2.service a688ca8e.../10.216.199.207 active running

155 | couchbase_node@3.service fb241f71.../10.153.232.237 active running

156 | sync_gw_node@1.service 2ad1cfaf.../10.95.196.213 active running

157 | ```

158 |

159 | They should all be in the `active` state. If any are in the `activating` state -- which is normal because it might take some time to download the docker image -- then you should wait until they are all active before continuing.

160 |

161 | ## Verify internal

162 |

163 | **Find internal ip**

164 |

165 | ```

166 | $ fleetctl list-units

167 | sync_gw_node.1.service 209a8a2e.../10.164.175.9 active running

168 | ```

169 |

170 | **Curl**

171 |

172 | On the CoreOS instance you are already ssh'd into, Use the ip found above and run a curl request against the server root:

173 |

174 | ```

175 | $ curl 10.164.175.9:4984

176 | {"couchdb":"Welcome","vendor":{"name":"Couchbase Sync Gateway","version":1},"version":"Couchbase Sync Gateway/master(6356065)"}

177 | ```

178 |

179 | ## Verify external

180 |

181 | **Find external ip**

182 |

183 | Using the internal ip found above, go to the EC2 Instances section of the AWS console, and hunt around until you find the instance with that internal ip, and then get the public ip for that instance, eg: `ec2-54-211-206-18.compute-1.amazonaws.com`

184 |

185 | **Curl**

186 |

187 | From your laptop, use the ip found above and run a curl request against the server root:

188 |

189 | ```

190 | $ curl ec2-54-211-206-18.compute-1.amazonaws.com:4984

191 | {"couchdb":"Welcome","vendor":{"name":"Couchbase Sync Gateway","version":1},"version":"Couchbase Sync Gateway/master(6356065)"}

192 | ```

193 |

194 | Congratulations! You now have a Couchbase Server + Sync Gateway cluster running.

195 |

196 | ## Kicking off more Sync Gateway nodes.

197 |

198 | To launch two more Sync Gateway nodes, run the following command:

199 |

200 | ```

201 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 sync-gw-cluster launch-sgw \

202 | --num-nodes=2 \

203 | --config-url=http://git.io/b9PK \

204 | --docker-tag 0.8.6

205 | ```

206 |

207 | ## Shutting down the cluster.

208 |

209 | Warning: if you try to shutdown the individual ec2 instances, **you must use the CloudFormation console**. If you try to shutdown the instances via the EC2 control panel, AWS will restart them, because that is what the CloudFormation is telling it to do.

210 |

211 | Here is the web UI where you need to shutdown the cluster:

212 |

213 |

214 |

215 | ## Extended instructions

216 |

217 | * [Disabling CoreOS restarts](https://github.com/couchbaselabs/couchbase-server-coreos/wiki/Disabling-CoreOS-auto-restart)

218 | * [Running Sync Gateway behind an Nginx proxy](https://github.com/tleyden/couchbase-cluster-go#running-sync-gateway-behind-an-nginx-proxy)

219 | * [How reboots are handled](https://github.com/couchbaselabs/couchbase-server-docker/issues/3#issuecomment-75984021)

220 | * [Couchbase-Cluster-Go README](https://github.com/tleyden/couchbase-cluster-go/blob/master/README.md)

221 |

222 | ## References

223 |

224 | * [Couchbase Server on Dockerhub](https://hub.docker.com/u/couchbase/server)

225 | * [Sync Gateway on Dockerhub](https://hub.docker.com/u/couchbase/sync-gateway)

226 | * [couchbase-cluster-go github repo](https://github.com/tleyden/couchbase-cluster-go)

227 | * [How I built couchbase 2.2 for docker](https://gist.github.com/dustin/6605182) by [@dlsspy](https://twitter.com/dlsspy)

228 | * https://registry.hub.docker.com/u/ncolomer/couchbase/

229 | * https://github.com/lifegadget/docker-couchbase

230 |

231 |

232 |

233 |

234 |

--------------------------------------------------------------------------------

/aws-cloudformation/couchbase_server.template:

--------------------------------------------------------------------------------

1 | {

2 | "AWSTemplateFormatVersion": "2010-09-09",

3 | "Description": "CoreOS on EC2: http://coreos.com/docs/running-coreos/cloud-providers/ec2/",

4 | "Mappings" : {

5 | "RegionMap" : {

6 | "us-east-1" : {

7 | "AMI" : "ami-d6bceabe"

8 | }

9 | }

10 | },

11 | "Parameters": {

12 | "InstanceType" : {

13 | "Description" : "EC2 PV instance type (m3.medium, etc).",

14 | "Type" : "String",

15 | "Default" : "m3.medium",

16 | "ConstraintDescription" : "Must be a valid EC2 PV instance type."

17 | },

18 | "ClusterSize": {

19 | "Default": "3",

20 | "MinValue": "1",

21 | "MaxValue": "12",

22 | "Description": "Number of nodes in cluster (1-12). 3 or larger is recommended.",

23 | "Type": "Number"

24 | },

25 | "DiscoveryURL": {

26 | "Description": "An unique etcd cluster discovery URL. Grab a new token from https://discovery.etcd.io/new",

27 | "Type": "String"

28 | },

29 | "AdvertisedIPAddress": {

30 | "Description": "Use 'private' if your etcd cluster is within one region or 'public' if it spans regions or cloud providers.",

31 | "Default": "private",

32 | "AllowedValues": ["private", "public"],

33 | "Type": "String"

34 | },

35 | "AllowSSHFrom": {

36 | "Description": "The net block (CIDR) that SSH is available to.",

37 | "Default": "0.0.0.0/0",

38 | "Type": "String"

39 | },

40 | "KeyPair" : {

41 | "Description" : "The name of an EC2 Key Pair to allow SSH access to the instance.",

42 | "Type" : "String"

43 | }

44 | },

45 | "Resources": {

46 | "CoreOSSecurityGroup": {

47 | "Type": "AWS::EC2::SecurityGroup",

48 | "Properties": {

49 | "GroupDescription": "CoreOS SecurityGroup",

50 | "SecurityGroupIngress": [

51 | {"IpProtocol": "tcp", "FromPort": "22", "ToPort": "22", "CidrIp": {"Ref": "AllowSSHFrom"}}

52 | ]

53 | }

54 | },

55 | "Ingress4001": {

56 | "Type": "AWS::EC2::SecurityGroupIngress",

57 | "Properties": {

58 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "4001", "ToPort": "4001", "SourceSecurityGroupId": {

59 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

60 | }

61 | }

62 | },

63 | "Ingress7001": {

64 | "Type": "AWS::EC2::SecurityGroupIngress",

65 | "Properties": {

66 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "7001", "ToPort": "7001", "SourceSecurityGroupId": {

67 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

68 | }

69 | }

70 | },

71 |

72 | "Ingress112xx": {

73 | "Type": "AWS::EC2::SecurityGroupIngress",

74 | "Properties": {

75 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "11209", "ToPort": "11211", "SourceSecurityGroupId": {

76 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

77 | }

78 | }

79 | },

80 | "Ingress8091": {

81 | "Type": "AWS::EC2::SecurityGroupIngress",

82 | "Properties": {

83 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "8091", "ToPort": "8091", "CidrIp": "0.0.0.0/0"

84 | }

85 | },

86 | "Ingress8092": {

87 | "Type": "AWS::EC2::SecurityGroupIngress",

88 | "Properties": {

89 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "8092", "ToPort": "8092", "SourceSecurityGroupId": {

90 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

91 | }

92 | }

93 | },

94 | "Ingress4369": {

95 | "Type": "AWS::EC2::SecurityGroupIngress",

96 | "Properties": {

97 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "4369", "ToPort": "4369", "SourceSecurityGroupId": {

98 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

99 | }

100 | }

101 | },

102 | "Ingress211xx": {

103 | "Type": "AWS::EC2::SecurityGroupIngress",

104 | "Properties": {

105 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "21100", "ToPort": "21199", "SourceSecurityGroupId": {

106 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

107 | }

108 | }

109 | },

110 |

111 |

112 |

113 |

114 | "CoreOSServerAutoScale": {

115 | "Type": "AWS::AutoScaling::AutoScalingGroup",

116 | "Properties": {

117 | "AvailabilityZones": {"Fn::GetAZs": ""},

118 | "LaunchConfigurationName": {"Ref": "CoreOSServerLaunchConfig"},

119 | "MinSize": "1",

120 | "MaxSize": "12",

121 | "DesiredCapacity": {"Ref": "ClusterSize"},

122 | "Tags": [

123 | {"Key": "Name", "Value": { "Ref" : "AWS::StackName" }, "PropagateAtLaunch": true}

124 | ]

125 | }

126 | },

127 | "CoreOSServerLaunchConfig": {

128 | "Type": "AWS::AutoScaling::LaunchConfiguration",

129 | "Properties": {

130 | "ImageId" : { "Fn::FindInMap" : [ "RegionMap", { "Ref" : "AWS::Region" }, "AMI" ]},

131 | "InstanceType": {"Ref": "InstanceType"},

132 | "KeyName": {"Ref": "KeyPair"},

133 | "SecurityGroups": [{"Ref": "CoreOSSecurityGroup"}],

134 | "BlockDeviceMappings": [{

135 | "DeviceName": "/dev/xvda",

136 | "Ebs" : {"VolumeSize": "20"}

137 | }],

138 | "UserData" : { "Fn::Base64":

139 | { "Fn::Join": [ "", [

140 | "#cloud-config\n\n",

141 | "write_files:\n",

142 | " - path: /etc/systemd/system/docker.service.d/increase-ulimit.conf\n",

143 | " owner: core:core\n",

144 | " permissions: 0644\n",

145 | " content: |\n",

146 | " [Service]\n",

147 | " LimitMEMLOCK=infinity\n",

148 | " - path: /etc/systemd/system/fleet.socket.d/30-ListenStream.conf\n",

149 | " owner: core:core\n",

150 | " permissions: 0644\n",

151 | " content: |\n",

152 | " [Socket]\n",

153 | " ListenStream=127.0.0.1:49153\n",

154 | " - path: /opt/couchbase/var/.README\n",

155 | " owner: core:core\n",

156 | " permissions: 0644\n",

157 | " content: |\n",

158 | " Couchbase /opt/couchbase/var data volume in container mounted here\n",

159 | " - path: /var/lib/cbfs/data/.README\n",

160 | " owner: core:core\n",

161 | " permissions: 0644\n",

162 | " content: |\n",

163 | " CBFS files are stored here\n",

164 | "coreos:\n",

165 | " etcd:\n",

166 | " discovery: ", { "Ref": "DiscoveryURL" }, "\n",

167 | " addr: $", { "Ref": "AdvertisedIPAddress" }, "_ipv4:4001\n",

168 | " peer-addr: $", { "Ref": "AdvertisedIPAddress" }, "_ipv4:7001\n",

169 | " units:\n",

170 | " - name: etcd.service\n",

171 | " command: start\n",

172 | " - name: fleet.service\n",

173 | " command: start\n",

174 | " - name: docker.service\n",

175 | " command: restart\n"

176 | ] ]

177 | }

178 | }

179 | }

180 | }

181 | }

182 | }

183 |

--------------------------------------------------------------------------------

](https://console.aws.amazon.com/cloudformation/home?region=us-east-1#cstack=sn%7ECouchbase-CoreOS%7Cturl%7Ehttp://tleyden-misc.s3.amazonaws.com/couchbase-coreos/sync_gateway.template)

16 |

17 | *NOTE: this is hardcoded to use the us-east-1 region, so if you need a different region, you should edit the URL accordingly*

18 |

19 | Use the following parameters in the form:

20 |

21 | * **ClusterSize**: 3 nodes (default)

22 | * **Discovery URL**: as it says, you need to grab a new token from https://discovery.etcd.io/new and paste it in the box.

23 | * **KeyPair**: use whatever you normally use to start EC2 instances. For this discussion, let's assumed you used `aws`, which corresponds to a file you have on your laptop called `aws.cer`

24 |

25 | ### Wait until instances are up

26 |

27 |

28 |

29 | ### ssh into a CoreOS instance

30 |

31 | Go to the AWS console under EC2 instances and find the public ip of one of your newly launched CoreOS instances.

32 |

33 |

34 |

35 | Choose any one of them (it doesn't matter which), and ssh into it as the **core** user with the cert provided in the previous step:

36 |

37 | ```

38 | $ ssh -i aws.cer -A core@ec2-54-83-80-161.compute-1.amazonaws.com

39 | ```

40 |

41 | ## Sanity check

42 |

43 | Let's make sure the CoreOS cluster is healthy first:

44 |

45 | ```

46 | $ fleetctl list-machines

47 | ```

48 |

49 | This should return a list of machines in the cluster, like this:

50 |

51 | ```

52 | MACHINE IP METADATA

53 | 03b08680... 10.33.185.16 -

54 | 209a8a2e... 10.164.175.9 -

55 | 25dd84b7... 10.13.180.194 -

56 | ```

57 |

58 | ## Launch cluster

59 |

60 |

61 | ```

62 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 couchbase-fleet launch-cbs \

63 | --version latest \

64 | --num-nodes 3 \

65 | --userpass "user:passw0rd" \

66 | --docker-tag 0.8.6

67 | ```

68 |

69 | Where:

70 |

71 | * --version= Couchbase Server version -- see [Docker Tags](https://registry.hub.docker.com/u/couchbase/server/tags) for a list of versions that can be used.

72 | * --num-nodes= number of couchbase nodes to start

73 | * --userpass the username and password as a single string, delimited by a colon (:)

74 | * --etcd-servers= Comma separated list of etcd servers, or omit to connect to etcd running on localhost

75 | * --docker-tag= if present, use this docker tag the couchbase-cluster-go version in spawned containers, otherwise, default to "latest"

76 |

77 | Replace `user:passw0rd` with a sensible username and password. It **must** be colon separated, with no spaces. The password itself must be at least 6 characters.

78 |

79 | After you kick it off, you can expect it to take approximately **10-20 minutes** to download the Docker images and bootstrap the cluster. Once it's finished, you should see the following log entry:

80 |

81 | ```

82 | Cluster is up!

83 | ```

84 |

85 | If you never got that far, you can check your output against this [expected output](https://gist.github.com/tleyden/6b903e40cf87b5dd4ed8). Please file an [issue](https://github.com/couchbaselabs/couchbase-server-coreos/issues) here.

86 |

87 | ## Verify

88 |

89 | To check the status of your cluster, run:

90 |

91 | ```

92 | $ fleetctl list-units

93 | ```

94 |

95 | You should see three units, all as active.

96 |

97 | ```

98 | UNIT MACHINE ACTIVE SUB

99 | couchbase_node@1.service 3c819355.../10.239.170.243 active running

100 | couchbase_node@2.service 782b35d4.../10.168.87.23 active running

101 | couchbase_node@3.service 7cd5f94c.../10.234.188.145 active running

102 | ```

103 |

104 | # Login to Admin Web UI

105 |

106 | * Find the public ip of any of your CoreOS instances via the AWS console

107 | * In a browser, go to `http://:8091`

108 | * Login with the username/password you provided above

109 |

110 | You should see:

111 |

112 |

113 |

114 | Congratulations! You now have a 3 node Couchbase Server cluster running under CoreOS / Docker.

115 |

116 | # Sync Gateway

117 |

118 | The steps below will walk you through adding Sync Gateway into the cluster.

119 |

120 |

121 |

122 |

123 | ### Kick off Sync Gateway cluster

124 |

125 | ```

126 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 sync-gw-cluster launch-sgw \

127 | --num-nodes=1 \

128 | --config-url=http://git.io/b9PK \

129 | --create-bucket todos \

130 | --create-bucket-size 512 \

131 | --create-bucket-replicas 1 \

132 | --docker-tag 0.8.6

133 | ```

134 |

135 | Where:

136 |

137 | * --num-nodes= number of sync gw nodes to start

138 | * --config-url= the url where the sync gw config json is stored

139 | * --sync-gw-commit= the branch or commit of sync gw to use, defaults to "image", which is the master branch at the time the docker image was built.

140 | * --create-bucket= create a bucket on couchbase server with the given name

141 | * --create-bucket-size= if creating a bucket, use this size in MB

142 | * --create-bucket-replicas= if creating a bucket, use this replica count (defaults to 1)

143 | * --etcd-servers= Comma separated list of etcd servers, or omit to connect to etcd running on localhost

144 | * --docker-tag= if present, use this docker tag for spawned containers, otherwise, default to "latest"

145 |

146 |

147 | ### View cluster

148 |

149 | After the above script finishes, run `fleetctl list-units` to list the services in your cluster, and you should see:

150 |

151 | ```

152 | UNIT MACHINE ACTIVE SUB

153 | couchbase_node@1.service 2ad1cfaf.../10.95.196.213 active running

154 | couchbase_node@2.service a688ca8e.../10.216.199.207 active running

155 | couchbase_node@3.service fb241f71.../10.153.232.237 active running

156 | sync_gw_node@1.service 2ad1cfaf.../10.95.196.213 active running

157 | ```

158 |

159 | They should all be in the `active` state. If any are in the `activating` state -- which is normal because it might take some time to download the docker image -- then you should wait until they are all active before continuing.

160 |

161 | ## Verify internal

162 |

163 | **Find internal ip**

164 |

165 | ```

166 | $ fleetctl list-units

167 | sync_gw_node.1.service 209a8a2e.../10.164.175.9 active running

168 | ```

169 |

170 | **Curl**

171 |

172 | On the CoreOS instance you are already ssh'd into, Use the ip found above and run a curl request against the server root:

173 |

174 | ```

175 | $ curl 10.164.175.9:4984

176 | {"couchdb":"Welcome","vendor":{"name":"Couchbase Sync Gateway","version":1},"version":"Couchbase Sync Gateway/master(6356065)"}

177 | ```

178 |

179 | ## Verify external

180 |

181 | **Find external ip**

182 |

183 | Using the internal ip found above, go to the EC2 Instances section of the AWS console, and hunt around until you find the instance with that internal ip, and then get the public ip for that instance, eg: `ec2-54-211-206-18.compute-1.amazonaws.com`

184 |

185 | **Curl**

186 |

187 | From your laptop, use the ip found above and run a curl request against the server root:

188 |

189 | ```

190 | $ curl ec2-54-211-206-18.compute-1.amazonaws.com:4984

191 | {"couchdb":"Welcome","vendor":{"name":"Couchbase Sync Gateway","version":1},"version":"Couchbase Sync Gateway/master(6356065)"}

192 | ```

193 |

194 | Congratulations! You now have a Couchbase Server + Sync Gateway cluster running.

195 |

196 | ## Kicking off more Sync Gateway nodes.

197 |

198 | To launch two more Sync Gateway nodes, run the following command:

199 |

200 | ```

201 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 sync-gw-cluster launch-sgw \

202 | --num-nodes=2 \

203 | --config-url=http://git.io/b9PK \

204 | --docker-tag 0.8.6

205 | ```

206 |

207 | ## Shutting down the cluster.

208 |

209 | Warning: if you try to shutdown the individual ec2 instances, **you must use the CloudFormation console**. If you try to shutdown the instances via the EC2 control panel, AWS will restart them, because that is what the CloudFormation is telling it to do.

210 |

211 | Here is the web UI where you need to shutdown the cluster:

212 |

213 |

214 |

215 | ## Extended instructions

216 |

217 | * [Disabling CoreOS restarts](https://github.com/couchbaselabs/couchbase-server-coreos/wiki/Disabling-CoreOS-auto-restart)

218 | * [Running Sync Gateway behind an Nginx proxy](https://github.com/tleyden/couchbase-cluster-go#running-sync-gateway-behind-an-nginx-proxy)

219 | * [How reboots are handled](https://github.com/couchbaselabs/couchbase-server-docker/issues/3#issuecomment-75984021)

220 | * [Couchbase-Cluster-Go README](https://github.com/tleyden/couchbase-cluster-go/blob/master/README.md)

221 |

222 | ## References

223 |

224 | * [Couchbase Server on Dockerhub](https://hub.docker.com/u/couchbase/server)

225 | * [Sync Gateway on Dockerhub](https://hub.docker.com/u/couchbase/sync-gateway)

226 | * [couchbase-cluster-go github repo](https://github.com/tleyden/couchbase-cluster-go)

227 | * [How I built couchbase 2.2 for docker](https://gist.github.com/dustin/6605182) by [@dlsspy](https://twitter.com/dlsspy)

228 | * https://registry.hub.docker.com/u/ncolomer/couchbase/

229 | * https://github.com/lifegadget/docker-couchbase

230 |

231 |

232 |

233 |

234 |

--------------------------------------------------------------------------------

/aws-cloudformation/couchbase_server.template:

--------------------------------------------------------------------------------

1 | {

2 | "AWSTemplateFormatVersion": "2010-09-09",

3 | "Description": "CoreOS on EC2: http://coreos.com/docs/running-coreos/cloud-providers/ec2/",

4 | "Mappings" : {

5 | "RegionMap" : {

6 | "us-east-1" : {

7 | "AMI" : "ami-d6bceabe"

8 | }

9 | }

10 | },

11 | "Parameters": {

12 | "InstanceType" : {

13 | "Description" : "EC2 PV instance type (m3.medium, etc).",

14 | "Type" : "String",

15 | "Default" : "m3.medium",

16 | "ConstraintDescription" : "Must be a valid EC2 PV instance type."

17 | },

18 | "ClusterSize": {

19 | "Default": "3",

20 | "MinValue": "1",

21 | "MaxValue": "12",

22 | "Description": "Number of nodes in cluster (1-12). 3 or larger is recommended.",

23 | "Type": "Number"

24 | },

25 | "DiscoveryURL": {

26 | "Description": "An unique etcd cluster discovery URL. Grab a new token from https://discovery.etcd.io/new",

27 | "Type": "String"

28 | },

29 | "AdvertisedIPAddress": {

30 | "Description": "Use 'private' if your etcd cluster is within one region or 'public' if it spans regions or cloud providers.",

31 | "Default": "private",

32 | "AllowedValues": ["private", "public"],

33 | "Type": "String"

34 | },

35 | "AllowSSHFrom": {

36 | "Description": "The net block (CIDR) that SSH is available to.",

37 | "Default": "0.0.0.0/0",

38 | "Type": "String"

39 | },

40 | "KeyPair" : {

41 | "Description" : "The name of an EC2 Key Pair to allow SSH access to the instance.",

42 | "Type" : "String"

43 | }

44 | },

45 | "Resources": {

46 | "CoreOSSecurityGroup": {

47 | "Type": "AWS::EC2::SecurityGroup",

48 | "Properties": {

49 | "GroupDescription": "CoreOS SecurityGroup",

50 | "SecurityGroupIngress": [

51 | {"IpProtocol": "tcp", "FromPort": "22", "ToPort": "22", "CidrIp": {"Ref": "AllowSSHFrom"}}

52 | ]

53 | }

54 | },

55 | "Ingress4001": {

56 | "Type": "AWS::EC2::SecurityGroupIngress",

57 | "Properties": {

58 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "4001", "ToPort": "4001", "SourceSecurityGroupId": {

59 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

60 | }

61 | }

62 | },

63 | "Ingress7001": {

64 | "Type": "AWS::EC2::SecurityGroupIngress",

65 | "Properties": {

66 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "7001", "ToPort": "7001", "SourceSecurityGroupId": {

67 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

68 | }

69 | }

70 | },

71 |

72 | "Ingress112xx": {

73 | "Type": "AWS::EC2::SecurityGroupIngress",

74 | "Properties": {

75 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "11209", "ToPort": "11211", "SourceSecurityGroupId": {

76 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

77 | }

78 | }

79 | },

80 | "Ingress8091": {

81 | "Type": "AWS::EC2::SecurityGroupIngress",

82 | "Properties": {

83 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "8091", "ToPort": "8091", "CidrIp": "0.0.0.0/0"

84 | }

85 | },

86 | "Ingress8092": {

87 | "Type": "AWS::EC2::SecurityGroupIngress",

88 | "Properties": {

89 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "8092", "ToPort": "8092", "SourceSecurityGroupId": {

90 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

91 | }

92 | }

93 | },

94 | "Ingress4369": {

95 | "Type": "AWS::EC2::SecurityGroupIngress",

96 | "Properties": {

97 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "4369", "ToPort": "4369", "SourceSecurityGroupId": {

98 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

99 | }

100 | }

101 | },

102 | "Ingress211xx": {

103 | "Type": "AWS::EC2::SecurityGroupIngress",

104 | "Properties": {

105 | "GroupName": {"Ref": "CoreOSSecurityGroup"}, "IpProtocol": "tcp", "FromPort": "21100", "ToPort": "21199", "SourceSecurityGroupId": {

106 | "Fn::GetAtt" : [ "CoreOSSecurityGroup", "GroupId" ]

107 | }

108 | }

109 | },

110 |

111 |

112 |

113 |

114 | "CoreOSServerAutoScale": {

115 | "Type": "AWS::AutoScaling::AutoScalingGroup",

116 | "Properties": {

117 | "AvailabilityZones": {"Fn::GetAZs": ""},

118 | "LaunchConfigurationName": {"Ref": "CoreOSServerLaunchConfig"},

119 | "MinSize": "1",

120 | "MaxSize": "12",

121 | "DesiredCapacity": {"Ref": "ClusterSize"},

122 | "Tags": [

123 | {"Key": "Name", "Value": { "Ref" : "AWS::StackName" }, "PropagateAtLaunch": true}

124 | ]

125 | }

126 | },

127 | "CoreOSServerLaunchConfig": {

128 | "Type": "AWS::AutoScaling::LaunchConfiguration",

129 | "Properties": {

130 | "ImageId" : { "Fn::FindInMap" : [ "RegionMap", { "Ref" : "AWS::Region" }, "AMI" ]},

131 | "InstanceType": {"Ref": "InstanceType"},

132 | "KeyName": {"Ref": "KeyPair"},

133 | "SecurityGroups": [{"Ref": "CoreOSSecurityGroup"}],

134 | "BlockDeviceMappings": [{

135 | "DeviceName": "/dev/xvda",

136 | "Ebs" : {"VolumeSize": "20"}

137 | }],

138 | "UserData" : { "Fn::Base64":

139 | { "Fn::Join": [ "", [

140 | "#cloud-config\n\n",

141 | "write_files:\n",

142 | " - path: /etc/systemd/system/docker.service.d/increase-ulimit.conf\n",

143 | " owner: core:core\n",

144 | " permissions: 0644\n",

145 | " content: |\n",

146 | " [Service]\n",

147 | " LimitMEMLOCK=infinity\n",

148 | " - path: /etc/systemd/system/fleet.socket.d/30-ListenStream.conf\n",

149 | " owner: core:core\n",

150 | " permissions: 0644\n",

151 | " content: |\n",

152 | " [Socket]\n",

153 | " ListenStream=127.0.0.1:49153\n",

154 | " - path: /opt/couchbase/var/.README\n",

155 | " owner: core:core\n",

156 | " permissions: 0644\n",

157 | " content: |\n",

158 | " Couchbase /opt/couchbase/var data volume in container mounted here\n",

159 | " - path: /var/lib/cbfs/data/.README\n",

160 | " owner: core:core\n",

161 | " permissions: 0644\n",

162 | " content: |\n",

163 | " CBFS files are stored here\n",

164 | "coreos:\n",

165 | " etcd:\n",

166 | " discovery: ", { "Ref": "DiscoveryURL" }, "\n",

167 | " addr: $", { "Ref": "AdvertisedIPAddress" }, "_ipv4:4001\n",

168 | " peer-addr: $", { "Ref": "AdvertisedIPAddress" }, "_ipv4:7001\n",

169 | " units:\n",

170 | " - name: etcd.service\n",

171 | " command: start\n",

172 | " - name: fleet.service\n",

173 | " command: start\n",

174 | " - name: docker.service\n",

175 | " command: restart\n"

176 | ] ]

177 | }

178 | }

179 | }

180 | }

181 | }

182 | }

183 |

--------------------------------------------------------------------------------

](https://console.aws.amazon.com/cloudformation/home?region=us-east-1#cstack=sn%7ECouchbase-CoreOS%7Cturl%7Ehttp://tleyden-misc.s3.amazonaws.com/couchbase-coreos/sync_gateway.template)

16 |

17 | *NOTE: this is hardcoded to use the us-east-1 region, so if you need a different region, you should edit the URL accordingly*

18 |

19 | Use the following parameters in the form:

20 |

21 | * **ClusterSize**: 3 nodes (default)

22 | * **Discovery URL**: as it says, you need to grab a new token from https://discovery.etcd.io/new and paste it in the box.

23 | * **KeyPair**: use whatever you normally use to start EC2 instances. For this discussion, let's assumed you used `aws`, which corresponds to a file you have on your laptop called `aws.cer`

24 |

25 | ### Wait until instances are up

26 |

27 |

28 |

29 | ### ssh into a CoreOS instance

30 |

31 | Go to the AWS console under EC2 instances and find the public ip of one of your newly launched CoreOS instances.

32 |

33 |

34 |

35 | Choose any one of them (it doesn't matter which), and ssh into it as the **core** user with the cert provided in the previous step:

36 |

37 | ```

38 | $ ssh -i aws.cer -A core@ec2-54-83-80-161.compute-1.amazonaws.com

39 | ```

40 |

41 | ## Sanity check

42 |

43 | Let's make sure the CoreOS cluster is healthy first:

44 |

45 | ```

46 | $ fleetctl list-machines

47 | ```

48 |

49 | This should return a list of machines in the cluster, like this:

50 |

51 | ```

52 | MACHINE IP METADATA

53 | 03b08680... 10.33.185.16 -

54 | 209a8a2e... 10.164.175.9 -

55 | 25dd84b7... 10.13.180.194 -

56 | ```

57 |

58 | ## Launch cluster

59 |

60 |

61 | ```

62 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 couchbase-fleet launch-cbs \

63 | --version latest \

64 | --num-nodes 3 \

65 | --userpass "user:passw0rd" \

66 | --docker-tag 0.8.6

67 | ```

68 |

69 | Where:

70 |

71 | * --version=

](https://console.aws.amazon.com/cloudformation/home?region=us-east-1#cstack=sn%7ECouchbase-CoreOS%7Cturl%7Ehttp://tleyden-misc.s3.amazonaws.com/couchbase-coreos/sync_gateway.template)

16 |

17 | *NOTE: this is hardcoded to use the us-east-1 region, so if you need a different region, you should edit the URL accordingly*

18 |

19 | Use the following parameters in the form:

20 |

21 | * **ClusterSize**: 3 nodes (default)

22 | * **Discovery URL**: as it says, you need to grab a new token from https://discovery.etcd.io/new and paste it in the box.

23 | * **KeyPair**: use whatever you normally use to start EC2 instances. For this discussion, let's assumed you used `aws`, which corresponds to a file you have on your laptop called `aws.cer`

24 |

25 | ### Wait until instances are up

26 |

27 |

28 |

29 | ### ssh into a CoreOS instance

30 |

31 | Go to the AWS console under EC2 instances and find the public ip of one of your newly launched CoreOS instances.

32 |

33 |

34 |

35 | Choose any one of them (it doesn't matter which), and ssh into it as the **core** user with the cert provided in the previous step:

36 |

37 | ```

38 | $ ssh -i aws.cer -A core@ec2-54-83-80-161.compute-1.amazonaws.com

39 | ```

40 |

41 | ## Sanity check

42 |

43 | Let's make sure the CoreOS cluster is healthy first:

44 |

45 | ```

46 | $ fleetctl list-machines

47 | ```

48 |

49 | This should return a list of machines in the cluster, like this:

50 |

51 | ```

52 | MACHINE IP METADATA

53 | 03b08680... 10.33.185.16 -

54 | 209a8a2e... 10.164.175.9 -

55 | 25dd84b7... 10.13.180.194 -

56 | ```

57 |

58 | ## Launch cluster

59 |

60 |

61 | ```

62 | $ sudo docker run --net=host tleyden5iwx/couchbase-cluster-go:0.8.6 couchbase-fleet launch-cbs \

63 | --version latest \

64 | --num-nodes 3 \

65 | --userpass "user:passw0rd" \

66 | --docker-tag 0.8.6

67 | ```

68 |

69 | Where:

70 |

71 | * --version=