├── .gitignore

├── PromGuard.Network.Diagram.png

├── PromGuard.Network.Diagram.xml

├── README.md

├── ansible.cfg

├── playbooks

├── promguard.yml

└── roles

│ ├── node_exporter

│ ├── handlers

│ │ └── main.yml

│ ├── tasks

│ │ └── main.yml

│ ├── templates

│ │ └── node_exporter.service.j2

│ └── vars

│ │ └── main.yml

│ ├── prometheus-server

│ ├── handlers

│ │ └── main.yml

│ ├── tasks

│ │ └── main.yml

│ ├── templates

│ │ ├── prometheus.service.j2

│ │ └── prometheus.yml.j2

│ └── vars

│ │ └── main.yml

│ ├── ufw

│ └── tasks

│ │ └── main.yml

│ └── wireguard

│ ├── tasks

│ └── main.yml

│ ├── templates

│ ├── 60-wireguard.cfg.j2

│ └── wg0.conf.j2

│ └── vars

│ └── main.yml

├── promguard.tf

├── run.sh

├── templates

├── hostname.tpl

└── inventory.tpl

└── util

├── ansible-check.sh

├── quit.sh

└── terraform-check.sh

/.gitignore:

--------------------------------------------------------------------------------

1 | terraform.tfplan

2 | terraform.tfstate

3 | .terraform.tfstate.*

4 | terraform.tfvars

5 | inventory

6 | *.backup

7 | ./*.tfstate

8 | .terraform/

9 | *.log

10 | *.bak

11 | *~

12 | .*.swp

13 | *.test

14 | *.iml

15 | *.retry

16 |

--------------------------------------------------------------------------------

/PromGuard.Network.Diagram.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cpu/PromGuard/ccc9d0306ad84c8b61358678e32ff353552badb5/PromGuard.Network.Diagram.png

--------------------------------------------------------------------------------

/PromGuard.Network.Diagram.xml:

--------------------------------------------------------------------------------

1 | 7Zpbc9o4FMc/DY94dPH1sUDafcgmnaYzfcwILIyntuWVRSD76VeSZcAXKE0MJbMmD9hHt6PzE3/dMsLTdPuFk3z1NwtpMkIg3I7wbIQQdD0sv5TltbT40C4NEY9Dk2lveIr/pcYIjHUdh7SoZRSMJSLO68YFyzK6EDUb4Zxt6tmWLKm3mpOItgxPC5K0rT/iUKxMLxywt/9F42hVtQyBSZmTxc+Is3Vm2hshvNSfMjklVV0mf7EiIdscmPDdCE85Y6J8SrdTmqjYVmEry30+krrzm9NMnFMA+2WJF5KsaeWydky8VsF4oVzEMjb3ZE6Tr6yIRcwymZTGYajyTFYiTeQ7lI9V3k9JHNXyFIKzn7tgqqwhKVZUuQFU8orkqrl0G6kRZZFNgUOryFUcJqTIS8jLeKtKTJYsE2bQqJBNkoZnvEQzIcaLhC7Vq+mqdJFuj8YL7ijI0U1ZSgV/lVnMQIa+Gcib/bDAVRBXh0MicM1wNEMx2tW1xyEfDJEjdLyBzll0TAG70g9Dywb+NWm5A63foYVAnRaqIFyHljPQ+r3fll2jBT3UQQt20ULvp4U6YLmJ6tq8hsz9Z60Dp+I0LnSgPskMsqPbfaJ8isx3UmV+ey05Z2m0JjwcpyyLBeNjWFUse1XWXW9PmudNWxi/dLoggYmxoal80EAR0CVBwz/o9NHL07XsHCdZEc8T+rxihdAFO/tG0lw+ZPNCfUHXsRDyLIwtPY/+Kkg6Jh88TJuYUz06nuP83DABS/2dMYqOBahuKnKStfzjHdV1ZWy2cqZCzpkQLD2tkILlb5VHkueJrEo19lxQLmtWgOIkmbKEce0apjB0qLdr4SAlkPsT4nbJa1NOF1Icdd2Vnu4MMlaLOIu+GZ3TYmxs31W/ZjDoR3dxY5bE1RrnQHchwh26i+z3627XAvTmdDeT+89BdI+piedbLrIgcKzAGzT3tOaitwfoz2tuqacnBHcnyhfTXKm4fmh3aa6P5tjtR3NLfQV7w8T0aza2+9HcoLExAdeU3K4TmduUXDxIbreYIN+CQSAXua6FwaC5pzX3HRuBP6+5N7DO7UNzjxwbNBa5B5J7rzPMxujAVuqy048EO43jBhu1D14vp8HBh9HgM1Ys/08NxkqDPQsBz4KuP4jwaRE+YyoflqQdS9JelM6vH6z6+IpKh8Hbj8EvPfmpi9yHuzZSoD9d4Po458aNxb8ftHHAjnNuF/dAA982jaer02icftmofaMHQQcNx++Bhn3bNB5+XJlG88YOwzaN6ranBqOHGyDcXpM9ffmq5sYWEzU/1yPPqZxLyVxnUMHJWZwJ7YwzGTkzFa61YOV8qws0FsJHyB2ue/XZQ/N6Tr8bx6B5P4A1nbquhsWZIGbgjDHoB5asugbL6TjF8EEbVqV/74Flt2eV74/fPgCsLjhNiJeAZTuw/ssKrggLtmDdPz4MsI7LYKVn1RLBa+9NLwbr+E242UwsdlHZ7xfwYqHj8+stxNPnR7X1PLV9bG0RbnaQXENWkdNeH/YEX77u/xNQpx38uyW++w8=

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # PromGuard - Authenticated/Encrypted Prometheus stat scraping over WireGuard

2 |

3 | 1. [Summary](https://github.com/cpu/PromGuard#summary)

4 | 1. [Prerequisites](https://github.com/cpu/PromGuard#prerequisites)

5 | 1. [Initial Setup](https://github.com/cpu/PromGuard#initial-setup)

6 | 1. [Usage](https://github.com/cpu/PromGuard#usage)

7 | 1. [Background](https://github.com/cpu/PromGuard#background)

8 | 1. [Implementation](https://github.com/cpu/PromGuard#implementation)

9 | 1. [Conclusion](https://github.com/cpu/PromGuard#conclusion)

10 | 1. [Example run](https://github.com/cpu/PromGuard#example-run)

11 |

12 | ## Summary

13 |

14 | [Prometheus](https://prometheus.io/) doesn't support authentication/encryption

15 | out of box. Scraping metrics over the capital I internet without is a no-go.

16 | Putting mutually authenticated TLS in front is a hassle.

17 |

18 | [WireGuard](http://wireguard.com/) is a next-generation VPN technology likely to

19 | be part of the mainline Linux kernel Soon(TM). It is: simple, fast, effective.

20 |

21 | Can we configure Prometheus to scrape stats over WireGuard? Of course. This is

22 | a repository showing an example of this approach using

23 | [Terraform](https://www.terraform.io/) and [Ansible](http://ansible.com/) so

24 | you can easily try it yourself with as little as _one command*_.

25 |

26 | `*` - _Not counting installing Terraform & Ansible, and configuring

27 | a DigitalOcean API token! Some limitations apply, batteries not included, offer

28 | not valid in Quebec._

29 |

30 | ## Demo Prerequisites

31 |

32 | 1. [Install Ansible](http://docs.ansible.com/ansible/latest/intro_installation.html)

33 | 1. [Install Terraform](https://www.terraform.io/intro/getting-started/install.html)

34 | 1. [Get a DigitalOcean API key](https://cloud.digitalocean.com/settings/api/tokens)

35 | 1. Clone this repo and `cd` into it.

36 |

37 | ## Initial Setup

38 |

39 | 1. Create `terraform.tfvars` in the root of the project directory

40 | 1. Inside of `terraform.tfvars` put:

41 | ```

42 | do_token = "YOUR_DIGITAL_OCEAN_API_KEY_HERE"

43 | do_ssh_key_file = "PATH_TO_YOUR_SSH_PUBLIC_KEY_HERE"

44 | do_ssh_key_name = "A_NAME_TO_ADD_YOUR_SSH_PUBLIC_KEY_UNDER_IDK_PICK_ONE"

45 | ```

46 | 1. Run `terraform init` to get required plugins

47 |

48 | ## Demo Usage

49 |

50 | 1. Run `./run.sh`

51 | 1. Follow the instructions at completion to access Prometheus interface on the

52 | monitoring host.

53 |

54 | ## Background

55 |

56 | ### Prometheus

57 |

58 | [Prometheus](https://prometheus.io/) is "an open-source systems monitoring and

59 | alerting toolkit originally built at SoundCloud". It provides a slick

60 | multi-dimensional time series metrics system exposing a powerful query language.

61 | [LWN](https://lwn.net) recently published a [great introduction to monitoring

62 | with Prometheus](https://lwn.net/Articles/744410/). Much like the author of the

63 | article I've recently transitioned my own systems from [Munin

64 | monitoring](http://munin-monitoring.org/) to Prometheus with great success.

65 |

66 | Prometheus is simple and easy to understand. At its core metrics from individual

67 | services/machines are exposed via HTTP at a `/metrics` URL path. These endpoints

68 | are often made available by dedicated programs Prometheus calls "exporters".

69 | Periodically (every 15s by default) the Prometheus server scrapes configured

70 | metrics endpoints ("targets" in Prometheus parlance), collecting the data into

71 | the time series database.

72 |

73 | Prometheus makes available a first-party

74 | [`node_exporter`](https://github.com/prometheus/node_exporter) that exposes

75 | typical system stats (disk space, CPU usage, network interface stats, etc) via

76 | a `/metrics` endpoint. This repostiory/example only configures this one exporter

77 | but [others are

78 | available](https://github.com/prometheus/docs/blob/master/content/docs/instrumenting/exporters.md)

79 | and this approach generalizes to them as well.

80 |

81 | ### Prometheus Authentication/Authorization/Encryption

82 |

83 | [Prometheus' own documentation](https://prometheus.io/docs/operating/security/#authentication-authorisation-encryption)

84 | is clear and up-front about the fact that "Prometheus and its components do not

85 | provide any server-side authentication, authorisation or encryption". Alone,

86 | the `node_exporter` has no ability to encrypt the metrics data it provides to a

87 | prometheus scraper, and no way to authenticate that the thing requesting metrics

88 | data is the prometheus scraper you expect. If your Prometheus server is in

89 | Toronto and your nodes are spread out around the world this poses a significant

90 | obstacle to overcome.

91 |

92 | The official recommendation is to deploy mutually authenticated TLS with [client

93 | certificates](https://en.wikipedia.org/wiki/Transport_Layer_Security#Client-authenticated_TLS_handshake),

94 | using a reverse proxy. I'm certainly [not adverse to

95 | TLS](https://letsencrypt.org/) but building your own internal PKI, deploying

96 | a dedicated reverse proxy to each host next to the exporter, and configuring

97 | the reverse proxy instances, the exporter instances, and Prometheus for

98 | client authentication is certainly not a walk in the park.

99 |

100 | Avoiding the hassle has driven folks to creative (but cumbersome) [SSH based

101 | solutions](https://miek.nl/2016/february/24/monitoring-with-ssh-and-prometheus/)

102 | and, more creatively, [tor hidden services](https://ef.gy/secure-prometheus-ssh-hidden-service).

103 |

104 | What if there was.... :sparkles: _A better way_ :sparkles:

105 |

106 | ### WireGuard

107 |

108 | [WireGuard](https://www.wireguard.com/) rules. It's an "extremely

109 | simple yet fast and modern VPN that utilizes state-of-the-art cryptography". The

110 | [white paper](https://www.wireguard.com/papers/wireguard.pdf), originally

111 | published at [NDSS

112 | 2017](https://www.ndss-symposium.org/ndss2017/ndss-2017-programme/wireguard-next-generation-kernel-network-tunnel/)

113 | goes into exquisite detail on the protocol and the small, easy to audit, and

114 | performant kernel mode implementation

115 |

116 | The tl;dr is that WireGuard lets us create fast, encrypted, authenticated

117 | links between servers. Its implementation is perfectly suited to writing

118 | firewall rules and we can easily work with the standard network interface it

119 | creates. No PKI or certificates required. There's not a single byte of ASN.1 in

120 | sight. It's enough to bring you to tears.

121 |

122 | ### WireGuard meets Prometheus

123 |

124 | If each target machine and Prometheus server has a WireGuard keypair

125 | & interface, then we can configure the target exporters to bind only to the

126 | WireGuard interface. We can also write firewall rules that restrict traffic to

127 | the exporter such that it must arrive over the WireGuard interface and from

128 | the Prometheus server's WireGuard peer IP. The end result is a system that only

129 | allows fully encrypted, fully authenticated access to the exporter stats from

130 | the minimum number of hosts. It also fails closed! If something goes wrong with

131 | the WireGuard configuration the exporter will not be internet accessible - rad!

132 | No extra services, or complex configuration.

133 |

134 | ### Implementation

135 |

136 | Initially I was going to write this as a blog post, but talk is cheap! Running

137 | code is much better. Using Terraform and Ansible makes this a reproducable

138 | demonstration of the idea.

139 |

140 | #### Terraform

141 |

142 | The Terraform config in

143 | [`promguard.tf`](https://github.com/cpu/PromGuard/blob/master/promguard.tf)

144 | has three main responsibilities:

145 |

146 | 1. Creating 1 monitor droplet and 3 to-be-monitored node droplets

147 | 1. Generating an Ansible inventory

148 | 1. Assigning WireGuard IPs to each droplet

149 |

150 | There isn't anything especially fancy about item 1. The [`monitor`

151 | droplet](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/promguard.tf#L153:L171)

152 | and the `${var.node_count}` individual [`node`

153 | droplets](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/promguard.tf#L173:L194)

154 | both use a `remote-exec` provisioner. This ensures the droplets have SSH

155 | available before continuing and also bootstraps the droplets with Python so that

156 | Ansible playbooks can be run.

157 |

158 | The [Ansible

159 | inventory](http://docs.ansible.com/ansible/latest/intro_inventory.html) is

160 | generated in three parts. First, for [each to-be-monitored

161 | node](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/templates/hostname.tpl),

162 | a inventory line is

163 | [templated](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/templates/hostname.tpl). The end result is a line of the form: ` ansible_host= wireguard_ip=`. An inventory line [for the monitor

165 | node](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/promguard.tf#L98:L109) is generated the same way. Lastly another [template](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/templates/inventory.tpl) is used to [stitch together the node and monitor inventory lines](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/promguard.tf#L111:L119) into one Ansible inventory.

166 |

167 | When generating the inventory line each server is given a WireGuard IP in the

168 | `10.0.0.0` RFC1918 reserved network.

169 | To make life easy [the monitor is always the first

170 | address](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/promguard.tf#L105:L107),

171 | `10.0.0.1`. The nodes are [assigned sequential

172 | addresses](https://github.com/cpu/PromGuard/blob/af52c13d83367f0f049cbb29b5dc73c91270ad93/promguard.tf#L90:PL94)

173 | starting at `10.0.0.2`.

174 |

175 | #### Ansible

176 |

177 | There are four main Ansible playbooks at work:

178 | 1. The [UFW

179 | playbook](https://github.com/cpu/PromGuard/tree/367f334819b6ba4c6a323e3bbec76b934f93b7c7/playbooks/roles/ufw/tasks)

180 | 1. The [WireGuard

181 | playbook](https://github.com/cpu/PromGuard/tree/367f334819b6ba4c6a323e3bbec76b934f93b7c7/playbooks/roles/wireguard)

182 | 1. The [Node Exporter playbook](https://github.com/cpu/PromGuard/tree/367f334819b6ba4c6a323e3bbec76b934f93b7c7/playbooks/roles/node_exporter)

183 | 1. The [Prometheus server

184 | playbook](https://github.com/cpu/PromGuard/tree/367f334819b6ba4c6a323e3bbec76b934f93b7c7/playbooks/roles/prometheus-servera)

185 |

186 | The UFW playbook is very straight-forward. It [installs

187 | UFW](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/ufw/tasks/main.yml#L3:L5), allows [inbound TCP on port 22](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/ufw/tasks/main.yml#L7:L12) for SSH, and [enables UFW at boot](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/ufw/tasks/main.yml#L14:L18) with a default deny inbound policy.

188 |

189 | The WireGuard playbook [installs `wireguard-dkms` and

190 | `wireguard-tools`](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/tasks/main.yml#L3:L17)

191 | after setting up the Ubuntu PPA. Each server [generates its own WireGuard

192 | private

193 | key](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/tasks/main.yml#L27:L30).

194 | The public key is [derived from the private key](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/tasks/main.yml#L37:L40) and registered as an Ansible fact [for

195 | that

196 | host](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/tasks/main.yml#L42:L44). This makes it easy to refer to a server's WireGuard public key from templates and tasks. Each private key is only known by the server it belongs to and the host running the Ansible playbooks.

197 |

198 | Beyond installing WireGuard and computing keys the WireGuard playbook also

199 | writes a [WireGuard config

200 | file](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/tasks/main.yml#L46:L52), and a [network interface config

201 | file](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/tasks/main.yml#L54:L60).

202 |

203 | The WireGuard config file (`/etc/wireguard/wg0.conf`) for each host is written from a template that [declares an `[Interface]`](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/templates/wg0.conf.j2#L4:L6) and the required `[Peer]` entries. The `[Peer]` config differs based on whether the host is a monitor, needing [one `[Peer]` for every server](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/templates/wg0.conf.j2#L19:L23), or if it is a monitored server needing only [one `[Peer]` for the monitor](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/templates/wg0.conf.j2#L10:L14). In both cases the `PublicKey` and `AllowedIPs` for each peer are populated using Ansible facts and the inventory.

204 |

205 | The network interface config file (`/etc/network/interfaces.d/60-wireguard.cfg.j2`) for each host is written from a template that [configures a `wg0` network `iface`](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/wireguard/templates/60-wireguard.cfg.j2#L6:L11). The `address` is populated based on the server's `wireguard_ip` assigned in the Ansible inventory. The `pre-up` statements configure the interface as a WireGuard type interface that should use the `/etc/wireguard/wg0.conf` file the WireGuard role creates.

206 |

207 | This gives us a `wg0` interface on each server, configured with the right

208 | IP/keypair, and ready with peer configuration based on the server's role.

209 |

210 | #### Node Exporter

211 |

212 | The `node_exporter` role is pretty simple. The [majority of the

213 | tasks](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/node_exporter/tasks/main.yml#L3:L46)

214 | are for setting up a dedicated user, downloading the exporter code, unpacking

215 | it, and making sure it runs on start with a systemd unit.

216 |

217 | Notably [the systemd unit

218 | template](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/node_exporter/templates/node_exporter.service.j2)

219 | makes sure the `ExecStart` line [passes

220 | `--web.listen-address`](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/node_exporter/templates/node_exporter.service.j2#L11)

221 | to restrict the `node_exporter` to listening on the `wireguard_ip` (e.g. on

222 | `wg0`). By default it will listen on `127.0.0.1` and we only want it to be

223 | accessible over WireGuard instead.

224 |

225 | The `node_exporter` role also [adds a new firewall

226 | rule](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/node_exporter/tasks/main.yml#L48:L58)

227 | for all of the to-be-monitored servers. This rule allows TCP traffic to the

228 | `node_exporter` port destined to the `wireguard_ip` from the [monitor's

229 | `wireguard_ip`](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/node_exporter/tasks/main.yml#L50).

230 |

231 | The end result is that every to-be-monitored server has a `node_exporter` that

232 | can only be accessed over WireGuard, and only by the monitor server. The monitor

233 | server isn't able to access any other ports/services and the metrics data will

234 | always be encrypted while it travels between the server and the monitor.

235 |

236 | #### Prometheus

237 |

238 | Like the `node_exporter` role the [bulk of the

239 | tasks](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/prometheus-server/tasks/main.yml)

240 | in the Prometheus role are for adding a dedicated user, downloading Prometheus,

241 | installing it, and making sure it has a systemd unit.

242 |

243 | The main point of interest is the

244 | [`prometheus.yml.j2`](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/prometheus-server/templates/prometheus.yml.j2)

245 | template that is used to write the Prometheus server yaml config file on the

246 | monitor server.

247 |

248 | For every server in the inventory a [target scrape job is

249 | written](https://github.com/cpu/PromGuard/blob/901e88d145f8dc971822546a130f685bb5035ce7/playbooks/roles/prometheus-server/templates/prometheus.yml.j2#L10:L12). The `targets` IP is the `wireguard_ip` of each server, ensuring the stat collection is done over WireGuard.

250 |

251 | The end result is that Prometheus is configured to scrape stats for each server,

252 | over the monitor server's WireGuard link to each target server. The target

253 | servers `node_exporter` is configured to listen on the WireGuard interface and

254 | the firewall has a rule in place to allow the monitor to access the

255 | `node_exporter`.

256 |

257 | ## Conclusion

258 |

259 | Phew! That's a lot of text. Thanks for sticking it out. I hope this was a useful

260 | example/resource.

261 |

262 | While this Terraform/Ansible code is just a demo, and specific to

263 | Prometheus/Node Exporter the idea and much of the code is transferrable to other

264 | scenarios where you need to offer a service to a trusted set of hosts in an

265 | encrypted/authenticated setting or want to use Terraform and Ansible together.

266 | Feel free to fork & adapt. Definitely let me know if you use this as a starting

267 | point for another fun WireGuard project :-)

268 |

269 | ## Example Run

270 |

271 | * An example `./run.sh` invocation recorded with `asciinema`. The IP addresses

272 | referred to elsewhere in this README match up with this recording.

273 |

274 | [](https://asciinema.org/a/RUGQCKxe8UAPPAMXtfRXrW33F)

275 |

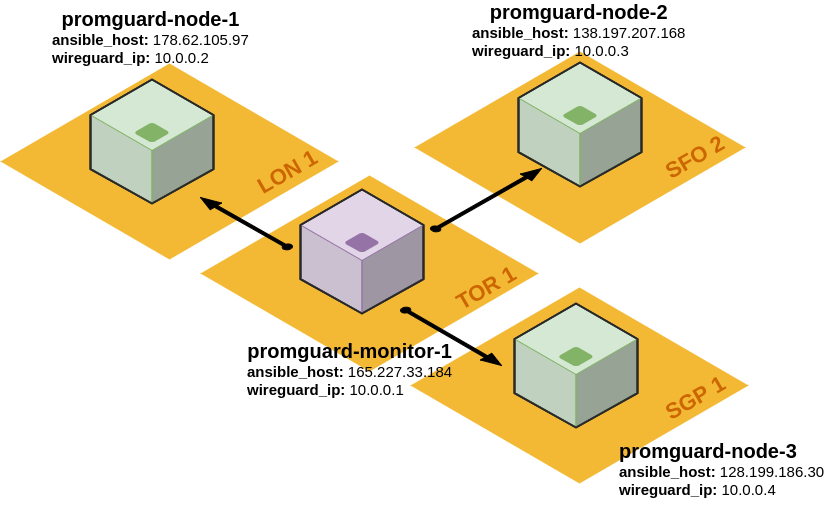

276 | * A small diagram of the resulting infrastructure. One monitor node

277 | (`promguard-monitor-1`) located in Toronto is configured with a WireGuard

278 | tunnel to three nodes to be monitored (`promguard-node-1` in London,

279 | `promguard-node-2` in San Francisco, and `promguard-node-3` in Singapore):

280 |

281 |

282 |

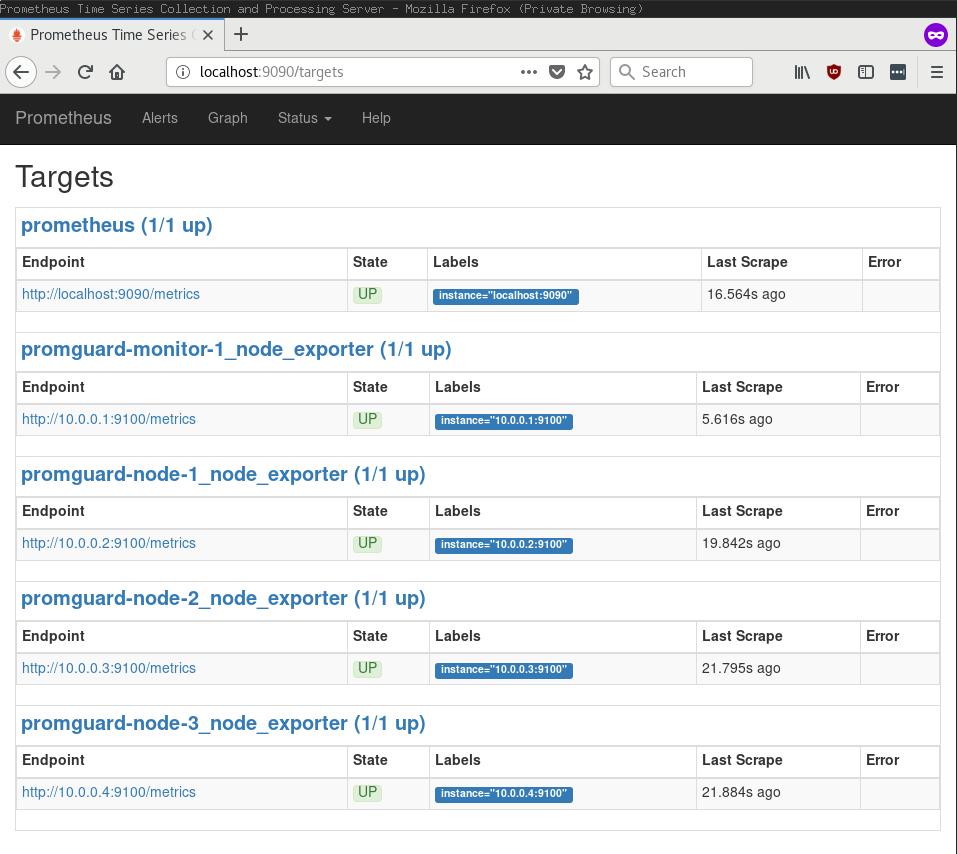

283 | * Here's what the Prometheus targets interface looks like accessed over a SSH

284 | port forward to the monitor host. Each target is specified by a WireGuard

285 | address (`10.0.0.x`):

286 |

287 |

288 |

289 | * The monitor host's (`promguard-monitor-1`) firewall is very simple. Nothing

290 | but SSH and WireGuard here! Strictly speaking this node doesn't even need to

291 | expose WireGuard since it only connects outbound to the monitored nodes.

292 |

293 | ```

294 | root@promguard-monitor-1:~# ufw status

295 | Status: active

296 |

297 | To Action From

298 | -- ------ ----

299 | 22/tcp ALLOW Anywhere # OpenSSH

300 | 51820/udp ALLOW Anywhere # WireGuard

301 | 22/tcp (v6) ALLOW Anywhere (v6) # OpenSSH

302 | 51820/udp (v6) ALLOW Anywhere (v6) # WireGuard

303 | ```

304 |

305 | * Here's what the monitor host's (`promguard-monitor-1`) `wg0` interface status

306 | looks like. It has one peer configured for each of the nodes (`10.0.0.2`,

307 | `10.0.0.3`, and `10.0.0.4`):

308 |

309 | ```

310 | root@promguard-monitor-1:~# wg

311 | interface: wg0

312 | public key: TxMVo4TkXvp+Av44qL1TiW1E0m6qhdM48E/L8AxdYj4=

313 | private key: (hidden)

314 | listening port: 51820

315 |

316 | peer: uJIL7F6e/02Z4byfX2Tl+WRrAu7SXLt6FpP3WBum3U8=

317 | endpoint: 178.62.105.97:51820

318 | allowed ips: 10.0.0.2/32

319 | latest handshake: 1 minute, 47 seconds ago

320 | transfer: 240.56 KiB received, 21.58 KiB sent

321 |

322 | peer: oJ0y/SGhq4ebIT1m2Ago4/W4/opkeY9WzKLrxFyxlWw=

323 | endpoint: 128.199.186.30:51820

324 | allowed ips: 10.0.0.4/32

325 | latest handshake: 1 minute, 48 seconds ago

326 | transfer: 242.62 KiB received, 21.58 KiB sent

327 |

328 | peer: MOCzYMLelX8uo2WaU/y/xSBRUUphPPoMNl8FymHOGlU=

329 | endpoint: 138.197.207.168:51820

330 | allowed ips: 10.0.0.3/32

331 | latest handshake: 1 minute, 49 seconds ago

332 | transfer: 241.71 KiB received, 21.58 KiB sent

333 | ```

334 |

335 | * Here's what an example node's (`promguard-node-3`) firewall looks like. It

336 | only allows access to the `node_exporter` port (`9100`) over the WireGuard

337 | interface, and only for the monitor node's source IP (`10.0.0.1`):

338 |

339 | ```

340 | root@promguard-node-3:~# ufw status

341 | Status: active

342 |

343 | To Action From

344 | -- ------ ----

345 | 22/tcp ALLOW Anywhere # OpenSSH

346 | 51820/udp ALLOW Anywhere # WireGuard

347 | 10.0.0.4 9100/tcp ALLOW 10.0.0.1 # promguard-monitor-1 WireGuard node-exporter scraper

348 | 22/tcp (v6) ALLOW Anywhere (v6) # OpenSSH

349 | 51820/udp (v6) ALLOW Anywhere (v6) # WireGuard

350 | ```

351 |

352 | * An example node's (`promguard-node-3` again) `wg0` interface shows only one

353 | peer, the monitor host:

354 |

355 | ```

356 | root@promguard-node-3:~# wg

357 | interface: wg0

358 | public key: oJ0y/SGhq4ebIT1m2Ago4/W4/opkeY9WzKLrxFyxlWw=

359 | private key: (hidden)

360 | listening port: 51820

361 |

362 | peer: TxMVo4TkXvp+Av44qL1TiW1E0m6qhdM48E/L8AxdYj4=

363 | endpoint: 165.227.33.184:51820

364 | allowed ips: 10.0.0.1/32

365 | latest handshake: 31 seconds ago

366 | transfer: 25.50 KiB received, 285.54 KiB sent

367 | ```

368 |

--------------------------------------------------------------------------------

/ansible.cfg:

--------------------------------------------------------------------------------

1 | [defaults]

2 | inventory = inventory

3 | remote_user = root

4 | become = true

5 | # NOTE(@cpu): This makes the demo much smoother by not requiring that someone

6 | # SSH to each host between the `terraform apply` set and the initial

7 | # `ansible-playbook` invocation. It is also a security risk in a production

8 | # setting! Don't reuse this without thinking first!

9 | host_key_checking = False

10 |

11 | [ssh-connection]

12 | pipelining=True

13 |

--------------------------------------------------------------------------------

/playbooks/promguard.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | - name: "Apply roles common to all servers"

4 | hosts: all

5 | roles:

6 | - ufw

7 | - wireguard

8 | - node_exporter

9 |

10 | - name: "Apply roles specific to monitor servers"

11 | hosts: monitors

12 | roles:

13 | - prometheus-server

14 |

--------------------------------------------------------------------------------

/playbooks/roles/node_exporter/handlers/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 | - name: "restart node_exporter"

3 | service:

4 | name: "node_exporter"

5 | state: "restarted"

6 |

--------------------------------------------------------------------------------

/playbooks/roles/node_exporter/tasks/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | - name: Add node_exporter user

4 | user:

5 | name: "node_exporter"

6 | createhome: no

7 | shell: "/bin/false"

8 |

9 | - name: Download Prometheus node_exporter tar.gz

10 | get_url:

11 | url: "{{ node_exporter_download_url }}"

12 | checksum: "{{ node_exporter_sha256 }}"

13 | dest: "/tmp/node_exporter-{{ node_exporter_version }}.linux-amd64.tar.gz"

14 |

15 | - name: Unpack the node_exporter tar.gz

16 | unarchive:

17 | src: "/tmp/node_exporter-{{ node_exporter_version }}.linux-amd64.tar.gz"

18 | remote_src: yes

19 | dest: "/tmp/"

20 | creates: "/tmp/node_exporter-{{ node_exporter_version }}.linux-amd64"

21 |

22 | - name: Copy the node_exporter binary to /usr/local/bin

23 | copy:

24 | src: "/tmp/node_exporter-{{ node_exporter_version }}.linux-amd64/node_exporter"

25 | remote_src: yes

26 | dest: "/usr/local/bin/node_exporter"

27 | mode: 0755

28 | owner: "node_exporter"

29 | group: "node_exporter"

30 |

31 | - name: Generate the node_exporter systemd unit

32 | template:

33 | src: "node_exporter.service.j2"

34 | dest: "/etc/systemd/system/node_exporter.service"

35 | mode: 0755

36 | notify: restart node_exporter

37 |

38 | - name: Enable the node_exporter systemd service

39 | service:

40 | name: node_exporter

41 | enabled: yes

42 |

43 | - name: Start the node_exporter systemd service

44 | service:

45 | name: node_exporter

46 | state: started

47 |

48 | - name: Open a firewall port for each Monitor's WireGuard IP to access the node_exporter

49 | ufw:

50 | src: "{{ hostvars[item]['wireguard_ip'] }}"

51 | dest: "{{ wireguard_ip }}"

52 | direction: in

53 | proto: tcp

54 | port: "{{ node_exporter_port }}"

55 | rule: allow

56 | comment: "{{ item }} WireGuard node-exporter scraper"

57 | with_items: "{{ hostvars[ansible_hostname]['groups']['monitors'] }}"

58 | when: ansible_hostname not in hostvars[ansible_hostname]['groups']['monitors']

59 |

--------------------------------------------------------------------------------

/playbooks/roles/node_exporter/templates/node_exporter.service.j2:

--------------------------------------------------------------------------------

1 | [Unit]

2 | Description=Node Exporter

3 | Wants=network-online.target

4 | After=network-online.target

5 |

6 | [Service]

7 | User=node_exporter

8 | Group=node_exporter

9 | Type=simple

10 | ExecStart=/usr/local/bin/node_exporter \

11 | --web.listen-address {{ wireguard_ip }}:{{ node_exporter_port }}

12 |

13 | [Install]

14 | WantedBy=multi-user.target

15 |

--------------------------------------------------------------------------------

/playbooks/roles/node_exporter/vars/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | node_exporter_version: "0.15.2"

4 | node_exporter_sha256: "sha256:1ce667467e442d1f7fbfa7de29a8ffc3a7a0c84d24d7c695cc88b29e0752df37"

5 | node_exporter_download_url: "https://github.com/prometheus/node_exporter/releases/download/v{{ node_exporter_version }}/node_exporter-{{ node_exporter_version }}.linux-amd64.tar.gz"

6 | node_exporter_port: 9100

7 |

--------------------------------------------------------------------------------

/playbooks/roles/prometheus-server/handlers/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 | - name: "restart prometheus"

3 | service:

4 | name: "prometheus"

5 | state: "restarted"

6 |

--------------------------------------------------------------------------------

/playbooks/roles/prometheus-server/tasks/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | # Include the node-exporter vars to have access to the node_exporter_port var

4 | - include_vars: "../../node_exporter/vars/main.yml"

5 |

6 | - name: Add prometheus user

7 | user:

8 | name: "prometheus"

9 | createhome: no

10 | shell: "/bin/false"

11 |

12 | - name: Create required prometheus directories

13 | file:

14 | path: "{{ item }}"

15 | state: directory

16 | owner: prometheus

17 | group: prometheus

18 | with_items:

19 | - "/etc/prometheus"

20 | - "/var/lib/prometheus"

21 |

22 | - name: Download the prometheus tar.gz

23 | get_url:

24 | url: "{{ prometheus_download_url }}"

25 | checksum: "{{ prometheus_sha256 }}"

26 | dest: "/tmp/prometheus-{{ prometheus_version }}.linux-amd64.tar.gz"

27 |

28 | - name: Unpack the prometheus tar.gz

29 | unarchive:

30 | src: "/tmp/prometheus-{{ prometheus_version }}.linux-amd64.tar.gz"

31 | remote_src: yes

32 | dest: "/tmp/"

33 | creates: "/tmp/prometheus-{{ prometheus_version }}.linux-amd64"

34 |

35 | - name: Copy the prometheus binaries to /usr/local/bin

36 | copy:

37 | src: "/tmp/prometheus-{{ prometheus_version }}.linux-amd64/{{ item }}"

38 | remote_src: yes

39 | dest: "/usr/local/bin/{{ item }}"

40 | mode: 0755

41 | owner: "prometheus"

42 | group: "prometheus"

43 | with_items:

44 | - "prometheus"

45 | - "promtool"

46 |

47 | - name: Copy the console libraries to /etc/prometheus

48 | command: "cp -r /tmp/prometheus-{{ prometheus_version }}.linux-amd64/{{ item }} /etc/prometheus/{{ item }}"

49 | args:

50 | creates: "/etc/prometheus/{{ item }}"

51 | with_items:

52 | - "consoles"

53 | - "console_libraries"

54 |

55 | - name: Fix permissions on console libraries in /etc/prometheus

56 | file:

57 | path: "/etc/prometheus/{{ item }}"

58 | state: directory

59 | mode: 0755

60 | owner: "prometheus"

61 | group: "prometheus"

62 | with_items:

63 | - "consoles"

64 | - "console_libraries"

65 |

66 | - name: Generate the prometheus config file

67 | template:

68 | src: "prometheus.yml.j2"

69 | dest: "/etc/prometheus/prometheus.yml"

70 | mode: 0755

71 | owner: "prometheus"

72 | group: "prometheus"

73 | notify: "restart prometheus"

74 |

75 | - name: Generate the prometheus systemd service

76 | template:

77 | src: "prometheus.service.j2"

78 | dest: "/etc/systemd/system/prometheus.service"

79 | mode: 0755

80 |

81 | - name: Enable the prometheus systemd service

82 | service:

83 | name: prometheus

84 | state: started

85 | enabled: yes

86 |

--------------------------------------------------------------------------------

/playbooks/roles/prometheus-server/templates/prometheus.service.j2:

--------------------------------------------------------------------------------

1 | [Unit]

2 | Description=Prometheus

3 | Wants=network-online.target

4 | After=network-online.target

5 |

6 | [Service]

7 | User=prometheus

8 | Group=prometheus

9 | Type=simple

10 | ExecStart=/usr/local/bin/prometheus \

11 | --config.file /etc/prometheus/prometheus.yml \

12 | --storage.tsdb.path /var/lib/prometheus/ \

13 | --web.console.templates=/etc/prometheus/consoles \

14 | --web.console.libraries=/etc/prometheus/console_libraries

15 |

16 | [Install]

17 | WantedBy=multi-user.target

18 |

--------------------------------------------------------------------------------

/playbooks/roles/prometheus-server/templates/prometheus.yml.j2:

--------------------------------------------------------------------------------

1 | global:

2 | scrape_interval: {{ prometheus_scrape_interval }}

3 |

4 | scrape_configs:

5 | - job_name: 'prometheus'

6 | static_configs:

7 | - targets: ['localhost:9090']

8 |

9 | {% for item in hostvars[ansible_hostname]["groups"]["all"]: %}

10 | - job_name: '{{ item }}_node_exporter'

11 | static_configs:

12 | - targets: ['{{ hostvars[item]["wireguard_ip"] }}:{{ node_exporter_port }}']

13 |

14 | {% endfor %}

15 |

--------------------------------------------------------------------------------

/playbooks/roles/prometheus-server/vars/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | prometheus_version: "2.0.0"

4 | prometheus_sha256: "sha256:e12917b25b32980daee0e9cf879d9ec197e2893924bd1574604eb0f550034d46"

5 | prometheus_download_url: "https://github.com/prometheus/prometheus/releases/download/v{{ prometheus_version }}/prometheus-{{ prometheus_version }}.linux-amd64.tar.gz"

6 | prometheus_scrape_interval: "30s"

7 |

--------------------------------------------------------------------------------

/playbooks/roles/ufw/tasks/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | - name: "Install UFW"

4 | apt:

5 | name: ufw

6 |

7 | - name: "Ensure UFW allows SSH"

8 | ufw:

9 | to_port: "22"

10 | proto: "tcp"

11 | rule: "allow"

12 | comment: "OpenSSH"

13 |

14 | - name: "Ensure UFW is enabled and denies by default"

15 | ufw:

16 | state: "enabled"

17 | policy: "deny"

18 | direction: "incoming"

19 |

--------------------------------------------------------------------------------

/playbooks/roles/wireguard/tasks/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | - name: Add WireGuard apt repo

4 | apt_repository:

5 | repo: "{{ wireguard_apt_repo }}"

6 | state: present

7 |

8 | - name: Update the apt cache

9 | apt:

10 | update_cache: yes

11 |

12 | - name: Install WireGuard components

13 | apt:

14 | name: "{{ item }}"

15 | with_items:

16 | - wireguard-dkms

17 | - wireguard-tools

18 |

19 | - name: Ensure WireGuard directory exists

20 | file:

21 | path: "/etc/wireguard"

22 | state: directory

23 | owner: root

24 | group: root

25 | mode: 0640

26 |

27 | - name: Generate a WireGuard private key

28 | shell: "umask 077; wg genkey > /etc/wireguard/wg.priv"

29 | args:

30 | creates: "/etc/wireguard/wg.priv"

31 |

32 | - name: Register the WireGuard private key

33 | command: "cat /etc/wireguard/wg.priv"

34 | register: wireguard_private_key_cmd

35 | changed_when: false

36 |

37 | - name: Compute the WireGuard public key

38 | shell: "wg pubkey < /etc/wireguard/wg.priv"

39 | register: "wireguard_public_key_cmd"

40 | changed_when: false

41 |

42 | - name: Register the WireGuard public key as a fact

43 | set_fact:

44 | wireguard_public_key: "{{ wireguard_public_key_cmd.stdout }}"

45 |

46 | - name: Write the WireGuard config

47 | template:

48 | src: "wg0.conf.j2"

49 | dest: "/etc/wireguard/wg0.conf"

50 | owner: root

51 | group: root

52 | mode: 0640

53 |

54 | - name: Write the wg0 interface config

55 | template:

56 | src: "60-wireguard.cfg.j2"

57 | dest: "/etc/network/interfaces.d/60-wireguard.cfg"

58 | owner: root

59 | group: root

60 | mode: 0644

61 |

62 | - name: Open a firewall port for WireGuard

63 | ufw:

64 | direction: in

65 | proto: udp

66 | port: "{{ wireguard_listen_port }}"

67 | rule: allow

68 | comment: "WireGuard"

69 |

70 | - name: Bring down the wg0 interface

71 | command: ifdown wg0

72 | # Ignore errors: wg0 might not exist yet

73 | ignore_errors: true

74 | changed_when: false

75 |

76 | - name: Bring up the wg0 interface

77 | command: ifup wg0

78 | changed_when: false

79 |

--------------------------------------------------------------------------------

/playbooks/roles/wireguard/templates/60-wireguard.cfg.j2:

--------------------------------------------------------------------------------

1 | #

2 | # Wireguard wg0 interface config for {{ ansible_hostname }}

3 | #

4 |

5 | auto wg0

6 | iface wg0 inet static

7 | address {{ wireguard_ip }}/24

8 | netmask 255.255.255.0

9 | pre-up ip link add dev $IFACE type wireguard

10 | pre-up wg setconf $IFACE /etc/wireguard/$IFACE.conf

11 | post-down ip link del $IFACE

12 |

--------------------------------------------------------------------------------

/playbooks/roles/wireguard/templates/wg0.conf.j2:

--------------------------------------------------------------------------------

1 | #

2 | # {{ ansible_hostname }} wg0 configuration

3 | #

4 | [Interface]

5 | PrivateKey = {{ wireguard_private_key_cmd.stdout }}

6 | ListenPort = {{ wireguard_listen_port }}

7 |

8 | {% if ansible_hostname not in hostvars[ansible_hostname]['groups']['monitors'] %}

9 | {% for item in hostvars[ansible_hostname]['groups']['monitors']: %}

10 | # {{ item }} (Monitor)

11 | [Peer]

12 | Endpoint = {{ hostvars[item]['ansible_default_ipv4']['address'] }}:{{ wireguard_listen_port }}

13 | PublicKey = {{ hostvars[item]['wireguard_public_key'] }}

14 | AllowedIPs = {{ hostvars[item]['wireguard_ip'] }}

15 |

16 | {% endfor %}

17 | {% else %}

18 | {% for item in hostvars[ansible_hostname]['groups']['all']: %}

19 | # {{ item }} (Monitored node)

20 | [Peer]

21 | Endpoint = {{ hostvars[item]['ansible_default_ipv4']['address'] }}:{{ wireguard_listen_port }}

22 | PublicKey = {{ hostvars[item]['wireguard_public_key'] }}

23 | AllowedIPs = {{ hostvars[item]['wireguard_ip'] }}

24 |

25 | {% endfor %}

26 | {% endif %}

27 |

28 |

29 |

--------------------------------------------------------------------------------

/playbooks/roles/wireguard/vars/main.yml:

--------------------------------------------------------------------------------

1 | ---

2 |

3 | wireguard_apt_repo: "ppa:wireguard/wireguard"

4 |

5 | wireguard_listen_port: 51820

6 |

--------------------------------------------------------------------------------

/promguard.tf:

--------------------------------------------------------------------------------

1 | /*

2 | * PromGuard Terraform

3 | * @cpu - 2018

4 | *

5 | * An example of creating 1 monitoring node, and n target nodes. Target nodes

6 | * are spread across geographically diverse regions. Template datasources are

7 | * used to populate an Ansible inventory output. Later Ansible playbooks will

8 | * create an encrypted site-to-site tunnel between the monitoring node and the

9 | * target nodes.

10 | *

11 | */

12 |

13 | /*

14 | * Placeholder variables - populate these in your `terraform.tfvars` file.

15 | */

16 | variable "do_token" {}

17 | variable "do_ssh_key_file" {}

18 | variable "do_ssh_key_name" {

19 | default = "promguard-ssh"

20 | }

21 |

22 | /*

23 | * PromGuard Configuration Options

24 | */

25 |

26 | # What size of Droplet should be used?

27 | variable "do_size" {

28 | default = "1gb"

29 | }

30 |

31 | # What droplet image should be used?

32 | variable "do_image" {

33 | default = "ubuntu-16-04-x64"

34 | }

35 |

36 | # What region should the monitoring droplet be in?

37 | # Default: Toronto, CA

38 | variable "do_monitor_region" {

39 | default = "tor1"

40 | }

41 |

42 | # How many nodes should be created - make sure `do_node_regions` has an entry

43 | # for each `node_count` if you change this!

44 | variable "node_count" {

45 | default = 3

46 | }

47 |

48 | # What regions should each of the remote nodes be created in?

49 | # Default:

50 | # Node 1 - London, UK

51 | # Node 2 - San Franciso, US

52 | # Node 3 - Singapore, SG

53 | variable "do_node_regions" {

54 | type = "map"

55 |

56 | default = {

57 | "promguard-node-1" = "lon1"

58 | "promguard-node-2" = "sfo2"

59 | "promguard-node-3" = "sgp1"

60 | }

61 | }

62 |

63 | /*

64 | * Terraform outputs

65 | */

66 |

67 | # ansible_inventory is an output for the rendered ansible_inventory template

68 | output "ansible_inventory" {

69 | value = "${data.template_file.ansible_inventory.rendered}"

70 | }

71 |

72 | # monitor_ip is an output for the public IPv4 address of the monitor droplet

73 | # used for SSH port forwarding

74 | output "monitor_ip" {

75 | value = "${digitalocean_droplet.monitor.ipv4_address}"

76 | }

77 |

78 | /*

79 | * Template Data Sources

80 | */

81 |

82 | # nodes_ansible is a template datasource that constructs a hostname template for

83 | # each of the "node" droplets

84 | data "template_file" "nodes_ansible" {

85 | count = "${var.node_count}"

86 | template = "${file("${path.module}/templates/hostname.tpl")}"

87 | vars {

88 | name = "promguard-node-${count.index + 1}"

89 | ansible_host = "ansible_host=${digitalocean_droplet.node.*.ipv4_address[count.index]}"

90 | # Each monitor host is given a sequential address in the RFC 1918 10.0.0.0

91 | # network using the `cidrhost` function. The node offset is the index

92 | # + 2 - this accounts for both zero offset indexing as well as for the

93 | # monitor host

94 | wireguard_ip = "wireguard_ip=${cidrhost("10.0.0.0/24", count.index + 2)}"

95 | }

96 | }

97 |

98 | # monitor_ansible is a template datasource that constructs a hostname template

99 | # only for the "monitor" droplet

100 | data "template_file" "monitor_ansible" {

101 | template = "${file("${path.module}/templates/hostname.tpl")}"

102 | vars {

103 | name = "promguard-monitor-1"

104 | ansible_host = "ansible_host=${digitalocean_droplet.monitor.ipv4_address}"

105 | # The monitor wireguard_ip is always the first host in the 10.0.0.0 subnet

106 | # for this example code.

107 | wireguard_ip = "wireguard_ip=${cidrhost("10.0.0.0/24", 1)}"

108 | }

109 | }

110 |

111 | # ansible_inventory is a template datasource that stitches together the

112 | # nodes_ansible and monitor_ansible datasources to make an Ansible inventory

113 | data "template_file" "ansible_inventory" {

114 | template = "${file("${path.module}/templates/inventory.tpl")}"

115 | vars {

116 | monitor = "${data.template_file.monitor_ansible.rendered}"

117 | node_hosts = "${join("",data.template_file.nodes_ansible.*.rendered)}"

118 | }

119 | }

120 |

121 |

122 | /*

123 | * DigitalOcean provider & resources

124 | */

125 |

126 | # Use DigitalOcean as the provider

127 | provider "digitalocean" {

128 | token = "${var.do_token}"

129 | }

130 |

131 | # Create an SSH key for the PromGuard droplets to reference

132 | resource "digitalocean_ssh_key" "promguard-ssh" {

133 | name = "${var.do_ssh_key_name}"

134 | public_key = "${file(var.do_ssh_key_file)}"

135 | }

136 |

137 | # Create an overall "promguard" tag that all droplets will reference

138 | resource "digitalocean_tag" "promguard" {

139 | name = "promguard"

140 | }

141 |

142 | # Create a "promguard_monitor" tag that only the monitor host will reference

143 | resource "digitalocean_tag" "promguard_monitor" {

144 | name = "promguard_monitor"

145 | }

146 |

147 | # Create a "promguard_node" tag that only the to-be-monitored nodes will

148 | # reference

149 | resource "digitalocean_tag" "promguard_node" {

150 | name = "promguard_node"

151 | }

152 |

153 | # Create a single "monitor" droplet in the do_monitor_region

154 | # A "remote-exec" provisioner is used to ensure SSH access is available and

155 | # Python installed before continuing with further provisioning stages.

156 | resource "digitalocean_droplet" "monitor" {

157 | name = "promguard-monitor-1"

158 | region = "${var.do_monitor_region}"

159 | image = "${var.do_image}"

160 | size = "${var.do_size}"

161 | ssh_keys = [ "${digitalocean_ssh_key.promguard-ssh.id}" ]

162 | tags = [ "${digitalocean_tag.promguard.id}", "${digitalocean_tag.promguard_monitor.id}" ]

163 |

164 | provisioner "remote-exec" {

165 | inline = [

166 | "# Connected!",

167 | "apt update",

168 | "apt install -y python"

169 | ]

170 | }

171 | }

172 |

173 | # Create node_count separate to-be-monitored nodes, each in a unique region

174 | # based on the do_node_regions map.

175 | # A "remote-exec" provisioner is used to ensure SSH access is available and

176 | # Python installed before continuing with further provisioning stages.

177 | resource "digitalocean_droplet" "node" {

178 | count = "${var.node_count}"

179 | name = "promguard-node-${count.index + 1}"

180 | region = "${var.do_node_regions["promguard-node-${count.index +1 }"]}"

181 | image = "${var.do_image}"

182 | size = "${var.do_size}"

183 | ssh_keys = [ "${digitalocean_ssh_key.promguard-ssh.id}" ]

184 | tags = [ "${digitalocean_tag.promguard.id}", "${digitalocean_tag.promguard_node.id}" ]

185 | depends_on = [ "digitalocean_droplet.monitor" ]

186 |

187 | provisioner "remote-exec" {

188 | inline = [

189 | "# Connected!",

190 | "apt update",

191 | "apt install -y python"

192 | ]

193 | }

194 | }

195 |

--------------------------------------------------------------------------------

/run.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | source "util/ansible-check.sh"

6 | source "util/terraform-check.sh"

7 | source "util/quit.sh"

8 |

9 | # Ensure ansible is installed

10 | check_ansible

11 | # Ensure terraform is installed

12 | check_terraform

13 | # Ensure the terraform.tfvars file with secret vars exists

14 | notexists_quit "./terraform.tfvars" "Create terraform.tfvars, see README.md"

15 |

16 | # Apply changes as required

17 | terraform apply

18 | # Output an Ansible inventory

19 | terraform output ansible_inventory > inventory

20 |

21 | # Configure everything with Ansible

22 | ansible-playbook playbooks/promguard.yml

23 |

24 | # All done!

25 | echo "All finished! WooHoo!"

26 | echo "You can now access prometheus through a SSH port forward to the monitor host."

27 | echo "Run:"

28 | echo " ssh -L9090:localhost:9090 root@$(terraform output monitor_ip) &"

29 | echo "And then:"

30 | echo " xdg-open http://localhost:9090/targets"

31 | echo ""

32 | echo "Have fun! Keep it Encrypted!"

33 |

--------------------------------------------------------------------------------

/templates/hostname.tpl:

--------------------------------------------------------------------------------

1 | ${name} ${ansible_host} ${wireguard_ip}

2 |

--------------------------------------------------------------------------------

/templates/inventory.tpl:

--------------------------------------------------------------------------------

1 | [nodes]

2 | ${node_hosts}

3 | [monitors]

4 | ${monitor}

5 |

--------------------------------------------------------------------------------

/util/ansible-check.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Set errexit option to exit immediately on any non-zero status return.

4 | set -e

5 |

6 | # check_ansible checks that Ansible is installed on the local system

7 | # and that it is a supported version.

8 | function check_ansible() {

9 | local REQUIRED_ANSIBLE_VERSION="2.4.0"

10 |

11 | if ! command -v ansible > /dev/null 2>&1; then

12 | echo "

13 | This project requires Ansible and it is not installed.

14 | Please see the README Installation section on Prerequisites"

15 | exit 1

16 | fi

17 |

18 | if [[ $(ansible --version | grep -oe '2\(.[0-9]\)*') < $REQUIRED_ANSIBLE_VERSION ]]; then

19 | echo "

20 | This project requires Ansible version $REQUIRED_ANSIBLE_VERSION or higher.

21 | This system has Ansible $(ansible --version)."

22 | exit 1

23 | fi

24 | }

25 |

--------------------------------------------------------------------------------

/util/quit.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | set -e

4 |

5 | function err_quit() {

6 | echo "Error: $1" 1>&2

7 | exit 1

8 | }

9 |

10 | function notexists_quit() {

11 | if [ ! -f "$1" ]

12 | then

13 | err_quit "\"$1\" doesn't exist - $2"

14 | fi

15 | }

16 |

17 | function exists_quit() {

18 | if [ -f "$1" ]

19 | then

20 | err_quit "\"$1\" already exists - $2"

21 | fi

22 | }

23 |

--------------------------------------------------------------------------------

/util/terraform-check.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | # Set errexit option to exit immediately on any non-zero status return.

4 | set -e

5 |

6 | # check_ansible checks that Terraform is installed on the local system

7 | # and that it is a supported version.

8 | function check_terraform() {

9 | local REQUIRED_TERRAFORM_VERSION="0.11.0"

10 |

11 | if ! command -v terraform > /dev/null 2>&1; then

12 | echo "

13 | This project requires Terraform and it is not installed.

14 | Please see the README Installation section on Prerequisites"

15 | exit 1

16 | fi

17 |

18 | if [[ $(terraform --version | grep -oe 'v0\(.[0-9]\)*') < $REQUIRED_TERRAFORM_VERSION ]]; then

19 | echo "

20 | This project requires Terraform version $REQUIRED_TERRAFORM_VERSION or higher.

21 | This system has $(terraform --version)."

22 | exit 1

23 | fi

24 | }

25 |

--------------------------------------------------------------------------------