├── book

├── statistics

│ ├── consistency.md

│ ├── sufficiency.md

│ ├── information-geometry.md

│ ├── lhc_stats_thumbnail.md

│ ├── neyman_pearson.md

│ ├── neyman_construction.md

│ ├── estimators.md

│ ├── statistical_decision_theory.md

│ ├── cramer-rao-bound.md

│ └── bias-variance.md

├── test-sphinxext-opengraph.md

├── logo.png

├── assets

│ ├── dag.png

│ ├── mvp.png

│ ├── vmp.png

│ ├── graphs.png

│ ├── pAandB.png

│ ├── backward.png

│ ├── forward.png

│ ├── AperpBmidC.png

│ ├── composition.png

│ ├── conditional.png

│ ├── intro_bwd.png

│ ├── intro_fwd.png

│ ├── pA_and_pB.png

│ ├── schmidhuber.png

│ ├── prob_cousins.png

│ ├── Data_Science_VD.png

│ ├── atlas-higgs-2012.png

│ ├── autodiff_systems.png

│ ├── intro_autodiff.png

│ ├── nbgrader-fetch.png

│ ├── schematic_p_xy.png

│ ├── change_kernel_lab.png

│ ├── change_kernel_new.png

│ ├── nbgrader-validate.png

│ ├── 001_vanilla_ellipse.png

│ ├── change_kernel_classic.png

│ ├── nbgrader-assignments.png

│ ├── schematic_p_x_given_y.png

│ ├── schematic_p_y_given_x.png

│ ├── LHC-stats-thumbnail.001.png

│ ├── Bayes-theorem-in-pictures.png

│ ├── HCPSS-stats-lectures-2020.001.png

│ ├── HCPSS-stats-lectures-2020.002.png

│ ├── Neyman-pearson

│ │ ├── Neyman-pearson.001.png

│ │ ├── Neyman-pearson.002.png

│ │ ├── Neyman-pearson.003.png

│ │ ├── Neyman-pearson.004.png

│ │ ├── Neyman-pearson.005.png

│ │ └── Neyman-pearson.006.png

│ ├── Neyman-construction

│ │ ├── Neyman-construction.001.png

│ │ ├── Neyman-construction.002.png

│ │ ├── Neyman-construction.003.png

│ │ ├── Neyman-construction.004.png

│ │ ├── Neyman-construction.005.png

│ │ ├── Neyman-construction.006.png

│ │ ├── Neyman-construction.007.png

│ │ ├── Neyman-construction.008.png

│ │ ├── Neyman-construction.009.png

│ │ ├── Neyman-construction.010.png

│ │ ├── Neyman-construction.011.png

│ │ └── Neyman-construction.012.png

│ └── wilks-delta-log-likelihood

│ │ ├── wilks-delta-log-likelihood-1.gif

│ │ └── wilks-delta-log-likelihood-2.gif

├── bibliography.md

├── chapter.md

├── pgm

│ └── exoplanets.png

├── content.md

├── introduction.md

├── central-limit-theorem

│ └── introduction.md

├── error-propagation

│ └── introduction.md

├── requirements.txt

├── discussion_forum.md

├── prml_notebooks

│ ├── attribution.md

│ └── ch08_Graphical_Models.ipynb

├── empirical_distribution.md

├── test_embed_video.md

├── _static

│ ├── pdf_print.css

│ └── save_state.js

├── color-in-equations.md

├── computing-topics.md

├── expectation.md

├── ml-topics.md

├── preliminaries.md

├── built-on.ipynb

├── statistics-topics.md

├── datasaurus.md

├── independence.md

├── _config.yml

├── probability-topics.md

├── section.md

├── other_resources

├── jupyterhub.md

├── distributions

│ ├── introduction.md

│ └── Binomial-Distribution.ipynb

├── intro.md

├── _toc.yml

├── notebooks.ipynb

├── markdown.md

├── nbgrader.md

├── measures_of_dependence.md

├── other_resources.md

├── references.bib

├── data-science-topics.md

├── conditional.md

├── random_variables.md

├── bayes_theorem.md

├── correlation.md

└── schedule.md

├── requirements.txt

├── .gitattributes

├── Makefile

├── binder

├── postBuild

└── trigger_binder.sh

├── .github

└── workflows

│ ├── merged.yml

│ └── deploy-jupyter-book.yml

├── README.md

├── LICENSE

└── .gitignore

/book/statistics/consistency.md:

--------------------------------------------------------------------------------

1 | # Consistency

2 |

3 | coming soon

--------------------------------------------------------------------------------

/book/statistics/sufficiency.md:

--------------------------------------------------------------------------------

1 | # Sufficiency

2 |

3 | coming soon

--------------------------------------------------------------------------------

/book/test-sphinxext-opengraph.md:

--------------------------------------------------------------------------------

1 | # Test Sphinxext-opengraph

2 |

3 | fixed?

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | jupyter~=1.0

2 | jupyterlab~=2.0

3 | jupyter-book~=0.8.3

4 |

--------------------------------------------------------------------------------

/book/statistics/information-geometry.md:

--------------------------------------------------------------------------------

1 | # Information Geometry

2 |

3 | coming soon

--------------------------------------------------------------------------------

/.gitattributes:

--------------------------------------------------------------------------------

1 | # Auto detect text files and perform LF normalization

2 | * text=auto

3 |

--------------------------------------------------------------------------------

/book/logo.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/logo.png

--------------------------------------------------------------------------------

/book/assets/dag.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/dag.png

--------------------------------------------------------------------------------

/book/assets/mvp.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/mvp.png

--------------------------------------------------------------------------------

/book/assets/vmp.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/vmp.png

--------------------------------------------------------------------------------

/book/bibliography.md:

--------------------------------------------------------------------------------

1 | # Bibliography

2 |

3 | ```{bibliography} references.bib

4 | ```

5 |

6 |

--------------------------------------------------------------------------------

/book/assets/graphs.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/graphs.png

--------------------------------------------------------------------------------

/book/assets/pAandB.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/pAandB.png

--------------------------------------------------------------------------------

/book/assets/backward.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/backward.png

--------------------------------------------------------------------------------

/book/assets/forward.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/forward.png

--------------------------------------------------------------------------------

/book/chapter.md:

--------------------------------------------------------------------------------

1 | # Chapter title

2 |

3 | Some text so that following files may be treated like sections

4 |

--------------------------------------------------------------------------------

/book/pgm/exoplanets.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/pgm/exoplanets.png

--------------------------------------------------------------------------------

/book/assets/AperpBmidC.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/AperpBmidC.png

--------------------------------------------------------------------------------

/book/assets/composition.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/composition.png

--------------------------------------------------------------------------------

/book/assets/conditional.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/conditional.png

--------------------------------------------------------------------------------

/book/assets/intro_bwd.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/intro_bwd.png

--------------------------------------------------------------------------------

/book/assets/intro_fwd.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/intro_fwd.png

--------------------------------------------------------------------------------

/book/assets/pA_and_pB.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/pA_and_pB.png

--------------------------------------------------------------------------------

/book/assets/schmidhuber.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/schmidhuber.png

--------------------------------------------------------------------------------

/book/assets/prob_cousins.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/prob_cousins.png

--------------------------------------------------------------------------------

/book/assets/Data_Science_VD.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Data_Science_VD.png

--------------------------------------------------------------------------------

/book/assets/atlas-higgs-2012.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/atlas-higgs-2012.png

--------------------------------------------------------------------------------

/book/assets/autodiff_systems.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/autodiff_systems.png

--------------------------------------------------------------------------------

/book/assets/intro_autodiff.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/intro_autodiff.png

--------------------------------------------------------------------------------

/book/assets/nbgrader-fetch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/nbgrader-fetch.png

--------------------------------------------------------------------------------

/book/assets/schematic_p_xy.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/schematic_p_xy.png

--------------------------------------------------------------------------------

/book/assets/change_kernel_lab.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/change_kernel_lab.png

--------------------------------------------------------------------------------

/book/assets/change_kernel_new.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/change_kernel_new.png

--------------------------------------------------------------------------------

/book/assets/nbgrader-validate.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/nbgrader-validate.png

--------------------------------------------------------------------------------

/book/assets/001_vanilla_ellipse.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/001_vanilla_ellipse.png

--------------------------------------------------------------------------------

/book/assets/change_kernel_classic.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/change_kernel_classic.png

--------------------------------------------------------------------------------

/book/assets/nbgrader-assignments.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/nbgrader-assignments.png

--------------------------------------------------------------------------------

/book/assets/schematic_p_x_given_y.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/schematic_p_x_given_y.png

--------------------------------------------------------------------------------

/book/assets/schematic_p_y_given_x.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/schematic_p_y_given_x.png

--------------------------------------------------------------------------------

/book/assets/LHC-stats-thumbnail.001.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/LHC-stats-thumbnail.001.png

--------------------------------------------------------------------------------

/book/assets/Bayes-theorem-in-pictures.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Bayes-theorem-in-pictures.png

--------------------------------------------------------------------------------

/book/assets/HCPSS-stats-lectures-2020.001.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/HCPSS-stats-lectures-2020.001.png

--------------------------------------------------------------------------------

/book/assets/HCPSS-stats-lectures-2020.002.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/HCPSS-stats-lectures-2020.002.png

--------------------------------------------------------------------------------

/book/statistics/lhc_stats_thumbnail.md:

--------------------------------------------------------------------------------

1 | # Thumbnail of LHC Statistical Procedures

2 |

3 | ```{figure} ../assets/LHC-stats-thumbnail.001.png

4 | ```

5 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | all: build

2 |

3 | default: build

4 |

5 | build:

6 | jupyter-book build book/

7 |

8 | clean: book/_build

9 | rm -rf book/_build

10 |

--------------------------------------------------------------------------------

/book/assets/Neyman-pearson/Neyman-pearson.001.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-pearson/Neyman-pearson.001.png

--------------------------------------------------------------------------------

/book/assets/Neyman-pearson/Neyman-pearson.002.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-pearson/Neyman-pearson.002.png

--------------------------------------------------------------------------------

/book/assets/Neyman-pearson/Neyman-pearson.003.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-pearson/Neyman-pearson.003.png

--------------------------------------------------------------------------------

/book/assets/Neyman-pearson/Neyman-pearson.004.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-pearson/Neyman-pearson.004.png

--------------------------------------------------------------------------------

/book/assets/Neyman-pearson/Neyman-pearson.005.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-pearson/Neyman-pearson.005.png

--------------------------------------------------------------------------------

/book/assets/Neyman-pearson/Neyman-pearson.006.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-pearson/Neyman-pearson.006.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.001.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.001.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.002.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.002.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.003.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.003.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.004.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.004.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.005.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.005.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.006.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.006.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.007.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.007.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.008.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.008.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.009.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.009.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.010.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.010.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.011.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.011.png

--------------------------------------------------------------------------------

/book/assets/Neyman-construction/Neyman-construction.012.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/Neyman-construction/Neyman-construction.012.png

--------------------------------------------------------------------------------

/book/content.md:

--------------------------------------------------------------------------------

1 | Content in Jupyter Book

2 | =======================

3 |

4 | There are many ways to write content in Jupyter Book. This short section

5 | covers a few tips for how to do so.

6 |

--------------------------------------------------------------------------------

/book/assets/wilks-delta-log-likelihood/wilks-delta-log-likelihood-1.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/wilks-delta-log-likelihood/wilks-delta-log-likelihood-1.gif

--------------------------------------------------------------------------------

/book/assets/wilks-delta-log-likelihood/wilks-delta-log-likelihood-2.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/cranmer/stats-ds-book/master/book/assets/wilks-delta-log-likelihood/wilks-delta-log-likelihood-2.gif

--------------------------------------------------------------------------------

/book/introduction.md:

--------------------------------------------------------------------------------

1 | # Central Limit Theorem

2 |

3 | Some words

4 |

5 | Some equations $e^{i\pi}+1=0$

6 |

7 | \begin{equation}

8 | \frac{1}{\sqrt{2 \pi} \sigma}

9 | \end{equation}

10 |

11 |

--------------------------------------------------------------------------------

/book/central-limit-theorem/introduction.md:

--------------------------------------------------------------------------------

1 | # Central Limit Theorem

2 |

3 | Some words

4 |

5 | Some equations $e^{i\pi}+1=0$

6 |

7 | \begin{equation}

8 | \frac{1}{\sqrt{2 \pi} \sigma}

9 | \end{equation}

10 |

11 |

--------------------------------------------------------------------------------

/book/error-propagation/introduction.md:

--------------------------------------------------------------------------------

1 | # Error propagation

2 |

3 | is often taught poorly

4 |

5 | Some equations $e^{i\pi}+1=0$

6 |

7 | \begin{equation}

8 | \frac{1}{\sqrt{2 \pi} \sigma}

9 | \end{equation}

10 |

11 |

--------------------------------------------------------------------------------

/binder/postBuild:

--------------------------------------------------------------------------------

1 | python -m pip install --no-cache-dir -r requirements.txt

2 | python -m pip install --no-cache-dir -r book/requirements.txt

3 | jupyter labextension install jupyterlab-jupytext --no-build

4 | jupyter labextension install nbdime-jupyterlab --no-build

5 | jupyter lab build -y

6 | jupyter lab clean -y

7 |

--------------------------------------------------------------------------------

/book/requirements.txt:

--------------------------------------------------------------------------------

1 | datascience~=0.17.0 # Gets scipy, numpy, pandas, folium, bokeh, and plotly

2 | nbinteract~=0.2

3 | sympy~=1.7.0

4 | jax~=0.2.7

5 | jaxlib~=0.1.57

6 | pyprob~=1.2.5 # Gets scikit-learn

7 | pyhf~=0.5

8 | daft~=0.1.0

9 | seaborn~=0.11.0 # Gets matplotlib

10 | altair~=4.1.0

11 | jupytext~=1.7

12 | sphinx-click~=2.5

13 | sphinx-tabs~=1.3

14 | sphinx-panels~=0.5

15 | sphinxext-opengraph~=0.3

16 | sphinxcontrib-bibtex<2.0.0

17 | git+https://github.com/ctgk/PRML.git

18 |

--------------------------------------------------------------------------------

/book/discussion_forum.md:

--------------------------------------------------------------------------------

1 | # Discussion Forum

2 |

3 |

4 | While it's not totally decided, the original plan was to use piazza for the course discussion forum.

5 |

6 | ```{admonition} Piazza Discussion Forum

7 | [https://piazza.com/nyu/fall2020/physga2059/home](https://piazza.com/nyu/fall2020/physga2059/home)

8 | ```

9 |

10 | ## A short video about piazza

11 |

12 |

--------------------------------------------------------------------------------

/.github/workflows/merged.yml:

--------------------------------------------------------------------------------

1 | name: Merged PR

2 |

3 | on:

4 | pull_request:

5 | types: [closed]

6 |

7 | jobs:

8 | binder:

9 | name: Trigger Binder build

10 | runs-on: ubuntu-latest

11 | if: github.event.pull_request.merged

12 | steps:

13 | - uses: actions/checkout@v2

14 | - name: Trigger Binder build

15 | run: |

16 | # Use Binder build API to trigger repo2docker to build image on Google Cloud cluster of Binder Federation

17 | bash binder/trigger_binder.sh https://gke.mybinder.org/build/gh/cranmer/stats-ds-book/master

18 |

--------------------------------------------------------------------------------

/book/prml_notebooks/attribution.md:

--------------------------------------------------------------------------------

1 | # PRML Examples

2 |

3 |

4 | The repository provides python implementation of the algorithms described in [Pattern Recognition and Machine Learning (Christopher Bishop)](https://research.microsoft.com/en-us/um/people/cmbishop/PRML/).

5 | It's highly recommended, but unfortunately not free online.

6 |

7 | ```{admonition} Attribution

8 | These notebooks and the underlying `prml` library are from the wonderful repository: [https://github.com/ctgk/PRML](https://github.com/ctgk/PRML)

9 | ```

10 |

11 |

12 | ```{image} https://davidrosenberg.github.io/ml2017/images/bishop-2x.jpg

13 | :name: bishop-cover

14 | ```

--------------------------------------------------------------------------------

/book/empirical_distribution.md:

--------------------------------------------------------------------------------

1 | # Empirical Distribution

2 |

3 | Often we are working directly with data and we don't know the parent distribution that generated the data.

4 |

5 | We often denote a dataset with $N$ data points indexed by $i$ as $\{x_i\}_{i=1}^N$.

6 |

7 | Sometimes this dataset is thought of a samples or realizatiosn from some parent distribution. For instance, we often assume that we have **independent and identically distributed (iid)** data $x_i \sim p_X$ for $i=1\dots N$.

8 |

9 | In other cases one thinks of this data set as an **emperical distribution**

10 |

11 | $$

12 | p_\textrm{emp, X} = \frac{1}{N} \sum_{i=1}^N \delta(x-x_i)

13 | $$

14 |

15 |

16 |

--------------------------------------------------------------------------------

/binder/trigger_binder.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | function trigger_binder() {

4 | local URL="${1}"

5 |

6 | curl -L --connect-timeout 10 --max-time 30 "${URL}"

7 | curl_return=$?

8 |

9 | # Return code 28 is when the --max-time is reached

10 | if [ "${curl_return}" -eq 0 ] || [ "${curl_return}" -eq 28 ]; then

11 | if [[ "${curl_return}" -eq 28 ]]; then

12 | printf "\nBinder build started.\nCheck back soon.\n"

13 | fi

14 | else

15 | return "${curl_return}"

16 | fi

17 |

18 | return 0

19 | }

20 |

21 | function main() {

22 | # 1: the Binder build API URL to curl

23 | trigger_binder $1

24 | }

25 |

26 | main "$@" || exit 1

27 |

--------------------------------------------------------------------------------

/book/statistics/neyman_pearson.md:

--------------------------------------------------------------------------------

1 | # Neyman-Pearson lemma

2 |

3 |

4 |

5 | `````{tabs}

6 | ````{tab} Step 1

7 |

8 | ```{figure} ../assets/Neyman-pearson/Neyman-pearson.001.png

9 | ```

10 |

11 | ````

12 | ````{tab} Step 2

13 |

14 | ```{figure} ../assets/Neyman-pearson/Neyman-pearson.002.png

15 | ```

16 |

17 | ````

18 | ````{tab} Step 3

19 |

20 | ```{figure} ../assets/Neyman-pearson/Neyman-pearson.003.png

21 | ```

22 |

23 | ````

24 | ````{tab} Step 4

25 |

26 | ```{figure} ../assets/Neyman-pearson/Neyman-pearson.004.png

27 | ```

28 |

29 | ````

30 | ````{tab} Step 5

31 |

32 | ```{figure} ../assets/Neyman-pearson/Neyman-pearson.005.png

33 | ```

34 |

35 | ````

36 | ````{tab} Step 6

37 |

38 | ```{figure} ../assets/Neyman-pearson/Neyman-pearson.006.png

39 | ```

40 |

41 | ````

42 | `````

43 |

44 |

--------------------------------------------------------------------------------

/book/test_embed_video.md:

--------------------------------------------------------------------------------

1 | # Test Embed Video

2 |

3 | Below is a Video

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 | ```{warning}

15 | This fa role doesn't seem to work.

16 | ```

17 |

18 | {fa}`check,text-success mr-1`

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Statistics and Data Science Jupyter Book

2 |

3 | [](https://github.com/cranmer/stats-ds-book/actions?query=workflow%3A%22Deploy+Jupyter+Book%22+branch%3Amaster)

4 | [](https://mybinder.org/v2/gh/cranmer/stats-ds-book/master?urlpath=lab/tree/book)

5 |

6 | This is the start of a book for Statistics and Data Science course for Fall 2020 at NYU Physics.

7 |

8 | This uses [Jupyter book](https://jupyterbook.org/customize/toc.html)

9 |

10 | The book itself is here: [http://cranmer.github.io/stats-ds-book](http://cranmer.github.io/stats-ds-book)

11 |

12 |

13 | Many thanks to Jupyter book team, Matthew Feickert for some assistance, and ctgk for the wonderful [ctgk/PRML](https://github.com/ctgk/PRML) repository.

14 |

--------------------------------------------------------------------------------

/book/_static/pdf_print.css:

--------------------------------------------------------------------------------

1 | /*********************************************

2 | * Print-specific CSS *

3 | *********************************************/

4 |

5 | @media print {

6 |

7 | div.topbar {

8 | display: none;

9 | }

10 |

11 | .pr-md-0 {

12 | flex: 0 0 100% !important;

13 | max-width: 100% !important;

14 | }

15 |

16 | .page_break {

17 | /*

18 | Control where and how page-breaks happen in pdf prints

19 | This page has a nice guide: https://tympanus.net/codrops/css_reference/break-before/

20 | This SO link describes how to use it: https://stackoverflow.com/a/1664058

21 | Simply add an empty div with this class where you want a page break

22 | like so: ;

23 | */

24 | clear: both;

25 | page-break-after: always !important;

26 | break-after: always !important;

27 | }

28 |

29 | }

--------------------------------------------------------------------------------

/book/color-in-equations.md:

--------------------------------------------------------------------------------

1 | # Color in equations

2 |

3 | Test 1:

4 |

5 | ```

6 | $${\color{#0271AE}{\int dx e^-x}}$$

7 | ```

8 |

9 | yields

10 |

11 | $$

12 | {\color{#0271AE}{\int dx e^-x}}

13 | $$

14 |

15 | Test 2:

16 |

17 | ```

18 | $$(x={\color{#DC2830}{c_1}} \cdot {\color{#0271AE}{x_1}} + {\color{#DC2830}{c_2}} \cdot {\color{#0271AE}{x_2}})$$

19 | ```

20 | yields

21 |

22 | $$

23 | (x={\color{#DC2830}{c_1}} \cdot {\color{#0271AE}{x_1}} + {\color{#DC2830}{c_2}} \cdot {\color{#0271AE}{x_2}})

24 | $$

25 |

26 | Test macro:

27 |

28 | ```

29 | $$

30 | A = \bmat{} 1 & 1 \\ 2 & 1\\ 3 & 2 \emat{},\ b=\bmat{} 2\\ 3 \\ 4\emat{},\ \gamma = 0.5

31 | $$

32 | ```

33 |

34 | yields

35 |

36 | $$

37 | A = \bmat{} 1 & 1 \\ 2 & 1\\ 3 & 2 \emat{},\ b=\bmat{} 2\\ 3 \\ 4\emat{},\ \gamma = 0.5

38 | $$

39 |

40 | test sphinx shortcut for color

41 |

42 | ```$$\bered{\int dx e^-x}$$```

43 |

44 | yields

45 |

46 | $$

47 | \bered{\int dx e^-x}

48 | $$

49 |

--------------------------------------------------------------------------------

/book/computing-topics.md:

--------------------------------------------------------------------------------

1 | # Software & Computing Topics

2 |

3 | 1. Basics

4 | 1. Shell / POSIX [Software Carpentries](http://swcarpentry.github.io/shell-novice/)

5 | 1. Version Control

6 | 1. Git [Software Carpentries](http://swcarpentry.github.io/git-novice/)

7 | 1. GitHub

8 | 1. Basic Model

9 | 1. Pull Requests

10 | 1. Actions

11 | 1. Licenses

12 | 1. Binder

13 | 1. Colab

14 | 1. Continuous Integration [HSF training](https://hsf-training.github.io/hsf-training-cicd/index.html)

15 | 1. Cloud computing

16 | 1. Containers

17 | 1. Docker

18 | 1. Singularity

19 | 1. Kubernetes

20 | 1. AWS

21 | 1. GKE

22 | 1. Environment management

23 | 1. [conda](https://docs.conda.io/projects/conda/en/latest/user-guide/cheatsheet.html)

24 | 1. virtual env

25 | 1. jupyter

26 | 1. Jupyter Lab

27 | 1. Voila

28 | 1. Configuration

29 | 1. JSON

30 | 1. YAML

31 | 1. XML

32 | 1. Testing

33 | 1. Documentation

34 | 1. DOIs

35 | 1. GitHub

36 | 1. Zenodo

37 |

38 |

--------------------------------------------------------------------------------

/book/_static/save_state.js:

--------------------------------------------------------------------------------

1 |

2 | /* This code is copied verbatim from this SO post by Rory McCrossan: https://stackoverflow.com/a/51543474/2217577.

3 | The code was shared under the CC BY-SA 4.0 license: https://creativecommons.org/licenses/by-sa/4.0/

4 | It's purpose is to simply store the state of checked boxes locally as a localStorage object.

5 | To use it, simply add checkboxes as normal within your md files:

6 | Item 1

7 | Item 2

8 | Item 3

9 | */

10 |

11 | function onClickBox() {

12 | var arr = $('.box').map(function() {

13 | return this.checked;

14 | }).get();

15 | localStorage.setItem("checked", JSON.stringify(arr));

16 | }

17 |

18 | $(document).ready(function() {

19 | var arr = JSON.parse(localStorage.getItem('checked')) || [];

20 | arr.forEach(function(checked, i) {

21 | $('.box').eq(i).prop('checked', checked);

22 | });

23 |

24 | $(".box").click(onClickBox);

25 | });

--------------------------------------------------------------------------------

/book/expectation.md:

--------------------------------------------------------------------------------

1 | # Expectation

2 |

3 | If a $X$ is a random variable, then a function $g(x)$ is also a random variable. We will touch on this again we talk about [How do distributions transform under a change of variables?](distributions/change-of-variables).

4 |

5 | The **expected value** of a function $g(x)$, which may just be $x$ itself or a component of $x$, is defined by

6 |

7 | $$

8 | \mathbb{E}[g(x)] := \int g(x) p_X(x) dx

9 | $$

10 |

11 | ```{admonition} Synonymous terms:

12 | Expected value, expectation, mean, or average, or first moment .

13 | ```

14 |

15 | Note in physics, one would often write $\langle g \rangle$ for the expected value of $g$.

16 |

17 | Note, sometimes one writes $\mathbb{E}_{p_X}$ to make the distribution $p_X$ more explicit.

18 |

19 | ## Expectations with emperical data

20 |

21 | If $\{x_i\}_{i=1}^N$ is a dataset (emperical distribution) with independent and identically distributed (iid) $x_i \sim p_X$, then one can estimate the expectation with the **sample mean**

22 |

23 | $$

24 | \mathbb{E}[g(x)] \approx \frac{1}{N} \sum_{i=1}^N g(x_i)

25 | $$

26 |

27 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 Kyle Cranmer

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/book/ml-topics.md:

--------------------------------------------------------------------------------

1 | # Machine Learning Topics

2 |

3 | 1. Loss, Risk

4 | 1. Emperical Risk

5 | 1. Generalization

6 | 1. Train / Test

7 | 1. Loss functions

8 | 1. classification

9 | 1. density estimation

10 | 1. Regression

11 | 1. linear regression

12 | 1. logistic regression

13 | 1. Gaussian Processes

14 | 1. Models

15 | 1. Decision trees

16 | 1. Support Vector Machines

17 | 1. Neural Networks

18 | 1. MLP

19 | 1. conv nets

20 | 1. RNN

21 | 1. Graph Networks

22 | 1. Paradigms

23 | 1. supervised

24 | 1. unsupervised

25 | 1. reinforcement

26 | 1. BackProp and AutoDiff

27 | 1. Forward mode

28 | 1. Reverse Mode

29 | 1. Fixed point / implicit

30 | 1. Learning Algorithms

31 | 1. Gradient Descent

32 | 1. SGD

33 | 1. Adam etc.

34 | 1. Natural Gradients

35 | 1. Domain adaptation

36 | 1. Transfer learning

37 | 1. No free lunch

38 | 1. Inductive Bias

39 | 1. Differentiable Programming

40 | 1. sorting

41 | 1. Gumbel

42 | 1. Probabilistic ML

43 | 1. VAE

44 | 1. GAN

45 | 1. Normalizing Flows

46 | 1. Blackbox optimization

47 | 1. Multiarm bandits

48 | 1. Bayesian Optimization

49 | 1. Hyperparameter optimization

50 |

51 |

--------------------------------------------------------------------------------

/book/preliminaries.md:

--------------------------------------------------------------------------------

1 | # Preliminaries

2 |

3 |

4 | The status of this checklist should be stored in your browser locally, so that you can come back to the same page and update the checkboxes.

5 | Note that this will NOT work across browsers, across devices, likely will not work in privacy/incognito browsing mode, and definitly will not work if you clear/reset your cache and temporary files.

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/.github/workflows/deploy-jupyter-book.yml:

--------------------------------------------------------------------------------

1 | name: Deploy Jupyter Book

2 |

3 | on:

4 | push:

5 | pull_request:

6 |

7 | jobs:

8 |

9 | deploy-book:

10 | runs-on: ubuntu-latest

11 |

12 | steps:

13 | - uses: actions/checkout@v2

14 |

15 | - name: Set up Python 3.8

16 | uses: actions/setup-python@v2

17 | with:

18 | python-version: 3.8

19 |

20 | - name: Install dependencies

21 | run: |

22 | python -m pip install --upgrade pip setuptools wheel

23 | python -m pip install --no-cache-dir -r requirements.txt

24 | python -m pip install --no-cache-dir -r book/requirements.txt

25 | python -m pip list

26 |

27 | - name: Build the book

28 | run: |

29 | jupyter-book build book/

30 | # cp book/_static/* book/_build/html/_static

31 |

32 | - name: Deploy Jupyter book to GitHub pages

33 | if: success() && github.event_name == 'push' && github.ref == 'refs/heads/master' && github.repository == 'cranmer/stats-ds-book'

34 | uses: peaceiris/actions-gh-pages@v3

35 | with:

36 | github_token: ${{ secrets.GITHUB_TOKEN }}

37 | publish_dir: book/_build/html

38 | force_orphan: true

39 | user_name: 'github-actions[bot]'

40 | user_email: 'github-actions[bot]@users.noreply.github.com'

41 | commit_message: Deploy to GitHub pages

42 |

--------------------------------------------------------------------------------

/book/built-on.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Built on"

8 | ]

9 | },

10 | {

11 | "cell_type": "code",

12 | "execution_count": 3,

13 | "metadata": {},

14 | "outputs": [

15 | {

16 | "name": "stdout",

17 | "output_type": "stream",

18 | "text": [

19 | "Wed Aug 19 17:30:25 CDT 2020\r\n"

20 | ]

21 | }

22 | ],

23 | "source": [

24 | "!date"

25 | ]

26 | },

27 | {

28 | "cell_type": "markdown",

29 | "metadata": {},

30 | "source": [

31 | "## Status\n",

32 | "\n",

33 | "[](https://github.com/cranmer/stats-ds-book/actions?query=workflow%3A%22Deploy+Jupyter+Book%22+branch%3Amaster)\n"

34 | ]

35 | },

36 | {

37 | "cell_type": "code",

38 | "execution_count": null,

39 | "metadata": {},

40 | "outputs": [],

41 | "source": []

42 | }

43 | ],

44 | "metadata": {

45 | "kernelspec": {

46 | "display_name": "Python 3",

47 | "language": "python",

48 | "name": "python3"

49 | },

50 | "language_info": {

51 | "codemirror_mode": {

52 | "name": "ipython",

53 | "version": 3

54 | },

55 | "file_extension": ".py",

56 | "mimetype": "text/x-python",

57 | "name": "python",

58 | "nbconvert_exporter": "python",

59 | "pygments_lexer": "ipython3",

60 | "version": "3.8.5"

61 | }

62 | },

63 | "nbformat": 4,

64 | "nbformat_minor": 2

65 | }

66 |

--------------------------------------------------------------------------------

/book/statistics-topics.md:

--------------------------------------------------------------------------------

1 | # Statistics Topics

2 |

3 |

4 | 1. Estimators

5 | 1. Bias, Variance, MSE

6 | 1. Cramer-Rao bound

7 | 1. Information Geometry

8 | 1. Sufficiency

9 | 1. Consistency

10 | 1. Asymptotic Properties

11 | 1. Maximum likelihood

12 | 1. Bias-Variance Tradeoff

13 | 1. [James-Stein Paradox](https://en.wikipedia.org/wiki/James–Stein_estimator)

14 | 1. Goodness of fit

15 | 1. chi-square test

16 | 1. other tests

17 | 1. anomoly detection

18 | 1. Hypothesis Testing

19 | 1. Simple vs. Compound hypotheses

20 | 1. Nuisance Parameters

21 | 1. TypeI and TypeII error

22 | 1. Test statistics

23 | 1. Neyman-Pearson Lemma

24 | 1. Connection to classification

25 | 1. multiple testing

26 | 1. look elsewhere effect

27 | 1. Family wise error rate

28 | 1. False Discovery Rate

29 | 1. [Asymptotics, Daves, Gross and Vitells](https://arxiv.org/abs/1005.1891)

30 | 1. Confidence Intervals

31 | 1. Interpretation

32 | 1. Coverage

33 | 1. Power

34 | 1. No UMPU Tests

35 | 1. Neyman-Construction

36 | 1. Likelihood-Ratio tests

37 | 1. Profile likelihood

38 | 1. Profile construction

39 | 1. Asymptotic Properties of Likelihood Ratio

40 | 1. Bayesian Model Selection

41 | 1. Bayes Factors

42 | 1. BIC, etc.

43 | 1. Bayesian Credible Intervals

44 | 1. Interpretation

45 | 1. Metropolis Hastings

46 | 1. Variational Inference

47 | 1. LDA

48 | 1. Causal Inference

49 | 1. [Elements of Causal Inference by Jonas Peters, Dominik Janzing and Bernhard Schölkopf](https://mitpress.mit.edu/books/elements-causal-inference) [free PDF](https://www.dropbox.com/s/dl/gkmsow492w3oolt/11283.pdf)

50 | 1. Statistical Decision Theory

51 | 1. [Admissible decision rule](https://en.wikipedia.org/wiki/Admissible_decision_rule)

52 | 1. Experimental Design

53 | 1. Expected Information Gain

54 | 1. Bayesian Optimization

55 |

56 |

--------------------------------------------------------------------------------

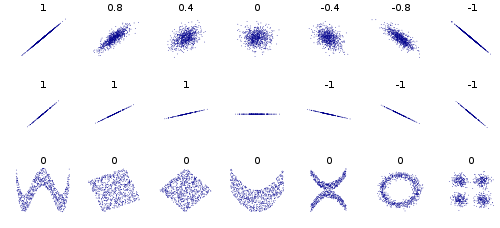

/book/datasaurus.md:

--------------------------------------------------------------------------------

1 |

2 | # Linear summary statistics and visualization

3 |

4 | ## Correlation and Dependence

5 |

6 | http://en.wikipedia.org/wiki/Correlation_and_dependence

7 |

8 | https://en.wikipedia.org/wiki/Anscombe%27s_quartet

9 |

10 | ## Draw my data

11 |

12 | http://robertgrantstats.co.uk/drawmydata.html

13 |

14 | ## Datasaurus

15 |

16 | [data source](https://www.autodeskresearch.com/publications/samestats)

17 |

18 | Justin Matejka, George Fitzmaurice (2017)

19 | Same Stats, Different Graphs: Generating Datasets with Varied Appearance and Identical Statistics through Simulated Annealing

20 | CHI 2017 Conference proceedings:

21 | ACM SIGCHI Conference on Human Factors in Computing Systems

22 |

23 |

24 | https://twitter.com/JustinMatejka/status/859075295059562498?s=20

25 |

26 |

27 |

28 |

29 |

30 | https://youtu.be/DbJyPELmhJc

31 |

32 |

--------------------------------------------------------------------------------

/book/independence.md:

--------------------------------------------------------------------------------

1 | # Independence

2 | ```{math}

3 | \newcommand\indep{\perp\kern-5pt\perp}

4 | ```

5 |

6 | As discussed in the previous section, **conditional probabilities** quantify the extent to which the knowledge of the occurrence of a certain event affects the probability of another event [^footnote1].

7 | In some cases, it makes no difference: the events are independent. More formally, events $A$ and $B$ are **independent** if and only if

8 |

9 | $$

10 | P (A|B) = P (A) .

11 | $$

12 |

13 | This definition is not valid if $P (B) = 0$. The following definition covers this case and is otherwise

14 | equivalent.

15 |

16 | ```{admonition} Definition (Independence).

17 | Let $(\Omega,\mathcal{F},P)$ be a probability space. Two events $A,B \in \mathcal{F}$

18 | are independent if and only if

19 |

20 | $$

21 | P (A \cap B) = P (A) P (B) .

22 | $$

23 | ```

24 | ```{admonition} Notation

25 | This is often denoted $ A \indep B $

26 | ```

27 |

28 | Similarly, we can define **conditional independence** between two events given a third event.

29 | $A$ and $B$ are conditionally independent given $C$ if and only if

30 |

31 | $$

32 | P (A|B, C) = P (A|C) ,

33 | $$

34 |

35 | where $P (A|B, C) := P (A|B \cap C)$. Intuitively, this means that the probability of $A$ is not affected by whether $B$ occurs or not, as long as $C$ occurs.

36 |

37 | ```{admonition} Notation

38 | This is often denoted $ A \indep B \mid C$

39 | ```

40 |

41 | ## Graphical Models

42 |

43 | There is a graphical model representation for joint distributions $P(A,B,C)$ that encodes their conditional (in)dependence known as a **probabilistic graphical model**. For this situation $ A \indep B \mid C$, the graphical model looks like this:

44 |

45 |  46 |

47 | The lack of an edge directly between $A$ and $B$ indicates that the two varaibles are conditionally independent. This image was produced with `daft`, and there are more examples in [Visualizing Graphical Models](./pgm/daft).

48 |

49 | [^footnote1]: This text is based on excerpts from Section 1.3 of [NYU CDS lecture notes on Probability and Statistics](https://cims.nyu.edu/~cfgranda/pages/stuff/probability_stats_for_DS.pdf)

50 |

--------------------------------------------------------------------------------

/book/_config.yml:

--------------------------------------------------------------------------------

1 | # Book settings

2 | title: Statistics and Data Science

3 | author: Kyle Cranmer

4 | logo: logo.png

5 | copyright: ""

6 |

7 | parse:

8 | myst_extended_syntax: true

9 |

10 | execute:

11 | exclude_patterns : ["*/Central-Limit-Theorem.ipynb","*/prop-error-plots.ipynb","*/track-example.ipynb"]

12 | execute_notebooks : off # force, off, auto

13 |

14 | # Information about where the book exists on the web

15 | repository:

16 | url: https://github.com/cranmer/stats-ds-book

17 | path_to_book: book

18 | branch: master

19 |

20 | html:

21 | home_page_in_navbar : true

22 | use_repository_button: true

23 | use_issues_button: true

24 | use_edit_page_button: true

25 | google_analytics_id: UA-178330963-1

26 | comments:

27 | hypothesis: true

28 | extra_footer : |

29 |

33 |

34 | sphinx:

35 | extra_extensions:

36 | - sphinx_tabs.tabs

37 | - sphinxext.opengraph

38 | html_show_copyright: false

39 | config:

40 | ogp_site_url: "https://cranmer.github.io/stats-ds-book/"

41 | ogp_image: "https://cranmer.github.io/stats-ds-book/_images/Neyman-pearson.006.png"

42 | ogp_description_length: 200

43 | mathjax_config:

44 | TeX:

45 | Macros:

46 | "N": "\\mathbb{N}"

47 | "indep": "{\\perp\\kern-5pt\\perp}"

48 | "floor": ["\\lfloor#1\\rfloor", 1]

49 | "bmat": ["\\left[\\begin{array}"]

50 | "emat": ["\\end{array}\\right]"]

51 | "bered": ["\\color{#DC2830}{#1}",1]

52 | "ecol": ["}}"]

53 |

54 | # Launch button settings

55 | launch_buttons:

56 | notebook_interface: classic #jupyterlab

57 | binderhub_url: https://mybinder.org

58 | colab_url: https://colab.research.google.com

59 |

60 | latex:

61 | latex_documents:

62 | targetname: book.tex

63 |

64 | extra_extensions:

65 | - sphinx_click.ext

66 | - sphinx_tabs.tabs

67 | - sphinx_panels

--------------------------------------------------------------------------------

/book/probability-topics.md:

--------------------------------------------------------------------------------

1 | # Probability Topics

2 |

3 | 1. Probability models

4 | 1. Probability denstiy functions

5 | 1. Classic distributons

6 | 1. Bernouli

7 | 1. Binomial

8 | 1. Poisson

9 | 1. Gaussian

10 | 1. Chi-Square

11 | 1. Exponential family

12 | 1. Multivariate distributions

13 | 1. Independence

14 | 1. Covariance

15 | 1. Conditional distributions

16 | 1. Marginal distributions

17 | 1. Graphical Models

18 | 1. [https://github.com/pgmpy/pgmpy](https://github.com/pgmpy/pgmpy)

19 | 1. [https://github.com/jmschrei/pomegranate](https://github.com/jmschrei/pomegranate)

20 | 1. [Video](https://youtu.be/DEHqIxX1Kq4)

21 | 1. Copula

22 | 1. Information theory

23 | 1. Entropy

24 | 1. Mutual information

25 | 1. KL divergence

26 | 1. cross entropy

27 | 1. Divergences

28 | 1. KL Divergence

29 | 1. Fisher distance

30 | 1. Optimal Transport

31 | 1. Hellinger distance

32 | 1. f-divergences

33 | 1. Stein divergence

34 | 1. Implicit probabity models

35 | 1. Simulators

36 | 1. Probabilistic Programming

37 | 1. https://docs.pymc.io

38 | 1. [ppymc3 vs. stan vs edward](https://statmodeling.stat.columbia.edu/2017/05/31/compare-stan-pymc3-edward-hello-world/)

39 | 1. pyro

40 | 1. pyprob

41 | 1. Likelihood function

42 | 1. [Axioms of probability](https://en.wikipedia.org/wiki/Probability_axioms)

43 | 1. [Probability Space](https://en.wikipedia.org/wiki/Probability_space)

44 | 1. Transformation properties

45 | 1. Change of variables

46 | 1. Propagation of errors

47 | 1. Reparameterization

48 | 1. Bayes Theorem

49 | 1. Subjective priors

50 | 1. Emperical Bayes

51 | 1. Jeffreys' prior

52 | 1. Unfiform priors

53 | 1. Reference Priors

54 | 1. Transformation Properties

55 | 1. Convolutions and the Central Limit Theorem

56 | 1. Binomial example

57 | 1. Convolutions in Fourier domain

58 | 1. [Extreme Value Theory](https://en.wikipedia.org/wiki/Extreme_value_theory)

59 | 1. Weibull law

60 | 1. Gumbel law

61 | 1. Fréchet Law

62 |

63 |

64 |

--------------------------------------------------------------------------------

/book/statistics/neyman_construction.md:

--------------------------------------------------------------------------------

1 | # Neyman construction

2 |

3 |

4 | `````{tabs}

5 | ````{tab} Step 1

6 |

7 | ```{figure} ../assets/Neyman-construction/Neyman-construction.001.png

8 | ```

9 |

10 | ````

11 | ````{tab} Step 2

12 |

13 | ```{figure} ../assets/Neyman-construction/Neyman-construction.002.png

14 | ```

15 |

16 | ````

17 | ````{tab} Step 3

18 |

19 | ```{figure} ../assets/Neyman-construction/Neyman-construction.003.png

20 | ```

21 |

22 | ````

23 | ````{tab} Step 4

24 |

25 | ```{figure} ../assets/Neyman-construction/Neyman-construction.004.png

26 | ```

27 |

28 | ````

29 | ````{tab} Step 5

30 |

31 | ```{figure} ../assets/Neyman-construction/Neyman-construction.005.png

32 | ```

33 |

34 | ````

35 | ````{tab} Step 6

36 |

37 | ```{figure} ../assets/Neyman-construction/Neyman-construction.006.png

38 | ```

39 |

40 | ````

41 | ````{tab} Step 7

42 |

43 | ```{figure} ../assets/Neyman-construction/Neyman-construction.007.png

44 | ```

45 |

46 | ````

47 | ````{tab} Step 8

48 |

49 | ```{figure} ../assets/Neyman-construction/Neyman-construction.008.png

50 | ```

51 |

52 | ````

53 | ````{tab} Step 9

54 |

55 | ```{figure} ../assets/Neyman-construction/Neyman-construction.009.png

56 | ```

57 |

58 | ````

59 | ````{tab} Step 10

60 |

61 | ```{figure} ../assets/Neyman-construction/Neyman-construction.010.png

62 | ```

63 |

64 | ````

65 | ````{tab} Step 11

66 |

67 | ```{figure} ../assets/Neyman-construction/Neyman-construction.011.png

68 | ```

69 |

70 | ````

71 | ````{tab} Step 12

72 |

73 | ```{figure} ../assets/Neyman-construction/Neyman-construction.012.png

74 | ```

75 |

76 | ````

77 | `````

78 |

79 |

80 | ## Generalizing to higher dimensional data

81 |

82 | ```{figure} ../assets/HCPSS-stats-lectures-2020.001.png

83 | ```

84 |

85 | ```{figure} ../assets/HCPSS-stats-lectures-2020.002.png

86 | ```

87 |

88 |

89 |

90 | ## Connection to Wilks's theorem

91 |

92 |

93 |

94 | `````{tabs}

95 | ````{tab} Step 1

96 |

97 | ```{figure} ../assets/wilks-delta-log-likelihood/wilks-delta-log-likelihood-1.gif

98 | ```

99 |

100 | ````

101 | ````{tab} Step 2

102 |

103 | ```{figure} ../assets/wilks-delta-log-likelihood/wilks-delta-log-likelihood-2.gif

104 | ```

105 |

106 | ````

107 | `````

--------------------------------------------------------------------------------

/book/section.md:

--------------------------------------------------------------------------------

1 | # Section title

2 |

3 | The "Section title" still uses a single #

4 |

5 | # Syllabus

6 |

7 | * Basics of probability

8 | * Probability models

9 | * Probability denstiy functions

10 | * Classic distributons

11 | * Bernouli

12 | * Binomial

13 | * Poisson

14 | * Gaussian

15 | * Chi-Square

16 | * Exponential family

17 | * Multivariate distributions

18 | * Independence

19 | * Covariance

20 | * Conditional distributions

21 | * Marginal distributions

22 | * Graphical Models

23 | * Copula

24 | * Information theory

25 | * Entropy

26 | * Mutual information

27 | * Implicit probabity models

28 | * Simulators

29 | * Probabilistic Programming

30 | * Likelihood function

31 | * Axioms of probability

32 | * Transformation properties

33 | * Change of variables

34 | * Propagation of errors

35 | * Reparameterization

36 | * Bayes Theorem

37 | * Subjective priors

38 | * Emperical Bayes

39 | * Jeffreys' prior

40 | * Unfiform priors

41 | * Reference Priors

42 | * Transformation Properties

43 | * Convolutions and the Central Limit Theorem

44 | * Binomial example

45 | * Convolutions in Fourier domain

46 | * Estimators

47 | * Bias, Variance, MSE

48 | * Cramer-Rao bound

49 | * Information Geometry

50 | * Sufficiency

51 | * Bias-Variance Tradeoff

52 | * James-Stein Paradox

53 | * Statistical Decision Theory

54 | * Hypothesis Testing

55 | * Simple vs. Compound hypotheses

56 | * Nuisance Parameters

57 | * TypeI and TypeII error

58 | * Test statistics

59 | * Neyman-Pearson Lemma

60 | * Confidence Intervals

61 | * Interpretation

62 | * Coverage

63 | * Power

64 | * No UMPU Tests

65 | * Neyman-Construction

66 | * Likelihood-Ratio tests

67 | * Profile likelihood

68 | * Profile construction

69 | * Asymptotic Properties of Likelihood Ratio

70 |

71 | * Bayesian Model Selection

72 | * Bayes Factors

73 | * BIC, etc.

74 | * Bayesian Credible Intervals

75 | * Interpretation

76 | * Metropolis Hastings

77 | * Variational Inference

78 | * LDA

79 | * Causality

80 |

81 |

82 |

83 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # pyenv

85 | .python-version

86 |

87 | # pipenv

88 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

89 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

90 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

91 | # install all needed dependencies.

92 | #Pipfile.lock

93 |

94 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

95 | __pypackages__/

96 |

97 | # Celery stuff

98 | celerybeat-schedule

99 | celerybeat.pid

100 |

101 | # SageMath parsed files

102 | *.sage.py

103 |

104 | # Environments

105 | .env

106 | .venv

107 | env/

108 | venv/

109 | ENV/

110 | env.bak/

111 | venv.bak/

112 |

113 | # Spyder project settings

114 | .spyderproject

115 | .spyproject

116 |

117 | # Rope project settings

118 | .ropeproject

119 |

120 | # mkdocs documentation

121 | /site

122 |

123 | # mypy

124 | .mypy_cache/

125 | .dmypy.json

126 | dmypy.json

127 |

128 | # Pyre type checker

129 | .pyre/

130 |

131 | # General

132 | .DS_Store

133 |

134 | # Jupyter book

135 | _build/

136 | plots/

137 |

--------------------------------------------------------------------------------

/book/statistics/estimators.md:

--------------------------------------------------------------------------------

1 | # Estimators

2 |

3 | One of the main differences between topics of probability and topics in statistics is that in statistics we have some task in mind.

4 | While a probability model $P_X(X \mid \theta)$ is an object of study when discussing probability, in statistics we usually want to

5 | *do* something with it.

6 |

7 | The first example that we will consider is to estimate the true, unknown value $\theta^*$ given some dataset $\{x_i\}_{i=1}^N$

8 | assuming that the data were drawn from $X_i \sim p_X(X|\theta^*)$.

9 |

10 | ```{admonition} Definition

11 | An estimator $\hat{\theta}(x_1, \dots, x_N)$ is a function of the data (that aims to estimate the true, unknown value $\theta^*$ assuming that the data were drawn from $X_i \sim p_X(X|\theta^*)$.

12 | ```

13 |

14 | There are several concrete estimators for different quantities, but this is an abstract definition of what is meant by an estimator. It is useful to think of the estimator as a procedure that you apply to the data, and then you can ask about the properties of a given procedure.

15 |

16 |

17 | ```{admonition} Terminology

18 | These closely related terms have slightly different meanings:

19 | * The *estimand* refers to the parameter $\theta$ being estimated.

20 | * The *estimator* refers to the function or procedure $\hat{\theta}(x_1, \dots, x_N)$

21 | * The specific value that an estimator takes (returns) for specific data is known as the *estimate*.

22 | ```

23 |

24 | We already introduced two estimators when studying [Transformation properties of the likelihood and posterior](.distributions/invariance-of-likelihood-to-reparameterizaton.html#equivariance-of-the-mle):

25 | * The maximum likelihood estimator: $\hat{\theta}_\textrm{MLE} := \textrm{argmax}_\theta p(X=x \mid \theta)$

26 | * The maximum a posteriori estimator: $\hat{\theta}_{MAP} := \textrm{argmax}_\theta p(\theta \mid X=x)$

27 |

28 | Note both of these estimators are defined by procedures that you apply once you have specific data.

29 |

30 |

31 | ```{admonition} Notation

32 | The estimate $\hat{\theta}(X_1, \dots, X_N)$ depends on the random variables $X_i$, so it is itself a random variable (unlike the parameter $\theta$).

33 | Often the estimate is denoted $\hat{\theta}$ and the dependence on the data is implicit.

34 | Subscripts are often used to indicate which estimator is being used, eg. the maximum likelihood estimator $\hat{\theta}_\textrm{MLE}$ and the maximum a posteriori estimator $\hat{\theta}_\textrm{MAP}$.

35 | ```

36 |

37 | ```{hint}

38 | It is often useful to consider two straw man estimators:

39 | * A constant estimator: $\hat{\theta}_\textrm{const} = \theta_0$ for $\theta_0 \in \Theta$

40 | * A random estimator: $\hat{\theta}_\textrm{random} =$ some random value for $\theta$ independent of the data

41 | Neither of these are useful estimators, but they can be used to help clarify your thinking due to their obvious properties.

42 | ```

43 |

--------------------------------------------------------------------------------

/book/other_resources:

--------------------------------------------------------------------------------

1 |

2 | Note this is not a markdown file.

3 |

4 | 1. Introduction to Causal Inference by Brady Neal [Course website](https://www.bradyneal.com/causal-inference-course)

5 | 1. [Elements of Causal Inference by Jonas Peters, Dominik Janzing and Bernhard Schölkopf](https://mitpress.mit.edu/books/elements-causal-inference) [free PDF](https://www.dropbox.com/s/dl/gkmsow492w3oolt/11283.pdf)

6 |

7 | 1. [Probability and Statistics](https://cims.nyu.edu/~cfgranda/pages/DSGA1002_fall17/index.html)

8 | 1. [Inference and Representation](https://inf16nyu.github.io/home/)

9 | 1. [Big Data 2015](https://www.vistrails.org/index.php/Course:_Big_Data_2015)

10 | 1. [Stanford Prob](http://cs229.stanford.edu/section/cs229-prob.pdf)

11 | 1. Linear Algebra links:

12 | 1. [Essence of linear algebra youtube videos by 3blue1brown](https://www.youtube.com/playlist?list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab)

13 | 1. [Introduction to Applied Linear Algebra – Vectors, Matrices, and Least Squares, Stephen Boyd and Lieven Vandenberghe](http://vmls-book.stanford.edu)

14 | 1. [Linear dynamical systems](https://www.youtube.com/watch?v=bf1264iFr-w&list=PLzvEnvQ9sS15pwCo8DYnJ-gArIkKZwJjF)

15 | 1. [Linear Algebra done right](https://linear.axler.net)

16 | 1. [NUMERICAL LINEAR ALGEBRA Lloyd N. Trefethen and David Bau, III](https://people.maths.ox.ac.uk/trefethen/text.html)

17 | 1. [Scientific Computing for PhDs](http://podcasts.ox.ac.uk/series/scientific-computing-dphil-students)

18 | 1. [Machine Learning](https://davidrosenberg.github.io/ml2017/#resources)

19 | 1. [PRML](https://github.com/cranmer/PRML)

20 | 1. [Mathematics for Machine Learning](https://mml-book.github.io)

21 | 1. Algorithms for Convex Optimization by Nisheeth K. Vishnoi [Course website](https://convex-optimization.github.io)

22 | 1. [Basic Python](https://swcarpentry.github.io/python-novice-inflammation/)

23 | 1. [Plotting and Programming with Python](https://swcarpentry.github.io/python-novice-gapminder/)

24 | 1. [Gentle Introduction to Automatic Differentiation on Kaggle](https://www.kaggle.com/borisettinger/gentle-introduction-to-automatic-differentiation)

25 |

26 | 1. [NeurIPS astro tutorial with datasets etc.](https://dwh.gg/NeurIPSastro)

27 |

28 | 1. [Paper about statistical combinations](https://arxiv.org/abs/2012.09874)

29 |

30 |

31 |

32 |

33 |

34 |

--------------------------------------------------------------------------------

/book/jupyterhub.md:

--------------------------------------------------------------------------------

1 | # JupyterHub for class

2 |

3 | In doing your work, you will need a python3 environment with several libraries installed. To streamline this, we created a JupyterHub instance with the necessary environment pre-installed. We will use this JupyterHub for some homework assignments that are graded with `nbgrader`. Below are the links to the

4 | * For students: [https://physga-2059-fall.rcnyu.org](https://physga-2059-fall.rcnyu.org)

5 | * For instructors: [https://physga-2059-fall-instructor.rcnyu.org](https://physga-2059-fall-instructor.rcnyu.org)

6 |

7 | Please give it a try and let us know how it works for you

8 |

9 | ```{tip}

10 | Course material will be put in the `shared` folder, which is read-only. You will need to copy the files to your home area to modify them.

11 | ```

12 |

13 |

14 | ```{tip}

15 | If you prefer the Jupyter Lab interface over the classic notebook, change the last part of the URL to "lab", e.g. [https://physga-2059-fall.rcnyu.org/user//lab/](https://physga-2059-fall.rcnyu.org/user//lab/) (and replace `` with your netid)

16 | ```

17 |

18 |

19 | ```{tip}

20 | The server will shutdown after 15 min of inactivity or (3 hours hard time limit). If you know you are done, click `Control Panel` in the top right and shutdown your server.

21 | ```

22 |

23 |

24 | ## Changing Kernels

25 |

26 | ```{tip}

27 | The default environment (kernel) is `Python 3`, you will need to change it to `Python [conda env:course]` to pick up the right environment with the installed libraries.

28 | ```

29 |

30 |

31 | `````{tabs}

32 | ````{tab} New Kernel

33 |

34 | ```{figure} ./assets/change_kernel_new.png

35 |

36 | Selecting the kernel for a new notebook

37 | ```

38 |

39 | ````

40 | ````{tab} Classic Notebook

41 |

42 | ```{figure} ./assets/change_kernel_classic.png

43 |

44 | Selecting the kernel for a the classic notebook

45 | ```

46 |

47 | ````

48 | ````{tab} Jupyter Lab

49 |

50 | ```{figure} ./assets/change_kernel_lab.png

51 |

52 | Selecting the kernel in Jupyter Lab

53 | ```

54 |

55 | ````

56 | `````

57 |

58 |

59 |

60 | %```{figure} ./assets/change_kernel_classic.png

61 | %

62 | %Selecting the kernel for a the classic notebook

63 | %```

64 | %

65 | %```{figure} ./assets/change_kernel_lab.png

66 | %

67 | %Selecting the kernel in Jupyter Lab

68 | %```

69 | %

70 | %```{figure} ./assets/change_kernel_new.png

71 | %

72 | %Selecting the kernel for a new notebook

73 | %```

74 |

75 | ## Documentation

76 |

77 | Overview and instructions

78 | [https://sites.google.com/a/nyu.edu/nyu-hpc/services/resources-for-classes/rc-jupyterhub](https://sites.google.com/a/nyu.edu/nyu-hpc/services/resources-for-classes/rc-jupyterhub)

79 |

80 | FAQ