├── LICENSE

├── README.md

├── config.py

├── model_data

└── readme.md

├── test.py

├── test_data

├── bikestunt.jpg

├── readme.md

└── surfing.jpeg

├── train_val.py

├── train_val_data

└── readme.md

└── utils

├── load_data.py

├── model.py

└── preprocessing.py

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2018 Ajay Dabas

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Image Caption Generator

2 |

3 | [](https://github.com/dabasajay/Image-Caption-Generator/issues)

4 | [](https://github.com/dabasajay/Image-Caption-Generator/network)

5 | [](https://github.com/dabasajay/Image-Caption-Generator/stargazers)

6 | [](https://dabasajay.github.io/)

7 |

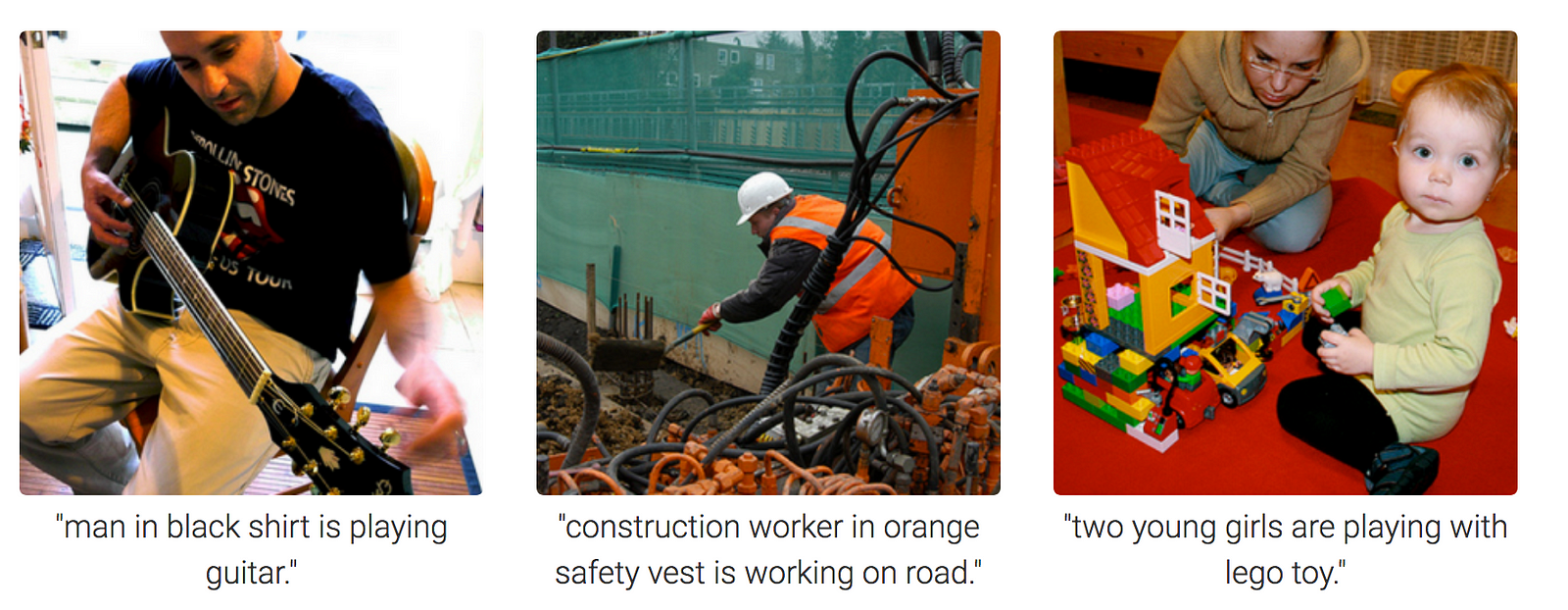

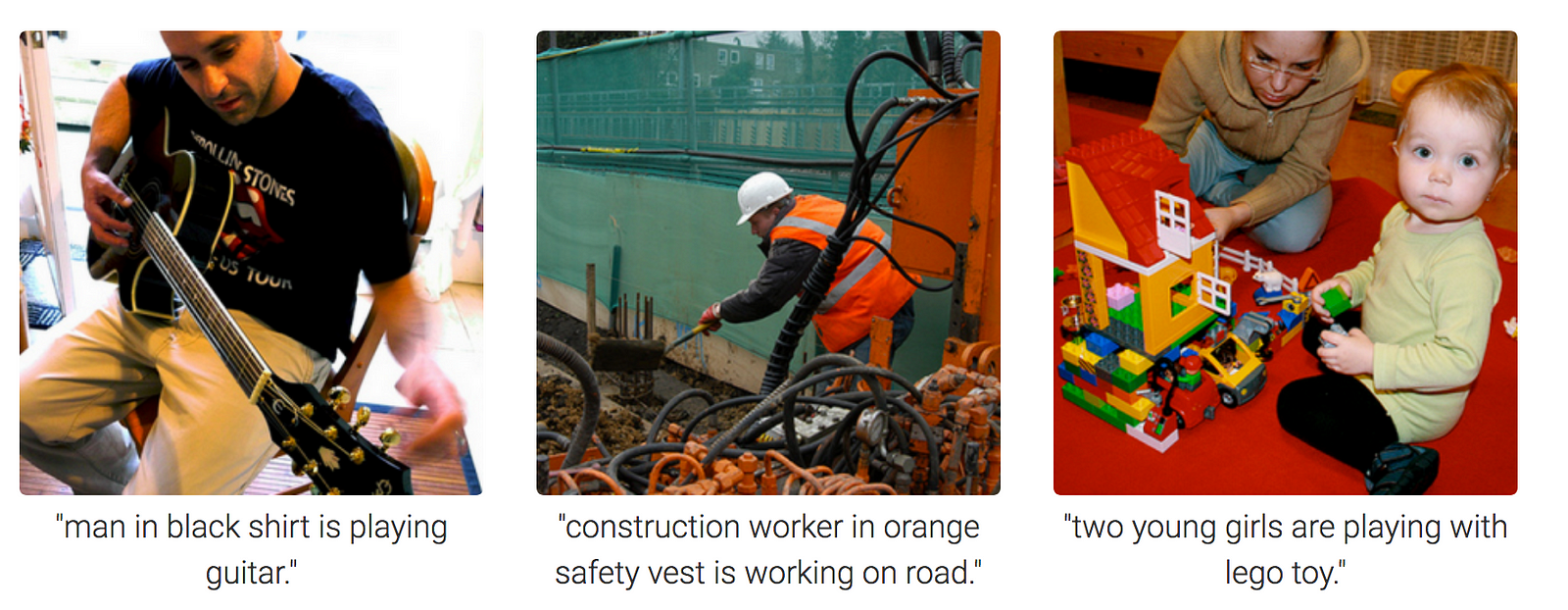

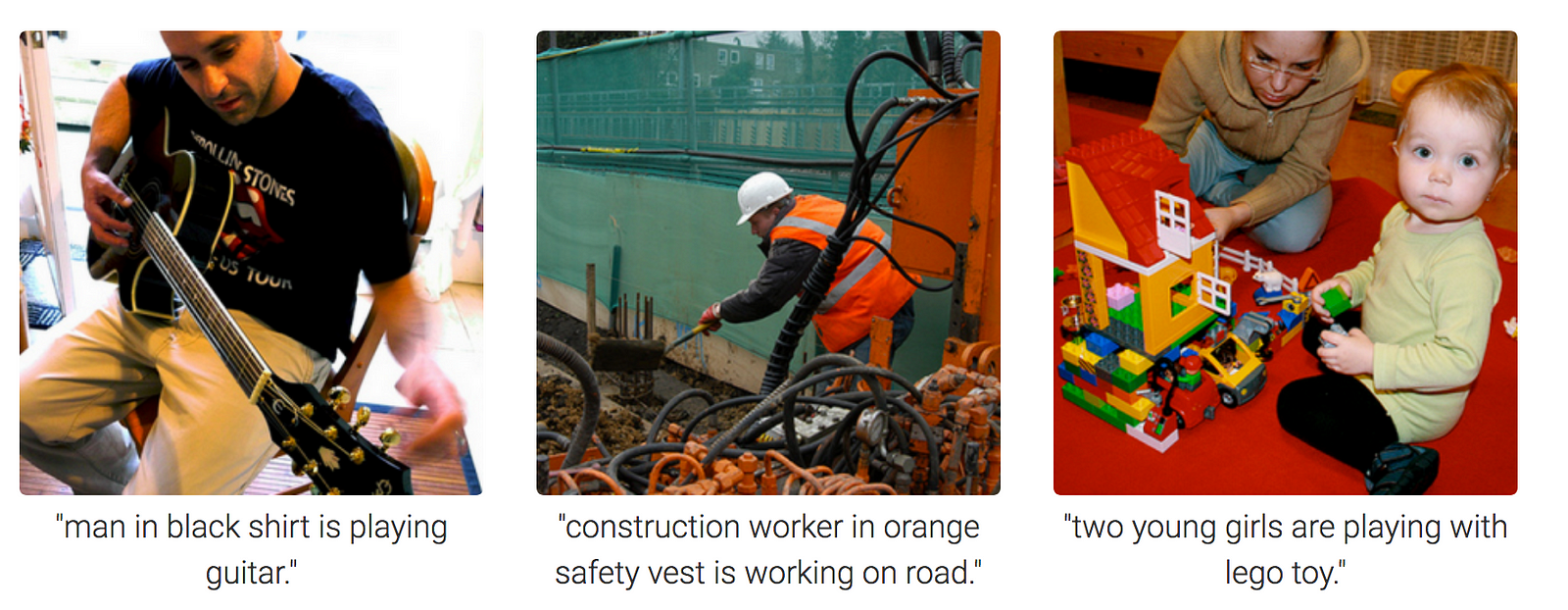

8 | A neural network to generate captions for an image using CNN and RNN with BEAM Search.

9 |

10 |

11 | Examples

12 |

13 |

14 |

15 |  16 |

16 |

17 |

18 |

19 | Image Credits : Towardsdatascience

20 |

21 |

22 | ## Table of Contents

23 |

24 | 1. [Requirements](#1-requirements)

25 | 2. [Training parameters and results](#2-training-parameters-and-results)

26 | 3. [Generated Captions on Test Images](#3-generated-captions-on-test-images)

27 | 4. [Procedure to Train Model](#4-procedure-to-train-model)

28 | 5. [Procedure to Test on new images](#5-procedure-to-test-on-new-images)

29 | 6. [Configurations (config.py)](#6-configurations-configpy)

30 | 7. [Frequently encountered problems](#7-frequently-encountered-problems)

31 | 8. [TODO](#8-todo)

32 | 9. [References](#9-references)

33 |

34 | ## 1. Requirements

35 |

36 | Recommended System Requirements to train model.

37 |

38 |

39 | - A good CPU and a GPU with atleast 8GB memory

40 | - Atleast 8GB of RAM

41 | - Active internet connection so that keras can download inceptionv3/vgg16 model weights

42 |

43 |

44 | Required libraries for Python along with their version numbers used while making & testing of this project

45 |

46 |

47 | - Python - 3.6.7

48 | - Numpy - 1.16.4

49 | - Tensorflow - 1.13.1

50 | - Keras - 2.2.4

51 | - nltk - 3.2.5

52 | - PIL - 4.3.0

53 | - Matplotlib - 3.0.3

54 | - tqdm - 4.28.1

55 |

56 |

57 | Flickr8k Dataset: Dataset Request Form

58 |

59 | UPDATE (April/2019): The official site seems to have been taken down (although the form still works). Here are some direct download links:

60 |

61 |

66 |

67 | Important: After downloading the dataset, put the reqired files in train_val_data folder

68 |

69 | ## 2. Training parameters and results

70 |

71 | #### NOTE

72 |

73 | - `batch_size=64` took ~14GB GPU memory in case of *InceptionV3 + AlternativeRNN* and *VGG16 + AlternativeRNN*

74 | - `batch_size=64` took ~8GB GPU memory in case of *InceptionV3 + RNN* and *VGG16 + RNN*

75 | - **If you're low on memory**, use google colab or reduce batch size

76 | - In case of BEAM Search, `loss` and `val_loss` are same as in case of argmax since the model is same

77 |

78 | | Model & Config | Argmax | BEAM Search |

79 | | :--- | :--- | :--- |

80 | | **InceptionV3 + AlternativeRNN** - Epochs = 20

- Batch Size = 64

- Optimizer = Adam

|**Crossentropy loss**

*(Lower the better)*- loss(train_loss): 2.4050

- val_loss: 3.0527

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.596818

- BLEU-2: 0.356009

- BLEU-3: 0.252489

- BLEU-4: 0.129536

|**k = 3**

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.606086

- BLEU-2: 0.359171

- BLEU-3: 0.249124

- BLEU-4: 0.126599

|

81 | | **InceptionV3 + RNN** - Epochs = 11

- Batch Size = 64

- Optimizer = Adam

|**Crossentropy loss**

*(Lower the better)*- loss(train_loss): 2.5254

- val_loss: 3.1769

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.601791

- BLEU-2: 0.344289

- BLEU-3: 0.230025

- BLEU-4: 0.108898

|**k = 3**

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.605097

- BLEU-2: 0.356094

- BLEU-3: 0.251132

- BLEU-4: 0.129900

|

82 | | **VGG16 + AlternativeRNN** - Epochs = 18

- Batch Size = 64

- Optimizer = Adam

|**Crossentropy loss**

*(Lower the better)*- loss(train_loss): 2.2880

- val_loss: 3.1889

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.596655

- BLEU-2: 0.342127

- BLEU-3: 0.229676

- BLEU-4: 0.108707

| **k = 3**

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.593876

- BLEU-2: 0.348569

- BLEU-3: 0.242063

- BLEU-4: 0.123221

|

83 | | **VGG16 + RNN** - Epochs = 7

- Batch Size = 64

- Optimizer = Adam

|**Crossentropy loss**

*(Lower the better)*- loss(train_loss): 2.6297

- val_loss: 3.3486

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.557626

- BLEU-2: 0.317652

- BLEU-3: 0.216636

- BLEU-4: 0.105288

|**k = 3**

**BLEU Scores on Validation data**

*(Higher the better)*- BLEU-1: 0.568993

- BLEU-2: 0.326569

- BLEU-3: 0.226629

- BLEU-4: 0.113102

|

84 |

85 |

86 | ## 3. Generated Captions on Test Images

87 |

88 | **Model used** - *InceptionV3 + AlternativeRNN*

89 |

90 | | Image | Caption |

91 | | :---: | :--- |

92 | |  |

| - Argmax: A man in a blue shirt is riding a bike on a dirt path.

- BEAM Search, k=3: A man is riding a bicycle on a dirt path.

|

93 | |  |

| - Argmax: A man in a red kayak is riding down a waterfall.

- BEAM Search, k=3: A man on a surfboard is riding a wave.

|

94 |

95 | ## 4. Procedure to Train Model

96 |

97 | 1. Clone the repository to preserve directory structure.

98 | `git clone https://github.com/dabasajay/Image-Caption-Generator.git`

99 | 2. Put the required dataset files in `train_val_data` folder (files mentioned in readme there).

100 | 3. Review `config.py` for paths and other configurations (explained below).

101 | 4. Run `train_val.py`.

102 |

103 | ## 5. Procedure to Test on new images

104 |

105 | 1. Clone the repository to preserve directory structure.

106 | `git clone https://github.com/dabasajay/Image-Caption-Generator.git`

107 | 2. Train the model to generate required files in `model_data` folder (steps given above).

108 | 3. Put the test images in `test_data` folder.

109 | 4. Review `config.py` for paths and other configurations (explained below).

110 | 5. Run `test.py`.

111 |

112 | ## 6. Configurations (config.py)

113 |

114 | **config**

115 |

116 | 1. **`images_path`** :- Folder path containing flickr dataset images

117 | 2. `train_data_path` :- .txt file path containing images ids for training

118 | 3. `val_data_path` :- .txt file path containing imgage ids for validation

119 | 4. `captions_path` :- .txt file path containing captions

120 | 5. `tokenizer_path` :- path for saving tokenizer

121 | 6. `model_data_path` :- path for saving files related to model

122 | 7. **`model_load_path`** :- path for loading trained model

123 | 8. **`num_of_epochs`** :- Number of epochs

124 | 9. **`max_length`** :- Maximum length of captions. This is set manually after training of model and required for test.py

125 | 10. **`batch_size`** :- Batch size for training (larger will consume more GPU & CPU memory)

126 | 11. **`beam_search_k`** :- BEAM search parameter which tells the algorithm how many words to consider at a time.

127 | 11. `test_data_path` :- Folder path containing images for testing/inference

128 | 12. **`model_type`** :- CNN Model type to use -> inceptionv3 or vgg16

129 | 13. **`random_seed`** :- Random seed for reproducibility of results

130 |

131 | **rnnConfig**

132 |

133 | 1. **`embedding_size`** :- Embedding size used in Decoder(RNN) Model

134 | 2. **`LSTM_units`** :- Number of LSTM units in Decoder(RNN) Model

135 | 3. **`dense_units`** :- Number of Dense units in Decoder(RNN) Model

136 | 4. **`dropout`** :- Dropout probability used in Dropout layer in Decoder(RNN) Model

137 |

138 | ## 7. Frequently encountered problems

139 |

140 | - **Out of memory issue**:

141 | - Try reducing `batch_size`

142 | - **Results differ everytime I run script**:

143 | - Due to stochastic nature of these algoritms, results *may* differ slightly everytime. Even though I did set random seed to make results reproducible, results *may* differ slightly.

144 | - **Results aren't very great using beam search compared to argmax**:

145 | - Try higher `k` in BEAM search using `beam_search_k` parameter in config. Note that higher `k` will improve results but it'll also increase inference time significantly.

146 |

147 | ## 8. TODO

148 |

149 | - [X] Support for VGG16 Model. Uses InceptionV3 Model by default.

150 |

151 | - [X] Implement 2 architectures of RNN Model.

152 |

153 | - [X] Support for batch processing in data generator with shuffling.

154 |

155 | - [X] Implement BEAM Search.

156 |

157 | - [X] Calculate BLEU Scores using BEAM Search.

158 |

159 | - [ ] Implement Attention and change model architecture.

160 |

161 | - [ ] Support for pre-trained word vectors like word2vec, GloVe etc.

162 |

163 | ## 9. References

164 |

165 |

170 |

--------------------------------------------------------------------------------

/config.py:

--------------------------------------------------------------------------------

1 | # All paths are relative to train_val.py file

2 | config = {

3 | 'images_path': 'train_val_data/Flicker8k_Dataset/', #Make sure you put that last slash(/)

4 | 'train_data_path': 'train_val_data/Flickr_8k.trainImages.txt',

5 | 'val_data_path': 'train_val_data/Flickr_8k.devImages.txt',

6 | 'captions_path': 'train_val_data/Flickr8k.token.txt',

7 | 'tokenizer_path': 'model_data/tokenizer.pkl',

8 | 'model_data_path': 'model_data/', #Make sure you put that last slash(/)

9 | 'model_load_path': 'model_data/model_inceptionv3_epoch-20_train_loss-2.4050_val_loss-3.0527.hdf5',

10 | 'num_of_epochs': 20,

11 | 'max_length': 40, #This is set manually after training of model and required for test.py

12 | 'batch_size': 64,

13 | 'beam_search_k':3,

14 | 'test_data_path': 'test_data/', #Make sure you put that last slash(/)

15 | 'model_type': 'inceptionv3', # inceptionv3 or vgg16

16 | 'random_seed': 1035

17 | }

18 |

19 | rnnConfig = {

20 | 'embedding_size': 300,

21 | 'LSTM_units': 256,

22 | 'dense_units': 256,

23 | 'dropout': 0.3

24 | }

--------------------------------------------------------------------------------

/model_data/readme.md:

--------------------------------------------------------------------------------

1 | Model Data Folder

2 |

3 | When you run the project, some files will be generated which'll be stored here

4 |

5 |

6 | - captions.txt : contains the saved text features

7 | - features.pkl : contains the saved image features

8 | - tokenizer.pkl : contains the saved tokenizer

9 | - model.hdf5 : the trained model

10 |

--------------------------------------------------------------------------------

/test.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from PIL import Image

3 | from pickle import load

4 | import matplotlib.pyplot as plt

5 | from keras.models import load_model

6 | from keras.preprocessing.image import load_img, img_to_array

7 | from utils.model import CNNModel, generate_caption_beam_search

8 | import os

9 |

10 | from config import config

11 |

12 | """

13 | *Some simple checking

14 | """

15 | assert type(config['max_length']) is int, 'Please provide an integer value for `max_length` parameter in config.py file'

16 | assert type(config['beam_search_k']) is int, 'Please provide an integer value for `beam_search_k` parameter in config.py file'

17 |

18 | # Extract features from each image in the directory

19 | def extract_features(filename, model, model_type):

20 | if model_type == 'inceptionv3':

21 | from keras.applications.inception_v3 import preprocess_input

22 | target_size = (299, 299)

23 | elif model_type == 'vgg16':

24 | from keras.applications.vgg16 import preprocess_input

25 | target_size = (224, 224)

26 | # Loading and resizing image

27 | image = load_img(filename, target_size=target_size)

28 | # Convert the image pixels to a numpy array

29 | image = img_to_array(image)

30 | # Reshape data for the model

31 | image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2]))

32 | # Prepare the image for the CNN Model model

33 | image = preprocess_input(image)

34 | # Pass image into model to get encoded features

35 | features = model.predict(image, verbose=0)

36 | return features

37 |

38 | # Load the tokenizer

39 | tokenizer_path = config['tokenizer_path']

40 | tokenizer = load(open(tokenizer_path, 'rb'))

41 |

42 | # Max sequence length (from training)

43 | max_length = config['max_length']

44 |

45 | # Load the model

46 | caption_model = load_model(config['model_load_path'])

47 |

48 | image_model = CNNModel(config['model_type'])

49 |

50 | # Load and prepare the image

51 | for image_file in os.listdir(config['test_data_path']):

52 | if(image_file.split('--')[0]=='output'):

53 | continue

54 | if(image_file.split('.')[1]=='jpg' or image_file.split('.')[1]=='jpeg'):

55 | print('Generating caption for {}'.format(image_file))

56 | # Encode image using CNN Model

57 | image = extract_features(config['test_data_path']+image_file, image_model, config['model_type'])

58 | # Generate caption using Decoder RNN Model + BEAM search

59 | generated_caption = generate_caption_beam_search(caption_model, tokenizer, image, max_length, beam_index=config['beam_search_k'])

60 | # Remove startseq and endseq

61 | caption = 'Caption: ' + generated_caption.split()[1].capitalize()

62 | for x in generated_caption.split()[2:len(generated_caption.split())-1]:

63 | caption = caption + ' ' + x

64 | caption += '.'

65 | # Show image and its caption

66 | pil_im = Image.open(config['test_data_path']+image_file, 'r')

67 | fig, ax = plt.subplots(figsize=(8, 8))

68 | ax.get_xaxis().set_visible(False)

69 | ax.get_yaxis().set_visible(False)

70 | _ = ax.imshow(np.asarray(pil_im), interpolation='nearest')

71 | _ = ax.set_title("BEAM Search with k={}\n{}".format(config['beam_search_k'],caption),fontdict={'fontsize': '20','fontweight' : '40'})

72 | plt.savefig(config['test_data_path']+'output--'+image_file)

--------------------------------------------------------------------------------

/test_data/bikestunt.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/dabasajay/Image-Caption-Generator/afa5abac6b713c081312f2b226986db8df57e2f1/test_data/bikestunt.jpg

--------------------------------------------------------------------------------

/test_data/readme.md:

--------------------------------------------------------------------------------

1 | Test Folder

2 |

3 | Put here the images you want to test the model on.

4 |

5 | Output images will be generated with a predix `output--`

--------------------------------------------------------------------------------

/test_data/surfing.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/dabasajay/Image-Caption-Generator/afa5abac6b713c081312f2b226986db8df57e2f1/test_data/surfing.jpeg

--------------------------------------------------------------------------------

/train_val.py:

--------------------------------------------------------------------------------

1 | from pickle import load

2 | from utils.model import *

3 | from utils.load_data import loadTrainData, loadValData, data_generator

4 | from tensorflow.keras.callbacks import ModelCheckpoint

5 | from config import config, rnnConfig

6 | import random

7 | # Setting random seed for reproducibility of results

8 | random.seed(config['random_seed'])

9 |

10 | """

11 | *Some simple checking

12 | """

13 | assert type(config['num_of_epochs']) is int, 'Please provide an integer value for `num_of_epochs` parameter in config.py file'

14 | assert type(config['max_length']) is int, 'Please provide an integer value for `max_length` parameter in config.py file'

15 | assert type(config['batch_size']) is int, 'Please provide an integer value for `batch_size` parameter in config.py file'

16 | assert type(config['beam_search_k']) is int, 'Please provide an integer value for `beam_search_k` parameter in config.py file'

17 | assert type(config['random_seed']) is int, 'Please provide an integer value for `random_seed` parameter in config.py file'

18 | assert type(rnnConfig['embedding_size']) is int, 'Please provide an integer value for `embedding_size` parameter in config.py file'

19 | assert type(rnnConfig['LSTM_units']) is int, 'Please provide an integer value for `LSTM_units` parameter in config.py file'

20 | assert type(rnnConfig['dense_units']) is int, 'Please provide an integer value for `dense_units` parameter in config.py file'

21 | assert type(rnnConfig['dropout']) is float, 'Please provide a float value for `dropout` parameter in config.py file'

22 |

23 | """

24 | *Load Data

25 | *X1 : Image features

26 | *X2 : Text features(Captions)

27 | """

28 | X1train, X2train, max_length = loadTrainData(config)

29 |

30 | X1val, X2val = loadValData(config)

31 |

32 | """

33 | *Load the tokenizer

34 | """

35 | tokenizer = load(open(config['tokenizer_path'], 'rb'))

36 | vocab_size = len(tokenizer.word_index) + 1

37 |

38 | """

39 | *Now that we have the image features from CNN model, we need to feed them to a RNN Model.

40 | *Define the RNN model

41 | """

42 | # model = RNNModel(vocab_size, max_length, rnnConfig, config['model_type'])

43 | model = AlternativeRNNModel(vocab_size, max_length, rnnConfig, config['model_type'])

44 | print('RNN Model (Decoder) Summary : ')

45 | print(model.summary())

46 |

47 | """

48 | *Train the model save after each epoch

49 | """

50 | num_of_epochs = config['num_of_epochs']

51 | batch_size = config['batch_size']

52 | steps_train = len(X2train)//batch_size

53 | if len(X2train)%batch_size!=0:

54 | steps_train = steps_train+1

55 | steps_val = len(X2val)//batch_size

56 | if len(X2val)%batch_size!=0:

57 | steps_val = steps_val+1

58 | model_save_path = config['model_data_path']+"model_"+str(config['model_type'])+"_epoch-{epoch:02d}_train_loss-{loss:.4f}_val_loss-{val_loss:.4f}.hdf5"

59 | checkpoint = ModelCheckpoint(model_save_path, monitor='val_loss', verbose=1, save_best_only=True, mode='min')

60 | callbacks = [checkpoint]

61 |

62 | print('steps_train: {}, steps_val: {}'.format(steps_train,steps_val))

63 | print('Batch Size: {}'.format(batch_size))

64 | print('Total Number of Epochs = {}'.format(num_of_epochs))

65 |

66 | # Shuffle train data

67 | ids_train = list(X2train.keys())

68 | random.shuffle(ids_train)

69 | X2train_shuffled = {_id: X2train[_id] for _id in ids_train}

70 | X2train = X2train_shuffled

71 |

72 | # Create the train data generator

73 | # returns [[img_features, text_features], out_word]

74 | generator_train = data_generator(X1train, X2train, tokenizer, max_length, batch_size, config['random_seed'])

75 | # Create the validation data generator

76 | # returns [[img_features, text_features], out_word]

77 | generator_val = data_generator(X1val, X2val, tokenizer, max_length, batch_size, config['random_seed'])

78 |

79 | # Fit for one epoch

80 | model.fit_generator(generator_train,

81 | epochs=num_of_epochs,

82 | steps_per_epoch=steps_train,

83 | validation_data=generator_val,

84 | validation_steps=steps_val,

85 | callbacks=callbacks,

86 | verbose=1)

87 |

88 | """

89 | *Evaluate the model on validation data and ouput BLEU score

90 | """

91 | print('Model trained successfully. Running model on validation set for calculating BLEU score using BEAM search with k={}'.format(config['beam_search_k']))

92 | evaluate_model_beam_search(model, X1val, X2val, tokenizer, max_length, beam_index=config['beam_search_k'])

--------------------------------------------------------------------------------

/train_val_data/readme.md:

--------------------------------------------------------------------------------

1 | Train-Validation Folder

2 |

3 | Download link for Flikr8k Dataset : Dataset Request Form

4 |

5 | UPDATE (April/2019): The official site seems to have been taken down (although the form still works). Here are some direct download links:

6 |

11 |

12 | Put the following files/folders in this directory:

13 |

14 |

15 | - Flicker8k_Dataset Folder

16 | - Flickr8k.token.txt File

17 | - Flickr_8k.trainImages.txt File

18 | - Flickr_8k.devImages.txt File

19 |

--------------------------------------------------------------------------------

/utils/load_data.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from utils.preprocessing import *

3 | from pickle import load, dump

4 | from keras.preprocessing.text import Tokenizer

5 | from keras.preprocessing.sequence import pad_sequences

6 | from keras.utils import to_categorical

7 | import random

8 | '''

9 | *We have Flickr_8k.trainImages.txt and Flickr_8k.devImages.txt files which consist of unique identifiers(id)

10 | which can be used to filter the images and their descriptions

11 | *Load a pre-defined list of image identifiers(id)

12 | *Glimpse of file:

13 | 2513260012_03d33305cf.jpg

14 | 2903617548_d3e38d7f88.jpg

15 | 3338291921_fe7ae0c8f8.jpg

16 | 488416045_1c6d903fe0.jpg

17 | 2644326817_8f45080b87.jpg

18 | '''

19 | def load_set(filename):

20 | file = open(filename, 'r')

21 | doc = file.read()

22 | file.close()

23 | ids = list()

24 | # Process line by line

25 | for line in doc.split('\n'):

26 | # Skip empty lines

27 | if len(line) < 1:

28 | continue

29 | # Get the image identifier(id)

30 | _id = line.split('.')[0]

31 | ids.append(_id)

32 | return set(ids)

33 |

34 | '''

35 | *The model we'll develop will generate a caption for a given image and the caption will be generated one word at a time.

36 | *The sequence of previously generated words will be provided as input. Therefore, we will need a ‘first word’ to

37 | kick-off the generation process and a ‘last word‘ to signal the end of the caption.

38 | *We'll use the strings ‘startseq‘ and ‘endseq‘ for this purpose. These tokens are added to the captions

39 | as they are loaded.

40 | *It is important to do this now before we encode the text so that the tokens are also encoded correctly.

41 | *Load captions into memory

42 | *Glimpse of file:

43 | 1000268201_693b08cb0e child in pink dress is climbing up set of stairs in an entry way

44 | 1000268201_693b08cb0e girl going into wooden building

45 | 1000268201_693b08cb0e little girl climbing into wooden playhouse

46 | 1000268201_693b08cb0e little girl climbing the stairs to her playhouse

47 | 1000268201_693b08cb0e little girl in pink dress going into wooden cabin

48 | '''

49 | def load_cleaned_captions(filename, ids):

50 | file = open(filename, 'r')

51 | doc = file.read()

52 | file.close()

53 | captions = dict()

54 | _count = 0

55 | # Process line by line

56 | for line in doc.split('\n'):

57 | # Split line on white space

58 | tokens = line.split()

59 | # Split id from caption

60 | image_id, image_caption = tokens[0], tokens[1:]

61 | # Skip images not in the ids set

62 | if image_id in ids:

63 | # Create list

64 | if image_id not in captions:

65 | captions[image_id] = list()

66 | # Wrap caption in start & end tokens

67 | caption = 'startseq ' + ' '.join(image_caption) + ' endseq'

68 | # Store

69 | captions[image_id].append(caption)

70 | _count = _count+1

71 | return captions, _count

72 |

73 | # Load image features

74 | def load_image_features(filename, ids):

75 | # load all features

76 | all_features = load(open(filename, 'rb'))

77 | # filter features

78 | features = {_id: all_features[_id] for _id in ids}

79 | return features

80 |

81 | # Convert a dictionary to a list

82 | def to_lines(captions):

83 | all_captions = list()

84 | for image_id in captions.keys():

85 | [all_captions.append(caption) for caption in captions[image_id]]

86 | return all_captions

87 |

88 | '''

89 | *The captions will need to be encoded to numbers before it can be presented to the model.

90 | *The first step in encoding the captions is to create a consistent mapping from words to unique integer values.

91 | Keras provides the Tokenizer class that can learn this mapping from the loaded captions.

92 | *Fit a tokenizer on given captions

93 | '''

94 | def create_tokenizer(captions):

95 | lines = to_lines(captions)

96 | tokenizer = Tokenizer()

97 | tokenizer.fit_on_texts(lines)

98 | return tokenizer

99 |

100 | # Calculate the length of the captions with the most words

101 | def calc_max_length(captions):

102 | lines = to_lines(captions)

103 | return max(len(line.split()) for line in lines)

104 |

105 | '''

106 | *Each caption will be split into words. The model will be provided one word & the image and it generates the next word.

107 | *Then the first two words of the caption will be provided to the model as input with the image to generate the next word.

108 | *This is how the model will be trained.

109 | *For example, the input sequence “little girl running in field” would be

110 | split into 6 input-output pairs to train the model:

111 |

112 | X1 X2(text sequence) y(word)

113 | -----------------------------------------------------------------

114 | image startseq, little

115 | image startseq, little, girl

116 | image startseq, little, girl, running

117 | image startseq, little, girl, running, in

118 | image startseq, little, girl, running, in, field

119 | image startseq, little, girl, running, in, field, endseq

120 | '''

121 | # Create sequences of images, input sequences and output words for an image

122 | def create_sequences(tokenizer, max_length, captions_list, image):

123 | # X1 : input for image features

124 | # X2 : input for text features

125 | # y : output word

126 | X1, X2, y = list(), list(), list()

127 | vocab_size = len(tokenizer.word_index) + 1

128 | # Walk through each caption for the image

129 | for caption in captions_list:

130 | # Encode the sequence

131 | seq = tokenizer.texts_to_sequences([caption])[0]

132 | # Split one sequence into multiple X,y pairs

133 | for i in range(1, len(seq)):

134 | # Split into input and output pair

135 | in_seq, out_seq = seq[:i], seq[i]

136 | # Pad input sequence

137 | in_seq = pad_sequences([in_seq], maxlen=max_length)[0]

138 | # Encode output sequence

139 | out_seq = to_categorical([out_seq], num_classes=vocab_size)[0]

140 | # Store

141 | X1.append(image)

142 | X2.append(in_seq)

143 | y.append(out_seq)

144 | return X1, X2, y

145 |

146 | # Data generator, intended to be used in a call to model.fit_generator()

147 | def data_generator(images, captions, tokenizer, max_length, batch_size, random_seed):

148 | # Setting random seed for reproducibility of results

149 | random.seed(random_seed)

150 | # Image ids

151 | image_ids = list(captions.keys())

152 | _count=0

153 | assert batch_size<= len(image_ids), 'Batch size must be less than or equal to {}'.format(len(image_ids))

154 | while True:

155 | if _count >= len(image_ids):

156 | # Generator exceeded or reached the end so restart it

157 | _count = 0

158 | # Batch list to store data

159 | input_img_batch, input_sequence_batch, output_word_batch = list(), list(), list()

160 | for i in range(_count, min(len(image_ids), _count+batch_size)):

161 | # Retrieve the image id

162 | image_id = image_ids[i]

163 | # Retrieve the image features

164 | image = images[image_id][0]

165 | # Retrieve the captions list

166 | captions_list = captions[image_id]

167 | # Shuffle captions list

168 | random.shuffle(captions_list)

169 | input_img, input_sequence, output_word = create_sequences(tokenizer, max_length, captions_list, image)

170 | # Add to batch

171 | for j in range(len(input_img)):

172 | input_img_batch.append(input_img[j])

173 | input_sequence_batch.append(input_sequence[j])

174 | output_word_batch.append(output_word[j])

175 | _count = _count + batch_size

176 | yield [[np.array(input_img_batch), np.array(input_sequence_batch)], np.array(output_word_batch)]

177 |

178 | def loadTrainData(config):

179 | train_image_ids = load_set(config['train_data_path'])

180 | # Check if we already have preprocessed data saved and if not, preprocess the data.

181 | # Create and save 'captions.txt' & features.pkl

182 | preprocessData(config)

183 | # Load captions

184 | train_captions, _count = load_cleaned_captions(config['model_data_path']+'captions.txt', train_image_ids)

185 | # Load image features

186 | train_image_features = load_image_features(config['model_data_path']+'features_'+str(config['model_type'])+'.pkl', train_image_ids)

187 | print('{}: Available images for training: {}'.format(mytime(),len(train_image_features)))

188 | print('{}: Available captions for training: {}'.format(mytime(),_count))

189 | if not os.path.exists(config['model_data_path']+'tokenizer.pkl'):

190 | # Prepare tokenizer

191 | tokenizer = create_tokenizer(train_captions)

192 | # Save the tokenizer

193 | dump(tokenizer, open(config['model_data_path']+'tokenizer.pkl', 'wb'))

194 | # Determine the maximum sequence length

195 | max_length = calc_max_length(train_captions)

196 | return train_image_features, train_captions, max_length

197 |

198 | def loadValData(config):

199 | val_image_ids = load_set(config['val_data_path'])

200 | # Load captions

201 | val_captions, _count = load_cleaned_captions(config['model_data_path']+'captions.txt', val_image_ids)

202 | # Load image features

203 | val_features = load_image_features(config['model_data_path']+'features_'+str(config['model_type'])+'.pkl', val_image_ids)

204 | print('{}: Available images for validation: {}'.format(mytime(),len(val_features)))

205 | print('{}: Available captions for validation: {}'.format(mytime(),_count))

206 | return val_features, val_captions

--------------------------------------------------------------------------------

/utils/model.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | # Keras

3 | from keras.applications.inception_v3 import InceptionV3

4 | from keras.applications.vgg16 import VGG16

5 | from keras.models import Model

6 | from keras.layers import Input, Dense, Dropout, LSTM, Embedding, concatenate, RepeatVector, TimeDistributed, Bidirectional

7 | from keras.preprocessing.sequence import pad_sequences

8 | from tqdm import tqdm

9 | # To measure BLEU Score

10 | from nltk.translate.bleu_score import corpus_bleu

11 |

12 | """

13 | *Define the CNN model

14 | """

15 | def CNNModel(model_type):

16 | if model_type == 'inceptionv3':

17 | model = InceptionV3()

18 | elif model_type == 'vgg16':

19 | model = VGG16()

20 | model.layers.pop()

21 | model = Model(inputs=model.inputs, outputs=model.layers[-1].output)

22 | return model

23 |

24 | """

25 | *Define the RNN model

26 | """

27 | def RNNModel(vocab_size, max_len, rnnConfig, model_type):

28 | embedding_size = rnnConfig['embedding_size']

29 | if model_type == 'inceptionv3':

30 | # InceptionV3 outputs a 2048 dimensional vector for each image, which we'll feed to RNN Model

31 | image_input = Input(shape=(2048,))

32 | elif model_type == 'vgg16':

33 | # VGG16 outputs a 4096 dimensional vector for each image, which we'll feed to RNN Model

34 | image_input = Input(shape=(4096,))

35 | image_model_1 = Dropout(rnnConfig['dropout'])(image_input)

36 | image_model = Dense(embedding_size, activation='relu')(image_model_1)

37 |

38 | caption_input = Input(shape=(max_len,))

39 | # mask_zero: We zero pad inputs to the same length, the zero mask ignores those inputs. E.g. it is an efficiency.

40 | caption_model_1 = Embedding(vocab_size, embedding_size, mask_zero=True)(caption_input)

41 | caption_model_2 = Dropout(rnnConfig['dropout'])(caption_model_1)

42 | caption_model = LSTM(rnnConfig['LSTM_units'])(caption_model_2)

43 |

44 | # Merging the models and creating a softmax classifier

45 | final_model_1 = concatenate([image_model, caption_model])

46 | final_model_2 = Dense(rnnConfig['dense_units'], activation='relu')(final_model_1)

47 | final_model = Dense(vocab_size, activation='softmax')(final_model_2)

48 |

49 | model = Model(inputs=[image_input, caption_input], outputs=final_model)

50 | model.compile(loss='categorical_crossentropy', optimizer='adam')

51 | return model

52 |

53 | """

54 | *Define the RNN model with different architecture

55 | """

56 | def AlternativeRNNModel(vocab_size, max_len, rnnConfig, model_type):

57 | embedding_size = rnnConfig['embedding_size']

58 | if model_type == 'inceptionv3':

59 | # InceptionV3 outputs a 2048 dimensional vector for each image, which we'll feed to RNN Model

60 | image_input = Input(shape=(2048,))

61 | elif model_type == 'vgg16':

62 | # VGG16 outputs a 4096 dimensional vector for each image, which we'll feed to RNN Model

63 | image_input = Input(shape=(4096,))

64 | image_model_1 = Dense(embedding_size, activation='relu')(image_input)

65 | image_model = RepeatVector(max_len)(image_model_1)

66 |

67 | caption_input = Input(shape=(max_len,))

68 | # mask_zero: We zero pad inputs to the same length, the zero mask ignores those inputs. E.g. it is an efficiency.

69 | caption_model_1 = Embedding(vocab_size, embedding_size, mask_zero=True)(caption_input)

70 | # Since we are going to predict the next word using the previous words

71 | # (length of previous words changes with every iteration over the caption), we have to set return_sequences = True.

72 | caption_model_2 = LSTM(rnnConfig['LSTM_units'], return_sequences=True)(caption_model_1)

73 | # caption_model = TimeDistributed(Dense(embedding_size, activation='relu'))(caption_model_2)

74 | caption_model = TimeDistributed(Dense(embedding_size))(caption_model_2)

75 |

76 | # Merging the models and creating a softmax classifier

77 | final_model_1 = concatenate([image_model, caption_model])

78 | # final_model_2 = LSTM(rnnConfig['LSTM_units'], return_sequences=False)(final_model_1)

79 | final_model_2 = Bidirectional(LSTM(rnnConfig['LSTM_units'], return_sequences=False))(final_model_1)

80 | # final_model_3 = Dense(rnnConfig['dense_units'], activation='relu')(final_model_2)

81 | # final_model = Dense(vocab_size, activation='softmax')(final_model_3)

82 | final_model = Dense(vocab_size, activation='softmax')(final_model_2)

83 |

84 | model = Model(inputs=[image_input, caption_input], outputs=final_model)

85 | model.compile(loss='categorical_crossentropy', optimizer='adam')

86 | # model.compile(loss='categorical_crossentropy', optimizer='rmsprop')

87 | return model

88 |

89 | """

90 | *Map an integer to a word

91 | """

92 | def int_to_word(integer, tokenizer):

93 | for word, index in tokenizer.word_index.items():

94 | if index == integer:

95 | return word

96 | return None

97 |

98 | """

99 | *Generate a caption for an image, given a pre-trained model and a tokenizer to map integer back to word

100 | *Uses simple argmax

101 | """

102 | def generate_caption(model, tokenizer, image, max_length):

103 | # Seed the generation process

104 | in_text = 'startseq'

105 | # Iterate over the whole length of the sequence

106 | for _ in range(max_length):

107 | # Integer encode input sequence

108 | sequence = tokenizer.texts_to_sequences([in_text])[0]

109 | # Pad input

110 | sequence = pad_sequences([sequence], maxlen=max_length)

111 | # Predict next word

112 | # The model will output a prediction, which will be a probability distribution over all words in the vocabulary.

113 | yhat = model.predict([image,sequence], verbose=0)

114 | # The output vector representins a probability distribution where maximum probability is the predicted word position

115 | # Take output class with maximum probability and convert to integer

116 | yhat = np.argmax(yhat)

117 | # Map integer back to word

118 | word = int_to_word(yhat, tokenizer)

119 | # Stop if we cannot map the word

120 | if word is None:

121 | break

122 | # Append as input for generating the next word

123 | in_text += ' ' + word

124 | # Stop if we predict the end of the sequence

125 | if word == 'endseq':

126 | break

127 | return in_text

128 |

129 | """

130 | *Generate a caption for an image, given a pre-trained model and a tokenizer to map integer back to word

131 | *Uses BEAM Search algorithm

132 | """

133 | def generate_caption_beam_search(model, tokenizer, image, max_length, beam_index=3):

134 | # in_text --> [[idx,prob]] ;prob=0 initially

135 | in_text = [[tokenizer.texts_to_sequences(['startseq'])[0], 0.0]]

136 | while len(in_text[0][0]) < max_length:

137 | tempList = []

138 | for seq in in_text:

139 | padded_seq = pad_sequences([seq[0]], maxlen=max_length)

140 | preds = model.predict([image,padded_seq], verbose=0)

141 | # Take top (i.e. which have highest probailities) `beam_index` predictions

142 | top_preds = np.argsort(preds[0])[-beam_index:]

143 | # Getting the top `beam_index` predictions and

144 | for word in top_preds:

145 | next_seq, prob = seq[0][:], seq[1]

146 | next_seq.append(word)

147 | # Update probability

148 | prob += preds[0][word]

149 | # Append as input for generating the next word

150 | tempList.append([next_seq, prob])

151 | in_text = tempList

152 | # Sorting according to the probabilities

153 | in_text = sorted(in_text, reverse=False, key=lambda l: l[1])

154 | # Take the top words

155 | in_text = in_text[-beam_index:]

156 | in_text = in_text[-1][0]

157 | final_caption_raw = [int_to_word(i,tokenizer) for i in in_text]

158 | final_caption = []

159 | for word in final_caption_raw:

160 | if word=='endseq':

161 | break

162 | else:

163 | final_caption.append(word)

164 | final_caption.append('endseq')

165 | return ' '.join(final_caption)

166 |

167 | """

168 | *Evaluate the model on BLEU Score using argmax predictions

169 | """

170 | def evaluate_model(model, images, captions, tokenizer, max_length):

171 | actual, predicted = list(), list()

172 | for image_id, caption_list in tqdm(captions.items()):

173 | yhat = generate_caption(model, tokenizer, images[image_id], max_length)

174 | ground_truth = [caption.split() for caption in caption_list]

175 | actual.append(ground_truth)

176 | predicted.append(yhat.split())

177 | print('BLEU Scores :')

178 | print('A perfect match results in a score of 1.0, whereas a perfect mismatch results in a score of 0.0.')

179 | print('BLEU-1: %f' % corpus_bleu(actual, predicted, weights=(1.0, 0, 0, 0)))

180 | print('BLEU-2: %f' % corpus_bleu(actual, predicted, weights=(0.5, 0.5, 0, 0)))

181 | print('BLEU-3: %f' % corpus_bleu(actual, predicted, weights=(0.3, 0.3, 0.3, 0)))

182 | print('BLEU-4: %f' % corpus_bleu(actual, predicted, weights=(0.25, 0.25, 0.25, 0.25)))

183 |

184 | """

185 | *Evaluate the model on BLEU Score using BEAM search predictions

186 | """

187 | def evaluate_model_beam_search(model, images, captions, tokenizer, max_length, beam_index=3):

188 | actual, predicted = list(), list()

189 | for image_id, caption_list in tqdm(captions.items()):

190 | yhat = generate_caption_beam_search(model, tokenizer, images[image_id], max_length, beam_index=beam_index)

191 | ground_truth = [caption.split() for caption in caption_list]

192 | actual.append(ground_truth)

193 | predicted.append(yhat.split())

194 | print('BLEU Scores :')

195 | print('A perfect match results in a score of 1.0, whereas a perfect mismatch results in a score of 0.0.')

196 | print('BLEU-1: %f' % corpus_bleu(actual, predicted, weights=(1.0, 0, 0, 0)))

197 | print('BLEU-2: %f' % corpus_bleu(actual, predicted, weights=(0.5, 0.5, 0, 0)))

198 | print('BLEU-3: %f' % corpus_bleu(actual, predicted, weights=(0.3, 0.3, 0.3, 0)))

199 | print('BLEU-4: %f' % corpus_bleu(actual, predicted, weights=(0.25, 0.25, 0.25, 0.25)))

--------------------------------------------------------------------------------

/utils/preprocessing.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | import os

3 | from pickle import dump

4 | import string

5 | from tqdm import tqdm

6 | from utils.model import CNNModel

7 | from keras.preprocessing.image import load_img, img_to_array

8 | from datetime import datetime as dt

9 |

10 | # Utility function for pretty printing

11 | def mytime(with_date=False):

12 | _str = ''

13 | if with_date:

14 | _str = str(dt.now().year)+'-'+str(dt.now().month)+'-'+str(dt.now().day)+' '

15 | _str = _str+str(dt.now().hour)+':'+str(dt.now().minute)+':'+str(dt.now().second)

16 | else:

17 | _str = str(dt.now().hour)+':'+str(dt.now().minute)+':'+str(dt.now().second)

18 | return _str

19 |

20 | """

21 | *This function returns a dictionary of form:

22 | {

23 | image_id1 : image_features1,

24 | image_id2 : image_features2,

25 | ...

26 | }

27 | """

28 | def extract_features(path, model_type):

29 | if model_type == 'inceptionv3':

30 | from keras.applications.inception_v3 import preprocess_input

31 | target_size = (299, 299)

32 | elif model_type == 'vgg16':

33 | from keras.applications.vgg16 import preprocess_input

34 | target_size = (224, 224)

35 | # Get CNN Model from model.py

36 | model = CNNModel(model_type)

37 | features = dict()

38 | # Extract features from each photo

39 | for name in tqdm(os.listdir(path)):

40 | # Loading and resizing image

41 | filename = path + name

42 | image = load_img(filename, target_size=target_size)

43 | # Convert the image pixels to a numpy array

44 | image = img_to_array(image)

45 | # Reshape data for the model

46 | image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2]))

47 | # Prepare the image for the CNN Model model

48 | image = preprocess_input(image)

49 | # Pass image into model to get encoded features

50 | feature = model.predict(image, verbose=0)

51 | # Store encoded features for the image

52 | image_id = name.split('.')[0]

53 | features[image_id] = feature

54 | return features

55 |

56 | """

57 | *Extract captions for images

58 | *Glimpse of file:

59 | 1000268201_693b08cb0e.jpg#0 A child in a pink dress is climbing up a set of stairs in an entry way .

60 | 1000268201_693b08cb0e.jpg#1 A girl going into a wooden building .

61 | 1000268201_693b08cb0e.jpg#2 A little girl climbing into a wooden playhouse .

62 | 1000268201_693b08cb0e.jpg#3 A little girl climbing the stairs to her playhouse .

63 | 1000268201_693b08cb0e.jpg#4 A little girl in a pink dress going into a wooden cabin .

64 | """

65 | def load_captions(filename):

66 | file = open(filename, 'r')

67 | doc = file.read()

68 | file.close()

69 | """

70 | Captions dict is of form:

71 | {

72 | image_id1 : [caption1, caption2, etc],

73 | image_id2 : [caption1, caption2, etc],

74 | ...

75 | }

76 | """

77 | captions = dict()

78 | # Process lines by line

79 | _count = 0

80 | for line in doc.split('\n'):

81 | # Split line on white space

82 | tokens = line.split()

83 | if len(line) < 2:

84 | continue

85 | # Take the first token as the image id, the rest as the caption

86 | image_id, image_caption = tokens[0], tokens[1:]

87 | # Extract filename from image id

88 | image_id = image_id.split('.')[0]

89 | # Convert caption tokens back to caption string

90 | image_caption = ' '.join(image_caption)

91 | # Create the list if needed

92 | if image_id not in captions:

93 | captions[image_id] = list()

94 | # Store caption

95 | captions[image_id].append(image_caption)

96 | _count = _count+1

97 | print('{}: Parsed captions: {}'.format(mytime(),_count))

98 | return captions

99 |

100 | def clean_captions(captions):

101 | # Prepare translation table for removing punctuation

102 | table = str.maketrans('', '', string.punctuation)

103 | for _, caption_list in captions.items():

104 | for i in range(len(caption_list)):

105 | caption = caption_list[i]

106 | # Tokenize i.e. split on white spaces

107 | caption = caption.split()

108 | # Convert to lowercase

109 | caption = [word.lower() for word in caption]

110 | # Remove punctuation from each token

111 | caption = [w.translate(table) for w in caption]

112 | # Remove hanging 's' and 'a'

113 | caption = [word for word in caption if len(word)>1]

114 | # Remove tokens with numbers in them

115 | caption = [word for word in caption if word.isalpha()]

116 | # Store as string

117 | caption_list[i] = ' '.join(caption)

118 |

119 | """

120 | *Save captions to file, one per line

121 | *After saving, captions.txt is of form :- `id` `caption`

122 | Example : 2252123185_487f21e336 stadium full of people watch game

123 | """

124 | def save_captions(captions, filename):

125 | lines = list()

126 | for key, captions_list in captions.items():

127 | for caption in captions_list:

128 | lines.append(key + ' ' + caption)

129 | data = '\n'.join(lines)

130 | file = open(filename, 'w')

131 | file.write(data)

132 | file.close()

133 |

134 | def preprocessData(config):

135 | print('{}: Using {} model'.format(mytime(),config['model_type'].title()))

136 | # Extract features from all images

137 | if os.path.exists(config['model_data_path']+'features_'+str(config['model_type'])+'.pkl'):

138 | print('{}: Image features already generated at {}'.format(mytime(), config['model_data_path']+'features_'+str(config['model_type'])+'.pkl'))

139 | else:

140 | print('{}: Generating image features using '+str(config['model_type'])+' model...'.format(mytime()))

141 | features = extract_features(config['images_path'], config['model_type'])

142 | # Save to file

143 | dump(features, open(config['model_data_path']+'features_'+str(config['model_type'])+'.pkl', 'wb'))

144 | print('{}: Completed & Saved features for {} images successfully'.format(mytime(),len(features)))

145 | # Load file containing captions and parse them

146 | if os.path.exists(config['model_data_path']+'captions.txt'):

147 | print('{}: Parsed caption file already generated at {}'.format(mytime(), config['model_data_path']+'captions.txt'))

148 | else:

149 | print('{}: Parsing captions file...'.format(mytime()))

150 | captions = load_captions(config['captions_path'])

151 | # Clean captions

152 | # Ignore this function because Tokenizer from keras will handle cleaning

153 | # clean_captions(captions)

154 | # Save captions

155 | save_captions(captions, config['model_data_path']+'captions.txt')

156 | print('{}: Parsed & Saved successfully'.format(mytime()))

--------------------------------------------------------------------------------

16 |

16 |  16 |

16 |  |

|  |

|