12 |

13 | [**HouseKeeping**](#housekeeping)

14 | - [Objectives](#objectives)

15 | - [Frequently asked questions](#frequently-asked-questions)

16 | - [Materials for the Session](#materials-for-the-session)

17 |

18 | [**LAB**](#1-database-initialization)

19 | - [01. Create Astra Account](#-1---create-your-datastax-astra-account)

20 | - [02. Create Astra Token](#-2---create-an-astra-token)

21 | - [03. Copy the token](#-3---copy-the-token-value-in-your-clipboard)

22 | - [04. Open Gitpod](#-4---open-gitpod)

23 | - [05. Setup CLI](#-5---set-up-the-cli-with-your-token)

24 | - [06. Create Database](#-6---create-destination-database-and-a-keyspace)

25 | - [07. Create Destination Table](#-7---create-destination-table)

26 | - [08. Setup env variables](#-8---setup-env-variables)

27 | - [09. Setup Project](#-9---setup-project)

28 | - [10. Run Importing Flow](#-10---run-importing-flow)

29 | - [11. Validate Data](#-11---validate-data)

30 |

31 | [**WalkThrough**](#walkthrough)

32 | - [01. Compute Embeddings](#-1-run-flow-compute)

33 | - [02. Show results](#-2-validate-output)

34 | - [03. Create Google Project](#-3-create-google-project)

35 | - [04. Enable project Billing](#-4-enable-billing)

36 | - [05. Save Project Id](#-5-save-project-id)

37 | - [06. Install gcloud CLI](#-6-download-and-install-gcoud-cli)

38 | - [07. Authenticate Against Google Cloud](#-7-authenticate-with-google-cloud)

39 | - [08. Select your project](#-8-set-your-project-)

40 | - [09. Enable Needed Apis](#-9-enable-needed-api)

41 | - [10. Setup Dataflow user](#-10-add-roles-to-dataflow-users)

42 | - [11. Create Secret](#11----create-secrets-for-the-project-in-secret-manager)

43 | - [12. Move in proper folder](#-12-make-sure-you-are-in-samples-dataflow-folder)

44 | - [13. Setup env var](#13--make-sure-you-have-those-variables-initialized)

45 | - [14. Run the pipeline](#14----run-the-pipeline)

46 | - [15. show Content of Table](#15----show-the-content-of-the-table)

47 |

48 | ----

49 | ## HouseKeeping

50 |

51 | ### Objectives

52 |

53 | * Introduce AstraDB and Vector Search capability

54 | * Give you an first understanding about Apache Beam and Google DataFlow

55 | * Discover NoSQL dsitributed databases and specially Apache Cassandra™.

56 | * Getting familiar with a few Google Cloud Platform services

57 |

58 | ### Frequently asked questions

59 |

60 |

61 |

12 |

13 | [**HouseKeeping**](#housekeeping)

14 | - [Objectives](#objectives)

15 | - [Frequently asked questions](#frequently-asked-questions)

16 | - [Materials for the Session](#materials-for-the-session)

17 |

18 | [**LAB**](#1-database-initialization)

19 | - [01. Create Astra Account](#-1---create-your-datastax-astra-account)

20 | - [02. Create Astra Token](#-2---create-an-astra-token)

21 | - [03. Copy the token](#-3---copy-the-token-value-in-your-clipboard)

22 | - [04. Open Gitpod](#-4---open-gitpod)

23 | - [05. Setup CLI](#-5---set-up-the-cli-with-your-token)

24 | - [06. Create Database](#-6---create-destination-database-and-a-keyspace)

25 | - [07. Create Destination Table](#-7---create-destination-table)

26 | - [08. Setup env variables](#-8---setup-env-variables)

27 | - [09. Setup Project](#-9---setup-project)

28 | - [10. Run Importing Flow](#-10---run-importing-flow)

29 | - [11. Validate Data](#-11---validate-data)

30 |

31 | [**WalkThrough**](#walkthrough)

32 | - [01. Compute Embeddings](#-1-run-flow-compute)

33 | - [02. Show results](#-2-validate-output)

34 | - [03. Create Google Project](#-3-create-google-project)

35 | - [04. Enable project Billing](#-4-enable-billing)

36 | - [05. Save Project Id](#-5-save-project-id)

37 | - [06. Install gcloud CLI](#-6-download-and-install-gcoud-cli)

38 | - [07. Authenticate Against Google Cloud](#-7-authenticate-with-google-cloud)

39 | - [08. Select your project](#-8-set-your-project-)

40 | - [09. Enable Needed Apis](#-9-enable-needed-api)

41 | - [10. Setup Dataflow user](#-10-add-roles-to-dataflow-users)

42 | - [11. Create Secret](#11----create-secrets-for-the-project-in-secret-manager)

43 | - [12. Move in proper folder](#-12-make-sure-you-are-in-samples-dataflow-folder)

44 | - [13. Setup env var](#13--make-sure-you-have-those-variables-initialized)

45 | - [14. Run the pipeline](#14----run-the-pipeline)

46 | - [15. show Content of Table](#15----show-the-content-of-the-table)

47 |

48 | ----

49 | ## HouseKeeping

50 |

51 | ### Objectives

52 |

53 | * Introduce AstraDB and Vector Search capability

54 | * Give you an first understanding about Apache Beam and Google DataFlow

55 | * Discover NoSQL dsitributed databases and specially Apache Cassandra™.

56 | * Getting familiar with a few Google Cloud Platform services

57 |

58 | ### Frequently asked questions

59 |

60 |

61 |

62 |

64 |

73 |

74 | 1️⃣ Can I run this workshop on my computer?

63 |64 |

There is nothing preventing you from running the workshop on your own machine, If you do so, you will need the following 65 |

-

66 |

- git installed on your local system 67 |

- Java installed on your local system 68 |

- Maven installed on your local system 69 |

75 |

77 |

85 |

86 | 2️⃣ What other prerequisites are required?

76 |77 |

-

78 |

- You will need an enough *real estate* on screen, we will ask you to open a few windows and it does not file mobiles (tablets should be OK) 79 |

- You will need a GitHub account eventually a google account for the Google Authentication (optional) 80 |

- You will need an Astra account: don't worry, we'll work through that in the following 81 |

- As Intermediate level we expect you to know what java and maven are 82 |

87 |

89 | No. All tools and services we provide here are FREE. FREE not only during the session but also after. 90 |

91 |

92 | 3️⃣ Do I need to pay for anything for this workshop?

88 |89 | No. All tools and services we provide here are FREE. FREE not only during the session but also after. 90 |

93 |

95 | Attending the session is not enough. You need to complete the homeworks detailed below and you will get a nice badge that you can share on linkedin or anywhere else *(open api badge)* 96 |

97 |

98 |

99 | ### Materials for the Session

100 |

101 | It doesn't matter if you join our workshop live or you prefer to work at your own pace,

102 | we have you covered. In this repository, you'll find everything you need for this workshop:

103 |

104 | - [Slide deck](/slides/slides.pdf)

105 | - [Discord chat](https://dtsx.io/discord)

106 |

107 | ----

108 |

109 | ## LAB

110 |

111 | #### ✅ `1` - Create your DataStax Astra account

112 |

113 | > ℹ️ Account creation tutorial is available in [awesome astra](https://awesome-astra.github.io/docs/pages/astra/create-account/)

114 |

115 |

116 | _click the image below or go to [https://astra.datastax./com](bit.ly/3QxhO6t)_

117 |

118 |

119 | 4️⃣ Will I get a certificate if I attend this workshop?

94 |95 | Attending the session is not enough. You need to complete the homeworks detailed below and you will get a nice badge that you can share on linkedin or anywhere else *(open api badge)* 96 |

120 |

121 |

120 |

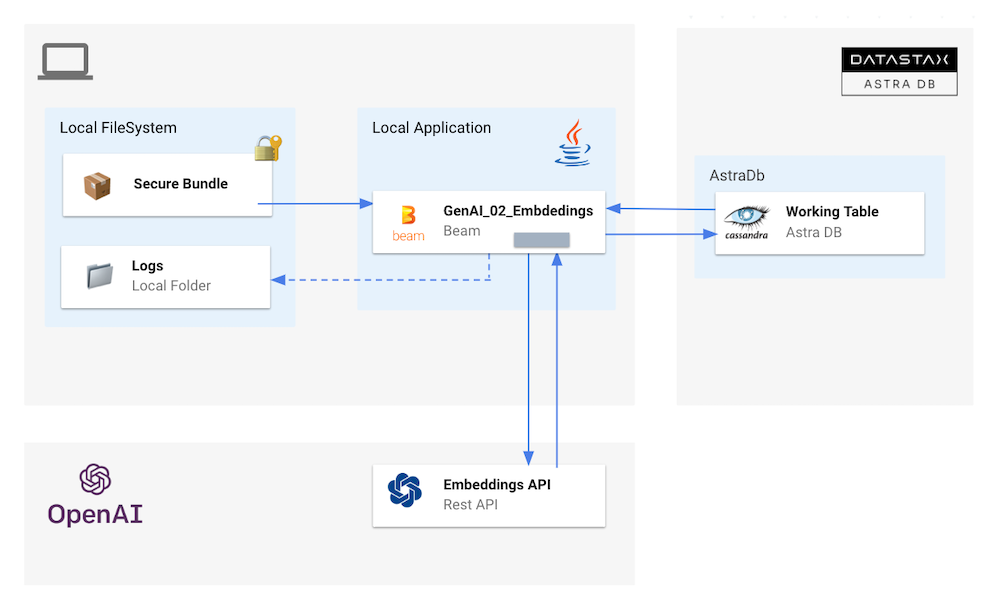

121 | 122 | 123 | 124 | #### ✅ `2` - Create an Astra Token 125 | 126 | > ℹ️ Token creation tutorial is available in [awesome astra](https://awesome-astra.github.io/docs/pages/astra/create-token/#c-procedure) 127 | 128 | - `Locate `Settings` (#1) in the menu on the left, then `Token Management` (#2) 129 | 130 | - Select the role `Organization Administrator` before clicking `[Generate Token]` 131 | 132 |  133 | 134 | The Token is in fact three separate strings: a `Client ID`, a `Client Secret` and the `token` proper. You will need some of these strings to access the database, depending on the type of access you plan. Although the Client ID, strictly speaking, is not a secret, you should regard this whole object as a secret and make sure not to share it inadvertently (e.g. committing it to a Git repository) as it grants access to your databases. 135 | 136 | ```json 137 | { 138 | "ClientId": "ROkiiDZdvPOvHRSgoZtyAapp", 139 | "ClientSecret": "fakedfaked", 140 | "Token":"AstraCS:fake" 141 | } 142 | ``` 143 | 144 | #### ✅ `3` - Copy the token value in your clipboard 145 | 146 | You can also leave the windo open to copy the value in a second. 147 | 148 | #### ✅ `4` - Open Gitpod 149 | 150 | > 151 | > ↗️ _Right Click and select open as a new Tab..._ 152 | > 153 | > [](https://gitpod.io/#https://github.com/datastaxdevs/workshop-beam) 154 | > 155 | 156 | 157 |  158 | 159 | 160 | #### ✅ `5` - Set up the CLI with your token 161 | 162 | _In gitpod, in a terminal window:_ 163 | 164 | - Login 165 | 166 | ```bash 167 | astra login --token AstraCS:fake 168 | ``` 169 | 170 | - Validate your are setup 171 | 172 | ```bash 173 | astra org 174 | ``` 175 | 176 | > **Output** 177 | > ``` 178 | > gitpod /workspace/workshop-beam (main) $ astra org 179 | > +----------------+-----------------------------------------+ 180 | > | Attribute | Value | 181 | > +----------------+-----------------------------------------+ 182 | > | Name | cedrick.lunven@datastax.com | 183 | > | id | f9460f14-9879-4ebe-83f2-48d3f3dce13c | 184 | > +----------------+-----------------------------------------+ 185 | > ``` 186 | 187 | 188 | #### ✅ `6` - Create destination Database and a keyspace 189 | 190 | > ℹ️ You can notice we enabled the Vector Search capability 191 | 192 | - Create db `workshop_beam` and wait for the DB to become active 193 | 194 | ``` 195 | astra db create workshop_beam -k beam --vector --if-not-exists 196 | ``` 197 | 198 | > 💻 Output 199 | > 200 | >  201 | 202 | - List databases 203 | 204 | ``` 205 | astra db list 206 | ``` 207 | 208 | > 💻 Output 209 | > 210 | >  211 | 212 | - Describe your db 213 | 214 | ``` 215 | astra db describe workshop_beam 216 | ``` 217 | 218 | > 💻 Output 219 | > 220 | >  221 | 222 | #### ✅ `7` - Create Destination table 223 | 224 | - Create Table: 225 | 226 | ```bash 227 | astra db cqlsh workshop_beam -k beam \ 228 | -e "CREATE TABLE IF NOT EXISTS fable(document_id TEXT PRIMARY KEY, title TEXT, document TEXT)" 229 | ``` 230 | 231 | - Show Table: 232 | 233 | ```bash 234 | astra db cqlsh workshop_beam -k beam -e "SELECT * FROM fable" 235 | ``` 236 | 237 | #### ✅ `8` - Setup env variables 238 | 239 | - Create `.env` file with variables 240 | 241 | ```bash 242 | astra db create-dotenv workshop_beam 243 | ``` 244 | 245 | - Display the file 246 | 247 | ```bash 248 | cat .env 249 | ``` 250 | 251 | - Load env variables 252 | 253 | ``` 254 | set -a 255 | source .env 256 | set +a 257 | env | grep ASTRA 258 | ``` 259 | 260 | #### ✅ `9` - Setup project 261 | 262 | This command will allows to validate that Java , maven and lombok are working as expected 263 | 264 | ``` 265 | mvn clean compile 266 | ``` 267 | 268 | #### ✅ `10` - Run Importing flow 269 | 270 | - Open the CSV. It is very short and simple for demo purpose (and open API prices laters :) ). 271 | 272 | ```bash 273 | /workspace/workshop-beam/samples-beam/src/main/resources/fables_of_fontaine.csv 274 | ``` 275 | 276 | - Open the Java file with the code 277 | 278 | ```bash 279 | gp open /workspace/workshop-beam/samples-beam/src/main/java/com/datastax/astra/beam/genai/GenAI_01_ImportData.java 280 | ``` 281 | 282 |  283 | 284 | 285 | - Run the Flow 286 | 287 | ``` 288 | cd samples-beam 289 | mvn clean compile exec:java \ 290 | -Dexec.mainClass=com.datastax.astra.beam.genai.GenAI_01_ImportData \ 291 | -Dexec.args="\ 292 | --astraToken=${ASTRA_DB_APPLICATION_TOKEN} \ 293 | --astraSecureConnectBundle=${ASTRA_DB_SECURE_BUNDLE_PATH} \ 294 | --astraKeyspace=${ASTRA_DB_KEYSPACE} \ 295 | --csvInput=`pwd`/src/main/resources/fables_of_fontaine.csv" 296 | ``` 297 | 298 | #### ✅ `11` - Validate Data 299 | 300 | ```bash 301 | astra db cqlsh workshop_beam -k beam -e "SELECT * FROM fable" 302 | ``` 303 | 304 | ---- 305 | 306 | ## WalkThrough 307 | 308 |  309 | 310 | We will now compute the embedding leveraging OpenAPI. It is not free, you need to provide your credit card to access the API. This part is a walkthrough. If you have an openAI key follow with me ! 311 | 312 | 313 | - [Access OpenAI interface and create a key](https://platform.openai.com/account/api-keys) 314 | 315 | - [Learn more about the Embeddings API](https://platform.openai.com/docs/api-reference/embeddings) 316 | 317 | - [Leanr More about the third party library in use](https://platform.openai.com/docs/libraries/community-libraries) 318 | 319 | 320 | #### ✅ `1` Run Flow Compute 321 | 322 | - Setup Open AI 323 | 324 | ``` 325 | export OPENAI_API_KEY="