├── .github

└── FUNDING.yml

├── .gitignore

├── LICENSE

├── Pipfile

├── Pipfile.lock

├── README.md

├── exp

├── __init__.py

├── __version__.py

├── args.py

├── gopt.py

├── params.py

└── run.py

├── extras

├── convergence.png

├── exp.png

└── getting_started.gif

├── setup.py

└── test

├── __init__.py

├── assets

├── __init__.py

├── dummy.conf

├── dummy_runnable.py

├── multiple_params.conf

└── runnable_tensorflow.py

└── test_params.py

/.github/FUNDING.yml:

--------------------------------------------------------------------------------

1 | ko_fi: davidenunes

2 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C Extensions

7 | *.so

8 |

9 | # swap files for vim

10 | *.swp

11 |

12 | # Packaging / Distribution

13 | .Python

14 | env/

15 | build/

16 | develop-eggs/

17 | dist/

18 | eggs/

19 | .eggs/

20 | lib/

21 | lib64/

22 | sdist/

23 | var/

24 | *.egg-info/

25 | .installed.cfg

26 | *.egg

27 |

28 | # PyInstaller

29 | # Usually these files are written by a python script from a template

30 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

31 | *.manifest

32 | *.spec

33 |

34 | # Installer Logs

35 | pip-log.txt

36 | pip-delete-this-directory.txt

37 |

38 | # Tests

39 | htmlcov/

40 | .tox/

41 | .coverage

42 | .coverage.*

43 | .cache

44 | nosetests.xml

45 | coverage.xml

46 | *,cover

47 |

48 | # PyBuilder

49 | target/

50 | # IPythonNotebook template

51 | ## Temporary data

52 | .ipynb_checkpoints/

53 |

54 | # sphinx

55 | _build/

56 |

57 |

58 | .vscode

59 |

60 |

61 | # Idea

62 | .idea

63 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/Pipfile:

--------------------------------------------------------------------------------

1 | [[source]]

2 | url = "https://pypi.org/simple"

3 | verify_ssl = true

4 | name = "pypi"

5 |

6 | [packages]

7 | gputil = "*"

8 | click = "*"

9 | matplotlib = "*"

10 | scikit-optimize = "*"

11 | toml = "*"

12 | tqdm = "*"

13 |

14 | [requires]

15 | python_version = "3.6"

16 |

--------------------------------------------------------------------------------

/Pipfile.lock:

--------------------------------------------------------------------------------

1 | {

2 | "_meta": {

3 | "hash": {

4 | "sha256": "9ad6c768704115a440294837bebf50d15e51f837034db9ab61610aa9b9417f74"

5 | },

6 | "pipfile-spec": 6,

7 | "requires": {

8 | "python_version": "3.6"

9 | },

10 | "sources": [

11 | {

12 | "name": "pypi",

13 | "url": "https://pypi.org/simple",

14 | "verify_ssl": true

15 | }

16 | ]

17 | },

18 | "default": {

19 | "click": {

20 | "hashes": [

21 | "sha256:2335065e6395b9e67ca716de5f7526736bfa6ceead690adf616d925bdc622b13",

22 | "sha256:5b94b49521f6456670fdb30cd82a4eca9412788a93fa6dd6df72c94d5a8ff2d7"

23 | ],

24 | "index": "pypi",

25 | "version": "==7.0"

26 | },

27 | "cycler": {

28 | "hashes": [

29 | "sha256:1d8a5ae1ff6c5cf9b93e8811e581232ad8920aeec647c37316ceac982b08cb2d",

30 | "sha256:cd7b2d1018258d7247a71425e9f26463dfb444d411c39569972f4ce586b0c9d8"

31 | ],

32 | "version": "==0.10.0"

33 | },

34 | "gputil": {

35 | "hashes": [

36 | "sha256:9afc56eb7ada21888673cf3ab2046932c6207fb2396797b9736b3d02a59532c6"

37 | ],

38 | "index": "pypi",

39 | "version": "==1.3.0"

40 | },

41 | "kiwisolver": {

42 | "hashes": [

43 | "sha256:0ee4ed8b3ae8f5f712b0aa9ebd2858b5b232f1b9a96b0943dceb34df2a223bc3",

44 | "sha256:0f7f532f3c94e99545a29f4c3f05637f4d2713e7fd91b4dd8abfc18340b86cd5",

45 | "sha256:1a078f5dd7e99317098f0e0d490257fd0349d79363e8c923d5bb76428f318421",

46 | "sha256:1aa0b55a0eb1bd3fa82e704f44fb8f16e26702af1a073cc5030eea399e617b56",

47 | "sha256:2874060b91e131ceeff00574b7c2140749c9355817a4ed498e82a4ffa308ecbc",

48 | "sha256:379d97783ba8d2934d52221c833407f20ca287b36d949b4bba6c75274bcf6363",

49 | "sha256:3b791ddf2aefc56382aadc26ea5b352e86a2921e4e85c31c1f770f527eb06ce4",

50 | "sha256:4329008a167fac233e398e8a600d1b91539dc33c5a3eadee84c0d4b04d4494fa",

51 | "sha256:45813e0873bbb679334a161b28cb9606d9665e70561fd6caa8863e279b5e464b",

52 | "sha256:53a5b27e6b5717bdc0125338a822605084054c80f382051fb945d2c0e6899a20",

53 | "sha256:574f24b9805cb1c72d02b9f7749aa0cc0b81aa82571be5201aa1453190390ae5",

54 | "sha256:66f82819ff47fa67a11540da96966fb9245504b7f496034f534b81cacf333861",

55 | "sha256:79e5fe3ccd5144ae80777e12973027bd2f4f5e3ae8eb286cabe787bed9780138",

56 | "sha256:83410258eb886f3456714eea4d4304db3a1fc8624623fc3f38a487ab36c0f653",

57 | "sha256:8b6a7b596ce1d2a6d93c3562f1178ebd3b7bb445b3b0dd33b09f9255e312a965",

58 | "sha256:9576cb63897fbfa69df60f994082c3f4b8e6adb49cccb60efb2a80a208e6f996",

59 | "sha256:95a25d9f3449046ecbe9065be8f8380c03c56081bc5d41fe0fb964aaa30b2195",

60 | "sha256:a424f048bebc4476620e77f3e4d1f282920cef9bc376ba16d0b8fe97eec87cde",

61 | "sha256:aaec1cfd94f4f3e9a25e144d5b0ed1eb8a9596ec36d7318a504d813412563a85",

62 | "sha256:acb673eecbae089ea3be3dcf75bfe45fc8d4dcdc951e27d8691887963cf421c7",

63 | "sha256:b15bc8d2c2848a4a7c04f76c9b3dc3561e95d4dabc6b4f24bfabe5fd81a0b14f",

64 | "sha256:b1c240d565e977d80c0083404c01e4d59c5772c977fae2c483f100567f50847b",

65 | "sha256:c595693de998461bcd49b8d20568c8870b3209b8ea323b2a7b0ea86d85864694",

66 | "sha256:ce3be5d520b4d2c3e5eeb4cd2ef62b9b9ab8ac6b6fedbaa0e39cdb6f50644278",

67 | "sha256:e0f910f84b35c36a3513b96d816e6442ae138862257ae18a0019d2fc67b041dc",

68 | "sha256:ea36e19ac0a483eea239320aef0bd40702404ff8c7e42179a2d9d36c5afcb55c",

69 | "sha256:efabbcd4f406b532206b8801058c8bab9e79645b9880329253ae3322b7b02cd5",

70 | "sha256:f923406e6b32c86309261b8195e24e18b6a8801df0cfc7814ac44017bfcb3939"

71 | ],

72 | "version": "==1.0.1"

73 | },

74 | "matplotlib": {

75 | "hashes": [

76 | "sha256:66a6b7264fb200dd217ebc95c53d59b5e5fa8cac6b8a650a50ed05438667ff32",

77 | "sha256:69ff0d7139f3886be552ff29478c886b461081c0afb3a3ad46afb1a445bae722",

78 | "sha256:70f8782c50ac2c7617aad0fa5ba59fc49f690a851d6afc0178813c49767644dd",

79 | "sha256:716caa55ebfb82d66f7a5584ad818b349998d9cf7e6282e5eda5fdddf4752742",

80 | "sha256:91bf4be2477aa7408131ae1a499b1c8904ea8eb1eb3f88412b4809ebe0698868",

81 | "sha256:d1bd008db1e389d14523345719c30fd0fb3c724b71ae098360c3c8e85b7c560f",

82 | "sha256:d419a5fb5654f620756ad9883bc3f1db6875f6f2760c367bee775357d1bbb38c",

83 | "sha256:dc5b097546eeadc3a91eee35a1dbbf876e78ebed83b934c391f0f14605234c76",

84 | "sha256:de25d893f54e1d50555e4a4babf66d337917499c33c78a24216838b3d2c6bf3b",

85 | "sha256:e4ad891787ad2f181e7582997520a19912990b5d0644b1fdaae365b6699b953f",

86 | "sha256:e69ab0def9b053f4ea5800306ff9c671776a2d151ec6b206465309bb468c0bcc",

87 | "sha256:e9d37b22467e0e4d6f989892a998db5f59ddbf3ab811b515585dfdde9aacc5f9",

88 | "sha256:ee4471dd1c5ed03f2f46149af351b7a2e6618eced329660f1b4b8bf573422b70"

89 | ],

90 | "index": "pypi",

91 | "version": "==3.0.1"

92 | },

93 | "numpy": {

94 | "hashes": [

95 | "sha256:032df9b6571c5f1d41ea6f6a189223208cb488990373aa686aca55570fcccb42",

96 | "sha256:094f8a83e5bd0a44a7557fa24a46db6ba7d5299c389ddbc9e0e18722f567fb63",

97 | "sha256:1c0c80e74759fa4942298044274f2c11b08c86230b25b8b819e55e644f5ff2b6",

98 | "sha256:2aa0910eaeb603b1a5598193cc3bc8eacf1baf6c95cbc3955eb8e15fa380c133",

99 | "sha256:2f5ebc7a04885c7d69e5daa05208faef4db7f1ae6a99f4d36962df8cd54cdc76",

100 | "sha256:32a07241cb624e104b88b08dea2851bf4ec5d65a1f599d7735041ced7171fd7a",

101 | "sha256:3c7959f750b54b445f14962a3ddc41b9eadbab00b86da55fbb1967b2b79aad10",

102 | "sha256:3d8f9273c763a139a99e65c2a3c10f1109df30bedae7f011b10d95c538364704",

103 | "sha256:63bca71691339d2d6f8a7c970821f2b12098a53afccc0190d4e1555e75e5223a",

104 | "sha256:7ae9c3baff3b989859c88e0168ad10902118595b996bf781eaf011bb72428798",

105 | "sha256:866a7c8774ccc7d603667fad95456b4cf56d79a2bb5a7648ac9f0082e0b9416e",

106 | "sha256:8bc4b92a273659e44ca3f3a2f8786cfa39d8302223bcfe7df794429c63d5f5a1",

107 | "sha256:919f65e0732195474897b1cafefb4d4e7c2bb8174a725e506b62e9096e4df28d",

108 | "sha256:9d1598573d310104acb90377f0a8c2319f737084689f5eb18012becaf345cda5",

109 | "sha256:9fff90c88bfaad2901be50453d5cd7897a826c1d901f0654ee1d73ab3a48cd18",

110 | "sha256:a245464ddf6d90e2d6287e9cef6bcfda2a99467fdcf1b677b99cd0b6c7b43de2",

111 | "sha256:a988db28f54e104a01e8573ceb6f28202b4c15635b1450b2e3b2b822c6564f9b",

112 | "sha256:b12fe6f31babb9477aa0f9692730654b3ee0e71f33b4568170dfafd439caf0a2",

113 | "sha256:b7599ff4acd23f5de983e3aec772153b1043e131487a5c6ad0f94b41a828877a",

114 | "sha256:c9f4dafd6065c4c782be84cd67ceeb9b1d4380af60a7af32be10ebecd723385e",

115 | "sha256:ce3622b73ccd844ba301c1aea65d36cf9d8331e7c25c16b1725d0f14db99aaf4",

116 | "sha256:d0f36a24cf8061a2c03e151be3418146717505b9b4ec17502fa3bbdb04ec1431",

117 | "sha256:d263f8f14f2da0c079c0297e829e550d8f2c4e0ffef215506bd1d0ddd2bff3de",

118 | "sha256:d8837ff272800668aabdfe70b966631914b0d6513aed4fc1b1428446f771834d",

119 | "sha256:ef694fe72a3995aa778a5095bda946e0d31f7efabd5e8063ad8c6238ab7d3f78",

120 | "sha256:f1fd1a6f40a501ba4035f5ed2c1f4faa68245d1407bf97d2ee401e4f23d1720b",

121 | "sha256:fa337b6bd5fe2b8c4e705f4102186feb9985de9bb8536d32d5129a658f1789e0",

122 | "sha256:febd31cd0d2fd2509ca2ec53cb339f8bf593c1bd245b9fc55c1917a68532a0af"

123 | ],

124 | "version": "==1.15.3"

125 | },

126 | "pyparsing": {

127 | "hashes": [

128 | "sha256:bc6c7146b91af3f567cf6daeaec360bc07d45ffec4cf5353f4d7a208ce7ca30a",

129 | "sha256:d29593d8ebe7b57d6967b62494f8c72b03ac0262b1eed63826c6f788b3606401"

130 | ],

131 | "version": "==2.2.2"

132 | },

133 | "python-dateutil": {

134 | "hashes": [

135 | "sha256:063df5763652e21de43de7d9e00ccf239f953a832941e37be541614732cdfc93",

136 | "sha256:88f9287c0174266bb0d8cedd395cfba9c58e87e5ad86b2ce58859bc11be3cf02"

137 | ],

138 | "version": "==2.7.5"

139 | },

140 | "scikit-learn": {

141 | "hashes": [

142 | "sha256:1ca280bbdeb0f9950f9427c71e29d9f14e63b2ffa3e8fdf95f25e13773e6d898",

143 | "sha256:33ad23aa0928c64567a24aac771aea4e179fab2a20f9f786ab00ca9fe0a13c82",

144 | "sha256:344bc433ccbfbadcac8c16b4cec9d7c4722bcea9ce19f6da42e2c2f805571941",

145 | "sha256:35ee532b5e992a6e8d8a71d325fd9e0b58716894657e7d3da3e7a1d888c2e7d4",

146 | "sha256:37cbbba2d2a3895bba834d50488d22268a511279e053135bb291f637fe30512b",

147 | "sha256:40cf1908ee712545f4286cc21f3ee21f3466c81438320204725ab37c96849f27",

148 | "sha256:4130760ac54f5946523c1a1fb32a6c0925e5245f77285270a8f6fb5901b7b733",

149 | "sha256:46cc8c32496f02affde7abe507af99cd752de0e41aec951a0bc40c693c2a1e07",

150 | "sha256:4a364cf22be381a17c05ada9f9ce102733a0f75893c51b83718cd9358444921e",

151 | "sha256:56aff3fa3417cd69807c1c74db69aee34ce08d7161cbdfebbff9b4023d9d224b",

152 | "sha256:58debb34a15cfc03f4876e450068dbd711d9ec36ae5503ed2868f2c1f88522f7",

153 | "sha256:7bcf7ade62ef3443470af32afb82646640d653f42502cf31a13cc17d3ff85d57",

154 | "sha256:7d4eab203ed260075f47e2bf6a2bd656367e4e8683b3ad46d4651070c5d1e9aa",

155 | "sha256:86697c6e4c2d74fbbf110c6d5979d34196a55108fa9896bf424f9795a8d935ad",

156 | "sha256:911115db6669c9b11efd502dcc5483cd0c53e4e3c4bcdfe2e73bbb27eb5e81da",

157 | "sha256:97d1d971f8ec257011e64b7d655df68081dd3097322690afa1a71a1d755f8c18",

158 | "sha256:99f22c3228ec9ab3933597825dc7d595b6c8c7b9ae725cfa557f16353fac8314",

159 | "sha256:a2e18e5a4095b3ca4852eb087d28335f3bb8515df4ccf906d380ee627613837f",

160 | "sha256:a3070f71a4479a9827148609f24f2978f10acffa3b8012fe9606720d271066bd",

161 | "sha256:a6a197499429d2eaa2ae922760aa3966ef353545422d5f47ea2ca9369cbf7d26",

162 | "sha256:a7f6f5b3bc7b8e2066076098788579af12bd507ccea8ca6859e52761aa61eaca",

163 | "sha256:a82b90b6037fcc6b311431395c11b02555a3fbf96921a0667c8f8b0c495991cb",

164 | "sha256:ab2c4266b8cd159a266eb03c709ad5400756dca9c45aa48fb523263344475093",

165 | "sha256:b983a2dfdb9d707c78790608bcfd63692e5c2d996865a9689f3db768d0a2978d",

166 | "sha256:bb33d447f4c6fb164d426467d7bf8a4901c303333c5809b85319b2e0626763cd",

167 | "sha256:bc2a0116a67081167f1fbfed731d361671e5925db291b70e65fa66170045c53f",

168 | "sha256:bd189f6d0c2fdccb7c0d3fd1227c6626dc17d00257edbb63dd7c88f31928db61",

169 | "sha256:d393f810da9cd4746cad7350fb89f0509c3ae702c79d2ba8bd875201be4102d1"

170 | ],

171 | "version": "==0.20.0"

172 | },

173 | "scikit-optimize": {

174 | "hashes": [

175 | "sha256:1d7657a4b8ef9aa6d81e49b369c677c584e83269f11710557741d3b3f8fa0a75",

176 | "sha256:a2304413f7b66b27dfaed64271c370e5e2c926fbc1cbecdb67fe47cd847f9d5c"

177 | ],

178 | "index": "pypi",

179 | "version": "==0.5.2"

180 | },

181 | "scipy": {

182 | "hashes": [

183 | "sha256:0611ee97296265af4a21164a5323f8c1b4e8e15c582d3dfa7610825900136bb7",

184 | "sha256:08237eda23fd8e4e54838258b124f1cd141379a5f281b0a234ca99b38918c07a",

185 | "sha256:0e645dbfc03f279e1946cf07c9c754c2a1859cb4a41c5f70b25f6b3a586b6dbd",

186 | "sha256:0e9bb7efe5f051ea7212555b290e784b82f21ffd0f655405ac4f87e288b730b3",

187 | "sha256:108c16640849e5827e7d51023efb3bd79244098c3f21e4897a1007720cb7ce37",

188 | "sha256:340ef70f5b0f4e2b4b43c8c8061165911bc6b2ad16f8de85d9774545e2c47463",

189 | "sha256:3ad73dfc6f82e494195144bd3a129c7241e761179b7cb5c07b9a0ede99c686f3",

190 | "sha256:3b243c77a822cd034dad53058d7c2abf80062aa6f4a32e9799c95d6391558631",

191 | "sha256:404a00314e85eca9d46b80929571b938e97a143b4f2ddc2b2b3c91a4c4ead9c5",

192 | "sha256:423b3ff76957d29d1cce1bc0d62ebaf9a3fdfaf62344e3fdec14619bb7b5ad3a",

193 | "sha256:42d9149a2fff7affdd352d157fa5717033767857c11bd55aa4a519a44343dfef",

194 | "sha256:625f25a6b7d795e8830cb70439453c9f163e6870e710ec99eba5722775b318f3",

195 | "sha256:698c6409da58686f2df3d6f815491fd5b4c2de6817a45379517c92366eea208f",

196 | "sha256:729f8f8363d32cebcb946de278324ab43d28096f36593be6281ca1ee86ce6559",

197 | "sha256:8190770146a4c8ed5d330d5b5ad1c76251c63349d25c96b3094875b930c44692",

198 | "sha256:878352408424dffaa695ffedf2f9f92844e116686923ed9aa8626fc30d32cfd1",

199 | "sha256:8b984f0821577d889f3c7ca8445564175fb4ac7c7f9659b7c60bef95b2b70e76",

200 | "sha256:8f841bbc21d3dad2111a94c490fb0a591b8612ffea86b8e5571746ae76a3deac",

201 | "sha256:c22b27371b3866c92796e5d7907e914f0e58a36d3222c5d436ddd3f0e354227a",

202 | "sha256:d0cdd5658b49a722783b8b4f61a6f1f9c75042d0e29a30ccb6cacc9b25f6d9e2",

203 | "sha256:d40dc7f494b06dcee0d303e51a00451b2da6119acbeaccf8369f2d29e28917ac",

204 | "sha256:d8491d4784aceb1f100ddb8e31239c54e4afab8d607928a9f7ef2469ec35ae01",

205 | "sha256:dfc5080c38dde3f43d8fbb9c0539a7839683475226cf83e4b24363b227dfe552",

206 | "sha256:e24e22c8d98d3c704bb3410bce9b69e122a8de487ad3dbfe9985d154e5c03a40",

207 | "sha256:e7a01e53163818d56eabddcafdc2090e9daba178aad05516b20c6591c4811020",

208 | "sha256:ee677635393414930541a096fc8e61634304bb0153e4e02b75685b11eba14cae",

209 | "sha256:f0521af1b722265d824d6ad055acfe9bd3341765735c44b5a4d0069e189a0f40",

210 | "sha256:f25c281f12c0da726c6ed00535ca5d1622ec755c30a3f8eafef26cf43fede694"

211 | ],

212 | "version": "==1.1.0"

213 | },

214 | "six": {

215 | "hashes": [

216 | "sha256:70e8a77beed4562e7f14fe23a786b54f6296e34344c23bc42f07b15018ff98e9",

217 | "sha256:832dc0e10feb1aa2c68dcc57dbb658f1c7e65b9b61af69048abc87a2db00a0eb"

218 | ],

219 | "version": "==1.11.0"

220 | },

221 | "toml": {

222 | "hashes": [

223 | "sha256:229f81c57791a41d65e399fc06bf0848bab550a9dfd5ed66df18ce5f05e73d5c",

224 | "sha256:235682dd292d5899d361a811df37e04a8828a5b1da3115886b73cf81ebc9100e"

225 | ],

226 | "index": "pypi",

227 | "version": "==0.10.0"

228 | },

229 | "tqdm": {

230 | "hashes": [

231 | "sha256:3c4d4a5a41ef162dd61f1edb86b0e1c7859054ab656b2e7c7b77e7fbf6d9f392",

232 | "sha256:5b4d5549984503050883bc126280b386f5f4ca87e6c023c5d015655ad75bdebb"

233 | ],

234 | "index": "pypi",

235 | "version": "==4.28.1"

236 | }

237 | },

238 | "develop": {}

239 | }

240 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |  4 |

5 |

4 |

5 |

6 | Experiment design, deployment, and optimization

7 |

8 |

9 | [](http://www.apache.org/licenses/LICENSE-2.0.html)

10 |

11 |

12 | EXP is a python experiment management toolset created to simplify two simple use cases: design and deploy experiments in the form of python modules/files.

13 |

14 | An experiment is a series of runs of a given configurable module for a specified set of parameters. This tool covers one of the most prevalent experiment deployment scenarios: testing a set of parameters in parallel in a local machine or homogeneous cluster. EXP also supports [global optimization](https://www.cs.ox.ac.uk/people/nando.defreitas/publications/BayesOptLoop.pdf) using **gaussian processes** or other surrogate models such as **random forests**. This can be used for instance as a tool for **hyperoparameter tuning** for machine learning models.

15 |

16 | ## Features

17 | * **parameter space design** based on configuration files ([TOML](https://github.com/toml-lang/toml) format);

18 | * **parallel experiment deployment** using ``multiprocessing`` processes;

19 | * **CUDA gpu workers** one parallel process per available GPUs: uses the variable [CUDA_VISIBLE_DEVICES](https://devblogs.nvidia.com/cuda-pro-tip-control-gpu-visibility-cuda_visible_devices);

20 | * **global optimization** from parameter spaces (e.g. for hyperparameter tunning) using [scikit-optimize](https://scikit-optimize.github.io/).

21 |

22 | ##

23 |  24 |

25 | ## Installation

26 | ``pip install exp``

27 |

28 | ``pipenv install exp`` with [pipenv](https://pipenv.readthedocs.io/en/latest/install/#pragmatic-installation-of-pipenv)

29 |

30 | ## Available CLI tools

31 | EXP provides two CLI modules:

32 | * exp.run: ``python -m exp.run -p basic.conf -m runnable.py --workers 10``

33 | * exp.gopt:``python -m exp.gopt -p basic.conf -m runnable.py --workers 4 -n 100 --plot``

34 |

35 | for more information check each commands help:

36 |

37 | ``python -m exp.run -h``

38 |

39 | ## Getting Started: Optimization

40 |

41 | ### 1. Runnable Module

42 | The first step is to create a module to use in our experiments. A basic configurable module ``runnable.py`` looks like this:

43 |

44 | ```python

45 | def run(x=1, **kwargs):

46 | return x ** 2

47 | ```

48 |

49 | This module computes the square of a parameter ``x``. Note that ``kwargs`` is included to capture other parameters that the experiment runner might use (even if they are not used by your module). Since run receives a dictionary, you could also define it as follows.

50 |

51 | ```python

52 | def run(**kwargs):

53 | x = kwargs.get('x',1)

54 | return x ** 2

55 | ```

56 |

57 | ### 2. Parameter Space Definition

58 | Next, we need a configuration file ``basic.conf`` were the parameters are specified:

59 | ```markdown

60 | [x]

61 | type = "range"

62 | bounds = [-10,10]

63 | ```

64 | This defines a parameter space with a single parameter ``x`` with values in the range ``[-10,10]``. For how to specify parameter spaces, see the [Parameter Space Specification](#parameter-space-specification).

65 |

66 | ### 3. Module Optimization

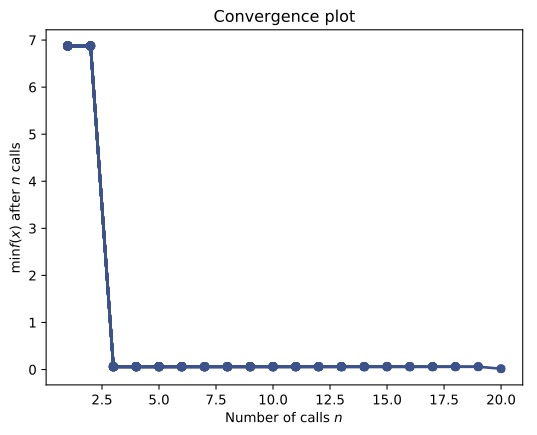

67 | Our simple module returns the ``x**2``, the optimizer tries to find the minimum value of this function based on the parameter space given by the configuration file. In this case, the optimizer will look at values of ``x`` between ``[-10,10]`` and try to find the minimum value.

68 |

69 | ```bash

70 | python -m exp.gopt --params basic.conf --module runnable.py --n 20 --workers 4

71 | ```

72 |

73 |

24 |

25 | ## Installation

26 | ``pip install exp``

27 |

28 | ``pipenv install exp`` with [pipenv](https://pipenv.readthedocs.io/en/latest/install/#pragmatic-installation-of-pipenv)

29 |

30 | ## Available CLI tools

31 | EXP provides two CLI modules:

32 | * exp.run: ``python -m exp.run -p basic.conf -m runnable.py --workers 10``

33 | * exp.gopt:``python -m exp.gopt -p basic.conf -m runnable.py --workers 4 -n 100 --plot``

34 |

35 | for more information check each commands help:

36 |

37 | ``python -m exp.run -h``

38 |

39 | ## Getting Started: Optimization

40 |

41 | ### 1. Runnable Module

42 | The first step is to create a module to use in our experiments. A basic configurable module ``runnable.py`` looks like this:

43 |

44 | ```python

45 | def run(x=1, **kwargs):

46 | return x ** 2

47 | ```

48 |

49 | This module computes the square of a parameter ``x``. Note that ``kwargs`` is included to capture other parameters that the experiment runner might use (even if they are not used by your module). Since run receives a dictionary, you could also define it as follows.

50 |

51 | ```python

52 | def run(**kwargs):

53 | x = kwargs.get('x',1)

54 | return x ** 2

55 | ```

56 |

57 | ### 2. Parameter Space Definition

58 | Next, we need a configuration file ``basic.conf`` were the parameters are specified:

59 | ```markdown

60 | [x]

61 | type = "range"

62 | bounds = [-10,10]

63 | ```

64 | This defines a parameter space with a single parameter ``x`` with values in the range ``[-10,10]``. For how to specify parameter spaces, see the [Parameter Space Specification](#parameter-space-specification).

65 |

66 | ### 3. Module Optimization

67 | Our simple module returns the ``x**2``, the optimizer tries to find the minimum value of this function based on the parameter space given by the configuration file. In this case, the optimizer will look at values of ``x`` between ``[-10,10]`` and try to find the minimum value.

68 |

69 | ```bash

70 | python -m exp.gopt --params basic.conf --module runnable.py --n 20 --workers 4

71 | ```

72 |

73 |

74 |  75 |

75 |

76 |

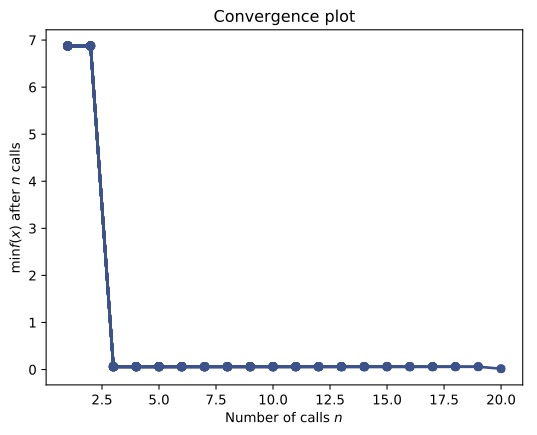

77 | finds a solution very close to ``0``. By default, the optimizer assumes a range defines the boundaries of a real-valued variable. If you wish to optimize discrete integers use the following specification:

78 |

79 | ```markdown

80 | [x]

81 | type = "range"

82 | bounds = [-10,10]

83 | dtype = "int"

84 | ```

85 | The optimizer will explore discrete values between -10 and 10 inclusively. Also, using the ``--plot`` flag displays a real-time **convergence plot** for the optimization process.

86 |

87 |

88 |  89 |

89 |

90 |

91 | which in this case converges immediately because the function to be optimized is quite simple, but the goal is to optimize complex models and choosing from a large set of parameters without having to run an exhaustive search through all the possible parameter combinations.

92 |

93 | ## Parameter Space Specification

94 | Parameter space files use [TOML](https://github.com/toml-lang/toml) format, I recommend taking a look at the specification and getting familiar with how to define values, arrays, etc. ParamSpaces in EXP has **4 types of parametes**, namely:

95 | * **value**: single value parameter;

96 | * **range**: a range of numbers between bounds;

97 | * **random**: a random *real/int* value between bounds;

98 | * **list**: a list of values (used for example to specify categorical parameters);

99 |

100 | Bellow, I supply an example for each type of parameter:

101 |

102 | ### Value

103 | Single value parameter.

104 | ```python

105 | # this a single valued parameter with a boolean value

106 | [some_param]

107 | type = "value"

108 | value = true

109 | ```

110 | ### Range

111 | A parameter with a set of values within a range.

112 | ```python

113 | # TOML files can handle comments which is useful to document experiment configurations

114 | [some_range_param]

115 | type = "range"

116 | bounds = [-10,10]

117 | step = 1 # this is optional and assumed to be 1

118 | dtype = "float" # also optional and assumed to be float

119 | ```

120 | The commands ``run`` and ``gopt`` will treat this parameter definition differently. The optimizer will explore values within the bounds including the end-points. The runner will take values between ``bounds[0]`` and ``bounds[1]`` excluding the last end-point (much like a python range or numpy arange).

121 |

122 | The ``dtype`` also influences how the optimizer looks for values in the range, if set to ``"int"``, it explores discrete integer values within the bounds; if set to ``"float"``, it assumes the parameter takes a continuous value between the specified bounds.

123 |

124 | ### Random

125 | A parameter with ``n`` random values sampled from "uniform" or "log-uniform" between the given bounds. If used with ``run``, a parameter space will be populated with a list of random values according to the specification. If used with ``gopt``, ``n`` is ignored and bounds are used instead, along with the prior.

126 |

127 | For optimization purposes, this works like range, except that you can specify the prior which can be "uniform" or "log-uniform", range assumes that the values are generated from "uniform" prior, when the parameter is used for optimization.

128 |

129 | The other difference between parameter grids and optimization is that the bounds do not include the end-points when generating parameter values for grid search. The optimizer will explore random values within the bounds specified, including the high end-point.

130 |

131 | ```python

132 | [random_param]

133 | type="random"

134 | bounds=[0,3] # optional, default range is [0,1]

135 | prior="uniform" # optional, default value is "uniform"

136 | dtype="float" # optional, default value is "float"

137 | n=1 # optional, default value is 1 (number of random parameters to be sampled)

138 | ```

139 | ### List

140 | A list is just an homogeneous series of values a parameter can take.

141 | ```python

142 | [another_param]

143 | type="list"

144 | value = [1,2,3]

145 | ```

146 | The array in ``"value"`` must be homogenous, something like ``value=[1,2,"A"]`` would throw a *Not a homogeneous array* error. List parameters are treated by ``gopt`` command as a **categorical** parameter. This is encoded using a *one-hot-encoding* for optimization.

147 |

148 | Also, for optimization purposes, a list is treated like a set, if you provide duplicate values it will only explore the unique values. For example if you want to specify a boolean parameter, use a list:

149 |

150 | ```python

151 | [some_boolean_decision]

152 | type="list"

153 | value = [true,false]

154 | ```

155 |

156 | # Library Modules

157 | EXP also provides different tools to specify param spaces programmatically

158 | ## ParamSpace

159 | The ``exp.params.ParamSpace`` class provides a way to create parameter spaces and iterate over all the possible

160 | combinations of parameters as follows:

161 | ```python

162 | >>>from exp.params import ParamSpace

163 | >>>ps = ParamSpace()

164 | >>>ps.add_value("p1",1)

165 | >>>ps.add_list("p2",[True,False])

166 | >>>ps.add_range("p3",low=0,high=10,dtype=int)

167 | >>>ps.size

168 | 20

169 | ```

170 |

171 | ```python

172 | grid = ps.param_grid(runs=2)

173 | ```

174 | ``grid`` has ``2*ps.size`` configurations because we repeat each configuration ``2`` times (number of runs). Each configuration dictionary includes 2 additional parameters ``"id"`` and ``"run"`` which are the unique configuration id and run id respectively.

175 |

176 | ```python

177 | for config in grid:

178 | # config is a dictionary with the params of a unique configuration in the parameter space

179 | do_something(config)

180 | ```

181 |

182 | ## ParamDict & Namespace

183 | ``ParamDict`` from ``exp.args`` module is a very simple dictionary where you can specify default values for different parameters. ``exp.args.Param`` is a named tuple: ``(typefn,default,options)`` where ``typefn`` is a type function like ``int`` or ``float`` that transforms strings into values of the given type if necessary, ``default`` is a default value, ``options`` is a list of possible values for the parameter.

184 |

185 | This is just a very simple alternative to using argparse with a lot of of parameters. Example of usage:

186 |

187 | ```python

188 | from exp.args import ParamDict,Namespace

189 |

190 | # these are interpreted by a ParamDict as a exp.args.Param named tuple

191 | param_spec = {

192 | 'x': (float, None),

193 | 'id': (int, 0),

194 | 'run': (int, 1),

195 | 'cat': (str, "A", ["A","B"])

196 | }

197 |

198 | def run(**kargs):

199 | args = ParamDict(param_spec) # creates a param dict from default values and options

200 | args.from_dict(kargs) # updates the dictionary with new values where the parameter name overlaps

201 | ns = args.to_namespace() # creates a namespace object so you can access ns.x, ns.run etc

202 | ...

203 | ```

204 |

205 | Another nice thing is that there is basic type conversions from string to boolean, int, float, etc. Depending

206 | on the arguments received in ``kwargs``, ``ParamDict`` converts the values automatically according to the parameter

207 | specifications.

208 |

209 | ## Created by

210 | **[Davide Nunes](https://github.com/davidenunes)**

211 |

212 | ## If you find this useful

213 |  214 |

215 | ## Licence

216 |

217 | [Apache License 2.0](LICENSE)

218 |

--------------------------------------------------------------------------------

/exp/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/davidenunes/exp/091dba3b7ef60b463c9be7ad2e9c1458f06a1e38/exp/__init__.py

--------------------------------------------------------------------------------

/exp/__version__.py:

--------------------------------------------------------------------------------

1 | __version__ = "0.5.1"

2 |

--------------------------------------------------------------------------------

/exp/args.py:

--------------------------------------------------------------------------------

1 | """ args is a simple module to allow for quick

2 | specification of function parameters with default arguments,

3 | option restriction and type conversion based on type classes

4 |

5 | Param: namedtuple for specification parameters from tuples

6 | Namespace: simple object that maps param["param"] parameter

7 | dictionaries to namespace.param access.

8 | ParamDict: stores Param instances with default values

9 | """

10 | from collections import namedtuple

11 |

12 |

13 | class Param(namedtuple('Param', ["typefn", "value", "options"])):

14 | """ Simple class representing parameters

15 |

16 | with values, validated/restricted by options

17 | and be converted from other values using a type function

18 | used to read parameter tuples that serve as entries to :obj:`ParamDict`

19 |

20 | """

21 |

22 | def __new__(cls, typefn, value=None, options=None):

23 | if options is not None:

24 | options = set(options)

25 | typefn = convert_type(typefn)

26 |

27 | if value is not None:

28 | value = typefn(value)

29 |

30 | if options is not None and value is not None:

31 | if value not in options:

32 | raise ValueError("Invalid Param Value: {} not in options {}".format(value, options))

33 |

34 | return super().__new__(cls, typefn, value, options)

35 |

36 |

37 | class Namespace(object):

38 | def __init__(self, dict_attr):

39 | if not isinstance(dict_attr, dict):

40 | raise TypeError("Namespace requires a dict, {} found".format(dict_attr))

41 |

42 | for k, v in dict_attr.items():

43 | self.__setattr__(k, v)

44 |

45 | def __str__(self):

46 | attr = ["{}={}".format(k, v) for k, v in self.__dict__.items()]

47 |

48 | return "Namespace({s})".format(s=','.join(attr))

49 |

50 | def __setattr__(self, key, value):

51 | super().__setattr__(key, value)

52 |

53 |

54 | class ParamDict(dict):

55 | """ Dictionary of parameters with default values

56 |

57 | Attributes:

58 | defaults = a dictionary :py:`str` -> :obj:`Param`

59 |

60 | """

61 |

62 | def __init__(self, defaults):

63 | self.defaults = dict()

64 | self.add_params(defaults)

65 |

66 | for arg in self.defaults:

67 | param = self.defaults[arg]

68 | dict.__setitem__(self, arg, param.value)

69 |

70 | # self.__dict__ .update(self)

71 |

72 | def __setitem__(self, key, val):

73 | if key in self.defaults:

74 | default = self.defaults[key]

75 | if val is None:

76 | val = default.value

77 | else:

78 | val = default.typefn(val)

79 | if default.options is not None:

80 | if val not in default.options:

81 | raise ValueError("Invalid Param Value: {} not in options {}".format(val, default.options))

82 |

83 | dict.__setitem__(self, key, val)

84 |

85 | def add_params(self, param_dict):

86 | """ Adds a set of parameter values from a given dictionary to the current values

87 | overwrites the default values for the parameters that already exist in the defaults

88 |

89 | Args:

90 | param_dict: a dictionary with param_name -> (type,vale,options) :obj:`Param`

91 | """

92 | for arg in param_dict:

93 | param = Param(*param_dict[arg])

94 | self.defaults[arg] = param

95 |

96 | def from_dict(self, args):

97 | for arg in args:

98 | self.__setitem__(arg, args[arg])

99 |

100 | def to_namespace(self):

101 | """ Converts the ParamDict to a :obj:`Namespace` object

102 | which allows you to access ``namespace.param1``

103 |

104 | Returns:

105 | a :obj:`Namespace` object with the current values of this parameter dictionary

106 | """

107 | return Namespace(self)

108 |

109 |

110 | def as_bool(v):

111 | """ Converts a given value to a boolean

112 |

113 | Args:

114 | v (int,str,bool): and integer, string, or boolean to be converted to boolean value.

115 | Returns:

116 | (bool): if the value is an int any value <= 0 returns False, else True

117 | if the value is a boolean simply forwards this value

118 | if the value is a string, ignores case and converts any (yes,true,t,y,1) to True

119 | and ('no', 'false', 'f', 'n', '0') to False

120 | """

121 | if v is None:

122 | return False

123 | elif isinstance(v, bool):

124 | return v

125 | elif isinstance(v, str):

126 | if v.lower() in ('yes', 'true', 't', 'y', '1'):

127 | return True

128 | elif v.lower() in ('no', 'false', 'f', 'n', '0'):

129 | return False

130 | else:

131 | raise TypeError('Boolean value expected.')

132 | elif isinstance(v, int) or isinstance(v, float):

133 | if v <= 0:

134 | return False

135 | else:

136 | return True

137 |

138 |

139 | def as_int(v):

140 | """ Converts a value to int by converting it first to float

141 |

142 | because calling ``int("2.3")`` raises a ValueError since 2.3 is

143 | not an integer. ``as_int("2.3")`` returns the same as ``int(2.3)``

144 |

145 | Args:

146 | v: a value convertible to numerical

147 |

148 | Returns:

149 | (int) the integer value of the given value

150 |

151 | """

152 | return int(float(v))

153 |

154 |

155 | def convert_type(type_class):

156 | """ Maps classes to convert functions present in this module

157 | Args:

158 | type_class: some class that can also be called to convert values into its types

159 |

160 |

161 | Returns:

162 | a type conversion function for the given class capable of converting more than literal values, for instance,

163 | requesting a type conversion class for boolean, returns a function capable of converting strings, or integers

164 | to a boolean value (see :obj:`as_bool`)

165 | """

166 | if type_class == bool:

167 | return as_bool

168 | elif type_class == int:

169 | return as_int

170 | else:

171 | return type_class

172 |

--------------------------------------------------------------------------------

/exp/gopt.py:

--------------------------------------------------------------------------------

1 | """ CLI for Hyper parameter optimisation

2 |

3 | prompt_toolkit run sequential model algorithmic optimisation

4 | based on gaussian processes, random forests etc.

5 | """

6 | import sys

7 |

8 | import GPUtil

9 | import click

10 | import csv

11 | import importlib

12 | import logging

13 | import multiprocessing as mp

14 | import os

15 | from matplotlib import pyplot as plt

16 | from multiprocessing import Event

17 | from multiprocessing import Queue, Process

18 | from multiprocessing.queues import Empty

19 | from skopt import Optimizer, plots

20 | from skopt.space import Integer, Real, Categorical

21 | from tqdm import tqdm

22 | from tqdm._utils import _term_move_up

23 | import traceback

24 | from toml import TomlDecodeError

25 | from exp.params import ParamSpace, DTypes, ParamDecodeError

26 |

27 |

28 | def params_to_skopt(param_space: ParamSpace):

29 | """ Converts a parameter space to a list of Dimention objects that can be used with

30 | a skopt Optimizer.

31 |

32 | A skopt Optimizer only receives 3 types of Dimensions: Categorical, Real, or Integer

33 | we convert parameters from our parameter space into one of those 3 types. Note that we only

34 | convert parameters that have either bounds or with a categorical domain with more than 1 value.

35 | If we have constant values in our parameter space, these don't need to be optimized anyway.

36 |

37 | Another function is provided to convert skopt output values back into a dictionary with

38 | a full configuration according to the parameter space (@see values_to_params).

39 |

40 | Args:

41 | param_space: a ParameterSpace where we can get the domain of each parameter

42 |

43 | Returns:

44 | a list of Dimension that can be passed to a skopt Optimizer

45 |

46 | """

47 | dimensions = []

48 | for param_name in param_space.param_names():

49 | domain_param = param_space.domain(param_name)

50 | domain = domain_param["domain"]

51 | dtype = DTypes.from_type(domain_param["dtype"])

52 | if len(domain) > 1:

53 | if dtype == DTypes.INT:

54 | low = min(domain)

55 | high = max(domain)

56 | dimensions.append(Integer(low, high, name=param_name))

57 | elif dtype == DTypes.FLOAT:

58 | low = min(domain)

59 | high = max(domain)

60 | prior = domain_param.get("prior", None)

61 | dimensions.append(

62 | Real(low, high, prior=prior, name=param_name))

63 | elif dtype == DTypes.CATEGORICAL:

64 | prior = domain_param.get("prior", None)

65 | dimensions.append(Categorical(

66 | domain, prior, transform="onehot", name=param_name))

67 | return dimensions

68 |

69 |

70 | def values_to_params(param_values, param_space):

71 | """

72 | We don't need to optimize parameters with a single value in their domain so we filter

73 | them out with params to skopt and convert back to configuration using this method

74 |

75 | Args:

76 | param_values: dict {param_name: value}

77 | param_space: rest of the parameter space used to complete this configuration

78 |

79 | """

80 | cfg = {}

81 | for param_name in param_space.param_names():

82 | if param_name in param_values:

83 | cfg[param_name] = param_values[param_name]

84 | else:

85 | # complete with value from parameter space

86 | value = param_space.get_param(param_name)

87 |

88 | if isinstance(value, (tuple, list)) and len(value) > 1:

89 | raise ValueError(

90 | "don't know how to complete a configuration that might have multiple values")

91 | else:

92 | if isinstance(value, (tuple, list)):

93 | value = value[0]

94 | cfg[param_name] = value

95 |

96 | return cfg

97 |

98 |

99 | def load_module(runnable_path):

100 | """ Loads a python file with the module to be evaluated.

101 |

102 | The module is a python file with a run function member. Each worker then

103 | passes each configuration it receives from a Queue to run as keyword arguments

104 |

105 | Args:

106 | runnable_path:

107 |

108 | Raises:

109 | TypeError: if the loaded module doesn't have a run function

110 |

111 | Returns:

112 | a reference to the newly loaded module so

113 |

114 | """

115 | runnable_path = os.path.abspath(runnable_path)

116 | spec = importlib.util.spec_from_file_location(

117 | "runnable", location=runnable_path)

118 | runnable = importlib.util.module_from_spec(spec)

119 | spec.loader.exec_module(runnable)

120 |

121 | try:

122 | getattr(runnable, "run")

123 | except AttributeError:

124 | raise TypeError(

125 | "module in {} does not contain a \"run\" method".format(runnable_path))

126 |

127 | return runnable

128 |

129 |

130 | def submit(n, optimizer: Optimizer, opt_param_names, current_configs, param_space: ParamSpace, queue: Queue):

131 | """ Generate and submit n new configurations to a queue.

132 |

133 | Asks the optimizer for n new values to explore, creates configurations for those points and puts them

134 | in the given queue.

135 |

136 | Args:

137 | n: the number of configurations to be generated

138 | optimizer: the optimiser object from skopt with the model used for the suggested points to explore

139 | opt_param_names: the names for the parameters using the same order of the dimensions in the optimizer

140 | current_configs: current list of configurations (updated with the newly generated ones)

141 | param_space: parameter space which we can use to convert optimizer points to fully specified configurations

142 | queue: que multiprocessing queue in which we put the new configurations

143 | """

144 | dims = opt_param_names

145 | xs = optimizer.ask(n_points=n)

146 | cfgs = [values_to_params(dict(zip(dims, x)), param_space) for x in xs]

147 | for i, c in enumerate(cfgs):

148 | c["id"] = i + len(current_configs)

149 | queue.put(c)

150 | current_configs += cfgs

151 |

152 |

153 | def worker(pid: int,

154 | module_path: str,

155 | config_queue: Queue,

156 | result_queue: Queue,

157 | error_queue: Queue,

158 | terminated: Event):

159 | """ Worker to be executed in its own process

160 |

161 | Args:

162 | module_path: path to model runnable that must be imported and runned on a given configuration

163 | terminated: each worker should have its own flag

164 | pid: (int) with worker id

165 | config_queue: configuration queue used to receive the parameters for this worker, each configuration is a task

166 | result_queue: queue where the worker deposits the results

167 |

168 | Returns:

169 | each time a new result is returned from calling the run function on the module, the worker puts this in to its

170 | result multiprocessing Queue in the form (worker_id, configuration_id, result)

171 |

172 | If an exception occurs during run(...) the worker puts that exception as the result into the queue instead

173 |

174 | """

175 | # ids should be 0, 1, 2, n where n is the maximum number of gpus available

176 | os.environ["CUDA_VISIBLE_DEVICES"] = str(pid)

177 | module = load_module(module_path)

178 |

179 | while not terminated.is_set():

180 | try:

181 | kwargs = config_queue.get(timeout=0.5)

182 | cfg_id = kwargs["id"]

183 | kwargs["pid"] = pid

184 | # e.g. model score

185 | result = module.run(**kwargs)

186 | result_queue.put((pid, cfg_id, result))

187 | except Empty:

188 | # nothing to do, check if it's time to terminate

189 | pass

190 | except Exception as e:

191 | terminated.set()

192 | error_queue.put((pid, cfg_id, traceback.format_exc()))

193 | result_queue.put((pid, cfg_id, e))

194 |

195 |

196 | def update_progress_kuma(progress):

197 | tqdm.write(_term_move_up() * 10) # move cursor up

198 | offset = " " * int(progress.n / progress.total * (progress.ncols - 40))

199 |

200 | tqdm.write(offset + ' _______________')

201 | tqdm.write(offset + ' | |')

202 | tqdm.write(offset + ' | KUMA-SAN IS |')

203 | tqdm.write(offset + ' | OPTIMIZING! |')

204 | tqdm.write(

205 | offset + ' | {:>3}/{:<3} |'.format(progress.n, progress.total))

206 | tqdm.write(offset + ' |________|')

207 | tqdm.write(offset + ' ( ) ( )||')

208 | tqdm.write(offset + ' ( •(エ)•)|| ')

209 | tqdm.write(offset + ' / づ')

210 |

211 |

212 | @click.command(help='optimizes the hyperparameters for a given function',

213 | context_settings=dict(help_option_names=['-h', '--help'])

214 | )

215 | @click.option('-p', '--params', required=True, type=click.Path(exists=True), help='path to parameter space file')

216 | @click.option('-m', '--module', required=True, type=click.Path(exists=True), help='path to python module file')

217 | @click.option('-w', '--workers', default=1, type=int, help="number of workers: limited to CPU core count or GPU "

218 | "count, cannot be <=0.")

219 | @click.option('-g', '--gpu', is_flag=True,

220 | help="bounds the number of workers to the number of available GPUs (not under load)."

221 | "Each process only sees a single GPU.")

222 | @click.option('-n', '--n', default=1, type=int, help='number of configuration runs')

223 | @click.option('--name', default="opt", type=str,

224 | help='optimization experiment name: used as prefix for some output files')

225 | @click.option('-s', '--surrogate', default="GP",

226 | type=click.Choice(["GP", "RF", "ET"], case_sensitive=False),

227 | help='surrogate model for global optimisation: '

228 | '(GP) gaussian process, '

229 | '(RF) random forest, or'

230 | '(ET) extra trees.')

231 | @click.option('-a', '--acquisition', default="EI",

232 | type=click.Choice(["LCB", "EI", "PI"], case_sensitive=False),

233 | help='acquisition function: '

234 | '(LCB) Lower Confidence Bound, '

235 | '(EI) Expected Improvement, or '

236 | '(PI) Probability of Improvement.')

237 | @click.option('--plot', is_flag=True, help='shows a convergence plot during the optimization process and saves it at'

238 | 'the current working dir')

239 | @click.option('-o', '--out', type=click.Path(), help="output directory for the results file. If plotting "

240 | "convergence, the plot is also saved in the first directory in the"

241 | "path.")

242 | @click.option('--sync/--async', default=False, help="--async (default) submission, means that we submit a new "

243 | "configuration to a worker for each new result the optimizer gets."

244 | "--sync mode makes the optimizer wait for all workers before "

245 | "submitting new configurations to all of them")

246 | @click.option('--kappa', type=float, default=1.96, help="(default=1.96) Used when the acquisition is LCB."

247 | "Controls how much of the variance in the "

248 | "predicted values should be taken into account. High values "

249 | "favour exploration vs exploitation")

250 | @click.option('--xi', type=float, default=0.01, help="(default=0.01) Used with EI acquisition: controls how much "

251 | "improvement we want over previous best.")

252 | @click.option('--kuma', is_flag=True, help='kuma-san will display the progress on your global optimization procedure.')

253 | def run(params, module, workers, gpu, n, surrogate, acquisition, name, plot, out, sync, kappa, xi, kuma):

254 | logger = logging.getLogger(__name__)

255 | handler = logging.FileHandler('{name}.log'.format(name=name), delay=True)

256 | handler.setLevel(logging.ERROR)

257 | formatter = logging.Formatter(

258 | '%(asctime)s - %(name)s - %(levelname)s - %(message)s')

259 | handler.setFormatter(formatter)

260 | logger.addHandler(handler)

261 |

262 | opt_results = None

263 | out_file = None

264 | try:

265 | if gpu:

266 | # detecting available gpus with load < 0.1

267 | gpu_ids = [g.id for g in GPUtil.getGPUs() if g.load < 0.2]

268 | num_workers = min(workers, len(gpu_ids))

269 |

270 | if num_workers <= 0:

271 | sys.exit(1)

272 | else:

273 | num_workers = min(workers, mp.cpu_count())

274 |

275 | logger.log(logging.DEBUG, "Spawning {} workers".format(num_workers))

276 | if num_workers <= 0:

277 | logger.log(logging.ERROR, "--workers cannot be 0")

278 | sys.exit(1)

279 |

280 | # prepare output file

281 | out_file_name = '{}_configurations.csv'.format(name)

282 | out = out_file_name if out is None else out

283 | if out is not None and os.path.isdir(out):

284 | out_file_path = os.path.join(out, out_file_name)

285 | else:

286 | out_file_path = out

287 |

288 | out_dir = os.path.abspath(os.path.join(out_file_path, os.pardir))

289 | out_file_path = os.path.join(out_dir, out_file_name)

290 |

291 | param_space = ParamSpace(params)

292 |

293 | dimensions = params_to_skopt(param_space)

294 | optimizer_dims = [d.name for d in dimensions]

295 | acquisition_kwargs = None

296 | if acquisition == "LCB":

297 | acquisition_kwargs = {'kappa': kappa}

298 | elif acquisition == "EI":

299 | acquisition_kwargs = {'xi': xi}

300 |

301 | optimizer = Optimizer(dimensions=dimensions,

302 | acq_func_kwargs=acquisition_kwargs,

303 | base_estimator=surrogate,

304 | acq_func=acquisition)

305 |

306 | out_file = open(out_file_path, 'w')

307 | out_writer = csv.DictWriter(

308 | out_file, fieldnames=param_space.param_names() + ["id", "evaluation"])

309 | out_writer.writeheader()

310 |

311 | # setup process pool and queues

312 | # manager = mp.Manager()

313 | config_queue = Queue()

314 | result_queue = Queue()

315 | error_queue = Queue()

316 |

317 | terminate_flags = [Event() for _ in range(num_workers)]

318 | processes = [

319 | Process(target=worker, args=(i, module, config_queue,

320 | result_queue, error_queue, terminate_flags[i]))

321 | for i in range(num_workers)]

322 |

323 | configs = []

324 | scores = {}

325 | # get initial points at random and submit one job per worker

326 | submit(num_workers, optimizer, optimizer_dims,

327 | configs, param_space, config_queue)

328 | # cfg_if: score

329 |

330 | num_completed = 0

331 | pending = len(configs)

332 | cancel = False

333 |

334 | for p in processes:

335 | p.daemon = True

336 | p.start()

337 |

338 | if plot:

339 | fig = plt.gcf()

340 | fig.show()

341 | fig.canvas.draw()

342 |

343 | progress_bar = tqdm(total=n, leave=True)

344 |

345 | if kuma:

346 | update_progress_kuma(progress_bar)

347 |

348 | while num_completed < n and not cancel:

349 | try:

350 | res = result_queue.get(timeout=1)

351 | pid, cfg_id, result = res

352 | if not isinstance(result, Exception):

353 | cfg = configs[cfg_id]

354 | # convert dictionary to x vector that optimizer takes

355 | x = [cfg[param] for param in optimizer_dims]

356 | # store scores for each config

357 | scores[cfg_id] = result

358 |

359 | out_row = dict(cfg)

360 | out_row["evaluation"] = result

361 | out_writer.writerow(out_row)

362 | # make sure we can see the results in the file as we run the optimizer

363 | out_file.flush()

364 | opt_results = optimizer.tell(x, result)

365 |

366 | num_completed += 1

367 | pending -= 1

368 |

369 | if plot:

370 | plots.plot_convergence(opt_results)

371 | fig.canvas.draw()

372 |

373 | # sync submission of jobs means we wait for all workers to finish

374 | if sync and pending == 0:

375 | if num_completed != n:

376 | num_submit = min(num_workers, n - num_completed)

377 | submit(num_submit, optimizer, optimizer_dims,

378 | configs, param_space, config_queue)

379 | pending = num_submit

380 | else:

381 | terminate_flags[pid].set()

382 |

383 | # async submission of jobs: as soon as we receive one result we submit the next

384 | if not sync:

385 | if (num_completed + pending) != n:

386 | submit(1, optimizer, optimizer_dims,

387 | configs, param_space, config_queue)

388 | pending += 1

389 | else:

390 | # signal the current worker for termination

391 | terminate_flags[pid].set()

392 |

393 | progress_bar.update()

394 | progress_bar.set_postfix(

395 | {"best solution ": opt_results["fun"]})

396 |

397 | if kuma:

398 | update_progress_kuma(progress_bar)

399 |

400 | else:

401 | _, cfg_id_err, err = error_queue.get()

402 | logger.error("configuration {} failed".format(cfg_id_err))

403 | logger.error(err)

404 |

405 | cancel = True

406 | except Empty:

407 | pass

408 |

409 | # try to wait for process termination

410 | for process in processes:

411 | process.join(timeout=0.5)

412 |

413 | if process.is_alive():

414 | process.terminate()

415 |

416 | progress_bar.close()

417 |

418 | except TomlDecodeError as e:

419 | logger.error(traceback.format_exc())

420 | print("\n\n[Invalid parameter file] TOML decode error:\n {}".format(

421 | e), file=sys.stderr)

422 | except ParamDecodeError as e:

423 | logger.error(traceback.format_exc())

424 | print("\n\n[Invalid parameter file]\n {}".format(e), file=sys.stderr)

425 | except Exception as e:

426 | logger.error(traceback.format_exc())

427 | raise e

428 | except KeyboardInterrupt:

429 | pass

430 | finally:

431 | # debugging

432 | if opt_results is not None and plot:

433 | plt_file = '{}_convergence.pdf'.format(name)

434 | out_path = os.path.join(out_dir, plt_file)

435 | plt.savefig(out_path, bbox_inches='tight')

436 |

437 | if out_file is not None:

438 | out_file.close()

439 |

440 |

441 | if __name__ == '__main__':

442 | run()

443 |

--------------------------------------------------------------------------------

/exp/params.py:

--------------------------------------------------------------------------------

1 | """ Parameter Space definition writing and loading

2 |

3 | :obj:`ParamSpace` uses TOML as the underlying format to write and load

4 | configurations to and from

5 | """

6 | import csv

7 | import itertools

8 | import math

9 | import os

10 | from enum import Enum

11 |

12 | import numpy as np

13 |

14 | import toml

15 |

16 |

17 | def _repeat_it(iterable, n):

18 | return itertools.chain.from_iterable(itertools.repeat(x, n) for x in iterable)

19 |

20 |

21 | class ParamError(Exception):

22 | pass

23 |

24 |

25 | class ParamDecodeError(ParamError):

26 | pass

27 |

28 |

29 | class Types(Enum):

30 | """ Enum with valid parameter types supported by :obj:`ParamSpace`

31 |

32 | """

33 | VALUE = "value"

34 | LIST = "list"

35 | RANGE = "range"

36 | RANDOM = "random"

37 |

38 | @staticmethod

39 | def from_str(value, case_sensitive=False):

40 | if not case_sensitive:

41 | value.lower()

42 | if value == Types.VALUE.value:

43 | return Types.VALUE

44 | elif value == Types.LIST.value:

45 | return Types.LIST

46 | elif value == Types.RANGE.value:

47 | return Types.RANGE

48 | elif value == Types.RANDOM.value:

49 | return Types.RANDOM

50 | else:

51 | raise ValueError("invalid parameter type: {}\n"

52 | "supported values: {}".format(value,

53 | ",".join([t.name for t in Types])))

54 |

55 |

56 | class DTypes(Enum):

57 | """ Enum with valid parameter dtypes supported by :obj:`ParamSpace`

58 |

59 | Useful to convert parameter spaces into scikit-optimization Dimensions

60 | """

61 | INT = "int"

62 | FLOAT = "float"

63 | CATEGORICAL = "categorical"

64 |

65 | @staticmethod

66 | def from_type(dtype, case_sensitive=False):

67 | if not case_sensitive and isinstance(dtype, str):

68 | dtype.lower()

69 |

70 | if dtype in (DTypes.FLOAT.value, float):

71 | return DTypes.FLOAT

72 | elif dtype in (DTypes.INT.value, int):

73 | return DTypes.INT

74 | elif dtype == DTypes.CATEGORICAL.value:

75 | return DTypes.CATEGORICAL

76 | else:

77 | raise ValueError("invalid parameter dtype: {}\n"

78 | "supported values: {}".format(dtype,

79 | ",".join([t.name for t in DTypes])))

80 |

81 |

82 | class ParamSpace:

83 | """ ParamSpace

84 |

85 | Create parameter spaces from configuration files.

86 |

87 | ParamSpace creates and read from parameter configuration files

88 | to create parameter spaces used for hyperparameter grid search

89 | (:py:mod:`exp.run`) or Global optimization procedures (:py:mod:`exp.gopt`)

90 |

91 | Args:

92 | filename (str): [optional] path to a configuration file. If not specified, creates an empty ParamSpace.

93 | """

94 |

95 | def __init__(self, filename=None):

96 | if filename is not None:

97 | self.params = toml.load(filename)

98 | else:

99 | self.params = {}

100 | self.size = self._compute_size()

101 |

102 | def _compute_size(self):

103 | """ Returns the size of the parameter space in terms of number of

104 | unique configurations

105 |

106 | Returns:

107 | (int) number of unique configurations

108 |

109 | """

110 | params = self.params.keys()

111 | if len(params) > 0:

112 | size = 1

113 | else:

114 | return 0

115 |

116 | for param in params:

117 | param_type = Types.from_str(self.params[param]["type"])

118 | param_value = self.get_param(param, param_type)

119 |

120 | if param_type == Types.VALUE:

121 | param_value = [param_value]

122 | size *= len(param_value)

123 | return size

124 |

125 | def param_names(self):

126 | """ param_names.

127 |

128 | Returns:

129 | (list) list of strings with the names of the parameters in this space

130 |

131 | """

132 | return list(self.params.keys())

133 |

134 | def _update_space_size(self, n):

135 | """ Updates the static grid size each instance maintains so it's cheap to return it

136 |

137 | Args:

138 | n: number of values a new parameter being added has

139 | """

140 | if self.size == 0:

141 | self.size = n

142 | else:

143 | self.size *= n

144 |

145 | def _get_param(self, name, param_type):

146 | """ get parameter

147 |

148 | gets a parameter and checks if a parameter is of a given type

149 |

150 | Args:

151 | name: name of the parameter to be returned

152 | param_type: (Type) parameter type

153 |

154 | Raises:

155 | LookupError: if parameter is not found in parameter space

156 | TypeError: if parameter found is not of the type specified

157 |

158 | Returns:

159 | (dict) dictionary with the parameter value and configurations

160 |

161 | """

162 | if name not in self.params:

163 | raise LookupError("Parameter not found: {}".format(name))

164 |

165 | param = self.params[name]

166 | actual_type = Types.from_str(param['type'])

167 | if actual_type != param_type:

168 | raise TypeError("expected {param} to be a {expected} but got {actual}".format(param=name,

169 | expected=param_type,

170 | actual=actual_type))

171 |

172 | return param

173 |

174 | def add_random(self, name, low=0., high=1., prior="uniform", dtype=float, n=None, persist=False):

175 | """ Specify random params within bounds and a given prior distribution

176 |

177 | Args:

178 | name: name for the parameter

179 | low: lower bound for the distribution (optional)

180 | high: higher bound for the distribution (optional)

181 | prior: distribution to be used for the random sampling, one of the following values:

182 | -uniform

183 | -log-uniform

184 | n: number of random values to be sampled if persist

185 | persist:

186 | Returns:

187 |

188 | """

189 | if dtype not in (float, int):

190 | raise TypeError(

191 | """\n Unknown dtype "{}", valid dtypes are:\n \t-float, \n \t-int """.format(dtype))

192 |

193 | param = self.params[name] = {}

194 | param["type"] = Types.RANDOM.value

195 | param["dtype"] = dtype.__name__

196 | if n:

197 | param["n"] = n

198 | self._update_space_size(n)

199 | else:

200 | self._update_space_size(1)

201 |

202 | param["bounds"] = [low, high]

203 |

204 | if prior not in ("uniform", "log-uniform"):

205 | raise TypeError(

206 | """\n Unknown prior "{}", valid priors are:\n \t-"uniform", \n \t-"log-uniform" """.format(prior))

207 | param["prior"] = prior

208 |

209 | if persist:

210 | value = self.get_random(name)

211 | del self.params[name]

212 | self.add_list(name, value)

213 |

214 | def get_random(self, name):

215 | """ get random parameter

216 |

217 | Args:

218 | name: parameter name

219 |

220 | Returns:

221 | array with one or more random parameter values according to the prior distribution