├── .github

└── workflows

│ └── dotnetcore.yml

├── .gitignore

├── Channels.md

├── Demultiplexer.md

├── Generator.md

├── LICENSE

├── Multiplexer.md

├── Pipelines.md

├── QuitChannel.md

├── README.md

├── Timeout.md

├── WebSearch.md

└── src

├── Program.cs

├── TryChannelsDemo.csproj

└── nuget.config

/.github/workflows/dotnetcore.yml:

--------------------------------------------------------------------------------

1 | name: .NET Core

2 |

3 | on: [push, pull_request]

4 |

5 | jobs:

6 | build:

7 |

8 | runs-on: ubuntu-latest

9 |

10 | steps:

11 | - uses: actions/checkout@v1

12 | - name: Setup .NET Core

13 | uses: actions/setup-dotnet@v1

14 | with:

15 | dotnet-version: 3.0.100

16 | - name: Build with dotnet

17 | run: dotnet build --configuration Release src

18 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | ## Ignore Visual Studio temporary files, build results, and

2 | ## files generated by popular Visual Studio add-ons.

3 | ##

4 | ## Get latest from https://github.com/github/gitignore/blob/master/VisualStudio.gitignore

5 |

6 | # User-specific files

7 | *.suo

8 | *.user

9 | *.userosscache

10 | *.sln.docstates

11 | *.zip

12 | *.pptx

13 | *.ppt

14 | # User-specific files (MonoDevelop/Xamarin Studio)

15 | *.userprefs

16 | .vscode/

17 | .git/

18 | # Build results

19 | [Dd]ebug/

20 | [Dd]ebugPublic/

21 | [Rr]elease/

22 | [Rr]eleases/

23 | x64/

24 | x86/

25 | bld/

26 | [Bb]in/

27 | [Oo]bj/

28 | [Ll]og/

29 |

30 | # Visual Studio 2015 cache/options directory

31 | .vs/

32 | # Uncomment if you have tasks that create the project's static files in wwwroot

33 | #wwwroot/

34 |

35 | # MSTest test Results

36 | [Tt]est[Rr]esult*/

37 | [Bb]uild[Ll]og.*

38 |

39 | # NUNIT

40 | *.VisualState.xml

41 | TestResult.xml

42 |

43 | # Build Results of an ATL Project

44 | [Dd]ebugPS/

45 | [Rr]eleasePS/

46 | dlldata.c

47 |

48 | # .NET Core

49 | project.lock.json

50 | project.fragment.lock.json

51 | artifacts/

52 | **/Properties/launchSettings.json

53 |

54 | *_i.c

55 | *_p.c

56 | *_i.h

57 | *.ilk

58 | *.meta

59 | *.obj

60 | *.pch

61 | *.pdb

62 | *.pgc

63 | *.pgd

64 | *.rsp

65 | *.sbr

66 | *.tlb

67 | *.tli

68 | *.tlh

69 | *.tmp

70 | *.tmp_proj

71 | *.log

72 | *.vspscc

73 | *.vssscc

74 | .builds

75 | *.pidb

76 | *.svclog

77 | *.scc

78 |

79 | # Chutzpah Test files

80 | _Chutzpah*

81 |

82 | # Visual C++ cache files

83 | ipch/

84 | *.aps

85 | *.ncb

86 | *.opendb

87 | *.opensdf

88 | *.sdf

89 | *.cachefile

90 | *.VC.db

91 | *.VC.VC.opendb

92 |

93 | # Visual Studio profiler

94 | *.psess

95 | *.vsp

96 | *.vspx

97 | *.sap

98 |

99 | # TFS 2012 Local Workspace

100 | $tf/

101 |

102 | # Guidance Automation Toolkit

103 | *.gpState

104 |

105 | # ReSharper is a .NET coding add-in

106 | _ReSharper*/

107 | *.[Rr]e[Ss]harper

108 | *.DotSettings.user

109 |

110 | # JustCode is a .NET coding add-in

111 | .JustCode

112 |

113 | # TeamCity is a build add-in

114 | _TeamCity*

115 |

116 | # DotCover is a Code Coverage Tool

117 | *.dotCover

118 |

119 | # Visual Studio code coverage results

120 | *.coverage

121 | *.coveragexml

122 |

123 | # NCrunch

124 | _NCrunch_*

125 | .*crunch*.local.xml

126 | nCrunchTemp_*

127 |

128 | # MightyMoose

129 | *.mm.*

130 | AutoTest.Net/

131 |

132 | # Web workbench (sass)

133 | .sass-cache/

134 |

135 | # Installshield output folder

136 | [Ee]xpress/

137 |

138 | # DocProject is a documentation generator add-in

139 | DocProject/buildhelp/

140 | DocProject/Help/*.HxT

141 | DocProject/Help/*.HxC

142 | DocProject/Help/*.hhc

143 | DocProject/Help/*.hhk

144 | DocProject/Help/*.hhp

145 | DocProject/Help/Html2

146 | DocProject/Help/html

147 |

148 | # Click-Once directory

149 | publish/

150 |

151 | # Publish Web Output

152 | *.[Pp]ublish.xml

153 | *.azurePubxml

154 | # TODO: Comment the next line if you want to checkin your web deploy settings

155 | # but database connection strings (with potential passwords) will be unencrypted

156 | *.pubxml

157 | *.publishproj

158 |

159 | # Microsoft Azure Web App publish settings. Comment the next line if you want to

160 | # checkin your Azure Web App publish settings, but sensitive information contained

161 | # in these scripts will be unencrypted

162 | PublishScripts/

163 |

164 | # NuGet Packages

165 | *.nupkg

166 | # The packages folder can be ignored because of Package Restore

167 | **/packages/*

168 | # except build/, which is used as an MSBuild target.

169 | !**/packages/build/

170 | # Uncomment if necessary however generally it will be regenerated when needed

171 | #!**/packages/repositories.config

172 | # NuGet v3's project.json files produces more ignorable files

173 | *.nuget.props

174 | *.nuget.targets

175 |

176 | # Microsoft Azure Build Output

177 | csx/

178 | *.build.csdef

179 |

180 | # Microsoft Azure Emulator

181 | ecf/

182 | rcf/

183 |

184 | # Windows Store app package directories and files

185 | AppPackages/

186 | BundleArtifacts/

187 | Package.StoreAssociation.xml

188 | _pkginfo.txt

189 | *.appx

190 |

191 | # Visual Studio cache files

192 | # files ending in .cache can be ignored

193 | *.[Cc]ache

194 | # but keep track of directories ending in .cache

195 | !*.[Cc]ache/

196 |

197 | # Others

198 | ClientBin/

199 | ~$*

200 | *~

201 | *.dbmdl

202 | *.dbproj.schemaview

203 | *.jfm

204 | *.pfx

205 | *.publishsettings

206 | orleans.codegen.cs

207 |

208 | # Since there are multiple workflows, uncomment next line to ignore bower_components

209 | # (https://github.com/github/gitignore/pull/1529#issuecomment-104372622)

210 | #bower_components/

211 |

212 | # RIA/Silverlight projects

213 | Generated_Code/

214 |

215 | # Backup & report files from converting an old project file

216 | # to a newer Visual Studio version. Backup files are not needed,

217 | # because we have git ;-)

218 | _UpgradeReport_Files/

219 | Backup*/

220 | UpgradeLog*.XML

221 | UpgradeLog*.htm

222 |

223 | # SQL Server files

224 | *.mdf

225 | *.ldf

226 | *.ndf

227 |

228 | # Business Intelligence projects

229 | *.rdl.data

230 | *.bim.layout

231 | *.bim_*.settings

232 |

233 | # Microsoft Fakes

234 | FakesAssemblies/

235 |

236 | # GhostDoc plugin setting file

237 | *.GhostDoc.xml

238 |

239 | # Node.js Tools for Visual Studio

240 | .ntvs_analysis.dat

241 | node_modules/

242 |

243 | # Typescript v1 declaration files

244 | typings/

245 |

246 | # Visual Studio 6 build log

247 | *.plg

248 |

249 | # Visual Studio 6 workspace options file

250 | *.opt

251 |

252 | # Visual Studio 6 auto-generated workspace file (contains which files were open etc.)

253 | *.vbw

254 |

255 | # Visual Studio LightSwitch build output

256 | **/*.HTMLClient/GeneratedArtifacts

257 | **/*.DesktopClient/GeneratedArtifacts

258 | **/*.DesktopClient/ModelManifest.xml

259 | **/*.Server/GeneratedArtifacts

260 | **/*.Server/ModelManifest.xml

261 | _Pvt_Extensions

262 |

263 | # Paket dependency manager

264 | .paket/paket.exe

265 | paket-files/

266 |

267 | # FAKE - F# Make

268 | .fake/

269 |

270 | # JetBrains Rider

271 | .idea/

272 | *.sln.iml

273 |

274 | # CodeRush

275 | .cr/

276 |

277 | # Python Tools for Visual Studio (PTVS)

278 | __pycache__/

279 | *.pyc

280 |

281 | # Cake - Uncomment if you are using it

282 | # tools/**

283 | # !tools/packages.config

284 |

285 | # Telerik's JustMock configuration file

286 | *.jmconfig

287 |

288 | # BizTalk build output

289 | *.btp.cs

290 | *.btm.cs

291 | *.odx.cs

292 | *.xsd.cs

293 | *.binlog

294 |

--------------------------------------------------------------------------------

/Channels.md:

--------------------------------------------------------------------------------

1 | ## Introducing Channels

2 |

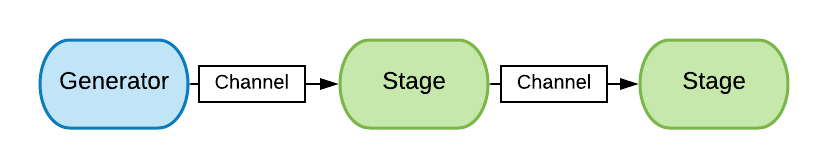

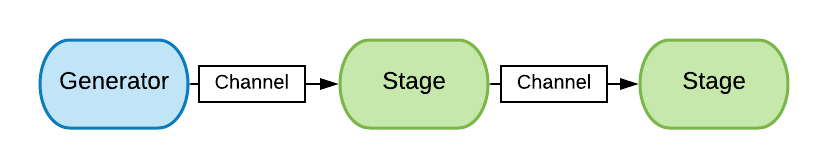

3 | A channel is a data structure that allows one thread to communicate with another thread. By communicating, we mean sending and receiving data in a first in first out (FIFO) order. Currently, they are part of the `System.Threading.Channels` namespace.

4 |

5 |  6 |

7 | That's how we create a channel:

8 |

9 | ```csharp

10 | Channel ch = Channel.CreateUnbounded();

11 | ```

12 |

13 | `Channel` is a static class that exposes several factory methods for creating channels. `Channel` is a data structure that supports reading and writing. That's how we write asynchronously to a channel:

14 |

15 | ```csharp

16 | await ch.Writer.WriteAsync("My first message");

17 | await ch.Writer.WriteAsync("My second message");

18 | ch.Writer.Complete();

19 | ```

20 |

21 | And this is how we read from a channel.

22 |

23 | ```csharp

24 | while (await ch.Reader.WaitToReadAsync())

25 | Console.WriteLine(await ch.Reader.ReadAsync());

26 | ```

27 |

28 | The reader's `WaitToReadAsync()` will complete with `true` when data is available to read, or with `false` when no further data will ever be read, that is, after the writer invokes `Complete()`. The reader also provides an option to consume the data as an async stream by exposing a method that returns `IAsyncEnumerable`:

29 |

30 | ```cs

31 | await foreach (var item in ch.Reader.ReadAllAsync())

32 | Console.WriteLine(item);

33 | ```

34 |

35 | ## A basic pub/sub messaging scenario

36 |

37 | Here's a basic example when we have a separate producer and consumer threads which communicate through a channel.

38 |

39 | ``` cs --region run_basic_channel_usage --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj

40 | ```

41 |

42 | The consumer (reader) waits until there's an available message to read. On the other hand, the producer (writer) waits until it's able to send a message, hence, we say that **channels both communicate and synchronize**. Both operations are non-blocking, that is, while we wait, the thread is free to do some other work.

43 | Notice that we have created an **unbounded** channel, meaning that it accepts as many messages as it can with regards to the available memory. With **bounded** channels, however, we can limit the number of messages that can be processed at a time.

44 |

45 | #### Next: [Generator »](../Generator.md) Previous: [Home «](../Readme.md)

46 |

--------------------------------------------------------------------------------

/Demultiplexer.md:

--------------------------------------------------------------------------------

1 | ## Demultiplexer

2 |

3 | We want to distribute the work amongst several consumers. Let's define `Split()`:

4 |

5 |

6 |

7 | That's how we create a channel:

8 |

9 | ```csharp

10 | Channel ch = Channel.CreateUnbounded();

11 | ```

12 |

13 | `Channel` is a static class that exposes several factory methods for creating channels. `Channel` is a data structure that supports reading and writing. That's how we write asynchronously to a channel:

14 |

15 | ```csharp

16 | await ch.Writer.WriteAsync("My first message");

17 | await ch.Writer.WriteAsync("My second message");

18 | ch.Writer.Complete();

19 | ```

20 |

21 | And this is how we read from a channel.

22 |

23 | ```csharp

24 | while (await ch.Reader.WaitToReadAsync())

25 | Console.WriteLine(await ch.Reader.ReadAsync());

26 | ```

27 |

28 | The reader's `WaitToReadAsync()` will complete with `true` when data is available to read, or with `false` when no further data will ever be read, that is, after the writer invokes `Complete()`. The reader also provides an option to consume the data as an async stream by exposing a method that returns `IAsyncEnumerable`:

29 |

30 | ```cs

31 | await foreach (var item in ch.Reader.ReadAllAsync())

32 | Console.WriteLine(item);

33 | ```

34 |

35 | ## A basic pub/sub messaging scenario

36 |

37 | Here's a basic example when we have a separate producer and consumer threads which communicate through a channel.

38 |

39 | ``` cs --region run_basic_channel_usage --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj

40 | ```

41 |

42 | The consumer (reader) waits until there's an available message to read. On the other hand, the producer (writer) waits until it's able to send a message, hence, we say that **channels both communicate and synchronize**. Both operations are non-blocking, that is, while we wait, the thread is free to do some other work.

43 | Notice that we have created an **unbounded** channel, meaning that it accepts as many messages as it can with regards to the available memory. With **bounded** channels, however, we can limit the number of messages that can be processed at a time.

44 |

45 | #### Next: [Generator »](../Generator.md) Previous: [Home «](../Readme.md)

46 |

--------------------------------------------------------------------------------

/Demultiplexer.md:

--------------------------------------------------------------------------------

1 | ## Demultiplexer

2 |

3 | We want to distribute the work amongst several consumers. Let's define `Split()`:

4 |

5 |  6 |

7 | ``` cs --region split --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

8 | ```

9 |

10 | `Split` takes a channel and redirects its messages to `n` number of newly created channels in a round-robin fashion. It returns these channels as read-only. Here's how to use it:

11 |

12 |

13 | ``` cs --region run_demultiplexing --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

14 | ```

15 |

16 | Joe sends 10 messages which we distribute amongst 3 channels. Some channels may take longer to process a message, therefore, we have no guarantee that the order of emission is going to be preserved. Our code is structured so that we process (in this case log) a message as soon as it arrives.

17 |

18 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

19 | ```

20 |

21 | #### Next: [Timeout »](../Timeout.md) Previous: [Multiplexer «](../Multiplexer.md)

22 |

--------------------------------------------------------------------------------

/Generator.md:

--------------------------------------------------------------------------------

1 | ## Generator

2 |

3 | A generator is a method that returns a channel. The one below creates a channel and writes a given number of messages asynchronously from a separate thread.

4 |

5 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_generator

6 | ```

7 |

8 | By returning a `ChannelReader` we ensure that our consumers won't be able to attempt writing to it.

9 |

10 | That's how we use the generator.

11 |

12 | ``` cs --region run_generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_generator

13 | ```

14 |

15 | #### Next: [Multiplexer »](../Multiplexer.md) Previous: [Channels «](../Channels.md)

16 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Denis Kyashif

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Multiplexer.md:

--------------------------------------------------------------------------------

1 | ## Multiplexer

2 |

3 | We have two channels, want to read from both and process whichever's message arrives first. We're going to solve this by consolidating their messages into a single channel. Let's define the `Merge()` method.

4 |

5 | ``` cs --region merge --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_multiplexing

6 | ```

7 |

8 | `Merge()` takes two channels and starts reading from them simultaneously. It creates and immediately returns a new channel which consolidates the outputs from the input channels. The reading procedures are run asynchronously on separate threads. Think of it like this:

9 |

10 |

6 |

7 | ``` cs --region split --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

8 | ```

9 |

10 | `Split` takes a channel and redirects its messages to `n` number of newly created channels in a round-robin fashion. It returns these channels as read-only. Here's how to use it:

11 |

12 |

13 | ``` cs --region run_demultiplexing --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

14 | ```

15 |

16 | Joe sends 10 messages which we distribute amongst 3 channels. Some channels may take longer to process a message, therefore, we have no guarantee that the order of emission is going to be preserved. Our code is structured so that we process (in this case log) a message as soon as it arrives.

17 |

18 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

19 | ```

20 |

21 | #### Next: [Timeout »](../Timeout.md) Previous: [Multiplexer «](../Multiplexer.md)

22 |

--------------------------------------------------------------------------------

/Generator.md:

--------------------------------------------------------------------------------

1 | ## Generator

2 |

3 | A generator is a method that returns a channel. The one below creates a channel and writes a given number of messages asynchronously from a separate thread.

4 |

5 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_generator

6 | ```

7 |

8 | By returning a `ChannelReader` we ensure that our consumers won't be able to attempt writing to it.

9 |

10 | That's how we use the generator.

11 |

12 | ``` cs --region run_generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_generator

13 | ```

14 |

15 | #### Next: [Multiplexer »](../Multiplexer.md) Previous: [Channels «](../Channels.md)

16 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Denis Kyashif

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Multiplexer.md:

--------------------------------------------------------------------------------

1 | ## Multiplexer

2 |

3 | We have two channels, want to read from both and process whichever's message arrives first. We're going to solve this by consolidating their messages into a single channel. Let's define the `Merge()` method.

4 |

5 | ``` cs --region merge --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_multiplexing

6 | ```

7 |

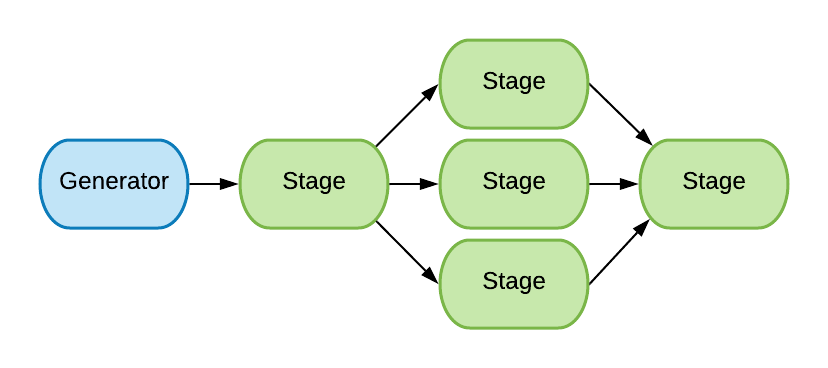

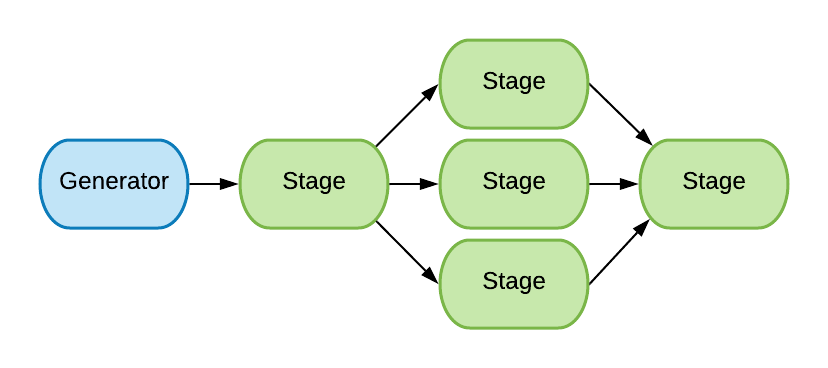

8 | `Merge()` takes two channels and starts reading from them simultaneously. It creates and immediately returns a new channel which consolidates the outputs from the input channels. The reading procedures are run asynchronously on separate threads. Think of it like this:

9 |

10 |  11 |

12 | `Merge()` also works with an arbitrary number of inputs.

13 | We've created the local asynchronous `Redirect()` function which takes a channel as an input writes its messages to the consolidated output. It returns a `Task` so we can use `WhenAll()` to wait for the input channels to complete. This allows us to also capture potential exceptions. In the end, we know that there's nothing left to be read, so we can safely close the writer.

14 |

15 | ``` cs --region run_multiplexing --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_multiplexing

16 | ```

17 |

18 | Our code is concurrent and non-blocking. The messages are being processed at the time of arrival and there's no need to use locks or any kind of conditional logic. While we're waiting, the thread is free to perform other work. We also don't have to handle the case when one of the writers complete (as you can see Ann has sent all of her messages before Joe).

19 |

20 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_multiplexing

21 | ```

22 |

23 | #### Next: [Timeout »](../Demultiplexer.md) Previous: [Generator «](../Generator.md)

24 |

25 |

--------------------------------------------------------------------------------

/Pipelines.md:

--------------------------------------------------------------------------------

1 | ## Pipelines

2 |

3 | A pipeline is a concurrency model which consists of a sequence of stages. Each stage performs a part of the full job and when it's done, it forwards it to the next stage. It also runs concurrently and **shares no state** with the other stages.

4 |

5 |

11 |

12 | `Merge()` also works with an arbitrary number of inputs.

13 | We've created the local asynchronous `Redirect()` function which takes a channel as an input writes its messages to the consolidated output. It returns a `Task` so we can use `WhenAll()` to wait for the input channels to complete. This allows us to also capture potential exceptions. In the end, we know that there's nothing left to be read, so we can safely close the writer.

14 |

15 | ``` cs --region run_multiplexing --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_multiplexing

16 | ```

17 |

18 | Our code is concurrent and non-blocking. The messages are being processed at the time of arrival and there's no need to use locks or any kind of conditional logic. While we're waiting, the thread is free to perform other work. We also don't have to handle the case when one of the writers complete (as you can see Ann has sent all of her messages before Joe).

19 |

20 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_multiplexing

21 | ```

22 |

23 | #### Next: [Timeout »](../Demultiplexer.md) Previous: [Generator «](../Generator.md)

24 |

25 |

--------------------------------------------------------------------------------

/Pipelines.md:

--------------------------------------------------------------------------------

1 | ## Pipelines

2 |

3 | A pipeline is a concurrency model which consists of a sequence of stages. Each stage performs a part of the full job and when it's done, it forwards it to the next stage. It also runs concurrently and **shares no state** with the other stages.

4 |

5 |  6 |

7 | ### Generator

8 |

9 | Each pipeline starts with a generator method, which initiates jobs by passing it to the stages. Each stage is also a method which runs on its own thread. The communication between the stages in a pipeline is performed using channels. A stage takes a channel as an input, reads from it asynchronously, performs some work and passes data to an output channel.

10 |

11 | To see it in action, we're going to design a program that efficiently counts the lines of code in a project. Let's start with the generator.

12 |

13 | ``` cs --region get_files_recursively --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

14 | ```

15 |

16 | We perform a depth first traversal of the folder and its subfolders and write each file name we enounter to the output channel. When we're done with the traversal, we mark the channel as complete so the consumer (the next stage) knows when to stop reading from it.

17 |

18 | ### Stage 1 - Keep the Source Files

19 |

20 | Stage 1 is going to determine whether the file contains source code or not. The ones that are not should be discarded.

21 |

22 | ``` cs --region filter_by_extension --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

23 | ```

24 |

25 | ### Stage 2 - Get the Line Count

26 |

27 | This stage is responsible for determining the number of lines in each file.

28 |

29 | ``` cs --region get_line_count --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

30 | ```

31 |

32 | ``` cs --region count_lines --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

33 | ```

34 |

35 | ## The Sink Stage

36 |

37 | Now we've implemented the stages of our pipeline, we are ready to put them all together.

38 |

39 | ``` cs --region run_pipeline --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

40 | ```

41 |

42 | ## Dealing with Backpressure

43 |

44 | In the line counter example, the stage where we read the file and count its lines might cause backpressure when a file is sufficiently large. It makes sense to increase the capacity of this stage and that's where `Merge` and `Split` which we discussed as [Multiplexer](/Multiplexer.md) and [Demultiplexer](/Demultiplexer.md) come into use.

45 |

46 |

6 |

7 | ### Generator

8 |

9 | Each pipeline starts with a generator method, which initiates jobs by passing it to the stages. Each stage is also a method which runs on its own thread. The communication between the stages in a pipeline is performed using channels. A stage takes a channel as an input, reads from it asynchronously, performs some work and passes data to an output channel.

10 |

11 | To see it in action, we're going to design a program that efficiently counts the lines of code in a project. Let's start with the generator.

12 |

13 | ``` cs --region get_files_recursively --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

14 | ```

15 |

16 | We perform a depth first traversal of the folder and its subfolders and write each file name we enounter to the output channel. When we're done with the traversal, we mark the channel as complete so the consumer (the next stage) knows when to stop reading from it.

17 |

18 | ### Stage 1 - Keep the Source Files

19 |

20 | Stage 1 is going to determine whether the file contains source code or not. The ones that are not should be discarded.

21 |

22 | ``` cs --region filter_by_extension --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

23 | ```

24 |

25 | ### Stage 2 - Get the Line Count

26 |

27 | This stage is responsible for determining the number of lines in each file.

28 |

29 | ``` cs --region get_line_count --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

30 | ```

31 |

32 | ``` cs --region count_lines --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

33 | ```

34 |

35 | ## The Sink Stage

36 |

37 | Now we've implemented the stages of our pipeline, we are ready to put them all together.

38 |

39 | ``` cs --region run_pipeline --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

40 | ```

41 |

42 | ## Dealing with Backpressure

43 |

44 | In the line counter example, the stage where we read the file and count its lines might cause backpressure when a file is sufficiently large. It makes sense to increase the capacity of this stage and that's where `Merge` and `Split` which we discussed as [Multiplexer](/Multiplexer.md) and [Demultiplexer](/Demultiplexer.md) come into use.

45 |

46 |  47 |

48 | We're use `Split` to distribute the source code files among 5 channels which will let us process up to 5 files simultaneously.

49 |

50 | ```cs

51 | var fileSource = GetFilesRecursively("node_modules");

52 | var sourceCodeFiles =

53 | FilterByExtension(fileSource, new HashSet {".js", ".ts" });

54 | var splitter = Split(sourceCodeFiles, 5);

55 | ```

56 |

57 | During `Merge`, we read concurrently from several channels and writes the messages to a single output. This is the stage which we need to tweak a little bit and perform the line counting.

58 |

59 | We introduce, `CountLinesAndMerge` which doesn't only redirect, but also transforms.

60 |

61 | ``` cs --region count_lines_and_merge --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

62 | ```

63 |

64 | You can update the code above for the sink stage and see how it performs for folders with lots of files. For more details on cancellation and error handling, check out the [blog post](https://deniskyashif.com/csharp-channels-part-3/)

65 |

66 | #### Next: [Home »](../Readme.md) Previous: [Web Search «](../WebSearch.md)

67 |

--------------------------------------------------------------------------------

/QuitChannel.md:

--------------------------------------------------------------------------------

1 | ## Quit Channel

2 |

3 | Unlike the Timeout, where we cancel reading, this time we want to cancel the writing procedure. We need to modify our `CreateMessenger()` generator.

4 |

5 | ``` cs --region generator_with_cancellation --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_quit_channel

6 | ```

7 |

8 | Now we need to pass our cancellation token to the generator which gives us control over the channel's longevity.

9 |

10 | ``` cs --region run_quit_channel --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_quit_channel

11 | ```

12 |

13 | Joe had 10 messages to send, but we gave him only 5 seconds, for which he managed to send less than that. We can also manually send a cancellation request, for example, after reading `N` number of messages.

14 |

15 | #### Next: [Web Search »](../WebSearch.md) Previous: [Timeout «](../Timeout.md)

16 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # C# Concurrency Patterns with Channels

2 |

3 |

47 |

48 | We're use `Split` to distribute the source code files among 5 channels which will let us process up to 5 files simultaneously.

49 |

50 | ```cs

51 | var fileSource = GetFilesRecursively("node_modules");

52 | var sourceCodeFiles =

53 | FilterByExtension(fileSource, new HashSet {".js", ".ts" });

54 | var splitter = Split(sourceCodeFiles, 5);

55 | ```

56 |

57 | During `Merge`, we read concurrently from several channels and writes the messages to a single output. This is the stage which we need to tweak a little bit and perform the line counting.

58 |

59 | We introduce, `CountLinesAndMerge` which doesn't only redirect, but also transforms.

60 |

61 | ``` cs --region count_lines_and_merge --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

62 | ```

63 |

64 | You can update the code above for the sink stage and see how it performs for folders with lots of files. For more details on cancellation and error handling, check out the [blog post](https://deniskyashif.com/csharp-channels-part-3/)

65 |

66 | #### Next: [Home »](../Readme.md) Previous: [Web Search «](../WebSearch.md)

67 |

--------------------------------------------------------------------------------

/QuitChannel.md:

--------------------------------------------------------------------------------

1 | ## Quit Channel

2 |

3 | Unlike the Timeout, where we cancel reading, this time we want to cancel the writing procedure. We need to modify our `CreateMessenger()` generator.

4 |

5 | ``` cs --region generator_with_cancellation --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_quit_channel

6 | ```

7 |

8 | Now we need to pass our cancellation token to the generator which gives us control over the channel's longevity.

9 |

10 | ``` cs --region run_quit_channel --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_quit_channel

11 | ```

12 |

13 | Joe had 10 messages to send, but we gave him only 5 seconds, for which he managed to send less than that. We can also manually send a cancellation request, for example, after reading `N` number of messages.

14 |

15 | #### Next: [Web Search »](../WebSearch.md) Previous: [Timeout «](../Timeout.md)

16 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # C# Concurrency Patterns with Channels

2 |

3 |  4 |

5 | This project contains interactive examples for implementing concurrent workflows in C# using channels. Check my blog posts on the topic:

6 | * [C# Channels - Publish / Subscribe Workflows](https://deniskyashif.com/csharp-channels-part-1/)

7 | * [C# Channels - Timeout and Cancellation](https://deniskyashif.com/csharp-channels-part-2/)

8 | * [C# Channels - Async Data Pipelines](https://deniskyashif.com/csharp-channels-part-3/)

9 |

10 | ## Table of contents

11 |

12 | - [Using Channels](Channels.md)

13 | - [Generator](Generator.md)

14 | - [Multiplexer](Multiplexer.md)

15 | - [Demultiplexer](Demultiplexer.md)

16 | - [Timeout](Timeout.md)

17 | - [Quit Channel](QuitChannel.md)

18 | - [Web Search](WebSearch.md)

19 | - [Pipelines](Pipelines.md)

20 |

21 | ## How to run it

22 |

23 | Install [dotnet try](https://github.com/dotnet/try/blob/master/DotNetTryLocal.md)

24 |

25 | ```

26 | git clone https://github.com/deniskyashif/trydotnet-channels.git

27 | cd trydotnet-channels

28 | dotnet try

29 | ```

30 |

--------------------------------------------------------------------------------

/Timeout.md:

--------------------------------------------------------------------------------

1 | ## Timeout

2 |

3 | We want to read from a channel for a certain amount of time.

4 |

5 | ``` cs --region run_timeout --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_timeout

6 | ```

7 |

8 | In this example, Joe was set to send 10 messages but over 5 seconds, we received less than that and then canceled the reading operation. If we reduce the number of messages Joe sends, or sufficiently increase the timeout duration, we'll read everything and thus avoid ending up in the `catch` block.

9 |

10 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_timeout

11 | ```

12 |

13 | #### Next: [Quit Channel »](../QuitChannel.md) Previous: [Demultiplexer «](../Demultiplexer.md)

14 |

--------------------------------------------------------------------------------

/WebSearch.md:

--------------------------------------------------------------------------------

1 | ## Web Search

2 |

3 | We're given the task to query several data sources and mix the results. The queries should run concurrently and we should disregard the ones taking too long. Also, we should handle a query response at the time of arrival, instead of waiting for all of them to complete.

4 |

5 | ``` cs --region web_search --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj

6 | ```

7 | Depending on the timeout interval we might end up receiving responses for all of the queries, or cut off the ones that are too slow.

8 |

9 | #### Next: [Pipelines »](../Pipelines.md) Previous: [Quit Channel «](../QuitChannel.md)

10 |

--------------------------------------------------------------------------------

/src/Program.cs:

--------------------------------------------------------------------------------

1 | using System;

2 | using System.IO;

3 | using System.Collections.Generic;

4 | using System.Diagnostics;

5 | using System.Linq;

6 | using System.Threading;

7 | using System.Threading.Channels;

8 | using System.Threading.Tasks;

9 | using static System.Console;

10 |

11 | public class Program

12 | {

13 | static Action WriteLineWithTime =

14 | (str) => WriteLine($"[{DateTime.UtcNow.ToLongTimeString()}] {str}");

15 |

16 | static async Task Main(

17 | string region = null,

18 | string session = null,

19 | string package = null,

20 | string project = null,

21 | string[] args = null)

22 | {

23 | if (!string.IsNullOrWhiteSpace(session))

24 | {

25 | switch (session)

26 | {

27 | case "run_generator":

28 | await RunGenerator(); break;

29 | case "run_multiplexing":

30 | await RunMultiplexing(); break;

31 | case "run_demultiplexing":

32 | await RunDemultiplexing(); break;

33 | case "run_timeout":

34 | await RunTimeout(); break;

35 | case "run_quit_channel":

36 | await RunQuitChannel(); break;

37 | case "run_pipeline":

38 | await RunPipeline(); break;

39 | default:

40 | WriteLine("Unrecognized session"); break;

41 | }

42 | return;

43 | }

44 |

45 | switch (region)

46 | {

47 | case "run_basic_channel_usage":

48 | await RunBasicChannelUsage(); break;

49 | case "web_search":

50 | await RunWebSearch(); break;

51 | default:

52 | WriteLine("Unrecognized region"); break;

53 | }

54 | }

55 |

56 | public static async Task RunBasicChannelUsage()

57 | {

58 | #region run_basic_channel_usage

59 | var ch = Channel.CreateUnbounded();

60 |

61 | var consumer = Task.Run(async () =>

62 | {

63 | while (await ch.Reader.WaitToReadAsync())

64 | WriteLineWithTime(await ch.Reader.ReadAsync());

65 | });

66 |

67 | var producer = Task.Run(async () =>

68 | {

69 | var rnd = new Random();

70 | for (int i = 0; i < 5; i++)

71 | {

72 | await Task.Delay(TimeSpan.FromSeconds(rnd.Next(3)));

73 | await ch.Writer.WriteAsync($"Message {i}");

74 | }

75 | ch.Writer.Complete();

76 | });

77 |

78 | await Task.WhenAll(consumer, producer);

79 | #endregion

80 | }

81 |

82 | public static async Task RunGenerator()

83 | {

84 | #region run_generator

85 | var joe = CreateMessenger("Joe", 5);

86 |

87 | await foreach (var item in joe.ReadAllAsync())

88 | WriteLineWithTime(item);

89 | #endregion

90 | }

91 |

92 | public static async Task RunMultiplexing()

93 | {

94 | #region run_multiplexing

95 | var ch = Merge(CreateMessenger("Joe", 3), CreateMessenger("Ann", 5));

96 |

97 | await foreach (var item in ch.ReadAllAsync())

98 | WriteLineWithTime(item);

99 | #endregion

100 | }

101 |

102 | public static async Task RunDemultiplexing()

103 | {

104 | #region run_demultiplexing

105 | var joe = CreateMessenger("Joe", 10);

106 | var readers = Split(joe, 3);

107 | var tasks = new List();

108 |

109 | for (int i = 0; i < readers.Count; i++)

110 | {

111 | var reader = readers[i];

112 | var index = i;

113 | tasks.Add(Task.Run(async () =>

114 | {

115 | await foreach (var item in reader.ReadAllAsync())

116 | {

117 | WriteLineWithTime(string.Format("Reader {0}: {1}", index, item));

118 | }

119 | }));

120 | }

121 |

122 | await Task.WhenAll(tasks);

123 | #endregion

124 | }

125 |

126 | public static async Task RunTimeout()

127 | {

128 | #region run_timeout

129 | var joe = CreateMessenger("Joe", 10);

130 | var cts = new CancellationTokenSource();

131 | cts.CancelAfter(TimeSpan.FromSeconds(5));

132 |

133 | try

134 | {

135 | await foreach (var item in joe.ReadAllAsync(cts.Token))

136 | Console.WriteLine(item);

137 |

138 | Console.WriteLine("Joe sent all of his messages.");

139 | }

140 | catch (OperationCanceledException)

141 | {

142 | Console.WriteLine("Joe, you are too slow!");

143 | }

144 | #endregion

145 | }

146 |

147 | public static async Task RunQuitChannel()

148 | {

149 | #region run_quit_channel

150 | var cts = new CancellationTokenSource();

151 | var joe = CreateMessenger("Joe", 10, cts.Token);

152 | cts.CancelAfter(TimeSpan.FromSeconds(5));

153 |

154 | await foreach (var item in joe.ReadAllAsync())

155 | WriteLineWithTime(item);

156 | #endregion

157 | }

158 |

159 | #region generator

160 | static ChannelReader CreateMessenger(string msg, int count)

161 | {

162 | var ch = Channel.CreateUnbounded();

163 | var rnd = new Random();

164 |

165 | Task.Run(async () =>

166 | {

167 | for (int i = 0; i < count; i++)

168 | {

169 | await ch.Writer.WriteAsync($"{msg} {i}");

170 | await Task.Delay(TimeSpan.FromSeconds(rnd.Next(3)));

171 | }

172 | ch.Writer.Complete();

173 | });

174 |

175 | return ch.Reader;

176 | }

177 | #endregion

178 |

179 | #region generator_with_cancellation

180 | static ChannelReader CreateMessenger(

181 | string msg,

182 | int count = 5,

183 | CancellationToken token = default(CancellationToken))

184 | {

185 | var ch = Channel.CreateUnbounded();

186 | var rnd = new Random();

187 |

188 | Task.Run(async () =>

189 | {

190 | for (int i = 0; i < count; i++)

191 | {

192 | if (token.IsCancellationRequested)

193 | {

194 | await ch.Writer.WriteAsync($"{msg} says bye!");

195 | break;

196 | }

197 | await ch.Writer.WriteAsync($"{msg} {i}");

198 | await Task.Delay(TimeSpan.FromSeconds(rnd.Next(0, 3)));

199 | }

200 | ch.Writer.Complete();

201 | });

202 |

203 | return ch.Reader;

204 | }

205 | #endregion

206 |

207 | #region merge

208 | static ChannelReader Merge(params ChannelReader[] inputs)

209 | {

210 | var output = Channel.CreateUnbounded();

211 |

212 | Task.Run(async () =>

213 | {

214 | async Task Redirect(ChannelReader input)

215 | {

216 | await foreach (var item in input.ReadAllAsync())

217 | await output.Writer.WriteAsync(item);

218 | }

219 |

220 | await Task.WhenAll(inputs.Select(i => Redirect(i)).ToArray());

221 | output.Writer.Complete();

222 | });

223 |

224 | return output;

225 | }

226 | #endregion

227 |

228 | #region split

229 | static IList> Split(ChannelReader ch, int n)

230 | {

231 | var outputs = new Channel[n];

232 |

233 | for (int i = 0; i < n; i++)

234 | outputs[i] = Channel.CreateUnbounded();

235 |

236 | Task.Run(async () =>

237 | {

238 | var index = 0;

239 | await foreach (var item in ch.ReadAllAsync())

240 | {

241 | await outputs[index].Writer.WriteAsync(item);

242 | index = (index + 1) % n;

243 | }

244 |

245 | foreach (var ch in outputs)

246 | ch.Writer.Complete();

247 | });

248 |

249 | return outputs.Select(ch => ch.Reader).ToArray();

250 | }

251 | #endregion

252 |

253 | public static async Task RunWebSearch()

254 | {

255 | #region web_search

256 | var ch = Channel.CreateUnbounded();

257 |

258 | async Task Search(string source, string term, CancellationToken token)

259 | {

260 | await Task.Delay(TimeSpan.FromSeconds(new Random().Next(5)), token);

261 | await ch.Writer.WriteAsync($"Result from {source} for {term}", token);

262 | }

263 |

264 | var term = "Jupyter";

265 | var token = new CancellationTokenSource(TimeSpan.FromSeconds(3)).Token;

266 |

267 | var search1 = Search("Wikipedia", term, token);

268 | var search2 = Search("Quora", term, token);

269 | var search3 = Search("Everything2", term, token);

270 |

271 | try

272 | {

273 | for (int i = 0; i < 3; i++)

274 | {

275 | Console.WriteLine(await ch.Reader.ReadAsync(token));

276 | }

277 | Console.WriteLine("All searches have completed.");

278 | }

279 | catch (OperationCanceledException)

280 | {

281 | Console.WriteLine("Timeout.");

282 | }

283 |

284 | ch.Writer.Complete();

285 | #endregion

286 | }

287 |

288 | public static async Task RunPipeline()

289 | {

290 | #region run_pipeline

291 | var sw = new Stopwatch();

292 | sw.Start();

293 | var cts = new CancellationTokenSource(TimeSpan.FromSeconds(5));

294 |

295 | // Try with a large folder e.g. node_modules

296 | var fileSource = GetFilesRecursively(".", cts.Token);

297 | var sourceCodeFiles = FilterByExtension(

298 | fileSource, new HashSet { ".cs", ".json", ".xml" });

299 | var (counter, errors) = GetLineCount(sourceCodeFiles);

300 | // Distribute the file reading stage amongst several workers

301 | // var (counter, errors) = CountLinesAndMerge(Split(sourceCodeFiles, 5));

302 |

303 | var totalLines = 0;

304 | await foreach (var item in counter.ReadAllAsync())

305 | {

306 | WriteLineWithTime($"{item.file.FullName} {item.lines}");

307 | totalLines += item.lines;

308 | }

309 | WriteLine($"Total lines: {totalLines}");

310 |

311 | await foreach (var errMessage in errors.ReadAllAsync())

312 | WriteLine(errMessage);

313 |

314 | sw.Stop();

315 | WriteLine(sw.Elapsed);

316 | #endregion

317 | }

318 |

319 | #region get_files_recursively

320 | static ChannelReader GetFilesRecursively(string root, CancellationToken token = default)

321 | {

322 | var output = Channel.CreateUnbounded();

323 |

324 | async Task WalkDir(string path)

325 | {

326 | if (token.IsCancellationRequested)

327 | throw new OperationCanceledException();

328 |

329 | foreach (var file in Directory.GetFiles(path))

330 | await output.Writer.WriteAsync(file, token);

331 |

332 | var tasks = Directory.GetDirectories(path).Select(WalkDir);

333 | await Task.WhenAll(tasks.ToArray());

334 | }

335 |

336 | Task.Run(async () =>

337 | {

338 | try

339 | {

340 | await WalkDir(root);

341 | }

342 | catch (OperationCanceledException) { WriteLine("Cancelled."); }

343 | finally { output.Writer.Complete(); }

344 | });

345 |

346 | return output;

347 | }

348 | #endregion

349 | #region filter_by_extension

350 | static ChannelReader FilterByExtension(

351 | ChannelReader input, HashSet exts)

352 | {

353 | var output = Channel.CreateUnbounded();

354 | Task.Run(async () =>

355 | {

356 | await foreach (var file in input.ReadAllAsync())

357 | {

358 | var fileInfo = new FileInfo(file);

359 | if (exts.Contains(fileInfo.Extension))

360 | await output.Writer.WriteAsync(fileInfo);

361 | }

362 |

363 | output.Writer.Complete();

364 | });

365 |

366 | return output;

367 | }

368 | #endregion

369 | #region get_line_count

370 | static (ChannelReader<(FileInfo file, int lines)> output, ChannelReader errors)

371 | GetLineCount(ChannelReader input)

372 | {

373 | var output = Channel.CreateUnbounded<(FileInfo, int)>();

374 | var errors = Channel.CreateUnbounded();

375 |

376 | Task.Run(async () =>

377 | {

378 | await foreach (var file in input.ReadAllAsync())

379 | {

380 | var lines = CountLines(file);

381 | if (lines == 0)

382 | await errors.Writer.WriteAsync($"[Error] Empty file {file}");

383 | else

384 | await output.Writer.WriteAsync((file, lines));

385 | }

386 | output.Writer.Complete();

387 | errors.Writer.Complete();

388 | });

389 |

390 | return (output, errors);

391 | }

392 | #endregion

393 | #region count_lines

394 | static int CountLines(FileInfo file)

395 | {

396 | using var sr = new StreamReader(file.FullName);

397 | var lines = 0;

398 |

399 | while (sr.ReadLine() != null)

400 | lines++;

401 |

402 | return lines;

403 | }

404 | #endregion

405 | #region count_lines_and_merge

406 | static (ChannelReader<(FileInfo file, int lines)> output, ChannelReader errors)

407 | CountLinesAndMerge(IList> inputs)

408 | {

409 | var output = Channel.CreateUnbounded<(FileInfo file, int lines)>();

410 | var errors = Channel.CreateUnbounded();

411 |

412 | Task.Run(async () =>

413 | {

414 | async Task Redirect(ChannelReader input)

415 | {

416 | await foreach (var file in input.ReadAllAsync())

417 | {

418 | var lines = CountLines(file);

419 | if (lines == 0)

420 | await errors.Writer.WriteAsync($"[Error] Empty file {file}");

421 | else

422 | await output.Writer.WriteAsync((file, lines));

423 | }

424 | }

425 |

426 | await Task.WhenAll(inputs.Select(Redirect).ToArray());

427 | output.Writer.Complete();

428 | errors.Writer.Complete();

429 | });

430 |

431 | return (output, errors);

432 | }

433 | #endregion

434 | }

435 |

--------------------------------------------------------------------------------

/src/TryChannelsDemo.csproj:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | Exe

5 | netcoreapp3.0

6 | 8

7 | $(NoWarn);1591

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

--------------------------------------------------------------------------------

/src/nuget.config:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

4 |

5 | This project contains interactive examples for implementing concurrent workflows in C# using channels. Check my blog posts on the topic:

6 | * [C# Channels - Publish / Subscribe Workflows](https://deniskyashif.com/csharp-channels-part-1/)

7 | * [C# Channels - Timeout and Cancellation](https://deniskyashif.com/csharp-channels-part-2/)

8 | * [C# Channels - Async Data Pipelines](https://deniskyashif.com/csharp-channels-part-3/)

9 |

10 | ## Table of contents

11 |

12 | - [Using Channels](Channels.md)

13 | - [Generator](Generator.md)

14 | - [Multiplexer](Multiplexer.md)

15 | - [Demultiplexer](Demultiplexer.md)

16 | - [Timeout](Timeout.md)

17 | - [Quit Channel](QuitChannel.md)

18 | - [Web Search](WebSearch.md)

19 | - [Pipelines](Pipelines.md)

20 |

21 | ## How to run it

22 |

23 | Install [dotnet try](https://github.com/dotnet/try/blob/master/DotNetTryLocal.md)

24 |

25 | ```

26 | git clone https://github.com/deniskyashif/trydotnet-channels.git

27 | cd trydotnet-channels

28 | dotnet try

29 | ```

30 |

--------------------------------------------------------------------------------

/Timeout.md:

--------------------------------------------------------------------------------

1 | ## Timeout

2 |

3 | We want to read from a channel for a certain amount of time.

4 |

5 | ``` cs --region run_timeout --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_timeout

6 | ```

7 |

8 | In this example, Joe was set to send 10 messages but over 5 seconds, we received less than that and then canceled the reading operation. If we reduce the number of messages Joe sends, or sufficiently increase the timeout duration, we'll read everything and thus avoid ending up in the `catch` block.

9 |

10 | ``` cs --region generator --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_timeout

11 | ```

12 |

13 | #### Next: [Quit Channel »](../QuitChannel.md) Previous: [Demultiplexer «](../Demultiplexer.md)

14 |

--------------------------------------------------------------------------------

/WebSearch.md:

--------------------------------------------------------------------------------

1 | ## Web Search

2 |

3 | We're given the task to query several data sources and mix the results. The queries should run concurrently and we should disregard the ones taking too long. Also, we should handle a query response at the time of arrival, instead of waiting for all of them to complete.

4 |

5 | ``` cs --region web_search --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj

6 | ```

7 | Depending on the timeout interval we might end up receiving responses for all of the queries, or cut off the ones that are too slow.

8 |

9 | #### Next: [Pipelines »](../Pipelines.md) Previous: [Quit Channel «](../QuitChannel.md)

10 |

--------------------------------------------------------------------------------

/src/Program.cs:

--------------------------------------------------------------------------------

1 | using System;

2 | using System.IO;

3 | using System.Collections.Generic;

4 | using System.Diagnostics;

5 | using System.Linq;

6 | using System.Threading;

7 | using System.Threading.Channels;

8 | using System.Threading.Tasks;

9 | using static System.Console;

10 |

11 | public class Program

12 | {

13 | static Action WriteLineWithTime =

14 | (str) => WriteLine($"[{DateTime.UtcNow.ToLongTimeString()}] {str}");

15 |

16 | static async Task Main(

17 | string region = null,

18 | string session = null,

19 | string package = null,

20 | string project = null,

21 | string[] args = null)

22 | {

23 | if (!string.IsNullOrWhiteSpace(session))

24 | {

25 | switch (session)

26 | {

27 | case "run_generator":

28 | await RunGenerator(); break;

29 | case "run_multiplexing":

30 | await RunMultiplexing(); break;

31 | case "run_demultiplexing":

32 | await RunDemultiplexing(); break;

33 | case "run_timeout":

34 | await RunTimeout(); break;

35 | case "run_quit_channel":

36 | await RunQuitChannel(); break;

37 | case "run_pipeline":

38 | await RunPipeline(); break;

39 | default:

40 | WriteLine("Unrecognized session"); break;

41 | }

42 | return;

43 | }

44 |

45 | switch (region)

46 | {

47 | case "run_basic_channel_usage":

48 | await RunBasicChannelUsage(); break;

49 | case "web_search":

50 | await RunWebSearch(); break;

51 | default:

52 | WriteLine("Unrecognized region"); break;

53 | }

54 | }

55 |

56 | public static async Task RunBasicChannelUsage()

57 | {

58 | #region run_basic_channel_usage

59 | var ch = Channel.CreateUnbounded();

60 |

61 | var consumer = Task.Run(async () =>

62 | {

63 | while (await ch.Reader.WaitToReadAsync())

64 | WriteLineWithTime(await ch.Reader.ReadAsync());

65 | });

66 |

67 | var producer = Task.Run(async () =>

68 | {

69 | var rnd = new Random();

70 | for (int i = 0; i < 5; i++)

71 | {

72 | await Task.Delay(TimeSpan.FromSeconds(rnd.Next(3)));

73 | await ch.Writer.WriteAsync($"Message {i}");

74 | }

75 | ch.Writer.Complete();

76 | });

77 |

78 | await Task.WhenAll(consumer, producer);

79 | #endregion

80 | }

81 |

82 | public static async Task RunGenerator()

83 | {

84 | #region run_generator

85 | var joe = CreateMessenger("Joe", 5);

86 |

87 | await foreach (var item in joe.ReadAllAsync())

88 | WriteLineWithTime(item);

89 | #endregion

90 | }

91 |

92 | public static async Task RunMultiplexing()

93 | {

94 | #region run_multiplexing

95 | var ch = Merge(CreateMessenger("Joe", 3), CreateMessenger("Ann", 5));

96 |

97 | await foreach (var item in ch.ReadAllAsync())

98 | WriteLineWithTime(item);

99 | #endregion

100 | }

101 |

102 | public static async Task RunDemultiplexing()

103 | {

104 | #region run_demultiplexing

105 | var joe = CreateMessenger("Joe", 10);

106 | var readers = Split(joe, 3);

107 | var tasks = new List();

108 |

109 | for (int i = 0; i < readers.Count; i++)

110 | {

111 | var reader = readers[i];

112 | var index = i;

113 | tasks.Add(Task.Run(async () =>

114 | {

115 | await foreach (var item in reader.ReadAllAsync())

116 | {

117 | WriteLineWithTime(string.Format("Reader {0}: {1}", index, item));

118 | }

119 | }));

120 | }

121 |

122 | await Task.WhenAll(tasks);

123 | #endregion

124 | }

125 |

126 | public static async Task RunTimeout()

127 | {

128 | #region run_timeout

129 | var joe = CreateMessenger("Joe", 10);

130 | var cts = new CancellationTokenSource();

131 | cts.CancelAfter(TimeSpan.FromSeconds(5));

132 |

133 | try

134 | {

135 | await foreach (var item in joe.ReadAllAsync(cts.Token))

136 | Console.WriteLine(item);

137 |

138 | Console.WriteLine("Joe sent all of his messages.");

139 | }

140 | catch (OperationCanceledException)

141 | {

142 | Console.WriteLine("Joe, you are too slow!");

143 | }

144 | #endregion

145 | }

146 |

147 | public static async Task RunQuitChannel()

148 | {

149 | #region run_quit_channel

150 | var cts = new CancellationTokenSource();

151 | var joe = CreateMessenger("Joe", 10, cts.Token);

152 | cts.CancelAfter(TimeSpan.FromSeconds(5));

153 |

154 | await foreach (var item in joe.ReadAllAsync())

155 | WriteLineWithTime(item);

156 | #endregion

157 | }

158 |

159 | #region generator

160 | static ChannelReader CreateMessenger(string msg, int count)

161 | {

162 | var ch = Channel.CreateUnbounded();

163 | var rnd = new Random();

164 |

165 | Task.Run(async () =>

166 | {

167 | for (int i = 0; i < count; i++)

168 | {

169 | await ch.Writer.WriteAsync($"{msg} {i}");

170 | await Task.Delay(TimeSpan.FromSeconds(rnd.Next(3)));

171 | }

172 | ch.Writer.Complete();

173 | });

174 |

175 | return ch.Reader;

176 | }

177 | #endregion

178 |

179 | #region generator_with_cancellation

180 | static ChannelReader CreateMessenger(

181 | string msg,

182 | int count = 5,

183 | CancellationToken token = default(CancellationToken))

184 | {

185 | var ch = Channel.CreateUnbounded();

186 | var rnd = new Random();

187 |

188 | Task.Run(async () =>

189 | {

190 | for (int i = 0; i < count; i++)

191 | {

192 | if (token.IsCancellationRequested)

193 | {

194 | await ch.Writer.WriteAsync($"{msg} says bye!");

195 | break;

196 | }

197 | await ch.Writer.WriteAsync($"{msg} {i}");

198 | await Task.Delay(TimeSpan.FromSeconds(rnd.Next(0, 3)));

199 | }

200 | ch.Writer.Complete();

201 | });

202 |

203 | return ch.Reader;

204 | }

205 | #endregion

206 |

207 | #region merge

208 | static ChannelReader Merge(params ChannelReader[] inputs)

209 | {

210 | var output = Channel.CreateUnbounded();

211 |

212 | Task.Run(async () =>

213 | {

214 | async Task Redirect(ChannelReader input)

215 | {

216 | await foreach (var item in input.ReadAllAsync())

217 | await output.Writer.WriteAsync(item);

218 | }

219 |

220 | await Task.WhenAll(inputs.Select(i => Redirect(i)).ToArray());

221 | output.Writer.Complete();

222 | });

223 |

224 | return output;

225 | }

226 | #endregion

227 |

228 | #region split

229 | static IList> Split(ChannelReader ch, int n)

230 | {

231 | var outputs = new Channel[n];

232 |

233 | for (int i = 0; i < n; i++)

234 | outputs[i] = Channel.CreateUnbounded();

235 |

236 | Task.Run(async () =>

237 | {

238 | var index = 0;

239 | await foreach (var item in ch.ReadAllAsync())

240 | {

241 | await outputs[index].Writer.WriteAsync(item);

242 | index = (index + 1) % n;

243 | }

244 |

245 | foreach (var ch in outputs)

246 | ch.Writer.Complete();

247 | });

248 |

249 | return outputs.Select(ch => ch.Reader).ToArray();

250 | }

251 | #endregion

252 |

253 | public static async Task RunWebSearch()

254 | {

255 | #region web_search

256 | var ch = Channel.CreateUnbounded();

257 |

258 | async Task Search(string source, string term, CancellationToken token)

259 | {

260 | await Task.Delay(TimeSpan.FromSeconds(new Random().Next(5)), token);

261 | await ch.Writer.WriteAsync($"Result from {source} for {term}", token);

262 | }

263 |

264 | var term = "Jupyter";

265 | var token = new CancellationTokenSource(TimeSpan.FromSeconds(3)).Token;

266 |

267 | var search1 = Search("Wikipedia", term, token);

268 | var search2 = Search("Quora", term, token);

269 | var search3 = Search("Everything2", term, token);

270 |

271 | try

272 | {

273 | for (int i = 0; i < 3; i++)

274 | {

275 | Console.WriteLine(await ch.Reader.ReadAsync(token));

276 | }

277 | Console.WriteLine("All searches have completed.");

278 | }

279 | catch (OperationCanceledException)

280 | {

281 | Console.WriteLine("Timeout.");

282 | }

283 |

284 | ch.Writer.Complete();

285 | #endregion

286 | }

287 |

288 | public static async Task RunPipeline()

289 | {

290 | #region run_pipeline

291 | var sw = new Stopwatch();

292 | sw.Start();

293 | var cts = new CancellationTokenSource(TimeSpan.FromSeconds(5));

294 |

295 | // Try with a large folder e.g. node_modules

296 | var fileSource = GetFilesRecursively(".", cts.Token);

297 | var sourceCodeFiles = FilterByExtension(

298 | fileSource, new HashSet { ".cs", ".json", ".xml" });

299 | var (counter, errors) = GetLineCount(sourceCodeFiles);

300 | // Distribute the file reading stage amongst several workers

301 | // var (counter, errors) = CountLinesAndMerge(Split(sourceCodeFiles, 5));

302 |

303 | var totalLines = 0;

304 | await foreach (var item in counter.ReadAllAsync())

305 | {

306 | WriteLineWithTime($"{item.file.FullName} {item.lines}");

307 | totalLines += item.lines;

308 | }

309 | WriteLine($"Total lines: {totalLines}");

310 |

311 | await foreach (var errMessage in errors.ReadAllAsync())

312 | WriteLine(errMessage);

313 |

314 | sw.Stop();

315 | WriteLine(sw.Elapsed);

316 | #endregion

317 | }

318 |

319 | #region get_files_recursively

320 | static ChannelReader GetFilesRecursively(string root, CancellationToken token = default)

321 | {

322 | var output = Channel.CreateUnbounded();

323 |

324 | async Task WalkDir(string path)

325 | {

326 | if (token.IsCancellationRequested)

327 | throw new OperationCanceledException();

328 |

329 | foreach (var file in Directory.GetFiles(path))

330 | await output.Writer.WriteAsync(file, token);

331 |

332 | var tasks = Directory.GetDirectories(path).Select(WalkDir);

333 | await Task.WhenAll(tasks.ToArray());

334 | }

335 |

336 | Task.Run(async () =>

337 | {

338 | try

339 | {

340 | await WalkDir(root);

341 | }

342 | catch (OperationCanceledException) { WriteLine("Cancelled."); }

343 | finally { output.Writer.Complete(); }

344 | });

345 |

346 | return output;

347 | }

348 | #endregion

349 | #region filter_by_extension

350 | static ChannelReader FilterByExtension(

351 | ChannelReader input, HashSet exts)

352 | {

353 | var output = Channel.CreateUnbounded();

354 | Task.Run(async () =>

355 | {

356 | await foreach (var file in input.ReadAllAsync())

357 | {

358 | var fileInfo = new FileInfo(file);

359 | if (exts.Contains(fileInfo.Extension))

360 | await output.Writer.WriteAsync(fileInfo);

361 | }

362 |

363 | output.Writer.Complete();

364 | });

365 |

366 | return output;

367 | }

368 | #endregion

369 | #region get_line_count

370 | static (ChannelReader<(FileInfo file, int lines)> output, ChannelReader errors)

371 | GetLineCount(ChannelReader input)

372 | {

373 | var output = Channel.CreateUnbounded<(FileInfo, int)>();

374 | var errors = Channel.CreateUnbounded();

375 |

376 | Task.Run(async () =>

377 | {

378 | await foreach (var file in input.ReadAllAsync())

379 | {

380 | var lines = CountLines(file);

381 | if (lines == 0)

382 | await errors.Writer.WriteAsync($"[Error] Empty file {file}");

383 | else

384 | await output.Writer.WriteAsync((file, lines));

385 | }

386 | output.Writer.Complete();

387 | errors.Writer.Complete();

388 | });

389 |

390 | return (output, errors);

391 | }

392 | #endregion

393 | #region count_lines

394 | static int CountLines(FileInfo file)

395 | {

396 | using var sr = new StreamReader(file.FullName);

397 | var lines = 0;

398 |

399 | while (sr.ReadLine() != null)

400 | lines++;

401 |

402 | return lines;

403 | }

404 | #endregion

405 | #region count_lines_and_merge

406 | static (ChannelReader<(FileInfo file, int lines)> output, ChannelReader errors)

407 | CountLinesAndMerge(IList> inputs)

408 | {

409 | var output = Channel.CreateUnbounded<(FileInfo file, int lines)>();

410 | var errors = Channel.CreateUnbounded();

411 |

412 | Task.Run(async () =>

413 | {

414 | async Task Redirect(ChannelReader input)

415 | {

416 | await foreach (var file in input.ReadAllAsync())

417 | {

418 | var lines = CountLines(file);

419 | if (lines == 0)

420 | await errors.Writer.WriteAsync($"[Error] Empty file {file}");

421 | else

422 | await output.Writer.WriteAsync((file, lines));

423 | }

424 | }

425 |

426 | await Task.WhenAll(inputs.Select(Redirect).ToArray());

427 | output.Writer.Complete();

428 | errors.Writer.Complete();

429 | });

430 |

431 | return (output, errors);

432 | }

433 | #endregion

434 | }

435 |

--------------------------------------------------------------------------------

/src/TryChannelsDemo.csproj:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | Exe

5 | netcoreapp3.0

6 | 8

7 | $(NoWarn);1591

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

--------------------------------------------------------------------------------

/src/nuget.config:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

6 |

7 | ``` cs --region split --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

8 | ```

9 |

10 | `Split

6 |

7 | ``` cs --region split --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_demultiplexing

8 | ```

9 |

10 | `Split 11 |

12 | `Merge

11 |

12 | `Merge 6 |

7 | ### Generator

8 |

9 | Each pipeline starts with a generator method, which initiates jobs by passing it to the stages. Each stage is also a method which runs on its own thread. The communication between the stages in a pipeline is performed using channels. A stage takes a channel as an input, reads from it asynchronously, performs some work and passes data to an output channel.

10 |

11 | To see it in action, we're going to design a program that efficiently counts the lines of code in a project. Let's start with the generator.

12 |

13 | ``` cs --region get_files_recursively --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

14 | ```

15 |

16 | We perform a depth first traversal of the folder and its subfolders and write each file name we enounter to the output channel. When we're done with the traversal, we mark the channel as complete so the consumer (the next stage) knows when to stop reading from it.

17 |

18 | ### Stage 1 - Keep the Source Files

19 |

20 | Stage 1 is going to determine whether the file contains source code or not. The ones that are not should be discarded.

21 |

22 | ``` cs --region filter_by_extension --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

23 | ```

24 |

25 | ### Stage 2 - Get the Line Count

26 |

27 | This stage is responsible for determining the number of lines in each file.

28 |

29 | ``` cs --region get_line_count --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

30 | ```

31 |

32 | ``` cs --region count_lines --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

33 | ```

34 |

35 | ## The Sink Stage

36 |

37 | Now we've implemented the stages of our pipeline, we are ready to put them all together.

38 |

39 | ``` cs --region run_pipeline --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

40 | ```

41 |

42 | ## Dealing with Backpressure

43 |

44 | In the line counter example, the stage where we read the file and count its lines might cause backpressure when a file is sufficiently large. It makes sense to increase the capacity of this stage and that's where `Merge

6 |

7 | ### Generator

8 |

9 | Each pipeline starts with a generator method, which initiates jobs by passing it to the stages. Each stage is also a method which runs on its own thread. The communication between the stages in a pipeline is performed using channels. A stage takes a channel as an input, reads from it asynchronously, performs some work and passes data to an output channel.

10 |

11 | To see it in action, we're going to design a program that efficiently counts the lines of code in a project. Let's start with the generator.

12 |

13 | ``` cs --region get_files_recursively --source-file ./src/Program.cs --project ./src/TryChannelsDemo.csproj --session run_pipeline

14 | ```

15 |