├── scripts

└── install_requirements.sh

├── decision_trees

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── data.py

├── model.py

└── utils.py

├── neural_network

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── data.py

├── model.py

└── utils.py

├── network_analysis

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── data.py

├── model.py

└── utils.py

├── working_with_data

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── comma_delimited_stock_prices.csv

├── model.py

└── data.py

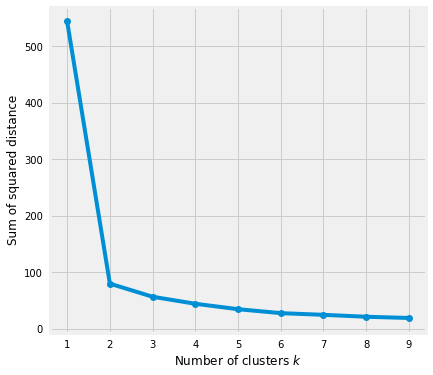

├── k_means_clustering

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── data.py

├── model.py

├── Understanding the algorithm.md

└── utils.py

├── k_nearest_neighbors

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── model.py

├── Understanding the algorithm.md

├── data.py

└── utils.py

├── logistic_regression

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── utils.py

├── model.py

└── data.py

├── multiple_regression

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── model.py

├── utils.py

└── data.py

├── recommender_systems

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── data.py

├── model.py

└── utils.py

├── LDA scikit-learn

├── __pycache__

│ ├── preprocess.cpython-34.pyc

│ ├── load20newsgroups.cpython-34.pyc

│ └── nmf_lda_scikitlearn.cpython-34.pyc

├── load20newsgroups.py

├── nmf_lda_scikitlearn.py

├── displaytopics.py

└── preprocess.py

├── natural_language_processing

├── __pycache__

│ ├── data.cpython-35.pyc

│ └── utils.cpython-35.pyc

├── model.py

├── data.py

└── utils.py

├── .github

├── dependabot.yml

└── ISSUE_TEMPLATE

│ ├── feature_request.md

│ └── bug_report.md

├── SECURITY.md

├── naive_bayes_classfier

├── naivebayesclassifier.py

├── utils.py

└── model.py

├── requirements.txt

├── mnist-deep-learning.py

├── logistic_regression_banking

├── utils.py

└── binary_logisitic_regression.py

├── LICENSE

├── simple_linear_regression

├── model.py

├── utils.py

└── data.py

├── helpers

├── machine_learning.py

├── linear_algebra.py

├── probabilty.py

├── gradient_descent.py

└── stats.py

├── .gitignore

├── NN_churn_prediction.py

├── telecom_churn_prediction.py

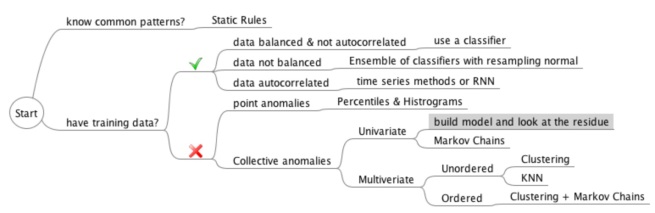

├── Anamoly_Detection_notes.md

├── regression_intro.py

├── hparams_grid_search_keras_nn.py

├── CODE_OF_CONDUCT.md

├── use_cases_insurnace.md

├── Understanding Vanishing Gradient.md

├── CONTRIBUTING.md

├── prec_rec_curve.py

├── Understanding SQL Queries.md

├── hypothesis_inference.py

├── friendster_network.py

├── README.md

└── sonar_clf_rf.py

/scripts/install_requirements.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | pip install -r requirements.txt

4 |

--------------------------------------------------------------------------------

/decision_trees/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/decision_trees/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/decision_trees/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/decision_trees/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/neural_network/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/neural_network/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/neural_network/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/neural_network/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/network_analysis/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/network_analysis/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/network_analysis/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/network_analysis/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/working_with_data/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/working_with_data/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/k_means_clustering/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/k_means_clustering/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/k_means_clustering/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/k_means_clustering/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/k_nearest_neighbors/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/k_nearest_neighbors/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/k_nearest_neighbors/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/k_nearest_neighbors/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/logistic_regression/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/logistic_regression/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/logistic_regression/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/logistic_regression/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/multiple_regression/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/multiple_regression/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/multiple_regression/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/multiple_regression/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/recommender_systems/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/recommender_systems/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/recommender_systems/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/recommender_systems/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/working_with_data/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/working_with_data/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/LDA scikit-learn/__pycache__/preprocess.cpython-34.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/LDA scikit-learn/__pycache__/preprocess.cpython-34.pyc

--------------------------------------------------------------------------------

/working_with_data/comma_delimited_stock_prices.csv:

--------------------------------------------------------------------------------

1 | 6/20/2014,AAPL,90.91

2 | 6/20/2014,MSFT,41.68

3 | 6/20/3014,FB,64.5

4 | 6/19/2014,AAPL,91.86

5 | 6/19/2014,MSFT,n/a

6 | 6/19/2014,FB,64.34

--------------------------------------------------------------------------------

/natural_language_processing/__pycache__/data.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/natural_language_processing/__pycache__/data.cpython-35.pyc

--------------------------------------------------------------------------------

/LDA scikit-learn/__pycache__/load20newsgroups.cpython-34.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/LDA scikit-learn/__pycache__/load20newsgroups.cpython-34.pyc

--------------------------------------------------------------------------------

/natural_language_processing/__pycache__/utils.cpython-35.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/natural_language_processing/__pycache__/utils.cpython-35.pyc

--------------------------------------------------------------------------------

/LDA scikit-learn/__pycache__/nmf_lda_scikitlearn.cpython-34.pyc:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devAmoghS/Machine-Learning-with-Python/HEAD/LDA scikit-learn/__pycache__/nmf_lda_scikitlearn.cpython-34.pyc

--------------------------------------------------------------------------------

/LDA scikit-learn/load20newsgroups.py:

--------------------------------------------------------------------------------

1 | from sklearn.datasets import fetch_20newsgroups

2 |

3 | dataset = fetch_20newsgroups(shuffle=True, random_state=1, remove=('headers', 'footers', 'quotes'))

4 | documents = dataset.data

5 |

--------------------------------------------------------------------------------

/k_means_clustering/data.py:

--------------------------------------------------------------------------------

1 | inputs = [[-14, -5],

2 | [13, 13],

3 | [20, 23],

4 | [-19, -11],

5 | [-9, -16],

6 | [21, 27],

7 | [-49, 15],

8 | [26, 13],

9 | [-46, 5],

10 | [-34, -1],

11 | [11, 15],

12 | [-49, 0],

13 | [-22, -16],

14 | [19, 28],

15 | [-12, -8],

16 | [-13, -19],

17 | [-41, 8],

18 | [-11, -6],

19 | [-25, -9],

20 | [-18, -3]]

21 |

--------------------------------------------------------------------------------

/LDA scikit-learn/nmf_lda_scikitlearn.py:

--------------------------------------------------------------------------------

1 | from sklearn.decomposition import NMF, LatentDirichletAllocation

2 | from preprocess import tfidf, tf

3 | num_topics = 20

4 |

5 | # Run NMF

6 | nmf = NMF(n_components=num_topics, random_state=1, alpha=.1, l1_ratio=.5, init='nndsvd').fit(tfidf)

7 |

8 | # Run LDA

9 | lda = LatentDirichletAllocation(n_topics=num_topics, max_iter=5, learning_method='online',

10 | learning_offset=50, random_state=0).fit(tf)

11 |

--------------------------------------------------------------------------------

/.github/dependabot.yml:

--------------------------------------------------------------------------------

1 | # To get started with Dependabot version updates, you'll need to specify whic

2 | # package ecosystems to update and where the package manifests are located.

3 | # Please see the documentation for all configuration options:

4 | # https://docs.github.com/github/administering-a-repository/configuration-options-for-dependency-updates

5 |

6 | version: 2

7 | updates:

8 | - package-ecosystem: "" # See documentation for possible values

9 | directory: "/" # Location of package manifests

10 | schedule:

11 | interval: "weekly"

12 |

--------------------------------------------------------------------------------

/LDA scikit-learn/displaytopics.py:

--------------------------------------------------------------------------------

1 | from nmf_lda_scikitlearn import nmf, lda

2 | from preprocess import tfidf_feature_names, tf_feature_names

3 |

4 |

5 | def display_topics(model, feature_names, num_top_words):

6 | for topic_idx, topic in enumerate(model.components_):

7 | print("Topic %d:" % (topic_idx))

8 | print("".join([feature_names[i]

9 | for i in topic.argsort()[:-num_top_words - 1:-1]])

10 | )

11 |

12 |

13 | num_top_words = 10

14 | display_topics(nmf, tfidf_feature_names, num_top_words)

15 | display_topics(lda, tf_feature_names, num_top_words)

16 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Is your feature request related to a problem? Please describe.**

11 | A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

12 |

13 | **Describe the solution you'd like**

14 | A clear and concise description of what you want to happen.

15 |

16 | **Describe alternatives you've considered**

17 | A clear and concise description of any alternative solutions or features you've considered.

18 |

19 | **Additional context**

20 | Add any other context or screenshots about the feature request here.

21 |

--------------------------------------------------------------------------------

/SECURITY.md:

--------------------------------------------------------------------------------

1 | # Security Policy

2 |

3 | ## Supported Versions

4 |

5 | Use this section to tell people about which versions of your project are

6 | currently being supported with security updates.

7 |

8 | | Version | Supported |

9 | | ------- | ------------------ |

10 | | 5.1.x | :white_check_mark: |

11 | | 5.0.x | :x: |

12 | | 4.0.x | :white_check_mark: |

13 | | < 4.0 | :x: |

14 |

15 | ## Reporting a Vulnerability

16 |

17 | Use this section to tell people how to report a vulnerability.

18 |

19 | Tell them where to go, how often they can expect to get an update on a

20 | reported vulnerability, what to expect if the vulnerability is accepted or

21 | declined, etc.

22 |

--------------------------------------------------------------------------------

/network_analysis/data.py:

--------------------------------------------------------------------------------

1 | users = [

2 | { "id": 0, "name": "Hero" },

3 | { "id": 1, "name": "Dunn" },

4 | { "id": 2, "name": "Sue" },

5 | { "id": 3, "name": "Chi" },

6 | { "id": 4, "name": "Thor" },

7 | { "id": 5, "name": "Clive" },

8 | { "id": 6, "name": "Hicks" },

9 | { "id": 7, "name": "Devin" },

10 | { "id": 8, "name": "Kate" },

11 | { "id": 9, "name": "Klein" }

12 | ]

13 |

14 | friendships = [(0, 1), (0, 2), (1, 2), (1, 3), (2, 3), (3, 4),

15 | (4, 5), (5, 6), (5, 7), (6, 8), (7, 8), (8, 9)]

16 |

17 | endorsements = [(0, 1), (1, 0), (0, 2), (2, 0), (1, 2),

18 | (2, 1), (1, 3), (2, 3), (3, 4), (5, 4),

19 | (5, 6), (7, 5), (6, 8), (8, 7), (8, 9)]

--------------------------------------------------------------------------------

/LDA scikit-learn/preprocess.py:

--------------------------------------------------------------------------------

1 | from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

2 | from load20newsgroups import documents

3 |

4 | num_features = 1000

5 |

6 | # NMF is able to use tf-idf

7 | tfidf_vectorizer = TfidfVectorizer(max_df=0.95, min_df=2, max_features=num_features, stop_words='english')

8 | tfidf = tfidf_vectorizer.fit_transform(documents)

9 | tfidf_feature_names = tfidf_vectorizer.get_feature_names()

10 |

11 | # LDA can only use raw term counts

12 | # because it is a probablistic graphical model

13 | tf_vectorizer = CountVectorizer(max_df=0.95, min_df=2, max_features=num_features, stop_words='english')

14 | tf = tf_vectorizer.fit_transform(documents)

15 | tf_feature_names = tf_vectorizer.get_feature_names()

16 |

--------------------------------------------------------------------------------

/network_analysis/model.py:

--------------------------------------------------------------------------------

1 | from network_analysis.data import users

2 | from network_analysis.utils import eigenvector_centralities, page_rank

3 |

4 | if __name__ == '__main__':

5 |

6 | print("Betweenness Centrality")

7 | for user in users:

8 | print(user["id"], user["betweenness_centrality"])

9 | print()

10 |

11 | print("Closeness Centrality")

12 | for user in users:

13 | print(user["id"], user["closeness_centrality"])

14 | print()

15 |

16 | print("Eigenvector Centrality")

17 | for user_id, centrality in enumerate(eigenvector_centralities):

18 | print(user_id, centrality)

19 | print()

20 |

21 | print("PageRank")

22 | for user_id, pr in page_rank(users).items():

23 | print(user_id, pr)

--------------------------------------------------------------------------------

/naive_bayes_classfier/naivebayesclassifier.py:

--------------------------------------------------------------------------------

1 | from naive_bayes_classfier.utils import count_words, word_probabilities, spam_probability

2 |

3 |

4 | class NaiveBayesClassifier:

5 |

6 | def __init__(self, k=0.5):

7 | self.k = k

8 | self.word_probs = []

9 |

10 | def train(self, training_set):

11 |

12 | # count spam and non-spam messages

13 | num_spams = len([is_spam

14 | for message, is_spam in training_set

15 | if is_spam])

16 | num_non_spams = len(training_set) - num_spams

17 |

18 | # run training data through a "pipeline"

19 | word_counts = count_words(training_set)

20 | self.word_probs = word_probabilities(word_counts, num_spams, num_non_spams, self.k)

21 |

22 | def classify(self, message):

23 | return spam_probability(self.word_probs, message)

24 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | Keras==2.13.1

2 | Keras-Preprocessing==1.0.5

3 | PySocks==1.6.8

4 | Pygments==2.15.0

5 | Quandl==3.4.5

6 | asn1crypto==0.24.0

7 | backcall==0.1.0

8 | beautifulsoup4==4.6.3

9 | certifi==2023.7.22

10 | cffi==1.11.5

11 | chardet==3.0.4

12 | cryptography==44.0.1

13 | cycler==0.10.0

14 | h5py==2.9.0

15 | idna==3.7

16 | inflection==0.3.1

17 | ipython==8.10.0

18 | jedi==0.13.2

19 | kiwisolver==1.0.1

20 | matplotlib==3.0.0

21 | more-itertools==5.0.0

22 | numpy==1.22.0

23 | pandas==0.23.4

24 | patsy==0.5.0

25 | pexpect==4.6.0

26 | pickleshare==0.7.5

27 | pip==23.3

28 | ptyprocess==0.6.0

29 | pyOpenSSL==18.0.0

30 | pycparser==2.19

31 | pyparsing==2.2.1

32 | python-dateutil==2.7.3

33 | pytz==2018.5

34 | requests>=2.20.0

35 | scikit-learn==1.5.0

36 | scipy==1.10.0

37 | seaborn==0.9.0

38 | setuptools==70.0.0

39 | six==1.11.0

40 | statsmodels==0.9.0

41 | tornado==6.4.2

42 | traitlets==4.3.2

43 | wcwidth==0.1.7

44 | wheel==0.38.1

45 |

--------------------------------------------------------------------------------

/neural_network/data.py:

--------------------------------------------------------------------------------

1 | raw_digits = [

2 | """11111

3 | 1...1

4 | 1...1

5 | 1...1

6 | 11111""",

7 |

8 | """..1..

9 | ..1..

10 | ..1..

11 | ..1..

12 | ..1..""",

13 |

14 | """11111

15 | ....1

16 | 11111

17 | 1....

18 | 11111""",

19 |

20 | """11111

21 | ....1

22 | 11111

23 | ....1

24 | 11111""",

25 |

26 | """1...1

27 | 1...1

28 | 11111

29 | ....1

30 | ....1""",

31 |

32 | """11111

33 | 1....

34 | 11111

35 | ....1

36 | 11111""",

37 |

38 | """11111

39 | 1....

40 | 11111

41 | 1...1

42 | 11111""",

43 |

44 | """11111

45 | ....1

46 | ....1

47 | ....1

48 | ....1""",

49 |

50 | """11111

51 | 1...1

52 | 11111

53 | 1...1

54 | 11111""",

55 |

56 | """11111

57 | 1...1

58 | 11111

59 | ....1

60 | 11111"""]

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **To Reproduce**

14 | Steps to reproduce the behavior:

15 | 1. Go to '...'

16 | 2. Click on '....'

17 | 3. Scroll down to '....'

18 | 4. See error

19 |

20 | **Expected behavior**

21 | A clear and concise description of what you expected to happen.

22 |

23 | **Screenshots**

24 | If applicable, add screenshots to help explain your problem.

25 |

26 | **Desktop (please complete the following information):**

27 | - OS: [e.g. iOS]

28 | - Browser [e.g. chrome, safari]

29 | - Version [e.g. 22]

30 |

31 | **Smartphone (please complete the following information):**

32 | - Device: [e.g. iPhone6]

33 | - OS: [e.g. iOS8.1]

34 | - Browser [e.g. stock browser, safari]

35 | - Version [e.g. 22]

36 |

37 | **Additional context**

38 | Add any other context about the problem here.

39 |

--------------------------------------------------------------------------------

/recommender_systems/data.py:

--------------------------------------------------------------------------------

1 | users_interests = [

2 | ["Hadoop", "Big Data", "HBase", "Java", "Spark", "Storm", "Cassandra"],

3 | ["NoSQL", "MongoDB", "Cassandra", "HBase", "Postgres"],

4 | ["Python", "scikit-learn", "scipy", "numpy", "statsmodels", "pandas"],

5 | ["R", "Python", "statistics", "regression", "probability"],

6 | ["machine learning", "regression", "decision trees", "libsvm"],

7 | ["Python", "R", "Java", "C++", "Haskell", "programming languages"],

8 | ["statistics", "probability", "mathematics", "theory"],

9 | ["machine learning", "scikit-learn", "Mahout", "neural networks"],

10 | ["neural networks", "deep learning", "Big Data", "artificial intelligence"],

11 | ["Hadoop", "Java", "MapReduce", "Big Data"],

12 | ["statistics", "R", "statsmodels"],

13 | ["C++", "deep learning", "artificial intelligence", "probability"],

14 | ["pandas", "R", "Python"],

15 | ["databases", "HBase", "Postgres", "MySQL", "MongoDB"],

16 | ["libsvm", "regression", "support vector machines"]

17 | ]

18 |

--------------------------------------------------------------------------------

/mnist-deep-learning.py:

--------------------------------------------------------------------------------

1 | from keras.datasets import mnist

2 | (train_images, train_labels), (test_images, test_labels) = mnist.load_data()

3 |

4 | # 1. Prepare the Data

5 | from keras.utils import to_categorical

6 | train_images = train_images.reshape((60000, 28 * 28)).astype('float32') / 255

7 | test_images = test_images.reshape((10000, 28 * 28)).astype('float32') / 255

8 | train_labels = to_categorical(train_labels)

9 | test_labels = to_categorical(test_labels)

10 |

11 | # 2. Set up network architecture

12 | from keras import models, layers

13 | network = models.Sequential()

14 | network.add(layers.Dense(512, activation='relu', input_shape=(28 * 28,)))

15 | network.add(layers.Dense(10, activation='softmax'))

16 |

17 | # 3/4. Pick Optimizer and Loss

18 | network.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=['accuracy'])

19 | network.fit(train_images, train_labels, epochs=5, batch_size=128)

20 |

21 | # 5. Measure on test

22 | test_loss, test_acc = network.evaluate(test_images, test_labels)

23 | print('test_acc:', test_acc)

24 |

--------------------------------------------------------------------------------

/logistic_regression_banking/utils.py:

--------------------------------------------------------------------------------

1 | import seaborn as sns

2 | import matplotlib.pyplot as plt

3 |

4 | plt.rc("font", size=14)

5 | sns.set(style="white")

6 | sns.set(style="whitegrid", color_codes=True)

7 |

8 |

9 | def plot_data(data):

10 | # barplot for the depencent variable

11 | sns.countplot(x='y', data=data, palette='hls')

12 | plt.show()

13 |

14 | # check the missing values

15 | print(data.isnull().sum())

16 |

17 | # customer distribution plot

18 | sns.countplot(y='job', data=data)

19 | plt.show()

20 |

21 | # customer marital status distribution

22 | sns.countplot(x='marital', data=data)

23 | plt.show()

24 |

25 | # barplot for credit in default

26 | sns.countplot(x='default', data=data)

27 | plt.show()

28 |

29 | # barptot for housing loan

30 | sns.countplot(x='housing', data=data)

31 | plt.show()

32 |

33 | # barplot for personal loan

34 | sns.countplot(x='loan', data=data)

35 | plt.show()

36 |

37 | # barplot for previous marketing campaign outcome

38 | sns.countplot(x='poutcome', data=data)

39 | plt.show()

40 |

41 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Amogh Singhal

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/simple_linear_regression/model.py:

--------------------------------------------------------------------------------

1 | import random

2 | from helpers.gradient_descent import minimize_stochastic

3 | from simple_linear_regression.data import num_friends_good, daily_minutes_good

4 | from simple_linear_regression.utils import least_squares_fit, r_squared, squared_error, squared_error_gradient

5 |

6 | if __name__ == '__main__':

7 |

8 | alpha, beta = least_squares_fit(num_friends_good, daily_minutes_good)

9 | print("alpha", alpha)

10 | print("beta", beta)

11 |

12 | print("r-squared", r_squared(alpha, beta, num_friends_good, daily_minutes_good))

13 |

14 | print()

15 |

16 | print("gradient descent:")

17 | # choose random value to start

18 | random.seed(0)

19 | theta = [random.random(), random.random()]

20 | alpha, beta = minimize_stochastic(squared_error,

21 | squared_error_gradient,

22 | num_friends_good,

23 | daily_minutes_good,

24 | theta,

25 | 0.0001)

26 | print("alpha", alpha)

27 | print("beta", beta)

28 |

--------------------------------------------------------------------------------

/decision_trees/data.py:

--------------------------------------------------------------------------------

1 | inputs = [

2 | ({'level': 'Senior', 'lang': 'Java', 'tweets': 'no', 'phd': 'no'}, False),

3 | ({'level': 'Senior', 'lang': 'Java', 'tweets': 'no', 'phd': 'yes'}, False),

4 | ({'level': 'Mid', 'lang': 'Python', 'tweets': 'no', 'phd': 'no'}, True),

5 | ({'level': 'Junior', 'lang': 'Python', 'tweets': 'no', 'phd': 'no'}, True),

6 | ({'level': 'Junior', 'lang': 'R', 'tweets': 'yes', 'phd': 'no'}, True),

7 | ({'level': 'Junior', 'lang': 'R', 'tweets': 'yes', 'phd': 'yes'}, False),

8 | ({'level': 'Mid', 'lang': 'R', 'tweets': 'yes', 'phd': 'yes'}, True),

9 | ({'level': 'Senior', 'lang': 'Python', 'tweets': 'no', 'phd': 'no'}, False),

10 | ({'level': 'Senior', 'lang': 'R', 'tweets': 'yes', 'phd': 'no'}, True),

11 | ({'level': 'Junior', 'lang': 'Python', 'tweets': 'yes', 'phd': 'no'}, True),

12 | ({'level': 'Senior', 'lang': 'Python', 'tweets': 'yes', 'phd': 'yes'}, True),

13 | ({'level': 'Mid', 'lang': 'Python', 'tweets': 'no', 'phd': 'yes'}, True),

14 | ({'level': 'Mid', 'lang': 'Java', 'tweets': 'yes', 'phd': 'no'}, True),

15 | ({'level': 'Junior', 'lang': 'Python', 'tweets': 'no', 'phd': 'yes'}, False)

16 | ]

--------------------------------------------------------------------------------

/k_means_clustering/model.py:

--------------------------------------------------------------------------------

1 | import random

2 |

3 | from k_means_clustering.data import inputs

4 | from k_means_clustering.utils import KMeans, bottom_up_cluster, \

5 | generate_clusters, get_values

6 |

7 | if __name__ == '__main__':

8 | random.seed(0)

9 | cluster = KMeans(3)

10 | cluster.train(inputs=inputs)

11 | print("3-means:")

12 | print(cluster.means)

13 | print()

14 |

15 | random.seed(0)

16 | cluster = KMeans(2)

17 | cluster.train(inputs=inputs)

18 | print("2-means:")

19 | print(cluster.means)

20 | print()

21 |

22 | # for k in range(1, len(inputs) + 1):

23 | # print(k, squared_clustering_errors(inputs, k))

24 | # print()

25 |

26 | # recolor_image('/home/amogh/Pictures/symantec.png')

27 |

28 | print("bottom up hierarchical clustering")

29 |

30 | base_cluster = bottom_up_cluster(inputs)

31 | print(base_cluster)

32 |

33 | print()

34 | print("three clusters, min:")

35 | for cluster in generate_clusters(base_cluster, 3):

36 | print(get_values(cluster))

37 |

38 | print()

39 | print("three clusters, max:")

40 | base_cluster = bottom_up_cluster(inputs, max)

41 | for cluster in generate_clusters(base_cluster, 3):

42 | print(get_values(cluster))

--------------------------------------------------------------------------------

/k_nearest_neighbors/model.py:

--------------------------------------------------------------------------------

1 | import random

2 | from k_nearest_neighbors.data import cities

3 | from k_nearest_neighbors.utils import knn_classify, random_distances

4 | from helpers.stats import mean

5 |

6 | if __name__ == "__main__":

7 |

8 | # try several different values for k

9 | for k in [1, 3, 5, 7]:

10 | num_correct = 0

11 |

12 | for location, actual_language in cities:

13 |

14 | other_cities = [other_city

15 | for other_city in cities

16 | if other_city != (location, actual_language)]

17 |

18 | predicted_language = knn_classify(k, other_cities, location)

19 |

20 | if predicted_language == actual_language:

21 | num_correct += 1

22 |

23 | print(k, "neighbor[s]:", num_correct, "correct out of", len(cities))

24 |

25 | dimensions = range(1, 101, 5)

26 |

27 | avg_distances = []

28 | min_distances = []

29 |

30 | random.seed(0)

31 | for dim in dimensions:

32 | distances = random_distances(dim, 10000) # 10,000 random pairs

33 | avg_distances.append(mean(distances)) # track the average

34 | min_distances.append(min(distances)) # track the minimum

35 | print(dim, min(distances), mean(distances), min(distances) / mean(distances))

36 |

--------------------------------------------------------------------------------

/logistic_regression/utils.py:

--------------------------------------------------------------------------------

1 | import math

2 | from functools import reduce

3 |

4 | from helpers.linear_algebra import vector_add, dot

5 |

6 |

7 | def logistic(x):

8 | return 1.0 / (1 + math.exp(-x))

9 |

10 |

11 | def logistic_prime(x):

12 | return logistic(x) * (1 - logistic(x))

13 |

14 |

15 | def logistic_log_likelihood_i(x_i, y_i, beta):

16 | if y_i == 1:

17 | return math.log(logistic(dot(x_i, beta)))

18 | else:

19 | return math.log(1 - logistic(dot(x_i, beta)))

20 |

21 |

22 | def logistic_log_likelihood(x, y, beta):

23 | return sum(logistic_log_likelihood_i(x_i, y_i, beta)

24 | for x_i, y_i in zip(x, y))

25 |

26 |

27 | def logistic_log_partial_ij(x_i, y_i, beta, j):

28 | """here i is the index of the data point,

29 | j the index of the derivative"""

30 |

31 | return (y_i - logistic(dot(x_i, beta))) * x_i[j]

32 |

33 |

34 | def logistic_log_gradient_i(x_i, y_i, beta):

35 | """the gradient of the log likelihood

36 | corresponding to the i-th data point"""

37 |

38 | return [logistic_log_partial_ij(x_i, y_i, beta, j)

39 | for j, _ in enumerate(beta)]

40 |

41 |

42 | def logistic_log_gradient(x, y, beta):

43 | return reduce(vector_add,

44 | [logistic_log_gradient_i(x_i, y_i, beta)

45 | for x_i, y_i in zip(x,y)])

--------------------------------------------------------------------------------

/simple_linear_regression/utils.py:

--------------------------------------------------------------------------------

1 | from helpers.stats import correlation, standard_deviation, mean, de_mean

2 |

3 |

4 | def predict(alpha, beta, x_i):

5 | return beta * x_i + alpha

6 |

7 |

8 | def error(alpha, beta, x_i, y_i):

9 | return y_i - predict(alpha, beta, x_i)

10 |

11 |

12 | def sum_of_squared_errors(alpha, beta, x, y):

13 | return sum(error(alpha, beta, x_i, y_i) ** 2

14 | for x_i, y_i in zip(x, y))

15 |

16 |

17 | def least_squares_fit(x, y):

18 | beta = correlation(x, y) * standard_deviation(y) / standard_deviation(x)

19 | alpha = mean(y) - beta * mean(x)

20 | return alpha, beta

21 |

22 |

23 | def total_sum_of_squares(y):

24 | """The total squared variation of y_i's from their mean"""

25 | return sum(v ** 2 for v in de_mean(y))

26 |

27 |

28 | def r_squared(alpha, beta, x, y):

29 | """the fraction of variation in y captured by the model"""

30 | return 1 - sum_of_squared_errors(alpha, beta, x, y) / total_sum_of_squares(y)

31 |

32 |

33 | def squared_error(x_i, y_i, theta):

34 | alpha, beta = theta

35 | return error(alpha, beta, x_i, y_i) ** 2

36 |

37 |

38 | def squared_error_gradient(x_i, y_i, theta):

39 | alpha, beta = theta

40 | return [-2 * error(alpha, beta, x_i, y_i), # alpha partial derivative

41 | -2 * error(alpha, beta, x_i, y_i) * x_i] # beta partial derivative

42 |

43 |

44 |

45 |

--------------------------------------------------------------------------------

/helpers/machine_learning.py:

--------------------------------------------------------------------------------

1 | import random

2 |

3 | #

4 | # data splitting

5 | #

6 |

7 |

8 | def split_data(data, prob):

9 | """split data into fractions [prob, 1 - prob]"""

10 | results = [], []

11 | for row in data:

12 | results[0 if random.random() < prob else 1].append(row)

13 | return results

14 |

15 |

16 | def train_test_split(x, y, test_pct):

17 | data = list(zip(x, y)) # pair corresponding values

18 | train, test = split_data(data, 1 - test_pct) # split the data-set of pairs

19 | x_train, y_train = list(zip(*train)) # magical un-zip trick

20 | x_test, y_test = list(zip(*test))

21 | return x_train, x_test, y_train, y_test

22 |

23 | #

24 | # correctness

25 | #

26 |

27 |

28 | def accuracy(tp, fp, fn, tn):

29 | correct = tp + tn

30 | total = tp + fp + fn + tn

31 | return correct / total

32 |

33 |

34 | def precision(tp, fp):

35 | return tp / (tp + fp)

36 |

37 |

38 | def recall(tp, fn):

39 | return tp / (tp + fn)

40 |

41 |

42 | def f1_score(tp, fp, fn):

43 | p = precision(tp, fp)

44 | r = recall(tp, fn)

45 |

46 | return 2 * p * r / (p + r)

47 |

48 |

49 | if __name__ == "__main__":

50 |

51 | print("accuracy(70, 4930, 13930, 981070)", accuracy(70, 4930, 13930, 981070))

52 | print("precision(70, 4930, 13930, 981070)", precision(70, 4930))

53 | print("recall(70, 4930, 13930, 981070)", recall(70, 13930))

54 | print("f1_score(70, 4930, 13930, 981070)", f1_score(70, 4930, 13930))

55 |

--------------------------------------------------------------------------------

/decision_trees/model.py:

--------------------------------------------------------------------------------

1 | from decision_trees.data import inputs

2 | from decision_trees.utils import partition_entropy_by, build_tree_id3, classify

3 |

4 | if __name__ == "__main__":

5 |

6 | for key in ['level', 'lang', 'tweets', 'phd']:

7 | print(key, partition_entropy_by(inputs, key))

8 | print()

9 |

10 | senior_inputs = [(input, label)

11 | for input, label in inputs if input["level"] == "Senior"]

12 |

13 | for key in ['lang', 'tweets', 'phd']:

14 | print(key, partition_entropy_by(senior_inputs, key))

15 | print()

16 |

17 | print("building the tree")

18 | tree = build_tree_id3(inputs)

19 | print(tree)

20 |

21 | print("Junior / Java / tweets / no phd", classify(tree,

22 | {"level": "Junior",

23 | "lang": "Java",

24 | "tweets": "yes",

25 | "phd": "no"}))

26 |

27 | print("Junior / Java / tweets / phd", classify(tree,

28 | {"level": "Junior",

29 | "lang": "Java",

30 | "tweets": "yes",

31 | "phd": "yes"}))

32 |

33 | print("Intern", classify(tree, {"level": "Intern"}))

34 | print("Senior", classify(tree, {"level": "Senior"}))

35 |

--------------------------------------------------------------------------------

/natural_language_processing/model.py:

--------------------------------------------------------------------------------

1 | from natural_language_processing.data import grammar, documents

2 | from natural_language_processing.utils import generate_sentence, topic_word_counts, document_topic_counts

3 |

4 | if __name__ == '__main__':

5 | # plot_resumes()

6 |

7 | # document = get_document()

8 |

9 | # bigrams = zip(document, document[1:]) # gives us precisely the pairs of consecutive elements of document

10 | # bigrams_transitions = defaultdict(list)

11 | # for prev, current in bigrams:

12 | # bigrams_transitions[prev].append(current)

13 |

14 | # trigrams = zip(document, document[1:], document[2:])

15 | # trigrams_transitions = defaultdict(list)

16 | # starts = []

17 | #

18 | # for prev, current, next in trigrams:

19 | # if prev == ".": # if previous word is a period

20 | # starts.append(current) # then this is start word

21 | #

22 | # trigrams_transitions[(prev, current)].append(next)

23 | #

24 | # print(generate_using_trigrams(starts, trigrams_transitions))

25 |

26 | # print(generate_sentence(grammar=grammar))

27 |

28 | for k, word_counts in enumerate(topic_word_counts):

29 | for word, count in word_counts.most_common():

30 | if count > 0:

31 | print(k, word, count)

32 |

33 | topic_names = ["Big Data and programming languages",

34 | "databases",

35 | "machine learning",

36 | "statistics"]

37 |

38 | for document, topic_counts in zip(documents, document_topic_counts):

39 | for topic, count in topic_counts.most_common():

40 | if count > 0:

41 | print(topic_names[topic], count)

42 | print()

43 |

44 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | *.egg-info/

24 | .installed.cfg

25 | *.egg

26 | MANIFEST

27 |

28 | # PyInstaller

29 | # Usually these files are written by a python script from a template

30 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

31 | *.manifest

32 | *.spec

33 |

34 | # Installer logs

35 | pip-log.txt

36 | pip-delete-this-directory.txt

37 |

38 | # Unit test / coverage reports

39 | htmlcov/

40 | .tox/

41 | .coverage

42 | .coverage.*

43 | .cache

44 | nosetests.xml

45 | coverage.xml

46 | *.cover

47 | .hypothesis/

48 | .pytest_cache/

49 |

50 | # Translations

51 | *.mo

52 | *.pot

53 |

54 | # Django stuff:

55 | *.log

56 | local_settings.py

57 | db.sqlite3

58 |

59 | # Flask stuff:

60 | instance/

61 | .webassets-cache

62 |

63 | # Scrapy stuff:

64 | .scrapy

65 |

66 | # Sphinx documentation

67 | docs/_build/

68 |

69 | # PyBuilder

70 | target/

71 |

72 | # Jupyter Notebook

73 | .ipynb_checkpoints

74 |

75 | # pyenv

76 | .python-version

77 |

78 | # celery beat schedule file

79 | celerybeat-schedule

80 |

81 | # SageMath parsed files

82 | *.sage.py

83 |

84 | # Environments

85 | .env

86 | .venv

87 | env/

88 | venv/

89 | ENV/

90 | env.bak/

91 | venv.bak/

92 |

93 | # Spyder project settings

94 | .spyderproject

95 | .spyproject

96 |

97 | # Rope project settings

98 | .ropeproject

99 |

100 | # mkdocs documentation

101 | /site

102 |

103 | # mypy

104 | .mypy_cache/

105 |

--------------------------------------------------------------------------------

/recommender_systems/model.py:

--------------------------------------------------------------------------------

1 | from functools import partial

2 |

3 | from recommender_systems.data import users_interests

4 | from recommender_systems.utils import make_user_interest_vector, cosine_similarity, most_similar_users_to, \

5 | user_based_suggestions, most_similar_interests_to, item_based_suggestions

6 |

7 | if __name__ == '__main__':

8 | unique_interests = sorted(list({interest

9 | for user_interests in users_interests

10 | for interest in user_interests}))

11 |

12 | print("unique interests")

13 | print(unique_interests)

14 |

15 | user_interest_matrix = map(partial(make_user_interest_vector, unique_interests), users_interests)

16 |

17 | user_similarities = [[cosine_similarity(interest_vector_i, interest_vector_j)

18 | for interest_vector_j in user_interest_matrix]

19 | for interest_vector_i in user_interest_matrix]

20 |

21 | print(most_similar_users_to(user_similarities, 0))

22 |

23 | print(user_based_suggestions(user_similarities, users_interests, 0))

24 |

25 | # item-based

26 | interest_user_matrix = [[user_interest_vector[j]

27 | for user_interest_vector in user_interest_matrix]

28 | for j, _ in enumerate(unique_interests)]

29 |

30 | interest_similarities = [[cosine_similarity(user_vector_i, user_vector_j)

31 | for user_vector_j in interest_user_matrix]

32 | for user_vector_i in interest_user_matrix]

33 |

34 | print(most_similar_interests_to(interest_similarities, 0, unique_interests))

35 |

36 | print(item_based_suggestions(interest_similarities, users_interests, user_interest_matrix, unique_interests, 0))

--------------------------------------------------------------------------------

/natural_language_processing/data.py:

--------------------------------------------------------------------------------

1 | data = [("big data", 100, 15), ("Hadoop", 95, 25), ("Python", 75, 50),

2 | ("R", 50, 40), ("machine learning", 80, 20), ("statistics", 20, 60),

3 | ("data science", 60, 70), ("analytics", 90, 3),

4 | ("team player", 85, 85), ("dynamic", 2, 90), ("synergies", 70, 0),

5 | ("actionable insights", 40, 30), ("think out of the box", 45, 10),

6 | ("self-starter", 30, 50), ("customer focus", 65, 15),

7 | ("thought leadership", 35, 35)]

8 |

9 | grammar = {

10 | "_S": ["_NP _VP"],

11 | "_NP": ["_N", "_A _NP _P _A _N"],

12 | "_VP": ["_V", "_V _NP"],

13 | "_N": ["data science", "Python", "regression"],

14 | "_A": ["big", "linear", "logistic"],

15 | "_P": ["about", "near"],

16 | "_V": ["learns", "trains", "tests", "is"]

17 | }

18 |

19 | documents = [

20 | ["Hadoop", "Big Data", "HBase", "Java", "Spark", "Storm", "Cassandra"],

21 | ["NoSQL", "MongoDB", "Cassandra", "HBase", "Postgres"],

22 | ["Python", "scikit-learn", "scipy", "numpy", "statsmodels", "pandas"],

23 | ["R", "Python", "statistics", "regression", "probability"],

24 | ["machine learning", "regression", "decision trees", "libsvm"],

25 | ["Python", "R", "Java", "C++", "Haskell", "programming languages"],

26 | ["statistics", "probability", "mathematics", "theory"],

27 | ["machine learning", "scikit-learn", "Mahout", "neural networks"],

28 | ["neural networks", "deep learning", "Big Data", "artificial intelligence"],

29 | ["Hadoop", "Java", "MapReduce", "Big Data"],

30 | ["statistics", "R", "statsmodels"],

31 | ["C++", "deep learning", "artificial intelligence", "probability"],

32 | ["pandas", "R", "Python"],

33 | ["databases", "HBase", "Postgres", "MySQL", "MongoDB"],

34 | ["libsvm", "regression", "support vector machines"]

35 | ]

36 |

--------------------------------------------------------------------------------

/simple_linear_regression/data.py:

--------------------------------------------------------------------------------

1 | num_friends_good = [49, 41, 40, 25, 21, 21, 19, 19, 18, 18, 16, 15, 15, 15, 15, 14, 14, 13, 13, 13, 13, 12, 12, 11, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

2 |

3 | daily_minutes_good = [68.77, 51.25, 52.08, 38.36, 44.54, 57.13, 51.4, 41.42, 31.22, 34.76, 54.01, 38.79, 47.59, 49.1, 27.66, 41.03, 36.73, 48.65, 28.12, 46.62, 35.57, 32.98, 35, 26.07, 23.77, 39.73, 40.57, 31.65, 31.21, 36.32, 20.45, 21.93, 26.02, 27.34, 23.49, 46.94, 30.5, 33.8, 24.23, 21.4, 27.94, 32.24, 40.57, 25.07, 19.42, 22.39, 18.42, 46.96, 23.72, 26.41, 26.97, 36.76, 40.32, 35.02, 29.47, 30.2, 31, 38.11, 38.18, 36.31, 21.03, 30.86, 36.07, 28.66, 29.08, 37.28, 15.28, 24.17, 22.31, 30.17, 25.53, 19.85, 35.37, 44.6, 17.23, 13.47, 26.33, 35.02, 32.09, 24.81, 19.33, 28.77, 24.26, 31.98, 25.73, 24.86, 16.28, 34.51, 15.23, 39.72, 40.8, 26.06, 35.76, 34.76, 16.13, 44.04, 18.03, 19.65, 32.62, 35.59, 39.43, 14.18, 35.24, 40.13, 41.82, 35.45, 36.07, 43.67, 24.61, 20.9, 21.9, 18.79, 27.61, 27.21, 26.61, 29.77, 20.59, 27.53, 13.82, 33.2, 25, 33.1, 36.65, 18.63, 14.87, 22.2, 36.81, 25.53, 24.62, 26.25, 18.21, 28.08, 19.42, 29.79, 32.8, 35.99, 28.32, 27.79, 35.88, 29.06, 36.28, 14.1, 36.63, 37.49, 26.9, 18.58, 38.48, 24.48, 18.95, 33.55, 14.24, 29.04, 32.51, 25.63, 22.22, 19, 32.73, 15.16, 13.9, 27.2, 32.01, 29.27, 33, 13.74, 20.42, 27.32, 18.23, 35.35, 28.48, 9.08, 24.62, 20.12, 35.26, 19.92, 31.02, 16.49, 12.16, 30.7, 31.22, 34.65, 13.13, 27.51, 33.2, 31.57, 14.1, 33.42, 17.44, 10.12, 24.42, 9.82, 23.39, 30.93, 15.03, 21.67, 31.09, 33.29, 22.61, 26.89, 23.48, 8.38, 27.81, 32.35, 23.84]

4 |

--------------------------------------------------------------------------------

/naive_bayes_classfier/utils.py:

--------------------------------------------------------------------------------

1 | import math

2 | import re

3 | from collections import defaultdict

4 |

5 |

6 | def tokenise(message):

7 | """Tokenise message into distinct words"""

8 | message = message.lower() # convert to lowercase

9 | all_words = re.findall("[a-z0-9']+", message) # extract the words

10 | return set(all_words) # remove duplicates

11 |

12 |

13 | def count_words(training_set):

14 | """training set consists of parts (meesage, is_spam)"""

15 | counts = defaultdict(lambda: [0, 0])

16 | for message, is_spam in training_set:

17 | for word in tokenise(message):

18 | counts[word][0 if is_spam else 1] += 1

19 | return counts

20 |

21 |

22 | def word_probabilities(counts, total_spams, total_non_spams, k=0.5):

23 | """Turn the word_counts into a list of triplets: w, p(w|spam) and p(w|~spam)"""

24 | return [(w,

25 | (spam + k)/(total_spams + 2 * k),

26 | (non_spam + k)/(total_non_spams + 2 * k))

27 | for w, (spam, non_spam) in counts.items()]

28 |

29 |

30 | def spam_probability(word_probs, message):

31 | """assigns word probabilities to messages"""

32 | message_words = tokenise(message)

33 | log_prob_if_spam = log_prob_if_not_spam = 0.0

34 |

35 | # iterate through each word in our vocabulary

36 | for word, prob_if_spam, prob_if_not_spam in word_probs:

37 |

38 | # if "word" appears in the message,

39 | # add the log probability of seeing it

40 | if word in message_words:

41 | log_prob_if_spam += math.log(prob_if_spam)

42 | log_prob_if_not_spam += math.log(prob_if_not_spam)

43 |

44 | # if the "word" doesn't appear in the message

45 | # add the log probability of not seeing it

46 | else:

47 | log_prob_if_spam += math.log(1.0 - prob_if_spam)

48 | log_prob_if_not_spam += math.log(1.0 - prob_if_not_spam)

49 |

50 | prob_if_spam = math.exp(log_prob_if_spam)

51 | prob_if_not_spam = math.exp(log_prob_if_not_spam)

52 |

53 | return prob_if_spam / (prob_if_spam + prob_if_not_spam)

54 |

55 |

--------------------------------------------------------------------------------

/NN_churn_prediction.py:

--------------------------------------------------------------------------------

1 | """Importing the libraries"""

2 | from keras.models import Sequential

3 | from keras.layers import Dense

4 | import pandas as pd

5 | from sklearn.metrics import confusion_matrix, classification_report, accuracy_score

6 | from sklearn.model_selection import train_test_split

7 | from sklearn.preprocessing import LabelEncoder, StandardScaler

8 |

9 | """Loading the data"""

10 | dataset = pd.read_csv("/home/amogh/Downloads/Churn_Modelling.csv")

11 |

12 | # filtering features and labels

13 | X = dataset.iloc[:, 3:13].values

14 | y = dataset.iloc[:, 13].values

15 |

16 | """Preprocessing the data"""

17 | # encoding the Gender and Geography

18 | labelencoder_X_1 = LabelEncoder()

19 | X[:, 1] = labelencoder_X_1.fit_transform(X[:, 1]) # Column 4 [France, Germany, Spain] => [0, 1, 2]

20 | labelencoder_X_2 = LabelEncoder()

21 | X[:, 2] = labelencoder_X_2.fit_transform(X[:, 2]) # Column 5 [Male, Female] => [0, 1]

22 |

23 | # splitting the data into training and testing

24 | X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20)

25 |

26 | # scaling features

27 | sc = StandardScaler()

28 | X_train = sc.fit_transform(X_train)

29 | X_test = sc.fit_transform(X_test)

30 |

31 | """Building the neural network"""

32 | # initializing the neural network

33 | model = Sequential()

34 | # input and first hidden layer

35 | model.add(Dense(6, input_dim=10, activation='relu'))

36 | # second hidden layer

37 | model.add(Dense(6, activation='relu'))

38 | # output layer - probability of churning

39 | model.add(Dense(1, activation='sigmoid'))

40 | # compiling the model

41 | model.compile(optimizer='adam',

42 | loss='binary_crossentropy',

43 | metrics=['accuracy'])

44 |

45 | """Running the model on the data"""

46 | # fitting the model

47 | model.fit(X_train, y_train, batch_size=10, epochs=100)

48 |

49 | y_pred = model.predict(X_test)

50 | y_pred = (y_pred > 0.5) # converting probabilities into binary

51 |

52 | """Evaluating the results"""

53 | # generating the confusion matrix

54 | cm = confusion_matrix(y_test, y_pred)

55 | print(cm)

56 |

57 | # determining the accuracy

58 | accuracy = accuracy_score(y_test, y_pred)

59 | print(accuracy)

60 |

61 | # generating the classification report

62 | cr = classification_report(y_test, y_pred)

63 | print(cr)

64 |

--------------------------------------------------------------------------------

/k_nearest_neighbors/Understanding the algorithm.md:

--------------------------------------------------------------------------------

1 | ### Introduction

2 |

3 | K-nearest nieghbor is a supervised machine learning algorithm.

4 |

5 | ### Problem Statement

6 |

7 | Given some labelled data points, we have to classify a new data point according to its nearest neigbors.

8 |

9 | **Example used here**

10 |

11 | We have the data for a large social networking company which ran polls for their favroite programming language. The users belong from a group of large cities. Now the VP of Community Engagement want you to `predict the` **favorite programming language** `for the places that were` **not** `part of the survey`

12 |

13 | ### Intuition

14 |

15 | * In kNN, k is the no. of neigbors you will evaluate to decide which group a new data point will belong to ?

16 | * Value of k is decided by plotting the error rate against the different value of k

17 | * Once the value of k is initiliazed, we take the nearest the k neigbors from the data point

18 | * The measure of distance between the data points can be calculated using either `Euclidean Distance` or `Manhattan Distance`

19 | * Once we calculate the distance of all the k nearest neigbors, we then look for the majority of labels in the neigbots

20 | * The data point is assigned to the group which has maximum no. of neigbors

21 |

22 | ### Choosing K value

23 | * First divide the entire data set into training set and test set.

24 | * Apply the KNN algorithm into training set and cross validate it with test set.

25 | * Lets assume you have a train set `xtrain` and test set `xtest`

26 | * Now create the model with `k` value `1` and predict with test set data

27 | * Check the accuracy and other parameters then repeat the same process after increasing the k value by 1 each time.

28 |

29 |

30 | Here I am increasing the k value by 1 from `1 to 29` and printing the accuracy with respected `k` value.

31 |

32 |

33 | ### Note

34 |

35 | * kNN is impacted by `Imbalanced datasets`.

36 | Suppose there are `m` instances of **class 1** and `n` insatnces of **class 2** where `n << m`.

37 | In a case where `k > n`, then this may lead to counting of more instances of m and

38 | hence it will impact the majority election in k nearest neigbors

39 |

40 | * kNN is also very sensitve to `outliers`

41 |

--------------------------------------------------------------------------------

/telecom_churn_prediction.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import numpy as np

3 | from IPython.display import display

4 | from sklearn.ensemble import RandomForestClassifier

5 | from sklearn.metrics import confusion_matrix, roc_curve

6 | from sklearn.model_selection import train_test_split

7 | import matplotlib.pyplot as plt

8 |

9 | df = pd.read_csv("/home/amogh/Downloads/Churn.csv")

10 | display(df.head(5))

11 |

12 | """Data Exploration and Cleaning"""

13 | # print("Number of rows: ", df.shape[0])

14 | # counts = df.describe().iloc[0]

15 | # display(pd.DataFrame(counts.tolist(), columns=["Count of values"], index=counts.index.values).transpose)

16 |

17 | """Feature Selection"""

18 | df = df.drop(["Phone", "Area Code", "State"], axis=1)

19 | features = df.drop(["Churn"], axis=1).columns

20 |

21 | """Fitting the model"""

22 | df_train, df_test = train_test_split(df, test_size=0.25)

23 | clf = RandomForestClassifier()

24 | clf.fit(df_train[features], df_train["Churn"])

25 |

26 | # Make predictions

27 | preds = clf.predict(df_test[features])

28 | probs = clf.predict_proba(df_test[features])

29 | display(preds)

30 |

31 | """Evaluating the model"""

32 | score = clf.score(df_test[features], df_test["Churn"])

33 | print("Accuracy: ", score)

34 |

35 | cf = pd.DataFrame(confusion_matrix(df_test["Churn"], preds), columns=["Predicted False", "Predicted True"], index=["Actual False", "Actual True"])

36 |

37 | display(cf)

38 |

39 | # Plotting the ROC curve

40 |

41 | fpr, tpr, threshold = roc_curve(df_test["Churn"], probs[:, 1])

42 | plt.title('Receiver Operating Characteristic')

43 | plt.plot(fpr, tpr, 'b')

44 | plt.plot([0, 1], [0, 1],'r--')

45 | plt.xlim([0, 1])

46 | plt.ylim([0, 1])

47 | plt.ylabel('True Positive Rate')

48 | plt.xlabel('False Positive Rate')

49 | plt.show()

50 |

51 | # Feature Importance Plot

52 | fig = plt.figure(figsize=(20, 18))

53 | ax = fig.add_subplot(111)

54 |

55 | df_f = pd.DataFrame(clf.feature_importances_, columns=["importance"])

56 | df_f["labels"] = features

57 | df_f.sort_values("importance", inplace=True, ascending=False)

58 | display(df_f.head(5))

59 |

60 | index = np.arange(len(clf.feature_importances_))

61 | bar_width = 0.5

62 | rects = plt.barh(index, df_f["importance"], bar_width, alpha=0.4, color='b', label='Main')

63 | plt.yticks(index, df_f["labels"])

64 | plt.show()

65 |

66 | df_test["prob_true"] = probs[:, 1]

67 | df_risky = df_test[df_test["prob_true"] > 0.9]

68 | display(df_risky.head(5)[["prob_true"]])

69 |

--------------------------------------------------------------------------------

/multiple_regression/model.py:

--------------------------------------------------------------------------------

1 | import random

2 |

3 | from helpers.linear_algebra import dot

4 | from helpers.stats import median, standard_deviation

5 | from multiple_regression.data import x, daily_minutes_good

6 | from multiple_regression.utils import estimate_beta, multiple_r_squared, bootstrap_statistic, estimate_sample_beta, \

7 | p_value, estimate_beta_ridge

8 |

9 | if __name__ == '__main__':

10 | random.seed(0)

11 | beta = estimate_beta(x, daily_minutes_good) # [30.63, 0.972, -1.868, 0.911]

12 | print("beta", beta)

13 | print("r-squared", multiple_r_squared(x, daily_minutes_good, beta))

14 | print()

15 |

16 | print("digression: the bootstrap")

17 | # 101 points all very close to 100

18 | close_to_100 = [99.5 + random.random() for _ in range(101)]

19 |

20 | # 101 points, 50 of them near 0, 50 of them near 200

21 | far_from_100 = ([99.5 + random.random()] +

22 | [random.random() for _ in range(50)] +

23 | [200 + random.random() for _ in range(50)])

24 |

25 | print("bootstrap_statistic(close_to_100, median, 100):")

26 | print(bootstrap_statistic(close_to_100, median, 100))

27 | print("bootstrap_statistic(far_from_100, median, 100):")

28 | print(bootstrap_statistic(far_from_100, median, 100))

29 | print()

30 |

31 | random.seed(0) # so that you get the same results as me

32 |

33 | bootstrap_betas = bootstrap_statistic(list(zip(x, daily_minutes_good)),

34 | estimate_sample_beta,

35 | 100)

36 |

37 | bootstrap_standard_errors = [

38 | standard_deviation([beta[i] for beta in bootstrap_betas])

39 | for i in range(4)]

40 |

41 | print("bootstrap standard errors", bootstrap_standard_errors)

42 | print()

43 |

44 | print("p_value(30.63, 1.174)", p_value(30.63, 1.174))

45 | print("p_value(0.972, 0.079)", p_value(0.972, 0.079))

46 | print("p_value(-1.868, 0.131)", p_value(-1.868, 0.131))

47 | print("p_value(0.911, 0.990)", p_value(0.911, 0.990))

48 | print()

49 |

50 | print("regularization")

51 |

52 | random.seed(0)

53 | for alpha in [0.0, 0.01, 0.1, 1, 10]:

54 | beta = estimate_beta_ridge(x, daily_minutes_good, alpha=alpha)

55 | print("alpha", alpha)

56 | print("beta", beta)

57 | print("dot(beta[1:],beta[1:])", dot(beta[1:], beta[1:]))

58 | print("r-squared", multiple_r_squared(x, daily_minutes_good, beta))

59 | print()

--------------------------------------------------------------------------------

/naive_bayes_classfier/model.py:

--------------------------------------------------------------------------------

1 | import glob

2 | import re

3 | from collections import Counter

4 | import random

5 | from naive_bayes_classfier.naivebayesclassifier import NaiveBayesClassifier

6 |

7 |

8 | def split_data(data, prob):

9 | """split data into fractions [prob, 1 - prob]"""

10 | results = [], []

11 | for row in data:

12 | results[0 if random.random() < prob else 1].append(row)

13 | return results

14 |

15 |

16 | def get_subject_data(path):

17 |

18 | data = []

19 |

20 | # regex for stripping out the leading "Subject:" and any spaces after it

21 | subject_regex = re.compile(r"^Subject:\s+")

22 |

23 | # glob.glob returns every filename that matches the wildcarded path

24 | for fn in glob.glob(path):

25 | is_spam = "ham" not in fn

26 |

27 | with open(fn, 'r', encoding='ISO-8859-1') as file:

28 | for line in file:

29 | if line.startswith("Subject:"):

30 | subject = subject_regex.sub("", line).strip()

31 | data.append((subject, is_spam))

32 |

33 | return data

34 |

35 |

36 | def p_spam_given_word(word_prob):

37 | word, prob_if_spam, prob_if_not_spam = word_prob

38 | return prob_if_spam / (prob_if_spam + prob_if_not_spam)

39 |

40 |

41 | def train_and_test_model(path):

42 |

43 | data = get_subject_data(path)

44 | random.seed(0) # just so you get the same answers as me

45 | train_data, test_data = split_data(data, 0.75)

46 |

47 | classifier = NaiveBayesClassifier()

48 | classifier.train(train_data)

49 |

50 | classified = [(subject, is_spam, classifier.classify(subject))

51 | for subject, is_spam in test_data]

52 |

53 | counts = Counter((is_spam, spam_probability > 0.5) # (actual, predicted)

54 | for _, is_spam, spam_probability in classified)

55 |

56 | print(counts)

57 |

58 | classified.sort(key=lambda row: row[2])

59 | spammiest_hams = list(filter(lambda row: not row[1], classified))[-5:]

60 | hammiest_spams = list(filter(lambda row: row[1], classified))[:5]

61 |

62 | print("\nspammiest_hams", spammiest_hams)

63 | print("\nhammiest_spams", hammiest_spams)

64 |

65 | words = sorted(classifier.word_probs, key=p_spam_given_word)

66 |

67 | spammiest_words = words[-5:]

68 | hammiest_words = words[:5]

69 |

70 | print("\nspammiest_words", spammiest_words)

71 | print("\nhammiest_words", hammiest_words)

72 |

73 |

74 | if __name__ == "__main__":

75 | train_and_test_model(r"data/*/*")

76 |

--------------------------------------------------------------------------------

/logistic_regression/model.py:

--------------------------------------------------------------------------------

1 | import random

2 | from functools import partial

3 |

4 | from helpers.gradient_descent import maximize_batch, maximize_stochastic

5 | from helpers.linear_algebra import dot

6 | from helpers.machine_learning import train_test_split

7 | from logistic_regression.data import data

8 | from logistic_regression.utils import logistic_log_likelihood, logistic_log_gradient, logistic_log_likelihood_i, \

9 | logistic_log_gradient_i, logistic

10 | from multiple_regression.utils import estimate_beta

11 | from working_with_data.utils import rescale

12 |

13 | if __name__ == '__main__':

14 | x = [[1] + row[:2] for row in data] # each element is [1, experience, salary]

15 | y = [row[2] for row in data] # each element is paid_account

16 |

17 | print("linear regression:")

18 |

19 | rescaled_x = rescale(x)

20 | beta = estimate_beta(rescaled_x, y)

21 | print(beta)

22 |

23 | print("logistic regression:")

24 |

25 | random.seed(0)

26 | x_train, x_test, y_train, y_test = train_test_split(rescaled_x, y, 0.33)

27 |

28 | # want to maximize log likelihood on the training data

29 | fn = partial(logistic_log_likelihood, x_train, y_train)

30 | gradient_fn = partial(logistic_log_gradient, x_train, y_train)

31 |

32 | # pick a random starting point

33 | beta_0 = [1, 1, 1]

34 |

35 | # and maximize using gradient descent

36 | beta_hat = maximize_batch(fn, gradient_fn, beta_0)

37 |

38 | print("beta_batch", beta_hat)

39 |

40 | beta_0 = [1, 1, 1]

41 | beta_hat = maximize_stochastic(logistic_log_likelihood_i,

42 | logistic_log_gradient_i,

43 | x_train, y_train, beta_0)

44 |

45 | print("beta stochastic", beta_hat)

46 |

47 | true_positives = false_positives = true_negatives = false_negatives = 0

48 |

49 | for x_i, y_i in zip(x_test, y_test):

50 | predict = logistic(dot(beta_hat, x_i))

51 |

52 | if y_i == 1 and predict >= 0.5: # TP: paid and we predict paid

53 | true_positives += 1

54 | elif y_i == 1: # FN: paid and we predict unpaid

55 | false_negatives += 1

56 | elif predict >= 0.5: # FP: unpaid and we predict paid

57 | false_positives += 1

58 | else: # TN: unpaid and we predict unpaid

59 | true_negatives += 1

60 |

61 | precision = true_positives / (true_positives + false_positives)

62 | recall = true_positives / (true_positives + false_negatives)

63 |

64 | print("precision", precision)

65 | print("recall", recall)

--------------------------------------------------------------------------------

/neural_network/model.py:

--------------------------------------------------------------------------------

1 | import random

2 |

3 | from neural_network.data import raw_digits

4 | from neural_network.utils import backpropagate, feed_forward

5 |

6 | if __name__ == "__main__":

7 |

8 | def make_digit(raw_digit):

9 | return [1 if c == '1' else 0

10 | for row in raw_digit.split("\n")

11 | for c in row.strip()]

12 |

13 |

14 | inputs = list(map(make_digit, raw_digits))

15 |

16 | targets = [[1 if i == j else 0 for i in range(10)]

17 | for j in range(10)]

18 |

19 | random.seed(0) # to get repeatable results

20 | input_size = 25 # each input is a vector of length 25

21 | num_hidden = 5 # we'll have 5 neurons in the hidden layer

22 | output_size = 10 # we need 10 outputs for each input

23 |

24 | # each hidden neuron has one weight per input, plus a bias weight

25 | hidden_layer = [[random.random() for __ in range(input_size + 1)]

26 | for __ in range(num_hidden)]

27 |

28 | # each output neuron has one weight per hidden neuron, plus a bias weight

29 | output_layer = [[random.random() for __ in range(num_hidden + 1)]

30 | for __ in range(output_size)]

31 |

32 | # the network starts out with random weights

33 | network = [hidden_layer, output_layer]

34 |

35 | # 10,000 iterations seems enough to converge

36 | for __ in range(10000):

37 | for input_vector, target_vector in zip(inputs, targets):

38 | backpropagate(network, input_vector, target_vector)

39 |

40 |

41 | def predict(input):

42 | return feed_forward(network, input)[-1]

43 |

44 |

45 | for i, input in enumerate(inputs):

46 | outputs = predict(input)

47 | print(i, [round(p, 2) for p in outputs])

48 |

49 | print(""".@@@.

50 | ...@@

51 | ..@@.

52 | ...@@

53 | .@@@.""")

54 |

55 | print([round(x, 2) for x in

56 | predict([0, 1, 1, 1, 0, # .@@@.

57 | 0, 0, 0, 1, 1, # ...@@

58 | 0, 0, 1, 1, 0, # ..@@.

59 | 0, 0, 0, 1, 1, # ...@@

60 | 0, 1, 1, 1, 0])]) # .@@@.

61 | print()

62 |

63 | print(""".@@@.

64 | @..@@

65 | .@@@.

66 | @..@@

67 | .@@@.""")

68 |

69 | print([round(x, 2) for x in

70 | predict([0, 1, 1, 1, 0, # .@@@.

71 | 1, 0, 0, 1, 1, # @..@@

72 | 0, 1, 1, 1, 0, # .@@@.

73 | 1, 0, 0, 1, 1, # @..@@

74 | 0, 1, 1, 1, 0])]) # .@@@.

75 | print()

76 |

--------------------------------------------------------------------------------

/recommender_systems/utils.py:

--------------------------------------------------------------------------------

1 | import math

2 | from collections import defaultdict

3 |

4 | from helpers.linear_algebra import dot

5 |

6 |

7 | def cosine_similarity(v, w):

8 | return dot(v, w) / math.sqrt(dot(v, v) * dot(w, w))

9 |

10 |

11 | def make_user_interest_vector(interests, user_interests):

12 | return [1 if interest in user_interests else 0

13 | for interest in interests]

14 |

15 |

16 | def most_similar_users_to(user_similarities, user_id):

17 | pairs = [(other_user_id, similarity)

18 | for other_user_id, similarity in

19 | enumerate(user_similarities[user_id])

20 | if user_id != other_user_id and similarity > 0]

21 |

22 | return sorted(pairs, key=lambda pair: pair[1], reverse=True)

23 |

24 |

25 | def most_similar_interests_to(interest_similarities, interest_id, unique_interests):

26 | pairs = [(unique_interests[other_interest_id], similarity)

27 | for other_interest_id, similarity in