├── Dockerfile

├── LICENSE

├── README.md

├── paramspider

├── __init__.py

├── client.py

└── main.py

├── setup.py

└── static

└── paramspider.png

/Dockerfile:

--------------------------------------------------------------------------------

1 | # Use a slim base image

2 | FROM python:3.8-slim

3 |

4 | # Set the working directory

5 | WORKDIR /app

6 |

7 | # Install git and dependencies

8 | RUN apt-get update && apt-get install -y git

9 |

10 | # Clone ParamSpider repository

11 | RUN git clone https://github.com/devanshbatham/paramspider

12 |

13 | # Change the working directory to the cloned repository

14 | WORKDIR /app/paramspider

15 |

16 | # Install ParamSpider dependencies using pip

17 | RUN pip install .

18 |

19 | # Set the entrypoint to run paramspider

20 | ENTRYPOINT ["paramspider"]

21 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 Devansh Batham

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 | paramspider

3 |

4 |

5 |

6 | Mining URLs from dark corners of Web Archives for bug hunting/fuzzing/further probing

7 |

8 |

9 | 📖 About •

10 | 🏗️ Installation •

11 | ⛏️ Usage •

12 | 🚀 Examples •

13 | 🤝 Contributing •

14 |

15 |

16 |

17 |

18 |

19 | ## About

20 |

21 | `paramspider` allows you to fetch URLs related to any domain or a list of domains from Wayback achives. It filters out "boring" URLs, allowing you to focus on the ones that matter the most.

22 |

23 | ## Installation

24 |

25 | To install `paramspider`, follow these steps:

26 |

27 | ```sh

28 | git clone https://github.com/devanshbatham/paramspider

29 | cd paramspider

30 | pip install .

31 | ```

32 |

33 | ## Usage

34 |

35 | To use `paramspider`, follow these steps:

36 |

37 | ```sh

38 | paramspider -d example.com

39 | ```

40 |

41 | ## Examples

42 |

43 | Here are a few examples of how to use `paramspider`:

44 |

45 | - Discover URLs for a single domain:

46 |

47 | ```sh

48 | paramspider -d example.com

49 | ```

50 |

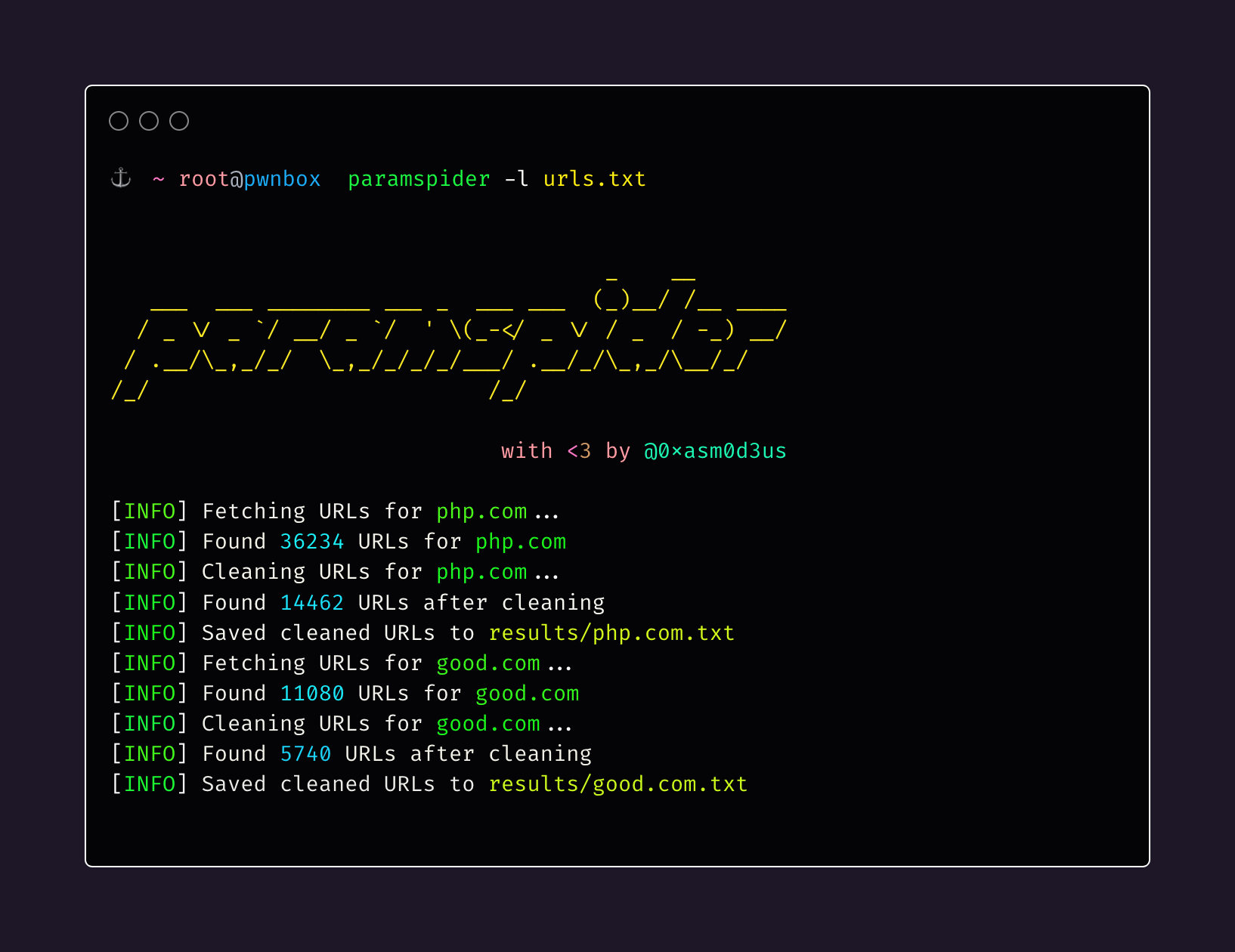

51 | - Discover URLs for multiple domains from a file:

52 |

53 | ```sh

54 | paramspider -l domains.txt

55 | ```

56 |

57 | - Stream URLs on the termial:

58 |

59 | ```sh

60 | paramspider -d example.com -s

61 | ```

62 |

63 | - Set up web request proxy:

64 |

65 | ```sh

66 | paramspider -d example.com --proxy '127.0.0.1:7890'

67 | ```

68 | - Adding a placeholder for URL parameter values (default: "FUZZ"):

69 |

70 | ```sh

71 | paramspider -d example.com -p '">reflection

'

72 | ```

73 |

74 | ## Contributing

75 |

76 | Contributions are welcome! If you'd like to contribute to `paramspider`, please follow these steps:

77 |

78 | 1. Fork the repository.

79 | 2. Create a new branch.

80 | 3. Make your changes and commit them.

81 | 4. Submit a pull request.

82 |

83 |

84 | ## Star History

85 |

86 | [](https://star-history.com/#devanshbatham/paramspider&Date)

87 |

88 |

89 |

--------------------------------------------------------------------------------

/paramspider/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/devanshbatham/ParamSpider/790eb91213419e9c4ddec2c91201d4be5399cb77/paramspider/__init__.py

--------------------------------------------------------------------------------

/paramspider/client.py:

--------------------------------------------------------------------------------

1 | import requests

2 | import random

3 | import json

4 | import logging

5 | import time

6 | import sys

7 |

8 |

9 |

10 | logging.basicConfig(level=logging.INFO)

11 |

12 |

13 | MAX_RETRIES = 3

14 |

15 | def load_user_agents():

16 | """

17 | Loads user agents

18 | """

19 |

20 | return [

21 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36",

22 | "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:54.0) Gecko/20100101 Firefox/54.0",

23 | "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36",

24 | "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:54.0) Gecko/20100101 Firefox/54.0",

25 | "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36",

26 | "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/603.3.8 (KHTML, like Gecko) Version/10.1.2 Safari/603.3.8",

27 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36 Edg/89.0.774.45",

28 | "Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; AS; rv:11.0) like Gecko",

29 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.96 Safari/537.36 Edge/16.16299",

30 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 OPR/45.0.2552.898",

31 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 Vivaldi/1.8.770.50",

32 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:54.0) Gecko/20100101 Firefox/54.0",

33 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 Edge/15.15063",

34 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 Edge/15.15063",

35 | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.81 Safari/537.36"

36 | ]

37 |

38 | def fetch_url_content(url,proxy):

39 | """

40 | Fetches the content of a URL using a random user agent.

41 | Retries up to MAX_RETRIES times if the request fails.

42 | """

43 | user_agents = load_user_agents()

44 | if proxy is not None:

45 | proxy={

46 | 'http':proxy,

47 | 'https':proxy

48 | }

49 | for i in range(MAX_RETRIES):

50 | user_agent = random.choice(user_agents)

51 | headers = {

52 | "User-Agent": user_agent

53 | }

54 |

55 | try:

56 | response = requests.get(url, proxies=proxy,headers=headers)

57 | response.raise_for_status()

58 | return response

59 | except (requests.exceptions.RequestException, ValueError):

60 | logging.warning(f"Error fetching URL {url}. Retrying in 5 seconds...")

61 | time.sleep(5)

62 | except KeyboardInterrupt:

63 | logging.warning("Keyboard Interrupt re ceived. Exiting gracefully...")

64 | sys.exit()

65 |

66 | logging.error(f"Failed to fetch URL {url} after {MAX_RETRIES} retries.")

67 | sys.exit()

68 |

--------------------------------------------------------------------------------

/paramspider/main.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | import logging

4 | import colorama

5 | from colorama import Fore, Style

6 | from . import client # Importing client from a module named "client"

7 | from urllib.parse import urlparse, parse_qs, urlencode

8 | import os

9 |

10 | yellow_color_code = "\033[93m"

11 | reset_color_code = "\033[0m"

12 |

13 | colorama.init(autoreset=True) # Initialize colorama for colored terminal output

14 |

15 | log_format = '%(message)s'

16 | logging.basicConfig(format=log_format, level=logging.INFO)

17 | logging.getLogger('').handlers[0].setFormatter(logging.Formatter(log_format))

18 |

19 | HARDCODED_EXTENSIONS = [

20 | ".jpg", ".jpeg", ".png", ".gif", ".pdf", ".svg", ".json",

21 | ".css", ".js", ".webp", ".woff", ".woff2", ".eot", ".ttf", ".otf", ".mp4", ".txt"

22 | ]

23 |

24 | def has_extension(url, extensions):

25 | """

26 | Check if the URL has a file extension matching any of the provided extensions.

27 |

28 | Args:

29 | url (str): The URL to check.

30 | extensions (list): List of file extensions to match against.

31 |

32 | Returns:

33 | bool: True if the URL has a matching extension, False otherwise.

34 | """

35 | parsed_url = urlparse(url)

36 | path = parsed_url.path

37 | extension = os.path.splitext(path)[1].lower()

38 |

39 | return extension in extensions

40 |

41 | def clean_url(url):

42 | """

43 | Clean the URL by removing redundant port information for HTTP and HTTPS URLs.

44 |

45 | Args:

46 | url (str): The URL to clean.

47 |

48 | Returns:

49 | str: Cleaned URL.

50 | """

51 | parsed_url = urlparse(url)

52 |

53 | if (parsed_url.port == 80 and parsed_url.scheme == "http") or (parsed_url.port == 443 and parsed_url.scheme == "https"):

54 | parsed_url = parsed_url._replace(netloc=parsed_url.netloc.rsplit(":", 1)[0])

55 |

56 | return parsed_url.geturl()

57 |

58 | def clean_urls(urls, extensions, placeholder):

59 | """

60 | Clean a list of URLs by removing unnecessary parameters and query strings.

61 |

62 | Args:

63 | urls (list): List of URLs to clean.

64 | extensions (list): List of file extensions to check against.

65 |

66 | Returns:

67 | list: List of cleaned URLs.

68 | """

69 | cleaned_urls = set()

70 | for url in urls:

71 | cleaned_url = clean_url(url)

72 | if not has_extension(cleaned_url, extensions):

73 | parsed_url = urlparse(cleaned_url)

74 | query_params = parse_qs(parsed_url.query)

75 | cleaned_params = {key: placeholder for key in query_params}

76 | cleaned_query = urlencode(cleaned_params, doseq=True)

77 | cleaned_url = parsed_url._replace(query=cleaned_query).geturl()

78 | cleaned_urls.add(cleaned_url)

79 | return list(cleaned_urls)

80 |

81 | def fetch_and_clean_urls(domain, extensions, stream_output,proxy, placeholder):

82 | """

83 | Fetch and clean URLs related to a specific domain from the Wayback Machine.

84 |

85 | Args:

86 | domain (str): The domain name to fetch URLs for.

87 | extensions (list): List of file extensions to check against.

88 | stream_output (bool): True to stream URLs to the terminal.

89 |

90 | Returns:

91 | None

92 | """

93 | logging.info(f"{Fore.YELLOW}[INFO]{Style.RESET_ALL} Fetching URLs for {Fore.CYAN + domain + Style.RESET_ALL}")

94 | wayback_uri = f"https://web.archive.org/cdx/search/cdx?url={domain}/*&output=txt&collapse=urlkey&fl=original&page=/"

95 | response = client.fetch_url_content(wayback_uri,proxy)

96 | urls = response.text.split()

97 |

98 | logging.info(f"{Fore.YELLOW}[INFO]{Style.RESET_ALL} Found {Fore.GREEN + str(len(urls)) + Style.RESET_ALL} URLs for {Fore.CYAN + domain + Style.RESET_ALL}")

99 |

100 | cleaned_urls = clean_urls(urls, extensions, placeholder)

101 | logging.info(f"{Fore.YELLOW}[INFO]{Style.RESET_ALL} Cleaning URLs for {Fore.CYAN + domain + Style.RESET_ALL}")

102 | logging.info(f"{Fore.YELLOW}[INFO]{Style.RESET_ALL} Found {Fore.GREEN + str(len(cleaned_urls)) + Style.RESET_ALL} URLs after cleaning")

103 | logging.info(f"{Fore.YELLOW}[INFO]{Style.RESET_ALL} Extracting URLs with parameters")

104 |

105 | results_dir = "results"

106 | if not os.path.exists(results_dir):

107 | os.makedirs(results_dir)

108 |

109 | result_file = os.path.join(results_dir, f"{domain}.txt")

110 |

111 | with open(result_file, "w") as f:

112 | for url in cleaned_urls:

113 | if "?" in url:

114 | f.write(url + "\n")

115 | if stream_output:

116 | print(url)

117 |

118 | logging.info(f"{Fore.YELLOW}[INFO]{Style.RESET_ALL} Saved cleaned URLs to {Fore.CYAN + result_file + Style.RESET_ALL}")

119 |

120 | def main():

121 | """

122 | Main function to handle command-line arguments and start URL mining process.

123 | """

124 | log_text = """

125 |

126 | _ __

127 | ___ ___ ________ ___ _ ___ ___ (_)__/ /__ ____

128 | / _ \/ _ `/ __/ _ `/ ' \(_-