├── .DS_Store

├── Problem 6

├── problem6.sql

├── students.sql

├── Students.csv

├── README.md

└── problem6.ipynb

├── Problem 7

├── .DS_Store

├── problem7.sql

├── transaction.sql

├── README.md

├── transaction.csv

└── problem7.ipynb

├── Problem 2

├── department.csv

├── department.sql

├── problem2.sql

├── README.md

├── problem2_2.ipynb

└── problem2_1.ipynb

├── Problem 5

├── problem5.sql

├── station.sql

├── README.md

├── problem5.ipynb

└── stations.csv

├── Problem 1

├── problem1.sql

├── employee_table.sql

├── README.md

├── employee.csv

├── employee.json

└── problem1.ipynb

├── Problem 3

├── problem3.sql

├── station.sql

├── README.md

├── problem3.ipynb

└── stations.csv

├── Problem 0

├── employee_salary.sql

├── problem0.sql

├── README.md

└── employee_salary.csv

├── Problem 4

├── station.sql

├── problem4.sql

├── README.md

├── problem4.ipynb

└── stations.csv

├── Problem 9

├── user_type.csv

├── README.md

├── user_info.csv

├── problem9.sql

└── download_facts.csv

├── Problem 8

├── problem8.sql

├── user.csv

├── README.md

├── ride_log.csv

└── problem8.ipynb

└── README.md

/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/developershomes/DataEngineeringProblems/main/.DS_Store

--------------------------------------------------------------------------------

/Problem 6/problem6.sql:

--------------------------------------------------------------------------------

1 | SELECT name

2 | FROM public.students

3 | WHERE marks > 75

4 | ORDER BY right(name,3),ID;

--------------------------------------------------------------------------------

/Problem 7/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/developershomes/DataEngineeringProblems/main/Problem 7/.DS_Store

--------------------------------------------------------------------------------

/Problem 2/department.csv:

--------------------------------------------------------------------------------

1 | department_id,department_name

2 | 1005,Sales

3 | 1002,Finanace

4 | 1004,Purchase

5 | 1001,Operations

6 | 1006,Marketing

7 | 1003,Technoogy

--------------------------------------------------------------------------------

/Problem 7/problem7.sql:

--------------------------------------------------------------------------------

1 | SELECT DISTINCT(a1.user_id)

2 | FROM transaction a1

3 | JOIN transaction a2 ON a1.user_id=a2.user_id

4 | AND a1.id <> a2.id

5 | AND DATEDIFF(a2.created_at,a1.created_at) BETWEEN 0 AND 7

6 | ORDER BY a1.user_id;

--------------------------------------------------------------------------------

/Problem 5/problem5.sql:

--------------------------------------------------------------------------------

1 | -- Query the list of CITY names starting with vowels (i.e., a, e, i, o, or u) from STATION. Your result cannot contain duplicates.

2 |

3 | SELECT DISTINCT(CITY) FROM STATION WHERE LEFT(CITY,1) IN ('A','E','I','O','U');

--------------------------------------------------------------------------------

/Problem 1/problem1.sql:

--------------------------------------------------------------------------------

1 | -- Active: 1675109399578@@127.0.0.1@5432@postgres

2 | SELECT id, first_name, last_name, MAX(salary) AS MaxSalary, department_id

3 | FROM public.employee

4 | GROUP BY id, first_name, last_name, department_id

5 | ORDER BY id

--------------------------------------------------------------------------------

/Problem 3/problem3.sql:

--------------------------------------------------------------------------------

1 | --Find the difference between the total number of CITY entries in the table and the number of distinct CITY entries in the table.

2 |

3 | SELECT count(city) as citycount, count(distinct(city)) as distinctcitycount,(count(city) - count(distinct(city))) as diffbetweenboth

4 | FROM public.station;

--------------------------------------------------------------------------------

/Problem 7/transaction.sql:

--------------------------------------------------------------------------------

1 | CREATE TABLE `transaction` (

2 | `id` int NOT NULL,

3 | `user_id` int DEFAULT NULL,

4 | `item` varchar(45) DEFAULT NULL,

5 | `created_at` date DEFAULT NULL,

6 | `revenue` int DEFAULT NULL,

7 | PRIMARY KEY (`id`)

8 | ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci

--------------------------------------------------------------------------------

/Problem 6/students.sql:

--------------------------------------------------------------------------------

1 | -- Table: public.station

2 |

3 | -- DROP TABLE IF EXISTS public.station;

4 |

5 | CREATE TABLE IF NOT EXISTS public.students

6 | (

7 | ID bigint,

8 | Name character varying(100) COLLATE pg_catalog."default",

9 | Marks bigint

10 | )

11 |

12 | TABLESPACE pg_default;

13 |

14 | ALTER TABLE IF EXISTS public.students

15 | OWNER to postgres;

--------------------------------------------------------------------------------

/Problem 2/department.sql:

--------------------------------------------------------------------------------

1 | -- Table: public.department

2 |

3 | -- DROP TABLE IF EXISTS public.department;

4 |

5 | CREATE TABLE IF NOT EXISTS public.department

6 | (

7 | department_id bigint,

8 | department_name character varying(100) COLLATE pg_catalog."default"

9 | )

10 |

11 | TABLESPACE pg_default;

12 |

13 | ALTER TABLE IF EXISTS public.department

14 | OWNER to postgres;

--------------------------------------------------------------------------------

/Problem 0/employee_salary.sql:

--------------------------------------------------------------------------------

1 | CREATE TABLE IF NOT EXISTS public.employee_salary

2 | (

3 | id bigint,

4 | first_name character varying(100) COLLATE pg_catalog."default",

5 | last_name character varying(100) COLLATE pg_catalog."default",

6 | salary bigint,

7 | department_id bigint

8 | )

9 |

10 | TABLESPACE pg_default;

11 |

12 | ALTER TABLE IF EXISTS public.employee_salary

13 | OWNER to postgres;

--------------------------------------------------------------------------------

/Problem 3/station.sql:

--------------------------------------------------------------------------------

1 | -- Table: public.station

2 |

3 | -- DROP TABLE IF EXISTS public.station;

4 |

5 | CREATE TABLE IF NOT EXISTS public.station

6 | (

7 | id bigint,

8 | city character varying(100) COLLATE pg_catalog."default",

9 | state character varying(100) COLLATE pg_catalog."default",

10 | lattitude numeric(20,10),

11 | longtitude numeric(20,10)

12 | )

13 |

14 | TABLESPACE pg_default;

15 |

16 | ALTER TABLE IF EXISTS public.station

17 | OWNER to postgres;

--------------------------------------------------------------------------------

/Problem 4/station.sql:

--------------------------------------------------------------------------------

1 | -- Table: public.station

2 |

3 | -- DROP TABLE IF EXISTS public.station;

4 |

5 | CREATE TABLE IF NOT EXISTS public.station

6 | (

7 | id bigint,

8 | city character varying(100) COLLATE pg_catalog."default",

9 | state character varying(100) COLLATE pg_catalog."default",

10 | lattitude numeric(20,10),

11 | longtitude numeric(20,10)

12 | )

13 |

14 | TABLESPACE pg_default;

15 |

16 | ALTER TABLE IF EXISTS public.station

17 | OWNER to postgres;

--------------------------------------------------------------------------------

/Problem 5/station.sql:

--------------------------------------------------------------------------------

1 | -- Table: public.station

2 |

3 | -- DROP TABLE IF EXISTS public.station;

4 |

5 | CREATE TABLE IF NOT EXISTS public.station

6 | (

7 | id bigint,

8 | city character varying(100) COLLATE pg_catalog."default",

9 | state character varying(100) COLLATE pg_catalog."default",

10 | lattitude numeric(20,10),

11 | longtitude numeric(20,10)

12 | )

13 |

14 | TABLESPACE pg_default;

15 |

16 | ALTER TABLE IF EXISTS public.station

17 | OWNER to postgres;

--------------------------------------------------------------------------------

/Problem 1/employee_table.sql:

--------------------------------------------------------------------------------

1 | -- Table: public.employee

2 |

3 | -- DROP TABLE IF EXISTS public.employee;

4 |

5 | CREATE TABLE IF NOT EXISTS public.employee

6 | (

7 | id bigint,

8 | first_name character varying(100) COLLATE pg_catalog."default",

9 | last_name character varying(100) COLLATE pg_catalog."default",

10 | salary bigint,

11 | department_id bigint

12 | )

13 |

14 | TABLESPACE pg_default;

15 |

16 | ALTER TABLE IF EXISTS public.employee

17 | OWNER to postgres;

--------------------------------------------------------------------------------

/Problem 6/Students.csv:

--------------------------------------------------------------------------------

1 | ID,Name,Marks

2 | 19,Samantha,87

3 | 21,Julia,96

4 | 11,Britney,95

5 | 32,Kristeen,100

6 | 12,Dyana,55

7 | 13,Jenny,66

8 | 14,Christene,88

9 | 15,Meera,24

10 | 16,Priya,76

11 | 17,Priyanka,77

12 | 18,Paige,74

13 | 19,Jane,64

14 | 21,Belvet,78

15 | 31,Scarlet,80

16 | 41,Salma,81

17 | 51,Amanda,34

18 | 61,Heraldo,94

19 | 71,Stuart,99

20 | 81,Aamina,77

21 | 76,Amina,89

22 | 91,Vivek,84

23 | 17,Evil,79

24 | 16,Devil,76

25 | 34,Fanny,75

26 | 38,Danny,75

--------------------------------------------------------------------------------

/Problem 4/problem4.sql:

--------------------------------------------------------------------------------

1 | --Query the two cities in STATION with the shortest and longest CITY names, as well as their respective lengths (i.e.: number of characters in the name). If there is more than one smallest or largest city, choose the one that comes first when ordered alphabetically.

2 |

3 | SELECT q1.city, q1.citylength

4 | FROM

5 | (SELECT CITY,LENGTH(CITY) as citylength, RANK() OVER (PARTITION BY LENGTH(CITY) ORDER BY LENGTH(CITY),CITY) as actualrank

6 | FROM STATION) q1

7 | WHERE q1. actualrank = 1

8 | AND q1.citylength = (SELECT MIN(LENGTH(CITY)) FROM STATION)

9 | OR q1.citylength = (SELECT MAX(LENGTH(CITY)) FROM STATION);

10 |

--------------------------------------------------------------------------------

/Problem 0/problem0.sql:

--------------------------------------------------------------------------------

1 | -- 1. List all the meployees whoes salary is more than 100K

2 |

3 | SELECT id, first_name, last_name, salary, department_id

4 | FROM public.employee_salary

5 | WHERE salary > 100000 ;

6 |

7 | -- 2. Provide distinct department id

8 |

9 | SELECT DISTINCT department_id

10 | FROM public.employee_salary ;

11 |

12 | -- 3. Provide first and last name of employees

13 |

14 | SELECT first_name, last_name

15 | FROM public.employee_salary ;

16 |

17 | -- 4. Provide all the details with the employees whose last name is 'Johnson'

18 |

19 | SELECT id, first_name, last_name, salary, department_id

20 | FROM public.employee_salary

21 | WHERE last_name = 'Johnson' ;

--------------------------------------------------------------------------------

/Problem 9/user_type.csv:

--------------------------------------------------------------------------------

1 | acc_id,paying_customer

2 | 700,no

3 | 701,no

4 | 702,no

5 | 703,no

6 | 704,no

7 | 705,no

8 | 706,no

9 | 707,no

10 | 708,no

11 | 709,no

12 | 710,no

13 | 711,no

14 | 712,no

15 | 713,no

16 | 714,no

17 | 715,no

18 | 716,no

19 | 717,no

20 | 718,no

21 | 719,no

22 | 720,no

23 | 721,no

24 | 722,no

25 | 723,no

26 | 724,no

27 | 725,yes

28 | 726,yes

29 | 727,yes

30 | 728,yes

31 | 729,yes

32 | 730,yes

33 | 731,yes

34 | 732,yes

35 | 733,yes

36 | 734,yes

37 | 735,yes

38 | 736,yes

39 | 737,yes

40 | 738,yes

41 | 739,yes

42 | 740,yes

43 | 741,yes

44 | 742,yes

45 | 743,yes

46 | 744,yes

47 | 745,yes

48 | 746,yes

49 | 747,yes

50 | 748,yes

51 | 749,yes

52 | 750,yes

--------------------------------------------------------------------------------

/Problem 8/problem8.sql:

--------------------------------------------------------------------------------

1 | --For Top 10 hoghest travlled users

2 | SELECT q.user_id, q.name, q.total

3 | FROM

4 | ( select user_id

5 | ,name

6 | , sum(distance) as total

7 | , RANK() OVER (ORDER BY sum(distance) DESC) as actualrank

8 | from DATAENG.ride_log as log

9 | LEFT OUTER JOIN DATAENG.user as users

10 | ON log.user_id = users.id

11 | GROUP BY user_id, name

12 | ORDER BY sum(distance) DESC) as q

13 | WHERE q.actualrank <= 10

14 |

15 |

16 | --For Top 10 Least travlled users

17 | SELECT q.user_id, q.name, q.total

18 | FROM

19 | ( select user_id

20 | ,name

21 | , sum(distance) as total

22 | , RANK() OVER (ORDER BY sum(distance)) as actualrank

23 | from DATAENG.ride_log as log

24 | LEFT OUTER JOIN DATAENG.user as users

25 | ON log.user_id = users.id

26 | GROUP BY user_id, name

27 | ORDER BY sum(distance)) as q

28 | WHERE q.actualrank <= 10

--------------------------------------------------------------------------------

/Problem 2/problem2.sql:

--------------------------------------------------------------------------------

1 | -- We have a table with employees tables in which we have employee details with salary and department id of the employees. We have one more table in which we have department id and department name.

2 | -- Provide below queries

3 | -- 1. Use this both tables and list all the employees woking in marketing department with highest to lowest salary order.

4 |

5 | SELECT first_name, last_name, salary

6 | FROM public.employee_salary as emp

7 | LEFT OUTER JOIN public.department as department

8 | ON emp.department_id = department.department_id

9 | WHERE department.department_name = 'Marketing'

10 | ORDER BY salary DESC;

11 |

12 | -- 2. Provide count of employees in each departnent with department name.

13 |

14 | SELECT department.department_name, count(*) as count_of_employee

15 | FROM public.department as department

16 | LEFT OUTER JOIN public.employee_salary as emp

17 | ON emp.department_id = department.department_id

18 | GROUP BY department.department_name;

19 |

--------------------------------------------------------------------------------

/Problem 8/user.csv:

--------------------------------------------------------------------------------

1 | id,name

2 | 1,Dustin Smith

3 | 2,Jay Ramirez

4 | 3,Joseph Cooke

5 | 4,Melinda Young

6 | 5,Sean Parker

7 | 6,Ian Foster

8 | 7,Christopher Schmitt

9 | 8,Patrick Gutierrez

10 | 9,Dennis Douglas

11 | 10,Brenda Morris

12 | 11,Jeffery Hernandez

13 | 12,David Rice

14 | 13,Charles Foster

15 | 14,Keith Perez DVM

16 | 15,Dean Cuevas

17 | 16,Melissa Bishop

18 | 17,Alexander Howell

19 | 18,Austin Robertson

20 | 19,Sherri Mcdaniel

21 | 20,Nancy Nguyen

22 | 21,Melody Ball

23 | 22,Christopher Stokes

24 | 23,Joseph Hamilton

25 | 24,Kevin Fischer

26 | 25,Crystal Berg

27 | 26,Barbara Larson

28 | 27,Jacqueline Heath

29 | 28,Eric Gardner

30 | 29,Daniel Kennedy

31 | 30,Kaylee Sims

32 | 31,Shannon Green

33 | 32,Stacy Collins

34 | 33,Donna Ortiz

35 | 34,Jennifer Simmons

36 | 35,Michael Gill

37 | 36,Alyssa Shaw

38 | 37,Destiny Clark

39 | 38,Thomas Lara

40 | 39,Mark Diaz

41 | 40,Stacy Bryant

42 | 41,Howard Rose

43 | 42,Brian Schwartz

44 | 43,Kimberly Potter

45 | 44,Cassidy Ryan

46 | 45,Benjamin Mcbride

47 | 46,Elizabeth Ward

48 | 47,Christina Price

49 | 48,Pamela Cox

50 | 49,Jessica Peterson

51 | 50,Michael Nelson

--------------------------------------------------------------------------------

/Problem 8/README.md:

--------------------------------------------------------------------------------

1 | # Problems 8 -> Top distance travelled

2 |

3 | Find the top 10 users that have traveled the least distance. Output their id, name and a total distance traveled.

4 |

5 | Problem Difficulty Level : Medium

6 |

7 | Data Structure

8 | ride_log

9 |

10 | - id

11 | - user_id

12 | - travel

13 |

14 | user

15 |

16 | - id

17 | - name

18 |

19 |  20 |

21 | Data for ride_log and user table

22 |

23 | [In CSV Format](ride_log.csv)

20 |

21 | Data for ride_log and user table

22 |

23 | [In CSV Format](ride_log.csv)

24 | [In CSV Format](user.csv)

25 |

26 | ## Solving using PySpark

27 |

28 | In Spark we will solve this problem using two ways

29 | 1. Using PySpark Functions

30 | 2. Using Spark SQL

31 |

32 | Use below notebook for solution

33 |

34 | [Problem Solution First Part](problem8.ipynb)

35 |

36 | ## Solving using MySQL

37 |

38 | In MySQL We will load data from CSV using MySQL Import functionality. And then we will solve this problem.

39 |

40 | Output Query

41 |

42 | [Problem Solution](problem8.sql)

43 |

44 | Please also follow below blog for understanding this problem

45 |

--------------------------------------------------------------------------------

/Problem 5/README.md:

--------------------------------------------------------------------------------

1 | # Problems 5 -> CITY names starting with vowels

2 |

3 | Query the list of CITY names starting with vowels (i.e., a, e, i, o, or u) from STATION. Your result cannot contain duplicates.

4 | The STATION table is described as follows:

5 |

6 | Problem Difficulty Level : Easy

7 |

8 | Data Structure

9 |

10 | - ID

11 | - City

12 | - State

13 | - Lattitude

14 | - Longitude

15 |

16 |  17 |

18 | Data for station table

19 |

20 | [In CSV Format](stations.csv)

21 |

22 | ## Solving using PySpark

23 |

24 | In Spark we will solve this problem using two ways

25 | 1. Using PySpark Functions

26 | 2. Using Spark SQL

27 |

28 | Use below notebook for solution

29 |

30 | [Problem Solution First Part](problem5.ipynb)

31 |

32 | ## Solving using PostgreSQL

33 |

34 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

35 |

36 | Output Query

37 |

38 | [Problem Solution](problem5.sql)

39 |

40 | Please also follow below blog for understanding this problem

41 |

--------------------------------------------------------------------------------

/Problem 3/README.md:

--------------------------------------------------------------------------------

1 | # Problems 3 -> Difference between total number of cities and distinct cities

2 |

3 | Find the difference between the total number of CITY entries in the table and the number of distinct CITY entries in the table.

4 | The STATION table is described as follows:

5 |

6 | Problem Difficulty Level : Easy

7 |

8 | Data Structure

9 |

10 | - ID

11 | - City

12 | - State

13 | - Lattitude

14 | - Longitude

15 |

16 |

17 |

18 | Data for station table

19 |

20 | [In CSV Format](stations.csv)

21 |

22 | ## Solving using PySpark

23 |

24 | In Spark we will solve this problem using two ways

25 | 1. Using PySpark Functions

26 | 2. Using Spark SQL

27 |

28 | Use below notebook for solution

29 |

30 | [Problem Solution First Part](problem5.ipynb)

31 |

32 | ## Solving using PostgreSQL

33 |

34 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

35 |

36 | Output Query

37 |

38 | [Problem Solution](problem5.sql)

39 |

40 | Please also follow below blog for understanding this problem

41 |

--------------------------------------------------------------------------------

/Problem 3/README.md:

--------------------------------------------------------------------------------

1 | # Problems 3 -> Difference between total number of cities and distinct cities

2 |

3 | Find the difference between the total number of CITY entries in the table and the number of distinct CITY entries in the table.

4 | The STATION table is described as follows:

5 |

6 | Problem Difficulty Level : Easy

7 |

8 | Data Structure

9 |

10 | - ID

11 | - City

12 | - State

13 | - Lattitude

14 | - Longitude

15 |

16 |  17 |

18 | Data for station table

19 |

20 | [In CSV Format](stations.csv)

21 |

22 | ## Solving using PySpark

23 |

24 | In Spark we will solve this problem using two ways

25 | 1. Using PySpark Functions

26 | 2. Using Spark SQL

27 |

28 | Use below notebook for solution

29 |

30 | [Problem Solution First Part](problem3.ipynb)

31 |

32 | ## Solving using PostgreSQL

33 |

34 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

35 |

36 | Output Query

37 |

38 | [Problem Solution](problem3.sql)

39 |

40 | Please also follow below blog for understanding this problem

41 |

--------------------------------------------------------------------------------

/Problem 7/README.md:

--------------------------------------------------------------------------------

1 | # Problems 7 -> Returning active users

2 |

3 | Write a query that'll identify returning active users. A returning active user is a user that has made a second purchase within 7 days of any other of their purchases. Output a list of user_ids of these returning active users.

4 |

5 | Problem Difficulty Level : Medium

6 |

7 | Data Structure

8 |

9 | - id

10 | - user_id

11 | - item

12 | - created_at

13 | - revenue

14 |

15 |

17 |

18 | Data for station table

19 |

20 | [In CSV Format](stations.csv)

21 |

22 | ## Solving using PySpark

23 |

24 | In Spark we will solve this problem using two ways

25 | 1. Using PySpark Functions

26 | 2. Using Spark SQL

27 |

28 | Use below notebook for solution

29 |

30 | [Problem Solution First Part](problem3.ipynb)

31 |

32 | ## Solving using PostgreSQL

33 |

34 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

35 |

36 | Output Query

37 |

38 | [Problem Solution](problem3.sql)

39 |

40 | Please also follow below blog for understanding this problem

41 |

--------------------------------------------------------------------------------

/Problem 7/README.md:

--------------------------------------------------------------------------------

1 | # Problems 7 -> Returning active users

2 |

3 | Write a query that'll identify returning active users. A returning active user is a user that has made a second purchase within 7 days of any other of their purchases. Output a list of user_ids of these returning active users.

4 |

5 | Problem Difficulty Level : Medium

6 |

7 | Data Structure

8 |

9 | - id

10 | - user_id

11 | - item

12 | - created_at

13 | - revenue

14 |

15 |  16 |

17 | Data for transaction table

18 |

19 | [In CSV Format](transaction.csv)

20 |

21 | ## Solving using PySpark

22 |

23 | In Spark we will solve this problem using two ways

24 | 1. Using PySpark Functions

25 | 2. Using Spark SQL

26 |

27 | Use below notebook for solution

28 |

29 | [Problem Solution First Part](problem7.ipynb)

30 |

31 | ## Solving using MySQL

32 |

33 | In MySQL We will load data from CSV using MySQL Import functionality. And then we will solve this problem.

34 |

35 | Output Query

36 |

37 | [Problem Solution](problem7.sql)

38 |

39 | Please also follow below blog for understanding this problem

40 |

--------------------------------------------------------------------------------

/Problem 0/README.md:

--------------------------------------------------------------------------------

1 | # Problems 0 -> Employee Salary more than

2 |

3 | We have a table with employees and their salaries. Write Queries to solve below problems

4 | 1. List all the meployees whoes salary is more than 100K

5 | 2. Provide distinct department id

6 | 3. Provide first and last name of employees

7 | 4. Provide all the details with the employees whose last name is 'Johnson'

8 |

9 | Problem Difficulty Level : Easy

10 |

11 | Data Structure

12 |

13 |

16 |

17 | Data for transaction table

18 |

19 | [In CSV Format](transaction.csv)

20 |

21 | ## Solving using PySpark

22 |

23 | In Spark we will solve this problem using two ways

24 | 1. Using PySpark Functions

25 | 2. Using Spark SQL

26 |

27 | Use below notebook for solution

28 |

29 | [Problem Solution First Part](problem7.ipynb)

30 |

31 | ## Solving using MySQL

32 |

33 | In MySQL We will load data from CSV using MySQL Import functionality. And then we will solve this problem.

34 |

35 | Output Query

36 |

37 | [Problem Solution](problem7.sql)

38 |

39 | Please also follow below blog for understanding this problem

40 |

--------------------------------------------------------------------------------

/Problem 0/README.md:

--------------------------------------------------------------------------------

1 | # Problems 0 -> Employee Salary more than

2 |

3 | We have a table with employees and their salaries. Write Queries to solve below problems

4 | 1. List all the meployees whoes salary is more than 100K

5 | 2. Provide distinct department id

6 | 3. Provide first and last name of employees

7 | 4. Provide all the details with the employees whose last name is 'Johnson'

8 |

9 | Problem Difficulty Level : Easy

10 |

11 | Data Structure

12 |

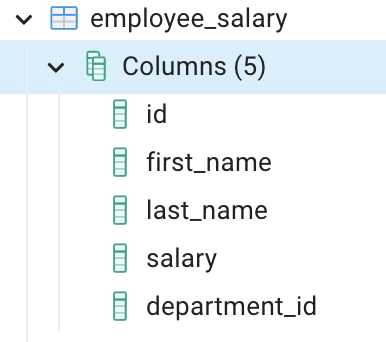

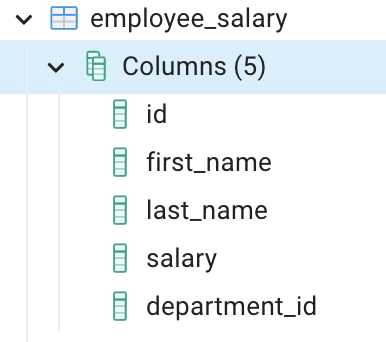

13 |  14 |

15 |

16 | Data for this problem

17 |

18 | [In CSV Format](employee_salary.csv)

19 |

20 | ## Solving using PySpark

21 |

22 | In Spark we will solve this problem using two ways

23 | 1. Using PySpark Functions

24 | 2. Using Spark SQL

25 |

26 | Use below notebook for solution

27 |

28 | [Problem Solution](problem0.ipynb)

29 |

30 | ## Solving using PostgreSQL

31 |

32 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

33 |

34 | Output Query

35 |

36 | [Problem Solution](problem0.sql)

37 |

38 | Please also follow below blog for understanding this problem

39 |

--------------------------------------------------------------------------------

/Problem 1/README.md:

--------------------------------------------------------------------------------

1 | # Problems 1 -> Employee With his Latest Salary

2 |

3 | We have a table with employees and their salaries, however, some of the records are old and contain outdated salary information. Find the current salary of each employee assuming that salaries increase each year. Output their id, first name, last name, department ID, and current salary. Order your list by employee ID in ascending order.

4 |

5 | Problem Difficulty Level : Medium

6 |

7 | Data Structure

8 |

9 |

14 |

15 |

16 | Data for this problem

17 |

18 | [In CSV Format](employee_salary.csv)

19 |

20 | ## Solving using PySpark

21 |

22 | In Spark we will solve this problem using two ways

23 | 1. Using PySpark Functions

24 | 2. Using Spark SQL

25 |

26 | Use below notebook for solution

27 |

28 | [Problem Solution](problem0.ipynb)

29 |

30 | ## Solving using PostgreSQL

31 |

32 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

33 |

34 | Output Query

35 |

36 | [Problem Solution](problem0.sql)

37 |

38 | Please also follow below blog for understanding this problem

39 |

--------------------------------------------------------------------------------

/Problem 1/README.md:

--------------------------------------------------------------------------------

1 | # Problems 1 -> Employee With his Latest Salary

2 |

3 | We have a table with employees and their salaries, however, some of the records are old and contain outdated salary information. Find the current salary of each employee assuming that salaries increase each year. Output their id, first name, last name, department ID, and current salary. Order your list by employee ID in ascending order.

4 |

5 | Problem Difficulty Level : Medium

6 |

7 | Data Structure

8 |

9 |  10 |

11 |

12 | Data for this problem

13 |

14 | [In CSV Format](employee.csv)

15 |

16 | [In JSON Format](employee.json)

17 |

18 | ## Solving using PySpark

19 |

20 | In Spark we will solve this problem using two ways

21 | 1. Using PySpark Functions

22 | 2. Using Spark SQL

23 |

24 | Use below notebook for solution

25 |

26 | [Problem Solution](problem1.ipynb)

27 |

28 | ## Solving using PostgreSQL

29 |

30 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

31 |

32 | Output Query

33 |

34 | [Problem Solution](problem1.sql)

35 |

36 | Please also follow below blog for understanding this problem

37 |

--------------------------------------------------------------------------------

/Problem 9/README.md:

--------------------------------------------------------------------------------

1 | # Problems 9 -> Premium vs Freemium

2 |

3 | Find the total number of downloads for paying and non-paying users by date. Include only records where non-paying customers have more downloads than paying customers. The output should be sorted by earliest date first and contain 3 columns date, non-paying downloads, paying downloads.

4 |

5 | Problem Difficulty Level : Hard

6 |

7 | Data Structure

8 | user_info

9 |

10 | - user_id

11 | - acc_id

12 |

13 | user_type

14 |

15 | - acc_id

16 | - paying_customer

17 |

18 | download_facts

19 |

20 | - date

21 | - user_id

22 | - downloads

23 |

24 |

25 | Data for ride_log and user table

26 |

27 | [User data CSV Format](user_info.csv)

10 |

11 |

12 | Data for this problem

13 |

14 | [In CSV Format](employee.csv)

15 |

16 | [In JSON Format](employee.json)

17 |

18 | ## Solving using PySpark

19 |

20 | In Spark we will solve this problem using two ways

21 | 1. Using PySpark Functions

22 | 2. Using Spark SQL

23 |

24 | Use below notebook for solution

25 |

26 | [Problem Solution](problem1.ipynb)

27 |

28 | ## Solving using PostgreSQL

29 |

30 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

31 |

32 | Output Query

33 |

34 | [Problem Solution](problem1.sql)

35 |

36 | Please also follow below blog for understanding this problem

37 |

--------------------------------------------------------------------------------

/Problem 9/README.md:

--------------------------------------------------------------------------------

1 | # Problems 9 -> Premium vs Freemium

2 |

3 | Find the total number of downloads for paying and non-paying users by date. Include only records where non-paying customers have more downloads than paying customers. The output should be sorted by earliest date first and contain 3 columns date, non-paying downloads, paying downloads.

4 |

5 | Problem Difficulty Level : Hard

6 |

7 | Data Structure

8 | user_info

9 |

10 | - user_id

11 | - acc_id

12 |

13 | user_type

14 |

15 | - acc_id

16 | - paying_customer

17 |

18 | download_facts

19 |

20 | - date

21 | - user_id

22 | - downloads

23 |

24 |

25 | Data for ride_log and user table

26 |

27 | [User data CSV Format](user_info.csv)

28 | [User Type data CSV Format](user_type.csv)

29 | [Download facts data CSV Format](download_facts.csv)

30 |

31 | ## Solving using PySpark

32 |

33 | In Spark we will solve this problem using two ways

34 | 1. Using PySpark Functions

35 | 2. Using Spark SQL

36 |

37 | Use below notebook for solution

38 |

39 | [Problem Solution First Part](problem9.ipynb)

40 |

41 | ## Solving using MySQL

42 |

43 | In MySQL We will load data from CSV using MySQL Import functionality. And then we will solve this problem.

44 |

45 | Output Query

46 |

47 | [Problem Solution](problem9.sql)

48 |

49 | Please also follow below blog for understanding this problem

50 |

--------------------------------------------------------------------------------

/Problem 9/user_info.csv:

--------------------------------------------------------------------------------

1 | user_id,acc_id

2 | 0,1

3 | 1,716

4 | 2,749

5 | 3,713

6 | 4,744

7 | 5,726

8 | 6,706

9 | 7,750

10 | 8,732

11 | 9,706

12 | 10,729

13 | 11,748

14 | 12,731

15 | 13,739

16 | 14,740

17 | 15,705

18 | 16,706

19 | 17,701

20 | 18,746

21 | 19,726

22 | 20,748

23 | 21,701

24 | 22,707

25 | 23,710

26 | 24,702

27 | 25,720

28 | 26,730

29 | 27,721

30 | 28,733

31 | 29,732

32 | 30,729

33 | 31,716

34 | 32,722

35 | 33,745

36 | 34,737

37 | 35,730

38 | 36,729

39 | 37,723

40 | 38,710

41 | 39,707

42 | 40,737

43 | 41,717

44 | 42,741

45 | 43,718

46 | 44,736

47 | 45,720

48 | 46,743

49 | 47,707

50 | 48,721

51 | 49,748

52 | 50,715

53 | 51,709

54 | 52,732

55 | 53,732

56 | 54,712

57 | 55,701

58 | 56,721

59 | 57,744

60 | 58,724

61 | 59,727

62 | 60,743

63 | 61,744

64 | 62,717

65 | 63,723

66 | 64,713

67 | 65,706

68 | 66,731

69 | 67,722

70 | 68,744

71 | 69,705

72 | 70,703

73 | 71,725

74 | 72,740

75 | 73,713

76 | 74,732

77 | 75,720

78 | 76,709

79 | 77,739

80 | 78,703

81 | 79,732

82 | 80,728

83 | 81,737

84 | 82,711

85 | 83,745

86 | 84,734

87 | 85,723

88 | 86,718

89 | 87,702

90 | 88,718

91 | 89,744

92 | 90,710

93 | 91,727

94 | 92,739

95 | 93,728

96 | 94,740

97 | 95,744

98 | 96,737

99 | 97,726

100 | 98,722

101 | 99,727

102 | 100,712

--------------------------------------------------------------------------------

/Problem 4/README.md:

--------------------------------------------------------------------------------

1 | # Problems 4 -> Get Shortest and Longest City Name

2 |

3 | Query the two cities in STATION with the shortest and longest CITY names, as well as their respective lengths (i.e.: number of characters in the name). If there is more than one smallest or largest city, choose the one that comes first when ordered alphabetically.

4 | The STATION table is described as follows:

5 |

6 | Problem Difficulty Level : Hard

7 |

8 | Data Structure

9 |

10 | - ID

11 | - City

12 | - State

13 | - Lattitude

14 | - Longitude

15 |

16 |  17 |

18 | Data for station table

19 |

20 | [In CSV Format](stations.csv)

21 |

22 | Sample Input

23 |

24 | For example, CITY has four entries: DEF, ABC, PQRS and WXY.

25 |

26 | Sample Output

27 |

28 | ``````````

29 | ABC 3

30 | PQRS 4

31 | ``````````

32 |

33 | ## Solving using PySpark

34 |

35 | In Spark we will solve this problem using two ways

36 | 1. Using PySpark Functions

37 | 2. Using Spark SQL

38 |

39 | Use below notebook for solution

40 |

41 | [Problem Solution First Part](problem4.ipynb)

42 |

43 | ## Solving using PostgreSQL

44 |

45 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

46 |

47 | Output Query

48 |

49 | [Problem Solution](problem4.sql)

50 |

51 | Please also follow below blog for understanding this problem

52 |

--------------------------------------------------------------------------------

/Problem 9/problem9.sql:

--------------------------------------------------------------------------------

1 | SELECT paying_customer.date,nonpaying_download,paying_downaload

2 | FROM

3 | ( select acc.paying_customer

4 | ,download.date, SUM(download.downloads) as paying_downaload

5 | FROM user_info as usr

6 | LEFT OUTER JOIN user_type as acc

7 | ON usr.acc_id = acc.acc_id

8 | LEFT OUTER JOIN download_facts as download

9 | ON usr.user_id = download.user_id

10 | WHERE paying_customer = 'yes'

11 | GROUP BY acc.paying_customer,download.date ) as paying_customer

12 | LEFT OUTER JOIN

13 | ( select acc.paying_customer

14 | ,download.date, SUM(download.downloads) as nonpaying_download

15 | FROM user_info as usr

16 | LEFT OUTER JOIN user_type as acc

17 | ON usr.acc_id = acc.acc_id

18 | LEFT OUTER JOIN download_facts as download

19 | ON usr.user_id = download.user_id

20 | WHERE paying_customer = 'no'

21 | GROUP BY acc.paying_customer,download.date) as non_paying_customer

22 | ON paying_customer.date = non_paying_customer.date

23 | WHERE nonpaying_download > paying_downaload

24 | ORDER BY paying_customer.date

25 |

26 | ----

27 |

28 | SELECT date, non_paying,

29 | paying

30 | FROM

31 | (SELECT date, sum(CASE

32 | WHEN paying_customer = 'yes' THEN downloads

33 | END) AS paying,

34 | sum(CASE

35 | WHEN paying_customer = 'no' THEN downloads

36 | END) AS non_paying

37 | FROM user_info a

38 | INNER JOIN user_type b ON a.acc_id = b.acc_id

39 | INNER JOIN download_facts c ON a.user_id=c.user_id

40 | GROUP BY date

41 | ORDER BY date) t

42 | WHERE (non_paying - paying) >0

43 | ORDER BY t.date ASC

--------------------------------------------------------------------------------

/Problem 6/README.md:

--------------------------------------------------------------------------------

1 | # Problems 6 -> Students more than 75 Marks

2 |

3 | Query the Name of any student in STUDENTS who scored higher than 75 Marks. Order your output by the last three characters of each name. If two or more students both have names ending in the same last three characters (i.e.: Bobby, Robby, etc.), secondary sort them by ascending ID.

4 |

5 | Problem Difficulty Level : Medium

6 |

7 | Data Structure

8 |

9 | - ID

10 | - Name

11 | - Marks

12 |

13 |

17 |

18 | Data for station table

19 |

20 | [In CSV Format](stations.csv)

21 |

22 | Sample Input

23 |

24 | For example, CITY has four entries: DEF, ABC, PQRS and WXY.

25 |

26 | Sample Output

27 |

28 | ``````````

29 | ABC 3

30 | PQRS 4

31 | ``````````

32 |

33 | ## Solving using PySpark

34 |

35 | In Spark we will solve this problem using two ways

36 | 1. Using PySpark Functions

37 | 2. Using Spark SQL

38 |

39 | Use below notebook for solution

40 |

41 | [Problem Solution First Part](problem4.ipynb)

42 |

43 | ## Solving using PostgreSQL

44 |

45 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

46 |

47 | Output Query

48 |

49 | [Problem Solution](problem4.sql)

50 |

51 | Please also follow below blog for understanding this problem

52 |

--------------------------------------------------------------------------------

/Problem 9/problem9.sql:

--------------------------------------------------------------------------------

1 | SELECT paying_customer.date,nonpaying_download,paying_downaload

2 | FROM

3 | ( select acc.paying_customer

4 | ,download.date, SUM(download.downloads) as paying_downaload

5 | FROM user_info as usr

6 | LEFT OUTER JOIN user_type as acc

7 | ON usr.acc_id = acc.acc_id

8 | LEFT OUTER JOIN download_facts as download

9 | ON usr.user_id = download.user_id

10 | WHERE paying_customer = 'yes'

11 | GROUP BY acc.paying_customer,download.date ) as paying_customer

12 | LEFT OUTER JOIN

13 | ( select acc.paying_customer

14 | ,download.date, SUM(download.downloads) as nonpaying_download

15 | FROM user_info as usr

16 | LEFT OUTER JOIN user_type as acc

17 | ON usr.acc_id = acc.acc_id

18 | LEFT OUTER JOIN download_facts as download

19 | ON usr.user_id = download.user_id

20 | WHERE paying_customer = 'no'

21 | GROUP BY acc.paying_customer,download.date) as non_paying_customer

22 | ON paying_customer.date = non_paying_customer.date

23 | WHERE nonpaying_download > paying_downaload

24 | ORDER BY paying_customer.date

25 |

26 | ----

27 |

28 | SELECT date, non_paying,

29 | paying

30 | FROM

31 | (SELECT date, sum(CASE

32 | WHEN paying_customer = 'yes' THEN downloads

33 | END) AS paying,

34 | sum(CASE

35 | WHEN paying_customer = 'no' THEN downloads

36 | END) AS non_paying

37 | FROM user_info a

38 | INNER JOIN user_type b ON a.acc_id = b.acc_id

39 | INNER JOIN download_facts c ON a.user_id=c.user_id

40 | GROUP BY date

41 | ORDER BY date) t

42 | WHERE (non_paying - paying) >0

43 | ORDER BY t.date ASC

--------------------------------------------------------------------------------

/Problem 6/README.md:

--------------------------------------------------------------------------------

1 | # Problems 6 -> Students more than 75 Marks

2 |

3 | Query the Name of any student in STUDENTS who scored higher than 75 Marks. Order your output by the last three characters of each name. If two or more students both have names ending in the same last three characters (i.e.: Bobby, Robby, etc.), secondary sort them by ascending ID.

4 |

5 | Problem Difficulty Level : Medium

6 |

7 | Data Structure

8 |

9 | - ID

10 | - Name

11 | - Marks

12 |

13 |  14 |

15 | Data for students table

16 |

17 | [In CSV Format](Students.csv)

18 |

19 | ## Sample Input

20 |

21 | ```

22 | 1 Ashley 81

23 | 2 Samantha 75

24 | 3 Julia 76

25 | 4 Belvet 84

26 | ```

27 |

28 | ## Sample Output

29 |

30 | ```

31 | Ashley

32 | Julia

33 | Belvet

34 | ```

35 |

36 | ## Explanation

37 |

38 | Only Ashley, Julia, and Belvet have Marks > . If you look at the last three characters of each of their names, there are no duplicates and 'ley' < 'lia' < 'vet'.

39 |

40 | ## Solving using PySpark

41 |

42 | In Spark we will solve this problem using two ways

43 | 1. Using PySpark Functions

44 | 2. Using Spark SQL

45 |

46 | Use below notebook for solution

47 |

48 | [Problem Solution First Part](problem6.ipynb)

49 |

50 | ## Solving using PostgreSQL

51 |

52 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

53 |

54 | Output Query

55 |

56 | [Problem Solution](problem6.sql)

57 |

58 | Please also follow below blog for understanding this problem

59 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Data Engineering Problems

2 | Data Engineering Problems with Solution

3 |

4 |

14 |

15 | Data for students table

16 |

17 | [In CSV Format](Students.csv)

18 |

19 | ## Sample Input

20 |

21 | ```

22 | 1 Ashley 81

23 | 2 Samantha 75

24 | 3 Julia 76

25 | 4 Belvet 84

26 | ```

27 |

28 | ## Sample Output

29 |

30 | ```

31 | Ashley

32 | Julia

33 | Belvet

34 | ```

35 |

36 | ## Explanation

37 |

38 | Only Ashley, Julia, and Belvet have Marks > . If you look at the last three characters of each of their names, there are no duplicates and 'ley' < 'lia' < 'vet'.

39 |

40 | ## Solving using PySpark

41 |

42 | In Spark we will solve this problem using two ways

43 | 1. Using PySpark Functions

44 | 2. Using Spark SQL

45 |

46 | Use below notebook for solution

47 |

48 | [Problem Solution First Part](problem6.ipynb)

49 |

50 | ## Solving using PostgreSQL

51 |

52 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

53 |

54 | Output Query

55 |

56 | [Problem Solution](problem6.sql)

57 |

58 | Please also follow below blog for understanding this problem

59 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Data Engineering Problems

2 | Data Engineering Problems with Solution

3 |

4 |  5 |

6 | Here, we are solving all the Data Engineering problems using below methods

7 | 1. Solving problem using PySpark

8 | 1. Using PySpark Functions

9 | 2. Using Spark SQL

10 | 2. Solving problem using SQL (PostgreSQL or MySQL)

11 |

12 | Please find list of all the problems

13 |

14 | 0. Problem0 -> [Get Employee with salary more than 100K](Problem%200/README.md)

15 | 1. Problem1 -> [Get Max Salary for each Employee](Problem%201/README.md)

16 | 2. Problem2 -> [Get Salary of all employees in Marketing department](Problem%202/README.md)

17 | 3. Problem3 -> [Find diff between count of cities and distict count of cities](Problem%203/README.md)

18 | 4. Problem4 -> [Get Shortest and Longest City Name](Problem%204/README.md)

19 | 5. Problem5 -> [CITY names starting with vowels](Problem%205/README.md)

20 | 6. Problem6 -> [Students more than 75 Marks ](Problem%206/README.md)

21 | 7. Problem7 -> [Returning active users](Problem%207/README.md)

22 | 8. Problem8 -> [Top distance travelled](Problem%208/README.md)

23 | 9. Problems 9 -> [Premium vs Freemium](Problem%209/README.md)

24 |

25 |

26 | Also find below blog for understanding all the data engineering problems

27 |

28 | https://developershome.blog/category/data-engineering/problem-solving/

29 |

30 | Also find below youtube channel for understanding all the data engineering problems and learning new concepts of data engineering.

31 |

32 | https://www.youtube.com/@developershomeIn

33 |

--------------------------------------------------------------------------------

/Problem 8/ride_log.csv:

--------------------------------------------------------------------------------

1 | id,user_id,distance

2 | 101,8,93

3 | 102,40,56

4 | 103,28,83

5 | 104,33,83

6 | 105,1,87

7 | 106,32,49

8 | 107,3,5

9 | 108,23,37

10 | 109,31,62

11 | 110,1,35

12 | 111,41,89

13 | 112,19,64

14 | 113,49,57

15 | 114,28,68

16 | 115,48,94

17 | 116,50,89

18 | 117,48,29

19 | 118,13,16

20 | 119,24,58

21 | 120,25,19

22 | 121,39,13

23 | 122,36,10

24 | 123,37,38

25 | 124,32,76

26 | 125,34,61

27 | 126,37,10

28 | 127,11,61

29 | 128,47,35

30 | 129,46,17

31 | 130,15,8

32 | 131,11,36

33 | 132,31,24

34 | 133,7,96

35 | 134,34,64

36 | 135,2,75

37 | 136,45,11

38 | 137,48,58

39 | 138,15,92

40 | 139,47,88

41 | 140,18,27

42 | 141,34,67

43 | 142,47,70

44 | 143,24,52

45 | 144,26,98

46 | 145,20,45

47 | 146,27,60

48 | 147,26,94

49 | 148,10,90

50 | 149,12,63

51 | 150,9,43

52 | 151,36,18

53 | 152,12,11

54 | 153,44,76

55 | 154,9,93

56 | 155,14,82

57 | 156,28,26

58 | 157,39,68

59 | 158,5,92

60 | 159,46,91

61 | 160,14,66

62 | 161,8,47

63 | 162,44,52

64 | 163,21,81

65 | 164,11,69

66 | 165,38,82

67 | 166,23,42

68 | 167,34,85

69 | 168,12,30

70 | 169,43,85

71 | 170,20,30

72 | 171,20,50

73 | 172,25,74

74 | 173,25,96

75 | 174,8,74

76 | 175,50,46

77 | 176,43,77

78 | 177,11,40

79 | 178,17,90

80 | 179,1,78

81 | 180,20,25

82 | 181,27,31

83 | 182,17,91

84 | 183,8,29

85 | 184,42,85

86 | 185,43,95

87 | 186,17,24

88 | 187,15,42

89 | 188,47,37

90 | 189,9,15

91 | 190,42,71

92 | 191,43,9

93 | 192,12,53

94 | 193,49,73

95 | 194,25,50

96 | 195,32,85

97 | 196,9,55

98 | 197,47,98

99 | 198,43,9

100 | 199,14,66

101 | 200,2,39

--------------------------------------------------------------------------------

/Problem 2/README.md:

--------------------------------------------------------------------------------

1 | # Problems 2 -> Employee From Sales Department with Salary

2 |

3 | We have a table with employees tables in which we have employee details with salary and department id of the employees. We have one more table in which we have department id and department name.

4 | Provide below queries

5 | 1. Use this both tables and list all the employees woking in marketing department with highest to lowest salary order.

6 | 2. Provide count of employees in each departnent with department name.

7 |

8 | Problem Difficulty Level : Easy

9 |

10 | Data Structure

11 |

12 | Employee table

13 |

14 |

5 |

6 | Here, we are solving all the Data Engineering problems using below methods

7 | 1. Solving problem using PySpark

8 | 1. Using PySpark Functions

9 | 2. Using Spark SQL

10 | 2. Solving problem using SQL (PostgreSQL or MySQL)

11 |

12 | Please find list of all the problems

13 |

14 | 0. Problem0 -> [Get Employee with salary more than 100K](Problem%200/README.md)

15 | 1. Problem1 -> [Get Max Salary for each Employee](Problem%201/README.md)

16 | 2. Problem2 -> [Get Salary of all employees in Marketing department](Problem%202/README.md)

17 | 3. Problem3 -> [Find diff between count of cities and distict count of cities](Problem%203/README.md)

18 | 4. Problem4 -> [Get Shortest and Longest City Name](Problem%204/README.md)

19 | 5. Problem5 -> [CITY names starting with vowels](Problem%205/README.md)

20 | 6. Problem6 -> [Students more than 75 Marks ](Problem%206/README.md)

21 | 7. Problem7 -> [Returning active users](Problem%207/README.md)

22 | 8. Problem8 -> [Top distance travelled](Problem%208/README.md)

23 | 9. Problems 9 -> [Premium vs Freemium](Problem%209/README.md)

24 |

25 |

26 | Also find below blog for understanding all the data engineering problems

27 |

28 | https://developershome.blog/category/data-engineering/problem-solving/

29 |

30 | Also find below youtube channel for understanding all the data engineering problems and learning new concepts of data engineering.

31 |

32 | https://www.youtube.com/@developershomeIn

33 |

--------------------------------------------------------------------------------

/Problem 8/ride_log.csv:

--------------------------------------------------------------------------------

1 | id,user_id,distance

2 | 101,8,93

3 | 102,40,56

4 | 103,28,83

5 | 104,33,83

6 | 105,1,87

7 | 106,32,49

8 | 107,3,5

9 | 108,23,37

10 | 109,31,62

11 | 110,1,35

12 | 111,41,89

13 | 112,19,64

14 | 113,49,57

15 | 114,28,68

16 | 115,48,94

17 | 116,50,89

18 | 117,48,29

19 | 118,13,16

20 | 119,24,58

21 | 120,25,19

22 | 121,39,13

23 | 122,36,10

24 | 123,37,38

25 | 124,32,76

26 | 125,34,61

27 | 126,37,10

28 | 127,11,61

29 | 128,47,35

30 | 129,46,17

31 | 130,15,8

32 | 131,11,36

33 | 132,31,24

34 | 133,7,96

35 | 134,34,64

36 | 135,2,75

37 | 136,45,11

38 | 137,48,58

39 | 138,15,92

40 | 139,47,88

41 | 140,18,27

42 | 141,34,67

43 | 142,47,70

44 | 143,24,52

45 | 144,26,98

46 | 145,20,45

47 | 146,27,60

48 | 147,26,94

49 | 148,10,90

50 | 149,12,63

51 | 150,9,43

52 | 151,36,18

53 | 152,12,11

54 | 153,44,76

55 | 154,9,93

56 | 155,14,82

57 | 156,28,26

58 | 157,39,68

59 | 158,5,92

60 | 159,46,91

61 | 160,14,66

62 | 161,8,47

63 | 162,44,52

64 | 163,21,81

65 | 164,11,69

66 | 165,38,82

67 | 166,23,42

68 | 167,34,85

69 | 168,12,30

70 | 169,43,85

71 | 170,20,30

72 | 171,20,50

73 | 172,25,74

74 | 173,25,96

75 | 174,8,74

76 | 175,50,46

77 | 176,43,77

78 | 177,11,40

79 | 178,17,90

80 | 179,1,78

81 | 180,20,25

82 | 181,27,31

83 | 182,17,91

84 | 183,8,29

85 | 184,42,85

86 | 185,43,95

87 | 186,17,24

88 | 187,15,42

89 | 188,47,37

90 | 189,9,15

91 | 190,42,71

92 | 191,43,9

93 | 192,12,53

94 | 193,49,73

95 | 194,25,50

96 | 195,32,85

97 | 196,9,55

98 | 197,47,98

99 | 198,43,9

100 | 199,14,66

101 | 200,2,39

--------------------------------------------------------------------------------

/Problem 2/README.md:

--------------------------------------------------------------------------------

1 | # Problems 2 -> Employee From Sales Department with Salary

2 |

3 | We have a table with employees tables in which we have employee details with salary and department id of the employees. We have one more table in which we have department id and department name.

4 | Provide below queries

5 | 1. Use this both tables and list all the employees woking in marketing department with highest to lowest salary order.

6 | 2. Provide count of employees in each departnent with department name.

7 |

8 | Problem Difficulty Level : Easy

9 |

10 | Data Structure

11 |

12 | Employee table

13 |

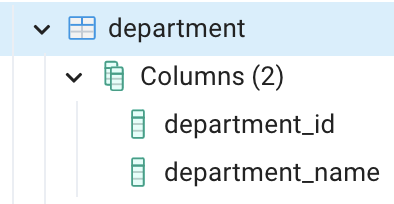

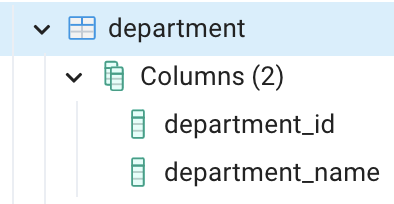

14 |  15 |

16 | Department table

17 |

18 |

15 |

16 | Department table

17 |

18 |  19 |

20 | Data for employee salary table

21 |

22 | [In CSV Format](../Problem%200/employee_salary.csv)

23 |

24 | Data for department table

25 |

26 | [In CSV Format](department.csv)

27 |

28 | ## Solving using PySpark

29 |

30 | In Spark we will solve this problem using two ways

31 | 1. Using PySpark Functions

32 | 2. Using Spark SQL

33 |

34 | Use below notebook for solution

35 |

36 | [Problem Solution First Part](problem2_1.ipynb)

19 |

20 | Data for employee salary table

21 |

22 | [In CSV Format](../Problem%200/employee_salary.csv)

23 |

24 | Data for department table

25 |

26 | [In CSV Format](department.csv)

27 |

28 | ## Solving using PySpark

29 |

30 | In Spark we will solve this problem using two ways

31 | 1. Using PySpark Functions

32 | 2. Using Spark SQL

33 |

34 | Use below notebook for solution

35 |

36 | [Problem Solution First Part](problem2_1.ipynb)

37 | [Problem Solution Second Part](problem2_2.ipynb)

38 |

39 | ## Solving using PostgreSQL

40 |

41 | In Postgre SQL We will load data from CSV using PostgreSQL Import functionality. And then we will solve this problem.

42 |

43 | Output Query

44 |

45 | [Problem Solution](problem2.sql)

46 |

47 | Please also follow below blog for understanding this problem

48 |

--------------------------------------------------------------------------------

/Problem 9/download_facts.csv:

--------------------------------------------------------------------------------

1 | date,user_id,downloads

2 | 24/8/2020,1,6

3 | 22/8/2020,2,6

4 | 18/8/2020,3,2

5 | 24/8/2020,4,4

6 | 19/8/2020,5,7

7 | 21/8/2020,6,3

8 | 24/8/2020,7,1

9 | 24/8/2020,8,8

10 | 17/8/2020,9,5

11 | 16/8/2020,10,4

12 | 22/8/2020,11,8

13 | 19/8/2020,12,6

14 | 15/8/2020,13,3

15 | 21/8/2020,14,0

16 | 24/8/2020,15,0

17 | 15/8/2020,16,5

18 | 18/8/2020,17,5

19 | 23/8/2020,18,8

20 | 15/8/2020,19,6

21 | 25/8/2020,20,4

22 | 16/8/2020,21,1

23 | 25/8/2020,22,4

24 | 22/8/2020,23,7

25 | 21/8/2020,24,4

26 | 25/8/2020,25,5

27 | 23/8/2020,26,6

28 | 19/8/2020,27,9

29 | 24/8/2020,28,3

30 | 20/8/2020,29,0

31 | 25/8/2020,30,8

32 | 20/8/2020,31,5

33 | 21/8/2020,32,8

34 | 15/8/2020,33,6

35 | 24/8/2020,34,4

36 | 25/8/2020,35,1

37 | 24/8/2020,36,7

38 | 17/8/2020,37,8

39 | 16/8/2020,38,8

40 | 17/8/2020,39,1

41 | 20/8/2020,40,8

42 | 18/8/2020,41,3

43 | 16/8/2020,42,0

44 | 23/8/2020,43,9

45 | 25/8/2020,44,9

46 | 16/8/2020,45,2

47 | 15/8/2020,46,2

48 | 21/8/2020,47,1

49 | 21/8/2020,48,4

50 | 22/8/2020,49,8

51 | 17/8/2020,50,6

52 | 21/8/2020,51,4

53 | 20/8/2020,52,7

54 | 16/8/2020,53,7

55 | 20/8/2020,54,6

56 | 20/8/2020,55,0

57 | 21/8/2020,56,8

58 | 18/8/2020,57,5

59 | 17/8/2020,58,2

60 | 24/8/2020,59,3

61 | 20/8/2020,60,7

62 | 22/8/2020,61,8

63 | 15/8/2020,62,6

64 | 23/8/2020,63,3

65 | 17/8/2020,64,4

66 | 16/8/2020,65,4

67 | 16/8/2020,66,3

68 | 19/8/2020,67,1

69 | 18/8/2020,68,2

70 | 17/8/2020,69,4

71 | 22/8/2020,70,7

72 | 20/8/2020,71,6

73 | 15/8/2020,72,2

74 | 17/8/2020,73,7

75 | 22/8/2020,74,1

76 | 17/8/2020,75,8

77 | 19/8/2020,76,0

78 | 25/8/2020,77,1

79 | 25/8/2020,78,0

80 | 17/8/2020,79,8

81 | 23/8/2020,80,7

82 | 24/8/2020,81,2

83 | 21/8/2020,82,0

84 | 24/8/2020,83,4

85 | 21/8/2020,84,0

86 | 25/8/2020,85,7

87 | 22/8/2020,86,1

88 | 20/8/2020,87,2

89 | 19/8/2020,88,3

90 | 22/8/2020,89,8

91 | 24/8/2020,90,0

92 | 22/8/2020,91,9

93 | 25/8/2020,92,7

94 | 25/8/2020,93,0

95 | 17/8/2020,94,1

96 | 23/8/2020,95,2

97 | 24/8/2020,96,3

98 | 21/8/2020,97,8

99 | 24/8/2020,98,0

100 | 21/8/2020,99,9

101 | 25/8/2020,100,7

--------------------------------------------------------------------------------

/Problem 0/employee_salary.csv:

--------------------------------------------------------------------------------

1 | id,first_name,last_name,salary,department_id

2 | 45,Kevin,Duncan,45210,1003

3 | 25,Pamela,Matthews,57944,1005

4 | 48,Robert,Lynch,117960,1004

5 | 34,Justin,Dunn,67992,1003

6 | 62,Dale,Hayes,97662,1005

7 | 1,Todd,Wilson,110000,1006

8 | 61,Ryan,Brown,120000,1003

9 | 21,Stephen,Berry,123617,1002

10 | 13,Julie,Sanchez,210000,1001

11 | 55,Michael,Morris,106799,1005

12 | 44,Trevor,Carter,38670,1001

13 | 73,William,Preston,155225,1003

14 | 39,Linda,Clark,186781,1002

15 | 10,Sean,Crawford,190000,1006

16 | 30,Stephen,Smith,194791,1001

17 | 75,Julia,Ramos,105000,1006

18 | 59,Kevin,Robinson,100924,1005

19 | 69,Ernest,Peterson,115993,1005

20 | 65,Deborah,Martin,67389,1004

21 | 63,Richard,Sanford,136083,1001

22 | 29,Jason,Olsen,51937,1006

23 | 11,Kevin,Townsend,166861,1002

24 | 43,Joseph,Rogers,22800,1005

25 | 32,Eric,Zimmerman,83093,1006

26 | 6,Natasha,Swanson,90000,1005

27 | 3,Kelly,Rosario,42689,1002

28 | 16,Briana,Rivas,151668,1005

29 | 38,Nicole,Lewis,114079,1001

30 | 42,Traci,Williams,180000,1003

31 | 49,Amber,Harding,77764,1002

32 | 26,Allison,Johnson,128782,1001

33 | 74,Richard,Cole,180361,1003

34 | 23,Angela,Williams,100875,1004

35 | 19,Michael,Ramsey,63159,1003

36 | 28,Alexis,Beck,12260,1005

37 | 64,Danielle,Williams,120000,1006

38 | 51,Theresa,Everett,31404,1002

39 | 58,Edward,Sharp,41077,1005

40 | 36,Jesus,Ward,36078,1005

41 | 5,Sherry,Golden,44101,1002

42 | 9,Christy,Mitchell,150000,1001

43 | 35,John,Ball,47795,1004

44 | 54,Wesley,Tucker,90221,1005

45 | 20,Cody,Gonzalez,112809,1004

46 | 57,Patricia,Harmon,147417,1005

47 | 24,William,Flores,142674,1003

48 | 60,Charles,Pearson,173317,1004

49 | 17,Jason,Burnett,42525,1006

50 | 7,Diane,Gordon,74591,1002

51 | 15,Anthony,Valdez,96898,1001

52 | 41,John,George,21642,1001

53 | 71,Kristine,Casey,67651,1003

54 | 12,Joshua,Johnson,123082,1004

55 | 68,Antonio,Carpenter,83684,1002

56 | 47,Kimberly,Dean,71416,1003

57 | 37,Philip,Gillespie,36424,1006

58 | 31,Kimberly,Brooks,95327,1003

59 | 27,Anthony,Ball,34386,1003

60 | 40,Colleen,Carrillo,147723,1004

61 | 70,Karen,Fernandez,101238,1003

62 | 4,Patricia,Powell,170000,1004

63 | 22,Brittany,Scott,162537,1002

64 | 8,Mercedes,Rodriguez,61048,1005

65 | 67,Tyler,Green,111085,1002

66 | 52,Kara,Smith,192838,1004

67 | 46,Joshua,Ewing,73088,1003

68 | 18,Jeffrey,Harris,20000,1002

69 | 56,Rachael,Williams,103585,1002

70 | 50,Victoria,Wilson,176620,1002

71 | 14,John,Coleman,152434,1001

72 | 72,Christine,Frye,137244,1004

73 | 2,Justin,Simon,130000,1005

74 | 53,Teresa,Cohen,98860,1001

75 | 66,Dustin,Bush,47567,1004

76 | 33,Peter,Holt,69945,1002

77 |

--------------------------------------------------------------------------------

/Problem 1/employee.csv:

--------------------------------------------------------------------------------

1 | "id","first_name","last_name","salary","department_id"

2 | 1,Todd,Wilson,110000,1006

3 | 1,Todd,Wilson,106119,1006

4 | 2,Justin,Simon,128922,1005

5 | 2,Justin,Simon,130000,1005

6 | 3,Kelly,Rosario,42689,1002

7 | 4,Patricia,Powell,162825,1004

8 | 4,Patricia,Powell,170000,1004

9 | 5,Sherry,Golden,44101,1002

10 | 6,Natasha,Swanson,79632,1005

11 | 6,Natasha,Swanson,90000,1005

12 | 7,Diane,Gordon,74591,1002

13 | 8,Mercedes,Rodriguez,61048,1005

14 | 9,Christy,Mitchell,137236,1001

15 | 9,Christy,Mitchell,140000,1001

16 | 9,Christy,Mitchell,150000,1001

17 | 10,Sean,Crawford,182065,1006

18 | 10,Sean,Crawford,190000,1006

19 | 11,Kevin,Townsend,166861,1002

20 | 12,Joshua,Johnson,123082,1004

21 | 13,Julie,Sanchez,185663,1001

22 | 13,Julie,Sanchez,200000,1001

23 | 13,Julie,Sanchez,210000,1001

24 | 14,John,Coleman,152434,1001

25 | 15,Anthony,Valdez,96898,1001

26 | 16,Briana,Rivas,151668,1005

27 | 17,Jason,Burnett,42525,1006

28 | 18,Jeffrey,Harris,14491,1002

29 | 18,Jeffrey,Harris,20000,1002

30 | 19,Michael,Ramsey,63159,1003

31 | 20,Cody,Gonzalez,112809,1004

32 | 21,Stephen,Berry,123617,1002

33 | 22,Brittany,Scott,162537,1002

34 | 23,Angela,Williams,100875,1004

35 | 24,William,Flores,142674,1003

36 | 25,Pamela,Matthews,57944,1005

37 | 26,Allison,Johnson,128782,1001

38 | 27,Anthony,Ball,34386,1003

39 | 28,Alexis,Beck,12260,1005

40 | 29,Jason,Olsen,51937,1006

41 | 30,Stephen,Smith,194791,1001

42 | 31,Kimberly,Brooks,95327,1003

43 | 32,Eric,Zimmerman,83093,1006

44 | 33,Peter,Holt,69945,1002

45 | 34,Justin,Dunn,67992,1003

46 | 35,John,Ball,47795,1004

47 | 36,Jesus,Ward,36078,1005

48 | 37,Philip,Gillespie,36424,1006

49 | 38,Nicole,Lewis,114079,1001

50 | 39,Linda,Clark,186781,1002

51 | 40,Colleen,Carrillo,147723,1004

52 | 41,John,George,21642,1001

53 | 42,Traci,Williams,138892,1003

54 | 42,Traci,Williams,150000,1003

55 | 42,Traci,Williams,160000,1003

56 | 42,Traci,Williams,180000,1003

57 | 43,Joseph,Rogers,22800,1005

58 | 44,Trevor,Carter,38670,1001

59 | 45,Kevin,Duncan,45210,1003

60 | 46,Joshua,Ewing,73088,1003

61 | 47,Kimberly,Dean,71416,1003

62 | 48,Robert,Lynch,117960,1004

63 | 49,Amber,Harding,77764,1002

64 | 50,Victoria,Wilson,176620,1002

65 | 51,Theresa,Everett,31404,1002

66 | 52,Kara,Smith,192838,1004

67 | 53,Teresa,Cohen,98860,1001

68 | 54,Wesley,Tucker,90221,1005

69 | 55,Michael,Morris,106799,1005

70 | 56,Rachael,Williams,103585,1002

71 | 57,Patricia,Harmon,147417,1005

72 | 58,Edward,Sharp,41077,1005

73 | 59,Kevin,Robinson,100924,1005

74 | 60,Charles,Pearson,173317,1004

75 | 61,Ryan,Brown,110225,1003

76 | 61,Ryan,Brown,120000,1003

77 | 62,Dale,Hayes,97662,1005

78 | 63,Richard,Sanford,136083,1001

79 | 64,Danielle,Williams,98655,1006

80 | 64,Danielle,Williams,110000,1006

81 | 64,Danielle,Williams,120000,1006

82 | 65,Deborah,Martin,67389,1004

83 | 66,Dustin,Bush,47567,1004

84 | 67,Tyler,Green,111085,1002

85 | 68,Antonio,Carpenter,83684,1002

86 | 69,Ernest,Peterson,115993,1005

87 | 70,Karen,Fernandez,101238,1003

88 | 71,Kristine,Casey,67651,1003

89 | 72,Christine,Frye,137244,1004

90 | 73,William,Preston,155225,1003

91 | 74,Richard,Cole,180361,1003

92 | 75,Julia,Ramos,61398,1006

93 | 75,Julia,Ramos,70000,1006

94 | 75,Julia,Ramos,83000,1006

95 | 75,Julia,Ramos,90000,1006

96 | 75,Julia,Ramos,105000,1006

97 |

--------------------------------------------------------------------------------

/Problem 7/transaction.csv:

--------------------------------------------------------------------------------

1 | id,user_id,item,created_at,revenue

2 | 1,109,milk,2020-03-03,123

3 | 2,139,biscuit,2020-03-18,421

4 | 3,120,milk,2020-03-18,176

5 | 4,108,banana,2020-03-18,862

6 | 5,130,milk,2020-03-28,333

7 | 6,103,bread,2020-03-29,862

8 | 7,122,banana,2020-03-07,952

9 | 8,125,bread,2020-03-13,317

10 | 9,139,bread,2020-03-30,929

11 | 10,141,banana,2020-03-17,812

12 | 11,116,bread,2020-03-31,226

13 | 12,128,bread,2020-03-04,112

14 | 13,146,biscuit,2020-03-04,362

15 | 14,119,banana,2020-03-28,127

16 | 15,142,bread,2020-03-09,503

17 | 16,122,bread,2020-03-06,593

18 | 17,128,biscuit,2020-03-24,160

19 | 18,112,banana,2020-03-24,262

20 | 19,149,banana,2020-03-29,382

21 | 20,100,banana,2020-03-18,599

22 | 21,130,milk,2020-03-16,604

23 | 22,103,milk,2020-03-31,290

24 | 23,112,banana,2020-03-23,523

25 | 24,102,bread,2020-03-25,325

26 | 25,120,biscuit,2020-03-21,858

27 | 26,109,bread,2020-03-22,432

28 | 27,101,milk,2020-03-01,449

29 | 28,138,milk,2020-03-19,961

30 | 29,100,milk,2020-03-29,410

31 | 30,129,milk,2020-03-02,771

32 | 31,123,milk,2020-03-31,434

33 | 32,104,biscuit,2020-03-31,957

34 | 33,110,bread,2020-03-13,210

35 | 34,143,bread,2020-03-27,870

36 | 35,130,milk,2020-03-12,176

37 | 36,128,milk,2020-03-28,498

38 | 37,133,banana,2020-03-21,837

39 | 38,150,banana,2020-03-20,927

40 | 39,120,milk,2020-03-27,793

41 | 40,109,bread,2020-03-02,362

42 | 41,110,bread,2020-03-13,262

43 | 42,140,milk,2020-03-09,468

44 | 43,112,banana,2020-03-04,381

45 | 44,117,biscuit,2020-03-19,831

46 | 45,137,banana,2020-03-23,490

47 | 46,130,bread,2020-03-09,149

48 | 47,133,bread,2020-03-08,658

49 | 48,143,milk,2020-03-11,317

50 | 49,111,biscuit,2020-03-23,204

51 | 50,150,banana,2020-03-04,299

52 | 51,131,bread,2020-03-10,155

53 | 52,140,biscuit,2020-03-17,810

54 | 53,147,banana,2020-03-22,702

55 | 54,119,biscuit,2020-03-15,355

56 | 55,116,milk,2020-03-12,468

57 | 56,141,milk,2020-03-14,254

58 | 57,143,bread,2020-03-16,647

59 | 58,105,bread,2020-03-21,562

60 | 59,149,biscuit,2020-03-11,827

61 | 60,117,banana,2020-03-22,249

62 | 61,150,banana,2020-03-21,450

63 | 62,134,bread,2020-03-08,981

64 | 63,133,banana,2020-03-26,353

65 | 64,127,milk,2020-03-27,300

66 | 65,101,milk,2020-03-26,740

67 | 66,137,biscuit,2020-03-12,473

68 | 67,113,biscuit,2020-03-21,278

69 | 68,141,bread,2020-03-21,118

70 | 69,112,biscuit,2020-03-14,334

71 | 70,118,milk,2020-03-30,603

72 | 71,111,milk,2020-03-19,205

73 | 72,146,biscuit,2020-03-13,599

74 | 73,148,banana,2020-03-14,530

75 | 74,100,banana,2020-03-13,175

76 | 75,105,banana,2020-03-05,815

77 | 76,129,milk,2020-03-02,489

78 | 77,121,milk,2020-03-16,476

79 | 78,117,bread,2020-03-11,270

80 | 79,133,milk,2020-03-12,446

81 | 80,124,bread,2020-03-31,937

82 | 81,145,bread,2020-03-07,821

83 | 82,105,banana,2020-03-09,972

84 | 83,131,milk,2020-03-09,808

85 | 84,114,biscuit,2020-03-31,202

86 | 85,120,milk,2020-03-06,898

87 | 86,130,milk,2020-03-06,581

88 | 87,141,biscuit,2020-03-11,749

89 | 88,147,bread,2020-03-14,262

90 | 89,118,milk,2020-03-15,735

91 | 90,136,biscuit,2020-03-22,410

92 | 91,132,bread,2020-03-06,161

93 | 92,137,biscuit,2020-03-31,427

94 | 93,107,bread,2020-03-01,701

95 | 94,111,biscuit,2020-03-18,218

96 | 95,100,bread,2020-03-07,410

97 | 96,106,milk,2020-03-21,379

98 | 97,114,banana,2020-03-25,705

99 | 98,110,bread,2020-03-27,225

100 | 99,130,milk,2020-03-16,494

101 | 100,117,bread,2020-03-10,209

--------------------------------------------------------------------------------

/Problem 6/problem6.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "id": "4328d022-1f8d-442f-921e-d16693058a4c",

6 | "metadata": {},

7 | "source": [

8 | "Here, we will solve problems two ways\n",

9 | "1. First using PySpark function \n",

10 | "2. Second using Spark SQL"

11 | ]

12 | },

13 | {

14 | "cell_type": "code",

15 | "execution_count": 1,

16 | "id": "6d4647c5-df06-4d53-b4b4-66677cc54ed1",

17 | "metadata": {},

18 | "outputs": [],

19 | "source": [

20 | "# First Load all the required library and also Start Spark Session\n",

21 | "# Load all the required library\n",

22 | "from pyspark.sql import SparkSession"

23 | ]

24 | },

25 | {

26 | "cell_type": "code",

27 | "execution_count": 2,

28 | "id": "c0fdceb9-20df-4588-8820-672d48778b09",

29 | "metadata": {},

30 | "outputs": [

31 | {

32 | "name": "stderr",

33 | "output_type": "stream",

34 | "text": [

35 | "WARNING: An illegal reflective access operation has occurred\n",

36 | "WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/opt/spark/jars/spark-unsafe_2.12-3.2.1.jar) to constructor java.nio.DirectByteBuffer(long,int)\n",

37 | "WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform\n",

38 | "WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations\n",

39 | "WARNING: All illegal access operations will be denied in a future release\n",

40 | "Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties\n",

41 | "Setting default log level to \"WARN\".\n",

42 | "To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).\n",

43 | "23/02/14 14:24:31 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable\n"

44 | ]

45 | }

46 | ],

47 | "source": [

48 | "#Start Spark Session\n",

49 | "spark = SparkSession.builder.appName(\"problem6\").getOrCreate()\n",

50 | "sqlContext = SparkSession(spark)\n",

51 | "#Dont Show warning only error\n",

52 | "spark.sparkContext.setLogLevel(\"ERROR\")"

53 | ]

54 | },

55 | {

56 | "cell_type": "code",

57 | "execution_count": 4,

58 | "id": "d5ec58af-280e-4eef-a95e-308df1bcbf68",

59 | "metadata": {},

60 | "outputs": [],

61 | "source": [

62 | "#Load CSV file into DataFrame\n",

63 | "studentdf = spark.read.format(\"csv\").option(\"header\",\"true\").option(\"inferSchema\",\"true\").load(\"students.csv\")"

64 | ]