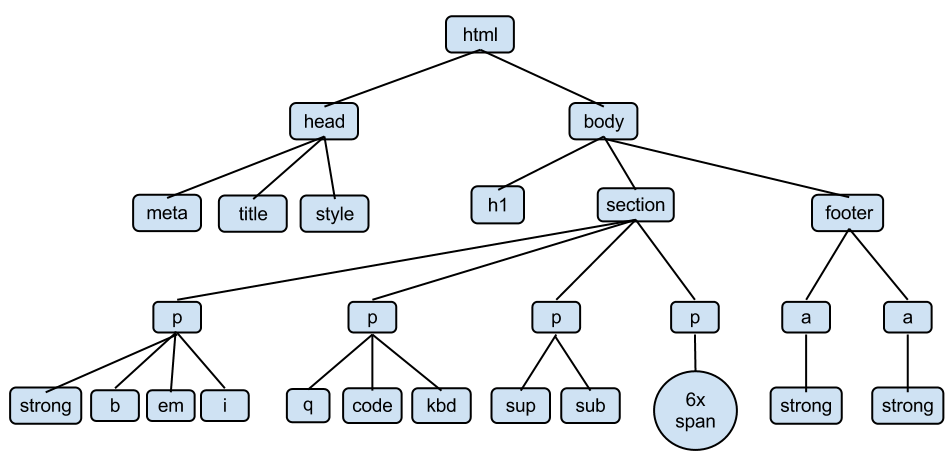

` tags *inside* of a `` tag.

143 | * Enter CSS

144 |

145 | ## CSS

146 |

147 | * CSS = Cascading Style Sheet.

148 | * CSS defines how HTML elements are to be displayed

149 | * HTML came first. But it was only meant to define content, not format it. While HTML contains tags like `` and ``, this is a very inefficient way to develop a website.

150 | * To solve this problem, CSS was created specifically to display content on a webpage. Now, one can change the look of an entire website just by changing one file.

151 | * Most web designers litter the HTML markup with tons of `class`'s and `id`'s to provide "hooks" for their CSS.

152 | * You can piggyback on these "hooks" to jump to the parts of the markup that contain the data you need.

153 | * The infographic [here](http://www.codingdojo.com/blog/html-vs-css-inforgraphic/) shows the difference between HTML and CSS and how together they form a web page:

154 |

155 |

156 |

157 | ## CSS Anatomy: Selectors

158 |

159 | | Type | HTML | CSS Selector |

160 | | :----- | :-------: | -------------: |

161 | | Element | ``, | `a`

`p a`|

162 | | Class | `` | `.blue`

`a.blue` |

163 | | ID | `` | `#blue`

`a#blue` |

164 |

165 | ## CSS Anatomy: Declarations

166 |

167 | - Selector: `a`

168 | - Property: `background-color`

169 | - Value: `yellow`

170 |

171 | ## CSS Anatomy: Hooks

172 |

173 |

174 |

175 | ## CSS + HTML

176 |

177 | What does the following HTML render to?

178 |

179 | ```html

180 |

181 |

182 |

183 | |

184 | Kurtis

185 | |

186 |

187 | McCoy

188 | |

189 |

190 |

191 | |

192 | Leah

193 | |

194 |

195 | Guerrero

196 | |

197 |

198 |

199 |

200 | ```

201 |

202 | > #### Exercises 1

203 | >

204 | > Find the CSS selectors for the following elements in the HTML above.

205 | > (Hint: There will be multiple solutions for each)

206 | >

207 | > 1. The entire table

208 | > 2. Just the row containing "Kurtis McCoy"

209 | > 3. Just the elements containing first names

210 |

211 | > #### Exercises 2

212 | >

213 | > A great resource to practice your CSS selection skills is http://flukeout.github.io/

214 | > Complete the first 10 exercises

215 |

216 | ## Inspect Element

217 |

218 | Google Chrome comes with great developer tools to help parse a webpage.

219 |

220 |  221 |

222 | The inspector gives you the HTML tree, as well as all the CSS selectors and style information.

223 |

224 | ## Inspect Element

225 |

226 |

227 |

228 | ---

229 | > #### Exercise 3

230 | >

231 | > Go to http://rochelleterman.github.io/. Using Google Chrome's inspect element:

232 | >

233 | > 1. Change the background color of each of the rows in the table

234 | > 2. Find the image source URL

235 | > 3. Find the HREF attribute of the link.

236 | >

237 | > Useful CSS declarations [here](http://miriamposner.com/blog/wp-content

238 | > uploads/2011/11/usefulcss.pdf)

239 |

240 | > #### Extra Challenge:

241 | >

242 | > Go to any website, and redesign the site using Google Chrome's inspect

243 | > element.

244 | >

245 |

246 | ## Putting it all together:

247 |

248 | 1. Use Inspect Element to see how your data is structured

249 | 2. Pay attention to HTML tags and CSS selectors

250 | 3. Pray that there is some kind of pattern

251 | 4. Leverage that pattern using Python

252 |

253 | ## Scraping Multiple Pages and Difficult sites

254 |

255 | * We're start by scrape one webpage. But what if you wanted to do many?

256 | * Two solutions:

257 | - URL patterns

258 | - Crawling ([Scrapy](http://scrapy.org/))

259 | * Lots of javascript or other problems? Check out [Selenium for Python](https://selenium-python.readthedocs.org/)

260 |

261 |

262 | **To the iPython Notebook!**

--------------------------------------------------------------------------------

/1_APIs/2_api_full-notes.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: "Accessing Databases via Web APIs: Lecture Notes"

3 | author: "PS239T"

4 | date: "Fall 2015"

5 | output: html_document

6 | ---

7 |

8 | ### Accessing Data: Some Preliminary Considerations

9 |

10 | Whenever you're trying to get information from the web, it's very important to first know whether you're accessing it through appropriate means.

11 |

12 | The UC Berkeley library has some excellent resources on this topic. Here is a flowchart that can help guide your course of action.

13 |

14 |

15 |

16 | You can see the library's licensed sources [here](http://guides.lib.berkeley.edu/text-mining).

17 |

18 | ### What is an API?

19 |

20 | * API stands for **Application Programming Interface**

21 |

22 | * Broadly defined: a set of rules and procedures that facilitate interactions between computers and their applications

23 |

24 | * A very common type of API is the Web API, which (among other things) allows users to query a remote database over the internet

25 |

26 | * Web APIs take on a variety of formats, but the vast majority adhere to a particular style known as **Representational State Transfer** or **REST**

27 |

28 | * What makes these "RESTful" APIs so convenient is that we can use them to query databases using URLs

29 |

30 | ### RESTful Web APIs are All Around You...

31 |

32 | Consider a simple Google search:

33 |

34 |

35 |

36 | Ever wonder what all that extra stuff in the address bar was all about? In this case, the full address is Google's way of sending a query to its databases asking requesting information related to the search term "golden state warriors".

37 |

38 |

39 |

40 | In fact, it looks like Google makes its query by taking the search terms, separating each of them with a "+", and appending them to the link "https://www.google.com/#q=". Therefore, we should be able to actually change our Google search by adding some terms to the URL and following the general format...

41 |

42 |

43 |

44 | Learning how to use RESTful APIs is all about learning how to format these URLs so that you can get the response you want.

45 |

46 | ### Some Basic Terminology

47 |

48 | * **Uniform Resource Location (URL)**: a string of characters that, when interpreted via the Hypertext Transfer Protocol (HTTP), points to a data resource, notably files written in Hypertext Markup Language (HTML) or a subset of a database. This is often referred to as a "call".

49 |

50 | * **HTTP Methods/Verbs**:

51 |

52 | + *GET*: requests a representation of a data resource corresponding to a particular URL. The process of executing the GET method is often referred to as a "GET request" and is the main method used for querying RESTful databases.

53 |

54 | + *HEAD*, *POST*, *PUT*, *DELETE*: other common methods, though mostly never used for database querying.

55 |

56 | ### How Do GET Requests Work? A Web Browsing Example

57 |

58 | As you might suspect from the example above, surfing the web is basically equivalent to sending a bunch of GET requests to different servers and asking for different files written in HTML.

59 |

60 | Suppose, for instance, I wanted to look something up on Wikipedia. My first step would be to open my web browser and type in http://www.wikipedia.org. Once I hit return, I'd see the page below.

61 |

62 |

63 |

64 | Several different processes occured, however, between me hitting "return" and the page finally being rendered. In order:

65 |

66 | 1. The web browser took the entered character string and used the command-line tool "Curl" to write a properly formatted HTTP GET request and submitted it to the server that hosts the Wikipedia homepage.

67 |

68 | 2. After receiving this request, the server sent an HTTP response, from which Curl extracted the HTML code for the page (partially shown below).

69 |

70 | 3. The raw HTML code was parsed and then executed by the web browser, rendering the page as seen in the window.

71 |

72 | ```

73 | [1] "\n\n\n\n\nWikipedia\n\n

2 |

3 |

4 |

5 | PS239T: Welcome!

6 |

166 |

167 |

168 |

343 |

344 |

347 |

348 |

349 |

350 |

--------------------------------------------------------------------------------

/1_APIs/all-formated.csv:

--------------------------------------------------------------------------------

1 | headline,word_count,keywords,date,id

2 | "Review: Alvin Ailey, at City Center, Maintains Polished Approach to Familiar Footwork",403,"['Dancing', 'Battle, Robert', 'New York City Center Theater', 'Ailey, Alvin, American Dance Theater', 'Blues Suite (Dance)', 'Revelations (Dance)']",2015-12-18,56733bdc38f0d85bed90f410

3 | New York Celebrates Billy Strayhorn’s Centennial With Special ‘A Train’ Ride,256,"['Ellington, Duke', 'Marsalis, Wynton', 'Jazz at Lincoln Center', 'New York Transit Museum', 'Jazz', 'Music', 'Subways']",2015-11-27,56589a4238f0d86d6ede4595

4 | Dance Listings for Dec. 18-24,1330,"['Culture (Arts)', 'Dancing']",2015-12-18,567447b338f0d805d002ea19

5 | Dance Listings for Dec. 11-17,2208,"['Dancing', 'Culture (Arts)']",2015-12-11,566a0bfa38f0d857ec8b0964

6 | Washington’s Shaw Neighborhood Is Remade for Young Urbanites,1194,"['Real Estate and Housing (Residential)', 'Real Estate (Commercial)', 'Washington (DC)', 'JBG Cos']",2015-12-02,565e1ee538f0d8640ffa095b

7 | "The Mets, the Royals and Charlie Parker, Linked by Autumn in New York",1078,"['Baseball', 'Ellington, Duke', 'Parker, Charlie', 'Kansas City Royals', 'New York Mets', 'World Series', 'Music', 'Kansas City (Kan)', 'New York City', 'Jazz', 'Birdland (Manhattan, NY)', 'Count Basie Orchestra', 'New York Giants (Baseball)', 'Coltrane, John', 'Armstrong, Louis']",2015-11-01,563331ad38f0d8310a2a0755

8 | "Gene Norman, Music Producer With an Ear for Jazz, Dies at 93",582,"['Deaths (Obituaries)', 'Jazz', 'Norman, Gene (1922-2015)']",2015-11-16,56464e6138f0d853f8379b62

9 | Fantasy Football Week 10: Rankings and Matchup Analysis,3588,"['Football', 'Fantasy Sports']",2015-11-14,56460b1838f0d8244c6375ad

10 | "New Music From Nate Wooley, Alicia Hall Moran and Adam Larson",882,"['Music', 'Third Man Records', 'Moran, Alicia Hall', 'Wooley, Nate', 'Larson, Adam R (1990- )', 'Von Hausswolff, Anna']",2015-11-08,563a318338f0d8786b6fe694

11 | Green-Wood Is the Brooklyn Cemetery With a Velvet Rope,1462,"['Cemeteries', 'Green-Wood Cemetery (Brooklyn, NY)', 'Parties (Social)', 'Brooklyn (NYC)']",2015-11-01,5633ed9338f0d85e68a21e53

12 | Review: Dance Heginbotham Shows Off Its Eccentric Style at the Joyce,371,"['Dancing', 'Heginbotham, John', 'Joyce Theater', 'Dance Heginbotham']",2015-10-13,561c30a038f0d84d2f3b8a88

13 | Fantasy Football Week 6: Rankings and Matchup Analysis,3078,"['Football', 'Fantasy Sports']",2015-10-17,5620f27f38f0d84dbbafe2d5

14 | Fantasy Football Week 5: Rankings and Matchup Analysis,2909,"['Football', 'Fantasy Sports', 'Freeman, Devonta (1992- )']",2015-10-10,5618111e38f0d814e13702e1

15 | "Phil Woods, Saxophonist Revered in Jazz and Heard on Hits, Dies at 83",724,"['Deaths (Obituaries)', 'Jazz', 'Saxophones', 'Woods, Phil (1931-2015)']",2015-09-30,560b30a238f0d84e27991244

16 | Jazz Listings for Oct. 2-8,1769,"['Music', 'Jazz']",2015-10-02,560db8bb38f0d81aa77a51d2

17 | Nancy Harms Celebrates Duke Ellington,326,"['Music', 'Jazz', 'Metropolitan Room', 'Harms, Nancy (Singer)']",2015-09-09,55ef4b7638f0d867b4c8968b

18 | "Gary Keys, Filmmaker Who Documented Duke Ellington, Dies at 81",523,"['Deaths (Obituaries)', 'Documentary Films and Programs', 'Ellington, Duke', 'Jazz', 'Keys, Gary (1934-2015)']",2015-08-31,55e39f4738f0d84c14715dc4

19 | "Review: ‘Negroland,’ by Margo Jefferson, on Growing Up Black and Privileged",1122,"['Books and Literature', 'Jefferson, Margo', 'Blacks', 'Negroland: A Memoir (Book)']",2015-09-11,55f1fcd138f0d824d9bc6c83

20 | "Corrections: September 3, 2015",594,[],2015-09-03,55e7e91638f0d80b7eeea706

21 | "Paid Notice: Deaths KEYS, GARY",195,"['KEYS, GARY']",2015-08-18,55dd2c6838f0d8657895ea0f

22 | "Paid Notice: Deaths KEYS, GARY ",194,[],2015-08-17,55d2f42038f0d80f08415c0c

23 | "Kevin Henkes’s ‘Waiting,’ and More",1059,"['Books and Literature', 'Henkes, Kevin', 'Daywalt, Drew (1970- )', 'Pinkney, Brian', 'Jeffers, Oliver', 'Waiting (Book)', 'The Day the Crayons Came Home (Book)', 'On the Ball (Book)']",2015-08-23,55d741ff38f0d81d6b2f0148

24 | ‘Cuba: The Conversation Continues’ and ‘Live in Cuba’ Expand a Musical Dialogue,960,"['Music', 'Cuba', 'Jazz', 'United States International Relations', 'Marsalis, Wynton', ""O'Farrill, Arturo"", 'Jazz at Lincoln Center Orchestra', 'Afro Latin Jazz Orchestra']",2015-08-22,55d7998838f0d83feb7c3fa0

25 | Beyond Green Eggs and Ham,2697,['News'],2015-07-29,55b890f738f0d878f9fef7ef

26 | Times Critics’ Guide: What to Do This Week,476,['Culture (Arts)'],2015-07-21,55acf7df38f0d83cdf2a9bc9

27 | Jazz Listings for July 17-23,1583,"['Music', 'Jazz']",2015-07-17,55a824ac38f0d87d1f9a7d7b

28 | Review: ‘Thelonious’ is a Tap Tribute to a Musician,418,"['Dancing', 'American Tap Dance Foundation', 'Monk, Thelonious', 'Music']",2015-07-11,55a031e738f0d8721baf65b2

29 | "Charles Winick, Author Who Challenged Views on Drugs and Gender, Dies at 92",998,"['Deaths (Obituaries)', 'Archaeology and Anthropology', 'City University of New York', 'Winick, Charles (1922-2015)']",2015-07-13,55a30e1738f0d80f8ac35d4c

30 | "Masabumi Kikuchi, Jazz Pianist Who Embraced Individualism, Dies at 75",557,"['Deaths (Obituaries)', 'Music', 'Jazz', 'Kikuchi, Masabumi']",2015-07-10,559f266538f0d8526a38bba9

31 | Gunther Schuller Dies at 89; Composer Synthesized Classical and Jazz,1879,"['Deaths (Obituaries)', 'Music', 'Jazz', 'Classical Music', 'Schuller, Gunther']",2015-06-22,5587636b38f0d810c2364153

32 | Review: Aaron Diehl’s ‘Space Time Continuum’ Is a Jubilant New Album,461,"['Music', 'Jazz', 'Golson, Benny', 'Temperley, Joe', 'Diehl, Aaron']",2015-06-11,5578932638f0d808b502d28c

33 | "Mario Cooper, Nexus Between AIDS Activists and Black Leaders, Dies at 61",810,"['Deaths (Obituaries)', 'Acquired Immune Deficiency Syndrome', 'Blacks', 'Cooper, Mario (1954-2015)']",2015-06-07,556facf238f0d865b59b35b9

34 | "Review: Maria Schneider Orchestra’s ‘The Thompson Fields,’ Connections to the Natural World",385,"['Music', 'Jazz', 'Schneider, Maria']",2015-06-02,556cc2e238f0d81d5c02cd44

35 | "Dudley Williams, Eloquent Dancer Who Defied Age, Dies at 76",1136,"['Deaths (Obituaries)', 'Dancing', 'Williams, Dudley', 'Ailey, Alvin, American Dance Theater']",2015-06-04,556f9c0738f0d865b59b3596

36 | Paperback Row,486,['Books and Literature'],2015-05-31,55688d4838f0d87c79ae5ee9

37 | Cara McCarty,508,"['Cooper Hewitt, Smithsonian Design Museum', 'McCarty, Cara (1956- )']",2015-05-17,55578cc138f0d86cbb7b7a2e

38 | "Stan Cornyn, Creative Record Executive, Is Dead at 81",765,"['Deaths (Obituaries)', 'Grammy Awards', 'Music', 'Warner Brothers', 'Cornyn, Stan (1933-2015)']",2015-05-16,555560ce38f0d833d435341f

39 | Word of the Day | cantankerous,214,[],2015-05-05,5548411638f0d81a8df26666

40 | ‘Harlem Nights/U Street Lights’ Samples Two Jazz Sources,152,['Jazz'],2015-05-03,554794e438f0d8091569448a

41 | "Louis Armstrong, the Real Ambassador",858,"['Music', 'Cold War Era', 'Blacks', 'United States International Relations', 'Jazz', 'Race and Ethnicity', 'Armstrong, Louis']",2015-05-02,55443d7638f0d85f67dd3571

42 | Jazz Listings for May 1-7,2340,"['Music', 'Jazz']",2015-05-01,5542a23338f0d83d46e51e6b

43 | "$1 Million Homes in Washington, D.C., Denver and Vermont",1070,"['Real Estate and Housing (Residential)', 'Denver (Colo)', 'Vermont', 'Washington (DC)']",2015-04-19,552e67a338f0d87fbef52e0d

44 | "In ‘Something Rotten!,’ if Music Be the Food of Farce, Play On",1761,"['Theater', 'Shakespeare, William', 'Kirkpatrick, Wayne (1961- )', 'Kirkpatrick, Karey (1964- )', 'Nicholaw, Casey', ""O'Farrell, John (1962- )"", 'Something Rotten! (Play)']",2015-04-19,552fa44838f0d824112d690a

45 | Jazz Listings for April 17-23,2079,"['Music', 'Jazz']",2015-04-17,553032fc38f0d847eef0f56a

46 | A Piece of Harlem History Turns to Dust,591,"['Renaissance Theater and Casino (Manhattan, NY)', 'Historic Buildings and Sites', 'Harlem (Manhattan, NY)', 'Demolition', 'Blacks']",2015-04-12,55282ccc38f0d855b3a9512b

47 | "Sugar Hill, Rich in Culture, and Affordable",1273,"['Real Estate and Housing (Residential)', 'Sugar Hill (Manhattan, NY)', 'Historic Buildings and Sites']",2015-04-12,552525df38f0d873d654723e

48 | Jazz Listings for April 10-16,2087,"['Music', 'Jazz']",2015-04-10,5526fc9538f0d8359e979810

49 | In Performance: T. Oliver Reid,205,"['Ellington, Duke', 'Jazz', 'Music', 'Reid, T Oliver', 'Theater']",2015-03-17,550817e338f0d87501d7055d

50 | In Performance | T. Oliver Reid,34,"['Theater', 'Ellington, Duke', 'Metropolitan Room', 'Music', 'Reid, T Oliver']",2015-03-16,55071c2638f0d87501d70245

51 | In Performance: T. Oliver Reid,31,"['Reid, T Oliver', 'Theater', 'Music', 'Metropolitan Room', 'Ellington, Duke']",2015-03-16,550717ef38f0d87501d70232

52 | Ecuatoriana Restaurant Is a Link to Ecuador in Harlem,567,"['Restaurants', 'Hamilton Heights (Manhattan, NY)', 'Ecuadorean-Americans', 'Ecuatoriana Restaurant (Manhattan, NY, Restaurant)']",2015-03-29,5515cf5a38f0d84d44f0de14

53 | Letters: The Content of Character,878,['Books and Literature'],2015-03-22,550b211b38f0d8631c7b1049

54 | Jazz Listings for March 20-26,2214,"['Music', 'Jazz']",2015-03-20,550b526d38f0d8631c7b112a

55 | "Orrin Keepnews, Record Executive and Producer of Jazz Classics, Dies at 91",984,"['Deaths (Obituaries)', 'Jazz', 'Music', 'Keepnews, Orrin (1923-2015)']",2015-03-02,54f357ef38f0d84018916389

56 | Jazz That Spans Generations,939,"['Music', 'Jazz', 'New Haven (Conn)', 'Yale School of Music', 'Diehl, Aaron']",2015-03-01,54f23e4738f0d8529ba34a08

57 | William Dieterle’s ‘Syncopation’ on DVD: Bending Notes and Jazz History,1267,"['Movies', 'Jazz', 'Video Releases (Entertainment)', 'William Dieterle (1893-1972)', 'Clarke, Shirley', 'Music', 'Syncopation (Movie)', 'The Connection (Movie)']",2015-03-01,54effca438f0d85d8e627423

58 | "Clark Terry, Master of Jazz Trumpet, Dies at 94",1453,"['Deaths (Obituaries)', 'Music', 'Jazz', 'Terry, Clark (1920-2015)']",2015-02-23,54e9eb3538f0d8377bb76cbd

59 | Amiri Baraka’s ‘S O S’,1185,"['Books and Literature', 'Baraka, Amiri', 'Poetry and Poets', 'S O S: Poems 1961-2013 (Book)']",2015-02-15,54db6e7938f0d8613900777f

60 | Been Rich All My Life,43,[],2015-01-29,54ca014938f0d8372df4ba64

61 | Which Literary Figure Is Overdue for a Biography?,1601,"['Books and Literature', 'Writing and Writers', 'Murray, Albert (1916-2013)', 'Wolfe, Tom']",2015-01-25,54be80b338f0d807ab72e00d

62 | "Ervin Drake, Composer of Pop Songs, Dies at 95",686,"['Deaths (Obituaries)', 'Music', 'Drake, Ervin']",2015-01-17,54b9cc0e38f0d83735b5a8ca

63 | Paperback Row,472,['Books and Literature'],2015-01-18,54b95f6238f0d83735b5a631

64 | Peggy Cooper Cafritz: Everything in a Big Way,1802,"['Cafritz, Peggy Cooper', 'Interior Design and Furnishings', 'Art', 'Fires and Firefighters', 'Minorities']",2015-01-15,54b6fd1438f0d8598e1e88bf

65 | Romantic Fancy in French Flavors,1296,"['Movies', 'Video Releases (Entertainment)', 'Gondry, Michel', 'Rohmer, Eric', 'Mood Indigo (Movie)', ""A Summer's Tale (Movie)""]",2015-01-11,54afe61038f0d84dd51add1e

66 | Duke Ellington: Memories Of Duke,0,[],2015-01-01,54a55dce38f0d83a07dc783f

67 |

--------------------------------------------------------------------------------

/2_HTML_CSS/1_HTML_slides.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 | PS239T: Welcome!

6 |

166 |

167 |

168 |

453 |

454 |

457 |

458 |

459 |

460 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 |

2 | Creative Commons Attribution-NonCommercial 4.0 International Public License

3 |

4 | By exercising the Licensed Rights (defined below), You accept and agree to be bound by the terms and conditions of this Creative Commons Attribution-NonCommercial 4.0 International Public License ("Public License"). To the extent this Public License may be interpreted as a contract, You are granted the Licensed Rights in consideration of Your acceptance of these terms and conditions, and the Licensor grants You such rights in consideration of benefits the Licensor receives from making the Licensed Material available under these terms and conditions.

5 |

6 | Section 1 – Definitions.

7 |

8 | Adapted Material means material subject to Copyright and Similar Rights that is derived from or based upon the Licensed Material and in which the Licensed Material is translated, altered, arranged, transformed, or otherwise modified in a manner requiring permission under the Copyright and Similar Rights held by the Licensor. For purposes of this Public License, where the Licensed Material is a musical work, performance, or sound recording, Adapted Material is always produced where the Licensed Material is synched in timed relation with a moving image.

9 | Adapter's License means the license You apply to Your Copyright and Similar Rights in Your contributions to Adapted Material in accordance with the terms and conditions of this Public License.

10 | Copyright and Similar Rights means copyright and/or similar rights closely related to copyright including, without limitation, performance, broadcast, sound recording, and Sui Generis Database Rights, without regard to how the rights are labeled or categorized. For purposes of this Public License, the rights specified in Section 2(b)(1)-(2) are not Copyright and Similar Rights.

11 | Effective Technological Measures means those measures that, in the absence of proper authority, may not be circumvented under laws fulfilling obligations under Article 11 of the WIPO Copyright Treaty adopted on December 20, 1996, and/or similar international agreements.

12 | Exceptions and Limitations means fair use, fair dealing, and/or any other exception or limitation to Copyright and Similar Rights that applies to Your use of the Licensed Material.

13 | Licensed Material means the artistic or literary work, database, or other material to which the Licensor applied this Public License.

14 | Licensed Rights means the rights granted to You subject to the terms and conditions of this Public License, which are limited to all Copyright and Similar Rights that apply to Your use of the Licensed Material and that the Licensor has authority to license.

15 | Licensor means the individual(s) or entity(ies) granting rights under this Public License.

16 | NonCommercial means not primarily intended for or directed towards commercial advantage or monetary compensation. For purposes of this Public License, the exchange of the Licensed Material for other material subject to Copyright and Similar Rights by digital file-sharing or similar means is NonCommercial provided there is no payment of monetary compensation in connection with the exchange.

17 | Share means to provide material to the public by any means or process that requires permission under the Licensed Rights, such as reproduction, public display, public performance, distribution, dissemination, communication, or importation, and to make material available to the public including in ways that members of the public may access the material from a place and at a time individually chosen by them.

18 | Sui Generis Database Rights means rights other than copyright resulting from Directive 96/9/EC of the European Parliament and of the Council of 11 March 1996 on the legal protection of databases, as amended and/or succeeded, as well as other essentially equivalent rights anywhere in the world.

19 | You means the individual or entity exercising the Licensed Rights under this Public License. Your has a corresponding meaning.

20 | Section 2 – Scope.

21 |

22 | License grant.

23 | Subject to the terms and conditions of this Public License, the Licensor hereby grants You a worldwide, royalty-free, non-sublicensable, non-exclusive, irrevocable license to exercise the Licensed Rights in the Licensed Material to:

24 | reproduce and Share the Licensed Material, in whole or in part, for NonCommercial purposes only; and

25 | produce, reproduce, and Share Adapted Material for NonCommercial purposes only.

26 | Exceptions and Limitations. For the avoidance of doubt, where Exceptions and Limitations apply to Your use, this Public License does not apply, and You do not need to comply with its terms and conditions.

27 | Term. The term of this Public License is specified in Section 6(a).

28 | Media and formats; technical modifications allowed. The Licensor authorizes You to exercise the Licensed Rights in all media and formats whether now known or hereafter created, and to make technical modifications necessary to do so. The Licensor waives and/or agrees not to assert any right or authority to forbid You from making technical modifications necessary to exercise the Licensed Rights, including technical modifications necessary to circumvent Effective Technological Measures. For purposes of this Public License, simply making modifications authorized by this Section 2(a)(4) never produces Adapted Material.

29 | Downstream recipients.

30 | Offer from the Licensor – Licensed Material. Every recipient of the Licensed Material automatically receives an offer from the Licensor to exercise the Licensed Rights under the terms and conditions of this Public License.

31 | No downstream restrictions. You may not offer or impose any additional or different terms or conditions on, or apply any Effective Technological Measures to, the Licensed Material if doing so restricts exercise of the Licensed Rights by any recipient of the Licensed Material.

32 | No endorsement. Nothing in this Public License constitutes or may be construed as permission to assert or imply that You are, or that Your use of the Licensed Material is, connected with, or sponsored, endorsed, or granted official status by, the Licensor or others designated to receive attribution as provided in Section 3(a)(1)(A)(i).

33 | Other rights.

34 |

35 | Moral rights, such as the right of integrity, are not licensed under this Public License, nor are publicity, privacy, and/or other similar personality rights; however, to the extent possible, the Licensor waives and/or agrees not to assert any such rights held by the Licensor to the limited extent necessary to allow You to exercise the Licensed Rights, but not otherwise.

36 | Patent and trademark rights are not licensed under this Public License.

37 | To the extent possible, the Licensor waives any right to collect royalties from You for the exercise of the Licensed Rights, whether directly or through a collecting society under any voluntary or waivable statutory or compulsory licensing scheme. In all other cases the Licensor expressly reserves any right to collect such royalties, including when the Licensed Material is used other than for NonCommercial purposes.

38 | Section 3 – License Conditions.

39 |

40 | Your exercise of the Licensed Rights is expressly made subject to the following conditions.

41 |

42 | Attribution.

43 |

44 | If You Share the Licensed Material (including in modified form), You must:

45 |

46 | retain the following if it is supplied by the Licensor with the Licensed Material:

47 | identification of the creator(s) of the Licensed Material and any others designated to receive attribution, in any reasonable manner requested by the Licensor (including by pseudonym if designated);

48 | a copyright notice;

49 | a notice that refers to this Public License;

50 | a notice that refers to the disclaimer of warranties;

51 | a URI or hyperlink to the Licensed Material to the extent reasonably practicable;

52 | indicate if You modified the Licensed Material and retain an indication of any previous modifications; and

53 | indicate the Licensed Material is licensed under this Public License, and include the text of, or the URI or hyperlink to, this Public License.

54 | You may satisfy the conditions in Section 3(a)(1) in any reasonable manner based on the medium, means, and context in which You Share the Licensed Material. For example, it may be reasonable to satisfy the conditions by providing a URI or hyperlink to a resource that includes the required information.

55 | If requested by the Licensor, You must remove any of the information required by Section 3(a)(1)(A) to the extent reasonably practicable.

56 | If You Share Adapted Material You produce, the Adapter's License You apply must not prevent recipients of the Adapted Material from complying with this Public License.

57 | Section 4 – Sui Generis Database Rights.

58 |

59 | Where the Licensed Rights include Sui Generis Database Rights that apply to Your use of the Licensed Material:

60 |

61 | for the avoidance of doubt, Section 2(a)(1) grants You the right to extract, reuse, reproduce, and Share all or a substantial portion of the contents of the database for NonCommercial purposes only;

62 | if You include all or a substantial portion of the database contents in a database in which You have Sui Generis Database Rights, then the database in which You have Sui Generis Database Rights (but not its individual contents) is Adapted Material; and

63 | You must comply with the conditions in Section 3(a) if You Share all or a substantial portion of the contents of the database.

64 | For the avoidance of doubt, this Section 4 supplements and does not replace Your obligations under this Public License where the Licensed Rights include other Copyright and Similar Rights.

65 | Section 5 – Disclaimer of Warranties and Limitation of Liability.

66 |

67 | Unless otherwise separately undertaken by the Licensor, to the extent possible, the Licensor offers the Licensed Material as-is and as-available, and makes no representations or warranties of any kind concerning the Licensed Material, whether express, implied, statutory, or other. This includes, without limitation, warranties of title, merchantability, fitness for a particular purpose, non-infringement, absence of latent or other defects, accuracy, or the presence or absence of errors, whether or not known or discoverable. Where disclaimers of warranties are not allowed in full or in part, this disclaimer may not apply to You.

68 | To the extent possible, in no event will the Licensor be liable to You on any legal theory (including, without limitation, negligence) or otherwise for any direct, special, indirect, incidental, consequential, punitive, exemplary, or other losses, costs, expenses, or damages arising out of this Public License or use of the Licensed Material, even if the Licensor has been advised of the possibility of such losses, costs, expenses, or damages. Where a limitation of liability is not allowed in full or in part, this limitation may not apply to You.

69 | The disclaimer of warranties and limitation of liability provided above shall be interpreted in a manner that, to the extent possible, most closely approximates an absolute disclaimer and waiver of all liability.

70 | Section 6 – Term and Termination.

71 |

72 | This Public License applies for the term of the Copyright and Similar Rights licensed here. However, if You fail to comply with this Public License, then Your rights under this Public License terminate automatically.

73 | Where Your right to use the Licensed Material has terminated under Section 6(a), it reinstates:

74 |

75 | automatically as of the date the violation is cured, provided it is cured within 30 days of Your discovery of the violation; or

76 | upon express reinstatement by the Licensor.

77 | For the avoidance of doubt, this Section 6(b) does not affect any right the Licensor may have to seek remedies for Your violations of this Public License.

78 | For the avoidance of doubt, the Licensor may also offer the Licensed Material under separate terms or conditions or stop distributing the Licensed Material at any time; however, doing so will not terminate this Public License.

79 | Sections 1, 5, 6, 7, and 8 survive termination of this Public License.

80 | Section 7 – Other Terms and Conditions.

81 |

82 | The Licensor shall not be bound by any additional or different terms or conditions communicated by You unless expressly agreed.

83 | Any arrangements, understandings, or agreements regarding the Licensed Material not stated herein are separate from and independent of the terms and conditions of this Public License.

84 | Section 8 – Interpretation.

85 |

86 | For the avoidance of doubt, this Public License does not, and shall not be interpreted to, reduce, limit, restrict, or impose conditions on any use of the Licensed Material that could lawfully be made without permission under this Public License.

87 | To the extent possible, if any provision of this Public License is deemed unenforceable, it shall be automatically reformed to the minimum extent necessary to make it enforceable. If the provision cannot be reformed, it shall be severed from this Public License without affecting the enforceability of the remaining terms and conditions.

88 | No term or condition of this Public License will be waived and no failure to comply consented to unless expressly agreed to by the Licensor.

89 | Nothing in this Public License constitutes or may be interpreted as a limitation upon, or waiver of, any privileges and immunities that apply to the Licensor or You, including from the legal processes of any jurisdiction or authority.

90 |

--------------------------------------------------------------------------------

/4_Selenium/Selenium.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "### Accessing Data: Some Preliminary Considerations\n",

8 | "\n",

9 | "Whenever you're trying to get information from the web, it's very important to first know whether you're accessing it through appropriate means.\n",

10 | "\n",

11 | "The UC Berkeley library has some excellent resources on this topic. Here is a flowchart that can help guide your course of action.\n",

12 | "\n",

13 | "\n",

14 | "\n",

15 | "You can see the library's licensed sources [here](http://guides.lib.berkeley.edu/text-mining)."

16 | ]

17 | },

18 | {

19 | "cell_type": "markdown",

20 | "metadata": {},

21 | "source": [

22 | "# Installing Selenium\n",

23 | "\n",

24 | "We're going to use Selenium for Firefox, which means we'll have to install `geckodriver`. You can download it [here](https://github.com/mozilla/geckodriver/releases/). Download the right version for your system, and then unzip it.\n",

25 | "\n",

26 | "You'll need to then move it to the correct path. This workshop expects you to be running Python 3.X with Anaconda. If you drag geckodriver into your anaconda/bin folder, then you should be all set."

27 | ]

28 | },

29 | {

30 | "cell_type": "markdown",

31 | "metadata": {},

32 | "source": [

33 | "# Selenium\n",

34 | "\n",

35 | "Very helpful documentation on how to navigate a webpage with selenium can be found [here](http://selenium-python.readthedocs.io/navigating.html). There are a lot of different ways to navigate, so you'll want to refer to this throughout the workshops, as well as when you're working on your own projects in the future."

36 | ]

37 | },

38 | {

39 | "cell_type": "code",

40 | "execution_count": null,

41 | "metadata": {

42 | "collapsed": true

43 | },

44 | "outputs": [],

45 | "source": [

46 | "from selenium import webdriver\n",

47 | "from selenium.webdriver.common.keys import Keys\n",

48 | "from selenium.webdriver.support.ui import Select\n",

49 | "from bs4 import BeautifulSoup"

50 | ]

51 | },

52 | {

53 | "cell_type": "markdown",

54 | "metadata": {},

55 | "source": [

56 | "First we'll set up the (web)driver. This will open up a Firefox window."

57 | ]

58 | },

59 | {

60 | "cell_type": "code",

61 | "execution_count": null,

62 | "metadata": {

63 | "collapsed": true

64 | },

65 | "outputs": [],

66 | "source": [

67 | "# setup driver\n",

68 | "driver = webdriver.Firefox()"

69 | ]

70 | },

71 | {

72 | "cell_type": "markdown",

73 | "metadata": {},

74 | "source": [

75 | "To go to a webpage, we just enter the url as the argument of the `get` method."

76 | ]

77 | },

78 | {

79 | "cell_type": "code",

80 | "execution_count": null,

81 | "metadata": {

82 | "collapsed": true

83 | },

84 | "outputs": [],

85 | "source": [

86 | "driver.get(\"http://www.google.com\")"

87 | ]

88 | },

89 | {

90 | "cell_type": "code",

91 | "execution_count": null,

92 | "metadata": {

93 | "collapsed": true

94 | },

95 | "outputs": [],

96 | "source": [

97 | "# go to page\n",

98 | "driver.get(\"http://wbsec.gov.in/(S(eoxjutirydhdvx550untivvu))/DetailedResult/Detailed_gp_2013.aspx\")"

99 | ]

100 | },

101 | {

102 | "cell_type": "markdown",

103 | "metadata": {},

104 | "source": [

105 | "### Zilla Parishad Name"

106 | ]

107 | },

108 | {

109 | "cell_type": "markdown",

110 | "metadata": {},

111 | "source": [

112 | "We can use the method `find_element_by_name` to find an element on the page by its name. An easy way to do this is to inspect the element."

113 | ]

114 | },

115 | {

116 | "cell_type": "code",

117 | "execution_count": null,

118 | "metadata": {

119 | "collapsed": true

120 | },

121 | "outputs": [],

122 | "source": [

123 | "# find \"district\" drop down\n",

124 | "district = driver.find_element_by_name(\"ddldistrict\")"

125 | ]

126 | },

127 | {

128 | "cell_type": "markdown",

129 | "metadata": {},

130 | "source": [

131 | "Now if we want to get the different options in this drop down, we can do the same. You'll notice that each name is associated with a unique value. Here since we're getting multiple elements, we'll use `find_elements_by_tag_name`"

132 | ]

133 | },

134 | {

135 | "cell_type": "code",

136 | "execution_count": null,

137 | "metadata": {

138 | "collapsed": true

139 | },

140 | "outputs": [],

141 | "source": [

142 | "# find options in that drop down\n",

143 | "district_options = district.find_elements_by_tag_name(\"option\")\n",

144 | "\n",

145 | "print(district_options[1].get_attribute(\"value\"))\n",

146 | "print(district_options[1].text)"

147 | ]

148 | },

149 | {

150 | "cell_type": "markdown",

151 | "metadata": {},

152 | "source": [

153 | "Now we'll make a dictionary associating each name with its value."

154 | ]

155 | },

156 | {

157 | "cell_type": "code",

158 | "execution_count": null,

159 | "metadata": {

160 | "collapsed": true

161 | },

162 | "outputs": [],

163 | "source": [

164 | "d_options = {option.text.strip(): option.get_attribute(\"value\") for option in district_options if option.get_attribute(\"value\").isdigit()}\n",

165 | "print(d_options)"

166 | ]

167 | },

168 | {

169 | "cell_type": "markdown",

170 | "metadata": {},

171 | "source": [

172 | "Now we can select a district by using its name and our dictionary. First we'll make our own function using Selenium's `Select`, and then we'll call it on \"Bankura\"."

173 | ]

174 | },

175 | {

176 | "cell_type": "code",

177 | "execution_count": null,

178 | "metadata": {

179 | "collapsed": true

180 | },

181 | "outputs": [],

182 | "source": [

183 | "district_select = Select(district)\n",

184 | "district_select.select_by_value(d_options[\"Bankura\"])"

185 | ]

186 | },

187 | {

188 | "cell_type": "markdown",

189 | "metadata": {},

190 | "source": [

191 | "### Panchayat Samity Name"

192 | ]

193 | },

194 | {

195 | "cell_type": "markdown",

196 | "metadata": {},

197 | "source": [

198 | "We can do the same as we did above to find the different blocks."

199 | ]

200 | },

201 | {

202 | "cell_type": "code",

203 | "execution_count": null,

204 | "metadata": {

205 | "collapsed": true

206 | },

207 | "outputs": [],

208 | "source": [

209 | "# find the \"block\" drop down\n",

210 | "block = driver.find_element_by_name(\"ddlblock\")"

211 | ]

212 | },

213 | {

214 | "cell_type": "code",

215 | "execution_count": null,

216 | "metadata": {

217 | "collapsed": true

218 | },

219 | "outputs": [],

220 | "source": [

221 | "# get options\n",

222 | "block_options = block.find_elements_by_tag_name(\"option\")\n",

223 | "\n",

224 | "print(block_options[1].get_attribute(\"value\"))\n",

225 | "print(block_options[1].text)"

226 | ]

227 | },

228 | {

229 | "cell_type": "code",

230 | "execution_count": null,

231 | "metadata": {

232 | "collapsed": true

233 | },

234 | "outputs": [],

235 | "source": [

236 | "b_options = {option.text.strip(): option.get_attribute(\"value\") for option in block_options if option.get_attribute(\"value\").isdigit()}\n",

237 | "print(b_options)"

238 | ]

239 | },

240 | {

241 | "cell_type": "code",

242 | "execution_count": null,

243 | "metadata": {

244 | "collapsed": true

245 | },

246 | "outputs": [],

247 | "source": [

248 | "block_select = Select(block)\n",

249 | "block_select.select_by_value(b_options[\"BANKURA-I\"])"

250 | ]

251 | },

252 | {

253 | "cell_type": "markdown",

254 | "metadata": {},

255 | "source": [

256 | "### Gram Panchayat Name"

257 | ]

258 | },

259 | {

260 | "cell_type": "markdown",

261 | "metadata": {},

262 | "source": [

263 | "Let's do it again for the third drop down menu."

264 | ]

265 | },

266 | {

267 | "cell_type": "code",

268 | "execution_count": null,

269 | "metadata": {

270 | "collapsed": true

271 | },

272 | "outputs": [],

273 | "source": [

274 | "# get options\n",

275 | "gp = driver.find_element_by_name(\"ddlgp\")\n",

276 | "gp_options = gp.find_elements_by_tag_name(\"option\")\n",

277 | "\n",

278 | "print(gp_options[1].get_attribute(\"value\"))\n",

279 | "print(gp_options[1].text)"

280 | ]

281 | },

282 | {

283 | "cell_type": "code",

284 | "execution_count": null,

285 | "metadata": {

286 | "collapsed": true

287 | },

288 | "outputs": [],

289 | "source": [

290 | "gp_options = {option.text.strip(): option.get_attribute(\"value\") for option in gp_options if option.get_attribute(\"value\").isdigit()}\n",

291 | "print(gp_options)"

292 | ]

293 | },

294 | {

295 | "cell_type": "code",

296 | "execution_count": null,

297 | "metadata": {

298 | "collapsed": true

299 | },

300 | "outputs": [],

301 | "source": [

302 | "gp_select = Select(gp)\n",

303 | "gp_select.select_by_value(gp_options[\"ANCHURI\"])"

304 | ]

305 | },

306 | {

307 | "cell_type": "markdown",

308 | "metadata": {},

309 | "source": [

310 | "### Save data from the generated table"

311 | ]

312 | },

313 | {

314 | "cell_type": "markdown",

315 | "metadata": {},

316 | "source": [

317 | "Our selections brought us to a table. Now let's get the underlying html. First we'll identify it by its CSS selector, and then use the `get_attribute` method."

318 | ]

319 | },

320 | {

321 | "cell_type": "code",

322 | "execution_count": null,

323 | "metadata": {

324 | "collapsed": true

325 | },

326 | "outputs": [],

327 | "source": [

328 | "# get the html for the table\n",

329 | "table = driver.find_element_by_css_selector(\"#DataGrid1\").get_attribute('innerHTML')"

330 | ]

331 | },

332 | {

333 | "cell_type": "markdown",

334 | "metadata": {},

335 | "source": [

336 | "To parse the html, we'll use BeautifulSoup."

337 | ]

338 | },

339 | {

340 | "cell_type": "code",

341 | "execution_count": null,

342 | "metadata": {

343 | "collapsed": true

344 | },

345 | "outputs": [],

346 | "source": [

347 | "# soup-ify\n",

348 | "table = BeautifulSoup(table, 'lxml')"

349 | ]

350 | },

351 | {

352 | "cell_type": "code",

353 | "execution_count": null,

354 | "metadata": {

355 | "collapsed": true

356 | },

357 | "outputs": [],

358 | "source": [

359 | "table"

360 | ]

361 | },

362 | {

363 | "cell_type": "markdown",

364 | "metadata": {},

365 | "source": [

366 | "First we'll get all the rows of the table using the `tr` selector."

367 | ]

368 | },

369 | {

370 | "cell_type": "code",

371 | "execution_count": null,

372 | "metadata": {

373 | "collapsed": true

374 | },

375 | "outputs": [],

376 | "source": [

377 | "# get list of rows\n",

378 | "rows = [row for row in table.select(\"tr\")]"

379 | ]

380 | },

381 | {

382 | "cell_type": "markdown",

383 | "metadata": {},

384 | "source": [

385 | "But the first row is the header so we don't want that."

386 | ]

387 | },

388 | {

389 | "cell_type": "code",

390 | "execution_count": null,

391 | "metadata": {

392 | "collapsed": true

393 | },

394 | "outputs": [],

395 | "source": [

396 | "print(rows[0])\n",

397 | "print()\n",

398 | "print(rows[1])\n",

399 | "\n",

400 | "rows = rows[1:]"

401 | ]

402 | },

403 | {

404 | "cell_type": "markdown",

405 | "metadata": {},

406 | "source": [

407 | "Each cell in the row corresponds to the data we want."

408 | ]

409 | },

410 | {

411 | "cell_type": "code",

412 | "execution_count": null,

413 | "metadata": {

414 | "collapsed": true

415 | },

416 | "outputs": [],

417 | "source": [

418 | "rows[0].select('td')"

419 | ]

420 | },

421 | {

422 | "cell_type": "markdown",

423 | "metadata": {},

424 | "source": [

425 | "Now it's just a matter of looping through the rows and getting the information we want from each one."

426 | ]

427 | },

428 | {

429 | "cell_type": "code",

430 | "execution_count": null,

431 | "metadata": {

432 | "collapsed": true

433 | },

434 | "outputs": [],

435 | "source": [

436 | "#for row in rows:\n",

437 | "data = []\n",

438 | "for row in rows:\n",

439 | " dic = {}\n",

440 | " dic['seat'] = row.select('td')[0].text\n",

441 | " dic['electors'] = row.select('td')[1].text\n",

442 | " dic['polled'] = row.select('td')[2].text\n",

443 | " dic['rejected'] = row.select('td')[3].text\n",

444 | " dic['osn'] = row.select('td')[4].text\n",

445 | " dic['candidate'] = row.select('td')[5].text\n",

446 | " dic['party'] = row.select('td')[6].text\n",

447 | " dic['secured'] = row.select('td')[7].text\n",

448 | " data.append(dic)"

449 | ]

450 | },

451 | {

452 | "cell_type": "markdown",

453 | "metadata": {},

454 | "source": [

455 | "Let's clean up the text a little bit."

456 | ]

457 | },

458 | {

459 | "cell_type": "code",

460 | "execution_count": null,

461 | "metadata": {

462 | "collapsed": true

463 | },

464 | "outputs": [],

465 | "source": [

466 | "# strip whitespace\n",

467 | "for dic in data:\n",

468 | " for key in dic:\n",

469 | " dic[key] = dic[key].strip()"

470 | ]

471 | },

472 | {

473 | "cell_type": "code",

474 | "execution_count": null,

475 | "metadata": {

476 | "collapsed": true

477 | },

478 | "outputs": [],

479 | "source": [

480 | "not data[0]['seat']"

481 | ]

482 | },

483 | {

484 | "cell_type": "markdown",

485 | "metadata": {},

486 | "source": [

487 | "You'll notice that some of the information, such as total electors, is not supplied for each canddiate. This code will add that information for the candidates who don't have it."

488 | ]

489 | },

490 | {

491 | "cell_type": "code",

492 | "execution_count": null,

493 | "metadata": {

494 | "collapsed": true

495 | },

496 | "outputs": [],

497 | "source": [

498 | "#fill out info\n",

499 | "\n",

500 | "i = 0\n",

501 | "while i < len(data):\n",

502 | " if data[i]['seat']:\n",

503 | " seat = data[i]['seat']\n",

504 | " electors = data[i]['electors']\n",

505 | " polled = data[i]['polled']\n",

506 | " rejected = data[i]['rejected']\n",

507 | " i = i+1\n",

508 | " else:\n",

509 | " data[i]['seat'] = seat\n",

510 | " data[i]['electors'] = electors\n",

511 | " data[i]['polled'] = polled\n",

512 | " data[i]['rejected'] = rejected\n",

513 | " i = i+1"

514 | ]

515 | },

516 | {

517 | "cell_type": "code",

518 | "execution_count": null,

519 | "metadata": {

520 | "collapsed": true

521 | },

522 | "outputs": [],

523 | "source": [

524 | "data"

525 | ]

526 | }

527 | ],

528 | "metadata": {

529 | "anaconda-cloud": {},

530 | "kernelspec": {

531 | "display_name": "Python 3",

532 | "language": "python",

533 | "name": "python3"

534 | },

535 | "language_info": {

536 | "codemirror_mode": {

537 | "name": "ipython",

538 | "version": 3

539 | },

540 | "file_extension": ".py",

541 | "mimetype": "text/x-python",

542 | "name": "python",

543 | "nbconvert_exporter": "python",

544 | "pygments_lexer": "ipython3",

545 | "version": "3.6.3"

546 | }

547 | },

548 | "nbformat": 4,

549 | "nbformat_minor": 1

550 | }

551 |

--------------------------------------------------------------------------------

/1_APIs/3_api_workbook.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Accessing Databases via Web APIs\n",

8 | "* * * * *"

9 | ]

10 | },

11 | {

12 | "cell_type": "code",

13 | "execution_count": null,

14 | "metadata": {

15 | "collapsed": false

16 | },

17 | "outputs": [],

18 | "source": [

19 | "# Import required libraries\n",

20 | "import requests\n",

21 | "import json\n",

22 | "from __future__ import division\n",

23 | "import math\n",

24 | "import csv\n",

25 | "import matplotlib.pyplot as plt\n",

26 | "import time"

27 | ]

28 | },

29 | {

30 | "cell_type": "markdown",

31 | "metadata": {},

32 | "source": [

33 | "## 1. Constructing API GET Request\n",

34 | "*****\n",

35 | "\n",

36 | "In the first place, we know that every call will require us to provide:\n",

37 | "\n",

38 | "1. a base URL for the API, and\n",

39 | "2. some authorization code or key.\n",

40 | "\n",

41 | "So let's store those in some variables."

42 | ]

43 | },

44 | {

45 | "cell_type": "markdown",

46 | "metadata": {},

47 | "source": [

48 | "To get the base url, we can simply use the [documentation](https://developer.nytimes.com/). The New York Times has a lot of different APIs. If we scroll down, the second one is the [Article Search API](https://developer.nytimes.com/article_search_v2.json), which is what we want. From that page we can find the url. Now let's assign it to a variable."

49 | ]

50 | },

51 | {

52 | "cell_type": "code",

53 | "execution_count": null,

54 | "metadata": {

55 | "collapsed": true

56 | },

57 | "outputs": [],

58 | "source": [

59 | "# set base url\n",

60 | "base_url = \"https://api.nytimes.com/svc/search/v2/articlesearch.json\""

61 | ]

62 | },

63 | {

64 | "cell_type": "markdown",

65 | "metadata": {},

66 | "source": [

67 | "For the API key, we'll use the following demonstration keys for now, but in the future, [get your own](https://developer.nytimes.com/signup), it only takes a few seconds!\n",

68 | "\n",

69 | "1. ef9055ba947dd842effe0ecf5e338af9:15:72340235\n",

70 | "2. 25e91a4f7ee4a54813dca78f474e45a0:15:73273810\n",

71 | "3. e15cea455f73cc47d6d971667e09c31c:19:44644296\n",

72 | "4. b931c838cdb745bbab0f213cfc16b7a5:12:44644296\n",

73 | "5. 1dc1475b6e7d5ff5a982804cc565cd0b:6:44644296\n",

74 | "6. 18046cd15e21e1b9996ddfb6dafbb578:4:44644296\n",

75 | "7. be8992a420bfd16cf65e8757f77a5403:8:44644296"

76 | ]

77 | },

78 | {

79 | "cell_type": "code",

80 | "execution_count": null,

81 | "metadata": {

82 | "collapsed": true

83 | },

84 | "outputs": [],

85 | "source": [

86 | "# set key\n",

87 | "key = \"be8992a420bfd16cf65e8757f77a5403:8:44644296\""

88 | ]

89 | },

90 | {

91 | "cell_type": "markdown",

92 | "metadata": {},

93 | "source": [

94 | "For many API's, you'll have to specify the response format, such as xml or JSON. But for this particular API, the only possible response format is JSON, as we can see in the url, so we don't have to name it explicitly."

95 | ]

96 | },

97 | {

98 | "cell_type": "markdown",

99 | "metadata": {},

100 | "source": [

101 | "Now we need to send some sort of data in the URL’s query string. This data tells the API what information we want. In our case, we want articles about Duke Ellington. Requests allows you to provide these arguments as a dictionary, using the `params` keyword argument. In addition to the search term `q`, we have to put in the `api-key` term."

102 | ]

103 | },

104 | {

105 | "cell_type": "code",

106 | "execution_count": null,

107 | "metadata": {

108 | "collapsed": true

109 | },

110 | "outputs": [],

111 | "source": [

112 | "# set search parameters\n",

113 | "search_params = {\"q\": \"Duke Ellington\",\n",

114 | " \"api-key\": key}"

115 | ]

116 | },

117 | {

118 | "cell_type": "markdown",

119 | "metadata": {},

120 | "source": [

121 | "Now we're ready to make the request. We use the `.get` method from the `requests` library to make an HTTP GET Request."

122 | ]

123 | },

124 | {

125 | "cell_type": "code",

126 | "execution_count": null,

127 | "metadata": {

128 | "collapsed": false

129 | },

130 | "outputs": [],

131 | "source": [

132 | "# make request\n",

133 | "r = requests.get(base_url, params=search_params)"

134 | ]

135 | },

136 | {

137 | "cell_type": "markdown",

138 | "metadata": {},

139 | "source": [

140 | "Now, we have a [response](http://docs.python-requests.org/en/latest/api/#requests.Response) object called `r`. We can get all the information we need from this object. For instance, we can see that the URL has been correctly encoded by printing the URL. Click on the link to see what happens."

141 | ]

142 | },

143 | {

144 | "cell_type": "code",

145 | "execution_count": null,

146 | "metadata": {

147 | "collapsed": false

148 | },

149 | "outputs": [],

150 | "source": [

151 | "print(r.url)"

152 | ]

153 | },

154 | {

155 | "cell_type": "markdown",

156 | "metadata": {},

157 | "source": [

158 | "Click on that link to see what it returns!\n",

159 | "\n",

160 | "It's not very pleasant looking, but in the next section we will work on parsing it into something more palatable. For now let's try adding some parameters to our search."

161 | ]

162 | },

163 | {

164 | "cell_type": "markdown",

165 | "metadata": {},

166 | "source": [

167 | "### Challenge 1: Adding a date range\n",

168 | "\n",

169 | "What if we only want to search within a particular date range? The NYT Article Search API allows us to specify start and end dates.\n",

170 | "\n",

171 | "Alter `search_params` so that the request only searches for articles in the year 2015. Remember, since `search_params` is a dictionary, we can simply add the new keys to it.\n",

172 | "\n",

173 | "Use the [documentation](https://developer.nytimes.com/article_search_v2.json#/Documentation/GET/articlesearch.json) to see how to format the new parameters."

174 | ]

175 | },

176 | {

177 | "cell_type": "code",

178 | "execution_count": null,

179 | "metadata": {

180 | "collapsed": true

181 | },

182 | "outputs": [],

183 | "source": [

184 | "# set date parameters here"

185 | ]

186 | },

187 | {

188 | "cell_type": "code",

189 | "execution_count": null,

190 | "metadata": {

191 | "collapsed": false

192 | },

193 | "outputs": [],

194 | "source": [

195 | "# Uncomment to test\n",

196 | "# r = requests.get(base_url, params=search_params)\n",

197 | "# print(r.url)"

198 | ]

199 | },

200 | {

201 | "cell_type": "markdown",

202 | "metadata": {},

203 | "source": [

204 | "### Challenge 2: Specifying a results page\n",

205 | "\n",

206 | "The above will return the first 10 results. To get the next ten, you need to add a \"page\" parameter. Change the search parameters above to get the second 10 results. "

207 | ]

208 | },

209 | {

210 | "cell_type": "code",

211 | "execution_count": null,

212 | "metadata": {

213 | "collapsed": true

214 | },

215 | "outputs": [],

216 | "source": [

217 | "# set page parameters here"

218 | ]

219 | },

220 | {

221 | "cell_type": "code",

222 | "execution_count": null,

223 | "metadata": {

224 | "collapsed": false

225 | },

226 | "outputs": [],

227 | "source": [

228 | "# Uncomment to test\n",

229 | "# r = requests.get(base_url, params=search_params)\n",

230 | "# print(r.url)"

231 | ]

232 | },

233 | {

234 | "cell_type": "markdown",

235 | "metadata": {},

236 | "source": [

237 | "## 2. Parsing the response text\n",

238 | "*****"

239 | ]

240 | },

241 | {

242 | "cell_type": "markdown",

243 | "metadata": {},

244 | "source": [

245 | "We can read the content of the server’s response using `.text` from `requests`."

246 | ]

247 | },

248 | {

249 | "cell_type": "code",

250 | "execution_count": null,

251 | "metadata": {

252 | "collapsed": false

253 | },

254 | "outputs": [],

255 | "source": [

256 | "# Inspect the content of the response, parsing the result as text\n",

257 | "response_text = r.text\n",

258 | "print(response_text[:1000])"

259 | ]

260 | },

261 | {

262 | "cell_type": "markdown",

263 | "metadata": {},

264 | "source": [

265 | "What you see here is JSON text, encoded as unicode text. JSON stands for \"Javascript object notation.\" It has a very similar structure to a python dictionary -- both are built on key/value pairs. This makes it easy to convert JSON response to a python dictionary. We do this with the `json.loads()` function."

266 | ]

267 | },

268 | {

269 | "cell_type": "code",

270 | "execution_count": null,

271 | "metadata": {

272 | "collapsed": false

273 | },

274 | "outputs": [],

275 | "source": [

276 | "# Convert JSON response to a dictionary\n",

277 | "data = json.loads(response_text)\n",

278 | "print(data)"

279 | ]

280 | },

281 | {

282 | "cell_type": "markdown",

283 | "metadata": {},

284 | "source": [

285 | "That looks intimidating! But it's really just a big dictionary. Let's see what keys we got in there."

286 | ]

287 | },

288 | {

289 | "cell_type": "code",

290 | "execution_count": null,

291 | "metadata": {

292 | "collapsed": false

293 | },

294 | "outputs": [],

295 | "source": [

296 | "print(data.keys())"

297 | ]

298 | },

299 | {

300 | "cell_type": "code",

301 | "execution_count": null,

302 | "metadata": {

303 | "collapsed": false

304 | },

305 | "outputs": [],

306 | "source": [

307 | "# this is boring\n",

308 | "data['status']"

309 | ]

310 | },

311 | {

312 | "cell_type": "code",

313 | "execution_count": null,

314 | "metadata": {

315 | "collapsed": false

316 | },

317 | "outputs": [],

318 | "source": [

319 | "# so is this\n",

320 | "data['copyright']"

321 | ]

322 | },

323 | {

324 | "cell_type": "code",

325 | "execution_count": null,

326 | "metadata": {

327 | "collapsed": false

328 | },

329 | "outputs": [],

330 | "source": [

331 | "# this looks more promising\n",

332 | "data['response']"

333 | ]

334 | },

335 | {

336 | "cell_type": "markdown",

337 | "metadata": {},

338 | "source": [

339 | "We'll need to parse this dictionary even further. Let's look at its keys."

340 | ]

341 | },

342 | {

343 | "cell_type": "code",

344 | "execution_count": null,

345 | "metadata": {

346 | "collapsed": false

347 | },

348 | "outputs": [],

349 | "source": [

350 | "data['response'].keys()"

351 | ]

352 | },

353 | {

354 | "cell_type": "code",

355 | "execution_count": null,

356 | "metadata": {

357 | "collapsed": false

358 | },

359 | "outputs": [],

360 | "source": [

361 | "data['response']['meta']"

362 | ]

363 | },

364 | {

365 | "cell_type": "markdown",

366 | "metadata": {

367 | "collapsed": false

368 | },

369 | "source": [

370 | "Looks like we probably want `docs`."

371 | ]

372 | },

373 | {

374 | "cell_type": "code",

375 | "execution_count": null,

376 | "metadata": {

377 | "collapsed": false

378 | },

379 | "outputs": [],

380 | "source": [

381 | "print(data['response']['docs'])"

382 | ]

383 | },

384 | {

385 | "cell_type": "markdown",

386 | "metadata": {},

387 | "source": [

388 | "That looks what we want! Let's assign that to its own variable."

389 | ]

390 | },

391 | {

392 | "cell_type": "code",

393 | "execution_count": null,

394 | "metadata": {

395 | "collapsed": false

396 | },

397 | "outputs": [],

398 | "source": [

399 | "docs = data['response']['docs']"

400 | ]

401 | },

402 | {

403 | "cell_type": "markdown",

404 | "metadata": {},

405 | "source": [

406 | "So that we can further manipulate this, we need to know what type of object it is."

407 | ]

408 | },

409 | {

410 | "cell_type": "code",

411 | "execution_count": null,

412 | "metadata": {

413 | "collapsed": false

414 | },

415 | "outputs": [],

416 | "source": [

417 | "type(docs)"

418 | ]

419 | },

420 | {

421 | "cell_type": "markdown",

422 | "metadata": {},

423 | "source": [

424 | "That makes things easy. Let's take a look at the first doc."

425 | ]

426 | },

427 | {

428 | "cell_type": "code",

429 | "execution_count": null,

430 | "metadata": {

431 | "collapsed": false

432 | },

433 | "outputs": [],

434 | "source": [

435 | "docs[0]"

436 | ]

437 | },

438 | {

439 | "cell_type": "markdown",

440 | "metadata": {},

441 | "source": [

442 | "## 3. Putting everything together to get all the articles.\n",

443 | "*****"

444 | ]

445 | },

446 | {

447 | "cell_type": "markdown",

448 | "metadata": {},

449 | "source": [

450 | "That's great. But we only have 10 items. The original response said we had 65 hits! Which means we have to make 65 /10, or 7 requests to get them all. Sounds like a job for a loop! \n",

451 | "\n",

452 | "But first, let's review what we've done so far."

453 | ]

454 | },

455 | {

456 | "cell_type": "code",

457 | "execution_count": null,

458 | "metadata": {

459 | "collapsed": false

460 | },

461 | "outputs": [],

462 | "source": [

463 | "# set key\n",

464 | "key = \"be8992a420bfd16cf65e8757f77a5403:8:44644296\"\n",

465 | "\n",

466 | "# set base url\n",

467 | "base_url = \"https://api.nytimes.com/svc/search/v2/articlesearch.json\"\n",

468 | "\n",

469 | "# set search parameters\n",

470 | "search_params = {\"q\": \"Duke Ellington\",\n",

471 | " \"api-key\": key,\n",

472 | " \"begin_date\": \"20150101\", # date must be in YYYYMMDD format\n",

473 | " \"end_date\": \"20151231\"}\n",

474 | "\n",

475 | "# make request\n",

476 | "r = requests.get(base_url, params=search_params)\n",

477 | "\n",

478 | "# wait 3 seconds for the GET request\n",

479 | "time.sleep(3)\n",

480 | "\n",

481 | "# convert to a dictionary\n",

482 | "data = json.loads(r.text)\n",

483 | "\n",

484 | "# get number of hits\n",

485 | "hits = data['response']['meta']['hits']\n",

486 | "print(\"number of hits: \", str(hits))\n",

487 | "\n",

488 | "# get number of pages\n",

489 | "pages = int(math.ceil(hits / 10))\n",

490 | "print(\"number of pages: \", str(pages))"

491 | ]

492 | },

493 | {

494 | "cell_type": "markdown",

495 | "metadata": {},

496 | "source": [

497 | "Now we're ready to loop through our pages. We'll start off by creating an empty list `all_docs` which will be our accumulator variable. Then we'll loop through `pages` and make a request for each one."

498 | ]

499 | },

500 | {

501 | "cell_type": "code",

502 | "execution_count": null,

503 | "metadata": {

504 | "collapsed": false

505 | },

506 | "outputs": [],

507 | "source": [

508 | "# make an empty list where we'll hold all of our docs for every page\n",

509 | "all_docs = []\n",

510 | "\n",

511 | "# now we're ready to loop through the pages\n",

512 | "for i in range(pages):\n",

513 | " print(\"collecting page\", str(i))\n",

514 | "\n",

515 | " # set the page parameter\n",

516 | " search_params['page'] = i\n",

517 | "\n",

518 | " # make request\n",

519 | " r = requests.get(base_url, params=search_params)\n",

520 | "\n",

521 | " # get text and convert to a dictionary\n",

522 | " data = json.loads(r.text)\n",

523 | "\n",

524 | " # get just the docs\n",

525 | " docs = data['response']['docs']\n",

526 | "\n",

527 | " # add those docs to the big list\n",

528 | " all_docs = all_docs + docs\n",

529 | "\n",

530 | " time.sleep(3) # pause between calls"

531 | ]

532 | },

533 | {

534 | "cell_type": "markdown",

535 | "metadata": {},

536 | "source": [

537 | "Let's make sure we got all the articles."

538 | ]

539 | },

540 | {

541 | "cell_type": "code",

542 | "execution_count": null,

543 | "metadata": {

544 | "collapsed": false

545 | },

546 | "outputs": [],

547 | "source": [

548 | "assert len(all_docs) == data['response']['meta']['hits']"

549 | ]

550 | },

551 | {

552 | "cell_type": "markdown",

553 | "metadata": {},

554 | "source": [

555 | "We did it!"

556 | ]

557 | },

558 | {

559 | "cell_type": "markdown",

560 | "metadata": {},

561 | "source": [

562 | "### Challenge 3: Make a function\n",

563 | "\n",

564 | "Using the code above, create a function called `get_api_data()` with the parameters `term` and a `year` that returns all the documents containing that search term in that year."

565 | ]

566 | },

567 | {

568 | "cell_type": "code",

569 | "execution_count": null,

570 | "metadata": {

571 | "collapsed": true

572 | },

573 | "outputs": [],

574 | "source": [

575 | "#DEFINE YOUR FUNCTION HERE"

576 | ]

577 | },

578 | {

579 | "cell_type": "code",

580 | "execution_count": null,

581 | "metadata": {

582 | "collapsed": false

583 | },

584 | "outputs": [],

585 | "source": [

586 | "# uncomment to test\n",

587 | "# get_api_data(\"Duke Ellington\", 2014)"

588 | ]

589 | },

590 | {

591 | "cell_type": "markdown",

592 | "metadata": {},

593 | "source": [

594 | "## 4. Formatting\n",

595 | "*****\n",

596 | "\n",

597 | "Let's take another look at one of these documents."

598 | ]

599 | },

600 | {

601 | "cell_type": "code",

602 | "execution_count": null,

603 | "metadata": {

604 | "collapsed": false

605 | },

606 | "outputs": [],

607 | "source": [

608 | "all_docs[0]"

609 | ]

610 | },

611 | {

612 | "cell_type": "markdown",

613 | "metadata": {},

614 | "source": [

615 | "This is all great, but it's pretty messy. What we’d really like to to have, eventually, is a CSV, with each row representing an article, and each column representing something about that article (header, date, etc). As we saw before, the best way to do this is to make a list of dictionaries, with each dictionary representing an article and each dictionary representing a field of metadata from that article (e.g. headline, date, etc.) We can do this with a custom function:"

616 | ]

617 | },

618 | {

619 | "cell_type": "code",

620 | "execution_count": null,

621 | "metadata": {

622 | "collapsed": true

623 | },

624 | "outputs": [],

625 | "source": [

626 | "def format_articles(unformatted_docs):\n",

627 | " '''\n",

628 | " This function takes in a list of documents returned by the NYT api \n",

629 | " and parses the documents into a list of dictionaries, \n",

630 | " with 'id', 'header', and 'date' keys\n",

631 | " '''\n",

632 | " formatted = []\n",

633 | " for i in unformatted_docs:\n",

634 | " dic = {}\n",

635 | " dic['id'] = i['_id']\n",

636 | " dic['headline'] = i['headline']['main']\n",

637 | " dic['date'] = i['pub_date'][0:10] # cutting time of day.\n",

638 | " formatted.append(dic)\n",

639 | " return(formatted)"

640 | ]

641 | },

642 | {

643 | "cell_type": "code",

644 | "execution_count": null,

645 | "metadata": {

646 | "collapsed": false

647 | },

648 | "outputs": [],

649 | "source": [

650 | "all_formatted = format_articles(all_docs)"

651 | ]

652 | },

653 | {

654 | "cell_type": "code",

655 | "execution_count": null,

656 | "metadata": {

657 | "collapsed": false

658 | },

659 | "outputs": [],

660 | "source": [

661 | "all_formatted[:5]"

662 | ]

663 | },

664 | {

665 | "cell_type": "markdown",

666 | "metadata": {},

667 | "source": [

668 | "### Challenge 4: Collect more fields\n",

669 | "\n",

670 | "Edit the function above so that we include the `lead_paragraph` and `word_count` fields.\n",

671 | "\n",

672 | "**HINT**: Some articles may not contain a lead_paragraph, in which case, it'll throw an error if you try to address this value (which doesn't exist.) You need to add a conditional statement that takes this into consideration. If\n",

673 | "\n",

674 | "**Advanced**: Add another key that returns a list of `keywords` associated with the article."

675 | ]

676 | },

677 | {

678 | "cell_type": "code",

679 | "execution_count": null,

680 | "metadata": {

681 | "collapsed": true

682 | },

683 | "outputs": [],

684 | "source": [

685 | "def format_articles(unformatted_docs):\n",

686 | " '''\n",

687 | " This function takes in a list of documents returned by the NYT api \n",

688 | " and parses the documents into a list of dictionaries, \n",

689 | " with 'id', 'header', 'date', 'lead paragrph' and 'word count' keys\n",

690 | " '''\n",

691 | " formatted = []\n",

692 | " for i in unformatted_docs:\n",

693 | " dic = {}\n",

694 | " dic['id'] = i['_id']\n",

695 | " dic['headline'] = i['headline']['main']\n",

696 | " dic['date'] = i['pub_date'][0:10] # cutting time of day.\n",

697 | "\n",

698 | " # YOUR CODE HERE\n",

699 | "\n",

700 | " formatted.append(dic)\n",

701 | " \n",

702 | " return(formatted)"

703 | ]

704 | },

705 | {

706 | "cell_type": "code",

707 | "execution_count": null,

708 | "metadata": {

709 | "collapsed": false

710 | },

711 | "outputs": [],

712 | "source": [

713 | "# uncomment to test\n",

714 | "all_formatted = format_articles(all_docs)\n",

715 | "all_formatted[:5]"

716 | ]

717 | },

718 | {

719 | "cell_type": "markdown",

720 | "metadata": {},

721 | "source": [

722 | "## 5. Exporting\n",

723 | "*****\n",

724 | "\n",

725 | "We can now export the data to a CSV."

726 | ]

727 | },

728 | {

729 | "cell_type": "code",

730 | "execution_count": null,

731 | "metadata": {

732 | "collapsed": false

733 | },

734 | "outputs": [],

735 | "source": [

736 | "keys = all_formatted[1]\n",

737 | "# writing the rest\n",

738 | "with open('all-formated.csv', 'w') as output_file:\n",

739 | " dict_writer = csv.DictWriter(output_file, keys)\n",

740 | " dict_writer.writeheader()\n",

741 | " dict_writer.writerows(all_formatted)"

742 | ]

743 | },

744 | {

745 | "cell_type": "markdown",

746 | "metadata": {},

747 | "source": [

748 | "## Capstone Challenge\n",

749 | "\n",

750 | "Using what you learned, tell me if Chris' claim (i.e. that Duke Ellington has gotten more popular lately) holds water."

751 | ]

752 | },

753 | {

754 | "cell_type": "code",

755 | "execution_count": null,

756 | "metadata": {

757 | "collapsed": true

758 | },

759 | "outputs": [],

760 | "source": [

761 | "# YOUR CODE HERE\n"

762 | ]

763 | }

764 | ],

765 | "metadata": {

766 | "anaconda-cloud": {},

767 | "kernelspec": {

768 | "display_name": "Python 3",

769 | "language": "python",

770 | "name": "python3"

771 | },

772 | "language_info": {