├── .gitignore

├── README.md

├── src-human-correlations

├── chatGPT_evaluate_intruders.py

├── chatGPT_evaluate_topic_ratings.py

├── generate_intruder_words_dataset.py

└── human_correlations_bootstrap.py

├── src-misc

├── 01-get_data.sh

├── 02-process_data.ipynb

├── 02-process_data.py

├── 03-figures_nmpi_llm.py

├── 04-launch_figures_nmpi_llm.sh

├── 05-find_rating_errors.ipynb

├── 06-figures_ari_llm.py

├── 07-pairwise_scores.ipynb

└── fig_utils.py

├── src-number-of-topics

├── LLM_scores_and_ARI.py

├── chatGPT_document_label_assignment.py

└── chatGPT_ratings_assignment.py

└── topic-modeling-output

├── dvae-topics-best-c_npmi_10_full.json

├── etm-topics-best-c_npmi_10_full.json

└── mallet-topics-best-c_npmi_10_full.json

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__

2 | repo-old

3 | *.pyc

4 | computed/

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Re-visiting Automated Topic Model Evaluation with Large Language Models

2 |

3 | This repo contains code and data for our [EMNLP 2023 paper](https://aclanthology.org/2023.emnlp-main.581/) about assessing topic model output with Large Language Models.

4 | ```

5 | @inproceedings{stammbach-etal-2023-revisiting,

6 | title = "Revisiting Automated Topic Model Evaluation with Large Language Models",

7 | author = "Stammbach, Dominik and

8 | Zouhar, Vil{\'e}m and

9 | Hoyle, Alexander and

10 | Sachan, Mrinmaya and

11 | Ash, Elliott",

12 | booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

13 | month = dec,

14 | year = "2023",

15 | address = "Singapore",

16 | publisher = "Association for Computational Linguistics",

17 | url = "https://aclanthology.org/2023.emnlp-main.581",

18 | doi = "10.18653/v1/2023.emnlp-main.581",

19 | pages = "9348--9357"

20 | }

21 | ```

22 |

23 | ## Prerequisites

24 |

25 | ```shell

26 | pip install --upgrade openai pandas

27 | ```

28 |

29 | ## Large Language Models and Topics with Human Annotations

30 |

31 | Download topic words and human annotations from the paper [Is Automated Topic Model Evaluation Broken?](https://arxiv.org/abs/2107.02173) from their [github repository](https://github.com/ahoho/topics/blob/dev/data/human/all_data/all_data.json).

32 |

33 |

34 | ### Intruder Detection Test

35 |

36 | Following (Hoyle et al., 2021), we randomly sample intruder words which are not in the top 50 topic words for each topic.

37 |

38 | ```shell

39 | python src-human-correlations/generate_intruder_words_dataset.py

40 | ```

41 |

42 | We can then call an LLM to automatically annotate the intruder words for each topic.

43 |

44 | ```shell

45 | python src-human-correlations/chatGPT_evaluate_intruders.py --API_KEY a_valid_openAI_api_key

46 | ```

47 |

48 | For the ratings task, simply call the file which rates topic word sets (no need to generate a dataset first)

49 |

50 | ```shell

51 | python src-human-correlations/chatGPT_evaluate_topic_ratings.py --API_KEY a_valid_openAI_api_key

52 | ```

53 | (In case the openAI API breaks, we simply save all output in a json file, and would restart the script while skipping all already annotated datapoints.)

54 |

55 |

56 | ### Evaluation LLMs and Human Correlations

57 |

58 | We evaluate using a bootstrapp appraoch where we sample human annotations and LLM annotations for each datapoint. We then average these sampled annotation, and compute a spearman's rho for each bootstrapped sample. We report the mean spearman's rho over all 1000 bootstrapped samples.

59 |

60 | ```shell

61 | python src-human-correlations/human_correlations_bootstrap.py --filename coherence-outputs-section-2/ratings_outfile_with_dataset_description.jsonl --task ratings

62 | ```

63 |

64 |

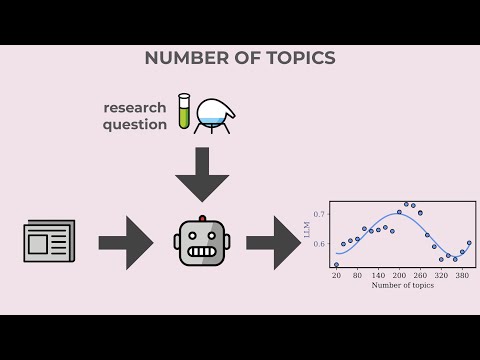

65 | ## Evaluating Topic Models with Different Numbers of Topics

66 |

67 | Download fitted topic models and metadata for two datasets (bills and wikitext) [here](https://www.dropbox.com/s/huxdloe5l6w2tu5/topic_model_k_selection.zip?dl=0) and unzip

68 |

69 | ### Rating Topic Word Sets

70 |

71 | To run LLM ratings of topic word sets on a dataset (wiki or NYT) with broad or specific ground-truth example topics, simply run:

72 |

73 | ```shell

74 | python src-number-of-topics/chatGPT_ratings_assignment.py --API_KEY a_valid_openAI_api_key --dataset wikitext --label_categories broad

75 | ```

76 |

77 | ### Purity of Document Collections

78 |

79 | We also assign a document label to the top documents belonging to a topic, following [Doogan and Buntine, 2021](https://aclanthology.org/2021.naacl-main.300/). We then average purity per document collection, and the number of topics with on averag highest purities is the preferred cluster of this procedure.

80 |

81 | To run LLM label assignments on a dataset (wiki or NYT) with broad or specific ground-truth example topics, simply run:

82 |

83 | ```shell

84 | python src-number-of-topics/chatGPT_document_label_assignment.py --API_KEY a_valid_openAI_api_key --dataset wikitext --label_categories broad

85 | ```

86 |

87 | ### Plot resulting scores

88 |

89 | ```shell

90 | python src-number-of-topics/LLM_scores_and_ARI.py --label_categories broad --method label_assignment --dataset bills --label_categories broad --filename number-of-topics-section-4/document_label_assignment_wikitext_broad.jsonl

91 | ```

92 |

93 | ## Questions

94 |

95 | Please contact [Dominik Stammbach](mailto:dominik.stammbach@gess.ethz.ch) regarding any questions.

96 |

97 |

98 | [](https://www.youtube.com/watch?v=qIDjtgWTgjs)

99 |

--------------------------------------------------------------------------------

/src-human-correlations/chatGPT_evaluate_intruders.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import json

3 | import random

4 | import openai

5 | from tqdm import tqdm

6 | from ast import literal_eval

7 | from collections import defaultdict

8 | import time

9 | import argparse

10 | import os

11 |

12 | def get_prompts(include_dataset_description="include"):

13 | if include_dataset_description == "include":

14 | system_prompt_NYT = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Select which word is the least related to all other words. If multiple words do not fit, choose the word that is most out of place.

15 | The topic modeling is based on The New York Times corpus. The corpus consists of articles from 1987 to 2007. Sections from a typical paper include International, National, New York Regional, Business, Technology, and Sports news; features on topics such as Dining, Movies, Travel, and Fashion; there are also obituaries and opinion pieces.

16 | Reply with a single word."""

17 |

18 | system_prompt_wikitext = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Select which word is the least related to all other words. If multiple words do not fit, choose the word that is most out of place.

19 | The topic modeling is based on the Wikipedia corpus. Wikipedia is an online encyclopedia covering a huge range of topics. Articles can include biographies ("George Washington"), scientific phenomena ("Solar Eclipse"), art pieces ("La Danse"), music ("Amazing Grace"), transportation ("U.S. Route 131"), sports ("1952 winter olympics"), historical events or periods ("Tang Dynasty"), media and pop culture ("The Simpsons Movie"), places ("Yosemite National Park"), plants and animals ("koala"), and warfare ("USS Nevada (BB-36)"), among others.

20 | Reply with a single word."""

21 | outfile_name = "coherence-outputs-section-2/intrusion_outfile_with_dataset_description.jsonl"

22 | else:

23 | system_prompt_NYT = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Select which word is the least related to all other words. If multiple words do not fit, choose the word that is most out of place.

24 | Reply with a single word."""

25 |

26 | system_prompt_wikitext = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Select which word is the least related to all other words. If multiple words do not fit, choose the word that is most out of place.

27 | Reply with a single word."""

28 | outfile_name = "coherence-outputs-section-2/intrusion_outfile_without_dataset_description.jsonl"

29 | return system_prompt_NYT, system_prompt_wikitext, outfile_name

30 |

31 | if __name__ == "__main__":

32 | parser = argparse.ArgumentParser()

33 | parser.add_argument("--API_KEY", default="openai API key", type=str, required=True, help="valid openAI API key")

34 | parser.add_argument("--dataset_description", default="include", type=str, help="whether to include a dataset description or not, default = include.")

35 | args = parser.parse_args()

36 |

37 |

38 | openai.api_key = args.API_KEY

39 | random.seed(42)

40 | df = pd.read_json("intruder_outfile.jsonl", lines=True)

41 |

42 | system_prompt_NYT, system_prompt_wikitext, outfile_name = get_prompts(include_dataset_description=args.dataset_description)

43 | os.makedirs("coherence-outputs-section-2", exist_ok=True)

44 |

45 |

46 | columns = df.columns.tolist()

47 |

48 | with open(outfile_name, "w") as outfile:

49 | for i, row in tqdm(df.iterrows(), total=len(df)):

50 | if row.dataset_name == "wikitext":

51 | system_prompt = system_prompt_wikitext

52 | else:

53 | system_prompt = system_prompt_NYT

54 |

55 | words = row.topic_terms

56 | # shuffle words

57 | random.shuffle(words)

58 | # we only prompt 5 words

59 | words = words[:5]

60 |

61 | # we add intruder term

62 | intruder_term = row.intruder_term

63 |

64 | # we shuffle again

65 | words.append(intruder_term)

66 | random.shuffle(words)

67 |

68 | # we have a user prompt

69 | user_prompt = ", ".join(['"' + w + '"' for w in words])

70 |

71 | out = {col: row[col] for col in columns}

72 | response = openai.ChatCompletion.create(model="gpt-3.5-turbo", messages = [{"role": "system", "content": system_prompt}, {"role": "user", "content": user_prompt}], temperature=0.7, max_tokens=15)["choices"][0]["message"]["content"].strip()

73 | out["response"] = response

74 | out["user_promt"] = user_prompt

75 | json.dump(out, outfile)

76 | outfile.write("\n")

77 | time.sleep(0.5)

78 |

79 |

80 |

--------------------------------------------------------------------------------

/src-human-correlations/chatGPT_evaluate_topic_ratings.py:

--------------------------------------------------------------------------------

1 | import json

2 | import random

3 | import openai

4 | from tqdm import tqdm

5 | import pandas as pd

6 | import time

7 | import argparse

8 | import os

9 |

10 |

11 | def get_prompts(include_dataset_description=True):

12 | if include_dataset_description:

13 | system_prompt_NYT = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Please rate how related the following words are to each other on a scale from 1 to 3 ("1" = not very related, "2" = moderately related, "3" = very related).

14 | The topic modeling is based on The New York Times corpus. The corpus consists of articles from 1987 to 2007. Sections from a typical paper include International, National, New York Regional, Business, Technology, and Sports news; features on topics such as Dining, Movies, Travel, and Fashion; there are also obituaries and opinion pieces.

15 | Reply with a single number, indicating the overall appropriateness of the topic."""

16 |

17 | system_prompt_wikitext = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Please rate how related the following words are to each other on a scale from 1 to 3 ("1" = not very related, "2" = moderately related, "3" = very related).

18 | The topic modeling is based on the Wikipedia corpus. Wikipedia is an online encyclopedia covering a huge range of topics. Articles can include biographies ("George Washington"), scientific phenomena ("Solar Eclipse"), art pieces ("La Danse"), music ("Amazing Grace"), transportation ("U.S. Route 131"), sports ("1952 winter olympics"), historical events or periods ("Tang Dynasty"), media and pop culture ("The Simpsons Movie"), places ("Yosemite National Park"), plants and animals ("koala"), and warfare ("USS Nevada (BB-36)"), among others.

19 | Reply with a single number, indicating the overall appropriateness of the topic."""

20 | outfile_name = "coherence-outputs-section-2/ratings_outfile_with_dataset_description.jsonl"

21 | else:

22 | system_prompt_NYT = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Please rate how related the following words are to each other on a scale from 1 to 3 ("1" = not very related, "2" = moderately related, "3" = very related).

23 | Reply with a single number, indicating the overall appropriateness of the topic."""

24 |

25 | system_prompt_wikitext = """You are a helpful assistant evaluating the top words of a topic model output for a given topic. Please rate how related the following words are to each other on a scale from 1 to 3 ("1" = not very related, "2" = moderately related, "3" = very related).

26 | Reply with a single number, indicating the overall appropriateness of the topic."""

27 | outfile_name = "coherence-outputs-section-2/ratings_outfile_without_dataset_description.jsonl"

28 | return system_prompt_NYT, system_prompt_wikitext, outfile_name

29 |

30 |

31 | if __name__ == "__main__":

32 |

33 | parser = argparse.ArgumentParser()

34 | parser.add_argument("--API_KEY", default="openai API key", type=str, required=True, help="valid openAI API key")

35 | parser.add_argument("--dataset_description", default="include", type=str, help="whether to include a dataset description or not, default = include.")

36 | args = parser.parse_args()

37 |

38 | openai.api_key = args.API_KEY

39 | random.seed(42)

40 |

41 | with open("all_data.json") as f:

42 | data = json.load(f)

43 | random.seed(42)

44 |

45 | system_prompt_NYT, system_prompt_wikitext, outfile_name = get_prompts(include_dataset_description=args.dataset_description)

46 | os.makedirs("coherence-outputs-section-2", exist_ok=True)

47 |

48 | with open(outfile_name, "w") as outfile:

49 | for dataset_name, dataset in data.items():

50 | for model_name, dataset_model in dataset.items():

51 | print (dataset_name, model_name)

52 | topics = dataset_model["topics"]

53 | human_evaluations = dataset_model["metrics"]["ratings_scores_avg"]

54 | i = 0

55 | for topic, human_eval in tqdm(zip(topics, human_evaluations), total=50):

56 | topic = topic[:10]

57 | for run in range(3):

58 | random.shuffle(topic)

59 | user_prompt = ", ".join(topic)

60 | if dataset_name == "wikitext":

61 | system_prompt = system_prompt_wikitext

62 | else:

63 | system_prompt = system_prompt_NYT

64 | response = openai.ChatCompletion.create(model="gpt-3.5-turbo", messages = [{"role": "system", "content": system_prompt}, {"role": "user", "content": user_prompt}], temperature=1.0, logit_bias={16:100, 17:100, 18:100}, max_tokens=1)["choices"][0]["message"]["content"].strip()

65 | out = {"dataset_name": dataset_name, "model_name": model_name, "topic_id": i, "user_prompt": user_prompt, "response": response, "human_eval":human_eval, "run": run}

66 | json.dump(out, outfile)

67 | outfile.write("\n")

68 | time.sleep(0.5)

69 | i += 1

70 |

71 |

--------------------------------------------------------------------------------

/src-human-correlations/generate_intruder_words_dataset.py:

--------------------------------------------------------------------------------

1 | import json

2 | import random

3 |

4 |

5 | if __name__ == "__main__":

6 | with open("all_data.json") as f:

7 | data = json.load(f)

8 |

9 | random.seed(42)

10 | with open("intruder_outfile.jsonl", "w") as outfile:

11 | for dataset_name, dataset in data.items():

12 | for model_name, dataset_model in dataset.items():

13 | print (dataset_name, model_name)

14 | if model_name == "mallet":

15 | fn = "topic-modeling-output/mallet-topics-best-c_npmi_10_full.json"

16 | elif model_name == "dvae":

17 | fn = "topic-modeling-output/dvae-topics-best-c_npmi_10_full.json"

18 | else:

19 | fn = "topic-modeling-output/etm-topics-best-c_npmi_10_full.json"

20 | with open(fn) as f:

21 | topics_data = json.load(f)

22 |

23 | raw_topics = topics_data[dataset_name]["topics"]

24 |

25 | words = set()

26 | for topic in raw_topics:

27 | words.update(topic)

28 | words = list(set(words))

29 | for i, (topic, metric, double_check) in enumerate(zip(raw_topics, dataset_model["metrics"]["intrusion_scores_avg"], dataset_model["topics"])):

30 | topic_set = set(topic)

31 | candidate_words = [i for i in words if i not in topic_set]

32 | random.shuffle(candidate_words)

33 | sampled_intruders = candidate_words[:10]

34 | for intruder in sampled_intruders:

35 | out = {}

36 | out["topic_id"] = i

37 | out["intruder_term"] = intruder

38 | out["topic_terms"] = topic[:10]

39 | out["intrusion_scores_avg"] = metric

40 | out["dataset_name"] = dataset_name

41 | out["model_name"] = model_name

42 | json.dump(out, outfile)

43 | outfile.write("\n")

44 |

45 |

--------------------------------------------------------------------------------

/src-human-correlations/human_correlations_bootstrap.py:

--------------------------------------------------------------------------------

1 | import pandas as pd

2 | import re

3 | from scipy.stats import spearmanr

4 | import numpy as np

5 | from sklearn.metrics import accuracy_score

6 | import random, json

7 | from ast import literal_eval

8 | from tqdm import tqdm

9 | import os

10 | import argparse

11 |

12 | def load_dataframe(fn, task=""):

13 | if fn.endswith(".csv"):

14 | df = pd.read_csv(fn)

15 | elif fn.endswith("jsonl"):

16 | df = pd.read_json(fn, lines=True)

17 | print (df)

18 | print (df.iloc[0])

19 | if task == "intrusion":

20 | if "response_correct" not in df.columns:

21 | df["response_correct"] = postprocess_chatGPT_intrusion_test(df)

22 | else:

23 |

24 | if "gpt_ratings" not in df.columns:

25 | df["gpt_ratings"] = df.response.astype(int)

26 | return df

27 |

28 | def postprocess_chatGPT_intrusion_test(df):

29 | response = df.response.tolist()

30 | response = [i.lower() for i in response]

31 | response = [re.findall(r"\b[a-z_\d]+\b", i) for i in response]

32 | response_correct = []

33 | #df.topic_terms = df.topic_terms.apply(lambda x: literal_eval(x))

34 | #for i,j,k in zip(response, df.intruder_term, df.topic_terms):

35 | for i,j in zip(response, df.intruder_term):

36 | if not i:

37 | response_correct.append(0)

38 | elif i[0] == j:

39 | response_correct.append(1)

40 | else:

41 | response_correct.append(0)

42 | return response_correct

43 |

44 | def postprocess_chatGPT_intrusion_new(df):

45 | for response, intruder_term, topic_words in zip(response, df.intruder_term, df.topic_terms):

46 | if not response:

47 | response_correct.append(0)

48 | elif response.lower() == intruder_term.lower():

49 | response_correct.append(1)

50 | else:

51 | response_correct.append(0)

52 | return response_correct

53 |

54 | def get_confidence_intervals(statistic):

55 | statistic = sorted(statistic)

56 | lower = statistic[4] # it's the fourth lowest value; if we had 100 samples, it would be the 2.5nd lowest value, this * 1.5 gets us 3.75

57 | upper = statistic[-4] # it's the fourth highest value

58 | print ("lower", lower, "upper", upper)

59 |

60 | def get_filenames(with_dataset_description = True):

61 | if with_dataset_description:

62 | intrusion_fn = "coherence-outputs-section-2/intrusion_outfile_with_dataset_description.jsonl"

63 | rating_fn = "coherence-outputs-section-2/ratings_outfile_with_dataset_description.csv"

64 | else:

65 | intrusion_fn = "coherence-outputs-section-2/intrusion_outfile_without_dataset_description.csv"

66 | rating_fn = "coherence-outputs-section-2/ratings_outfile_without_dataset_description.jsonl"

67 | return intrusion_fn, rating_fn

68 |

69 |

70 | def compute_human_ceiling_intrusion(data, only_confident = False):

71 | ratings_human, ratings_chatGPT = [], []

72 | spearman_wiki = []

73 | spearman_NYT = []

74 | spearman_concat = []

75 | for _ in tqdm(range(1000), total=1000):

76 | ratings_human, ratings_chatGPT = [], []

77 | for dataset in ["wikitext", "nytimes"]:

78 | for model in ["mallet", "dvae", "etm"]:

79 | for topic_id in range(50):

80 | intrusion_scores_raw = data[dataset][model]["metrics"]["intrusion_scores_raw"][topic_id]

81 | if only_confident:

82 | intrusion_scores_raw = [i for i,j in zip(intrusion_scores_raw, data[dataset][model]["metrics"]["intrusion_confidences_raw"][topic_id]) if j == 1]

83 | if not intrusion_scores_raw:

84 | intrusion_scores_raw = [0]

85 |

86 | if len(intrusion_scores_raw) == 1:

87 | intrusion_scores_1 = intrusion_scores_raw

88 | intrusion_scores_2 = intrusion_scores_raw

89 | else:

90 | length = len(intrusion_scores_raw) // 2

91 | intrusion_scores_1 = intrusion_scores_raw[:length]

92 | intrusion_scores_2 = intrusion_scores_raw[length:]

93 | intrusion_scores_1 = random.choices(intrusion_scores_1, k=len(intrusion_scores_1))

94 | intrusion_scores_2 = random.choices(intrusion_scores_2, k=len(intrusion_scores_2))

95 | ratings_human.append(np.mean(intrusion_scores_1))

96 | ratings_chatGPT.append(np.mean(intrusion_scores_2))

97 | spearman_wiki.append(spearmanr(ratings_chatGPT[:150], ratings_human[:150]).statistic)

98 | spearman_NYT.append(spearmanr(ratings_chatGPT[150:], ratings_human[150:]).statistic)

99 | spearman_concat.append(spearmanr(ratings_chatGPT, ratings_human).statistic)

100 | print ("wiki", np.mean(spearman_wiki))

101 | #get_confidence_intervals(spearman_wiki)

102 | print ("NYT", np.mean(spearman_NYT))

103 | #get_confidence_intervals(spearman_NYT)

104 | print ("concat", np.mean(spearman_concat))

105 | #get_confidence_intervals(spearman_concat)

106 |

107 | def compute_human_ceiling_rating(data, only_confident = False):

108 | ratings_human, ratings_chatGPT = [], []

109 | spearman_wiki = []

110 | spearman_NYT = []

111 | spearman_concat = []

112 | for _ in tqdm(range(1000), total=1000):

113 | ratings_human, ratings_chatGPT = [], []

114 | for dataset in ["wikitext", "nytimes"]:

115 | for model in ["mallet", "dvae", "etm"]:

116 | for topic_id in range(50):

117 | intrusion_scores_raw = data[dataset][model]["metrics"]["ratings_scores_raw"][topic_id]

118 | if only_confident:

119 | intrusion_scores_raw = [i for i,j in zip(intrusion_scores_raw, data[dataset][model]["metrics"]["ratings_confidences_raw"][topic_id]) if j == 1]

120 |

121 | if not intrusion_scores_raw:

122 | intrusion_scores_raw = [1]

123 | if len(intrusion_scores_raw) == 1:

124 | intrusion_scores_1 = intrusion_scores_raw

125 | intrusion_scores_2 = intrusion_scores_raw

126 | else:

127 | length = len(intrusion_scores_raw) // 2

128 | intrusion_scores_1 = intrusion_scores_raw[:length]

129 | intrusion_scores_2 = intrusion_scores_raw[length:]

130 | intrusion_scores_1 = random.choices(intrusion_scores_1, k=len(intrusion_scores_1))

131 | intrusion_scores_2 = random.choices(intrusion_scores_2, k=len(intrusion_scores_2))

132 | ratings_human.append(np.mean(intrusion_scores_1))

133 | ratings_chatGPT.append(np.mean(intrusion_scores_2))

134 | spearman_wiki.append(spearmanr(ratings_chatGPT[:150], ratings_human[:150]).statistic)

135 | spearman_NYT.append(spearmanr(ratings_chatGPT[150:], ratings_human[150:]).statistic)

136 | spearman_concat.append(spearmanr(ratings_chatGPT, ratings_human).statistic)

137 | print ("wiki", np.mean(spearman_wiki))

138 | #get_confidence_intervals(spearman_wiki)

139 | print ("NYT", np.mean(spearman_NYT))

140 | #get_confidence_intervals(spearman_NYT)

141 | print ("concat", np.mean(spearman_concat))

142 | #get_confidence_intervals(spearman_concat)

143 |

144 |

145 | def compute_spearmanr_bootstrap_intrusion(df_intruder_scores, data, only_confident=False):

146 | ratings_human, ratings_chatGPT = [], []

147 | spearman_wiki = []

148 | spearman_NYT = []

149 | spearman_concat = []

150 |

151 | for _ in tqdm(range(1000), total=1000):

152 | ratings_human, ratings_chatGPT = [], []

153 | for dataset in ["wikitext", "nytimes"]:

154 | for model in ["mallet", "dvae", "etm"]:

155 | for topic_id in range(50):

156 | intrusion_scores_raw = data[dataset][model]["metrics"]["intrusion_scores_raw"][topic_id]

157 | if only_confident:

158 | intrusion_scores_raw = [i for i,j in zip(intrusion_scores_raw, data[dataset][model]["metrics"]["intrusion_confidences_raw"][topic_id]) if j == 1]

159 | # sample bootstrap fold

160 |

161 | intrusion_scores_raw = random.choices(intrusion_scores_raw, k=len(intrusion_scores_raw))

162 | df_topic = df_intruder_scores[(df_intruder_scores.dataset_name == dataset) & (df_intruder_scores.model_name == model) & (df_intruder_scores.topic_id == topic_id)]

163 | gpt_ratings = random.choices(df_topic.response_correct.tolist(), k= len(df_topic.response_correct))

164 |

165 | # save results

166 | ratings_human.append(np.mean(intrusion_scores_raw))

167 | ratings_chatGPT.append(np.mean(gpt_ratings))

168 | # compute spearman_R and save results

169 | spearman_wiki.append(spearmanr(ratings_chatGPT[:150], ratings_human[:150]).statistic)

170 | spearman_NYT.append(spearmanr(ratings_chatGPT[150:], ratings_human[150:]).statistic)

171 | spearman_concat.append(spearmanr(ratings_chatGPT, ratings_human).statistic)

172 | print ("wiki", np.mean(spearman_wiki))

173 | get_confidence_intervals(spearman_wiki)

174 | print ("NYT", np.mean(spearman_NYT))

175 | get_confidence_intervals(spearman_NYT)

176 | print ("concat", np.mean(spearman_concat))

177 | get_confidence_intervals(spearman_concat)

178 |

179 | def compute_spearmanr_bootstrap_rating(df_rating_scores, data, only_confident=False):

180 | ratings_human, ratings_chatGPT = [], []

181 | spearman_wiki = []

182 | spearman_NYT = []

183 | spearman_concat = []

184 |

185 | for _ in tqdm(range(150), total=150):

186 | ratings_human, ratings_chatGPT = [], []

187 | for dataset in ["wikitext", "nytimes"]:

188 | for model in ["mallet", "dvae", "etm"]:

189 | for topic_id in range(50):

190 | rating_scores_raw = data[dataset][model]["metrics"]["ratings_scores_raw"][topic_id]

191 | if only_confident:

192 | rating_scores_raw = [i for i,j in zip(rating_scores_raw, data[dataset][model]["metrics"]["ratings_confidences_raw"][topic_id]) if j == 1]

193 | # sample bootstrap fold

194 | rating_scores_raw = random.choices(rating_scores_raw, k=len(rating_scores_raw))

195 | df_topic = df_rating_scores[(df_rating_scores.dataset_name == dataset) & (df_rating_scores.model_name == model) & (df_rating_scores.topic_id == topic_id)]

196 | gpt_ratings = random.choices(df_topic.gpt_ratings.tolist(), k= len(df_topic.gpt_ratings))

197 |

198 | # save results

199 | ratings_human.append(np.mean(rating_scores_raw))

200 | ratings_chatGPT.append(np.mean(gpt_ratings))

201 | # compute spearman_R and save results

202 | spearman_wiki.append(spearmanr(ratings_chatGPT[:150], ratings_human[:150]).statistic)

203 | spearman_NYT.append(spearmanr(ratings_chatGPT[150:], ratings_human[150:]).statistic)

204 | spearman_concat.append(spearmanr(ratings_chatGPT, ratings_human).statistic)

205 | print ("wiki", np.mean(spearman_wiki))

206 | get_confidence_intervals(spearman_wiki)

207 | print ("NYT", np.mean(spearman_NYT))

208 | get_confidence_intervals(spearman_NYT)

209 | print ("concat", np.mean(spearman_concat))

210 | get_confidence_intervals(spearman_concat)

211 |

212 |

213 |

214 |

215 | if __name__ == "__main__":

216 | parser = argparse.ArgumentParser()

217 | parser.add_argument("--task", default="ratings", type=str)

218 | parser.add_argument("--filename", default="coherence-outputs-section-2/ratings_outfile_with_dataset_description.jsonl", type=str, help="whether to include a dataset description or not, default = include.")

219 | parser.add_argument("--only_confident", default="true", type=str)

220 | args = parser.parse_args()

221 |

222 | if args.only_confident == "true":

223 | only_confident = True

224 | else:

225 | only_confident = False

226 |

227 | random.seed(42)

228 | path = "coherence-outputs-section-2"

229 |

230 | experiments = ["human_ceiling", "dataset_description", "dataset_description_only_confident", "no_dataset_description"]

231 |

232 | with open("all_data.json") as f:

233 | data = json.load(f)

234 |

235 | if args.task == "human_ceiling":

236 | compute_human_ceiling_intrusion(data, only_confident=only_confident)

237 | compute_human_ceiling_rating(data, only_confident=only_confident)

238 | elif args.task == "ratings":

239 | df_rating = load_dataframe(args.filename)

240 | compute_spearmanr_bootstrap_rating(df_rating, data, only_confident=only_confident)

241 |

242 | elif args.task == "intrusion":

243 | df_rating = load_dataframe(args.filename)

244 | compute_spearmanr_bootstrap_rating(df_rating, data, only_confident=only_confident)

245 |

--------------------------------------------------------------------------------

/src-misc/01-get_data.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/bash

2 |

3 | mkdir -p data

4 |

5 | wget https://raw.githubusercontent.com/ahoho/topics/dev/data/human/all_data/all_data.json -O data/intrusion.json

--------------------------------------------------------------------------------

/src-misc/02-process_data.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 1,

6 | "metadata": {},

7 | "outputs": [],

8 | "source": [

9 | "import json\n",

10 | "data = json.load(open(\"../data/intrusion.json\", \"r\"))"

11 | ]

12 | },

13 | {

14 | "cell_type": "code",

15 | "execution_count": null,

16 | "metadata": {},

17 | "outputs": [],

18 | "source": [

19 | "data[\"wikitext\"][\"mallet\"][\"metrics\"].keys()\n",

20 | "\n",

21 | "[(k, len(v), None if type(v[0]) != list else len(v[0])) for k, v in data[\"nytimes\"][\"mallet\"][\"metrics\"].items()]"

22 | ]

23 | },

24 | {

25 | "cell_type": "code",

26 | "execution_count": 2,

27 | "metadata": {},

28 | "outputs": [

29 | {

30 | "data": {

31 | "text/plain": [

32 | "['species',\n",

33 | " 'birds',\n",

34 | " 'males',\n",

35 | " 'females',\n",

36 | " 'bird',\n",

37 | " 'white',\n",

38 | " 'found',\n",

39 | " 'female',\n",

40 | " 'male',\n",

41 | " 'range',\n",

42 | " 'large',\n",

43 | " 'breeding',\n",

44 | " 'long',\n",

45 | " 'black',\n",

46 | " 'small',\n",

47 | " 'shark',\n",

48 | " 'population',\n",

49 | " 'common',\n",

50 | " 'prey',\n",

51 | " 'eggs']"

52 | ]

53 | },

54 | "execution_count": 2,

55 | "metadata": {},

56 | "output_type": "execute_result"

57 | }

58 | ],

59 | "source": [

60 | "data[\"wikitext\"][\"mallet\"][\"topics\"][1]"

61 | ]

62 | }

63 | ],

64 | "metadata": {

65 | "kernelspec": {

66 | "display_name": "Python 3.10.7 64-bit",

67 | "language": "python",

68 | "name": "python3"

69 | },

70 | "language_info": {

71 | "codemirror_mode": {

72 | "name": "ipython",

73 | "version": 3

74 | },

75 | "file_extension": ".py",

76 | "mimetype": "text/x-python",

77 | "name": "python",

78 | "nbconvert_exporter": "python",

79 | "pygments_lexer": "ipython3",

80 | "version": "3.10.7"

81 | },

82 | "orig_nbformat": 4,

83 | "vscode": {

84 | "interpreter": {

85 | "hash": "916dbcbb3f70747c44a77c7bcd40155683ae19c65e1c03b4aa3499c5328201f1"

86 | }

87 | }

88 | },

89 | "nbformat": 4,

90 | "nbformat_minor": 2

91 | }

92 |

--------------------------------------------------------------------------------

/src-misc/02-process_data.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | import json

4 |

5 | data = json.load(open("data/intrusion.json", "r"))

6 |

7 | for topic_scores, topic_words in zip(data["wikitext"]["etm"]["metrics"]["intrusion_scores_raw"], data["wikitext"]["etm"]["topics"]):

8 | print(len(topic_scores), len(topic_words))

9 | group_a = [w for s, w in zip(topic_scores, topic_words) if s == 0]

10 | group_b = [w for s, w in zip(topic_scores, topic_words) if s == 1]

11 | print(group_a, group_b)

--------------------------------------------------------------------------------

/src-misc/03-figures_nmpi_llm.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python3

2 |

3 | import matplotlib.pyplot as plt

4 | import pandas as pd

5 | import os

6 | from tqdm import tqdm

7 | import numpy as np

8 | from sklearn.metrics import adjusted_mutual_info_score, adjusted_rand_score, completeness_score, homogeneity_score

9 | from scipy.stats import spearmanr

10 | from collections import defaultdict

11 | import fig_utils

12 | from matplotlib.ticker import FormatStrFormatter

13 | import argparse

14 |

15 | args = argparse.ArgumentParser()

16 | args.add_argument("--experiment", default="wikitext_specific")

17 | args = args.parse_args()

18 |

19 | os.makedirs("computed/figures", exist_ok=True)

20 |

21 | N_CLUSTER = range(20, 420, 20)

22 |

23 |

24 | def moving_average(a, window_size=3):

25 | if window_size == 0:

26 | return a

27 | out = []

28 | for i in range(len(a)):

29 | start = max(0, i - window_size)

30 | out.append(np.mean(a[start:i + 1]))

31 | return out

32 |

33 |

34 | def compute_adjusted_NMI_bills():

35 | df_metadata = pd.read_json(

36 | "data/bills/processed/labeled/vocab_15k/train.metadata.jsonl",

37 | lines=True

38 | )

39 | print(df_metadata)

40 | paths = [

41 | "runs/outputs/k_selection/" + "bills" +

42 | "-labeled/vocab_15k/k-" + str(i)

43 | for i in N_CLUSTER

44 | ]

45 | topic = df_metadata.topic.tolist()

46 | cluster_metrics = defaultdict(list)

47 |

48 | for path, num_topics in tqdm(zip(paths, N_CLUSTER), total=20):

49 | path = os.path.join(path, "2972")

50 | beta = np.load(os.path.join(path, "beta.npy"))

51 | theta = np.load(os.path.join(path, "train.theta.npy"))

52 | argmax_theta = theta.argmax(axis=-1)

53 | cluster_metrics["ami"].append(

54 | adjusted_mutual_info_score(topic, argmax_theta)

55 | )

56 | cluster_metrics["ari"].append(

57 | adjusted_rand_score(topic, argmax_theta)

58 | )

59 | cluster_metrics["completeness"].append(

60 | completeness_score(topic, argmax_theta)

61 | )

62 | cluster_metrics["homogeneity"].append(

63 | homogeneity_score(topic, argmax_theta)

64 | )

65 | return cluster_metrics

66 |

67 |

68 | def compute_adjusted_NMI_wikitext_old(broad_categories=True):

69 | df_metadata = pd.read_json(

70 | "data/wikitext/processed/labeled/vocab_15k/train.metadata.jsonl",

71 | lines=True

72 | )

73 | print(df_metadata)

74 | paths = [

75 | "runs/outputs/k_selection/" + "wikitext" +

76 | "-labeled/vocab_15k/k-" + str(i)

77 | for i in N_CLUSTER

78 | ]

79 |

80 | if broad_categories:

81 | topic = df_metadata.category.tolist()

82 | else:

83 | topic = df_metadata.subcategory.tolist()

84 |

85 | cluster_metrics = defaultdict(list)

86 |

87 | for path, num_topics in tqdm(zip(paths, N_CLUSTER), total=20):

88 | path = os.path.join(path, "2972")

89 | beta = np.load(os.path.join(path, "beta.npy"))

90 | theta = np.load(os.path.join(path, "train.theta.npy"))

91 | argmax_theta = theta.argmax(axis=-1)

92 | cluster_metrics["ami"].append(

93 | adjusted_mutual_info_score(topic, argmax_theta)

94 | )

95 | cluster_metrics["ari"].append(adjusted_rand_score(topic, argmax_theta))

96 | cluster_metrics["completeness"].append(

97 | completeness_score(topic, argmax_theta)

98 | )

99 | cluster_metrics["homogeneity"].append(

100 | homogeneity_score(topic, argmax_theta)

101 | )

102 | return cluster_metrics

103 |

104 | def compute_adjusted_NMI_wikitext(broad_categories=True):

105 | df_metadata = pd.read_json("data/wikitext/processed/labeled/vocab_15k/train.metadata.jsonl", lines=True)

106 | print (df_metadata)

107 | paths = ["runs/outputs/k_selection/" + "wikitext" + "-labeled/vocab_15k/k-" + str(i) for i in range(20, 420, 20)]

108 |

109 | # re-do columns:

110 | value_counts = df_metadata.category.value_counts().rename_axis("topic").reset_index(name="counts")

111 | value_counts = {i:j for i,j in zip(value_counts.topic, value_counts.counts) if j > 25}

112 | df_metadata["subtopic"] = ["other" if i in value_counts else i for i in df_metadata.category]

113 |

114 | value_counts = df_metadata.subcategory.value_counts().rename_axis("topic").reset_index(name="counts")

115 | value_counts = {i:j for i,j in zip(value_counts.topic, value_counts.counts) if j > 25}

116 | df_metadata["subtopic"] = ["other" if i in value_counts else i for i in df_metadata.subcategory]

117 |

118 | if broad_categories:

119 | topic = df_metadata.category.tolist()

120 | else:

121 | topic = df_metadata.subcategory.tolist()

122 |

123 | cluster_metrics = defaultdict(list)

124 |

125 | for path,num_topics in tqdm(zip(paths, range(20,420,20)), total=20):

126 | path = os.path.join(path, "2972")

127 | beta = np.load(os.path.join(path, "beta.npy"))

128 | theta = np.load(os.path.join(path, "train.theta.npy"))

129 | argmax_theta = theta.argmax(axis=-1)

130 | cluster_metrics["ami"].append(adjusted_mutual_info_score(topic, argmax_theta))

131 | cluster_metrics["ari"].append(adjusted_rand_score(topic, argmax_theta))

132 | cluster_metrics["completeness"].append(completeness_score(topic, argmax_theta))

133 | cluster_metrics["homogeneity"].append(homogeneity_score(topic, argmax_theta))

134 | return cluster_metrics

135 |

136 |

137 |

138 |

139 | # experiment = "bills_broad"

140 | # experiment = "wikitext_broad"

141 | # experiment = "wikitext_specific"

142 |

143 | if args.experiment == "bills_broad":

144 | dataset = "bills"

145 | cluster_metrics = compute_adjusted_NMI_bills()

146 | paths = [

147 | "runs/outputs/k_selection/bills-labeled/vocab_15k/k-" + str(i)

148 | for i in N_CLUSTER

149 | ]

150 | df = pd.read_csv("LLM-scores/LLM_outputs_bills_broad.csv")

151 | plt_label = "Adjusted MI"

152 | outfile_name = "n_clusters_bills_dataset.pdf"

153 | plt_title = "BillSum, broad, $\\rho = RHO_CORR$"

154 | left_ylab = True

155 | right_ylab = False

156 | degree = 1

157 | elif args.experiment == "wikitext_broad":

158 | dataset = "wikitext"

159 | cluster_metrics = compute_adjusted_NMI_wikitext()

160 | paths = [

161 | "runs/outputs/k_selection/wikitext-labeled/vocab_15k/k-" + str(i)

162 | for i in N_CLUSTER

163 | ]

164 | df = pd.read_csv("LLM-scores/LLM_outputs_wikitext_broad.csv")

165 | plt_label = "Adjusted MI"

166 | outfile_name = "n_clusters_wikitext_broad.pdf"

167 | plt_title = "Wikitext, broad, $\\rho = RHO_CORR$"

168 | left_ylab = False

169 | right_ylab = False

170 | degree = 1

171 | elif args.experiment == "wikitext_specific":

172 | dataset = "wikitext"

173 | cluster_metrics = compute_adjusted_NMI_wikitext(broad_categories=False)

174 | paths = [

175 | "runs/outputs/k_selection/wikitext-labeled/vocab_15k/k-" + str(i)

176 | for i in N_CLUSTER

177 | ]

178 | df = pd.read_csv("LLM-scores/LLM_outputs_wikitext_specific.csv")

179 | plt_label = "Adjusted MI"

180 | outfile_name = "n_clusters_wikitext_specific.pdf"

181 | plt_title = "Wikitext, specific, $\\rho = RHO_CORR$"

182 | left_ylab = False

183 | right_ylab = True

184 | degree = 3

185 |

186 | df["gpt_ratings"] = df.chatGPT_eval.astype(int)

187 | average_goodness = []

188 | # get average gpt_ratings for each k

189 | for path in paths:

190 | path = os.path.join(path, "2972")

191 | df_at_k = df[df.path == path]

192 | average_goodness.append(df_at_k.gpt_ratings.mean())

193 |

194 | # smooth via moving_average to remove weird outliers

195 | average_goodness = moving_average(average_goodness)

196 |

197 | # if we want to plot spearmanR for different clustering metrics

198 | compute_spearmanR_different_cluster_metrics = False

199 | if compute_spearmanR_different_cluster_metrics:

200 | for key in ["ami", "ari", "completeness", "homogeneity"]:

201 | value = cluster_metrics[key]

202 | statistic = spearmanr(average_goodness, value)

203 | print(

204 | "topics " + key, statistic.statistic.round(3),

205 | statistic.pvalue.round(3)

206 | )

207 |

208 | # re-shape to compute z-scores (otherwise, the scales are off because LLM scores and clustering metrics are on different scales and the graphs do not look too great

209 | average_goodness = np.array(average_goodness).reshape(-1, 1)

210 | AMI = np.array(cluster_metrics["ami"]).reshape(-1, 1)

211 |

212 | # compute z-scores

213 | # average_goodness = StandardScaler().fit_transform(average_goodness).squeeze()

214 | # AMI = StandardScaler().fit_transform(AMI).squeeze()

215 | average_goodness = np.array(average_goodness).squeeze()

216 | AMI = np.array(AMI).squeeze()

217 |

218 | # plot figures

219 | fig = plt.figure(figsize=(3.5, 2))

220 | ax1 = plt.gca()

221 | ax2 = ax1.twinx()

222 | SCATTER_STYLE = {"edgecolor": "black", "s": 30}

223 | l1 = ax1.scatter(

224 | N_CLUSTER,

225 | average_goodness,

226 | label="LLM score",

227 | color=fig_utils.COLORS[0],

228 | **SCATTER_STYLE

229 | )

230 | l2 = ax2.scatter(

231 | N_CLUSTER,

232 | AMI,

233 | label=plt_label,

234 | color=fig_utils.COLORS[1],

235 | **SCATTER_STYLE

236 | )

237 |

238 | # print to one digit

239 | ax2.yaxis.set_major_formatter(FormatStrFormatter('%.1f'))

240 |

241 | xticks_fine = np.linspace(min(N_CLUSTER), max(N_CLUSTER), 500)

242 |

243 | poly_ag = np.poly1d(np.polyfit(N_CLUSTER, average_goodness, degree))

244 | ax1.plot(xticks_fine, poly_ag(xticks_fine), '-', color=fig_utils.COLORS[0], zorder=-100)

245 | poly_ami = np.poly1d(np.polyfit(N_CLUSTER, AMI, degree))

246 | ax2.plot(xticks_fine, poly_ami(xticks_fine), '-', color=fig_utils.COLORS[1], zorder=-100)

247 |

248 | plt.legend(

249 | [l1, l2], [p_.get_label() for p_ in [l1, l2]],

250 | loc="upper right",

251 | handletextpad=-0.2,

252 | labelspacing=0.15,

253 | borderpad=0.15,

254 | borderaxespad=0.1,

255 | )

256 | if left_ylab:

257 | ax1.set_ylabel("Adjusted MI")

258 | if right_ylab:

259 | ax2.set_ylabel("Averaged LLM score")

260 | ax1.set_xlabel("Number of topics")

261 | plt.xticks(N_CLUSTER[::3], N_CLUSTER[::3])

262 |

263 |

264 | statistic = spearmanr(average_goodness, cluster_metrics["ami"])

265 | plt.title(plt_title.replace("RHO_CORR", f"{statistic[0]:.2f}"))

266 |

267 | plt.tight_layout(pad=0.2)

268 | plt.savefig("computed/figures/" + outfile_name)

269 | plt.show()

270 |

--------------------------------------------------------------------------------

/src-misc/04-launch_figures_nmpi_llm.sh:

--------------------------------------------------------------------------------

1 | #!/usr/bin/bash

2 |

3 | for EXPERIMENT in "bills_broad" "wikitext_broad" "wikitext_specific"; do

4 | # prevent from showing

5 | DISPLAY="" python3 src_vilem/03-figures_nmpi_llm.py --experiment ${EXPERIMENT}

6 | done

--------------------------------------------------------------------------------

/src-misc/05-find_rating_errors.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 34,

6 | "metadata": {},

7 | "outputs": [

8 | {

9 | "name": "stderr",

10 | "output_type": "stream",

11 | "text": [

12 | "/tmp/ipykernel_32934/435855610.py:6: FutureWarning: Indexing with multiple keys (implicitly converted to a tuple of keys) will be deprecated, use a list instead.\n",

13 | " df_grouped = df_rating_scores.groupby([\"dataset_name\", \"model_name\", \"topic_id\"])[\"chatGPT_eval\", \"human_eval\"].mean().reset_index()\n"

14 | ]

15 | }

16 | ],

17 | "source": [

18 | "#!/usr/bin/env python3\n",

19 | "\n",

20 | "import pandas as pd\n",

21 | "\n",

22 | "df_rating_scores = pd.read_json(\"../computed/ratings_outfile_with_logit_bias_old_prompt_5_runs.jsonl\", lines=True)\n",

23 | "df_grouped = df_rating_scores.groupby([\"dataset_name\", \"model_name\", \"topic_id\"])[\"chatGPT_eval\", \"human_eval\"].mean().reset_index()\n",

24 | "df_grouped[\"diff_abs\"] = (df_grouped[\"chatGPT_eval\"]-df_grouped[\"human_eval\"]).abs()\n",

25 | "df_grouped[\"diff\"] = (df_grouped[\"chatGPT_eval\"]-df_grouped[\"human_eval\"])"

26 | ]

27 | },

28 | {

29 | "cell_type": "code",

30 | "execution_count": 36,

31 | "metadata": {},

32 | "outputs": [

33 | {

34 | "data": {

35 | "text/html": [

36 | "\n",

37 | "\n",

50 | "

\n",

51 | " \n",

52 | " \n",

53 | " | \n",

54 | " dataset_name | \n",

55 | " model_name | \n",

56 | " topic_id | \n",

57 | " chatGPT_eval | \n",

58 | " human_eval | \n",

59 | " diff_abs | \n",

60 | " diff | \n",

61 | "

\n",

62 | " \n",

63 | " \n",

64 | " \n",

65 | " | 299 | \n",

66 | " wikitext | \n",

67 | " mallet | \n",

68 | " 49 | \n",

69 | " 2.6 | \n",

70 | " 2.6 | \n",

71 | " 0.0 | \n",

72 | " 0.0 | \n",

73 | "

\n",

74 | " \n",

75 | " | 157 | \n",

76 | " wikitext | \n",

77 | " dvae | \n",

78 | " 7 | \n",

79 | " 2.8 | \n",

80 | " 2.8 | \n",

81 | " 0.0 | \n",

82 | " 0.0 | \n",

83 | "

\n",

84 | " \n",

85 | " | 16 | \n",

86 | " nytimes | \n",

87 | " dvae | \n",

88 | " 16 | \n",

89 | " 2.0 | \n",

90 | " 2.0 | \n",

91 | " 0.0 | \n",

92 | " 0.0 | \n",

93 | "

\n",

94 | " \n",

95 | " | 110 | \n",

96 | " nytimes | \n",

97 | " mallet | \n",

98 | " 10 | \n",

99 | " 2.0 | \n",

100 | " 2.0 | \n",

101 | " 0.0 | \n",

102 | " 0.0 | \n",

103 | "

\n",

104 | " \n",

105 | " | 101 | \n",

106 | " nytimes | \n",

107 | " mallet | \n",

108 | " 1 | \n",

109 | " 3.0 | \n",

110 | " 3.0 | \n",

111 | " 0.0 | \n",

112 | " 0.0 | \n",

113 | "

\n",

114 | " \n",

115 | "

\n",

116 | "

\n",

152 | "\n",

165 | "

\n",

166 | " \n",

167 | " \n",

168 | " | \n",

169 | " dataset_name | \n",

170 | " model_name | \n",

171 | " topic_id | \n",

172 | " chatGPT_eval | \n",

173 | " human_eval | \n",

174 | " diff_abs | \n",

175 | " diff | \n",

176 | "

\n",

177 | " \n",

178 | " \n",

179 | " \n",

180 | " | 58 | \n",

181 | " nytimes | \n",

182 | " etm | \n",

183 | " 8 | \n",

184 | " 1.4 | \n",

185 | " 2.866667 | \n",

186 | " 1.466667 | \n",

187 | " -1.466667 | \n",

188 | "

\n",

189 | " \n",

190 | " | 41 | \n",

191 | " nytimes | \n",

192 | " dvae | \n",

193 | " 41 | \n",

194 | " 1.0 | \n",

195 | " 2.533333 | \n",

196 | " 1.533333 | \n",

197 | " -1.533333 | \n",

198 | "

\n",

199 | " \n",

200 | " | 28 | \n",

201 | " nytimes | \n",

202 | " dvae | \n",

203 | " 28 | \n",

204 | " 1.2 | \n",

205 | " 2.733333 | \n",

206 | " 1.533333 | \n",

207 | " -1.533333 | \n",

208 | "

\n",

209 | " \n",

210 | " | 34 | \n",

211 | " nytimes | \n",

212 | " dvae | \n",

213 | " 34 | \n",

214 | " 1.0 | \n",

215 | " 2.666667 | \n",

216 | " 1.666667 | \n",

217 | " -1.666667 | \n",

218 | "

\n",

219 | " \n",

220 | " | 216 | \n",

221 | " wikitext | \n",

222 | " etm | \n",

223 | " 16 | \n",

224 | " 1.0 | \n",

225 | " 2.933333 | \n",

226 | " 1.933333 | \n",

227 | " -1.933333 | \n",

228 | "

\n",

229 | " \n",

230 | "

\n",

231 | "

\n",

267 | "\n",

280 | "

\n",

281 | " \n",

282 | " \n",

283 | " | \n",

284 | " dataset_name | \n",

285 | " model_name | \n",

286 | " topic_id | \n",

287 | " chatGPT_eval | \n",

288 | " human_eval | \n",

289 | " diff_abs | \n",

290 | " diff | \n",

291 | "

\n",

292 | " \n",

293 | " \n",

294 | " \n",

295 | " | 200 | \n",

296 | " wikitext | \n",

297 | " etm | \n",

298 | " 0 | \n",

299 | " 2.0 | \n",

300 | " 1.600000 | \n",

301 | " 0.400000 | \n",

302 | " 0.400000 | \n",

303 | "

\n",

304 | " \n",

305 | " | 210 | \n",

306 | " wikitext | \n",

307 | " etm | \n",

308 | " 10 | \n",

309 | " 1.8 | \n",

310 | " 1.333333 | \n",

311 | " 0.466667 | \n",

312 | " 0.466667 | \n",

313 | "

\n",

314 | " \n",

315 | " | 248 | \n",

316 | " wikitext | \n",

317 | " etm | \n",

318 | " 48 | \n",

319 | " 3.0 | \n",

320 | " 2.533333 | \n",

321 | " 0.466667 | \n",

322 | " 0.466667 | \n",

323 | "

\n",

324 | " \n",

325 | " | 207 | \n",

326 | " wikitext | \n",

327 | " etm | \n",

328 | " 7 | \n",

329 | " 1.8 | \n",

330 | " 1.266667 | \n",

331 | " 0.533333 | \n",

332 | " 0.533333 | \n",

333 | "

\n",

334 | " \n",

335 | " | 242 | \n",

336 | " wikitext | \n",

337 | " etm | \n",

338 | " 42 | \n",

339 | " 1.8 | \n",

340 | " 1.133333 | \n",

341 | " 0.666667 | \n",

342 | " 0.666667 | \n",

343 | "

\n",

344 | " \n",

345 | "

\n",

346 | "