├── .gitignore

├── README.md

├── docs

├── anaconda-install.md

├── collections-study.ipynb

├── matplotlib-study.ipynb

├── numpy-study.ipynb

├── opencv

│ ├── canny.py

│ ├── lane-line-detection.py

│ ├── opencv-study.ipynb

│ └── opencv-study.py

└── pytorch-study.ipynb

├── experiment

├── Face-Detection-opencv

│ ├── README.md

│ ├── data

│ │ ├── haarcascade_frontalface_default.xml

│ │ └── lbpcascade_frontalface.xml

│ ├── face-detection.ipynb

│ ├── img

│ │ ├── multi.jpg

│ │ ├── multi2.jpg

│ │ └── single.jpg

│ ├── video-face-detection.py

│ └── video

│ │ ├── output.mp4

│ │ └── video.MOV

├── GAN

│ ├── README.md

│ └── gan.py

├── Image-Super-Resolution

│ ├── README.md

│ └── models.py

├── MNIST-Classification

│ └── MNIST-classification.ipynb

├── Mento-Carlo

│ ├── MenteCarlo.ipynb

│ └── README.md

├── Regression

│ ├── DNN-generation.ipynb

│ ├── function-fitting-batch.ipynb

│ └── function-fitting.ipynb

├── Style-Transfer

│ ├── README.md

│ └── networks.py

├── ViT

│ └── vit.py

└── YOLO

│ └── README.md

├── homework

├── CNN-MNIST

│ ├── README.md

│ └── W6_MNIST_FC.ipynb

├── DNN

│ ├── README.md

│ └── W5_Homework.ipynb

└── gradient-calc

│ ├── gradient-calc.ipynb

│ └── gradient-calc.md

├── paper-reading-list.md

└── resources

├── doubleZ.jpg

├── face-detection

├── gaussian.png

├── output.gif

└── threshold.png

├── opencv

├── canny.jpg

├── card.jpg

├── hough.jpg

├── lane.jpg

├── lane2.jpg

├── lena.jpg

└── match_shape.jpg

├── paper-reading-list

├── Attention-Aware-overview.png

├── Cascade-Cost-Volume-overview.png

├── Cost-Volume-Pyramid-overview.png

├── MVSNet-overview.png

├── P-mvsnet-overview.png

├── PatchmatchNet-overview.png

├── R-MVSNet-overview.png

└── point-mvs-overview.png

└── poster.jpg

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | __pycache__

3 | .idea/

4 | .vscode/

5 | doubleZ-*

6 | resources/opencv/lane.mp4

7 | t10k*

8 | train-labels-idx*

9 | train-images-idx*

10 | *.pt

11 | README.assets/

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # PKU计算机视觉课程材料

2 |

3 | > 北京大学深圳研究生院信息工程学院2021秋计算机视觉(04711432)课程材料

4 |

5 | ## 课程作业

6 |

7 |

40 |

41 |

42 | | 课时/时间 | 主题 | 作业内容 | 腾讯文档汇总版 |

43 | | :---------------------------- | ------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

44 | | ✅第一次作业

(2021.09.21) | 课程导论 | 📃[计算机视觉课论文列表](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/paper-reading-list.md) | [计算机视觉课论文汇总](https://docs.qq.com/doc/DSGNEZVlES3R0REt0) |

45 | | ✅第二次作业

(2021.09.28) | 基础知识(OpenCV等) | ⚗️[OpenCV人脸检测demo](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/experiment/Face-Detection-opencv/face-detection.ipynb)

📝[Anaconda安装及使用](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/anaconda-install.md)

📔[Matplotlib学习](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/matplotlib-study.ipynb)

📔[NumPy学习](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/numpy-study.ipynb)

📔[OpenCV学习](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/opencv/opencv-study.ipynb)

📔[collections容器学习](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/collections-study.ipynb) | / |

46 | | ✅第三次作业

(2021.10.12) | 基础知识(PyTorch) | :pencil:[视频人脸检测](https://github.com/doubleZ0108/Computer-Vision-PKU/tree/master/experiment/Face-Detection-opencv) \| ⚗️[code](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/experiment/Face-Detection-opencv/video-face-detection.py)

📔[PyTorch学习](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/pytorch-study.ipynb)

:alembic:[传统方法车道线检测](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/opencv/lane-line-detection.py)

:alembic:[Canny边缘检测动态展示](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/docs/opencv/canny.py) | [第三次作业汇总](https://docs.qq.com/doc/DSFNJSUZlTXNZRFFC) |

47 | | ✅第四次作业

(2021.10.19) | 矩阵求导 | :pencil:[矩阵求导问题](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/homework/gradient-calc/gradient-calc.ipynb) | [第四次作业汇总](https://docs.qq.com/pdf/DSGhVTmNNeXNtTkZj) |

48 | | ✅第五次作业

(2021.10.26) | 初识神经网络 | :alembic:[两层全连接网络拟合曲线](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/homework/DNN/W5_Homework.ipynb)

:pencil:[两层全连接网络逐步衍化](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/experiment/Regression/DNN-generation.ipynb) | [第五次作业汇总](https://docs.qq.com/pdf/DSEpHR0xSU1FRQWVT) |

49 | | ✅第六次作业

(2021.11.03) | 卷积神经网络 | :alembic:[CNN处理MNIST手写数字识别问题](https://github.com/doubleZ0108/Computer-Vision-PKU/blob/master/homework/CNN-MNIST/W6_MNIST_FC.ipynb)

:pencil:[CNN处理MNIST手写数字识别问题](https://github.com/doubleZ0108/Computer-Vision-PKU/tree/master/homework/CNN-MNIST) | [第六次作业汇总](https://docs.qq.com/pdf/DSGN4eHBJTGF3cm9p) |

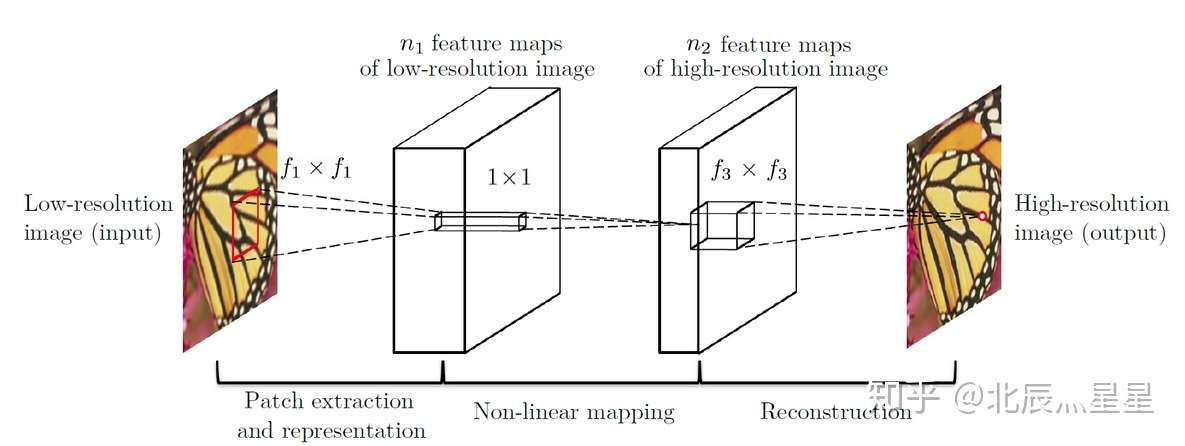

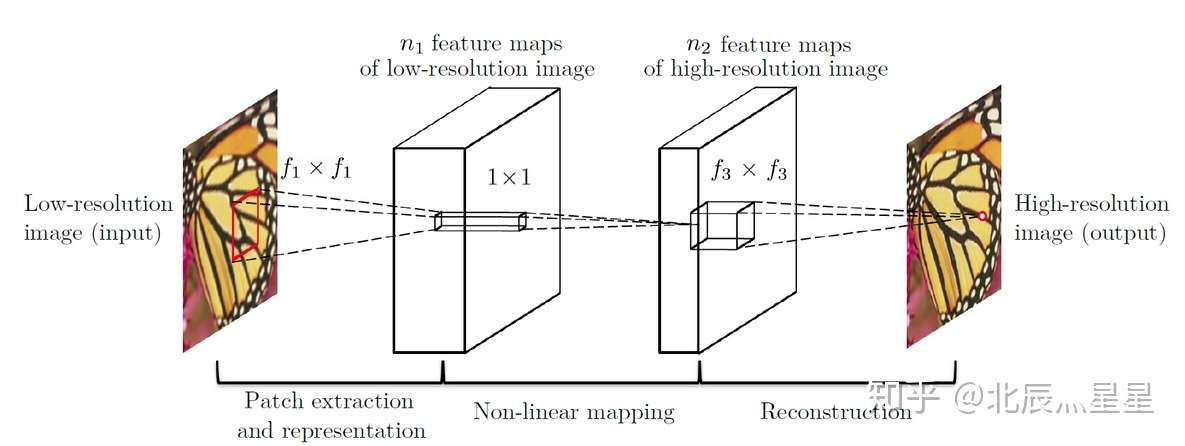

50 | | ✅第七次作业

(2021.11.10) | 图像超分 | :pencil:[图像超分辨率](https://github.com/doubleZ0108/Computer-Vision-PKU/tree/master/experiment/Image-Super-Resolution) | [第七次作业汇总](https://docs.qq.com/pdf/DSENtVE55eXNlS2JY) |

51 | | ✅第八次作业

(2021.11.17) | GAN生成对抗网络 | :pencil:[GAN论文阅读和实验](https://github.com/doubleZ0108/Computer-Vision-PKU/tree/master/experiment/GAN) | [第八次作业汇总](https://docs.qq.com/pdf/DSExKV21SY3lLc2FT) |

52 | | ✅第九次作业

(2021.11.24) | 风格迁移 | :alembic:[风格迁移实验](https://github.com/doubleZ0108/Computer-Vision-PKU/tree/master/experiment/Style-Transfer)

🌐[风格迁移Demo网站](https://doublez0108.github.io/CV/Style-Transfer/style-transfer.html) | [第九次作业汇总](https://docs.qq.com/pdf/DSHFhVlV2ZGdJYUpi) |

53 | | ✅第十次作业

(2021.12.01) | YOLO目标检测 | :pencil:[YOLO目标检测实验](https://github.com/doubleZ0108/Computer-Vision-PKU/tree/master/experiment/YOLO) | [第十次作业汇总](https://docs.qq.com/pdf/DSFhzaUpNTW5jVU5J) |

54 |

55 |

56 |

57 | ## 期末展示

58 |

59 |

60 |

61 |

62 |

63 | ## 关于作者

64 |

65 | - **姓名/学号**:张喆 2101212846

66 | - **学院/专业**:北京大学信息工程学院 计算机应用技术

67 | - **课程**:计算机视觉(04711432)

68 | - **指导老师**:[张健助理教授](http://www.ece.pku.edu.cn/info/1012/1075.htm)

69 | - **联系方式**:[doublez@stu.pku.edu.cn](mailto:doublez@stu.pku.edu.cn)

70 |

--------------------------------------------------------------------------------

/docs/anaconda-install.md:

--------------------------------------------------------------------------------

1 | # Anaconda安装及使用

2 |

3 | * [系统环境](#系统环境)

4 | * [Anaconda GUI](#anaconda-gui)

5 | * [安装](#安装)

6 | * [使用](#使用)

7 | * [Anaconda 命令行](#anaconda-命令行)

8 | * [安装](#安装-1)

9 | * [使用](#使用-1)

10 | * [Anaconda配置CV所需环境](#anaconda配置cv所需环境)

11 |

12 | ------

13 |

14 | 【Anaconda官网】[Anaconda | Individual Edition](https://www.anaconda.com/products/individual#Downloads)

15 |

16 |

17 |

18 | ## 系统环境

19 |

20 | - 本地**操作系统**:macOS Big Sur 11.6

21 | - 服务器操作系统:Ubuntu 18.04

22 |

23 | ---

24 |

25 | ## Anaconda GUI

26 |

27 | ### 安装

28 |

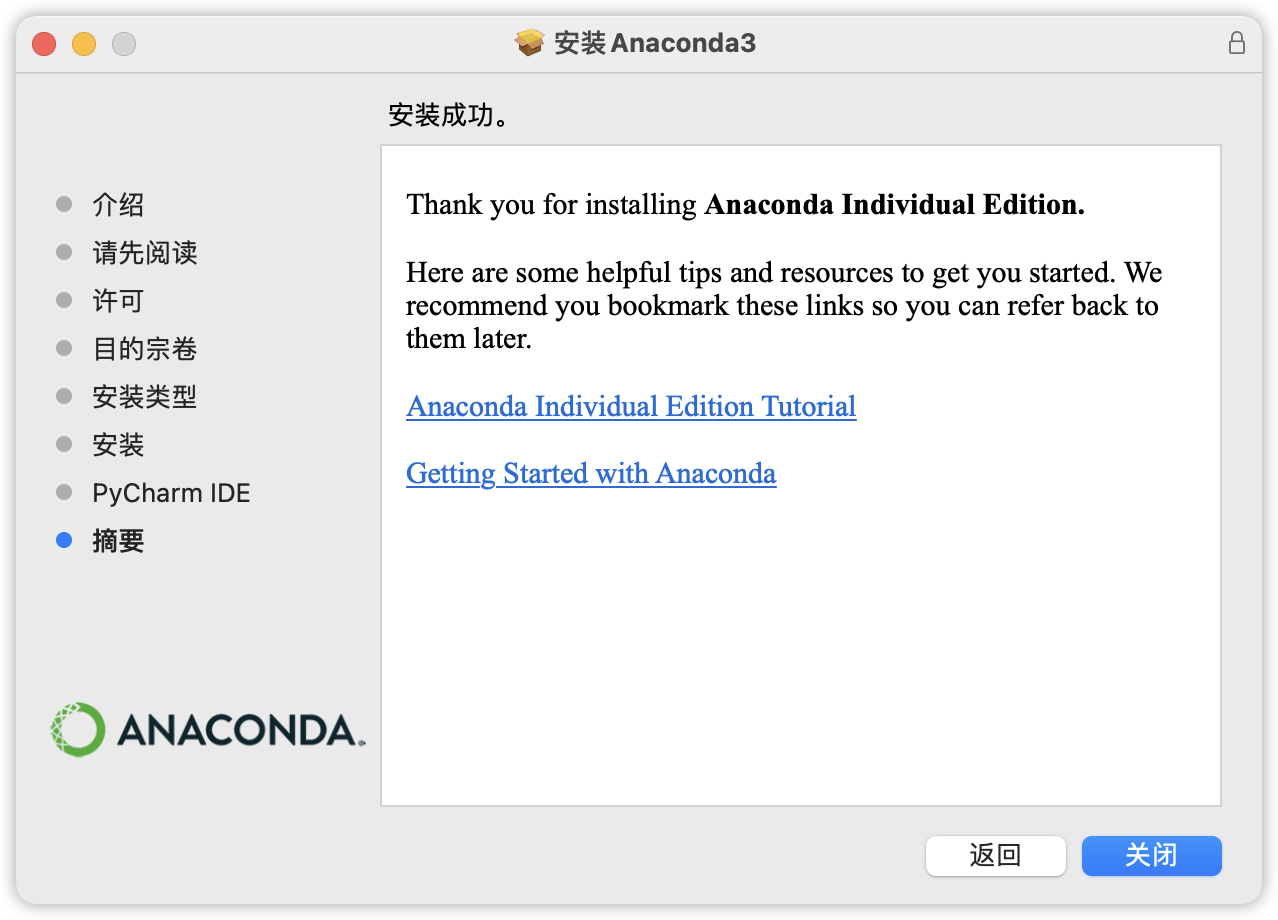

29 | 在官网下载安装包,根据提示一步步进行安装即可

30 |

31 |  32 |

33 |

32 |

33 |  34 |

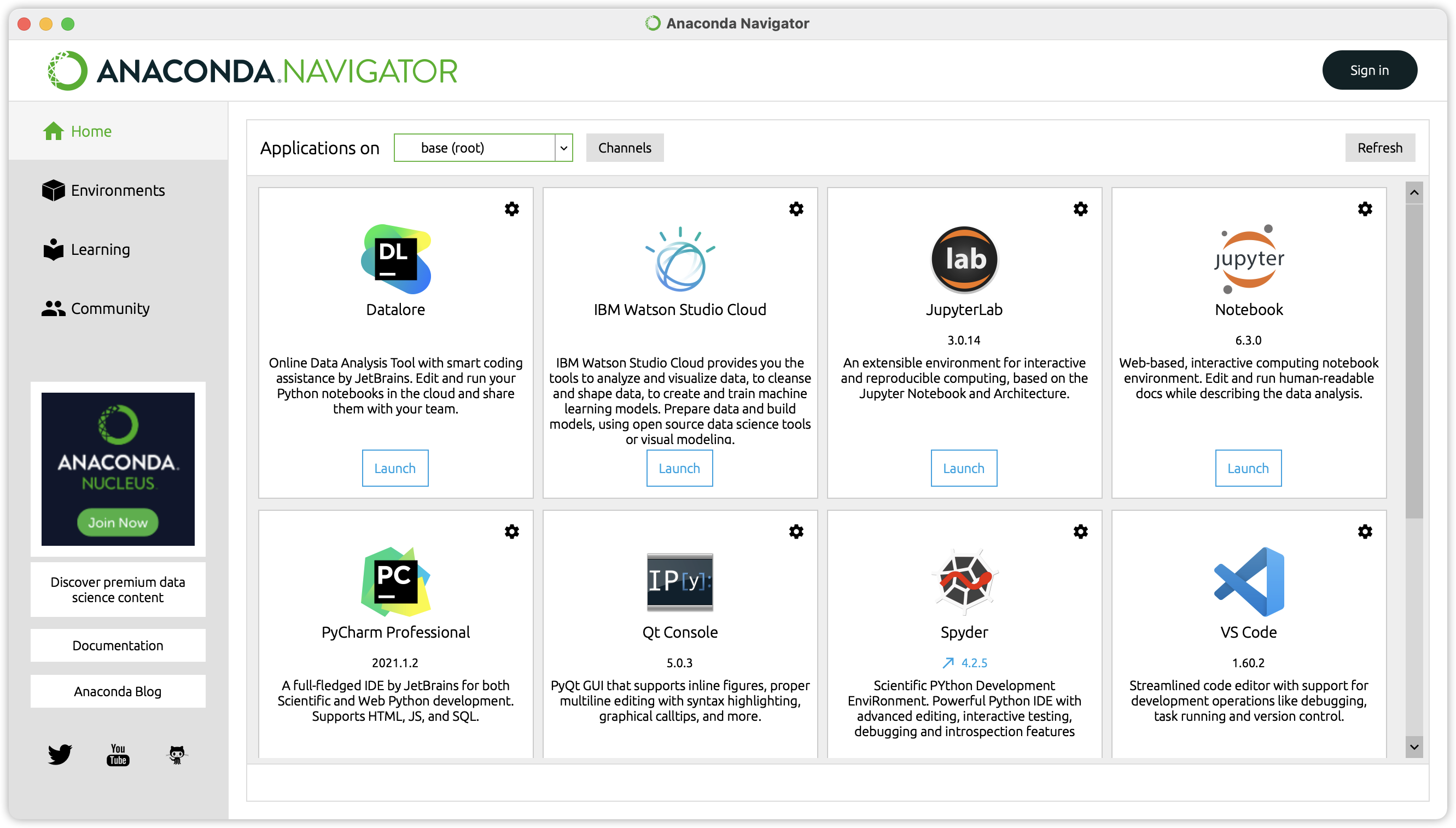

35 | ### 使用

36 |

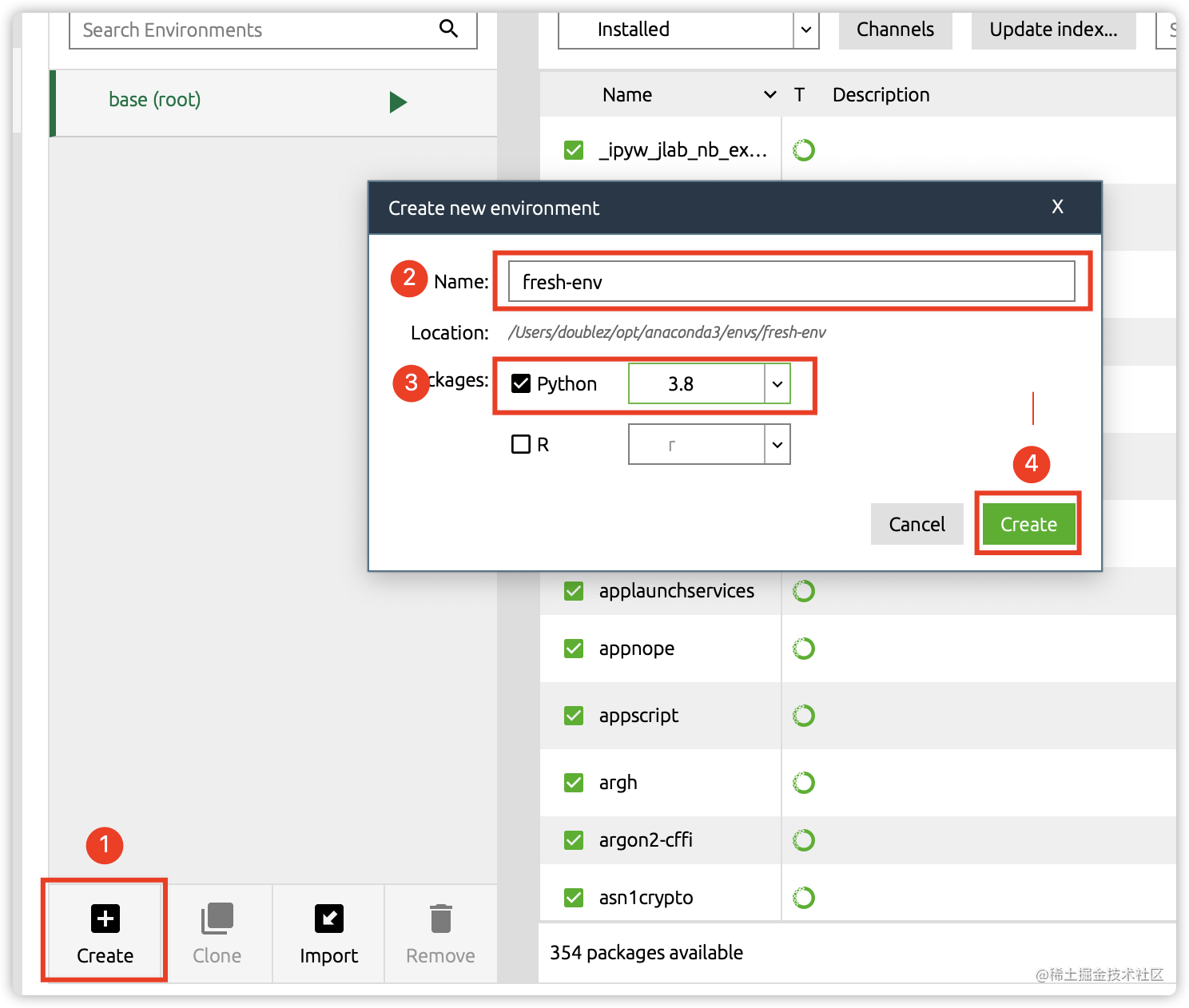

37 | 1. 创建虚拟环境

38 |

39 |

34 |

35 | ### 使用

36 |

37 | 1. 创建虚拟环境

38 |

39 |  40 |

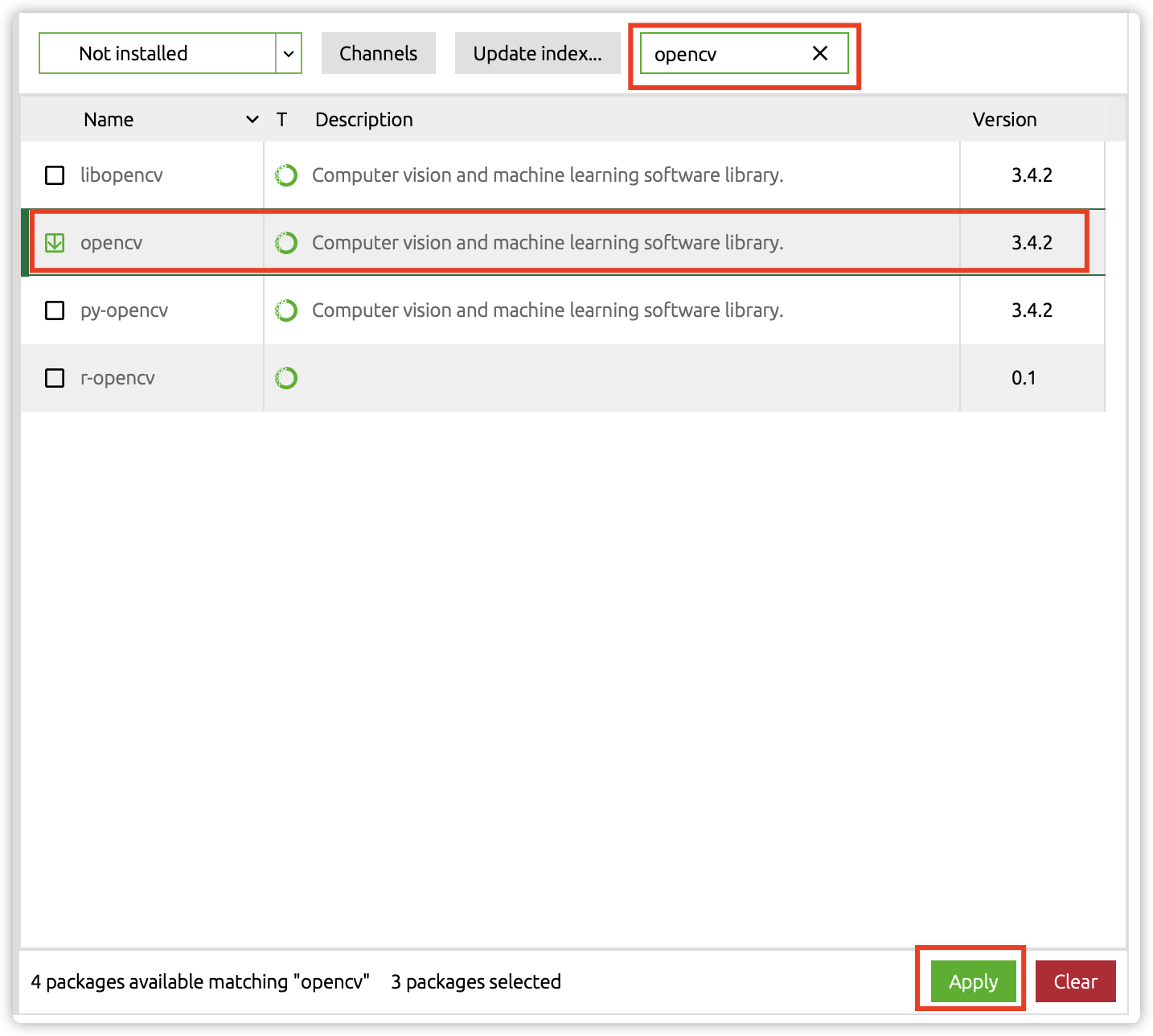

41 | 2. 选择所需包进行安装

42 |

43 |

40 |

41 | 2. 选择所需包进行安装

42 |

43 |  44 |

45 | 3. 在IDE中使用虚拟环境进行测试

46 |

47 | > 需要安装`ipykernel`

48 |

49 |

44 |

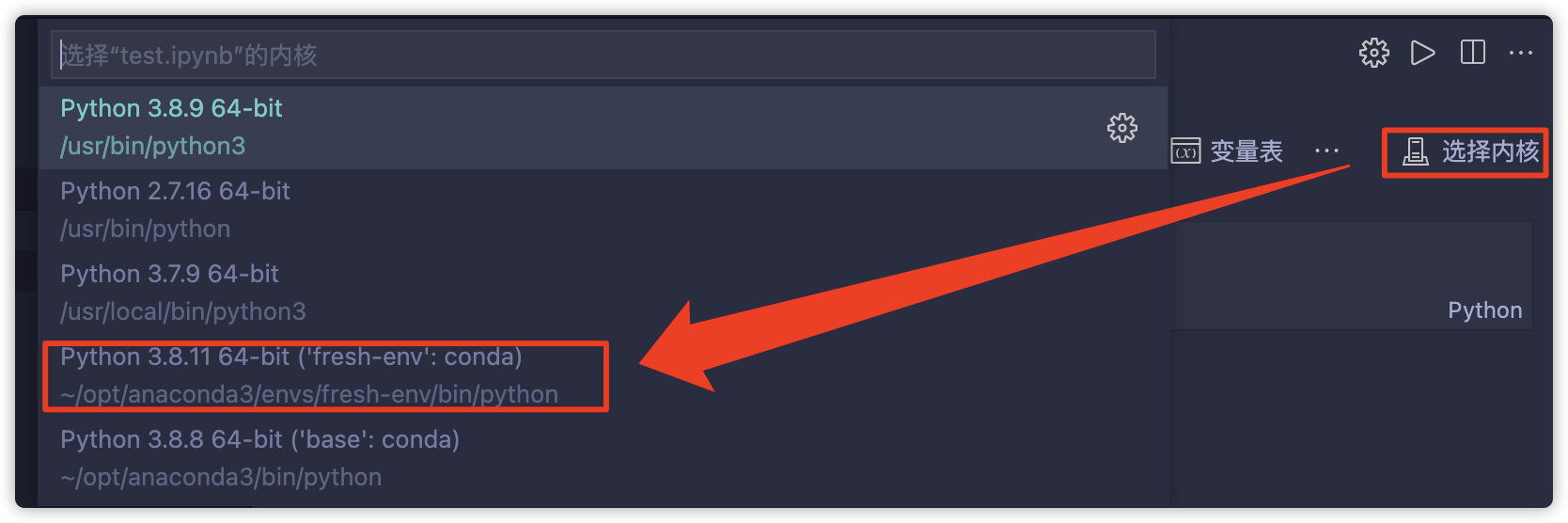

45 | 3. 在IDE中使用虚拟环境进行测试

46 |

47 | > 需要安装`ipykernel`

48 |

49 |  50 |

51 |

50 |

51 |  52 |

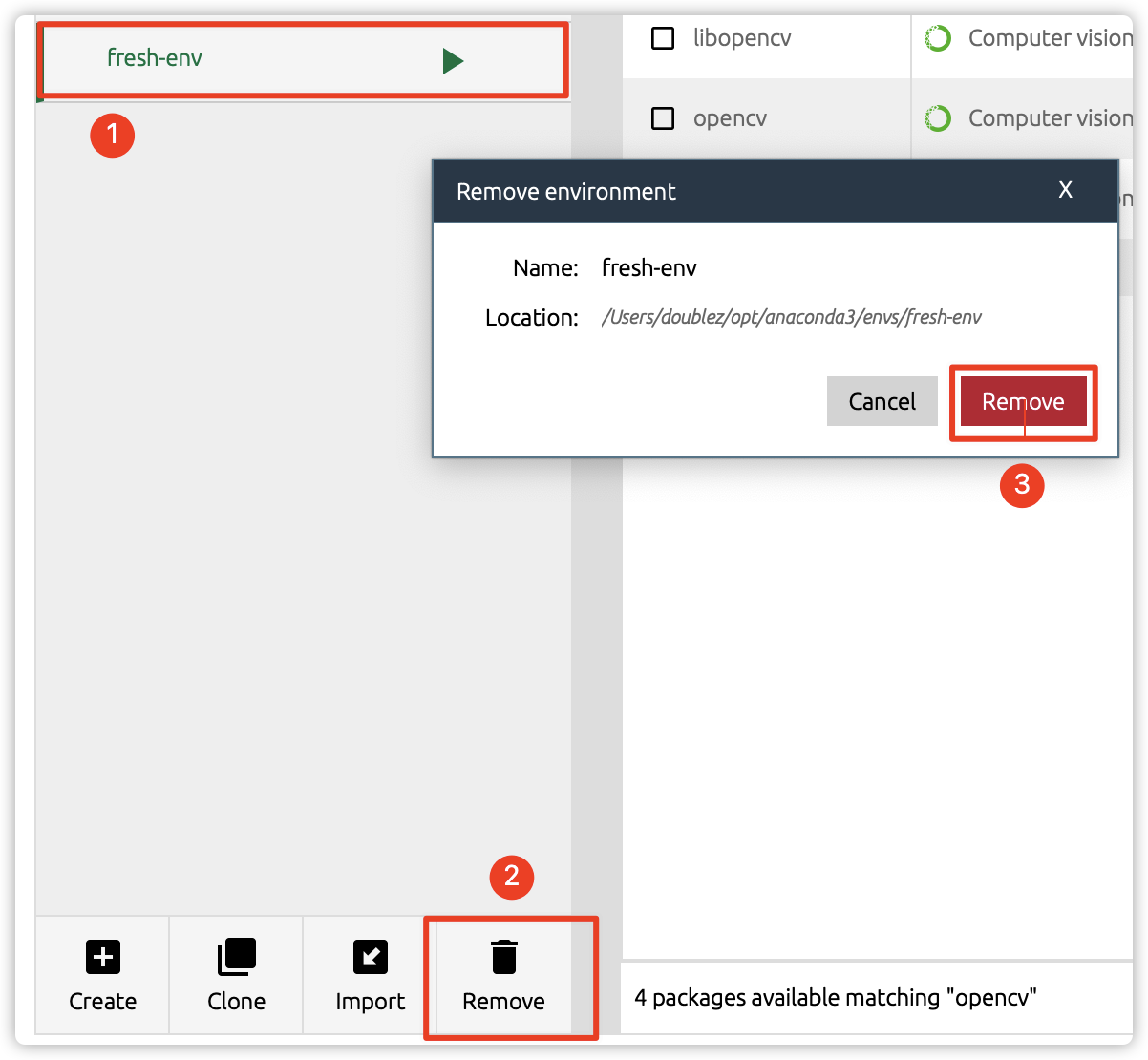

53 | 4. 删除虚拟环境

54 |

55 |

52 |

53 | 4. 删除虚拟环境

54 |

55 |  56 |

57 | ---

58 |

59 | ## Anaconda 命令行

60 |

61 | ### 安装

62 |

63 | 1. 在官网下载Anaconda安装包

64 | 2. 按照官网建议通过SHA-256验证数据的正确性

65 |

66 | ```bash

67 | sha256sum /path/filename

68 | ```

69 |

70 | 3. 运行shell脚本

71 | 4. 根据提示进行安装

72 | 5. 写入环境变量

73 | 6. 最后通过`conda —version`验证是否安装成功

74 |

75 | > 由于服务器上之前已经安装好Anaconda,这里不再展示安装步骤截图

76 |

77 | ### 使用

78 |

79 | ```bash

80 | # 创建虚拟环境

81 | conda create -n [env_name]

82 |

83 | # 查看所有环境信息

84 | conda info --envs

85 |

86 | # 激活某个环境

87 | conda activate [env_name]

88 |

89 | # 退出激活的环境

90 | conda deactivate

91 |

92 | # 删除某个环境

93 | conda remove -n [env_name] --all

94 | ```

95 |

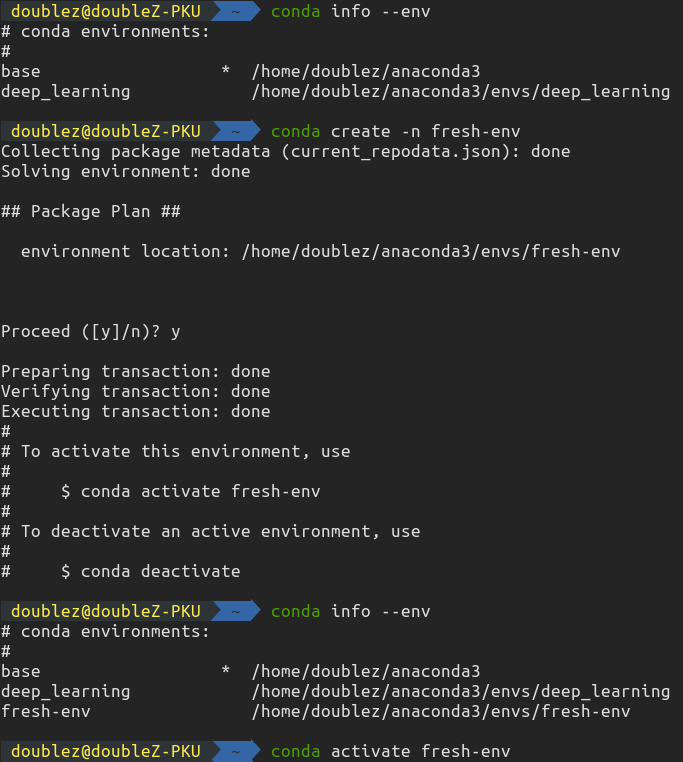

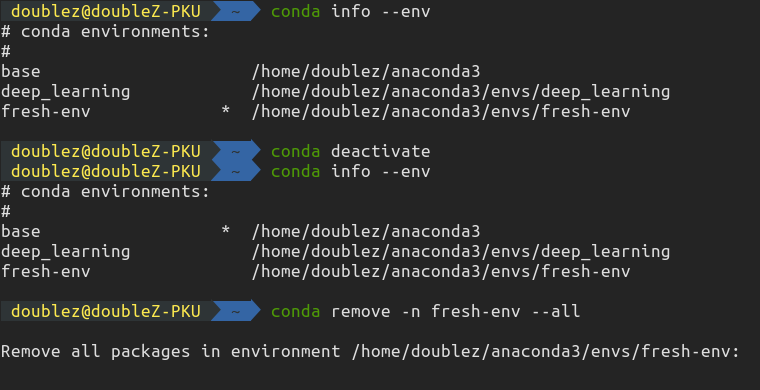

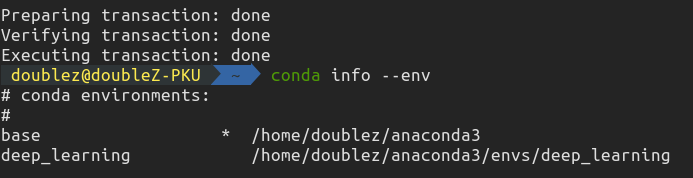

96 | 下图为在Ubuntu下的实际操作:

97 |

98 |

56 |

57 | ---

58 |

59 | ## Anaconda 命令行

60 |

61 | ### 安装

62 |

63 | 1. 在官网下载Anaconda安装包

64 | 2. 按照官网建议通过SHA-256验证数据的正确性

65 |

66 | ```bash

67 | sha256sum /path/filename

68 | ```

69 |

70 | 3. 运行shell脚本

71 | 4. 根据提示进行安装

72 | 5. 写入环境变量

73 | 6. 最后通过`conda —version`验证是否安装成功

74 |

75 | > 由于服务器上之前已经安装好Anaconda,这里不再展示安装步骤截图

76 |

77 | ### 使用

78 |

79 | ```bash

80 | # 创建虚拟环境

81 | conda create -n [env_name]

82 |

83 | # 查看所有环境信息

84 | conda info --envs

85 |

86 | # 激活某个环境

87 | conda activate [env_name]

88 |

89 | # 退出激活的环境

90 | conda deactivate

91 |

92 | # 删除某个环境

93 | conda remove -n [env_name] --all

94 | ```

95 |

96 | 下图为在Ubuntu下的实际操作:

97 |

98 |  99 |

100 |

99 |

100 |  101 |

102 |

101 |

102 |  103 |

104 | ---

105 |

106 | ## Anaconda配置CV所需环境

107 |

108 | 由于最新版的anaconda默认python版本是3.8导致opencv不支持,因此首先把conda的python版本降为3.7

109 |

110 | ```bash

111 | conda install python=3.7 anaconda=custom

112 | ```

113 |

114 | 之后创建虚拟环境并安装所需python包

115 |

116 | ```bash

117 | conda create -n cv

118 | conda activate cv

119 | conda install opencv, numpy, matplotlib

120 | ```

121 |

122 |

103 |

104 | ---

105 |

106 | ## Anaconda配置CV所需环境

107 |

108 | 由于最新版的anaconda默认python版本是3.8导致opencv不支持,因此首先把conda的python版本降为3.7

109 |

110 | ```bash

111 | conda install python=3.7 anaconda=custom

112 | ```

113 |

114 | 之后创建虚拟环境并安装所需python包

115 |

116 | ```bash

117 | conda create -n cv

118 | conda activate cv

119 | conda install opencv, numpy, matplotlib

120 | ```

121 |

122 |  123 |

124 |

125 |

126 |

--------------------------------------------------------------------------------

/docs/collections-study.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# collection容器库"

8 | ]

9 | },

10 | {

11 | "cell_type": "code",

12 | "execution_count": 113,

13 | "metadata": {},

14 | "outputs": [],

15 | "source": [

16 | "import collections\n",

17 | "from collections import Counter, deque, defaultdict, OrderedDict, namedtuple, ChainMap"

18 | ]

19 | },

20 | {

21 | "cell_type": "code",

22 | "execution_count": 114,

23 | "metadata": {},

24 | "outputs": [

25 | {

26 | "name": "stdout",

27 | "output_type": "stream",

28 | "text": [

29 | "['deque', 'defaultdict', 'namedtuple', 'UserDict', 'UserList', 'UserString', 'Counter', 'OrderedDict', 'ChainMap', 'Awaitable', 'Coroutine', 'AsyncIterable', 'AsyncIterator', 'AsyncGenerator', 'Hashable', 'Iterable', 'Iterator', 'Generator', 'Reversible', 'Sized', 'Container', 'Callable', 'Collection', 'Set', 'MutableSet', 'Mapping', 'MutableMapping', 'MappingView', 'KeysView', 'ItemsView', 'ValuesView', 'Sequence', 'MutableSequence', 'ByteString']\n"

30 | ]

31 | }

32 | ],

33 | "source": [

34 | "print(collections.__all__)"

35 | ]

36 | },

37 | {

38 | "cell_type": "markdown",

39 | "metadata": {},

40 | "source": [

41 | "## Counter\n",

42 | "\n",

43 | "- 基础用法跟正常的dict()是一样的\n",

44 | " - `elements()`, `items()`\n",

45 | "- `most_common(n)`:出现次数最多的n个\n",

46 | "- 可以对两个Counter对象`+` `-` `&` `|`"

47 | ]

48 | },

49 | {

50 | "cell_type": "code",

51 | "execution_count": 115,

52 | "metadata": {},

53 | "outputs": [

54 | {

55 | "data": {

56 | "text/plain": [

57 | "Counter({'red': 2, 'blue': 3, 'green': 1})"

58 | ]

59 | },

60 | "execution_count": 115,

61 | "metadata": {},

62 | "output_type": "execute_result"

63 | }

64 | ],

65 | "source": [

66 | "colors = ['red', 'blue', 'red', 'green', 'blue', 'blue']\n",

67 | "c = Counter(colors)\n",

68 | "\n",

69 | "d = {'red': 2, 'blue': 3, 'green': 1}\n",

70 | "c = Counter(d)\n",

71 | "c"

72 | ]

73 | },

74 | {

75 | "cell_type": "code",

76 | "execution_count": 116,

77 | "metadata": {},

78 | "outputs": [

79 | {

80 | "name": "stdout",

81 | "output_type": "stream",

82 | "text": [

83 | "['red', 'red', 'blue', 'blue', 'blue', 'green']\n",

84 | "red 2\n",

85 | "blue 3\n",

86 | "green 1\n"

87 | ]

88 | }

89 | ],

90 | "source": [

91 | "print(list(c.elements()))\n",

92 | "for key, val in c.items():\n",

93 | " print(key, val)"

94 | ]

95 | },

96 | {

97 | "cell_type": "code",

98 | "execution_count": 117,

99 | "metadata": {},

100 | "outputs": [

101 | {

102 | "data": {

103 | "text/plain": [

104 | "[('blue', 3), ('red', 2)]"

105 | ]

106 | },

107 | "execution_count": 117,

108 | "metadata": {},

109 | "output_type": "execute_result"

110 | }

111 | ],

112 | "source": [

113 | "# 相同计数的按照首次出现的顺序排序\n",

114 | "c.most_common(2)"

115 | ]

116 | },

117 | {

118 | "cell_type": "code",

119 | "execution_count": 118,

120 | "metadata": {},

121 | "outputs": [

122 | {

123 | "data": {

124 | "text/plain": [

125 | "Counter({'red': 1, 'blue': 2, 'green': 1})"

126 | ]

127 | },

128 | "execution_count": 118,

129 | "metadata": {},

130 | "output_type": "execute_result"

131 | }

132 | ],

133 | "source": [

134 | "c.subtract(['red', 'blue'])\n",

135 | "c"

136 | ]

137 | },

138 | {

139 | "cell_type": "code",

140 | "execution_count": 119,

141 | "metadata": {},

142 | "outputs": [

143 | {

144 | "name": "stdout",

145 | "output_type": "stream",

146 | "text": [

147 | "Counter({'blue': 4, 'red': 2, 'green': 2})\n",

148 | "Counter({'blue': 2})\n",

149 | "Counter({'red': 4, 'blue': 2, 'green': 1})\n"

150 | ]

151 | }

152 | ],

153 | "source": [

154 | "print(c + c) # add\n",

155 | "print(c & Counter(blue=3)) # intersection\n",

156 | "print(c | Counter(red=4)) # union"

157 | ]

158 | },

159 | {

160 | "cell_type": "markdown",

161 | "metadata": {},

162 | "source": [

163 | "## deque\n",

164 | "\n",

165 | "- `count()`\n",

166 | "- `clear()`\n",

167 | "- `append()`, `appendleft()`\n",

168 | "- `extend()`, `extendleft()`:添加iterable中的元素\n",

169 | "- `index()`:返回第一次匹配的位置\n",

170 | "- `insert(pos, val)`:在pos位置插入元素\n",

171 | "- `pop()`, `popleft()`\n",

172 | "- `remove(val)`:移除deque里第一个匹配的val值\n",

173 | "- `rotate(x)`:向右循环x步,等价于`d.appendleft(d.pop())`"

174 | ]

175 | },

176 | {

177 | "cell_type": "code",

178 | "execution_count": 120,

179 | "metadata": {},

180 | "outputs": [

181 | {

182 | "name": "stdout",

183 | "output_type": "stream",

184 | "text": [

185 | "deque([1, 2, 3])\n",

186 | "deque([1, 2, 3, 4])\n",

187 | "deque([0, 1, 2, 3, 4])\n"

188 | ]

189 | }

190 | ],

191 | "source": [

192 | "d = deque([1,2,3])\n",

193 | "print(d)\n",

194 | "\n",

195 | "d.append(4)\n",

196 | "print(d)\n",

197 | "\n",

198 | "d.appendleft(0)\n",

199 | "print(d)"

200 | ]

201 | },

202 | {

203 | "cell_type": "code",

204 | "execution_count": 121,

205 | "metadata": {},

206 | "outputs": [

207 | {

208 | "name": "stdout",

209 | "output_type": "stream",

210 | "text": [

211 | "deque([0, 1, 2, 3, 4, 7, 8, 9])\n",

212 | "deque([-3, -2, -1, 0, 1, 2, 3, 4, 7, 8, 9])\n"

213 | ]

214 | }

215 | ],

216 | "source": [

217 | "d.extend([7,8,9])\n",

218 | "print(d)\n",

219 | "\n",

220 | "d.extendleft([-1,-2,-3]) # 注意在左面添加会反过来的\n",

221 | "print(d)"

222 | ]

223 | },

224 | {

225 | "cell_type": "code",

226 | "execution_count": 122,

227 | "metadata": {},

228 | "outputs": [

229 | {

230 | "name": "stdout",

231 | "output_type": "stream",

232 | "text": [

233 | "3\n",

234 | "deque([-3, 'a', -2, -1, 0, 1, 2, 3, 4, 7, 8, 9])\n"

235 | ]

236 | }

237 | ],

238 | "source": [

239 | "print(d.index(0))\n",

240 | "\n",

241 | "d.insert(1, 'a')\n",

242 | "print(d)"

243 | ]

244 | },

245 | {

246 | "cell_type": "code",

247 | "execution_count": 123,

248 | "metadata": {},

249 | "outputs": [

250 | {

251 | "name": "stdout",

252 | "output_type": "stream",

253 | "text": [

254 | "deque([-3, -2, -1, 0, 1, 2, 3, 4, 7, 8, 9])\n"

255 | ]

256 | }

257 | ],

258 | "source": [

259 | "d.remove('a')\n",

260 | "print(d)"

261 | ]

262 | },

263 | {

264 | "cell_type": "code",

265 | "execution_count": 124,

266 | "metadata": {},

267 | "outputs": [

268 | {

269 | "name": "stdout",

270 | "output_type": "stream",

271 | "text": [

272 | "deque([9, -3, -2, -1, 0, 1, 2, 3, 4, 7, 8])\n"

273 | ]

274 | }

275 | ],

276 | "source": [

277 | "d.rotate(1)\n",

278 | "print(d)"

279 | ]

280 | },

281 | {

282 | "cell_type": "markdown",

283 | "metadata": {},

284 | "source": [

285 | "## defaultdict\n",

286 | "\n",

287 | "主要用来解决默认dict值不存在时会报错(例如+1必须要特判)\n",

288 | "\n",

289 | "通过设定类型可指定默认值(例如list缺失值是`[]`,int缺失值是0)"

290 | ]

291 | },

292 | {

293 | "cell_type": "code",

294 | "execution_count": 125,

295 | "metadata": {},

296 | "outputs": [

297 | {

298 | "data": {

299 | "text/plain": [

300 | "dict_items([('yellow', [1, 3]), ('blue', [2, 4]), ('red', [1])])"

301 | ]

302 | },

303 | "execution_count": 125,

304 | "metadata": {},

305 | "output_type": "execute_result"

306 | }

307 | ],

308 | "source": [

309 | "# 将 键-值对 转换为 键-列表\n",

310 | "s = [('yellow', 1), ('blue', 2), ('yellow', 3), ('blue', 4), ('red', 1)]\n",

311 | "\n",

312 | "d = defaultdict(list)\n",

313 | "for k, v in s:\n",

314 | " d[k].append(v)\n",

315 | "\n",

316 | "d.items()"

317 | ]

318 | },

319 | {

320 | "cell_type": "code",

321 | "execution_count": 126,

322 | "metadata": {},

323 | "outputs": [

324 | {

325 | "data": {

326 | "text/plain": [

327 | "dict_items([('h', 1), ('e', 1), ('l', 3), ('o', 2), ('w', 1), ('r', 1), ('d', 1)])"

328 | ]

329 | },

330 | "execution_count": 126,

331 | "metadata": {},

332 | "output_type": "execute_result"

333 | }

334 | ],

335 | "source": [

336 | "# 计数\n",

337 | "s = \"helloworld\"\n",

338 | "d = defaultdict(int)\n",

339 | "for k in s:\n",

340 | " d[k] += 1\n",

341 | "d.items()"

342 | ]

343 | },

344 | {

345 | "cell_type": "markdown",

346 | "metadata": {},

347 | "source": [

348 | "## OrderedDict\n",

349 | "\n",

350 | "因为是有序的字典,因此可以记住顺序\n",

351 | "\n",

352 | "- `popitem()`\n",

353 | "- `move_to_end()`"

354 | ]

355 | },

356 | {

357 | "cell_type": "code",

358 | "execution_count": 127,

359 | "metadata": {},

360 | "outputs": [

361 | {

362 | "name": "stdout",

363 | "output_type": "stream",

364 | "text": [

365 | "OrderedDict([('a', None), ('b', None)])\n",

366 | "OrderedDict([('b', None), ('a', None)])\n"

367 | ]

368 | }

369 | ],

370 | "source": [

371 | "od = OrderedDict.fromkeys('abc')\n",

372 | "\n",

373 | "od.popitem(last=True)\n",

374 | "print(od)\n",

375 | "\n",

376 | "od.move_to_end('a', last=True)\n",

377 | "print(od)"

378 | ]

379 | },

380 | {

381 | "cell_type": "markdown",

382 | "metadata": {},

383 | "source": [

384 | "## namedtyple\n",

385 | "\n",

386 | "允许自定义名字的tuple子类"

387 | ]

388 | },

389 | {

390 | "cell_type": "code",

391 | "execution_count": 128,

392 | "metadata": {},

393 | "outputs": [

394 | {

395 | "name": "stdout",

396 | "output_type": "stream",

397 | "text": [

398 | "3\n"

399 | ]

400 | },

401 | {

402 | "data": {

403 | "text/plain": [

404 | "OrderedDict([('x', 1), ('y', 2)])"

405 | ]

406 | },

407 | "execution_count": 128,

408 | "metadata": {},

409 | "output_type": "execute_result"

410 | }

411 | ],

412 | "source": [

413 | "Point = namedtuple('Point', ['x','y'])\n",

414 | "\n",

415 | "p = Point(x=1,y=2)\n",

416 | "print(p.x + p[1])\n",

417 | "\n",

418 | "p._asdict()"

419 | ]

420 | },

421 | {

422 | "cell_type": "markdown",

423 | "metadata": {},

424 | "source": [

425 | "## ChainMap\n",

426 | "\n",

427 | "把多个字典融合成一个\n",

428 | "\n",

429 | "当多个字典有重复的key时,按照链的顺序第一次查找的返回\n",

430 | "\n",

431 | "例如应用在:命令行参数、系统环境参数、默认参数 的优先级决策上\n"

432 | ]

433 | },

434 | {

435 | "cell_type": "code",

436 | "execution_count": 129,

437 | "metadata": {},

438 | "outputs": [

439 | {

440 | "name": "stdout",

441 | "output_type": "stream",

442 | "text": [

443 | "10\n"

444 | ]

445 | }

446 | ],

447 | "source": [

448 | "colors = {'red': 3, 'blue': 1}\n",

449 | "phones = {'iPhone': 10, 'Huawei': 5}\n",

450 | "langs = {'python': 2, 'js': 3}\n",

451 | "\n",

452 | "chainmap = ChainMap(colors, phones, langs)\n",

453 | "\n",

454 | "print(chainmap['iPhone'])"

455 | ]

456 | },

457 | {

458 | "cell_type": "code",

459 | "execution_count": 130,

460 | "metadata": {},

461 | "outputs": [

462 | {

463 | "name": "stdout",

464 | "output_type": "stream",

465 | "text": [

466 | "ChainMap({'red': 3, 'blue': 1}, {'iPhone': 10, 'Huawei': 5}, {'python': 2, 'js': 3, 'c': 10})\n"

467 | ]

468 | }

469 | ],

470 | "source": [

471 | "langs['c'] = 10\n",

472 | "print(chainmap) # chainmap会自动更新"

473 | ]

474 | },

475 | {

476 | "cell_type": "code",

477 | "execution_count": 131,

478 | "metadata": {},

479 | "outputs": [

480 | {

481 | "name": "stdout",

482 | "output_type": "stream",

483 | "text": [

484 | "ChainMap({'red': 3}, {'iPhone': 10, 'Huawei': 5}, {'python': 2, 'js': 3, 'c': 10})\n",

485 | "{'red': 3}\n"

486 | ]

487 | }

488 | ],

489 | "source": [

490 | "chainmap.pop('blue')\n",

491 | "\n",

492 | "print(chainmap)\n",

493 | "print(colors) # chainmap中删除,原字典也会同步删除"

494 | ]

495 | }

496 | ],

497 | "metadata": {

498 | "kernelspec": {

499 | "display_name": "Python 3.6.13 ('ourmvsnet')",

500 | "language": "python",

501 | "name": "python3"

502 | },

503 | "language_info": {

504 | "codemirror_mode": {

505 | "name": "ipython",

506 | "version": 3

507 | },

508 | "file_extension": ".py",

509 | "mimetype": "text/x-python",

510 | "name": "python",

511 | "nbconvert_exporter": "python",

512 | "pygments_lexer": "ipython3",

513 | "version": "3.6.13"

514 | },

515 | "orig_nbformat": 4,

516 | "vscode": {

517 | "interpreter": {

518 | "hash": "ce3435b4fd239f1f6f12780933ef58404daca4493791636b6dc56d0922f97ec9"

519 | }

520 | }

521 | },

522 | "nbformat": 4,

523 | "nbformat_minor": 2

524 | }

525 |

--------------------------------------------------------------------------------

/docs/numpy-study.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "source": [

6 | "# NumPy学习"

7 | ],

8 | "metadata": {}

9 | },

10 | {

11 | "cell_type": "markdown",

12 | "source": [

13 | "**Table of Content**\n",

14 | "* 属性\n",

15 | "* 创建\n",

16 | " * 随机数\n",

17 | "* 运算\n",

18 | " * 聚合函数\n",

19 | "* 索引\n",

20 | "* 数组操作\n",

21 | "* 拷贝\n",

22 | "\n",

23 | "[Numpy中文网](https://www.numpy.org.cn)\n",

24 | "\n",

25 | "采用C语言编写,采用矩阵运算,消耗资源少,比自带数据结构的运算款很多\n",

26 | "\n",

27 | "Vectorization is one of the main reasons why NumPy is so powerful."

28 | ],

29 | "metadata": {}

30 | },

31 | {

32 | "cell_type": "markdown",

33 | "source": [

34 | "## 属性"

35 | ],

36 | "metadata": {}

37 | },

38 | {

39 | "cell_type": "code",

40 | "execution_count": 1,

41 | "source": [

42 | "import numpy as np"

43 | ],

44 | "outputs": [],

45 | "metadata": {}

46 | },

47 | {

48 | "cell_type": "code",

49 | "execution_count": 2,

50 | "source": [

51 | "array = np.array([[1,2,3],[4,5,6]])\n",

52 | "print(array)"

53 | ],

54 | "outputs": [

55 | {

56 | "output_type": "stream",

57 | "name": "stdout",

58 | "text": [

59 | "[[1 2 3]\n",

60 | " [4 5 6]]\n"

61 | ]

62 | }

63 | ],

64 | "metadata": {}

65 | },

66 | {

67 | "cell_type": "code",

68 | "execution_count": 3,

69 | "source": [

70 | "# 维度\n",

71 | "print(array.ndim)\n",

72 | "\n",

73 | "# 行列数\n",

74 | "print(array.shape)\n",

75 | "\n",

76 | "# 元素个数\n",

77 | "print(array.size)"

78 | ],

79 | "outputs": [

80 | {

81 | "output_type": "stream",

82 | "name": "stdout",

83 | "text": [

84 | "2\n",

85 | "(2, 3)\n",

86 | "6\n"

87 | ]

88 | }

89 | ],

90 | "metadata": {}

91 | },

92 | {

93 | "cell_type": "markdown",

94 | "source": [

95 | "## 创建"

96 | ],

97 | "metadata": {}

98 | },

99 | {

100 | "cell_type": "code",

101 | "execution_count": 8,

102 | "source": [

103 | "# 指定数据类型\n",

104 | "a = np.array([1, 2, 3], dtype=np.int32)\n",

105 | "b = np.array([1, 2, 3], dtype=np.float64)\n",

106 | "\n",

107 | "print(a)\n",

108 | "print(b)"

109 | ],

110 | "outputs": [

111 | {

112 | "output_type": "stream",

113 | "name": "stdout",

114 | "text": [

115 | "[1 2 3] [1. 2. 3.]\n",

116 | "[[0. 0. 0. 0.]\n",

117 | " [0. 0. 0. 0.]\n",

118 | " [0. 0. 0. 0.]]\n",

119 | "[[1 1 1]\n",

120 | " [1 1 1]]\n"

121 | ]

122 | }

123 | ],

124 | "metadata": {}

125 | },

126 | {

127 | "cell_type": "code",

128 | "execution_count": 3,

129 | "source": [

130 | "# 快速创建\n",

131 | "zero = np.zeros((3, 4))\n",

132 | "one = np.ones((2, 3), dtype=np.int32)\n",

133 | "empty = np.empty((2, 3)) # 接近零的数\n",

134 | "full = np.full((2, 3), 7) # 全为某值的矩阵\n",

135 | "identity = np.eye(3) # 对角矩阵\n",

136 | "\n",

137 | "print(zero)\n",

138 | "print(one)\n",

139 | "print(empty)\n",

140 | "print(full)\n",

141 | "print(identity)"

142 | ],

143 | "outputs": [

144 | {

145 | "output_type": "stream",

146 | "name": "stdout",

147 | "text": [

148 | "[[0. 0. 0. 0.]\n",

149 | " [0. 0. 0. 0.]\n",

150 | " [0. 0. 0. 0.]]\n",

151 | "[[1 1 1]\n",

152 | " [1 1 1]]\n",

153 | "[[-1.72723371e-077 1.29073736e-231 1.97626258e-323]\n",

154 | " [ 0.00000000e+000 0.00000000e+000 4.17201348e-309]]\n",

155 | "[[7 7 7]\n",

156 | " [7 7 7]]\n",

157 | "[[1. 0. 0.]\n",

158 | " [0. 1. 0.]\n",

159 | " [0. 0. 1.]]\n"

160 | ]

161 | }

162 | ],

163 | "metadata": {}

164 | },

165 | {

166 | "cell_type": "code",

167 | "execution_count": 13,

168 | "source": [

169 | "# 连续数字\n",

170 | "arange = np.arange(1, 10, 2) # 步长为2\n",

171 | "\n",

172 | "# 线段形数据\n",

173 | "linspace = np.linspace(1, 10, 8) # 分割成8个点\n",

174 | "\n",

175 | "# 改变形状\n",

176 | "reshape = np.arange(20).reshape(4, 5) # 参数为-1则会自动计算\n",

177 | "\n",

178 | "print(arange)\n",

179 | "print(linspace)\n",

180 | "print(reshape)"

181 | ],

182 | "outputs": [

183 | {

184 | "output_type": "stream",

185 | "name": "stdout",

186 | "text": [

187 | "[1 3 5 7 9]\n",

188 | "[ 1. 2.28571429 3.57142857 4.85714286 6.14285714 7.42857143\n",

189 | " 8.71428571 10. ]\n",

190 | "[[ 0 1 2 3 4]\n",

191 | " [ 5 6 7 8 9]\n",

192 | " [10 11 12 13 14]\n",

193 | " [15 16 17 18 19]]\n"

194 | ]

195 | }

196 | ],

197 | "metadata": {}

198 | },

199 | {

200 | "cell_type": "markdown",

201 | "source": [

202 | "### 随机数"

203 | ],

204 | "metadata": {}

205 | },

206 | {

207 | "cell_type": "code",

208 | "execution_count": 131,

209 | "source": [

210 | "# 0~1间的浮点数\n",

211 | "r = np.random.rand(2, 3)\n",

212 | "print(\"rand: \", r)\n",

213 | "\n",

214 | "# 0~1间均匀取样随机数\n",

215 | "r = np.random.random((2, 4))\n",

216 | "print(\"random: \", r)\n",

217 | "\n",

218 | "# [0, 10)间的随机整数\n",

219 | "r = np.random.randint(10)\n",

220 | "print(\"randint: \", r)\n",

221 | "\n",

222 | "# 基于给定值生成随机数\n",

223 | "r = np.random.choice([3, 5, 7, 9], size=(2, 3))\n",

224 | "print(\"choice: \", r)\n",

225 | "\n",

226 | "# 均匀分布随机数 [low, high)\n",

227 | "r = np.random.uniform(1, 10, (3, 2))\n",

228 | "print(\"uniform: \", r)\n",

229 | "\n",

230 | "# 标准正态分布 均值0 方差1\n",

231 | "r = np.random.randn(3, 2)\n",

232 | "print(\"randn: \", r)\n",

233 | "\n",

234 | "# 正态分布 均值10 方差1\n",

235 | "r = np.random.normal(10, 1, (3, 2))\n",

236 | "print(\"normal: \", r)"

237 | ],

238 | "outputs": [

239 | {

240 | "output_type": "stream",

241 | "name": "stdout",

242 | "text": [

243 | "rand: [[0.44155749 0.44601869 0.05318859]\n",

244 | " [0.28594465 0.72112534 0.91809614]]\n",

245 | "random: [[0.07914297 0.68466181 0.49258672 0.1509604 ]\n",

246 | " [0.63843674 0.85319365 0.3285023 0.60701208]]\n",

247 | "randint: 2\n",

248 | "choice: [[3 9 9]\n",

249 | " [7 3 5]]\n",

250 | "uniform: [[2.87500892 9.01628853]\n",

251 | " [1.99811272 3.47776626]\n",

252 | " [7.94675885 4.08628526]]\n",

253 | "randn: [[-0.8897855 0.66911561]\n",

254 | " [ 1.32854304 0.34210128]\n",

255 | " [-1.62014918 -0.21174625]]\n",

256 | "[[10.49353599 10.8195831 ]\n",

257 | " [ 9.44557101 8.93493195]\n",

258 | " [10.00297058 9.5695596 ]]\n"

259 | ]

260 | }

261 | ],

262 | "metadata": {}

263 | },

264 | {

265 | "cell_type": "markdown",

266 | "source": [

267 | "## 运算"

268 | ],

269 | "metadata": {}

270 | },

271 | {

272 | "cell_type": "code",

273 | "execution_count": 5,

274 | "source": [

275 | "a = np.array([[1,2], [3, 4]])\n",

276 | "b = np.arange(4).reshape((2,2))\n",

277 | "\n",

278 | "# 矩阵乘法\n",

279 | "print(np.dot(a, b))\n",

280 | "print(a.dot(b))\n",

281 | "\n",

282 | "# 对位乘法\n",

283 | "print(a * b)\n",

284 | "print(np.multiply(a, b))\n",

285 | "\n",

286 | "print(a ** 2)"

287 | ],

288 | "outputs": [

289 | {

290 | "output_type": "stream",

291 | "name": "stdout",

292 | "text": [

293 | "[[ 4 7]\n",

294 | " [ 8 15]]\n",

295 | "[[ 4 7]\n",

296 | " [ 8 15]]\n",

297 | "[[ 0 2]\n",

298 | " [ 6 12]]\n",

299 | "[[ 0 2]\n",

300 | " [ 6 12]]\n",

301 | "[[ 1 4]\n",

302 | " [ 9 16]]\n"

303 | ]

304 | }

305 | ],

306 | "metadata": {}

307 | },

308 | {

309 | "cell_type": "code",

310 | "execution_count": 27,

311 | "source": [

312 | "# 一些数学函数\n",

313 | "e = np.sin(a)\n",

314 | "print(e)"

315 | ],

316 | "outputs": [

317 | {

318 | "output_type": "stream",

319 | "name": "stdout",

320 | "text": [

321 | "[[ 0.84147098 0.90929743]\n",

322 | " [ 0.14112001 -0.7568025 ]]\n"

323 | ]

324 | }

325 | ],

326 | "metadata": {}

327 | },

328 | {

329 | "cell_type": "code",

330 | "execution_count": 28,

331 | "source": [

332 | "# 逻辑判断\n",

333 | "print(b < 3)"

334 | ],

335 | "outputs": [

336 | {

337 | "output_type": "stream",

338 | "name": "stdout",

339 | "text": [

340 | "[[ True True]\n",

341 | " [ True False]]\n"

342 | ]

343 | }

344 | ],

345 | "metadata": {}

346 | },

347 | {

348 | "cell_type": "markdown",

349 | "source": [

350 | "### 聚合函数"

351 | ],

352 | "metadata": {}

353 | },

354 | {

355 | "cell_type": "code",

356 | "execution_count": 154,

357 | "source": [

358 | "r = np.random.randint(0, 100, (2, 5))\n",

359 | "print(r)\n",

360 | "\n",

361 | "print(\"sum: \", np.sum(r))\n",

362 | "print(\"sum[axis=0]: \", np.sum(r, axis=0)) # 列为方向\n",

363 | "print(\"sum[axis=1]: \", np.sum(r, axis=1)) # 一行运算一次\n",

364 | "\n",

365 | "print(\"min: \", np.min(r))\n",

366 | "print(\"min[axis=0]: \", np.min(r, axis=0))\n",

367 | "print(\"min[axis=1]: \", np.min(r, axis=1))\n",

368 | "\n",

369 | "print(\"argmax: \", np.argmax(r))\n",

370 | "print(\"argmax[axis=0]: \", np.argmax(r, axis=0))\n",

371 | "print(\"argmax[axis=1]: \", np.argmax(r, axis=1))\n",

372 | "\n",

373 | "print(\"mean: \", np.mean(r))\n",

374 | "print(\"average: \", np.average(r))\n",

375 | "print(\"median: \", np.median(r))"

376 | ],

377 | "outputs": [

378 | {

379 | "output_type": "stream",

380 | "name": "stdout",

381 | "text": [

382 | "[[ 7 60 21 79 84]\n",

383 | " [72 82 40 36 1]]\n",

384 | "sum: 482\n",

385 | "sum[axis=0]: [ 79 142 61 115 85]\n",

386 | "sum[axis=1]: [251 231]\n",

387 | "min: 1\n",

388 | "min[axis=0]: [ 7 60 21 36 1]\n",

389 | "min[axis=1]: [7 1]\n",

390 | "argmax: 4\n",

391 | "argmax[axis=0]: [1 1 1 0 0]\n",

392 | "argmax[axis=1]: [4 1]\n",

393 | "mean: 48.2\n",

394 | "average: 48.2\n",

395 | "median: 50.0\n"

396 | ]

397 | }

398 | ],

399 | "metadata": {}

400 | },

401 | {

402 | "cell_type": "code",

403 | "execution_count": 156,

404 | "source": [

405 | "r = np.random.randint(0, 10, (2, 3))\n",

406 | "print(r)\n",

407 | "\n",

408 | "# 将非零元素的行与列分隔开,冲构成两个分别关于行与列的矩阵\n",

409 | "print(np.nonzero(r))"

410 | ],

411 | "outputs": [

412 | {

413 | "output_type": "stream",

414 | "name": "stdout",

415 | "text": [

416 | "[[6 1 0]\n",

417 | " [8 8 9]]\n",

418 | "(array([0, 0, 1, 1, 1]), array([0, 1, 0, 1, 2]))\n"

419 | ]

420 | }

421 | ],

422 | "metadata": {}

423 | },

424 | {

425 | "cell_type": "code",

426 | "execution_count": 160,

427 | "source": [

428 | "a = np.arange(6, 0, -1).reshape(2, 3)\n",

429 | "print(a)\n",

430 | "\n",

431 | "print(np.sort(a)) # 行内排序\n",

432 | "print(np.sort(a, axis=0)) # 列内排序\n"

433 | ],

434 | "outputs": [

435 | {

436 | "output_type": "stream",

437 | "name": "stdout",

438 | "text": [

439 | "[[6 5 4]\n",

440 | " [3 2 1]]\n",

441 | "[[4 5 6]\n",

442 | " [1 2 3]]\n",

443 | "[[3 2 1]\n",

444 | " [6 5 4]]\n"

445 | ]

446 | }

447 | ],

448 | "metadata": {}

449 | },

450 | {

451 | "cell_type": "code",

452 | "execution_count": 161,

453 | "source": [

454 | "# 两种转置表达方式\n",

455 | "print(np.transpose(a))\n",

456 | "print(a.T)"

457 | ],

458 | "outputs": [

459 | {

460 | "output_type": "stream",

461 | "name": "stdout",

462 | "text": [

463 | "[[6 3]\n",

464 | " [5 2]\n",

465 | " [4 1]]\n",

466 | "[[6 3]\n",

467 | " [5 2]\n",

468 | " [4 1]]\n"

469 | ]

470 | }

471 | ],

472 | "metadata": {}

473 | },

474 | {

475 | "cell_type": "code",

476 | "execution_count": 165,

477 | "source": [

478 | "# 将数组中过大过小的数据进行裁切\n",

479 | "a = np.arange(0, 10).reshape(2, 5)\n",

480 | "print(a)\n",

481 | "\n",

482 | "# 小于3的都变为3, 大于8的都变为8\n",

483 | "print(np.clip(a, 3, 8))"

484 | ],

485 | "outputs": [

486 | {

487 | "output_type": "stream",

488 | "name": "stdout",

489 | "text": [

490 | "[[0 1 2 3 4]\n",

491 | " [5 6 7 8 9]]\n",

492 | "[[3 3 3 3 4]\n",

493 | " [5 6 7 8 8]]\n"

494 | ]

495 | }

496 | ],

497 | "metadata": {}

498 | },

499 | {

500 | "cell_type": "markdown",

501 | "source": [

502 | "## 索引"

503 | ],

504 | "metadata": {}

505 | },

506 | {

507 | "cell_type": "code",

508 | "execution_count": 6,

509 | "source": [

510 | "a = np.arange(6)\n",

511 | "\n",

512 | "print(a)\n",

513 | "print(a[1])\n",

514 | "\n",

515 | "a = a.reshape((2, 3))\n",

516 | "print(a)\n",

517 | "print(a[1]) # 矩阵的第二行\n",

518 | "print(a[1][2], a[1, 2]) # 第二行第三个元素(两种表示)"

519 | ],

520 | "outputs": [

521 | {

522 | "output_type": "stream",

523 | "name": "stdout",

524 | "text": [

525 | "[0 1 2 3 4 5]\n",

526 | "1\n",

527 | "[[0 1 2]\n",

528 | " [3 4 5]]\n",

529 | "[3 4 5]\n",

530 | "5 5\n"

531 | ]

532 | }

533 | ],

534 | "metadata": {}

535 | },

536 | {

537 | "cell_type": "code",

538 | "execution_count": 8,

539 | "source": [

540 | "# 切片\n",

541 | "print(a)\n",

542 | "print(a[1, 1:3])\n",

543 | "print(a[:, 1])\n",

544 | "\n",

545 | "print(a[:, ::-1]) # 交换每一行的顺序\n",

546 | "print(a[np.arange(2), [1, 2]]) # [0,1] [1,2] 取(0 1) (1 2)两个元素"

547 | ],

548 | "outputs": [

549 | {

550 | "output_type": "stream",

551 | "name": "stdout",

552 | "text": [

553 | "[[0 1 2]\n",

554 | " [3 4 5]]\n",

555 | "[4 5]\n",

556 | "[1 4]\n",

557 | "[[2 1 0]\n",

558 | " [5 4 3]]\n",

559 | "[1 5]\n"

560 | ]

561 | }

562 | ],

563 | "metadata": {}

564 | },

565 | {

566 | "cell_type": "code",

567 | "execution_count": 9,

568 | "source": [

569 | "a[a > 3] = 999\n",

570 | "print(a)"

571 | ],

572 | "outputs": [

573 | {

574 | "output_type": "stream",

575 | "name": "stdout",

576 | "text": [

577 | "[[ 0 1 2]\n",

578 | " [ 3 999 999]]\n"

579 | ]

580 | }

581 | ],

582 | "metadata": {}

583 | },

584 | {

585 | "cell_type": "code",

586 | "execution_count": 183,

587 | "source": [

588 | "# 遍历\n",

589 | "for row in a:\n",

590 | " for item in row:\n",

591 | " print(item, end=\" \")\n",

592 | "\n",

593 | "print()\n",

594 | "\n",

595 | "for item in a.flat: # a.flatten() 将多维矩阵展开成1行的矩阵 flatten()直接是数组,flat是迭代器\n",

596 | " print(item, end=\" \")"

597 | ],

598 | "outputs": [

599 | {

600 | "output_type": "stream",

601 | "name": "stdout",

602 | "text": [

603 | "0 1 2 3 4 5 \n",

604 | "0 1 2 3 4 5 "

605 | ]

606 | }

607 | ],

608 | "metadata": {}

609 | },

610 | {

611 | "cell_type": "markdown",

612 | "source": [

613 | "## 数组操作"

614 | ],

615 | "metadata": {}

616 | },

617 | {

618 | "cell_type": "code",

619 | "execution_count": 185,

620 | "source": [

621 | "# 合并\n",

622 | "a = np.array([1, 1, 1])\n",

623 | "b = np.array([2, 2, 2])\n",

624 | "\n",

625 | "# 垂直拼接\n",

626 | "print(np.vstack((a, b)))\n",

627 | "\n",

628 | "# 水平拼接\n",

629 | "print(np.hstack((a, b)))"

630 | ],

631 | "outputs": [

632 | {

633 | "output_type": "stream",

634 | "name": "stdout",

635 | "text": [

636 | "[[1 1 1]\n",

637 | " [2 2 2]]\n",

638 | "[1 1 1 2 2 2]\n"

639 | ]

640 | }

641 | ],

642 | "metadata": {}

643 | },

644 | {

645 | "cell_type": "code",

646 | "execution_count": 194,

647 | "source": [

648 | "# 添加维度\n",

649 | "# 一维数组转置会没有效果的\n",

650 | "print(a.T)\n",

651 | "\n",

652 | "print(a[np.newaxis, :]) # 一行三列\n",

653 | "print(a[:, np.newaxis]) # 三行一列\n",

654 | "\n",

655 | "print(a[np.newaxis, :].T)\n",

656 | "print(a[:, np.newaxis].shape)"

657 | ],

658 | "outputs": [

659 | {

660 | "output_type": "stream",

661 | "name": "stdout",

662 | "text": [

663 | "[1 1 1]\n",

664 | "[[1 1 1]]\n",

665 | "[[1]\n",

666 | " [1]\n",

667 | " [1]]\n",

668 | "[[1]\n",

669 | " [1]\n",

670 | " [1]]\n",

671 | "(3, 1)\n"

672 | ]

673 | }

674 | ],

675 | "metadata": {}

676 | },

677 | {

678 | "cell_type": "code",

679 | "execution_count": 200,

680 | "source": [

681 | "# 合并多个矩阵\n",

682 | "a, b = np.array([1, 1, 1]), np.array([2, 2, 2])\n",

683 | "a, b = a[:, np.newaxis], b[:, np.newaxis]\n",

684 | "\n",

685 | "print(np.concatenate((a,b,b,a), axis=0))\n",

686 | "print(np.concatenate((a,b,b,a), axis=1))"

687 | ],

688 | "outputs": [

689 | {

690 | "output_type": "stream",

691 | "name": "stdout",

692 | "text": [

693 | "[[1]\n",

694 | " [1]\n",

695 | " [1]\n",

696 | " [2]\n",

697 | " [2]\n",

698 | " [2]\n",

699 | " [2]\n",

700 | " [2]\n",

701 | " [2]\n",

702 | " [1]\n",

703 | " [1]\n",

704 | " [1]]\n",

705 | "[[1 2 2 1]\n",

706 | " [1 2 2 1]\n",

707 | " [1 2 2 1]]\n"

708 | ]

709 | }

710 | ],

711 | "metadata": {}

712 | },

713 | {

714 | "cell_type": "code",

715 | "execution_count": 206,

716 | "source": [

717 | "# 分割\n",

718 | "a = np.arange(12).reshape(3, -1)\n",

719 | "print(a)\n",

720 | "\n",

721 | "# 横向切\n",

722 | "print(np.split(a, 3, axis=0))\n",

723 | "print(np.vsplit(a, 3))\n",

724 | "\n",

725 | "# 纵向切\n",

726 | "print(np.split(a, 2, axis=1))\n",

727 | "print(np.hsplit(a, 2))\n",

728 | "\n",

729 | "# 不等量切割\n",

730 | "print(np.array_split(a, 3, axis=1))\n"

731 | ],

732 | "outputs": [

733 | {

734 | "output_type": "stream",

735 | "name": "stdout",

736 | "text": [

737 | "[[ 0 1 2 3]\n",

738 | " [ 4 5 6 7]\n",

739 | " [ 8 9 10 11]]\n",

740 | "[array([[0, 1, 2, 3]]), array([[4, 5, 6, 7]]), array([[ 8, 9, 10, 11]])]\n",

741 | "[array([[0, 1, 2, 3]]), array([[4, 5, 6, 7]]), array([[ 8, 9, 10, 11]])]\n",

742 | "[array([[0, 1],\n",

743 | " [4, 5],\n",

744 | " [8, 9]]), array([[ 2, 3],\n",

745 | " [ 6, 7],\n",

746 | " [10, 11]])]\n",

747 | "[array([[0, 1],\n",

748 | " [4, 5],\n",

749 | " [8, 9]]), array([[ 2, 3],\n",

750 | " [ 6, 7],\n",

751 | " [10, 11]])]\n",

752 | "[array([[0, 1],\n",

753 | " [4, 5],\n",

754 | " [8, 9]]), array([[ 2],\n",

755 | " [ 6],\n",

756 | " [10]]), array([[ 3],\n",

757 | " [ 7],\n",

758 | " [11]])]\n"

759 | ]

760 | }

761 | ],

762 | "metadata": {}

763 | },

764 | {

765 | "cell_type": "markdown",

766 | "source": [

767 | "## 拷贝"

768 | ],

769 | "metadata": {}

770 | },

771 | {

772 | "cell_type": "code",

773 | "execution_count": 208,

774 | "source": [

775 | "# 浅拷贝\n",

776 | "a = np.arange(3)\n",

777 | "b = a\n",

778 | "b[1] = 100\n",

779 | "print(a, b, b is a)"

780 | ],

781 | "outputs": [

782 | {

783 | "output_type": "stream",

784 | "name": "stdout",

785 | "text": [

786 | "[ 0 100 2] [ 0 100 2] True\n"

787 | ]

788 | }

789 | ],

790 | "metadata": {}

791 | },

792 | {

793 | "cell_type": "code",

794 | "execution_count": 210,

795 | "source": [

796 | "# 深拷贝\n",

797 | "b = a.copy()\n",

798 | "a[2] = 99\n",

799 | "print(a, b, a is b)"

800 | ],

801 | "outputs": [

802 | {

803 | "output_type": "stream",

804 | "name": "stdout",

805 | "text": [

806 | "[ 0 100 99] [ 0 100 99] False\n"

807 | ]

808 | }

809 | ],

810 | "metadata": {}

811 | }

812 | ],

813 | "metadata": {

814 | "orig_nbformat": 4,

815 | "language_info": {

816 | "name": "python",

817 | "version": "3.7.11",

818 | "mimetype": "text/x-python",

819 | "codemirror_mode": {

820 | "name": "ipython",

821 | "version": 3

822 | },

823 | "pygments_lexer": "ipython3",

824 | "nbconvert_exporter": "python",

825 | "file_extension": ".py"

826 | },

827 | "kernelspec": {

828 | "name": "python3",

829 | "display_name": "Python 3.7.11 64-bit ('cv': conda)"

830 | },

831 | "interpreter": {

832 | "hash": "fae0b8db5daef04bdef6b28b91130d8cf2746b07f3f9c6da64121295d3f83694"

833 | }

834 | },

835 | "nbformat": 4,

836 | "nbformat_minor": 2

837 | }

--------------------------------------------------------------------------------

/docs/opencv/canny.py:

--------------------------------------------------------------------------------

1 | import cv2

2 |

3 | img = cv2.imread('../../resources/opencv/card.jpg')

4 | img = cv2.resize(img, (1000, 500))

5 | gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

6 | _, th = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

7 |

8 | cv2.namedWindow('canny')

9 | cv2.createTrackbar('minVal', 'canny', 0, 225, lambda x: x)

10 | cv2.createTrackbar('maxVal', 'canny', 0, 255, lambda x: x)

11 | cv2.setTrackbarPos('minVal', 'canny', 0)

12 | cv2.setTrackbarPos('maxVal', 'canny', 20)

13 |

14 | while True:

15 | minVal, maxVal = cv2.getTrackbarPos('minVal', 'canny'), cv2.getTrackbarPos('maxVal', 'canny')

16 |

17 | # ⚠️要在gray上做才能看到效果,threshold已经滤波的差不多了根本看不出来差距

18 | # canny = cv2.Canny(th, minVal, maxVal)

19 | canny = cv2.Canny(gray, minVal, maxVal)

20 |

21 | cv2.imshow('canny', canny)

22 | if cv2.waitKey(1) == 27:

23 | break

24 |

25 | cv2.destroyAllWindows()

--------------------------------------------------------------------------------

/docs/opencv/lane-line-detection.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import matplotlib.pyplot as plt

3 | import numpy as np

4 | from moviepy.editor import VideoFileClip

5 |

6 | PLOT_FLAG = False

7 |

8 | def pre_process(img, blur_ksize=5, canny_low=50, canny_high=100):

9 | """

10 | (1) 图像预处理:灰度-高斯模糊-Canny边缘检测

11 | @param img: 原RGB图像

12 | @param blur_ksize: 高斯卷积核

13 | @param canny_low: canny最低阈值

14 | @param canny_high: canny最高阈值

15 | @return: 只含有边缘信息的图像

16 | """

17 | gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

18 | blur = cv2.GaussianBlur(gray, (blur_ksize, blur_ksize), 1)

19 | edges = cv2.Canny(blur, canny_low, canny_high)

20 |

21 | if PLOT_FLAG:

22 | plt.imshow(edges, cmap='gray'), plt.title("pre process: edges"), plt.show()

23 |

24 | return edges

25 |

26 |

27 | def roi_extract(img, boundary):

28 | """

29 | (2) 感兴趣区域提取

30 | @param img: 包含边缘信息的图像

31 | @return: 提取感兴趣区域后的边缘信息图像

32 | """

33 | rows, cols = img.shape[:2]

34 | points = np.array([[(0, rows), (460, boundary), (520, boundary), (cols, rows)]])

35 |

36 | mask = np.zeros_like(img)

37 | cv2.fillPoly(mask, points, 255)

38 | if PLOT_FLAG:

39 | plt.imshow(mask, cmap='gray'), plt.title("roi mask"), plt.show()

40 |

41 | roi = cv2.bitwise_and(img, mask)

42 | if PLOT_FLAG:

43 | plt.imshow(roi, cmap='gray'), plt.title("roi"), plt.show()

44 |

45 | return roi

46 |

47 |

48 | def hough_extract(img, rho=1, theta=np.pi/180, threshold=15, min_line_len=40, max_line_gap=20):

49 | """

50 | (3) 霍夫变换

51 | @param img: 提取感兴趣区域后的边缘信息图像

52 | @param rho, theta, threshold, min_line_len, max_line_gap: 霍夫变换参数

53 | @return: 提取的直线信息

54 | """

55 | lines = cv2.HoughLinesP(img, rho, theta, threshold, minLineLength=min_line_len, maxLineGap=max_line_gap)

56 |

57 | if PLOT_FLAG:

58 | drawing = np.zeros_like(img)

59 | for line in lines:

60 | for x1, y1, x2, y2 in line:

61 | cv2.line(drawing, (x1,y1), (x2,y2), 255, 2)

62 | plt.imshow(drawing, cmap='gray'), plt.title("hough lines"), plt.show()

63 | print("Total of Hough lines: ", len(lines))

64 |

65 | return lines

66 |

67 |

68 | def line_fit(lines, boundary, width):

69 | """

70 | (4): 通过霍夫变换检测的直线拟合最终的左右车道

71 | @param lines: 霍夫变换得到的直线

72 | @param boundary: 裁剪的roi边界(车道下方在图像的边界,尽头是上面roi定义的325)

73 | @param width: 图像下边界

74 | @return:

75 | """

76 | # 按照斜率正负划分直线

77 | left_lines, right_lines = [], []

78 | for line in lines:

79 | for x1, y1, x2, y2 in line:

80 | k = (y2 - y1) / (x2 - x1)

81 | left_lines.append(line) if k < 0 else right_lines.append(line)

82 |

83 | # 直线过滤

84 | # left_lines = line_filter(left_lines)

85 | # right_lines = line_filter(right_lines)

86 | # print(len(left_lines)+len(right_lines))

87 |

88 | # 将所有点汇总

89 | left_points = [(x1, y1) for line in left_lines for x1, y1, x2, y2 in line] + [(x2, y2) for line in left_lines for

90 | x1, y1, x2, y2 in line]

91 | right_points = [(x1, y1) for line in right_lines for x1, y1, x2, y2 in line] + [(x2, y2) for line in right_lines for

92 | x1, y1, x2, y2 in line]

93 | # 最小二乘法拟合这些点为直线

94 | left_results = least_squares_fit(left_points, boundary, width)

95 | right_results = least_squares_fit(right_points, boundary, width)

96 |

97 | # 最终区域定点的坐标

98 | vtxs = np.array([[left_results[1], left_results[0], right_results[0], right_results[1]]])

99 | return vtxs

100 |

101 |

102 | def line_filter(lines, offset=0.1):

103 | """

104 | (4'): 直线过滤

105 | @param lines: 霍夫变换直接提取的所有直线

106 | @param offset: 斜率大于此偏移量的直线将被筛出

107 | @return: 筛出后的直线

108 | """

109 | slope = [(y2-y1)/(x2-x1) for line in lines for x1, y1, x2, y2 in line]

110 | while len(lines) > 0:

111 | mean = np.mean(slope)

112 | diff = [abs(s - mean) for s in slope]

113 | index = np.argmax(diff)

114 | if diff[index] > offset:

115 | slope.pop(index)

116 | lines.pop(index)

117 | else:

118 | break

119 | return lines

120 |

121 |

122 | def least_squares_fit(points, ymin, ymax):

123 | x = [p[0] for p in points]

124 | y = [p[1] for p in points]

125 |

126 | fit = np.polyfit(y, x, 1)

127 | fit_fn = np.poly1d(fit)

128 |

129 | # 我们知道的是车道线的y坐标,通过拟合的函数求出x坐标

130 | xmin, xmax = int(fit_fn(ymin)), int(fit_fn(ymax))

131 | return [(xmin, ymin), (xmax, ymax)]

132 |

133 |

134 | def lane_line_detection(img):

135 | pre_process_img = pre_process(img)

136 |

137 | roi_img = roi_extract(pre_process_img, boundary=325)

138 |

139 | lines = hough_extract(roi_img)

140 |

141 | vtxs = line_fit(lines, 325, img.shape[0])

142 |

143 | cv2.fillPoly(img, vtxs, (0, 255, 0))

144 | if PLOT_FLAG:

145 | plt.imshow(img[:, :, ::-1]), plt.title("final output"), plt.show()

146 | return img

147 |

148 |

149 | if __name__ == '__main__':

150 | # img = cv2.imread('../../resources/opencv/lane2.jpg')

151 | # detected_img = lane_line_detection(img)

152 |

153 | clip = VideoFileClip('../../resources/opencv/lane.mp4')

154 | out_clip = clip.fl_image(lane_line_detection)

155 | out_clip.write_videofile('lane-detected.mp4', audio=False)

156 |

157 |

--------------------------------------------------------------------------------

/docs/opencv/opencv-study.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import numpy as np

3 | import matplotlib.pyplot as plt

4 |

5 | def call_back_func(x):

6 | print(x)

7 |

8 |

9 | def mouse_event(event, x, y, flags, param):

10 | if event == cv2.EVENT_LBUTTONDOWN:

11 | print(x,y)

12 |

13 | img = cv2.imread("../resources/lena.jpg")

14 | cv2.namedWindow('image')

15 | cv2.createTrackbar('attr', 'image', 0, 255, call_back_func)

16 | while True:

17 | cv2.imshow('image', img)

18 | if cv2.waitKey(1) == 27:

19 | break

20 |

21 | # attr = cv2.getTrackbarPos('attr', 'image')

22 | # img[:] = [attr, attr, attr]

23 |

24 | cv2.setMouseCallback('image', mouse_event)

25 |

26 |

27 | ###

28 |

--------------------------------------------------------------------------------

/docs/pytorch-study.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "code",

5 | "execution_count": 1,

6 | "metadata": {},

7 | "outputs": [],

8 | "source": [

9 | "import torch\n",

10 | "import numpy as np"

11 | ]

12 | },

13 | {

14 | "cell_type": "markdown",

15 | "metadata": {},

16 | "source": [

17 | "## Tensor"

18 | ]

19 | },

20 | {

21 | "cell_type": "code",

22 | "execution_count": 26,

23 | "metadata": {},

24 | "outputs": [

25 | {

26 | "name": "stdout",

27 | "output_type": "stream",

28 | "text": [

29 | "Numpy: [[1. 1. 1. 1.]\n",

30 | " [1. 1. 1. 1.]\n",

31 | " [1. 1. 1. 1.]]\n",

32 | "Tensor: tensor([[0, 0, 0, 0],\n",

33 | " [0, 0, 0, 0],\n",

34 | " [0, 0, 0, 0]])\n",

35 | "tensor([-1.9611, -0.2047, 0.6853])\n",

36 | "tensor([[7959390389040738153, 2318285298082652788, 8675445202132104482],\n",

37 | " [7957695011165139568, 2318365875964093043, 7233184988217307170]])\n"

38 | ]

39 | }

40 | ],

41 | "source": [

42 | "# 创建tensor\n",

43 | "n = np.ones((3, 4))\n",

44 | "\n",

45 | "t = torch.ones(3, 4)\n",

46 | "t = torch.rand(5, 3)\n",

47 | "t = torch.zeros(3, 4, dtype=torch.long)\n",

48 | "\n",

49 | "a = torch.tensor([1, 2, 3])\n",

50 | "a = torch.randn_like(a, dtype=torch.float)\n",

51 | "\n",

52 | "y = t.new_empty(2, 3) # 复用t的其他属性\n",

53 | "\n",

54 | "print(\"Numpy: \", n)\n",

55 | "print(\"Tensor: \", t)\n",

56 | "print(a)\n",

57 | "print(y)"

58 | ]

59 | },

60 | {

61 | "cell_type": "code",

62 | "execution_count": 27,

63 | "metadata": {},

64 | "outputs": [

65 | {

66 | "name": "stdout",

67 | "output_type": "stream",

68 | "text": [

69 | "torch.Size([3, 4])\n",

70 | "torch.Size([3, 4])\n"

71 | ]

72 | }

73 | ],

74 | "source": [

75 | "# 基础属性\n",

76 | "print(t.size())\n",

77 | "print(t.shape)"

78 | ]

79 | },

80 | {

81 | "cell_type": "code",

82 | "execution_count": 29,

83 | "metadata": {},

84 | "outputs": [

85 | {

86 | "name": "stdout",

87 | "output_type": "stream",

88 | "text": [

89 | "tensor([[1.1634, 1.8894, 1.0713],\n",

90 | " [0.5683, 1.0986, 0.8609]])\n",

91 | "tensor([[1.1634, 1.8894, 1.0713],\n",

92 | " [0.5683, 1.0986, 0.8609]])\n"

93 | ]

94 | }

95 | ],

96 | "source": [

97 | "# 简单运算\n",

98 | "x = torch.rand(2, 3)\n",

99 | "y = torch.rand_like(x)\n",

100 | "\n",

101 | "print(x + y)\n",

102 | "print(torch.add(x, y))"

103 | ]

104 | },

105 | {

106 | "cell_type": "code",

107 | "execution_count": 30,

108 | "metadata": {},

109 | "outputs": [

110 | {

111 | "name": "stdout",

112 | "output_type": "stream",

113 | "text": [

114 | "tensor([[1.1634, 1.8894, 1.0713],\n",

115 | " [0.5683, 1.0986, 0.8609]])\n"

116 | ]

117 | }

118 | ],

119 | "source": [

120 | "# in-place运算 会改变变量的值\n",

121 | "y.add_(x)\n",

122 | "print(y)"

123 | ]

124 | },

125 | {

126 | "cell_type": "code",

127 | "execution_count": 31,

128 | "metadata": {},

129 | "outputs": [

130 | {

131 | "name": "stdout",

132 | "output_type": "stream",

133 | "text": [

134 | "tensor([0.9541, 0.4638])\n"

135 | ]

136 | }

137 | ],

138 | "source": [

139 | "# 切片\n",

140 | "print(x[:, 1])"

141 | ]

142 | },

143 | {

144 | "cell_type": "code",

145 | "execution_count": 32,

146 | "metadata": {},

147 | "outputs": [

148 | {

149 | "name": "stdout",

150 | "output_type": "stream",

151 | "text": [

152 | "torch.Size([4, 4]) torch.Size([16]) torch.Size([2, 2, 4])\n"

153 | ]

154 | }

155 | ],

156 | "source": [

157 | "# resize\n",

158 | "x = torch.randn(4, 4)\n",

159 | "y = x.view(16)\n",

160 | "z = x.view(-1, 2, 4)\n",

161 | "\n",

162 | "print(x.size(), y.size(), z.size())"

163 | ]

164 | },

165 | {

166 | "cell_type": "code",

167 | "execution_count": 33,

168 | "metadata": {},

169 | "outputs": [

170 | {

171 | "name": "stdout",

172 | "output_type": "stream",

173 | "text": [

174 | "tensor([0.1197])\n",

175 | "0.11974674463272095\n"

176 | ]

177 | }

178 | ],

179 | "source": [

180 | "# get value(if only have one element)\n",

181 | "x = torch.rand(1)\n",

182 | "print(x)\n",

183 | "print(x.item())"

184 | ]

185 | },

186 | {

187 | "cell_type": "markdown",

188 | "metadata": {},

189 | "source": [

190 | "### Tensor与Numpy转换"

191 | ]

192 | },

193 | {

194 | "cell_type": "code",

195 | "execution_count": 39,

196 | "metadata": {},

197 | "outputs": [

198 | {

199 | "name": "stdout",

200 | "output_type": "stream",

201 | "text": [

202 | "tensor([[0.2107, 0.2437, 0.0706],\n",

203 | " [0.6233, 0.4380, 0.4589]])\n",

204 | "[[0.21070534 0.24373996 0.07062978]\n",

205 | " [0.6232684 0.43795878 0.45893037]]\n"

206 | ]

207 | }

208 | ],

209 | "source": [

210 | "# torch -> numpy\n",

211 | "t = torch.rand(2, 3)\n",

212 | "n = t.numpy()\n",

213 | "\n",

214 | "print(t)\n",

215 | "print(n)"

216 | ]

217 | },

218 | {

219 | "cell_type": "code",

220 | "execution_count": 37,

221 | "metadata": {},

222 | "outputs": [

223 | {

224 | "name": "stdout",

225 | "output_type": "stream",

226 | "text": [

227 | "[[0.16070166 0.54871463]\n",

228 | " [0.07759188 0.32236617]\n",

229 | " [0.14265208 0.4026539 ]]\n",

230 | "tensor([[0.1607, 0.5487],\n",

231 | " [0.0776, 0.3224],\n",

232 | " [0.1427, 0.4027]], dtype=torch.float64)\n"

233 | ]

234 | }

235 | ],

236 | "source": [

237 | "# numpy -> tensor\n",

238 | "n = np.random.rand(3, 2)\n",

239 | "t = torch.from_numpy(n)\n",

240 | "\n",

241 | "print(n)\n",

242 | "print(t)"

243 | ]

244 | },

245 | {

246 | "cell_type": "code",

247 | "execution_count": 40,

248 | "metadata": {},

249 | "outputs": [

250 | {

251 | "name": "stdout",

252 | "output_type": "stream",

253 | "text": [

254 | "tensor([[1.2107, 1.2437, 1.0706],\n",

255 | " [1.6233, 1.4380, 1.4589]])\n",

256 | "[[1.2107053 1.24374 1.0706298]\n",

257 | " [1.6232684 1.4379587 1.4589304]]\n"

258 | ]

259 | }

260 | ],

261 | "source": [

262 | "# 修改一个两个都会跟着改的\n",

263 | "t.add_(1)\n",

264 | "\n",

265 | "print(t)\n",

266 | "print(n)"

267 | ]

268 | },

269 | {

270 | "cell_type": "code",

271 | "execution_count": 6,

272 | "metadata": {},

273 | "outputs": [

274 | {

275 | "name": "stdout",

276 | "output_type": "stream",

277 | "text": [

278 | "[[0.30370266 0.36835002 0.99111293]\n",

279 | " [0.36966819 0.68330543 0.12247895]]\n",

280 | "tensor([[0.5291, 0.4583, 0.6517],\n",

281 | " [0.5869, 0.5024, 0.0601]])\n"

282 | ]

283 | }

284 | ],

285 | "source": [

286 | "# 复制之后就不会共享内存了\n",

287 | "t = torch.rand(2, 3)\n",

288 | "n = np.random.rand(2, 3)\n",

289 | "\n",

290 | "# 二者的函数名是不一样的\n",

291 | "t_ = n.copy()\n",

292 | "n_ = t.clone()\n",

293 | "\n",

294 | "t.zero_()\n",

295 | "n = np.zeros((2, 3))\n",

296 | "\n",

297 | "print(t_)\n",

298 | "print(n_)"

299 | ]

300 | },

301 | {

302 | "cell_type": "code",

303 | "execution_count": 57,

304 | "metadata": {},

305 | "outputs": [],

306 | "source": [

307 | "# 如果添加了求导,则需要将data转换为numpy\n",

308 | "n = torch.rand(2, 3, requires_grad=True)\n",

309 | "t = n.data.numpy()"

310 | ]

311 | },

312 | {

313 | "cell_type": "markdown",

314 | "metadata": {},

315 | "source": [

316 | "### CUDA"

317 | ]

318 | },

319 | {

320 | "cell_type": "code",

321 | "execution_count": 46,

322 | "metadata": {},

323 | "outputs": [

324 | {

325 | "name": "stdout",

326 | "output_type": "stream",

327 | "text": [

328 | "tensor([[1.3248, 0.3975, 0.7192],\n",

329 | " [0.7229, 0.7046, 0.7572]], device='cuda:0')\n",

330 | "tensor([[1.3248, 0.3975, 0.7192],\n",

331 | " [0.7229, 0.7046, 0.7572]])\n"

332 | ]

333 | }

334 | ],

335 | "source": [

336 | "if torch.cuda.is_available():\n",

337 | " device = torch.device(\"cuda\")\n",

338 | " y = torch.rand(2, 3, device=device)\n",

339 | " x = torch.rand(2, 3)\n",

340 | " x = x.to(device)\n",

341 | "\n",

342 | " z = x + y\n",

343 | " print(z)\n",

344 | " print(z.to(\"cpu\"))"

345 | ]

346 | },

347 | {

348 | "cell_type": "markdown",

349 | "metadata": {},

350 | "source": [

351 | "## 自动求导"

352 | ]

353 | },

354 | {

355 | "cell_type": "code",

356 | "execution_count": 50,

357 | "metadata": {},

358 | "outputs": [

359 | {

360 | "name": "stdout",

361 | "output_type": "stream",

362 | "text": [

363 | "tensor([[1.7932]], grad_fn=)\n"

364 | ]

365 | }

366 | ],

367 | "source": [

368 | "x = torch.randn(4, 1, requires_grad=True)\n",

369 | "b = torch.randn(4, 1, requires_grad=True)\n",

370 | "W = torch.randn(4, 4)\n",

371 | "\n",

372 | "# y = torch.mm(torch.mm(torch.t(x), W), b)\n",

373 | "y = x.t().mm(W).mm(b)\n",

374 | "print(y)"

375 | ]

376 | },

377 | {

378 | "cell_type": "code",

379 | "execution_count": 51,

380 | "metadata": {},

381 | "outputs": [

382 | {

383 | "name": "stdout",

384 | "output_type": "stream",

385 | "text": [

386 | "None\n",

387 | "tensor([[ 0.4634],\n",

388 | " [-1.1022],\n",

389 | " [-3.5316],\n",

390 | " [ 1.3660]])\n"

391 | ]

392 | }

393 | ],

394 | "source": [

395 | "print(x.grad)\n",

396 | "\n",

397 | "y.backward()\n",

398 | "\n",

399 | "print(x.grad)"

400 | ]

401 | },

402 | {

403 | "cell_type": "code",

404 | "execution_count": 24,

405 | "metadata": {},

406 | "outputs": [

407 | {

408 | "name": "stdout",

409 | "output_type": "stream",

410 | "text": [

411 | "tensor([[-0.7865],\n",

412 | " [-0.1405],\n",

413 | " [-0.2294],\n",

414 | " [ 0.3251]], requires_grad=True)\n",

415 | "tensor([[0.7966]], grad_fn=)\n",

416 | "tensor([[-1.5730],\n",

417 | " [-0.2811],\n",

418 | " [-0.4588],\n",

419 | " [ 0.6501]])\n"

420 | ]

421 | }

422 | ],

423 | "source": [

424 | "x = torch.randn(4, 1, requires_grad=True)\n",

425 | "y = torch.mm(torch.t(x), x)\n",

426 | "print(x)\n",

427 | "print(y)\n",

428 | "\n",

429 | "y.backward(retain_graph=True) # 可以再次求导,否则只能backward一次\n",

430 | "print(x.grad)"

431 | ]

432 | },

433 | {

434 | "cell_type": "code",

435 | "execution_count": 25,

436 | "metadata": {},

437 | "outputs": [

438 | {

439 | "name": "stdout",

440 | "output_type": "stream",

441 | "text": [

442 | "tensor([[-0.7865],\n",

443 | " [-0.1405],\n",

444 | " [-0.2294],\n",

445 | " [ 0.3251]], requires_grad=True)\n",

446 | "tensor([[0.7966]], grad_fn=)\n",

447 | "tensor([[-3.1460],\n",

448 | " [-0.5622],\n",

449 | " [-0.9177],\n",

450 | " [ 1.3003]])\n"

451 | ]

452 | }

453 | ],

454 | "source": [

455 | "print(x)\n",

456 | "print(y)\n",

457 | "\n",

458 | "y.backward(retain_graph=True)\n",

459 | "print(x.grad)"

460 | ]

461 | },

462 | {

463 | "cell_type": "code",

464 | "execution_count": 26,

465 | "metadata": {},

466 | "outputs": [

467 | {

468 | "name": "stdout",

469 | "output_type": "stream",

470 | "text": [

471 | "tensor([[-1.5730],\n",

472 | " [-0.2811],\n",

473 | " [-0.4588],\n",

474 | " [ 0.6501]])\n"

475 | ]

476 | }

477 | ],

478 | "source": [

479 | "x.grad.zero_() # 梯度清零\n",

480 | "y.backward()\n",

481 | "print(x.grad) # 跟第一次的值相同"

482 | ]

483 | },

484 | {

485 | "cell_type": "markdown",

486 | "metadata": {},

487 | "source": [

488 | "## 全连接"

489 | ]

490 | },

491 | {

492 | "cell_type": "code",

493 | "execution_count": 5,

494 | "metadata": {},

495 | "outputs": [

496 | {

497 | "name": "stdout",

498 | "output_type": "stream",

499 | "text": [

500 | "torch.Size([10, 200])\n",

501 | "weight : torch.Size([200, 100])\n",

502 | "bias : torch.Size([200])\n"

503 | ]

504 | }

505 | ],

506 | "source": [

507 | "input = torch.randn(10, 100) # 第一个10是batch size\n",

508 | "linear_network = torch.nn.Linear(100, 200)\n",

509 | "output = linear_network(input)\n",

510 | "\n",

511 | "print(output.shape)\n",

512 | "\n",

513 | "for name, parameter in linear_network.named_parameters():\n",

514 | " print(name, ':', parameter.size())"

515 | ]

516 | },

517 | {

518 | "cell_type": "markdown",

519 | "metadata": {},

520 | "source": [

521 | "## CNN"

522 | ]

523 | },

524 | {

525 | "cell_type": "code",

526 | "execution_count": 4,

527 | "metadata": {},

528 | "outputs": [

529 | {

530 | "name": "stdout",

531 | "output_type": "stream",

532 | "text": [

533 | "torch.Size([1, 5, 28, 28])\n",

534 | "weight : torch.Size([5, 1, 3, 3])\n",

535 | "bias : torch.Size([5])\n"

536 | ]

537 | }

538 | ],

539 | "source": [

540 | "input = torch.randn(1, 1, 28, 28) # batch, channel, height, width\n",

541 | "conv = torch.nn.Conv2d(in_channels=1, out_channels=5, kernel_size=3, padding=1, stride=1, bias=True)\n",

542 | "output = conv(input)\n",

543 | "\n",

544 | "print(output.shape)\n",

545 | "\n",

546 | "for name, parameters in conv.named_parameters():\n",

547 | " print(name, ':', parameters.shape)\n",

548 | "\n",

549 | "# weight : torch.Size([5, 1, 3, 3])\n",

550 | "# out_channels, channel, kernel_size, kernel_size"

551 | ]

552 | },

553 | {

554 | "cell_type": "code",

555 | "execution_count": 5,

556 | "metadata": {},

557 | "outputs": [

558 | {

559 | "name": "stdout",

560 | "output_type": "stream",

561 | "text": [

562 | "torch.Size([1, 5, 14, 14])\n"

563 | ]

564 | }

565 | ],

566 | "source": [

567 | "input = torch.randn(1, 1, 28, 28)\n",

568 | "conv = torch.nn.Conv2d(in_channels=1, out_channels=5, kernel_size=3, padding=1, stride=2)\n",

569 | "output = conv(input)\n",

570 | "print(output.shape) # (input - kernel + 2*padding) / stride + 1"

571 | ]

572 | },

573 | {

574 | "cell_type": "code",

575 | "execution_count": null,

576 | "metadata": {},

577 | "outputs": [],

578 | "source": []

579 | }

580 | ],

581 | "metadata": {

582 | "interpreter": {

583 | "hash": "a3b0ff572e5cd24ec265c5b4da969dd40707626c6e113422fd84bd2b9440fcfc"

584 | },

585 | "kernelspec": {

586 | "display_name": "Python 3.9.7 64-bit ('deep_learning': conda)",

587 | "name": "python3"

588 | },

589 | "language_info": {

590 | "codemirror_mode": {

591 | "name": "ipython",

592 | "version": 3

593 | },

594 | "file_extension": ".py",

595 | "mimetype": "text/x-python",

596 | "name": "python",

597 | "nbconvert_exporter": "python",

598 | "pygments_lexer": "ipython3",

599 | "version": "3.9.7"

600 | },

601 | "orig_nbformat": 4

602 | },

603 | "nbformat": 4,

604 | "nbformat_minor": 2

605 | }

606 |

--------------------------------------------------------------------------------

/experiment/Face-Detection-opencv/README.md:

--------------------------------------------------------------------------------

1 | # 视频人脸检测

2 |

3 | 姓名:张喆 学号:2101212846 指导老师:张健助理教授

4 |

5 | * [具体实现](#具体实现)

6 | * [结果展示](#结果展示)

7 | * [扩展分析](#扩展分析)

8 |

9 | -----

10 |

11 | ## 具体实现

12 |

13 | 本实验主要分为两大模块:视频处理 + 图像人脸检测

14 |

15 | 图像人脸检测部分与作业二类似,依然采用OpenCV中的`detectMultiScale()方法,模型选用`haarcascade`。

16 |

17 | 视频处理模块代码如下:

18 |

19 | ```python

20 | capture = cv2.VideoCapture('video/video.MOV')

21 |

22 | width, height = int(capture.get(cv2.CAP_PROP_FRAME_WIDTH)), int(capture.get(cv2.CAP_PROP_FRAME_HEIGHT))

23 | fourcc = cv2.VideoWriter_fourcc(*'mp4v')

24 | writer = cv2.VideoWriter('video/output.mp4', fourcc, 25, (width, height))

25 |

26 | if capture.isOpened():

27 | ret, frame = capture.read()

28 | else:

29 | ret = False

30 |

31 | while ret:

32 | detected_frame = face_detection(frame)

33 | writer.write(detected_frame)

34 | ret, frame = capture.read()

35 |

36 | writer.release()

37 | ```

38 |

39 | ## 结果展示

40 |

41 | > 输出的视频通过`ffmpeg -i output.mp4 -vf scale=640:-1 output.gif`导出为gif展示

42 |

43 |

44 |

45 | ## 扩展分析

46 |

47 | 在初始实验时将原始帧图像转换为灰度图后就进行人脸检测,输出的结果中有较多人脸没有被很好检测,尤其是带墨镜的男生存在较多的帧未能检测。因此我又多次尝试调整了`minNeighbors`和`minSize`的阈值,过小时会有很多噪声(非人脸区域被框选),过大时女生人脸也难以被正确检测。因此又尝试了些图像处理的简单方法加以改进。

48 |

49 | 1. 高斯滤波器

50 |

51 | ```python

52 | blur = cv2.GaussianBlur(gray, (3,3), 0)

53 | ```

54 |

55 | 采用高斯滤波器后图像得到一定的平滑,噪声得到一定程度的消除,从结果来看效果较好

56 |

57 |

58 |

59 | 2. 阈值分割处理

60 |

61 | ```python

62 | _, th = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

63 | ```

64 |

65 | 在高斯滤波基础上又采用了阈值分割处理,这里是用的是Otsu自适应阈值分割算法,但从中间输出的灰度图中可以看到人脸经过阈值处理后细节丢失了很多,可能对于检测器而言变得特征不明显,因此大量人脸无法被正确标注

66 |

67 |

68 |

69 | 最终本实验选择先将原图转换为灰度图后进行高斯滤波处理,处理后的图像再进行人脸检测已经能得到比较满意的效果。

--------------------------------------------------------------------------------

/experiment/Face-Detection-opencv/img/multi.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/doubleZ0108/Computer-Vision-PKU/4ab2fa3deb1edfd65943f52b1e0d8dc0d8ee6d0e/experiment/Face-Detection-opencv/img/multi.jpg

--------------------------------------------------------------------------------

/experiment/Face-Detection-opencv/img/multi2.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/doubleZ0108/Computer-Vision-PKU/4ab2fa3deb1edfd65943f52b1e0d8dc0d8ee6d0e/experiment/Face-Detection-opencv/img/multi2.jpg

--------------------------------------------------------------------------------

/experiment/Face-Detection-opencv/img/single.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/doubleZ0108/Computer-Vision-PKU/4ab2fa3deb1edfd65943f52b1e0d8dc0d8ee6d0e/experiment/Face-Detection-opencv/img/single.jpg