├── .gitignore

├── .travis.yml

├── CMakeLists.txt

├── COPYING

├── COPYING.LESSER

├── FindEigen3.cmake

├── README.md

├── doc

└── Doxyfile

├── include

├── distributions.h

├── libcluster.h

└── probutils.h

├── python

├── CMakeLists.txt

├── FindNumpy.cmake

├── libclusterpy.cpp

├── libclusterpy.h

└── testapi.py

├── src

├── cluster.cpp

├── comutils.cpp

├── comutils.h

├── distributions.cpp

├── mcluster.cpp

├── probutils.cpp

└── scluster.cpp

└── test

├── CMakeLists.txt

├── cluster_test.cpp

├── mcluster_test.cpp

├── scluster_test.cpp

├── scott25.dat

└── testdata.h

/.gitignore:

--------------------------------------------------------------------------------

1 | # ignore list for git status etc.

2 | *.mex*

3 | *.user

4 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: cpp

2 | dist: trusty

3 | sudo: required

4 |

5 | addons:

6 | apt:

7 | packages:

8 | - cmake

9 | - python3

10 | - python3-dev

11 | - libeigen3-dev

12 | - libboost-all-dev

13 | - libboost-python-dev

14 | - python3-numpy

15 |

16 | install:

17 | - cd /usr/lib/x86_64-linux-gnu/

18 | - sudo ln -s libboost_python-py34.so libboost_python3.so

19 | - cd $TRAVIS_BUILD_DIR

20 | - mkdir build

21 | - cd build

22 | - cmake -DBUILD_PYTHON_INTERFACE=ON -DBUILD_USE_PYTHON3=ON ..

23 | - make

24 | - sudo make install

25 |

26 | script:

27 | - cd $TRAVIS_BUILD_DIR/build

28 | - ./cluster_test

29 | - ./scluster_test

30 | - ./mcluster_test

31 | - sudo ldconfig

32 | - cd ../python

33 | - python3 testapi.py

34 |

--------------------------------------------------------------------------------

/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | project(cluster)

2 | cmake_minimum_required(VERSION 2.6)

3 |

4 |

5 | #--------------------------------#

6 | # Includes #

7 | #--------------------------------#

8 |

9 | find_package(Boost REQUIRED)

10 | include_directories(${Boost_INCLUDE_DIRS})

11 | include(${PROJECT_SOURCE_DIR}/FindEigen3.cmake REQUIRED)

12 | include_directories(${EIGEN_INCLUDE_DIRS})

13 | include(FindOpenMP)

14 |

15 |

16 | #--------------------------------#

17 | # Enforce an out-of-source build #

18 | #--------------------------------#

19 |

20 | string(COMPARE EQUAL "${PROJECT_SOURCE_DIR}" "${PROJECT_BINARY_DIR}" INSOURCE)

21 | if(INSOURCE)

22 | message(FATAL_ERROR "This project requires an out of source build.")

23 | endif(INSOURCE)

24 |

25 |

26 | #--------------------------------#

27 | # Compiler environment Setup #

28 | #--------------------------------#

29 |

30 | # Some compilation options (changeable from ccmake)

31 | option(BUILD_EXHAUST_SPLIT "Use the exhaustive cluster split heuristic?" off)

32 | option(BUILD_PYTHON_INTERFACE "Build the python interface?" off)

33 | option(BUILD_USE_PYTHON3 "Use python3 instead of python 2?" on)

34 |

35 | # Locations for source code

36 | set(LIB_SOURCE_DIR ${PROJECT_SOURCE_DIR}/src)

37 | set(LIB_INCLUDE_DIR ${PROJECT_SOURCE_DIR}/include)

38 | set(TEST_SOURCE_DIR ${PROJECT_SOURCE_DIR}/test)

39 | set(PYTHON_SOURCE_DIR ${PROJECT_SOURCE_DIR}/python)

40 |

41 | # Locations for binary files

42 | set(LIBRARY_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/lib)

43 | set(EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/build)

44 |

45 | # Automatically or from command line set build type

46 | if(NOT CMAKE_BUILD_TYPE)

47 | set(CMAKE_BUILD_TYPE Release CACHE STRING

48 | "Build type options are: None Debug Release RelWithDebInfo MinSizeRel."

49 | FORCE

50 | )

51 | endif(NOT CMAKE_BUILD_TYPE)

52 |

53 | # If we want to use the greedy splitting heuristic, define it here

54 | if(BUILD_EXHAUST_SPLIT)

55 | set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DEXHAUST_SPLIT")

56 | endif(BUILD_EXHAUST_SPLIT)

57 |

58 | # Python needs row major matrices (for convenience)

59 | if(BUILD_PYTHON_INTERFACE)

60 | set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DEIGEN_DEFAULT_TO_ROW_MAJOR")

61 | endif(BUILD_PYTHON_INTERFACE)

62 |

63 | # Search for OpenMP support for multi-threading

64 | if(OPENMP_FOUND)

65 | set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} ${OpenMP_CXX_FLAGS}")

66 | set(CMAKE_EXE_LINKER_FLAGS

67 | "${CMAKE_EXE_LINKER_FLAGS} ${OpenMP_EXE_LINKER_FLAGS}"

68 | )

69 | # Disable Eigen's parallelisation (this will get in the way of mine)

70 | set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DEIGEN_DONT_PARALLELIZE")

71 | endif(OPENMP_FOUND)

72 |

73 |

74 | #--------------------------------#

75 | # Library Build Instructions #

76 | #--------------------------------#

77 |

78 | # Make sure we include library headers in compile

79 | include_directories(${LIB_INCLUDE_DIR})

80 |

81 | # Library build instructions

82 | add_library(${PROJECT_NAME} SHARED

83 | ${LIB_INCLUDE_DIR}/libcluster.h

84 | ${LIB_INCLUDE_DIR}/probutils.h

85 | ${LIB_INCLUDE_DIR}/distributions.h

86 | ${LIB_SOURCE_DIR}/distributions.cpp

87 | ${LIB_SOURCE_DIR}/comutils.h

88 | ${LIB_SOURCE_DIR}/comutils.cpp

89 | ${LIB_SOURCE_DIR}/cluster.cpp

90 | ${LIB_SOURCE_DIR}/scluster.cpp

91 | ${LIB_SOURCE_DIR}/mcluster.cpp

92 | ${LIB_SOURCE_DIR}/probutils.cpp

93 | )

94 |

95 | add_definitions("-Wall")

96 |

97 |

98 | #--------------------------------#

99 | # Library Install Instructions #

100 | #--------------------------------#

101 |

102 | if(NOT CMAKE_INSTALL_PREFIX)

103 | set(CMAKE_INSTALL_PREFIX "/usr/local" )

104 | endif(NOT CMAKE_INSTALL_PREFIX)

105 |

106 | install(TARGETS ${PROJECT_NAME} DESTINATION lib)

107 | install(FILES

108 | ${LIB_INCLUDE_DIR}/libcluster.h

109 | ${LIB_INCLUDE_DIR}/probutils.h

110 | ${LIB_INCLUDE_DIR}/distributions.h

111 | DESTINATION include/libcluster

112 | )

113 |

114 |

115 | #--------------------------------#

116 | # Subdirectories to recurse to #

117 | #--------------------------------#

118 |

119 | subdirs(test python)

120 |

--------------------------------------------------------------------------------

/COPYING.LESSER:

--------------------------------------------------------------------------------

1 | GNU LESSER GENERAL PUBLIC LICENSE

2 | Version 3, 29 June 2007

3 |

4 | Copyright (C) 2007 Free Software Foundation, Inc.

5 | Everyone is permitted to copy and distribute verbatim copies

6 | of this license document, but changing it is not allowed.

7 |

8 |

9 | This version of the GNU Lesser General Public License incorporates

10 | the terms and conditions of version 3 of the GNU General Public

11 | License, supplemented by the additional permissions listed below.

12 |

13 | 0. Additional Definitions.

14 |

15 | As used herein, "this License" refers to version 3 of the GNU Lesser

16 | General Public License, and the "GNU GPL" refers to version 3 of the GNU

17 | General Public License.

18 |

19 | "The Library" refers to a covered work governed by this License,

20 | other than an Application or a Combined Work as defined below.

21 |

22 | An "Application" is any work that makes use of an interface provided

23 | by the Library, but which is not otherwise based on the Library.

24 | Defining a subclass of a class defined by the Library is deemed a mode

25 | of using an interface provided by the Library.

26 |

27 | A "Combined Work" is a work produced by combining or linking an

28 | Application with the Library. The particular version of the Library

29 | with which the Combined Work was made is also called the "Linked

30 | Version".

31 |

32 | The "Minimal Corresponding Source" for a Combined Work means the

33 | Corresponding Source for the Combined Work, excluding any source code

34 | for portions of the Combined Work that, considered in isolation, are

35 | based on the Application, and not on the Linked Version.

36 |

37 | The "Corresponding Application Code" for a Combined Work means the

38 | object code and/or source code for the Application, including any data

39 | and utility programs needed for reproducing the Combined Work from the

40 | Application, but excluding the System Libraries of the Combined Work.

41 |

42 | 1. Exception to Section 3 of the GNU GPL.

43 |

44 | You may convey a covered work under sections 3 and 4 of this License

45 | without being bound by section 3 of the GNU GPL.

46 |

47 | 2. Conveying Modified Versions.

48 |

49 | If you modify a copy of the Library, and, in your modifications, a

50 | facility refers to a function or data to be supplied by an Application

51 | that uses the facility (other than as an argument passed when the

52 | facility is invoked), then you may convey a copy of the modified

53 | version:

54 |

55 | a) under this License, provided that you make a good faith effort to

56 | ensure that, in the event an Application does not supply the

57 | function or data, the facility still operates, and performs

58 | whatever part of its purpose remains meaningful, or

59 |

60 | b) under the GNU GPL, with none of the additional permissions of

61 | this License applicable to that copy.

62 |

63 | 3. Object Code Incorporating Material from Library Header Files.

64 |

65 | The object code form of an Application may incorporate material from

66 | a header file that is part of the Library. You may convey such object

67 | code under terms of your choice, provided that, if the incorporated

68 | material is not limited to numerical parameters, data structure

69 | layouts and accessors, or small macros, inline functions and templates

70 | (ten or fewer lines in length), you do both of the following:

71 |

72 | a) Give prominent notice with each copy of the object code that the

73 | Library is used in it and that the Library and its use are

74 | covered by this License.

75 |

76 | b) Accompany the object code with a copy of the GNU GPL and this license

77 | document.

78 |

79 | 4. Combined Works.

80 |

81 | You may convey a Combined Work under terms of your choice that,

82 | taken together, effectively do not restrict modification of the

83 | portions of the Library contained in the Combined Work and reverse

84 | engineering for debugging such modifications, if you also do each of

85 | the following:

86 |

87 | a) Give prominent notice with each copy of the Combined Work that

88 | the Library is used in it and that the Library and its use are

89 | covered by this License.

90 |

91 | b) Accompany the Combined Work with a copy of the GNU GPL and this license

92 | document.

93 |

94 | c) For a Combined Work that displays copyright notices during

95 | execution, include the copyright notice for the Library among

96 | these notices, as well as a reference directing the user to the

97 | copies of the GNU GPL and this license document.

98 |

99 | d) Do one of the following:

100 |

101 | 0) Convey the Minimal Corresponding Source under the terms of this

102 | License, and the Corresponding Application Code in a form

103 | suitable for, and under terms that permit, the user to

104 | recombine or relink the Application with a modified version of

105 | the Linked Version to produce a modified Combined Work, in the

106 | manner specified by section 6 of the GNU GPL for conveying

107 | Corresponding Source.

108 |

109 | 1) Use a suitable shared library mechanism for linking with the

110 | Library. A suitable mechanism is one that (a) uses at run time

111 | a copy of the Library already present on the user's computer

112 | system, and (b) will operate properly with a modified version

113 | of the Library that is interface-compatible with the Linked

114 | Version.

115 |

116 | e) Provide Installation Information, but only if you would otherwise

117 | be required to provide such information under section 6 of the

118 | GNU GPL, and only to the extent that such information is

119 | necessary to install and execute a modified version of the

120 | Combined Work produced by recombining or relinking the

121 | Application with a modified version of the Linked Version. (If

122 | you use option 4d0, the Installation Information must accompany

123 | the Minimal Corresponding Source and Corresponding Application

124 | Code. If you use option 4d1, you must provide the Installation

125 | Information in the manner specified by section 6 of the GNU GPL

126 | for conveying Corresponding Source.)

127 |

128 | 5. Combined Libraries.

129 |

130 | You may place library facilities that are a work based on the

131 | Library side by side in a single library together with other library

132 | facilities that are not Applications and are not covered by this

133 | License, and convey such a combined library under terms of your

134 | choice, if you do both of the following:

135 |

136 | a) Accompany the combined library with a copy of the same work based

137 | on the Library, uncombined with any other library facilities,

138 | conveyed under the terms of this License.

139 |

140 | b) Give prominent notice with the combined library that part of it

141 | is a work based on the Library, and explaining where to find the

142 | accompanying uncombined form of the same work.

143 |

144 | 6. Revised Versions of the GNU Lesser General Public License.

145 |

146 | The Free Software Foundation may publish revised and/or new versions

147 | of the GNU Lesser General Public License from time to time. Such new

148 | versions will be similar in spirit to the present version, but may

149 | differ in detail to address new problems or concerns.

150 |

151 | Each version is given a distinguishing version number. If the

152 | Library as you received it specifies that a certain numbered version

153 | of the GNU Lesser General Public License "or any later version"

154 | applies to it, you have the option of following the terms and

155 | conditions either of that published version or of any later version

156 | published by the Free Software Foundation. If the Library as you

157 | received it does not specify a version number of the GNU Lesser

158 | General Public License, you may choose any version of the GNU Lesser

159 | General Public License ever published by the Free Software Foundation.

160 |

161 | If the Library as you received it specifies that a proxy can decide

162 | whether future versions of the GNU Lesser General Public License shall

163 | apply, that proxy's public statement of acceptance of any version is

164 | permanent authorization for you to choose that version for the

165 | Library.

166 |

--------------------------------------------------------------------------------

/FindEigen3.cmake:

--------------------------------------------------------------------------------

1 | # Make sure that we can find Eigen

2 | # This creates the following variables:

3 | # - EIGEN_INCLUDE_DIRS where to find the library

4 | # - EIGEN_FOUND TRUE if found, FALSE otherwise

5 |

6 | find_path(

7 | EIGEN_INCLUDE_DIRS Eigen

8 | /usr/local/eigen3

9 | /usr/local/include/eigen3

10 | /usr/include/eigen3

11 | )

12 |

13 | # Check found Eigen

14 | if(EIGEN_INCLUDE_DIRS)

15 | set(EIGEN_FOUND TRUE)

16 | message(STATUS "Found Eigen: ${EIGEN_INCLUDE_DIRS}")

17 | else(EIGEN_INCLUDE_DIRS)

18 | if(EIGEN_FIND_REQUIRED)

19 | set(EIGEN_FOUND FALSE)

20 | message(FATAL_ERROR "Eigen not found")

21 | endif(EIGEN_FIND_REQUIRED)

22 | endif(EIGEN_INCLUDE_DIRS)

23 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | Libcluster

2 | ==========

3 |

4 | [](https://travis-ci.org/dsteinberg/libcluster)

5 |

6 | ***Author***:

7 | [Daniel Steinberg](http://dsteinberg.github.io/)

8 |

9 | ***License***:

10 | LGPL v3 (See COPYING and COPYING.LESSER)

11 |

12 | ***Overview***:

13 |

14 | This library implements the following algorithms with variational Bayes

15 | learning procedures and efficient cluster splitting heuristics:

16 |

17 | * The Variational Dirichlet Process (VDP) [1, 2, 6]

18 | * The Bayesian Gaussian Mixture Model [3 - 6]

19 | * The Grouped Mixtures Clustering (GMC) model [6]

20 | * The Symmetric Grouped Mixtures Clustering (S-GMC) model [4 - 6]. This is

21 | referred to as Gaussian latent Dirichlet allocation (G-LDA) in [4, 5].

22 | * Simultaneous Clustering Model (SCM) for Multinomial Documents, and Gaussian

23 | Observations [5, 6].

24 | * Multiple-source Clustering Model (MCM) for clustering two observations,

25 | one of an image/document, and multiple of segments/words

26 | simultaneously [4 - 6].

27 | * And more clustering algorithms based on diagonal Gaussian, and

28 | Exponential distributions.

29 |

30 | And also,

31 | * Various functions for evaluating means, standard deviations, covariance,

32 | primary Eigenvalues etc of data.

33 | * Extensible template interfaces for creating new algorithms within the

34 | variational Bayes framework.

35 |

36 |

37 |

38 |  39 |

39 |  40 |

41 |

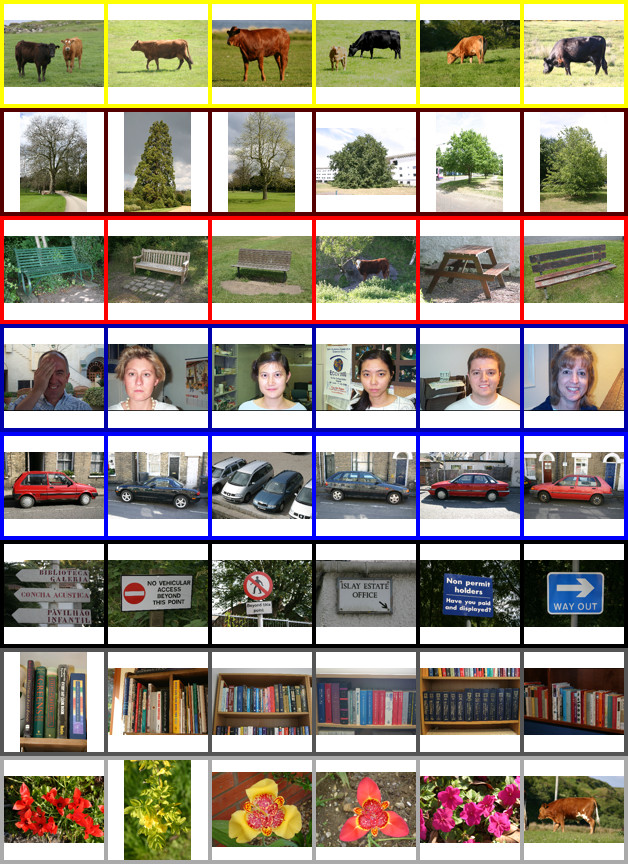

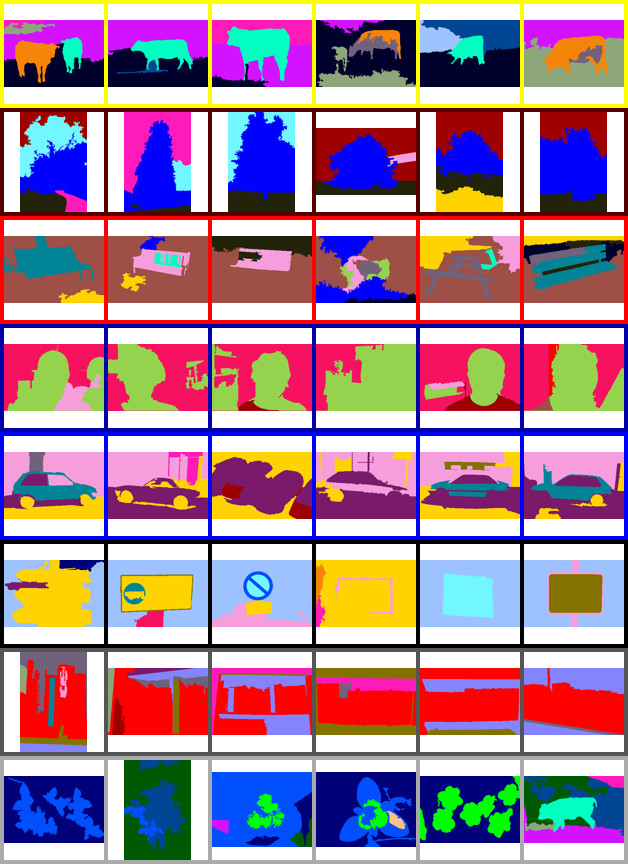

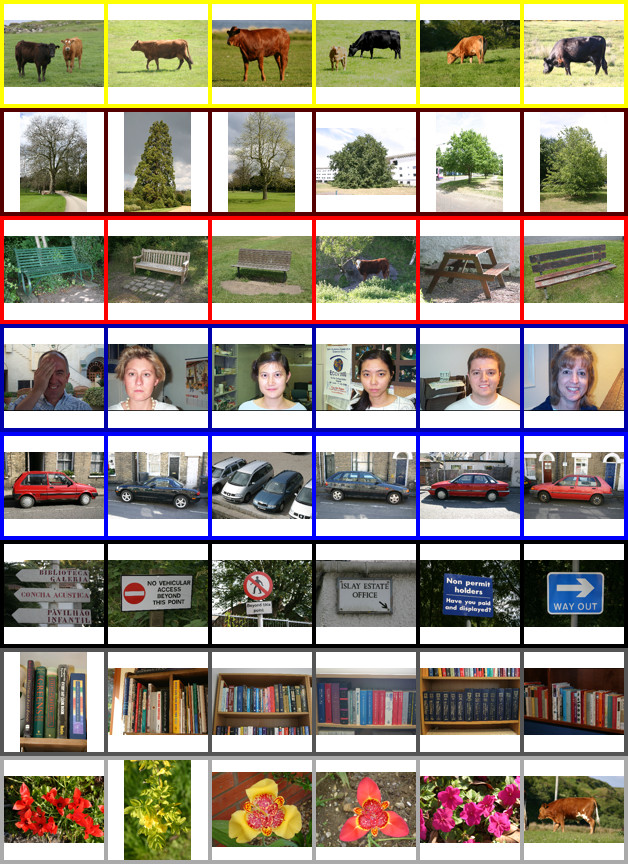

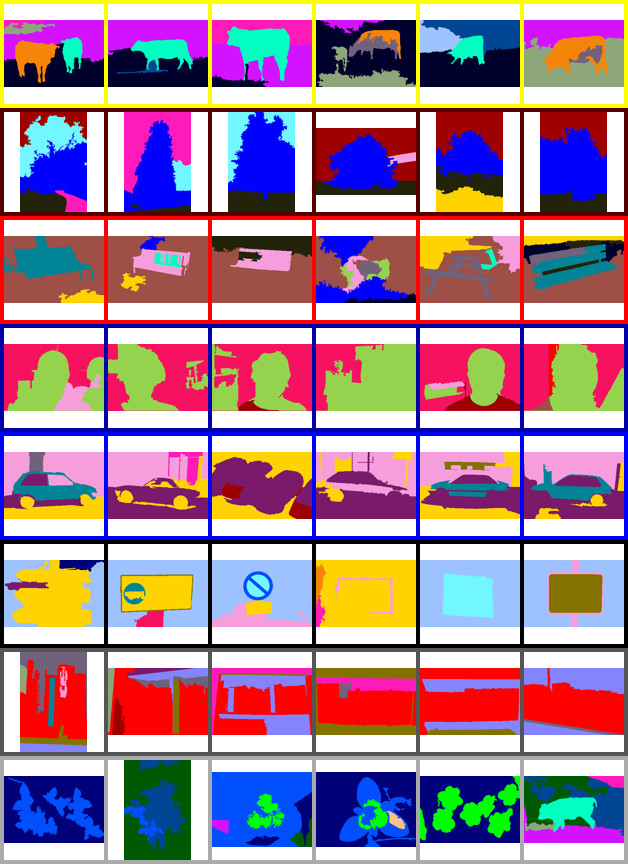

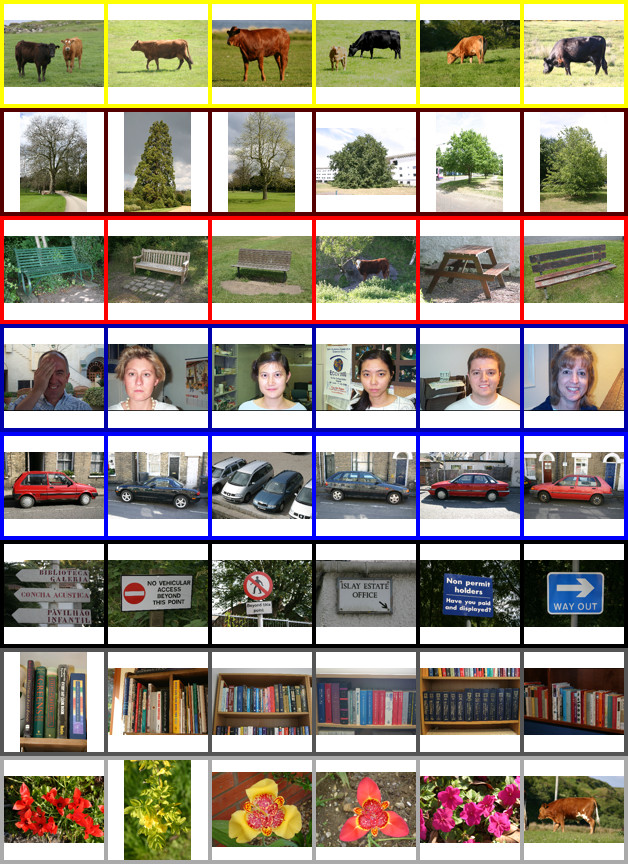

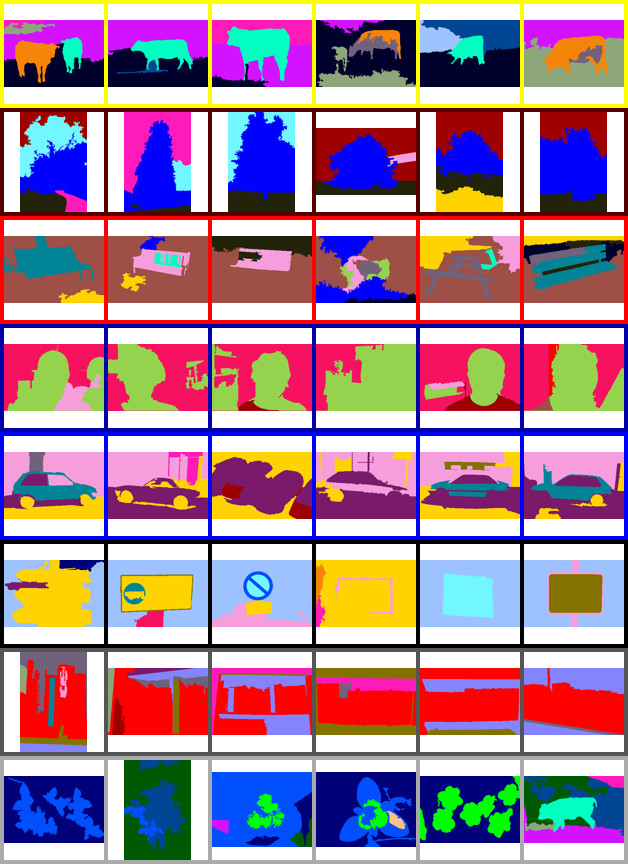

42 | An example of using the MCM to simultaneously cluster images and objects within

43 | images for unsupervised scene understanding. See [4 - 6] for more information.

44 |

45 | * * *

46 |

47 |

48 | TABLE OF CONTENTS

49 | -----------------

50 |

51 | * [Dependencies](#dependencies)

52 |

53 | * [Install Instructions](#install-instructions)

54 |

55 | * [C++ Interface](#c-interface)

56 |

57 | * [Python Interface](#python-interface)

58 |

59 | * [General Usability Tips](#general-usability-tips)

60 |

61 | * [References and Citing](#references-and-citing)

62 |

63 |

64 | * * *

65 |

66 |

67 | DEPENDENCIES

68 | ------------

69 |

70 | - Eigen version 3.0 or greater

71 | - Boost version 1.4.x or greater and devel packages (special math functions)

72 | - OpenMP, comes default with most compilers (may need a special version of

73 | [LLVM](http://openmp.llvm.org/)).

74 | - CMake

75 |

76 | For the python interface:

77 |

78 | - Python 2 or 3

79 | - Boost python and boost python devel packages (make sure you have version 2

80 | or 3 for the relevant version of python)

81 | - Numpy (tested with v1.7)

82 |

83 |

84 | INSTALL INSTRUCTIONS

85 | --------------------

86 |

87 | *For Linux and OS X -- I've never tried to build on Windows.*

88 |

89 | To build libcluster:

90 |

91 | 1. Make sure you have CMake installed, and Eigen and Boost preferably in the

92 | usual locations:

93 |

94 | /usr/local/include/eigen3/ or /usr/include/eigen3

95 | /usr/local/include/boost or /usr/include/boost

96 |

97 | 2. Make a build directory where you checked out the source if it does not

98 | already exist, then change into this directory,

99 |

100 | cd {where you checked out the source}

101 | mkdir build

102 | cd build

103 |

104 | 3. To build libcluster, run the following from the build directory:

105 |

106 | cmake ..

107 | make

108 | sudo make install

109 |

110 | This installs:

111 |

112 | libcluster.h /usr/local/include

113 | distributions.h /usr/local/include

114 | probutils.h /usr/local/include

115 | libcluster.* /usr/local/lib (* this is either .dylib or .so)

116 |

117 | 4. Use the doxyfile in {where you checked out the source}/doc to make the

118 | documentation with doxygen:

119 |

120 | doxygen Doxyfile

121 |

122 | **NOTE**: There are few options you can change using ccmake (or the cmake gui),

123 | these include:

124 |

125 | - `BUILD_EXHAUST_SPLIT` (toggle `ON` or `OFF`, default `OFF`) This uses the

126 | exhaustive cluster split heuristic [1, 2] instead of the greedy heuristic [4,

127 | 5] for all algorithms but the SCM and MCM. The greedy heuristic is MUCH

128 | faster, but does give different results. I have yet to determine whether it

129 | is actually worse than the exhaustive method (if it is, it is not by much).

130 | The SCM and MCM only use the greedy split heuristic at this stage.

131 |

132 | - `BUILD_PYTHON_INTERFACE` (toggle `ON` or `OFF`, default `OFF`) Build the

133 | python interface. This requires boost python, and also uses row-major storage

134 | to be compatible with python.

135 |

136 | - `BUILD_USE_PYTHON3` (toggle `ON` or `OFF`, default `ON`) Use python 3 or 2 to

137 | build the python interface. Make sure you have the relevant python and boost

138 | python libraries installed!

139 |

140 | - `CMAKE_INSTALL_PREFIX` (default `/usr/local`) The default prefix for

141 | installing the library and binaries.

142 |

143 | - `EIGEN_INCLUDE_DIRS` (default `/usr/include/eigen3`) Where to look for the

144 | Eigen matrix library.

145 |

146 | **NOTE**: On linux you may have to run `sudo ldconfig` before the system can

147 | find libcluster.so (or just reboot).

148 |

149 | **NOTE**: On Red-Hat based systems, `/usr/local/lib` is not checked unless

150 | added to `/etc/ld.so.conf`! This may lead to "cannot find libcluster.so"

151 | errors.

152 |

153 |

154 | C++ INTERFACE

155 | -------------

156 |

157 | All of the interfaces to this library are documented in `include/libcluster.h`.

158 | There are far too many algorithms to go into here, and I *strongly* recommend

159 | looking at the `test/` directory for example usage, specifically,

160 |

161 | * `cluster_test.cpp` for the group mixture models (GMC etc)

162 | * `scluster_test.cpp` for the SCM

163 | * `mcluster_test.cpp` for the MCM

164 |

165 | Here is an example for regular mixture models, such as the BGMM, which simply

166 | clusters some test data and prints the resulting posterior parameters to the

167 | terminal,

168 |

169 | ```C++

170 |

171 | #include "libcluster.h"

172 | #include "distributions.h"

173 | #include "testdata.h"

174 |

175 |

176 | //

177 | // Namespaces

178 | //

179 |

180 | using namespace std;

181 | using namespace Eigen;

182 | using namespace libcluster;

183 | using namespace distributions;

184 |

185 |

186 | //

187 | // Functions

188 | //

189 |

190 | // Main

191 | int main()

192 | {

193 |

194 | // Populate test data from testdata.h

195 | MatrixXd Xcat;

196 | vMatrixXd X;

197 | makeXdata(Xcat, X);

198 |

199 | // Set up the inputs for the BGMM

200 | Dirichlet weights;

201 | vector clusters;

202 | MatrixXd qZ;

203 |

204 | // Learn the BGMM

205 | double F = learnBGMM(Xcat, qZ, weights, clusters, PRIORVAL, true);

206 |

207 | // Print the posterior parameters

208 | cout << endl << "Cluster Weights:" << endl;

209 | cout << weights.Elogweight().exp().transpose() << endl;

210 |

211 | cout << endl << "Cluster means:" << endl;

212 | for (vector::iterator k=clusters.begin(); k < clusters.end(); ++k)

213 | cout << k->getmean() << endl;

214 |

215 | cout << endl << "Cluster covariances:" << endl;

216 | for (vector::iterator k=clusters.begin(); k < clusters.end(); ++k)

217 | cout << k->getcov() << endl << endl;

218 |

219 | return 0;

220 | }

221 |

222 | ```

223 |

224 | Note that `distributions.h` has also been included. In fact, all of the

225 | algorithms in `libcluster.h` are just wrappers over a few key functions in

226 | `cluster.cpp`, `scluster.cpp` and `mcluster.cpp` that can take in *arbitrary*

227 | distributions as inputs, and so more algorithms potentially exist than

228 | enumerated in `libcluster.h`. If you want to create different algorithms, or

229 | define more cluster distributions (like categorical) have a look at inheriting

230 | the `WeightDist` and `ClusterDist` base classes in `distributions.h`. Depending

231 | on the distributions you use, you may also have to come up with a way to

232 | 'split' clusters. Otherwise you can create an algorithm with a random initial

233 | set of clusters like the MCM at the top level, which then variational Bayes

234 | will prune.

235 |

236 | There are also some generally useful functions included in `probutils.h` when

237 | dealing with mixture models (such as the log-sum-exp trick).

238 |

239 |

240 | PYTHON INTERFACE

241 | ----------------

242 |

243 | ### Installation

244 |

245 | Easy, follow the normal build instructions up to step (4) (if you haven't

246 | already), then from the build directory:

247 |

248 | cmake ..

249 | ccmake .

250 |

251 | Make sure `BUILD_PYTHON_INTERFACE` is `ON`

252 |

253 | make

254 | sudo make install

255 |

256 | This installs all the same files as step (4), as well as `libclusterpy.so` to

257 | your python staging directory, so it should be on your python path. I.e. just

258 | run

259 |

260 | ```python

261 | import libclusterpy

262 | ```

263 |

264 | **Trouble Shooting**:

265 |

266 | On Fedora 20/21 I have to append `/usr/local/lib` to the file `/etc/ld.so.conf`

267 | to make python find the compiled shared object.

268 |

269 |

270 | ### Usage

271 |

272 | Import the library as

273 |

274 | ```python

275 | import numpy as np

276 | import libclusterpy as lc

277 | ```

278 |

279 | Then for the mixture models, assuming `X` is a numpy array where `X.shape` is

280 | `(N, D)` -- `N` being the number of samples, and `D` being the dimension of

281 | each sample,

282 |

283 | f, qZ, w, mu, cov = lc.learnBGMM(X)

284 |

285 | where `f` is the final free energy value, `qZ` is a distribution over all of

286 | the cluster labels where `qZ.shape` is `(N, K)` and `K` is the number of

287 | clusters (each row of `qZ` sums to 1). Then `w`, `mu` and `cov` the expected

288 | posterior cluster parameters (see the documentation for details. Alternatively,

289 | tuning the `prior` argument can be used to change the number of clusters found,

290 |

291 | f, qZ, w, mu, cov = lc.learnBGMM(X, prior=0.1)

292 |

293 | This interface is common to all of the simple mixture models (i.e. VDP, BGMM

294 | etc).

295 |

296 | For the group mixture models (GMC, SGMC etc) `X` is a *list* of arrays of size

297 | `(Nj, D)` (indexed by j), one for each group/album, `X = [X_1, X_2, ...]`. The

298 | returned `qZ` and `w` are also lists of arrays, one for each group, e.g.,

299 |

300 | f, qZ, w, mu, cov = lc.learnSGMC(X)

301 |

302 | The SCM again has a similar interface to the above models, but now `X` is a

303 | *list of lists of arrays*, `X = [[X_11, X_12, ...], [X_21, X_22, ...], ...]`.

304 | This specifically for modelling situations where `X` is a matrix of all of the

305 | features of, for example, `N_ij` segments in image `ij` in album `j`.

306 |

307 | f, qY, qZ, wi, wij, mu, cov = lc.learnSCM(X)

308 |

309 | Where `qY` is a list of arrays of top-level/image cluster probabilities, `qZ`

310 | is a list of lists of arrays of bottom-level/segment cluster probabilities.

311 | `wi` are the mixture weights (list of arrays) corresponding to the `qY` labels,

312 | and `wij` are the weights (list of lists of arrays) corresponding the `qZ`

313 | labels. This has two optional prior inputs, and a cluster truncation level

314 | (max number of clusters) for the top-level/image clusters,

315 |

316 | f, qY, qZ, wi, wij, mu, cov = lc.learnSCM(X, trunc=10, dirprior=1,

317 | gausprior=0.1)

318 |

319 | Where `dirprior` refers to the top-level cluster prior, and `gausprior` the

320 | bottom-level.

321 |

322 | Finally, the MCM has a similar interface to the MCM, but with an extra input,

323 | `W` which is of the same format as the `X` in the GMC-style models, i.e. it is

324 | a list of arrays of top-level or image features, `W = [W_1, W_2, ...]`. The

325 | usage is,

326 |

327 | f, qY, qZ, wi, wij, mu_t, mu_k, cov_t, cov_k = lc.learnMCM(W, X)

328 |

329 | Here `mu_t` and `cov_t` are the top-level posterior cluster parameters -- these

330 | are both lists of `T` cluster parameters (`T` being the number of clusters

331 | found. Similarly `mu_k` and `cov_k` are lists of `K` bottom-level posterior

332 | cluster parameters. Like the SCM, this has a number of optional inputs,

333 |

334 |

335 | f, qY, qZ, wi, wij, mu_t, mu_k, cov_t, cov_k = lc.learnMCM(W, X, trunc=10,

336 | gausprior_t=1,

337 | gausprior_k=0.1)

338 |

339 | Where `gausprior_t` refers to the top-level cluster prior, and `gausprior_k`

340 | the bottom-level.

341 |

342 | Look at the `libclusterpy` docstrings for more help on usage, and the

343 | `testapi.py` script in the `python` directory for more usage examples.

344 |

345 | **NOTE** if you get the following message when importing libclusterpy:

346 |

347 | ImportError: /lib64/libboost_python.so.1.54.0: undefined symbol: PyClass_Type

348 |

349 | Make sure you have `boost-python3` installed!

350 |

351 |

352 | GENERAL USABILITY TIPS

353 | ----------------------

354 |

355 | When verbose mode is activated you will get output that looks something like

356 | this:

357 |

358 | Learning MODEL X...

359 | --------<=>

360 | ---<==>

361 | --------x<=>

362 | --------------<====>

363 | ----<*>

364 | ---<>

365 | Finished!

366 | Number of clusters = 4

367 | Free Energy = 41225

368 |

369 | What this means:

370 |

371 | * `-` iteration of Variational Bayes (VBE and VBM step)

372 | * `<` cluster splitting has started (model selection)

373 | * `=` found a valid candidate split

374 | * `>` chosen candidate split and testing for inclusion into model

375 | * `x` clusters have been deleted because they became devoid of observations

376 | * `*` clusters (image/document clusters) that are empty have been removed.

377 |

378 | For best clustering results, I have found the following tips may help:

379 |

380 | 1. If clustering runs REALLY slowly then it may be because of hyper-threading.

381 | OpenMP will by default use as many cores available to it as possible, this

382 | includes virtual hyper-threading cores. Unfortunately this may result in

383 | large slow-downs, so try only allowing these functions to use a number of

384 | threads less than or equal to the number of PHYSICAL cores on your machine.

385 |

386 | 2. Garbage in = garbage out. Make sure your assumptions about the data are

387 | reasonable for the type of cluster distribution you use. For instance, if

388 | your observations do not resemble a mixture of Gaussians in feature space,

389 | then it may not be appropriate to use Gaussian clusters.

390 |

391 | 3. For Gaussian clusters: standardising or whitening your data may help, i.e.

392 |

393 | if X is an NxD matrix of observations you wish to cluster, you may get

394 | better results if you use a standardised version of it, X*,

395 |

396 | X_s = C * ( X - mean(X) ) / std(X)

397 |

398 | where `C` is some constant (optional) and the mean and std are for each

399 | column of X.

400 |

401 | You may obtain even better results by using PCA or ZCA whitening on X

402 | (assuming ZERO MEAN data), using python syntax:

403 |

404 | [U, S, V] = svd(cov(X))

405 | X_w = X.dot(U).dot(diag(1. / sqrt(diag(S)))) # PCA Whitening

406 |

407 | Such that

408 |

409 | cov(X_w) = I_D.

410 |

411 | Also, to get some automatic scaling you can multiply the prior by the

412 | PRINCIPAL eigenvector of `cov(X)` (or `cov(X_s)`, `cov(X_w)`).

413 |

414 | **NOTE**: If you use diagonal covariance Gaussians I STRONGLY recommend PCA

415 | or ZCA whitening your data first, otherwise you may end up with hundreds of

416 | clusters!

417 |

418 | 4. For Exponential clusters: Your observations have to be in the range [0,

419 | inf). The clustering solution may also be sensitive to the prior. I find

420 | usually using a prior value that has the approximate magnitude of your data

421 | or more leads to better convergence.

422 |

423 |

424 | * * *

425 |

426 |

427 | REFERENCES AND CITING

428 | ---------------------

429 |

430 | **[1]** K. Kurihara, M. Welling, and N. Vlassis. Accelerated variational

431 | Dirichlet process mixtures, Advances in Neural Information Processing Systems,

432 | vol. 19, p. 761, 2007.

433 |

434 | **[2]** D. M. Steinberg, A. Friedman, O. Pizarro, and S. B. Williams. A

435 | Bayesian nonparametric approach to clustering data from underwater robotic

436 | surveys. In International Symposium on Robotics Research, Flagstaff, AZ, Aug.

437 | 2011.

438 |

439 | **[3]** C. M. Bishop. Pattern Recognition and Machine Learning. Cambridge, UK:

440 | Springer Science+Business Media, 2006.

441 |

442 | **[4]** D. M. Steinberg, O. Pizarro, S. B. Williams. Synergistic Clustering of

443 | Image and Segment Descriptors for Unsupervised Scene Understanding, In

444 | International Conference on Computer Vision (ICCV). IEEE, Sydney, NSW, 2013.

445 |

446 | **[5]** D. M. Steinberg, O. Pizarro, S. B. Williams. Hierarchical Bayesian

447 | Models for Unsupervised Scene Understanding. Journal of Computer Vision and

448 | Image Understanding (CVIU). Elsevier, 2014.

449 |

450 | **[6]** D. M. Steinberg, An Unsupervised Approach to Modelling Visual Data, PhD

451 | Thesis, 2013.

452 |

453 | Please consider citing the following if you use this code:

454 |

455 | * VDP: [2, 4, 6]

456 | * BGMM: [5, 6]

457 | * GMC: [6]

458 | * SGMC/GLDA: [4, 5, 6]

459 | * SCM: [5, 6]

460 | * MCM: [4, 5, 6]

461 |

462 | You can find these on my [homepage](http://dsteinberg.github.io/).

463 | Thank you!

464 |

--------------------------------------------------------------------------------

/include/distributions.h:

--------------------------------------------------------------------------------

1 | /*

2 | * libcluster -- A collection of hierarchical Bayesian clustering algorithms.

3 | * Copyright (C) 2013 Daniel M. Steinberg (daniel.m.steinberg@gmail.com)

4 | *

5 | * This file is part of libcluster.

6 | *

7 | * libcluster is free software: you can redistribute it and/or modify it under

8 | * the terms of the GNU Lesser General Public License as published by the Free

9 | * Software Foundation, either version 3 of the License, or (at your option)

10 | * any later version.

11 | *

12 | * libcluster is distributed in the hope that it will be useful, but WITHOUT

13 | * ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

14 | * FITNESS FOR A PARTICULAR PURPOSE. See the GNU Lesser General Public License

15 | * for more details.

16 | *

17 | * You should have received a copy of the GNU Lesser General Public License

18 | * along with libcluster. If not, see .

19 | */

20 |

21 | #ifndef DISTRIBUTIONS_H

22 | #define DISTRIBUTIONS_H

23 |

24 | #include

25 | #include

26 | #include

27 |

28 | //TODO: make all protected variables private and accessed by protected functions

29 | // to improve encapsulation??

30 |

31 | /*! Namespace that implements weight and cluster distributions. */

32 | namespace distributions

33 | {

34 |

35 | //

36 | // Namespace 'symbolic' constants

37 | //

38 |

39 | const double BETAPRIOR = 1.0; //!< beta prior value (Gaussians)

40 | const double NUPRIOR = 1.0; //!< nu prior value (diagonal Gaussians)

41 | const double ALPHA1PRIOR = 1.0; //!< alpha1 prior value (All weight dists)

42 | const double ALPHA2PRIOR = 1.0; //!< alpha2 prior value (SB & Gdir)

43 | const double APRIOR = 1.0; //!< a prior value (Exponential)

44 |

45 |

46 | //

47 | // Useful Typedefs

48 | //

49 |

50 | typedef Eigen::Array ArrayXb; //!< Boolean Array

51 |

52 |

53 | //

54 | // Weight Parameter Distribution classes

55 | //

56 |

57 | /*! \brief To make a new weight class that will work with the algorithm

58 | * templates, your class must have this as the minimum interface.

59 | */

60 | class WeightDist

61 | {

62 | public:

63 |

64 | // WeightDist(), required inherited constructor template

65 |

66 | /*! \brief Update the distribution.

67 | * \param Nk an array of observations counts.

68 | */

69 | virtual void update (const Eigen::ArrayXd& Nk) = 0;

70 |

71 | /*! \brief Evaluate the expectation of the log label weights in the mixtures.

72 | * \returns An array of likelihoods for the labels given the weights

73 | */

74 | virtual const Eigen::ArrayXd& Elogweight () const = 0;

75 |

76 | /*! \brief Get the number of observations contributing to each weight.

77 | * \returns An array the number of observations contributing to each weight.

78 | */

79 | const Eigen::ArrayXd& getNk () const { return this->Nk; }

80 |

81 | /*! \brief Get the free energy contribution of these weights.

82 | * \returns the free energy contribution of these weights

83 | */

84 | virtual double fenergy () const = 0;

85 |

86 | /*! \brief virtual destructor.

87 | */

88 | virtual ~WeightDist() {}

89 |

90 | protected:

91 |

92 | /*! \brief Default constructor to set an empty observation array.

93 | */

94 | WeightDist () : Nk(Eigen::ArrayXd::Zero(1)) {}

95 |

96 | Eigen::ArrayXd Nk; //!< Number of observations making up the weights.

97 | };

98 |

99 |

100 | /*!

101 | * \brief Stick-Breaking (Dirichlet Process) parameter distribution.

102 | */

103 | class StickBreak : public WeightDist

104 | {

105 | public:

106 |

107 | StickBreak ();

108 |

109 | StickBreak (const double concentration);

110 |

111 | void update (const Eigen::ArrayXd& Nk);

112 |

113 | const Eigen::ArrayXd& Elogweight () const { return this->E_logpi; }

114 |

115 | double fenergy () const;

116 |

117 | virtual ~StickBreak () {}

118 |

119 | protected:

120 |

121 | // Prior hyperparameters, expectations etc

122 | double alpha1_p; //!< First prior param \f$ Beta(\alpha_1,\alpha_2) \f$

123 | double alpha2_p; //!< Second prior param \f$ Beta(\alpha_1,\alpha_2) \f$

124 | double F_p; //!< Free energy component dependent on priors only

125 |

126 | // Posterior hyperparameters and expectations

127 | Eigen::ArrayXd alpha1; //!< First posterior param corresp to \f$ \alpha_1 \f$

128 | Eigen::ArrayXd alpha2; //!< Second posterior param corresp to \f$ \alpha_2 \f$

129 | Eigen::ArrayXd E_logv; //!< Stick breaking log expectation

130 | Eigen::ArrayXd E_lognv; //!< Inverse stick breaking log expectation

131 | Eigen::ArrayXd E_logpi; //!< Expected log weights

132 |

133 | // Order tracker

134 | std::vector< std::pair > ordvec; //!< For order specific updates

135 |

136 | private:

137 |

138 | // Do some prior free energy calcs

139 | void priorfcalc (void);

140 | };

141 |

142 |

143 | /*!

144 | * \brief Generalised Dirichlet parameter distribution (truncated stick

145 | * breaking).

146 | */

147 | class GDirichlet : public StickBreak

148 | {

149 | public:

150 |

151 | void update (const Eigen::ArrayXd& Nk);

152 |

153 | double fenergy () const;

154 |

155 | virtual ~GDirichlet () {}

156 |

157 | };

158 |

159 |

160 | /*!

161 | * \brief Dirichlet parameter distribution.

162 | */

163 | class Dirichlet : public WeightDist

164 | {

165 | public:

166 |

167 | Dirichlet ();

168 |

169 | Dirichlet (const double alpha);

170 |

171 | void update (const Eigen::ArrayXd& Nk);

172 |

173 | const Eigen::ArrayXd& Elogweight () const { return this->E_logpi; }

174 |

175 | double fenergy () const;

176 |

177 | virtual ~Dirichlet () {}

178 |

179 | private:

180 |

181 | // Prior hyperparameters, expectations etc

182 | double alpha_p; // Symmetric Dirichlet prior \f$ Dir(\alpha) \f$

183 | double F_p; // Free energy component dependent on priors only

184 |

185 | // Posterior hyperparameters and expectations

186 | Eigen::ArrayXd alpha; // Posterior param corresp to \f$ \alpha \f$

187 | Eigen::ArrayXd E_logpi; // Expected log weights

188 |

189 | };

190 |

191 |

192 | //

193 | // Cluster Parameter Distribution classes

194 | //

195 |

196 | /*! \brief To make a new cluster distribution class that will work with the

197 | * algorithm templates your class must have this as the minimum

198 | * interface.

199 | */

200 | class ClusterDist

201 | {

202 | public:

203 |

204 | /*! \brief Add observations to the cluster without updating the parameters

205 | * (i.e. add to the sufficient statistics)

206 | * \param qZk the observation indicators for this cluster, corresponding to

207 | * X.

208 | * \param X the observations [obs x dims], to add to this cluster according

209 | * to qZk.

210 | */

211 | virtual void addobs (

212 | const Eigen::VectorXd& qZk,

213 | const Eigen::MatrixXd& X

214 | ) = 0;

215 |

216 | /*! \brief Update the cluster parameters from the observations added from

217 | * addobs().

218 | */

219 | virtual void update () = 0;

220 |

221 | /*! \brief Clear the all parameters and observation accumulations from

222 | * addobs().

223 | */

224 | virtual void clearobs () = 0;

225 |

226 | /*! \brief Evaluate the log marginal likelihood of the observations.

227 | * \param X a matrix of observations, [obs x dims].

228 | * \returns An array of likelihoods for the observations given this dist.

229 | */

230 | virtual Eigen::VectorXd Eloglike (const Eigen::MatrixXd& X) const = 0;

231 |

232 | /*! \brief Get the free energy contribution of these cluster parameters.

233 | * \returns the free energy contribution of these cluster parameters.

234 | */

235 | virtual double fenergy () const = 0;

236 |

237 | /*! \brief Propose a split for the observations given these cluster parameters

238 | * \param X a matrix of observations, [obs x dims], to split.

239 | * \returns a binary array of split assignments.

240 | * \note this needs to consistently split observations between multiple

241 | * subsequent calls, but can change after each update().

242 | */

243 | virtual ArrayXb splitobs (const Eigen::MatrixXd& X) const = 0;

244 |

245 | /*! \brief Return the number of observations belonging to this cluster.

246 | * \returns the number of observations belonging to this cluster.

247 | */

248 | double getN () const { return this->N; }

249 |

250 | /*! \brief Return the cluster prior value.

251 | * \returns the cluster prior value.

252 | */

253 | double getprior () const { return this->prior; }

254 |

255 | /*! \brief virtual destructor.

256 | */

257 | virtual ~ClusterDist() {}

258 |

259 | protected:

260 |

261 | /*! \brief Constructor that must be called to set the prior and cluster

262 | * dimensionality.

263 | * \param prior the cluster prior.

264 | * \param D the dimensionality of this cluster.

265 | */

266 | ClusterDist (const double prior, const unsigned int D)

267 | : D(D), prior(prior), N(0) {}

268 |

269 | unsigned int D; //!< Dimensionality

270 | double prior; //!< Cluster prior

271 | double N; //!< Number of observations making up this cluster.

272 |

273 | };

274 |

275 |

276 | /*!

277 | * \brief Gaussian-Wishart parameter distribution for full Gaussian clusters.

278 | */

279 | class GaussWish : public ClusterDist

280 | {

281 | public:

282 |

283 | /*! \brief Make a Gaussian-Wishart prior.

284 | *

285 | * \param clustwidth makes the covariance prior \f$ clustwidth \times D

286 | * \times \mathbf{I}_D \f$.

287 | * \param D is the dimensionality of the data

288 | */

289 | GaussWish (const double clustwidth, const unsigned int D);

290 |

291 | void addobs (const Eigen::VectorXd& qZk, const Eigen::MatrixXd& X);

292 |

293 | void update ();

294 |

295 | void clearobs ();

296 |

297 | Eigen::VectorXd Eloglike (const Eigen::MatrixXd& X) const;

298 |

299 | ArrayXb splitobs (const Eigen::MatrixXd& X) const;

300 |

301 | double fenergy () const;

302 |

303 | /*! \brief Get the estimated cluster mean.

304 | * \returns the expected cluster mean.

305 | */

306 | const Eigen::RowVectorXd& getmean () const { return this->m; }

307 |

308 | /*! \brief Get the estimated cluster covariance.

309 | * \returns the expected cluster covariance.

310 | */

311 | Eigen::MatrixXd getcov () const { return this->iW/this->nu; }

312 |

313 | virtual ~GaussWish () {}

314 |

315 | private:

316 |

317 | // Prior hyperparameters etc

318 | double nu_p;

319 | double beta_p;

320 | Eigen::RowVectorXd m_p;

321 | Eigen::MatrixXd iW_p;

322 | double logdW_p;

323 | double F_p;

324 |

325 | // Posterior hyperparameters

326 | double nu; // nu, Lambda ~ Wishart(W, nu)

327 | double beta; // beta, mu ~ Normal(m, (beta*Lambda)^-1)

328 | Eigen::RowVectorXd m; // m, mu ~ Normal(m, (beta*Lambda)^-1)

329 | Eigen::MatrixXd iW; // Inverse W, Lambda ~ Wishart(W, nu)

330 | double logdW; // log(det(W))

331 |

332 | // Sufficient Statistics

333 | double N_s;

334 | Eigen::RowVectorXd x_s;

335 | Eigen::MatrixXd xx_s;

336 |

337 | };

338 |

339 |

340 | /*!

341 | * \brief Normal-Gamma parameter distribution for diagonal Gaussian clusters.

342 | */

343 | class NormGamma : public ClusterDist

344 | {

345 | public:

346 |

347 | /*! \brief Make a Normal-Gamma prior.

348 | *

349 | * \param clustwidth makes the covariance prior \f$ clustwidth \times

350 | * \mathbf{I}_D \f$.

351 | * \param D is the dimensionality of the data

352 | */

353 | NormGamma (const double clustwidth, const unsigned int D);

354 |

355 | void addobs (const Eigen::VectorXd& qZk, const Eigen::MatrixXd& X);

356 |

357 | void update ();

358 |

359 | void clearobs ();

360 |

361 | Eigen::VectorXd Eloglike (const Eigen::MatrixXd& X) const;

362 |

363 | ArrayXb splitobs (const Eigen::MatrixXd& X) const;

364 |

365 | double fenergy () const;

366 |

367 | /*! \brief Get the estimated cluster mean.

368 | * \returns the expected cluster mean.

369 | */

370 | const Eigen::RowVectorXd& getmean () const { return this->m; }

371 |

372 | /*! \brief Get the estimated cluster covariance.

373 | * \returns the expected cluster covariance (just the diagonal elements).

374 | */

375 | Eigen::RowVectorXd getcov () const { return this->L*this->nu; }

376 |

377 | virtual ~NormGamma () {}

378 |

379 | private:

380 |

381 | // Prior hyperparameters etc

382 | double nu_p;

383 | double beta_p;

384 | Eigen::RowVectorXd m_p;

385 | Eigen::RowVectorXd L_p;

386 | double logL_p;

387 |

388 | // Posterior hyperparameters

389 | double nu;

390 | double beta;

391 | Eigen::RowVectorXd m;

392 | Eigen::RowVectorXd L;

393 | double logL;

394 |

395 | // Sufficient Statistics

396 | double N_s;

397 | Eigen::RowVectorXd x_s;

398 | Eigen::RowVectorXd xx_s;

399 |

400 | };

401 |

402 |

403 | /*!

404 | * \brief Exponential-Gamma parameter distribution for Exponential clusters.

405 | */

406 | class ExpGamma : public ClusterDist

407 | {

408 | public:

409 |

410 | /*! \brief Make a Gamma prior.

411 | *

412 | * \param obsmag is the prior value for b in Gamma(a, b), which works well

413 | * when it is approximately the magnitude of the observation

414 | * dimensions, x_djn.

415 | * \param D is the dimensionality of the data

416 | */

417 | ExpGamma (const double obsmag, const unsigned int D);

418 |

419 | void addobs (const Eigen::VectorXd& qZk, const Eigen::MatrixXd& X);

420 |

421 | void update ();

422 |

423 | void clearobs ();

424 |

425 | Eigen::VectorXd Eloglike (const Eigen::MatrixXd& X) const;

426 |

427 | ArrayXb splitobs (const Eigen::MatrixXd& X) const;

428 |

429 | double fenergy () const;

430 |

431 | /*! \brief Get the estimated cluster rate parameter, i.e. Exp(E[lambda]),

432 | * where lambda is the rate parameter.

433 | * \returns the expected cluster rate parameter.

434 | */

435 | Eigen::RowVectorXd getrate () { return this->a*this->ib; }

436 |

437 | virtual ~ExpGamma () {}

438 |

439 | private:

440 |

441 | // Prior hyperparameters

442 | double a_p;

443 | double b_p;

444 |

445 | // Posterior hyperparameters etc

446 | double a;

447 | Eigen::RowVectorXd ib; // inverse b

448 | double logb;

449 |

450 | // Sufficient Statistics

451 | double N_s;

452 | Eigen::RowVectorXd x_s;

453 |

454 | };

455 |

456 |

457 | }

458 |

459 | #endif // DISTRIBUTIONS_H

460 |

--------------------------------------------------------------------------------

/include/probutils.h:

--------------------------------------------------------------------------------

1 | /*

2 | * libcluster -- A collection of hierarchical Bayesian clustering algorithms.

3 | * Copyright (C) 2013 Daniel M. Steinberg (daniel.m.steinberg@gmail.com)

4 | *

5 | * This file is part of libcluster.

6 | *

7 | * libcluster is free software: you can redistribute it and/or modify it under

8 | * the terms of the GNU Lesser General Public License as published by the Free

9 | * Software Foundation, either version 3 of the License, or (at your option)

10 | * any later version.

11 | *

12 | * libcluster is distributed in the hope that it will be useful, but WITHOUT

13 | * ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

14 | * FITNESS FOR A PARTICULAR PURPOSE. See the GNU Lesser General Public License

15 | * for more details.

16 | *

17 | * You should have received a copy of the GNU Lesser General Public License

18 | * along with libcluster. If not, see .

19 | */

20 |

21 | #ifndef PROBUTILS_H

22 | #define PROBUTILS_H

23 |

24 | #include

25 | #include

26 | #include

27 |

28 |

29 | //

30 | // Namespaces

31 | //

32 |

33 | /*! \brief Namespace for various linear algebra tools useful for dealing with

34 | * Gaussians and log-probability expressions.

35 | *

36 | * \author Daniel Steinberg

37 | * Australian Centre for Field Robotics

38 | * The University of Sydney

39 | *

40 | * \date 15/02/2011

41 | */

42 | namespace probutils

43 | {

44 |

45 |

46 | //

47 | // Useful Functions

48 | //

49 |

50 | /*! \brief Calculate the column means of a matrix.

51 | *

52 | * \param X an NxD matrix.

53 | * \returns a 1xD row vector of the means of each column of X.

54 | */

55 | Eigen::RowVectorXd mean (const Eigen::MatrixXd& X);

56 |

57 |

58 | /*! \brief Calculate the column means of a vector of matrices (one mean for

59 | * all data in the matrices).

60 | *

61 | * \param X a vector of N_jxD matrices for j = 1:J.

62 | * \returns a 1xD row vector of the means of each column of X.

63 | * \throws std::invalid_argument if X has inconsistent D between elements.

64 | */

65 | Eigen::RowVectorXd mean (const std::vector& X);

66 |

67 |

68 | /*! \brief Calculate the column standard deviations of a matrix, uses N - 1.

69 | *

70 | * \param X an NxD matrix.

71 | * \returns a 1xD row vector of the standard deviations of each column of X.

72 | */

73 | Eigen::RowVectorXd stdev (const Eigen::MatrixXd& X);

74 |

75 |

76 | /*! \brief Calculate the covariance of a matrix.

77 | *

78 | * If X is an NxD matrix, then this calculates:

79 | *

80 | * \f[ Cov(X) = \frac{1} {N-1} (X-E[X])^T (X-E[X]) \f]

81 | *

82 | * \param X is a NxD matrix to calculate the covariance of.

83 | * \returns a DxD covariance matrix.

84 | * \throws std::invalid_argument if X is 1xD or less (has one or less

85 | * observations).

86 | */

87 | Eigen::MatrixXd cov (const Eigen::MatrixXd& X);

88 |

89 |

90 | /*! \brief Calculate the covariance of a vector of matrices (one mean for

91 | * all data in the matrices).

92 | *

93 | * This calculates:

94 | *

95 | * \f[ Cov(X) = \frac{1} {\sum_j N_j-1} \sum_j (X_j-E[X])^T (X_j-E[X]) \f]

96 | *

97 | * \param X is a a vector of N_jxD matrices for j = 1:J.

98 | * \returns a DxD covariance matrix.

99 | * \throws std::invalid_argument if any X_j has one or less observations.

100 | * \throws std::invalid_argument if X has inconsistent D between elements.

101 | */

102 | Eigen::MatrixXd cov (const std::vector& X);

103 |

104 |

105 | /*! \brief Calculate the Mahalanobis distance, (x-mu)' * A^-1 * (x-mu), N

106 | * times.

107 | *

108 | * \param X an NxD matrix of samples/obseravtions.

109 | * \param mu a 1XD vector of means.

110 | * \param A a DxD marix of weights, A must be invertable.

111 | * \returns an Nx1 matrix of distances evaluated for each row of X.

112 | * \throws std::invalid_argument If X, mu and A do not have compatible

113 | * dimensionality, or if A is not PSD.

114 | */

115 | Eigen::VectorXd mahaldist (

116 | const Eigen::MatrixXd& X,

117 | const Eigen::RowVectorXd& mu,

118 | const Eigen::MatrixXd& A

119 | );

120 |

121 |

122 | /*! \brief Perform a log(sum(exp(X))) in a numerically stable fashion.

123 | *

124 | * \param X is a NxK matrix. We wish to sum along the rows (sum out K).

125 | * \returns an Nx1 vector where the log(sum(exp(X))) operation has been

126 | * performed along the rows.

127 | */

128 | Eigen::VectorXd logsumexp (const Eigen::MatrixXd& X);

129 |

130 |

131 | /*! \brief The eigen power method. Return the principal eigenvalue and

132 | * eigenvector.

133 | *

134 | * \param A is the square DxD matrix to decompose.

135 | * \param eigvec is the Dx1 principal eigenvector (mutable)

136 | * \returns the principal eigenvalue.

137 | * \throws std::invalid_argument if the matrix A is not square

138 | *

139 | */

140 | double eigpower (const Eigen::MatrixXd& A, Eigen::VectorXd& eigvec);

141 |

142 |

143 | /*! \brief Get the log of the determinant of a PSD matrix.

144 | *

145 | * \param A a DxD positive semi-definite matrix.

146 | * \returns log(det(A))

147 | * \throws std::invalid_argument if the matrix A is not square or if it is

148 | * not positive semidefinite.

149 | */

150 | double logdet (const Eigen::MatrixXd& A);

151 |

152 |

153 | /*! \brief Calculate digamma(X) for each element of X.

154 | *

155 | * \param X an NxM matrix

156 | * \returns an NxM matrix for which digamma(X) has been calculated for each

157 | * element

158 | */

159 | Eigen::MatrixXd mxdigamma (const Eigen::MatrixXd& X);

160 |

161 |

162 | /*! \brief Calculate log(gamma(X)) for each element of X.

163 | *

164 | * \param X an NxM matrix

165 | * \returns an NxM matrix for which log(gamma(X)) has been calculated for

166 | * each element

167 | */

168 | Eigen::MatrixXd mxlgamma (const Eigen::MatrixXd& X);

169 |

170 | }

171 |

172 | #endif // PROBUTILS_H

173 |

--------------------------------------------------------------------------------

/python/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | if(BUILD_PYTHON_INTERFACE)

2 |

3 | message(STATUS "Will build the python interface")

4 | if(BUILD_USE_PYTHON3)

5 | set(PYCMD "python3")

6 | message(STATUS "Will use python 3")

7 | else(BUILD_USE_PYTHON3)

8 | set(PYCMD "python2")

9 | message(STATUS "Will use python 2")

10 | endif(BUILD_USE_PYTHON3)

11 |

12 | # Python needs row major matrices (for convenience)

13 | set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DEIGEN_DEFAULT_TO_ROW_MAJOR")

14 |

15 |

16 | #--------------------------------#

17 | # Includes #

18 | #--------------------------------#

19 |

20 | if(BUILD_USE_PYTHON3)

21 | find_package(Boost COMPONENTS python3 REQUIRED)

22 | else(BUILD_USE_PYTHON3)

23 | find_package(Boost COMPONENTS python REQUIRED)

24 | endif(BUILD_USE_PYTHON3)

25 |

26 | include(${PYTHON_SOURCE_DIR}/FindNumpy.cmake REQUIRED)

27 | include_directories(${NUMPY_INCLUDE_DIR})

28 | find_package(PythonLibs REQUIRED)

29 | include_directories(${PYTHON_INCLUDE_DIRS})

30 |

31 |

32 | #--------------------------------#

33 | # Library Build Instructions #

34 | #--------------------------------#

35 |

36 | add_library(${PROJECT_NAME}py SHARED

37 | ${PYTHON_SOURCE_DIR}/libclusterpy.h

38 | ${PYTHON_SOURCE_DIR}/libclusterpy.cpp

39 | )

40 |

41 | if(BUILD_USE_PYTHON3)

42 | set(BOOST_PYTHON boost_python3)

43 | else(BUILD_USE_PYTHON3)

44 | set(BOOST_PYTHON boost_python)

45 | endif(BUILD_USE_PYTHON3)

46 |

47 | target_link_libraries(${PROJECT_NAME}py

48 | ${BOOST_PYTHON}

49 | ${PYTHON_LIBRARIES}

50 | ${Boost_LIBRARIES}

51 | ${PROJECT_NAME}

52 | )

53 |

54 |

55 | #--------------------------------#

56 | # Install Instructions #

57 | #--------------------------------#

58 |

59 | # Get python path

60 | execute_process(COMMAND ${PYCMD} -c

61 | "from distutils.sysconfig import get_python_lib; print(get_python_lib())"

62 | OUTPUT_VARIABLE PYTHON_SITE_PACKAGES OUTPUT_STRIP_TRAILING_WHITESPACE

63 | )

64 |

65 | # Install target

66 | install(TARGETS ${PROJECT_NAME}py DESTINATION ${PYTHON_SITE_PACKAGES})

67 |

68 | endif(BUILD_PYTHON_INTERFACE)

69 |

--------------------------------------------------------------------------------

/python/FindNumpy.cmake:

--------------------------------------------------------------------------------

1 | # - Find numpy

2 | # Find the native numpy includes

3 | # This module defines

4 | # NUMPY_INCLUDE_DIR, where to find numpy/arrayobject.h, etc.

5 | # NUMPY_FOUND, If false, do not try to use numpy headers.

6 |

7 | # This is (modified) from the avogadro project, http://avogadro.cc (GPL)

8 |

9 | if (NUMPY_INCLUDE_DIR)

10 | # in cache already

11 | set (NUMPY_FIND_QUIETLY TRUE)

12 | endif (NUMPY_INCLUDE_DIR)

13 |

14 | EXEC_PROGRAM ("${PYCMD}"

15 | ARGS "-c 'import numpy; print(numpy.get_include())'"

16 | OUTPUT_VARIABLE NUMPY_INCLUDE_DIR)

17 |

18 |

19 | if (NUMPY_INCLUDE_DIR MATCHES "Traceback")

20 | # Did not successfully include numpy

21 | set(NUMPY_FOUND FALSE)

22 | else (NUMPY_INCLUDE_DIR MATCHES "Traceback")

23 | # successful

24 | set (NUMPY_FOUND TRUE)

25 | set (NUMPY_INCLUDE_DIR ${NUMPY_INCLUDE_DIR} CACHE STRING "Numpy include path")

26 | endif (NUMPY_INCLUDE_DIR MATCHES "Traceback")

27 |

28 | if (NUMPY_FOUND)

29 | if (NOT NUMPY_FIND_QUIETLY)

30 | message (STATUS "Numpy headers found")

31 | endif (NOT NUMPY_FIND_QUIETLY)

32 | else (NUMPY_FOUND)

33 | if (NUMPY_FIND_REQUIRED)

34 | message (FATAL_ERROR "Numpy headers missing")

35 | endif (NUMPY_FIND_REQUIRED)

36 | endif (NUMPY_FOUND)

37 |

38 | MARK_AS_ADVANCED (NUMPY_INCLUDE_DIR)

39 |

--------------------------------------------------------------------------------

/python/libclusterpy.cpp:

--------------------------------------------------------------------------------

1 | /*

2 | * libcluster -- A collection of hierarchical Bayesian clustering algorithms.

3 | * Copyright (C) 2013 Daniel M. Steinberg (daniel.m.steinberg@gmail.com)

4 | *

5 | * This file is part of libcluster.

6 | *

7 | * libcluster is free software: you can redistribute it and/or modify it under

8 | * the terms of the GNU Lesser General Public License as published by the Free

9 | * Software Foundation, either version 3 of the License, or (at your option)

10 | * any later version.

11 | *

12 | * libcluster is distributed in the hope that it will be useful, but WITHOUT

13 | * ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

14 | * FITNESS FOR A PARTICULAR PURPOSE. See the GNU Lesser General Public License

15 | * for more details.

16 | *

17 | * You should have received a copy of the GNU Lesser General Public License

18 | * along with libcluster. If not, see .

19 | */

20 |

21 | #include

22 | #include "distributions.h"

23 | #include "libclusterpy.h"

24 |

25 | //

26 | // Namespaces

27 | //

28 |

29 | using namespace std;

30 | using namespace Eigen;

31 | using namespace distributions;

32 | using namespace libcluster;

33 | using namespace boost::python;

34 | using namespace boost::python::api;

35 |

36 |

37 | //

38 | // Private Functions

39 | //

40 |

41 |

42 | // Convert (memory share) a numpy array to an Eigen MatrixXd

43 | MatrixXd numpy2MatrixXd (const object& X)

44 | {

45 | if (PyArray_Check(X.ptr()) == false)

46 | throw invalid_argument("PyObject is not an array!");

47 |

48 | // Cast PyObject* to PyArrayObject* now we know that it's valid

49 | PyArrayObject* Xptr = (PyArrayObject*) X.ptr();

50 |

51 | if (PyArray_ISFLOAT(Xptr) == false)

52 | throw invalid_argument("PyObject is not an array of floats/doubles!");

53 |

54 | return Map ((double*) PyArray_DATA(Xptr),

55 | PyArray_DIMS(Xptr)[0], PyArray_DIMS(Xptr)[1]);

56 | }

57 |

58 |

59 | // Convert (memory share) a list of numpy arrays to a vector of Eigen MatrixXd

60 | vMatrixXd lnumpy2vMatrixXd (const boost::python::list& X)

61 | {

62 |

63 | vMatrixXd X_;

64 |

65 | for (int i=0; i < len(X); ++i)

66 | X_.push_back(numpy2MatrixXd(X[i]));

67 |

68 | return X_;

69 | }

70 |

71 |

72 | // Convert (memory share) a list of lists of arrays to a vector of vectors of

73 | // matrices

74 | vvMatrixXd llnumpy2vvMatrixXd (const boost::python::list& X)

75 | {

76 |

77 | vvMatrixXd X_;

78 |

79 | for (int i=0; i < len(X); ++i)

80 | {

81 | vMatrixXd Xi_;

82 |

83 | // Compiler complains when try to use lnumpy2vmatrix instead of following

84 | for (int j=0; j < len(X[i]); ++j)

85 | Xi_.push_back(numpy2MatrixXd(X[i][j]));

86 |

87 | X_.push_back(Xi_);

88 | }

89 |

90 | return X_;

91 | }

92 |

93 |

94 | // Get all the means from Gaussian clusters, Kx[1xD] matrices

95 | vMatrixXd getmean (const vector& clusters)

96 | {

97 | vMatrixXd means;

98 |

99 | for (size_t k=0; k < clusters.size(); ++k)

100 | means.push_back(clusters[k].getmean());

101 |

102 | return means;

103 | }

104 |

105 |

106 | // Get all of the covarances of Gaussian clusters, Kx[DxD] matrices

107 | vMatrixXd getcov (const vector& clusters)

108 | {

109 | vMatrixXd covs;

110 |

111 | for (size_t k=0; k < clusters.size(); ++k)

112 | covs.push_back(clusters[k].getcov());

113 |

114 | return covs;

115 | }

116 |

117 |

118 | // Get the expected cluster weights in each of the groups

119 | template

120 | vector getweights (const vector& weights)

121 | {

122 | vector rwgt;

123 | for (size_t k=0; k < weights.size(); ++k)

124 | rwgt.push_back(ArrayXd(weights[k].Elogweight().exp()));

125 |

126 | return rwgt;

127 | }

128 |

129 |

130 | //

131 | // Public Wrappers

132 | //

133 |

134 | // VDP

135 | tuple wrapperVDP (

136 | const object& X,

137 | const float clusterprior,

138 | const int maxclusters,

139 | const bool verbose,

140 | const int nthreads

141 | )

142 | {

143 | // Convert X

144 | const MatrixXd X_ = numpy2MatrixXd(X);

145 |

146 | // Pre-allocate some stuff

147 | MatrixXd qZ;

148 | StickBreak weights;

149 | vector clusters;

150 |

151 | // Do the clustering

152 | double f = learnVDP(X_, qZ, weights, clusters, clusterprior, maxclusters,

153 | verbose, nthreads);

154 |

155 | // Return relevant objects

156 | return make_tuple(f, qZ, ArrayXd(weights.Elogweight().exp()),

157 | getmean(clusters), getcov(clusters));

158 | }

159 |

160 |

161 | // BGMM

162 | tuple wrapperBGMM (

163 | const object& X,

164 | const float clusterprior,

165 | const int maxclusters,

166 | const bool verbose,

167 | const int nthreads

168 | )

169 | {

170 | // Convert X

171 | const MatrixXd X_ = numpy2MatrixXd(X);

172 |

173 | // Pre-allocate some stuff

174 | MatrixXd qZ;

175 | Dirichlet weights;

176 | vector clusters;

177 |

178 | // Do the clustering

179 | double f = learnBGMM(X_, qZ, weights, clusters, clusterprior, maxclusters,

180 | verbose, nthreads);

181 |

182 | // Return relevant objects

183 | return make_tuple(f, qZ, ArrayXd(weights.Elogweight().exp()),

184 | getmean(clusters), getcov(clusters));

185 | }

186 |

187 |

188 | // GMC

189 | tuple wrapperGMC (

190 | const boost::python::list &X,

191 | const float clusterprior,

192 | const int maxclusters,

193 | const bool sparse,

194 | const bool verbose,

195 | const int nthreads

196 | )

197 | {

198 | // Convert X

199 | const vMatrixXd X_ = lnumpy2vMatrixXd(X);

200 |

201 | // Pre-allocate some stuff

202 | vMatrixXd qZ;

203 | vector weights;

204 | vector clusters;

205 |

206 | // Do the clustering

207 | double f = learnGMC(X_, qZ, weights, clusters, clusterprior, maxclusters,

208 | sparse, verbose, nthreads);

209 |

210 | // Return relevant objects

211 | return make_tuple(f, qZ, getweights(weights), getmean(clusters),

212 | getcov(clusters));

213 | }

214 |

215 |

216 | // SGMC

217 | tuple wrapperSGMC (

218 | const boost::python::list &X,

219 | const float clusterprior,

220 | const int maxclusters,

221 | const bool sparse,

222 | const bool verbose,

223 | const int nthreads

224 | )

225 | {

226 | // Convert X

227 | const vMatrixXd X_ = lnumpy2vMatrixXd(X);

228 |

229 | // Pre-allocate some stuff

230 | vMatrixXd qZ;

231 | vector weights;

232 | vector clusters;

233 |

234 | // Do the clustering

235 | double f = learnSGMC(X_, qZ, weights, clusters, clusterprior, maxclusters,

236 | sparse, verbose, nthreads);

237 |

238 | // Return relevant objects

239 | return make_tuple(f, qZ, getweights(weights), getmean(clusters),

240 | getcov(clusters));

241 | }

242 |

243 |

244 | // SCM

245 | tuple wrapperSCM (

246 | const boost::python::list &X,

247 | const float dirprior,

248 | const float gausprior,

249 | const int trunc,

250 | const int maxclusters,

251 | const bool verbose,

252 | const int nthreads

253 | )

254 | {

255 | // Convert X

256 | const vvMatrixXd X_ = llnumpy2vvMatrixXd(X);

257 |

258 | // Pre-allocate some stuff

259 | vMatrixXd qY;

260 | vvMatrixXd qZ;

261 | vector weights_j;

262 | vector weights_t;

263 | vector clusters;

264 |

265 | // Do the clustering

266 | double f = learnSCM(X_, qY, qZ, weights_j, weights_t, clusters, dirprior,

267 | gausprior, trunc, maxclusters, verbose, nthreads);

268 |

269 | // Return relevant objects

270 | return make_tuple(f, qY, qZ, getweights(weights_j),

271 | getweights(weights_t), getmean(clusters), getcov(clusters));

272 | }

273 |

274 |

275 | // MCM

276 | tuple wrapperMCM (

277 | const boost::python::list &W,

278 | const boost::python::list &X,

279 | const float gausprior_t,

280 | const float gausprior_k,

281 | const int trunc,

282 | const int maxclusters,

283 | const bool verbose,

284 | const int nthreads

285 | )

286 | {

287 | // Convert W and X

288 | const vMatrixXd W_ = lnumpy2vMatrixXd(W);

289 | const vvMatrixXd X_ = llnumpy2vvMatrixXd(X);

290 |

291 | // Pre-allocate some stuff

292 | vMatrixXd qY;

293 | vvMatrixXd qZ;

294 | vector weights_j;

295 | vector weights_t;

296 | vector clusters_t;

297 | vector clusters_k;

298 |

299 | // Do the clustering

300 | double f = learnMCM(W_, X_, qY, qZ, weights_j, weights_t, clusters_t,

301 | clusters_k, gausprior_t, gausprior_k, trunc, maxclusters,

302 | verbose, nthreads);

303 |

304 | // Return relevant objects

305 | return make_tuple(f, qY, qZ, getweights(weights_j),

306 | getweights(weights_t), getmean(clusters_t),

307 | getmean(clusters_k), getcov(clusters_t), getcov(clusters_k));

308 | }

309 |

--------------------------------------------------------------------------------

/python/libclusterpy.h:

--------------------------------------------------------------------------------

1 | /*

2 | * libcluster -- A collection of hierarchical Bayesian clustering algorithms.

3 | * Copyright (C) 2013 Daniel M. Steinberg (daniel.m.steinberg@gmail.com)

4 | *

5 | * This file is part of libcluster.

6 | *

7 | * libcluster is free software: you can redistribute it and/or modify it under

8 | * the terms of the GNU Lesser General Public License as published by the Free

9 | * Software Foundation, either version 3 of the License, or (at your option)

10 | * any later version.

11 | *

12 | * libcluster is distributed in the hope that it will be useful, but WITHOUT

13 | * ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

14 | * FITNESS FOR A PARTICULAR PURPOSE. See the GNU Lesser General Public License

15 | * for more details.

16 | *

17 | * You should have received a copy of the GNU Lesser General Public License

18 | * along with libcluster. If not, see .

19 | */

20 |

21 | #ifndef LIBCLUSTERPY_H

22 | #define LIBCLUSTERPY_H

23 |

24 | #define NPY_NO_DEPRECATED_API NPY_1_7_API_VERSION // Test deprication for v1.7

25 |

26 | #include

27 | #include

28 | #include

29 | #include "libcluster.h"

30 |

31 |

32 | //

33 | // To-python type converters

34 | //

35 |

36 | // Eigen::MatrixXd/ArrayXd (double) to numpy array ([[...]])

37 | template

38 | struct eigen2numpy

39 | {

40 | static PyObject* convert (const M& X)

41 | {

42 | npy_intp arsize[] = {X.rows(), X.cols()};

43 | M* X_ = new M(X); // Copy to persistent array

44 | PyObject* Xp = PyArray_SimpleNewFromData(2, arsize, NPY_DOUBLE, X_->data());

45 |

46 | if (Xp == NULL)

47 | throw std::runtime_error("Cannot convert Eigen matrix to Numpy array!");

48 |

49 | return Xp;

50 | }

51 | };

52 |

53 |

54 | // std::vector to python list [...].

55 | template

56 | struct vector2list

57 | {

58 | static PyObject* convert (const std::vector& X)

59 | {

60 | boost::python::list* Xp = new boost::python::list();

61 |

62 | for (size_t i = 0; i < X.size(); ++i)

63 | Xp->append(X[i]);

64 |

65 | return Xp->ptr();

66 | }

67 | };

68 |

69 |

70 | //

71 | // Wrappers

72 | //

73 |

74 | // VDP

75 | boost::python::tuple wrapperVDP (

76 | const boost::python::api::object& X,

77 | const float clusterprior,

78 | const int maxclusters,

79 | const bool verbose,

80 | const int nthreads

81 | );

82 |

83 |

84 | // BGMM

85 | boost::python::tuple wrapperBGMM (

86 | const boost::python::api::object& X,

87 | const float clusterprior,

88 | const int maxclusters,

89 | const bool verbose,

90 | const int nthreads

91 | );

92 |

93 |

94 | // GMC

95 | boost::python::tuple wrapperGMC (

96 | const boost::python::list& X,

97 | const float clusterprior,