├── doc.go

├── helper

├── test-cover.sh

├── golint.sh

├── README-cn.md

└── README-en.md

├── compose.store.yml

├── .gitignore

├── .golangci.yml

├── go.mod

├── .github

└── workflows

│ ├── tests.yml

│ └── codeql-analysis.yml

├── utils.go

├── LICENSE

├── script.go

├── client_cover_test.go

├── go.sum

├── batch_cover_test.go

├── README.md

├── batch_test.go

├── client_test.go

├── client.go

└── batch.go

/doc.go:

--------------------------------------------------------------------------------

1 | // Package rockscache The first Redis cache library to ensure eventual consistency and strong consistency with DB.

2 | package rockscache

3 |

--------------------------------------------------------------------------------

/helper/test-cover.sh:

--------------------------------------------------------------------------------

1 | set -x

2 | go test -covermode count -coverprofile=coverage.txt || exit 1

3 | curl -s https://codecov.io/bash | bash

4 |

5 | # go tool cover -html=coverage.txt

6 |

--------------------------------------------------------------------------------

/helper/golint.sh:

--------------------------------------------------------------------------------

1 | set -x

2 |

3 | curl -sSfL https://raw.githubusercontent.com/golangci/golangci-lint/master/install.sh | sh -s -- -b $(go env GOPATH)/bin v1.51.2

4 | $(go env GOPATH)/bin/golangci-lint run

5 |

--------------------------------------------------------------------------------

/compose.store.yml:

--------------------------------------------------------------------------------

1 | version: '3.3'

2 | services:

3 | redis:

4 | image: 'redis'

5 | volumes:

6 | - /etc/localtime:/etc/localtime:ro

7 | - /etc/timezone:/etc/timezone:ro

8 | ports:

9 | - '6379:6379'

10 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Binaries for programs and plugins

2 | *.exe

3 | *.exe~

4 | *.dll

5 | *.so

6 | *.dylib

7 |

8 | # Test binary, built with `go test -c`

9 | *.test

10 |

11 | # Output of the go coverage tool, specifically when used with LiteIDE

12 | *.out

13 |

14 | coverage.txt

15 | # Dependency directories (remove the comment below to include it)

16 | # vendor/

17 | .idea/

18 |

--------------------------------------------------------------------------------

/.golangci.yml:

--------------------------------------------------------------------------------

1 | run:

2 | deadline: 5m

3 | skip-dirs:

4 | # - test

5 | # - bench

6 |

7 | linter-settings:

8 | goconst:

9 | min-len: 2

10 | min-occurrences: 2

11 |

12 | linters:

13 | enable:

14 | - revive

15 | - goconst

16 | - gofmt

17 | - goimports

18 | - misspell

19 | - unparam

20 |

21 | issues:

22 | exclude-use-default: false

23 | exclude-rules:

24 | - path: _test.go

25 | linters:

26 | - errcheck

27 | - revive

28 |

--------------------------------------------------------------------------------

/go.mod:

--------------------------------------------------------------------------------

1 | module github.com/dtm-labs/rockscache

2 |

3 | go 1.18

4 |

5 | require (

6 | github.com/lithammer/shortuuid v3.0.0+incompatible

7 | github.com/redis/go-redis/v9 v9.0.3

8 | github.com/stretchr/testify v1.8.4

9 | golang.org/x/sync v0.1.0

10 | )

11 |

12 | require (

13 | github.com/cespare/xxhash/v2 v2.2.0 // indirect

14 | github.com/davecgh/go-spew v1.1.1 // indirect

15 | github.com/dgryski/go-rendezvous v0.0.0-20200823014737-9f7001d12a5f // indirect

16 | github.com/google/uuid v1.3.0 // indirect

17 | github.com/pmezard/go-difflib v1.0.0 // indirect

18 | gopkg.in/yaml.v3 v3.0.1 // indirect

19 | )

20 |

--------------------------------------------------------------------------------

/.github/workflows/tests.yml:

--------------------------------------------------------------------------------

1 | name: Tests

2 | on:

3 | push:

4 | branches-ignore:

5 | - 'tmp-*'

6 | pull_request:

7 | branches-ignore:

8 | - 'tmp-*'

9 |

10 | jobs:

11 | tests:

12 | name: CI

13 | runs-on: ubuntu-latest

14 | services:

15 | redis:

16 | image: 'redis'

17 | volumes:

18 | - /etc/localtime:/etc/localtime:ro

19 | - /etc/timezone:/etc/timezone:ro

20 | ports:

21 | - 6379:6379

22 | steps:

23 | - name: Set up Go 1.18

24 | uses: actions/setup-go@v2

25 | with:

26 | go-version: '1.18.10'

27 |

28 | - name: Check out code

29 | uses: actions/checkout@v2

30 |

31 | - name: Install dependencies

32 | run: |

33 | go mod download

34 |

35 | - name: Run CI lint

36 | run: sh helper/golint.sh

37 |

38 | - name: Run test cover

39 | run: sh helper/test-cover.sh

40 |

--------------------------------------------------------------------------------

/utils.go:

--------------------------------------------------------------------------------

1 | package rockscache

2 |

3 | import (

4 | "context"

5 | "log"

6 | "runtime/debug"

7 | "time"

8 |

9 | "github.com/redis/go-redis/v9"

10 | )

11 |

12 | var verbose = false

13 |

14 | // SetVerbose sets verbose mode.

15 | func SetVerbose(v bool) {

16 | verbose = v

17 | }

18 |

19 | func debugf(format string, args ...interface{}) {

20 | if verbose {

21 | log.Printf(format, args...)

22 | }

23 | }

24 | func now() int64 {

25 | return time.Now().Unix()

26 | }

27 |

28 | func callLua(ctx context.Context, rdb redis.Scripter, script *redis.Script, keys []string, args []interface{}) (interface{}, error) {

29 | debugf("callLua: script=%s, keys=%v, args=%v", script.Hash(), keys, args)

30 | r := script.EvalSha(ctx, rdb, keys, args)

31 | if redis.HasErrorPrefix(r.Err(), "NOSCRIPT") {

32 | // try load script

33 | if err := script.Load(ctx, rdb).Err(); err != nil {

34 | debugf("callLua: load script failed: %v", err)

35 | r = script.Eval(ctx, rdb, keys, args) // fallback to EVAL

36 | } else {

37 | r = script.EvalSha(ctx, rdb, keys, args) // retry EVALSHA

38 | }

39 | }

40 | v, err := r.Result()

41 | if err == redis.Nil {

42 | err = nil

43 | }

44 | debugf("callLua result: v=%v, err=%v", v, err)

45 | return v, err

46 | }

47 |

48 | func withRecover(f func()) {

49 | defer func() {

50 | if r := recover(); r != nil {

51 | debug.PrintStack()

52 | }

53 | }()

54 | f()

55 | }

56 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | BSD 3-Clause License

2 |

3 | Copyright (c) 2022, DTM Development and Communities

4 | All rights reserved.

5 |

6 | Redistribution and use in source and binary forms, with or without

7 | modification, are permitted provided that the following conditions are met:

8 |

9 | 1. Redistributions of source code must retain the above copyright notice, this

10 | list of conditions and the following disclaimer.

11 |

12 | 2. Redistributions in binary form must reproduce the above copyright notice,

13 | this list of conditions and the following disclaimer in the documentation

14 | and/or other materials provided with the distribution.

15 |

16 | 3. Neither the name of the copyright holder nor the names of its

17 | contributors may be used to endorse or promote products derived from

18 | this software without specific prior written permission.

19 |

20 | THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

21 | AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

22 | IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

23 | DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

24 | FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

25 | DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

26 | SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

27 | CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

28 | OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

29 | OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

30 |

--------------------------------------------------------------------------------

/.github/workflows/codeql-analysis.yml:

--------------------------------------------------------------------------------

1 | # For most projects, this workflow file will not need changing; you simply need

2 | # to commit it to your repository.

3 | #

4 | # You may wish to alter this file to override the set of languages analyzed,

5 | # or to provide custom queries or build logic.

6 | #

7 | # ******** NOTE ********

8 | # We have attempted to detect the languages in your repository. Please check

9 | # the `language` matrix defined below to confirm you have the correct set of

10 | # supported CodeQL languages.

11 | #

12 | name: "CodeQL"

13 |

14 | on:

15 | push:

16 | branches: [ main ]

17 | pull_request:

18 | # The branches below must be a subset of the branches above

19 | branches: [ main ]

20 | schedule:

21 | - cron: '25 19 * * 2'

22 |

23 | jobs:

24 | analyze:

25 | name: Analyze

26 | runs-on: ubuntu-latest

27 | permissions:

28 | actions: read

29 | contents: read

30 | security-events: write

31 |

32 | strategy:

33 | fail-fast: false

34 | matrix:

35 | language: [ 'go' ]

36 | # CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python', 'ruby' ]

37 | # Learn more about CodeQL language support at https://git.io/codeql-language-support

38 |

39 | steps:

40 | - name: Checkout repository

41 | uses: actions/checkout@v2

42 |

43 | # Initializes the CodeQL tools for scanning.

44 | - name: Initialize CodeQL

45 | uses: github/codeql-action/init@v1

46 | with:

47 | languages: ${{ matrix.language }}

48 | # If you wish to specify custom queries, you can do so here or in a config file.

49 | # By default, queries listed here will override any specified in a config file.

50 | # Prefix the list here with "+" to use these queries and those in the config file.

51 | # queries: ./path/to/local/query, your-org/your-repo/queries@main

52 |

53 | # Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

54 | # If this step fails, then you should remove it and run the build manually (see below)

55 | - name: Autobuild

56 | uses: github/codeql-action/autobuild@v1

57 |

58 | # ℹ️ Command-line programs to run using the OS shell.

59 | # 📚 https://git.io/JvXDl

60 |

61 | # ✏️ If the Autobuild fails above, remove it and uncomment the following three lines

62 | # and modify them (or add more) to build your code if your project

63 | # uses a compiled language

64 |

65 | #- run: |

66 | # make bootstrap

67 | # make release

68 |

69 | - name: Perform CodeQL Analysis

70 | uses: github/codeql-action/analyze@v1

71 |

--------------------------------------------------------------------------------

/script.go:

--------------------------------------------------------------------------------

1 | package rockscache

2 |

3 | import "github.com/redis/go-redis/v9"

4 |

5 | var (

6 | deleteScript = redis.NewScript(`

7 | redis.call('HSET', KEYS[1], 'lockUntil', 0)

8 | redis.call('HDEL', KEYS[1], 'lockOwner')

9 | redis.call('EXPIRE', KEYS[1], ARGV[1])`)

10 |

11 | getScript = redis.NewScript(`

12 | local v = redis.call('HGET', KEYS[1], 'value')

13 | local lu = redis.call('HGET', KEYS[1], 'lockUntil')

14 | if lu ~= false and tonumber(lu) < tonumber(ARGV[1]) or lu == false and v == false then

15 | redis.call('HSET', KEYS[1], 'lockUntil', ARGV[2])

16 | redis.call('HSET', KEYS[1], 'lockOwner', ARGV[3])

17 | return { v, 'LOCKED' }

18 | end

19 | return {v, lu}`)

20 |

21 | setScript = redis.NewScript(`

22 | local o = redis.call('HGET', KEYS[1], 'lockOwner')

23 | if o ~= ARGV[2] then

24 | return

25 | end

26 | redis.call('HSET', KEYS[1], 'value', ARGV[1])

27 | redis.call('HDEL', KEYS[1], 'lockUntil')

28 | redis.call('HDEL', KEYS[1], 'lockOwner')

29 | redis.call('EXPIRE', KEYS[1], ARGV[3])`)

30 |

31 | lockScript = redis.NewScript(`

32 | local lu = redis.call('HGET', KEYS[1], 'lockUntil')

33 | local lo = redis.call('HGET', KEYS[1], 'lockOwner')

34 | if lu == false or tonumber(lu) < tonumber(ARGV[2]) or lo == ARGV[1] then

35 | redis.call('HSET', KEYS[1], 'lockUntil', ARGV[2])

36 | redis.call('HSET', KEYS[1], 'lockOwner', ARGV[1])

37 | return 'LOCKED'

38 | end

39 | return lo`)

40 |

41 | unlockScript = redis.NewScript(`

42 | local lo = redis.call('HGET', KEYS[1], 'lockOwner')

43 | if lo == ARGV[1] then

44 | redis.call('HSET', KEYS[1], 'lockUntil', 0)

45 | redis.call('HDEL', KEYS[1], 'lockOwner')

46 | redis.call('EXPIRE', KEYS[1], ARGV[2])

47 | end`)

48 |

49 | getBatchScript = redis.NewScript(`

50 | local rets = {}

51 | for i, key in ipairs(KEYS)

52 | do

53 | local v = redis.call('HGET', key, 'value')

54 | local lu = redis.call('HGET', key, 'lockUntil')

55 | if lu ~= false and tonumber(lu) < tonumber(ARGV[1]) or lu == false and v == false then

56 | redis.call('HSET', key, 'lockUntil', ARGV[2])

57 | redis.call('HSET', key, 'lockOwner', ARGV[3])

58 | table.insert(rets, { v, 'LOCKED' })

59 | else

60 | table.insert(rets, {v, lu})

61 | end

62 | end

63 | return rets`)

64 |

65 | setBatchScript = redis.NewScript(`

66 | local n = #KEYS

67 | for i, key in ipairs(KEYS)

68 | do

69 | local o = redis.call('HGET', key, 'lockOwner')

70 | if o ~= ARGV[1] then

71 | return

72 | end

73 | redis.call('HSET', key, 'value', ARGV[i+1])

74 | redis.call('HDEL', key, 'lockUntil')

75 | redis.call('HDEL', key, 'lockOwner')

76 | redis.call('EXPIRE', key, ARGV[i+1+n])

77 | end`)

78 |

79 | deleteBatchScript = redis.NewScript(`

80 | for i, key in ipairs(KEYS) do

81 | redis.call('HSET', key, 'lockUntil', 0)

82 | redis.call('HDEL', key, 'lockOwner')

83 | redis.call('EXPIRE', key, ARGV[1])

84 | end`)

85 | )

86 |

--------------------------------------------------------------------------------

/client_cover_test.go:

--------------------------------------------------------------------------------

1 | package rockscache

2 |

3 | import (

4 | "context"

5 | "errors"

6 | "fmt"

7 | "testing"

8 | "time"

9 |

10 | "github.com/stretchr/testify/assert"

11 | )

12 |

13 | func TestBadOptions(t *testing.T) {

14 | assert.Panics(t, func() {

15 | NewClient(nil, Options{})

16 | })

17 | }

18 |

19 | func TestDisable(t *testing.T) {

20 | rc := NewClient(nil, NewDefaultOptions())

21 | rc.Options.DisableCacheDelete = true

22 | rc.Options.DisableCacheRead = true

23 | fn := func() (string, error) { return "", nil }

24 | _, err := rc.Fetch2(context.Background(), "key", 60, fn)

25 | assert.Nil(t, err)

26 | err = rc.TagAsDeleted2(context.Background(), "key")

27 | assert.Nil(t, err)

28 | }

29 |

30 | func TestEmptyExpire(t *testing.T) {

31 | testEmptyExpire(t, 0)

32 | testEmptyExpire(t, 10*time.Second)

33 | }

34 |

35 | func testEmptyExpire(t *testing.T, expire time.Duration) {

36 | clearCache()

37 | rc := NewClient(rdb, NewDefaultOptions())

38 | rc.Options.EmptyExpire = expire

39 | fn := func() (string, error) { return "", nil }

40 | fetchError := errors.New("fetch error")

41 | errFn := func() (string, error) {

42 | return "", fetchError

43 | }

44 | _, err := rc.Fetch("key1", 600, fn)

45 | assert.Nil(t, err)

46 | _, err = rc.Fetch("key1", 600, errFn)

47 | if expire == 0 {

48 | assert.ErrorIs(t, err, fetchError)

49 | } else {

50 | assert.Nil(t, err)

51 | }

52 |

53 | rc.Options.StrongConsistency = true

54 | _, err = rc.Fetch("key2", 600, fn)

55 | assert.Nil(t, err)

56 | _, err = rc.Fetch("key2", 600, errFn)

57 | if expire == 0 {

58 | assert.ErrorIs(t, err, fetchError)

59 | } else {

60 | assert.Nil(t, err)

61 | }

62 | }

63 |

64 | func TestErrorFetch(t *testing.T) {

65 | fn := func() (string, error) { return "", fmt.Errorf("error") }

66 | clearCache()

67 | rc := NewClient(rdb, NewDefaultOptions())

68 | _, err := rc.Fetch("key1", 60, fn)

69 | assert.Equal(t, fmt.Errorf("error"), err)

70 |

71 | rc.Options.StrongConsistency = true

72 | _, err = rc.Fetch("key2", 60, fn)

73 | assert.Equal(t, fmt.Errorf("error"), err)

74 | }

75 |

76 | func TestPanicFetch(t *testing.T) {

77 | fn := func() (string, error) { return "abc", nil }

78 | pfn := func() (string, error) { panic(fmt.Errorf("error")) }

79 | clearCache()

80 | rc := NewClient(rdb, NewDefaultOptions())

81 | _, err := rc.Fetch("key1", 60*time.Second, fn)

82 | assert.Nil(t, err)

83 | rc.TagAsDeleted("key1")

84 | _, err = rc.Fetch("key1", 60*time.Second, pfn)

85 | assert.Nil(t, err)

86 | time.Sleep(20 * time.Millisecond)

87 | }

88 |

89 | func TestTagAsDeletedWait(t *testing.T) {

90 | clearCache()

91 | rc := NewClient(rdb, NewDefaultOptions())

92 | rc.Options.WaitReplicas = 1

93 | rc.Options.WaitReplicasTimeout = 10

94 | err := rc.TagAsDeleted("key1")

95 | if getCluster() != nil {

96 | assert.Nil(t, err)

97 | } else {

98 | assert.Error(t, err, fmt.Errorf("wait replicas 1 failed. result replicas: 0"))

99 | }

100 | }

101 |

--------------------------------------------------------------------------------

/go.sum:

--------------------------------------------------------------------------------

1 | github.com/bsm/ginkgo/v2 v2.7.0 h1:ItPMPH90RbmZJt5GtkcNvIRuGEdwlBItdNVoyzaNQao=

2 | github.com/bsm/gomega v1.26.0 h1:LhQm+AFcgV2M0WyKroMASzAzCAJVpAxQXv4SaI9a69Y=

3 | github.com/cespare/xxhash/v2 v2.2.0 h1:DC2CZ1Ep5Y4k3ZQ899DldepgrayRUGE6BBZ/cd9Cj44=

4 | github.com/cespare/xxhash/v2 v2.2.0/go.mod h1:VGX0DQ3Q6kWi7AoAeZDth3/j3BFtOZR5XLFGgcrjCOs=

5 | github.com/davecgh/go-spew v1.1.0/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

6 | github.com/davecgh/go-spew v1.1.1 h1:vj9j/u1bqnvCEfJOwUhtlOARqs3+rkHYY13jYWTU97c=

7 | github.com/davecgh/go-spew v1.1.1/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

8 | github.com/dgryski/go-rendezvous v0.0.0-20200823014737-9f7001d12a5f h1:lO4WD4F/rVNCu3HqELle0jiPLLBs70cWOduZpkS1E78=

9 | github.com/dgryski/go-rendezvous v0.0.0-20200823014737-9f7001d12a5f/go.mod h1:cuUVRXasLTGF7a8hSLbxyZXjz+1KgoB3wDUb6vlszIc=

10 | github.com/google/uuid v1.3.0 h1:t6JiXgmwXMjEs8VusXIJk2BXHsn+wx8BZdTaoZ5fu7I=

11 | github.com/google/uuid v1.3.0/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+yHo=

12 | github.com/lithammer/shortuuid v3.0.0+incompatible h1:NcD0xWW/MZYXEHa6ITy6kaXN5nwm/V115vj2YXfhS0w=

13 | github.com/lithammer/shortuuid v3.0.0+incompatible/go.mod h1:FR74pbAuElzOUuenUHTK2Tciko1/vKuIKS9dSkDrA4w=

14 | github.com/pmezard/go-difflib v1.0.0 h1:4DBwDE0NGyQoBHbLQYPwSUPoCMWR5BEzIk/f1lZbAQM=

15 | github.com/pmezard/go-difflib v1.0.0/go.mod h1:iKH77koFhYxTK1pcRnkKkqfTogsbg7gZNVY4sRDYZ/4=

16 | github.com/redis/go-redis/v9 v9.0.3 h1:+7mmR26M0IvyLxGZUHxu4GiBkJkVDid0Un+j4ScYu4k=

17 | github.com/redis/go-redis/v9 v9.0.3/go.mod h1:WqMKv5vnQbRuZstUwxQI195wHy+t4PuXDOjzMvcuQHk=

18 | github.com/stretchr/objx v0.1.0/go.mod h1:HFkY916IF+rwdDfMAkV7OtwuqBVzrE8GR6GFx+wExME=

19 | github.com/stretchr/objx v0.4.0/go.mod h1:YvHI0jy2hoMjB+UWwv71VJQ9isScKT/TqJzVSSt89Yw=

20 | github.com/stretchr/objx v0.5.0/go.mod h1:Yh+to48EsGEfYuaHDzXPcE3xhTkx73EhmCGUpEOglKo=

21 | github.com/stretchr/testify v1.7.1/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

22 | github.com/stretchr/testify v1.8.0/go.mod h1:yNjHg4UonilssWZ8iaSj1OCr/vHnekPRkoO+kdMU+MU=

23 | github.com/stretchr/testify v1.8.1 h1:w7B6lhMri9wdJUVmEZPGGhZzrYTPvgJArz7wNPgYKsk=

24 | github.com/stretchr/testify v1.8.1/go.mod h1:w2LPCIKwWwSfY2zedu0+kehJoqGctiVI29o6fzry7u4=

25 | github.com/stretchr/testify v1.8.4 h1:CcVxjf3Q8PM0mHUKJCdn+eZZtm5yQwehR5yeSVQQcUk=

26 | github.com/stretchr/testify v1.8.4/go.mod h1:sz/lmYIOXD/1dqDmKjjqLyZ2RngseejIcXlSw2iwfAo=

27 | golang.org/x/sync v0.1.0 h1:wsuoTGHzEhffawBOhz5CYhcrV4IdKZbEyZjBMuTp12o=

28 | golang.org/x/sync v0.1.0/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

29 | gopkg.in/check.v1 v0.0.0-20161208181325-20d25e280405 h1:yhCVgyC4o1eVCa2tZl7eS0r+SDo693bJlVdllGtEeKM=

30 | gopkg.in/check.v1 v0.0.0-20161208181325-20d25e280405/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

31 | gopkg.in/yaml.v3 v3.0.0-20200313102051-9f266ea9e77c/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

32 | gopkg.in/yaml.v3 v3.0.1 h1:fxVm/GzAzEWqLHuvctI91KS9hhNmmWOoWu0XTYJS7CA=

33 | gopkg.in/yaml.v3 v3.0.1/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

34 |

--------------------------------------------------------------------------------

/helper/README-cn.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [](https://codecov.io/gh/dtm-labs/rockscache)

4 | [](https://goreportcard.com/report/github.com/dtm-labs/rockscache)

5 | [](https://pkg.go.dev/github.com/dtm-labs/rockscache)

6 |

7 | 简体中文 | [English](https://github.com/dtm-labs/rockscache/blob/main/helper/README-en.md)

8 |

9 | # RocksCache

10 | 首个确保最终一致、强一致的 `Redis` 缓存库。

11 |

12 | ## 特性

13 | - 最终一致:极端情况也能确保缓存的最终一致

14 | - 强一致:支持给应用提供强一致的访问

15 | - 高性能:与常见的缓存方案对比,性能无大的差别

16 | - 防击穿:给出更好的防击穿方案

17 | - 防穿透

18 | - 防雪崩

19 | - 非常易用:仅提供极简的两个函数,对应用无要求

20 | - 提供批量查询接口

21 |

22 | ## 使用

23 | 本缓存库采用最常见的`更新DB后,删除缓存`的缓存管理策略

24 |

25 | ### 读取缓存

26 | ``` Go

27 | import "github.com/dtm-labs/rockscache"

28 |

29 | // 使用默认选项生成rockscache的客户端

30 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

31 |

32 | // 使用Fetch获取数据,第一个参数是数据的key,第二个参数为数据过期时间,第三个参数为缓存不存在时,数据获取函数

33 | v, err := rc.Fetch("key1", 300, func()(string, error) {

34 | // 从数据库或其他渠道获取数据

35 | return "value1", nil

36 | })

37 | ```

38 |

39 | ### 删除缓存

40 | ``` Go

41 | rc.TagAsDeleted(key)

42 | ```

43 |

44 | ## 批量查询接口使用

45 |

46 | ### 批量读取缓存

47 | ``` Go

48 | import "github.com/dtm-labs/rockscache"

49 |

50 | // 使用默认选项生成rockscache的客户端

51 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

52 |

53 | // 使用FetchBatch获取数据,第一个参数是数据的keys列表,第二个参数为数据过期时间,第三个参数为缓存不存在时,数据获取函数

54 | // 数据获取函数的参数为 keys 列表的下标数组,表示 keys 中没命中缓存的 key 的下标,通过这些下标可以构造批量查询条件;返回值是以下标为 key,字符串为值的 map

55 | v, err := rc.FetchBatch([]string{"key1", "key2", "key3"}, 300, func(idxs []int) (map[int]string, error) {

56 | // 从数据库或其他渠道获取数据

57 | values := make(map[int]string)

58 | for _, i := range idxs {

59 | values[i] = fmt.Sprintf("value%d", i)

60 | }

61 | return values, nil

62 | })

63 | ```

64 |

65 | ### 批量删除缓存

66 | ``` Go

67 | rc.TagAsDeletedBatch(keys)

68 | ```

69 |

70 | ## 最终一致

71 | 引入缓存后,由于数据同时存储在两个地方:数据库和Redis,因此就存在分布式系统中的一致性问题。关于这个一致性问题的背景知识,以及Redis缓存流行方案介绍,可以参见:

72 | - 这篇通俗易懂些:[聊聊数据库与缓存数据一致性问题](https://juejin.cn/post/6844903941646319623)

73 | - 这篇更加深入:[携程最终一致和强一致性缓存实践](https://www.infoq.cn/article/hh4iouiijhwb4x46vxeo)

74 |

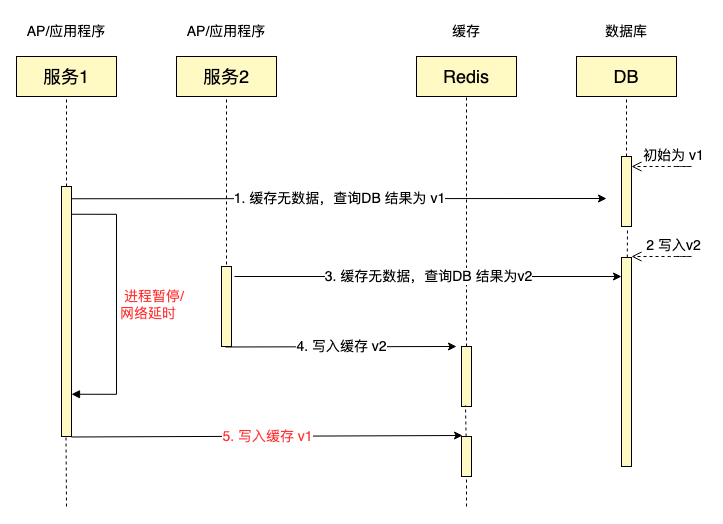

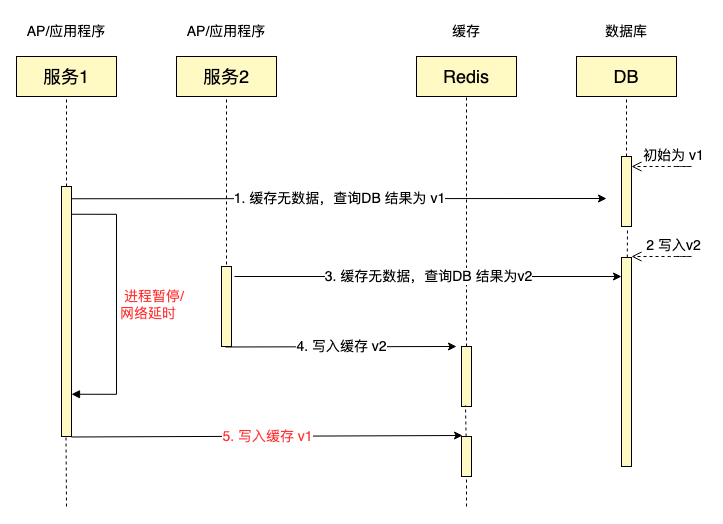

75 | 但是目前看到的所有缓存方案,如果不在应用层引入版本,都无法解决下面这个数据不一致的场景:

76 |

77 |  78 |

79 | ### 解决方案

80 | 本项目给大家带来了一个全新方案:标记删除,能够确保缓存与数据库的数据一致性,解决这个大难题。此方案是首创,已申请专利,现开源出来,供大家使用。

81 |

82 | 当开发者读数据时调用`Fetch`,并且确保更新数据库之后调用`TagAsDeleted`,那么就能够确保缓存最终一致。当遇见上图中步骤5写入v1时,最终会放弃写入。

83 | - 如何确保更新数据库之后会调用TagAsDeleted,参见[DB与缓存操作的原子性](https://dtm.pub/app/cache.html#atomic)

84 | - 步骤5写入v1时,数据写入会被放弃的原理,参见[缓存一致性](https://dtm.pub/app/cache.html)

85 |

86 | 完整的可运行的例子,参考[dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

87 |

88 | ## 强一致的访问

89 | 如果您的应用需要使用缓存,并且需要的是强一致,而不是最终一致,那么可以通过打开选项`StrongConsisteny`来支持,访问方式不变

90 | ``` Go

91 | rc.Options.StrongConsisteny = true

92 | ```

93 |

94 | 详细原理参考[缓存一致性](https://dtm.pub/app/cache.html),例子参考[dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

95 |

96 | ## 降级以及强一致

97 | 本库支持降级,降级开关分为

98 | - `DisableCacheRead`: 关闭缓存读,默认`false`;如果打开,那么Fetch就不从缓存读取数据,而是直接调用fn获取数据

99 | - `DisableCacheDelete`: 关闭缓存删除,默认false;如果打开,那么TagAsDeleted就什么操作都不做,直接返回

100 |

101 | 当Redis出现问题,需要降级时,可以通过这两个开关控制。如果您需要在降级升级的过程中,也保持强一致的访问,rockscache也是支持的

102 |

103 | 详细原理参考[缓存一致性](https://dtm.pub/app/cache.html),例子参考[dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

104 |

105 | ## 防缓存击穿

106 | 通过本库使用缓存,自带防缓存击穿功能。一方面`Fetch`会在进程内部使用`singleflight`,避免一个进程内有多个请求发到Redis,另一方面在Redis层会使用分布式锁,避免多个进程的多个请求同时发到DB,保证最终只有一个数据查询请求到DB。

107 |

108 | 本项目的的防缓存击穿,在热点缓存数据删除时,能够提供更快的响应时间。假如某个热点缓存数据需要花费3s计算,普通的防缓存击穿方案会导致这个时间内的所有这个热点数据的请求都等待3s,而本项目的方案,则能够立即返回。

109 | ## 防缓存穿透

110 | 通过本库使用缓存,自带防缓存穿透功能。当`Fetch`中的`fn`返回空字符串时,认为这是空结果,会将过期时间设定为rockscache选项中的`EmptyExpire`.

111 |

112 | `EmptyExpire` 默认为60s,如果设定为0,那么关闭防缓存穿透,对空结果不保存

113 |

114 | ## 防缓存雪崩

115 | 通过本库使用缓存,自带防缓存雪崩。rockscache中的`RandomExpireAdjustment`默认为0.1,如果设定为600的过期时间,那么过期时间会被设定为`540s - 600s`中间的一个随机数,避免数据出现同时到期

116 |

117 | ## 联系我们

118 | ### 公众号

119 | dtm-labs官方公众号:《分布式事务》,大量分布式事务干货分享,以及dtm-labs的最新消息

120 | ### 交流群

121 | 如果您希望更快的获得反馈,或者更多的了解其他用户在使用过程中的各种反馈,欢迎加入我们的微信交流群

122 |

123 | 请加作者的微信 yedf2008 好友或者扫码加好友,备注 `rockscache` 按照指引进群

124 |

125 |

126 |

127 | 欢迎使用[dtm-labs/rockscache](https://github.com/dtm-labs/rockscache),欢迎star支持我们

128 |

--------------------------------------------------------------------------------

/batch_cover_test.go:

--------------------------------------------------------------------------------

1 | package rockscache

2 |

3 | import (

4 | "context"

5 | "errors"

6 | "fmt"

7 | "math/rand"

8 | "testing"

9 | "time"

10 |

11 | "github.com/stretchr/testify/assert"

12 | )

13 |

14 | var (

15 | n = int(rand.Int31n(20) + 10)

16 | )

17 |

18 | func TestDisableForBatch(t *testing.T) {

19 | idxs := genIdxs(n)

20 | keys := genKeys(idxs)

21 | getFn := func(idxs []int) (map[int]string, error) {

22 | return nil, nil

23 | }

24 |

25 | rc := NewClient(nil, NewDefaultOptions())

26 | rc.Options.DisableCacheDelete = true

27 | rc.Options.DisableCacheRead = true

28 |

29 | _, err := rc.FetchBatch2(context.Background(), keys, 60, getFn)

30 | assert.Nil(t, err)

31 | err = rc.TagAsDeleted2(context.Background(), keys[0])

32 | assert.Nil(t, err)

33 | }

34 |

35 | func TestErrorFetchForBatch(t *testing.T) {

36 | idxs := genIdxs(n)

37 | keys := genKeys(idxs)

38 | fetchError := errors.New("fetch error")

39 | fn := func(idxs []int) (map[int]string, error) {

40 | return nil, fetchError

41 | }

42 | clearCache()

43 | rc := NewClient(rdb, NewDefaultOptions())

44 | _, err := rc.FetchBatch(keys, 60, fn)

45 | assert.ErrorIs(t, err, fetchError)

46 |

47 | rc.Options.StrongConsistency = true

48 | _, err = rc.FetchBatch(keys, 60, fn)

49 | assert.ErrorIs(t, err, fetchError)

50 | }

51 |

52 | func TestEmptyExpireForBatch(t *testing.T) {

53 | testEmptyExpireForBatch(t, 0)

54 | testEmptyExpireForBatch(t, 10*time.Second)

55 | }

56 |

57 | func testEmptyExpireForBatch(t *testing.T, expire time.Duration) {

58 | idxs := genIdxs(n)

59 | keys := genKeys(idxs)

60 | fn := func(idxs []int) (map[int]string, error) {

61 | return nil, nil

62 | }

63 | fetchError := errors.New("fetch error")

64 | errFn := func(idxs []int) (map[int]string, error) {

65 | return nil, fetchError

66 | }

67 |

68 | clearCache()

69 | rc := NewClient(rdb, NewDefaultOptions())

70 | rc.Options.EmptyExpire = expire

71 |

72 | _, err := rc.FetchBatch(keys, 60, fn)

73 | assert.Nil(t, err)

74 | _, err = rc.FetchBatch(keys, 60, errFn)

75 | if expire == 0 {

76 | assert.ErrorIs(t, err, fetchError)

77 | } else {

78 | assert.Nil(t, err)

79 | }

80 |

81 | clearCache()

82 | rc.Options.StrongConsistency = true

83 | _, err = rc.FetchBatch(keys, 60, fn)

84 | assert.Nil(t, err)

85 | _, err = rc.FetchBatch(keys, 60, errFn)

86 | if expire == 0 {

87 | assert.ErrorIs(t, err, fetchError)

88 | } else {

89 | assert.Nil(t, err)

90 | }

91 | }

92 |

93 | func TestPanicFetchForBatch(t *testing.T) {

94 | idxs := genIdxs(n)

95 | keys := genKeys(idxs)

96 | fn := func(idxs []int) (map[int]string, error) {

97 | return nil, nil

98 | }

99 | fetchError := errors.New("fetch error")

100 | errFn := func(idxs []int) (map[int]string, error) {

101 | panic(fetchError)

102 | }

103 | clearCache()

104 | rc := NewClient(rdb, NewDefaultOptions())

105 |

106 | _, err := rc.FetchBatch(keys, 60, fn)

107 | assert.Nil(t, err)

108 | rc.TagAsDeleted("key1")

109 | _, err = rc.FetchBatch(keys, 60, errFn)

110 | assert.Nil(t, err)

111 | time.Sleep(20 * time.Millisecond)

112 | }

113 |

114 | func TestTagAsDeletedBatchWait(t *testing.T) {

115 | clearCache()

116 | rc := NewClient(rdb, NewDefaultOptions())

117 | rc.Options.WaitReplicas = 1

118 | rc.Options.WaitReplicasTimeout = 10

119 | err := rc.TagAsDeletedBatch([]string{"key1", "key2"})

120 | if getCluster() != nil {

121 | assert.Nil(t, err)

122 | } else {

123 | assert.Error(t, err, fmt.Errorf("wait replicas 1 failed. result replicas: 0"))

124 | }

125 | }

126 |

127 | func TestWeakFetchBatchCanceled(t *testing.T) {

128 | clearCache()

129 | rc := NewClient(rdb, NewDefaultOptions())

130 | n := int(rand.Int31n(20) + 10)

131 | idxs := genIdxs(n)

132 | keys, values1, values2 := genKeys(idxs), genValues(n, "value_"), genValues(n, "eulav_")

133 | values3 := genValues(n, "vvvv_")

134 | go func() {

135 | dc2 := NewClient(rdb, NewDefaultOptions())

136 | v, err := dc2.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values1, 450))

137 | assert.Nil(t, err)

138 | assert.Equal(t, values1, v)

139 | }()

140 | time.Sleep(20 * time.Millisecond)

141 |

142 | began := time.Now()

143 | ctx, cancel := context.WithTimeout(context.Background(), 200*time.Millisecond)

144 | defer cancel()

145 | _, err := rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values2, 200))

146 | assert.ErrorIs(t, err, context.DeadlineExceeded)

147 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

148 |

149 | ctx, cancel = context.WithCancel(context.Background())

150 | go func() {

151 | time.Sleep(200 * time.Millisecond)

152 | cancel()

153 | }()

154 | began = time.Now()

155 | _, err = rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values3, 200))

156 | assert.ErrorIs(t, err, context.Canceled)

157 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

158 | }

159 |

160 | func TestStrongFetchBatchCanceled(t *testing.T) {

161 | clearCache()

162 | rc := NewClient(rdb, NewDefaultOptions())

163 | rc.Options.StrongConsistency = true

164 | n := int(rand.Int31n(20) + 10)

165 | idxs := genIdxs(n)

166 | keys, values1, values2 := genKeys(idxs), genValues(n, "value_"), genValues(n, "eulav_")

167 | values3 := genValues(n, "vvvv_")

168 | go func() {

169 | dc2 := NewClient(rdb, NewDefaultOptions())

170 | v, err := dc2.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values1, 450))

171 | assert.Nil(t, err)

172 | assert.Equal(t, values1, v)

173 | }()

174 | time.Sleep(20 * time.Millisecond)

175 |

176 | began := time.Now()

177 | ctx, cancel := context.WithTimeout(context.Background(), 200*time.Millisecond)

178 | defer cancel()

179 | _, err := rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values2, 200))

180 | assert.ErrorIs(t, err, context.DeadlineExceeded)

181 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

182 |

183 | ctx, cancel = context.WithCancel(context.Background())

184 | go func() {

185 | time.Sleep(200 * time.Millisecond)

186 | cancel()

187 | }()

188 | began = time.Now()

189 | _, err = rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values3, 200))

190 | assert.ErrorIs(t, err, context.Canceled)

191 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

192 | }

193 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [](https://codecov.io/gh/dtm-labs/rockscache)

4 | [](https://goreportcard.com/report/github.com/dtm-labs/rockscache)

5 | [](https://pkg.go.dev/github.com/dtm-labs/rockscache)

6 |

7 | English | [简体中文](https://github.com/dtm-labs/rockscache/blob/main/helper/README-cn.md)

8 |

9 | # RocksCache

10 | The first Redis cache library to ensure eventual consistency and strong consistency with DB.

11 |

12 | ## Features

13 | - Eventual Consistency: ensures eventual consistency of cache even in extreme cases

14 | - Strong consistency: provides strong consistent access to applications

15 | - Anti-breakdown: a better solution for cache breakdown

16 | - Anti-penetration

17 | - Anti-avalanche

18 | - Batch Query

19 |

20 | ## Usage

21 | This cache repository uses the most common `update DB and then delete cache` cache management policy

22 |

23 | ### Read cache

24 | ``` Go

25 | import "github.com/dtm-labs/rockscache"

26 |

27 | // new a client for rockscache using the default options

28 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

29 |

30 | // use Fetch to fetch data

31 | // 1. the first parameter is the key of the data

32 | // 2. the second parameter is the data expiration time

33 | // 3. the third parameter is the data fetch function which is called when the cache does not exist

34 | v, err := rc.Fetch("key1", 300 * time.Second, func()(string, error) {

35 | // fetch data from database or other sources

36 | return "value1", nil

37 | })

38 | ```

39 |

40 | ### Delete the cache

41 | ``` Go

42 | rc.TagAsDeleted(key)

43 | ```

44 |

45 | ## Batch usage

46 |

47 | ### Batch read cache

48 | ``` Go

49 | import "github.com/dtm-labs/rockscache"

50 |

51 | // new a client for rockscache using the default options

52 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

53 |

54 | // use FetchBatch to fetch data

55 | // 1. the first parameter is the keys list of the data

56 | // 2. the second parameter is the data expiration time

57 | // 3. the third parameter is the batch data fetch function which is called when the cache does not exist

58 | // the parameter of the batch data fetch function is the index list of those keys

59 | // missing in cache, which can be used to form a batch query for missing data.

60 | // the return value of the batch data fetch function is a map, with key of the

61 | // index and value of the corresponding data in form of string

62 | v, err := rc.FetchBatch([]string{"key1", "key2", "key3"}, 300, func(idxs []int) (map[int]string, error) {

63 | // fetch data from database or other sources

64 | values := make(map[int]string)

65 | for _, i := range idxs {

66 | values[i] = fmt.Sprintf("value%d", i)

67 | }

68 | return values, nil

69 | })

70 | ```

71 |

72 | ### Batch delete cache

73 | ``` Go

74 | rc.TagAsDeletedBatch(keys)

75 | ```

76 |

77 | ## Eventual consistency

78 | With the introduction of caching, consistency problems in a distributed system show up, as the data is stored in two places at the same time: the database and Redis. For background on this consistency problem, and an introduction to popular Redis caching solutions, see.

79 | - [https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/](https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/)

80 |

81 | But all the caching solutions we've seen so far, without introducing versioning at the application level, fail to address the following data inconsistency scenario.

82 |

83 |

78 |

79 | ### 解决方案

80 | 本项目给大家带来了一个全新方案:标记删除,能够确保缓存与数据库的数据一致性,解决这个大难题。此方案是首创,已申请专利,现开源出来,供大家使用。

81 |

82 | 当开发者读数据时调用`Fetch`,并且确保更新数据库之后调用`TagAsDeleted`,那么就能够确保缓存最终一致。当遇见上图中步骤5写入v1时,最终会放弃写入。

83 | - 如何确保更新数据库之后会调用TagAsDeleted,参见[DB与缓存操作的原子性](https://dtm.pub/app/cache.html#atomic)

84 | - 步骤5写入v1时,数据写入会被放弃的原理,参见[缓存一致性](https://dtm.pub/app/cache.html)

85 |

86 | 完整的可运行的例子,参考[dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

87 |

88 | ## 强一致的访问

89 | 如果您的应用需要使用缓存,并且需要的是强一致,而不是最终一致,那么可以通过打开选项`StrongConsisteny`来支持,访问方式不变

90 | ``` Go

91 | rc.Options.StrongConsisteny = true

92 | ```

93 |

94 | 详细原理参考[缓存一致性](https://dtm.pub/app/cache.html),例子参考[dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

95 |

96 | ## 降级以及强一致

97 | 本库支持降级,降级开关分为

98 | - `DisableCacheRead`: 关闭缓存读,默认`false`;如果打开,那么Fetch就不从缓存读取数据,而是直接调用fn获取数据

99 | - `DisableCacheDelete`: 关闭缓存删除,默认false;如果打开,那么TagAsDeleted就什么操作都不做,直接返回

100 |

101 | 当Redis出现问题,需要降级时,可以通过这两个开关控制。如果您需要在降级升级的过程中,也保持强一致的访问,rockscache也是支持的

102 |

103 | 详细原理参考[缓存一致性](https://dtm.pub/app/cache.html),例子参考[dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

104 |

105 | ## 防缓存击穿

106 | 通过本库使用缓存,自带防缓存击穿功能。一方面`Fetch`会在进程内部使用`singleflight`,避免一个进程内有多个请求发到Redis,另一方面在Redis层会使用分布式锁,避免多个进程的多个请求同时发到DB,保证最终只有一个数据查询请求到DB。

107 |

108 | 本项目的的防缓存击穿,在热点缓存数据删除时,能够提供更快的响应时间。假如某个热点缓存数据需要花费3s计算,普通的防缓存击穿方案会导致这个时间内的所有这个热点数据的请求都等待3s,而本项目的方案,则能够立即返回。

109 | ## 防缓存穿透

110 | 通过本库使用缓存,自带防缓存穿透功能。当`Fetch`中的`fn`返回空字符串时,认为这是空结果,会将过期时间设定为rockscache选项中的`EmptyExpire`.

111 |

112 | `EmptyExpire` 默认为60s,如果设定为0,那么关闭防缓存穿透,对空结果不保存

113 |

114 | ## 防缓存雪崩

115 | 通过本库使用缓存,自带防缓存雪崩。rockscache中的`RandomExpireAdjustment`默认为0.1,如果设定为600的过期时间,那么过期时间会被设定为`540s - 600s`中间的一个随机数,避免数据出现同时到期

116 |

117 | ## 联系我们

118 | ### 公众号

119 | dtm-labs官方公众号:《分布式事务》,大量分布式事务干货分享,以及dtm-labs的最新消息

120 | ### 交流群

121 | 如果您希望更快的获得反馈,或者更多的了解其他用户在使用过程中的各种反馈,欢迎加入我们的微信交流群

122 |

123 | 请加作者的微信 yedf2008 好友或者扫码加好友,备注 `rockscache` 按照指引进群

124 |

125 |

126 |

127 | 欢迎使用[dtm-labs/rockscache](https://github.com/dtm-labs/rockscache),欢迎star支持我们

128 |

--------------------------------------------------------------------------------

/batch_cover_test.go:

--------------------------------------------------------------------------------

1 | package rockscache

2 |

3 | import (

4 | "context"

5 | "errors"

6 | "fmt"

7 | "math/rand"

8 | "testing"

9 | "time"

10 |

11 | "github.com/stretchr/testify/assert"

12 | )

13 |

14 | var (

15 | n = int(rand.Int31n(20) + 10)

16 | )

17 |

18 | func TestDisableForBatch(t *testing.T) {

19 | idxs := genIdxs(n)

20 | keys := genKeys(idxs)

21 | getFn := func(idxs []int) (map[int]string, error) {

22 | return nil, nil

23 | }

24 |

25 | rc := NewClient(nil, NewDefaultOptions())

26 | rc.Options.DisableCacheDelete = true

27 | rc.Options.DisableCacheRead = true

28 |

29 | _, err := rc.FetchBatch2(context.Background(), keys, 60, getFn)

30 | assert.Nil(t, err)

31 | err = rc.TagAsDeleted2(context.Background(), keys[0])

32 | assert.Nil(t, err)

33 | }

34 |

35 | func TestErrorFetchForBatch(t *testing.T) {

36 | idxs := genIdxs(n)

37 | keys := genKeys(idxs)

38 | fetchError := errors.New("fetch error")

39 | fn := func(idxs []int) (map[int]string, error) {

40 | return nil, fetchError

41 | }

42 | clearCache()

43 | rc := NewClient(rdb, NewDefaultOptions())

44 | _, err := rc.FetchBatch(keys, 60, fn)

45 | assert.ErrorIs(t, err, fetchError)

46 |

47 | rc.Options.StrongConsistency = true

48 | _, err = rc.FetchBatch(keys, 60, fn)

49 | assert.ErrorIs(t, err, fetchError)

50 | }

51 |

52 | func TestEmptyExpireForBatch(t *testing.T) {

53 | testEmptyExpireForBatch(t, 0)

54 | testEmptyExpireForBatch(t, 10*time.Second)

55 | }

56 |

57 | func testEmptyExpireForBatch(t *testing.T, expire time.Duration) {

58 | idxs := genIdxs(n)

59 | keys := genKeys(idxs)

60 | fn := func(idxs []int) (map[int]string, error) {

61 | return nil, nil

62 | }

63 | fetchError := errors.New("fetch error")

64 | errFn := func(idxs []int) (map[int]string, error) {

65 | return nil, fetchError

66 | }

67 |

68 | clearCache()

69 | rc := NewClient(rdb, NewDefaultOptions())

70 | rc.Options.EmptyExpire = expire

71 |

72 | _, err := rc.FetchBatch(keys, 60, fn)

73 | assert.Nil(t, err)

74 | _, err = rc.FetchBatch(keys, 60, errFn)

75 | if expire == 0 {

76 | assert.ErrorIs(t, err, fetchError)

77 | } else {

78 | assert.Nil(t, err)

79 | }

80 |

81 | clearCache()

82 | rc.Options.StrongConsistency = true

83 | _, err = rc.FetchBatch(keys, 60, fn)

84 | assert.Nil(t, err)

85 | _, err = rc.FetchBatch(keys, 60, errFn)

86 | if expire == 0 {

87 | assert.ErrorIs(t, err, fetchError)

88 | } else {

89 | assert.Nil(t, err)

90 | }

91 | }

92 |

93 | func TestPanicFetchForBatch(t *testing.T) {

94 | idxs := genIdxs(n)

95 | keys := genKeys(idxs)

96 | fn := func(idxs []int) (map[int]string, error) {

97 | return nil, nil

98 | }

99 | fetchError := errors.New("fetch error")

100 | errFn := func(idxs []int) (map[int]string, error) {

101 | panic(fetchError)

102 | }

103 | clearCache()

104 | rc := NewClient(rdb, NewDefaultOptions())

105 |

106 | _, err := rc.FetchBatch(keys, 60, fn)

107 | assert.Nil(t, err)

108 | rc.TagAsDeleted("key1")

109 | _, err = rc.FetchBatch(keys, 60, errFn)

110 | assert.Nil(t, err)

111 | time.Sleep(20 * time.Millisecond)

112 | }

113 |

114 | func TestTagAsDeletedBatchWait(t *testing.T) {

115 | clearCache()

116 | rc := NewClient(rdb, NewDefaultOptions())

117 | rc.Options.WaitReplicas = 1

118 | rc.Options.WaitReplicasTimeout = 10

119 | err := rc.TagAsDeletedBatch([]string{"key1", "key2"})

120 | if getCluster() != nil {

121 | assert.Nil(t, err)

122 | } else {

123 | assert.Error(t, err, fmt.Errorf("wait replicas 1 failed. result replicas: 0"))

124 | }

125 | }

126 |

127 | func TestWeakFetchBatchCanceled(t *testing.T) {

128 | clearCache()

129 | rc := NewClient(rdb, NewDefaultOptions())

130 | n := int(rand.Int31n(20) + 10)

131 | idxs := genIdxs(n)

132 | keys, values1, values2 := genKeys(idxs), genValues(n, "value_"), genValues(n, "eulav_")

133 | values3 := genValues(n, "vvvv_")

134 | go func() {

135 | dc2 := NewClient(rdb, NewDefaultOptions())

136 | v, err := dc2.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values1, 450))

137 | assert.Nil(t, err)

138 | assert.Equal(t, values1, v)

139 | }()

140 | time.Sleep(20 * time.Millisecond)

141 |

142 | began := time.Now()

143 | ctx, cancel := context.WithTimeout(context.Background(), 200*time.Millisecond)

144 | defer cancel()

145 | _, err := rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values2, 200))

146 | assert.ErrorIs(t, err, context.DeadlineExceeded)

147 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

148 |

149 | ctx, cancel = context.WithCancel(context.Background())

150 | go func() {

151 | time.Sleep(200 * time.Millisecond)

152 | cancel()

153 | }()

154 | began = time.Now()

155 | _, err = rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values3, 200))

156 | assert.ErrorIs(t, err, context.Canceled)

157 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

158 | }

159 |

160 | func TestStrongFetchBatchCanceled(t *testing.T) {

161 | clearCache()

162 | rc := NewClient(rdb, NewDefaultOptions())

163 | rc.Options.StrongConsistency = true

164 | n := int(rand.Int31n(20) + 10)

165 | idxs := genIdxs(n)

166 | keys, values1, values2 := genKeys(idxs), genValues(n, "value_"), genValues(n, "eulav_")

167 | values3 := genValues(n, "vvvv_")

168 | go func() {

169 | dc2 := NewClient(rdb, NewDefaultOptions())

170 | v, err := dc2.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values1, 450))

171 | assert.Nil(t, err)

172 | assert.Equal(t, values1, v)

173 | }()

174 | time.Sleep(20 * time.Millisecond)

175 |

176 | began := time.Now()

177 | ctx, cancel := context.WithTimeout(context.Background(), 200*time.Millisecond)

178 | defer cancel()

179 | _, err := rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values2, 200))

180 | assert.ErrorIs(t, err, context.DeadlineExceeded)

181 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

182 |

183 | ctx, cancel = context.WithCancel(context.Background())

184 | go func() {

185 | time.Sleep(200 * time.Millisecond)

186 | cancel()

187 | }()

188 | began = time.Now()

189 | _, err = rc.FetchBatch2(ctx, keys, 60*time.Second, genBatchDataFunc(values3, 200))

190 | assert.ErrorIs(t, err, context.Canceled)

191 | assertEqualDuration(t, time.Duration(200)*time.Millisecond, time.Since(began))

192 | }

193 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [](https://codecov.io/gh/dtm-labs/rockscache)

4 | [](https://goreportcard.com/report/github.com/dtm-labs/rockscache)

5 | [](https://pkg.go.dev/github.com/dtm-labs/rockscache)

6 |

7 | English | [简体中文](https://github.com/dtm-labs/rockscache/blob/main/helper/README-cn.md)

8 |

9 | # RocksCache

10 | The first Redis cache library to ensure eventual consistency and strong consistency with DB.

11 |

12 | ## Features

13 | - Eventual Consistency: ensures eventual consistency of cache even in extreme cases

14 | - Strong consistency: provides strong consistent access to applications

15 | - Anti-breakdown: a better solution for cache breakdown

16 | - Anti-penetration

17 | - Anti-avalanche

18 | - Batch Query

19 |

20 | ## Usage

21 | This cache repository uses the most common `update DB and then delete cache` cache management policy

22 |

23 | ### Read cache

24 | ``` Go

25 | import "github.com/dtm-labs/rockscache"

26 |

27 | // new a client for rockscache using the default options

28 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

29 |

30 | // use Fetch to fetch data

31 | // 1. the first parameter is the key of the data

32 | // 2. the second parameter is the data expiration time

33 | // 3. the third parameter is the data fetch function which is called when the cache does not exist

34 | v, err := rc.Fetch("key1", 300 * time.Second, func()(string, error) {

35 | // fetch data from database or other sources

36 | return "value1", nil

37 | })

38 | ```

39 |

40 | ### Delete the cache

41 | ``` Go

42 | rc.TagAsDeleted(key)

43 | ```

44 |

45 | ## Batch usage

46 |

47 | ### Batch read cache

48 | ``` Go

49 | import "github.com/dtm-labs/rockscache"

50 |

51 | // new a client for rockscache using the default options

52 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

53 |

54 | // use FetchBatch to fetch data

55 | // 1. the first parameter is the keys list of the data

56 | // 2. the second parameter is the data expiration time

57 | // 3. the third parameter is the batch data fetch function which is called when the cache does not exist

58 | // the parameter of the batch data fetch function is the index list of those keys

59 | // missing in cache, which can be used to form a batch query for missing data.

60 | // the return value of the batch data fetch function is a map, with key of the

61 | // index and value of the corresponding data in form of string

62 | v, err := rc.FetchBatch([]string{"key1", "key2", "key3"}, 300, func(idxs []int) (map[int]string, error) {

63 | // fetch data from database or other sources

64 | values := make(map[int]string)

65 | for _, i := range idxs {

66 | values[i] = fmt.Sprintf("value%d", i)

67 | }

68 | return values, nil

69 | })

70 | ```

71 |

72 | ### Batch delete cache

73 | ``` Go

74 | rc.TagAsDeletedBatch(keys)

75 | ```

76 |

77 | ## Eventual consistency

78 | With the introduction of caching, consistency problems in a distributed system show up, as the data is stored in two places at the same time: the database and Redis. For background on this consistency problem, and an introduction to popular Redis caching solutions, see.

79 | - [https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/](https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/)

80 |

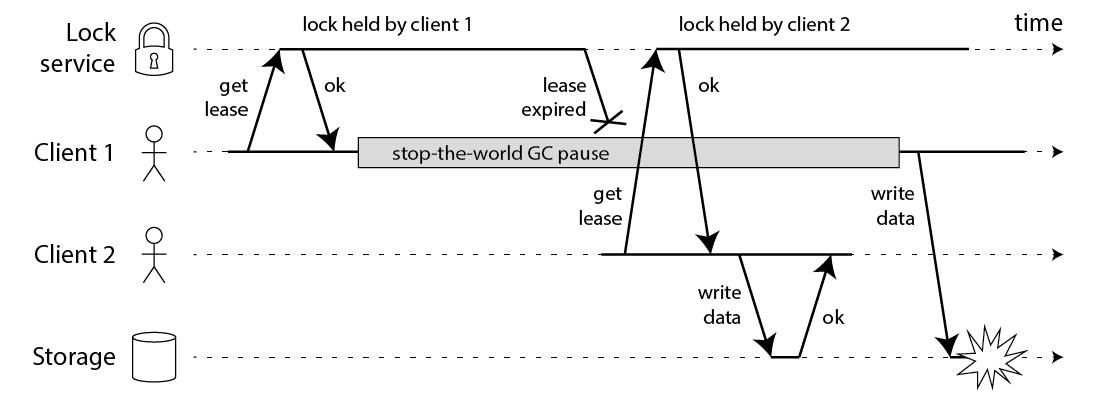

81 | But all the caching solutions we've seen so far, without introducing versioning at the application level, fail to address the following data inconsistency scenario.

82 |

83 |  84 |

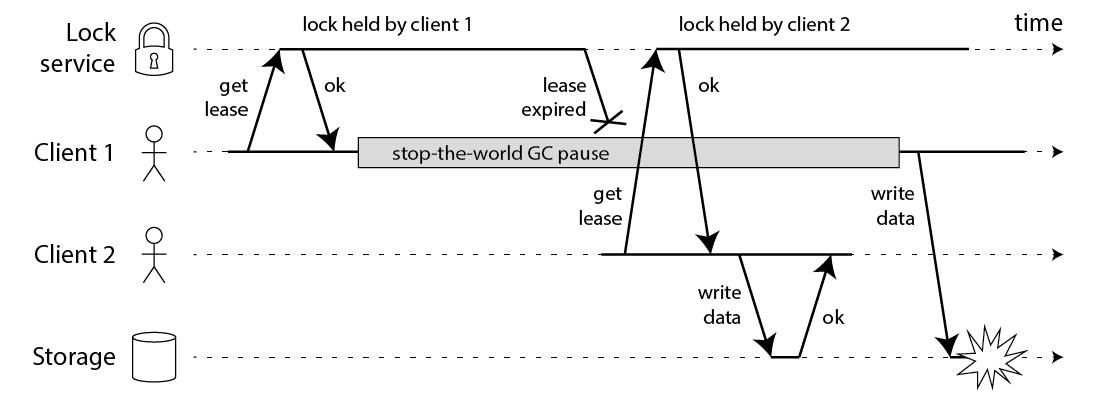

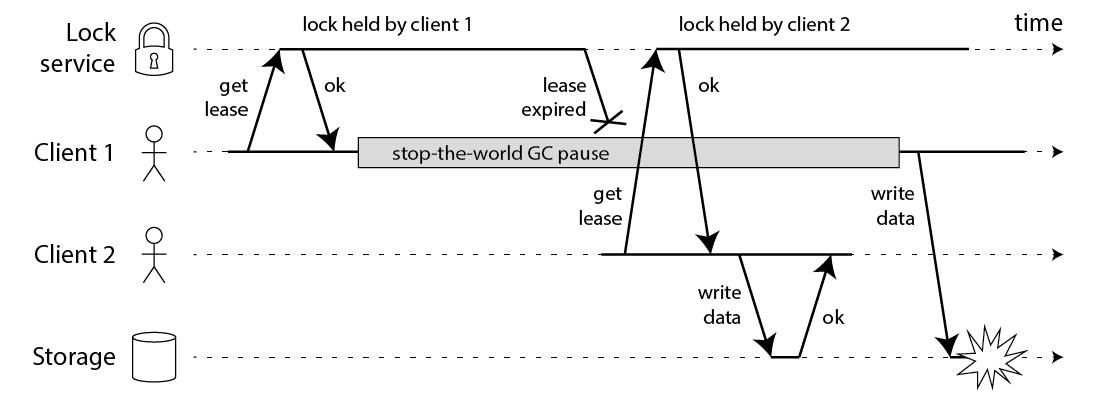

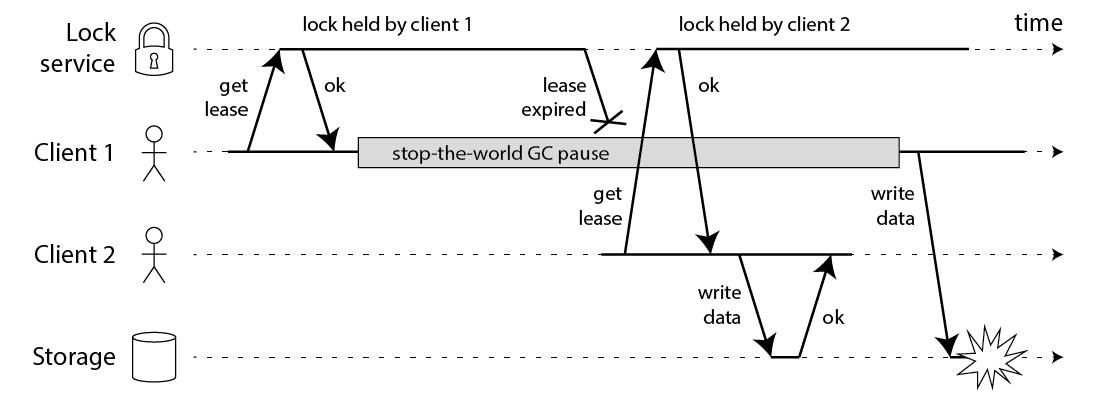

85 | Even if you use lock to do the updating, there are still corner cases that can cause inconsistency.

86 |

87 |

84 |

85 | Even if you use lock to do the updating, there are still corner cases that can cause inconsistency.

86 |

87 |  88 |

89 | ### Solution

90 | This project brings you a brand new solution that guarantee data consistency between the cache and the database, without introducing version. This solution is the first of its kind and has been patented and is now open sourced for everyone to use.

91 |

92 | When the developer calls `Fetch` when reading the data, and makes sure to call `TagAsDeleted` after updating the database, then the cache can guarentee the eventual consistency. When step 5 in the diagram above is writing to v1, the write in this solution will eventually be ignored.

93 | - See [Atomicity of DB and cache operations](https://en.dtm.pub/app/cache.html#atomic) for how to ensure that TagAsDeleted is called after updating the database.

94 | - See [Cache consistency](https://en.dtm.pub/app/cache.html) for why data writes are ignored when step 5 is writing v1 to cache.

95 |

96 | For a full runnable example, see [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

97 |

98 | ## Strongly consistent access

99 | If your application needs to use caching and requires strong consistency rather than eventual consistency, then this can be supported by turning on the option `StrongConsisteny`, with the access method remaining the same

100 | ``` Go

101 | rc.Options.StrongConsisteny = true

102 | ```

103 |

104 | Refer to [cache consistency](https://en.dtm.pub/app/cache.html) for detailed principles and [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache) for examples

105 |

106 | ## Downgrading and strong consistency

107 | The library supports downgrading. The downgrade switch is divided into

108 | - `DisableCacheRead`: turns off cache reads, default `false`; if on, then Fetch does not read from the cache, but calls fn directly to fetch the data

109 | - `DisableCacheDelete`: disables cache delete, default false; if on, then TagAsDeleted does nothing and returns directly

110 |

111 | When Redis has a problem and needs to be downgraded, you can control this with these two switches. If you need to maintain strong consistent access even during a downgrade, rockscache also supports

112 |

113 | Refer to [cache-consistency](https://en.dtm.pub/app/cache.html) for detailed principles and [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache) for examples

114 |

115 | ## Anti-Breakdown

116 | The use of cache through this library comes with an anti-breakdown feature. On the one hand `Fetch` will use `singleflight` within the process to avoid multiple requests being sent to Redis within a process, and on the other hand distributed locks will be used in the Redis layer to avoid multiple requests being sent to the DB from multiple processes at the same time, ensuring that only one data query request ends up at the DB.

117 |

118 | The project's anti-breakdown provides a faster response time when hot cached data is deleted. If a hot cache data takes 3s to compute, a normal anti-breakdown solution would cause all requests for this hot data to wait 3s for this time, whereas this project's solution returns it immediately.

119 |

120 | ## Anti-Penetration

121 | The use of caching through this library comes with anti-penetration features. When `fn` in `Fetch` returns an empty string, this is considered an empty result and the expiry time is set to `EmptyExpire` in the rockscache option.

122 |

123 | `EmptyExpire` defaults to 60s, if set to 0 then anti-penetration is turned off and no empty results are saved

124 |

125 | ## Anti-Avalanche

126 | The cache is used with this library and comes with an anti-avalanche. `RandomExpireAdjustment` in rockscache defaults to 0.1, if set to an expiry time of 600 then the expiry time will be set to a random number in the middle of `540s - 600s` to avoid data expiring at the same time

127 |

128 | ## Contact us

129 |

130 | ## Chat Group

131 |

132 | Join the chat via [https://discord.gg/dV9jS5Rb33](https://discord.gg/dV9jS5Rb33).

133 |

134 | ## Give a star! ⭐

135 |

136 | If you think this project is interesting, or helpful to you, please give a star!

137 |

--------------------------------------------------------------------------------

/helper/README-en.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [](https://codecov.io/gh/dtm-labs/rockscache)

4 | [](https://goreportcard.com/report/github.com/dtm-labs/rockscache)

5 | [](https://pkg.go.dev/github.com/dtm-labs/rockscache)

6 |

7 | English | [简体中文](https://github.com/dtm-labs/rockscache/blob/main/helper/README-cn.md)

8 |

9 | # RocksCache

10 | The first Redis cache library to ensure eventual consistency and strong consistency with DB.

11 |

12 | ## Features

13 | - Eventual Consistency: ensures eventual consistency of cache even in extreme cases

14 | - Strong consistency: provides strong consistent access to applications

15 | - Anti-breakdown: a better solution for cache breakdown

16 | - Anti-penetration

17 | - Anti-avalanche

18 | - Batch Query

19 |

20 | ## Usage

21 | This cache repository uses the most common `update DB and then delete cache` cache management policy

22 |

23 | ### Read cache

24 | ``` Go

25 | import "github.com/dtm-labs/rockscache"

26 |

27 | // new a client for rockscache using the default options

28 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

29 |

30 | // use Fetch to fetch data

31 | // 1. the first parameter is the key of the data

32 | // 2. the second parameter is the data expiration time

33 | // 3. the third parameter is the data fetch function which is called when the cache does not exist

34 | v, err := rc.Fetch("key1", 300, func()(string, error) {

35 | // fetch data from database or other sources

36 | return "value1", nil

37 | })

38 | ```

39 |

40 | ### Delete the cache

41 | ``` Go

42 | rc.TagAsDeleted(key)

43 | ```

44 |

45 | ## Batch usage

46 |

47 | ### Batch read cache

48 | ``` Go

49 | import "github.com/dtm-labs/rockscache"

50 |

51 | // new a client for rockscache using the default options

52 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

53 |

54 | // use FetchBatch to fetch data

55 | // 1. the first parameter is the keys list of the data

56 | // 2. the second parameter is the data expiration time

57 | // 3. the third parameter is the batch data fetch function which is called when the cache does not exist

58 | // the parameter of the batch data fetch function is the index list of those keys

59 | // missing in cache, which can be used to form a batch query for missing data.

60 | // the return value of the batch data fetch function is a map, with key of the

61 | // index and value of the corresponding data in form of string

62 | v, err := rc.FetchBatch([]string{"key1", "key2", "key3"}, 300, func(idxs []int) (map[int]string, error) {

63 | // fetch data from database or other sources

64 | values := make(map[int]string)

65 | for _, i := range idxs {

66 | values[i] = fmt.Sprintf("value%d", i)

67 | }

68 | return values, nil

69 | })

70 | ```

71 |

72 | ### Batch delete cache

73 | ``` Go

74 | rc.TagAsDeletedBatch(keys)

75 | ```

76 |

77 | ## Eventual consistency

78 | With the introduction of caching, consistency problems in a distributed system show up, as the data is stored in two places at the same time: the database and Redis. For background on this consistency problem, and an introduction to popular Redis caching solutions, see.

79 | - [https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/](https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/)

80 |

81 | But all the caching solutions we've seen so far, without introducing versioning at the application level, fail to address the following data inconsistency scenario.

82 |

83 |

88 |

89 | ### Solution

90 | This project brings you a brand new solution that guarantee data consistency between the cache and the database, without introducing version. This solution is the first of its kind and has been patented and is now open sourced for everyone to use.

91 |

92 | When the developer calls `Fetch` when reading the data, and makes sure to call `TagAsDeleted` after updating the database, then the cache can guarentee the eventual consistency. When step 5 in the diagram above is writing to v1, the write in this solution will eventually be ignored.

93 | - See [Atomicity of DB and cache operations](https://en.dtm.pub/app/cache.html#atomic) for how to ensure that TagAsDeleted is called after updating the database.

94 | - See [Cache consistency](https://en.dtm.pub/app/cache.html) for why data writes are ignored when step 5 is writing v1 to cache.

95 |

96 | For a full runnable example, see [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

97 |

98 | ## Strongly consistent access

99 | If your application needs to use caching and requires strong consistency rather than eventual consistency, then this can be supported by turning on the option `StrongConsisteny`, with the access method remaining the same

100 | ``` Go

101 | rc.Options.StrongConsisteny = true

102 | ```

103 |

104 | Refer to [cache consistency](https://en.dtm.pub/app/cache.html) for detailed principles and [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache) for examples

105 |

106 | ## Downgrading and strong consistency

107 | The library supports downgrading. The downgrade switch is divided into

108 | - `DisableCacheRead`: turns off cache reads, default `false`; if on, then Fetch does not read from the cache, but calls fn directly to fetch the data

109 | - `DisableCacheDelete`: disables cache delete, default false; if on, then TagAsDeleted does nothing and returns directly

110 |

111 | When Redis has a problem and needs to be downgraded, you can control this with these two switches. If you need to maintain strong consistent access even during a downgrade, rockscache also supports

112 |

113 | Refer to [cache-consistency](https://en.dtm.pub/app/cache.html) for detailed principles and [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache) for examples

114 |

115 | ## Anti-Breakdown

116 | The use of cache through this library comes with an anti-breakdown feature. On the one hand `Fetch` will use `singleflight` within the process to avoid multiple requests being sent to Redis within a process, and on the other hand distributed locks will be used in the Redis layer to avoid multiple requests being sent to the DB from multiple processes at the same time, ensuring that only one data query request ends up at the DB.

117 |

118 | The project's anti-breakdown provides a faster response time when hot cached data is deleted. If a hot cache data takes 3s to compute, a normal anti-breakdown solution would cause all requests for this hot data to wait 3s for this time, whereas this project's solution returns it immediately.

119 |

120 | ## Anti-Penetration

121 | The use of caching through this library comes with anti-penetration features. When `fn` in `Fetch` returns an empty string, this is considered an empty result and the expiry time is set to `EmptyExpire` in the rockscache option.

122 |

123 | `EmptyExpire` defaults to 60s, if set to 0 then anti-penetration is turned off and no empty results are saved

124 |

125 | ## Anti-Avalanche

126 | The cache is used with this library and comes with an anti-avalanche. `RandomExpireAdjustment` in rockscache defaults to 0.1, if set to an expiry time of 600 then the expiry time will be set to a random number in the middle of `540s - 600s` to avoid data expiring at the same time

127 |

128 | ## Contact us

129 |

130 | ## Chat Group

131 |

132 | Join the chat via [https://discord.gg/dV9jS5Rb33](https://discord.gg/dV9jS5Rb33).

133 |

134 | ## Give a star! ⭐

135 |

136 | If you think this project is interesting, or helpful to you, please give a star!

137 |

--------------------------------------------------------------------------------

/helper/README-en.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [](https://codecov.io/gh/dtm-labs/rockscache)

4 | [](https://goreportcard.com/report/github.com/dtm-labs/rockscache)

5 | [](https://pkg.go.dev/github.com/dtm-labs/rockscache)

6 |

7 | English | [简体中文](https://github.com/dtm-labs/rockscache/blob/main/helper/README-cn.md)

8 |

9 | # RocksCache

10 | The first Redis cache library to ensure eventual consistency and strong consistency with DB.

11 |

12 | ## Features

13 | - Eventual Consistency: ensures eventual consistency of cache even in extreme cases

14 | - Strong consistency: provides strong consistent access to applications

15 | - Anti-breakdown: a better solution for cache breakdown

16 | - Anti-penetration

17 | - Anti-avalanche

18 | - Batch Query

19 |

20 | ## Usage

21 | This cache repository uses the most common `update DB and then delete cache` cache management policy

22 |

23 | ### Read cache

24 | ``` Go

25 | import "github.com/dtm-labs/rockscache"

26 |

27 | // new a client for rockscache using the default options

28 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

29 |

30 | // use Fetch to fetch data

31 | // 1. the first parameter is the key of the data

32 | // 2. the second parameter is the data expiration time

33 | // 3. the third parameter is the data fetch function which is called when the cache does not exist

34 | v, err := rc.Fetch("key1", 300, func()(string, error) {

35 | // fetch data from database or other sources

36 | return "value1", nil

37 | })

38 | ```

39 |

40 | ### Delete the cache

41 | ``` Go

42 | rc.TagAsDeleted(key)

43 | ```

44 |

45 | ## Batch usage

46 |

47 | ### Batch read cache

48 | ``` Go

49 | import "github.com/dtm-labs/rockscache"

50 |

51 | // new a client for rockscache using the default options

52 | rc := rockscache.NewClient(redisClient, NewDefaultOptions())

53 |

54 | // use FetchBatch to fetch data

55 | // 1. the first parameter is the keys list of the data

56 | // 2. the second parameter is the data expiration time

57 | // 3. the third parameter is the batch data fetch function which is called when the cache does not exist

58 | // the parameter of the batch data fetch function is the index list of those keys

59 | // missing in cache, which can be used to form a batch query for missing data.

60 | // the return value of the batch data fetch function is a map, with key of the

61 | // index and value of the corresponding data in form of string

62 | v, err := rc.FetchBatch([]string{"key1", "key2", "key3"}, 300, func(idxs []int) (map[int]string, error) {

63 | // fetch data from database or other sources

64 | values := make(map[int]string)

65 | for _, i := range idxs {

66 | values[i] = fmt.Sprintf("value%d", i)

67 | }

68 | return values, nil

69 | })

70 | ```

71 |

72 | ### Batch delete cache

73 | ``` Go

74 | rc.TagAsDeletedBatch(keys)

75 | ```

76 |

77 | ## Eventual consistency

78 | With the introduction of caching, consistency problems in a distributed system show up, as the data is stored in two places at the same time: the database and Redis. For background on this consistency problem, and an introduction to popular Redis caching solutions, see.

79 | - [https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/](https://yunpengn.github.io/blog/2019/05/04/consistent-redis-sql/)

80 |

81 | But all the caching solutions we've seen so far, without introducing versioning at the application level, fail to address the following data inconsistency scenario.

82 |

83 |  84 |

85 | Even if you use lock to do the updating, there are still corner cases that can cause inconsistency.

86 |

87 |

84 |

85 | Even if you use lock to do the updating, there are still corner cases that can cause inconsistency.

86 |

87 |  88 |

89 | ### Solution

90 | This project brings you a brand new solution that guarantee data consistency between the cache and the database, without introducing version. This solution is the first of its kind and has been patented and is now open sourced for everyone to use.

91 |

92 | When the developer calls `Fetch` when reading the data, and makes sure to call `TagAsDeleted` after updating the database, then the cache can guarentee the eventual consistency. When step 5 in the diagram above is writing to v1, the write in this solution will eventually be ignored.

93 | - See [Atomicity of DB and cache operations](https://en.dtm.pub/app/cache.html#atomic) for how to ensure that TagAsDeleted is called after updating the database.

94 | - See [Cache consistency](https://en.dtm.pub/app/cache.html) for why data writes are ignored when step 5 is writing v1 to cache.

95 |

96 | For a full runnable example, see [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache)

97 |

98 | ## Strongly consistent access

99 | If your application needs to use caching and requires strong consistency rather than eventual consistency, then this can be supported by turning on the option `StrongConsisteny`, with the access method remaining the same

100 | ``` Go

101 | rc.Options.StrongConsisteny = true

102 | ```

103 |

104 | Refer to [cache consistency](https://en.dtm.pub/app/cache.html) for detailed principles and [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache) for examples

105 |

106 | ## Downgrading and strong consistency

107 | The library supports downgrading. The downgrade switch is divided into

108 | - `DisableCacheRead`: turns off cache reads, default `false`; if on, then Fetch does not read from the cache, but calls fn directly to fetch the data

109 | - `DisableCacheDelete`: disables cache delete, default false; if on, then TagAsDeleted does nothing and returns directly

110 |

111 | When Redis has a problem and needs to be downgraded, you can control this with these two switches. If you need to maintain strong consistent access even during a downgrade, rockscache also supports

112 |

113 | Refer to [cache-consistency](https://en.dtm.pub/app/cache.html) for detailed principles and [dtm-cases/cache](https://github.com/dtm-labs/dtm-cases/tree/main/cache) for examples

114 |

115 | ## Anti-Breakdown

116 | The use of cache through this library comes with an anti-breakdown feature. On the one hand `Fetch` will use `singleflight` within the process to avoid multiple requests being sent to Redis within a process, and on the other hand distributed locks will be used in the Redis layer to avoid multiple requests being sent to the DB from multiple processes at the same time, ensuring that only one data query request ends up at the DB.

117 |

118 | The project's anti-breakdown provides a faster response time when hot cached data is deleted. If a hot cache data takes 3s to compute, a normal anti-breakdown solution would cause all requests for this hot data to wait 3s for this time, whereas this project's solution returns it immediately.

119 |

120 | ## Anti-Penetration

121 | The use of caching through this library comes with anti-penetration features. When `fn` in `Fetch` returns an empty string, this is considered an empty result and the expiry time is set to `EmptyExpire` in the rockscache option.

122 |

123 | `EmptyExpire` defaults to 60s, if set to 0 then anti-penetration is turned off and no empty results are saved

124 |

125 | ## Anti-Avalanche

126 | The cache is used with this library and comes with an anti-avalanche. `RandomExpireAdjustment` in rockscache defaults to 0.1, if set to an expiry time of 600 then the expiry time will be set to a random number in the middle of `540s - 600s` to avoid data expiring at the same time

127 |

128 | ## Contact us

129 |

130 | ## Chat Group

131 |

132 | Join the chat via [https://discord.gg/dV9jS5Rb33](https://discord.gg/dV9jS5Rb33).

133 |

134 | ## Give a star! ⭐

135 |

136 | If you think this project is interesting, or helpful to you, please give a star!

137 |

--------------------------------------------------------------------------------

/batch_test.go:

--------------------------------------------------------------------------------

1 | package rockscache

2 |

3 | import (

4 | "errors"

5 | "math/rand"

6 | "strconv"

7 | "testing"

8 | "time"

9 |

10 | "github.com/stretchr/testify/assert"

11 | )

12 |

13 | func genBatchDataFunc(values map[int]string, sleepMilli int) func(idxs []int) (map[int]string, error) {

14 | return func(idxs []int) (map[int]string, error) {

15 | debugf("batch fetching: %v", idxs)

16 | time.Sleep(time.Duration(sleepMilli) * time.Millisecond)

17 | return values, nil

18 | }

19 | }

20 |

21 | func genIdxs(to int) (idxs []int) {

22 | for i := 0; i < to; i++ {

23 | idxs = append(idxs, i)

24 | }

25 | return

26 | }

27 |

28 | func genKeys(idxs []int) (keys []string) {

29 | for _, i := range idxs {

30 | suffix := strconv.Itoa(i)

31 | k := "key" + suffix

32 | keys = append(keys, k)

33 | }

34 | return

35 | }

36 |

37 | func genValues(n int, prefix string) map[int]string {

38 | values := make(map[int]string)

39 | for i := 0; i < n; i++ {

40 | v := prefix + strconv.Itoa(i)

41 | values[i] = v

42 | }

43 | return values

44 | }

45 |

46 | func TestWeakFetchBatch(t *testing.T) {

47 | clearCache()

48 | rc := NewClient(rdb, NewDefaultOptions())

49 | began := time.Now()

50 | n := int(rand.Int31n(20) + 10)

51 | idxs := genIdxs(n)

52 | keys, values1, values2 := genKeys(idxs), genValues(n, "value_"), genValues(n, "eulav_")

53 | values3 := genValues(n, "vvvv_")

54 | go func() {

55 | dc2 := NewClient(rdb, NewDefaultOptions())

56 | v, err := dc2.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values1, 200))

57 | assert.Nil(t, err)

58 | assert.Equal(t, values1, v)

59 | }()

60 | time.Sleep(20 * time.Millisecond)

61 |

62 | v, err := rc.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values2, 200))

63 | assert.Nil(t, err)

64 | assert.Equal(t, values1, v)

65 | assert.True(t, time.Since(began) > time.Duration(150)*time.Millisecond)

66 |

67 | err = rc.TagAsDeletedBatch(keys)

68 | assert.Nil(t, err)

69 |

70 | began = time.Now()

71 | v, err = rc.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values3, 200))

72 | assert.Nil(t, err)

73 | assert.Equal(t, values1, v)

74 | assert.True(t, time.Since(began) < time.Duration(200)*time.Millisecond)

75 |

76 | time.Sleep(300 * time.Millisecond)

77 | v, err = rc.FetchBatch(keys, 60*time.Second, genBatchDataFunc(values3, 200))

78 | assert.Nil(t, err)

79 | assert.Equal(t, values3, v)

80 | }

81 |

82 | func TestWeakFetchBatchOverlap(t *testing.T) {

83 | clearCache()

84 | rc := NewClient(rdb, NewDefaultOptions())

85 | began := time.Now()

86 | n := 100

87 | idxs := genIdxs(n)

88 | keys := genKeys(idxs)