├── .netrc

├── bot

├── modules

│ ├── __init__.py

│ ├── deleteme.txt

│ ├── delete.py

│ ├── mirror_status.py

│ ├── count.py

│ ├── shell.py

│ ├── speedtest.py

│ ├── list.py

│ ├── watch.py

│ ├── updates.py

│ ├── cancel_mirror.py

│ ├── clone.py

│ ├── eval.py

│ ├── authorize.py

│ ├── config.py

│ └── torrent_search.py

├── helper

│ ├── ext_utils

│ │ ├── __init__.py

│ │ ├── delete-me.txt

│ │ ├── exceptions.py

│ │ ├── db_handler.py

│ │ ├── fs_utils.py

│ │ └── bot_utils.py

│ ├── mirror_utils

│ │ ├── __init__.py

│ │ ├── download_utils

│ │ │ ├── deleteme.txt

│ │ │ ├── __init__.py

│ │ │ ├── download_helper.py

│ │ │ ├── telegram_downloader.py

│ │ │ ├── aria2_download.py

│ │ │ ├── youtube_dl_download_helper.py

│ │ │ ├── mega_downloader.py

│ │ │ └── qbit_downloader.py

│ │ ├── status_utils

│ │ │ ├── __init__.py

│ │ │ ├── deleteme.txt

│ │ │ ├── listeners.py

│ │ │ ├── tar_status.py

│ │ │ ├── extract_status.py

│ │ │ ├── status.py

│ │ │ ├── telegram_download_status.py

│ │ │ ├── clone_status.py

│ │ │ ├── upload_status.py

│ │ │ ├── youtube_dl_download_status.py

│ │ │ ├── gdownload_status.py

│ │ │ ├── mega_download_status.py

│ │ │ ├── qbit_download_status.py

│ │ │ └── aria_download_status.py

│ │ └── upload_utils

│ │ │ ├── __init__.py

│ │ │ ├── deleteme.txt

│ │ │ └── gdtot_helper.py

│ ├── telegram_helper

│ │ ├── __init__.py

│ │ ├── deleteme.txt

│ │ ├── button_build.py

│ │ ├── bot_commands.py

│ │ ├── filters.py

│ │ └── message_utils.py

│ ├── custom_filters.py

│ └── __init__.py

├── __main__.py

└── __init__.py

├── _config.yml

├── heroku.yml

├── captain-definition

├── requirements-cli.txt

├── aria.bat

├── vps.md

├── début.sh

├── Dockerfile

├── .github

└── workflows

│ └── manual.yml

├── alive.py

├── generate_drive_token.py

├── qBittorrent.conf

├── aria.sh

├── config.env

├── heroku-guide.md

├── add_to_team_drive.py

├── extract

├── pextract

└── nodes.py

/.netrc:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/bot/modules/__init__.py:

--------------------------------------------------------------------------------

1 | #TEKEAYUSH

--------------------------------------------------------------------------------

/bot/modules/deleteme.txt:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-cayman

--------------------------------------------------------------------------------

/bot/helper/ext_utils/__init__.py:

--------------------------------------------------------------------------------

1 | #TEKEAYUSH

--------------------------------------------------------------------------------

/bot/helper/ext_utils/delete-me.txt:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/__init__.py:

--------------------------------------------------------------------------------

1 | #TEKEAYUSH

--------------------------------------------------------------------------------

/bot/helper/telegram_helper/__init__.py:

--------------------------------------------------------------------------------

1 | #TEKEAYUSH

--------------------------------------------------------------------------------

/bot/helper/telegram_helper/deleteme.txt:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/download_utils/deleteme.txt:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/__init__.py:

--------------------------------------------------------------------------------

1 | #TEKEAYUSH

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/deleteme.txt:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/upload_utils/__init__.py:

--------------------------------------------------------------------------------

1 | #TEKEAYUSH

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/upload_utils/deleteme.txt:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/download_utils/__init__.py:

--------------------------------------------------------------------------------

1 | #TEKEAYUSH

--------------------------------------------------------------------------------

/heroku.yml:

--------------------------------------------------------------------------------

1 | build:

2 | docker:

3 | web: Dockerfile

4 | run:

5 | web: bash début.sh

6 |

--------------------------------------------------------------------------------

/captain-definition:

--------------------------------------------------------------------------------

1 | {

2 | "schemaVersion": 2,

3 | "dockerfilePath": "./Dockerfile"

4 | }

5 |

--------------------------------------------------------------------------------

/requirements-cli.txt:

--------------------------------------------------------------------------------

1 | oauth2client

2 | google-api-python-client

3 | progress

4 | progressbar2

5 | httplib2shim

6 | google_auth_oauthlib

7 | pyrogram

8 |

--------------------------------------------------------------------------------

/aria.bat:

--------------------------------------------------------------------------------

1 | aria2c --enable-rpc --rpc-listen-all=false --rpc-listen-port 6800 --max-connection-per-server=10 --rpc-max-request-size=1024M --seed-time=0.01 --min-split-size=10M --follow-torrent=mem --split=10 --daemon=true --allow-overwrite=true

2 |

--------------------------------------------------------------------------------

/bot/helper/ext_utils/exceptions.py:

--------------------------------------------------------------------------------

1 | class DirectDownloadLinkException(Exception):

2 | """Not method found for extracting direct download link from the http link"""

3 | pass

4 |

5 |

6 | class NotSupportedExtractionArchive(Exception):

7 | """The archive format use is trying to extract is not supported"""

8 | pass

9 |

--------------------------------------------------------------------------------

/vps.md:

--------------------------------------------------------------------------------

1 | ## Run the Following:

2 | 1.To clone the Repository:

3 | ```

4 | git clone https://github.com/TheCaduceus/Dr.Torrent/

5 | cd Dr.Torrent

6 | ```

7 | 2.Install Requirements:

8 | ```

9 | sudo apt install python3

10 | sudo snap install docker

11 | ```

12 | 3.Configuration:

13 | ```

14 | sudo pacman -S docker python

15 | ```

16 |

--------------------------------------------------------------------------------

/début.sh:

--------------------------------------------------------------------------------

1 | if [[ -n $TOKEN_PICKLE_URL ]]; then

2 | wget -q $TOKEN_PICKLE_URL -O /usr/src/app/token.pickle

3 | fi

4 |

5 | if [[ -n $ACCOUNTS_ZIP_URL ]]; then

6 | wget -q $ACCOUNTS_ZIP_URL -O /usr/src/app/accounts.zip

7 | unzip accounts.zip -d /usr/src/app/accounts

8 | rm accounts.zip

9 | fi

10 |

11 | gunicorn wserver:start_server --bind 0.0.0.0:$PORT --worker-class aiohttp.GunicornWebWorker & qbittorrent-nox -d --webui-port=8090 & python3 alive.py & ./aria.sh; python3 -m bot

12 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM breakdowns/mega-sdk-python:latest

2 |

3 | WORKDIR /usr/src/app

4 | RUN chmod 777 /usr/src/app

5 |

6 | COPY extract /usr/local/bin

7 | COPY pextract /usr/local/bin

8 | RUN chmod +x /usr/local/bin/extract && chmod +x /usr/local/bin/pextract

9 |

10 | COPY requirements.txt .

11 | RUN pip3 install --no-cache-dir -r requirements.txt

12 |

13 | COPY . .

14 | COPY .netrc /root/.netrc

15 | RUN chmod 600 /usr/src/app/.netrc

16 | RUN chmod +x aria.sh

17 |

18 | CMD ["bash","début.sh"]

19 |

--------------------------------------------------------------------------------

/.github/workflows/manual.yml:

--------------------------------------------------------------------------------

1 | name: Deploy on Heroku

2 | on: workflow_dispatch

3 |

4 | jobs:

5 | deploy:

6 | runs-on: ubuntu-latest

7 | steps:

8 | - uses: actions/checkout@v2

9 | - uses: akhileshns/heroku-deploy@v3.12.12

10 | with:

11 | heroku_api_key: ${{secrets.HEROKU_API_KEY}}

12 | heroku_app_name: ${{secrets.HEROKU_APP_NAME}}

13 | heroku_email: ${{secrets.HEROKU_EMAIL}}

14 | usedocker: true

15 | docker_heroku_process_type: web

16 | stack: "container"

17 | region: "us"

18 | env:

19 | HD_CONFIG_FILE_URL: ${{secrets.CONFIG_FILE_URL}}

20 |

--------------------------------------------------------------------------------

/alive.py:

--------------------------------------------------------------------------------

1 |

2 |

3 | import time

4 | import requests

5 | import os

6 | from dotenv import load_dotenv

7 |

8 | load_dotenv('config.env')

9 |

10 | try:

11 | BASE_URL = os.environ.get('BASE_URL_OF_BOT', None)

12 | if len(BASE_URL) == 0:

13 | BASE_URL = None

14 | except KeyError:

15 | BASE_URL = None

16 |

17 | try:

18 | IS_VPS = os.environ.get('IS_VPS', 'False')

19 | if IS_VPS.lower() == 'true':

20 | IS_VPS = True

21 | else:

22 | IS_VPS = False

23 | except KeyError:

24 | IS_VPS = False

25 |

26 | if not IS_VPS and BASE_URL is not None:

27 | while True:

28 | time.sleep(1000)

29 | status = requests.get(BASE_URL).status_code

30 |

--------------------------------------------------------------------------------

/bot/helper/custom_filters.py:

--------------------------------------------------------------------------------

1 | from pyrogram import filters

2 |

3 | def callback_data(data):

4 | def func(flt, client, callback_query):

5 | return callback_query.data in flt.data

6 |

7 | data = data if isinstance(data, list) else [data]

8 | return filters.create(

9 | func,

10 | 'CustomCallbackDataFilter',

11 | data=data

12 | )

13 |

14 | def callback_chat(chats):

15 | def func(flt, client, callback_query):

16 | return callback_query.message.chat.id in flt.chats

17 |

18 | chats = chats if isinstance(chats, list) else [chats]

19 | return filters.create(

20 | func,

21 | 'CustomCallbackChatsFilter',

22 | chats=chats

23 | )

24 |

--------------------------------------------------------------------------------

/bot/helper/telegram_helper/button_build.py:

--------------------------------------------------------------------------------

1 | from telegram import InlineKeyboardButton

2 |

3 |

4 | class ButtonMaker:

5 | def __init__(self):

6 | self.button = []

7 |

8 | def buildbutton(self, key, link):

9 | self.button.append(InlineKeyboardButton(text = key, url = link))

10 |

11 | def sbutton(self, key, data):

12 | self.button.append(InlineKeyboardButton(text = key, callback_data = data))

13 |

14 | def build_menu(self, n_cols, footer_buttons=None, header_buttons=None):

15 | menu = [self.button[i:i + n_cols] for i in range(0, len(self.button), n_cols)]

16 | if header_buttons:

17 | menu.insert(0, header_buttons)

18 | if footer_buttons:

19 | menu.append(footer_buttons)

20 | return menu

21 |

--------------------------------------------------------------------------------

/generate_drive_token.py:

--------------------------------------------------------------------------------

1 | import pickle

2 | import os

3 | from google_auth_oauthlib.flow import InstalledAppFlow

4 | from google.auth.transport.requests import Request

5 |

6 | credentials = None

7 | __G_DRIVE_TOKEN_FILE = "token.pickle"

8 | __OAUTH_SCOPE = ["https://www.googleapis.com/auth/drive"]

9 | if os.path.exists(__G_DRIVE_TOKEN_FILE):

10 | with open(__G_DRIVE_TOKEN_FILE, 'rb') as f:

11 | credentials = pickle.load(f)

12 | if credentials is None or not credentials.valid:

13 | if credentials and credentials.expired and credentials.refresh_token:

14 | credentials.refresh(Request())

15 | else:

16 | flow = InstalledAppFlow.from_client_secrets_file(

17 | 'credentials.json', __OAUTH_SCOPE)

18 | credentials = flow.run_console(port=0)

19 |

20 | # Save the credentials for the next run

21 | with open(__G_DRIVE_TOKEN_FILE, 'wb') as token:

22 | pickle.dump(credentials, token)

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/listeners.py:

--------------------------------------------------------------------------------

1 | class MirrorListeners:

2 | def __init__(self, context, update):

3 | self.bot = context

4 | self.update = update

5 | self.message = update.message

6 | self.uid = self.message.message_id

7 |

8 | def onDownloadStarted(self):

9 | raise NotImplementedError

10 |

11 | def onDownloadProgress(self):

12 | raise NotImplementedError

13 |

14 | def onDownloadComplete(self):

15 | raise NotImplementedError

16 |

17 | def onDownloadError(self, error: str):

18 | raise NotImplementedError

19 |

20 | def onUploadStarted(self):

21 | raise NotImplementedError

22 |

23 | def onUploadProgress(self):

24 | raise NotImplementedError

25 |

26 | def onUploadComplete(self, link: str):

27 | raise NotImplementedError

28 |

29 | def onUploadError(self, error: str):

30 | raise NotImplementedError

31 |

--------------------------------------------------------------------------------

/qBittorrent.conf:

--------------------------------------------------------------------------------

1 | [AutoRun]

2 | enabled=true

3 | program=

4 |

5 | [LegalNotice]

6 | Accepted=true

7 |

8 | [BitTorrent]

9 | Session\AsyncIOThreadsCount=8

10 | Session\SlowTorrentsDownloadRate=100

11 | Session\SlowTorrentsInactivityTimer=600

12 |

13 | [Preferences]

14 | Advanced\AnnounceToAllTrackers=true

15 | Advanced\AnonymousMode=false

16 | Advanced\IgnoreLimitsLAN=true

17 | Advanced\RecheckOnCompletion=true

18 | Advanced\LtTrackerExchange=true

19 | Bittorrent\MaxConnecs=3000

20 | Bittorrent\MaxConnecsPerTorrent=500

21 | Bittorrent\DHT=true

22 | Bittorrent\DHTPort=6881

23 | Bittorrent\PeX=true

24 | Bittorrent\LSD=true

25 | Bittorrent\sameDHTPortAsBT=true

26 | Downloads\DiskWriteCacheSize=32

27 | Downloads\PreAllocation=true

28 | Downloads\UseIncompleteExtension=true

29 | General\PreventFromSuspendWhenDownloading=true

30 | Queueing\IgnoreSlowTorrents=true

31 | Queueing\MaxActiveDownloads=15

32 | Queueing\MaxActiveTorrents=50

33 | Queueing\QueueingEnabled=false

34 | WebUI\Enabled=true

35 | WebUI\Port=8090

36 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/tar_status.py:

--------------------------------------------------------------------------------

1 | from .status import Status

2 | from bot.helper.ext_utils.bot_utils import get_readable_file_size, MirrorStatus

3 |

4 |

5 | class TarStatus(Status):

6 | def __init__(self, name, path, size):

7 | self.__name = name

8 | self.__path = path

9 | self.__size = size

10 |

11 | # The progress of Tar function cannot be tracked. So we just return dummy values.

12 | # If this is possible in future,we should implement it

13 |

14 | def progress(self):

15 | return '0'

16 |

17 | def speed(self):

18 | return '0'

19 |

20 | def name(self):

21 | return self.__name

22 |

23 | def path(self):

24 | return self.__path

25 |

26 | def size(self):

27 | return get_readable_file_size(self.__size)

28 |

29 | def eta(self):

30 | return '0s'

31 |

32 | def status(self):

33 | return MirrorStatus.STATUS_ARCHIVING

34 |

35 | def processed_bytes(self):

36 | return 0

37 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/extract_status.py:

--------------------------------------------------------------------------------

1 | from .status import Status

2 | from bot.helper.ext_utils.bot_utils import get_readable_file_size, MirrorStatus

3 |

4 |

5 | class ExtractStatus(Status):

6 | def __init__(self, name, path, size):

7 | self.__name = name

8 | self.__path = path

9 | self.__size = size

10 |

11 | # The progress of extract function cannot be tracked. So we just return dummy values.

12 | # If this is possible in future,we should implement it

13 |

14 | def progress(self):

15 | return '0'

16 |

17 | def speed(self):

18 | return '0'

19 |

20 | def name(self):

21 | return self.__name

22 |

23 | def path(self):

24 | return self.__path

25 |

26 | def size(self):

27 | return get_readable_file_size(self.__size)

28 |

29 | def eta(self):

30 | return '0s'

31 |

32 | def status(self):

33 | return MirrorStatus.STATUS_EXTRACTING

34 |

35 | def processed_bytes(self):

36 | return 0

37 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/download_utils/download_helper.py:

--------------------------------------------------------------------------------

1 | import threading

2 |

3 |

4 | class MethodNotImplementedError(NotImplementedError):

5 | def __init__(self):

6 | super(self, 'Not implemented method')

7 |

8 |

9 | class DownloadHelper:

10 | def __init__(self):

11 | self.name = '' # Name of the download; empty string if no download has been started

12 | self.size = 0.0 # Size of the download

13 | self.downloaded_bytes = 0.0 # Bytes downloaded

14 | self.speed = 0.0 # Download speed in bytes per second

15 | self.progress = 0.0

16 | self.progress_string = '0.00%'

17 | self.eta = 0 # Estimated time of download complete

18 | self.eta_string = '0s' # A listener class which have event callbacks

19 | self._resource_lock = threading.Lock()

20 |

21 | def add_download(self, link: str, path):

22 | raise MethodNotImplementedError

23 |

24 | def cancel_download(self):

25 | # Returns None if successfully cancelled, else error string

26 | raise MethodNotImplementedError

27 |

--------------------------------------------------------------------------------

/bot/modules/delete.py:

--------------------------------------------------------------------------------

1 | from telegram.ext import CommandHandler

2 | import threading

3 | from telegram import Update

4 | from bot import dispatcher, LOGGER

5 | from bot.helper.telegram_helper.message_utils import auto_delete_message, sendMessage

6 | from bot.helper.telegram_helper.filters import CustomFilters

7 | from bot.helper.telegram_helper.bot_commands import BotCommands

8 | from bot.helper.mirror_utils.upload_utils import gdriveTools

9 |

10 |

11 | def deletefile(update, context):

12 | msg_args = update.message.text.split(None, 1)

13 | msg = ''

14 | try:

15 | link = msg_args[1]

16 | LOGGER.info(msg_args[1])

17 | except IndexError:

18 | msg = 'Send a link along with command'

19 |

20 | if msg == '' :

21 | drive = gdriveTools.GoogleDriveHelper()

22 | msg = drive.deletefile(link)

23 | LOGGER.info(f"DeleteFileCmd : {msg}")

24 | reply_message = sendMessage(msg, context.bot, update)

25 |

26 | threading.Thread(target=auto_delete_message, args=(context.bot, update.message, reply_message)).start()

27 |

28 | delete_handler = CommandHandler(command=BotCommands.DeleteCommand, callback=deletefile, filters=CustomFilters.owner_filter | CustomFilters.sudo_user, run_async=True)

29 | dispatcher.add_handler(delete_handler)

30 |

--------------------------------------------------------------------------------

/aria.sh:

--------------------------------------------------------------------------------

1 | export MAX_DOWNLOAD_SPEED=0

2 | tracker_list=$(curl -Ns https://raw.githubusercontent.com/XIU2/TrackersListCollection/master/all.txt https://ngosang.github.io/trackerslist/trackers_all_http.txt https://raw.githubusercontent.com/DeSireFire/animeTrackerList/master/AT_all.txt https://raw.githubusercontent.com/hezhijie0327/Trackerslist/main/trackerslist_combine.txt | awk '$0' | tr '\n' ',')

3 | export MAX_CONCURRENT_DOWNLOADS=4

4 |

5 | aria2c --enable-rpc --rpc-listen-all=false --check-certificate=false \

6 | --max-connection-per-server=10 --rpc-max-request-size=1024M \

7 | --bt-tracker="[$tracker_list]" --bt-max-peers=0 --bt-tracker-connect-timeout=300 --bt-stop-timeout=1200 --min-split-size=10M \

8 | --follow-torrent=mem --split=10 \

9 | --daemon=true --allow-overwrite=true --max-overall-download-limit=$MAX_DOWNLOAD_SPEED \

10 | --max-overall-upload-limit=1K --max-concurrent-downloads=$MAX_CONCURRENT_DOWNLOADS \

11 | --peer-id-prefix=-qB4360- --user-agent=qBittorrent/4.3.6 --peer-agent=qBittorrent/4.3.6 \

12 | --disk-cache=64M --file-allocation=prealloc --continue=true \

13 | --max-file-not-found=0 --max-tries=20 --auto-file-renaming=true \

14 | --bt-enable-lpd=true --seed-time=0.01 --seed-ratio=1.0 \

15 | --content-disposition-default-utf8=true --http-accept-gzip=true --reuse-uri=true --netrc-path=/usr/src/app/.netrc

16 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/status.py:

--------------------------------------------------------------------------------

1 | # Generic status class. All other status classes must inherit this class

2 |

3 |

4 | class Status:

5 |

6 | def progress(self):

7 | """

8 | Calculates the progress of the mirror (upload or download)

9 | :return: progress in percentage

10 | """

11 | raise NotImplementedError

12 |

13 | def speed(self):

14 | """:return: speed in bytes per second"""

15 | raise NotImplementedError

16 |

17 | def name(self):

18 | """:return name of file/directory being processed"""

19 | raise NotImplementedError

20 |

21 | def path(self):

22 | """:return path of the file/directory"""

23 | raise NotImplementedError

24 |

25 | def size(self):

26 | """:return Size of file folder"""

27 | raise NotImplementedError

28 |

29 | def eta(self):

30 | """:return ETA of the process to complete"""

31 | raise NotImplementedError

32 |

33 | def status(self):

34 | """:return String describing what is the object of this class will be tracking (upload/download/something

35 | else) """

36 | raise NotImplementedError

37 |

38 | def processed_bytes(self):

39 | """:return The size of file that has been processed (downloaded/uploaded/archived)"""

40 | raise NotImplementedError

41 |

--------------------------------------------------------------------------------

/bot/modules/mirror_status.py:

--------------------------------------------------------------------------------

1 | from telegram.ext import CommandHandler

2 | from bot import dispatcher, status_reply_dict, status_reply_dict_lock, download_dict, download_dict_lock

3 | from bot.helper.telegram_helper.message_utils import *

4 | from telegram.error import BadRequest

5 | from bot.helper.telegram_helper.filters import CustomFilters

6 | from bot.helper.telegram_helper.bot_commands import BotCommands

7 | import threading

8 |

9 |

10 | def mirror_status(update, context):

11 | with download_dict_lock:

12 | if len(download_dict) == 0:

13 | message = "No active downloads"

14 | reply_message = sendMessage(message, context.bot, update)

15 | threading.Thread(target=auto_delete_message, args=(bot, update.message, reply_message)).start()

16 | return

17 | index = update.effective_chat.id

18 | with status_reply_dict_lock:

19 | if index in status_reply_dict.keys():

20 | deleteMessage(bot, status_reply_dict[index])

21 | del status_reply_dict[index]

22 | sendStatusMessage(update, context.bot)

23 | deleteMessage(context.bot, update.message)

24 |

25 |

26 | mirror_status_handler = CommandHandler(BotCommands.StatusCommand, mirror_status,

27 | filters=CustomFilters.authorized_chat | CustomFilters.authorized_user, run_async=True)

28 | dispatcher.add_handler(mirror_status_handler)

29 |

--------------------------------------------------------------------------------

/bot/helper/telegram_helper/bot_commands.py:

--------------------------------------------------------------------------------

1 | class _BotCommands:

2 | def __init__(self):

3 | self.StartCommand = 'start'

4 | self.MirrorCommand = 'mirror'

5 | self.UnzipMirrorCommand = 'unzip'

6 | self.TarMirrorCommand = 'tar'

7 | self.ZipMirrorCommand = 'zip'

8 | self.CancelMirror = 'cancel'

9 | self.CancelAllCommand = 'cnlall'

10 | self.ListCommand = 'list'

11 | self.StatusCommand = 'status'

12 | self.AuthorizedUsersCommand = 'users'

13 | self.AuthorizeCommand = 'auth'

14 | self.UnAuthorizeCommand = 'unauth'

15 | self.AddSudoCommand = 'addsudo'

16 | self.RmSudoCommand = 'rmsudo'

17 | self.PingCommand = 'ping'

18 | self.RestartCommand = 'restart'

19 | self.StatsCommand = 'stat'

20 | self.HelpCommand = 'hlp'

21 | self.LogCommand = 'log'

22 | self.SpeedCommand = 'speedtest'

23 | self.CloneCommand = 'clone'

24 | self.CountCommand = 'count'

25 | self.WatchCommand = 'watch'

26 | self.TarWatchCommand = 'tarwatch'

27 | self.DeleteCommand = 'del'

28 | self.ConfigMenuCommand = 'config'

29 | self.ShellCommand = 'shell'

30 | self.UpdateCommand = 'update'

31 | self.ExecHelpCommand = 'exechelp'

32 | self.TsHelpCommand = 'tshelp'

33 | self.GDTOTCommand = 'gdtot'

34 |

35 | BotCommands = _BotCommands()

36 |

--------------------------------------------------------------------------------

/bot/modules/count.py:

--------------------------------------------------------------------------------

1 | from telegram.ext import CommandHandler

2 | from bot.helper.mirror_utils.upload_utils.gdriveTools import GoogleDriveHelper

3 | from bot.helper.telegram_helper.message_utils import deleteMessage, sendMessage

4 | from bot.helper.telegram_helper.filters import CustomFilters

5 | from bot.helper.telegram_helper.bot_commands import BotCommands

6 | from bot import dispatcher

7 |

8 |

9 | def countNode(update, context):

10 | args = update.message.text.split(" ", maxsplit=1)

11 | if len(args) > 1:

12 | link = args[1]

13 | msg = sendMessage(f"Counting: {link}", context.bot, update)

14 | gd = GoogleDriveHelper()

15 | result = gd.count(link)

16 | deleteMessage(context.bot, msg)

17 | if update.message.from_user.username:

18 | uname = f'@{update.message.from_user.username}'

19 | else:

20 | uname = f'{update.message.from_user.first_name}'

21 | if uname is not None:

22 | cc = f'\n\ncc: {uname}'

23 | sendMessage(result + cc, context.bot, update)

24 | else:

25 | sendMessage("Provide G-Drive Shareable Link to Count.", context.bot, update)

26 |

27 | count_handler = CommandHandler(BotCommands.CountCommand, countNode, filters=CustomFilters.authorized_chat | CustomFilters.authorized_user, run_async=True)

28 | dispatcher.add_handler(count_handler)

29 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/telegram_download_status.py:

--------------------------------------------------------------------------------

1 | from bot import DOWNLOAD_DIR

2 | from bot.helper.ext_utils.bot_utils import MirrorStatus, get_readable_file_size, get_readable_time

3 | from .status import Status

4 |

5 |

6 | class TelegramDownloadStatus(Status):

7 | def __init__(self, obj, listener):

8 | self.obj = obj

9 | self.uid = listener.uid

10 | self.message = listener.message

11 |

12 | def gid(self):

13 | return self.obj.gid

14 |

15 | def path(self):

16 | return f"{DOWNLOAD_DIR}{self.uid}"

17 |

18 | def processed_bytes(self):

19 | return self.obj.downloaded_bytes

20 |

21 | def size_raw(self):

22 | return self.obj.size

23 |

24 | def size(self):

25 | return get_readable_file_size(self.size_raw())

26 |

27 | def status(self):

28 | return MirrorStatus.STATUS_DOWNLOADING

29 |

30 | def name(self):

31 | return self.obj.name

32 |

33 | def progress_raw(self):

34 | return self.obj.progress

35 |

36 | def progress(self):

37 | return f'{round(self.progress_raw(), 2)}%'

38 |

39 | def speed_raw(self):

40 | """

41 | :return: Download speed in Bytes/Seconds

42 | """

43 | return self.obj.download_speed

44 |

45 | def speed(self):

46 | return f'{get_readable_file_size(self.speed_raw())}/s'

47 |

48 | def eta(self):

49 | try:

50 | seconds = (self.size_raw() - self.processed_bytes()) / self.speed_raw()

51 | return f'{get_readable_time(seconds)}'

52 | except ZeroDivisionError:

53 | return '-'

54 |

55 | def download(self):

56 | return self.obj

57 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/clone_status.py:

--------------------------------------------------------------------------------

1 | from .status import Status

2 | from bot.helper.ext_utils.bot_utils import MirrorStatus, get_readable_file_size, get_readable_time

3 |

4 |

5 | class CloneStatus(Status):

6 | def __init__(self, obj, size, update, gid):

7 | self.cobj = obj

8 | self.__csize = size

9 | self.message = update.message

10 | self.__cgid = gid

11 |

12 | def processed_bytes(self):

13 | return self.cobj.transferred_size

14 |

15 | def size_raw(self):

16 | return self.__csize

17 |

18 | def size(self):

19 | return get_readable_file_size(self.__csize)

20 |

21 | def status(self):

22 | return MirrorStatus.STATUS_CLONING

23 |

24 | def name(self):

25 | return self.cobj.name

26 |

27 | def gid(self) -> str:

28 | return self.__cgid

29 |

30 | def progress_raw(self):

31 | try:

32 | return self.cobj.transferred_size / self.__csize * 100

33 | except ZeroDivisionError:

34 | return 0

35 |

36 | def progress(self):

37 | return f'{round(self.progress_raw(), 2)}%'

38 |

39 | def speed_raw(self):

40 | """

41 | :return: Download speed in Bytes/Seconds

42 | """

43 | return self.cobj.cspeed()

44 |

45 | def speed(self):

46 | return f'{get_readable_file_size(self.speed_raw())}/s'

47 |

48 | def eta(self):

49 | try:

50 | seconds = (self.__csize - self.cobj.transferred_size) / self.speed_raw()

51 | return f'{get_readable_time(seconds)}'

52 | except ZeroDivisionError:

53 | return '-'

54 |

55 | def download(self):

56 | return self.cobj

57 |

--------------------------------------------------------------------------------

/bot/modules/shell.py:

--------------------------------------------------------------------------------

1 | import subprocess

2 | from bot import LOGGER, dispatcher

3 | from telegram import ParseMode

4 | from telegram.ext import CommandHandler

5 | from bot.helper.telegram_helper.filters import CustomFilters

6 | from bot.helper.telegram_helper.bot_commands import BotCommands

7 |

8 |

9 | def shell(update, context):

10 | message = update.effective_message

11 | cmd = message.text.split(' ', 1)

12 | if len(cmd) == 1:

13 | message.reply_text('No command to execute was given.')

14 | return

15 | cmd = cmd[1]

16 | process = subprocess.Popen(

17 | cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE, shell=True)

18 | stdout, stderr = process.communicate()

19 | reply = ''

20 | stderr = stderr.decode()

21 | stdout = stdout.decode()

22 | if stdout:

23 | reply += f"*Stdout*\n`{stdout}`\n"

24 | LOGGER.info(f"Shell - {cmd} - {stdout}")

25 | if stderr:

26 | reply += f"*Stderr*\n`{stderr}`\n"

27 | LOGGER.error(f"Shell - {cmd} - {stderr}")

28 | if len(reply) > 3000:

29 | with open('shell_output.txt', 'w') as file:

30 | file.write(reply)

31 | with open('shell_output.txt', 'rb') as doc:

32 | context.bot.send_document(

33 | document=doc,

34 | filename=doc.name,

35 | reply_to_message_id=message.message_id,

36 | chat_id=message.chat_id)

37 | else:

38 | message.reply_text(reply, parse_mode=ParseMode.MARKDOWN)

39 |

40 |

41 | SHELL_HANDLER = CommandHandler(BotCommands.ShellCommand, shell,

42 | filters=CustomFilters.owner_filter, run_async=True)

43 | dispatcher.add_handler(SHELL_HANDLER)

44 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/upload_status.py:

--------------------------------------------------------------------------------

1 | from .status import Status

2 | from bot.helper.ext_utils.bot_utils import MirrorStatus, get_readable_file_size, get_readable_time

3 | from bot import DOWNLOAD_DIR

4 |

5 |

6 | class UploadStatus(Status):

7 | def __init__(self, obj, size, gid, listener):

8 | self.obj = obj

9 | self.__size = size

10 | self.uid = listener.uid

11 | self.message = listener.message

12 | self.__gid = gid

13 |

14 | def path(self):

15 | return f"{DOWNLOAD_DIR}{self.uid}"

16 |

17 | def processed_bytes(self):

18 | return self.obj.uploaded_bytes

19 |

20 | def size_raw(self):

21 | return self.__size

22 |

23 | def size(self):

24 | return get_readable_file_size(self.__size)

25 |

26 | def status(self):

27 | return MirrorStatus.STATUS_UPLOADING

28 |

29 | def name(self):

30 | return self.obj.name

31 |

32 | def progress_raw(self):

33 | try:

34 | return self.obj.uploaded_bytes / self.__size * 100

35 | except ZeroDivisionError:

36 | return 0

37 |

38 | def progress(self):

39 | return f'{round(self.progress_raw(), 2)}%'

40 |

41 | def speed_raw(self):

42 | """

43 | :return: Upload speed in Bytes/Seconds

44 | """

45 | return self.obj.speed()

46 |

47 | def speed(self):

48 | return f'{get_readable_file_size(self.speed_raw())}/s'

49 |

50 | def eta(self):

51 | try:

52 | seconds = (self.__size - self.obj.uploaded_bytes) / self.speed_raw()

53 | return f'{get_readable_time(seconds)}'

54 | except ZeroDivisionError:

55 | return '-'

56 |

57 | def gid(self) -> str:

58 | return self.__gid

59 |

60 | def download(self):

61 | return self.obj

62 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/youtube_dl_download_status.py:

--------------------------------------------------------------------------------

1 | from bot import DOWNLOAD_DIR

2 | from bot.helper.ext_utils.bot_utils import MirrorStatus, get_readable_file_size, get_readable_time

3 | from .status import Status

4 | from bot.helper.ext_utils.fs_utils import get_path_size

5 |

6 | class YoutubeDLDownloadStatus(Status):

7 | def __init__(self, obj, listener):

8 | self.obj = obj

9 | self.uid = listener.uid

10 | self.message = listener.message

11 |

12 | def gid(self):

13 | return self.obj.gid

14 |

15 | def path(self):

16 | return f"{DOWNLOAD_DIR}{self.uid}"

17 |

18 | def processed_bytes(self):

19 | if self.obj.downloaded_bytes != 0:

20 | return self.obj.downloaded_bytes

21 | else:

22 | return get_path_size(f"{DOWNLOAD_DIR}{self.uid}")

23 |

24 | def size_raw(self):

25 | return self.obj.size

26 |

27 | def size(self):

28 | return get_readable_file_size(self.size_raw())

29 |

30 | def status(self):

31 | return MirrorStatus.STATUS_DOWNLOADING

32 |

33 | def name(self):

34 | return self.obj.name

35 |

36 | def progress_raw(self):

37 | return self.obj.progress

38 |

39 | def progress(self):

40 | return f'{round(self.progress_raw(), 2)}%'

41 |

42 | def speed_raw(self):

43 | """

44 | :return: Download speed in Bytes/Seconds

45 | """

46 | return self.obj.download_speed

47 |

48 | def speed(self):

49 | return f'{get_readable_file_size(self.speed_raw())}/s'

50 |

51 | def eta(self):

52 | try:

53 | seconds = (self.size_raw() - self.processed_bytes()) / self.speed_raw()

54 | return f'{get_readable_time(seconds)}'

55 | except:

56 | return '-'

57 |

58 | def download(self):

59 | return self.obj

60 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/gdownload_status.py:

--------------------------------------------------------------------------------

1 | from .status import Status

2 | from bot.helper.ext_utils.bot_utils import MirrorStatus, get_readable_file_size, get_readable_time

3 | from bot import DOWNLOAD_DIR

4 |

5 |

6 | class DownloadStatus(Status):

7 | def __init__(self, obj, size, listener, gid):

8 | self.dobj = obj

9 | self.__dsize = size

10 | self.uid = listener.uid

11 | self.message = listener.message

12 | self.__dgid = gid

13 |

14 | def path(self):

15 | return f"{DOWNLOAD_DIR}{self.uid}"

16 |

17 | def processed_bytes(self):

18 | return self.dobj.downloaded_bytes

19 |

20 | def size_raw(self):

21 | return self.__dsize

22 |

23 | def size(self):

24 | return get_readable_file_size(self.__dsize)

25 |

26 | def status(self):

27 | return MirrorStatus.STATUS_DOWNLOADING

28 |

29 | def name(self):

30 | return self.dobj.name

31 |

32 | def gid(self) -> str:

33 | return self.__dgid

34 |

35 | def progress_raw(self):

36 | try:

37 | return self.dobj.downloaded_bytes / self.__dsize * 100

38 | except ZeroDivisionError:

39 | return 0

40 |

41 | def progress(self):

42 | return f'{round(self.progress_raw(), 2)}%'

43 |

44 | def speed_raw(self):

45 | """

46 | :return: Download speed in Bytes/Seconds

47 | """

48 | return self.dobj.dspeed()

49 |

50 | def speed(self):

51 | return f'{get_readable_file_size(self.speed_raw())}/s'

52 |

53 | def eta(self):

54 | try:

55 | seconds = (self.__dsize - self.dobj.downloaded_bytes) / self.speed_raw()

56 | return f'{get_readable_time(seconds)}'

57 | except ZeroDivisionError:

58 | return '-'

59 |

60 | def download(self):

61 | return self.dobj

62 |

--------------------------------------------------------------------------------

/bot/modules/speedtest.py:

--------------------------------------------------------------------------------

1 | from speedtest import Speedtest

2 | from bot.helper.telegram_helper.filters import CustomFilters

3 | from bot import dispatcher

4 | from bot.helper.telegram_helper.bot_commands import BotCommands

5 | from bot.helper.telegram_helper.message_utils import sendMessage, editMessage

6 | from telegram.ext import CommandHandler

7 |

8 |

9 | def speedtest(update, context):

10 | speed = sendMessage("Running Speed Test . . . ", context.bot, update)

11 | test = Speedtest()

12 | test.get_best_server()

13 | test.download()

14 | test.upload()

15 | test.results.share()

16 | result = test.results.dict()

17 | string_speed = f'''

18 | Server

19 | Name: {result['server']['name']}

20 | Country: {result['server']['country']}, {result['server']['cc']}

21 | Sponsor: {result['server']['sponsor']}

22 | ISP: {result['client']['isp']}

23 | SpeedTest Results

24 | Upload: {speed_convert(result['upload'] / 8)}

25 | Download: {speed_convert(result['download'] / 8)}

26 | Ping: {result['ping']} ms

27 | ISP Rating: {result['client']['isprating']}

28 | '''

29 | editMessage(string_speed, speed)

30 |

31 |

32 | def speed_convert(size):

33 | """Hi human, you can't read bytes?"""

34 | power = 2 ** 10

35 | zero = 0

36 | units = {0: "", 1: "Kb/s", 2: "MB/s", 3: "Gb/s", 4: "Tb/s"}

37 | while size > power:

38 | size /= power

39 | zero += 1

40 | return f"{round(size, 2)} {units[zero]}"

41 |

42 |

43 | SPEED_HANDLER = CommandHandler(BotCommands.SpeedCommand, speedtest,

44 | filters=CustomFilters.authorized_chat | CustomFilters.authorized_user, run_async=True)

45 |

46 | dispatcher.add_handler(SPEED_HANDLER)

47 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/mega_download_status.py:

--------------------------------------------------------------------------------

1 | from bot.helper.ext_utils.bot_utils import get_readable_file_size,MirrorStatus, get_readable_time

2 | from bot import DOWNLOAD_DIR

3 | from .status import Status

4 |

5 |

6 | class MegaDownloadStatus(Status):

7 |

8 | def __init__(self, obj, listener):

9 | self.uid = obj.uid

10 | self.listener = listener

11 | self.obj = obj

12 | self.message = listener.message

13 |

14 | def name(self) -> str:

15 | return self.obj.name

16 |

17 | def progress_raw(self):

18 | try:

19 | return round(self.processed_bytes() / self.obj.size * 100,2)

20 | except ZeroDivisionError:

21 | return 0.0

22 |

23 | def progress(self):

24 | """Progress of download in percentage"""

25 | return f"{self.progress_raw()}%"

26 |

27 | def status(self) -> str:

28 | return MirrorStatus.STATUS_DOWNLOADING

29 |

30 | def processed_bytes(self):

31 | return self.obj.downloaded_bytes

32 |

33 | def eta(self):

34 | try:

35 | seconds = (self.size_raw() - self.processed_bytes()) / self.speed_raw()

36 | return f'{get_readable_time(seconds)}'

37 | except ZeroDivisionError:

38 | return '-'

39 |

40 | def size_raw(self):

41 | return self.obj.size

42 |

43 | def size(self) -> str:

44 | return get_readable_file_size(self.size_raw())

45 |

46 | def downloaded(self) -> str:

47 | return get_readable_file_size(self.obj.downloadedBytes)

48 |

49 | def speed_raw(self):

50 | return self.obj.speed

51 |

52 | def speed(self) -> str:

53 | return f'{get_readable_file_size(self.speed_raw())}/s'

54 |

55 | def gid(self) -> str:

56 | return self.obj.gid

57 |

58 | def path(self) -> str:

59 | return f"{DOWNLOAD_DIR}{self.uid}"

60 |

61 | def download(self):

62 | return self.obj

63 |

--------------------------------------------------------------------------------

/bot/helper/telegram_helper/filters.py:

--------------------------------------------------------------------------------

1 | from telegram.ext import MessageFilter

2 | from telegram import Message

3 | from bot import AUTHORIZED_CHATS, SUDO_USERS, OWNER_ID, download_dict, download_dict_lock

4 |

5 |

6 | class CustomFilters:

7 | class _OwnerFilter(MessageFilter):

8 | def filter(self, message):

9 | return bool(message.from_user.id == OWNER_ID)

10 |

11 | owner_filter = _OwnerFilter()

12 |

13 | class _AuthorizedUserFilter(MessageFilter):

14 | def filter(self, message):

15 | id = message.from_user.id

16 | return bool(id in AUTHORIZED_CHATS or id in SUDO_USERS or id == OWNER_ID)

17 |

18 | authorized_user = _AuthorizedUserFilter()

19 |

20 | class _AuthorizedChat(MessageFilter):

21 | def filter(self, message):

22 | return bool(message.chat.id in AUTHORIZED_CHATS)

23 |

24 | authorized_chat = _AuthorizedChat()

25 |

26 | class _SudoUser(MessageFilter):

27 | def filter(self,message):

28 | return bool(message.from_user.id in SUDO_USERS)

29 |

30 | sudo_user = _SudoUser()

31 |

32 | class _MirrorOwner(MessageFilter):

33 | def filter(self, message: Message):

34 | user_id = message.from_user.id

35 | if user_id == OWNER_ID:

36 | return True

37 | args = str(message.text).split(' ')

38 | if len(args) > 1:

39 | # Cancelling by gid

40 | with download_dict_lock:

41 | for message_id, status in download_dict.items():

42 | if status.gid() == args[1] and status.message.from_user.id == user_id:

43 | return True

44 | else:

45 | return False

46 | if not message.reply_to_message and len(args) == 1:

47 | return True

48 | # Cancelling by replying to original mirror message

49 | reply_user = message.reply_to_message.from_user.id

50 | return bool(reply_user == user_id)

51 | mirror_owner_filter = _MirrorOwner()

52 |

--------------------------------------------------------------------------------

/bot/helper/__init__.py:

--------------------------------------------------------------------------------

1 | import heroku3

2 |

3 | from functools import wraps

4 | from pyrogram.types import Message

5 | from bot import HEROKU_API_KEY, HEROKU_APP_NAME

6 |

7 | def get_text(message: Message) -> [None, str]:

8 | """Extract Text From Commands"""

9 | text_to_return = message.text

10 | if message.text is None:

11 | return None

12 | if " " in text_to_return:

13 | try:

14 | return message.text.split(None, 1)[1]

15 | except IndexError:

16 | return None

17 | else:

18 | return None

19 |

20 | heroku_client = None

21 | if HEROKU_API_KEY:

22 | heroku_client = heroku3.from_key(HEROKU_API_KEY)

23 |

24 | def check_heroku(func):

25 | @wraps(func)

26 | async def heroku_cli(client, message):

27 | heroku_app = None

28 | if not heroku_client:

29 | await message.reply_text("`Please Add HEROKU_API_KEY Key For This To Function To Work!`", parse_mode="markdown")

30 | elif not HEROKU_APP_NAME:

31 | await message.reply_text("`Please Add HEROKU_APP_NAME For This To Function To Work!`", parse_mode="markdown")

32 | if HEROKU_APP_NAME and heroku_client:

33 | try:

34 | heroku_app = heroku_client.app(HEROKU_APP_NAME)

35 | except:

36 | await message.reply_text(message, "`Heroku Api Key And App Name Doesn't Match!`", parse_mode="markdown")

37 | if heroku_app:

38 | await func(client, message, heroku_app)

39 |

40 | return heroku_cli

41 |

42 | def fetch_heroku_git_url(api_key, app_name):

43 | if not api_key:

44 | return None

45 | if not app_name:

46 | return None

47 | heroku = heroku3.from_key(api_key)

48 | try:

49 | heroku_applications = heroku.apps()

50 | except:

51 | return None

52 | heroku_app = None

53 | for app in heroku_applications:

54 | if app.name == app_name:

55 | heroku_app = app

56 | break

57 | if not heroku_app:

58 | return None

59 | return heroku_app.git_url.replace("https://", "https://api:" + api_key + "@")

60 |

61 | HEROKU_URL = fetch_heroku_git_url(HEROKU_API_KEY, HEROKU_APP_NAME)

62 |

--------------------------------------------------------------------------------

/config.env:

--------------------------------------------------------------------------------

1 | # REQUIRED CONFIG

2 | BOT_TOKEN = ""

3 | GDRIVE_FOLDER_ID = ""

4 | OWNER_ID =

5 | DOWNLOAD_DIR = "/usr/src/app/downloads"

6 | DOWNLOAD_STATUS_UPDATE_INTERVAL = 5 # Keep this 5 or 7

7 | AUTO_DELETE_MESSAGE_DURATION = 20 # Change it to -1 to disable it

8 | IS_TEAM_DRIVE = "" # True if using Shared Drive

9 | TELEGRAM_API =

10 | TELEGRAM_HASH = ""

11 | UPSTREAM_REPO = "https://github.com/TheCaduceus/DrTorrent" # Don't touch this

12 | UPSTREAM_BRANCH = "master" # Don't touch this

13 | # OPTIONAL CONFIG

14 | DATABASE_URL = "" # Not required if Heroku

15 | AUTHORIZED_CHATS = "" # Split by space

16 | SUDO_USERS = "" # Split by space

17 | IGNORE_PENDING_REQUESTS = "False"

18 | USE_SERVICE_ACCOUNTS = "" # True if using Service Accounts

19 | INDEX_URL = "" # INDEX URL without /

20 | STATUS_LIMIT = "" # Recommend limit status to 4 tasks max

21 | UPTOBOX_TOKEN = ""

22 | # Mega Configurations

23 | MEGA_API_KEY = "" # Required to clone Mega files

24 | MEGA_EMAIL_ID = "" # Required to clone Mega files

25 | MEGA_PASSWORD = "" # Required to clone Mega files

26 | BLOCK_MEGA_FOLDER = ""

27 | BLOCK_MEGA_LINKS = ""

28 | STOP_DUPLICATE = ""

29 | # Link Shortener Configurations

30 | SHORTENER = ""

31 | SHORTENER_API = ""

32 | # GDTOT Configurations

33 | GDTOT_COOKIES = "crypt= ; PHPSESSID= " # Required to use GDTOT Links

34 | # VPS Configurations

35 | IS_VPS = "" # True if deployed on VPS

36 | SERVER_PORT = "80" # Only for VPS

37 | BASE_URL_OF_BOT = "" # Required for Heroku

38 | # If you want to use Credentials externally from Index Links, fill these vars with the direct links

39 | # These are optional, if you don't know, simply leave them, don't fill anything in them.

40 | ACCOUNTS_ZIP_URL = ""

41 | TOKEN_PICKLE_URL = ""

42 | # Limit Configurations

43 | TORRENT_DIRECT_LIMIT = ""

44 | TAR_UNZIP_LIMIT = ""

45 | CLONE_LIMIT = ""

46 | MEGA_LIMIT = ""

47 | # Heroku Configurations

48 | HEROKU_API_KEY = "" # Mandatory

49 | HEROKU_APP_NAME = "" # Mandatory

50 | VIEW_LINK = "False" # True if using Index

51 | # Add more buttons (Three buttons are already added of Drive Link, Index Link, and View Link, you can add extra buttons too, these are optional)

52 | # If you don't know what are below entries, simply leave them, Don't fill anything in them.

53 | BUTTON_FOUR_NAME = "Official Website"

54 | BUTTON_FOUR_URL = "https://www.caduceus.ml/"

55 | BUTTON_FIVE_NAME = ""

56 | BUTTON_FIVE_URL = ""

57 | BUTTON_SIX_NAME = ""

58 | BUTTON_SIX_URL = ""

59 |

--------------------------------------------------------------------------------

/heroku-guide.md:

--------------------------------------------------------------------------------

1 | ## Deploying slam-mirrorbot on Heroku with Github Workflows.

2 |

3 | ## Pre-requisites

4 |

5 | - [Heroku](heroku.com) accounts

6 | - Recommended to use 1 App in 1 Heroku accounts

7 | - Don't use bin/fake credits card, because your Heroku account will banned

8 |

9 | ## Deployment

10 |

11 | 1. Give stars and Fork this repo then upload **token.pickle** to your forks, or you can upload your **token.pickle** to your Index and put your **token.pickle** link to **TOKEN_PICKLE_URL** (**NOTE**: If you didn't upload **token.pickle** uploading will not work). How to generate **token.pickle**? [Read here](https://github.com/breakdowns/slam-mirrorbot#getting-google-oauth-api-credential-file)

12 |

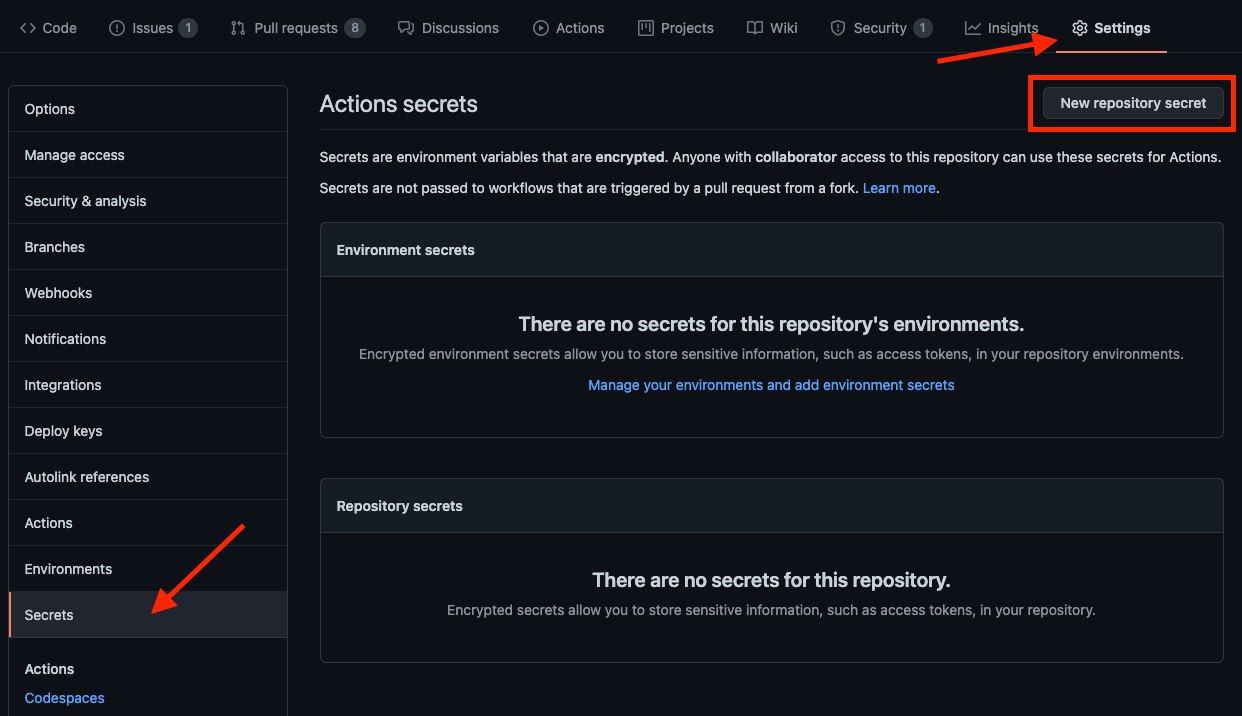

13 | 2. Go to Repository `Settings` -> `Secrets`

14 |

15 |

16 |

17 | 3. Add the below Required Variables one by one by clicking `New Repository Secret` everytime.

18 |

19 | ```

20 | HEROKU_EMAIL

21 | HEROKU_API_KEY

22 | HEROKU_APP_NAME

23 | CONFIG_FILE_URL

24 | ```

25 |

26 | ### Description of the above Required Variables

27 | * `HEROKU_EMAIL` Heroku Account email Id in which the above app will be deployed

28 | * `HEROKU_API_KEY` Go to your Heroku account and go to Account Settings. Scroll to the bottom until you see API Key. Copy this key and add it

29 | * `HEROKU_APP_NAME` Your Heroku app name, Name Must be unique

30 | * `CONFIG_FILE_URL` Fill This in any text editor. Remove the _____REMOVE_THIS_LINE_____=True line and fill the variables. For details about config you can see Here. Go to https://gist.github.com and paste your config data. Rename the file to config.env then create secret gist. Click on Raw, copy the link. This will be your CONFIG_FILE_URL.

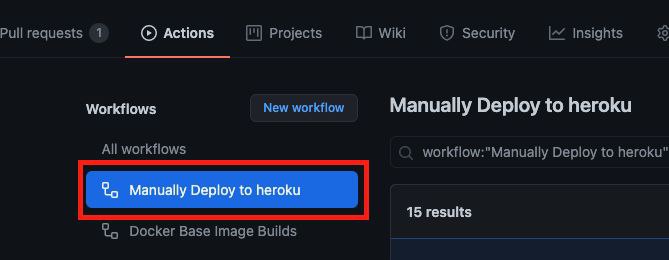

31 | 4. After adding all the above Required Variables go to Github Actions tab in your repo

32 |

33 | 5. Select `Manually Deploy to heroku` workflow as shown below:

34 |

35 |

36 |

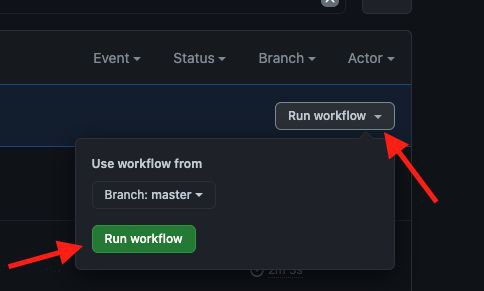

37 | 6. Then click on Run workflow

38 |

39 |

40 |

41 | 7. _Done!_ your bot will be deployed now.

42 |

43 | ## NOTE

44 | - Don't change/edit variables from Heroku if you want to change/edit do it from Github Secrets

45 | - If you want to set optional variables, go to your Heroku app settings and add the variables

46 |

47 | ## Credits

48 | - [arghyac35](https://github.com/arghyac35) for Tutorial

49 |

--------------------------------------------------------------------------------

/bot/modules/list.py:

--------------------------------------------------------------------------------

1 | from telegram.ext import CommandHandler

2 | from bot.helper.mirror_utils.upload_utils.gdriveTools import GoogleDriveHelper

3 | from bot import LOGGER, dispatcher

4 | from bot.helper.telegram_helper.message_utils import sendMessage, sendMarkup, editMessage

5 | from bot.helper.telegram_helper.filters import CustomFilters

6 | import threading

7 | from bot.helper.telegram_helper.bot_commands import BotCommands

8 | from bot.helper.mirror_utils.upload_utils.gdtot_helper import GDTOT

9 |

10 | def list_drive(update, context):

11 | try:

12 | search = update.message.text.split(' ',maxsplit=1)[1]

13 | LOGGER.info(f"Searching: {search}")

14 | reply = sendMessage('Searching..... Please wait!', context.bot, update)

15 | gdrive = GoogleDriveHelper(None)

16 | msg, button = gdrive.drive_list(search)

17 |

18 | if button:

19 | editMessage(msg, reply, button)

20 | else:

21 | editMessage(f'No result found for {search}', reply, button)

22 |

23 | except IndexError:

24 | sendMessage('Send a search key along with command', context.bot, update)

25 |

26 |

27 | def gdtot(update, context):

28 | try:

29 | search = update.message.text.split(' ', 1)[1]

30 | search_list = search.split(' ')

31 | for glink in search_list:

32 | LOGGER.info(f"Extracting gdtot link: {glink}")

33 | button = None

34 | reply = sendMessage('Getting Your GDTOT File Wait....', context.bot, update)

35 | file_name, file_url = GDTOT().parse(url=glink)

36 | if file_name == 404:

37 | sendMessage(file_url, context.bot, update)

38 | return

39 | if file_url != 404:

40 | gdrive = GoogleDriveHelper(None)

41 | msg, button = gdrive.clone(file_url)

42 | if button:

43 | editMessage(msg, reply, button)

44 | else:

45 | editMessage(file_name, reply, button)

46 | except IndexError:

47 | sendMessage('Send cmd along with url', context.bot, update)

48 | except Exception as e:

49 | LOGGER.info(e)

50 |

51 | list_handler = CommandHandler(BotCommands.ListCommand, list_drive, filters=CustomFilters.authorized_chat | CustomFilters.authorized_user, run_async=True)

52 | gdtot_handler = CommandHandler(BotCommands.GDTOTCommand, gdtot, filters=CustomFilters.authorized_chat | CustomFilters.authorized_user, run_async=True)

53 |

54 |

55 |

56 | dispatcher.add_handler(list_handler)

57 | dispatcher.add_handler(gdtot_handler)

58 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/qbit_download_status.py:

--------------------------------------------------------------------------------

1 | from bot import DOWNLOAD_DIR, LOGGER, get_client

2 | from bot.helper.ext_utils.bot_utils import MirrorStatus, get_readable_file_size, get_readable_time

3 | from .status import Status

4 |

5 |

6 | class QbDownloadStatus(Status):

7 |

8 | def __init__(self, gid, listener, qbhash, client):

9 | super().__init__()

10 | self.__gid = gid

11 | self.__hash = qbhash

12 | self.client = client

13 | self.__uid = listener.uid

14 | self.listener = listener

15 | self.message = listener.message

16 |

17 |

18 | def progress(self):

19 | """

20 | Calculates the progress of the mirror (upload or download)

21 | :return: returns progress in percentage

22 | """

23 | return f'{round(self.torrent_info().progress*100,2)}%'

24 |

25 | def size_raw(self):

26 | """

27 | Gets total size of the mirror file/folder

28 | :return: total size of mirror

29 | """

30 | return self.torrent_info().size

31 |

32 | def processed_bytes(self):

33 | return self.torrent_info().downloaded

34 |

35 | def speed(self):

36 | return f"{get_readable_file_size(self.torrent_info().dlspeed)}/s"

37 |

38 | def name(self):

39 | return self.torrent_info().name

40 |

41 | def path(self):

42 | return f"{DOWNLOAD_DIR}{self.__uid}"

43 |

44 | def size(self):

45 | return get_readable_file_size(self.torrent_info().size)

46 |

47 | def eta(self):

48 | return get_readable_time(self.torrent_info().eta)

49 |

50 | def status(self):

51 | download = self.torrent_info().state

52 | if download == "queuedDL":

53 | status = MirrorStatus.STATUS_WAITING

54 | elif download == "metaDL" or download == "checkingResumeData":

55 | status = MirrorStatus.STATUS_DOWNLOADING + " (Metadata)"

56 | elif download == "pausedDL":

57 | status = MirrorStatus.STATUS_PAUSE

58 | else:

59 | status = MirrorStatus.STATUS_DOWNLOADING

60 | return status

61 |

62 | def torrent_info(self):

63 | return self.client.torrents_info(torrent_hashes=self.__hash)[0]

64 |

65 | def download(self):

66 | return self

67 |

68 | def uid(self):

69 | return self.__uid

70 |

71 | def gid(self):

72 | return self.__gid

73 |

74 | def cancel_download(self):

75 | LOGGER.info(f"Cancelling Download: {self.name()}")

76 | self.listener.onDownloadError('Download stopped by user!')

77 | self.client.torrents_delete(torrent_hashes=self.__hash, delete_files=True)

78 |

--------------------------------------------------------------------------------

/bot/modules/watch.py:

--------------------------------------------------------------------------------

1 | from telegram.ext import CommandHandler

2 | from telegram import Bot, Update

3 | from bot import DOWNLOAD_DIR, dispatcher, LOGGER

4 | from bot.helper.telegram_helper.message_utils import sendMessage, sendStatusMessage

5 | from .mirror import MirrorListener

6 | from bot.helper.mirror_utils.download_utils.youtube_dl_download_helper import YoutubeDLHelper

7 | from bot.helper.telegram_helper.bot_commands import BotCommands

8 | from bot.helper.telegram_helper.filters import CustomFilters

9 | import threading

10 |

11 |

12 | def _watch(bot: Bot, update, isTar=False):

13 | mssg = update.message.text

14 | message_args = mssg.split(' ')

15 | name_args = mssg.split('|')

16 |

17 | try:

18 | link = message_args[1]

19 | except IndexError:

20 | msg = f"/{BotCommands.WatchCommand} [youtube-dl supported link] [quality] |[CustomName] to mirror with youtube-dl.\n\n"

21 | msg += "Note: Quality and custom name are optional\n\nExample of quality: audio, 144, 240, 360, 480, 720, 1080, 2160."

22 | msg += "\n\nIf you want to use custom filename, enter it after |"

23 | msg += f"\n\nExample:\n/{BotCommands.WatchCommand} https://youtu.be/Pk_TthHfLeE 720 |Slam\n\n"

24 | msg += "This file will be downloaded in 720p quality and it's name will be Slam"

25 | sendMessage(msg, bot, update)

26 | return

27 |

28 | try:

29 | if "|" in mssg:

30 | mssg = mssg.split("|")

31 | qual = mssg[0].split(" ")[2]

32 | if qual == "":

33 | raise IndexError

34 | else:

35 | qual = message_args[2]

36 | if qual != "audio":

37 | qual = f'bestvideo[height<={qual}]+bestaudio/best[height<={qual}]'

38 | except IndexError:

39 | qual = "bestvideo+bestaudio/best"

40 |

41 | try:

42 | name = name_args[1]

43 | except IndexError:

44 | name = ""

45 |

46 | pswd = ""

47 | listener = MirrorListener(bot, update, pswd, isTar)

48 | ydl = YoutubeDLHelper(listener)

49 | threading.Thread(target=ydl.add_download,args=(link, f'{DOWNLOAD_DIR}{listener.uid}', qual, name)).start()

50 | sendStatusMessage(update, bot)

51 |

52 |

53 | def watchTar(update, context):

54 | _watch(context.bot, update, True)

55 |

56 |

57 | def watch(update, context):

58 | _watch(context.bot, update)

59 |

60 |

61 | mirror_handler = CommandHandler(BotCommands.WatchCommand, watch,

62 | filters=CustomFilters.authorized_chat | CustomFilters.authorized_user, run_async=True)

63 | tar_mirror_handler = CommandHandler(BotCommands.TarWatchCommand, watchTar,

64 | filters=CustomFilters.authorized_chat | CustomFilters.authorized_user, run_async=True)

65 |

66 |

67 | dispatcher.add_handler(mirror_handler)

68 | dispatcher.add_handler(tar_mirror_handler)

69 |

--------------------------------------------------------------------------------

/bot/helper/ext_utils/db_handler.py:

--------------------------------------------------------------------------------

1 | import psycopg2

2 | from psycopg2 import Error

3 | from bot import AUTHORIZED_CHATS, SUDO_USERS, DB_URI, LOGGER

4 |

5 | class DbManger:

6 | def __init__(self):

7 | self.err = False

8 |

9 | def connect(self):

10 | try:

11 | self.conn = psycopg2.connect(DB_URI)

12 | self.cur = self.conn.cursor()

13 | except psycopg2.DatabaseError as error :

14 | LOGGER.error("Error in dbMang : ", error)

15 | self.err = True

16 |

17 | def disconnect(self):

18 | self.cur.close()

19 | self.conn.close()

20 |

21 | def db_auth(self,chat_id: int):

22 | self.connect()

23 | if self.err :

24 | return "There's some error check log for details"

25 | else:

26 | sql = 'INSERT INTO users VALUES ({});'.format(chat_id)

27 | self.cur.execute(sql)

28 | self.conn.commit()

29 | self.disconnect()

30 | AUTHORIZED_CHATS.add(chat_id)

31 | return 'Authorized successfully'

32 |

33 | def db_unauth(self,chat_id: int):

34 | self.connect()

35 | if self.err :

36 | return "There's some error check log for details"

37 | else:

38 | sql = 'DELETE from users where uid = {};'.format(chat_id)

39 | self.cur.execute(sql)

40 | self.conn.commit()

41 | self.disconnect()

42 | AUTHORIZED_CHATS.remove(chat_id)

43 | return 'Unauthorized successfully'

44 |

45 | def db_addsudo(self,chat_id: int):

46 | self.connect()

47 | if self.err :

48 | return "There's some error check log for details"

49 | else:

50 | if chat_id in AUTHORIZED_CHATS:

51 | sql = 'UPDATE users SET sudo = TRUE where uid = {};'.format(chat_id)

52 | self.cur.execute(sql)

53 | self.conn.commit()

54 | self.disconnect()

55 | SUDO_USERS.add(chat_id)

56 | return 'Successfully promoted as Sudo'

57 | else:

58 | sql = 'INSERT INTO users VALUES ({},TRUE);'.format(chat_id)

59 | self.cur.execute(sql)

60 | self.conn.commit()

61 | self.disconnect()

62 | SUDO_USERS.add(chat_id)

63 | return 'Successfully Authorized and promoted as Sudo'

64 |

65 | def db_rmsudo(self,chat_id: int):

66 | self.connect()

67 | if self.err :

68 | return "There's some error check log for details"

69 | else:

70 | sql = 'UPDATE users SET sudo = FALSE where uid = {};'.format(chat_id)

71 | self.cur.execute(sql)

72 | self.conn.commit()

73 | self.disconnect()

74 | SUDO_USERS.remove(chat_id)

75 | return 'Successfully removed from Sudo'

76 |

--------------------------------------------------------------------------------

/bot/helper/mirror_utils/status_utils/aria_download_status.py:

--------------------------------------------------------------------------------

1 | from bot import aria2, DOWNLOAD_DIR, LOGGER

2 | from bot.helper.ext_utils.bot_utils import MirrorStatus

3 | from .status import Status

4 |

5 | def get_download(gid):

6 | return aria2.get_download(gid)

7 |

8 |

9 | class AriaDownloadStatus(Status):

10 |

11 | def __init__(self, gid, listener):

12 | super().__init__()

13 | self.upload_name = None

14 | self.__gid = gid

15 | self.__download = get_download(self.__gid)

16 | self.__uid = listener.uid

17 | self.__listener = listener

18 | self.message = listener.message

19 |

20 | def __update(self):

21 | self.__download = get_download(self.__gid)

22 | download = self.__download

23 | if download.followed_by_ids:

24 | self.__gid = download.followed_by_ids[0]

25 |

26 | def progress(self):

27 | """

28 | Calculates the progress of the mirror (upload or download)

29 | :return: returns progress in percentage

30 | """

31 | self.__update()

32 | return self.__download.progress_string()

33 |

34 | def size_raw(self):

35 | """

36 | Gets total size of the mirror file/folder

37 | :return: total size of mirror

38 | """

39 | return self.aria_download().total_length

40 |

41 | def processed_bytes(self):

42 | return self.aria_download().completed_length

43 |

44 | def speed(self):

45 | return self.aria_download().download_speed_string()

46 |

47 | def name(self):

48 | return self.aria_download().name

49 |

50 | def path(self):

51 | return f"{DOWNLOAD_DIR}{self.__uid}"

52 |

53 | def size(self):

54 | return self.aria_download().total_length_string()

55 |

56 | def eta(self):

57 | return self.aria_download().eta_string()

58 |

59 | def status(self):

60 | download = self.aria_download()

61 | if download.is_waiting:

62 | status = MirrorStatus.STATUS_WAITING

63 | elif download.has_failed:

64 | status = MirrorStatus.STATUS_FAILED

65 | else:

66 | status = MirrorStatus.STATUS_DOWNLOADING

67 | return status

68 |

69 | def aria_download(self):

70 | self.__update()

71 | return self.__download

72 |

73 | def download(self):

74 | return self

75 |

76 | def getListener(self):

77 | return self.__listener

78 |

79 | def uid(self):

80 | return self.__uid

81 |

82 | def gid(self):

83 | self.__update()

84 | return self.__gid

85 |

86 | def cancel_download(self):

87 | LOGGER.info(f"Cancelling Download: {self.name()}")

88 | download = self.aria_download()

89 | if download.is_waiting:

90 | self.__listener.onDownloadError("★ 𝗗𝗼𝘄𝗻𝗹𝗼𝗮𝗱 𝗖𝗮𝗻𝗰𝗲𝗹𝗹𝗲𝗱 𝗕𝘆 𝗨𝘀𝗲𝗿!! ★")

91 | aria2.remove([download], force=True)

92 | return

93 | if len(download.followed_by_ids) != 0:

94 | downloads = aria2.get_downloads(download.followed_by_ids)

95 | aria2.remove(downloads, force=True)

96 | self.__listener.onDownloadError('★ 𝗗𝗼𝘄𝗻𝗹𝗼𝗮𝗱 𝗖𝗮𝗻𝗰𝗲𝗹𝗹𝗲𝗱 𝗕𝘆 𝗨𝘀𝗲𝗿!! ★')

97 | aria2.remove([download], force=True)

98 |

--------------------------------------------------------------------------------

/bot/modules/updates.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import subprocess

3 | import heroku3

4 |

5 | from datetime import datetime

6 | from os import environ, execle, path, remove

7 |

8 | from git import Repo

9 | from git.exc import GitCommandError, InvalidGitRepositoryError, NoSuchPathError

10 |

11 | from pyrogram import filters

12 |

13 | from bot import app, OWNER_ID, UPSTREAM_REPO, UPSTREAM_BRANCH, bot

14 | from bot.helper import get_text, HEROKU_URL

15 | from bot.helper.telegram_helper.bot_commands import BotCommands

16 |

17 | REPO_ = UPSTREAM_REPO

18 | BRANCH_ = UPSTREAM_BRANCH

19 |

20 |

21 | # Update Command

22 |

23 | @app.on_message(filters.command([BotCommands.UpdateCommand, f'{BotCommands.UpdateCommand}@{bot.username}']) & filters.user(OWNER_ID))

24 | async def update_it(client, message):

25 | msg_ = await message.reply_text("`Updating Please Wait!`")

26 | try:

27 | repo = Repo()

28 | except GitCommandError:

29 | return await msg_.edit(

30 | "**Invalid Git Command. Please Report This Bug To [Support Group](https://t.me/SlamMirrorSupport)**"

31 | )

32 | except InvalidGitRepositoryError:

33 | repo = Repo.init()

34 | if "upstream" in repo.remotes:

35 | origin = repo.remote("upstream")

36 | else:

37 | origin = repo.create_remote("upstream", REPO_)

38 | origin.fetch()

39 | repo.create_head(UPSTREAM_BRANCH, origin.refs.master)

40 | repo.heads.master.set_tracking_branch(origin.refs.master)

41 | repo.heads.master.checkout(True)

42 | if repo.active_branch.name != UPSTREAM_BRANCH:

43 | return await msg_.edit(

44 | f"`Seems Like You Are Using Custom Branch - {repo.active_branch.name}! Please Switch To {UPSTREAM_BRANCH} To Make This Updater Function!`"

45 | )

46 | try:

47 | repo.create_remote("upstream", REPO_)

48 | except BaseException:

49 | pass

50 | ups_rem = repo.remote("upstream")

51 | ups_rem.fetch(UPSTREAM_BRANCH)

52 | if not HEROKU_URL:

53 | try:

54 | ups_rem.pull(UPSTREAM_BRANCH)

55 | except GitCommandError:

56 | repo.git.reset("--hard", "FETCH_HEAD")

57 | subprocess.run(["pip3", "install", "--no-cache-dir", "-r", "requirements.txt"])

58 | await msg_.edit("`Updated Sucessfully! Give Me Some Time To Restart!`")

59 | with open("./aria.sh", 'rb') as file:

60 | script = file.read()

61 | subprocess.call("./aria.sh", shell=True)

62 | args = [sys.executable, "-m", "bot"]

63 | execle(sys.executable, *args, environ)

64 | exit()

65 | return

66 | else:

67 | await msg_.edit("`Heroku Detected! Pushing, Please wait!`")

68 | ups_rem.fetch(UPSTREAM_BRANCH)

69 | repo.git.reset("--hard", "FETCH_HEAD")

70 | if "heroku" in repo.remotes:

71 | remote = repo.remote("heroku")

72 | remote.set_url(HEROKU_URL)

73 | else:

74 | remote = repo.create_remote("heroku", HEROKU_URL)

75 | try:

76 | remote.push(refspec="HEAD:refs/heads/master", force=True)

77 | except BaseException as error:

78 | await msg_.edit(f"**Updater Error** \nTraceBack : `{error}`")

79 | return repo.__del__()

80 | await msg_.edit(f"`Updated Sucessfully! \n\nCheck your config with` `/{BotCommands.ConfigMenuCommand}`")

81 |

--------------------------------------------------------------------------------

/add_to_team_drive.py:

--------------------------------------------------------------------------------

1 | from __future__ import print_function

2 | from google.oauth2.service_account import Credentials

3 | import googleapiclient.discovery, json, progress.bar, glob, sys, argparse, time

4 | from google_auth_oauthlib.flow import InstalledAppFlow

5 | from google.auth.transport.requests import Request

6 | import os, pickle

7 |

8 | stt = time.time()

9 |

10 | parse = argparse.ArgumentParser(

11 | description='A tool to add service accounts to a shared drive from a folder containing credential files.')

12 | parse.add_argument('--path', '-p', default='accounts',

13 | help='Specify an alternative path to the service accounts folder.')

14 | parse.add_argument('--credentials', '-c', default='./credentials.json',

15 | help='Specify the relative path for the credentials file.')

16 | parse.add_argument('--yes', '-y', default=False, action='store_true', help='Skips the sanity prompt.')

17 | parsereq = parse.add_argument_group('required arguments')

18 | parsereq.add_argument('--drive-id', '-d', help='The ID of the Shared Drive.', required=True)

19 |

20 | args = parse.parse_args()

21 | acc_dir = args.path

22 | did = args.drive_id