├── .gitignore

├── MAVSlam

├── .DS_Store

├── .gitignore

├── .project

├── .settings

│ └── org.eclipse.jdt.core.prefs

├── LICENSE.md

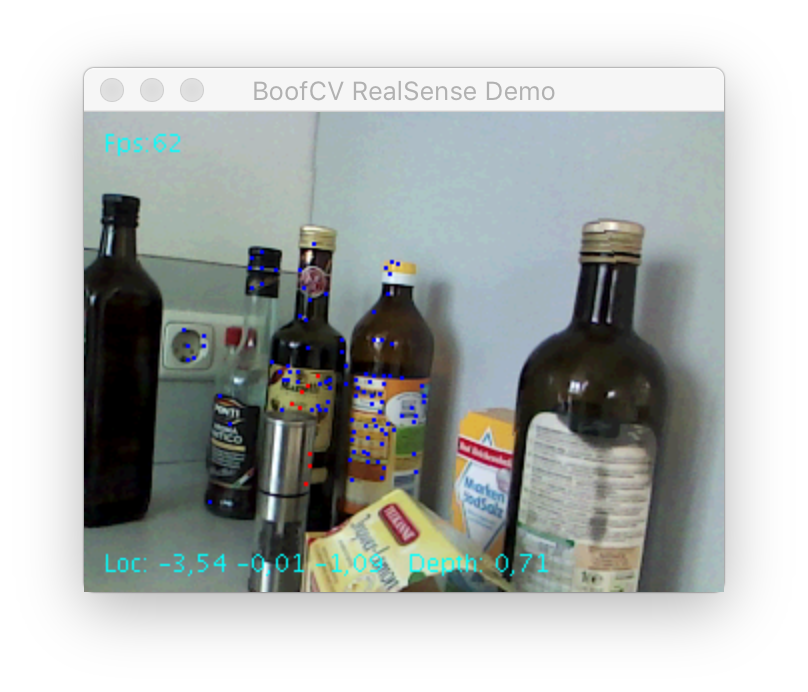

├── RealSense.png

├── build.xml

├── dis

│ └── .gitignore

├── heading.png

├── lib

│ ├── .gitignore

│ ├── BoofCV-calibration-0.23.jar

│ ├── BoofCV-feature-0.23.jar

│ ├── BoofCV-geo-0.23.jar

│ ├── BoofCV-io-0.23.jar

│ ├── BoofCV-ip-0.23.jar

│ ├── BoofCV-jcodec-0.23.jar

│ ├── BoofCV-learning-0.23.jar

│ ├── BoofCV-recognition-0.23.jar

│ ├── BoofCV-sfm-0.23.jar

│ ├── BoofCV-visualize-0.23.jar

│ ├── core-0.29.jar

│ ├── ddogleg-0.9.jar

│ ├── dense64-0.29.jar

│ ├── equation-0.29.jar

│ ├── fastcast-3.0.jar

│ ├── fst-2.42-onejar.jar

│ ├── georegression-0.10.jar

│ ├── gson-2.8.5.jar

│ ├── http.jar

│ ├── jna-4.2.2.jar

│ ├── jna-platform-4.2.2.jar

│ ├── jnaerator-runtime-0.13.jar

│ ├── mavcomm.jar

│ ├── mavmq.jar

│ ├── simple-0.29.jar

│ ├── xmlpull-1.1.3.1.jar

│ ├── xpp3_min-1.1.4c.jar

│ └── xstream-1.4.7.jar

├── microslam.png

├── msp.properties

├── native

│ ├── librealsense.dylib

│ └── librealsense.so

├── obstacle.png

├── screenshot6.png

└── src

│ ├── .DS_Store

│ └── com

│ ├── .gitignore

│ └── comino

│ ├── .gitignore

│ ├── dev

│ ├── CoVarianceEjML.java

│ └── RotationMatrixTest.java

│ ├── librealsense

│ ├── .gitignore

│ └── wrapper

│ │ ├── LibRealSenseIntrinsics.java

│ │ ├── LibRealSenseUtils.java

│ │ └── LibRealSenseWrapper.java

│ ├── realsense

│ ├── .gitignore

│ └── boofcv

│ │ ├── RealSenseInfo.java

│ │ ├── StreamRealSenseTest.java

│ │ ├── StreamRealSenseTestVIO.java

│ │ └── StreamRealSenseVisDepth.java

│ ├── server

│ └── mjpeg

│ │ ├── IMJPEGOverlayListener.java

│ │ ├── IVisualStreamHandler.java

│ │ └── impl

│ │ └── HttpMJPEGHandler.java

│ └── slam

│ ├── boofcv

│ ├── MAVDepthVisualOdometry.java

│ ├── sfm

│ │ ├── DepthSparse3D.java

│ │ └── DepthSparse3D_to_PixelTo3D.java

│ ├── vio

│ │ ├── FactoryMAVOdometryVIO.java

│ │ ├── odometry

│ │ │ ├── MAVOdomPixelDepthPnPVIO.java

│ │ │ └── MAVOdomPixelDepthPnP_to_DepthVisualOdometryVIO.java

│ │ └── tracker

│ │ │ └── FactoryMAVPointTrackerTwoPassVIO.java

│ └── vo

│ │ ├── FactoryMAVOdometry.java

│ │ ├── odometry

│ │ ├── MAVOdomPixelDepthPnP.java

│ │ └── MAVOdomPixelDepthPnP_to_DepthVisualOdometry.java

│ │ └── tracker

│ │ └── FactoryMAVPointTrackerTwoPass.java

│ ├── detectors

│ ├── ISLAMDetector.java

│ └── impl

│ │ ├── DirectDepthDetector.java

│ │ ├── FwDirectDepthDetector.java

│ │ ├── VfhDepthDetector.java

│ │ ├── VfhDynamicDirectDepthDetector.java

│ │ └── VfhFeatureDetector.java

│ ├── estimators

│ ├── IPositionEstimator.java

│ ├── vio

│ │ └── MAVVisualPositionEstimatorVIO.java

│ └── vo

│ │ └── MAVVisualPositionEstimatorVO.java

│ └── main

│ └── StartUp.java

└── README.md

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 | MAVSlam/.classpath

3 | MAVSlam/dis

4 | MAVSlam/bin

5 | MAVSlam/build_number

--------------------------------------------------------------------------------

/MAVSlam/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/.DS_Store

--------------------------------------------------------------------------------

/MAVSlam/.gitignore:

--------------------------------------------------------------------------------

1 | /bin/

2 | /dis/

3 | /build.number

4 |

--------------------------------------------------------------------------------

/MAVSlam/.project:

--------------------------------------------------------------------------------

1 |

2 |

3 | MAVSlam

4 |

5 |

6 |

7 |

8 |

9 | org.eclipse.jdt.core.javabuilder

10 |

11 |

12 |

13 |

14 |

15 | org.eclipse.jdt.core.javanature

16 |

17 |

18 |

--------------------------------------------------------------------------------

/MAVSlam/.settings/org.eclipse.jdt.core.prefs:

--------------------------------------------------------------------------------

1 | eclipse.preferences.version=1

2 | org.eclipse.jdt.core.compiler.codegen.inlineJsrBytecode=enabled

3 | org.eclipse.jdt.core.compiler.codegen.targetPlatform=1.8

4 | org.eclipse.jdt.core.compiler.codegen.unusedLocal=preserve

5 | org.eclipse.jdt.core.compiler.compliance=1.8

6 | org.eclipse.jdt.core.compiler.debug.lineNumber=generate

7 | org.eclipse.jdt.core.compiler.debug.localVariable=generate

8 | org.eclipse.jdt.core.compiler.debug.sourceFile=generate

9 | org.eclipse.jdt.core.compiler.problem.assertIdentifier=error

10 | org.eclipse.jdt.core.compiler.problem.enumIdentifier=error

11 | org.eclipse.jdt.core.compiler.source=1.8

12 |

--------------------------------------------------------------------------------

/MAVSlam/LICENSE.md:

--------------------------------------------------------------------------------

1 | The MACGCL is licensed generally under a permissive 3-clause BSD license. Contributions are required to be made under the same license.

2 |

3 | ```

4 | /****************************************************************************

5 | *

6 | * Copyright (c) 2016 Eike Mansfeld ecm@gmx.de. All rights reserved.

7 | *

8 | * Redistribution and use in source and binary forms, with or without

9 | * modification, are permitted provided that the following conditions

10 | * are met:

11 | *

12 | * 1. Redistributions of source code must retain the above copyright

13 | * notice, this list of conditions and the following disclaimer.

14 | * 2. Redistributions in binary form must reproduce the above copyright

15 | * notice, this list of conditions and the following disclaimer in

16 | * the documentation and/or other materials provided with the

17 | * distribution.

18 | * 3. Neither the name of the copyright holder nor the names of its

19 | contributors may be used to endorse or promote products derived

20 | from this software without specific prior written permission.

21 | *

22 | * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

23 | * "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

24 | * LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

25 | * FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

26 | * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

27 | * INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

28 | * BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

29 | * OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED

30 | * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

31 | * LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

32 | * ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

33 | * POSSIBILITY OF SUCH DAMAGE.

34 | *

35 | ****************************************************************************/

36 | ```

--------------------------------------------------------------------------------

/MAVSlam/RealSense.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/RealSense.png

--------------------------------------------------------------------------------

/MAVSlam/build.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

28 |

29 |

30 |

31 |

32 |

61 |

62 |

63 |

64 |

65 |

66 |

67 |

68 |

69 |

70 |

71 |

72 |

73 |

74 |

75 | Current build number:${build.number}

76 |

77 |

78 |

79 |

80 |

81 |

82 |

--------------------------------------------------------------------------------

/MAVSlam/dis/.gitignore:

--------------------------------------------------------------------------------

1 | /.DS_Store

2 |

--------------------------------------------------------------------------------

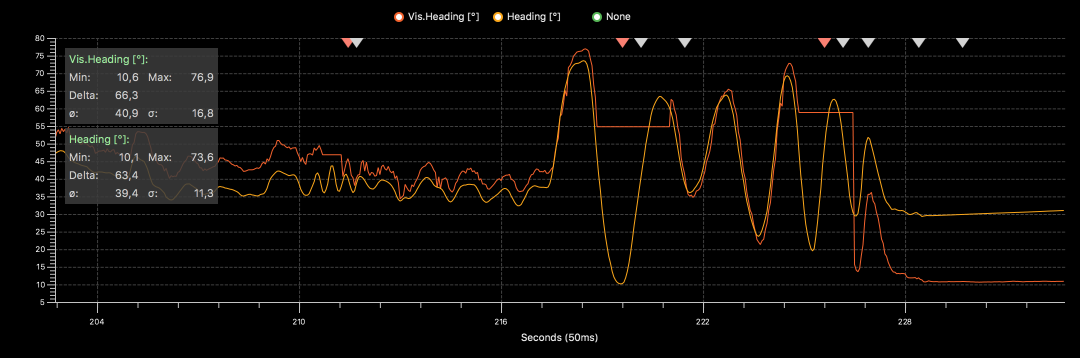

/MAVSlam/heading.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/heading.png

--------------------------------------------------------------------------------

/MAVSlam/lib/.gitignore:

--------------------------------------------------------------------------------

1 | /.DS_Store

2 |

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-calibration-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-calibration-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-feature-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-feature-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-geo-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-geo-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-io-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-io-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-ip-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-ip-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-jcodec-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-jcodec-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-learning-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-learning-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-recognition-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-recognition-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-sfm-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-sfm-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/BoofCV-visualize-0.23.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/BoofCV-visualize-0.23.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/core-0.29.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/core-0.29.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/ddogleg-0.9.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/ddogleg-0.9.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/dense64-0.29.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/dense64-0.29.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/equation-0.29.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/equation-0.29.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/fastcast-3.0.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/fastcast-3.0.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/fst-2.42-onejar.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/fst-2.42-onejar.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/georegression-0.10.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/georegression-0.10.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/gson-2.8.5.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/gson-2.8.5.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/http.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/http.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/jna-4.2.2.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/jna-4.2.2.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/jna-platform-4.2.2.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/jna-platform-4.2.2.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/jnaerator-runtime-0.13.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/jnaerator-runtime-0.13.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/mavcomm.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/mavcomm.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/mavmq.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/mavmq.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/simple-0.29.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/simple-0.29.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/xmlpull-1.1.3.1.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/xmlpull-1.1.3.1.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/xpp3_min-1.1.4c.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/xpp3_min-1.1.4c.jar

--------------------------------------------------------------------------------

/MAVSlam/lib/xstream-1.4.7.jar:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/lib/xstream-1.4.7.jar

--------------------------------------------------------------------------------

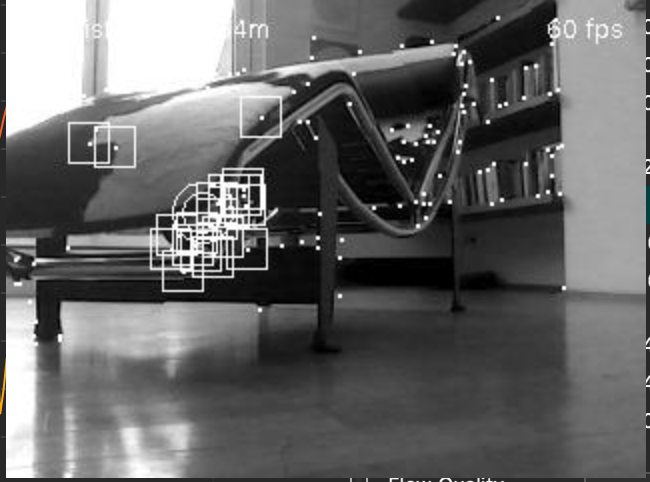

/MAVSlam/microslam.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/microslam.png

--------------------------------------------------------------------------------

/MAVSlam/msp.properties:

--------------------------------------------------------------------------------

1 | #Sat, 17 Feb 2018 08:39:55 +0100

2 | #Build

3 | build=638

4 |

5 | # Vision general settings

6 | vision_enabled=true

7 | vision_debug=true

8 |

9 | vision_heading_init=false

10 | vision_highres=false

11 |

12 | vision_min_quality=30

13 |

14 | vision_pub_pos_xy=true

15 | vision_pub_pos_z=true

16 |

17 | vision_pub_speed_xy=true

18 | vision_pub_speed_z=true

19 |

20 | vision_detector_cycle=100

21 |

22 |

23 | # DirectDepthDetector

24 |

25 |

26 |

27 | # FeatureDetector

28 |

29 | feature_max_distance=2.50

30 | feature_min_altitude=0.30

31 |

32 | #SLAM Grid

33 |

34 | slam_publish_microslam=true

35 |

36 | #Autopilot

37 | autopilot_forget_map=true

38 |

--------------------------------------------------------------------------------

/MAVSlam/native/librealsense.dylib:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/native/librealsense.dylib

--------------------------------------------------------------------------------

/MAVSlam/native/librealsense.so:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/native/librealsense.so

--------------------------------------------------------------------------------

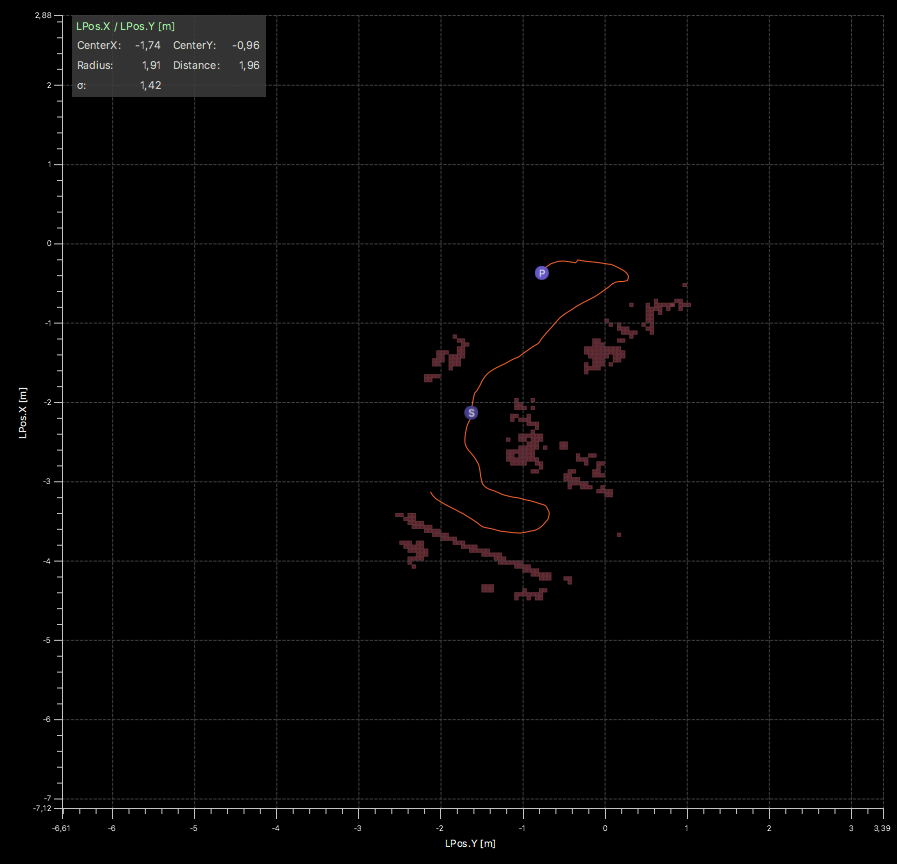

/MAVSlam/obstacle.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/obstacle.png

--------------------------------------------------------------------------------

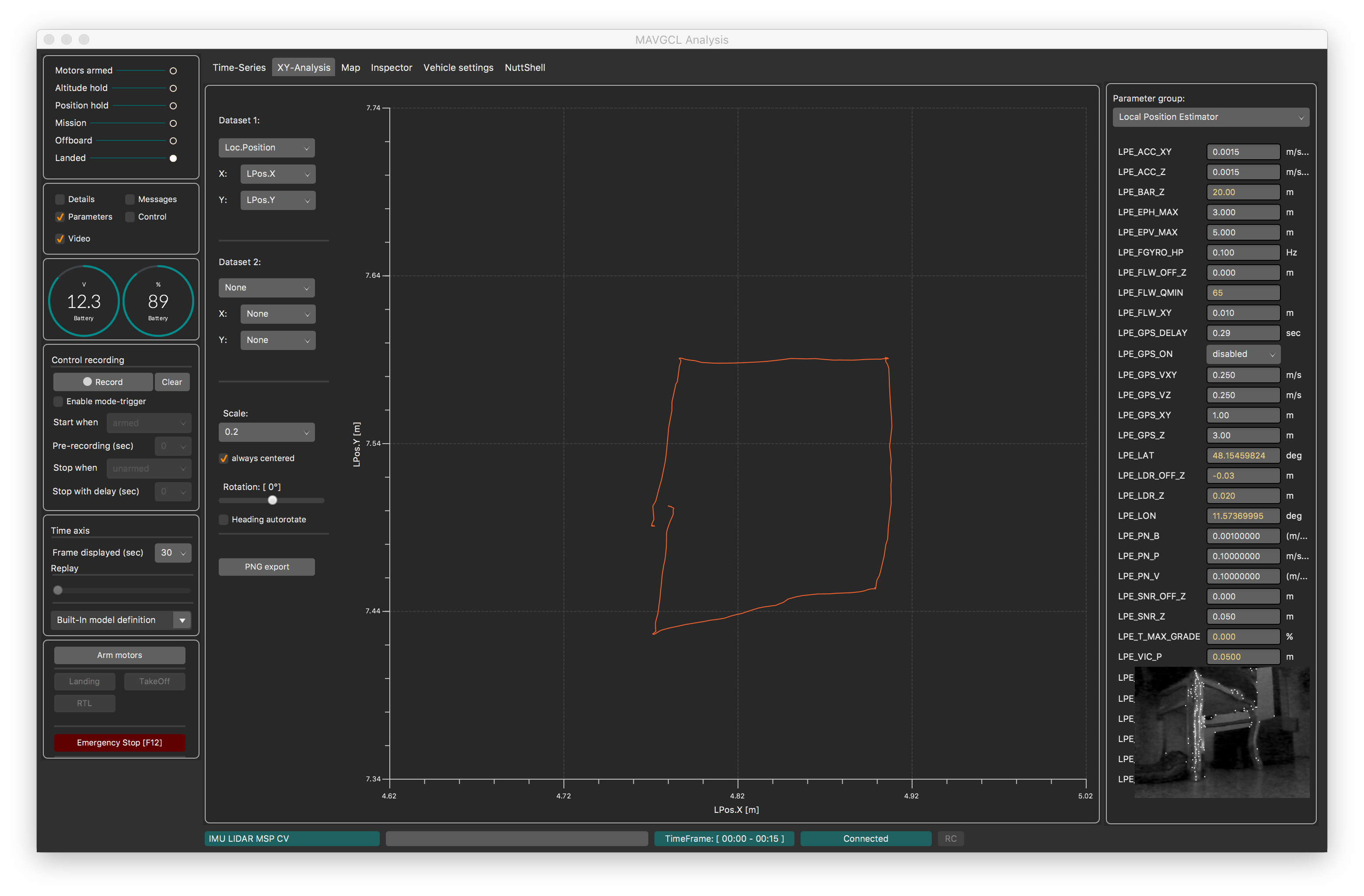

/MAVSlam/screenshot6.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/screenshot6.png

--------------------------------------------------------------------------------

/MAVSlam/src/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecmnet/MAVSlam/3049d30bc7d0fba3ec6130f172e72bcb64475142/MAVSlam/src/.DS_Store

--------------------------------------------------------------------------------

/MAVSlam/src/com/.gitignore:

--------------------------------------------------------------------------------

1 | /.DS_Store

2 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/.gitignore:

--------------------------------------------------------------------------------

1 | /.DS_Store

2 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/dev/CoVarianceEjML.java:

--------------------------------------------------------------------------------

1 | package com.comino.dev;

2 |

3 | import org.ejml.simple.SimpleMatrix;

4 |

5 | public class CoVarianceEjML {

6 |

7 | public static void main(String[] args){

8 |

9 | double data[][] = new double[][]{

10 | { 90, 60, 90 },

11 | { 90, 90, 30 },

12 | { 60, 60, 60 },

13 | { 60, 60, 90 },

14 | { 30, 30, 30 }

15 | };

16 |

17 | SimpleMatrix X = new SimpleMatrix(data);

18 | int n = X.numRows();

19 | SimpleMatrix Xt = X.transpose();

20 | int m = Xt.numRows();

21 |

22 | // Means:

23 | SimpleMatrix x = new SimpleMatrix(m, 1);

24 | for(int r=0; r c){

34 | S.set(r, c, S.get(c, r));

35 | } else {

36 | double cov = Xt.extractVector(true, r).minus( x.get((r), 0) ).dot(Xt.extractVector(true, c).minus( x.get((c), 0) ).transpose());

37 | S.set(r, c, (cov / n));

38 | }

39 | }

40 | }

41 | // System.out.println(S);

42 |

43 | // Plotting:

44 | for(int r=0; r {

96 | MouseEvent ev = event;

97 | mouse_x = (int)ev.getX();

98 | mouse_y = (int)ev.getY();

99 | });

100 |

101 |

102 | // RealSenseInfo info = new RealSenseInfo(320,240, RealSenseInfo.MODE_RGB);

103 | RealSenseInfo info = new RealSenseInfo(640,480, RealSenseInfo.MODE_RGB);

104 |

105 | try {

106 |

107 | realsense = new StreamRealSenseVisDepth(0,info);

108 |

109 | } catch(Exception e) {

110 | System.out.println("REALSENSE:"+e.getMessage());

111 | return;

112 | }

113 |

114 | mouse_x = info.width/2;

115 | mouse_y = info.height/2;

116 |

117 | primaryStage.setScene(new Scene(root, info.width,info.height));

118 | primaryStage.show();

119 |

120 |

121 | PkltConfig configKlt = new PkltConfig();

122 | configKlt.pyramidScaling = new int[]{1, 2, 4, 8};

123 | configKlt.templateRadius = 3;

124 |

125 | PointTrackerTwoPass tracker =

126 | FactoryPointTrackerTwoPass.klt(configKlt, new ConfigGeneralDetector(900, 2, 1),

127 | GrayU8.class, GrayS16.class);

128 |

129 | DepthSparse3D sparseDepth = new DepthSparse3D.I(1e-3);

130 |

131 | // declares the algorithm

132 | MAVDepthVisualOdometry visualOdometry =

133 | FactoryMAVOdometry.depthPnP(1.2, 120, 2, 200, 50, true,

134 | sparseDepth, tracker, GrayU8.class, GrayU16.class);

135 |

136 | visualOdometry.setCalibration(realsense.getIntrinsics(),new DoNothingPixelTransform_F32());

137 |

138 |

139 | output = new BufferedImage(info.width, info.height, BufferedImage.TYPE_3BYTE_BGR);

140 | wirgb = new WritableImage(info.width, info.height);

141 | ivrgb.setImage(wirgb);

142 |

143 |

144 | realsense.registerListener(new Listener() {

145 |

146 | int fps; float mouse_depth; float md; int mc; int mf=0; int fpm;

147 |

148 | @Override

149 | public void process(Planar rgb, GrayU16 depth, long timeRgb, long timeDepth) {

150 |

151 |

152 | if((System.currentTimeMillis() - tms) > 250) {

153 | tms = System.currentTimeMillis();

154 | if(mf>0)

155 | fps = fpm/mf;

156 | if(mc>0)

157 | mouse_depth = md / mc;

158 | mc = 0; md = 0; mf=0; fpm=0;

159 | }

160 | mf++;

161 | fpm += (int)(1f/((timeRgb - oldTimeDepth)/1000f)+0.5f);

162 | oldTimeDepth = timeRgb;

163 |

164 | if( !visualOdometry.process(rgb.getBand(0),depth) ) {

165 | bus1.writeObject(position);

166 | System.out.println("VO Failed!");

167 | visualOdometry.reset();

168 | return;

169 | }

170 |

171 |

172 |

173 | Se3_F64 leftToWorld = visualOdometry.getCameraToWorld();

174 | Vector3D_F64 T = leftToWorld.getT();

175 |

176 |

177 | AccessPointTracks3D points = (AccessPointTracks3D)visualOdometry;

178 | ConvertBufferedImage.convertTo(rgb, output, false);

179 |

180 | Graphics c = output.getGraphics();

181 |

182 | int count = 0; float total = 0; int dx=0, dy=0; int dist=999;

183 | int x, y; int index = -1;

184 |

185 | for( int i = 0; i < points.getAllTracks().size(); i++ ) {

186 | if(points.isInlier(i)) {

187 |

188 |

189 | c.setColor(Color.BLUE);

190 |

191 | x = (int)points.getAllTracks().get(i).x;

192 | y = (int)points.getAllTracks().get(i).y;

193 |

194 |

195 |

196 | int d = depth.get(x,y);

197 | if(d > 0) {

198 |

199 |

200 | int di = (int)Math.sqrt((x-mouse_x)*(x-mouse_x) + (y-mouse_y)*(y-mouse_y));

201 | if(di < dist) {

202 | index = i;

203 | dx = x;

204 | dy = y;

205 | dist = di;

206 | }

207 | total++;

208 | if(d<500) {

209 | c.setColor(Color.RED); count++;

210 | }

211 | c.drawRect(x,y, 1, 1);

212 | }

213 | }

214 | }

215 |

216 | if(depth!=null) {

217 |

218 | System.out.println(visualOdometry.getPoint3DFromPixel(160, 120));

219 | // if(index > -1)

220 | // System.out.println(visualOdometry.getTrackLocation(index));

221 |

222 | mc++;

223 | md = md + depth.get(dx,dy) / 1000f;

224 | c.setColor(Color.GREEN);

225 | c.drawOval(dx-3,dy-3, 6, 6);

226 | }

227 |

228 |

229 | c.setColor(Color.CYAN);

230 | c.drawString("Fps:"+fps, 10, 20);

231 | c.drawString(String.format("Loc: %4.2f %4.2f %4.2f", T.x, T.y, T.z), 10, info.height-10);

232 | c.drawString(String.format("Depth: %3.2f", mouse_depth), info.width-85, info.height-10);

233 |

234 | position.x = T.x;

235 | position.y = T.y;

236 | position.z = T.z;

237 |

238 | position.tms = timeRgb;

239 |

240 | bus1.writeObject(position);

241 |

242 | if((count / total)>0.6f) {

243 | c.setColor(Color.RED);

244 | c.drawString("WARNING!", info.width-70, 20);

245 | }

246 | c.dispose();

247 |

248 | Platform.runLater(() -> {

249 | SwingFXUtils.toFXImage(output, wirgb);

250 | });

251 | }

252 |

253 | }).start();

254 |

255 | }

256 |

257 | @Override

258 | public void stop() throws Exception {

259 | realsense.stop();

260 | bus1.release();

261 | super.stop();

262 | }

263 |

264 | public static void main(String[] args) {

265 | launch(args);

266 | }

267 |

268 | }

269 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/realsense/boofcv/StreamRealSenseTestVIO.java:

--------------------------------------------------------------------------------

1 | /****************************************************************************

2 | *

3 | * Copyright (c) 2017 Eike Mansfeld ecm@gmx.de. All rights reserved.

4 | *

5 | * Redistribution and use in source and binary forms, with or without

6 | * modification, are permitted provided that the following conditions

7 | * are met:

8 | *

9 | * 1. Redistributions of source code must retain the above copyright

10 | * notice, this list of conditions and the following disclaimer.

11 | * 2. Redistributions in binary form must reproduce the above copyright

12 | * notice, this list of conditions and the following disclaimer in

13 | * the documentation and/or other materials provided with the

14 | * distribution.

15 | * 3. Neither the name of the copyright holder nor the names of its

16 | * contributors may be used to endorse or promote products derived

17 | * from this software without specific prior written permission.

18 | *

19 | * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

20 | * "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

21 | * LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

22 | * FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

23 | * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

24 | * INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

25 | * BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

26 | * OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED

27 | * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

28 | * LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

29 | * ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

30 | * POSSIBILITY OF SUCH DAMAGE.

31 | *

32 | ****************************************************************************/

33 |

34 | package com.comino.realsense.boofcv;

35 |

36 | import java.awt.Color;

37 | import java.awt.Graphics;

38 | import java.awt.image.BufferedImage;

39 |

40 | import com.comino.mq.tests.OptPos;

41 | import com.comino.msp.utils.MSPMathUtils;

42 | import com.comino.realsense.boofcv.StreamRealSenseVisDepth.Listener;

43 | import com.comino.slam.boofcv.MAVDepthVisualOdometry;

44 | import com.comino.slam.boofcv.sfm.DepthSparse3D;

45 | import com.comino.slam.boofcv.vio.FactoryMAVOdometryVIO;

46 | import com.comino.slam.boofcv.vio.tracker.FactoryMAVPointTrackerTwoPassVIO;

47 |

48 | import boofcv.abst.feature.detect.interest.ConfigGeneralDetector;

49 | import boofcv.abst.feature.tracker.PointTrackerTwoPass;

50 | import boofcv.abst.sfm.AccessPointTracks3D;

51 | import boofcv.alg.distort.DoNothingPixelTransform_F32;

52 | import boofcv.alg.tracker.klt.PkltConfig;

53 | import boofcv.core.image.ConvertImage;

54 | import boofcv.io.image.ConvertBufferedImage;

55 | import boofcv.struct.image.GrayS16;

56 | import boofcv.struct.image.GrayU16;

57 | import boofcv.struct.image.GrayU8;

58 | import boofcv.struct.image.Planar;

59 | import georegression.geometry.ConvertRotation3D_F64;

60 | import georegression.struct.EulerType;

61 | import georegression.struct.point.Point3D_F64;

62 | import georegression.struct.point.Vector3D_F64;

63 | import georegression.struct.se.Se3_F64;

64 | import javafx.application.Application;

65 | import javafx.application.Platform;

66 | import javafx.embed.swing.SwingFXUtils;

67 | import javafx.scene.Scene;

68 | import javafx.scene.image.ImageView;

69 | import javafx.scene.image.WritableImage;

70 | import javafx.scene.input.MouseEvent;

71 | import javafx.scene.layout.FlowPane;

72 | import javafx.stage.Stage;

73 |

74 | public class StreamRealSenseTestVIO extends Application {

75 |

76 | private static final float INLIER_PIXEL_TOL = 1.5f;

77 | private static final int MAXTRACKS = 100;

78 | private static final int KLT_RADIUS = 3;

79 | private static final float KLT_THRESHOLD = 1f;

80 | private static final int RANSAC_ITERATIONS = 150;

81 | private static final int RETIRE_THRESHOLD = 2;

82 | private static final int ADD_THRESHOLD = 70;

83 | private static final int REFINE_ITERATIONS = 80;

84 |

85 | private BufferedImage output;

86 | private final ImageView ivrgb = new ImageView();

87 | private WritableImage wirgb;

88 |

89 | private StreamRealSenseVisDepth realsense;

90 |

91 | private GrayU8 gray = null;

92 | private Se3_F64 pose = new Se3_F64();

93 | private double[] visAttitude = new double[3];

94 |

95 | private long oldTimeDepth=0;

96 | private long tms = 0;

97 |

98 | private int mouse_x;

99 | private int mouse_y;

100 |

101 | private OptPos position = new OptPos();

102 |

103 | @Override

104 | public void start(Stage primaryStage) {

105 | primaryStage.setTitle("BoofCV RealSense Demo");

106 |

107 | FlowPane root = new FlowPane();

108 |

109 | root.getChildren().add(ivrgb);

110 |

111 |

112 | ivrgb.setOnMouseMoved(event -> {

113 | MouseEvent ev = event;

114 | mouse_x = (int)ev.getX();

115 | mouse_y = (int)ev.getY();

116 | });

117 |

118 |

119 | RealSenseInfo info = new RealSenseInfo(320,240, RealSenseInfo.MODE_RGB);

120 | // RealSenseInfo info = new RealSenseInfo(640,480, RealSenseInfo.MODE_RGB);

121 |

122 | gray = new GrayU8(info.width,info.height);

123 |

124 | try {

125 |

126 | realsense = new StreamRealSenseVisDepth(0,info);

127 |

128 | } catch(Exception e) {

129 | System.out.println("REALSENSE:"+e.getMessage());

130 | return;

131 | }

132 |

133 | mouse_x = info.width/2;

134 | mouse_y = info.height/2;

135 |

136 | primaryStage.setScene(new Scene(root, info.width,info.height));

137 | primaryStage.show();

138 |

139 |

140 | PkltConfig configKlt = new PkltConfig();

141 | configKlt.pyramidScaling = new int[]{1, 2, 4, 8};

142 | configKlt.templateRadius = 3;

143 |

144 | PointTrackerTwoPass tracker =

145 | FactoryMAVPointTrackerTwoPassVIO.klt(configKlt, new ConfigGeneralDetector(MAXTRACKS, KLT_RADIUS, KLT_THRESHOLD),

146 | GrayU8.class, GrayS16.class);

147 |

148 | DepthSparse3D sparseDepth = new DepthSparse3D.I(1e-3);

149 |

150 | // declares the algorithm

151 | MAVDepthVisualOdometry visualOdometry =

152 | FactoryMAVOdometryVIO.depthPnP(INLIER_PIXEL_TOL, ADD_THRESHOLD, RETIRE_THRESHOLD, RANSAC_ITERATIONS, REFINE_ITERATIONS, true,

153 | new Point3D_F64(),

154 | sparseDepth, tracker, GrayU8.class, GrayU16.class);

155 |

156 | visualOdometry.setCalibration(realsense.getIntrinsics(),new DoNothingPixelTransform_F32());

157 |

158 | Runtime.getRuntime().addShutdownHook(new Thread() {

159 | public void run() {

160 | realsense.stop();

161 | }

162 | });

163 |

164 |

165 | output = new BufferedImage(info.width, info.height, BufferedImage.TYPE_INT_RGB);

166 | wirgb = new WritableImage(info.width, info.height);

167 | ivrgb.setImage(wirgb);

168 |

169 | visualOdometry.reset();

170 |

171 |

172 | realsense.registerListener(new Listener() {

173 |

174 | int fps; float mouse_depth; float md; int mc; int mf=0; int fpm;

175 |

176 | @Override

177 | public void process(Planar rgb, GrayU16 depth, long timeRgb, long timeDepth) {

178 |

179 |

180 | if((System.currentTimeMillis() - tms) > 250) {

181 | tms = System.currentTimeMillis();

182 | if(mf>0)

183 | fps = fpm/mf;

184 | if(mc>0)

185 | mouse_depth = md / mc;

186 | mc = 0; md = 0; mf=0; fpm=0;

187 | }

188 | mf++;

189 | fpm += (int)(1f/((timeRgb - oldTimeDepth)/1000f)+0.5f);

190 | oldTimeDepth = timeRgb;

191 |

192 | ConvertImage.average(rgb, gray);

193 |

194 | if( !visualOdometry.process(gray, depth, pose) ) {

195 | visualOdometry.reset();

196 | System.err.println("No motion estimate");

197 | return;

198 | }

199 |

200 |

201 | pose.set(visualOdometry.getCameraToWorld());

202 | Vector3D_F64 T = pose.getT();

203 |

204 | ConvertRotation3D_F64.matrixToEuler(pose.getRotation(), EulerType.ZXY, visAttitude);

205 |

206 |

207 | AccessPointTracks3D points = (AccessPointTracks3D)visualOdometry;

208 | ConvertBufferedImage.convertTo_U8(rgb, output, false);

209 |

210 | Graphics c = output.getGraphics();

211 |

212 | int count = 0; float total = 0; int dx=0, dy=0; int dist=999;

213 | int x, y; int index = -1;

214 |

215 | for( int i = 0; i < points.getAllTracks().size(); i++ ) {

216 | if(points.isInlier(i)) {

217 |

218 |

219 | c.setColor(Color.WHITE);

220 |

221 | x = (int)points.getAllTracks().get(i).x;

222 | y = (int)points.getAllTracks().get(i).y;

223 |

224 |

225 |

226 | int d = depth.get(x,y);

227 | if(d > 0) {

228 |

229 |

230 | int di = (int)Math.sqrt((x-mouse_x)*(x-mouse_x) + (y-mouse_y)*(y-mouse_y));

231 | if(di < dist) {

232 | index = i;

233 | dx = x;

234 | dy = y;

235 | dist = di;

236 | }

237 | total++;

238 | if(d<500) {

239 | c.setColor(Color.RED); count++;

240 | }

241 | c.drawRect(x,y, 1, 1);

242 | }

243 | }

244 | }

245 |

246 | if(depth!=null) {

247 | // if(index > -1)

248 | // System.out.println(visualOdometry.getTrackLocation(index));

249 |

250 | mc++;

251 | md = md + depth.get(dx,dy) / 1000f;

252 | c.setColor(Color.WHITE);

253 | c.drawOval(dx-3,dy-3, 6, 6);

254 | }

255 |

256 |

257 | c.setColor(Color.CYAN);

258 | c.drawString("Fps:"+fps, 10, 20);

259 | c.drawString(String.format("Quality: %3.0f",visualOdometry.getQuality()), info.width-85, 20);

260 | c.drawString(String.format("Loc: %4.2f %4.2f %4.2f", T.x, T.y, T.z), 10, info.height-10);

261 | c.drawString(String.format("Depth: %3.2f", mouse_depth), info.width-85, info.height-10);

262 | c.drawString(String.format("ROLL: %3.0f°", MSPMathUtils.fromRad(visAttitude[0])), info.width-85, info.height-55);

263 | c.drawString(String.format("PITCH: %3.0f°", MSPMathUtils.fromRad(visAttitude[1])), info.width-85, info.height-40);

264 | c.drawString(String.format("YAW: %3.0f°", MSPMathUtils.fromRad(visAttitude[2])), info.width-85, info.height-25);

265 |

266 | position.x = T.x;

267 | position.y = T.y;

268 | position.z = T.z;

269 |

270 | position.tms = timeRgb;

271 |

272 | // if((count / total)>0.6f) {

273 | // c.setColor(Color.RED);

274 | // c.drawString("WARNING!", info.width-70, 20);

275 | // }

276 | c.dispose();

277 |

278 | Platform.runLater(() -> {

279 | SwingFXUtils.toFXImage(output, wirgb);

280 | });

281 | }

282 |

283 | }).start();

284 |

285 | }

286 |

287 | @Override

288 | public void stop() throws Exception {

289 | realsense.stop();

290 | super.stop();

291 | }

292 |

293 | public static void main(String[] args) {

294 |

295 |

296 | launch(args);

297 | }

298 |

299 | }

300 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/realsense/boofcv/StreamRealSenseVisDepth.java:

--------------------------------------------------------------------------------

1 | /****************************************************************************

2 | *

3 | * Copyright (c) 2017 Eike Mansfeld ecm@gmx.de. All rights reserved.

4 | *

5 | * Redistribution and use in source and binary forms, with or without

6 | * modification, are permitted provided that the following conditions

7 | * are met:

8 | *

9 | * 1. Redistributions of source code must retain the above copyright

10 | * notice, this list of conditions and the following disclaimer.

11 | * 2. Redistributions in binary form must reproduce the above copyright

12 | * notice, this list of conditions and the following disclaimer in

13 | * the documentation and/or other materials provided with the

14 | * distribution.

15 | * 3. Neither the name of the copyright holder nor the names of its

16 | * contributors may be used to endorse or promote products derived

17 | * from this software without specific prior written permission.

18 | *

19 | * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

20 | * "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

21 | * LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

22 | * FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

23 | * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

24 | * INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

25 | * BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

26 | * OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED

27 | * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

28 | * LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

29 | * ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

30 | * POSSIBILITY OF SUCH DAMAGE.

31 | *

32 | ****************************************************************************/

33 |

34 |

35 | package com.comino.realsense.boofcv;

36 |

37 | import java.util.ArrayList;

38 | import java.util.List;

39 |

40 | import com.comino.librealsense.wrapper.LibRealSenseIntrinsics;

41 | import com.comino.librealsense.wrapper.LibRealSenseUtils;

42 | import com.comino.librealsense.wrapper.LibRealSenseWrapper;

43 | import com.comino.librealsense.wrapper.LibRealSenseWrapper.rs_format;

44 | import com.comino.librealsense.wrapper.LibRealSenseWrapper.rs_intrinsics;

45 | import com.comino.librealsense.wrapper.LibRealSenseWrapper.rs_option;

46 | import com.comino.librealsense.wrapper.LibRealSenseWrapper.rs_stream;

47 | import com.sun.jna.Pointer;

48 | import com.sun.jna.ptr.PointerByReference;

49 |

50 | import boofcv.struct.calib.IntrinsicParameters;

51 | import boofcv.struct.image.GrayU16;

52 | import boofcv.struct.image.GrayU8;

53 | import boofcv.struct.image.Planar;

54 |

55 | public class StreamRealSenseVisDepth {

56 |

57 | private static final long MAX_RATE = 20;

58 |

59 | // time out used in some places

60 | private long timeout=10000;

61 |

62 |

63 | private List listeners;

64 |

65 | // image with depth information

66 | private GrayU16 depth = new GrayU16(1,1);

67 |

68 | private Planar rgb = new Planar(GrayU8.class,1,1,3);

69 |

70 |

71 |

72 | private CombineThread thread;

73 |

74 | private PointerByReference error= new PointerByReference();

75 | private PointerByReference ctx;

76 |

77 | private PointerByReference dev;

78 |

79 | private RealSenseInfo info;

80 | private float scale;

81 |

82 | private LibRealSenseIntrinsics intrinsics;

83 |

84 | public StreamRealSenseVisDepth(int devno , RealSenseInfo info)

85 | {

86 |

87 | ctx = LibRealSenseWrapper.INSTANCE.rs_create_context(11201, error);

88 | if(LibRealSenseWrapper.INSTANCE.rs_get_device_count(ctx, error)<1)

89 | ctx = LibRealSenseWrapper.INSTANCE.rs_create_context(4, error);

90 |

91 | if(LibRealSenseWrapper.INSTANCE.rs_get_device_count(ctx, error)<1) {

92 | LibRealSenseWrapper.INSTANCE.rs_delete_context(ctx, error);

93 | throw new IllegalArgumentException("No device found");

94 | }

95 | this.listeners = new ArrayList();

96 |

97 | this.info = info;

98 |

99 | dev = LibRealSenseWrapper.INSTANCE.rs_get_device(ctx, devno, error);

100 | LibRealSenseWrapper.INSTANCE.rs_wait_for_frames(dev, error);

101 |

102 |

103 | Pointer ch = LibRealSenseWrapper.INSTANCE.rs_get_device_firmware_version(dev, error);

104 | System.out.println("Firmware version: "+ch.getString(0));

105 |

106 | LibRealSenseUtils.rs_apply_depth_control_preset(dev, LibRealSenseUtils.PRESET_DEPTH_HIGH);

107 | LibRealSenseWrapper.INSTANCE.rs_set_device_option(dev, rs_option.RS_OPTION_COLOR_ENABLE_AUTO_WHITE_BALANCE, 0, error);

108 | LibRealSenseWrapper.INSTANCE.rs_set_device_option(dev, rs_option.RS_OPTION_R200_EMITTER_ENABLED, 1, error);

109 | LibRealSenseWrapper.INSTANCE.rs_set_device_option(dev, rs_option.RS_OPTION_COLOR_ENABLE_AUTO_EXPOSURE, 1, error);

110 | LibRealSenseWrapper.INSTANCE.rs_set_device_option(dev, rs_option.RS_OPTION_R200_LR_AUTO_EXPOSURE_ENABLED,1 , error);

111 | LibRealSenseWrapper.INSTANCE.rs_set_device_option(dev, rs_option.RS_OPTION_COLOR_BACKLIGHT_COMPENSATION, 0, error);

112 |

113 |

114 | LibRealSenseWrapper.INSTANCE.rs_enable_stream(dev, rs_stream.RS_STREAM_COLOR,

115 | info.width,info.height,rs_format.RS_FORMAT_RGB8, info.framerate, error);

116 |

117 | if(info.mode==RealSenseInfo.MODE_INFRARED) {

118 | LibRealSenseWrapper.INSTANCE.rs_enable_stream(dev, rs_stream.RS_STREAM_INFRARED2_ALIGNED_TO_DEPTH,

119 | info.width,info.height,rs_format.RS_FORMAT_ANY, info.framerate, error);

120 | }

121 |

122 | LibRealSenseWrapper.INSTANCE.rs_enable_stream(dev, rs_stream.RS_STREAM_DEPTH,

123 | info.width,info.height,rs_format.RS_FORMAT_Z16, info.framerate, error);

124 |

125 |

126 | scale = LibRealSenseWrapper.INSTANCE.rs_get_device_depth_scale(dev, error);

127 |

128 | rs_intrinsics rs_int= new rs_intrinsics();

129 | LibRealSenseWrapper.INSTANCE.rs_get_stream_intrinsics(dev, rs_stream.RS_STREAM_RECTIFIED_COLOR, rs_int, error);

130 | intrinsics = new LibRealSenseIntrinsics(rs_int);

131 |

132 | System.out.println("Depth scale: "+scale+" Intrinsics: "+intrinsics.toString());

133 |

134 | depth.reshape(info.width,info.height);

135 | rgb.reshape(info.width,info.height);

136 |

137 | }

138 |

139 | public StreamRealSenseVisDepth registerListener(Listener listener) {

140 | listeners.add(listener);

141 | return this;

142 | }

143 |

144 |

145 | public void start() {

146 | LibRealSenseWrapper.INSTANCE.rs_start_device(dev, error);

147 |

148 | thread = new CombineThread();

149 | thread.start();

150 | thread.setName("VIO");

151 | thread.setPriority(Thread.NORM_PRIORITY);

152 |

153 | while(!thread.running)

154 | Thread.yield();

155 | }

156 |

157 | public void stop() {

158 | thread.requestStop = true;

159 | long start = System.currentTimeMillis()+timeout;

160 | while( start > System.currentTimeMillis() && thread.running )

161 | Thread.yield();

162 | LibRealSenseWrapper.INSTANCE.rs_stop_device(dev, error);

163 | }

164 |

165 |

166 |

167 | public IntrinsicParameters getIntrinsics() {

168 | return intrinsics;

169 | }

170 |

171 |

172 | private class CombineThread extends Thread {

173 |

174 | public volatile boolean running = false;

175 | public volatile boolean requestStop = false;

176 |

177 | public volatile Pointer depthData;

178 | public volatile Pointer rgbData;

179 |

180 | private long timeDepth;

181 | private long timeRgb;

182 |

183 | private long tms_offset_depth = 0;

184 | private long tms_offset_rgb = 0;

185 |

186 | @Override

187 | public void run() {

188 | running = true; long tms; long wait;

189 |

190 | while( !requestStop ) {

191 |

192 | try {

193 |

194 | tms = System.currentTimeMillis();

195 |

196 | LibRealSenseWrapper.INSTANCE.rs_wait_for_frames(dev, error);

197 |

198 | depthData = LibRealSenseWrapper.INSTANCE.rs_get_frame_data(dev,

199 | rs_stream.RS_STREAM_DEPTH_ALIGNED_TO_RECTIFIED_COLOR, error);

200 | timeDepth = (long)LibRealSenseWrapper.INSTANCE.rs_get_frame_timestamp(dev,

201 | rs_stream.RS_STREAM_DEPTH_ALIGNED_TO_RECTIFIED_COLOR, error);

202 | if(tms_offset_depth==0)

203 | tms_offset_depth = System.currentTimeMillis() - timeDepth;

204 |

205 | if(depthData!=null)

206 | bufferDepthToU16(depthData,depth);

207 |

208 | switch(info.mode) {

209 | case RealSenseInfo.MODE_RGB:

210 | synchronized ( this ) {

211 | timeRgb = (long)LibRealSenseWrapper.INSTANCE.rs_get_frame_timestamp(dev,

212 | rs_stream.RS_STREAM_RECTIFIED_COLOR, error);

213 | if(tms_offset_rgb==0)

214 | tms_offset_rgb = System.currentTimeMillis() - timeRgb;

215 | rgbData = LibRealSenseWrapper.INSTANCE.rs_get_frame_data(dev,

216 | rs_stream.RS_STREAM_RECTIFIED_COLOR, error);

217 | if(rgbData!=null)

218 | bufferRgbToMsU8(rgbData,rgb);

219 | }

220 | break;

221 | case RealSenseInfo.MODE_INFRARED:

222 | synchronized ( this ) {

223 | timeRgb = (long)LibRealSenseWrapper.INSTANCE.rs_get_frame_timestamp(dev,

224 | rs_stream.RS_STREAM_INFRARED2_ALIGNED_TO_DEPTH, error);

225 | if(tms_offset_rgb==0)

226 | tms_offset_rgb = System.currentTimeMillis() - timeRgb;

227 | rgbData = LibRealSenseWrapper.INSTANCE.rs_get_frame_data(dev,

228 | rs_stream.RS_STREAM_INFRARED2_ALIGNED_TO_DEPTH, error);

229 | if(rgbData!=null)

230 | bufferGrayToMsU8(rgbData,rgb);

231 | }

232 | break;

233 | }

234 |

235 | if(listeners.size()>0) {

236 | for(Listener listener : listeners)

237 | listener.process(rgb, depth, timeRgb+tms_offset_rgb, timeDepth+tms_offset_depth);

238 | }

239 |

240 | // Limit maximum frame rate to MAX_RATE

241 | wait = MAX_RATE - ( System.currentTimeMillis() - tms + 1 );

242 | if(wait>0)

243 | Thread.sleep(wait);

244 |

245 | } catch(Exception e) {

246 | e.printStackTrace();

247 | }

248 | }

249 |

250 | running = false;

251 | }

252 | }

253 |

254 | public void bufferGrayToU8(Pointer input , GrayU8 output ) {

255 | byte[] inp = input.getByteArray(0, output.width * output.height );

256 | System.arraycopy(inp, 0, output.data, 0, output.width * output.height);

257 | }

258 |

259 |

260 | public void bufferDepthToU16(Pointer input , GrayU16 output ) {

261 | short[] inp = input.getShortArray(0, output.width * output.height);

262 | // System.arraycopy(inp, 0, output.data, 0, output.width * output.height);

263 |

264 | // Upside down mounting

265 | indexIn = output.width * output.height -1;

266 | for( y = 0; y < output.height; y++ ) {

267 | indexOut = output.startIndex + y*output.stride;

268 | for( x = 0; x < output.width; x++ , indexOut++ ) {

269 | output.data[indexOut] = inp[indexIn--];

270 | }

271 | }

272 | }

273 |

274 |

275 | private int x,y,indexOut, indexIn;

276 | private byte[] input;

277 |

278 | public void bufferRgbToMsU8( Pointer inp , Planar output ) {

279 |

280 | input = inp.getByteArray(0, output.width * output.height * 3);

281 |

282 | // Upside down mounting

283 | indexIn = output.width * output.height * 3 -1;

284 | for( y = 0; y < output.height; y++ ) {

285 | indexOut = output.startIndex + y*output.stride;

286 | for( x = 0; x < output.width; x++ , indexOut++ ) {

287 | output.getBand(0).data[indexOut] = input[indexIn--];

288 | output.getBand(1).data[indexOut] = input[indexIn--];

289 | output.getBand(2).data[indexOut] = input[indexIn--];

290 | }

291 | }

292 | }

293 |

294 | public void bufferGrayToMsU8( Pointer inp , Planar output ) {

295 |

296 | input = inp.getByteArray(0, output.width * output.height);

297 |

298 | // Upside down mounting

299 | indexIn = output.width * output.height -1;

300 | for(y = 0; y < output.height; y++ ) {

301 | int indexOut = output.startIndex + y*output.stride;

302 | for(x = 0; x < output.width; x++ , indexOut++ ) {

303 | output.getBand(0).data[indexOut] = input[indexIn];

304 | output.getBand(1).data[indexOut] = input[indexIn];

305 | output.getBand(2).data[indexOut] = input[indexIn--];

306 | }

307 | }

308 | }

309 |

310 | public interface Listener {

311 | public void process(Planar rgb, GrayU16 depth, long timeRgb, long timeDepth);

312 | }

313 | }

314 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/server/mjpeg/IMJPEGOverlayListener.java:

--------------------------------------------------------------------------------

1 | /****************************************************************************

2 | *

3 | * Copyright (c) 2017 Eike Mansfeld ecm@gmx.de. All rights reserved.

4 | *

5 | * Redistribution and use in source and binary forms, with or without

6 | * modification, are permitted provided that the following conditions

7 | * are met:

8 | *

9 | * 1. Redistributions of source code must retain the above copyright

10 | * notice, this list of conditions and the following disclaimer.

11 | * 2. Redistributions in binary form must reproduce the above copyright

12 | * notice, this list of conditions and the following disclaimer in

13 | * the documentation and/or other materials provided with the

14 | * distribution.

15 | * 3. Neither the name of the copyright holder nor the names of its

16 | * contributors may be used to endorse or promote products derived

17 | * from this software without specific prior written permission.

18 | *

19 | * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

20 | * "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

21 | * LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

22 | * FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

23 | * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

24 | * INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

25 | * BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

26 | * OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED

27 | * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

28 | * LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

29 | * ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

30 | * POSSIBILITY OF SUCH DAMAGE.

31 | *

32 | ****************************************************************************/

33 |

34 |

35 | package com.comino.server.mjpeg;

36 |

37 | import java.awt.Graphics;

38 |

39 | public interface IMJPEGOverlayListener {

40 |

41 | public void processOverlay(Graphics ctx);

42 |

43 | }

44 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/server/mjpeg/IVisualStreamHandler.java:

--------------------------------------------------------------------------------

1 | /****************************************************************************

2 | *

3 | * Copyright (c) 2017 Eike Mansfeld ecm@gmx.de. All rights reserved.

4 | *

5 | * Redistribution and use in source and binary forms, with or without

6 | * modification, are permitted provided that the following conditions

7 | * are met:

8 | *

9 | * 1. Redistributions of source code must retain the above copyright

10 | * notice, this list of conditions and the following disclaimer.

11 | * 2. Redistributions in binary form must reproduce the above copyright

12 | * notice, this list of conditions and the following disclaimer in

13 | * the documentation and/or other materials provided with the

14 | * distribution.

15 | * 3. Neither the name of the copyright holder nor the names of its

16 | * contributors may be used to endorse or promote products derived

17 | * from this software without specific prior written permission.

18 | *

19 | * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

20 | * "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

21 | * LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

22 | * FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

23 | * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

24 | * INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

25 | * BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

26 | * OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED

27 | * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

28 | * LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

29 | * ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

30 | * POSSIBILITY OF SUCH DAMAGE.

31 | *

32 | ****************************************************************************/

33 |

34 | package com.comino.server.mjpeg;

35 |

36 | import com.comino.msp.model.DataModel;

37 |

38 | public interface IVisualStreamHandler {

39 |

40 | public final static int HTTPVIDEO = 0;

41 | public final static int FILE = 1;

42 |

43 | public void addToStream(T image, DataModel model, long tms_us);

44 | public void registerOverlayListener(IMJPEGOverlayListener listener);

45 |

46 | }

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/server/mjpeg/impl/HttpMJPEGHandler.java:

--------------------------------------------------------------------------------

1 | /****************************************************************************

2 | *

3 | * Copyright (c) 2017 Eike Mansfeld ecm@gmx.de. All rights reserved.

4 | *

5 | * Redistribution and use in source and binary forms, with or without

6 | * modification, are permitted provided that the following conditions

7 | * are met:

8 | *

9 | * 1. Redistributions of source code must retain the above copyright

10 | * notice, this list of conditions and the following disclaimer.

11 | * 2. Redistributions in binary form must reproduce the above copyright

12 | * notice, this list of conditions and the following disclaimer in

13 | * the documentation and/or other materials provided with the

14 | * distribution.

15 | * 3. Neither the name of the copyright holder nor the names of its

16 | * contributors may be used to endorse or promote products derived

17 | * from this software without specific prior written permission.

18 | *

19 | * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

20 | * "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

21 | * LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

22 | * FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

23 | * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

24 | * INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

25 | * BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS

26 | * OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED

27 | * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

28 | * LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

29 | * ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

30 | * POSSIBILITY OF SUCH DAMAGE.

31 | *

32 | ****************************************************************************/

33 |

34 |

35 | package com.comino.server.mjpeg.impl;

36 |

37 | import java.awt.Graphics2D;

38 | import java.awt.image.BufferedImage;

39 | import java.io.BufferedOutputStream;

40 | import java.io.IOException;

41 | import java.io.OutputStream;

42 | import java.util.ArrayList;

43 | import java.util.List;

44 |

45 | import javax.imageio.ImageIO;

46 |

47 | import com.comino.msp.model.DataModel;

48 | import com.comino.realsense.boofcv.RealSenseInfo;

49 | import com.comino.server.mjpeg.IMJPEGOverlayListener;

50 | import com.comino.server.mjpeg.IVisualStreamHandler;

51 | import com.sun.net.httpserver.HttpExchange;

52 | import com.sun.net.httpserver.HttpHandler;

53 |

54 | import boofcv.io.image.ConvertBufferedImage;

55 | import boofcv.struct.image.GrayU8;

56 | import boofcv.struct.image.Planar;

57 |

58 | public class HttpMJPEGHandler implements HttpHandler, IVisualStreamHandler {

59 |

60 | private static final int MAX_VIDEO_RATE_MS = 40;

61 |

62 | private List listeners = null;

63 | private BufferedImage image = null;

64 | private DataModel model = null;

65 | private Graphics2D ctx;

66 |

67 | private T input_image;

68 |

69 | private long last_image_tms = 0;

70 |

71 | public HttpMJPEGHandler(RealSenseInfo info, DataModel model) {

72 | this.model = model;

73 | this.listeners = new ArrayList();

74 | this.image = new BufferedImage(info.width, info.height, BufferedImage.TYPE_3BYTE_BGR);

75 | this.ctx = image.createGraphics();

76 |

77 | ImageIO.setUseCache(false);

78 |

79 | }

80 |

81 | @Override @SuppressWarnings("unchecked")

82 | public void handle(HttpExchange he) throws IOException {

83 |

84 | he.getResponseHeaders().add("content-type","multipart/x-mixed-replace; boundary=--BoundaryString");

85 | he.sendResponseHeaders(200, 0);

86 | OutputStream os = new BufferedOutputStream(he.getResponseBody());

87 | while(true) {

88 | os.write(("--BoundaryString\r\nContent-type:image/jpeg content-length:1\r\n\r\n").getBytes());

89 |

90 | try {

91 |

92 | synchronized(this) {

93 |

94 | if(input_image==null)

95 | wait();

96 | }

97 |

98 | if(input_image instanceof Planar) {

99 | ConvertBufferedImage.convertTo_U8((Planar)input_image, image, true);

100 | }

101 | else if(input_image instanceof GrayU8)

102 | ConvertBufferedImage.convertTo((GrayU8)input_image, image, true);

103 |

104 | if(listeners.size()>0) {

105 | for(IMJPEGOverlayListener listener : listeners)

106 | listener.processOverlay(ctx);

107 | }

108 |

109 | ImageIO.write(image, "jpg", os );

110 | os.write("\r\n\r\n".getBytes());

111 |

112 |

113 |

114 | input_image = null;

115 |

116 | } catch (Exception e) { }

117 | }

118 | }

119 |

120 | @Override

121 | public void registerOverlayListener(IMJPEGOverlayListener listener) {

122 | this.listeners.add(listener);

123 | }

124 |

125 | @Override

126 | public void addToStream(T input, DataModel model, long tms_us) {

127 |

128 | if((System.currentTimeMillis()-last_image_tms)

10 | extends DepthVisualOdometry {

11 |

12 | public int getInlierCount();

13 |

14 | public Point3D_F64 getTrackLocation(int index);

15 |

16 | public double getQuality();

17 |

18 | public void reset(Se3_F64 initialState);

19 |

20 | public boolean process(Vis visual, Depth depth, Se3_F64 currentAttitude);

21 |

22 | public Point3D_F64 getPoint3DFromPixel(int pixelx, int pixely);

23 |

24 | }

25 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/slam/boofcv/sfm/DepthSparse3D.java:

--------------------------------------------------------------------------------

1 | package com.comino.slam.boofcv.sfm;

2 |

3 | /*

4 | * Copyright (c) 2011-2016, Peter Abeles. All Rights Reserved.

5 | *

6 | * This file is part of BoofCV (http://boofcv.org).

7 | *

8 | * Licensed under the Apache License, Version 2.0 (the "License");

9 | * you may not use this file except in compliance with the License.

10 | * You may obtain a copy of the License at

11 | *

12 | * http://www.apache.org/licenses/LICENSE-2.0

13 | *

14 | * Unless required by applicable law or agreed to in writing, software

15 | * distributed under the License is distributed on an "AS IS" BASIS,

16 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

17 | * See the License for the specific language governing permissions and

18 | * limitations under the License.

19 | */

20 |

21 | import boofcv.alg.distort.radtan.RemoveRadialPtoN_F64;

22 | import boofcv.struct.calib.IntrinsicParameters;

23 | import boofcv.struct.distort.PixelTransform_F32;

24 | import boofcv.struct.image.GrayF32;

25 | import boofcv.struct.image.GrayI;

26 | import boofcv.struct.image.ImageGray;

27 | import georegression.struct.point.Point2D_F64;

28 | import georegression.struct.point.Point3D_F64;

29 |

30 | /**

31 | * Computes the 3D coordinate a point in a visual camera given a depth image. The visual camera is a standard camera

32 | * while the depth camera contains the depth (value along z-axis) of objects inside its field of view. The

33 | * Kinect (structured light) and flash ladar (time of flight) are examples of sensors which could use this class.

34 | * The z-axis is defined to be pointing straight out of the visual camera and both depth and visual cameras are

35 | * assumed to be parallel with identical pointing vectors for the z-axis.

36 | *

37 | * A mapping is provided for converting between pixels in the visual camera and the depth camera. This mapping

38 | * is assumed to be fixed with time.

39 | *

40 | * @author Peter Abeles

41 | */

42 | public abstract class DepthSparse3D {

43 |

44 | // Storage for the depth image

45 | protected T depthImage;

46 |

47 | // transform from visual camera pixels to normalized image coordinates

48 | private RemoveRadialPtoN_F64 p2n = new RemoveRadialPtoN_F64();

49 |

50 | // location of point in visual camera coordinate system

51 | private Point3D_F64 worldPt = new Point3D_F64();

52 |

53 | // pixel in normalized image coordinates

54 | private Point2D_F64 norm = new Point2D_F64();

55 |

56 | // transform from visual image coordinate system to depth image coordinate system

57 | private PixelTransform_F32 visualToDepth;

58 |

59 | // scales the values from the depth image

60 | private double depthScale;

61 |

62 | /**

63 | * Configures parameters

64 | *

65 | * @param depthScale Used to change units found in the depth image.

66 | */

67 | public DepthSparse3D(double depthScale) {

68 | this.depthScale = depthScale;

69 | }

70 |

71 | /**

72 | * Configures intrinsic camera parameters

73 | *

74 | * @param paramVisual Intrinsic parameters of visual camera.

75 | * @param visualToDepth Transform from visual to depth camera pixel coordinate systems.

76 | */

77 | public void configure(IntrinsicParameters paramVisual , PixelTransform_F32 visualToDepth ) {

78 | this.visualToDepth = visualToDepth;

79 | p2n.setK(paramVisual.fx,paramVisual.fy,paramVisual.skew,paramVisual.cx,paramVisual.cy).

80 | setDistortion(paramVisual.radial, paramVisual.t1,paramVisual.t2);

81 | }

82 |

83 |

84 | /**

85 | * Sets the depth image. A reference is saved internally.

86 | *

87 | * @param depthImage Image containing depth information.

88 | */

89 | public void setDepthImage(T depthImage) {

90 | this.depthImage = depthImage;

91 | }

92 |

93 | /**

94 | * Given a pixel coordinate in the visual camera, compute the 3D coordinate of that point.

95 | *

96 | * @param x x-coordinate of point in visual camera

97 | * @param y y-coordinate of point in visual camera

98 | * @return true if a 3D point could be computed and false if not

99 | */

100 | public boolean process( int x , int y ) {

101 | visualToDepth.compute(x, y);

102 |

103 | int depthX = (int)visualToDepth.distX;

104 | int depthY = (int)visualToDepth.distY;

105 |

106 | if( depthImage.isInBounds(depthX,depthY) ) {

107 | // get the depth at the specified location

108 | double value = lookupDepth(depthX, depthY);

109 |

110 | // see if its an invalid value

111 | // ecmnet modif: use last value instead

112 | if( value == 0 ) {

113 | return false;

114 |

115 | }

116 |

117 | // convert visual pixel into normalized image coordinate

118 | p2n.compute(x,y,norm);

119 |

120 | // project into 3D space

121 | worldPt.z = value*depthScale;

122 | worldPt.x = worldPt.z*norm.x;

123 | worldPt.y = worldPt.z*norm.y;

124 |

125 | return true;

126 | } else {

127 |

128 | return false;

129 | }

130 | }

131 |

132 | /**

133 | * The found 3D coordinate of the point in the visual camera coordinate system. Is only valid when

134 | * {@link #process(int, int)} returns true.

135 | *

136 | * @return 3D coordinate of point in visual camera coordinate system

137 | */

138 | public Point3D_F64 getWorldPt() {

139 | return worldPt;

140 | }

141 |

142 | /**

143 | * Internal function which looks up the pixel's depth. Depth is defined as the value of the z-coordinate which

144 | * is pointing out of the camera. If there is no depth measurement at this location return 0.

145 | *

146 | * @param depthX x-coordinate of pixel in depth camera

147 | * @param depthY y-coordinate of pixel in depth camera

148 | * @return depth at the specified coordinate

149 | */

150 | protected abstract double lookupDepth(int depthX, int depthY);

151 |

152 | /**

153 | * Implementation for {@link GrayI}.

154 | */

155 | public static class I extends DepthSparse3D {

156 |

157 | public I(double depthScale) {

158 | super(depthScale);

159 | }

160 |

161 | @Override

162 | protected double lookupDepth(int depthX, int depthY) {

163 | return depthImage.get(depthX,depthY);

164 | }

165 | }

166 |

167 | /**

168 | * Implementation for {@link GrayF32}.

169 | */

170 | public static class F32 extends DepthSparse3D {

171 |

172 | public F32(double depthScale) {

173 | super(depthScale);

174 | }

175 |

176 | @Override

177 | protected double lookupDepth(int depthX, int depthY) {

178 | return depthImage.unsafe_get(depthX,depthY);

179 | }

180 | }

181 | }

182 |

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/slam/boofcv/sfm/DepthSparse3D_to_PixelTo3D.java:

--------------------------------------------------------------------------------

1 | package com.comino.slam.boofcv.sfm;

2 |

3 | import boofcv.abst.sfm.ImagePixelTo3D;

4 | import boofcv.struct.image.ImageGray;

5 |

6 | /**

7 | * Wrapper around {@link DepthSparse3D} for {@link ImagePixelTo3D}.

8 | *

9 | * @author Peter Abeles

10 | */

11 | public class DepthSparse3D_to_PixelTo3D

12 | implements ImagePixelTo3D

13 | {

14 | DepthSparse3D alg;

15 |

16 | public DepthSparse3D_to_PixelTo3D(DepthSparse3D alg) {

17 | this.alg = alg;

18 | }

19 |

20 | @Override

21 | public boolean process(double x, double y) {

22 | return alg.process((int)x,(int)y);

23 | }

24 |

25 | @Override

26 | public double getX() {

27 | return alg.getWorldPt().x;

28 | }

29 |

30 | @Override

31 | public double getY() {

32 | return alg.getWorldPt().y;

33 | }

34 |

35 | @Override

36 | public double getZ() {

37 | return alg.getWorldPt().z;

38 | }

39 |

40 | @Override

41 | public double getW() {

42 | return 1;

43 | }

44 | }

--------------------------------------------------------------------------------

/MAVSlam/src/com/comino/slam/boofcv/vio/FactoryMAVOdometryVIO.java:

--------------------------------------------------------------------------------

1 | /*

2 | * Copyright (c) 2011-2016, Peter Abeles, Eike Mansfeld. All Rights Reserved.

3 | *

4 | * This file is part of BoofCV (http://boofcv.org).

5 | *

6 | * Licensed under the Apache License, Version 2.0 (the "License");

7 | * you may not use this file except in compliance with the License.

8 | * You may obtain a copy of the License at

9 | *

10 | * http://www.apache.org/licenses/LICENSE-2.0

11 | *

12 | * Unless required by applicable law or agreed to in writing, software

13 | * distributed under the License is distributed on an "AS IS" BASIS,

14 | * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

15 | * See the License for the specific language governing permissions and

16 | * limitations under the License.

17 | */

18 |

19 | package com.comino.slam.boofcv.vio;

20 |

21 | import org.ddogleg.fitting.modelset.ModelMatcher;

22 | import org.ddogleg.fitting.modelset.ransac.Ransac;

23 |

24 | import com.comino.slam.boofcv.sfm.DepthSparse3D;

25 | import com.comino.slam.boofcv.sfm.DepthSparse3D_to_PixelTo3D;

26 | import com.comino.slam.boofcv.vio.odometry.MAVOdomPixelDepthPnPVIO;

27 | import com.comino.slam.boofcv.vio.odometry.MAVOdomPixelDepthPnP_to_DepthVisualOdometryVIO;

28 | import com.comino.slam.boofcv.vo.odometry.MAVOdomPixelDepthPnP;

29 |

30 | import boofcv.abst.feature.tracker.PointTrackerTwoPass;

31 | import boofcv.abst.geo.Estimate1ofPnP;

32 | import boofcv.abst.geo.RefinePnP;

33 |

34 | import boofcv.abst.sfm.ImagePixelTo3D;

35 | import boofcv.alg.geo.DistanceModelMonoPixels;

36 | import boofcv.alg.geo.pose.PnPDistanceReprojectionSq;

37 | import boofcv.factory.geo.EnumPNP;

38 | import boofcv.factory.geo.EstimatorToGenerator;

39 | import boofcv.factory.geo.FactoryMultiView;

40 | import boofcv.struct.geo.Point2D3D;

41 | import boofcv.struct.image.ImageGray;

42 | import boofcv.struct.image.ImageType;

43 | import georegression.fitting.se.ModelManagerSe3_F64;

44 | import georegression.struct.point.Point3D_F64;