├── LICENSE

├── README.md

├── bin

├── _tp_modules

│ └── .placeholder

├── commons.py

├── crtsh.py

├── cybercrime_tracker.py

├── greynoise.py

├── hybrid_analysis.py

├── malshare.py

├── phishing_catcher.py

├── phishing_catcher_confusables.py

├── phishing_catcher_suspicious.yaml

├── phishing_kit_tracker.py

├── psbdmp.py

├── py_pkg_update.sh

├── requirements.txt

├── threatcrowd.py

├── twitter.py

├── urlhaus.py

├── urlscan.py

└── urlscan_file_search.py

├── default

├── app.conf

├── authorize.conf

├── commands.conf

├── data

│ └── ui

│ │ ├── nav

│ │ └── default.xml

│ │ └── views

│ │ ├── crtsh.xml

│ │ ├── cybercrimeTracker.xml

│ │ ├── greyNoise.xml

│ │ ├── hybridAnalysis.xml

│ │ ├── malshare.xml

│ │ ├── osintSweep.xml

│ │ ├── pastebinDump.xml

│ │ ├── phishingCatcher.xml

│ │ ├── phishingKitTracker.xml

│ │ ├── threatCrowd.xml

│ │ ├── twitter.xml

│ │ ├── urlhaus.xml

│ │ └── urlscan.xml

├── inputs.conf

├── props.conf

└── transforms.conf

├── etc

└── config.py

├── local

├── .placeholder

└── data

│ └── ui

│ └── views

│ └── .placeholder

├── lookups

└── .placeholder

├── metadata

├── default.meta

└── local.meta

└── static

└── assets

├── .placeholder

├── crtsh_dashboard.png

├── cybercrimeTracker_dashboard.png

├── greynoise_dashboard.png

├── hybridAnalysis_dashboard.png

├── input_from_pipeline.png

├── input_from_pipeline_correlation.png

├── malshare_dashboard.png

├── osintSweep_dashboard.png

├── phishingCatcher_dashboard.png

├── phishingKitTracker_dashboard.png

├── psbdmp_dashboard.png

├── threatcrowd_dashboard.png

├── twitter_dashboard.png

├── urlhaus_dashboard.png

└── urlscan_dashboard.png

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 ecstatic-nobel

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # [OSweep™]

2 | ##### Don't Just Search OSINT. Sweep It.

3 |

4 | ### Description

5 | If you work in IT security, then you most likely use OSINT to help you understand what it is that your SIEM alerted you on and what everyone else in the world understands about it. More than likely you are using more than one OSINT service because most of the time OSINT will only provide you with reports based on the last analysis of the IOC. For some, that's good enough. They create network and email blocks, create new rules for their IDS/IPS, update the content in the SIEM, create new alerts for monitors in Google Alerts and DomainTools, etc etc. For others, they deploy these same countermeasures based on provided reports from their third-party tools that the company is paying THOUSANDS of dollars for.

6 |

7 | The problem with both of these is that the analyst needs to dig a little deeper (ex. FULLY deobfuscate a PowerShell command found in a malicious macro) to gather all of the IOCs. And what if the additional IOC(s) you are basing your analysis on has nothing to do with what is true about that site today? And then you get pwned? And then other questions from management arise...

8 |

9 | See where this is headed? You're about to get a pink slip and walked out of the building so you can start looking for another job in a different line of work.

10 |

11 | So why did you get pwned? You know that if you wasted time gathering all the IOCs for that one alert manually, it would have taken you half of your shift to complete and you would've got pwned regardless.

12 |

13 | The fix? **OSweep™**.

14 |

15 | ### Prerequisites

16 | Before getting started, ensure you have the following:

17 | **Ubuntu 18.04+**

18 | - Python 2.7.14 ($SPLUNK_HOME/bin/python)

19 | - Splunk 7.1.3+

20 | - Deb Packages

21 | - gcc

22 | - python-pip

23 |

24 | **CentOS 7+**

25 | - Python 2.7.14 ($SPLUNK_HOME/bin/python)

26 | - Splunk 7.1.3+

27 | - Yum Packages

28 | - epel-release

29 | - gcc

30 | - python-pip

31 |

32 | **Optional Packages**

33 | - Git

34 |

35 | Click **[HERE](https://github.com/ecstatic-nobel/OSweep/wiki/Setup)** to get started.

36 |

37 | ### Gallery

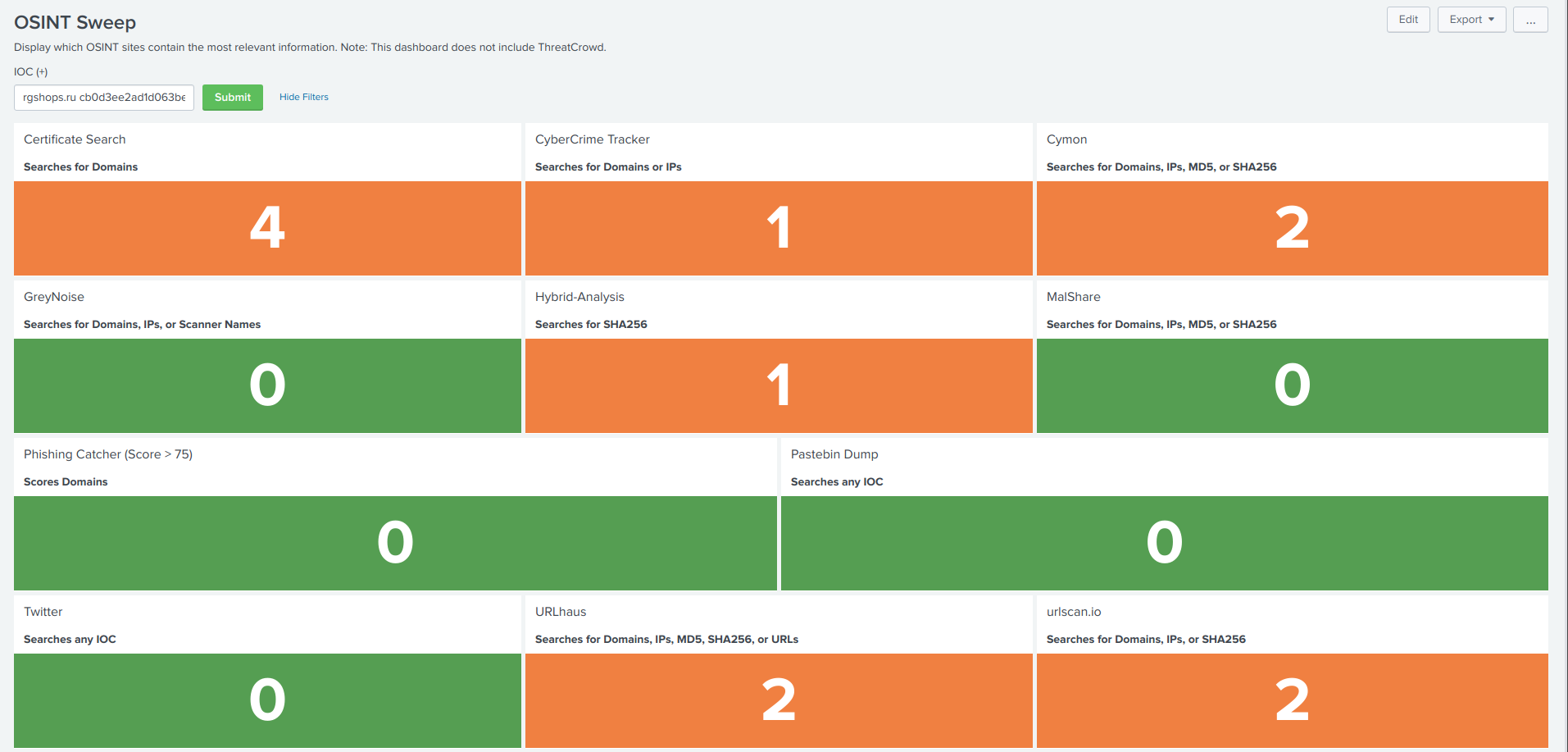

38 | **OSINT Sweep - Dashboard**

39 |

40 |

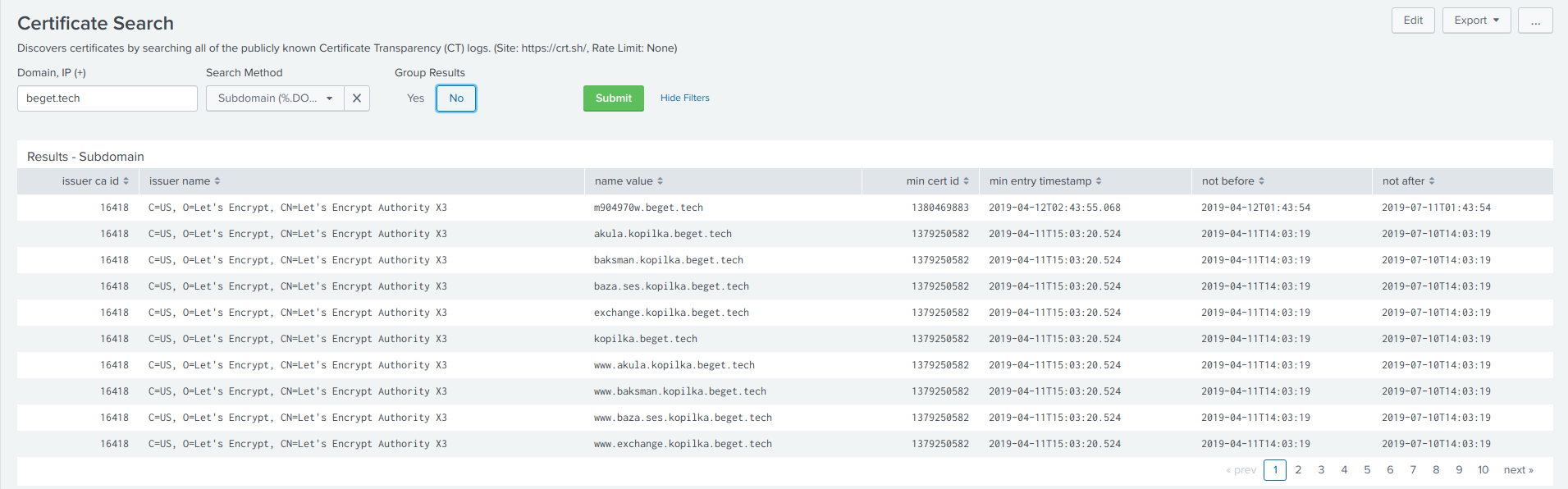

41 | **Certificate Search - Dashboard**

42 |

43 |

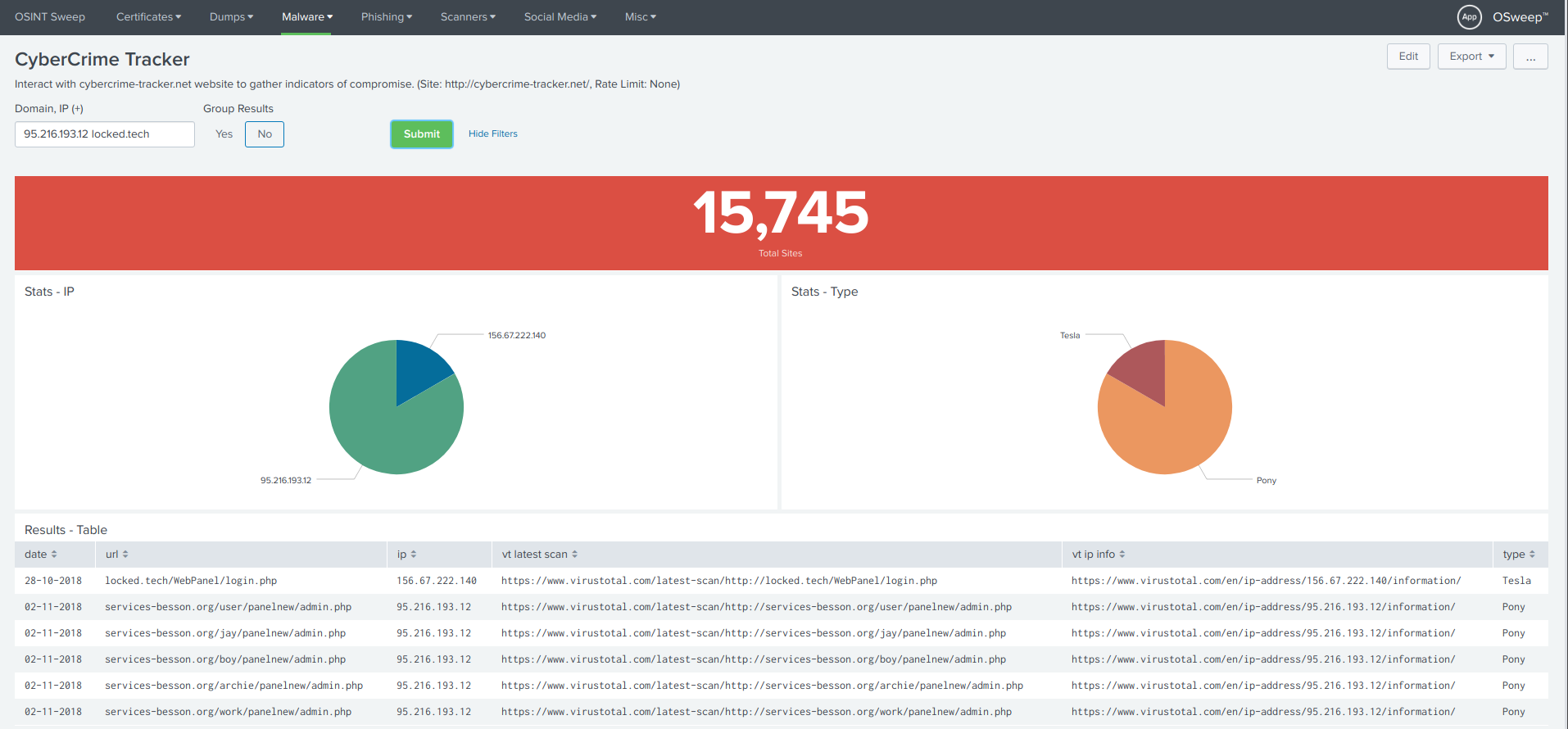

44 | **CyberCrime Tracker - Dashboard**

45 |

46 |

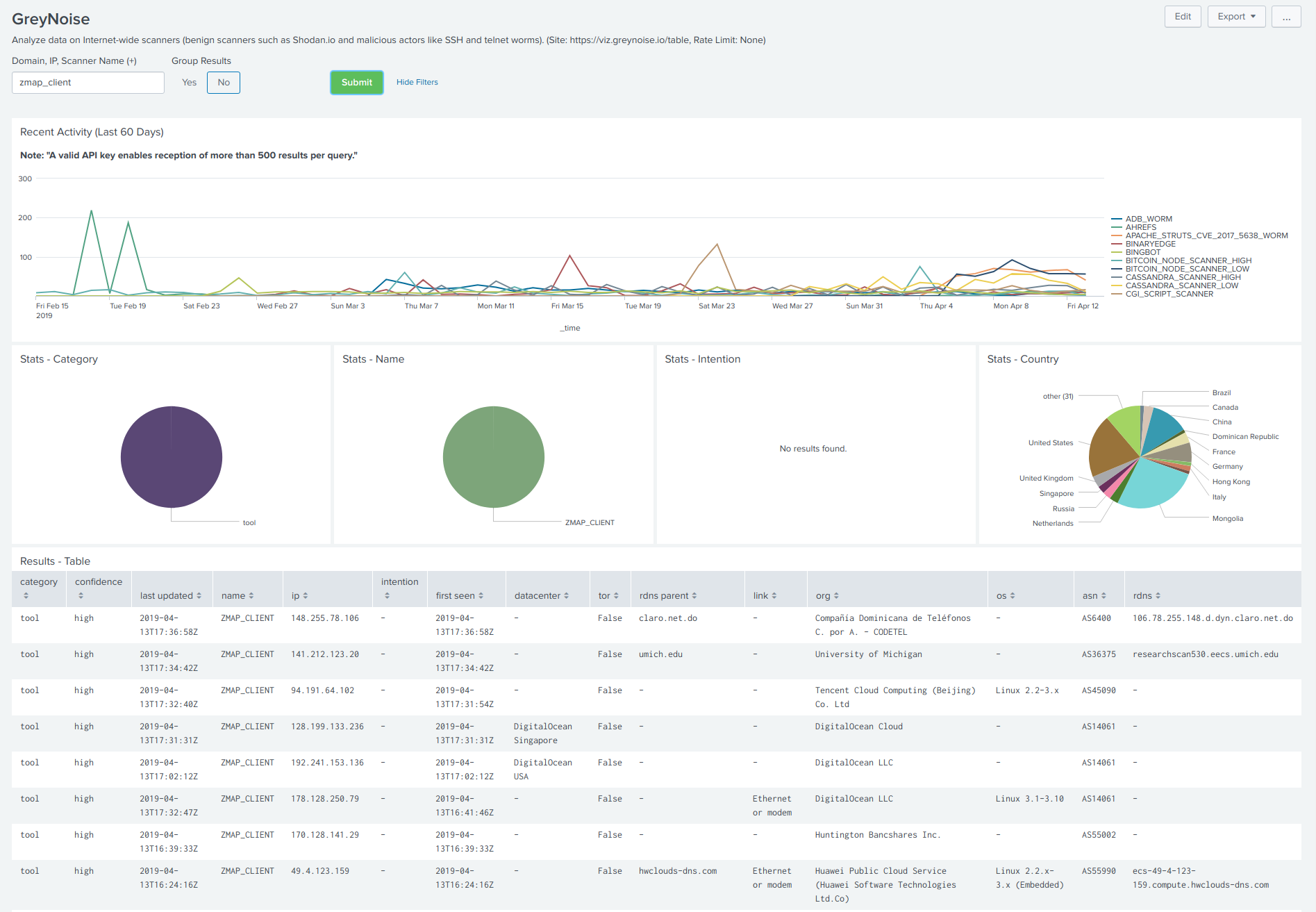

47 | **GreyNoise - Dashboard**

48 |

49 |

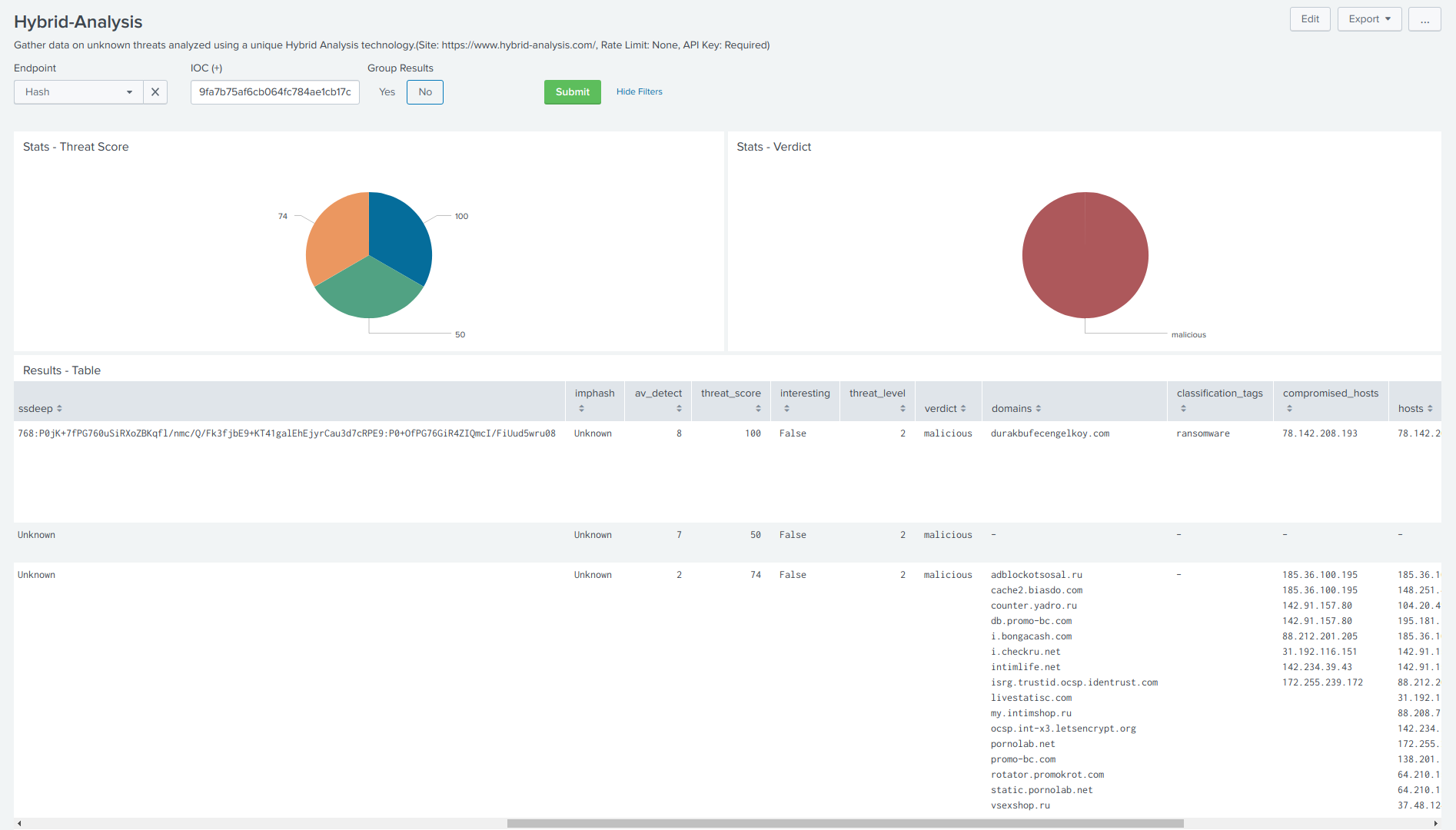

50 | **Hybrid-Analysis - Dashboard**

51 |

52 |

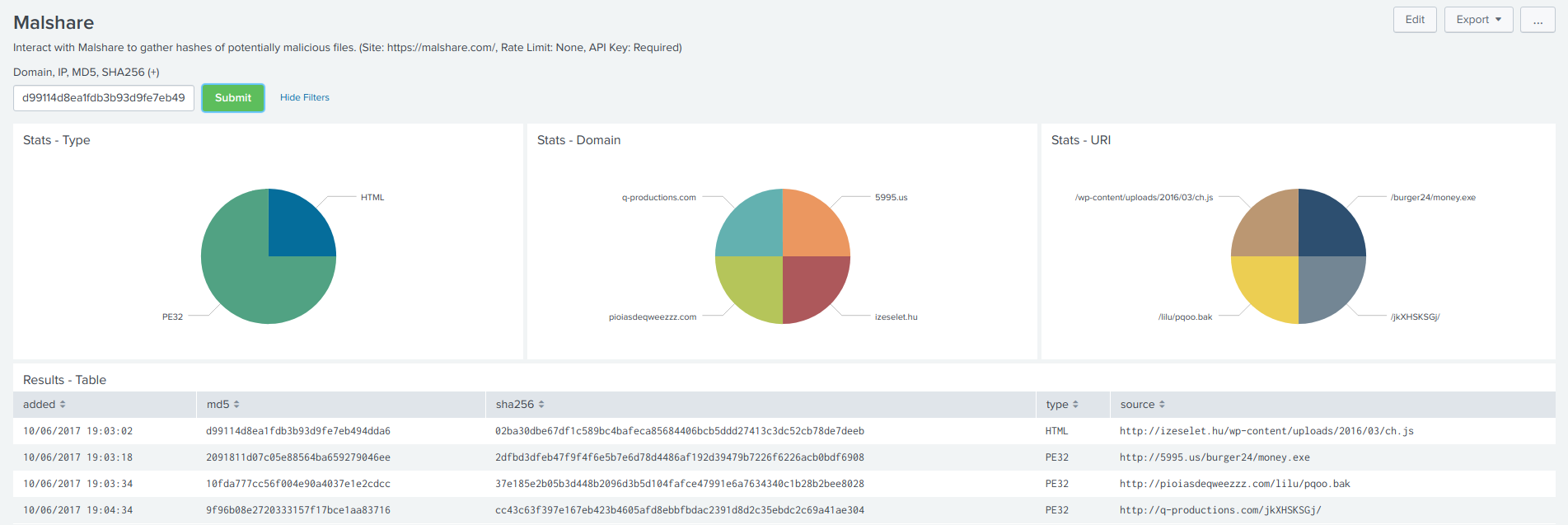

53 | **MalShare - Dashboard**

54 |

55 |

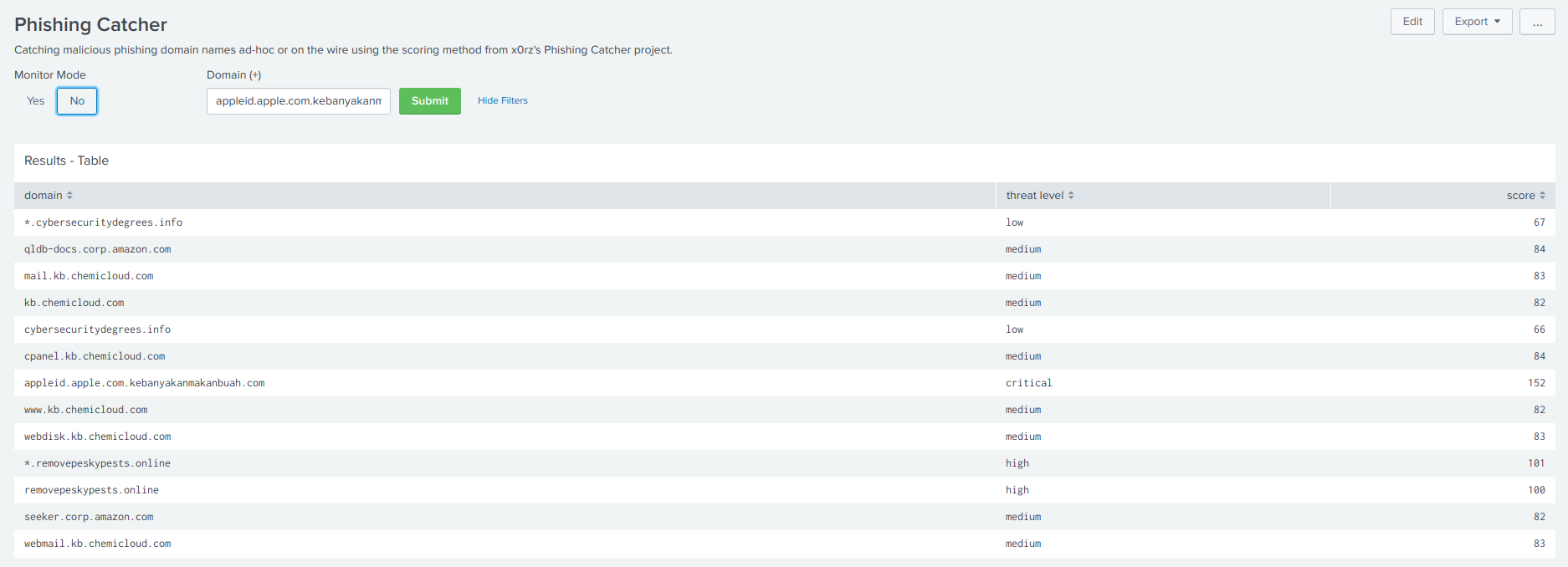

56 | **Phishing Catcher - Dashboard**

57 |

58 |

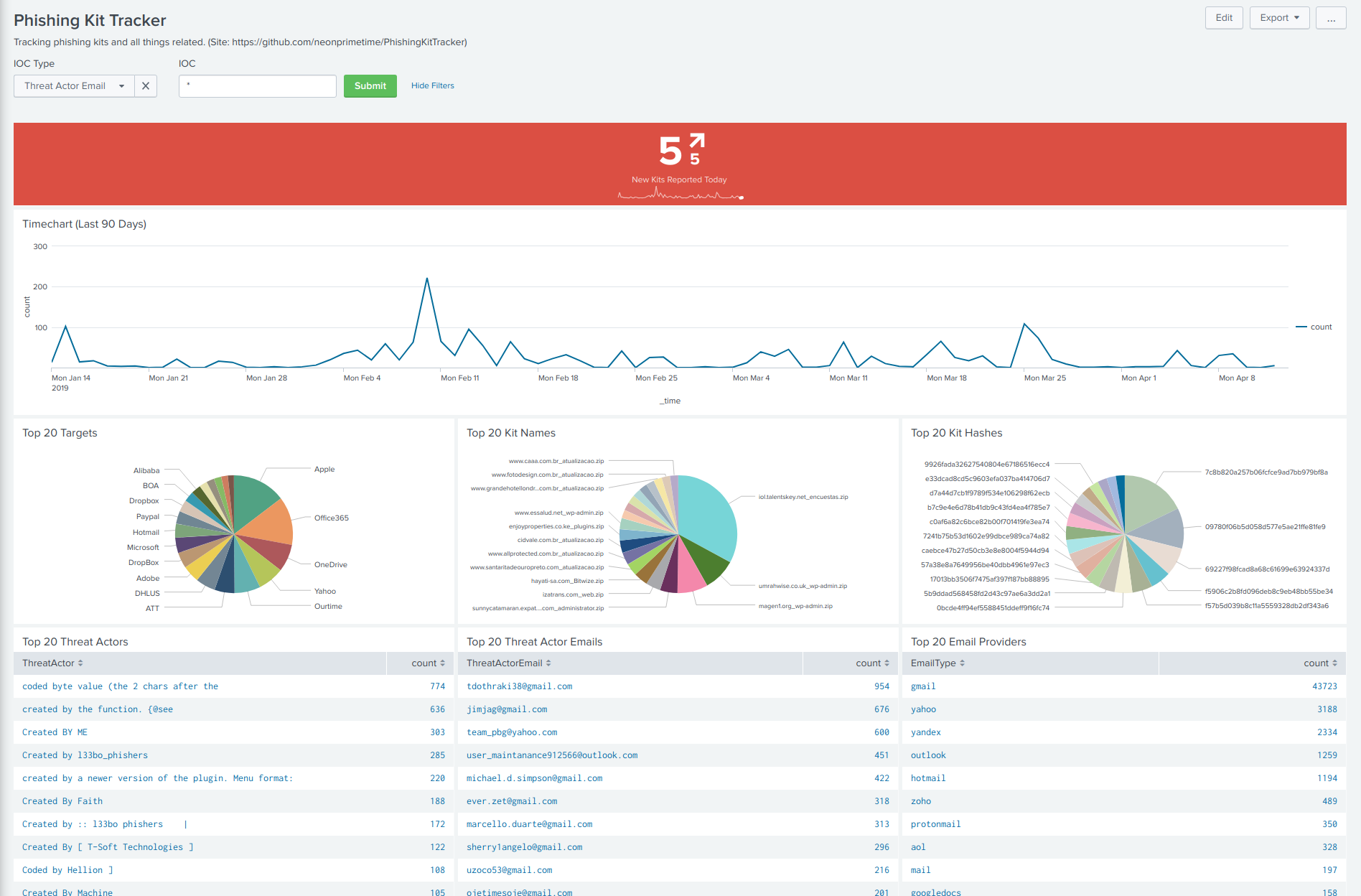

59 | **Phishing Kit Tracker - Dashboard**

60 |

61 |

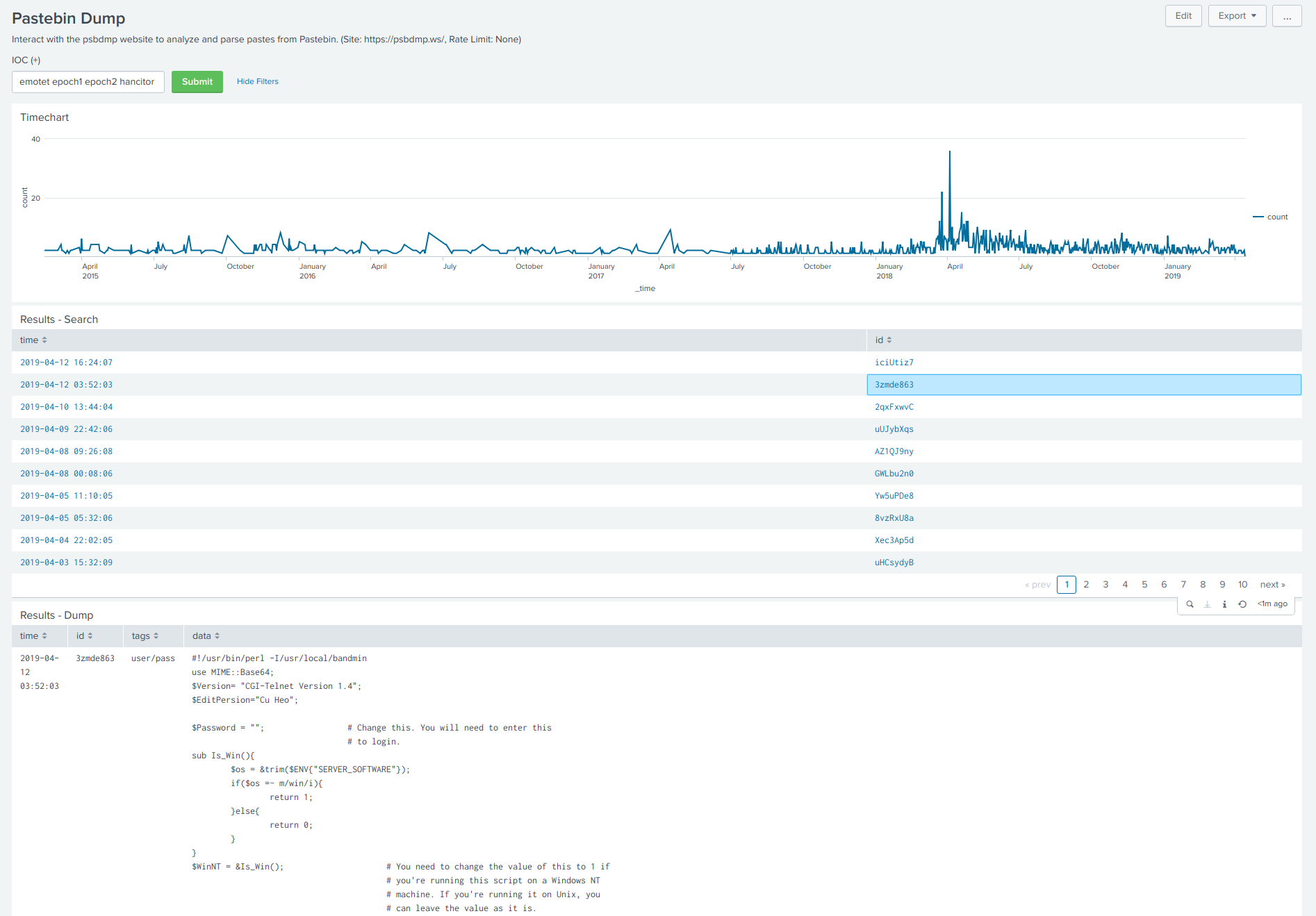

62 | **Pastebin Dump - Dashboard**

63 |

64 |

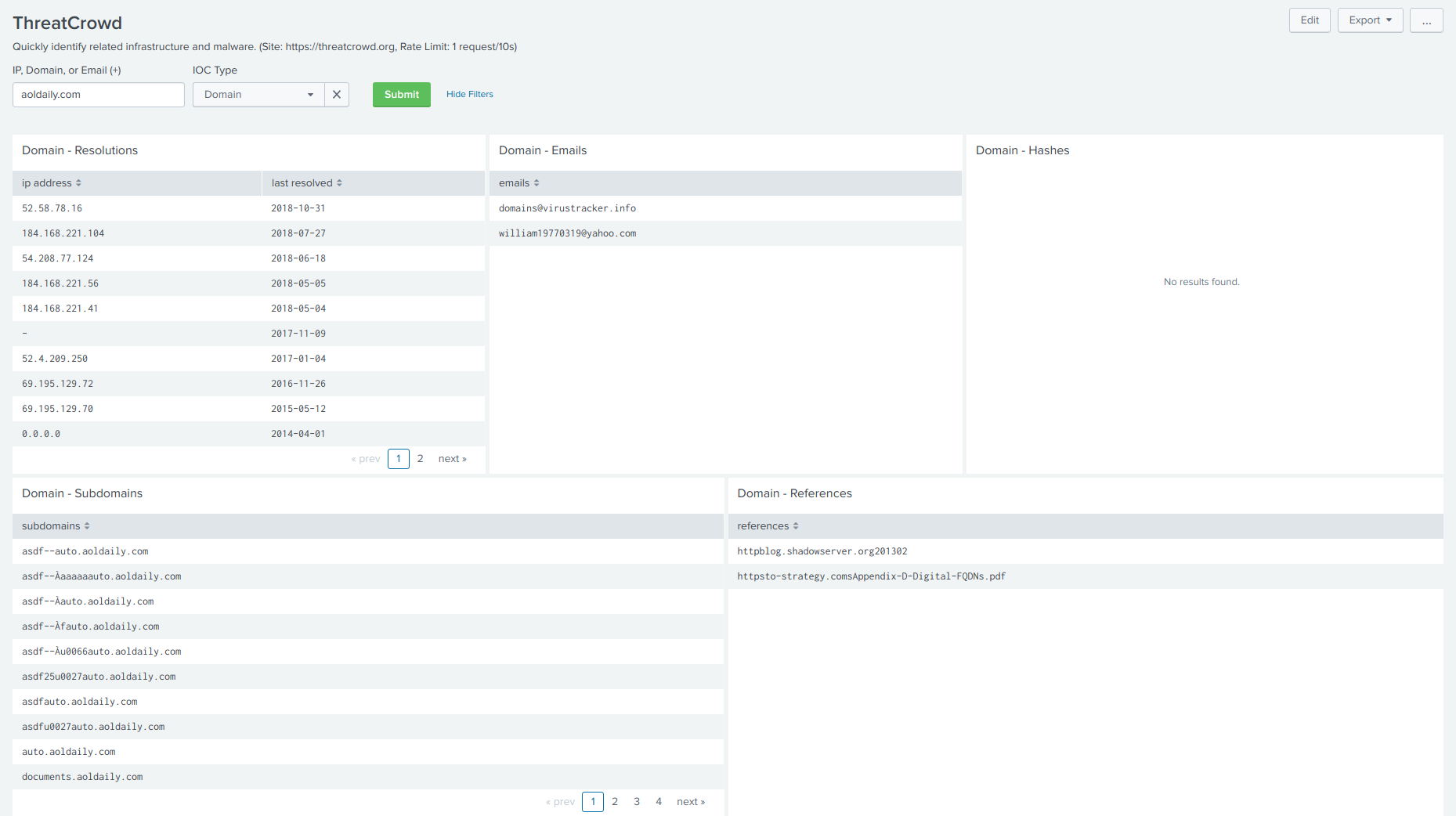

65 | **ThreatCrowd - Dashboard**

66 |

67 |

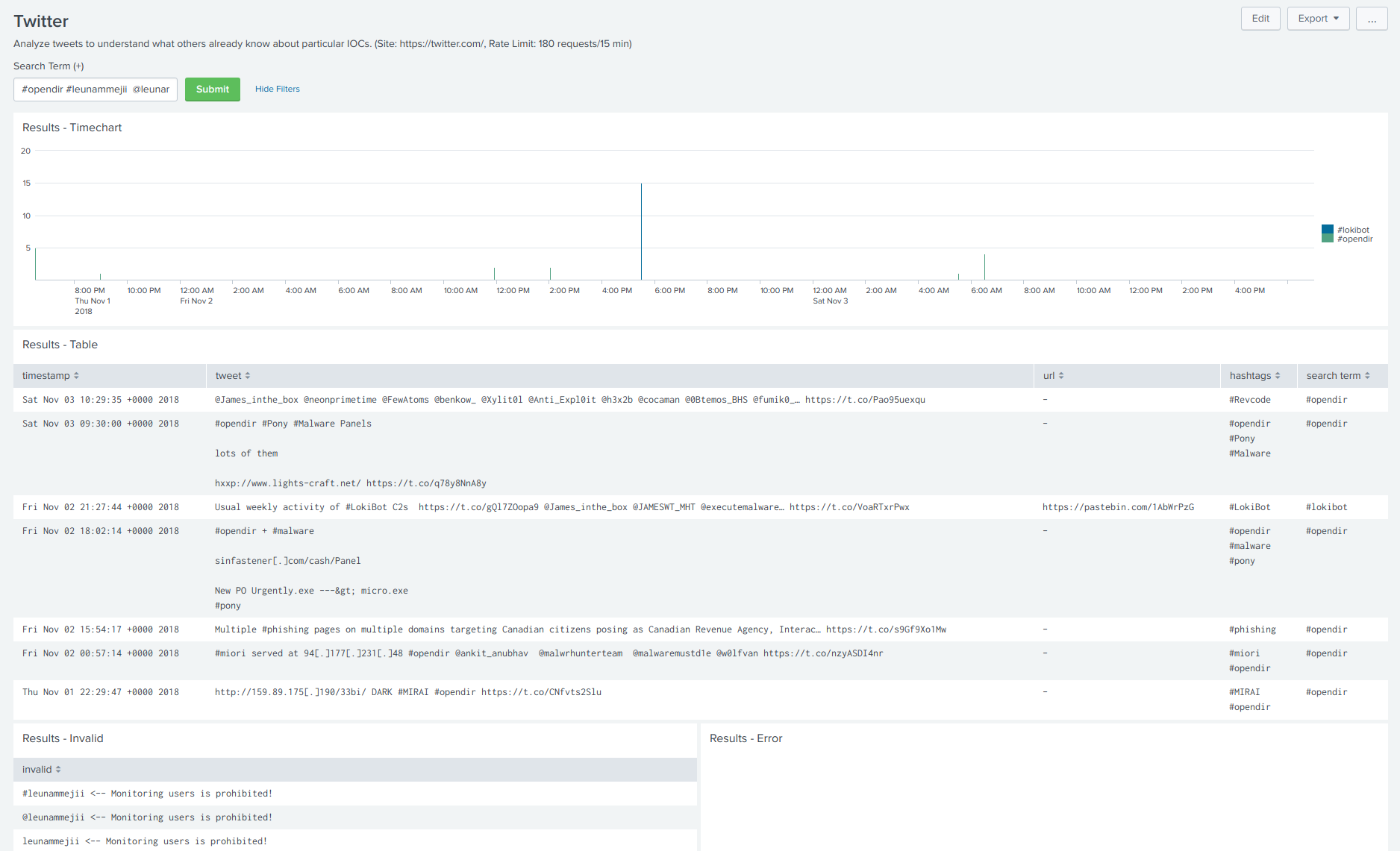

68 | **Twitter - Dashboard**

69 |

70 |

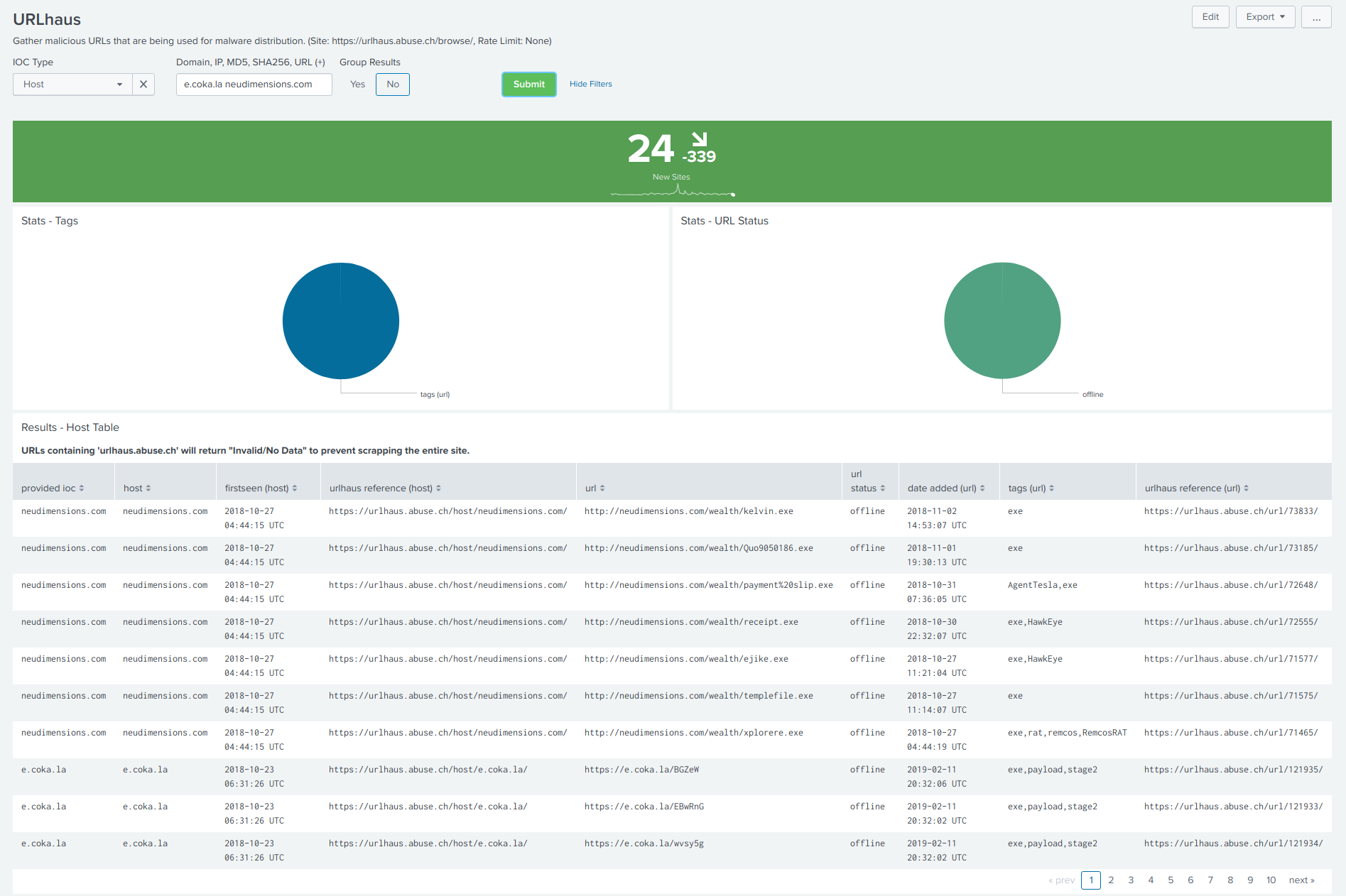

71 | **URLhaus - Dashboard**

72 |

73 |

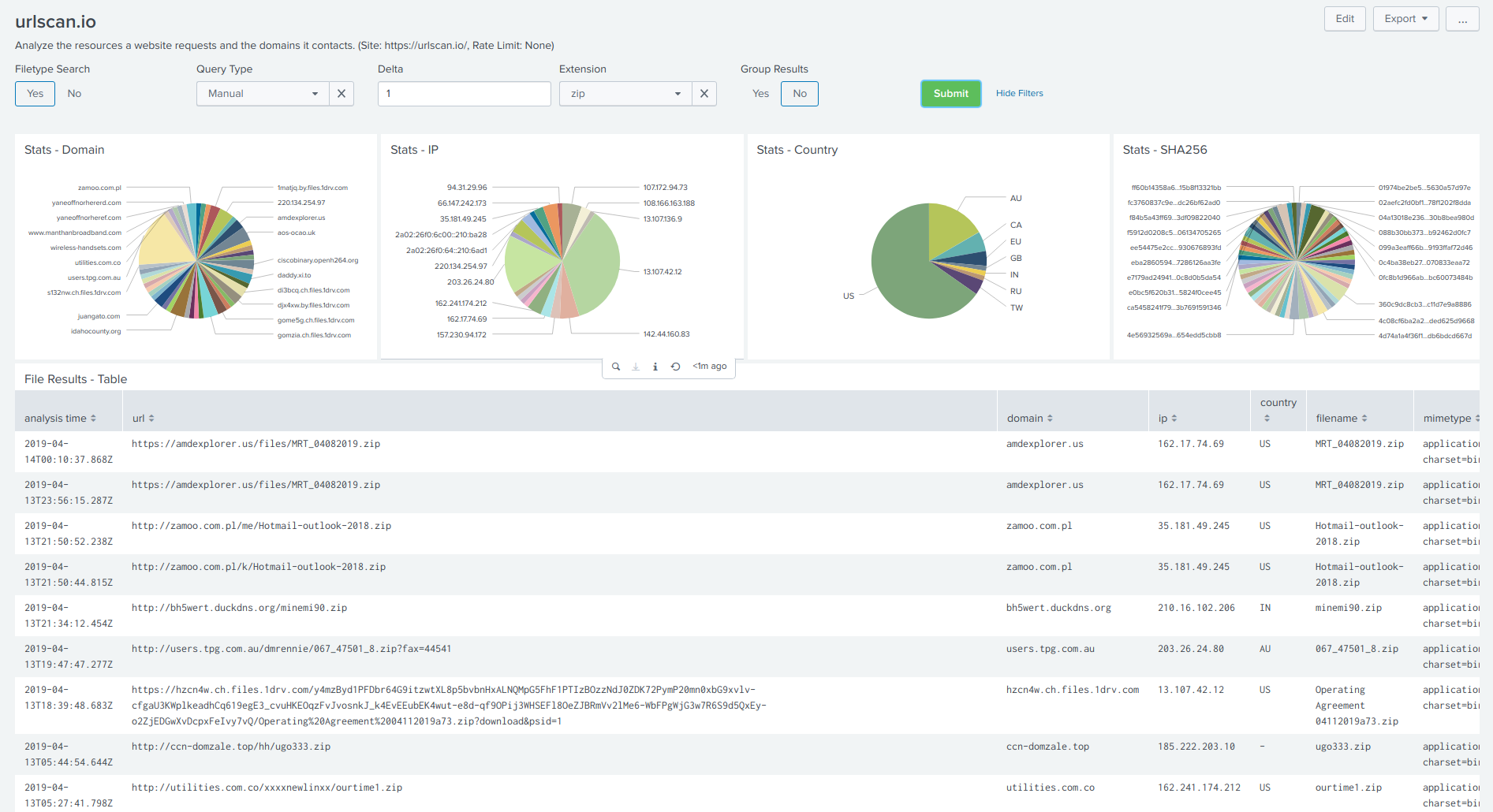

74 | **urlscan.io - Dashboard**

75 |

76 |

77 | ### Dashboards Coming Soon

78 | - Alienvault

79 | - Censys

80 | - PulseDive

81 |

82 | Please fork, create merge requests, and help make this better.

83 |

--------------------------------------------------------------------------------

/bin/_tp_modules/.placeholder:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ecstatic-nobel/OSweep/48018747b050c4f95109d2be4f8faa9645502816/bin/_tp_modules/.placeholder

--------------------------------------------------------------------------------

/bin/commons.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Common functions

4 | """

5 |

6 | import os

7 | import re

8 | import sys

9 | import traceback

10 |

11 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

12 | tp_modules = "{}/bin/_tp_modules".format(app_home)

13 | sys.path.insert(0, tp_modules)

14 | import splunk.Intersplunk as InterSplunk

15 | import requests

16 |

17 | config_path = "{}/etc/".format(app_home)

18 | sys.path.insert(1, config_path)

19 | import config

20 |

21 |

22 | def create_session():

23 | """Create a Requests Session object."""

24 | session = requests.session()

25 | uagent = "Mozilla/5.0 (Windows NT 6.3; Trident/7.0; rv:11.0) like Gecko"

26 | session.headers.update({"User-Agent": uagent})

27 | session.proxies.update({

28 | "http": config.http_proxy_url,

29 | "https": config.https_proxy_url

30 | })

31 | return session

32 |

33 | def get_apidomain(api):

34 | """Return the API domain."""

35 | if api == "hybrid-analysis":

36 | return config.hybrid_analysis_api_domain

37 |

38 | def get_apikey(api):

39 | """Return the API key."""

40 | if api == "greynoise":

41 | return config.greynoise_key

42 | if api == "hybrid-analysis":

43 | return config.hybrid_analysis_apikey

44 | if api == "malshare":

45 | return config.malshare_apikey

46 | if api == "pulsedive":

47 | return config.pulsedive_apikey

48 | if api == "twitter":

49 | return {

50 | "access_token": config.twitter_access_token,

51 | "access_token_secret": config.twitter_access_token_secret,

52 | "consumer_key": config.twitter_consumer_key,

53 | "consumer_secret": config.twitter_consumer_secret

54 | }

55 |

56 | def lower_keys(target):

57 | """Return a string or dictionary with the first character capitalized."""

58 | if isinstance(target, str) or isinstance(target, unicode):

59 | words = target.encode("UTF-8").split("_")

60 | return " ".join(words).lower()

61 |

62 | if isinstance(target, dict):

63 | dictionary = {}

64 | for key, value in target.iteritems():

65 | words = key.encode("UTF-8").split("_")

66 | key = " ".join(words).lower()

67 | dictionary[key] = value

68 | return dictionary

69 |

70 | def merge_dict(one, two):

71 | """Merge two dictionaries."""

72 | merged_dict = {}

73 | merged_dict.update(lower_keys(one))

74 | merged_dict.update(lower_keys(two))

75 | return merged_dict

76 |

77 | def return_results(module):

78 | try:

79 | results, dummy_results, settings = InterSplunk.getOrganizedResults()

80 |

81 | if isinstance(results, list) and len(results) > 0:

82 | new_results = module.process_iocs(results)

83 | elif len(sys.argv) > 1:

84 | new_results = module.process_iocs(None)

85 | except:

86 | stack = traceback.format_exc()

87 | new_results = InterSplunk.generateErrorResults("Error: " + str(stack))

88 |

89 | InterSplunk.outputResults(new_results)

90 | return

91 |

92 | def deobfuscate_string(provided_ioc):

93 | """Return deobfuscated URLs."""

94 | pattern = re.compile("^h..p", re.IGNORECASE)

95 | provided_ioc = pattern.sub("http", provided_ioc)

96 |

97 | pattern = re.compile("\[.\]", re.IGNORECASE)

98 | provided_ioc = pattern.sub(".", provided_ioc)

99 |

100 | pattern = re.compile("^\*\.", re.IGNORECASE)

101 | provided_ioc = pattern.sub("", provided_ioc)

102 | return provided_ioc

103 |

--------------------------------------------------------------------------------

/bin/crtsh.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use crt.sh to discover certificates by searching all of the publicly

4 | known Certificate Transparency (CT) logs. The script accepts a list of strings

5 | (domains or IPs):

6 | | crtsh $ioc$

7 |

8 | or input from the pipeline (any field where the value is a domain or IP). The

9 | first argument is the name of one field:

10 |

11 | | fields

12 | | crtsh

13 |

14 | Source: https://github.com/PaulSec/crt.sh

15 |

16 | Instructions:

17 | 1. Switch to the Certificate Search dashboard in the OSweep app.

18 | 2. Add the list of IOCs to the "Domain, IP (+)" textbox.

19 | 3. Select "Yes" or "No" from the "Wildcard" dropdown to search for subdomains.

20 | 4. Click "Submit".

21 |

22 | Rate Limit: None

23 |

24 | Results Limit: None

25 |

26 | Notes: Search for subdomains by passing "wildcard" as the first argument:

27 | | crtsh wildcard $domain$

28 |

29 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

30 | """

31 |

32 | import json

33 | import os

34 | import sys

35 |

36 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

37 | tp_modules = "{}/bin/_tp_modules".format(app_home)

38 | sys.path.insert(0, tp_modules)

39 | import validators

40 |

41 | import commons

42 |

43 |

44 | def process_iocs(results):

45 | """Return data formatted for Splunk from crt.sh."""

46 | if results != None:

47 | provided_iocs = [y for x in results for y in x.values()]

48 | elif sys.argv[1] != "subdomain" and sys.argv[1] != "wildcard":

49 | if len(sys.argv) > 1:

50 | provided_iocs = sys.argv[1:]

51 | elif sys.argv[1] == "subdomain" or sys.argv[1] == "wildcard":

52 | if len(sys.argv) > 2:

53 | provided_iocs = sys.argv[2:]

54 |

55 | session = commons.create_session()

56 | splunk_table = []

57 |

58 | for provided_ioc in set(provided_iocs):

59 | provided_ioc = commons.deobfuscate_string(provided_ioc)

60 |

61 | if validators.domain(provided_ioc) or validators.ipv4(provided_ioc):

62 | crt_dicts = query_crtsh(provided_ioc, session)

63 | else:

64 | splunk_table.append({"invalid": provided_ioc})

65 | continue

66 |

67 | for crt_dict in crt_dicts:

68 | splunk_table.append(crt_dict)

69 |

70 | session.close()

71 | return splunk_table

72 |

73 | def query_crtsh(provided_ioc, session):

74 | """Search crt.sh for the given domain."""

75 | if sys.argv[1] == "subdomain":

76 | provided_ioc = "%25.{}".format(provided_ioc)

77 | elif sys.argv[1] == "wildcard":

78 | provided_ioc = "%25{}".format(provided_ioc)

79 |

80 | base_url = "https://crt.sh/?q={}&output=json"

81 | url = base_url.format(provided_ioc)

82 | resp = session.get(url, timeout=180)

83 | crt_dicts = []

84 |

85 | if resp.status_code == 200 and resp.content != "":

86 | content = resp.content.decode("UTF-8")

87 | cert_history = json.loads("{}".format(content.replace("}{", "},{")))

88 | # cert_history = json.loads("[{}]".format(content.replace("}{", "},{")))

89 |

90 | for cert in cert_history:

91 | cert = commons.lower_keys(cert)

92 | crt_dicts.append(cert)

93 | else:

94 | provided_ioc = provided_ioc.replace("%25.", "").replace("%25", "")

95 | crt_dicts.append({"no data": provided_ioc})

96 | return crt_dicts

97 |

98 | if __name__ == "__main__":

99 | current_module = sys.modules[__name__]

100 | commons.return_results(current_module)

101 |

--------------------------------------------------------------------------------

/bin/cybercrime_tracker.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use cybercrime-tracker.net to better understand the type of malware

4 | a site is hosting. The script accepts a list of strings (domain or IP):

5 | | cybercrimeTracker

6 |

7 | or input from the pipeline (any field where the value is a domain). The first

8 | argument is the name of one field:

9 |

10 | | fields

11 | | cybercrimeTracker

12 |

13 | Source: https://github.com/PaulSec/cybercrime-tracker.net

14 |

15 | Instructions:

16 | 1. Switch to the CyberCrime Tracker dashboard in the OSweep app.

17 | 2. Add the list of IOCs to the "Domain, IP (+)" textbox.

18 | 3. Select whether the results will be grouped and how from the dropdowns.

19 | 4. Click "Submit".

20 |

21 | Rate Limit: None

22 |

23 | Results Limit: None

24 |

25 | Notes: None

26 |

27 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

28 | """

29 |

30 | from collections import OrderedDict

31 | import os

32 | import re

33 | import sys

34 |

35 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

36 | tp_modules = "{}/bin/_tp_modules".format(app_home)

37 | sys.path.insert(0, tp_modules)

38 | from bs4 import BeautifulSoup

39 | import validators

40 |

41 | import commons

42 |

43 |

44 | def get_feed():

45 | """Return OSINT data feed."""

46 | api = "http://cybercrime-tracker.net/all.php"

47 | session = commons.create_session()

48 | resp = session.get(api, timeout=180)

49 | session.close()

50 |

51 | if resp.status_code == 200 and resp.text != "":

52 | data = resp.text.splitlines()

53 | header = "url"

54 | data_feed = []

55 |

56 | for line in data:

57 | data_feed.append({"url": line})

58 | return data_feed

59 | return

60 |

61 | def write_file(data_feed, file_path):

62 | """Write data to a file."""

63 | if data_feed == None:

64 | return

65 |

66 | with open(file_path, "w") as open_file:

67 | keys = data_feed[0].keys()

68 | header = ",".join(keys)

69 |

70 | open_file.write("{}\n".format(header))

71 |

72 | for data in data_feed:

73 | data_string = ",".join(data.values())

74 | open_file.write("{}\n".format(data_string.encode("UTF-8")))

75 | return

76 |

77 | def process_iocs(results):

78 | """Return data formatted for Splunk from CyberCrime Tracker."""

79 | if results != None:

80 | provided_iocs = [y for x in results for y in x.values()]

81 | else:

82 | provided_iocs = sys.argv[1:]

83 |

84 | session = commons.create_session()

85 | splunk_table = []

86 |

87 | for provided_ioc in set(provided_iocs):

88 | provided_ioc = commons.deobfuscate_string(provided_ioc)

89 |

90 | if validators.domain(provided_ioc) or validators.ipv4(provided_ioc):

91 | cct_dicts = query_cct(provided_ioc, session)

92 | else:

93 | splunk_table.append({"invalid": provided_ioc})

94 | continue

95 |

96 | for cct_dict in cct_dicts:

97 | splunk_table.append(cct_dict)

98 |

99 | session.close()

100 | return splunk_table

101 |

102 | def query_cct(provided_ioc, session):

103 | """Search cybercrime-tracker.net for specific information about panels."""

104 | api = "http://cybercrime-tracker.net/index.php?search={}&s=0&m=10000"

105 | vt_latest = "https://www.virustotal.com/latest-scan/http://{}"

106 | vt_ip = "https://www.virustotal.com/en/ip-address/{}/information/"

107 | base_url = api.format(provided_ioc)

108 | resp = session.get(base_url, timeout=180)

109 | cct_dicts = []

110 |

111 | if resp.status_code == 200:

112 | soup = BeautifulSoup(resp.content, "html.parser")

113 | table = soup.findAll("table", attrs={"class": "ExploitTable"})[0]

114 | rows = table.find_all(["tr"])[1:]

115 |

116 | if len(rows) == 0:

117 | cct_dicts.append({"no data": provided_ioc})

118 | return cct_dicts

119 |

120 | for row in rows:

121 | cells = row.find_all("td", limit=5)

122 |

123 | if len(cells) > 0:

124 | tmp = {

125 | "date": cells[0].text,

126 | "url": cells[1].text,

127 | "ip": cells[2].text,

128 | "type": cells[3].text,

129 | "vt latest scan": vt_latest.format(cells[1].text),

130 | "vt ip info": None

131 | }

132 |

133 | if tmp["ip"] != "":

134 | tmp["vt ip info"] = vt_ip.format(tmp["ip"])

135 |

136 | if tmp not in cct_dicts:

137 | cct_dicts.append(tmp)

138 | else:

139 | cct_dicts.append({"no data": provided_ioc})

140 | return cct_dicts

141 |

142 | if __name__ == "__main__":

143 | if sys.argv[1].lower() == "feed":

144 | data_feed = get_feed()

145 | lookup_path = "{}/lookups".format(app_home)

146 | file_path = "{}/cybercrime_tracker_feed.csv".format(lookup_path)

147 | write_file(data_feed, file_path)

148 | exit(0)

149 |

150 | current_module = sys.modules[__name__]

151 | commons.return_results(current_module)

152 |

--------------------------------------------------------------------------------

/bin/greynoise.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use GreyNoise to analyze data on Internet-wide scanners (benign

4 | scanners such as Shodan.io and malicious actors like SSH and telnet worms). The

5 | script accepts a list of strings (domain, IP, and/or scanner name):

6 | | greyNoise

7 |

8 | or input from the pipeline (any field where the value is a domain, IP, scanner

9 | name). The first argument is the name of one field:

10 |

11 | | fields

12 | | greyNoise

13 |

14 | Source: https://viz.greynoise.io/table

15 |

16 | Instructions:

17 | 1. Manually download the data feed (one-time)

18 | ```

19 | | greyNoise feed

20 | ```

21 | 2. Switch to the **GreyNoise** dashboard in the OSweep app.

22 | 3. Add the list of IOCs to the "Domain, IP, Scanner Name (+)" textbox.

23 | 4. Select whether the results will be grouped and how from the dropdowns.

24 | 5. Click "Submit".

25 |

26 | Rate Limit: None

27 |

28 | Results Limit: None

29 |

30 | Notes: None

31 |

32 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

33 | """

34 |

35 | from collections import OrderedDict

36 | import os

37 | import sys

38 |

39 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

40 | tp_modules = "{}/bin/_tp_modules".format(app_home)

41 | sys.path.insert(0, tp_modules)

42 | import validators

43 |

44 | import commons

45 |

46 |

47 | api = "https://api.greynoise.io/v1/query"

48 |

49 | def get_feed():

50 | """Return the latest report summaries from the feed."""

51 | session = commons.create_session()

52 | api_key = commons.get_apikey("greynoise")

53 | tags = query_list(session)

54 |

55 | if tags == None:

56 | return

57 |

58 | if api_key != None:

59 | session.params = {"key": api_key}

60 | return query_tags(tags, session)

61 |

62 | def query_list(session):

63 | """Return a list of tags."""

64 | resp = session.get("{}/list".format(api), timeout=180)

65 |

66 | if resp.status_code == 200 and "tags" in resp.json().keys():

67 | return resp.json()["tags"]

68 | return

69 |

70 | def query_tags(tags, session):

71 | """Return dictionaries containing information about a tag."""

72 | data_feed = []

73 |

74 | for tag in tags:

75 | resp = session.post("{}/tag".format(api), data={"tag": tag})

76 |

77 | if resp.status_code == 200 and "records" in resp.json().keys() and \

78 | len(resp.json()["records"]):

79 | records = resp.json()["records"]

80 |

81 | for record in records:

82 | record["datacenter"] = record["metadata"].get("datacenter", "")

83 | record["tor"] = str(record["metadata"].get("tor", ""))

84 | record["rdns_parent"] = record["metadata"].get("rdns_parent", "")

85 | record["link"] = record["metadata"].get("link", "")

86 | record["org"] = record["metadata"].get("org", "")

87 | record["os"] = record["metadata"].get("os", "")

88 | record["asn"] = record["metadata"].get("asn", "")

89 | record["rdns"] = record["metadata"].get("rdns", "")

90 | record.pop("metadata")

91 | data_feed.append(record)

92 |

93 | session.close()

94 |

95 | if len(data_feed) == 0:

96 | return

97 | return data_feed

98 |

99 | def write_file(data_feed, file_path):

100 | """Write data to a file."""

101 | if data_feed == None:

102 | return

103 |

104 | with open(file_path, "w") as open_file:

105 | keys = data_feed[0].keys()

106 | header = ",".join(keys)

107 |

108 | open_file.write("{}\n".format(header))

109 |

110 | for data in data_feed:

111 | data_string = "^^".join(data.values())

112 | data_string = data_string.replace(",", "")

113 | data_string = data_string.replace("^^", ",")

114 | data_string = data_string.replace('"', "")

115 | open_file.write("{}\n".format(data_string.encode("UTF-8")))

116 | return

117 |

118 | def process_iocs(results):

119 | """Return data formatted for Splunk from GreyNoise."""

120 | if results != None:

121 | provided_iocs = [y for x in results for y in x.values()]

122 | else:

123 | provided_iocs = sys.argv[1:]

124 |

125 | splunk_table = []

126 | lookup_path = "{}/lookups".format(app_home)

127 | open_file = open("{}/greynoise_feed.csv".format(lookup_path), "r")

128 | data_feed = open_file.read().splitlines()

129 | header = data_feed[0].split(",")

130 | open_file.close()

131 |

132 | open_file = open("{}/greynoise_scanners.csv".format(lookup_path), "r")

133 | scanners = set(open_file.read().splitlines()[1:])

134 | scanners = [x.lower() for x in scanners]

135 | open_file.close()

136 |

137 | for provided_ioc in set(provided_iocs):

138 | provided_ioc = commons.deobfuscate_string(provided_ioc)

139 |

140 | if not validators.ipv4(provided_ioc) and \

141 | not validators.domain(provided_ioc) and \

142 | provided_ioc.lower() not in scanners:

143 | splunk_table.append({"invalid": provided_ioc})

144 | continue

145 |

146 | line_found = False

147 |

148 | for line in data_feed:

149 | if provided_ioc.lower() in line.lower():

150 | line_found = True

151 | scanner_data = line.split(",")

152 | scanner_dict = OrderedDict(zip(header, scanner_data))

153 | scanner_dict = commons.lower_keys(scanner_dict)

154 | splunk_table.append(scanner_dict)

155 |

156 | if line_found == False:

157 | splunk_table.append({"no data": provided_ioc})

158 | return splunk_table

159 |

160 | if __name__ == "__main__":

161 | if sys.argv[1].lower() == "feed":

162 | data_feed = get_feed()

163 | lookup_path = "{}/lookups".format(app_home)

164 | scanner_list = "{}/greynoise_scanners.csv".format(lookup_path)

165 | file_path = "{}/greynoise_feed.csv".format(lookup_path)

166 |

167 | with open(scanner_list, "w") as sfile:

168 | sfile.write("scanner\n")

169 |

170 | scanners = []

171 | for data in data_feed:

172 | scanner = data["name"].encode("UTF-8")

173 |

174 | if scanner not in scanners:

175 | sfile.write("{}\n".format(scanner.lower()))

176 |

177 | write_file(data_feed, file_path)

178 | exit(0)

179 |

180 | current_module = sys.modules[__name__]

181 | commons.return_results(current_module)

182 |

--------------------------------------------------------------------------------

/bin/hybrid_analysis.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description:

4 |

5 | Source:

6 |

7 | Instructions:

8 |

9 | Rate Limit: None

10 |

11 | Results Limit: None

12 |

13 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

14 | """

15 |

16 | import os

17 | import re

18 | import sys

19 |

20 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

21 | tp_modules = "{}/bin/_tp_modules".format(app_home)

22 | sys.path.insert(0, tp_modules)

23 | import validators

24 |

25 | import commons

26 |

27 |

28 | api = "https://{}/api/v2/search/{}".lower()

29 |

30 | def process_iocs(results):

31 | """Return data formatted for Splunk from Hybrid-Analysis."""

32 | params = [

33 | 'authentihash',

34 | 'av_detect',

35 | 'context',

36 | 'country',

37 | 'domain',

38 | 'env_id',

39 | 'filename',

40 | 'filetype_desc',

41 | 'filetype',

42 | 'hash',

43 | 'host',

44 | 'imp_hash',

45 | 'port',

46 | 'similar_to',

47 | 'ssdeep',

48 | 'tag',

49 | 'url',

50 | 'verdict',

51 | 'vx_family'

52 | ]

53 |

54 | if results != None:

55 | provided_iocs = [y for x in results for y in x.values()]

56 | elif sys.argv[1] == "terms" and sys.argv[2] in params:

57 | if len(sys.argv) > 2:

58 | endpoint = sys.argv[1]

59 | param = sys.argv[2]

60 | provided_iocs = sys.argv[3:]

61 | elif sys.argv[1] == "hash" and sys.argv[2] == "hash":

62 | if len(sys.argv) > 2:

63 | endpoint = sys.argv[1]

64 | param = sys.argv[2]

65 | provided_iocs = sys.argv[3:]

66 |

67 | session = commons.create_session()

68 | api_domain = commons.get_apidomain("hybrid-analysis")

69 | api_key = commons.get_apikey("hybrid-analysis")

70 | splunk_table = []

71 |

72 | for provided_ioc in set(provided_iocs):

73 | provided_ioc = commons.deobfuscate_string(provided_ioc)

74 | provided_ioc = provided_ioc.lower()

75 |

76 | ioc_dicts = query_hybridanalysis(endpoint, param, provided_ioc, api_domain, api_key, session)

77 |

78 | for ioc_dict in ioc_dicts:

79 | splunk_table.append(ioc_dict)

80 |

81 | session.close()

82 | return splunk_table

83 |

84 | def query_hybridanalysis(endpoint, param, provided_ioc, api_domain, api_key, session):

85 | """ """

86 | ioc_dicts = []

87 |

88 | session.headers.update({

89 | "api-key":api_key,

90 | "Accept":"application/json",

91 | "User-Agent":"Falcon Sandbox"

92 | })

93 | resp = session.post(api.format(api_domain, endpoint), data={param:provided_ioc}, timeout=180)

94 |

95 | if resp.status_code == 200 and resp.content != '':

96 | results = resp.json()

97 | else:

98 | ioc_dicts.append({"no data": provided_ioc})

99 | return ioc_dicts

100 |

101 | if isinstance(results, dict):

102 | if "result" in results.keys() and len(results["result"]) > 0:

103 | results = results["result"]

104 | else:

105 | ioc_dicts.append({"no data": provided_ioc})

106 | return ioc_dicts

107 |

108 | for result in results:

109 | ioc_dict = {}

110 | ioc_dict["type"] = result.get("type", None)

111 | ioc_dict["target_url"] = result.get("target_url", None)

112 | ioc_dict["submit_name"] = result.get("submit_name", None)

113 | ioc_dict["md5"] = result.get("md5", None)

114 | ioc_dict["sha256"] = result.get("sha256", None)

115 | ioc_dict["ssdeep"] = result.get("ssdeep", None)

116 | ioc_dict["imphash"] = result.get("imphash", None)

117 | ioc_dict["av_detect"] = result.get("av_detect", None)

118 | ioc_dict["analysis_start_time"] = result.get("analysis_start_time", None)

119 | ioc_dict["threat_score"] = result.get("threat_score", None)

120 | ioc_dict["interesting"] = result.get("interesting", None)

121 | ioc_dict["threat_level"] = result.get("threat_level", None)

122 | ioc_dict["verdict"] = result.get("verdict", None)

123 | ioc_dict["domains"] = result.get("domains", None)

124 | if ioc_dict["domains"] != None:

125 | ioc_dict["domains"] = "\n".join(ioc_dict["domains"])

126 | ioc_dict["classification_tags"] = result.get("classification_tags", None)

127 | if ioc_dict["classification_tags"] != None:

128 | ioc_dict["classification_tags"] = "\n".join(ioc_dict["classification_tags"])

129 | ioc_dict["compromised_hosts"] = result.get("compromised_hosts", None)

130 | if ioc_dict["compromised_hosts"] != None:

131 | ioc_dict["compromised_hosts"] = "\n".join(ioc_dict["compromised_hosts"])

132 | ioc_dict["hosts"] = result.get("hosts", None)

133 | if ioc_dict["hosts"] != None:

134 | ioc_dict["hosts"] = "\n".join(ioc_dict["hosts"])

135 | ioc_dict["total_network_connections"] = result.get("total_network_connections", None)

136 | ioc_dict["total_processes"] = result.get("total_processes", None)

137 | ioc_dict["extracted_files"] = result.get("extracted_files", None)

138 | if ioc_dict["extracted_files"] != None:

139 | ioc_dict["extracted_files"] = "\n".join(ioc_dict["extracted_files"])

140 | ioc_dict["processes"] = result.get("processes", None)

141 | if ioc_dict["processes"] != None:

142 | ioc_dict["processes"] = "\n".join(ioc_dict["processes"])

143 | ioc_dict["tags"] = result.get("tags", None)

144 | if ioc_dict["tags"] != None:

145 | ioc_dict["tags"] = "\n".join(ioc_dict["tags"])

146 | ioc_dicts.append(ioc_dict)

147 | return ioc_dicts

148 |

149 | if __name__ == "__main__":

150 | current_module = sys.modules[__name__]

151 | commons.return_results(current_module)

152 |

--------------------------------------------------------------------------------

/bin/malshare.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use Malshare to gather hashes of potentially malicious files. The

4 | script accepts a list of strings (domains, IPs, MD5, or SHA256):

5 | | malshare $ioc$

6 |

7 | or input from the pipeline (any field where the value is a domain, IP, MD5 or

8 | SHA256). The first argument is the name of one field:

9 |

10 | | fields

11 | | malshare

12 |

13 | Source: https://malshare.com/

14 |

15 | Instructions:

16 | 1. Switch to the Malshare dashboard in the OSweep app.

17 | 2. Add the list of IOCs to the "Domain, IP, MD5, SHA256 (+)" textbox.

18 | 4. Click "Submit".

19 |

20 | Rate Limit: None

21 |

22 | Results Limit: None

23 |

24 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

25 | """

26 |

27 | import json

28 | import os

29 | import re

30 | import sys

31 |

32 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

33 | tp_modules = "{}/bin/_tp_modules".format(app_home)

34 | sys.path.insert(0, tp_modules)

35 | import validators

36 |

37 | import commons

38 |

39 |

40 | api = "https://malshare.com/api.php?api_key={}&action=search&query={}".lower()

41 |

42 | def process_iocs(results):

43 | """Return data formatted for Splunk from Malshare."""

44 | if results != None:

45 | provided_iocs = [y for x in results for y in x.values()]

46 | else:

47 | provided_iocs = sys.argv[1:]

48 |

49 | session = commons.create_session()

50 | api_key = commons.get_apikey("malshare")

51 | splunk_table = []

52 |

53 | for provided_ioc in set(provided_iocs):

54 | provided_ioc = commons.deobfuscate_string(provided_ioc)

55 | provided_ioc = provided_ioc.lower()

56 |

57 | if validators.ipv4(provided_ioc) or validators.domain(provided_ioc) or \

58 | re.match("^[a-f\d]{32}$", provided_ioc) or re.match("^[a-f\d]{64}$", provided_ioc):

59 | pass

60 | else:

61 | splunk_table.append({"invalid": provided_ioc})

62 | continue

63 |

64 | ioc_dicts = query_malshare(provided_ioc, api_key, session)

65 |

66 | for ioc_dict in ioc_dicts:

67 | splunk_table.append(ioc_dict)

68 |

69 | session.close()

70 | return splunk_table

71 |

72 | def query_malshare(provided_ioc, api_key, session):

73 | """Query Malshare using the provided IOC return data as an dictonary."""

74 | ioc_dicts = []

75 |

76 | resp = session.get(api.format(api_key, provided_ioc), timeout=180)

77 |

78 | if resp.status_code == 200 and resp.content != '':

79 | content = re.sub("^", "[", resp.content.decode("UTF-8"))

80 | content = re.sub("$", "]", content)

81 | content = json.loads("{}".format(content.replace("}{", "},{")))

82 | else:

83 | ioc_dicts.append({"no data": provided_ioc})

84 | return ioc_dicts

85 |

86 | for data in content:

87 | ioc_dict = {}

88 | ioc_dict["md5"] = data.get("md5", None)

89 | ioc_dict["sha256"] = data.get("sha256", None)

90 | ioc_dict["type"] = data.get("type", None)

91 | ioc_dict["added"] = data.get("added", None)

92 | ioc_dict["source"] = data.get("source", None)

93 | ioc_dicts.append(ioc_dict)

94 | return ioc_dicts

95 |

96 | if __name__ == "__main__":

97 | current_module = sys.modules[__name__]

98 | commons.return_results(current_module)

99 |

--------------------------------------------------------------------------------

/bin/phishing_catcher.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use the Phishing Catcher project to catch malicious phishing domain

4 | names either ad-hoc or on the wire using the project's scoring method. The

5 | script accepts a list of strings (domains):

6 | | phishingCatcher

7 |

8 | or input from the pipeline (any field where the value is a domain). The first

9 | argument is the name of one field:

10 |

11 | | fields

12 | | phishingCatcher

13 |

14 | Source: https://github.com/x0rz/phishing_catcher

15 |

16 | Instructions:

17 | 1. Switch to the **Phishing Catcher** dashboard in the OSweep app.

18 | 2. Select whether you want to monitor the logs in realtime or add a list of domains.

19 | 3. If Monitor Mode is "Yes":

20 | - Add a search string to the 'Base Search' textbox.

21 | - Add the field name of the field containing the domain to the "Field Name" textbox.

22 | - Select the time range to search.

23 | 4. If Monitor Mode is "No":

24 | - Add the list of domains to the 'Domain (+)' textbox.

25 | 5. Click 'Submit'.

26 |

27 | Rate Limit: None

28 |

29 | Results Limit: None

30 |

31 | Notes: None

32 |

33 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

34 | """

35 |

36 | import os

37 | import re

38 | import sys

39 |

40 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

41 | tp_modules = "{}/bin/_tp_modules".format(app_home)

42 | sys.path.insert(0, tp_modules)

43 | import entropy

44 | import pylev

45 | import tld

46 | import yaml

47 |

48 | import commons

49 | import phishing_catcher_confusables as confusables

50 |

51 |

52 | def get_modules():

53 | """Return Phishing Catcher modules."""

54 | session = commons.create_session()

55 | suspicious = request_module(session, "/phishing_catcher_suspicious.yaml")

56 | confusables = request_module(session, "/phishing_catcher_confusables.py")

57 | session.close()

58 |

59 | if suspicious == None or confusables == None:

60 | return

61 | return suspicious, confusables

62 |

63 | def request_module(session, filename):

64 | """Return a list of tags."""

65 | base_url = "https://raw.githubusercontent.com/x0rz/phishing_catcher/master{}"

66 | resp = session.get(base_url.format(filename), timeout=180)

67 |

68 | if resp.status_code == 200 and resp.content != "":

69 | return resp.content.splitlines()

70 | return

71 |

72 | def write_file(file_contents, file_path):

73 | """Write data to a file."""

74 | with open(file_path, "w") as open_file:

75 | for content in file_contents:

76 | open_file.write("{}\n".format(content))

77 | return

78 |

79 | def process_iocs(results):

80 | """Return data formatted for Splunk."""

81 | with open("phishing_catcher_suspicious.yaml", "r") as s:

82 | global suspicious

83 | suspicious = yaml.safe_load(s)

84 |

85 | if results != None:

86 | provided_iocs = [y for x in results for y in x.values()]

87 | else:

88 | provided_iocs = sys.argv[1:]

89 |

90 | splunk_table = []

91 |

92 | for provided_ioc in set(provided_iocs):

93 | score = score_domain(provided_ioc.lower())

94 |

95 | if score >= 120:

96 | threat_level = "critical"

97 | elif score >= 90:

98 | threat_level = "high"

99 | elif score >= 80:

100 | threat_level = "medium"

101 | elif score >= 65:

102 | threat_level = "low"

103 | elif score < 65:

104 | threat_level = "harmless"

105 |

106 | splunk_table.append({

107 | "threat level": threat_level,

108 | "domain": provided_ioc,

109 | "score": score

110 | })

111 | return splunk_table

112 |

113 | def score_domain(provided_ioc):

114 | """Return the scores of the provided domain."""

115 | score = 0

116 |

117 | for suspicious_tld in suspicious["tlds"]:

118 | if provided_ioc.endswith(suspicious_tld):

119 | score += 20

120 |

121 | try:

122 | res = tld.get_tld(provided_ioc, as_object=True, fail_silently=True,

123 | fix_protocol=True)

124 | domain = ".".join([res.subdomain, res.domain])

125 | except Exception:

126 | domain = provided_ioc

127 |

128 | score += int(round(entropy.shannon_entropy(domain)*50))

129 | domain = confusables.unconfuse(domain)

130 | words_in_domain = re.split("\W+", domain)

131 |

132 |

133 | if domain.startswith("*."):

134 | domain = domain[2:]

135 |

136 | if words_in_domain[0] in ["com", "net", "org"]:

137 | score += 10

138 |

139 | for word in suspicious["keywords"]:

140 | if word in domain:

141 | score += suspicious["keywords"][word]

142 |

143 | for key in [k for k, v in suspicious["keywords"].items() if v >= 70]:

144 | for word in [w for w in words_in_domain if w not in ["email", "mail", "cloud"]]:

145 | if pylev.levenshtein(str(word), str(key)) == 1:

146 | score += 70

147 |

148 | if "xn--" not in domain and domain.count("-") >= 4:

149 | score += domain.count("-") * 3

150 |

151 | if domain.count(".") >= 3:

152 | score += domain.count(".") * 3

153 | return score

154 |

155 | if __name__ == "__main__":

156 | if sys.argv[1].lower() == "modules":

157 | suspicious, confusables = get_modules()

158 | sfile = "{}/bin/phishing_catcher_suspicious.yaml".format(app_home)

159 | cfile = "{}/bin/phishing_catcher_confusables.py".format(app_home)

160 |

161 | write_file(suspicious, sfile)

162 | write_file(confusables, cfile)

163 | exit(0)

164 |

165 | current_module = sys.modules[__name__]

166 | commons.return_results(current_module)

167 |

--------------------------------------------------------------------------------

/bin/phishing_catcher_suspicious.yaml:

--------------------------------------------------------------------------------

1 | keywords:

2 | # Domains are checked and scored with the following keywords.

3 | # Any changes must use the same format.

4 | # This section cannot be empty.

5 |

6 | # Generic

7 | 'account': 35

8 | 'activity': 40

9 | 'alert': 25

10 | 'authenticate': 60

11 | 'authentication': 70

12 | 'authorize': 45

13 | 'bank': 20

14 | 'bill': 20

15 | 'client': 30

16 | 'confirm': 35

17 | 'credential': 50

18 | 'customer': 40

19 | 'form': 20

20 | 'invoice': 35

21 | 'live': 20

22 | 'loan': 20

23 | 'log-in': 30

24 | 'login': 25

25 | 'lottery': 35

26 | 'manage': 30

27 | 'office': 30

28 | 'online': 30

29 | 'password': 40

30 | 'portal': 30

31 | 'purchase': 40

32 | 'recover': 35

33 | 'refund': 30

34 | 'rezulta': 35

35 | 'safe': 20

36 | 'secure': 30

37 | 'security': 40

38 | 'servehttp': 45

39 | 'service': 35

40 | 'sign-in': 35

41 | 'signin': 30

42 | 'support': 35

43 | 'tax': 15

44 | 'transaction': 55

45 | 'transfer': 40

46 | 'unlock': 30

47 | 'update': 30

48 | 'verification': 60

49 | 'verify': 30

50 | 'wallet': 30

51 | 'webscr': 30

52 |

53 | # Apple iCloud

54 | 'apple': 25

55 | 'appleid': 35

56 | 'icloud': 30

57 | 'iforgot': 35

58 | 'itunes': 30

59 |

60 | # Bank/money

61 | 'absabank': 40

62 | 'accurint': 40

63 | 'advcash': 35

64 | 'allegro': 35

65 | 'alliancebank': 60

66 | 'americanexpress': 75

67 | 'ameritrade': 50

68 | 'arenanet': 40

69 | 'associatedbank': 70

70 | 'atb': 15

71 | 'bancadiroma': 55

72 | 'bancaintesa': 55

73 | 'bancasa': 35

74 | 'bancodebrasil': 65

75 | 'bancoreal': 45

76 | 'bankmillennium': 70

77 | 'bankofamerica': 65

78 | 'bankofkc': 40

79 | 'bankofthewest': 65

80 | 'barclays': 40

81 | 'bendigo': 35

82 | 'bloomspot': 45

83 | 'bmo': 15

84 | 'bnymellon': 65

85 | 'bradesco': 40

86 | 'buywithme': 45

87 | 'cahoot': 30

88 | 'caixabank': 25

89 | 'capitalone': 50

90 | 'capitecbank': 55

91 | 'cartasi': 35

92 | 'centurylink': 55

93 | 'cibc': 20

94 | 'cimbbank': 40

95 | 'citi': 20

96 | 'citigroup': 45

97 | 'citizens': 40

98 | 'citizensbank': 50

99 | 'comerica': 40

100 | 'commbank': 80

101 | 'craigslist': 50

102 | 'creditkarma': 55

103 | 'cua': 15

104 | 'db': 10

105 | 'delta': 25

106 | 'desjardins': 50

107 | 'dinersclub': 50

108 | 'discover': 60

109 | 'discovercard': 60

110 | 'discovery': 45

111 | 'entromoney': 50

112 | 'eppicard': 40

113 | 'fichiers': 40

114 | 'fifththirdbank': 70

115 | 'firstdirect': 55

116 | 'firstfedca': 40

117 | 'fnb': 15

118 | 'franklintempleton': 70

119 | 'gardenislandfcu': 80

120 | 'gatherfcu': 80

121 | 'gofund': 30

122 | 'gruppocarige': 60

123 | 'gtbank': 30

124 | 'halifax': 35

125 | 'hmrc': 20

126 | 'hsbc': 20

127 | 'huntington': 50

128 | 'idex': 20

129 | 'independentbank': 75

130 | 'indexexchange': 65

131 | 'ing': 15

132 | 'interac': 35

133 | 'interactivebrokers': 90

134 | 'intesasanpaolo': 70

135 | 'itau': 20

136 | 'key': 15

137 | 'kgefcu': 80

138 | 'kiwibank': 40

139 | 'latam': 65

140 | 'livingsocial': 60

141 | 'lloydsbank': 50

142 | 'localbitcoins': 65

143 | 'lottomatica': 55

144 | 'mastercard': 50

145 | 'mbtrading': 45

146 | 'meridian': 40

147 | 'merilledge': 50

148 | 'metrobank': 45

149 | 'moneygram': 45

150 | 'morganstanley': 65

151 | 'nantucketbank': 65

152 | 'nationwide': 50

153 | 'natwest': 35

154 | 'nedbank': 35

155 | 'neteller': 40

156 | 'netsuite': 40

157 | 'nexiit': 35

158 | 'nordea': 30

159 | 'okpay': 25

160 | 'operativebank': 65

161 | 'pagseguro': 45

162 | 'paxful': 30

163 | 'payeer': 30

164 | 'paypal': 30

165 | 'payza': 25

166 | 'peoples': 35

167 | 'perfectmoney': 60

168 | 'permanenttsb': 60

169 | 'pnc': 15

170 | 'qiwi': 20

171 | 'rackspace': 45

172 | 'raiffeisenbanken': 80

173 | 'royalbank': 45

174 | 'safra': 25

175 | 'salemfive': 45

176 | 'santander': 45

177 | 'sars': 20

178 | 'scotia': 30

179 | 'scotiabank': 50

180 | 'scottrade': 45

181 | 'skrill': 30

182 | 'skyfinancial': 60

183 | 'smile': 25

184 | 'solidtrustpay': 65

185 | 'standardbank': 60

186 | 'steam': 25

187 | 'stgeorge': 60

188 | 'suncorp': 35

189 | 'swedbank': 40

190 | 'tdcanadatrust': 65

191 | 'tesco': 25

192 | 'tippr': 25

193 | 'unicredit': 45

194 | 'uquid': 25

195 | 'usaa': 20

196 | 'usairways': 45

197 | 'usbank': 30

198 | 'visa': 20

199 | 'vodafone': 40

200 | 'volksbanken': 55

201 | 'wachovia': 40

202 | 'walmart': 35

203 | 'webmoney': 40

204 | 'weezzo': 30

205 | 'wellsfargo': 50

206 | 'westernunion': 60

207 | 'westpac': 35

208 |

209 | # Coffee

210 | 'starbucks': 45

211 |

212 | # Cryptocurrency

213 | 'bibox': 25

214 | 'binance': 35

215 | 'bitconnect': 50

216 | 'bitfinex': 40

217 | 'bitflyer': 40

218 | 'bithumb': 35

219 | 'bitmex': 30

220 | 'bitstamp': 40

221 | 'bittrex': 35

222 | 'blockchain': 50

223 | 'coinbase': 40

224 | 'coinhive': 40

225 | 'coinsbank': 45

226 | 'hitbtc': 30

227 | 'kraken': 30

228 | 'lakebtc': 35

229 | 'localbitcoin': 60

230 | 'mycrypto': 40

231 | 'myetherwallet': 65

232 | 'mymonero': 40

233 | 'poloniex': 40

234 | 'xapo': 20

235 |

236 | # Ecommerce

237 | 'amazon': 30

238 | 'overstock': 45

239 | 'alibaba': 35

240 | 'aliexpress': 50

241 | 'leboncoin': 45

242 |

243 | # Email

244 | 'gmail': 25

245 | 'google': 30

246 | 'hotmail': 35

247 | 'microsoft': 45

248 | 'office365': 45

249 | 'onedrive': 40

250 | 'outlook': 35

251 | 'protonmail': 50

252 | 'tutanota': 40

253 | 'windows': 35

254 | 'yahoo': 25

255 | 'yandex': 30

256 |

257 | # Insurance

258 | 'geico': 25

259 | 'philamlife': 50

260 |

261 | # Logistics

262 | 'ceva': 20

263 | 'dhl': 15

264 | 'fedex': 25

265 | 'kuehne': 30

266 | 'lbcexpress': 50

267 | 'maersk': 30

268 | 'panalpina': 45

269 | 'robinson': 40

270 | 'schenker': 40

271 | 'sncf': 20

272 | 'ups': 15

273 |

274 | # Malware

275 | '16shop': 30

276 | 'agenttesla': 50

277 | 'arkei': 25

278 | 'azorult': 35

279 | 'batman': 30

280 | 'betabot': 35

281 | 'botnet': 30

282 | 'diamondfox': 50

283 | 'emotet': 30

284 | 'formbook': 40

285 | 'gaudox': 30

286 | 'gorynch': 35

287 | 'iceIX': 25

288 | 'kardon': 30

289 | 'keybase': 35

290 | 'kronos': 30

291 | 'litehttp': 40

292 | 'lokibot': 35

293 | 'megalodon': 45

294 | 'mirai': 25

295 | 'neutrino': 40

296 | 'pekanbaru': 45

297 | 'predatorthethief': 80

298 | 'quasar': 30

299 | 'rarog': 25

300 | 'tesla': 25

301 | 'ursnif': 30

302 | 'workhard': 40

303 |

304 | # Security

305 | '1password': 45

306 | 'dashlane': 40

307 | 'lastpass': 40

308 | 'nordvpn': 35

309 | 'protonvpn': 45

310 | 'tunnelbear': 50

311 |

312 | # Social Media

313 | 'facebook': 40

314 | 'flickr': 30

315 | 'instagram': 45

316 | 'linkedin': 40

317 | 'myspace': 35

318 | 'orkut': 25

319 | 'pintrest': 40

320 | 'reddit': 30

321 | 'tumblr': 30

322 | 'twitter': 35

323 | 'whatsapp': 40

324 | 'youtube': 35

325 |

326 | # Other

327 | 'adobe': 25

328 | 'aetna': 25

329 | 'americanairlines': 80

330 | 'americangreetings': 85

331 | 'baracuda': 40

332 | 'cabletv': 35

333 | 'careerbuilder': 65

334 | 'coxcable': 40

335 | 'directtv': 40

336 | 'docusign': 40

337 | 'dropbox': 35

338 | 'ebay': 20

339 | 'flash': 25

340 | 'github': 30

341 | 'groupon': 35

342 | 'mydish': 30

343 | 'netflix': 35

344 | 'pandora': 35

345 | 'salesforce': 50

346 | 'skype': 25

347 | 'spotify': 35

348 | 'tibia': 25

349 | 'trello': 30

350 | 'verizon': 35

351 |

352 | # Miscellaneous & SE tricks

353 | '-com.': 15

354 | '-gouv-': 20

355 | '.com-': 15

356 | '.com.': 15

357 | '.gouv-': 20

358 | '.gouv.': 20

359 | '.gov-': 15

360 | '.gov.': 15

361 | '.net-': 15

362 | '.net.': 15

363 | '.org-': 15

364 | '.org.': 15

365 | 'cgi-bin': 30

366 |

367 | # FR specific

368 | 'laposte': 35

369 | 'suivi': 25

370 |

371 | # BE specific

372 | 'argenta': 35

373 | 'belfius': 35

374 | 'beobank': 35

375 | 'bepost': 30

376 | 'crelan': 30

377 | 'europabank': 50

378 | 'fintro': 30

379 | 'fortis': 30

380 | 'keytrade': 40

381 | 'mobileviking': 60

382 | 'nagelmackers': 60

383 | 'proximus': 40

384 | 'rabobank': 40

385 |

386 | # ------------------------------------------------------------------------------

387 |

388 | tlds:

389 | # Domains containing the following TLDs will have 20 added to the score.

390 | # Any changes must use the same format.

391 | # This section cannot be empty.

392 | - '.app'

393 | - '.au'

394 | - '.bank'

395 | - '.biz'

396 | - '.br'

397 | - '.business'

398 | - '.ca'

399 | - '.cc'

400 | - '.cf'

401 | - '.cl'

402 | - '.click'

403 | - '.club'

404 | - '.co'

405 | - '.country'

406 | - '.de'

407 | - '.discount'

408 | - '.download'

409 | - '.eu'

410 | - '.fr'

411 | - '.ga'

412 | - '.gb'

413 | - '.gdn'

414 | - '.gq'

415 | - '.hu'

416 | - '.icu'

417 | - '.id'

418 | - '.il'

419 | - '.in'

420 | - '.info'

421 | - '.jp'

422 | - '.ke'

423 | - '.kim'

424 | - '.live'

425 | - '.lk'

426 | - '.loan'

427 | - '.me'

428 | - '.men'

429 | - '.ml'

430 | - '.mom'

431 | - '.mx'

432 | - '.ng'

433 | - '.online'

434 | - '.ooo'

435 | - '.page'

436 | - '.party'

437 | - '.pe'

438 | - '.pk'

439 | - '.pw'

440 | - '.racing'

441 | - '.ren'

442 | - '.review'

443 | - '.ru'

444 | - '.science'

445 | - '.services'

446 | - '.site'

447 | - '.stream'

448 | - '.study'

449 | - '.support'

450 | - '.tech'

451 | - '.tk'

452 | - '.top'

453 | - '.uk'

454 | - '.us'

455 | - '.vip'

456 | - '.win'

457 | - '.work'

458 | - '.xin'

459 | - '.xyz'

460 | - '.za'

461 |

--------------------------------------------------------------------------------

/bin/phishing_kit_tracker.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Tracking threat actor emails in phishing kits.

4 |

5 | Source: https://github.com/neonprimetime/PhishingKitTracker

6 |

7 | Instructions: None

8 |

9 | Rate Limit: None

10 |

11 | Results Limit: None

12 |

13 | Notes: None

14 |

15 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

16 | """

17 |

18 | import time

19 | from collections import OrderedDict

20 | import glob

21 | import os

22 | import shutil

23 | import sys

24 | import zipfile

25 |

26 | app_home = "{}/etc/apps/OSweep".format(os.environ["SPLUNK_HOME"])

27 | tp_modules = "{}/bin/_tp_modules".format(app_home)

28 | sys.path.insert(0, tp_modules)

29 | import validators

30 |

31 | import commons

32 |

33 |

34 | date = time.strftime("%Y-%m")

35 | csv = "https://raw.githubusercontent.com/neonprimetime/PhishingKitTracker/master/{}_PhishingKitTracker.csv"

36 |

37 | def get_project():

38 | """Download the project to /tmp"""

39 | session = commons.create_session()

40 | project = "https://github.com/neonprimetime/PhishingKitTracker/archive/master.zip"

41 | resp = session.get(project, timeout=180)

42 |

43 | if not (resp.status_code == 200 and resp.content != ""):

44 | return

45 |

46 | with open("/tmp/master.zip", "wb") as repo:

47 | repo.write(resp.content)

48 |

49 | repo_zip = zipfile.ZipFile("/tmp/master.zip", "r")

50 | repo_zip.extractall("/tmp/")

51 | repo_zip.close()

52 |

53 | # Remove current files

54 | for csv in glob.glob("/{}/etc/apps/OSweep/lookups/2*_PhishingKitTracker.csv".format(os.environ["SPLUNK_HOME"])):

55 | os.remove(csv)

56 |

57 | # Add new files

58 | for csv in glob.glob("/tmp/PhishingKitTracker-master/2*_PhishingKitTracker.csv"):

59 | shutil.move(csv, "/{}/etc/apps/OSweep/lookups".format(os.environ["SPLUNK_HOME"]))

60 |

61 | os.remove("/tmp/master.zip")

62 | shutil.rmtree("/tmp/PhishingKitTracker-master")

63 | return

64 |

65 | def get_feed():

66 | """Return the latest report summaries from the feed."""

67 | session = commons.create_session()

68 | data_feed = get_file(session)

69 |

70 | if data_feed == None:

71 | return

72 | return data_feed

73 |

74 | def get_file(session):

75 | """Return a list of tags."""

76 | resp = session.get(csv.format(date), timeout=180)

77 |

78 | if resp.status_code == 200 and resp.content != "":

79 | return resp.content.splitlines()

80 | return

81 |

82 | def write_file(data_feed, file_path):

83 | """Write data to a file."""

84 | if data_feed == None:

85 | return

86 |

87 | with open(file_path, "w") as open_file:

88 | header = data_feed[0]

89 |

90 | open_file.write("{}\n".format(header))

91 |

92 | for data in data_feed[1:]:

93 | open_file.write("{}\n".format(data.encode("UTF-8")))

94 | return

95 |

96 | if __name__ == "__main__":

97 | if sys.argv[1].lower() == "feed":

98 | data_feed = get_feed()

99 | lookup_path = "{}/lookups".format(app_home)

100 | file_path = "{}/{}_PhishingKitTracker.csv".format(lookup_path, date)

101 |

102 | write_file(data_feed, file_path)

103 | elif sys.argv[1].lower() == "git":

104 | get_project()

105 |

--------------------------------------------------------------------------------

/bin/psbdmp.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use crt.sh to discover certificates by searching all of the publicly

4 | known Certificate Transparency (CT) logs. The script accepts a list of strings

5 | (domains or IPs):

6 | | psbdmp search $string$

7 |

8 | or input from the pipeline (any field where the value is a domain or IP). The

9 | first argument is the name of one field:

10 |

11 | | fields

12 | | psbdmp

13 |

14 | Source: https://psbdmp.ws/api

15 |

16 | Instructions:

17 | 1. Switch to the Certificate Search dashboard in the OSweep app.

18 | 2. Add the list of IOCs to the "Domain, IP (+)" textbox.

19 | 3. Select "Yes" or "No" from the "Wildcard" dropdown to search for subdomains.

20 | 4. Click "Submit".

21 |

22 | Rate Limit: None

23 |

24 | Results Limit: None

25 |

26 | Notes: Search for subdomains by passing "wildcard" as the first argument:

27 | | psbdmp search $string$

28 |

29 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

30 | """

31 |

32 | import json

33 | import os

34 | import sys

35 |

36 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

37 | tp_modules = "{}/bin/_tp_modules".format(app_home)

38 | sys.path.insert(0, tp_modules)

39 | import validators

40 |

41 | import commons

42 |

43 |

44 | def process_iocs(results):

45 | """Return data formatted for Splunk from psbdmp."""

46 | if sys.argv[1] == "search" or sys.argv[1] == "dump":

47 | endpoint = sys.argv[1]

48 | provided_iocs = sys.argv[2:]

49 |

50 | session = commons.create_session()

51 | splunk_table = []

52 |

53 | for provided_ioc in set(provided_iocs):

54 | provided_ioc = commons.deobfuscate_string(provided_ioc)

55 |

56 | if endpoint == "search":

57 | psbdmp_dicts = psbdmp_search(provided_ioc, session)

58 | elif endpoint == "dump":

59 | psbdmp_dicts = psbdmp_dump(provided_ioc, session)

60 | else:

61 | splunk_table.append({"invalid": provided_ioc})

62 | continue

63 |

64 | for psbdmp_dict in psbdmp_dicts:

65 | splunk_table.append(psbdmp_dict)

66 |

67 | session.close()

68 | return splunk_table

69 |

70 | def psbdmp_search(provided_ioc, session):

71 | """ """

72 | base_url = "https://psbdmp.ws/api/search/{}"

73 | url = base_url.format(provided_ioc)

74 | resp = session.get(url, timeout=180)

75 | psd_dicts = []

76 |

77 | if resp.status_code == 200 and resp.json()["error"] != 1 and len(resp.json()["data"]) > 0:

78 | data = resp.json()["data"]

79 |

80 | for result in data:

81 | result = commons.lower_keys(result)

82 | result.update({"provided_ioc": provided_ioc})

83 | psd_dicts.append(result)

84 | else:

85 | psd_dicts.append({"no data": provided_ioc})

86 | return psd_dicts

87 |

88 | def psbdmp_dump(provided_ioc, session):

89 | """ """

90 | # psbdmp.ws does not have an endpoint to the archive

91 | # base_url = "https://psbdmp.ws/api/dump/get/{}"

92 | base_url = "https://pastebin.com/raw/{}"

93 | url = base_url.format(provided_ioc)

94 | resp = session.get(url, timeout=180)

95 | psd_dicts = []

96 |

97 | # psbdmp.ws does not have an endpoint to the archive

98 | # if resp.status_code == 200 and resp.json()["error"] != 1:

99 | # dump = resp.json()

100 | # psd_dicts.append(dump)

101 | if resp.status_code == 200 and resp.content != "":

102 | dump = {"id":provided_ioc, "data":resp.content}

103 | psd_dicts.append(dump)

104 | else:

105 | psd_dicts.append({"no data": provided_ioc})

106 | return psd_dicts

107 |

108 | if __name__ == "__main__":

109 | current_module = sys.modules[__name__]

110 | commons.return_results(current_module)

111 |

--------------------------------------------------------------------------------

/bin/py_pkg_update.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | rm -rf _tp_modules/* && \

4 | sudo pip install -r requirements.txt -t _tp_modules/

5 |

--------------------------------------------------------------------------------

/bin/requirements.txt:

--------------------------------------------------------------------------------

1 | bs4

2 | entropy

3 | HTMLParser

4 | pylev

5 | PySocks

6 | PyYAML

7 | requests

8 | requests_oauthlib

9 | six

10 | tld

11 | tweepy

12 | validators

13 |

--------------------------------------------------------------------------------

/bin/threatcrowd.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use ThreatCrowd to quickly identify related infrastructure and

4 | malware. The script accepts a list of strings (domains, IPs, or email addresses):

5 | | threatcrowd

6 |

7 | or input from the pipeline (any field where the value is a domain, IP, and/or

8 | email address). The first argument is the name of one field:

9 |

10 | | fields

11 | | threatcrowd

12 |

13 | Output: List of dictionaries

14 |

15 | Source: https://www.threatcrowd.org/index.php

16 |

17 | Instructions:

18 | 1. Switch to the ThreatCrowd dashboard in the OSweep app.

19 | 2. Add the list of IOCs to the "IP, Domain, or Email (+)" textbox.

20 | 3. Select the IOC type.

21 | 4. Click "Submit".

22 |

23 | Rate Limit: 1 request/10s

24 |

25 | Results Limit: None

26 |

27 | Notes: None

28 |

29 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

30 | """

31 |

32 | import os

33 | import re

34 | import sys

35 | from time import sleep

36 |

37 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

38 | tp_modules = "{}/bin/_tp_modules".format(app_home)

39 | sys.path.insert(0, tp_modules)

40 | import validators

41 |

42 | import commons

43 |

44 |

45 | api = "http://www.threatcrowd.org/searchApi/v2/{}/report/?{}={}"

46 |

47 | def process_iocs(results):

48 | """Return data formatted for Splunk from ThreatCrowd."""

49 | if results != None:

50 | provided_iocs = [y for x in results for y in x.values()]

51 | else:

52 | provided_iocs = sys.argv[1:]

53 |

54 | session = commons.create_session()

55 | splunk_table = []

56 |

57 | for provided_ioc in set(provided_iocs):

58 | provided_ioc = commons.deobfuscate_string(provided_ioc)

59 | provided_ioc = provided_ioc.lower()

60 |

61 | if validators.ipv4(provided_ioc):

62 | ioc_type = "ip"

63 | elif validators.domain(provided_ioc):

64 | ioc_type = "domain"

65 | elif validators.email(provided_ioc):

66 | ioc_type = "email"

67 | elif re.match("^[a-f\d]{32}$", provided_ioc) or re.match("^[a-f\d]{64}$", provided_ioc):

68 | ioc_type = "resource"

69 | else:

70 | splunk_table.append({"invalid": provided_ioc})

71 | continue

72 |

73 | ioc_dicts = query_threatcrowd(provided_ioc, ioc_type, session)

74 |

75 | for ioc_dict in ioc_dicts:

76 | splunk_table.append(ioc_dict)

77 |

78 | if len(provided_iocs) > 1:

79 | sleep(10)

80 |

81 | session.close()

82 | return splunk_table

83 |

84 | def query_threatcrowd(provided_ioc, ioc_type, session):

85 | """Pivot off an IP or domain and return data as an dictonary."""

86 | ioc_dicts = []

87 |

88 | if ioc_type == "resource":

89 | resp = session.get(api.format("file", ioc_type, provided_ioc), timeout=180)

90 | else:

91 | resp = session.get(api.format(ioc_type, ioc_type, provided_ioc), timeout=180)

92 |

93 | if resp.status_code == 200 and "permalink" in resp.json().keys() and \

94 | provided_ioc in resp.json()["permalink"]:

95 | for key in resp.json().keys():

96 | if key == "votes" or key == "permalink" or key == "response_code":

97 | continue

98 | elif key in ("md5", "sha1"):

99 | value = resp.json()[key]

100 | ioc_dicts.append({key: value})

101 | elif key == "resolutions":

102 | for res in resp.json()[key]:

103 | res = commons.lower_keys(res)

104 | ioc_dicts.append(res)

105 | else:

106 | for value in resp.json()[key]:

107 | key = commons.lower_keys(key)

108 | ioc_dicts.append({key: value})

109 | else:

110 | ioc_dicts.append({"no data": provided_ioc})

111 | return ioc_dicts

112 |

113 | if __name__ == "__main__":

114 | current_module = sys.modules[__name__]

115 | commons.return_results(current_module)

116 |

--------------------------------------------------------------------------------

/bin/twitter.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Analyze tweets to understand what others already know about

4 | particular IOCs. The scripts accepts a list of strings:

5 | | twitter

6 |

7 | or input from the pipeline. The first argument is the name of one field:

8 |

9 | | fields

10 | | threatcrowd

11 |

12 | If the string is a username, it will return as invalid.

13 |

14 | Source: https://twitter.com/

15 |

16 | Instructions:

17 | 1. Open the terminal

18 | 2. Navigate to "$SPLUNK_HOME/etc/apps/OSweep/etc/".

19 | 3. Edit "config.py" and add the following values as strings to the config file:

20 | - twitter_consumer_key -> Consumer Key

21 | - twitter_consumer_secret -> Consumer Secret

22 | - twitter_access_token -> Access Token

23 | - twitter_access_token_secret -> Access Token Secret

24 | 4. Save "config.py" and close the terminal.

25 | 5. Switch to the Twitter dashboard in the OSweep app.

26 | 6. Add the list of IOCs to the "Search Term (+)" textbox.

27 | 7. Click "Submit".

28 |

29 | Rate Limit: 180 requests/15 min

30 |

31 | Results Limit: -

32 |

33 | Notes: None

34 |

35 | Debugger: open("/tmp/splunk_script.txt", "a").write("{}: \n".format())

36 | """

37 |

38 | import os

39 | import sys

40 | import time

41 | import urllib

42 |

43 | app_home = "{}/etc/apps/OSweep".format(os.environ['SPLUNK_HOME'])

44 | tp_modules = "{}/bin/_tp_modules".format(app_home)

45 | sys.path.insert(0, tp_modules)

46 | import tweepy

47 | import validators

48 |

49 | import commons

50 |

51 |

52 | def create_session():

53 | """Return Twitter session."""

54 | keys = commons.get_apikey("twitter")

55 | auth = tweepy.OAuthHandler(keys["consumer_key"],

56 | keys["consumer_secret"])

57 | auth.set_access_token(keys["access_token"],

58 | keys["access_token_secret"])

59 | session = tweepy.API(auth)

60 |

61 | try:

62 | session.rate_limit_status()

63 | except:

64 | sc = session.last_response.status_code

65 | msg = session.last_response.content

66 | return {"error": "HTTP Status Code {}: {}".format(sc, msg)}

67 | return session

68 |

69 | def process_iocs(results):

70 | """Return data formatted for Splunk from Twitter."""

71 | if results != None:

72 | provided_iocs = [y for x in results for y in x.values()]

73 | else:

74 | provided_iocs = sys.argv[1:]

75 |

76 | if len(provided_iocs) > 180:

77 | return {"error": "Search term limit: 180\nTotal Search Terms Provided: {}".format(len(provided_iocs))}

78 |

79 | session = create_session()

80 | splunk_table = []

81 |

82 | if isinstance(session, dict):

83 | splunk_table.append(session)

84 | return splunk_table

85 |

86 | rate_limit = check_rate_limit(session, provided_iocs)

87 | if isinstance(rate_limit, dict):

88 | splunk_table.append(rate_limit)

89 | return splunk_table

90 |

91 | empty_files = ["d41d8cd98f00b204e9800998ecf8427e",

92 | "e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855"]

93 | splunk_table = []

94 |

95 | for provided_ioc in set(provided_iocs):

96 | provided_ioc = commons.deobfuscate_string(provided_ioc)

97 |

98 | if provided_ioc in empty_files:

99 | splunk_table.append({"invalid": provided_ioc})

100 | continue

101 |

102 | if validators.url(provided_ioc) or validators.domain(provided_ioc) or \

103 | validators.ipv4(provided_ioc) or validators.md5(provided_ioc) or \

104 | validators.sha256(provided_ioc) or \

105 | len(provided_ioc) > 2 and len(provided_ioc) <= 140:

106 | ioc_dicts = query_twitter(session, provided_ioc)

107 | else:

108 | splunk_table.append({"invalid": provided_ioc})

109 | continue

110 |

111 | for ioc_dict in ioc_dicts:

112 | ioc_dict = commons.lower_keys(ioc_dict)

113 | splunk_table.append(ioc_dict)

114 | return splunk_table

115 |

116 | def check_rate_limit(session, provided_iocs):

117 | """Return rate limit information."""

118 | rate_limit = session.rate_limit_status()["resources"]["search"]["/search/tweets"]

119 |

120 | if rate_limit["remaining"] == 0:

121 | reset_time = rate_limit["reset"]

122 | rate_limit["reset"] = time.strftime("%Y-%m-%d %H:%M:%S",

123 | time.localtime(reset_time))

124 | return rate_limit

125 |

126 | if len(provided_iocs) > rate_limit["remaining"]:

127 | rate_limit = {"Search term limit": rate_limit["remaining"],

128 | "Total Search Terms Provided": len(provided_iocs)}

129 | return rate_limit

130 | return

131 |

132 | def query_twitter(session, provided_ioc):

133 | """Return results from Twitter as a dictionary."""

134 | ioc_dicts = []

135 |

136 | if provided_ioc.startswith("@"):

137 | ioc_dicts.append({"invalid": "{} <-- Monitoring users is prohibited!".format(provided_ioc)})

138 | return ioc_dicts

139 |

140 | encoded_ioc = urllib.quote_plus(provided_ioc)

141 | search_tweets = session.search(q=encoded_ioc,

142 | lang="en",

143 | result_type="mixed",

144 | count="100")

145 |

146 | for tweet in search_tweets:

147 | if tweet._json["user"]["name"] == provided_ioc.replace("#", "") or \

148 | tweet._json["user"]["screen_name"] == provided_ioc.replace("#", ""):

149 | ioc_dicts.append({"invalid": "{} <-- Monitoring users is prohibited!".format(provided_ioc)})

150 | return ioc_dicts

151 |

152 | if "retweeted_status" in tweet._json.keys():

153 | if tweet._json["retweeted_status"]["user"]["name"] == provided_ioc.replace("#", "") or \

154 | tweet._json["retweeted_status"]["user"]["screen_name"] == provided_ioc.replace("#", ""):

155 | ioc_dicts.append({"invalid": "{} <-- Monitoring users is prohibited!".format(provided_ioc)})

156 | return ioc_dicts

157 |

158 | urls = []

159 | for x in tweet._json["entities"]["urls"]:

160 | if not x["expanded_url"].startswith("https://twitter.com/i/web/status/"):

161 | urls.append(x["expanded_url"])

162 |

163 | hashtags = []

164 | for x in tweet._json["entities"]["hashtags"]:

165 | hashtags.append("#{}".format(x["text"]))

166 |

167 | ioc_dict = {}

168 | ioc_dict["search_term"] = provided_ioc

169 | ioc_dict["url"] = "\n".join(urls)

170 | ioc_dict["hashtags"] = "\n".join(hashtags)

171 | ioc_dict["timestamp"] = tweet._json["created_at"]

172 | ioc_dict["tweet"] = tweet._json["text"]

173 |

174 | if "retweeted_status" in tweet._json.keys():

175 | ioc_dict["timestamp"] = tweet._json["retweeted_status"]["created_at"]

176 | ioc_dict["tweet"] = tweet._json["retweeted_status"]["text"]

177 |

178 | ioc_dicts.append(ioc_dict)

179 | return ioc_dicts

180 |

181 | if __name__ == "__main__":

182 | current_module = sys.modules[__name__]

183 | commons.return_results(current_module)

184 |

--------------------------------------------------------------------------------

/bin/urlhaus.py:

--------------------------------------------------------------------------------

1 | #!/opt/splunk/bin/python

2 | """

3 | Description: Use URLHaus to get insights, browse the URLhaus database and find

4 | most recent additions. The script accepts a list of strings (domain, IP, MD5,

5 | SHA256, and/or URL):

6 | | urlhaus

7 |

8 | or input from the pipeline (any field where the value is a domain, MD5, SHA256,

9 | and/or URL). The first argument is the name of one field:

10 |

11 | | fields

12 | | urlhaus

13 |

14 | Source: https://urlhaus.abuse.ch/api/

15 |

16 | Instructions:

17 | 1. Manually download URL dump (one-time)

18 | ```