├── .editorconfig

├── .gitignore

├── .npmignore

├── .travis.yml

├── README.md

├── example.html

├── lib

└── wikigeo.js

├── package.json

├── src

└── wikigeo.coffee

└── test

└── wikigeo.coffee

/.editorconfig:

--------------------------------------------------------------------------------

1 | # EditorConfig helps developers define and maintain consistent

2 | # coding styles between different editors and IDEs

3 | # editorconfig.org

4 |

5 | root = true

6 |

7 |

8 | [*]

9 |

10 | # Change these settings to your own preference

11 | indent_style = space

12 | indent_size = 2

13 |

14 | # We recommend you to keep these unchanged

15 | end_of_line = lf

16 | charset = utf-8

17 | trim_trailing_whitespace = true

18 | insert_final_newline = true

19 |

20 | [*.md]

21 | trim_trailing_whitespace = false

22 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | node_modules

2 |

--------------------------------------------------------------------------------

/.npmignore:

--------------------------------------------------------------------------------

1 | node_modules

2 | src

3 | test

4 |

--------------------------------------------------------------------------------

/.travis.yml:

--------------------------------------------------------------------------------

1 | language: node_js

2 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | wikigeo

2 | =======

3 |

4 | [](http://travis-ci.org/edsu/wikigeo)

5 |

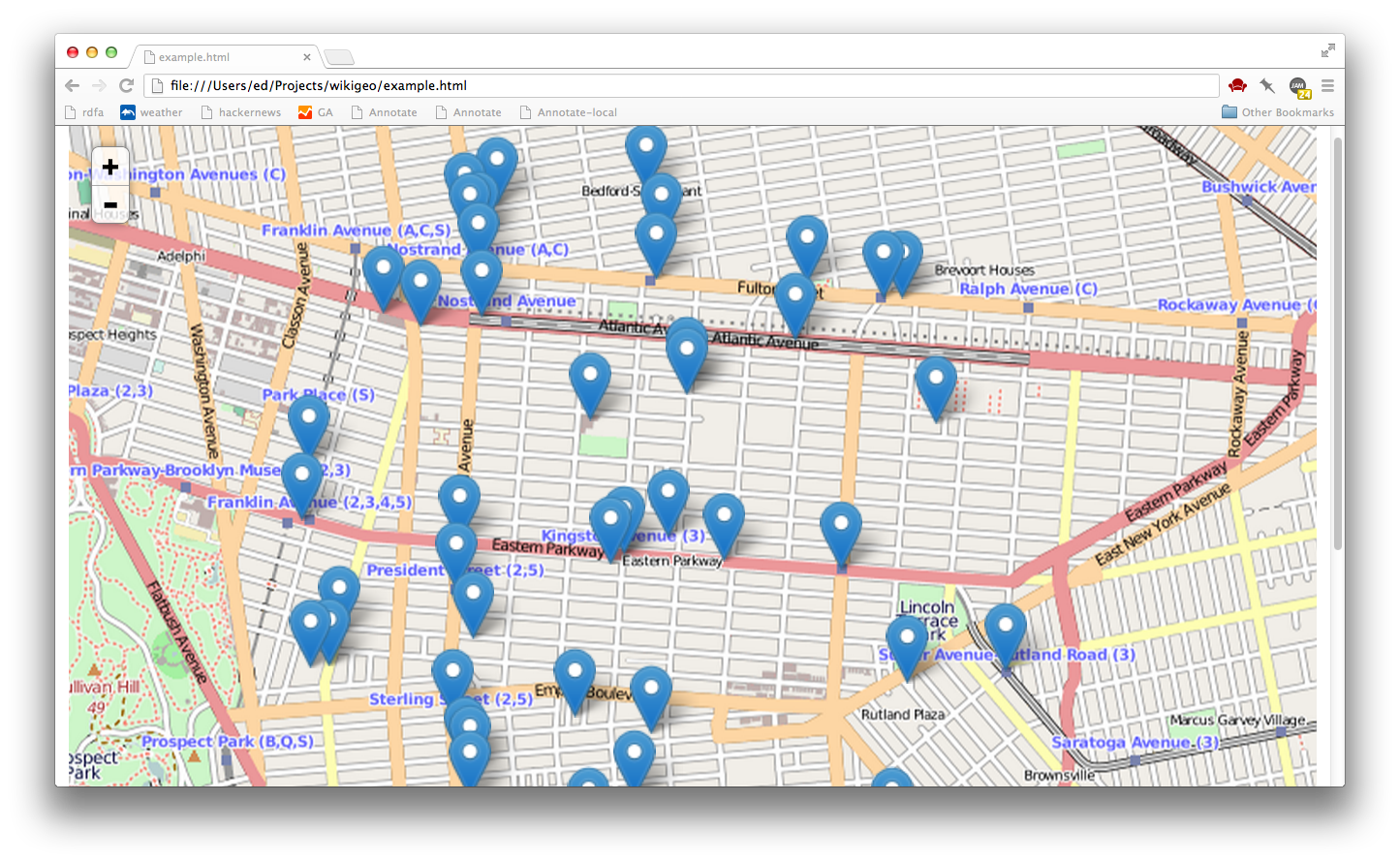

6 | wikigeo allows you to fetch [geojson](http://www.geojson.org/geojson-spec.html)

7 | for [Wikipedia](http://wikipedia.org) articles around a given geographic

8 | coordinate. It can be easily added to a map using [Leaflet's geojson

9 | support](http://leafletjs.com/examples/geojson.html). The data comes directly

10 | from the [Wikipedia API](http://en.wikipedia.org/w/api.php) which has the

11 | [GeoData](http://www.mediawiki.org/wiki/Extension:GeoData) extension installed.

12 |

13 |

14 |

15 | Basics

16 | ------

17 |

18 | When you add wikigeo.js to your HTML page you will get a function called

19 | `geojson` which you pass a coordinate `[longitude, latitude]` and a callback

20 | function that will receive the geojson data:

21 |

22 | ```javascript

23 | geojson([-73.94, 40.67], function(data) {

24 | console.log(data);

25 | });

26 | ```

27 |

28 | The geojson for this call should look something like:

29 |

30 | ```javascript

31 | {

32 | "type": "FeatureCollection",

33 | "features": [

34 | {

35 | "id": "30854694",

36 | "type": "Feature",

37 | "properties": {

38 | "url": "https://en.wikipedia.org/?curid=30854694",

39 | "name": "Jewish Children\'s Museum",

40 | "touched": "2014-05-18T12:50:57Z"

41 | },

42 | "geometry": {

43 | "type": "Point",

44 | "coordinates": [

45 | -73.9419,

46 | 40.6689

47 | ]

48 | }

49 | },

50 | {

51 | "id": "729460",

52 | "type": "Feature",

53 | "properties": {

54 | "url": "https://en.wikipedia.org/?curid=729460",

55 | "name": "Kingston Avenue (IRT Eastern Parkway Line)",

56 | "touched": "2014-05-13T00:12:17Z"

57 | },

58 | "geometry": {

59 | "type": "Point",

60 | "coordinates": [

61 | -73.9422,

62 | 40.6694

63 | ]

64 | }

65 | }

66 | ]

67 | }

68 | ```

69 |

70 | If you want to get more than 10 results, or increase the radius use the

71 | `limit` and `radius` options:

72 |

73 | ```javascript

74 | geojson([-73.94, 40.67], {radius: 10000, limit: 100}, function(data) {

75 | console.log(data);

76 | });

77 | ```

78 |

79 | Additional Wikipedia Properties

80 | -------------------------------

81 |

82 | Additional properties of the Wikipedia articles can be added to the

83 | geojson feature properties by setting any of the following options to true:

84 | `images`, `categories`, `summaries`, `templates`. For example:

85 |

86 | ```javascript

87 | geojson(

88 | [-73.94, 40.67],

89 | {

90 | limit: 5,

91 | radius: 1000,

92 | images: true,

93 | categories: true,

94 | summaries: true,

95 | templates: true

96 | },

97 | function(data) {

98 | console.log(data);

99 | }

100 | );

101 | ```

102 |

103 | Beware, this can increase your query time since multiple trips to the Wikipedia

104 | API are often required. Here's what the results would look like for the above

105 | call:

106 |

107 | ```javascript

108 |

109 | {

110 | "type": "FeatureCollection",

111 | "features": [

112 | {

113 | "id": "43485",

114 | "type": "Feature",

115 | "properties": {

116 | "url": "https://en.wikipedia.org/?curid=43485",

117 | "name": "Silver Spring, Maryland",

118 | "touched": "2014-05-20T04:25:48Z",

119 | "image": "Silver_Spring_Montage.jpg",

120 | "imageUrl": "https://upload.wikimedia.org/wikipedia/commons/4/48/Silver_Spring_Montage.jpg",

121 | "templates": ["!",

122 | "-",

123 | "Abbr",

124 | "Ambox",

125 | "Both",

126 | "Br separated entries",

127 | "Category handler",

128 | "Citation",

129 | "Citation needed",

130 | "Cite news",

131 | "Cite web",

132 | "Column-width",

133 | "Commons",

134 | "Commons category",

135 | "Convert",

136 | "Coord",

137 | "Country data Maryland",

138 | "Country data United States",

139 | "Country data United States of America",

140 | "DCMetroArea",

141 | "Dead link",

142 | "Expand section",

143 | "Fix",

144 | "Fix/category",

145 | "Flag",

146 | "Flag/core",

147 | "Flagicon image",

148 | "GR",

149 | "Geobox coor",

150 | "Geographic reference",

151 | "Infobox",

152 | "Infobox settlement",

153 | "Infobox settlement/areadisp",

154 | "Infobox settlement/densdisp",

155 | "Infobox settlement/impus",

156 | "Infobox settlement/lengthdisp",

157 | "Infobox settlement/pref",

158 | "Largest cities",

159 | "Largest cities of Maryland",

160 | "Maryland",

161 | "Max",

162 | "Max/2",

163 | "Montgomery County, Maryland",

164 | "Navbar",

165 | "Navbox",

166 | "Navbox subgroup",

167 | "Navboxes",

168 | "Nowrap",

169 | "Order of magnitude",

170 | "Precision",

171 | "Reflist",

172 | "Rnd",

173 | "Side box",

174 | "Sister",

175 | "Talk other",

176 | "Transclude",

177 | "US Census population",

178 | "US county navigation box",

179 | "US state navigation box",

180 | "Unbulleted list",

181 | "Wikivoyage",

182 | "National Register of Historic Places in Maryland",

183 | "Navbar",

184 | "Navbox",

185 | "Reflist",

186 | "Str left",

187 | "Talk other",

188 | "Transclude",

189 | "Arguments",

190 | "Citation/CS1",

191 | "Citation/CS1/Configuration",

192 | "Citation/CS1/Date validation",

193 | "Citation/CS1/Whitelist",

194 | "Coordinates",

195 | "HtmlBuilder",

196 | "Infobox",

197 | "Location map",

198 | "Math",

199 | "Navbar",

200 | "Navbox",

201 | "Yesno"

202 | ],

203 | "summary": "The Polychrome Historic District is a national historic district in the Four Corners neighborhood in Silver Spring, Montgomery County, Maryland. It recognizes a group of five houses built by John Joseph Earley in 1934 and 1935. Earley used precast concrete panels with brightly colored aggregate to produce the polychrome effect, with Art Deco details. The two-inch-thick panels were attached to a conventional wood frame. Earley was interested in the use of mass-production techniques to produce small, inexpensive houses, paralleling Frank Lloyd Wright\'s Usonian house concepts.",

204 | "categories": ["Art Deco architecture in Maryland",

205 | "Coordinates on Wikidata",

206 | "Historic American Buildings Survey in Maryland",

207 | "Historic districts in Maryland",

208 | "Houses in Montgomery County, Maryland",

209 | "Houses on the National Register of Historic Places in Maryland",

210 | "Maryland Registered Historic Place stubs"

211 | ]

212 | },

213 | "geometry": {

214 | "type": "Point",

215 | "coordinates": [

216 | -77.0158,

217 | 39.0181

218 | ]

219 | }

220 | }

221 | ]

222 | }

223 | ```

224 |

225 | Quick Start With Leaflet

226 | ------------------------

227 |

228 | In your HTML, you'll want to use Leaflet as usual:

229 |

230 | ```javascript

231 |

232 | // create the map

233 |

234 | var map = L.map('map').setView([40.67, -73.94], 13);

235 | var osmLayer = L.tileLayer('http://{s}.tile.openstreetmap.org/{z}/{x}/{y}.png', {});

236 | osmLayer.addTo(map);

237 |

238 | // get geojson and add it to the map

239 |

240 | geojson([-73.94, 40.67], {radius: 10000, limit: 50}, function(data) {

241 | L.geoJson(data).addTo(map)

242 | });

243 | ```

244 |

245 | Please note that the order of geo coordinates in Leaflet is (lat, lon) whereas

246 | in geojson it is (lon, lat).

247 |

248 | That's it, to get the basic functionality working. Open example.html for a

249 | working example.

250 |

251 | Develop

252 | -------

253 |

254 | If you want to hack on wikigeo you'll need [Node](http://nodejs.org) and

255 | then you'll need to install some dependencies for testing:

256 |

257 | npm install

258 |

259 | This will install mocha, which you can use to run the tests, simply do:

260 |

261 | npm test

262 |

263 | and you should see something like a nyan cat:

264 |

265 | ```

266 | > wikigeo@1.0.0 test /home/despairblue/vcs/git/wikigeo

267 | > mocha --colors --reporter nyan --compilers coffee:coffee-script/register test/wikigeo.coffee

268 |

269 | 11 -_-_-_-_-_-__,------,

270 | 0 -_-_-_-_-_-__| /\_/\

271 | 0 -_-_-_-_-_-_~|_( ^ .^)

272 | -_-_-_-_-_-_ "" ""

273 |

274 | 11 passing (5s)

275 | ```

276 |

277 | License

278 | -------

279 |

280 | [](http://creativecommons.org/publicdomain/zero/1.0/)

281 |

--------------------------------------------------------------------------------

/example.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

32 |

33 |

34 |

35 |

--------------------------------------------------------------------------------

/lib/wikigeo.js:

--------------------------------------------------------------------------------

1 | // Generated by CoffeeScript 1.6.2

2 | /*

3 |

4 | geojson takes a geo coordinate (longitude, latitude) and a callback that will

5 | be given geojson for Wikipedia articles that are relevant for that location

6 |

7 | options:

8 | radius: search radius in meters (default: 1000, max: 10000)

9 | limit: the number of wikipedia articles to limit to (default: 10, max: 500)

10 | images: set to true to get filename images for the article

11 | summaries: set to true to get short text summaries for the article

12 | templates: set to true to get a list of templates used in the article

13 | categories: set to true to get a list of categories the article is in

14 |

15 | example:

16 | geojson([-77.0155, 39.0114], {radius: 5000}, function(data) {

17 | console.log(data);

18 | })

19 | */

20 |

21 | var error, fetch, geojson, request, root, _browserFetch, _clean, _convert, _fetch, _search,

22 | _this = this;

23 |

24 | geojson = function(geo, opts, callback) {

25 | if (opts == null) {

26 | opts = {};

27 | }

28 | if (typeof opts === "function") {

29 | callback = opts;

30 | opts = {};

31 | }

32 | if (!opts.limit) {

33 | opts.limit = 10;

34 | }

35 | if (!opts.radius) {

36 | opts.radius = 10000;

37 | }

38 | if (!opts.language) {

39 | opts.language = "en";

40 | }

41 | if (opts.radius > 10000) {

42 | throw new Error("radius cannot be greater than 10000");

43 | }

44 | if (opts.limit > 500) {

45 | throw new Error("limit cannot be greater than 500");

46 | }

47 | return _search(geo, opts, callback);

48 | };

49 |

50 | _search = function(geo, opts, callback, results, queryContinue) {

51 | var continueParams, name, param, q, url;

52 |

53 | url = "http://" + opts.language + ".wikipedia.org/w/api.php";

54 | q = {

55 | action: "query",

56 | prop: "info|coordinates",

57 | generator: "geosearch",

58 | ggsradius: opts.radius,

59 | ggscoord: "" + geo[1] + "|" + geo[0],

60 | ggslimit: opts.limit,

61 | format: "json"

62 | };

63 | if (opts.images) {

64 | q.prop += "|pageprops";

65 | }

66 | if (opts.summaries) {

67 | q.prop += "|extracts";

68 | q.exlimit = "max";

69 | q.exintro = 1;

70 | q.explaintext = 1;

71 | }

72 | if (opts.templates) {

73 | q.prop += "|templates";

74 | q.tllimit = 500;

75 | }

76 | if (opts.categories) {

77 | q.prop += "|categories";

78 | q.cllimit = 500;

79 | }

80 | continueParams = {

81 | extracts: "excontinue",

82 | coordinates: "cocontinue",

83 | templates: "tlcontinue",

84 | categories: "clcontinue"

85 | };

86 | if (queryContinue) {

87 | for (name in continueParams) {

88 | param = continueParams[name];

89 | if (queryContinue[name]) {

90 | q[param] = queryContinue[name][param];

91 | }

92 | }

93 | }

94 | return fetch(url, {

95 | params: q

96 | }, function(response) {

97 | var article, articleId, first, newValues, prop, resultsArticle, _ref;

98 |

99 | if (!results) {

100 | first = true;

101 | results = response;

102 | } else {

103 | first = false;

104 | }

105 | if (!(response.query && response.query.pages)) {

106 | _convert(results, opts, callback);

107 | return;

108 | }

109 | _ref = response.query.pages;

110 | for (articleId in _ref) {

111 | article = _ref[articleId];

112 | resultsArticle = results.query.pages[articleId];

113 | if (!resultsArticle) {

114 | continue;

115 | }

116 | for (prop in continueParams) {

117 | param = continueParams[prop];

118 | if (prop === 'extracts') {

119 | prop = 'extract';

120 | }

121 | newValues = article[prop];

122 | if (!newValues) {

123 | continue;

124 | }

125 | if (Array.isArray(newValues)) {

126 | if (!resultsArticle[prop]) {

127 | resultsArticle[prop] = newValues;

128 | } else if (!first && resultsArticle[prop][-1] !== newValues[-1]) {

129 | resultsArticle[prop] = resultsArticle[prop].concat(newValues);

130 | }

131 | } else {

132 | resultsArticle[prop] = article[prop];

133 | }

134 | }

135 | }

136 | if (response['query-continue']) {

137 | if (!queryContinue) {

138 | queryContinue = response['query-continue'];

139 | } else {

140 | for (name in continueParams) {

141 | param = continueParams[name];

142 | if (response['query-continue'][name]) {

143 | queryContinue[name] = response['query-continue'][name];

144 | }

145 | }

146 | }

147 | return _search(geo, opts, callback, results, queryContinue);

148 | } else {

149 | return _convert(results, opts, callback);

150 | }

151 | });

152 | };

153 |

154 | _convert = function(results, opts, callback) {

155 | var article, articleId, feature, geo, image, imageUrl, md5sum, titleEscaped, url, _ref;

156 |

157 | geo = {

158 | type: "FeatureCollection",

159 | features: []

160 | };

161 | if (!(results && results.query && results.query.pages)) {

162 | callback(geo);

163 | return;

164 | }

165 | _ref = results.query.pages;

166 | for (articleId in _ref) {

167 | article = _ref[articleId];

168 | if (!article.coordinates) {

169 | continue;

170 | }

171 | titleEscaped = article.title.replace(/\s/g, "_");

172 | url = "https://" + opts.language + ".wikipedia.org/?curid=" + articleId;

173 | feature = {

174 | id: articleId,

175 | type: "Feature",

176 | properties: {

177 | url: url,

178 | name: article.title,

179 | touched: article.touched

180 | },

181 | geometry: {

182 | type: "Point",

183 | coordinates: [Number(article.coordinates[0].lon), Number(article.coordinates[0].lat)]

184 | }

185 | };

186 | if (opts.images) {

187 | if (article.pageprops) {

188 | md5sum = require('crypto').createHash('md5').update(article.pageprops.page_image).digest("hex");

189 | image = article.pageprops.page_image;

190 | imageUrl = "https://upload.wikimedia.org/wikipedia/commons/" + md5sum[0] + "/" + md5sum.slice(0, 2) + "/" + article.pageprops.page_image;

191 | } else {

192 | image = null;

193 | imageUrl = null;

194 | }

195 | feature.properties.image = image;

196 | feature.properties.imageUrl = imageUrl;

197 | }

198 | if (opts.templates) {

199 | feature.properties.templates = _clean(article.templates);

200 | }

201 | if (opts.summaries) {

202 | feature.properties.summary = article.extract;

203 | }

204 | if (opts.categories) {

205 | feature.properties.categories = _clean(article.categories);

206 | }

207 | geo.features.push(feature);

208 | }

209 | callback(geo);

210 | };

211 |

212 | _clean = function(list) {

213 | var i;

214 |

215 | return (function() {

216 | var _i, _len, _results;

217 |

218 | _results = [];

219 | for (_i = 0, _len = list.length; _i < _len; _i++) {

220 | i = list[_i];

221 | _results.push(i.title.replace(/^.+?:/, ''));

222 | }

223 | return _results;

224 | })();

225 | };

226 |

227 | _fetch = function(uri, opts, callback) {

228 | return request(uri, {

229 | qs: opts.params,

230 | json: true

231 | }, function(e, r, data) {

232 | return callback(data);

233 | });

234 | };

235 |

236 | _browserFetch = function(uri, opts, callback) {

237 | return $.ajax({

238 | url: uri,

239 | data: opts.params,

240 | dataType: "jsonp",

241 | success: function(response) {

242 | return callback(response);

243 | }

244 | });

245 | };

246 |

247 | try {

248 | request = require('request');

249 | fetch = _fetch;

250 | } catch (_error) {

251 | error = _error;

252 | fetch = _browserFetch;

253 | }

254 |

255 | root = typeof exports !== "undefined" && exports !== null ? exports : this;

256 |

257 | root.geojson = geojson;

258 |

--------------------------------------------------------------------------------

/package.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "wikigeo",

3 | "version": "1.0.1",

4 | "main": "lib/wikigeo",

5 | "scripts": {

6 | "build": "coffee --bare --compile --output lib src/wikigeo.coffee",

7 | "prepublish": "coffee --bare --compile --output lib src/wikigeo.coffee",

8 | "watch": "coffee -w --output lib src/wikigeo.coffee",

9 | "test": "mocha --colors --reporter nyan --compilers coffee:coffee-script/register test/wikigeo.coffee"

10 | },

11 | "dependencies": {

12 | "coffee-script": "^1.7.1",

13 | "request": "^2.35.0"

14 | },

15 | "devDependencies": {

16 | "chai": "^1.9.1",

17 | "mocha": "^1.19.0"

18 | }

19 | }

20 |

--------------------------------------------------------------------------------

/src/wikigeo.coffee:

--------------------------------------------------------------------------------

1 | ###

2 |

3 | geojson takes a geo coordinate (longitude, latitude) and a callback that will

4 | be given geojson for Wikipedia articles that are relevant for that location

5 |

6 | options:

7 | radius: search radius in meters (default: 1000, max: 10000)

8 | limit: the number of wikipedia articles to limit to (default: 10, max: 500)

9 | images: set to true to get filename images for the article

10 | summaries: set to true to get short text summaries for the article

11 | templates: set to true to get a list of templates used in the article

12 | categories: set to true to get a list of categories the article is in

13 |

14 | example:

15 | geojson([-77.0155, 39.0114], {radius: 5000}, function(data) {

16 | console.log(data);

17 | })

18 |

19 | ###

20 |

21 | geojson = (geo, opts={}, callback) =>

22 | if typeof opts == "function"

23 | callback = opts

24 | opts = {}

25 | opts.limit = 10 if not opts.limit

26 | opts.radius = 10000 if not opts.radius

27 |

28 | if not opts.language

29 | opts.language = "en"

30 |

31 | if opts.radius > 10000

32 | throw new Error("radius cannot be greater than 10000")

33 |

34 | if opts.limit > 500

35 | throw new Error("limit cannot be greater than 500")

36 |

37 | _search(geo, opts, callback)

38 |

39 |

40 | #

41 | # recursive function to collect the results from all search result pages

42 | #

43 |

44 | _search = (geo, opts, callback, results, queryContinue) =>

45 | url = "http://#{ opts.language }.wikipedia.org/w/api.php"

46 | q =

47 | action: "query"

48 | prop: "info|coordinates"

49 | generator: "geosearch"

50 | ggsradius: opts.radius

51 | ggscoord: "#{geo[1]}|#{geo[0]}"

52 | ggslimit: opts.limit

53 | format: "json"

54 |

55 | if opts.images

56 | q.prop += "|pageprops"

57 |

58 | if opts.summaries

59 | q.prop += "|extracts"

60 | q.exlimit = "max"

61 | q.exintro = 1

62 | q.explaintext = 1

63 |

64 | if opts.templates

65 | q.prop += "|templates"

66 | q.tllimit = 500

67 |

68 | if opts.categories

69 | q.prop += "|categories"

70 | q.cllimit = 500

71 |

72 | # add continue parameters if they have been provided, these are

73 | # parameters that are used to fetch more results from the api

74 |

75 | continueParams =

76 | extracts: "excontinue"

77 | coordinates: "cocontinue"

78 | templates: "tlcontinue"

79 | categories: "clcontinue"

80 |

81 | if queryContinue

82 | for name, param of continueParams

83 | if queryContinue[name]

84 | q[param] = queryContinue[name][param]

85 |

86 | fetch url, params: q, (response) =>

87 |

88 | if not results

89 | first = true

90 | results = response

91 | else

92 | first = false

93 |

94 | # no results, oh well just give them empty geojson

95 | if not (response.query and response.query.pages)

96 | _convert(results, opts, callback)

97 | return

98 |

99 | for articleId, article of response.query.pages

100 | resultsArticle = results.query.pages[articleId]

101 | if not resultsArticle

102 | continue

103 |

104 | # this parameter is singular in article data...

105 | for prop, param of continueParams

106 | if prop == 'extracts'

107 | prop = 'extract'

108 |

109 | # continue if there are no new values to merge

110 | newValues = article[prop]

111 | if not newValues

112 | continue

113 |

114 | # merge arrays by concatenating with old values

115 | if Array.isArray(newValues)

116 | if not resultsArticle[prop]

117 | resultsArticle[prop] = newValues

118 | else if not first and resultsArticle[prop][-1] != newValues[-1]

119 | resultsArticle[prop] = resultsArticle[prop].concat(newValues)

120 |

121 | # otherwise just assign

122 | else

123 | resultsArticle[prop] = article[prop]

124 |

125 | if response['query-continue']

126 | if not queryContinue

127 | queryContinue = response['query-continue']

128 | else

129 | for name, param of continueParams

130 | if response['query-continue'][name]

131 | queryContinue[name] = response['query-continue'][name]

132 | _search(geo, opts, callback, results, queryContinue)

133 |

134 | else

135 | _convert(results, opts, callback)

136 |

137 |

138 | #

139 | # do the work of converting a wikipedia response to geojson

140 | #

141 |

142 | _convert = (results, opts, callback) ->

143 | geo =

144 | type: "FeatureCollection"

145 | features: []

146 |

147 | if not (results and results.query and results.query.pages)

148 | callback(geo)

149 | return

150 |

151 | for articleId, article of results.query.pages

152 | if not article.coordinates

153 | continue

154 |

155 | titleEscaped = article.title.replace /\s/g, "_"

156 | url = "https://#{opts.language}.wikipedia.org/?curid=#{articleId}"

157 |

158 | feature =

159 | id: articleId

160 | type: "Feature"

161 | properties:

162 | url: url

163 | name: article.title

164 | touched: article.touched

165 | geometry:

166 | type: "Point"

167 | coordinates: [

168 | (Number) article.coordinates[0].lon

169 | (Number) article.coordinates[0].lat

170 | ]

171 |

172 | if opts.images

173 | if article.pageprops?.page_image

174 | # @see https://www.mediawiki.org/wiki/Manual:$wgHashedUploadDirectory

175 | md5sum = require('crypto').createHash('md5').update(article.pageprops.page_image).digest("hex")

176 | image = article.pageprops.page_image

177 | imageUrl = "https://upload.wikimedia.org/wikipedia/commons/#{md5sum[0]}/#{md5sum[0..1]}/#{article.pageprops.page_image}"

178 | else

179 | image = null

180 | imageUrl = null

181 | feature.properties.image = image

182 | feature.properties.imageUrl = imageUrl

183 |

184 | if opts.templates

185 | feature.properties.templates = _clean(article.templates)

186 |

187 | if opts.summaries

188 | feature.properties.summary = article.extract

189 |

190 | if opts.categories

191 | feature.properties.categories = _clean(article.categories)

192 |

193 | geo.features.push feature

194 |

195 | callback(geo)

196 | return

197 |

198 | #

199 | # strip wikipedia specific information

200 | #

201 |

202 | _clean = (list) ->

203 | return (i.title.replace(/^.+?:/, '') for i in list)

204 |

205 | #

206 | # helpers for doing http in browser (w/ jQuery) and in node (w/ request)

207 | #

208 |

209 | _fetch = (uri, opts, callback) ->

210 | request uri, qs: opts.params, json: true, (e, r, data) ->

211 | callback(data)

212 |

213 | _browserFetch = (uri, opts, callback) ->

214 | $.ajax url: uri, data: opts.params, dataType: "jsonp", success: (response) ->

215 | callback(response)

216 |

217 | try

218 | request = require('request')

219 | fetch = _fetch

220 | catch error

221 | fetch = _browserFetch

222 |

223 | root = exports ? this

224 | root.geojson = geojson

225 |

--------------------------------------------------------------------------------

/test/wikigeo.coffee:

--------------------------------------------------------------------------------

1 | wikigeo = require '../src/wikigeo'

2 | geojson = wikigeo.geojson

3 | assert = require('chai').assert

4 |

5 | describe 'wikigeo', ->

6 |

7 | describe 'geojson', ->

8 | this.timeout(10000)

9 |

10 | it 'should work with just lat/lon', (done) ->

11 | geojson [-73.94, 40.67], (data) ->

12 | assert.equal data.type, "FeatureCollection"

13 | assert.ok data.features

14 | assert.ok Array.isArray(data.features)

15 | assert.ok data.features.length > 0 and data.features.length <= 10

16 |

17 | f = data.features[0]

18 | assert.ok f.id

19 | assert.ok f.properties.url

20 | assert.match f.properties.url, /https:\/\/en.wikipedia.org\//

21 | assert.equal f.type, "Feature"

22 | assert.ok f.properties

23 | assert.ok f.properties.name

24 | assert.ok f.properties.touched

25 | assert.ok f.geometry

26 | assert.equal f.geometry.type, "Point"

27 | assert.ok f.geometry.coordinates

28 | assert.equal typeof f.geometry.coordinates[0], "number"

29 | assert.equal typeof f.geometry.coordinates[1], "number"

30 | done()

31 |

32 | it 'should return empty results when there are no hits', (done) ->

33 | geojson [77.0155, 39.0114], (data) ->

34 | assert.equal data.type, "FeatureCollection"

35 | assert.ok data.features

36 | assert.ok Array.isArray(data.features)

37 | assert.equal data.features.length, 0

38 | done()

39 |

40 | it 'should be able to get summaries', (done) ->

41 | geojson [-77.0155, 39.0114], summaries: true, (data) ->

42 | assert.ok data.features[0].properties.summary

43 | done()

44 |

45 | it 'should be able to get images', (done) ->

46 | geojson [-77.0155, 39.0114], images: true, (data) ->

47 | assert.ok data.features[0].properties.image

48 | done()

49 |

50 | it 'should contain an imageUrl', (done) ->

51 | geojson [-77.0155, 39.0114], images: true, (data) ->

52 | assert.match data.features[0].properties.imageUrl, /https:\/\/upload.wikimedia.org\/wikipedia\/commons/

53 | done()

54 |

55 | it 'should be able to get templates', (done) ->

56 | geojson [-77.0155, 39.0114], templates: true, (data) ->

57 | assert.ok data.features[0].properties.templates

58 | done()

59 |

60 | it 'should be able to get categories', (done) ->

61 | geojson [-77.0155, 39.0114], categories: true, (data) ->

62 | assert.ok data.features[0].properties.categories

63 | done()

64 |

65 | it 'limit should cause more results to come in', (done) ->

66 | geojson [-77.0155, 39.0114], limit: 100, (data) ->

67 | assert.ok data.features.length > 5 and data.features.length < 100

68 | done()

69 |

70 | it 'respects maximum limit', (done) ->

71 | doit = -> geojson([-77.0155, 39.0114], limit: 501, (data) ->)

72 | assert.throws doit, 'limit cannot be greater than 500'

73 | done()

74 |

75 | it 'respects maximum radius', (done) ->

76 | doit = -> geojson([-77.0155, 39.0114], radius: 10001, (data) ->)

77 | assert.throws doit, 'radius cannot be greater than 10000'

78 | done()

79 |

80 | it 'allows for non en wikipedias', (done) ->

81 | geojson [-77.0155, 39.0114], language: 'de', (data) ->

82 | assert.match data.features[0].properties.url, /https:\/\/de\.wikipedia\.org/

83 | done()

84 |

--------------------------------------------------------------------------------