├── README.md

├── assets

├── OpenSans-Regular.ttf

├── character_sheet_default_ref.jpg

├── characters_parts

│ ├── part_a.jpg

│ ├── part_b.jpg

│ └── part_c.jpg

├── openimages_classes.txt

├── plush_parts

│ ├── part_a.jpg

│ ├── part_b.jpg

│ └── part_c.jpg

└── product_parts

│ ├── part_a.jpg

│ ├── part_b.jpg

│ └── part_c.jpg

├── configs

├── infer

│ ├── infer_characters.yaml

│ ├── infer_plush.yaml

│ └── infer_products.yaml

└── train

│ └── train_characters.yaml

├── demo

├── app.py

└── pit.py

├── ip_adapter

├── __init__.py

├── attention_processor.py

├── attention_processor_faceid.py

├── custom_pipelines.py

├── ip_adapter.py

├── ip_adapter_faceid.py

├── ip_adapter_faceid_separate.py

├── resampler.py

├── sd3_attention_processor.py

├── test_resampler.py

└── utils.py

├── ip_lora_inference

├── download_ip_adapter.sh

├── download_loras.sh

└── inference_ip_lora.py

├── ip_lora_train

├── ip_adapter_for_lora.py

├── ip_lora_dataset.py

├── run_example.sh

├── sdxl_ip_lora_pipeline.py

└── train_ip_lora.py

├── ip_plus_space_exploration

├── download_directions.sh

├── download_ip_adapter.sh

├── edit_by_direction.py

├── find_direction.py

└── ip_model_utils.py

├── model

├── __init__.py

├── dit.py

└── pipeline_pit.py

├── pyproject.toml

├── scripts

├── generate_characters.py

├── generate_products.py

├── infer.py

└── train.py

├── training

├── __init__.py

├── coach.py

├── dataset.py

└── train_config.py

└── utils

├── __init__.py

├── bezier_utils.py

├── vis_utils.py

└── words_bank.py

/README.md:

--------------------------------------------------------------------------------

1 | # Piece it Together: Part-Based Concepting with IP-Priors

2 | > Elad Richardson, Kfir Goldberg, Yuval Alaluf, Daniel Cohen-Or

3 | > Tel Aviv University, Bria AI

4 | >

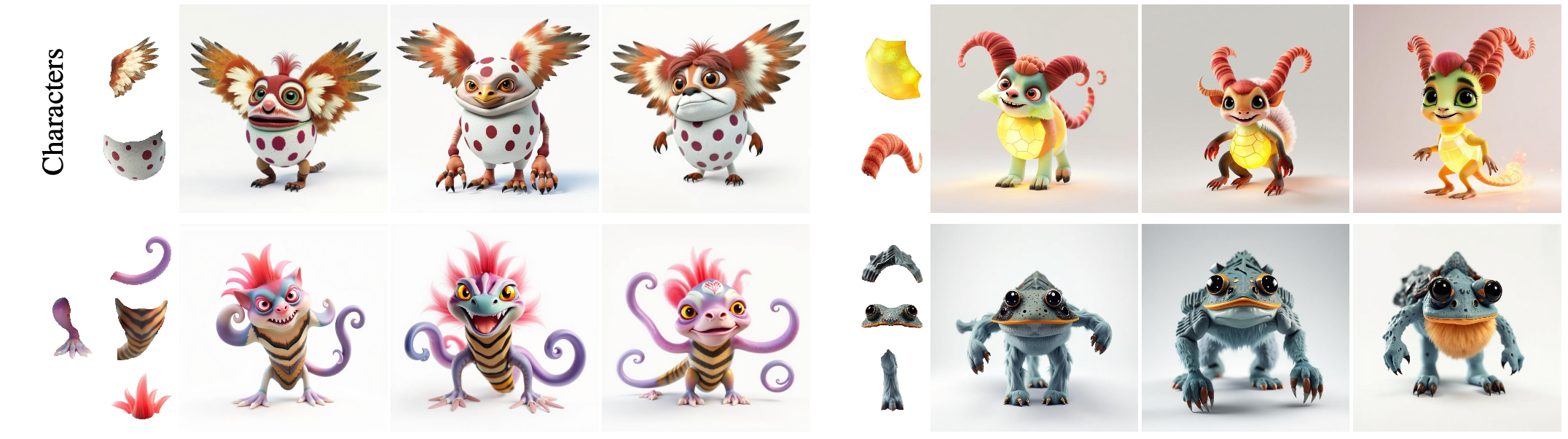

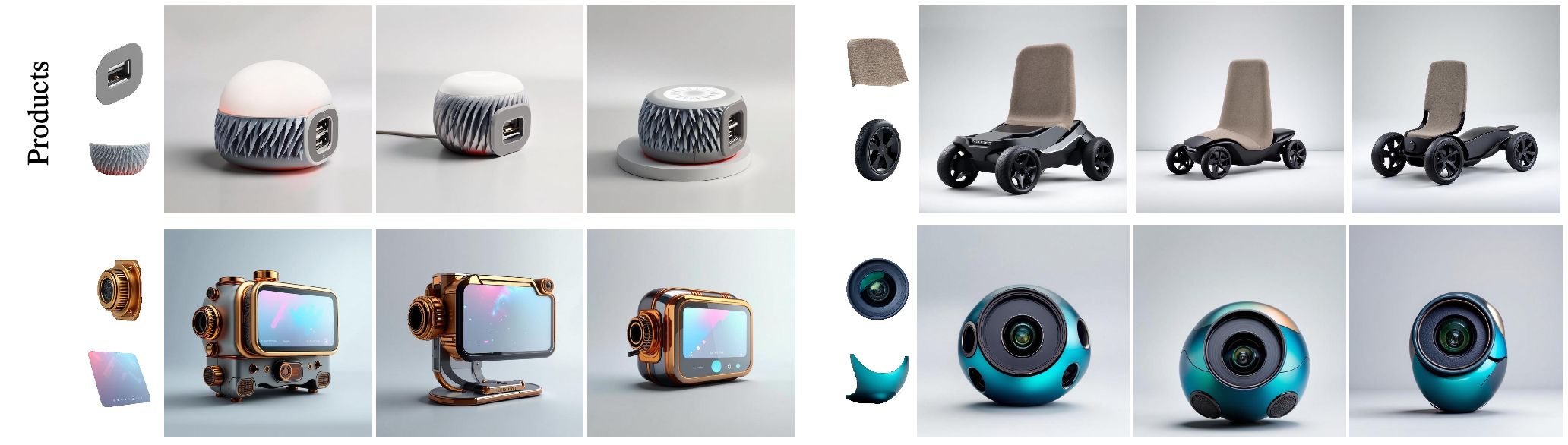

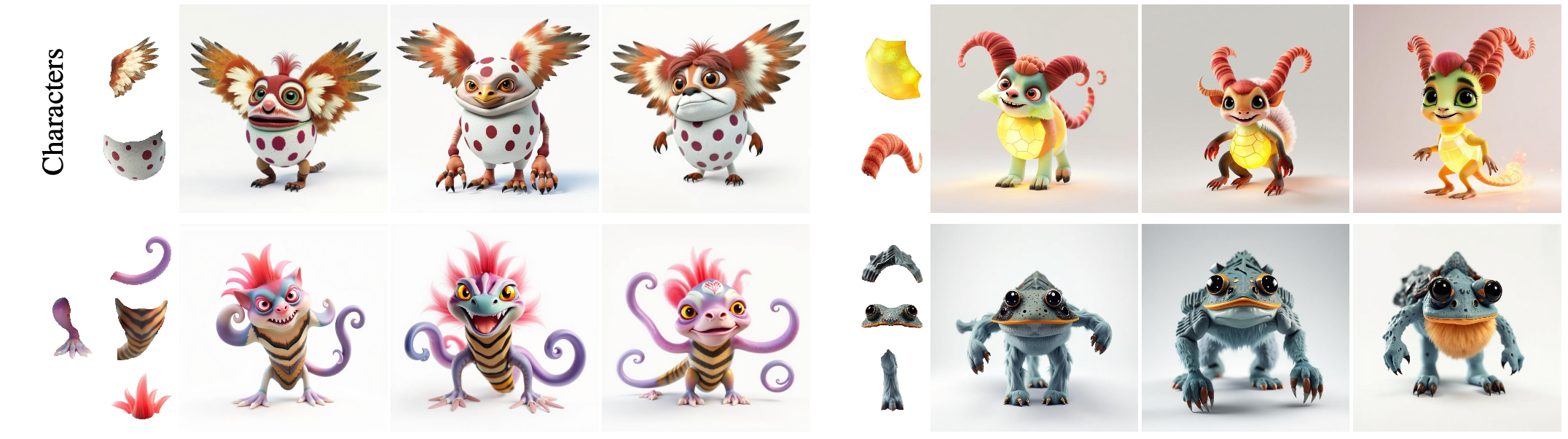

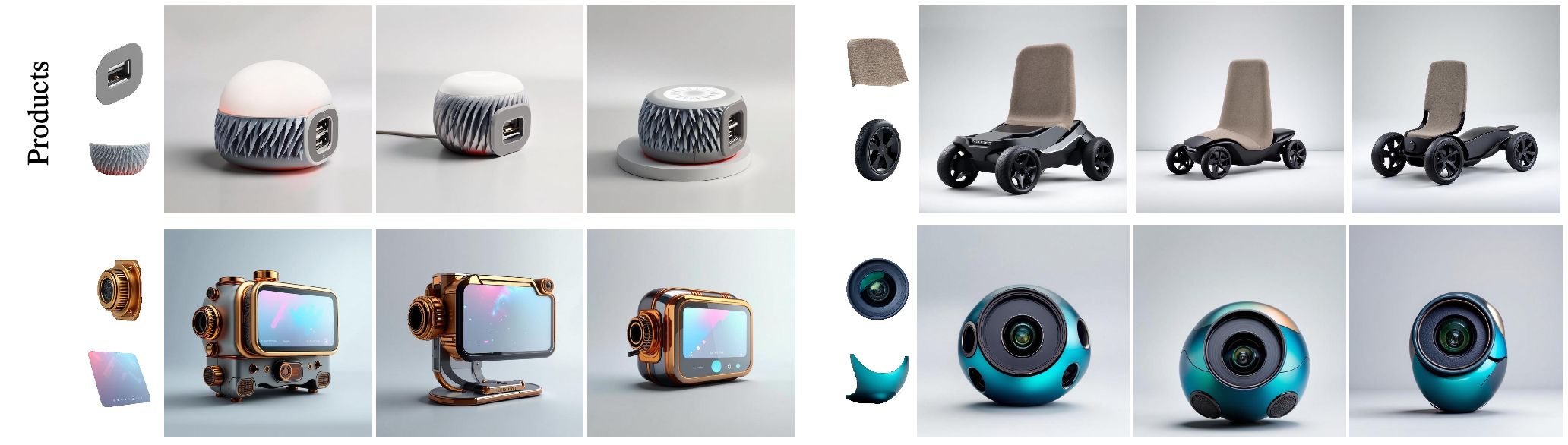

5 | > Advanced generative models excel at synthesizing images but often rely on text-based conditioning. Visual designers, however, often work beyond language, directly drawing inspiration from existing visual elements. In many cases, these elements represent only fragments of a potential concept-such as an uniquely structured wing, or a specific hairstyle-serving as inspiration for the artist to explore how they can come together creatively into a coherent whole. Recognizing this need, we introduce a generative framework that seamlessly integrates a partial set of user-provided visual components into a coherent composition while simultaneously sampling the missing parts needed to generate a plausible and complete concept. Our approach builds on a strong and underexplored representation space, extracted from IP-Adapter+, on which we train IP-Prior, a lightweight flow-matching model that synthesizes coherent compositions based on domain-specific priors, enabling diverse and context-aware generations. Additionally, we present a LoRA-based fine-tuning strategy that significantly improves prompt adherence in IP-Adapter+ for a given task, addressing its common trade-off between reconstruction quality and prompt adherence.

6 |

7 |  8 |

8 |  9 |

10 |

11 |

12 |

13 |

9 |

10 |

11 |

12 |

13 |

14 |  15 |

15 |

16 | Using a dedicated prior for the target domain, our method, Piece it Together (PiT), effectively completes missing information by seamlessly integrating given elements into a coherent composition while adding the necessary missing pieces needed for the complete concept to reside in the prior domain.

17 |

18 |

19 | ## Description :scroll:

20 | Official implementation of the paper "Piece it Together: Part-Based Concepting with IP-Priors"

21 |

22 |

23 | ## Table of contents

24 | - [Piece it Together: Part-Based Concepting with IP-Priors](#piece-it-together-part-based-concepting-with-ip-priors)

25 | - [Description :scroll:](#description-scroll)

26 | - [Table of contents](#table-of-contents)

27 | - [Getting started with PiT :rocket:](#getting-started-with-pit-rocket)

28 | - [Setup your environment](#setup-your-environment)

29 | - [Inference with PiT](#inference-with-pit)

30 | - [Training PiT](#training-pit)

31 | - [Inference with IP-LoRA](#inference-with-ip-lora)

32 | - [Training IP-LoRA](#training-ip-lora)

33 | - [Preparing your data](#preparing-your-data)

34 | - [Running the training script](#running-the-training-script)

35 | - [Exploring the IP+ space](#exploring-the-ip-space)

36 | - [Finding new directions](#finding-new-directions)

37 | - [Editing images with found directions](#editing-images-with-found-directions)

38 | - [Acknowledgments](#acknowledgments)

39 | - [Citation](#citation)

40 |

41 |

42 |

43 | ## Getting started with PiT :rocket:

44 |

45 | ### Setup your environment

46 |

47 | 1. Clone the repo:

48 |

49 | ```bash

50 | git clone https://github.com/eladrich/PiT

51 | cd PiT

52 | ```

53 |

54 | 2. Install `uv`:

55 |

56 | Instructions taken from [here](https://docs.astral.sh/uv/getting-started/installation/).

57 |

58 | For linux systems this should be:

59 | ```bash

60 | curl -LsSf https://astral.sh/uv/install.sh | sh

61 | source $HOME/.local/bin/env

62 | ```

63 |

64 | 3. Install the dependencies:

65 |

66 | ```bash

67 | uv sync

68 | ```

69 |

70 | 4. Activate your `.venv` and set the Python env:

71 |

72 | ```bash

73 | source .venv/bin/activate

74 | export PYTHONPATH=${PYTHONPATH}:${PWD}

75 | ```

76 |

77 |

78 |

79 | ## Inference with PiT

80 | | Domain | Examples | Link |

81 | |--------|--------------|----------------------------------------------------------------------------------------------|

82 | | Characters |  | [Here](https://huggingface.co/kfirgold99/Piece-it-Together/tree/main/models/characters_ckpt) |

83 | | Products |

| [Here](https://huggingface.co/kfirgold99/Piece-it-Together/tree/main/models/characters_ckpt) |

83 | | Products |  | [Here](https://huggingface.co/kfirgold99/Piece-it-Together/tree/main/models/products_ckpt) |

84 | | Toys |

| [Here](https://huggingface.co/kfirgold99/Piece-it-Together/tree/main/models/products_ckpt) |

84 | | Toys |  | [Here](https://huggingface.co/kfirgold99/Piece-it-Together/tree/main/models/plush_ckpt) |

85 |

86 |

87 | ## Training PiT

88 |

89 | ### Data Generation

90 | PiT assumes that the data is structured so that the the target images and part images are in the same directory with the naming convention being `image_name.jpg` for hte base image and `image_name_i.jpg` for the parts.

91 |

92 | To use a generated data see the sample scripts

93 | ```bash

94 | python -m scripts.generate_characters

95 | ```

96 |

97 | ```bash

98 | python -m scripts.generate_products

99 | ```

100 |

101 | ### Training

102 |

103 | For training see the `training/coach.py` file and the example below

104 |

105 | ``bash

106 | python -m scripts.train --config_path=configs/train/train_characters.yaml

107 | ``

108 |

109 | ## PiT Inference

110 |

111 | For inference see `scripts.infer.py` with the corresponding configs under `configs/infer`

112 |

113 | ```bash

114 | python -m scripts.infer --config_path=configs/infer/infer_characters.yaml

115 | ```

116 |

117 |

118 | ## Inference with IP-LoRA

119 |

120 | 1. Download the IP checkpoint and the LoRAs

121 |

122 | ```bash

123 | ip_lora_inference/download_ip_adapter.sh

124 | ip_lora_inference/download_loras.sh

125 | ```

126 |

127 | 2. Run inference with your preferred model

128 |

129 | example for running the styled-generation LoRA

130 |

131 | ```bash

132 | python ip_lora_inference/inference_ip_lora.py --lora_type "character_sheet" --lora_path "weights/character_sheet/pytorch_lora_weights.safetensors" --prompt "a character sheet displaying a creature, from several angles with 1 large front view in the middle, clean white background. In the background we can see half-completed, partially colored, sketches of different parts of the object" --output_dir "ip_lora_inference/character_sheet/" --ref_images_paths "assets/character_sheet_default_ref.jpg"

133 | --ip_adapter_path "weights/ip_adapter/sdxl_models/ip-adapter-plus_sdxl_vit-h.bin"

134 | ```

135 |

136 | ## Training IP-LoRA

137 |

138 | ### Preparing your data

139 |

140 | The expected data format for the training script is as follows:

141 |

142 | ```

143 | --base_dir/

144 | ----targets/

145 | ------img1.jpg

146 | ------img1.txt

147 | ------img2.jpg

148 | ------img2.txt

149 | ------img3.jpg

150 | ------img3.txt

151 | .

152 | .

153 | .

154 | ----refs/

155 | ------img1_ref.jpg

156 | ------img2_ref.jpg

157 | ------img3_ref.jpg

158 | .

159 | .

160 | .

161 | ```

162 |

163 | Where `imgX.jpg` is the target image for the input reference image `imgX_ref.jpg` with the prompt `imgX.txt`

164 |

165 | ### Running the training script

166 |

167 | For training a character-sheet styled generation LoRA, run the following command:

168 |

169 | ```bash

170 | python ./ip_lora_train/train_ip_lora.py \

171 | --rank 64 \

172 | --resolution 1024 \

173 | --validation_epochs 1 \

174 | --num_train_epochs 100 \

175 | --checkpointing_steps 50 \

176 | --train_batch_size 2 \

177 | --learning_rate 1e-4 \

178 | --dataloader_num_workers 1 \

179 | --gradient_accumulation_steps 8 \

180 | --dataset_base_dir \

181 | --prompt_mode character_sheet \

182 | --output_dir ./output/train_ip_lora/character_sheet

183 |

184 | ```

185 |

186 | and for the text adherence LoRA, run the following command:

187 |

188 | ```bash

189 | python ./ip_lora_train/train_ip_lora.py \

190 | --rank 64 \

191 | --resolution 1024 \

192 | --validation_epochs 1 \

193 | --num_train_epochs 100 \

194 | --checkpointing_steps 50 \

195 | --train_batch_size 2 \

196 | --learning_rate 1e-4 \

197 | --dataloader_num_workers 1 \

198 | --gradient_accumulation_steps 8 \

199 | --dataset_base_dir \

200 | --prompt_mode creature_in_scene \

201 | --output_dir ./output/train_ip_lora/creature_in_scene

202 | ```

203 |

204 | ## Exploring the IP+ space

205 |

206 | Start by downloading the needed IP+ checkpoint and the directions presented in the paper:

207 |

208 | ```bash

209 | ip_plus_space_exploration/download_directions.sh

210 | ip_plus_space_exploration/download_ip_adapter.sh

211 | ```

212 |

213 | ### Finding new directions

214 |

215 | To find a direction in the IP+ space from "class1" (e.g. "scrawny") to "class2" (e.g. "muscular"):

216 |

217 | 1. Create `class1_dir` and `class2_dir` containing images of the source and target classes respectively

218 |

219 | 2. Run the `find_direction` script:

220 |

221 | ```bash

222 | python ip_plus_space_exploration/find_direction.py --class1_dir --class2_dir --output_dir ./ip_directions --ip_model_type "plus"

223 | ```

224 |

225 | ### Editing images with found directions

226 |

227 | Use the direction found in the previous stage, or one downloaded from [HuggingFace](https://huggingface.co/kfirgold99/Piece-it-Together) in the previous stage.

228 |

229 | ```bash

230 | python ip_plus_space_exploration/edit_by_direction.py --ip_model_type "plus" --image_path --direction_path --direction_type "ip" --output_dir "./edit_by_direction/"

231 | ```

232 |

233 | ## Acknowledgments

234 |

235 | Code is based on

236 | - https://github.com/pOpsPaper/pOps

237 | - https://github.com/cloneofsimo/minRF by the great [@cloneofsimo](https://github.com/cloneofsimo)

238 |

239 | ## Citation

240 |

241 | If you use this code for your research, please cite the following paper:

242 |

243 | ```

244 | @misc{richardson2025piece,

245 | title={Piece it Together: Part-Based Concepting with IP-Priors},

246 | author={Richardson, Elad and Goldberg, Kfir and Alaluf, Yuval and Cohen-Or, Daniel},

247 | year={2025},

248 | eprint={2503.10365},

249 | archivePrefix={arXiv},

250 | primaryClass={cs.CV},

251 | url={https://arxiv.org/abs/2503.10365},

252 | }

253 | ```

--------------------------------------------------------------------------------

/assets/OpenSans-Regular.ttf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/OpenSans-Regular.ttf

--------------------------------------------------------------------------------

/assets/character_sheet_default_ref.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/character_sheet_default_ref.jpg

--------------------------------------------------------------------------------

/assets/characters_parts/part_a.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/characters_parts/part_a.jpg

--------------------------------------------------------------------------------

/assets/characters_parts/part_b.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/characters_parts/part_b.jpg

--------------------------------------------------------------------------------

/assets/characters_parts/part_c.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/characters_parts/part_c.jpg

--------------------------------------------------------------------------------

/assets/plush_parts/part_a.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/plush_parts/part_a.jpg

--------------------------------------------------------------------------------

/assets/plush_parts/part_b.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/plush_parts/part_b.jpg

--------------------------------------------------------------------------------

/assets/plush_parts/part_c.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/plush_parts/part_c.jpg

--------------------------------------------------------------------------------

/assets/product_parts/part_a.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/product_parts/part_a.jpg

--------------------------------------------------------------------------------

/assets/product_parts/part_b.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/product_parts/part_b.jpg

--------------------------------------------------------------------------------

/assets/product_parts/part_c.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/product_parts/part_c.jpg

--------------------------------------------------------------------------------

/configs/infer/infer_characters.yaml:

--------------------------------------------------------------------------------

1 | prior_path: models/characters_ckpt/prior.ckpt

2 | prior_repo: kfirgold99/Piece-it-Together

3 | crops_dir: assets/characters_parts

4 | output_dir: inference/characters

--------------------------------------------------------------------------------

/configs/infer/infer_plush.yaml:

--------------------------------------------------------------------------------

1 | prior_path: models/plush_ckpt/prior.ckpt

2 | prior_repo: kfirgold99/Piece-it-Together

3 | crops_dir: assets/plush_parts

4 | output_dir: inference/plush

--------------------------------------------------------------------------------

/configs/infer/infer_products.yaml:

--------------------------------------------------------------------------------

1 | prior_path: models/products_ckpt/prior.ckpt

2 | prior_repo: kfirgold99/Piece-it-Together

3 | crops_dir: assets/product_parts

4 | output_dir: inference/products

--------------------------------------------------------------------------------

/configs/train/train_characters.yaml:

--------------------------------------------------------------------------------

1 | dataset_path: 'datasets/generated/characters'

2 | val_dataset_path: 'datasets/generated/characters'

3 | output_dir: 'training_results/train_characters'

4 | train_batch_size: 64

5 | num_layers: 4

6 | max_crops: 7

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

/demo/app.py:

--------------------------------------------------------------------------------

1 | import gradio as gr

2 | import spaces

3 | from pit import PiTDemoPipeline

4 |

5 | BLOCK_WIDTH = 300

6 | BLOCK_HEIGHT = 360

7 | FONT_SIZE = 3.5

8 |

9 | pit_pipeline = PiTDemoPipeline(

10 | prior_repo="kfirgold99/Piece-it-Together", prior_path="models/characters_ckpt/prior.ckpt"

11 | )

12 |

13 |

14 | @spaces.GPU

15 | def run_character_generation(part_1, part_2, part_3, seed=None):

16 | crops_paths = [part_1, part_2, part_3]

17 | image = pit_pipeline.run(crops_paths=crops_paths, seed=seed, n_images=1)[0]

18 | return image

19 |

20 |

21 | with gr.Blocks(css="style.css") as demo:

22 | gr.HTML(

23 | """

| [Here](https://huggingface.co/kfirgold99/Piece-it-Together/tree/main/models/plush_ckpt) |

85 |

86 |

87 | ## Training PiT

88 |

89 | ### Data Generation

90 | PiT assumes that the data is structured so that the the target images and part images are in the same directory with the naming convention being `image_name.jpg` for hte base image and `image_name_i.jpg` for the parts.

91 |

92 | To use a generated data see the sample scripts

93 | ```bash

94 | python -m scripts.generate_characters

95 | ```

96 |

97 | ```bash

98 | python -m scripts.generate_products

99 | ```

100 |

101 | ### Training

102 |

103 | For training see the `training/coach.py` file and the example below

104 |

105 | ``bash

106 | python -m scripts.train --config_path=configs/train/train_characters.yaml

107 | ``

108 |

109 | ## PiT Inference

110 |

111 | For inference see `scripts.infer.py` with the corresponding configs under `configs/infer`

112 |

113 | ```bash

114 | python -m scripts.infer --config_path=configs/infer/infer_characters.yaml

115 | ```

116 |

117 |

118 | ## Inference with IP-LoRA

119 |

120 | 1. Download the IP checkpoint and the LoRAs

121 |

122 | ```bash

123 | ip_lora_inference/download_ip_adapter.sh

124 | ip_lora_inference/download_loras.sh

125 | ```

126 |

127 | 2. Run inference with your preferred model

128 |

129 | example for running the styled-generation LoRA

130 |

131 | ```bash

132 | python ip_lora_inference/inference_ip_lora.py --lora_type "character_sheet" --lora_path "weights/character_sheet/pytorch_lora_weights.safetensors" --prompt "a character sheet displaying a creature, from several angles with 1 large front view in the middle, clean white background. In the background we can see half-completed, partially colored, sketches of different parts of the object" --output_dir "ip_lora_inference/character_sheet/" --ref_images_paths "assets/character_sheet_default_ref.jpg"

133 | --ip_adapter_path "weights/ip_adapter/sdxl_models/ip-adapter-plus_sdxl_vit-h.bin"

134 | ```

135 |

136 | ## Training IP-LoRA

137 |

138 | ### Preparing your data

139 |

140 | The expected data format for the training script is as follows:

141 |

142 | ```

143 | --base_dir/

144 | ----targets/

145 | ------img1.jpg

146 | ------img1.txt

147 | ------img2.jpg

148 | ------img2.txt

149 | ------img3.jpg

150 | ------img3.txt

151 | .

152 | .

153 | .

154 | ----refs/

155 | ------img1_ref.jpg

156 | ------img2_ref.jpg

157 | ------img3_ref.jpg

158 | .

159 | .

160 | .

161 | ```

162 |

163 | Where `imgX.jpg` is the target image for the input reference image `imgX_ref.jpg` with the prompt `imgX.txt`

164 |

165 | ### Running the training script

166 |

167 | For training a character-sheet styled generation LoRA, run the following command:

168 |

169 | ```bash

170 | python ./ip_lora_train/train_ip_lora.py \

171 | --rank 64 \

172 | --resolution 1024 \

173 | --validation_epochs 1 \

174 | --num_train_epochs 100 \

175 | --checkpointing_steps 50 \

176 | --train_batch_size 2 \

177 | --learning_rate 1e-4 \

178 | --dataloader_num_workers 1 \

179 | --gradient_accumulation_steps 8 \

180 | --dataset_base_dir \

181 | --prompt_mode character_sheet \

182 | --output_dir ./output/train_ip_lora/character_sheet

183 |

184 | ```

185 |

186 | and for the text adherence LoRA, run the following command:

187 |

188 | ```bash

189 | python ./ip_lora_train/train_ip_lora.py \

190 | --rank 64 \

191 | --resolution 1024 \

192 | --validation_epochs 1 \

193 | --num_train_epochs 100 \

194 | --checkpointing_steps 50 \

195 | --train_batch_size 2 \

196 | --learning_rate 1e-4 \

197 | --dataloader_num_workers 1 \

198 | --gradient_accumulation_steps 8 \

199 | --dataset_base_dir \

200 | --prompt_mode creature_in_scene \

201 | --output_dir ./output/train_ip_lora/creature_in_scene

202 | ```

203 |

204 | ## Exploring the IP+ space

205 |

206 | Start by downloading the needed IP+ checkpoint and the directions presented in the paper:

207 |

208 | ```bash

209 | ip_plus_space_exploration/download_directions.sh

210 | ip_plus_space_exploration/download_ip_adapter.sh

211 | ```

212 |

213 | ### Finding new directions

214 |

215 | To find a direction in the IP+ space from "class1" (e.g. "scrawny") to "class2" (e.g. "muscular"):

216 |

217 | 1. Create `class1_dir` and `class2_dir` containing images of the source and target classes respectively

218 |

219 | 2. Run the `find_direction` script:

220 |

221 | ```bash

222 | python ip_plus_space_exploration/find_direction.py --class1_dir --class2_dir --output_dir ./ip_directions --ip_model_type "plus"

223 | ```

224 |

225 | ### Editing images with found directions

226 |

227 | Use the direction found in the previous stage, or one downloaded from [HuggingFace](https://huggingface.co/kfirgold99/Piece-it-Together) in the previous stage.

228 |

229 | ```bash

230 | python ip_plus_space_exploration/edit_by_direction.py --ip_model_type "plus" --image_path --direction_path --direction_type "ip" --output_dir "./edit_by_direction/"

231 | ```

232 |

233 | ## Acknowledgments

234 |

235 | Code is based on

236 | - https://github.com/pOpsPaper/pOps

237 | - https://github.com/cloneofsimo/minRF by the great [@cloneofsimo](https://github.com/cloneofsimo)

238 |

239 | ## Citation

240 |

241 | If you use this code for your research, please cite the following paper:

242 |

243 | ```

244 | @misc{richardson2025piece,

245 | title={Piece it Together: Part-Based Concepting with IP-Priors},

246 | author={Richardson, Elad and Goldberg, Kfir and Alaluf, Yuval and Cohen-Or, Daniel},

247 | year={2025},

248 | eprint={2503.10365},

249 | archivePrefix={arXiv},

250 | primaryClass={cs.CV},

251 | url={https://arxiv.org/abs/2503.10365},

252 | }

253 | ```

--------------------------------------------------------------------------------

/assets/OpenSans-Regular.ttf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/OpenSans-Regular.ttf

--------------------------------------------------------------------------------

/assets/character_sheet_default_ref.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/character_sheet_default_ref.jpg

--------------------------------------------------------------------------------

/assets/characters_parts/part_a.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/characters_parts/part_a.jpg

--------------------------------------------------------------------------------

/assets/characters_parts/part_b.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/characters_parts/part_b.jpg

--------------------------------------------------------------------------------

/assets/characters_parts/part_c.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/characters_parts/part_c.jpg

--------------------------------------------------------------------------------

/assets/plush_parts/part_a.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/plush_parts/part_a.jpg

--------------------------------------------------------------------------------

/assets/plush_parts/part_b.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/plush_parts/part_b.jpg

--------------------------------------------------------------------------------

/assets/plush_parts/part_c.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/plush_parts/part_c.jpg

--------------------------------------------------------------------------------

/assets/product_parts/part_a.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/product_parts/part_a.jpg

--------------------------------------------------------------------------------

/assets/product_parts/part_b.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/product_parts/part_b.jpg

--------------------------------------------------------------------------------

/assets/product_parts/part_c.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/eladrich/PiT/73d26b0a4ab627e3f26c50763178bd75d0408cc2/assets/product_parts/part_c.jpg

--------------------------------------------------------------------------------

/configs/infer/infer_characters.yaml:

--------------------------------------------------------------------------------

1 | prior_path: models/characters_ckpt/prior.ckpt

2 | prior_repo: kfirgold99/Piece-it-Together

3 | crops_dir: assets/characters_parts

4 | output_dir: inference/characters

--------------------------------------------------------------------------------

/configs/infer/infer_plush.yaml:

--------------------------------------------------------------------------------

1 | prior_path: models/plush_ckpt/prior.ckpt

2 | prior_repo: kfirgold99/Piece-it-Together

3 | crops_dir: assets/plush_parts

4 | output_dir: inference/plush

--------------------------------------------------------------------------------

/configs/infer/infer_products.yaml:

--------------------------------------------------------------------------------

1 | prior_path: models/products_ckpt/prior.ckpt

2 | prior_repo: kfirgold99/Piece-it-Together

3 | crops_dir: assets/product_parts

4 | output_dir: inference/products

--------------------------------------------------------------------------------

/configs/train/train_characters.yaml:

--------------------------------------------------------------------------------

1 | dataset_path: 'datasets/generated/characters'

2 | val_dataset_path: 'datasets/generated/characters'

3 | output_dir: 'training_results/train_characters'

4 | train_batch_size: 64

5 | num_layers: 4

6 | max_crops: 7

7 |

8 |

9 |

10 |

--------------------------------------------------------------------------------

/demo/app.py:

--------------------------------------------------------------------------------

1 | import gradio as gr

2 | import spaces

3 | from pit import PiTDemoPipeline

4 |

5 | BLOCK_WIDTH = 300

6 | BLOCK_HEIGHT = 360

7 | FONT_SIZE = 3.5

8 |

9 | pit_pipeline = PiTDemoPipeline(

10 | prior_repo="kfirgold99/Piece-it-Together", prior_path="models/characters_ckpt/prior.ckpt"

11 | )

12 |

13 |

14 | @spaces.GPU

15 | def run_character_generation(part_1, part_2, part_3, seed=None):

16 | crops_paths = [part_1, part_2, part_3]

17 | image = pit_pipeline.run(crops_paths=crops_paths, seed=seed, n_images=1)[0]

18 | return image

19 |

20 |

21 | with gr.Blocks(css="style.css") as demo:

22 | gr.HTML(

23 | """Piece it Together: Part-Based Concepting with IP-Priors

"""

24 | )

25 | gr.HTML(

26 | ''

27 | )

28 | gr.HTML(

29 | 'Piece it Together (PiT) combines different input parts to generate a complete concept in a prior domain.

'

30 | )

31 | with gr.Row(equal_height=True, elem_classes="justified-element"):

32 | with gr.Column(scale=0, min_width=BLOCK_WIDTH):

33 | part_1 = gr.Image(label="Upload part 1 (or keep empty)", type="filepath", width=BLOCK_WIDTH, height=BLOCK_HEIGHT)

34 | with gr.Column(scale=0, min_width=BLOCK_WIDTH):

35 | part_2 = gr.Image(label="Upload part 2 (or keep empty)", type="filepath", width=BLOCK_WIDTH, height=BLOCK_HEIGHT)

36 | with gr.Column(scale=0, min_width=BLOCK_WIDTH):

37 | part_3 = gr.Image(label="Upload part 3 (or keep empty)", type="filepath", width=BLOCK_WIDTH, height=BLOCK_HEIGHT)

38 | with gr.Column(scale=0, min_width=BLOCK_WIDTH):

39 | output_eq_1 = gr.Image(label="Output", width=BLOCK_WIDTH, height=BLOCK_HEIGHT)

40 | with gr.Row(equal_height=True, elem_classes="justified-element"):

41 | run_button = gr.Button("Create your character!", elem_classes="small-elem")

42 | run_button.click(fn=run_character_generation, inputs=[part_1, part_2, part_3], outputs=[output_eq_1])

43 | with gr.Row(equal_height=True, elem_classes="justified-element"):

44 | pass

45 |

46 | with gr.Row(equal_height=True, elem_classes="justified-element"):

47 | with gr.Column(scale=1):

48 | examples = [

49 | [

50 | "assets/characters_parts/part_a.jpg",

51 | "assets/characters_parts/part_b.jpg",

52 | "assets/characters_parts/part_c.jpg",

53 | ]

54 | ]

55 | gr.Examples(

56 | examples=examples,

57 | inputs=[part_1, part_2, part_3],

58 | outputs=[output_eq_1],

59 | fn=run_character_generation,

60 | cache_examples=False,

61 | )

62 |

63 | demo.queue().launch(share=True)

64 |

--------------------------------------------------------------------------------

/demo/pit.py:

--------------------------------------------------------------------------------

1 | import random

2 | from pathlib import Path

3 | from typing import Optional

4 |

5 | import numpy as np

6 | import pyrallis

7 | import torch

8 | from diffusers import (

9 | StableDiffusionXLPipeline,

10 | )

11 | from huggingface_hub import hf_hub_download

12 | from PIL import Image

13 |

14 | from ip_adapter import IPAdapterPlusXL

15 | from model.dit import DiT_Llama

16 | from model.pipeline_pit import PiTPipeline

17 | from training.train_config import TrainConfig

18 |

19 |

20 | def paste_on_background(image, background, min_scale=0.4, max_scale=0.8, scale=None):

21 | # Calculate aspect ratio and determine resizing based on the smaller dimension of the background

22 | aspect_ratio = image.width / image.height

23 | scale = random.uniform(min_scale, max_scale) if scale is None else scale

24 | new_width = int(min(background.width, background.height * aspect_ratio) * scale)

25 | new_height = int(new_width / aspect_ratio)

26 |

27 | # Resize image and calculate position

28 | image = image.resize((new_width, new_height), resample=Image.LANCZOS)

29 | pos_x = random.randint(0, background.width - new_width)

30 | pos_y = random.randint(0, background.height - new_height)

31 |

32 | # Paste the image using its alpha channel as mask if present

33 | background.paste(image, (pos_x, pos_y), image if "A" in image.mode else None)

34 | return background

35 |

36 |

37 | def set_seed(seed: int):

38 | """Ensures reproducibility across multiple libraries."""

39 | random.seed(seed) # Python random module

40 | np.random.seed(seed) # NumPy random module

41 | torch.manual_seed(seed) # PyTorch CPU random seed

42 | torch.cuda.manual_seed_all(seed) # PyTorch GPU random seed

43 | torch.backends.cudnn.deterministic = True # Ensures deterministic behavior

44 | torch.backends.cudnn.benchmark = False # Disable benchmarking to avoid randomness

45 |

46 |

47 | class PiTDemoPipeline:

48 | def __init__(self, prior_repo: str, prior_path: str):

49 | # Download model and config

50 | prior_ckpt_path = hf_hub_download(

51 | repo_id=prior_repo,

52 | filename=str(prior_path),

53 | local_dir="pretrained_models",

54 | )

55 | prior_cfg_path = hf_hub_download(

56 | repo_id=prior_repo, filename=str(Path(prior_path).parent / "cfg.yaml"), local_dir="pretrained_models"

57 | )

58 | self.model_cfg: TrainConfig = pyrallis.load(TrainConfig, open(prior_cfg_path, "r"))

59 |

60 | self.weight_dtype = torch.float32

61 | self.device = "cuda:0"

62 | prior = DiT_Llama(

63 | embedding_dim=2048,

64 | hidden_dim=self.model_cfg.hidden_dim,

65 | n_layers=self.model_cfg.num_layers,

66 | n_heads=self.model_cfg.num_attention_heads,

67 | )

68 | prior.load_state_dict(torch.load(prior_ckpt_path))

69 | image_pipe = StableDiffusionXLPipeline.from_pretrained(

70 | "stabilityai/stable-diffusion-xl-base-1.0",

71 | torch_dtype=torch.float16,

72 | add_watermarker=False,

73 | )

74 | ip_ckpt_path = hf_hub_download(

75 | repo_id="h94/IP-Adapter",

76 | filename="ip-adapter-plus_sdxl_vit-h.bin",

77 | subfolder="sdxl_models",

78 | local_dir="pretrained_models",

79 | )

80 |

81 | self.ip_model = IPAdapterPlusXL(

82 | image_pipe,

83 | "models/image_encoder",

84 | ip_ckpt_path,

85 | self.device,

86 | num_tokens=16,

87 | )

88 | self.image_processor = self.ip_model.clip_image_processor

89 |

90 | empty_image = Image.new("RGB", (256, 256), (255, 255, 255))

91 | zero_image = torch.Tensor(self.image_processor(empty_image)["pixel_values"][0])

92 | self.zero_image_embeds = self.ip_model.get_image_embeds(zero_image.unsqueeze(0), skip_uncond=True)

93 |

94 | prior_pipeline = PiTPipeline(

95 | prior=prior,

96 | )

97 | self.prior_pipeline = prior_pipeline.to(self.device)

98 | set_seed(42)

99 |

100 | def run(self, crops_paths: list[str], scale: float = 2.0, seed: Optional[int] = None, n_images: int = 1):

101 | if seed is not None:

102 | set_seed(seed)

103 | processed_crops = []

104 | input_images = []

105 |

106 | crops_paths = [None] + crops_paths

107 | # Extend to >3 with Nones

108 | while len(crops_paths) < 3:

109 | crops_paths.append(None)

110 |

111 | for path_ind, path in enumerate(crops_paths):

112 | if path is None:

113 | image = Image.new("RGB", (224, 224), (255, 255, 255))

114 | else:

115 | image = Image.open(path).convert("RGB")

116 | if path_ind > 0 or not self.model_cfg.use_ref:

117 | background = Image.new("RGB", (1024, 1024), (255, 255, 255))

118 | image = paste_on_background(image, background, scale=0.92)

119 | else:

120 | image = image.resize((1024, 1024))

121 | input_images.append(image)

122 | # Name should be parent directory name

123 | processed_image = (

124 | torch.Tensor(self.image_processor(image)["pixel_values"][0])

125 | .to(self.device)

126 | .unsqueeze(0)

127 | .to(self.weight_dtype)

128 | )

129 | processed_crops.append(processed_image)

130 |

131 | image_embed_inputs = []

132 | for crop_ind in range(len(processed_crops)):

133 | image_embed_inputs.append(self.ip_model.get_image_embeds(processed_crops[crop_ind], skip_uncond=True))

134 | crops_input_sequence = torch.cat(image_embed_inputs, dim=1)

135 | generated_images = []

136 | for _ in range(n_images):

137 | seed = random.randint(0, 1000000)

138 | for curr_scale in [scale]:

139 | negative_cond_sequence = torch.zeros_like(crops_input_sequence)

140 | embeds_len = self.zero_image_embeds.shape[1]

141 | for i in range(0, negative_cond_sequence.shape[1], embeds_len):

142 | negative_cond_sequence[:, i : i + embeds_len] = self.zero_image_embeds.detach()

143 |

144 | img_emb = self.prior_pipeline(

145 | cond_sequence=crops_input_sequence,

146 | negative_cond_sequence=negative_cond_sequence,

147 | num_inference_steps=25,

148 | num_images_per_prompt=1,

149 | guidance_scale=curr_scale,

150 | generator=torch.Generator(device="cuda").manual_seed(seed),

151 | ).image_embeds

152 |

153 | for seed_2 in range(1):

154 | images = self.ip_model.generate(

155 | image_prompt_embeds=img_emb,

156 | num_samples=1,

157 | num_inference_steps=50,

158 | )

159 | generated_images += images

160 |

161 | return generated_images

162 |

--------------------------------------------------------------------------------

/ip_adapter/__init__.py:

--------------------------------------------------------------------------------

1 | from .ip_adapter import IPAdapter, IPAdapterPlus, IPAdapterPlusXL, IPAdapterXL, IPAdapterFull

2 |

3 | __all__ = [

4 | "IPAdapter",

5 | "IPAdapterPlus",

6 | "IPAdapterPlusXL",

7 | "IPAdapterXL",

8 | "IPAdapterFull",

9 | ]

10 |

--------------------------------------------------------------------------------

/ip_adapter/attention_processor.py:

--------------------------------------------------------------------------------

1 | # modified from https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention_processor.py

2 | import torch

3 | import torch.nn as nn

4 | import torch.nn.functional as F

5 |

6 |

7 | class AttnProcessor(nn.Module):

8 | r"""

9 | Default processor for performing attention-related computations.

10 | """

11 |

12 | def __init__(

13 | self,

14 | hidden_size=None,

15 | cross_attention_dim=None,

16 | ):

17 | super().__init__()

18 |

19 | def __call__(

20 | self,

21 | attn,

22 | hidden_states,

23 | encoder_hidden_states=None,

24 | attention_mask=None,

25 | temb=None,

26 | *args,

27 | **kwargs,

28 | ):

29 | residual = hidden_states

30 |

31 | if attn.spatial_norm is not None:

32 | hidden_states = attn.spatial_norm(hidden_states, temb)

33 |

34 | input_ndim = hidden_states.ndim

35 |

36 | if input_ndim == 4:

37 | batch_size, channel, height, width = hidden_states.shape

38 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

39 |

40 | batch_size, sequence_length, _ = (

41 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

42 | )

43 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

44 |

45 | if attn.group_norm is not None:

46 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

47 |

48 | query = attn.to_q(hidden_states)

49 |

50 | if encoder_hidden_states is None:

51 | encoder_hidden_states = hidden_states

52 | elif attn.norm_cross:

53 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

54 |

55 | key = attn.to_k(encoder_hidden_states)

56 | value = attn.to_v(encoder_hidden_states)

57 |

58 | query = attn.head_to_batch_dim(query)

59 | key = attn.head_to_batch_dim(key)

60 | value = attn.head_to_batch_dim(value)

61 |

62 | attention_probs = attn.get_attention_scores(query, key, attention_mask)

63 | hidden_states = torch.bmm(attention_probs, value)

64 | hidden_states = attn.batch_to_head_dim(hidden_states)

65 |

66 | # linear proj

67 | hidden_states = attn.to_out[0](hidden_states)

68 | # dropout

69 | hidden_states = attn.to_out[1](hidden_states)

70 |

71 | if input_ndim == 4:

72 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

73 |

74 | if attn.residual_connection:

75 | hidden_states = hidden_states + residual

76 |

77 | hidden_states = hidden_states / attn.rescale_output_factor

78 |

79 | return hidden_states

80 |

81 |

82 | class IPAttnProcessor(nn.Module):

83 | r"""

84 | Attention processor for IP-Adapater.

85 | Args:

86 | hidden_size (`int`):

87 | The hidden size of the attention layer.

88 | cross_attention_dim (`int`):

89 | The number of channels in the `encoder_hidden_states`.

90 | scale (`float`, defaults to 1.0):

91 | the weight scale of image prompt.

92 | num_tokens (`int`, defaults to 4 when do ip_adapter_plus it should be 16):

93 | The context length of the image features.

94 | """

95 |

96 | def __init__(self, hidden_size, cross_attention_dim=None, scale=1.0, num_tokens=4):

97 | super().__init__()

98 |

99 | self.hidden_size = hidden_size

100 | self.cross_attention_dim = cross_attention_dim

101 | self.scale = scale

102 | self.num_tokens = num_tokens

103 |

104 | self.to_k_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

105 | self.to_v_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

106 |

107 | def __call__(

108 | self,

109 | attn,

110 | hidden_states,

111 | encoder_hidden_states=None,

112 | attention_mask=None,

113 | temb=None,

114 | *args,

115 | **kwargs,

116 | ):

117 | residual = hidden_states

118 |

119 | if attn.spatial_norm is not None:

120 | hidden_states = attn.spatial_norm(hidden_states, temb)

121 |

122 | input_ndim = hidden_states.ndim

123 |

124 | if input_ndim == 4:

125 | batch_size, channel, height, width = hidden_states.shape

126 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

127 |

128 | batch_size, sequence_length, _ = (

129 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

130 | )

131 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

132 |

133 | if attn.group_norm is not None:

134 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

135 |

136 | query = attn.to_q(hidden_states)

137 |

138 | if encoder_hidden_states is None:

139 | encoder_hidden_states = hidden_states

140 | else:

141 | # get encoder_hidden_states, ip_hidden_states

142 | end_pos = encoder_hidden_states.shape[1] - self.num_tokens

143 | encoder_hidden_states, ip_hidden_states = (

144 | encoder_hidden_states[:, :end_pos, :],

145 | encoder_hidden_states[:, end_pos:, :],

146 | )

147 | if attn.norm_cross:

148 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

149 |

150 | key = attn.to_k(encoder_hidden_states)

151 | value = attn.to_v(encoder_hidden_states)

152 |

153 | query = attn.head_to_batch_dim(query)

154 | key = attn.head_to_batch_dim(key)

155 | value = attn.head_to_batch_dim(value)

156 |

157 | attention_probs = attn.get_attention_scores(query, key, attention_mask)

158 | hidden_states = torch.bmm(attention_probs, value)

159 | hidden_states = attn.batch_to_head_dim(hidden_states)

160 |

161 | # for ip-adapter

162 | ip_key = self.to_k_ip(ip_hidden_states)

163 | ip_value = self.to_v_ip(ip_hidden_states)

164 |

165 | ip_key = attn.head_to_batch_dim(ip_key)

166 | ip_value = attn.head_to_batch_dim(ip_value)

167 |

168 | ip_attention_probs = attn.get_attention_scores(query, ip_key, None)

169 | self.attn_map = ip_attention_probs

170 | ip_hidden_states = torch.bmm(ip_attention_probs, ip_value)

171 | ip_hidden_states = attn.batch_to_head_dim(ip_hidden_states)

172 |

173 | hidden_states = hidden_states + self.scale * ip_hidden_states

174 |

175 | # linear proj

176 | hidden_states = attn.to_out[0](hidden_states)

177 | # dropout

178 | hidden_states = attn.to_out[1](hidden_states)

179 |

180 | if input_ndim == 4:

181 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

182 |

183 | if attn.residual_connection:

184 | hidden_states = hidden_states + residual

185 |

186 | hidden_states = hidden_states / attn.rescale_output_factor

187 |

188 | return hidden_states

189 |

190 |

191 | class AttnProcessor2_0(torch.nn.Module):

192 | r"""

193 | Processor for implementing scaled dot-product attention (enabled by default if you're using PyTorch 2.0).

194 | """

195 |

196 | def __init__(

197 | self,

198 | hidden_size=None,

199 | cross_attention_dim=None,

200 | ):

201 | super().__init__()

202 | if not hasattr(F, "scaled_dot_product_attention"):

203 | raise ImportError("AttnProcessor2_0 requires PyTorch 2.0, to use it, please upgrade PyTorch to 2.0.")

204 |

205 | def __call__(

206 | self,

207 | attn,

208 | hidden_states,

209 | encoder_hidden_states=None,

210 | attention_mask=None,

211 | temb=None,

212 | *args,

213 | **kwargs,

214 | ):

215 | residual = hidden_states

216 |

217 | if attn.spatial_norm is not None:

218 | hidden_states = attn.spatial_norm(hidden_states, temb)

219 |

220 | input_ndim = hidden_states.ndim

221 |

222 | if input_ndim == 4:

223 | batch_size, channel, height, width = hidden_states.shape

224 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

225 |

226 | batch_size, sequence_length, _ = (

227 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

228 | )

229 |

230 | if attention_mask is not None:

231 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

232 | # scaled_dot_product_attention expects attention_mask shape to be

233 | # (batch, heads, source_length, target_length)

234 | attention_mask = attention_mask.view(batch_size, attn.heads, -1, attention_mask.shape[-1])

235 |

236 | if attn.group_norm is not None:

237 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

238 |

239 | query = attn.to_q(hidden_states)

240 |

241 | if encoder_hidden_states is None:

242 | encoder_hidden_states = hidden_states

243 | elif attn.norm_cross:

244 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

245 |

246 | key = attn.to_k(encoder_hidden_states)

247 | value = attn.to_v(encoder_hidden_states)

248 |

249 | inner_dim = key.shape[-1]

250 | head_dim = inner_dim // attn.heads

251 |

252 | query = query.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

253 |

254 | key = key.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

255 | value = value.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

256 |

257 | # the output of sdp = (batch, num_heads, seq_len, head_dim)

258 | # TODO: add support for attn.scale when we move to Torch 2.1

259 | hidden_states = F.scaled_dot_product_attention(

260 | query, key, value, attn_mask=attention_mask, dropout_p=0.0, is_causal=False

261 | )

262 |

263 | hidden_states = hidden_states.transpose(1, 2).reshape(batch_size, -1, attn.heads * head_dim)

264 | hidden_states = hidden_states.to(query.dtype)

265 |

266 | # linear proj

267 | hidden_states = attn.to_out[0](hidden_states)

268 | # dropout

269 | hidden_states = attn.to_out[1](hidden_states)

270 |

271 | if input_ndim == 4:

272 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

273 |

274 | if attn.residual_connection:

275 | hidden_states = hidden_states + residual

276 |

277 | hidden_states = hidden_states / attn.rescale_output_factor

278 |

279 | return hidden_states

280 |

281 |

282 | class IPAttnProcessor2_0(torch.nn.Module):

283 | r"""

284 | Attention processor for IP-Adapater for PyTorch 2.0.

285 | Args:

286 | hidden_size (`int`):

287 | The hidden size of the attention layer.

288 | cross_attention_dim (`int`):

289 | The number of channels in the `encoder_hidden_states`.

290 | scale (`float`, defaults to 1.0):

291 | the weight scale of image prompt.

292 | num_tokens (`int`, defaults to 4 when do ip_adapter_plus it should be 16):

293 | The context length of the image features.

294 | """

295 |

296 | def __init__(self, hidden_size, cross_attention_dim=None, scale=1.0, num_tokens=4):

297 | super().__init__()

298 |

299 | if not hasattr(F, "scaled_dot_product_attention"):

300 | raise ImportError("AttnProcessor2_0 requires PyTorch 2.0, to use it, please upgrade PyTorch to 2.0.")

301 |

302 | self.hidden_size = hidden_size

303 | self.cross_attention_dim = cross_attention_dim

304 | self.scale = scale

305 | self.num_tokens = num_tokens

306 |

307 | self.to_k_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

308 | self.to_v_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

309 |

310 | def __call__(

311 | self,

312 | attn,

313 | hidden_states,

314 | encoder_hidden_states=None,

315 | attention_mask=None,

316 | temb=None,

317 | *args,

318 | **kwargs,

319 | ):

320 | residual = hidden_states

321 |

322 | if attn.spatial_norm is not None:

323 | hidden_states = attn.spatial_norm(hidden_states, temb)

324 |

325 | input_ndim = hidden_states.ndim

326 |

327 | if input_ndim == 4:

328 | batch_size, channel, height, width = hidden_states.shape

329 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

330 |

331 | batch_size, sequence_length, _ = (

332 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

333 | )

334 |

335 | if attention_mask is not None:

336 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

337 | # scaled_dot_product_attention expects attention_mask shape to be

338 | # (batch, heads, source_length, target_length)

339 | attention_mask = attention_mask.view(batch_size, attn.heads, -1, attention_mask.shape[-1])

340 |

341 | if attn.group_norm is not None:

342 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

343 |

344 | query = attn.to_q(hidden_states)

345 |

346 | if encoder_hidden_states is None:

347 | encoder_hidden_states = hidden_states

348 | else:

349 | # get encoder_hidden_states, ip_hidden_states

350 | end_pos = encoder_hidden_states.shape[1] - self.num_tokens

351 | encoder_hidden_states, ip_hidden_states = (

352 | encoder_hidden_states[:, :end_pos, :],

353 | encoder_hidden_states[:, end_pos:, :],

354 | )

355 | if attn.norm_cross:

356 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

357 |

358 | key = attn.to_k(encoder_hidden_states)

359 | value = attn.to_v(encoder_hidden_states)

360 |

361 | inner_dim = key.shape[-1]

362 | head_dim = inner_dim // attn.heads

363 |

364 | query = query.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

365 |

366 | key = key.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

367 | value = value.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

368 |

369 | # the output of sdp = (batch, num_heads, seq_len, head_dim)

370 | # TODO: add support for attn.scale when we move to Torch 2.1

371 | hidden_states = F.scaled_dot_product_attention(

372 | query, key, value, attn_mask=attention_mask, dropout_p=0.0, is_causal=False

373 | )

374 |

375 | hidden_states = hidden_states.transpose(1, 2).reshape(batch_size, -1, attn.heads * head_dim)

376 | hidden_states = hidden_states.to(query.dtype)

377 |

378 | # for ip-adapter

379 | ip_key = self.to_k_ip(ip_hidden_states)

380 | ip_value = self.to_v_ip(ip_hidden_states)

381 |

382 | ip_key = ip_key.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

383 | ip_value = ip_value.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

384 |

385 | # the output of sdp = (batch, num_heads, seq_len, head_dim)

386 | # TODO: add support for attn.scale when we move to Torch 2.1

387 | ip_hidden_states = F.scaled_dot_product_attention(

388 | query, ip_key, ip_value, attn_mask=None, dropout_p=0.0, is_causal=False

389 | )

390 | with torch.no_grad():

391 | self.attn_map = query @ ip_key.transpose(-2, -1).softmax(dim=-1)

392 | #print(self.attn_map.shape)

393 |

394 | ip_hidden_states = ip_hidden_states.transpose(1, 2).reshape(batch_size, -1, attn.heads * head_dim)

395 | ip_hidden_states = ip_hidden_states.to(query.dtype)

396 |

397 | hidden_states = hidden_states + self.scale * ip_hidden_states

398 |

399 | # linear proj

400 | hidden_states = attn.to_out[0](hidden_states)

401 | # dropout

402 | hidden_states = attn.to_out[1](hidden_states)

403 |

404 | if input_ndim == 4:

405 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

406 |

407 | if attn.residual_connection:

408 | hidden_states = hidden_states + residual

409 |

410 | hidden_states = hidden_states / attn.rescale_output_factor

411 |

412 | return hidden_states

413 |

414 |

415 | ## for controlnet

416 | class CNAttnProcessor:

417 | r"""

418 | Default processor for performing attention-related computations.

419 | """

420 |

421 | def __init__(self, num_tokens=4):

422 | self.num_tokens = num_tokens

423 |

424 | def __call__(self, attn, hidden_states, encoder_hidden_states=None, attention_mask=None, temb=None, *args, **kwargs,):

425 | residual = hidden_states

426 |

427 | if attn.spatial_norm is not None:

428 | hidden_states = attn.spatial_norm(hidden_states, temb)

429 |

430 | input_ndim = hidden_states.ndim

431 |

432 | if input_ndim == 4:

433 | batch_size, channel, height, width = hidden_states.shape

434 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

435 |

436 | batch_size, sequence_length, _ = (

437 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

438 | )

439 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

440 |

441 | if attn.group_norm is not None:

442 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

443 |

444 | query = attn.to_q(hidden_states)

445 |

446 | if encoder_hidden_states is None:

447 | encoder_hidden_states = hidden_states

448 | else:

449 | end_pos = encoder_hidden_states.shape[1] - self.num_tokens

450 | encoder_hidden_states = encoder_hidden_states[:, :end_pos] # only use text

451 | if attn.norm_cross:

452 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

453 |

454 | key = attn.to_k(encoder_hidden_states)

455 | value = attn.to_v(encoder_hidden_states)

456 |

457 | query = attn.head_to_batch_dim(query)

458 | key = attn.head_to_batch_dim(key)

459 | value = attn.head_to_batch_dim(value)

460 |

461 | attention_probs = attn.get_attention_scores(query, key, attention_mask)

462 | hidden_states = torch.bmm(attention_probs, value)

463 | hidden_states = attn.batch_to_head_dim(hidden_states)

464 |

465 | # linear proj

466 | hidden_states = attn.to_out[0](hidden_states)

467 | # dropout

468 | hidden_states = attn.to_out[1](hidden_states)

469 |

470 | if input_ndim == 4:

471 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

472 |

473 | if attn.residual_connection:

474 | hidden_states = hidden_states + residual

475 |

476 | hidden_states = hidden_states / attn.rescale_output_factor

477 |

478 | return hidden_states

479 |

480 |

481 | class CNAttnProcessor2_0:

482 | r"""

483 | Processor for implementing scaled dot-product attention (enabled by default if you're using PyTorch 2.0).

484 | """

485 |

486 | def __init__(self, num_tokens=4):

487 | if not hasattr(F, "scaled_dot_product_attention"):

488 | raise ImportError("AttnProcessor2_0 requires PyTorch 2.0, to use it, please upgrade PyTorch to 2.0.")

489 | self.num_tokens = num_tokens

490 |

491 | def __call__(

492 | self,

493 | attn,

494 | hidden_states,

495 | encoder_hidden_states=None,

496 | attention_mask=None,

497 | temb=None,

498 | *args,

499 | **kwargs,

500 | ):

501 | residual = hidden_states

502 |

503 | if attn.spatial_norm is not None:

504 | hidden_states = attn.spatial_norm(hidden_states, temb)

505 |

506 | input_ndim = hidden_states.ndim

507 |

508 | if input_ndim == 4:

509 | batch_size, channel, height, width = hidden_states.shape

510 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

511 |

512 | batch_size, sequence_length, _ = (

513 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

514 | )

515 |

516 | if attention_mask is not None:

517 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

518 | # scaled_dot_product_attention expects attention_mask shape to be

519 | # (batch, heads, source_length, target_length)

520 | attention_mask = attention_mask.view(batch_size, attn.heads, -1, attention_mask.shape[-1])

521 |

522 | if attn.group_norm is not None:

523 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

524 |

525 | query = attn.to_q(hidden_states)

526 |

527 | if encoder_hidden_states is None:

528 | encoder_hidden_states = hidden_states

529 | else:

530 | end_pos = encoder_hidden_states.shape[1] - self.num_tokens

531 | encoder_hidden_states = encoder_hidden_states[:, :end_pos] # only use text

532 | if attn.norm_cross:

533 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

534 |

535 | key = attn.to_k(encoder_hidden_states)

536 | value = attn.to_v(encoder_hidden_states)

537 |

538 | inner_dim = key.shape[-1]

539 | head_dim = inner_dim // attn.heads

540 |

541 | query = query.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

542 |

543 | key = key.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

544 | value = value.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

545 |

546 | # the output of sdp = (batch, num_heads, seq_len, head_dim)

547 | # TODO: add support for attn.scale when we move to Torch 2.1

548 | hidden_states = F.scaled_dot_product_attention(

549 | query, key, value, attn_mask=attention_mask, dropout_p=0.0, is_causal=False

550 | )

551 |

552 | hidden_states = hidden_states.transpose(1, 2).reshape(batch_size, -1, attn.heads * head_dim)

553 | hidden_states = hidden_states.to(query.dtype)

554 |

555 | # linear proj

556 | hidden_states = attn.to_out[0](hidden_states)

557 | # dropout

558 | hidden_states = attn.to_out[1](hidden_states)

559 |

560 | if input_ndim == 4:

561 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

562 |

563 | if attn.residual_connection:

564 | hidden_states = hidden_states + residual

565 |

566 | hidden_states = hidden_states / attn.rescale_output_factor

567 |

568 | return hidden_states

569 |

--------------------------------------------------------------------------------

/ip_adapter/attention_processor_faceid.py:

--------------------------------------------------------------------------------

1 | # modified from https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention_processor.py

2 | import torch

3 | import torch.nn as nn

4 | import torch.nn.functional as F

5 |

6 | from diffusers.models.lora import LoRALinearLayer

7 |

8 |

9 | class LoRAAttnProcessor(nn.Module):

10 | r"""

11 | Default processor for performing attention-related computations.

12 | """

13 |

14 | def __init__(

15 | self,

16 | hidden_size=None,

17 | cross_attention_dim=None,

18 | rank=4,

19 | network_alpha=None,

20 | lora_scale=1.0,

21 | ):

22 | super().__init__()

23 |

24 | self.rank = rank

25 | self.lora_scale = lora_scale

26 |

27 | self.to_q_lora = LoRALinearLayer(hidden_size, hidden_size, rank, network_alpha)

28 | self.to_k_lora = LoRALinearLayer(cross_attention_dim or hidden_size, hidden_size, rank, network_alpha)

29 | self.to_v_lora = LoRALinearLayer(cross_attention_dim or hidden_size, hidden_size, rank, network_alpha)

30 | self.to_out_lora = LoRALinearLayer(hidden_size, hidden_size, rank, network_alpha)

31 |

32 | def __call__(

33 | self,

34 | attn,

35 | hidden_states,

36 | encoder_hidden_states=None,

37 | attention_mask=None,

38 | temb=None,

39 | *args,

40 | **kwargs,

41 | ):

42 | residual = hidden_states

43 |

44 | if attn.spatial_norm is not None:

45 | hidden_states = attn.spatial_norm(hidden_states, temb)

46 |

47 | input_ndim = hidden_states.ndim

48 |

49 | if input_ndim == 4:

50 | batch_size, channel, height, width = hidden_states.shape

51 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

52 |

53 | batch_size, sequence_length, _ = (

54 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

55 | )

56 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

57 |

58 | if attn.group_norm is not None:

59 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

60 |

61 | query = attn.to_q(hidden_states) + self.lora_scale * self.to_q_lora(hidden_states)

62 |

63 | if encoder_hidden_states is None:

64 | encoder_hidden_states = hidden_states

65 | elif attn.norm_cross:

66 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

67 |

68 | key = attn.to_k(encoder_hidden_states) + self.lora_scale * self.to_k_lora(encoder_hidden_states)

69 | value = attn.to_v(encoder_hidden_states) + self.lora_scale * self.to_v_lora(encoder_hidden_states)

70 |

71 | query = attn.head_to_batch_dim(query)

72 | key = attn.head_to_batch_dim(key)

73 | value = attn.head_to_batch_dim(value)

74 |

75 | attention_probs = attn.get_attention_scores(query, key, attention_mask)

76 | hidden_states = torch.bmm(attention_probs, value)

77 | hidden_states = attn.batch_to_head_dim(hidden_states)

78 |

79 | # linear proj

80 | hidden_states = attn.to_out[0](hidden_states) + self.lora_scale * self.to_out_lora(hidden_states)

81 | # dropout

82 | hidden_states = attn.to_out[1](hidden_states)

83 |

84 | if input_ndim == 4:

85 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

86 |

87 | if attn.residual_connection:

88 | hidden_states = hidden_states + residual

89 |

90 | hidden_states = hidden_states / attn.rescale_output_factor

91 |

92 | return hidden_states

93 |

94 |

95 | class LoRAIPAttnProcessor(nn.Module):

96 | r"""

97 | Attention processor for IP-Adapater.

98 | Args:

99 | hidden_size (`int`):

100 | The hidden size of the attention layer.

101 | cross_attention_dim (`int`):

102 | The number of channels in the `encoder_hidden_states`.

103 | scale (`float`, defaults to 1.0):

104 | the weight scale of image prompt.

105 | num_tokens (`int`, defaults to 4 when do ip_adapter_plus it should be 16):

106 | The context length of the image features.

107 | """

108 |

109 | def __init__(self, hidden_size, cross_attention_dim=None, rank=4, network_alpha=None, lora_scale=1.0, scale=1.0, num_tokens=4):

110 | super().__init__()

111 |

112 | self.rank = rank

113 | self.lora_scale = lora_scale

114 |

115 | self.to_q_lora = LoRALinearLayer(hidden_size, hidden_size, rank, network_alpha)

116 | self.to_k_lora = LoRALinearLayer(cross_attention_dim or hidden_size, hidden_size, rank, network_alpha)

117 | self.to_v_lora = LoRALinearLayer(cross_attention_dim or hidden_size, hidden_size, rank, network_alpha)

118 | self.to_out_lora = LoRALinearLayer(hidden_size, hidden_size, rank, network_alpha)

119 |

120 | self.hidden_size = hidden_size

121 | self.cross_attention_dim = cross_attention_dim

122 | self.scale = scale

123 | self.num_tokens = num_tokens

124 |

125 | self.to_k_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

126 | self.to_v_ip = nn.Linear(cross_attention_dim or hidden_size, hidden_size, bias=False)

127 |

128 | def __call__(

129 | self,

130 | attn,

131 | hidden_states,

132 | encoder_hidden_states=None,

133 | attention_mask=None,

134 | temb=None,

135 | *args,

136 | **kwargs,

137 | ):

138 | residual = hidden_states

139 |

140 | if attn.spatial_norm is not None:

141 | hidden_states = attn.spatial_norm(hidden_states, temb)

142 |

143 | input_ndim = hidden_states.ndim

144 |

145 | if input_ndim == 4:

146 | batch_size, channel, height, width = hidden_states.shape

147 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

148 |

149 | batch_size, sequence_length, _ = (

150 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

151 | )

152 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

153 |

154 | if attn.group_norm is not None:

155 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

156 |

157 | query = attn.to_q(hidden_states) + self.lora_scale * self.to_q_lora(hidden_states)

158 |

159 | if encoder_hidden_states is None:

160 | encoder_hidden_states = hidden_states

161 | else:

162 | # get encoder_hidden_states, ip_hidden_states

163 | end_pos = encoder_hidden_states.shape[1] - self.num_tokens

164 | encoder_hidden_states, ip_hidden_states = (

165 | encoder_hidden_states[:, :end_pos, :],

166 | encoder_hidden_states[:, end_pos:, :],

167 | )

168 | if attn.norm_cross:

169 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

170 |

171 | key = attn.to_k(encoder_hidden_states) + self.lora_scale * self.to_k_lora(encoder_hidden_states)

172 | value = attn.to_v(encoder_hidden_states) + self.lora_scale * self.to_v_lora(encoder_hidden_states)

173 |

174 | query = attn.head_to_batch_dim(query)

175 | key = attn.head_to_batch_dim(key)

176 | value = attn.head_to_batch_dim(value)

177 |

178 | attention_probs = attn.get_attention_scores(query, key, attention_mask)

179 | hidden_states = torch.bmm(attention_probs, value)

180 | hidden_states = attn.batch_to_head_dim(hidden_states)

181 |

182 | # for ip-adapter

183 | ip_key = self.to_k_ip(ip_hidden_states)

184 | ip_value = self.to_v_ip(ip_hidden_states)

185 |

186 | ip_key = attn.head_to_batch_dim(ip_key)

187 | ip_value = attn.head_to_batch_dim(ip_value)

188 |

189 | ip_attention_probs = attn.get_attention_scores(query, ip_key, None)

190 | self.attn_map = ip_attention_probs

191 | ip_hidden_states = torch.bmm(ip_attention_probs, ip_value)

192 | ip_hidden_states = attn.batch_to_head_dim(ip_hidden_states)

193 |

194 | hidden_states = hidden_states + self.scale * ip_hidden_states

195 |

196 | # linear proj

197 | hidden_states = attn.to_out[0](hidden_states) + self.lora_scale * self.to_out_lora(hidden_states)

198 | # dropout

199 | hidden_states = attn.to_out[1](hidden_states)

200 |

201 | if input_ndim == 4:

202 | hidden_states = hidden_states.transpose(-1, -2).reshape(batch_size, channel, height, width)

203 |

204 | if attn.residual_connection:

205 | hidden_states = hidden_states + residual

206 |

207 | hidden_states = hidden_states / attn.rescale_output_factor

208 |

209 | return hidden_states

210 |

211 |

212 | class LoRAAttnProcessor2_0(nn.Module):

213 |

214 | r"""

215 | Default processor for performing attention-related computations.

216 | """

217 |

218 | def __init__(

219 | self,

220 | hidden_size=None,

221 | cross_attention_dim=None,

222 | rank=4,

223 | network_alpha=None,

224 | lora_scale=1.0,

225 | ):

226 | super().__init__()

227 |

228 | self.rank = rank

229 | self.lora_scale = lora_scale

230 |

231 | self.to_q_lora = LoRALinearLayer(hidden_size, hidden_size, rank, network_alpha)

232 | self.to_k_lora = LoRALinearLayer(cross_attention_dim or hidden_size, hidden_size, rank, network_alpha)

233 | self.to_v_lora = LoRALinearLayer(cross_attention_dim or hidden_size, hidden_size, rank, network_alpha)

234 | self.to_out_lora = LoRALinearLayer(hidden_size, hidden_size, rank, network_alpha)

235 |

236 | def __call__(

237 | self,

238 | attn,

239 | hidden_states,

240 | encoder_hidden_states=None,

241 | attention_mask=None,

242 | temb=None,

243 | *args,

244 | **kwargs,

245 | ):

246 | residual = hidden_states

247 |

248 | if attn.spatial_norm is not None:

249 | hidden_states = attn.spatial_norm(hidden_states, temb)

250 |

251 | input_ndim = hidden_states.ndim

252 |

253 | if input_ndim == 4:

254 | batch_size, channel, height, width = hidden_states.shape

255 | hidden_states = hidden_states.view(batch_size, channel, height * width).transpose(1, 2)

256 |

257 | batch_size, sequence_length, _ = (

258 | hidden_states.shape if encoder_hidden_states is None else encoder_hidden_states.shape

259 | )

260 | attention_mask = attn.prepare_attention_mask(attention_mask, sequence_length, batch_size)

261 |

262 | if attn.group_norm is not None:

263 | hidden_states = attn.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

264 |

265 | query = attn.to_q(hidden_states) + self.lora_scale * self.to_q_lora(hidden_states)

266 |

267 | if encoder_hidden_states is None:

268 | encoder_hidden_states = hidden_states

269 | elif attn.norm_cross:

270 | encoder_hidden_states = attn.norm_encoder_hidden_states(encoder_hidden_states)

271 |

272 | key = attn.to_k(encoder_hidden_states) + self.lora_scale * self.to_k_lora(encoder_hidden_states)

273 | value = attn.to_v(encoder_hidden_states) + self.lora_scale * self.to_v_lora(encoder_hidden_states)

274 |

275 | inner_dim = key.shape[-1]

276 | head_dim = inner_dim // attn.heads

277 |

278 | query = query.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

279 |

280 | key = key.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

281 | value = value.view(batch_size, -1, attn.heads, head_dim).transpose(1, 2)

282 |

283 | # the output of sdp = (batch, num_heads, seq_len, head_dim)

284 | # TODO: add support for attn.scale when we move to Torch 2.1