├── .dockerignore

├── .github

└── workflows

│ └── main.yaml

├── .gitignore

├── Dockerfile

├── LICENSE

├── README.md

├── app.py

├── data

├── .gitkeep

└── inputImage.jpg

├── docs

└── .gitkeep

├── flowcharts

├── Data Ingetions.png

├── Data validation.png

├── Model Pusher.png

├── Model trainer.png

└── deployment.jpeg

├── requirements.txt

├── setup.py

├── signLanguage

├── __init__.py

├── components

│ ├── __init__.py

│ ├── data_ingestion.py

│ ├── data_validation.py

│ ├── model_pusher.py

│ └── model_trainer.py

├── configuration

│ ├── __init__.py

│ └── s3_operations.py

├── constant

│ ├── __init__.py

│ ├── application.py

│ └── training_pipeline

│ │ └── __init__.py

├── entity

│ ├── __init__.py

│ ├── artifact_entity.py

│ └── config_entity.py

├── exception

│ └── __init__.py

├── logger

│ └── __init__.py

├── pipeline

│ ├── __init__.py

│ └── training_pipeline.py

└── utils

│ ├── __init__.py

│ └── main_utils.py

├── template.py

├── templates

└── index.html

└── yolov5

├── .dockerignore

├── .gitattributes

├── .github

├── ISSUE_TEMPLATE

│ ├── bug-report.yml

│ ├── config.yml

│ ├── feature-request.yml

│ └── question.yml

├── PULL_REQUEST_TEMPLATE.md

├── dependabot.yml

└── workflows

│ ├── ci-testing.yml

│ ├── codeql-analysis.yml

│ ├── docker.yml

│ ├── greetings.yml

│ ├── stale.yml

│ └── translate-readme.yml

├── .pre-commit-config.yaml

├── CITATION.cff

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── README.zh-CN.md

├── benchmarks.py

├── best.pt

├── classify

├── predict.py

├── train.py

├── tutorial.ipynb

└── val.py

├── data

├── Argoverse.yaml

├── GlobalWheat2020.yaml

├── ImageNet.yaml

├── Objects365.yaml

├── SKU-110K.yaml

├── VOC.yaml

├── VisDrone.yaml

├── coco.yaml

├── coco128-seg.yaml

├── coco128.yaml

├── hyps

│ ├── hyp.Objects365.yaml

│ ├── hyp.VOC.yaml

│ ├── hyp.no-augmentation.yaml

│ ├── hyp.scratch-high.yaml

│ ├── hyp.scratch-low.yaml

│ └── hyp.scratch-med.yaml

├── images

│ ├── bus.jpg

│ └── zidane.jpg

├── scripts

│ ├── download_weights.sh

│ ├── get_coco.sh

│ ├── get_coco128.sh

│ └── get_imagenet.sh

└── xView.yaml

├── detect.py

├── export.py

├── hubconf.py

├── models

├── __init__.py

├── common.py

├── custom_yolov5s.yaml

├── experimental.py

├── hub

│ ├── anchors.yaml

│ ├── yolov3-spp.yaml

│ ├── yolov3-tiny.yaml

│ ├── yolov3.yaml

│ ├── yolov5-bifpn.yaml

│ ├── yolov5-fpn.yaml

│ ├── yolov5-p2.yaml

│ ├── yolov5-p34.yaml

│ ├── yolov5-p6.yaml

│ ├── yolov5-p7.yaml

│ ├── yolov5-panet.yaml

│ ├── yolov5l6.yaml

│ ├── yolov5m6.yaml

│ ├── yolov5n6.yaml

│ ├── yolov5s-LeakyReLU.yaml

│ ├── yolov5s-ghost.yaml

│ ├── yolov5s-transformer.yaml

│ ├── yolov5s6.yaml

│ └── yolov5x6.yaml

├── segment

│ ├── yolov5l-seg.yaml

│ ├── yolov5m-seg.yaml

│ ├── yolov5n-seg.yaml

│ ├── yolov5s-seg.yaml

│ └── yolov5x-seg.yaml

├── tf.py

├── yolo.py

├── yolov5l.yaml

├── yolov5m.yaml

├── yolov5n.yaml

├── yolov5s.yaml

└── yolov5x.yaml

├── my_model.pt

├── requirements.txt

├── segment

├── predict.py

├── train.py

├── tutorial.ipynb

└── val.py

├── setup.cfg

├── train.py

├── tutorial.ipynb

├── utils

├── __init__.py

├── activations.py

├── augmentations.py

├── autoanchor.py

├── autobatch.py

├── aws

│ ├── __init__.py

│ ├── mime.sh

│ ├── resume.py

│ └── userdata.sh

├── callbacks.py

├── dataloaders.py

├── docker

│ ├── Dockerfile

│ ├── Dockerfile-arm64

│ └── Dockerfile-cpu

├── downloads.py

├── flask_rest_api

│ ├── README.md

│ ├── example_request.py

│ └── restapi.py

├── general.py

├── google_app_engine

│ ├── Dockerfile

│ ├── additional_requirements.txt

│ └── app.yaml

├── loggers

│ ├── __init__.py

│ ├── clearml

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── clearml_utils.py

│ │ └── hpo.py

│ ├── comet

│ │ ├── README.md

│ │ ├── __init__.py

│ │ ├── comet_utils.py

│ │ ├── hpo.py

│ │ └── optimizer_config.json

│ └── wandb

│ │ ├── __init__.py

│ │ └── wandb_utils.py

├── loss.py

├── metrics.py

├── plots.py

├── segment

│ ├── __init__.py

│ ├── augmentations.py

│ ├── dataloaders.py

│ ├── general.py

│ ├── loss.py

│ ├── metrics.py

│ └── plots.py

├── torch_utils.py

└── triton.py

├── val.py

└── yolov5s.pt

/.dockerignore:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/.dockerignore

--------------------------------------------------------------------------------

/.github/workflows/main.yaml:

--------------------------------------------------------------------------------

1 | name: workflow

2 |

3 | on:

4 | push:

5 | branches:

6 | - main

7 | paths-ignore:

8 | - 'README.md'

9 |

10 | permissions:

11 | id-token: write

12 | contents: read

13 |

14 | jobs:

15 | integration:

16 | name: Continuous Integration

17 | runs-on: ubuntu-latest

18 | steps:

19 | - name: Checkout Code

20 | uses: actions/checkout@v3

21 |

22 | - name: Lint code

23 | run: echo "Linting repository"

24 |

25 | - name: Run unit tests

26 | run: echo "Running unit tests"

27 |

28 | build-and-push-ecr-image:

29 | name: Continuous Delivery

30 | needs: integration

31 | runs-on: ubuntu-latest

32 | steps:

33 | - name: Checkout Code

34 | uses: actions/checkout@v3

35 |

36 | - name: Install Utilities

37 | run: |

38 | sudo apt-get update

39 | sudo apt-get install -y jq unzip

40 | - name: Configure AWS credentials

41 | uses: aws-actions/configure-aws-credentials@v1

42 | with:

43 | aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

44 | aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

45 | aws-region: ${{ secrets.AWS_REGION }}

46 |

47 | - name: Login to Amazon ECR

48 | id: login-ecr

49 | uses: aws-actions/amazon-ecr-login@v1

50 |

51 | - name: Build, tag, and push image to Amazon ECR

52 | id: build-image

53 | env:

54 | ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

55 | ECR_REPOSITORY: ${{ secrets.ECR_REPOSITORY_NAME }}

56 | IMAGE_TAG: latest

57 | run: |

58 | # Build a docker container and

59 | # push it to ECR so that it can

60 | # be deployed to ECS.

61 | docker build -t $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG .

62 | docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

63 | echo "::set-output name=image::$ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG"

64 |

65 |

66 | Continuous-Deployment:

67 | needs: build-and-push-ecr-image

68 | runs-on: self-hosted

69 | steps:

70 | - name: Checkout

71 | uses: actions/checkout@v3

72 |

73 | - name: Configure AWS credentials

74 | uses: aws-actions/configure-aws-credentials@v1

75 | with:

76 | aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

77 | aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

78 | aws-region: ${{ secrets.AWS_REGION }}

79 |

80 | - name: Login to Amazon ECR

81 | id: login-ecr

82 | uses: aws-actions/amazon-ecr-login@v1

83 |

84 |

85 | - name: Pull latest images

86 | run: |

87 | docker pull ${{secrets.AWS_ECR_LOGIN_URI}}/${{ secrets.ECR_REPOSITORY_NAME }}:latest

88 |

89 | # - name: Stop and remove container if running

90 | # run: |

91 | # docker ps -q --filter "name=sign" | grep -q . && docker stop sign && docker rm -fv sign

92 |

93 | - name: Run Docker Image to serve users

94 | run: |

95 | docker run -d -p 8080:8080 --ipc="host" --name=sign -e 'AWS_ACCESS_KEY_ID=${{ secrets.AWS_ACCESS_KEY_ID }}' -e 'AWS_SECRET_ACCESS_KEY=${{ secrets.AWS_SECRET_ACCESS_KEY }}' -e 'AWS_REGION=${{ secrets.AWS_REGION }}' ${{secrets.AWS_ECR_LOGIN_URI}}/${{ secrets.ECR_REPOSITORY_NAME }}:latest

96 | - name: Clean previous images and containers

97 | run: |

98 | docker system prune -f

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # pyenv

85 | .python-version

86 |

87 | # pipenv

88 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

89 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

90 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

91 | # install all needed dependencies.

92 | #Pipfile.lock

93 |

94 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

95 | __pypackages__/

96 |

97 | # Celery stuff

98 | celerybeat-schedule

99 | celerybeat.pid

100 |

101 | # SageMath parsed files

102 | *.sage.py

103 |

104 | # Environments

105 | .env

106 | .venv

107 | env/

108 | venv/

109 | ENV/

110 | env.bak/

111 | venv.bak/

112 |

113 | # Spyder project settings

114 | .spyderproject

115 | .spyproject

116 |

117 | # Rope project settings

118 | .ropeproject

119 |

120 | # mkdocs documentation

121 | /site

122 |

123 | # mypy

124 | .mypy_cache/

125 | .dmypy.json

126 | dmypy.json

127 |

128 | # Pyre type checker

129 | .pyre/

130 | artifacts/*

131 | Sign_language_data.zip

132 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM python:3.7-slim-buster

2 | WORKDIR /app

3 | COPY . /app

4 |

5 | RUN apt update -y && apt install awscli -y

6 |

7 | RUN apt-get update && apt-get install ffmpeg libsm6 libxext6 unzip -y && pip install -r requirements.txt

8 | CMD ["python3", "app.py"]

9 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 BAPPY AHMED

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # End-to-end-Sign-Language-Detection

2 |

3 | 1. constants

4 | 2. entity

5 | 3. components

6 | 4. pipelines

7 | 5. app.py

8 |

--------------------------------------------------------------------------------

/app.py:

--------------------------------------------------------------------------------

1 | import sys,os

2 | from signLanguage.pipeline.training_pipeline import TrainPipeline

3 | from signLanguage.exception import SignException

4 | from signLanguage.utils.main_utils import decodeImage, encodeImageIntoBase64

5 | from flask import Flask, request, jsonify, render_template,Response

6 | from flask_cors import CORS, cross_origin

7 | from signLanguage.constant.application import APP_HOST, APP_PORT

8 |

9 |

10 |

11 | app = Flask(__name__)

12 | CORS(app)

13 |

14 | class ClientApp:

15 | def __init__(self):

16 | self.filename = "inputImage.jpg"

17 |

18 |

19 |

20 |

21 | @app.route("/train")

22 | def trainRoute():

23 | obj = TrainPipeline()

24 | obj.run_pipeline()

25 | return "Training Successfull!!"

26 |

27 |

28 |

29 | @app.route("/")

30 | def home():

31 | return render_template("index.html")

32 |

33 |

34 |

35 | @app.route("/predict", methods=['POST','GET'])

36 | @cross_origin()

37 | def predictRoute():

38 | try:

39 | image = request.json['image']

40 | decodeImage(image, clApp.filename)

41 |

42 | os.system("cd yolov5/ && python detect.py --weights my_model.pt --img 416 --conf 0.5 --source ../data/inputImage.jpg")

43 |

44 | opencodedbase64 = encodeImageIntoBase64("yolov5/runs/detect/exp/inputImage.jpg")

45 | result = {"image": opencodedbase64.decode('utf-8')}

46 | os.system("rm -rf yolov5/runs")

47 |

48 | except ValueError as val:

49 | print(val)

50 | return Response("Value not found inside json data")

51 | except KeyError:

52 | return Response("Key value error incorrect key passed")

53 | except Exception as e:

54 | print(e)

55 | result = "Invalid input"

56 |

57 | return jsonify(result)

58 |

59 |

60 |

61 |

62 | @app.route("/live", methods=['GET'])

63 | @cross_origin()

64 | def predictLive():

65 | try:

66 | os.system("cd yolov5/ && python detect.py --weights my_model.pt --img 416 --conf 0.5 --source 0")

67 | os.system("rm -rf yolov5/runs")

68 | return "Camera starting!!"

69 |

70 | except ValueError as val:

71 | print(val)

72 | return Response("Value not found inside json data")

73 |

74 |

75 |

76 |

77 |

78 |

79 | if __name__ == "__main__":

80 | clApp = ClientApp()

81 | app.run(host=APP_HOST, port=APP_PORT)

82 |

83 |

--------------------------------------------------------------------------------

/data/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/data/.gitkeep

--------------------------------------------------------------------------------

/data/inputImage.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/data/inputImage.jpg

--------------------------------------------------------------------------------

/docs/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/docs/.gitkeep

--------------------------------------------------------------------------------

/flowcharts/Data Ingetions.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/flowcharts/Data Ingetions.png

--------------------------------------------------------------------------------

/flowcharts/Data validation.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/flowcharts/Data validation.png

--------------------------------------------------------------------------------

/flowcharts/Model Pusher.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/flowcharts/Model Pusher.png

--------------------------------------------------------------------------------

/flowcharts/Model trainer.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/flowcharts/Model trainer.png

--------------------------------------------------------------------------------

/flowcharts/deployment.jpeg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/flowcharts/deployment.jpeg

--------------------------------------------------------------------------------

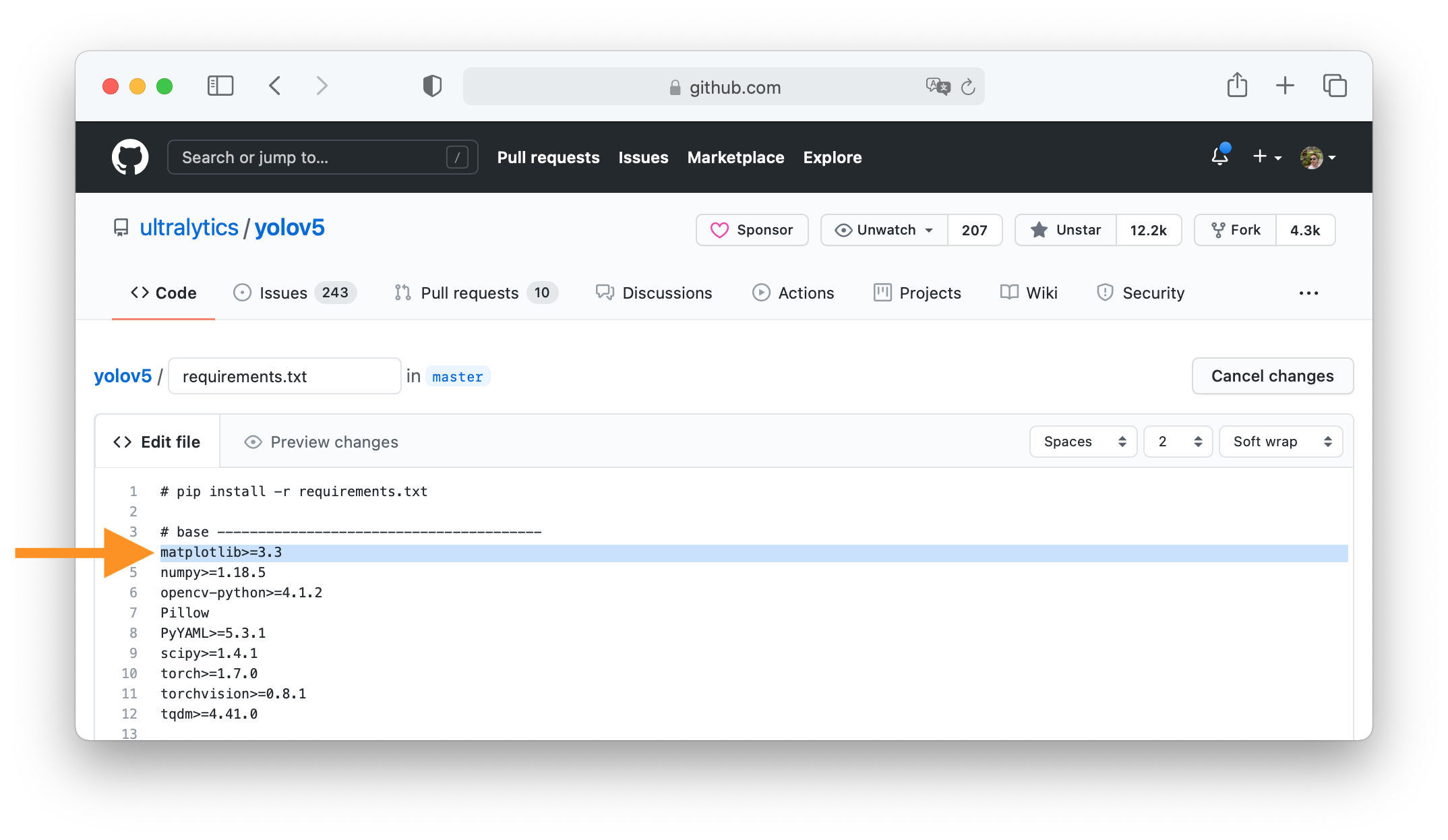

/requirements.txt:

--------------------------------------------------------------------------------

1 | dill==0.3.5.1

2 | from-root==1.0.2

3 | notebook==7.0.0a7

4 | boto3

5 | mypy-boto3-s3

6 | flask-cors

7 | flask

8 |

9 | # YOLOv5 requirements

10 | # Usage: pip install -r requirements.txt

11 |

12 | # Base ----------------------------------------

13 | matplotlib>=3.2.2

14 | numpy>=1.18.5

15 | opencv-python>=4.1.1

16 | Pillow>=7.1.2

17 | PyYAML>=5.3.1

18 | requests>=2.23.0

19 | scipy>=1.4.1

20 | torch>=1.7.0 # see https://pytorch.org/get-started/locally/ (recommended)

21 | torchvision>=0.8.1

22 | tqdm>=4.64.0

23 | # protobuf<=3.20.1 # https://github.com/ultralytics/yolov5/issues/8012

24 |

25 | # Logging -------------------------------------

26 | tensorboard>=2.4.1

27 | # clearml>=1.2.0

28 | # comet

29 |

30 | # Plotting ------------------------------------

31 | pandas>=1.1.4

32 | seaborn>=0.11.0

33 |

34 | # Export --------------------------------------

35 | # coremltools>=6.0 # CoreML export

36 | # onnx>=1.9.0 # ONNX export

37 | # onnx-simplifier>=0.4.1 # ONNX simplifier

38 | # nvidia-pyindex # TensorRT export

39 | # nvidia-tensorrt # TensorRT export

40 | # scikit-learn<=1.1.2 # CoreML quantization

41 | # tensorflow>=2.4.1 # TF exports (-cpu, -aarch64, -macos)

42 | # tensorflowjs>=3.9.0 # TF.js export

43 | # openvino-dev # OpenVINO export

44 |

45 | # Deploy --------------------------------------

46 | # tritonclient[all]~=2.24.0

47 |

48 | # Extras --------------------------------------

49 | ipython # interactive notebook

50 | psutil # system utilization

51 | thop>=0.1.1 # FLOPs computation

52 | # mss # screenshots

53 | # albumentations>=1.0.3

54 | # pycocotools>=2.0 # COCO mAP

55 | # roboflow

56 |

57 |

58 | -e .

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | from setuptools import find_packages, setup

2 |

3 | setup(

4 | name = 'signLanguages',

5 | version= '0.0.0',

6 | author= 'iNeuron',

7 | author_email= 'boktiar@ineuron.ai',

8 | packages= find_packages(),

9 | install_requires = []

10 |

11 | )

--------------------------------------------------------------------------------

/signLanguage/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/signLanguage/__init__.py

--------------------------------------------------------------------------------

/signLanguage/components/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/signLanguage/components/__init__.py

--------------------------------------------------------------------------------

/signLanguage/components/data_ingestion.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | from six.moves import urllib

4 | import zipfile

5 | from signLanguage.logger import logging

6 | from signLanguage.exception import SignException

7 | from signLanguage.entity.config_entity import DataIngestionConfig

8 | from signLanguage.entity.artifact_entity import DataIngestionArtifact

9 |

10 |

11 |

12 | class DataIngestion:

13 | def __init__(self, data_ingestion_config: DataIngestionConfig = DataIngestionConfig()):

14 | try:

15 | self.data_ingestion_config = data_ingestion_config

16 | except Exception as e:

17 | raise SignException(e, sys)

18 |

19 |

20 |

21 | def download_data(self)-> str:

22 | '''

23 | Fetch data from the url

24 | '''

25 |

26 | try:

27 | dataset_url = self.data_ingestion_config.data_download_url

28 | zip_download_dir = self.data_ingestion_config.data_ingestion_dir

29 | os.makedirs(zip_download_dir, exist_ok=True)

30 | data_file_name = os.path.basename(dataset_url)

31 | zip_file_path = os.path.join(zip_download_dir, data_file_name)

32 | logging.info(f"Downloading data from {dataset_url} into file {zip_file_path}")

33 | urllib.request.urlretrieve(dataset_url, zip_file_path)

34 | logging.info(f"Downloaded data from {dataset_url} into file {zip_file_path}")

35 | return zip_file_path

36 |

37 | except Exception as e:

38 | raise SignException(e, sys)

39 |

40 |

41 |

42 | def extract_zip_file(self,zip_file_path: str)-> str:

43 | """

44 | zip_file_path: str

45 | Extracts the zip file into the data directory

46 | Function returns None

47 | """

48 | try:

49 | feature_store_path = self.data_ingestion_config.feature_store_file_path

50 | os.makedirs(feature_store_path, exist_ok=True)

51 | with zipfile.ZipFile(zip_file_path, 'r') as zip_ref:

52 | zip_ref.extractall(feature_store_path)

53 | logging.info(f"Extracting zip file: {zip_file_path} into dir: {feature_store_path}")

54 |

55 | return feature_store_path

56 |

57 | except Exception as e:

58 | raise SignException(e, sys)

59 |

60 |

61 |

62 | def initiate_data_ingestion(self)-> DataIngestionArtifact:

63 | logging.info("Entered initiate_data_ingestion method of Data_Ingestion class")

64 | try:

65 | zip_file_path = self.download_data()

66 | feature_store_path = self.extract_zip_file(zip_file_path)

67 |

68 | data_ingestion_artifact = DataIngestionArtifact(

69 | data_zip_file_path = zip_file_path,

70 | feature_store_path = feature_store_path

71 | )

72 |

73 | logging.info("Exited initiate_data_ingestion method of Data_Ingestion class")

74 | logging.info(f"Data ingestion artifact: {data_ingestion_artifact}")

75 |

76 | return data_ingestion_artifact

77 |

78 | except Exception as e:

79 | raise SignException(e, sys)

80 |

81 |

--------------------------------------------------------------------------------

/signLanguage/components/data_validation.py:

--------------------------------------------------------------------------------

1 | import os,sys

2 | import shutil

3 | from signLanguage.logger import logging

4 | from signLanguage.exception import SignException

5 | from signLanguage.entity.config_entity import DataValidationConfig

6 | from signLanguage.entity.artifact_entity import (DataIngestionArtifact,

7 | DataValidationArtifact)

8 |

9 |

10 |

11 |

12 | class DataValidation:

13 | def __init__(

14 | self,

15 | data_ingestion_artifact: DataIngestionArtifact,

16 | data_validation_config: DataValidationConfig,

17 | ):

18 | try:

19 | self.data_ingestion_artifact = data_ingestion_artifact

20 | self.data_validation_config = data_validation_config

21 |

22 | except Exception as e:

23 | raise SignException(e, sys)

24 |

25 |

26 |

27 | def validate_all_files_exist(self)-> bool:

28 | try:

29 | validation_status = None

30 |

31 | all_files = os.listdir(self.data_ingestion_artifact.feature_store_path)

32 |

33 | for file in all_files:

34 | if file not in self.data_validation_config.required_file_list:

35 | validation_status = False

36 | os.makedirs(self.data_validation_config.data_validation_dir, exist_ok=True)

37 | with open(self.data_validation_config.valid_status_file_dir, 'w') as f:

38 | f.write(f"Validation status: {validation_status}")

39 | else:

40 | validation_status = True

41 | os.makedirs(self.data_validation_config.data_validation_dir, exist_ok=True)

42 | with open(self.data_validation_config.valid_status_file_dir, 'w') as f:

43 | f.write(f"Validation status: {validation_status}")

44 |

45 | return validation_status

46 |

47 |

48 | except Exception as e:

49 | raise SignException(e, sys)

50 |

51 |

52 |

53 | def initiate_data_validation(self) -> DataValidationArtifact:

54 | logging.info("Entered initiate_data_validation method of DataValidation class")

55 | try:

56 | status = self.validate_all_files_exist()

57 | data_validation_artifact = DataValidationArtifact(

58 | validation_status=status)

59 |

60 | logging.info("Exited initiate_data_validation method of DataValidation class")

61 | logging.info(f"Data validation artifact: {data_validation_artifact}")

62 |

63 | if status:

64 | shutil.copy(self.data_ingestion_artifact.data_zip_file_path, os.getcwd())

65 |

66 | return data_validation_artifact

67 |

68 | except Exception as e:

69 | raise SignException(e, sys)

70 |

71 |

--------------------------------------------------------------------------------

/signLanguage/components/model_pusher.py:

--------------------------------------------------------------------------------

1 | import sys

2 | from signLanguage.configuration.s3_operations import S3Operation

3 | from signLanguage.entity.artifact_entity import (

4 | ModelPusherArtifacts,

5 | ModelTrainerArtifact

6 | )

7 | from signLanguage.entity.config_entity import ModelPusherConfig

8 | from signLanguage.exception import SignException

9 | from signLanguage.logger import logging

10 |

11 |

12 |

13 | class ModelPusher:

14 | def __init__(self,model_pusher_config: ModelPusherConfig,model_trainer_artifact: ModelTrainerArtifact, s3: S3Operation):

15 |

16 | self.model_pusher_config = model_pusher_config

17 | self.model_trainer_artifacts = model_trainer_artifact

18 | self.s3 = s3

19 |

20 |

21 |

22 | def initiate_model_pusher(self) -> ModelPusherArtifacts:

23 |

24 | """

25 | Method Name : initiate_model_pusher

26 |

27 | Description : This method initiates model pusher.

28 |

29 | Output : Model pusher artifact

30 | """

31 | logging.info("Entered initiate_model_pusher method of Modelpusher class")

32 | try:

33 | # Uploading the best model to s3 bucket

34 | self.s3.upload_file(

35 | self.model_trainer_artifacts.trained_model_file_path,

36 | self.model_pusher_config.S3_MODEL_KEY_PATH,

37 | self.model_pusher_config.BUCKET_NAME,

38 | remove=False,

39 | )

40 | logging.info("Uploaded best model to s3 bucket")

41 | logging.info("Exited initiate_model_pusher method of ModelTrainer class")

42 |

43 | # Saving the model pusher artifacts

44 | model_pusher_artifact = ModelPusherArtifacts(

45 | bucket_name=self.model_pusher_config.BUCKET_NAME,

46 | s3_model_path=self.model_pusher_config.S3_MODEL_KEY_PATH,

47 | )

48 |

49 | return model_pusher_artifact

50 |

51 | except Exception as e:

52 | raise SignException(e, sys) from e

53 |

54 |

--------------------------------------------------------------------------------

/signLanguage/components/model_trainer.py:

--------------------------------------------------------------------------------

1 | import os,sys

2 | import yaml

3 | from signLanguage.utils.main_utils import read_yaml_file

4 | from signLanguage.logger import logging

5 | from signLanguage.exception import SignException

6 | from signLanguage.entity.config_entity import ModelTrainerConfig

7 | from signLanguage.entity.artifact_entity import ModelTrainerArtifact

8 |

9 |

10 | class ModelTrainer:

11 | def __init__(

12 | self,

13 | model_trainer_config: ModelTrainerConfig,

14 | ):

15 | self.model_trainer_config = model_trainer_config

16 |

17 |

18 |

19 | def initiate_model_trainer(self,) -> ModelTrainerArtifact:

20 | logging.info("Entered initiate_model_trainer method of ModelTrainer class")

21 |

22 | try:

23 | logging.info("Unzipping data")

24 | os.system("unzip Sign_language_data.zip")

25 | os.system("rm Sign_language_data.zip")

26 |

27 | with open("data.yaml", 'r') as stream:

28 | num_classes = str(yaml.safe_load(stream)['nc'])

29 |

30 | model_config_file_name = self.model_trainer_config.weight_name.split(".")[0]

31 | print(model_config_file_name)

32 |

33 | config = read_yaml_file(f"yolov5/models/{model_config_file_name}.yaml")

34 |

35 | config['nc'] = int(num_classes)

36 |

37 |

38 | with open(f'yolov5/models/custom_{model_config_file_name}.yaml', 'w') as f:

39 | yaml.dump(config, f)

40 |

41 | os.system(f"cd yolov5/ && python train.py --img 416 --batch {self.model_trainer_config.batch_size} --epochs {self.model_trainer_config.no_epochs} --data ../data.yaml --cfg ./models/custom_yolov5s.yaml --weights {self.model_trainer_config.weight_name} --name yolov5s_results --cache")

42 | os.system("cp yolov5/runs/train/yolov5s_results/weights/best.pt yolov5/")

43 | os.makedirs(self.model_trainer_config.model_trainer_dir, exist_ok=True)

44 | os.system(f"cp yolov5/runs/train/yolov5s_results/weights/best.pt {self.model_trainer_config.model_trainer_dir}/")

45 |

46 | os.system("rm -rf yolov5/runs")

47 | os.system("rm -rf train")

48 | os.system("rm -rf test")

49 | os.system("rm -rf data.yaml")

50 |

51 | model_trainer_artifact = ModelTrainerArtifact(

52 | trained_model_file_path="yolov5/best.pt",

53 | )

54 |

55 | logging.info("Exited initiate_model_trainer method of ModelTrainer class")

56 | logging.info(f"Model trainer artifact: {model_trainer_artifact}")

57 |

58 | return model_trainer_artifact

59 |

60 |

61 | except Exception as e:

62 | raise SignException(e, sys)

63 |

64 |

65 |

66 |

67 |

68 |

69 |

--------------------------------------------------------------------------------

/signLanguage/configuration/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/signLanguage/configuration/__init__.py

--------------------------------------------------------------------------------

/signLanguage/constant/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/signLanguage/constant/__init__.py

--------------------------------------------------------------------------------

/signLanguage/constant/application.py:

--------------------------------------------------------------------------------

1 | APP_HOST = "0.0.0.0"

2 | APP_PORT = 8080

--------------------------------------------------------------------------------

/signLanguage/constant/training_pipeline/__init__.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | ARTIFACTS_DIR: str = "artifacts"

4 |

5 |

6 | """

7 | Data Ingestion related constant start with DATA_INGESTION VAR NAME

8 | """

9 | DATA_INGESTION_DIR_NAME: str = "data_ingestion"

10 |

11 | DATA_INGESTION_FEATURE_STORE_DIR: str = "feature_store"

12 |

13 | DATA_DOWNLOAD_URL: str = "https://github.com/entbappy/Branching-tutorial/raw/master/Sign_language_data.zip"

14 |

15 |

16 |

17 | """

18 | Data Validation realted contant start with DATA_VALIDATION VAR NAME

19 | """

20 |

21 | DATA_VALIDATION_DIR_NAME: str = "data_validation"

22 |

23 | DATA_VALIDATION_STATUS_FILE = 'status.txt'

24 |

25 | DATA_VALIDATION_ALL_REQUIRED_FILES = ["train", "test", "data.yaml"]

26 |

27 |

28 |

29 | """

30 | MODEL TRAINER related constant start with MODEL_TRAINER var name

31 | """

32 | MODEL_TRAINER_DIR_NAME: str = "model_trainer"

33 |

34 | MODEL_TRAINER_PRETRAINED_WEIGHT_NAME: str = "yolov5s.pt"

35 |

36 | MODEL_TRAINER_NO_EPOCHS: int = 1

37 |

38 | MODEL_TRAINER_BATCH_SIZE: int = 16

39 |

40 |

41 |

42 | """

43 | MODEL PUSHER related constant start with MODEL_PUSHER var name

44 | """

45 | BUCKET_NAME = "sign-lang-23"

46 | S3_MODEL_NAME = "best.pt"

47 |

--------------------------------------------------------------------------------

/signLanguage/entity/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/signLanguage/entity/__init__.py

--------------------------------------------------------------------------------

/signLanguage/entity/artifact_entity.py:

--------------------------------------------------------------------------------

1 | from dataclasses import dataclass

2 |

3 | @dataclass

4 | class DataIngestionArtifact:

5 | data_zip_file_path:str

6 | feature_store_path:str

7 |

8 |

9 | @dataclass

10 | class DataValidationArtifact:

11 | validation_status: bool

12 |

13 |

14 |

15 | @dataclass

16 | class ModelTrainerArtifact:

17 | trained_model_file_path: str

18 |

19 |

20 |

21 |

22 | @dataclass

23 | class ModelPusherArtifacts:

24 | bucket_name: str

25 | s3_model_path: str

26 |

--------------------------------------------------------------------------------

/signLanguage/entity/config_entity.py:

--------------------------------------------------------------------------------

1 | import os

2 | from dataclasses import dataclass

3 | from datetime import datetime

4 | from signLanguage.constant.training_pipeline import *

5 |

6 | TIMESTAMP: str = datetime.now().strftime("%m_%d_%Y_%H_%M_%S")

7 |

8 | @dataclass

9 | class TrainingPipelineConfig:

10 | artifacts_dir: str = os.path.join(ARTIFACTS_DIR,TIMESTAMP)

11 |

12 |

13 |

14 | training_pipeline_config:TrainingPipelineConfig = TrainingPipelineConfig()

15 |

16 |

17 |

18 | @dataclass

19 | class DataIngestionConfig:

20 | data_ingestion_dir: str = os.path.join(

21 | training_pipeline_config.artifacts_dir, DATA_INGESTION_DIR_NAME

22 | )

23 |

24 | feature_store_file_path: str = os.path.join(

25 | data_ingestion_dir, DATA_INGESTION_FEATURE_STORE_DIR

26 | )

27 |

28 | data_download_url: str = DATA_DOWNLOAD_URL

29 |

30 |

31 |

32 | @dataclass

33 | class DataValidationConfig:

34 | data_validation_dir: str = os.path.join(

35 | training_pipeline_config.artifacts_dir, DATA_VALIDATION_DIR_NAME

36 | )

37 |

38 | valid_status_file_dir: str = os.path.join(data_validation_dir, DATA_VALIDATION_STATUS_FILE)

39 |

40 | required_file_list = DATA_VALIDATION_ALL_REQUIRED_FILES

41 |

42 |

43 |

44 |

45 | @dataclass

46 | class ModelTrainerConfig:

47 | model_trainer_dir: str = os.path.join(

48 | training_pipeline_config.artifacts_dir, MODEL_TRAINER_DIR_NAME

49 | )

50 |

51 | weight_name = MODEL_TRAINER_PRETRAINED_WEIGHT_NAME

52 |

53 | no_epochs = MODEL_TRAINER_NO_EPOCHS

54 |

55 | batch_size = MODEL_TRAINER_BATCH_SIZE

56 |

57 |

58 |

59 | @dataclass

60 | class ModelPusherConfig:

61 | BUCKET_NAME: str = BUCKET_NAME

62 | S3_MODEL_KEY_PATH: str = S3_MODEL_NAME

--------------------------------------------------------------------------------

/signLanguage/exception/__init__.py:

--------------------------------------------------------------------------------

1 | import sys

2 |

3 |

4 | def error_message_detail(error, error_detail: sys):

5 | _, _, exc_tb = error_detail.exc_info()

6 |

7 | file_name = exc_tb.tb_frame.f_code.co_filename

8 |

9 | error_message = "Error occurred python script name [{0}] line number [{1}] error message [{2}]".format(

10 | file_name, exc_tb.tb_lineno, str(error)

11 | )

12 |

13 | return error_message

14 |

15 |

16 | class SignException(Exception):

17 | def __init__(self, error_message, error_detail):

18 | """

19 | :param error_message: error message in string format

20 | """

21 | super().__init__(error_message)

22 |

23 | self.error_message = error_message_detail(

24 | error_message, error_detail=error_detail

25 | )

26 |

27 | def __str__(self):

28 | return self.error_message

29 |

30 |

31 |

--------------------------------------------------------------------------------

/signLanguage/logger/__init__.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import os

3 | from datetime import datetime

4 | from from_root import from_root

5 |

6 |

7 | LOG_FILE = f"{datetime.now().strftime('%m_%d_%Y_%H_%M_%S')}.log"

8 |

9 | log_path = os.path.join(from_root(), 'log', LOG_FILE)

10 |

11 | os.makedirs(log_path, exist_ok=True)

12 |

13 | lOG_FILE_PATH = os.path.join(log_path, LOG_FILE)

14 |

15 | logging.basicConfig(

16 | filename=lOG_FILE_PATH,

17 | format= "[ %(asctime)s ] %(name)s - %(levelname)s - %(message)s",

18 | level= logging.INFO

19 | )

--------------------------------------------------------------------------------

/signLanguage/pipeline/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/signLanguage/pipeline/__init__.py

--------------------------------------------------------------------------------

/signLanguage/pipeline/training_pipeline.py:

--------------------------------------------------------------------------------

1 | import sys, os

2 | from signLanguage.logger import logging

3 | from signLanguage.exception import SignException

4 | from signLanguage.components.data_ingestion import DataIngestion

5 | from signLanguage.components.data_validation import DataValidation

6 | from signLanguage.components.model_trainer import ModelTrainer

7 | from signLanguage.components.model_pusher import ModelPusher

8 | from signLanguage.configuration.s3_operations import S3Operation

9 |

10 | from signLanguage.entity.config_entity import (DataIngestionConfig,

11 | DataValidationConfig,

12 | ModelTrainerConfig,

13 | ModelPusherConfig)

14 |

15 | from signLanguage.entity.artifact_entity import (DataIngestionArtifact,

16 | DataValidationArtifact,

17 | ModelTrainerArtifact,

18 | ModelPusherArtifacts)

19 |

20 |

21 | class TrainPipeline:

22 | def __init__(self):

23 | self.data_ingestion_config = DataIngestionConfig()

24 | self.data_validation_config = DataValidationConfig()

25 | self.model_trainer_config = ModelTrainerConfig()

26 | self.model_pusher_config = ModelPusherConfig()

27 | self.s3_operations = S3Operation()

28 |

29 |

30 |

31 | def start_data_ingestion(self)-> DataIngestionArtifact:

32 | try:

33 | logging.info(

34 | "Entered the start_data_ingestion method of TrainPipeline class"

35 | )

36 | logging.info("Getting the data from URL")

37 |

38 | data_ingestion = DataIngestion(

39 | data_ingestion_config = self.data_ingestion_config

40 | )

41 |

42 | data_ingestion_artifact = data_ingestion.initiate_data_ingestion()

43 | logging.info("Got the data from URL")

44 | logging.info(

45 | "Exited the start_data_ingestion method of TrainPipeline class"

46 | )

47 |

48 | return data_ingestion_artifact

49 |

50 | except Exception as e:

51 | raise SignException(e, sys)

52 |

53 |

54 |

55 | def start_data_validation(

56 | self, data_ingestion_artifact: DataIngestionArtifact

57 | ) -> DataValidationArtifact:

58 | logging.info("Entered the start_data_validation method of TrainPipeline class")

59 |

60 | try:

61 | data_validation = DataValidation(

62 | data_ingestion_artifact=data_ingestion_artifact,

63 | data_validation_config=self.data_validation_config,

64 | )

65 |

66 | data_validation_artifact = data_validation.initiate_data_validation()

67 |

68 | logging.info("Performed the data validation operation")

69 |

70 | logging.info(

71 | "Exited the start_data_validation method of TrainPipeline class"

72 | )

73 |

74 | return data_validation_artifact

75 |

76 | except Exception as e:

77 | raise SignException(e, sys) from e

78 |

79 |

80 |

81 | def start_model_trainer(self

82 | ) -> ModelTrainerArtifact:

83 | try:

84 | model_trainer = ModelTrainer(

85 | model_trainer_config=self.model_trainer_config,

86 | )

87 | model_trainer_artifact = model_trainer.initiate_model_trainer()

88 | return model_trainer_artifact

89 |

90 | except Exception as e:

91 | raise SignException(e, sys)

92 |

93 |

94 |

95 | def start_model_pusher(self, model_trainer_artifact: ModelTrainerArtifact, s3: S3Operation):

96 |

97 | try:

98 | model_pusher = ModelPusher(

99 | model_pusher_config=self.model_pusher_config,

100 | model_trainer_artifact= model_trainer_artifact,

101 | s3=s3

102 |

103 | )

104 | model_pusher_artifact = model_pusher.initiate_model_pusher()

105 | return model_pusher_artifact

106 | except Exception as e:

107 | raise SignException(e, sys)

108 |

109 |

110 |

111 |

112 |

113 | def run_pipeline(self) -> None:

114 | try:

115 | data_ingestion_artifact = self.start_data_ingestion()

116 | data_validation_artifact = self.start_data_validation(

117 | data_ingestion_artifact=data_ingestion_artifact

118 | )

119 | if data_validation_artifact.validation_status == True:

120 | model_trainer_artifact = self.start_model_trainer()

121 | #model_pusher_artifact = self.start_model_pusher(model_trainer_artifact=model_trainer_artifact,s3=self.s3_operations)

122 |

123 |

124 | else:

125 | raise Exception("Your data is not in correct format")

126 |

127 |

128 | except Exception as e:

129 | raise SignException(e, sys)

130 |

--------------------------------------------------------------------------------

/signLanguage/utils/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/entbappy/End-to-end-Sign-Language-Detection/0bbe4e9fb7fccec9869dad566fec7c83c0497da9/signLanguage/utils/__init__.py

--------------------------------------------------------------------------------

/signLanguage/utils/main_utils.py:

--------------------------------------------------------------------------------

1 | import os.path

2 | import sys

3 | import yaml

4 | import base64

5 |

6 | from signLanguage.exception import SignException

7 | from signLanguage.logger import logging

8 |

9 |

10 | def read_yaml_file(file_path: str) -> dict:

11 | try:

12 | with open(file_path, "rb") as yaml_file:

13 | logging.info("Read yaml file successfully")

14 | return yaml.safe_load(yaml_file)

15 |

16 | except Exception as e:

17 | raise SignException(e, sys) from e

18 |

19 |

20 | def write_yaml_file(file_path: str, content: object, replace: bool = False) -> None:

21 | try:

22 | if replace:

23 | if os.path.exists(file_path):

24 | os.remove(file_path)

25 |

26 | os.makedirs(os.path.dirname(file_path), exist_ok=True)

27 |

28 | with open(file_path, "w") as file:

29 | yaml.dump(content, file)

30 | logging.info("Successfully write_yaml_file")

31 |

32 | except Exception as e:

33 | raise SignException(e, sys)

34 |

35 |

36 |

37 |

38 | def decodeImage(imgstring, fileName):

39 | imgdata = base64.b64decode(imgstring)

40 | with open("./data/" + fileName, 'wb') as f:

41 | f.write(imgdata)

42 | f.close()

43 |

44 |

45 | def encodeImageIntoBase64(croppedImagePath):

46 | with open(croppedImagePath, "rb") as f:

47 | return base64.b64encode(f.read())

48 |

--------------------------------------------------------------------------------

/template.py:

--------------------------------------------------------------------------------

1 | import os

2 | from pathlib import Path

3 | import logging

4 |

5 | logging.basicConfig(level=logging.INFO, format='[%(asctime)s]: %(message)s:')

6 |

7 |

8 | project_name = "signLanguage"

9 |

10 |

11 | list_of_files = [

12 | ".github/workflows/.gitkeep",

13 | "data/.gitkeep",

14 | "docs/.gitkeep",

15 | f"{project_name}/__init__.py",

16 | f"{project_name}/components/__init__.py",

17 | f"{project_name}/components/data_ingestion.py",

18 | f"{project_name}/components/data_validation.py",

19 | f"{project_name}/components/model_trainer.py",

20 | f"{project_name}/components/model_pusher.py",

21 | f"{project_name}/configuration/__init__.py",

22 | f"{project_name}/configuration/s3_operations.py",

23 | f"{project_name}/constant/__init__.py",

24 | f"{project_name}/constant/training_pipeline/__init__.py",

25 | f"{project_name}/constant/application.py",

26 | f"{project_name}/entity/__init__.py",

27 | f"{project_name}/entity/artifacts_entity.py",

28 | f"{project_name}/entity/config_entity.py",

29 | f"{project_name}/exception/__init__.py",

30 | f"{project_name}/logger/__init__.py",

31 | f"{project_name}/pipeline/__init__.py",

32 | f"{project_name}/pipeline/training_pipeline.py",

33 | f"{project_name}/utils/__init__.py",

34 | f"{project_name}/utils/main_utils.py",

35 | "template/index.html",

36 | ".dockerignore",

37 | "app.py",

38 | "Dockerfile",

39 | "requirements.txt",

40 | "setup.py"

41 |

42 |

43 | ]

44 |

45 |

46 | for filepath in list_of_files:

47 | filepath = Path(filepath)

48 |

49 | filedir, filename = os.path.split(filepath)

50 |

51 | if filedir !="":

52 | os.makedirs(filedir, exist_ok=True)

53 | logging.info(f"Creating directory: {filedir} for the file {filename}")

54 |

55 |

56 | if(not os.path.exists(filename)) or (os.path.getsize(filename) == 0):

57 | with open(filepath, 'w') as f:

58 | pass

59 | logging.info(f"Creating empty file: {filename}")

60 |

61 |

62 | else:

63 | logging.info(f"{filename} is already created")

64 |

65 |

66 |

67 |

68 |

69 |

70 |

71 |

--------------------------------------------------------------------------------

/yolov5/.dockerignore:

--------------------------------------------------------------------------------

1 | # Repo-specific DockerIgnore -------------------------------------------------------------------------------------------

2 | .git

3 | .cache

4 | .idea

5 | runs

6 | output

7 | coco

8 | storage.googleapis.com

9 |

10 | data/samples/*

11 | **/results*.csv

12 | *.jpg

13 |

14 | # Neural Network weights -----------------------------------------------------------------------------------------------

15 | **/*.pt

16 | **/*.pth

17 | **/*.onnx

18 | **/*.engine

19 | **/*.mlmodel

20 | **/*.torchscript

21 | **/*.torchscript.pt

22 | **/*.tflite

23 | **/*.h5

24 | **/*.pb

25 | *_saved_model/

26 | *_web_model/

27 | *_openvino_model/

28 |

29 | # Below Copied From .gitignore -----------------------------------------------------------------------------------------

30 | # Below Copied From .gitignore -----------------------------------------------------------------------------------------

31 |

32 |

33 | # GitHub Python GitIgnore ----------------------------------------------------------------------------------------------

34 | # Byte-compiled / optimized / DLL files

35 | __pycache__/

36 | *.py[cod]

37 | *$py.class

38 |

39 | # C extensions

40 | *.so

41 |

42 | # Distribution / packaging

43 | .Python

44 | env/

45 | build/

46 | develop-eggs/

47 | dist/

48 | downloads/

49 | eggs/

50 | .eggs/

51 | lib/

52 | lib64/

53 | parts/

54 | sdist/

55 | var/

56 | wheels/

57 | *.egg-info/

58 | wandb/

59 | .installed.cfg

60 | *.egg

61 |

62 | # PyInstaller

63 | # Usually these files are written by a python script from a template

64 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

65 | *.manifest

66 | *.spec

67 |

68 | # Installer logs

69 | pip-log.txt

70 | pip-delete-this-directory.txt

71 |

72 | # Unit test / coverage reports

73 | htmlcov/

74 | .tox/

75 | .coverage

76 | .coverage.*

77 | .cache

78 | nosetests.xml

79 | coverage.xml

80 | *.cover

81 | .hypothesis/

82 |

83 | # Translations

84 | *.mo

85 | *.pot

86 |

87 | # Django stuff:

88 | *.log

89 | local_settings.py

90 |

91 | # Flask stuff:

92 | instance/

93 | .webassets-cache

94 |

95 | # Scrapy stuff:

96 | .scrapy

97 |

98 | # Sphinx documentation

99 | docs/_build/

100 |

101 | # PyBuilder

102 | target/

103 |

104 | # Jupyter Notebook

105 | .ipynb_checkpoints

106 |

107 | # pyenv

108 | .python-version

109 |

110 | # celery beat schedule file

111 | celerybeat-schedule

112 |

113 | # SageMath parsed files

114 | *.sage.py

115 |

116 | # dotenv

117 | .env

118 |

119 | # virtualenv

120 | .venv*

121 | venv*/

122 | ENV*/

123 |

124 | # Spyder project settings

125 | .spyderproject

126 | .spyproject

127 |

128 | # Rope project settings

129 | .ropeproject

130 |

131 | # mkdocs documentation

132 | /site

133 |

134 | # mypy

135 | .mypy_cache/

136 |

137 |

138 | # https://github.com/github/gitignore/blob/master/Global/macOS.gitignore -----------------------------------------------

139 |

140 | # General

141 | .DS_Store

142 | .AppleDouble

143 | .LSOverride

144 |

145 | # Icon must end with two \r

146 | Icon

147 | Icon?

148 |

149 | # Thumbnails

150 | ._*

151 |

152 | # Files that might appear in the root of a volume

153 | .DocumentRevisions-V100

154 | .fseventsd

155 | .Spotlight-V100

156 | .TemporaryItems

157 | .Trashes

158 | .VolumeIcon.icns

159 | .com.apple.timemachine.donotpresent

160 |

161 | # Directories potentially created on remote AFP share

162 | .AppleDB

163 | .AppleDesktop

164 | Network Trash Folder

165 | Temporary Items

166 | .apdisk

167 |

168 |

169 | # https://github.com/github/gitignore/blob/master/Global/JetBrains.gitignore

170 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and WebStorm

171 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

172 |

173 | # User-specific stuff:

174 | .idea/*

175 | .idea/**/workspace.xml

176 | .idea/**/tasks.xml

177 | .idea/dictionaries

178 | .html # Bokeh Plots

179 | .pg # TensorFlow Frozen Graphs

180 | .avi # videos

181 |

182 | # Sensitive or high-churn files:

183 | .idea/**/dataSources/

184 | .idea/**/dataSources.ids

185 | .idea/**/dataSources.local.xml

186 | .idea/**/sqlDataSources.xml

187 | .idea/**/dynamic.xml

188 | .idea/**/uiDesigner.xml

189 |

190 | # Gradle:

191 | .idea/**/gradle.xml

192 | .idea/**/libraries

193 |

194 | # CMake

195 | cmake-build-debug/

196 | cmake-build-release/

197 |

198 | # Mongo Explorer plugin:

199 | .idea/**/mongoSettings.xml

200 |

201 | ## File-based project format:

202 | *.iws

203 |

204 | ## Plugin-specific files:

205 |

206 | # IntelliJ

207 | out/

208 |

209 | # mpeltonen/sbt-idea plugin

210 | .idea_modules/

211 |

212 | # JIRA plugin

213 | atlassian-ide-plugin.xml

214 |

215 | # Cursive Clojure plugin

216 | .idea/replstate.xml

217 |

218 | # Crashlytics plugin (for Android Studio and IntelliJ)

219 | com_crashlytics_export_strings.xml

220 | crashlytics.properties

221 | crashlytics-build.properties

222 | fabric.properties

223 |

--------------------------------------------------------------------------------

/yolov5/.gitattributes:

--------------------------------------------------------------------------------

1 | # this drop notebooks from GitHub language stats

2 | *.ipynb linguist-vendored

3 |

--------------------------------------------------------------------------------

/yolov5/.github/ISSUE_TEMPLATE/bug-report.yml:

--------------------------------------------------------------------------------

1 | name: 🐛 Bug Report

2 | # title: " "

3 | description: Problems with YOLOv5

4 | labels: [bug, triage]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Thank you for submitting a YOLOv5 🐛 Bug Report!

10 |

11 | - type: checkboxes

12 | attributes:

13 | label: Search before asking

14 | description: >

15 | Please search the [issues](https://github.com/ultralytics/yolov5/issues) to see if a similar bug report already exists.

16 | options:

17 | - label: >

18 | I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and found no similar bug report.

19 | required: true

20 |

21 | - type: dropdown

22 | attributes:

23 | label: YOLOv5 Component

24 | description: |

25 | Please select the part of YOLOv5 where you found the bug.

26 | multiple: true

27 | options:

28 | - "Training"

29 | - "Validation"

30 | - "Detection"

31 | - "Export"

32 | - "PyTorch Hub"

33 | - "Multi-GPU"

34 | - "Evolution"

35 | - "Integrations"

36 | - "Other"

37 | validations:

38 | required: false

39 |

40 | - type: textarea

41 | attributes:

42 | label: Bug

43 | description: Provide console output with error messages and/or screenshots of the bug.

44 | placeholder: |

45 | 💡 ProTip! Include as much information as possible (screenshots, logs, tracebacks etc.) to receive the most helpful response.

46 | validations:

47 | required: true

48 |

49 | - type: textarea

50 | attributes:

51 | label: Environment

52 | description: Please specify the software and hardware you used to produce the bug.

53 | placeholder: |

54 | - YOLO: YOLOv5 🚀 v6.0-67-g60e42e1 torch 1.9.0+cu111 CUDA:0 (A100-SXM4-40GB, 40536MiB)

55 | - OS: Ubuntu 20.04

56 | - Python: 3.9.0

57 | validations:

58 | required: false

59 |

60 | - type: textarea

61 | attributes:

62 | label: Minimal Reproducible Example

63 | description: >

64 | When asking a question, people will be better able to provide help if you provide code that they can easily understand and use to **reproduce** the problem.

65 | This is referred to by community members as creating a [minimal reproducible example](https://stackoverflow.com/help/minimal-reproducible-example).

66 | placeholder: |

67 | ```

68 | # Code to reproduce your issue here

69 | ```

70 | validations:

71 | required: false

72 |

73 | - type: textarea

74 | attributes:

75 | label: Additional

76 | description: Anything else you would like to share?

77 |

78 | - type: checkboxes

79 | attributes:

80 | label: Are you willing to submit a PR?

81 | description: >

82 | (Optional) We encourage you to submit a [Pull Request](https://github.com/ultralytics/yolov5/pulls) (PR) to help improve YOLOv5 for everyone, especially if you have a good understanding of how to implement a fix or feature.

83 | See the YOLOv5 [Contributing Guide](https://github.com/ultralytics/yolov5/blob/master/CONTRIBUTING.md) to get started.

84 | options:

85 | - label: Yes I'd like to help by submitting a PR!

86 |

--------------------------------------------------------------------------------

/yolov5/.github/ISSUE_TEMPLATE/config.yml:

--------------------------------------------------------------------------------

1 | blank_issues_enabled: true

2 | contact_links:

3 | - name: 📄 Docs

4 | url: https://docs.ultralytics.com/yolov5

5 | about: View Ultralytics YOLOv5 Docs

6 | - name: 💬 Forum

7 | url: https://community.ultralytics.com/

8 | about: Ask on Ultralytics Community Forum

9 | - name: 🎧 Discord

10 | url: https://discord.gg/n6cFeSPZdD

11 | about: Ask on Ultralytics Discord

12 |

--------------------------------------------------------------------------------

/yolov5/.github/ISSUE_TEMPLATE/feature-request.yml:

--------------------------------------------------------------------------------

1 | name: 🚀 Feature Request

2 | description: Suggest a YOLOv5 idea

3 | # title: " "

4 | labels: [enhancement]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Thank you for submitting a YOLOv5 🚀 Feature Request!

10 |

11 | - type: checkboxes

12 | attributes:

13 | label: Search before asking

14 | description: >

15 | Please search the [issues](https://github.com/ultralytics/yolov5/issues) to see if a similar feature request already exists.

16 | options:

17 | - label: >

18 | I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and found no similar feature requests.

19 | required: true

20 |

21 | - type: textarea

22 | attributes:

23 | label: Description

24 | description: A short description of your feature.

25 | placeholder: |

26 | What new feature would you like to see in YOLOv5?

27 | validations:

28 | required: true

29 |

30 | - type: textarea

31 | attributes:

32 | label: Use case

33 | description: |

34 | Describe the use case of your feature request. It will help us understand and prioritize the feature request.

35 | placeholder: |

36 | How would this feature be used, and who would use it?

37 |

38 | - type: textarea

39 | attributes:

40 | label: Additional

41 | description: Anything else you would like to share?

42 |

43 | - type: checkboxes

44 | attributes:

45 | label: Are you willing to submit a PR?

46 | description: >

47 | (Optional) We encourage you to submit a [Pull Request](https://github.com/ultralytics/yolov5/pulls) (PR) to help improve YOLOv5 for everyone, especially if you have a good understanding of how to implement a fix or feature.

48 | See the YOLOv5 [Contributing Guide](https://github.com/ultralytics/yolov5/blob/master/CONTRIBUTING.md) to get started.

49 | options:

50 | - label: Yes I'd like to help by submitting a PR!

51 |

--------------------------------------------------------------------------------

/yolov5/.github/ISSUE_TEMPLATE/question.yml:

--------------------------------------------------------------------------------

1 | name: ❓ Question

2 | description: Ask a YOLOv5 question

3 | # title: " "

4 | labels: [question]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Thank you for asking a YOLOv5 ❓ Question!

10 |

11 | - type: checkboxes

12 | attributes:

13 | label: Search before asking

14 | description: >

15 | Please search the [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) to see if a similar question already exists.

16 | options:

17 | - label: >

18 | I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

19 | required: true

20 |

21 | - type: textarea

22 | attributes:

23 | label: Question

24 | description: What is your question?

25 | placeholder: |

26 | 💡 ProTip! Include as much information as possible (screenshots, logs, tracebacks etc.) to receive the most helpful response.

27 | validations:

28 | required: true

29 |

30 | - type: textarea

31 | attributes:

32 | label: Additional

33 | description: Anything else you would like to share?

34 |

--------------------------------------------------------------------------------

/yolov5/.github/PULL_REQUEST_TEMPLATE.md:

--------------------------------------------------------------------------------

1 |

12 |

13 | copilot:all

14 |

--------------------------------------------------------------------------------

/yolov5/.github/dependabot.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 | updates:

3 | - package-ecosystem: pip

4 | directory: "/"

5 | schedule:

6 | interval: weekly

7 | time: "04:00"

8 | open-pull-requests-limit: 10

9 | reviewers:

10 | - glenn-jocher

11 | labels:

12 | - dependencies

13 |

14 | - package-ecosystem: github-actions

15 | directory: "/"

16 | schedule:

17 | interval: weekly

18 | time: "04:00"

19 | open-pull-requests-limit: 5

20 | reviewers:

21 | - glenn-jocher

22 | labels:

23 | - dependencies

24 |

--------------------------------------------------------------------------------

/yolov5/.github/workflows/codeql-analysis.yml:

--------------------------------------------------------------------------------

1 | # This action runs GitHub's industry-leading static analysis engine, CodeQL, against a repository's source code to find security vulnerabilities.

2 | # https://github.com/github/codeql-action

3 |

4 | name: "CodeQL"

5 |

6 | on:

7 | schedule:

8 | - cron: '0 0 1 * *' # Runs at 00:00 UTC on the 1st of every month

9 |

10 | jobs:

11 | analyze:

12 | name: Analyze

13 | runs-on: ubuntu-latest

14 |

15 | strategy:

16 | fail-fast: false

17 | matrix:

18 | language: ['python']

19 | # CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python' ]

20 | # Learn more:

21 | # https://docs.github.com/en/free-pro-team@latest/github/finding-security-vulnerabilities-and-errors-in-your-code/configuring-code-scanning#changing-the-languages-that-are-analyzed

22 |

23 | steps:

24 | - name: Checkout repository

25 | uses: actions/checkout@v3

26 |

27 | # Initializes the CodeQL tools for scanning.

28 | - name: Initialize CodeQL

29 | uses: github/codeql-action/init@v2

30 | with:

31 | languages: ${{ matrix.language }}

32 | # If you wish to specify custom queries, you can do so here or in a config file.

33 | # By default, queries listed here will override any specified in a config file.

34 | # Prefix the list here with "+" to use these queries and those in the config file.

35 | # queries: ./path/to/local/query, your-org/your-repo/queries@main

36 |

37 | # Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

38 | # If this step fails, then you should remove it and run the build manually (see below)

39 | - name: Autobuild

40 | uses: github/codeql-action/autobuild@v2

41 |

42 | # ℹ️ Command-line programs to run using the OS shell.

43 | # 📚 https://git.io/JvXDl

44 |

45 | # ✏️ If the Autobuild fails above, remove it and uncomment the following three lines

46 | # and modify them (or add more) to build your code if your project

47 | # uses a compiled language

48 |

49 | #- run: |

50 | # make bootstrap

51 | # make release

52 |

53 | - name: Perform CodeQL Analysis

54 | uses: github/codeql-action/analyze@v2

55 |

--------------------------------------------------------------------------------

/yolov5/.github/workflows/docker.yml:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, AGPL-3.0 license

2 | # Builds ultralytics/yolov5:latest images on DockerHub https://hub.docker.com/r/ultralytics/yolov5

3 |

4 | name: Publish Docker Images

5 |

6 | on:

7 | push:

8 | branches: [ master ]

9 |

10 | jobs:

11 | docker:

12 | if: github.repository == 'ultralytics/yolov5'

13 | name: Push Docker image to Docker Hub

14 | runs-on: ubuntu-latest

15 | steps:

16 | - name: Checkout repo

17 | uses: actions/checkout@v3

18 |

19 | - name: Set up QEMU

20 | uses: docker/setup-qemu-action@v2

21 |

22 | - name: Set up Docker Buildx

23 | uses: docker/setup-buildx-action@v2

24 |

25 | - name: Login to Docker Hub

26 | uses: docker/login-action@v2

27 | with:

28 | username: ${{ secrets.DOCKERHUB_USERNAME }}

29 | password: ${{ secrets.DOCKERHUB_TOKEN }}

30 |

31 | - name: Build and push arm64 image

32 | uses: docker/build-push-action@v4

33 | continue-on-error: true

34 | with:

35 | context: .

36 | platforms: linux/arm64

37 | file: utils/docker/Dockerfile-arm64

38 | push: true

39 | tags: ultralytics/yolov5:latest-arm64

40 |

41 | - name: Build and push CPU image

42 | uses: docker/build-push-action@v4

43 | continue-on-error: true

44 | with:

45 | context: .

46 | file: utils/docker/Dockerfile-cpu

47 | push: true

48 | tags: ultralytics/yolov5:latest-cpu

49 |

50 | - name: Build and push GPU image

51 | uses: docker/build-push-action@v4

52 | continue-on-error: true

53 | with:

54 | context: .

55 | file: utils/docker/Dockerfile

56 | push: true

57 | tags: ultralytics/yolov5:latest

58 |

--------------------------------------------------------------------------------

/yolov5/.github/workflows/stale.yml:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, AGPL-3.0 license

2 |

3 | name: Close stale issues

4 | on:

5 | schedule:

6 | - cron: '0 0 * * *' # Runs at 00:00 UTC every day

7 |

8 | jobs:

9 | stale:

10 | runs-on: ubuntu-latest

11 | steps:

12 | - uses: actions/stale@v8

13 | with:

14 | repo-token: ${{ secrets.GITHUB_TOKEN }}

15 | stale-issue-message: |

16 | 👋 Hello, this issue has been automatically marked as stale because it has not had recent activity. Please note it will be closed if no further activity occurs.

17 |

18 | Access additional [YOLOv5](https://ultralytics.com/yolov5) 🚀 resources:

19 | - **Wiki** – https://github.com/ultralytics/yolov5/wiki

20 | - **Tutorials** – https://github.com/ultralytics/yolov5#tutorials

21 | - **Docs** – https://docs.ultralytics.com

22 |

23 | Access additional [Ultralytics](https://ultralytics.com) ⚡ resources:

24 | - **Ultralytics HUB** – https://ultralytics.com/hub

25 | - **Vision API** – https://ultralytics.com/yolov5

26 | - **About Us** – https://ultralytics.com/about

27 | - **Join Our Team** – https://ultralytics.com/work

28 | - **Contact Us** – https://ultralytics.com/contact

29 |

30 | Feel free to inform us of any other **issues** you discover or **feature requests** that come to mind in the future. Pull Requests (PRs) are also always welcomed!

31 |

32 | Thank you for your contributions to YOLOv5 🚀 and Vision AI ⭐!

33 |

34 | stale-pr-message: 'This pull request has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions YOLOv5 🚀 and Vision AI ⭐.'

35 | days-before-issue-stale: 30

36 | days-before-issue-close: 10

37 | days-before-pr-stale: 90

38 | days-before-pr-close: 30

39 | exempt-issue-labels: 'documentation,tutorial,TODO'

40 | operations-per-run: 300 # The maximum number of operations per run, used to control rate limiting.

41 |

--------------------------------------------------------------------------------

/yolov5/.github/workflows/translate-readme.yml:

--------------------------------------------------------------------------------

1 | # YOLOv5 🚀 by Ultralytics, AGPL-3.0 license

2 | # README translation action to translate README.md to Chinese as README.zh-CN.md on any change to README.md

3 |

4 | name: Translate README

5 |

6 | on:

7 | push:

8 | branches:

9 | - translate_readme # replace with 'master' to enable action

10 | paths:

11 | - README.md

12 |

13 | jobs:

14 | Translate:

15 | runs-on: ubuntu-latest

16 | steps:

17 | - uses: actions/checkout@v3

18 | - name: Setup Node.js

19 | uses: actions/setup-node@v3

20 | with:

21 | node-version: 16

22 | # ISO Language Codes: https://cloud.google.com/translate/docs/languages

23 | - name: Adding README - Chinese Simplified

24 | uses: dephraiim/translate-readme@main

25 | with:

26 | LANG: zh-CN

27 |

--------------------------------------------------------------------------------

/yolov5/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, AGPL-3.0 license

2 | # Pre-commit hooks. For more information see https://github.com/pre-commit/pre-commit-hooks/blob/main/README.md

3 |

4 | exclude: 'docs/'

5 | # Define bot property if installed via https://github.com/marketplace/pre-commit-ci

6 | ci:

7 | autofix_prs: true

8 | autoupdate_commit_msg: '[pre-commit.ci] pre-commit suggestions'

9 | autoupdate_schedule: monthly

10 | # submodules: true

11 |