├── .github

└── workflows

│ ├── buildsdist.yml

│ ├── ciwheels.yml

│ ├── draft-pdf.yml

│ ├── scripts

│ └── windows-setup.sh

│ └── test.yml

├── .gitignore

├── .readthedocs.yml

├── CMakeLists.txt

├── LICENSE

├── README.md

├── build.sh

├── cmake

└── Modules

│ ├── FindGLPK.cmake

│ └── FindSphinx.cmake

├── docs

├── CMakeLists.txt

├── Doxyfile

├── code_design1.png

├── conf.py

├── cpp_dense_walk.rst

├── cpp_init.rst

├── cpp_sparse_walk.rst

├── cpp_utils.rst

├── index.rst

├── installation.rst

├── logo1.png

├── make.bat

├── py_dense_walk.rst

├── py_init.rst

├── py_modules.rst

├── py_sparse_walk.rst

├── py_utils.rst

├── requirements.txt

└── support.rst

├── examples

├── data

│ ├── ADLITTLE.pkl

│ ├── ISRAEL.pkl

│ └── SCTAP1.pkl

├── dense_walks.ipynb

└── sparse_walks.ipynb

├── paper

├── images

│ └── Code_Design.pdf

├── paper.bib

└── paper.md

├── pybind11

└── PybindExt.cpp

├── pyproject.toml

├── requirements.txt

├── src

├── CMakeLists.txt

├── dense

│ ├── BallWalk.cpp

│ ├── BallWalk.hpp

│ ├── BarrierWalk.cpp

│ ├── BarrierWalk.hpp

│ ├── Common.hpp

│ ├── DikinLSWalk.cpp

│ ├── DikinLSWalk.hpp

│ ├── DikinWalk.cpp

│ ├── DikinWalk.hpp

│ ├── HitRun.cpp

│ ├── HitRun.hpp

│ ├── JohnWalk.cpp

│ ├── JohnWalk.hpp

│ ├── RandomWalk.cpp

│ ├── RandomWalk.hpp

│ ├── VaidyaWalk.cpp

│ └── VaidyaWalk.hpp

├── sparse

│ ├── Common.hpp

│ ├── LeverageScore.cpp

│ ├── LeverageScore.hpp

│ ├── SparseBallWalk.cpp

│ ├── SparseBallWalk.hpp

│ ├── SparseBarrierWalk.cpp

│ ├── SparseBarrierWalk.hpp

│ ├── SparseDikinLSWalk.cpp

│ ├── SparseDikinLSWalk.hpp

│ ├── SparseDikinWalk.cpp

│ ├── SparseDikinWalk.hpp

│ ├── SparseHitRun.cpp

│ ├── SparseHitRun.hpp

│ ├── SparseJohnWalk.cpp

│ ├── SparseJohnWalk.hpp

│ ├── SparseRandomWalk.cpp

│ ├── SparseRandomWalk.hpp

│ ├── SparseVaidyaWalk.cpp

│ └── SparseVaidyaWalk.hpp

└── utils

│ ├── Common.hpp

│ ├── DenseCenter.cpp

│ ├── DenseCenter.hpp

│ ├── FacialReduction.cpp

│ ├── FacialReduction.hpp

│ ├── FullWalkRun.hpp

│ ├── SparseCenter.cpp

│ ├── SparseCenter.hpp

│ ├── SparseLP.cpp

│ └── SparseLP.hpp

└── tests

├── CMakeLists.txt

├── cpp

├── test_dense_walk.cpp

├── test_fr.cpp

├── test_init.cpp

├── test_sparse_walk.cpp

└── test_weights.cpp

└── python

├── test_dense_walk.py

├── test_fr.py

├── test_init.py

├── test_sparse_walk.py

└── test_weights.py

/.github/workflows/buildsdist.yml:

--------------------------------------------------------------------------------

1 | name: Build source distribution

2 | # Build the source distribution under Linux

3 |

4 | on:

5 | push:

6 | branches:

7 | - main

8 | tags:

9 | - 'v*' # Triggers on tag pushes that match the pattern (e.g., v1.0.0, v2.0.1, etc.)

10 | pull_request:

11 | branches:

12 | - main

13 |

14 | jobs:

15 | build_sdist:

16 | name: Source distribution

17 | runs-on: ubuntu-latest

18 |

19 | steps:

20 | - name: Checkout repository

21 | uses: actions/checkout@v4

22 |

23 | - name: Set up Python

24 | uses: actions/setup-python@v5

25 | with:

26 | python-version: 3.9

27 |

28 | - name: Install dependencies

29 | shell: bash

30 | run: |

31 | sudo apt-get install -y libeigen3-dev libglpk-dev

32 | python -m pip install numpy scipy

33 | python -m pip install twine build

34 |

35 | - name: Build source distribution

36 | run: |

37 | python -m build --sdist

38 | # Check whether the source distribution will render correctly

39 | twine check dist/*.tar.gz

40 |

41 | - name: Store artifacts

42 | uses: actions/upload-artifact@v4

43 | with:

44 | name: cibw-sdist

45 | path: dist/*.tar.gz

46 |

47 | publish-to-pypi:

48 | name: >-

49 | Publish Python 🐍 distribution 📦 to PyPI

50 | if: startsWith(github.ref, 'refs/tags/') # only publish on tag pushes

51 | needs:

52 | - build_sdist

53 | runs-on: ubuntu-latest

54 |

55 | environment:

56 | name: pypi

57 | url: https://pypi.org/p/polytopewalk

58 |

59 | permissions:

60 | id-token: write # IMPORTANT: mandatory for trusted publishing

61 |

62 | steps:

63 | - name: Download the sdist

64 | uses: actions/download-artifact@v4

65 | with:

66 | pattern: cibw-*

67 | path: dist

68 | merge-multiple: true

69 |

70 | - name: Publish distribution 📦 to PyPI

71 | uses: pypa/gh-action-pypi-publish@release/v1

72 |

73 | publish-to-testpypi:

74 | name: >-

75 | Publish Python 🐍 distribution 📦 to TestPyPI

76 | if: startsWith(github.ref, 'refs/tags/') # only publish on tag pushes

77 | needs:

78 | - build_sdist

79 | runs-on: ubuntu-latest

80 |

81 | environment:

82 | name: testpypi

83 | url: https://test.pypi.org/p/polytopewalk

84 |

85 | permissions:

86 | id-token: write # IMPORTANT: mandatory for trusted publishing

87 |

88 | steps:

89 | - name: Download the sdist

90 | uses: actions/download-artifact@v4

91 | with:

92 | pattern: cibw-*

93 | path: dist

94 | merge-multiple: true

95 |

96 | - name: Publish distribution 📦 to TestPyPI

97 | uses: pypa/gh-action-pypi-publish@release/v1

98 | with:

99 | repository-url: https://test.pypi.org/legacy/

100 | verbose: true

101 |

102 |

103 |

104 |

105 |

--------------------------------------------------------------------------------

/.github/workflows/ciwheels.yml:

--------------------------------------------------------------------------------

1 | name: Build CI wheels

2 |

3 | on:

4 | push:

5 | branches:

6 | - main

7 | tags:

8 | - 'v*' # Triggers on tag pushes that match the pattern (e.g., v1.0.0, v2.0.1, etc.)

9 | pull_request:

10 | branches:

11 | - main

12 |

13 | jobs:

14 | build_wheels:

15 | name: Build wheel for ${{ matrix.os }} on cp${{ matrix.python }}-${{ matrix.platform_id }}-${{ matrix.manylinux_image }}

16 | runs-on: ${{ matrix.os }}

17 | strategy:

18 | matrix:

19 | include:

20 | # Window 64 bit

21 | - os: windows-latest

22 | python: 39

23 | platform_id: win_amd64

24 | - os: windows-latest

25 | python: 310

26 | platform_id: win_amd64

27 | - os: windows-latest

28 | python: 311

29 | platform_id: win_amd64

30 | - os: windows-latest

31 | python: 312

32 | platform_id: win_amd64

33 | - os: windows-latest

34 | python: 313

35 | platform_id: win_amd64

36 |

37 | # Linux 64 bit manylinux2014

38 | - os: ubuntu-latest

39 | python: 39

40 | platform_id: manylinux_x86_64

41 | manylinux_image: manylinux2014

42 | - os: ubuntu-latest

43 | python: 310

44 | platform_id: manylinux_x86_64

45 | manylinux_image: manylinux2014

46 | - os: ubuntu-latest

47 | python: 311

48 | platform_id: manylinux_x86_64

49 | manylinux_image: manylinux2014

50 | - os: ubuntu-latest

51 | python: 312

52 | platform_id: manylinux_x86_64

53 | manylinux_image: manylinux2014

54 | - os: ubuntu-latest

55 | python: 313

56 | platform_id: manylinux_x86_64

57 | manylinux_image: manylinux2014

58 |

59 | # MacOS macos-12 x86_64 is deprecated

60 | # Macos macos-13 x86_64

61 | - os: macos-13

62 | python: 39

63 | platform_id: macosx_x86_64

64 | deployment_target: "13"

65 | - os: macos-13

66 | python: 310

67 | platform_id: macosx_x86_64

68 | deployment_target: "13"

69 | - os: macos-13

70 | python: 311

71 | platform_id: macosx_x86_64

72 | deployment_target: "13"

73 | - os: macos-13

74 | python: 312

75 | platform_id: macosx_x86_64

76 | deployment_target: "13"

77 | - os: macos-13

78 | python: 313

79 | platform_id: macosx_x86_64

80 | deployment_target: "13"

81 | # MacOS macos-14 arm64

82 | - os: macos-latest

83 | python: 39

84 | platform_id: macosx_arm64

85 | deployment_target: "14"

86 | - os: macos-latest

87 | python: 310

88 | platform_id: macosx_arm64

89 | deployment_target: "14"

90 | - os: macos-latest

91 | python: 311

92 | platform_id: macosx_arm64

93 | deployment_target: "14"

94 | - os: macos-latest

95 | python: 312

96 | platform_id: macosx_arm64

97 | deployment_target: "14"

98 | - os: macos-latest

99 | python: 313

100 | platform_id: macosx_arm64

101 | deployment_target: "14"

102 |

103 | steps:

104 | - name: Checkout code

105 | uses: actions/checkout@v4

106 |

107 | - name: Install packages (Windows)

108 | if: runner.os == 'Windows'

109 | shell: bash

110 | run: |

111 | curl -owinglpk-4.65.zip -L --insecure https://jaist.dl.sourceforge.net/project/winglpk/winglpk/GLPK-4.65/winglpk-4.65.zip

112 | 7z x winglpk-4.65.zip

113 | cp glpk-4.65/w64/glpk_4_65.lib glpk-4.65/w64/glpk.lib

114 | mkdir lib

115 | cp glpk-4.65/w64/glpk_4_65.dll lib/glpk_4_65.dll

116 | echo GLPK_LIB_DIR=${GITHUB_WORKSPACE}\\glpk-4.65\\w64 >> $GITHUB_ENV

117 | echo GLPK_INCLUDE_DIR=${GITHUB_WORKSPACE}\\glpk-4.65\\src >> $GITHUB_ENV

118 |

119 | - name: Build wheels

120 | uses: pypa/cibuildwheel@v2.20.0

121 | env:

122 | # Skip 32-bit builds and musllinux

123 | CIBW_SKIP: "*-win32 *-manylinux_i686 *musllinux*"

124 | CIBW_BUILD: cp${{ matrix.python }}-${{ matrix.platform_id }}

125 | BUILD_DOCS: "OFF"

126 | MACOSX_DEPLOYMENT_TARGET: ${{ matrix.deployment_target }}

127 | CIBW_BEFORE_ALL_MACOS: |

128 | brew install eigen glpk

129 | CIBW_BEFORE_ALL_LINUX: |

130 | yum install -y epel-release eigen3-devel glpk-devel

131 | CIBW_BEFORE_ALL_WINDOWS: >

132 | echo "OK starts Windows build" &&

133 | choco install eigen -y

134 | CIBW_BEFORE_BUILD_WINDOWS: "pip install delvewheel"

135 | CIBW_REPAIR_WHEEL_COMMAND_WINDOWS: "delvewheel repair --add-path \"glpk-4.65/w64/\" -w {dest_dir} {wheel}"

136 | with:

137 | package-dir: .

138 | output-dir: wheelhouse

139 | config-file: '{package}/pyproject.toml'

140 |

141 | - uses: actions/upload-artifact@v4

142 | with:

143 | name: cibw-wheels-${{ matrix.os }}-cp${{ matrix.python }}-${{ matrix.platform_id }}

144 | path: ./wheelhouse/*.whl

145 |

146 | publish-to-pypi:

147 | name: >-

148 | Publish Python 🐍 distribution 📦 to PyPI

149 | if: startsWith(github.ref, 'refs/tags/') # only publish on tag pushes

150 | needs:

151 | - build_wheels

152 | runs-on: ubuntu-latest

153 |

154 | environment:

155 | name: pypi

156 | url: https://pypi.org/p/polytopewalk

157 |

158 | permissions:

159 | id-token: write # IMPORTANT: mandatory for trusted publishing

160 |

161 | steps:

162 | - name: Download all the wheels

163 | uses: actions/download-artifact@v4

164 | with:

165 | pattern: cibw-*

166 | path: dist

167 | merge-multiple: true

168 |

169 | - name: Publish distribution 📦 to PyPI

170 | uses: pypa/gh-action-pypi-publish@release/v1

171 |

172 | publish-to-testpypi:

173 | name: >-

174 | Publish Python 🐍 distribution 📦 to TestPyPI

175 | if: startsWith(github.ref, 'refs/tags/') # only publish on tag pushes

176 | needs:

177 | - build_wheels

178 | runs-on: ubuntu-latest

179 |

180 | environment:

181 | name: testpypi

182 | url: https://test.pypi.org/p/polytopewalk

183 |

184 | permissions:

185 | id-token: write # IMPORTANT: mandatory for trusted publishing

186 |

187 | steps:

188 | - name: Download all the wheels

189 | uses: actions/download-artifact@v4

190 | with:

191 | pattern: cibw-*

192 | path: dist

193 | merge-multiple: true

194 |

195 | - name: Publish distribution 📦 to TestPyPI

196 | uses: pypa/gh-action-pypi-publish@release/v1

197 | with:

198 | repository-url: https://test.pypi.org/legacy/

199 |

--------------------------------------------------------------------------------

/.github/workflows/draft-pdf.yml:

--------------------------------------------------------------------------------

1 | name: Draft PDF

2 | on:

3 | push:

4 | branches:

5 | - main

6 | paths:

7 | - 'paper/**'

8 |

9 | jobs:

10 | paper:

11 | runs-on: ubuntu-latest

12 | name: Paper Draft

13 | steps:

14 | - name: Checkout

15 | uses: actions/checkout@v4

16 | - name: Build draft PDF

17 | uses: openjournals/openjournals-draft-action@master

18 | with:

19 | journal: joss

20 | # This should be the path to the paper within your repo.

21 | paper-path: paper/paper.md

22 | - name: Upload

23 | uses: actions/upload-artifact@v4

24 | with:

25 | name: paper

26 | # This is the output path where Pandoc will write the compiled

27 | # PDF. Note, this should be the same directory as the input

28 | # paper.md

29 | path: paper/paper.pdf

30 |

--------------------------------------------------------------------------------

/.github/workflows/scripts/windows-setup.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | echo "Let's start windows-setup"

4 | # export PATH="/c/msys64/mingw64/bin:/c/Program Files/Git/bin:$PATH"

5 | export PATH="/mingw64/bin:$PATH"

6 |

7 | # # install GLPK for Windows from binary

8 | # wget https://downloads.sourceforge.net/project/winglpk/winglpk/GLPK-4.65/winglpk-4.65.zip

9 | # upzip winglpk-4.65.zip

10 | # mkdir /mingw64/local

11 | # cp -r winglpk-4.65/* /mingw64/

12 |

13 | # # install ipopt from binary

14 | # wget https://github.com/coin-or/Ipopt/releases/download/releases%2F3.14.16/Ipopt-3.14.16-win64-msvs2019-md.zip

15 | # unzip Ipopt-3.14.16-win64-msvs2019-md.zip

16 | # mkdir /mingw64/local

17 | # cp -r Ipopt-3.14.16-win64-msvs2019-md/* /mingw64/

18 |

19 | # # # install ipopt via https://coin-or.github.io/Ipopt/INSTALL.html

20 | # # # install Mumps

21 | # # git clone https://github.com/coin-or-tools/ThirdParty-Mumps.git

22 | # # cd ThirdParty-Mumps

23 | # # ./get.Mumps

24 | # # ./configure --prefix=/mingw64/

25 | # # make

26 | # # make install

27 | # # cd ..

28 |

29 | # # # install ipopt from source

30 | # # git clone https://github.com/coin-or/Ipopt.git

31 | # # cd Ipopt

32 | # # mkdir build

33 | # # cd build

34 | # # ../configure --prefix=/mingw64/

35 | # # make

36 | # # make install

37 | # # cd ..

38 | # # cd ..

39 |

40 | # # get FindIPOPT_DIR from casadi, which is better written

41 | # git clone --depth 1 --branch 3.6.5 https://github.com/casadi/casadi.git

42 |

43 | # # install ifopt from source

44 | # git clone https://github.com/ethz-adrl/ifopt.git

45 | # cd ifopt

46 | # # move FindIPOPT.cmake around

47 | # mv ifopt_ipopt/cmake/FindIPOPT.cmake ifopt_ipopt/cmake/FindIPOPT.cmakeold

48 | # cp ../casadi/cmake/FindIPOPT.cmake ifopt_ipopt/cmake/

49 | # cp ../casadi/cmake/canonicalize_paths.cmake ifopt_ipopt/cmake/

50 | # cmake -A x64 -B build \

51 | # -DCMAKE_VERBOSE_MAKEFILE=ON \

52 | # -DCMAKE_INSTALL_PREFIX="/mingw64/local" \

53 | # -DCMAKE_PREFIX_PATH="/mingw64" \

54 | # -G "Visual Studio 17 2022"

55 |

56 | # cmake --build build --config Release

57 | # cmake --install build --config Release

58 | # cd ..

59 |

60 | # # mkdir build

61 | # # cd build

62 | # # cmake .. -DCMAKE_VERBOSE_MAKEFILE=ON \

63 | # # -DCMAKE_INSTALL_PREFIX="/mingw64/local" \

64 | # # -DCMAKE_PREFIX_PATH="/mingw64" \

65 | # # -DIPOPT_LIBRARIES="/mingw64/lib/libipopt.dll.a" \

66 | # # -DIPOPT_INCLUDE_DIRS="/mingw64/include/coin-or" \

67 | # # -G "Unix Makefiles"

68 |

69 | # # make VERBOSE=1

70 | # # make install

71 | # # cd ..

72 | # # cd ..

73 |

74 | # eigen_dir=$(cygpath -w /mingw64/share/eigen3/cmake)

75 | # echo $eigen_dir

76 | # echo "Eigen3_DIR=$eigen_dir" >> $GITHUB_ENV

77 | # ifopt_dir=$(cygpath -w /mingw64/local/share/ifopt/cmake)

78 | # echo `ls /mingw64/local/share/ifopt/cmake`

79 | # echo $ifopt_dir

80 | # echo "ifopt_DIR=$ifopt_dir" >> $GITHUB_ENV

81 | eigen_dir=$(cygpath -w /mingw64/share/eigen3/cmake)

82 | echo $eigen_dir

83 | echo "Eigen3_DIR=$eigen_dir" >> $GITHUB_ENV

84 |

85 | # glpk_include_dir=$(cygpath -w /mingw64/include)

86 | # glpk_library=$(cygpath -w /mingw64/lib)

87 | # echo "GLPK_INCLUDE_DIR=$glpk_include_dir" >> $GITHUB_ENV

88 | # echo "GLPK_LIBRARY=$glpk_library" >> $GITHUB_ENV

--------------------------------------------------------------------------------

/.github/workflows/test.yml:

--------------------------------------------------------------------------------

1 | name: Run automated test suite

2 |

3 | on:

4 | push:

5 | branches:

6 | - main

7 |

8 | jobs:

9 | test:

10 | runs-on: windows-latest

11 | strategy:

12 | matrix:

13 | python-version: ["3.9", "3.10", "3.11", "3.12", "3.13"]

14 |

15 | steps:

16 | - name: Checkout repository

17 | uses: actions/checkout@v4

18 |

19 | - name: Set up Python ${{ matrix.python-version }}

20 | uses: actions/setup-python@v4

21 | with:

22 | python-version: ${{ matrix.python-version }}

23 |

24 | - name: Install dependencies

25 | shell: bash

26 | run: |

27 | python -m pip install --upgrade pip

28 | pip install -r requirements.txt

29 | pip install polytopewalk

30 |

31 | - name: Run tests

32 | run: python -m unittest discover -s tests/python -p "*.py"

33 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | CMakeLists.txt.user

2 | CMakeCache.txt

3 | CMakeFiles

4 | CMakeScripts

5 | Testing

6 | Makefile

7 | cmake_install.cmake

8 | install_manifest.txt

9 | compile_commands.json

10 | CTestTestfile.cmake

11 | _deps

12 | .vscode

13 |

14 | .DS_Store

15 |

16 | test.ipynb

17 | # Byte-compiled / optimized / DLL files

18 | __pycache__/

19 | *.py[cod]

20 | *$py.class

21 |

22 | # C extensions

23 | *.so

24 |

25 | # Distribution / packaging

26 | .Python

27 | build/

28 | develop-eggs/

29 | dist/

30 | downloads/

31 | eggs/

32 | .eggs/

33 | lib/

34 | lib64/

35 | parts/

36 | sdist/

37 | var/

38 | wheels/

39 | share/python-wheels/

40 | *.egg-info/

41 | .installed.cfg

42 | *.egg

43 | MANIFEST

44 |

45 | # PyInstaller

46 | # Usually these files are written by a python script from a template

47 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

48 | *.manifest

49 | *.spec

50 |

51 | # Installer logs

52 | pip-log.txt

53 | pip-delete-this-directory.txt

54 |

55 | # Unit test / coverage reports

56 | htmlcov/

57 | .tox/

58 | .nox/

59 | .coverage

60 | .coverage.*

61 | .cache

62 | nosetests.xml

63 | coverage.xml

64 | *.cover

65 | *.py,cover

66 | .hypothesis/

67 | .pytest_cache/

68 | cover/

69 |

70 | # Translations

71 | *.mo

72 | *.pot

73 |

74 | # Django stuff:

75 | *.log

76 | local_settings.py

77 | db.sqlite3

78 | db.sqlite3-journal

79 |

80 | # Flask stuff:

81 | instance/

82 | .webassets-cache

83 |

84 | # Scrapy stuff:

85 | .scrapy

86 |

87 | # Sphinx documentation

88 | docs/_build/

89 |

90 | # PyBuilder

91 | .pybuilder/

92 | target/

93 |

94 | # Jupyter Notebook

95 | .ipynb_checkpoints

96 |

97 | # IPython

98 | profile_default/

99 | ipython_config.py

100 |

101 | # pyenv

102 | # For a library or package, you might want to ignore these files since the code is

103 | # intended to run in multiple environments; otherwise, check them in:

104 | # .python-version

105 |

106 | # pipenv

107 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

108 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

109 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

110 | # install all needed dependencies.

111 | #Pipfile.lock

112 |

113 | # poetry

114 | # Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

115 | # This is especially recommended for binary packages to ensure reproducibility, and is more

116 | # commonly ignored for libraries.

117 | # https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

118 | #poetry.lock

119 |

120 | # pdm

121 | # Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

122 | #pdm.lock

123 | # pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

124 | # in version control.

125 | # https://pdm.fming.dev/#use-with-ide

126 | .pdm.toml

127 |

128 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

129 | __pypackages__/

130 |

131 | # Celery stuff

132 | celerybeat-schedule

133 | celerybeat.pid

134 |

135 | # SageMath parsed files

136 | *.sage.py

137 |

138 | # Environments

139 | .env

140 | .venv

141 | env/

142 | venv/

143 | ENV/

144 | env.bak/

145 | venv.bak/

146 |

147 | # Spyder project settings

148 | .spyderproject

149 | .spyproject

150 |

151 | # Rope project settings

152 | .ropeproject

153 |

154 | # mkdocs documentation

155 | /site

156 |

157 | # mypy

158 | .mypy_cache/

159 | .dmypy.json

160 | dmypy.json

161 |

162 | # Pyre type checker

163 | .pyre/

164 |

165 | # pytype static type analyzer

166 | .pytype/

167 |

168 | # Cython debug symbols

169 | cython_debug/

170 |

171 | # PyCharm

172 | # JetBrains specific template is maintained in a separate JetBrains.gitignore that can

173 | # be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

174 | # and can be added to the global gitignore or merged into this file. For a more nuclear

175 | # option (not recommended) you can uncomment the following to ignore the entire idea folder.

176 | #.idea/

177 |

178 | .pypirc

--------------------------------------------------------------------------------

/.readthedocs.yml:

--------------------------------------------------------------------------------

1 | version: 2

2 |

3 | build:

4 | os: ubuntu-22.04

5 | apt_packages:

6 | - cmake

7 | tools:

8 | python: "3.10"

9 | # You can also specify other tool versions:

10 | # nodejs: "16"

11 | # commands:

12 | # - cmake -B build -S . -DBUILD_DOCS=ON # Configure CMake

13 | # - cmake --build build --target Doxygen Sphinx # Build project

14 |

15 | # Build documentation in the docs/ directory with Sphinx

16 | sphinx:

17 | configuration: docs/conf.py

18 |

19 | # Dependencies required to build your docs

20 | python:

21 | install:

22 | - requirements: docs/requirements.txt

--------------------------------------------------------------------------------

/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | cmake_minimum_required(VERSION 3.15)

2 | project(polytopewalk)

3 |

4 | set(CMAKE_CXX_STANDARD 11)

5 | if(UNIX)

6 | # For Unix-like systems, add the -fPIC option

7 | set(CMAKE_CXX_FLAGS "-O2 -fPIC")

8 | elseif(WIN32)

9 | set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -D_USE_MATH_DEFINES")

10 | # For Windows, specific Windows options can be set here

11 | get_property(dirs DIRECTORY ${CMAKE_CURRENT_SOURCE_DIR} PROPERTY INCLUDE_DIRECTORIES)

12 | foreach(dir ${dirs})

13 | message(STATUS "dir='${dir}'")

14 | endforeach()

15 | endif()

16 |

17 | set(CMAKE_MODULE_PATH ${CMAKE_MODULE_PATH} "${CMAKE_SOURCE_DIR}/cmake/Modules/")

18 | message(STATUS "CMAKE_MODULE_PATH: ${CMAKE_MODULE_PATH}")

19 |

20 | # Set project directories

21 | set(POLYTOPEWALK_DIR ${CMAKE_CURRENT_SOURCE_DIR})

22 | set(SOURCES_DIR ${POLYTOPEWALK_DIR}/src)

23 | set(TESTS_DIR ${POLYTOPEWALK_DIR}/tests/cpp)

24 |

25 | # Documentation configuration

26 | if (BUILD_DOCS)

27 | add_subdirectory("docs")

28 | return() # Stop processing further targets for BUILD_DOCS

29 | endif()

30 |

31 | # Main project dependencies (only when not building docs)

32 | find_package(Python COMPONENTS Interpreter Development.Module REQUIRED)

33 | find_package(pybind11 CONFIG REQUIRED)

34 | find_package(Eigen3 CONFIG REQUIRED)

35 | find_package(GLPK REQUIRED)

36 |

37 | add_subdirectory("src")

38 | # set(CMAKE_MODULE_PATH "${PROJECT_SOURCE_DIR}/cmake" ${CMAKE_MODULE_PATH})

39 |

40 | pybind11_add_module(${PROJECT_NAME} MODULE pybind11/PybindExt.cpp)

41 | target_link_libraries(${PROJECT_NAME} PUBLIC utils dense sparse pybind11::module Eigen3::Eigen ${GLPK_LIBRARY})

42 | target_include_directories(${PROJECT_NAME} PRIVATE ${Eigen3_INCLUDE_DIRS} ${GLPK_INCLUDE_DIR})

43 | install(TARGETS ${PROJECT_NAME} LIBRARY DESTINATION .)

44 |

45 | # # Windows-specific DLL installation

46 | # if(WIN32)

47 | # file(GLOB DLL_FILES "${CMAKE_CURRENT_SOURCE_DIR}/lib/*.dll")

48 | # install(FILES ${DLL_FILES} DESTINATION ${PROJECT_NAME})

49 | # endif()

50 |

51 | # if (BUILD_TESTS)

52 | add_subdirectory("tests")

53 | # endif()

54 |

55 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2023 Yuansi Chen, Benny Sun

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |  3 |

3 |

4 |

5 |

6 |

7 |

8 |

9 | # PolytopeWalk

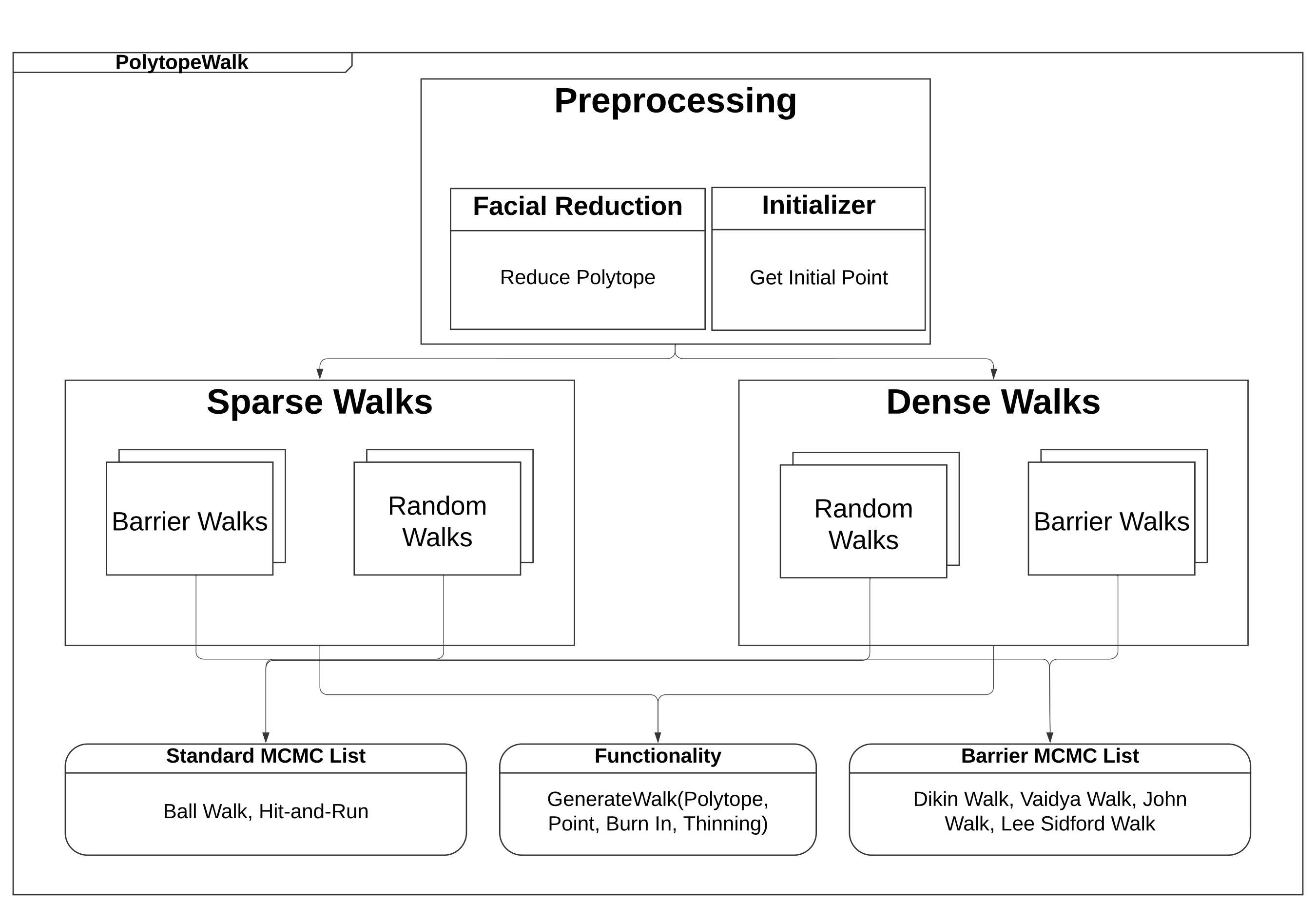

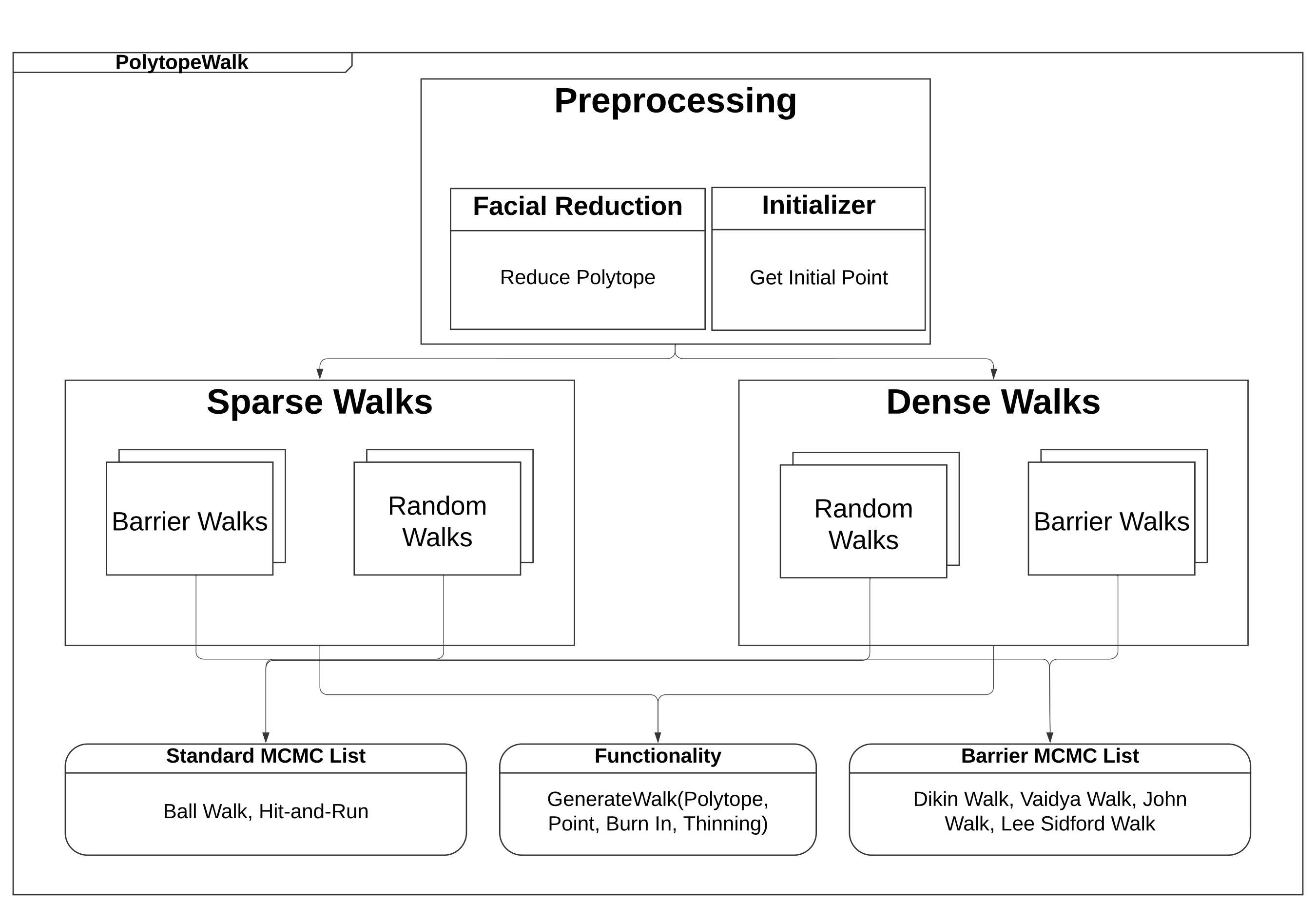

10 | **PolytopeWalk** is a `C++` library for running MCMC sampling algorithms to generate samples from a uniform distribution over a polytope with a `Python` interface. It handles preprocessing of the polytope (Facial Reduction algorithm) and initialization as well. Current implementations include the Dikin Walk, John Walk, Vaidya Walk, Ball Walk, Lee Sidford Walk, and Hit-and-Run in both the full-dimensional formulation and the sparse constrained formulation. For documentation on all functions/methods, please visit our webpage: https://polytopewalk.readthedocs.io/en/latest/ and read our paper on arXiv here: https://arxiv.org/abs/2412.06629. Finally, for example inputs and outputs, please visit the examples folder, which includes code to uniformly sample from both real-world polytopes from the `Netlib` dataset and structured polytopes.

11 |

12 | ## Code Structure

13 |

14 |

15 |  16 |

16 |

17 |

18 | ## Implemented Algorithms

19 | Let `d` be the dimension of the polytope, `n` be the number of boundaries, and `R/r` be where the convex body contains a ball of radius `r` and is mostly contained in a ball of radius `R`. We implement the following 6 MCMC sampling algorithms for uniform sampling over polytopes.

20 |

21 | | Name | Mixing Time | Author |

22 | | ------------ | ----------------- | ------------------- |

23 | | `Ball Walk` | $O(d^2R^2/r^2)$ | [Vempala (2005)](https://faculty.cc.gatech.edu/~vempala/papers/survey.pdf) |

24 | | `Hit and Run` | $O(d^2R^2/r^2)$ | [Lovasz (1999)](https://link.springer.com/content/pdf/10.1007/s101070050099.pdf) |

25 | | `Dikin Walk` | $O(nd)$ | [Sachdeva and Vishnoi (2015)](https://arxiv.org/pdf/1508.01977) |

26 | | `Vaidya Walk` | $O(n^{1/2}d^{3/2})$ | [Chen et al. (2018)](https://jmlr.org/papers/v19/18-158.html) |

27 | | `John Walk` | $O(d^{2.5})$ | [Chen et al. (2018)](https://jmlr.org/papers/v19/18-158.html) |

28 | | `Lee Sidford Walk` | $\tau(d^{2})$ | [Laddha et al. (2019)](https://arxiv.org/abs/1911.05656) (conjectured, proof incomplete) |

29 |

30 | For each implemented algorithm, we provide the full-dimensional formulation and the sparse constrained formulation. Each polytope can be expressed from 1 formulation to the other. The main benefit of utilizing the constrained formulation is that it maintains sparse operations in A, ensuring scalability in higher dimensions. Many of the `netlib` dataset sparse polytopes are represented in this formulation. The formulations are specified below.

31 |

32 | In the full-dimensional formulation with dense matrix A ($n$ x $d$ matrix) and vector b ($n$ dimensional vector), we specify the following:

33 |

34 | ```math

35 | \mathcal{K}_1 = \{x \in \mathbb{R}^{d} | Ax \le b\}

36 | ```

37 |

38 | where the polytope is specified with $n$ constraints.

39 |

40 | In the constrained formulation with sparse matrix A ($n$ x $d$ matrix) and vector b ($n$ dimensional vector), we specify the following:

41 |

42 | ```math

43 | \mathcal{K}_2 = \{x \in \mathbb{R}^{d} | Ax = b, x \succeq_k 0\}

44 | ```

45 |

46 | where the polytope is specified with $n$ equality constraints and $k$ coordinate-wise inequality constraints.

47 |

48 | In **PolytopeWalk**, we implement the MCMC algorithms in both the dense, full-dimensional and the sparse, constrained polytope formulation.

49 |

50 |

51 | ## Installation

52 |

53 | ### Dependencies

54 | **PolytopeWalk** requires:

55 | - Python (>= 3.9)

56 | - NumPy (>= 1.20)

57 | - SciPy (>= 1.6.0)

58 |

59 | ### User installation

60 | If you already have a working installation of NumPy and SciPy, the easiest way to install **PolytopeWalk** is using `pip`:

61 | ```bash

62 | pip install -U polytopewalk

63 | ```

64 |

65 |

66 | ## Developer Installation Instructions

67 |

68 | ### Important links

69 | - Official source code repo: https://github.com/ethz-randomwalk/polytopewalk

70 | - Download releases: https://pypi.org/project/polytopewalk/

71 |

72 | ### Install prerequisites

73 | (listed in each of the operating systems)

74 | - macOS: ``brew install eigen glpk``

75 | - Linux:

76 | - Ubuntu ``sudo apt-get install -y libeigen3-dev libglpk-dev``

77 | - CentOS ``yum install -y epel-release eigen3-devel glpk-devel``

78 | - Windows: ``choco install eigen -y``

79 | - Then, install winglpk from sourceforge

80 |

81 | ### Local install from source via pip

82 | ```bash

83 | git clone https://github.com/ethz-randomwalk/polytopewalk.git

84 | cd polytopewalk

85 | pip install .

86 | ```

87 |

88 |

89 | ### Compile C++ from source (not necessary)

90 | Only do this, if there is need to run and test C++ code directly. For normal users, we recommend only using the Python interface.

91 |

92 | Build with cmake

93 | ```bash

94 | git clone https://github.com/ethz-randomwalk/polytopewalk.git && cd polytopewalk

95 | cmake -B build -S . & cd build

96 | make

97 | sudo make install

98 | ```

99 |

100 | ## Examples

101 | The `examples` folder provides examples of sampling from both sparse (constrained) and dense (full-dimensional) formulations of the MCMC sampling algorithms. We test our random walk algorithms on family of 3 structured polytopes and 3 polytopes from `netlib` for real-world analysis. The lines below show a quick demonstration of sampling from a polytope using a sparse MCMC algorithm.

102 | ```python

103 | import numpy as np

104 | from scipy.sparse import csr_matrix, lil_matrix, csr_array

105 | from polytopewalk.sparse import SparseDikinWalk

106 |

107 | def generate_simplex(d):

108 | return np.array([1/d] * d), np.array([[1] * d]), np.array([1]), d, 'simplex'

109 |

110 | x, A, b, k, name = generate_simplex(5)

111 | sparse_dikin = SparseDikinWalk(r = 0.9, thin = 1)

112 | dikin_res = sparse_dikin.generateCompleteWalk(10_000, x, A, b, k, burn = 100)

113 | ```

114 | We also demonstrate how to sample from a polytope in a dense, full-dimensional formulation. We additionally introduce the Facial Reduction algorithm, used to simplify the constrained polytope into the full-dimensional form.

115 | ```python

116 | import numpy as np

117 | from scipy.sparse import csr_matrix, lil_matrix, csr_array

118 | from polytopewalk.dense import DikinWalk

119 | from polytopewalk import FacialReduction

120 |

121 | def generate_simplex(d):

122 | return np.array([1/d] * d), np.array([[1] * d]), np.array([1]), d, 'simplex'

123 |

124 | fr = FacialReduction()

125 | _, A, b, k, name = generate_simplex(5)

126 |

127 | polytope = fr.reduce(A, b, k, sparse = False)

128 | dense_A = polytope.dense_A

129 | dense_b = polytope.dense_b

130 |

131 | dc = DenseCenter()

132 | init = dc.getInitialPoint(dense_A, dense_b)

133 |

134 | dikin_res = dikin.generateCompleteWalk(1_000, init, dense_A, dense_b, burn = 100)

135 | ```

136 |

137 | ## Testing

138 | The `tests` folder includes comprehensives tests of the Facial Reduction algorithm, Initialization, Weights from MCMC algorithms, and Sparse/Dense Random Walk algorithms in both Python and C++. Our Github package page comes with an automated test suite hooked up to continuous integration after push requests to the main branch.

139 |

140 | We provide instructions for locally testing **PolytopeWalk** in both Python and C++. For both, we must locally clone the repository (assuming we have installed the package already):

141 |

142 | ```bash

143 | git clone https://github.com/ethz-randomwalk/polytopewalk.git

144 | cd polytopewalk

145 | ```

146 |

147 | ### Python Testing

148 | We can simply run the command:

149 | ```bash

150 | python -m unittest discover -s tests/python -p "*.py"

151 | ```

152 |

153 | ### C++ Testing

154 | First, we must compile the C++ code :

155 | ```bash

156 | cmake -B build -S . && cd build

157 | make

158 | ```

159 | Then, we can individually run the test files:

160 | ```bash

161 | ./tests/test_weights

162 | ./tests/test_fr

163 | ./tests/test_dense_walk

164 | ./tests/test_sparse_walk

165 | ./tests/test_init

166 | ```

167 |

168 | ## Community Guidelines

169 |

170 | For those wishing to contribute to the software, please feel free to use the pull-request feature on our Github page, alongside a brief description of the improvements to the code. For those who have any issues with our software, please let us know in the issues section of our Github page. Finally, if you have any questions, feel free to contact the authors of this page at this email address: bys7@duke.edu.

171 |

--------------------------------------------------------------------------------

/build.sh:

--------------------------------------------------------------------------------

1 | cmake -B build -S .

2 | # rm -r build; mkdir build ; cd build; cmake ..

3 | # get source distribution

4 | # python3 -m build --sdist

5 | # upload to pypi

6 | # python3 -m twine upload dist/*

7 |

--------------------------------------------------------------------------------

/cmake/Modules/FindGLPK.cmake:

--------------------------------------------------------------------------------

1 | find_path(GLPK_INCLUDE_DIR glpk.h

2 | PATHS

3 | D:/a/polytopewalk/polytopewalk/glpk-4.65/src

4 | glpk-4.65/src

5 | )

6 | find_library(GLPK_LIBRARY NAMES glpk

7 | PATHS

8 | D:/a/polytopewalk/polytopewalk/glpk-4.65/w64

9 | glpk-4.65/w64

10 | )

11 |

12 | # Handle finding status with CMake standard arguments

13 | include(FindPackageHandleStandardArgs)

14 | find_package_handle_standard_args(GLPK DEFAULT_MSG GLPK_LIBRARY GLPK_INCLUDE_DIR)

15 |

16 | mark_as_advanced(GLPK_INCLUDE_DIR GLPK_LIBRARY)

--------------------------------------------------------------------------------

/cmake/Modules/FindSphinx.cmake:

--------------------------------------------------------------------------------

1 | #Look for an executable called sphinx-build

2 | find_program(SPHINX_EXECUTABLE

3 | NAMES sphinx-build

4 | DOC "Path to sphinx-build executable")

5 |

6 | include(FindPackageHandleStandardArgs)

7 |

8 | #Handle standard arguments to find_package like REQUIRED and QUIET

9 | find_package_handle_standard_args(Sphinx

10 | "Failed to find sphinx-build executable"

11 | SPHINX_EXECUTABLE)

--------------------------------------------------------------------------------

/docs/CMakeLists.txt:

--------------------------------------------------------------------------------

1 | find_package(Doxygen REQUIRED)

2 |

3 | # Find all the public headers

4 | # get_target_property(MY_PUBLIC_HEADER_DIR polytopewalk INTERFACE_INCLUDE_DIRECTORIES)

5 | set(MY_PUBLIC_HEADER_DIR "${CMAKE_SOURCE_DIR}/src")

6 | file(GLOB_RECURSE MY_PUBLIC_HEADERS ${MY_PUBLIC_HEADER_DIR}/*.hpp)

7 |

8 | set(DOXYGEN_INPUT_DIR ${PROJECT_SOURCE_DIR}/src)

9 | set(DOXYGEN_RECURSIVE YES)

10 | set(DOXYGEN_USE_MDFILE_AS_MAINPAGE ${PROJECT_SOURCE_DIR}/README.md)

11 | set(DOXYGEN_EXCLUDE_PATTERNS */tests/*)

12 | set(DOXYGEN_OUTPUT_DIR ${CMAKE_CURRENT_BINARY_DIR})

13 | set(DOXYGEN_INDEX_FILE ${DOXYGEN_OUTPUT_DIR}/html/index.html)

14 | set(DOXYFILE_IN ${CMAKE_CURRENT_SOURCE_DIR}/Doxyfile)

15 | set(DOXYFILE_OUT ${CMAKE_CURRENT_BINARY_DIR}/Doxyfile.out)

16 |

17 | #Replace variables inside @@ with the current values

18 | configure_file(${DOXYFILE_IN} ${DOXYFILE_OUT} @ONLY)

19 |

20 | file(MAKE_DIRECTORY ${DOXYGEN_OUTPUT_DIR}) #Doxygen won't create this for us

21 | add_custom_command(OUTPUT ${DOXYGEN_INDEX_FILE}

22 | DEPENDS ${MY_PUBLIC_HEADERS}

23 | COMMAND ${DOXYGEN_EXECUTABLE} ${DOXYFILE_OUT}

24 | MAIN_DEPENDENCY ${DOXYFILE_OUT} ${DOXYFILE_IN}

25 | COMMENT "Generating docs")

26 |

27 | add_custom_target(Doxygen ALL DEPENDS ${DOXYGEN_INDEX_FILE})

28 |

29 | find_package(Sphinx REQUIRED)

30 |

31 | set(SPHINX_SOURCE ${CMAKE_CURRENT_SOURCE_DIR})

32 | set(SPHINX_BUILD ${CMAKE_CURRENT_BINARY_DIR}/sphinx)

33 |

34 | add_custom_target(Sphinx ALL

35 | COMMAND ${SPHINX_EXECUTABLE} -b html

36 | # Tell Breathe where to find the Doxygen output

37 | -Dbreathe_projects.polytopewalk=${DOXYGEN_OUTPUT_DIR}/xml

38 | ${SPHINX_SOURCE} ${SPHINX_BUILD}

39 | WORKING_DIRECTORY ${CMAKE_CURRENT_BINARY_DIR}

40 | COMMENT "Generating documentation with Sphinx")

41 |

--------------------------------------------------------------------------------

/docs/code_design1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ethz-randomwalk/polytopewalk/c6ff026acff73c09c678d8394fa327778b0a8ed5/docs/code_design1.png

--------------------------------------------------------------------------------

/docs/conf.py:

--------------------------------------------------------------------------------

1 | # Configuration file for the Sphinx documentation builder.

2 | #

3 | # This file only contains a selection of the most common options. For a full

4 | # list see the documentation:

5 | # https://www.sphinx-doc.org/en/master/usage/configuration.html

6 |

7 | # -- Path setup --------------------------------------------------------------

8 |

9 | # If extensions (or modules to document with autodoc) are in another directory,

10 | # add these directories to sys.path here. If the directory is relative to the

11 | # documentation root, use os.path.abspath to make it absolute, like shown here.

12 | #

13 | import os

14 | import subprocess

15 | import toml

16 | # import sys

17 | # sys.path.insert(0, os.path.abspath('.'))

18 |

19 |

20 |

21 | # -- Project information -----------------------------------------------------

22 | # obtain project information from pyproject.toml

23 | # Path to your pyproject.toml file

24 | pyproject_path = os.path.join(os.path.dirname(__file__), '..', 'pyproject.toml')

25 |

26 | # Load the pyproject.toml file

27 | with open(pyproject_path, 'r') as f:

28 | pyproject_data = toml.load(f)

29 |

30 | # Extract the relevant fields from the pyproject.toml

31 | project = pyproject_data['project']['name']

32 | authors = pyproject_data['project']['authors']

33 | author = ', '.join([author['name'] for author in authors]) # Comma-separated list of author names

34 | release = pyproject_data['project']['version']

35 | copyright = f"2024, {author}" # Use author and release for copyright

36 |

37 | # -- General configuration ---------------------------------------------------

38 |

39 | # Settings to determine if we are building on readthedocs

40 | read_the_docs_build = os.environ.get('READTHEDOCS', None) == 'True'

41 | if read_the_docs_build:

42 | subprocess.call('pwd', shell=True)

43 | subprocess.call('ls', shell=True)

44 | subprocess.call('cmake -B ../build -S .. -DBUILD_DOCS=ON', shell=True)

45 | subprocess.call('cmake --build ../build --target Doxygen', shell=True)

46 | xml_dir = os.path.abspath("../build/docs/xml")

47 |

48 |

49 |

50 | # Add any Sphinx extension module names here, as strings. They can be

51 | # extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

52 | # ones.

53 | extensions = [

54 | 'sphinx.ext.autodoc',

55 | 'sphinx.ext.napoleon', # For Google/NumPy-style docstrings

56 | 'sphinx.ext.viewcode', # Links to source code

57 | 'breathe', # For C++ API

58 | ]

59 |

60 | # Breathe Configuration

61 | breathe_default_project = "polytopewalk"

62 | if read_the_docs_build:

63 | breathe_projects = {"polytopewalk": xml_dir}

64 |

65 |

66 | # Add any paths that contain templates here, relative to this directory.

67 | templates_path = ['_templates']

68 |

69 | # List of patterns, relative to source directory, that match files and

70 | # directories to ignore when looking for source files.

71 | # This pattern also affects html_static_path and html_extra_path.

72 | exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

73 |

74 | autoclass_content = 'both'

75 |

76 |

77 | # -- Options for HTML output -------------------------------------------------

78 |

79 | # The theme to use for HTML and HTML Help pages. See the documentation for

80 | # a list of builtin themes.

81 | #

82 | html_theme = 'sphinx_rtd_theme'

83 |

84 | # Add any paths that contain custom static files (such as style sheets) here,

85 | # relative to this directory. They are copied after the builtin static files,

86 | # so a file named "default.css" will overwrite the builtin "default.css".

87 | html_static_path = ['_static']

--------------------------------------------------------------------------------

/docs/cpp_dense_walk.rst:

--------------------------------------------------------------------------------

1 | C++ Dense Random Walks

2 | ==========

3 |

4 | .. doxygenclass:: RandomWalk

5 | :members:

6 |

7 | .. doxygenclass:: BallWalk

8 | :members:

9 |

10 | .. doxygenclass:: HitAndRun

11 | :members:

12 |

13 | .. doxygenclass:: BarrierWalk

14 | :members:

15 |

16 | .. doxygenclass:: DikinWalk

17 | :members:

18 |

19 | .. doxygenclass:: VaidyaWalk

20 | :members:

21 |

22 | .. doxygenclass:: JohnWalk

23 | :members:

24 |

25 | .. doxygenclass:: DikinLSWalk

26 | :members:

--------------------------------------------------------------------------------

/docs/cpp_init.rst:

--------------------------------------------------------------------------------

1 | C++ Initialization Classes

2 | ==========

3 |

4 | .. doxygenstruct:: FROutput

5 | :members:

6 |

7 | .. doxygenclass:: FacialReduction

8 | :members:

9 |

10 | .. doxygenclass:: SparseCenter

11 | :members:

12 |

13 | .. doxygenclass:: DenseCenter

14 | :members:

--------------------------------------------------------------------------------

/docs/cpp_sparse_walk.rst:

--------------------------------------------------------------------------------

1 | C++ Sparse Random Walks

2 | ==========

3 |

4 | .. doxygenclass:: SparseRandomWalk

5 | :members:

6 |

7 | .. doxygenclass:: SparseBallWalk

8 | :members:

9 |

10 | .. doxygenclass:: SparseHitAndRun

11 | :members:

12 |

13 | .. doxygenclass:: SparseBarrierWalk

14 | :members:

15 |

16 | .. doxygenclass:: SparseDikinWalk

17 | :members:

18 |

19 | .. doxygenclass:: SparseVaidyaWalk

20 | :members:

21 |

22 | .. doxygenclass:: SparseJohnWalk

23 | :members:

24 |

25 | .. doxygenclass:: SparseDikinLSWalk

26 | :members:

--------------------------------------------------------------------------------

/docs/cpp_utils.rst:

--------------------------------------------------------------------------------

1 | C++ PolytopeWalk Utils

2 | ==========

3 |

4 | .. doxygenclass:: LeverageScore

5 | :members:

6 |

7 | .. doxygenfunction:: sparseFullWalkRun

8 |

9 | .. doxygenfunction:: denseFullWalkRun

--------------------------------------------------------------------------------

/docs/index.rst:

--------------------------------------------------------------------------------

1 | .. polytopewalk documentation master file, created by

2 | sphinx-quickstart on Tue May 9 22:50:40 2023.

3 | You can adapt this file completely to your liking, but it should at least

4 | contain the root `toctree` directive.

5 |

6 | Welcome to polytopewalk's documentation!

7 | =======================================

8 |

9 | .. toctree::

10 | :maxdepth: 2

11 | :caption: User Guide

12 |

13 | installation

14 | python_api

15 | cpp_api

16 | support

17 |

18 |

19 | Indices and tables

20 | ==================

21 |

22 | * :ref:`genindex`

23 | * :ref:`modindex`

24 | * :ref:`search`

25 |

26 | Python API

27 | ===========

28 |

29 | The Python bindings are created using `pybind11` and are documented here. This section contains the Python API documentation for the PolytopeWalk library.

30 |

31 | .. toctree::

32 | :maxdepth: 4

33 | :caption: Python Modules

34 |

35 | py_init

36 | py_dense_walk

37 | py_sparse_walk

38 | py_utils

39 |

40 |

41 | C++ API

42 | ========

43 |

44 | The C++ API is documented using Doxygen.

45 | This section provides an overview of the C++ API.

46 |

47 | Here is a list of important classes in the C++ API:

48 |

49 | * `RandomWalk`

50 | * `BallWalk`

51 | * `HitAndRun`

52 | * `BarrierWalk`

53 | * `DikinWalk`

54 | * `VaidyaWalk`

55 | * `JohnWalk`

56 | * `DikinLSWalk`

57 | * `SparseRandomWalk`

58 | * `SparseBallWalk`

59 | * `SparseHitAndRun`

60 | * `SparseBarrierWalk`

61 | * `SparseDikinWalk`

62 | * `SparseVaidyaWalk`

63 | * `SparseJohnWalk`

64 | * `SparseDikinLSWalk`

65 |

66 |

67 | For more detailed documentation on these classes, see the following pages.

68 |

69 | .. toctree::

70 | :maxdepth: 4

71 | :caption: C++ Modules

72 |

73 | cpp_init

74 | cpp_dense_walk

75 | cpp_sparse_walk

76 | cpp_utils

--------------------------------------------------------------------------------

/docs/installation.rst:

--------------------------------------------------------------------------------

1 | ============

2 | Installation

3 | ============

4 |

5 | Developer instructions listed at https://github.com/ethz-randomwalk/polytopewalk

6 |

7 | Or, if you have pip installed:

8 |

9 | .. code-block:: bash

10 |

11 | pip install polytopewalk

--------------------------------------------------------------------------------

/docs/logo1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ethz-randomwalk/polytopewalk/c6ff026acff73c09c678d8394fa327778b0a8ed5/docs/logo1.png

--------------------------------------------------------------------------------

/docs/make.bat:

--------------------------------------------------------------------------------

1 | @ECHO OFF

2 |

3 | pushd %~dp0

4 |

5 | REM Command file for Sphinx documentation

6 |

7 | if "%SPHINXBUILD%" == "" (

8 | set SPHINXBUILD=sphinx-build

9 | )

10 | set SOURCEDIR=source

11 | set BUILDDIR=build

12 |

13 | if "%1" == "" goto help

14 |

15 | %SPHINXBUILD% >NUL 2>NUL

16 | if errorlevel 9009 (

17 | echo.

18 | echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

19 | echo.installed, then set the SPHINXBUILD environment variable to point

20 | echo.to the full path of the 'sphinx-build' executable. Alternatively you

21 | echo.may add the Sphinx directory to PATH.

22 | echo.

23 | echo.If you don't have Sphinx installed, grab it from

24 | echo.https://www.sphinx-doc.org/

25 | exit /b 1

26 | )

27 |

28 | %SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

29 | goto end

30 |

31 | :help

32 | %SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS% %O%

33 |

34 | :end

35 | popd

36 |

--------------------------------------------------------------------------------

/docs/py_dense_walk.rst:

--------------------------------------------------------------------------------

1 | Python Dense Random Walks

2 | ==========

3 |

4 | .. autoclass:: polytopewalk.dense.RandomWalk

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

9 | .. autoclass:: polytopewalk.dense.BarrierWalk

10 | :members:

11 | :undoc-members:

12 | :inherited-members:

13 | :show-inheritance:

14 |

15 | .. autoclass:: polytopewalk.dense.DikinWalk

16 | :members:

17 | :undoc-members:

18 |

19 | .. autoclass:: polytopewalk.dense.VaidyaWalk

20 | :members:

21 | :undoc-members:

22 |

23 | .. autoclass:: polytopewalk.dense.JohnWalk

24 | :members:

25 | :undoc-members:

26 |

27 | .. autoclass:: polytopewalk.dense.DikinLSWalk

28 | :members:

29 | :undoc-members:

30 |

31 | .. autoclass:: polytopewalk.dense.BallWalk

32 | :members:

33 | :undoc-members:

34 |

35 | .. autoclass:: polytopewalk.dense.HitAndRun

36 | :members:

37 | :undoc-members:

--------------------------------------------------------------------------------

/docs/py_init.rst:

--------------------------------------------------------------------------------

1 | Python Initialization Classes

2 | ==========

3 |

4 | .. autoclass:: polytopewalk.FROutput

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

9 | .. autoclass:: polytopewalk.FacialReduction

10 | :members:

11 | :undoc-members:

12 | :show-inheritance:

13 |

14 | .. autoclass:: polytopewalk.dense.DenseCenter

15 | :members:

16 | :undoc-members:

17 | :show-inheritance:

18 |

19 | .. autoclass:: polytopewalk.sparse.SparseCenter

20 | :members:

21 | :undoc-members:

22 | :show-inheritance:

--------------------------------------------------------------------------------

/docs/py_modules.rst:

--------------------------------------------------------------------------------

1 | Python API Documentation

2 | ========================

3 |

4 | This section contains the Python API documentation for the PolytopeWalk library.

5 |

6 | Module: polytopewalk

7 | ---------------------

8 |

9 | .. automodule:: polytopewalk

10 | :members:

11 | :undoc-members:

12 | :show-inheritance:

13 |

14 | Initialization Classes

15 | --------

16 |

17 | .. autoclass:: polytopewalk.FacialReduction

18 | :members:

19 | :undoc-members:

20 | :show-inheritance:

21 |

22 | .. autoclass:: polytopewalk.dense.DenseCenter

23 | :members:

24 | :undoc-members:

25 | :show-inheritance:

26 |

27 | .. autoclass:: polytopewalk.sparse.SparseCenter

28 | :members:

29 | :undoc-members:

30 | :show-inheritance:

31 |

32 | RandomWalk Classes

33 | -------------------

34 |

35 | .. autoclass:: polytopewalk.dense.RandomWalk

36 | :members:

37 | :undoc-members:

38 | :show-inheritance:

39 |

40 | .. autoclass:: polytopewalk.sparse.SparseRandomWalk

41 | :members:

42 | :undoc-members:

43 | :show-inheritance:

44 |

45 | Subclasses of RandomWalk

46 | ------------------------

47 |

48 | .. autoclass:: polytopewalk.dense.BarrierWalk

49 | :members:

50 | :undoc-members:

51 | :inherited-members:

52 | :show-inheritance:

53 |

54 | .. autoclass:: polytopewalk.dense.DikinWalk

55 | :members:

56 | :undoc-members:

57 |

58 | .. autoclass:: polytopewalk.dense.VaidyaWalk

59 | :members:

60 | :undoc-members:

61 |

62 | .. autoclass:: polytopewalk.dense.JohnWalk

63 | :members:

64 | :undoc-members:

65 |

66 | .. autoclass:: polytopewalk.dense.DikinLSWalk

67 | :members:

68 | :undoc-members:

69 |

70 | .. autoclass:: polytopewalk.dense.BallWalk

71 | :members:

72 | :undoc-members:

73 |

74 | .. autoclass:: polytopewalk.dense.HitAndRun

75 | :members:

76 | :undoc-members:

77 |

78 | Subclasses of SparseRandomWalk

79 | ------------------------

80 |

81 | .. autoclass:: polytopewalk.sparse.SparseBarrierWalk

82 | :members:

83 | :undoc-members:

84 | :inherited-members:

85 | :show-inheritance:

86 |

87 | .. autoclass:: polytopewalk.sparse.SparseDikinWalk

88 | :members:

89 | :undoc-members:

90 |

91 | .. autoclass:: polytopewalk.sparse.SparseVaidyaWalk

92 | :members:

93 | :undoc-members:

94 |

95 | .. autoclass:: polytopewalk.sparse.SparseJohnWalk

96 | :members:

97 | :undoc-members:

98 |

99 | .. autoclass:: polytopewalk.sparse.SparseDikinLSWalk

100 | :members:

101 | :undoc-members:

102 |

103 | .. autoclass:: polytopewalk.sparse.SparseBallWalk

104 | :members:

105 | :undoc-members:

106 |

107 | .. autoclass:: polytopewalk.sparse.SparseHitAndRun

108 | :members:

109 | :undoc-members:

--------------------------------------------------------------------------------

/docs/py_sparse_walk.rst:

--------------------------------------------------------------------------------

1 | Python Sparse Random Walks

2 | ==========

3 |

4 | .. autoclass:: polytopewalk.sparse.SparseRandomWalk

5 | :members:

6 | :undoc-members:

7 | :show-inheritance:

8 |

9 | .. autoclass:: polytopewalk.sparse.SparseBarrierWalk

10 | :members:

11 | :undoc-members:

12 | :inherited-members:

13 | :show-inheritance:

14 |

15 | .. autoclass:: polytopewalk.sparse.SparseDikinWalk

16 | :members:

17 | :undoc-members:

18 |

19 | .. autoclass:: polytopewalk.sparse.SparseVaidyaWalk

20 | :members:

21 | :undoc-members:

22 |

23 | .. autoclass:: polytopewalk.sparse.SparseJohnWalk

24 | :members:

25 | :undoc-members:

26 |

27 | .. autoclass:: polytopewalk.sparse.SparseDikinLSWalk

28 | :members:

29 | :undoc-members:

30 |

31 | .. autoclass:: polytopewalk.sparse.SparseBallWalk

32 | :members:

33 | :undoc-members:

34 |

35 | .. autoclass:: polytopewalk.sparse.SparseHitAndRun

36 | :members:

37 | :undoc-members:

--------------------------------------------------------------------------------

/docs/py_utils.rst:

--------------------------------------------------------------------------------

1 | Python PolytopeWalk Utils

2 | ==========

3 |

4 | .. autofunction:: polytopewalk.sparseFullWalkRun

5 |

6 | .. autofunction:: polytopewalk.denseFullWalkRun

7 |

--------------------------------------------------------------------------------

/docs/requirements.txt:

--------------------------------------------------------------------------------

1 | toml

2 | sphinx

3 | breathe

4 | sphinx-rtd-theme

5 | polytopewalk # to have prebuilt python polytopewalk package, so that docs from pybind11 can be generated

--------------------------------------------------------------------------------

/docs/support.rst:

--------------------------------------------------------------------------------

1 | =======

2 | Support

3 | =======

4 |

5 | Contact us via benny.sun@duke.edu.

6 |

7 | Or, via Github Issues

--------------------------------------------------------------------------------

/examples/data/ADLITTLE.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ethz-randomwalk/polytopewalk/c6ff026acff73c09c678d8394fa327778b0a8ed5/examples/data/ADLITTLE.pkl

--------------------------------------------------------------------------------

/examples/data/ISRAEL.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ethz-randomwalk/polytopewalk/c6ff026acff73c09c678d8394fa327778b0a8ed5/examples/data/ISRAEL.pkl

--------------------------------------------------------------------------------

/examples/data/SCTAP1.pkl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ethz-randomwalk/polytopewalk/c6ff026acff73c09c678d8394fa327778b0a8ed5/examples/data/SCTAP1.pkl

--------------------------------------------------------------------------------

/paper/images/Code_Design.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/ethz-randomwalk/polytopewalk/c6ff026acff73c09c678d8394fa327778b0a8ed5/paper/images/Code_Design.pdf

--------------------------------------------------------------------------------

/paper/paper.bib:

--------------------------------------------------------------------------------

1 |

2 | @Article{chow:68,

3 | author = {C. K. Chow and C. N. Liu},

4 | title = {Approximating discrete probability distributions with dependence trees},

5 | journal = {IEEE Transactions on Information Theory},

6 | year = {1968},

7 | volume = {IT-14},

8 | number = {3},

9 | pages = {462--467}}

10 |

11 |

12 | @article{10.1093/biomet/57.1.97,

13 | author = {Hastings, W. K.},

14 | title = {{Monte Carlo sampling methods using Markov chains and their applications}},

15 | journal = {Biometrika},

16 | volume = {57},

17 | number = {1},

18 | pages = {97-109},

19 | year = {1970},

20 | month = {04},

21 | abstract = {A generalization of the sampling method introduced by Metropolis et al. (1953) is presented along with an exposition of the relevant theory, techniques of application and methods and difficulties of assessing the error in Monte Carlo estimates. Examples of the methods, including the generation of random orthogonal matrices and potential applications of the methods to numerical problems arising in statistics, are discussed.},

22 | issn = {0006-3444},

23 | doi = {10.1093/biomet/57.1.97},

24 | url = {https://doi.org/10.1093/biomet/57.1.97},

25 | eprint = {https://academic.oup.com/biomet/article-pdf/57/1/97/23940249/57-1-97.pdf},

26 | }

27 |

28 |

29 | @misc{pybind11,

30 | author = {Wenzel Jakob and Jason Rhinelander and Dean Moldovan},

31 | year = {2017},

32 | note = {https://github.com/pybind/pybind11},

33 | title = {pybind11 -- Seamless operability between C++11 and Python}

34 | }

35 | @MISC{eigenweb,

36 | author = {Ga\"{e}l Guennebaud and Beno\^{i}t Jacob and others},

37 | title = {Eigen v3},

38 | howpublished = {http://eigen.tuxfamily.org},

39 | year = {2010}

40 | }

41 |

42 | @misc{glpk,

43 | author = {Andrew Makhorin},

44 | year = {2012},

45 | note = {https://www.gnu.org/software/glpk/glpk.html},

46 | title = {(GNU Linear Programming Kit) package}

47 | }

48 |

49 | @Article{ harris2020array,

50 | title = {Array programming with {NumPy}},

51 | author = {Charles R. Harris and K. Jarrod Millman and St{\'{e}}fan J.

52 | van der Walt and Ralf Gommers and Pauli Virtanen and David

53 | Cournapeau and Eric Wieser and Julian Taylor and Sebastian

54 | Berg and Nathaniel J. Smith and Robert Kern and Matti Picus

55 | and Stephan Hoyer and Marten H. van Kerkwijk and Matthew

56 | Brett and Allan Haldane and Jaime Fern{\'{a}}ndez del

57 | R{\'{i}}o and Mark Wiebe and Pearu Peterson and Pierre

58 | G{\'{e}}rard-Marchant and Kevin Sheppard and Tyler Reddy and

59 | Warren Weckesser and Hameer Abbasi and Christoph Gohlke and

60 | Travis E. Oliphant},

61 | year = {2020},

62 | month = sep,

63 | journal = {Nature},

64 | volume = {585},

65 | number = {7825},

66 | pages = {357--362},

67 | doi = {10.1038/s41586-020-2649-2},

68 | publisher = {Springer Science and Business Media {LLC}},

69 | url = {https://doi.org/10.1038/s41586-020-2649-2}

70 | }

71 |

72 | @ARTICLE{2020SciPy-NMeth,

73 | author = {Virtanen, Pauli and Gommers, Ralf and Oliphant, Travis E. and

74 | Haberland, Matt and Reddy, Tyler and Cournapeau, David and

75 | Burovski, Evgeni and Peterson, Pearu and Weckesser, Warren and

76 | Bright, Jonathan and {van der Walt}, St{\'e}fan J. and

77 | Brett, Matthew and Wilson, Joshua and Millman, K. Jarrod and

78 | Mayorov, Nikolay and Nelson, Andrew R. J. and Jones, Eric and

79 | Kern, Robert and Larson, Eric and Carey, C J and

80 | Polat, {\.I}lhan and Feng, Yu and Moore, Eric W. and

81 | {VanderPlas}, Jake and Laxalde, Denis and Perktold, Josef and

82 | Cimrman, Robert and Henriksen, Ian and Quintero, E. A. and

83 | Harris, Charles R. and Archibald, Anne M. and

84 | Ribeiro, Ant{\^o}nio H. and Pedregosa, Fabian and

85 | {van Mulbregt}, Paul and {SciPy 1.0 Contributors}},

86 | title = {{{SciPy} 1.0: Fundamental Algorithms for Scientific

87 | Computing in Python}},

88 | journal = {Nature Methods},

89 | year = {2020},

90 | volume = {17},

91 | pages = {261--272},

92 | adsurl = {https://rdcu.be/b08Wh},

93 | doi = {10.1038/s41592-019-0686-2},

94 | }

95 |

96 | @article{Metropolis1953,

97 | added-at = {2010-08-02T15:41:00.000+0200},

98 | author = {Metropolis, Nicholas and Rosenbluth, Arianna W. and Rosenbluth, Marshall N. and Teller, Augusta H. and Teller, Edward},

99 | biburl = {https://www.bibsonomy.org/bibtex/25bdc169acdc743b5f9946748d3ce587b/lopusz},

100 | doi = {10.1063/1.1699114},

101 | interhash = {b67019ed11f34441c67cc69ee5683945},

102 | intrahash = {5bdc169acdc743b5f9946748d3ce587b},

103 | journal = {The Journal of Chemical Physics},

104 | keywords = {MonteCarlo},

105 | number = 6,

106 | pages = {1087-1092},

107 | publisher = {AIP},

108 | timestamp = {2010-08-02T15:41:00.000+0200},

109 | title = {Equation of State Calculations by Fast Computing Machines},

110 | url = {http://link.aip.org/link/?JCP/21/1087/1},

111 | volume = 21,

112 | year = 1953

113 | }

114 |

115 |

116 | @Book{pearl:88,

117 | author = {Judea Pearl},

118 | title = {Probabilistic {R}easoning in {I}ntelligent {S}ystems:

119 | {N}etworks of {P}lausible {I}nference},

120 | publisher = {Morgan Kaufman Publishers},

121 | year = {1988},

122 | address = {San Mateo, CA}

123 | }

124 |

125 | @article{DBLP:journals/corr/abs-1911-05656,

126 | author = {Aditi Laddha and

127 | Yin Tat Lee and

128 | Santosh S. Vempala},

129 | title = {Strong Self-Concordance and Sampling},

130 | journal = {CoRR},

131 | volume = {abs/1911.05656},

132 | year = {2019},

133 | url = {http://arxiv.org/abs/1911.05656},

134 | eprinttype = {arXiv},

135 | eprint = {1911.05656},

136 | timestamp = {Mon, 02 Dec 2019 13:44:01 +0100},

137 | biburl = {https://dblp.org/rec/journals/corr/abs-1911-05656.bib},

138 | bibsource = {dblp computer science bibliography, https://dblp.org}

139 | }

140 |

141 | @article{DBLP:journals/corr/abs-2202-01908,

142 | author = {Yunbum Kook and

143 | Yin Tat Lee and

144 | Ruoqi Shen and

145 | Santosh S. Vempala},

146 | title = {Sampling with Riemannian Hamiltonian Monte Carlo in a Constrained

147 | Space},

148 | journal = {CoRR},

149 | volume = {abs/2202.01908},

150 | year = {2022},

151 | url = {https://arxiv.org/abs/2202.01908},

152 | eprinttype = {arXiv},

153 | eprint = {2202.01908},

154 | timestamp = {Wed, 09 Feb 2022 15:43:34 +0100},

155 | biburl = {https://dblp.org/rec/journals/corr/abs-2202-01908.bib},

156 | bibsource = {dblp computer science bibliography, https://dblp.org}

157 | }

158 |

159 | @article{JMLR:v19:18-158,

160 | author = {Yuansi Chen and Raaz Dwivedi and Martin J. Wainwright and Bin Yu},

161 | title = {Fast MCMC Sampling Algorithms on Polytopes},

162 | journal = {Journal of Machine Learning Research},

163 | year = {2018},

164 | volume = {19},

165 | number = {55},

166 | pages = {1--86},

167 | url = {http://jmlr.org/papers/v19/18-158.html}

168 | }

169 |

170 | @article{drusvyatskiy2017many,

171 | author = {Drusvyatskiy, Dmitriy and Wolkowicz, Henry and others},

172 | date-added = {2022-11-20 21:00:56 -0500},

173 | date-modified = {2022-11-20 21:00:56 -0500},

174 | journal = {Foundations and Trends{\textregistered} in Optimization},

175 | number = {2},

176 | pages = {77--170},

177 | publisher = {Now Publishers, Inc.},

178 | title = {The many faces of degeneracy in conic optimization},

179 | volume = {3},

180 | year = {2017}}

181 |

182 | @article{DBLP:journals/corr/SachdevaV15,

183 | author = {Sushant Sachdeva and

184 | Nisheeth K. Vishnoi},

185 | title = {A Simple Analysis of the Dikin Walk},

186 | journal = {CoRR},

187 | volume = {abs/1508.01977},

188 | year = {2015},

189 | url = {http://arxiv.org/abs/1508.01977},

190 | eprinttype = {arXiv},

191 | eprint = {1508.01977},

192 | timestamp = {Mon, 13 Aug 2018 16:46:57 +0200},

193 | biburl = {https://dblp.org/rec/journals/corr/SachdevaV15.bib},

194 | bibsource = {dblp computer science bibliography, https://dblp.org}

195 | }

196 |

197 | @article{vempala2005,

198 | author = {Santosh Vempala},

199 | title = {Geometric Random Walks: A Survey},

200 | journal = {Combinatorial and Computational Geometry},

201 | volume = {52},

202 | year = {2005},

203 | url = {https://faculty.cc.gatech.edu/~vempala/papers/survey.pdf}

204 | }

205 |

206 | @article{lovasz1999,

207 | author = {László Lovász},

208 | title = {Hit-and-run mixes fast},

209 | journal = {Mathematical Programming},

210 | volume = {86},

211 | year = {1999},

212 | url = {https://link.springer.com/content/pdf/10.1007/s101070050099.pdf}

213 | }

214 |

215 | @article{Simonovits2003,

216 | author = {Mikl´os Simonovits},

217 | title = {How to compute the volume in high dimension?},

218 | journal = {Mathematical Programming},

219 | volume = {97},

220 | year = {2003},

221 | url = {https://link.springer.com/article/10.1007/s10107-003-0447-x}

222 | }

223 |

224 | @article{DBLP:journals/corr/abs-1803-05861,

225 | author = {Ludovic Cal{\`{e}}s and

226 | Apostolos Chalkis and

227 | Ioannis Z. Emiris and

228 | Vissarion Fisikopoulos},

229 | title = {Practical volume computation of structured convex bodies, and an application

230 | to modeling portfolio dependencies and financial crises},

231 | journal = {CoRR},

232 | volume = {abs/1803.05861},

233 | year = {2018},

234 | url = {http://arxiv.org/abs/1803.05861},

235 | eprinttype = {arXiv},

236 | eprint = {1803.05861},

237 | timestamp = {Mon, 13 Aug 2018 16:45:57 +0200},

238 | biburl = {https://dblp.org/rec/journals/corr/abs-1803-05861.bib},

239 | bibsource = {dblp computer science bibliography, https://dblp.org}

240 | }

241 |

242 |

243 | @misc{COBRA,

244 | title={Creation and analysis of biochemical constraint-based models: the COBRA Toolbox v3.0},

245 | author={Laurent Heirendt and Sylvain Arreckx and Thomas Pfau and Sebastián N. Mendoza and Anne Richelle and Almut Heinken and Hulda S. Haraldsdóttir and Jacek Wachowiak and Sarah M. Keating and Vanja Vlasov and Stefania Magnusdóttir and Chiam Yu Ng and German Preciat and Alise Žagare and Siu H. J. Chan and Maike K. Aurich and Catherine M. Clancy and Jennifer Modamio and John T. Sauls and Alberto Noronha and Aarash Bordbar and Benjamin Cousins and Diana C. El Assal and Luis V. Valcarcel and Iñigo Apaolaza and Susan Ghaderi and Masoud Ahookhosh and Marouen Ben Guebila and Andrejs Kostromins and Nicolas Sompairac and Hoai M. Le and Ding Ma and Yuekai Sun and Lin Wang and James T. Yurkovich and Miguel A. P. Oliveira and Phan T. Vuong and Lemmer P. El Assal and Inna Kuperstein and Andrei Zinovyev and H. Scott Hinton and William A. Bryant and Francisco J. Aragón Artacho and Francisco J. Planes and Egils Stalidzans and Alejandro Maass and Santosh Vempala and Michael Hucka and Michael A. Saunders and Costas D. Maranas and Nathan E. Lewis and Thomas Sauter and Bernhard Ø. Palsson and Ines Thiele and Ronan M. T. Fleming},

246 | year={2018},

247 | eprint={1710.04038},

248 | archivePrefix={arXiv},

249 | primaryClass={q-bio.QM}

250 | }

251 |

252 | @article{10.1093/nar/gkv1049,

253 | author = {King, Zachary A. and Lu, Justin and Dräger, Andreas and Miller, Philip and Federowicz, Stephen and Lerman, Joshua A. and Ebrahim, Ali and Palsson, Bernhard O. and Lewis, Nathan E.},

254 | title = "{BiGG Models: A platform for integrating, standardizing and sharing genome-scale models}",

255 | journal = {Nucleic Acids Research},

256 | volume = {44},

257 | number = {D1},

258 | pages = {D515-D522},

259 | year = {2015},

260 | month = {10},