├── excercise

├── P1

│ └── nginx-pod.yml

└── 1-Basic-kubectl

│ ├── nginx-pod.yml

│ └── Readme.md

├── workloads

├── daemonset

│ ├── README.md

│ ├── nginx.yml

│ └── simple-daemonset.yml

├── job

│ ├── paralel-job

│ │ ├── Readme.md

│ │ └── sleep-20s.yml

│ └── uuidgen.yml

├── replicaset

│ └── replicaset.yml

├── deployment

│ ├── nginx-deployment.yml

│ ├── nginx-svc-nodeport.yml

│ └── README.md

└── cronjob

│ └── wget-15min.yml

├── storage

├── localpath - nginx

│ ├── Readme.md

│ ├── pvc.yml

│ ├── nginx-pod.yml

│ └── pv.yml

├── localpath

│ ├── README.md

│ ├── persistentVolumeClaim.yaml

│ ├── redis-example

│ │ ├── redis-pvc.yaml

│ │ ├── redis-pv.yaml

│ │ ├── redispod.yaml

│ │ └── README.md

│ ├── http-pod.yaml

│ └── persistentVolume.yaml

├── resizeable-pv

│ ├── README.md

│ ├── storage-class.yml

│ ├── pvc.yml

│ ├── pv.yml

│ └── pod.yml

├── nfs

│ ├── nfs-server.md

│ ├── pod-using-nfs.yml

│ ├── simple-nfs.yml

│ └── Readme.md

├── emty-dir-accessing-2pod-same-volume

│ ├── readme.md

│ └── ngnix-shared-volume.yml

└── longhorn

│ └── Readme.md

├── installation

├── k3s.md

├── helm-install.sh

├── kubespray.md

├── containerd-installation.md

└── kubeadm-installation.md

├── rbac

└── scenario

│ ├── creating-new-clusteadmin

│ ├── Readme.md

│ ├── 01-serviceacount.yml

│ ├── 02-secret.yml

│ ├── 04-ClusterRolebinding.yml

│ ├── 03-clusterRole.yml

│ └── 05-kubeconfig-creator.sh

│ └── pod-get-resource

│ ├── service-account.yaml

│ ├── role.yml

│ ├── pod.yml

│ ├── rolebinding.yaml

│ └── Readme.md

├── Resource-Limit

├── Namespace-limit

│ ├── Readme.md

│ └── resource-quota.yml

└── Pod-Limit

│ ├── Readme.md

│ └── Nginx.yml

├── keda

└── time-based-scale

│ ├── prometheus

│ ├── Readme.md

│ ├── scale-object.yml

│ └── deployment.yml

│ └── Readme.md

├── PolicyManagement

└── Kyverno

│ ├── Pod.yml

│ ├── policy.yml

│ └── Readme.md

├── ingress

├── apple-orange-ingress

│ ├── Readme.md

│ ├── ingress.yml

│ └── pod.yml

├── README.md

├── nginx-ingress-manifest

│ └── simple-app-ingress.yml

└── nginx-ingress-helm

│ └── Readme.md

├── Resource-Management

├── Limit-Range

│ ├── test-pod.yaml

│ ├── test-pod-exceed.yaml

│ ├── limitrange.yaml

│ └── Readme.md

├── Resource-Quota

│ ├── resource-quota.yaml

│ ├── quota-test-pod.yaml

│ └── Readme.md

├── metric-server

│ └── Readme.md

├── HPA

│ ├── hpa-memory.yml

│ ├── hpa.yml

│ ├── nginx-deployment.yml

│ └── Readme.md

├── VPA

│ ├── Readme.md

│ ├── vpa.yml

│ └── nginx-deployment.yml

└── QOS

│ └── Readme.md

├── secret

├── using-env-from-secret

│ ├── secret.yml

│ ├── nginx-secret-env.yml

│ └── readme.md

└── dockerhub-imagepull-secret

│ └── Readme.md

├── ConfigMap

├── configmap-to-env

│ ├── configmap.yml

│ ├── pod.yml

│ └── Readme.md

├── Configmap-as-volume-redis

│ ├── Readme.md

│ └── redis-manifest-with-cm.yml

├── nginx-configmap

│ └── Readme.md

└── Volume-from-Configmap.yml

├── Network-policy

├── default-deny-np.yml

├── auth-server-np.yml

├── webauth-server.yml

├── web-auth-client.yml

└── README.md

├── sidecar-container

├── Readme.md

├── side-car-tail-log.yml

└── tail-log-with-initcontainer.yml

├── scenario

├── manual-canary-deployment

│ ├── nginx-service.yml

│ ├── nginx-ingress.yml

│ ├── nginx-configmap-canary.yml

│ ├── nginx-configmap-stable.yml

│ ├── Readme.md

│ ├── nginx-canary.yml

│ └── nginx-stable.yml

├── ipnetns-container

│ └── Readme.md

├── curl-kubernetes-object

│ └── Readme.md

├── Creating a ClusterRole to Access a Pod to get pod list in Kubernetes

│ └── README.md

└── turn-dockercompose-to-k8s-manifest

│ └── Readme.md

├── scheduler

├── nodeName

│ └── Readme.md

├── node-affinity

│ └── README.md

├── node-selector

│ └── Readme.md

├── drain

│ └── Readme.md

└── pod-afinity

│ ├── hpa.yml

│ └── Readme.md

├── metalLB

├── nginx-service.yaml

├── nginx-deployment.yml

├── metallb-config.yaml

└── Readme.md

├── audit-log

├── audit-policy.yaml

└── Readme.md

├── statefulset

├── mysql-cluster-scenario

│ ├── readme.md

│ ├── mysql-configmap.yml

│ ├── mysql-service.yml

│ └── mysql-statfulset.yml

└── nginx-scenario

│ ├── README.md

│ ├── pv.yml

│ └── statefulset.yml

├── service

├── external

│ └── varzesh3

│ │ └── Readme.md

├── headless

│ ├── nginx-deployment.yml

│ └── Readme.md

├── LoadBalancer

│ └── Readme.md

├── ClusterIP

│ └── Readme.md

└── NodePort

│ └── Readme.md

├── initcontainer

├── Readme.md

└── Pod-address.yml

├── kwatch

├── nginx-corrupted.yml

└── Readme.md

├── node-affinity

└── node-affinity.yml

├── Monitoring

└── Prometheus

│ ├── scrape-config

│ └── scrape.yaml

│ ├── prometheus-rules

│ └── pod-down.yml

│ ├── app.yml

│ └── Readme.md

├── readiness-liveness

├── liveness-exec

│ └── Readme.md

├── readiness-exec

│ └── Readme.md

├── liveness-http

│ └── Readme.md

├── liveness-grpc

│ └── Readme.md

├── liveness-tcp

│ └── Readme.md

└── startup-prob

│ └── Readme.md

├── Service-Mesh

└── isitio

│ ├── kiali-dashboard

│ └── Readme.md

│ └── canary-deployment

│ ├── Readme.md

│ ├── Virtualservice.yml

│ └── deployment.yml

├── logging

└── side-car

│ ├── fluent-configmap.yml

│ └── deployment-with-fluentd.yml

├── rolebinding

└── Create.md

├── CONTRIBUTING.md

├── enviornment

└── expose-pod-info-with-env

│ └── expose-data-env.yml

├── Static-POD

└── Readme.md

├── etcd

├── etcd.md

├── Readme.md

└── What Configurations are inside of ETCD.MD

├── api-gateway

├── istio-api-gateway

│ └── all-in-one.yml

└── Readme.md

├── PriorityClass

└── Readme.md

├── security

├── gate-keeper

│ └── Readme.md

├── kube-bench

│ └── Readme.md

└── kubescape

│ └── Readme.md

├── multi-container-pattern

├── side-car

│ └── sidecar.yml

└── adaptor

│ └── adaptor.yml

├── README.md

├── helm

└── helm-cheatsheet.md

├── istio

├── installation.sh

└── istio-app.yml

├── kubeconfig-access-for-one-namespace

└── kubeconfig-generator.sh

└── cheat-sheet

└── kubectl.md

/excercise/P1/nginx-pod.yml:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/workloads/daemonset/README.md:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

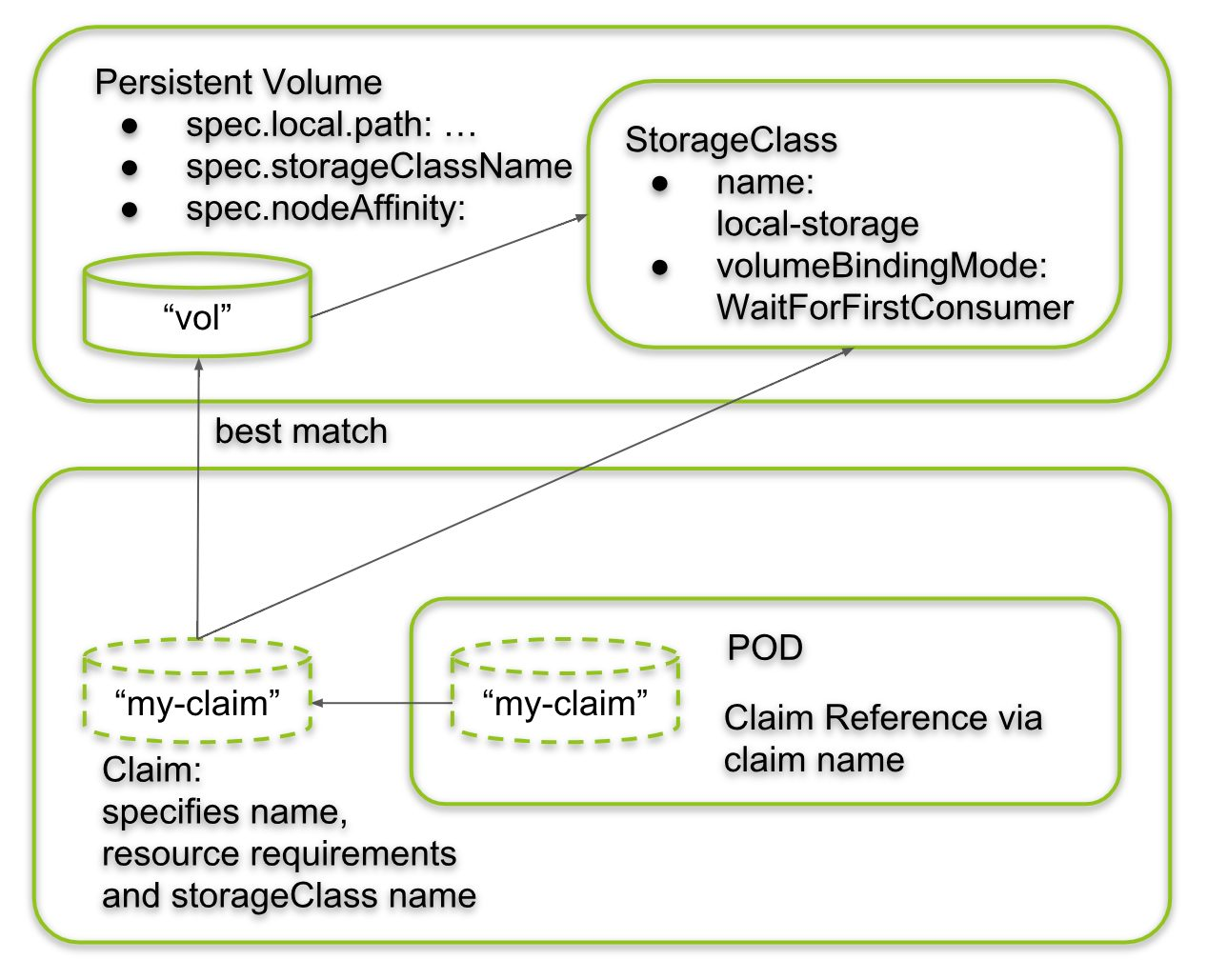

/storage/localpath - nginx/Readme.md:

--------------------------------------------------------------------------------

1 |

2 | mkdir -p /mnt/disks/vol1

3 |

--------------------------------------------------------------------------------

/installation/k3s.md:

--------------------------------------------------------------------------------

1 | ```

2 | curl -sfL https://get.k3s.io | sh -

3 | ```

4 |

--------------------------------------------------------------------------------

/rbac/scenario/creating-new-clusteadmin/Readme.md:

--------------------------------------------------------------------------------

1 | https://devopscube.com/kubernetes-kubeconfig-file/

2 |

--------------------------------------------------------------------------------

/Resource-Limit/Namespace-limit/Readme.md:

--------------------------------------------------------------------------------

1 | kubectl apply -f resource-quota.yml

2 | kubectl get resourcequota -n farshad

3 |

--------------------------------------------------------------------------------

/storage/localpath/README.md:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/storage/resizeable-pv/README.md:

--------------------------------------------------------------------------------

1 | ## After Applying all of resources##

2 | **Change:**

3 | ``storage: 100Mi`` to ``storage: 200Mi`` in ``pvc.yml``

4 |

--------------------------------------------------------------------------------

/rbac/scenario/pod-get-resource/service-account.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ServiceAccount

3 | metadata:

4 | name: pod-reader-sa

5 | namespace: default

6 |

--------------------------------------------------------------------------------

/keda/time-based-scale/prometheus/Readme.md:

--------------------------------------------------------------------------------

1 |  2 |

--------------------------------------------------------------------------------

/storage/nfs/nfs-server.md:

--------------------------------------------------------------------------------

1 | ```

2 | apt update && apt install nfs-server

3 | mkdir /exports

4 | echo "/exports *(rw,sync,no_subtree_check)" > /etc/exports

5 | ```

6 |

--------------------------------------------------------------------------------

/PolicyManagement/Kyverno/Pod.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: test-pod

5 | spec:

6 | containers:

7 | - name: nginx

8 | image: nginx

9 |

--------------------------------------------------------------------------------

/rbac/scenario/creating-new-clusteadmin/01-serviceacount.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ServiceAccount

3 | metadata:

4 | name: devops-cluster-admin

5 | namespace: kube-system

6 |

--------------------------------------------------------------------------------

/ingress/apple-orange-ingress/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | curl -H 'Host:packops.local' http://192.168.6.130/apple

3 | ```

4 |

5 |

6 |

--------------------------------------------------------------------------------

/storage/resizeable-pv/storage-class.yml:

--------------------------------------------------------------------------------

1 | apiVersion: storage.k8s.io/v1

2 | kind: StorageClass

3 | metadata:

4 | name: localdisk

5 | provisioner: kubernetes.io/no-provisioner

6 | allowVolumeExpansion: true

7 |

--------------------------------------------------------------------------------

/Resource-Management/Limit-Range/test-pod.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: test-pod

5 | namespace: dev-namespace

6 | spec:

7 | containers:

8 | - name: test-container

9 | image: nginx

10 |

--------------------------------------------------------------------------------

/secret/using-env-from-secret/secret.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Secret

3 | metadata:

4 | name: backend-user

5 | type: Opaque

6 | data:

7 | backend-username: YmFja2VuZC1hZG1pbg== # base64-encoded value of 'backend-admin'

8 |

--------------------------------------------------------------------------------

/ConfigMap/configmap-to-env/configmap.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ConfigMap

3 | metadata:

4 | name: simple-config

5 | data:

6 | database_url: "mongodb://db1.packops.dev:27017"

7 | feature_flag: "true"

8 | log_level: "debug"

9 |

--------------------------------------------------------------------------------

/installation/helm-install.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | VERSION=3.9.0

3 | wget https://get.helm.sh/helm-v$VERSION-linux-amd64.tar.gz

4 | tar -xzf helm-v$VERSION-linux-amd64.tar.gz

5 | cp ./linux-amd64/helm /usr/local/bin/ && rm -rf ./linux-amd64

6 |

--------------------------------------------------------------------------------

/Resource-Limit/Pod-Limit/Readme.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | kubectl describe pod nginx-deployment-8c6b98574-27csj -n farshad

4 |

5 |

6 |

7 |

--------------------------------------------------------------------------------

/Network-policy/default-deny-np.yml:

--------------------------------------------------------------------------------

1 | apiVersion: networking.k8s.io/v1

2 | kind: NetworkPolicy

3 | metadata:

4 | name: default-deny-ingress

5 | namespace: web-auth

6 | spec:

7 | podSelector: {}

8 | policyTypes:

9 | - Ingress

10 |

--------------------------------------------------------------------------------

/sidecar-container/Readme.md:

--------------------------------------------------------------------------------

1 | in scenario that we want an order for sidecars container or we want order between main container and sidecar we could use sidecar container in initcontainer (main will start after that sequential sidecar will start)

2 |

--------------------------------------------------------------------------------

/excercise/1-Basic-kubectl/nginx-pod.yml:

--------------------------------------------------------------------------------

1 | # pod.yaml

2 | apiVersion: v1

3 | kind: Pod

4 | metadata:

5 | name: my-pod

6 | spec:

7 | containers:

8 | - name: my-container

9 | image: nginx

10 | ports:

11 | - containerPort: 80

12 |

--------------------------------------------------------------------------------

/scenario/manual-canary-deployment/nginx-service.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: nginx-service

5 | spec:

6 | selector:

7 | app: nginx

8 | ports:

9 | - protocol: TCP

10 | port: 80

11 | targetPort: 80

12 |

--------------------------------------------------------------------------------

/rbac/scenario/pod-get-resource/role.yml:

--------------------------------------------------------------------------------

1 | apiVersion: rbac.authorization.k8s.io/v1

2 | kind: Role

3 | metadata:

4 | namespace: default

5 | name: pod-reader

6 | rules:

7 | - apiGroups: [""]

8 | resources: ["pods"]

9 | verbs: ["get", "watch", "list"]

10 |

--------------------------------------------------------------------------------

/storage/resizeable-pv/pvc.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolumeClaim

3 | metadata:

4 | name: test-pvc

5 | spec:

6 | storageClassName: localdisk

7 | accessModes:

8 | - ReadWriteOnce

9 | resources:

10 | requests:

11 | storage: 100Mi

12 |

--------------------------------------------------------------------------------

/storage/localpath - nginx/pvc.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolumeClaim

3 | metadata:

4 | name: local-pvc

5 | spec:

6 | accessModes:

7 | - ReadWriteOnce

8 | storageClassName: local-storage

9 | resources:

10 | requests:

11 | storage: 5Gi

12 |

--------------------------------------------------------------------------------

/storage/resizeable-pv/pv.yml:

--------------------------------------------------------------------------------

1 | kind: PersistentVolume

2 | apiVersion: v1

3 | metadata:

4 | name: test-pv

5 | spec:

6 | storageClassName: localdisk

7 | capacity:

8 | storage: 1Gi

9 | accessModes:

10 | - ReadWriteOnce

11 | hostPath:

12 | path: /etc/output

13 |

--------------------------------------------------------------------------------

/storage/localpath/persistentVolumeClaim.yaml:

--------------------------------------------------------------------------------

1 | kind: PersistentVolumeClaim

2 | apiVersion: v1

3 | metadata:

4 | name: my-claim

5 | spec:

6 | accessModes:

7 | - ReadWriteOnce

8 | storageClassName: my-local-storage

9 | resources:

10 | requests:

11 | storage: 5Gi

12 |

--------------------------------------------------------------------------------

/storage/localpath/redis-example/redis-pvc.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolumeClaim

3 | metadata:

4 | name: redisdb-pvc

5 | spec:

6 | storageClassName: "pv-local"

7 | accessModes:

8 | - ReadWriteOnce

9 | resources:

10 | requests:

11 | storage: 1Gi

12 |

--------------------------------------------------------------------------------

/Resource-Limit/Namespace-limit/resource-quota.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ResourceQuota

3 | metadata:

4 | name: mem-cpu-demo

5 | namespace: farshad

6 |

7 | spec:

8 | hard:

9 | requests.cpu: 2

10 | requests.memory: 1Gi

11 | limits.cpu: 3

12 | limits.memory: 2Gi

13 |

--------------------------------------------------------------------------------

/rbac/scenario/creating-new-clusteadmin/02-secret.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Secret

3 | metadata:

4 | name: devops-cluster-admin-secret

5 | namespace: kube-system

6 | annotations:

7 | kubernetes.io/service-account.name: devops-cluster-admin

8 | type: kubernetes.io/service-account-token

9 |

--------------------------------------------------------------------------------

/storage/localpath/redis-example/redis-pv.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolume

3 | metadata:

4 | name: redis-pv

5 | spec:

6 | storageClassName: "pv-local"

7 | capacity:

8 | storage: 1Gi

9 | accessModes:

10 | - ReadWriteOnce

11 | hostPath:

12 | path: "/mnt/data"

13 |

--------------------------------------------------------------------------------

/Resource-Management/Resource-Quota/resource-quota.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ResourceQuota

3 | metadata:

4 | name: resource-quota

5 | namespace: prod-namespace

6 | spec:

7 | hard:

8 | requests.cpu: "10"

9 | requests.memory: "20Gi"

10 | limits.cpu: "15"

11 | limits.memory: "30Gi"

12 |

--------------------------------------------------------------------------------

/scheduler/nodeName/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | #nodename-pod.yml

3 | apiVersion: v1

4 | kind: Pod

5 | metadata:

6 | name: nginx

7 | labels:

8 | name: nginx

9 | spec:

10 | containers:

11 | - name: nginx

12 | image: nginx

13 | ports:

14 | - containerPort: 8080

15 | nodeName: node1

16 |

17 | ```

18 |

--------------------------------------------------------------------------------

/rbac/scenario/pod-get-resource/pod.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: pod-checker

5 | namespace: default

6 | spec:

7 | serviceAccountName: pod-reader-sa

8 | containers:

9 | - name: kubectl-container

10 | image: bitnami/kubectl:latest

11 | command: ["sleep", "3600"]

12 |

--------------------------------------------------------------------------------

/storage/emty-dir-accessing-2pod-same-volume/readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | kubectl apply -f ngnix-shared-volume.yml

3 | kubectl exec -it two-containers -c nginx-container -- /bin/bash

4 | curl localhost

5 | ```

6 |

7 |

--------------------------------------------------------------------------------

/Resource-Management/Resource-Quota/quota-test-pod.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: quota-test-pod

5 | namespace: prod-namespace

6 | spec:

7 | containers:

8 | - name: test-container

9 | image: nginx

10 | resources:

11 | requests:

12 | cpu: "1"

13 | memory: "512Mi"

14 |

--------------------------------------------------------------------------------

/metalLB/nginx-service.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: nginx

5 | spec:

6 | selector:

7 | app: nginx

8 | ports:

9 | - protocol: TCP

10 | port: 80

11 | targetPort: 80

12 | type: LoadBalancer

13 | loadBalancerIP: 192.168.6.210 # Assigning a specific IP from MetalLB's range

14 |

--------------------------------------------------------------------------------

/ConfigMap/Configmap-as-volume-redis/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | kubectl apply -f redis-manifest-with-cm.yml

3 | kubectl get cm shared-packops-redis-config -o yaml

4 | kubectl get pods

5 | kubectl exec -it shared-packops-redis-7cddbbf994-k5szl -- bash

6 | redis-cli

7 | ```

8 | ```

9 | cat /redis-master/redis.conf

10 | auth PASSWORD1234P

11 | get keys

12 | ```

13 |

--------------------------------------------------------------------------------

/rbac/scenario/pod-get-resource/rolebinding.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: rbac.authorization.k8s.io/v1

2 | kind: RoleBinding

3 | metadata:

4 | name: read-pods

5 | namespace: default

6 | subjects:

7 | - kind: ServiceAccount

8 | name: pod-reader-sa

9 | namespace: default

10 | roleRef:

11 | kind: Role

12 | name: pod-reader

13 | apiGroup: rbac.authorization.k8s.io

14 |

--------------------------------------------------------------------------------

/audit-log/audit-policy.yaml:

--------------------------------------------------------------------------------

1 | #/etc/kubernetes/audit-policy.yaml

2 |

3 | apiVersion: audit.k8s.io/v1

4 | kind: Policy

5 | rules:

6 | - level: Metadata

7 | verbs: ["create", "update", "patch", "delete"]

8 | resources:

9 | - group: ""

10 | resources: ["pods", "services", "configmaps"]

11 | - group: "apps"

12 | resources: ["deployments", "statefulsets"]

13 |

--------------------------------------------------------------------------------

/secret/using-env-from-secret/nginx-secret-env.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: env-single-secret

5 | spec:

6 | containers:

7 | - name: envars-test-container

8 | image: nginx

9 | env:

10 | - name: SECRET_USERNAME

11 | valueFrom:

12 | secretKeyRef:

13 | name: backend-user

14 | key: backend-username

15 |

--------------------------------------------------------------------------------

/Resource-Management/Limit-Range/test-pod-exceed.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: test-pod-exceed

5 | namespace: dev-namespace

6 | spec:

7 | containers:

8 | - name: test-container

9 | image: nginx

10 | resources:

11 | requests:

12 | cpu: "3" # Exceeds the max limit of 2 CPU

13 | memory: "2Gi" # Exceeds the max limit of 1Gi memory

14 |

--------------------------------------------------------------------------------

/metalLB/nginx-deployment.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: nginx

5 | spec:

6 | replicas: 2

7 | selector:

8 | matchLabels:

9 | app: nginx

10 | template:

11 | metadata:

12 | labels:

13 | app: nginx

14 | spec:

15 | containers:

16 | - name: nginx

17 | image: nginx

18 | ports:

19 | - containerPort: 80

20 |

--------------------------------------------------------------------------------

/rbac/scenario/creating-new-clusteadmin/04-ClusterRolebinding.yml:

--------------------------------------------------------------------------------

1 | apiVersion: rbac.authorization.k8s.io/v1

2 | kind: ClusterRoleBinding

3 | metadata:

4 | name: devops-cluster-admin

5 | roleRef:

6 | apiGroup: rbac.authorization.k8s.io

7 | kind: ClusterRole

8 | name: devops-cluster-admin

9 | subjects:

10 | - kind: ServiceAccount

11 | name: devops-cluster-admin

12 | namespace: kube-system

13 |

14 |

--------------------------------------------------------------------------------

/workloads/job/paralel-job/Readme.md:

--------------------------------------------------------------------------------

1 | You can also run a job with parallelism. There are two fields in the spec, called completions and parallelism. completions is set to 1 by default. If you want more than one successful completion, then increase this value. parallelism determines how many pods to launch. A job will not launch more pods than needed for successful completion, even if the parallelism value is greater.

2 |

--------------------------------------------------------------------------------

/statefulset/mysql-cluster-scenario/readme.md:

--------------------------------------------------------------------------------

1 |

2 | https://kubernetes.io/docs/tasks/run-application/run-replicated-stateful-application/

3 |

4 |

5 | ```

6 | kubectl run mysql-client --image=mysql:5.7 -i --rm --restart=Never --\

7 | mysql -h mysql-0.mysql <> /output/output.log; sleep 10; done']

10 | volumeMounts:

11 | - name: pv-storage

12 | mountPath: /output

13 | volumes:

14 | - name: pv-storage

15 | persistentVolumeClaim:

16 | claimName: test-pvc

17 |

--------------------------------------------------------------------------------

/kwatch/nginx-corrupted.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: nginx-failing-deployment

5 | spec:

6 | replicas: 1

7 | selector:

8 | matchLabels:

9 | app: nginx-failing

10 | template:

11 | metadata:

12 | labels:

13 | app: nginx-failing

14 | spec:

15 | containers:

16 | - name: nginx

17 | image: nginx

18 |

19 | command: ["/bin/sh", "-c"]

20 | args:

21 | - "sleep 60; exit 1"

22 |

--------------------------------------------------------------------------------

/node-affinity/node-affinity.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: nginx

5 | spec:

6 | affinity:

7 | nodeAffinity:

8 | requiredDuringSchedulingIgnoredDuringExecution:

9 | nodeSelectorTerms:

10 | - matchExpressions:

11 | - key: nodename

12 | operator: In

13 | values:

14 | - bi-team

15 | containers:

16 | - name: nginx

17 | image: nginx

18 | imagePullPolicy: IfNotPresent

19 |

--------------------------------------------------------------------------------

/scheduler/node-affinity/README.md:

--------------------------------------------------------------------------------

1 | # Lable a Kubernetes Node

2 | 1- kubectl get nodes

3 |

4 | ```

5 | worker0 Ready 1d v1.13.0 ...,kubernetes.io/hostname=worker0

6 | worker1 Ready 1d v1.13.0 ...,kubernetes.io/hostname=worker1

7 | worker2 Ready 1d v1.13.0 ...,kubernetes.io/hostname=worker2

8 | ```

9 |

10 | 2- kubectl label nodes nodename=bi-team

11 |

12 | 3- kubectl apply -f node-affinity.yml

13 |

--------------------------------------------------------------------------------

/Resource-Management/VPA/Readme.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ## install vpa

4 | ```

5 | kubectl apply -f https://raw.githubusercontent.com/kubernetes/autoscaler/vpa-release-1.0/vertical-pod-autoscaler/deploy/vpa-v1-crd-gen.yaml

6 | kubectl apply -f https://raw.githubusercontent.com/kubernetes/autoscaler/vpa-release-1.0/vertical-pod-autoscaler/deploy/vpa-rbac.yaml

7 | ```

8 | ```

9 | kubectl apply -f nginx-deployment.yaml

10 | kubectl apply -f nginx-vpa.yaml

11 |

12 | kubectl describe vpa nginx-vpa

13 |

14 | ```

15 |

--------------------------------------------------------------------------------

/storage/localpath/http-pod.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: www

5 | labels:

6 | name: www

7 | spec:

8 | containers:

9 | - name: www

10 | image: nginx:alpine

11 | ports:

12 | - containerPort: 80

13 | name: www

14 | volumeMounts:

15 | - name: www-persistent-storage

16 | mountPath: /usr/share/nginx/html

17 | volumes:

18 | - name: www-persistent-storage

19 | persistentVolumeClaim:

20 | claimName: my-claim

21 |

--------------------------------------------------------------------------------

/scheduler/node-selector/Readme.md:

--------------------------------------------------------------------------------

1 | 1. Label your node

2 | First, you label the node where you want the pod to be scheduled.

3 | ```

4 | kubectl label nodes disktype=ssd

5 | ```

6 | 2. Pod Specification with Node Selector

7 | Next, you define a pod that will use this node selector:

8 | ```

9 | apiVersion: v1

10 | kind: Pod

11 | metadata:

12 | name: selector

13 | spec:

14 | containers:

15 | - name: my-container

16 | image: nginx

17 | nodeSelector:

18 | disktype: ssd

19 |

20 |

21 | ```

22 |

--------------------------------------------------------------------------------

/ConfigMap/configmap-to-env/pod.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: app-pod

5 | spec:

6 | containers:

7 | - name: app-container

8 | image: nginx

9 | env:

10 | - name: DATABASE_URL

11 | valueFrom:

12 | configMapKeyRef:

13 | name: simple-config

14 | key: database_url

15 | - name: LOG_LEVEL

16 | valueFrom:

17 | configMapKeyRef:

18 | name: simple-config

19 | key: log_level

20 |

--------------------------------------------------------------------------------

/scenario/manual-canary-deployment/nginx-configmap-canary.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ConfigMap

3 | metadata:

4 | name: nginx-config-canary

5 | data:

6 | nginx.conf: |

7 | worker_processes 1;

8 |

9 | events {

10 | worker_connections 1024;

11 | }

12 |

13 | http {

14 | server {

15 | listen 80;

16 | location / {

17 | add_header Content-Type text/plain;

18 | return 200 "Release: Canary";

19 | }

20 | }

21 | }

22 |

--------------------------------------------------------------------------------

/Network-policy/auth-server-np.yml:

--------------------------------------------------------------------------------

1 | apiVersion: networking.k8s.io/v1

2 | kind: NetworkPolicy

3 | metadata:

4 | name: auth-server-ingress

5 | namespace: web-auth

6 | spec:

7 | podSelector:

8 | matchLabels:

9 | app: auth-server

10 | policyTypes:

11 | - Ingress

12 | ingress:

13 | - from:

14 | - namespaceSelector:

15 | matchLabels:

16 | role: auth

17 | podSelector:

18 | matchLabels:

19 | app: auth-client

20 | ports:

21 | - protocol: TCP

22 | port: 80

23 |

--------------------------------------------------------------------------------

/Resource-Management/Resource-Quota/Readme.md:

--------------------------------------------------------------------------------

1 | You want to manage resource usage in the prod-namespace by setting both requests and limits. Specifically, you will:

2 |

3 | Limit the total CPU requests in the namespace to 10 CPUs.

4 | Limit the total memory requests in the namespace to 20Gi.

5 | Cap the total CPU limits in the namespace to 15 CPUs.

6 | Cap the total memory limits in the namespace to 30Gi.

7 |

8 | ```

9 | kubectl get resourcequota resource-quota --namespace=prod-namespace --output=yaml

10 | ```

11 |

--------------------------------------------------------------------------------

/scenario/manual-canary-deployment/nginx-configmap-stable.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ConfigMap

3 | metadata:

4 | name: nginx-config-stable

5 | data:

6 | nginx.conf: |

7 | worker_processes 1;

8 |

9 | events {

10 | worker_connections 1024;

11 | }

12 |

13 | http {

14 | server {

15 | listen 80;

16 | location / {

17 | add_header Content-Type text/plain;

18 | return 200 "Release: Stable";

19 | }

20 | }

21 | }

22 |

23 |

--------------------------------------------------------------------------------

/Monitoring/Prometheus/scrape-config/scrape.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: monitoring.coreos.com/v1alpha1

2 | kind: ScrapeConfig

3 | metadata:

4 | name: static-targets

5 | namespace: monitoring

6 | labels:

7 | release: kube-prom-stack # must match your Prometheus release label

8 | spec:

9 | staticConfigs:

10 | - targets:

11 | - "10.0.0.5:9100"

12 | - "10.0.0.6:9100"

13 | labels:

14 | job: "node-exporter-external"

15 | environment: "prod"

16 | metricsPath: /metrics

17 | scrapeInterval: 30s

18 |

--------------------------------------------------------------------------------

/Resource-Management/HPA/hpa.yml:

--------------------------------------------------------------------------------

1 | #kubectl autoscale deployment nginx-deployment --cpu-percent=50 --min=1 --max=10

2 | apiVersion: autoscaling/v2

3 | kind: HorizontalPodAutoscaler

4 | metadata:

5 | name: nginx-hpa

6 | spec:

7 | scaleTargetRef:

8 | apiVersion: apps/v1

9 | kind: Deployment

10 | name: nginx-deployment

11 | minReplicas: 1

12 | maxReplicas: 10

13 | metrics:

14 | - type: Resource

15 | resource:

16 | name: cpu

17 | target:

18 | type: Utilization

19 | averageUtilization: 50

20 |

--------------------------------------------------------------------------------

/Resource-Management/VPA/vpa.yml:

--------------------------------------------------------------------------------

1 | apiVersion: autoscaling.k8s.io/v1

2 | kind: VerticalPodAutoscaler

3 | metadata:

4 | name: nginx-vpa

5 | spec:

6 | targetRef:

7 | apiVersion: "apps/v1"

8 | kind: Deployment

9 | name: nginx-deployment

10 | updatePolicy:

11 | updateMode: "Auto"

12 | resourcePolicy:

13 | containerPolicies:

14 | - containerName: "nginx"

15 | minAllowed:

16 | cpu: "50m"

17 | memory: "64Mi"

18 | maxAllowed:

19 | cpu: "500m"

20 | memory: "512Mi"

21 |

--------------------------------------------------------------------------------

/scheduler/drain/Readme.md:

--------------------------------------------------------------------------------

1 | Cordoning the Node: Prevents new pods from being scheduled on the node.

2 | ```

3 | kubectl cordon

4 | ```

5 | Draining the Node: Evicts all the pods while respecting the configurations.

6 |

7 | ```

8 | kubectl drain --ignore-daemonsets --delete-emptydir-data

9 | ```

10 | Perform Maintenance: After draining, perform any maintenance needed on the node.

11 |

12 | Uncordon the Node (if needed): Allow new pods to be scheduled again.

13 |

14 | ```

15 | kubectl uncordon

16 | ```

17 |

--------------------------------------------------------------------------------

/readiness-liveness/liveness-exec/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | apiVersion: v1

3 | kind: Pod

4 | metadata:

5 | labels:

6 | test: liveness

7 | name: liveness-exec

8 | spec:

9 | containers:

10 | - name: liveness

11 | image: registry.k8s.io/busybox

12 | args:

13 | - /bin/sh

14 | - -c

15 | - touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600

16 | livenessProbe:

17 | exec:

18 | command:

19 | - cat

20 | - /tmp/healthy

21 | initialDelaySeconds: 5

22 | periodSeconds: 5

23 | ```

24 |

--------------------------------------------------------------------------------

/readiness-liveness/readiness-exec/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 |

3 | apiVersion: v1

4 | kind: Pod

5 | metadata:

6 | labels:

7 | test: liveness

8 | name: liveness-exec

9 | spec:

10 | containers:

11 | - name: liveness

12 | image: registry.k8s.io/busybox

13 | args:

14 | - /bin/sh

15 | - -c

16 | - touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600

17 | readinessProbe:

18 | exec:

19 | command:

20 | - cat

21 | - /tmp/healthy

22 | initialDelaySeconds: 5

23 | periodSeconds: 5

24 | ```

25 |

--------------------------------------------------------------------------------

/kwatch/Readme.md:

--------------------------------------------------------------------------------

1 | 1- Configmap

2 |

3 | ```

4 | #config.yml

5 | apiVersion: v1

6 | kind: Namespace

7 | metadata:

8 | name: kwatch

9 | ---

10 | apiVersion: v1

11 | kind: ConfigMap

12 | metadata:

13 | name: kwatch

14 | namespace: kwatch

15 | data:

16 | config.yaml: |

17 | alert:

18 | telegram:

19 | token: TOKEN

20 | chatId: CHAT_ID

21 | ```

22 | ```

23 | kubectl apply -f config.yml

24 | ```

25 | 2- Deploy Kwatch

26 | ```

27 | kubectl apply -f https://raw.githubusercontent.com/abahmed/kwatch/v0.8.4/deploy/deploy.yaml

28 | ```

29 |

--------------------------------------------------------------------------------

/scheduler/pod-afinity/hpa.yml:

--------------------------------------------------------------------------------

1 | ```

2 | #kubectl autoscale deployment nginx-deployment --cpu-percent=50 --min=1 --max=10

3 | apiVersion: autoscaling/v2

4 | kind: HorizontalPodAutoscaler

5 | metadata:

6 | name: nginx-hpa

7 | spec:

8 | scaleTargetRef:

9 | apiVersion: apps/v1

10 | kind: Deployment

11 | name: nginx-deployment

12 | minReplicas: 1

13 | maxReplicas: 10

14 | metrics:

15 | - type: Resource

16 | resource:

17 | name: cpu

18 | target:

19 | type: Utilization

20 | averageUtilization: 50

21 |

22 | ```

23 |

--------------------------------------------------------------------------------

/readiness-liveness/liveness-http/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | apiVersion: v1

3 | kind: Pod

4 | metadata:

5 | labels:

6 | test: liveness

7 | name: liveness-http

8 | spec:

9 | containers:

10 | - name: liveness

11 | image: registry.k8s.io/e2e-test-images/agnhost:2.40

12 | args:

13 | - liveness

14 | livenessProbe:

15 | httpGet:

16 | path: /healthz

17 | port: 8080

18 | httpHeaders:

19 | - name: Custom-Header

20 | value: Awesome

21 | initialDelaySeconds: 3

22 | periodSeconds: 3

23 |

24 | ```

25 |

--------------------------------------------------------------------------------

/readiness-liveness/liveness-grpc/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | apiVersion: v1

3 | kind: Pod

4 | metadata:

5 | name: etcd-with-grpc

6 | spec:

7 | containers:

8 | - name: etcd

9 | image: registry.k8s.io/etcd:3.5.1-0

10 | command: [ "/usr/local/bin/etcd", "--data-dir", "/var/lib/etcd", "--listen-client-urls", "http://0.0.0.0:2379", "--advertise-client-urls", "http://127.0.0.1:2379", "--log-level", "debug"]

11 | ports:

12 | - containerPort: 2379

13 | livenessProbe:

14 | grpc:

15 | port: 2379

16 | initialDelaySeconds: 10

17 |

18 | ```

19 |

--------------------------------------------------------------------------------

/readiness-liveness/liveness-tcp/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | apiVersion: v1

3 | kind: Pod

4 | metadata:

5 | name: goproxy

6 | labels:

7 | app: goproxy

8 | spec:

9 | containers:

10 | - name: goproxy

11 | image: registry.k8s.io/goproxy:0.1

12 | ports:

13 | - containerPort: 8080

14 | readinessProbe:

15 | tcpSocket:

16 | port: 8080

17 | initialDelaySeconds: 15

18 | periodSeconds: 10

19 | livenessProbe:

20 | tcpSocket:

21 | port: 8080

22 | initialDelaySeconds: 15

23 | periodSeconds: 10

24 |

25 | ```

26 |

--------------------------------------------------------------------------------

/storage/localpath - nginx/pv.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolume

3 | metadata:

4 | name: local-pv

5 | spec:

6 | capacity:

7 | storage: 10Gi

8 | accessModes:

9 | - ReadWriteOnce

10 | persistentVolumeReclaimPolicy: Retain

11 | storageClassName: local-storage

12 | local:

13 | path: /mnt/disks/vol1

14 | nodeAffinity:

15 | required:

16 | nodeSelectorTerms:

17 | - matchExpressions:

18 | - key: kubernetes.io/hostname

19 | operator: In

20 | values:

21 | - node1

22 |

23 |

--------------------------------------------------------------------------------

/Service-Mesh/isitio/kiali-dashboard/Readme.md:

--------------------------------------------------------------------------------

1 | # Install kiali plugin

2 | ```

3 | git clone https://github.com/istio/istio.git

4 | kubectl apply -f istio/samples/addons

5 | ```

6 |

7 | # make nodeport service for kiali

8 |

9 | ```

10 | apiVersion: v1

11 | kind: Service

12 | metadata:

13 | name: kiali-nodeport

14 | namespace: istio-system

15 | spec:

16 | type: NodePort

17 | ports:

18 | - port: 20001

19 | targetPort: 20001

20 | nodePort: 30001

21 | selector:

22 | app.kubernetes.io/instance: kiali

23 | app.kubernetes.io/name: kiali

24 | ```

25 |

--------------------------------------------------------------------------------

/logging/side-car/fluent-configmap.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ConfigMap

3 | metadata:

4 | name: fluentd-config

5 | data:

6 | fluentd.conf: |

7 |

8 | type tail

9 | format none

10 | path /var/log/1.log

11 | pos_file /var/log/1.log.pos

12 | tag count.format1

13 |

14 |

15 |

16 | type tail

17 | format none

18 | path /var/log/2.log

19 | pos_file /var/log/2.log.pos

20 | tag count.format2

21 |

22 |

23 |

24 | type google_cloud

25 |

26 |

--------------------------------------------------------------------------------

/statefulset/nginx-scenario/README.md:

--------------------------------------------------------------------------------

1 |

2 | # Create Persistent Volume (if you dont have )

3 | create pv and make it default pv in our scenario Our storage is Localpath

4 | ```

5 | kubectl apply pv.yml

6 | ```

7 | ## Make it Default pv

8 | ```

9 | kubectl patch storageclass my-local-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

10 | ```

11 |

12 | # bring up your statefulset

13 | ```

14 | kubectl apply -f statefulset.yml

15 | ```

16 |

17 | # verify your PV and PVC

18 |

19 | ```

20 | kubectl get pv

21 | kubectl get pvc

22 | ```

23 |

--------------------------------------------------------------------------------

/statefulset/nginx-scenario/pv.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolume

3 | metadata:

4 | name: my-local-pv

5 | spec:

6 | capacity:

7 | storage: 500Gi

8 | accessModes:

9 | - ReadWriteOnce

10 | persistentVolumeReclaimPolicy: Retain

11 | storageClassName: my-local-storage

12 | local:

13 | path: /opt/st

14 | nodeAffinity:

15 | required:

16 | nodeSelectorTerms:

17 | - matchExpressions:

18 | - key: kubernetes.io/hostname

19 | operator: In

20 | values:

21 | - node1

22 | - node2

23 | - node3

24 |

--------------------------------------------------------------------------------

/storage/localpath/persistentVolume.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolume

3 | metadata:

4 | name: my-local-pv

5 | spec:

6 | capacity:

7 | storage: 500Gi

8 | accessModes:

9 | - ReadWriteOnce

10 | persistentVolumeReclaimPolicy: Retain

11 | storageClassName: my-local-storage

12 | local:

13 | path: /opt/st

14 | nodeAffinity:

15 | required:

16 | nodeSelectorTerms:

17 | - matchExpressions:

18 | - key: kubernetes.io/hostname

19 | operator: In

20 | values:

21 | - node1

22 | - node2

23 | - node3

24 |

--------------------------------------------------------------------------------

/Monitoring/Prometheus/prometheus-rules/pod-down.yml:

--------------------------------------------------------------------------------

1 | apiVersion: monitoring.coreos.com/v1

2 | kind: PrometheusRule

3 | metadata:

4 | labels:

5 | prometheus: kube-prometheus-stack-prometheus

6 | role: alert-rules

7 | name: your-application-name

8 | spec:

9 | groups:

10 | - name: "your-application-name.rules"

11 | rules:

12 | - alert: PodDown

13 | for: 1m

14 | expr: sum(up{job="your-service-monitor-name"}) < 1 or absent(up{job="your-service-monitor-name"})

15 | annotations:

16 | message: The deployment has less than 1 pod running.

17 |

--------------------------------------------------------------------------------

/scenario/manual-canary-deployment/Readme.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 | kubectl apply -f nginx-canary.yml

5 | kubectl apply -f nginx-stable.yml

6 | kubectl apply -f nginx-service.yml

7 | kubectl apply -f nginx-configmap-canary.yml

8 | kubectl apply -f nginx-configmap-stable.yml

9 |

10 |

11 | ```

12 | kubectl get svc

13 | ```

14 |

15 | ```

16 | for i in {1..30}; do curl -s http://10.233.57.87 && echo ""; sleep 1; done

17 | ```

18 |

19 |

20 |

--------------------------------------------------------------------------------

/workloads/job/uuidgen.yml:

--------------------------------------------------------------------------------

1 | apiVersion: batch/v1

2 | kind: Job

3 | metadata:

4 | name: uuidgen

5 | spec:

6 | template:

7 | metadata:

8 | spec:

9 | containers:

10 | - name: ubuntu

11 | image: ubuntu:latest

12 | imagePullPolicy: Always

13 | command: ["bash"]

14 | args:

15 | - -c

16 | - |

17 | apt update

18 | apt install uuid-runtime

19 | for i in {1..10}; do echo `uuidgen`@mailinator.com; done > /tmp/emails.txt

20 | cat /tmp/emails.txt

21 | sleep 60

22 | restartPolicy: OnFailure

23 |

--------------------------------------------------------------------------------

/workloads/deployment/nginx-deployment.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: nginx-depoyment

5 | spec:

6 | replicas: 3

7 | selector:

8 | matchLabels:

9 | app: webserver

10 | template:

11 | metadata:

12 | labels:

13 | app: webserver

14 | spec:

15 | containers:

16 | - name: nginx

17 | image: nginx:latest

18 | ports:

19 | - containerPort: 80

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

--------------------------------------------------------------------------------

/Resource-Management/VPA/nginx-deployment.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: nginx-deployment

5 | spec:

6 | replicas: 1

7 | selector:

8 | matchLabels:

9 | app: nginx

10 | template:

11 | metadata:

12 | labels:

13 | app: nginx

14 | spec:

15 | containers:

16 | - name: nginx

17 | image: nginx:1.14.2

18 | ports:

19 | - containerPort: 80

20 | resources:

21 | requests:

22 | cpu: "100m"

23 | memory: "128Mi"

24 | limits:

25 | cpu: "200m"

26 | memory: "256Mi"

27 |

--------------------------------------------------------------------------------

/ingress/apple-orange-ingress/ingress.yml:

--------------------------------------------------------------------------------

1 | apiVersion: networking.k8s.io/v1

2 | kind: Ingress

3 | metadata:

4 | name: ingress-packops-local

5 | spec:

6 | ingressClassName: nginx

7 | rules:

8 | - host: packops.local

9 | http:

10 | paths:

11 | - path: /apple

12 | pathType: Prefix

13 | backend:

14 | service:

15 | name: apple-service

16 | port:

17 | number: 5678

18 | - path: /orange

19 | pathType: Prefix

20 | backend:

21 | service:

22 | name: orange-service

23 | port:

24 | number: 5678

25 |

--------------------------------------------------------------------------------

/keda/time-based-scale/prometheus/scale-object.yml:

--------------------------------------------------------------------------------

1 | apiVersion: keda.sh/v1alpha1

2 | kind: ScaledObject

3 | metadata:

4 | name: packops-scaler

5 | namespace: default

6 | spec:

7 | scaleTargetRef:

8 | kind: Deployment

9 | name: nginx-deployment

10 | # minReplicaCount: 2

11 | maxReplicaCount: 10

12 | cooldownPeriod: 10

13 | pollingInterval: 10

14 | triggers:

15 | - type: prometheus

16 | metadata:

17 | serverAddress: http://kube-prom-stack-kube-prome-prometheus.monitoring.svc.cluster.local:9090

18 | metricName: custom_metric

19 | query: "custom_metric"

20 | threshold: "1"

21 |

--------------------------------------------------------------------------------

/workloads/daemonset/nginx.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: DaemonSet

3 | metadata:

4 | name: nginx-da

5 | namespace: devops

6 | labels:

7 | app: nginx-da

8 | spec:

9 | selector:

10 | matchLabels:

11 | app: nginx-da

12 | template:

13 | metadata:

14 | labels:

15 | app: nginx-da

16 | spec:

17 | containers:

18 | - name: nginx-da

19 | image: nginx:alpine

20 | volumeMounts:

21 | - name: localtime

22 | mountPath: /etc/localtime

23 | volumes:

24 | - name: localtime

25 | hostPath:

26 | path: /usr/share/zoneinfo/Asia/Tehran

27 |

--------------------------------------------------------------------------------

/workloads/cronjob/wget-15min.yml:

--------------------------------------------------------------------------------

1 | apiVersion: batch/v1

2 | kind: CronJob

3 | metadata:

4 | namespace: devops

5 | name: packops-cronjob

6 | spec:

7 | schedule: "*/15 * * * *"

8 | jobTemplate:

9 | spec:

10 | template:

11 | spec:

12 | containers:

13 | - name: reference

14 | image: busybox

15 | imagePullPolicy: IfNotPresent

16 | command:

17 | - /bin/sh

18 | - -c

19 | - date; echo "This pod Schedule every 15 min"

20 | - wget -q -O - https://packops.ir/wp-cron.php?doing_wp_cron >/dev/null 2>&1

21 | restartPolicy: OnFailure

22 |

--------------------------------------------------------------------------------

/storage/emty-dir-accessing-2pod-same-volume/ngnix-shared-volume.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Pod

3 | metadata:

4 | name: two-containers

5 | spec:

6 |

7 | restartPolicy: Never

8 |

9 | volumes:

10 | - name: shared-data

11 | emptyDir: {}

12 |

13 | containers:

14 |

15 | - name: nginx-container

16 | image: nginx

17 | volumeMounts:

18 | - name: shared-data

19 | mountPath: /usr/share/nginx/html

20 |

21 | - name: debian-container

22 | image: alpine

23 | volumeMounts:

24 | - name: shared-data

25 | mountPath: /pod-data

26 | command: ["/bin/sh"]

27 | args: ["-c", "echo Hello from the debian container > /pod-data/index.html"]

28 |

--------------------------------------------------------------------------------

/rolebinding/Create.md:

--------------------------------------------------------------------------------

1 | ```

2 | kubectl create rolebinding farshad-admin --clusterrole=admin --user=farshad

3 | ```

4 |

5 | ```

6 | kubectl get RoleBinding -o yaml

7 | ```

8 |

9 | # output would be something like this

10 | ```

11 | apiVersion: rbac.authorization.k8s.io/v1

12 | kind: RoleBinding

13 | metadata:

14 | creationTimestamp: "2023-01-15T14:54:47Z"

15 | name: farshad-admin

16 | namespace: default

17 | resourceVersion: "22145"

18 | uid: 6bf97d50-fa9f-4437-b2d0-e1aa1dbe22ae

19 | roleRef:

20 | apiGroup: rbac.authorization.k8s.io

21 | kind: ClusterRole

22 | name: admin

23 | subjects:

24 | - apiGroup: rbac.authorization.k8s.io

25 | kind: User

26 | name: farshad

27 |

28 | ```

29 |

--------------------------------------------------------------------------------

/service/LoadBalancer/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | apiVersion: v1

3 | kind: Service

4 | metadata:

5 | name: myapp-loadbalancer-service

6 | spec:

7 | type: LoadBalancer

8 | ports:

9 | - port: 80

10 | targetPort: 80

11 | selector:

12 | app: nginx

13 | ---

14 | apiVersion: apps/v1

15 | kind: Deployment

16 | metadata:

17 | name: nginx-deployment

18 | labels:

19 | app: nginx

20 | spec:

21 | replicas: 3

22 | selector:

23 | matchLabels:

24 | app: nginx

25 | template:

26 | metadata:

27 | labels:

28 | app: nginx

29 | spec:

30 | containers:

31 | - name: nginx

32 | image: nginx:latest

33 | ports:

34 | - containerPort: 80

35 | ---

36 |

37 | ```

38 |

--------------------------------------------------------------------------------

/Network-policy/webauth-server.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: web-auth-server

5 | labels:

6 | app: auth-server

7 | namespace: web-auth

8 | spec:

9 | replicas: 1

10 | selector:

11 | matchLabels:

12 | app: auth-server

13 | template:

14 | metadata:

15 | labels:

16 | app: auth-server

17 | spec:

18 | containers:

19 | - name: nginx

20 | image: nginx:1.14.2

21 |

22 | ---

23 |

24 | apiVersion: v1

25 | kind: Service

26 | metadata:

27 | name: web-auth-server-svc

28 | namespace: web-auth

29 | spec:

30 | ports:

31 | - port: 80

32 | protocol: TCP

33 | targetPort: 80

34 | selector:

35 | app: auth-server

36 | type: ClusterIP

37 |

--------------------------------------------------------------------------------

/secret/using-env-from-secret/readme.md:

--------------------------------------------------------------------------------

1 | Creat a secret name backend-user with key backend-username and value backend-admin

2 | this way

3 | ```

4 | kubectl create secret generic backend-user --from-literal=backend-username='backend-admin'

5 | ```

6 | OR Create it as a manifest

7 | ```

8 | apiVersion: v1

9 | kind: Secret

10 | metadata:

11 | name: backend-user

12 | type: Opaque

13 | data:

14 | backend-username: YmFja2VuZC1hZG1pbg== # base64-encoded value of 'backend-admin'

15 | ```

16 | and apply nginx

17 | ```

18 | kubectl apply nginx-secret-env.yml

19 | ```

20 | Check the env it should have show backend-admin

21 | ```

22 | kubectl exec -i -t env-single-secret -- /bin/sh -c 'echo $SECRET_USERNAME'

23 | ```

24 | ![Uploading image.png…]()

25 |

--------------------------------------------------------------------------------

/Network-policy/web-auth-client.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | labels:

5 | app: auth-client

6 | name: auth-client

7 | namespace: web-auth

8 | spec:

9 | replicas: 1

10 | selector:

11 | matchLabels:

12 | app: auth-client

13 | template:

14 | metadata:

15 | labels:

16 | app: auth-client

17 | spec:

18 | containers:

19 | - name: busybox

20 | image: radial/busyboxplus:curl

21 | command:

22 | - sh

23 | - -c

24 | - while true; do if curl -s -o /dev/null -m 3 web-auth-server-svc; then echo "[SUCCESS]

25 | Successfully reached auth server!"; else echo "[FAIL] Failed to reach auth server!";

26 | fi; sleep 5; done

27 |

--------------------------------------------------------------------------------

/service/ClusterIP/Readme.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ```

4 | apiVersion: apps/v1

5 | kind: Deployment

6 | metadata:

7 | name: nginx-deployment

8 | labels:

9 | app: nginx

10 | spec:

11 | replicas: 3

12 | selector:

13 | matchLabels:

14 | app: nginx

15 | template:

16 | metadata:

17 | labels:

18 | app: nginx

19 | spec:

20 | containers:

21 | - name: nginx

22 | image: nginx:latest

23 | ports:

24 | - containerPort: 80

25 | ---

26 | apiVersion: v1

27 | kind: Service

28 | metadata:

29 | name: myapp-clusterip-service

30 | spec:

31 | type: ClusterIP

32 | ports:

33 | - port: 80

34 | targetPort: 80

35 | selector:

36 | app: nginx

37 | ```

38 |

--------------------------------------------------------------------------------

/service/NodePort/Readme.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ```

4 | apiVersion: apps/v1

5 | kind: Deployment

6 | metadata:

7 | name: nginx-deployment

8 | labels:

9 | app: nginx

10 | spec:

11 | replicas: 3

12 | selector:

13 | matchLabels:

14 | app: nginx

15 | template:

16 | metadata:

17 | labels:

18 | app: nginx

19 | spec:

20 | containers:

21 | - name: nginx

22 | image: nginx:latest

23 | ports:

24 | - containerPort: 80

25 | ---

26 | apiVersion: v1

27 | kind: Service

28 | metadata:

29 | name: myapp-service

30 | spec:

31 | type: NodePort

32 | ports:

33 | - targetPort: 80

34 | port: 80

35 | nodePort: 30008

36 | selector:

37 | app: nginx

38 |

--------------------------------------------------------------------------------

/statefulset/mysql-cluster-scenario/mysql-service.yml:

--------------------------------------------------------------------------------

1 | # Headless service for stable DNS entries of StatefulSet members.

2 | apiVersion: v1

3 | kind: Service

4 | metadata:

5 | name: mysql

6 | labels:

7 | app: mysql

8 | app.kubernetes.io/name: mysql

9 | spec:

10 | ports:

11 | - name: mysql

12 | port: 3306

13 | clusterIP: None

14 | selector:

15 | app: mysql

16 | ---

17 | # Client service for connecting to any MySQL instance for reads.

18 | # For writes, you must instead connect to the primary: mysql-0.mysql.

19 | apiVersion: v1

20 | kind: Service

21 | metadata:

22 | name: mysql-read

23 | labels:

24 | app: mysql

25 | app.kubernetes.io/name: mysql

26 | readonly: "true"

27 | spec:

28 | ports:

29 | - name: mysql

30 | port: 3306

31 | selector:

32 | app: mysql

33 |

--------------------------------------------------------------------------------

/storage/nfs/pod-using-nfs.yml:

--------------------------------------------------------------------------------

1 | kind: Pod

2 | apiVersion: v1

3 | metadata:

4 | name: pod-using-nfs

5 | spec:

6 | # Add the server as an NFS volume for the pod

7 | volumes:

8 | - name: nfs-volume

9 | nfs:

10 | # URL for the NFS server

11 | server: 10.108.211.244 # Change this!

12 | path: /

13 |

14 | # In this container, we'll mount the NFS volume

15 | # and write the date to a file inside it.

16 | containers:

17 | - name: app

18 | image: alpine

19 |

20 | # Mount the NFS volume in the container

21 | volumeMounts:

22 | - name: nfs-volume

23 | mountPath: /var/nfs

24 |

25 | # Write to a file inside our NFS

26 | command: ["/bin/sh"]

27 | args: ["-c", "while true; do date >> /var/nfs/dates.txt; sleep 5; done"]

28 |

--------------------------------------------------------------------------------

/Resource-Management/HPA/nginx-deployment.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: nginx-deployment

5 | spec:

6 | replicas: 1

7 | selector:

8 | matchLabels:

9 | app: nginx

10 | template:

11 | metadata:

12 | labels:

13 | app: nginx

14 | spec:

15 | containers:

16 | - name: nginx

17 | image: nginx:1.17.4

18 | ports:

19 | - containerPort: 80

20 | resources:

21 | requests:

22 | cpu: 50m

23 | limits:

24 | cpu: 100m

25 | ---

26 | apiVersion: v1

27 | kind: Service

28 | metadata:

29 | name: nginx-service

30 | labels:

31 | app: nginx

32 | spec:

33 | selector:

34 | app: nginx

35 | ports:

36 | - protocol: TCP

37 | port: 80

38 | targetPort: 80

39 |

40 |

--------------------------------------------------------------------------------

/workloads/deployment/nginx-svc-nodeport.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: nginx-svc # create a service with "nginx" name

5 | labels:

6 | app: webserver

7 | spec:

8 | type: NodePort

9 | selector:

10 | app.kubernetes.io/name: MyApp

11 | ports:

12 | - port: 80

13 | # By default and for convenience, the `targetPort` is set to

14 | # the same value as the `port` field.

15 | targetPort: 80

16 | # Optional field

17 | # By default and for convenience, the Kubernetes control plane

18 | # will allocate a port from a range (default: 30000-32767)

19 | nodePort: 30007

20 | selector: # headless service provides to reach pod with podName.serviceName

21 | app: webserver

22 |

--------------------------------------------------------------------------------

/scenario/manual-canary-deployment/nginx-canary.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: nginx-canary

5 | labels:

6 | app: nginx

7 | version: canary

8 | spec:

9 | replicas: 1

10 | selector:

11 | matchLabels:

12 | app: nginx

13 | version: canary

14 | template:

15 | metadata:

16 | labels:

17 | app: nginx

18 | version: canary

19 | spec:

20 | containers:

21 | - name: nginx

22 | image: nginx:latest

23 | volumeMounts:

24 | - name: nginx-config-canary

25 | mountPath: /etc/nginx/nginx.conf

26 | subPath: nginx.conf

27 | ports:

28 | - containerPort: 80

29 | volumes:

30 | - name: nginx-config-canary

31 | configMap:

32 | name: nginx-config-canary

33 |

--------------------------------------------------------------------------------

/scenario/manual-canary-deployment/nginx-stable.yml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: Deployment

3 | metadata:

4 | name: nginx-stable

5 | labels:

6 | app: nginx

7 | version: stable

8 | spec:

9 | replicas: 3

10 | selector:

11 | matchLabels:

12 | app: nginx

13 | version: stable

14 | template:

15 | metadata:

16 | labels:

17 | app: nginx

18 | version: stable

19 | spec:

20 | containers:

21 | - name: nginx

22 | image: nginx:latest

23 | volumeMounts:

24 | - name: nginx-config-stable

25 | mountPath: /etc/nginx/nginx.conf

26 | subPath: nginx.conf

27 | ports:

28 | - containerPort: 80

29 | volumes:

30 | - name: nginx-config-stable

31 | configMap:

32 | name: nginx-config-stable

33 |

--------------------------------------------------------------------------------

/sidecar-container/side-car-tail-log.yml:

--------------------------------------------------------------------------------

1 | $ cat log-shipper-sidecar-deployment.yaml

2 | apiVersion: apps/v1

3 | kind: Deployment

4 | metadata:

5 | name: myapp

6 | labels:

7 | app: myapp

8 | spec:

9 | replicas: 1

10 | selector:

11 | matchLabels:

12 | app: myapp

13 | template:

14 | metadata:

15 | labels:

16 | app: myapp

17 | spec:

18 | containers:

19 | - name: myapp

20 | image: alpine:latest

21 | command: ['sh', '-c', 'echo "logging" > /opt/logs.txt']

22 | volumeMounts:

23 | - name: data

24 | mountPath: /opt

25 | - name: logshipper

26 | image: alpine:latest

27 | command: ['sh', '-c', 'tail /opt/logs.txt']

28 | volumeMounts:

29 | - name: data

30 | mountPath: /opt

31 | volumes:

32 | - name: data

33 | emptyDir: {}

34 |

--------------------------------------------------------------------------------

/ingress/README.md:

--------------------------------------------------------------------------------

1 | # install ingress with Hostmode (listen on port 80 )

2 | for accessing on port 80 we need to change deployment to host mode network

3 | i Add this cahnge but for Your Information i just added below hostnetowrk in deploymnet section in manifest ingress-host-mode.yml

4 |

5 | ```

6 | template:

7 | spec:

8 | hostNetwork: true

9 |

10 | ```

11 |

12 | ```

13 | kubectl apply -f ingress-host-mode.yml

14 | kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

15 | ```

16 | # Scale up ingress controler to 3 (in our scenario we have 3 node)

17 | ```

18 | kubectl scale deployments ingress-nginx-controller -n ingress-nginx --replicas 3

19 |

20 | ```

21 |

22 | # apply simple app that listen on 5678 (apple.packops.local)

23 | kubectl -f simple-app-ingress.yml

24 |

25 |

26 |

27 |

28 | # Set host apple.packops.local tp kubernetes ip

29 |

--------------------------------------------------------------------------------

/secret/dockerhub-imagepull-secret/Readme.md:

--------------------------------------------------------------------------------

1 | ## 1- Create imagepullsecret

2 | Create username password based on your dockerhub user pass in this structure :

3 | ```

4 | kubectl create secret docker-registry my-registry-secret \

5 | --docker-server=https://index.docker.io/v1/ \

6 | --docker-username=mrnickfetrat@gmail.com \

7 | --docker-password=PASSS \

8 | --docker-email=mrnickfetrat@gmail.com

9 |

10 | ```

11 | ## 1-1 For cheking you secret

12 | ```

13 | kubectl get secrets my-registry-secret -o yaml

14 | ```

15 | ## 2- create your manifest based on private image that you have on dockerhub

16 | ```

17 | apiVersion: v1

18 | kind: Pod

19 | metadata:

20 | name: my-private-image-pod

21 | spec:

22 | containers:

23 | - name: my-container

24 | image: farshadnikfetrat/hello-nodejs:5918f3e7

25 | imagePullSecrets:

26 | - name: my-registry-secret

27 |

28 | ```

29 |

--------------------------------------------------------------------------------

/scenario/ipnetns-container/Readme.md:

--------------------------------------------------------------------------------

1 | ```

2 | ip netns add packops1-ns

3 | ip netns exec packops1-ns python3 -m http.server 80

4 | ip netns exec packops1-ns ip a

5 |

6 | ip netns list

7 |

8 |

9 | ip netns add packops2-ns

10 | ip netns exec python3 -m http.server 80

11 | ip netns exec packops2-ns ip a

12 |

13 |

14 | sudo ip link add veth-packops1 type veth peer name veth-packops2

15 | sudo ip link set veth-packops1 netns packops1-ns

16 | sudo ip link set veth-packops2 netns packops2-ns

17 |

18 |