├── DataSelection

├── 2.DataPreparation

│ └── data_preparation.ipynb

├── 3.ExploreLATData

│ └── explore_latdata.ipynb

├── 4.ExploreLATDataBurst

│ └── explore_latdata_burst.ipynb

└── 5.UsingLATAllSkyWeekly

│ └── LAT_weekly_allsky.ipynb

├── Exposure

└── Exposure.ipynb

├── GRBAnalysis

└── 1.LATGRBAnalysis

│ └── lat_grb_analysis.ipynb

├── LICENSE

├── ObsSim

├── 1.ObservationSim

│ └── obssim_tutorial.ipynb

└── 2.OrbitSim

│ └── orbsim_tutorial.ipynb

├── README.md

└── SourceAnalysis

├── 1.BinnedLikelihood

└── binned_likelihood_tutorial.ipynb

├── 10.EnergyDispersion

└── binnededisp_tutorial.ipynb

├── 2.UnbinnedLikelihood

└── likelihood_tutorial.ipynb

├── 3.PythonLikelihood

└── python_tutorial.ipynb

├── 3C279_input_model.xml

├── 4.SummedPythonLikelihood

└── summed_tutorial.ipynb

├── 5.PythonUpperLimits

└── upper_limits.ipynb

├── 6.ExtendedSourceAnalysis

└── extended.ipynb

├── 7.LATAperturePhotometry

└── aperture_photometry.ipynb

└── 8.PulsarGating

└── pulsar_gating_tutorial.ipynb

/DataSelection/2.DataPreparation/data_preparation.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Data Preparation\n",

8 | "\n",

9 | "Photon and spacecraft data are all that a user needs for the analysis. For the definition of LAT data products, see the information in the [Cicerone](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Data/LAT_DP.html).\n",

10 | "\n",

11 | "The LAT data can be extracted from the Fermi Science Support Center web site as described in the section [Extract LAT data](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/extract_latdata.html). Preparing these data for analysis depends on the type of analysis you wish to perform (e.g. point source, extended source, GRB spectral analysis, timing analysis, etc). The different cuts to the data are described in detail in the [Cicerone](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Data/LAT_DP.html)."

12 | ]

13 | },

14 | {

15 | "cell_type": "markdown",

16 | "metadata": {},

17 | "source": [

18 | "Data preparation consists of two steps:\n",

19 | "* ([gtselect](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtselect.txt)): Used to make cuts based on columns in the event data file such as time, energy, position, zenith angle, instrument coordinates, event class, and event type (new in Pass 8).\n",

20 | "* ([gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt)): In addition to cutting the selected events, gtmktime makes cuts based on the spacecraft file and updates the Good Time Interval (GTI) extension.\n",

21 | "\n",

22 | "\n",

23 | "Here we give an example of how to prepare the data for the analysis of a point source. For your particular source analysis you have to prepare your data performing similar steps, but with the cuts suggested in Cicerone for your case."

24 | ]

25 | },

26 | {

27 | "cell_type": "markdown",

28 | "metadata": {},

29 | "source": [

30 | "## 1. Event Selection with gtselect\n",

31 | "\n",

32 | "In this section, we look at making basic data cuts using [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtselect.txt). By default, gtselect prompts for cuts on:\n",

33 | "* Time\n",

34 | "* Energy\n",

35 | "* Position (RA,Dec,radius)\n",

36 | "* Maximum Zenith Angle\n",

37 | "\n",

38 | "However, by using the following hidden parameters (or using the '_Show Advanced Parameters_' check box in GUI mode), you can also make cuts on:\n",

39 | "\n",

40 | "* Minimum Event class ID (``evclsmin``)\n",

41 | "* Maximum Event class ID (``evclsmax``)\n",

42 | "* Event conversion type ID (``convtype``)\n",

43 | "* Minimum pulse phase (``phasemin``)\n",

44 | "* Maximum pulse phase (``phasemax``)"

45 | ]

46 | },

47 | {

48 | "cell_type": "markdown",

49 | "metadata": {},

50 | "source": [

51 | "For this example, we use data that was extracted using the procedure described in the [Extract LAT Data](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/extract_latdata.html) tutorial. The original selection used the following information:\n",

52 | "\n",

53 | "* Search Center (RA,DEC) = (193.98,-5.82)\n",

54 | "* Radius = 20 degrees\n",

55 | "* Start Time (MET) = 239557417 seconds (2008-08-04 T15:43:37)\n",

56 | "* Stop Time (MET) = 255398400 seconds (2009-02-04 T00:00:00)\n",

57 | "* Minimum Energy = 100 MeV\n",

58 | "* Maximum Energy = 500000 MeV\n",

59 | "\n",

60 | "The LAT operated in survey mode for that period of time. We provide the user with the photon and spacecraft data files extracted in the same method as described in the [Extract LAT data](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/extract_latdata.html) tutorial:\n",

61 | "\n",

62 | "1. L1506091032539665347F73_PH00.fits\n",

63 | "2. L1506091032539665347F73_PH01.fits\n",

64 | "3. L1506091032539665347F73_SC00.fits"

65 | ]

66 | },

67 | {

68 | "cell_type": "code",

69 | "execution_count": null,

70 | "metadata": {},

71 | "outputs": [],

72 | "source": [

73 | "!wget https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/dataPreparation/L1506091032539665347F73_PH00.fits\n",

74 | "!wget https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/dataPreparation/L1506091032539665347F73_PH01.fits\n",

75 | "!wget https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/dataPreparation/L1506091032539665347F73_SC00.fits"

76 | ]

77 | },

78 | {

79 | "cell_type": "code",

80 | "execution_count": null,

81 | "metadata": {},

82 | "outputs": [],

83 | "source": [

84 | "!mkdir data\n",

85 | "!mv *.fits ./data"

86 | ]

87 | },

88 | {

89 | "cell_type": "markdown",

90 | "metadata": {},

91 | "source": [

92 | "If more than one photon file was returned by the [LAT Data Server](https://fermi.gsfc.nasa.gov/cgi-bin/ssc/LAT/LATDataQuery.cgi), we will need to provide an input file list in order to use all the event data files in the same analysis. This text file can be generated by typing:"

93 | ]

94 | },

95 | {

96 | "cell_type": "code",

97 | "execution_count": null,

98 | "metadata": {},

99 | "outputs": [],

100 | "source": [

101 | "!ls ./data/*_PH* > ./data/events.txt"

102 | ]

103 | },

104 | {

105 | "cell_type": "code",

106 | "execution_count": null,

107 | "metadata": {

108 | "scrolled": true

109 | },

110 | "outputs": [],

111 | "source": [

112 | "!cat ./data/events.txt"

113 | ]

114 | },

115 | {

116 | "cell_type": "markdown",

117 | "metadata": {},

118 | "source": [

119 | "This input file list can be used in place of a single input events (or FT1) file by placing an `@` symbol before the text filename. The output from [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtselect.txt) will be a single file containing all events from the combined file list that satisfy the other specified cuts.\n",

120 | "\n",

121 | "For a simple point source analysis, it is recommended that you only include events with a high probability of being photons. This cut is performed by selecting \"source\" class events with the the [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtselect.txt) tool by including the hidden parameter ``evclass`` on the command line. For LAT Pass 8 data, `source` events are specified as event class 128 (the default value).\n",

122 | "\n",

123 | "Additionally, in Pass 8, you can supply the hidden parameter `evtype` (event type) which is a sub-selection on `evclass`. For a simple analysis, we wish to include all front+back converting events within all PSF and Energy subclasses. This is specified as `evtype` 3 (the default value).\n",

124 | "\n",

125 | "The recommended values for both `evclass` and `evtype` may change as LAT data processing develops.\n",

126 | "\n",

127 | "Now run [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtselect.txt) to select the data you wish to analyze. For this example, we consider the \"source class\" photons within a 20 degree acceptance cone of the blazar 3C 279. We apply the **gtselect** tool to the data file as follows:"

128 | ]

129 | },

130 | {

131 | "cell_type": "code",

132 | "execution_count": null,

133 | "metadata": {},

134 | "outputs": [],

135 | "source": [

136 | "%%bash\n",

137 | "gtselect evclass=128 evtype=3\n",

138 | " @./data/events.txt\n",

139 | " ./data/3C279_region_filtered.fits\n",

140 | " 193.98\n",

141 | " -5.82\n",

142 | " 20\n",

143 | " INDEF\n",

144 | " INDEF\n",

145 | " 100\n",

146 | " 500000\n",

147 | " 90\n",

148 | "\n",

149 | "#### Parameters:\n",

150 | "# Input file or files (if multiple files are in a .txt file,\n",

151 | "# don't forget the @ symbol)\n",

152 | "# Output file\n",

153 | "# RA for new search center\n",

154 | "# Dec or new search center\n",

155 | "# Radius of the new search region\n",

156 | "# Start time (MET in s)\n",

157 | "# End time (MET in s)\n",

158 | "# Lower energy limit (MeV)\n",

159 | "# Upper energy limit (MeV)\n",

160 | "# Maximum zenith angle value (degrees)"

161 | ]

162 | },

163 | {

164 | "cell_type": "markdown",

165 | "metadata": {},

166 | "source": [

167 | "The filtered data will be found in the file `./data/3C279_region_filtered.fits`.\n",

168 | "\n",

169 | "**Note**: If you don't want to make a selection on a given parameter, just enter a zero (0) as the value.\n",

170 | "\n",

171 | "In this step we also selected the maximum zenith angle value as suggested in the [Cicerone](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Data_Exploration/Data_preparation.html). Gamma-ray photons coming from the Earth limb (\"albedo gammas\") are a strong source of background. You can minimize this effect with a zenith angle cut. The value of `zmax` = 90 degrees is suggested for reconstructing events above 100 MeV and provides a sufficient buffer between your region of interest (ROI) and the Earth's limb.\n",

172 | "\n",

173 | "In the next step, [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) will remove any time period for which our ROI overlaps this buffer region. While increasing the buffer (reducing `zmax`) may decrease the background rate from albedo gammas, it will also reduce the amount of time your ROI is completely free of the buffer zone and thus reduce the livetime on the source of interest."

174 | ]

175 | },

176 | {

177 | "cell_type": "markdown",

178 | "metadata": {},

179 | "source": [

180 | "**Notes**:\n",

181 | "\n",

182 | "* The RA and Dec of the search center must exactly match that used in the dataserver selection. If they are not the same, multiple copies of the source position will appear in your prepared data file which will cause later stages of analysis to fail. See \"DSS Keywords\" below.\n",

183 | "\n",

184 | "\n",

185 | "* The radius of the search region selected here must lie entirely within the region defined in the dataserver selection. They can be the same values, with no negative effects.\n",

186 | "\n",

187 | "\n",

188 | "* The time span selected here must lie within the time span defined in the dataserver selection. They can be the same values with no negative effects.\n",

189 | "\n",

190 | "\n",

191 | "* The energy range selected here must lie within the time span defined in the dataserver selection. They can be the same values with no negative effects."

192 | ]

193 | },

194 | {

195 | "cell_type": "markdown",

196 | "metadata": {},

197 | "source": [

198 | "**BE AWARE**: [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtselect.txt) writes descriptions of the data selections to a series of _Data Sub-Space_ (DSS) keywords in the `EVENTS` extension header.\n",

199 | "\n",

200 | "These keywords are used by the exposure-related tools and by [gtlike](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtlike.txt) for calculating various quantities, such as the predicted number of detected events given by the source model. These keywords MUST be same for all of the filtered event files considered in a given analysis.\n",

201 | "\n",

202 | "[gtlike](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtlike.txt) will check to ensure that all of the DSS keywords are the same in all of the event data files. For a discussion of the DSS keywords see the [Data Sub-Space Keywords page](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/dss_keywords.html)."

203 | ]

204 | },

205 | {

206 | "cell_type": "markdown",

207 | "metadata": {},

208 | "source": [

209 | "There are multiple ways to view information about your data file. For example:\n",

210 | "* You may obtain the value of start and end time of your file by using the fkeypar tool. This tool is part of the [FTOOLS](http://heasarc.nasa.gov/lheasoft/ftools/ftools_menu.html) software package and is used to read the value of a FITS header keyword and write it to an output parameter file. For more information on `fkeypar`, type: "

211 | ]

212 | },

213 | {

214 | "cell_type": "markdown",

215 | "metadata": {},

216 | "source": [

217 | "`fhelp fkeypar`"

218 | ]

219 | },

220 | {

221 | "cell_type": "markdown",

222 | "metadata": {},

223 | "source": [

224 | "* The [gtvcut](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtvcut.txt) tool can be used to view the DSS keywords in a given extension, where the EVENTS extension is assumed by default. This is an excellent way to to find out what selections have been made already on your data file (by either the dataserver, or previous runs of gtselect).\n",

225 | "\n",

226 | " * NOTE: If you wish to view the (very long) list of good time intervals (GTIs), you can use the hidden parameter `suppress_gtis=no` on the command line. The full list of GTIs is suppressed by default."

227 | ]

228 | },

229 | {

230 | "cell_type": "markdown",

231 | "metadata": {},

232 | "source": [

233 | "## 2. Time Selection with gtmktime\n",

234 | "\n",

235 | "Good Time Intervals (GTIs):\n",

236 | "\n",

237 | "* A GTI is a time range when the data can be considered valid. The GTI extension contains a list of these GTI's for the file. Thus the sum of the entries in the GTI extension of a file corresponds to the time when the data in the file is \"good.\"\n",

238 | "\n",

239 | "* The initial list of GTI's are the times that the LAT was collecting data over the time range you selected. The LAT does not collect data while the observatory is transiting the South Atlantic Anomaly (SAA), or during rare events such as software updates or spacecraft maneuvers.\n",

240 | "\n",

241 | "**Notes**:\n",

242 | "* Your object will most likely not be in the field of view during the entire time that the LAT was taking data.\n",

243 | "\n",

244 | "* Additional data cuts made with gtmktime will update the GTIs based on the cuts specified in both gtmktime and gtselect.\n",

245 | "\n",

246 | "* The Fermitools use the GTIs when calculating exposure. If the GTIs have not been properly updated, the exposure correction made during science analysis may be incorrect."

247 | ]

248 | },

249 | {

250 | "cell_type": "markdown",

251 | "metadata": {},

252 | "source": [

253 | "[gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) is used to update the GTI extension and make cuts based on spacecraft parameters contained in the spacecraft (pointing and livetime history) file. It reads the spacecraft file and, based on the filter expression and specified cuts, creates a set of GTIs. These are then combined (logical and) with the existing GTIs in the Event data file, and all events outside this new set of GTIs are removed from the file. New GTIs are then written to the GTI extension of the new file.\n",

254 | "\n",

255 | "Cuts can be made on any field in the spacecraft file by adding terms to the filter expression using C-style relational syntax:\n",

256 | "\n",

257 | " ! -> not, && -> and, || -> or, ==, !=, >, <, >=, <=\n",

258 | "\n",

259 | " ABS(), COS(), SIN(), etc., also work\n",

260 | "\n",

261 | ">**NOTE**: Every time you specify an additional cut on time, ROI, zenith angle, event class, or event type using [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtselect.txt), you must run [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) to reevaluate the GTI selection.\n",

262 | "\n",

263 | "Several of the cuts made above with **gtselect** will directly affect the exposure. Running [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) will select the correct GTIs to handle these cuts."

264 | ]

265 | },

266 | {

267 | "cell_type": "markdown",

268 | "metadata": {},

269 | "source": [

270 | "It is also especially important to apply a zenith cut for small ROIs (< 20 degrees), as this brings your source of interest close to the Earth's limb. There are two different methods for handling the complex cut on zenith angle:\n",

271 | "\n",

272 | "* One method is to exclude time intervals where the buffer zone defined by the zenith cut intersects the ROI from the list of GTIs. In order to do that, run [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) and answer \"yes\" at the prompt:"

273 | ]

274 | },

275 | {

276 | "cell_type": "markdown",

277 | "metadata": {},

278 | "source": [

279 | "```\n",

280 | "> gtmktime\n",

281 | "...\n",

282 | "> Apply ROI-based zenith angle cut [] yes\n",

283 | "```"

284 | ]

285 | },

286 | {

287 | "cell_type": "markdown",

288 | "metadata": {},

289 | "source": [

290 | ">**NOTE**: If you are studying a very broad region (or the whole sky) you would lose most (all) of your data when you implement the ROI-based zenith angle cut in [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt).\n",

291 | ">\n",

292 | ">In this case you can allow all time intervals where the cut intersects the ROI, but the intersection lies outside the FOV. To do this, run _gtmktime_ specifying a filter expression defining your analysis region, and answer \"no\" to the question regarding the ROI-based zenith angle cut:\n",

293 | ">\n",

294 | ">`> Apply ROI-based zenith angle cut [] no`\n",

295 | ">\n",

296 | ">Here, RA_of_center_ROI, DEC_of_center_ROI and radius_ROI correspond to the ROI selection made with gtselect, zenith_cut is defined as 90 degrees (as above), and limb_angle_minus_FOV is (zenith angle of horizon - FOV radius) where the zenith angle of the horizon is 113 degrees."

297 | ]

298 | },

299 | {

300 | "cell_type": "markdown",

301 | "metadata": {},

302 | "source": [

303 | "* Alternatively, you can apply the zenith cut to the livetime calculation while running [gtltcube](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtltcube.txt). This is the method that is currently recommended by the LAT team (see the [Livetimes and Exposure](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Likelihood/Exposure.html) section of the [Cicerone](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/)), and is the method we will use most commonly in these analysis threads. To do this, answer \"no\" at the [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) prompt:"

304 | ]

305 | },

306 | {

307 | "cell_type": "markdown",

308 | "metadata": {},

309 | "source": [

310 | "`> Apply ROI-based zenith angle cut [] no`\n",

311 | "\n",

312 | "You'll then need to specify a value for gtltcube's `zmax` parameter when calculating the livetime cube:\n",

313 | "\n",

314 | "`> gtltcube zmax=90`"

315 | ]

316 | },

317 | {

318 | "cell_type": "markdown",

319 | "metadata": {},

320 | "source": [

321 | "[gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) also provides the ability to exclude periods when some event has negatively affected the quality of the LAT data. To do this, we select good time intervals (GTIs) by using a logical filter for any of the [quantities in the spacecraft file](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Data/LAT_Data_Columns.html#SpacecraftFile). Some possible quantities for filtering data are:\n",

322 | "\n",

323 | "* `DATA_QUAL` - quality flag set by the LAT instrument team (1 = ok, 2 = waiting review, 3 = good with bad parts, 0 = bad)\n",

324 | "\n",

325 | "* `LAT_CONFIG` - instrument configuration (0 = not recommended for analysis, 1 = science configuration)\n",

326 | "\n",

327 | "* `ROCK_ANGLE` - can be used to eliminate pointed observations from the dataset.\n",

328 | "\n",

329 | ">**NOTE**: A history of the rocking profiles that have been used by the LAT can be found in the [SSC's LAT observations page.](https://fermi.gsfc.nasa.gov/ssc/observations/types/allsky/)"

330 | ]

331 | },

332 | {

333 | "cell_type": "markdown",

334 | "metadata": {},

335 | "source": [

336 | "The current [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) filter expression recommended by the LAT team is:\n",

337 | "\n",

338 | "**(DATA_QUAL>0)&&(LAT_CONFIG==1).**\n",

339 | "\n",

340 | ">**NOTE**: The \"DATA_QUAL\" parameter can be set to different values, based on the type of object and analysis the user is interested into (see this page of the Cicerone for the most updated detailed description of the parameter's values). Typically, setting the parameter to 1 is the best option. For GRB analysis, on the contrary, the parameter should be set to \">0\".\n",

341 | "\n",

342 | "Here is an example of running [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) on the 3C 279 filtered events file. For convienience, we rename the spacecraft file to `spacecraft.fits`."

343 | ]

344 | },

345 | {

346 | "cell_type": "code",

347 | "execution_count": null,

348 | "metadata": {},

349 | "outputs": [],

350 | "source": [

351 | "!mv ./data/L1506091032539665347F73_SC00.fits ./data/spacecraft.fits"

352 | ]

353 | },

354 | {

355 | "cell_type": "markdown",

356 | "metadata": {},

357 | "source": [

358 | "Now, we run **gtmktime**:"

359 | ]

360 | },

361 | {

362 | "cell_type": "code",

363 | "execution_count": null,

364 | "metadata": {},

365 | "outputs": [],

366 | "source": [

367 | "%%bash\n",

368 | "gtmktime\n",

369 | " ./data/spacecraft.fits\n",

370 | " (DATA_QUAL>0)&&(LAT_CONFIG==1)\n",

371 | " no\n",

372 | " ./data/3C279_region_filtered.fits\n",

373 | " ./data/3C279_region_filtered_gti.fits\n",

374 | " \n",

375 | "#### Parameters specified above are:\n",

376 | "# Spacecraft file\n",

377 | "# Filter expression\n",

378 | "# Apply ROI-based zenith angle cut\n",

379 | "# Event data file\n",

380 | "# Output event file name"

381 | ]

382 | },

383 | {

384 | "cell_type": "code",

385 | "execution_count": null,

386 | "metadata": {},

387 | "outputs": [],

388 | "source": [

389 | "!ls ./data/"

390 | ]

391 | },

392 | {

393 | "cell_type": "markdown",

394 | "metadata": {},

395 | "source": [

396 | "The filtered event file, [3C279_region_filtered_gti.fits,](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/dataPreparation/3C279_region_filtered_gti.fits) output from [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) can be downloaded from the Fermi SSC site.\n",

397 | "\n",

398 | "After the data preparation, it is advisable to examine your data before beginning detailed analysis. The [Explore LAT data](3.ExploreLATData.ipynb) tutorial has suggestions on methods of getting a quick preview of your data."

399 | ]

400 | }

401 | ],

402 | "metadata": {

403 | "kernelspec": {

404 | "display_name": "Python 3",

405 | "language": "python",

406 | "name": "python3"

407 | },

408 | "language_info": {

409 | "codemirror_mode": {

410 | "name": "ipython",

411 | "version": 3

412 | },

413 | "file_extension": ".py",

414 | "mimetype": "text/x-python",

415 | "name": "python",

416 | "nbconvert_exporter": "python",

417 | "pygments_lexer": "ipython3",

418 | "version": "3.7.9"

419 | },

420 | "pycharm": {

421 | "stem_cell": {

422 | "cell_type": "raw",

423 | "metadata": {

424 | "collapsed": false

425 | },

426 | "source": []

427 | }

428 | }

429 | },

430 | "nbformat": 4,

431 | "nbformat_minor": 2

432 | }

433 |

--------------------------------------------------------------------------------

/DataSelection/3.ExploreLATData/explore_latdata.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Exploring LAT Data\n",

8 | "\n",

9 | "Before detailed analysis, we recommended gaining familiarity with the structure and content of the [LAT data products](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Data/LAT_DP.html), as well as the process for [preparing the data](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data_preparation.html) by making cuts on the data file. This tutorial demonstrates simple ways to quickly explore LAT data.\n",

10 | "\n",

11 | ">**IMPORTANT**! In almost all cases, light curves and energy spectra need to be produced by a [Likelihood Analysis](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/binned_likelihood_tutorial.html) using [gtlike](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtlike.txt) to be scientifically valid.\n",

12 | "\n",

13 | "In addition to the Fermitools, you will also be using the following FITS File Viewers:\n",

14 | "\n",

15 | "* _ds9_ (image viewer); download and install from: http://ds9.si.edu/site/Home.html\n",

16 | "\n",

17 | "* _fv_ (view images and tables; can also make plots and histograms;\n",

18 | "download and install from: http://heasarc.gsfc.nasa.gov/docs/software/ftools/fv"

19 | ]

20 | },

21 | {

22 | "cell_type": "markdown",

23 | "metadata": {},

24 | "source": [

25 | "## Data Files\n",

26 | "\n",

27 | "Some of the files used in this tutorial were prepared within the [Data Preparation](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data_preparation.html) tutorial. These are:\n",

28 | "\n",

29 | "* [`3C279_region_filtered_gti.fits`](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/dataPreparation/3C279_region_filtered_gti.fits) (16.6 MB) - a 20 degree region around the blazar 3C 279 with appropriate selection cuts applied\n",

30 | "* [`spacecraft.fits`](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/dataPreparation/spacecraft.fits) (67.6 MB) - the spacecraft data file for 3C 279.\n",

31 | "\n",

32 | "You can retrieve these via `wget`. Below we download these data files and move them to a `data` subdirectory:"

33 | ]

34 | },

35 | {

36 | "cell_type": "code",

37 | "execution_count": null,

38 | "metadata": {},

39 | "outputs": [],

40 | "source": [

41 | "!wget \"https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATData/3C279_region_filtered_gti.fits\"\n",

42 | "!wget \"https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATData/spacecraft.fits\""

43 | ]

44 | },

45 | {

46 | "cell_type": "code",

47 | "execution_count": null,

48 | "metadata": {},

49 | "outputs": [],

50 | "source": [

51 | "!mkdir ./data\n",

52 | "!mv *.fits ./data"

53 | ]

54 | },

55 | {

56 | "cell_type": "markdown",

57 | "metadata": {},

58 | "source": [

59 | "Alternatively, you can select your own region and time period of interest from the [LAT data server](http://fermi.gsfc.nasa.gov/cgi-bin/ssc/LAT/LATDataQuery.cgi) and substitute them. **Photon** and **spacecraft** data files are all that you need for the analysis."

60 | ]

61 | },

62 | {

63 | "cell_type": "markdown",

64 | "metadata": {},

65 | "source": [

66 | "# 1. Using `ds9`\n",

67 | "\n",

68 | "First, we'll look at making quick counts maps with *ds9*."

69 | ]

70 | },

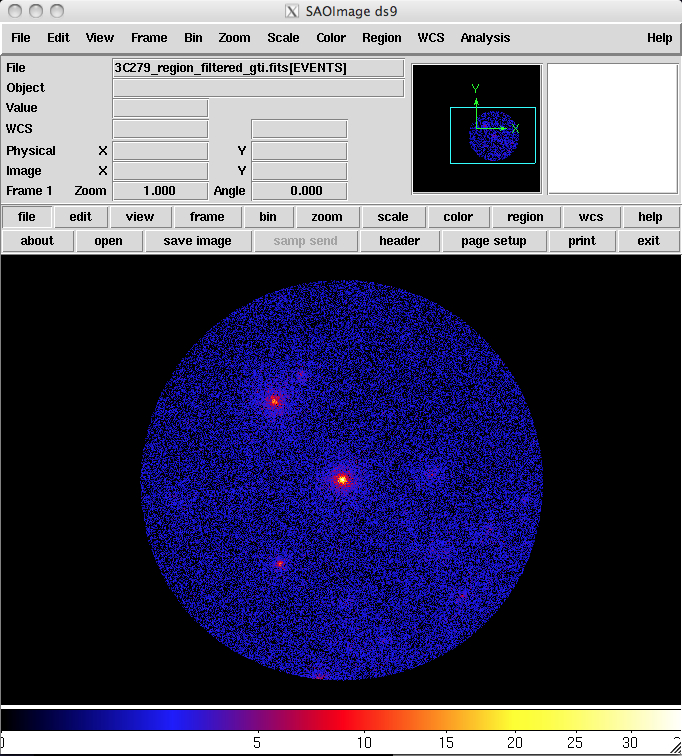

71 | {

72 | "cell_type": "markdown",

73 | "metadata": {},

74 | "source": [

75 | "### *ds9* Quick look:\n",

76 | "\n",

77 | "To see the data, use *ds9* to create a quick counts maps of the events in the file.\n",

78 | "\n",

79 | "For example, to look at the 3C 279 data file, type the following command in your command line:"

80 | ]

81 | },

82 | {

83 | "cell_type": "markdown",

84 | "metadata": {},

85 | "source": [

86 | "```\n",

87 | "> ds9 -bin factor 0.1 0.1 -cmap b -scale sqrt 3C279_region_filtered_gti.fits &\n",

88 | "```"

89 | ]

90 | },

91 | {

92 | "cell_type": "markdown",

93 | "metadata": {},

94 | "source": [

95 | "A *ds9* window will open up and an image similar to the one shown below will be displayed.\n",

96 | "\n",

97 | "\n",

98 | "\n",

99 | "\n",

100 | "Breaking the command line into its parts:\n",

101 | "\n",

102 | "* *ds9* - executes the *ds9* application.\n",

103 | "\n",

104 | "* -bin factor 0.1 0.1 - Tells *ds9* that the x and y bin sizes are to be 0.1 units in each direction. Since we will be binning on the coordinates (RA, DEC), this means we will have 0.1 degree bins.\n",

105 | "\n",

106 | ">**Note**: The default factor is 1, so if you leave this off the *ds9* command line the image will use 1 degree bins\n",

107 | "\n",

108 | "* -cmap b - Tells ds9 to use the \"b\" color map to display the image. This is completely optional and the choice of map \"b\" represents the personal preference of the author. If left off, the default color map is \"gray\" (a grayscale color map).\n",

109 | "\n",

110 | "\n",

111 | "* -scale sqrt - Tells *ds9* to scale the colormap using the square root of the counts in the pixels. This particular scale helps to accentuate faint maxima where there is a bright source in the field as is the case here. Again this is the author's personal preference for this option. If left off, the default scale is linear.\n",

112 | "\n",

113 | "\n",

114 | "* & - backgrounds the task, allowing continued use of the command line."

115 | ]

116 | },

117 | {

118 | "cell_type": "markdown",

119 | "metadata": {},

120 | "source": [

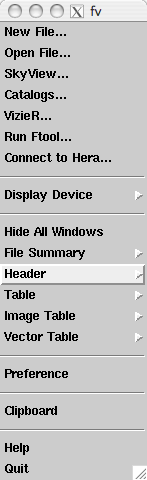

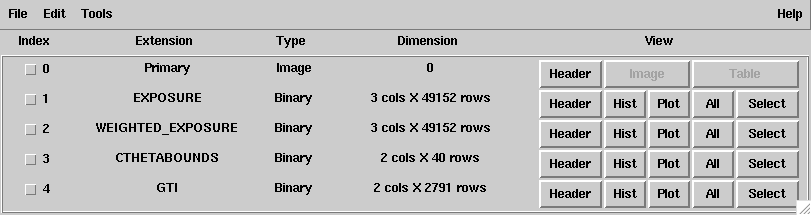

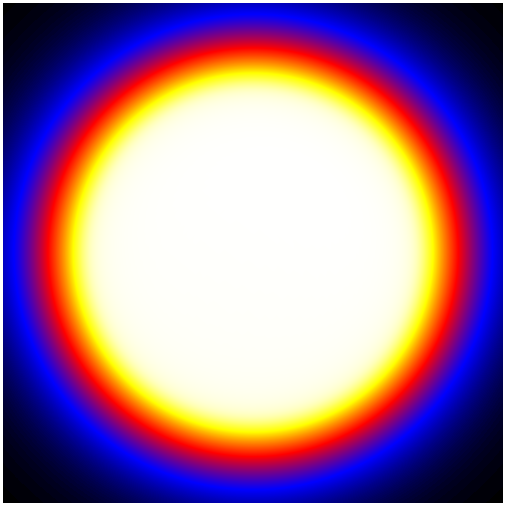

121 | "### Exploring with fv:\n",

122 | "\n",

123 | "*fv* gives you much more interactive control of how you explore the data.\n",

124 | "\n",

125 | "It can make plots and 1D and 2D histograms, allow you look at the data directly, and enable you to view the FITS file headers to look at some of the important keywords.\n",

126 | "\n",

127 | "To display a file in *fv*, type *fv* and the filename in the command line:"

128 | ]

129 | },

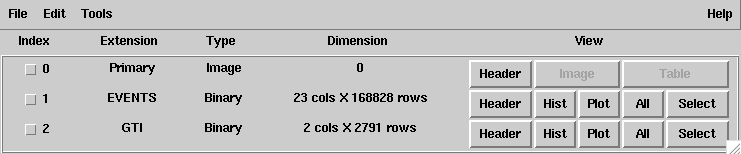

130 | {

131 | "cell_type": "markdown",

132 | "metadata": {},

133 | "source": [

134 | "```\n",

135 | "> fv 3C279_region_filtered_gti.fits &\n",

136 | "```\n",

137 | "\n",

138 | "\n",

139 | "\n",

140 | "\n",

141 | "This will bring up two windows:\n",

142 | "- the *fv* menu window \n",

143 | "- summary information about the file you are looking at \n",

144 | "\n",

145 | "\n",

146 | "For the purposes of the tutorial, we will only be using the summary window, but feel free to explore the options in the main menu window as well.\n",

147 | "\n",

148 | "The summary window shows:\n",

149 | "\n",

150 | "1. There is an empty primary array and two FITS extensions (`EVENTS`, the events table & `GTI`, the table of good time intervals). This is the normal file structure for a LAT events file. (If you don't see this structure, there is something wrong with your file.)\n",

151 | "\n",

152 | "2. There are 168828 events in the filtered 3C279 file (the number of rows in the EVENTS extension), and 22 pieces of information (the number of columns) for each event.\n",

153 | "\n",

154 | "3. There are 2791 GTI entries.\n",

155 | "\n",

156 | "From this window, data can be viewed in different ways:\n",

157 | "\n",

158 | "* For each extension, the FITS header can be examined for keywords and their values.\n",

159 | "\n",

160 | "* Histograms and plots can be made of the data in the EVENTS and GTI extensions.\n",

161 | "\n",

162 | "* Data in the EVENTS or GTI extensions can also be viewed directly.\n",

163 | "\n",

164 | "Let's look at each of these in turn."

165 | ]

166 | },

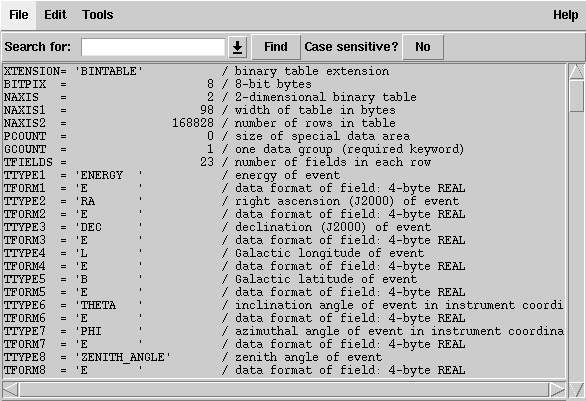

167 | {

168 | "cell_type": "markdown",

169 | "metadata": {},

170 | "source": [

171 | "### Viewing an Extension Header\n",

172 | "\n",

173 | "Click on the `Header` button for the EVENTS extension; a new window listing all the header keywords and their values for this extension will be displayed. Notice that the same information is presented that was shown in the summary window; namely that: the data is a binary table (XTENSION='BINTABLE'); there are 123857 entries (NAXIS2=123857); and there are 22 data values for each event (TFIELDS=22).\n",

174 | "\n",

175 | "In addition, there is information about the size (in bytes) of each row and the descriptions of each of the data fields contained in the table.\n",

176 | "\n",

177 | "\n",

178 | "\n",

179 | "As you scroll down, you will find some other useful information:\n",

180 | "\n",

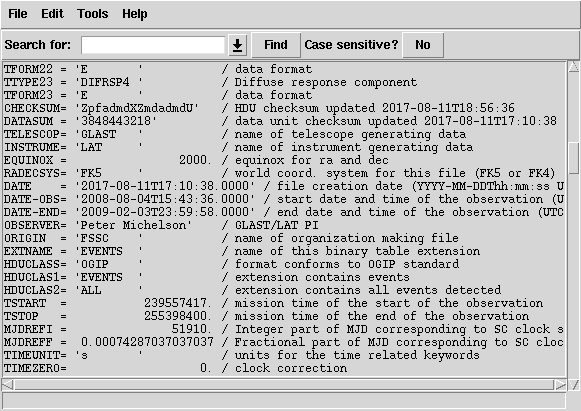

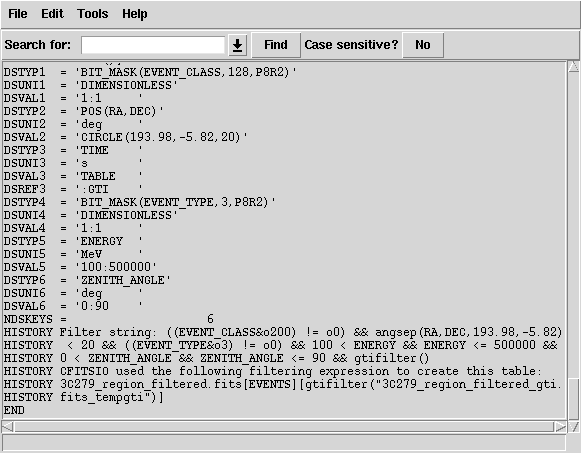

181 | "\n",

182 | "\n",

183 | "Important keywords in the HEADER include:\n",

184 | "* **DATE** - The date the file was created.\n",

185 | "* **DATE-OBS** - The starting time, in UTC, of the data in the file.\n",

186 | "* **DATE-END** - The ending time, in UTC, of the data in the file.\n",

187 | "* **TSTART** - The equivalant of **DATE-OBS** but in Mission Elapsed Time (MET). Note: MET is the time system used in the event file to time tag all events.\n",

188 | "* **TSTOP** - The equivalant of **DATE-END** in MET.\n",

189 | "* **MJDREFI** - The integer Modified Julian Date (MJD) of the zero point for MET. This corresponds to midnight, Jan. 1st, 2001 for FERMI\n",

190 | "* **MJDREFF** - the fractional value of the reference Modified Julian Date, = 0.0007428703770377037\n",

191 | "\n",

192 | "Finally, as you continue scrolling to the bottom of the header, you will see:\n",

193 | "\n",

194 | "\n",

195 | "\n",

196 | "This part of the header contains information about the data cuts that were used to extract the data. These are contained in the various DSS keywords. For a full description of the meaning of these values see the [DSS keyword page](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/dss_keywords.html). In this file the DSVAL1 keyword tells us which kind of event class has been used, the DSVAL2 keyword tells us that the data was extracted in a circular region, 20 degrees in radius, centered on RA=193.98 and DEC=-5.82. The DSVAL3 keyword shows us that the valid time range is defined by the GTIs. The DSVAL4 keywords shows the selected energy range in MeV, and DSVAL5 indicates that a zenith angle cut has been defined."

197 | ]

198 | },

199 | {

200 | "cell_type": "markdown",

201 | "metadata": {},

202 | "source": [

203 | "### Making a Counts Map"

204 | ]

205 | },

206 | {

207 | "cell_type": "markdown",

208 | "metadata": {},

209 | "source": [

210 | "*fv* can also be used to make a quick counts map to see what the region you extracted looks like. To do this:\n",

211 | "\n",

212 | "1. In the summary window for the FITS file, click on the **Hist** button for the EVENTS extension. A Histogram window will open.\n",

213 | ">**Note**: We use the histogram option rather than the plot option, as that would produce a scatter plot.\n",

214 | "\n",

215 | "2. From the X column's drop down menu in the column name field, select **RA**.\n",

216 | "fv will automatically fill in the TLMin, TLMax, Data Min and Data Max fields based on the header keywords and data values for that column. It will also make guesses for the values of the Min, Max and Bin Size fields.\n",

217 | "\n",

218 | "3. From the Y column's drop down menu in the column name field, select **DEC** from the list of columns.\n",

219 | "\n",

220 | "4. Select the limits on each of the coordinates in the Min and Max boxes.\n",

221 | "In this example, we've selected the limits to be just larger than the values in the Data Min and Data Max field for each column.\n",

222 | "\n",

223 | "5. Set the bin size for each column (in the units of the respective column; in this case we used 0.1 degrees).\n",

224 | "\n",

225 | "\n",

226 | "\n",

227 | "For this map, we've selected 0.1 degree bins."

228 | ]

229 | },

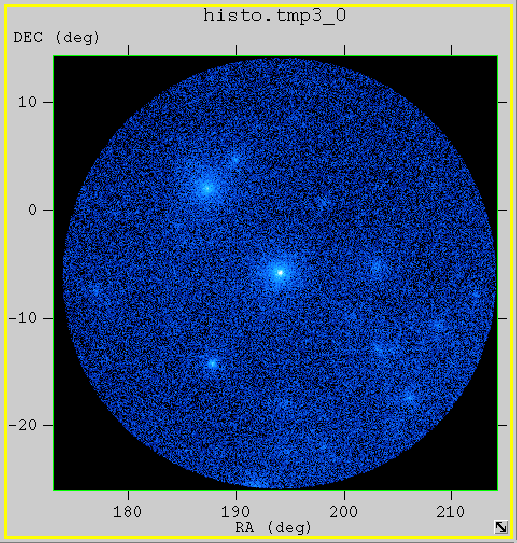

230 | {

231 | "cell_type": "markdown",

232 | "metadata": {},

233 | "source": [

234 | "6. You can also select a data column from the FITS file to use as a weight if you desire.\n",

235 | "For example, if you wanted to make an approximated flux map, you could select the ENERGY column in the Weight field and the counts would be weighted by their energy.\n",

236 | "\n",

237 | "\n",

238 | "7. Click on the `Make` button to generate the map.\n",

239 | "\n",

240 | " This will create the plot in a new window and keep the histogram window open in case you want to make changes and create a different image. The \"Make/Close\" button will create the image and close the histogram window.\n",

241 | "\n",

242 | " *fv* also allows you to adjust the color and scale, just as you can in *ds9*. However, it has a different selection of color maps.\n",

243 | "\n",

244 | " As in *ds9*, the default is gray scale. The image at right was displayed with the cold color map, selected by clicking on the \"Colors\" menu item, then selecting: \"Continuous\" submenu --> \"cold\" check box."

245 | ]

246 | },

247 | {

248 | "cell_type": "markdown",

249 | "metadata": {},

250 | "source": [

251 | "# 2. Binning the Data\n",

252 | "\n",

253 | "While *ds9* and *fv* can be used to make quick look plots when exploring the data, they don't automatically do all the things you would like when making data files for analysis. For this, you will need to use the *Fermi*-specific [gtbin](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtbin.txt) tool to manipulate the data."

254 | ]

255 | },

256 | {

257 | "cell_type": "markdown",

258 | "metadata": {},

259 | "source": [

260 | "You can use [gtbin](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtbin.txt) to bin photon data into the following representations:\n",

261 | "\n",

262 | "* Images (maps)\n",

263 | "* Light curves\n",

264 | "* Energy spectra (PHA files)\n",

265 | "\n",

266 | "This has the advantage of creating the files in exactly the format needed by the other science tools as well as by other analysis tools such as [XSPEC](http://heasarc.gsfc.nasa.gov/docs/xanadu/xspec/), and of adding correct WCS keywords to the images so that the coordinate systems are properly displayed when using image viewers (such as *ds9* and *fv*) that can correctly interpret the WCS keywords."

267 | ]

268 | },

269 | {

270 | "cell_type": "markdown",

271 | "metadata": {},

272 | "source": [

273 | "In this section we will use the [3C279_region_filtered_gti.fits](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATData/3C279_region_filtered_gti.fits) file to make images and will look at the results with *ds9*. In the [Explore LAT Data (for Burst)](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/explore_latdata_burst.html) section we will show how to use [gtbin](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtbin.txt) to produce a light curve.\n",

274 | "\n",

275 | "Just as with *fv* and *ds9*, *gtbin* can be used to make counts maps out of the extracted data.\n",

276 | "\n",

277 | "The main advantage of using [gtbin](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtbin.txt) is that it adds the proper header keywords so that the coordinate system is properly displayed as you move around the image.\n",

278 | "\n",

279 | "Here, we'll make the same image of the anti-center region that we make with *fv* and *ds9*, but this time we'll use the [gtbin](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtbin.txt) tool to make the image."

280 | ]

281 | },

282 | {

283 | "cell_type": "markdown",

284 | "metadata": {},

285 | "source": [

286 | "[gtbin](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtbin.txt) is invoked on the command line with or without the name of the file you want to process. If no file name is given, [gtbin](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtbin.txt) will prompt for it."

287 | ]

288 | },

289 | {

290 | "cell_type": "code",

291 | "execution_count": null,

292 | "metadata": {},

293 | "outputs": [],

294 | "source": [

295 | "%%bash\n",

296 | "gtbin\n",

297 | " CMAP\n",

298 | " ./data/3C279_region_filtered_gti.fits\n",

299 | " ./data/3C279_region_cmap.fits\n",

300 | " NONE\n",

301 | " 400\n",

302 | " 400\n",

303 | " 0.1\n",

304 | " CEL\n",

305 | " 193.98\n",

306 | " -5.82\n",

307 | " 0\n",

308 | " AIT\n",

309 | "\n",

310 | "#### Parameters:\n",

311 | "# Type of output file (CCUBE|CMAP|LC|PHA1|PHA2)\n",

312 | "# Event data file name\n",

313 | "# Output file name\n",

314 | "# Spacecraft data file name [NONE is valid]\n",

315 | "# Size of the X axis in pixels\n",

316 | "# Size of the Y axis in pixels\n",

317 | "# Image scale (in degrees/pixel)\n",

318 | "# Coordinate system (CEL - celestial, GAL -galactic) (CEL|GAL)\n",

319 | "# First coordinate of image center in degrees (RA or galactic l)\n",

320 | "# Second coordinate of image center in degrees (DEC or galactic b)\n",

321 | "# Rotation angle of image axis, in degrees\n",

322 | "# Projection method e.g. AIT|ARC|CAR|GLS|MER|NCP|SIN|STG|TAN\n",

323 | "#\n",

324 | "# For strange reasons, gtbin cannot be run with the ! magic; instead, we use the %%bash magic."

325 | ]

326 | },

327 | {

328 | "cell_type": "markdown",

329 | "metadata": {},

330 | "source": [

331 | "There are many different possible projection types. For a small region of the sky, the difference between projections is small, but is more significant for larger regions of the sky."

332 | ]

333 | },

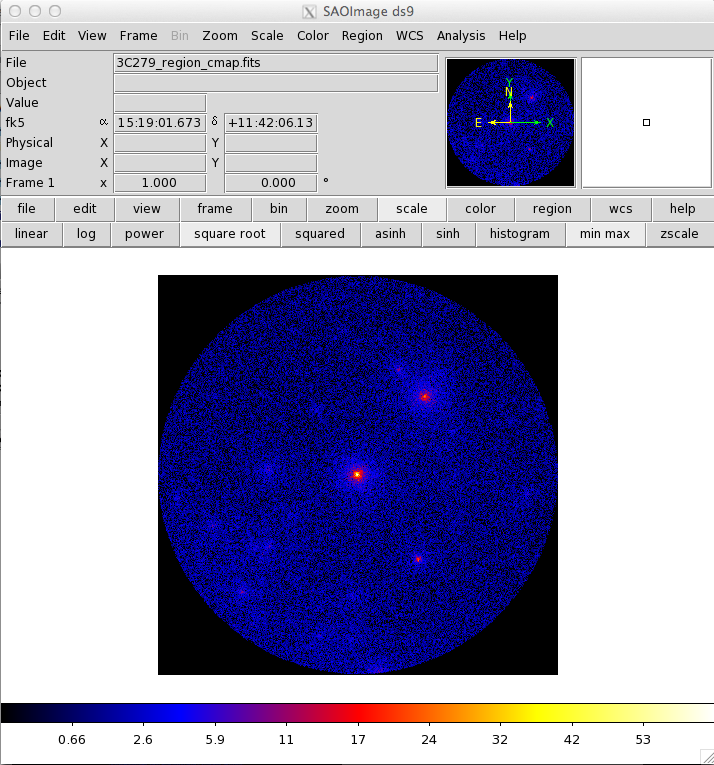

334 | {

335 | "cell_type": "markdown",

336 | "metadata": {},

337 | "source": [

338 | "In this case, we want a counts map, so we:\n",

339 | "1. Select `CMAP`.\n",

340 | "\n",

341 | " The CCUBE (counts cube) option produces a set of count maps over several energy bins.\n",

342 | " \n",

343 | " \n",

344 | "2. Provide an **output file name**.\n",

345 | "3. Specify `NONE` for the spacecraft file as it is not needed for the counts map.\n",

346 | "4. Input **image size and scale** in pixels and degrees/pixel.\n",

347 | "\n",

348 | " **Note**: We select a 400x400 pixel image with 0.1 degree pixels in order to create an image that contains all the extracted data.\n",

349 | " \n",

350 | " \n",

351 | "5. Enter the **coordinate system**, either celestial (CEL) or galactic (GAL), to be used in generating the image. The coordinates for the image center (next bullet) must be in the indicated coordinate system.\n",

352 | "6. Enter the **coordinates** for the center of the image, which here correspond to the position of 3C 279.\n",

353 | "7. Enter the **rotation angle (0)**.\n",

354 | "8. Enter the projection method for the image. See Calabretta & Greisen 2002, A&A, 395, 1077 for definitions of these projections. An AITOFF projection is selected.\n",

355 | "\n",

356 | "Here is the output [counts map file](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATData/3C279_region_cmap.fits) to use for comparison, displayed in *ds9*.\n",

357 | "\n",

358 | "\n",

359 | "\n",

360 | "Compare this result to the images made with *fv* and *ds9* and you will notice that the image is flipped along the y-axis.\n",

361 | "\n",

362 | "This is because the coordinate system keywords have been properly added to the image header and the Right Ascension (RA) coordinate actual increases right to left and not left to right.\n",

363 | "\n",

364 | "Moving the cursor over the image now shows the RA and Dec of the cursor position in the FK5 fields in the top left section of the display.\n",

365 | "\n",

366 | "If you want to look at coordinates in another system, such as galactic coordinates, you can make the change by first selecting the '**WCS**' button (on the right in the top row of buttons), and then the appropriate coordinate system from the choices that appear in the second row of buttons (FK4, FK5, IRCS, Galactic or Ecliptic)."

367 | ]

368 | },

369 | {

370 | "cell_type": "markdown",

371 | "metadata": {},

372 | "source": [

373 | "# 3. Examining Exposure Maps\n",

374 | "\n",

375 | "In this section, we explore ways of generating and looking at exposure maps. If you have not yet run [gtmktime](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtmktime.txt) on the data file you are examining, this analysis will likely yield incorrect results. It is advisable to prepare your data file properly by following the [Data Preparation](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data_preparation.html) tutorial before looking in detail at the [livetime and exposure](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Data_Exploration/livetime_and_exposure.html).\n",

376 | "\n",

377 | "Generally, to look at the exposure you must:\n",

378 | "\n",

379 | "1. Make an livetime cube from the spacecraft data file using **gtltcube**.\n",

380 | "2. As necessary, merge multiple livetime cubes covering different time ranges.\n",

381 | "3. Create the exposure map using the **gtexpmap** tool.\n",

382 | "4. Examine the map using *ds9*."

383 | ]

384 | },

385 | {

386 | "cell_type": "markdown",

387 | "metadata": {},

388 | "source": [

389 | "### Calculate the Livetime\n",

390 | "\n",

391 | "In order to determine the exposure for your source, you need to understand how much time the LAT has observed any given position on the sky at any given inclination angle.\n",

392 | "\n",

393 | "[gtltcube](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtltcube.txt) calculates this 'livetime cube' for the entire sky for the time range covered by the spacecraft file.\n",

394 | "\n",

395 | "To do this, you will need to make the livetime cube from the spacecraft (pointing and livetime history) file, using the [gtltcube](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtltcube.txt) tool."

396 | ]

397 | },

398 | {

399 | "cell_type": "code",

400 | "execution_count": null,

401 | "metadata": {},

402 | "outputs": [],

403 | "source": [

404 | "!gtltcube \\\n",

405 | " evfile =./data/3C279_region_filtered_gti.fits \\\n",

406 | " scfile = ./data/spacecraft.fits \\\n",

407 | " outfile = ./data/3C279_region_ltcube.fits \\\n",

408 | " dcostheta = 0.025 \\\n",

409 | " binsz = 1\n",

410 | "\n",

411 | "#### gtltcube Parameters:\n",

412 | "# Event data file\n",

413 | "# Spacecraft data file\n",

414 | "# Output file\n",

415 | "# Step size in cos(theta) (0.:1.)\n",

416 | "# Pixel size (degrees)\n",

417 | "#\n",

418 | "# May take a while to finish"

419 | ]

420 | },

421 | {

422 | "cell_type": "markdown",

423 | "metadata": {},

424 | "source": [

425 | "**gtltcube** may take some time to finish."

426 | ]

427 | },

428 | {

429 | "cell_type": "markdown",

430 | "metadata": {},

431 | "source": [

432 | "As you can see below in the image of the *fv* summary window below, the details recorded in the livetime cube file are multi-dimensional and difficult to visualize. For that, we will need an exposure map.\n",

433 | "\n",

434 | "\n",

435 | "### Combining _multiple_ livetime cubes\n",

436 | "\n",

437 | "In some cases, you will have multiple livetime cubes covering different periods of time that you wish to combine in order to examine the exposure over the entire time range.\n",

438 | "\n",

439 | "One example would be the researcher who generates weekly flux datapoints for light curves, and has the need to analyze the source significance over a larger time period. In this case, it is much less CPU-intensive to combine previously generated livetime cubes before calculating the exposure map, than to start the livetime cube generation from scratch.\n",

440 | "\n",

441 | "To combine multiple livetime cubes into a single cube use the [gtltsum](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtltsum.txt) tool."

442 | ]

443 | },

444 | {

445 | "cell_type": "markdown",

446 | "metadata": {},

447 | "source": [

448 | "**Note**: [gtltsum](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtltsum.txt) is quick, but it does have a few limitations, including:\n",

449 | "* It will only add two cubes at a time. If you have more than one cube to add, you must do them sequentially.\n",

450 | "\n",

451 | "* It does not allow you to append to or overwrite an existing livetime cube file.\n",

452 | "\n",

453 | " For example: if you wanted to add four cubes (c1, c2, c3 and c4), you cannot add c1 and c2 to get cube_a, then add cube_a and c3 and save the result as cube_a; you must use different file names for the output livetime cubes at each step.\n",

454 | "\n",

455 | "\n",

456 | "* The calculation parameters that were used to generate the livetime cubes (step size and pixel size) must be identical between the livetime cubes."

457 | ]

458 | },

459 | {

460 | "cell_type": "markdown",

461 | "metadata": {},

462 | "source": [

463 | "Here is an example of adding two livetime cubes from the first and second halves of the six months of 3C 279 data using **gtltsum** (where the midpoint was 247477908 MET):"

464 | ]

465 | },

466 | {

467 | "cell_type": "code",

468 | "execution_count": null,

469 | "metadata": {},

470 | "outputs": [],

471 | "source": [

472 | "!wget \"https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATData/3C279_region_first_ltcube.fits\"\n",

473 | "!wget \"https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATData/3C279_region_second_ltcube.fits\""

474 | ]

475 | },

476 | {

477 | "cell_type": "code",

478 | "execution_count": null,

479 | "metadata": {},

480 | "outputs": [],

481 | "source": [

482 | "!mv *cube.fits ./data"

483 | ]

484 | },

485 | {

486 | "cell_type": "code",

487 | "execution_count": null,

488 | "metadata": {},

489 | "outputs": [],

490 | "source": [

491 | "!ls ./data"

492 | ]

493 | },

494 | {

495 | "cell_type": "code",

496 | "execution_count": null,

497 | "metadata": {},

498 | "outputs": [],

499 | "source": [

500 | "!gtltsum \\\n",

501 | " infile1 = ./data/3C279_region_first_ltcube.fits \\\n",

502 | " infile2 = ./data/3C279_region_second_ltcube.fits \\\n",

503 | " outfile = ./data/3C279_region_summed_ltcube.fits\n",

504 | "\n",

505 | "#### Parameters:\n",

506 | "# Livetime cube 1 or list of files\n",

507 | "# Livetime cube 2\n",

508 | "# Output file"

509 | ]

510 | },

511 | {

512 | "cell_type": "markdown",

513 | "metadata": {},

514 | "source": [

515 | "### Generate an Exposure Map or Cube\n",

516 | "\n",

517 | "Once you have a livetime cube for the entire dataset, you need to calculate the exposure for your dataset. This can be in the form of an exposure **map** or an exposure **cube**.\n",

518 | "\n",

519 | "* Exposure **maps** are mono-energetic, and each plane represents the exposure at the midpoint of the energy band, not integrated over the band's energy range. Exposure maps are used for **unbinned** analysis methods. You will specify the number of energy bands when you run the [gtexpmap](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtexpmap.txt) tool.\n",

520 | "\n",

521 | "* Exposure **cubes** are used for **binned** analysis methods. The binning in both position and energy must match the binning of the input data file, which will be a counts cube. When you run the [gtexpcube2](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtexpcube2.txt) tool, you must be sure the binning matches.\n",

522 | "\n",

523 | "For simplicity, we will generate an exposure map by running the [gtexpmap](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtexpmap.txt) tool on the event file."

524 | ]

525 | },

526 | {

527 | "cell_type": "markdown",

528 | "metadata": {},

529 | "source": [

530 | "[**gtexpmap**](https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/help/gtexpmap.txt) allows you to control the exposure map parameters, including:\n",

531 | "\n",

532 | "* Map center, size, and scale\n",

533 | "* Projection type (selection includes Aitoff, Cartesian, Mercator, Tangential, etc.; default is Aitoff)\n",

534 | "* Energy range\n",

535 | "* Number of energy bins\n",

536 | "\n",

537 | "The following example shows input and output for generating an exposure map for the region surrounding 3C 279."

538 | ]

539 | },

540 | {

541 | "cell_type": "code",

542 | "execution_count": null,

543 | "metadata": {},

544 | "outputs": [],

545 | "source": [

546 | "!gtexpmap \\\n",

547 | " evfile = ./data/3C279_region_filtered_gti.fits \\\n",

548 | " scfile = ./data/spacecraft.fits \\\n",

549 | " expcube = ./data/3C279_region_summed_ltcube.fits \\\n",

550 | " outfile = ./data/3C279_exposure_map.fits \\\n",

551 | " irfs = P8R3_SOURCE_V2 \\\n",

552 | " srcrad = 30 \\\n",

553 | " nlong = 500 \\\n",

554 | " nlat = 500 \\\n",

555 | " nenergies = 30\n",

556 | "\n",

557 | "#### gtexpmap Parameters: ALSO SEE BELOW\n",

558 | "# Event data file\n",

559 | "# Spacecraft data file\n",

560 | "# Exposure hypercube file\n",

561 | "# Output file name\n",

562 | "# Response functions\n",

563 | "# Radius of the source region (in degrees)\n",

564 | "# Number of longitude points (2:1000)\n",

565 | "# Number of latitude points (2:1000)\n",

566 | "# Number of energies (2:100)\n",

567 | "\n",

568 | "# This will generate an exposure map on six months of data.\n",

569 | "# This may take a long time.\n",

570 | "# Below you will find a wget command to get the resulting file."

571 | ]

572 | },

573 | {

574 | "cell_type": "markdown",

575 | "metadata": {},

576 | "source": [

577 | "**As six months is far too much data for an unbinned analysis, this computation will take a long time. Skip past this section to find a copy of the output file.**\n",

578 | "\n",

579 | "Running **gtexpmap** in the command line will prompt you to enter the following:\n",

580 | "\n",

581 | "* Name of an events file to determine the energy range to use.\n",

582 | "* Name of the exposure cube file to use.\n",

583 | "* Name of the output file and the instrument response function to use.\n",

584 | " * For more discussion on the proper instrument response function (IRF) to use in your data analysis, see the overview discussion in the [Cicerone](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_LAT_IRFs/IRF_overview.html), as well as the current [recommended data selection](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_Data_Exploration/Data_preparation.html) information from the LAT team. The [LAT data caveats](https://fermi.gsfc.nasa.gov/ssc/data/analysis/LAT_caveats.html) are also important to review before starting LAT analysis.\n",

585 | "\n",

586 | "The next set of parameters specify the size, scale, and position of the map to generate.\n",

587 | "\n",

588 | "* The radius of the 'source region'\n",

589 | " * The source region is different than the region of interest (ROI). This is the region that you will model when fitting your data. As every region of the sky that contains sources will also have adjacent regions containing sources, it is advisable to model an area larger than that covered by your dataset. Here we have increased the source region by an additional 10°, which is the minimum needed for an actual analysis. Be aware of what sources may be near your region, and model them if appropriate (especially if they are very bright in gamma rays).\n",

590 | "* Number of longitude and latitude points\n",

591 | "* Number of energy bins that will have maps created\n",

592 | " * This number can be small (∼5) for sources with flat spectra in the LAT regime. However, for sources like pulsars that vary in flux significantly over the LAT energy range, a larger number of energies is recommended, typically 10 per decade in energy."

593 | ]

594 | },

595 | {

596 | "cell_type": "code",

597 | "execution_count": null,

598 | "metadata": {},

599 | "outputs": [],

600 | "source": [

601 | "# Get the output exposure map\n",

602 | "!wget \"https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATData/3C279_exposure_map.fits\""

603 | ]

604 | },

605 | {

606 | "cell_type": "code",

607 | "execution_count": null,

608 | "metadata": {},

609 | "outputs": [],

610 | "source": [

611 | "!mv 3C279_exposure_map.fits ./data/3C279_exposure_map.fits"

612 | ]

613 | },

614 | {

615 | "cell_type": "code",

616 | "execution_count": null,

617 | "metadata": {},

618 | "outputs": [],

619 | "source": [

620 | "!ls ./data"

621 | ]

622 | },

623 | {

624 | "cell_type": "markdown",

625 | "metadata": {},

626 | "source": [

627 | "Once the file has been generated, it can be viewed with *ds9*. When you open the file in *ds9*, a \"Data Cube\" window will appear, allowing you to select between the various maps generated."

628 | ]

629 | },

630 | {

631 | "cell_type": "code",

632 | "execution_count": null,

633 | "metadata": {},

634 | "outputs": [],

635 | "source": [

636 | "!ds9 ./data/3C279_exposure_map.fits"

637 | ]

638 | },

639 | {

640 | "cell_type": "markdown",

641 | "metadata": {

642 | "pycharm": {

643 | "name": "#%% md\n"

644 | }

645 | },

646 | "source": [

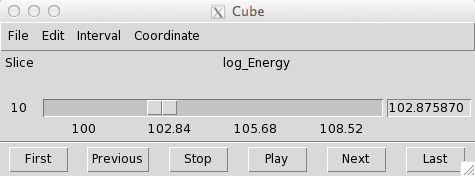

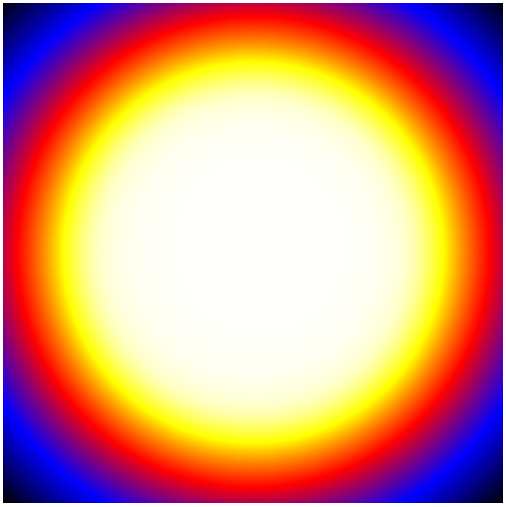

647 | "\n",

648 | "\n",

649 | "\n",

650 | "Below are four of the 30 layers in the map, scaled by `log(Energy)`, and extracted from *ds9*.\n",

651 | "\n",

652 | "| First Layer | Fourth Layer |\n",

653 | "| --- | --- |\n",

654 | "| |  |\n",

655 | "\n",

656 | "| Tenth Layer | Last Layer |\n",

657 | "| --- | --- |\n",

658 | "|  |  |\n",

659 | "\n",

660 | "\n",

661 | "As you can see, the exposure changes as you go to higher energies. This is due to two effects:\n",

662 | "\n",

663 | "1. The [PSF](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_LAT_IRFs/IRF_PSF.html) is broader at lower energies, making the wings of the exposure expand well outside the region of interest. This is why it is necessary to add at least 10 degrees to your ROI. In this case, you can see that even 10 degrees has not fully captured the wings of the PSF at low energies.\n",

664 | "\n",

665 | "\n",

666 | "2. The [effective area](https://fermi.gsfc.nasa.gov/ssc/data/analysis/documentation/Cicerone/Cicerone_LAT_IRFs/IRF_EA.html) of the LAT changes at higher energies.\n",

667 | "\n",

668 | "Both of these effects are quantified on the [LAT performance page](http://www.slac.stanford.edu/exp/glast/groups/canda/lat_Performance.htm)."

669 | ]

670 | }

671 | ],

672 | "metadata": {

673 | "kernelspec": {

674 | "display_name": "Python 3",

675 | "language": "python",

676 | "name": "python3"

677 | },

678 | "language_info": {

679 | "codemirror_mode": {

680 | "name": "ipython",

681 | "version": 3

682 | },

683 | "file_extension": ".py",

684 | "mimetype": "text/x-python",

685 | "name": "python",

686 | "nbconvert_exporter": "python",

687 | "pygments_lexer": "ipython3",

688 | "version": "3.7.9"

689 | },

690 | "nav_menu": {},

691 | "pycharm": {

692 | "stem_cell": {

693 | "cell_type": "raw",

694 | "metadata": {

695 | "collapsed": false

696 | },

697 | "source": []

698 | }

699 | },

700 | "toc": {

701 | "navigate_menu": true,

702 | "number_sections": true,

703 | "sideBar": true,

704 | "threshold": 6,

705 | "toc_cell": false,

706 | "toc_section_display": "block",

707 | "toc_window_display": false

708 | }

709 | },

710 | "nbformat": 4,

711 | "nbformat_minor": 2

712 | }

713 |

--------------------------------------------------------------------------------

/DataSelection/4.ExploreLATDataBurst/explore_latdata_burst.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# Explore LAT Data (for Burst)\n",

8 | "\n",

9 | "In this example, we will examine the LAT data for a gamma-ray burst based on the time and position derived from a GBM trigger."

10 | ]

11 | },

12 | {

13 | "cell_type": "markdown",

14 | "metadata": {},

15 | "source": [

16 | "# Prerequisites\n",

17 | "\n",

18 | "It is assumed that:\n",

19 | "\n",

20 | "* You are in your working directory.\n",

21 | "* The GBM reported a burst via a [GCN circular]() with the following information:\n",

22 | " * Name = GRB 080916C\n",

23 | " * RA = 121.8\n",

24 | " * Dec = -61.3\n",

25 | " * TStart = 243216766 s (Mission Elapsed Time)\n",

26 | " * T90 = 66 s"

27 | ]

28 | },

29 | {

30 | "cell_type": "markdown",

31 | "metadata": {},

32 | "source": [

33 | "# Steps\n",

34 | "\n",

35 | "The analysis steps are:\n",

36 | "\n",

37 | "1. Extract the Data\n",

38 | "2. Data Selections\n",

39 | "3. Bin the Data\n",

40 | "4. Look at the Data"

41 | ]

42 | },

43 | {

44 | "cell_type": "markdown",

45 | "metadata": {},

46 | "source": [

47 | "## 1. Extract the Data\n",

48 | "\n",

49 | "Generally, one should refer to [Extract LAT data](https://fermi.gsfc.nasa.gov/ssc/data/p6v11/analysis/scitools/extract_latdata.html) tutorial and use the time and spatial information from some source (here, a GCN notice) to make the appropiate extraction cuts from the [LAT data server](http://fermi.gsfc.nasa.gov/cgi-bin/ssc/LAT/LATDataQuery.cgi)."

50 | ]

51 | },

52 | {

53 | "cell_type": "markdown",

54 | "metadata": {},

55 | "source": [

56 | "We use the following parameters to extract the data for an ROI of 20 degrees from 500s before to 1500 seconds after the trigger time:\n",

57 | "\n",

58 | "* Search Center (RA,Dec)\t=\t(121.8,-61.3)\n",

59 | "* Radius\t=\t20 degrees\n",

60 | "* Start Time (MET)\t=\t243216266 seconds (2008-09-16T00:04:26)\n",

61 | "* Stop Time (MET)\t=\t243218266 seconds (2008-09-16T00:37:46)\n",

62 | "* Minimum Energy\t=\t20 MeV\n",

63 | "* Maximum Energy\t=\t300000 MeV\n",

64 | "\n",

65 | "Note that for analyses that require the diffuse background, the standard model starts at 60 MeV."

66 | ]

67 | },

68 | {

69 | "cell_type": "code",

70 | "execution_count": null,

71 | "metadata": {},

72 | "outputs": [],

73 | "source": [

74 | "!wget https://fermi.gsfc.nasa.gov/ssc/data/analysis/scitools/data/exploreLATDataGRB/LAT_explore_GRB.tgz"

75 | ]

76 | },

77 | {

78 | "cell_type": "code",

79 | "execution_count": null,

80 | "metadata": {},

81 | "outputs": [],

82 | "source": [

83 | "!tar xvzf LAT_explore_GRB.tgz"

84 | ]

85 | },

86 | {

87 | "cell_type": "code",

88 | "execution_count": null,

89 | "metadata": {},

90 | "outputs": [],

91 | "source": [

92 | "!mkdir data\n",

93 | "!mv *.fits ./data"

94 | ]

95 | },

96 | {

97 | "cell_type": "code",

98 | "execution_count": null,

99 | "metadata": {},

100 | "outputs": [],

101 | "source": [

102 | "!ls ./data"

103 | ]

104 | },

105 | {

106 | "cell_type": "markdown",

107 | "metadata": {},

108 | "source": [

109 | "This will extract the data files used in this tutorial into the `data` directory."

110 | ]

111 | },

112 | {

113 | "cell_type": "markdown",

114 | "metadata": {},

115 | "source": [

116 | "## Data Selections\n",

117 | "\n",

118 | "NOTE: For information on the recommended selections for burst analysis of LAT data, you should refer to the [Cicerone](http://fermi.gsfc.nasa.gov/ssc/data/p6v11/analysis/documentation/Cicerone/Cicerone_Data_Exploration/Data_preparation.html).\n",

119 | "\n",

120 | "To map the region of the burst, you should select a large spatial region from within a short time range bracketing the burst. For the lightcurve on the other hand, its best to select you want a small region around the burst from within a long time range. Therefore, you will need to make two different data selections.\n",

121 | "\n",

122 | "In both cases, we will use the loosest event class cut (to include all class events). This works because rapid, bright events like GRBs overwhelm the background rates for the short period of the flare."

123 | ]

124 | },

125 | {

126 | "cell_type": "markdown",

127 | "metadata": {},

128 | "source": [

129 | "Here, we use [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/p6v11/analysis/scitools/help/gtselect.txt) to extract the region for the spatial mapping:"

130 | ]

131 | },

132 | {

133 | "cell_type": "code",

134 | "execution_count": null,

135 | "metadata": {},

136 | "outputs": [],

137 | "source": [

138 | "%%bash\n",

139 | "gtselect evclass=128\n",

140 | " ./data/grb_events.fits\n",

141 | " ./data/GRB081916C_map_events.fits\n",

142 | " 121.8\n",

143 | " -61.3\n",

144 | " 20\n",

145 | " 243216666\n",

146 | " 243216966\n",

147 | " 100\n",

148 | " 300000\n",

149 | " 180"

150 | ]

151 | },

152 | {

153 | "cell_type": "markdown",

154 | "metadata": {},

155 | "source": [

156 | "**Notes**:\n",

157 | "* The input photon file is `grb_events.fits`, and the output file is `GRB081916C_map_events.fits`.\n",

158 | "* We selected a circular region with radius 20 degree around the burst location (the ROI here has to fall within that selected in the data server) from a 300s time period around the trigger.\n",

159 | "* We made an additional energy cut, selecting only photons between 100 MeV and 300 GeV. This removes the low-energy high-background events from the map."

160 | ]

161 | },

162 | {

163 | "cell_type": "markdown",

164 | "metadata": {},

165 | "source": [

166 | "**Run [gtselect](https://fermi.gsfc.nasa.gov/ssc/data/p6v11/analysis/scitools/help/gtselect.txt) again**, this time with a smaller region but a longer time range. (Note that _gtselect_ saves the values from the previous run and uses them as defaults for the next run.)"

167 | ]

168 | },

169 | {

170 | "cell_type": "code",

171 | "execution_count": null,

172 | "metadata": {},

173 | "outputs": [],

174 | "source": [

175 | "%%bash\n",

176 | "gtselect evclass=128\n",

177 | " ./data/grb_events.fits\n",

178 | " ./data/GRB081916C_lc_events.fits\n",

179 | " 121.8\n",

180 | " -61.3\n",

181 | " 10\n",

182 | " 243216266\n",

183 | " 243218266\n",

184 | " 30\n",

185 | " 300000\n",

186 | " 180"

187 | ]

188 | },

189 | {

190 | "cell_type": "markdown",

191 | "metadata": {},

192 | "source": [

193 | "**Notes**:\n",

194 | "\n",

195 | "* A new output file, with the extension `lc_events.fits` has been produced.\n",

196 | "* The search radius was reduced to 10 degrees.\n",

197 | "* The start to stop time range has been expanded.\n",

198 | "* The energy range has been expanded."

199 | ]

200 | },

201 | {

202 | "cell_type": "markdown",

203 | "metadata": {},

204 | "source": [

205 | "## Bin the Data\n",

206 | "\n",

207 | "Use [gtbin](https://fermi.gsfc.nasa.gov/ssc/data/p6v11/analysis/scitools/help/gtbin.txt) to bin the photon data into a map and a lightcurve.\n",

208 | "\n",

209 | "First, create the counts map:"

210 | ]

211 | },

212 | {

213 | "cell_type": "code",

214 | "execution_count": null,

215 | "metadata": {},

216 | "outputs": [],

217 | "source": [

218 | "%%bash\n",

219 | "gtbin\n",

220 | " CMAP\n",

221 | " ./data/GRB081916C_map_events.fits\n",

222 | " ./data/GRB081916C_counts_map.fits\n",

223 | " NONE\n",

224 | " 50\n",

225 | " 50\n",

226 | " 0.5\n",

227 | " CEL\n",

228 | " 121.8\n",

229 | " -61.3\n",

230 | " 0.\n",

231 | " AIT"

232 | ]

233 | },

234 | {

235 | "cell_type": "markdown",

236 | "metadata": {},

237 | "source": [

238 | "**Notes**:\n",

239 | " \n",

240 | "* Select _cmap_ (i.e., count map) as the output.\n",

241 | "* When we ran _gtselect_ we called the file from a large area `GRB081916C_map_events.fits`.\n",

242 | "* The counts map file will be called `GRB081916C_counts_map.fits`.\n",

243 | "* Although in _gtselect_ we selected a circular region with a 20 degree radius, we will form a counts map with 50 pixels on a side, and 1/2 degree square pixels.\n",

244 | "\n",

245 | "Now, we create the lightcurve. Once again, the previous inputs were saved and provided as defaults where appropriate."

246 | ]

247 | },

248 | {

249 | "cell_type": "code",

250 | "execution_count": null,

251 | "metadata": {},

252 | "outputs": [],

253 | "source": [

254 | "%%bash\n",

255 | "gtbin\n",

256 | " LC\n",

257 | " ./data/GRB081916C_lc_events.fits\n",

258 | " ./data/GRB081916C_light_curve.fits\n",

259 | " NONE\n",

260 | " LIN\n",

261 | " 243216266\n",

262 | " 243218266\n",

263 | " 10"

264 | ]

265 | },

266 | {

267 | "cell_type": "markdown",

268 | "metadata": {},

269 | "source": [

270 | "**Notes**:\n",

271 | "* We used **gtselect** to create `GRB081916C_lc_events.fits`, a photon list from a small region but using a long time range.\n",

272 | "\n",

273 | "\n",

274 | "* **gtbin** binned these photons and output the result into `GRB081916C_light_curve.fits`.\n",

275 | "\n",

276 | " There are a number of options for choosing the time bins. Here we have chosen linear bins with equal time widths of 10 seconds."

277 | ]

278 | },

279 | {

280 | "cell_type": "markdown",

281 | "metadata": {},

282 | "source": [

283 | "## Examine the Data\n",

284 | "\n",

285 | "We now have two FITS files with binned data: one with a lightcurve (`GRB081916C_light_curve.fits`), and a second with a counts map (`GRB081916C_counts_map.fits`).\n",

286 | "\n",

287 | "**Note**: Currently we do not have any Fermi specific graphics programs, but there are various tools available to plot FITS data files, such as [_fv_](http://heasarc.nasa.gov/ftools/fv/) and [_ds9_](http://hea-www.harvard.edu/RD/ds9/)."

288 | ]

289 | },

290 | {

291 | "cell_type": "markdown",

292 | "metadata": {},

293 | "source": [

294 | "To look at the counts map, we will use *fv*.\n",

295 | "\n",

296 | "1. First, start up *fv* in your terminal using:\n",

297 | " prompt> fv &\n",

298 | " (Or see the code cell below)\n",

299 | " \n",

300 | " \n",

301 | "2. Then open `GRB081916C_counts_map.fits`. Click on 'open file' to get the `File Dialog` GUI. Choose `GRB081916C_counts_map.fits`. A new GUI will open up with a table with two rows.\n",

302 | "\n",

303 | "\n",

304 | "3. FITS files consist of a series of extensions with data. Since the counts map is an image, it is stored in the primary extension (for historical reasons only images can be stored in the primary extension). Clicking on the 'Image' button in the first row results in:"

305 | ]

306 | },

307 | {

308 | "cell_type": "code",

309 | "execution_count": null,

310 | "metadata": {},

311 | "outputs": [],

312 | "source": [

313 | "!fv ./data/GRB081916C_counts_map.fits"

314 | ]

315 | },

316 | {

317 | "cell_type": "code",

318 | "execution_count": null,

319 | "metadata": {},

320 | "outputs": [],

321 | "source": [

322 | "from IPython.display import HTML"

323 | ]

324 | },

325 | {

326 | "cell_type": "code",

327 | "execution_count": null,

328 | "metadata": {},

329 | "outputs": [],

330 | "source": [

331 | "HTML(\"\")"

332 | ]

333 | },

334 | {

335 | "cell_type": "markdown",

336 | "metadata": {},

337 | "source": [

338 | "One readily observes that there are many pixels containing a few counts each near the burst location, and few pixels containing any counts away from the burst. It is also evident that the burst location is not centered in the counts map. This means the preliminary location sent out in the GCN was not quite the right location. This is not uncommon for automated transient localizations.\n",

339 | "\n",