├── README.md

├── images

├── GSEA.png

├── GSEA02.png

├── HMM_01.png

├── MA_PLM.png

├── MA_RLE.png

├── MA_rma.png

├── MA_NUSE.png

├── MA_types.png

├── GSEA_Barbie.png

├── GSEA_ssGSEA.png

├── MA_GCprobes.png

├── MA_mapping.png

├── MA_oneColor.png

├── tcgsa

│ ├── clst3.png

│ ├── clust2.png

│ ├── baselineD1.png

│ ├── noBaseline.png

│ ├── hm_baseline.png

│ └── noBaseline_precluster.png

├── GSEA_Verhaak.png

├── MA_twoColors.png

├── intro

│ ├── ngsapps.png

│ ├── replicates.png

│ ├── wcm_schema.png

│ ├── replicates2.png

│ ├── AuerDoerge2010.png

│ └── biology-02-00378-g001.jpg

├── MA_medianPolish.png

├── nanopore_principle.png

├── MA_differentProbesets.png

├── nanopore_processing.png

├── nanopore_processing02.png

└── MA_normMethodComparison_TACmanual.png

├── references

└── GSEA

│ ├── lec14a.pdf

│ └── ssGSEA_caw_BIG_120314.pptx

├── RNA_heteroskedasticity_files

└── figure-html

│ ├── unnamed-chunk-3-1.png

│ ├── unnamed-chunk-5-1.png

│ ├── unnamed-chunk-8-1.png

│ ├── unnamed-chunk-9-1.png

│ └── unnamed-chunk-10-1.png

├── vdj_tcr_sequencing.md

├── bashrc

├── scRNA-seq_python

├── installing_scanpyEtc.sh

└── scRNA-seq_scanorama.Rmd

├── scRRBS.md

├── proteomics.md

├── shiny.md

├── integrativeAnalysis.md

├── alignment_vs_pseudoalignment.md

├── metabolomics.md

├── GOterm_overrepresentation.md

├── ExpHub_submission.md

├── hidden_markov_model.md

├── clusterProfiler_with_goseq.md

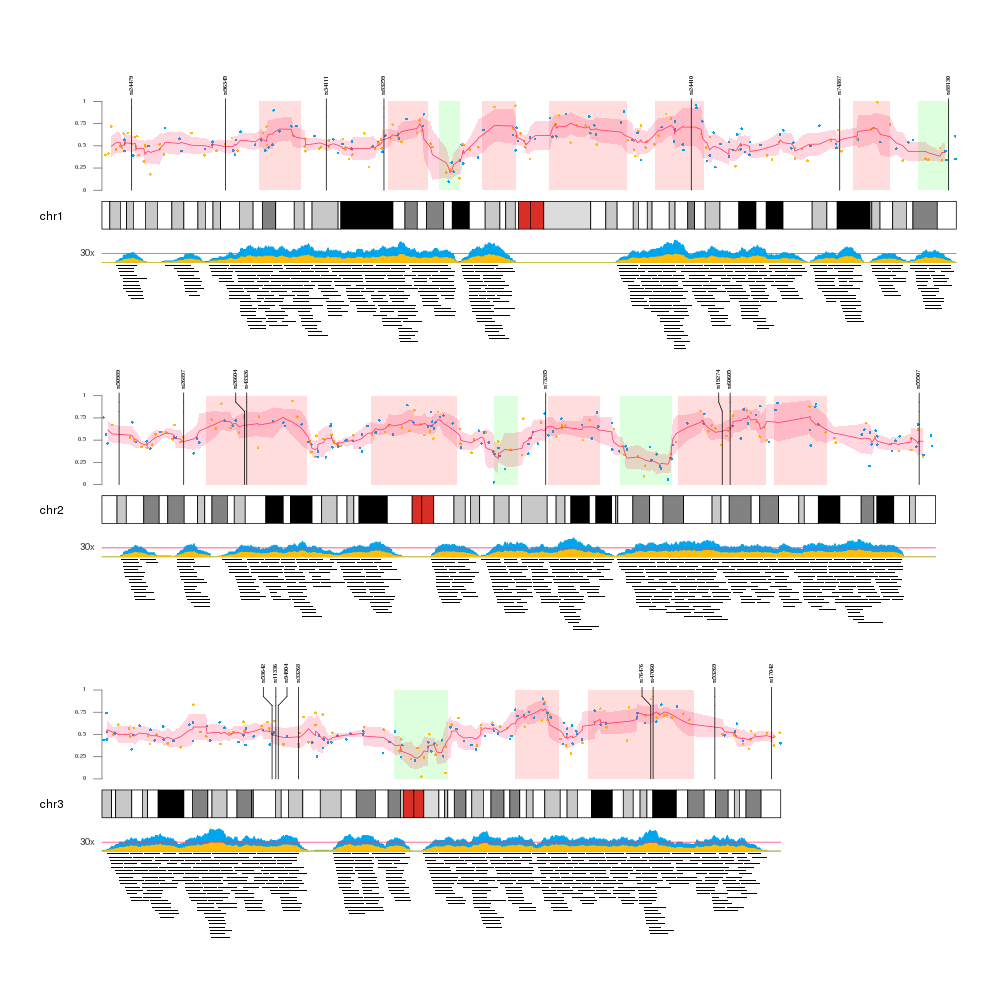

├── karyotype.md

├── rscript.md

├── dna_methylation.md

├── revigo.md

├── repeatMasker.md

├── scRNA-seq_RNAvelocity.md

├── Seurat_labelTransfer.md

├── spatial_transcriptomics.md

├── DimReds.md

├── atac.md

├── scRNA-seq_zinbwave_zinger.R

├── motif_analyses.md

├── nanopore_sequencing.md

├── repetitiveElements.md

├── scRNA-seq.md

├── Seurat.md

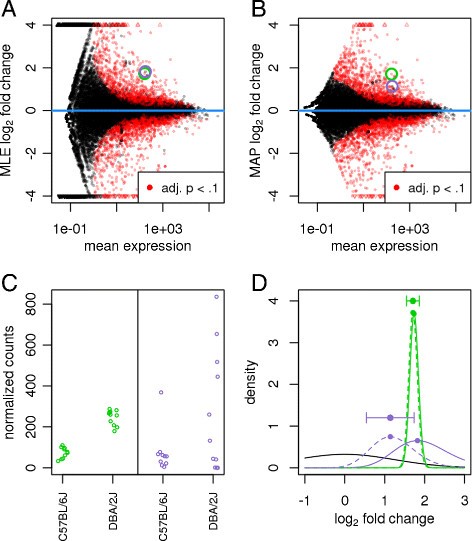

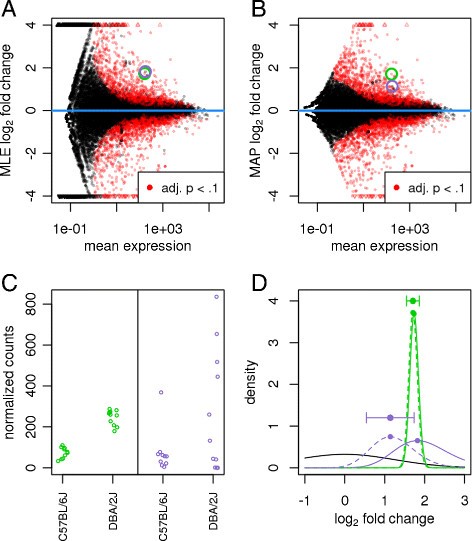

├── RNA_heteroskedasticity.md

├── RNA_heteroskedasticity.Rmd

├── CITE-seq.md

├── notes_10Q.md

├── IntroToNGS_WCMGS.md

└── microarrays.md

/README.md:

--------------------------------------------------------------------------------

1 | # Collection of notes about *omics analyses

2 |

--------------------------------------------------------------------------------

/images/GSEA.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/GSEA.png

--------------------------------------------------------------------------------

/images/GSEA02.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/GSEA02.png

--------------------------------------------------------------------------------

/images/HMM_01.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/HMM_01.png

--------------------------------------------------------------------------------

/images/MA_PLM.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_PLM.png

--------------------------------------------------------------------------------

/images/MA_RLE.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_RLE.png

--------------------------------------------------------------------------------

/images/MA_rma.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_rma.png

--------------------------------------------------------------------------------

/images/MA_NUSE.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_NUSE.png

--------------------------------------------------------------------------------

/images/MA_types.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_types.png

--------------------------------------------------------------------------------

/images/GSEA_Barbie.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/GSEA_Barbie.png

--------------------------------------------------------------------------------

/images/GSEA_ssGSEA.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/GSEA_ssGSEA.png

--------------------------------------------------------------------------------

/images/MA_GCprobes.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_GCprobes.png

--------------------------------------------------------------------------------

/images/MA_mapping.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_mapping.png

--------------------------------------------------------------------------------

/images/MA_oneColor.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_oneColor.png

--------------------------------------------------------------------------------

/images/tcgsa/clst3.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/tcgsa/clst3.png

--------------------------------------------------------------------------------

/images/GSEA_Verhaak.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/GSEA_Verhaak.png

--------------------------------------------------------------------------------

/images/MA_twoColors.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_twoColors.png

--------------------------------------------------------------------------------

/images/intro/ngsapps.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/intro/ngsapps.png

--------------------------------------------------------------------------------

/images/tcgsa/clust2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/tcgsa/clust2.png

--------------------------------------------------------------------------------

/images/MA_medianPolish.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_medianPolish.png

--------------------------------------------------------------------------------

/images/intro/replicates.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/intro/replicates.png

--------------------------------------------------------------------------------

/images/intro/wcm_schema.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/intro/wcm_schema.png

--------------------------------------------------------------------------------

/images/tcgsa/baselineD1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/tcgsa/baselineD1.png

--------------------------------------------------------------------------------

/images/tcgsa/noBaseline.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/tcgsa/noBaseline.png

--------------------------------------------------------------------------------

/references/GSEA/lec14a.pdf:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/references/GSEA/lec14a.pdf

--------------------------------------------------------------------------------

/images/intro/replicates2.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/intro/replicates2.png

--------------------------------------------------------------------------------

/images/nanopore_principle.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/nanopore_principle.png

--------------------------------------------------------------------------------

/images/tcgsa/hm_baseline.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/tcgsa/hm_baseline.png

--------------------------------------------------------------------------------

/images/MA_differentProbesets.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_differentProbesets.png

--------------------------------------------------------------------------------

/images/intro/AuerDoerge2010.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/intro/AuerDoerge2010.png

--------------------------------------------------------------------------------

/images/nanopore_processing.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/nanopore_processing.png

--------------------------------------------------------------------------------

/images/nanopore_processing02.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/nanopore_processing02.png

--------------------------------------------------------------------------------

/images/intro/biology-02-00378-g001.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/intro/biology-02-00378-g001.jpg

--------------------------------------------------------------------------------

/images/tcgsa/noBaseline_precluster.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/tcgsa/noBaseline_precluster.png

--------------------------------------------------------------------------------

/references/GSEA/ssGSEA_caw_BIG_120314.pptx:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/references/GSEA/ssGSEA_caw_BIG_120314.pptx

--------------------------------------------------------------------------------

/images/MA_normMethodComparison_TACmanual.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/images/MA_normMethodComparison_TACmanual.png

--------------------------------------------------------------------------------

/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-3-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-3-1.png

--------------------------------------------------------------------------------

/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-5-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-5-1.png

--------------------------------------------------------------------------------

/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-8-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-8-1.png

--------------------------------------------------------------------------------

/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-9-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-9-1.png

--------------------------------------------------------------------------------

/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-10-1.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/friedue/Notes/HEAD/RNA_heteroskedasticity_files/figure-html/unnamed-chunk-10-1.png

--------------------------------------------------------------------------------

/vdj_tcr_sequencing.md:

--------------------------------------------------------------------------------

1 | VDJ/TCR sequencing

2 | ====================

3 |

4 |

5 |

--------------------------------------------------------------------------------

/bashrc:

--------------------------------------------------------------------------------

1 | ```sh

2 | # .bashrc

3 |

4 | # Source global definitions

5 | #if [ -f /etc/bashrc ]; then

6 | # . /etc/bashrc

7 | #fi

8 |

9 | # User specific aliases and functions

10 |

11 | alias ls='ls -lahFG'

12 | export LC_NUMERIC=en_US.UTF-8

13 | export LC_ALL=en_US.UTF-8

14 |

15 | # This makes the system reluctant to wipe out existing files via cp and mv

16 | set -o noclobber

17 | ```

18 |

--------------------------------------------------------------------------------

/scRNA-seq_python/installing_scanpyEtc.sh:

--------------------------------------------------------------------------------

1 |

2 | # combining anaconda & pip: https://www.anaconda.com/using-pip-in-a-conda-environment/

3 | conda search scanpy

4 | conda info --envs

5 | conda activate scrna # make sure to switch to that environment

6 | conda install pip

7 | /scratchLocal/frd2007/software/anaconda3/envs/scrna/bin/pip install scanorama

8 |

9 | ## testing scanorama

10 | wget https://raw.githubusercontent.com/brianhie/scanorama/master/bin/process.py --no-check-certificate

11 |

12 |

13 |

14 |

--------------------------------------------------------------------------------

/scRRBS.md:

--------------------------------------------------------------------------------

1 | scRRBS

2 | ============

3 |

4 | * original paper: [Guo et al.](https://www.ncbi.nlm.nih.gov/pubmed/24179143)

5 |

6 | * general pros/cons of RRBS apply:

7 | * low coverage of regions with little CpG density

8 | * generally sparse representation: ca. 1 mio CpGsv(1-10% of all CpGs)

9 | * improved detection of promoter/CpG-rich regions

10 | * relatively consistent across replicates

11 |

12 |

13 | [DeepCpG](https://github.com/PMBio/deepcpg/blob/master/examples/README.md) can be used to impute missing values to get a more granular picture at the single-cell level

14 | [Angermueller et al., 2017](https://genomebiology.biomedcentral.com/articles/10.1186/s13059-017-1189-z#Abs1)

--------------------------------------------------------------------------------

/proteomics.md:

--------------------------------------------------------------------------------

1 | Proteomics

2 | ===========

3 |

4 | | Score | Meaning | Reference |

5 | |--------|---------|-----------|

6 | | Ascore | localization of a phosphorylation site; score is the difference between the top scorer - 2nd best scorer | [Ascore][Ascore] |

7 | | Maxscore | | |

8 | | Modscore | modscore is an updated version of Ascore | |

9 | | Localization score | | |

10 |

11 | - `#` missing TMT label

12 | - `]` missing TMT label at N-terminus

13 | - `@` lysine

14 | - `@]@` glitch

15 |

16 | References for the Gygi lab pipeline:

17 |

18 | * [Mouse Phospho-Proteomics Atlas][Mouse Atlas]

19 | * [Ascore][Ascore]

20 |

21 |

22 |

23 |

24 |

25 | [Ascore]: https://www.ncbi.nlm.nih.gov/pubmed/21183079 "Huttlin et al., Cell (2010)"

26 | [Mouse Atlas]: http://ascore.med.harvard.edu/ "Ascore"

27 |

--------------------------------------------------------------------------------

/shiny.md:

--------------------------------------------------------------------------------

1 |

2 | Why shiny apps tend to become unwieldy quickly:

3 |

4 | >Input and output IDs in Shiny apps share a global namespace, meaning, each ID must be unique across the entire app. If you’re using functions to generate UI, and those functions generate inputs and outputs, then you need to ensure that none of the IDs collide. [1][1]

5 |

6 | ## Shiny modules

7 |

8 | * piece of a Shiny app

9 | * can’t be directly run

10 | * included as part of a larger app (or as part of a larger Shiny module–they are composable)

11 | * add namespacing to Shiny UI and server logic

12 |

13 | Modules can represent input, output, or both.

14 |

15 | * composed of two functions that represent 1) a piece of UI, and 2) a fragment of server logic that uses that UI

16 |

17 | ---------------

18 | [1]: https://shiny.rstudio.com/articles/modules.html

19 |

--------------------------------------------------------------------------------

/integrativeAnalysis.md:

--------------------------------------------------------------------------------

1 | Integrative analyses

2 | ---------------------

3 |

4 | * [Review of methods](http://insights.sagepub.com/redirect_file.php?fileId=6771&filename=5060-BMI-Genomic,-Proteomic,-and-Metabolomic-Data-Integration-Strategies.pdf&fileType=pdf) and introduction to the Grinn R package (predecessor to Metabobox)

5 |

6 | ### Metabobox (R package, 2017)

7 |

8 | - see Metabolomics Notes

9 | - [github repo](https://github.com/kwanjeeraw/mETABOX)

10 |

11 | ### __3omics__ (not updated since 2012)

12 | - has a Name-ID converter

13 | - [Example data](http://3omics.cmdm.tw/help.php#examples) and analysis details

14 | - Correlation Network

15 | - "3Omics generates inter-omic correlation network to display the relationship or common patterns in data over time or experimental conditions for all transcripts, proteins and metabolites. Where users may only have two of the three -omics data-sets, 3Omics supplements the missing transcript, protein or metabolite information by searching iHOP"

16 | - Coexpression Profile

17 | - Phenotype Analysis

18 | - Pathway Analysis

19 | - GO Enrichment Analysis

--------------------------------------------------------------------------------

/alignment_vs_pseudoalignment.md:

--------------------------------------------------------------------------------

1 | Rob Patro:

2 |

3 | >Selective alignment is simply an efficient mechanism to compute actual alignment scores, it performs sensitive seeding, seed chaining & scoring, and crucially, actually computes an alignment score (via DP), while pseudo-alignment does not do these things, and is a way to quickly determine the likely compatibility between a read and a set of references.

4 |

5 | > Both of the approaches are algorithmic ways to return, in the case of pseudoalignment, sets of references [that are most compatible with the reads at hand],

6 | and in the case of selective alignment, scored mappings. They can both be run over "arbitrary" referenced indices.

7 |

8 | >If you don't (1) validate the "compatibility" you find and (2) seed in a sensitive fashion, then you end up with mappings whose accuracy is lesser than that derived from alignments. [see their pre-print on this](https://www.biorxiv.org/content/10.1101/657874v1)

9 |

10 | > For example, a 100bp read could "pseudoalign" to a transcript with which it shares only a single k-mer although that would preclude any reasonable quality alignment. There is no scoring adopted in the determination of compatibility, which can and does lead to spurious mapping.

11 |

--------------------------------------------------------------------------------

/metabolomics.md:

--------------------------------------------------------------------------------

1 | Metabolomics

2 | ---------------

3 |

4 | ### Metabobox (2017)

5 |

6 | - R package, [paper](http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0171046)

7 | - can probably be used with other data types, too

8 | - [github repo](https://github.com/kwanjeeraw/mETABOX)

9 | - features:

10 | - Data normalization and data transformation

11 | - Univariate statistical analyses with hypothesis testing procedures that are automatically selected based on study designs

12 | - Joint and interactive visualization of genes, proteins and metabolites in different combinations of networks

13 | - Calculation of data-driven networks using correlation-based approaches

14 | - Calculation of chemical structure similarity networks from substructure fingerprints

15 | - Functional interpretation with overrepresentation analysis, functional class scoring and WordCloud generation

16 |

17 |

18 |

19 | #### Network analysis

20 |

21 | * internal graph database: a _pre-compiled graph database_, which is used for collecting prior knowledge from several [resources](http://journals.plos.org/plosone/article/file?id=10.1371/journal.pone.0171046.s001&type=supplementary) (Note: based on GRCh38 (!))

22 |

23 |

24 |

--------------------------------------------------------------------------------

/GOterm_overrepresentation.md:

--------------------------------------------------------------------------------

1 | ## ClusterProfiler

2 |

3 | * `enrichGO` and `enrichKEGG`: test based on hypergeometric distribution with additional multiple hypothesis-testing correction [Paper](https://www.liebertpub.com/doi/10.1089/omi.2011.0118)

4 |

5 | ```

6 | ## retrieve ENTREZ IDs

7 | eg <- clusterProfiler::bitr(t_tx$gene_symbol, fromType="SYMBOL", toType="ENTREZID", OrgDb="org.Hs.eg.db") %>%

8 | as.data.table

9 | setnames(eg, names(eg), c("gene_symbol", "entrez"))

10 |

11 | clstcomp <- eg[t_tx, on = "gene_symbol"] %>%

12 | .[, c("gene_symbol","entrez","cluster_k60","day","logFC"), with=FALSE] %>%

13 | .[!is.na(entrez)] %>% unique

14 |

15 | ## make a list of ENTREZ IDs, one per cluster

16 | clstcomp.list <- lapply(sort(unique(clstcomp$cluster_k60)), function(x) clstcomp[cluster_k60 == x]$entrez )

17 |

18 | ## run enrichGO

19 | ck.GO_bp <- compareCluster(clstcomp.list, fun = "enrichGO", OrgDb = org.Hs.eg.db, ont = "BP")

20 |

21 | ## visualization

22 | dotplot(ck.GO_bp) + ggtitle("Overrepresented GO terms (Biological Processes)")

23 |

24 | ```

25 |

26 | Individual lists (no comparisons)

27 |

28 | ```

29 | cp.GO_bp.ind <- lapply(clstcomp.list, function(x){

30 | out <- enrichGO(x, OrgDb = org.Mm.eg.db, universe = unique(deg.dt$entrez), ont = "BP", pvalueCutoff = 0.05, pAdjustMethod = "BH")

31 | out <- DOSE::setReadable(out, 'org.Mm.eg.db', 'ENTREZID')

32 | return(out)

33 | })

34 | ```

35 |

36 | * `geneRatio` should be number of genes that overlap gene set divided by size of gene set

37 | * the number in parentheses for the clusterCompare plot corresponds to the number of genes that were in that group

38 |

--------------------------------------------------------------------------------

/ExpHub_submission.md:

--------------------------------------------------------------------------------

1 | For **every data set**:

2 |

3 | ## 1. Save data and metadata in ExpHub (documentation by [ExperimentHub](https://bioconductor.org/packages/3.10/bioc/vignettes/ExperimentHub/inst/doc/CreateAnExperimentHubPackage.html))

4 | - .R or .Rmds stored in `inst/scripts/`

5 | - `make-data.Rmd` -- describe how the data stored in ExpHub was obtained and saved. [example Rmd](https://github.com/LTLA/scRNAseq/blob/master/inst/scripts/make-nestorowa-hsc-data.Rmd)

6 | ```

7 | ## describe how the data was obtained and processed

8 | count.file <- read.table("file.txt")

9 | counts <- as.matrix(count.file)

10 | coldata <- DataFrame(row.names = colnames(count.file),

11 | condition = c("A","A","B","B"))

12 |

13 | ## save the data

14 | path <- file.path("myPackage", "storeddata", "1.0.0")

15 | dir.create(path, showWarnings-FALSE, recursive=TRUE)

16 |

17 | saveRDS(counts, file = file.path(path, "counts.rds"))

18 | saveRDS(coldata, file = file.path(path, "coldata.rds"))

19 | ```

20 | - `make-metadata.R` -- generate a csv file that will be stored in the `inst/extdata` folder of the package, the result can look [like this](https://github.com/LTLA/scRNAseq/blob/master/inst/extdata/metadata-nestorowa-hsc.csv). [example R script](https://github.com/LTLA/scRNAseq/blob/master/inst/scripts/make-nestorowa-hsc-metadata.R)

21 | ```

22 | write.csv(file = "../extdata/metadata-storeddata.csv", stringsAsFactors = FALSE, data.frame(...) )

23 | ```

24 | ## 2. Provide functions to generate the R object that will be exposed to the user

25 | - scripts in `R/`

26 | - `get_storeddata.R`

27 | ```

28 | get_data_hpca <- function() {

29 | version <- "1.0.0"

30 | se <- .create_se(file.path("hpca", version), has.rowdata=FALSE)

31 | }

32 | ```

33 | - `create_se.R`

34 |

--------------------------------------------------------------------------------

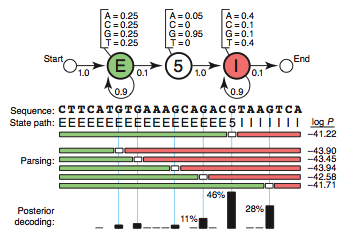

/hidden_markov_model.md:

--------------------------------------------------------------------------------

1 | ## Hidden Markov Model

2 |

3 | from [[1]]():

4 |

5 | Each state has its own __emission probabilities__, which, for example, models the base composition of exons, introns and the consensus G at the 5′SS.

6 |

7 | Each state also has __transition probabilities__, the probabilities of moving from this state to a new state.

8 |

9 |

10 |

11 | [Casino example](http://learninglover.com/blog/?p=635)

12 |

13 | ### Baum-Welch algorithm

14 |

15 | The Baum–Welch algorithm is used to find the unknown parameters of a hidden Markov model (HMM). [[2]] I.e. given a sequence of observations, generate a transition and emission matrix that may have generated the observations. [[4]]

16 |

17 | The BWA makes use of the forward-backward algorithm. [[2]]

18 |

19 |

20 |

21 | ### Viterbi path

22 |

23 | There are potentially many state paths that could generate the same sequence. We want to find the one with the highest probability. (...) The efficient Viterbi algorithm is guaranteed to find the most probable state path given a sequence and an HMM. [[1]]

24 | The Baum–Welch algorithm uses the well known EM algorithm to find the maximum likelihood estimate of the parameters of a hidden Markov model given a set of observed feature vectors. [[3]]

25 |

26 | HMMs don’t deal well with correlations between residues, because they assume that each residue depends only on one underlying state. [[1]]

27 |

28 | [interactive spreasheet for teaching forward-backward algorithm](http://www.cs.jhu.edu/~jason/papers/#eisner-2002-tnlp)

29 |

30 |

31 | ----------------------

32 | [1]: http://dx.doi.org/10.1038/nbt1004-1315 "Eddy, S. Nat Biotech (2014)"

33 | [2]: https://en.wikipedia.org/wiki/Baum%E2%80%93Welch_algorithm "Wikipedia: Baum Welch algorithm]"

34 | [3]: https://en.wikipedia.org/wiki/Viterbi_algorithm "Wikipedia: Viterbi algorithm"

35 | [4]: http://biostat.jhsph.edu/bstcourse/bio638/readings/BW.pdf "Baum-Welch Algorithm explained"

36 |

--------------------------------------------------------------------------------

/clusterProfiler_with_goseq.md:

--------------------------------------------------------------------------------

1 | I've been perpetually annoyed by the fact that clusterProfiler offers useful visualizations, but I don't want to miss out on the correction that GOseq offers.

2 | So, here's a way to combine them both by replacing the p-values of the clusterProfiler result with the goseq results.

3 | It would probably make sense to use no cut-off when running enrichGO to obtain all possible gene sets.

4 |

5 | ```

6 | # GOSEQ =================

7 | library(goseq) # package for GO term enrichment that also accounts for gene legnth

8 | gene.vector <- row.names(DGE.results) %in% DGEgenes %>% as.integer

9 | names(gene.vector) <- row.names(DGE.results)

10 | pwf <- nullp(gene.vector, "sacCer3", "ensGene")

11 | GO.wall <- goseq(pwf, "sacCer3", "ensGene")

12 |

13 | # CLUSTERPROFILE=========

14 | library(clusterProfiler)

15 | dge <- subset(dgeres, padj <= 0.01 )$ENTREZID

16 | go_enrich <- enrichGO(gene = dge,

17 | universe = dgeres$ENTREZID,

18 | OrgDb = "org.Sc.sgd.db",

19 | keyType = 'ENTREZID',

20 | readable = F, # setting this TRUE won't work with yeast

21 | ont = "BP",

22 | pvalueCutoff = 0.05, # probably better to turn this off

23 | qvalueCutoff = 0.10)

24 |

25 | # COMBINE =================

26 |

27 | comp.go <- merge(go_enrich@result[,1:7], GO.wall,

28 | by.x = "ID", by.y = "category", all = TRUE)

29 |

30 | ## get adjusted goseq p.values

31 | comp.go$padj_for_cp <- p.adjust(comp.go$pvalue, method = "BH") # BH is the default choice in enrichGO

32 |

33 | ## before we replace, we need to make sure that our df matches the results of enrichGO

34 | rownames(comp.go) <- comp.go$ID

35 | comp.go <- subset(comp.go, !is.na(pvalue))

36 | comp.go <- comp.go[rownames(go_enrich@result),]

37 |

38 | ## add the goseq-values to the enrichGO results

39 | go.res <- go_enrich

40 | go.res@result$p.adjust <- comp.go$padj_for_cp

41 | go.res@result$pvalue <- comp.go$over_represented_pvalue

42 |

43 | # TADAA

44 | dotplot(go.res, showCategory = 10)

45 | ````

--------------------------------------------------------------------------------

/karyotype.md:

--------------------------------------------------------------------------------

1 | ## Karyotype plots

2 |

3 | - Cytogenetic G-banding data (G-banding = Giemsa staining based)

4 | - Giemsa bands are related to functional nuclear processes such as replication or transcription in the following points

5 | - G bands are late- replicating

6 | - chromatins in G bands are more condensed

7 | - G-band DNA is localized at the nuclear periphery

8 | - G bands are homoge- neous in GC content and essentially consist of GC-poor iso- chores

9 |

10 | [PNAS 2002](doi.dx.org/10.1073/pnas.022437999)

11 |

12 | Recommended package: [karyoploteR](https://bernatgel.github.io/karyoploter_tutorial/) ([vignette](https://bioconductor.org/packages/release/bioc/vignettes/karyoploteR/inst/doc/karyoploteR.html))

13 |

14 |

15 |

16 | >Chemically staining the metaphase chromosomes results in a alternating dark and light banding pattern, which could provide information about abnormalities for chromosomes. Cytogenetic bands could also provide potential predictions of chromosomal structural characteristics, such as repeat structure content, CpG island density, gene density, and GC content.

17 | biovizBase package provides utilities to get ideograms from the UCSC genome browser, as a wrapper around some functionality from rtracklayer. It gets the table for cytoBand and stores the table for certain species as a GRanges object.

18 | We found a color setting scheme in package geneplotter, and we implemented it in biovisBase.

19 | The function .cytobandColor will return a default color set. You could also get it from options after you load biovizBase package.

20 | And we recommended function getBioColor to get the color vector you want, and names of the color is biological categorical data. This function hides interval color genenerators and also the complexity of getting color from options. You could specify whether you want to get colors by default or from options, in this way, you can temporarily edit colors in options and could change or all the graphics. This give graphics a uniform color scheme.

21 |

22 | [BioViz Vignette](https://www.bioconductor.org/packages/release/bioc/vignettes/biovizBase/inst/doc/intro.pdf)

23 |

24 | - brown/red corresponds to "stalk"

25 |

--------------------------------------------------------------------------------

/rscript.md:

--------------------------------------------------------------------------------

1 | # Basic use of `Rscript`

2 |

3 | Example script content:

4 |

5 | ```

6 | #!/usr/bin/env Rscript

7 | args <- commandArgs(trailingOnly = TRUE)

8 | rnorm(n=as.numeric(args[1]), mean=as.numeric(args[2]))

9 | ```

10 |

11 | Invoke the script:

12 |

13 | ```

14 | $ Rscript myScript.R 5 100

15 | ```

16 |

17 | Obviously, this creates a string vector `args` which contains the entries `5` and `100`

18 |

19 | General usage:

20 |

21 | ```

22 | Rscript [options] [-e expr [-e expr2 ...] | file] [args]

23 | ```

24 |

25 | Handling mising arguments:

26 |

27 | ```

28 | # test if there is at least one argument: if not, return an error

29 | if (length(args)==0) {

30 | stop("At least one argument must be supplied (input file).n", call.=FALSE)

31 | } else if (length(args)==1) {

32 | # default output file

33 | args[2] = "out.txt"

34 | }

35 | ```

36 |

37 | For storing helpful information about what types of parameters are expected etc., the `optparse` package is the way to go. [Eric Minikel](https://gist.github.com/ericminikel/8428297) has a great example. Below is a shorter summary:

38 |

39 | ```

40 | #!/usr/bin/env Rscript

41 | library("optparse")

42 |

43 | option_list = list(

44 | make_option(c("-f", "--file"), type="character", default=NULL,

45 | help="dataset file name", metavar="character"),

46 | make_option(c("-o", "--out"), type="character", default="out.txt",

47 | help="output file name [default= %default]", metavar="character")

48 | );

49 |

50 | opt_parser = OptionParser(option_list=option_list);

51 | opt = parse_args(opt_parser); # list with all the arguments sorted by order of appearance in option_list

52 | ```

53 |

54 | Arguments can be called by their names; here: `opt$file` and `opt$out`:

55 |

56 | ```

57 | ## program...

58 | df = read.table(opt$file, header=TRUE)

59 | num_vars = which(sapply(df, class)=="numeric")

60 | df_out = df[ ,num_vars]

61 | write.table(df_out, file=opt$out, row.names=FALSE)

62 | ```

63 |

64 | ## References

65 |

66 | -

67 | -

68 | -

69 | - [Great presentation touching on all important points](https://nbisweden.github.io/RaukR-2018/working_with_scripts_Markus/presentation/WorkingWithScriptsPresentation.html#1)

70 |

--------------------------------------------------------------------------------

/dna_methylation.md:

--------------------------------------------------------------------------------

1 | * [DNA methylation basics](#basics)

2 | * [Regions defined by DNA methylation](#regions)

3 |

4 | -------------------------------

5 |

6 |

7 | ## DNA methylation basics

8 |

9 | see [Messerschmidt Review (2014)]

10 |

11 | * undetectable in certain yeast, nematode, and fly species

12 | * in mamamals, DNA methylation is vital

13 | * mostly in __symmetrical CpG context__

14 | * single CpGs typically hypermethylated vs. unmethylated CpG islands --> __low CpG density promoters__ are generally hypermethylated, yet active vs. __high CpG density promoters__ that are inactive if methylated

15 | * __transposons__ are repressed if methylated (esp. LINEs, LTRs), so are __pericentromeric repeats__

16 | * __imprinted genes__ show parent-of-origin-specific DNA methylation (introduced during gamete differentiation) and subsequently allele-specific gene expression

17 |

18 | ### DNA methylation machinery

19 |

20 | #### _de novo_ methylation

21 |

22 | --> __Dnmt3a, Dnmt3b__

23 |

24 | * maternally provided (Dnmt3a)

25 |

26 | #### methylation maintenance

27 |

28 | --> __Dnmt1__

29 |

30 | * high affinity for hemimethylation

31 | * its deletion in mESCs causes hypotmethylation, but not a complete loss (lethal effect, though)

32 |

33 | _Dnmt2_ is a misnomer: it methylates RNA, not DNA

34 |

35 |

36 | ## Regions defined by DNA methylation

37 |

38 | | |LMR|UMR|IMR|PMD|

39 | |-|---|---|---|---|

40 | |me level| 10-50% | 0-10% |mean: 57% | 0-100%, seemingly disordered|

41 | |size|||271 bp| 150 kb|

42 | |abundance||||20-40% of the genome|

43 | |tissue-specific?|yes||yes, mostly| yes (not present in ESC)|

44 | |CpG content|low|high|not defined|variable|

45 | |localization|DHS overlap|mostly promoters, CGIs|mostly intragenic|heterochromatin|

46 | |reference|[Stadler (2011)]()|[Stadler (2011)]()|[Elliott (2015)]()|[Lister (2009)](), [Gaidatzis (2014)]()|

47 |

48 |

49 | -------------

50 | [Elliott (2015)]: http://dx.doi.org/10.1038/ncomms7363 "Elliott et al., Nat Comms (2015): Intermediate DNA methylation is a conserved signature of genome regulation"

51 | [Lister (2009)]: http://dx.doi.org/10.1038/nature08514 "Lister et al., Nature (2009): Human DNA methylomes at base resolution show widespread epigenomic differences"

52 | [Messerschmidt Review (2014)]:http://dx.doi.org/10.1101/gad.234294.113 "Messerschmidt et al., Genes & Development (2014): DNA methylation dynamics during epigenetic reprogramming in the germline and preimplantation embryos"

53 | [Stadler (2011)]:http://dx.doi.org/10.1038/nature11086 "Stadler et al., Nature (2011): DNA-binding factors shape the mouse methylome at distal regulatory regions"

54 |

55 |

--------------------------------------------------------------------------------

/revigo.md:

--------------------------------------------------------------------------------

1 | # Summarizing GO terms using semantic similarity

2 |

3 | [REVIGO](http://revigo.irb.hr/) clusters GO terms based on their semantic similarity, p-values, and relatedness resulting in a hierarchical clustering where less dispensable terms are placed closer to the root. The clustering is visualized in a clustered heatmap showing the p-values for each term.

4 |

5 | REVIGO generates multiple plots; the easiest way to obtain them in high quality is to download the R scripts that it offers underneath each of the options (e.g. scatter plot, tree map) and to run those yourself.

6 |

7 | Here, I've downloaded the R script after selecting the treemap plot ("Make R script for plotting").

8 | The script is aptly named `REVIGO_treemap.r`.

9 | The plot can be generated as easily as this:

10 |

11 | ```{r eval=FALSE}

12 | ## this will generate a PDF file named "REVIGO_treemap.pdf" in your current

13 | ## working directory

14 | source("~/Downloads/REVIGO_treemap.r")

15 | ```

16 |

17 | Since I personally don't like plots being immediately printed to PDF (much more difficult to include them in an Rmarkdown!),

18 | I've tweaked the function a bit; it's essentially the original REVIGO script that I downloaded minus the part where the `revigo.data` object is generated and with a couple more options to tune the resulting heatmap.

19 |

20 | ```{r define_own_treemap_function}

21 | REVIGO_treemap <- function(revigo.data, col_palette = "Paired",

22 | title = "REVIGO Gene Ontology treemap", ...){

23 | stuff <- data.frame(revigo.data)

24 | names(stuff) <- c("term_ID","description","freqInDbPercent","abslog10pvalue",

25 | "uniqueness","dispensability","representative")

26 | stuff$abslog10pvalue <- as.numeric( as.character(stuff$abslog10pvalue) )

27 | stuff$freqInDbPercent <- as.numeric( as.character(stuff$freqInDbPercent) )

28 | stuff$uniqueness <- as.numeric( as.character(stuff$uniqueness) )

29 | stuff$dispensability <- as.numeric( as.character(stuff$dispensability) )

30 | # check the treemap command documentation for all possible parameters -

31 | # there are a lot more

32 | treemap::treemap(

33 | stuff,

34 | index = c("representative","description"),

35 | vSize = "abslog10pvalue",

36 | type = "categorical",

37 | vColor = "representative",

38 | title = title,

39 | inflate.labels = FALSE,

40 | lowerbound.cex.labels = 0,

41 | bg.labels = 255,

42 | position.legend = "none",

43 | fontsize.title = 22, fontsize.labels=c(18,12,8),

44 | palette= col_palette, ...

45 | )

46 | }

47 | ```

48 |

49 | I still need the originally downloaded script to generate the `revigo.data` object, which I will then pass onto my newly tweaked function.

50 | Using the command line (!) tools `sed` and `egrep`, I'm going to only keep the lines between the one starting with "revigo.data" and the line starting with "stuff".

51 | The output of that will be parsed into a new file, which will only generate the `revigo.data` object that I'm after (no PDF!).

52 |

53 | ```{r}

54 | # the system function allows me to run command line stuff outside of R

55 | # just for legibility purposes, I'll break up the command into individual

56 | # components, which I'll join back together using paste()

57 | sed_cmd <- "sed -n '/^revigo\\.data.*/,/^stuff.*/p'"

58 | fname <- "~/Downloads/REVIGO_treemap.r"

59 | egrep_cmd <- "egrep '^rev|^c'"

60 | out_fname <- "~/Downloads/REVIGO_myData.r"

61 | system(paste(sed_cmd, fname, "|", egrep_cmd, ">", out_fname))

62 | ## upon sourcing; no treemap PDF will be generated, but the revigo.data object

63 | ## should appear in your R environment

64 | source("~/Downloads/REVIGO_myData.r")

65 | REVIGO_treemap(revigo.data)

66 | ```

67 |

68 | If this is all too tedious, you may want to check out a recent Python implementation of REVIGO's principles: [GO-figure](https://www.biorxiv.org/content/10.1101/2020.12.02.408534v1.full).

--------------------------------------------------------------------------------

/repeatMasker.md:

--------------------------------------------------------------------------------

1 | Understanding repeatMasker and repeat annotation

2 | ==================================================

3 |

4 | >RepeatMasker is a program that screens DNA sequences for interspersed repeats and low complexity DNA sequences. The output of the program is a detailed annotation of the repeats that are present in the query sequence as well as a modified version of the query sequence in which all the annotated repeats have been masked

5 |

6 | From:

7 |

8 | Repeats are identified with `RepeatModeler`.

9 |

10 | The full repeatMasker track can be downloaded e.g. via `wget "https://hgdownload.soe.ucsc.edu/goldenPath/mm10/database/rmsk.txt.gz"`

11 | The fields should be labelled as follows:

12 |

13 | | Field | Meaning |

14 | |-------|---------|

15 | | chrom | "Genomic sequence name" |

16 | | chromStart | "Start in genomic sequence" |

17 | | chromEnd | "End in genomic sequence" |

18 | | name | "Name of repeat"|

19 | | score | "always 0 place holder"|

20 | | strand | "Relative orientation + or -"|

21 | | swScore | "Smith Waterman alignment score"|

22 | | milliDiv| "Base mismatches in parts per thousand"|

23 | |milliDel | "Bases deleted in parts per thousand"|

24 | | milliIns | "Bases inserted in parts per thousand"|

25 | | genoLeft | "-#bases after match in genomic sequence"|

26 | | repClass | "Class of repeat"|

27 | |repFamily | "Family of repeat"|

28 | |repStart| "Start (if strand is +) or -#bases after match (if strand is -) in repeat sequence"|

29 | | repEnd| "End in repeat sequence"|

30 | | repLeft| "-#bases after match (if strand is +) or start (if strand is -) in repeat sequence"|

31 |

32 | Based on info from

33 |

34 | * up to ten different **classes** of repeats:

35 | * Short interspersed nuclear elements (SINE), which include ALUs

36 | * Long interspersed nuclear elements (LINE)

37 | * Long terminal repeat elements (LTR), which include retroposons

38 | * DNA repeat elements (DNA)

39 | * Simple repeats (micro-satellites)

40 | * Low complexity repeats

41 | * Satellite repeats

42 | * RNA repeats (including RNA, tRNA, rRNA, snRNA, scRNA, srpRNA)

43 | * Other repeats, which includes class RC (Rolling Circle)

44 | * Unknown

45 |

46 | "A "?" at the end of the "Family" or "Class" (for example, DNA?) signifies that the curator was unsure of the classification. At some point in the future, either the "?" will be removed or the classification will be changed."

47 |

48 | from [UCSC GenomeBrowser Track description](https://genome.ucsc.edu/cgi-bin/hgTables?db=hg19&hgta_group=rep&hgta_track=rmsk&hgta_table=rmsk&hgta_doSchema=describe+table+schema)

49 |

50 | ## Families, classes and so on

51 |

52 | >The most elementary level of classification of TEs is the family, which designates interspersed genomic copies derived from the amplification of an ancestral progenitor sequence (10). Each TE family can be represented by a consensus sequence approximating that of the ancestral progenitor.

53 |

54 | From [Flynn et al. (2020)](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7196820/)

55 |

56 | >RepeatModeler contains a basic homology-based classification module (RepeatClassifier) which compares the TE families generated by the various de novo tools to both the RepeatMasker Repeat Protein Database (DB) and to the RepeatMasker libraries (e.g., Dfam and/or RepBase). The Repeat Protein DB is a set of TE-derived coding sequences that covers a wide range of TE classes and organisms. As is often the case with a search against all known TE consensus sequences, there will be a high number of false positive or partial matches. RepeatClassifier uses a combination of score and overlap filters to produce a reduced set of high-confidence results. If there is a concordance in classification among the filtered results, RepeatClassifier will label the family using the RepeatMasker/Dfam classification system and adjust the orientation (if necessary). Remaining families are labeled “Unknown” if a call cannot be made. Classification is the only step that requires a database, and can be completed with only open-source Dfam if Repbase is not available.

57 |

--------------------------------------------------------------------------------

/scRNA-seq_RNAvelocity.md:

--------------------------------------------------------------------------------

1 | # RNA velocity

2 |

3 | ## Basics

4 |

5 | * Goal: **predict the future expression state of a cell**

6 | * input: two gene-by-cell matrices

7 | * one with spliced (`S`)

8 | * one with unspliced counts (`U`)

9 |

10 | For each *gene*, a phase-plot is constructed.

11 | That phase-plot is used to estimate the gene- and cell-specific velocity.

12 | The phase-plot is a scatterplot of the relevant row (gene) from the U and S matrices (well, a moment estimator of these quantities, but that is a minor point). In other words, the gene-specific velocities are determined by the relationship of S and U. [(HansenLabBlog)](http://www.hansenlab.org/velocity_batch)

13 |

14 | In the words of the Theis Lab:

15 | >For each gene, a steady-state-ratio of pre-mature (unspliced) and mature (spliced) mRNA counts is fitted, which constitutes a constant transcriptional state. Velocities are then obtained as **residuals from this ratio**. Positive velocity indicates that a gene is up-regulated, which occurs for cells that show higher abundance of unspliced mRNA for that gene than expected in steady state. Conversely, negative velocity indicates that a gene is down-regulated.

16 |

17 | [This gif](https://user-images.githubusercontent.com/31883718/80227452-eb822480-864d-11ea-9399-56886c5e2785.gif) illustrates the principles.

18 |

19 | In brief, velocity is estimated for each gene in each cell and then projected into lower dimensional space to reveal the direction of *cell* fate transitions.

20 | The extraplolation of traditional RNA velocity measurements is valid for approx. a couple of hours (based on [Qiu et al., 2022](https://doi.org/10.1016/j.cell.2021.12.045).

21 |

22 | ### Batch effect

23 |

24 | Batch effect seems to be present according to the Hansen Lab. [(HansenLabBlog)](http://www.hansenlab.org/velocity_batch)

25 |

26 | Challenge for RNA velocity: we need to batch correct not 1 but 2 matrices simultaneously

27 |

28 | `scVelo` currently does not pay attention to this, as they state "any additional preprocessing step only affects X and is not applied to spliced/unspliced counts." [(Ref)](https://colab.research.google.com/github/theislab/scvelo_notebooks/blob/master/VelocityBasics.ipynb#scrollTo=SgjdS1emFTbq)

29 |

30 | HansenLab suggests:

31 |

32 | >we correct S and U for library size, and form M=U+S. Then we log-transform M as log(M+1), use ComBat and invert the log transformation. This is what we feed to scVelo and friends. [Details](http://www.hansenlab.org/velocity_batch)

33 |

34 | ## Processing details

35 |

36 | The starting point for any type of velocity analysis: **2 count matrices of pre-mature (unspliced) and mature (spliced) abundances**.

37 |

38 | These can be obtained from standard sequencing protocols, using the `velocyto.py` or `loompy/kallisto` counting pipeline.

39 |

40 | [**Velocyto**](http://velocyto.org/velocyto.py/tutorial/index.html) offers multiple wrappers around 10X Genomics (CellRanger) data, Smart-seq2 data etc.

41 | It essentially looks at every single mapped read and determines whether it represents a spliced, unspliced or ambiguous molecule.

42 |

43 | The BAM file will have to:

44 |

45 | - Be sorted by mapping position.

46 | - Represents either a single sample (multiple cells prepared using a certain barcode set in a single experiment) or single cell.

47 | - Contain an error corrected cell barcodes as a TAG named CB or XC.

48 | - Contain an error corrected molecular barcodes as a TAG named UB or XM.

49 |

50 | ```

51 | # wrapper for 10X

52 | velocyto run10x -m repeat_msk.gtf mypath/sample01 somepath/refdata-cellranger-mm10-1.2.0/genes/genes.gtf

53 |

54 | # same thing with the simple velocyto run command

55 | velocyto run -b filtered_barcodes.tsv -o output_path -m repeat_msk_srt.gtf bam_file.bam annotation.gtf

56 | ```

57 |

58 | The output is a 4-layered [loom file](http://linnarssonlab.org/loompy/index.html), i.e. an HDF5 file that contains specific groups representing the main matrix as well as row and column attribute. ([loom details here](http://linnarssonlab.org/loompy/conventions/index.html)).

59 |

60 |

61 |

62 | For a more detailed run-down of how to move from R-processed data over to velocity, see [Sam's description](https://smorabit.github.io/tutorials/8_velocyto/) or [Basil's](https://github.com/basilkhuder/Seurat-to-RNA-Velocity).

63 |

64 | ### scanpy, scVelo and AnnData

65 |

66 | scVelo is based on `adata`

67 |

68 | - stores a data matrix `adata.X`,

69 | - annotation of observations `adata.obs`

70 | - variables `adata.var`, and

71 | - unstructured annotations `adata.uns`

72 | - computed velocities are stored in `adata.layers` just like the count matrices.

73 |

74 | **Names** of observations and variables can be accessed via `adata.obs_names` and `adata.var_names`, respectively.

75 |

76 | AnnData objects can be sliced like dataframes: `adata_subset = adata[:, list_of_gene_names]`. For more details, see the [anndata docs](https://anndata.readthedocs.io/en/latest/api.html).

77 |

78 | ### Additional resources:

79 |

80 | * For getting a better understanding of `AnnData`, it probably serves to look at a general `pandas` tutorial, e.g. [this one](https://blog.jetbrains.com/datalore/2021/02/25/pandas-tutorial-10-popular-questions-for-python-data-frames/)

81 | * A good overview of typical `scanpy` commands (incl. PCA, UMAP), is given by the [PBMC tutorial](https://scanpy-tutorials.readthedocs.io/en/latest/pbmc3k.html).

82 | * The [complete run-down of **scVelo** and visualization commands](https://scvelo.readthedocs.io/VelocityBasics.html)

83 |

--------------------------------------------------------------------------------

/Seurat_labelTransfer.md:

--------------------------------------------------------------------------------

1 | Testing Seurat's label transfer

2 | ================================

3 |

4 | > Friederike Dündar | 10/26/2020

5 |

6 | ## `Seurat`'s Azimuth workflow

7 |

8 | >Azimuth, a workflow to leverage high-quality reference datasets to rapidly map new scRNA-seq datasets (queries). For example, you can map any scRNA-seq dataset of human PBMC onto our reference, automating the process of visualization, clustering annotation, and differential expression. Azimuth can be run within Seurat, or using a standalone web application that requires no installation or programming experience.

9 | [Ref](https://satijalab.org/seurat/)

10 |

11 | The [vignette](https://satijalab.org/seurat/v4.0/reference_mapping.html) is based on `Seurat v4` and describes how to transfer labels from [CITE-seq reference of 162,000 PBMC measures with 228 antibodies](https://www.biorxiv.org/content/10.1101/2020.10.12.335331v1)

12 |

13 | >in this version of Azimuth, **we do not recommend mapping samples that have been enriched to consist primarily of a single cell type. This is due to assumptions that are made during SCTransform normalization**, and will be extended in future versions.

14 | [Ref: app description](https://satijalab.org/azimuth/)

15 |

16 | ## How to handle batches

17 |

18 | >UMAP and label transfer results are very similar whether a dataset containing multiple batches is mapped one batch at a time or combined. However, the mapping score returned by the app (see below for more discussion) may change. In the presence of batch effects, cells from certain batches sometimes receive high mapping scores when the batches are mapped separately but receive low mapping scores when batches are mapped together. Since the mapping score is meant to identify cells that are defined by a source of heterogeneity that is not present in the reference dataset, the presence of batch effects may cause low mapping scores.

19 | [Ref](https://satijalab.org/azimuth/)

20 |

21 | ## Prediction vs. mapping score

22 |

23 | **prediction score**:

24 |

25 | Prediction scores are calculated based on the cell type identities of the reference cells near to the mapped query cell.

26 |

27 | - For a given cell, the sum of prediction scores for all cell types is 1.

28 | - The PS for a given assigned cell type is the maximum score over all possible cell types

29 | - the higher it is, the more **reference cells near a query cell have the same label**

30 | - low prediction score may be caused if the probability for a given label is equally split between two clusters

31 |

32 | **mapping score**:

33 |

34 | From `?MappingScore()`

35 |

36 | >This metric was designed to help identify query cells that aren't

37 | well represented in the reference dataset. The intuition for the

38 | score is that we are going to project the query cells into a

39 | reference-defined space and then project them back onto the query.

40 | By comparing the neighborhoods before and after projection, we

41 | identify cells who's local neighborhoods are the most affected by

42 | this transformation. This could be because there is a population

43 | of query cells that aren't present in the reference or the state

44 | of the cells in the query is significantly different from the

45 | equivalent cell type in the reference.

46 |

47 | - This value from 0 to 1 reflects confidence that this cell is well represented by the reference

48 | - cell types that are not present in the reference should have lower mapping scores

49 |

50 |

51 | ### How can a cell get a high prediction score and a low mapping score?

52 |

53 | >A high prediction score means that a high proportion of reference cells near a query cell have the same label. However, these reference cells may not represent the query cell well, resulting in a low mapping score. Cell types that are not present in the reference should have lower mapping scores. For example, we have observed that query datasets containing neutrophils (which are not present in our reference), will be confidently annotated as CD14 Monocytes, as Monocytes are the closest cell type to neutrophils, but receive a low mapping score.

54 |

55 | ### How can a cell get a low prediction score and a high mapping score?

56 |

57 | > A cell can get a low prediction score because its probability is equally split between two clusters (for example, for some cells, it may not be possible to confidently classify them between the two possibilities of CD4 Central Memory (CM), and Effector Memory (EM), which lowers the prediction score, but the mapping score will remain high.

58 |

59 | ## UMAP

60 |

61 | From the help of `ProjectUMAP()`:

62 |

63 | This function will take a query dataset and project it into the coordinates of a provided reference UMAP. This is essentially a wrapper around two steps:

64 |

65 | * `FindNeighbors()`: Find the nearest reference cell neighbors and

66 | their distances for each query cell.

67 | * `RunUMAP()`: Perform umap projection by providing the neighbor

68 | set calculated above and the umap model previously computed

69 | in the reference.

70 |

71 | If there are cell states that are present in the query dataset that are not represented in the reference, they will project to the most similar cell in the reference. This is the expected behavior and functionality as established by the UMAP package, but can potentially mask the presence of new cell types in the query which may be of interest.

72 |

73 |

74 | ## Recommended "de novo" visualization by merging the PCs for reference and query

75 |

76 | ```

77 | #merge reference and query

78 | reference$id <- 'reference'

79 | pbmc3k$id <- 'query'

80 | refquery <- merge(reference, pbmc3k)

81 | refquery[["spca"]] <- merge(reference[["spca"]], pbmc3k[["ref.spca"]])

82 | refquery <- RunUMAP(refquery, reduction = 'spca', dims = 1:50)

83 | DimPlot(refquery, group.by = 'id')

84 | ```

85 |

--------------------------------------------------------------------------------

/spatial_transcriptomics.md:

--------------------------------------------------------------------------------

1 | # Spatial transcriptomics

2 |

3 | [Github repo](https://github.com/SpatialTranscriptomicsResearch) of the original lab that developed Visium

4 |

5 | * [Viewer](https://github.com/jfnavarro/st_viewer/wiki)

6 |

7 | ## Detection of genes whose expression is highly localized

8 |

9 | ### Mark variogram

10 |

11 | A spatial pattern records the locations of events produced by an underlying spatial process in a study region.

12 |

13 | `spatstat`[http://www.spatstat.org/] package

14 |

15 | Auxiliary information attached to each point in the point pattern is called a mark and we speak of a marked point pattern.

16 |

17 | **Variogram**:

18 |

19 | - function describing the degree of spatial dependence of a spatial random field

20 | - how much do the values of two marks differ depending on the distance between those samples (assumption: samples taken far apart will vary more than samples taken close to each other)

21 | - variogram = variance of the difference between fiedl values at two locations

22 |

23 | #### Application to spatial transcriptomics

24 |

25 | Originally introduced by the Sandberg Lab [(Edsgard, Johnsson & Sandberg (2018), Nat Methods)](https://www.nature.com/articles/nmeth.4634) in their package [`trendsceek`](https://github.com/edsgard/trendsceek):

26 |

27 | >To identify genes for which dependencies exist between the spatial distribution of cells and gene expression in those cells, we modeled data as marked point processes, which we used to rank and assess the significance of the spatial expression trends of each gene.

28 |

29 | * points = spatial locations of cells (or regions)

30 | * marks on each point = expression levels

31 | * tests for significant dependency between the spatial distributions of points and their associated marks (expression levels) through **pairwise analyses of points as a function of the distance r (radius)** between them

32 | * if marks and the locations of points are independent, the scores obtained should be constant across the different distances r

33 | * To assess the significance of a gene's spatial expression pattern, we implemented a resampling procedure in which the expression values are permuted, reflecting a null model with no spatial dependency of expression

34 |

35 | They also applied their method to the coordinates defined by UMAP/t-SNE

36 |

37 | >spatial methods have the ability to identify continuous gradients or spatial expression patterns defined by fewer genes that would be hard to identify through clustering of pairwise cellular expression profile correlations

38 | >

39 | >- only a subset of highly variable genes have significant spatial expression patterns

40 |

41 | * For the distribution of all pairs at a particular radius, a mark segregation is said to be present if the distribution is dependent on r such that it deviates from what would be expected if the marks were randomly distributed

42 | * Four summary statistics of the pair distribution were calculated for each radius and compared to the null distribution of the summary statistic derived from the permuted expression labels.

43 |

44 | ### SpatialDE

45 |

46 | * [Svensson et al.](https://www.nature.com/articles/nmeth.4636)

47 | * [repo of the python package](https://github.com/Teichlab/SpatialDE/)

48 |

49 | ### Splotch

50 |

51 | * [Aijo et al.](https://www.biorxiv.org/content/10.1101/757096v1.full.pdf+html)

52 | * [repo](https://github.com/tare/Splotch)

53 |

54 | ## Spatial representations in R

55 |

56 | ### `sf` library

57 |

58 | from [Jesse Sadler](https://www.jessesadler.com/post/simple-feature-objects/)

59 |

60 | `sf class object` = `sfg` + `sfc` objects

61 |

62 | - basically a data frame with rows of features, columns of attributes and a special geometry column with the spatial aspects of the features

63 | - `sf` object: collection of simple features represented by a data frame

64 | - `sfg` object: geometry of a single feature

65 | - `sfc` object: geometry *column* with the spatial attributes of the object printed above the data frame

66 |

67 |

68 | ### `sfg`

69 |

70 | Represents the coordinates of the objects.

71 |

72 | Geometry types:

73 |

74 | | Name | Represents | Created with | Function |

75 | |-----|-------------|--------------|----------|

76 | | POINT | a single point | a vector | `sf_point()` |

77 | | MULTIPOINT |multiple points |matrix with each row = point | `sf_multipoint()` |

78 | LINESTRING | sequence of two or more points connected by straight lines | matrix with each row = point | `sf_linestring()` |

79 | | MULTILINESTRING | multiple lines | list of matrices | `sf_multilinestring()`

80 | | POLYGON | closed (!) ring with zero or more interior holes | list of matrices | `sf_polygon()`|

81 | | MULTIPOLYGON | multiple polygons | list of list of matrices | `sf_multipolygon()` |

82 | | GEOMETRYCOLLECTION | any combination of the above types | list that combines any of the above | `sf_geometrycollection()` |

83 |

84 | ### `sfc`

85 |

86 | For representing *geospatial* data, i.e. they are lists of of one ore more `sfg` objects with attributes that contain the coordinate reference system

87 |

88 | Functions for creating `sfc` objects: `st_sfc(multipoint_sfg)` (this would create an `sfc` object with `NA`'s in the `epsg` and `proj4string` attributes)

89 |

90 | ### Creating an `sf` object

91 |

92 | The `sf` objects combine the spatial information with any number of attributes, e.g. names, values etc.

93 |

94 | They can be created with the `st_sf()` function

95 | * joins a df to an sfg object

96 |

97 | ## Generating a point pattern (`ppp`) object for `markvariogram` function (`spatstat` package)

98 |

99 | ```

100 | ## extract coordinates

101 | spatial.coords <- reducedDim(sce.obj, coords_accessor)

102 |

103 | ## generate ppp object

104 | x.coord = spatial.coords[, 1]

105 | y.coord = spatial.coords[, 2]

106 |

107 | pp <- ppp(

108 | x = x.coord,

109 | y = y.coord,

110 | xrange = range(x.coord),

111 | yrange = range(y.coord)

112 | )

113 | pp[["marks"]] <- as.data.frame(x = t(x = exprs_data))

114 | mv <- markvario(X = pp, normalise = TRUE, ...)

115 | ```

116 |

117 |

--------------------------------------------------------------------------------

/DimReds.md:

--------------------------------------------------------------------------------

1 | # Dimensionality reduction techniques

2 |

3 | we are concerned with defining similarities between two objects *i* and *j* in the high dimensional input space *X* and low dimensional embedded space *Y*

4 |

5 | >It is interesting to think about why basically each of the techniques is applicable in one particular research area and not common in other areas. For example, Independent Component Analysis (ICA) is used in signal processing, Non-Negative Matrix Factorization (NMF) is popular for text mining, Non-Metric Multi-Dimensional Scaling (NMDS) is very common in Metagenomics analysis etc., but it is rare to see e.g. NMF to be used for RNA sequencing data analysis. [Oskolkov](https://towardsdatascience.com/reduce-dimensions-for-single-cell-4224778a2d67)

6 |

7 | * PCA

8 | * UMAP

9 | * tSNE

10 | * diffusion map

11 |

12 | >linear dimensionality reduction techniques can not fully resolve the heterogeneity in the single cell data. (...) Linear dimension reduction techniques are good at preserving the global structure of the data (connections between all the data points) while it seems that for single cell data it is more important to keep the local structure of the data (connections between neighboring points). [Oskolkov](https://towardsdatascience.com/reduce-dimensions-for-single-cell-4224778a2d67)

13 |

14 | ## UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction

15 |

16 | "The UMAP algorithm seeks to find an embedding by searching for a low-dimensional projection of the data that has the closest possible equivalent fuzzy topological structure"

17 |

18 | Cost function: Cross-Entropy --> probably the main reason for why UMAP can preserve the global structure better than tSNE

19 |

20 |

21 |

22 | [Mathematical background](https://arxiv.org/abs/1802.03426)

23 |

24 | 3 basic assumptions [1](https://umap-learn.readthedocs.io/en/latest/):

25 |

26 |

27 | 1. The data is uniformly distributed on Riemannian manifold;

28 | 2. The Riemannian metric is locally constant (or can be approximated as such);

29 | 3. The manifold is locally connected.

30 |

31 | In contrast to tSNE, UMAP *estimates* the nearest-neighbors distances (with the [nearest-neighbor-descent algorithm](https://dl.acm.org/citation.cfm?id=1963487)), which relieves some of the computational burden

32 |

33 | From [Oskolkov](https://towardsdatascience.com/how-exactly-umap-works-13e3040e1668):

34 | Both tSNE and UMAP essentially consist of two steps:

35 |

36 | 1. Building a **graph** in high dimensions and computing the bandwidth of the exponential probability, σ, using the binary search and the fixed number of nearest neighbors to consider.

37 | 2. **Optimization of the low-dimensional representation** via Gradient Descent. The second step is the bottleneck of the algorithm, it is consecutive and can not be multi-threaded. Since both tSNE and UMAP do the second step, it is not immediately obvious why UMAP can do it more efficiently than tSNE.

38 |

39 | ### `n_neighbors`

40 |

41 | - constraining the size of the local neighborhood when learning the manifold structure

42 | - low values --> focus on local structure

43 | - default value: 15

44 |

45 | ### `min_dist`

46 |

47 | - minimum distance between points in the low dimensional representation

48 | - low values --> clumps

49 | - range: 0-1; default: 0.1

50 |

51 | ### `n_components`

52 |

53 | = number of dimensions in which the data should be represented

54 |

55 | ### `metric`

56 |

57 | - distance computation, e.g. Euclidean, Manhttan, Minkowski...

58 |

59 | ## tSNE

60 |

61 | The t-SNE cost function seeks to minimize the Kullback–Leibler divergence between the joint probability distribution in the high-dimensional space, *pij*, and the joint probability distribution in the low-dimensional space, *qij*. The fact that both *pij* and *qij* require calculations over all pairs of points imposes a high computational burden on t-SNE. [2](https://pubs.acs.org/doi/10.1021/acs.analchem.8b05827)

62 |

63 | From Appendix C of [McInnes et al.](https://arxiv.org/abs/1802.03426):

64 |

65 | t-SNE defines input probabilities in three stages:

66 |

67 | 1. For each pair of points, i and j, in X, a pair-wise similarity, vij, is calculated, Gaussian with respect to the Euclidean distance between xi and xj.

68 | 2. The similarities are converted into N conditional probability distributions by normalization. The perplexity of the probability distribution is matched to a user-defined value.

69 | 3. The probability distributions are symmetrized and further normalized over the entire matrix of values.

70 |

71 | Similarities between pairs of points in the output space *Y* are defined using a Student t-distribution with one degree of freedom on the squared Euclidean distance followed by the matrix-wise normalization to form qij.

72 |

73 | Barnes-Hut: only calculate vj|i for n nearest neighbors of i where n is a multiple of the user-selected perplexity; for all other j, vi|j is assumed to be 0 (justified because similarities in the high dimensions are effectively zero outside of the nearest neighbors of each point due to the calibration of the pj|i values to reprorcuce a desired perplexity)

74 |

75 | ## PCA

76 |

77 | PCA relies on the determination of orthogonal eigenvectors along which the largest variances in the data are to be found. PCA works very well to approximate data by a low-dimensional subspace, which is equivalent to the existence of many linear relations among the projected data points.

78 |

79 | ## Diffusion Map

80 |

81 | - based on calculating transition probabilities between cells; this is used to calculate the diffusion distance

82 | - main idea: embed data into a lower-dimensional space such that the Euclidean distance between points approximates diffusion distance data [3](www.dam.brown.edu/people/mmcguirl/diffusionmapTDA.pdf)

83 |

84 | 1. Calculate diffusion distance: K(x,y) = exp(- (|x-y|)/alpha)

85 | 2. Create distance/kernel matrix: Kij = K(xi, xj)

86 | 3. Create diffusion matrix (Markov) M by normalizing so that sum over rows is 1

87 | 4. Calculate eigenvectors of M, sort by eigenvalues

88 | 5. Return top eigenvectors

--------------------------------------------------------------------------------

/atac.md:

--------------------------------------------------------------------------------

1 | # ATAC-seq normalization

2 |

3 | - IMO, the background (in ChIP-seq) is really much more representative of how well the sequencing worked than the peaks, especially for tricky ChIPs

4 | - for ATAC-seq, I'm not so sure. Might depend on the sample. "Efficiency bias" could be confounded with "population heterogeneity", ergo, subtle changes in 'peak height' may reflect biologically meaningful differences in the number of cells that show chromatin accessibility at a given locus

5 | - It all comes down to having replicates of the same condition -- those will help with the decision about the types of biases we're encountering in a given set of samples -- and it doesn't fully matter whether the differences between the replicates represent biological or technical causes as long as we can agree that these differences are *uninteresting* for the bigger picture.

6 |

7 | What could cause efficiency bias in ATAC-seq samples?

8 |

9 | - tagmentation differences -- how fickle is the digestion step? or, rather, how similar are the outputs for the same sample types when digestion times are kept constant?

10 | - over-digestion --> tags from open regions are lost (too small); too much of the normally closed chromatin ends up being sequenced

11 | - under-digestion

12 |

13 | ## CSAW's take

14 |

15 | From [csaw vignette](https://bioconductor.org/packages/3.12/workflows/vignettes/csawUsersGuide/inst/doc/csaw.pdf):

16 |

17 | If one assumes that the differences at high abundances represent genuine DB events, then we need to remove **composition bias**.

18 |

19 | - composition biases are formed when there are differences in the composition of sequences across libraries, i.e. in the region repertoire

20 | - Highly enriched regions consume more sequencing resources and thereby suppress the representation of other regions. Differences in the magnitude of suppression between libraries can lead to spurious DB calls.

21 | - Scaling by library size fails to correct for this as composition biases can still occur in libraries of the same size.

22 | - to correct for this, use **non-DB background regions**

23 |