├── README.md

├── faq

├── GATK workshop的变异寻找流程.md

├── GATK的学习小组包括哪些内容呢.md

├── GATK软件需要的输入文件是哪些.md

├── 参加GATK的研讨会操作课程前需要准备什么.md

└── 如何向GATK官方汇报bugs.md

├── methods

├── 基于DNAseq的GATK最佳实践概述.md

├── 基于RNAseq的GATK最佳实践概述.md

└── 基于RNAseq的GATK最佳实践流程.md

├── processing.md

└── tutorials

├── .DS_Store

├── filetype

├── 从vcf格式的变异文件里面挑选感兴趣位点.md

├── 如何不比对就把fastq文件转为bam格式呢?.md

├── 如何从fasta序列里面模拟测序reads?.md

├── 如何处理参考基因组的非染色体序列?.md

├── 如何对bam文件进行修复.md

└── 如何根据坐标区间对bam文件取子集?.md

├── steps

├── 如何去除PCR重复?.md

├── 如何用HC找变异.md

├── 如何用UG找变异.md

├── 如何运行BQSR.md

├── 如何运行VQSR.md

└── 如何高效的比对?.md

├── vcf-filter-annotation

├── .DS_Store

├── GVCF和普通的VCF文件的区别是什么.md

├── VCF文件是什么,该如何解释呢.md

├── 变异位点评价模块是干什么的.md

├── 如何对vcf文件进行过滤?.md

├── 用CollectVariantCallingMetrics来评价找到的变异.md

├── 用VariantEval来评价找到的变异.md

├── 突变碱基特异性注释和过滤.md

└── 评价找到的变异.md

└── 如何安装GATK最佳实践的必备软件.md

/README.md:

--------------------------------------------------------------------------------

1 | # GATK中文社区招募

2 |

3 | 昨天gatk官网放出了征求中文社区合作伙伴的消息,作为生物信息学领域最活跃的中文社群,我们当仁不让的要接下这茬。GATK流程是目前科研界+工业界应用最广泛变异位点识别流程,而且是由大名鼎鼎的broad开发和维护,非常具有学习参考价值。

4 |

5 | 首先介绍一下我们的计划:

6 |

7 | ## 建设协作论坛

8 |

9 | 采用开源论坛系统Flarum建设GATK中文社区,该论坛所有帖子将以markdown代码形式发帖,所有的markdown源代码都会同步存放在此github的organization的这个project下面。GATK中文社区地址是: 对应的broad的GATK主页是;

10 |

11 | ## 建设协作github

12 |

13 | 因为论坛都是以markdown写作,所以源代码很容易上传保存到统一的github合作仓库。https://github.com/gatk-chinese/forum

14 |

15 | - faq

16 | - tools

17 | - tutorials

18 | - methods

19 | - others

20 | - Images (存储上面5个文件夹的所有图片)

21 |

22 | 下面介绍一下GATK官方的工作,我们在github建立的5个存放markdown源代码的文件夹,对应着下面的GATK官网的5块知识点。

23 |

24 | ## 文档

25 |

26 | - 约40多个最常见的用户问题[Frequently Asked Questions ](https://software.broadinstitute.org/gatk/documentation/topic?name=faqs)

27 | - 约30多个官方教程[Step-by-step instructions targeted by use case](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials)

28 | - 约20多个[专有名词解释](https://software.broadinstitute.org/gatk/documentation/topic?name=dictionary)

29 | - 一些PPT和[youtube视频下载](https://software.broadinstitute.org/gatk/documentation/presentations) 存放在 the [GATK Workshops](https://drive.google.com/open?id=1y7q0gJ-ohNDhKG85UTRTwW1Jkq4HJ5M3) directory 需要翻墙!

30 |

31 | 这个工作量是最大的,需要**GATK熟手**才能比较流畅的翻译加工。我已经在自己的社交圈物色了3名不错的人才负责这部分知识点,同时也欢迎读者推荐自己或者认识的高手加盟,我会认真对待这个合作。

32 |

33 | ## 套件的工具

34 |

35 | GATK涉及到了对高通量测序数据寻找遗传变异位点过程的方方面面,绝大部分用户用到了就是GATK对bam文件的一个再处理,还有UG或者HC模式的变异寻找工具。

36 |

37 | - [Diagnostics and Quality Control Tools](https://software.broadinstitute.org/gatk/documentation/tooldocs/current/#DiagnosticsandQualityControlTools)

38 | - [Sequence Data Processing Tools](https://software.broadinstitute.org/gatk/documentation/tooldocs/current/#SequenceDataProcessingTools)

39 | - [Variant Discovery Tools](https://software.broadinstitute.org/gatk/documentation/tooldocs/current/#VariantDiscoveryTools)

40 | - [Variant Evaluation Tools](https://software.broadinstitute.org/gatk/documentation/tooldocs/current/#VariantEvaluationTools)

41 | - [Variant Manipulation Tools](https://software.broadinstitute.org/gatk/documentation/tooldocs/current/#VariantManipulationTools)

42 |

43 | 这个主要是工具的帮助文档的翻译,理论上谷歌翻译+人工修改就可以了,并没有涉及到过多的生物信息学知识点,对**经验要求也不高**,大部分都是工具的参数的解读。这个版块需要比较多的志愿者参与,欢迎联系我邮箱 jmzeng1314@163.com 获取参与细节,来信请务必做好一定程度的自我介绍。

44 |

45 | ## 用法及算法

46 |

47 | 这个版块与其官方教程部分重叠,但是侧重于对步骤细节的理解以及取舍,对变异位点识别原理的掌握要求也很高。目前处于待定状态,可能需要专家加盟,甚至需要部分从事遗传咨询研究的朋友合作翻译,而不仅仅是做数据处理的生物信息学家。

48 |

49 | ## [最佳实践](https://software.broadinstitute.org/gatk/best-practices/)

50 |

51 | 这里准备结合几个项目数据分析实际案例来讲解如何应用GATK官网推荐的最佳流程。

52 |

53 | ## [博客](https://software.broadinstitute.org/gatk/blog)

54 |

55 | 主要是GATK官方维护小组的人会在上面发布一些关于GATK的动态,版本,小故事,会议等等。并不在我们的翻译计划中。

56 |

57 | ## [论坛](https://gatkforums.broadinstitute.org/gatk)

58 |

59 | 这里有全世界各地不同生物信息学水平的遗传变异研究者对于GATK,但不限于该软件使用方法的各种讨论。目前也不在我们的翻译计划中。

60 |

--------------------------------------------------------------------------------

/faq/GATK的学习小组包括哪些内容呢.md:

--------------------------------------------------------------------------------

1 | # GATK的研讨会包括哪些内容呢?

2 |

3 | 原文链接:[ What do GATK workshops cover?](https://software.broadinstitute.org/gatk/documentation/article?id=9218)

4 |

5 | ## 为期三天的GATK研讨会

6 |

7 | 这次研讨会将会集中在使用broad研究所开发的GATK软件来寻找变异位点的核心步骤,即GATK小组开发的最佳实践流程。你将会了解为什么最佳实践的每个步骤都是寻找变异位点这一过程必不可少的,每一步骤分别对数据做了什么,如何使用GATK工具来处理你自己的数据并得到最可信最准确的结果。同时,在研讨会的课程中,我们会突出讲解部分GATK的新功能,比如用GVCF模式来对多样本联合寻找变异,还有如何对RNA-seq数据特异的寻找变异流程,和如何使用mutech2来选择somatic变异。我们也会介绍一下即将到来的GATK4的功能,比如它的CNV流程。最后我们会演练整个GATK流程的各个步骤的衔接和实战。

8 |

9 | ## 课程设置

10 |

11 | 本次研讨会包括一天的讲座,中间会提供大量的问答环节,力图把GATK的基础知识介绍给大家。并且伴随着2天的上机实战,具体安排如下:

12 |

13 | - 第一天全天:演讲,关于GATK最佳实践应用于高通量测序数据的前因后果,理论基础以及应用形式。

14 | - 第二天上午:上机操作, 使用GATK寻找变异位点,包括(SNPs + Indels)

15 | - 第二天下午:上机操作,使用GATK对找到变异位点进行过滤。

16 | - 第三天上午:上机操作,使用GATK寻找somatic的变异位点,包括 (SNPs + Indels + CNV)

17 | - 第三天下午:介绍通过WDL(Workflow Description Language)整合的云端流程

18 |

19 | 上机实践侧重于数据分析步骤,希望提供练习的方式帮助用户了解寻找变异位点这一过程中涉及到的各种文件格式的转换,以及如何更好的利用GATK工具来完成这一流程。在这些实践课程中,我们会有机的结合GATK和PICARDS工具,还有其它一些第三方工具,比如Samtools, IGV, RStudio and RTG Tools ,介绍它们的一些操作技巧,比较有趣的分析方法,还有中间可能会遇到的报错信息的处理。

20 |

21 | 还会讲解如何使用WDL来写GATK分析流程的脚本,这个是broad最新开发的一个流程描述语言。并且在本地或者云端执行自己写好的流程。

22 |

23 | 请注意,本次研讨会侧重于人类研究数据的处理,本次研究会讲解的大部分操作步骤都适合其它非人类研究数据的处理,但是应用起来会有一些需要解决的移植问题,不过在这一点上面我们不会讲太多。

24 |

25 | ## 目标受众

26 |

27 | 第一天的基础知识讲解的受众比较广泛,既可以是第一次接触变异位点寻找这个分析方法,或者第一次接触GATK,想从零开始了解;也可以是已经使用过GATK的用户,想提升自己对GATK的理解。但是参会者需要熟练一些基础名词,并且有基因组或者遗传学的基本背景。

28 |

29 | 上机操作是为那些想了解GATK具体使用过程的细节新手或者中级用户准备的,需要对LINUX命令行有着基础的理解能力。希望参会者可以带自己的笔记本电脑,并且预先安装好一些必备的软件工具,具体细节将会在研究会正式开始前两周公布,除非研究会的举办方提供了练习用的电脑或者云服务器。而且必须是mac或者linux系统,Windows系统是不能参加上机实践的。限30人!

30 |

31 | ## GATK最佳实践的PPT演讲内容如下:

32 |

33 | - Introduction to variant discovery analysis and GATK Best Practices

34 | - (coffee break)

35 | - Marking Duplicates

36 | - Indel Realignment (to be phased out)

37 | - Base Recalibration

38 | - (lunch break)

39 | - Germline Variant Calling and Joint Genotyping

40 | - Filtering germline variants with VQSR

41 | - Genotype Refinement Workflow

42 | - (coffee break)

43 | - Callset Evaluation

44 | - Somatic variant discovery with MuTect2

45 | - Preview of CNV discovery with GATK4

--------------------------------------------------------------------------------

/faq/GATK软件需要的输入文件是哪些.md:

--------------------------------------------------------------------------------

1 | # GATK软件需要的输入文件是哪些?

2 |

3 | 原文链接:[ What input files does the GATK accept / require? ](https://software.broadinstitute.org/gatk/documentation/article?id=1204)

4 |

5 | All analyses done with the GATK typically involve several (though not necessarily all) of the following inputs:

6 |

7 | - Reference genome sequence

8 | - Sequencing reads

9 | - Intervals of interest

10 | - Reference-ordered data

11 |

12 | This article describes the corresponding file formats that are acceptable for use with the GATK.

13 |

14 | ------

15 |

16 | ### 1. Reference Genome Sequence

17 |

18 | The GATK requires the reference sequence in a single reference sequence in FASTA format, with all contigs in the same file. The GATK requires strict adherence to the FASTA standard. All the standard IUPAC bases are accepted, but keep in mind that non-standard bases (i.e. other than ACGT, such as W for example) will be ignored (i.e. those positions in the genome will be skipped).

19 |

20 | **Some users have reported having issues with reference files that have been stored or modified on Windows filesystems. The issues manifest as "10" characters (corresponding to encoded newlines) inserted in the sequence, which cause the GATK to quit with an error. If you encounter this issue, you will need to re-download a valid master copy of the reference file, or clean it up yourself.**

21 |

22 | Gzipped fasta files will not work with the GATK, so please make sure to unzip them first. Please see [this article](http://www.broadinstitute.org/gatk/guide/article?id=1601) for more information on preparing FASTA reference sequences for use with the GATK.

23 |

24 | #### Important note about human genome reference versions

25 |

26 | If you are using human data, your reads must be aligned to one of the official b3x (e.g. b36, b37) or hg1x (e.g. hg18, hg19) references. The names and order of the contigs in the reference you used must exactly match that of one of the official references canonical orderings. These are defined by historical karotyping of largest to smallest chromosomes, followed by the X, Y, and MT for the b3x references; the order is thus 1, 2, 3, ..., 10, 11, 12, ... 20, 21, 22, X, Y, MT. The hg1x references differ in that the chromosome names are prefixed with "chr" and chrM appears first instead of last. The GATK will detect misordered contigs (for example, lexicographically sorted) and throw an error. This draconian approach, though unnecessary technically, ensures that all supplementary data provided with the GATK works correctly. You can use ReorderSam to fix a BAM file aligned to a missorted reference sequence.

27 |

28 | **Our Best Practice recommendation is that you use a standard GATK reference from the GATK resource bundle.**

29 |

30 | ------

31 |

32 | ### 2. Sequencing Reads

33 |

34 | The only input format for sequence reads that the GATK itself supports is the [Sequence Alignment/Map (SAM)] format. See [SAM/BAM] for more details on the SAM/BAM format as well as [Samtools](http://samtools.sourceforge.net/) and [Picard](http://picard.sourceforge.net/), two complementary sets of utilities for working with SAM/BAM files.

35 |

36 | If you don't find the information you need in this section, please see our [FAQs on BAM files](http://www.broadinstitute.org/gatk/guide/article?id=1317).

37 |

38 | If you are starting out your pipeline with raw reads (typically in FASTQ format) you'll need to make sure that when you map those reads to the reference and produce a BAM file, the resulting BAM file is fully compliant with the GATK requirements. See the Best Practices documentation for detailed instructions on how to do this.

39 |

40 | In addition to being in SAM format, we require the following additional constraints in order to use your file with the GATK:

41 |

42 | - The file must be binary (with `.bam` file extension).

43 | - The file must be indexed.

44 | - The file must be sorted in coordinate order with respect to the reference (i.e. the contig ordering in your bam must exactly match that of the reference you are using).

45 | - The file must have a proper bam header with read groups. Each read group must contain the platform (PL) and sample (SM) tags. For the platform value, we currently support 454, LS454, Illumina, Solid, ABI_Solid, and CG (all case-insensitive).

46 | - Each read in the file must be associated with exactly one read group.

47 |

48 | Below is an example well-formed SAM field header and fields (with @SQ dictionary truncated to show only the first two chromosomes for brevity):

49 |

50 | ```

51 | @HD VN:1.0 GO:none SO:coordinate

52 | @SQ SN:1 LN:249250621 AS:NCBI37 UR:file:/lustre/scratch102/projects/g1k/ref/main_project/human_g1k_v37.fasta M5:1b22b98cdeb4a9304cb5d48026a85128

53 | @SQ SN:2 LN:243199373 AS:NCBI37 UR:file:/lustre/scratch102/projects/g1k/ref/main_project/human_g1k_v37.fasta M5:a0d9851da00400dec1098a9255ac712e

54 | @RG ID:ERR000162 PL:ILLUMINA LB:g1k-sc-NA12776-CEU-1 PI:200 DS:SRP000031 SM:NA12776 CN:SC

55 | @RG ID:ERR000252 PL:ILLUMINA LB:g1k-sc-NA12776-CEU-1 PI:200 DS:SRP000031 SM:NA12776 CN:SC

56 | @RG ID:ERR001684 PL:ILLUMINA LB:g1k-sc-NA12776-CEU-1 PI:200 DS:SRP000031 SM:NA12776 CN:SC

57 | @RG ID:ERR001685 PL:ILLUMINA LB:g1k-sc-NA12776-CEU-1 PI:200 DS:SRP000031 SM:NA12776 CN:SC

58 | @PG ID:GATK TableRecalibration VN:v2.2.16 CL:Covariates=[ReadGroupCovariate, QualityScoreCovariate, DinucCovariate, CycleCovariate], use_original_quals=true, defau

59 | t_read_group=DefaultReadGroup, default_platform=Illumina, force_read_group=null, force_platform=null, solid_recal_mode=SET_Q_ZERO, window_size_nqs=5, homopolymer_nback=7, except on_if_no_tile=false, pQ=5, maxQ=40, smoothing=137 UR:file:/lustre/scratch102/projects/g1k/ref/main_project/human_g1k_v37.fasta M5:b4eb71ee878d3706246b7c1dbef69299

60 | @PG ID:bwa VN:0.5.5

61 | ERR001685.4315085 16 1 9997 25 35M * 0 0 CCGATCTCCCTAACCCTAACCCTAACCCTAACCCT ?8:C7ACAABBCBAAB?CCAABBEBA@ACEBBB@? XT:A:U XN:i:4 X0:i:1 X1:i:0 XM:i:2 XO:i:0 XG:i:0 RG:Z:ERR001685 NM:i:6 MD:Z:0N0N0N0N1A0A28 OQ:Z:>>:>2>>>>>>>>>>>>>>>>>>?>>>>??>???>

62 | ERR001689.1165834 117 1 9997 0 * = 9997 0 CCGATCTAGGGTTAGGGTTAGGGTTAGGGTTAGGG >7AA<@@C?@?B?B??>9?B??>A?B???BAB??@ RG:Z:ERR001689 OQ:Z:>:<<8<<<><<><><<>7<>>>?>>??>???????

63 | ERR001689.1165834 185 1 9997 25 35M = 9997 0 CCGATCTCCCTAACCCTAACCCTAACCCTAACCCT 758A:?>>8?=@@>>?;4<>=??@@==??@?==?8 XT:A:U XN:i:4 SM:i:25 AM:i:0 X0:i:1 X1:i:0 XM:i:2 XO:i:0 XG:i:0 RG:Z:ERR001689 NM:i:6 MD:Z:0N0N0N0N1A0A28 OQ:Z:;74>7><><><>>>>><:<>>>>>>>>>>>>>>>>

64 | ERR001688.2681347 117 1 9998 0 * = 9998 0 CGATCTTAGGGTTAGGGTTAGGGTTAGGGTTAGGG 5@BA@A6B???A?B??>B@B??>B@B??>BAB??? RG:Z:ERR001688 OQ:Z:=>>>><4><

45 | ```

46 |

47 | 2) Alignment jobs were executed as follows:

48 |

49 | ```

50 | runDir=/path/to/1pass

51 | mkdir $runDir

52 | cd $runDir

53 | STAR --genomeDir $genomeDir --readFilesIn mate1.fq mate2.fq --runThreadN

54 | ```

55 |

56 | 3) For the 2-pass STAR, a new index is then created using splice junction information contained in the file SJ.out.tab from the first pass:

57 |

58 | ```

59 | genomeDir=/path/to/hg19_2pass

60 | mkdir $genomeDir

61 | STAR --runMode genomeGenerate --genomeDir $genomeDir --genomeFastaFiles hg19.fa \

62 | --sjdbFileChrStartEnd /path/to/1pass/SJ.out.tab --sjdbOverhang 75 --runThreadN

63 | ```

64 |

65 | 4) The resulting index is then used to produce the final alignments as follows:

66 |

67 | ```

68 | runDir=/path/to/2pass

69 | mkdir $runDir

70 | cd $runDir

71 | STAR --genomeDir $genomeDir --readFilesIn mate1.fq mate2.fq --runThreadN

72 | ```

73 |

74 | #### 2. Add read groups, sort, mark duplicates, and create index

75 |

76 | The above step produces a SAM file, which we then put through the usual Picard processing steps: adding read group information, sorting, marking duplicates and indexing.

77 |

78 | ```

79 | java -jar picard.jar AddOrReplaceReadGroups I=star_output.sam O=rg_added_sorted.bam SO=coordinate RGID=id RGLB=library RGPL=platform RGPU=machine RGSM=sample

80 |

81 | java -jar picard.jar MarkDuplicates I=rg_added_sorted.bam O=dedupped.bam CREATE_INDEX=true VALIDATION_STRINGENCY=SILENT M=output.metrics

82 | ```

83 |

84 | #### 3. Split'N'Trim and reassign mapping qualities

85 |

86 | Next, we use a new GATK tool called SplitNCigarReads developed specially for RNAseq, which splits reads into exon segments (getting rid of Ns but maintaining grouping information) and hard-clip any sequences overhanging into the intronic regions.

87 |

88 | In the future we plan to integrate this into the GATK engine so that it will be done automatically where appropriate, but for now it needs to be run as a separate step.

89 |

90 | At this step we also add one important tweak: we need to reassign mapping qualities, because STAR assigns good alignments a MAPQ of 255 (which technically means “unknown” and is therefore meaningless to GATK). So we use the GATK’s [ReassignOneMappingQuality](http://www.broadinstitute.org/gatk/gatkdocs/org_broadinstitute_sting_gatk_filters_ReassignOneMappingQualityFilter.html) read filter to reassign all good alignments to the default value of 60. This is not ideal, and we hope that in the future RNAseq mappers will emit meaningful quality scores, but in the meantime this is the best we can do. In practice we do this by adding the ReassignOneMappingQuality read filter to the splitter command.

91 |

92 | Finally, be sure to specify that reads with N cigars should be allowed. This is currently still classified as an "unsafe" option, but this classification will change to reflect the fact that this is now a supported option for RNAseq processing.

93 |

94 | ```

95 | java -jar GenomeAnalysisTK.jar -T SplitNCigarReads -R ref.fasta -I dedupped.bam -o split.bam -rf ReassignOneMappingQuality -RMQF 255 -RMQT 60 -U ALLOW_N_CIGAR_READS

96 | ```

97 |

98 | #### 4. Indel Realignment (optional)

99 |

100 | After the splitting step, we resume our regularly scheduled programming... to some extent. We have found that performing realignment around indels can help rescue a few indels that would otherwise be missed, but to be honest the effect is marginal. So while it can’t hurt to do it, we only recommend performing the realignment step if you have compute and time to spare (or if it’s important not to miss any potential indels).

101 |

102 | #### 5. Base Recalibration

103 |

104 | We do recommend running base recalibration (BQSR). Even though the effect is also marginal when applied to good quality data, it can absolutely save your butt in cases where the qualities have systematic error modes.

105 |

106 | Both steps 4 and 5 are run as described for DNAseq (with the same known sites resource files), without any special arguments. Finally, please note that you should NOT run ReduceReads on your RNAseq data. The ReduceReads tool will no longer be available in GATK 3.0.

107 |

108 | #### 6. Variant calling

109 |

110 | Finally, we have arrived at the variant calling step! Here, we recommend using HaplotypeCaller because it is performing much better in our hands than UnifiedGenotyper (our tests show that UG was able to call less than 50% of the true positive indels that HC calls). We have added some functionality to the variant calling code which will intelligently take into account the information about intron-exon split regions that is embedded in the BAM file by SplitNCigarReads. In brief, the new code will perform “dangling head merging” operations and avoid using soft-clipped bases (this is a temporary solution) as necessary to minimize false positive and false negative calls. To invoke this new functionality, just add `-dontUseSoftClippedBases` to your regular HC command line. Note that the `-recoverDanglingHeads` argument which was previously required is no longer necessary as that behavior is now enabled by default in HaplotypeCaller. Also, we found that we get better results if we set the minimum phred-scaled confidence threshold for calling variants 20, but you can lower this to increase sensitivity if needed.

111 |

112 | ```

113 | java -jar GenomeAnalysisTK.jar -T HaplotypeCaller -R ref.fasta -I input.bam -dontUseSoftClippedBases -stand_call_conf 20.0 -o output.vcf

114 | ```

115 |

116 | #### 7. Variant filtering

117 |

118 | To filter the resulting callset, you will need to apply hard filters, as we do not yet have the RNAseq training/truth resources that would be needed to run variant recalibration (VQSR).

119 |

120 | We recommend that you filter clusters of at least 3 SNPs that are within a window of 35 bases between them by adding `-window 35 -cluster 3` to your command. This filter recommendation is specific for RNA-seq data.

121 |

122 | As in DNA-seq, we recommend filtering based on Fisher Strand values (FS > 30.0) and Qual By Depth values (QD < 2.0).

123 |

124 | ```

125 | java -jar GenomeAnalysisTK.jar -T VariantFiltration -R hg_19.fasta -V input.vcf -window 35 -cluster 3 -filterName FS -filter "FS > 30.0" -filterName QD -filter "QD < 2.0" -o output.vcf

126 | ```

127 |

128 | Please note that we selected these hard filtering values in attempting to optimize both high sensitivity and specificity together. By applying the hard filters, some real sites will get filtered. This is a tradeoff that each analyst should consider based on his/her own project. If you care more about sensitivity and are willing to tolerate more false positives calls, you can choose not to filter at all (or to use less restrictive thresholds).

129 |

130 | An example of filtered (SNPs cluster filter) and unfiltered false variant calls:

131 |

132 | An example of true variants that were filtered (false negatives). As explained in text, there is a tradeoff that comes with applying filters:

133 |

134 | ------

135 |

136 | ### Known issues

137 |

138 | There are a few known issues; one is that the allelic ratio is problematic. In many heterozygous sites, even if we can see in the RNAseq data both alleles that are present in the DNA, the ratio between the number of reads with the different alleles is far from 0.5, and thus the HaplotypeCaller (or any caller that expects a diploid genome) will miss that call. A DNA-aware mode of the caller might be able to fix such cases (which may be candidates also for downstream analysis of allele specific expression).

139 |

140 | Although our new tool (splitNCigarReads) cleans many false positive calls that are caused by splicing inaccuracies by the aligners, we still call some false variants for that same reason, as can be seen in the example below. Some of those errors might be fixed in future versions of the pipeline with more sophisticated filters, with another realignment step in those regions, or by making the caller aware of splice positions.

141 |

142 |

143 |

144 | As stated previously, we will continue to improve the tools and process over time. We have plans to improve the splitting/clipping functionalities, improve true positive and minimize false positive rates, as well as developing statistical filtering (i.e. variant recalibration) recommendations.

145 |

146 | We also plan to add functionality to process DNAseq and RNAseq data from the same samples simultaneously, in order to facilitate analyses of post-transcriptional processes. Future extensions to the HaplotypeCaller will provide this functionality, which will require both DNAseq and RNAseq in order to produce the best results. Finally, we are also looking at solutions for measuring differential expression of alleles.

147 |

148 |

--------------------------------------------------------------------------------

/processing.md:

--------------------------------------------------------------------------------

1 | # GATK翻译任务安排

2 |

3 | 参考 https://github.com/broadinstitute/picard 的翻译策略,最终形成 http://broadinstitute.github.io/picard/ 的可视化网页界面!协作的翻译稿件以markdown形式存放在统一的github里面 https://github.com/gatk-chinese/forum

4 |

5 | ## [名词解释](https://software.broadinstitute.org/gatk/documentation/topic?name=dictionary)

6 |

7 | 一个人即可

8 |

9 | ### [官方教程](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials)

10 |

11 | 初识GATK系列:

12 |

13 | - GATK best practices 相关软件安装以及测试

14 | - GATK workshops 相关软件安装及测试

15 | - Queue 以及 cloud 的安装及测试 使用

16 | - GATK4 beta的使用

17 | - IGV 使用

18 |

19 | **如何使用系列:**

20 |

21 | - mapping

22 | - Markduplicates

23 | - local realignment

24 | - BQSR(recallibrate base quality scores)

25 | - VQSR(recallibrate variant quality scores)

26 | - haplotypeCaller

27 | - UnifiedGenotyper

28 |

29 | 文件格式转换

30 |

31 | - 生成uBAM

32 | - bam -> fastq

33 | - Genomic interval

34 | - GRCh38 以及 ALT contig

35 |

36 | **评价,注释,过滤**

37 |

38 | - bam和vcf格式的检查

39 | - vcf的过滤(SelectVariants)

40 | - vcf的评价

41 | - vcf的注释

42 |

43 | ## FAQs

44 |

45 | FAQs是对上面教程的补充,主要是有很多人没有仔细阅读文档,或者没能充分理解,提出的常见的问题。

46 |

47 | 比如VCA,BAM,interval list等文件格式的问题。

48 |

49 | 运行GATK的准备文件,包括参考基因组,bundle 数据集。

50 |

51 | 一些参数的不理解。

52 |

53 | 一些命令的不理解,是否可以省略某些步骤

54 |

55 | 是否适用于非二倍体,是否适用于转录组数据,多样本分析注意事项

56 |

57 | ## [用法及算法](https://software.broadinstitute.org/gatk/documentation/topic?name=methods)

58 |

59 | 首先是DNA和RNA测序的数据分析最佳实践

60 |

61 | 其次是多样本的GVCF模式

62 |

63 | 还有 HaplotypeCaller流程的详细解读

64 |

65 | HC overview: How the HaplotypeCaller works

66 | HC step 1: Defining ActiveRegions by measuring data entropy

67 | HC step 2: Local re-assembly and haplotype determination

68 | HC step 3 : Evaluating the evidence for haplotypes and variant alleles

69 | HC step 4: Assigning per-sample genotypes

70 |

71 | 还有变异位点的深度分析,包括过滤,质量值,评价体系,统计检验模型的讲解。

72 |

--------------------------------------------------------------------------------

/tutorials/.DS_Store:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gatk-chinese/forum/01188653096fa32c9a32de3e19f8423cddede238/tutorials/.DS_Store

--------------------------------------------------------------------------------

/tutorials/filetype/从vcf格式的变异文件里面挑选感兴趣位点.md:

--------------------------------------------------------------------------------

1 | # 如何对vcf文件进行挑选子集?

2 |

3 | 原文链接: [ Selecting variants of interest from a callset](https://software.broadinstitute.org/gatk/documentation/article?id=54)

4 |

5 | 这个内容并不多,只是看起来步骤有点多。

6 |

7 | Often, a VCF containing many samples and/or variants will need to be subset in order to facilitate certain analyses (e.g. comparing and contrasting cases vs. controls; extracting variant or non-variant loci that meet certain requirements, displaying just a few samples in a browser like IGV, etc.). The GATK tool that we use the most for subsetting calls in various ways is SelectVariants; it enables easy and convenient subsetting of VCF files according to many criteria.

8 |

9 | Select Variants operates on VCF files (also sometimes referred to as ROD in our documentation, for Reference Ordered Data) provided at the command line using the GATK's built in `--variant` option. You can provide multiple VCF files for Select Variants, but at least one must be named 'variant' and this will be the file (or set of files) from which variants will be selected. Other files can be used to modify the selection based on concordance or discordance between the callsets (see --discordance / --concordance arguments in the [tool documentation](https://www.broadinstitute.org/gatk/gatkdocs/org_broadinstitute_gatk_tools_walkers_variantutils_SelectVariants.php)).

10 |

11 | There are many options for setting the selection criteria, depending on what you want to achieve. For example, given a single VCF file, one or more samples can be extracted from the file, based either on a complete sample name, or on a pattern match. Variants can also be selected based on annotated properties, such as depth of coverage or allele frequency. This is done using [JEXL expressions](http://www.broadinstitute.org/gatk/guide/article?id=1255); make sure to read the linked document for details, especially the section on working with complex expressions.

12 |

13 | Note that in the output VCF, some annotations such as AN (number of alleles), AC (allele count), AF (allele frequency), and DP (depth of coverage) are recalculated as appropriate to accurately reflect the composition of the subset callset. See further below for an explanation of how that works.

14 |

15 | ------

16 |

17 | ### Command-line arguments

18 |

19 | For a complete, detailed argument reference, refer to the GATK document page [here](http://www.broadinstitute.org/gatk/gatkdocs/org_broadinstitute_gatk_tools_walkers_variantutils_SelectVariants.php).

20 |

21 | ------

22 |

23 | ### Subsetting by sample and ALT alleles

24 |

25 | SelectVariants now keeps (r5832) the alt allele, even if a record is AC=0 after subsetting the site down to selected samples. For example, when selecting down to just sample NA12878 from the OMNI VCF in 1000G (1525 samples), the resulting VCF will look like:

26 |

27 | ```

28 | 1 82154 rs4477212 A G . PASS AC=0;AF=0.00;AN=2;CR=100.0;DP=0;GentrainScore=0.7826;HW=1.0 GT:GC 0/0:0.7205

29 | 1 534247 SNP1-524110 C T . PASS AC=0;AF=0.00;AN=2;CR=99.93414;DP=0;GentrainScore=0.7423;HW=1.0 GT:GC 0/0:0.6491

30 | 1 565286 SNP1-555149 C T . PASS AC=2;AF=1.00;AN=2;CR=98.8266;DP=0;GentrainScore=0.7029;HW=1.0 GT:GC 1/1:0.3471

31 | 1 569624 SNP1-559487 T C . PASS AC=2;AF=1.00;AN=2;CR=97.8022;DP=0;GentrainScore=0.8070;HW=1.0 GT:GC 1/1:0.3942

32 | ```

33 |

34 | Although NA12878 is 0/0 at the first sites, ALT allele is preserved in the VCF record. This is the correct behavior, as reducing samples down shouldn't change the character of the site, only the AC in the subpopulation. This is related to the tricky issue of isPolymorphic() vs. isVariant().

35 |

36 | - isVariant => is there an ALT allele?

37 | - isPolymorphic => is some sample non-ref in the samples?

38 |

39 | For clarity, in previous versions of SelectVariants, the first two monomorphic sites lose the ALT allele, because NA12878 is hom-ref at this site, resulting in VCF that looks like:

40 |

41 | ```

42 | 1 82154 rs4477212 A . . PASS AC=0;AF=0.00;AN=2;CR=100.0;DP=0;GentrainScore=0.7826;HW=1.0 GT:GC 0/0:0.7205

43 | 1 534247 SNP1-524110 C . . PASS AC=0;AF=0.00;AN=2;CR=99.93414;DP=0;GentrainScore=0.7423;HW=1.0 GT:GC 0/0:0.6491

44 | 1 565286 SNP1-555149 C T . PASS AC=2;AF=1.00;AN=2;CR=98.8266;DP=0;GentrainScore=0.7029;HW=1.0 GT:GC 1/1:0.3471

45 | 1 569624 SNP1-559487 T C . PASS AC=2;AF=1.00;AN=2;CR=97.8022;DP=0;GentrainScore=0.8070;HW=1.0 GT:GC 1/1:0.3942

46 | ```

47 |

48 | If you really want a VCF without monomorphic sites, use the option to drop monomorphic sites after subsetting.

49 |

50 | ------

51 |

52 | ### How do the AC, AF, AN, and DP fields change?

53 |

54 | Let's say you have a file with three samples. The numbers before the ":" will be the genotype (0/0 is hom-ref, 0/1 is het, and 1/1 is hom-var), and the number after will be the depth of coverage.

55 |

56 | ```

57 | BOB MARY LINDA

58 | 1/0:20 0/0:30 1/1:50

59 | ```

60 |

61 | In this case, the INFO field will say AN=6, AC=3, AF=0.5, and DP=100 (in practice, I think these numbers won't necessarily add up perfectly because of some read filters we apply when calling, but it's approximately right).

62 |

63 | Now imagine I only want a file with the samples "BOB" and "MARY". The new file would look like:

64 |

65 | ```

66 | BOB MARY

67 | 1/0:20 0/0:30

68 | ```

69 |

70 | The INFO field will now have to change to reflect the state of the new data. It will be AN=4, AC=1, AF=0.25, DP=50.

71 |

72 | Let's pretend that MARY's genotype wasn't 0/0, but was instead "./." (no genotype could be ascertained). This would look like

73 |

74 | ```

75 | BOB MARY

76 | 1/0:20 ./.:.

77 | ```

78 |

79 | with AN=2, AC=1, AF=0.5, and DP=20.

80 |

81 | ------

82 |

83 | ### Additional information

84 |

85 | For information on how to construct regular expressions for use with this tool, see the method article on [variant filtering with JEXL](https://www.broadinstitute.org/gatk/guide/article?id=1255), or "Summary of regular-expression constructs" section [here](http://docs.oracle.com/javase/1.4.2/docs/api/java/util/regex/Pattern.html) for more hardcore reading.

--------------------------------------------------------------------------------

/tutorials/filetype/如何不比对就把fastq文件转为bam格式呢?.md:

--------------------------------------------------------------------------------

1 | # 如何不比对就把fastq文件转为bam格式呢?

2 |

3 | 原文链接: [(How to) Generate an unmapped BAM (uBAM)](http://gatkforums.broadinstitute.org/discussion/6484/).

4 |

5 | 不需要全文翻译,只需要说清楚这个需求是为什么,然后讲一下两种方法即可。

6 |

7 | [](https://us.v-cdn.net/5019796/uploads/FileUpload/31/992f952c8e9819d57bf74b0a4ac308.png)Here we outline how to generate an unmapped BAM (uBAM) from either a FASTQ or aligned BAM file. We use Picard's FastqToSam to convert a FASTQ (**Option A**) or Picard's RevertSam to convert an aligned BAM (**Option B**).

8 |

9 | #### Jump to a section on this page

10 |

11 | (A) [Convert FASTQ to uBAM](https://gatkforums.broadinstitute.org/gatk/discussion/6484#optionA) and add read group information using FastqToSam

12 | (B) [Convert aligned BAM to uBAM](https://gatkforums.broadinstitute.org/gatk/discussion/6484#optionB) and discard problematic records using RevertSam

13 |

14 | #### Tools involved

15 |

16 | - [FastqToSam](https://broadinstitute.github.io/picard/command-line-overview.html#FastqToSam)

17 | - [RevertSam](https://broadinstitute.github.io/picard/command-line-overview.html#RevertSam)

18 |

19 | #### Prerequisites

20 |

21 | - Installed Picard tools

22 |

23 | #### Download example data

24 |

25 | - [tutorial_6484_FastqToSam.tar.gz](https://drive.google.com/open?id=0BzI1CyccGsZiUXVNT3hsNldvUFk)

26 | - [tutorial_6484_RevertSam.tar.gz](https://drive.google.com/open?id=0BzI1CyccGsZiMWZacmVWWnV2VFE)

27 |

28 | Tutorial data reads were originally aligned to the [advanced tutorial bundle](http://gatkforums.broadinstitute.org/discussion/4610/)'s human_g1k_v37_decoy.fasta reference and to 10:91,000,000-92,000,000.

29 |

30 | #### Related resources

31 |

32 | - Our [dictionary entry on read groups](http://gatkforums.broadinstitute.org/discussion/6472/read-groups#latest) discusses the importance of assigning appropriate read group fields that differentiate samples and factors that contribute to batch effects, e.g. flow cell lane. Be sure your read group fields conform to the recommendations.

33 | - [This post](http://gatkforums.broadinstitute.org/discussion/2909#addRG) provides an example command for AddOrReplaceReadGroups.

34 | - This *How to* is part of a larger workflow and tutorial on [(How to) Efficiently map and clean up short read sequence data](http://gatkforums.broadinstitute.org/discussion/6483).

35 | - To extract reads in a genomic interval from the aligned BAM, [use GATK's PrintReads](http://gatkforums.broadinstitute.org/discussion/6517/).

36 |

37 | ------

38 |

39 | ### (A) Convert FASTQ to uBAM and add read group information using FastqToSam

40 |

41 | Picard's [FastqToSam](https://broadinstitute.github.io/picard/command-line-overview.html#FastqToSam) transforms a FASTQ file to an unmapped BAM, requires two read group fields and makes optional specification of other read group fields. In the command below we note which fields are required for GATK Best Practices Workflows. All other read group fields are optional.

42 |

43 | ```

44 | java -Xmx8G -jar picard.jar FastqToSam \

45 | FASTQ=6484_snippet_1.fastq \ #first read file of pair

46 | FASTQ2=6484_snippet_2.fastq \ #second read file of pair

47 | OUTPUT=6484_snippet_fastqtosam.bam \

48 | READ_GROUP_NAME=H0164.2 \ #required; changed from default of A

49 | SAMPLE_NAME=NA12878 \ #required

50 | LIBRARY_NAME=Solexa-272222 \ #required

51 | PLATFORM_UNIT=H0164ALXX140820.2 \

52 | PLATFORM=illumina \ #recommended

53 | SEQUENCING_CENTER=BI \

54 | RUN_DATE=2014-08-20T00:00:00-0400

55 |

56 | ```

57 |

58 | Some details on select parameters:

59 |

60 | - For paired reads, specify each FASTQ file with `FASTQ` and `FASTQ2` for the first read file and the second read file, respectively. Records in each file must be queryname sorted as the tool assumes identical ordering for pairs. The tool automatically strips the `/1` and `/2` read name suffixes and adds SAM flag values to indicate reads are paired. Do not provide a single interleaved fastq file, as the tool will assume reads are unpaired and the SAM flag values will reflect single ended reads.

61 | - For single ended reads, specify the input file with `FASTQ`.

62 | - `QUALITY_FORMAT` is detected automatically if unspecified.

63 | - `SORT_ORDER` by default is queryname.

64 | - `PLATFORM_UNIT` is often in run_barcode.lane format. Include if sample is multiplexed.

65 | - `RUN_DATE` is in [Iso8601 date format](https://en.wikipedia.org/wiki/ISO_8601).

66 |

67 | Paired reads will have [SAM flag](https://broadinstitute.github.io/picard/explain-flags.html) values that reflect pairing and the fact that the reads are unmapped as shown in the example read pair below.

68 |

69 | **Original first read**

70 |

71 | ```

72 | @H0164ALXX140820:2:1101:10003:49022/1

73 | ACTTTAGAAATTTACTTTTAAGGACTTTTGGTTATGCTGCAGATAAGAAATATTCTTTTTTTCTCCTATGTCAGTATCCCCCATTGAAATGACAATAACCTAATTATAAATAAGAATTAGGCTTTTTTTTGAACAGTTACTAGCCTATAGA

74 | +

75 | -FFFFFJJJJFFAFFJFJJFJJJFJFJFJJJ<@AAB@AA@AA>6@@A:>,*@A@<@??@8?9>@==8?:?@?;?:>==9?>8>@:?>>=>;<==>>;>?=?>>=<==>>=>9<=>??>?>;8>?>>>;4>=>7=6>=>>=><;=;>===?=>=>>?9>>>>??==== MC:Z:60M91S MD:Z:151 PG:Z:MarkDuplicates RG:Z:H0164.2 NM:i:0 MQ:i:0 OQ:Z:769=,<;0:=<0=:9===/,:-==29>;,5,98=599;<=########################################################################################### SA:Z:2,33141573,-,37S69M45S,0,1; MC:Z:151M MD:Z:48T4T6 PG:Z:MarkDuplicates RG:Z:H0164.2 NM:i:2 MQ:i:60 OQ:Z:<-<-FA, indexing with Samtools and then calling with HaplotypeCaller as follows. Note this workaround creates an invalid BAM according to ValidateSamFile. Also, another caveat is that because HaplotypeCaller uses softclipped sequences, any overlapping regions of read pairs will count twice towards variation instead of once. Thus, this step may lead to overconfident calls in such regions.

98 |

99 | Remove the 0x1 bitwise flag from alignments

100 |

101 | ```

102 | samtools view -h altalt_snaut.bam | gawk '{printf "%s\t", $1; if(and($2,0x1))

103 | {t=$2-0x1}else{t=$2}; printf "%s\t" , t; for (i=3; i altalt_se.bam

105 | ```

106 |

107 | Index the resulting BAM

108 |

109 | ```

110 | samtools index altalt_se.bam

111 | ```

112 |

113 | Call variants in `-ERC GVCF` mode with HaplotypeCaller for each sample

114 |

115 | ```

116 | java -jar GenomeAnalysisTK.jar -T HaplotypeCaller \

117 | -R chr19_chr19_KI270866v1_alt.fasta \

118 | -I altalt_se.bam -o altalt_hc.g.vcf \

119 | -ERC GVCF --emitDroppedReads -bamout altalt_hc.bam

120 | ```

121 |

122 | Finally, use GenotypeGVCFs as shown in [section 4](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials#4)'s command [4.7] for a multisample variant callset. Tutorial_8017_toSE calls 68 variant sites--66 on the primary assembly and two on the alternate contig.

123 |

124 | ### 6.2 Variant calls for **tutorial_8017_postalt**

125 |

126 | BWA's postalt-processing requires the query-grouped output of BWA-MEM. Piping an alignment step with postalt-processing is possible. However, to be able to compare variant calls from an identical alignment, we present the postalt-processing as an *add-on* workflow that takes the alignment from the first workflow.

127 |

128 | The command uses the `bwa-postalt.js` script, which we run through `k8`, a Javascript execution shell. It then lists the ALT index, the aligned SAM `altalt.sam` and names the resulting file `> altalt_postalt.sam`.

129 |

130 | ```

131 | k8 bwa-postalt.js \

132 | chr19_chr19_KI270866v1_alt.fasta.alt \

133 | altalt.sam > altalt_postalt.sam

134 | ```

135 |

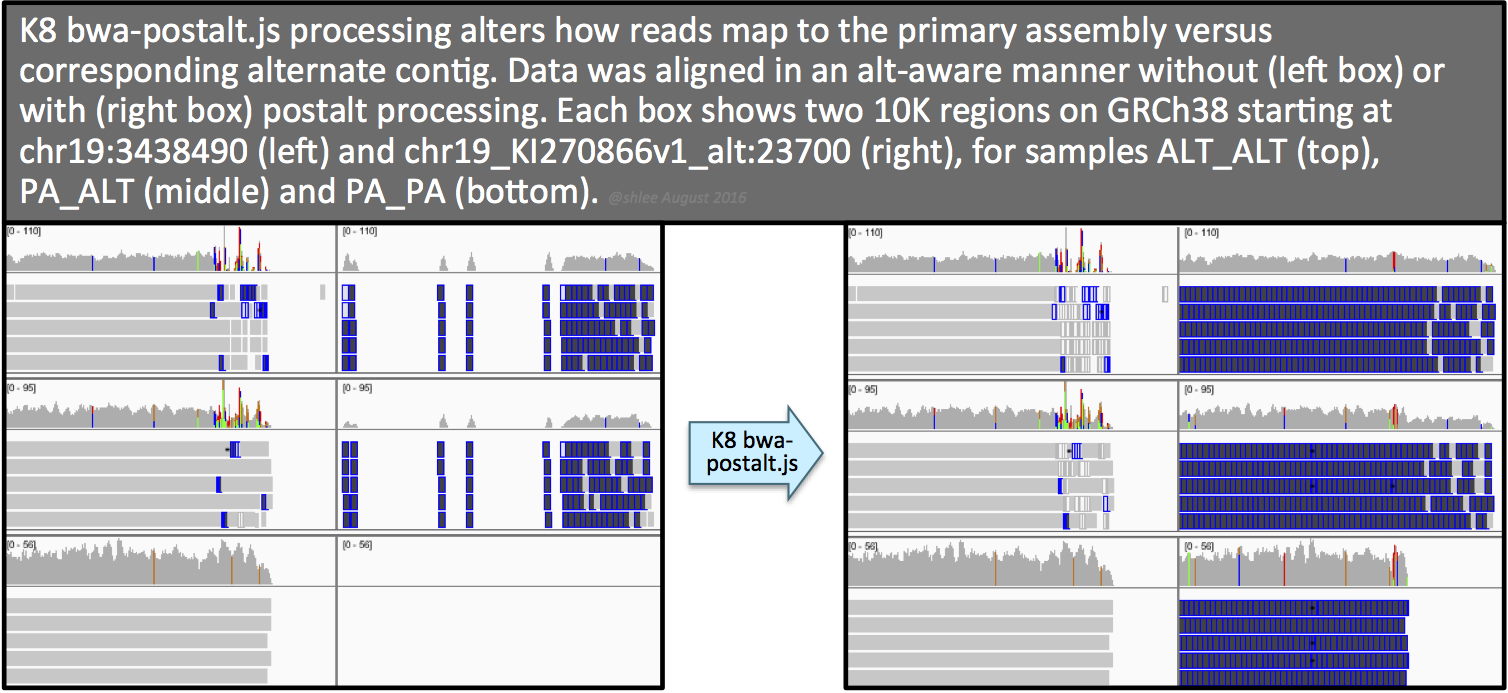

136 | [](https://us.v-cdn.net/5019796/uploads/FileUpload/a5/3522324635aec94071a9ff688a4aa6.png)The resulting postalt-processed SAM, `altalt_postalt.sam`, undergoes the same processing as the first workflow (commands 4.1 through 4.7) except that (i) we omit `--max_alternate_alleles 3`and `--read_filter OverclippedRead` options for the HaplotypeCaller command like we did in **section 6.1** and (ii) we perform the 0x1 flag removal step from **section 6.1**.

137 |

138 | The effect of this postalt-processing is immediately apparent in the IGV screenshots. Previously empty regions are now filled with alignments. Look closely in the highly divergent region of the primary locus. Do you notice a change, albeit subtle, before and after postalt-processing for samples ALTALT and PAALT?

139 |

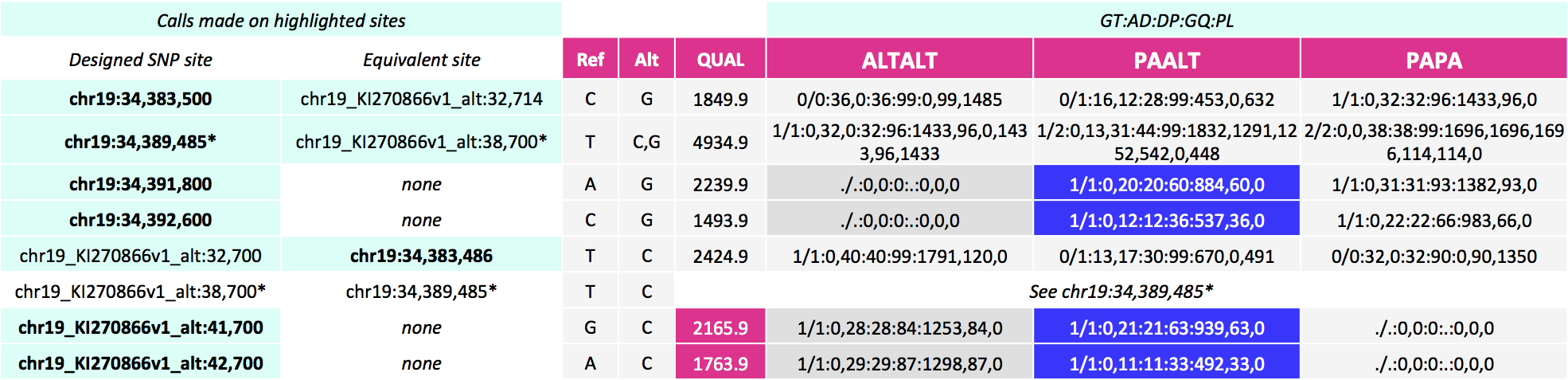

140 | These alignments give the calls below for our SNP sites of interest. Here, notice calls are made for more sites--on the equivalent site if present in addition to the design site (highlighted in the first two columns). For the three pairs of sites that can be called on either the primary locus or alternate contig, the variant site QUALs, the INFO field annotation metrics and the sample level annotation values are identical for each pair.

141 |

142 | [](https://us.v-cdn.net/5019796/uploads/FileUpload/c4/5f07b27798374175ba40f970e77a62.png)

143 |

144 | Postalt-processing lowers the MAPQ of primary locus alignments in the highly divergent region that map better to the alt locus. You can see this as a subtle change in the IGV screenshot. After postalt-processing we see an increase in white zero MAPQ reads in the highly divergent region of the primary locus for ALTALT and PAALT. For ALTALT, this effectively cleans up the variant calls in this region at chr19:34,391,800 and chr19:34,392,600. Previously for ALTALT, these calls contained some reads: 4 and 25 for the first workflow and 0 and 28 for the second workflow. After postalt-processing, no reads are considered in this region giving us `./.:0,0:0:.:0,0,0`calls for both sites.

145 |

146 | What we omit from examination are the effects of postalt-processing on decoy contig alignments. Namely, if an alignment on the primary assembly aligns better on a decoy contig, then postalt-processing discounts the alignment on the primary assembly by assigning it a zero MAPQ score.

147 |

148 | To wrap up, here are the number of variant sites called for the three workflows. As you can see, this last workflow calls the most variants at 95 variant sites, with 62 on the primary assembly and 33 on the alternate contig.

149 |

150 | ```

151 | Workflow total on primary assembly on alternate contig

152 | tutorial_8017 56 54 2

153 | tutorial_8017_toSE 68 66 2

154 | tutorial_8017_postalt 95 62 33

155 | ```

156 |

157 | [back to top](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials#top)

158 |

159 | ------

160 |

161 | ### 7. Related resources

162 |

163 | - For WDL scripts of the workflows represented in this tutorial, see the [GATK WDL scripts repository](https://github.com/broadinstitute/wdl/tree/develop/scripts/tutorials/gatk).

164 | - To revert an aligned BAM to unaligned BAM, see **Section B** of [Tutorial#6484](https://software.broadinstitute.org/gatk/documentation/article?id=6484).

165 | - To simulate reads from a reference contig, see [Tutorial#7859](https://software.broadinstitute.org/gatk/documentation/article?id=7859).

166 | - *Dictionary* entry [Reference Genome Components](https://software.broadinstitute.org/gatk/documentation/article?id=7857) reviews terminology that describe reference genome components.

167 | - The [GATK resource bundle](https://software.broadinstitute.org/gatk/download/bundle) provides an analysis set GRCh38 reference FASTA as well as several other related resource files.

168 | - As of this writing (August 8, 2016), the SAM format ALT index file for GRCh38 is available only in the [x86_64-linux bwakit download](https://sourceforge.net/projects/bio-bwa/files/bwakit/) as stated in this [bwakit README](https://github.com/lh3/bwa/tree/master/bwakit). The `hs38DH.fa.alt` file is in the `resource-GRCh38` folder. Rename this file's basename to match that of the corresponding reference FASTA.

169 | - For more details on MergeBamAlignment features, see [Section 3C](https://software.broadinstitute.org/gatk/documentation/article?id=6483#step3C) of [Tutorial#6483](https://software.broadinstitute.org/gatk/documentation/article?id=6483).

170 | - For details on the PairedEndSingleSampleWorkflow that uses GRCh38, see [here](https://github.com/broadinstitute/wdl/blob/develop/scripts/broad_pipelines/PairedSingleSampleWf_160720.md).

171 | - See [here](https://samtools.github.io/hts-specs) for VCF specifications.

172 |

173 |

--------------------------------------------------------------------------------

/tutorials/filetype/如何对bam文件进行修复.md:

--------------------------------------------------------------------------------

1 | # 如何对bam文件进行修复

2 |

3 | 原文链接: [ (How to) Fix a badly formatted BAM](https://software.broadinstitute.org/gatk/documentation/article?id=2909)

4 |

5 | 这一个内容比较重要

6 |

7 | Fix a BAM that is not indexed or not sorted, has not had duplicates marked, or is lacking read group information. The options on this page are listed in order of decreasing complexity.

8 |

9 | You may ask, is all of this really necessary? The GATK imposes strict formatting guidelines, including requiring certain [read group information](http://gatkforums.broadinstitute.org/discussion/6472/), that other software packages do not require. Although this represents a small additional processing burden upfront, the downstream benefits are numerous, including the ability to process library data individually, and significant gains in speed and parallelization options.

10 |

11 | #### Prerequisites

12 |

13 | - Installed Picard tools

14 | - If indexing or marking duplicates, then coordinate sorted reads

15 | - If coordinate sorting, then reference aligned reads

16 | - For each read group ID, a single BAM file. If you have a multiplexed file, separate to individual files per sample.

17 |

18 | #### Jump to a section on this page

19 |

20 | 1. [Add read groups, coordinate sort and index](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials#addRG) using AddOrReplaceReadGroups

21 | 2. [Coordinate sort and index](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials#sort) using SortSam

22 | 3. [Index an already coordinate-sorted BAM](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials#index) using BuildBamIndex

23 | 4. [Mark duplicates](https://software.broadinstitute.org/gatk/documentation/topic?name=tutorials#markduplicates) using MarkDuplicates

24 |

25 | #### Tools involved

26 |

27 | - [AddOrReplaceReadGroups](http://broadinstitute.github.io/picard/command-line-overview.html#AddOrReplaceReadGroups)

28 | - [SortSam](https://broadinstitute.github.io/picard/command-line-overview.html#SortSam)

29 | - [BuildBamIndex](https://software.broadinstitute.org/gatk/documentation/broadinstitute.github.io/picard/command-line-overview.html#BuildBamIndex)

30 | - [MarkDuplicates](https://broadinstitute.github.io/picard/command-line-overview.html#MarkDuplicates)

31 |

32 | #### Related resources

33 |

34 | - Our [dictionary entry on read groups](http://gatkforums.broadinstitute.org/discussion/6472/) discusses the importance of assigning appropriate read group fields that differentiate samples and factors that contribute to batch effects, e.g. flow cell lane. Be sure that your read group fields conform to the recommendations.

35 | - [Picard's standard options](http://broadinstitute.github.io/picard/command-line-overview.html#Overview) includes adding `CREATE_INDEX` to the commands of any of its tools that produce coordinate sorted BAMs.

36 |

37 | ------

38 |

39 | ### 1. Add read groups, coordinate sort and index using AddOrReplaceReadGroups

40 |

41 | Use Picard's [AddOrReplaceReadGroups](http://broadinstitute.github.io/picard/command-line-overview.html#AddOrReplaceReadGroups) to appropriately label read group (`@RG`) fields, coordinate sort and index a BAM file. Only the five required `@RG` fields are included in the command shown. Consider the other optional `@RG` fields for better record keeping.

42 |

43 | ```

44 | java -jar picard.jar AddOrReplaceReadGroups \

45 | INPUT=reads.bam \

46 | OUTPUT=reads_addRG.bam \

47 | RGID=H0164.2 \ #be sure to change from default of 1

48 | RGLB= library1 \

49 | RGPL=illumina \

50 | RGPU=H0164ALXX140820.2 \

51 | RGSM=sample1 \

52 | ```

53 |

54 | This creates a file called `reads_addRG.bam` with the same content and sorting as the input file, except the SAM record header's `@RG` line will be updated with the new information for the specified fields and each read will now have an RG tag filled with the `@RG` ID field information. Because of this repetition, the length of the `@RG` ID field contributes to file size.

55 |

56 | To additionally coordinate sort by genomic location and create a `.bai` index, add the following options to the command.

57 |

58 | ```

59 | SORT_ORDER=coordinate \

60 | CREATE_INDEX=true

61 | ```

62 |

63 | To process large files, also designate a temporary directory.

64 |

65 | ```

66 | TMP_DIR=/path/shlee #sets environmental variable for temporary directory

67 | ```

68 |

69 | ------

70 |

71 | ### 2. Coordinate sort and index using SortSam

72 |

73 | Picard's [SortSam](https://broadinstitute.github.io/picard/command-line-overview.html#SortSam) both sorts and indexes and converts between SAM and BAM formats. For coordinate sorting, reads must be aligned to a reference genome.

74 |

75 | ```

76 | java -jar picard.jar SortSam \

77 | INPUT=reads.bam \

78 | OUTPUT=reads_sorted.bam \

79 | SORT_ORDER=coordinate \

80 | ```

81 |

82 | Concurrently index by tacking on the following option.

83 |

84 | ```

85 | CREATE_INDEX=true

86 | ```

87 |

88 | This creates a file called `reads_sorted.bam` containing reads sorted by genomic location, aka coordinate, and a `.bai` index file with the same prefix as the output, e.g. `reads_sorted.bai`, within the same directory.

89 |

90 | To process large files, also designate a temporary directory.

91 |

92 | ```

93 | TMP_DIR=/path/shlee #sets environmental variable for temporary directory

94 | ```

95 |

96 | ------

97 |

98 | ### 3. Index an already coordinate-sorted BAM using BuildBamIndex

99 |

100 | Picard's [BuildBamIndex](https://software.broadinstitute.org/gatk/documentation/broadinstitute.github.io/picard/command-line-overview.html#BuildBamIndex) allows you to index a BAM that is already coordinate sorted.

101 |

102 | ```

103 | java -jar picard.jar BuildBamIndex \

104 | INPUT=reads_sorted.bam

105 | ```

106 |

107 | This creates a `.bai` index file with the same prefix as the input file, e.g. `reads_sorted.bai`, within the same directory as the input file. You want to keep this default behavior as many tools require the same prefix and directory location for the pair of files. Note that Picard tools do not systematically create an index file when they output a new BAM file, whereas GATK tools will always output indexed files.

108 |

109 | To process large files, also designate a temporary directory.

110 |

111 | ```

112 | TMP_DIR=/path/shlee #sets environmental variable for temporary directory

113 | ```

114 |

115 | ------

116 |

117 | ### 4. Mark duplicates using MarkDuplicates

118 |

119 | Picard's [MarkDuplicates](https://broadinstitute.github.io/picard/command-line-overview.html#MarkDuplicates) flags both PCR and optical duplicate reads with a 1024 (0x400) [SAM flag](https://broadinstitute.github.io/picard/explain-flags.html). The input BAM must be coordinate sorted.

120 |

121 | ```

122 | java -jar picard.jar MarkDuplicates \

123 | INPUT=reads_sorted.bam \

124 | OUTPUT=reads_markdup.bam \

125 | METRICS_FILE=metrics.txt \

126 | CREATE_INDEX=true

127 | ```

128 |

129 | This creates a file called `reads_markdup.bam` with duplicate reads marked. It also creates a file called `metrics.txt` containing duplicate read data metrics and a `.bai` index file.

130 |

131 | To process large files, also designate a temporary directory.

132 |

133 | ```

134 | TMP_DIR=/path/shlee #sets environmental variable for temporary directory

135 | ```

136 |

137 | - During sequencing, which involves PCR amplification within the sequencing machine, by a stochastic process we end up sequencing a proportion of DNA molecules that arose from the same parent insert. To be stringent in our variant discovery, GATK tools discount the duplicate reads as evidence for or against a putative variant.

138 |

139 | - Marking duplicates is less relevant to targeted amplicon sequencing and RNA-Seq analysis.

140 |

141 | - Optical duplicates arise from a read being sequenced twice as neighboring clusters.

142 |

143 |

--------------------------------------------------------------------------------

/tutorials/filetype/如何根据坐标区间对bam文件取子集?.md:

--------------------------------------------------------------------------------

1 | # 如何根据坐标区间对bam文件取子集?

2 |

3 | 原文链接:[ (How to) Create a snippet of reads corresponding to a genomic interval](https://software.broadinstitute.org/gatk/documentation/article?id=6517)

4 |

5 |

6 |

7 | #### Tools involved

8 |

9 | - [PrintReads](https://www.broadinstitute.org/gatk/gatkdocs/org_broadinstitute_gatk_tools_walkers_readutils_PrintReads.php)

10 |

11 | #### Prerequisites

12 |

13 | - Installed GATK tools

14 | - Reference genome

15 | - Coordinate-sorted, aligned and indexed BAM

16 |

17 | #### Download example data

18 |

19 | - Use the [advanced tutorial bundle](http://gatkforums.broadinstitute.org/discussion/4610/)'s human_g1k_v37_decoy.fasta as reference

20 | - [tutorial_6517.tar.gz](https://drive.google.com/open?id=0BzI1CyccGsZiTmlDLW13MXdTSG8) contains four files: 6517_2Mbp_input.bam and .bai covering reads aligning to 10:90,000,000-92,000,000 and 6517_1Mbp_output.bam and .bai covering 10:91,000,000-92,000,000

21 |

22 | #### Related resources

23 |

24 | - This *How to* is referenced in a tutorial on [(How to) Generate an unmapped BAM (uBAM)](http://gatkforums.broadinstitute.org/discussion/6484/).

25 | - See [this tutorial](http://gatkforums.broadinstitute.org/discussion/2909/) to coordinate-sort and index a BAM.

26 | - See [here](https://www.broadinstitute.org/gatk/gatkdocs/org_broadinstitute_gatk_engine_CommandLineGATK.php#--unsafe) for command line parameters accepted by all GATK tools.

27 | - For applying interval lists, e.g. to whole exome data, see [When should I use L to pass in a list of intervals](http://gatkforums.broadinstitute.org/discussion/4133/when-should-i-use-l-to-pass-in-a-list-of-intervals).

28 |

29 | ------

30 |

31 | ### Create a snippet of reads corresponding to a genomic interval using PrintReads

32 |

33 | PrintReads merges or subsets sequence data. The tool automatically applies MalformedReadFilter and BadCigarFilter to filter out certain types of reads that cause problems for downstream GATK tools, e.g. reads with mismatching numbers of bases and base qualities or reads with CIGAR strings containing the N operator.

34 |

35 | - To create a test snippet of RNA-Seq data that retains reads with Ns in CIGAR strings, use `-U ALLOW_N_CIGAR_READS`.

36 |

37 | Subsetting reads corresponding to a genomic interval using PrintReads requires reads that are aligned to a reference genome, coordinate-sorted and indexed. Place the `.bai` index in the same directory as the `.bam` file.

38 |

39 | ```

40 | java -Xmx8G -jar /path/GenomeAnalysisTK.jar \

41 | -T PrintReads \

42 | -R /path/human_g1k_v37_decoy.fasta \ #reference fasta

43 | -L 10:91000000-92000000 \ #desired genomic interval chr:start-end

44 | -I 6517_2Mbp_input.bam \ #input

45 | -o 6517_1Mbp_output.bam

46 | ```

47 |

48 | This creates a subset of reads from the input file, `6517_2Mbp_input.bam`, that align to the interval defined by the `-L` option, here a 1 Mbp region on chromosome 10. The tool creates two new files, `6517_1Mbp_output.bam`and corresponding index `6517_1Mbp_output.bai`.

49 |

50 | - For paired reads, the tool does not account for reads whose mate aligns outside of the defined interval. To filter these lost mate reads, use RevertSam's `SANITIZE` option.

51 |

52 | To process large files, also designate a temporary directory.

53 |

54 | ```

55 | TMP_DIR=/path/shlee #sets environmental variable for temporary directory

56 | ```

--------------------------------------------------------------------------------

/tutorials/steps/如何用HC找变异.md:

--------------------------------------------------------------------------------

1 | # 如何用HC找变异

2 |

3 | 原文链接:[ (howto) Call variants with HaplotypeCaller](https://software.broadinstitute.org/gatk/documentation/article?id=2803)

4 |

5 | 这个内容比较单一,就是翻译一个命令的使用

6 |

7 | #### Objective

8 |

9 | Call variants on a single genome with the HaplotypeCaller, producing a raw (unfiltered) VCF.

10 |

11 | #### Caveat

12 |

13 | This is meant only for single-sample analysis. To analyze multiple samples, see the Best Practices documentation on joint analysis.

14 |

15 | #### Prerequisites

16 |

17 | - TBD

18 |

19 | #### Steps

20 |

21 | 1. Determine the basic parameters of the analysis

22 | 2. Call variants in your sequence data

23 |

24 | ------

25 |

26 | ### 1. Determine the basic parameters of the analysis

27 |

28 | If you do not specify these parameters yourself, the program will use default values. However we recommend that you set them explicitly because it will help you understand how the results are bounded and how you can modify the program's behavior.

29 |

30 | - Genotyping mode (`--genotyping_mode`)

31 |

32 | This specifies how we want the program to determine the alternate alleles to use for genotyping. In the default `DISCOVERY` mode, the program will choose the most likely alleles out of those it sees in the data. In `GENOTYPE_GIVEN_ALLELES` mode, the program will only use the alleles passed in from a VCF file (using the `-alleles` argument). This is useful if you just want to determine if a sample has a specific genotype of interest and you are not interested in other alleles.

33 |

34 | - Emission confidence threshold (`-stand_emit_conf`)

35 |

36 | This is the minimum confidence threshold (phred-scaled) at which the program should emit sites that appear to be possibly variant.

37 |

38 | - Calling confidence threshold (`-stand_call_conf`)

39 |

40 | This is the minimum confidence threshold (phred-scaled) at which the program should emit variant sites as called. If a site's associated genotype has a confidence score lower than the calling threshold, the program will emit the site as filtered and will annotate it as LowQual. This threshold separates high confidence calls from low confidence calls.

41 |

42 | *The terms "called" and "filtered" are tricky because they can mean different things depending on context. In ordinary language, people often say a site was called if it was emitted as variant. But in the GATK's technical language, saying a site was called means that that site passed the confidence threshold test. For filtered, it's even more confusing, because in ordinary language, when people say that sites were filtered, they usually mean that those sites successfully passed a filtering test. However, in the GATK's technical language, the same phrase (saying that sites were filtered) means that those sites failed the filtering test. In effect, it means that those would be filtered out if the filter was used to actually remove low-confidence calls from the callset, instead of just tagging them. In both cases, both usages are valid depending on the point of view of the person who is reporting the results. So it's always important to check what is the context when interpreting results that include these terms.*

43 |

44 | ------

45 |

46 | ### 2. Call variants in your sequence data

47 |

48 | #### Action

49 |

50 | Run the following GATK command:

51 |

52 | ```

53 | java -jar GenomeAnalysisTK.jar \

54 | -T HaplotypeCaller \

55 | -R reference.fa \

56 | -I preprocessed_reads.bam \

57 | -L 20 \

58 | --genotyping_mode DISCOVERY \

59 | -stand_emit_conf 10 \

60 | -stand_call_conf 30 \

61 | -o raw_variants.vcf

62 | ```

63 |

64 | *Note that -L specifies that we only want to run the command on a subset of the data (here, chromosome 20). This is useful for testing as well as other purposes, as documented here. For example, when running on exome data, we use -L to specify a file containing the list of exome targets corresponding to the capture kit that was used to generate the exome libraries.*

65 |

66 | #### Expected Result

67 |

68 | This creates a VCF file called `raw_variants.vcf`, containing all the sites that the HaplotypeCaller evaluated to be potentially variant. Note that this file contains both SNPs and Indels.

69 |

70 | Although you now have a nice fresh set of variant calls, the variant discovery stage is not over. The distinctions made by the caller itself between low-confidence calls and the rest is very primitive, and should not be taken as a definitive guide for filtering. The GATK callers are designed to be very lenient in calling variants, so it is extremely important to apply one of the recommended filtering methods (variant recalibration or hard-filtering), in order to move on to downstream analyses with the highest-quality call set possible.

71 |

72 |

73 |

74 |

--------------------------------------------------------------------------------

/tutorials/steps/如何用UG找变异.md:

--------------------------------------------------------------------------------

1 | # 如何用UG找变异

2 |

3 | 原文链接: [ (howto) Call variants with the UnifiedGenotyper](https://software.broadinstitute.org/gatk/documentation/article?id=2804)

4 |

5 | 这个内容比较单一,就是翻译一个命令的使用,但是GATK官方推荐不用这个工具了。

6 |

7 | 所以不需要翻译。

8 |

9 |

--------------------------------------------------------------------------------

/tutorials/steps/如何运行BQSR.md:

--------------------------------------------------------------------------------

1 | # 如何运行BQSR

2 |

3 | 原文链接: [ (howto) Recalibrate base quality scores = run BQSR](https://software.broadinstitute.org/gatk/documentation/article?id=2801)

4 |

5 | 内容不多,需要翻译

6 |

7 | #### Objective

8 |

9 | Recalibrate base quality scores in order to correct sequencing errors and other experimental artifacts.

10 |

11 | #### Prerequisites

12 |

13 | - TBD

14 |

15 | #### Steps

16 |

17 | 1. Analyze patterns of covariation in the sequence dataset

18 | 2. Do a second pass to analyze covariation remaining after recalibration

19 | 3. Generate before/after plots

20 | 4. Apply the recalibration to your sequence data

21 |

22 | ------

23 |

24 | ### 1. Analyze patterns of covariation in the sequence dataset

25 |

26 | #### Action

27 |

28 | Run the following GATK command:

29 |

30 | ```

31 | java -jar GenomeAnalysisTK.jar \

32 | -T BaseRecalibrator \

33 | -R reference.fa \

34 | -I input_reads.bam \

35 | -L 20 \

36 | -knownSites dbsnp.vcf \

37 | -knownSites gold_indels.vcf \

38 | -o recal_data.table

39 | ```

40 |

41 | #### Expected Result

42 |

43 | This creates a GATKReport file called `recal_data.table` containing several tables. These tables contain the covariation data that will be used in a later step to recalibrate the base qualities of your sequence data.

44 |

45 | It is imperative that you provide the program with a set of known sites, otherwise it will refuse to run. The known sites are used to build the covariation model and estimate empirical base qualities. For details on what to do if there are no known sites available for your organism of study, please see the online GATK documentation.

46 |

47 | Note that `-L 20` is used here and in the next steps to restrict analysis to only chromosome 20 in the b37 human genome reference build. To run against a different reference, you may need to change the name of the contig according to the nomenclature used in your reference.

48 |

49 | ------

50 |

51 | ### 2. Do a second pass to analyze covariation remaining after recalibration

52 |

53 | #### Action

54 |

55 | Run the following GATK command:

56 |

57 | ```

58 | java -jar GenomeAnalysisTK.jar \

59 | -T BaseRecalibrator \

60 | -R reference.fa \

61 | -I input_reads.bam \

62 | -L 20 \

63 | -knownSites dbsnp.vcf \

64 | -knownSites gold_indels.vcf \

65 | -BQSR recal_data.table \

66 | -o post_recal_data.table

67 | ```

68 |

69 | #### Expected Result

70 |

71 | This creates another GATKReport file, which we will use in the next step to generate plots. Note the use of the `-BQSR` flag, which tells the GATK engine to perform on-the-fly recalibration based on the first recalibration data table.

72 |

73 | ------

74 |

75 | ### 3. Generate before/after plots

76 |

77 | #### Action

78 |

79 | Run the following GATK command:

80 |

81 | ```

82 | java -jar GenomeAnalysisTK.jar \

83 | -T AnalyzeCovariates \

84 | -R reference.fa \

85 | -L 20 \

86 | -before recal_data.table \

87 | -after post_recal_data.table \

88 | -plots recalibration_plots.pdf

89 | ```

90 |

91 | #### Expected Result

92 |

93 | This generates a document called `recalibration_plots.pdf` containing plots that show how the reported base qualities match up to the empirical qualities calculated by the BaseRecalibrator. Comparing the **before** and **after**plots allows you to check the effect of the base recalibration process before you actually apply the recalibration to your sequence data. For details on how to interpret the base recalibration plots, please see the online GATK documentation.

94 |

95 | ------

96 |

97 | ### 4. Apply the recalibration to your sequence data

98 |

99 | #### Action

100 |

101 | Run the following GATK command:

102 |

103 | ```

104 | java -jar GenomeAnalysisTK.jar \

105 | -T PrintReads \

106 | -R reference.fa \

107 | -I input_reads.bam \

108 | -L 20 \

109 | -BQSR recal_data.table \

110 | -o recal_reads.bam

111 | ```

112 |

113 | #### Expected Result

114 |

115 | This creates a file called `recal_reads.bam` containing all the original reads, but now with exquisitely accurate base substitution, insertion and deletion quality scores. By default, the original quality scores are discarded in order to keep the file size down. However, you have the option to retain them by adding the flag `–emit_original_quals` to the PrintReads command, in which case the original qualities will also be written in the file, tagged `OQ`.

116 |

117 | Notice how this step uses a very simple tool, PrintReads, to apply the recalibration. What’s happening here is that we are loading in the original sequence data, having the GATK engine recalibrate the base qualities on-the-fly thanks to the `-BQSR` flag (as explained earlier), and just using PrintReads to write out the resulting data to the new file.

--------------------------------------------------------------------------------

/tutorials/steps/如何运行VQSR.md:

--------------------------------------------------------------------------------

1 | # 如何运行VQSR

2 |

3 | 原文链接: [ (howto) Recalibrate variant quality scores = run VQSR](https://software.broadinstitute.org/gatk/documentation/article?id=2805)

4 |

5 | 内容有点多,估计跟GATK最佳实践也是很大部分重合着的

6 |

7 | #### Objective

8 |

9 | Recalibrate variant quality scores and produce a callset filtered for the desired levels of sensitivity and specificity.

10 |

11 | #### Prerequisites

12 |

13 | - TBD

14 |

15 | #### Caveats

16 |

17 | This document provides a typical usage example including parameter values. However, the values given may not be representative of the latest Best Practices recommendations. When in doubt, please consult the [FAQ document on VQSR training sets and parameters](https://www.broadinstitute.org/gatk/guide/article?id=1259), which overrides this document. See that document also for caveats regarding exome vs. whole genomes analysis design.

18 |

19 | #### Steps

20 |

21 | 1. Prepare recalibration parameters for SNPs

22 | a. Specify which call sets the program should use as resources to build the recalibration model

23 | b. Specify which annotations the program should use to evaluate the likelihood of Indels being real

24 | c. Specify the desired truth sensitivity threshold values that the program should use to generate tranches

25 | d. Determine additional model parameters

26 | 2. Build the SNP recalibration model

27 | 3. Apply the desired level of recalibration to the SNPs in the call set

28 | 4. Prepare recalibration parameters for Indels a. Specify which call sets the program should use as resources to build the recalibration model b. Specify which annotations the program should use to evaluate the likelihood of Indels being real c. Specify the desired truth sensitivity threshold values that the program should use to generate tranches d. Determine additional model parameters

29 | 5. Build the Indel recalibration model

30 | 6. Apply the desired level of recalibration to the Indels in the call set

31 |

32 | ------

33 |

34 | ### 1. Prepare recalibration parameters for SNPs

35 |

36 | #### a. Specify which call sets the program should use as resources to build the recalibration model

37 |

38 | For each training set, we use key-value tags to qualify whether the set contains known sites, training sites, and/or truth sites. We also use a tag to specify the prior likelihood that those sites are true (using the Phred scale).

39 |

40 | - True sites training resource: HapMap

41 |

42 | This resource is a SNP call set that has been validated to a very high degree of confidence. The program will consider that the variants in this resource are representative of true sites (truth=true), and will use them to train the recalibration model (training=true). We will also use these sites later on to choose a threshold for filtering variants based on sensitivity to truth sites. The prior likelihood we assign to these variants is Q15 (96.84%).

43 |

44 | - True sites training resource: Omni

45 |

46 | This resource is a set of polymorphic SNP sites produced by the Omni genotyping array. The program will consider that the variants in this resource are representative of true sites (truth=true), and will use them to train the recalibration model (training=true). The prior likelihood we assign to these variants is Q12 (93.69%).

47 |

48 | - Non-true sites training resource: 1000G

49 |

50 | This resource is a set of high-confidence SNP sites produced by the 1000 Genomes Project. The program will consider that the variants in this resource may contain true variants as well as false positives (truth=false), and will use them to train the recalibration model (training=true). The prior likelihood we assign to these variants is Q10 (90%).

51 |

52 | - Known sites resource, not used in training: dbSNP

53 |

54 | This resource is a SNP call set that has not been validated to a high degree of confidence (truth=false). The program will not use the variants in this resource to train the recalibration model (training=false). However, the program will use these to stratify output metrics such as Ti/Tv ratio by whether variants are present in dbsnp or not (known=true). The prior likelihood we assign to these variants is Q2 (36.90%).

55 |

56 | *The default prior likelihood assigned to all other variants is Q2 (36.90%). This low value reflects the fact that the philosophy of the GATK callers is to produce a large, highly sensitive callset that needs to be heavily refined through additional filtering.*

57 |

58 | #### b. Specify which annotations the program should use to evaluate the likelihood of SNPs being real

59 |

60 | These annotations are included in the information generated for each variant call by the caller. If an annotation is missing (typically because it was omitted from the calling command) it can be added using the VariantAnnotator tool.

61 |

62 | - [Coverage (DP)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_Coverage.php)

63 |

64 | Total (unfiltered) depth of coverage. Note that this statistic should not be used with exome datasets; see caveat detailed in the [VQSR arguments FAQ doc](https://software.broadinstitute.org/gatk/documentation/(https://www.broadinstitute.org/gatk/guide/article?id=1259)).

65 |

66 | - [QualByDepth (QD)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_QualByDepth.php)

67 |

68 | Variant confidence (from the QUAL field) / unfiltered depth of non-reference samples.

69 |

70 | - [FisherStrand (FS)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_StrandOddsRatio.php)

71 |

72 | Measure of strand bias (the variation being seen on only the forward or only the reverse strand). More bias is indicative of false positive calls. This complements the [StrandOddsRatio (SOR)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_StrandOddsRatio.php) annotation.

73 |

74 | - [StrandOddsRatio (SOR)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_StrandOddsRatio.php)

75 |

76 | Measure of strand bias (the variation being seen on only the forward or only the reverse strand). More bias is indicative of false positive calls. This complements the [FisherStrand (FS)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_FisherStrand.php) annotation.

77 |

78 | - [MappingQualityRankSumTest (MQRankSum)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_MappingQualityRankSumTest.php)

79 |

80 | The rank sum test for mapping qualities. Note that the mapping quality rank sum test can not be calculated for sites without a mixture of reads showing both the reference and alternate alleles.

81 |

82 | - [ReadPosRankSumTest (ReadPosRankSum)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_ReadPosRankSumTest.php)

83 |

84 | The rank sum test for the distance from the end of the reads. If the alternate allele is only seen near the ends of reads, this is indicative of error. Note that the read position rank sum test can not be calculated for sites without a mixture of reads showing both the reference and alternate alleles.

85 |

86 | - [RMSMappingQuality (MQ)](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_RMSMappingQuality.php)

87 |

88 | Estimation of the overall mapping quality of reads supporting a variant call.

89 |

90 | - [InbreedingCoeff](https://www.broadinstitute.org/gatk/guide/tooldocs/org_broadinstitute_gatk_tools_walkers_annotator_InbreedingCoeff.php)

91 |

92 | Evidence of inbreeding in a population. See caveats regarding population size and composition detailed in the [VQSR arguments FAQ doc](https://software.broadinstitute.org/gatk/documentation/(https://www.broadinstitute.org/gatk/guide/article?id=1259)).

93 |

94 | #### c. Specify the desired truth sensitivity threshold values that the program should use to generate tranches

95 |

96 | - First tranche threshold 100.0

97 | - Second tranche threshold 99.9

98 | - Third tranche threshold 99.0

99 | - Fourth tranche threshold 90.0

100 |