4 |

5 | ## Citation

6 | If you find this package helpful, please consider citing our work:

7 | ```

8 | @inproceedings{

9 | mishra2023generative,

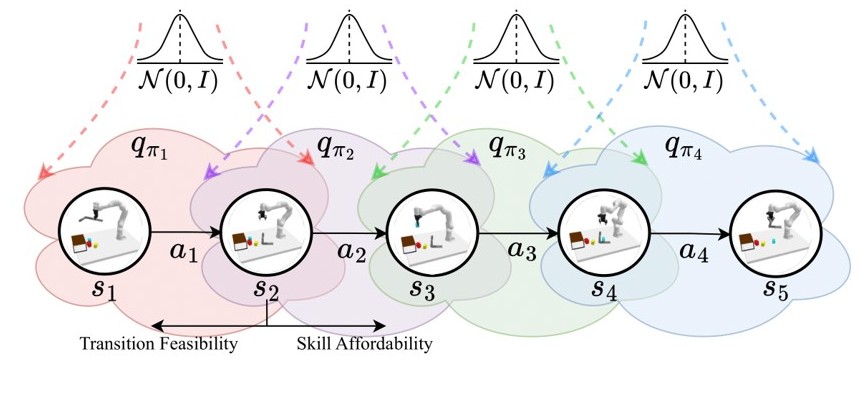

10 | title={Generative Skill Chaining: Long-Horizon Skill Planning with Diffusion Models},

11 | author={Utkarsh Aashu Mishra and Shangjie Xue and Yongxin Chen and Danfei Xu},

12 | booktitle={7th Annual Conference on Robot Learning},

13 | year={2023},

14 | url={https://openreview.net/forum?id=HtJE9ly5dT}

15 | }

16 | ```

17 |

--------------------------------------------------------------------------------

/conda/environment_cpu.yaml:

--------------------------------------------------------------------------------

1 | name: generative_skill_chaining

2 |

3 | channels:

4 | - pytorch

5 | - conda-forge

6 | - default

7 |

8 | dependencies:

9 | - python=3.7

10 |

11 | # PyTorch

12 | - pytorch

13 | - cpuonly

14 | - torchvision

15 | - torchtext

16 |

17 | # NumPy Family

18 | - numpy

19 | - scipy

20 | - networkx

21 | - scikit-image

22 |

23 | # Env

24 | - pybox2d

25 |

26 | # IO

27 | - imageio

28 | - pillow

29 | - pyyaml

30 | - cloudpickle

31 | - h5py

32 | - absl-py

33 | - pyparsing

34 |

35 | # Plotting

36 | - tensorboard

37 | - pandas

38 | - matplotlib

39 | - seaborn

40 |

41 | # Other

42 | - pytest

43 | - tqdm

44 | - future

45 |

46 | - pip

47 | - pip:

48 | - -r requirements.txt

--------------------------------------------------------------------------------

/conda/environment_gpu.yaml:

--------------------------------------------------------------------------------

1 | name: dplan

2 |

3 | channels:

4 | - pytorch

5 | - conda-forge

6 | - nvidia

7 | - default

8 |

9 | dependencies:

10 | - python=3.7

11 |

12 | # PyTorch

13 | - pytorch

14 | - cudatoolkit=11.1

15 | - torchvision

16 | - torchtext

17 |

18 | # NumPy Family

19 | - numpy

20 | - scipy

21 | - networkx

22 | - scikit-image

23 |

24 | # Env

25 | - pybox2d

26 |

27 | # IO

28 | - imageio

29 | - pillow

30 | - pyyaml

31 | - cloudpickle

32 | - h5py

33 | - absl-py

34 | - pyparsing

35 |

36 | # Plotting

37 | - tensorboard

38 | - pandas

39 | - matplotlib

40 | - seaborn

41 |

42 | # Other

43 | - pytest

44 | - tqdm

45 | - future

46 |

47 | - pip

48 | - pip:

49 | - -r requirements.txt

--------------------------------------------------------------------------------

/conda/requirements.txt:

--------------------------------------------------------------------------------

1 | gym>=0.12

2 | pygame

3 | functorch

4 | -e ../third_party/scod-regression

5 | black

6 | mypy

7 | flake8

8 | -e ../.

--------------------------------------------------------------------------------

/configs/pybullet/dynamics/table_env.yaml:

--------------------------------------------------------------------------------

1 | dynamics: TableEnvDynamics

2 | dynamics_kwargs:

3 | network_class: dynamics.MLPDynamics

4 | network_kwargs:

5 | hidden_layers: [256, 256]

6 | ortho_init: true

7 |

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/finger.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/finger.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/hand.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/hand.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link0.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link0.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link1.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link1.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link2.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link2.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link3.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link3.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link4.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link4.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link5.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link5.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link6.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link6.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link7.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link7.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_85_base_link.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_85_base_link.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_base_link.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_base_link.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/visual/robotiq_arg2f_85_pad.dae:

--------------------------------------------------------------------------------

1 |

4 |

5 | ## Citation

6 | If you find this package helpful, please consider citing our work:

7 | ```

8 | @inproceedings{

9 | mishra2023generative,

10 | title={Generative Skill Chaining: Long-Horizon Skill Planning with Diffusion Models},

11 | author={Utkarsh Aashu Mishra and Shangjie Xue and Yongxin Chen and Danfei Xu},

12 | booktitle={7th Annual Conference on Robot Learning},

13 | year={2023},

14 | url={https://openreview.net/forum?id=HtJE9ly5dT}

15 | }

16 | ```

17 |

--------------------------------------------------------------------------------

/conda/environment_cpu.yaml:

--------------------------------------------------------------------------------

1 | name: generative_skill_chaining

2 |

3 | channels:

4 | - pytorch

5 | - conda-forge

6 | - default

7 |

8 | dependencies:

9 | - python=3.7

10 |

11 | # PyTorch

12 | - pytorch

13 | - cpuonly

14 | - torchvision

15 | - torchtext

16 |

17 | # NumPy Family

18 | - numpy

19 | - scipy

20 | - networkx

21 | - scikit-image

22 |

23 | # Env

24 | - pybox2d

25 |

26 | # IO

27 | - imageio

28 | - pillow

29 | - pyyaml

30 | - cloudpickle

31 | - h5py

32 | - absl-py

33 | - pyparsing

34 |

35 | # Plotting

36 | - tensorboard

37 | - pandas

38 | - matplotlib

39 | - seaborn

40 |

41 | # Other

42 | - pytest

43 | - tqdm

44 | - future

45 |

46 | - pip

47 | - pip:

48 | - -r requirements.txt

--------------------------------------------------------------------------------

/conda/environment_gpu.yaml:

--------------------------------------------------------------------------------

1 | name: dplan

2 |

3 | channels:

4 | - pytorch

5 | - conda-forge

6 | - nvidia

7 | - default

8 |

9 | dependencies:

10 | - python=3.7

11 |

12 | # PyTorch

13 | - pytorch

14 | - cudatoolkit=11.1

15 | - torchvision

16 | - torchtext

17 |

18 | # NumPy Family

19 | - numpy

20 | - scipy

21 | - networkx

22 | - scikit-image

23 |

24 | # Env

25 | - pybox2d

26 |

27 | # IO

28 | - imageio

29 | - pillow

30 | - pyyaml

31 | - cloudpickle

32 | - h5py

33 | - absl-py

34 | - pyparsing

35 |

36 | # Plotting

37 | - tensorboard

38 | - pandas

39 | - matplotlib

40 | - seaborn

41 |

42 | # Other

43 | - pytest

44 | - tqdm

45 | - future

46 |

47 | - pip

48 | - pip:

49 | - -r requirements.txt

--------------------------------------------------------------------------------

/conda/requirements.txt:

--------------------------------------------------------------------------------

1 | gym>=0.12

2 | pygame

3 | functorch

4 | -e ../third_party/scod-regression

5 | black

6 | mypy

7 | flake8

8 | -e ../.

--------------------------------------------------------------------------------

/configs/pybullet/dynamics/table_env.yaml:

--------------------------------------------------------------------------------

1 | dynamics: TableEnvDynamics

2 | dynamics_kwargs:

3 | network_class: dynamics.MLPDynamics

4 | network_kwargs:

5 | hidden_layers: [256, 256]

6 | ortho_init: true

7 |

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/finger.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/finger.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/hand.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/hand.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link0.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link0.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link1.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link1.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link2.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link2.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link3.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link3.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link4.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link4.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link5.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link5.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link6.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link6.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/link7.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/link7.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_85_base_link.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_85_base_link.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_base_link.stl:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/generative-skill-chaining/gsc-code/b8f5bc0d44bd453827f3f254ca1b03ec049849c5/configs/pybullet/envs/assets/franka_panda/collision/robotiq_arg2f_base_link.stl

--------------------------------------------------------------------------------

/configs/pybullet/envs/assets/franka_panda/visual/robotiq_arg2f_85_pad.dae:

--------------------------------------------------------------------------------

1 | 0 0 1 0 2 0 3 1 1 1 0 1 4 2 5 2 6 2 5 3 7 3 6 3 2 4 5 4 4 4 2 5 4 5 0 5 5 6 2 6 1 6 5 7 1 7 7 7 7 8 1 8 6 8 1 9 3 9 6 9 0 10 4 10 3 10 4 11 6 11 3 11

79 |