├── .dockerignore

├── .github

├── ISSUE_TEMPLATE

│ ├── bug_report.md

│ └── feature_request.md

└── workflows

│ ├── lint_and_format.yml

│ ├── publish_pypi.yml

│ ├── publish_to_ghcr.yml

│ └── pytest.yml

├── .gitignore

├── CONTRIBUTING.md

├── Dockerfile

├── LICENSE

├── Makefile

├── README.md

├── assets

├── bert.gif

├── glass-gif.gif

├── inference.gif

├── llama_images

│ ├── llama-13b-class.png

│ ├── llama-13b-summ.png

│ ├── llama-7b-class.png

│ └── llama-7b-summ.png

├── money.gif

├── overview_diagram.png

├── progress.gif

├── readme_images

│ └── redpajama_results

│ │ ├── RedPajama-3B Classification.png

│ │ ├── RedPajama-3B Summarization.png

│ │ ├── RedPajama-7B Classification.png

│ │ └── RedPajama-7B Summarization.png

├── repo-main.png

├── rocket.gif

├── time.gif

└── toolkit-animation.gif

├── examples

├── ablation_config.yml

├── config.yml

├── llama2_config.yml

├── mistral_config.yml

└── test_suite

│ ├── dot_product_tests.csv

│ └── json_validity_tests.csv

├── llama2

├── README.md

├── baseline_inference.sh

├── llama2_baseline_inference.py

├── llama2_classification.py

├── llama2_classification_inference.py

├── llama2_summarization.py

├── llama2_summarization_inference.py

├── llama_patch.py

├── prompts.py

├── run_lora.sh

└── sample_ablate.sh

├── llmtune

├── __init__.py

├── cli

│ ├── __init__.py

│ └── toolkit.py

├── config.yml

├── constants

│ └── files.py

├── data

│ ├── __init__.py

│ ├── dataset_generator.py

│ └── ingestor.py

├── finetune

│ ├── __init__.py

│ ├── generics.py

│ └── lora.py

├── inference

│ ├── __init__.py

│ ├── generics.py

│ └── lora.py

├── pydantic_models

│ ├── __init__.py

│ └── config_model.py

├── qa

│ ├── __init__.py

│ ├── metric_suite.py

│ ├── qa_metrics.py

│ ├── qa_tests.py

│ └── test_suite.py

├── ui

│ ├── __init__.py

│ ├── generics.py

│ └── rich_ui.py

└── utils

│ ├── __init__.py

│ ├── ablation_utils.py

│ ├── rich_print_utils.py

│ └── save_utils.py

├── mistral

├── README.md

├── baseline_inference.sh

├── mistral_baseline_inference.py

├── mistral_classification.py

├── mistral_classification_inference.py

├── mistral_summarization.py

├── mistral_summarization_inference.py

├── prompts.py

├── run_lora.sh

└── sample_ablate.sh

├── poetry.lock

├── pyproject.toml

├── requirements.txt

├── test_utils

├── __init__.py

└── test_config.py

└── tests

├── data

├── test_dataset_generator.py

└── test_ingestor.py

├── finetune

├── test_finetune_generics.py

└── test_finetune_lora.py

├── inference

├── test_inference_generics.py

└── test_inference_lora.py

├── qa

├── test_metric_suite.py

├── test_qa_metrics.py

├── test_qa_tests.py

└── test_test_suite.py

├── test_ablation_utils.py

├── test_cli.py

├── test_directory_helper.py

└── test_version.py

/.dockerignore:

--------------------------------------------------------------------------------

1 | # .git

2 |

3 | # exlcude temp folder

4 | temp

5 |

6 | # exclude data files

7 | data/ucr/*

8 |

9 | # Jupyter

10 | *.bundle.*

11 | lib/

12 | node_modules/

13 | *.egg-info/

14 | .ipynb_checkpoints

15 | .ipynb_checkpoints/

16 | *.tsbuildinfo

17 |

18 | # IDE

19 | .vscode

20 | .vscode/*

21 |

22 | #Cython

23 | *.pyc

24 |

25 | # Packages

26 | *.egg

27 | !/tests/**/*.egg

28 | /*.egg-info

29 | /dist/*

30 | build

31 | _build

32 | .cache

33 | *.so

34 |

35 | # Installer logs

36 | pip-log.txt

37 |

38 | # Unit test / coverage reports

39 | .coverage

40 | .tox

41 | .pytest_cache

42 |

43 | .DS_Store

44 | .idea/*

45 | .python-version

46 | .vscode/*

47 |

48 | /test.py

49 | /test_*.*

50 |

51 | /setup.cfg

52 | MANIFEST.in

53 | /docs/site/*

54 | /tests/fixtures/simple_project/setup.py

55 | /tests/fixtures/project_with_extras/setup.py

56 | .mypy_cache

57 |

58 | .venv

59 | /releases/*

60 | pip-wheel-metadata

61 | /poetry.toml

62 |

63 | poetry/core/*

64 |

65 | # Logs

66 | log.txt

67 | logs

68 | /logs/*

69 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/bug_report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Bug report

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Describe the bug**

11 | A clear and concise description of what the bug is.

12 |

13 | **To Reproduce**

14 | Steps to reproduce the behavior:

15 | 1. Go to '...'

16 | 2. Click on '....'

17 | 3. Scroll down to '....'

18 | 4. See error

19 |

20 | **Expected behavior**

21 | A clear and concise description of what you expected to happen.

22 |

23 | **Screenshots**

24 | If applicable, add screenshots to help explain your problem.

25 |

26 | **Environment:**

27 | - OS: [e.g. Ubuntu 22.04]

28 | - GPU model and number [e.g. 1x NVIDIA RTX 3090]

29 | - GPU driver version [e.g. 535.171.04]

30 | - CUDA Driver version [e.g. 12.2]

31 | - Packages Installed

32 |

33 | **Additional context**

34 | Add any other context about the problem here.

35 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature_request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: Feature request

3 | about: Suggest an idea for this project

4 | title: ''

5 | labels: ''

6 | assignees: ''

7 |

8 | ---

9 |

10 | **Is your feature request related to a problem? Please describe.**

11 | A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

12 |

13 | **Describe the solution you'd like**

14 | A clear and concise description of what you want to happen.

15 |

16 | **Describe alternatives you've considered**

17 | A clear and concise description of any alternative solutions or features you've considered.

18 |

19 | **Additional context**

20 | Add any other context or screenshots about the feature request here.

21 |

--------------------------------------------------------------------------------

/.github/workflows/lint_and_format.yml:

--------------------------------------------------------------------------------

1 | name: Ruff

2 | on: pull_request

3 | jobs:

4 | lint:

5 | name: Lint & Format

6 | runs-on: ubuntu-latest

7 | steps:

8 | - uses: actions/checkout@v4

9 | - uses: chartboost/ruff-action@v1

10 | name: Lint

11 | with:

12 | version: 0.3.5

13 | args: "check --output-format=full --statistics"

14 | - uses: chartboost/ruff-action@v1

15 | name: Format

16 | with:

17 | version: 0.3.5

18 | args: "format --check"

19 |

--------------------------------------------------------------------------------

/.github/workflows/publish_pypi.yml:

--------------------------------------------------------------------------------

1 | name: PyPI CD

2 | on:

3 | release:

4 | types: [published]

5 | jobs:

6 | pypi:

7 | name: Build and Upload Release

8 | runs-on: ubuntu-latest

9 | environment:

10 | name: pypi

11 | url: https://pypi.org/p/llm-toolkit

12 | permissions:

13 | id-token: write # IMPORTANT: this permission is mandatory for trusted publishing

14 | steps:

15 | # ----------------

16 | # Set Up

17 | # ----------------

18 | - name: Checkout

19 | uses: actions/checkout@v4

20 | with:

21 | fetch-depth: 0

22 | - name: Setup Python

23 | uses: actions/setup-python@v5

24 | with:

25 | python-version: "3.11"

26 | - name: Install poetry

27 | uses: snok/install-poetry@v1

28 | with:

29 | version: 1.5.1

30 | virtualenvs-create: true

31 | virtualenvs-in-project: true

32 | installer-parallel: true

33 | # ----------------

34 | # Install Deps

35 | # ----------------

36 | - name: Install Dependencies

37 | run: |

38 | poetry install --no-interaction --no-root

39 | poetry self add "poetry-dynamic-versioning[plugin]"

40 | # ----------------

41 | # Build & Publish

42 | # ----------------

43 | - name: Build

44 | run: poetry build

45 | - name: Publish package distributions to PyPI

46 | uses: pypa/gh-action-pypi-publish@release/v1

47 |

--------------------------------------------------------------------------------

/.github/workflows/publish_to_ghcr.yml:

--------------------------------------------------------------------------------

1 | name: Github Packages CD

2 | on:

3 | release:

4 | types: [published]

5 | env:

6 | REGISTRY: ghcr.io

7 | IMAGE_NAME: ${{ github.repository }}

8 | jobs:

9 | build-and-push-image:

10 | name: Build and Push Image

11 | runs-on: ubuntu-latest

12 | permissions:

13 | contents: read

14 | packages: write

15 | steps:

16 | - name: Checkout repository

17 | uses: actions/checkout@v4

18 | - name: Log in to the Container registry

19 | uses: docker/login-action@65b78e6e13532edd9afa3aa52ac7964289d1a9c1

20 | with:

21 | registry: ${{ env.REGISTRY }}

22 | username: ${{ github.actor }}

23 | password: ${{ secrets.GITHUB_TOKEN }}

24 | - name: Extract metadata (tags, labels) for Docker

25 | id: meta

26 | uses: docker/metadata-action@9ec57ed1fcdbf14dcef7dfbe97b2010124a938b7

27 | with:

28 | images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

29 | - name: Build and push Docker image

30 | uses: docker/build-push-action@f2a1d5e99d037542a71f64918e516c093c6f3fc4

31 | with:

32 | context: .

33 | push: true

34 | tags: ${{ steps.meta.outputs.tags }}

35 | labels: ${{ steps.meta.outputs.labels }}

36 |

--------------------------------------------------------------------------------

/.github/workflows/pytest.yml:

--------------------------------------------------------------------------------

1 | name: pytest CI

2 | on: pull_request

3 | jobs:

4 | pytest:

5 | name: Run pytest and check min coverage threshold (80%)

6 | runs-on: ubuntu-latest

7 | steps:

8 | # ----------------

9 | # Set Up

10 | # ----------------

11 | - name: Checkout

12 | uses: actions/checkout@v4

13 | with:

14 | fetch-depth: 0

15 | - name: Setup Python

16 | uses: actions/setup-python@v5

17 | with:

18 | python-version: "3.11"

19 | - name: Install poetry

20 | uses: snok/install-poetry@v1

21 | with:

22 | version: 1.5.1

23 | virtualenvs-create: true

24 | virtualenvs-in-project: true

25 | installer-parallel: true

26 | # ----------------

27 | # Install Deps

28 | # ----------------

29 | - name: Install Dependencies

30 | run: |

31 | poetry install --no-interaction --no-root

32 | # ----------------

33 | # Run Test

34 | # ----------------

35 | - name: Run pytest

36 | run: poetry run pytest --cov=./ --cov-report=term

37 | - name: Check Coverage

38 | run: poetry run coverage report --fail-under=80

39 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | .DS_Store

2 |

3 | # experiment files

4 | */experiments

5 | */experiment

6 | experiment/*

7 | */archive

8 | */backup

9 | */baseline_results

10 |

11 | # Byte-compiled / optimized / DLL files

12 | __pycache__/

13 | *.py[cod]

14 | *$py.class

15 |

16 | # C extensions

17 | *.so

18 |

19 | # Distribution / packaging

20 | .Python

21 | build/

22 | develop-eggs/

23 | dist/

24 | downloads/

25 | eggs/

26 | .eggs/

27 | lib/

28 | lib64/

29 | parts/

30 | sdist/

31 | var/

32 | wheels/

33 | share/python-wheels/

34 | *.egg-info/

35 | .installed.cfg

36 | *.egg

37 | MANIFEST

38 |

39 | # Jupyter Notebook

40 | .ipynb_checkpoints

41 |

42 | # Environments

43 | .env

44 | .venv

45 | env/

46 | venv/

47 | ENV/

48 | env.bak/

49 | venv.bak/

50 |

51 | # Coverage Report

52 | .coverage

53 | /htmlcov

54 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | ## Contributing

2 |

3 | If you would like to contribute to this project, we recommend following the ["fork-and-pull" Git workflow](https://www.atlassian.com/git/tutorials/comparing-workflows/forking-workflow).

4 |

5 | 1. **Fork** the repo on GitHub

6 | 2. **Clone** the project to your own machine

7 | 3. **Commit** changes to your own branch

8 | 4. **Push** your work back up to your fork

9 | 5. Submit a **Pull request** so that we can review your changes

10 |

11 | NOTE: Be sure to merge the latest from "upstream" before making a pull request!

12 |

13 | ### Set Up Dev Environment

14 |

15 |

16 | 1. Clone Repo

17 |

18 | ```shell

19 | git clone https://github.com/georgian-io/LLM-Finetuning-Toolkit.git

20 | cd LLM-Finetuning-Toolkit/

21 | ```

22 |

23 |

24 |

25 |

26 | 2. Install Dependencies

27 |

28 | Install with Docker [Recommended]

29 |

30 | ```shell

31 | docker build -t llm-toolkit .

32 | ```

33 |

34 | ```shell

35 | # CPU

36 | docker run -it llm-toolkit

37 | # GPU

38 | docker run -it --gpus all llm-toolkit

39 | ```

40 |

41 |

42 |

43 |

44 | Poetry (recommended)

45 |

46 | See poetry documentation page for poetry [installation instructions](https://python-poetry.org/docs/#installation)

47 |

48 | ```shell

49 | poetry install

50 | ```

51 |

52 |

53 |

54 | pip

55 | We recommend using a virtual environment like `venv` or `conda` for installation

56 |

57 | ```shell

58 | pip install -e .

59 | ```

60 |

61 |

62 |

63 |

64 | ### Checklist Before Pull Request (Optional)

65 |

66 | 1. Use `ruff check --fix` to check and fix lint errors

67 | 2. Use `ruff format` to apply formatting

68 | 3. Run `pytest` at the top level directory to run unit tests

69 |

70 | NOTE: Ruff linting and formatting checks are done when PR is raised via Git Action. Before raising a PR, it is a good practice to check and fix lint errors, as well as apply formatting.

71 |

72 | ### Releasing

73 |

74 | To manually release a PyPI package, please run:

75 |

76 | ```shell

77 | make build-release

78 | ```

79 |

80 | Note: Make sure you have a pypi token for this [PyPI repo](https://pypi.org/project/llm-toolkit/).

81 |

--------------------------------------------------------------------------------

/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM nvidia/cuda:12.1.0-cudnn8-devel-ubuntu20.04

2 | RUN apt-get update && \

3 | DEBIAN_FRONTEND=noninteractive apt-get install -y --no-install-recommends git curl software-properties-common && \

4 | add-apt-repository ppa:deadsnakes/ppa && apt-get update && apt install -y python3.10 python3.10-distutils && \

5 | curl -sS https://bootstrap.pypa.io/get-pip.py | python3.10

6 |

7 |

8 | RUN update-alternatives --install /usr/bin/python3 python3 /usr/bin/python3.8 1 && \

9 | update-alternatives --install /usr/bin/python3 python3 /usr/bin/python3.10 2 && \

10 | update-alternatives --auto python3

11 |

12 | RUN export CUDA_HOME=/usr/local/cuda/

13 |

14 | COPY . /home/llm-finetuning-hub

15 | WORKDIR /home/llm-finetuning-hub

16 | RUN pip3 install --no-cache-dir -r ./requirements.txt

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 |

179 | Copyright 2024 Georgian Partners

180 |

181 | Licensed under the Apache License, Version 2.0 (the "License");

182 | you may not use this file except in compliance with the License.

183 | You may obtain a copy of the License at

184 |

185 | http://www.apache.org/licenses/LICENSE-2.0

186 |

187 | Unless required by applicable law or agreed to in writing, software

188 | distributed under the License is distributed on an "AS IS" BASIS,

189 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

190 | See the License for the specific language governing permissions and

191 | limitations under the License.

192 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | test-coverage:

2 | pytest --cov=llmtune tests/

3 |

4 | fix-format:

5 | ruff check --fix

6 | ruff format

7 |

8 | build-release:

9 | rm -rf dist

10 | rm -rf build

11 | poetry build

12 | poetry publish

13 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # LLM Finetuning Toolkit

2 |

3 |

4 |  5 |

5 |

6 |

7 | ## Overview

8 |

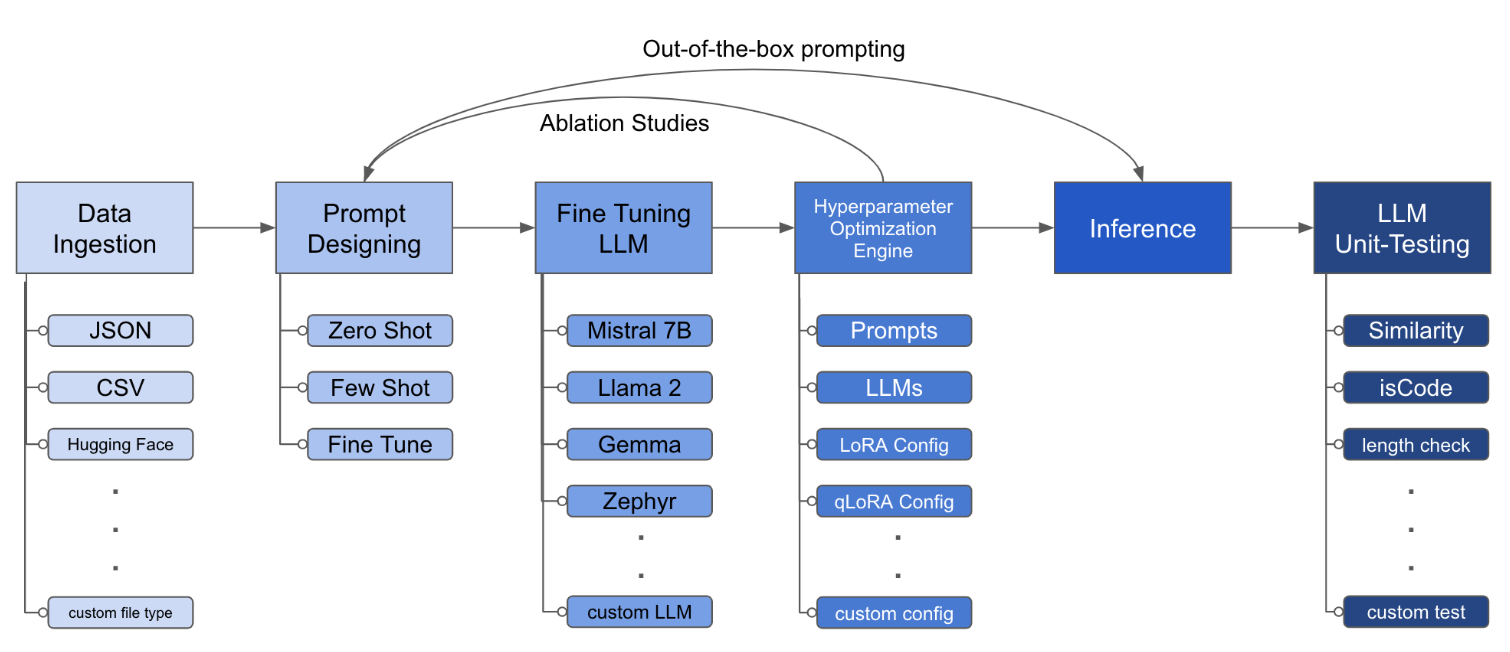

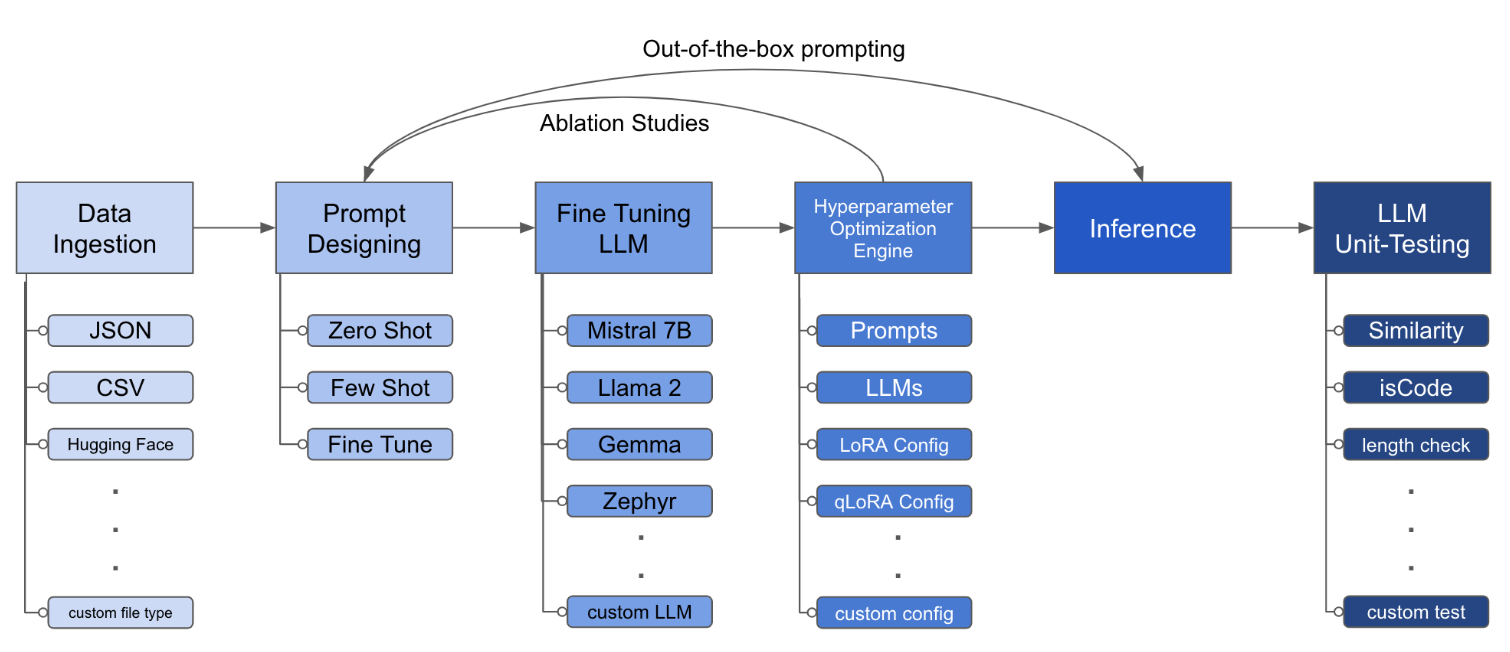

9 | LLM Finetuning toolkit is a config-based CLI tool for launching a series of LLM fine-tuning experiments on your data and gathering their results. From one single `yaml` config file, control all elements of a typical experimentation pipeline - **prompts**, **open-source LLMs**, **optimization strategy** and **LLM testing**.

10 |

11 |

12 |  13 |

13 |

14 |

15 | ## Installation

16 |

17 | ### [pipx](https://pipx.pypa.io/stable/) (recommended)

18 |

19 | [pipx](https://pipx.pypa.io/stable/) installs the package and dependencies in a separate virtual environment

20 |

21 | ```shell

22 | pipx install llm-toolkit

23 | ```

24 |

25 | ### pip

26 |

27 | ```shell

28 | pip install llm-toolkit

29 | ```

30 |

31 | ## Quick Start

32 |

33 | This guide contains 3 stages that will enable you to get the most out of this toolkit!

34 |

35 | - **Basic**: Run your first LLM fine-tuning experiment

36 | - **Intermediate**: Run a custom experiment by changing the components of the YAML configuration file

37 | - **Advanced**: Launch series of fine-tuning experiments across different prompt templates, LLMs, optimization techniques -- all through **one** YAML configuration file

38 |

39 | ### Basic

40 |

41 | ```shell

42 | llmtune generate config

43 | llmtune run ./config.yml

44 | ```

45 |

46 | The first command generates a helpful starter `config.yml` file and saves in the current working directory. This is provided to users to quickly get started and as a base for further modification.

47 |

48 | Then the second command initiates the fine-tuning process using the settings specified in the default YAML configuration file `config.yaml`.

49 |

50 | ### Intermediate

51 |

52 | The configuration file is the central piece that defines the behavior of the toolkit. It is written in YAML format and consists of several sections that control different aspects of the process, such as data ingestion, model definition, training, inference, and quality assurance. We highlight some of the critical sections.

53 |

54 | #### Flash Attention 2

55 |

56 | To enable Flash-attention for [supported models](https://huggingface.co/docs/transformers/perf_infer_gpu_one#flashattention-2). First install `flash-attn`:

57 |

58 | **pipx**

59 |

60 | ```shell

61 | pipx inject llm-toolkit flash-attn --pip-args=--no-build-isolation

62 | ```

63 |

64 | **pip**

65 |

66 | ```

67 | pip install flash-attn --no-build-isolation

68 | ```

69 |

70 | Then, add to config file.

71 |

72 | ```yaml

73 | model:

74 | torch_dtype: "bfloat16" # or "float16" if using older GPU

75 | attn_implementation: "flash_attention_2"

76 | ```

77 |

78 | #### Data Ingestion

79 |

80 | An example of what the data ingestion may look like:

81 |

82 | ```yaml

83 | data:

84 | file_type: "huggingface"

85 | path: "yahma/alpaca-cleaned"

86 | prompt:

87 | ### Instruction: {instruction}

88 | ### Input: {input}

89 | ### Output:

90 | prompt_stub: { output }

91 | test_size: 0.1 # Proportion of test as % of total; if integer then # of samples

92 | train_size: 0.9 # Proportion of train as % of total; if integer then # of samples

93 | train_test_split_seed: 42

94 | ```

95 |

96 | - While the above example illustrates using a public dataset from Hugging Face, the config file can also ingest your own data.

97 |

98 | ```yaml

99 | file_type: "json"

100 | path: "

101 | ```

102 |

103 | ```yaml

104 | file_type: "csv"

105 | path: "

106 | ```

107 |

108 | - The prompt fields help create instructions to fine-tune the LLM on. It reads data from specific columns, mentioned in {} brackets, that are present in your dataset. In the example provided, it is expected for the data file to have column names: `instruction`, `input` and `output`.

109 |

110 | - The prompt fields use both `prompt` and `prompt_stub` during fine-tuning. However, during testing, **only** the `prompt` section is used as input to the fine-tuned LLM.

111 |

112 | #### LLM Definition

113 |

114 | ```yaml

115 | model:

116 | hf_model_ckpt: "NousResearch/Llama-2-7b-hf"

117 | quantize: true

118 | bitsandbytes:

119 | load_in_4bit: true

120 | bnb_4bit_compute_dtype: "bf16"

121 | bnb_4bit_quant_type: "nf4"

122 |

123 | # LoRA Params -------------------

124 | lora:

125 | task_type: "CAUSAL_LM"

126 | r: 32

127 | lora_dropout: 0.1

128 | target_modules:

129 | - q_proj

130 | - v_proj

131 | - k_proj

132 | - o_proj

133 | - up_proj

134 | - down_proj

135 | - gate_proj

136 | ```

137 |

138 | - While the above example showcases using Llama2 7B, in theory, any open-source LLM supported by Hugging Face can be used in this toolkit.

139 |

140 | ```yaml

141 | hf_model_ckpt: "mistralai/Mistral-7B-v0.1"

142 | ```

143 |

144 | ```yaml

145 | hf_model_ckpt: "tiiuae/falcon-7b"

146 | ```

147 |

148 | - The parameters for LoRA, such as the rank `r` and dropout, can be altered.

149 |

150 | ```yaml

151 | lora:

152 | r: 64

153 | lora_dropout: 0.25

154 | ```

155 |

156 | #### Quality Assurance

157 |

158 | ```yaml

159 | qa:

160 | llm_metrics:

161 | - length_test

162 | - word_overlap_test

163 | ```

164 |

165 | - To ensure that the fine-tuned LLM behaves as expected, you can add tests that check if the desired behaviour is being attained. Example: for an LLM fine-tuned for a summarization task, we may want to check if the generated summary is indeed smaller in length than the input text. We would also like to learn the overlap between words in the original text and generated summary.

166 |

167 | #### Artifact Outputs

168 |

169 | This config will run fine-tuning and save the results under directory `./experiment/[unique_hash]`. Each unique configuration will generate a unique hash, so that our tool can automatically pick up where it left off. For example, if you need to exit in the middle of the training, by relaunching the script, the program will automatically load the existing dataset that has been generated under the directory, instead of doing it all over again.

170 |

171 | After the script finishes running you will see these distinct artifacts:

172 |

173 | ```shell

174 | /dataset # generated pkl file in hf datasets format

175 | /model # peft model weights in hf format

176 | /results # csv of prompt, ground truth, and predicted values

177 | /qa # csv of test results: e.g. vector similarity between ground truth and prediction

178 | ```

179 |

180 | Once all the changes have been incorporated in the YAML file, you can simply use it to run a custom fine-tuning experiment!

181 |

182 | ```shell

183 | python toolkit.py --config-path

184 | ```

185 |

186 | ### Advanced

187 |

188 | Fine-tuning workflows typically involve running ablation studies across various LLMs, prompt designs and optimization techniques. The configuration file can be altered to support running ablation studies.

189 |

190 | - Specify different prompt templates to experiment with while fine-tuning.

191 |

192 | ```yaml

193 | data:

194 | file_type: "huggingface"

195 | path: "yahma/alpaca-cleaned"

196 | prompt:

197 | - >-

198 | This is the first prompt template to iterate over

199 | ### Input: {input}

200 | ### Output:

201 | - >-

202 | This is the second prompt template

203 | ### Instruction: {instruction}

204 | ### Input: {input}

205 | ### Output:

206 | prompt_stub: { output }

207 | test_size: 0.1 # Proportion of test as % of total; if integer then # of samples

208 | train_size: 0.9 # Proportion of train as % of total; if integer then # of samples

209 | train_test_split_seed: 42

210 | ```

211 |

212 | - Specify various LLMs that you would like to experiment with.

213 |

214 | ```yaml

215 | model:

216 | hf_model_ckpt:

217 | [

218 | "NousResearch/Llama-2-7b-hf",

219 | mistralai/Mistral-7B-v0.1",

220 | "tiiuae/falcon-7b",

221 | ]

222 | quantize: true

223 | bitsandbytes:

224 | load_in_4bit: true

225 | bnb_4bit_compute_dtype: "bf16"

226 | bnb_4bit_quant_type: "nf4"

227 | ```

228 |

229 | - Specify different configurations of LoRA that you would like to ablate over.

230 |

231 | ```yaml

232 | lora:

233 | r: [16, 32, 64]

234 | lora_dropout: [0.25, 0.50]

235 | ```

236 |

237 | ## Extending

238 |

239 | The toolkit provides a modular and extensible architecture that allows developers to customize and enhance its functionality to suit their specific needs. Each component of the toolkit, such as data ingestion, fine-tuning, inference, and quality assurance testing, is designed to be easily extendable.

240 |

241 | ## Contributing

242 |

243 | Open-source contributions to this toolkit are welcome and encouraged.

244 | If you would like to contribute, please see [CONTRIBUTING.md](CONTRIBUTING.md).

245 |

--------------------------------------------------------------------------------

/assets/bert.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/bert.gif

--------------------------------------------------------------------------------

/assets/glass-gif.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/glass-gif.gif

--------------------------------------------------------------------------------

/assets/inference.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/inference.gif

--------------------------------------------------------------------------------

/assets/llama_images/llama-13b-class.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/llama_images/llama-13b-class.png

--------------------------------------------------------------------------------

/assets/llama_images/llama-13b-summ.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/llama_images/llama-13b-summ.png

--------------------------------------------------------------------------------

/assets/llama_images/llama-7b-class.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/llama_images/llama-7b-class.png

--------------------------------------------------------------------------------

/assets/llama_images/llama-7b-summ.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/llama_images/llama-7b-summ.png

--------------------------------------------------------------------------------

/assets/money.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/money.gif

--------------------------------------------------------------------------------

/assets/overview_diagram.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/overview_diagram.png

--------------------------------------------------------------------------------

/assets/progress.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/progress.gif

--------------------------------------------------------------------------------

/assets/readme_images/redpajama_results/RedPajama-3B Classification.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/readme_images/redpajama_results/RedPajama-3B Classification.png

--------------------------------------------------------------------------------

/assets/readme_images/redpajama_results/RedPajama-3B Summarization.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/readme_images/redpajama_results/RedPajama-3B Summarization.png

--------------------------------------------------------------------------------

/assets/readme_images/redpajama_results/RedPajama-7B Classification.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/readme_images/redpajama_results/RedPajama-7B Classification.png

--------------------------------------------------------------------------------

/assets/readme_images/redpajama_results/RedPajama-7B Summarization.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/readme_images/redpajama_results/RedPajama-7B Summarization.png

--------------------------------------------------------------------------------

/assets/repo-main.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/repo-main.png

--------------------------------------------------------------------------------

/assets/rocket.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/rocket.gif

--------------------------------------------------------------------------------

/assets/time.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/time.gif

--------------------------------------------------------------------------------

/assets/toolkit-animation.gif:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/georgian-io/LLM-Finetuning-Toolkit/1593c3ca14a99ba98518c051eb22d80e51b625d7/assets/toolkit-animation.gif

--------------------------------------------------------------------------------

/examples/ablation_config.yml:

--------------------------------------------------------------------------------

1 | save_dir: "./experiment/"

2 |

3 | ablation:

4 | use_ablate: false

5 |

6 | # Data Ingestion -------------------

7 | data:

8 | file_type: "huggingface" # one of 'json', 'csv', 'huggingface'

9 | path: "yahma/alpaca-cleaned"

10 | prompt:

11 | >- # prompt, make sure column inputs are enclosed in {} brackets and that they match your data

12 | Below is an instruction that describes a task.

13 | Write a response that appropriately completes the request.

14 | ### Instruction: {instruction}

15 | ### Input: {input}

16 | ### Output:

17 | prompt_stub:

18 | >- # Stub to add for training at the end of prompt, for test set or inference, this is omitted; make sure only one variable is present

19 | {output}

20 | test_size: 0.1 # Proportion of test as % of total; if integer then # of samples

21 | train_size: 0.9 # Proportion of train as % of total; if integer then # of samples

22 | train_test_split_seed: 42

23 |

24 | # Model Definition -------------------

25 | model:

26 | hf_model_ckpt: ["NousResearch/Llama-2-7b-hf", "mistralai/Mistral-7B-v0.1"]

27 | quantize: true

28 | bitsandbytes:

29 | load_in_4bit: true

30 | bnb_4bit_compute_dtype: "bfloat16"

31 | bnb_4bit_quant_type: "nf4"

32 |

33 | # LoRA Params -------------------

34 | lora:

35 | task_type: "CAUSAL_LM"

36 | r: [16, 32, 64]

37 | lora_dropout: [0.1, 0.25]

38 | target_modules:

39 | - q_proj

40 | - v_proj

41 | - k_proj

42 | - o_proj

43 | - up_proj

44 | - down_proj

45 | - gate_proj

46 |

47 | # Training -------------------

48 | training:

49 | training_args:

50 | num_train_epochs: 5

51 | per_device_train_batch_size: 4

52 | gradient_accumulation_steps: 4

53 | gradient_checkpointing: True

54 | optim: "paged_adamw_32bit"

55 | logging_steps: 100

56 | learning_rate: 2.0e-4

57 | bf16: true # Set to true for mixed precision training on Newer GPUs

58 | tf32: true

59 | # fp16: false # Set to true for mixed precision training on Older GPUs

60 | max_grad_norm: 0.3

61 | warmup_ratio: 0.03

62 | lr_scheduler_type: "constant"

63 | sft_args:

64 | max_seq_length: 5000

65 | # neftune_noise_alpha: None

66 |

67 | inference:

68 | max_new_tokens: 1024

69 | use_cache: True

70 | do_sample: True

71 | top_p: 0.9

72 | temperature: 0.8

73 |

--------------------------------------------------------------------------------

/examples/config.yml:

--------------------------------------------------------------------------------

1 | save_dir: "./experiment/"

2 |

3 | ablation:

4 | use_ablate: false

5 |

6 | # Data Ingestion -------------------

7 | data:

8 | file_type: "huggingface" # one of 'json', 'csv', 'huggingface'

9 | path: "yahma/alpaca-cleaned"

10 | prompt:

11 | >- # prompt, make sure column inputs are enclosed in {} brackets and that they match your data

12 | Below is an instruction that describes a task.

13 | Write a response that appropriately completes the request.

14 | ### Instruction: {instruction}

15 | ### Input: {input}

16 | ### Output:

17 | prompt_stub:

18 | >- # Stub to add for training at the end of prompt, for test set or inference, this is omitted; make sure only one variable is present

19 | {output}

20 | test_size: 0.1 # Proportion of test as % of total; if integer then # of samples

21 | train_size: 0.9 # Proportion of train as % of total; if integer then # of samples

22 | train_test_split_seed: 42

23 |

24 | # Model Definition -------------------

25 | model:

26 | hf_model_ckpt: "NousResearch/Llama-2-7b-hf"

27 | quantize: true

28 | bitsandbytes:

29 | load_in_4bit: true

30 | bnb_4bit_compute_dtype: "bfloat16"

31 | bnb_4bit_quant_type: "nf4"

32 |

33 | # LoRA Params -------------------

34 | lora:

35 | task_type: "CAUSAL_LM"

36 | r: 32

37 | lora_dropout: 0.1

38 | target_modules:

39 | - q_proj

40 | - v_proj

41 | - k_proj

42 | - o_proj

43 | - up_proj

44 | - down_proj

45 | - gate_proj

46 |

47 | # Training -------------------

48 | training:

49 | training_args:

50 | num_train_epochs: 5

51 | per_device_train_batch_size: 4

52 | gradient_accumulation_steps: 4

53 | gradient_checkpointing: True

54 | optim: "paged_adamw_32bit"

55 | logging_steps: 100

56 | learning_rate: 2.0e-4

57 | bf16: true # Set to true for mixed precision training on Newer GPUs

58 | tf32: true

59 | # fp16: false # Set to true for mixed precision training on Older GPUs

60 | max_grad_norm: 0.3

61 | warmup_ratio: 0.03

62 | lr_scheduler_type: "constant"

63 | sft_args:

64 | max_seq_length: 5000

65 | # neftune_noise_alpha: None

66 |

67 | inference:

68 | max_new_tokens: 1024

69 | use_cache: True

70 | do_sample: True

71 | top_p: 0.9

72 | temperature: 0.8

--------------------------------------------------------------------------------

/examples/llama2_config.yml:

--------------------------------------------------------------------------------

1 | save_dir: "./experiment/"

2 |

3 | ablation:

4 | use_ablate: false

5 |

6 | # Data Ingestion -------------------

7 | data:

8 | file_type: "huggingface" # one of 'json', 'csv', 'huggingface'

9 | path: "yahma/alpaca-cleaned"

10 | prompt:

11 | >- # prompt, make sure column inputs are enclosed in {} brackets and that they match your data

12 | Below is an instruction that describes a task.

13 | Write a response that appropriately completes the request.

14 | ### Instruction: {instruction}

15 | ### Input: {input}

16 | ### Output:

17 | prompt_stub:

18 | >- # Stub to add for training at the end of prompt, for test set or inference, this is omitted; make sure only one variable is present

19 | {output}

20 | test_size: 0.1 # Proportion of test as % of total; if integer then # of samples

21 | train_size: 0.9 # Proportion of train as % of total; if integer then # of samples

22 | train_test_split_seed: 42

23 |

24 | # Model Definition -------------------

25 | model:

26 | hf_model_ckpt: "NousResearch/Llama-2-7b-hf"

27 | quantize: true

28 | bitsandbytes:

29 | load_in_4bit: true

30 | bnb_4bit_compute_dtype: "bfloat16"

31 | bnb_4bit_quant_type: "nf4"

32 |

33 | # LoRA Params -------------------

34 | lora:

35 | task_type: "CAUSAL_LM"

36 | r: 32

37 | lora_dropout: 0.1

38 | target_modules:

39 | - q_proj

40 | - v_proj

41 | - k_proj

42 | - o_proj

43 | - up_proj

44 | - down_proj

45 | - gate_proj

46 |

47 | # Training -------------------

48 | training:

49 | training_args:

50 | num_train_epochs: 5

51 | per_device_train_batch_size: 4

52 | gradient_accumulation_steps: 4

53 | gradient_checkpointing: True

54 | optim: "paged_adamw_32bit"

55 | logging_steps: 100

56 | learning_rate: 2.0e-4

57 | bf16: true # Set to true for mixed precision training on Newer GPUs

58 | tf32: true

59 | # fp16: false # Set to true for mixed precision training on Older GPUs

60 | max_grad_norm: 0.3

61 | warmup_ratio: 0.03

62 | lr_scheduler_type: "constant"

63 | sft_args:

64 | max_seq_length: 5000

65 | # neftune_noise_alpha: None

66 |

67 | inference:

68 | max_new_tokens: 1024

69 | use_cache: True

70 | do_sample: True

71 | top_p: 0.9

72 | temperature: 0.8

73 |

--------------------------------------------------------------------------------

/examples/mistral_config.yml:

--------------------------------------------------------------------------------

1 | save_dir: "./experiment/"

2 |

3 | ablation:

4 | use_ablate: false

5 |

6 | # Data Ingestion -------------------

7 | data:

8 | file_type: "huggingface" # one of 'json', 'csv', 'huggingface'

9 | path: "yahma/alpaca-cleaned"

10 | prompt:

11 | >- # prompt, make sure column inputs are enclosed in {} brackets and that they match your data

12 | Below is an instruction that describes a task.

13 | Write a response that appropriately completes the request.

14 | ### Instruction: {instruction}

15 | ### Input: {input}

16 | ### Output:

17 | prompt_stub:

18 | >- # Stub to add for training at the end of prompt, for test set or inference, this is omitted; make sure only one variable is present

19 | {output}

20 | test_size: 0.1 # Proportion of test as % of total; if integer then # of samples

21 | train_size: 0.9 # Proportion of train as % of total; if integer then # of samples

22 | train_test_split_seed: 42

23 |

24 | # Model Definition -------------------

25 | model:

26 | hf_model_ckpt: "mistralai/Mistral-7B-v0.1"

27 | quantize: true

28 | bitsandbytes:

29 | load_in_4bit: true

30 | bnb_4bit_compute_dtype: "bfloat16"

31 | bnb_4bit_quant_type: "nf4"

32 |

33 | # LoRA Params -------------------

34 | lora:

35 | task_type: "CAUSAL_LM"

36 | r: 32

37 | lora_dropout: 0.1

38 | target_modules:

39 | - q_proj

40 | - v_proj

41 | - k_proj

42 | - o_proj

43 | - up_proj

44 | - down_proj

45 | - gate_proj

46 |

47 | # Training -------------------

48 | training:

49 | training_args:

50 | num_train_epochs: 5

51 | per_device_train_batch_size: 4

52 | gradient_accumulation_steps: 4

53 | gradient_checkpointing: True

54 | optim: "paged_adamw_32bit"

55 | logging_steps: 100

56 | learning_rate: 2.0e-4

57 | bf16: true # Set to true for mixed precision training on Newer GPUs

58 | tf32: true

59 | # fp16: false # Set to true for mixed precision training on Older GPUs

60 | max_grad_norm: 0.3

61 | warmup_ratio: 0.03

62 | lr_scheduler_type: "constant"

63 | sft_args:

64 | max_seq_length: 5000

65 | # neftune_noise_alpha: None

66 |

67 | inference:

68 | max_new_tokens: 1024

69 | use_cache: True

70 | do_sample: True

71 | top_p: 0.9

72 | temperature: 0.8

73 |

--------------------------------------------------------------------------------

/examples/test_suite/dot_product_tests.csv:

--------------------------------------------------------------------------------

1 | "Test Type","prompt","ground_truth","threshold"

2 | "cosine_similarity","Output “the dog is brown”, word for word:","the dog is brown",0.95

3 | "cosine_similarity","You are a customer service chatbot, offering polite responses to customer questions. The company has a 30 day cancellation policy. Answer the following customer question. Question: “How long is the cancellation policy? Answer: ","30 days",0.2

4 |

--------------------------------------------------------------------------------

/examples/test_suite/json_validity_tests.csv:

--------------------------------------------------------------------------------

1 | "Test Type","prompt"

2 | "json_valid","Hi my name is John. My age is 30 and I have blue eyes. Here is the same data in json format: "

3 | "json_valid","Here is an example of a simple json object: "

4 |

--------------------------------------------------------------------------------

/llama2/baseline_inference.sh:

--------------------------------------------------------------------------------

1 | python llama2_baseline_inference.py --task_type classification --prompt_type zero-shot & wait

2 | python llama2_baseline_inference.py --task_type classification --prompt_type few-shot & wait

3 | python llama2_baseline_inference.py --task_type summarization --prompt_type zero-shot & wait

4 | python llama2_baseline_inference.py --task_type summarization --prompt_type few-shot

5 |

--------------------------------------------------------------------------------

/llama2/llama2_baseline_inference.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import os

3 | import evaluate

4 | import warnings

5 | import json

6 | import pandas as pd

7 | import pickle

8 | import torch

9 | import time

10 |

11 | from datasets import load_dataset

12 | from prompts import (

13 | ZERO_SHOT_CLASSIFIER_PROMPT,

14 | FEW_SHOT_CLASSIFIER_PROMPT,

15 | ZERO_SHOT_SUMMARIZATION_PROMPT,

16 | FEW_SHOT_SUMMARIZATION_PROMPT,

17 | get_newsgroup_data,

18 | get_samsum_data,

19 | )

20 | from transformers import (

21 | AutoTokenizer,

22 | AutoModelForCausalLM,

23 | BitsAndBytesConfig,

24 | )

25 | from sklearn.metrics import (

26 | accuracy_score,

27 | f1_score,

28 | precision_score,

29 | recall_score,

30 | )

31 |

32 | metric = evaluate.load("rouge")

33 | warnings.filterwarnings("ignore")

34 |

35 |

36 | def compute_metrics_decoded(decoded_labs, decoded_preds, args):

37 | if args.task_type == "summarization":

38 | rouge = metric.compute(

39 | predictions=decoded_preds, references=decoded_labs, use_stemmer=True

40 | )

41 | metrics = {metric: round(rouge[metric] * 100.0, 3) for metric in rouge.keys()}

42 |

43 | elif args.task_type == "classification":

44 | metrics = {

45 | "micro_f1": f1_score(decoded_labs, decoded_preds, average="micro"),

46 | "macro_f1": f1_score(decoded_labs, decoded_preds, average="macro"),

47 | "precision": precision_score(decoded_labs, decoded_preds, average="micro"),

48 | "recall": recall_score(decoded_labs, decoded_preds, average="micro"),

49 | "accuracy": accuracy_score(decoded_labs, decoded_preds),

50 | }

51 |

52 | return metrics

53 |

54 |

55 | def main(args):

56 | # replace attention with flash attention

57 | if torch.cuda.get_device_capability()[0] >= 8:

58 | from llama_patch import replace_attn_with_flash_attn

59 |

60 | print("Using flash attention")

61 | replace_attn_with_flash_attn()

62 | use_flash_attention = True

63 |

64 | save_dir = os.path.join(

65 | "baseline_results", args.pretrained_ckpt, args.task_type, args.prompt_type

66 | )

67 | if not os.path.exists(save_dir):

68 | os.makedirs(save_dir)

69 |

70 | if args.task_type == "classification":

71 | dataset = load_dataset("rungalileo/20_Newsgroups_Fixed")

72 | test_dataset = dataset["test"]

73 | test_data, test_labels = test_dataset["text"], test_dataset["label"]

74 |

75 | newsgroup_classes, few_shot_samples, _ = get_newsgroup_data()

76 |

77 | elif args.task_type == "summarization":

78 | dataset = load_dataset("samsum")

79 | test_dataset = dataset["test"]

80 | test_data, test_labels = test_dataset["dialogue"], test_dataset["summary"]

81 |

82 | few_shot_samples = get_samsum_data()

83 |

84 | if args.prompt_type == "zero-shot":

85 | if args.task_type == "classification":

86 | prompt = ZERO_SHOT_CLASSIFIER_PROMPT

87 | elif args.task_type == "summarization":

88 | prompt = ZERO_SHOT_SUMMARIZATION_PROMPT

89 |

90 | elif args.prompt_type == "few-shot":

91 | if args.task_type == "classification":

92 | prompt = FEW_SHOT_CLASSIFIER_PROMPT

93 | elif args.task_type == "summarization":

94 | prompt = FEW_SHOT_SUMMARIZATION_PROMPT

95 |

96 | # BitsAndBytesConfig int-4 config

97 | bnb_config = BitsAndBytesConfig(

98 | load_in_4bit=True,

99 | bnb_4bit_use_double_quant=True,

100 | bnb_4bit_quant_type="nf4",

101 | bnb_4bit_compute_dtype=torch.bfloat16,

102 | )

103 |

104 | # Load model and tokenizer

105 | model = AutoModelForCausalLM.from_pretrained(

106 | args.pretrained_ckpt,

107 | quantization_config=bnb_config,

108 | use_cache=False,

109 | device_map="auto",

110 | )

111 | model.config.pretraining_tp = 1

112 |

113 | if use_flash_attention:

114 | from llama_patch import forward

115 |

116 | assert (

117 | model.model.layers[0].self_attn.forward.__doc__ == forward.__doc__

118 | ), "Model is not using flash attention"

119 |

120 | tokenizer = AutoTokenizer.from_pretrained(args.pretrained_ckpt)

121 | tokenizer.pad_token = tokenizer.eos_token

122 | tokenizer.padding_side = "right"

123 |

124 | results = []

125 | good_data, good_labels = [], []

126 | ctr = 0

127 | # for instruct, label in zip(instructions, labels):

128 | for data, label in zip(test_data, test_labels):

129 | if not isinstance(data, str):

130 | continue

131 | if not isinstance(label, str):

132 | continue

133 |

134 | # example = instruct[:-len(label)] # remove the answer from the example

135 | if args.prompt_type == "zero-shot":

136 | if args.task_type == "classification":

137 | example = prompt.format(

138 | newsgroup_classes=newsgroup_classes,

139 | sentence=data,

140 | )

141 | elif args.task_type == "summarization":

142 | example = prompt.format(

143 | dialogue=data,

144 | )

145 |

146 | elif args.prompt_type == "few-shot":

147 | if args.task_type == "classification":

148 | example = prompt.format(

149 | newsgroup_classes=newsgroup_classes,

150 | few_shot_samples=few_shot_samples,

151 | sentence=data,

152 | )

153 | elif args.task_type == "summarization":

154 | example = prompt.format(

155 | few_shot_samples=few_shot_samples,

156 | dialogue=data,

157 | )

158 |

159 | input_ids = tokenizer(

160 | example, return_tensors="pt", truncation=True

161 | ).input_ids.cuda()

162 |

163 | with torch.inference_mode():

164 | outputs = model.generate(

165 | input_ids=input_ids,

166 | max_new_tokens=20 if args.task_type == "classification" else 50,

167 | do_sample=True,

168 | top_p=0.95,

169 | temperature=1e-3,

170 | )

171 | result = tokenizer.batch_decode(

172 | outputs.detach().cpu().numpy(), skip_special_tokens=True

173 | )[0]

174 |

175 | # Extract the generated text, and do basic processing

176 | result = result[len(example) :].replace("\n", "").lstrip().rstrip()

177 | results.append(result)

178 | good_labels.append(label)

179 | good_data.append(data)

180 |

181 | print(f"Example {ctr}/{len(test_data)} | GT: {label} | Pred: {result}")

182 | ctr += 1

183 |

184 | metrics = compute_metrics_decoded(good_labels, results, args)

185 | print(metrics)

186 | metrics["predictions"] = results

187 | metrics["labels"] = good_labels

188 | metrics["data"] = good_data

189 |

190 | with open(os.path.join(save_dir, "metrics.pkl"), "wb") as handle:

191 | pickle.dump(metrics, handle)

192 |

193 | print(f"Completed experiment {save_dir}")

194 | print("----------------------------------------")

195 |

196 |

197 | if __name__ == "__main__":

198 | parser = argparse.ArgumentParser()

199 | parser.add_argument("--pretrained_ckpt", default="NousResearch/Llama-2-7b-hf")

200 | parser.add_argument("--prompt_type", default="zero-shot")

201 | parser.add_argument("--task_type", default="classification")

202 | args = parser.parse_args()

203 |

204 | main(args)

205 |

--------------------------------------------------------------------------------

/llama2/llama2_classification.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import torch

3 | import os

4 | import numpy as np

5 | import pandas as pd

6 | import pickle

7 |

8 |

9 | from peft import (

10 | LoraConfig,

11 | prepare_model_for_kbit_training,

12 | get_peft_model,

13 | )

14 | from transformers import (

15 | AutoTokenizer,

16 | AutoModelForCausalLM,

17 | BitsAndBytesConfig,

18 | TrainingArguments,

19 | )

20 | from trl import SFTTrainer

21 |

22 | from prompts import get_newsgroup_data_for_ft

23 |

24 |

25 | def main(args):

26 | train_dataset, test_dataset = get_newsgroup_data_for_ft(

27 | mode="train", train_sample_fraction=args.train_sample_fraction

28 | )

29 | print(f"Sample fraction:{args.train_sample_fraction}")

30 | print(f"Training samples:{train_dataset.shape}")

31 |

32 | # BitsAndBytesConfig int-4 config

33 | bnb_config = BitsAndBytesConfig(

34 | load_in_4bit=True,

35 | bnb_4bit_use_double_quant=True,

36 | bnb_4bit_quant_type="nf4",

37 | bnb_4bit_compute_dtype=torch.bfloat16,

38 | )

39 |

40 | # Load model and tokenizer

41 | model = AutoModelForCausalLM.from_pretrained(

42 | args.pretrained_ckpt,

43 | quantization_config=bnb_config,

44 | use_cache=False,

45 | device_map="auto",

46 | )

47 | model.config.pretraining_tp = 1

48 |

49 | tokenizer = AutoTokenizer.from_pretrained(args.pretrained_ckpt)

50 | tokenizer.pad_token = tokenizer.eos_token

51 | tokenizer.padding_side = "right"

52 |

53 | # LoRA config based on QLoRA paper

54 | peft_config = LoraConfig(

55 | lora_alpha=16,

56 | lora_dropout=args.dropout,

57 | r=args.lora_r,

58 | bias="none",

59 | task_type="CAUSAL_LM",

60 | )

61 |

62 | # prepare model for training

63 | model = prepare_model_for_kbit_training(model)

64 | model = get_peft_model(model, peft_config)

65 |

66 | results_dir = f"experiments/classification-sampleFraction-{args.train_sample_fraction}_epochs-{args.epochs}_rank-{args.lora_r}_dropout-{args.dropout}"

67 |

68 | training_args = TrainingArguments(

69 | output_dir=results_dir,

70 | logging_dir=f"{results_dir}/logs",

71 | num_train_epochs=args.epochs,

72 | per_device_train_batch_size=6 if use_flash_attention else 4,

73 | gradient_accumulation_steps=2,

74 | gradient_checkpointing=True,

75 | optim="paged_adamw_32bit",

76 | logging_steps=100,

77 | learning_rate=2e-4,

78 | bf16=True,

79 | tf32=True,

80 | max_grad_norm=0.3,

81 | warmup_ratio=0.03,

82 | lr_scheduler_type="constant",

83 | report_to="none",

84 | # disable_tqdm=True # disable tqdm since with packing values are in correct

85 | )

86 |

87 | max_seq_length = 512 # max sequence length for model and packing of the dataset

88 |

89 | trainer = SFTTrainer(

90 | model=model,

91 | train_dataset=train_dataset,

92 | peft_config=peft_config,

93 | max_seq_length=max_seq_length,

94 | tokenizer=tokenizer,

95 | packing=True,

96 | args=training_args,

97 | dataset_text_field="instructions",

98 | )

99 |

100 | trainer_stats = trainer.train()

101 | train_loss = trainer_stats.training_loss

102 | print(f"Training loss:{train_loss}")

103 |

104 | peft_model_id = f"{results_dir}/assets"

105 | trainer.model.save_pretrained(peft_model_id)

106 | tokenizer.save_pretrained(peft_model_id)

107 |

108 | with open(f"{results_dir}/results.pkl", "wb") as handle:

109 | run_result = [

110 | args.epochs,

111 | args.lora_r,

112 | args.dropout,

113 | train_loss,

114 | ]

115 | pickle.dump(run_result, handle)

116 | print("Experiment over")

117 |

118 |

119 | if __name__ == "__main__":

120 | parser = argparse.ArgumentParser()

121 | parser.add_argument("--pretrained_ckpt", default="NousResearch/Llama-2-7b-hf")

122 | parser.add_argument("--lora_r", default=8, type=int)

123 | parser.add_argument("--epochs", default=5, type=int)

124 | parser.add_argument("--dropout", default=0.1, type=float)

125 | parser.add_argument("--train_sample_fraction", default=0.99, type=float)

126 |

127 | args = parser.parse_args()

128 | main(args)

129 |

--------------------------------------------------------------------------------

/llama2/llama2_classification_inference.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import torch

3 | import os

4 | import pandas as pd

5 | import evaluate

6 | import pickle

7 | import warnings

8 | from tqdm import tqdm

9 |

10 | from llama_patch import unplace_flash_attn_with_attn

11 | from peft import AutoPeftModelForCausalLM

12 | from transformers import AutoTokenizer

13 | from sklearn.metrics import (

14 | accuracy_score,

15 | f1_score,

16 | precision_score,

17 | recall_score,

18 | )

19 |

20 | from prompts import get_newsgroup_data_for_ft

21 |

22 | metric = evaluate.load("rouge")

23 | warnings.filterwarnings("ignore")

24 |

25 |

26 | def main(args):

27 | _, test_dataset = get_newsgroup_data_for_ft(mode="inference")

28 |

29 | experiment = args.experiment_dir

30 | peft_model_id = f"{experiment}/assets"

31 |

32 | # unpatch flash attention

33 | unplace_flash_attn_with_attn()

34 |

35 | # load base LLM model and tokenizer

36 | model = AutoPeftModelForCausalLM.from_pretrained(

37 | peft_model_id,

38 | low_cpu_mem_usage=True,

39 | torch_dtype=torch.float16,

40 | load_in_4bit=True,

41 | )

42 | model.eval()

43 |

44 | tokenizer = AutoTokenizer.from_pretrained(peft_model_id)

45 |

46 | results = []

47 | oom_examples = []

48 | instructions, labels = test_dataset["instructions"], test_dataset["labels"]

49 |

50 | for instruct, label in tqdm(zip(instructions, labels)):

51 | input_ids = tokenizer(

52 | instruct, return_tensors="pt", truncation=True

53 | ).input_ids.cuda()

54 |

55 | with torch.inference_mode():

56 | try:

57 | outputs = model.generate(

58 | input_ids=input_ids,

59 | max_new_tokens=20,

60 | do_sample=True,

61 | top_p=0.95,

62 | temperature=1e-3,

63 | )

64 | result = tokenizer.batch_decode(

65 | outputs.detach().cpu().numpy(), skip_special_tokens=True

66 | )[0]

67 | result = result[len(instruct) :]

68 | except:

69 | result = ""

70 | oom_examples.append(input_ids.shape[-1])

71 |

72 | results.append(result)

73 |

74 | metrics = {

75 | "micro_f1": f1_score(labels, results, average="micro"),

76 | "macro_f1": f1_score(labels, results, average="macro"),

77 | "precision": precision_score(labels, results, average="micro"),

78 | "recall": recall_score(labels, results, average="micro"),

79 | "accuracy": accuracy_score(labels, results),

80 | "oom_examples": oom_examples,

81 | }

82 | print(metrics)

83 |

84 | save_dir = os.path.join(experiment, "metrics")

85 | if not os.path.exists(save_dir):

86 | os.makedirs(save_dir)

87 |

88 | with open(os.path.join(save_dir, "metrics.pkl"), "wb") as handle:

89 | pickle.dump(metrics, handle)

90 |

91 | print(f"Completed experiment {peft_model_id}")

92 | print("----------------------------------------")

93 |

94 |

95 | if __name__ == "__main__":

96 | parser = argparse.ArgumentParser()

97 | parser.add_argument(

98 | "--experiment_dir",

99 | default="experiments/classification-sampleFraction-0.1_epochs-5_rank-8_dropout-0.1",

100 | )

101 |

102 | args = parser.parse_args()

103 | main(args)

104 |

--------------------------------------------------------------------------------

/llama2/llama2_summarization.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import torch

3 | import os

4 | import numpy as np

5 | import pandas as pd

6 | import pickle

7 | import datasets

8 | from datasets import Dataset, load_dataset

9 |

10 | from peft import (

11 | LoraConfig,

12 | prepare_model_for_kbit_training,

13 | get_peft_model,

14 | )

15 | from transformers import (

16 | AutoTokenizer,

17 | AutoModelForCausalLM,

18 | BitsAndBytesConfig,

19 | TrainingArguments,

20 | )

21 | from trl import SFTTrainer

22 |

23 | from prompts import TRAINING_SUMMARIZATION_PROMPT_v2

24 |

25 |

26 | def prepare_instructions(dialogues, summaries):

27 | instructions = []

28 |

29 | prompt = TRAINING_SUMMARIZATION_PROMPT_v2

30 |

31 | for dialogue, summary in zip(dialogues, summaries):

32 | example = prompt.format(

33 | dialogue=dialogue,

34 | summary=summary,

35 | )

36 | instructions.append(example)

37 |

38 | return instructions

39 |

40 |

41 | def prepare_samsum_data():

42 | dataset = load_dataset("samsum")

43 | train_dataset = dataset["train"]

44 | val_dataset = dataset["test"]

45 |

46 | dialogues = train_dataset["dialogue"]

47 | summaries = train_dataset["summary"]

48 | train_instructions = prepare_instructions(dialogues, summaries)

49 | train_dataset = datasets.Dataset.from_pandas(

50 | pd.DataFrame(data={"instructions": train_instructions})

51 | )

52 |

53 | return train_dataset

54 |

55 |

56 | def main(args):

57 | train_dataset = prepare_samsum_data()

58 |

59 | # BitsAndBytesConfig int-4 config

60 | bnb_config = BitsAndBytesConfig(

61 | load_in_4bit=True,

62 | bnb_4bit_use_double_quant=True,

63 | bnb_4bit_quant_type="nf4",

64 | bnb_4bit_compute_dtype=torch.bfloat16,

65 | )

66 |

67 | # Load model and tokenizer

68 | model = AutoModelForCausalLM.from_pretrained(

69 | args.pretrained_ckpt,