├── Installation

├── .gitkeep

└── install_anaconda_python.md

├── Pratice Guide

├── .gitkeep

└── research_guide_for_fyp.md

├── ML from Scratch

├── README.md

├── Linear Regression

│ ├── .gitkeep

│ ├── salaries.csv

│ ├── README.md

│ └── Predict-Salary-based-on-Experience---Linear-Regression.ipynb

├── Random Forest

│ └── README.md

├── Support Vector Machine

│ └── README.md

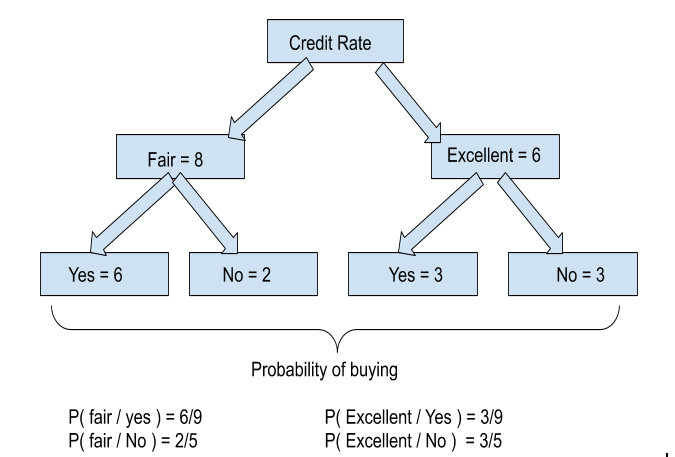

├── Decision Tree

│ └── README.md

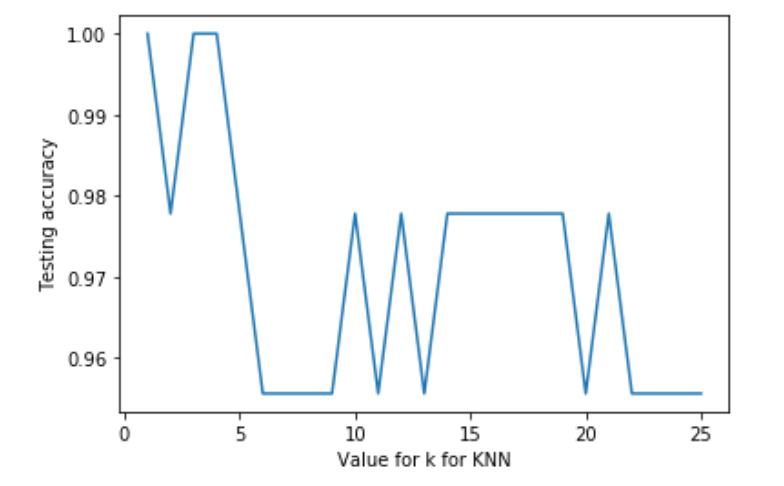

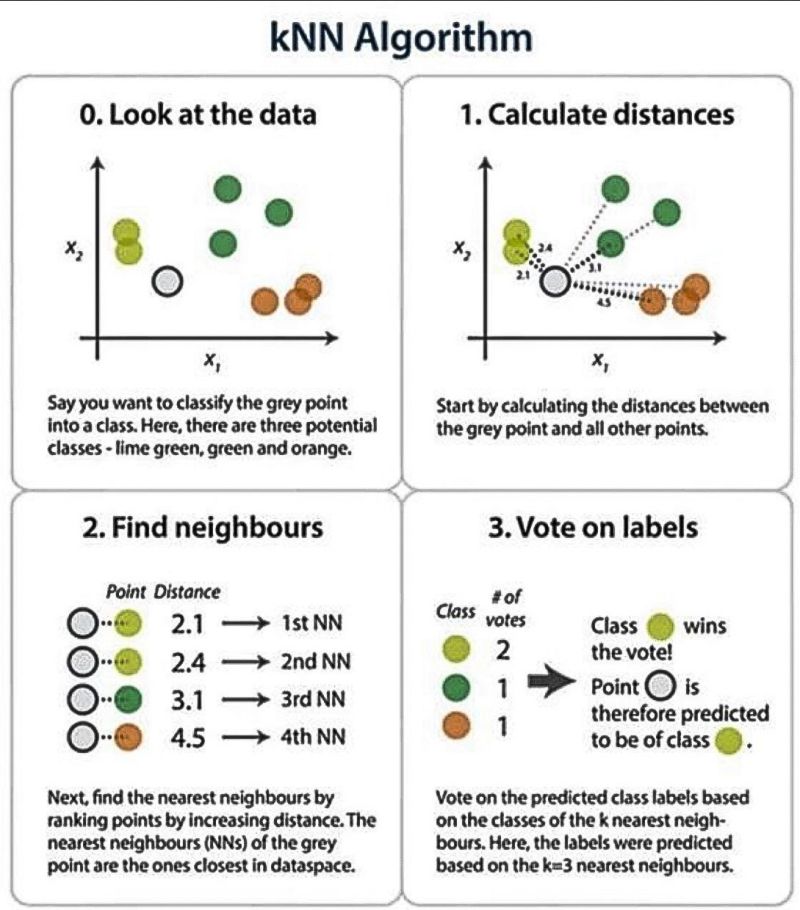

├── KNN

│ └── README.md

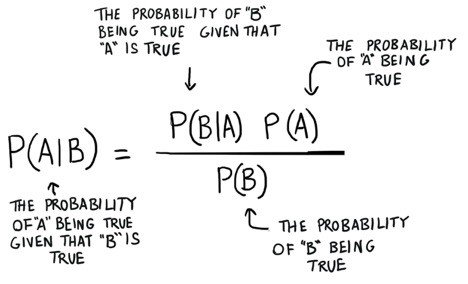

├── Naive Bayes

│ └── README.md

├── K Means Clustering

│ └── README.md

└── Logistic Regression

│ └── README.md

├── Reality vs Expectation

├── .gitkeep

└── Is AI Overhyped? Reality vs Expectation.md

├── Mathematical Implementation

├── .gitkeep

├── PCA.md

├── Compute MSA, RMSE, and MAE.md

└── confusion_matrix.md

├── Machine Learning from Beginner to Advanced

├── .gitkeep

├── Data Cleaning and Pre-processing.md

├── data_preparation.md

├── Introduction to ML and AI.md

├── Key terms used in ML.md

├── Regression Performance Metrics.md

├── mathematics_ai_ml_history_motivation.md

└── Classification Performance Metrics.md

├── LICENSE

└── README.md

/Installation/.gitkeep:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Pratice Guide/.gitkeep:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/ML from Scratch/README.md:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Reality vs Expectation/.gitkeep:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Mathematical Implementation/.gitkeep:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/ML from Scratch/Linear Regression/.gitkeep:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/.gitkeep:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Mathematical Implementation/PCA.md:

--------------------------------------------------------------------------------

1 | Working............

2 |

--------------------------------------------------------------------------------

/Mathematical Implementation/Compute MSA, RMSE, and MAE.md:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/Data Cleaning and Pre-processing.md:

--------------------------------------------------------------------------------

1 |

2 |

--------------------------------------------------------------------------------

/ML from Scratch/Linear Regression/salaries.csv:

--------------------------------------------------------------------------------

1 | Years of Experience,Salary

2 | 1.1,39343

3 | 1.3,46205

4 | 1.5,37731

5 | 2,43525

6 | 2.2,39891

7 | 2.9,56642

8 | 3,60150

9 | 3.2,54445

10 | 3.2,64445

11 | 3.7,57189

12 | 3.9,63218

13 | 4,55794

14 | 4,56957

15 | 4.1,57081

16 | 4.5,61111

17 | 4.9,67938

18 | 5.1,66029

19 | 5.3,83088

20 | 5.9,81363

21 | 6,93940

22 | 6.8,91738

23 | 7.1,98273

24 | 7.9,101302

25 | 8.2,113812

26 | 8.7,109431

27 | 9,105582

28 | 9.5,116969

29 | 9.6,112635

30 | 10.3,122391

31 | 10.5,121872

32 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2022 Sunil Ghimire

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/data_preparation.md:

--------------------------------------------------------------------------------

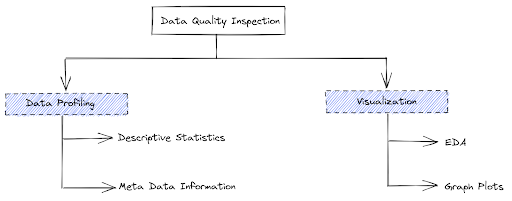

1 | # 1. Data Quality Inspection

2 |

3 |

4 |

5 |

6 | The two most popular approach to data quality inspection is: Data Profiling and Data visualization

7 |

8 | ## 1.1. Data Profiling

9 |

10 | Data profiling is reviewing source data, understanding structure, content, and interrelationships, and identifying potential for data projects

11 |

12 | Data profiling is a crucial part of

13 |

14 | - Data warehouse and business intelligence (DW/BI) projects—data profiling can uncover data quality issues in data sources, and what needs to be corrected in ETL.

15 | - Data conversion and migration projects—data profiling can identify data quality issues, which you can handle in scripts and data integration tools copying data from source to target. It can also uncover new requirements for the target system.

16 | - Source system data quality projects—data profiling can highlight data that suffers from serious or numerous quality issues, and the source of the issues (e.g. user inputs, errors in interfaces, data corruption).

17 |

18 | ### 1.1.1. Data profiling involves:

19 |

20 | - Collecting descriptive statistics like min, max, count, and sum.

21 | - Collecting data types, length, and recurring patterns.

22 | - Tagging data with keywords, descriptions, or categories.

23 | - Performing data quality assessment, risk of performing joins on the data.

24 | - Discovering metadata and assessing its accuracy.

25 | - Identifying distributions, key candidates, foreign-key candidates, functional dependencies, embedded value dependencies, and performing inter-table analysis

26 |

27 | ### 1.1.2. Data profiling involves:

28 | Data profiling collects statistics and meta-information about available data that provide insight into the content of data sets

29 |

--------------------------------------------------------------------------------

/Pratice Guide/research_guide_for_fyp.md:

--------------------------------------------------------------------------------

1 | # PART 1: The Reflective Individual Report

2 |

3 | **I. Finding a research topic:- approx 500 words.**

4 | In this task identify your topic and explain how you crafted your research project, selected research tools and search techniques, and which library Library Collections did you explore.

5 |

6 | **Areas that can be covered include**:

7 | * What is your chosen research topic?

8 | * How and why did you choose it?

9 | * What is your research aims and objectives?

10 | * What specific techniques and strategies did you use for finding relevant information?

11 | * What specific library search tools did you use and why?

12 | * What did you learn about finding information on your topic or in your discipline?

13 | * Was it necessary to move outside your discipline to find sufficient sources?

14 |

15 | **II. Professional Activities:- Approx 500 words.**

16 | In this task, you have to show a reflection on a wide range of professional research development activities and responsibilities, through your own self-discovery…

17 |

18 | **Areas which can be covered included:**

19 | * Student’s research tasks and schedule including for example a Gantt Chart

20 | * How did you fit in the dependent researcher role?

21 | * Any Challenges you faced and how did you tackle them?

22 |

23 | **III. Literature Review:- Approx 1500 words.**

24 | This task brings together the main themes of the modules and requires you to build a portfolio from your literature collections.

25 |

26 | **Areas which can be covered included:**

27 | * A mind map of your research topic

28 | * What tools and resources did you use to develop the mind map

29 | * A brief summary of your literature review with proper citation

30 | * What resources and techniques did you use to write your literature review

31 | * Did you have any reason for not selecting specific resources, even though they appeared promising?

32 | * What is your research method and what are the practical steps you used to complete your research?

33 | * Comment on the feasibility of your chosen method including challenges and recommendations.

34 |

35 | **IV. Refection of Research Project:- Approx 500 words**

36 | Here is your opportunity to bring your achievements and contributions in a portfolio of the work done in a professional manner and reflected upon the relevance of the various activities.

37 |

38 | Summarise how you have reflected on your learning and development whilst on a research project. Demonstrate awareness of your personal strengths and weaknesses and the ability to adapt to the independent research process and how you would engage further in continuing self-development.

39 |

40 | **Areas which can be covered included:**

41 | * What was the achievement during this research?

42 | * What did you learn about your own research process and style?

43 | * What expertise have you gained as a researcher?

44 | * What do you still need to learn?

45 | * What would you change about your process if you had another chance?

46 | * Any recommendations

47 |

48 | **PART 2: The Reflective Notebook File**

49 | The final 50% will be awarded for your notebook file.

50 |

51 | Note: Your work needs to be fully referenced, which is good practice for your dissertation.

52 |

53 | If you have any queries, feedback, or suggestions then you can leave a comment or mail on info@sunilghimire.com.np. See you in the next tutorial.

54 |

55 | ### **Stay safe !! Happy Learning !!!**

56 |

57 | _**STEPHEN HAWKING ONCE SAID, “The thing about smart people is that they seem like crazy people to dumb people.”**_

58 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/Introduction to ML and AI.md:

--------------------------------------------------------------------------------

1 | # INTRODUCTION TO ARTIFICIAL INTELLIGENCE & MACHINE LEARNING

2 |

3 |

4 | Artificial intelligence can be interpreted as adding human intelligence in a machine. Artificial intelligence is not a system but a discipline that focuses on making machine smart enough to tackle the problem like the human brain does. The ability to learn, understand, imagine are the qualities that are naturally found in Humans. Developing a system that has the same or better level of these qualities artificially is termed as Artificial Intelligence.

5 |

6 | Machine Learning is a subset of AI. That is, all machine learning counts as AI, but not all AI counts as machine learning. Machine learning refers to the system that can learn by themselves. **Machine learning is the study of computer algorithms that comprises of algorithms and statistical models that allow computer programs to automatically improve through experience.**

7 |

8 | > “Machine learning is the tendency of machines to learn from data analysis and achieve Artificial Intelligence.”

9 |

10 | **Machine Learning** is the science of getting computers to act by feeding them data and letting them learn a few tricks on their own without being explicitly programmed.

11 |

12 | ## DIFFERENCE BETWEEN MACHINE LEARNING AND ARTIFICIAL INTELLIGENCE

13 | * Artificial intelligence focuses on Success whereas Machine Learning focuses on Accuracy

14 | * AI is not a system, but it can be implemented on system to make system intelligent. ML is a system that can extract knowledge from datasets

15 | * AI is used in decision making whereas ML is used in learning from experience

16 | * AI mimics human whereas ML develops self-learning algorithm

17 | * AI leads to wisdom or intelligence whereas ML leads to knowledge or experience

18 | * Machine Learning is one of the way to achieve Artificial Intelligence.

19 |

20 | ## TYPES OF MACHINE LEARNING

21 | * Supervised Learning

22 | * Unsupervised learning

23 | * Reinforced Learning

24 |

25 | **Supervised** means to oversee or direct a certain activity and make sure it is done correctly. In this type of learning the machine learns under guidance. So, at school or teachers guided us and taught us similarly in supervised learning machines learn by feeding them label data and explicitly telling them that this is the input and this is exactly how the output must look. So, the teacher, in this case, is the training data.

26 | * Linear Regression

27 | * Logistic Regression

28 | * Support Vector Machine

29 | * Naive Bayes Classifier

30 | * Artificial Neural Networks, etc

31 |

32 | **Unsupervised** means to act without anyone’s supervision or without anybody’s direction. Now, here the data is not labeled. There is no guide and the machine has to figure out the data set given and it has to find hidden patterns in order to make predictions about the output. An example of unsupervised learning is an adult-like you and me. We do not need a guide to help us with our daily activities. We can figure things out on our own without any supervision.

33 | * K-means clustering

34 | * Principal Component Analysis

35 | * Generative Adversarial Networks

36 |

37 | **Reinforcement** means to establish or encourage a pattern of behavior. It is a learning method wherein an agent learns by producing actions and discovers errors or rewards. Once the agent gets trained it gets ready to predict the new data presented to it.

38 |

39 | Let’s say a child is born. What will he do? But after some months or years, he tries to walk. So here he basically follows the hit and trial concept because he is new to the surroundings and the only way to learn is experience. We notice baby stretching and kicking his legs and starts to roll over. Then he starts crawling. He then tries to stand up but he fails in doing so for many attempts. Then the baby will learn to support all his weight when held in a standing position. This is what reinforcement learning is.

40 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/Key terms used in ML.md:

--------------------------------------------------------------------------------

1 | # KEY TERMS USED IN MACHINE LEARNING

2 |

3 | Before starting tutorials on machine learning, we came with an idea of providing a brief definition of key terms used in Machine Learning. These key terms will be regularly used in our coming tutorials on machine learning from scratch and will also be used in further higher courses. So let’s start with the term Machine Learning:

4 |

5 | ## MACHINE LEARNING

6 | Machine learning is the study of computer algorithms that comprises of algorithms and statistical models that allow computer programs to automatically improve through experience. It is the science of getting computers to act by feeding them data and letting them learn a few tricks on their own without being explicitly programmed.

7 |

8 | > TYPES OF MACHINE LEARNING

9 | > **Do you want to know about supervised, unsupervised and reinforcement learning?** [Read More](https://github.com/ghimiresunil/Implementation-of-Machine-Learning-Algorithm-from-Scratch/blob/main/Machine%20Learning%20from%20Beginner%20to%20Advanced/Introduction%20to%20ML%20and%20AI.md)

10 |

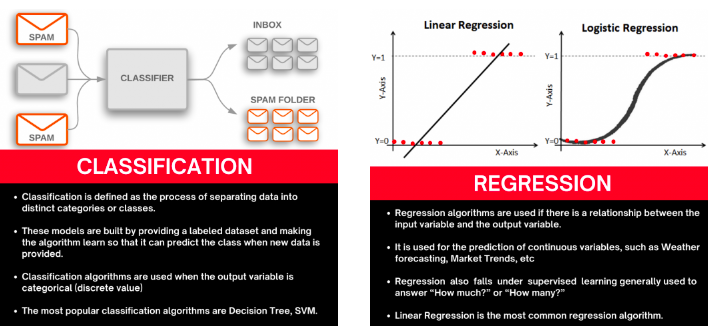

11 | ## CLASSIFICATION

12 | Classification, a sub-category of supervised learning, is defined as the process of separating data into distinct categories or classes. These models are built by providing a labeled dataset and making the algorithm learn so that it can predict the class when new data is provided. The most popular classification algorithms are Decision Tree, SVM.

13 |

14 | We have two types of learners in respective classification problems:

15 |

16 | * Lazy Learners: As the name suggests, such kind of learners waits for the testing data to be appeared after storing the training data. Classification is done only after getting the testing data. They spend less time on training but more time on predicting. Examples of lazy learners are K-nearest neighbor and case-based reasoning.

17 |

18 | * Eager Learners: As opposite to lazy learners, eager learners construct a classification model without waiting for the testing data to be appeared after storing the training data. They spend more time on training but less time on predicting. Examples of eager learners are Decision Trees, Naïve Bayes, and Artificial Neural Networks (ANN).

19 |

20 | **_Note_**: We will study these algorithms in the coming tutorials.

21 |

22 | ## REGRESSION

23 | While classification deals with predicting discrete classes, regression is used in predicting continuous numerical valued classes. Regression is also falls under supervised learning generally used to answer “How much?” or “How many?”. Regressions create relationships and correlations between different types of data. Linear Regression is the most common regression algorithm.

24 |

25 | Regression models are of two types:

26 |

27 | * Simple regression model: This is the most basic regression model in which predictions are formed from a single, univariate feature of the data.

28 | * Multiple regression model: As the name implies, in this regression model the predictions are formed from multiple features of the data.

29 | ## CLUSTERING

30 | Cluster is defined as groups of data points such that data points in a group will be similar or related to one another and different from the data points of another group. And the process is known as clustering. The goal of clustering is to determine the intrinsic grouping in a set of unlabelled data. Clustering is a form of unsupervised learning since it doesn’t require labeled data.

31 |

32 | ## DIMENSIONALITY

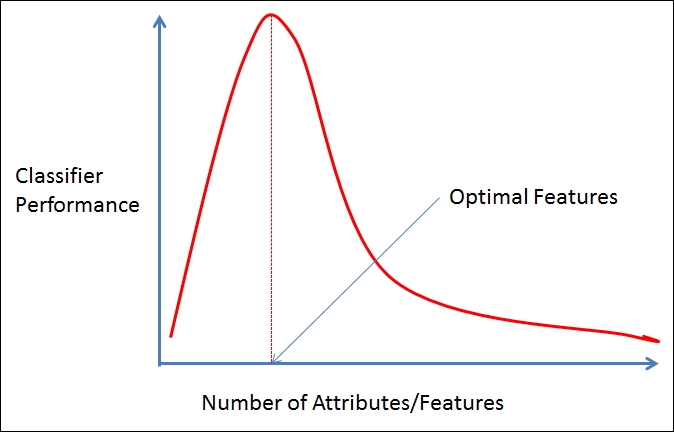

33 | The dimensionality of a data set is the number of attributes or features that the objects in the dataset have. In a particular dataset, if there are number of attributes, then it can be difficult to analyze such dataset which is known as curse of dimensionality.

34 |

35 | ## CURSE OF DIMENSIONALITY

36 | Data analysis becomes difficult as the dimensionality of data set increases. As dimensionality increases, the data becomes increasingly sparse in the space that it occupies.

37 |

38 | * For classification, there will not be enough data objects to allow the creation of model that reliably assigns a class to all possible objects.

39 | * For clustering, the density and distance between points which are critical for clustering becomes less meaningful.

40 |

41 |

42 |

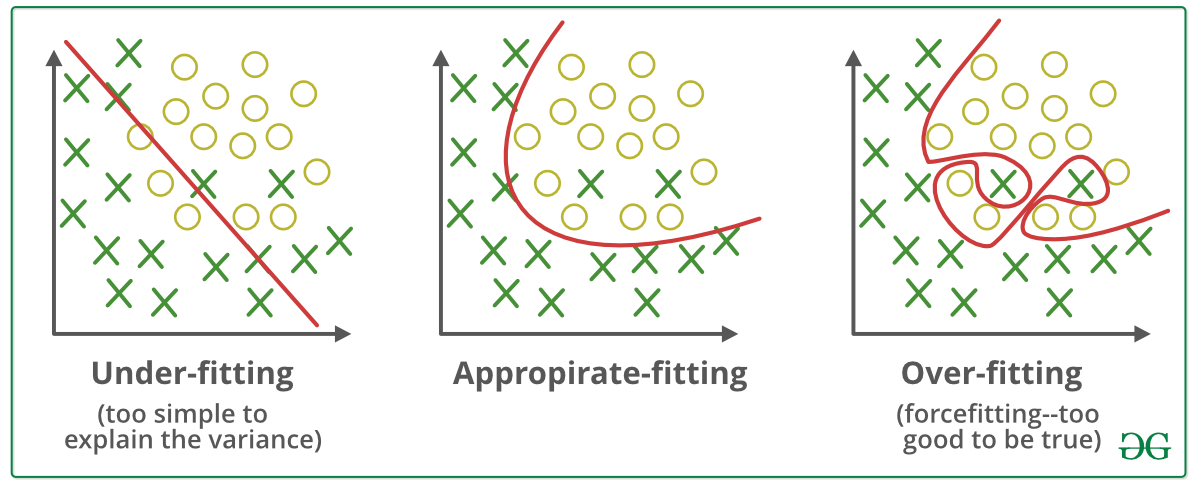

43 | ## UNDERFITTING

44 | A machine learning algorithm is said to have underfitting when it can’t capture the underlying trend of data. It means that our model doesn’t fit the data well enough. It usually happens when we have fewer data to build a model and also when we try to build a linear model with non-linear data when using a less complex model.

45 |

46 | ## OVERFITTING

47 | When a model gets trained with so much data, it starts learning from the noise and inaccurate data entries in our dataset. The cause of overfitting is non-parametric and non-linear methods. We use cross-validation to reduce overfitting which allows you to tune hyperparameters with only your original training set. This allows you to keep your test set as truly unseen dataset for selecting your final model.

48 |

49 |

50 |

51 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/Regression Performance Metrics.md:

--------------------------------------------------------------------------------

1 | # Performance Metrics: Regression Model

2 |

3 | Today I am going to discuss Performance Metrics, and this time it will be Regression model metrics. As in my previous blog, I have discussed Classification Metrics, this time its Regression. I am going to talk about the 5 most widely used Regression metrics:

4 |

5 | Let’s understand one thing first and that is the Difference between Classification and Regression:

6 |

7 |

8 |

9 | ## WHAT IS AN ERROR?

10 | Any deviation from the actual value is an error.

11 |

12 | Error = $Y_{actual} - Y_{predicted}$

13 |

14 | So keeping this in mind, we have understood the requirement of the metrics, let’s deep dive into the methods we can use to find out ways to understand our model’s performance.

15 |

16 | ### Mean Squared Error (MSE)

17 |

18 | Let’s try to breakdown the name, it says Mean, it says Squared, it says Error. We know what Error is, from the above explanation, we know what square is. We square the Error, and then we know what the Mean is. So we take the mean of all the errors which are squared and added.

19 |

20 | First question should arise is, why are we doing a Square? Why can we not take the error directly?

21 |

22 | Let’s take the height example, My model predicted 167cm whereas my actual value is 163cm, so the deviation is of +5cm. Let’s take another example where my predicted height is 158cm and my actual height is 163cm. Now, my model made a mistake of -5cm.

23 |

24 | Now let’s find Mean Error for 2 points, so the calculation states [+5 + (-5)]/2 = 0

25 |

26 | This shows that my model has 0 error, but is that true? No right? So to avoid such problems we have to take square to get rid of the Sign of the error.

27 |

28 | So let’s see the formulation of this Metric:

29 |

30 | MSE = $\frac{1}{n}\Sigma_{i=1}^n(y_i - \hat{y_i})^2$

31 |

32 | Where,

33 |

34 | n = total otal number of data points

35 | $y_i$ = actual value

36 | $\hat{y_i}$ = predicted value

37 |

38 | Root Mean Squared Error (RMSE)

39 |

40 | Now as we all understood what MSE is, it is pretty much obvious that taking root of the equation will give us RMSE. Let’s see the formula first.

41 |

42 | RMSE = $\sqrt{\Sigma_{i=1}^n \frac{(\hat{y_i} - y_i)^2}{n}}$

43 |

44 | Where,

45 |

46 | n = total otal number of data points

47 | $y_i$ = actual value

48 | $\hat{y_i}$ = predicted value

49 |

50 | Now the question is, if we already have the MSE, why we require RMSE?

51 |

52 | Let’s try to understand it with example. Take the above example of the 2 data points and calculate MSE and RMSE for them,

53 |

54 | MSE = $\[(5)^2 + (-5)^2\]/2 = 50 / 2 = 25 $

55 |

56 | RMSE = Sqrt(MSE) = $(25)^{0.5}$ = 5

57 |

58 | Now, you tell among these values which one is more accurate and relevant to the actual error of the model?

59 |

60 | RMSE right? So in actual Squaring off, the values increase them exponentially. While not taking a root might affect our understanding that where my model is actually making mistakes.

61 |

62 | ### Mean Absolute Error (MAE)

63 |

64 | Now, I am sure you might have given this a thought, why squaring? Why not just taking the Absolute value of them, so here we have it. Everything stays the same, the only difference is, we take the Absolute value of our error, this also takes care of the sign issues we had earlier. So let’s look into the formula :

65 |

66 | MAE = $ \frac{1}{N} \Sigma_{i=1}^n|y_i - \hat{y_i}|$

67 |

68 | Where,

69 |

70 | n = total otal number of data points

71 | $y_i$ = actual value

72 | $\hat{y_i}$ = predicted value

73 |

74 | #### MAE VS RMSE

75 |

76 | Let’s understand, MAE and RMSE can be used together to diagnose the variation in the errors in a set of forecasts. RMSE will always be larger or equal to the MAE. The greater difference between them, the greater the variance in the individual errors in the sample. If the RMSE=MAE, then all the errors are of the same magnitude.

77 |

78 | Errors $[2, -3, 5, 120, -116, 197]$

79 | RMSE = 115.5

80 | MAE = 88.6

81 |

82 | If we see the difference, RMSE has a higher value then MAE, which states that RMSE gives more importance to higher error due to squaring the values.

83 |

84 | ### Mean Absolute Percentage Error (MAPE)

85 |

86 | MAPE = $\frac{1}{n}\Sigma_{i=1}^n \frac{|y_i - \hat{y_i}|}{y_i} \times 100 \%$

87 |

88 | Where,

89 |

90 | n = total otal number of data points

91 | $y_i$ = actual value

92 | $\hat{y_i}$ = predicted value

93 |

94 | MAPE represents the error in percentage and therefore it’s not relative to the size of the numbers in the data itself, whereas any other performance metrics in the regression model.

95 |

96 | ### $R^2$ or Coefficient of Determination

97 |

98 | It is the Ratio of the MSE (Prediction Error) and Baseline Variance of target Variable, here baseline is the deviation of our Y values from the Mean value.

99 |

100 | The metric helps us to compare our current model with constant baseline value (i.e. mean) and tells us how much our model is better, R2 is always less than 1, and it doesn’t matter how large or small the errors are R2 is always less than 1. Let’s See the Formulation:

101 |

102 | $R^2$ = $1- \frac{SS_{RES}}{SS_{TOT}} = 1 - \frac{\Sigma_{i}(y_i - \hat{y_i})^2}{\Sigma_{i}(y_i - \bar{y_i})^2}$

103 |

--------------------------------------------------------------------------------

/Reality vs Expectation/Is AI Overhyped? Reality vs Expectation.md:

--------------------------------------------------------------------------------

1 | # IS AI OVERHYPED? REALITY VS EXPECTATION

2 |

3 |

4 |

5 |

6 | Humans are the most intelligent species found on Earth. The ability to learn, understand, imagine are the qualities that are naturally found in Humans. Developing a system that has the same or better level of these qualities artificially is termed as Artificial Intelligence. Before talking about AI hype, let’s go through brief history of AI.

7 |

8 | ## HISTORY

9 |

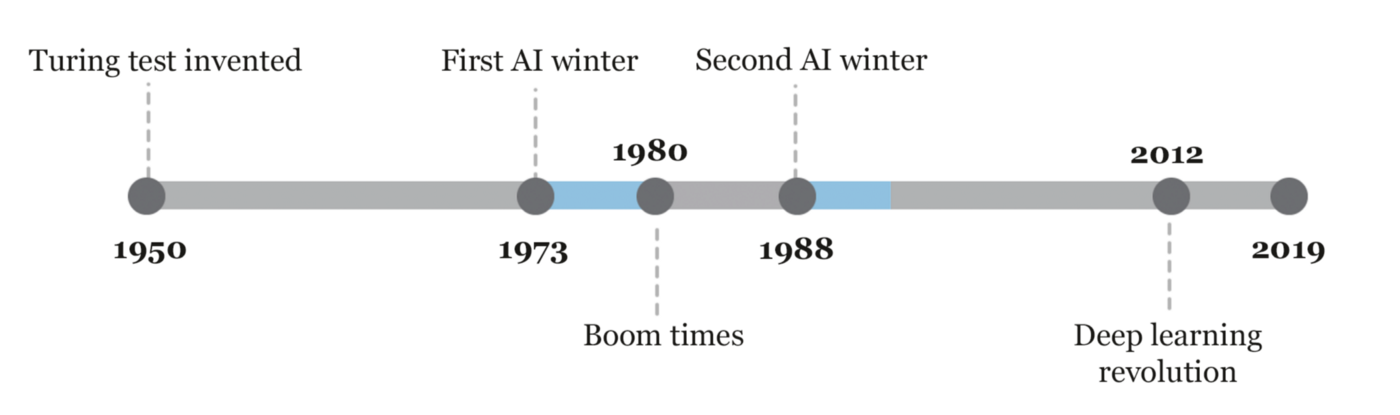

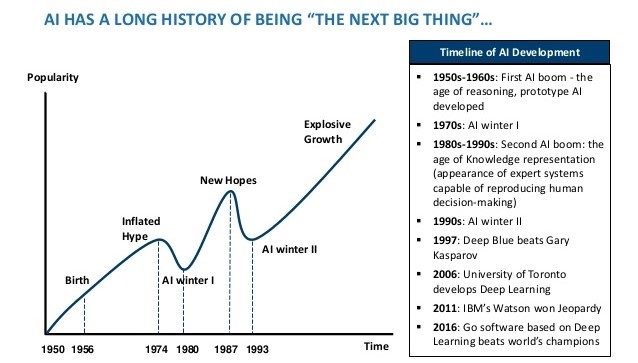

10 | The beginning of modern AI started when the term “Artificial Intelligence” was coined in 1956, at a conference at Dartmouth College, in Hanover. Government funding and interest increased but expectations exceeded the reality which led to AI Winter from 1974 to 1980. British government started funding again to compete with the Japanese but couldn’t stop the 2nd AI winter which occurred from 1987 to 1993.

11 |

12 |

13 |

14 | The field has come a very long way in the past decade. The 10s were the hottest AI summer with tech giants like Google, Facebook, Microsoft repeatedly touting AI’s abilities.

15 |

16 |

17 |

18 | After the development of higher processing power like GPU, TPU in 10s, Deep Learning made huge jumps. Neural networks offered better results than other algorithms when used on the same data. Talking about the achievement because of Deep learning, Alexa and Siri understanding your voice, Spotify, and Netflix recommending what you watch next, Facebook tagging all your friends in a photo automatically are the remarkable one. With the continuous progress in speed, memory capacity, Quantum Computing performance of AI is being enhanced gradually. Simulations that usually take months are available in days, hours, or less.

19 |

20 | ## CURRENT SCENARIO

21 |

22 | Albert Einstein had once said, “The measure of intelligence is the ability to change”. AI, which started in the 1950s, is aimed at creating a machine that can imitate human behavior with great proficiency. And the question is how AI handles things beyond Human’s imagination? Apart from all the achievements so far in the field of AI, expectations are unreasonably high rather than understanding how it works. Most of the Tech leaders excite people with claims that AI will replace humans but the reality is we do not have the ability to create an AI controlling a robot that can peel an orange.

23 |

24 | In recent years, there’s been intense hype about AI but we aren’t even near living up to the public expectation. Self-driving cars and health care are currently the most hyped areas of artificial intelligence, the most challenging and are taking much longer than the optimistic timetables. AI is still in its infancy, but people are expecting amazing advancements in the next few years like in movies.

25 |

26 | Business-oriented companies are brainwashing or stimulating customers by using AI as a marketing term. Companies deploy some small AI project in their products in a commercial setting. Nowadays, AI is being forcefully tagged in consumer products, like AI Fan, AI fridge, and even AI Toilet. The below picture shows the use of “Artificial Intelligence” in marketing and related key terms.

27 |

28 | ## CHANGE YOUR MIND

29 |

30 | AI is gaining more attention among students and researchers. But, people still don’t realize that they can’t become an expert using stuff like PyTorch, TensorFlow etc. Implementing built-in models with frameworks like Keras, Tensorflow, and Scikit is not unique and special, especially, when we are following an online tutorial and simply changing the data to ours. Coming up and understanding the things behind those architectures is extremely hard. We need a full understanding of probability and statistics, information theory, calculus, etc to just grasp the main ideas.

31 |

32 | AI components are made up of mathematically proven methods or implementation which are coded to work on data. The use of AI depends upon the type of problems they are designed to work on. If ML doesn’t work for you, it’s because you’re not using it right, not because the methods are wrong.

33 |

34 | A routine job that doesn’t require a brain is sure to be replaced by AI. It’s not going to close the job opportunity but will open the different sets of skilled jobs. Let’s take an example of self-driving cars. General Motors, Google’s Waymo, Toyota, Honda, and Tesla are making self-driving cars. After its success, drivers’ jobs will disappear but new job roles of creating and maintaining them will open. Humanity has to evolve towards being more intelligent and it’s the right time.

35 |

36 |

37 | ## CONCLUSION

38 |

39 | We currently call AI as Big Data & Machine Learning. We are focused on finding patterns and predicting the near future trends but AI completely fails when it comes to generating explanations. Reality is, we can’t still trust the decisions of AI in highly-valued & highly-risked domains like finance, healthcare, and energy where even 0.01% accuracy matters.

40 |

41 | With all things taken into consideration, it is sure that AI hype will gradually decrease when people realize we are still using “pseudo-AI” where the fact is humans are operating AI behind the scenes. Though AI has been bookended by fear, doubt, and misinformation, AI hype doesn’t seem to come to an end sooner.

42 |

--------------------------------------------------------------------------------

/ML from Scratch/Linear Regression/README.md:

--------------------------------------------------------------------------------

1 | # LINEAR REGRESSION FROM SCRATCH

2 |

3 |

4 |

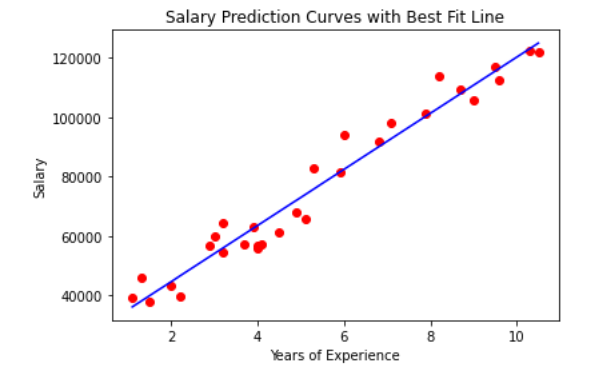

5 | Regression is the method which measures the average relationship between two or more continuous variables in term of the response variable and feature variables. In other words, regression analysis is to know the nature of the relationship between two or more variables to use it for predicting the most likely value of dependent variables for a given value of independent variables. Linear regression is a mostly used regression algorithm.

6 |

7 | For more concrete understanding, let’s say there is a high correlation between day temperature and sales of tea and coffee. Then the salesman might wish to know the temperature for the next day to decide for the stock of tea and coffee. This can be done with the help of regression.

8 |

9 | The variable, whose value is estimated, predicted, or influenced is called a dependent variable. And the variable which is used for prediction or is known is called an independent variable. It is also called explanatory, regressor, or predictor variable.

10 |

11 | ## 1. LINEAR REGRESSION

12 |

13 | Linear Regression is a supervised method that tries to find a relation between a continuous set of variables from any given dataset. So, the problem statement that the algorithm tries to solve linearly is to best fit a line/plane/hyperplane (as the dimension goes on increasing) for any given set of data.

14 |

15 | This algorithm use statistics on the training data to find the best fit linear or straight-line relationship between the input variables (X) and output variable (y). Simple equation of Linear Regression model can be written as:

16 |

17 | ```

18 | Y=mX+c ; Here m and c are calculated on training

19 | ```

20 |

21 | In the above equation, m is the scale factor or coefficient, c being the bias coefficient, Y is the dependent variable and X is the independent variable. Once the coefficient m and c are known, this equation can be used to predict the output value Y when input X is provided.

22 |

23 | Mathematically, coefficients m and c can be calculated as:

24 |

25 | ```

26 | m = sum((X(i) - mean(X)) * (Y(i) - mean(Y))) / sum( (X(i) - mean(X))^2)

27 | c = mean(Y) - m * mean(X)

28 | ```

29 |

30 |

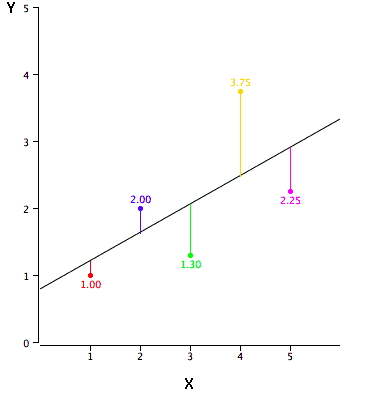

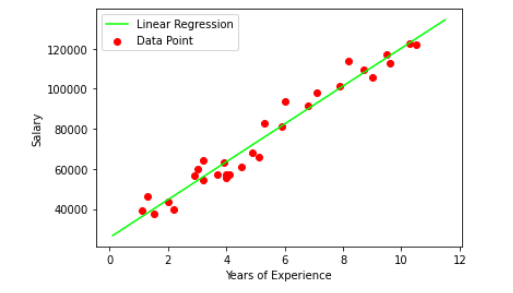

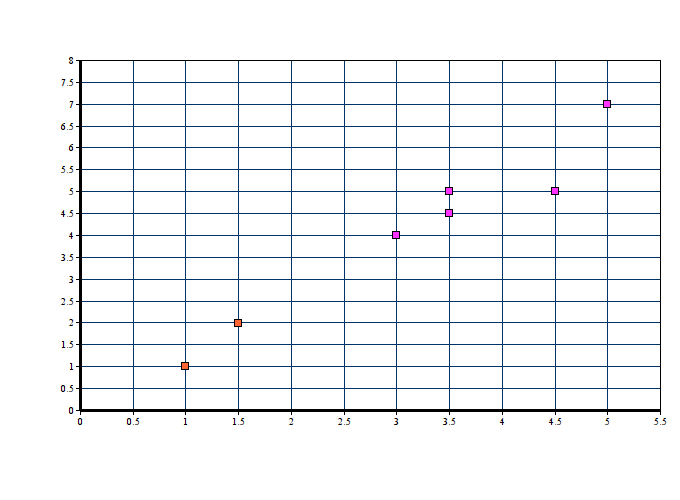

31 | As you can see, the red point is very near the regression line; its error of prediction is small. By contrast, the yellow point is much higher than the regression line and therefore its error of prediction is large. The best-fitting line is the line that minimizes the sum of the squared errors of prediction.

32 |

33 | ## 1.1. LINEAR REGRESSION FROM SCRATCH

34 |

35 | We will build a linear regression model to predict the salary of a person on the basis of years of experience from scratch. You can download the dataset from the link given below. Let’s start with importing required libraries:

36 |

37 | ```

38 | %matplotlib inline

39 | import numpy as np

40 | import matplotlib.pyplot as plt

41 | import pandas as pd

42 | ```

43 |

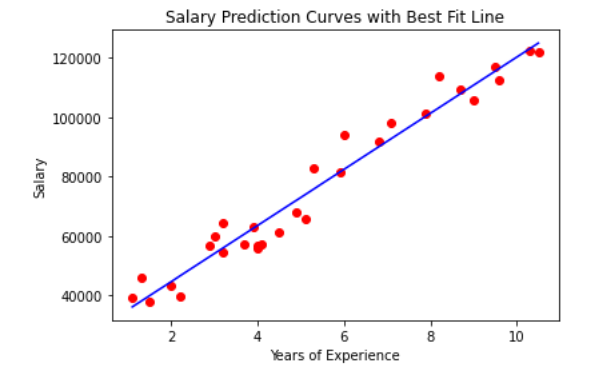

44 | We are using dataset of 30 data items consisting of features like years of experience and salary. Let’s visualize the dataset first.

45 |

46 | ```

47 | dataset = pd.read_csv('salaries.csv')

48 |

49 | #Scatter Plot

50 | X = dataset['Years of Experience']

51 | Y = dataset['Salary']

52 |

53 | plt.scatter(X,Y,color='blue')

54 | plt.xlabel('Years of Experience')

55 | plt.ylabel('Salary')

56 | plt.title('Salary Prediction Curves')

57 | plt.show()

58 | ```

59 |

60 |

61 | ```

62 | def mean(values):

63 | return sum(values) / float(len(values))

64 |

65 | # initializing our inputs and outputs

66 | X = dataset['Years of Experience'].values

67 | Y = dataset['Salary'].values

68 |

69 | # mean of our inputs and outputs

70 | x_mean = mean(X)

71 | y_mean = mean(Y)

72 |

73 | #total number of values

74 | n = len(X)

75 |

76 | # using the formula to calculate the b1 and b0

77 | numerator = 0

78 | denominator = 0

79 | for i in range(n):

80 | numerator += (X[i] - x_mean) * (Y[i] - y_mean)

81 | denominator += (X[i] - x_mean) ** 2

82 |

83 | b1 = numerator / denominator

84 | b0 = y_mean - (b1 * x_mean)

85 |

86 | #printing the coefficient

87 | print(b1, b0)

88 | ```

89 | Finally, we have calculated the unknown coefficient m as b1 and c as b0. Here we have b1 = **9449.962321455077** and b0 = **25792.20019866869**.

90 |

91 | Let’s visualize the best fit line from scratch.

92 |

93 |

94 |

95 | Now let’s predict the salary Y by providing years of experience as X:

96 |

97 | ```

98 | def predict(x):

99 | return (b0 + b1 * x)

100 | y_pred = predict(6.5)

101 | print(y_pred)

102 |

103 | Output: 87216.95528812669

104 | ```

105 | ### 1.2. LINEAR REGRESSION USING SKLEARN

106 |

107 | ```

108 | from sklearn.linear_model import LinearRegression

109 |

110 | X = dataset.drop(['Salary'],axis=1)

111 | Y = dataset['Salary']

112 |

113 | reg = LinearRegression() #creating object reg

114 | reg.fit(X,Y) # Fitting the Data set

115 | ```

116 |

117 | Let’s visualize the best fit line using Linear Regression from sklearn

118 |

119 |

120 |

121 | Now let’s predict the salary Y by providing years of experience as X:

122 |

123 | ```

124 | y_pred = reg.predict([[6.5]])

125 | y_pred

126 |

127 | Output: 87216.95528812669

128 | ```

129 |

130 | ## 1.3. CONCLUSION

131 |

132 | We need to able to measure how good our model is (accuracy). There are many methods to achieve this but we would implement Root mean squared error and coefficient of Determination (R² Score).

133 |

134 | * Try Model with Different error metric for Linear Regression like Mean Absolute Error, Root mean squared error.

135 | * Try algorithm with large data set, imbalanced & balanced dataset so that you can have all flavors of Regression.

136 |

137 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/mathematics_ai_ml_history_motivation.md:

--------------------------------------------------------------------------------

1 | ## HISTORY OF MATHEMATICS

2 |

3 | * ...........?

4 | * Number system: **2500 Years ago**

5 | * 0 1 2 3 4 5 6 7 8 9

6 | * 0 1 2 3 4 5 6 7 8 9 A B

7 | * 0 1

8 |

9 | * Logical Reasoning: Language and Logics

10 | * If **A**, then **B** (if $x^2$ is even, then x is even)

11 | * if not **B**, then not **A** (If x is not even, then $x^2$ is not even)

12 | * Euclidean Algorithm: **2000 years ago**

13 | * **The Elements**: The most influential book of all-time

14 | * Greatest Common Divisor of 2 numbers: **GCD(1054, 714)**

15 | * **Cryptography** (Encryption and Decryption)

16 | * $\pi$ and Geometry (About $5^{th}$ Century)

17 | * Pythagoras Theorem

18 | * Metcalfe's Law

19 | * Suppose, Weight of network is $n^2$ then,

20 | * **Network of 50M = Network of 40M + Network of 30M**

21 | * Input Analysis

22 | * Some programs with n inputs take $n^2$ time to run (Bubble Sort). In terms of processing time: **50 inputs = 40 inputs + 30 inputs**

23 | * Surface Area

24 | * **Area of radius 50 = Area of radius 40 + Area of radius 30**

25 | * Physics

26 | * The KE of an object with mass(m) and velocity(v) is **$1/2mv^2$**. In terms of energy:

27 | * **Energy at 500 mph = Energy at 400 mph + Energy at 300 mph**

28 | * With the energy used to accelerate one bullet to 500 mph, we could accelerate two others to 400 mph and 300 mph

29 | * Logarithms and Algebra

30 | | | Logarithms = Time| Exponents = Growth |

31 | |-|:------------------:|:--------------------:|

32 | |Time/ Growth Perspective| $ln(x)$ Time needed to grow to x (with 100% continuous compounding) |$e^x$ Amount of growth after time x (with 100% continuous compounding)|

33 | * Radius of earth was calcutated using basic Geometry, deductive reasoning and just measuring the shadow length

34 | * Cartesian Co-ordinates

35 | * Complex Numbers

36 | * Euler'ss Formula: **$17^{th} Century$**

37 | * Calculus

38 | * Math and Physics changed forever

39 | * Rate of Change, slope

40 | * Algebra, Rate of speed

41 | * Calculus, Exact speed at any time

42 | * Radar reads the real time of Aeroplane

43 | * Motion of planet: How they change their speed throughout the orbit?

44 | * Chemistry: Diffusion rates

45 | * Biology

46 | * **In 1736: Euler** Published a paper

47 | * Seven Bridges of Konigsberg

48 | * $1^{st} paper of graph theory$

49 | * Can you cross each bridge exactly once?

50 | * Google

51 | * Facebook

52 | * **In 1822**: J. Fourier

53 | * Heat Flow

54 | * Fourier Series

55 | * Fourier Transform

56 | * Group Theory

57 | * Symmetry Analysis (Chemistry)

58 | * Boolean Algebra

59 | * Set Theory

60 | * Probability and statistics

61 | * Permutation and Combination

62 | * **In 1990**: Game Theory, Chaos Theory (Butterfly Effect)

63 | * Game Theory: The Science of Decision Making

64 | * Mathematics and Decision: Social Interactions

65 | * 1950, **John Nash**

66 | * A game is any interaction between multiple people in which each person's **payoff** is affected by the decisions made by others

67 | * Did you interact with anyone today?

68 | * You can probably analyze the decisions you made using game theory

69 | * Economics, Political Science, Biology, Military, Psychology, **Computer Science**, Mathematics, Physics

70 | * Non-cooperative (Competitive) and Cooperative Game Theory

71 | * Game Theory: Non-cooperative (Competitive)

72 | * It covers competitive social interactions where there will be some winners and some losers

73 | * The Prisoner's Dilemma

74 | | Shyam / Hari | No Confession | Confession |

75 | | ------------ | ------------- | ---------- |

76 | | No Confession | 3 Years - 3 Years | Shyam (10 Yrs) - Hari Free |

77 | | Confession | Shyam(Free) - Hari (10 Yrs) | 5 years - 5 Years |

78 | * Optimal Solution ..........?

79 | * The Turing Test

80 | * In the 19550s, Alan Turing created Turing Test which is used to determine the level of intelligence of a computer

81 | * Controversy of Turing Test

82 | * Some people disagree with the Turing Test. They claim it does not actually measure a computer's intelligence

83 | * Instead of coming up with a response themselves, they can use the same generic phrases to pass the test

84 |

85 | ## ARTIFICIAL INTELLIGENCE

86 |

87 | * Thinking

88 | * It must have sth to do with thinking

89 | * Thinking, Perception & Action

90 | * Philosophy: We would love to talk about problems involving thinking, perception & action

91 | * Computer Science: We will talk about models that are targeted at thinking, perception & action

92 | * Models (Models of thinking)

93 | * Differential Equations, Probability Functions, Physical & Computational Simulations

94 |

95 | Note: We need to build models in order to explain the past, predict the future, understand the subject matter and control the world… **THAT'S WHAT THIS SUBJECT IS ALL ABOUT**

96 |

97 | ## REPRESENTATIONS STRATEGIES

98 |

99 | * Representations that support models targeted at thinking, perception & action

100 | * What is “Representation”?

101 | * River Crossing Puzzle (Farmer, Tiger, Goat, Grass)

102 |

103 |

104 | * What would be the right representation of this problem?

105 | * Picture of the Farmer?

106 | * Poem of the situation? (Haiku, Story…?)

107 | * As we know: The right representation must involve sth about the location of the participants in this scenario…!

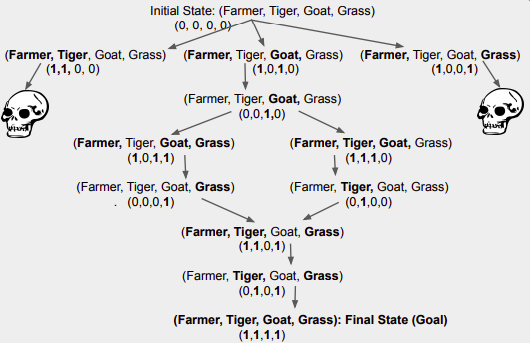

108 | * Representation Solution

109 |

110 |

111 | * Representation Strategies & Constraints

112 | * General mathematics exposes constraints

113 | * AI is constraints exposed by representation that supports models targeted to thinking... but….?

114 | * **According to Dr. Winston (Professor, Computer Scientist, MIT)** - AI is all about algorithms enabled by constraints exposed by representations that supports models targeted thinking, perception & action…!

115 |

--------------------------------------------------------------------------------

/ML from Scratch/Random Forest/README.md:

--------------------------------------------------------------------------------

1 | # RANDOM FOREST FROM SCRATCH PYTHON

2 |

3 |

4 |

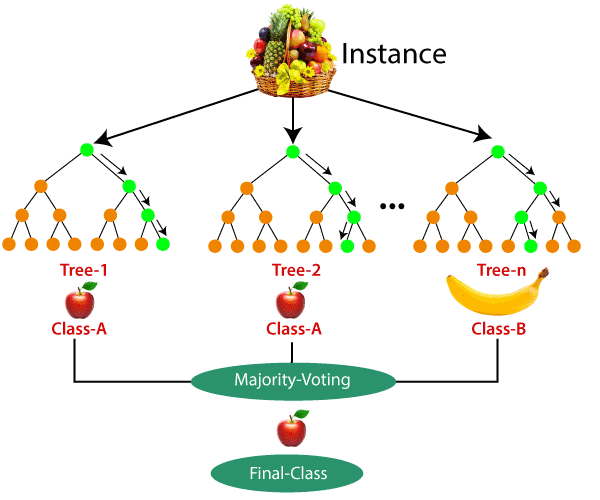

5 | As the name suggests, the Random forest is a “forest” of trees! i.e Decision Trees. A random forest is a tree-based machine learning algorithm that randomly selects specific features to build multiple decision trees. The random forest then combines the output of individual decision trees to generate the final output. Now, let’s start our today’s topic on random forest from scratch.

6 |

7 | Decision trees involve the greedy selection to the best split point from the dataset at each step. We can use random forest for classification as well as regression problems. If the total number of column in the training dataset is denoted by p :

8 |

9 | - We take sqrt(p) number of columns for classification

10 | - For regression, we take a p/3 number of columns.

11 |

12 |

13 | ## WHEN TO USE RANDOM FOREST?

14 | - When we focus on accuracy rather than interpretation

15 | - If you want better accuracy on the unexpected validation dataset

16 |

17 | ## HOW TO USE RANDOM FOREST ?

18 | - Select random samples from a given dataset

19 | - Construct decision trees from every sample and obtain their output

20 | - Perform a vote for each predicted result

21 | - Most voted prediction is selected as the final prediction result

22 |

23 |

24 |

25 | ### STOCK PREDICTION USING RANDOM FOREST

26 |

27 | In this tutorial of Random forest from scratch, since it is totally based on a decision tree we aren’t going to cover scratch tutorial. You can go through [decision tree from scratch](https://github.com/ghimiresunil/Implementation-of-Machine-Learning-Algorithm-from-Scratch/tree/main/ML%20from%20Scratch/Decision%20Tree).

28 |

29 | ```

30 | import matplotlib.pyplot as plt

31 | import numpy as np

32 | import pandas as pd

33 |

34 | # Import the model we are using

35 | from sklearn.ensemble import RandomForestRegressor

36 |

37 | data = pd.read_csv('data.csv')

38 | data.head()

39 | ```

40 |

41 | | | Date| Open | High| Low| Close| Volume | Name|

42 | | --- | ----| ----| ---- | ---- | ---- | ----| ---- |

43 | | 0 | 2006-01-03| 211.47 | 218.05 | 209.32 | 217.83 | 13137450 | GOOGL|

44 | | 1 | 2006-01-04| 222.17 | 224.70 | 220.09 | 222.84 | 15292353 | GOOGL|

45 | | 2 | 2006-01-05| 223.22 | 226.70 | 220.97 | 225.85 | 10815661a | GOOGL|

46 | | 3 | 2006-01-06| 228.66 | 235.49 | 226.85 | 233.06 | 17759521 | GOOGL|

47 | | 4 | 2006-01-09| 233.44 | 236.94 | 230.70 | 233.68 | 12795837 | GOOGL|

48 |

49 | Here, we will be using the dataset (available below) which contains seven columns namely date, open, high, low, close, volume, and name of the company. Here in this case google is the only company we have used. Open refers to the time at which people can begin trading on a particular exchange. Low represents a lower price point for a stock or index. High refers to a market milestone in which a stock or index reaches a greater price point than previously for a particular time period. Close simply refers to the time at which a stock exchange closes to trading. Volume refers to the number of shares of stock traded during a particular time period, normally measured in average daily trading volume.

50 |

51 | ```

52 | abc=[]

53 | for i in range(len(data)):

54 | abc.append(data['Date'][i].split('-'))

55 | data['Date'][i] = ''.join(abc[i])

56 | ```

57 | Using above dataset, we are now trying to predict the ‘Close’ Value based on all attributes. Let’s split the data into train and test dataset.

58 |

59 | ```

60 | #These are the labels: They describe what the stock price was over a period.

61 | X_1 = data.drop('Close',axis=1)

62 | Y_1 = data['Close']

63 |

64 | # Using Skicit-learn to split data into training and testing sets

65 | from sklearn.model_selection import train_test_split

66 |

67 | X_train_1, X_test_1, y_train_1, y_test_1 = train_test_split(X_1, Y_1, test_size=0.33, random_state=42)

68 | ```

69 |

70 | Now, let’s instantiate the model and train the model on training dataset:

71 |

72 | ```

73 | rfg = RandomForestRegressor(n_estimators= 10, random_state=42)

74 | rfg.fit(X_train_1,y_train_1)

75 | ```

76 |

77 | Let’s find out the features on the basis of their importance by calculating numerical feature importances

78 |

79 | ```

80 | # Saving feature names for later use

81 | feature_list = list(X_1.columns)

82 |

83 | # Get numerical feature importances

84 | importances = list(rfg.feature_importances_)

85 |

86 | # List of tuples with variable and importance

87 | feature_importances = [(feature, round(importance, 2)) for feature, importance in zip(feature_list, importances)]

88 |

89 | # Sort the feature importances by most important first

90 | feature_importances = sorted(feature_importances, key = lambda x: x[1], reverse = True)

91 |

92 | # Print out the feature and importances

93 | [print('Variable: {:20} Importance: {}'.format(*pair)) for pair in feature_importances];

94 | ```

95 |

96 |

97 |

98 |

99 | ```

100 | rfg.score(X_test_1, y_test_1)

101 |

102 | output:- 0.9997798214978976

103 | ```

104 |

105 | We are getting an accuracy of ~99% while predicting. We then display the original value and the predicted Values.

106 |

107 | ```

108 | pd.concat([pd.Series(rfg.predict(X_test_1)), y_test_1.reset_index(drop=True)], axis=1)

109 | ```

110 |

111 | ### ADVANTAGES OF RANDOM FOREST

112 | - It reduces overfitting as it yields prediction based on majority voting.

113 | - Random forest can be used for classification as well as regression.

114 | - It works well on a large range of datasets.

115 | - Random forest provides better accuracy on unseen data and even if some data is missing

116 | - Data normalization isn’t required as it is a rule-based approach

117 |

118 | ### DISADVANTAGES

119 |

120 | - Random forest requires much more computational power and memory space to build numerous decision trees.

121 | - Due to the ensemble of decision trees, it also suffers interpretability and fails to determine the significance of each variable.

122 | - Random forests can be less intuitive for a large collection of decision trees.

123 | - Using bagging techniques, Random forest makes trees only which are dependent on each other. Bagging might provide similar predictions in each tree as the same greedy algorithm is used to create each decision tree. Hence, it is likely to be using the same or very similar split points in each tree which mitigates the variance originally sought.

124 |

--------------------------------------------------------------------------------

/ML from Scratch/Support Vector Machine/README.md:

--------------------------------------------------------------------------------

1 | # Support Vector Machine – SVM From Scratch Python

2 |

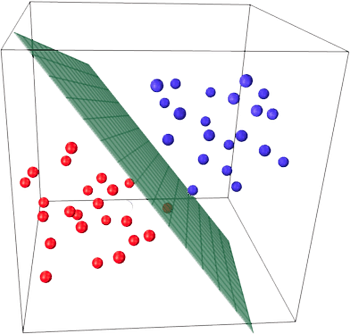

3 |

4 |

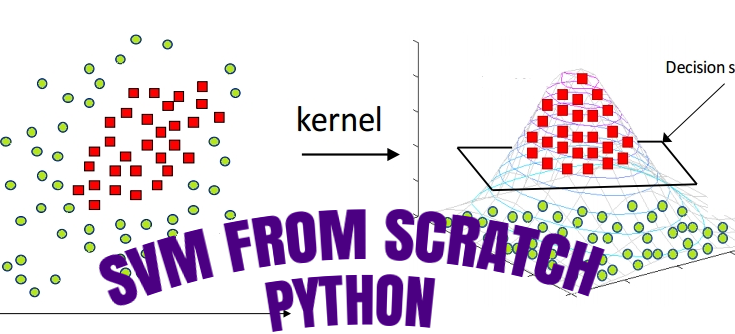

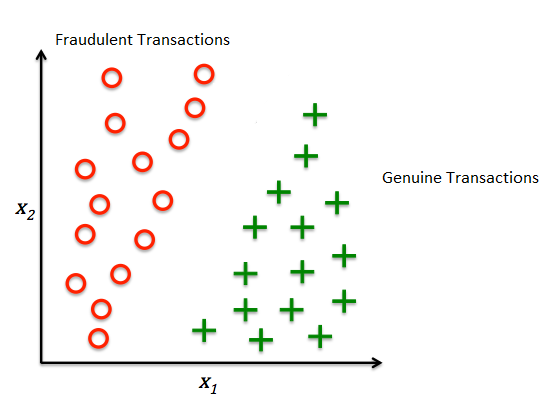

5 | In the 1960s, Support vector Machine (SVM) known as supervised machine learning classification was first developed, and later refined in the 1990s which has become extremely popular nowadays owing to its extremely efficient results. The SVM is a supervised algorithm is capable of performing classification, regression, and outlier detection. But, it is widely used in classification objectives. SVM is known as a fast and dependable classification algorithm that performs well even on less amount of data. Let’s begin today’s tutorial on SVM from scratch python.

6 |

7 |

8 |

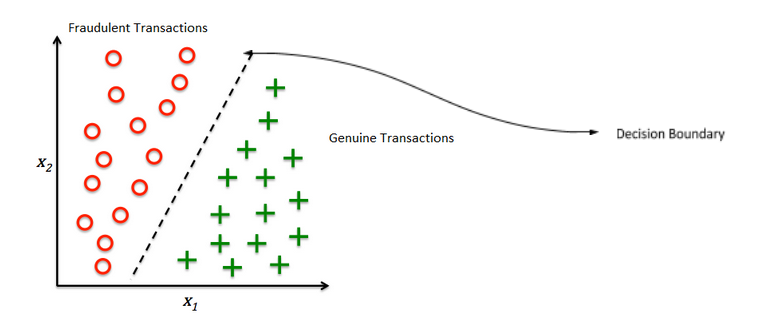

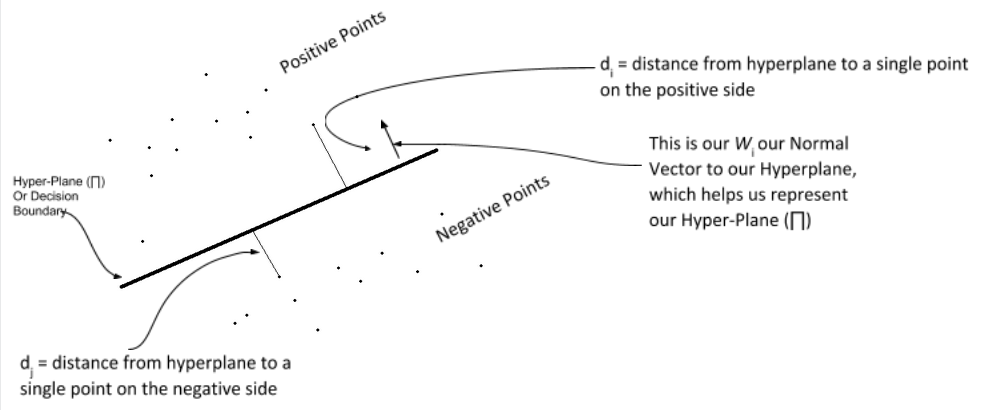

9 | ## HOW SVM WORKS ?

10 |

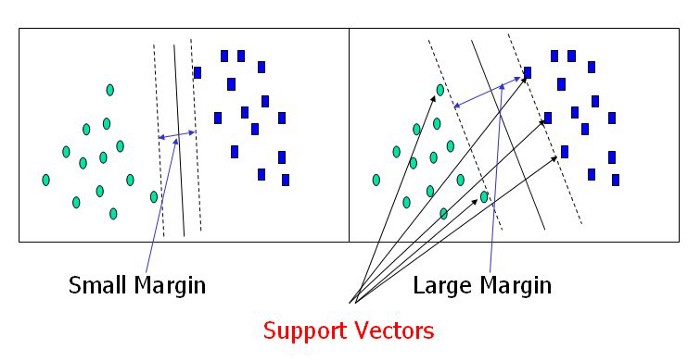

11 | SVM finds the best N-dimensional hyperplane in space that classifies the data points into distinct classes. Support Vector Machines uses the concept of ‘Support Vectors‘, which are the closest points to the hyperplane. A hyperplane is constructed in such a way that distance to the nearest element(support vectors) is the largest. The better the gap, the better the classifier works.

12 |

13 |

14 |

15 | The line (in 2 input feature) or plane (in 3 input feature) is known as a decision boundary. Every new data from test data will be classified according to this decision boundary. The equation of the hyperplane in the ‘M’ dimension:

16 |

17 | $y = w_0 + w_1x_1 + w_2x_2 + w_3x_3 + ......= w_0 + \Sigma_{i=1}^mw_ix_i = w_0 + w^{T}X = b + w^{T}X $

18 |

19 | where,

20 | $W_i$ = $vectors\(w_0, w_1, w_2, w_3, ...... w_m\)$

21 |

22 |

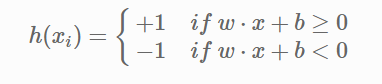

23 |

24 | The point above or on the hyperplane will be classified as class +1, and the point below the hyperplane will be classified as class -1.

25 |

26 |

27 |

28 | ## SVM IN NON-LINEAR DATA

29 |

30 | SVM can also conduct non-linear classification.

31 |

32 |

33 |

34 | For the above dataset, it is obvious that it is not possible to draw a linear margin to divide the data sets. In such cases, we use the kernel concept.

35 |

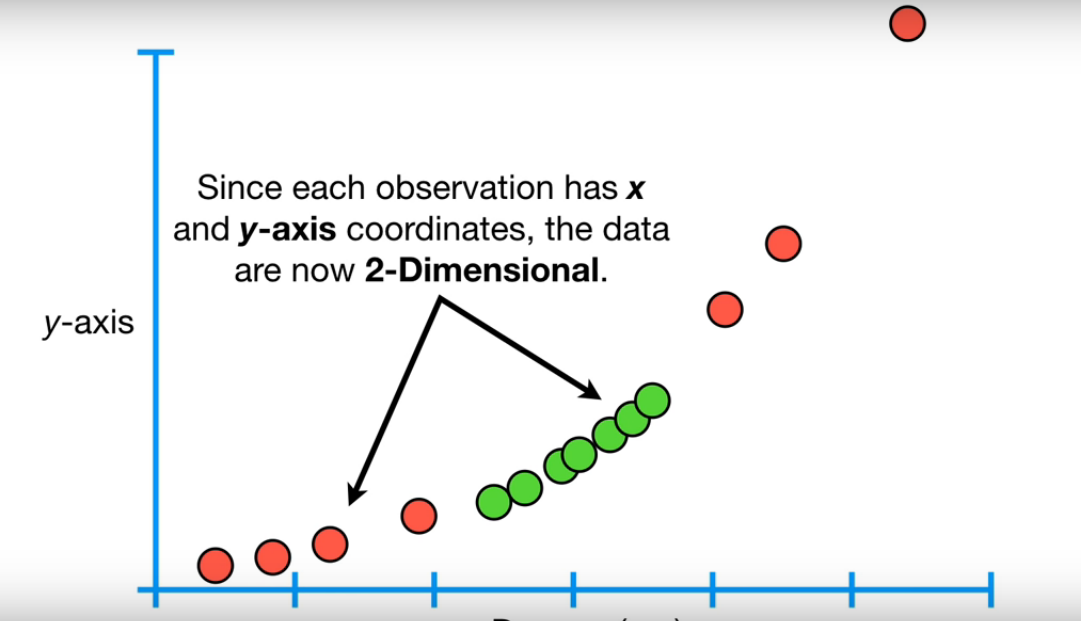

36 | SVM works on mapping data to higher dimensional feature space so that data points can be categorized even when the data aren’t otherwise linearly separable. SVM finds mapping function to convert 2D input space into 3D output space. In the above condition, we start by adding Y-axis with an idea of moving dataset into higher dimension.. So, we can draw a graph where the y-axis will be the square of data points of the X-axis.

37 |

38 |

39 |

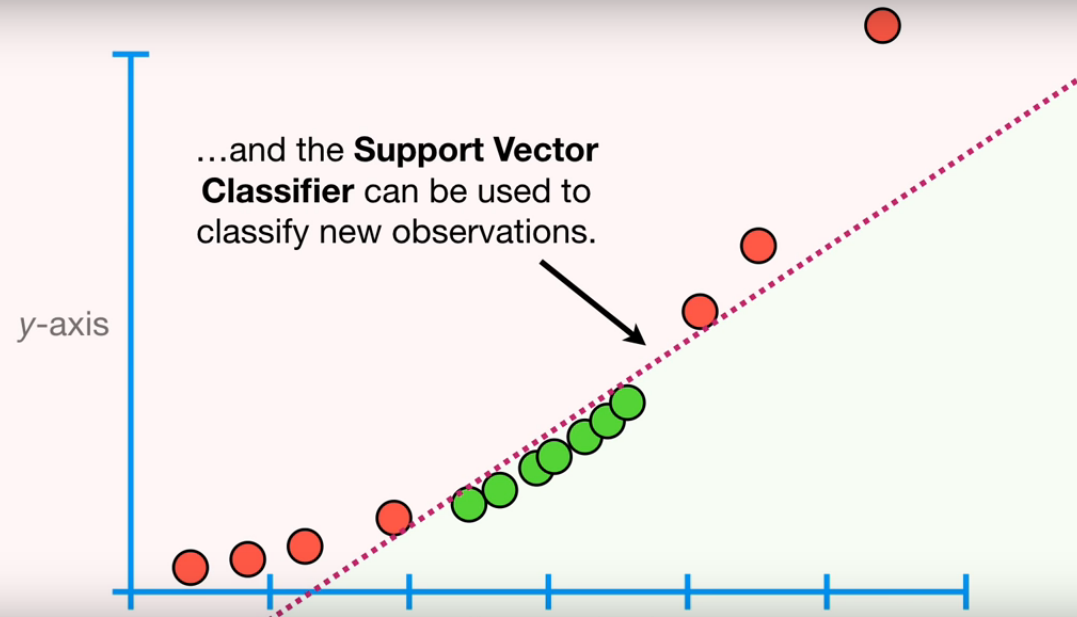

40 | And now, the data are two dimensional, we can draw a Support Vector Classifier that classifies the dataset into two distinct regions. Now, let’s draw a support vector classifier.

41 |

42 |

43 |

44 | **_Note_**: This example is taken from [Statquest](https://www.youtube.com/watch?v=efR1C6CvhmE).

45 |

46 | ## HOW TO TRANSFORM DATA ??

47 |

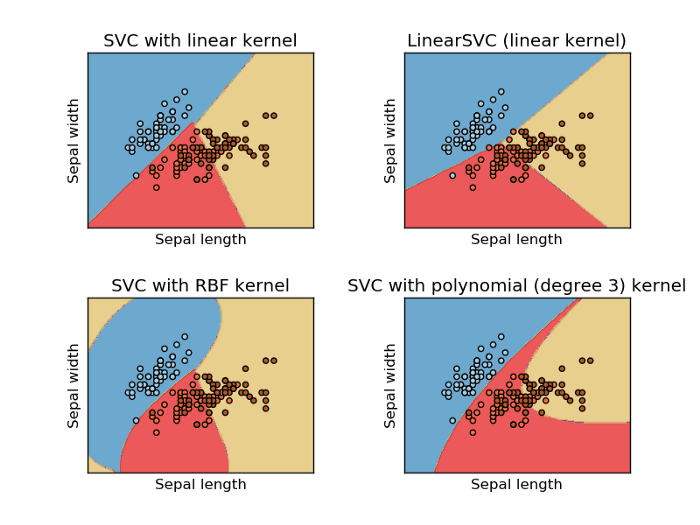

48 | SVM uses a kernel function to draw Support Vector Classifier in a higher dimension. Types of Kernel Functions are :

49 |

50 | - Linear

51 | - Polynomial

52 | - Radial Basis Function(rbf)

53 |

54 | In the above example, we have used a polynomial kernel function which has a parameter d (degree of polynomial). Kernel systematically increases the degree of the polynomial and the relationship between each pair of observation are used to find Support Vector Classifier. We also use cross-validation to find the good value of d.

55 |

56 | ### Radial Basis Function Kernel

57 |

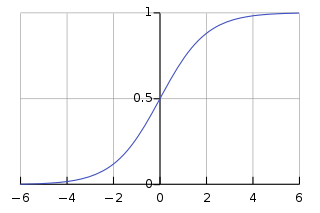

58 | Widely used kernel in SVM, we will be discussing radial basis Function Kernel in this tutorial for SVM from Scratch Python. Radial kernel finds a Support vector Classifier in infinite dimensions. Radial kernel behaves like the Weighted Nearest Neighbour model that means closest observation will have more influence on classifying new data.

59 |

60 | $K(X_1, X_2) = exponent(-\gamma||X_1 - X_2||^2)$

61 |

62 | Where,

63 | $||X_1 - X_2||$ = Euclidean distance between $X_1$ & $X_2$

64 |

65 | ## SOFT MARGIN – SVM

66 | n this method, SVM makes some incorrect classification and tries to balance the tradeoff between finding the line that maximizes the margin and minimizes misclassification. The level of misclassification tolerance is defined as a hyperparameter termed as a penalty- ‘C’.

67 |

68 | For large values of C, the optimization will choose a smaller-margin hyperplane if that hyperplane does a better job of getting all the training points classified correctly. Conversely, a very small value of C will cause the optimizer to look for a larger-margin separating hyperplane, even if that hyperplane misclassifies more points. For very tiny values of C, you should get misclassified examples, often even if your training data is linearly separable.

69 |

70 | Due to the presence of some outliers, the hyperplane can’t classify the data points region correctly. In this case, we use a soft margin & C hyperparameter.

71 |

72 | ## SVM IMPLEMENTATION IN PYTHON

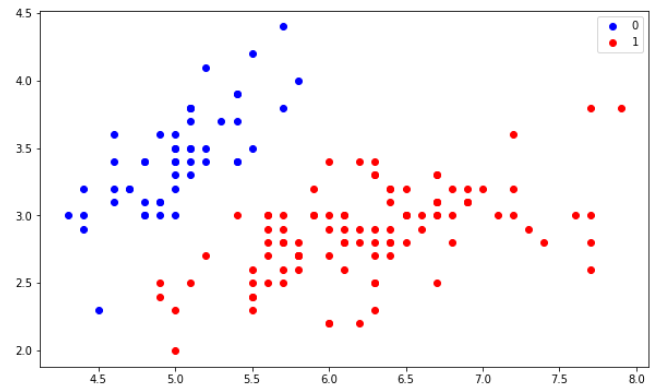

73 | In this tutorial, we will be using to implement our SVM algorithm is the Iris dataset. You can download it from this [link](https://www.kaggle.com/code/jchen2186/machine-learning-with-iris-dataset/data). Since the Iris dataset has three classes. Also, there are four features available for us to use. We will be using only two features, i.e Sepal length, and Sepal Width.

74 |

75 |

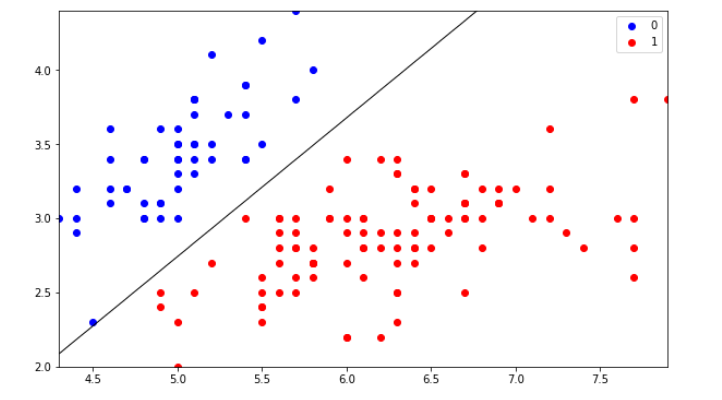

76 |

77 | ## BONUS – SVM FROM SCRATCH PYTHON!!

78 | Kernel Trick: Earlier, we had studied SVM classifying non-linear datasets by increasing the dimension of data. When we map data to a higher dimension, there are chances that we may overfit the model. Kernel trick actually refers to using efficient and less expensive ways to transform data into higher dimensions.

79 |

80 | Kernel function only calculates relationship between every pair of points as if they are in the higher dimensions; they don’t actually do the transformation. This trick , calculating the high dimensional relationships without actually transforming data to the higher dimension, is called the **Kernel Trick**.

81 |

--------------------------------------------------------------------------------

/Machine Learning from Beginner to Advanced/Classification Performance Metrics.md:

--------------------------------------------------------------------------------

1 | # Performance Metrics in Machine Learning Classification Model

2 |

3 |

4 |

5 | In this file, we are going to talk about 5 of the most widely used Evaluation Metrics of Classification Model. Before going into the details of performance metrics, let’s answer a few points:

6 |

7 | ## WHY DO WE NEED EVALUATION METRICS?

8 |

9 | Being Humans we want to know the efficiency or the performance of any machine or software we come across. For example, if we consider a car we want to know the Mileage, or if we there is a certain algorithm we want to know about the Time and Space Complexity, similarly there must be some or the other way we can measure the efficiency or performance of our Machine Learning Models as well.

10 |

11 | That being said, let’s look at some of the metrics for our Classification Models. Here, there are separate metrics for Regression and Classification models. As Regression gives us continuous values as output and Classification gives us discrete values as output, we will focus on Classification Metrics.

12 |

13 | ### ACCURACY

14 |

15 | The most commonly and widely used metric, for any model, is accuracy, it basically does what It says, calculates what is the prediction accuracy of our model. The formulation is given below:

16 |

17 | Accuracy = $\frac{Number \ of \ correct \ prediction}{Total \ number \ of \ points}\ *\ 100$

18 |

19 | As we can see, it basically tells us among all the points how many of them are correctly predicted.

20 |

21 | **Advantages**

22 | * Easy to use Metric

23 | * Highly Interpretable

24 | * If data points are balanced it gives proper effectiveness of the model

25 |

26 | **Disadvantages**

27 | * Not recommended for Imbalanced data, as results can be misleading. Let me give you an exatample. Let’s say we have 100 data points among which 95 points are negative and 5 points are positive. If I have a dumb model, which only predicts negative results then at the end of training I will have a model that will only predict negative. But still, be 95% accurate based on the above formula. Hence not recommended for imbalanced data.

28 | * We don’t understand where our model is making mistakes.

29 |

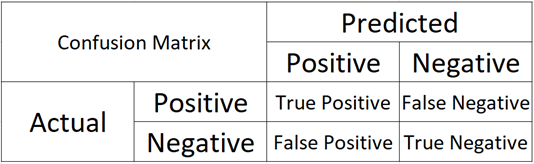

30 | ### CONFUSION METRICS

31 |

32 | As the name suggests it is a 2×2 matrix that has Actual and Predicted as Rows and Columns respectively. It determines the number of Correct and Incorrect Predictions, we didn’t bother about incorrect prediction in the Accuracy method, and we only consider the correct ones, so the Confusion Matrix helps us understand both aspects.

33 |

34 | Let’s have a look at the diagram to have a better understanding of it:

35 |

36 |

37 |

38 | ### WHAT DOES THESE NOTATION MEANS?

39 |

40 | Imagine I have a binary classification problem with classes as positive and negative labels, now, If my actual point is Positive and my Model predicted point is also positive then I get a True Positive, here “True” means correctly classified and “Positive” is the predicted class by the model, Similarly If I have actual class as Negative and I predicted it as Positive, i.e. an incorrect predicted, then I get False Positive, “False” means Incorrect prediction, and “Positive” is the predicted class by the model.

41 |

42 | We always want diagonal elements to have high values. As they are correct predictions, i.e. TP & TN.

43 |

44 | **Advantages**:

45 | * It specifies a model is confused between which class labels.

46 | * You get the types of errors made by the model, especially Type I or Type II.

47 | * Better than accuracy as it shows the incorrect predictions as well, you understand in-depth the errors made by the model, and rectify the areas where it is going incorrect.

48 |

49 | **Disadvantage**:

50 | * Not very much well suited for Multi-class

51 |

52 | **PRECISION & RECALL**

53 | Precision is the measure which states, among all the predicted positive class, how many are actually positive, formula is given below:

54 |

55 | Precision = $\frac{True Positive}{True Positive + False Positive}$

56 |

57 | Recall is the measure which states, among all the Positive classes how many are actually predicted correctly, formula is given below:

58 |

59 | Recall = $\frac{True Positive}{True Positive + False Negative}$

60 |

61 | We often seek for getting high precision and recall. If both are high means our model is sensible. Here, we also take into consideration, the incorrect points, hence we are aware where our model is making mistakes, and Minority class is also taken into consideration.

62 |

63 | **Advantages**:

64 | * It tells us about the efficiency of the model

65 | * Also shows us how much or data is biased towards one class.

66 | * Helps us understand whether our model is performing well in an imbalanced dataset for the minority class.

67 |

68 | **Disadvantage**

69 | * Recall deals with true positives and false negatives and precision deals with true positives and false positives. It doesn’t deal with all the cells of the confusion matrix. True negatives are never taken into account.

70 | * Hence, precision and recall should only be used in situations, where the correct identification of the negative class does not play a role.

71 | * Focuses only on Positive class.

72 | * Best suited for Binary Classification.

73 |

74 | ### F1-SCORE

75 | F1 score is the harmonic mean of the precision and recall, where an F1 score reaches its best value at 1 (perfect precision and recall). The F1 score is also known as the Sorensen–Dice coefficient or Dice similarity coefficient (DSC).

76 |

77 | It leverages both the advantages of Precision and Recall. An Ideal model will have precision and recall as 1 hence F1 score will also be 1.

78 |

79 | F1 - Score = $2 * \frac{Precision * Recall}{Precision + Recall}$

80 |

81 | **Advantages and Disadvantages**:

82 | * It is as same as Precision and Recall.

83 |

84 | **AU-ROC**

85 |

86 | AU-ROC is the Area Under the Receiver Operating Curve, which is a graph showing the performance of a model, for all the values considered as a threshold. As AU-ROC is a graph it has its own X-axis and Y-axis, whereas X-axis is FPR and Y-axis is TPR

87 |

88 | TPR = $\frac{True Positive}{True Positive + False Negative}$

89 | FPR = $\frac{False Positive}{False Positive + True Negative}$

90 |

91 | ROC curve plots are basically TPR vs. FPR calculated at different classification thresholds. Lowering the classification threshold classifies more items as positive, thus increasing both False Positives and True Positives i.e. basically correct predictions.

92 |

93 | All the values are sorted and plotted in a graph, and the area under the ROC curve is the actual performance of the model at different thresholds.

94 |

95 |

96 |

97 |

98 |

99 | **Advantages**:

100 | * A simple graphical representation of the diagnostic accuracy of a test: the closer the apex of the curve toward the upper left corner, the greater the discriminatory ability of the test.

101 | * Also, allows a more complex (and more exact) measure of the accuracy of a test, which is the AUC.

102 | * The AUC in turn can be used as a simple numeric rating of diagnostic test accuracy, which simplifies comparison between diagnostic tests.

103 |

104 | **Disadvantages**:

105 | * Actual decision thresholds are usually not displayed in the plot.

106 | * As the sample size decreases, the plot becomes more jagged.

107 | * Not easily interpretable from a business perspective.

108 |

109 | _**So there you have it, some of the widely used performance metrics for Classification Models.**_

110 |

--------------------------------------------------------------------------------

/Installation/install_anaconda_python.md:

--------------------------------------------------------------------------------

1 | # How To Install the Anaconda Python on Windows and Linux (Ubuntu and it's Derivatives)

2 |

3 | Setting up software requirements (environment) is the first and the most important step in getting started with Machine Learning. It takes a lot of effort to get all of those things ready at times. When we finish preparing for the work environment, we will be halfway there.

4 |

5 | In this file, you’ll learn how to use Anaconda to build up a Python machine learning development environment. These instructions are suitable for both Windows and Linux systems.

6 |

7 | * Steps are to install Anaconda in Windows & Ubuntu

8 | * Creating & Working On Conda Environment

9 | * Walkthrough on ML Project

10 |

11 | ## 1. Introduction

12 | Anaconda is an open-source package manager, environment manager, and distribution of the Python and R programming languages. It is used for data science, machine learning, large-scale data processing, scientific computing, and predictive analytics.

13 |

14 | Anaconda aims to simplify package management and deployment. The distribution includes 250 open-source data packages, with over 7,500 more available via the Anaconda repositories suitable for Windows,Linux and MacOS. It also includes the conda command-line tool and a desktop GUI called Anaconda Navigator.

15 |

16 | ### 1.1. Install Anaconda on Windows

17 |

18 | * Go to anaconda official website

19 | * [Download](https://www.anaconda.com/products/distribution) based on your Operating set up (64x or 32x) for windows

20 | * After Anaconda has finished downloading, double-click the _.exe_ file to start the installation process.

21 | * Then, until the installation of Windows is complete, follow the on-screen instructions.

22 | * Don’t forget to add the path to the environmental variable. The benefit is that you can use Anaconda in your Command Prompt, Git Bash, cmder, and so on.

23 | * If you like, you can install Microsoft [VSCode](https://code.visualstudio.com/), but it’s not required.

24 | * Click on Finish

25 | * Open a Command Prompt. If the conda is successfully installed then run conda -V or conda –version in the command prompt and it will pop out the installed version.

26 |

27 | ### 1.2. Install Anaconda on Ubuntu and it's derivatives

28 |

29 | ```Anaconda3-2022.05-Linux-x86_64.sh``` is the most recent stable version at the time of writing this post. Check the [Downloads page](https://www.anaconda.com/distribution/) to see whether there is a new version of Anaconda for Python 3 available for download before downloading the installation script.

30 |

31 | Downloading the newest Anaconda installer bash script, verifying it, and then running it is the best approach to install Anaconda. To install Anaconda on Ubuntu, follow the steps below:

32 |

33 | #### Step 01

34 |

35 | Install the following packages if you’re installing Anaconda on a desktop system and want to use the GUI application.

36 |

37 | ```

38 | $ sudo apt install libgl1-mesa-glx libegl1-mesa libxrandr2 libxrandr2 libxss1 libxcursor1 libxcomposite1 libasound2 libxi6 libxtst6

39 | ```

40 |

41 | #### Step 02

42 |

43 | Download the Anaconda installation script with wget

44 |

45 | ```

46 | $ wget -P /tmp https://repo.anaconda.com/archive/Anaconda3-2022.05-Linux-x86_64.sh

47 | ```

48 |

49 | **_Note_**: If you want to work with different version of anaconda, adjust the [version of anaconda](https://repo.anaconda.com/archive/).

50 |

51 | #### Step 03

52 |

53 | For verifying the data integrity of the installer with cryptographic hash verification you can use the ```SHA-256``` checksum. You’ll use the sha256sum command along with the filename of the script:

54 |

55 | ```

56 | $ sha256sum /tmp/Anaconda3-2022.05-Linux-x86_64.sh`

57 | ```

58 |

59 | You’ll receive output that looks similar to this:

60 |

61 | ```

62 | Output:

63 | afcd2340039557f7b5e8a8a86affa9ddhsdg887jdfifji988686bds

64 | ```

65 |

66 | #### Step 04

67 |

68 | To begin the installation procedure, run the script

69 |

70 | ```

71 | $ bash /tmp/Anaconda3-2022.05-Linux-x86_64.sh

72 | ```

73 |

74 | You’ll receive a welcome mesage as output. Press `ENTER` to continue and then press `ENTER` to read through the license. Once you complete reading the license, you will be asked for approving the license terms.

75 |

76 | ```

77 | Output

78 | Do you approve the license terms? [yes|no]

79 | ```

80 |

81 | Type `yes`.

82 |

83 | Next, you’ll be asked to choose the location of the installation. You can press `ENTER` to accept the default location, or specify a different location to modify it.

84 |

85 | Once you are done you will get a thank you message.

86 |

87 | #### Step 05

88 |

89 | Enter the following bash command to activate the Anaconda installation.

90 |

91 | ```

92 | $ source ~/.bashrc

93 | ```

94 |

95 | Once you have done that, you’ll be placed into the default `base` programming environment of Anaconda, and your command prompt will will show base environment.

96 |

97 | ```

98 | $(base) linus@ubuntu

99 | ```

100 |

101 | ### 1.3. Updating Anaconda

102 |

103 | To update the anaconda to the latest version, open and enter the following command:

104 |

105 | ```

106 | (base) linus@ubuntu:~$ conda update --all -y

107 | ```

108 |

109 | ### 1.4. Creating & Working on Conda Environment

110 |

111 | Anaconda virtual environments let us specify specific package versions. You can specify which version of Python to use for each Anaconda environment you create.

112 |

113 | _**Note**_: Name the environment ```venv_first_env``` or some nice and relevant name as per your project.

114 |

115 | ```

116 | (base) linus@ubuntu:~$ conda create --name venv_first_env python=3.9

117 | ```

118 |

119 | You’ll receive output with information about what is downloaded and which packages will be installed, and then be prompted to proceed with `y` or `n`. As long as you agree, type `y`.

120 |

121 | The `conda` utility will now fetch the packages for the environment and let you know when it’s complete.

122 |

123 | You can activate your new environment by typing the following:

124 |

125 | ```

126 | (base) linus@ubuntu:~$ conda activate venv_first_env

127 | ```

128 |

129 | With your environment activated, your command prompt prefix will reflect that you are no longer in the `base` environment, but in the new one that you just created.

130 |

131 | When you’re ready to deactivate your Anaconda environment, you can do so by typing:

132 |

133 | ```

134 | (venv_first_env) linus@ubuntu:~$ conda deactivate

135 | ```

136 |

137 | With this command, you can see the list of all of the environments you’ve created:

138 |

139 | ```

140 | (base) linus@ubuntu:~$ conda info --envs

141 | ```

142 |

143 | When you create environment using `conda create` , it will come with several default packages. Few examples of then are:

144 |

145 |

146 | - `openssl`

147 | - `pip`

148 | - `python`

149 | - `readline`

150 | - `setuptools`

151 | - `sqlite`

152 | - `tk`

153 |

154 | You might need to add additional package in your environment .

155 |

156 | You can add packages such as `matplotlib` for example, with the following command:

157 |

158 | ```

159 | (venv_first_env) linus@ubuntu:~$ conda install matplotlib

160 | ```

161 |

162 |

163 | For installing the specific version, you can specify specific version with the following command:

164 | ```

165 | (venv_first_env) linus@ubuntu:~$ conda install matplotlib=1.4.3

166 | ```

167 |

168 |

169 | ### 1.5. Getting Started With Jupyter Notebook

170 |

171 | Jupyter Notebooks are capable of performing data visualization in the same environment and are strong, versatile, and shared. Data scientists may use Jupyter Notebooks to generate and distribute documents ranging from code to full-fledged reports.

172 |

173 | You can directly launch Juypter through the terminal using the following command:

174 |

175 | #### Command 01:

176 | ```

177 | (venv_first_env) linus@ubuntu:~$ jupyter notebook

178 | ```

179 |

180 | #### command 02:

181 | ```

182 | (venv_first_env) linus@ubuntu:~$ jupyter notebook --no-browser --port=8885

183 | ```

184 |

185 | ### 06. Working with Jupyter Noteboook

186 |

187 | * create a new notebook, click on the New button on the top right hand corner of the web page and select `Python 3 notebook`. The Python statements are entered in each cell. To execute the Python statements within each cell, press both the `SHIFT` and `ENTER` keys simultaneously. The result will be displayed right below the cell.