├── .gitignore

├── LICENSE

├── README.md

├── bark

├── __init__.py

├── __main__.py

├── api.py

├── assets

│ └── prompts

│ │ ├── announcer.npz

│ │ ├── de_speaker_0.npz

│ │ ├── de_speaker_1.npz

│ │ ├── de_speaker_2.npz

│ │ ├── de_speaker_3.npz

│ │ ├── de_speaker_4.npz

│ │ ├── de_speaker_5.npz

│ │ ├── de_speaker_6.npz

│ │ ├── de_speaker_7.npz

│ │ ├── de_speaker_8.npz

│ │ ├── de_speaker_9.npz

│ │ ├── en_speaker_0.npz

│ │ ├── en_speaker_1.npz

│ │ ├── en_speaker_2.npz

│ │ ├── en_speaker_3.npz

│ │ ├── en_speaker_4.npz

│ │ ├── en_speaker_5.npz

│ │ ├── en_speaker_6.npz

│ │ ├── en_speaker_7.npz

│ │ ├── en_speaker_8.npz

│ │ ├── en_speaker_9.npz

│ │ ├── es_speaker_0.npz

│ │ ├── es_speaker_1.npz

│ │ ├── es_speaker_2.npz

│ │ ├── es_speaker_3.npz

│ │ ├── es_speaker_4.npz

│ │ ├── es_speaker_5.npz

│ │ ├── es_speaker_6.npz

│ │ ├── es_speaker_7.npz

│ │ ├── es_speaker_8.npz

│ │ ├── es_speaker_9.npz

│ │ ├── fr_speaker_0.npz

│ │ ├── fr_speaker_1.npz

│ │ ├── fr_speaker_2.npz

│ │ ├── fr_speaker_3.npz

│ │ ├── fr_speaker_4.npz

│ │ ├── fr_speaker_5.npz

│ │ ├── fr_speaker_6.npz

│ │ ├── fr_speaker_7.npz

│ │ ├── fr_speaker_8.npz

│ │ ├── fr_speaker_9.npz

│ │ ├── hi_speaker_0.npz

│ │ ├── hi_speaker_1.npz

│ │ ├── hi_speaker_2.npz

│ │ ├── hi_speaker_3.npz

│ │ ├── hi_speaker_4.npz

│ │ ├── hi_speaker_5.npz

│ │ ├── hi_speaker_6.npz

│ │ ├── hi_speaker_7.npz

│ │ ├── hi_speaker_8.npz

│ │ ├── hi_speaker_9.npz

│ │ ├── it_speaker_0.npz

│ │ ├── it_speaker_1.npz

│ │ ├── it_speaker_2.npz

│ │ ├── it_speaker_3.npz

│ │ ├── it_speaker_4.npz

│ │ ├── it_speaker_5.npz

│ │ ├── it_speaker_6.npz

│ │ ├── it_speaker_7.npz

│ │ ├── it_speaker_8.npz

│ │ ├── it_speaker_9.npz

│ │ ├── ja_speaker_0.npz

│ │ ├── ja_speaker_1.npz

│ │ ├── ja_speaker_2.npz

│ │ ├── ja_speaker_3.npz

│ │ ├── ja_speaker_4.npz

│ │ ├── ja_speaker_5.npz

│ │ ├── ja_speaker_6.npz

│ │ ├── ja_speaker_7.npz

│ │ ├── ja_speaker_8.npz

│ │ ├── ja_speaker_9.npz

│ │ ├── ko_speaker_0.npz

│ │ ├── ko_speaker_1.npz

│ │ ├── ko_speaker_2.npz

│ │ ├── ko_speaker_3.npz

│ │ ├── ko_speaker_4.npz

│ │ ├── ko_speaker_5.npz

│ │ ├── ko_speaker_6.npz

│ │ ├── ko_speaker_7.npz

│ │ ├── ko_speaker_8.npz

│ │ ├── ko_speaker_9.npz

│ │ ├── pl_speaker_0.npz

│ │ ├── pl_speaker_1.npz

│ │ ├── pl_speaker_2.npz

│ │ ├── pl_speaker_3.npz

│ │ ├── pl_speaker_4.npz

│ │ ├── pl_speaker_5.npz

│ │ ├── pl_speaker_6.npz

│ │ ├── pl_speaker_7.npz

│ │ ├── pl_speaker_8.npz

│ │ ├── pl_speaker_9.npz

│ │ ├── pt_speaker_0.npz

│ │ ├── pt_speaker_1.npz

│ │ ├── pt_speaker_2.npz

│ │ ├── pt_speaker_3.npz

│ │ ├── pt_speaker_4.npz

│ │ ├── pt_speaker_5.npz

│ │ ├── pt_speaker_6.npz

│ │ ├── pt_speaker_7.npz

│ │ ├── pt_speaker_8.npz

│ │ ├── pt_speaker_9.npz

│ │ ├── readme.md

│ │ ├── ru_speaker_0.npz

│ │ ├── ru_speaker_1.npz

│ │ ├── ru_speaker_2.npz

│ │ ├── ru_speaker_3.npz

│ │ ├── ru_speaker_4.npz

│ │ ├── ru_speaker_5.npz

│ │ ├── ru_speaker_6.npz

│ │ ├── ru_speaker_7.npz

│ │ ├── ru_speaker_8.npz

│ │ ├── ru_speaker_9.npz

│ │ ├── speaker_0.npz

│ │ ├── speaker_1.npz

│ │ ├── speaker_2.npz

│ │ ├── speaker_3.npz

│ │ ├── speaker_4.npz

│ │ ├── speaker_5.npz

│ │ ├── speaker_6.npz

│ │ ├── speaker_7.npz

│ │ ├── speaker_8.npz

│ │ ├── speaker_9.npz

│ │ ├── tr_speaker_0.npz

│ │ ├── tr_speaker_1.npz

│ │ ├── tr_speaker_2.npz

│ │ ├── tr_speaker_3.npz

│ │ ├── tr_speaker_4.npz

│ │ ├── tr_speaker_5.npz

│ │ ├── tr_speaker_6.npz

│ │ ├── tr_speaker_7.npz

│ │ ├── tr_speaker_8.npz

│ │ ├── tr_speaker_9.npz

│ │ ├── v2

│ │ ├── de_speaker_0.npz

│ │ ├── de_speaker_1.npz

│ │ ├── de_speaker_2.npz

│ │ ├── de_speaker_3.npz

│ │ ├── de_speaker_4.npz

│ │ ├── de_speaker_5.npz

│ │ ├── de_speaker_6.npz

│ │ ├── de_speaker_7.npz

│ │ ├── de_speaker_8.npz

│ │ ├── de_speaker_9.npz

│ │ ├── en_speaker_0.npz

│ │ ├── en_speaker_1.npz

│ │ ├── en_speaker_2.npz

│ │ ├── en_speaker_3.npz

│ │ ├── en_speaker_4.npz

│ │ ├── en_speaker_5.npz

│ │ ├── en_speaker_6.npz

│ │ ├── en_speaker_7.npz

│ │ ├── en_speaker_8.npz

│ │ ├── en_speaker_9.npz

│ │ ├── es_speaker_0.npz

│ │ ├── es_speaker_1.npz

│ │ ├── es_speaker_2.npz

│ │ ├── es_speaker_3.npz

│ │ ├── es_speaker_4.npz

│ │ ├── es_speaker_5.npz

│ │ ├── es_speaker_6.npz

│ │ ├── es_speaker_7.npz

│ │ ├── es_speaker_8.npz

│ │ ├── es_speaker_9.npz

│ │ ├── fr_speaker_0.npz

│ │ ├── fr_speaker_1.npz

│ │ ├── fr_speaker_2.npz

│ │ ├── fr_speaker_3.npz

│ │ ├── fr_speaker_4.npz

│ │ ├── fr_speaker_5.npz

│ │ ├── fr_speaker_6.npz

│ │ ├── fr_speaker_7.npz

│ │ ├── fr_speaker_8.npz

│ │ ├── fr_speaker_9.npz

│ │ ├── hi_speaker_0.npz

│ │ ├── hi_speaker_1.npz

│ │ ├── hi_speaker_2.npz

│ │ ├── hi_speaker_3.npz

│ │ ├── hi_speaker_4.npz

│ │ ├── hi_speaker_5.npz

│ │ ├── hi_speaker_6.npz

│ │ ├── hi_speaker_7.npz

│ │ ├── hi_speaker_8.npz

│ │ ├── hi_speaker_9.npz

│ │ ├── it_speaker_0.npz

│ │ ├── it_speaker_1.npz

│ │ ├── it_speaker_2.npz

│ │ ├── it_speaker_3.npz

│ │ ├── it_speaker_4.npz

│ │ ├── it_speaker_5.npz

│ │ ├── it_speaker_6.npz

│ │ ├── it_speaker_7.npz

│ │ ├── it_speaker_8.npz

│ │ ├── it_speaker_9.npz

│ │ ├── ja_speaker_0.npz

│ │ ├── ja_speaker_1.npz

│ │ ├── ja_speaker_2.npz

│ │ ├── ja_speaker_3.npz

│ │ ├── ja_speaker_4.npz

│ │ ├── ja_speaker_5.npz

│ │ ├── ja_speaker_6.npz

│ │ ├── ja_speaker_7.npz

│ │ ├── ja_speaker_8.npz

│ │ ├── ja_speaker_9.npz

│ │ ├── ko_speaker_0.npz

│ │ ├── ko_speaker_1.npz

│ │ ├── ko_speaker_2.npz

│ │ ├── ko_speaker_3.npz

│ │ ├── ko_speaker_4.npz

│ │ ├── ko_speaker_5.npz

│ │ ├── ko_speaker_6.npz

│ │ ├── ko_speaker_7.npz

│ │ ├── ko_speaker_8.npz

│ │ ├── ko_speaker_9.npz

│ │ ├── pl_speaker_0.npz

│ │ ├── pl_speaker_1.npz

│ │ ├── pl_speaker_2.npz

│ │ ├── pl_speaker_3.npz

│ │ ├── pl_speaker_4.npz

│ │ ├── pl_speaker_5.npz

│ │ ├── pl_speaker_6.npz

│ │ ├── pl_speaker_7.npz

│ │ ├── pl_speaker_8.npz

│ │ ├── pl_speaker_9.npz

│ │ ├── pt_speaker_0.npz

│ │ ├── pt_speaker_1.npz

│ │ ├── pt_speaker_2.npz

│ │ ├── pt_speaker_3.npz

│ │ ├── pt_speaker_4.npz

│ │ ├── pt_speaker_5.npz

│ │ ├── pt_speaker_6.npz

│ │ ├── pt_speaker_7.npz

│ │ ├── pt_speaker_8.npz

│ │ ├── pt_speaker_9.npz

│ │ ├── ru_speaker_0.npz

│ │ ├── ru_speaker_1.npz

│ │ ├── ru_speaker_2.npz

│ │ ├── ru_speaker_3.npz

│ │ ├── ru_speaker_4.npz

│ │ ├── ru_speaker_5.npz

│ │ ├── ru_speaker_6.npz

│ │ ├── ru_speaker_7.npz

│ │ ├── ru_speaker_8.npz

│ │ ├── ru_speaker_9.npz

│ │ ├── tr_speaker_0.npz

│ │ ├── tr_speaker_1.npz

│ │ ├── tr_speaker_2.npz

│ │ ├── tr_speaker_3.npz

│ │ ├── tr_speaker_4.npz

│ │ ├── tr_speaker_5.npz

│ │ ├── tr_speaker_6.npz

│ │ ├── tr_speaker_7.npz

│ │ ├── tr_speaker_8.npz

│ │ ├── tr_speaker_9.npz

│ │ ├── zh_speaker_0.npz

│ │ ├── zh_speaker_1.npz

│ │ ├── zh_speaker_2.npz

│ │ ├── zh_speaker_3.npz

│ │ ├── zh_speaker_4.npz

│ │ ├── zh_speaker_5.npz

│ │ ├── zh_speaker_6.npz

│ │ ├── zh_speaker_7.npz

│ │ ├── zh_speaker_8.npz

│ │ └── zh_speaker_9.npz

│ │ ├── zh_speaker_0.npz

│ │ ├── zh_speaker_1.npz

│ │ ├── zh_speaker_2.npz

│ │ ├── zh_speaker_3.npz

│ │ ├── zh_speaker_4.npz

│ │ ├── zh_speaker_5.npz

│ │ ├── zh_speaker_6.npz

│ │ ├── zh_speaker_7.npz

│ │ ├── zh_speaker_8.npz

│ │ └── zh_speaker_9.npz

├── cli.py

├── generation.py

├── model.py

└── model_fine.py

├── create_data.py

├── create_wavs.py

├── data.py

├── model-card.md

├── notebooks

├── fake_classifier.ipynb

├── long_form_generation.ipynb

├── memory_profiling_bark.ipynb

└── use_small_models_on_cpu.ipynb

├── pyproject.toml

└── setup.py

/.gitignore:

--------------------------------------------------------------------------------

1 | __pycache__/

2 | suno_bark.egg-info/

3 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) Suno, Inc

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Setup training data generation (windows venv setup)

2 | > Download or clone this repository to a local folder.

3 | >

4 | > If you have git installed: `git clone https://github.com/gitmylo/bark-data-gen`

5 |

6 | > `py -m venv venv` - create the venv (using `py` here, which uses the latest version, if you have a windows store install, use `python`.)

7 |

8 | > `call venv/Scripts/activate.bat` - activate the venv

9 |

10 | > `pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117 --force` - install torch with cuda (with --force to ensure it gets reinstalled)

11 |

12 | > `python create_data.py` - run the script, outputs to `output` (make sure you're in the venv if you're running it again later)

13 |

14 | > `python create_wavs.py` - extra processing to create the wavs for the data. saved in `out_wavs`

15 |

16 | ## ~Currently there's no public training available.~

17 | ~I will release my model when I consider it ready.

18 | Dataset created from shared npy files will be shared on huggingface~

19 |

20 | ## Model, running and training.

21 | [Model](https://huggingface.co/GitMylo/bark-voice-cloning), [Training and running code](https://github.com/gitmylo/bark-voice-cloning-HuBERT-quantizer), [Dataset used](https://huggingface.co/datasets/GitMylo/bark-semantic-training)

22 |

23 | ### ~Please share your created semantics and associated wavs to help me train~

24 | ~Do NOT rename the files, making a mistake during renaming will pollute the training data. It won't know which wavs fit which semantics.~

25 |

26 | ~Create a zip with your semantics. This is the data I'll need for training. There is still 2 more steps of processing required, but having the semantics helps out a bunch. Thanks.~

27 |

28 | ~Send them in dms to `mylo#6228` on discord, or create an issue on [this github repo](https://github.com/gitmylo/bark-data-gen/issues) with a link to download your semantics.~

29 |

30 | Training has completed.

31 |

32 | ## ------------- Old readme -------------

33 | # 🐶 Bark

34 |

35 | [](https://discord.gg/J2B2vsjKuE)

36 | [](https://twitter.com/OnusFM)

37 |  38 |

39 | > 🔗 [Examples](https://suno-ai.notion.site/Bark-Examples-5edae8b02a604b54a42244ba45ebc2e2) • [Suno Studio Waitlist](https://3os84zs17th.typeform.com/suno-studio) • [Updates](#-updates) • [How to Use](#-usage-in-python) • [Installation](#-installation) • [FAQ](#-faq)

40 |

41 | [//]:

38 |

39 | > 🔗 [Examples](https://suno-ai.notion.site/Bark-Examples-5edae8b02a604b54a42244ba45ebc2e2) • [Suno Studio Waitlist](https://3os84zs17th.typeform.com/suno-studio) • [Updates](#-updates) • [How to Use](#-usage-in-python) • [Installation](#-installation) • [FAQ](#-faq)

40 |

41 | [//]:

(vertical spaces around image)

42 |

43 |

44 |  45 |

45 |

46 |

47 |

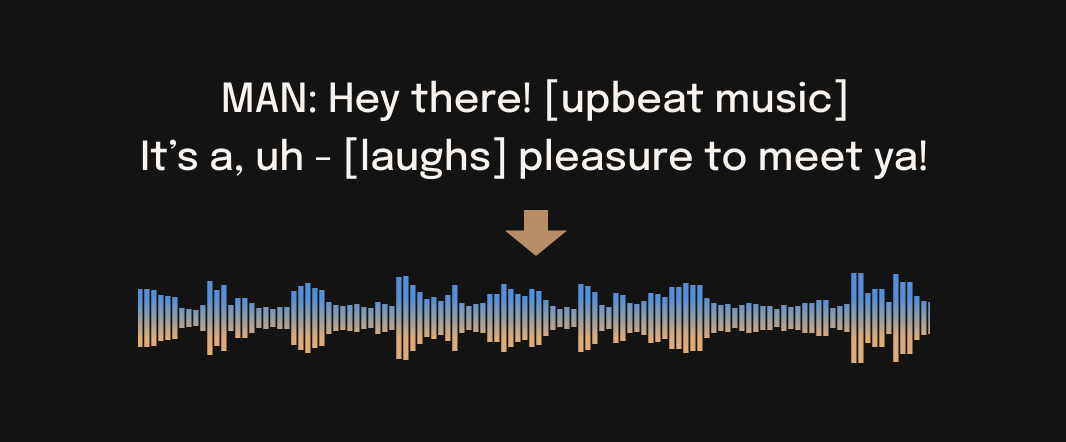

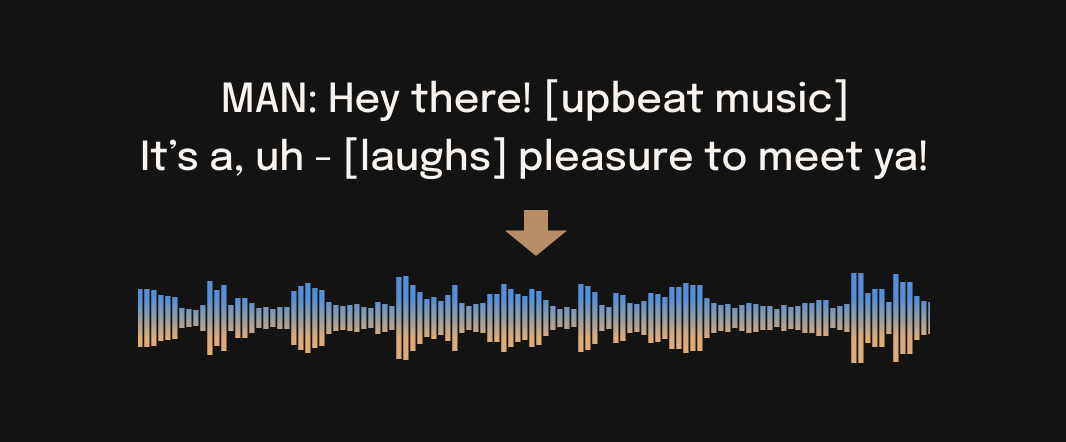

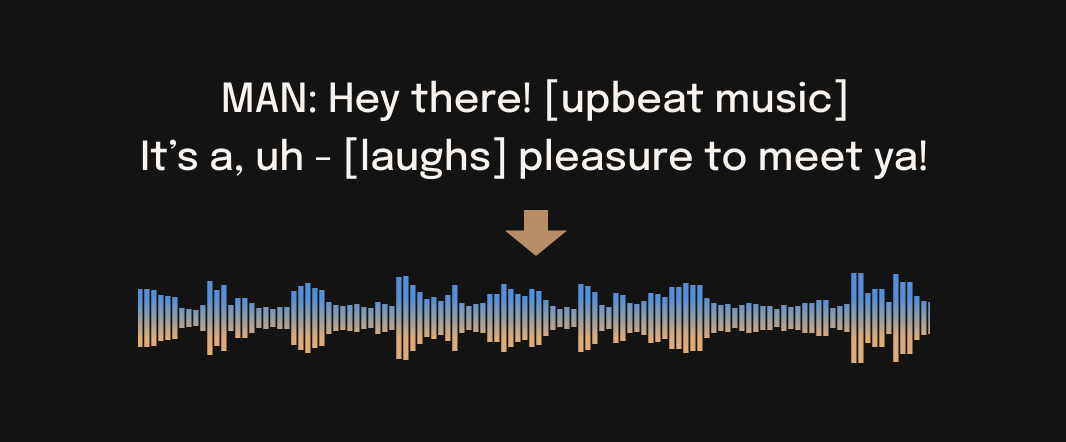

48 | Bark is a transformer-based text-to-audio model created by [Suno](https://suno.ai). Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model can also produce nonverbal communications like laughing, sighing and crying. To support the research community, we are providing access to pretrained model checkpoints, which are ready for inference and available for commercial use.

49 |

50 | ## ⚠ Disclaimer

51 | Bark was developed for research purposes. It is not a conventional text-to-speech model but instead a fully generative text-to-audio model, which can deviate in unexpected ways from provided prompts. Suno does not take responsibility for any output generated. Use at your own risk, and please act responsibly.

52 |

53 | ## 📖 Quick Index

54 | * [🚀 Updates](#-updates)

55 | * [💻 Installation](#-installation)

56 | * [🐍 Usage](#-usage-in-python)

57 | * [🌀 Live Examples](https://suno-ai.notion.site/Bark-Examples-5edae8b02a604b54a42244ba45ebc2e2)

58 | * [❓ FAQ](#-faq)

59 |

60 | ## 🎧 Demos

61 |

62 | [](https://huggingface.co/spaces/suno/bark)

63 | [](https://replicate.com/suno-ai/bark)

64 | [](https://colab.research.google.com/drive/1eJfA2XUa-mXwdMy7DoYKVYHI1iTd9Vkt?usp=sharing)

65 |

66 | ## 🚀 Updates

67 |

68 | **2023.05.01**

69 | - ©️ Bark is now licensed under the MIT License, meaning it's now available for commercial use!

70 | - ⚡ 2x speed-up on GPU. 10x speed-up on CPU. We also added an option for a smaller version of Bark, which offers additional speed-up with the trade-off of slightly lower quality.

71 | - 📕 [Long-form generation](notebooks/long_form_generation.ipynb), voice consistency enhancements and other examples are now documented in a new [notebooks](./notebooks) section.

72 | - 👥 We created a [voice prompt library](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c). We hope this resource helps you find useful prompts for your use cases! You can also join us on [Discord](https://discord.gg/J2B2vsjKuE), where the community actively shares useful prompts in the **#audio-prompts** channel.

73 | - 💬 Growing community support and access to new features here:

74 |

75 | [](https://discord.gg/J2B2vsjKuE)

76 |

77 | - 💾 You can now use Bark with GPUs that have low VRAM (<4GB).

78 |

79 | **2023.04.20**

80 | - 🐶 Bark release!

81 |

82 | ## 🐍 Usage in Python

83 |

84 |

85 | 🪑 Basics

86 |

87 | ```python

88 | from bark import SAMPLE_RATE, generate_audio, preload_models

89 | from scipy.io.wavfile import write as write_wav

90 | from IPython.display import Audio

91 |

92 | # download and load all models

93 | preload_models()

94 |

95 | # generate audio from text

96 | text_prompt = """

97 | Hello, my name is Suno. And, uh — and I like pizza. [laughs]

98 | But I also have other interests such as playing tic tac toe.

99 | """

100 | audio_array = generate_audio(text_prompt)

101 |

102 | # save audio to disk

103 | write_wav("bark_generation.wav", SAMPLE_RATE, audio_array)

104 |

105 | # play text in notebook

106 | Audio(audio_array, rate=SAMPLE_RATE)

107 | ```

108 |

109 | [pizza1.webm](https://user-images.githubusercontent.com/34592747/cfa98e54-721c-4b9c-b962-688e09db684f.webm)

110 |

111 |

112 |

113 |

114 | 🌎 Foreign Language

115 |

116 | Bark supports various languages out-of-the-box and automatically determines language from input text. When prompted with code-switched text, Bark will attempt to employ the native accent for the respective languages. English quality is best for the time being, and we expect other languages to further improve with scaling.

117 |

118 |

119 |

120 | ```python

121 |

122 | text_prompt = """

123 | 추석은 내가 가장 좋아하는 명절이다. 나는 며칠 동안 휴식을 취하고 친구 및 가족과 시간을 보낼 수 있습니다.

124 | """

125 | audio_array = generate_audio(text_prompt)

126 | ```

127 | [suno_korean.webm](https://user-images.githubusercontent.com/32879321/235313033-dc4477b9-2da0-4b94-9c8b-a8c2d8f5bb5e.webm)

128 |

129 | *Note: since Bark recognizes languages automatically from input text, it is possible to use, for example, a german history prompt with english text. This usually leads to english audio with a german accent.*

130 | ```python

131 | text_prompt = """

132 | Der Dreißigjährige Krieg (1618-1648) war ein verheerender Konflikt, der Europa stark geprägt hat.

133 | This is a beginning of the history. If you want to hear more, please continue.

134 | """

135 | audio_array = generate_audio(text_prompt)

136 | ```

137 | [suno_german_accent.webm](https://user-images.githubusercontent.com/34592747/3f96ab3e-02ec-49cb-97a6-cf5af0b3524a.webm)

138 |

139 |

140 |

141 |

142 |

143 |

144 |

145 | 🎶 Music

146 | Bark can generate all types of audio, and, in principle, doesn't see a difference between speech and music. Sometimes Bark chooses to generate text as music, but you can help it out by adding music notes around your lyrics.

147 |

148 |

149 |

150 | ```python

151 | text_prompt = """

152 | ♪ In the jungle, the mighty jungle, the lion barks tonight ♪

153 | """

154 | audio_array = generate_audio(text_prompt)

155 | ```

156 | [lion.webm](https://user-images.githubusercontent.com/5068315/230684766-97f5ea23-ad99-473c-924b-66b6fab24289.webm)

157 |

158 |

159 |

160 | 🎤 Voice Presets

161 |

162 | Bark supports 100+ speaker presets across [supported languages](#supported-languages). You can browse the library of supported voice presets [HERE](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c), or in the [code](bark/assets/prompts). The community also often shares presets in [Discord](https://discord.gg/J2B2vsjKuE).

163 |

164 | > Bark tries to match the tone, pitch, emotion and prosody of a given preset, but does not currently support custom voice cloning. The model also attempts to preserve music, ambient noise, etc.

165 |

166 | ```python

167 | text_prompt = """

168 | I have a silky smooth voice, and today I will tell you about

169 | the exercise regimen of the common sloth.

170 | """

171 | audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1")

172 | ```

173 |

174 | [sloth.webm](https://user-images.githubusercontent.com/5068315/230684883-a344c619-a560-4ff5-8b99-b4463a34487b.webm)

175 |

176 |

177 | ### 📃 Generating Longer Audio

178 |

179 | By default, `generate_audio` works well with around 13 seconds of spoken text. For an example of how to do long-form generation, see 👉 **[Notebook](notebooks/long_form_generation.ipynb)** 👈

180 |

181 |

182 | Click to toggle example long-form generations (from the example notebook)

183 |

184 | [dialog.webm](https://user-images.githubusercontent.com/2565833/235463539-f57608da-e4cb-4062-8771-148e29512b01.webm)

185 |

186 | [longform_advanced.webm](https://user-images.githubusercontent.com/2565833/235463547-1c0d8744-269b-43fe-9630-897ea5731652.webm)

187 |

188 | [longform_basic.webm](https://user-images.githubusercontent.com/2565833/235463559-87efe9f8-a2db-4d59-b764-57db83f95270.webm)

189 |

190 |

191 |

192 |

193 | ## Command line

194 | ```commandline

195 | python -m bark --text "Hello, my name is Suno." --output_filename "example.wav"

196 | ```

197 |

198 | ## 💻 Installation

199 | *‼️ CAUTION ‼️ Do NOT use `pip install bark`. It installs a different package, which is not managed by Suno.*

200 | ```bash

201 | pip install git+https://github.com/suno-ai/bark.git

202 | ```

203 |

204 | or

205 |

206 | ```bash

207 | git clone https://github.com/suno-ai/bark

208 | cd bark && pip install .

209 | ```

210 |

211 |

212 | ## 🛠️ Hardware and Inference Speed

213 |

214 | Bark has been tested and works on both CPU and GPU (`pytorch 2.0+`, CUDA 11.7 and CUDA 12.0).

215 |

216 | On enterprise GPUs and PyTorch nightly, Bark can generate audio in roughly real-time. On older GPUs, default colab, or CPU, inference time might be significantly slower. For older GPUs or CPU you might want to consider using smaller models. Details can be found in out tutorial sections here.

217 |

218 | The full version of Bark requires around 12GB of VRAM to hold everything on GPU at the same time.

219 | To use a smaller version of the models, which should fit into 8GB VRAM, set the environment flag `SUNO_USE_SMALL_MODELS=True`.

220 |

221 | If you don't have hardware available or if you want to play with bigger versions of our models, you can also sign up for early access to our model playground [here](https://3os84zs17th.typeform.com/suno-studio).

222 |

223 | ## ⚙️ Details

224 |

225 | Bark is fully generative text-to-audio model devolved for research and demo purposes. It follows a GPT style architecture similar to [AudioLM](https://arxiv.org/abs/2209.03143) and [Vall-E](https://arxiv.org/abs/2301.02111) and a quantized Audio representation from [EnCodec](https://github.com/facebookresearch/encodec). It is not a conventional TTS model, but instead a fully generative text-to-audio model capable of deviating in unexpected ways from any given script. Different to previous approaches, the input text prompt is converted directly to audio without the intermediate use of phonemes. It can therefore generalize to arbitrary instructions beyond speech such as music lyrics, sound effects or other non-speech sounds.

226 |

227 | Below is a list of some known non-speech sounds, but we are finding more every day. Please let us know if you find patterns that work particularly well on [Discord](https://discord.gg/J2B2vsjKuE)!

228 |

229 | - `[laughter]`

230 | - `[laughs]`

231 | - `[sighs]`

232 | - `[music]`

233 | - `[gasps]`

234 | - `[clears throat]`

235 | - `—` or `...` for hesitations

236 | - `♪` for song lyrics

237 | - CAPITALIZATION for emphasis of a word

238 | - `[MAN]` and `[WOMAN]` to bias Bark toward male and female speakers, respectively

239 |

240 | ### Supported Languages

241 |

242 | | Language | Status |

243 | | --- | :---: |

244 | | English (en) | ✅ |

245 | | German (de) | ✅ |

246 | | Spanish (es) | ✅ |

247 | | French (fr) | ✅ |

248 | | Hindi (hi) | ✅ |

249 | | Italian (it) | ✅ |

250 | | Japanese (ja) | ✅ |

251 | | Korean (ko) | ✅ |

252 | | Polish (pl) | ✅ |

253 | | Portuguese (pt) | ✅ |

254 | | Russian (ru) | ✅ |

255 | | Turkish (tr) | ✅ |

256 | | Chinese, simplified (zh) | ✅ |

257 |

258 | Requests for future language support [here](https://github.com/suno-ai/bark/discussions/111) or in the **#forums** channel on [Discord](https://discord.com/invite/J2B2vsjKuE).

259 |

260 | ## 🙏 Appreciation

261 |

262 | - [nanoGPT](https://github.com/karpathy/nanoGPT) for a dead-simple and blazing fast implementation of GPT-style models

263 | - [EnCodec](https://github.com/facebookresearch/encodec) for a state-of-the-art implementation of a fantastic audio codec

264 | - [AudioLM](https://github.com/lucidrains/audiolm-pytorch) for related training and inference code

265 | - [Vall-E](https://arxiv.org/abs/2301.02111), [AudioLM](https://arxiv.org/abs/2209.03143) and many other ground-breaking papers that enabled the development of Bark

266 |

267 | ## © License

268 |

269 | Bark is licensed under the MIT License.

270 |

271 | Please contact us at 📧 [bark@suno.ai](mailto:bark@suno.ai) to request access to a larger version of the model.

272 |

273 | ## 📱 Community

274 |

275 | - [Twitter](https://twitter.com/OnusFM)

276 | - [Discord](https://discord.gg/J2B2vsjKuE)

277 |

278 | ## 🎧 Suno Studio (Early Access)

279 |

280 | We’re developing a playground for our models, including Bark.

281 |

282 | If you are interested, you can sign up for early access [here](https://3os84zs17th.typeform.com/suno-studio).

283 |

284 | ## ❓ FAQ

285 |

286 | #### How do I specify where models are downloaded and cached?

287 | * Bark uses Hugging Face to download and store models. You can see find more info [here](https://huggingface.co/docs/huggingface_hub/package_reference/environment_variables#hfhome).

288 |

289 |

290 | #### Bark's generations sometimes differ from my prompts. What's happening?

291 | * Bark is a GPT-style model. As such, it may take some creative liberties in its generations, resulting in higher-variance model outputs than traditional text-to-speech approaches.

292 |

293 | #### What voices are supported by Bark?

294 | * Bark supports 100+ speaker presets across [supported languages](#supported-languages). You can browse the library of speaker presets [here](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c). The community also shares presets in [Discord](https://discord.gg/J2B2vsjKuE). Bark also supports generating unique random voices that fit the input text. Bark does not currently support custom voice cloning.

295 |

296 | #### Why is the output limited to ~13-14 seconds?

297 | * Bark is a GPT-style model, and its architecture/context window is optimized to output generations with roughly this length.

298 |

299 | #### How much VRAM do I need?

300 | * The full version of Bark requires around 12Gb of memory to hold everything on GPU at the same time. However, even smaller cards down to ~2Gb work with some additional settings. Simply add the following code snippet before your generation:

301 |

302 | ```python

303 | import os

304 | os.environ["SUNO_OFFLOAD_CPU"] = True

305 | os.environ["SUNO_USE_SMALL_MODELS"] = True

306 | ```

307 |

308 | #### My generated audio sounds like a 1980s phone call. What's happening?

309 | * Bark generates audio from scratch. It is not meant to create only high-fidelity, studio-quality speech. Rather, outputs could be anything from perfect speech to multiple people arguing at a baseball game recorded with bad microphones.

310 |

--------------------------------------------------------------------------------

/bark/__init__.py:

--------------------------------------------------------------------------------

1 | from .api import generate_audio, text_to_semantic, semantic_to_waveform, save_as_prompt

2 | from .generation import SAMPLE_RATE, preload_models

3 |

--------------------------------------------------------------------------------

/bark/__main__.py:

--------------------------------------------------------------------------------

1 | from .cli import cli

2 |

3 | cli()

4 |

--------------------------------------------------------------------------------

/bark/api.py:

--------------------------------------------------------------------------------

1 | from typing import Dict, Optional, Union

2 |

3 | import numpy as np

4 |

5 | from .generation import codec_decode, generate_coarse, generate_fine, generate_text_semantic

6 |

7 |

8 | def text_to_semantic(

9 | text: str,

10 | history_prompt: Optional[Union[Dict, str]] = None,

11 | temp: float = 0.7,

12 | silent: bool = False,

13 | ):

14 | """Generate semantic array from text.

15 |

16 | Args:

17 | text: text to be turned into audio

18 | history_prompt: history choice for audio cloning

19 | temp: generation temperature (1.0 more diverse, 0.0 more conservative)

20 | silent: disable progress bar

21 |

22 | Returns:

23 | numpy semantic array to be fed into `semantic_to_waveform`

24 | """

25 | x_semantic = generate_text_semantic(

26 | text,

27 | history_prompt=history_prompt,

28 | temp=temp,

29 | silent=silent,

30 | use_kv_caching=True

31 | )

32 | return x_semantic

33 |

34 |

35 | def semantic_to_waveform(

36 | semantic_tokens: np.ndarray,

37 | history_prompt: Optional[Union[Dict, str]] = None,

38 | temp: float = 0.7,

39 | silent: bool = False,

40 | output_full: bool = False,

41 | ):

42 | """Generate audio array from semantic input.

43 |

44 | Args:

45 | semantic_tokens: semantic token output from `text_to_semantic`

46 | history_prompt: history choice for audio cloning

47 | temp: generation temperature (1.0 more diverse, 0.0 more conservative)

48 | silent: disable progress bar

49 | output_full: return full generation to be used as a history prompt

50 |

51 | Returns:

52 | numpy audio array at sample frequency 24khz

53 | """

54 | coarse_tokens = generate_coarse(

55 | semantic_tokens,

56 | history_prompt=history_prompt,

57 | temp=temp,

58 | silent=silent,

59 | use_kv_caching=True

60 | )

61 | fine_tokens = generate_fine(

62 | coarse_tokens,

63 | history_prompt=history_prompt,

64 | temp=0.5,

65 | )

66 | audio_arr = codec_decode(fine_tokens)

67 | if output_full:

68 | full_generation = {

69 | "semantic_prompt": semantic_tokens,

70 | "coarse_prompt": coarse_tokens,

71 | "fine_prompt": fine_tokens,

72 | }

73 | return full_generation, audio_arr

74 | return audio_arr

75 |

76 |

77 | def save_as_prompt(filepath, full_generation):

78 | assert(filepath.endswith(".npz"))

79 | assert(isinstance(full_generation, dict))

80 | assert("semantic_prompt" in full_generation)

81 | assert("coarse_prompt" in full_generation)

82 | assert("fine_prompt" in full_generation)

83 | np.savez(filepath, **full_generation)

84 |

85 |

86 | def generate_audio(

87 | text: str,

88 | history_prompt: Optional[Union[Dict, str]] = None,

89 | text_temp: float = 0.7,

90 | waveform_temp: float = 0.7,

91 | silent: bool = False,

92 | output_full: bool = False,

93 | ):

94 | """Generate audio array from input text.

95 |

96 | Args:

97 | text: text to be turned into audio

98 | history_prompt: history choice for audio cloning

99 | text_temp: generation temperature (1.0 more diverse, 0.0 more conservative)

100 | waveform_temp: generation temperature (1.0 more diverse, 0.0 more conservative)

101 | silent: disable progress bar

102 | output_full: return full generation to be used as a history prompt

103 |

104 | Returns:

105 | numpy audio array at sample frequency 24khz

106 | """

107 | semantic_tokens = text_to_semantic(

108 | text,

109 | history_prompt=history_prompt,

110 | temp=text_temp,

111 | silent=silent,

112 | )

113 | out = semantic_to_waveform(

114 | semantic_tokens,

115 | history_prompt=history_prompt,

116 | temp=waveform_temp,

117 | silent=silent,

118 | output_full=output_full,

119 | )

120 | if output_full:

121 | full_generation, audio_arr = out

122 | return full_generation, audio_arr

123 | else:

124 | audio_arr = out

125 | return audio_arr

126 |

--------------------------------------------------------------------------------

/bark/assets/prompts/announcer.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/announcer.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/de_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/de_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/en_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/en_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/es_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/es_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/fr_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/fr_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/hi_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/hi_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/it_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/it_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ja_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ja_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ko_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ko_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pl_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pl_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/pt_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/pt_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/readme.md:

--------------------------------------------------------------------------------

1 | # Example Prompts Data

2 |

3 | ## Version Two

4 | The `v2` prompts are better engineered to follow text with a consistent voice.

5 | To use them, simply include `v2` in the prompt. For example

6 | ```python

7 | from bark import generate_audio

8 | text_prompt = "madam I'm adam"

9 | audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1")

10 | ```

11 |

12 | ## Prompt Format

13 | The provided data is in the .npz format, which is a file format used in Python for storing arrays and data. The data contains three arrays: semantic_prompt, coarse_prompt, and fine_prompt.

14 |

15 | ```semantic_prompt```

16 |

17 | The semantic_prompt array contains a sequence of token IDs generated by the BERT tokenizer from Hugging Face. These tokens encode the text input and are used as an input to generate the audio output. The shape of this array is (n,), where n is the number of tokens in the input text.

18 |

19 | ```coarse_prompt```

20 |

21 | The coarse_prompt array is an intermediate output of the text-to-speech pipeline, and contains token IDs generated by the first two codebooks of the EnCodec Codec from Facebook. This step converts the semantic tokens into a different representation that is better suited for the subsequent step. The shape of this array is (2, m), where m is the number of tokens after conversion by the EnCodec Codec.

22 |

23 | ```fine_prompt```

24 |

25 | The fine_prompt array is a further processed output of the pipeline, and contains 8 codebooks from the EnCodec Codec. These codebooks represent the final stage of tokenization, and the resulting tokens are used to generate the audio output. The shape of this array is (8, p), where p is the number of tokens after further processing by the EnCodec Codec.

26 |

27 | Overall, these arrays represent different stages of a text-to-speech pipeline that converts text input into synthesized audio output. The semantic_prompt array represents the input text, while coarse_prompt and fine_prompt represent intermediate and final stages of tokenization, respectively.

28 |

29 |

30 |

31 |

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_8.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/ru_speaker_9.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/ru_speaker_9.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_0.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_0.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_1.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_1.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_2.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_2.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_3.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_3.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_4.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_4.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_5.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_5.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_6.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_6.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_7.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_7.npz

--------------------------------------------------------------------------------

/bark/assets/prompts/speaker_8.npz:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gitmylo/bark-data-gen/8f676db0be07be8c0e16577c5b99eea2157f3e9b/bark/assets/prompts/speaker_8.npz