├── .vscode

└── settings.json

├── KubeServices

├── LICENSE

├── README.md

├── Resources

├── KubernetesDashboard.png

├── RaspberryPiKubernetesCluster.jpg

├── blinkt.jpg

├── fan-shim.jpg

├── k8s-first-node.png

├── k8s-master.png

├── kubernetes-nodes.png

├── kubernetes-up-and-running.png

├── network.png

├── network.pptx

├── nfs-server.png

├── patch-cable.jpg

├── power-supply.jpg

├── rack.jpg

├── rpi-kube-cluster.jpg

├── rpi4.png

├── samsung-flash-128.png

├── sd-cards.png

├── ssh-login.jpg

├── static-route-linksys.png

├── switch.png

└── usb-ssd.jpg

├── kubecluster.md

├── kubesetup

├── README.md

├── archive

│ ├── persistent-storage

│ │ ├── README.md

│ │ ├── nfs-client-deployment-arm.yaml

│ │ ├── persistent-volume.yaml

│ │ └── storage-class.yaml

│ └── storage-class-archive

│ │ ├── nginx-deployment.yaml

│ │ ├── nginx-pv-claim.yaml

│ │ └── nginx-pv.yaml

├── cluster-config

│ ├── README.md

│ └── config

├── dashboard

│ ├── dashboard-admin-role-binding.yml

│ └── dashboard-admin-user.yml

├── image-classifier.yml

├── jupyter

│ ├── jupyter-deployment.yaml

│ ├── jupyter-volume-claim.yaml

│ └── jupyter-volume.yaml

├── kuard

│ ├── kuard-deployment.yaml

│ ├── kuard-volume-claim.yaml

│ └── kuard-volume.yaml

├── led-controller

│ └── led-controller.yml

├── metallb

│ └── metallb.yml

├── mysql

│ ├── mysql-deployment.yaml

│ ├── mysql-service.yaml

│ ├── mysql-volume-claim.yaml

│ └── mysql-volume.yaml

├── nginx

│ ├── nginx-daemonset.yaml

│ ├── nginx-pv-claim.yaml

│ └── nginx-pv.yaml

├── openweathermap.yml

└── owm-python.loadbalancer.yml

├── nginx

├── healthy

│ └── index.html

├── index.html

├── kubernetes-up-and-running.png

└── static

│ ├── index.png

│ ├── kube.png

│ ├── style.css

│ └── vscode.png

├── raspisetup.md

├── scripts

├── admin

│ ├── all-command.sh

│ ├── all-restart.sh

│ └── all-shutdown.sh

├── get-dashboard-token.sh

├── install-master-auto.sh

├── install-master.sh

├── install-node-auto.sh

├── install-node.sh

└── scriptlets

│ ├── common

│ ├── boot-from-usb.sh

│ ├── install-docker.sh

│ ├── install-fanshim.sh

│ ├── install-kubernetes.sh

│ └── install-log2ram.sh

│ ├── master

│ ├── dhcpd.conf

│ ├── install-dhcp-server.sh

│ ├── install-init.sh

│ ├── install-nfs.sh

│ ├── kubernetes-init.sh

│ ├── kubernetes-setup.sh

│ └── setup-networking.sh

│ └── node

│ └── install-init.sh

├── setup.sh

└── wifirouter.md

/.vscode/settings.json:

--------------------------------------------------------------------------------

1 | {

2 | "spellright.language": "English (Australian)",

3 | "spellright.documentTypes": [

4 | "markdown",

5 | "latex",

6 | "plaintext"

7 | ]

8 | }

--------------------------------------------------------------------------------

/KubeServices:

--------------------------------------------------------------------------------

1 | https://hub.docker.com/r/arm32v7/redis/

2 |

3 |

4 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Dave Glover

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Part 1: Building a Kubernetes "Intelligent Edge" Cluster on Raspberry Pi

2 |

3 |

4 |

5 | |Author|[Dave Glover, Microsoft Australia](https://developer.microsoft.com/advocates/dave-glover?WT.mc_id=iot-0000-dglover)|

6 | |----|---|

7 | |Platform| Raspberry Pi, Raspbian Buster, Kernel 4.19|

8 | |Date|Updated May 2020|

9 | | Acknowledgments | Inspired by [Alex Ellis' work with his Raspberry Pi Zero Docker Cluster](https://blog.alexellis.io/visiting-pimoroni/) |

10 | |Skill Level| This guide assumes you have some Raspberry Pi and networking experience. |

11 |

12 | ## Building a Raspberry Pi Kubernetes Cluster

13 |

14 | Building a Kubernetes Intelligent Edge cluster on Raspberry Pi is a great learning experience, a stepping stone to building robust Intelligent Edge solutions, and an awesome way to impress your friends. Skills you develop on the _edge_ can be used in the _cloud_ with [Azure Kubernetes Service](https://azure.microsoft.com/services/kubernetes-service/?WT.mc_id=iot-0000-dglover).

15 |

16 | ### Learning Kubernetes

17 |

18 | You can download a free copy of the [Kubernetes: Up and Running, Second Edition](https://azure.microsoft.com/resources/kubernetes-up-and-running/?WT.mc_id=iot-0000-dglover) book. It is an excellent introduction to Kubernetes and it will accelerate your understanding of Kubernetes.

19 |

20 |

21 |

22 | Published: 8/22/2019

23 |

24 | Improve the agility, reliability, and efficiency of your distributed systems by using Kubernetes. Get the practical Kubernetes deployment skills you need in this O’Reilly e-book. You’ll learn how to:

25 |

26 | * Develop and deploy real-world applications.

27 |

28 | * Create and run a simple cluster.

29 |

30 | * Integrate storage into containerized microservices.

31 |

32 | * Use Kubernetes concepts and specialized objects like DaemonSet jobs, ConfigMaps, and secrets.

33 |

34 | Learn how to use tools and APIs to automate scalable distributed systems for online services, machine learning applications, or even a cluster of Raspberry Pi computers.

35 |

36 | ## Introduction

37 |

38 | The Kubernetes cluster is built with Raspberry Pi 4 nodes and is very capable. It has been tested with Python and C# [Azure Functions](https://azure.microsoft.com/services/functions?WT.mc_id=iot-0000-dglover), [Azure Custom Vision](https://azure.microsoft.com/services/cognitive-services/custom-vision-service?WT.mc_id=iot-0000-dglover) Machine Learning models, and [NGINX](https://www.nginx.com/) Web Server.

39 |

40 | This project forms the basis for a four-part _Intelligence on the Edge_ series. The followup topics will include:

41 |

42 | * Build, debug, and deploy Python and C# [Azure Functions](https://azure.microsoft.com/services/functions?WT.mc_id=iot-0000-dglover) to a Raspberry Pi Kubernetes Cluster, and learn how to access hardware from a Kubernetes managed container.

43 |

44 | * Developing, deploying and managing _Intelligence on the Edge_ with [Azure IoT Edge on Kubernetes](https://docs.microsoft.com/azure/iot-edge/how-to-install-iot-edge-kubernetes?WT.mc_id=iot-0000-dglover).

45 |

46 | * Getting started with the [dapr.io](https://dapr.io?WT.mc_id=github-blog-dglover), an event-driven, portable runtime for building microservices on cloud and edge.

47 |

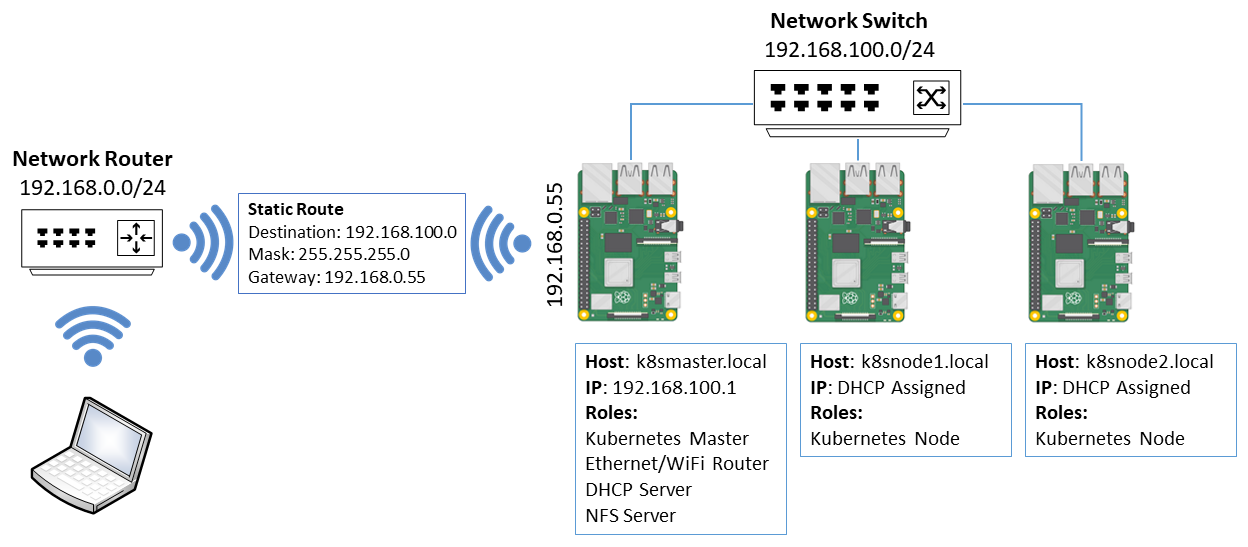

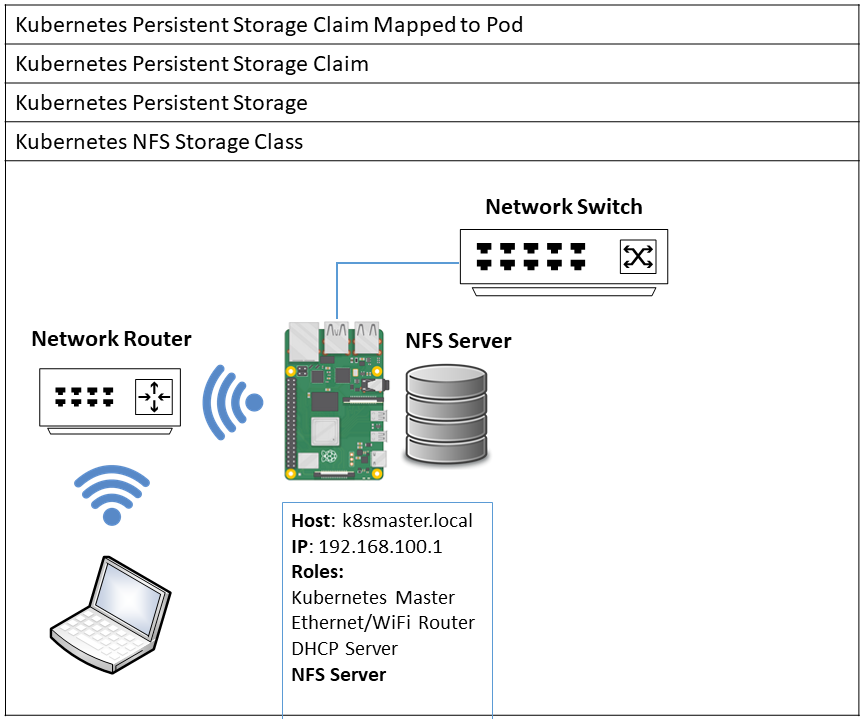

48 | ## System Configuration

49 |

50 |

51 |

52 | The Kubernetes Master and Node installations are fully scripted, and along with Kubernetes itself, the following services are installed and configured:

53 |

54 | 1. [Flannel](https://github.com/coreos/flannel) Container Network Interface (CNI) Plugin.

55 | 2. [MetalLb](https://metallb.universe.tf/) LoadBalancer. MetalLB is a load-balancer implementation for bare metal Kubernetes clusters, using standard routing protocols.

56 | 3. [Kubernetes Dashboard](https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/).

57 | 4. [Kubernetes Persistent Volumes](https://kubernetes.io/docs/concepts/storage/persistent-volumes/) Storage on NFS.

58 | 5. NFS Server.

59 | 6. NGINX Web Server.

60 |

61 | ## Parts List

62 |

63 | The following list assumes a Kubernetes cluster built with a minimum of three Raspberry Pis.

64 |

65 | |Items||

66 | |-----|----|

67 | | 1 x Raspberry Pi for Kubernetes Master.

- I used a Raspberry 3B Plus, I had one spare, it has dual-band WiFi, and Gigabit Ethernet over USB 2.0 port (300Mbps), fast enough.

2 x Raspberry Pis for Kubernetes Nodes- I used two Raspberry Pi 4 4GBs.

- Raspberry Pi 4s make great Kubernetes Nodes, but Raspberry Pi 3s and 2s work very well too.

|

68 | |3 x SD Cards, one for each Raspberry Pi in the cluster.- Minimum 16GB, recommend 32GB. The new U3 Series from SanDisk is fast!

- The SD Card can be smaller if you intend to run the Kubernetes Nodes from USB3.

- Unsure what SD Card to buy, then check out these [SD Card recommendations](https://www.androidcentral.com/best-sd-cards-raspberry-pi-4)

|  |

69 | |3 x Power supplies, one for each Raspberry Pi.||

70 | |1 x Network Switch [Dlink DGS-1005A](https://www.dlink.com.au/home-solutions/DGS-1005A-5-port-gigabit-desktop-switch) or similar|  |

71 | |3 x Ethernet Patch Cables (I used 25cm patch cables to reduce clutter.) | |

72 | | Optional: If you using a Raspberry Pi 4 then recommend active cooling: Pimoroni [FanSHIM](https://shop.pimoroni.com/products/fan-shim) |  |

73 | |Optional: 1 x [Raspberry Pi Rack](https://www.amazon.com.au/gp/product/B013SSA3HA/ref=ppx_yo_dt_b_asin_title_o02_s00?ie=UTF8&psc=1) or similar |  |

74 | |Optional: 2 x [Pimoroni Blinkt](https://shop.pimoroni.com/products/blinkt) RGB Led Strips. The BlinkT LED Strip can be a great way to visualize pod activity. | |

75 | |Optional: 2 x USB3 Flash Drivers for Kubernetes Nodes, or similar. I would recommend the Samsung [USB 3.1 Flash Drive FIT Plus 128GB](https://www.samsung.com/us/computing/memory-storage/usb-flash-drives/usb-3-1-flash-drive-fit-plus-128gb-muf-128ab-am/). See the [5 of the Fastest and Best USB 3.0 Flash Drives](https://www.makeuseof.com/tag/5-of-the-fastest-usb-3-0-flash-drives-you-should-buy/). Installation script sets up Raspberry Pi Boot from USB3.|  |

76 | |Optional: 2 x USB3 SSDs for Kubernetes Nodes, or similar, ie something small. Installation script sets up Raspberry Pi Boot from USB3 SSD. Note, these are [SSD Enclosures](https://www.amazon.com.au/Wavlink-10Gbps-Enclosure-Aluminum-Include/dp/B07D54JH16/ref=sr_1_8?keywords=usb+3+ssd&qid=1571218898&s=electronics&sr=1-8), you need the M.2 drives as well.|  |

77 |

78 | ## Flashing Raspbian Buster Lite Boot SD Cards

79 |

80 | Build your Kubernetes cluster with Raspbian Buster Lite. Raspbian Lite is headless, takes less space, and leaves more resources available for your applications. You must enable **SSH** for each SD Card, and add a **WiFi profile** for the Kubernetes Master SD Card.

81 |

82 | There are plenty of guides for flashing Raspbian Lite SD Cards. Here are a couple of useful references:

83 |

84 | * Download [Raspbian Buster Lite](https://www.raspberrypi.org/downloads/).

85 | * [Setting up a Raspberry Pi headless](https://www.raspberrypi.org/documentation/configuration/wireless/headless.md).

86 | * If you've not set up a Raspberry Pi before then this is a great guide. ["HEADLESS RASPBERRY PI 3 B+ SSH WIFI SETUP (MAC + WINDOWS)"](https://desertbot.io/blog/headless-raspberry-pi-3-bplus-ssh-wifi-setup). The Instructions outlined for macOS will work on Linux.

87 |

88 | ### Creating Raspbian SD Card Boot Images

89 |

90 | 1. Using [balena Etcher](https://www.balena.io/etcher/), flash 3 x SD Cards with [Raspbian Buster Lite](https://www.raspberrypi.org/downloads/raspbian/). See the introduction to [Installing operating system images](https://www.raspberrypi.org/documentation/installation/installing-images/).

91 | 2. On each SD Card create an empty file named **ssh**, this enables SSH login on the Raspberry Pi.

92 | * **Windows:** From Powershell, open the drive labeled _boot_, most likely the _d:_ drive, and type `echo $null > ssh; exit`. From the Windows Command Prompt, open drive labeled _boot_, most like the _d:_ drive, and type `type NUL > ssh & exit`.

93 | * **macOS and Linux:** Open terminal from drive labeled _boot_, type `touch ssh && exit`.

94 | 3. On the Kubernetes Master SD Card, add a **wpa_supplicant.conf** file to the SD Card _boot_ drive with your WiFi Routers WiFi settings.

95 |

96 | ```text

97 | ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

98 | update_config=1

99 | country=AU

100 |

101 | network={

102 | ssid="SSID"

103 | psk="WiFi Password"

104 | }

105 | ```

106 |

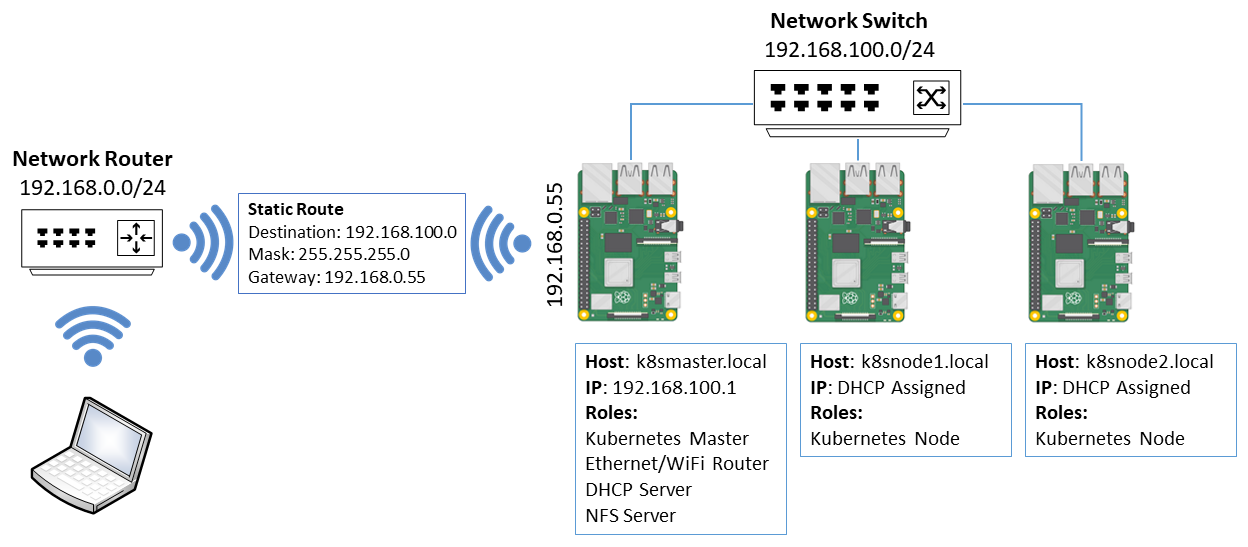

107 | ## Kubernetes Network Topology

108 |

109 | The Kubernetes Master is also responsible for:

110 |

111 | 1. Allocating IP Addresses to the Kubernetes Nodes.

112 | 2. Bridging network traffic between the external WiFi network and the internal cluster Ethernet network.

113 | 3. NFS Services to support Kubernetes Persistent Storage.

114 |

115 |

116 |

117 | ## Installation Script Naming Conventions

118 |

119 | The following naming conventions are enforced in the installation scripts:

120 |

121 | 1. The Kubernetes Master will be named **k8smaster.local**

122 | 2. The Kubernetes Nodes will be named **k8snode1..n**

123 |

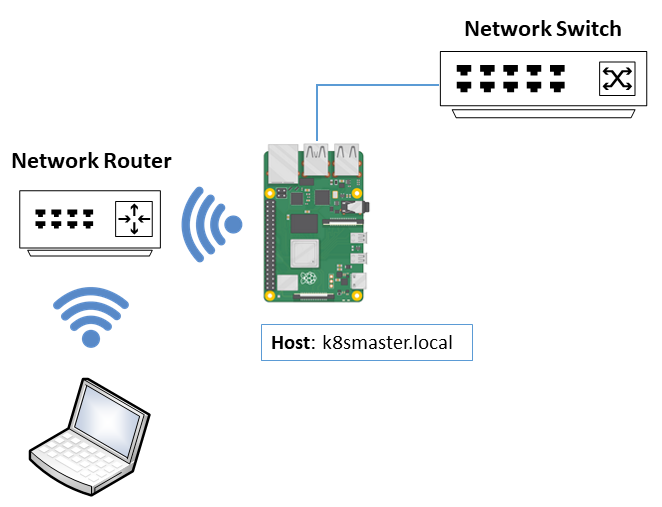

124 | ## Kubernetes Master Installation

125 |

126 |

127 |

128 | ### Installation Process

129 |

130 | Ensure the Raspberry Pi to be configured as a **Kubernetes Master** is:

131 |

132 | 1. Connected by **Ethernet** to the **Network Switch**, and the **Network Switch** is power **on**.

133 | 2. The **WiFi Router** is in range and powered on.

134 | 3. If rebuilding the Kubernetes Master then **disconnect** existing Kubernetes Nodes from the Network Switch as they can interfere with Kubernetes Master initialization.

135 |

136 | #### Step 1: Start the Kubernetes Master Installation Process

137 |

138 | 1. Open a new terminal window from a macOS, Linux, or Windows Bash (Linux Subsystem for Windows).

139 | 2. Run the following command from the SSH terminal you started in step 1.

140 | > Note, as at July 2020 for [Raspberry Pi 3B and 3B+ CGroup support](https://github.com/raspberrypi/linux/issues/3644) is not included in the kernel. CGroups is required for Kubernetes. The workaround is to enable the 64bit kernel. You will be prompted to enable the 64Bit kernel.

141 |

142 | ```bash

143 | bash -c "$(curl https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/master/setup.sh)"

144 | ```

145 |

146 | 3. Select **M**aster set up.

147 |

148 | 4. Configure Installation Options

149 | * Enable Boot from USB3 support

150 |

151 | * Install [Pimoroni Fan SHIM](https://shop.pimoroni.com/products/fan-shim) Support

152 |

153 | 5. The automated installation will start. Note, the entire automated Kubernetes Master installation process is driven from your desktop computer.

154 |

155 | ## Kubernetes Node Installation

156 |

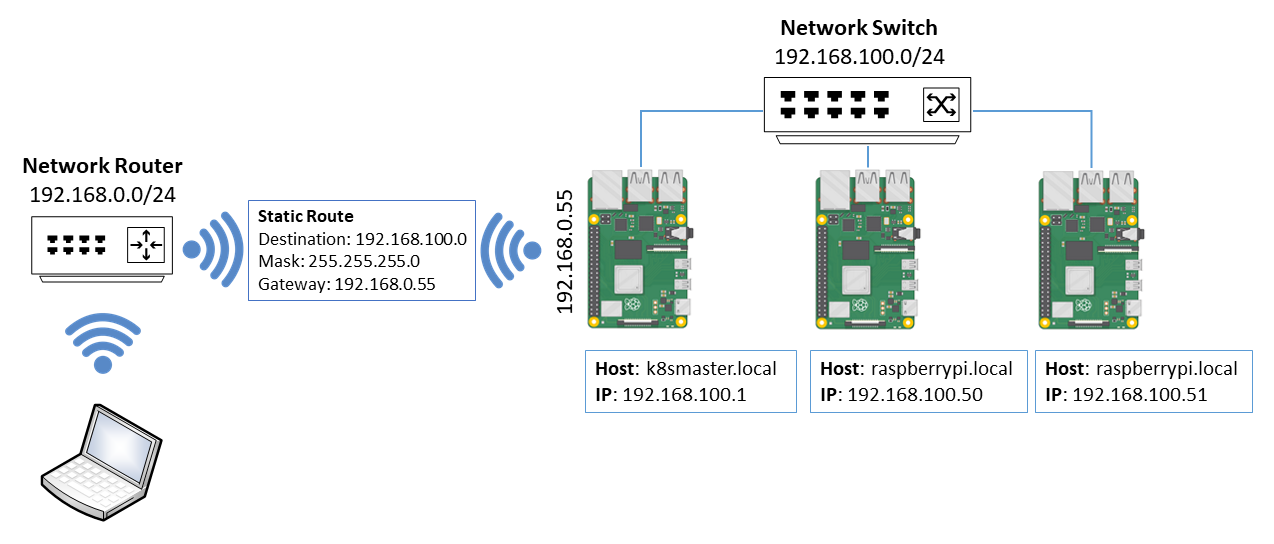

157 | Ensure the k8smaster and all the Raspberry Pis that will be configured are **powered on** and connected to the **Network Switch**. The DHCP Server running on the k8smaster will allocate an IP Addresses to the Raspberry Pis to become the Kubernetes nodes.

158 |

159 |

160 |

161 | ### Step 1: Connect to the newly configured Kubernetes Master

162 |

163 | 1. From your desktop computer, start an SSH Session to the k8smaster `ssh pi@k8smaster.local`

164 |

165 | ### Step 2: Start the Installation Process

166 |

167 | 1. Run the following command from the SSH terminal you started in step 1.

168 | > Note, as at July 2020 for [Raspberry Pi 3B and 3B+ CGroup support](https://github.com/raspberrypi/linux/issues/3644) is not included in the kernel. CGroups is required for Kubernetes. The workaround is to enable the 64bit kernel. You will be prompted to enable the 64Bit kernel.

169 |

170 | ```bash

171 | bash -c "$(curl https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/master/setup.sh)"

172 | ```

173 |

174 | 2. Select **N**ode set up.

175 |

176 | 3. Configure Installation Options

177 | * Enable Boot from USB3 support

178 |

179 | * Install [Pimoroni Fan SHIM](https://shop.pimoroni.com/products/fan-shim) Support

180 |

181 |

182 |

183 |

184 | ### Step 3: Review Devices

185 |

186 | A list of devices found will be displayed. The devices display are those that have been allocated an IP Address by the DHCP Server running on the Kubernetes Master. Note, Kubernetes Nodes will only be installed on devices named _raspberrypi_.

187 |

188 | ```text

189 | HostName : IP Address

190 | ================================

191 | raspberrypi : 192.168.100.50

192 | raspberrypi : 192.168.100.51

193 | ```

194 |

195 | Answer yes when all devices you wish to install Kubernetes on are displayed. The automated installation will now start.

196 |

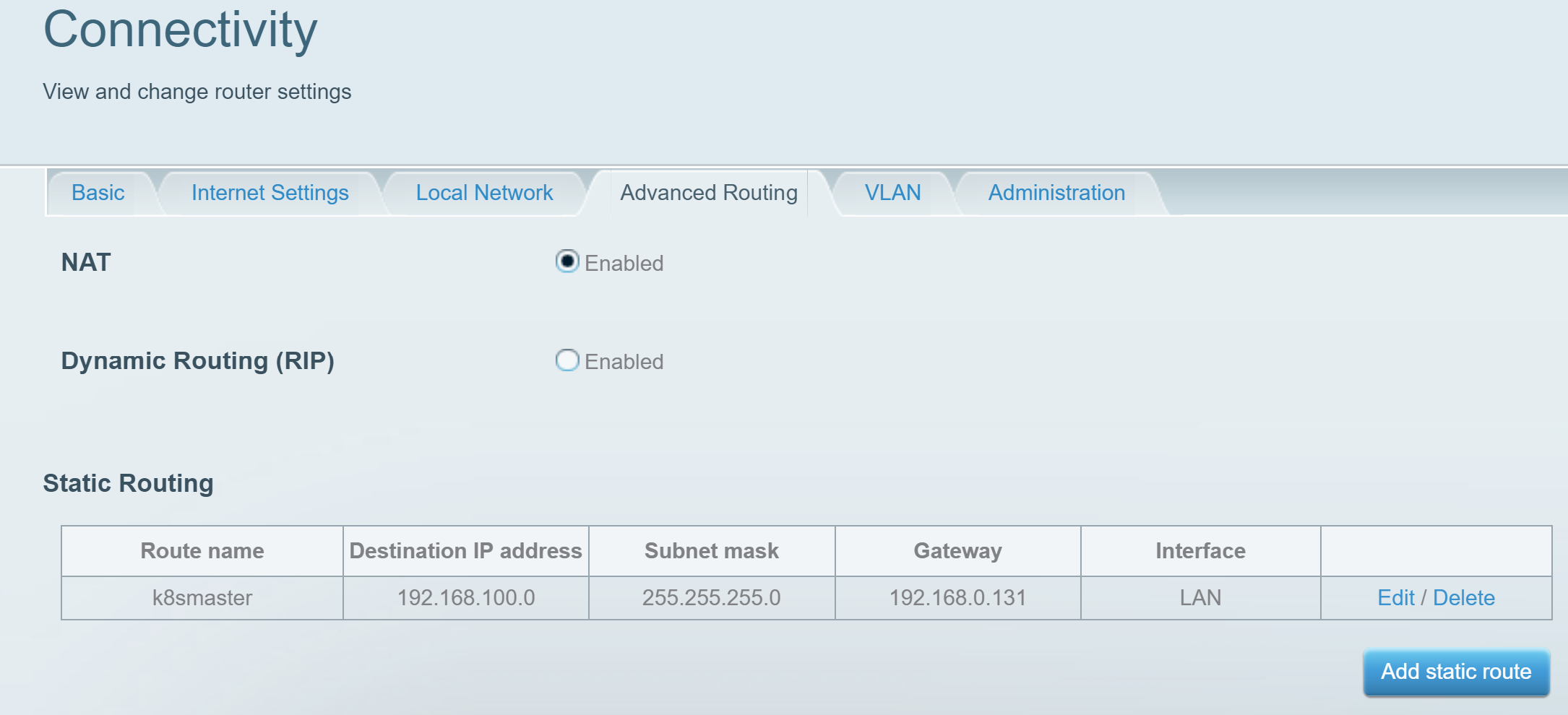

197 | ## Setting up a Static Route to the Kubernetes Cluster

198 |

199 | 1. The Kubernetes Cluster runs isolated on the **Network Switch** and operates on subnet 192.168.100.0/24.

200 | 2. A static route needs to be configured either on the **Network Router** or on your computer to define the entry point (gateway) into the Cluster subnet (192.168.100.0/24).

201 | 3. The gateway IP Address is allocated by your **Network Router** to the Kubernetes Master WiFi adapter. In the above example, the Gateway address is 192.168.0.55.

202 |

203 | Most **Network Routers** allow you to configure a static route. The following is an example configured on a Linksys Router.

204 |

205 |

206 |

207 | ### Alternative: Set Local Static Route to Cluster Subnet (192.168.100.0/24)

208 |

209 | If you don't have access to configure the Network Router you can set a static route on your local computer.

210 |

211 | ### Windows

212 |

213 | From "Run as Administrator" Command Prompt

214 |

215 | ```bash

216 | route add 192.168.100.0 mask 255.255.255.0 192.168.0.55

217 | ```

218 |

219 | ### macOS and Linux

220 |

221 | **NOT WORKING RESEARCH SOME MORE**

222 |

223 | ```bash

224 | route add -net 192.168.100.0 netmask 255.255.255.0 gw 192.168.0.55

225 | ```

226 |

227 | ### Troubleshooting Worker Node DNS issues

228 |

229 | The Kubernetes master node network installation script sets the DNS servers used by the cluster to *domain-name-servers 8.8.8.8, 8.8.4.4* in */etc/dhcp/dhcpd.conf*.

230 |

231 | On company or managed networks querying these DNS servers may be blocked. If the default DNS addresses are blocked then the Kubernetes worker node installation will fail.

232 |

233 | Update the domain-name-servers in the */etc/dhcp/dhcpd.conf* file to the IP addresses of your managed network DNS servers.

234 |

235 | ## Installing kubectl on your Desktop Computer

236 |

237 | 1. [Install kubectl](https://kubernetes.io/docs/tasks/tools/install-kubectl/)

238 | 2. Open a terminal window on your desktop computer

239 | 3. Change directory to your home directory

240 | * macOS, Linux, and Windows Powershell `cd ~/`, Windows Command Prompt `cd %USERPROFILE%`

241 | 4. Copy Kube Config from **k8smaster.local**

242 |

243 | ```bash

244 | scp -r pi@k8smaster.local:~/.kube ./

245 | ```

246 |

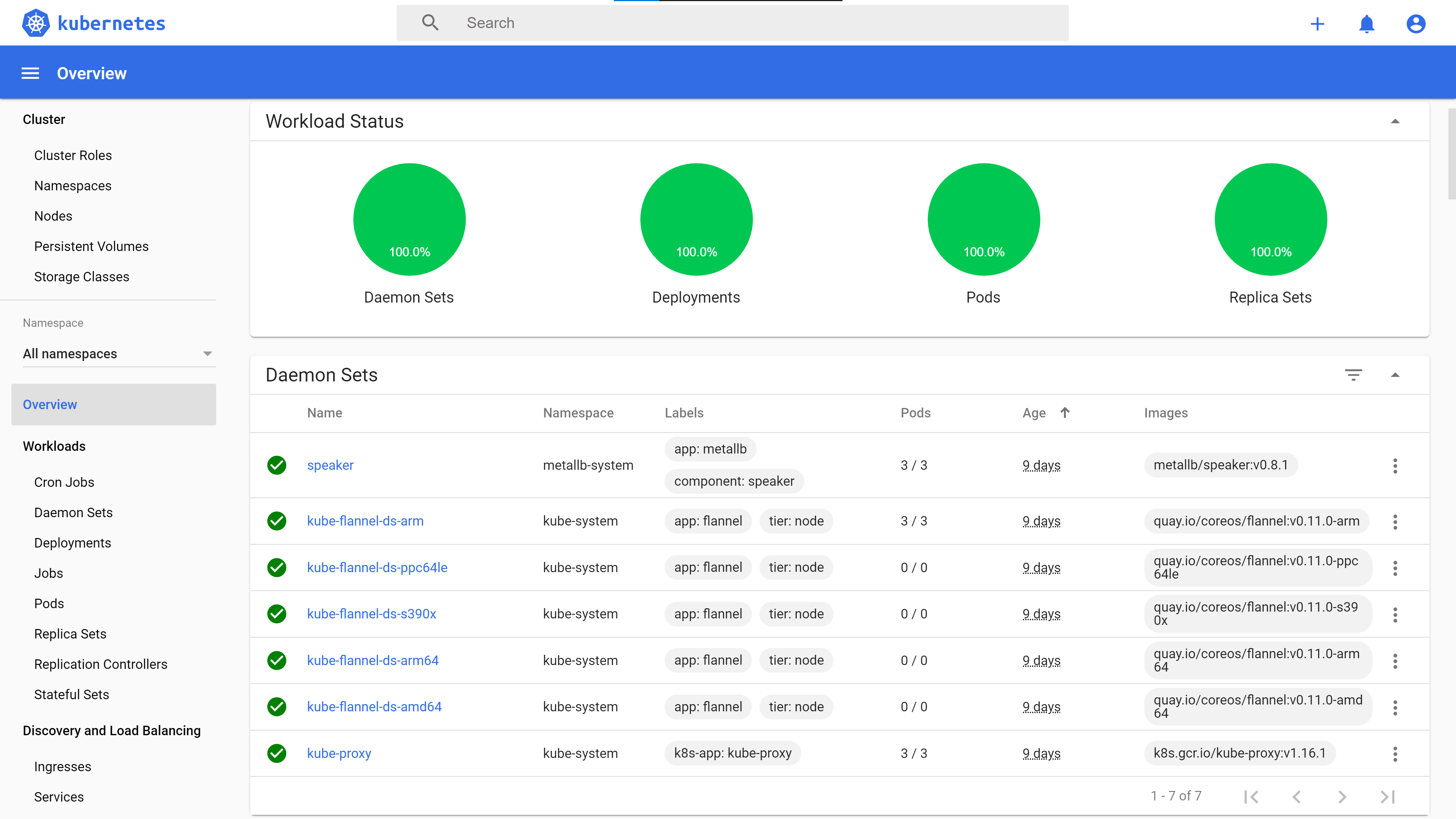

247 | ## Kubernetes Dashboard

248 |

249 | ### Step 1: Get the Dashboard Access Token

250 |

251 | From the Windows Command Prompt (or PowerShell), macOS, or Linux Terminal, run the following command:

252 |

253 | Note, you will be prompted for the k8smaster.local password.

254 |

255 | ```bash

256 | ssh pi@k8smaster.local ./get-dashboard-token.sh

257 | ```

258 |

259 | ### Step 2: Start the Kubernetes Proxy

260 |

261 | On your Linux, macOS, or Windows computer, start a command prompt/terminal and start the Kubernetes Proxy.

262 |

263 | ```bash

264 | kubectl proxy

265 | ```

266 |

267 | ### Step 3: Browse to the Kubernetes Dashboard

268 |

269 | Click the following link to open the Kubernetes Dashboard. Select **Token** authentication, paste in the token you created from **Step 1** and connect.

270 |

271 | **http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/overview?namespace=default**

272 |

273 |

274 |

275 | ## Kubernetes Cluster Persistence Storage

276 |

277 | NFS Server installed on k8smaster.local

278 |

279 | 2. Installed and provisioned by Kubernetes Master installation script.

280 | 3. The following diagram describes how persistent storage is configured in the cluster.

281 |

282 |

283 |

284 | ## Useful Commands

285 |

286 | ### List DHCP Leases

287 |

288 | ```bash

289 | dhcp-lease-list

290 | ```

291 |

292 | ### Switching Clusters

293 |

294 | ```bash

295 | kubectl config get-contexts

296 | kubectl config view

297 | kubectl config current-context

298 |

299 | kubectl config use-context pi3 or pi4

300 | ```

301 |

302 | ### Resetting Kubernetes Master or Node

303 |

304 | ````bash

305 | sudo kubeadm reset && sudo systemctl daemon-reload && sudo systemctl restart kubelet.service

306 | ````

307 |

308 | ## References and Acknowledgements

309 |

310 | 1. Setting [iptables to legacy mode](https://github.com/kubernetes/kubernetes/issues/71305) on Raspbian Buster/Debian 10 for Kubernetes kube-proxy. Configured in installation scripts.

311 |

312 | ```bash

313 | sudo update-alternatives --set iptables /usr/sbin/iptables-legacy > /dev/null

314 | sudo update-alternatives --set ip6tables /usr/sbin/ip6tables-legacy > /dev/null

315 | ```

316 | 2. [Kubernetes Secrets](https://www.tutorialspoint.com/kubernetes/kubernetes_secrets.htm)

317 |

318 | ### Kubernetes Dashboard

319 |

320 | 1. [Kubernetes Web UI (Dashboard)](https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/)

321 | 2. [Creating admin user to access Kubernetes dashboard](https://medium.com/@kanrangsan/creating-admin-user-to-access-kubernetes-dashboard-723d6c9764e4)

322 |

323 | ### Kubernetes Persistent Storage

324 |

325 | 1. [Kubernetes NFS-Client Provisioner](https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client)

326 | 2. [kubernetes-incubator/external-storage](https://github.com/kubernetes-incubator/external-storage/blob/master/nfs-client/deploy/deployment-arm.yaml)

327 |

328 | ### NFS Persistent Storage

329 |

330 | 1. [Kubernetes NFS-Client Provisioner](https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client)

331 | 2. [NFS Client Provisioner Deployment Template](https://github.com/kubernetes-incubator/external-storage/blob/master/nfs-client/deploy/deployment-arm.yaml)

332 |

333 | ### Flannel Cluster Networking

334 |

335 | 1. [Flannel CNI](https://kubernetes.io/docs/concepts/cluster-administration/networking/#the-kubernetes-network-model) (Cluster Networking) installation.

336 |

337 | ### MetalLB Load Balancer

338 |

339 | 1. [MetalLB LoadBalance](https://metallb.universe.tf/) installation.

340 |

--------------------------------------------------------------------------------

/Resources/KubernetesDashboard.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/KubernetesDashboard.png

--------------------------------------------------------------------------------

/Resources/RaspberryPiKubernetesCluster.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/RaspberryPiKubernetesCluster.jpg

--------------------------------------------------------------------------------

/Resources/blinkt.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/blinkt.jpg

--------------------------------------------------------------------------------

/Resources/fan-shim.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/fan-shim.jpg

--------------------------------------------------------------------------------

/Resources/k8s-first-node.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/k8s-first-node.png

--------------------------------------------------------------------------------

/Resources/k8s-master.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/k8s-master.png

--------------------------------------------------------------------------------

/Resources/kubernetes-nodes.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/kubernetes-nodes.png

--------------------------------------------------------------------------------

/Resources/kubernetes-up-and-running.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/kubernetes-up-and-running.png

--------------------------------------------------------------------------------

/Resources/network.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/network.png

--------------------------------------------------------------------------------

/Resources/network.pptx:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/network.pptx

--------------------------------------------------------------------------------

/Resources/nfs-server.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/nfs-server.png

--------------------------------------------------------------------------------

/Resources/patch-cable.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/patch-cable.jpg

--------------------------------------------------------------------------------

/Resources/power-supply.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/power-supply.jpg

--------------------------------------------------------------------------------

/Resources/rack.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/rack.jpg

--------------------------------------------------------------------------------

/Resources/rpi-kube-cluster.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/rpi-kube-cluster.jpg

--------------------------------------------------------------------------------

/Resources/rpi4.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/rpi4.png

--------------------------------------------------------------------------------

/Resources/samsung-flash-128.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/samsung-flash-128.png

--------------------------------------------------------------------------------

/Resources/sd-cards.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/sd-cards.png

--------------------------------------------------------------------------------

/Resources/ssh-login.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/ssh-login.jpg

--------------------------------------------------------------------------------

/Resources/static-route-linksys.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/static-route-linksys.png

--------------------------------------------------------------------------------

/Resources/switch.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/switch.png

--------------------------------------------------------------------------------

/Resources/usb-ssd.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/gloveboxes/Raspberry-Pi-Kubernetes-Cluster/090c70a67d97923736adf70f3ce14434ae6fc503/Resources/usb-ssd.jpg

--------------------------------------------------------------------------------

/kubecluster.md:

--------------------------------------------------------------------------------

1 | # Setting up Raspberry Pi Kubernetes Cluster

2 |

3 | ref https://github.com/codesqueak/k18srpi4

4 |

5 | * Date: **Oct 2019**

6 | * Operating System: **Raspbian Buster**

7 | * Kernel: **4.19**

8 |

9 | Follow notes at:

10 |

11 | 1. [Kubernetes on Raspberry Pi with .NET Core](https://medium.com/@mczachurski/kubernetes-on-raspberry-pi-with-net-core-36ea79681fe7)

12 |

13 | 2. [k8s-pi.md ](https://codegists.com/snippet/shell/k8s-pimd_elafargue_shell)

14 |

15 | ## Rename and Update your Raspberry Pi

16 |

17 | ```bash

18 | read -p "Name your Raspberry Pi (eg k8smaster, k8snode1, ...): " RPINAME && \

19 | sudo raspi-config nonint do_hostname $RPINAME && \

20 | sudo apt update && sudo apt upgrade -y && sudo reboot

21 |

22 | ```

23 |

24 | ## Ensure iptables tooling does not use the nftables backend

25 |

26 | - [Installing kubeadm on Debian Buster](https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/)

27 | - [kube-proxy currently incompatible with `iptables >= 1.8`](https://github.com/kubernetes/kubernetes/issues/71305)

28 |

29 | ```bash

30 | sudo update-alternatives --set iptables /usr/sbin/iptables-legacy && \

31 | sudo update-alternatives --set ip6tables /usr/sbin/ip6tables-legacy

32 | ```

33 |

34 | ### Test iptables in legacy mode

35 |

36 | ```bash

37 | iptables -V

38 |

39 | returns iptables v1.8.2 (legacy)

40 | ```

41 |

42 | ## Kubernetes Prerequisites

43 |

44 | 1. Disable Swap

45 | Required for Kubernetes on Raspberry Pi

46 | 2. Optimise Memory

47 | If using Raspberry Pi Lite (Headless) you can reduce the memory split between the GPU and the rest of the system down to 16mb.

48 | 3. Enable cgroups

49 | Append cgroup_enable=cpuset cgroup_enable=memory to the end of the line of /boot/cmdline.txt file.

50 |

51 | ```bash

52 | sudo dphys-swapfile swapoff && \

53 | sudo dphys-swapfile uninstall && \

54 | sudo systemctl disable dphys-swapfile && \

55 | echo "gpu_mem=16" | sudo tee -a /boot/config.txt && \

56 | sudo sed -i 's/$/ ipv6.disable=1 cgroup_enable=cpuset cgroup_enable=memory cgroup_memory=1/' /boot/cmdline.txt

57 | ```

58 |

59 | ## Install Docker

60 |

61 | ```bash

62 | curl -sSL get.docker.com | sh && sudo usermod $USER -aG docker && sudo reboot

63 | ```

64 |

65 | ## Install Kubernetes

66 |

67 | Copy the next complete block of commands and paste in to the Raspberry Pi Terminal.

68 |

69 | ```bash

70 | curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - && \

71 | echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list && \

72 | sudo apt-get update -q && \

73 | sudo apt-get install -qy kubeadm && \

74 | sudo reboot

75 | ```

76 |

77 | ## Pull Kubernetes Images (Optional)

78 |

79 | Optional, but useful as you can see th images being pulled. If you don't do here the images will be pulled by Kubeadmin init.

80 |

81 | ```bash

82 | kubeadm config images pull

83 | ```

84 |

85 | ## Kubernetes Master and Node Set Up

86 |

87 | Follow the next section to install the Kubernetes Master, else skip to [Kuberntes Node Set Up](#kubernetes-node-set-up)

88 |

89 | ### Kubernetes Master Set Up

90 |

91 | ```bash

92 | sudo kubeadm init --apiserver-advertise-address=192.168.100.1 --pod-network-cidr=10.244.0.0/16 --token-ttl 0

93 | ```

94 |

95 | Notes:

96 |

97 | 1. For flannel to work, you must pass --pod-network-cidr=10.244.0.0/16 to kubeadm init.

98 | 2. Using a --token-ttl 0 is not recommended for production environments. It's fine and simplifies a development/test environment.

99 |

100 | #### Make the install generally available

101 |

102 | ```bash

103 | mkdir -p $HOME/.kube

104 | sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

105 | sudo chown $(id -u):$(id -g) $HOME/.kube/config

106 | ```

107 |

108 | #### Install Flannel Pod Network add-on

109 |

110 | [Creating a single control-plane cluster with kubeadm](https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/)

111 |

112 | ```bash

113 | sudo sysctl net.bridge.bridge-nf-call-iptables=1 && \

114 | kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

115 | ```

116 |

117 | ### Kubernetes Node Set Up

118 |

119 | When the kubeadm init command completes you need to take a note of the token. You use this command to join a node to the kubernetes master.

120 |

121 | ```bash

122 | sudo sysctl net.bridge.bridge-nf-call-iptables=1

123 | ```

124 |

125 | ```bash

126 | sudo kubeadm join 192.168.2.1:6443 --token ......

127 | ```

128 |

129 | ## Resetting Kubernetes Master or Node

130 |

131 | ````bash

132 | sudo kubeadm reset && \

133 | sudo systemctl daemon-reload && \

134 | sudo systemctl restart kubelet.service

135 | ````

136 |

137 | ## Useful Kubernetes Commands

138 |

139 | ```bash

140 | kubectl get pods --namespace=kube-system -o wide

141 |

142 | kubectl get nodes

143 |

144 | for i in range{1..1000}; do date; kubectl get pods --namespace=kube-system -o wide;sleep 5; done;

145 | ```

146 |

147 | ## Allow Pods on Master

148 |

149 | ```bash

150 | kubectl taint nodes --all node-role.kubernetes.io/master-

151 | ```

152 |

153 |

154 | ## Kubernetes Dashboard Security

155 |

156 | On your Kubernetes Master

157 |

158 | Create a file called ” dashboard-admin.yaml “ with the following content:

159 |

160 | ```yaml

161 | apiVersion: rbac.authorization.k8s.io/v1

162 | kind: ClusterRoleBinding

163 | metadata:

164 | name: kubernetes-dashboard

165 | labels:

166 | k8s-app: kubernetes-dashboard

167 | roleRef:

168 | apiGroup: rbac.authorization.k8s.io

169 | kind: ClusterRole

170 | name: cluster-admin

171 | subjects:

172 | - kind: ServiceAccount

173 | name: kubernetes-dashboard

174 | namespace: kube-system

175 |

176 | ```

177 |

178 | then run

179 |

180 | ```bash

181 | kubectl create -f dashboard-admin.yaml

182 | ```

183 |

184 |

185 | ## SSH Tunnel to Cluster Master

186 |

187 | ssh -f -N -L 8080:10.101.166.227:80 pi@192.168.0.150

188 |

189 |

190 |

191 | https://www.digitalocean.com/community/tutorials/ssh-essentials-working-with-ssh-servers-clients-and-keys

192 |

193 | http://kamilslab.com/2016/12/17/how-to-set-up-ssh-keys-on-the-raspberry-pi/

194 |

195 | ```bash

196 | ssh-copy-id username@remote_host

197 | ```

198 |

199 |

200 | ## Exercise .NET App

201 |

202 | ```bash

203 | for i in {1..1000000}; do curl http://192.168.0.142:8002/api/values; echo $i; done

204 | ```

205 |

206 |

207 | ```bash

208 | nslookup netcoreapi.default.svc.cluster.local

209 | ```

210 |

211 |

212 |

213 | ## Docker Build Process

214 |

215 | ```bash

216 | docker build -t netcoreapi .

217 | docker tag netcoreapi glovebox/netcoreapi:v002

218 | docker push glovebox/netcoreapi:v002

219 |

220 | ```

221 |

222 |

223 | ### Update Kubernetes Image

224 |

225 | [Interactive Tutorial - Updating Your App](https://kubernetes.io/docs/tutorials/kubernetes-basics/update-interactive/)

226 |

227 | ```bash

228 | kubectl set image deployments/netcoreapi-deployment netcoreapi=glovebox/netcoreapi:v002

229 | ```

230 |

231 |

232 |

233 | ## MySQL on Raspberry Pi

234 |

235 | https://hub.docker.com/r/hypriot/rpi-mysql/

236 |

237 | ## References

238 |

239 | https://blog.alexellis.io/test-drive-k3s-on-raspberry-pi/

240 |

241 | https://downloads.raspberrypi.org/raspbian_lite/images/raspbian_lite-2019-04-09/

242 |

243 | https://www.syncfusion.com/ebooks/using-netcore-docker-and-kubernetes-succinctly

244 |

245 | https://itnext.io/building-a-kubernetes-cluster-on-raspberry-pi-and-low-end-equipment-part-1-a768359fbba3

246 |

247 | function.yml https://github.com/teamserverless/k8s-on-raspbian/blob/master/GUIDE.md

248 |

249 | ## Persistent Storage

250 |

251 | 1 [How to Setup Raspberry Pi NFS Server](https://pimylifeup.com/raspberry-pi-nfs/)

252 | 2 [Building an ARM Kubernetes Cluster](https://itnext.io/building-an-arm-kubernetes-cluster-ef31032636f9)

253 | 3 [kubernetes-incubator/external-storage](https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client)

254 | 4 [Persistent Volumes](https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistent-volumes)

--------------------------------------------------------------------------------

/kubesetup/README.md:

--------------------------------------------------------------------------------

1 | # Kube Set Up

2 |

3 | ## MetalLB

4 |

5 | [Installation](https://metallb.universe.tf/installation/)

6 |

7 | ```bash

8 | kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.8.1/manifests/metallb.yaml

9 | ```

10 |

11 | MetalLB remains idle until configured. This is accomplished by creating and deploying a configmap into the same namespace (metallb-system) as the deployment.

12 |

13 | ```bash

14 | kubectl apply -f metallb.yml

15 | ```

16 |

17 | ```bash

18 | kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.8.1/manifests/metallb.yaml

19 | ```

20 |

21 | MetalLB remains idle until configured. This is accomplished by creating and deploying a configmap into the same namespace (metallb-system) as the deployment.

22 |

23 | ```bash

24 | kubectl apply -f metallb.yml

25 | ```

26 |

27 | ### View MetalLB State

28 |

29 | ```bash

30 | kubectl get nodes --namespace=metallb-system -o wide

31 |

32 | kubectl get pods --namespace=metallb-system -o wide

33 |

34 | kubectl get svc --namespace=metallb-system -o wide

35 | ```

36 |

37 |

--------------------------------------------------------------------------------

/kubesetup/archive/persistent-storage/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | # Kubernetes NFS-Client Provisioner

4 |

5 | ## Deploy NFS-Client provisioner

6 |

7 | Creates a NFS Client Provisioning Service named **nfs-client-provisioner**

8 |

9 | ```bash

10 | kubectl apply -f nfs-client-deployment-arm.yaml

11 | ```

12 |

13 | ## Create Storage Class

14 |

15 | Creates a storage class named **managed-nfs-storage**

16 |

17 | ```bash

18 | kubectl apply -f storage-class.yaml

19 | ```

20 |

21 | ## Check Storage Class

22 |

23 | ```bash

24 | kubectl get storageclass

25 | ```

26 |

27 | ## Create a Persistent Volume

28 |

29 | Creates a persistent volume named **glovebox**

30 |

31 | ```bash

32 | kubectl apply -f persistent-volume.yaml

33 | ```

34 |

35 | ### Check Persistent Volume

36 |

37 | ```bash

38 | kubectl get pv

39 | ```

40 |

41 | ## Create a Persistent Volume Claim

42 |

43 | Creates a persistent volume named **glovebox-claim**

44 |

45 | ```bash

46 | kubectl apply -f persistent-volume-claim.yaml

47 | ```

48 |

49 | ### Check Persistent Volume Claims

50 |

51 | ```bash

52 | kubectl get pv

53 | ```

54 |

55 | ## Test Storage

56 |

57 | [Configure a Pod to Use a PersistentVolume for Storage](https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/)

58 |

59 | ```bash

60 | kubectl apply -f nginx-test-pod.yaml

61 | ```

62 |

63 | ### Verify that the Container in the Pod is running

64 |

65 | ```bash

66 | kubectl get pod

67 | ```

68 |

69 | ### Verify Service running

70 |

71 | ```bash

72 | kubectl get svc

73 | ```

74 |

75 | From web browser or curl verify pulling **index.html** from NFS Server

76 |

77 | ## Useful Commands

78 |

79 | ```bash

80 | kubectl exec -it task-pv-pod -- /bin/bash

81 | ```

82 |

--------------------------------------------------------------------------------

/kubesetup/archive/persistent-storage/nfs-client-deployment-arm.yaml:

--------------------------------------------------------------------------------

1 | # https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client

2 | # https://github.com/kubernetes-incubator/external-storage/blob/master/nfs-client/deploy/deployment-arm.yaml

3 | apiVersion: v1

4 | kind: ServiceAccount

5 | metadata:

6 | name: nfs-client-provisioner

7 | ---

8 | kind: Deployment

9 | apiVersion: apps/v1

10 | metadata:

11 | name: nfs-client-provisioner

12 | spec:

13 | replicas: 1

14 | selector:

15 | matchLabels:

16 | app: nfs-client-provisioner

17 | strategy:

18 | type: Recreate

19 | template:

20 | metadata:

21 | labels:

22 | app: nfs-client-provisioner

23 | spec:

24 | serviceAccountName: nfs-client-provisioner

25 | containers:

26 | - name: nfs-client-provisioner

27 | image: quay.io/external_storage/nfs-client-provisioner-arm:latest

28 | volumeMounts:

29 | - name: nfs-client-root

30 | mountPath: /persistentvolumes

31 | env:

32 | - name: PROVISIONER_NAME

33 | value: fuseim.pri/ifs

34 | - name: NFS_SERVER

35 | value: k8smaster.local

36 | - name: NFS_PATH

37 | value: /home/pi/nfsshare

38 | volumes:

39 | - name: nfs-client-root

40 | nfs:

41 | server: k8smaster.local

42 | path: /home/pi/nfsshare

--------------------------------------------------------------------------------

/kubesetup/archive/persistent-storage/persistent-volume.yaml:

--------------------------------------------------------------------------------

1 | # https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistent-volumes

2 |

3 | apiVersion: v1

4 | kind: PersistentVolume

5 | metadata:

6 | name: glovebox-storage

7 | spec:

8 | capacity:

9 | storage: 5Gi

10 | volumeMode: Filesystem

11 | accessModes:

12 | - ReadWriteOnce

13 | persistentVolumeReclaimPolicy: Recycle

14 | storageClassName: managed-nfs-storage

15 | mountOptions:

16 | - hard

17 | - nfsvers=4.1

18 | nfs:

19 | path: /home/pi/nfsshare

20 | server: k8smaster.local

--------------------------------------------------------------------------------

/kubesetup/archive/persistent-storage/storage-class.yaml:

--------------------------------------------------------------------------------

1 | # https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client

2 | # https://raw.githubusercontent.com/kubernetes-incubator/external-storage/master/nfs-client/deploy/class.yaml

3 | apiVersion: storage.k8s.io/v1

4 | kind: StorageClass

5 | metadata:

6 | name: managed-nfs-storage

7 | provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

8 | parameters:

9 | archiveOnDelete: "false"

--------------------------------------------------------------------------------

/kubesetup/archive/storage-class-archive/nginx-deployment.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: nginx-service

5 | labels:

6 | app: nginx-service

7 | spec:

8 | type: LoadBalancer

9 | ports:

10 | - port: 80

11 | protocol: TCP

12 | targetPort: 80

13 | selector:

14 | app: nginx-deployment

15 | ---

16 | apiVersion: apps/v1

17 | kind: Deployment

18 | metadata:

19 | name: nginx-deployment

20 | labels:

21 | app: nginx-deployment

22 | spec:

23 | replicas: 1

24 | selector:

25 | matchLabels:

26 | app: nginx-deployment

27 | template:

28 | metadata:

29 | labels:

30 | app: nginx-deployment

31 | spec:

32 | volumes:

33 | - name: nginx-storage

34 | persistentVolumeClaim:

35 | claimName: nginx-claim

36 | containers:

37 | - name: task-pv-container

38 | image: nginx

39 | ports:

40 | - containerPort: 80

41 | name: "http-server"

42 | volumeMounts:

43 | - mountPath: "/usr/share/nginx/html"

44 | name: nginx-storage

--------------------------------------------------------------------------------

/kubesetup/archive/storage-class-archive/nginx-pv-claim.yaml:

--------------------------------------------------------------------------------

1 | # https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

2 |

3 | apiVersion: v1

4 | kind: PersistentVolumeClaim

5 | metadata:

6 | name: nginx-claim

7 | spec:

8 | storageClassName: managed-nfs-storage

9 | accessModes:

10 | - ReadWriteOnce

11 | resources:

12 | requests:

13 | storage: 3Gi

14 | selector:

15 | matchLabels:

16 | storage-tier: nfs

--------------------------------------------------------------------------------

/kubesetup/archive/storage-class-archive/nginx-pv.yaml:

--------------------------------------------------------------------------------

1 | # https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistent-volumes

2 |

3 | apiVersion: v1

4 | kind: PersistentVolume

5 | metadata:

6 | name: nginx-volume

7 | labels:

8 | storage-tier: nfs

9 | spec:

10 | capacity:

11 | storage: 5Gi

12 | volumeMode: Filesystem

13 | accessModes:

14 | - ReadWriteOnce

15 | persistentVolumeReclaimPolicy: Recycle

16 | storageClassName: managed-nfs-storage

17 | mountOptions:

18 | - hard

19 | - nfsvers=4.1

20 | nfs:

21 | path: /home/pi/nfsshare/nginx

22 | server: k8smaster.local

--------------------------------------------------------------------------------

/kubesetup/cluster-config/README.md:

--------------------------------------------------------------------------------

1 | # Kubectl Config

2 |

3 | Useful for switching between multiple clusters.

4 |

5 | ## Config

6 |

7 | This sample config file needs to be updated with:

8 |

9 | 1. certificate-authority-data:

10 | 2. server: IP Address

11 | 3. Users: client-certificate-data, and client-key-data

12 |

13 | Notes:

14 |

15 | 1. The sample config file includes config information specific machine. This data will not work with your cluster

16 | 2. This information is available when you copy the Kubernetes Config to your local dev machine with:

17 |

18 | ```bash

19 | scp -r pi@k8smaster.local:~/.kube ./kube

20 | ```

21 |

22 | ## Switching Clusters

23 |

24 | ```bash

25 | kubectl config use-context pi3 or pi4

26 | ```

27 |

28 | or edit the config file and set the **current-context** property.

29 |

30 | ## Kubernetes Reference

31 |

32 | [Configure Access to Multiple Clusters](https://kubernetes.io/docs/tasks/access-application-cluster/configure-access-multiple-clusters/)

--------------------------------------------------------------------------------

/kubesetup/cluster-config/config:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | clusters:

3 | - cluster:

4 | certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJd01ERXdNVEl5TVRRd01sb1hEVEk1TVRJeU9USXlNVFF3TWxvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTmVJCnNSVzRQdHloS2lBVFNYUnR6ek1QVXd1elNzOXV5UFA1THhLZE1oYyt3VHNWQVQyMURmYytZelA1YUhRVjZZRC8KOXk0c2lZZXJRQVBsZXB5blJaekVaaG1zYXk0MGpkWHB1TDJORFdMVzNHQTBvTGt0UjVJYmdIbUJUVGJMS3c2YQpqTmRhQkNaN3BCamQ3Y0NWa0ZlWG96VnlRdkNYUU1uSWluZUFNSHhKalpiSnBvTnRYOGFzWnVnZmxSRG1ZbC9GCmVWaGJmcEdOdHUzei9lNks2d2M3d0ZKWWxDU0RoN3RIVVNMUzVMUkdXMHlld3gvU2RFR2U4c0RHRXowM3NSRW8KdlpTV0N2d1RXbnlJZVFsRGp4M0dOZTBVQS9MeEdob1R6VEQvcDhYNlZlWFp0YzVEUDdRWFpmb2JXWEkzUzJRQgo5Mzlld0RuUkVObGRjQW1uRllrQ0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFMdEZudlNCUnpSU0s5ZlFqRGJDR2cvRGxrZG4KSGpoMWI0VXc3M2orMUxyZE85Q0ZOYzl6UnhuVWtaaWZJTVJUOUV6K1JFWXZlVFRMM2o1VTdQRW0wLzVEKzNBQwo0Qm5ZL0h2cVRPQWoyM2ZwRmFkSEM1cnNpT0JzTTR4UUlQMHVudmlvWkRJT3R3eEVJRFZlMW5hTmJSYVo4RXJwCnZNa2dCcVpzSGdyV05ZMDVEMWQyU2F3ZjJyQWRTbDdyenlMaVZrUHVKVVBuNEx2ZUlESVZCMXpwdFp5Y05CL1AKLzFsdCtic083cTZIbXlPSy9nbCs0aUhzb2RDdjVFbkdoM1hVMCtsaG81Njh0MzAzNzM0UlZBWWJ5WGZpWjNybQpxdFB1alJoNmtUSS9ZS3dGR05xSHhRRjJOK0lzUkl1UStZZkhnQXEySmg3aW5ySVhqZk5MY3YxVjBwST0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

5 | server: https://192.168.100.1:6443

6 | name: pi3

7 | - cluster:

8 | certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJd01ERXdNakF3TXpZMU5Gb1hEVEk1TVRJek1EQXdNelkxTkZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBSzliCkZPcmxTa1lPME1sdFNCU25QcnE4M2x5aFF4L201MG9yNy9EbEdoajdMSlJYU3BmWGZYbDZUR1NSQjU2SjhFTVkKcUdQV3E5bG9vSjlaaUdDVEpBeXFUUGgrVmF0dEpHbk5pbEZ3RVNac0U3cmFTdkpwdm8rMk81MTBOR0lsQU1EcQpTTHVjTmtuVlZZYld2SkdsejZab1U4UUdTaUVwWFUrelNDL201ZDlUcENPcGJsK2dLWWNENTJqSGZwNkhNTEcrCkZCcnFOaTErR042ZUk3KytzZjE5Vk5FN0NacW1PbXh6MS8xZXhJajhwc0dhK3dWaUhJVzZkN0hoV01IQkFncloKVkNOd3hqcytJbGMrUHhjMk1EeDIzREJhWGd6a1pVQVFSd2JVakZQSnc2eUwvKzR5MHd0TmZmbnhIQnhLbU9zZgpVcHJ1S3lCZnREeU1JditHRW5rQ0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFDdnd0Szg3NXRaUU9LU3YxSitiYkhTNko0L3MKbUhkRWdCejY2MHZlNCtnRUZEYjZyMm44N2kyM0IzaWNVU05McWx0Vk1paFNYUVpwYTVoUVBTWW1DeVVWb1M0WAptOVlmT2Y4WTBGK2Vtc1UrOGhic0hjRjk4N3JiZi8vTnlvd29PcUh0YlhsRU5qTjJ3aVdLSS9uRDdkSGsydk1PCkxtYzFDZkhLYThuNThrcThsSm9xckd3QW1vaWFJZWZJVS9xTlJmNGUwYW9BVWlLUzZrNlJlYWR1V1craUtDMnQKMmtoYm5RZFZQWmJRUXpzV0xwSkNHekxXaXdIb1kvMi9tc1FBRWpsU2NRU0k2aDJYVlhVbk5lcm1hODNMbDVVQgo0ZUR2S0lZaVdUT2tKM2dVT2RDWFliSXo1Qkp1NEVYSXBHcXB1d0NWSGRJcWF1R0tUbFI1UjdReCtVVT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

9 | server: https://192.168.100.1:6443

10 | name: pi4

11 | contexts:

12 | - context:

13 | cluster: pi3

14 | user: pi3-kubernetes-admin

15 | name: pi3

16 | - context:

17 | cluster: pi4

18 | user: pi4-kubernetes-admin

19 | name: pi4

20 | current-context: pi3

21 | kind: Config

22 | preferences: {}

23 | users:

24 | - name: pi3-kubernetes-admin

25 | user:

26 | client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM4akNDQWRxZ0F3SUJBZ0lJVjgzbkpsTEtlNDB3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TURBeE1ERXlNakUwTURKYUZ3MHlNREV5TXpFeU1qRTFNVEZhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQTBsYnZLZ1paT2d1K09FNFgKbWNNbjhQeDFMOFF1WlQzQnBVL2R1WUhVT2NwUzc4RHJwWUtJdVV6emRuTWxjVitGZU5MR2MxKzRhWkZteXgrTgpSdlV3YVc1QjNERDhzdi9pclZDWlVwYWZGTC9RclczKzVZZFYzVUc5N1FhU0pjY0JYRVJQT2NSa1FRVTVZaUM4CkNnL3Y0SUY1Rmw5bkVXVlg1NHU3a2EyT3hVWXFGMWZJTXE3SUU0NElnalVzbDBraFVJbUlCVDJaSUV2ZS9vdDgKOWZuczZtWXVMaFM4VWVsNWJIUnQrWmYwZVVyV2JkVEFCaTc3WnJEV0NsWWlGNDFpcVFRTDZYb3E4SUw1eVFyegpFWXo1b1pXdVZ6SzN4TDZOdlBHM3VJOHQ5V3JnajVBZlN6VjFGSzd3eWRDd0p0NmErVW1RQkdnVWFvYUJtRG52CnJjWVZUd0lEQVFBQm95Y3dKVEFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFFTjdReUVSTklWb2dzdlNTU09oeGZZOVRFeWtGcjMxaHhDWApSSGJGdi95Y1lwNjFoNEJER3lubVhPVmZXYTJxUFBJZVAxbE9naEs1UFJRK2dPK1pLZy9XNUhYRGlXQ2lEclFICnpCVENsbTdDZlAzZUlYV25WSEEreTNIVmZRT25XRWpZbG4vZ3lFc2xNRWVCSHJaSUVSNk04aWJSeGtzTHVnQ3gKWlMrcFZ5U01za2xlTXNYV3JvMS9mTTkxMmkrNWdPeHhMc0tFamtqWm9iTUZlNWRCRHlONVlqcElTeDFyT28vdQpDREo0WGUwSk9UUVBtTDB5Z25KVlg2ckw1aEQrSXJJNEJqa3ZQY2ZXSUtHbVhFTE9VYlU2K1U4Zklkdms5K0p1CjZiWnlzOHkvcUFiQjdHcDRFMWsxV0Uzbmg1aVpKUlVBTVZndm1sclV2cWU5dmNZMlpHYz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

27 | client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBMGxidktnWlpPZ3UrT0U0WG1jTW44UHgxTDhRdVpUM0JwVS9kdVlIVU9jcFM3OERyCnBZS0l1VXp6ZG5NbGNWK0ZlTkxHYzErNGFaRm15eCtOUnZVd2FXNUIzREQ4c3YvaXJWQ1pVcGFmRkwvUXJXMysKNVlkVjNVRzk3UWFTSmNjQlhFUlBPY1JrUVFVNVlpQzhDZy92NElGNUZsOW5FV1ZYNTR1N2thMk94VVlxRjFmSQpNcTdJRTQ0SWdqVXNsMGtoVUltSUJUMlpJRXZlL290ODlmbnM2bVl1TGhTOFVlbDViSFJ0K1pmMGVVcldiZFRBCkJpNzdackRXQ2xZaUY0MWlxUVFMNlhvcThJTDV5UXJ6RVl6NW9aV3VWekszeEw2TnZQRzN1STh0OVdyZ2o1QWYKU3pWMUZLN3d5ZEN3SnQ2YStVbVFCR2dVYW9hQm1EbnZyY1lWVHdJREFRQUJBb0lCQUhEWVl3ZFEwSjNybnVubQpPNU1xdUVyNXBvVXg0eEk5eDU1QTh0dUxZNmg5ZTNGVk54ZGNxSzJCTXp6aEdiMXhXZEl3Z25kemF5UjM5WVlVCkwxOWFPOWJVYUZFUmx3RVJkek0wZ28xa2NZUllSRVJITnZFOVlqdUtBYk1nUzFncEkvbTBUQ3paeUU5NTFnZG4KT0hyTmdnd1lhall1aU1VMGNheXZzcm05TzFOcUVCZWhLS2lqLzFDOTc1ZzgzTHF4ZTYyOERVdDJqOXFVNi90MgpuTDFuOWNFTTlmMG4wbjlXRXQ0enBnRFE1aWZqazFCNFhueFh5WjlBbEJXQTZTTTJsYnQ5UEpGWXlXenVDNklZCkNsaWk5ZEpPVDRldHVtR3IwbjkvWDFLaHNmMUZBVlJvVGJ0V3J1ZmxLeHBGN25NRHRIU2xaV0E2czI4cVlKRVgKL1pmWnJvRUNnWUVBNENkOS9LanBRemwxb016U0cwbk1HZnd3cFc3WU12K3FoRGl4YmpMS0YxM1VGT1hUaUNWTwpTT1AzQTJtZU1xZVJKTGdkemtyeWUyNlZXUnpNN3hKQ3F6Snp4TlRZMjhQbGEyckkzUmc1dUZlTlRCdlpIUXBHCjJ3MkV4TSswV2xnZDMzWHpYQmRkOGRhb2gzZ3VJTnRnM3pqaU8xRGdWMGVCTXpEd0IvQnRlYWtDZ1lFQThEa0EKUzM3Mk52Q1pYUjdtWVhTSER5UHg4RlpwWk5aeUlEc1dEVGYvaGdpQXNJbHpQY3FTRmp0d1I2T3I0eEszc0wrWApDQzVKNldleHpVUU9YVGh2aGNNeVE5UTZJY0hvc1NRb2U0MnoxMm9LQUZUaDhxL3dFUGJ3eGMrZ1FQUVkvOEFECjJJMlI0OVBzL2xKRXFLQTU3OE8xMVo4VnFad3hTUlBmbkxla29qY0NnWUVBbUN5M2Iyd00wRUtXQk5DSVkxWTYKWmZtNzNOUGZtdC9QRjJ5VnFFWjZ4RnBDdk4wNk9sZDVTaXJaYTB1c3hwN1QvcVd3Tm5qVEhkRDVPMEkrTHArcQoreWFKU2J0bWJld1VPRlNLZ084TllJU3Z2RmU3a2VlRUt2cUdoRWF1SGhkc1VHUjNEckllYVN4ZHhYcGxkcEQ0CnR4S2JJOEhJUy9pVFVmbUxPeGlTZWVFQ2dZRUExdzh3NUdYVnAzbmUweTlHc1JqUmtReHRITzAvamJjdWxReFEKd2FUUWJmNU90NzFXSG91c0haczQrZW5kaUh6SlZzTXZRM090Vi9ndGhjYlgxVDBoR20rV0lJTnZSNm1CMkpTMgphV2FEQ3VjejdQZ1JFR1BTV0YyN1VGeUE2NldjZTlvN2x6T20ySUJ5TzMwTFdxdVhNci9UbWx2QjJRYXNXUEFoCjBQdlh1Q0VDZ1lFQW0rbm42WEFFdmpxNUNPSVJucTNDS3NlT1ZmbW9YLzVNZDdsQ1ZwNlpXOXBlTXNiVmF0clAKQ1BCc1YrdERnSitTMzVMTXpLdFErZ3JCMzlrdGVadVBMZlRXMEhocno4T0RxK3ZYWEZMT2p1VnVkdnZoVE9nUAovMmNIQUh6KytYcjBoakRJcEpzTUdzdEpEZ2ZzdGZiV1lPK3VwTy9UY0tTK2h2bUhaY2V6Wm9ZPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

28 | - name: pi4-kubernetes-admin

29 | user:

30 | client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM4akNDQWRxZ0F3SUJBZ0lJSkRLbFhCaHFVK2d3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TURBeE1ESXdNRE0yTlRSYUZ3MHlNVEF4TURFd01ETTRNRGxhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQTA4MndpL1hKODhKV3RIcmcKaWZGZjkya0lkcCsxNi85ZzdwcjVaSnRlMnVxUmF1UFJScXU2MVdvR2JDMUUrR2lyQ3JZMFRqVXp0SUhDdW81Qwp1M2xlS2VsZFRDaTFGUFpTZnFnbmNRNXJFalNOWEZRVFNRRmxDc2RLRXNHaGlIMVFaOG10Q3RoMzgreUppSEhHCklhdHRnOU5xdU1iWnc4WCs4WXhoVS8vY1AvbWZOTTUvZWJReDNQWU1zZ1A0NjhDeXV1TkZLRXdTdGtkVlRJWVQKdUJEYmVNWGt3b0lNbzFuS0FGOTRFdzVCaFhrWHNSTHFnajJOalh6Y25UU05JTnFYV2J5OE1YRnRKMlRKcXFUMApaSnMyY0tXdTVaN2VEakw4K3BKSWNBclhLRFRKSzJtY210ODc4aUoyZG8rZnhSd0d1T0xQakNyYVpMMTNjRnRiCm5USk1Dd0lEQVFBQm95Y3dKVEFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFBOHNSREVtZ0gvR1poUWhBeEp4cGxnUW5TYjJVTmJnRlhseQpKbUNiYW1SZng2V2ZwNEpUaGZXSTArU2hnTzZuKy8wMEsxM01GOVVvN21vVmtQREdqK3M3MEhDSUtGb1dIUVhFCjNVSVFmSDZnVGNtNTVHZ1NiMGE2Yzc4c2d5YUNyRWpoaDRPU1lzRVRMWnk5cEJHcmFKWDVBeThIeGtnOWs0MjIKQW10QmxQVytDSXl3NXN1cTFnVDFoU25KTTY3N2EzQlA0VXNOZG1uNXVvTjJPRmVsQldqakxtbUFsLzV0MTNVVQplWnhTcnl4MWJ4R0tzdTJVOU03OFNxMGlYWXFxVW5XWWVFcmJJSENZREU2RU1Cb3JWZVlsWnhGN3R2eGg2bWtuCjZxbENuWHpTblRESG1SSlZ5OXpUUWhIUVZpRVo3aVp4ZzVBNS9LNVUvUDJxcWxhWmd1VT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

31 | client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBMDgyd2kvWEo4OEpXdEhyZ2lmRmY5MmtJZHArMTYvOWc3cHI1Wkp0ZTJ1cVJhdVBSClJxdTYxV29HYkMxRStHaXJDclkwVGpVenRJSEN1bzVDdTNsZUtlbGRUQ2kxRlBaU2ZxZ25jUTVyRWpTTlhGUVQKU1FGbENzZEtFc0doaUgxUVo4bXRDdGgzOCt5SmlISEdJYXR0ZzlOcXVNYlp3OFgrOFl4aFUvL2NQL21mTk01LwplYlF4M1BZTXNnUDQ2OEN5dXVORktFd1N0a2RWVElZVHVCRGJlTVhrd29JTW8xbktBRjk0RXc1QmhYa1hzUkxxCmdqMk5qWHpjblRTTklOcVhXYnk4TVhGdEoyVEpxcVQwWkpzMmNLV3U1WjdlRGpMOCtwSkljQXJYS0RUSksybWMKbXQ4NzhpSjJkbytmeFJ3R3VPTFBqQ3JhWkwxM2NGdGJuVEpNQ3dJREFRQUJBb0lCQVFER0hyWlJCVFhHUFBnTAoxSkRxbDQwMENkeXYwWTlEVk52ZjlianBJZWlsa0JzOFNDUC9IaTRpNlExZTdTMkJ5NjZLMDRxenlWSTNPOVhoCjJhYVVaTi92Qm1xT0RkbnM1TWlmenowdHBOWUU3b0Y0WnZDdkxvM01la1JRclMwalZrejYrSXhVQTg2WXJaNnMKc2ZncWtJZGRjMHAxMHhQcDYvQWhGRFlLYytBYk9mbk5YMHc3czdKQWFEekxTZ01Ea3J6RjdKd20zTGJpaTNIbgovUGVDM2FVVlZPTjc4aDJiWjVzQmtIM0ZJL0s2Z0FHL29TL0dTMU9xdG00ZnA2RlFtMG5DS0l4YXNQdFViQ0xMClpEZE5FdjM0TndSdWdQRkx1VEhVUkZsdDZ2dFpkUlI5MWFHMDFYSFBQU2lqRFFFTE5PSDJYbHVzby9LQWRrTzcKSEtlci92T2hBb0dCQU9wdXRqbXNiOGdlV0dtWmdHNFZjc1VEYzRtSWRqTGt0WmNmS3RnUnY0SWZ4M29FSVRWWQpRSXNKRW53SHBpTzRkaU5IVC9XMkdlU0FwdUczRXZnRjBKcjl2K2ZQUE9YWjFJS1NJUyt6cXBKTTM3bys1YmlCClV0c0VCQjJ4NzRucTNYZGJRR3pKY3NnVUFLS1AyMTcvU3B0eDJ1V052WkQyTy9CRXdZQlMxajQ3QW9HQkFPZEsKQnEwK3QySFNoRFZ1cjVzaUx0ZFZzQTh5UE1uRXRMYitJR2g0blg1M3p2aGpyWDR4U0NZWi9oKzgrWU1TRXpHdgpRaW5Idk1YVG50RHE0RWwzRXphU0V0ZmVteTZ0dmlaaUZDWmV5ZmVkM3QzcVFXNDU2dXZNZWpsSVFUQnkxbmMvCk9IT3dNLzZ2MklHbFpMQU5wandMelRxVTl0SjAwaTZheHNZNmNUeHhBb0dBY0xibVduaDBEazI0eUowTFNPSjcKR2dwOHhJV2QvdjVENlBNTlVISElHREpiWUdrWDVtUVdORU1hWmhQdlo0RkxHODh2dkwzZldTUWFHTEJES0lqegpNWElMa05MdFByNHJGTlJackd1LytUT0k1aTFUbWhCajIvWGtYTHF1cHlzTGJGV3RkaUN0VlZGNHRMQmlFeHkvCnJGbGptN2M0aTdnNFBWOXhnZGRTTnYwQ2dZQkhlcXRCazJaZFJ4QXc2em8rT1h3OGRIRHE0VjNFQlpUTUVSRzIKOTcvRXZBWXM0YkZXbEtoMWpnYnBqQitZa0ZkNlBXMjNOOUZ4V2d0MUNZR3pjcWR2Y0FsK3lYOHdGK1h5T3RGNwpZa1FNMEs5MTZkVzYyUTl2UEV4eHM1RGlCanVkc3Q0aGNzMCs0dDJJZzdMd2JlZDRHeldiNnptMHBRSG9BVkY1CkpjcWxJUUtCZ0RJWDY4QTdRRXFvTzcxdUwvNWh1cmpmRlF5Y3dFSFlNRjJ4Zy9SZXRMem45WklQUEw1ZzAzWWUKTWR1eS9VRlpTOHh3a2Vzcnd5ZFg0SXJ2YWIyT25qMlVSK1VkNjF0Zm9ZaDA3V1U2S3lOcG1za2VDbGNDSllhUQpFSnZtdlRZaGNGcDU4Mm5SWHUvUkRMc2ZNVkxzN29xNm9FcnRnSi9GblQwTDUwZ0tWNkhFCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

32 |

--------------------------------------------------------------------------------

/kubesetup/dashboard/dashboard-admin-role-binding.yml:

--------------------------------------------------------------------------------

1 | apiVersion: rbac.authorization.k8s.io/v1

2 | kind: ClusterRoleBinding

3 | metadata:

4 | name: admin-user

5 | roleRef:

6 | apiGroup: rbac.authorization.k8s.io

7 | kind: ClusterRole

8 | name: cluster-admin

9 | subjects:

10 | - kind: ServiceAccount

11 | name: admin-user

12 | namespace: kube-system

--------------------------------------------------------------------------------

/kubesetup/dashboard/dashboard-admin-user.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ServiceAccount

3 | metadata:

4 | name: admin-user

5 | namespace: kube-system

--------------------------------------------------------------------------------

/kubesetup/image-classifier.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: image-classifier

5 | labels:

6 | app: image-classifier

7 | spec:

8 | type: LoadBalancer

9 | ports:

10 | - port: 80

11 | protocol: TCP

12 | targetPort: 80

13 | selector:

14 | app: image-classifier

15 | ---

16 | apiVersion: apps/v1

17 | kind: Deployment

18 | metadata:

19 | name: image-classifier

20 | labels:

21 | app: image-classifier

22 | spec:

23 | replicas: 0

24 | selector:

25 | matchLabels:

26 | app: image-classifier

27 | template:

28 | metadata:

29 | labels:

30 | app: image-classifier

31 | spec:

32 | containers:

33 | - name: image-classifier

34 | image: glovebox/image-classifier-v2:arm32v7-1.0

35 | resources:

36 | limits:

37 | memory: "512Mi"

38 | imagePullPolicy: Always

39 | ports:

40 | - containerPort: 80

41 | protocol: TCP

--------------------------------------------------------------------------------

/kubesetup/jupyter/jupyter-deployment.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: jupyter

5 | labels:

6 | app: jupyter

7 | spec:

8 | type: LoadBalancer

9 | ports:

10 | - port: 8888

11 | protocol: TCP

12 | targetPort: 8888

13 | selector:

14 | app: jupyter

15 | ---

16 | apiVersion: apps/v1

17 | kind: ReplicaSet

18 | metadata:

19 | name: jupyter

20 | labels:

21 | app: jupyter

22 | spec:

23 |

24 | replicas: 0

25 | selector:

26 | matchLabels:

27 | app: jupyter

28 | template:

29 | metadata:

30 | labels:

31 | app: jupyter

32 | spec:

33 | volumes:

34 | - name: "jupyter-data"

35 | persistentVolumeClaim:

36 | claimName: "jupyter-claim"

37 | # hostPath:

38 | # path: "/home/pi/notebooks"

39 | containers:

40 | - name: jupyter

41 | image: glovebox/jupyter-datascience:latest

42 | volumeMounts:

43 | - mountPath: "/notebooks"

44 | name: "jupyter-data"

45 | imagePullPolicy: Always

46 | ports:

47 | - containerPort: 8888

48 | protocol: TCP

49 |

50 | # on the node user pi, mkdir notebooks

51 | # sudo chmod -R 757 notebooks/

--------------------------------------------------------------------------------

/kubesetup/jupyter/jupyter-volume-claim.yaml:

--------------------------------------------------------------------------------

1 | kind: PersistentVolumeClaim

2 | apiVersion: v1

3 | metadata:

4 | name: jupyter-claim

5 | spec:

6 | accessModes:

7 | - ReadWriteMany

8 | resources:

9 | requests:

10 | storage: 5Gi

11 | selector:

12 | matchLabels:

13 | volume: jupyter-volume

--------------------------------------------------------------------------------

/kubesetup/jupyter/jupyter-volume.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolume

3 | metadata:

4 | name: jupyter-nfs

5 | labels:

6 | volume: jupyter-volume

7 | spec:

8 | accessModes:

9 | - ReadWriteMany

10 | capacity:

11 | storage: 5Gi

12 | nfs:

13 | server: k8smaster.local

14 | path: "/home/pi/nfsshare/notebooks"

--------------------------------------------------------------------------------

/kubesetup/kuard/kuard-deployment.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: kuard

5 | labels:

6 | app: kuard

7 | spec:

8 | type: LoadBalancer

9 | ports:

10 | - port: 8080

11 | protocol: TCP

12 | targetPort: 8080

13 | selector:

14 | app: kuard

15 | ---

16 | apiVersion: apps/v1

17 | kind: ReplicaSet

18 | metadata:

19 | name: kuard

20 | labels:

21 | app: kuard

22 | spec:

23 | replicas: 0

24 | selector:

25 | matchLabels:

26 | app: kuard

27 | template:

28 | metadata:

29 | labels:

30 | app: kuard

31 | spec:

32 | volumes:

33 | - name: "kuard-data"

34 | persistentVolumeClaim:

35 | claimName: "kuard-claim"

36 | containers:

37 | - name: kuard

38 | # https://github.com/kubernetes-up-and-running/kuard

39 | image: gcr.io/kuar-demo/kuard-arm:blue

40 | volumeMounts:

41 | - mountPath: "/data"

42 | name: "kuard-data"

43 | livenessProbe:

44 | httpGet:

45 | path: /healthy

46 | port: 8080

47 | initialDelaySeconds: 5

48 | timeoutSeconds: 1

49 | periodSeconds: 10

50 | failureThreshold: 3

51 | imagePullPolicy: Always

52 | ports:

53 | - containerPort: 8080

54 | name: http

55 | protocol: TCP

56 |

57 |

--------------------------------------------------------------------------------

/kubesetup/kuard/kuard-volume-claim.yaml:

--------------------------------------------------------------------------------

1 | kind: PersistentVolumeClaim

2 | apiVersion: v1

3 | metadata:

4 | name: kuard-claim

5 | spec:

6 | accessModes:

7 | - ReadWriteMany

8 | resources:

9 | requests:

10 | storage: 5Gi

11 | selector:

12 | matchLabels:

13 | volume: kuard-volume

--------------------------------------------------------------------------------

/kubesetup/kuard/kuard-volume.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolume

3 | metadata:

4 | name: kuard-nfs

5 | labels:

6 | volume: kuard-volume

7 | spec:

8 | accessModes:

9 | - ReadWriteMany

10 | capacity:

11 | storage: 5Gi

12 | nfs:

13 | server: k8smaster.local

14 | path: "/home/pi/nfsshare/kuard"

--------------------------------------------------------------------------------

/kubesetup/led-controller/led-controller.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: led-controller

5 | labels:

6 | app: led-controller

7 | spec:

8 | type: LoadBalancer

9 | ports:

10 | - port: 80

11 | protocol: TCP

12 | targetPort: 80

13 | selector:

14 | app: led-controller

15 | ---

16 | apiVersion: apps/v1

17 | kind: DaemonSet

18 | metadata:

19 | name: led-controller

20 | labels:

21 | app: led-controller

22 | spec:

23 | selector:

24 | matchLabels:

25 | app: led-controller

26 | template:

27 | metadata:

28 | labels:

29 | app: led-controller

30 | spec:

31 | containers:

32 | - name: led-controller

33 | image: glovebox/led-controller:arm32v7-0.6

34 | imagePullPolicy: Always

35 | securityContext:

36 | privileged: true

37 | ports:

38 | - containerPort: 80

39 | protocol: TCP

40 |

--------------------------------------------------------------------------------

/kubesetup/metallb/metallb.yml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: ConfigMap

3 | metadata:

4 | namespace: metallb-system

5 | name: config

6 | data:

7 | config: |

8 | address-pools:

9 | - name: default

10 | protocol: layer2

11 | addresses:

12 | - 192.168.100.200-192.168.100.250

--------------------------------------------------------------------------------

/kubesetup/mysql/mysql-deployment.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: apps/v1

2 | kind: ReplicaSet

3 | metadata:

4 | name: mysql

5 | # labels so that we can bind a Service to this Pod

6 | labels:

7 | app: mysql

8 | spec:

9 | replicas: 0

10 | selector:

11 | matchLabels:

12 | app: mysql

13 | template:

14 | metadata:

15 | labels:

16 | app: mysql

17 | spec:

18 | containers:

19 | - name: database

20 | image: biarms/mysql

21 | # securityContext:

22 | # privileged: true

23 | # runAsUser: 0

24 | # runAsGroup: 0

25 | # fsGroup: 2000

26 | resources:

27 | requests:

28 | memory: 2Gi

29 | env:

30 | # Environment variables are not a best practice for security,

31 | # but we're using them here for brevity in the example.

32 | # See Chapter 11 for better options.

33 | - name: MYSQL_ROOT_PASSWORD

34 | value: raspberry

35 | livenessProbe:

36 | tcpSocket:

37 | port: 3306

38 | ports:

39 | - containerPort: 3306

40 | # volumeMounts:

41 | # - name: database

42 | # mountPath: "/var/lib/mysql"

43 | volumes:

44 | - name: database

45 | persistentVolumeClaim:

46 | claimName: "mysql-claim"

--------------------------------------------------------------------------------

/kubesetup/mysql/mysql-service.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: mysql

5 | spec:

6 | type: LoadBalancer

7 | ports:

8 | - port: 3306

9 | protocol: TCP

10 | selector:

11 | app: mysql

--------------------------------------------------------------------------------

/kubesetup/mysql/mysql-volume-claim.yaml:

--------------------------------------------------------------------------------

1 | kind: PersistentVolumeClaim

2 | apiVersion: v1

3 | metadata:

4 | name: mysql-claim

5 | spec:

6 | accessModes:

7 | - ReadWriteOnce

8 | resources:

9 | requests:

10 | storage: 5Gi

11 | selector:

12 | matchLabels:

13 | volume: mysql-volume

--------------------------------------------------------------------------------

/kubesetup/mysql/mysql-volume.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: PersistentVolume

3 | metadata:

4 | name: mysql-nfs

5 | labels:

6 | volume: mysql-volume

7 | spec:

8 | accessModes:

9 | - ReadWriteOnce

10 | capacity:

11 | storage: 5Gi

12 | nfs:

13 | server: k8smaster.local

14 | path: "/home/pi/nfsshare/mysql"

--------------------------------------------------------------------------------

/kubesetup/nginx/nginx-daemonset.yaml:

--------------------------------------------------------------------------------

1 | apiVersion: v1

2 | kind: Service

3 | metadata:

4 | name: nginx-service

5 | labels:

6 | app: nginx-service

7 | spec:

8 | type: LoadBalancer

9 | ports:

10 | - port: 80

11 | protocol: TCP

12 | targetPort: 80

13 | selector:

14 | app: nginx-web-server

15 | ---

16 | apiVersion: apps/v1

17 | kind: DaemonSet