├── .gitignore

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── experiments

├── constants.py

├── curriculum.py

├── example.py

├── range_evaluation.py

├── training.py

└── utils.py

├── models

├── positional_encodings.py

├── transformer.py

└── transformer_utils.py

├── overview.png

├── requirements.txt

└── tasks

├── cs

├── binary_addition.py

├── binary_multiplication.py

├── bucket_sort.py

├── compute_sqrt.py

├── duplicate_string.py

├── missing_duplicate_string.py

└── odds_first.py

├── dcf

├── modular_arithmetic_brackets.py

├── reverse_string.py

├── solve_equation.py

└── stack_manipulation.py

├── regular

├── cycle_navigation.py

├── even_pairs.py

├── modular_arithmetic.py

└── parity_check.py

└── task.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # Distribution / packaging

7 | .Python

8 | build/

9 | develop-eggs/

10 | dist/

11 | downloads/

12 | eggs/

13 | .eggs/

14 | lib/

15 | lib64/

16 | parts/

17 | sdist/

18 | var/

19 | wheels/

20 | share/python-wheels/

21 | *.egg-info/

22 | .installed.cfg

23 | *.egg

24 | MANIFEST

25 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # How to Contribute

2 |

3 | ## Contributor License Agreement

4 |

5 | Contributions to this project must be accompanied by a Contributor License

6 | Agreement. You (or your employer) retain the copyright to your contribution,

7 | this simply gives us permission to use and redistribute your contributions as

8 | part of the project. Head over to to see

9 | your current agreements on file or to sign a new one.

10 |

11 | You generally only need to submit a CLA once, so if you've already submitted one

12 | (even if it was for a different project), you probably don't need to do it

13 | again.

14 |

15 | ## Code reviews

16 |

17 | All submissions, including submissions by project members, require review. We

18 | use GitHub pull requests for this purpose. Consult

19 | [GitHub Help](https://help.github.com/articles/about-pull-requests/) for more

20 | information on using pull requests.

21 |

22 | ## Community Guidelines

23 |

24 | This project follows [Google's Open Source Community

25 | Guidelines](https://opensource.google/conduct/).

26 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 |

2 | Apache License

3 | Version 2.0, January 2004

4 | http://www.apache.org/licenses/

5 |

6 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

7 |

8 | 1. Definitions.

9 |

10 | "License" shall mean the terms and conditions for use, reproduction,

11 | and distribution as defined by Sections 1 through 9 of this document.

12 |

13 | "Licensor" shall mean the copyright owner or entity authorized by

14 | the copyright owner that is granting the License.

15 |

16 | "Legal Entity" shall mean the union of the acting entity and all

17 | other entities that control, are controlled by, or are under common

18 | control with that entity. For the purposes of this definition,

19 | "control" means (i) the power, direct or indirect, to cause the

20 | direction or management of such entity, whether by contract or

21 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

22 | outstanding shares, or (iii) beneficial ownership of such entity.

23 |

24 | "You" (or "Your") shall mean an individual or Legal Entity

25 | exercising permissions granted by this License.

26 |

27 | "Source" form shall mean the preferred form for making modifications,

28 | including but not limited to software source code, documentation

29 | source, and configuration files.

30 |

31 | "Object" form shall mean any form resulting from mechanical

32 | transformation or translation of a Source form, including but

33 | not limited to compiled object code, generated documentation,

34 | and conversions to other media types.

35 |

36 | "Work" shall mean the work of authorship, whether in Source or

37 | Object form, made available under the License, as indicated by a

38 | copyright notice that is included in or attached to the work

39 | (an example is provided in the Appendix below).

40 |

41 | "Derivative Works" shall mean any work, whether in Source or Object

42 | form, that is based on (or derived from) the Work and for which the

43 | editorial revisions, annotations, elaborations, or other modifications

44 | represent, as a whole, an original work of authorship. For the purposes

45 | of this License, Derivative Works shall not include works that remain

46 | separable from, or merely link (or bind by name) to the interfaces of,

47 | the Work and Derivative Works thereof.

48 |

49 | "Contribution" shall mean any work of authorship, including

50 | the original version of the Work and any modifications or additions

51 | to that Work or Derivative Works thereof, that is intentionally

52 | submitted to Licensor for inclusion in the Work by the copyright owner

53 | or by an individual or Legal Entity authorized to submit on behalf of

54 | the copyright owner. For the purposes of this definition, "submitted"

55 | means any form of electronic, verbal, or written communication sent

56 | to the Licensor or its representatives, including but not limited to

57 | communication on electronic mailing lists, source code control systems,

58 | and issue tracking systems that are managed by, or on behalf of, the

59 | Licensor for the purpose of discussing and improving the Work, but

60 | excluding communication that is conspicuously marked or otherwise

61 | designated in writing by the copyright owner as "Not a Contribution."

62 |

63 | "Contributor" shall mean Licensor and any individual or Legal Entity

64 | on behalf of whom a Contribution has been received by Licensor and

65 | subsequently incorporated within the Work.

66 |

67 | 2. Grant of Copyright License. Subject to the terms and conditions of

68 | this License, each Contributor hereby grants to You a perpetual,

69 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

70 | copyright license to reproduce, prepare Derivative Works of,

71 | publicly display, publicly perform, sublicense, and distribute the

72 | Work and such Derivative Works in Source or Object form.

73 |

74 | 3. Grant of Patent License. Subject to the terms and conditions of

75 | this License, each Contributor hereby grants to You a perpetual,

76 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

77 | (except as stated in this section) patent license to make, have made,

78 | use, offer to sell, sell, import, and otherwise transfer the Work,

79 | where such license applies only to those patent claims licensable

80 | by such Contributor that are necessarily infringed by their

81 | Contribution(s) alone or by combination of their Contribution(s)

82 | with the Work to which such Contribution(s) was submitted. If You

83 | institute patent litigation against any entity (including a

84 | cross-claim or counterclaim in a lawsuit) alleging that the Work

85 | or a Contribution incorporated within the Work constitutes direct

86 | or contributory patent infringement, then any patent licenses

87 | granted to You under this License for that Work shall terminate

88 | as of the date such litigation is filed.

89 |

90 | 4. Redistribution. You may reproduce and distribute copies of the

91 | Work or Derivative Works thereof in any medium, with or without

92 | modifications, and in Source or Object form, provided that You

93 | meet the following conditions:

94 |

95 | (a) You must give any other recipients of the Work or

96 | Derivative Works a copy of this License; and

97 |

98 | (b) You must cause any modified files to carry prominent notices

99 | stating that You changed the files; and

100 |

101 | (c) You must retain, in the Source form of any Derivative Works

102 | that You distribute, all copyright, patent, trademark, and

103 | attribution notices from the Source form of the Work,

104 | excluding those notices that do not pertain to any part of

105 | the Derivative Works; and

106 |

107 | (d) If the Work includes a "NOTICE" text file as part of its

108 | distribution, then any Derivative Works that You distribute must

109 | include a readable copy of the attribution notices contained

110 | within such NOTICE file, excluding those notices that do not

111 | pertain to any part of the Derivative Works, in at least one

112 | of the following places: within a NOTICE text file distributed

113 | as part of the Derivative Works; within the Source form or

114 | documentation, if provided along with the Derivative Works; or,

115 | within a display generated by the Derivative Works, if and

116 | wherever such third-party notices normally appear. The contents

117 | of the NOTICE file are for informational purposes only and

118 | do not modify the License. You may add Your own attribution

119 | notices within Derivative Works that You distribute, alongside

120 | or as an addendum to the NOTICE text from the Work, provided

121 | that such additional attribution notices cannot be construed

122 | as modifying the License.

123 |

124 | You may add Your own copyright statement to Your modifications and

125 | may provide additional or different license terms and conditions

126 | for use, reproduction, or distribution of Your modifications, or

127 | for any such Derivative Works as a whole, provided Your use,

128 | reproduction, and distribution of the Work otherwise complies with

129 | the conditions stated in this License.

130 |

131 | 5. Submission of Contributions. Unless You explicitly state otherwise,

132 | any Contribution intentionally submitted for inclusion in the Work

133 | by You to the Licensor shall be under the terms and conditions of

134 | this License, without any additional terms or conditions.

135 | Notwithstanding the above, nothing herein shall supersede or modify

136 | the terms of any separate license agreement you may have executed

137 | with Licensor regarding such Contributions.

138 |

139 | 6. Trademarks. This License does not grant permission to use the trade

140 | names, trademarks, service marks, or product names of the Licensor,

141 | except as required for reasonable and customary use in describing the

142 | origin of the Work and reproducing the content of the NOTICE file.

143 |

144 | 7. Disclaimer of Warranty. Unless required by applicable law or

145 | agreed to in writing, Licensor provides the Work (and each

146 | Contributor provides its Contributions) on an "AS IS" BASIS,

147 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

148 | implied, including, without limitation, any warranties or conditions

149 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

150 | PARTICULAR PURPOSE. You are solely responsible for determining the

151 | appropriateness of using or redistributing the Work and assume any

152 | risks associated with Your exercise of permissions under this License.

153 |

154 | 8. Limitation of Liability. In no event and under no legal theory,

155 | whether in tort (including negligence), contract, or otherwise,

156 | unless required by applicable law (such as deliberate and grossly

157 | negligent acts) or agreed to in writing, shall any Contributor be

158 | liable to You for damages, including any direct, indirect, special,

159 | incidental, or consequential damages of any character arising as a

160 | result of this License or out of the use or inability to use the

161 | Work (including but not limited to damages for loss of goodwill,

162 | work stoppage, computer failure or malfunction, or any and all

163 | other commercial damages or losses), even if such Contributor

164 | has been advised of the possibility of such damages.

165 |

166 | 9. Accepting Warranty or Additional Liability. While redistributing

167 | the Work or Derivative Works thereof, You may choose to offer,

168 | and charge a fee for, acceptance of support, warranty, indemnity,

169 | or other liability obligations and/or rights consistent with this

170 | License. However, in accepting such obligations, You may act only

171 | on Your own behalf and on Your sole responsibility, not on behalf

172 | of any other Contributor, and only if You agree to indemnify,

173 | defend, and hold each Contributor harmless for any liability

174 | incurred by, or claims asserted against, such Contributor by reason

175 | of your accepting any such warranty or additional liability.

176 |

177 | END OF TERMS AND CONDITIONS

178 |

179 | APPENDIX: How to apply the Apache License to your work.

180 |

181 | To apply the Apache License to your work, attach the following

182 | boilerplate notice, with the fields enclosed by brackets "[]"

183 | replaced with your own identifying information. (Don't include

184 | the brackets!) The text should be enclosed in the appropriate

185 | comment syntax for the file format. We also recommend that a

186 | file or class name and description of purpose be included on the

187 | same "printed page" as the copyright notice for easier

188 | identification within third-party archives.

189 |

190 | Copyright [yyyy] [name of copyright owner]

191 |

192 | Licensed under the Apache License, Version 2.0 (the "License");

193 | you may not use this file except in compliance with the License.

194 | You may obtain a copy of the License at

195 |

196 | http://www.apache.org/licenses/LICENSE-2.0

197 |

198 | Unless required by applicable law or agreed to in writing, software

199 | distributed under the License is distributed on an "AS IS" BASIS,

200 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

201 | See the License for the specific language governing permissions and

202 | limitations under the License.

203 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Randomized Positional Encodings Boost Length Generalization of Transformers

2 |

3 |

4 |  5 |

5 |

6 |

7 | This repository provides an implementation of our ACL 2023 paper [Randomized Positional Encodings Boost Length Generalization of Transformers](https://arxiv.org/abs/2305.16843).

8 |

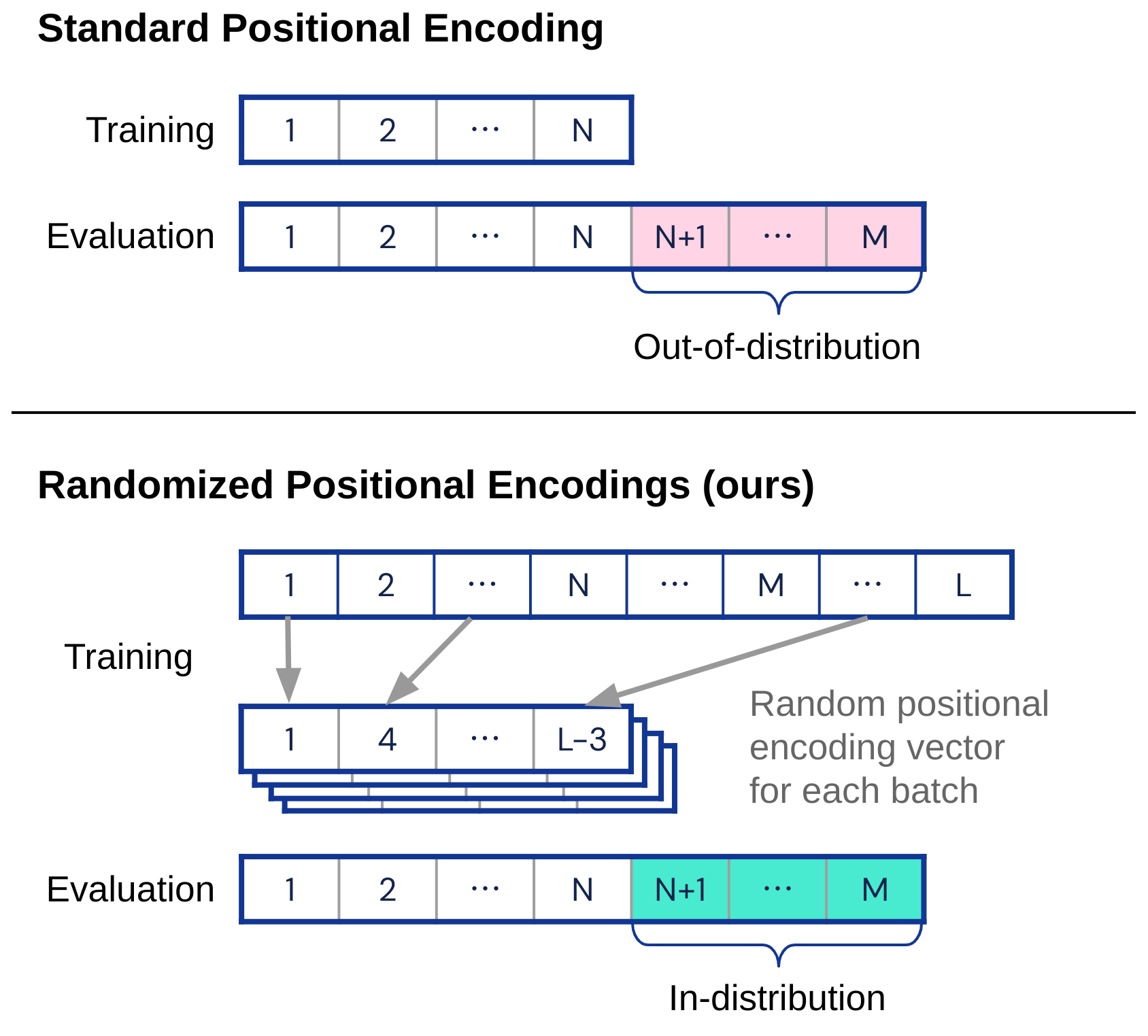

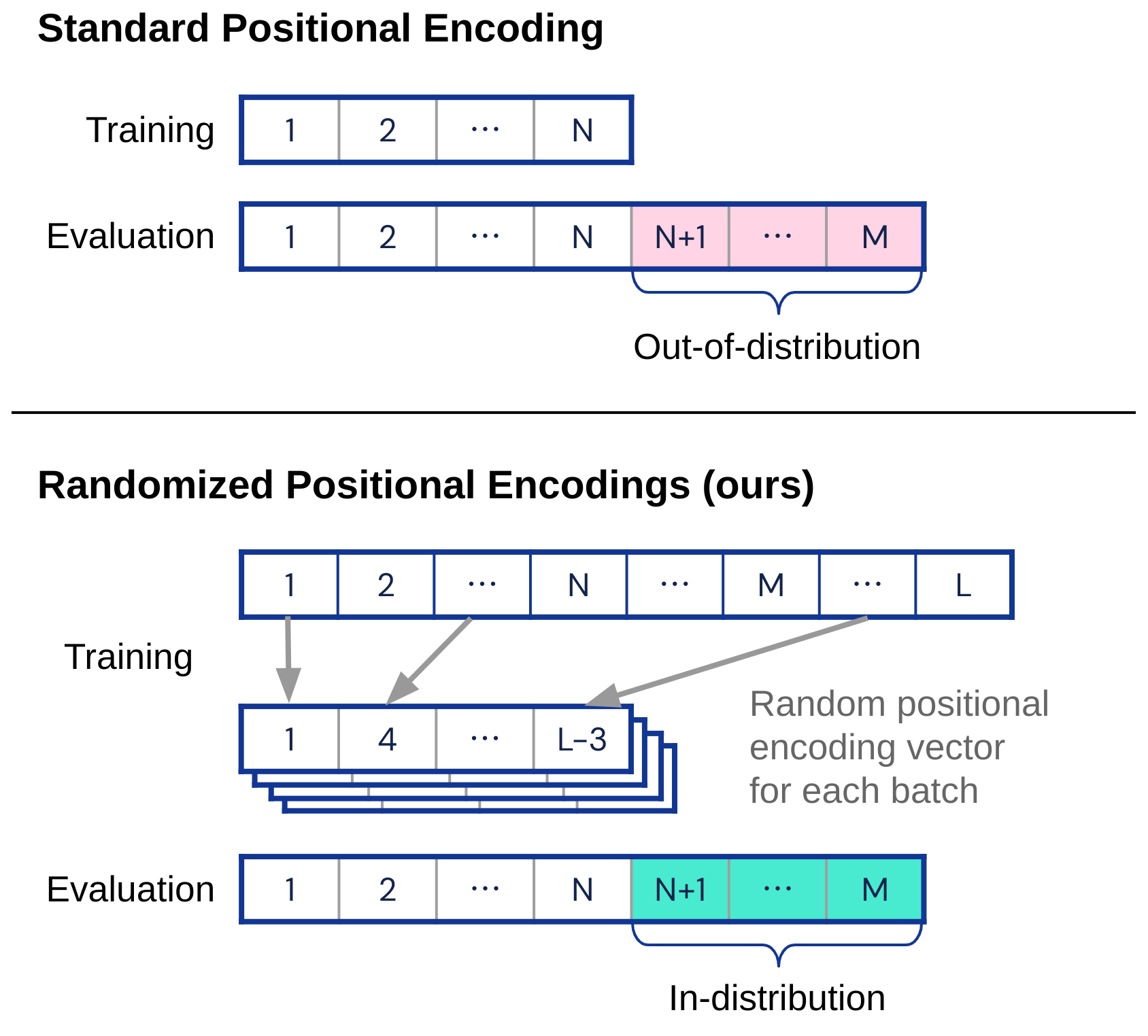

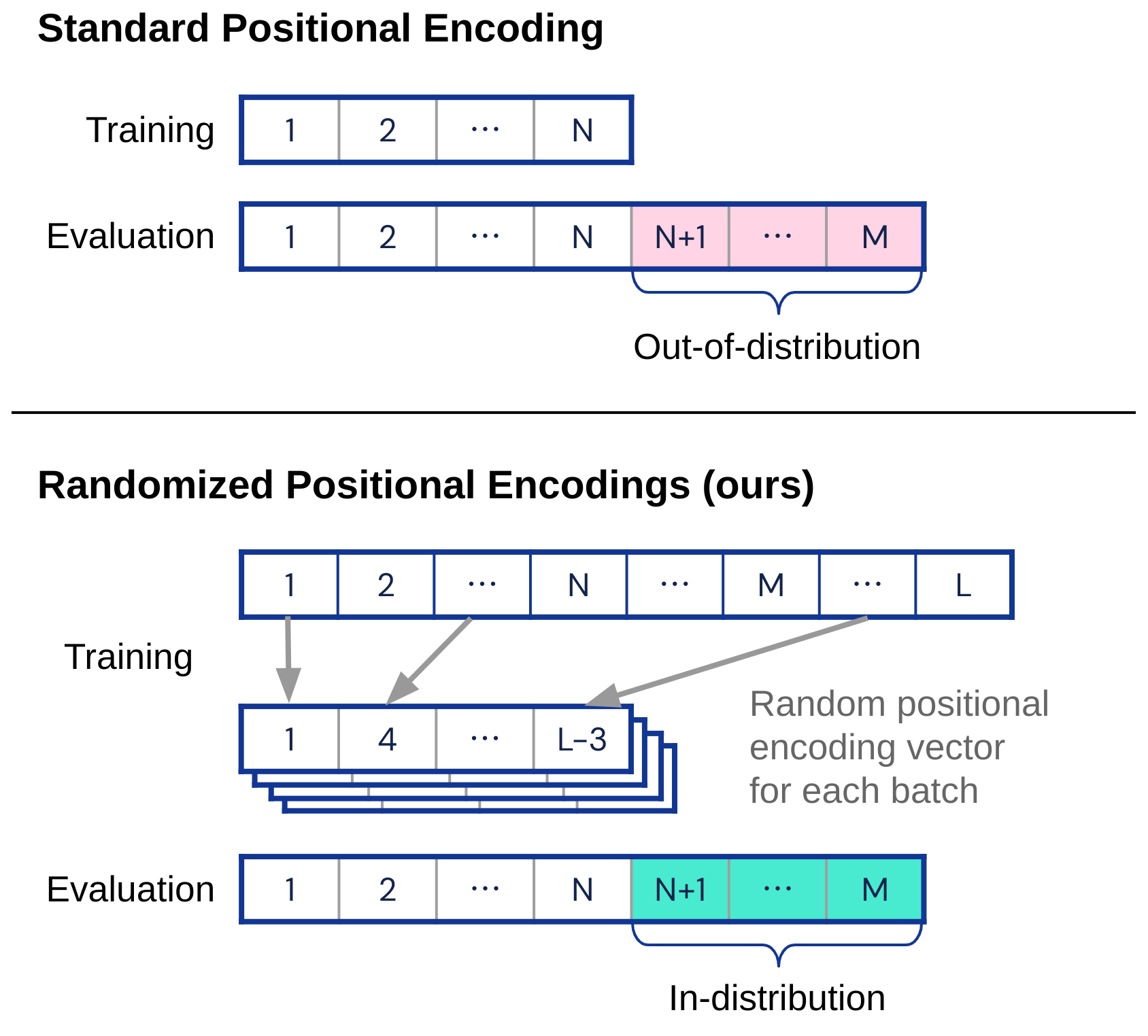

9 | >Transformers have impressive generalization capabilities on tasks with a fixed context length.

10 | However, they fail to generalize to sequences of arbitrary length, even for seemingly simple tasks such as duplicating a string.

11 | Moreover, simply training on longer sequences is inefficient due to the quadratic computation complexity of the global attention mechanism.

12 | In this work, we demonstrate that this failure mode is linked to positional encodings being out-of-distribution for longer sequences (even for relative encodings) and introduce a novel family of positional encodings that can overcome this problem.

13 | Concretely, our randomized positional encoding scheme simulates the positions of longer sequences and randomly selects an ordered subset to fit the sequence's length.

14 | Our large-scale empirical evaluation of 6000 models across 15 algorithmic reasoning tasks shows that our method allows Transformers to generalize to sequences of unseen length (increasing test accuracy by 12.0% on average).

15 |

16 | It is based on [JAX](https://jax.readthedocs.io) and [Haiku](https://dm-haiku.readthedocs.io) and contains all the code, datasets, and models necessary to reproduce the paper's results.

17 |

18 |

19 | ## Content

20 |

21 | ```

22 | .

23 | ├── models

24 | │ ├── positional_encodings.py

25 | │ ├── transformer.py - Transformer (Vaswani et al., 2017)

26 | │ └── transformer_utils.py

27 | ├── tasks

28 | │ ├── cs - Context-sensitive tasks

29 | │ ├── dcf - Deterministic context-free tasks

30 | │ ├── regular - Regular tasks

31 | │ └── task.py - Abstract `GeneralizationTask`

32 | ├── experiments

33 | | ├── constants.py - Training/Evaluation constants

34 | | ├── curriculum.py - Training curricula (over sequence lengths)

35 | | ├── example.py - Example traning script

36 | | ├── range_evaluation.py - Evaluation loop (test sequences lengths)

37 | | ├── training.py - Training loop

38 | | └── utils.py - Utility functions

39 | ├── README.md

40 | └── requirements.txt - Dependencies

41 | ```

42 |

43 |

44 | ## Installation

45 |

46 | Clone the source code into a local directory:

47 | ```bash

48 | git clone https://github.com/google-deepmind/randomized_positional_encodings.git

49 | cd randomized_positional_encodings

50 | ```

51 |

52 | `pip install -r requirements.txt` will install all required dependencies.

53 | This is best done inside a [conda environment](https://www.anaconda.com/).

54 | To that end, install [Anaconda](https://www.anaconda.com/download#downloads).

55 | Then, create and activate the conda environment:

56 | ```bash

57 | conda create --name randomized_positional_encodings

58 | conda activate randomized_positional_encodings

59 | ```

60 |

61 | Install `pip` and use it to install all the dependencies:

62 | ```bash

63 | conda install pip

64 | pip install -r requirements.txt

65 | ```

66 |

67 | If you have a GPU available (highly recommended for fast training), then you can install JAX with CUDA support.

68 | ```bash

69 | pip install --upgrade "jax[cuda12_pip]" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

70 | ```

71 | Note that the jax version must correspond to the existing CUDA installation you wish to use (CUDA 12 in the example above).

72 | Please see the [JAX documentation](https://github.com/google/jax#installation) for more details.

73 |

74 |

75 | ## Usage

76 |

77 | Before running any code, make sure to activate the conda environment and set the `PYTHONPATH`:

78 | ```bash

79 | conda activate randomized_positional_encodings

80 | export PYTHONPATH=$(pwd)/..

81 | ```

82 |

83 | We provide an example of a training and evaluation run at:

84 | ```bash

85 | python experiments/example.py

86 | ```

87 |

88 |

89 | ## Citing this work

90 |

91 | ```bibtex

92 | @inproceedings{ruoss2023randomized,

93 | author = {Anian Ruoss and

94 | Gr{\'{e}}goire Del{\'{e}}tang and

95 | Tim Genewein and

96 | Jordi Grau{-}Moya and

97 | R{\'{o}}bert Csord{\'{a}}s and

98 | Mehdi Bennani and

99 | Shane Legg and

100 | Joel Veness},

101 | title = {Randomized Positional Encodings Boost Length Generalization of Transformers},

102 | booktitle = {61st Annual Meeting of the Association for Computational Linguistics}

103 | year = {2023},

104 | }

105 | ```

106 |

107 |

108 | ## License and disclaimer

109 |

110 | Copyright 2023 DeepMind Technologies Limited

111 |

112 | All software is licensed under the Apache License, Version 2.0 (Apache 2.0);

113 | you may not use this file except in compliance with the Apache 2.0 license.

114 | You may obtain a copy of the Apache 2.0 license at:

115 | https://www.apache.org/licenses/LICENSE-2.0

116 |

117 | All other materials are licensed under the Creative Commons Attribution 4.0

118 | International License (CC-BY). You may obtain a copy of the CC-BY license at:

119 | https://creativecommons.org/licenses/by/4.0/legalcode

120 |

121 | Unless required by applicable law or agreed to in writing, all software and

122 | materials distributed here under the Apache 2.0 or CC-BY licenses are

123 | distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND,

124 | either express or implied. See the licenses for the specific language governing

125 | permissions and limitations under those licenses.

126 |

127 | This is not an official Google product.

128 |

--------------------------------------------------------------------------------

/experiments/constants.py:

--------------------------------------------------------------------------------

1 | # Copyright 2024 DeepMind Technologies Limited

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | """Constants for our length generalization experiments."""

17 |

18 | import functools

19 |

20 | from randomized_positional_encodings.experiments import curriculum as curriculum_lib

21 | from randomized_positional_encodings.models import transformer

22 | from randomized_positional_encodings.tasks.cs import binary_addition

23 | from randomized_positional_encodings.tasks.cs import binary_multiplication

24 | from randomized_positional_encodings.tasks.cs import bucket_sort

25 | from randomized_positional_encodings.tasks.cs import compute_sqrt

26 | from randomized_positional_encodings.tasks.cs import duplicate_string

27 | from randomized_positional_encodings.tasks.cs import missing_duplicate_string

28 | from randomized_positional_encodings.tasks.cs import odds_first

29 | from randomized_positional_encodings.tasks.dcf import modular_arithmetic_brackets

30 | from randomized_positional_encodings.tasks.dcf import reverse_string

31 | from randomized_positional_encodings.tasks.dcf import solve_equation

32 | from randomized_positional_encodings.tasks.dcf import stack_manipulation

33 | from randomized_positional_encodings.tasks.regular import cycle_navigation

34 | from randomized_positional_encodings.tasks.regular import even_pairs

35 | from randomized_positional_encodings.tasks.regular import modular_arithmetic

36 | from randomized_positional_encodings.tasks.regular import parity_check

37 |

38 |

39 | MODEL_BUILDERS = {

40 | 'transformer_encoder': functools.partial(

41 | transformer.make_transformer,

42 | transformer_module=transformer.TransformerEncoder, # pytype: disable=module-attr

43 | ),

44 | }

45 |

46 | CURRICULUM_BUILDERS = {

47 | 'fixed': curriculum_lib.FixedCurriculum,

48 | 'regular_increase': curriculum_lib.RegularIncreaseCurriculum,

49 | 'reverse_exponential': curriculum_lib.ReverseExponentialCurriculum,

50 | 'uniform': curriculum_lib.UniformCurriculum,

51 | }

52 |

53 | TASK_BUILDERS = {

54 | 'even_pairs': even_pairs.EvenPairs,

55 | 'modular_arithmetic': functools.partial(

56 | modular_arithmetic.ModularArithmetic, modulus=5

57 | ),

58 | 'parity_check': parity_check.ParityCheck,

59 | 'cycle_navigation': cycle_navigation.CycleNavigation,

60 | 'stack_manipulation': stack_manipulation.StackManipulation,

61 | 'reverse_string': functools.partial(

62 | reverse_string.ReverseString, vocab_size=2

63 | ),

64 | 'modular_arithmetic_brackets': functools.partial(

65 | modular_arithmetic_brackets.ModularArithmeticBrackets,

66 | modulus=5,

67 | mult=True,

68 | ),

69 | 'solve_equation': functools.partial(

70 | solve_equation.SolveEquation, modulus=5

71 | ),

72 | 'duplicate_string': functools.partial(

73 | duplicate_string.DuplicateString, vocab_size=2

74 | ),

75 | 'missing_duplicate_string': missing_duplicate_string.MissingDuplicateString,

76 | 'odds_first': functools.partial(odds_first.OddsFirst, vocab_size=2),

77 | 'binary_addition': binary_addition.BinaryAddition,

78 | 'binary_multiplication': binary_multiplication.BinaryMultiplication,

79 | 'compute_sqrt': compute_sqrt.ComputeSqrt,

80 | 'bucket_sort': functools.partial(bucket_sort.BucketSort, vocab_size=5),

81 | }

82 |

83 | TASK_LEVELS = {

84 | 'even_pairs': 'regular',

85 | 'modular_arithmetic': 'regular',

86 | 'parity_check': 'regular',

87 | 'cycle_navigation': 'regular',

88 | 'stack_manipulation': 'dcf',

89 | 'reverse_string': 'dcf',

90 | 'modular_arithmetic_brackets': 'dcf',

91 | 'solve_equation': 'dcf',

92 | 'duplicate_string': 'cs',

93 | 'missing_duplicate_string': 'cs',

94 | 'odds_first': 'cs',

95 | 'binary_addition': 'cs',

96 | 'binary_multiplication': 'cs',

97 | 'compute_sqrt': 'cs',

98 | 'bucket_sort': 'cs',

99 | }

100 |

--------------------------------------------------------------------------------

/experiments/curriculum.py:

--------------------------------------------------------------------------------

1 | # Copyright 2024 DeepMind Technologies Limited

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | """Curricula over sequence lengths used to evaluate length generalization.

17 |

18 | Allows to sample different sequence lengths along training. For instance,

19 | one might want to start with length=1 and regularly increase the length by 1,

20 | every 50k steps.

21 | """

22 |

23 | import abc

24 | from collections.abc import Collection

25 | import random

26 |

27 | import numpy as np

28 |

29 |

30 | class Curriculum(abc.ABC):

31 | """Curriculum to sample lengths."""

32 |

33 | @abc.abstractmethod

34 | def sample_sequence_length(self, step: int) -> int:

35 | """Samples a sequence length from the current distribution."""

36 |

37 |

38 | class FixedCurriculum(Curriculum):

39 | """A fixed curriculum, always sampling the same sequence length."""

40 |

41 | def __init__(self, sequence_length: int):

42 | """Initializes.

43 |

44 | Args:

45 | sequence_length: The sequence length to sample.

46 | """

47 | super().__init__()

48 | self._sequence_length = sequence_length

49 |

50 | def sample_sequence_length(self, step: int) -> int:

51 | """Returns a fixed sequence length."""

52 | del step

53 | return self._sequence_length

54 |

55 |

56 | class UniformCurriculum(Curriculum):

57 | """A uniform curriculum, sampling different sequence lengths."""

58 |

59 | def __init__(self, values: Collection[int]):

60 | """Initializes.

61 |

62 | Args:

63 | values: The sequence lengths to sample.

64 | """

65 | super().__init__()

66 | self._values = tuple(values)

67 |

68 | def sample_sequence_length(self, step: int) -> int:

69 | """Returns a sequence length sampled from a uniform distribution."""

70 | del step

71 | return random.choice(self._values)

72 |

73 |

74 | class ReverseExponentialCurriculum(Curriculum):

75 | """A reverse exponential curriculum, sampling different sequence lengths."""

76 |

77 | def __init__(self, values: Collection[int], tau: bool):

78 | """Initializes.

79 |

80 | Args:

81 | values: The sequence lengths to sample.

82 | tau: The exponential rate to use.

83 | """

84 | super().__init__()

85 | self._values = tuple(values)

86 | self._tau = tau

87 |

88 | def sample_sequence_length(self, step: int) -> int:

89 | """Returns a length sampled from a reverse exponential distribution."""

90 | del step

91 | probs = self._tau ** np.array(self._values)

92 | probs = np.array(probs, dtype=np.float32)

93 | probs = probs / np.sum(probs)

94 | return np.random.choice(self._values, p=probs)

95 |

96 |

97 | class RegularIncreaseCurriculum(Curriculum):

98 | """Curriculum for sequence lengths with a regular increase."""

99 |

100 | def __init__(

101 | self,

102 | initial_sequence_length: int,

103 | increase_frequency: int,

104 | increase_amount: int,

105 | sample_all_length: bool,

106 | ):

107 | """Initializes.

108 |

109 | Args:

110 | initial_sequence_length: The value of the sequence length at the beginning

111 | of the curriculum.

112 | increase_frequency: How often we increase the possible sequence length.

113 | increase_amount: The amount of the increase in length.

114 | sample_all_length: Whether to sample all length lower than the current one

115 | or just return the current one.

116 | """

117 | super().__init__()

118 | self._initial_sequence_length = initial_sequence_length

119 | self._increase_frequency = increase_frequency

120 | self._increase_amount = increase_amount

121 | self._sample_all_length = sample_all_length

122 |

123 | def sample_sequence_length(self, step: int) -> int:

124 | """Returns a sequence length from the curriculum with the current step."""

125 | if not self._sample_all_length:

126 | return self._initial_sequence_length + self._increase_amount * (

127 | step // self._increase_frequency

128 | )

129 | return (

130 | self._initial_sequence_length

131 | + self._increase_amount

132 | * np.random.randint(0, step // self._increase_frequency + 1)

133 | )

134 |

--------------------------------------------------------------------------------

/experiments/example.py:

--------------------------------------------------------------------------------

1 | # Copyright 2024 DeepMind Technologies Limited

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | """Example script to train and evaluate a network."""

17 |

18 | from absl import app

19 | from absl import flags

20 | import haiku as hk

21 | import jax.numpy as jnp

22 | import numpy as np

23 |

24 | from randomized_positional_encodings.experiments import constants

25 | from randomized_positional_encodings.experiments import curriculum as curriculum_lib

26 | from randomized_positional_encodings.experiments import training

27 | from randomized_positional_encodings.experiments import utils

28 |

29 |

30 | _BATCH_SIZE = flags.DEFINE_integer(

31 | 'batch_size',

32 | default=128,

33 | help='Training batch size.',

34 | lower_bound=1,

35 | )

36 | _SEQUENCE_LENGTH = flags.DEFINE_integer(

37 | 'sequence_length',

38 | default=40,

39 | help='Maximum training sequence length.',

40 | lower_bound=1,

41 | )

42 | _TASK = flags.DEFINE_string(

43 | 'task',

44 | default='missing_duplicate_string',

45 | help='Length generalization task (see `constants.py` for other tasks).',

46 | )

47 | _ARCHITECTURE = flags.DEFINE_string(

48 | 'architecture',

49 | default='transformer_encoder',

50 | help='Model architecture (see `constants.py` for other architectures).',

51 | )

52 | _IS_AUTOREGRESSIVE = flags.DEFINE_boolean(

53 | 'is_autoregressive',

54 | default=False,

55 | help='Whether to use autoregressive sampling or not.',

56 | )

57 | _COMPUTATION_STEPS_MULT = flags.DEFINE_integer(

58 | 'computation_steps_mult',

59 | default=0,

60 | help=(

61 | 'The amount of computation tokens to append to the input tape (defined'

62 | ' as a multiple of the input length)'

63 | ),

64 | lower_bound=0,

65 | )

66 | # The architecture parameters depend on the architecture, so we cannot define

67 | # them as via flags. See `constants.py` for the required values.

68 | _ARCHITECTURE_PARAMS = {

69 | 'num_layers': 5,

70 | 'embedding_dim': 64,

71 | 'dropout_prob': 0.1,

72 | 'positional_encodings': 'NOISY_RELATIVE',

73 | 'positional_encodings_params': {'noise_max_length': 2048},

74 | }

75 |

76 |

77 | def main(_) -> None:

78 | # Create the task.

79 | curriculum = curriculum_lib.UniformCurriculum(

80 | values=list(range(1, _SEQUENCE_LENGTH.value + 1))

81 | )

82 | task = constants.TASK_BUILDERS[_TASK.value]()

83 |

84 | # Create the model.

85 | single_output = task.output_length(10) == 1

86 | model = constants.MODEL_BUILDERS[_ARCHITECTURE.value](

87 | output_size=task.output_size,

88 | return_all_outputs=True,

89 | **_ARCHITECTURE_PARAMS,

90 | )

91 | if _IS_AUTOREGRESSIVE.value:

92 | if 'transformer' not in _ARCHITECTURE.value:

93 | model = utils.make_model_with_targets_as_input(

94 | model, _COMPUTATION_STEPS_MULT.value

95 | )

96 | model = utils.add_sampling_to_autoregressive_model(model, single_output)

97 | else:

98 | model = utils.make_model_with_empty_targets(

99 | model, task, _COMPUTATION_STEPS_MULT.value, single_output

100 | )

101 | model = hk.transform(model)

102 |

103 | # Create the loss and accuracy based on the pointwise ones.

104 | def loss_fn(output, target):

105 | loss = jnp.mean(jnp.sum(task.pointwise_loss_fn(output, target), axis=-1))

106 | return loss, {}

107 |

108 | def accuracy_fn(output, target):

109 | mask = task.accuracy_mask(target)

110 | return jnp.sum(mask * task.accuracy_fn(output, target)) / jnp.sum(mask)

111 |

112 | # Create the final training parameters.

113 | training_params = training.ClassicTrainingParams(

114 | seed=0,

115 | model_init_seed=0,

116 | training_steps=10_000,

117 | log_frequency=100,

118 | length_curriculum=curriculum,

119 | batch_size=_BATCH_SIZE.value,

120 | task=task,

121 | model=model,

122 | loss_fn=loss_fn,

123 | learning_rate=1e-3,

124 | l2_weight=0.0,

125 | accuracy_fn=accuracy_fn,

126 | compute_full_range_test=True,

127 | max_range_test_length=100,

128 | range_test_total_batch_size=512,

129 | range_test_sub_batch_size=64,

130 | is_autoregressive=_IS_AUTOREGRESSIVE.value,

131 | )

132 |

133 | training_worker = training.TrainingWorker(training_params, use_tqdm=True)

134 | _, eval_results, _ = training_worker.run()

135 |

136 | # Gather results and print final score.

137 | accuracies = [r['accuracy'] for r in eval_results]

138 | score = np.mean(accuracies[_SEQUENCE_LENGTH.value + 1 :])

139 | print(f'Score: {score}')

140 |

141 |

142 | if __name__ == '__main__':

143 | app.run(main)

144 |

--------------------------------------------------------------------------------

/experiments/range_evaluation.py:

--------------------------------------------------------------------------------

1 | # Copyright 2024 DeepMind Technologies Limited

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | """Evaluation of a network on sequences of different lengths."""

17 |

18 | import dataclasses

19 | import random

20 | from typing import Any, Callable, Mapping

21 |

22 | from absl import logging

23 | import chex

24 | import haiku as hk

25 | import jax

26 | import jax.numpy as jnp

27 | import numpy as np

28 | import tqdm

29 |

30 | _Batch = Mapping[str, jnp.ndarray]

31 |

32 |

33 | @dataclasses.dataclass

34 | class EvaluationParams:

35 | """The parameters used for range evaluation of networks."""

36 |

37 | model: hk.Transformed

38 | params: chex.ArrayTree

39 |

40 | accuracy_fn: Callable[[jnp.ndarray, jnp.ndarray], jnp.ndarray]

41 | sample_batch: Callable[[chex.Array, int, int], _Batch]

42 |

43 | max_test_length: int

44 | total_batch_size: int

45 | sub_batch_size: int

46 |

47 | is_autoregressive: bool = False

48 |

49 |

50 | def range_evaluation(

51 | eval_params: EvaluationParams,

52 | use_tqdm: bool = False,

53 | ) -> list[Mapping[str, Any]]:

54 | """Evaluates the model on longer, never seen strings and log the results.

55 |

56 | Args:

57 | eval_params: The evaluation parameters, see above.

58 | use_tqdm: Whether to use a progress bar with tqdm.

59 |

60 | Returns:

61 | The list of dicts containing the accuracies.

62 | """

63 | model = eval_params.model

64 | params = eval_params.params

65 |

66 | random.seed(1)

67 | np.random.seed(1)

68 | rng_seq = hk.PRNGSequence(1)

69 |

70 | if eval_params.is_autoregressive:

71 | apply_fn = jax.jit(model.apply, static_argnames=('sample',))

72 | else:

73 | apply_fn = jax.jit(model.apply)

74 |

75 | results = []

76 | lengths = range(1, eval_params.max_test_length + 1)

77 |

78 | if use_tqdm:

79 | lengths = tqdm.tqdm(lengths)

80 |

81 | for length in lengths:

82 | # We need to clear the cache of jitted functions, to avoid overflow as we

83 | # are jitting len(lengths) ones, which can be a lot.

84 | apply_fn.clear_cache()

85 | sub_accuracies = []

86 |

87 | for _ in range(eval_params.total_batch_size // eval_params.sub_batch_size):

88 | batch = eval_params.sample_batch(

89 | next(rng_seq), eval_params.sub_batch_size, length

90 | )

91 |

92 | if eval_params.is_autoregressive:

93 | outputs = apply_fn(

94 | params,

95 | next(rng_seq),

96 | batch['input'],

97 | jnp.empty_like(batch['output']),

98 | sample=True,

99 | )

100 | else:

101 | outputs = apply_fn(params, next(rng_seq), batch['input'])

102 |

103 | sub_accuracies.append(

104 | float(np.mean(eval_params.accuracy_fn(outputs, batch['output'])))

105 | )

106 | log_data = {

107 | 'length': length,

108 | 'accuracy': np.mean(sub_accuracies),

109 | }

110 | logging.info(log_data)

111 | results.append(log_data)

112 | return results

113 |

--------------------------------------------------------------------------------

/experiments/training.py:

--------------------------------------------------------------------------------

1 | # Copyright 2024 DeepMind Technologies Limited

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | """Training loop for length generalization experiments."""

17 |

18 | import dataclasses

19 | import functools

20 | import random

21 | from typing import Any, Callable, Mapping, Optional

22 |

23 | from absl import logging

24 | import chex

25 | import haiku as hk

26 | import jax

27 | import jax.numpy as jnp

28 | import numpy as np

29 | import optax

30 | import tqdm

31 |

32 | from randomized_positional_encodings.experiments import curriculum as curriculum_lib

33 | from randomized_positional_encodings.experiments import range_evaluation

34 | from randomized_positional_encodings.tasks import task as task_lib

35 |

36 |

37 | _Batch = Mapping[str, jnp.ndarray]

38 | _LossMetrics = Optional[Mapping[str, jnp.ndarray]]

39 | _LossFn = Callable[[chex.Array, chex.Array], tuple[float, _LossMetrics]]

40 | _AccuracyFn = Callable[[chex.Array, chex.Array], float]

41 | _ModelApplyFn = Callable[..., chex.Array]

42 | _MAX_RNGS_RESERVE = 50000

43 |

44 |

45 | @dataclasses.dataclass

46 | class ClassicTrainingParams:

47 | """Parameters needed to train classical architectures."""

48 |

49 | seed: int # Used to sample during forward pass (e.g. from final logits).

50 | model_init_seed: int # Used to initialize model parameters.

51 | training_steps: int

52 | log_frequency: int

53 |

54 | task: task_lib.GeneralizationTask

55 | length_curriculum: curriculum_lib.Curriculum

56 | batch_size: int

57 |

58 | model: hk.Transformed

59 | loss_fn: Callable[[jnp.ndarray, jnp.ndarray], tuple[float, _LossMetrics]]

60 | learning_rate: float

61 | l2_weight: float

62 | test_model: Optional[hk.Transformed] = None

63 | max_grad_norm: float = 1.0

64 | is_autoregressive: bool = False

65 |

66 | compute_full_range_test: bool = False

67 | range_test_total_batch_size: int = 512

68 | range_test_sub_batch_size: int = 64

69 | max_range_test_length: int = 100

70 |

71 | accuracy_fn: Optional[Callable[[jnp.ndarray, jnp.ndarray], jnp.ndarray]] = (

72 | None

73 | )

74 |

75 |

76 | def _apply_loss_and_metrics_fn(

77 | params: hk.Params,

78 | rng_key: chex.PRNGKey,

79 | batch: _Batch,

80 | model_apply_fn: _ModelApplyFn,

81 | loss_fn: _LossFn,

82 | accuracy_fn: _AccuracyFn,

83 | is_autoregressive: bool = False,

84 | ) -> tuple[float, tuple[_LossMetrics, float]]:

85 | """Computes the model output and applies the loss function.

86 |

87 | Depending on whether a model is autoregressive or not, it will have a

88 | different number of input parameters (i.e., autoregressive models also require

89 | the targets as an input).

90 |

91 | Args:

92 | params: The model parameters.

93 | rng_key: The prng key to use for random number generation.

94 | batch: The data (consists of both inputs and outputs).

95 | model_apply_fn: The model function that converts inputs into outputs.

96 | loss_fn: A function that computes the loss for a batch of logits and labels.

97 | accuracy_fn: A function that computes the accuracy for a batch of logits and

98 | labels.

99 | is_autoregressive: Whether the model is autoregressive or not.

100 |

101 | Returns:

102 | The loss of the model for the batch of data, extra loss metrics and the

103 | accuracy, if accuracy_fn is not None.

104 | """

105 | if is_autoregressive:

106 | outputs = model_apply_fn(

107 | params, rng_key, batch["input"], batch["output"], sample=False

108 | )

109 | else:

110 | outputs = model_apply_fn(params, rng_key, batch["input"])

111 |

112 | loss, loss_metrics = loss_fn(outputs, batch["output"])

113 | if accuracy_fn is not None:

114 | accuracy = accuracy_fn(outputs, batch["output"])

115 | else:

116 | accuracy = None

117 | return loss, (loss_metrics, accuracy)

118 |

119 |

120 | @functools.partial(

121 | jax.jit,

122 | static_argnames=(

123 | "model_apply_fn",

124 | "loss_fn",

125 | "accuracy_fn",

126 | "optimizer",

127 | "is_autoregressive",

128 | ),

129 | )

130 | def _update_parameters(

131 | params: hk.Params,

132 | rng_key: chex.PRNGKey,

133 | batch: _Batch,

134 | model_apply_fn: _ModelApplyFn,

135 | loss_fn: _LossFn,

136 | accuracy_fn: _AccuracyFn,

137 | optimizer: optax.GradientTransformation,

138 | opt_state: optax.OptState,

139 | is_autoregressive: bool = False,

140 | ) -> tuple[hk.Params, optax.OptState, tuple[float, _LossMetrics, float]]:

141 | """Applies a single SGD update step to the model parameters.

142 |

143 | Args:

144 | params: The model parameters.

145 | rng_key: The prng key to use for random number generation.

146 | batch: The data (consists of both inputs and outputs).

147 | model_apply_fn: The model function that converts inputs into outputs.

148 | loss_fn: A function that computes the loss for a batch of logits and labels.

149 | accuracy_fn: A function that computes the accuracy for a batch of logits and

150 | labels.

151 | optimizer: The optimizer that computes the updates from the gradients of the

152 | `loss_fn` with respect to the `params` and the previous `opt_state`.

153 | opt_state: The optimizer state, e.g., momentum for each variable when using

154 | Adam.

155 | is_autoregressive: Whether the model is autoregressive or not.

156 |

157 | Returns:

158 | The updated parameters, the new optimizer state, and the loss, loss metrics

159 | and accuracy.

160 | """

161 | (loss, (metrics, accuracy)), grads = jax.value_and_grad(

162 | _apply_loss_and_metrics_fn, has_aux=True

163 | )(

164 | params,

165 | rng_key,

166 | batch,

167 | model_apply_fn,

168 | loss_fn,

169 | accuracy_fn,

170 | is_autoregressive,

171 | )

172 | updates, new_opt_state = optimizer.update(grads, opt_state, params)

173 | new_params = optax.apply_updates(params, updates)

174 | return new_params, new_opt_state, (loss, metrics, accuracy)

175 |

176 |

177 | class TrainingWorker:

178 | """Training worker."""

179 |

180 | def __init__(

181 | self, training_params: ClassicTrainingParams, use_tqdm: bool = False

182 | ):

183 | """Initializes the worker.

184 |

185 | Args:

186 | training_params: The training parameters.

187 | use_tqdm: Whether to add a progress bar to stdout.

188 | """

189 | self._training_params = training_params

190 | self._use_tqdm = use_tqdm

191 | self._params = None

192 | self._step = 0

193 |

194 | def step_for_evaluator(self) -> int:

195 | return self._step

196 |

197 | def run(

198 | self,

199 | ) -> tuple[list[Mapping[str, Any]], list[Mapping[str, Any]], chex.ArrayTree]:

200 | """Trains the model with the provided config.

201 |

202 | Returns:

203 | Results (various training and validation metrics), module parameters

204 | and router parameters.

205 | """

206 | logging.info("Starting training!")

207 | training_params = self._training_params

208 | rngs_reserve = min(_MAX_RNGS_RESERVE, training_params.training_steps)

209 |

210 | random.seed(training_params.seed)

211 | np.random.seed(training_params.seed)

212 | rng_seq = hk.PRNGSequence(training_params.seed)

213 | rng_seq.reserve(rngs_reserve)

214 |

215 | step = 0

216 |

217 | results = []

218 | model = training_params.model

219 | task = training_params.task

220 | length_curriculum = training_params.length_curriculum

221 |

222 | if training_params.l2_weight is None or training_params.l2_weight == 0:

223 | optimizer = optax.adam(training_params.learning_rate)

224 | else:

225 | optimizer = optax.adamw(

226 | training_params.learning_rate, weight_decay=training_params.l2_weight

227 | )

228 |

229 | optimizer = optax.chain(

230 | optax.clip_by_global_norm(training_params.max_grad_norm), optimizer

231 | )

232 |

233 | dummy_batch = task.sample_batch(

234 | next(rng_seq), length=10, batch_size=training_params.batch_size

235 | )

236 | model_init_rng_key = jax.random.PRNGKey(training_params.model_init_seed)

237 |

238 | if training_params.is_autoregressive:

239 | params = model.init(

240 | model_init_rng_key,

241 | dummy_batch["input"],

242 | dummy_batch["output"],

243 | sample=False,

244 | )

245 | else:

246 | params = model.init(model_init_rng_key, dummy_batch["input"])

247 |

248 | opt_state = optimizer.init(params)

249 | self._params, self._step = params, 0

250 |

251 | steps = range(training_params.training_steps + 1)

252 |

253 | if self._use_tqdm:

254 | steps = tqdm.tqdm(steps)

255 |

256 | for step in steps:

257 | # Randomness handled by either python.random or numpy.

258 | length = length_curriculum.sample_sequence_length(step)

259 | # Randomness handled by either jax, python.random or numpy.

260 | train_batch = task.sample_batch(

261 | next(rng_seq), length=length, batch_size=training_params.batch_size

262 | )

263 | params, opt_state, (train_loss, train_metrics, train_accuracy) = (

264 | _update_parameters(

265 | params=params,

266 | rng_key=next(rng_seq),

267 | batch=train_batch,

268 | model_apply_fn=model.apply,

269 | loss_fn=training_params.loss_fn,

270 | accuracy_fn=training_params.accuracy_fn,

271 | optimizer=optimizer,

272 | opt_state=opt_state,

273 | is_autoregressive=training_params.is_autoregressive,

274 | )

275 | )

276 | self._params, self._step = params, step

277 |

278 | log_freq = training_params.log_frequency

279 | if (log_freq > 0) and (step % log_freq == 0):

280 | log_data = {

281 | "step": step,

282 | "train_loss": float(train_loss),

283 | }

284 | if training_params.accuracy_fn is not None:

285 | log_data["train_accuracy"] = float(train_accuracy)

286 | for key, value in train_metrics.items():

287 | log_data[".".join(["train_metrics", key])] = np.array(value)

288 | logging.info(log_data)

289 | results.append(log_data)

290 |

291 | # We need to access this private attribute since the default reserve size

292 | # can not be edited yet.

293 | if not rng_seq._subkeys: # pylint: disable=protected-access

294 | rng_seq.reserve(rngs_reserve)

295 |

296 | eval_results = list()

297 |

298 | if training_params.compute_full_range_test:

299 | eval_params = range_evaluation.EvaluationParams(

300 | model=training_params.test_model or model,

301 | params=params,

302 | accuracy_fn=training_params.accuracy_fn,

303 | sample_batch=task.sample_batch,

304 | max_test_length=training_params.max_range_test_length,

305 | total_batch_size=training_params.range_test_total_batch_size,

306 | sub_batch_size=training_params.range_test_sub_batch_size,

307 | is_autoregressive=training_params.is_autoregressive,

308 | )

309 | eval_results = range_evaluation.range_evaluation(

310 | eval_params,

311 | use_tqdm=True,

312 | )

313 |

314 | return results, eval_results, params

315 |

--------------------------------------------------------------------------------

/experiments/utils.py:

--------------------------------------------------------------------------------

1 | # Copyright 2024 DeepMind Technologies Limited

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | """Provides utility functions for training and evaluation."""

17 |

18 | import inspect

19 | from typing import Any, Callable

20 |

21 | import chex

22 | import haiku as hk

23 | from jax import nn as jnn

24 | from jax import numpy as jnp

25 |

26 | from randomized_positional_encodings.tasks import task

27 |

28 | COMPUTATION_EMPTY_TOKEN = 0

29 | OUTPUT_EMPTY_TOKEN = 1

30 |

31 |

32 | def make_model_with_empty_targets(

33 | model: Callable[[chex.Array], chex.Array],

34 | generalization_task: task.GeneralizationTask,

35 | computation_steps_mult: int = 0,

36 | single_output: bool = False,

37 | ) -> Callable[[chex.Array], chex.Array]:

38 | """Returns a wrapped model that pads the inputs to match the output length.

39 |

40 | For a given input tape `input_tape` of vocabulary size `vocab_size`, the

41 | wrapped model will process a tape of the format

42 | [`input_tape`, `empty_tape`], where the empty tape token is `vocab_size + 1`.

43 | The `empty_tape` has the same length as the task output.

44 |

45 | Args:

46 | model: A model function that converts inputs to outputs.

47 | generalization_task: The task that we train on.

48 | computation_steps_mult: The amount of empty cells to append to the input

49 | tape. This variable is a multiplier and the actual number of cells is

50 | `computation_steps_mult * input_length`.

51 | single_output: Whether to return the squeezed tensor of values.

52 | """

53 |

54 | def new_model(x: chex.Array) -> chex.Array:

55 | batch_size, input_length, input_size = x.shape

56 | output_length = generalization_task.output_length(input_length)

57 | extra_dims_onehot = 1 + int(computation_steps_mult > 0)

58 | final_input_size = input_size + extra_dims_onehot

59 |

60 | # Add trailing zeros to account for new final_input_size.

61 | extra_zeros_x = jnp.zeros(

62 | (batch_size, input_length, final_input_size - input_size)

63 | )

64 | x = jnp.concatenate([x, extra_zeros_x], axis=-1)

65 |

66 | computation_tape = jnp.full(

67 | (batch_size, computation_steps_mult * input_length),

68 | fill_value=input_size + COMPUTATION_EMPTY_TOKEN,

69 | )

70 | computation_tape = jnn.one_hot(

71 | computation_tape, num_classes=final_input_size

72 | )

73 |

74 | output_tokens = jnp.full(

75 | (batch_size, output_length),

76 | fill_value=input_size

77 | + OUTPUT_EMPTY_TOKEN

78 | - int(computation_steps_mult == 0),

79 | )

80 | output_tokens = jnn.one_hot(output_tokens, num_classes=final_input_size)

81 | final_input = jnp.concatenate([x, computation_tape, output_tokens], axis=1)

82 |

83 | if 'input_length' in inspect.getfullargspec(model).args:

84 | output = model(final_input, input_length=input_length) # pytype: disable=wrong-keyword-args

85 | else:

86 | output = model(final_input)

87 | output = output[:, -output_length:]

88 | if single_output:

89 | output = jnp.squeeze(output, axis=1)

90 | return output

91 |

92 | return new_model

93 |

94 |

95 | def make_model_with_targets_as_input(

96 | model: Callable[[chex.Array], chex.Array], computation_steps_mult: int = 0

97 | ) -> Callable[[chex.Array, chex.Array], chex.Array]:

98 | """Returns a wrapped model that takes the targets as inputs.

99 |

100 | This function is useful for the autoregressive case where we pass the targets

101 | as inputs to the model. The final input looks like:

102 | [inputs, computation_tokens, output_token, targets]

103 |

104 | Args:

105 | model: A haiku model that takes 'x' as input.

106 | computation_steps_mult: The amount of computation tokens to append to the

107 | input tape. This variable is a multiplier and the actual number of cell is

108 | computation_steps_mult * input_length.

109 | """

110 |

111 | def new_model(x: chex.Array, y: chex.Array) -> chex.Array:

112 | """Returns an output from the inputs and targets.

113 |

114 | Args:

115 | x: One-hot input vectors, shape (B, T, input_size).

116 | y: One-hot target output vectors, shape (B, T, output_size).

117 | """

118 | batch_size, input_length, input_size = x.shape

119 | _, output_length, output_size = y.shape

120 | extra_dims_onehot = 1 + int(computation_steps_mult > 0)

121 | final_input_size = max(input_size, output_size) + extra_dims_onehot

122 |

123 | # Add trailing zeros to account for new final_input_size.

124 | extra_zeros_x = jnp.zeros(

125 | (batch_size, input_length, final_input_size - input_size)

126 | )

127 | x = jnp.concatenate([x, extra_zeros_x], axis=-1)

128 | extra_zeros_y = jnp.zeros(

129 | (batch_size, output_length, final_input_size - output_size)

130 | )

131 | y = jnp.concatenate([y, extra_zeros_y], axis=-1)

132 |

133 | computation_tape = jnp.full(

134 | (batch_size, computation_steps_mult * input_length),

135 | fill_value=input_size + COMPUTATION_EMPTY_TOKEN,

136 | )

137 | computation_tape = jnn.one_hot(

138 | computation_tape, num_classes=final_input_size

139 | )

140 |

141 | output_token = jnp.full(

142 | (batch_size, 1),

143 | fill_value=input_size

144 | + OUTPUT_EMPTY_TOKEN

145 | - int(computation_steps_mult == 0),

146 | )

147 | output_token = jnn.one_hot(output_token, num_classes=final_input_size)

148 | final_input = jnp.concatenate(

149 | [x, computation_tape, output_token, y], axis=1

150 | )

151 |

152 | if 'input_length' in inspect.getfullargspec(model).args:

153 | output = model(final_input, input_length=input_length) # pytype: disable=wrong-keyword-args

154 | else:

155 | output = model(final_input)

156 |

157 | return output[:, -output_length - 1 : -1]

158 |

159 | return new_model

160 |

161 |

162 | def add_sampling_to_autoregressive_model(

163 | model: Callable[[chex.Array, chex.Array], chex.Array],

164 | single_output: bool = False,

165 | ) -> Callable[[chex.Array, chex.Array, bool], chex.Array]:

166 | """Adds a 'sample' argument to the model, to use autoregressive sampling."""

167 |

168 | def new_model_with_sampling(

169 | x: chex.Array,

170 | y: chex.Array,

171 | sample: bool,

172 | ) -> chex.Array:

173 | """Returns an autoregressive model if `sample == True and output_size > 1`.

174 |

175 | Args:

176 | x: The input sequences of shape (b, t, i), where i is the input size.

177 | y: The target sequences of shape (b, t, o), where o is the output size.

178 | sample: Whether to evaluate the model using autoregressive decoding.

179 | """

180 | output_length = 1 if len(y.shape) == 2 else y.shape[1]

181 | output_size = y.shape[-1]

182 |

183 | if not sample or output_length == 1:

184 | output = model(x, y)

185 |

186 | else:

187 |

188 | def evaluate_model_autoregressively(

189 | idx: int,

190 | predictions: chex.Array,

191 | ) -> chex.Array:

192 | """Iteratively evaluates the model based on the previous predictions.

193 |

194 | Args:

195 | idx: The index of the target sequence that should be evaluated.

196 | predictions: The logits for the predictions up to but not including

197 | the index `idx`.

198 |

199 | Returns:

200 | The `predictions` array modified only at position `idx` where the

201 | logits for index `idx` have been inserted.

202 | """

203 | one_hot_predictions = jnn.one_hot(

204 | jnp.argmax(predictions, axis=-1),

205 | num_classes=output_size,

206 | )

207 | logits = model(x, one_hot_predictions)

208 | return predictions.at[:, idx].set(logits[:, idx])

209 |

210 | output = hk.fori_loop(

211 | lower=0,

212 | upper=output_length,

213 | body_fun=evaluate_model_autoregressively,

214 | init_val=jnp.empty_like(y),

215 | )

216 |

217 | if single_output:

218 | output = jnp.squeeze(output, axis=1)

219 | return output

220 |

221 | return new_model_with_sampling

222 |

223 |

224 | def update_tree_with_new_containers(

225 | tree: Any, update_dict: dict[str, Any]

226 | ) -> None:

227 | """Updates a dataclass tree in place, adding new containers.

228 |

229 | This method is useful for the nested library to add fields to a tree, for

230 | which containers have not been created.

231 | For instance, if A is a dataclass with attribute architecture_params, and we

232 | want to add the value architecture_params.rnn_model.size, we need to create

233 | the container 'rnn_model' inside architecture_params.

234 |

235 | Args:

236 | tree: An object with attribute (typically a dataclass).

237 | update_dict: A dict of nested updates. See example above.

238 | """

239 | for key in update_dict:

240 | subkeys = key.split('.')

241 | if len(subkeys) >= 2:

242 | # Example: architecture.params.size

243 | for i in range(0, len(subkeys) - 2):

244 | getattr(tree, subkeys[i])[subkeys[i + 1]] = {}

245 |

--------------------------------------------------------------------------------

/models/positional_encodings.py:

--------------------------------------------------------------------------------

1 | # Copyright 2024 DeepMind Technologies Limited

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | """Positional encodings, used in `transformer.py`."""

17 |

18 | import enum

19 | import functools

20 | import math

21 | from typing import Any, Optional, Union

22 |

23 | import chex

24 | import haiku as hk

25 | import jax

26 | import jax.numpy as jnp

27 | import jax.random as jrandom

28 | import numpy as np

29 |

30 |

31 | class PositionalEncodings(enum.Enum):

32 | """Enum for all the positional encodings implemented."""

33 |

34 | NONE = 0

35 | SIN_COS = 1

36 | ALIBI = 2

37 | RELATIVE = 3

38 | ROTARY = 4

39 | LEARNT = 5

40 | NOISY_SIN_COS = 6

41 | NOISY_RELATIVE = 7

42 | NOISY_LEARNT = 8

43 | NOISY_ROTARY = 9

44 | NOISY_ALIBI = 10

45 |

46 |

47 | @chex.dataclass

48 | class SinCosParams:

49 | """Parameters for the classical sin/cos positional encoding."""

50 |

51 | # The maximum wavelength used.

52 | max_time: int = 10_000

53 |

54 |

55 | # We will use this same class for Rotary and Relative.

56 | RotaryParams = SinCosParams

57 | RelativeParams = SinCosParams

58 |

59 |

60 | @chex.dataclass

61 | class LearntParams:

62 | """Parameters for the classical sin/cos positional encoding."""

63 |

64 | # The size of the embedding matrix to use.

65 | max_sequence_length: int

66 |

67 |

68 | @chex.dataclass

69 | class NoisySinCosParams:

70 | """Parameters for the noisy sin/cos positional encoding."""

71 |

72 | # The maximum length to sample.

73 | noise_max_length: int

74 | # The maximum wavelength used.

75 | max_time: int = 10_000

76 |

77 |

78 | @chex.dataclass

79 | class NoisyRelativeParams:

80 | """Parameters for the noisy relative positional encoding."""

81 |

82 | # The maximum length to sample.

83 | noise_max_length: int

84 | # Either randomize the right side and keep the same encodings for the left

85 | # part, keeping the symmetry, or randomize each side independently.

86 | randomize_both_sides: bool = False

87 | # The maximum wavelength used.

88 | max_time: int = 10_000

89 |

90 |

91 | @chex.dataclass

92 | class NoisyLearntParams:

93 | """Parameters for the noisy relative positional encoding."""

94 |

95 | # The maximum length to sample.

96 | noise_max_length: int

97 |

98 |

99 | @chex.dataclass

100 | class NoisyAlibiParams:

101 | """Parameters for the noisy alibi positional encoding."""

102 |

103 | # The maximum length to sample.

104 | noise_max_length: int

105 | # Either randomize the right side and keep the same encodings for the left

106 | # part, maintaining symmetry, or randomize each side independently.

107 | randomize_both_sides: bool = False

108 |

109 |

110 | @chex.dataclass

111 | class NoisyRotaryParams:

112 | """Parameters for the noisy rotary positional encoding."""

113 |

114 | # The maximum length to sample.

115 | noise_max_length: int

116 |

117 |

118 | PositionalEncodingsParams = Union[

119 | SinCosParams,

120 | RelativeParams,

121 | RotaryParams,

122 | LearntParams,

123 | NoisySinCosParams,

124 | NoisyAlibiParams,

125 | NoisyRelativeParams,

126 | NoisyRotaryParams,

127 | NoisyLearntParams,

128 | ]

129 |

130 |

131 | POS_ENC_TABLE = {

132 | 'NONE': PositionalEncodings.NONE,

133 | 'SIN_COS': PositionalEncodings.SIN_COS,

134 | 'ALIBI': PositionalEncodings.ALIBI,

135 | 'RELATIVE': PositionalEncodings.RELATIVE,

136 | 'ROTARY': PositionalEncodings.ROTARY,

137 | 'LEARNT': PositionalEncodings.LEARNT,

138 | 'NOISY_SIN_COS': PositionalEncodings.NOISY_SIN_COS,

139 | 'NOISY_ALIBI': PositionalEncodings.NOISY_ALIBI,

140 | 'NOISY_RELATIVE': PositionalEncodings.NOISY_RELATIVE,

141 | 'NOISY_ROTARY': PositionalEncodings.NOISY_ROTARY,

142 | 'NOISY_LEARNT': PositionalEncodings.NOISY_LEARNT,

143 | }

144 |

145 | POS_ENC_PARAMS_TABLE = {

146 | 'NONE': SinCosParams,

147 | 'SIN_COS': SinCosParams,

148 | 'ALIBI': SinCosParams,

149 | 'RELATIVE': RelativeParams,

150 | 'ROTARY': RotaryParams,

151 | 'LEARNT': LearntParams,

152 | 'NOISY_SIN_COS': NoisySinCosParams,

153 | 'NOISY_ALIBI': NoisyAlibiParams,

154 | 'NOISY_RELATIVE': NoisyRelativeParams,

155 | 'NOISY_ROTARY': NoisyRotaryParams,

156 | 'NOISY_LEARNT': NoisyLearntParams,

157 | }

158 |

159 |

160 | def sinusoid_position_encoding(

161 | sequence_length: int,

162 | hidden_size: int,

163 | max_timescale: float = 1e4,

164 | add_negative_side: bool = False,

165 | ) -> np.ndarray:

166 | """Creates sinusoidal encodings from the original transformer paper.

167 |

168 | The returned values are, for all i < D/2:

169 | array[pos, i] = sin(pos / (max_timescale^(2*i / D)))

170 | array[pos, D/2 + i] = cos(pos / (max_timescale^(2*i / D)))

171 |

172 | Args:

173 | sequence_length: Sequence length.

174 | hidden_size: Dimension of the positional encoding vectors, D. Should be

175 | even.

176 | max_timescale: Maximum timescale for the frequency.

177 | add_negative_side: Whether to also include the positional encodings for

178 | negative positions.

179 |

180 | Returns:

181 | An array of shape [L, D] if add_negative_side is False, else [2 * L, D].

182 | """

183 | if hidden_size % 2 != 0:

184 | raise ValueError(

185 | 'The feature dimension should be even for sin/cos positional encodings.'

186 | )

187 | freqs = np.arange(0, hidden_size, 2)

188 | inv_freq = max_timescale ** (-freqs / hidden_size)

189 | pos_seq = np.arange(

190 | start=-sequence_length if add_negative_side else 0, stop=sequence_length

191 | )

192 | sinusoid_inp = np.einsum('i,j->ij', pos_seq, inv_freq)

193 | return np.concatenate([np.sin(sinusoid_inp), np.cos(sinusoid_inp)], axis=-1)

194 |

195 |

196 | def noisy_fixed_positional_encodings(

197 | fixed_positional_encodings: chex.Array,

198 | sequence_length: int,

199 | rng: Optional[chex.PRNGKey] = None,

200 | ) -> chex.Array:

201 | """Generates noisy positional encodings from fixed positional encodings.

202 |

203 | Randomly samples and orders sequence_length positional encodings from a wider

204 | range [0, noise_max_length) rather than just [0, sequence_length).

205 | The user provides the full_encodings, which should span the entire range

206 | [0, noise_max_length).

207 |

208 | Args:

209 | fixed_positional_encodings: A tensor of shape (noise_max_length,

210 | embedding_size). This is from what the encodings will be sampled.

211 | sequence_length: The length of the output sequence.

212 | rng: Optional rng to use rather than hk.next_rng_key().

213 |

214 | Returns:

215 | A tensor of size [sequence_length, embedding_size].

216 | """

217 | noise_max_length, _ = fixed_positional_encodings.shape

218 | indexes = jrandom.choice(

219 | rng if rng is not None else hk.next_rng_key(),

220 | jnp.arange(noise_max_length),

221 | shape=(sequence_length,),

222 | replace=False,

223 | )

224 | indexes = jnp.sort(indexes)

225 | encodings = fixed_positional_encodings[indexes]

226 | return encodings

227 |

228 |

229 | def _rel_shift_inner(logits: jax.Array, attention_length: int) -> jax.Array:

230 | """Shifts the relative logits.

231 |

232 | This is a more general than the original Transformer-XL implementation as

233 | inputs may also see the future. (The implementation does not rely on a

234 | causal mask removing the upper-right triangle.)

235 |

236 | Given attention length 3 and inputs:

237 | [[-3, -2, -1, 0, 1, 2],

238 | [-3, -2, -1, 0, 1, 2],

239 | [-3, -2, -1, 0, 1, 2]]

240 |

241 | The shifted output is:

242 | [[0, 1, 2],

243 | [-1, 0, 1],

244 | [-2, -1, 0]]

245 |

246 | Args:

247 | logits: input tensor of shape [T_q, T_v + T_q]

248 | attention_length: T_v `int` length of the attention, should be equal to

249 | memory size + sequence length.

250 |

251 | Returns:

252 | A shifted version of the input of size [T_q, T_v]. In each row, a window of

253 | size T_v elements is kept. The window starts at

254 | subsequent row.

255 | """

256 | if logits.ndim != 2:

257 | raise ValueError('`logits` needs to be an array of dimension 2.')

258 | tq, total_len = logits.shape

259 | assert total_len == tq + attention_length

260 | logits = jnp.reshape(logits, [total_len, tq])

261 | logits = jnp.reshape(logits, [total_len, tq])

262 | logits = jax.lax.slice(logits, (1, 0), logits.shape) # logits[1:]

263 | logits = jnp.reshape(logits, [tq, total_len - 1])

264 | # Equiv to logits[:, :attention_length].

265 | logits = jax.lax.slice(logits, (0, 0), (tq, attention_length))

266 | return logits

267 |

268 |

269 | def _rel_shift_causal(logits: jax.Array) -> jax.Array:

270 | """Shifts the relative logits, assuming causal attention.

271 |

272 | Given inputs:

273 | [[-4, -3, -2, -1],

274 | [-4, -3, -2, -1]]

275 |

276 | The shifted (and, later, masked) output is:

277 | [[-3, -2, -1, 0],

278 | [-4, -3, -2, -1]]

279 |

280 | Args:

281 | logits: input tensor of shape [T_q, T_v]

282 |

283 | Returns:

284 | A shifted version of the input of size [T_q, T_v].

285 | """

286 | t1, t2 = logits.shape

287 | # We prepend zeros on the final timescale dimension.

288 | to_pad = jnp.zeros_like(logits[..., :1])

289 | x = jnp.concatenate((to_pad, logits), axis=-1)

290 |

291 | # Reshape trick to shift input.

292 | x = jnp.reshape(x, [t2 + 1, t1])

293 |

294 | # Remove extra time dimension and re-shape.

295 | x = jax.lax.slice(x, [1] + [0] * (x.ndim - 1), x.shape)

296 |

297 | return jnp.reshape(x, [t1, t2])

298 |

299 |

300 | def relative_shift(

301 | logits: jax.Array, attention_length: int, causal: bool = False

302 | ) -> jax.Array:

303 | if causal:

304 | fn = _rel_shift_causal

305 | else:

306 | fn = lambda t: _rel_shift_inner(t, attention_length)

307 | return jax.vmap(jax.vmap(fn))(logits)