├── CONTRIBUTING.md

├── solutions

├── x_media_review

│ └── README.md

└── causal-impact

│ ├── README.md

│ └── CausalImpact_with_Experimental_Design.ipynb

├── README.md

├── .github

└── workflows

│ └── scorecard.yml

└── LICENSE

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # How to Contribute

2 |

3 | We'd love to accept your patches and contributions to this project. There are

4 | just a few small guidelines you need to follow.

5 |

6 | ## Contributor License Agreement

7 |

8 | Contributions to this project must be accompanied by a Contributor License

9 | Agreement (CLA). You (or your employer) retain the copyright to your

10 | contribution; this simply gives us permission to use and redistribute your

11 | contributions as part of the project. Head over to

12 | to see your current agreements on file or

13 | to sign a new one.

14 |

15 | You generally only need to submit a CLA once, so if you've already submitted one

16 | (even if it was for a different project), you probably don't need to do it

17 | again.

18 |

19 | ## Code Reviews

20 |

21 | All submissions, including submissions by project members, require review. We

22 | use GitHub pull requests for this purpose. Consult

23 | [GitHub Help](https://help.github.com/articles/about-pull-requests/) for more

24 | information on using pull requests.

25 |

26 | ## Community Guidelines

27 |

28 | This project follows

29 | [Google's Open Source Community Guidelines](https://opensource.google/conduct/).

30 |

--------------------------------------------------------------------------------

/solutions/x_media_review/README.md:

--------------------------------------------------------------------------------

1 | # Cross-Media Review with same level

2 |

3 | ##### This is not an official Google product.

4 |

5 | Advertisers often use many media to achieve their goals, but because standardsvary from one medium to another,

6 | it can be difficult to properly evaluate strategies and make appropriate decisions.

7 |

8 | By using Google Analytics 4 conversions, the media that directly drive sessions are limited,

9 | but multiple media can be evaluated at the same level.

10 |

11 | By providing the monthly investment amount for each medium,

12 | it produces consistent output about the effectiveness and efficiency of each measure, which is what marketers want to know.

13 |

14 |

15 | ## Overview

16 |

17 | ### What you can do with Cross-Media Review with same level

18 |

19 | - Visualization of effectiveness and efficiency in time series

20 | - Visualization of monthly differences in effectiveness for each media

21 | - Visualization of monthly efficiency by media

22 |

23 |

24 | ### Motivation to develop and open the source code

25 |

26 | There are cases where the effectiveness and efficiency of media with different standards are evaluated as they are,

27 | and cases where media with different roles are evaluated with the same standards (last click model).

28 | In these cases, there is a possibility that resources are not being allocated to measures that are truly contributing to acquisition,

29 | so we created this to reduce this possibility and improve productivity.

30 |

31 |

32 | ### Typical procedure for use

33 |

34 | 1. TBW

35 |

36 |

37 |

38 | ## Getting started

39 |

40 | 1. Prepare the time series data on spreadsheet

41 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Business Intelligence Group - Marketing Analysis & Data Science Solution Packages

2 |

3 | ##### This is not an official Google product.

4 |

5 | ## Overview

6 | This repository provides a collection of Jupyter Notebooks designed to help marketing analysts and data scientists measure and analyze the effectiveness of marketing campaigns. These notebooks facilitate data preprocessing, visualization, statistical analysis, and machine learning model building, enabling a deep dive into campaign performance and the extraction of actionable insights. By customizing and utilizing these notebooks, marketing analysts and data scientists can develop and execute more effective campaign strategies, ultimately driving data-informed decisions and optimizing marketing ROI.

7 |

8 | ### Motivation to develop and open the source code

9 | - CausalImpact with experimental design

10 | - Some marketing practitioners pay attention to

11 | [Causal inference in statistics](https://en.wikipedia.org/wiki/Causal_inference). However,

12 | using time series data without parallel trend assumptions does not allow for

13 | appropriate analysis. Therefore, the purpose is to enable the implementation and

14 | analysis of interventions after classifying time-series data for which parallel

15 | trend assumptions can be made.

16 |

17 | For contributions, see [CONTRIBUTING.md](https://github.com/google/business_intelligence_group/blob/main/CONTRIBUTING.md).

18 |

19 | ### Available solution packages:

20 | - [CausalImpact with experimental design](https://github.com/google/business_intelligence_group/tree/main/solutions/causal-impact)

21 |

22 |

23 | ## Note

24 | - **Analysis should not be the goal**

25 | - Solving business problems is the goal.

26 | - Be clear about the decision you want to make to solve business problems.

27 | - Make clear the path to what you need to know to make a decision.

28 | - Analysis is one way to find out what you need to know.

29 | - **[Test your hypotheses instead of testing the effectiveness]**

30 | - Formulate hypotheses about why there are issues in the current situation and how to solve them.

31 | - Business situations are constantly changing, so analysis without a hypothesis will not be reproducible.

32 | - **[Be honest with the data]**

33 | - However, playing with data to prove a hypothesis is strictly prohibited.

34 | - Acquire the necessary knowledge to be able to conduct appropriate verification.

35 | - Do not do [HARKing](https://en.wikipedia.org/wiki/HARKing)(hypothesizing after the results are known)

36 | - Do not do [p-hacking](https://en.wikipedia.org/wiki/Data_dredging)

37 |

--------------------------------------------------------------------------------

/.github/workflows/scorecard.yml:

--------------------------------------------------------------------------------

1 | # This workflow uses actions that are not certified by GitHub. They are provided

2 | # by a third-party and are governed by separate terms of service, privacy

3 | # policy, and support documentation.

4 |

5 | name: Scorecard supply-chain security

6 | on:

7 | # For Branch-Protection check. Only the default branch is supported. See

8 | # https://github.com/ossf/scorecard/blob/main/docs/checks.md#branch-protection

9 | branch_protection_rule:

10 | # To guarantee Maintained check is occasionally updated. See

11 | # https://github.com/ossf/scorecard/blob/main/docs/checks.md#maintained

12 | schedule:

13 | - cron: '29 10 * * 1'

14 | push:

15 | branches: [ "main" ]

16 |

17 | # Declare default permissions as read only.

18 | permissions: read-all

19 |

20 | jobs:

21 | analysis:

22 | name: Scorecard analysis

23 | runs-on: ubuntu-latest

24 | permissions:

25 | # Needed to upload the results to code-scanning dashboard.

26 | security-events: write

27 | # Needed to publish results and get a badge (see publish_results below).

28 | id-token: write

29 | # Uncomment the permissions below if installing in a private repository.

30 | # contents: read

31 | # actions: read

32 |

33 | steps:

34 | - name: "Checkout code"

35 | uses: actions/checkout@93ea575cb5d8a053eaa0ac8fa3b40d7e05a33cc8 # v3.1.0

36 | with:

37 | persist-credentials: false

38 |

39 | - name: "Run analysis"

40 | uses: ossf/scorecard-action@99c53751e09b9529366343771cc321ec74e9bd3d # v2.0.6

41 | with:

42 | results_file: results.sarif

43 | results_format: sarif

44 | # (Optional) "write" PAT token. Uncomment the `repo_token` line below if:

45 | # - you want to enable the Branch-Protection check on a *public* repository, or

46 | # - you are installing Scorecard on a *private* repository

47 | # To create the PAT, follow the steps in https://github.com/ossf/scorecard-action#authentication-with-pat.

48 | # repo_token: ${{ secrets.SCORECARD_TOKEN }}

49 |

50 | # Public repositories:

51 | # - Publish results to OpenSSF REST API for easy access by consumers

52 | # - Allows the repository to include the Scorecard badge.

53 | # - See https://github.com/ossf/scorecard-action#publishing-results.

54 | # For private repositories:

55 | # - `publish_results` will always be set to `false`, regardless

56 | # of the value entered here.

57 | publish_results: true

58 |

59 | # Upload the results as artifacts (optional). Commenting out will disable uploads of run results in SARIF

60 | # format to the repository Actions tab.

61 | - name: "Upload artifact"

62 | uses: actions/upload-artifact@3cea5372237819ed00197afe530f5a7ea3e805c8 # v3.1.0

63 | with:

64 | name: SARIF file

65 | path: results.sarif

66 | retention-days: 5

67 |

68 | # Upload the results to GitHub's code scanning dashboard.

69 | - name: "Upload to code-scanning"

70 | uses: github/codeql-action/upload-sarif@807578363a7869ca324a79039e6db9c843e0e100 # v2.1.27

71 | with:

72 | sarif_file: results.sarif

73 |

--------------------------------------------------------------------------------

/solutions/causal-impact/README.md:

--------------------------------------------------------------------------------

1 | # CausalImpact with experimental design

2 |

3 | ##### This is not an official Google product.

4 |

5 | CausalImpact is a R package for causal inference using Bayesian structural

6 | time-series models. In using CausalImpact, the parallel trend assumption is

7 | needed for counterfactual modeling, so this code performs classification of time

8 | series data based on DTW distances.

9 |

10 | ## Overview

11 |

12 | ### What you can do with CausalImpact with experiment design

13 |

14 | - Experimental Design

15 | - load time series data from google spreadsheet or csv file

16 | - classify time series data so that parallel trend assumptions can be made

17 | - simulate the conditions required for verification

18 | - CausalImpact Analysis

19 | - load time series data from google spreadsheet or csv file

20 | - CausalImpact Analysis

21 |

22 | ### Motivation to develop and open the source code

23 |

24 | Some marketing practitioners pay attention to

25 | [Causal inference in statistics](https://en.wikipedia.org/wiki/Causal_inference). However,

26 | using time series data without parallel trend assumptions does not allow for

27 | appropriate analysis. Therefore, the purpose is to enable the implementation and

28 | analysis of interventions after classifying time-series data for which parallel

29 | trend assumptions can be made.

30 |

31 | ### Typical procedure for use

32 |

33 | 1. Assume a hypothetical solution to the issue and its factors.

34 | 2. Assume room for KPIs and the mechanisms that drive KPIs depending on the solution.

35 | 3. In advance, decide next-actions to be taken for each result of hypothesis testing (with/without significant difference).

36 | - Recommend supporting the mechanism with relevant data other than KPIs

37 | 4. Prepare time-series KPI data for at least 100 time points.

38 | - Regional segmentation is recommended.

39 | - Previous data, such as the previous year, may make a difference in the market environment.

40 | - Relevant data must be independent and unaffected by interventions

41 | 5. **(Experimental Design)** This tool is used to conduct the experimental design.

42 | - Split into groups that are closest to each other where the parallel trend assumption can be placed.

43 | - Simulation of required timeframe and budget

44 | - :warning: If the parallel trend assumption cannot be placed, we recommend considering another approach

45 | 6. Implemented interventions.

46 | 7. Prepare time-series KPI data, including intervention period and assumed residual period, in addition to previous data.

47 | 8. **(CausalImpact Analysis)** Conduct CausalImpact analysis.

48 | 9. Implement next actions based on results of hypothesis testing

49 |

50 | ## Note

51 |

52 | - Do not do [HARKing](https://en.wikipedia.org/wiki/HARKing)(hypothesizing after the results are known)

53 | - Do not do [p-hacking](https://en.wikipedia.org/wiki/Data_dredging)

54 |

55 | ## Getting started

56 |

57 | 1. Prepare the time series data on spreadsheet or csv file

58 | 2. Open ipynb file with **[Open in Colab](https://colab.research.google.com/github/google/business_intelligence_group/blob/main/solutions/causal-impact/CausalImpact_with_Experimental_Design.ipynb)** Button.

59 | 3. Run cells in sequence

60 |

61 | ## Tutorial

62 | #### CausalImpact Analysis Section

63 |

64 | 1. Press the **Connect** button to connect to the runtime

65 |

66 | 2. Run **Step 1** cell. Step 1 cells take a little longer because they install the [tfcausalImpact library](https://github.com/WillianFuks/tfcausalimpact).

If you do so, you will see some selections in the Step 1 cell.

67 |

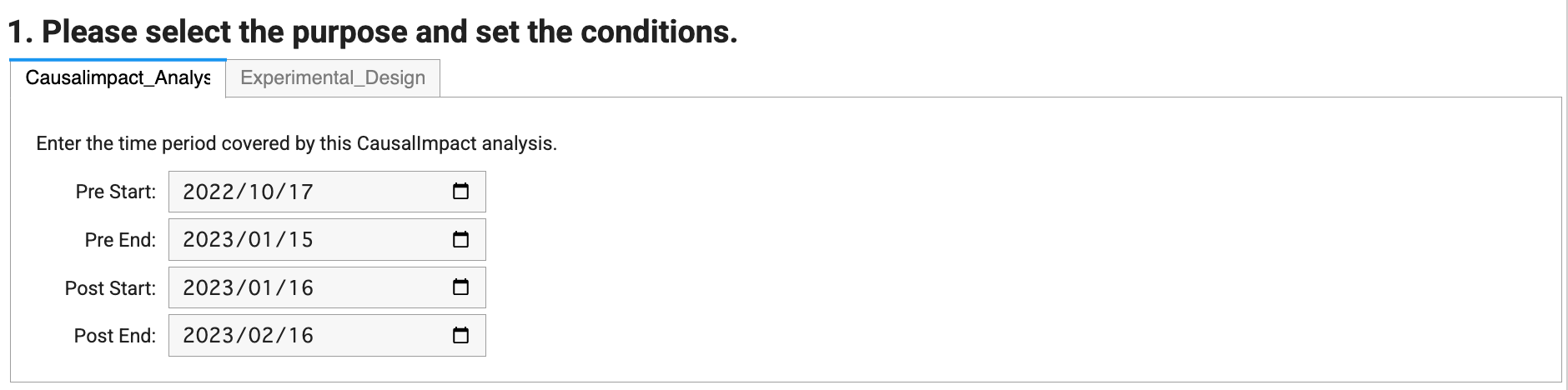

68 | 3. In question 1, choose **CausalImpact Analysis** and update period before the intervention(**Pre Start & Pre End**) and the period during the intervention(**Post Start and Post End**).

69 |

70 |

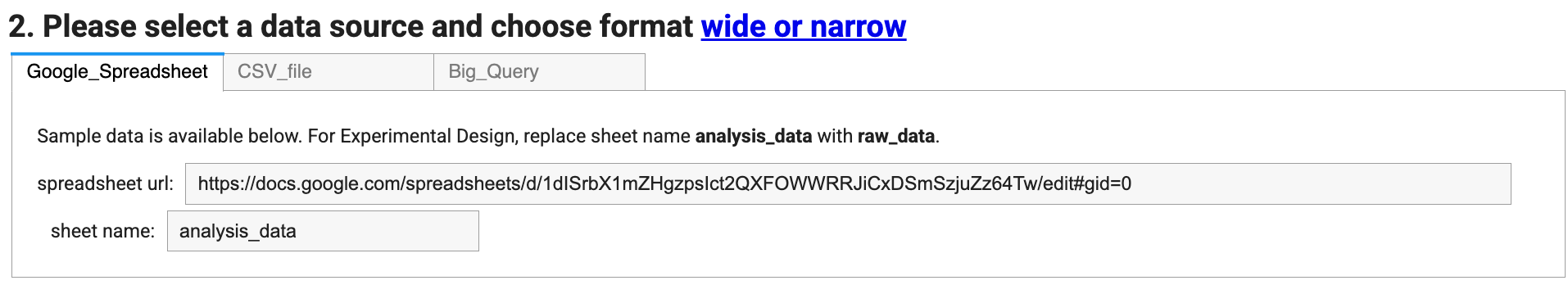

71 | 4. In question 2, please select the data source from **google_spreadsheet**, **CSV_file**, or **Big_Query**.

72 | Then enter the required items.

73 |

74 |

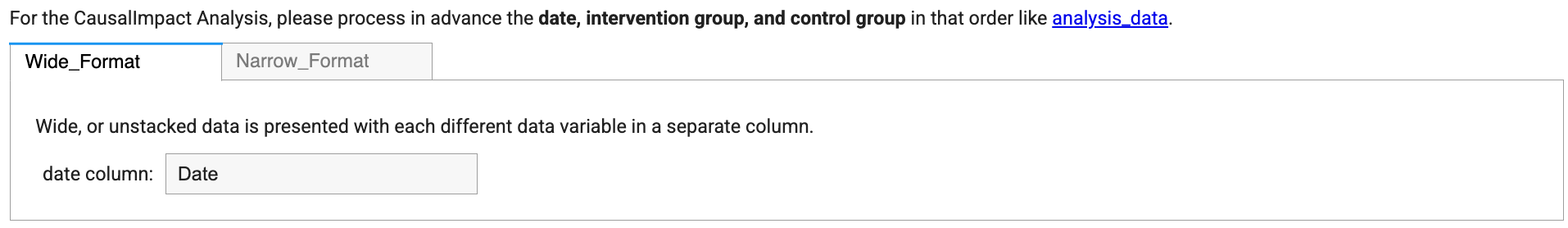

75 | 5. After entering the required items, the data format will be selected. For CausalImpact analysis, please prepare the data in **wide format** in advance.

76 | After selecting wide format, enter the **date column name**.

77 |

78 |

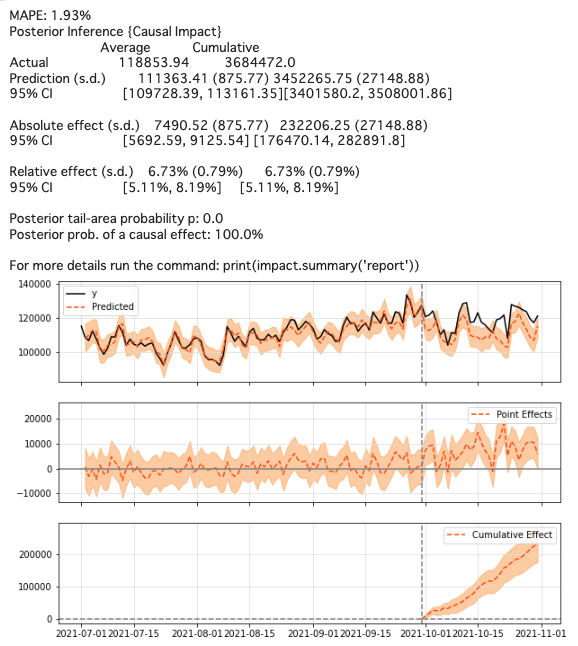

79 | 6. Once the items are filled in, run the **Step 2** cell.

80 | (:warning: If you have selected **google_spreadsheet** or **big_query**, a pop-up will appear regarding granting permission, so please grant it to Colab.)

81 |

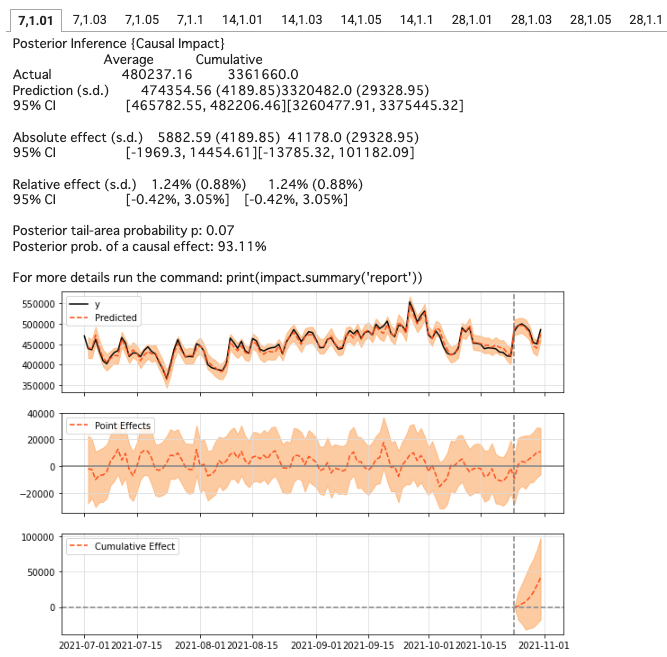

82 | 7. After Step 2 is executed, you will see **the results of CausalImpact Analysis**.

83 |

84 |

85 | #### Experimental Design Section

86 |

87 | 1. Press the **Connect** button to connect to the runtime

88 |

89 | 2. Run **Step 1** cell. Step 1 cells take a little longer because they install the [tfcausalImpact library](https://github.com/WillianFuks/tfcausalimpact).

If you do so, you will see some selections in the Step 1 cell.

90 |

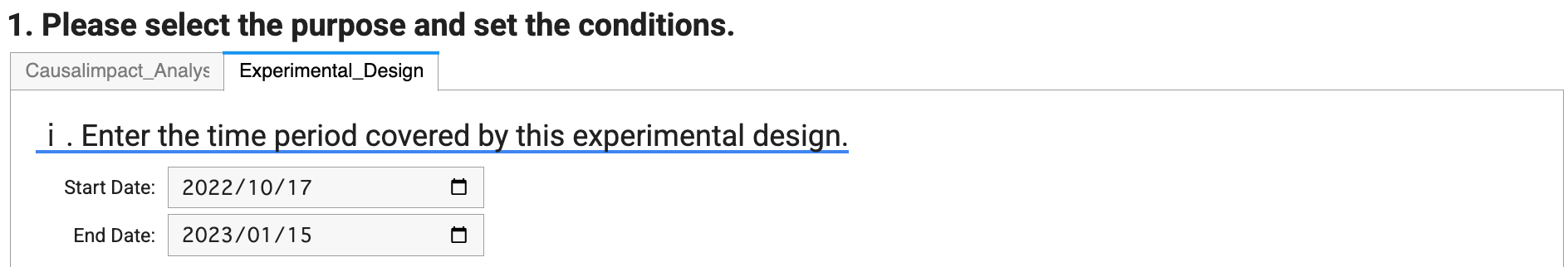

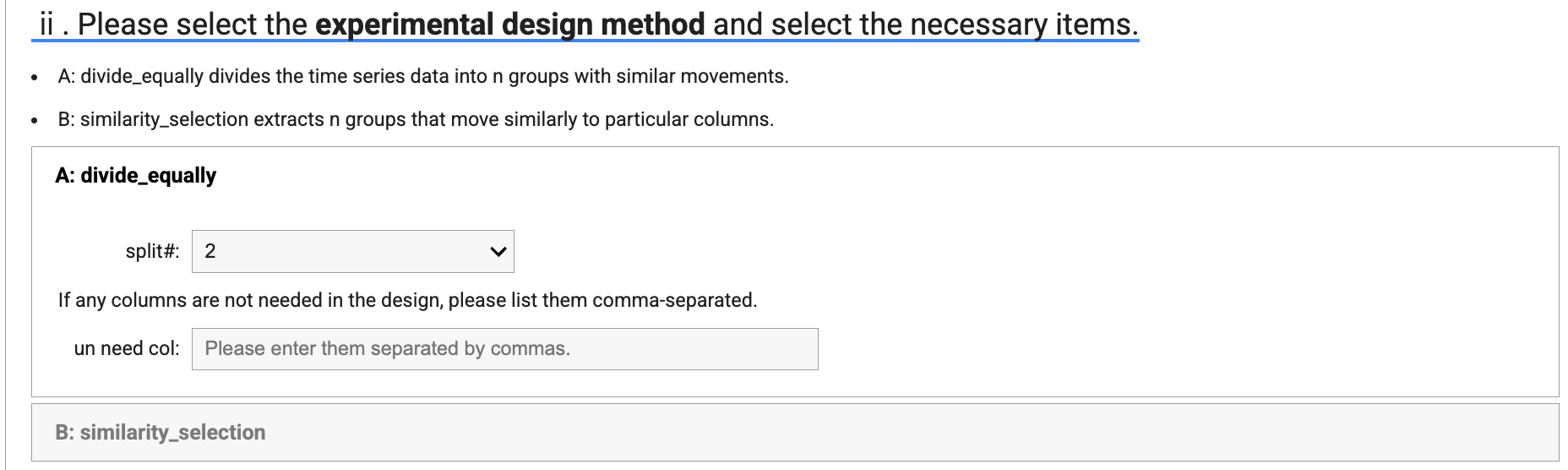

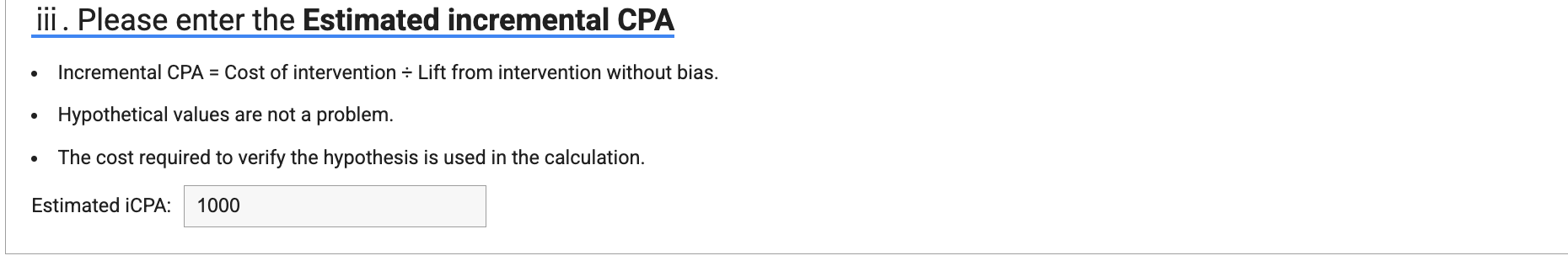

91 | 3. In question 1, choose **Experimental Design** and update the term(**Start Date & End Date**) to be used in the Experimental Design.

92 |

93 |

94 | 4. After updating the term, select the **type of Experimental Design** and update the required items.

95 | * A: divide_equally divides the time series data into n groups with similar movements.

96 | * B: similarity_selection extracts n groups that move similarly to a particular column.

97 |

98 |

99 |

100 | 5. After updating required items, enter the estimated incremental CPA.

101 |

102 |

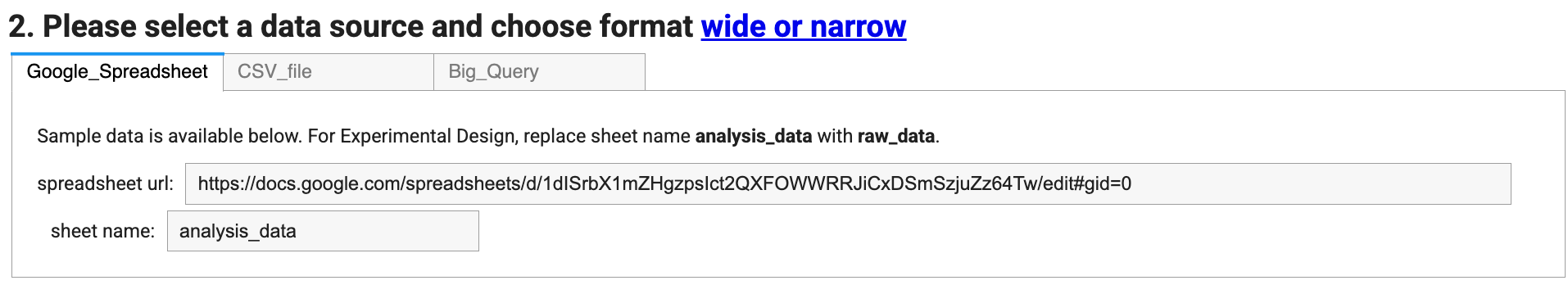

103 | 6. In question 2, please select the data source from **google_spreadsheet**, **CSV_file**, or **Big_Query**.

104 | Then enter the required items.

105 |

106 |

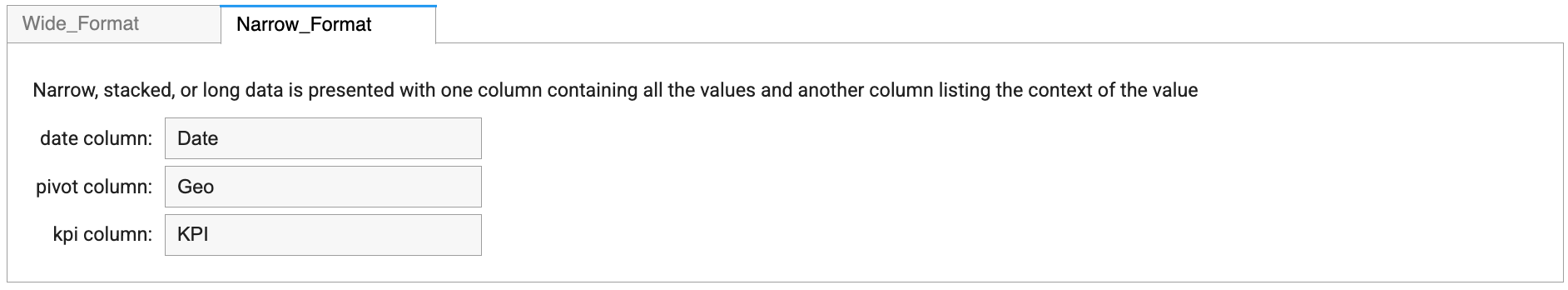

107 | 6. After entering the required items, select data format [**narrow_format** or **wide_format**](https://en.wikipedia.org/wiki/Wide_and_narrow_data) and enter the required fields.

108 |

109 |

110 | 7. Once the items are filled in, run the **Step 2** cell.

111 | (:warning: If you have selected **google_spreadsheet** or **big_query**, a pop-up will appear regarding granting permission, so please grant it to Colab.)

112 |

113 | 8. The output results will vary depending on the type of experimental design, but select the data on which you want to run the simulation.

114 |

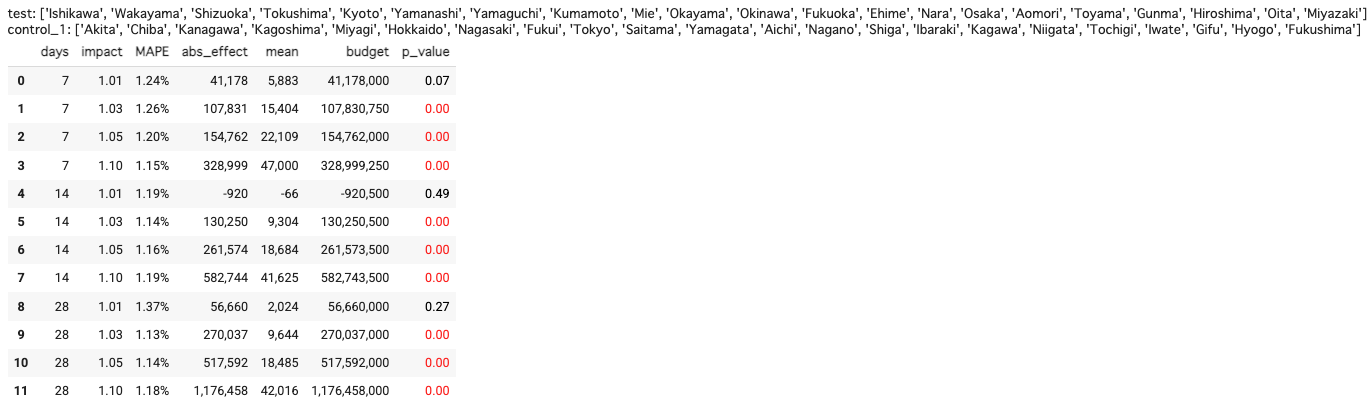

115 | 9. Once the items are filled in, run the **Step 3** cell. Depending on the data, this may take more than 10 minutes.

116 | After Step 3 is run, the results are displayed in a table. Check the MAPE, budget and p-value, and consider the intervention period and the assumed increments to experimental design.

117 |

118 |

119 | 10. run the **Step 4** cell.

120 |

121 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/solutions/causal-impact/CausalImpact_with_Experimental_Design.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {

6 | "id": "view-in-github",

7 | "colab_type": "text"

8 | },

9 | "source": [

10 | " "

11 | ]

12 | },

13 | {

14 | "cell_type": "markdown",

15 | "metadata": {

16 | "id": "dH_QXijHFzJP"

17 | },

18 | "source": [

19 | "# **CausalImpact with Experimental Design**\n",

20 | "\n",

21 | "This Colab file contains *Experimental Design* and *CausalImpact Analysis*.\n",

22 | "\n",

23 | "See [README.md](https://github.com/google/business_intelligence_group/tree/main/solutions/causal-impact) for details\n",

24 | "\n",

25 | "---\n",

26 | "\n",

27 | "Copyright 2024 Google LLC\n",

28 | "\n",

29 | "Licensed under the Apache License, Version 2.0 (the \"License\"); you may not use this file except in compliance with the License. You may obtain a copy of the License at https://www.apache.org/licenses/LICENSE-2.0\n",

30 | "\n",

31 | "Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License."

32 | ]

33 | },

34 | {

35 | "cell_type": "code",

36 | "source": [

37 | "# @title Step.1 (~ 2min)\n",

38 | "%%time\n",

39 | "\n",

40 | "import sys\n",

41 | "if 'fastdtw' not in sys.modules:\n",

42 | " !pip install 'fastdtw' --q\n",

43 | "if 'tslearn' not in sys.modules:\n",

44 | " !pip install 'tslearn' --q\n",

45 | "if 'tfp-causalimpact' not in sys.modules:\n",

46 | " !pip install 'tfp-causalimpact' --q\n",

47 | "\n",

48 | "# Data Load\n",

49 | "from google.colab import auth, files, widgets\n",

50 | "from google.auth import default\n",

51 | "from google.cloud import bigquery\n",

52 | "import io\n",

53 | "import os\n",

54 | "import gspread\n",

55 | "from oauth2client.client import GoogleCredentials\n",

56 | "\n",

57 | "# Calculate\n",

58 | "import altair as alt\n",

59 | "import itertools\n",

60 | "import random\n",

61 | "import numpy as np\n",

62 | "import pandas as pd\n",

63 | "import fastdtw\n",

64 | "\n",

65 | "from tslearn.clustering import TimeSeriesKMeans\n",

66 | "from decimal import Decimal, ROUND_HALF_UP\n",

67 | "from scipy.spatial.distance import euclidean\n",

68 | "from sklearn.metrics import mean_absolute_percentage_error\n",

69 | "from sklearn.preprocessing import MinMaxScaler\n",

70 | "from statsmodels.tsa.seasonal import STL\n",

71 | "\n",

72 | "# UI/UX\n",

73 | "import datetime\n",

74 | "from dateutil.relativedelta import relativedelta\n",

75 | "import ipywidgets\n",

76 | "from IPython.display import display, Markdown, HTML, Javascript\n",

77 | "from tqdm.auto import tqdm\n",

78 | "import warnings\n",

79 | "warnings.simplefilter('ignore')\n",

80 | "\n",

81 | "# causalimpact\n",

82 | "import causalimpact\n",

83 | "import tensorflow as tf\n",

84 | "import tensorflow_probability as tfp\n",

85 | "tfd = tfp.distributions\n",

86 | "\n",

87 | "class PreProcess(object):\n",

88 | " \"\"\"PreProcess handles process from data loading to visualization.\n",

89 | "\n",

90 | " Create a UI, load time series data based on input and do some\n",

91 | " transformations to pass it to analysis. This also includes visualization of\n",

92 | " points that should be confirmed in time series data.\n",

93 | "\n",

94 | " Attributes:\n",

95 | " _apply_text_style: Decorate the text\n",

96 | " define_ui: Define the UI using ipywidget\n",

97 | " generate_ui: Generates UI for input from the user\n",

98 | " load_data: Load data from any data source\n",

99 | " _load_data_from_sheet: Load data from spreadsheet\n",

100 | " _load_data_from_csv: Load data from CSV\n",

101 | " _load_data_from_bigquery: Load data from Big Query\n",

102 | " format_date: Set index\n",

103 | " _shape_wide: Configure narrow/wide conversion\n",

104 | " _trend_check: Visualize data\n",

105 | " saving_params: Save the contents entered in the UI\n",

106 | " set_params: Set the saved input contents to the instance\n",

107 | " \"\"\"\n",

108 | "\n",

109 | " def __init__(self):\n",

110 | " self.define_ui()\n",

111 | "\n",

112 | " @staticmethod\n",

113 | " def _apply_text_style(type, text):\n",

114 | " if type == 'success':\n",

115 | " return print(f\"\\033[38;2;15;157;88m \" + text + \"\\033[0m\")\n",

116 | "\n",

117 | " if type == 'failure':\n",

118 | " return print(f\"\\033[38;2;219;68;55m \" + text + \"\\033[0m\")\n",

119 | "\n",

120 | " if isinstance(type, int):\n",

121 | " span_style = ipywidgets.HTML(\n",

122 | " \"\"\n",

124 | " + text\n",

125 | " + ''\n",

126 | " )\n",

127 | " return span_style\n",

128 | "\n",

129 | " def define_ui(self):\n",

130 | " self._define_data_source_widgets()\n",

131 | " self._define_data_format_widgets()\n",

132 | " self._define_date_widgets()\n",

133 | " self._define_experimental_design_widgets()\n",

134 | " self._define_simulation_widgets()\n",

135 | "\n",

136 | " def _define_data_source_widgets(self):\n",

137 | " # Input box for data sources\n",

138 | " self.sheet_url = ipywidgets.Text(\n",

139 | " placeholder='Please enter google spreadsheet url',\n",

140 | " value='https://docs.google.com/spreadsheets/d/1dISrbX1mZHgzpsIct2QXFOWWRRJiCxDSmSzjuZz64Tw/edit#gid=0',\n",

141 | " description='spreadsheet url:',\n",

142 | " style={'description_width': 'initial'},\n",

143 | " layout=ipywidgets.Layout(width='800px'),\n",

144 | " )\n",

145 | " self.sheet_name = ipywidgets.Text(\n",

146 | " placeholder='Please enter sheet name',\n",

147 | " value='analysis_data',\n",

148 | " # value='raw_data',\n",

149 | " description='sheet name:',\n",

150 | " )\n",

151 | " self.csv_name = ipywidgets.Text(\n",

152 | " placeholder='Please enter csv name',\n",

153 | " description='csv name:',\n",

154 | " layout=ipywidgets.Layout(width='500px'),\n",

155 | " )\n",

156 | " self.bq_project_id = ipywidgets.Text(\n",

157 | " placeholder='Please enter project id',\n",

158 | " description='project id:',\n",

159 | " layout=ipywidgets.Layout(width='500px'),\n",

160 | " )\n",

161 | " self.bq_table_name = ipywidgets.Text(\n",

162 | " placeholder='Please enter table name',\n",

163 | " description='table name:',\n",

164 | " layout=ipywidgets.Layout(width='500px'),\n",

165 | " )\n",

166 | "\n",

167 | " def _define_data_format_widgets(self):\n",

168 | " # Input box for data format\n",

169 | " self.date_col = ipywidgets.Text(\n",

170 | " placeholder='Please enter date column name',\n",

171 | " value='Date',\n",

172 | " description='date column:',\n",

173 | " )\n",

174 | " self.pivot_col = ipywidgets.Text(\n",

175 | " placeholder='Please enter pivot column name',\n",

176 | " value='Geo',\n",

177 | " description='pivot column:',\n",

178 | " )\n",

179 | " self.kpi_col = ipywidgets.Text(\n",

180 | " placeholder='Please enter kpi column name',\n",

181 | " value='KPI',\n",

182 | " description='kpi column:',\n",

183 | " )\n",

184 | "\n",

185 | " def _define_experimental_design_widgets(self):\n",

186 | " # Input box for Experimental_Design-related\n",

187 | " self.exclude_cols = ipywidgets.Text(\n",

188 | " placeholder=(\n",

189 | " 'Enter comma-separated columns if any columns are not used in the'\n",

190 | " ' design.'\n",

191 | " ),\n",

192 | " description='exclude cols:',\n",

193 | " layout=ipywidgets.Layout(width='1000px'),\n",

194 | " )\n",

195 | " self.num_of_split = ipywidgets.Dropdown(\n",

196 | " options=[2, 3, 4, 5],\n",

197 | " value=2,\n",

198 | " description='split#:',\n",

199 | " disabled=False,\n",

200 | " )\n",

201 | " self.target_columns = ipywidgets.Text(\n",

202 | " placeholder='Please enter comma-separated entries',\n",

203 | " value='Tokyo, Kanagawa',\n",

204 | " description='target_cols:',\n",

205 | " layout=ipywidgets.Layout(width='500px'),\n",

206 | " )\n",

207 | " self.num_of_pick_range = ipywidgets.IntRangeSlider(\n",

208 | " value=[5, 10],\n",

209 | " min=1,\n",

210 | " max=50,\n",

211 | " step=1,\n",

212 | " description='pick range:',\n",

213 | " orientation='horizontal',\n",

214 | " readout=True,\n",

215 | " readout_format='d',\n",

216 | " )\n",

217 | " self.num_of_covariate = ipywidgets.Dropdown(\n",

218 | " options=[1, 2, 3, 4, 5],\n",

219 | " value=1,\n",

220 | " description='covariate#:',\n",

221 | " layout=ipywidgets.Layout(width='192px'),\n",

222 | " )\n",

223 | " self.target_share = ipywidgets.FloatSlider(\n",

224 | " value=0.3,\n",

225 | " min=0.05,\n",

226 | " max=0.5,\n",

227 | " step=0.05,\n",

228 | " description='target share#:',\n",

229 | " orientation='horizontal',\n",

230 | " readout=True,\n",

231 | " readout_format='.1%',\n",

232 | " )\n",

233 | " self.control_columns = ipywidgets.Text(\n",

234 | " placeholder='Please enter comma-separated entries',\n",

235 | " value='Aomori, Akita',\n",

236 | " description='control_cols:',\n",

237 | " layout=ipywidgets.Layout(width='500px'),\n",

238 | " )\n",

239 | "\n",

240 | " def _define_simulation_widgets(self):\n",

241 | " # Input box for simulation params\n",

242 | " self.num_of_seasons = ipywidgets.IntText(\n",

243 | " value=1,\n",

244 | " description='num_of_seasons:',\n",

245 | " disabled=False,\n",

246 | " style={'description_width': 'initial'},\n",

247 | " )\n",

248 | " self.estimate_icpa = ipywidgets.IntText(\n",

249 | " value=1000,\n",

250 | " description='Estimated iCPA:',\n",

251 | " style={'description_width': 'initial'},\n",

252 | " )\n",

253 | " self.credible_interval = ipywidgets.RadioButtons(\n",

254 | " options=[70, 80, 90, 95],\n",

255 | " value=90,\n",

256 | " description='Credible interval %:',\n",

257 | " style={'description_width': 'initial'},\n",

258 | " )\n",

259 | "\n",

260 | " def _define_date_widgets(self):\n",

261 | " # Input box for Date-related\n",

262 | " self.pre_period_start = ipywidgets.DatePicker(\n",

263 | " description='Pre Start:',\n",

264 | " value=datetime.date.today() - relativedelta(days=122),\n",

265 | " )\n",

266 | " self.pre_period_end = ipywidgets.DatePicker(\n",

267 | " description='Pre End:',\n",

268 | " value=datetime.date.today() - relativedelta(days=32),\n",

269 | " )\n",

270 | " self.post_period_start = ipywidgets.DatePicker(\n",

271 | " description='Post Start:',\n",

272 | " value=datetime.date.today() - relativedelta(days=31),\n",

273 | " )\n",

274 | " self.post_period_end = ipywidgets.DatePicker(\n",

275 | " description='Post End:',\n",

276 | " value=datetime.date.today(),\n",

277 | " )\n",

278 | " self.start_date = ipywidgets.DatePicker(\n",

279 | " description='Start Date:',\n",

280 | " value=datetime.date.today() - relativedelta(days=122),\n",

281 | " )\n",

282 | " self.end_date = ipywidgets.DatePicker(\n",

283 | " description='End Date:',\n",

284 | " value=datetime.date.today() - relativedelta(days=32),\n",

285 | " )\n",

286 | " self.depend_data = ipywidgets.ToggleButton(\n",

287 | " value=False,\n",

288 | " description='Click >> Use the beginning and end of data',\n",

289 | " disabled=False,\n",

290 | " button_style='info',\n",

291 | " tooltip='Description',\n",

292 | " layout=ipywidgets.Layout(width='300px'),\n",

293 | " )\n",

294 | "\n",

295 | " def generate_ui(self):\n",

296 | " self._build_source_selection_tab()\n",

297 | " self._build_data_type_selection_tab()\n",

298 | " self._build_design_type_tab()\n",

299 | " self._build_purpose_selection_tab()\n",

300 | "\n",

301 | " def _build_source_selection_tab(self):\n",

302 | " # UI for data soure\n",

303 | " self.soure_selection = ipywidgets.Tab()\n",

304 | " self.soure_selection.children = [\n",

305 | " ipywidgets.VBox([self.sheet_url, self.sheet_name]),\n",

306 | " ipywidgets.VBox([self.csv_name]),\n",

307 | " ipywidgets.VBox([self.bq_project_id, self.bq_table_name]),\n",

308 | " ]\n",

309 | " self.soure_selection.set_title(0, 'Google_Spreadsheet')\n",

310 | " self.soure_selection.set_title(1, 'CSV_file')\n",

311 | " self.soure_selection.set_title(2, 'Big_Query')\n",

312 | "\n",

313 | " def _build_data_type_selection_tab(self):\n",

314 | " # UI for data type(narrow or wide)\n",

315 | " self.data_type_selection = ipywidgets.Tab()\n",

316 | " self.data_type_selection.children = [\n",

317 | " ipywidgets.VBox([\n",

318 | " ipywidgets.Label(\n",

319 | " 'Wide, or unstacked data is presented with each different'\n",

320 | " ' data variable in a separate column.'\n",

321 | " ),\n",

322 | " self.date_col,\n",

323 | " ]),\n",

324 | " ipywidgets.VBox([\n",

325 | " ipywidgets.Label(\n",

326 | " 'Narrow, stacked, or long data is presented with one column '\n",

327 | " 'containing all the values and another column listing the '\n",

328 | " 'context of the value'\n",

329 | " ),\n",

330 | " ipywidgets.HBox([self.date_col, self.pivot_col, self.kpi_col]),\n",

331 | " ]),\n",

332 | " ]\n",

333 | " self.data_type_selection.set_title(0, 'Wide_Format')\n",

334 | " self.data_type_selection.set_title(1, 'Narrow_Format')\n",

335 | "\n",

336 | " def _build_design_type_tab(self):\n",

337 | " # UI for experimental design\n",

338 | " self.design_type = ipywidgets.Tab(\n",

339 | " children=[\n",

340 | " ipywidgets.VBox([\n",

341 | " ipywidgets.HTML(\n",

342 | " 'divide_equally divides the time series data into N'\n",

343 | " ' groups(split#) with similar movements.'\n",

344 | " ),\n",

345 | " self.num_of_split,\n",

346 | " self.exclude_cols,\n",

347 | " ]),\n",

348 | " ipywidgets.VBox([\n",

349 | " ipywidgets.HTML(\n",

350 | " 'similarity_selection extracts N groups(covariate#) that '\n",

351 | " 'move similarly to particular columns(target_cols).'\n",

352 | " ),\n",

353 | " ipywidgets.HBox([\n",

354 | " self.target_columns,\n",

355 | " self.num_of_covariate,\n",

356 | " self.num_of_pick_range,\n",

357 | " ]),\n",

358 | " self.exclude_cols,\n",

359 | " ]),\n",

360 | " ipywidgets.VBox([\n",

361 | " ipywidgets.HTML(\n",

362 | " 'target share extracts targeted time series data from'\n",

363 | " ' the proportion of interventions.'\n",

364 | " ),\n",

365 | " self.target_share,\n",

366 | " self.exclude_cols,\n",

367 | " ]),\n",

368 | " ipywidgets.VBox([\n",

369 | " ipywidgets.HTML(\n",

370 | " 'To improve reproducibility, it is important to create an'\n",

371 | " ' accurate counterfactual model rather than a balanced'\n",

372 | " ' assignment.'\n",

373 | " ),\n",

374 | " self.target_columns,\n",

375 | " self.control_columns,\n",

376 | " ]),\n",

377 | " ]\n",

378 | " )\n",

379 | " self.design_type.set_title(0, 'A: divide_equally')\n",

380 | " self.design_type.set_title(1, 'B: similarity_selection')\n",

381 | " self.design_type.set_title(2, 'C: target_share')\n",

382 | " self.design_type.set_title(3, 'D: pre-allocated')\n",

383 | "\n",

384 | " def _build_purpose_selection_tab(self):\n",

385 | " # UI for purpose (CausalImpact or Experimental Design)\n",

386 | " self.purpose_selection = ipywidgets.Tab()\n",

387 | " self.date_selection = ipywidgets.Tab()\n",

388 | " self.date_selection.children = [\n",

389 | " ipywidgets.VBox(\n",

390 | " [\n",

391 | " ipywidgets.HTML('The minimum date of the data is '\n",

392 | " 'selected as the start date.'),\n",

393 | " ipywidgets.HTML('The maximum date in the data is '\n",

394 | " 'selected as the end date.'),\n",

395 | " ]),\n",

396 | " ipywidgets.VBox(\n",

397 | " [\n",

398 | " self.start_date,\n",

399 | " self.end_date,\n",

400 | " ]\n",

401 | " )]\n",

402 | " self.date_selection.set_title(0, 'automatic selection')\n",

403 | " self.date_selection.set_title(1, 'manual input')\n",

404 | "\n",

405 | " self.purpose_selection.children = [\n",

406 | " # Causalimpact\n",

407 | " ipywidgets.VBox([\n",

408 | " PreProcess._apply_text_style(\n",

409 | " 15, '⑶ - a: Enter the Pre and Post the intervention.'\n",

410 | " ),\n",

411 | " self.pre_period_start,\n",

412 | " self.pre_period_end,\n",

413 | " self.post_period_start,\n",

414 | " self.post_period_end,\n",

415 | " PreProcess._apply_text_style(\n",

416 | " 15,\n",

417 | " '⑶ - b: Enter the number of periodicities in the'\n",

418 | " ' time series data.(default=1)',\n",

419 | " ),\n",

420 | " ipywidgets.VBox([self.num_of_seasons, self.credible_interval]),\n",

421 | " ]),\n",

422 | " # Experimental_Design\n",

423 | " ipywidgets.VBox([\n",

424 | " PreProcess._apply_text_style(\n",

425 | " 15,\n",

426 | " '⑶ - a: Please select date for experimental design',\n",

427 | " ),\n",

428 | " self.date_selection,\n",

429 | " PreProcess._apply_text_style(\n",

430 | " 15,\n",

431 | " '⑶ - b: Select the experimental design method and'\n",

432 | " ' enter the necessary items.',\n",

433 | " ),\n",

434 | " self.design_type,\n",

435 | " PreProcess._apply_text_style(\n",

436 | " 15,\n",

437 | " '⑶ - c: (Optional) Enter Estimated incremental CPA(Cost'\n",

438 | " ' of intervention ÷ Lift from intervention without bias) & the '\n",

439 | " 'number of periodicities in the time series data.',\n",

440 | " ),\n",

441 | " ipywidgets.VBox([\n",

442 | " self.estimate_icpa,\n",

443 | " self.num_of_seasons,\n",

444 | " self.credible_interval,\n",

445 | " ]),\n",

446 | " ]),\n",

447 | " ]\n",

448 | " self.purpose_selection.set_title(0, 'Causalimpact')\n",

449 | " self.purpose_selection.set_title(1, 'Experimental_Design')\n",

450 | "\n",

451 | " display(\n",

452 | " PreProcess._apply_text_style(18, '⑴ Please select a data source.'),\n",

453 | " self.soure_selection,\n",

454 | " Markdown('

"

11 | ]

12 | },

13 | {

14 | "cell_type": "markdown",

15 | "metadata": {

16 | "id": "dH_QXijHFzJP"

17 | },

18 | "source": [

19 | "# **CausalImpact with Experimental Design**\n",

20 | "\n",

21 | "This Colab file contains *Experimental Design* and *CausalImpact Analysis*.\n",

22 | "\n",

23 | "See [README.md](https://github.com/google/business_intelligence_group/tree/main/solutions/causal-impact) for details\n",

24 | "\n",

25 | "---\n",

26 | "\n",

27 | "Copyright 2024 Google LLC\n",

28 | "\n",

29 | "Licensed under the Apache License, Version 2.0 (the \"License\"); you may not use this file except in compliance with the License. You may obtain a copy of the License at https://www.apache.org/licenses/LICENSE-2.0\n",

30 | "\n",

31 | "Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License."

32 | ]

33 | },

34 | {

35 | "cell_type": "code",

36 | "source": [

37 | "# @title Step.1 (~ 2min)\n",

38 | "%%time\n",

39 | "\n",

40 | "import sys\n",

41 | "if 'fastdtw' not in sys.modules:\n",

42 | " !pip install 'fastdtw' --q\n",

43 | "if 'tslearn' not in sys.modules:\n",

44 | " !pip install 'tslearn' --q\n",

45 | "if 'tfp-causalimpact' not in sys.modules:\n",

46 | " !pip install 'tfp-causalimpact' --q\n",

47 | "\n",

48 | "# Data Load\n",

49 | "from google.colab import auth, files, widgets\n",

50 | "from google.auth import default\n",

51 | "from google.cloud import bigquery\n",

52 | "import io\n",

53 | "import os\n",

54 | "import gspread\n",

55 | "from oauth2client.client import GoogleCredentials\n",

56 | "\n",

57 | "# Calculate\n",

58 | "import altair as alt\n",

59 | "import itertools\n",

60 | "import random\n",

61 | "import numpy as np\n",

62 | "import pandas as pd\n",

63 | "import fastdtw\n",

64 | "\n",

65 | "from tslearn.clustering import TimeSeriesKMeans\n",

66 | "from decimal import Decimal, ROUND_HALF_UP\n",

67 | "from scipy.spatial.distance import euclidean\n",

68 | "from sklearn.metrics import mean_absolute_percentage_error\n",

69 | "from sklearn.preprocessing import MinMaxScaler\n",

70 | "from statsmodels.tsa.seasonal import STL\n",

71 | "\n",

72 | "# UI/UX\n",

73 | "import datetime\n",

74 | "from dateutil.relativedelta import relativedelta\n",

75 | "import ipywidgets\n",

76 | "from IPython.display import display, Markdown, HTML, Javascript\n",

77 | "from tqdm.auto import tqdm\n",

78 | "import warnings\n",

79 | "warnings.simplefilter('ignore')\n",

80 | "\n",

81 | "# causalimpact\n",

82 | "import causalimpact\n",

83 | "import tensorflow as tf\n",

84 | "import tensorflow_probability as tfp\n",

85 | "tfd = tfp.distributions\n",

86 | "\n",

87 | "class PreProcess(object):\n",

88 | " \"\"\"PreProcess handles process from data loading to visualization.\n",

89 | "\n",

90 | " Create a UI, load time series data based on input and do some\n",

91 | " transformations to pass it to analysis. This also includes visualization of\n",

92 | " points that should be confirmed in time series data.\n",

93 | "\n",

94 | " Attributes:\n",

95 | " _apply_text_style: Decorate the text\n",

96 | " define_ui: Define the UI using ipywidget\n",

97 | " generate_ui: Generates UI for input from the user\n",

98 | " load_data: Load data from any data source\n",

99 | " _load_data_from_sheet: Load data from spreadsheet\n",

100 | " _load_data_from_csv: Load data from CSV\n",

101 | " _load_data_from_bigquery: Load data from Big Query\n",

102 | " format_date: Set index\n",

103 | " _shape_wide: Configure narrow/wide conversion\n",

104 | " _trend_check: Visualize data\n",

105 | " saving_params: Save the contents entered in the UI\n",

106 | " set_params: Set the saved input contents to the instance\n",

107 | " \"\"\"\n",

108 | "\n",

109 | " def __init__(self):\n",

110 | " self.define_ui()\n",

111 | "\n",

112 | " @staticmethod\n",

113 | " def _apply_text_style(type, text):\n",

114 | " if type == 'success':\n",

115 | " return print(f\"\\033[38;2;15;157;88m \" + text + \"\\033[0m\")\n",

116 | "\n",

117 | " if type == 'failure':\n",

118 | " return print(f\"\\033[38;2;219;68;55m \" + text + \"\\033[0m\")\n",

119 | "\n",

120 | " if isinstance(type, int):\n",

121 | " span_style = ipywidgets.HTML(\n",

122 | " \"\"\n",

124 | " + text\n",

125 | " + ''\n",

126 | " )\n",

127 | " return span_style\n",

128 | "\n",

129 | " def define_ui(self):\n",

130 | " self._define_data_source_widgets()\n",

131 | " self._define_data_format_widgets()\n",

132 | " self._define_date_widgets()\n",

133 | " self._define_experimental_design_widgets()\n",

134 | " self._define_simulation_widgets()\n",

135 | "\n",

136 | " def _define_data_source_widgets(self):\n",

137 | " # Input box for data sources\n",

138 | " self.sheet_url = ipywidgets.Text(\n",

139 | " placeholder='Please enter google spreadsheet url',\n",

140 | " value='https://docs.google.com/spreadsheets/d/1dISrbX1mZHgzpsIct2QXFOWWRRJiCxDSmSzjuZz64Tw/edit#gid=0',\n",

141 | " description='spreadsheet url:',\n",

142 | " style={'description_width': 'initial'},\n",

143 | " layout=ipywidgets.Layout(width='800px'),\n",

144 | " )\n",

145 | " self.sheet_name = ipywidgets.Text(\n",

146 | " placeholder='Please enter sheet name',\n",

147 | " value='analysis_data',\n",

148 | " # value='raw_data',\n",

149 | " description='sheet name:',\n",

150 | " )\n",

151 | " self.csv_name = ipywidgets.Text(\n",

152 | " placeholder='Please enter csv name',\n",

153 | " description='csv name:',\n",

154 | " layout=ipywidgets.Layout(width='500px'),\n",

155 | " )\n",

156 | " self.bq_project_id = ipywidgets.Text(\n",

157 | " placeholder='Please enter project id',\n",

158 | " description='project id:',\n",

159 | " layout=ipywidgets.Layout(width='500px'),\n",

160 | " )\n",

161 | " self.bq_table_name = ipywidgets.Text(\n",

162 | " placeholder='Please enter table name',\n",

163 | " description='table name:',\n",

164 | " layout=ipywidgets.Layout(width='500px'),\n",

165 | " )\n",

166 | "\n",

167 | " def _define_data_format_widgets(self):\n",

168 | " # Input box for data format\n",

169 | " self.date_col = ipywidgets.Text(\n",

170 | " placeholder='Please enter date column name',\n",

171 | " value='Date',\n",

172 | " description='date column:',\n",

173 | " )\n",

174 | " self.pivot_col = ipywidgets.Text(\n",

175 | " placeholder='Please enter pivot column name',\n",

176 | " value='Geo',\n",

177 | " description='pivot column:',\n",

178 | " )\n",

179 | " self.kpi_col = ipywidgets.Text(\n",

180 | " placeholder='Please enter kpi column name',\n",

181 | " value='KPI',\n",

182 | " description='kpi column:',\n",

183 | " )\n",

184 | "\n",

185 | " def _define_experimental_design_widgets(self):\n",

186 | " # Input box for Experimental_Design-related\n",

187 | " self.exclude_cols = ipywidgets.Text(\n",

188 | " placeholder=(\n",

189 | " 'Enter comma-separated columns if any columns are not used in the'\n",

190 | " ' design.'\n",

191 | " ),\n",

192 | " description='exclude cols:',\n",

193 | " layout=ipywidgets.Layout(width='1000px'),\n",

194 | " )\n",

195 | " self.num_of_split = ipywidgets.Dropdown(\n",

196 | " options=[2, 3, 4, 5],\n",

197 | " value=2,\n",

198 | " description='split#:',\n",

199 | " disabled=False,\n",

200 | " )\n",

201 | " self.target_columns = ipywidgets.Text(\n",

202 | " placeholder='Please enter comma-separated entries',\n",

203 | " value='Tokyo, Kanagawa',\n",

204 | " description='target_cols:',\n",

205 | " layout=ipywidgets.Layout(width='500px'),\n",

206 | " )\n",

207 | " self.num_of_pick_range = ipywidgets.IntRangeSlider(\n",

208 | " value=[5, 10],\n",

209 | " min=1,\n",

210 | " max=50,\n",

211 | " step=1,\n",

212 | " description='pick range:',\n",

213 | " orientation='horizontal',\n",

214 | " readout=True,\n",

215 | " readout_format='d',\n",

216 | " )\n",

217 | " self.num_of_covariate = ipywidgets.Dropdown(\n",

218 | " options=[1, 2, 3, 4, 5],\n",

219 | " value=1,\n",

220 | " description='covariate#:',\n",

221 | " layout=ipywidgets.Layout(width='192px'),\n",

222 | " )\n",

223 | " self.target_share = ipywidgets.FloatSlider(\n",

224 | " value=0.3,\n",

225 | " min=0.05,\n",

226 | " max=0.5,\n",

227 | " step=0.05,\n",

228 | " description='target share#:',\n",

229 | " orientation='horizontal',\n",

230 | " readout=True,\n",

231 | " readout_format='.1%',\n",

232 | " )\n",

233 | " self.control_columns = ipywidgets.Text(\n",

234 | " placeholder='Please enter comma-separated entries',\n",

235 | " value='Aomori, Akita',\n",

236 | " description='control_cols:',\n",

237 | " layout=ipywidgets.Layout(width='500px'),\n",

238 | " )\n",

239 | "\n",

240 | " def _define_simulation_widgets(self):\n",

241 | " # Input box for simulation params\n",

242 | " self.num_of_seasons = ipywidgets.IntText(\n",

243 | " value=1,\n",

244 | " description='num_of_seasons:',\n",

245 | " disabled=False,\n",

246 | " style={'description_width': 'initial'},\n",

247 | " )\n",

248 | " self.estimate_icpa = ipywidgets.IntText(\n",

249 | " value=1000,\n",

250 | " description='Estimated iCPA:',\n",

251 | " style={'description_width': 'initial'},\n",

252 | " )\n",

253 | " self.credible_interval = ipywidgets.RadioButtons(\n",

254 | " options=[70, 80, 90, 95],\n",

255 | " value=90,\n",

256 | " description='Credible interval %:',\n",

257 | " style={'description_width': 'initial'},\n",

258 | " )\n",

259 | "\n",

260 | " def _define_date_widgets(self):\n",

261 | " # Input box for Date-related\n",

262 | " self.pre_period_start = ipywidgets.DatePicker(\n",

263 | " description='Pre Start:',\n",

264 | " value=datetime.date.today() - relativedelta(days=122),\n",

265 | " )\n",

266 | " self.pre_period_end = ipywidgets.DatePicker(\n",

267 | " description='Pre End:',\n",

268 | " value=datetime.date.today() - relativedelta(days=32),\n",

269 | " )\n",

270 | " self.post_period_start = ipywidgets.DatePicker(\n",

271 | " description='Post Start:',\n",

272 | " value=datetime.date.today() - relativedelta(days=31),\n",

273 | " )\n",

274 | " self.post_period_end = ipywidgets.DatePicker(\n",

275 | " description='Post End:',\n",

276 | " value=datetime.date.today(),\n",

277 | " )\n",

278 | " self.start_date = ipywidgets.DatePicker(\n",

279 | " description='Start Date:',\n",

280 | " value=datetime.date.today() - relativedelta(days=122),\n",

281 | " )\n",

282 | " self.end_date = ipywidgets.DatePicker(\n",

283 | " description='End Date:',\n",

284 | " value=datetime.date.today() - relativedelta(days=32),\n",

285 | " )\n",

286 | " self.depend_data = ipywidgets.ToggleButton(\n",

287 | " value=False,\n",

288 | " description='Click >> Use the beginning and end of data',\n",

289 | " disabled=False,\n",

290 | " button_style='info',\n",

291 | " tooltip='Description',\n",

292 | " layout=ipywidgets.Layout(width='300px'),\n",

293 | " )\n",

294 | "\n",

295 | " def generate_ui(self):\n",

296 | " self._build_source_selection_tab()\n",

297 | " self._build_data_type_selection_tab()\n",

298 | " self._build_design_type_tab()\n",

299 | " self._build_purpose_selection_tab()\n",

300 | "\n",

301 | " def _build_source_selection_tab(self):\n",

302 | " # UI for data soure\n",

303 | " self.soure_selection = ipywidgets.Tab()\n",

304 | " self.soure_selection.children = [\n",

305 | " ipywidgets.VBox([self.sheet_url, self.sheet_name]),\n",

306 | " ipywidgets.VBox([self.csv_name]),\n",

307 | " ipywidgets.VBox([self.bq_project_id, self.bq_table_name]),\n",

308 | " ]\n",

309 | " self.soure_selection.set_title(0, 'Google_Spreadsheet')\n",

310 | " self.soure_selection.set_title(1, 'CSV_file')\n",

311 | " self.soure_selection.set_title(2, 'Big_Query')\n",

312 | "\n",

313 | " def _build_data_type_selection_tab(self):\n",

314 | " # UI for data type(narrow or wide)\n",

315 | " self.data_type_selection = ipywidgets.Tab()\n",

316 | " self.data_type_selection.children = [\n",

317 | " ipywidgets.VBox([\n",

318 | " ipywidgets.Label(\n",

319 | " 'Wide, or unstacked data is presented with each different'\n",

320 | " ' data variable in a separate column.'\n",

321 | " ),\n",

322 | " self.date_col,\n",

323 | " ]),\n",

324 | " ipywidgets.VBox([\n",

325 | " ipywidgets.Label(\n",

326 | " 'Narrow, stacked, or long data is presented with one column '\n",

327 | " 'containing all the values and another column listing the '\n",

328 | " 'context of the value'\n",

329 | " ),\n",

330 | " ipywidgets.HBox([self.date_col, self.pivot_col, self.kpi_col]),\n",

331 | " ]),\n",

332 | " ]\n",

333 | " self.data_type_selection.set_title(0, 'Wide_Format')\n",

334 | " self.data_type_selection.set_title(1, 'Narrow_Format')\n",

335 | "\n",

336 | " def _build_design_type_tab(self):\n",

337 | " # UI for experimental design\n",

338 | " self.design_type = ipywidgets.Tab(\n",

339 | " children=[\n",

340 | " ipywidgets.VBox([\n",

341 | " ipywidgets.HTML(\n",

342 | " 'divide_equally divides the time series data into N'\n",

343 | " ' groups(split#) with similar movements.'\n",

344 | " ),\n",

345 | " self.num_of_split,\n",

346 | " self.exclude_cols,\n",

347 | " ]),\n",

348 | " ipywidgets.VBox([\n",

349 | " ipywidgets.HTML(\n",

350 | " 'similarity_selection extracts N groups(covariate#) that '\n",

351 | " 'move similarly to particular columns(target_cols).'\n",

352 | " ),\n",

353 | " ipywidgets.HBox([\n",

354 | " self.target_columns,\n",

355 | " self.num_of_covariate,\n",

356 | " self.num_of_pick_range,\n",

357 | " ]),\n",

358 | " self.exclude_cols,\n",

359 | " ]),\n",

360 | " ipywidgets.VBox([\n",

361 | " ipywidgets.HTML(\n",

362 | " 'target share extracts targeted time series data from'\n",

363 | " ' the proportion of interventions.'\n",

364 | " ),\n",

365 | " self.target_share,\n",

366 | " self.exclude_cols,\n",

367 | " ]),\n",

368 | " ipywidgets.VBox([\n",

369 | " ipywidgets.HTML(\n",

370 | " 'To improve reproducibility, it is important to create an'\n",

371 | " ' accurate counterfactual model rather than a balanced'\n",

372 | " ' assignment.'\n",

373 | " ),\n",

374 | " self.target_columns,\n",

375 | " self.control_columns,\n",

376 | " ]),\n",

377 | " ]\n",

378 | " )\n",

379 | " self.design_type.set_title(0, 'A: divide_equally')\n",

380 | " self.design_type.set_title(1, 'B: similarity_selection')\n",

381 | " self.design_type.set_title(2, 'C: target_share')\n",

382 | " self.design_type.set_title(3, 'D: pre-allocated')\n",

383 | "\n",

384 | " def _build_purpose_selection_tab(self):\n",

385 | " # UI for purpose (CausalImpact or Experimental Design)\n",

386 | " self.purpose_selection = ipywidgets.Tab()\n",

387 | " self.date_selection = ipywidgets.Tab()\n",

388 | " self.date_selection.children = [\n",

389 | " ipywidgets.VBox(\n",

390 | " [\n",

391 | " ipywidgets.HTML('The minimum date of the data is '\n",

392 | " 'selected as the start date.'),\n",

393 | " ipywidgets.HTML('The maximum date in the data is '\n",

394 | " 'selected as the end date.'),\n",

395 | " ]),\n",

396 | " ipywidgets.VBox(\n",

397 | " [\n",

398 | " self.start_date,\n",

399 | " self.end_date,\n",

400 | " ]\n",

401 | " )]\n",

402 | " self.date_selection.set_title(0, 'automatic selection')\n",

403 | " self.date_selection.set_title(1, 'manual input')\n",

404 | "\n",

405 | " self.purpose_selection.children = [\n",

406 | " # Causalimpact\n",

407 | " ipywidgets.VBox([\n",

408 | " PreProcess._apply_text_style(\n",

409 | " 15, '⑶ - a: Enter the Pre and Post the intervention.'\n",

410 | " ),\n",

411 | " self.pre_period_start,\n",

412 | " self.pre_period_end,\n",

413 | " self.post_period_start,\n",

414 | " self.post_period_end,\n",

415 | " PreProcess._apply_text_style(\n",

416 | " 15,\n",

417 | " '⑶ - b: Enter the number of periodicities in the'\n",

418 | " ' time series data.(default=1)',\n",

419 | " ),\n",

420 | " ipywidgets.VBox([self.num_of_seasons, self.credible_interval]),\n",

421 | " ]),\n",

422 | " # Experimental_Design\n",

423 | " ipywidgets.VBox([\n",

424 | " PreProcess._apply_text_style(\n",

425 | " 15,\n",

426 | " '⑶ - a: Please select date for experimental design',\n",

427 | " ),\n",

428 | " self.date_selection,\n",

429 | " PreProcess._apply_text_style(\n",

430 | " 15,\n",

431 | " '⑶ - b: Select the experimental design method and'\n",

432 | " ' enter the necessary items.',\n",

433 | " ),\n",

434 | " self.design_type,\n",

435 | " PreProcess._apply_text_style(\n",

436 | " 15,\n",

437 | " '⑶ - c: (Optional) Enter Estimated incremental CPA(Cost'\n",

438 | " ' of intervention ÷ Lift from intervention without bias) & the '\n",

439 | " 'number of periodicities in the time series data.',\n",

440 | " ),\n",

441 | " ipywidgets.VBox([\n",

442 | " self.estimate_icpa,\n",

443 | " self.num_of_seasons,\n",

444 | " self.credible_interval,\n",

445 | " ]),\n",

446 | " ]),\n",

447 | " ]\n",

448 | " self.purpose_selection.set_title(0, 'Causalimpact')\n",

449 | " self.purpose_selection.set_title(1, 'Experimental_Design')\n",

450 | "\n",

451 | " display(\n",

452 | " PreProcess._apply_text_style(18, '⑴ Please select a data source.'),\n",

453 | " self.soure_selection,\n",

454 | " Markdown('

'),\n",

455 | " PreProcess._apply_text_style(\n",

456 | " 18, '⑵ Please select wide or narrow data format.'\n",

457 | " ),\n",

458 | " self.data_type_selection,\n",

459 | " Markdown('

'),\n",

460 | " PreProcess._apply_text_style(\n",

461 | " 18, '⑶ Please select the purpose and set conditions.'\n",

462 | " ),\n",

463 | " self.purpose_selection,\n",

464 | " )\n",

465 | "\n",

466 | " def load_data(self):\n",

467 | " if self.soure_selection.selected_index == 0:\n",

468 | " try:\n",

469 | " self.loaded_df = self._load_data_from_sheet(\n",

470 | " self.sheet_url.value, self.sheet_name.value\n",

471 | " )\n",

472 | " except Exception as e:\n",

473 | " self._apply_text_style('failure', '\\n\\nFailure!!')\n",

474 | " print('Error: {}'.format(e))\n",

475 | " print('Please check the following:')\n",

476 | " print('* sheet url:{}'.format(self.sheet_url.value))\n",

477 | " print('* sheet name:{}'.format(self.sheet_name.value))\n",

478 | " raise Exception('Please check Failure')\n",

479 | "\n",

480 | " elif self.soure_selection.selected_index == 1:\n",

481 | " try:\n",

482 | " self.loaded_df = self._load_data_from_csv(self.csv_name.value)\n",

483 | " except Exception as e:\n",

484 | " self._apply_text_style('failure', '\\n\\nFailure!!')\n",

485 | " print('Error: {}'.format(e))\n",

486 | " print('Please check the following:')\n",

487 | " print('* There is something wrong with the CSV-related settings.')\n",

488 | " print('* CSV namel:{}'.format(self.csv_name.value))\n",

489 | " raise Exception('Please check Failure')\n",

490 | "\n",

491 | " elif self.soure_selection.selected_index == 2:\n",

492 | " try:\n",

493 | " self.loaded_df = self._load_data_from_bigquery(\n",

494 | " self.bq_project_id.value, self.bq_table_name.value\n",

495 | " )\n",

496 | " except Exception as e:\n",

497 | " self._apply_text_style('failure', '\\n\\nFailure!!')\n",

498 | " print('Error: {}'.format(e))\n",

499 | " print('Please check the following:')\n",

500 | " print('* There is something wrong with the bq-related settings.')\n",

501 | " print('* bq project id:{}'.format(self.bq_project_id.value))\n",

502 | " print('* bq table name :{}'.format(self.bq_table_name.value))\n",

503 | " raise Exception('Please check Failure')\n",

504 | "\n",

505 | " else:\n",

506 | " raise Exception('Please select a data souce at Step.1-2.')\n",

507 | "\n",

508 | " self._apply_text_style(\n",

509 | " 'success',\n",

510 | " 'Success! The target data has been loaded.')\n",

511 | " display(self.loaded_df.head(3))\n",

512 | "\n",

513 | " @staticmethod\n",

514 | " def _load_data_from_sheet(spreadsheet_url, sheet_name):\n",

515 | " \"\"\"load_data_from_sheet load data from spreadsheet.\n",

516 | "\n",

517 | " Args:\n",

518 | " spreadsheet_url: Spreadsheet url with data.\n",

519 | " sheet_name: Sheet name with data.\n",

520 | " \"\"\"\n",

521 | " auth.authenticate_user()\n",

522 | " creds, _ = default()\n",

523 | " gc = gspread.authorize(creds)\n",

524 | " _workbook = gc.open_by_url(spreadsheet_url)\n",

525 | " _worksheet = _workbook.worksheet(sheet_name)\n",

526 | " df_sheet = pd.DataFrame(_worksheet.get_all_values())\n",

527 | " df_sheet.columns = list(df_sheet.loc[0, :])\n",

528 | " df_sheet.drop(0, inplace=True)\n",

529 | " df_sheet.reset_index(drop=True, inplace=True)\n",

530 | " df_sheet.replace(',', '', regex=True, inplace=True)\n",

531 | " df_sheet.rename(columns=lambda x: x.replace(\" \", \"\"), inplace=True)\n",

532 | " df_sheet = df_sheet.apply(pd.to_numeric, errors='ignore')\n",

533 | " return df_sheet\n",

534 | "\n",

535 | " @staticmethod\n",

536 | " def _load_data_from_csv(csv_name):\n",

537 | " \"\"\"load_data_from_csv read data from csv.\n",

538 | "\n",

539 | " Args:\n",

540 | " csv_name: csv file name.\n",

541 | " \"\"\"\n",

542 | " uploaded = files.upload()\n",

543 | " df_csv = pd.read_csv(io.BytesIO(uploaded[csv_name]))\n",

544 | " df_csv.replace(',', '', regex=True, inplace=True)\n",

545 | " df_csv.rename(columns=lambda x: x.replace(\" \", \"\"), inplace=True)\n",

546 | " df_csv = df_csv.apply(pd.to_numeric, errors='ignore')\n",

547 | " return df_csv\n",

548 | "\n",

549 | " @staticmethod\n",

550 | " def _load_data_from_bigquery(bq_project_id, bq_table_name):\n",

551 | " \"\"\"_load_data_from_bigquery load data from bigquery.\n",

552 | "\n",

553 | " Args:\n",

554 | " bq_project_id: bigquery project id.\n",

555 | " bq_table_name: bigquery table name\n",

556 | " \"\"\"\n",

557 | " auth.authenticate_user()\n",

558 | " client = bigquery.Client(project=bq_project_id)\n",

559 | " query = 'SELECT * FROM `' + bq_table_name + '`;'\n",

560 | " df_bq = client.query(query).to_dataframe()\n",

561 | " df_bq.replace(',', '', regex=True, inplace=True)\n",

562 | " df_bq.rename(columns=lambda x: x.replace(\" \", \"\"), inplace=True)\n",

563 | " df_bq = df_bq.apply(pd.to_numeric, errors='ignore')\n",

564 | " return df_bq\n",

565 | "\n",

566 | " def format_data(self):\n",

567 | " \"\"\"Formats the loaded data for causal impact analysis or experimental design.\n",

568 | "\n",

569 | " This method performs several data transformation steps:\n",

570 | " 1. Cleans column names by removing spaces from `date_col`, `pivot_col`, and `kpi_col`.\n",

571 | " 2. Converts the data to a wide format if specified by `data_type_selection`.\n",

572 | " 3. Drops columns specified in `exclude_cols`.\n",

573 | " 4. Converts the date column to datetime objects and sets it as the DataFrame index.\n",

574 | " 5. Reindexes the DataFrame to ensure a continuous date range from the minimum to maximum date.\n",

575 | " 6. Calculates `tick_count` for visualization purposes.\n",

576 | " 7. Provides visual feedback on the data formatting success or failure.\n",

577 | " 8. Displays an overview of the formatted data, including index, date range, and missing values.\n",

578 | " 9. Visualizes data trends (total and individual) and descriptive statistics.\n",

579 | "\n",

580 | " Raises:\n",

581 | " Exception: If any error occurs during data formatting, often due to\n",

582 | " mismatched data format selection (wide/narrow) or incorrect\n",

583 | " column names. Provides specific error messages to guide debugging.\n",

584 | " \"\"\"\n",

585 | " self.date_col_name = self.date_col.value.replace(' ', '')\n",

586 | " self.pivot_col_name = self.pivot_col.value.replace(' ', '')\n",

587 | " self.kpi_col_name = self.kpi_col.value.replace(' ', '')\n",

588 | "\n",

589 | " try:\n",

590 | " if self.data_type_selection.selected_index == 0:\n",

591 | " self.formatted_data = self.loaded_df.copy()\n",

592 | " elif self.data_type_selection.selected_index == 1:\n",

593 | " self.formatted_data = self._shape_wide(\n",

594 | " self.loaded_df,\n",

595 | " self.date_col_name,\n",

596 | " self.pivot_col_name,\n",

597 | " self.kpi_col_name,\n",

598 | " )\n",

599 | "\n",

600 | " self.formatted_data.drop(\n",

601 | " self.exclude_cols.value.replace(', ', ',').split(','),\n",

602 | " axis=1,\n",

603 | " errors='ignore',\n",

604 | " inplace=True,\n",

605 | " )\n",

606 | " self.formatted_data[self.date_col_name] = pd.to_datetime(\n",

607 | " self.formatted_data[self.date_col_name]\n",

608 | " )\n",

609 | " self.formatted_data = self.formatted_data.set_index(self.date_col_name)\n",

610 | " self.formatted_data = self.formatted_data.reindex(\n",

611 | " pd.date_range(\n",

612 | " start=self.formatted_data.index.min(),\n",

613 | " end=self.formatted_data.index.max(),\n",

614 | " name=self.formatted_data.index.name))\n",

615 | " self.tick_count = len(self.formatted_data.resample('M')) - 1\n",

616 | " self._apply_text_style(\n",

617 | " 'success',\n",

618 | " '\\nSuccess! The data was formatted for analysis.'\n",

619 | " )\n",

620 | " display(self.formatted_data.head(3))\n",

621 | " self._apply_text_style(\n",

622 | " 'failure',\n",

623 | " '\\nCheck! Here is an overview of the data.'\n",

624 | " )\n",

625 | " print(\n",

626 | " 'Index name:{} | The earliest date: {} | The latest date: {}'.format(\n",

627 | " self.formatted_data.index.name,\n",